3 Sampling the Imaginary

If you would like to know the probability someone is a vampire given they test positive to the blood-based vampire test, you compute

\[ \Pr(\text{vampire}|\text{positive}) = \frac{\Pr(\text{positive}|\text{vampire})\Pr(\text{vampire})}{\Pr(\text{positive})}. \]

We’ll do so within a tibble.

library(tidyverse)

tibble(pr_positive_vampire = .95,

pr_positive_mortal = .01,

pr_vampire = .001) %>%

mutate(pr_positive = pr_positive_vampire * pr_vampire + pr_positive_mortal * (1 - pr_vampire)) %>%

mutate(pr_vampire_positive = pr_positive_vampire * pr_vampire / pr_positive) %>%

glimpse()## Rows: 1

## Columns: 5

## $ pr_positive_vampire <dbl> 0.95

## $ pr_positive_mortal <dbl> 0.01

## $ pr_vampire <dbl> 0.001

## $ pr_positive <dbl> 0.01094

## $ pr_vampire_positive <dbl> 0.08683729Here’s the other way of tackling the vampire problem, this time using the frequency format.

tibble(pr_vampire = 100 / 100000,

pr_positive_vampire = 95 / 100,

pr_positive_mortal = 999 / 99900) %>%

mutate(pr_positive = 95 + 999) %>%

mutate(pr_vampire_positive = pr_positive_vampire * 100 / pr_positive) %>%

glimpse()## Rows: 1

## Columns: 5

## $ pr_vampire <dbl> 0.001

## $ pr_positive_vampire <dbl> 0.95

## $ pr_positive_mortal <dbl> 0.01

## $ pr_positive <dbl> 1094

## $ pr_vampire_positive <dbl> 0.08683729The posterior distribution is a probability distribution. And like all probability distributions, we can imagine drawing samples from it. The sampled events in this case are parameter values. Most parameters have no exact empirical realization. The Bayesian formalism treats parameter distributions as relative plausibility, not as any physical random process. In any event, randomness is always a property of information, never of the real world. But inside the computer, parameters are just as empirical as the outcome of a coin flip or a die toss or an agricultural experiment. The posterior defines the expected frequency that different parameter values will appear, once we start plucking parameters out of it. (McElreath, 2020a, p. 50, emphasis in the original)

3.1 Sampling from a grid-approximate posterior

Once again, here we use grid approximation to generate samples.

# how many grid points would you like?

n <- 1000

n_success <- 6

n_trials <- 9

(

d <-

tibble(p_grid = seq(from = 0, to = 1, length.out = n),

# note we're still using a flat uniform prior

prior = 1) %>%

mutate(likelihood = dbinom(n_success, size = n_trials, prob = p_grid)) %>%

mutate(posterior = (likelihood * prior) / sum(likelihood * prior))

)## # A tibble: 1,000 × 4

## p_grid prior likelihood posterior

## <dbl> <dbl> <dbl> <dbl>

## 1 0 1 0 0

## 2 0.00100 1 8.43e-17 8.43e-19

## 3 0.00200 1 5.38e-15 5.38e-17

## 4 0.00300 1 6.11e-14 6.11e-16

## 5 0.00400 1 3.42e-13 3.42e-15

## 6 0.00501 1 1.30e-12 1.30e-14

## 7 0.00601 1 3.87e-12 3.88e-14

## 8 0.00701 1 9.73e-12 9.74e-14

## 9 0.00801 1 2.16e-11 2.16e-13

## 10 0.00901 1 4.37e-11 4.38e-13

## # … with 990 more rowsOur d data contains all the components in McElreath’s R code 3.2 block. Do note we’ve renamed his prob_p and prob_data as prior and likelihood, respectively. Now we’ll use the dplyr::slice_sample() function to sample rows from d, saving them as sample.

# how many samples would you like?

n_samples <- 1e4

# make it reproducible

set.seed(3)

samples <-

d %>%

slice_sample(n = n_samples, weight_by = posterior, replace = T)

glimpse(samples)## Rows: 10,000

## Columns: 4

## $ p_grid <dbl> 0.5645646, 0.6516517, 0.5475475, 0.5905906, 0.5955956, 0.7877878, 0.7267267, 0.…

## $ prior <dbl> 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1…

## $ likelihood <dbl> 0.224559942, 0.271902722, 0.209666553, 0.244608692, 0.247990921, 0.191887140, 0…

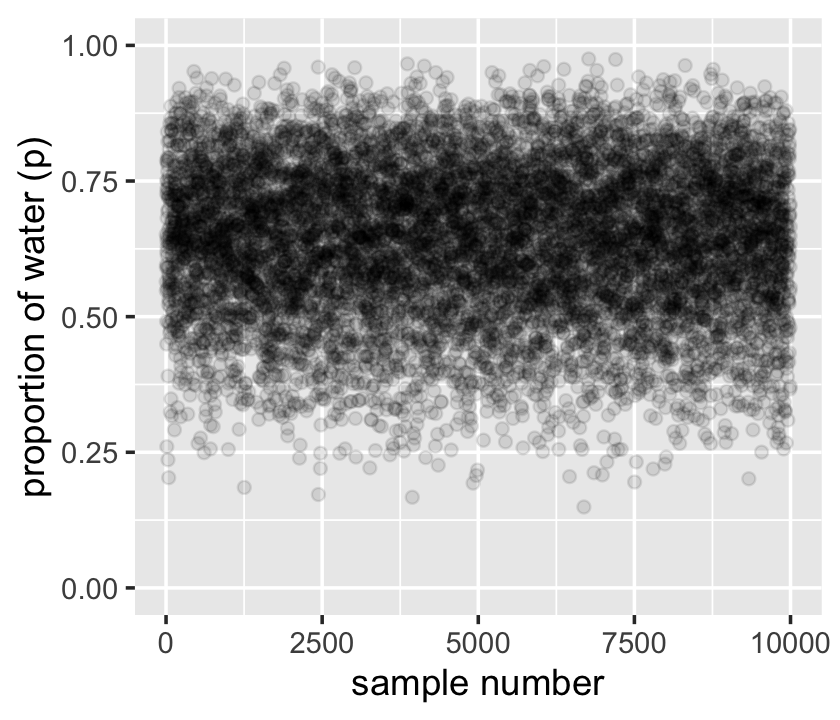

## $ posterior <dbl> 2.247847e-03, 2.721749e-03, 2.098764e-03, 2.448535e-03, 2.482392e-03, 1.920792e…Now we can plot the left panel of Figure 3.1 with geom_point(). But before we do, we’ll need to add a variable numbering the samples.

samples %>%

mutate(sample_number = 1:n()) %>%

ggplot(aes(x = sample_number, y = p_grid)) +

geom_point(alpha = 1/10) +

scale_y_continuous("proportion of water (p)", limits = c(0, 1)) +

xlab("sample number")

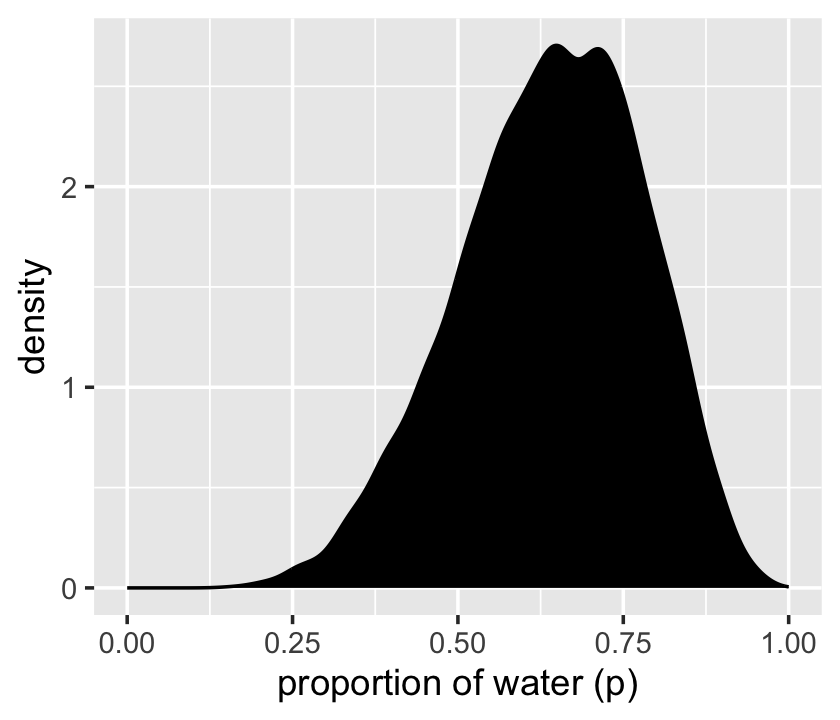

We’ll make the density in the right panel with geom_density().

samples %>%

ggplot(aes(x = p_grid)) +

geom_density(fill = "black") +

scale_x_continuous("proportion of water (p)", limits = c(0, 1))

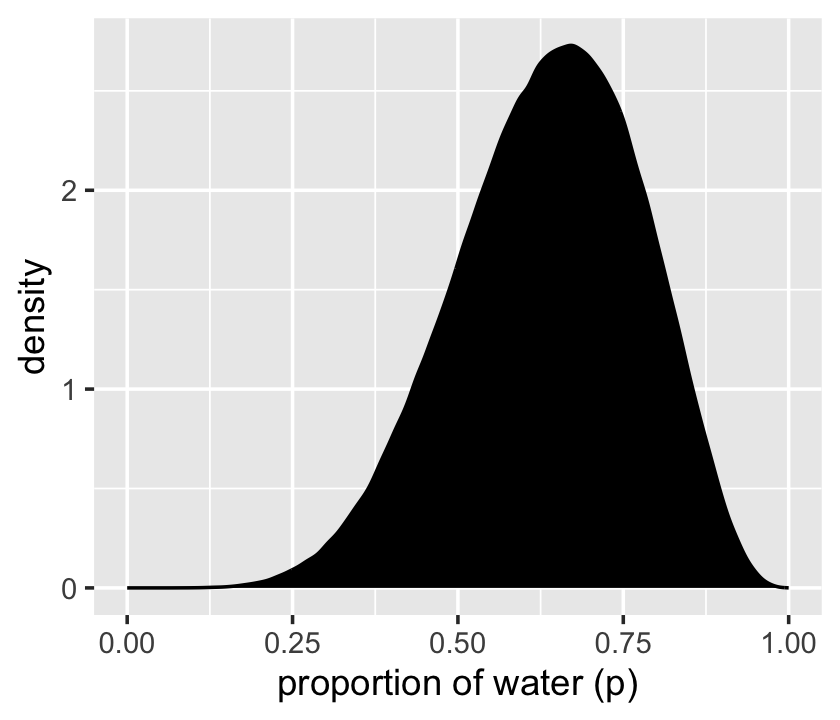

That was based on 1e4 samples. On page 53, McElreath said the density would converge on the idealized shape if we keep increasing the number of samples. Here’s what it looks like with 1e6.

set.seed(3)

d %>%

slice_sample(n = 1e6, weight_by = posterior, replace = T) %>%

ggplot(aes(x = p_grid)) +

geom_density(fill = "black") +

scale_x_continuous("proportion of water (p)", limits = c(0, 1))

Yep, that’s more ideal.

3.2 Sampling to summarize

“Once your model produces a posterior distribution, the model’s work is done. But your work has just begun. It is necessary to summarize and interpret the posterior distribution. Exactly how it is summarized depends upon your purpose” (p. 53).

3.2.1 Intervals of defined boundaries.

To get the proportion of water less than some value of p_grid within the tidyverse, you might first filter() by that value and then take the sum() within summarise().

d %>%

filter(p_grid < .5) %>%

summarise(sum = sum(posterior))## # A tibble: 1 × 1

## sum

## <dbl>

## 1 0.172To learn more about dplyr::summarise() and related functions, check out Baert’s Data wrangling part 4: Summarizing and slicing your data and Section 5.6 of R4DS (Grolemund & Wickham, 2017).

If what you want is a frequency based on filtering by samples, then you might use n() within summarise().

samples %>%

filter(p_grid < .5) %>%

summarise(sum = n() / n_samples)## # A tibble: 1 × 1

## sum

## <dbl>

## 1 0.163A more explicit approach for the same computation is to follow up count() with mutate().

samples %>%

count(p_grid < .5) %>%

mutate(probability = n / sum(n))## # A tibble: 2 × 3

## `p_grid < 0.5` n probability

## <lgl> <int> <dbl>

## 1 FALSE 8371 0.837

## 2 TRUE 1629 0.163An even trickier approach for the same is to insert the logical statement p_grid < .5 within the mean() function.

samples %>%

summarise(sum = mean(p_grid < .5))## # A tibble: 1 × 1

## sum

## <dbl>

## 1 0.163Much like McElreath discussed in the Overthinking: Counting with sum box, this works “because R internally converts a logical expression, like samples < 0.5, to a vector of TRUE and FALSE results, one for each element of samples, saying whether or not each element matches the criterion” (p. 54). When we inserted that vector of TRUE and FALSE values within the mean() function, they were then internally converted to a vector of 1’s and 0’s, the mean of which was the probability. Tricky!

To determine the posterior probability between 0.5 and 0.75, you can use & within filter().

samples %>%

filter(p_grid > .5 & p_grid < .75) %>%

summarise(sum = n() / n_samples)## # A tibble: 1 × 1

## sum

## <dbl>

## 1 0.606Just multiply that value by 100 to get a percent.

samples %>%

filter(p_grid > .5 & p_grid < .75) %>%

summarise(sum = n() / n_samples,

percent = n() / n_samples * 100)## # A tibble: 1 × 2

## sum percent

## <dbl> <dbl>

## 1 0.606 60.6And, of course, you can do that with our mean() trick, too.

samples %>%

summarise(percent = 100 * mean(p_grid > .5 & p_grid < .75))## # A tibble: 1 × 1

## percent

## <dbl>

## 1 60.63.2.2 Intervals of defined mass.

It is more common to see scientific journals reporting an interval of defined mass, usually known as a confidence interval. An interval of posterior probability, such as the ones we are working with, may instead be called a credible interval. We’re going to call it a compatibility interval instead, in order to avoid the unwarranted implications of “confidence” and “credibility.” What the interval indicates is a range of parameter values compatible with the model and data. The model and data themselves may not inspire confidence, in which case the interval will not either. (p. 54, emphasis in the original)

As a part of this block quote, McElreath linked to endnote 54, which reads: “I learned this term from Sander Greenland and his collaborators. See Amrhein et al. (2019) and Gelman and Greenland (2019)” (p. 560).

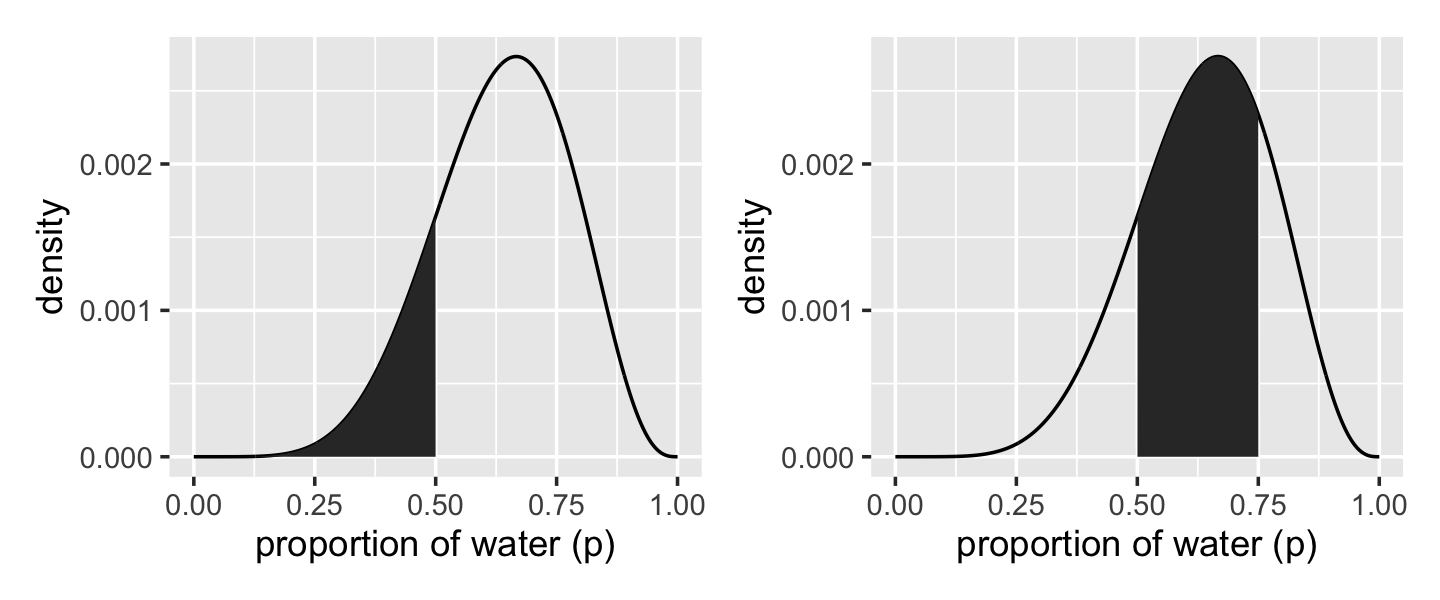

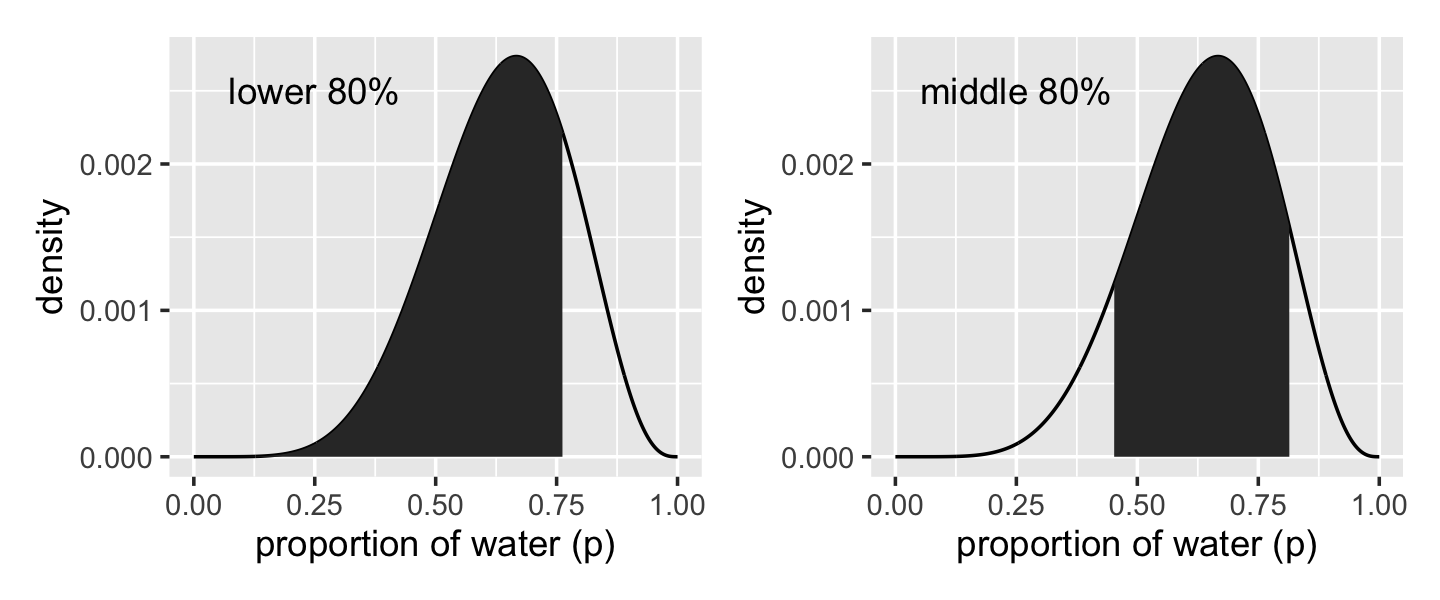

We’ll create the upper two panels for Figure 3.2 with geom_line(), geom_area(), some careful filtering, and a little patchwork syntax.

# upper left panel

p1 <-

d %>%

ggplot(aes(x = p_grid, y = posterior)) +

geom_line() +

geom_area(data = d %>% filter(p_grid < .5)) +

labs(x = "proportion of water (p)",

y = "density")

# upper right panel

p2 <-

d %>%

ggplot(aes(x = p_grid, y = posterior)) +

geom_line() +

# note this next line is the only difference in code from the last plot

geom_area(data = d %>% filter(p_grid < .75 & p_grid > .5)) +

labs(x = "proportion of water (p)",

y = "density")

# combine

library(patchwork)

p1 + p2

We’ll come back for the lower two panels in a bit.

Since we saved our p_grid samples within the well-named samples tibble, we’ll have to index with $ within quantile.

(q_80 <- quantile(samples$p_grid, prob = .8))## 80%

## 0.7627628That value will come in handy for the lower left panel of Figure 3.2. For an alternative approach, we could select() the samples vector, extract it from the tibble with pull(), and then pump it into quantile().

samples %>%

pull(p_grid) %>%

quantile(prob = .8)## 80%

## 0.7627628We might also use quantile() within summarise().

samples %>%

summarise(`80th percentile` = quantile(p_grid, p = .8))## # A tibble: 1 × 1

## `80th percentile`

## <dbl>

## 1 0.763Here’s the summarise() approach with two probabilities.

samples %>%

summarise(`10th percentile` = quantile(p_grid, p = .1),

`90th percentile` = quantile(p_grid, p = .9))## # A tibble: 1 × 2

## `10th percentile` `90th percentile`

## <dbl> <dbl>

## 1 0.451 0.815The tidyverse approach is nice in that that family of functions typically returns a data frame. But sometimes you just want your values in a numeric vector for the sake of quick indexing. In that case, base R quantile() shines.

(q_10_and_90 <- quantile(samples$p_grid, prob = c(.1, .9)))## 10% 90%

## 0.4514515 0.8148148Now we have our cutoff values saved as q_80 and q_10_and_90, we’re ready to make the bottom panels of Figure 3.2.

# lower left panel

p1 <-

d %>%

ggplot(aes(x = p_grid, y = posterior)) +

geom_line() +

geom_area(data = d %>% filter(p_grid < q_80)) +

annotate(geom = "text",

x = .25, y = .0025,

label = "lower 80%") +

labs(x = "proportion of water (p)",

y = "density")

# lower right panel

p2 <-

d %>%

ggplot(aes(x = p_grid, y = posterior)) +

geom_line() +

geom_area(data = d %>% filter(p_grid > q_10_and_90[1] & p_grid < q_10_and_90[2])) +

annotate(geom = "text",

x = .25, y = .0025,

label = "middle 80%") +

labs(x = "proportion of water (p)",

y = "density")

# combine

p1 + p2

Now we follow along with McElreath’s R code 3.11 to compute a highly skewed posterior. We’ve already defined p_grid and prior within d, above. Here we’ll reuse them and update the rest of the columns.

# here we update the `dbinom()` parameters

n_success <- 3

n_trials <- 3

# update `d`

d <-

d %>%

mutate(likelihood = dbinom(n_success, size = n_trials, prob = p_grid)) %>%

mutate(posterior = (likelihood * prior) / sum(likelihood * prior))

# make the next part reproducible

set.seed(3)

# here's our new samples tibble

(

samples <-

d %>%

slice_sample(n = n_samples, weight_by = posterior, replace = T)

)## # A tibble: 10,000 × 4

## p_grid prior likelihood posterior

## <dbl> <dbl> <dbl> <dbl>

## 1 0.717 1 0.368 0.00147

## 2 0.652 1 0.277 0.00111

## 3 0.548 1 0.164 0.000656

## 4 1 1 1 0.00400

## 5 0.991 1 0.973 0.00389

## 6 0.788 1 0.489 0.00195

## 7 0.940 1 0.830 0.00332

## 8 0.817 1 0.545 0.00218

## 9 0.955 1 0.871 0.00348

## 10 0.449 1 0.0908 0.000363

## # … with 9,990 more rowsThe rethinking::PI() function works like a nice shorthand for quantile().

quantile(samples$p_grid, prob = c(.25, .75))## 25% 75%

## 0.7087087 0.9349349rethinking::PI(samples$p_grid, prob = .5)## 25% 75%

## 0.7087087 0.9349349Now’s a good time to introduce Matthew Kay’s (2022) tidybayes package, which offers an array of convenience functions for summarizing Bayesian models of the type we’ll be working with in this project. For all the brms-related deets, see Kay’s (2021) vignette, Extracting and visualizing tidy draws from brms models. Here we start simple.

library(tidybayes)

median_qi(samples$p_grid, .width = .5)## y ymin ymax .width .point .interval

## 1 0.8428428 0.7087087 0.9349349 0.5 median qiThe tidybayes package contains a family of functions that make it easy to summarize a distribution with a measure of central tendency accompanied by intervals. With median_qi(), we asked for the median and quantile-based intervals–just like we’ve been doing with quantile(). Note how the .width argument within median_qi() worked the same way the prob argument did within rethinking::PI(). With .width = .5, we indicated we wanted a quantile-based 50% interval, which was returned in the ymin and ymax columns. The tidybayes framework makes it easy to request multiple types of intervals. E.g., here we’ll request 50%, 80%, and 99% intervals.

median_qi(samples$p_grid, .width = c(.5, .8, .99))## y ymin ymax .width .point .interval

## 1 0.8428428 0.7087087 0.9349349 0.50 median qi

## 2 0.8428428 0.5705706 0.9749750 0.80 median qi

## 3 0.8428428 0.2562563 0.9989990 0.99 median qiThe .width column in the output indexed which line presented which interval. The value in the y column remained constant across rows. That’s because that column listed the measure of central tendency, the median in this case.

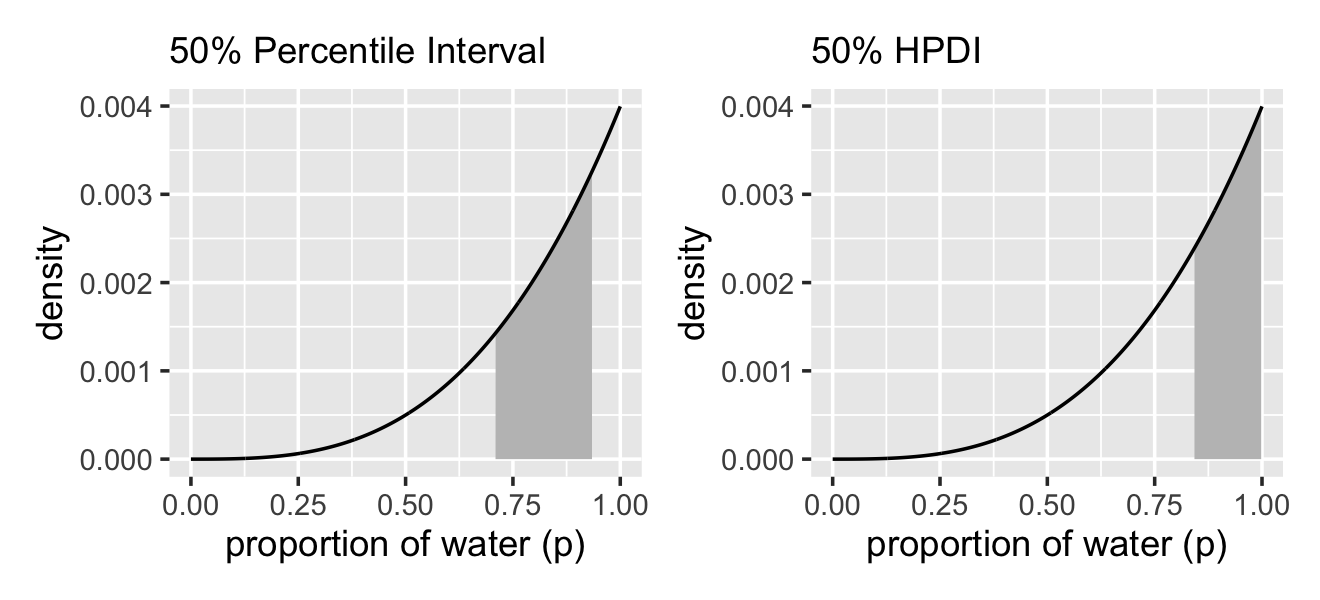

Now let’s use the rethinking::HPDI() function to return 50% highest posterior density intervals (HPDIs).

rethinking::HPDI(samples$p_grid, prob = .5)## |0.5 0.5|

## 0.8418418 0.9989990The reason I introduce tidybayes now is that the functions of the brms package only support percentile-based intervals of the type we computed with quantile() and median_qi(). But tidybayes also supports HPDIs.

mode_hdi(samples$p_grid, .width = .5)## y ymin ymax .width .point .interval

## 1 0.955384 0.8418418 0.998999 0.5 mode hdiThis time we used the mode as the measure of central tendency. With this family of tidybayes functions, you specify the measure of central tendency in the prefix (i.e., mean, median, or mode) and then the type of interval you’d like (i.e., qi or hdi).

If all you want are the intervals without the measure of central tendency or all that other technical information, tidybayes also offers the handy qi() and hdi() functions.

qi(samples$p_grid, .width = .5)## [,1] [,2]

## [1,] 0.7087087 0.9349349hdi(samples$p_grid, .width = .5)## [,1] [,2]

## [1,] 0.8418418 0.998999These are nice in that they return simple numeric vectors, making them particularly useful to use as references within ggplot2 plots. Now we have that skill, we can use it to make Figure 3.3.

# lower left panel

p1 <-

d %>%

ggplot(aes(x = p_grid, y = posterior)) +

# check out our sweet `qi()` indexing

geom_area(data = d %>%

filter(p_grid > qi(samples$p_grid, .width = .5)[1] &

p_grid < qi(samples$p_grid, .width = .5)[2]),

fill = "grey75") +

geom_line() +

labs(subtitle = "50% Percentile Interval",

x = "proportion of water (p)",

y = "density")

# lower right panel

p2 <-

d %>%

ggplot(aes(x = p_grid, y = posterior)) +

geom_area(data = . %>%

filter(p_grid > hdi(samples$p_grid, .width = .5)[1] &

p_grid < hdi(samples$p_grid, .width = .5)[2]),

fill = "grey75") +

geom_line() +

labs(subtitle = "50% HPDI",

x = "proportion of water (p)",

y = "density")

# combine!

p1 | p2

In the geom_area() line for the HPDI plot, did you notice how we replaced d with .? When using the pipe (i.e., %>%), you can use the . as a placeholder for the original data object. It’s an odd and handy trick to know about. Go here to learn more.

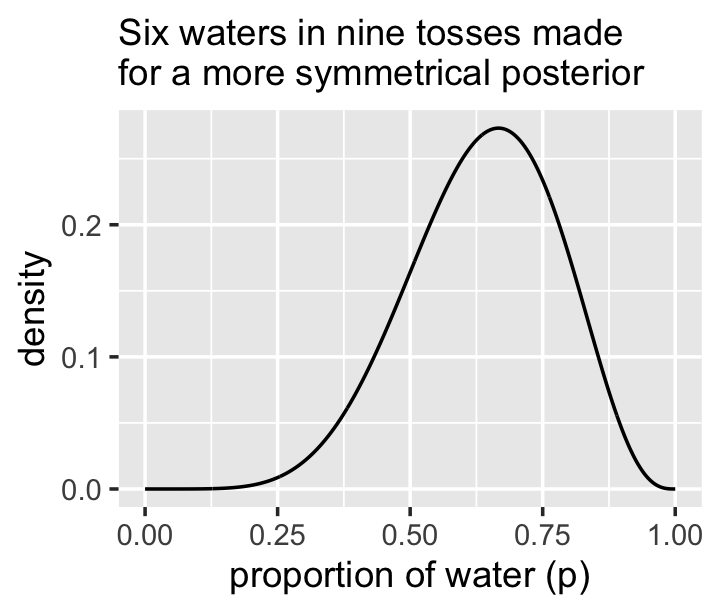

So the HPDI has some advantages over the PI. But in most cases, these two types of interval are very similar. They only look so different in this case because the posterior distribution is highly skewed. If we instead used samples from the posterior distribution for six waters in nine tosses, these intervals would be nearly identical. Try it for yourself, using different probability masses, such as

prob=0.8andprob=0.95. When the posterior is bell shaped, it hardly matters which type of interval you use. (p. 57)

Let’s try it out. First we’ll update the simulation for six waters in nine tosses.

# "six waters in nine tosses"

n_success <- 6

n_trials <- 9

new_d <-

d %>%

mutate(likelihood = dbinom(n_success, size = n_trials, prob = p_grid)) %>%

mutate(posterior = (likelihood * prior) / sum(posterior))

set.seed(3)

new_samples <-

new_d %>%

slice_sample(n = n_samples, weight_by = posterior, replace = T)Here are the intervals by .width and type of .interval.

bind_rows(mean_hdi(new_samples$p_grid, .width = c(.8, .95)),

mean_qi(new_samples$p_grid, .width = c(.8, .95))) %>%

select(.width, .interval, ymin:ymax) %>%

arrange(.width) %>%

mutate_if(is.double, round, digits = 2)## .width .interval ymin ymax

## 1 0.80 hdi 0.49 0.84

## 2 0.80 qi 0.45 0.81

## 3 0.95 hdi 0.37 0.89

## 4 0.95 qi 0.35 0.88We didn’t need that last mutate_if() line. It just made it easier to compare the ymin and ymax values. Anyway, McElreath was right. This time the differences between the HPDIs and QIs were trivial. Here’s a look at the posterior.

new_d %>%

ggplot(aes(x = p_grid)) +

geom_line(aes(y = posterior)) +

labs(subtitle = "Six waters in nine tosses made\nfor a more symmetrical posterior",

x = "proportion of water (p)",

y = "density")

Be warned:

The HPDI also has some disadvantages. HPDI is more computationally intensive than PI and suffers from greater simulation variance, which is a fancy way of saying that it is sensitive to how many samples you draw from the posterior. It is also harder to understand and many scientific audiences will not appreciate its features, while they will immediately understand a percentile interval, as ordinary non-Bayesian intervals are typically interpreted (incorrectly) as percentile intervals (pp. 57–58, emphasis in the original)

For convenience, we’ll primarily stick to the PI-based intervals in this ebook.

3.2.2.1 Rethinking: What do compatibility intervals mean?

At the start of this section, McElreath poked a little at frequentist confidence intervals. For an introduction to confidence intervals from the perspective of a frequentist, you might check out Cumming’s (2014) The new statistics: Why and how and the works referenced therein. Though their definition isn’t the most intuitive, I usually use confidence intervals when I wear my frequentist hat.

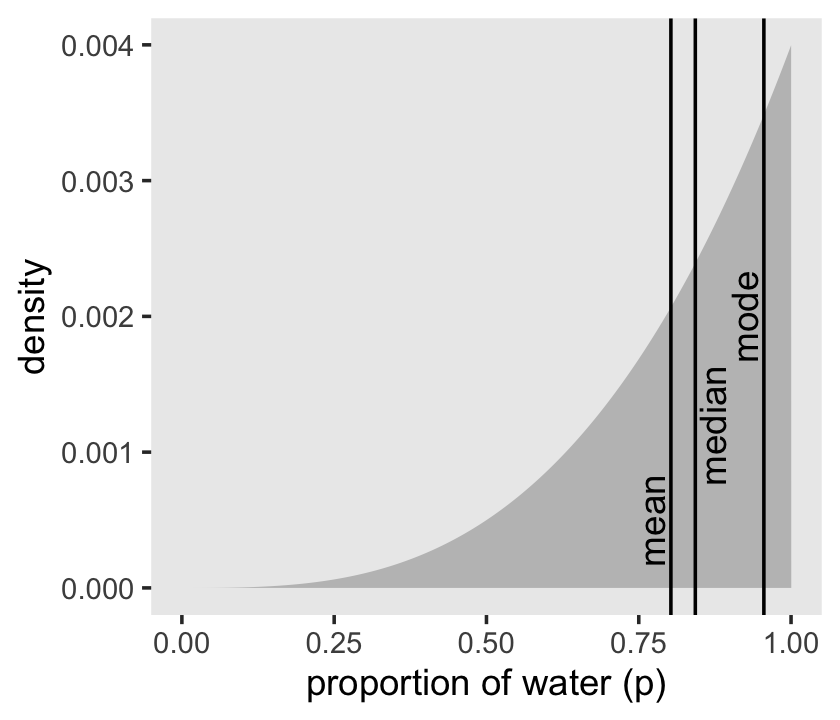

3.2.3 Point estimates.

We’ve been calling point estimates measures of central tendency. If we arrange() our d tibble in descending order by posterior, we’ll see the corresponding p_grid value for its MAP estimate.

d %>%

arrange(desc(posterior))## # A tibble: 1,000 × 4

## p_grid prior likelihood posterior

## <dbl> <dbl> <dbl> <dbl>

## 1 1 1 1 0.00400

## 2 0.999 1 0.997 0.00398

## 3 0.998 1 0.994 0.00397

## 4 0.997 1 0.991 0.00396

## 5 0.996 1 0.988 0.00395

## 6 0.995 1 0.985 0.00394

## 7 0.994 1 0.982 0.00392

## 8 0.993 1 0.979 0.00391

## 9 0.992 1 0.976 0.00390

## 10 0.991 1 0.973 0.00389

## # … with 990 more rowsTo emphasize it, we can use slice() to select the top row.

d %>%

arrange(desc(posterior)) %>%

slice(1)## # A tibble: 1 × 4

## p_grid prior likelihood posterior

## <dbl> <dbl> <dbl> <dbl>

## 1 1 1 1 0.00400We can get the mode with mode_hdi() or mode_qi().

samples %>% mode_hdi(p_grid)## # A tibble: 1 × 6

## p_grid .lower .upper .width .point .interval

## <dbl> <dbl> <dbl> <dbl> <chr> <chr>

## 1 0.955 0.475 1 0.95 mode hdisamples %>% mode_qi(p_grid)## # A tibble: 1 × 6

## p_grid .lower .upper .width .point .interval

## <dbl> <dbl> <dbl> <dbl> <chr> <chr>

## 1 0.955 0.399 0.994 0.95 mode qiThose returned a lot of output in addition to the mode. If all you want is the mode itself, you can just use tidybayes::Mode().

Mode(samples$p_grid)## [1] 0.955384Medians and means are typical measures of central tendency, too.

samples %>%

summarise(mean = mean(p_grid),

median = median(p_grid))## # A tibble: 1 × 2

## mean median

## <dbl> <dbl>

## 1 0.803 0.843We can inspect the three types of point estimate in the left panel of Figure 3.4. First we’ll bundle the three point estimates together in a tibble.

(

point_estimates <-

bind_rows(samples %>% mean_qi(p_grid),

samples %>% median_qi(p_grid),

samples %>% mode_qi(p_grid)) %>%

select(p_grid, .point) %>%

# these last two columns will help us annotate

mutate(x = p_grid + c(-.03, .03, -.03),

y = c(.0005, .0012, .002))

)## # A tibble: 3 × 4

## p_grid .point x y

## <dbl> <chr> <dbl> <dbl>

## 1 0.803 mean 0.773 0.0005

## 2 0.843 median 0.873 0.0012

## 3 0.955 mode 0.925 0.002Now plot.

d %>%

ggplot(aes(x = p_grid)) +

geom_area(aes(y = posterior),

fill = "grey75") +

geom_vline(xintercept = point_estimates$p_grid) +

geom_text(data = point_estimates,

aes(x = x, y = y, label = .point),

angle = 90) +

labs(x = "proportion of water (p)",

y = "density") +

theme(panel.grid = element_blank())

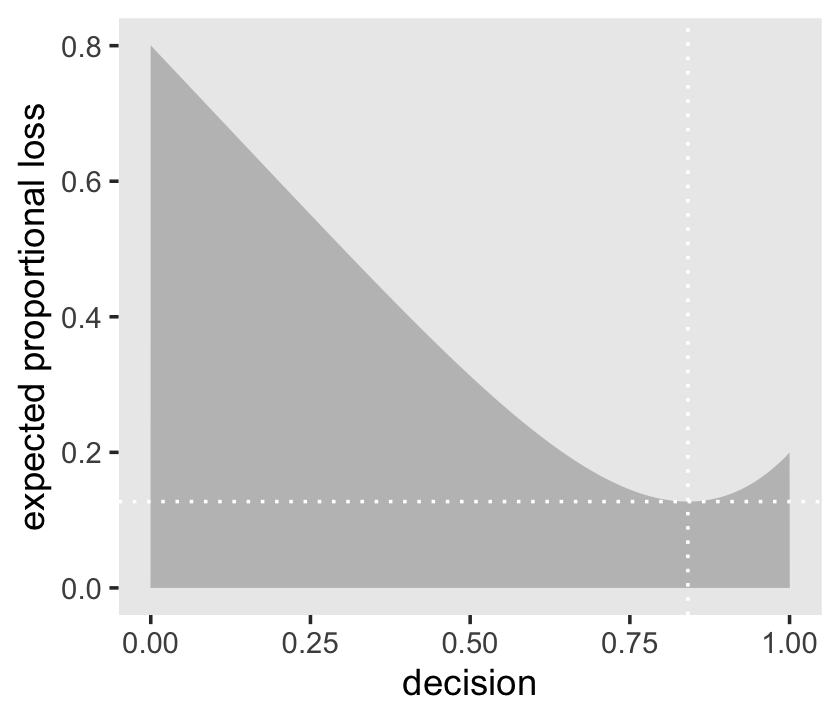

As it turns out “different loss functions imply different point estimates” (p. 59, emphasis in the original).

Let \(p\) be the proportion of the Earth covered by water and \(d\) be our guess. If McElreath pays us $100 if we guess exactly right but subtracts money from the prize proportional to how far off we are, then our loss is proportional to \(d - p\). If we decide \(d = .5\), we can compute our expected loss.

d %>%

summarise(`expected loss` = sum(posterior * abs(0.5 - p_grid)))## # A tibble: 1 × 1

## `expected loss`

## <dbl>

## 1 0.313What McElreath did with sapply(), we’ll do with purrr::map(). If you haven’t used it, map() is part of a family of similarly-named functions (e.g., map2()) from the purrr package (Henry & Wickham, 2020), which is itself part of the tidyverse. The map() family is the tidyverse alternative to the family of apply() functions from the base R framework. You can learn more about how to use the map() family here or here or here.

make_loss <- function(our_d) {

d %>%

mutate(loss = posterior * abs(our_d - p_grid)) %>%

summarise(weighted_average_loss = sum(loss))

}

(

l <-

d %>%

select(p_grid) %>%

rename(decision = p_grid) %>%

mutate(weighted_average_loss = purrr::map(decision, make_loss)) %>%

unnest(weighted_average_loss)

)## # A tibble: 1,000 × 2

## decision weighted_average_loss

## <dbl> <dbl>

## 1 0 0.800

## 2 0.00100 0.799

## 3 0.00200 0.798

## 4 0.00300 0.797

## 5 0.00400 0.796

## 6 0.00501 0.795

## 7 0.00601 0.794

## 8 0.00701 0.793

## 9 0.00801 0.792

## 10 0.00901 0.791

## # … with 990 more rowsNow we’re ready for the right panel of Figure 3.4.

# this will help us find the x and y coordinates for the minimum value

min_loss <-

l %>%

filter(weighted_average_loss == min(weighted_average_loss)) %>%

as.numeric()

# the plot

l %>%

ggplot(aes(x = decision, y = weighted_average_loss)) +

geom_area(fill = "grey75") +

geom_vline(xintercept = min_loss[1], color = "white", linetype = 3) +

geom_hline(yintercept = min_loss[2], color = "white", linetype = 3) +

ylab("expected proportional loss") +

theme(panel.grid = element_blank())

We saved the exact minimum value as min_loss[1], which is 0.8408408. Within sampling error, this is the posterior median as depicted by our samples.

samples %>%

summarise(posterior_median = median(p_grid))## # A tibble: 1 × 1

## posterior_median

## <dbl>

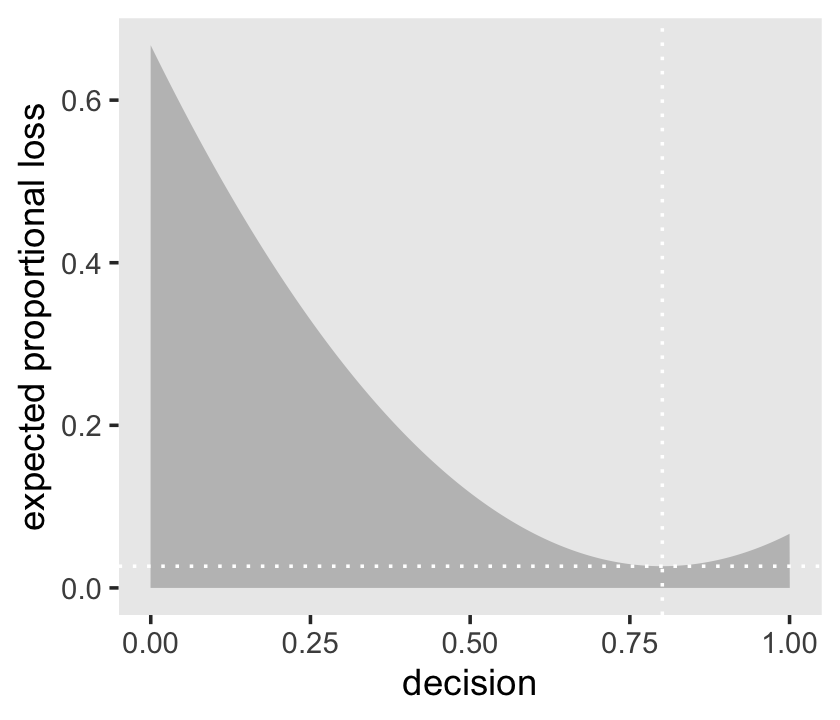

## 1 0.843The quadratic loss \((d - p)^2\) suggests we should use the mean instead. Let’s investigate.

# amend our loss function

make_loss <- function(our_d) {

d %>%

mutate(loss = posterior * (our_d - p_grid)^2) %>%

summarise(weighted_average_loss = sum(loss))

}

# remake our `l` data

l <-

d %>%

select(p_grid) %>%

rename(decision = p_grid) %>%

mutate(weighted_average_loss = purrr::map(decision, make_loss)) %>%

unnest(weighted_average_loss)

# update to the new minimum loss coordinates

min_loss <-

l %>%

filter(weighted_average_loss == min(weighted_average_loss)) %>%

as.numeric()

# update the plot

l %>%

ggplot(aes(x = decision, y = weighted_average_loss)) +

geom_area(fill = "grey75") +

geom_vline(xintercept = min_loss[1], color = "white", linetype = 3) +

geom_hline(yintercept = min_loss[2], color = "white", linetype = 3) +

ylab("expected proportional loss") +

theme(panel.grid = element_blank())

Based on quadratic loss \((d - p)^2\), the exact minimum value is 0.8008008. Within sampling error, this is the posterior mean of our samples.

samples %>%

summarise(posterior_meaan = mean(p_grid))## # A tibble: 1 × 1

## posterior_meaan

## <dbl>

## 1 0.803Usually, research scientists don’t think about loss functions. And so any point estimate like the mean or MAP that they may report isn’t intended to support any particular decision, but rather to describe the shape of the posterior. You might argue that the decision to make is whether or not to accept an hypothesis. But the challenge then is to say what the relevant costs and benefits would be, in terms of the knowledge gained or lost. Usually it’s better to communicate as much as you can about the posterior distribution, as well as the data and the model itself, so that others can build upon your work. Premature decisions to accept or reject hypotheses can cost lives. (p. 61)

In the endnote (62) linked to the end of that quote in the text, McElreath wrote: “See Hauer (2004) for three tales from transportation safety in which testing resulted in premature incorrect decisions and a demonstrable and continuing loss of human life” (p. 561).

3.3 Sampling to simulate prediction

McElreath’s five good reasons for simulation were

- model design

- model checking,

- software validation,

- research design, and

- forecasting.

3.3.1 Dummy data.

Dummy data for the globe tossing model arise from the binomial likelihood. If you let \(w\) be a count of water and \(n\) be the number of tosses, the binomial likelihood is

\[\operatorname{Pr} (w|n, p) = \frac{n!}{w!(n - w)!} p^w (1 - p)^{n - w}.\]

Letting \(n = 2\), \(p(w) = .7\), and \(w_\text{observed} = 0 \text{ through }2\), the densities are:

tibble(n = 2,

`p(w)` = .7,

w = 0:2) %>%

mutate(density = dbinom(w, size = n, prob = `p(w)`))## # A tibble: 3 × 4

## n `p(w)` w density

## <dbl> <dbl> <int> <dbl>

## 1 2 0.7 0 0.09

## 2 2 0.7 1 0.42

## 3 2 0.7 2 0.49If we’re going to simulate, we should probably set our seed. Doing so makes the results reproducible.

set.seed(3)

rbinom(1, size = 2, prob = .7)## [1] 2Here are ten reproducible draws.

set.seed(3)

rbinom(10, size = 2, prob = .7)## [1] 2 1 2 2 1 1 2 2 1 1Now generate 100,000 (i.e., 1e5) reproducible dummy observations.

# how many would you like?

n_draws <- 1e5

set.seed(3)

d <- tibble(draws = rbinom(n_draws, size = 2, prob = .7))

d %>%

count(draws) %>%

mutate(proportion = n / nrow(d))## # A tibble: 3 × 3

## draws n proportion

## <int> <int> <dbl>

## 1 0 9000 0.09

## 2 1 42051 0.421

## 3 2 48949 0.489As McElreath mused in the text (p. 63), those simulated proportion values are very close to the analytically calculated values in our density column a few code blocks up.

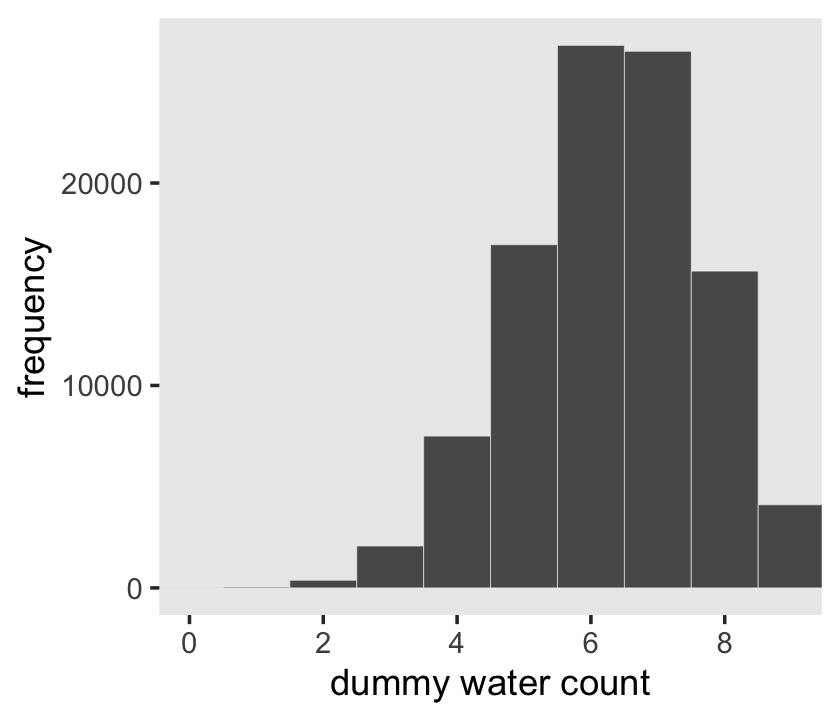

Here’s the simulation updated so \(n = 9\), which we plot in our version of Figure 3.5.

set.seed(3)

d <- tibble(draws = rbinom(n_draws, size = 9, prob = .7))

# the histogram

d %>%

ggplot(aes(x = draws)) +

geom_histogram(binwidth = 1, center = 0,

color = "grey92", linewidth = 1/10) +

scale_x_continuous("dummy water count", breaks = 0:4 * 2) +

ylab("frequency") +

coord_cartesian(xlim = c(0, 9)) +

theme(panel.grid = element_blank())

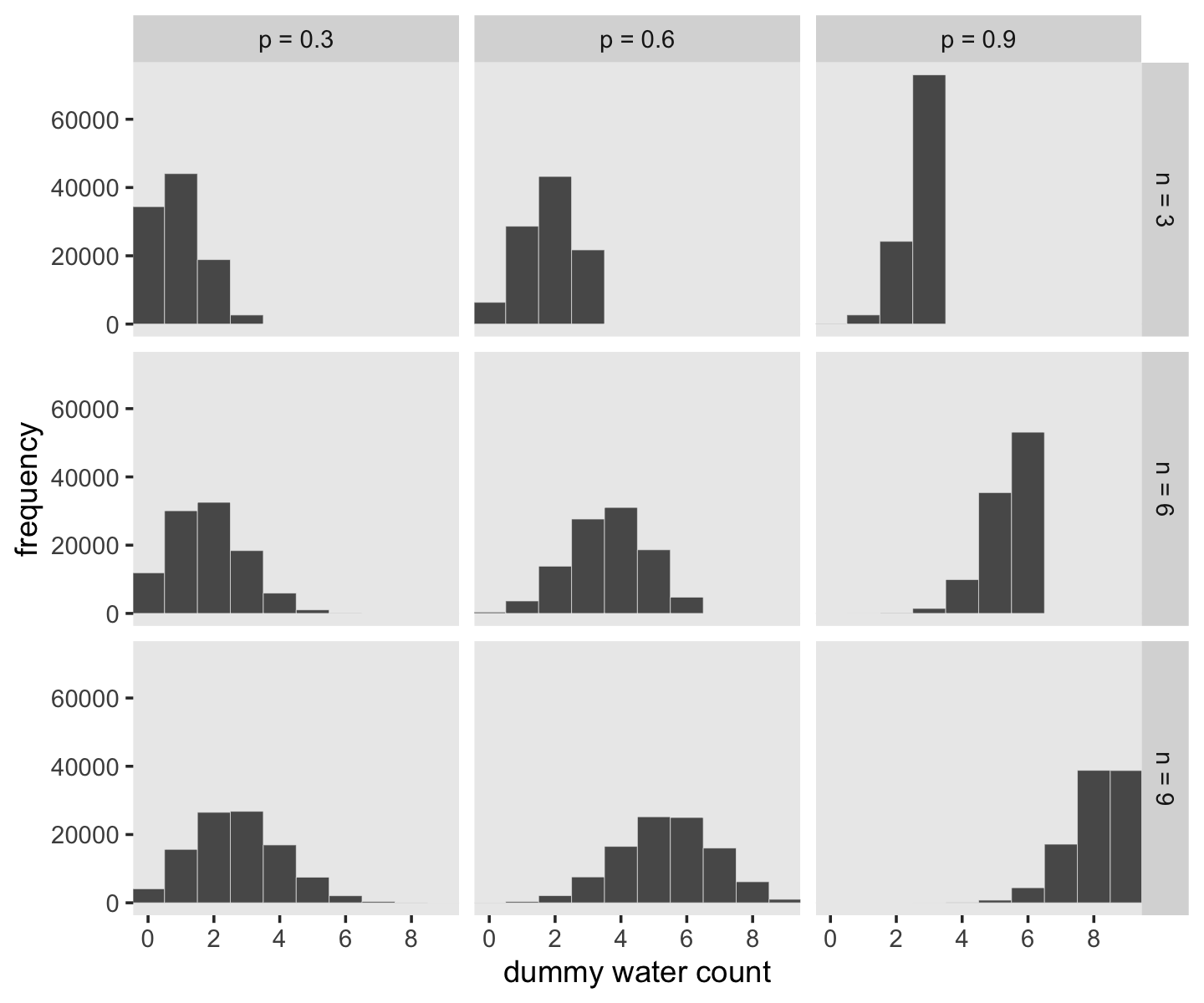

McElreath suggested we play around with different values of size and prob. With the next block of code, we’ll simulate nine conditions.

n_draws <- 1e5

simulate_binom <- function(n, probability) {

set.seed(3)

rbinom(n_draws, size = n, prob = probability)

}

d <-

crossing(n = c(3, 6, 9),

probability = c(.3, .6, .9)) %>%

mutate(draws = map2(n, probability, simulate_binom)) %>%

ungroup() %>%

mutate(n = str_c("n = ", n),

probability = str_c("p = ", probability)) %>%

unnest(draws)

head(d)## # A tibble: 6 × 3

## n probability draws

## <chr> <chr> <int>

## 1 n = 3 p = 0.3 0

## 2 n = 3 p = 0.3 2

## 3 n = 3 p = 0.3 1

## 4 n = 3 p = 0.3 0

## 5 n = 3 p = 0.3 1

## 6 n = 3 p = 0.3 1Let’s plot the simulation results.

d %>%

ggplot(aes(x = draws)) +

geom_histogram(binwidth = 1, center = 0,

color = "grey92", linewidth = 1/10) +

scale_x_continuous("dummy water count", breaks = 0:4 * 2) +

ylab("frequency") +

coord_cartesian(xlim = c(0, 9)) +

theme(panel.grid = element_blank()) +

facet_grid(n ~ probability)

3.3.2 Model checking.

If you’re new to applied statistics, you might be surprised how often mistakes arise.

3.3.2.1 Did the software work?

Let this haunt your dreams: “There is no way to really be sure that software works correctly” (p. 64).

If you’d like to dive deeper into these dark waters, check out one my favorite talks from StanCon 2018, Esther Williams in the Harold Holt Memorial Swimming Pool, by the ineffable Dan Simpson. If Simpson doesn’t end up drowning you, see Gabry and Simpson’s talk at the Royal Statistical Society 2018, Visualization in Bayesian workflow, a follow-up blog called Maybe it’s time to let the old ways die; or We broke R-hat so now we have to fix it, and that blog’s associated pre-print by Vehtari et al. (2019), Rank-normalization, folding, and localization: An improved \(\widehat R\) for assessing convergence of MCMC.

3.3.2.2 Is the model adequate?

The implied predictions of the model are uncertain in two ways, and it’s important to be aware of both.

First, there is observation uncertainty. For any unique value of the parameter \(p\), there is a unique implied pattern of observations that the model expects. These patterns of observations are the same gardens of forking data that you explored in the previous chapter. These patterns are also what you sampled in the previous section. There is uncertainty in the predicted observations, because even if you know \(p\) with certainty, you won’t know the next globe toss with certainty (unless \(p = 0\) or \(p = 1\)).

Second, there is uncertainty about \(p\). The posterior distribution over \(p\) embodies this uncertainty. And since there is uncertainty about \(p\), there is uncertainty about everything that depends upon \(p\). The uncertainty in \(p\) will interact with the sampling variation, when we try to assess what the model tells us about outcomes.

We’d like to propagate the parameter uncertainty–carry it forward–as we evaluate the implied predictions. All that is required is averaging over the posterior density for \(p\), while computing the predictions. For each possible value of the parameter \(p\), there is an implied distribution of outcomes. So if you were to compute the sampling distribution of outcomes at each value of \(p\), then you could average all of these prediction distributions together, using the posterior probabilities of each value of \(p\), to get a posterior predictive distribution. (pp. 64–65, emphasis in the original)

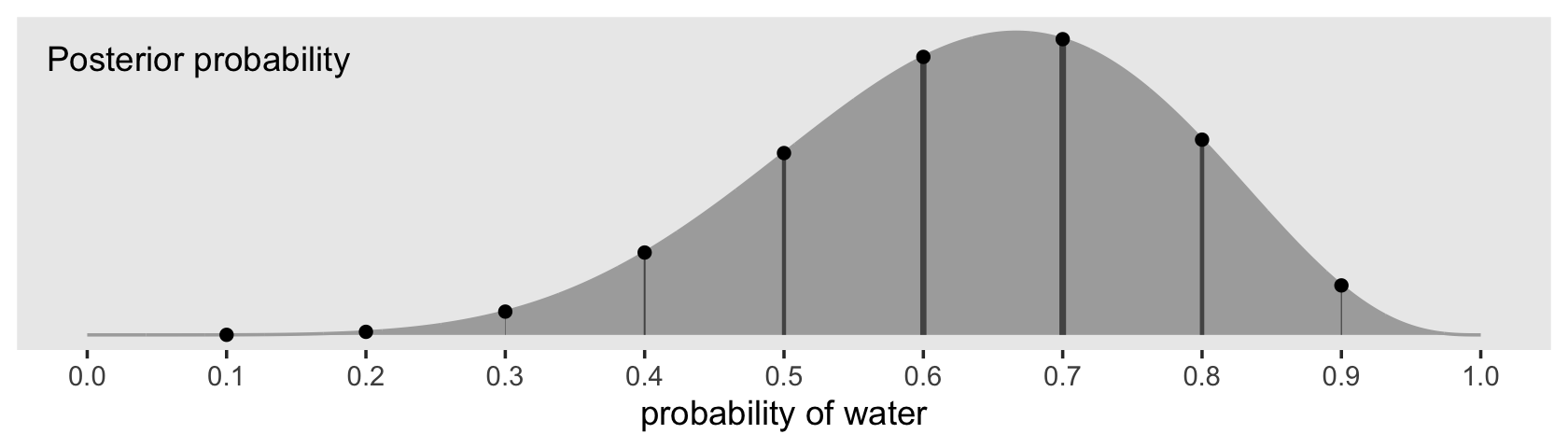

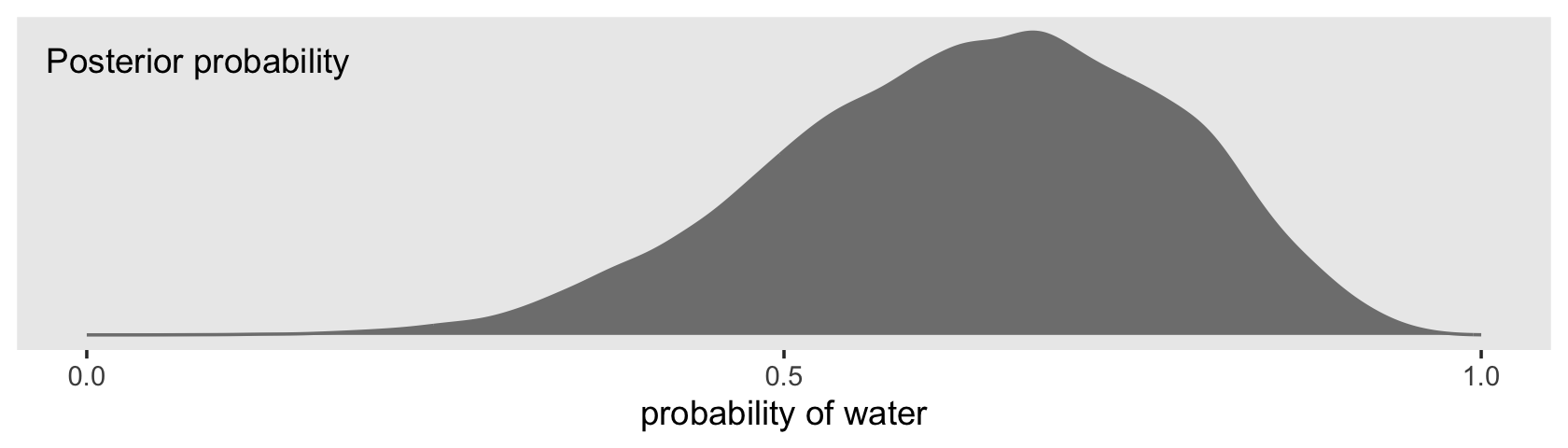

All this is depicted in Figure 3.6. To get ready to make our version, let’s first refresh our original grid approximation d.

# how many grid points would you like?

n <- 1001

n_success <- 6

n_trials <- 9

(

d <-

tibble(p_grid = seq(from = 0, to = 1, length.out = n),

# note we're still using a flat uniform prior

prior = 1) %>%

mutate(likelihood = dbinom(n_success, size = n_trials, prob = p_grid)) %>%

mutate(posterior = (likelihood * prior) / sum(likelihood * prior))

)## # A tibble: 1,001 × 4

## p_grid prior likelihood posterior

## <dbl> <dbl> <dbl> <dbl>

## 1 0 1 0 0

## 2 0.001 1 8.37e-17 8.37e-19

## 3 0.002 1 5.34e-15 5.34e-17

## 4 0.003 1 6.07e-14 6.07e-16

## 5 0.004 1 3.40e-13 3.40e-15

## 6 0.005 1 1.29e-12 1.29e-14

## 7 0.006 1 3.85e-12 3.85e-14

## 8 0.007 1 9.68e-12 9.68e-14

## 9 0.008 1 2.15e-11 2.15e-13

## 10 0.009 1 4.34e-11 4.34e-13

## # … with 991 more rowsWe can make our version of the top of Figure 3.6 with a little tricky filtering.

d %>%

ggplot(aes(x = p_grid, y = posterior)) +

geom_area(color = "grey67", fill = "grey67") +

geom_segment(data = . %>%

filter(p_grid %in% c(seq(from = .1, to = .9, by = .1), 3 / 10)),

aes(xend = p_grid, yend = 0, linewidth = posterior),

color = "grey33", show.legend = F) +

geom_point(data = . %>%

filter(p_grid %in% c(seq(from = .1, to = .9, by = .1), 3 / 10))) +

annotate(geom = "text",

x = .08, y = .0025,

label = "Posterior probability") +

scale_linewidth_continuous(range = c(0, 1)) +

scale_x_continuous("probability of water", breaks = 0:10 / 10) +

scale_y_continuous(NULL, breaks = NULL) +

theme(panel.grid = element_blank())

Note how we weighted the widths of the vertical lines by the posterior density.

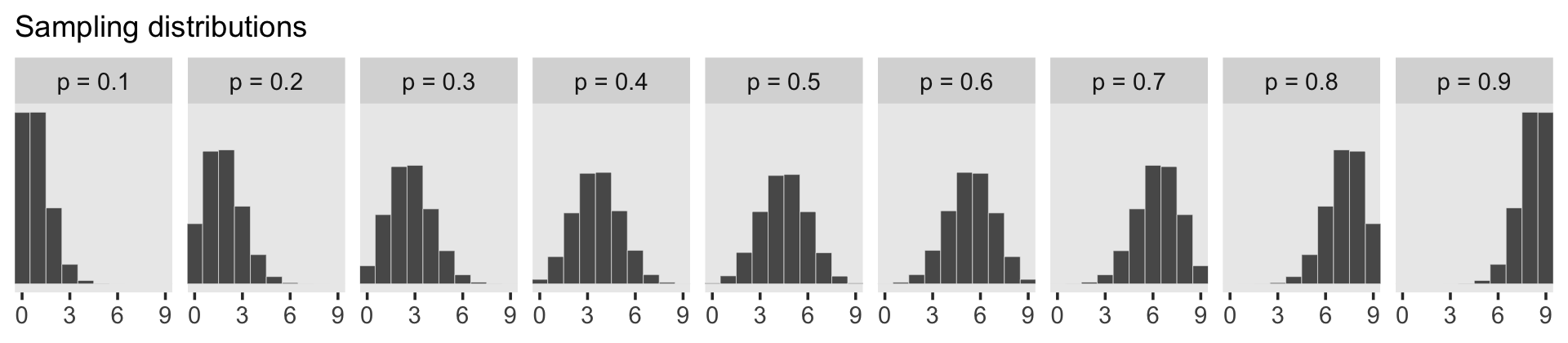

We’ll need to do a bit of wrangling before we’re ready to make the plot in the middle panel of Figure 3.6.

n_draws <- 1e5

simulate_binom <- function(probability) {

set.seed(3)

rbinom(n_draws, size = 9, prob = probability)

}

d_small <-

tibble(probability = seq(from = .1, to = .9, by = .1)) %>%

mutate(draws = purrr::map(probability, simulate_binom)) %>%

unnest(draws) %>%

mutate(label = str_c("p = ", probability))

head(d_small)## # A tibble: 6 × 3

## probability draws label

## <dbl> <int> <chr>

## 1 0.1 0 p = 0.1

## 2 0.1 2 p = 0.1

## 3 0.1 0 p = 0.1

## 4 0.1 0 p = 0.1

## 5 0.1 1 p = 0.1

## 6 0.1 1 p = 0.1Now we’re ready to plot.

d_small %>%

ggplot(aes(x = draws)) +

geom_histogram(binwidth = 1, center = 0,

color = "grey92", linewidth = 1/10) +

scale_x_continuous(NULL, breaks = 0:3 * 3) +

scale_y_continuous(NULL, breaks = NULL) +

labs(subtitle = "Sampling distributions") +

coord_cartesian(xlim = c(0, 9)) +

theme(panel.grid = element_blank()) +

facet_wrap(~ label, ncol = 9)

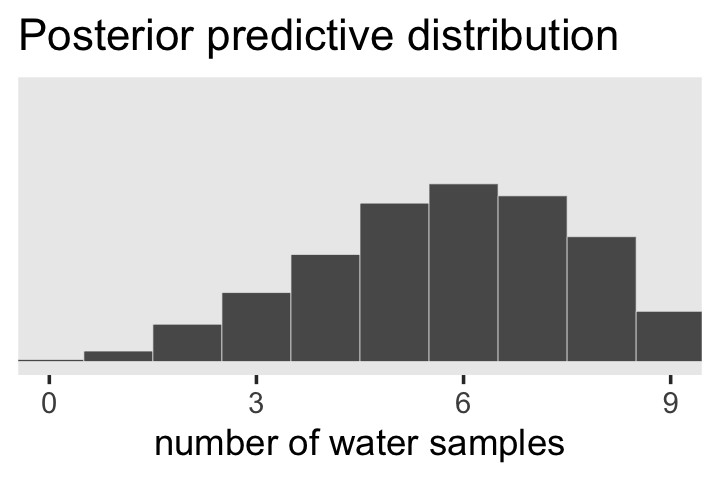

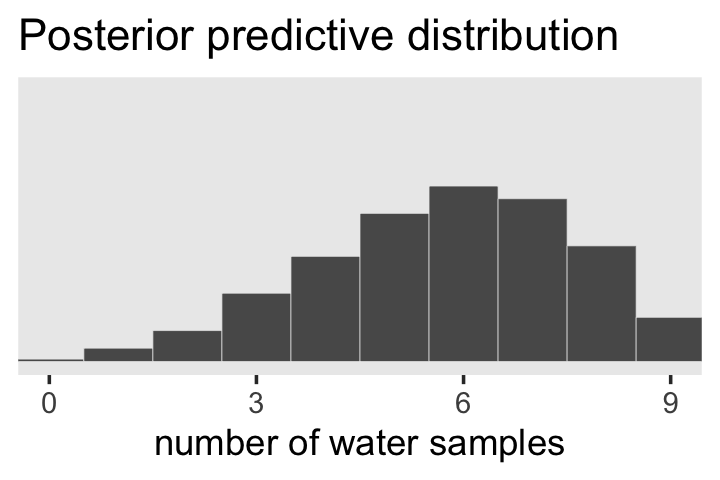

To make the plot at the bottom of Figure 3.6, we’ll redefine our samples, this time including the w variable (see the R code 3.26 block in the text).

# how many samples would you like?

n_samples <- 1e4

# make it reproducible

set.seed(3)

samples <-

d %>%

slice_sample(n = n_samples, weight_by = posterior, replace = T) %>%

mutate(w = purrr::map_dbl(p_grid, rbinom, n = 1, size = 9))

glimpse(samples)## Rows: 10,000

## Columns: 5

## $ p_grid <dbl> 0.564, 0.651, 0.487, 0.592, 0.596, 0.787, 0.727, 0.490, 0.751, 0.449, 0.619, 0.…

## $ prior <dbl> 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1…

## $ likelihood <dbl> 0.224085305, 0.271795022, 0.151288232, 0.245578315, 0.248256678, 0.192870804, 0…

## $ posterior <dbl> 2.240853e-03, 2.717950e-03, 1.512882e-03, 2.455783e-03, 2.482567e-03, 1.928708e…

## $ w <dbl> 4, 7, 3, 3, 7, 6, 8, 2, 6, 4, 5, 5, 8, 6, 4, 6, 8, 2, 6, 9, 9, 7, 4, 8, 9, 8, 6…Here’s our histogram.

samples %>%

ggplot(aes(x = w)) +

geom_histogram(binwidth = 1, center = 0,

color = "grey92", linewidth = 1/10) +

scale_x_continuous("number of water samples",

breaks = 0:3 * 3) +

scale_y_continuous(NULL, breaks = NULL) +

ggtitle("Posterior predictive distribution") +

coord_cartesian(xlim = c(0, 9),

ylim = c(0, 3000)) +

theme(panel.grid = element_blank())

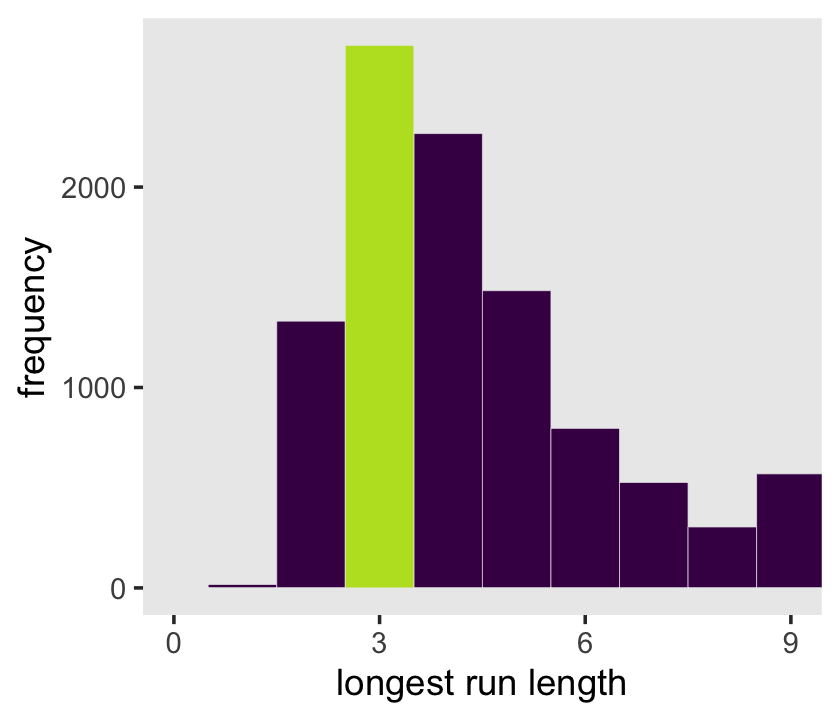

In Figure 3.7, McElreath considered the longest sequence of the sample values. We’ve been using rbinom() with the size parameter set to 9 for our simulations. E.g.,

rbinom(10, size = 9, prob = .6)## [1] 7 5 6 8 7 5 6 3 3 4Notice this collapsed (i.e., aggregated) over the sequences within the individual sets of 9. What we need is to simulate nine individual trials many times over. For example, this

rbinom(9, size = 1, prob = .6)## [1] 0 1 1 1 0 0 0 0 0would be the disaggregated version of just one of the numerals returned by rbinom() when size = 9. So let’s try simulating again with un-aggregated samples. We’ll keep adding to our samples tibble. In addition to the disaggregated draws based on the \(p\) values listed in p_grid, we’ll also want to add a row index for each of those p_grid values–it’ll come in handy when we plot.

# make it reproducible

set.seed(3)

samples <-

samples %>%

mutate(iter = 1:n(),

draws = purrr::map(p_grid, rbinom, n = 9, size = 1)) %>%

unnest(draws)

glimpse(samples)## Rows: 90,000

## Columns: 7

## $ p_grid <dbl> 0.564, 0.564, 0.564, 0.564, 0.564, 0.564, 0.564, 0.564, 0.564, 0.651, 0.651, 0.…

## $ prior <dbl> 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1…

## $ likelihood <dbl> 0.2240853, 0.2240853, 0.2240853, 0.2240853, 0.2240853, 0.2240853, 0.2240853, 0.…

## $ posterior <dbl> 0.002240853, 0.002240853, 0.002240853, 0.002240853, 0.002240853, 0.002240853, 0…

## $ w <dbl> 4, 4, 4, 4, 4, 4, 4, 4, 4, 7, 7, 7, 7, 7, 7, 7, 7, 7, 3, 3, 3, 3, 3, 3, 3, 3, 3…

## $ iter <int> 1, 1, 1, 1, 1, 1, 1, 1, 1, 2, 2, 2, 2, 2, 2, 2, 2, 2, 3, 3, 3, 3, 3, 3, 3, 3, 3…

## $ draws <int> 1, 0, 1, 1, 0, 0, 1, 1, 0, 1, 1, 1, 1, 1, 0, 0, 1, 0, 1, 0, 0, 0, 0, 0, 0, 1, 1…The main action is in the draws column.

Now we have to count the longest sequences. The base R rle() function will help with that. Consider McElreath’s sequence of tosses.

tosses <- c("w", "l", "w", "w", "w", "l", "w", "l", "w")You can plug that into rle().

rle(tosses)## Run Length Encoding

## lengths: int [1:7] 1 1 3 1 1 1 1

## values : chr [1:7] "w" "l" "w" "l" "w" "l" "w"For our purposes, we’re interested in lengths. That tells us the length of each sequences of the same value. The 3 corresponds to our run of three ws. The max() function will help us confirm it’s the largest value.

rle(tosses)$lengths %>% max()## [1] 3Now let’s apply our method to the data and plot.

samples %>%

group_by(iter) %>%

summarise(longest_run_length = rle(draws)$lengths %>% max()) %>%

ggplot(aes(x = longest_run_length)) +

geom_histogram(aes(fill = longest_run_length == 3),

binwidth = 1, center = 0,

color = "grey92", linewidth = 1/10) +

scale_fill_viridis_d(option = "D", end = .9) +

scale_x_continuous("longest run length", breaks = 0:3 * 3) +

ylab("frequency") +

coord_cartesian(xlim = c(0, 9)) +

theme(legend.position = "none",

panel.grid = element_blank())

Let’s look at rle() again.

rle(tosses)## Run Length Encoding

## lengths: int [1:7] 1 1 3 1 1 1 1

## values : chr [1:7] "w" "l" "w" "l" "w" "l" "w"We can use the length of the output (i.e., 7 in this example) as the numbers of switches from, in this case, “w” and “l”.

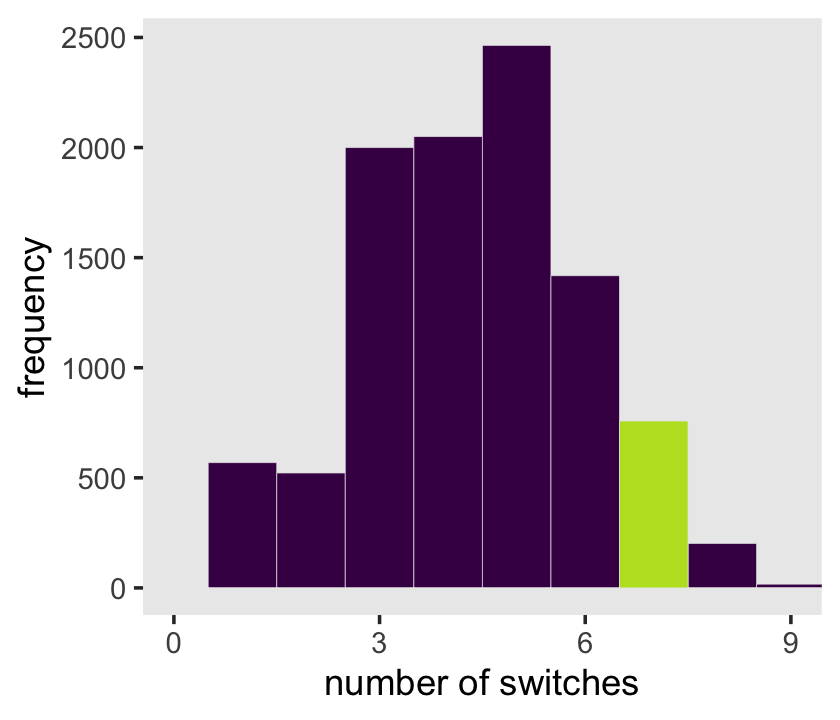

rle(tosses)$lengths %>% length()## [1] 7With that new trick, we’re ready to make the right panel of Figure 3.7.

samples %>%

group_by(iter) %>%

summarise(longest_run_length = rle(draws)$lengths %>% length()) %>%

ggplot(aes(x = longest_run_length)) +

geom_histogram(aes(fill = longest_run_length == 7),

binwidth = 1, center = 0,

color = "grey92", linewidth = 1/10) +

scale_fill_viridis_d(option = "D", end = .9) +

scale_x_continuous("number of switches", breaks = 0:3 * 3) +

ylab("frequency") +

coord_cartesian(xlim = c(0, 9)) +

theme(legend.position = "none",

panel.grid = element_blank())

3.4 Summary Let’s practice with brms

Open brms.

library(brms)With brms, we’ll fit the primary model of \(w = 6\) and \(n = 9\) much like we did at the end of Chapter 2.

b3.1 <-

brm(data = list(w = 6),

family = binomial(link = "identity"),

w | trials(9) ~ 0 + Intercept,

# this is a flat prior

prior(beta(1, 1), class = b, lb = 0, ub = 1),

iter = 5000, warmup = 1000,

seed = 3,

file = "fits/b03.01")We’ll learn more about the beta distribution in Chapter 12. But for now, here’s the posterior summary for b_Intercept, the probability of a “w”.

posterior_summary(b3.1)["b_Intercept", ] %>%

round(digits = 2)## Estimate Est.Error Q2.5 Q97.5

## 0.64 0.14 0.35 0.87As we’ll fully cover in the next chapter, Estimate is the posterior mean, the two Q columns are the quantile-based 95% intervals, and Est.Error is the posterior standard deviation.

Much like the way we used the samples() function to simulate probability values, above, we can do so with the brms::fitted() function. But we will have to specify scale = "linear" in order to return results in the probability metric. By default, brms::fitted() will return summary information. Since we want actual simulation draws, we’ll specify summary = F.

f <-

fitted(b3.1,

summary = F,

scale = "linear") %>%

data.frame() %>%

set_names("p")

glimpse(f)## Rows: 16,000

## Columns: 1

## $ p <dbl> 0.6878563, 0.5386513, 0.7030050, 0.6889854, 0.4738290, 0.5879676, 0.5843004, 0.7014505, …By default, we have a generically-named vector of 4,000 samples. We’ll explain the defaults in later chapters. For now, notice we can view these in a density.

f %>%

ggplot(aes(x = p)) +

geom_density(fill = "grey50", color = "grey50") +

annotate(geom = "text", x = .08, y = 2.5,

label = "Posterior probability") +

scale_x_continuous("probability of water",

breaks = c(0, .5, 1),

limits = 0:1) +

scale_y_continuous(NULL, breaks = NULL) +

theme(panel.grid = element_blank())

Looks a lot like the posterior probability density at the top of Figure 3.6, doesn’t it? Much like we did with samples, we can use this distribution of probabilities to predict histograms of w counts. With those in hand, we can make an analogue to the histogram in the bottom panel of Figure 3.6.

# the simulation

set.seed(3)

f <-

f %>%

mutate(w = rbinom(n(), size = n_trials, prob = p))

# the plot

f %>%

ggplot(aes(x = w)) +

geom_histogram(binwidth = 1, center = 0,

color = "grey92", linewidth = 1/10) +

scale_x_continuous("number of water samples", breaks = 0:3 * 3) +

scale_y_continuous(NULL, breaks = NULL, limits = c(0, 5000)) +

ggtitle("Posterior predictive distribution") +

coord_cartesian(xlim = c(0, 9)) +

theme(panel.grid = element_blank())

As you might imagine, we can use the output from fitted() to return disaggregated batches of 0’s and 1’s, too. And we could even use those disaggregated 0’s and 1’s to examine longest run lengths and numbers of switches as in the analyses for Figure 3.7. I’ll leave those as exercises for the interested reader.

Session info

sessionInfo()## R version 4.2.2 (2022-10-31)

## Platform: x86_64-apple-darwin17.0 (64-bit)

## Running under: macOS Big Sur ... 10.16

##

## Matrix products: default

## BLAS: /Library/Frameworks/R.framework/Versions/4.2/Resources/lib/libRblas.0.dylib

## LAPACK: /Library/Frameworks/R.framework/Versions/4.2/Resources/lib/libRlapack.dylib

##

## locale:

## [1] en_US.UTF-8/en_US.UTF-8/en_US.UTF-8/C/en_US.UTF-8/en_US.UTF-8

##

## attached base packages:

## [1] stats graphics grDevices utils datasets methods base

##

## other attached packages:

## [1] brms_2.18.0 Rcpp_1.0.9 tidybayes_3.0.2 patchwork_1.1.2 forcats_0.5.1 stringr_1.4.1

## [7] dplyr_1.0.10 purrr_1.0.1 readr_2.1.2 tidyr_1.2.1 tibble_3.1.8 ggplot2_3.4.0

## [13] tidyverse_1.3.2

##

## loaded via a namespace (and not attached):

## [1] readxl_1.4.1 backports_1.4.1 plyr_1.8.7 igraph_1.3.4

## [5] splines_4.2.2 svUnit_1.0.6 crosstalk_1.2.0 TH.data_1.1-1

## [9] rstantools_2.2.0 inline_0.3.19 digest_0.6.31 htmltools_0.5.3

## [13] rethinking_2.21 fansi_1.0.3 magrittr_2.0.3 checkmate_2.1.0

## [17] googlesheets4_1.0.1 tzdb_0.3.0 modelr_0.1.8 RcppParallel_5.1.5

## [21] matrixStats_0.63.0 sandwich_3.0-2 xts_0.12.1 prettyunits_1.1.1

## [25] colorspace_2.0-3 rvest_1.0.2 ggdist_3.2.1 haven_2.5.1

## [29] xfun_0.35 callr_3.7.3 crayon_1.5.2 jsonlite_1.8.4

## [33] lme4_1.1-31 survival_3.4-0 zoo_1.8-10 glue_1.6.2

## [37] gtable_0.3.1 gargle_1.2.0 emmeans_1.8.0 distributional_0.3.1

## [41] pkgbuild_1.3.1 rstan_2.21.8 shape_1.4.6 abind_1.4-5

## [45] scales_1.2.1 mvtnorm_1.1-3 DBI_1.1.3 miniUI_0.1.1.1

## [49] viridisLite_0.4.1 xtable_1.8-4 HDInterval_0.2.4 stats4_4.2.2

## [53] StanHeaders_2.21.0-7 DT_0.24 htmlwidgets_1.5.4 httr_1.4.4

## [57] threejs_0.3.3 arrayhelpers_1.1-0 posterior_1.3.1 ellipsis_0.3.2

## [61] pkgconfig_2.0.3 loo_2.5.1 farver_2.1.1 sass_0.4.2

## [65] dbplyr_2.2.1 utf8_1.2.2 tidyselect_1.2.0 labeling_0.4.2

## [69] rlang_1.0.6 reshape2_1.4.4 later_1.3.0 munsell_0.5.0

## [73] cellranger_1.1.0 tools_4.2.2 cachem_1.0.6 cli_3.6.0

## [77] generics_0.1.3 broom_1.0.2 evaluate_0.18 fastmap_1.1.0

## [81] processx_3.8.0 knitr_1.40 fs_1.5.2 nlme_3.1-160

## [85] projpred_2.2.1 mime_0.12 xml2_1.3.3 compiler_4.2.2

## [89] bayesplot_1.10.0 shinythemes_1.2.0 rstudioapi_0.13 gamm4_0.2-6

## [93] reprex_2.0.2 bslib_0.4.0 stringi_1.7.8 highr_0.9

## [97] ps_1.7.2 Brobdingnag_1.2-8 lattice_0.20-45 Matrix_1.5-1

## [101] nloptr_2.0.3 markdown_1.1 shinyjs_2.1.0 tensorA_0.36.2

## [105] vctrs_0.5.1 pillar_1.8.1 lifecycle_1.0.3 jquerylib_0.1.4

## [109] bridgesampling_1.1-2 estimability_1.4.1 httpuv_1.6.5 R6_2.5.1

## [113] bookdown_0.28 promises_1.2.0.1 gridExtra_2.3 codetools_0.2-18

## [117] boot_1.3-28 colourpicker_1.1.1 MASS_7.3-58.1 gtools_3.9.4

## [121] assertthat_0.2.1 withr_2.5.0 shinystan_2.6.0 multcomp_1.4-20

## [125] mgcv_1.8-41 parallel_4.2.2 hms_1.1.1 grid_4.2.2

## [129] minqa_1.2.5 coda_0.19-4 rmarkdown_2.16 cmdstanr_0.5.3

## [133] googledrive_2.0.0 shiny_1.7.2 lubridate_1.8.0 base64enc_0.1-3

## [137] dygraphs_1.1.1.6