13 Models With Memory

Multilevel models… remember features of each cluster in the data as they learn about all of the clusters. Depending upon the variation among clusters, which is learned from the data as well, the model pools information across clusters. This pooling tends to improve estimates about each cluster. This improved estimation leads to several, more pragmatic sounding, benefits of the multilevel approach. (McElreath, 2020a, p. 400, emphasis in the original)

These benefits include:

- better estimates for repeated sampling (i.e., in longitudinal data),

- better estimates when there are imbalances among subsamples,

- estimates of the variation across subsamples, and

- avoiding simplistic averaging by retaining variation across subsamples.

All of these benefits flow out of the same strategy and model structure. You learn one basic design and you get all of this for free.

When it comes to regression, multilevel regression deserves to be the default approach. There are certainly contexts in which it would be better to use an old-fashioned single-level model. But the contexts in which multilevel models are superior are much more numerous. It is better to begin to build a multilevel analysis, and then realize it’s unnecessary, than to overlook it. And once you grasp the basic multilevel strategy, it becomes much easier to incorporate related tricks such as allowing for measurement error in the data and even modeling missing data itself (Chapter 15). (p. 400)

I’m totally on board with this. After learning about the multilevel model, I see it everywhere. For more on the sentiment it should be the default, check out McElreath’s blog post, Multilevel regression as default.

13.1 Example: Multilevel tadpoles

Let’s load the reedfrogs data (see Vonesh & Bolker, 2005) and fire up brms.

library(brms)

data(reedfrogs, package = "rethinking")

d <- reedfrogs

rm(reedfrogs)Go ahead and acquaint yourself with the reedfrogs.

library(tidyverse)

d %>%

glimpse()## Rows: 48

## Columns: 5

## $ density <int> 10, 10, 10, 10, 10, 10, 10, 10, 10, 10, 10, 10, 10, 10, 10, 10, 25, 25, 25, 25, 25, 25, 25,…

## $ pred <fct> no, no, no, no, no, no, no, no, pred, pred, pred, pred, pred, pred, pred, pred, no, no, no,…

## $ size <fct> big, big, big, big, small, small, small, small, big, big, big, big, small, small, small, sm…

## $ surv <int> 9, 10, 7, 10, 9, 9, 10, 9, 4, 9, 7, 6, 7, 5, 9, 9, 24, 23, 22, 25, 23, 23, 23, 21, 6, 13, 4…

## $ propsurv <dbl> 0.9000000, 1.0000000, 0.7000000, 1.0000000, 0.9000000, 0.9000000, 1.0000000, 0.9000000, 0.4…Making the tank cluster variable is easy.

d <-

d %>%

mutate(tank = 1:nrow(d))Here’s the formula for the un-pooled model in which each tank gets its own intercept:

\[\begin{align*} \text{surv}_i & \sim \operatorname{Binomial}(n_i, p_i) \\ \operatorname{logit}(p_i) & = \alpha_{\text{tank}[i]} \\ \alpha_j & \sim \operatorname{Normal} (0, 1.5) & \text{for } j = 1, \dots, 48, \end{align*}\]

where \(n_i\) is indexed by the density column. Its values are distributed like so.

d %>%

count(density)## density n

## 1 10 16

## 2 25 16

## 3 35 16Now fit this simple aggregated binomial model much like we practiced in Chapter 11.

b13.1 <-

brm(data = d,

family = binomial,

surv | trials(density) ~ 0 + factor(tank),

prior(normal(0, 1.5), class = b),

iter = 2000, warmup = 1000, chains = 4, cores = 4,

seed = 13,

file = "fits/b13.01")We don’t need a depth=2 argument to discover we have 48 different intercepts. The default print() behavior will do.

print(b13.1)## Family: binomial

## Links: mu = logit

## Formula: surv | trials(density) ~ 0 + factor(tank)

## Data: d (Number of observations: 48)

## Draws: 4 chains, each with iter = 2000; warmup = 1000; thin = 1;

## total post-warmup draws = 4000

##

## Population-Level Effects:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## factortank1 1.71 0.76 0.35 3.32 1.00 5965 2690

## factortank2 2.41 0.90 0.77 4.38 1.00 4901 2558

## factortank3 0.76 0.63 -0.40 2.04 1.00 6090 2689

## factortank4 2.41 0.89 0.81 4.36 1.00 6258 3082

## factortank5 1.71 0.77 0.34 3.36 1.00 4940 2499

## factortank6 1.72 0.77 0.39 3.38 1.00 6048 3061

## factortank7 2.41 0.91 0.88 4.40 1.00 5595 2867

## factortank8 1.72 0.76 0.35 3.37 1.00 5475 2615

## factortank9 -0.36 0.60 -1.56 0.82 1.00 6327 2826

## factortank10 1.72 0.78 0.34 3.40 1.00 5799 2853

## factortank11 0.76 0.62 -0.42 2.01 1.00 5468 2858

## factortank12 0.35 0.63 -0.87 1.61 1.00 5135 3110

## factortank13 0.76 0.65 -0.44 2.10 1.00 6180 2641

## factortank14 0.01 0.59 -1.16 1.20 1.00 5360 3277

## factortank15 1.72 0.79 0.31 3.41 1.00 6555 2823

## factortank16 1.74 0.78 0.34 3.37 1.00 5036 2706

## factortank17 2.55 0.69 1.36 4.06 1.00 4771 2989

## factortank18 2.14 0.62 1.05 3.48 1.00 5676 2708

## factortank19 1.82 0.55 0.84 3.00 1.00 6579 2789

## factortank20 3.09 0.81 1.67 4.89 1.00 5513 2629

## factortank21 2.16 0.63 1.06 3.56 1.00 4868 2438

## factortank22 2.13 0.61 1.03 3.45 1.00 5751 2526

## factortank23 2.14 0.58 1.11 3.36 1.00 5814 2941

## factortank24 1.55 0.52 0.62 2.69 1.00 5605 2728

## factortank25 -1.11 0.46 -2.05 -0.25 1.01 5549 2758

## factortank26 0.07 0.41 -0.73 0.90 1.00 5359 2584

## factortank27 -1.55 0.50 -2.60 -0.63 1.00 5139 2886

## factortank28 -0.54 0.41 -1.35 0.24 1.00 5784 2878

## factortank29 0.08 0.39 -0.67 0.84 1.00 5350 3120

## factortank30 1.30 0.46 0.46 2.24 1.00 5680 2730

## factortank31 -0.72 0.41 -1.58 0.04 1.00 5584 2848

## factortank32 -0.39 0.41 -1.21 0.39 1.00 5756 3148

## factortank33 2.84 0.65 1.70 4.18 1.00 4915 2760

## factortank34 2.45 0.57 1.41 3.67 1.00 5083 2773

## factortank35 2.48 0.59 1.41 3.73 1.00 6234 3034

## factortank36 1.91 0.48 1.03 2.92 1.00 6060 3296

## factortank37 1.91 0.48 1.03 2.91 1.00 5465 2841

## factortank38 3.36 0.78 1.99 5.05 1.00 5907 2908

## factortank39 2.44 0.59 1.40 3.73 1.00 6060 2850

## factortank40 2.15 0.52 1.21 3.27 1.00 5156 2828

## factortank41 -1.90 0.48 -2.93 -1.02 1.00 6241 2527

## factortank42 -0.63 0.35 -1.34 0.03 1.00 6080 2929

## factortank43 -0.52 0.34 -1.20 0.15 1.00 5482 2523

## factortank44 -0.39 0.34 -1.08 0.25 1.00 6723 2985

## factortank45 0.51 0.35 -0.16 1.20 1.00 6431 3238

## factortank46 -0.63 0.35 -1.33 0.06 1.00 6302 2560

## factortank47 1.91 0.49 1.04 2.93 1.00 5036 2543

## factortank48 -0.06 0.34 -0.73 0.63 1.00 4893 3202

##

## Draws were sampled using sampling(NUTS). For each parameter, Bulk_ESS

## and Tail_ESS are effective sample size measures, and Rhat is the potential

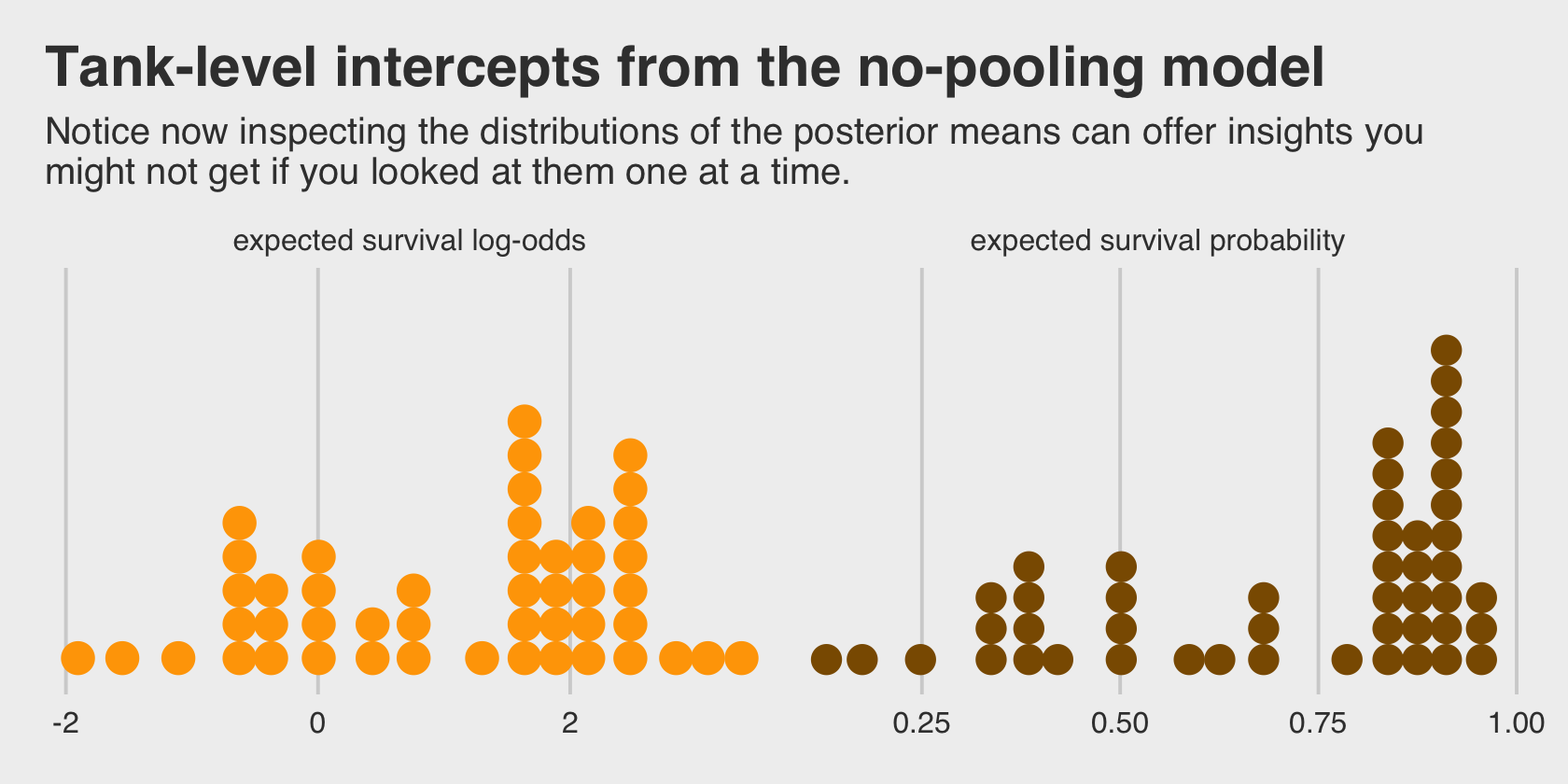

## scale reduction factor on split chains (at convergence, Rhat = 1).This is much like the models we’ve fit in earlier chapters using McElreath’s index approach, but on steroids. It’ll be instructive to take a look at distribution of the \(\alpha_j\) parameters in density plots. We’ll plot them in both their log-odds and probability metrics.

For kicks and giggles, let’s use a FiveThirtyEight-like theme for this chapter’s plots. An easy way to do so is with help from the ggthemes package.

library(ggthemes)

library(tidybayes)

# change the default

theme_set(theme_gray() + theme_fivethirtyeight())

tibble(estimate = fixef(b13.1)[, 1]) %>%

mutate(p = inv_logit_scaled(estimate)) %>%

pivot_longer(estimate:p) %>%

mutate(name = if_else(name == "p", "expected survival probability", "expected survival log-odds")) %>%

ggplot(aes(x = value, fill = name)) +

stat_dots(size = 0) +

scale_fill_manual(values = c("orange1", "orange4")) +

scale_y_continuous(breaks = NULL) +

labs(title = "Tank-level intercepts from the no-pooling model",

subtitle = "Notice now inspecting the distributions of the posterior means can offer insights you\nmight not get if you looked at them one at a time.") +

theme(legend.position = "none",

panel.grid = element_blank()) +

facet_wrap(~ name, scales = "free_x")

Even though it seems like we can derive important insights from how the tank-level intercepts are distributed, that information is not explicitly encoded in the statistical model. Keep that in mind as we now consider the multilevel alternative. Its formula is

\[\begin{align*} \text{surv}_i & \sim \operatorname{Binomial}(n_i, p_i) \\ \operatorname{logit}(p_i) & = \alpha_{\text{tank}[i]} \\ \alpha_j & \sim \operatorname{Normal}(\color{#CD8500}{\bar \alpha}, \color{#CD8500} \sigma) \\ \color{#CD8500}{\bar \alpha} & \color{#CD8500} \sim \color{#CD8500}{\operatorname{Normal}(0, 1.5)} \\ \color{#CD8500} \sigma & \color{#CD8500} \sim \color{#CD8500}{\operatorname{Exponential}(1)}, \end{align*}\]

where

the prior for the tank intercepts is now a function of two parameters, \(\bar \alpha\) and \(\sigma\). You can say \(\bar \alpha\) like “bar alpha.” The bar means average. These two parameters inside the prior is where the “multi” in multilevel arises. The Gaussian distribution with mean \(\bar \alpha\) standard deviation \(\sigma\) is the prior for each tank’s intercept. But that prior itself has priors for \(\bar \alpha\) and \(\sigma\). So there are two levels in the model, each resembling a simpler model. (p. 403, emphasis in the original)

With brms, you might specify the corresponding multilevel model like this.

b13.2 <-

brm(data = d,

family = binomial,

surv | trials(density) ~ 1 + (1 | tank),

prior = c(prior(normal(0, 1.5), class = Intercept), # alpha bar

prior(exponential(1), class = sd)), # sigma

iter = 5000, warmup = 1000, chains = 4, cores = 4,

sample_prior = "yes",

seed = 13,

file = "fits/b13.02")The syntax for the varying effects follows the lme4 style, ( <varying parameter(s)> | <grouping variable(s)> ). In this case (1 | tank) indicates only the intercept, 1, varies by tank. The extent to which parameters vary is controlled by the prior, prior(exponential(1), class = sd), which is parameterized in the standard deviation metric. Do note that last part. It’s common in multilevel software to model in the variance metric, instead. For technical reasons we won’t really get into until Chapter 14, Stan parameterizes this as a standard deviation.

Let’s compute the WAIC comparisons.

b13.1 <- add_criterion(b13.1, "waic")

b13.2 <- add_criterion(b13.2, "waic")

w <- loo_compare(b13.1, b13.2, criterion = "waic")

print(w, simplify = F)## elpd_diff se_diff elpd_waic se_elpd_waic p_waic se_p_waic waic se_waic

## b13.2 0.0 0.0 -100.3 3.7 21.2 0.8 200.7 7.3

## b13.1 -7.4 1.9 -107.7 2.4 26.0 1.3 215.5 4.7The se_diff is small relative to the elpd_diff. If we convert the \(\text{elpd}\) difference to the WAIC metric, the message stays the same.

cbind(waic_diff = w[, 1] * -2,

se = w[, 2] * 2)## waic_diff se

## b13.2 0.0000 0.000000

## b13.1 14.8186 3.746732Here are the WAIC weights.

model_weights(b13.1, b13.2, weights = "waic") %>%

round(digits = 2)## b13.1 b13.2

## 0 1I’m not going to show it here, but if you’d like a challenge, try comparing the models with the PSIS-LOO. You’ll get some great practice with high pareto_k values and the moment matching for problematic observations (Paananen, Piironen, et al., 2020; see Paananen, Bürkner, et al., 2020).

But back on track, McElreath commented on the number of effective parameters for the two models. This, recall, is listed in the column for \(p_\text{WAIC}\).

w[, "p_waic"]## b13.2 b13.1

## 21.18811 25.97493And indeed, even though out multilevel model (b13.2) technically had two more parameters than the conventional single-level model (b13.1), its \(p_\text{WAIC}\) is substantially smaller, due to the regularizing level-2 \(\sigma\) parameter. Speaking of which, let’s examine the model summary.

print(b13.2)## Family: binomial

## Links: mu = logit

## Formula: surv | trials(density) ~ 1 + (1 | tank)

## Data: d (Number of observations: 48)

## Draws: 4 chains, each with iter = 5000; warmup = 1000; thin = 1;

## total post-warmup draws = 16000

##

## Group-Level Effects:

## ~tank (Number of levels: 48)

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## sd(Intercept) 1.61 0.21 1.25 2.09 1.00 4092 7855

##

## Population-Level Effects:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## Intercept 1.35 0.26 0.86 1.88 1.00 2783 5362

##

## Draws were sampled using sampling(NUTS). For each parameter, Bulk_ESS

## and Tail_ESS are effective sample size measures, and Rhat is the potential

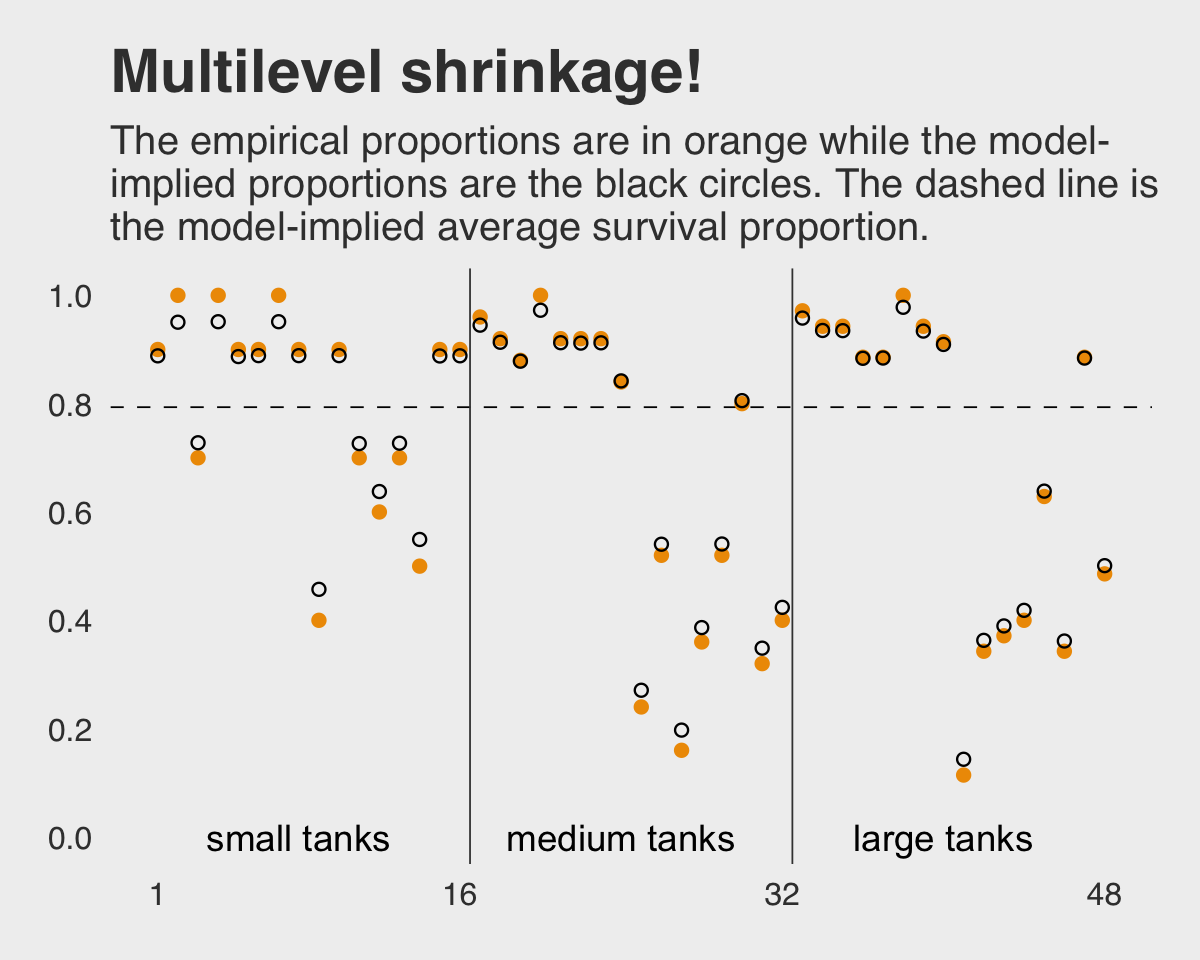

## scale reduction factor on split chains (at convergence, Rhat = 1).This time we don’t get a list of 48 separate tank-level parameters. However, we do get a description of their distribution in terms of \(\bar \alpha\) (i.e., Intercept) and \(\sigma\) (i.e., sd(Intercept)). If you’d like the actual tank-level parameters, don’t worry; they’re coming in Figure 13.1. We’ll need to do a little prep work, though.

post <- as_draws_df(b13.2)

post_mdn <-

coef(b13.2, robust = T)$tank[, , ] %>%

data.frame() %>%

bind_cols(d) %>%

mutate(post_mdn = inv_logit_scaled(Estimate))

head(post_mdn)## Estimate Est.Error Q2.5 Q97.5 density pred size surv propsurv tank post_mdn

## 1 2.0731215 0.8185642 0.6201200 3.998769 10 no big 9 0.9 1 0.8882631

## 2 2.9522208 1.0424981 1.1786419 5.522017 10 no big 10 1.0 2 0.9503683

## 3 0.9835817 0.6671749 -0.2498358 2.404096 10 no big 7 0.7 3 0.7278183

## 4 2.9720105 1.0587864 1.2239606 5.531913 10 no big 10 1.0 4 0.9512935

## 5 2.0597013 0.8409981 0.5896150 4.085309 10 no small 9 0.9 5 0.8869242

## 6 2.0770989 0.8566703 0.6281105 4.051723 10 no small 9 0.9 6 0.8886573Here’s the ggplot2 code to reproduce Figure 13.1.

post_mdn %>%

ggplot(aes(x = tank)) +

geom_hline(yintercept = inv_logit_scaled(median(post$b_Intercept)), linetype = 2, linewidth = 1/4) +

geom_vline(xintercept = c(16.5, 32.5), linewidth = 1/4, color = "grey25") +

geom_point(aes(y = propsurv), color = "orange2") +

geom_point(aes(y = post_mdn), shape = 1) +

annotate(geom = "text",

x = c(8, 16 + 8, 32 + 8), y = 0,

label = c("small tanks", "medium tanks", "large tanks")) +

scale_x_continuous(breaks = c(1, 16, 32, 48)) +

scale_y_continuous(breaks = 0:5 / 5, limits = c(0, 1)) +

labs(title = "Multilevel shrinkage!",

subtitle = "The empirical proportions are in orange while the model-\nimplied proportions are the black circles. The dashed line is\nthe model-implied average survival proportion.") +

theme(panel.grid.major = element_blank())

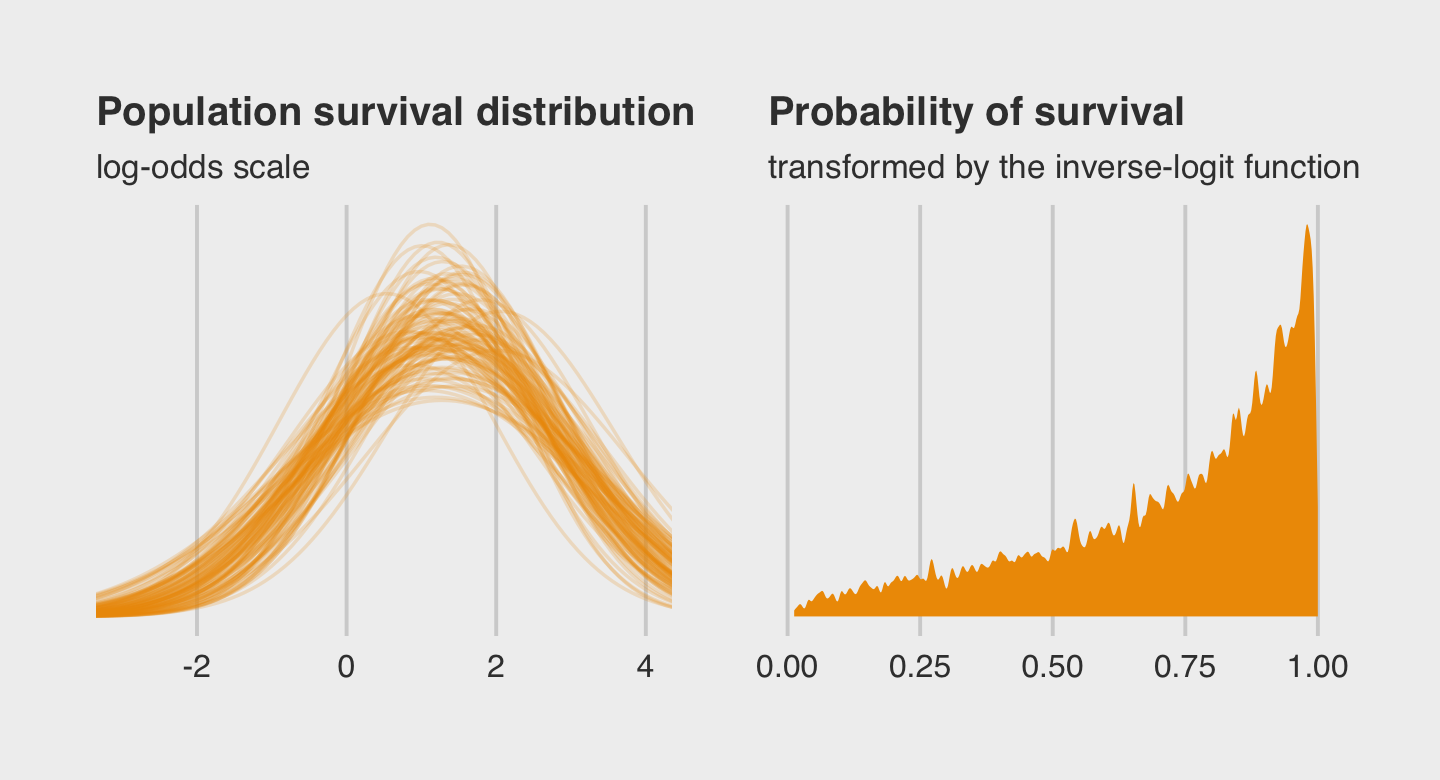

Here is the code for our version of Figure 13.2.a, where we visualize the model-implied population distribution of log-odds survival (i.e., the population distribution yielding all the tank-level intercepts).

# this makes the output of `slice_sample()` reproducible

set.seed(13)

p1 <-

post %>%

slice_sample(n = 100) %>%

expand_grid(x = seq(from = -4, to = 5, length.out = 100)) %>%

mutate(density = dnorm(x, mean = b_Intercept, sd = sd_tank__Intercept)) %>%

ggplot(aes(x = x, y = density, group = .draw)) +

geom_line(alpha = .2, color = "orange2") +

scale_y_continuous(NULL, breaks = NULL) +

labs(title = "Population survival distribution",

subtitle = "log-odds scale") +

coord_cartesian(xlim = c(-3, 4))Now we make our Figure 13.2.b and then bind the two subplots with patchwork.

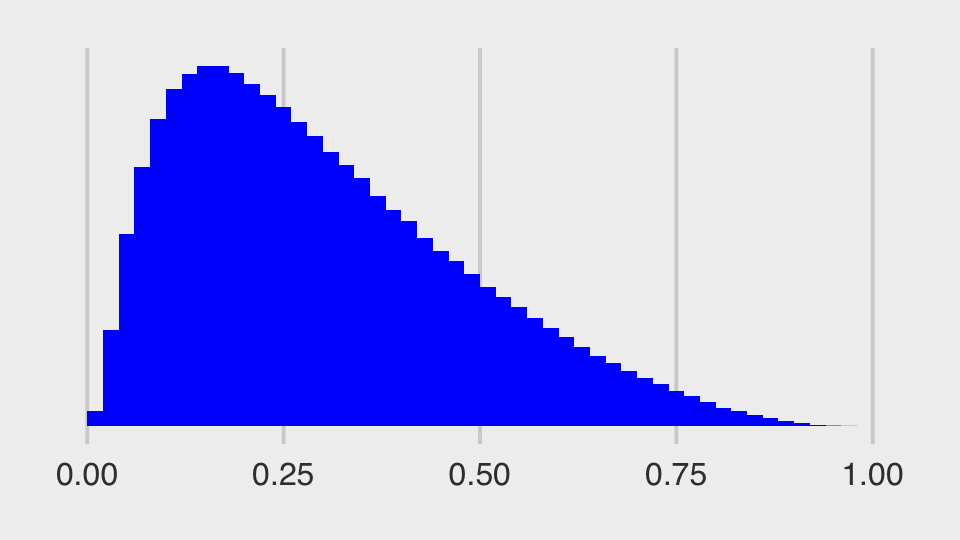

set.seed(13)

p2 <-

post %>%

slice_sample(n = 8000, replace = T) %>%

mutate(sim_tanks = rnorm(n(), mean = b_Intercept, sd = sd_tank__Intercept)) %>%

ggplot(aes(x = inv_logit_scaled(sim_tanks))) +

geom_density(linewidth = 0, fill = "orange2", adjust = 0.1) +

scale_y_continuous(NULL, breaks = NULL) +

labs(title = "Probability of survival",

subtitle = "transformed by the inverse-logit function")

library(patchwork)

(p1 + p2) &

theme(plot.title = element_text(size = 12),

plot.subtitle = element_text(size = 10))

Both plots show different ways in expressing the model uncertainty in terms of both location \(\alpha\) and scale \(\sigma\).

13.1.0.1 Rethinking: Varying intercepts as over-dispersion.

In the previous chapter (page 369), the beta-binomial and gamma-Poisson models were presented as ways for coping with over-dispersion of count data. Varying intercepts accomplish the same thing, allowing count outcomes to be over-dispersed. They accomplish this, because when each observed count gets its own unique intercept, but these intercepts are pooled through a common distribution, the predictions expect over-dispersion just like a beta-binomial or gamma-Poisson model would. Multilevel models are also mixtures. Compared to a beta-binomial or gamma-Poisson model, a binomial or Poisson model with a varying intercept on every observed outcome will often be easier to estimate and easier to extend. (p. 407, emphasis in the original)

13.1.0.2 Overthinking: Prior for variance components.

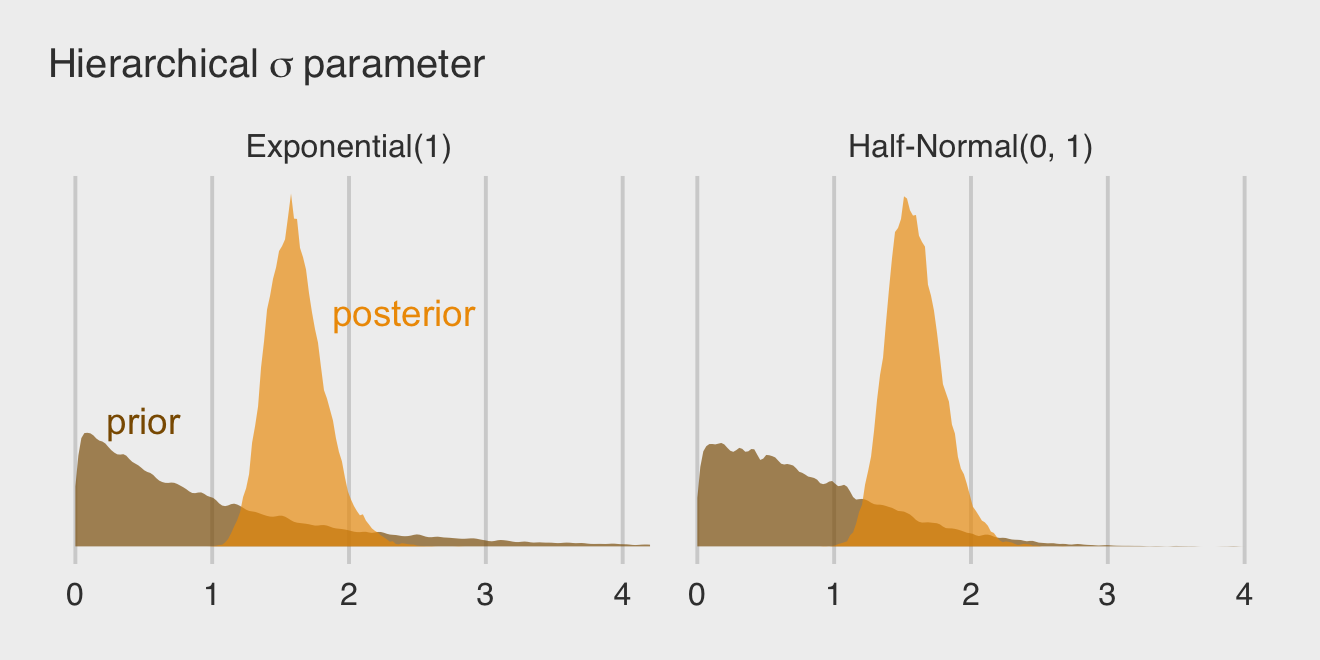

Yep, you can use the half-Normal distribution for your priors in brms, too. Here it is for model b13.2.

b13.2b <-

update(b13.2,

prior = c(prior(normal(0, 1.5), class = Intercept),

prior(normal(0, 1), class = sd)),

iter = 5000, warmup = 1000, chains = 4, cores = 4,

sample_prior = "yes",

seed = 13,

file = "fits/b13.02b")McElreath mentioned how one might set a lower bound at zero for the half-Normal prior when using rethinking::ulam(). There’s no need to do so when using brms::brm(). The lower bounds for priors of class = sd are already set to zero by default.

Check the model summary.

print(b13.2b)## Family: binomial

## Links: mu = logit

## Formula: surv | trials(density) ~ 1 + (1 | tank)

## Data: d (Number of observations: 48)

## Draws: 4 chains, each with iter = 5000; warmup = 1000; thin = 1;

## total post-warmup draws = 16000

##

## Group-Level Effects:

## ~tank (Number of levels: 48)

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## sd(Intercept) 1.59 0.20 1.23 2.04 1.00 4579 6766

##

## Population-Level Effects:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## Intercept 1.34 0.25 0.85 1.85 1.00 3404 6761

##

## Draws were sampled using sampling(NUTS). For each parameter, Bulk_ESS

## and Tail_ESS are effective sample size measures, and Rhat is the potential

## scale reduction factor on split chains (at convergence, Rhat = 1).If you’re curious how the exponential and half-Normal priors compare to one another and to their posteriors, you might just plot.

# for annotation

text <-

tibble(value = c(0.5, 2.4),

density = c(1, 1.85),

distribution = factor(c("prior", "posterior"), levels = c("prior", "posterior")),

prior = "Exponential(1)")

# gather and wrangle the prior and posterior draws

tibble(`prior_Exponential(1)` = prior_draws(b13.2) %>% pull(sd_tank),

`posterior_Exponential(1)` = as_draws_df(b13.2) %>% pull(sd_tank__Intercept),

`prior_Half-Normal(0, 1)` = prior_draws(b13.2b) %>% pull(sd_tank),

`posterior_Half-Normal(0, 1)` = as_draws_df(b13.2b) %>% pull(sd_tank__Intercept)) %>%

pivot_longer(everything(),

names_sep = "_",

names_to = c("distribution", "prior")) %>%

mutate(distribution = factor(distribution, levels = c("prior", "posterior"))) %>%

# plot!

ggplot(aes(x = value, fill = distribution)) +

geom_density(linewidth = 0, alpha = 2/3, adjust = 0.25) +

geom_text(data = text,

aes(y = density, label = distribution, color = distribution)) +

scale_fill_manual(NULL, values = c("orange4", "orange2")) +

scale_color_manual(NULL, values = c("orange4", "orange2")) +

scale_y_continuous(NULL, breaks = NULL) +

labs(subtitle = expression(Hierarchical~sigma~parameter)) +

coord_cartesian(xlim = c(0, 4)) +

theme(legend.position = "none") +

facet_wrap(~ prior)

By the way, this is why we set iter = 5000 and sample_prior = "yes" for the last two models. Neither were necessary to fit the models, but both helped us out with this plot.

13.2 Varying effects and the underfitting/overfitting trade-off

Varying intercepts are just regularized estimates, but adaptively regularized by estimating how diverse the clusters are while estimating the features of each cluster. This fact is not easy to grasp….

A major benefit of using varying effects estimates, instead of the empirical raw estimates, is that they provide more accurate estimates of the individual cluster (tank) intercepts. On average, the varying effects actually provide a better estimate of the individual tank (cluster) means. The reason that the varying intercepts provide better estimates is that they do a better job of trading off underfitting and overfitting. (p. 408)

In this section, we explicate this by contrasting three perspectives:

- complete pooling (i.e., a single-\(\alpha\) model),

- no pooling (i.e., the single-level \(\alpha_{\text{tank}[i]}\) model), and

- partial pooling [i.e., the multilevel model for which \(\alpha_j \sim \operatorname{Normal} (\bar \alpha, \sigma)\)].

To demonstrate [the magic of the multilevel model], we’ll simulate some tadpole data. That way, we’ll know the true per-pond survival probabilities. Then we can compare the no-pooling estimates to the partial pooling estimates, by computing how close each gets to the true values they are trying to estimate. The rest of this section shows how to do such a simulation. (p. 409)

13.2.1 The model.

The simulation formula should look familiar.

\[\begin{align*} \text{surv}_i & \sim \operatorname{Binomial}(n_i, p_i) \\ \operatorname{logit}(p_i) & = \alpha_{\text{pond}[i]} \\ \alpha_j & \sim \operatorname{Normal}(\bar \alpha, \sigma) \\ \bar \alpha & \sim \operatorname{Normal}(0, 1.5) \\ \sigma & \sim \operatorname{Exponential}(1) \end{align*}\]

13.2.2 Assign values to the parameters.

Here we follow along with McElreath and “assign specific values representative of the actual tadpole data” (p. 409). Because he included a set.seed() line in his R code 13.8, our results should match his exactly.

a_bar <- 1.5

sigma <- 1.5

n_ponds <- 60

set.seed(5005)

dsim <-

tibble(pond = 1:n_ponds,

ni = rep(c(5, 10, 25, 35), each = n_ponds / 4) %>% as.integer(),

true_a = rnorm(n = n_ponds, mean = a_bar, sd = sigma))

head(dsim)## # A tibble: 6 × 3

## pond ni true_a

## <int> <int> <dbl>

## 1 1 5 0.567

## 2 2 5 1.99

## 3 3 5 -0.138

## 4 4 5 1.86

## 5 5 5 3.91

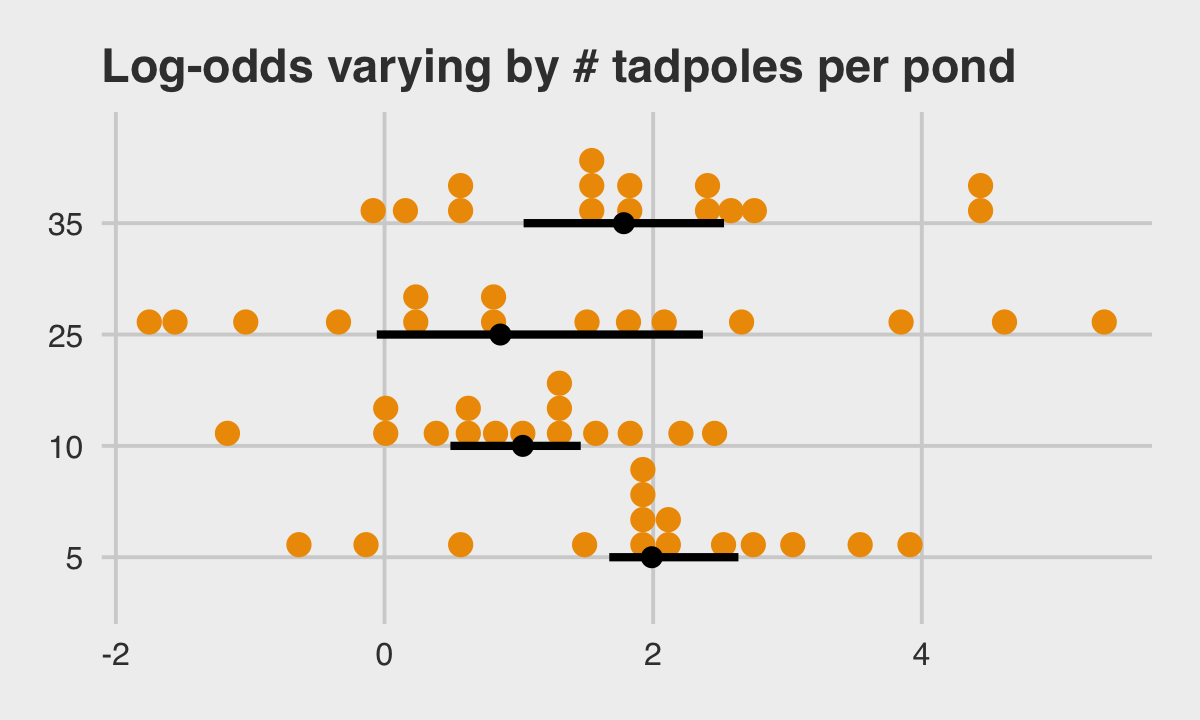

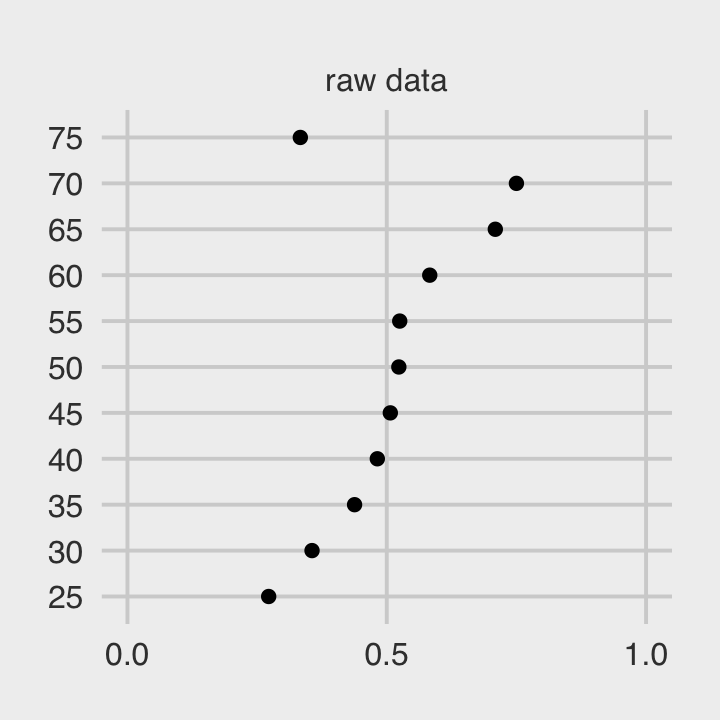

## 6 6 5 1.95McElreath twice urged us to inspect the contents of this simulation. In addition to looking at the data with head(), we might well plot.

dsim %>%

mutate(ni = factor(ni)) %>%

ggplot(aes(x = true_a, y = ni)) +

stat_dotsinterval(fill = "orange2", slab_size = 0, .width = .5) +

ggtitle("Log-odds varying by # tadpoles per pond") +

theme(plot.title = element_text(size = 14))

13.2.3 Sumulate survivors.

Each pond \(i\) has \(n_i\) potential survivors, and nature flips each tadpole’s coin, so to speak, with probability of survival \(p_i\). This probability \(p_i\) is implied by the model definition, and is equal to:

\[p_i = \frac{\exp (\alpha_i)}{1 + \exp (\alpha_i)}\]

The model uses a logit link, and so the probability is defined by the [

inv_logit_scaled()] function. (p. 411)

Although McElreath shared his set.seed() number in the last section, he didn’t share it for this bit. We’ll go ahead and carry over the one from last time. However, in a moment we’ll see this clearly wasn’t the one he used here. As a consequence, our results will deviate a bit from his.

set.seed(5005)

(

dsim <-

dsim %>%

mutate(si = rbinom(n = n(), prob = inv_logit_scaled(true_a), size = ni))

)## # A tibble: 60 × 4

## pond ni true_a si

## <int> <int> <dbl> <int>

## 1 1 5 0.567 4

## 2 2 5 1.99 4

## 3 3 5 -0.138 3

## 4 4 5 1.86 5

## 5 5 5 3.91 5

## 6 6 5 1.95 4

## 7 7 5 1.49 4

## 8 8 5 2.52 4

## 9 9 5 2.18 3

## 10 10 5 2.05 4

## # … with 50 more rows13.2.4 Compute the no-pooling estimates.

The no-pooling estimates (i.e., \(\alpha_{\text{tank}[i]}\)) are the results of simple algebra.

(

dsim <-

dsim %>%

mutate(p_nopool = si / ni)

)## # A tibble: 60 × 5

## pond ni true_a si p_nopool

## <int> <int> <dbl> <int> <dbl>

## 1 1 5 0.567 4 0.8

## 2 2 5 1.99 4 0.8

## 3 3 5 -0.138 3 0.6

## 4 4 5 1.86 5 1

## 5 5 5 3.91 5 1

## 6 6 5 1.95 4 0.8

## 7 7 5 1.49 4 0.8

## 8 8 5 2.52 4 0.8

## 9 9 5 2.18 3 0.6

## 10 10 5 2.05 4 0.8

## # … with 50 more rows“These are the same no-pooling estimates you’d get by fitting a model with a dummy variable for each pond and flat priors that induce no regularization” (p. 411). That is, these are the same kinds of estimates we got back when we fit b13.1.

13.2.5 Compute the partial-pooling estimates.

Fit the multilevel (partial-pooling) model.

b13.3 <-

brm(data = dsim,

family = binomial,

si | trials(ni) ~ 1 + (1 | pond),

prior = c(prior(normal(0, 1.5), class = Intercept),

prior(exponential(1), class = sd)),

iter = 2000, warmup = 1000, chains = 4, cores = 4,

seed = 13,

file = "fits/b13.03")Here’s our standard brms summary.

print(b13.3)## Family: binomial

## Links: mu = logit

## Formula: si | trials(ni) ~ 1 + (1 | pond)

## Data: dsim (Number of observations: 60)

## Draws: 4 chains, each with iter = 2000; warmup = 1000; thin = 1;

## total post-warmup draws = 4000

##

## Group-Level Effects:

## ~pond (Number of levels: 60)

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## sd(Intercept) 1.50 0.20 1.15 1.94 1.00 1483 2348

##

## Population-Level Effects:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## Intercept 1.47 0.23 1.04 1.93 1.00 1175 1587

##

## Draws were sampled using sampling(NUTS). For each parameter, Bulk_ESS

## and Tail_ESS are effective sample size measures, and Rhat is the potential

## scale reduction factor on split chains (at convergence, Rhat = 1).I’m not aware that you can use McElreath’s depth=2 trick in brms for summary() or print(). However, you can get most of that information and more with the Stan-like summary using the $fit syntax.

b13.3$fit## Inference for Stan model: 1c3cbb692fbcf75336f23ce8df587b71.

## 4 chains, each with iter=2000; warmup=1000; thin=1;

## post-warmup draws per chain=1000, total post-warmup draws=4000.

##

## mean se_mean sd 2.5% 25% 50% 75% 97.5% n_eff Rhat

## b_Intercept 1.47 0.01 0.23 1.04 1.32 1.47 1.62 1.93 1152 1

## sd_pond__Intercept 1.50 0.01 0.20 1.15 1.35 1.49 1.63 1.94 1486 1

## r_pond[1,Intercept] 0.08 0.01 0.95 -1.64 -0.58 0.05 0.69 2.05 6235 1

## r_pond[2,Intercept] 0.11 0.01 0.93 -1.58 -0.55 0.08 0.72 2.06 6709 1

## r_pond[3,Intercept] -0.68 0.01 0.82 -2.24 -1.23 -0.70 -0.15 0.99 6474 1

## r_pond[4,Intercept] 1.15 0.01 1.18 -0.93 0.31 1.06 1.89 3.69 6639 1

## r_pond[5,Intercept] 1.16 0.01 1.14 -0.87 0.36 1.08 1.89 3.64 5988 1

## r_pond[6,Intercept] 0.09 0.01 0.93 -1.63 -0.55 0.04 0.68 1.96 7062 1

## r_pond[7,Intercept] 0.09 0.01 0.93 -1.62 -0.52 0.02 0.66 1.97 7251 1

## r_pond[8,Intercept] 0.07 0.01 0.91 -1.67 -0.55 0.04 0.67 1.92 6336 1

## r_pond[9,Intercept] -0.69 0.01 0.86 -2.33 -1.28 -0.72 -0.15 1.11 6323 1

## r_pond[10,Intercept] 0.10 0.01 0.97 -1.71 -0.57 0.06 0.73 2.15 6668 1

## r_pond[11,Intercept] 1.14 0.02 1.15 -0.92 0.33 1.07 1.84 3.68 5652 1

## r_pond[12,Intercept] -1.35 0.01 0.81 -2.99 -1.88 -1.35 -0.77 0.20 5675 1

## r_pond[13,Intercept] 1.13 0.01 1.11 -0.82 0.38 1.05 1.80 3.57 6128 1

## r_pond[14,Intercept] 0.07 0.01 0.92 -1.63 -0.55 0.02 0.64 1.98 5343 1

## r_pond[15,Intercept] 1.13 0.02 1.17 -0.93 0.30 1.04 1.87 3.57 5861 1

## r_pond[16,Intercept] -0.85 0.01 0.66 -2.16 -1.29 -0.85 -0.41 0.47 5604 1

## r_pond[17,Intercept] -1.96 0.01 0.67 -3.31 -2.41 -1.95 -1.51 -0.71 4592 1

## r_pond[18,Intercept] -1.22 0.01 0.63 -2.43 -1.66 -1.22 -0.81 0.07 5016 1

## r_pond[19,Intercept] -1.22 0.01 0.64 -2.47 -1.65 -1.22 -0.81 0.07 4989 1

## r_pond[20,Intercept] -0.44 0.01 0.68 -1.68 -0.91 -0.46 -0.01 0.97 5310 1

## r_pond[21,Intercept] -1.59 0.01 0.64 -2.87 -2.00 -1.60 -1.17 -0.34 4995 1

## r_pond[22,Intercept] 0.67 0.01 0.87 -0.90 0.06 0.61 1.24 2.56 5610 1

## r_pond[23,Intercept] 1.55 0.02 1.05 -0.26 0.81 1.48 2.20 3.86 4417 1

## r_pond[24,Intercept] -0.85 0.01 0.68 -2.16 -1.32 -0.86 -0.41 0.58 4938 1

## r_pond[25,Intercept] 0.06 0.01 0.78 -1.38 -0.49 0.02 0.57 1.70 7130 1

## r_pond[26,Intercept] 0.66 0.01 0.89 -0.91 0.03 0.61 1.24 2.57 6817 1

## r_pond[27,Intercept] 0.03 0.01 0.73 -1.32 -0.48 0.01 0.51 1.56 5237 1

## r_pond[28,Intercept] -0.44 0.01 0.69 -1.75 -0.91 -0.46 0.00 0.98 4853 1

## r_pond[29,Intercept] -0.86 0.01 0.64 -2.07 -1.29 -0.86 -0.44 0.41 4713 1

## r_pond[30,Intercept] -0.44 0.01 0.68 -1.71 -0.90 -0.46 0.01 0.96 4631 1

## r_pond[31,Intercept] 1.39 0.01 0.76 0.04 0.85 1.35 1.86 3.04 5124 1

## r_pond[32,Intercept] 0.54 0.01 0.59 -0.52 0.13 0.51 0.93 1.74 4408 1

## r_pond[33,Intercept] 1.41 0.01 0.78 0.04 0.87 1.36 1.86 3.18 3972 1

## r_pond[34,Intercept] -0.44 0.01 0.49 -1.40 -0.77 -0.45 -0.11 0.51 3748 1

## r_pond[35,Intercept] -0.96 0.01 0.46 -1.86 -1.26 -0.97 -0.65 -0.04 3349 1

## r_pond[36,Intercept] 2.10 0.01 0.95 0.49 1.45 1.99 2.69 4.14 5174 1

## r_pond[37,Intercept] -3.38 0.01 0.62 -4.68 -3.77 -3.35 -2.95 -2.27 5087 1

## r_pond[38,Intercept] -2.07 0.01 0.47 -3.03 -2.37 -2.06 -1.75 -1.17 3446 1

## r_pond[39,Intercept] -0.97 0.01 0.46 -1.87 -1.28 -0.97 -0.66 -0.09 3269 1

## r_pond[40,Intercept] 2.09 0.01 0.94 0.50 1.41 2.02 2.69 4.14 5006 1

## r_pond[41,Intercept] 2.13 0.01 0.95 0.51 1.48 2.05 2.68 4.26 4495 1

## r_pond[42,Intercept] 0.55 0.01 0.60 -0.55 0.12 0.52 0.95 1.81 4620 1

## r_pond[43,Intercept] -1.75 0.01 0.45 -2.66 -2.05 -1.74 -1.45 -0.87 3627 1

## r_pond[44,Intercept] -0.63 0.01 0.48 -1.53 -0.96 -0.66 -0.32 0.35 3125 1

## r_pond[45,Intercept] -2.63 0.01 0.52 -3.67 -2.98 -2.62 -2.28 -1.65 4289 1

## r_pond[46,Intercept] -1.45 0.01 0.40 -2.23 -1.71 -1.45 -1.19 -0.69 2670 1

## r_pond[47,Intercept] 2.32 0.01 0.92 0.81 1.64 2.22 2.87 4.35 4778 1

## r_pond[48,Intercept] 2.33 0.01 0.94 0.81 1.67 2.25 2.89 4.41 4591 1

## r_pond[49,Intercept] 0.15 0.01 0.48 -0.77 -0.17 0.14 0.46 1.13 3984 1

## r_pond[50,Intercept] 0.15 0.01 0.49 -0.76 -0.19 0.13 0.47 1.13 3815 1

## r_pond[51,Intercept] 0.16 0.01 0.50 -0.77 -0.19 0.15 0.48 1.16 3503 1

## r_pond[52,Intercept] -1.45 0.01 0.40 -2.24 -1.71 -1.44 -1.19 -0.67 2999 1

## r_pond[53,Intercept] 0.14 0.01 0.49 -0.76 -0.20 0.13 0.46 1.13 3852 1

## r_pond[54,Intercept] 2.33 0.01 0.94 0.70 1.67 2.25 2.89 4.49 4192 1

## r_pond[55,Intercept] -0.88 0.01 0.41 -1.69 -1.16 -0.88 -0.60 -0.06 2839 1

## r_pond[56,Intercept] 1.67 0.01 0.75 0.35 1.14 1.62 2.16 3.33 4787 1

## r_pond[57,Intercept] -0.76 0.01 0.41 -1.54 -1.04 -0.77 -0.48 0.05 2572 1

## r_pond[58,Intercept] 1.22 0.01 0.66 0.03 0.76 1.20 1.64 2.64 4432 1

## r_pond[59,Intercept] 0.36 0.01 0.50 -0.58 0.01 0.34 0.67 1.40 3423 1

## r_pond[60,Intercept] 1.22 0.01 0.64 0.06 0.79 1.18 1.60 2.61 4893 1

## lprior -3.32 0.01 0.28 -3.95 -3.49 -3.29 -3.13 -2.84 1711 1

## lp__ -185.66 0.24 7.59 -201.63 -190.51 -185.11 -180.34 -171.94 969 1

##

## Samples were drawn using NUTS(diag_e) at Fri Sep 23 07:21:42 2022.

## For each parameter, n_eff is a crude measure of effective sample size,

## and Rhat is the potential scale reduction factor on split chains (at

## convergence, Rhat=1).As an aside, notice how this summary still reports the old-style n_eff values, rather than the updated Bulk_ESS and Tail_ESS values. I suspect this will change sometime soon. In the meantime, here’s a thread on the Stan Forums featuring members of the Stan team discussing how.

Let’s get ready for the diagnostic plot of Figure 13.3. First we add the partially-pooled estimates, as summarized by their posterior means, to the dsim data. Then we compute error values.

# we could have included this step in the block of code below, if we wanted to

p_partpool <-

coef(b13.3)$pond[, , ] %>%

data.frame() %>%

transmute(p_partpool = inv_logit_scaled(Estimate))

dsim <-

dsim %>%

bind_cols(p_partpool) %>%

mutate(p_true = inv_logit_scaled(true_a)) %>%

mutate(nopool_error = abs(p_nopool - p_true),

partpool_error = abs(p_partpool - p_true))

dsim %>%

glimpse()## Rows: 60

## Columns: 9

## $ pond <int> 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24…

## $ ni <int> 5, 5, 5, 5, 5, 5, 5, 5, 5, 5, 5, 5, 5, 5, 5, 10, 10, 10, 10, 10, 10, 10, 10, 10, 10, …

## $ true_a <dbl> 0.56673123, 1.99002317, -0.13775688, 1.85676651, 3.91208800, 1.95414869, 1.48963805, …

## $ si <int> 4, 4, 3, 5, 5, 4, 4, 4, 3, 4, 5, 2, 5, 4, 5, 6, 3, 5, 5, 7, 4, 9, 10, 6, 8, 9, 8, 7, …

## $ p_nopool <dbl> 0.80, 0.80, 0.60, 1.00, 1.00, 0.80, 0.80, 0.80, 0.60, 0.80, 1.00, 0.40, 1.00, 0.80, 1…

## $ p_partpool <dbl> 0.8250615, 0.8297859, 0.6882019, 0.9321608, 0.9326275, 0.8261283, 0.8260882, 0.824133…

## $ p_true <dbl> 0.6380086, 0.8797456, 0.4656151, 0.8649196, 0.9803934, 0.8758983, 0.8160239, 0.925812…

## $ nopool_error <dbl> 0.161991419, 0.079745589, 0.134384860, 0.135080387, 0.019606594, 0.075898310, 0.01602…

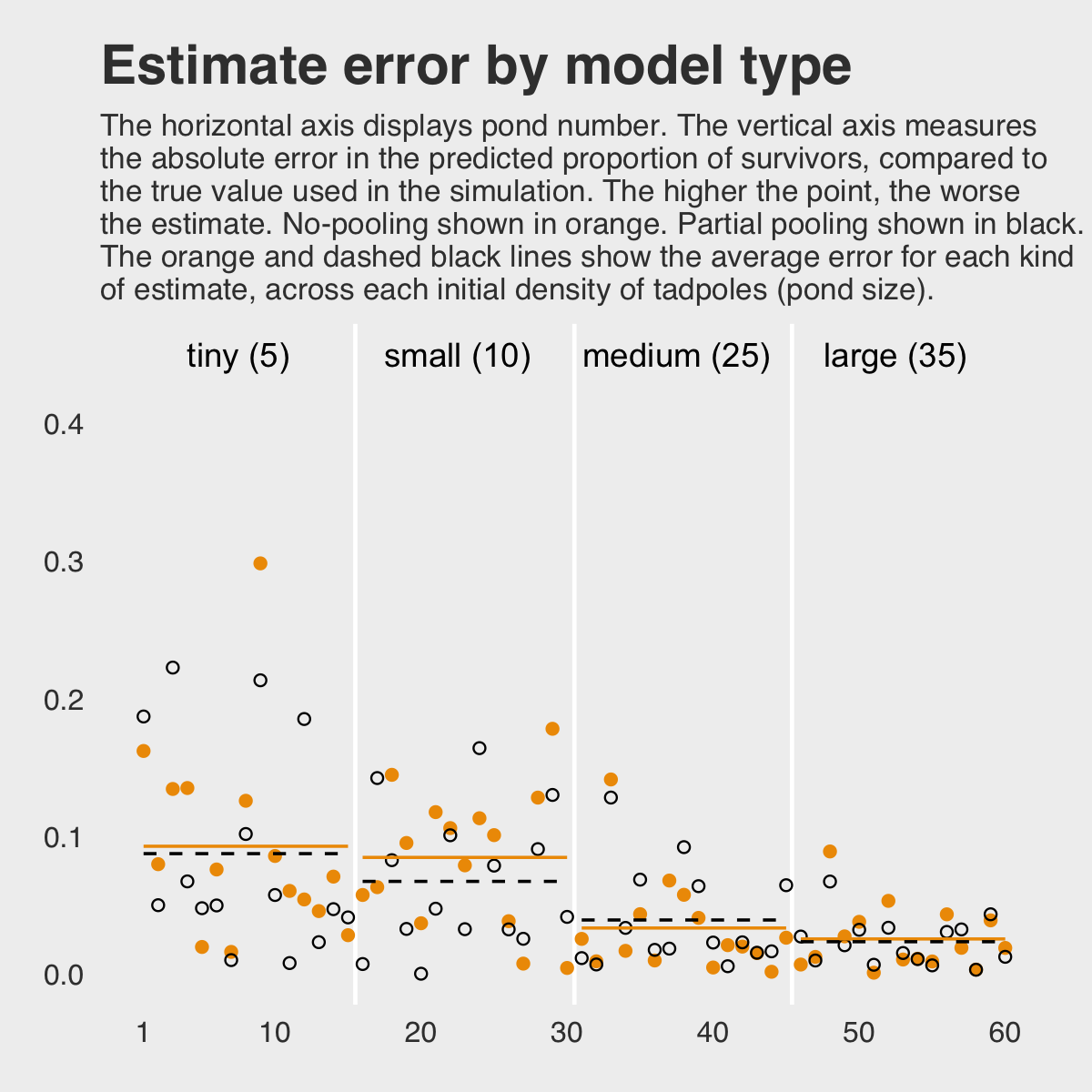

## $ partpool_error <dbl> 0.1870529111, 0.0499597344, 0.2225867530, 0.0672412307, 0.0477658592, 0.0497699776, 0…Here is our code for Figure 13.3. The extra data processing for dfline is how we get the values necessary for the horizontal summary lines.

dfline <-

dsim %>%

select(ni, nopool_error:partpool_error) %>%

pivot_longer(-ni) %>%

group_by(name, ni) %>%

summarise(mean_error = mean(value)) %>%

mutate(x = c( 1, 16, 31, 46),

xend = c(15, 30, 45, 60))

dsim %>%

ggplot(aes(x = pond)) +

geom_vline(xintercept = c(15.5, 30.5, 45.4),

color = "white", linewidth = 2/3) +

geom_point(aes(y = nopool_error), color = "orange2") +

geom_point(aes(y = partpool_error), shape = 1) +

geom_segment(data = dfline,

aes(x = x, xend = xend,

y = mean_error, yend = mean_error),

color = rep(c("orange2", "black"), each = 4),

linetype = rep(1:2, each = 4)) +

annotate(geom = "text",

x = c(15 - 7.5, 30 - 7.5, 45 - 7.5, 60 - 7.5), y = .45,

label = c("tiny (5)", "small (10)", "medium (25)", "large (35)")) +

scale_x_continuous(breaks = c(1, 10, 20, 30, 40, 50, 60)) +

labs(title = "Estimate error by model type",

subtitle = "The horizontal axis displays pond number. The vertical axis measures\nthe absolute error in the predicted proportion of survivors, compared to\nthe true value used in the simulation. The higher the point, the worse\nthe estimate. No-pooling shown in orange. Partial pooling shown in black.\nThe orange and dashed black lines show the average error for each kind\nof estimate, across each initial density of tadpoles (pond size).",

y = "absolute error") +

theme(panel.grid.major = element_blank(),

plot.subtitle = element_text(size = 10))

If you wanted to quantify the difference in simple summaries, you might execute something like this.

dsim %>%

select(ni, nopool_error:partpool_error) %>%

pivot_longer(-ni) %>%

group_by(name) %>%

summarise(mean_error = mean(value) %>% round(digits = 3),

median_error = median(value) %>% round(digits = 3))## # A tibble: 2 × 3

## name mean_error median_error

## <chr> <dbl> <dbl>

## 1 nopool_error 0.059 0.042

## 2 partpool_error 0.054 0.033Although many years of work in statistics have shown that partially pooled estimates are better, on average, this is not always the case. Our results are an example of this. McElreath addressed this directly:

But there are some cases in which the no-pooling estimates are better. These exceptions often result from ponds with extreme probabilities of survival. The partial pooling estimates shrink such extreme ponds towards the mean, because few ponds exhibit such extreme behavior. But sometimes outliers really are outliers. (p. 414)

I originally learned about the multilevel in order to work with longitudinal data. In that context, I found the basic principles of a multilevel structure quite intuitive. The concept of partial pooling, however, took me some time to wrap my head around. If you’re struggling with this, be patient and keep chipping away.

When McElreath lectured on this topic in 2015, he traced partial pooling to statistician Charles M. Stein. Efron and Morris (1977) wrote the now classic paper, Stein’s paradox in statistics, which does a nice job breaking down why partial pooling can be so powerful. One of the primary examples they used in the paper was of 1970 batting average data. If you’d like more practice seeing how partial pooling works–or if you just like baseball–, check out my blog post, Stein’s paradox and what partial pooling can do for you.

13.2.5.1 Overthinking: Repeating the pond simulation.

Within the brms workflow, we can reuse a compiled model with update(). But first, we’ll simulate new data.

a_bar <- 1.5

sigma <- 1.5

n_ponds <- 60

set.seed(1999) # for new data, set a new seed

new_dsim <-

tibble(pond = 1:n_ponds,

ni = rep(c(5, 10, 25, 35), each = n_ponds / 4) %>% as.integer(),

true_a = rnorm(n = n_ponds, mean = a_bar, sd = sigma)) %>%

mutate(si = rbinom(n = n(), prob = inv_logit_scaled(true_a), size = ni)) %>%

mutate(p_nopool = si / ni)

glimpse(new_dsim)## Rows: 60

## Columns: 5

## $ pond <int> 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, …

## $ ni <int> 5, 5, 5, 5, 5, 5, 5, 5, 5, 5, 5, 5, 5, 5, 5, 10, 10, 10, 10, 10, 10, 10, 10, 10, 10, 10, 10…

## $ true_a <dbl> 2.5990087, 1.4432554, 3.3045137, 3.7047030, 1.7005354, 2.2797409, 0.6759270, -0.2784119, -0…

## $ si <int> 4, 4, 5, 4, 4, 4, 2, 4, 3, 5, 4, 5, 2, 2, 5, 10, 8, 10, 10, 9, 10, 9, 5, 10, 10, 6, 7, 7, 8…

## $ p_nopool <dbl> 0.80, 0.80, 1.00, 0.80, 0.80, 0.80, 0.40, 0.80, 0.60, 1.00, 0.80, 1.00, 0.40, 0.40, 1.00, 1…Fit the new model.

b13.3_new <-

update(b13.3,

newdata = new_dsim,

chains = 4, cores = 4,

seed = 13,

file = "fits/b13.03_new")print(b13.3_new)## Family: binomial

## Links: mu = logit

## Formula: si | trials(ni) ~ 1 + (1 | pond)

## Data: new_dsim (Number of observations: 60)

## Draws: 4 chains, each with iter = 2000; warmup = 1000; thin = 1;

## total post-warmup draws = 4000

##

## Group-Level Effects:

## ~pond (Number of levels: 60)

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## sd(Intercept) 1.32 0.19 1.00 1.74 1.00 1299 2026

##

## Population-Level Effects:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## Intercept 1.63 0.20 1.24 2.04 1.00 1455 2090

##

## Draws were sampled using sampling(NUTS). For each parameter, Bulk_ESS

## and Tail_ESS are effective sample size measures, and Rhat is the potential

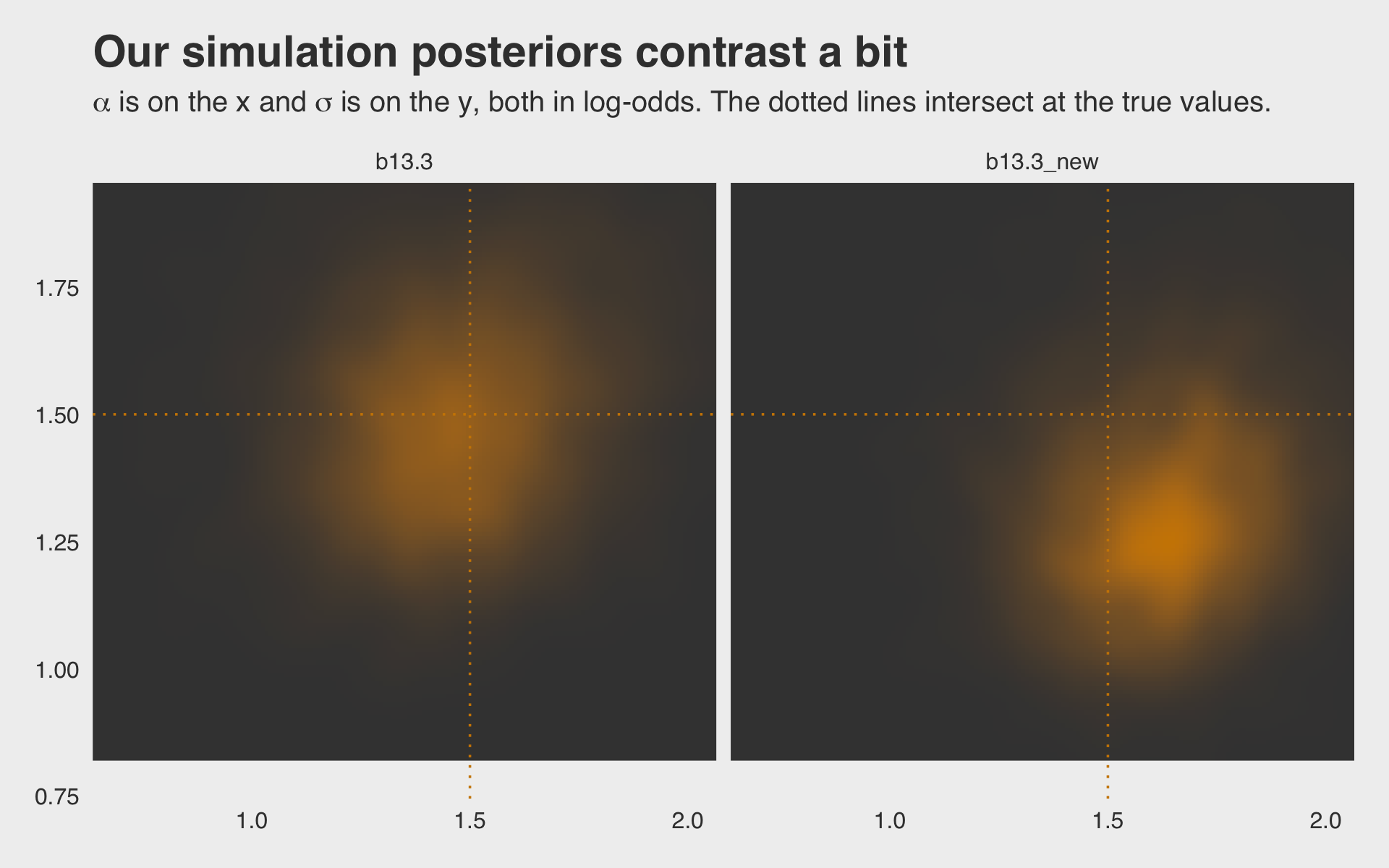

## scale reduction factor on split chains (at convergence, Rhat = 1).Why not plot the first simulation versus the second one?

bind_rows(as_draws_df(b13.3),

as_draws_df(b13.3_new)) %>%

mutate(model = rep(c("b13.3", "b13.3_new"), each = n() / 2)) %>%

ggplot(aes(x = b_Intercept, y = sd_pond__Intercept)) +

stat_density_2d(geom = "raster",

aes(fill = after_stat(density)),

contour = F, n = 200) +

geom_vline(xintercept = a_bar, color = "orange3", linetype = 3) +

geom_hline(yintercept = sigma, color = "orange3", linetype = 3) +

scale_fill_gradient(low = "grey25", high = "orange3") +

ggtitle("Our simulation posteriors contrast a bit",

subtitle = expression(alpha*" is on the x and "*sigma*" is on the y, both in log-odds. The dotted lines intersect at the true values.")) +

coord_cartesian(xlim = c(.7, 2),

ylim = c(.8, 1.9)) +

theme(legend.position = "none",

panel.grid.major = element_blank()) +

facet_wrap(~ model, ncol = 2)

If you’d like the stanfit portion of your brm() object, subset with $fit. Take b13.3, for example. You might check out its structure via b13.3$fit %>% str(). Here’s the actual Stan code.

b13.3$fit@stanmodel## S4 class stanmodel '1c3cbb692fbcf75336f23ce8df587b71' coded as follows:

## // generated with brms 2.18.0

## functions {

## }

## data {

## int<lower=1> N; // total number of observations

## int Y[N]; // response variable

## int trials[N]; // number of trials

## // data for group-level effects of ID 1

## int<lower=1> N_1; // number of grouping levels

## int<lower=1> M_1; // number of coefficients per level

## int<lower=1> J_1[N]; // grouping indicator per observation

## // group-level predictor values

## vector[N] Z_1_1;

## int prior_only; // should the likelihood be ignored?

## }

## transformed data {

## }

## parameters {

## real Intercept; // temporary intercept for centered predictors

## vector<lower=0>[M_1] sd_1; // group-level standard deviations

## vector[N_1] z_1[M_1]; // standardized group-level effects

## }

## transformed parameters {

## vector[N_1] r_1_1; // actual group-level effects

## real lprior = 0; // prior contributions to the log posterior

## r_1_1 = (sd_1[1] * (z_1[1]));

## lprior += normal_lpdf(Intercept | 0, 1.5);

## lprior += exponential_lpdf(sd_1 | 1);

## }

## model {

## // likelihood including constants

## if (!prior_only) {

## // initialize linear predictor term

## vector[N] mu = rep_vector(0.0, N);

## mu += Intercept;

## for (n in 1:N) {

## // add more terms to the linear predictor

## mu[n] += r_1_1[J_1[n]] * Z_1_1[n];

## }

## target += binomial_logit_lpmf(Y | trials, mu);

## }

## // priors including constants

## target += lprior;

## target += std_normal_lpdf(z_1[1]);

## }

## generated quantities {

## // actual population-level intercept

## real b_Intercept = Intercept;

## }

## 13.3 More than one type of cluster

“We can use and often should use more than one type of cluster in the same model” (p. 415).

13.3.0.1 Rethinking: Cross-classification and hierarchy.

The kind of data structure in

data(chimpanzees)is usually called a cross-classified multilevel model. It is cross-classified, because actors are not nested within unique blocks. If each chimpanzee had instead done all of his or her pulls on a single day, within a single block, then the data structure would instead be hierarchical. However, the model specification would typically be the same. So the model structure and code you’ll see below will apply both to cross-classified designs and hierarchical designs. (p. 415, emphasis in the original)

13.3.1 Multilevel chimpanzees.

The initial multilevel update from model b11.4 from Section 11.1.1 follows the statistical formula

\[\begin{align*} \text{left_pull}_i & \sim \operatorname{Binomial}(n_i = 1, p_i) \\ \operatorname{logit} (p_i) & = \alpha_{\text{actor}[i]} + \color{#CD8500}{\gamma_{\text{block}[i]}} + \beta_{\text{treatment}[i]} \\ \beta_j & \sim \operatorname{Normal}(0, 0.5) \;\;\; , \text{for } j = 1, \dots, 4 \\ \alpha_j & \sim \operatorname{Normal}(\bar \alpha, \sigma_\alpha) \;\;\; , \text{for } j = 1, \dots, 7 \\ \color{#CD8500}{\gamma_j} & \color{#CD8500} \sim \color{#CD8500}{\operatorname{Normal}(0, \sigma_\gamma) \;\;\; , \text{for } j = 1, \dots, 6} \\ \bar \alpha & \sim \operatorname{Normal}(0, 1.5) \\ \sigma_\alpha & \sim \operatorname{Exponential}(1) \\ \color{#CD8500}{\sigma_\gamma} & \color{#CD8500} \sim \color{#CD8500}{\operatorname{Exponential}(1)}. \end{align*}\]

⚠️ WARNING ⚠️

I am so sorry, but we are about to head straight into a load of confusion. If you follow along linearly in the text, we won’t have the language to parse this all out until Section 13.4. In short, our difficulties will have to do with what are called the centered and the non-centered parameterizations for multilevel models. For the next several models in the text, McElreath used the centered parameterization. As we’ll learn in Section 13.4, this often causes problems when you use Stan to fit your multilevel models. Happily, the solution to those problems is often the non-centered parameterization, which is well known among the Stan team. This issue is so well known, in fact, that Bürkner only supports the non-centered parameterization with brms (see here). To my knowledge, there is no easy way around this. In the long run, this is a good thing. Your brms models will likely avoid some of the problems McElreath highlighted in this part of the text. In the short term, this also means that our results will not completely match up with those in the text. If you really want to reproduce McElreath’s models m13.4 through m13.6, you’ll have to fit them with the rethinking package or directly in Stan. Our models b13.4 through b13.6 will be the non-centered brms alternatives. Either way, the models make the same predictions, but the nuts and bolts and gears we’ll use to construct our multilevel golems will look a little different. With all that in mind, here’s how we might express our statistical model using the non-centered parameterization more faithful to the way it will be expressed with brms::brm():

\[\begin{align*} \text{left_pull}_i & \sim \operatorname{Binomial}(n_i = 1, p_i) \\ \operatorname{logit} (p_i) & = \bar \alpha + \beta_{\text{treatment}[i]} + \color{#CD8500}{z_{\text{actor}[i]} \sigma_\alpha + x_{\text{block}[i]} \sigma_\gamma} \\ \bar \alpha & \sim \operatorname{Normal}(0, 1.5) \\ \beta_j & \sim \operatorname{Normal}(0, 0.5) \;\;\; , \text{for } j = 1, \dots, 4 \\ \color{#CD8500}{z_j} & \color{#CD8500}\sim \color{#CD8500}{\operatorname{Normal}(0, 1)} \\ \color{#CD8500}{x_j} & \color{#CD8500}\sim \color{#CD8500}{\operatorname{Normal}(0, 1)} \\ \sigma_\alpha & \sim \operatorname{Exponential}(1) \\ \sigma_\gamma & \sim \operatorname{Exponential}(1). \end{align*}\]

If you jump ahead to Section 13.4.2, you’ll see this is just re-write of the formula on the top of page 424. For now, let’s load the data.

data(chimpanzees, package = "rethinking")

d <- chimpanzees

rm(chimpanzees)Wrangle and view.

d <-

d %>%

mutate(actor = factor(actor),

block = factor(block),

treatment = factor(1 + prosoc_left + 2 * condition))

glimpse(d)## Rows: 504

## Columns: 9

## $ actor <fct> 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, …

## $ recipient <int> NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA,…

## $ condition <int> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, …

## $ block <fct> 1, 1, 1, 1, 1, 1, 2, 2, 2, 2, 2, 2, 3, 3, 3, 3, 3, 3, 4, 4, 4, 4, 4, 4, 5, 5, 5, 5, 5, …

## $ trial <int> 2, 4, 6, 8, 10, 12, 14, 16, 18, 20, 22, 24, 26, 28, 30, 32, 34, 36, 38, 40, 42, 44, 46,…

## $ prosoc_left <int> 0, 0, 1, 0, 1, 1, 1, 1, 0, 0, 0, 1, 0, 1, 0, 1, 1, 0, 1, 0, 0, 0, 1, 1, 0, 0, 1, 1, 0, …

## $ chose_prosoc <int> 1, 0, 0, 1, 1, 1, 0, 0, 1, 1, 0, 0, 0, 1, 1, 1, 0, 1, 1, 0, 0, 1, 1, 0, 1, 0, 0, 0, 1, …

## $ pulled_left <int> 0, 1, 0, 0, 1, 1, 0, 0, 0, 0, 1, 0, 1, 1, 0, 1, 0, 0, 1, 1, 1, 0, 1, 0, 0, 1, 0, 0, 0, …

## $ treatment <fct> 1, 1, 2, 1, 2, 2, 2, 2, 1, 1, 1, 2, 1, 2, 1, 2, 2, 1, 2, 1, 1, 1, 2, 2, 1, 1, 2, 2, 1, …Even when using the non-centered parameterization, McElreath’s m13.4 is a bit of an odd model to translate into brms syntax. To my knowledge, it can’t be done with conventional syntax. But we can fit the model with careful use of the non-linear syntax, which might look like this.

b13.4 <-

brm(data = d,

family = binomial,

bf(pulled_left | trials(1) ~ a + b,

a ~ 1 + (1 | actor) + (1 | block),

b ~ 0 + treatment,

nl = TRUE),

prior = c(prior(normal(0, 0.5), nlpar = b),

prior(normal(0, 1.5), class = b, coef = Intercept, nlpar = a),

prior(exponential(1), class = sd, group = actor, nlpar = a),

prior(exponential(1), class = sd, group = block, nlpar = a)),

iter = 2000, warmup = 1000, chains = 4, cores = 4,

seed = 13,

file = "fits/b13.04")The b ~ 0 + treatment part of the formula is our expression of what we wrote above as \(\beta_{\text{treatment}[i]}\). There’s a lot going on with the a ~ 1 + (1 | actor) + (1 | block) part of the formula. The initial 1 outside of the parenthesis is \(\bar \alpha\). The (1 | actor) and (1 | block) parts correspond to \(z_{\text{actor}[i]} \sigma_\alpha\) and \(x_{\text{block}[i]} \sigma_\gamma\), respectively.

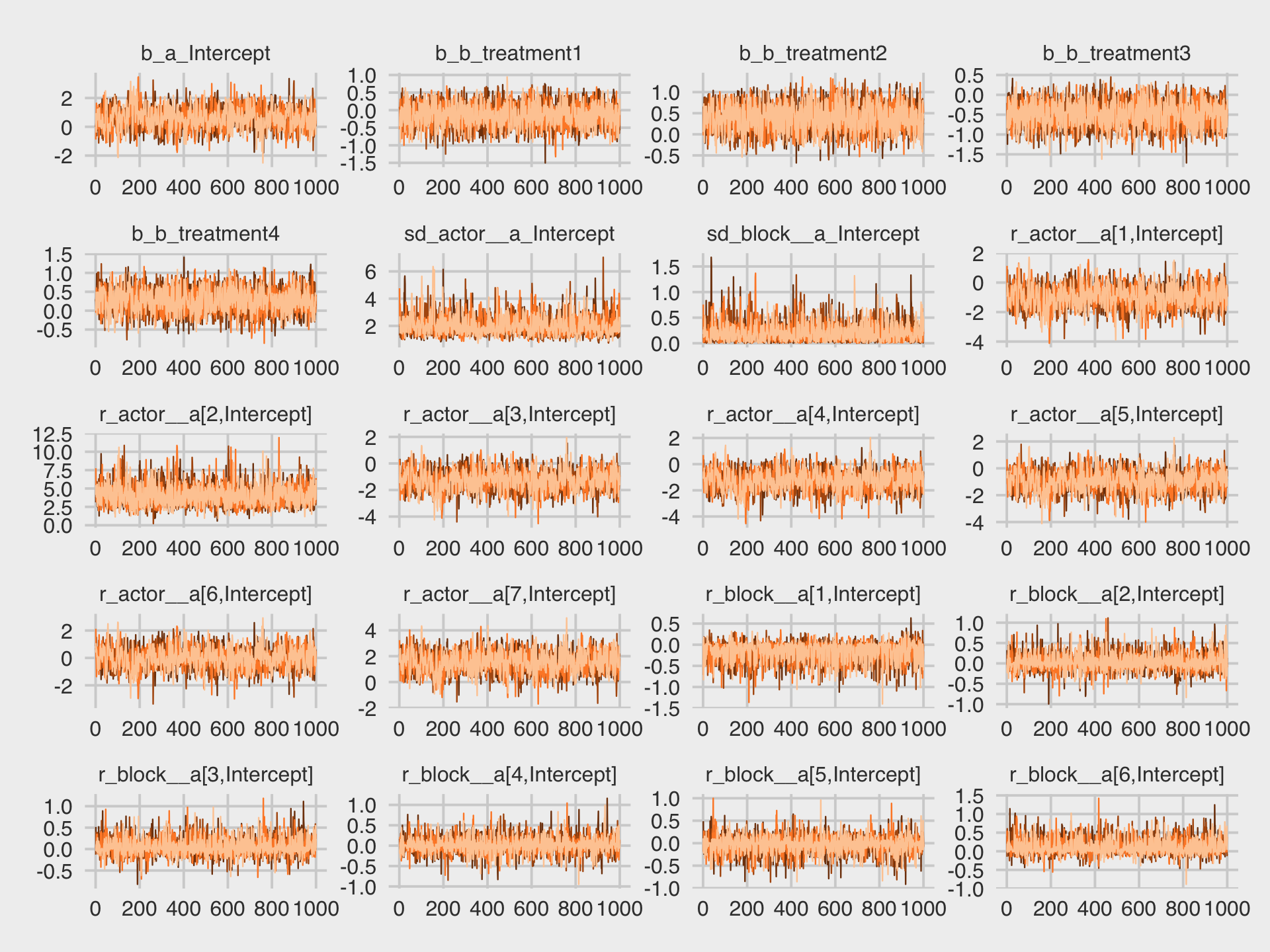

Check the trace plots.

library(bayesplot)

color_scheme_set("orange")

as_draws_df(b13.4) %>%

mcmc_trace(pars = vars(b_a_Intercept:`r_block__a[6,Intercept]`),

facet_args = list(ncol = 4),

linewidth = 0.15) +

theme(legend.position = "none")

They all look fine. In the text (e.g., page 416), McElreath briefly mentioned warnings about divergent transitions. We didn’t get any warnings like that. Keep following along and you’ll soon learn why.

Here’s a look at the summary when using print().

print(b13.4)## Family: binomial

## Links: mu = logit

## Formula: pulled_left | trials(1) ~ a + b

## a ~ 1 + (1 | actor) + (1 | block)

## b ~ 0 + treatment

## Data: d (Number of observations: 504)

## Draws: 4 chains, each with iter = 2000; warmup = 1000; thin = 1;

## total post-warmup draws = 4000

##

## Group-Level Effects:

## ~actor (Number of levels: 7)

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## sd(a_Intercept) 2.00 0.67 1.08 3.65 1.00 1262 1735

##

## ~block (Number of levels: 6)

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## sd(a_Intercept) 0.21 0.17 0.01 0.66 1.00 1605 1746

##

## Population-Level Effects:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## a_Intercept 0.61 0.72 -0.83 2.01 1.00 901 1339

## b_treatment1 -0.14 0.31 -0.77 0.46 1.00 2069 2895

## b_treatment2 0.39 0.31 -0.23 0.97 1.00 2079 2871

## b_treatment3 -0.48 0.31 -1.10 0.12 1.00 2034 2646

## b_treatment4 0.27 0.31 -0.33 0.86 1.00 1980 2583

##

## Draws were sampled using sampling(NUTS). For each parameter, Bulk_ESS

## and Tail_ESS are effective sample size measures, and Rhat is the potential

## scale reduction factor on split chains (at convergence, Rhat = 1).When you use the (1 | <group>) syntax within brm(), the group-specific parameters are not shown with print(). You only get the hierarchical \(\sigma_\text{<group>}\) summaries, shown here as the two rows for sd(a_Intercept). However, you can get a summary of all the parameters with the posterior_summary() function.

posterior_summary(b13.4) %>% round(digits = 2)## Estimate Est.Error Q2.5 Q97.5

## b_a_Intercept 0.61 0.72 -0.83 2.01

## b_b_treatment1 -0.14 0.31 -0.77 0.46

## b_b_treatment2 0.39 0.31 -0.23 0.97

## b_b_treatment3 -0.48 0.31 -1.10 0.12

## b_b_treatment4 0.27 0.31 -0.33 0.86

## sd_actor__a_Intercept 2.00 0.67 1.08 3.65

## sd_block__a_Intercept 0.21 0.17 0.01 0.66

## r_actor__a[1,Intercept] -0.96 0.73 -2.39 0.48

## r_actor__a[2,Intercept] 4.03 1.33 1.97 7.14

## r_actor__a[3,Intercept] -1.27 0.73 -2.71 0.20

## r_actor__a[4,Intercept] -1.27 0.73 -2.71 0.17

## r_actor__a[5,Intercept] -0.96 0.73 -2.41 0.50

## r_actor__a[6,Intercept] -0.03 0.73 -1.47 1.45

## r_actor__a[7,Intercept] 1.51 0.77 0.00 3.07

## r_block__a[1,Intercept] -0.16 0.21 -0.68 0.15

## r_block__a[2,Intercept] 0.04 0.18 -0.32 0.46

## r_block__a[3,Intercept] 0.05 0.18 -0.28 0.48

## r_block__a[4,Intercept] 0.01 0.18 -0.35 0.40

## r_block__a[5,Intercept] -0.03 0.18 -0.42 0.30

## r_block__a[6,Intercept] 0.11 0.19 -0.18 0.59

## lprior -6.34 1.25 -9.38 -4.47

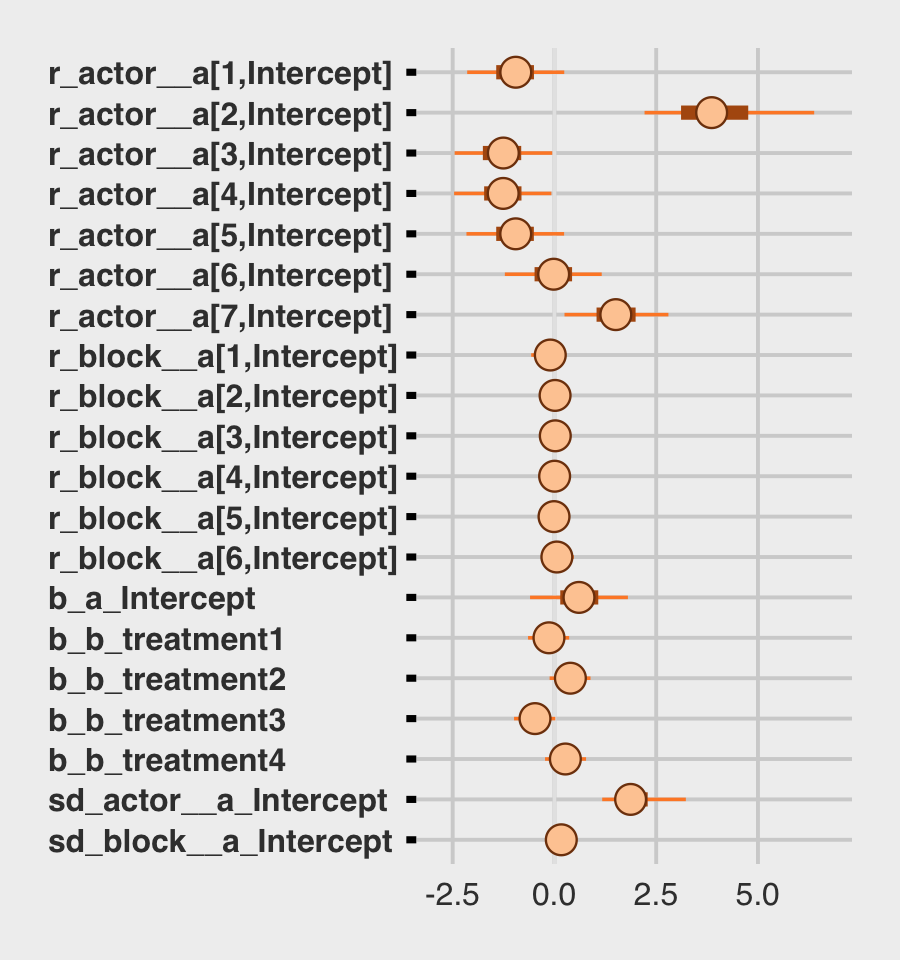

## lp__ -287.08 3.93 -295.62 -280.33We might make the coefficient plot of Figure 13.4.a with bayesplot::mcmc_plot().

b13.4 %>%

mcmc_plot(variable = c("^r_", "^b_", "^sd_"), regex = T) +

theme(axis.text.y = element_text(hjust = 0))

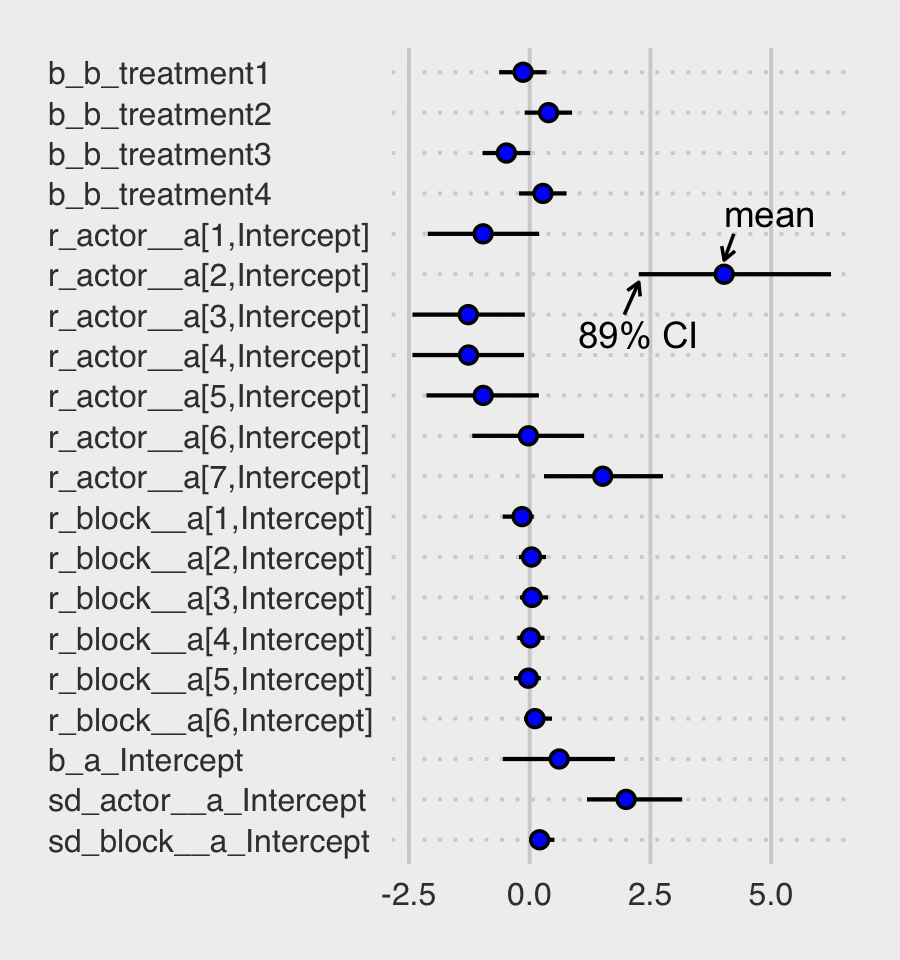

For a little more control, we might switch to a tidybayes-oriented approach.

# extract the posterior draws

post <- as_draws_df(b13.4)

# this is all stylistic fluff

levels <-

c("sd_block__a_Intercept", "sd_actor__a_Intercept",

"b_a_Intercept",

str_c("r_block__a[", 6:1, ",Intercept]"),

str_c("r_actor__a[", 7:1, ",Intercept]"),

str_c("b_b_treatment", 4:1))

text <-

tibble(x = posterior_summary(b13.4, probs = c(0.055, 0.955),)["r_actor__a[2,Intercept]", c(3, 1)],

y = c(13.5, 16.5),

label = c("89% CI", "mean"),

hjust = c(.5, 0))

arrow <-

tibble(x = posterior_summary(b13.4, probs = c(0.055, 0.955),)["r_actor__a[2,Intercept]", c(3, 1)] + c(- 0.3, 0.2),

xend = posterior_summary(b13.4, probs = c(0.055, 0.955),)["r_actor__a[2,Intercept]", c(3, 1)],

y = c(14, 16),

yend = c(14.8, 15.35))

# here's the main event

post %>%

pivot_longer(b_a_Intercept:`r_block__a[6,Intercept]`)%>%

mutate(name = factor(name, levels = levels)) %>%

ggplot(aes(x = value, y = name)) +

stat_pointinterval(point_interval = mean_qi,

.width = .89, shape = 21, size = 1, point_size = 2, point_fill = "blue") +

geom_text(data = text,

aes(x = x, y = y, label = label, hjust = hjust)) +

geom_segment(data = arrow,

aes(x = x, xend = xend,

y = y, yend = yend),

arrow = arrow(length = unit(0.15, "cm"))) +

theme(axis.text.y = element_text(hjust = 0),

panel.grid.major.y = element_line(linetype = 3))

Regardless of whether we use a bayesplot- or tidybayes-oriented workflow, a careful look at our coefficient plots will show the parameters are a little different from those McElreath reported. Again, this is because of the subtle differences between our non-centered parameterization and McElreath’s centered parameterization. This will all make more sense in Section 13.4.

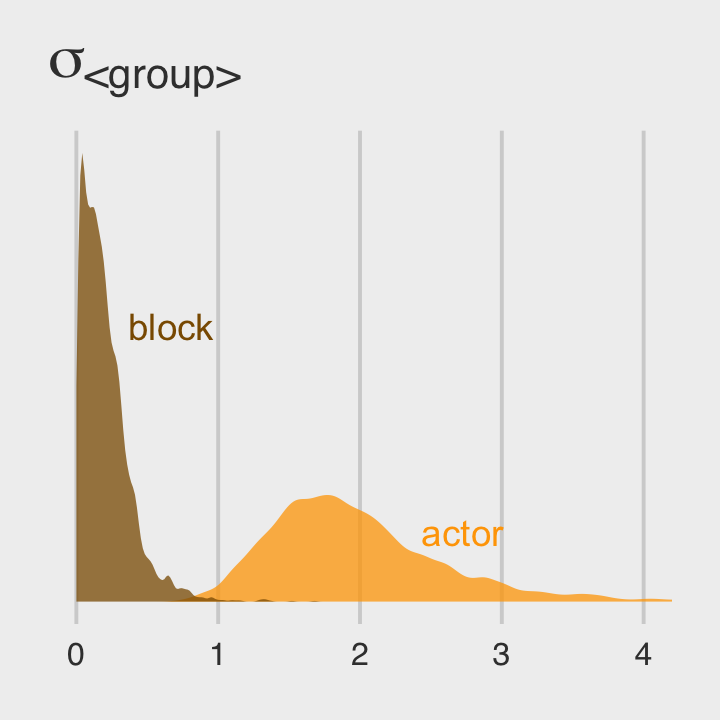

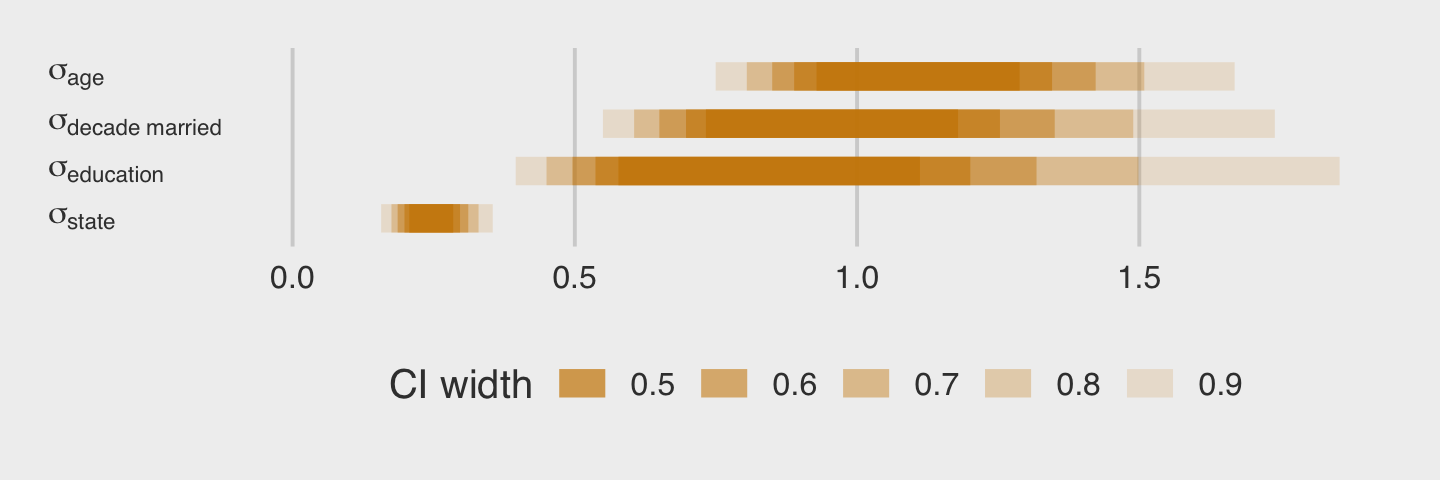

Now use post to compare the group-level \(\sigma\) parameters as in Figure 13.4.b.

post %>%

pivot_longer(starts_with("sd")) %>%

ggplot(aes(x = value, fill = name)) +

geom_density(linewidth = 0, alpha = 3/4, adjust = 2/3, show.legend = F) +

annotate(geom = "text", x = 0.67, y = 2, label = "block", color = "orange4") +

annotate(geom = "text", x = 2.725, y = 0.5, label = "actor", color = "orange1") +

scale_fill_manual(values = str_c("orange", c(1, 4))) +

scale_y_continuous(NULL, breaks = NULL) +

ggtitle(expression(sigma["<group>"])) +

coord_cartesian(xlim = c(0, 4))

Since both the coefficient plots and the density plots indicate there is much more variability among the actor parameters than in the block parameters, we might fit a model that ignores the variation among the levels of block.

b13.5 <-

brm(data = d,

family = binomial,

bf(pulled_left | trials(1) ~ a + b,

a ~ 1 + (1 | actor),

b ~ 0 + treatment,

nl = TRUE),

prior = c(prior(normal(0, 0.5), nlpar = b),

prior(normal(0, 1.5), class = b, coef = Intercept, nlpar = a),

prior(exponential(1), class = sd, group = actor, nlpar = a)),

iter = 2000, warmup = 1000, chains = 4, cores = 4,

seed = 13,

file = "fits/b13.05")We might compare our models by their WAIC estimates.

b13.4 <- add_criterion(b13.4, "waic")

b13.5 <- add_criterion(b13.5, "waic")

loo_compare(b13.4, b13.5, criterion = "waic") %>%

print(simplify = F)## elpd_diff se_diff elpd_waic se_elpd_waic p_waic se_p_waic waic se_waic

## b13.5 0.0 0.0 -265.5 9.6 8.5 0.4 531.1 19.2

## b13.4 -0.8 0.8 -266.3 9.7 10.7 0.6 532.6 19.4model_weights(b13.4, b13.5, weights = "waic") %>%

round(digits = 2)## b13.4 b13.5

## 0.32 0.68The two models yield nearly-equivalent WAIC estimates. Just as in the text, our p_waic column shows the models differ by about 2 effective parameters due to the shrinkage from the multilevel partial pooling. Yet recall what McElreath wrote:

There is nothing to gain here by selecting either model. The comparison of the two models tells a richer story… Since this is an experiment, there is nothing to really select. The experimental design tells us the relevant causal model to inspect. (pp. 418–419)

13.3.2 Even more clusters.

We can extend partial pooling to the treatment conditions, too. With brms, it will be more natural to revert to the conventional formula syntax.

b13.6 <-

brm(data = d,

family = binomial,

pulled_left | trials(1) ~ 1 + (1 | actor) + (1 | block) + (1 | treatment),

prior = c(prior(normal(0, 1.5), class = Intercept),

prior(exponential(1), class = sd)),

iter = 2000, warmup = 1000, chains = 4, cores = 4,

seed = 13,

file = "fits/b13.06")Recall that with brms, we don’t have a coeftab() like with McElreath’s rethinking. For us, one approach would be to compare the relevent rows from fixef(b13.4) to the relevant elements from ranef(b13.6).

tibble(parameter = str_c("b[", 1:4, "]"),

`b13.4` = fixef(b13.4)[2:5, 1],

`b13.6` = ranef(b13.6)$treatment[, 1, "Intercept"]) %>%

mutate_if(is.double, round, digits = 2)## # A tibble: 4 × 3

## parameter b13.4 b13.6

## <chr> <dbl> <dbl>

## 1 b[1] -0.14 -0.11

## 2 b[2] 0.39 0.38

## 3 b[3] -0.48 -0.44

## 4 b[4] 0.27 0.27Like in the text, “these are not identical, but they are very close” (p. 419). We might compare the group-level \(\sigma\) parameters with a plot.

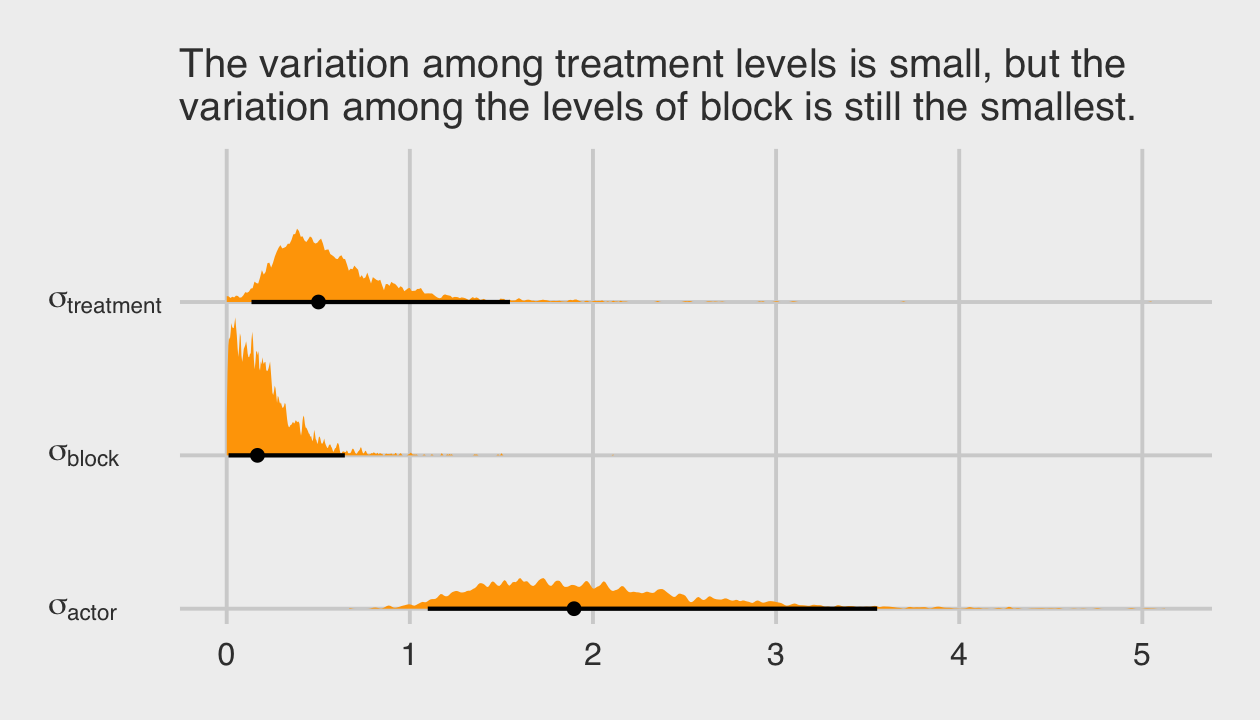

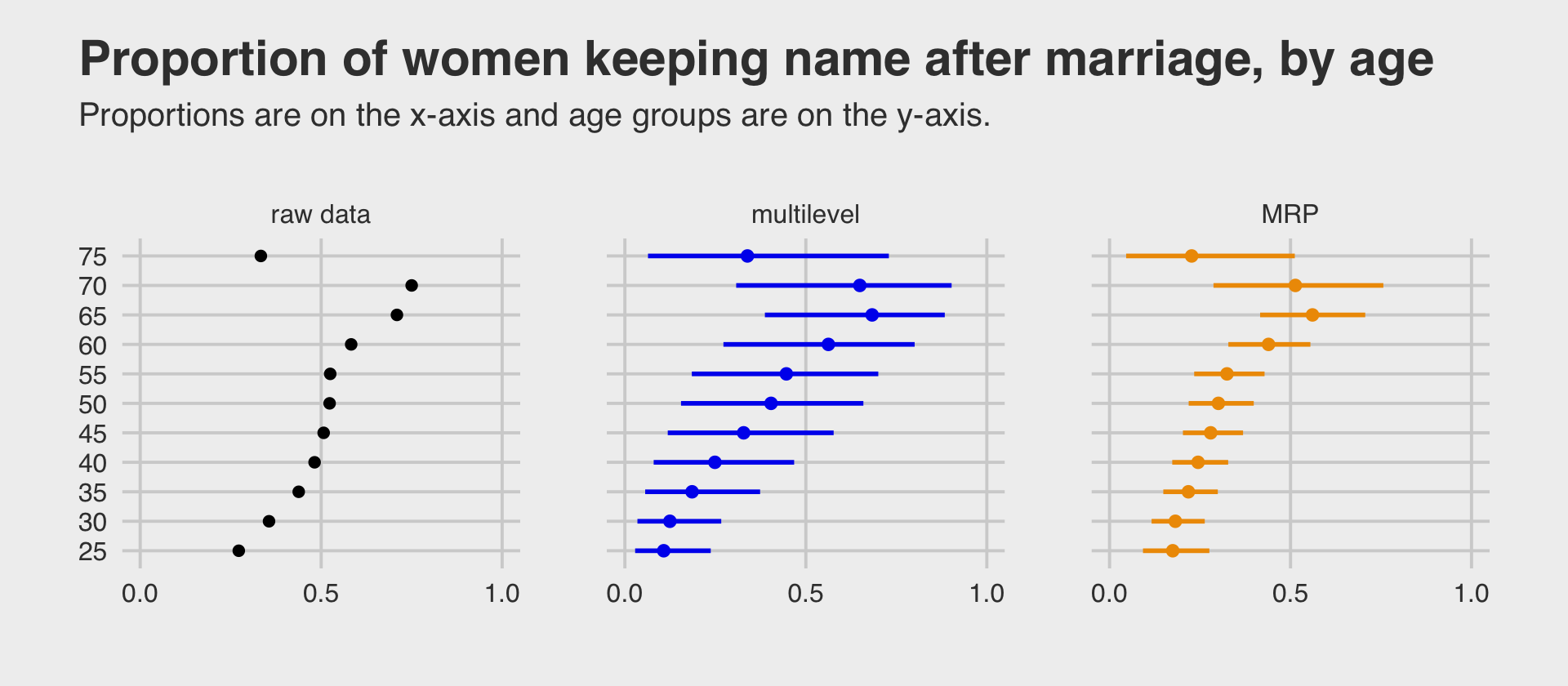

as_draws_df(b13.6) %>%

pivot_longer(starts_with("sd")) %>%

mutate(group = str_remove(name, "sd_") %>% str_remove(., "__Intercept")) %>%

mutate(parameter = str_c("sigma[", group,"]")) %>%

ggplot(aes(x = value, y = parameter)) +

stat_halfeye(.width = .95, size = 1, fill = "orange", adjust = 0.1) +

scale_y_discrete(labels = ggplot2:::parse_safe, expand = expansion(add = 0.1)) +

labs(subtitle = "The variation among treatment levels is small, but the\nvariation among the levels of block is still the smallest.") +

theme(axis.text.y = element_text(hjust = 0))

Among the three \(\sigma_\text{<group>}\) parameters, \(\sigma_\text{block}\) is the smallest. Now we’ll compare b13.6 to the last two models with the WAIC.

b13.6 <- add_criterion(b13.6, "waic")

loo_compare(b13.4, b13.5, b13.6, criterion = "waic") %>%

print(simplify = F)## elpd_diff se_diff elpd_waic se_elpd_waic p_waic se_p_waic waic se_waic

## b13.5 0.0 0.0 -265.5 9.6 8.5 0.4 531.1 19.2

## b13.4 -0.8 0.8 -266.3 9.7 10.7 0.6 532.6 19.4

## b13.6 -1.1 0.8 -266.6 9.6 10.9 0.6 533.2 19.3model_weights(b13.4, b13.5, b13.6, weights = "loo") %>%

round(digits = 2)## b13.4 b13.5 b13.6

## 0.25 0.56 0.19The models show little difference “on purely predictive criteria. This is the typical result, when each cluster (each treatment here) has a lot of data to inform its parameters” (p. 419). Unlike in the text, we didn’t have a problem with divergent transitions. We’ll see why in the next section.

Before we move on, this section just hints at a historical software difficulty. In short, it’s not uncommon to have a theory-based model that includes multiple sources of clustering (i.e., requiring many ( <varying parameter(s)> | <grouping variable(s)> ) parts in the model formula). This can make for all kinds of computational difficulties and result in software error messages, inadmissible solutions, and so on. One of the practical solutions to difficulties like these has been to simplify the statistical models by removing some of the clustering terms. Even though such simpler models were not the theory-based ones, at least they yielded solutions. Nowadays, Stan (via brms or otherwise) is making it easier to fit the full theoretically-based model. To learn more about this topic, check out this nice blog post by Michael Frank, Mixed effects models: Is it time to go Bayesian by default?. Make sure to check out the discussion in the comments section, which includes all-stars like Bürkner and Douglas Bates. You can get more context for the issue from Barr et al. (2013), Random effects structure for confirmatory hypothesis testing: Keep it maximal.

13.4 Divergent transitions and non-centered priors

Although we did not get divergent transitions warnings in from our last few models the way McElreath did with his, the issues is still relevant for brms.

One of the best things about Hamiltonian Monte Carlo is that it provides internal checks of efficiency and accuracy. One of these checks comes free, arising from the constraints on the physics simulation. Recall that HMC simulates the frictionless flow of a particle on a surface. In any given transition, which is just a single flick of the particle, the total energy at the start should be equal to the total energy at the end. That’s how energy in a closed system works. And in a purely mathematical system, the energy is always conserved correctly. It’s just a fact about the physics.

But in a numerical system, it might not be. Sometimes the total energy is not the same at the end as it was at the start. In these cases, the energy is divergent. How can this happen? It tends to happen when the posterior distribution is very steep in some region of parameter space. Steep changes in probability are hard for a discrete physics simulation to follow. When that happens, the algorithm notices by comparing the energy at the start to the energy at the end. When they don’t match, it indicates numerical problems exploring that part of the posterior distribution.

Divergent transitions are rejected. They don’t directly damage your approximation of the posterior distribution. But they do hurt it indirectly, because the region where divergent transitions happen is hard to explore correctly. (p. 420, emphasis in the original)

Two primary ways to handle divergent transitions are by increasing the adapt_delta parameter, which we’ve already done a few times in previous chapters, or reparameterizing the model. As McElreath will cover in a bit, switching from the centered to the non-centered parameterization will often work when using multilevel models.

13.4.1 The Devil’s Funnel.

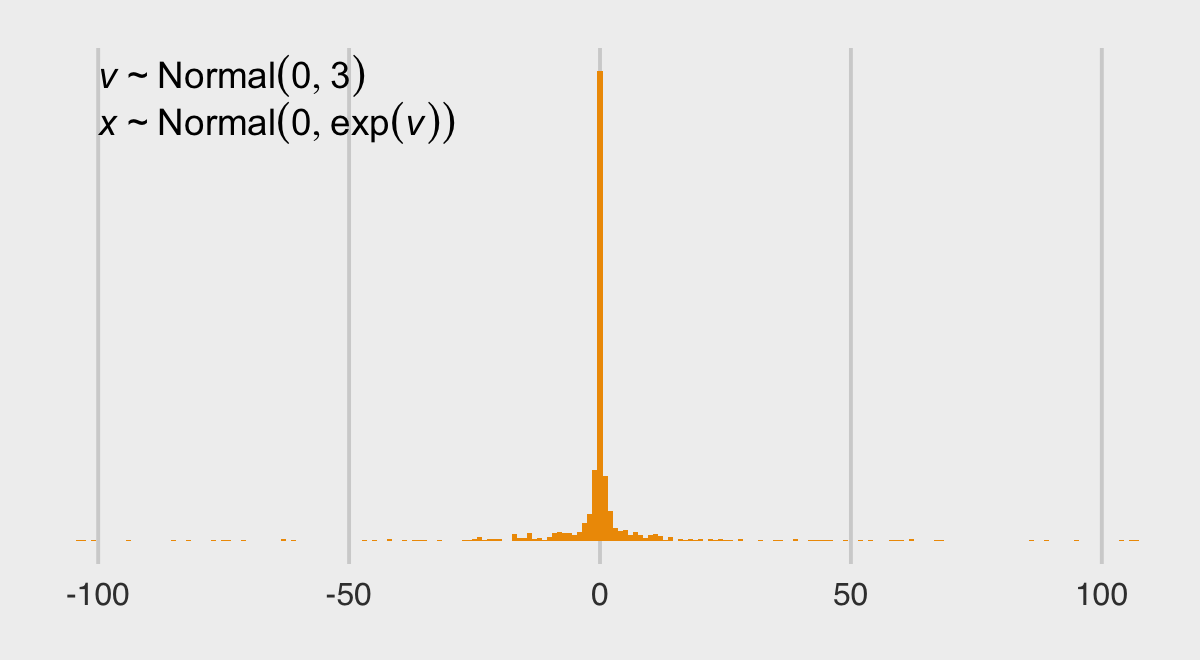

McElreath posed a joint distribution

\[\begin{align*} v & \sim \operatorname{Normal}(0, 3) \\ x & \sim \operatorname{Normal}(0, \exp(v)), \end{align*}\]

where the scale of \(x\) depends on another variable, \(v\). In R code 13.26, McElreath then proposed fitting the following model with rethinking::ulam().

m13.7 <-

ulam(

data = list(N = 1),

alist(

v ~ normal(0, 3),

x ~ normal(0, exp(v))

),

chains = 4

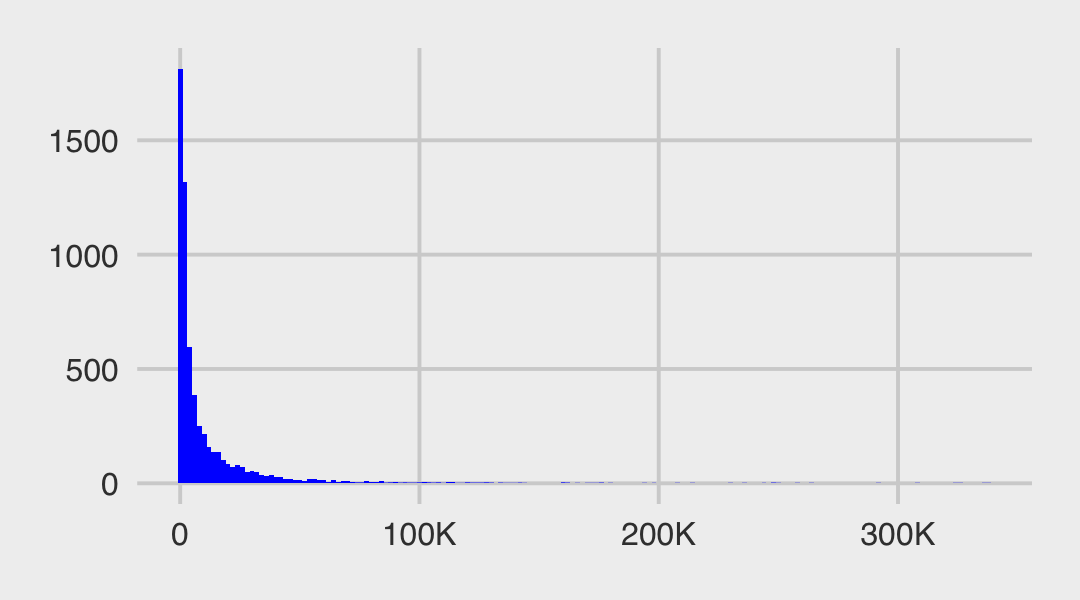

)I’m not aware that you can do something like this with brms. If you think I’m in error, please share your solution. We can at least get a sense of the model by simulating from the joint distribution and plotting.

set.seed(13)

tibble(v = rnorm(1e3, mean = 0, sd = 3)) %>%

mutate(x = rnorm(1e3, mean = 0, sd = exp(v))) %>%

ggplot(aes(x = x)) +

geom_histogram(binwidth = 1, fill = "orange2") +

annotate(geom = "text",

x = -100, y = 490, hjust = 0,

label = expression(italic(v)%~%Normal(0, 3))) +

annotate(geom = "text",

x = -100, y = 440, hjust = 0,

label = expression(italic(x)%~%Normal(0, exp(italic(v))))) +

coord_cartesian(xlim = c(-100, 100)) +

scale_y_continuous(breaks = NULL)

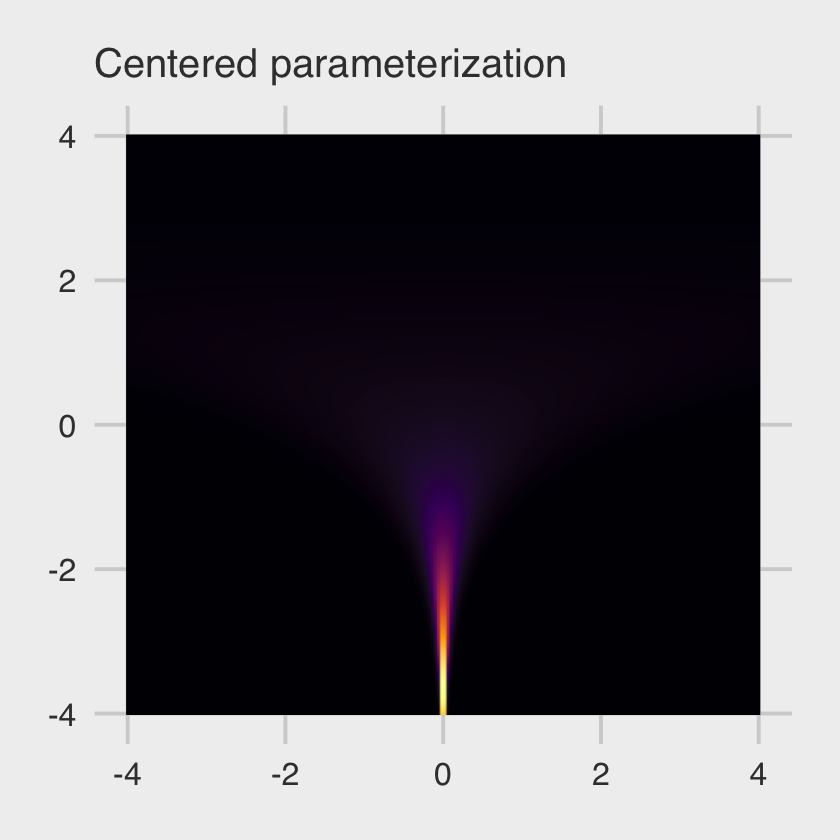

The distribution looks something like a Student-\(t\) with a very low \(\nu\) parameter. We can express the joint likelihood of \(p(v, x)\) as

\[p(v, x) = p(x | v)\ p(v).\]

Here that is in a plot.

# define the parameter space

parameter_space <- seq(from = -4, to = 4, length.out = 200)

# simulate

crossing(v = parameter_space,

x = parameter_space) %>%

mutate(likelihood_v = dnorm(v, mean = 0, sd = 3),

likelihood_x = dnorm(x, mean = 0, sd = exp(v))) %>%

mutate(joint_likelihood = likelihood_v * likelihood_x) %>%

# plot!

ggplot(aes(x = x, y = v, fill = joint_likelihood)) +

geom_raster(interpolate = T) +

scale_fill_viridis_c(option = "B") +

labs(subtitle = "Centered parameterization") +

theme(legend.position = "none")

This ends up as a version of McElreath’s Figure 13.5.a.

At low values of \(v\), the distribution of \(x\) contracts around zero. This forms a very steep valley that the Hamiltonian particle needs to explore. Steep surfaces are hard to simulate, because the simulation is not actually continuous. It happens in discrete steps. If the steps are too big, the simulation will overshoot. (p. 421)

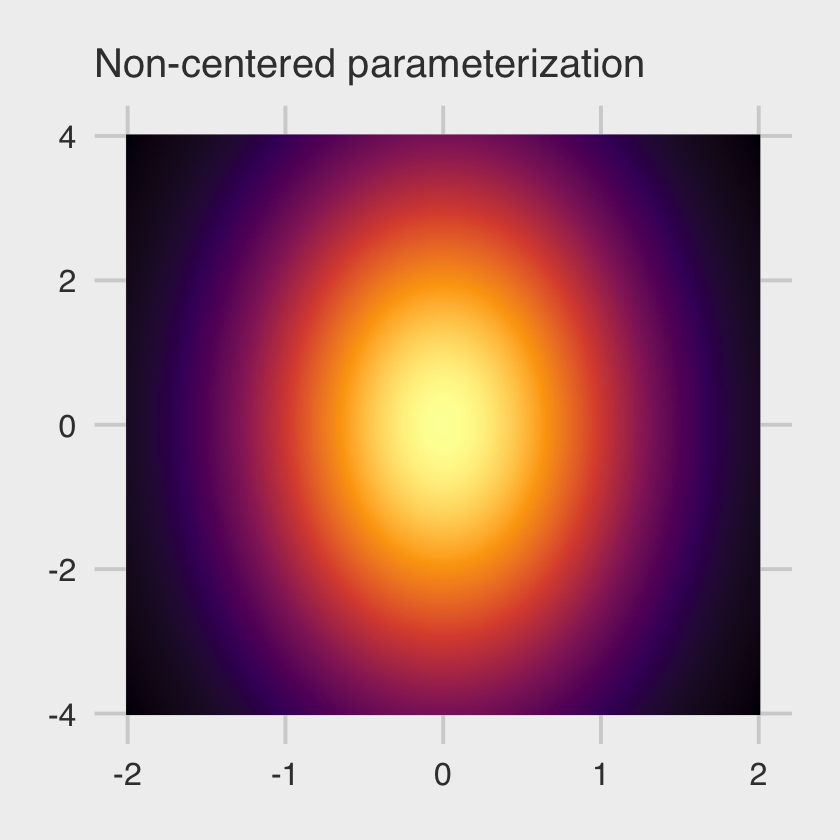

To avoid the divergent transitions than can arise from steep valleys like this, we can switch from our original formula to a non-centered parameterization, such as:

\[\begin{align*} v & \sim \operatorname{Normal}(0, 3) \\ z & \sim \operatorname{Normal}(0, 1) \\ x & = z \exp(v), \end{align*}\]

where \(x\) is now the product of two independent distributions, \(v\) and \(z\). With this parameterization, we can express the joint likelihood \(p(v, z)\) as

\[p(v, z) = p(z) \ p(v),\]

where \(p(z)\) is not conditional on \(v\) and \(p(v)\) is not conditional on \(z\). Here’s what that looks like in a plot.

# simulate

crossing(v = parameter_space,

z = parameter_space / 2) %>%

mutate(likelihood_v = dnorm(v, mean = 0, sd = 3),

likelihood_z = dnorm(z, mean = 0, sd = 1)) %>%

mutate(joint_likelihood = likelihood_v * likelihood_z) %>%

# plot!

ggplot(aes(x = z, y = v, fill = joint_likelihood)) +

geom_raster(interpolate = T) +

scale_fill_viridis_c(option = "B") +

labs(subtitle = "Non-centered parameterization") +

theme(legend.position = "none")

This is our version of the right-hand panel of McElreath’s Figure 13.5. No nasty funnel–just a friendly glowing likelihood orb.

13.4.2 Non-centered chimpanzees.

At the top of the section, McElreath reported the rethinking::ulam() default is to set adapt_delta = 0.95. Readers should be aware that the brms::brm() default is adapt_delta = 0.80. A consequence of this difference is rethinking::ulam() will tend to take smaller step sizes than brms::brm(), at the cost of slower exploration of the posterior. I don’t know that one is inherently better than the other. They’re just defaults.

Recall that due to how brms only supports the non-centered parameterization, we have already fit our version of McElreath’s m13.4nc. We called it b13.4. Here is the model summary, again.

print(b13.4)## Family: binomial

## Links: mu = logit

## Formula: pulled_left | trials(1) ~ a + b

## a ~ 1 + (1 | actor) + (1 | block)

## b ~ 0 + treatment

## Data: d (Number of observations: 504)

## Draws: 4 chains, each with iter = 2000; warmup = 1000; thin = 1;

## total post-warmup draws = 4000

##

## Group-Level Effects:

## ~actor (Number of levels: 7)

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## sd(a_Intercept) 2.00 0.67 1.08 3.65 1.00 1262 1735

##

## ~block (Number of levels: 6)

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## sd(a_Intercept) 0.21 0.17 0.01 0.66 1.00 1605 1746

##

## Population-Level Effects:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## a_Intercept 0.61 0.72 -0.83 2.01 1.00 901 1339

## b_treatment1 -0.14 0.31 -0.77 0.46 1.00 2069 2895

## b_treatment2 0.39 0.31 -0.23 0.97 1.00 2079 2871

## b_treatment3 -0.48 0.31 -1.10 0.12 1.00 2034 2646

## b_treatment4 0.27 0.31 -0.33 0.86 1.00 1980 2583

##

## Draws were sampled using sampling(NUTS). For each parameter, Bulk_ESS

## and Tail_ESS are effective sample size measures, and Rhat is the potential

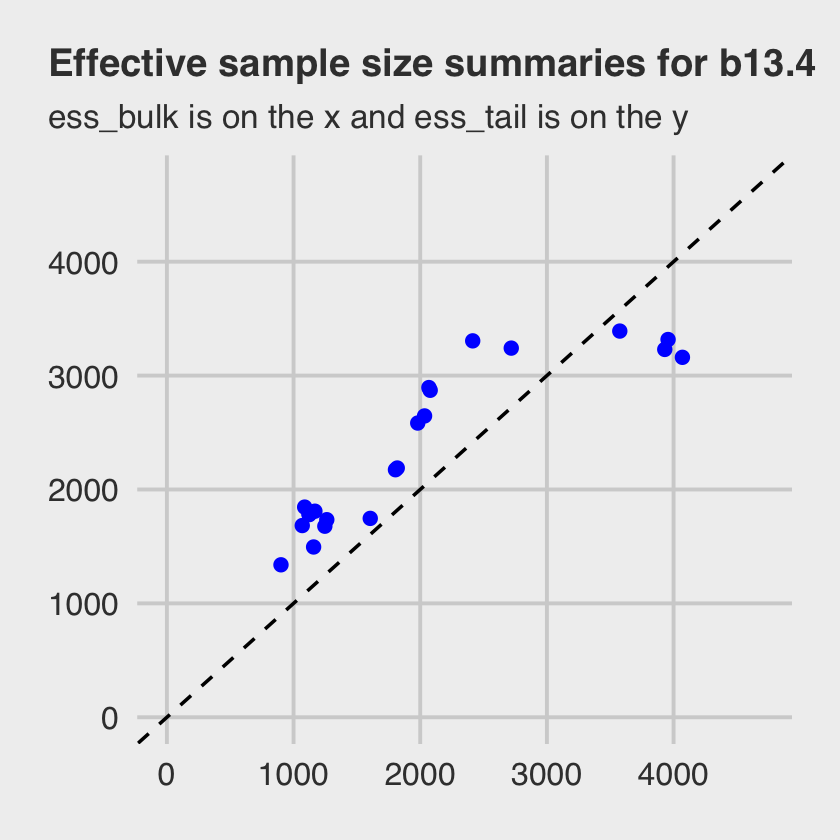

## scale reduction factor on split chains (at convergence, Rhat = 1).Because we only fit this model using the non-centered parameterization, we won’t be able to fully reproduce McElreath’s Figure 13.6. But we can still plot our effective sample sizes. Recall that unlike the way rethinking only reports n_eff, brms now reports both Bulk_ESS and Tail_ESS (see Vehtari et al., 2019). At the moment, brms does not offer a convenience function that allows users to collect those values in a data frame. However you can do so with help from the posterior package. For our purposes, the function of interest is summarise_draws(), which will take the output from as_draws_df() as input.

library(posterior)

as_draws_df(b13.4) %>%

summarise_draws()## # A tibble: 22 × 10

## variable mean median sd mad q5 q95 rhat ess_bulk ess_tail

## <chr> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

## 1 b_a_Intercept 0.610 0.603 0.722 0.694 -0.599 1.80 1.00 901. 1339.

## 2 b_b_treatment1 -0.137 -0.134 0.309 0.308 -0.648 0.362 1.00 2069. 2895.

## 3 b_b_treatment2 0.390 0.392 0.306 0.309 -0.118 0.886 1.00 2079. 2871.

## 4 b_b_treatment3 -0.483 -0.477 0.306 0.308 -0.992 0.0215 1.00 2034. 2646.

## 5 b_b_treatment4 0.275 0.268 0.305 0.300 -0.231 0.776 1.00 1980. 2583.

## 6 sd_actor__a_Intercept 2.00 1.87 0.671 0.532 1.17 3.23 1.00 1262. 1735.

## 7 sd_block__a_Intercept 0.205 0.168 0.174 0.147 0.0171 0.529 1.00 1605. 1746.

## 8 r_actor__a[1,Intercept] -0.965 -0.950 0.727 0.681 -2.14 0.241 1.00 1158. 1495.

## 9 r_actor__a[2,Intercept] 4.03 3.86 1.33 1.21 2.21 6.38 1.00 1804. 2173.

## 10 r_actor__a[3,Intercept] -1.27 -1.26 0.727 0.689 -2.45 -0.0548 1.00 1069. 1683.

## # … with 12 more rowsNote how the last three columns are the rhat, the ess_bulk, and the ess_tail. Here we summarize those two effective sample size columns in a scatter plot similar to Figure 13.6, but based only on our b13.4, which used the non-centered parameterization.

as_draws_df(b13.4) %>%

summarise_draws() %>%

ggplot(aes(x = ess_bulk, y = ess_tail)) +

geom_abline(linetype = 2) +

geom_point(color = "blue") +

xlim(0, 4700) +

ylim(0, 4700) +

ggtitle("Effective sample size summaries for b13.4",

subtitle = "ess_bulk is on the x and ess_tail is on the y") +

theme(plot.subtitle = element_text(size = 10),

plot.title = element_text(size = 11.5),

plot.title.position = "plot")

Both measures of effective sample size are fine.

So should we always use the non-centered parameterization? No. Sometimes the centered form is better. It could even be true that the centered form is better for one cluster in a model while the non-centered form is better for another cluster in the same model. It all depends upon the details. Typically, a cluster with low variation, like the blocks in

m13.4, will sample better with a non-centered prior. And if you have a large number of units inside a cluster, but not much data for each unit, then the non-centered is also usually better. But being able to switch back and forth as needed is very useful. (p. 425)

I won’t argue with McElreath, here. But if you run into a situation where you’d like to use the centered parameterization, you will have to use rethinking or fit your model directly in Stan. brms won’t support you, there.

13.5 Multilevel posterior predictions

Every model is a merger of sense and nonsense. When we understand a model, we can find its sense and control its nonsense. But as models get more complex, it is very difficult to impossible to understand them just by inspecting tables of posterior means and intervals. Exploring implied posterior predictions helps much more….

The introduction of varying effects does introduce nuance, however.

First, we should no longer expect the model to exactly retrodict the sample, because adaptive regularization has as its goal to trade off poorer fit in sample for better inference and hopefully better fit out of sample. That is what shrinkage does for us. Of course, we should never be trying to really retrodict the sample. But now you have to expect that even a perfectly good model fit will differ from the raw data in a systematic way.

Second, “prediction” in the context of a multilevel model requires additional choices. If we wish to validate a model against the specific clusters used to fit the model, that is one thing. But if we instead wish to compute predictions for new clusters, other than the ones observed in the sample, that is quite another. We’ll consider each of these in turn, continuing to use the chimpanzees model from the previous section. (p. 426)

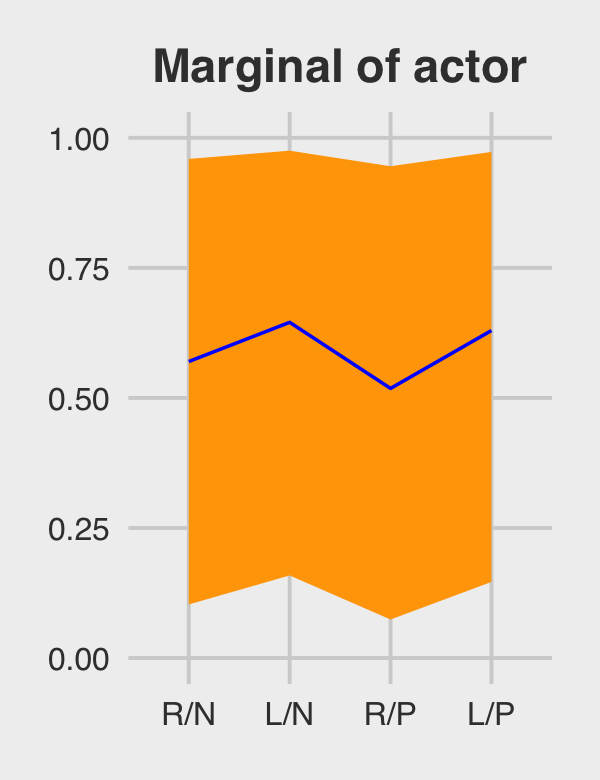

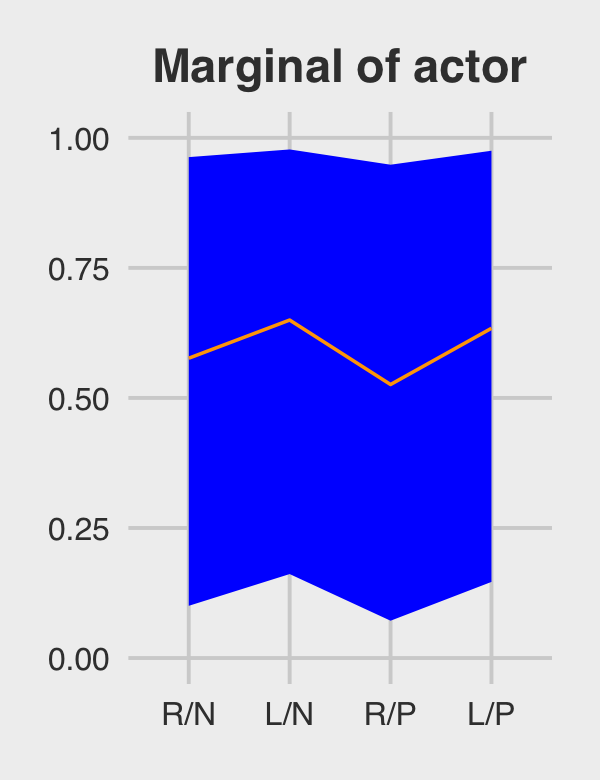

13.5.1 Posterior prediction for same clusters.

Like McElreath did in the text, we’ll do this two ways. Recall we use brms::fitted() in place of rethinking::link().

chimp <- 2

nd <-

d %>%

distinct(treatment) %>%

mutate(actor = chimp,

block = 1)

labels <- c("R/N", "L/N", "R/P", "L/P")

f <-

fitted(b13.4,

newdata = nd) %>%

data.frame() %>%

bind_cols(nd) %>%

mutate(treatment = factor(treatment, labels = labels))

f## Estimate Est.Error Q2.5 Q97.5 treatment actor block

## 1 0.9783742 0.02066066 0.9226771 0.9993302 R/N 2 1

## 2 0.9869666 0.01273507 0.9534004 0.9996419 L/N 2 1

## 3 0.9701555 0.02734177 0.8968877 0.9990598 R/P 2 1

## 4 0.9853764 0.01423009 0.9466887 0.9995752 L/P 2 1Here are the empirical probabilities computed directly from the data (i.e., the no-pooling model).

(

chimp_2_d <-

d %>%

filter(actor == chimp) %>%

group_by(treatment) %>%

summarise(prob = mean(pulled_left)) %>%

ungroup() %>%

mutate(treatment = factor(treatment, labels = labels))

)## # A tibble: 4 × 2

## treatment prob

## <fct> <dbl>

## 1 R/N 1

## 2 L/N 1

## 3 R/P 1

## 4 L/P 1McElreath didn’t show the corresponding plot in the text. It might look like this.

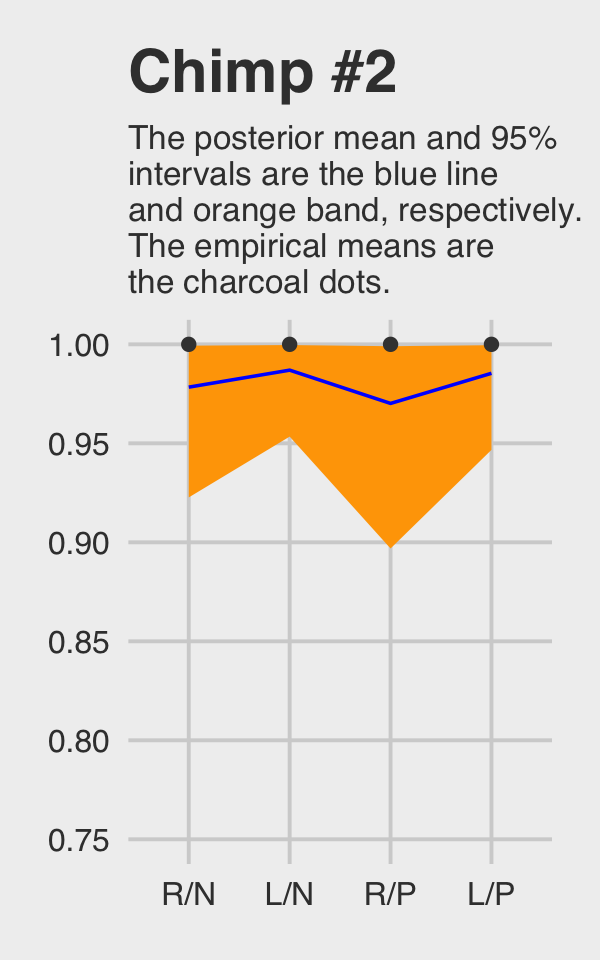

f %>%

# if you want to use `geom_line()` or `geom_ribbon()` with a factor on the x-axis,

# you need to code something like `group = 1` in `aes()`

ggplot(aes(x = treatment, y = Estimate, group = 1)) +

geom_ribbon(aes(ymin = Q2.5, ymax = Q97.5), fill = "orange1") +

geom_line(color = "blue") +

geom_point(data = chimp_2_d,

aes(y = prob),

color = "grey25") +

ggtitle("Chimp #2",

subtitle = "The posterior mean and 95%\nintervals are the blue line\nand orange band, respectively.\nThe empirical means are\nthe charcoal dots.") +

coord_cartesian(ylim = c(.75, 1)) +

theme(plot.subtitle = element_text(size = 10))

Do note how severely we’ve restricted the \(y\)-axis range. But okay, now let’s do things by hand. We’ll need to extract the posterior draws and look at the structure of the data.

post <- as_draws_df(b13.4)

glimpse(post)## Rows: 4,000

## Columns: 25

## $ b_a_Intercept <dbl> -0.01253738, 0.27715021, -0.60972821, -0.15498266, -0.61317791, 0.80178514…

## $ b_b_treatment1 <dbl> -0.18520107, -0.17970889, -0.09769529, 0.22813833, 0.11551463, 0.08631149,…

## $ b_b_treatment2 <dbl> 0.52203229, 0.26206450, 0.40831981, 0.57389197, 0.87561966, 0.72394865, 0.…

## $ b_b_treatment3 <dbl> -0.36627775, -0.38881273, -1.24405532, -0.27001046, 0.03480524, -0.1556004…

## $ b_b_treatment4 <dbl> 0.303189000, 0.010369854, 0.006293823, 0.444938619, 0.980032736, 0.6944412…

## $ sd_actor__a_Intercept <dbl> 1.946228, 2.455028, 1.356452, 1.793645, 2.747367, 2.203897, 1.337697, 1.55…

## $ sd_block__a_Intercept <dbl> 0.50254469, 0.14974609, 0.02880073, 0.10875859, 0.07914902, 0.01498366, 0.…

## $ `r_actor__a[1,Intercept]` <dbl> -0.82504414, -0.84244964, 0.86017331, -0.37891829, -0.50212580, -1.4478446…

## $ `r_actor__a[2,Intercept]` <dbl> 3.126632, 5.609742, 4.924606, 3.681224, 5.914519, 4.229041, 2.554125, 3.25…

## $ `r_actor__a[3,Intercept]` <dbl> -0.7817855, -0.7026046, 0.2986054, -0.8145322, -1.1316879, -2.0276635, -2.…

## $ `r_actor__a[4,Intercept]` <dbl> -0.7384717, -0.7404704, 0.3122383, -1.0387072, -0.4004929, -1.5632378, -1.…

## $ `r_actor__a[5,Intercept]` <dbl> -0.23706378, 0.09097625, -0.03719888, -0.35148078, -0.28176217, -1.2832069…

## $ `r_actor__a[6,Intercept]` <dbl> 0.707364409, 0.573295265, 1.499676717, 0.528135011, 0.760294540, -0.321259…

## $ `r_actor__a[7,Intercept]` <dbl> 2.176938019, 1.549886945, 2.823354699, 1.962122386, 2.512776792, 1.2687953…

## $ `r_block__a[1,Intercept]` <dbl> -0.359242102, 0.014307299, -0.057766295, 0.184269279, 0.057038880, -0.0268…

## $ `r_block__a[2,Intercept]` <dbl> 0.325677004, 0.105730090, -0.004867564, 0.035314959, -0.043653112, 0.01032…

## $ `r_block__a[3,Intercept]` <dbl> 0.516119746, 0.017709876, 0.012122285, -0.038462960, -0.061588322, 0.01764…

## $ `r_block__a[4,Intercept]` <dbl> 0.166978130, 0.083781821, -0.004410712, 0.058228024, 0.051474567, -0.00801…

## $ `r_block__a[5,Intercept]` <dbl> 0.489088261, -0.075775127, 0.027695632, -0.244153031, -0.091435589, 0.0210…

## $ `r_block__a[6,Intercept]` <dbl> 0.408815456, 0.154832006, -0.007034443, 0.048713863, 0.111826940, -0.01466…