Chapter 5 R Lab 4 - 02/05/2023

In this lecture we will learn how to implement classification trees and their extensions (bagging and random forest). Also, we will introduce the ROC curve and the AUC, useful in order to evaluate classification algorithms.

The following packages are required: tidymodels,rpart.plot, randomForest, tidyverse, pROC and discrim.

library(tidyverse)

library(tidymodels)

library(randomForest)

library(rpart.plot) #for plotting CART

library(pROC)## Type 'citation("pROC")' for a citation.##

## Attaching package: 'pROC'## The following objects are masked from 'package:stats':

##

## cov, smooth, varlibrary(discrim) #QDA5.1 Credit data and classification tree

The file credit.csv contains information about the default status (yes or no) of more than 500 individuals. Together with the response variable named default, 4 regressors are available. Here below the preview of the data:

credit = read.csv("./files/credit.csv")

glimpse(credit)## Rows: 522

## Columns: 5

## $ months_loan_duration <int> 48, 42, 24, 36, 30, 12, 48, 12…

## $ percent_of_income <int> 2, 2, 3, 2, 4, 3, 3, 1, 4, 2, …

## $ years_at_residence <int> 2, 4, 4, 2, 2, 1, 4, 1, 4, 4, …

## $ age <int> 22, 45, 53, 35, 28, 25, 24, 22…

## $ default <chr> "yes", "no", "yes", "no", "yes…We transform the response variable into a factor and check the names of the categories:

credit$default = factor(credit$default)

levels(credit$default) # fine, we don't have to change them## [1] "no" "yes"The percentage distribution of the response variable is the following:

credit %>%

count(default) %>%

mutate(perc = n/sum(n)*100)## default n perc

## 1 no 291 55.74713

## 2 yes 231 44.25287As usual let’s try to summarise the data with tables and plots:

summary(credit)## months_loan_duration percent_of_income years_at_residence

## Min. : 6.00 Min. :1.000 Min. :1.000

## 1st Qu.:12.00 1st Qu.:2.000 1st Qu.:2.000

## Median :18.00 Median :3.000 Median :3.000

## Mean :21.34 Mean :2.946 Mean :2.826

## 3rd Qu.:26.75 3rd Qu.:4.000 3rd Qu.:4.000

## Max. :72.00 Max. :4.000 Max. :4.000

## age default

## Min. :19.00 no :291

## 1st Qu.:26.00 yes:231

## Median :31.50

## Mean :34.89

## 3rd Qu.:41.00

## Max. :75.00credit %>%

ggplot()+

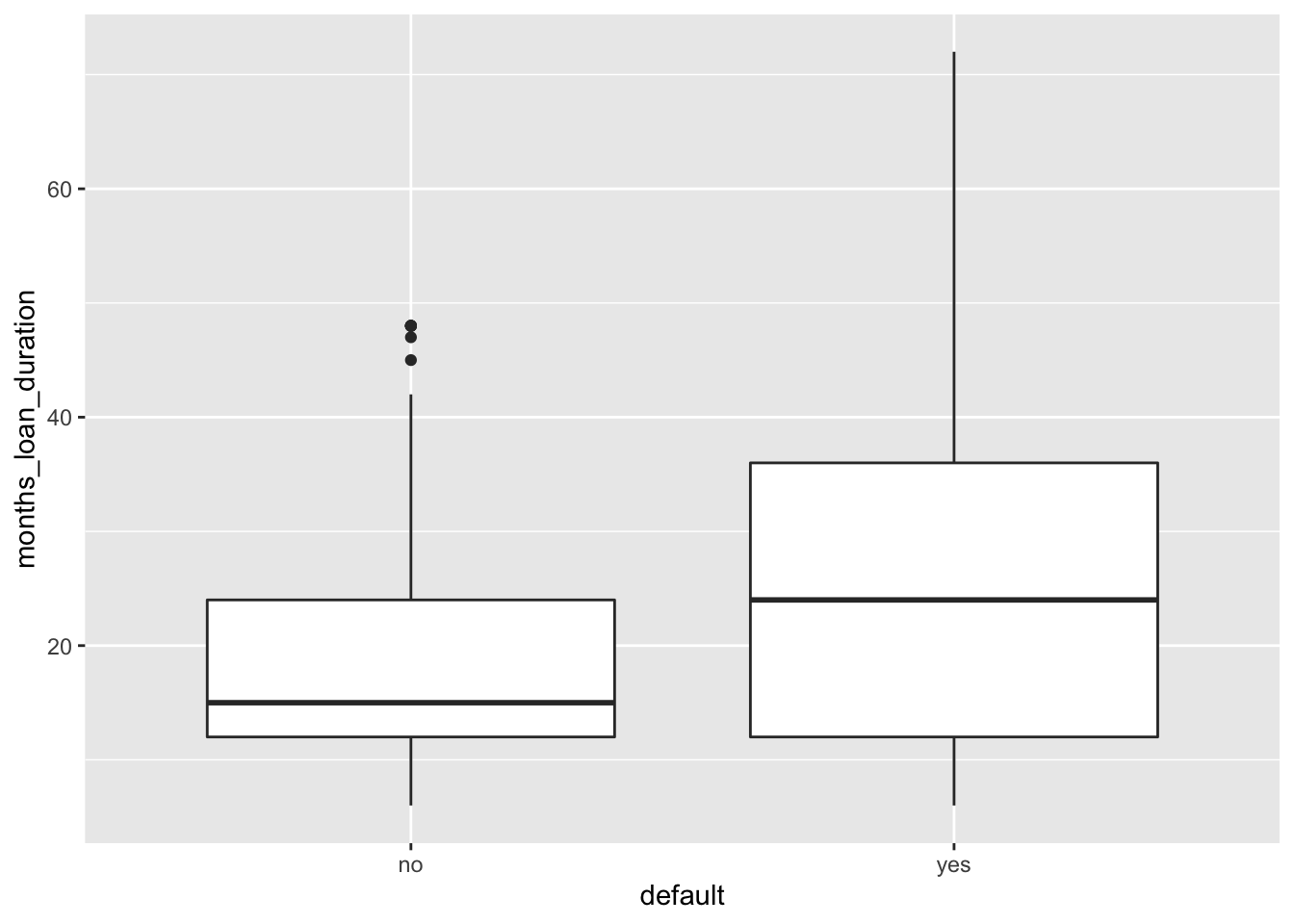

geom_boxplot(aes(x=default, y=months_loan_duration))

# higher median duration value in case of default

credit %>%

ggplot()+

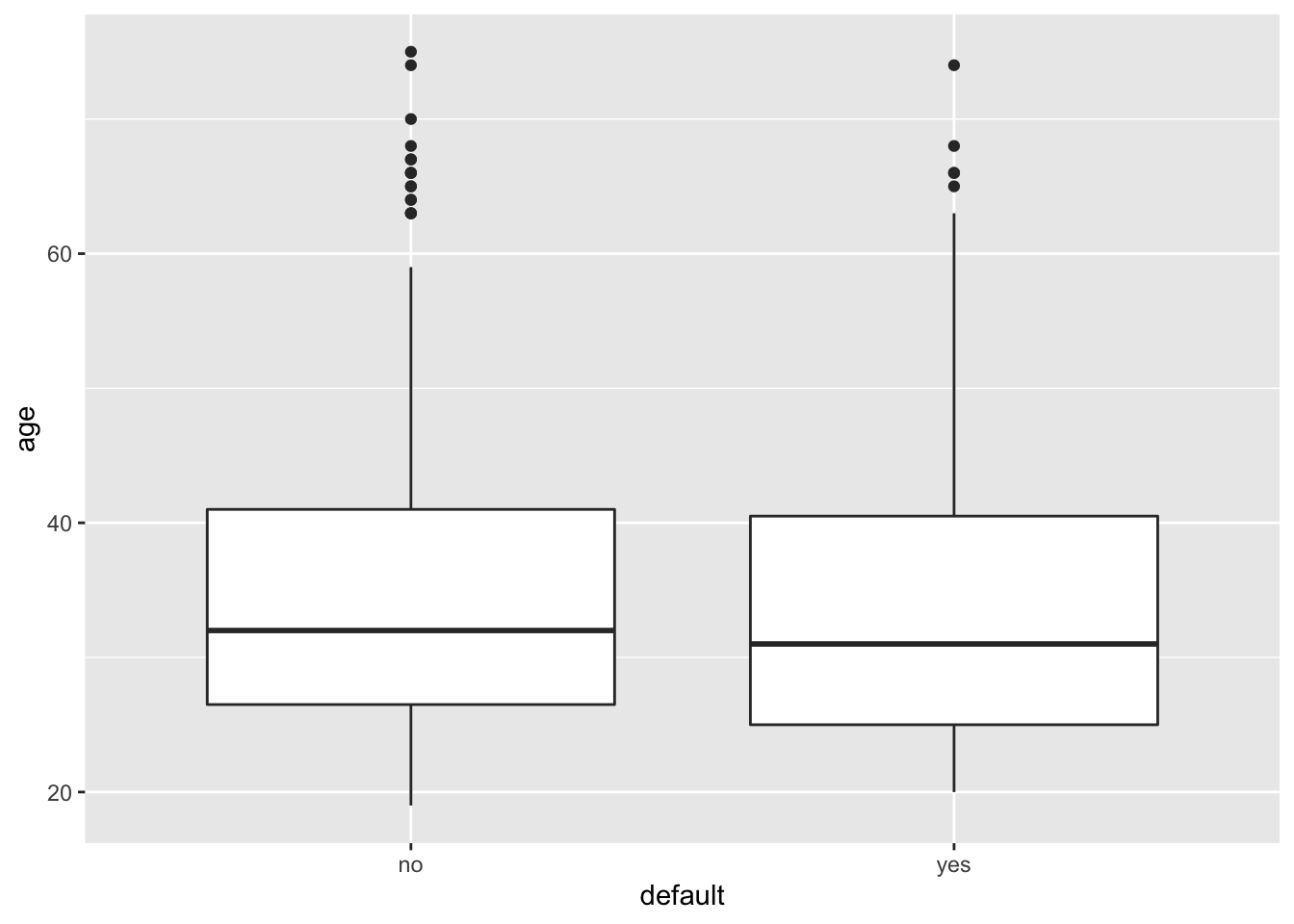

geom_boxplot(aes(x=default, y=age))

# similar distribution between the two classes for age

credit %>%

ggplot()+

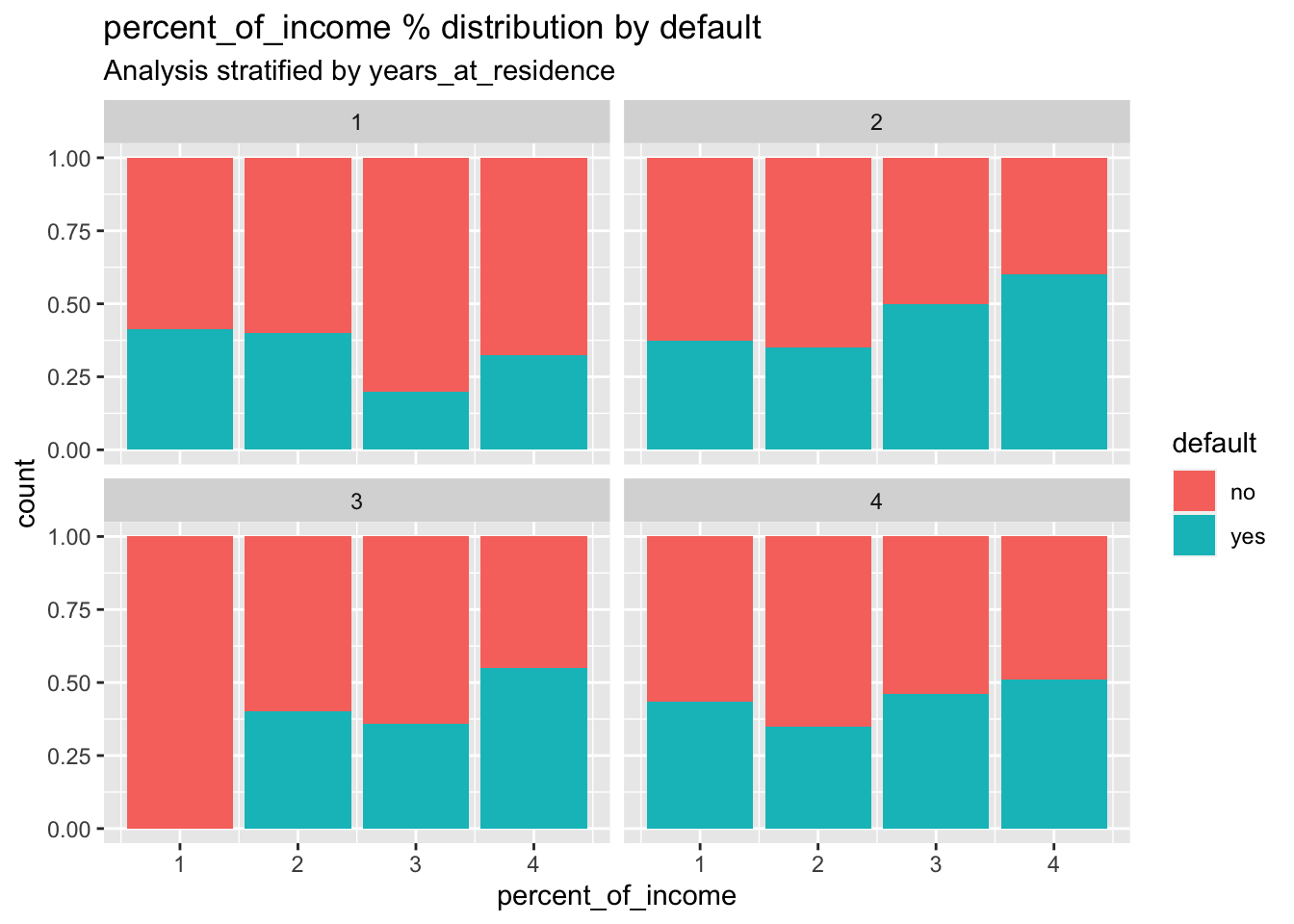

geom_bar(aes(x=percent_of_income,fill=default),

position="fill") +

facet_wrap(~years_at_residence)+

labs(title = "percent_of_income % distribution by default",

subtitle = "Analysis stratified by years_at_residence ")

# some small differences in the distribution of the default are visible across the different categories of percent_of_income and years_at_residenceWe split the dataset in training (70%) and test set (30%) by using the approach described in Section 3.3:

# Create a vector of indexes with an 70% random sample

set.seed(13, sample.kind="Rejection")

train_index = sample(1:nrow(credit), 0.7*nrow(credit))

# Subset the credit data frame to training indexes only

credit_train = credit[train_index, ]

credit_train %>%

count(default) %>%

mutate(n/sum(n))## default n n/sum(n)

## 1 no 204 0.5589041

## 2 yes 161 0.4410959# Exclude the training indexes to create the test set

credit_test = credit[-train_index, ] 5.2 Classification tree

To estimate the classification tree we use the decision_tree already used before for the grade data (see R lab 3). Here the selected mode is classification as we are dealing with a classification problem. The Gini index will be used for splitting:

set.seed(13, sample.kind="Rejection")

credit_tree = decision_tree() %>%

set_engine('rpart') %>%

set_mode('classification') %>%

fit(default ~ . , data = credit_train)

credit_tree## parsnip model object

##

## n= 365

##

## node), split, n, loss, yval, (yprob)

## * denotes terminal node

##

## 1) root 365 161 no (0.5589041 0.4410959)

## 2) months_loan_duration< 47.5 336 137 no (0.5922619 0.4077381)

## 4) months_loan_duration< 17 159 50 no (0.6855346 0.3144654)

## 8) months_loan_duration< 7.5 33 5 no (0.8484848 0.1515152) *

## 9) months_loan_duration>=7.5 126 45 no (0.6428571 0.3571429)

## 18) months_loan_duration>=9.5 106 35 no (0.6698113 0.3301887) *

## 19) months_loan_duration< 9.5 20 10 no (0.5000000 0.5000000)

## 38) age>=32.5 9 2 no (0.7777778 0.2222222) *

## 39) age< 32.5 11 3 yes (0.2727273 0.7272727) *

## 5) months_loan_duration>=17 177 87 no (0.5084746 0.4915254)

## 10) age>=26.5 126 57 no (0.5476190 0.4523810)

## 20) months_loan_duration>=37.5 8 1 no (0.8750000 0.1250000) *

## 21) months_loan_duration< 37.5 118 56 no (0.5254237 0.4745763)

## 42) percent_of_income< 3.5 59 24 no (0.5932203 0.4067797) *

## 43) percent_of_income>=3.5 59 27 yes (0.4576271 0.5423729)

## 86) age< 30.5 21 9 no (0.5714286 0.4285714)

## 172) months_loan_duration< 22.5 7 1 no (0.8571429 0.1428571) *

## 173) months_loan_duration>=22.5 14 6 yes (0.4285714 0.5714286) *

## 87) age>=30.5 38 15 yes (0.3947368 0.6052632)

## 174) months_loan_duration>=25.5 11 4 no (0.6363636 0.3636364) *

## 175) months_loan_duration< 25.5 27 8 yes (0.2962963 0.7037037) *

## 11) age< 26.5 51 21 yes (0.4117647 0.5882353) *

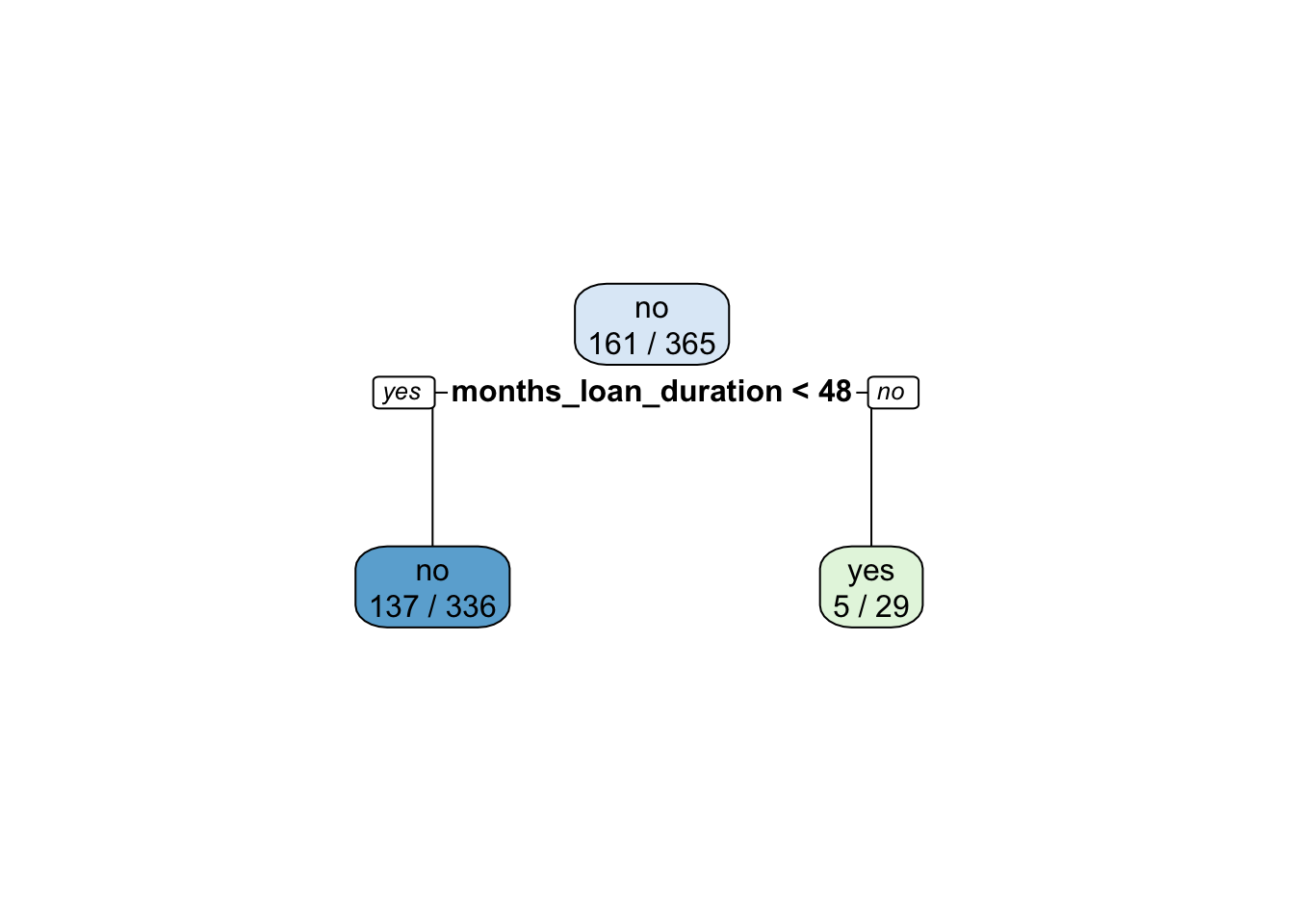

## 3) months_loan_duration>=47.5 29 5 yes (0.1724138 0.8275862) *It is possible to obtain the size of the tree by counting the number of nodes which are classified as leaf nodes:

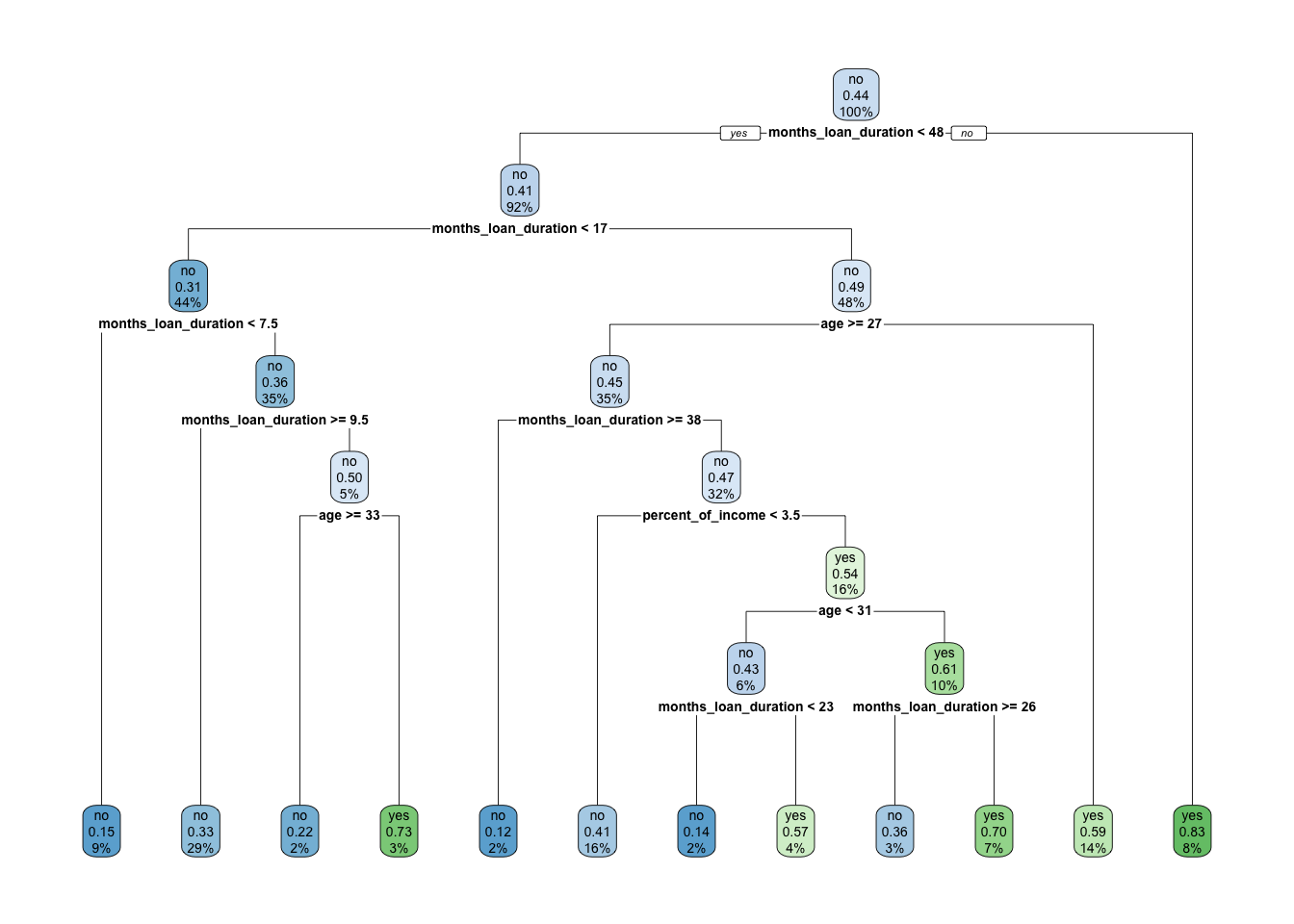

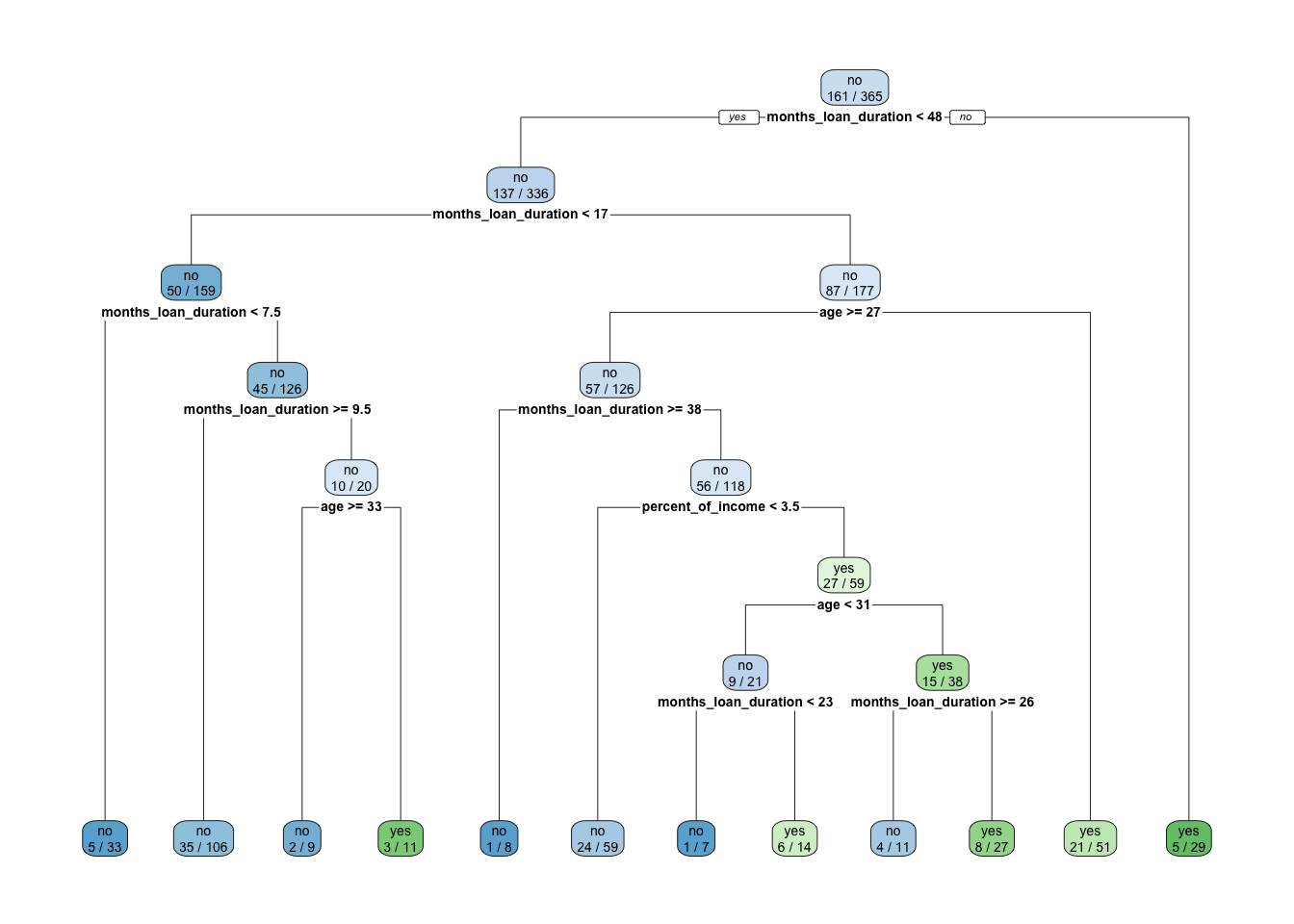

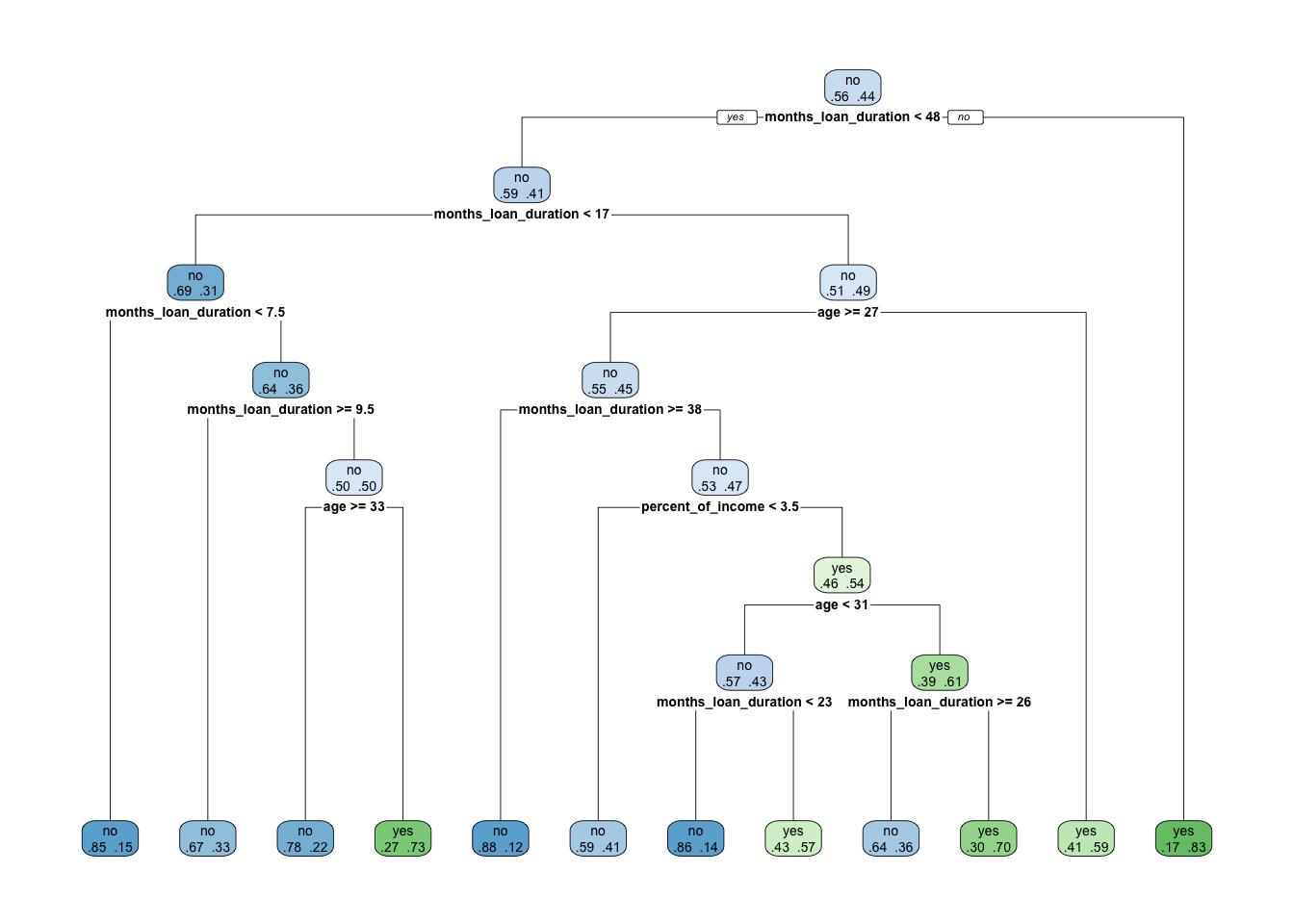

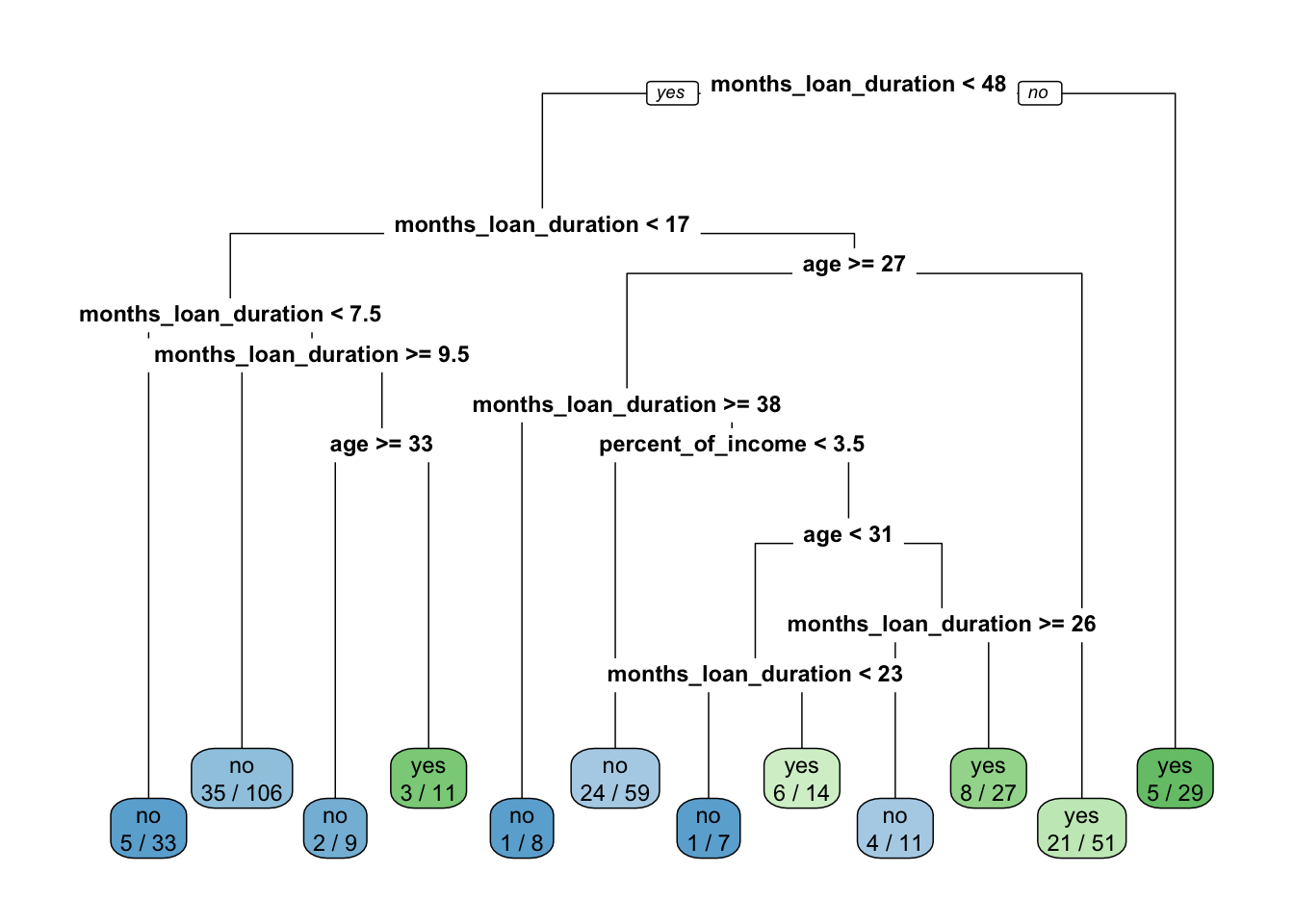

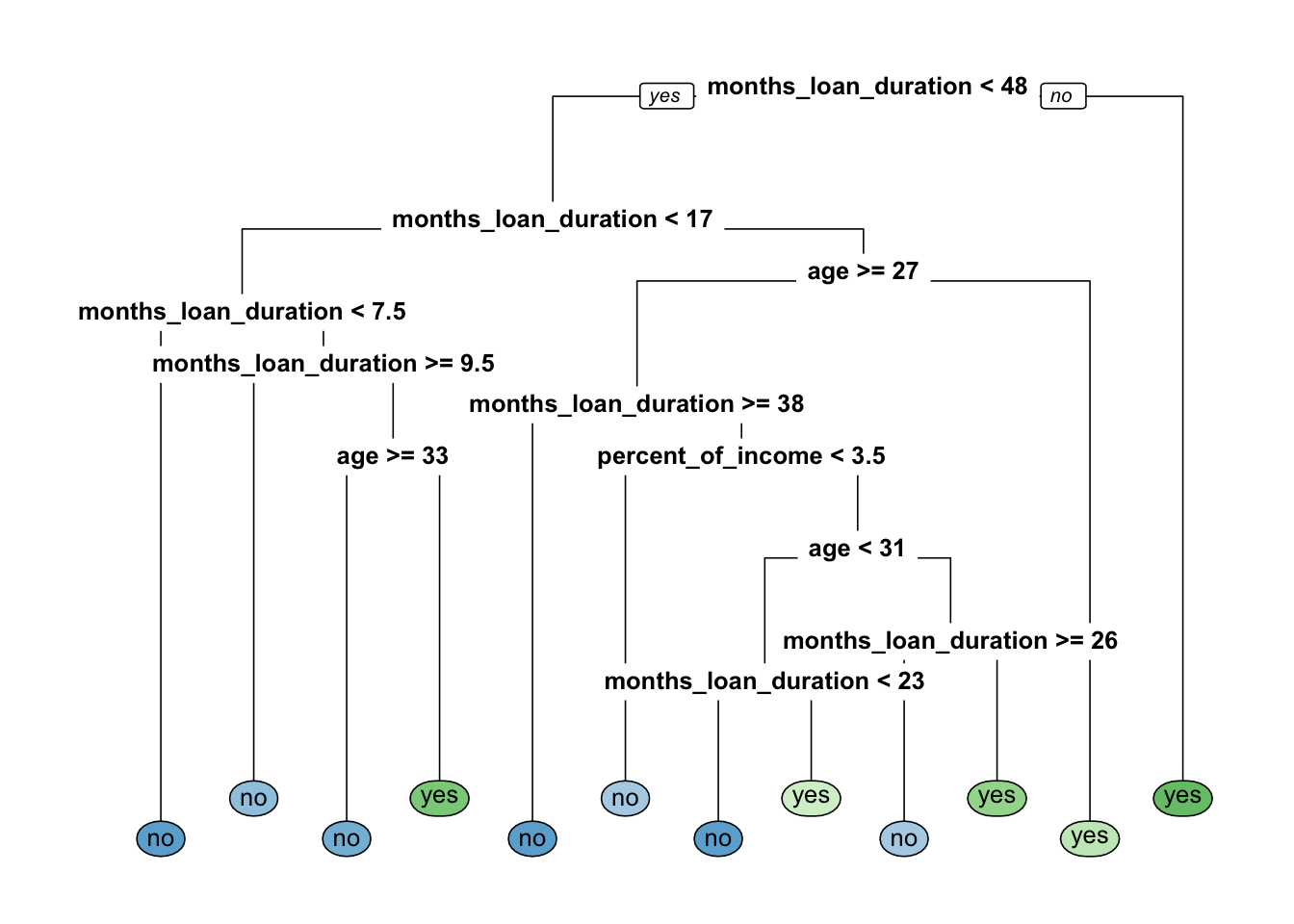

sum(credit_tree$fit$frame$var=="<leaf>")## [1] 12Here below we plot the classification tree by trying also different option of the rpart.plot function (see also http://www.milbo.org/rpart-plot/prp.pdf):

rpart.plot(x = credit_tree$fit, roundint=FALSE)

rpart.plot(x = credit_tree$fit, roundint=FALSE, extra=3)

rpart.plot(x = credit_tree$fit, roundint=FALSE, extra=4)

rpart.plot(x = credit_tree$fit, roundint=FALSE, type=0, extra=3)

rpart.plot(x = credit_tree$fit, roundint=FALSE, type=0, extra=0) #super simplified version

We compute now the predicted category for the observations in the test set:

set.seed(13, sample.kind="Rejection")

credit_treepred = predict(credit_tree,

new_data = credit_test,

type = "class")

head(credit_treepred)## # A tibble: 6 × 1

## .pred_class

## <fct>

## 1 no

## 2 no

## 3 no

## 4 no

## 5 yes

## 6 noGiven the predicted category, it is possible to create the confusion matrix and to compute the performance indexes as described in Section 3.2. Here we compute only the accuracy for the sake of simplicity:

# Calculate the confusion matrix for the test set

table(credit_treepred$.pred_class,

credit_test$default) ##

## no yes

## no 65 38

## yes 22 32acctree = mean(credit_treepred$.pred_class==credit_test$default)

acctree## [1] 0.6178344We see that 61.78% of the test observations are correctly classified. This is quite a poor performance.

As described in Section 4.3, we evaluate the possibility of pruning the tree by using the information contained in the cptable:

cptab = data.frame(credit_tree$fit$cptable)

cptab## CP nsplit rel.error xerror xstd

## 1 0.11801242 0 1.0000000 1.0000000 0.05891905

## 2 0.02795031 1 0.8819876 0.9192547 0.05826241

## 3 0.01552795 3 0.8260870 0.9440994 0.05849777

## 4 0.01242236 7 0.7577640 0.9627329 0.05865475

## 5 0.01035197 8 0.7453416 0.9440994 0.05849777

## 6 0.01000000 11 0.7142857 0.9440994 0.05849777bestcp = cptab$CP[which.min(cptab$xerror)]

bestcp## [1] 0.02795031In this case the minimum value of the cross-validation error (xerror) corresponds to a very small number of splits (only 1 split). In this case there is no possibility to simplify further the tree, unless we chosse nsplit=0, a choice which is not interesting for us because it means that we are not using any predictors for the credit model. Thus, in this specific case it doesn’t make sense to implement the 1-SE approach described in Section 4.3. We consider directly the value of CP corresponding to the lowest value of xerror and to nsplit=1.

Let’s prune the tree by using the chosen value of CP:

credit_tree_prun = decision_tree(cost_complexity = bestcp,

mode = 'classification',

engine = 'rpart') %>%

fit(default ~ . , data = credit_train)

rpart.plot(x = credit_tree_prun$fit,roundint=FALSE, extra=3)

We can now compute the accuracy for the pruned tree:

credit_treepred_prun = predict(credit_tree_prun,

new_data = credit_test,

type = "class")

table(credit_treepred_prun$.pred_class,

credit_test$default) ##

## no yes

## no 87 62

## yes 0 8acctree_prun = mean(credit_treepred_prun$.pred_class==credit_test$default)

acctree_prun## [1] 0.6050955acctree## [1] 0.6178344The pruned tree is basically a stump and has an accuracy which is basically very similar to the original single tree. Given the similar accuracy, we prefer the pruned tree because it has the advantage of being simpler (or less complex).

We now compute for the selected tree the predicted probabilities that will be required later for the ROC curve:

# Calculate predicted probabilities

credit_treepred_prun_prob = predict(credit_tree_prun,

new_data = credit_test,

type = "prob")

head(credit_treepred_prun_prob)## # A tibble: 6 × 2

## .pred_no .pred_yes

## <dbl> <dbl>

## 1 0.592 0.408

## 2 0.592 0.408

## 3 0.592 0.408

## 4 0.592 0.408

## 5 0.592 0.408

## 6 0.592 0.408Note that in this case we obtain a tibble (i.e. a dataframe) with 2 columns (one for each category) and a number of rows given by the number of observations in the test set. For each row the prediction (also reported in the vector credit_treepred_prun$.pred_class) is given by the majority category (i.e. the category with the highest probability).

5.3 Bagging

Bagging has already been described in Section 4.4 for the case of a regression tree. It works similarly for a classification tree:

set.seed(1, sample.kind="Rejection")

credit_bag = rand_forest(mtry = ncol(credit_train)-1,#minus the response

trees = 500) %>% #default value

set_engine('randomForest',importance = T) %>% #to estimate predictors importance

set_mode('classification') %>%

fit(default ~ ., data = credit_train)

credit_bag## parsnip model object

##

##

## Call:

## randomForest(x = maybe_data_frame(x), y = y, ntree = ~500, mtry = min_cols(~ncol(credit_train) - 1, x), importance = ~T)

## Type of random forest: classification

## Number of trees: 500

## No. of variables tried at each split: 4

##

## OOB estimate of error rate: 46.58%

## Confusion matrix:

## no yes class.error

## no 126 78 0.3823529

## yes 92 69 0.5714286The output provides the out-of-bag (OOB) estimate of the error rate (an unbiased estimate of the test set error) and the corresponding confusion matrix. The former could be used for discriminating between different random forest classifiers (estimated, for example, with different values of trees).

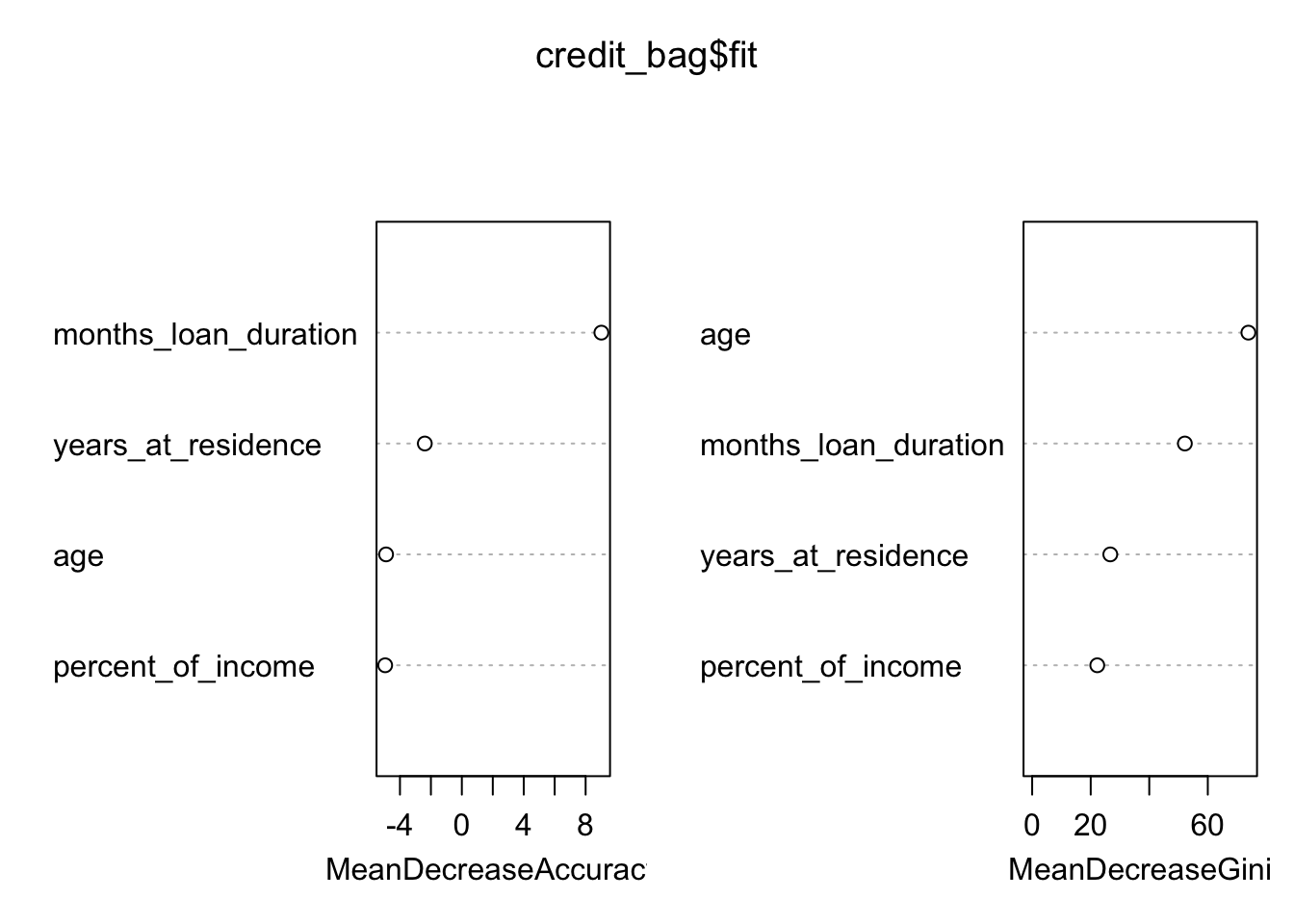

We analyze now graphically the importance of the regressors (this output is obtained by setting importance = T during the training phase):

credit_bag$fit$importance## no yes

## months_loan_duration 0.012860651 0.032233995

## percent_of_income -0.010234180 -0.003931482

## years_at_residence -0.014133589 0.009019865

## age -0.006074422 -0.014632452

## MeanDecreaseAccuracy MeanDecreaseGini

## months_loan_duration 0.021215043 52.16202

## percent_of_income -0.007402118 22.23831

## years_at_residence -0.003786219 26.68510

## age -0.009869710 73.86159varImpPlot(credit_bag$fit)

The figure displays:

- in the left plot the mean decrease (across all \(B\) trees) of accuracy in prediction on the OOB samples when a given variable is excluded. This measures how much accuracy the model losses by excluding each variable.

- in the right plot the mean decrease (across all \(B\) trees) in node purity on the OOB samples when a given variable is excluded. For classification, the node impurity is measured by the Gini index. This measures how much each variable contributes to the homogeneity of the nodes in the resulting random forest.

In general the higher the value of the mean decrease accuracy/Gini, the higher the importance of the variable in the model.

For the considered case we see that months_loan_duration and age are the two most important regressors (the former was used also in the single pruned tree).

We compute now the predictions and the test confusion matrix and accuracy given by bagging:

credit_bag_pred = predict(credit_bag,

new_data = credit_test,

type = "class")

head(credit_bag_pred)## # A tibble: 6 × 1

## .pred_class

## <fct>

## 1 no

## 2 no

## 3 no

## 4 no

## 5 yes

## 6 noaccbag = mean((credit_test$default == credit_bag_pred$.pred_class))

acctree_prun## [1] 0.6050955accbag## [1] 0.5605096By comparing accuracy, bagging performs slightly worse with respect to the single prune tree.

We also compute the predicted probabilities that will be needed later for ROC:

credit_bag_pred_prob = predict(credit_bag,

new_data = credit_test,

type = "prob")5.4 Random forest

Random forest is very similar to bagging. The only difference is in the specification of the mtry argument. For a classification problem we consider a number of regressors to be selected randomly to be equal to \(\sqrt{p}\):

set.seed(1, sample.kind="Rejection")

credit_rf = rand_forest(mtry = sqrt(ncol(credit_train)-1),

trees = 500) %>%

set_engine('randomForest',importance = T) %>%

set_mode('classification') %>%

fit(default ~ ., data = credit_train)

print(credit_rf)## parsnip model object

##

##

## Call:

## randomForest(x = maybe_data_frame(x), y = y, ntree = ~500, mtry = min_cols(~sqrt(ncol(credit_train) - 1), x), importance = ~T)

## Type of random forest: classification

## Number of trees: 500

## No. of variables tried at each split: 2

##

## OOB estimate of error rate: 46.03%

## Confusion matrix:

## no yes class.error

## no 131 73 0.3578431

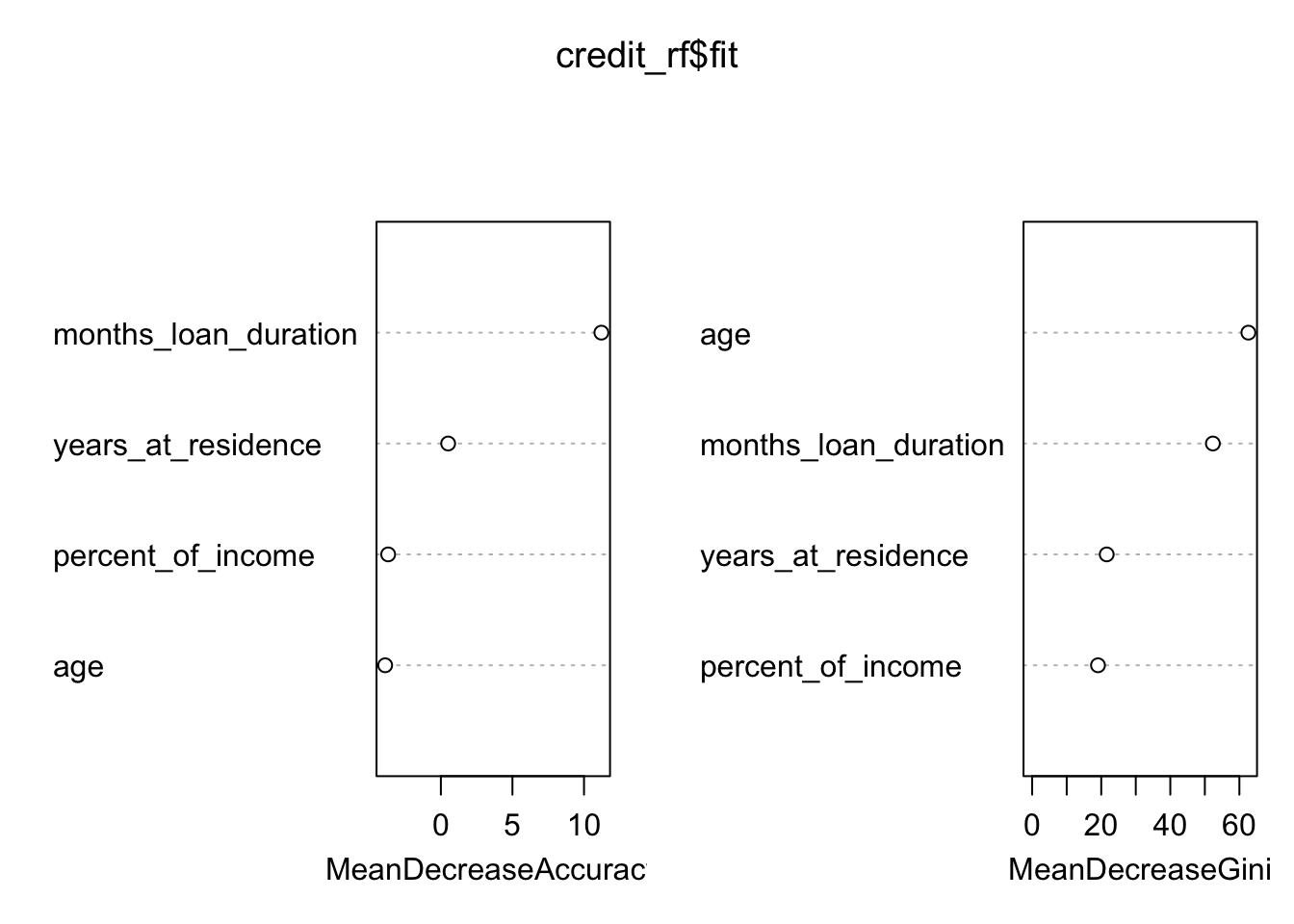

## yes 95 66 0.5900621As before we plot the measure for the importance of the regressors:

varImpPlot(credit_rf$fit)

Finally, we compute the predictions of default by using the random forest model:

credit_rfpred = predict(object = credit_rf,

new_data = credit_test,

type = "class")

# Calculate the confusion matrix for the test set

table(credit_test$default, credit_rfpred$.pred_class)##

## no yes

## no 65 22

## yes 42 28accrf = mean((credit_test$default == credit_rfpred$.pred_class))

acctree_prun #pruned## [1] 0.6050955accbag #bagged ## [1] 0.5605096accrf #random forest## [1] 0.5923567# Compute predicted probabilities

credit_rf_pred_prob = predict(object = credit_rf,

new_data = credit_test,

type = "prob")By comparing accuracy, we conclude the random forest performs better than bagging and similarly to the single pruned tree. However, all the accuracies are very low.

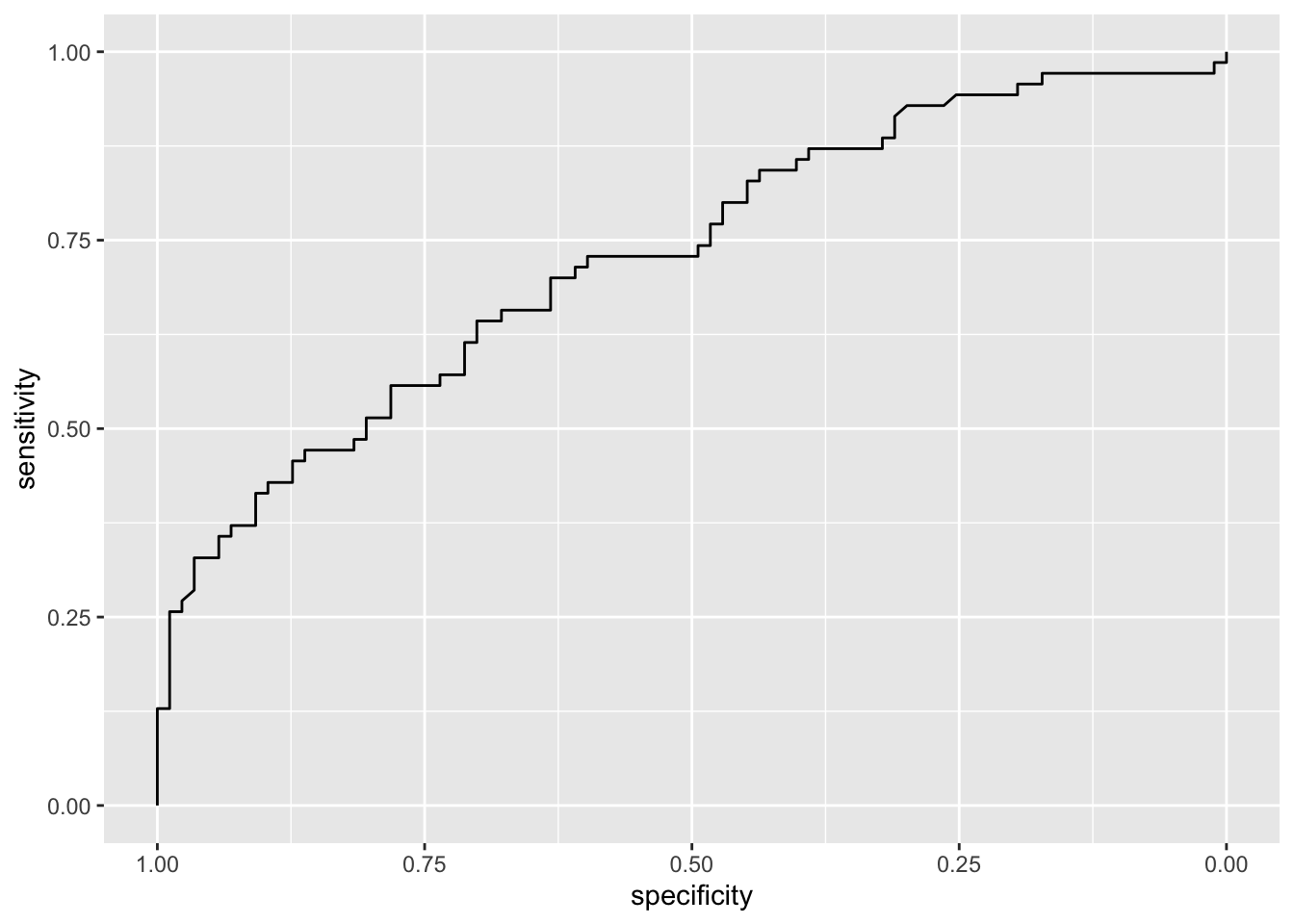

5.5 Model comparison by using the ROC curves

To obtain the ROC curve we use the function roc contained in the pROC package. It is necessary to specify as arguments the vector of observed categories (response) and the vector of the probabilities for the “Yes” category (predictor).

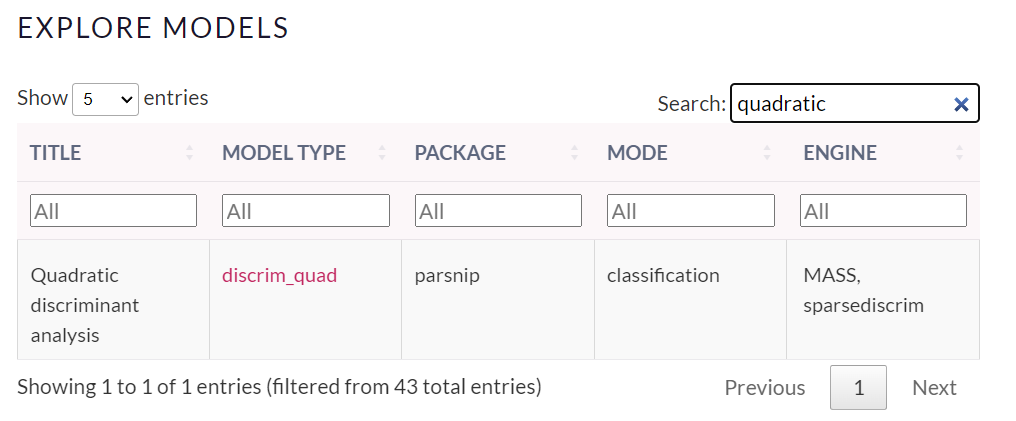

By using the ROC curve introduced in Section ??, we compute the implemented models for the credit problem. For the sake of comparison, we will include another classification algorithm, QDA. This can be useful in order to check if the CART methodology is working good enough with our data.

qdamod = discrim_quad() %>%

set_engine('MASS') %>%

set_mode('classification') %>%

fit(default ~ . , data = credit_train)

credit_qda_pred <- predict(qdamod,

new_data = credit_test,

type = 'prob')We are interested in credit_qda_pred$.pred_yes object. It contains the predicted probabilities of the positive class for each observation in the credit_test dataset.We need these probabilities to create an ROC curve.

Given the ROC curve it is possible to plot it and to compute the area under the curve (auc). The higher the AUC, the better is the performance of our algorithm. On the other side, the higher the AUC, the nearer our algorithm performances are to the (utopic) best performance algorithm, that has both sensitivity and specificity equal to 1.

roc_qda = roc(credit_test$default,

credit_qda_pred$.pred_yes)## Setting levels: control = no, case = yes## Setting direction: controls < casesggroc(roc_qda)

auc(roc_qda)## Area under the curve: 0.729Now let’s plot together all the ROC curves, in order to have a look at their different performances. First of all, we need to create different “ROC” objects, one for each trained algorithm:

roc_tree = roc(credit_test$default,

credit_treepred_prun_prob$.pred_yes)## Setting levels: control = no, case = yes## Setting direction: controls < casesroc_bag = roc(credit_test$default,

credit_bag_pred_prob$.pred_yes)## Setting levels: control = no, case = yes

## Setting direction: controls < casesroc_rf = roc(credit_test$default,

credit_rf_pred_prob$.pred_yes) ## Setting levels: control = no, case = yes

## Setting direction: controls < casesThen we should plot them using a list. Use some interactivity in order to help yourself in the analysis:

ggroc(list(qda= roc_qda,

tree = roc_tree,

bag = roc_bag,

rf = roc_rf)) %>% plotly::ggplotly()Note that the roc curve for the single tree is practically a straight line. This is basically due to the fact that the single tree is a stump and specificity/sensitivity (computed using predictions) don’t change that much by modification of the probability threshold. The bagging and random forest roc curves are very similar. At the same time, if QDA statistical requirements hold, it would be the algorithm of our choice.

Finally we compute the area under the curve (AUC) for the four methods:

auc(roc_qda)## Area under the curve: 0.729auc(roc_tree)## Area under the curve: 0.5571auc(roc_bag)## Area under the curve: 0.6071auc(roc_rf)## Area under the curve: 0.6099All the AUC values are very similar and all very low. They denote a poor performance of all the classifiers. I think this is a problem mainly related to the regressors that are used in the analysis which are not informative enough for describing the pattern of the default variable.

Extra homework for you: Starting from this point, why don’t you try to evaluate other classification algorithms (as LDA, KNN and logistic)?

5.6 Exercises Lab 4

5.6.1 Exercise 1

We use the OJ dataset which is part of the ISLR library. The data refers to 1070 purchases where the customer either purchased Citrus Hill (CH) or Minute Maid Orange Juice (MM). The response variable is Purchase. A number of characteristics of the customers are recorded (see ?OJ).

library(ISLR)

library(tidyverse)

dim(OJ)## [1] 1070 18glimpse(OJ)## Rows: 1,070

## Columns: 18

## $ Purchase <fct> CH, CH, CH, MM, CH, CH, CH, CH, CH, …

## $ WeekofPurchase <dbl> 237, 239, 245, 227, 228, 230, 232, 2…

## $ StoreID <dbl> 1, 1, 1, 1, 7, 7, 7, 7, 7, 7, 7, 7, …

## $ PriceCH <dbl> 1.75, 1.75, 1.86, 1.69, 1.69, 1.69, …

## $ PriceMM <dbl> 1.99, 1.99, 2.09, 1.69, 1.69, 1.99, …

## $ DiscCH <dbl> 0.00, 0.00, 0.17, 0.00, 0.00, 0.00, …

## $ DiscMM <dbl> 0.00, 0.30, 0.00, 0.00, 0.00, 0.00, …

## $ SpecialCH <dbl> 0, 0, 0, 0, 0, 0, 1, 1, 0, 0, 0, 0, …

## $ SpecialMM <dbl> 0, 1, 0, 0, 0, 1, 1, 0, 0, 0, 0, 0, …

## $ LoyalCH <dbl> 0.500000, 0.600000, 0.680000, 0.4000…

## $ SalePriceMM <dbl> 1.99, 1.69, 2.09, 1.69, 1.69, 1.99, …

## $ SalePriceCH <dbl> 1.75, 1.75, 1.69, 1.69, 1.69, 1.69, …

## $ PriceDiff <dbl> 0.24, -0.06, 0.40, 0.00, 0.00, 0.30,…

## $ Store7 <fct> No, No, No, No, Yes, Yes, Yes, Yes, …

## $ PctDiscMM <dbl> 0.000000, 0.150754, 0.000000, 0.0000…

## $ PctDiscCH <dbl> 0.000000, 0.000000, 0.091398, 0.0000…

## $ ListPriceDiff <dbl> 0.24, 0.24, 0.23, 0.00, 0.00, 0.30, …

## $ STORE <dbl> 1, 1, 1, 1, 0, 0, 0, 0, 0, 0, 0, 0, …- Create a training set containing a random sample of 800 observations (use as seed the value 4455) and a test set containing the remaining observations.

- Consider

Purchaseas response variable. Provide and plot its frequency distribution for the training observations. - Fit a tree to the training data for

Purchase. Use all the other variables as predictors.Plot the tree. Which is the most important variable? Try different specifications of the

extraandtypeargument of therpart.plotfunction (see?rpart.plot)Extract all the information available for observation n. 146 in the training data set. Which is the

Purchaseprediction for this observation using the estimated tree?Predict the response for the test data and produce a confusion matrix. What is the test errorr rate?

- Do you think is it convenient to prune the tree? If yes, estimate the pruned tree and plot it.

- If you decided to prune the tree, compute the test error rate for the pruned tree. Compare the test error rate of the pruned and unpruned tree. Which is better?

5.6.2 Exercise 2

Consider the BreastCancer dataset included in the mlbench library.

library(mlbench)

data(BreastCancer)

glimpse(BreastCancer)## Rows: 699

## Columns: 11

## $ Id <chr> "1000025", "1002945", "1015425", "1…

## $ Cl.thickness <ord> 5, 5, 3, 6, 4, 8, 1, 2, 2, 4, 1, 2,…

## $ Cell.size <ord> 1, 4, 1, 8, 1, 10, 1, 1, 1, 2, 1, 1…

## $ Cell.shape <ord> 1, 4, 1, 8, 1, 10, 1, 2, 1, 1, 1, 1…

## $ Marg.adhesion <ord> 1, 5, 1, 1, 3, 8, 1, 1, 1, 1, 1, 1,…

## $ Epith.c.size <ord> 2, 7, 2, 3, 2, 7, 2, 2, 2, 2, 1, 2,…

## $ Bare.nuclei <fct> 1, 10, 2, 4, 1, 10, 10, 1, 1, 1, 1,…

## $ Bl.cromatin <fct> 3, 3, 3, 3, 3, 9, 3, 3, 1, 2, 3, 2,…

## $ Normal.nucleoli <fct> 1, 2, 1, 7, 1, 7, 1, 1, 1, 1, 1, 1,…

## $ Mitoses <fct> 1, 1, 1, 1, 1, 1, 1, 1, 5, 1, 1, 1,…

## $ Class <fct> benign, benign, benign, benign, ben…Remove missing values and the variable named

Id(identifier), which is useless and would confuse the machine learning algorithms.Partition the data set for 80% training and 20% evaluation (seed=2).

Use the training data for growing a tree for predicting

Classas a function of all the other variables. Plot the tree.Compute the predictions (classes and probabilities, separately) for the test observations. Compute the accuracy.

Implement bagging and compare its performance (in terms of accuracy) with the full classification tree implemented before. Compute also probabilities that will be used at the end for the AUC.

Implement random forest and compare its performance (in terms of accuracy) with the methods implemented before. Compute also probabilities that will be used at the end for the AUC.

For all the considered models compute the AUC and plot the ROCs.