Chapter 7 Linear Regression

7.1 Objectives

After completing this chapter, the readers are expected to

- understand the concept of simple and multiple linear regression

- perform simple linear regression

- perform multiple linear regression

- perform model fit assessment of linear regression models

- present and interpret the results of linear regression analyses

7.2 Introduction

Linear regression is one of the most common statistical analyses in medical and health sciences. Linear regression models the linear (i.e. straight line) relationship between:

- outcome: numerical variable (e.g. blood pressure, BMI, cholesterol level).

- predictors/independent variables: numerical variables and categorical variables (e.g. gender, race, education level).

In simple words, we might be interested in knowing the relationship between the cholesterol level and its associated factors, for example gender, age, BMI and lifestyle. This can be explored by a Linear regression analysis.

7.3 Linear regression models

Linear regression is a type of Generalized linear models (GLMs), which also includes other outcome types, for example categorical and count. In subsequent chapters, we will cover these outcome types in form of logistic regression and Poisson regression. Basically, the relationship between the outcome and predictors in a linear regression is structured as follows,

\[\begin{aligned} numerical\ outcome = &\ numerical\ predictors \\ & + categorical\ predictors \end{aligned}\]

More appropriate forms of this relationship will explained later under simple and multiple linear regressions sections.

7.4 Prepare R Environment for Analysis

7.4.1 Libraries

For this chapter, we will be using the following packages:

- haven: for reading SPSS and STATA datasets

- tidyverse: a general and powerful package for data transformation

- gtsummary: for coming up with nice tables for results and plotting the graphs

- ggplot2, ggpubr, GGally: for plotting the graphs

- rsq: for getting \(R^2\) value from a GLM model

- broom: for tidying up the results

These are loaded as follows using the function library(),

7.4.2 Dataset

We will use the coronary.dta dataset in STATA format. The dataset contains the total cholesterol level, their individual characteristics and intervention groups in a hypothetical clinical trial. The dataset contains 200 observations for nine variables:

- id: Subjects’ ID.

- cad: Coronary artery disease status (categorical) {no cad, cad}.

- sbp : Systolic blood pressure in mmHg (numerical).

- dbp : Diastolic blood pressure in mmHg (numerical).

- chol: Total cholesterol level in mmol/L (numerical).

- age: Age in years (numerical).

- bmi: Body mass index (numerical).

- race: Race of the subjects (categorical) {malay, chinese, indian}.

- gender: Gender of the subjects (categorical) {woman, man}.

The dataset is loaded as follows,

We then look at the basic structure of the dataset,

## Rows: 200

## Columns: 9

## $ id <dbl> 1, 14, 56, 61, 62, 64, 69, 108, 112, 134, 139, 142, 159, 168, 1…

## $ cad <fct> no cad, no cad, no cad, no cad, no cad, no cad, cad, no cad, no…

## $ sbp <dbl> 106, 130, 136, 138, 115, 124, 110, 112, 138, 104, 126, 170, 146…

## $ dbp <dbl> 68, 78, 84, 100, 85, 72, 80, 70, 85, 70, 82, 96, 88, 86, 96, 92…

## $ chol <dbl> 6.5725, 6.3250, 5.9675, 7.0400, 6.6550, 5.9675, 4.4825, 5.4725,…

## $ age <dbl> 60, 34, 36, 45, 53, 43, 44, 50, 43, 48, 48, 53, 50, 53, 51, 51,…

## $ bmi <dbl> 38.90000, 37.80000, 40.50000, 37.60000, 40.30000, 37.60000, 34.…

## $ race <fct> indian, malay, malay, malay, indian, malay, malay, chinese, chi…

## $ gender <fct> woman, woman, woman, woman, man, man, man, woman, woman, man, m…7.5 Simple Linear Regression

7.5.1 About Simple Linear Regression

Simple linear regression (SLR) models linear (straight line) relationship between:

- outcome: numerical variable.

- ONE predictor: numerical/categorical variable.

Note: When the predictor is a categorical variable, this is typically analyzed by one-way ANOVA. However, SLR can also handle a categorical variable in the GLM framework.

We may formally represent SLR in form of an equation as follows,

\[numerical\ outcome = intercept + coefficient \times predictor\] or in a shorter form using mathematical notations,

\[\hat y = b_0 + b_1x_1\] where \(\hat y\) (pronounced y hat) is the predicted value of the outcome y.

7.5.2 Data exploration

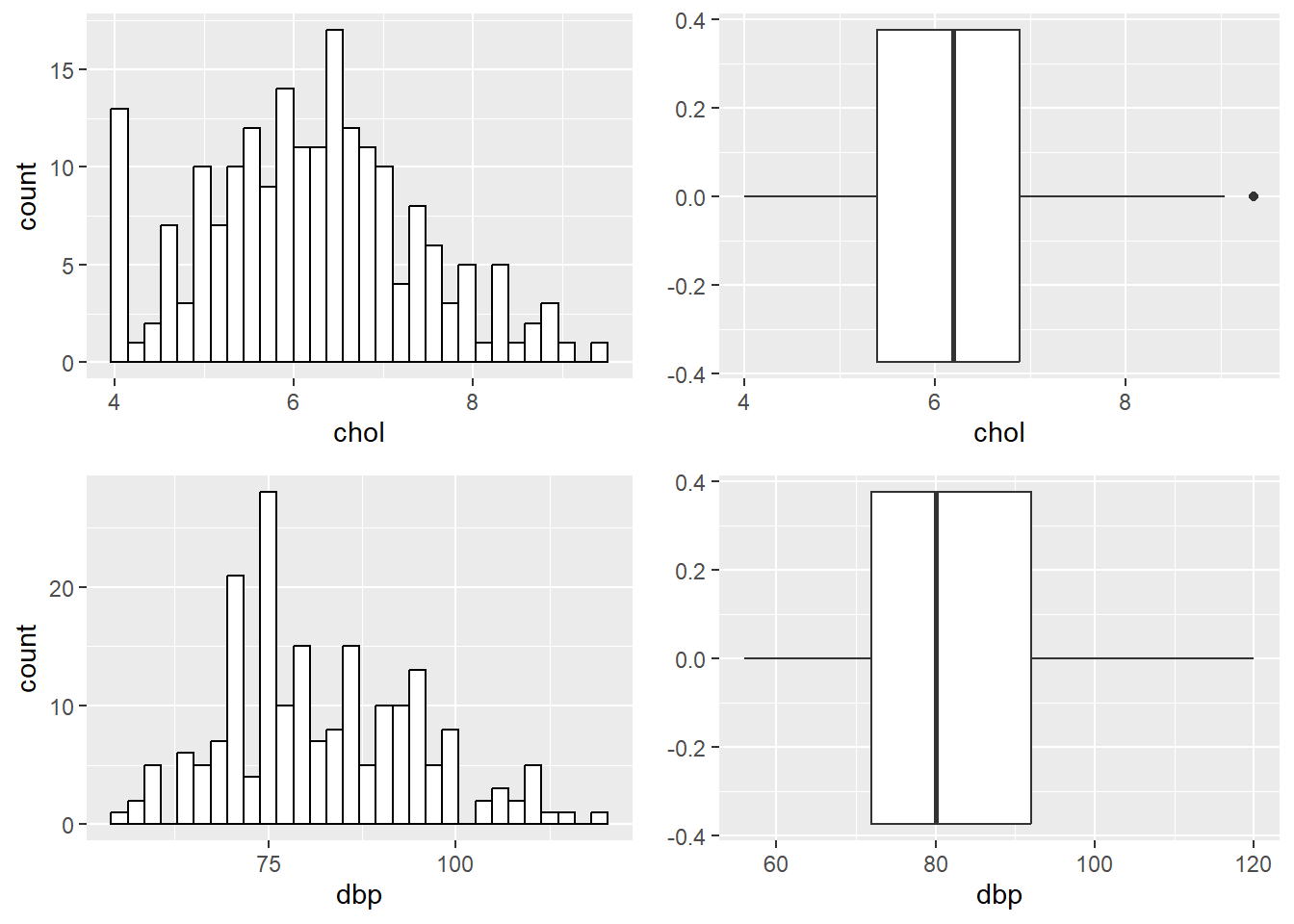

Let say, for the SLR we are interested in knowing whether diastolic blood pressure (predictor) is associated with the cholesterol level (outcome). We explore the variables by obtaining the descriptive statistics and plotting the data distribution.

We obtain the descriptive statistics of the variables,

| Characteristic | N = 2001 |

|---|---|

| chol | 6.19 (5.39, 6.90) |

| dbp | 80 (72, 92) |

| 1 Median (Q1, Q3) | |

and the histograms and box-and-whiskers plots,

hist_chol <-

ggplot(coronary, aes(chol)) +

geom_histogram(color = "black", fill = "white")

hist_dbp <-

ggplot(coronary, aes(dbp)) +

geom_histogram(color = "black", fill = "white")

bplot_chol <-

ggplot(coronary, aes(chol)) +

geom_boxplot()

bplot_dbp <-

ggplot(coronary, aes(dbp)) +

geom_boxplot()

ggarrange(hist_chol, bplot_chol, hist_dbp, bplot_dbp)

FIGURE 7.1: Histograms and box-and-whiskers plots for chol and dbp.

7.5.3 Univariable analysis

For the analysis, we fit the SLR model, which consists of only one predictor (univariable). Here, chol is specified as the outcome, and dbp as the predictor.

In lm, the formula is specified as outcome ~ predictor. Here, we specify chol ~ dbp as the formula in lm.

We fit and view the summary information of the model as,

##

## Call:

## lm(formula = chol ~ dbp, data = coronary)

##

## Residuals:

## Min 1Q Median 3Q Max

## -1.9967 -0.8304 -0.1292 0.7734 2.8470

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 2.995134 0.492092 6.087 5.88e-09 ***

## dbp 0.038919 0.005907 6.589 3.92e-10 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 1.075 on 198 degrees of freedom

## Multiple R-squared: 0.1798, Adjusted R-squared: 0.1757

## F-statistic: 43.41 on 1 and 198 DF, p-value: 3.917e-10We can tidy up the glm output and obtain the 95% confidence interval (CI) using tidy() from the broom package,

## # A tibble: 2 × 7

## term estimate std.error statistic p.value conf.low conf.high

## <chr> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

## 1 (Intercept) 3.00 0.492 6.09 5.88e- 9 2.02 3.97

## 2 dbp 0.0389 0.00591 6.59 3.92e-10 0.0273 0.0506From the output above, we pay attention at these results:

- coefficients, \(b\) – column

estimate. - 95% CI – columns

conf.lowandconf.high. - P-value – column

p.value.

7.5.4 Model fit assessment

It is important to assess to what extend the SLR model reflects the data. First, we can assess this by \(R^2\), which is the percentage of the variance for the outcome that is explained by the predictor. In simpler words, to what extend the variation in the values of the outcome is caused/explained by the predictor. This ranges from 0% (the predictor does not explain the outcome at all) to 100% (the predictor explains the outcome perfectly). Here, we obtain the \(R^2\) values,

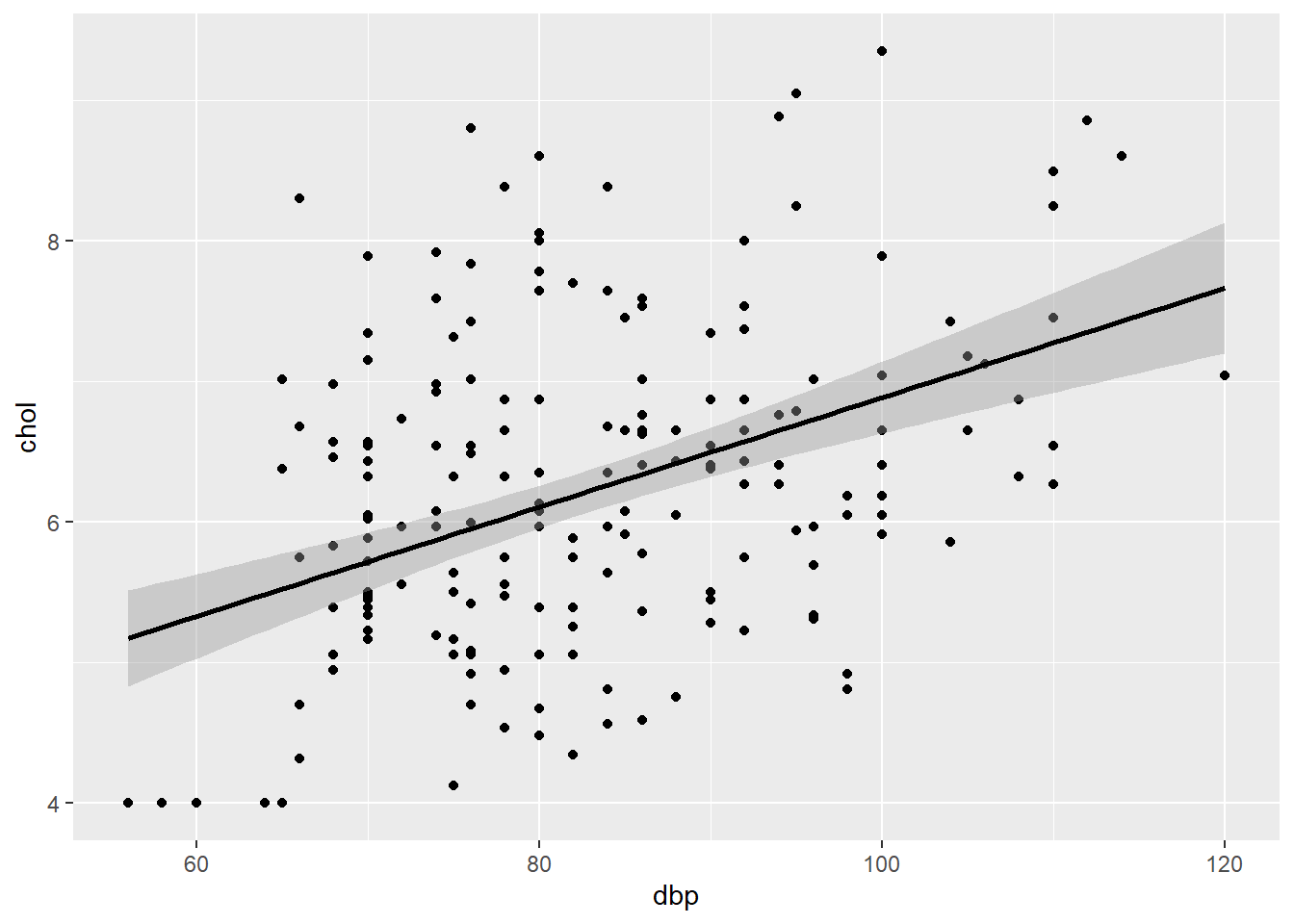

## [1] 0.1798257Next, we can assess the model fit by a scatter plot,

plot_slr <-

ggplot(coronary, aes(x = dbp, y = chol)) +

geom_point() + geom_smooth(method = lm)

plot_slr

FIGURE 7.2: Scatter plot of chol (outcome) vs dbp (predictor).

This plot allows the assessment of normality, linearity and equal variance assumptions. We expect an elliptical/oval shape (normality) and equal scatter of dots above and below the prediction line (equal variance). These aspects indicate a linear relationship between chol and dbp (linearity).

7.5.5 Presentation and interpretation

To present the result, we can use tbl_regression() to come up with a nice table. We use slr_chol of the lm output with tbl_regression() in the gtsummary package.

| Characteristic | Beta | 95% CI1 | p-value |

|---|---|---|---|

| dbp | 0.04 | 0.03, 0.05 | <0.001 |

| 1 CI = Confidence Interval | |||

It is also very informative to present the model equation,

\[chol = 3.0 + 0.04\times dbp\]

where we obtain the intercept value from summary(slr_chol).

Based on the \(R^2\) (which was 0.18), table and model equation, we may interpret the results as follows:

- 1mmHg increase in DBP causes 0.04mmol/L increase in cholesterol level.

- DBP explains 18% of the variance in cholesterol level.

7.6 Multiple Linear Regression

7.6.1 About Multiple Linear Regression

Multiple linear regression (MLR) models linear relationship between:

- outcome: numerical variable.

- MORE than one predictors: numerical and categorical variables.

We may formally represent MLR in form of an equation,

\[\begin{aligned} numerical\ outcome = &\ intercept \\ & + coefficients \times numerical\ predictors \\ & + coefficients \times categorical\ predictors \end{aligned}\] or in a shorter form,

\[\hat y = b_0 + b_1x_1 + b_2x_2 + ... + b_px_p\] where we have p predictors.

Whenever the predictor is a categorical variable with more than two levels, we use dummy variable(s). There is no issue with binary categorical variable. For the variable with more than two levels, the number of dummy variables (i.e. once turned into several binary variables) equals number of levels minus one. For example, whenever we have four levels, we will obtain three dummy (binary) variables. As we will see later, glm will automatically do this for factor variable and provide separate estimates for each dummy variable.

7.6.2 Data exploration

Now, for the MLR we are no longer restricted to one predictor. Let’s say, we are interested in knowing the relationship between blood pressure (SBP and DBP), age, BMI, race and render as the predictors and the cholesterol level (outcome). As before, we explore the variables by the descriptive statistics,

| Characteristic | N = 2001 |

|---|---|

| sbp | 126 (115, 144) |

| dbp | 80 (72, 92) |

| chol | 6.19 (5.39, 6.90) |

| age | 47 (42, 53) |

| bmi | 37.80 (36.10, 39.20) |

| 1 Median (Q1, Q3) | |

| Characteristic | N = 2001 |

|---|---|

| race | |

| malay | 73 (37%) |

| chinese | 64 (32%) |

| indian | 63 (32%) |

| gender | |

| woman | 100 (50%) |

| man | 100 (50%) |

| 1 n (%) | |

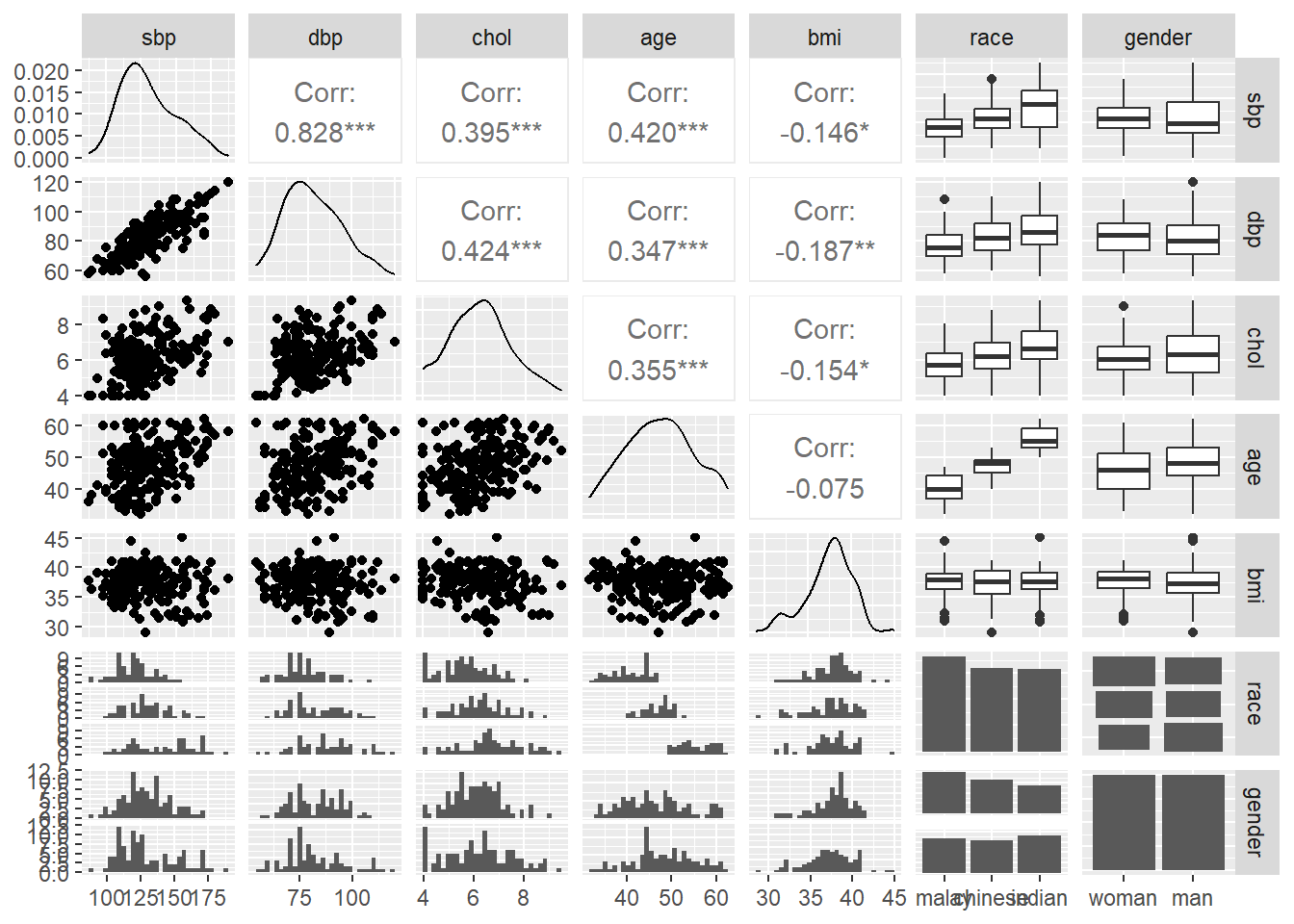

and the pairs plot, where we focus on the distribution of the data by histograms and box-and-whiskers plots. The pairs plot also includes information on the bivariate correlation statistics between the numerical variables.

FIGURE 7.3: Pairs plot for all variables.

7.6.3 Univariable analysis

For the univariable analysis in the context of MLR, we aim to select variables that are worthwhile to be included in the multivariable model.

In the context of exploratory research, we want to choose only variables with P-values < 0.25 to be included in MLR. To obtain the P-values, you may perform separate SLRs for each of the predictors (on your own). However, obtaining P-value for each predictor is easy by add1() function. Here, we use likelihood ratio test (LRT) using test = "LRT" option to obtain the P-values. We start with an intercept only model slr_chol0 using chol ~ 1 formula specification in the glm followed by add1(). add1() will test each predictor one by one.

slr_chol0 <- lm(chol ~ 1, data = coronary) # intercept only model

add1(slr_chol0, scope = ~ sbp + dbp + age + bmi + race + gender,

test = "F")## Single term additions

##

## Model:

## chol ~ 1

## Df Sum of Sq RSS AIC F value Pr(>F)

## <none> 278.77 68.417

## sbp 1 43.411 235.36 36.562 36.5200 7.377e-09 ***

## dbp 1 50.131 228.64 30.769 43.4121 3.917e-10 ***

## age 1 35.097 243.68 43.505 28.5178 2.534e-07 ***

## bmi 1 6.600 272.17 65.625 4.8015 0.0296 *

## race 2 37.099 241.68 43.855 15.1204 7.780e-07 ***

## gender 1 1.323 277.45 69.465 0.9443 0.3324

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1From the output, all variables are important with P < .25 except gender. These variables, excluding gender, are candidates in this variable selection step.

However, please keep in mind that in the context of confirmatory research, the variables that we want to include are not merely based on P-values alone. It is important to consider expert judgement as well.

7.6.4 Multivariable analysis

Multivariable analysis involves more than one predictors. In the univariable variable selection, we decided on several potential predictors. For MLR, we (judiciously) included these variables in an MLR model. In the present dataset, we have the following considerations:

- including both SBP and DBP is redundant, because both represent the blood pressure. These variables are also highly correlated. This is indicated by the correlation value, r = 0.828 and scatter plot for the SBP-DBP pair in the pairs plot in the data exploration step.

genderwas not sigficant, thus we may exclude the variable.- let say, as advised by experts in the field, we should exclude

agein the modelling.

Now, given these considerations, we perform MLR with the selected variables,

##

## Call:

## lm(formula = chol ~ dbp + bmi + race, data = coronary)

##

## Residuals:

## Min 1Q Median 3Q Max

## -2.18698 -0.73076 -0.01935 0.63476 2.91524

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 4.870859 1.245373 3.911 0.000127 ***

## dbp 0.029500 0.006203 4.756 3.83e-06 ***

## bmi -0.038530 0.028099 -1.371 0.171871

## racechinese 0.356642 0.181757 1.962 0.051164 .

## raceindian 0.724716 0.190625 3.802 0.000192 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 1.041 on 195 degrees of freedom

## Multiple R-squared: 0.2418, Adjusted R-squared: 0.2263

## F-statistic: 15.55 on 4 and 195 DF, p-value: 4.663e-11From the output above, for each variable, we focus these results:

- coefficients, \(b\)s – column

estimate. - 95% CIs – columns

conf.lowandconf.high. - P-values – column

p.value.

Note that for a categorical variable with more than two categories, the estimates are obtained for each dummy variable. In our case, race consists of Malay, Chinese and Indian. From the output, the dummy variables are racechinese representing Chinese vs Malay and raceindian representing Indian vs Malay dummy variables, where Malay is set as the baseline comparison group.

We also notice that some variables are not significant at significance level of 0.05, namely bmi and racechinese. As for racechinese dummy variable, because this forms part of the race variable, we accept the variable because it is marginally insignificant (0.0512 vs 0.05) and the other dummy variable raceindian is significant.

Stepwise automatic variable selection

We noted that not all variables included in the model are significant. In our case, we may remove bmi because it is not statistically significant. But in exploratory research where we have hundreds of variables, it is impossible to select variables by eye-ball judgement. So, in this case, how to perform the variable selection? To explore the significance of the variables, we may perform stepwise automatic selection. It is important to know stepwise selection is meant for exploratory research. For confirmatory analysis, it is important to rely on expert opinion for the variable selection. We may perform forward, backward or both forward and backward selection combined.

Forward selection starts with an intercept only or empty model without variable. It proceeds by adding one variable after another. In R, Akaike information criterion (AIC) is used as the comparative goodness-of-fit measure and model quality. In the stepwise selection, it seeks to find the model with the lowest AIC iteratively and the steps are shown in the output. More information about AIC can be referred to Hu (2007) and Wikipedia (2022).

# forward

mlr_chol_stepforward <- step(slr_chol0, scope = ~ dbp + bmi + race,

direction = "forward")## Start: AIC=68.42

## chol ~ 1

##

## Df Sum of Sq RSS AIC

## + dbp 1 50.131 228.64 30.769

## + race 2 37.099 241.68 43.855

## + bmi 1 6.600 272.17 65.625

## <none> 278.77 68.417

##

## Step: AIC=30.77

## chol ~ dbp

##

## Df Sum of Sq RSS AIC

## + race 2 15.2428 213.40 20.971

## <none> 228.64 30.769

## + bmi 1 1.6045 227.04 31.360

##

## Step: AIC=20.97

## chol ~ dbp + race

##

## Df Sum of Sq RSS AIC

## <none> 213.40 20.971

## + bmi 1 2.0381 211.36 21.051Backward selection starts with a model containing all variables. Then, it proceeds by removing one variable after another, of which it aims to find the model with the lowest AIC.

## Start: AIC=21.05

## chol ~ dbp + bmi + race

##

## Df Sum of Sq RSS AIC

## - bmi 1 2.0381 213.40 20.971

## <none> 211.36 21.051

## - race 2 15.6764 227.04 31.361

## - dbp 1 24.5188 235.88 41.002

##

## Step: AIC=20.97

## chol ~ dbp + race

##

## Df Sum of Sq RSS AIC

## <none> 213.40 20.971

## - race 2 15.243 228.64 30.769

## - dbp 1 28.275 241.68 43.855Bidirectional selection, as implemented in R, starts as with the model with all variables. Then, it proceeds with removing or adding variables, which combines both forward and backward selection methods. It stops once it finds the model with the lowest AIC.

## Start: AIC=21.05

## chol ~ dbp + bmi + race

##

## Df Sum of Sq RSS AIC

## - bmi 1 2.0381 213.40 20.971

## <none> 211.36 21.051

## - race 2 15.6764 227.04 31.361

## - dbp 1 24.5188 235.88 41.002

##

## Step: AIC=20.97

## chol ~ dbp + race

##

## Df Sum of Sq RSS AIC

## <none> 213.40 20.971

## + bmi 1 2.0381 211.36 21.051

## - race 2 15.2428 228.64 30.769

## - dbp 1 28.2747 241.68 43.855Preliminary model

Let say, after considering the P-value, stepwise selection (in exploratory research) and expert opinion, we decided that our preliminary model is,

chol ~ dbp + race

and we fit the model again to view basic information of the model,

##

## Call:

## lm(formula = chol ~ dbp + race, data = coronary)

##

## Residuals:

## Min 1Q Median 3Q Max

## -2.1378 -0.7068 -0.0289 0.5997 2.7778

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 3.298028 0.486213 6.783 1.36e-10 ***

## dbp 0.031108 0.006104 5.096 8.14e-07 ***

## racechinese 0.359964 0.182149 1.976 0.049534 *

## raceindian 0.713690 0.190883 3.739 0.000243 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 1.043 on 196 degrees of freedom

## Multiple R-squared: 0.2345, Adjusted R-squared: 0.2228

## F-statistic: 20.01 on 3 and 196 DF, p-value: 2.335e-11and the r-squared is

## [1] 0.23450377.6.5 Interaction

Interaction is the combination of predictors that requires interpretation of their regression coefficients separately based on the levels of the predictor. For example, we need separate interpretation of the coefficient for dbp depending on race group: Malay, Chinese or Indian. This makes interpreting our analysis complicated as we can no longer interpret each coefficient on its own. So, most of the time, we pray not to have interaction in our regression model. We fit the model with a two-way interaction term,

##

## Call:

## glm(formula = chol ~ dbp + race + dbp:race, data = coronary)

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 2.11114 0.92803 2.275 0.024008 *

## dbp 0.04650 0.01193 3.897 0.000134 ***

## racechinese 1.95576 1.28477 1.522 0.129572

## raceindian 2.41530 1.25766 1.920 0.056266 .

## dbp:racechinese -0.02033 0.01596 -1.273 0.204376

## dbp:raceindian -0.02126 0.01529 -1.391 0.165905

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## (Dispersion parameter for gaussian family taken to be 1.087348)

##

## Null deviance: 278.77 on 199 degrees of freedom

## Residual deviance: 210.95 on 194 degrees of freedom

## AIC: 592.23

##

## Number of Fisher Scoring iterations: 2From the output, there is no evidence that suggests the presence of interaction because the included interaction term was insignificant. In R, it is easy to fit interaction by *, e.g. dbp * race will automatically includes all variables involved. This is equal to specifiying glm(chol ~ dbp + race + dbp:race, data = coronary), where we can use : to include the interaction.

7.6.6 Model fit assessment

For MLR, we assess the model fit by \(R^2\) and histogram and scatter plots of residuals. Residuals, in simple term, are the discrepancies between the observed values (dots) and the predicted values (by the fit MLR model). So, the lesser the discrepancies, the better is the model fit.

Percentage of variance explained, \(R^2\)

First, we obtain the \(R^2\) values. In comparison to the \(R^2\) obtained for the SLR, we include adj = TRUE here to obtain an adjusted \(R^2\). The adjusted \(R^2\) here is the \(R^2\) with penalty for the number of predictors p. This discourages including too many variables, which might be unnecessary.

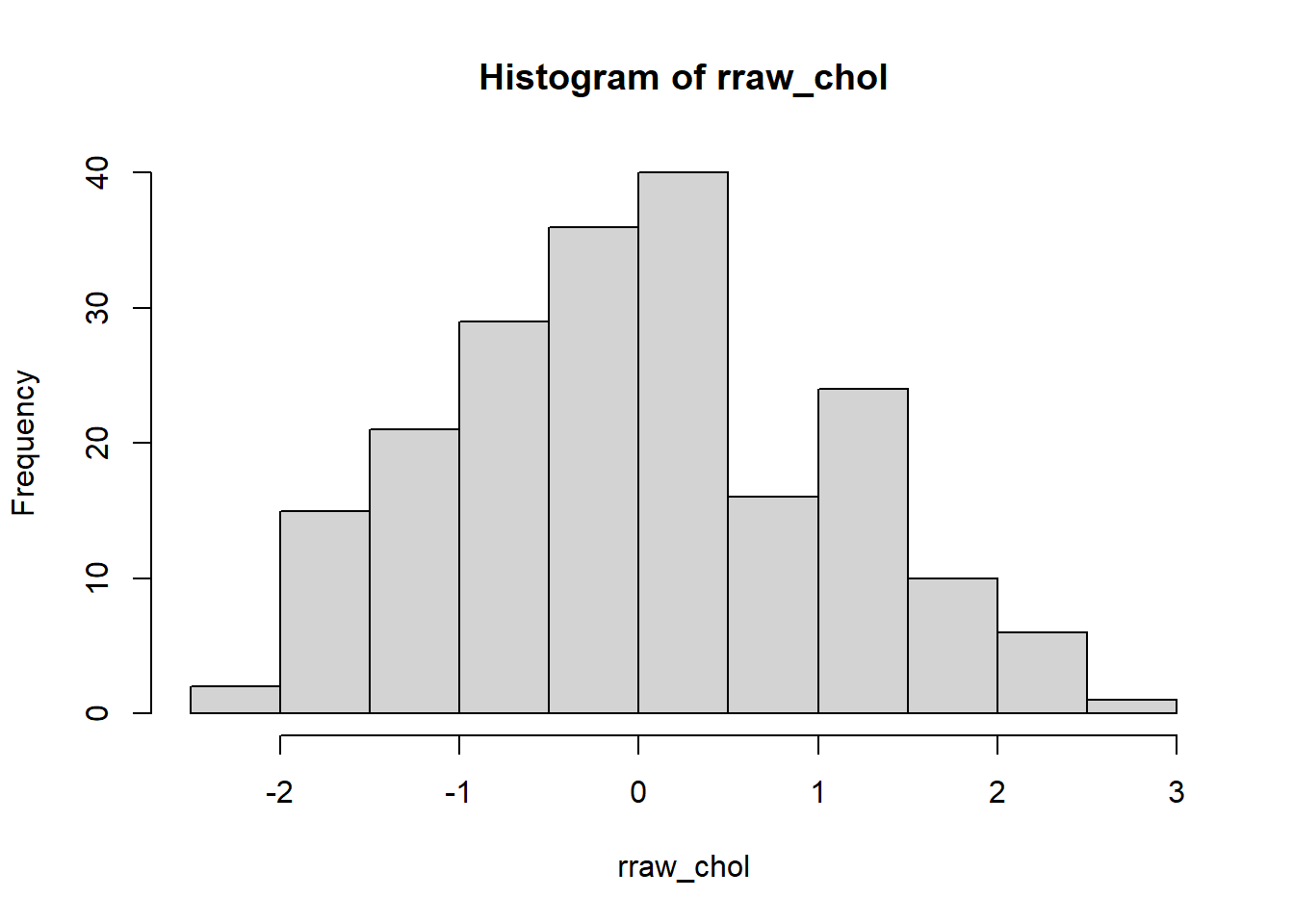

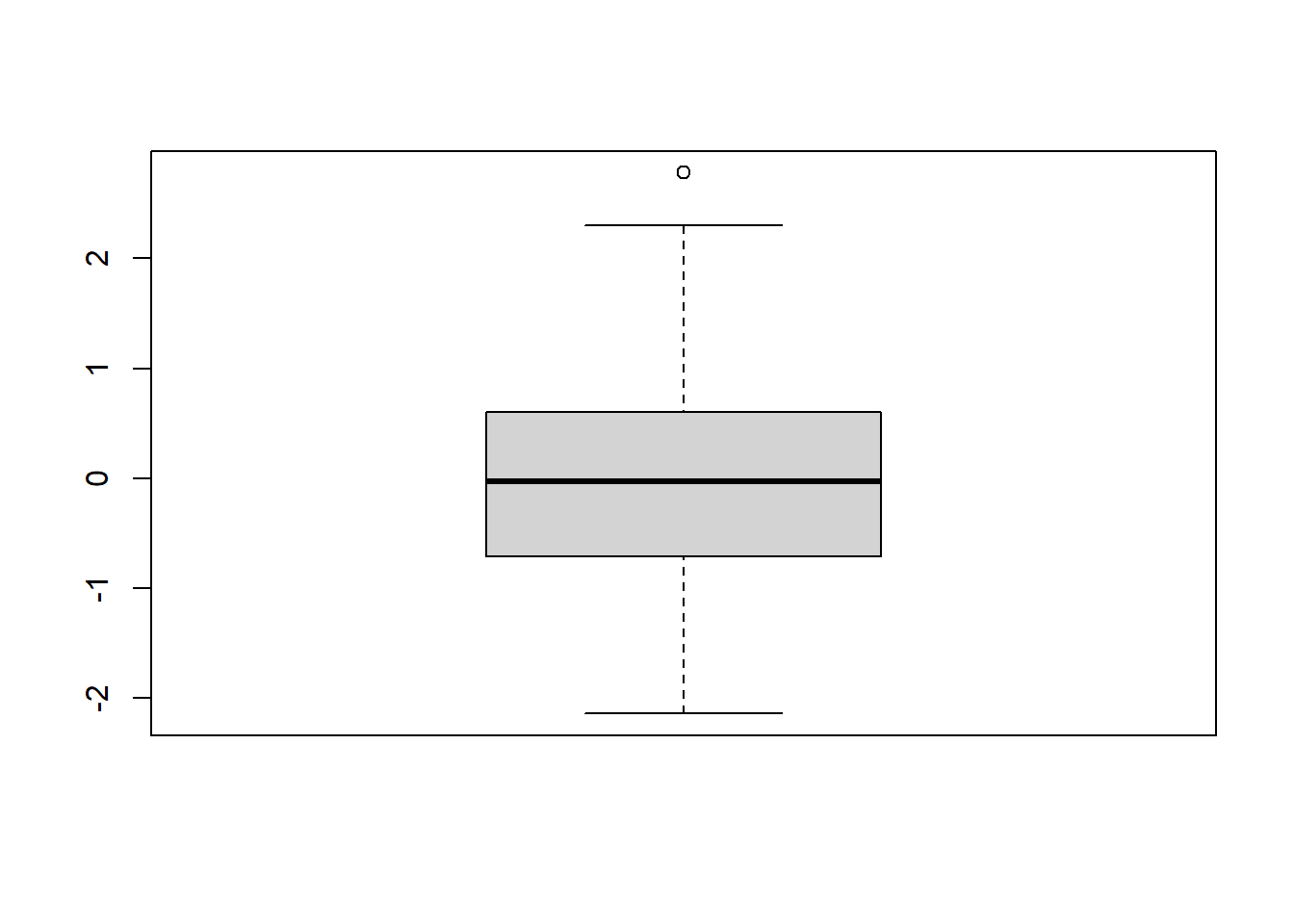

## [1] 0.2227869Histogram and box-and-whiskers plot

Second, we plot a histogram and a box-and-whiskers plot to assess the normality of raw/unstandardized residuals of the MLR model. We expect normally distributed residuals to indicate a good fit of the MLR model. Here, we have a normally distributed residuals.

FIGURE 7.4: Histogram of raw residuals.

FIGURE 7.5: Box-and-whiskers plot of raw residuals.

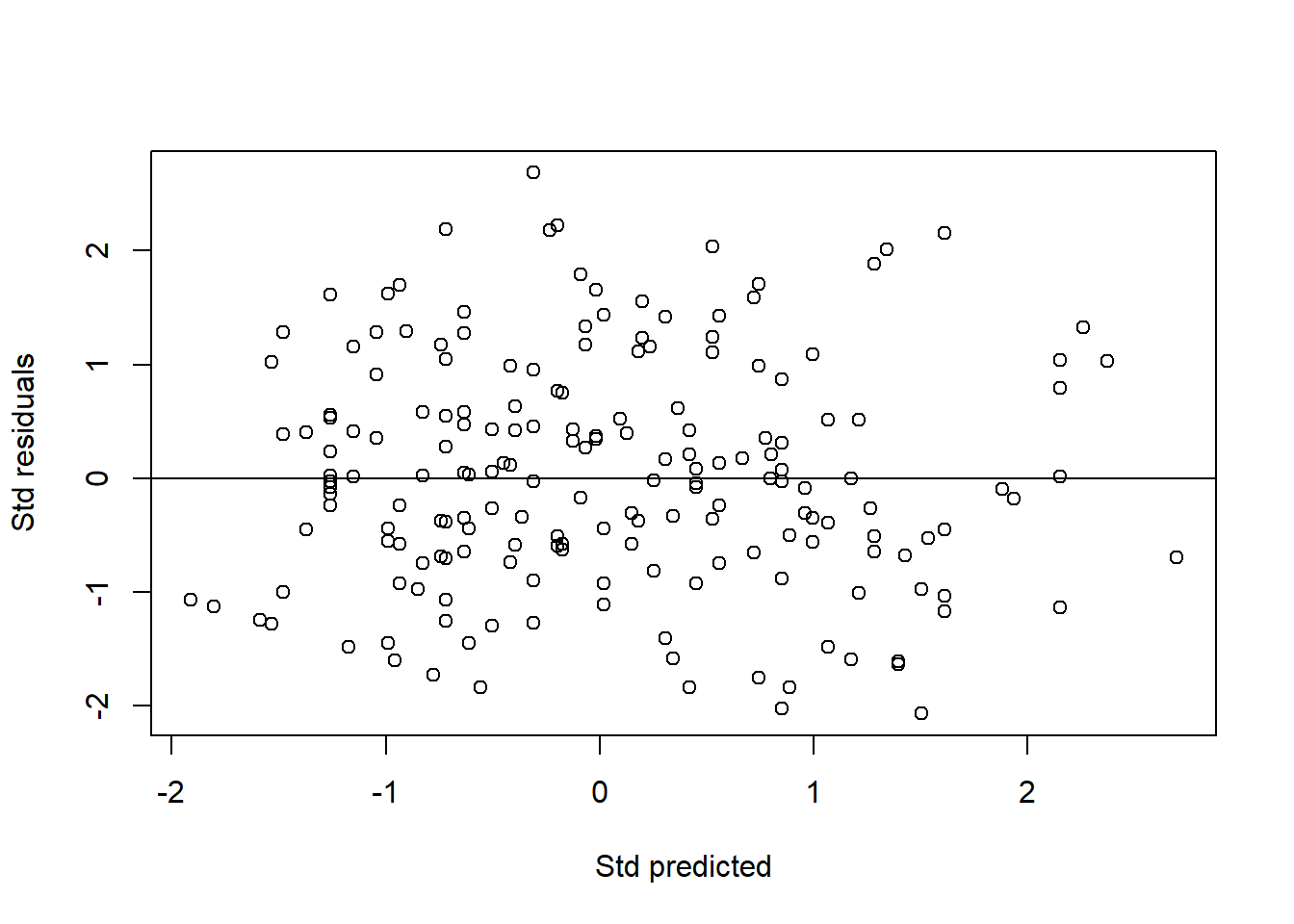

Scatter plots

Third, we plot a standardized residuals (Y-axis) vs standardized predicted values (X-axis). Similar to the one for SLR, this plot allows assessment of normality, linearity and equal variance assumptions. The dots should form elliptical/oval shape (normality) and scattered roughly equal above and below the zero line (equal variance). Both these indicate linearity. Our plot below shows that the assumptions are met.

rstd_chol <- rstandard(mlr_chol_sel) # standardized residuals

pstd_chol <- scale(predict(mlr_chol_sel)) # standardized predicted values

plot(rstd_chol ~ pstd_chol, xlab = "Std predicted", ylab = "Std residuals")

abline(0, 0) # normal, linear, equal variance

FIGURE 7.6: Scatter plot of standardized residuals vs standardized predicted values

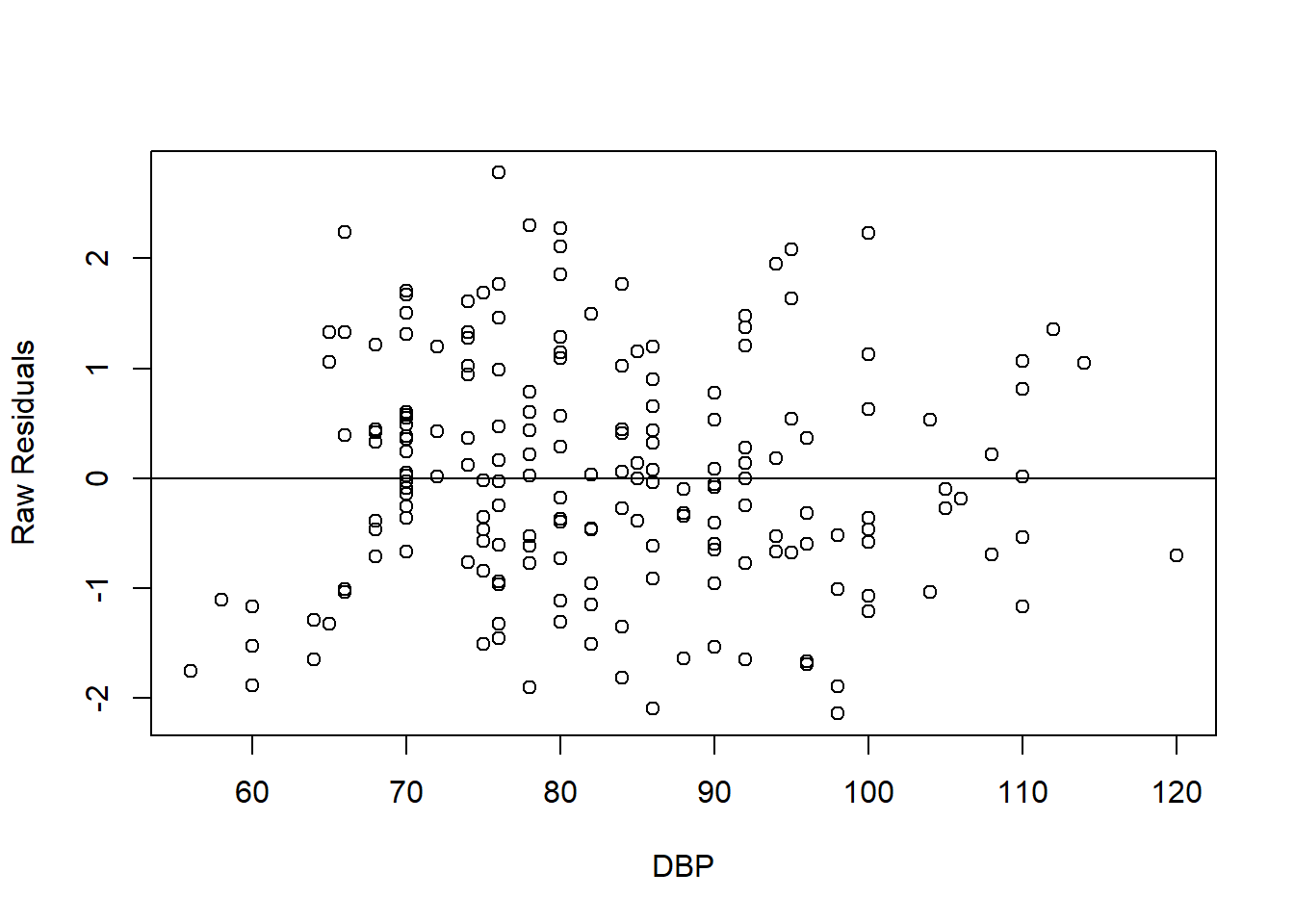

In addition to the standardized residuals vs standardized predicted values plot, for numerical predictors, we assess the linear relationship between the raw residuals and the observed values of the numerical predictors. We plot the raw residuals vs numerical predictor below. The plot interpreted in similar way to the standardized residuals vs standardized predicted values plot. The plot shows good linearity between the residuals and the numerical predictor.

FIGURE 7.7: Scatter plot of raw residuals vs DBP (numerical predictor).

7.6.7 Presentation and interpretation

After passing all the assumption checks, we may now decide on our final model. We may rename the preliminary model mlr_chol_sel to mlr_chol_final for easier reference.

Similar to SLR, we use tbl_regression() to come up with a nice table to present the results.

| Characteristic | Beta | 95% CI1 | p-value |

|---|---|---|---|

| dbp | 0.03 | 0.02, 0.04 | <0.001 |

| race | |||

| malay | — | — | |

| chinese | 0.36 | 0.00, 0.72 | 0.050 |

| indian | 0.71 | 0.34, 1.1 | <0.001 |

| 1 CI = Confidence Interval | |||

It will be useful to be able to save the output in the spreadsheet format for later use. We can use tidy() function in this case and export it to a .csv file,

Then, we present the model equation. Cholesterol level in mmol/L can be predicted by its predictors as given by,

\[chol = 3.30 + 0.03\times dbp + 0.36\times race\ (chinese) + 0.71\times race\ (indian)\] Based on the adjusted \(R^2\), table and model equation, we may interpret the results as follows:

- 1mmHg increase in DBP causes 0.03mmol/L increase in cholesterol, while controlling for the effect of race.

- Likewise, 10mmHg increase in DBP causes 0.03 x 10 = 0.3mmol/L increase in cholesterol, while controlling for the effect of race.

- Being Chinese causes 0.36mmol/L increase in cholesterol in comparison to Malay, while controlling for the effect of DBP.

- Being Indian causes 0.71mmol/L increase in cholesterol in comparison to Malay, while controlling for the effect of DBP.

- DBP and race explains 22.3% variance in cholesterol.

For each of this interpretation, please keep in mind to also consider the 95% CI of each of coefficient. For example, being Indian causes 0.71mmol/L increase in cholesterol in comparison to Malay, where this may range from 0.34mmol/L to 1.1mmol/L based on the 95% CI.

7.7 Prediction

In some situations, it is useful to use the SLR/MLR model for prediction. For example, we may want to predict the cholesterol level of a patient given some clinical characteristics. We can use the final model above for prediction. For starter, let us view the predicted values for our sample,

## # A tibble: 6 × 10

## id cad sbp dbp chol age bmi race gender pred_chol

## <dbl> <fct> <dbl> <dbl> <dbl> <dbl> <dbl> <fct> <fct> <dbl>

## 1 1 no cad 106 68 6.57 60 38.9 indian woman 6.13

## 2 14 no cad 130 78 6.32 34 37.8 malay woman 5.72

## 3 56 no cad 136 84 5.97 36 40.5 malay woman 5.91

## 4 61 no cad 138 100 7.04 45 37.6 malay woman 6.41

## 5 62 no cad 115 85 6.66 53 40.3 indian man 6.66

## 6 64 no cad 124 72 5.97 43 37.6 malay man 5.54Compare the predicted values with the observed cholesterol level. Recall that we already checked this for the model fit assessment before.

It is more useful to predict for newly observed data. Let us try predicting the cholesterol level for an Indian patient with DBP = 90mmHg,

## 1

## 6.811448Now, we also do so the data with many more patients,

new_data <- data.frame(dbp = c(90, 90, 90),

race = c("malay", "chinese", "indian"))

predict(mlr_chol_final, new_data)## 1 2 3

## 6.097758 6.457722 6.811448## dbp race pred_chol

## 1 90 malay 6.097758

## 2 90 chinese 6.457722

## 3 90 indian 6.8114487.8 Summary

In this chapter, we went through the basics about SLR and MLR. We performed the analysis for each and learned how to assess the model fit for the regression models. We learned how to nicely present and interpret the results. In addition, we also learned how to utilize the model for prediction. Excellent texts on regression includes Biostatistics: Basic Concepts and Methodology for the Health Sciences (Daniel and Cross 2014) and the more advanced books such as Applied Linear Statistical Models (Neter et al. 2013) and Epidemiology: Study Design and Data Analysis (Woodward 2013).