Chapter 3 Exploratory Analyses

In the Chapter 1.3.3 discussion of the 5A Method, we describe three types of data analysis: exploratory, inferential, and predictive. The next three chapters of the text dive deeper into each of these analyses. Exploratory data analysis primarily seeks to describe the distributions within and associations between variables. Often we hope to generate hypotheses regarding the parameters of a distribution to be tested in an inferential analysis. In some cases, we wish to identify associations between variables for later use in a predictive analysis. Other times, the exploration is purely to describe the data at hand, without any intention of extending insights to a broader context. Regardless, we have a vast collection of methods and tools at our disposal to explore data.

Exploratory analyses rely heavily on the capacity to summarize variable values in numerical and visual form. Consequently, we open the chapter with an introduction to descriptive statistics and data graphics. We then apply numerical and visual methods to explore the distributions of both categorical and numerical variables. The chapter concludes with an exploration of both linear and nonlinear associations. In each section, we provide a brief introduction to the focus area before demonstrating the solution to an exemplar problem using the 5A Method. Let’s start exploring!

3.1 Data Summary

Many modern data sets consist of thousands, if not millions, of observations. With so many individual values, how could we possibly describe the distributions and associations of a given variable? The answer is that we summarize a variable’s values using statistics and visualizations. It is much easier for analysts and stakeholders to consume a few numerical values and visual graphics compared to a table with thousands of rows. Yet, certain statistics and graphics are better suited for particular variable types and applications. As a result, it is critical that data scientists choose the appropriate summary tools and avoid misleading conclusions.

3.1.1 Descriptive Statistics

In Chapter 2.3.2, we briefly introduced a few statistics to facilitate the use of the summarize() function. Now we dig deeper into the primary types of statistics and their use in exploratory analyses. Summary statistics are employed to describe the distribution of a variable’s values based on frequency, centrality, and dispersion. Statistics can also describe the association between two variables’ values based on conditional frequency and correlation. We define these characteristics below and present the most common statistics within each category.

Throughout the section we demonstrate statistics using the popular iris data set. The data was originally collected by Edgar Anderson in 1935 (Anderson 1935) and is included in the base R installation. It comprises petal and sepal measurements for three different species of iris flowers. We purposely suppress the code required to compute the descriptive statistics in order to focus on definitions and interpretations. The associated code and applications are reserved for the subsequent case studies.

Frequency

Frequency refers to how often particular variable values occur. For a categorical variable, frequency typically consists of counts of the number of observations within each category. For example, if we wanted to summarize the frequency of each iris species within our data set we might construct the table below.

| species | count |

|---|---|

| setosa | 50 |

| versicolor | 50 |

| virginica | 50 |

Rather than attempting to review 150 rows of data, Table 3.1 offers a quick summary of the species frequency. It is clear that there are an equal number of each iris species in the data. That fact would be difficult to discern without a summary statistic.

For continuous, numerical variables it is often not useful to summarize the frequency of individual values. Depending on the level of fidelity (i.e., decimal places), it’s possible that none of the values are repeated. Instead we determine the frequency within ranges of values. With the iris data, suppose we wanted to summarize the frequency of petal lengths (in centimeters). Table 3.2 displays the appropriate statistics.

| length | count | proportion |

|---|---|---|

| 1-1.9 | 50 | 0.33 |

| 2-2.9 | 0 | 0.00 |

| 3-3.9 | 11 | 0.07 |

| 4-4.9 | 43 | 0.29 |

| 5-5.9 | 35 | 0.23 |

| 6-6.9 | 11 | 0.07 |

Using the frequency table, we quickly determine that none of the flowers have petals between 2 and 2.9 centimeters. Without such a summary, we would be left to manually search the values for petal length. The table also shows another type of statistic for describing frequency, which is a proportion.

One downside to using counts as a frequency measure is that they only having meaning relative to a total. If we tell someone that 50 flowers have a petal length less than 2 centimeters, it is not clear whether that represents a lot or a little. If there are 1,000 flowers total, then 50 is not as significant compared to when there are only 150 flowers total. A proportion (i.e., relative frequency) does not suffer this problem. If we know that 33% of the flowers are less than 2 centimeters, then we can comprehend the significance without knowing the total.

Upon summarizing the frequency of particular variable values, we might be interested in knowing the most frequent value. The most frequent value (or range of values) for a variable is a statistic known as the mode. When a variable has a single mode, we refer to it as unimodal. In the unique case of all values occurring with equal frequency (i.e., uniform) we also label the variable unimodal. Table 3.1 is a clear example of this case, since all species occur with equal frequency. However, it is more often the case that unimodal variables have one distinct, most frequent value (or range of values) and all other values occur much less frequently.

If a variable has more than one distinct, most frequent value, then we refer to it as multimodal. To be clear, the frequencies of the multiple modes do not need to be exactly the same. There simply must exist a distinctly larger frequency among the other neighboring values. The petal length frequencies in Table 3.2 are a good example of this phenomenon. While lengths between 1 and 1.9 centimeters are the most common overall, lengths between 4 and 4.9 centimeters also stand out among adjacent values. For this bimodal variable, we would refer to a major mode (1-1.9 cm) and a minor mode (4-4.9 cm).

Centrality

Centrality, or central tendancy, refers loosely to the middle of a variable’s values. For some unimodal variables, the middle might coincide with the mode. In this case, we can think of a variable’s center as meaning its most common, or expected, value. However, this is not always the case. For bimodal variables in particular, the most commonly occurring values are not always in the literal middle. Even for unimodal variables, extreme values might skew the definition of middle. We investigate these concepts further with two well-known statistics.

The median of a variable is literally the middle value. In other words, half of the variable values are larger and half are smaller. However, this requires some sense of ordering to the values. Thus, for categorical variables, we can only compute the median when they are ordinal. For numerical variables, we compute the median by sorting the values in ascending order and selecting the middle value. Of course, if there are an even number of observations, then there is no middle value! After sorting the petal lengths for the 150 irises in our data set, the 75th value is 4.3 and the 76th value is 4.4. Which should we choose as the middle? In this case, we choose 4.35 since it is halfway between the two values.

The other most common statistic to describe centrality is the arithmetic mean. There is no sense of a mean for categorical variables, because the calculation requires summation and division. For numerical variables, we sum all of the values and divide by the number of values. The result is what we generally refer to as the average. The average value for a variable invokes the idea of the most common or expected value. However, we once again must be careful. The mean petal length for all 150 irises is 3.76 centimeters. Yet, we know that the modes are 1-1.9 and 4-4.9 centimeters. This apparent discrepancy is easily explained by splitting our statistics according to species.

| species | count | mean | median |

|---|---|---|---|

| setosa | 50 | 1.46 | 1.50 |

| versicolor | 50 | 4.26 | 4.35 |

| virginica | 50 | 5.55 | 5.55 |

Table 3.3 reveals that setosa irises have petals much shorter than the other two species. The 50 setosas “pull” the mean petal length below the median (middle) length of 4.35. When the mean and median of a variable differ greatly, we refer to the values as skewed. By contrast, when the mean and median are equal (or nearly equal) we describe the values as symmetric. In the case of a symmetric, unimodal variable it is possible for the mean, median, and mode to all be equal. Modality and skew are variable characteristics much more easily displayed in a graphic than described with a statistic. We demonstrate this extensively in future sections of the chapter. For now, we transition to the final type of descriptive statistic.

Dispersion

Dispersion, or spread, refers to how a variable’s values differ from one another. Are most of the values close to one another? Are they close to the center or accumulated near one (or both) of the extremes? These questions can be answered with a few common descriptive statistics.

The range of a variable is the difference between its maximum and minimum values. A larger range indicates a wider dispersion of values. Table 3.4 provides the range of petal lengths for each iris species.

| species | minimum | maximum | range |

|---|---|---|---|

| setosa | 1.0 | 1.9 | 0.9 |

| versicolor | 3.0 | 5.1 | 2.1 |

| virginica | 4.5 | 6.9 | 2.4 |

Versicolor and virginica irises display a broader range of petal lengths compared to setosas. Thus, the petal lengths for those two species appear to be more dispersed or spread out. One downside to describing dispersion with the overall range is its sensitivity to extreme values, which we call outliers. If one or two extraordinary flowers happen to have very long or short petals, then we may not want them to skew our overall perception of petal lengths for all flowers. A different statistic partially addresses this concern.

The interquartile range (IQR) describes the dispersion for the middle half of a variable’s values. Here we mean “middle half” literally. Rather than the difference between the maximum and minimum, IQR is the difference between the 75th percentile (3rd quartile) and 25th percentile (1st quartile). Since one-quarter of values are greater than the 75th percentile and one-quarter of values are less than the 25th percentile, the difference captures the middle half.

| species | Q1 | Q3 | IQR |

|---|---|---|---|

| setosa | 1.4 | 1.575 | 0.175 |

| versicolor | 4.0 | 4.600 | 0.600 |

| virginica | 5.1 | 5.875 | 0.775 |

Table 3.5 lists the dispersion for the middle half of petal lengths for each species. We still observe much less variability in petal lengths for setosas. But we can be more confident that this statistic is not driven by a few extreme flowers.

While ranges answer the question of how far values are from one another, they do not clearly indicate how far they are from the measures of center. A well-known statistic for this task is the standard deviation. We can think of the standard deviation as the average distance of each value from the mean. Thus, a larger standard deviation indicates a variable with values that tend to be further from center. Similar to the range, the standard deviation can be very sensitive to outliers. Table 3.6 provides all three types of descriptive statistics for the distribution of petal length: frequency, centrality, and dispersion.

| species | count | mean | stdev |

|---|---|---|---|

| setosa | 50 | 1.46 | 0.17 |

| versicolor | 50 | 4.26 | 0.47 |

| virginica | 50 | 5.55 | 0.55 |

We now have a clear summary of the distribution of petal lengths for the three species of iris. From a frequency standpoint, we know that the observations are evenly split between species. With regard to centrality, we observe that setosa irises tend to have petals much shorter than the other two species. Finally, we see that the variability in petal lengths is larger for versicolor and virginica irises. That said, the standard deviations are between 10 and 12% of the mean for all species. So, from a percentage standpoint, the dispersion of petal lengths is relatively similar.

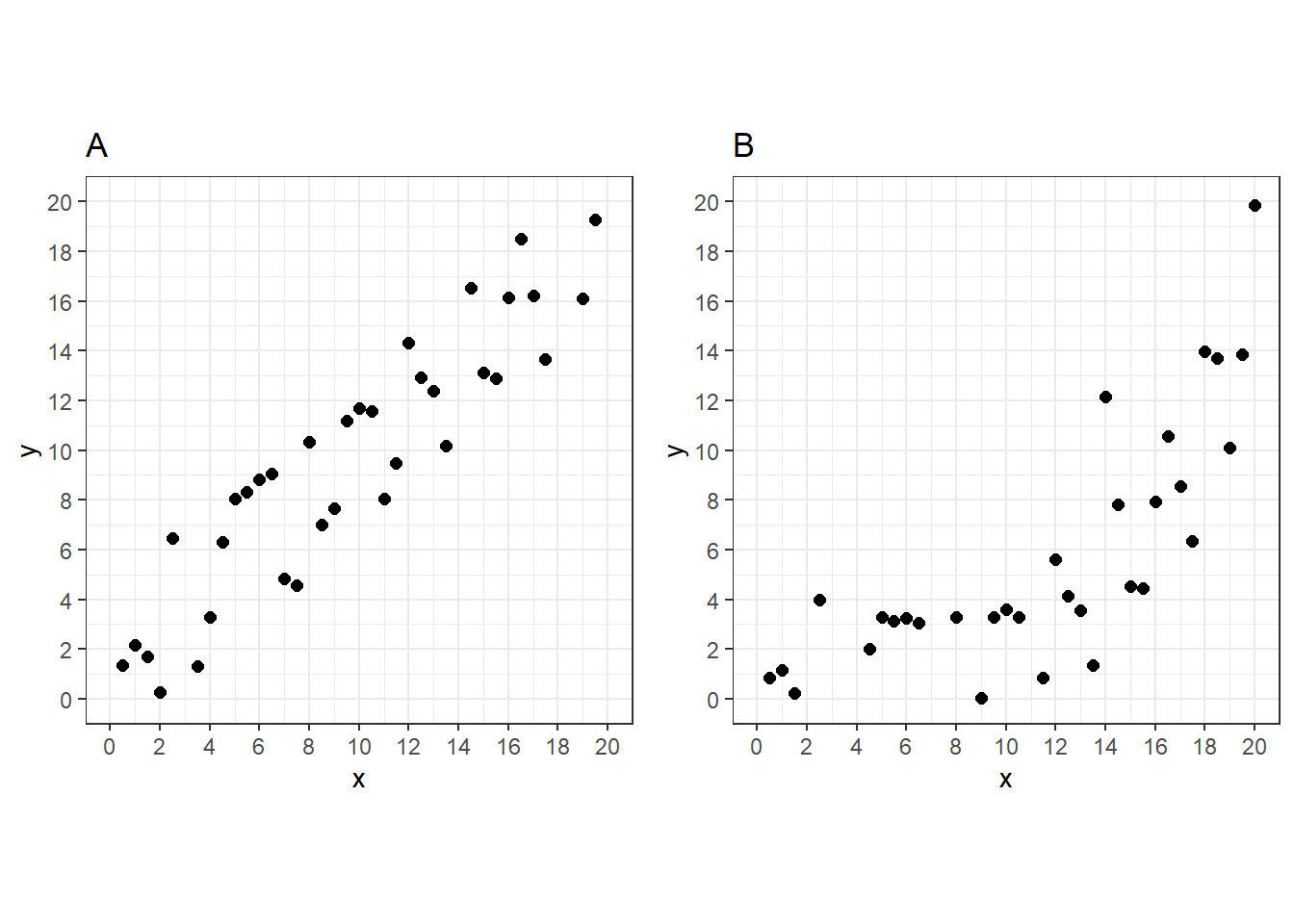

Correlation

Descriptive statistics for frequency, centrality, and dispersion all offer valuable insights regarding the distribution of a single variable’s values. However, we are often interested in the association between the values of two variables. By association we mean a consistent relationship between changes in the value of one variable and the value of a second variable. Perhaps when one variable increases, the other variable tends to decrease. When such a relationship exists between two variables, we say they are correlated. We can measure the strength of this correlation using descriptive statistics.

The appropriate statistic for measuring correlation depends on the type of variables under consideration. If the two variables are categorical, then we cannot use the same statistics compared to when they are numerical. When one variable is categorical and the other is numerical, we require different metrics as well. Here we briefly introduce two common descriptive statistics and save a deeper discussion for the case studies.

When both variables are categorical, we can describe their correlation using a conditional proportion. Here we are trying to determine if the frequency of values for one variable changes across the values of the other variable. Suppose for a moment that the petal length variable had instead been presented as categorical with sizes small (1-2.9 cm), medium (3-4.9 cm), and large (5-6.9 cm). With this categorization, we can produce Table 3.7.

| species | small | medium | large |

|---|---|---|---|

| setosa | 50 | 0 | 0 |

| versicolor | 0 | 48 | 2 |

| virginica | 0 | 6 | 44 |

Now we ask the question, are species and petal length correlated with one another? We can answer this question by computing a proportion for each size conditioned on the species. For example, given an iris is one of the 50 virginicas the size proportions are 0% small, 12% medium, and 88% large. By contrast, the size proportions for the 50 setosas are 100% small, 0% medium, and 0% large. If there was no correlation between species and petal length, then we would expect the size proportions to be roughly the same. Instead, they are dramatically different across species. Consequently, we find that there is a strong correlation between species and petal length.

When both variables are numerical, we describe their association using a correlation coefficient. There are multiple types of coefficients for particular applications and we describe their differences further in the case studies. But a common feature among them is that their values must be between -1 and +1. A negative sign indicates a decreasing association. In other words, as one variable increases in value the other tends to decrease. A positive sign suggests an increasing association. As one variable increases in value the other also increases. The closer the coefficient is to 1 (in absolute value) the stronger the correlation. A coefficient near zero indicates there is no association between the two variables.

Referring to the iris data, we might be interested in the correlation between a petal’s length and its width. It makes intuitive sense that wider petals might tend to also be longer. We can describe this intuition in a quantitative manner using a correlation coefficient. The computation of correlation coefficients is a bit complex, so we reserve it for later discussion. For now, suffice it to say that the correlation coefficient between petal length and width is +0.96. Because this value is so close to 1, we label the association as very strong. Thus, as petals get wider there is a very strong tendancy for them to also get longer. A common categorization of correlation strength based on the coefficient’s absolute value is:

- Strong: 1 to 0.75

- Moderate: 0.75 to 0.50

- Weak: 0.50 to 0.25

- None: 0.25 to 0

An often-repeated phrase on this topic is that correlation does not imply causation. We are not claiming that a petal’s width is causing its length. In fact, in this case, both dimensions are likely caused by the biology of the flower. Instead, we are simply saying that we observe a tendancy for wider petals to also be longer.

In this subsection of the chapter we introduced a wide variety of descriptive statistics to help characterize the distributions of and associations between variables. While a collection of one-number summaries is informative, it is often more effective to depict concepts such as frequency, centrality, dispersion, and correlation in a visual graphic. Throughout the rest of the chapter, we demonstrate the use of visual summaries within case studies and indicate the most appropriate graphic for each application. Prior to that, we must first introduce the key features of data graphics in general.

3.1.2 Data Graphics

The use of data visualization is pervasive throughout the analysis process. Not only do analysts construct visual graphics for internal evaluation, but they also present them to stakeholders as part of the advising step of the 5A Method. We introduce a wide variety of such graphics in the case studies throughout this chapter. However, there are important overarching characteristics of all visualizations that warrant prior consideration. Specifically, we describe four key features of all graphics: visual cues, coordinate systems, scales, and context.

Once again, we demonstrate the concepts within the section using the iris data set and suppress the R code required to generate the visualizations. For now, the focus is on decomposing the key features of an existing graphic. In subsequent sections we provide the code to construct our own tailored graphics.

Visual Cues

Visual cues are the primary building blocks of data visualizations. We think of visual cues as the way a graphic transforms data into pictures. Some of the most common visual cues are position, height, area, shape, and color but there are many others. When constructing a data graphic, we assign each variable to an appropriate visual cue. Let’s begin our discussion with a simple bar chart that displays the number of irises of each species.

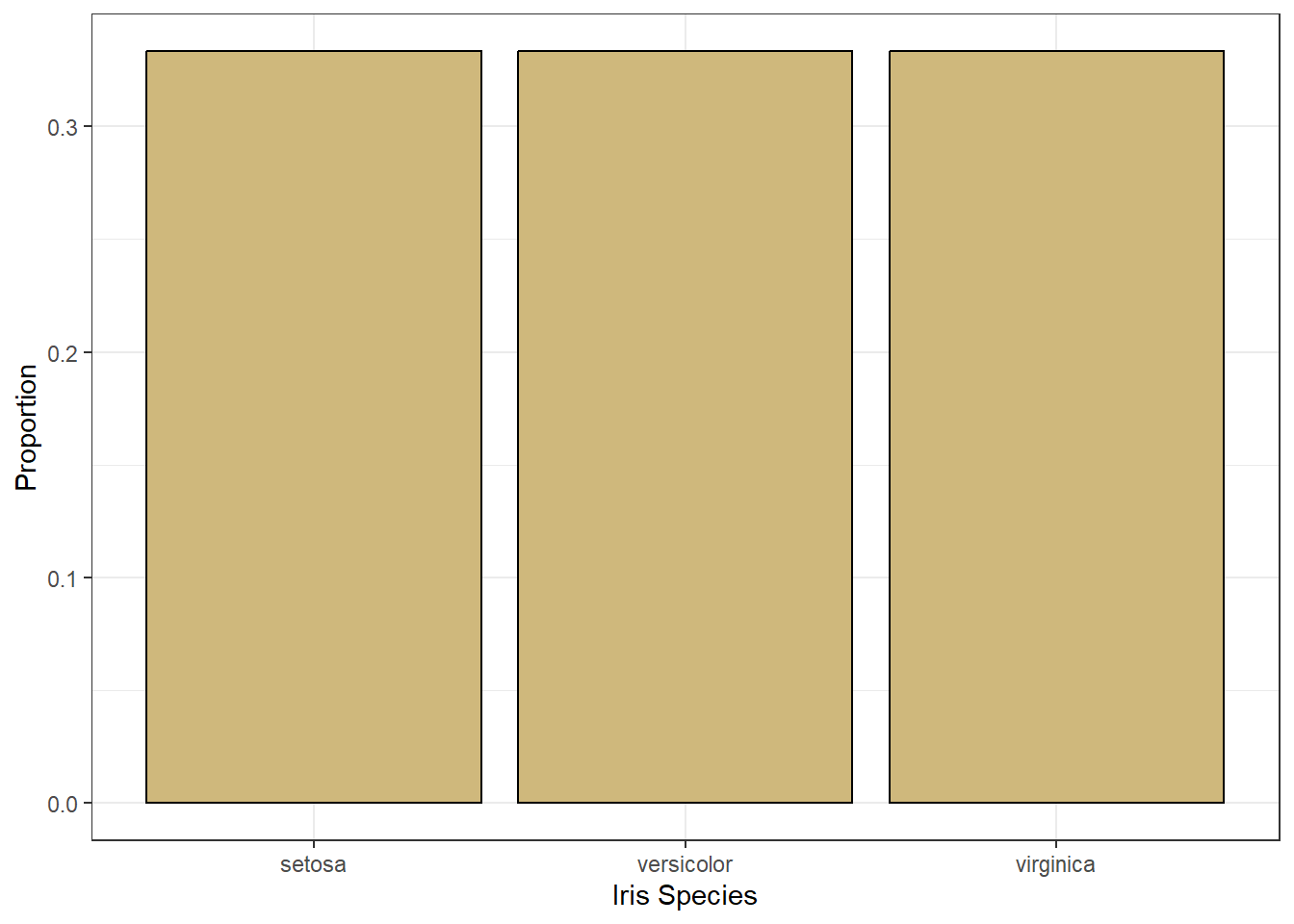

Figure 3.1: Bar Chart of Iris Species

Figure 3.1 suggests the data includes an equal number of irises of each species. There are two variables depicted in the bar chart: species and number (as a proportion). So, what visual cue is assigned to each of these variables? The cue for species is the position of the bar. The left-most bar is for setosa, the middle bar is for versicolor, and the right-most bar is for virginica. Meanwhile, the cue for number is the height of the bar. All of the bars are the same height, which indicates there is an equal number of each species.

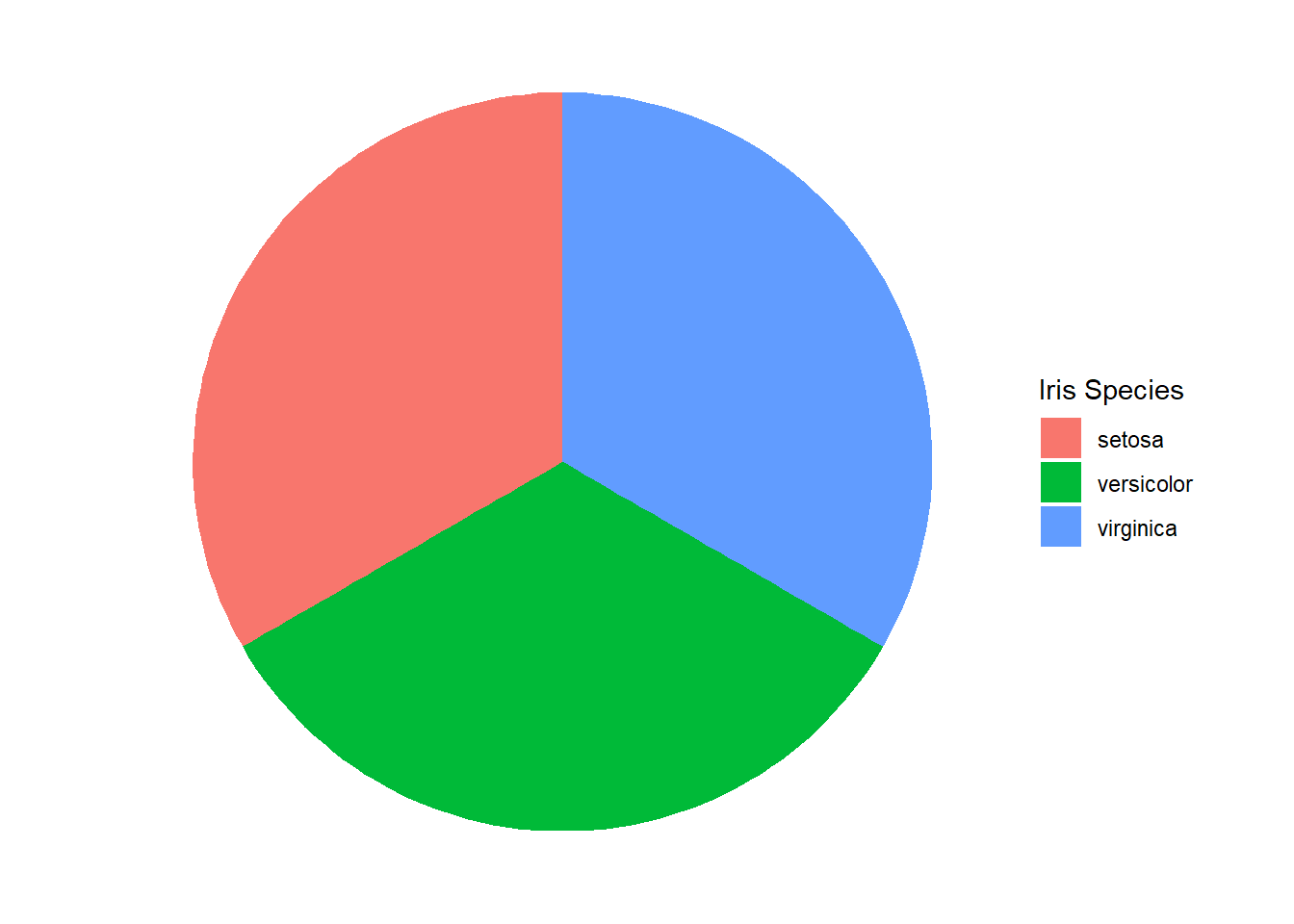

Although the bar chart is the most common graphic for depicting frequency, it is not the only option. By choosing different visual cues, we obtain different graphics. Suppose we choose color as the cue for species and area as the cue for number (again as a proportion). In this case, we might construct the graphic in Figure 3.2.

Figure 3.2: Pie Chart of Iris Species

Nothing changed about the data between the two graphics. Both represent the exact same variable values. However, the choice of visual cue dramatically changes the appearance of the graphic. Some would argue that it also changes the viewer’s capacity to interpret the associated data. In fact, research suggests that pie charts are inefficient and inaccurate in conveying frequency data compared to bar charts (Siirtola 2019). This is particularly true when the graphic summarizes a large number of categories.

Throughout the remaining chapters of the book, we suggest effective visual cues for different variable types. The simple example here demonstrates that the choice can be very important in terms of the information conveyed to the viewer. Another important choice in designing visual graphics is the coordinate system.

Coordinate Systems

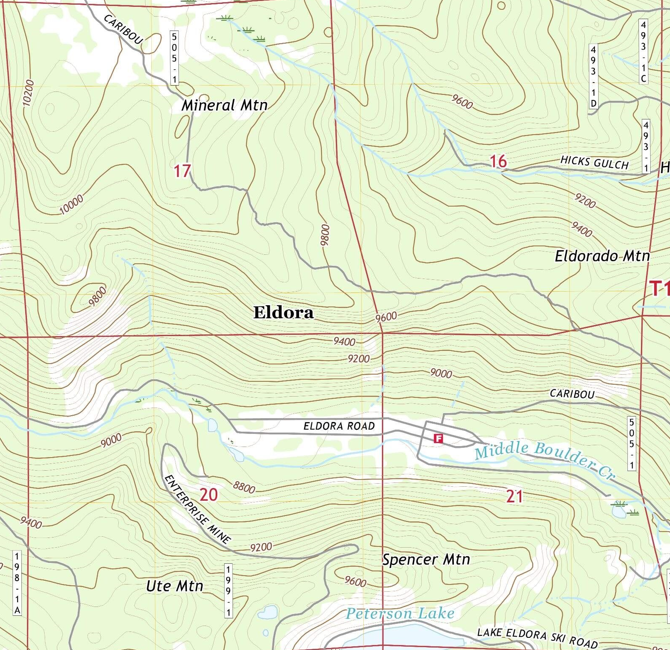

The coordinate system defines how the data is organized within the graphic. The most common coordinate systems are Cartesian, polar, and geographic. The Cartesian coordinate system uses location in the standard \(x\)-\(y\) plane. Polar coordinates instead use angle and radius to place data points in the plane. Finally, geographic coordinates use latitude and longitude to locate data points.

Typically the type of data defines the appropriate coordinate system, but sometimes there are multiple options available. Our bar and pie charts of iris species provide a perfect example. The bar chart uses the Cartesian coordinate system. The position on the \(x\)-axis is used for the species, while the height along the \(y\)-axis is used for the number. By contrast, the pie chart uses the polar coordinate system. The angle and radius of the boundary lines define the area assigned to the number of irises. The fill color of that area determines the species.

The need for geographic coordinates is generally more obvious. When dealing with physical location data and maps, geographic coordinates are often the correct choice. However, even this is not a perfect rule. The Earth is (roughly) a sphere, so we can represent locations on its surface using polar coordinates rather than geographic. In some cases, the domain experts or stakeholders generating research questions have preferences for which coordinate system to employ. Thus, careful collaboration can assist with the choice of system.

As with visual cues, we present a variety of coordinate systems within the case studies and recommend appropriate choices. After choosing a coordinate system, options also exist for the scale with which we display values.

Scales

Scale is how a graphic converts data values into visual cues within the coordinate system. This conversion is generally driven by the type of variable: numerical or categorical. Numerical data appears on scales that are linear, logarithmic, or percentage. Special care must be afforded time-based numerical variables given the various units of measurement (seconds, hours, days, years, etc.) and cyclic nature. The scales for categorical variables align with their sub-types: nominal and ordinal.

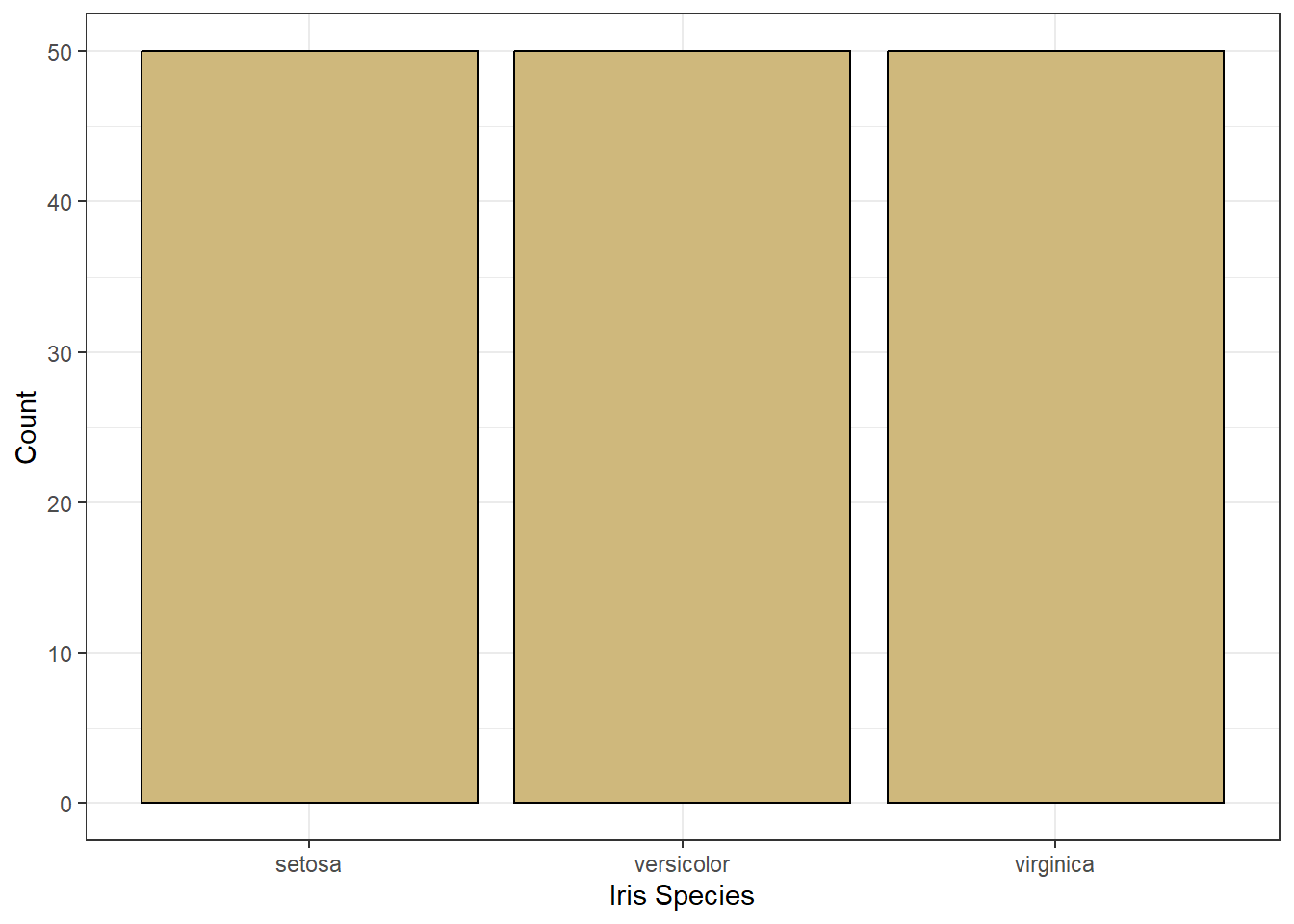

In reference to Figure 3.1, the scale on the \(x\)-axis for the categorical variable is nominal because there is no natural order to the species. The scale on the \(y\)-axis for the numerical variable is percentage because a proportion is a ratio. That said, we can easily change the scale of the \(y\)-axis to linear.

Figure 3.3: Bar Chart of Iris Species

Figure 3.3 uses the same visual cues and coordinate system, but a different scale for the number of irises. Now the scale is linear because a one unit increase in height (visual cue) suggests a one unit increase in the number of irises. This is not the case for a percentage scale. A one percentage-point increase in height is not the same as one additional iris (unless there happens to be exactly 100 of them!).

Logarithmic scales often occur in situations where we transform nonlinear relationships between variables to make them linear. A real-world example of a logarithmic scale is the Richter scale used to describe the intensity of earthquakes. With logarithmic scales, a one unit increase in visual cue suggests an order of magnitude increase in the variable value. Thus a two unit increase on the Richter scale represents an earthquake 100 times stronger (two orders of magnitude).

After selecting appropriate visual cues, coordinate systems, and scales our graphics are technically sound. However, they could be lacking in practical clarity without sufficient explanations of domain-specific information. We refer to such explanations as the context of the graphic and describe the implementation in the next subsection.

Context

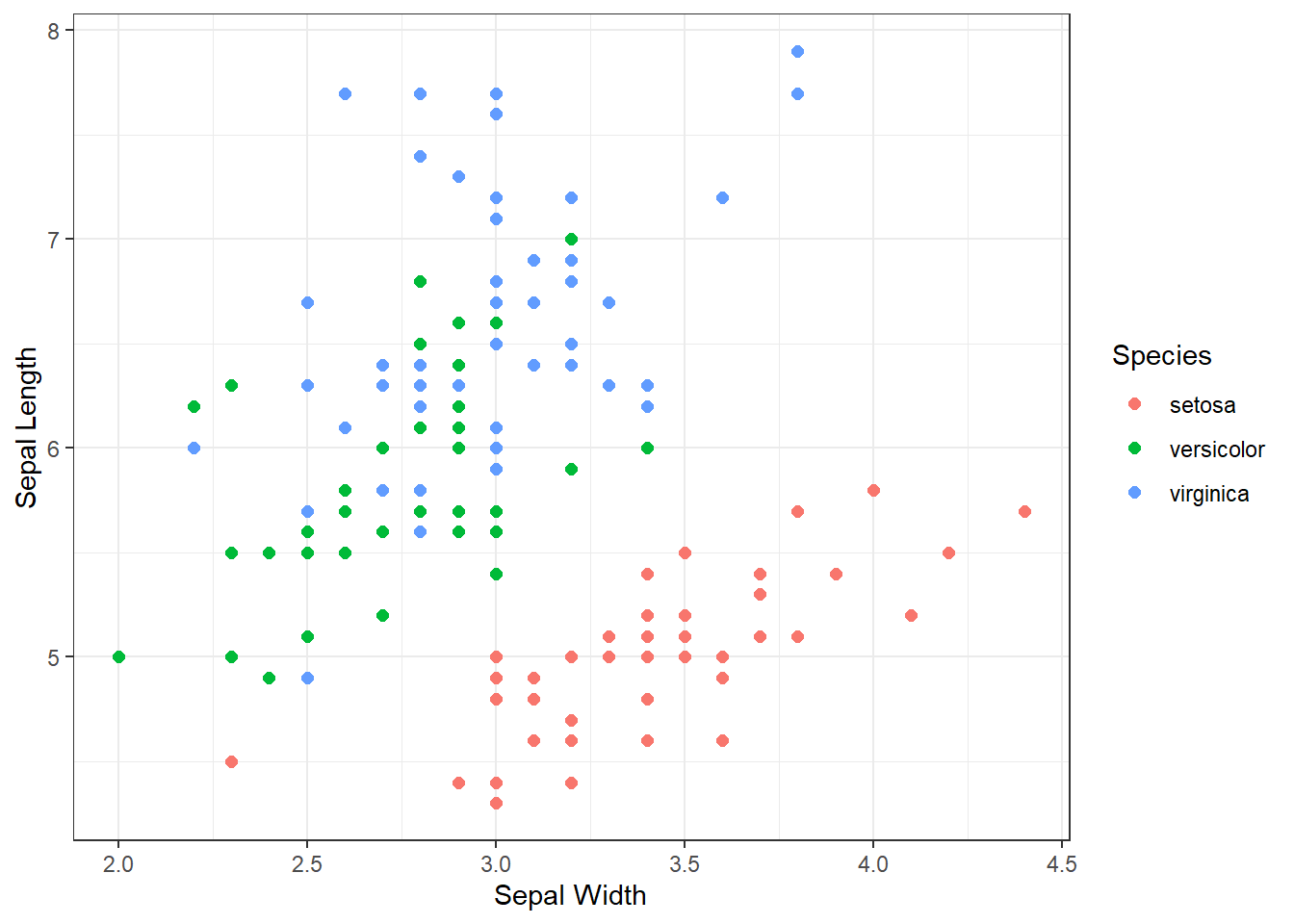

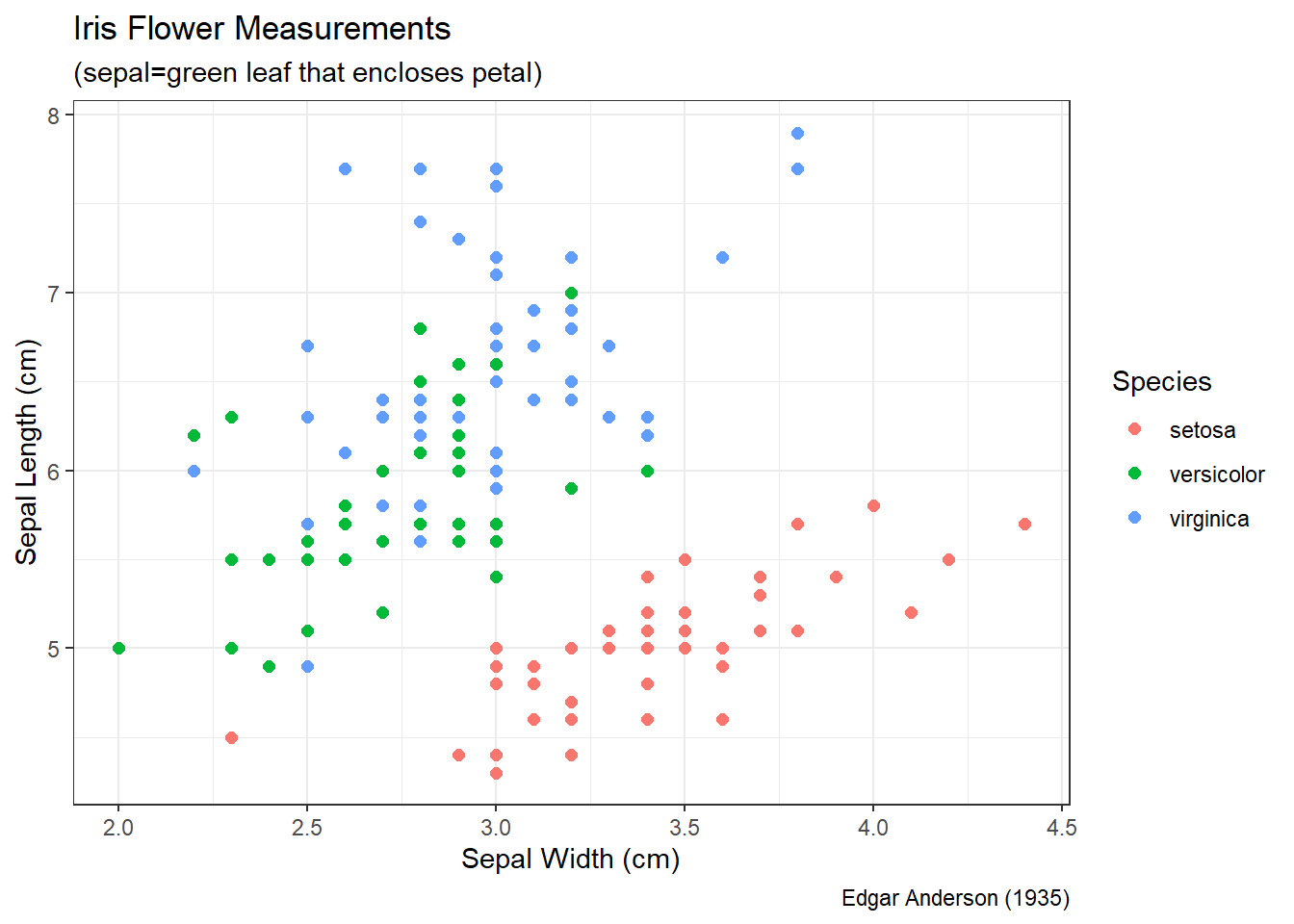

Without proper context a viewer cannot extract meaningful insights from a graphic. Context refers to the real-world meaning of the data and the purpose for which the graphic was created. Graphic titles, labels, captions, and annotations (e.g., text or arrows) are common ways to clearly convey context to a viewer. However, when creating visualizations, we should always weigh context against cluttering up the graphic. Let’s construct a scatter plot of sepal length versus width for each species of iris.

Figure 3.4: Scatter Plot of Sepal Length and Width

Prior to the discussion of context, we decompose the visual cues, coordinate system, and scales within Figure 3.4. There are three variables depicted in the graphic: sepal length, sepal width, and species. The visual cues for sepal width and length are position on the \(x\) and \(y\) axes, respectively. The visual cue for species is the color of the point. Given the use of \(x\) and \(y\) axes, we have employed the Cartesian coordinate system. The scale for both axes is linear because a one unit increase in distance along an axis represents a one unit increase in the sepal measurement. Finally, the color scale for species is nominal because there is no clear order to the color choice.

Although the scatter plot is comprised of clearly-defined cues, coordinates, and scales it lack a great deal of context. If we were to show this graphic to a viewer unfamiliar with the data, they might have a number of questions. What is a sepal? What sort of person, place, or thing has a sepal? What are the units of measurement? Where and when was this data collected and by whom? These questions are clear indicators that the graphic lacks context. Ideally, a data visualization should stand on its own with little-to-no human intervention of explanation. Let’s update the scatter plot to include context obtained from the help menu (?iris).

Figure 3.5: Scatter Plot of Sepal Length and Width

Figure 3.5 answers our viewer’s questions on its own. It is now clear that Edgar Anderson measured iris flowers in 1935. The part of the flower he measured (in centimeters) is the sepal, which is the green leaf that protects the petal. Regardless of species, it appears that irises with wider sepals also have longer sepals. This makes intuitive sense, because we would generally expect a larger flower to be larger in all dimensions.

Overall, this section provides a primer on the key features of all data visualizations. Clear and precise graphics include properly-matched visual cues, coordinate systems, and scales along with sufficient context for the viewer to independently draw valuable insights. For the remainder of the chapter we leverage descriptive statistics and data graphics to explore distributions and associations, and apply the terminology presented in this introduction to reinforce its importance.

3.2 Distributions

The term distribution broadly refers to the frequency, centrality, and dispersion of values for a variable. In other words, what values can a variable produce and how often do each of those values occur. In Chapter 2.2.3 we briefly introduced the concept of distributions in the context of simulated data. If the result of rolling a six-sided die is our variable, then the possible values are the integers 1 through 6 and each value occurs with equal frequency. For named distributions (e.g., Uniform) the possible values and their frequencies are clearly defined. However, not all variables follow a named distribution. In many cases, we must describe a distribution based on observed values of the variable. This is where visualization offers a stellar tool.

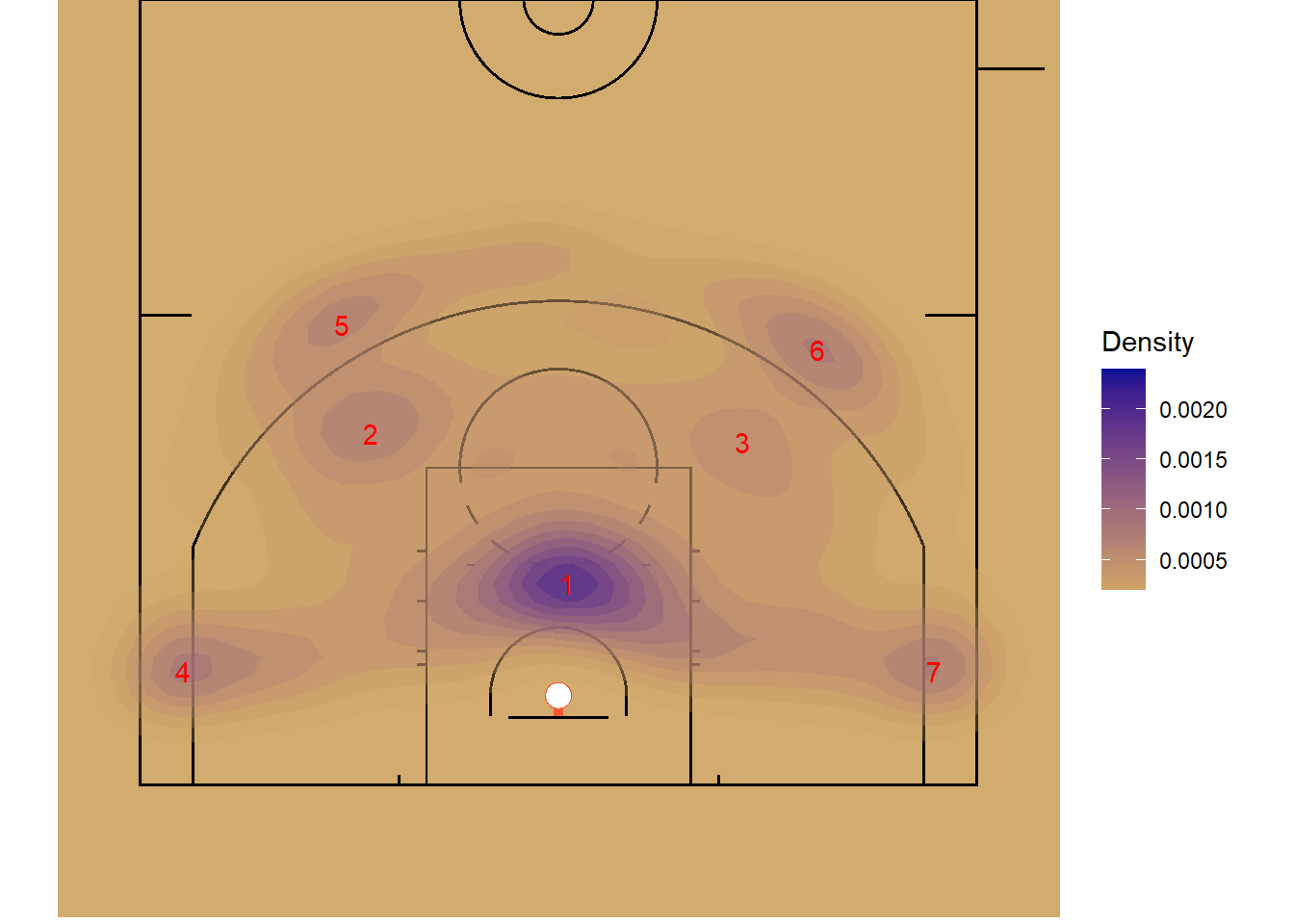

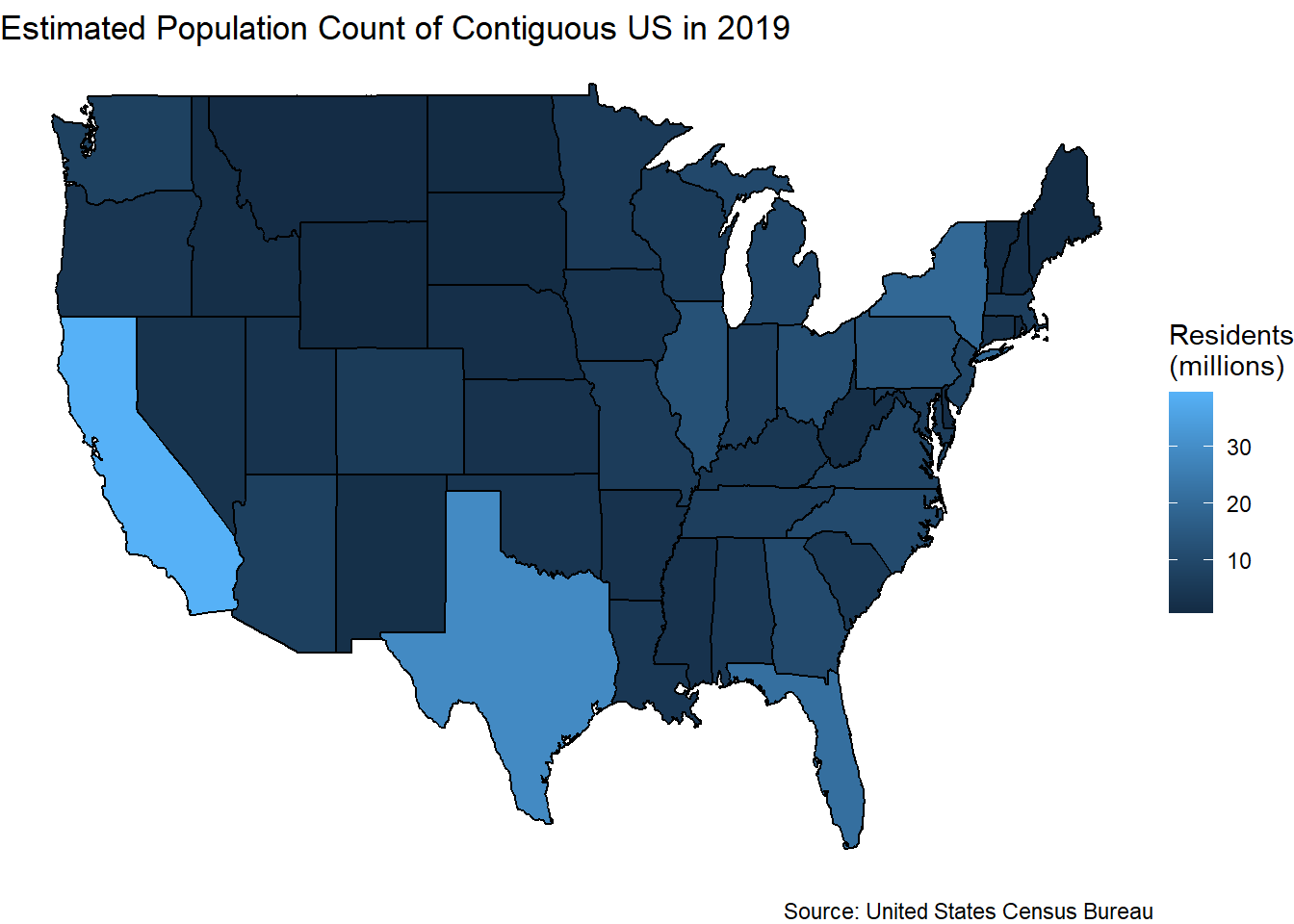

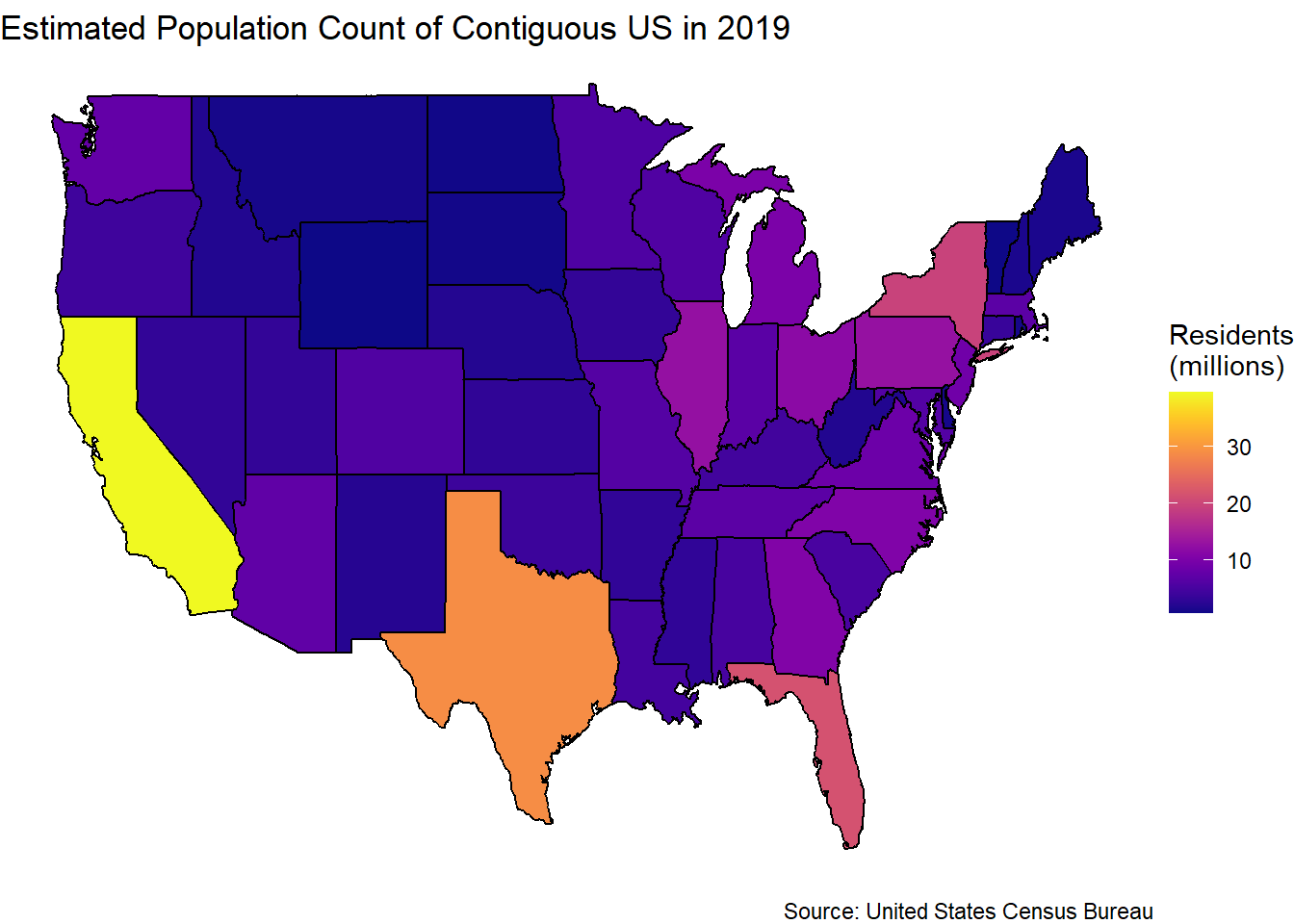

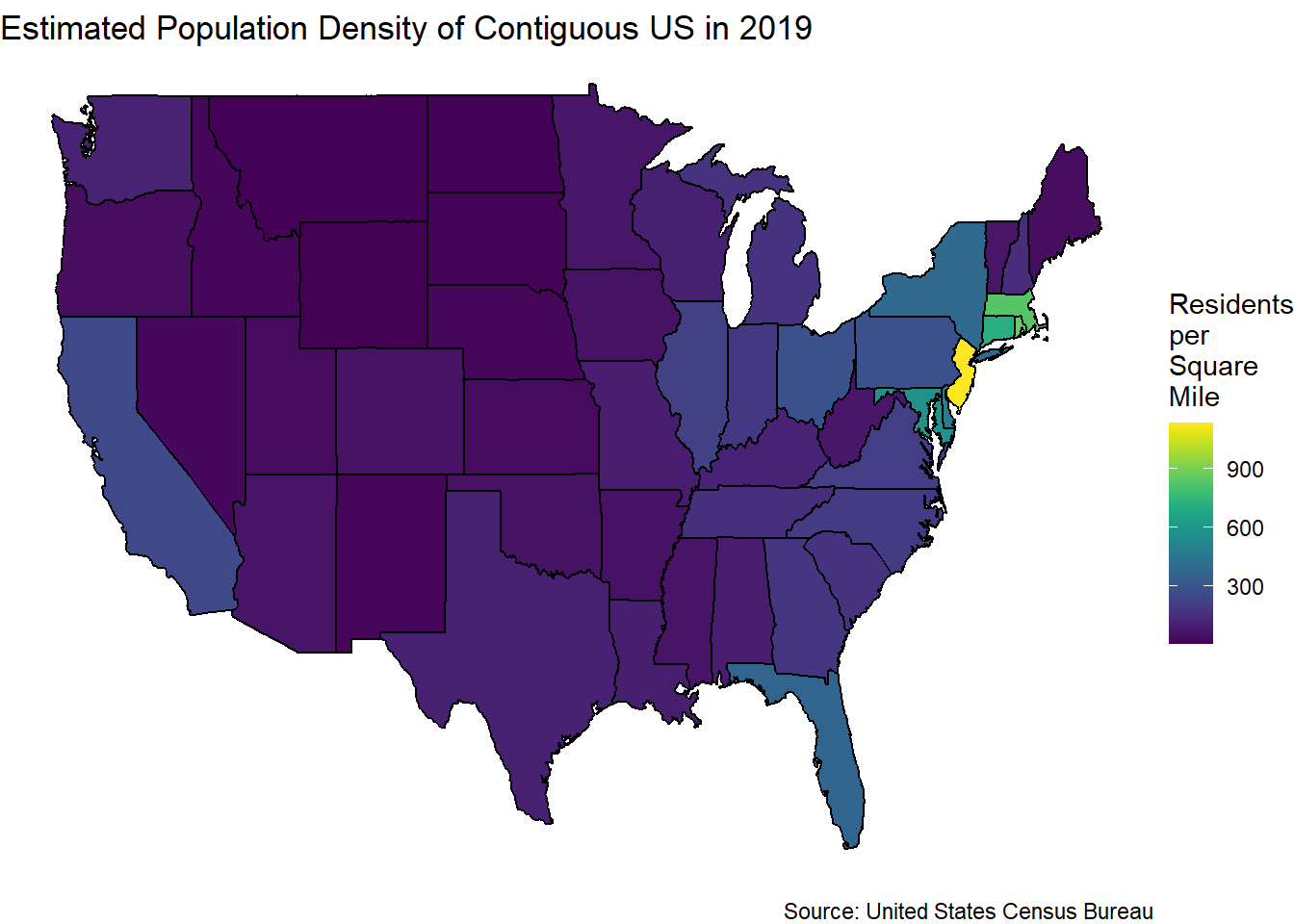

In the next seven sections, we introduce common graphics for exploring the distributions of variables. We begin with bar charts and word clouds for the distributions of categorical variables. Next, we present histograms and box plots for the distributions of numerical variables. Then we depict multi-variate distributions using heat maps and contour plots. This presentation includes a special type of geospatial heat map called a choropleth map. Each graphic is applied to a real-world example by following the 5A Method.

3.2.1 Bar Charts

We start our exploration with a discussion of distributions for categorical variables. In Chapter 2.1.1 we distinguish between types of categorical variables. Ordinal variables possess some natural order among the categories, while nominal variables do not. When categorical variables have a repeated structure that permits grouping, we often refer to them as factors. On the other hand, nominal variables are sometimes comprised of free text that does not easily permit grouping. For this case study, we focus on factors and save free text for the next section.

Figure 3.1 provides a simple example of a bar chart for a factor. The possible values of the factor are indicated by the individual bars: setosa, versicolor, and virginica. We refer to the values of a factor as the levels. In this case, the levels occur in equal frequency since the bars in the chart are all of equal height. Thus, a bar chart is a sensible graphic for depicting the distribution of a factor. The bars represent the possible values of the variable and their heights represent the frequency.

Given our focus on factors, we leverage functions from the forcats library throughout the case study. We also employ the dplyr library for wrangling and ggplot2 for visualization. All three of these packages are part of the tidyverse suite, so the associated functions are accessible after loading the library. Now let’s demonstrate an exploratory analysis involving factors using bar charts and the 5A Method.

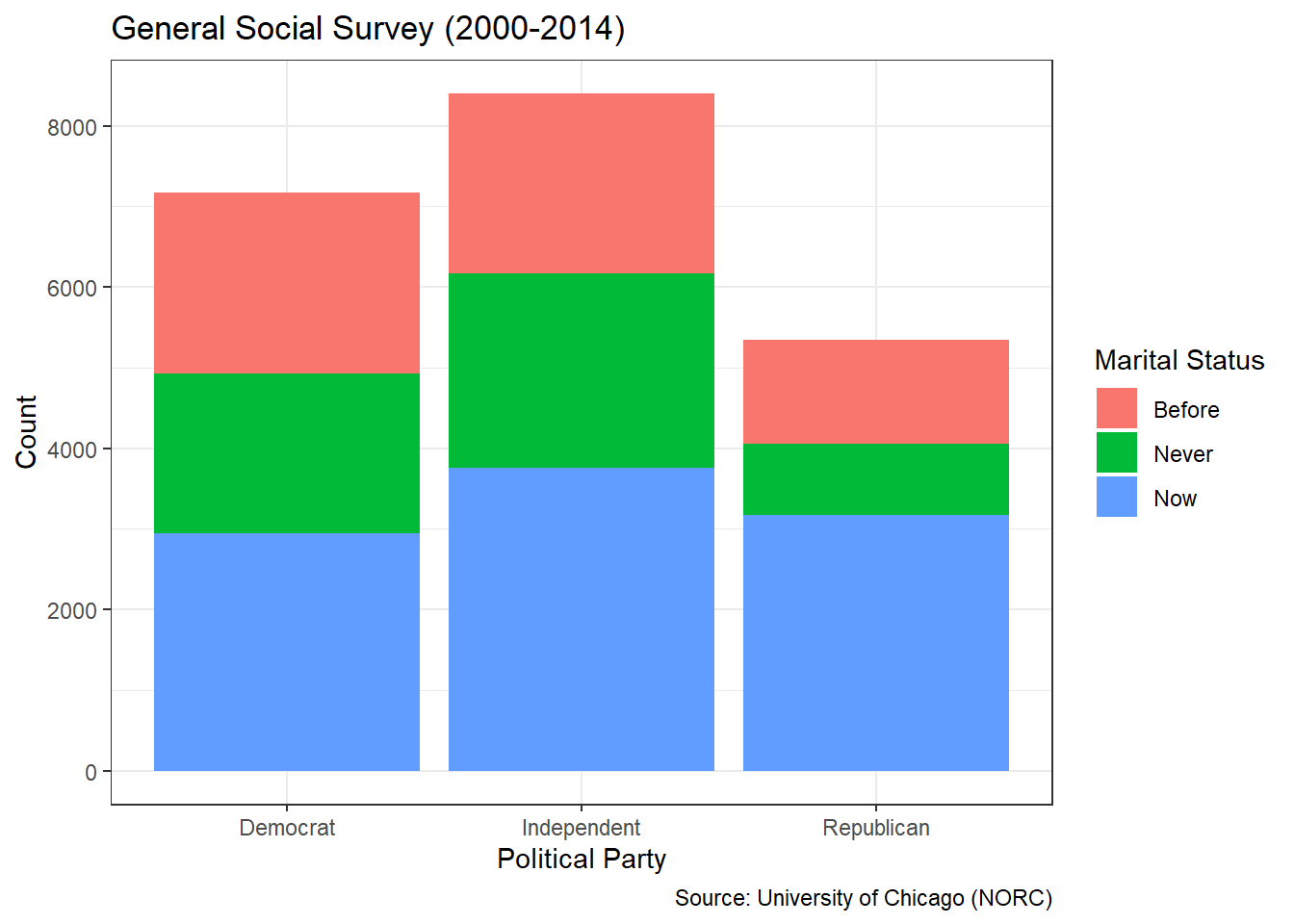

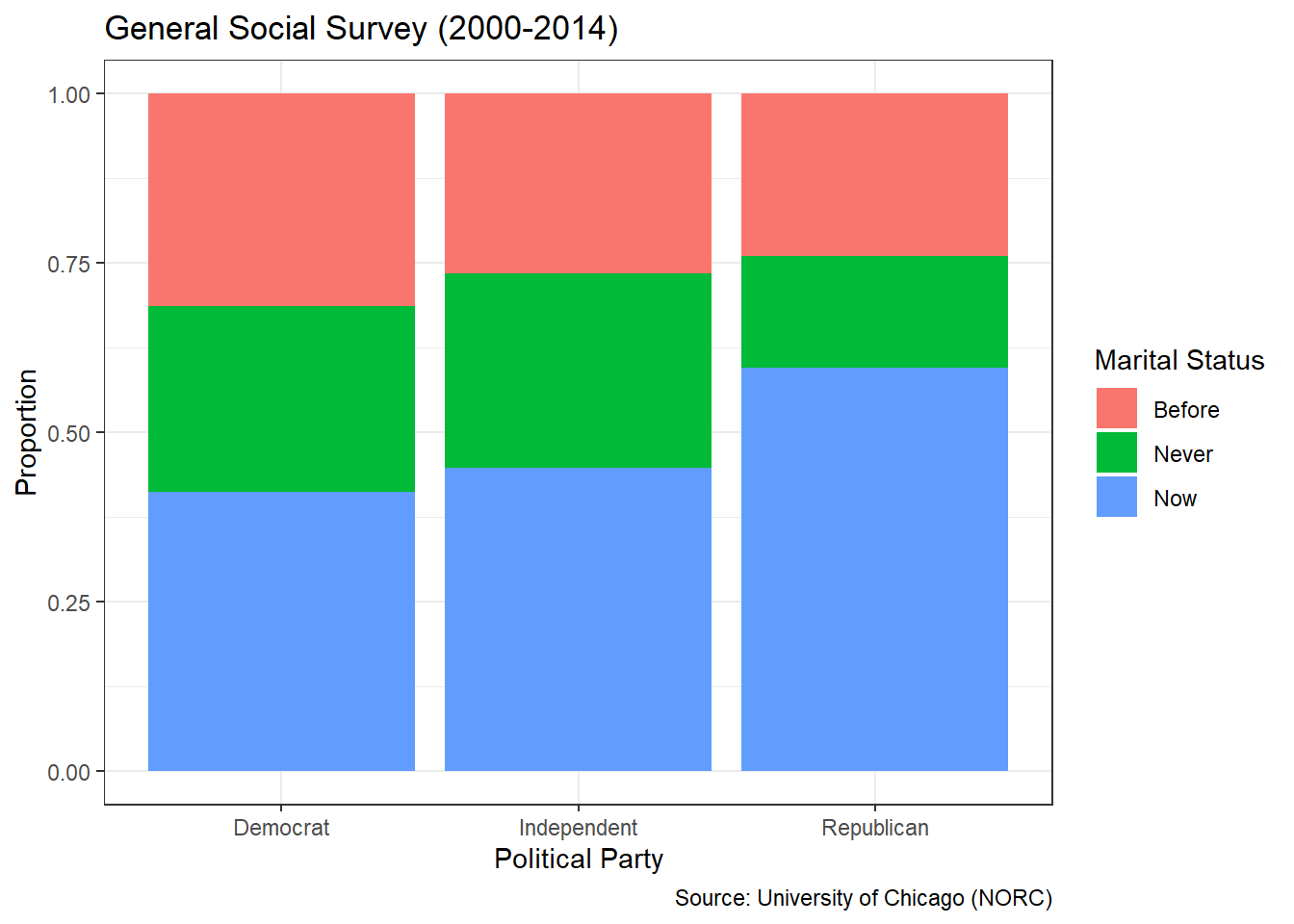

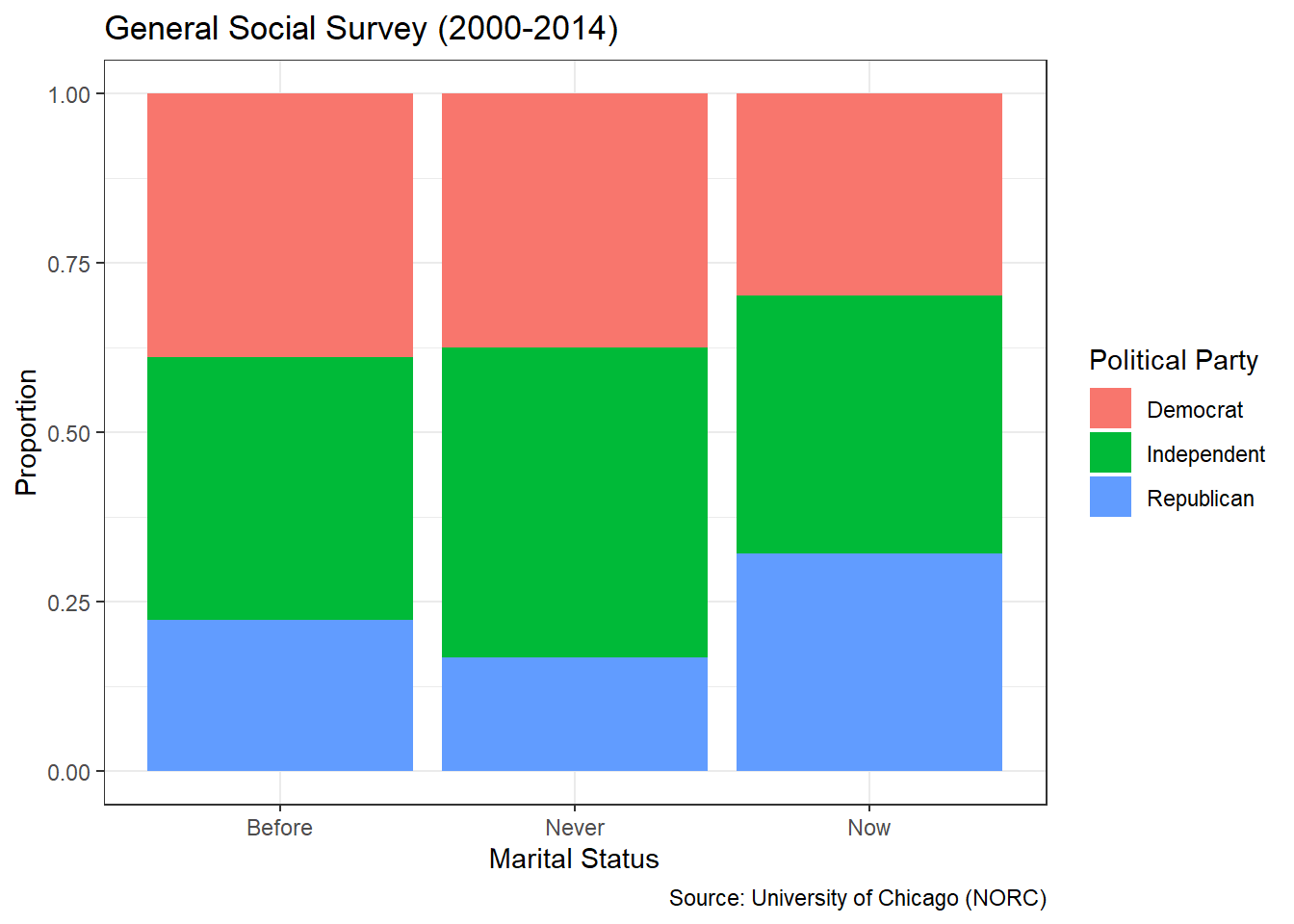

Ask a Question

The National Research Opinion Center (NORC) at the University of Chicago has administered the General Social Survey (GSS) since 1972. The purpose of the survey is to monitor societal change and study the growing complexity of American society. Personal descriptors include age, race, sex, gender, marital status, income, education, political party, and religious affiliation among others. Suppose we are asked the following specific question: What was the distribution of marital status and income among those who responded to the GSS between 2000 and 2014?

This question has almost certainly already been answered! But for our academic purposes, we will pretend it has not. Based on a review of credentials at this link, the NORC appears to be a reputable source that makes its data openly available. Any time we are dealing with demographic or personally-identifiable information, we should be cognizant of ethical considerations regarding privacy and discrimination. So, we keep this in mind throughout the process.

Acquire the Data

The GSS website makes all of the survey data publicly available. So, we could access it directly. But the forcats library offers a built-in data set called gss_cat which includes GSS results between the years 2000 and 2014. Let’s load this easily-accessible data set and check its structure.

## tibble [21,483 × 9] (S3: tbl_df/tbl/data.frame)

## $ year : int [1:21483] 2000 2000 2000 2000 2000 2000 2000 2000 2000 2000 ...

## $ marital: Factor w/ 6 levels "No answer","Never married",..: 2 4 5 2 4 6 2 4 6 6 ...

## $ age : int [1:21483] 26 48 67 39 25 25 36 44 44 47 ...

## $ race : Factor w/ 4 levels "Other","Black",..: 3 3 3 3 3 3 3 3 3 3 ...

## $ rincome: Factor w/ 16 levels "No answer","Don't know",..: 8 8 16 16 16 5 4 9 4 4 ...

## $ partyid: Factor w/ 10 levels "No answer","Don't know",..: 6 5 7 6 9 10 5 8 9 4 ...

## $ relig : Factor w/ 16 levels "No answer","Don't know",..: 15 15 15 6 12 15 5 15 15 15 ...

## $ denom : Factor w/ 30 levels "No answer","Don't know",..: 25 23 3 30 30 25 30 15 4 25 ...

## $ tvhours: int [1:21483] 12 NA 2 4 1 NA 3 NA 0 3 ...The data consists of a tidy data frame with 21,483 rows (observations) and 9 columns (variables). Each observation represents a person who answered the survey and the variables describe that person. Three of the variables are integers, while the remaining six variables are factors. The output provides the number of unique levels (categories) for each factor, some of which appear to be “No Answer”, “Don’t Know”, or “Other”. We’ll keep these non-answers in mind during the analysis step. As always, if we have questions about the variables included in the data, we simply call the help menu with ?gss_cat.

For the research question at hand, we reduce our focus to two of the factors using the select() function. We also remove any rows that are missing responses using the na.omit() function and then save the new data frame as gss_cat2. However, na.omit() only removes rows for which the response is literally blank (NA) and does not remove responses such as “No answer”. We address these non-answers separately.

#select two columns and omit rows with missing data

gss_cat2 <- gss_cat %>%

select(marital,rincome) %>%

na.omit()Based on the manner in which the data was collected, there is no way for us to check for accidental duplicates. Ideally, each person would be assigned a unique identifier to distinguish between duplicated respondents and distinct respondents that happen to provide the same answers to the survey. Without unique identifiers, we are left to assume there are no duplicates. On the other hand, the lack of unique identifiers does help protect the identity of each respondent.

When dealing with factors, an important data cleaning procedure is to check the named levels (categories) for synonyms, misspellings, or other errors. For ordinal factors, we also want to check for proper ordering of the levels. We obtain a list of all the levels of a factor using the levels() function. Let’s begin with marital status.

## [1] "No answer" "Never married" "Separated" "Divorced"

## [5] "Widowed" "Married"There do not appear to be any mistaken repeats or other errors. The levels are unique and well-defined. We need not be concerned with order, because marital status is a nominal variable. There is no clear ordering to the levels. One might argue some sort of temporal ordering from never married, to married, to divorced, but where would we place separated and widowed? Since there is no obvious answer, we label the variable nominal. Next, we’ll investigate the reported income factor.

## [1] "No answer" "Don't know" "Refused" "$25000 or more"

## [5] "$20000 - 24999" "$15000 - 19999" "$10000 - 14999" "$8000 to 9999"

## [9] "$7000 to 7999" "$6000 to 6999" "$5000 to 5999" "$4000 to 4999"

## [13] "$3000 to 3999" "$1000 to 2999" "Lt $1000" "Not applicable"What a mess! Some of the dollar levels have dashes and some have the word “to”. The range of dollar values within each level are not consistent and there are multiple non-answer levels. Additionally, income level is an ordinal variable that should be displayed ascending. Instead, the order is descending with non-answers mixed in. We remedy all of these issues using functions from the forcats library. Let’s begin by combining and renaming levels into sensible categories using the fct_collapse function.

#combine and rename income levels

gss_cat3 <- gss_cat2 %>%

mutate(rincome2=fct_collapse(rincome,

missing=c("No answer","Don't know","Refused","Not applicable"),

under_5=c("Lt $1000","$1000 to 2999","$3000 to 3999","$4000 to 4999"),

from_5to10=c("$5000 to 5999","$6000 to 6999","$7000 to 7999","$8000 to 9999"),

from_10to15="$10000 - 14999",

from_15to20="$15000 - 19999",

from_20to25="$20000 - 24999",

over_25="$25000 or more")) %>%

select(-rincome)

#show new levels of income

levels(gss_cat3$rincome2)## [1] "missing" "over_25" "from_20to25" "from_15to20" "from_10to15"

## [6] "from_5to10" "under_5"We combine all of the non-answers into a single level called “missing”. We also combine and rename the dollar-value levels into consistent categories with a range of $5 thousand dollars each. After creating this new income variable rincome2, we remove the original rincome variable to avoid confusion. However, when we observe the new list of levels, we see they are still in descending order. For reordering, we employ the fct_relevel() function.

#reorder income levels

gss_cat4 <- gss_cat3 %>%

mutate(rincome2=fct_relevel(rincome2,

c("missing","under_5","from_5to10","from_10to15","from_15to20",

"from_20to25","over_25")))

#show new categories of income

levels(gss_cat4$rincome2)## [1] "missing" "under_5" "from_5to10" "from_10to15" "from_15to20"

## [6] "from_20to25" "over_25"Finally, we have sensible, ordered levels. Notice, we save a new data frame (gss_cat2, gss_cat3, gss_cat4) each time we change the data. This isn’t strictly necessary. We could overwrite the original table each time we make changes. But this can make it difficult to keep track of changes when learning. So, for educational purposes, we keep changes separated. That said, we now have a clean data set that is ready for analysis.

Analyze the Data

When conducting an exploratory analysis, a logical first step is simply to summarize the variables in tables. For factors, this consists of level counts that are easily obtained with the summary() function.

## marital rincome2

## No answer : 17 missing :8468

## Never married: 5416 under_5 :1183

## Separated : 743 from_5to10 : 970

## Divorced : 3383 from_10to15:1168

## Widowed : 1807 from_15to20:1048

## Married :10117 from_20to25:1283

## over_25 :7363For marital status, only 17 respondents provided no answer. Of those who did respond, over 10,000 declared being married and less than 1,000 claimed separation. With regard to annual income, the most frequent answers were none at all or the highest income level. The remaining respondents were relatively equally-distributed across income levels.

Rather than raw counts, we might like to determine the distribution across levels as proportions. Counts can be converted to proportions using the following pipeline.

#compute income level percentages

gss_cat4 %>%

group_by(rincome2) %>%

summarize(count=n()) %>%

mutate(prop=count/sum(count)) %>%

ungroup()## # A tibble: 7 × 3

## rincome2 count prop

## <fct> <int> <dbl>

## 1 missing 8468 0.394

## 2 under_5 1183 0.0551

## 3 from_5to10 970 0.0452

## 4 from_10to15 1168 0.0544

## 5 from_15to20 1048 0.0488

## 6 from_20to25 1283 0.0597

## 7 over_25 7363 0.343In this case, we group the data by annual income level and compute the counts using the n() function. Then we divide by the total count to obtain a proportion for each level. It is good practice to ungroup() data after achieving the desired results to avoid unintentionally-grouped calculations later on. With the counts in the form of proportions, it is much easier to provide universal insights that do not rely on knowledge of the total number of respondents. For example, we can convey that each of the income levels between $0 and $25 thousand captures around 5 to 6% of respondents. Whereas more than 34% of respondents are included in the greater than $25 thousand per year level.

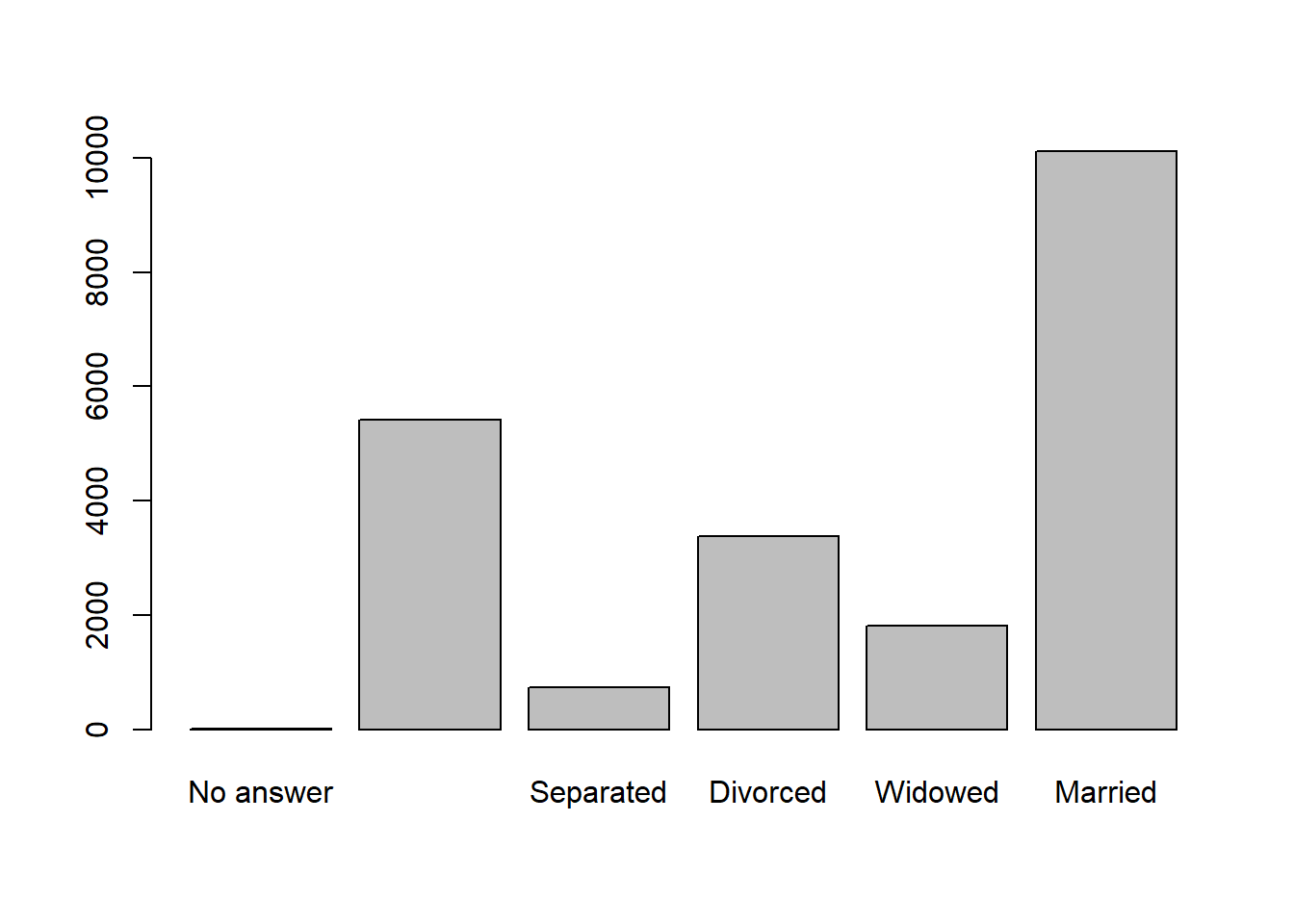

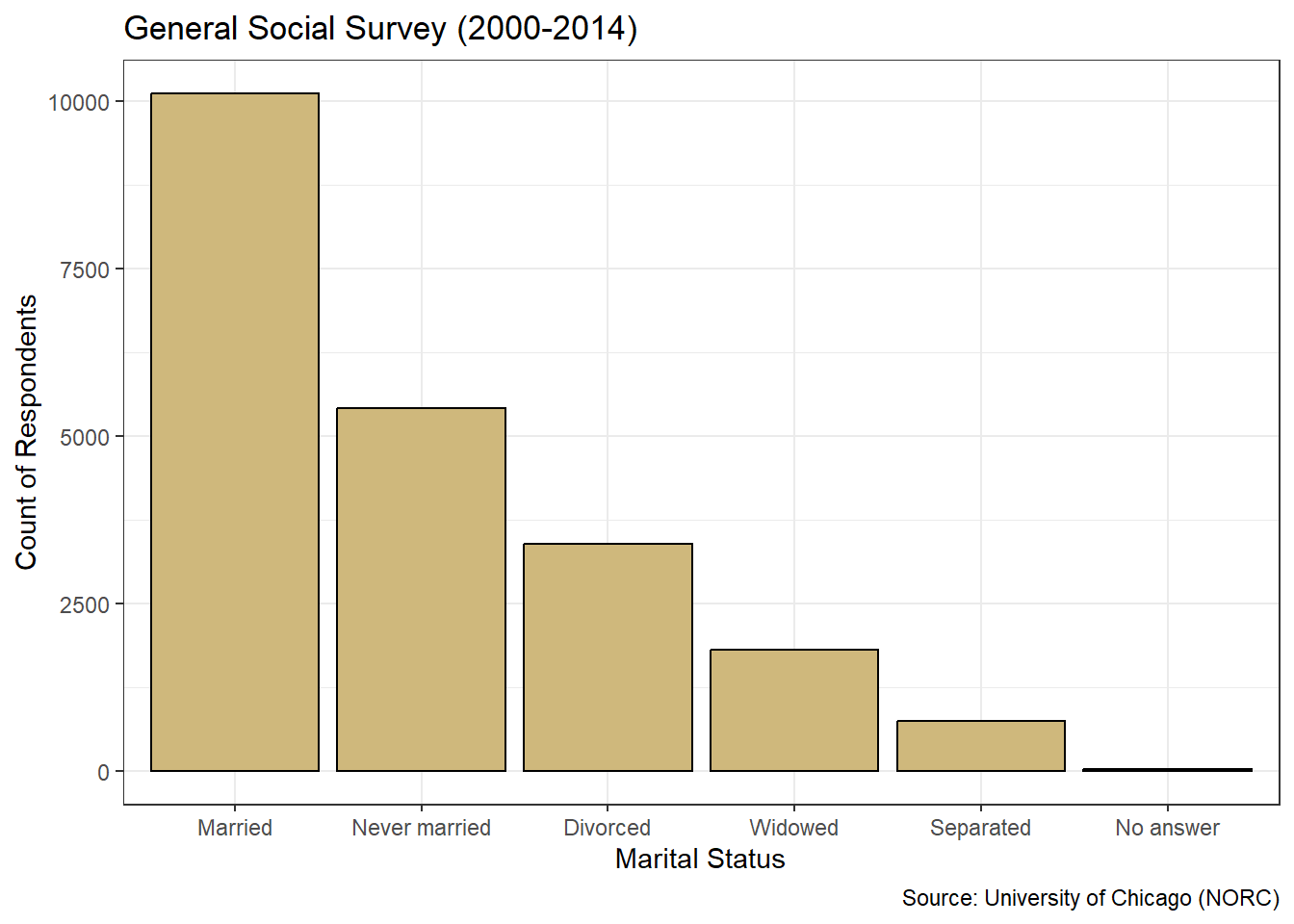

While tables of counts and proportions certainly provide the distribution of respondents, they are not as easy to consume as visualizations. The most common visual graphic for counts of categorical data is the bar chart. The plot() function in the base R language automatically detects the variable type and chooses the appropriate graphic, as seen in Figure 3.6 below.

Figure 3.6: Basic Bar Chart of Marital Status

This basic plot leaves a lot to be desired in terms of clearly conveying insights. There are no titles, axis labels, units, or references, and the “Never Married” level is missing its label (likely due to spacing). For a quick, internal visualization, this might be fine. But for external presentation, the ggplot2 library offers much more control over the construction and appearance of graphics. Let’s create the same bar chart using ggplot2 layering.

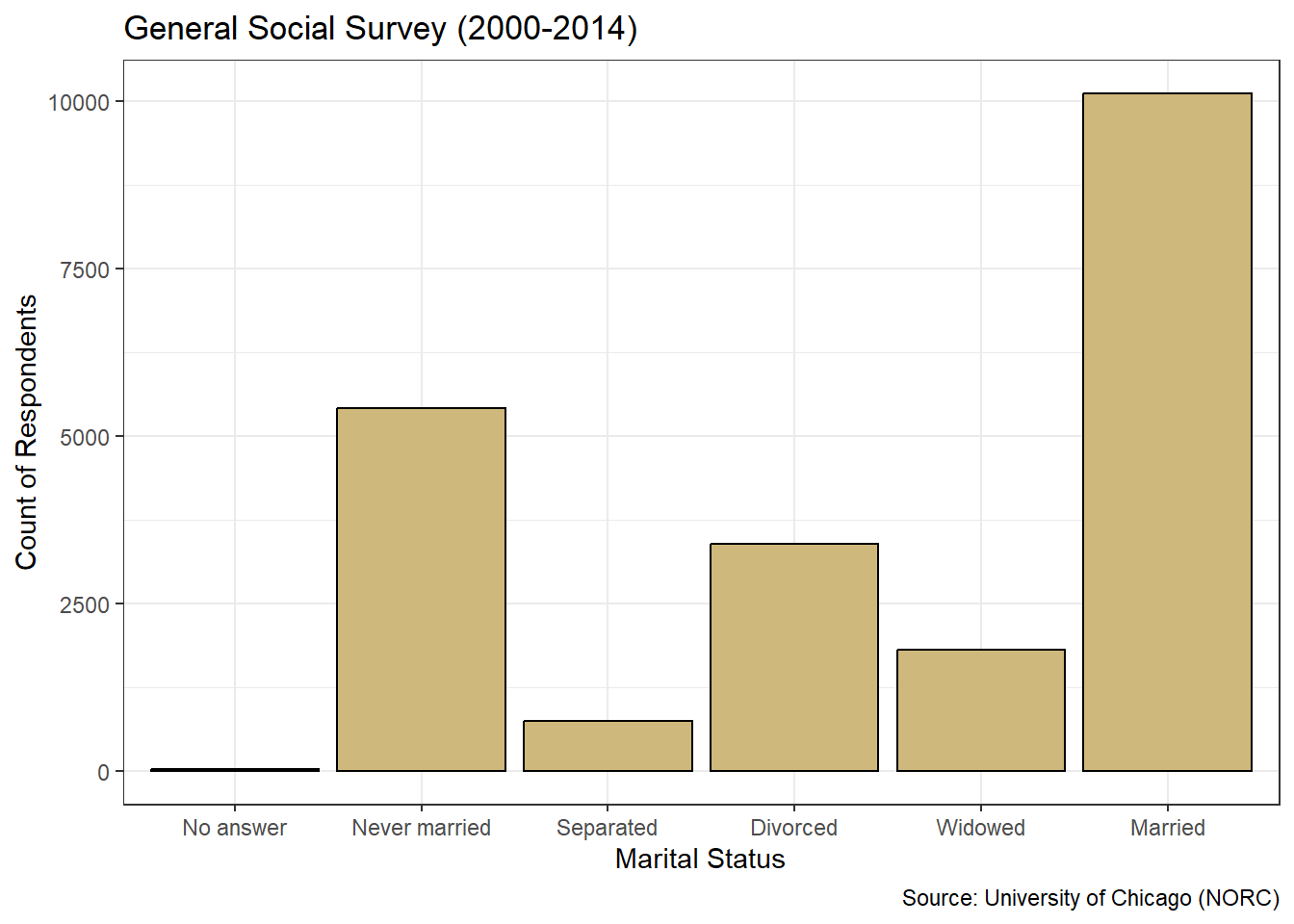

#plot ggplot2 bar chart of marital status

ggplot(data=gss_cat4,aes(x=marital)) +

geom_bar(color="black",fill="#CFB87C") +

labs(title="General Social Survey (2000-2014)",

x="Marital Status",

y="Count of Respondents",

caption="Source: University of Chicago (NORC)") +

theme_bw()

Figure 3.7: ggplot2 Bar Chart of Marital Status

Figure 3.7 is a dramatic improvement over the basic bar chart in terms of appearance. Within the ggplot() function, we specify the data location and the aesthetic connection between variable and axis using aes(). Then we pick the type of graphic and its coloring using the geom_bar() function. Colors can be listed by name (e.g., black) or using six-digit hex codes (e.g., #CFB87C). Next we assign labels for the chart, axes, and caption using the labs() function. Finally, we choose the black-and-white theme from among many different theme options. Each of these functions is layered onto the previous using the plus sign. We continue to add to our ggplot2 repertoire in future case studies, but this is a great start.

In Chapter 3.1 we describe key features of clear and precise data visualizations. To reinforce those concepts, we revisit them here. There are two variables depicted in the graphic: marital status and number of respondents. The visual cue for status is position on the \(x\)-axis, while the visual cue for number is height on the \(y\)-axis. Given the use of \(x\) and \(y\) axes, the coordinate system is Cartesian. The scale on the \(x\)-axis is nominal, while the scale on the \(y\)-axis is linear. Finally, sufficient context regarding the purpose and source of the data is provided in the title and caption.

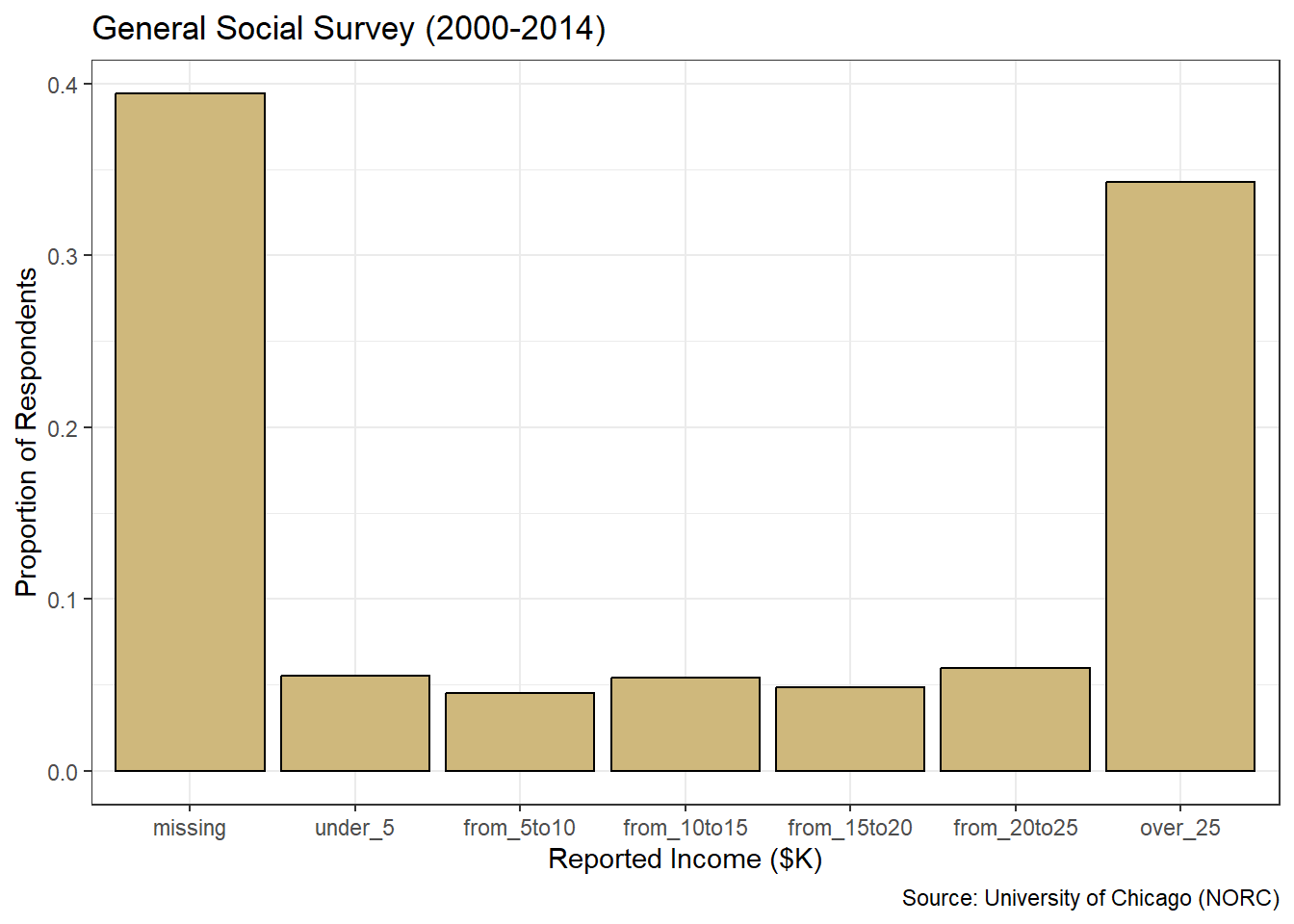

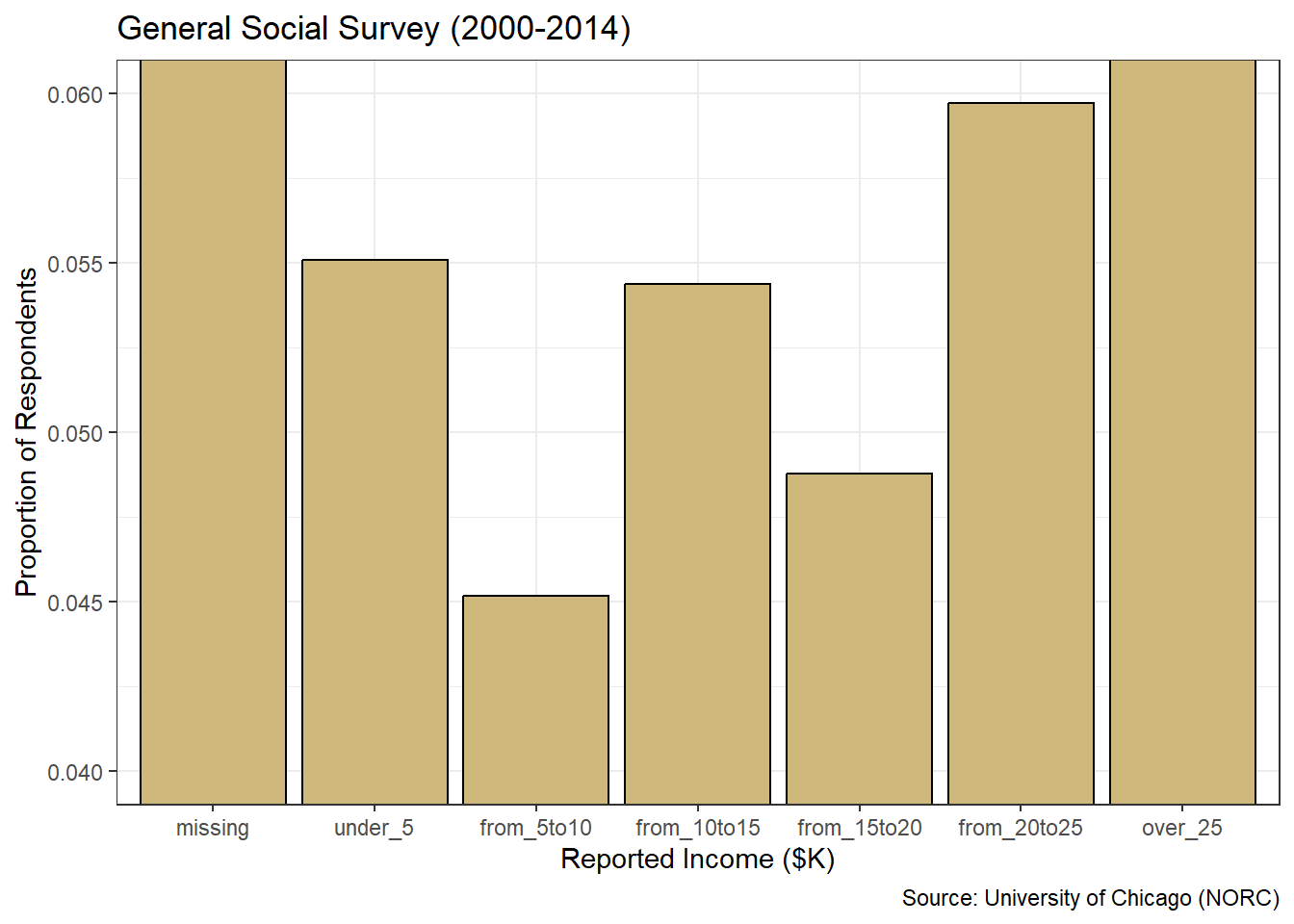

Let’s construct a similar bar chart for the reported income factor using ggplot2 layering. However, for this graph we convert the \(y\)-axis to a proportion with an additional aesthetic declaration in the geom_bar() function.

#plot ggplot2 bar chart of income

ggplot(data=gss_cat4,aes(x=rincome2)) +

geom_bar(aes(y=..prop..,group=1),

color="black",fill="#CFB87C") +

labs(title="General Social Survey (2000-2014)",

x="Reported Income ($K)",

y="Proportion of Respondents",

caption="Source: University of Chicago (NORC)") +

theme_bw()

Figure 3.8: Proportions Bar Chart of Income

Figure 3.8 conveys the same insights as the table of level proportions, but in a format that is much easier to rapidly glean. The missing income level and the highest income level stand out dramatically at roughly 40% and 35%, respectively. It is also much easier to observe the equal-distribution around 5% of respondents across the remaining levels. The graphics generated with ggplot2 are well-suited for presentation to the stakeholder(s) who asked the original question.

Advise on Results

For the relatively simple question at hand, the process of advising stakeholders on analytic insights might just consist of providing and explaining the bar charts. Of course, our focus is on only two demographic variables considered one at a time. A more thorough analysis would include all of the variables and the associations between them. We’ll explore such concepts in future sections. The focus of this section is the summary and visualization of individual categorical variables.

The simplicity of this case study affords the opportunity to point out a few best practices and common pitfalls in constructing bar charts for stakeholders. One best practice for bar charts of nominal variables is to present the bars in ascending or descending order of frequency. Note, this is not the same as ordinal variable levels being naturally ordered based on innate characteristics. Instead, we order the levels based on the counts within them. We demonstrate this for marital status using the fct_infreq() function from the forcats library.

#plot descending bar chart of marital status

ggplot(data=gss_cat4,aes(x=fct_infreq(marital))) +

geom_bar(color="black",fill="#CFB87C") +

labs(title="General Social Survey (2000-2014)",

x="Marital Status",

y="Count of Respondents",

caption="Source: University of Chicago (NORC)") +

theme_bw()

Figure 3.9: Descending Bar Chart of Marital Status

Nothing has changed about the data in Figure 3.9 compared to Figure 3.7. We simply made it easier for the viewer to quickly establish a precedence of frequency among the levels. Most respondents are married, then the next most have never been married, and so on. Ordering by frequency works well with nominal variables, but we should not sort the bars this way for ordinal variables. Otherwise we might disrupt the natural ordering of the levels and confuse the viewer.

A common pitfall with bar charts is the manipulation of the range of the \(y\)-axis. All of the bar charts we’ve presented so far begin at zero and extend beyond the highest frequency. Let’s look at the same bar chart as in Figure 3.8, but with the \(y\)-axis zoomed in on a smaller range of values using the coord_cartesian() function.

#plot misleading bar chart of income

ggplot(data=gss_cat4,aes(x=rincome2)) +

geom_bar(aes(y=..prop..,group=1),

color="black",fill="#CFB87C") +

labs(title="General Social Survey (2000-2014)",

x="Reported Income ($K)",

y="Proportion of Respondents",

caption="Source: University of Chicago (NORC)") +

theme_bw() +

coord_cartesian(ylim = c(0.04,0.06))

Figure 3.10: Misleading Bar Chart of Income

If our stakeholder were to ignore the \(y\)-axis labels and focus solely on the bar heights, they might draw the inaccurate conclusion that dramatically more people make between $20 and $25 thousand per year versus $5 to $10 thousand. The bar appears four times as tall! However, we know this is not true. It is an artifact of raising the minimum value on the \(y\)-axis. Similarly, the tops of the outer-most bars are cut off. For some viewers, this might lead to the conclusion that the over $25 thousand level is very similar in frequency to its adjacent level. But this too is far from the truth.

By zooming in on the \(y\)-axis, we created a very misleading graphic. It may be technically correct, given the \(y\)-axis is labeled, but it is poorly constructed and ethically questionable. Bar charts should always start at zero and extend beyond the most frequent level. With these best-practices in mind, we can finally answer the original research question.

Answer the Question

How should we describe the marital status and income level of the survey respondents? Based on Figure 3.9, we see that the vast majority of the people who answered the survey are married. Of those who are not married, most have never been married or are divorced. A relatively small number of respondents are separated or widowed. Referring to Figure 3.8, we see that roughly 40% of people did not report an income, while about 35% reported an income greater than $25 thousand per year. The remaining 25% of people were fairly evenly spread across the five income levels between $0 and $25 thousand.

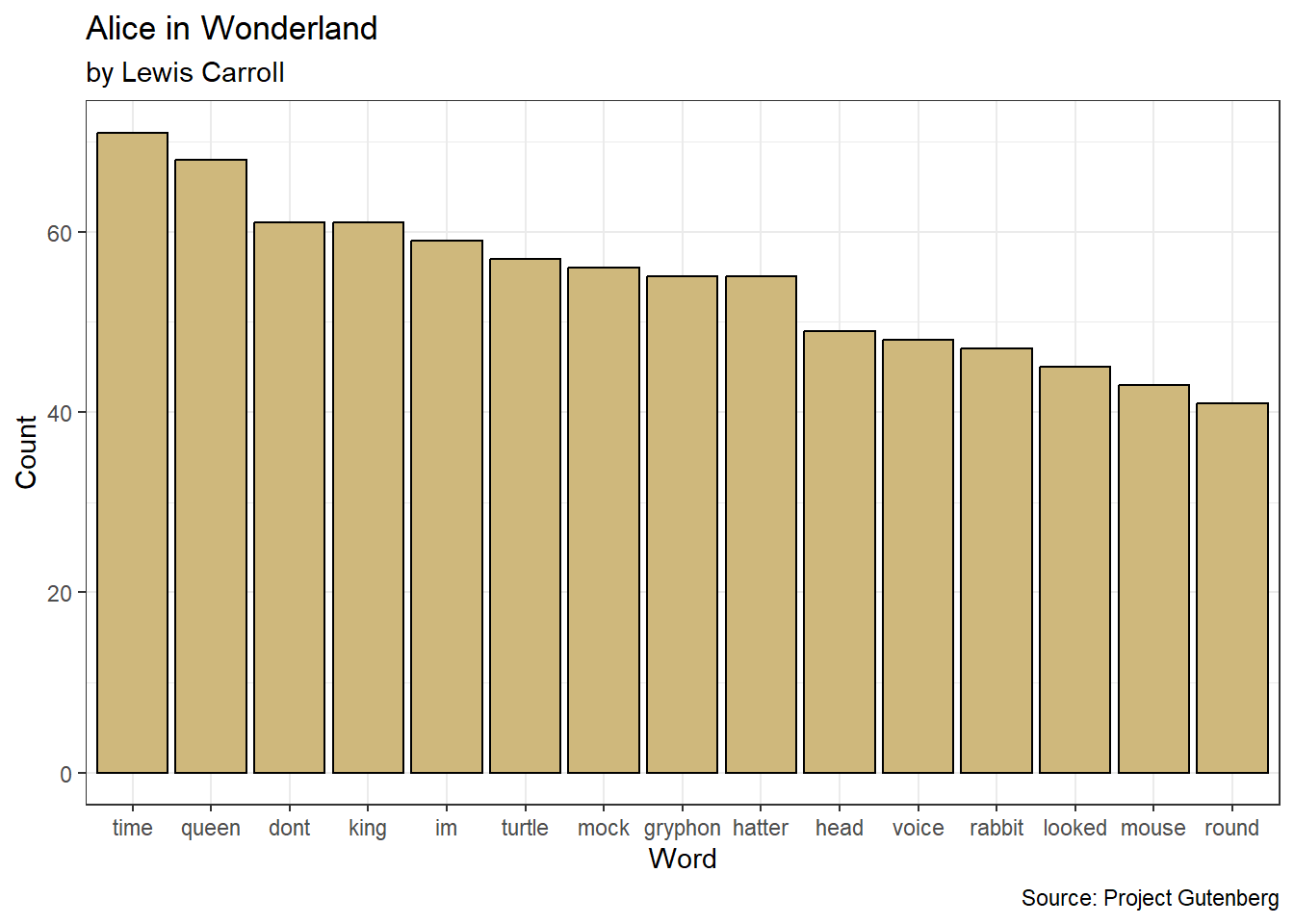

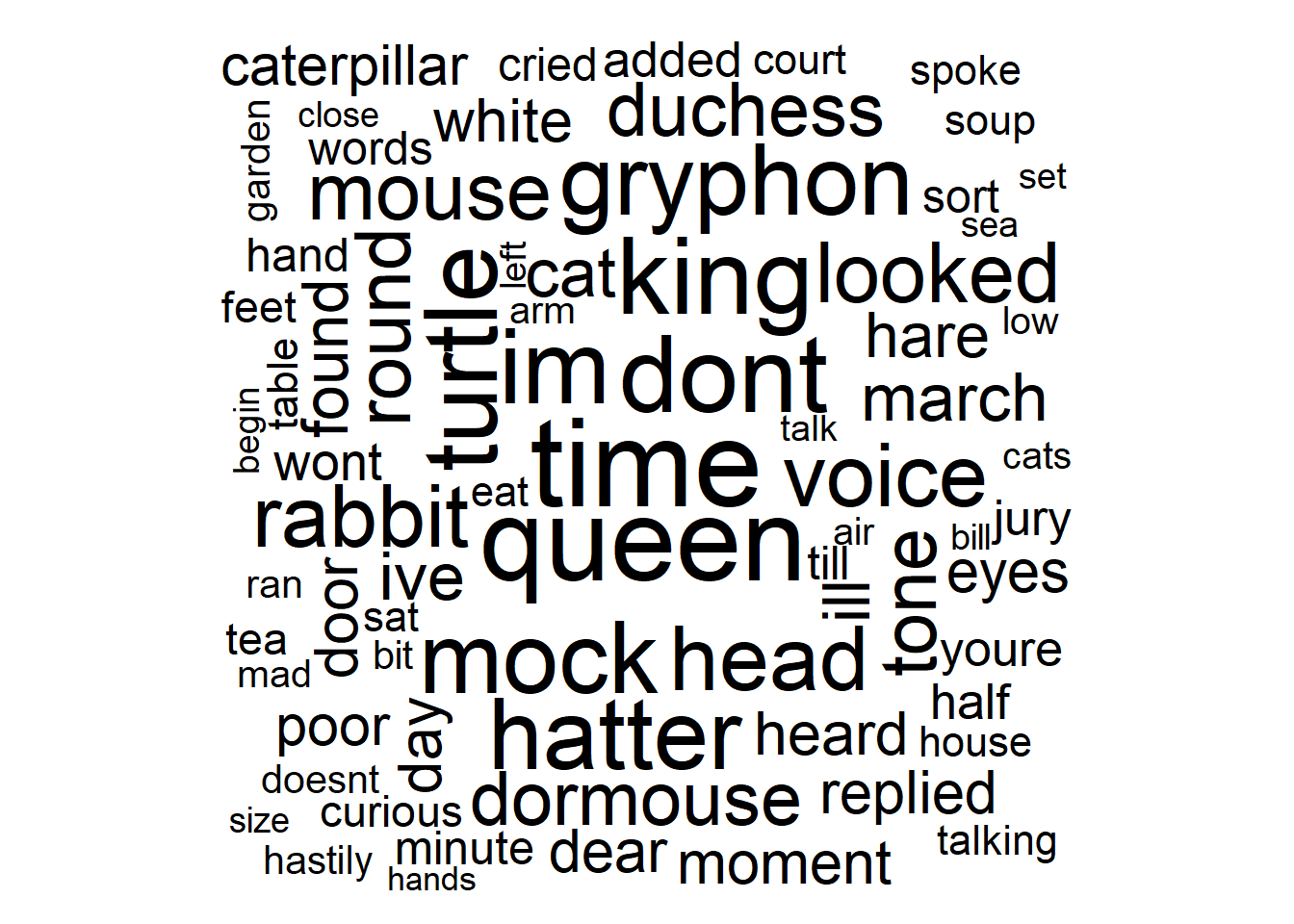

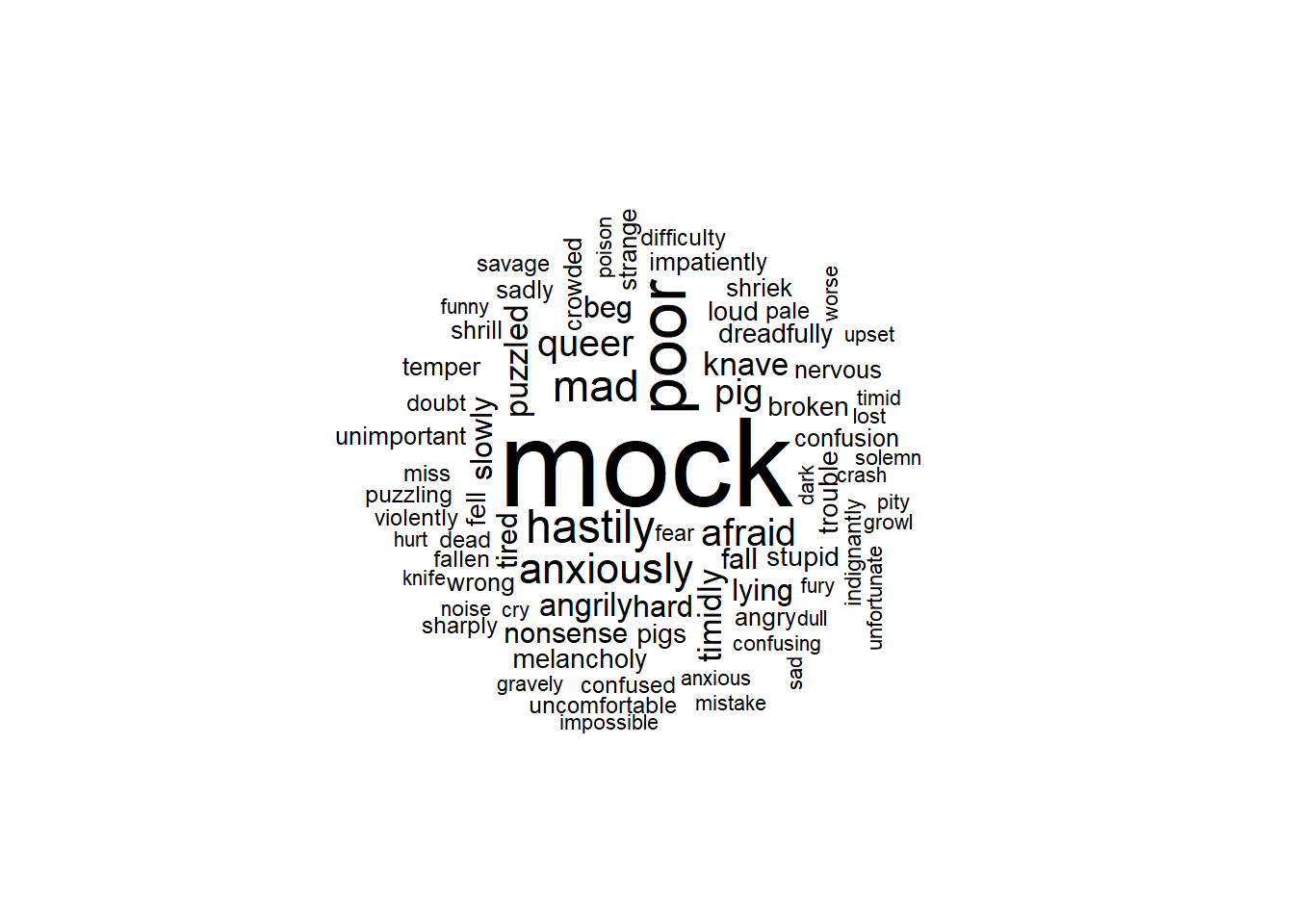

In this presentation of bar charts, we explored the distributions of factors. However, not all categorical variables lend themselves to grouping into factor levels. In many cases, the values of a categorical variable are free text that may not be repeated. Moreover, our text data may not even be structured in a tidy format with observations and variables. In Chapter 3.2.6, we explore just such a case and introduce word clouds as another visualization tool.

When exploring categorical variables, bar charts and word clouds are valuable visualization tools. However, they should not be used to depict the distribution of numerical variables. In the next two sections, we introduce histograms and box plots as the appropriate graphical tools for exploring numerical variables.

3.2.2 Histograms

In Chapter 2.1.1 we distinguish between types of numerical variables. Discrete variables typically involve counting, while continuous variables involve measuring. Though the distinction between discrete and continuous variables may be clear in theory, it can be hazy in practice. Frequently, we discretize continuous variables via rounding to ease presentation. Other times we treat discrete variables as continuous in order to apply mathematical techniques that require continuity. In either situation, we should carefully consider and explain the implications of simplifying assumptions.

Often, continuous variables measure some sense of space or time. In other cases, they might measure efficiency based on proportions or percentages. Regardless, a continuous variable can (in theory) take on infinitely-many values. Between any two values we choose, there are infinitely more possible values. Thus, a traditional bar chart makes no sense because we could never have enough bars! We avoid this issue by allowing each bar to represent a range of possible values, while the height still indicates frequency. This special type of bar chart is known as a histogram.

Throughout this case study we employ the dplyr library for wrangling and ggplot2 for visualization. Since both of these packages are part of the tidyverse suite, we need only load one library. Now let’s demonstrate an exploratory analysis involving continuous variables using histograms and the 5A Method.

Ask a Question

Major League Baseball (MLB) has organized the sport of profession baseball in America since 1871. Consequently, baseball provides one of the oldest sources of sports data available. In fact, the sports analytics industry gained popularity largely due to the events described in Michael Lewis’s book Moneyball (Lewis 2004), which was later adapted into a major motion picture. As a brief introduction to such analyses, suppose we are asked the following question: What was the distribution of opportunity and efficiency among major league batters between 2010 and 2020?

The concepts of opportunity and efficiency are fundamental to sports analytics. Opportunity comprises the number of occasions a player or team has to perform. Efficiency refers to how well the player or team performs per occasion. For batters in baseball, opportunity is generally measured by the statistic at-bats. A common metric for performance in batting is hits. When we divide hits by at-bats, we obtain a popular measure of efficiency called the batting average. As with any sport, baseball is rife with unique terminology and abbreviations. A glossary of common baseball terms can be found at this link.

Opportunity and efficiency are of such great concern to baseball analysts, that our research question has likely been answered. However, we’re here to develop our problem solving skills based on a broad range of interesting questions and domains. So, we treat our question as unanswered. In terms of availability, MLB statistics have been dutifully collected for decades. The challenge is to find a single reliable source for the time frame of interest. We describe one such source in the next step of the problem solving process.

Acquire the Data

The Society of American Baseball Research (SABR) is a worldwide community of people with a love for baseball. One of its key leaders, Sean Lahman, maintains a massive database of publicly-available baseball statistics housed in the Lahman library in R. Let’s load the library and review the available data sets.

After executing the empty data() function, we obtain a tab in our work space called “R data sets”. This tab depicts all of the built-in data sets currently available, based on the loaded libraries. Scrolling down to the Lahman package, we see a wide variety of data tables. Given our focus on batting statistics, we import the Batting data table and inspect its structure.

## Rows: 112,184

## Columns: 22

## $ playerID <chr> "abercda01", "addybo01", "allisar01", "allisdo01", "ansonca01…

## $ yearID <int> 1871, 1871, 1871, 1871, 1871, 1871, 1871, 1871, 1871, 1871, 1…

## $ stint <int> 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1…

## $ teamID <fct> TRO, RC1, CL1, WS3, RC1, FW1, RC1, BS1, FW1, BS1, CL1, CL1, W…

## $ lgID <fct> NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, N…

## $ G <int> 1, 25, 29, 27, 25, 12, 1, 31, 1, 18, 22, 1, 10, 3, 20, 29, 1,…

## $ AB <int> 4, 118, 137, 133, 120, 49, 4, 157, 5, 86, 89, 3, 36, 15, 94, …

## $ R <int> 0, 30, 28, 28, 29, 9, 0, 66, 1, 13, 18, 0, 6, 7, 24, 26, 0, 0…

## $ H <int> 0, 32, 40, 44, 39, 11, 1, 63, 1, 13, 27, 0, 7, 6, 33, 32, 0, …

## $ X2B <int> 0, 6, 4, 10, 11, 2, 0, 10, 1, 2, 1, 0, 0, 0, 9, 3, 0, 0, 1, 0…

## $ X3B <int> 0, 0, 5, 2, 3, 1, 0, 9, 0, 1, 10, 0, 0, 0, 1, 3, 0, 0, 1, 0, …

## $ HR <int> 0, 0, 0, 2, 0, 0, 0, 0, 0, 0, 3, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0…

## $ RBI <int> 0, 13, 19, 27, 16, 5, 2, 34, 1, 11, 18, 0, 1, 5, 21, 23, 0, 0…

## $ SB <int> 0, 8, 3, 1, 6, 0, 0, 11, 0, 1, 0, 0, 2, 2, 4, 4, 0, 0, 3, 0, …

## $ CS <int> 0, 1, 1, 1, 2, 1, 0, 6, 0, 0, 1, 0, 0, 0, 0, 4, 0, 0, 1, 0, 0…

## $ BB <int> 0, 4, 2, 0, 2, 0, 1, 13, 0, 0, 3, 1, 2, 0, 2, 9, 0, 0, 4, 1, …

## $ SO <int> 0, 0, 5, 2, 1, 1, 0, 1, 0, 0, 4, 0, 0, 0, 2, 2, 3, 0, 2, 0, 2…

## $ IBB <int> NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, N…

## $ HBP <int> NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, N…

## $ SH <int> NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, N…

## $ SF <int> NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, N…

## $ GIDP <int> 0, 0, 1, 0, 0, 0, 0, 1, 0, 0, 0, 0, 2, 0, 1, 2, 0, 0, 0, 0, 3…The data is stored in the form of a data frame with 110,495 rows (observations) and 22 columns (variables). Each row represents a unique combination of player, season, and team. Each column provides descriptors and performance data for the player within the listed season and team. All of the performance data is labeled as integer because it counts discrete events such as games, at-bats, and hits. As with any sport, baseball statistics involve a lot of abbreviations! If we are unsure of the meaning for a given variable, we can call the help menu from the console with ?Batting.

The batting table includes far more information than we require to answer the research question, so some wrangling is in order. Let’s filter on the desired seasons and summarize the statistics of interest using functions from the dplyr library.

#wrangle batting data

Batting2 <- Batting %>%

filter(yearID>=2010,yearID<=2020) %>%

group_by(playerID,yearID) %>%

summarize(tAB=sum(AB),

tH=sum(H)) %>%

ungroup() %>%

filter(tAB>=80) %>%

mutate(BA=tH/tAB)In the first filter() we isolate the appropriate decade. Using group_by() and summarize() we obtain the total at-bats and hits per season for each player. With the second filter() we eliminate any players with fewer than 80 at-bats. Finally, we apply mutate() to create a new variable for batting average.

Why did we impose the requirement of 80 at-bats per season? Firstly, there are many players with zero at-bats in a season and we cannot have a zero in the denominator of the batting average calculation. Secondly, we are typically interested in the performance of players who had ample opportunity. Other than the oddity of the COVID-19 year, MLB teams played 162 games per season during the time frame of interest. So, we impose a somewhat arbitrary threshold of opportunity at about half of these games. In practice, stakeholders might request a threshold better tailored to their needs, but 80 is sufficient for our purposes here.

Before beginning an analysis, we address the issue of data cleaning. Without more intimate knowledge of the game of baseball, it may be difficult to identify unlikely or mistaken values in this data set. However, one obvious quality check is to compare the total at-bats and hits for each player and season combination. It’s not possible for a player to achieve more hits than at-bats. This would imply a batting average greater than one. We check for this error by summing the total occurrences.

## [1] 0The inequality within the parentheses returns TRUE (1) or FALSE (0), so summing results in the total number of observations for which the inequality is TRUE. The resulting total of zero suggests there are no cases where a player has more hits than at-bats. Though there could be more subtle errors that we are unaware of, at least this obvious error is not present. Let’s move on to an analysis of the resulting data.

Analyze the Data

As with categorical variables, a sensible place to start an exploratory analysis of numerical variables is with descriptive statistics. Measures of centrality attempt to capture the average or most common values for a variable. Central statistics include the mean and median. Measures of dispersion describe the variability of individual values from each other or the average. Dispersion statistics include standard deviation and interquartile range (IQR). For this case study, we spotlight the mean and standard deviation, and reserve median and IQR for the next section. Let’s compute the mean and standard deviation for at-bats.

## # A tibble: 1 × 3

## count mean stdev

## <int> <dbl> <dbl>

## 1 4916 326. 168.For the 4,916 players in the data set, the average number of at-bats (opportunity) per season is around 326. The standard deviation of at-bats is about 168. One way to think about standard deviation is how far individual values typically are from the mean value. In this case, individual players vary above and below the mean of 326 by about 168 at-bats, on average. Relatively speaking, that’s quite a bit of variation! Now let’s calculate the same statistics for batting average.

## # A tibble: 1 × 3

## count mean stdev

## <int> <dbl> <dbl>

## 1 4916 0.250 0.0372The mean batting efficiency over the time frame of interest is 25%. In other words, the average player achieved a hit on only one-quarter of their at-bats. Hitting a ball traveling at up to 100 miles-per-hour is difficult! The standard deviation for batting average is nearly 4 percentage-points. Over 100 at-bats, this represents only 4 additional (or fewer) hits compared to the mean. Intuitively, this seems like a relatively small variation.

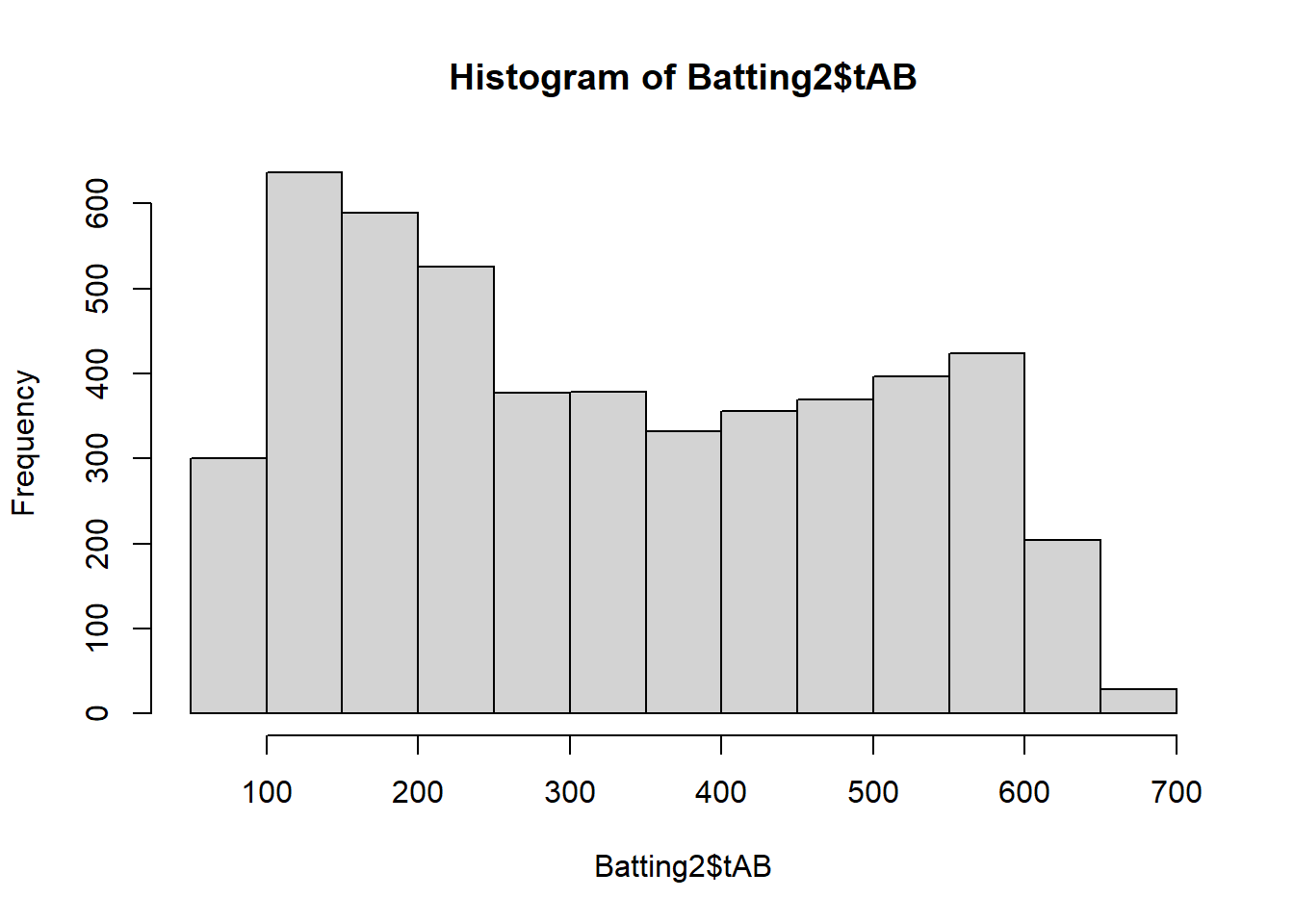

In terms of visualization, a bar chart is not appropriate for numerical variables. There are no existing levels to depict counts. However, we can create our own categories (called “bins”) and display them in a histogram. As with bar charts, the base R language includes a function to produce simple histograms.

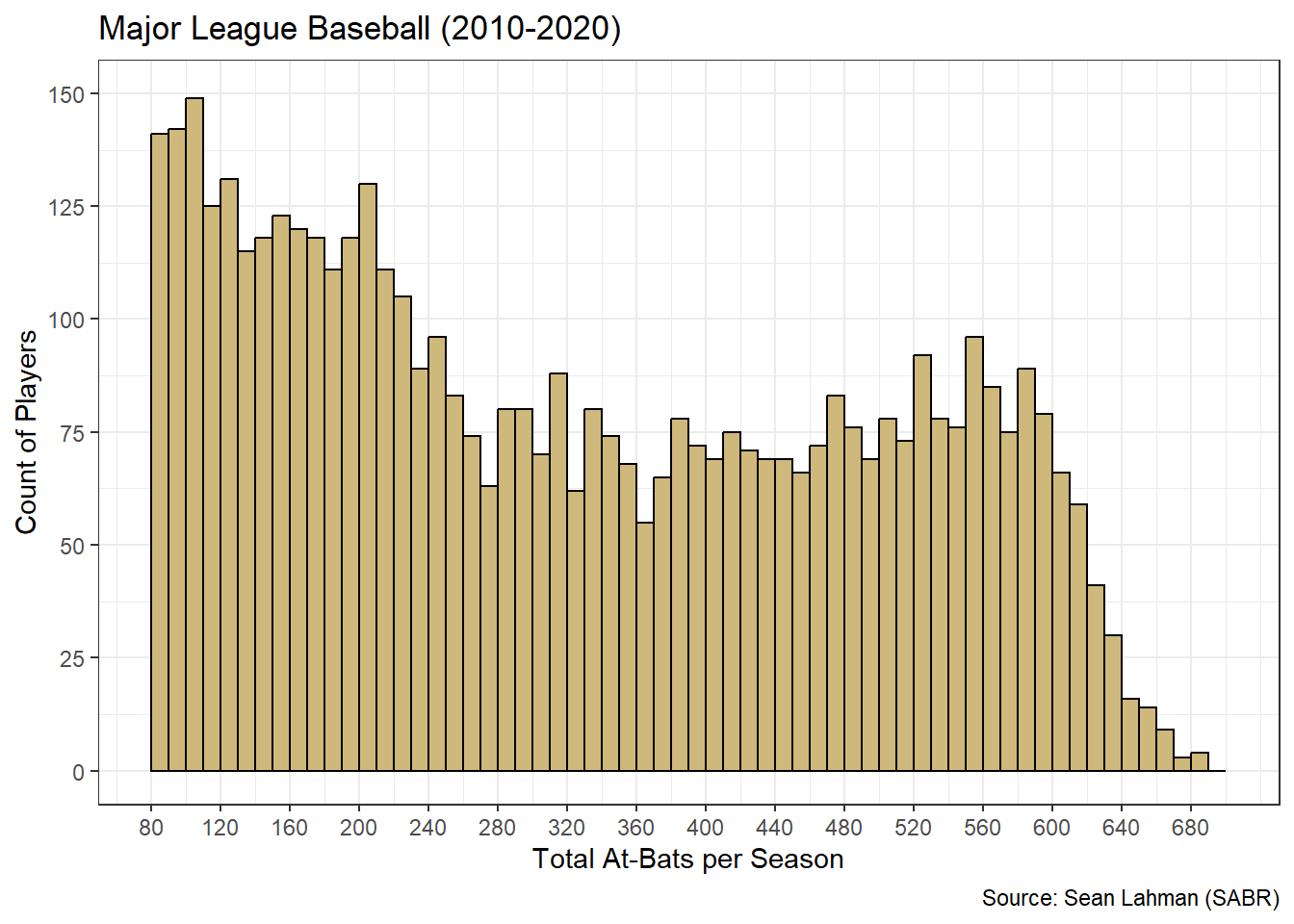

Figure 3.11: Basic Histogram of At-Bats

The hist() function quickly produces a simple histogram, but Figure 3.11 is not appropriate for external presentation. The titles are in coding syntax rather than plain language and we have no control over the categorization (binning). Let’s leverage ggplot2 layering to produce a much better graphic.

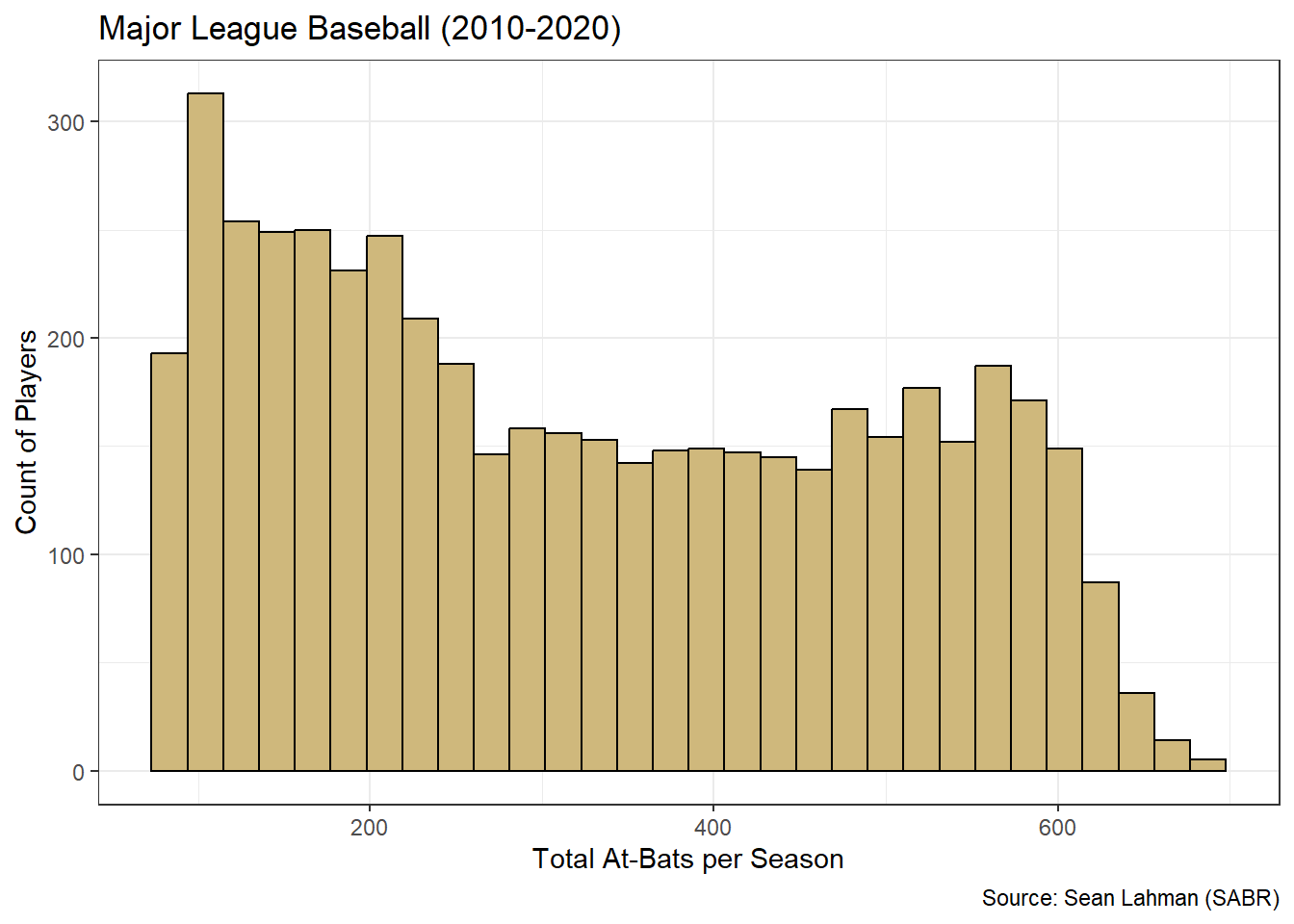

#plot ggplot2 histogram of at-bats

ggplot(data=Batting2,aes(x=tAB)) +

geom_histogram(color="black",fill="#CFB87C") +

labs(title="Major League Baseball (2010-2020)",

x="Total At-Bats per Season",

y="Count of Players",

caption="Source: Sean Lahman (SABR)") +

theme_bw()

Figure 3.12: ggplot2 Histogram of At-Bats

Figure 3.12 remedies the issues with titles and labels. However, it is unclear what range of values is included in each bin (bar). The ggplot2 structure offers us complete control over the binning, but we must first decide how many bins or what bin width is appropriate. These choices can be as much art as science. That said, a good place to start for the number of bins is the square-root of the number of observations. We then evenly distribute the bins over the range of values for the variable. We execute this method for at-bats below.

#determine bin number

bins <- sqrt(nrow(Batting2))

#determine range of at-bats

range <- max(Batting2$tAB)-min(Batting2$tAB)

#determine bin widths

range/bins## [1] 8.614518The nrow() function counts the observations in the data frame and then we determine the square root with sqrt(). The total range of at-bat values is computed as the difference between the maximum (max()) and minimum (min()) values. Finally, we calculate bin width as the ratio of the range and number of bins. A bin width of 8.6 is not very intuitive for a viewer. But this width is close to 10, which is broadly intuitive. So, we choose a bin width of 10 and add this to the geom_histogram() function below.

#create tailored histogram of at-bats

atbats <- ggplot(data=Batting2,aes(x=tAB)) +

geom_histogram(binwidth=10,boundary=80,closed="left",

color="black",fill="#CFB87C") +

labs(title="Major League Baseball (2010-2020)",

x="Total At-Bats per Season",

y="Count of Players",

caption="Source: Sean Lahman (SABR)") +

theme_bw() +

scale_x_continuous(limits=c(80,700),breaks=seq(80,700,40)) +

scale_y_continuous(limits=c(0,150),breaks=seq(0,150,25))

#display histogram

atbats

Figure 3.13: Tailored Histogram of At-Bats

We include a few other improvements besides the bin width. Within the geom_histogram() function, the boundary parameter sets the starting value for all bins and the closed parameter determines whether each bin includes its left or right endpoint. The scale_?_continuous() functions establish value limits and break-points for each axis. The result is a much more clear and precise graphic. Now we know, for example, that the first bin includes at-bat values between [80,90), the second bin includes [90,100), and so on.

How should we interpret the histogram in Figure 3.13? There appear to be two distinct “peaks” in the distribution. We refer to such distributions as bimodal. The first peak is at the far left where the chart begins. This is not uncommon in situations where we impose an artificial threshold. Recall that many batters had a small number of at-bats, if any at all, in a given season. This could be due to poor performance or injury. Regardless, the frequency is high for at-bat values below the threshold of 80 and decreases steadily until values around 360. The second peak occurs around 550 at-bats. These values likely represent players who stayed healthy and performed well enough to be included in the batting lineup for most games.

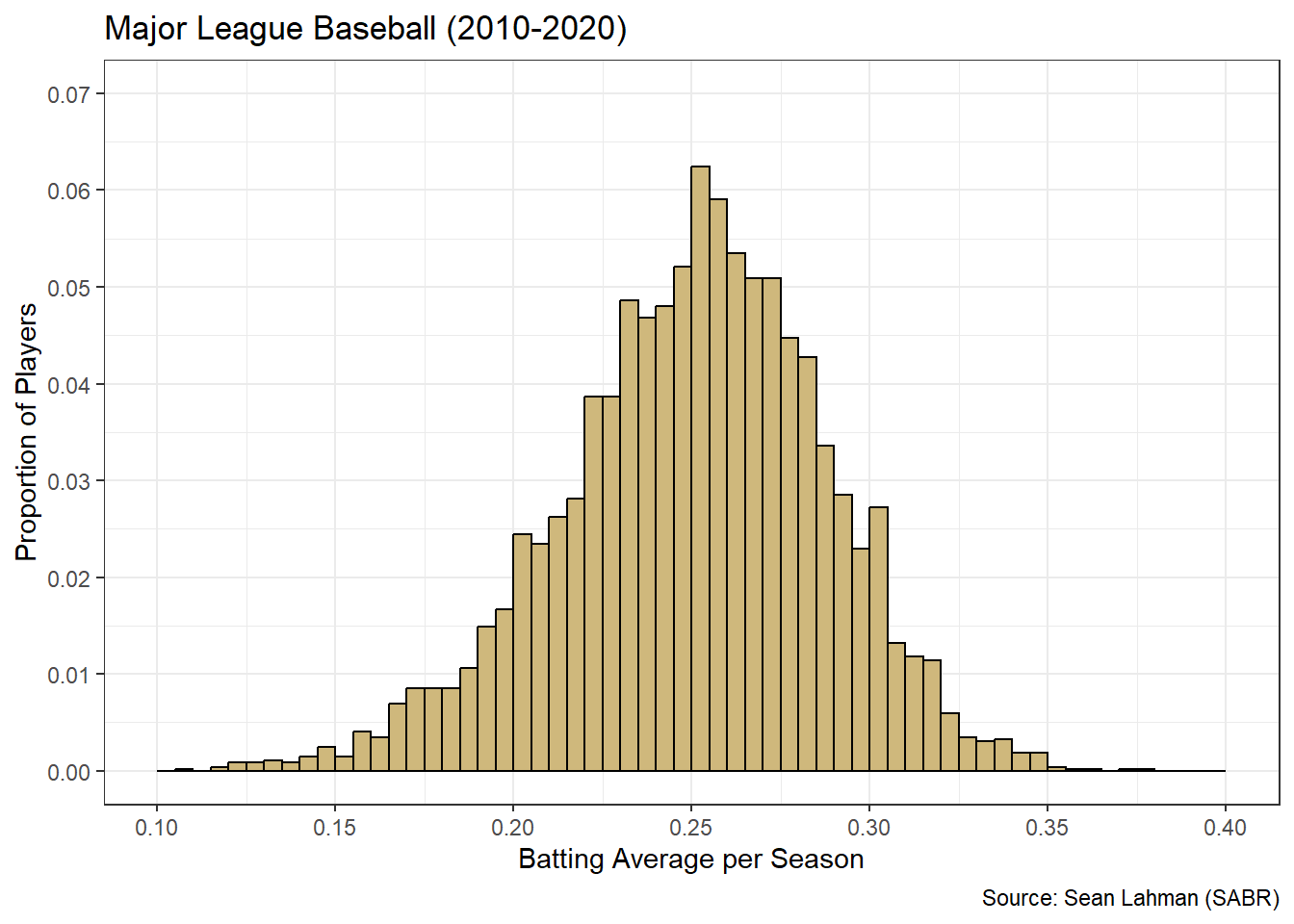

Just as with bar charts, the frequency in histograms can be represented as raw counts or proportions. When it includes proportions, we often refer to the graphic as a relative frequency histogram. Let’s construct a relative frequency histogram for batting average.

#create tailored histogram of batting average

batavg <- ggplot(data=Batting2,aes(x=BA)) +

geom_histogram(aes(y=0.005*..density..),

binwidth=0.005,boundary=0,closed="left",

color="black",fill="#CFB87C") +

labs(title="Major League Baseball (2010-2020)",

x="Batting Average per Season",

y="Proportion of Players",

caption="Source: Sean Lahman (SABR)") +

theme_bw() +

scale_x_continuous(limits=c(0.1,0.4),breaks=seq(0.1,0.4,0.05)) +

scale_y_continuous(limits=c(0,0.07),breaks=seq(0,0.07,0.01))

#display histogram

batavg

Figure 3.14: Tailored Histogram of Batting Average

Unlike at-bats, the batting average histogram in Figure 3.14 is unimodal. There is a single peak right at the average value of 25%. While batting opportunity seems to be split into two distinct groups, batting efficiency is well-represented by a single grouping. Based on the bin widths and the conversion to relative frequency, we know that over 6% of all players had a batting average between 25% and 25.5%. This is the highest frequency bin in the center. The remaining bins to the left and right decrease in frequency, creating a bell-shaped distribution. This is a very important shape in probability and statistics, and we investigate it much more in Chapter 4. For now, we move on to advising stakeholders on the insights offered by the histograms.

Advise on Results

When advising stakeholders on the distribution of numerical variables, it is important to appropriately blend the insights available from summary statistics and visual graphics. Sometimes the two types of exploratory tools are well-aligned and other times they are not. The histograms for batting opportunity and efficiency demonstrate these two different situations based on their dissimilar modality.

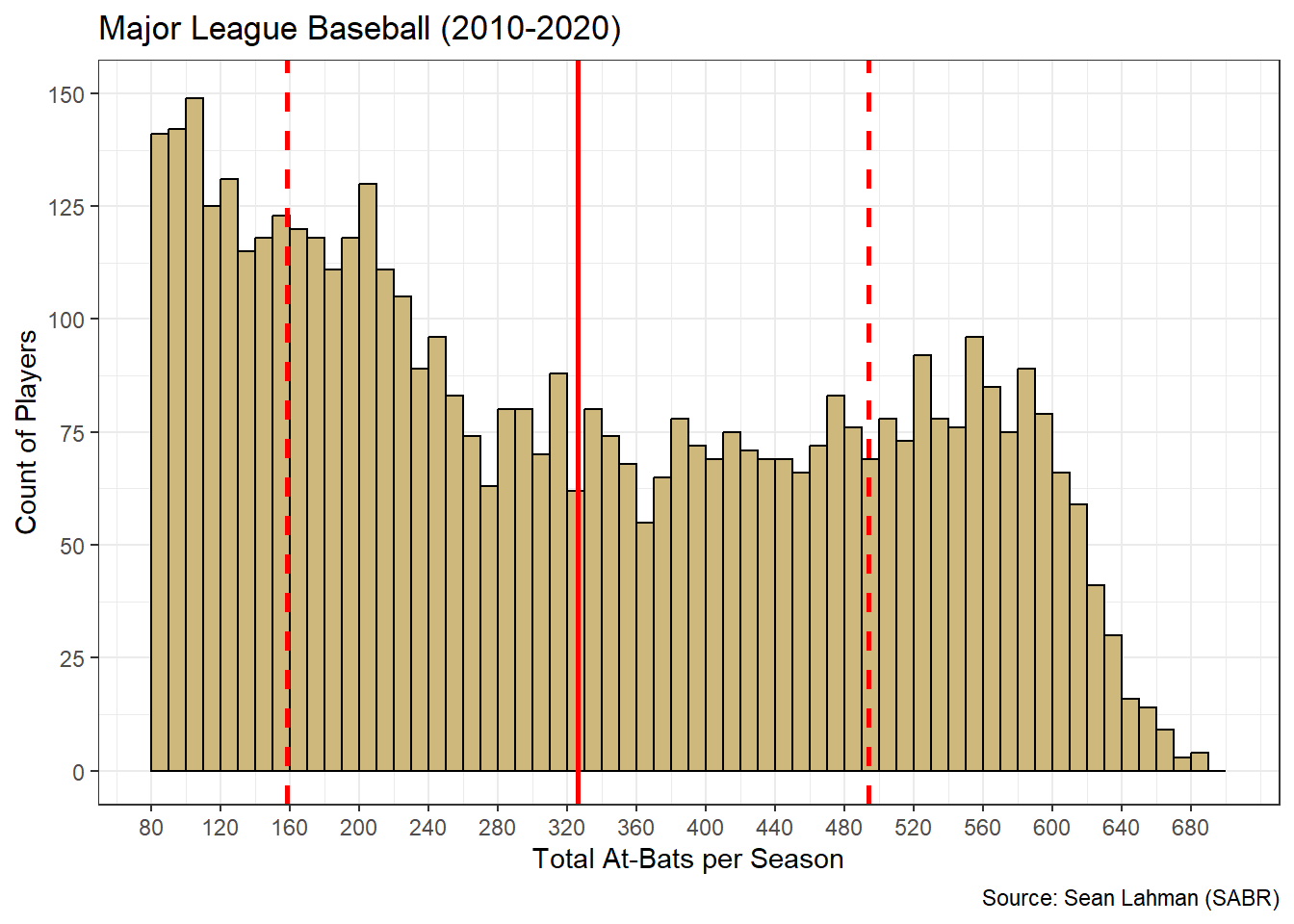

Given the bimodal nature of at-bats, the summary statistics and histogram tell different stories. If we solely reported summary statistics, stakeholders might believe that most players are afforded around 326 at-bats per season (based on the mean). But the histogram suggests this is not the case at all. Let’s overlay the mean (solid line) and standard deviations (dashed lines) on the at-bats histogram.

#display at-bats histogram with stats

atbats +

geom_vline(xintercept=326,color="red",size=1) +

geom_vline(xintercept=c(158,494),color='red',size=1,linetype="dashed")

Figure 3.15: Histogram and Stats for At-Bats

Figure 3.15 demonstrates why it is so important to include the full distribution of values in addition to summary statistics. While we often think of the mean as the average or most common value, this interpretation can be misleading. In this case, relatively few players were afforded the mean number of at-bats. Additionally, while many players were within one standard deviation of the mean, none of those players were included in the two peak frequencies on each end of the distribution. This is a result of the mathematics of the mean. The average of many low numbers and many high numbers is something in between.

When presenting a histogram like Figure 3.15 to stakeholders, it is important to describe the limitations of the mean as a metric for describing “common” behavior. The bimodal nature of the distribution limits the usefulness of the mean. That said, the at-bats histogram exemplifies an opportunity for collaboration with domain experts. A data scientist may not initially understand why the distribution is bimodal, but baseball experts might quickly identify the two distinct groups of low and high-performing players. In this case, the data scientist could gain as much insight as they offer!

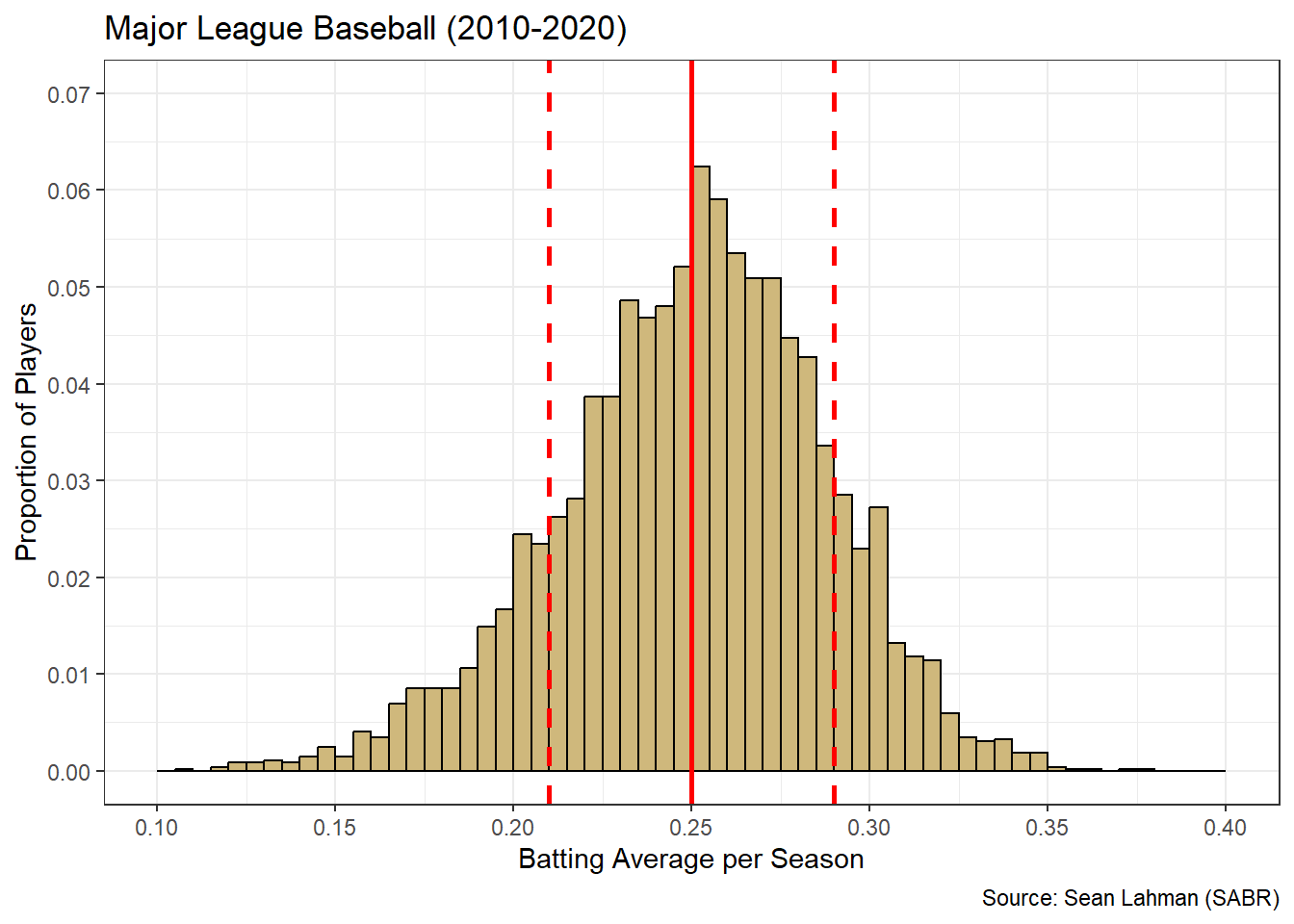

In stark contrast to at-bats, when we overlay batting average statistics on the unimodal histogram we observe a more consistent story.

#display batting average histogram with stats

batavg +

geom_vline(xintercept=0.25,color="red",size=1) +

geom_vline(xintercept=c(0.21,0.29),color="red",size=1,linetype="dashed")

Figure 3.16: Histogram and Stats for Batting Average

The mean batting efficiency is coincident with the most frequent values. Also, most players are within one standard deviation of these most frequent values. Unlike the at-bats histogram, it is uncommon for a player to be outside one standard deviation of the mean batting efficiency. Thus, for batting efficiency, the summary statistics align well with the common understanding of “average” performance. As a result, Figure 3.16 is likely much easier to interpret for stakeholders. We are now prepared to answer the research question.

Answer the Question

How should we describe the batting opportunity and efficiency of MLB players? In terms of opportunity, there appears to be two distinct groups of batters. Either due to injury or poor performance, the first group of players are afforded relatively few at-bats. A second grouping appears to have stayed healthy and/or performed well enough to witness many at-bats. The peak frequency among this group is around 550 at-bats, spread over a 162 game season. With regard to efficiency, the mean batting average is 25% with lower or higher values being less and less frequent. Relatively few batters have a season with an efficiency below 20% or above 30%.

With two well-designed histograms and the thoughtful presentation of insights, we are able to answer an interesting question regarding batting opportunity and efficiency in Major League Baseball. When exploring continuous numerical variables, a histogram is the appropriate visualization tool. In the next section, we introduce box plots as a useful graphic for describing numerical variables grouped by the levels of a factor. We also discuss the identification and resolution of extreme values called outliers.

3.2.3 Box Plots

In Chapter 2.3.5 we briefly mention the identification of extreme of unlikely values as part of data cleaning. Such values are often referred to as outliers. If an outlying value is determined to be an error, due to poor collection practices or some other mistake, then it should be removed or corrected. However, not all outliers are mistakes. Some values are extreme but legitimate (i.e., possible) and their removal limits the broader understanding of the data. In general, we should not remove an outlying observation from the data frame unless it is a clear error. But how do we identify outliers in the first place?

In plain language, an outlier is any value that is “far away” from most of the other possible values. Of course, the context of the “other values” matters. A seven-foot tall person would almost certainly be considered an outlier in the general population. But among professional basketball players, it is not as uncommon to be seven feet tall. In that context, the person may not be an outlier. This distinction points toward the need to visualize the distribution of the data in question. Otherwise, there is no objective way to define “other values” and “far away”. Though histograms are an acceptable graphic for this purpose, box plot are specifically-designed to display outliers.

In this section, we continue to employ the dplyr and ggplot2 libraries from the tidyverse suite. Let’s explore the identification and resolution of outliers using box plots and the 5A Method.

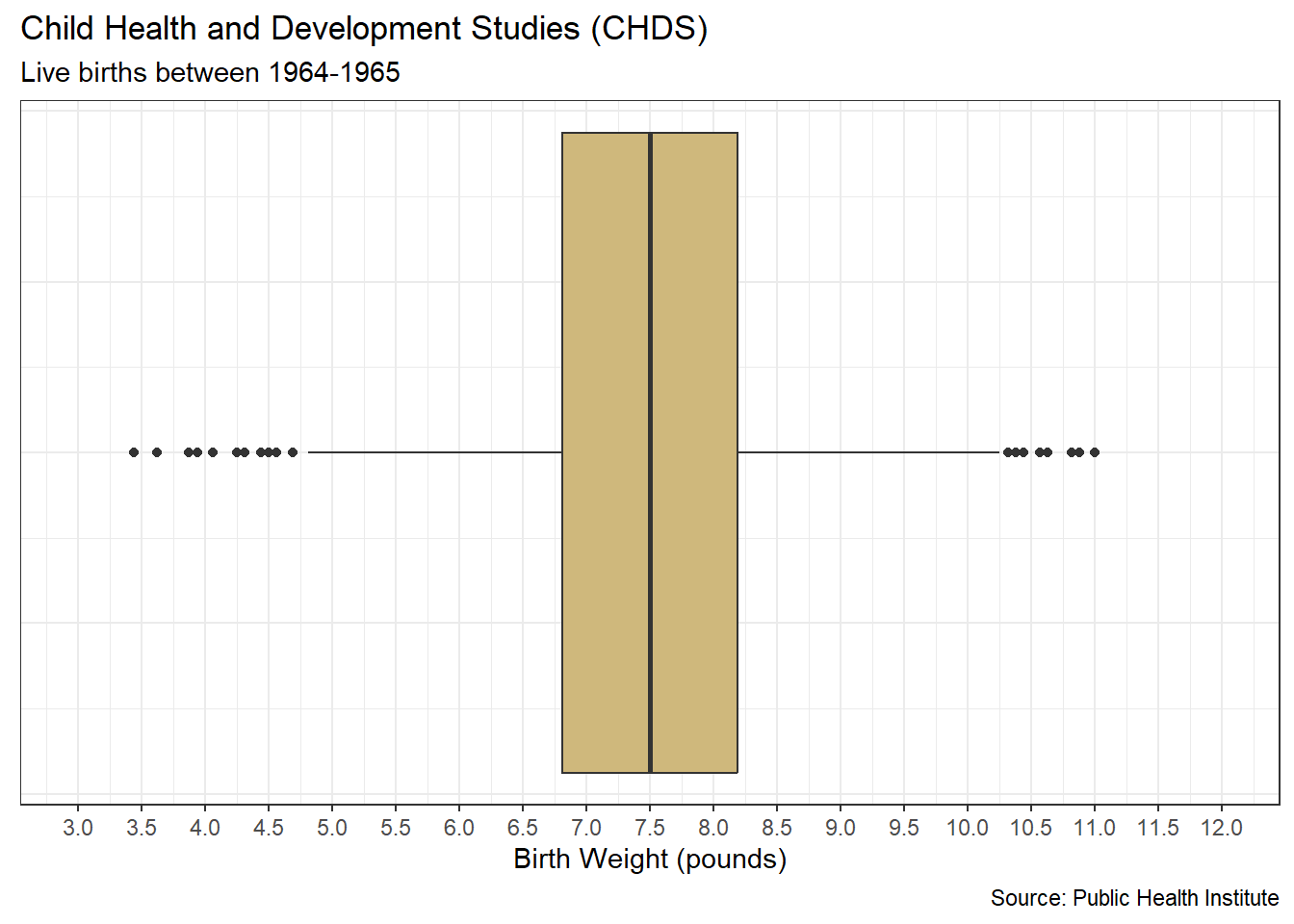

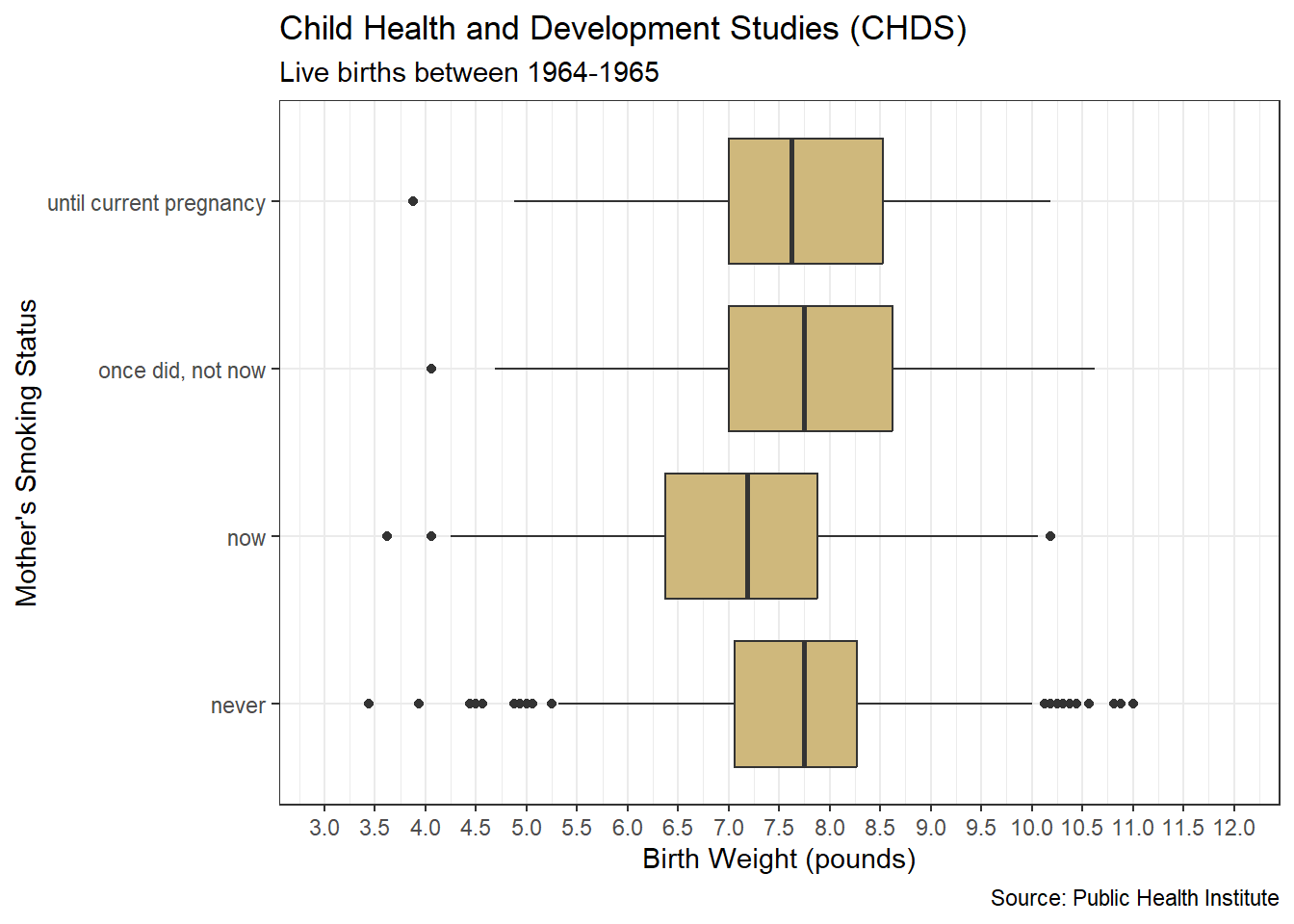

Ask a Question

The Child Health and Development Studies (CHDS) have investigated how health and disease are passed between generations since 1959. Their researchers focus on not only genetic predisposition, but also social and environmental factors. As part of a long-term study, the CHDS collected the demographics and smoking habits of expectant parents. Suppose we are asked the following question: As part of the CHDS project, were there any extreme or unlikely birth weights for babies of mothers who did and did not smoke during pregnancy?

Since the existence of outliers is specific to a given data set, this question may or may not have been answered. That said, the impact of smoking on unborn children has been researched extensively. It is certainly of concern for obstetricians or those planning a pregnancy. Doctors and expectant parents should be aware of common minimum and maximum birth weights, as well as uncommon cases. The CHDS is a project of the Public Health Institute, which appears to be a trustworthy organization based on information at this link. They publish research funded by national institutes and make their data and results widely available. Thus, the question is likely answerable.

This inquiry does warrant special consideration in terms of ethics. On the one hand, it could be critically important to distinguish between common and uncommon birth weights for unborn children. On the other, if smoking is associated with uncommon birth weights, then there is the potential for parents in the study to be shamed or ostracized for such behavior. Depending on the form of the acquired data, we must carefully protect any personally-identifiable information to avoid unintentional disclosure.

Acquire the Data

While the data is likely available directly from the CHDS, the mosaicData package includes a related table named Gestation. We load the library, import the data, and review the structure of the table below.

#load library

library(mosaicData)

#import gestation data

data(Gestation)

#display structure of data

glimpse(Gestation)## Rows: 1,236

## Columns: 23

## $ id <dbl> 15, 20, 58, 61, 72, 100, 102, 129, 142, 148, 164, 171, 175, …

## $ pluralty <chr> "single fetus", "single fetus", "single fetus", "single fetu…

## $ outcome <chr> "live birth", "live birth", "live birth", "live birth", "liv…

## $ date <date> 1964-11-11, 1965-02-07, 1965-04-25, 1965-02-12, 1964-11-25,…

## $ gestation <dbl> 284, 282, 279, NA, 282, 286, 244, 245, 289, 299, 351, 282, 2…

## $ sex <chr> "male", "male", "male", "male", "male", "male", "male", "mal…

## $ wt <dbl> 120, 113, 128, 123, 108, 136, 138, 132, 120, 143, 140, 144, …

## $ parity <dbl> 1, 2, 1, 2, 1, 4, 4, 2, 3, 3, 2, 4, 3, 5, 3, 4, 3, 3, 2, 3, …

## $ race <chr> "asian", "white", "white", "white", "white", "white", "black…

## $ age <dbl> 27, 33, 28, 36, 23, 25, 33, 23, 25, 30, 27, 32, 23, 36, 30, …

## $ ed <chr> "College graduate", "College graduate", "HS graduate--no oth…

## $ ht <dbl> 62, 64, 64, 69, 67, 62, 62, 65, 62, 66, 68, 64, 63, 61, 63, …

## $ wt.1 <dbl> 100, 135, 115, 190, 125, 93, 178, 140, 125, 136, 120, 124, 1…

## $ drace <chr> "asian", "white", "white", "white", "white", "white", "black…

## $ dage <dbl> 31, 38, 32, 43, 24, 28, 37, 23, 26, 34, 28, 36, 28, 32, 42, …

## $ ded <chr> "College graduate", "College graduate", "8th -12th grade - d…

## $ dht <dbl> 65, 70, NA, 68, NA, 64, NA, 71, 70, NA, NA, 74, NA, NA, NA, …

## $ dwt <dbl> 110, 148, NA, 197, NA, 130, NA, 192, 180, NA, NA, 185, NA, N…

## $ marital <chr> "married", "married", "married", "married", "married", "marr…

## $ inc <chr> "2500-5000", "10000-12500", "5000-7500", "20000-22500", "250…

## $ smoke <chr> "never", "never", "now", "once did, not now", "now", "until …

## $ time <chr> "never smoked", "never smoked", "still smokes", "2 to 3 year…

## $ number <chr> "never", "never", "1-4 per day", "20-29 per day", "20-29 per…The data frame comprises 1,236 observations (rows) and 23 variables (columns). According to information obtained from the help menu (?Gestation), each observation represents the live birth of a biologically male baby and each variable describes the baby and parents. It is unclear from the available information why the data only includes male births. The documentation states the parents participated in the CHDS in 1961 and 1962. Our variable of primary interest is the birth weight (wt), which is listed in ounces. We are also interested in the smoke variable, which indicates if the mother smoked during the pregnancy.

Upon review of the data, there appears to be a mismatch between the years listed in the documentation and the years shown in the output. The help menu says the years are 1961 and 1962, but we see 1964 and 1965 in the example values. Let’s check the bounds of the date variable.

## [1] "1965-09-10"## [1] "1964-09-11"In fact, this data frame only includes births between September 1964 to September 1965. The year of birth is not the focus of our research question, but this apparent mistake in documentation could be important. We should always divulge such issues when presenting results.

Given our limited focus, some wrangling is appropriate. We begin by reducing the variables to complete observations with a birth weight and smoking status. Birth weight is appropriately typed numeric, but we convert it from ounces to pounds since that is the commonly-reported unit. The smoking status includes repeated categorical values, so it should be a factor rather than character type. We convert smoking status to a factor and then review its levels.

#wrangle gestation data

Gestation2 <- Gestation %>%

transmute(weight=wt/16,

smoke=as.factor(smoke)) %>%

na.omit()

#display smoke levels

levels(Gestation2$smoke)## [1] "never" "now"

## [3] "once did, not now" "until current pregnancy"The levels are not perfectly exclusive. The “once did, not now” and “until current pregnancy” levels seem to have some logical overlap. The meaning of these levels is not crystal clear. Does “once” mean literally a single time or during a past time period? By contrast, “never” and “now” are much clearer and non-overlapping. With greater access to the CHDS researchers, we might be able to clarify the differences in status. For now, we leave the levels as they are and move on to the analysis.

Analyze the Data

The research question suggests exploring the data to determine if any birth weights are extreme or unlikely. We cannot do that until we understand what birth weights are likely. Thus, we must describe the centrality and dispersion of the data. A simple place to begin is with the summary() function.

## weight smoke

## Min. : 3.438 never :544

## 1st Qu.: 6.812 now :484

## Median : 7.500 once did, not now :103

## Mean : 7.470 until current pregnancy: 95

## 3rd Qu.: 8.188

## Max. :11.000The summary statistics for birth weight include what are known as quartiles. The 1st quartile comprises the smallest 25% of the data. In this case, 25% of birth weights are less than 6.812 pounds. The 2nd quartile, also known as the median, splits the data in half. Specifically, 50% of birth weights are less than 7.500 pounds. Finally, the 3rd quartile bounds the lower 75% of values. So, 75% of birth weights are less than 8.188 pounds.

The terms quartile, quantile, and percentile are all very closely related. For example, the 1st quartile, 0.25 quantile, and 25th percentile are all equivalent. Although not a common label, the minimum value is equivalent to the 0th quartile, the 0.00 quantile, and the 0th percentile. Similarly, the maximum value is equivalent to the 4th quartile, the 1.00 quantile, and the 100th percentile. If we want to compute any of these values individually, we apply the quantile() function.

## 25%

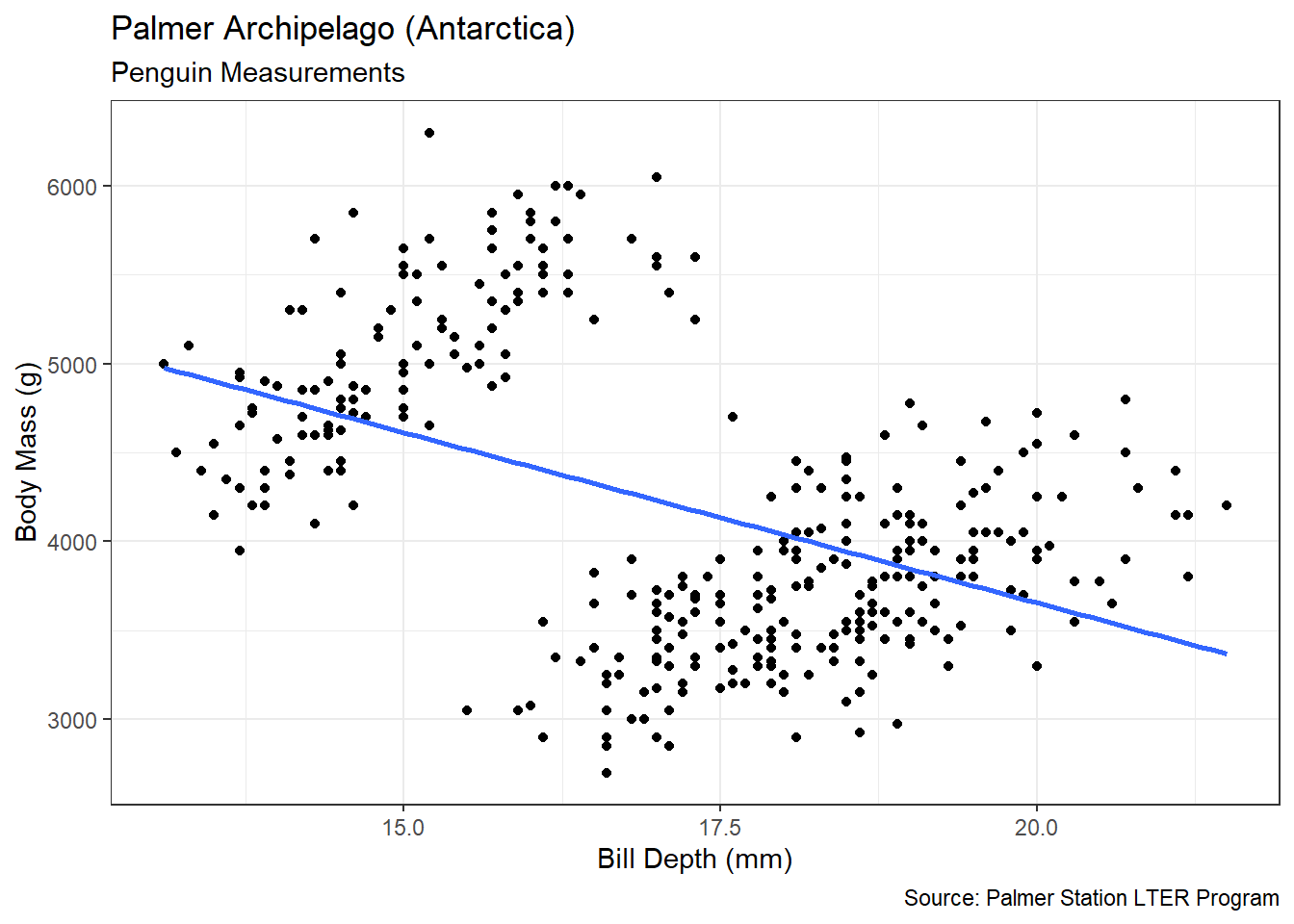

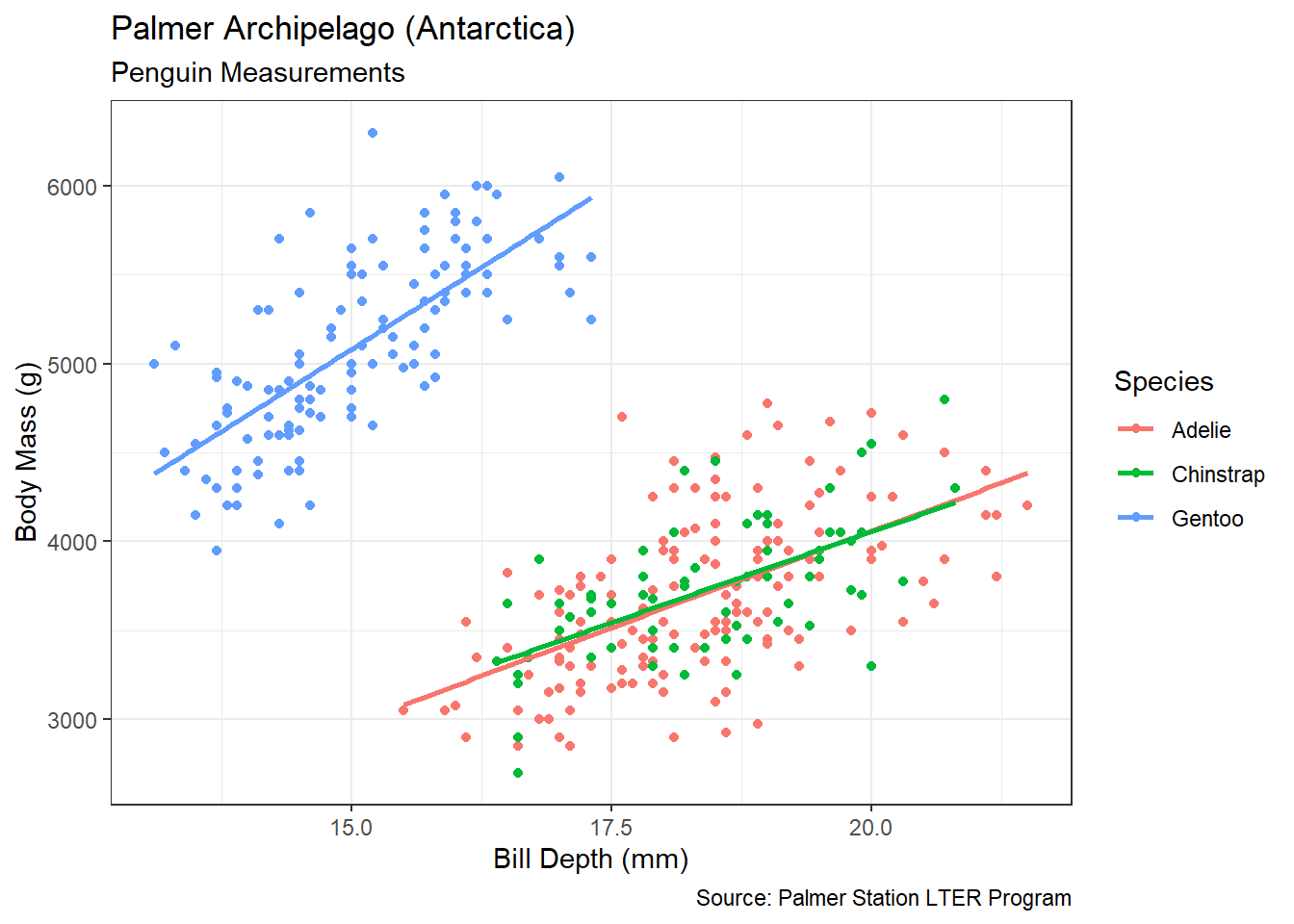

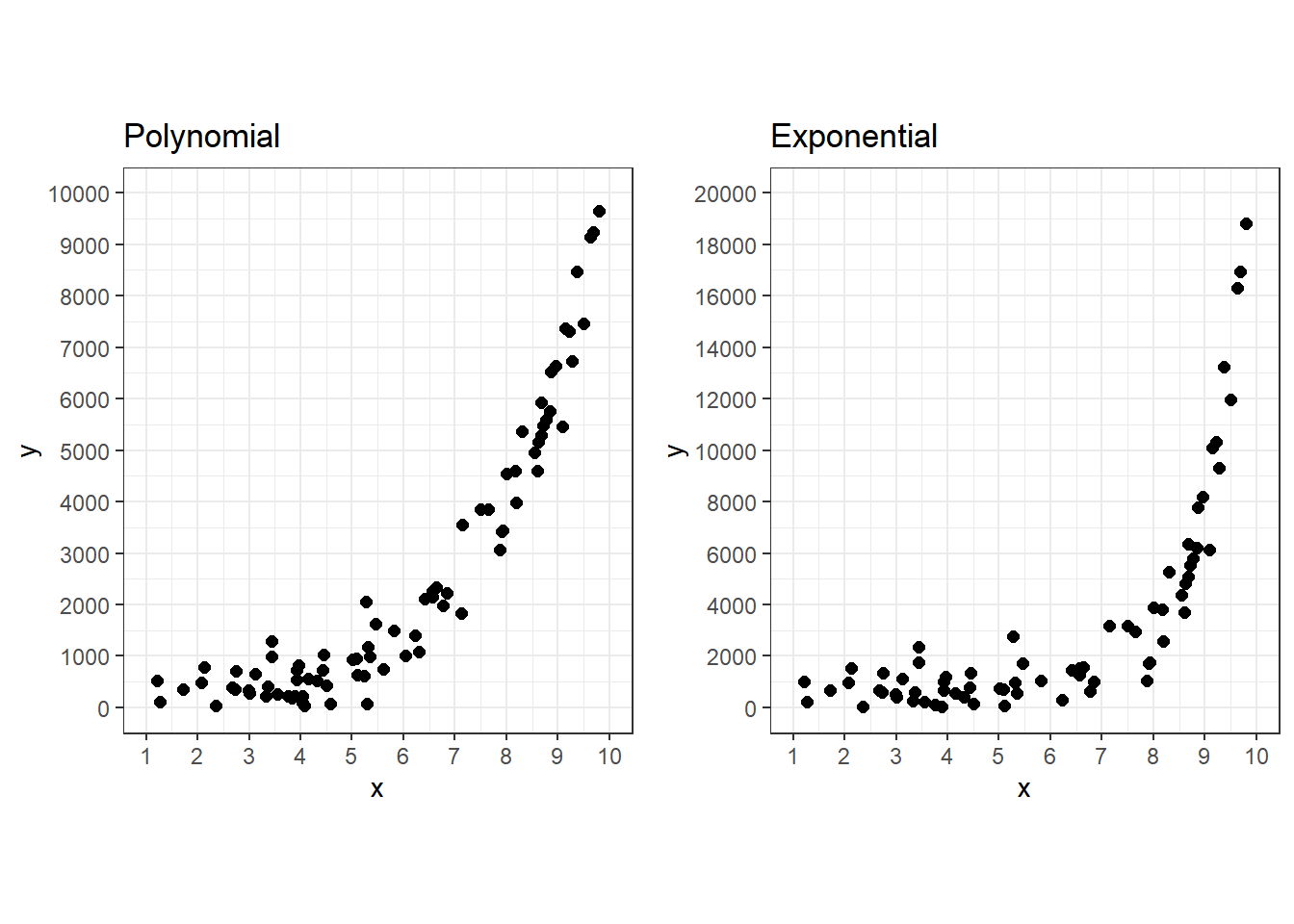

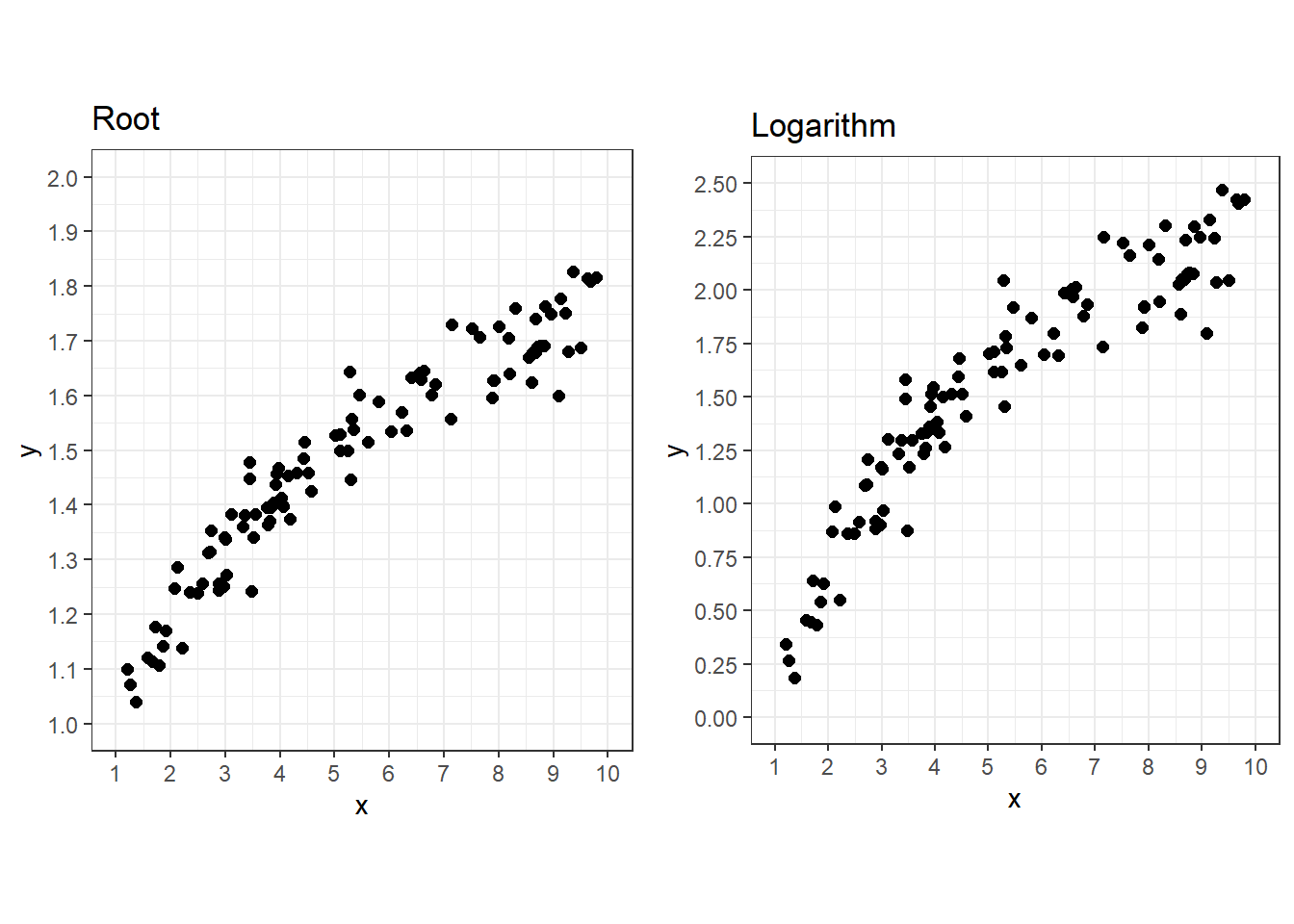

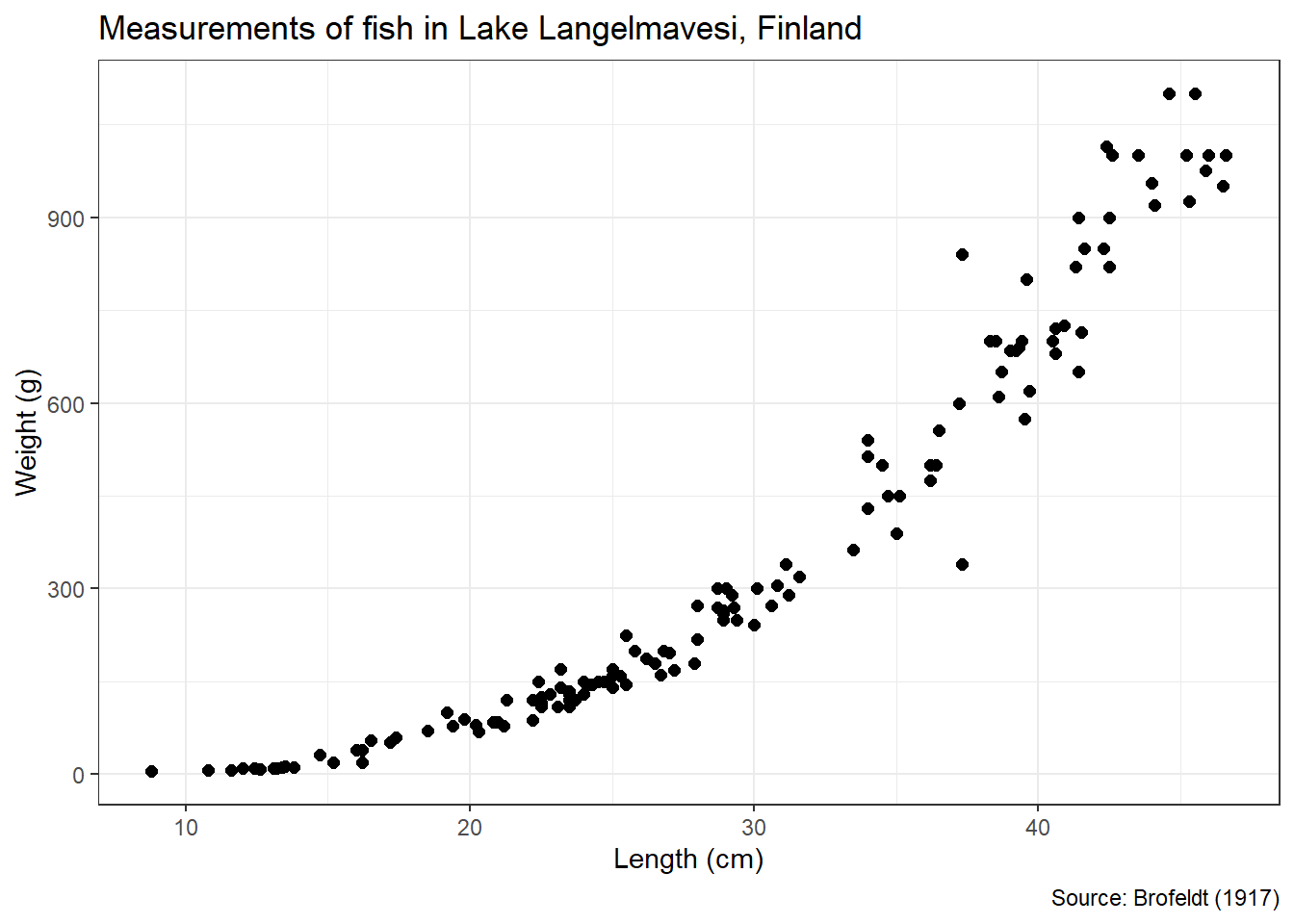

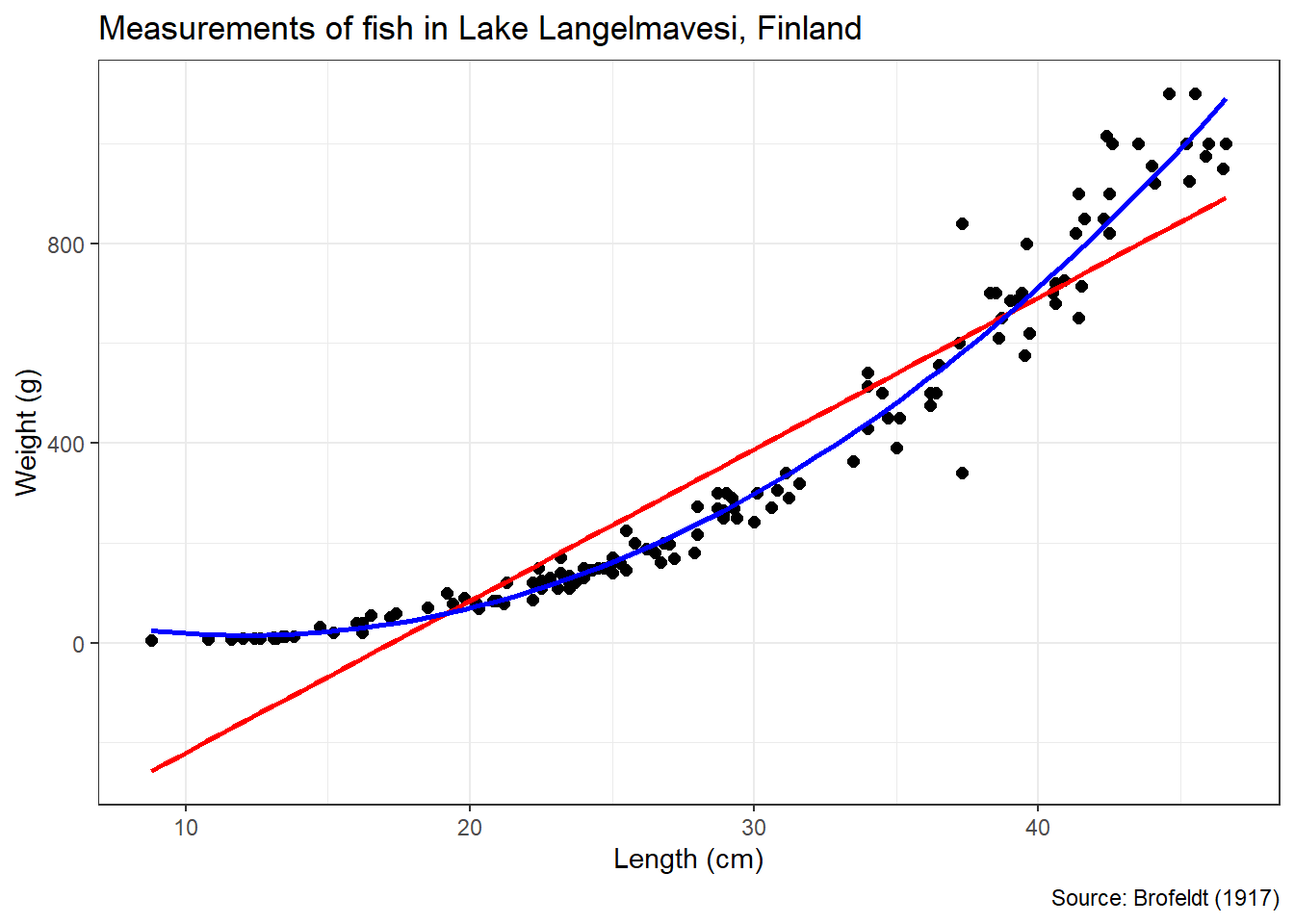

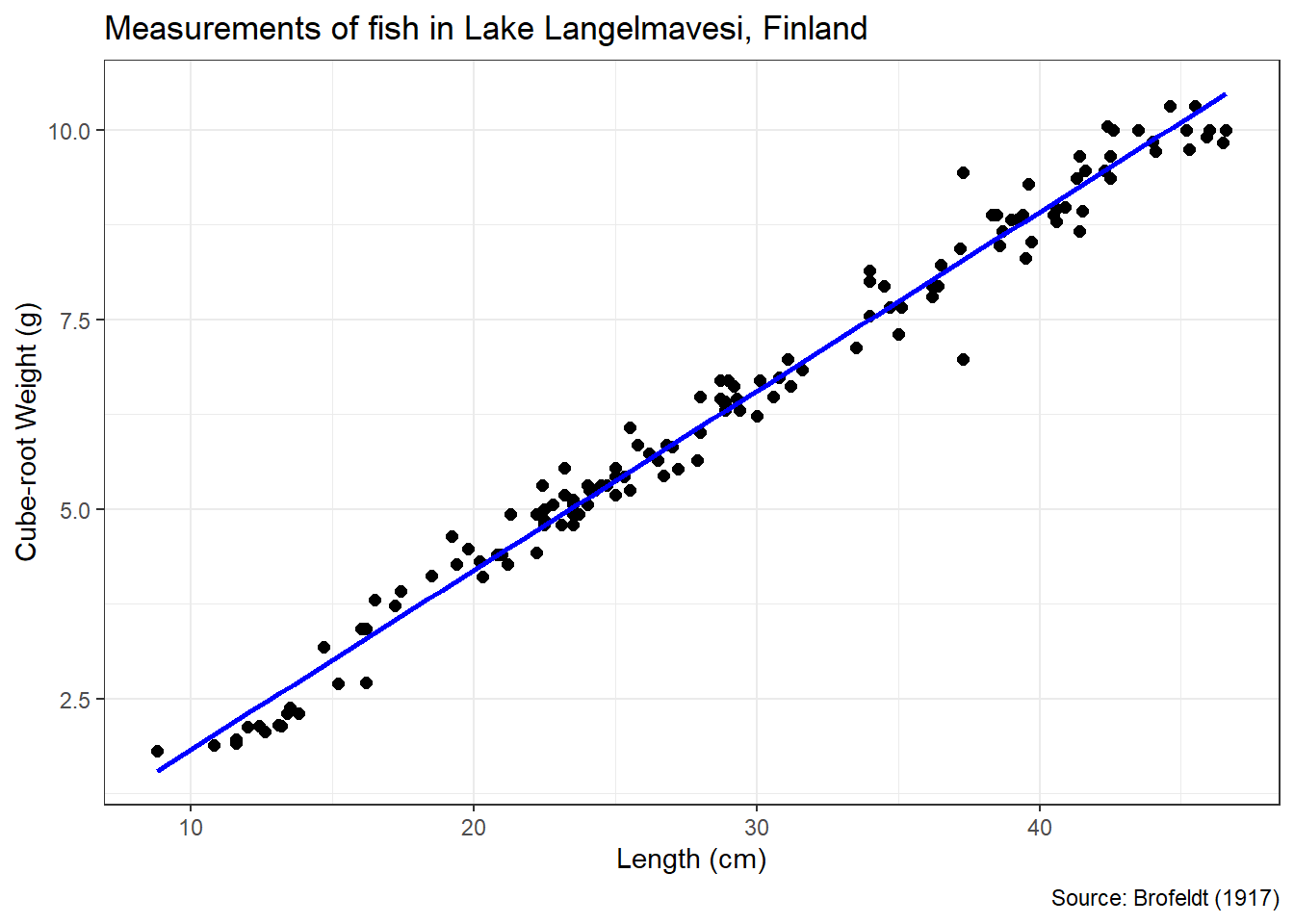

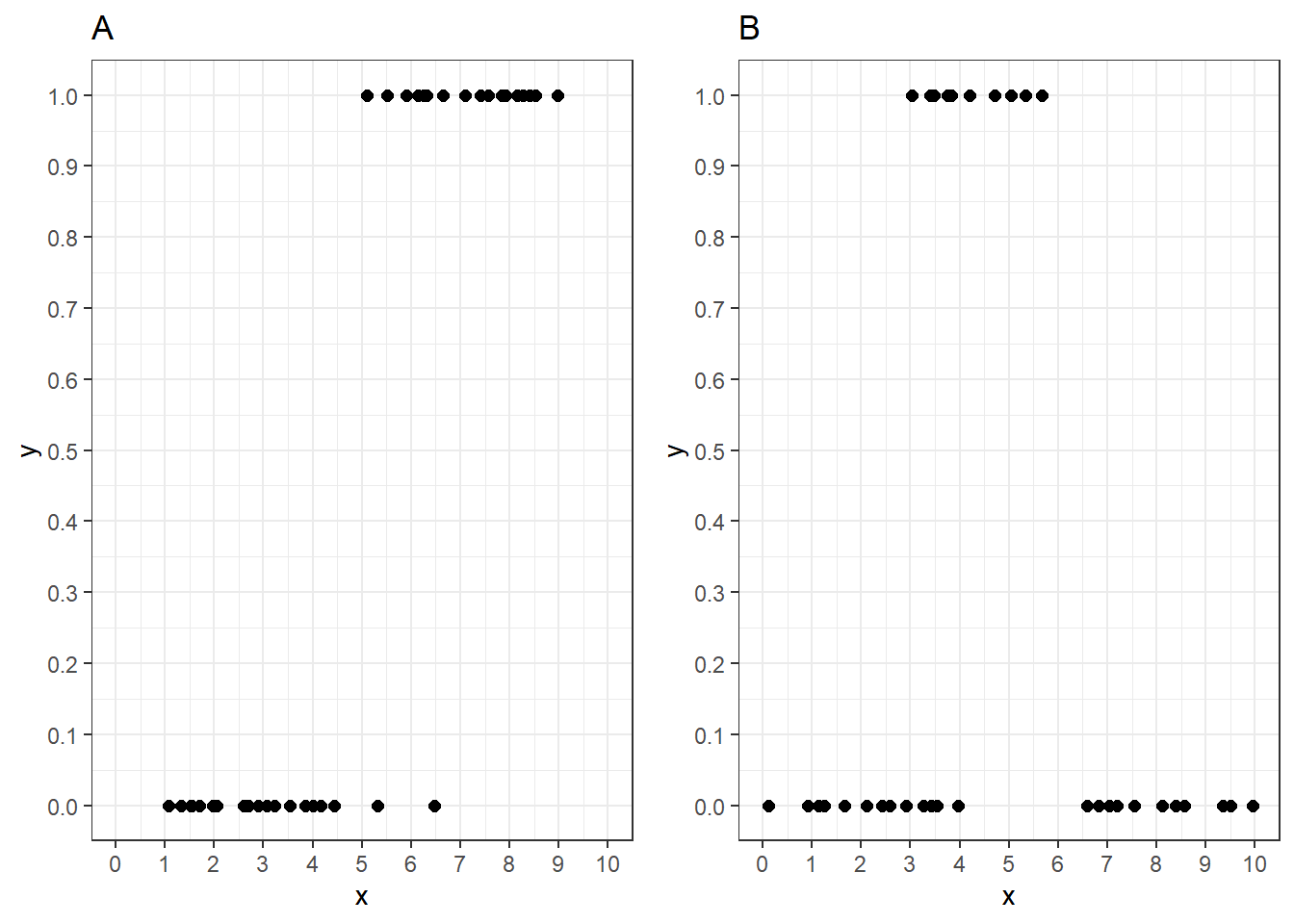

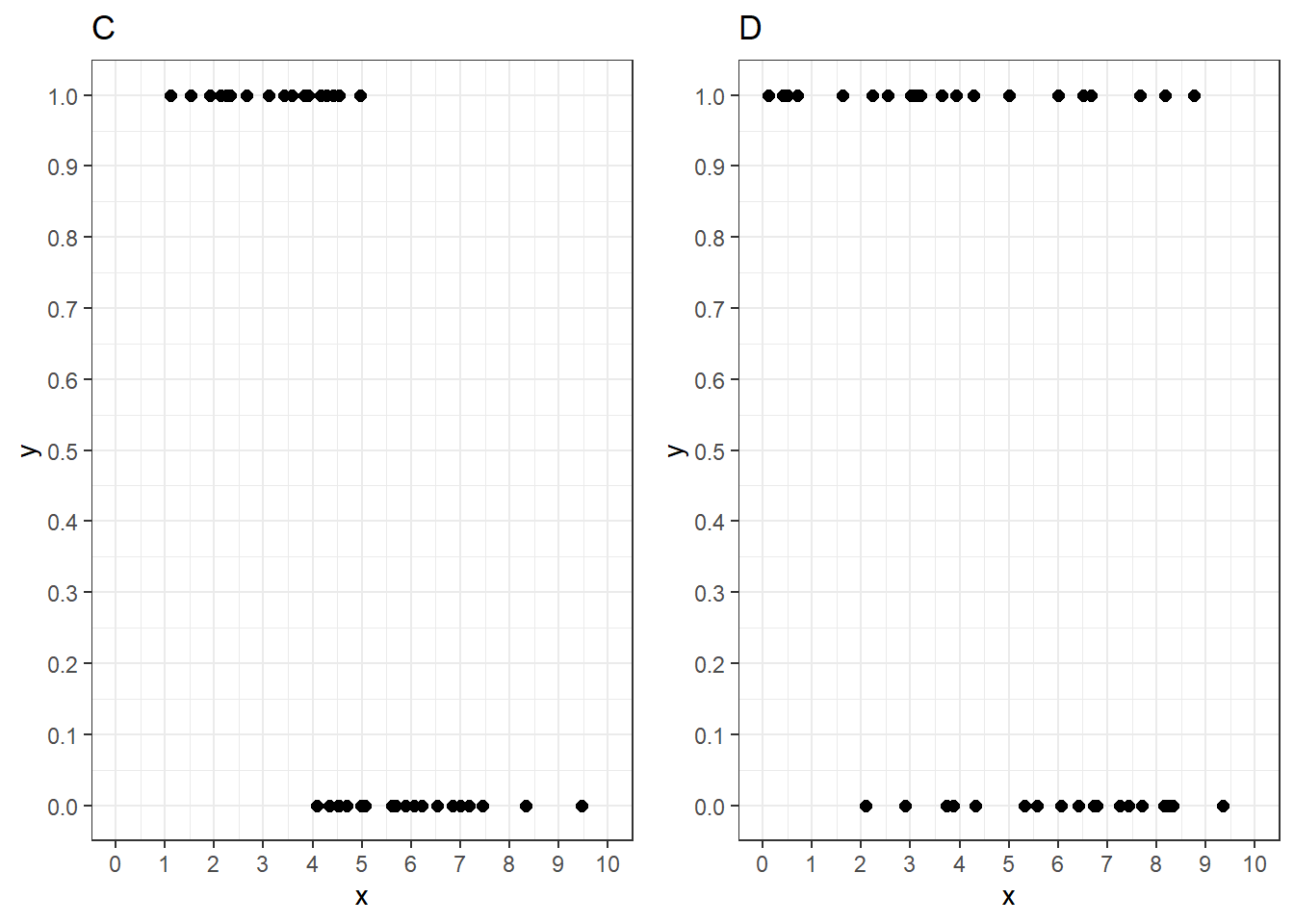

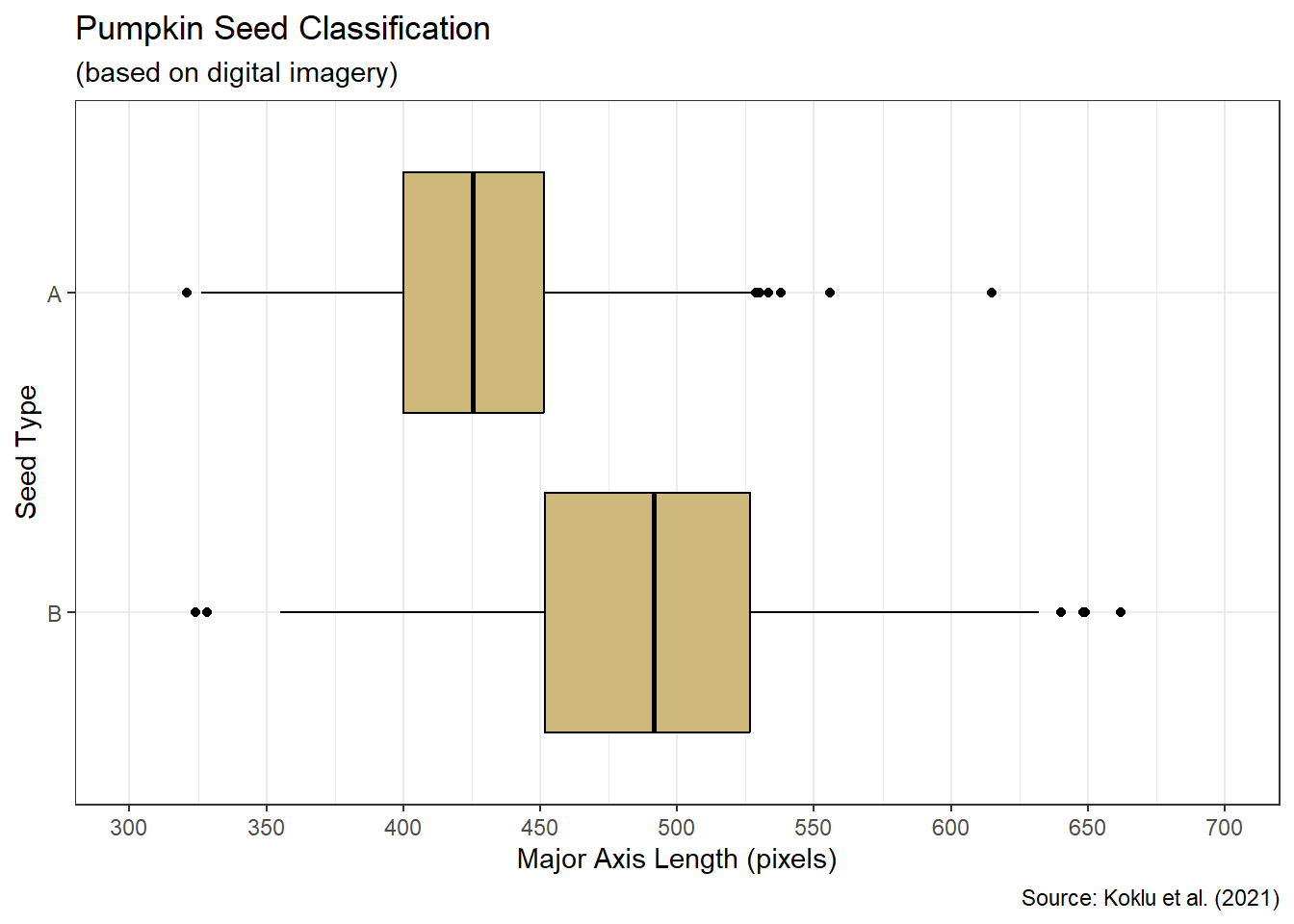

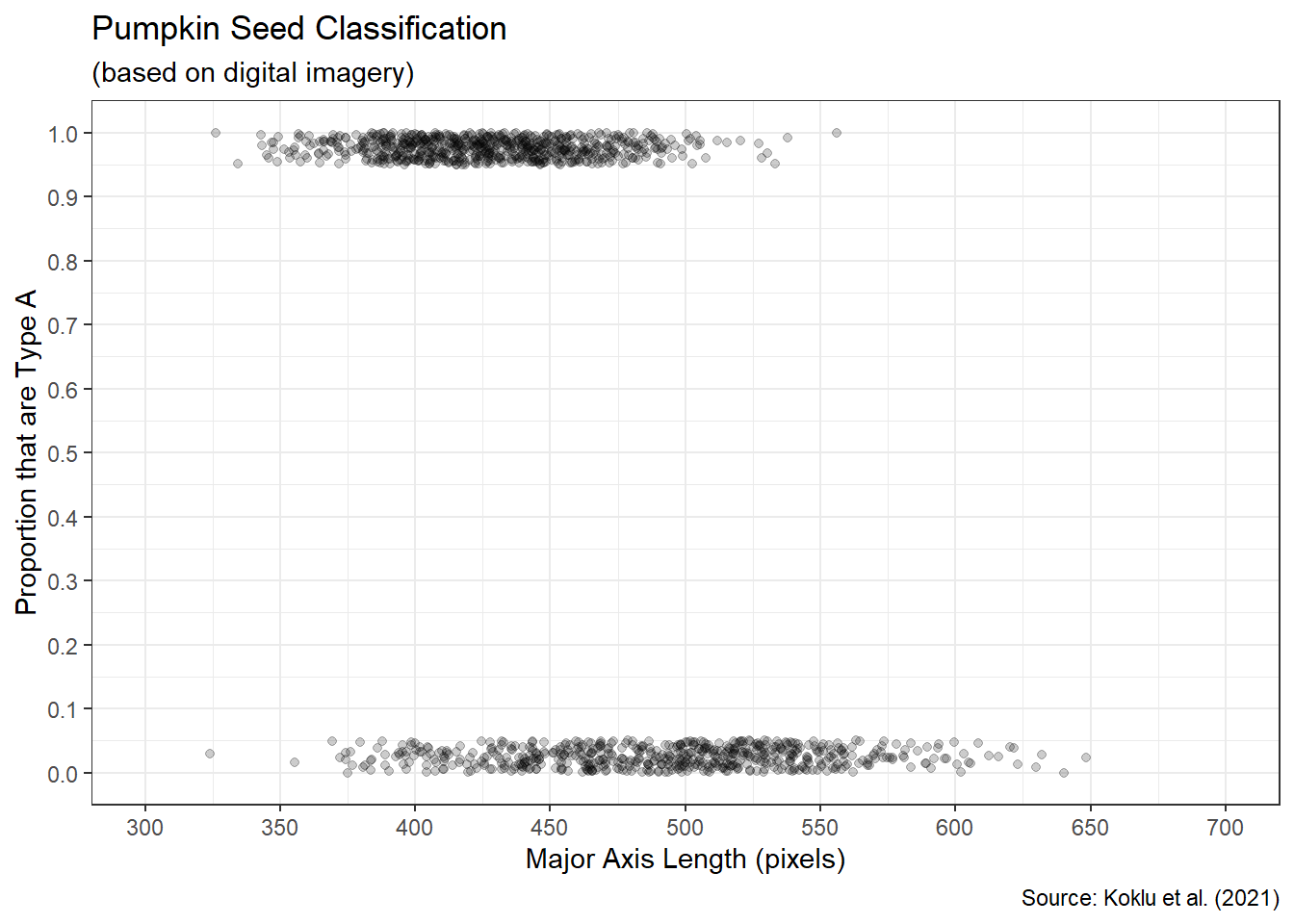

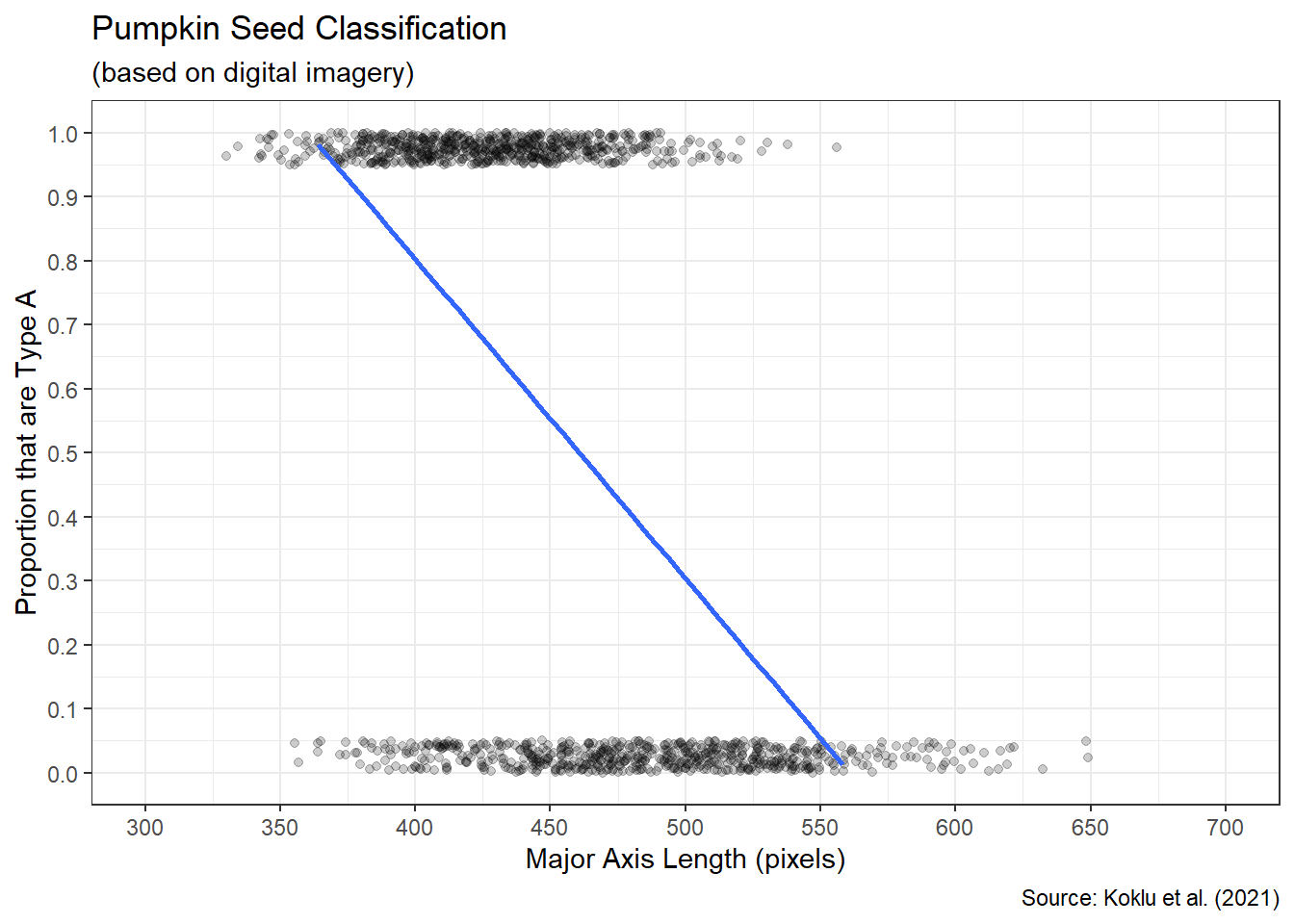

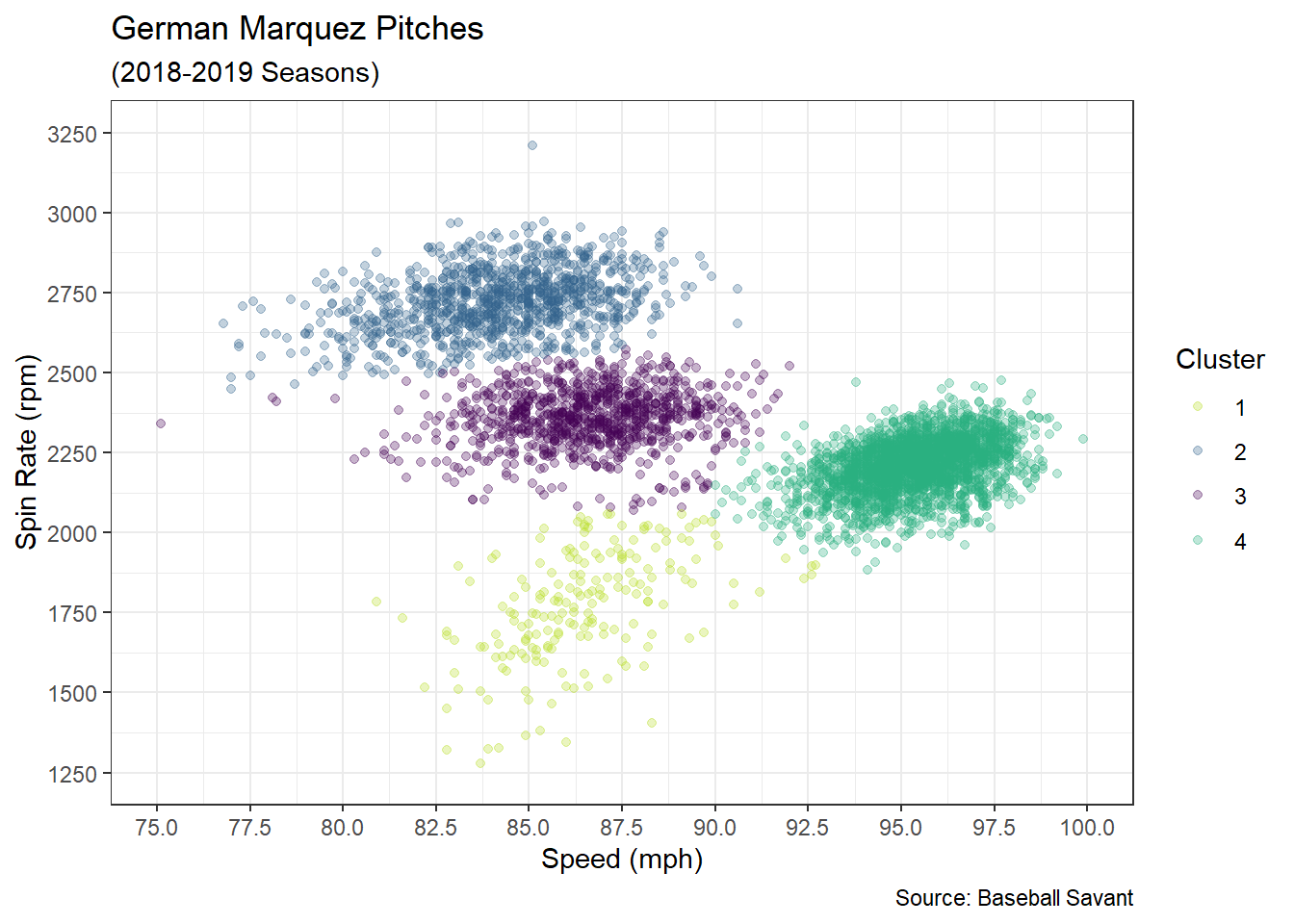

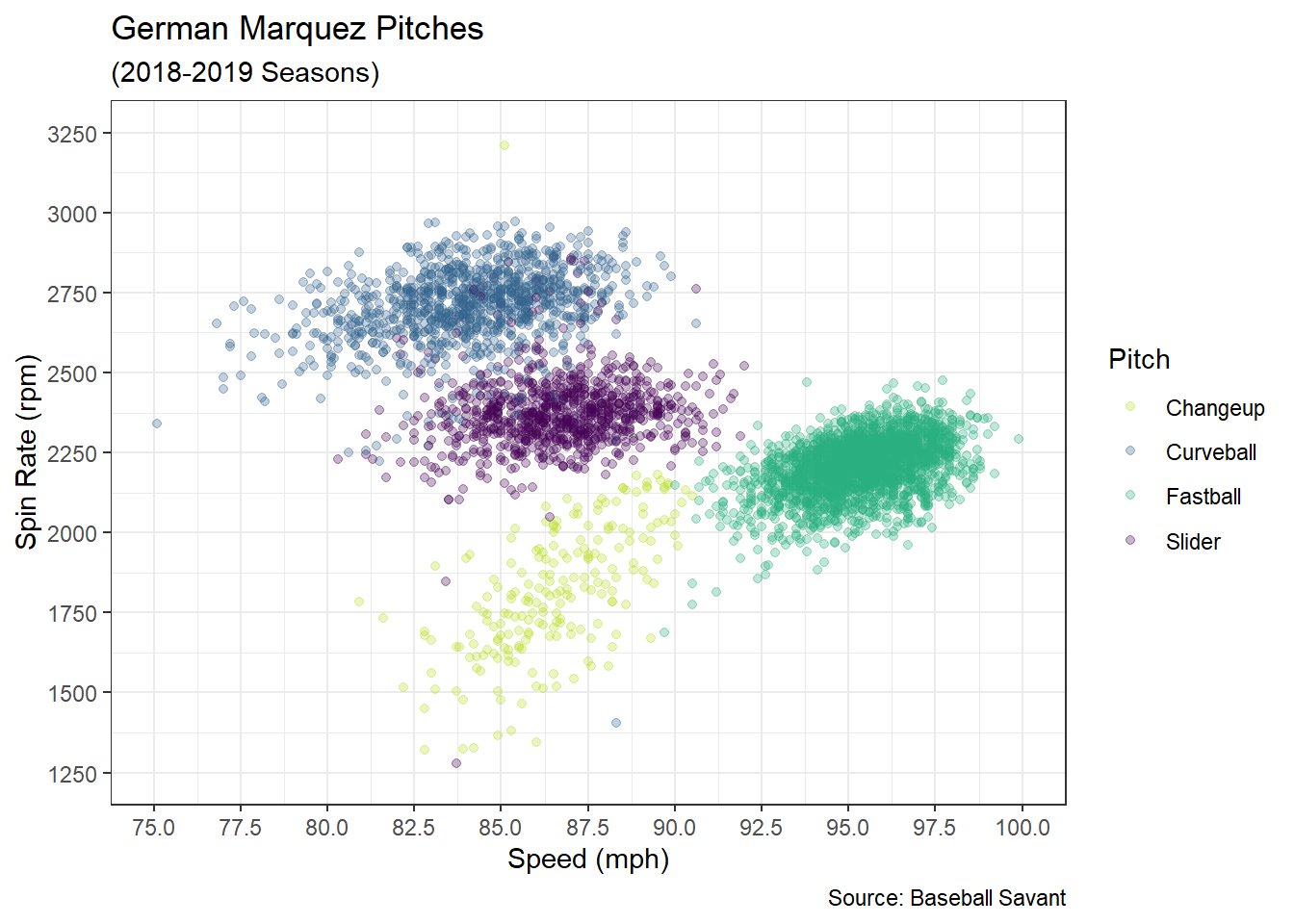

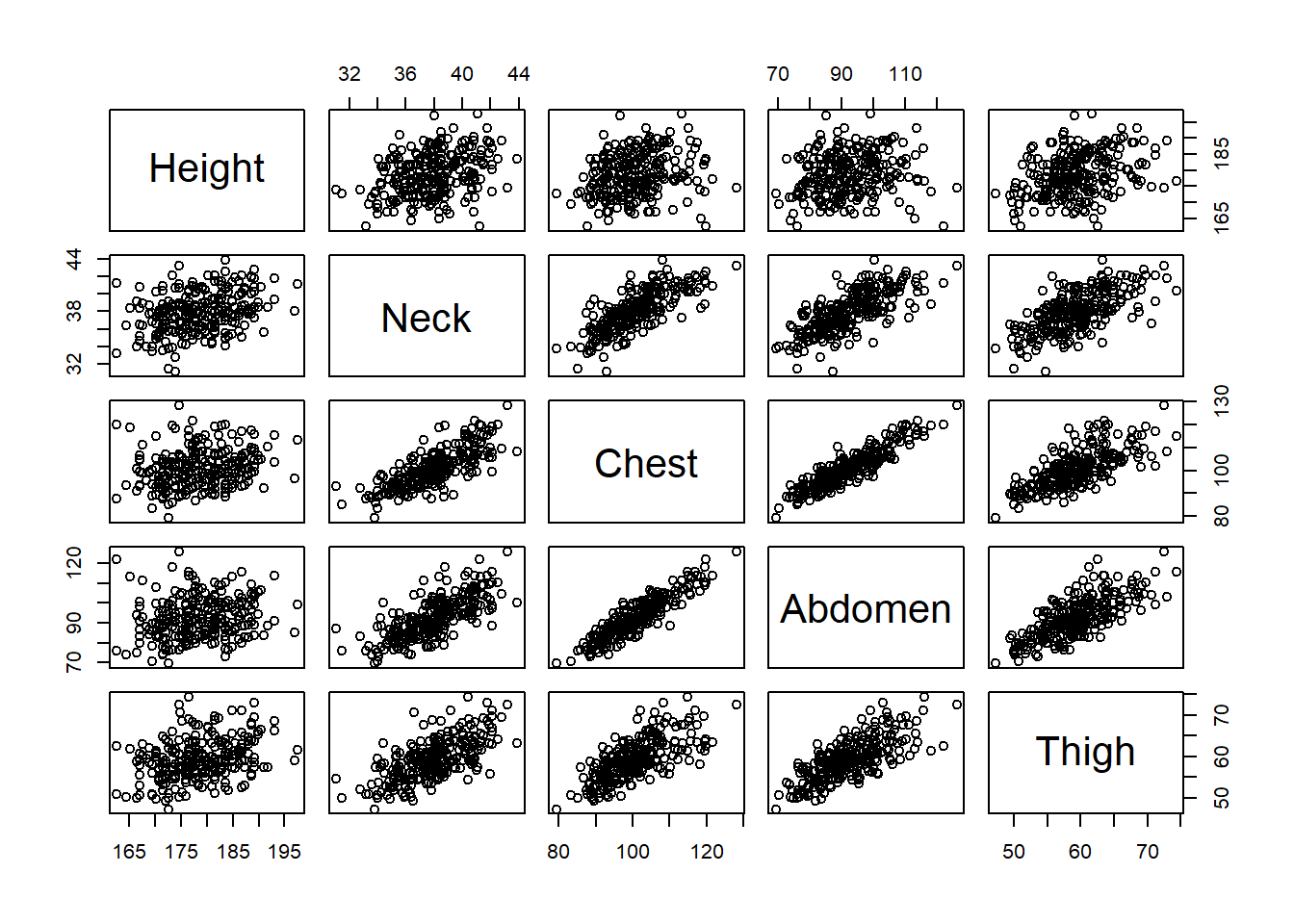

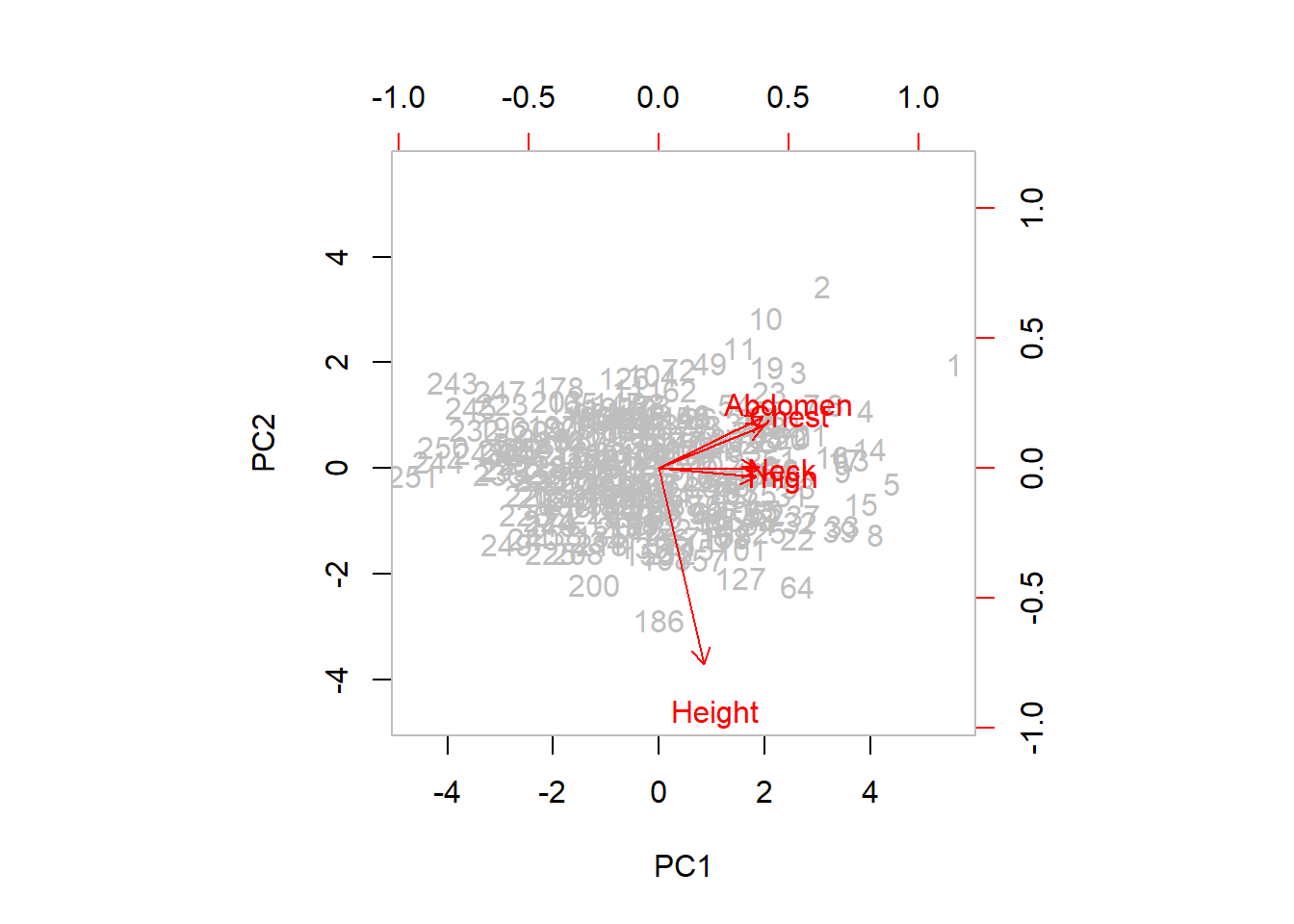

## 6.8125## 75%