Chapter 5 Predictive Analyses

The third and final type of analysis conducted by data scientists involves predictive modeling. As we might expect from the title, this approach involves predicting the unknown value of one variable using known values of other variables. In the context of modeling, we refer to the response and the predictors. The response variable represents the output or dependent variable in a model. The predictors represent the inputs or independent variables. Predictive modeling differs from inferential statistics primarily in its use of predictor variables. Inferential techniques, such as confidence intervals and hypothesis tests, provide reasonable estimates of the mean response, but they do not leverage potentially-valuable information provided by associated predictors. By contrast, predictive models are designed to identify and employ associated predictors and produce a better estimate of the response than the mean alone.

There exist a wide variety of methods for modeling data. Consequently, we open this chapter by decomposing various model types and the associated terminology. Specifically, we distinguish supervised from unsupervised models, regression from classification, and finally parametric from nonparametric models. Each combination of model types has its own unique purpose, methods, and tools. While we provide introductory applications for many models, additional course work is required to master the full suite of options. After establishing the foundations of modeling, we focus on the types of variables we hope to predict.

We construct models to predict two types of response variables: numerical and categorical. Models designed to predict numerical variable values are referred to as regression models. Thus, response variables such as height, weight, cost, duration, and distance lend themselves well to regression modeling. On the other hand, we predict categorical variables using classification models. In the context of factors (grouped categorical variables), we can think of this as predicting the appropriate level for the response. Factors such as test results (pass/fail), game outcomes (win/lose), and logical conclusions (true/false) require classification models. While categorical response variables are not limited to binary outcomes (e.g., pass/fail), that is our focus in this text. More advanced multinomial classification is reserved for other offerings.

5.1 Data Modeling

In general, a model is a mathematical representation of a real-world system or process. When that model is constructed based on random sample data from a stochastic process, we refer to it as a statistical model. Further still, we use the label statistical learning model, because the associated algorithms “learn” distributions and associations from the sample data. Let’s investigate the primary types of models data scientists construct.

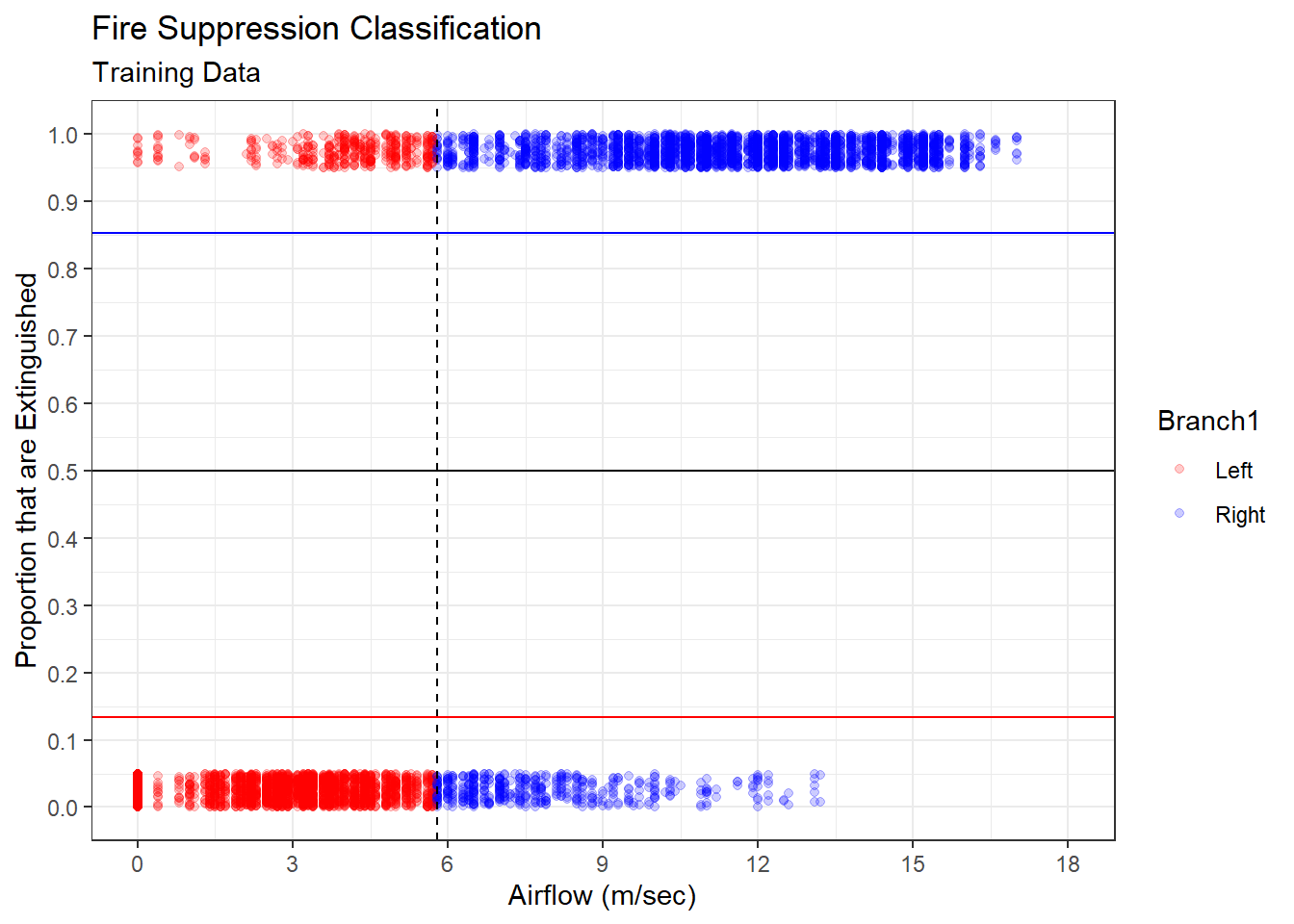

5.1.1 Model Types

While there are many types of statistical learning models, we normally identify them based on the three criteria described in the subsections that follow.

Supervised versus Unsupervised

The first major division of statistical learning models identifies whether they are supervised or unsupervised. Put simply, supervised models include a response variable and unsupervised models do not. When a response variable is present, its values “supervise” the associated algorithm to produce an accurate model. Since the objective of this chapter is to predict a response, all of the models are supervised. Common supervised statistical learning methods include:

- Linear Regression

- Logistic Regression

- Discriminant Analysis

- Smoothing Splines

- \(K\)-Nearest Neighbors

- Decision Trees

When our analysis does not include an identified response variable, we instead construct unsupervised models. Unsupervised models lend themselves well to exploratory analyses. When we have no response variable to explain or predict, what remains to explore? We seek to understand relationships between the independent variables. For example, there may exist inherent clusters of observations based on combinations of independent variable values. We introduced this unsupervised approach in Chapter 3.3.6. Another common point of interest is whether independent variables are associated with one another. In these cases, we can distill the behavior of many independent variables down into a smaller number of features that exhibit similar behavior. We described this process of dimension reduction in Chapter 3.3.7. Typical unsupervised learning methods include:

- Cluster Analysis

- Principal Components Analysis

If the appropriate modeling approach is supervised (i.e., includes a response variable), then the next division of methods regards the type of response variable.

Regression versus Classification

A response variable can be numerical (quantitative) or categorical (qualitative). Numerical variables can be discrete (counts) or continuous (measures), while categorical variables can be ordinal (ranks) or nominal (names). Numerical response variables require regression modeling, while categorical response variables require classification modeling.

When the response variable is numerical, we choose from among a suite of regression methods. Most regression algorithms assume the numerical response is a continuous variable, even if the observed values are discrete (e.g., integer). Thus, data scientists must be careful in accurately interpreting the explanations or predictions of a discrete response when treating it as continuous. Drawing from the previous list of supervised learning methods, regression approaches include:

- Linear Regression

- Smoothing Splines

- \(K\)-Nearest Neighbors

- Decision Trees

For categorical response variables, we apply classification methods. By far, the most common form is binary classification. Such problems distinguish True from False, Win from Lose, and Success from Failure. However, in all such cases we typically simplify the two categories to 1 and 0. That said, multinomial methods also exist for the classification of more than two categories. Sometimes the distinction between a discrete numerical response and an ordinal categorical response is unclear. For example, the categories January, February, and March might easily be replaced with the numbers 1, 2, and 3 for month. Both are valid, but the choice could impact the modeling approach. Common classification methods include:

- Logistic Regression

- Discriminant Analysis

- \(K\)-Nearest Neighbors

- Decision Trees

This list highlights two interesting points. Firstly, logistic regression is listed as a method for classification. This naming choice is unfortunate given our desire to clearly distinguish between modeling approaches. The second point of interest is that \(K\)-Nearest Neighbors and Decision Trees are on both lists. This is not a mistake. Some supervised learning methods offer the flexibility to conduct either regression or classification. Once we have identified the type of response we wish to predict, we must decide on the structure with which to model the response. In this regard, we have the choice between parametric or nonparametric methods.

Parametric versus Nonparametric

For certain problems we have much more information about the structure of the stochastic process that generates response data. We might know, for example, that a response variable is linearly associated with a certain predictor. At the very least, we might be able to safely assume such an association based on what we observe in a sample. In other cases, the functional form of the association between two variables may not be so clear. Not only might linearity be inappropriate, but we might have no clear idea of any functional form to apply. This knowledge (or lack thereof) regarding the structure of associations is what distinguishes parametric from nonparametric models.

With parametric models, we apply a specific functional form to the association between the predictors and the response. That form could be linear, log-linear, polynomial, or any other function that specifies a particular structure. The problem is thus reduced to estimating the parameters within that structure. For example, if we decide two variables \(x\) and \(y\) are linearly associated, then the model can be represented as \(y=\beta_0+\beta_1 x\). We then apply a statistical learning algorithm to estimate the intercept and slope parameters (\(\beta_0\) and \(\beta_1\)). Popular parametric modeling approaches include:

- Linear Regression

- Logistic Regression

- Discriminant Analysis

In each of the parametric methods listed above, we assume a specific functional form. When using nonparametric methods, there is no need to make such assumptions. Rather than presuming the data is generated from a process with a strict functional form, nonparametric methods estimate behaviors and derive associations directly from the data. The benefit of nonparametric methods is that they avoid potential mis-identification of structure. For instance, what if the association we think is linear is actually not linear? We can avoid this by not assuming linearity (or any other form) in the first place. However, the cost is that nonparametric approaches generally require much more data in order to identify unstructured associations. Common nonparametric methods include:

- Smoothing Splines

- \(K\)-Nearest Neighbors

- Decision Trees

When selecting a statistical modeling approach, we choose between supervised or unsupervised, regression or classification, and parametric or nonparametric based on the research question, the variable types, and the data-generating process. We describe a few common modeling combinations in the sections that follow. But first we must discuss the appropriate methods for validating a model’s performance.

5.1.2 Model Validation

There are two primary stages in the development of a statistical learning model. First, we must construct a functional relationship between the predictors and the response. This might involve estimating the parameters of an equation or tailoring the stages of an algorithm, depending on the type of model. Regardless, we refer to this stage as training the model. During training, the model learns how best to associate the predictors with the response. After constructing the model, we need to evaluate its performance. We would not want to deploy a model in a real-world scenario without knowing how accurately it can predict the response. We refer to this stage as testing the model. In testing, we learn what to expect from the model prior to deployment. But we must be careful which portion of the sample data we use to train the model versus test the model. This concern leads to the need for a validation set.

A validation set is a portion of the sample data we hold out specifically for evaluating the accuracy of the model. In this way, the data we use to train the model is distinct from the data we use to test the model. The validation set approach tends to result in better estimates of a model’s prediction accuracy because the testing data plays the role of “future” data that was not available when designing the model. Think of this like the difference between practice problems and exam problems in a class. If the practice problems are exactly the same as the exam problems, then a student may score well simply because they memorized the answers. However, if the exam problems are slightly different from the practice problems, then a high score is a much more accurate reflection of genuine understanding. In the same way, when a model is tested on the same data with which it was trained, it may appear artificially accurate. We call this phenomenon overfitting.

When a model is overfit it has been tailored too specifically to one data set and will not typically perform as well on other data. By splitting a sample into training and testing sets, we can avoid overfitting. But what proportion of the sample should be included in each set? If the training set is too large, then the assessment of accuracy on a small testing set will be highly variable. On the other hand, if the training set is too small, then the model will be poorly estimated and introduce bias. A common compromise between these two issues is to select between 70 and 80% of the sample for training. This choice affords sufficient data for the model to learn the associations between predictors and the response, while also reserving enough testing data to compute a reliable accuracy estimate.

The methods for computing the accuracy of a model against a testing set depend on whether we are pursuing regression or classification. The remainder of the chapter distinguishes these two model types and provides example applications of the validation set approach.

5.2 Regression Models

In Chapters 3.3.2 and 4.2.4, respectively, we explored associations and computed inferential statistics for linear regression models. Regression was the appropriate method because the variables of interest were numerical. However, in neither chapter did we attempt to predict a response. With exploratory analysis, the goal was simply to describe the association between numerical variables with a linear equation. We then produced an interval estimate for the slope of that equation using inferential techniques. In this chapter, our objective is to predict the response variable (\(y\)) in a linear equation using the predictor variable (\(x\)).

5.2.1 Simple Linear Regression

By far the most common supervised, parametric, regression model for predicting a numerical response value \(y\) is the simple linear regression model. The qualifier simple indicates there is only one predictor variable \(x\). In later sections we address the case of multiple predictor variables. After estimating the intercept (\(\beta_0\)) and slope (\(\beta_1\)) of a simple linear regression model using the least-squares algorithm, we obtain the function shown in Equation (5.1).

\[\begin{equation} \hat{y} = \hat{\beta}_0+\hat{\beta}_1 x \tag{5.1} \end{equation}\]

The “hat” symbols above the intercept and slope acknowledge that the parameters were estimated from sample data. Consequently, when we input a value for \(x\), the predicted response \(\hat{y}\) is also an estimate. We demonstrate this estimation process in an example below.

Ask a Question

The National Oceanic and Atmospheric Administration (NOAA) seeks to better understand and predict changes in the Earth’s climate and weather. This mission includes a variety of scientific research related to tropical storms in the Atlantic Ocean. One of the many devastating characteristics of a tropical storm is the maximum sustained wind speed, particularly when the storm reaches the classification of a hurricane. Based on the physics of hurricanes, there is a strong relationship between the low pressure center (“eye”) of a storm and the wind speeds produced around its perimeter (“wall”). Consequently, we should be able to predict a storm’s wind speed based on its air pressure. Let’s investigate with the following specific question: What maximum sustained wind speed would we predict for a tropical storm with a central air pressure of 950 millibars?

There is nothing unique about the choice of the value 950. We aim to demonstrate the use of a predictive model for a specific input and this air pressure value is as good as any. The techniques we discuss here are appropriate for a much broader range of air pressures. As a result, our research question is likely of concern to anyone interested in the science or destructive capacity of a tropical storm. A better understanding of the associations between various physical characteristics of a storm could improve our ability to predict the danger to the local populace. That said, the correlation between air pressure and wind speed is widely understood by the scientific community, so we likely won’t reveal anything groundbreaking here! Regardless, the process of answering our question is a valuable academic exercise.

Acquire the Data

The NOAA website offers open access to a number of data tools related to climate and weather. But there is also a convenient data set built into the dplyr package called storms. The data is from NOAA and includes attributes for tropical storms in the Atlantic Ocean from 1975 through 2021. Import, wrangle, and review the data using the code below.

#import storm data

data(storms)

#wrangle storm data

storms2 <- storms %>%

select(wind,pressure) %>%

na.omit()

#review data structure

glimpse(storms2)## Rows: 19,066

## Columns: 2

## $ wind <int> 25, 25, 25, 25, 25, 25, 25, 30, 35, 40, 45, 50, 50, 55, 60, 6…

## $ pressure <int> 1013, 1013, 1013, 1013, 1012, 1012, 1011, 1006, 1004, 1002, 1…Each of the over 19 thousand observations refers to the measurement of a storm’s maximum sustained wind speed (in knots) and the air pressure (in millibars) inside the eye at the start of every six-hour time interval. The word “knot” is short-hand for nautical miles per hour, where a nautical mile is equivalent to 1.15 standard miles. As described in Chapter 5.1.2, we must randomly split this sample data into training and testing sets prior to estimating a model. In the code chunk below, we randomly select 75% of the observations for training and reserve the remaining 25% for testing.

#randomly select rows from sample data

set.seed(303)

rows <- sample(x=1:nrow(storms2),

size=floor(0.75*nrow(storms2)),

replace=FALSE)

#split sample into training and testing

training <- storms2[rows,]

testing <- storms2[-rows,]The original sample consists of 19,066 rows. We randomly select 75% of these rows to be part of the training set. But 75% of 19,066 is 14,299.5, so we round down to an integer using the floor() function. We could just as easily have rounded in other ways. The goal is to define an integer number of rows as close as possible to the desired percentage. The resulting 14,299 random row numbers are assigned to the training set. The remaining 4,767 row numbers are assigned to the testing set. With our sample data properly prepared for predictive analysis, we can determine the best model.

Analyze the Data

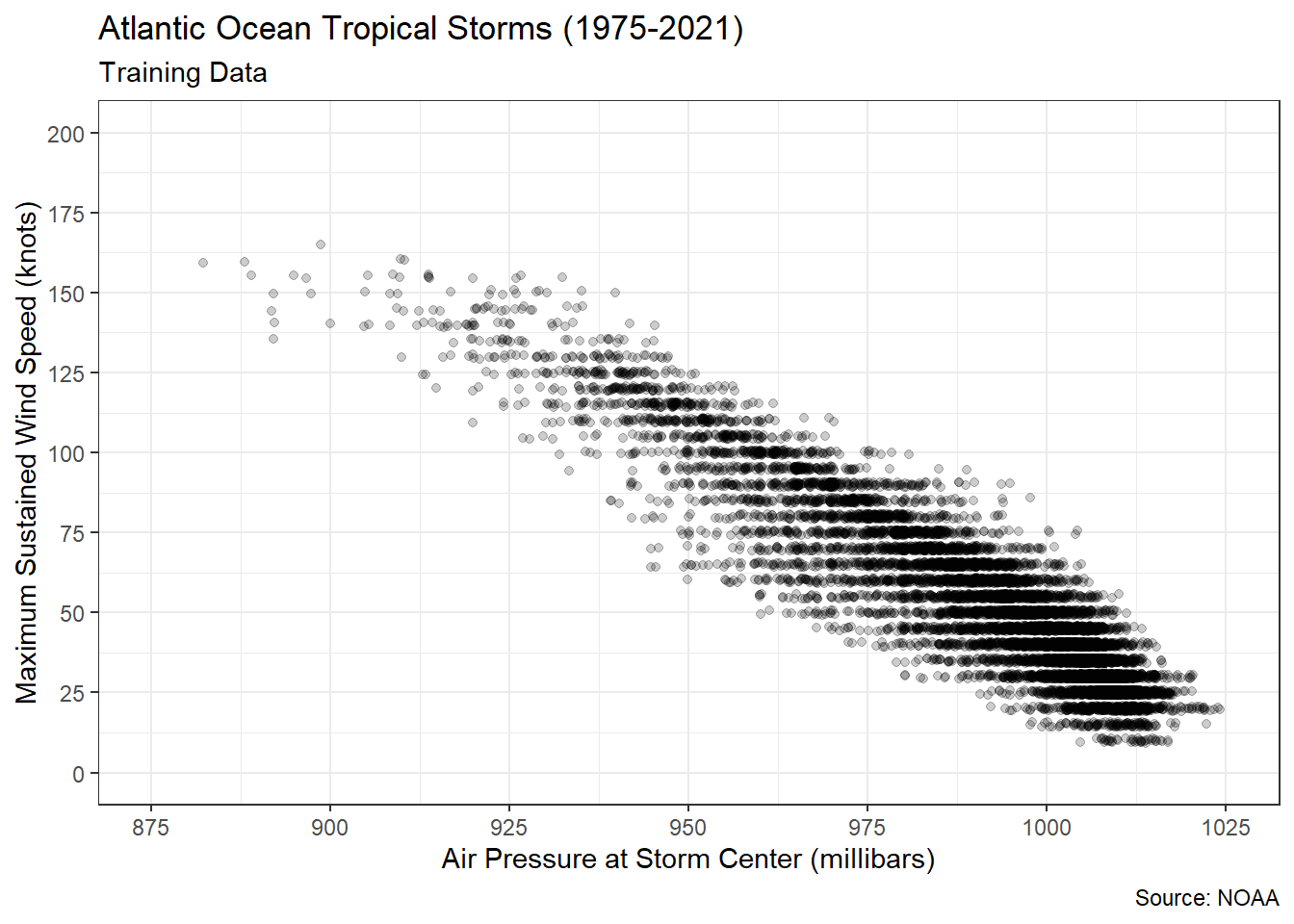

As always, before estimating a linear model we should ensure that the association between our predictor and response is plausibly linear. Figure 5.1 displays the relationship between air pressure and wind speed in the training data.

#create scatter plot

ggplot(data=training,

mapping=aes(x=pressure,y=wind)) +

geom_point(position="jitter",alpha=0.2) +

labs(title="Atlantic Ocean Tropical Storms (1975-2021)",

subtitle="Training Data",

x="Air Pressure at Storm Center (millibars)",

y="Maximum Sustained Wind Speed (knots)",

caption="Source: NOAA") +

scale_x_continuous(limits=c(875,1025),breaks=seq(875,1025,25)) +

scale_y_continuous(limits=c(0,200),breaks=seq(0,200,25)) +

theme_bw()

Figure 5.1: Linear Association between Air Pressure and Wind Speed

There appears to be a very strong, decreasing, linear association between the variables. As the air pressure inside the storm center increases, the maximum sustained wind speed appears to decrease. This aligns with what scientists know about pressure gradients. Wind moves from areas of high pressure to areas of low pressure. The larger the difference (gradient) between the two pressures, the faster the wind moves. Thus, very low pressure storm centers draw very high wind speeds. We can confirm our visual evidence by computing a Pearson correlation coefficient.

## [1] -0.9275745A coefficient value of -0.93 confirms a very strong, negative correlation. Based on both visual and numerical evidence, we can feel confident that a linear model is appropriate. Figure 5.2 adds the best-fit line to the scatter plot.

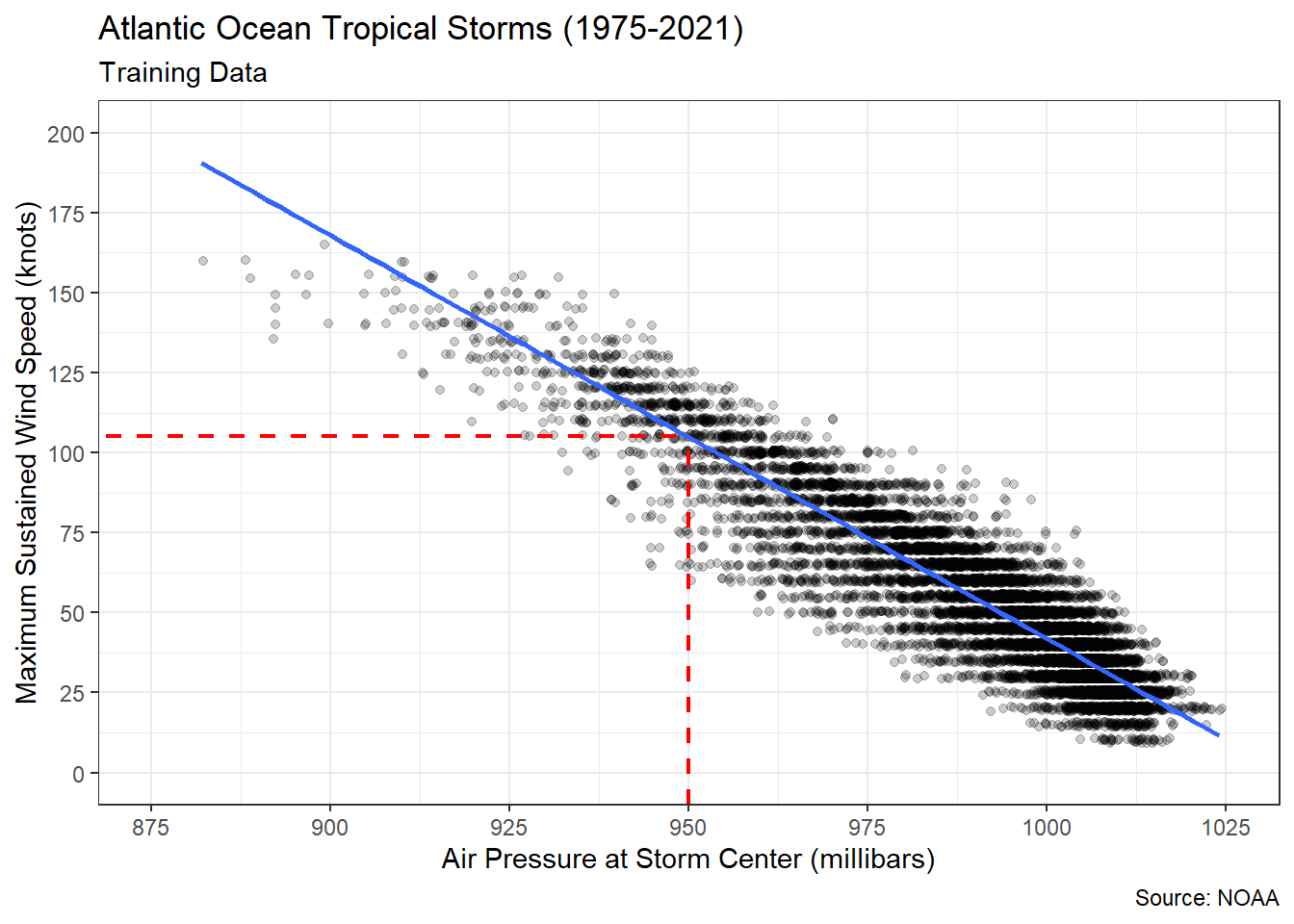

#create scatter plot

ggplot(data=training,

mapping=aes(x=pressure,y=wind)) +

geom_point(position="jitter",alpha=0.2) +

geom_smooth(method="lm",formula=y~x) +

geom_segment(x=950,xend=950,y=-10,yend=105,

linewidth=0.75,linetype="dashed",color="red") +

geom_segment(x=860,xend=950,y=105,yend=105,

linewidth=0.75,linetype="dashed",color="red") +

labs(title="Atlantic Ocean Tropical Storms (1975-2021)",

subtitle="Training Data",

x="Air Pressure at Storm Center (millibars)",

y="Maximum Sustained Wind Speed (knots)",

caption="Source: NOAA") +

scale_x_continuous(limits=c(875,1025),breaks=seq(875,1025,25)) +

scale_y_continuous(limits=c(0,200),breaks=seq(0,200,25)) +

theme_bw()

Figure 5.2: Linear Association between Air Pressure and Wind Speed

The best-fit (blue) line offers our first chance to try and answer the research question. Purely based on a visual inspection (dashed red line), it appears a storm with a central air pressure of 950 millibars should produce wind speeds around 105 knots. That said, there are observations in the scatter plot that vary between 60 to 125 knots even when the air pressure is fixed at 950 millibars. We’ll address this variability soon enough. First, let’s determine the equation of the best-fit line.

#estimate simple linear regression model

model <- lm(wind~pressure,data=training)

#review parameter estimates

coefficients(summary(model))## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 1301.535105 4.216796087 308.6550 0

## pressure -1.259642 0.004243529 -296.8382 0The least-squares algorithm estimated an intercept of \(1301.54\) knots and a slope of \(-1.26\) knots/millibar. In other words, for every one millibar increase in air pressure we expect a reduction in maximum wind speed of 1.26 knots. Returning to the functional form from the introduction to this section, we can write Equation (5.2).

\[\begin{equation} \hat{y}=1301.54 - 1.26 x \tag{5.2} \end{equation}\]

Using this equation, we can obtain a predicted wind speed (\(\hat{y}\)) for any air pressure (\(x\)) of interest. If we input \(x=950\), then we obtain \(\hat{y}=1301.54-1.26 \cdot 950=104.54\). That is very close to our visual estimate of 105 millibars! But there is no reason to perform the arithmetic manually. Instead, we use the predict() function.

## 1

## 104.8755Within the predict() function we reference the name of the linear model and the new data we want to input. The result is a similar prediction that varies only due to rounding. In one sense we could stop here and answer our research question. The answer appears to be 105 millibars. However, we must recall the lessons of Chapter 4. The prediction \(\hat{y}=105\) is merely a point estimate. If we had sampled different storms, then we would likely obtain a different model and a different prediction. We would prefer to have a plausible range of values for the prediction and a measure of confidence. Luckily, we can employ the same bootstrap resampling techniques we already learned.

#initiate empty data frame

results_boot <- data.frame(wind=rep(NA,1000))

#resample 1000 times and save predictions

for(i in 1:1000){

set.seed(i)

storms_i <- sample_n(training,size=nrow(training),replace=TRUE)

model_i <- lm(wind~pressure,data=storms_i)

results_boot$wind[i] <- predict(model_i,newdata=data.frame(pressure=950))

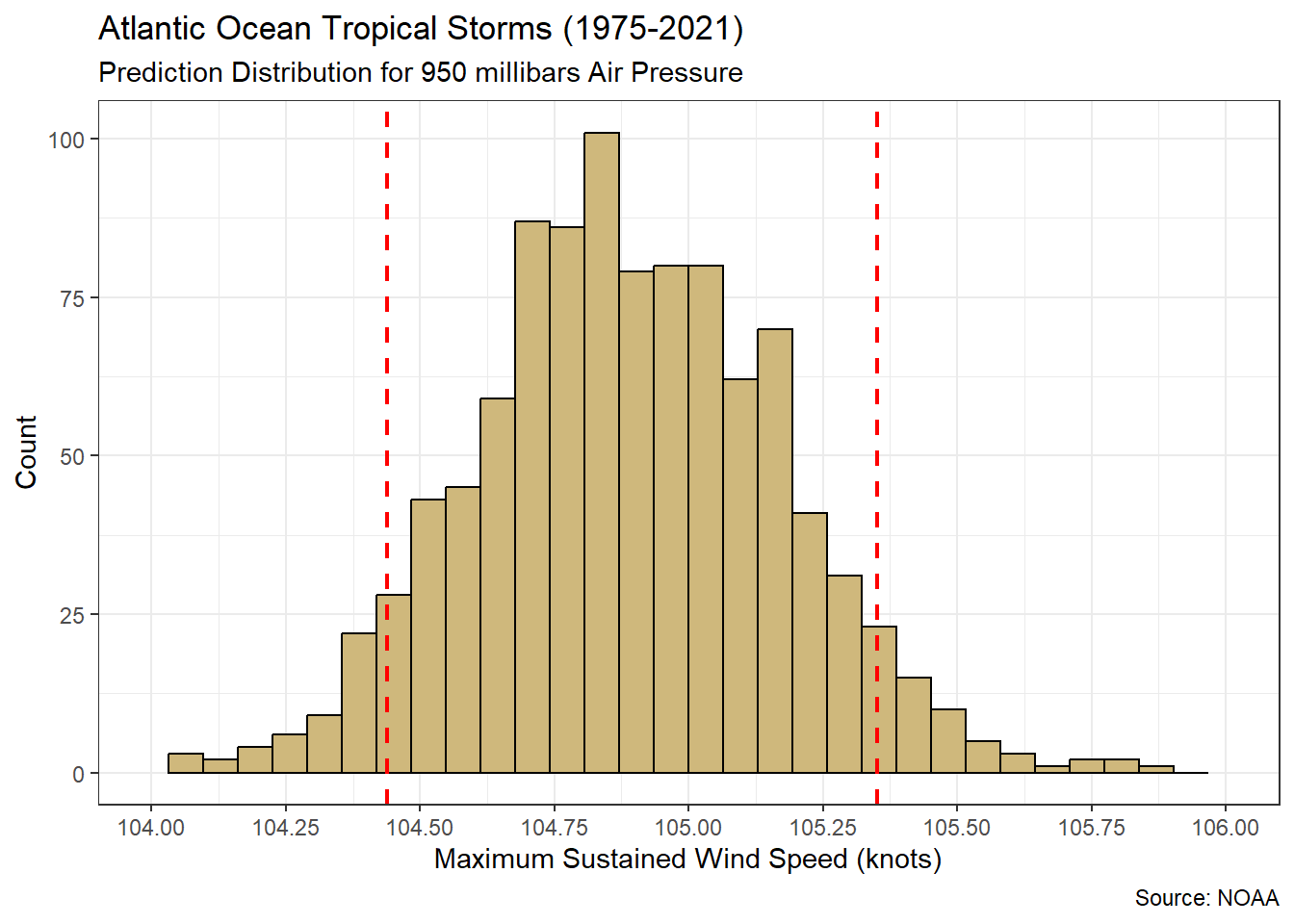

}In the previous code chunk, we repeatedly resample the training set with replacement. This process simulates gathering new storm measurements from the population. After each resample, we fit a simple linear regression model and predict the wind speed for the 950 millibar air pressure. Each time we obtain a slightly different prediction. We can review the distribution of these predictions in Figure 5.3.

#plot resampling distribution

ggplot(data=results_boot,

mapping=aes(x=wind)) +

geom_histogram(color="black",fill="#CFB87C",bins=32) +

geom_vline(xintercept=quantile(results_boot$wind,0.05),

color="red",linetype="dashed",linewidth=0.75) +

geom_vline(xintercept=quantile(results_boot$wind,0.95),

color="red",linetype="dashed",linewidth=0.75) +

labs(title="Atlantic Ocean Tropical Storms (1975-2021)",

subtitle="Prediction Distribution for 950 millibars Air Pressure",

x="Maximum Sustained Wind Speed (knots)",

y="Count",

caption="Source: NOAA") +

scale_x_continuous(limits=c(104,106),breaks=seq(104,106,0.25)) +

theme_bw()

Figure 5.3: Distribution for predicted maximum wind speed (knots) when air pressure is 950 millibars

Over 1,000 simulated training sets, our prediction of the maximum wind speed varies between 104 and 106 knots. A 90% confidence interval (red dashed lines) ranges between 104.44 and 105.35 knots. Hence, when the central air pressure of a storm is 950 millibars we are 90% confident that the maximum sustained wind speed will be between 104.44 and 105.35 knots, on average. As usual, the confidence interval is far superior to a single point estimate.

Advise on Results

A key point when advising stakeholders on the results of a predictive analysis regards the proper interpretation of the predicted response (\(\hat{y}\)). Importantly, \(\hat{y}\) represents the average (mean) response value given a particular predictor value \(x\). It was not an accident that we included the phrase “on average” at the end of our confidence interval interpretation. When we predict a wind speed of 105 knots, we are not suggesting that the next storm with a pressure of 950 millibars will produce winds of exactly 105 knots. We are saying that the next 1,000 storms (for example) with a pressure of 950 millibars will produce an average wind speed of 105 knots.

The same careful interpretation applies to the interval estimates. Our 90% confidence interval refers to the average wind speed of all storms that have a central air pressure of 950 millibars. If we wanted to predict the wind speed of the next storm that has a pressure of 950 millibars, then the 90% confidence interval would be much wider. It is much more difficult to precisely predict the behavior of a single event than the average behavior of many events. This is a relatively foundational concept in statistics. We can observe the difference in the two intervals using the predict() function.

#compute 90% confidence interval

predict(model,newdata=data.frame(pressure=950),

interval="confidence",level=0.9)## fit lwr upr

## 1 104.8755 104.5447 105.2063#compute 90% prediction interval

predict(model,newdata=data.frame(pressure=950),

interval="prediction",level=0.9)## fit lwr upr

## 1 104.8755 89.21584 120.5352By adding two optional terms in the predict() function we transform a point estimate into an interval estimate. In this case, R is relying on mathematical theory to produce the lower and upper interval bounds. We will not belabor the details here. Our current purpose is to provide some intuition regarding the precision of our predictions. When we choose the option interval="confidence" and set the level to 90%, we are mimicking the bootstrap resampling from the previous section. The minor differences in the bounds are due to applying theory rather than computation. We refer to this estimate as a confidence interval for the mean response.

The second estimate is known as a prediction interval. A prediction interval seeks to estimate the next response value rather than the average response value. Notice the bounds are much wider. The next storm with a pressure of 950 millibars could plausibly produce winds anywhere from 89.22 to 120.54 knots. A single storm could vary greatly and we observed that first-hand in the scatter plot in Figure 5.2. But averaging tends to reduce variability. When we average the wind speeds of the next 1,000 storms with a pressure of 950 millibars, we obtain a much more precise range of plausible values.

Both types of intervals are incredibly value, but it is important for data scientists to apply them appropriately. When reporting the 90% confidence interval to a stakeholder, it is critical they understand that the estimate refers to the mean response. Imagine you provide the precise confidence interval of 104.54 to 105.21 knots and the next storm with a pressure of 950 millibars produces winds of 120 knots. A stakeholder might think your analysis is questionable, even though this wind speed is included in the prediction interval. Thus, it is important to clearly communicate this distinction.

Answer the Question

Based on a training set of 14,299 Atlantic storm measurements between 1975 and 2021, we predict an average maximum sustained wind speed between 104.44 and 105.35 knots when the air pressure of the eye is 950 millibars. This prediction is only valid for tropical storms in the Atlantic Ocean and the model should only be applied to central air pressures between 882 and 1,024 millibars. Other air pressure values are outside the range of the training data and require extrapolation. Further research is required to test a broader range of air pressures.

While the prediction of an individual input value is useful, we prefer to predict all the values in the testing set and to determine the overall accuracy of the model. After all, prediction models are only valuable if we can expect them to be consistently close to the actual response for a variety of input values. That said, there are many different measures for the proximity of predictions and observed values. In the next section, we describe one of the most common measure of regression accuracy.

5.2.2 Regression Accuracy

The primary metric for evaluating the quality of a prediction model is accuracy. However, the concept of accuracy has a slightly different meaning depending on whether we are predicting a numerical or categorical (factor) variable. When predicting the levels of a factor, we simply count the number of times the predictions are correct or incorrect. But with a continuous numerical variable, our predictions for the response will seldom (if ever) be exactly correct. As a result, we instead measure accuracy in terms of distance from the correct value. A common metric for the aggregate distance of all predictions from the correct numerical value is root mean squared error (RMSE).

The calculation for RMSE proceeds exactly how the name implies. First we compute the error as the distance between the true value and the predicted value. Because those distances can be positive or negative, depending on whether we over or under-estimate the true value, we square the error. In order to aggregate the squared errors for all predictions made by the model, we compute the mean. However, that mean is in squared units which is not very intuitive in real-world context. Thus, we take the square root to return to the original units of the response variable. In mathematical notation, RMSE is represented as follows:

\[\begin{equation} \text{RMSE}=\sqrt{\frac{\sum (y-\hat{y})^2}{n}} \tag{5.3} \end{equation}\]

In Equation (5.3), \(y\) is the actual response value, \(\hat{y}\) is the predicted response value, and \(n\) is the number of predictions. In a sense, RMSE is the average distance of our predictions from the actual response values. In general, an accurate regression model is one that produces a small RMSE. We can avoid overfitting by splitting our sample data into training and testing sets. Then we estimate the parameters of the model using the training data and evaluate the model’s accuracy using the testing data. We demonstrate this process below using the tidyverse and a familiar example.

Ask a Question

We once again return to the penguins of the Palmer Archipelago in Antarctica. In Chapters 3.3.2 and 4.2.4 we explored the linear association between a penguin’s flipper length and its body mass. As part of an exploratory analysis, we established a strong, positive, linear association between the two variables. Then we applied inferential statistics to demonstrate the significance of that association and an interval estimate for the slope parameter. Now we turn our attention to the development of a predictive model and ask the following question: How accurately can we predict the body mass of a Palmer penguin based on its flipper length?

An accurate predictive model for body mass could be incredibly useful for the scientists at Palmer Station. Perhaps flipper length is much easier than body mass to collect for new specimens. If so, then a predictive model could avoid the need for weighing or help calibrate the required scales. A predictive model could also assist with diagnosing penguins that have a weight dramatically different from what would be expected for their size. At the very least, such a model would provide validation for real-world measurements.

Acquire the Data

As with our previous two encounters, we import the data from the palmerpenguins package and isolate the variables of interest.

#load palmerpenguin library

library(palmerpenguins)

#import penguin data

data(penguins)

#wrangle penguin data

penguins2 <- penguins %>%

select(flipper_length_mm,body_mass_g) %>%

na.omit()For this example, we randomly select 75% of the observations for training. The randomness of selection is critical because we want the training and testing sets to have similar distributions. We implement the split in the code below.

#randomly select rows from sample data

set.seed(303)

rows <- sample(x=1:nrow(penguins2),size=floor(0.75*nrow(penguins2)),replace=FALSE)

#split sample into training and testing

training <- penguins2[rows,]

testing <- penguins2[-rows,]The original sample consists of 342 rows (penguins). We randomly select 75% of these rows to be part of the training set. But 75% of 342 is 256.5, so we round down using the floor() function. The resulting 256 row numbers are assigned to the training set. The remaining 86 row numbers are assigned to the testing set. Just as a quality check on the two data sets, let’s compute the mean body mass of the penguins in each set.

## [1] 4200.098## [1] 4206.686The average body mass of penguins in the training and testing sets are within 7 grams of one another. Given the average penguin weighs over 4,000 grams, we feel relatively confident that the random split maintained similar distributions. We are now ready to estimate a regression model using the training data and validate its accuracy using the testing data.

Analyze the Data

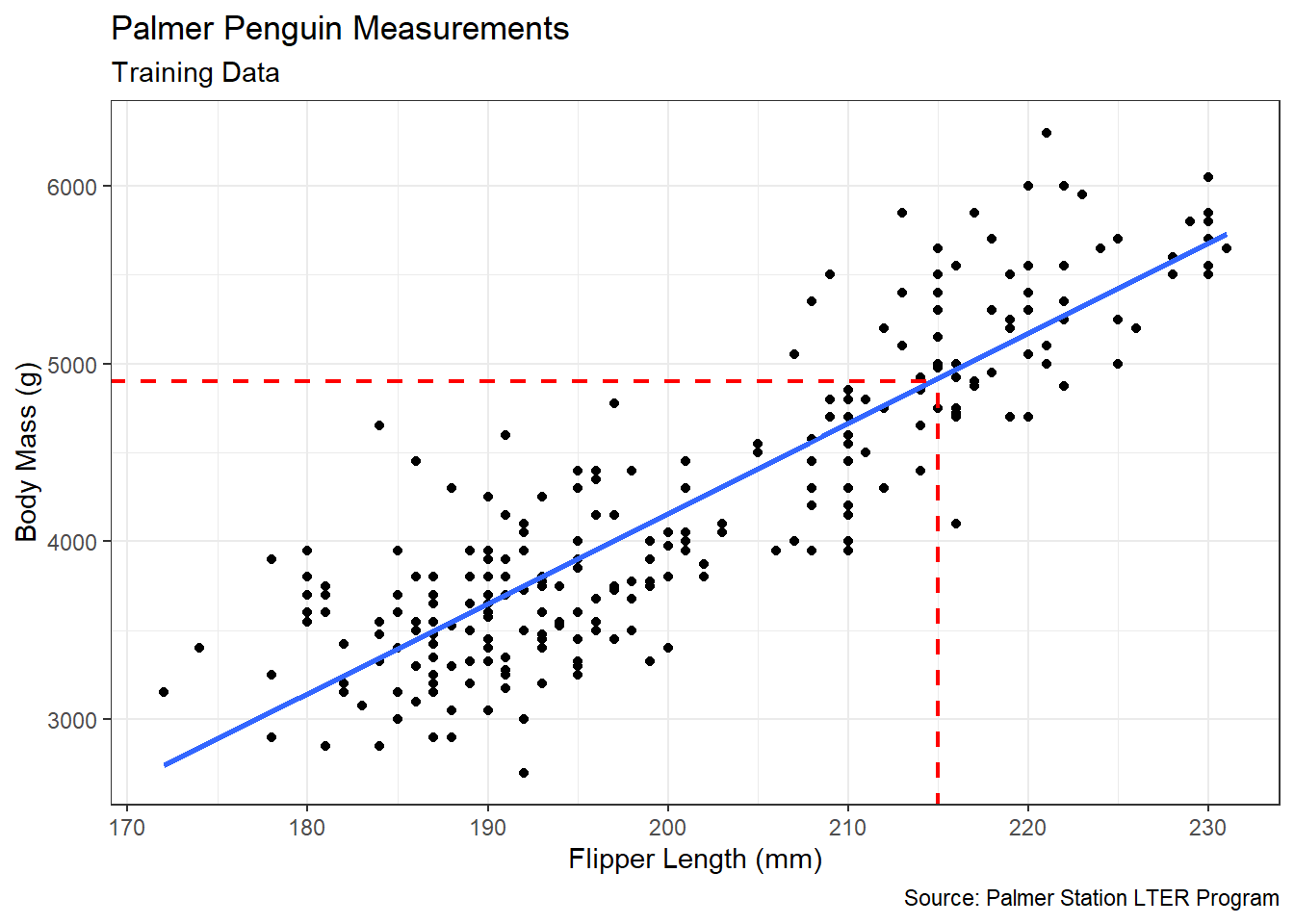

For consistency, we re-create the scatter plot and best-fit line from previous chapters. However, in this case we only use the training data. We do not permit the model to consider the testing data until the end of our analysis.

#create scatter plot

ggplot(data=training,aes(x=flipper_length_mm,y=body_mass_g)) +

geom_point() +

geom_smooth(method="lm",formula=y~x,se=FALSE) +

geom_segment(x=215,xend=215,y=2500,yend=4900,

linewidth=0.75,linetype="dashed",color="red") +

geom_segment(x=169,xend=215,y=4900,yend=4900,

linewidth=0.75,linetype="dashed",color="red") +

labs(title="Palmer Penguin Measurements",

subtitle="Training Data",

x="Flipper Length (mm)",

y="Body Mass (g)",

caption="Source: Palmer Station LTER Program") +

theme_bw()

Figure 5.4: Linear Association between Flipper Length and Body Mass

Figure 5.4 displays the same strong, positive, linear association we observe in previous sections. But our intended use for the best-fit line is very different than before. We now hope to use the best-fit line to estimate an unknown body mass based on a known flipper length. If, for example, we are asked to predict the body mass of a penguin with 215 mm flippers, we might consult the dashed red line in the scatter plot. It appears the average penguin with 215 mm flippers has a body mass of about 4,900 grams. Yet, there is no need to estimate this value visually. We instead estimate the parameters of the best-fit line using the training data and compute the prediction directly.

#estimate simple linear regression model

model <- lm(body_mass_g~flipper_length_mm,data=training)

#review parameter estimates

coefficients(summary(model))## Estimate Std. Error t value Pr(>|t|)

## (Intercept) -5974.51250 354.319942 -16.86191 2.555754e-43

## flipper_length_mm 50.65933 1.759747 28.78784 6.108672e-82We consult the Estimate column for the intercept and slope of the best-fit line. Thus, the blue line in Figure 5.4 has the following equation:

\[\begin{equation} \text{Body Mass}=50.66 \cdot \text{Flipper Length}-5974.51 \tag{5.4} \end{equation}\]

Based on Equation (5.4), the average penguin with 215 mm flippers is predicted to have a body mass of 4,917.39 grams. Our visual estimate was very close! But the algebraic prediction is more precise. We can avoid rounding and obtain an even more precise estimate using the predict() function.

## 1

## 4917.244The predict() function requires two arguments. The first is the model with which to make the prediction. The second is a data frame of input values to predict. In this case, we only use the single value of 215. Of course, we have no idea how accurate this individual prediction might be for a given penguin. Upon review of the scatter plot, penguins with 215 mm flippers have a variety of body masses. To assess the overall prediction accuracy of the model, we now apply the entire testing set.

#estimate body mass for entire testing set

testing_pred <- testing %>%

mutate(pred_body_mass_g=predict(model,newdata=testing))

#review structure of predictions

glimpse(testing_pred)## Rows: 86

## Columns: 3

## $ flipper_length_mm <int> 181, 195, 190, 197, 194, 183, 184, 181, 190, 179, 19…

## $ body_mass_g <int> 3625, 4675, 4250, 4500, 4200, 3550, 3900, 3300, 4600…

## $ pred_body_mass_g <dbl> 3194.827, 3904.057, 3650.761, 4005.376, 3853.398, 32…After predicting the entire testing set, we have real and predicted body masses. Remember, the model does not have access to these 86 observation when we estimate the parameters. It is as though someone brought along 86 new penguins and asked us to predict the body masses. If we let \(y\) be the true body mass and \(\hat{y}\) be the predicted body mass, then we compute RMSE using Equation (5.3).

#compute RMSE for model

testing_pred %>%

summarize(RMSE=sqrt(mean((body_mass_g-pred_body_mass_g)^2)))## # A tibble: 1 × 1

## RMSE

## <dbl>

## 1 376.If we employ the best-fit line from the training data to predict new testing data, we find a RMSE of about 376. In context, this suggests we can predict the body mass of a Palmer penguin to within 376 grams, on average, if we know its flipper length. This is our best estimate of the model’s performance on new data (penguins). Whether this accuracy is acceptable depends on the opinions of domain experts.

Advise on Results

When interpreting the results of this analysis for domain experts, we might simply provide the equation of the best-fit line along with instructions for its use. Multiply a penguin’s flipper length by 50.66 and subtract 5,974.51. The resulting body mass estimate is accurate to within 376 grams, on average. The domain expert may or may not find this model valuable. Perhaps they are capable of estimating a penguin’s body mass more accurately based on experience alone. If a more accurate model is required, we may consider including additional predictor variables (e.g., bill length). We investigate these multiple linear regression models in the next section. On the other hand, the domain expert may not know whether this accuracy is “good” or not. In that case, it helps if we provide a frame of reference in the form of a null model.

In predictive analysis, the null model represents the prediction we might make with no information at all. Typically, this involves predicting the average response value. We know from previous work that the mean body mass of penguins in the training set is 4,200.10 grams. What would be the RMSE if we predicted the testing set by always guessing 4,200.10 for the body mass?

## # A tibble: 1 × 1

## RMSE

## <dbl>

## 1 728.If we use no other information about the penguins and simply guess the mean body mass for all of them, we are off by an average of nearly 728 grams. By using a single piece of information, namely the penguin’s flipper length, we cut that error nearly in half! Comparisons to a null model such as this often assist in describing the value of a trained model to domain experts. If we are asked whether the flipper length model is “good” we can reply that it cuts the error in half compared to no model at all. Of course, we still don’t know how much more accurate the model could be if additional predictors are included.

In terms of limitations, a word of caution regarding extrapolation is warranted. The training set includes penguins with flipper lengths ranging between 172 and 231 mm. The model parameters are estimated based solely on data in that range. It is possible that the association between flipper length and body mass changes for penguins with extremely short or long flippers. Thus, the model should not be applied to such situations. If domain experts desire a more robust model, then a more diverse set of sample data is required.

Answer the Question

Based on a sample of 342 penguins from the Palmer Archipelago in Antarctica, it appears we can predict a penguin’s body mass to within 376 grams, on average, if we know its flipper length. This accuracy, and the associated model, only apply to the Adelie, Chinstrap, and Gentoo species with flipper lengths between 172 and 231 mm. Further study is required to determine if a more accurate model is achievable using additional characteristics of the resident penguins. In order to develop a model with multiple predictors, we require the methods presented in the next section.

5.2.3 Multiple Linear Regression

Previously, we estimated a simple linear regression model using training data and validated its accuracy using testing data. Though the resulting accuracy was superior to simply guessing the mean response (i.e., the null model), it is possible that additional predictor variables improve the model’s accuracy. A linear model with a continuous numerical response and more than one predictor is referred to as a multiple linear regression model. In general, a model with \(p\) predictor variables is defined as in Equation (5.5).

\[\begin{equation} y = \beta_0+\beta_1 x_1+\beta_2 x_2+\cdots+\beta_p x_p \tag{5.5} \end{equation}\]

Similar to the simple linear regression equation, there is a single intercept parameter (\(\beta_0\)). However, there are now multiple slope parameters (\(\beta_1,\beta_2,...,\beta_p\)). The slope for a given predictor represents the change in the response when all other predictors are held constant. That said, estimating and interpreting the value of each slope parameter is primarily in the realm of inference. Our current focus is prediction, so we want to know whether the presence of each predictor increases the accuracy of the model. We investigate the value of multiple predictors using the tidyverse in the example below.

Ask a Question

In Chapter 2.3.4 we demonstrate data cleaning with a sample of player performance values from the National Football League (NFL) scouting combine. With regard to missing values, we notice that some players decline to compete in certain events. Yet, this missing information could be valuable for team executives trying to determine whether to draft a particular player. One solution is to develop a predictive model for the missing event based on the player’s performance in the other events. As an example, we pose the following question: How accurately can we predict an offensive lineman’s forty-yard dash time based on the other five events?

The answer to this question could be of very high interest to teams considering drafting an offensive lineman. The draft value of this position was made famous, in part, by the 2009 movie The Blind Side which highlighted the early career of offensive tackle Michael Oher. Though the facts of the film have more recently been brought into question, the value of the position to football teams remains unquestionably high. In this case study, we assume the forty-yard dash is the missing event and the offensive linemen participate in the other five events. However, the process described here remains the same for other missing events.

Acquire the Data

The data for NFL combines in the years 2000 to 2020 is made available in the file nfl_combine.csv. The offensive line of a football team consists of five players. The center (C) is placed in the middle of the line with offensive guards (OG) to the left and right. Outside of the two guards are offensive tackles (OT) on the far left and far right of the line. Sometimes players are capable of performing in multiple positions and thus are referred to generically as offensive linemen (OL). Below, we isolate these positions and wrangle the combine data.

#import NFL Combine data

combine <- read_csv("nfl_combine.csv") %>%

filter(Pos %in% c("C","OG","OT","OL")) %>%

transmute(Forty=`40YD`,Vertical,Bench=BenchReps,

Broad=`Broad Jump`,Cone=`3Cone`,Shuttle) %>%

na.omit()

#review data structure

glimpse(combine)## Rows: 451

## Columns: 6

## $ Forty <dbl> 4.85, 5.32, 5.23, 5.57, 5.22, 5.24, 5.08, 5.08, 4.91, 5.37, 5…

## $ Vertical <dbl> 36.5, 29.0, 25.5, 27.0, 30.5, 30.0, 30.5, 33.0, 29.5, 25.0, 3…

## $ Bench <dbl> 24, 26, 23, 23, 21, 34, 24, 28, 24, 22, 26, 26, 20, 28, 25, 2…

## $ Broad <dbl> 121, 110, 102, 97, 109, 107, 107, 113, 110, 93, 113, 109, 106…

## $ Cone <dbl> 7.65, 8.26, 7.88, 8.07, 7.58, 8.03, 7.57, 7.91, 7.76, 8.17, 8…

## $ Shuttle <dbl> 4.68, 5.07, 4.76, 4.98, 4.66, 4.87, 4.69, 4.64, 4.62, 5.11, 4…The resulting data frame includes 451 offensive linemen who performed in all six events. Though our goal is to predict forty-yard dash times when they are not present, we need training and testing data where these values are present. Otherwise, we cannot estimate and validate a predictive model. We next randomly split the sample data into 75% training and 25% testing.

#randomly select rows from sample data

set.seed(303)

rows <- sample(x=1:nrow(combine),size=floor(0.75*nrow(combine)),replace=FALSE)

#split sample into training and testing

training <- combine[rows,]

testing <- combine[-rows,]As always, our goal when splitting the sample is to have each data set maintain the same distribution. One quality check in this regard is to compute the mean response within the training and testing sets.

## [1] 5.255296## [1] 5.216814The average forty-yard dash times for offensive linemen in the training and testing sets are 5.255 and 5.217 seconds, respectively. Though we would not expect the two values to be exactly the same, the random approach to splitting the data sets should ensure close proximity. In this case, the mean response for both sets is relatively close. Our data is now ready for analysis.

Analyze the Data

When constructing predictive models, we require a baseline accuracy for comparison. Otherwise, it is difficult to assess the value of the model. Typically, the baseline is derived from the null model which consists of predicting the mean response for all observations. In our case, the null model suggests every offensive lineman runs the forty-yard dash in 5.255 seconds. Let’s compute the accuracy of this model against the testing set.

## # A tibble: 1 × 1

## RMSE

## <dbl>

## 1 0.175If we always predict a forty-yard dash time of 5.255 seconds for offensive lineman, we will be off by an average of 0.175 seconds. Can we do better by including information from the other events? Let’s find out by investigating the association between events.

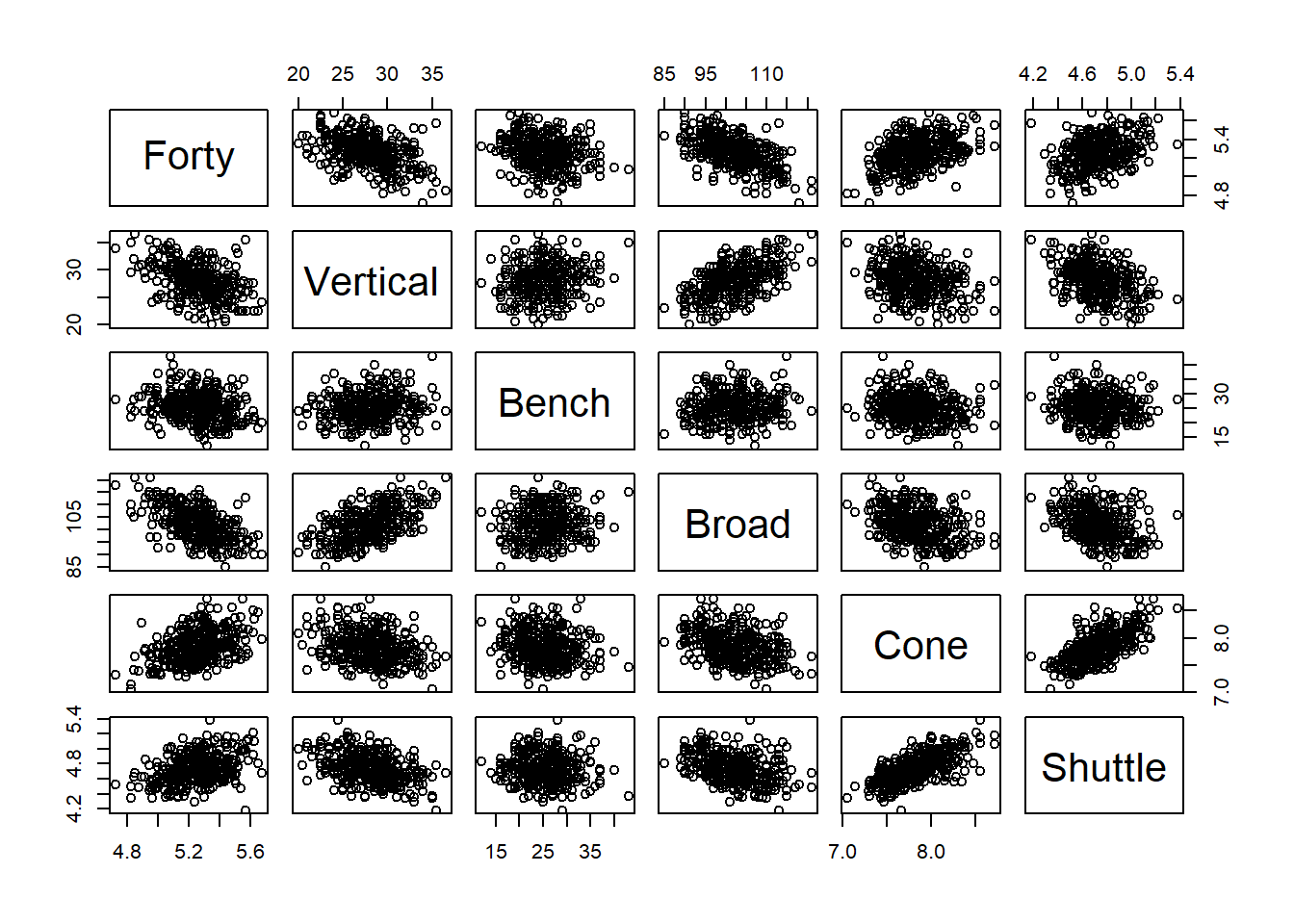

Figure 5.5: Linear Associations between Events

Upon reviewing the top row of the scatter plot matrix in Figure 5.5, we find some clear associations between forty-yard dash time and the other events. The vertical jump, bench press, and broad jump all appear to have negative, linear associations with forty-yard dash time. In other words, the higher or farther a player jumps, the lower (faster) the forty-yard dash time. Similarly, the more repetitions a player bench presses, the faster the time. Meanwhile, the associations with the cone and shuttle drills appear to be positive and linear. The longer a player takes to complete each drill, the longer it takes them to complete the forty-yard dash. Overall, it appears the other five events could provide some predictive value for forty-yard dash time.

As a starting point, we estimate a simple linear regression model with only vertical jump height as the predictor. The model is fit to the training data and evaluated on the testing data. Does this model outperform the null model?

#estimate simple linear regression model

model1 <- lm(Forty~Vertical,data=training)

#estimate accuracy of model

testing %>%

mutate(pred_Forty=predict(model1,newdata=testing)) %>%

summarize(RMSE=sqrt(mean((Forty-pred_Forty)^2)))## # A tibble: 1 × 1

## RMSE

## <dbl>

## 1 0.155The answer is yes! By applying vertical jump height we reduce the average error of the model from 0.175 to 0.155 seconds. Can we do even better by adding another predictor? We add bench press repetitions to the linear model with a plus sign.

#estimate multiple linear regression model

model2 <- lm(Forty~Vertical+Bench,data=training)

#estimate accuracy of model

testing %>%

mutate(pred_Forty=predict(model2,newdata=testing)) %>%

summarize(RMSE=sqrt(mean((Forty-pred_Forty)^2)))## # A tibble: 1 × 1

## RMSE

## <dbl>

## 1 0.149Again, the answer is yes! When we use a player’s vertical jump and bench press we can predict forty-yard dash time to within 0.149 seconds, on average. Notice the improvement is smaller than when we transitioned from the null model to the vertical jump model. Based on the scatter plot matrix, this should not be too surprising. The correlation between bench press and forty-yard dash appears weaker than that of vertical jump and forty-yard dash. Yet, we still witness improvement with the additional predictor. What happens to our accuracy if we include all five events?

#estimate multiple linear regression model

model_all <- lm(Forty~.,data=training)

#estimate accuracy of model

testing %>%

mutate(pred_Forty=predict(model_all,newdata=testing)) %>%

summarize(RMSE=sqrt(mean((Forty-pred_Forty)^2)))## # A tibble: 1 × 1

## RMSE

## <dbl>

## 1 0.137We achieve even further reduction in error down to 0.137 seconds. Notice we use a short-cut in the lm() function this time. The period in the syntax Forty~. includes every other variable in the data frame as a predictor. By using the information provided by the other five events, we can predict an offensive lineman’s forty-yard dash time to within 0.137 seconds. This represents a 22% reduction in error compared to the null model.

In order to provide an actionable prediction model to domain experts, we require estimates for the model parameters. We could simply use the estimated parameters from the training data model. However, common practice to obtain even better parameter estimates is to fit the final model to all of the data.

#estimate parameter values

model_final <- lm(Forty~.,data=combine)

#review parameter estimates

coefficients(summary(model_final))## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 5.169997868 0.262512406 19.694299 1.494712e-62

## Vertical -0.006555840 0.002549351 -2.571572 1.044743e-02

## Bench -0.004435510 0.001296457 -3.421256 6.808712e-04

## Broad -0.008877147 0.001218900 -7.282916 1.495156e-12

## Cone 0.130625002 0.029466497 4.433001 1.171945e-05

## Shuttle 0.055273159 0.046433750 1.190366 2.345372e-01Given the values in the Estimate column, the final multiple linear regression model with five predictors is written as in Equation (5.6).

\[\begin{equation} \text{Forty}=5.170-0.007 \cdot \text{Vertical}-0.004 \cdot \text{Bench}-0.009 \cdot \text{Broad}+\\ 0.131 \cdot \text{Cone}+0.055 \cdot \text{Shuttle} \tag{5.6} \end{equation}\]

Though we base the parameter estimates on the entire sample, the reported accuracy of the model is still based on the training and testing split. This subtle distinction is important! We validate the accuracy of a model by testing it on data that it did not “see” during training. Once we decide which predictors are included in the most accurate model, we estimate the parameters using all available data. We do not compute the RMSE for this final model, because we have no remaining hold-out data. Instead, we rely on the RMSE from the validation process.

Advise on Results

Our predictive model provides a method for NFL team executives to estimate an offensive lineman’s forty-yard dash time when that player declines to participate in the event. The domain expert need only “plug in” the values for the five other events into the multiple linear regression equation and complete the arithmetic to obtain an estimated forty-yard dash time. That estimate is accurate to within 0.137 seconds, on average. Whether this accuracy is sufficient for use in the NFL draft is up to the domain experts. If a more accurate model is required, then we might attempt more advanced non-linear or non-parametric approaches. However, the majority of these approaches are outside the scope of the text.

An important limitation of the model is the assumption that players participate in all five other events. If a player skips the shuttle drill, for example, it is not appropriate to use our current model and simply leave out the shuttle term. The model was trained in the presence of shuttle drill data and that can influence the parameter estimates for the other predictors. If we want a model that excludes the shuttle drill, then we must repeat our training and testing process without it.

#estimate multiple linear regression model

model4 <- lm(Forty~.-Shuttle,data=training)

#estimate accuracy of model

testing %>%

mutate(pred_Forty=predict(model4,newdata=testing)) %>%

summarize(RMSE=sqrt(mean((Forty-pred_Forty)^2)))## # A tibble: 1 × 1

## RMSE

## <dbl>

## 1 0.137We use another short-cut in the lm() function to exclude shuttle drill from the model. By preceding a predictor with the minus sign, we remove it from the model. The four-variable model without shuttle drill predicts forty-yard dash time to within an average of just over 0.137 seconds. We removed a predictor and witnessed almost no increase in error. One way to interpret this is that the shuttle drill offers almost no additional predictive value when we already have the other four events included. What are the new parameter estimates when we fit the four-variable model to all of the data?

#estimate parameter values

model_final2 <- lm(Forty~.-Shuttle,data=combine)

#review parameter estimates

coefficients(summary(model_final2))## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 5.288807793 0.242911355 21.772584 3.911342e-72

## Vertical -0.007269022 0.002479108 -2.932112 3.539881e-03

## Bench -0.004407247 0.001296845 -3.398438 7.385550e-04

## Broad -0.008962307 0.001217368 -7.362039 8.803704e-13

## Cone 0.152420633 0.023097960 6.598879 1.177380e-10While some of the parameters changed very little, the intercept and the cone drill parameter estimates changed quite a bit. This is due, in part, to the very strong correlation between the cone and shuttle drills depicted in Figure 5.5. With such a strong dependence between the two predictors, the removal of one impacts the estimate of the other. Thus, in a situation where the domain expert is missing the shuttle drill, they should employ this new regression equation rather than the five-variable equation.

Answer the Question

Based on a sample of 451 offensive linemen, it appears we can predict a player’s forty-yard dash time to within 0.137 seconds if we know their performance in the other five events. Even if we are missing the shuttle drill time, we can still predict the forty-yard dash with roughly the same accuracy. Further study is necessary to determine if other factors, such as the player’s height and weight, provide additional predictive value.

A logical extension to this study is to determine which combination of predictors provides the most accurate model. Adding more predictors does not always increase the accuracy of a model. If predictor variables are not correlated with the response, then including them in the model may just introduce “noise” that decreases the model’s effectiveness. Thus, we prefer to find the smallest number of predictors necessary to obtain a sufficiently accurate model. This process of choosing predictors is known as feature selection or variable selection. There are a wide variety of techniques for determining the optimal combination of predictors, such as subset selection, regularization, and dimension reduction. But these approaches are reserved for more advanced texts.

5.2.4 Regression Trees

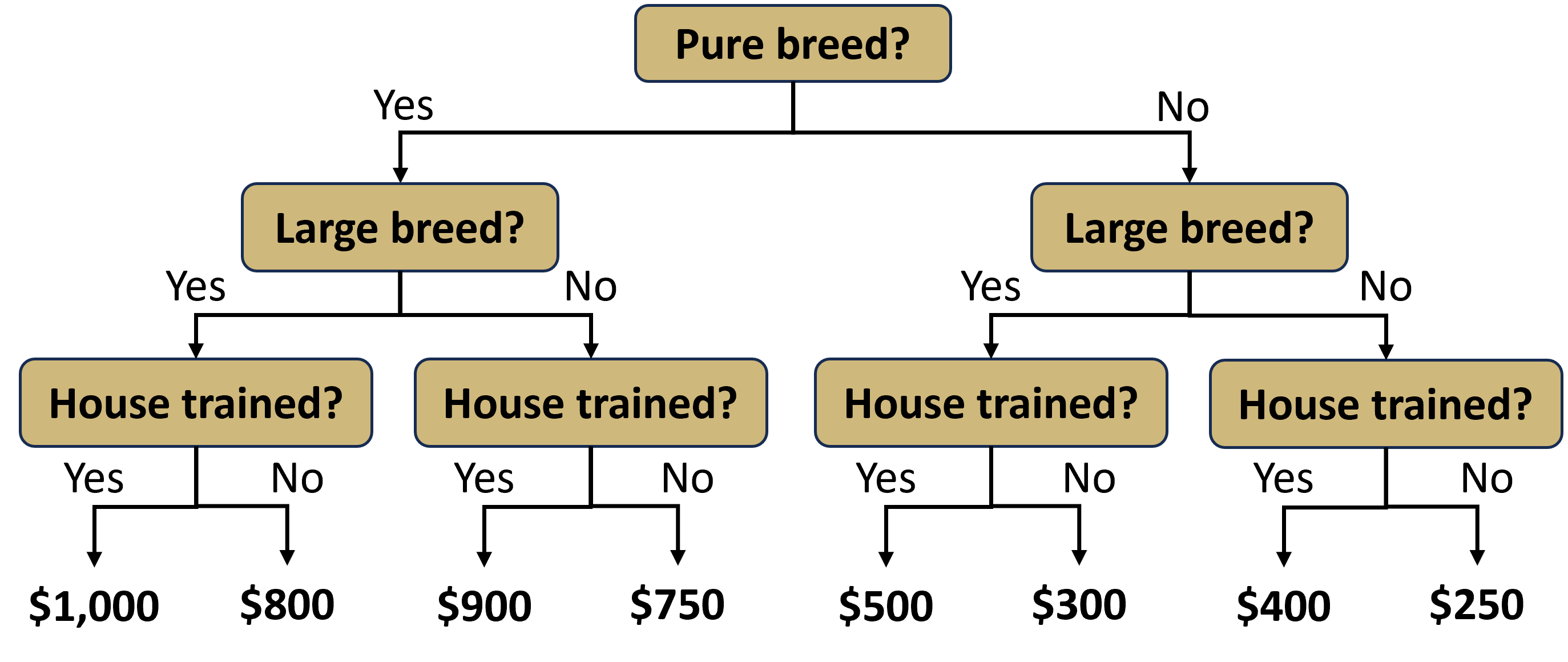

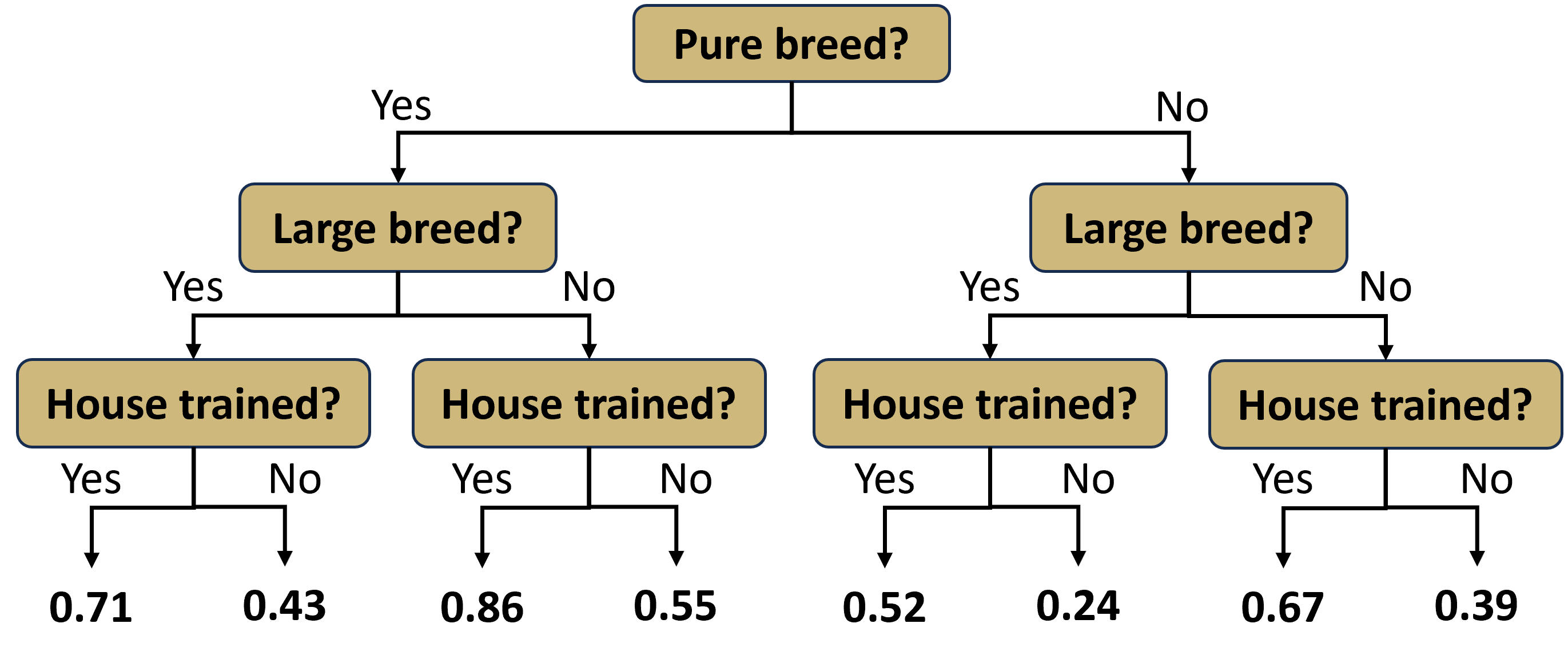

Thus far we have only considered parametric models for predicting a numerical response. Multiple linear regression is a parametric approach because it consists of estimating parameters (\(\beta_0,\beta_1,\cdots,\beta_p\)) in a linear equation. However, a whole host of non-parametric regression models also exist. Non-parametric models have no particular functional form and instead derive predictions directly from the data. One common example of a non-parametric model is a decision tree. When the response is a numerical variable, we refer to the model as a regression tree. Regression trees consist of a sequence of binary decisions that eventually end with the prediction of a numerical response. Consider the simple example of adopting a new dog. If we want to predict the cost, we might employ a regression tree like that in Figure 5.6.

Figure 5.6: Regression Tree to Predict Dog Adoption Cost

Though presented upside-down, the diagram does roughly resemble a tree. At each level of the tree, there exists a binary decision that creates a branch. At the first level, we must decide whether we care to have a pure breed dog or not. If we do not want a pure breed dog, then we take the right branch. At the next level, we are asked whether we want a large dog. Suppose we do want a large breed and take the left branch. Finally, we must decide whether we want the dog to be house trained. If the answer is yes, then we take the left branch again. Having made all of the decisions, we arrive at a terminal leaf of the tree. The associated value of $500 is our prediction for the cost to adopt the dog.

Notice this model requires no calculations to produce a prediction. There are no parameters or equations, just a simple visual diagram. But where did the predicted costs at the bottom of the tree come from? We demonstrate the answer to this question in an example.

Ask a Question

In Chapter 5.2.3, we constructed a multiple linear regression model to predict the forty-yard dash time of an offensive lineman based on their performance in the other scouting combine events. The associated parametric model includes estimates for the coefficients on each predictor value in a linear equation. As a point of comparison, we now construct a non-parametric version of the same model in the form of a regression tree. Rather than coefficient estimates in an equation, the model consists of a sequence of branches in a tree. After navigating the branches of the tree, we arrive at an answer to the following question: How accurately can we predict an offensive lineman’s forty-yard dash time based on the other five events?

Acquire the Data

The performance data for players in the years 2000 to 2020 National Football League (NFL) scouting combine are available in the file nfl_combine.csv. In the code chunk below, we import and wrangle the data before splitting it into training and testing sets.

#import NFL Combine data

combine <- read_csv("nfl_combine.csv") %>%

filter(Pos %in% c("C","OG","OT","OL")) %>%

transmute(Forty=`40YD`,Vertical,Bench=BenchReps,

Broad=`Broad Jump`,Cone=`3Cone`,Shuttle) %>%

na.omit()

#randomly select rows from sample data

set.seed(303)

rows <- sample(x=1:nrow(combine),size=floor(0.75*nrow(combine)),replace=FALSE)

#split sample into training and testing

training <- combine[rows,]

testing <- combine[-rows,]

#compute mean response

mean(training$Forty)## [1] 5.255296The average forty-yard dash time for the 338 offensive linemen in the training set is 5.255 seconds. We use this value in the null model as we initiate our analysis.

Analyze the Data

Prior to developing a prediction model, we require a frame of reference for improvements in accuracy based on root mean squared error (RMSE). In this case, our reference point is the null model consisting of the mean response.

## # A tibble: 1 × 1

## RMSE

## <dbl>

## 1 0.175If we predict 5.255 seconds for every lineman in the testing set, regardless of performance in the other events, we are off by an average of 0.175 seconds. Our goal is to improve this accuracy as much as possible by constructing a decision tree. Conceptually, a decision tree is based on the idea of repeatedly branching (i.e., splitting) the training set into two groups based on the predictor values. For example, suppose we decide to branch on a lineman’s broad jump distance. First we need a broad jump value to branch on. One logical option is to use the mean.

## [1] 102.1568The average lineman jumps 102.157 inches. By branching on this value, we split the linemen into two groups: below average and above average. We often refer to these groups as the left and right branches. Let’s compute the mean forty-yard dash time for our two branches.

#add branch assignments

training_branch <- training %>%

mutate(Branch1=if_else(Broad<102.157,"Left","Right"))

#compute branch means

training_branch %>%

group_by(Branch1) %>%

summarize(avg=mean(Forty)) %>%

ungroup()## # A tibble: 2 × 2

## Branch1 avg

## <chr> <dbl>

## 1 Left 5.33

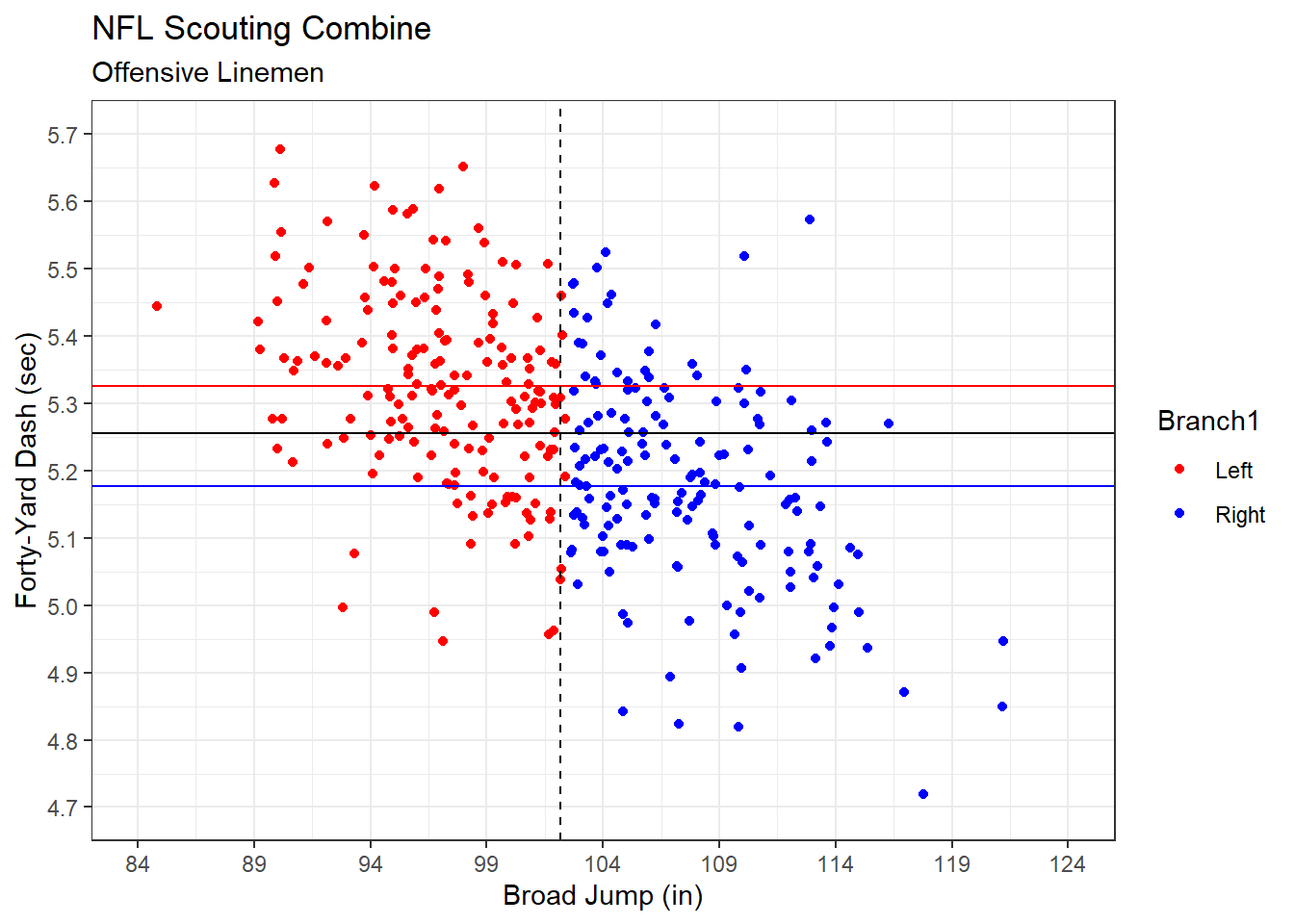

## 2 Right 5.18Now we see a difference in forty-yard dash performance between linemen with below and above average broad jumping ability. Players that jump shorter distances take longer to complete the forty-yard dash. The opposite is true for players that jump longer distances. We visualize this difference in a scatter plot of broad jump distance versus forty-yard dash time.

#display scatter plot

ggplot(data=training_branch,aes(x=Broad,y=Forty,color=Branch1)) +

geom_point(position="jitter") +

geom_hline(yintercept=5.326,color="red") +

geom_hline(yintercept=5.255) +

geom_hline(yintercept=5.177,color="blue") +

geom_vline(xintercept=102.157,linetype="dashed") +

labs(title="NFL Scouting Combine",

subtitle="Offensive Linemen",

x="Broad Jump (in)",

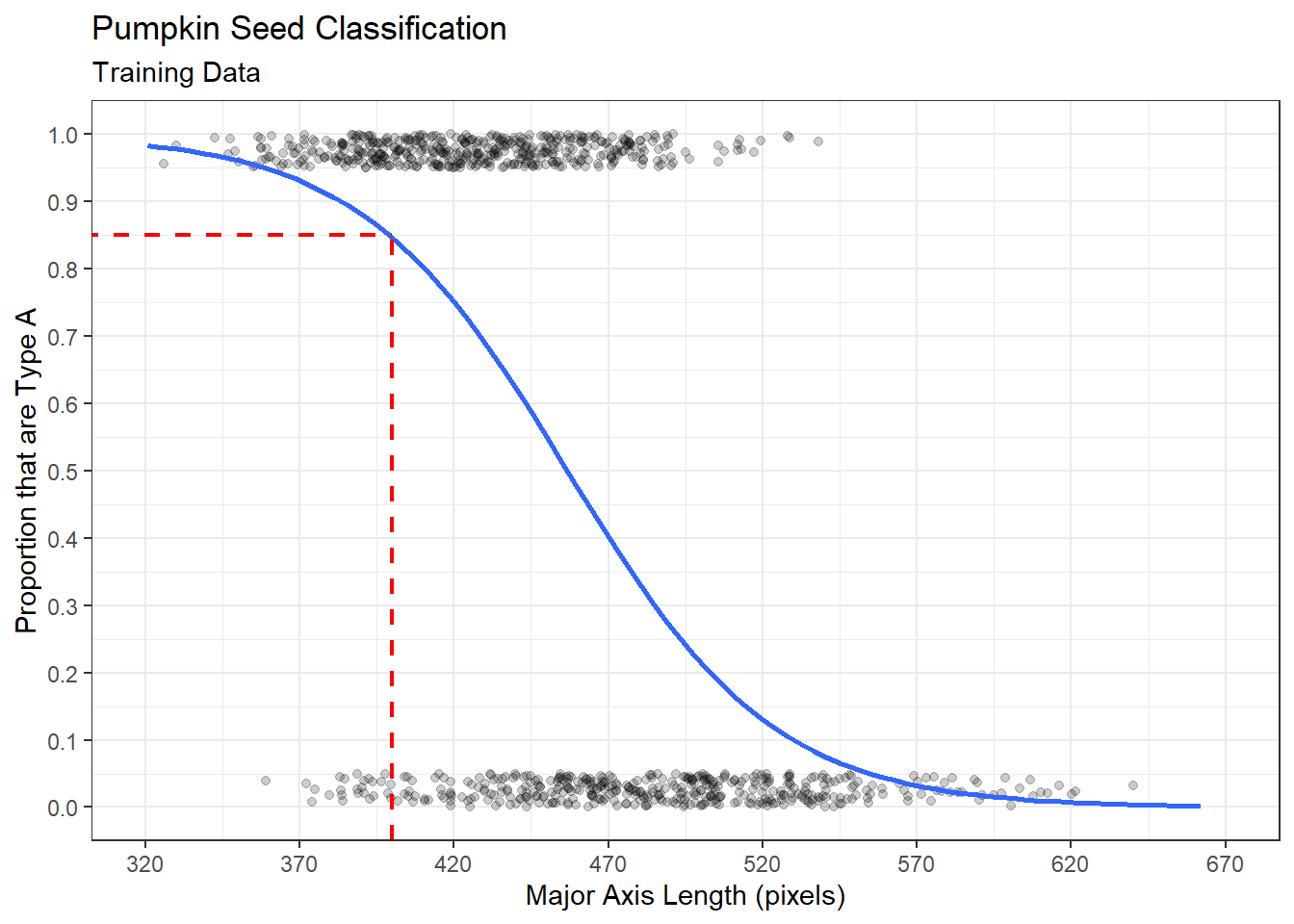

y="Forty-Yard Dash (sec)") +

scale_x_continuous(limits=c(84,124),breaks=seq(84,124,5)) +

scale_y_continuous(limits=c(4.7,5.7),breaks=seq(4.7,5.7,0.1)) +

scale_color_manual(values=c("red","blue")) +

theme_bw()

Figure 5.7: Forty-Yard Dash Time Branched on Broad Jump Distance

The horizontal black line in Figure 5.7 indicates the overall mean forty-yard dash time. This is the prediction for our null model that ignores broad jump distance. By instead branching on broad jump distance (vertical dashed line) we achieve more accurate predictions for each branch. The left branch has a greater mean forty-yard dash time (horizontal red line) than that of the right branch (horizontal blue line). So, rather than using a generic prediction for forty-yard dash time, we tailor it to each branch according to the broad jump performance. This should produce more accurate predictions in the testing set.

#compute RMSE for tree model

testing %>%

mutate(Forty_pred=if_else(Broad<102.157,5.326,5.177)) %>%

summarize(RMSE=sqrt(mean((Forty-Forty_pred)^2)))## # A tibble: 1 × 1

## RMSE

## <dbl>

## 1 0.158Simply by branching on broad jump distance, we reduce our prediction error from 0.175 to 0.158 seconds. What if we branch again? Can we achieve even greater accuracy? We investigate this question by branching on the mean cone drill time.

#compute mean cone drill for each branch

training_branch %>%

group_by(Branch1) %>%

summarize(avg=mean(Cone)) %>%

ungroup()## # A tibble: 2 × 2

## Branch1 avg

## <chr> <dbl>

## 1 Left 7.90

## 2 Right 7.76The left branch (below average broad jump) has an average cone drill time of 7.90 seconds. After splitting on this new predictor we obtain a left-left branch and left-right branch. The right branch (above average broad jump) has an average cone drill time of 7.76 seconds. When we split on this value, we create right-left and right-right branches. Let’s label each lineman in the training set according to these branches.

#add branch assignments

training_branch2 <- training_branch %>%

mutate(Branch2=case_when(

Branch1=="Left" & Cone<7.901 ~ "LL",

Branch1=="Left" & Cone>=7.901 ~ "LR",

Branch1=="Right" & Cone<7.759 ~ "RL",

Branch1=="Right" & Cone>=7.759 ~ "RR"

))

#compute branch means

training_branch2 %>%

group_by(Branch2) %>%

summarize(avg=mean(Forty)) %>%

ungroup()## # A tibble: 4 × 2

## Branch2 avg

## <chr> <dbl>

## 1 LL 5.28

## 2 LR 5.38

## 3 RL 5.14

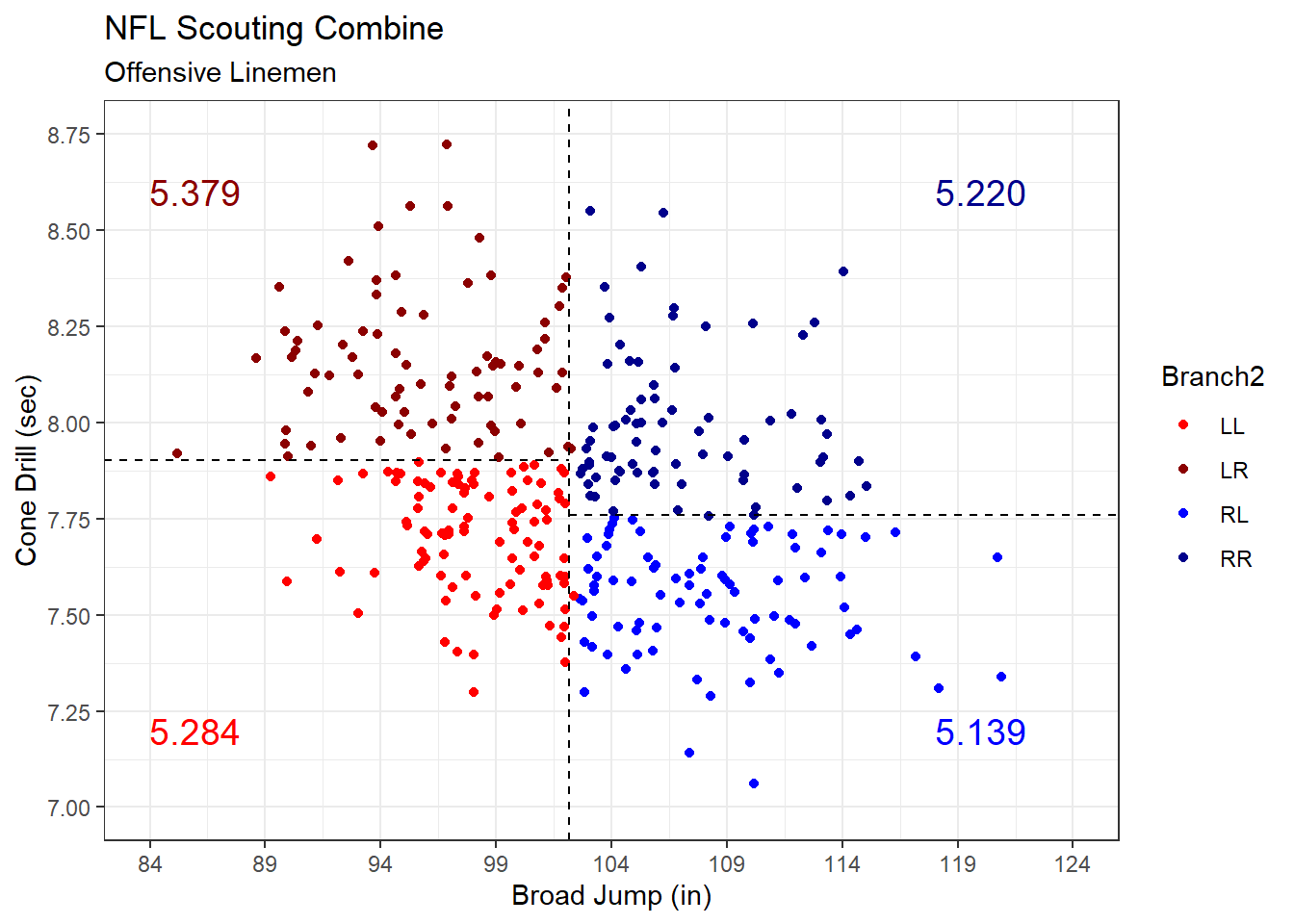

## 4 RR 5.22Now we have segmented the linemen into four groups. The left-right (LR) branch represents linemen with below average jump distance and above average cone time. In other words, they performed poorly in both events. Consequently, that group has the slowest average forty-yard dash time. By contrast, the right-left (RL) branch includes linemen with above average jump distance and below average cone time. These players can jump far and move fast. Not surprisingly, they have the fastest mean forty-yard dash time. We visualize all four groups in a scatter plot of broad jump versus cone drill.

#display scatter plot

ggplot(data=training_branch2,aes(x=Broad,y=Cone,color=Branch2)) +

geom_point(position="jitter") +

geom_vline(xintercept=102.157,linetype="dashed") +

geom_segment(x=82,xend=102.157,y=7.901,yend=7.901,linetype="dashed",color="black") +

geom_segment(x=102.157,xend=126,y=7.759,yend=7.759,linetype="dashed",color="black") +

labs(title="NFL Scouting Combine",

subtitle="Offensive Linemen",

x="Broad Jump (in)",

y="Cone Drill (sec)") +

scale_x_continuous(limits=c(84,124),breaks=seq(84,124,5)) +

scale_y_continuous(limits=c(7,8.75),breaks=seq(7,8.75,0.25)) +

scale_color_manual(values=c("red","dark red","blue","dark blue")) +

annotate("text",x=86,y=8.6,label="5.379",color="dark red",size=5) +

annotate("text",x=86,y=7.2,label="5.284",color="red",size=5) +

annotate("text",x=120,y=8.6,label="5.220",color="dark blue",size=5) +

annotate("text",x=120,y=7.2,label="5.139",color="blue",size=5) +

theme_bw()

Figure 5.8: Forty-Yard Dash Time Branched on Broad Jump Distance and Cone Drill Time

After branching on two predictors, we have four groups of linemen in Figure 5.8. Given the limitations of visualizing three dimensions, we list the mean forty-yard dash time for each group in text. Once again, by tailoring our forty-yard dash predictions more and more specifically to each sub-group of linemen, we should achieve greater accuracy.

#compute RMSE for tree model

testing %>%

mutate(Forty_pred=case_when(

Broad<102.157 & Cone<7.901 ~ 5.284,

Broad<102.157 & Cone>=7.901 ~ 5.379,

Broad>=102.157 & Cone<7.759 ~ 5.139,

Broad>=102.157 & Cone>=7.759 ~ 5.220

)) %>%

summarize(RMSE=sqrt(mean((Forty-Forty_pred)^2)))## # A tibble: 1 × 1

## RMSE

## <dbl>

## 1 0.153Though not as dramatic as the first branch, our second branch does reduce the error from 0.158 to 0.153 seconds. We could continue branching on the remaining predictors in a similar manner and ideally achieve a more accurate model. However, there is no need to continue the process manually. The tree package includes functions for growing decision trees that ease the process. In fact, the tree() function works just like the lm() function in terms of notation. Below we use the training data to build a regression tree to predict forty-yard dash time based on the other events. We then display the results in a tree diagram.

#load tree library

library(tree)

#build decision tree

model <- tree(Forty~.,data=training)

#plot decision tree

plot(model)

text(model,cex=0.8,pretty=TRUE)

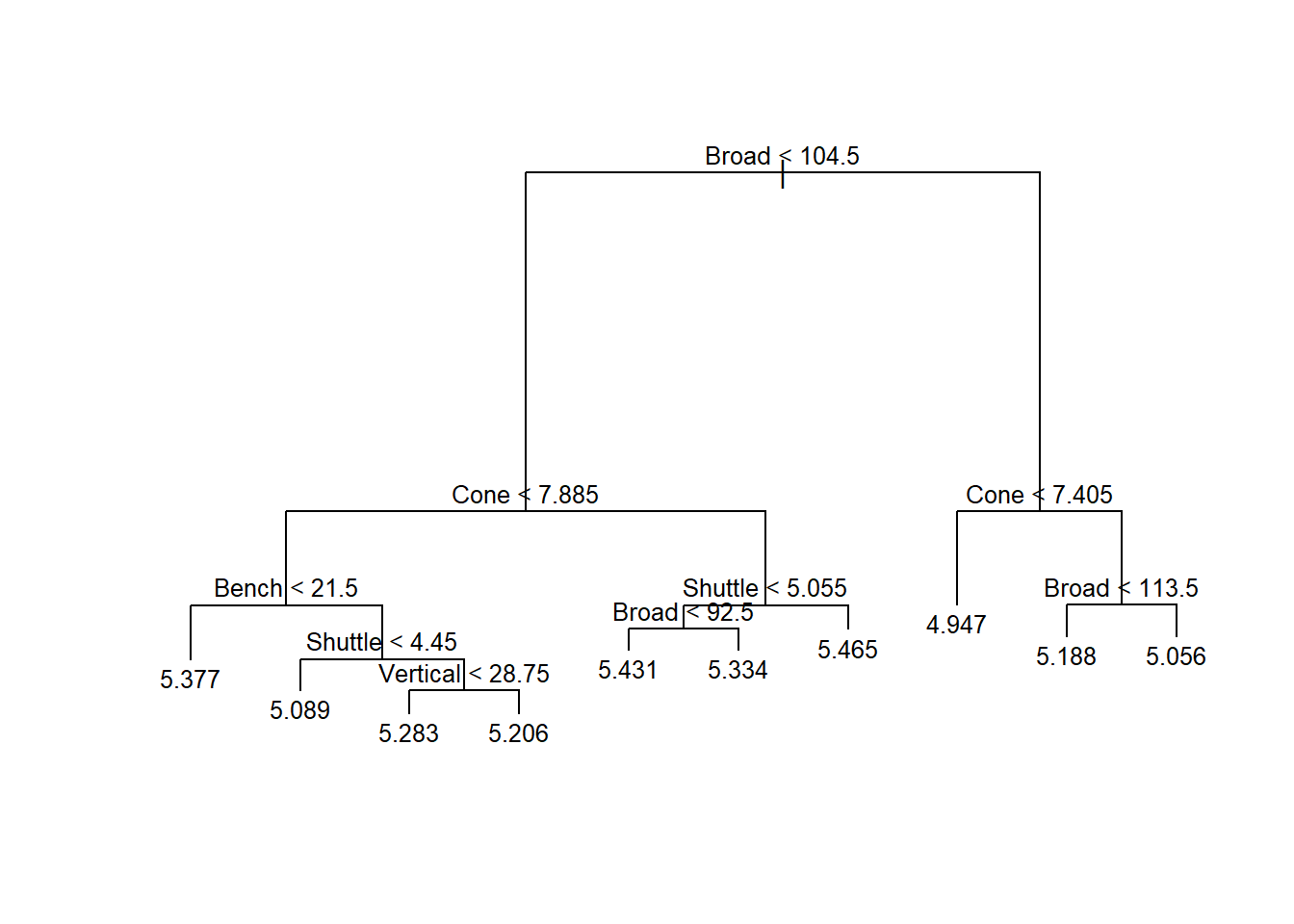

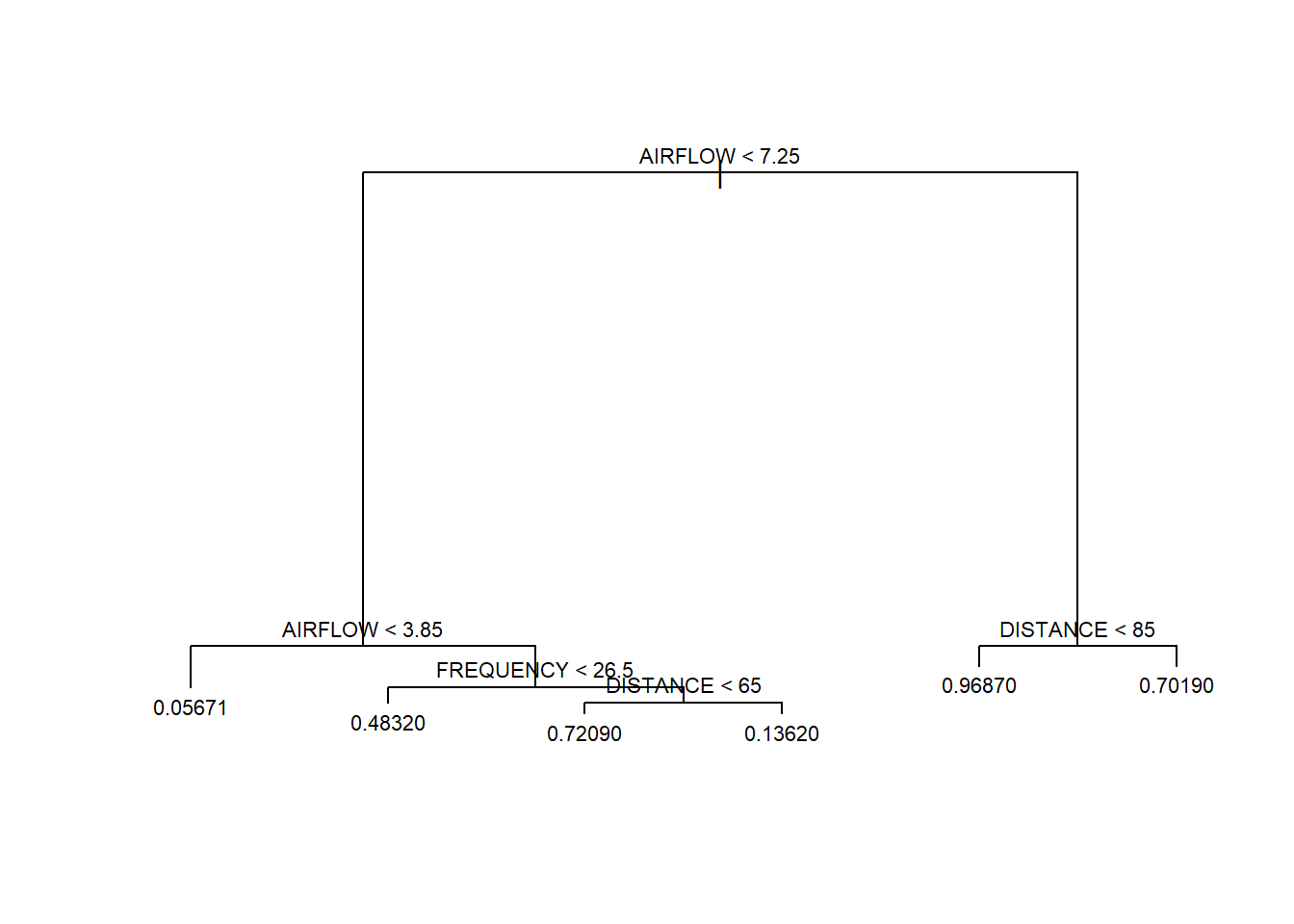

Figure 5.9: Regression Tree to Predict Forty-Yard Dash Time

Figure 5.9 finally displays our model in the form of a tree. However, the algorithm employed to grow the tree is more sophisticated than our manual approach. Rather than branching on the mean value of a randomly-selected predictor, the algorithm uses recursive binary splitting. This method chooses the predictor and branch value that provides the maximum reduction in error at each level of the tree. For the first branch, the algorithm chooses broad jump but splits on 104.5 inches because that value provides a greater reduction in error than the mean value of 102.2 inches. By default, the labels on each branch indicate the left branch. Further down the tree we notice that branches on the same level are not required to split on the same predictor. In fact, the third level of the tree includes bench press, shuttle run, and broad jump again. Such behavior is permitted with recursive binary splitting, because the algorithm chooses the branch that provides the greatest improvement in accuracy.

At the bottom of the tree, the leaves indicate the mean forty-yard dash time for the associated group. After splitting our linemen into 10 distinct groups, let’s see what accuracy the model achieves. As with linear regression models, we simply apply the predict() function to the testing set and compute the RMSE.

#compute RMSE for tree model

testing %>%

mutate(Forty_pred=predict(model,newdata=testing)) %>%

summarize(RMSE=sqrt(mean((Forty-Forty_pred)^2)))## # A tibble: 1 × 1

## RMSE

## <dbl>

## 1 0.150Even with the more sophisticated algorithm, we only achieve another modest decrease in error compared to our manual approach. Using the regression tree, we can predict the forty-yard dash time of an offensive lineman to within 0.150 seconds, on average. Should we recommend this model or our previous multiple linear regression model to stakeholders?

Advise on Results

As with any modeling approach, regression trees have their pros and cons. Compared to multiple linear regression models, decision trees are often less accurate. The linear model of forty-yard dash time presented earlier provides a RMSE of 0.137 seconds. Thus, the decision tree’s RMSE of 0.150 may be rightly perceived as inferior. However, decision trees are far easier to explain and implement for most stakeholders. Imagine a coach wants to predict the forty-yard dash time for the following player.

## # A tibble: 1 × 5

## Vertical Bench Broad Cone Shuttle

## <dbl> <dbl> <dbl> <dbl> <dbl>

## 1 27 23 97 8.07 4.98The coach needs nothing other than Figure 5.9. Based on the lineman’s performance in the five events, we navigate the branches of the tree by choosing left, right, left, right and arrive at 5.334 seconds. For that particular player, we don’t even need to know their vertical jump or bench press performance. Compare this to the linear model that requires the coach to find a calculator and compute the following:

\[ 5.170-0.007\cdot27-0.004\cdot23-0.009\cdot97+0.131\cdot8.07+0.055\cdot4.98=5.347 \]

To be fair, nearly any stakeholder will be capable of performing the required arithmetic for the linear model with a calculator. However, the tree model is clearly faster and easier to implement. The question is whether the overall reduction in accuracy is unacceptable to the stakeholder. If the difference between a 5.334 second lineman and a 5.347 second lineman is important, then the linear model may be preferred.

Answer the Question

Based on a sample of 451 offensive linemen from the years 2000 to 2020, we can predict forty-yard dash time to within 0.150 seconds based on the performance in the other five events. This accuracy is based on a very intuitive decision tree model that can be implemented without calculations, directly from a diagram. If a greater level of accuracy is required, a multiple linear regression model can reduce the error to 0.137 seconds. However, implementation does require some basic computations using a calculator.

Given the inferior performance of regression trees compared to other modeling approaches, there exist a variety of extensions that can greatly improve prediction accuracy. In practice, many data scientists employ ensemble methods that consist of growing and aggregating multiple decision trees. If we average the predictions from multiple trees, then we tend to get more accurate results. Ensemble methods such as bagging, boosting, and random forests can dramatically decrease the prediction error compared to individual decision trees. However, they represent advanced techniques beyond the scope of this text.

5.2.5 Categorical Predictors

So far in this chapter we have described linear regression models with numerical predictors. In other words, the predictor variables (\(x_1,x_2,\cdots,x_p\)) take on continuous, numerical values. However, we often want to use categorical predictor variables as well. This presents a problem because the levels of a factor are typically words rather than numbers. How do we use words to predict a numerical response in a linear regression model? The answer is to assign a binary numerical variable to each level of the factor. Specifically, if we want to predict numerical response \(y\) based on a categorical predictor \(z\) with \(k\) levels, then we define linear regression model in Equation (5.7).

\[\begin{equation} y = \beta_0+\beta_1 z_1+\beta_2 z_2+\cdots+\beta_{k-1} z_{k-1} \tag{5.7} \end{equation}\]

The predictor \(z_1\) is a binary variable that is set equal to 1 if an observation is assigned the first level of the factor and 0 otherwise. The same is true for \(z_2\) through \(z_{k-1}\). We do not need a binary variable for the last level (\(k\)) of the factor. By process of elimination, if an observation is not assigned one of the first \(k-1\) levels, then is must be assigned the last level. Because a given observation is assigned one and only one level, we know that \(z_1+z_2+\cdots+z_{k-1}\leq1\).

Another way to think about this formulation for categorical predictors is that it simply shifts the intercept (\(\beta_0\)) of the best-fit line. For example, if an observation is assigned the first level, then \(z_1=1\) and \(z_2=z_3=\cdots=z_{k-1}=0\). In that case, Equation (5.7) is reduced to \(y=\beta_0+\beta_1\). Thus, the intercept is shifted by a value of \(\beta_1\). Since the categorical variable is the only predictor in this example, there is no slope parameter. However, we can mix numerical and categorical predictors so that the regression equation includes both intercept and slope parameters. We demonstrate this with a case study using the tidyverse below.

Ask a Question

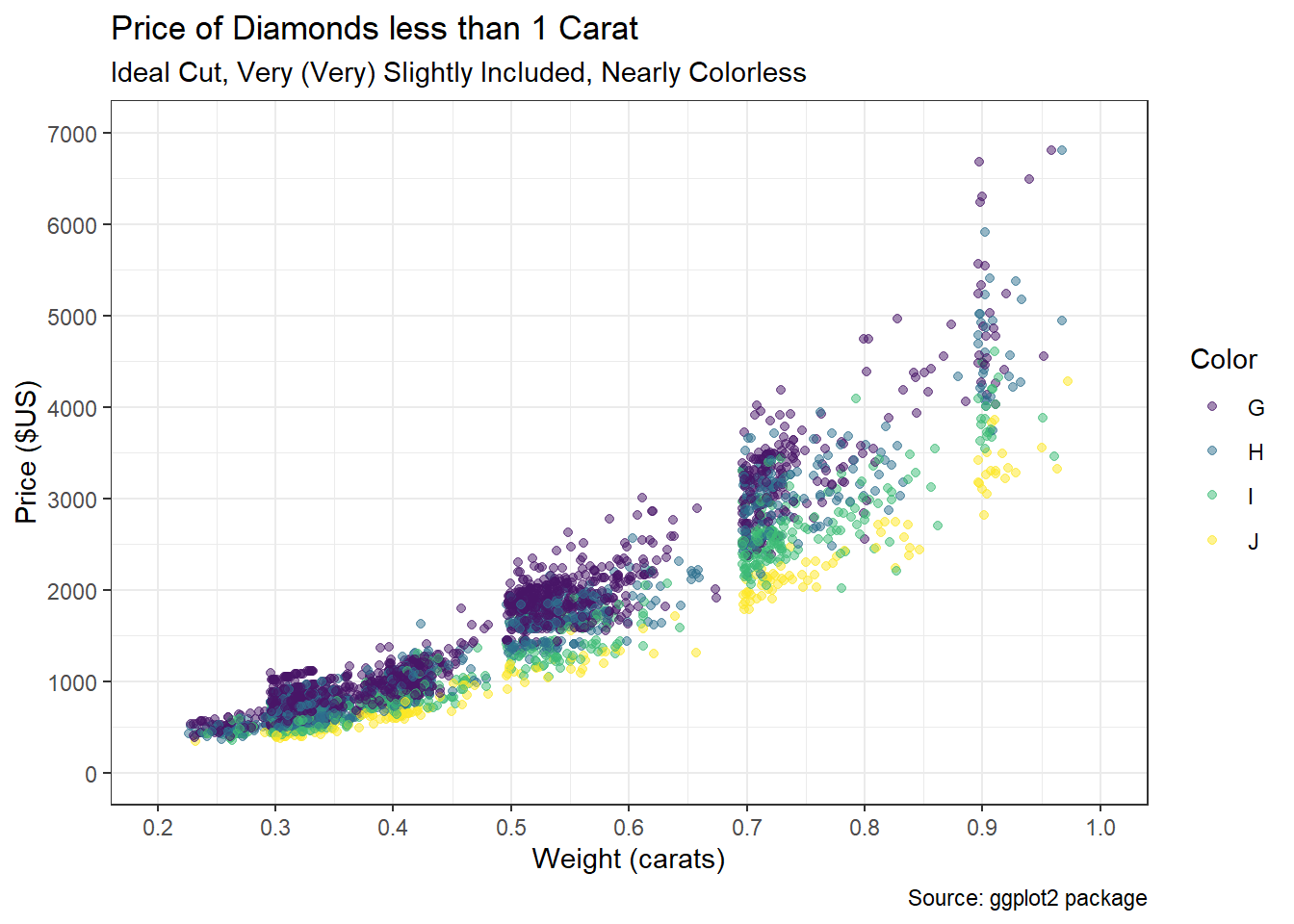

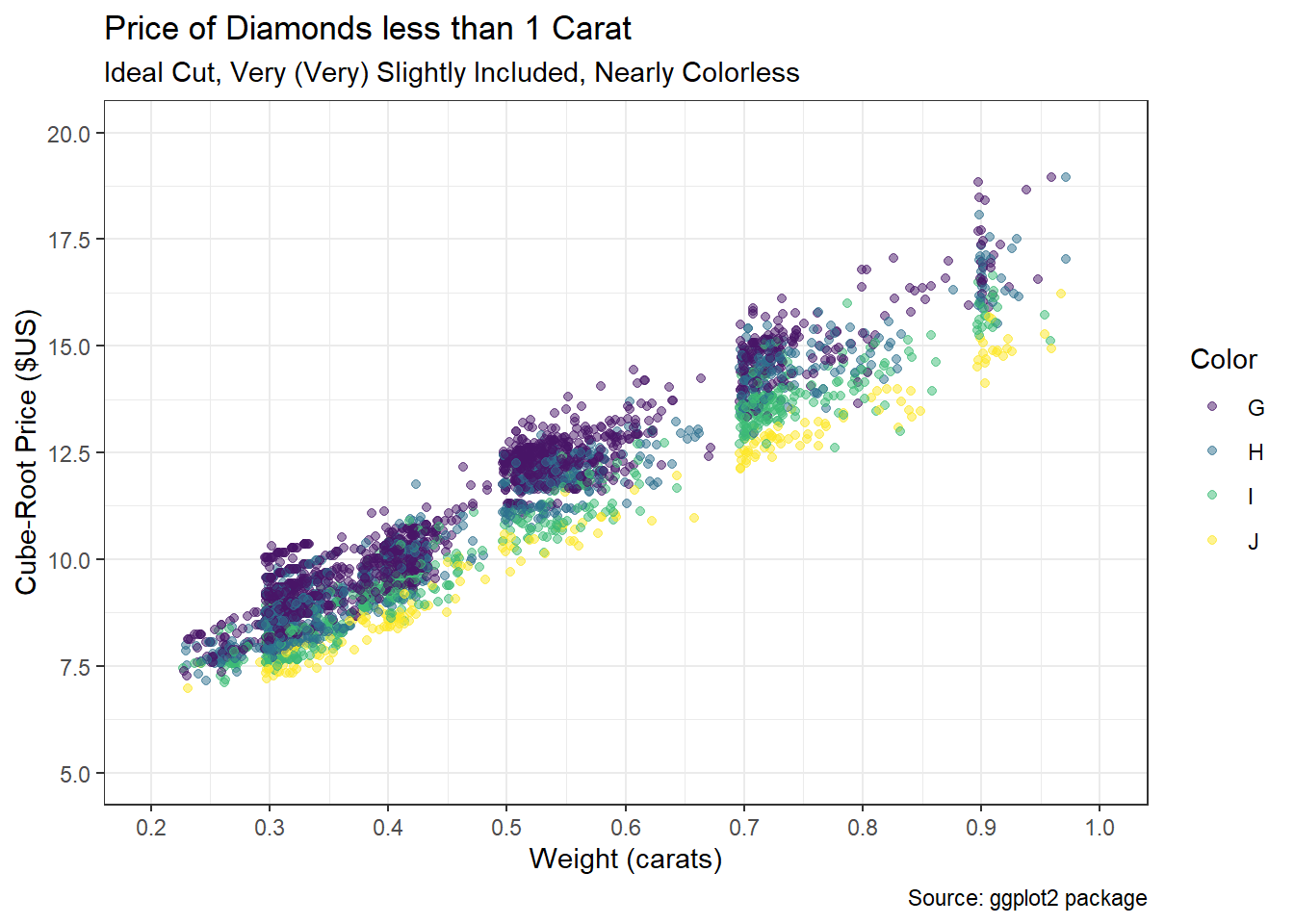

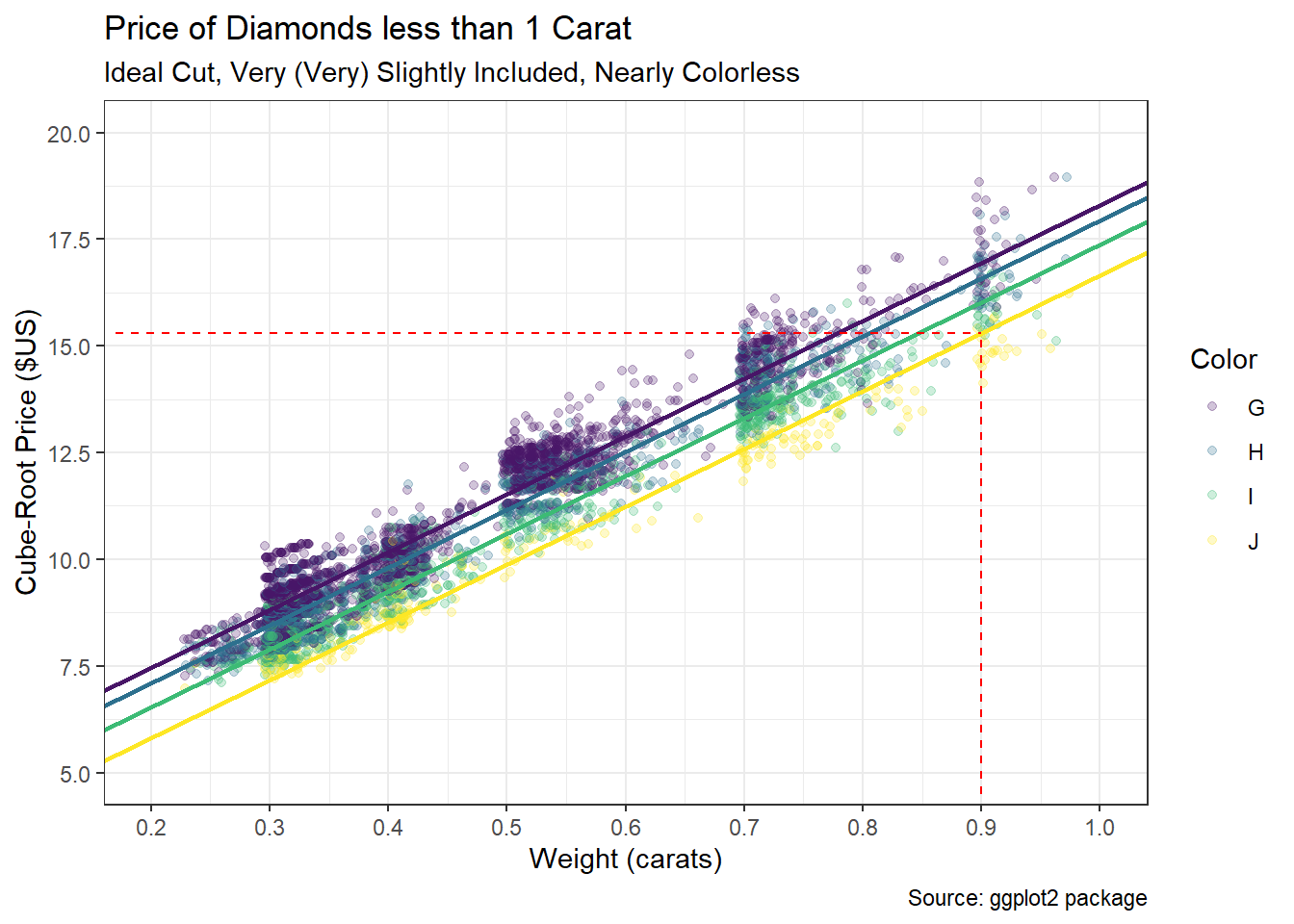

The ggplot2 package includes a data set called diamonds which describes the appearance, size, and price of over 50 thousand round cut diamonds. Our goal is to predict the price of a diamond based on what are commonly referred to as the 4Cs: carat, cut, color, and clarity. While carat is a numerical measure of a diamond’s weight (1 carat equals 0.2 grams), the other three measures are categorical assignments determined by experts. The cut of a diamond is ranked on a scale from fair to ideal. The color is assigned a letter between Z and D that corresponds to a range between light yellow and colorless. Finally, the clarity of a diamond is ranked on an alpha-numeric scale between I1 and IF that aligns with descriptors from imperfect to flawless. A large, ideal cut, colorless, flawless diamond can be incredibly expensive given its rarity. For our case study, we isolate two of the Cs and investigate the following question: How accurately can we predict the price of a diamond based on its carat and color?

For academic purposes, we increase the specificity of this question even further. We consider only ideal cut, nearly colorless diamonds of less than one carat that are assigned a clarity between VS2 and VVS1. Though this may appear overly-specific, we will be left with more than enough diamonds in our sample. Reducing the scope of the problem decreases some of the variability that otherwise exists in pricing and facilitates the demonstration of our modeling approach. The selected characteristics also represent diamonds that are not too large, but still exhibit very high quality. We begin by acquiring the built-in data.

Acquire the Data

The data we seek is stored in the file diamonds as part of the tidyverse package. We import the data below and isolate the characteristics of interest.

#import diamond data

data(diamonds)

#wrangle diamond data

diamonds2 <- diamonds %>%

filter(carat<1,

cut=="Ideal",

color %in% c("G","H","I","J"),

clarity %in% c("VS2","VS1","VVS2","VVS1")) %>%

transmute(price,carat,

color=factor(color,levels=c("G","H","I","J"),ordered=FALSE)) %>%

droplevels()

#summarize data frame

summary(diamonds2)## price carat color

## Min. : 340 Min. :0.2300 G:2265

## 1st Qu.: 689 1st Qu.:0.3200 H:1232

## Median :1008 Median :0.4100 I: 818

## Mean :1432 Mean :0.4684 J: 278

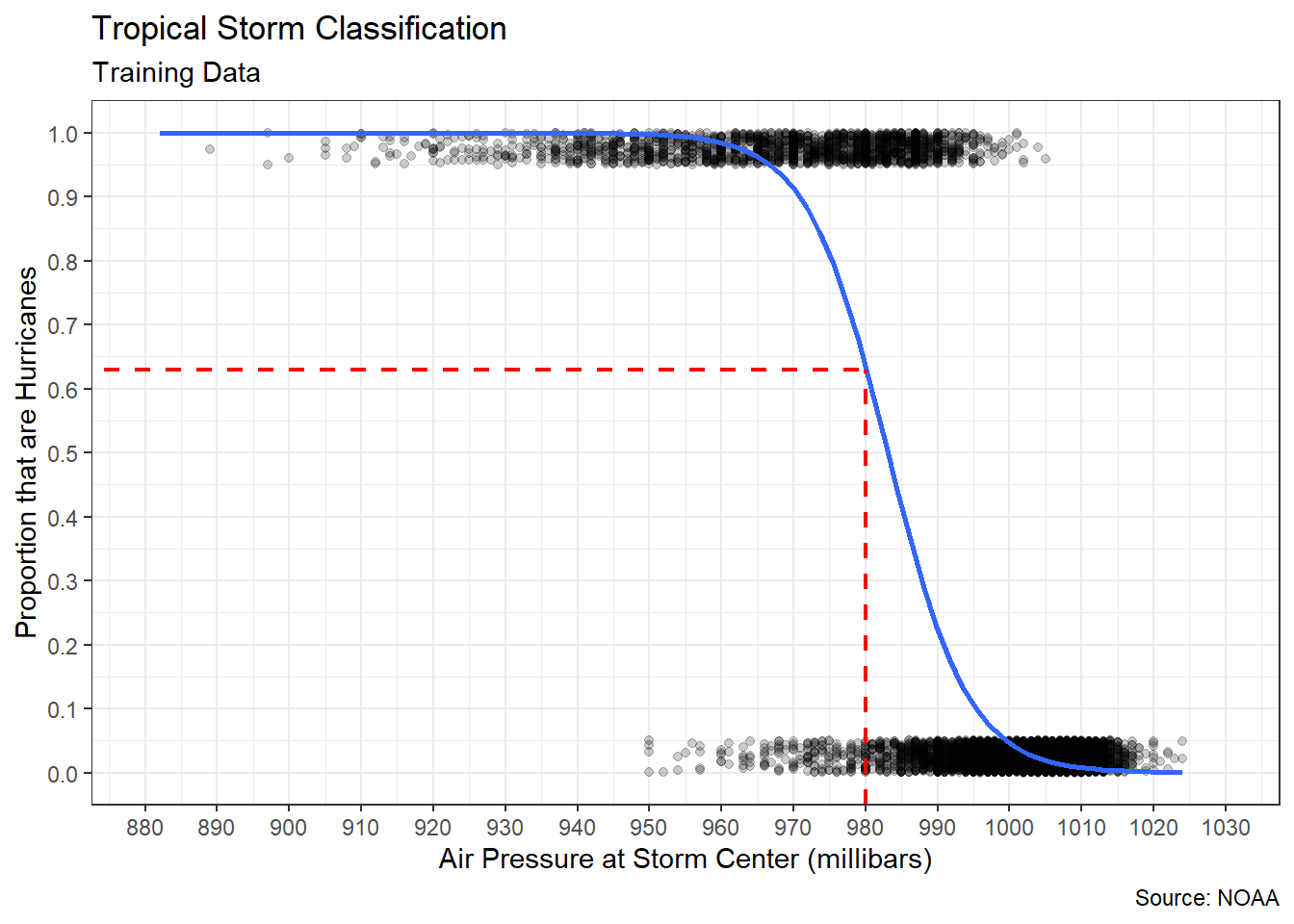

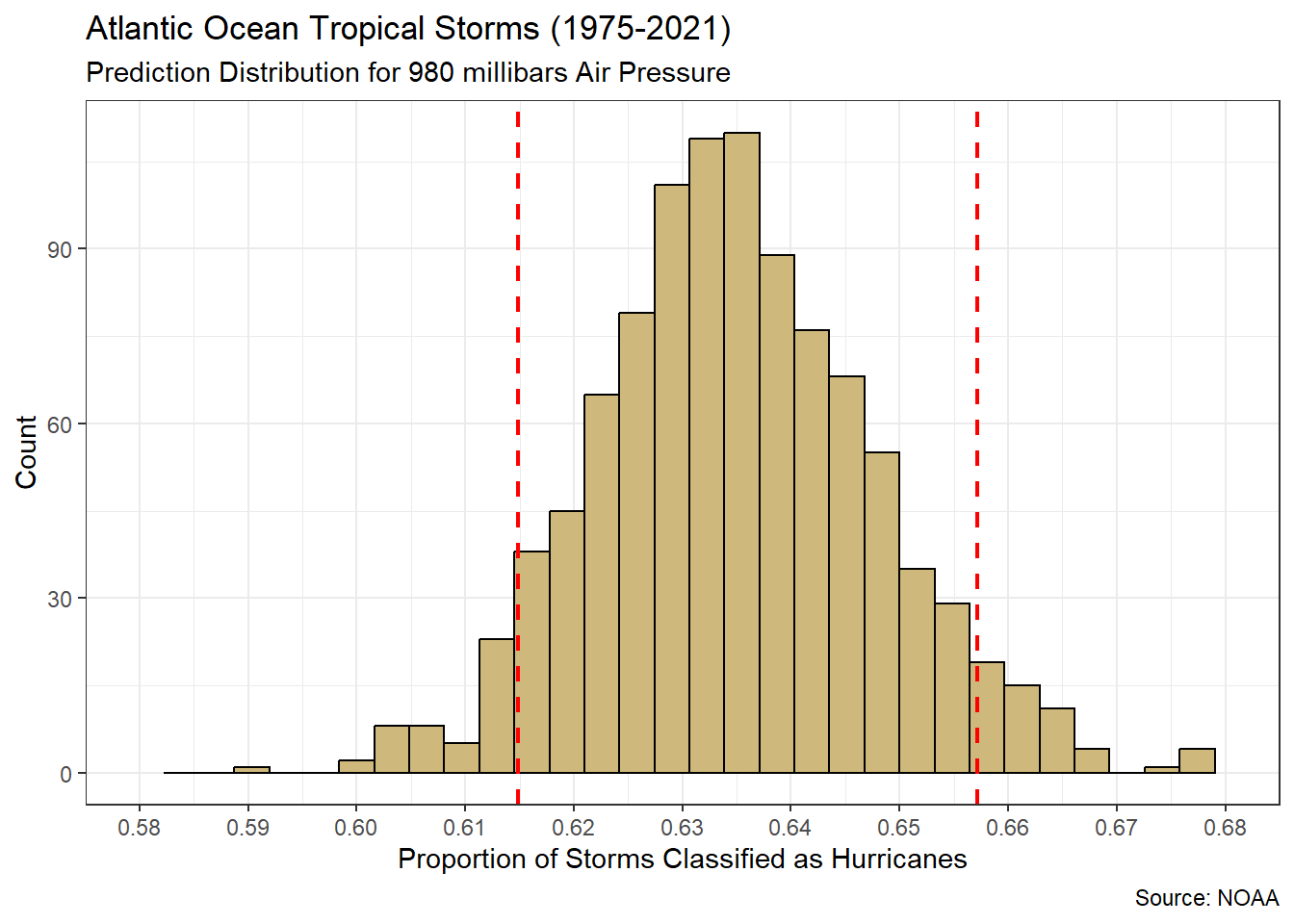

## 3rd Qu.:1922 3rd Qu.:0.5600