Chapter 4 Inferential Analyses

The second type of analysis conducted by data scientists leverages inferential statistics. The related methods and tools differ from exploratory analysis in their intended use. The results of an exploratory analysis are intended to describe the distributions of and associations between variables within the acquired data set. With inferential analysis we seek to extend the results beyond the acquired data. Specifically, we employ estimates from sample data to make claims about the distributions and associations for variables in the larger population from which the data is acquired.

As an example, imagine we are asked to determine the proportion of American adults who are obese based on body mass index (BMI). It would be nearly impossible to acquire BMI values for every adult in the population. Instead, we gather a representative sample of the population and compute the obesity proportion within the sample. Suppose we acquire BMI values for one thousand of the 260 million adults in the United States. Within the sample, we find that 420 (42%) of the adults are obese. The true obesity proportion is called the population parameter and its value is unknown. But we estimate the true proportion with the sample statistic of 42%. Thus, inference consists of estimating population parameters using sample statistics.

Even though we cannot know the true obesity proportion in the population of 260 million adults, we can obtain a defensible estimate using a representative sample of one thousand adults. This highlights a key characteristic of samples: they should be representative of the population. Ideally, when we calculate a statistic from a sample we get a number that is close to what we would get for the parameter in the population. If the sample of one thousand Americans represents the population well, then the obesity proportion in the entire population should be close to 42%. When a sample does not represent the population well, we say it is biased.

After obtaining a representative sample, the two most common analytic tools in inferential analyses are confidence intervals and hypothesis tests. Confidence intervals provide a margin of error around our estimates. For example, we might determine that the obesity proportion for American adults is 42% plus or minus 2 percentage-points. By providing a range of plausible values we acknowledge the variability associated with estimating a population parameter from a sample statistic. Rather than computing a range of likely values for a parameter, hypothesis tests assess the plausibility of a particular value. If, for example, someone claims that 45% of Americans are obese, then we evaluate the claim using a hypothesis test. Depending on the variability of obesity, it is possible a population with a rate of 45% could produce a sample with a rate of 42%. The majority of this chapter is devoted to computing confidence intervals and conducting hypothesis tests for various types of parameters. But we begin with a discussion of random sampling techniques and the statistics we typically compute from unbiased samples.

4.1 Data Sampling

In order to infer a plausible value, or range of values, for a population parameter the associated statistic should be calculated from a representative sample. There are a variety of methods to obtain a representative sample, but the gold standard is random sampling. A sample is considered random if every possible combination of observations of a certain size has the same chance of being selected from the population. Often we refer to the individual observations in a random sample as being independent and identically distributed (iid). Independence exists when the variable values of each observation have no influence on the values of the other observations. Random variable values are identically distributed when there is no change to the data-generating process during sampling. We present the most common forms of random sampling in the first subsection.

The risk imposed by not sampling randomly is the potential introduction of bias. A biased sample is likely to produce biased statistics. A statistic is biased when its value consistently differs from the value of its associated parameter. Since the true parameter value is unknown in most applications, bias can be difficult to detect. The best approach is to avoid bias by employing random sampling techniques that have been shown to produce representative samples. In the next subsection, we introduce common sources of bias that are minimized by random sampling.

Prior to discussing sampling methods and bias, it is worth noting the importance of sample size. While the method of gathering the sample impacts its bias, the size of the sample affects its variance. When the number of observations in a sample is too small, statistical estimates can vary wildly from one sample to the next. For example, if we only sampled five adults from the entire population and recorded their weights, then we might witness a 100% obesity rate simply by chance. Another sample of five adults might return a 0% obesity rate, again purely by chance. Very large samples make it much less likely we will witness such extreme fluctuations in statistics. Two separate samples of one thousand adults might produce obesity rates of 40% and 44%, but this represents much less variability. While there is no one “magic” number for the minimum sample size across all analytic techniques, more is generally better.

4.1.1 Sampling Methods

After defining the population of interest, researchers obtain a sample via the random selection of observations. Random sampling is conducted in a variety of forms. The preferred approach is often driven by the researcher’s access to the population and/or the parameter of interest. Regardless of approach, the goal is to acquire a subset of data that represents the population well. Below we briefly introduce four common random sampling methods.

Simple random sampling is the most straight-forward method for collecting an unbiased sample. In a simple random sample, every individual observation in the population has an equal chance of being selected for inclusion. Imagine writing the names of all 260 million American adults on individual pieces of paper, mixing them up in a bag, and then blindly drawing one thousand names out of the bag. In theory, this would provide each adult in the population an equal chance of being included in the sample. In practice we use a random number generator, rather than pieces of paper, but the concept of simple random sampling is the same.

Systematic sampling involves selecting observations at fixed intervals. In a systematic sample, researchers generate a random starting point and then select the observations that occur at the appropriate increments after the starting point. Imagine we plan to gather a sample of adults entering a department store. We might randomly generate a starting time each day and then survey every 5th adult that comes in the store until closing. Alternatively, we could choose to survey one adult every 5 minutes after the starting time. In either case, if the starting point and arrivals are random then the sample is considered random.

Stratified sampling involves splitting the population into sub-groups prior to sampling. Stratified sampling is often employed when researchers want to ensure that certain sub-groups appear in the sample in the same proportion as in the population. Typical sub-groups in human research include sex, race, socioeconomic status, etc. Once the population is split into these sub-groups (strata), observations are randomly sampled within each group. In the obesity example, researchers might want to ensure a proportionate number of biological males and females in the sample. If the ratio in the population is 51% female and 49% male, then the researchers might choose to randomly sample 510 females and then randomly sample 490 males. Without the strata, there is no guarantee that the sample includes males and females in the correct proportion.

Cluster sampling is similar to stratified sampling but there are key differences. In cluster sampling, the population is also split into sub-groups. However, each sub-group should itself be representative of the entire population. Then a set number of sub-groups (clusters) are randomly sampled and every observation in the selected clusters is included in the sample. Suppose we identify zip codes in America as the clusters. Then we randomly sample a certain number of zip codes. Finally, every person who lives in the selected zip codes gets included in the sample. If each cluster truly represents the population, then the collection of clusters should as well. For the obesity example, zip code may not be the best clustering rule since certain regions of the country have obesity rates well above or below the national average.

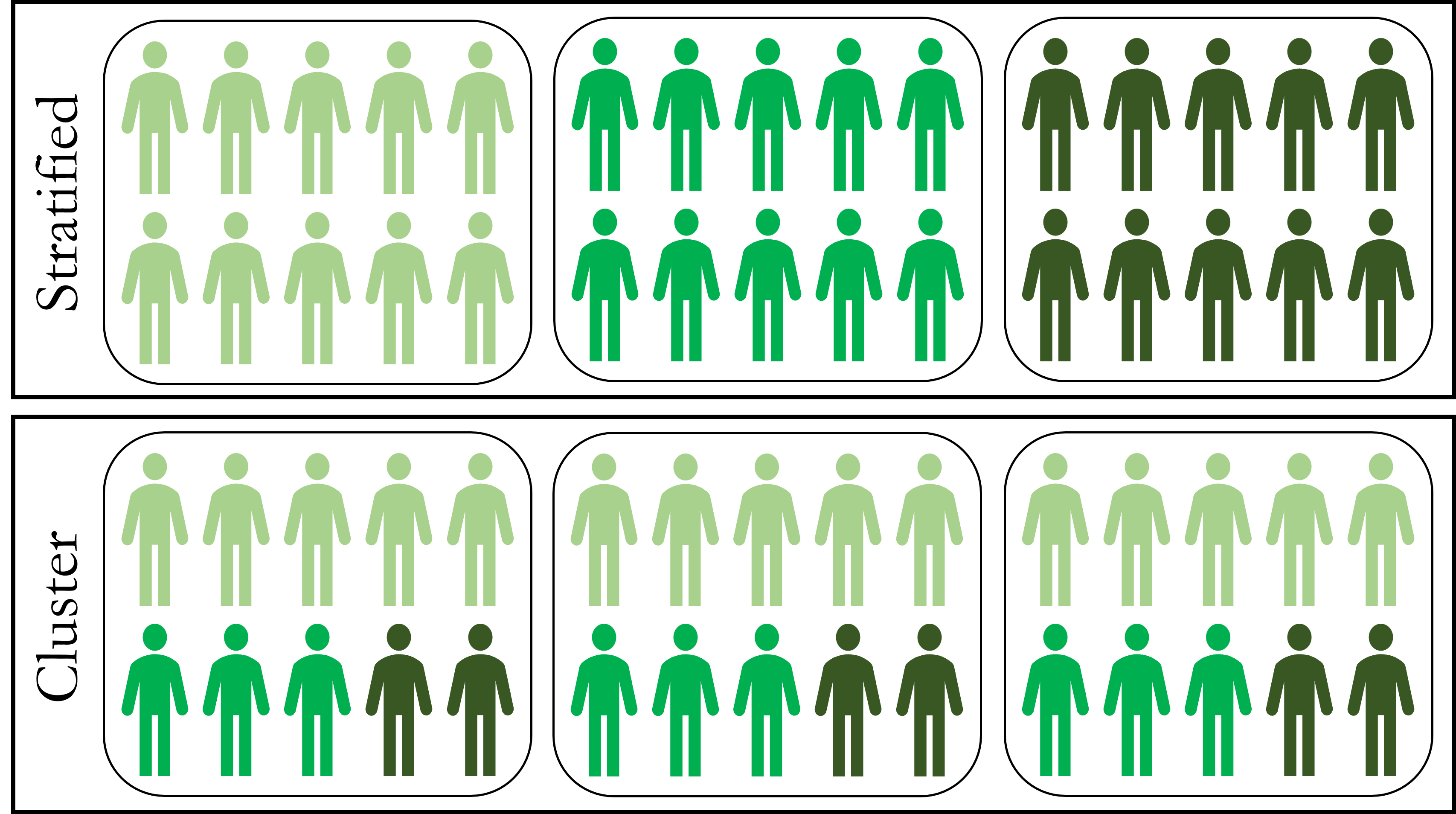

Given that stratified and cluster sampling both involve sub-grouping the population, they can be easily confused. Figure 4.1 depicts the critical difference in how the sub-groups are created.

Figure 4.1: Stratified versus Cluster Sampling

Suppose researchers want to ensure that low, medium, and high income adults are represented in the sample in the same proportions as in the population. Stratified sampling splits the population into the three income strata first. Then researchers randomly sample the desired number of observations (based on proportion in the population) from each strata. By contrast, cluster sampling splits the population into clusters that already include the proper proportions of income status. Then researchers randomly select entire clusters for inclusion in the sample. If executed properly, either method can ensure an unbiased sample.

If a sample is not collected randomly, then it may suffer from bias. A biased sample does not represent the population and subsequent statistics poorly estimate the associated parameter. Below we list common sources of bias that emerge in samples that are not collected randomly.

Self-selection bias occurs when the observational units have control over whether to be included in the sample or not. One example is when data is collected using a voluntary survey. If a certain subset of people are more likely to choose to respond, then their answers may not be reflective of the entire population. If American adults are asked to participate in a survey regarding obesity, members may not be equally likely to volunteer due to social pressures regarding body image. Consequently, the study may underestimate the proportion of obese adults in the population.

Undercoverage bias happens when observations in the sample are not collected in proportion to the population. Often this happens when researchers only gather easily-accessible data (convenience sampling). Imagine if researchers only gathered BMI information from randomly selected patients at a local clinic. Given the demonstrated connections between obesity and other health issues, a sample of clinic patients may overestimate the obesity proportion in the population. On the other hand, a sample gathered from members of a local gym might underestimate the obesity proportion. Both examples suffer from undercoverage of the population.

Survivorship bias occurs when a sample only includes data from a subset of the population that meets a certain criteria. As an example, consider a health survey sent out to active members of a local gym. The survey does not consider the health of members who quit going to the gym, so the results will likely be overly optimistic. Survivorship could also be an issue of mobility. If a study consists of body measurements collected in person, then the sample may underestimate the obesity proportion due to an inability or unwillingness to physically report.

Even when the observations in a sample are gathered randomly, it is possible for bias to emerge due to how the data is collected. Issues such as faulty equipment, administrative errors, and lack of cooperation by participants can all bias the data. Below we list sources of bias due to data collection methods.

Non-response bias happens when observational units refuse to contribute to part of the sample data. Unlike self-selection, the sample may include participants in the correct proportions. But certain observations may be missing variable values due to non-response. One example might be a survey that requests sensitive information like political affiliation, religious beliefs, or sexual preference. In the obesity example, some respondents may refuse to provide body weight as part of the survey. In that case, the analysis could misrepresent the true proportion of obese adults even if the correct proportion participates in the survey.

Observer bias occurs when the researcher’s personal opinions influence the data collection process. This type of bias can occur intentionally or unintentionally. An obvious case of this type of bias would occur if a researcher were asked to determine a person’s obesity status based on sight, rather than measured values. Another case might be a researcher who is not familiar with the measurement equipment and uses it incorrectly. Though some observer biases are quite obvious, there are other more subtle ways that researchers allow their personal viewpoints or expectations to be reflected in the data collection.

Recall bias occurs when the researcher or subject is more or less likely to remember the correct data value. This can happen when there is a time lag between when the data occurred and when it was collected. For example, a subject may not accurately remember their own body weight if they have not recently measured it. Similarly, the researcher may forget the correct measured weight if they do not record it immediately. Recall bias can also occur when the context of the research is unique to the study subject. Someone who has struggled with weight loss may be much more likely to have recently weighed themselves and remember the value. These examples highlight why data should be collected in an objective, repeatable manner whenever possible.

4.1.2 Inferential Statistics

In Chapter 3.1.1 we discuss descriptive statistics as a method of data summary. In the context of exploratory analysis, a statistic is descriptive because we only aim to summarize variable values within the acquired data. We are describing what we observe. On the other hand, inferential statistics are intended to summarize variable values beyond the acquired data. Specifically, we want to estimate a population parameter using a sample statistic. Our goal when computing sample statistics remains to characterize the frequency, centrality, dispersion, and correlation of variable values. We also use similar metrics such as the proportion, mean, standard deviation, and correlation coefficient. The difference lies in the application of each statistic. In an inferential analysis, we infer the unknown value of a parameter in the entire population based on the known value of a statistic computed from a representative sample.

In order to distinguish between an unknown parameter and a known statistic, it is useful to introduce a bit of notation. Often, but not always, we represent population parameters using Greek letters. For example, the population mean is typically denoted as \(\mu\). The associated sample statistic adds a “hat” to the symbol to indicate an estimate of the unknown value. Hence, the sample mean would appear as \(\hat{\mu}\). Unfortunately, this is not entirely consistent across textbooks and other resources. The sample mean, for example, is frequently labeled with the symbol \(\bar{x}\) in a variety of contexts. As a result, it is critical to clearly define and consistently apply notation. In this text, we employ the “hat” methodology throughout.

Random sampling and objective data collection help avoid bias. With an unbiased sample, data scientists can compute inferential statistics that accurately estimate population parameters. These sample statistics are referred to as point estimates because they offer a one-number approximation of the associated parameter. But the precision of such estimates cannot be determined from a single value. Instead, we prefer a range of values that plausibly capture the population parameter. Such ranges of values are referred to as confidence intervals.

4.2 Confidence Intervals

Inferential statistics are often sensitive to the data that happens to be randomly selected for the sample. Different samples produce different estimates for the population parameter of interest. Hence, a single point estimate provides no information about the variability across samples. We remedy this concern by constructing an interval of estimates within which we are confident the population parameter lies. We call this range of values a confidence interval. In order to construct confidence intervals, we must first carefully describe the distribution of a statistic.

4.2.1 Sampling Distributions

The observed value of a statistic depends on which members of the population are selected for the sample. Since population members are selected randomly, different samples are likely to produce different statistics. A sampling distribution captures the range and frequency of values we can expect to observe for a statistic. Rather than basing the estimation of a parameter on a single point, a sampling distribution describes the entire range of possible values. We demonstrate this concept with an example.

True Distribution

Suppose our population of interest is every song that appeared on the Billboard Top 100 music chart in the year 2000. Furthermore, imagine the parameter we want to estimate is the median ranking of a song in its first week on the charts. The tidyverse package happens to include the entire population in the data set called billboard. In the following code chunk, we import and wrangle the data to isolate the first week.

#import billboard data

data(billboard)

#wrangle data to only include week 1

billboard_pop <- billboard %>%

select(artist,track,wk1)

#review data structure

glimpse(billboard_pop)## Rows: 317

## Columns: 3

## $ artist <chr> "2 Pac", "2Ge+her", "3 Doors Down", "3 Doors Down", "504 Boyz",…

## $ track <chr> "Baby Don't Cry (Keep...", "The Hardest Part Of ...", "Kryptoni…

## $ wk1 <dbl> 87, 91, 81, 76, 57, 51, 97, 84, 59, 76, 84, 57, 50, 71, 79, 80,…The population consists of 317 songs, along with their first week ranking. Typically, we do not have access to the entire population of interest. If we did, there would be no reason to estimate a parameter. We could simply compute it directly! In this case, the median first-week ranking for a song in the year 2000 is 81 (see below).

## [1] 81Thus, half of all songs in the population have a first-week ranking greater than 81 out of 100. However, as previously stated, we typically will not know this value because we will not have access to the entire population. Instead, we draw a random sample from the population and compute the sample median as a point estimate. Let’s extract a random sample of 50 songs and compute the sample median ranking.

#set randomization seed

set.seed(1)

#select 50 random song rankings

billboard_samp1 <- sample(x=billboard_pop$wk1,size=50,replace=FALSE)The sample() function randomly selects size=50 rows from the population of all song rankings. We do so without replacement (replace=FALSE) because we do not want the same song to appear more than once. The result is a sample of 50 randomly selected song rankings in billboard_samp1. From the sample, we compute the point estimate for median first-week ranking.

## [1] 81.5Based on the sample of 50 songs, our best estimate for the true median first-week ranking is 81.5 out of 100. Here we use “true” median interchangeably with “population” median. Remember, in practice we will not know that the true median is 81. We will only know the sample median of 81.5. As result, we will not know whether 81.5 is an accurate estimate or not. Even more concerning, we will not know what estimate would result from a different sample. For this academic exercise, let’s see what happens with a different randomization seed.

#set randomization seed

set.seed(1000)

#select 50 random song rankings and compute the mean

median(sample(x=billboard_pop$wk1,size=50,replace=FALSE))## [1] 80Changing the randomization seed simulates drawing a different 50 songs from the population. For simplicity, we skip the step of defining the sample vector and directly compute the median. Had we sampled these 50 songs, our point estimate for median first-week ranking would be 80 out of 100. Both point estimates are relatively close to the true parameter value, but we have no way of knowing that in practice because we will not have access to the whole population.

Next, we take this exercise of repeated sampling a step further. Imagine we had the capability to randomly sample 50 songs from the population 1,000 times. Each time we compute the sample median ranking of the 50 songs as in the previous two cases. In order to expedite the sampling process, we employ a for() loop.

#initiate empty data frame

results <- data.frame(med=rep(NA,1000))

#repeat sampling process 1000 times and save median

for(i in 1:1000){

set.seed(i)

results$med[i] <- median(sample(x=billboard_pop$wk1,size=50,replace=FALSE))

}Prior to initiating the loop, we define an empty data frame called results to hold all 1,000 sample medians. We then fill the empty data frame one sample median at a time within the loop. The result is a vector of 1,000 median first-week rankings. Rather than attempt to view these values directly, we depict them in a density plot.

#plot sampling distribution

ggplot(data=results,aes(x=med)) +

geom_density(color="black",fill="#CFB87C",adjust=3) +

geom_vline(xintercept=true_median,color="red",linewidth=1) +

labs(title="Billboard Top 100 for the Year 2000",

x="Sample Median Week 1 Ranking",

y="Density") +

scale_x_continuous(limits=c(72,90),breaks=seq(72,90,2)) +

theme_bw()

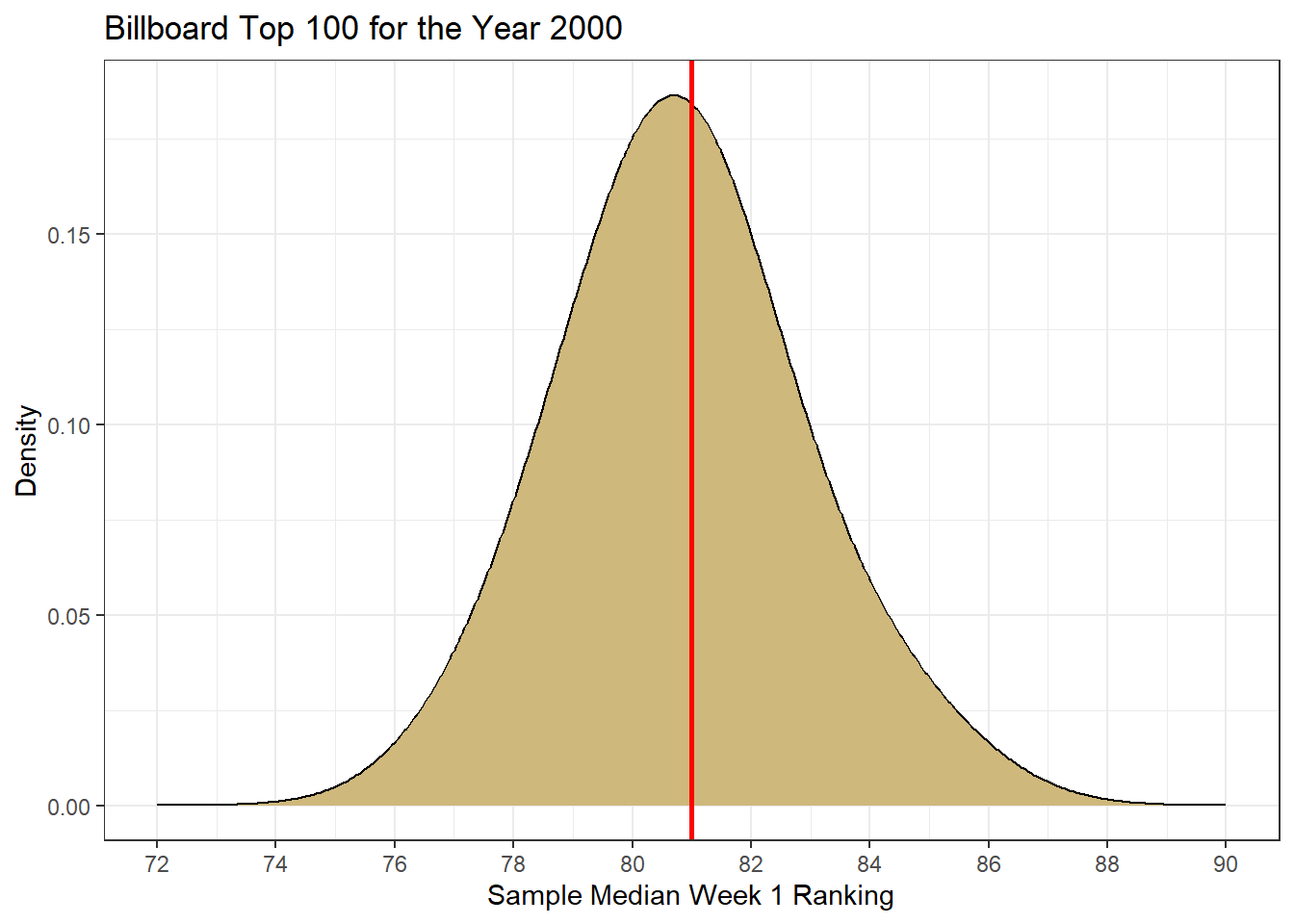

Figure 4.2: Sampling Distribution for Median Week 1 Ranking

The density plot depicted in Figure 4.2 represents the true sampling distribution for median first-week ranking. It provides us an indication of the range and frequency of values we can expect to observe for the median. In this case, we see that the median first-week ranking for a song on the Billboard Top 100 ranges between about 72 and 90 out of 100. However, those extreme values are very unlikely. Instead, we expect to see median rankings close to 81 where the density is much greater. This also happens to be near the true median ranking (red line).

What we learn from this exercise is that sample medians tend to be very close to the population median. In general, the larger our sample size the more likely the sample median will be close to the population median. With a sample size of 50 songs, the sample median rarely differs more than six ranks from the true median. The vast majority of the samples produce a median within two ranks of the population median.

This simulated example of repeatedly sampling from a population provides the intuition regarding the variability of a statistic. However, this is not how inferential statistics is conducted in practice. Due to limitations of time, money, and resources we seldom have the luxury of more than one sample from the population. So, how can we construct a sampling distribution if we only have a single sample and thus a single point estimate?

Bootstrapped Distribution

Initially it may seem impossible to create a distribution of many statistics out of a single sample. In fact, this seeming impossibility is the origin of the name for the method we use: bootstrapping. At some point around the late-1800s the phrase “pull yourself up by your bootstraps” originated. The literal interpretation suggests someone lift themselves off the ground by pulling on the loops attached to their own boots. But the figurative use encourages someone to be self-sufficient in the face of a difficult, if not impossible, task.

Bootstrapping, also known as bootstrap resampling, is a method for constructing a distribution of multiple statistics from a single sample. In essence, we treat the single sample as though it were the entire population. Then we sample from that “population” repeatedly just as we demonstrated in the previous subsection. Two important differences are that we perform the resampling with replacement and our sample size is the same as the “population” size.

Resampling with replacement permits observations to appear in each sample more than once. At first this may seem counter-intuitive. Won’t including the same values in the sample multiple times compromise the accuracy of our statistic? The answer is no and the reason is due to the randomness of the resampling. Values that appear in greater frequency in the original sample (“population”) will generally appear in greater frequency in the resample (“sample”). This is exactly how we want the resampling to behave. Furthermore, since the resample size is the same as the original sample size the only way to obtain different values for the statistic is to allow replacement.

To demonstrate the resampling process, we return to the Billboard Top 100 example. The first sample we drew from the entire population is named billboard_samp1. We now assume these 50 songs are the only sample we have access to. The 50-song sample is treated as the “population” from which we resample. Once again we leverage a for() loop, but now we set the parameter replace=TRUE.

#initiate empty data frame

results_boot <- data.frame(med=rep(NA,1000))

#repeat sampling process 1000 times and save sample medians

for(i in 1:1000){

set.seed(i)

results_boot$med[i] <- median(sample(x=billboard_samp1,size=50,replace=TRUE))

}The bootstrap resampling results in 1,000 sample medians. Each one estimates the true median first-week ranking for songs in the Billboard 100. From the resampled statistics, we produce a histogram of sample medians. Here we choose a histogram, rather than a density plot, to emphasize that the bootstrap sampling distribution is intended to estimate the true sampling distribution.

#plot resampling distribution

ggplot(data=results_boot,aes(x=med)) +

geom_histogram(color="black",fill="#CFB87C",bins=32) +

geom_vline(xintercept=quantile(results_boot$med,0.05),

color="red",linetype="dashed",linewidth=1) +

geom_vline(xintercept=quantile(results_boot$med,0.95),

color="red",linetype="dashed",linewidth=1) +

labs(title="Billboard Top 100 for the Year 2000",

subtitle="Sampling Distribution",

x="Sample Median Week 1 Ranking",

y="Count") +

scale_x_continuous(limits=c(72,90),breaks=seq(72,90,2)) +

theme_bw()

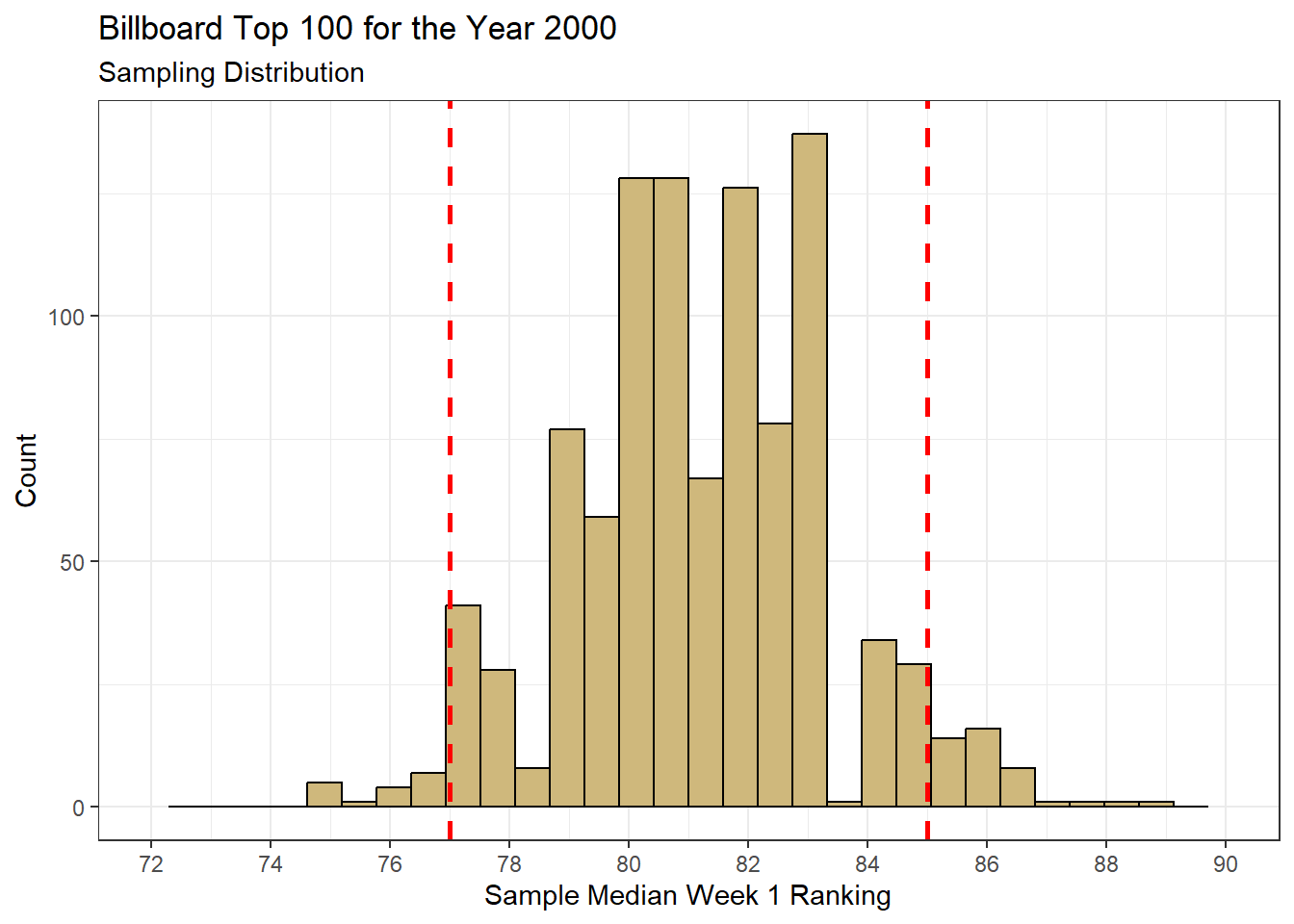

Figure 4.3: Bootstrap Resampling Distribution for Median Week 1 Ranking

The shape and range of the bootstrap sampling distribution in Figure 4.3 is similar to the true sampling distribution in Figure 4.2. However, we notice its center is shifted slightly to the right. This is because the resampled statistics are computed from an original sample with a median of 81.5. Remember, we have no idea in this case that the true median is 81. We simply know that the bootstrap distribution represents a plausible range of values for the true median.

The concept of “plausible range” of values finally brings us to the construction of a confidence interval. Based on the bootstrap distribution, we might expect the true median song ranking to be anywhere from 77 to 85. But the benefit of a confidence interval is in the assignment of a confidence level. How sure are we that a given range of estimated values captures the true value? Perhaps we want to be 90% confident that we capture the true median. We determine the bounds associated with 90% confidence using the quantiles of the bootstrap distribution. The dashed red lines in Figure 4.3 are set at the 0.05 and 0.95 quantiles. These values are equivalent to the 5th and 95th percentiles. They are computed using the quantile() function.

## 5%

## 77## 95%

## 85We see that 5% of the observed sample medians are less than 77 and 5% are greater than 85. The sample median rankings in between these quantiles represent the middle 90%. Consequently, we can say that we are 90% confident that the true median first-week ranking of songs is between 77 and 85 out of 100. This statement represents a confidence interval. As it turns out, the true median of 81 is in the interval but we will never know this with certainty. What we do know is that 90% of our resamples resulted in median rankings between the bounds of the confidence interval. Thus, it is likely we captured the true median ranking.

In practice, common confidence levels range between 90% and 99%. Higher confidence levels result in wider intervals for the same data. If we want to be more confident we captured the true parameter value, we have to provide a wider interval. Think of this relationship using the analogy of fishing. If we want to be more confident that we’ll catch a fish, then we have to cast a wider net. Regardless of the interval width or confidence level, the combination is superior to a single point estimate. After all, it is much easier to catch a fish with a net than with a spear!

We continue to demonstrate the use of confidence intervals for real-world problems in future sections. In all cases, we seek a plausible range of values for an unknown population parameter using sample statistics. For this introductory text in data science, we focus on computational approaches (i.e., bootstrap resampling) for constructing confidence intervals. More advanced or mathematically-focused texts traditionally construct confidence intervals using theoretical sampling distributions. However, the application of theory typically requires more restrictive technical conditions than those imposed by the computational methods presented here.

4.2.2 Population Proportion

Provided a sufficiently large random sample, we can construct confidence intervals for any population parameter. By far the most commonly investigated parameters are the proportion, mean, and slope. In this section, we conduct inference on a population proportion. Afterward, we pursue similar analyses for the mean of a population and slope of a regression line.

Proportions are frequently computed from the levels of a factor (i.e., grouped categorical variable). Imagine we gather a random sample of 100 dogs from kennels across our local area, along with their rabies vaccination status. In this case our factor has two levels: vaccinated and not vaccinated. We might be interested in the true proportion of dogs that have been vaccinated for rabies in our local area. We estimate the population proportion (\(p\)) based on the sample proportion (\(\hat{p}\)) among our 100 dogs. This is the essence of inferential analyses. We want to apply the proportion of vaccinated dogs in the sample to the entire population of dogs in the local area. Different samples of 100 dogs would likely result in different sample proportions. Consequently, we prefer to determine a range of plausible proportions within the population in the form of a confidence interval.

In this section, we return to the 5A Method for solving data-driven problems. We begin with a problem requiring a confidence interval for a single proportion. As usual, we leverage the tidyverse throughout the problem-solving process.

Ask a Question

The New York City (NYC) Department of Health and Mental Hygiene (DOHMH) maintains an open data site at the following link. The available data includes health inspections at restaurants throughout the city and indicators for any violations. When a restaurant suffers a “critical” violation it means inspectors discovered issues that are likely to result in food-borne illness among customers. Imagine you are considering a vacation in NYC and want to answer the following question: What proportion of restaurants in Manhattan are likely to make me sick?

A wide variety of people are likely concerned with the answer to this question. Locals and tourists alike should be informed of the risks associated with eating at Manhattan’s restaurants. In this case, we are specifically focused on the risk of food-borne illness. To be fair, someone could fall ill after eating at a restaurant due to a contagious customer or something else unrelated to health code violations. Our focus is solely on risks associated with critical health code violations. Thanks to the DOHMH’s transparency with their data, we have the capacity to answer this question.

Acquire the Data

The DOHMH website offers an API to access the results of all planned and completed health inspections. The site includes a wide variety of metadata explaining the meaning, structure, and limitations of the data tables. For example, only restaurants in an active status are included. Any permanently-closed restaurants are removed. Newly-registered restaurants that have not yet been inspected are indicated with an inspection date in the year 1900. Inspected restaurants include the inspection date and whether or not there was a violation. Multiple violations during the same inspection result in multiple rows in the table.

Let’s access the API and import inspection results specifically for the borough of Manhattan. We can filter the results of an API call using the query=list() option. We find the variable names (e.g., boro) to filter on using the site’s metadata. As described in Chapter 2.2.2, the httr and jsonlite packages ease the import of data from APIs.

#load libraries

library(httr)

library(jsonlite)

#request data from API

violations <- GET("https://data.cityofnewyork.us/resource/43nn-pn8j.json",

query=list(boro="Manhattan"))

#parse response from API

violations2 <- content(violations,"text")

#transform json to data frame

violations3 <- fromJSON(violations2)The final result of our query in violations3 is the 1,000 most recent records in the DOHMH database. It is common for APIs to limit the amount of returned data in order to prevent overloading servers. Although we could obtain additional data by conducting multiple queries, we have more than enough for our purposes. Next, we perform a variety of data wrangling tasks.

First we remove records for restaurants that have not yet been inspected. These restaurants have an inspection date of January 1, 1900 according to the DOHMH website. Since our statistic of interest is based on inspection results, we cannot include restaurants that have not been inspected. Another task is to limit the data to only the most recent inspection for each restaurant. Inspections from years past are not relevant to the current status of a restaurant, since conditions may have changed. After sorting and grouping, we extract the most recent inspection using the slice_head() function. Finally, we simplify the inspection results to a binary indicator of whether the inspectors found a critical violation.

#identify most recent restaurant inspections

inspections <- violations3 %>%

filter(inspection_date!="1900-01-01T00:00:00.000") %>%

select(camis,inspection_date,critical_flag) %>%

distinct() %>%

na.omit() %>%

arrange(camis,desc(inspection_date),critical_flag) %>%

group_by(camis) %>%

slice_head(n=1) %>%

ungroup() %>%

transmute(restaurant=camis,

date=as_date(inspection_date),

critical=ifelse(critical_flag=="Critical",1,0))

#review data structure

glimpse(inspections)## Rows: 564

## Columns: 3

## $ restaurant <chr> "40365166", "40365726", "40365942", "40368752", "40372971",…

## $ date <date> 2022-03-31, 2022-10-26, 2023-08-24, 2025-01-08, 2024-09-12…

## $ critical <dbl> 0, 1, 1, 1, 1, 1, 1, 0, 0, 0, 0, 1, 1, 0, 1, 0, 0, 1, 0, 0,…After wrangling, we are left with a sample of unique restaurants, the most recent inspection date, and whether inspectors found a critical violation. This cleaned and organized sample is ready for analysis.

Analyze the Data

The ultimate goal of our analysis is a confidence interval for the true proportion of Manhattan restaurants with a critical health violation. But we begin with a point estimate. Given that the critical violation variable is binary, we can compute the sample proportion using the mean() function.

## [1] 0.5342067Our sample statistic is \(\hat{p}=0.53\). This means 53% of the inspected restaurants in the sample have critical health code violations. That is disconcerting! However, this point estimate is based on a single sample. Depending on sampling variability, we might obtain higher or lower proportions in a different sample. This is why we prefer to provide a range of likely values along with a confidence level. Let’s generate multiple sample proportions using bootstrap resampling, as presented earlier in the chapter.

#initiate empty data frame

results_boot <- data.frame(prop=rep(NA,1000))

#resample 1000 times and save sample proportions

for(i in 1:1000){

set.seed(i)

results_boot$prop[i] <- mean(sample(x=inspections$critical,

size=nrow(inspections),

replace=TRUE))

}The previous code simulates 1,000 samples from the population of restaurants using resampling with replacement. The result is a vector of sample proportions we depict in a histogram.

#plot resampling distribution

ggplot(data=results_boot,aes(x=prop)) +

geom_histogram(color="black",fill="#CFB87C",bins=32) +

geom_vline(xintercept=quantile(results_boot$prop,0.05),

color="red",linetype="dashed",linewidth=1) +

geom_vline(xintercept=quantile(results_boot$prop,0.95),

color="red",linetype="dashed",linewidth=1) +

labs(title="NYC Manhattan Restaurant Inspections",

subtitle="Sampling Distribution",

x="Proportion of Restaurants with Critical Violations",

y="Count",

caption="Source: NYC DOHMH") +

theme_bw()

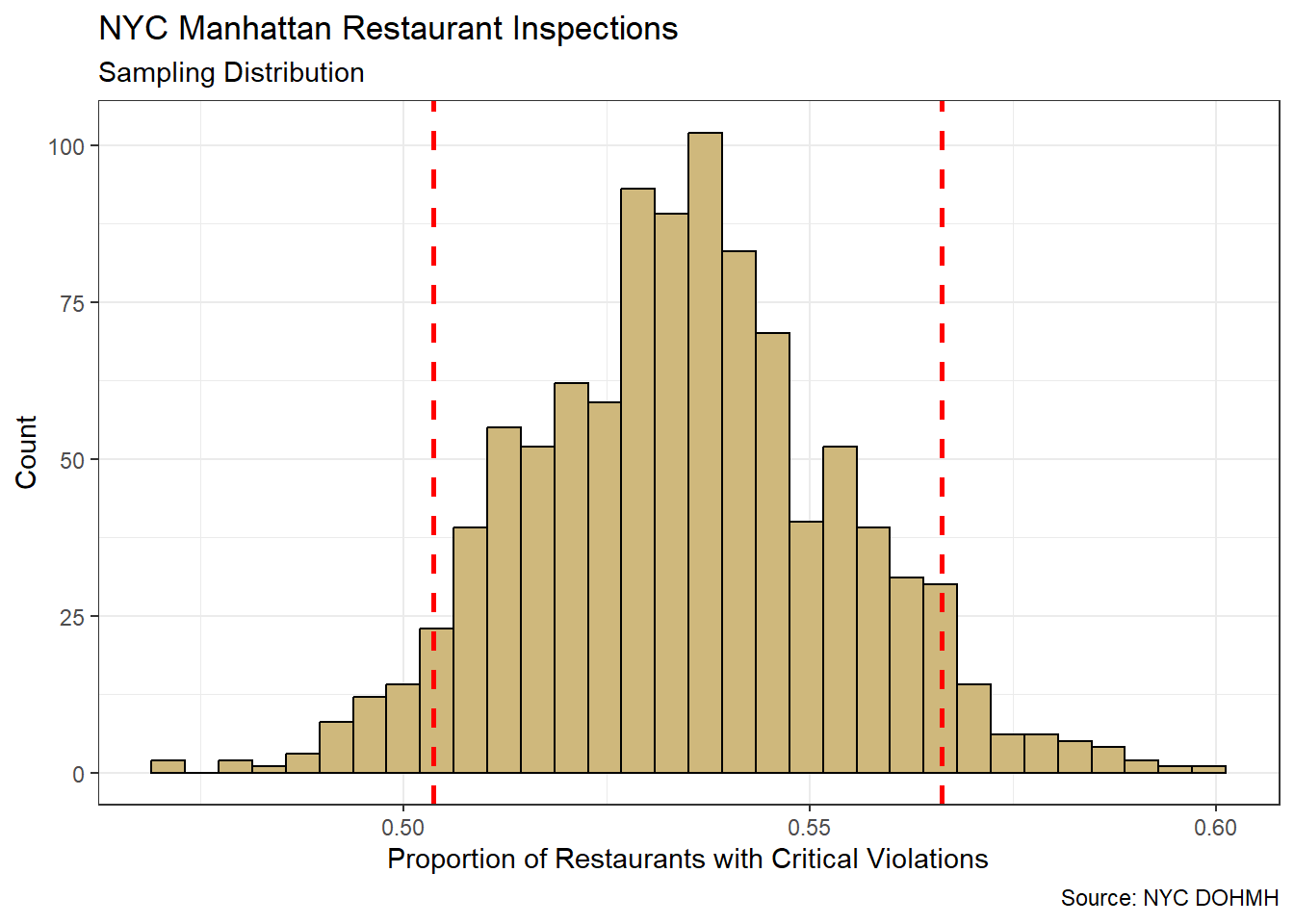

Figure 4.4: Bootstrap Resampling Distribution for Sample Proportion

The bootstrap resampling distribution in Figure 4.4 displays the range of sample proportions we might expect to observe with repeated sampling from the population. The symmetric, bell-shaped appearance of the distribution is not a coincidence. Mathematical theory tells us that the sampling distribution for a proportion follows the Normal distribution. However, our computational approach to confidence intervals does not require this assumption of a named distribution. Instead, we simply rely on the quantiles of the unnamed bootstrap distribution.

The quantile boundaries (dashed red lines) in Figure 4.4 depict the middle 90% of sample proportions. We compute these boundaries directly with the quantile() function.

## 5% 95%

## 0.503639 0.566230Based on this analysis, we are 90% confident that the true proportion of Manhattan restaurants with critical health code violations is between 0.504 and 0.566. In terms of notation, we write this as \(p \in (0.504,0.566)\). In other words, we are confident the population proportion is in this interval. If we require a greater level of confidence in our results, then we must accept a wider interval. Let’s construct a 95% confidence interval.

## 2.5% 97.5%

## 0.4978166 0.5720888As expected, this interval is wider. Specifically, we are 95% confident that the true proportion of Manhattan restaurants with critical health code violations is between 0.498 and 0.572. Often the appropriate confidence level is determined in collaboration with domain experts. With that in mind, we move to the advising step of the 5A Method.

Advise on Results

When presenting confidence intervals to domain experts, it is common to express them in terms of margin of error. Rather than listing the bounds of the interval, this presentation method provides the center and spread. For example, we might say we are 95% confident that the true proportion of Manhattan restaurants with critical health code violations is 0.535 plus or minus 0.037. The value of 0.037 acts as the margin of error for the point estimate. Splitting the interval in half like this is only appropriate when the sampling distribution is symmetric around its center. However, due to mathematical theory, the sampling distribution for the proportion will always be symmetric when the sample size is large.

A common mistake when interpreting the results of an inferential analysis is to describe the confidence level as a probability. It is not accurate, for example, to say there is a 95% probability the true proportion is between 0.498 and 0.572. This interpretation implies that the population proportion is a random value. That is not the case! Though unknown, the true proportion of violating restaurants is a fixed constant. There is no randomness involved. The randomness lies in our estimate of the confidence interval bounds, not in the true value. This is why we always use the word “confidence” rather than “probability” when presenting intervals.

In addition to proper interpretation, we should also be careful to describe the limitations of the results. Our entire analysis assumes that the most-recently-inspected restaurants are representative of the entire population of restaurants in Manhattan. In collaboration with domain experts, we might discover biases that challenge this assumption. Perhaps restaurants that have previous violations get inspected more frequently or with a higher priority. If this is the case, then our sample might be biased toward restaurants more likely to have a critical violation. On the other hand, we also know that the data excludes restaurants that have permanently closed. If a large number of these restaurants closed due to health code violations, then the remaining restaurants may be biased in the opposite direction. In practice, it can be difficult to obtain a perfectly unbiased sample. Regardless, it is important to identify and resolve issues of bias in our data.

The quality control of the data collection can also compromise our analysis. The DOHMH site states:

“Because this dataset is compiled from several large administrative data systems, it contains some illogical values that could be a result of data entry or transfer errors. Data may also be missing.”

Missing data and errors may or may not invalidate our conclusions. The impact largely depends on the magnitude of the issues and their prevalence with violating versus non-violating restaurants. We removed missing values during our data wrangling process, but it is difficult to identify errors without direct access to those who collected the data. Regardless, all such limitations should be revealed to stakeholders along with the results. Once all of the data issues are resolved we can answer the research question.

Answer the Question

Based on a large sample of recently-inspected restaurants in Manhattan it appears more likely than not that a customer is at risk of food-borne illness. We can say with 95% confidence that the proportion of restaurants with critical health code violations is between 0.498 and 0.572. Said another way, 53.5% of restaurants are likely to make you sick (margin of error 3.7%). Consequently, patrons may want to conduct more careful research on current health code status before selecting a restaurant.

Regarding the reproducibility of these results, the connection to the API ensures the most current data. When repeating the data acquisition and analysis presented here, there is no guarantee of obtaining exactly the same results. A different sample of inspections could result in different proportions and intervals. However, if the inspections included in our sample are representative of those we might expect in the future, then our results remain relevant.

4.2.3 Population Mean

In the previous section, we present confidence intervals for a population proportion. This approach lends itself well to conducting inference on categorical variables. Specifically, it facilitates the estimation of frequency within the levels of a factor. However, we also require methods for estimating numerical variables. Rather than frequency, we are often interested in the central tendancy of numerical variables. By far the most common measure of centrality is the arithmetic mean (i.e., the average). Hence we now demonstrate the estimation of a population mean (\(\mu\)) based on the sample mean (\(\hat{\mu}\)) and its margin of error.

In this section, we construct confidence intervals for a single population mean. However, we introduce a different type of interval compared to the previous section. Rather than two-sided confidence intervals, we present one-sided confidence bounds. The vast majority of the process for constructing intervals remains the same. But the formulation and interpretation of the analysis differs. We highlight these important differences throughout the section. We begin by loading the tidyverse and offering an exemplar research question.

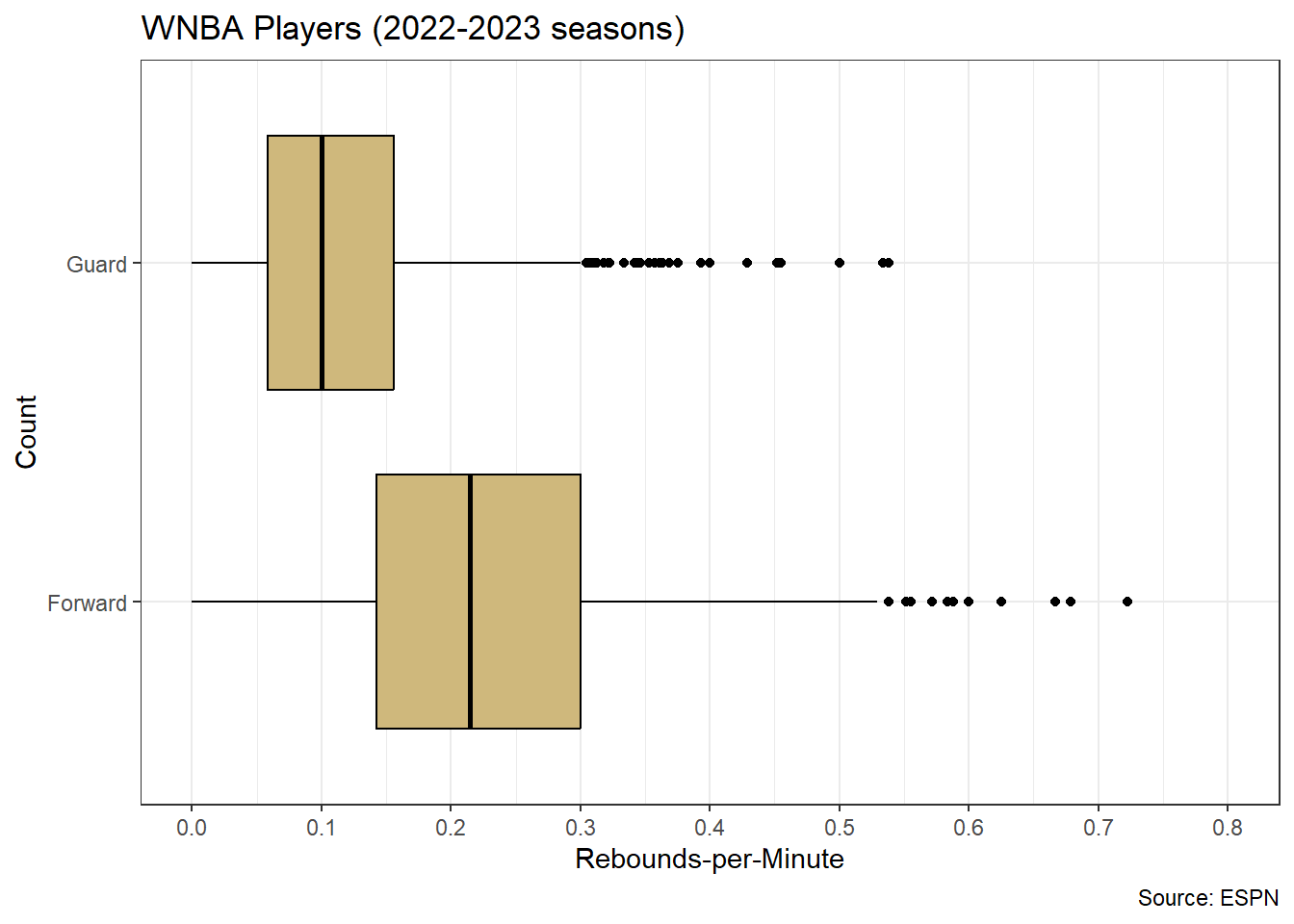

Ask a Question

The Women’s National Basketball Association (WNBA) was founded in 1996 and currently consists of 12 professional teams. Each team plays a total of 40 games in a season and each game consists of four 10-minute quarters. As with any basketball league, the team that has the most points when times expires wins the game. Since a team’s points are comprised of individual players’ points, the scoring efficiency of each player on the court is critically important. By efficiency, we mean the number of points scored given the number of minutes on the court. On this note, imagine we are asked the following question: What is a lower bound for the average scoring efficiency of players in the WNBA?

While the Men’s NBA has historically received more attention, the WNBA recently witnessed its most-watched season in 21 years according to ESPN. As a result, the interest in answering our research question is likely on the rise. However, our question could benefit from greater specificity. Does “players” refer to anyone who has ever played in the WNBA or current players? What makes a lower bound “reasonable” in this context? For our purposes, we assume the interest is in current players and therefore recent seasons. In terms of the bound, we choose to focus on players who are afforded a reasonable opportunity to score, in terms of time on the court. Here we impose a minimum of 10 minutes per game, but this is easily adjusted. Without any such limit, an obvious and uninteresting lower bound is zero. Next we seek a source for our player data.

Acquire the Data

In almost all cases, we prefer to obtain our data directly from the primary source. The WNBA offers an API for player statistics, but rather than accessing it directly we leverage functions from the wehoop package. The library of available functions provides a more intuitive and efficient method for obtaining the desired data by scraping the Entertainment and Sports Programming Network (ESPN) website. After installing the package for the first time, we load the library and import the two most-recent seasons using the load_wnba_player_box() function.

#load wehoop library

library(wehoop)

#import 2022-2023 seasons

wnba <- load_wnba_player_box(seasons=c(2022,2023))The resulting data frame includes over 5,300 player-game combinations described by 57 variables. Most of the variables are not of interest for our current research question or those in subsequent sections. So, we wrangle the data to isolate the desired information.

#wrangle data

wnba2 <- wnba %>%

transmute(season,date=game_date,team=as.factor(team_name),

location=as.factor(home_away),player=athlete_display_name,

position=as.factor(athlete_position_name),

minutes,points,assists,rebounds,turnovers,fouls) %>%

na.omit() %>%

filter(minutes>=10)

#review data structure

glimpse(wnba2)## Rows: 3,828

## Columns: 12

## $ season <int> 2022, 2022, 2022, 2022, 2022, 2022, 2022, 2022, 2022, 2022, …

## $ date <date> 2022-09-18, 2022-09-18, 2022-09-18, 2022-09-18, 2022-09-18,…

## $ team <fct> Aces, Aces, Aces, Aces, Aces, Aces, Sun, Sun, Sun, Sun, Sun,…

## $ location <fct> away, away, away, away, away, away, home, home, home, home, …

## $ player <chr> "A'ja Wilson", "Kiah Stokes", "Chelsea Gray", "Kelsey Plum",…

## $ position <fct> Forward, Center, Guard, Guard, Guard, Guard, Forward, Forwar…

## $ minutes <dbl> 40, 27, 37, 33, 37, 22, 36, 40, 29, 36, 29, 18, 36, 24, 33, …

## $ points <int> 11, 2, 20, 15, 13, 17, 12, 11, 13, 17, 6, 11, 19, 2, 11, 17,…

## $ assists <int> 1, 0, 6, 3, 8, 0, 2, 11, 0, 1, 3, 1, 3, 0, 7, 6, 2, 1, 0, 5,…

## $ rebounds <int> 14, 5, 5, 1, 5, 2, 8, 10, 8, 6, 1, 0, 4, 7, 2, 6, 2, 0, 0, 6…

## $ turnovers <int> 1, 1, 2, 5, 1, 1, 2, 4, 1, 2, 0, 3, 0, 0, 4, 3, 2, 1, 1, 2, …

## $ fouls <int> 2, 3, 3, 2, 2, 2, 5, 1, 4, 3, 1, 3, 1, 3, 0, 1, 3, 1, 1, 1, …After reducing and renaming the variables of interest, eliminating observations with missing data, and filtering to isolate cases for which a player had at least 10 minutes on the court, we are left with a clean and sufficient data set. But does this data represent a random sample? After all, we extracted every player-game combo for two seasons and artificially imposed a 10-minute requirement for minutes played. Perhaps a more relevant question is whether this data is representative of the population of interest. Since we are interested in the scoring efficiency of current players who are afforded sufficient opportunity, in terms of minutes played, this sample is likely representative. With that said, we proceed to our analysis.

Analyze the Data

Our population parameter of interest is the true mean scoring efficiency of WNBA players. Scoring efficiency is computed by dividing points and minutes to obtain points-per-minute. Since we limit our observations to cases with at least 10 minutes of court time, we have no concern for division by zero. After adding this new variable, we depict the distribution of points-per-minute in a histogram.

#compute points-per-minute

wnba3 <- wnba2 %>%

mutate(ppm=points/minutes)

#display distribution of points-per-minute

ggplot(data=wnba3,aes(x=ppm)) +

geom_histogram(color="black",fill="#CFB87C",

boundary=0,closed="left",binwidth=0.05) +

geom_vline(xintercept=mean(wnba3$ppm),color="red",

linewidth=0.75) +

labs(title="WNBA Players (2022-2023 seasons)",

x="Points-per-Minute",

y="Count",

caption="Source: ESPN") +

scale_x_continuous(limits=c(0,1.5),breaks=seq(0,1.5,0.1)) +

theme_bw()

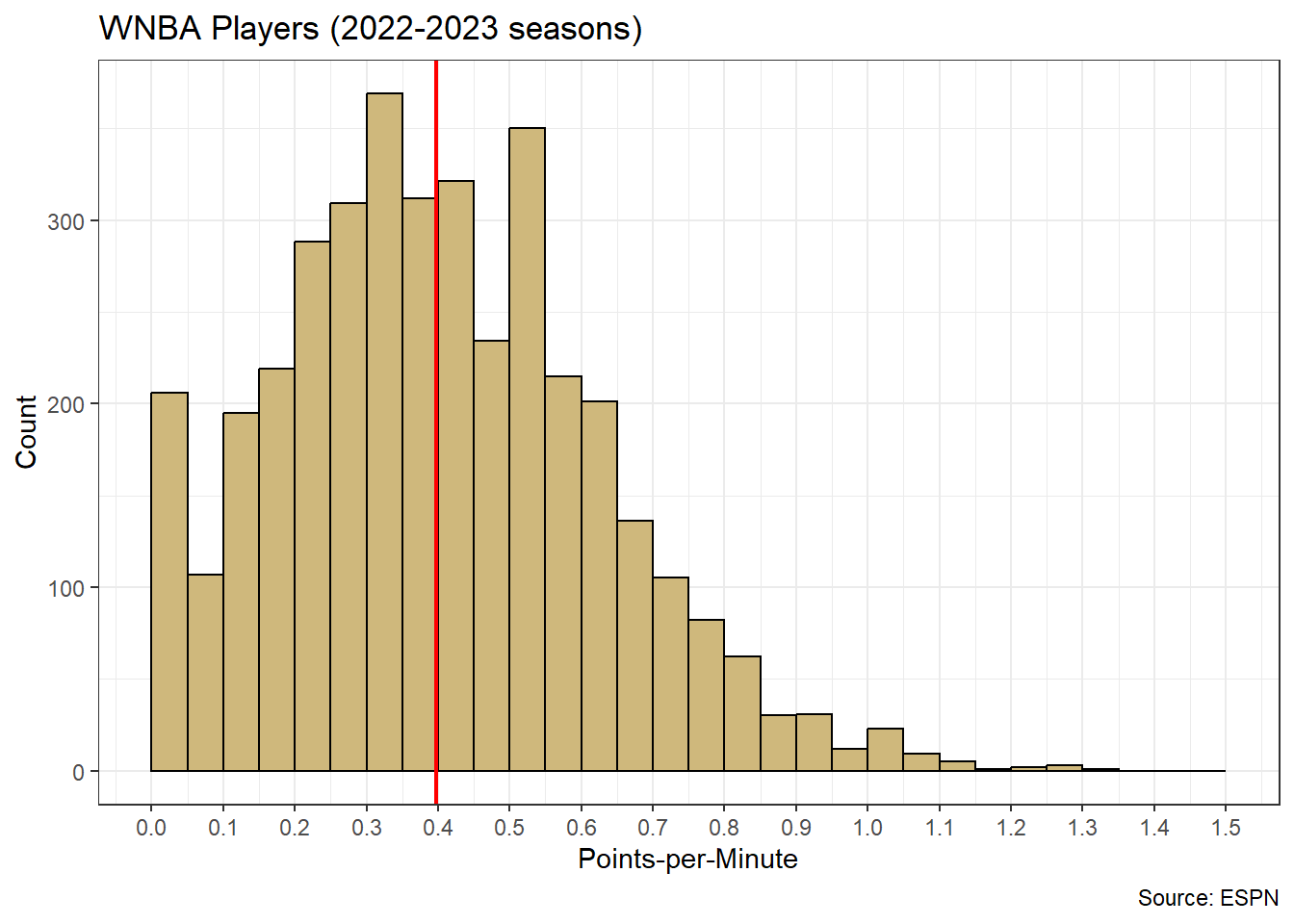

Figure 4.5: Distribution for Individual Player Points-per-Minute

Figure 4.5 displays the distribution of points-per-minute for individual players. We observe an obvious right skew, with most players around the sample mean (red line) of \(\hat{\mu}=0.4\) points-per-minute and a few players with efficiencies as high as 1.3 points-per-minute. Right-skewed distributions are not uncommon for variables with a natural minimum. In this case, it is impossible for a player to have a scoring efficiency below zero. Note, this is not a sampling distribution for average points-per-minute. To obtain that distribution we first conduct bootstrap resampling.

#initiate empty data frame

results_boot <- data.frame(avg=rep(NA,1000))

#resample 1000 times and save sample means

for(i in 1:1000){

set.seed(i)

results_boot$avg[i] <- mean(sample(x=wnba3$ppm,

size=nrow(wnba3),

replace=TRUE))

}

#view summary of sample means

summary(results_boot$avg)## Min. 1st Qu. Median Mean 3rd Qu. Max.

## 0.3854 0.3947 0.3972 0.3973 0.3999 0.4097The bootstrapped sample means vary between efficiencies of 0.385 and 0.410. So, while individual scoring efficiency may vary between 0 and 1.3, the average efficiency varies across a much smaller range. This reduction in variability is a very common phenomenon caused by averaging. Let’s view the resampled means in a histogram.

#plot resampling distribution

ggplot(data=results_boot,aes(x=avg)) +

geom_histogram(color="black",fill="#CFB87C",bins=32) +

geom_vline(xintercept=quantile(results_boot$avg,0.05),

color="red",linetype="dashed",linewidth=0.75) +

labs(title="WNBA Players (2022-2023 seasons)",

subtitle="Sampling Distribution",

x="Average Points-per-Minute",

y="Count",

caption="Source: ESPN") +

scale_x_continuous(limits=c(0.385,0.41),breaks=seq(0.385,0.41,0.005)) +

theme_bw()

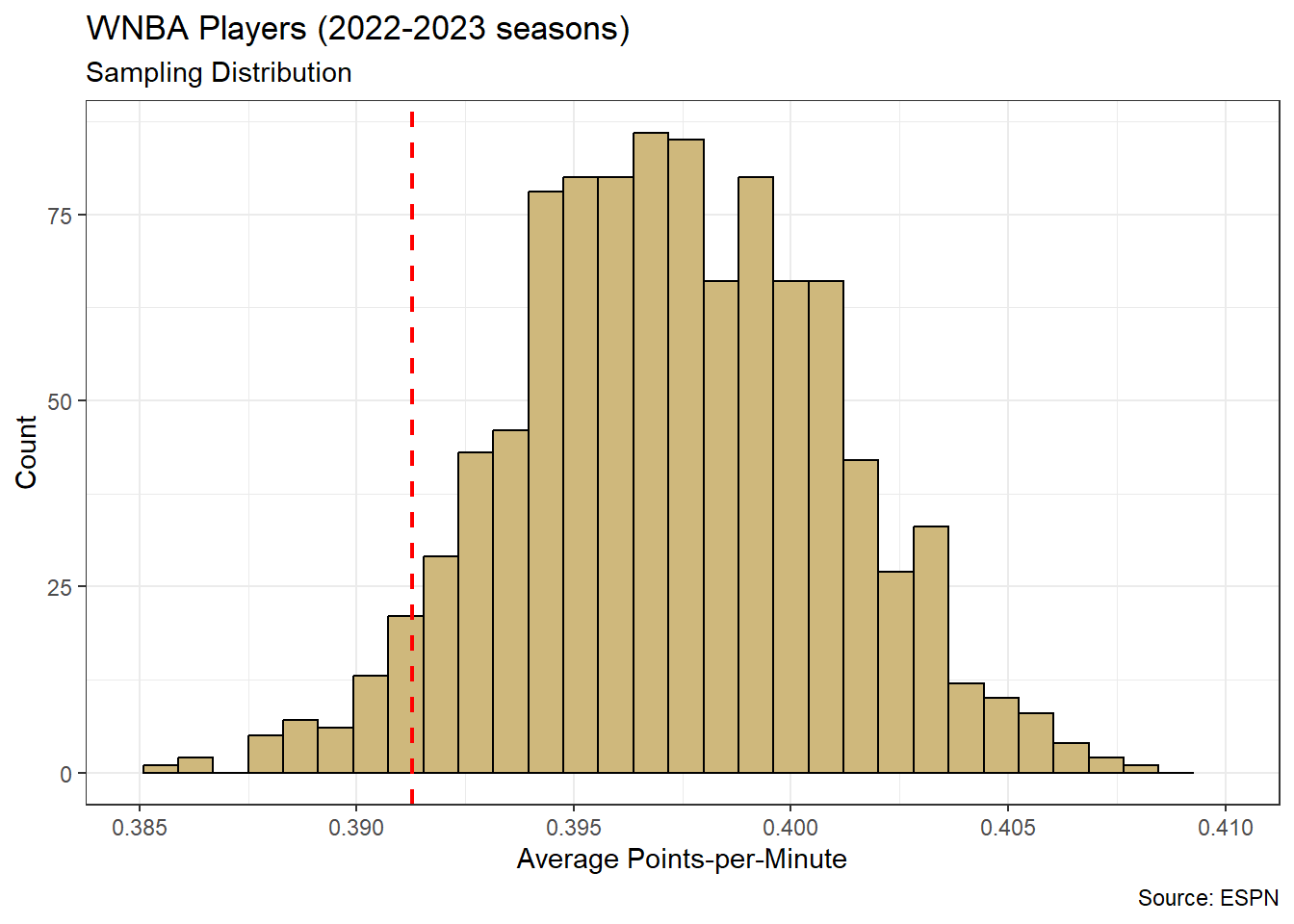

Figure 4.6: Distribution for Average Player Points-per-Minute

The sampling distribution for average points-per-minute appears relatively symmetric. Mathematical theory tells us that the sample mean follows the t-distribution, which is similar to the Normal distribution. However, we do not need to name the sampling distribution in order to compute a lower bound for the mean. The red dashed line in Figure 4.6 represents a 95% lower confidence bound. Of the 1,000 resampled means, 95% are greater than this threshold. We compute the bound directly using the quantile() function.

## 5%

## 0.3912825Thus, we are 95% confident that the true mean scoring efficiency for a WNBA player is at least 0.391 points-per-minute. In mathematical notation we would write \(\mu \in (0.391,\infty)\), because we are confident the population mean is in this interval. If we require greater confidence, then we must move the lower bound to the left. A smaller lower bound captures a larger range of values and permits greater confidence.

Advise on Results

When interpreting our confidence bound, it is important to distinguish between the performance of individual players and the average player. In Figure 4.5, we notice that it is possible for individual players to achieve scoring efficiencies as low as 0 points-per-minute. However, such values are nearly impossible for the average player in Figure 4.6. Not a single one of our hypothetical average players had an efficiency below 0.38, let alone 0. This helps speak to the use of the word “reasonable” in our research question. Although 0 is a valid lower bound for scoring efficiency, it is not informative regarding the average player. Some individual players will certainly have games with no points, even with at least 10 minutes on the court. But the average player will seldom perform worse than 0.391 points-per-minute.

In reference to the 10 minute time restriction, this assumption presents a great opportunity for collaboration with domain experts. Perhaps 10 minutes is too restrictive in the eyes of those who have a more nuanced understanding of the game of basketball. Coaches or managers may consider 5 minutes, for example, a “reasonable” opportunity to score. Data scientists should facilitate discussions regarding the costs and benefits of adjusting this restriction. If the time on the court is too low, then the data may be biased toward a scoring efficiency of zero. Does this truly reflect a player’s ability to score or their lack of opportunity? On the other hand, if the time limit is increased too high, then there may not be sufficient data in the sample. For example, only a little over 25% of the players in the sample were on the court for more than 30 minutes. Are we willing to ignore 75% of the data in order to impose this higher restriction? By collaborating effectively to answer these questions, data scientists and domain experts can identify the appropriate threshold. Assuming the current value of 10 minutes is acceptable, we are prepared to answer our research question.

Answer the Question

Based on a recent sample of over 3,800 player-game combinations, a reasonable lower bound for average scoring efficiency in the WNBA is 0.391 points-per-minute (95% confidence). This estimate is valid for players who are afforded the equivalent of one quarter (10 minutes) on the court. While it is possible for a given player to score zero points in a game, regardless of time on the court, the average player will seldom perform worse than our lower bound of 0.391 point-per-minute.

By providing all of the code for acquiring and analyzing the data, we have rendered our work reproducible for others. If stakeholders determine that more seasons should be considered, then they can easily be added in the API call. If domain experts determine that the time on the court restriction should be lowered to 5 minutes, then this can be quickly adjusted and the analysis re-run. The same is true for the confidence level, if there is a desire to change it. The value of reproducible analysis also lies in the capacity of others to check our work and expand on it. While some research in industry must understandably protect proprietary data and/or techniques, we should always strive for transparency whenever possible.

4.2.4 Population Slope

So far, we have discussed statistical inference for population proportions and means. These two parameter types are by far the most common summaries of categorical and numerical variables. However, we can pursue inference for any population parameter of interest using the computational approaches demonstrated here. Often we are interested in estimating the values of parameters within a statistical model. For example, in Chapter 3.3.2 we introduced the linear regression model in Equation (4.1).

\[\begin{equation} y = \beta_0+\beta_1 x \tag{4.1} \end{equation}\]

If a variable \(y\) is linearly associated with another variable \(x\), then the slope parameter \(\beta_1\) describes the direction and size of the effect. From the standpoint of inferential statistics, \(\beta_1\) is a population parameter. It represents the true association between the variables \(y\) and \(x\) in the population. Just as with any parameter, we estimate the true value of \(\beta_1\) based on a statistic computed from a random sample. In order to fit a linear regression model to sample data, we employ the least squares method and produce a point estimate denoted \(\hat{\beta}_1\). This sample statistic represents the average change in \(y\) we expect to observe when there is a one unit increase in \(x\). But, as always, we prefer a plausible range of values for our parameter rather than a single point estimate. Thus, in this section we present methods for constructing confidence intervals for slope parameters in the context of an example.

Ask a Question

In Chapter 3.3.2, we explore linear association between numerical variables using sample data from the Palmer Station Long Term Ecological Research (LTER) Program. Recall, the program studies various penguin species on the Palmer Archipelago in Antarctica. Based on visual evidence, we identify a linear association between a penguin’s body mass and its flipper length. Given the exploratory focus, we limit our insights to the observed data. If we wish to extend those insights to the entire population of Palmer penguins, then we require more formal inferential techniques. Hence, we ask the following question: What is the association between body mass and flipper length for all penguins on the Palmer Archipelago?

In part, we already have an answer to this question. We computed a point estimate for the association between body mass and flipper length in the previous chapter. However, we have no idea of the precision around that estimate. There could be a great deal of variability in the association from one penguin to the next. Thus, the range of plausible slope values remains unanswered.

Acquire the Data

We have access to a sample of body measurements for Palmer penguins in the palmerpenguins package. We import and wrangle the sample data below.

#load palmerpenguins library

library(palmerpenguins)

#import penguin data

data(penguins)

#wrangle penguin data

penguins2 <- penguins %>%

select(flipper_length_mm,body_mass_g) %>%

na.omit()

#review data structure

glimpse(penguins2)## Rows: 342

## Columns: 2

## $ flipper_length_mm <int> 181, 186, 195, 193, 190, 181, 195, 193, 190, 186, 18…

## $ body_mass_g <int> 3750, 3800, 3250, 3450, 3650, 3625, 4675, 3475, 4250…Our sample consists of the flipper length (in millimeters) and body mass (in grams) for 342 different penguins. From previous exploration, we know the sample consists of three different penguin species. However, our research question does not indicate an interest in distinguishing species. Thus, we pursue our analysis independent of this factor.

Analyze the Data

Prior to computing a point estimate or constructing a confidence interval for our slope parameter, we display the linear association between our numerical variables in a scatter plot.

#create scatter plot

ggplot(data=penguins2,aes(x=flipper_length_mm,y=body_mass_g)) +

geom_point() +

geom_smooth(method="lm",formula=y~x,se=FALSE) +

labs(title="Palmer Penguin Measurements",

x="Flipper Length (mm)",

y="Body Mass (g)",

caption="Source: Palmer Station LTER Program") +

theme_bw()

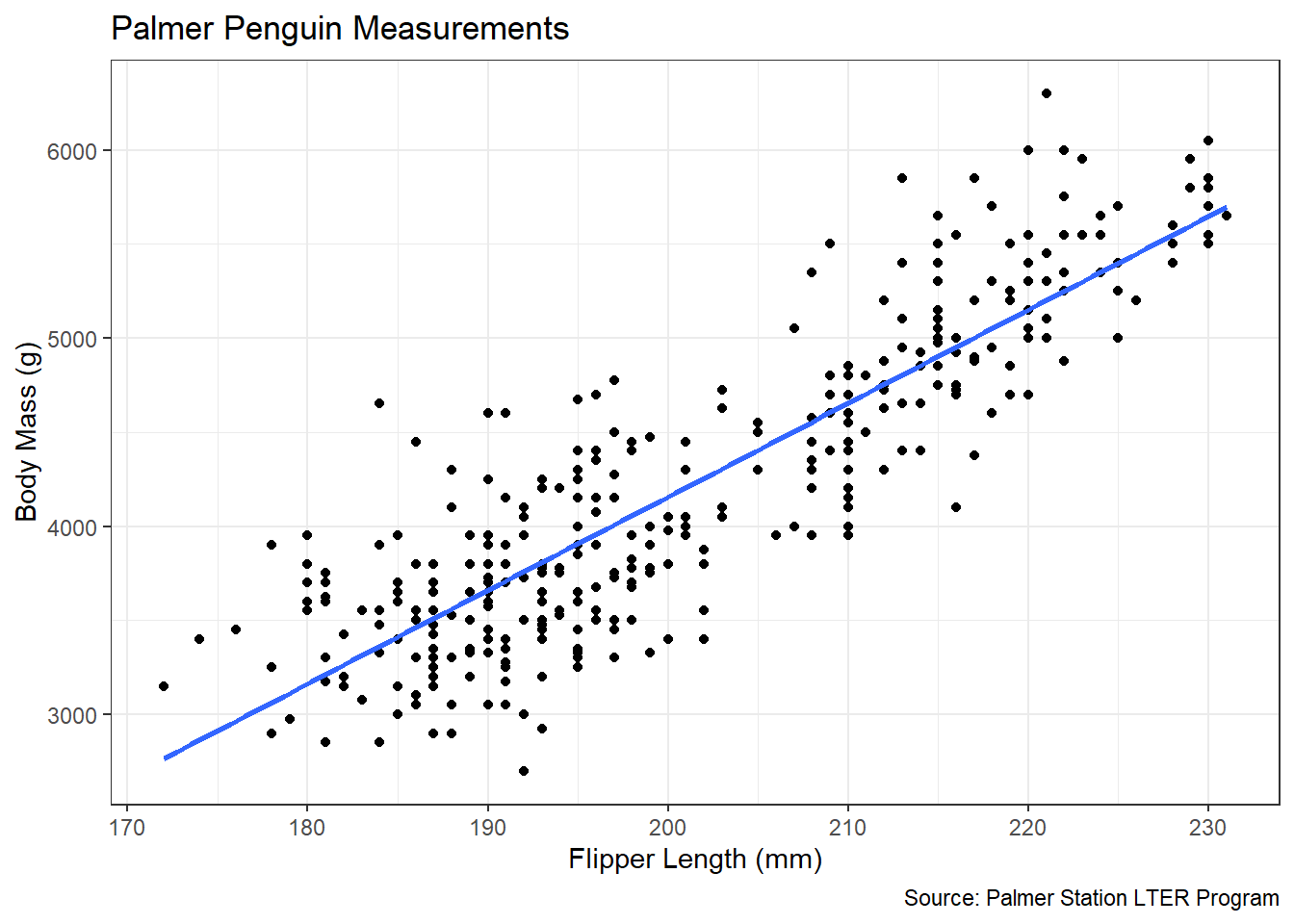

Figure 4.7: Linear Association between Flipper Length and Body Mass

Figure 4.7 suggests a strong, positive, linear association between a penguin’s flipper length and its body mass. This relationship makes intuitive sense, because we would expect a penguin with longer flippers to be larger in general and thus weigh more. The blue line in the scatter plot represents the best-fit line resulting from the least squares method. The focus of our analysis is the true slope (\(\beta_1\)) of this line. Keep in mind, the observed slope in the scatter plot represents a point estimate (\(\hat{\beta}_1\)) based on the sample data. Our interest is in using this estimate to make inferences about the slope for the entire population of penguins. To begin, we need the numerical value for the estimated slope.

#fit simple linear regression

model <- lm(body_mass_g~flipper_length_mm,data=penguins2)

#extract estimated coefficients

coefficients(summary(model))## Estimate Std. Error t value Pr(>|t|)

## (Intercept) -5780.83136 305.814504 -18.90306 5.587301e-55

## flipper_length_mm 49.68557 1.518404 32.72223 4.370681e-107In the previous code, we fit a simple linear regression model using the lm() function. We then extract the estimated coefficients \(\hat{\beta}_0\) and \(\hat{\beta}_1\) from the summary output. The majority of the summary output is beyond the scope of this text, so we limit our focus to the Estimate column. The values in this column indicate an estimated intercept of \(\hat{\beta}_0=-5780.83\) and an estimated slope of \(\hat{\beta}_1=49.69\). Since our current interest is the slope, we isolate this value in the second row and first column of the table.

## [1] 49.68557For every one millimeter increase in flipper length, we expect a 49.69 gram increase in average body mass. But, as with proportions and means, this represents a point estimate of the slope based on a single sample. Were we to obtain a new sample of penguin measurements, the estimated slope would likely be different. Thus, we need a sampling distribution for the estimated slope to determine the range of plausible values. We once again accomplish this with bootstrap resampling. However, we must be careful to resample both flipper length and body mass for a given penguin. Otherwise, we cannot fit a simple linear regression model and extract the estimated slope. We use the sample_n() function to ensure both columns of our data frame are included in the resamples.

#initiate empty data frame

results_boot <- data.frame(beta=rep(NA,1000))

#resample 1000 times and save sample slopes

for(i in 1:1000){

set.seed(i)

penguins_i <- sample_n(penguins2,size=nrow(penguins2),replace=TRUE)

model_i <- lm(body_mass_g~flipper_length_mm,data=penguins_i)

results_boot$beta[i] <- coefficients(summary(model_i))[2,1]

}The thousand bootstrapped resamples result in a variety of estimated slopes that associate body mass with flipper length. Let’s view the resampled slopes in a histogram.

#plot resampling distribution

ggplot(data=results_boot,aes(x=beta)) +

geom_histogram(color="black",fill="#CFB87C",bins=32) +

geom_vline(xintercept=quantile(results_boot$beta,0.025),

color="red",linetype="dashed",linewidth=0.75) +

geom_vline(xintercept=quantile(results_boot$beta,0.975),

color="red",linetype="dashed",linewidth=0.75) +

labs(title="Palmer Penguin Measurements",

subtitle="Sampling Distribution",

x="Estimated Slope",

y="Count",

caption="Source: Palmer Station LTER Program") +

theme_bw()

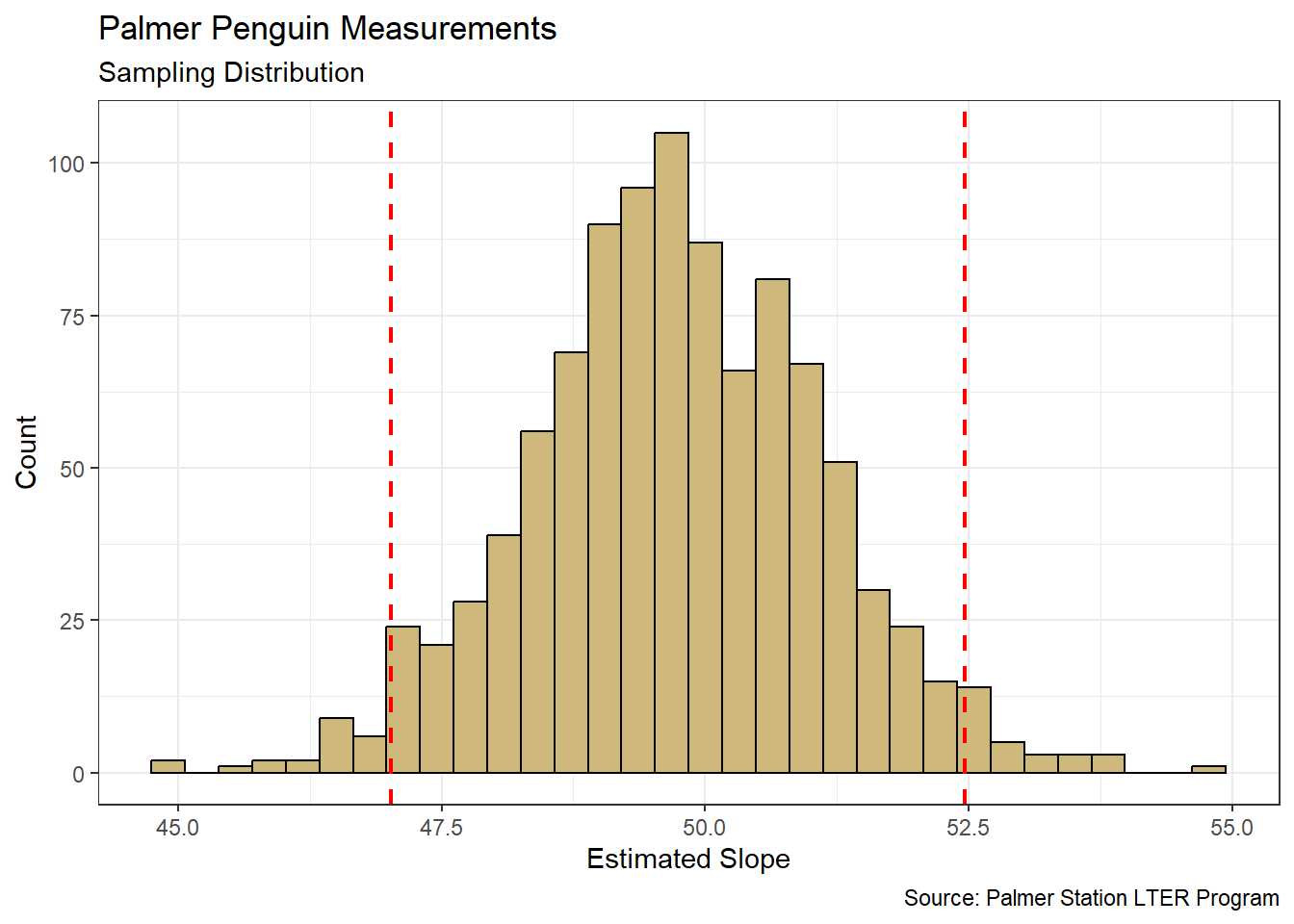

Figure 4.8: Distribution for average increase in Body Mass per unit increase in Flipper Length

Based on the sampling distribution in Figure 4.8, it appears we might observe slopes anywhere between 45 and 55 grams per millimeter. Similar to the sample mean, theory indicates that the sample slope follows the symmetric t-distribution. But that assumption is not required here. A plausible range of values is represented by the bounds (dashed red lines) of a 95% confidence interval. We compute these bounds directly using the quantile() function.

## 2.5% 97.5%

## 47.01807 52.46620We are 95% confident that the true slope parameter that associates body mass with flipper length is between 47.02 and 52.47 g/mm (i.e., \(\beta_1 \in (47.02,52.47)\)). Said another way, for every one millimeter increase in flipper length we are 95% confident that the average body mass increases by between 47.02 and 52.47 grams.

Advise on Results

When interpreting these results for stakeholders, we must be careful to identify the population to which we refer. The sample data only includes three species of penguin on the Palmer Archipelago.

## Adelie Chinstrap Gentoo

## 152 68 124We can confidently state that our estimated association between body mass and flipper length applies to Adelie, Chinstrap, and Gentoo penguins. But if other species of penguin exist on the Palmer Archipelago, we would not want to extrapolate our results to those species. It’s possible that the association between body mass and flipper length varies dramatically for other species, so we should not assume that our estimate applies universally.

An important value that is not included in our confidence interval is zero. If zero was in our interval, then it would be plausible that there is no association between body mass and flipper length. In other words, body mass and flipper length would be independent. Such results can be challenging to convey to a broad audience when a point estimate suggests a non-zero slope. Imagine for a moment that our original point estimate for the slope was 3 g/mm, but the confidence interval was -1 to 7 g/mm. The point estimate indicates a positive association between body mass and flipper length, but the confidence interval suggests there could be no association at all. In such cases, we must explain that the positive association in the original sample is simply due to the randomness of sampling. The evidence of an association in the sample is not strong enough to believe that it exists in the entire population.

Answer the Question

Based on a sample of 342 penguins from the Palmer Archipelago, there appears to be a strong, positive, linear association between body mass and flipper length. Specifically, we can say with 95% confidence that the average increase in body mass for a one millimeter increase in flipper length is between 47.02 and 52.47 grams. While these results should only be applied to Adelie, Chinstrap, and Gentoo penguins, it makes intuitive sense that penguins with longer flippers are larger in general and thus weigh more.

The preceding sections presented methods for computing a plausible range of values for a single population parameter. However, we are sometimes interested in the difference between two population parameters. Given sample data for two independent populations, we can construct confidence intervals for the difference in parameters using the same bootstrapping approach. We demonstrate such confidence intervals in the next section.

4.2.5 Two Populations

Another common method of inference regards the difference between two population parameters. Returning to the kennel example, imagine we are interested in how the proportion of vaccinated dogs in our local area compares to that of a neighboring city. In this case there are two distinct populations of dogs: our local area and the neighboring area. Consequently, there are two parameters of interest: the true proportion of vaccinated dogs in our area (\(p_1\)) and the true proportion in the neighboring area (\(p_2\)). We can estimate the difference between these two parameters (\(p_1-p_2\)) by building a confidence interval via bootstrap resampling.

Given we are interested in two different populations, we require two independent samples. We then resample (with replacement) from each sample, compute separate statistics, and finally calculate a difference in statistics (\(\hat{p}_1-\hat{p}_2\)). By repeating this bootstrapping process, we obtain a sampling distribution for the difference. Differences in proportions or means are the most common, but we can compare any statistic we choose. In this section, we demonstrate a difference in proportions using the New York City health inspection data.

Ask a Question

The New York City (NYC) Department of Health and Mental Hygiene (DOHMH) website offers data on all five boroughs in the city. In this context, we might consider all active restaurants within a borough as a population. Thus, our problem includes five populations from which we obtain a sample of restaurant inspections. Using these samples, we compare the proportion of restaurants with critical health code violations for two different boroughs. Suppose we ask the following question: What is the difference in the proportion of restaurants likely to make me sick in the Bronx versus Manhattan?

Given the focus on the physical health of patrons, we should pause to consider the potential ethical implications of answering this question. On the one hand, answering this question could potentially prevent patrons from suffering food-borne illness at NYC restaurants. On the other hand, if there is a significant difference, then there could be financial impacts for owners of non-violating restaurants in the “worse” borough. Patrons might choose to avoid all restaurants in a certain borough if our analysis suggests a higher proportion. Is this fair to those business owners? Do the health benefits outweigh the financial costs? These are challenging questions that should be considered prior to acquiring the data.

Acquire the Data

We acquire the data for both boroughs using the same methodology as in Chapter 4.2.2. The only difference is that we execute two separate API calls, one for each borough. Then, we combine the two data frames into one and perform the same wrangling tasks to obtain only the most recent inspections for each restaurant. For convenience, we have completed all of these steps ahead of time and saved the results in a file called restaurants.csv. This file actually includes data for all five boroughs, but we filter on the two boroughs of interest.

#import and filter inspection data

inspections <- read_csv("restaurants.csv") %>%

filter(borough %in% c("Manhattan","Bronx"))

#review data structure

glimpse(inspections)## Rows: 1,414

## Columns: 4

## $ restaurant <dbl> 30075445, 40364296, 40364443, 40364956, 40365726, 40365942,…

## $ borough <chr> "Bronx", "Bronx", "Manhattan", "Bronx", "Manhattan", "Manha…

## $ date <date> 2023-08-01, 2022-01-03, 2022-08-08, 2022-05-02, 2022-10-26…

## $ critical <dbl> 0, 1, 1, 1, 1, 0, 0, 0, 0, 1, 1, 1, 0, 0, 1, 1, 1, 1, 0, 0,…After all of our data wrangling tasks, we are left with inspection records for 1,414 restaurants across the two boroughs. We now have a clean, organized sample of restaurant inspections to support our analysis.

Analyze the Data

The ultimate goal of our inferential analysis is to construct a confidence interval for the difference in proportions. Prior to inference, we often conduct a brief exploratory analysis. Let’s compute the sample proportion of violating restaurants in each borough.

#compute sample statistics

inspections %>%

group_by(borough) %>%

summarize(count=n(),

prop=mean(critical)) %>%

ungroup()## # A tibble: 2 × 3

## borough count prop

## <chr> <int> <dbl>

## 1 Bronx 727 0.583

## 2 Manhattan 687 0.534Based on the sample, 58% of restaurants in the Bronx have critical violations (\(\hat{p}_1=0.58\)). This is true for only 53% of the restaurants in Manhattan (\(\hat{p}_2=0.53\)). So, we might be inclined to believe that there is a 5 percentage-point difference between the two boroughs. But we must resist drawing conclusions based purely on a single point estimate. The benefit of a confidence interval is the consideration for the variability in results across multiple samples and multiple point estimates. In order to obtain a distribution of point estimates (i.e., differences in proportions) we employ bootstrap resampling.

#initiate empty vector

results_boot <- data.frame(diff=rep(NA,1000))

#extract samples for each borough

manhat <- inspections %>%

filter(borough=="Manhattan") %>%

pull(critical)

bronx <- inspections %>%

filter(borough=="Bronx") %>%

pull(critical)

#repeat bootstrapping process 1000 times

for(i in 1:1000){

set.seed(i)

manhat_prop <- mean(sample(x=manhat,

size=length(manhat),

replace=TRUE))

bronx_prop <- mean(sample(x=bronx,

size=length(bronx),

replace=TRUE))

results_boot$diff[i] <- bronx_prop-manhat_prop

}

#review data structure

glimpse(results_boot)## Rows: 1,000

## Columns: 1

## $ diff <dbl> 0.09949164, 0.03954358, 0.01334270, 0.02314551, 0.03567131, 0.014…As usual, we begin the bootstrapping process by defining an empty vector to hold our statistics. However, we include a new step to account for the two different populations. Using the pull() function within a dplyr pipeline, we convert the violation column for each borough into a vector. Then, within the for loop, we resample from each vector with replacement and calculate the proportion of violations. Finally, we compute the difference between boroughs and save the result in our empty vector.

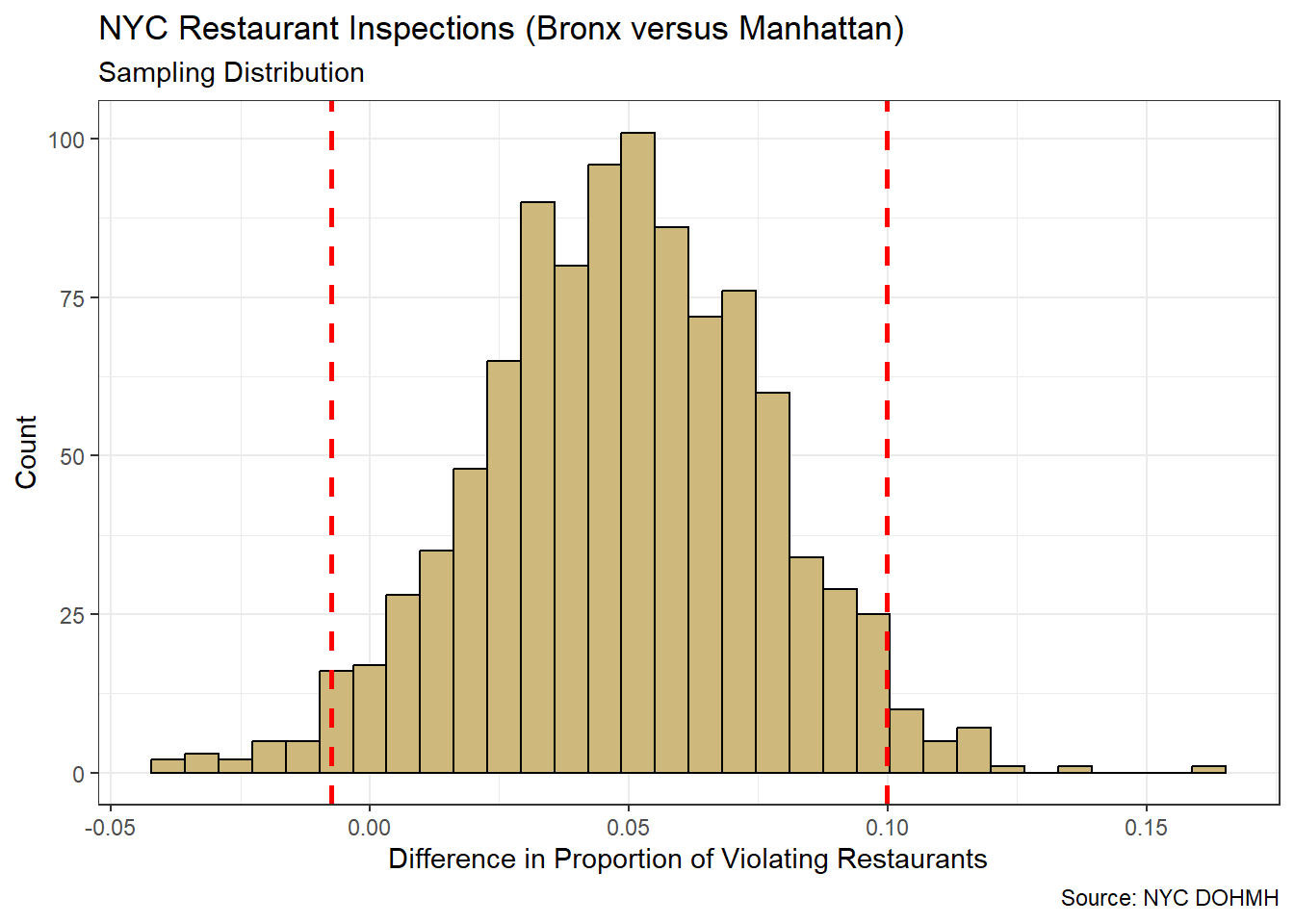

Even in the first few bootstrap results, we see a variety of differences between the boroughs ranging from 1 to 10 percentage-points. We listed the Bronx first in the difference, so positive values indicate a higher proportion in the Bronx. In Figure 4.9, we depict the distribution of all the differences. The dashed red lines represent the bounds of a 95% confidence interval.

#plot sampling distribution and bounds

ggplot(data=results_boot,aes(x=diff)) +

geom_histogram(color="black",fill="#CFB87C",bins=32) +

geom_vline(xintercept=quantile(results_boot$diff,0.025),

color="red",linewidth=1,linetype="dashed") +

geom_vline(xintercept=quantile(results_boot$diff,0.975),

color="red",linewidth=1,linetype="dashed") +

labs(title="NYC Restaurant Inspections (Bronx versus Manhattan)",

subtitle="Sampling Distribution",

x="Difference in Proportion of Violating Restaurants",

y="Count",

caption="Source: NYC DOHMH") +

theme_bw()

Figure 4.9: Bootstrap Sampling Distribution for Difference in Proportions

The majority of differences depicted in the sampling distribution are positive. A positive difference suggests the Bronx has a higher proportion of violating restaurants than Manhattan. However, there are some negative differences as well. In fact, some of them are within the 95% confidence interval.

## 2.5% 97.5%

## -0.007452212 0.099830013Based on the quantiles of the sampling distribution, we are 95% confident that the difference in true proportions between the Bronx and Manhattan is between -0.007 and +0.100. In other words, we are confident that \(p_1-p_2 \in (-0.007,0.100)\). Of critical importance is the fact that zero is in the interval. A value of zero suggests there is no difference between the two boroughs. So, even though the majority of our resamples suggest the Bronx is “worse” in terms of restaurants, we cannot rule out the possibility that Manhattan is just as bad.

Advise on Results

When conducting inference, it is important to always solicit the desired confidence level from the stakeholders before initiating the analysis. This is a matter of ethics in the scientific process. In certain cases, changing the confidence level can change the answer to the question. For example, if a particular stakeholder was intent on making the Bronx look worse, then they could simply lower the confidence level to 90%.

## 5% 95%

## 0.003296933 0.093925506At the 90% confidence level, zero is no longer in the interval. Now a stakeholder could claim that the proportion of violating restaurants in the Bronx appears to be higher. By contrast, if a different stakeholder was adamant that the boroughs are the same, then they could strengthen their argument by raising the confidence level to 99%.

## 0.5% 99.5%

## -0.02727474 0.11744549At the 99% level, zero is even further from the boundary of the interval. Such gaming of the process to support a preconceived notion is unethical. Stakeholders and data scientists should carefully collaborate prior to the analysis in order to select the appropriate level of confidence in the results. Afterward, the results speak for themselves. They should not be changed to accommodate a desired outcome.

Ethical concerns aside, the fact that our conclusion changes when the confidence level changes is troublesome. We prefer our analytic results to be robust to reasonable changes in confidence level. If a difference of zero were in the interval, regardless of confidence level, then we could feel secure that the boroughs are likely the same. Similarly, if zero was consistently outside (below) the interval, then we could be convinced the Bronx is worse. The variability in results weakens our conclusions. Still, we should prefer an ethical yet weak answer to our research questions over unethical answers of any strength.

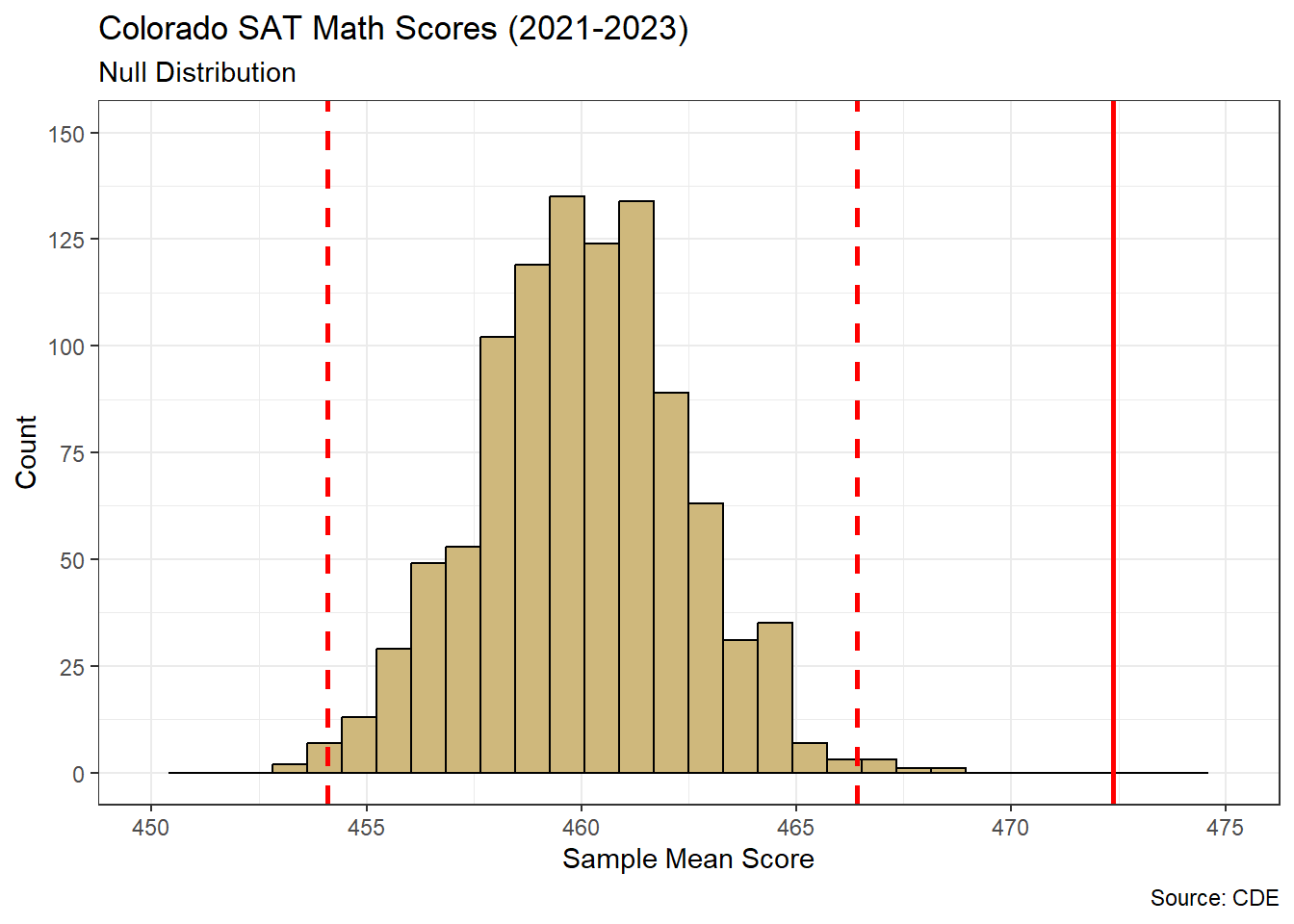

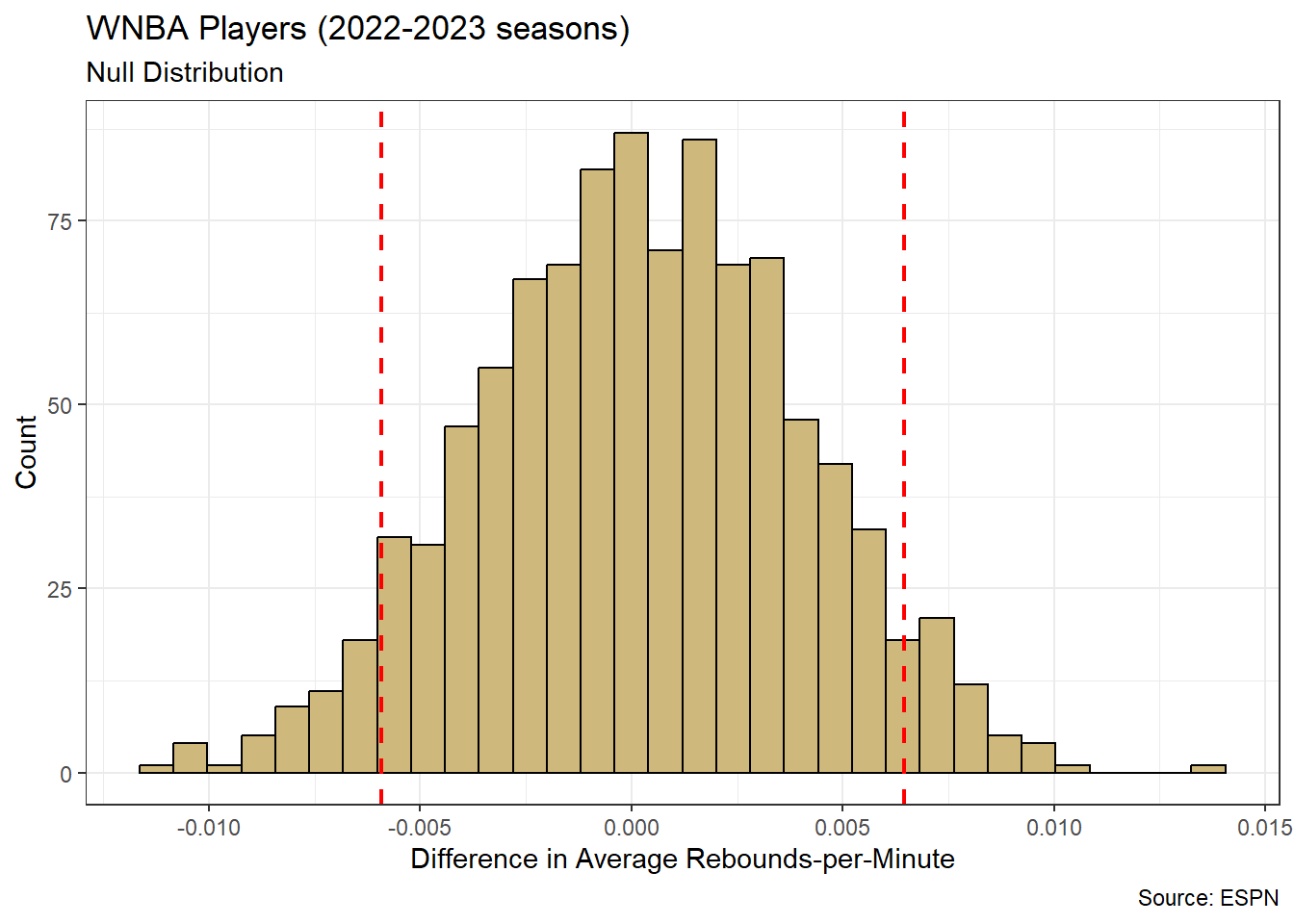

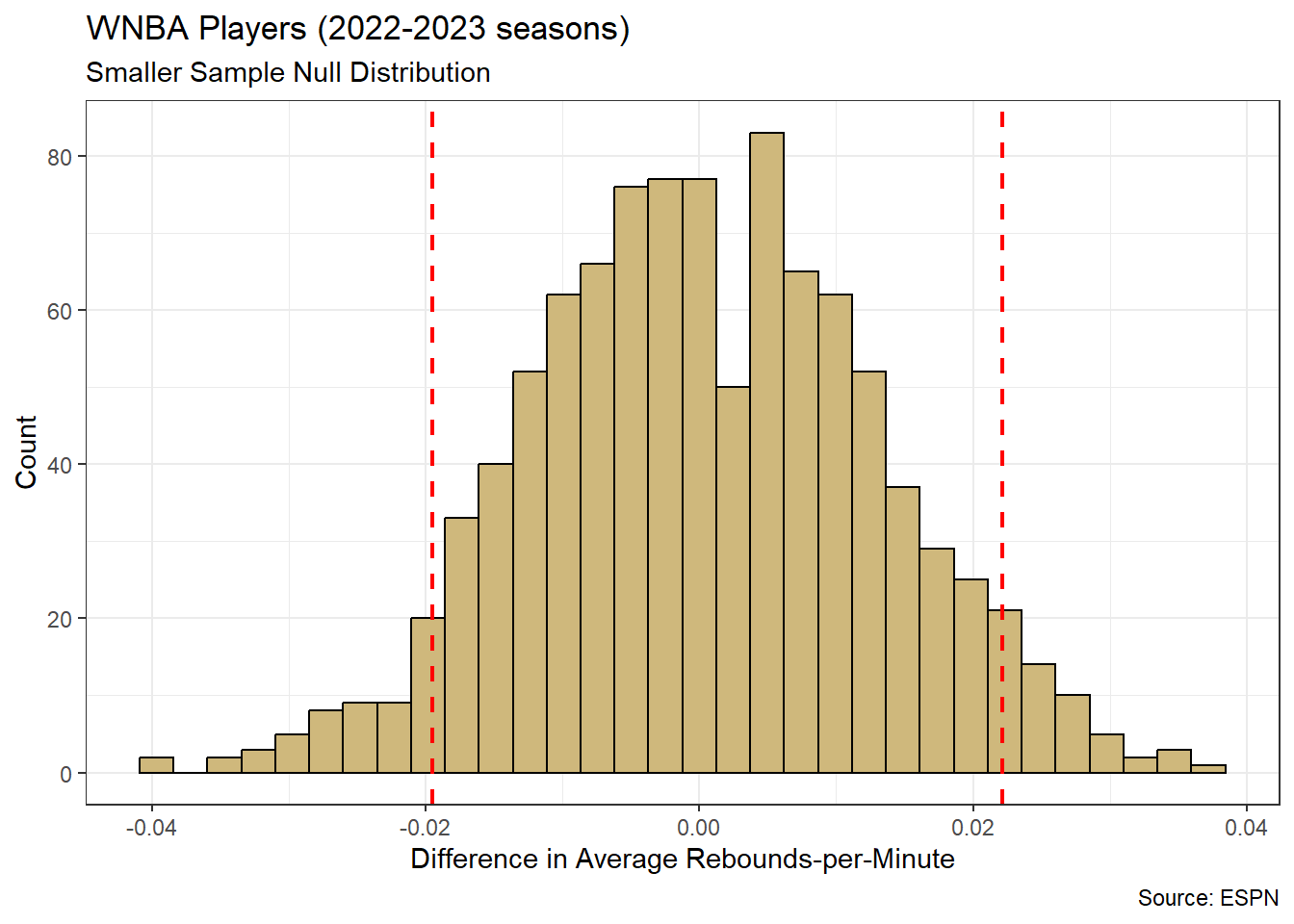

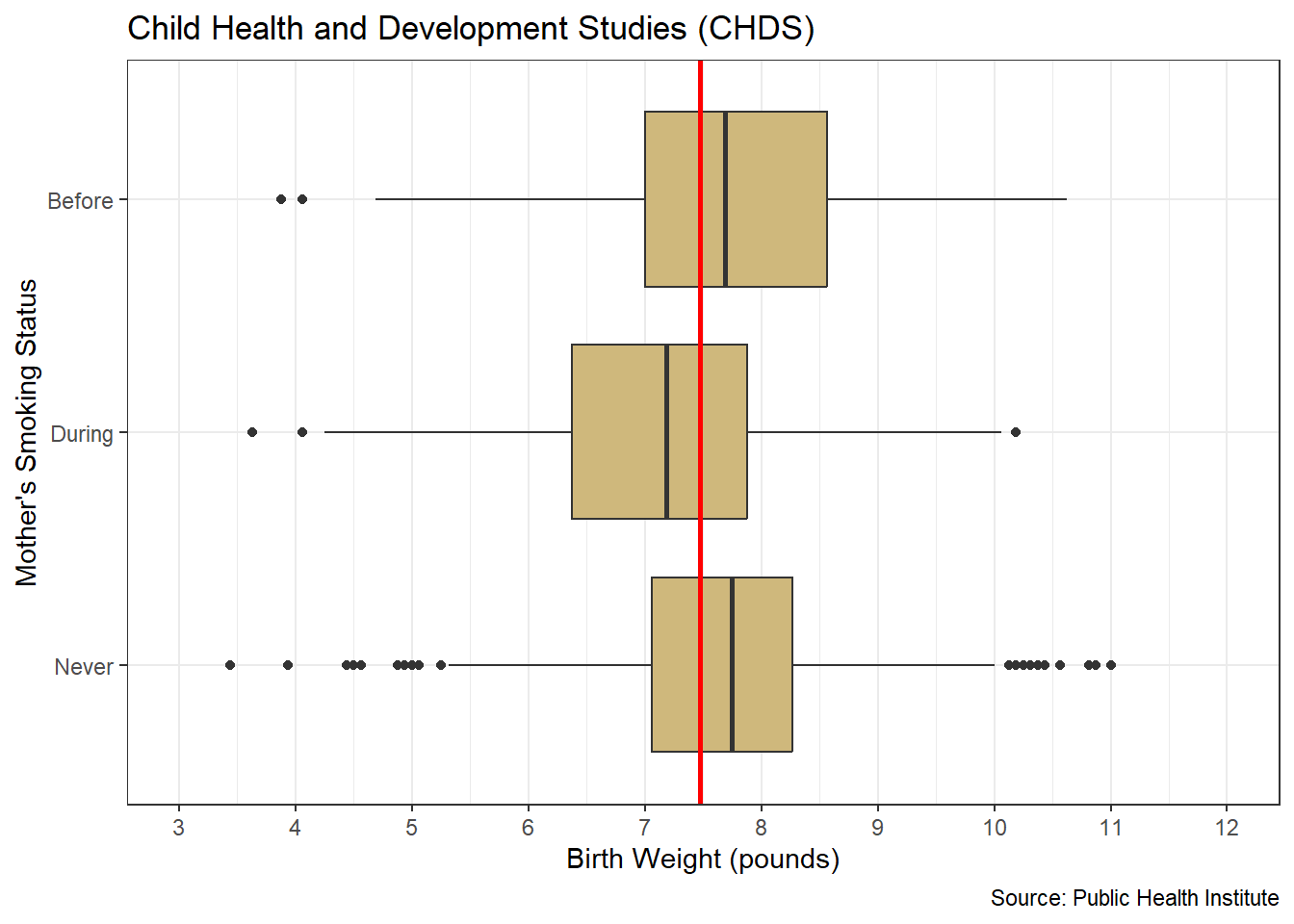

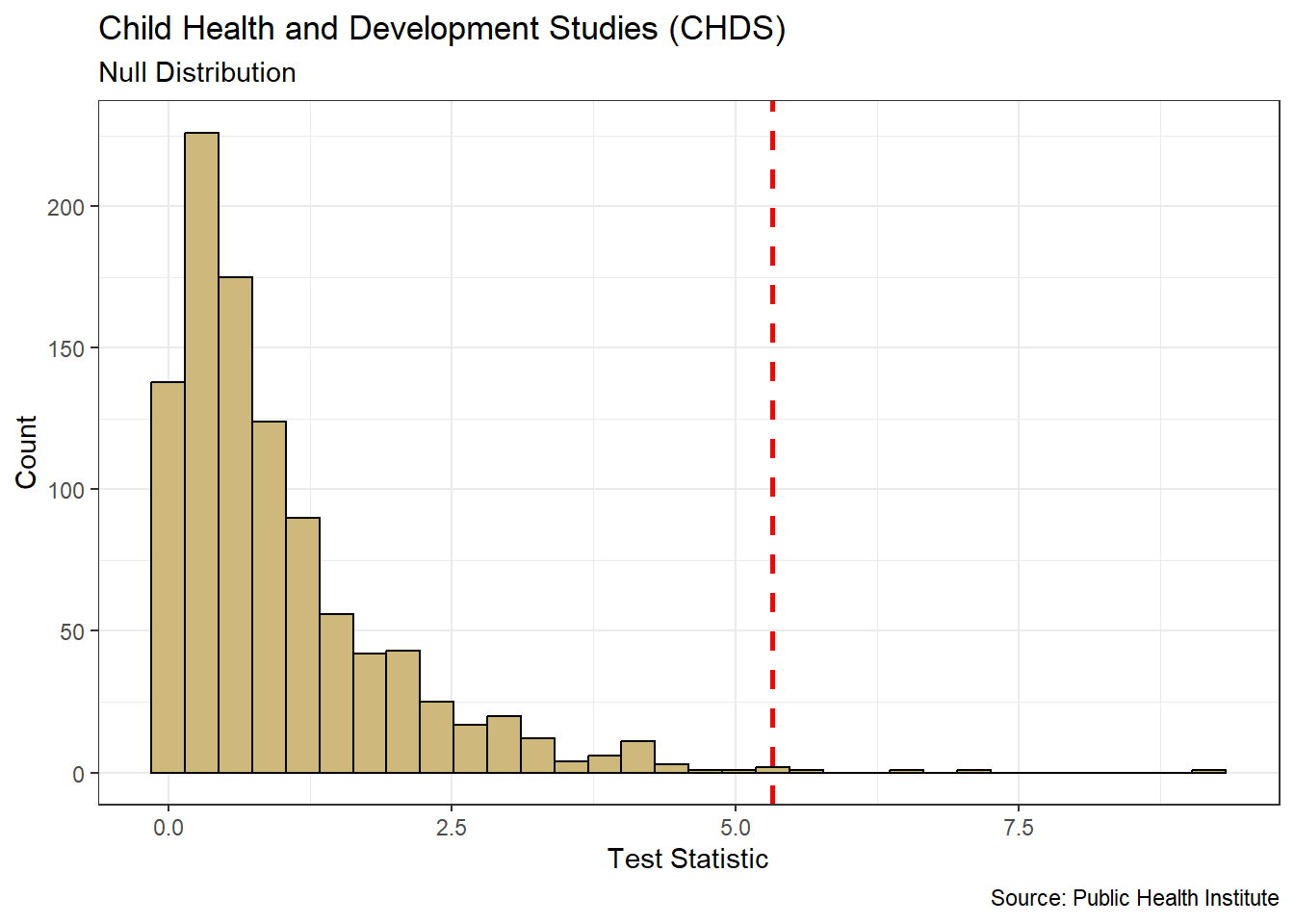

Answer the Question