9 Hierarchical Models

As Kruschke put it, “There are many realistic situations that involve meaningful hierarchical structure. Bayesian modeling software makes it straightforward to specify and analyze complex hierarchical models” (p. 221). IMO, brms makes it even easier than JAGS.

9.1 A single coin from a single mint

Recall from the last chapter that our likelihood is the Bernoulli distribution,

\[y_i \sim \operatorname{Bernoulli} (\theta).\]

We’ll use the Beta density for our prior distribution for \(\theta\),

\[\theta \sim \operatorname{Beta} (\alpha, \beta).\]

And we can re-express \(\alpha\) and \(\beta\) in terms of the mode \(\omega\) and concentration \(\kappa\), such that

\[\alpha = \omega(\kappa - 2) + 1 \textrm{ and } \beta = (1 - \omega)(\kappa - 2) + 1.\]

The consequence of this is that we can re-express \(\theta\) as

\[\theta \sim \operatorname{Beta} (\omega(\kappa - 2) + 1, (1 - \omega)(\kappa - 2) + 1).\]

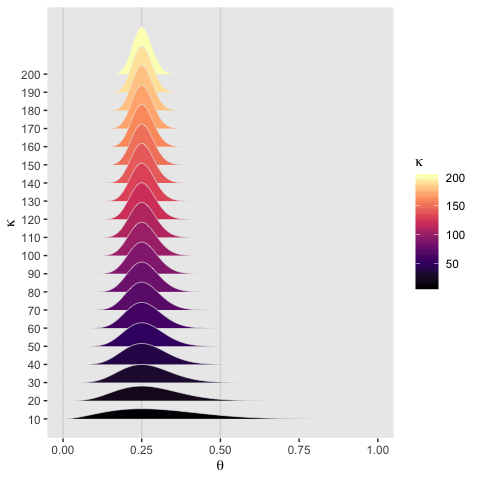

On page 224, Kruschke wrote: “The value of \(\kappa\) governs how near \(\theta\) is to \(\omega\), with larger values of \(\kappa\) generating values of \(\theta\) more concentrated near \(\omega\).” To give a sense of that, we’ll simulate 20 beta distributions, all with \(\omega = .25\) but with \(\theta\) increasing from 10 to 200, by 10.

library(tidyverse)

library(ggridges)

beta_by_k <- function(k) {

w <- .25

tibble(x = seq(from = 0, to = 1, length.out = 1000)) %>%

mutate(theta = dbeta(x = x,

shape1 = w * (k - 2) + 1,

shape2 = (1 - w) * (k - 2) + 1))

}

tibble(k = seq(from = 10, to = 200, by = 10)) %>%

mutate(theta = map(k, beta_by_k)) %>%

unnest(theta) %>%

ggplot(aes(x = x, y = k,

height = theta,

group = k, fill = k)) +

geom_vline(xintercept = c(0, .25, .5), color = "grey85", size = 1/2) +

geom_ridgeline(size = 1/5, color = "grey92", scale = 2) +

scale_fill_viridis_c(expression(kappa), option = "A") +

scale_y_continuous(expression(kappa), breaks = seq(from = 10, to = 200, by = 10)) +

xlab(expression(theta)) +

theme(panel.grid = element_blank())

Holding \(\omega\) constant, the density gets more concentrated around \(\omega\) as \(\kappa\) increases.

9.1.1 Posterior via grid approximation.

Given \(\alpha\) and \(\beta\), we can compute the corresponding mode \(\omega\). To foreshadow, consider \(\text{Beta} (2, 2)\).

## [1] 0.5That is, the mode of \(\text{Beta} (2, 2) = .5\).

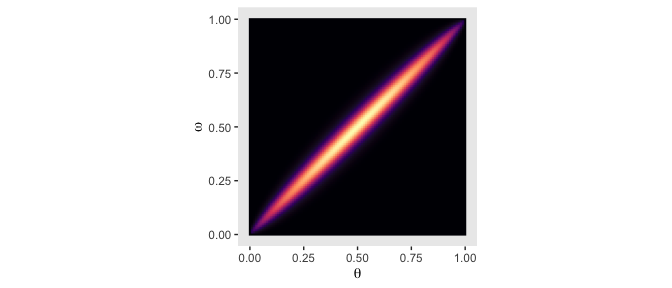

We won’t be able to make the wireframe plots on the left of Figure 9.2, but we can make the others. We’ll make the initial data following Kruschke’s (p. 226) formulas.

\[p(\theta, \omega) = p(\theta | \omega) p(\omega) = \operatorname{Beta} (\theta | \omega (100 - 2) + 1, (1 - \omega) (100 - 2) + 1) \operatorname{Beta} (\omega | 2, 2)\]

First, we’ll make a custom function, make_prior() based on the formulas.

make_prior <- function(theta, omega, alpha, beta, kappa) {

# p(theta | omega)

t <- dbeta(x = theta,

shape1 = omega * (kappa - 2) + 1,

shape2 = (1 - omega) * (kappa - 2) + 1)

# p(omega)

o <- dbeta(x = omega,

shape1 = alpha,

shape2 = beta)

# p(theta, omega) = p(theta | omega) * p(omega)

return(t * o)

}Next we’ll define the parameter space as a tightly-spaced sequence of values ranging from 0 to 1.

Now we’ll use parameter_space to define the ranges for the two variables, theta and omega, which we’ll save in a tibble. We’ll then sequentially feed those theta and omega values into our make_prior() while manually specifying the desired values for alpha, beta, and kappa.

d <-

# here we define the grid for our grid approximation

crossing(theta = parameter_space,

omega = parameter_space) %>%

# compute the joint prior

mutate(prior = make_prior(theta, omega, alpha = 2, beta = 2, kappa = 100)) %>%

# convert the prior from the density metric to the probability metric

mutate(prior = prior / sum(prior))

head(d)## # A tibble: 6 x 3

## theta omega prior

## <dbl> <dbl> <dbl>

## 1 0 0 0

## 2 0 0.01 0

## 3 0 0.02 0

## 4 0 0.03 0

## 5 0 0.04 0

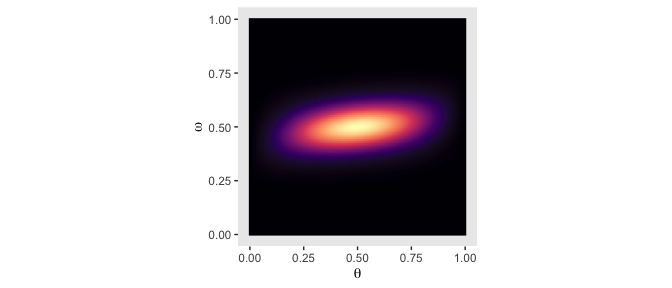

## 6 0 0.05 0Now we’re ready to plot the top middle panel of Figure 9.2.

d %>%

ggplot(aes(x = theta, y = omega, fill = prior)) +

geom_raster(interpolate = T) +

scale_fill_viridis_c(option = "A") +

labs(x = expression(theta),

y = expression(omega)) +

coord_equal() +

theme(panel.grid = element_blank(),

legend.position = "none")

Note, you could also make this with geom_tile(). But geom_raster() with the interpolate = T argument smooths the color transitions.

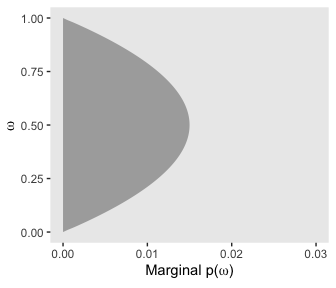

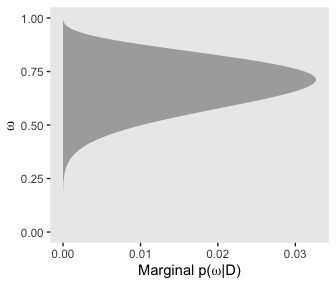

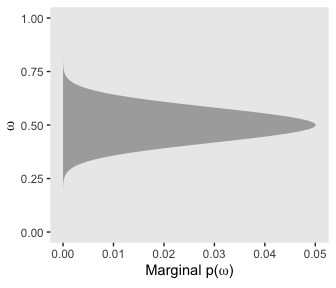

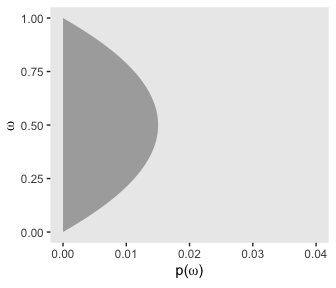

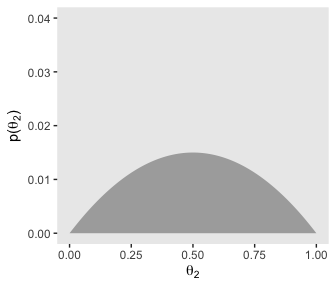

If we collapse “the joint prior across \(\theta\)” (i.e., group_by(omega) and then sum(prior)), we plot the marginal distribution for \(p(\omega)\) as seen in the top right panel.

d %>%

group_by(omega) %>%

summarise(prior = sum(prior)) %>%

ggplot(aes(x = omega,

ymin = 0,

ymax = prior)) +

geom_ribbon(fill = "grey67") +

labs(x = expression(omega),

y = expression(paste("Marginal p(", omega, ")"))) +

coord_flip(ylim = c(0, .03)) +

theme(panel.grid = element_blank())

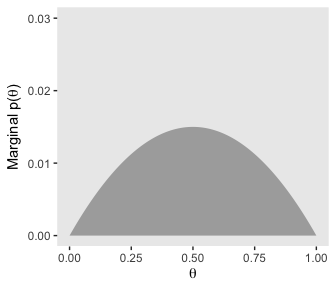

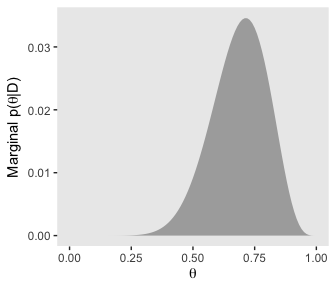

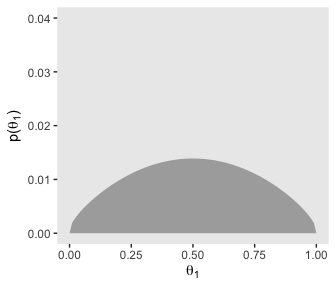

We’ll follow a similar procedure to get the marginal probability distribution for theta.

d %>%

group_by(theta) %>%

summarise(prior = sum(prior)) %>%

ggplot(aes(x = theta,

ymin = 0,

ymax = prior)) +

geom_ribbon(fill = "grey67") +

labs(x = expression(theta),

y = expression(paste("Marginal p(", theta, ")"))) +

coord_cartesian(ylim = c(0, .03)) +

theme(panel.grid = element_blank())

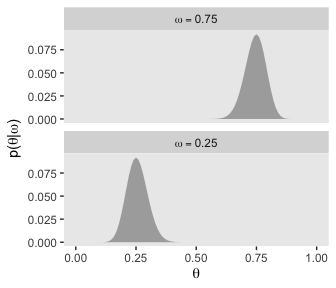

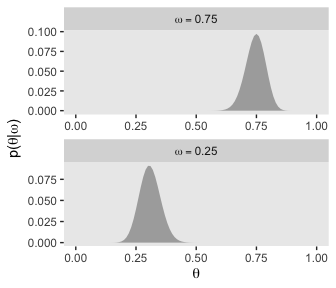

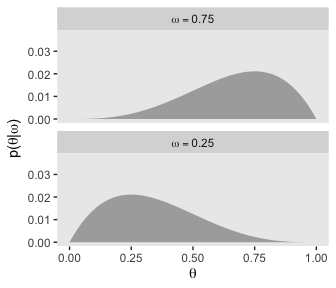

We’ll use the filter() function to take the two slices from the posterior grid. Since we’re taking slices, we’re no longer working with the joint probability distribution. As such, our two marginal prior distributions for theta no longer sum to 1, which means they’re no longer in a probability metric. No worries. After we group by omega, we can simply divide prior by the sum() of prior which renormalizes the two slices “so that they are individually proper probability densities that sum to 1.0 over \(\theta\)” (p. 226).

d %>%

filter(omega %in% c(.25, .75)) %>%

group_by(omega) %>%

mutate(prior = prior / sum(prior)) %>%

mutate(label = factor(str_c("omega == ", omega),

levels = c("omega == 0.75", "omega == 0.25"))) %>%

ggplot(aes(x = theta,

ymin = 0,

ymax = prior)) +

geom_ribbon(fill = "grey67") +

labs(x = expression(theta),

y = expression(paste("p(", theta, "|", omega, ")"))) +

coord_cartesian() +

theme(panel.grid = element_blank()) +

facet_wrap(~label, ncol = 1, labeller = label_parsed)

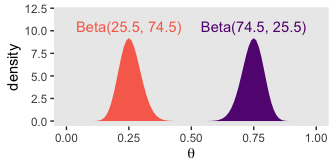

As Kruschke pointed out at the top of page 228, these are indeed Beta densities. Here’s proof.

# we'll want this for the annotation

text <-

tibble(theta = c(.75, .25),

y = 10.5,

label = c("Beta(74.5, 25.5)", "Beta(25.5, 74.5)"),

omega = letters[1:2])

# here's the primary data for the plot

tibble(theta = rep(parameter_space, times = 2),

alpha = rep(c(74.5, 25.5), each = 101),

beta = rep(c(25.5, 74.5), each = 101),

omega = rep(letters[1:2], each = 101)) %>%

# the plot

ggplot(aes(x = theta, fill = omega)) +

geom_ribbon(aes(ymin = 0, ymax = dbeta(x = theta, shape1 = alpha, shape2 = beta))) +

geom_text(data = text,

aes(y = y, label = label, color = omega)) +

scale_fill_viridis_d(option = "A", begin = .3, end = .7) +

scale_color_viridis_d(option = "A", begin = .3, end = .7) +

labs(x = expression(theta),

y = "density") +

coord_cartesian(ylim = 0:12) +

theme(panel.grid = element_blank(),

legend.position = "none")

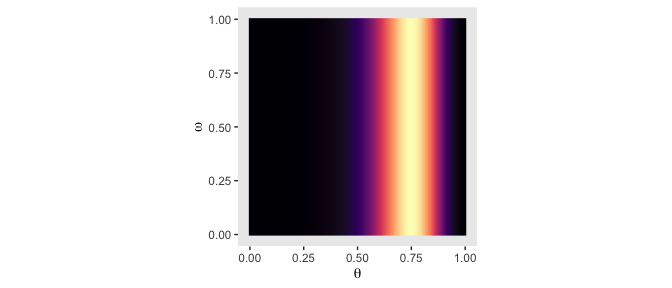

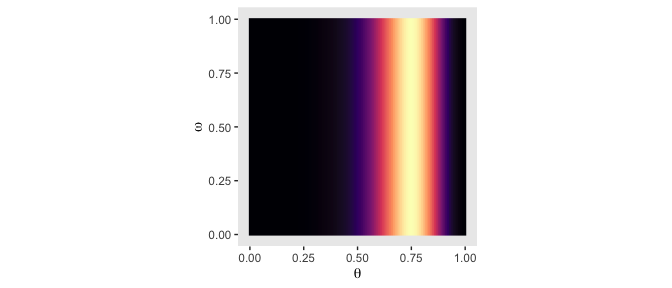

But back on track, we need the Bernoulli likelihood function for the lower three rows of Figure 9.2.

bernoulli_likelihood <- function(theta, data) {

n <- length(data)

z <- sum(data)

return(theta^z * (1 - theta)^(n - sum(data)))

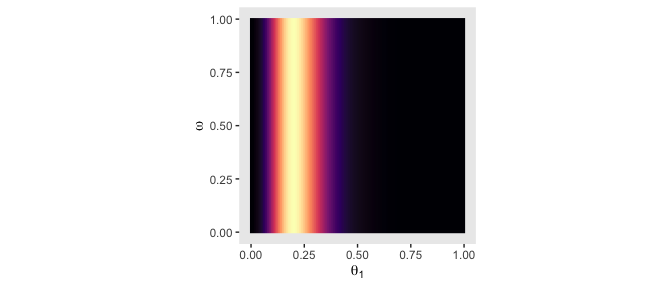

}Time to feed theta and our data into the bernoulli_likelihood() function, which will allow us to make the 2-dimensional density plot in the middle of Figure 9.2.

# define the data

n <- 12

z <- 9

trial_data <- rep(0:1, times = c(n - z, z))

# compute the likelihood

d <-

d %>%

mutate(likelihood = bernoulli_likelihood(theta = theta,

data = trial_data))

# plot

d %>%

ggplot(aes(x = theta, y = omega, fill = likelihood)) +

geom_raster(interpolate = T) +

scale_fill_viridis_c(option = "A") +

labs(x = expression(theta),

y = expression(omega)) +

coord_equal() +

theme(panel.grid = element_blank(),

legend.position = "none")

Note how this plot demonstrates how the likelihood is solely dependent on \(\theta\); it’s orthogonal to \(\omega\). This is the visual consequence of Kruschke’s Formula 9.6,

\[\begin{align*} p (\theta, \omega | y) & = \frac{p (y | \theta, \omega) \; p (\theta, \omega)}{p (y)} \\ & = \frac{p (y | \theta) \; p (\theta | \omega) \; p (\omega)}{p (y)}. \end{align*}\]

That is, in the second line of the equation, the probability of \(y\) was only conditional on \(\theta\). But the reason we call this a hierarchical model is because the probability of \(\theta\) itself is conditioned on \(\omega\). The prior itself had a prior.

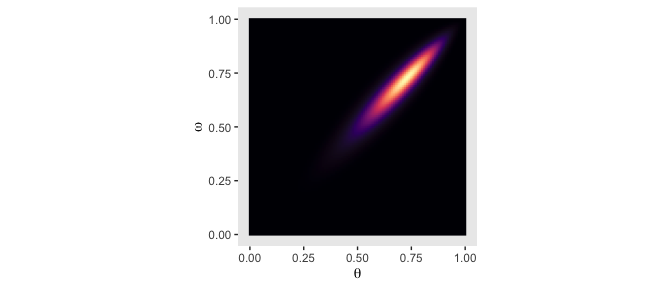

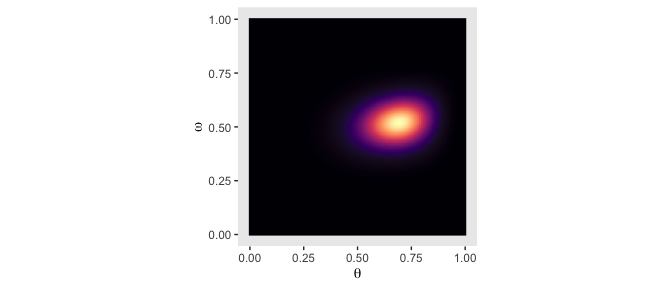

From Formula 9.1, the posterior \(p(\theta, \omega | D)\) is proportional to \(p(D | \theta) \; p(\theta | \omega) \; p(\omega)\). Divide that by the normalizing constant and we’ll have it in a proper probability metric. Recall that we’ve already saved the results of \(p(\theta | \omega) \; p(\omega)\) in the prior column. So we just need to multiply prior by likelihood and divide by their sum.

Our first depiction will be the middle panel of the second row from the bottom.

d <-

d %>%

mutate(posterior = (likelihood * prior) / sum(likelihood * prior))

d %>%

ggplot(aes(x = theta, y = omega, fill = posterior)) +

geom_raster(interpolate = T) +

scale_fill_viridis_c(option = "A") +

labs(x = expression(theta),

y = expression(omega)) +

coord_equal() +

theme(panel.grid = element_blank(),

legend.position = "none")

Although the likelihood was orthogonal to \(\omega\), conditioning the prior for \(\theta\) on \(\omega\) resulted in a posterior that was conditioned on both \(\theta\) and \(\omega\).

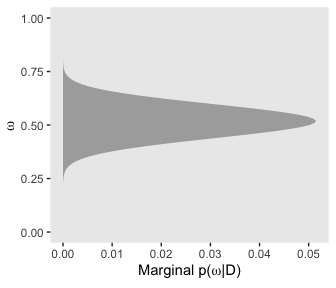

Making the marginal plots for posterior is much like when making them for prior, above.

# for omega

d %>%

group_by(omega) %>%

summarise(posterior = sum(posterior)) %>%

ggplot(aes(x = omega,

ymin = 0,

ymax = posterior)) +

geom_ribbon(fill = "grey67") +

labs(x = expression(omega),

y = expression(paste("Marginal p(", omega, "|D)"))) +

coord_flip() +

theme(panel.grid = element_blank())

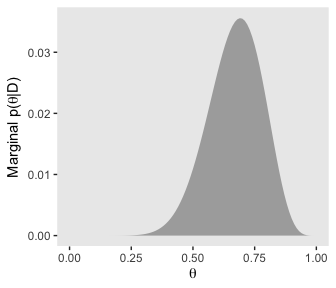

# for theta

d %>%

group_by(theta) %>%

summarise(posterior = sum(posterior)) %>%

ggplot(aes(x = theta,

ymin = 0,

ymax = posterior)) +

geom_ribbon(fill = "grey67") +

labs(x = expression(theta),

y = expression(paste("Marginal p(", theta, "|D)"))) +

coord_cartesian() +

theme(panel.grid = element_blank())

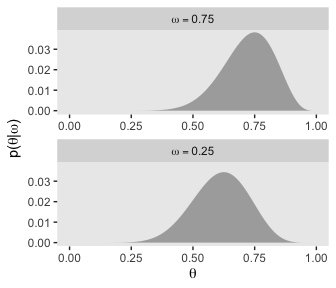

Note that after we slice with filter(), the next two wrangling lines renormalize those posterior slices into probability metrics. That is, when we take a slice through the joint posterior at a particular value of \(\omega\), and renormalize by dividing the sum of discrete probability masses in that slice, we get the conditional distribution \(p(\theta | \omega, D)\).

d %>%

filter(omega %in% c(.25, .75)) %>%

group_by(omega) %>%

mutate(posterior = posterior / sum(posterior)) %>%

mutate(label = factor(str_c("omega == ", omega),

levels = c("omega == 0.75", "omega == 0.25"))) %>%

ggplot(aes(x = theta,

ymin = 0,

ymax = posterior)) +

geom_ribbon(fill = "grey67") +

labs(x = expression(theta),

y = expression(paste("p(", theta, "|", omega, ")"))) +

theme(panel.grid = element_blank()) +

facet_wrap(~label, ncol = 1, labeller = label_parsed, scales = "free")

To repeat the process for Figure 9.3, we’ll first compute the new joint prior.

d <-

crossing(theta = parameter_space,

omega = parameter_space) %>%

mutate(prior = make_prior(theta, omega, alpha = 20, beta = 20, kappa = 6)) %>%

mutate(prior = prior / sum(prior))Here’s the initial data and the 2-dimensional density plot for the prior, the middle plot in the top row of Figure 9.3.

d %>%

ggplot(aes(x = theta, y = omega, fill = prior)) +

geom_raster(interpolate = T) +

scale_fill_viridis_c(option = "A") +

labs(x = expression(theta),

y = expression(omega)) +

coord_equal() +

theme(panel.grid = element_blank(),

legend.position = "none")

That higher certainty in \(\omega\) resulted in a two-dimensional density plot where the values on the y-axis were concentrated near .5. This will have down-the-road consequences for the posterior. But before we get there, we’ll average over omega and theta to plot their marginal prior distributions.

# for omega

d %>%

group_by(omega) %>%

summarise(prior = sum(prior)) %>%

ggplot(aes(x = omega,

ymin = 0,

ymax = prior)) +

geom_ribbon(fill = "grey67") +

labs(x = expression(omega),

y = expression(paste("Marginal p(", omega, ")"))) +

coord_flip() +

theme(panel.grid = element_blank())

# for theta

d %>%

group_by(theta) %>%

summarise(prior = sum(prior)) %>%

ggplot(aes(x = theta,

ymin = 0,

ymax = prior)) +

geom_ribbon(fill = "grey67") +

labs(x = expression(theta),

y = expression(paste("Marginal p(", theta, ")"))) +

coord_cartesian(ylim = c(0, .03)) +

theme(panel.grid = element_blank())

Here are the two short plots in the right panel of the second row from the top of Figure 9.3.

d %>%

filter(omega %in% c(.25, .75)) %>%

group_by(omega) %>%

mutate(prior = prior / sum(prior)) %>%

mutate(label = factor(str_c("omega == ", omega),

levels = c("omega == 0.75", "omega == 0.25"))) %>%

ggplot(aes(x = theta,

ymin = 0,

ymax = prior)) +

geom_ribbon(fill = "grey67") +

labs(x = expression(theta),

y = expression(paste("p(", theta, "|", omega, ")"))) +

coord_cartesian(ylim = c(0, .0375)) +

theme(panel.grid = element_blank()) +

facet_wrap(~label, ncol = 1, labeller = label_parsed)

Now we’re ready for the likelihood.

# compute

d <-

d %>%

mutate(likelihood = bernoulli_likelihood(theta = theta,

data = trial_data))

# plot

d %>%

ggplot(aes(x = theta, y = omega, fill = likelihood)) +

geom_raster(interpolate = T) +

scale_fill_viridis_c(option = "A") +

labs(x = expression(theta),

y = expression(omega)) +

coord_equal() +

theme(panel.grid = element_blank(),

legend.position = "none")

Now on to the posterior. Our first depiction will be the middle panel of the second row from the bottom of Figure 9.3. This will be \(p(\theta, \omega | y)\).

# compute the posterior

d <-

d %>%

mutate(posterior = (likelihood * prior) / sum(likelihood * prior))

# plot

d %>%

ggplot(aes(x = theta, y = omega, fill = posterior)) +

geom_raster(interpolate = T) +

scale_fill_viridis_c(option = "A") +

labs(x = expression(theta),

y = expression(omega)) +

coord_equal() +

theme(panel.grid = element_blank(),

legend.position = "none")

Here are the marginal plots for the two dimensions in our posterior.

# for omega

d %>%

group_by(omega) %>%

summarise(posterior = sum(posterior)) %>%

ggplot(aes(x = omega,

ymin = 0,

ymax = posterior)) +

geom_ribbon(fill = "grey67") +

labs(x = expression(omega),

y = expression(paste("Marginal p(", omega, "|D)"))) +

coord_flip() +

theme(panel.grid = element_blank())

# for theta

d %>%

group_by(theta) %>%

summarise(posterior = sum(posterior)) %>%

ggplot(aes(x = theta,

ymin = 0,

ymax = posterior)) +

geom_ribbon(fill = "grey67") +

labs(x = expression(theta),

y = expression(paste("Marginal p(", theta, "|D)"))) +

coord_cartesian() +

theme(panel.grid = element_blank())

And we’ll finish off with the plots of Figure 9.3’s lower right panel.

d %>%

filter(omega %in% c(.25, .75)) %>%

group_by(omega) %>%

mutate(posterior = posterior / sum(posterior)) %>%

mutate(label = factor(str_c("omega == ", omega),

levels = c("omega == 0.75", "omega == 0.25"))) %>%

ggplot(aes(x = theta,

ymin = 0,

ymax = posterior)) +

geom_ribbon(fill = "grey67") +

labs(x = expression(theta),

y = expression(paste("p(", theta, "|", omega, ")"))) +

coord_cartesian(ylim = c(0, .0375)) +

theme(panel.grid = element_blank()) +

facet_wrap(~label, ncol = 1, labeller = label_parsed, scales = "free")

9.2 Multiple coins from a single mint

What if we collect data from more than one coin created by the mint? If each coin has its own distinct bias \(\theta_s\), then we are estimating a distinct parameter value for each coin, and using all the data to estimate \(\omega\). (p. 230)

9.2.1 Posterior via grid approximation.

Now we have two coins,

the full prior distribution is a joint distribution over three parameters: \(\omega\), \(\theta_1\), and \(\theta_2\). In a grid approximation, the prior is specified as a three-dimensional (3D) array that holds the prior probability at various grid points in the 3D space. (p. 233)

So we’re going to have to update our make_prior() function. It was originally designed to handle two dimensions, \(\theta\) and \(\omega\). But now we have to update it to handle our three dimensions.

make_prior <- function(theta1, theta2, omega, alpha, beta, kappa) {

# p(theta_1 | omega)

t1 <- dbeta(x = theta1,

shape1 = omega * (kappa - 2) + 1,

shape2 = (1 - omega) * (kappa - 2) + 1)

# p(theta_2 | omega)

t2 <- dbeta(x = theta2,

shape1 = omega * (kappa - 2) + 1,

shape2 = (1 - omega) * (kappa - 2) + 1)

# p(omega)

o <- dbeta(x = omega,

shape1 = alpha,

shape2 = beta)

# p(theta1, theta2, omega) = p(theta1 | omega) * p(theta2 | omega) * p(omega)

return(t1 * t2 * o)

}Let’s make our new data object, d.

d <-

crossing(theta_1 = parameter_space,

theta_2 = parameter_space,

omega = parameter_space) %>%

mutate(prior = make_prior(theta_1, theta_2, omega, alpha = 2, beta = 2, kappa = 5)) %>%

# here we normalize

mutate(prior = prior / sum(prior))

glimpse(d)## Observations: 1,030,301

## Variables: 4

## $ theta_1 <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,…

## $ theta_2 <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,…

## $ omega <dbl> 0.00, 0.01, 0.02, 0.03, 0.04, 0.05, 0.06, 0.07, 0.08, 0.…

## $ prior <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,…Unlike what Kruschke said in the text, we’re not using a 3D data array. Rather, we’re just using a tibble with which prior has been expanded across all possible dimensions of the three indexing variables: theta_1, theta_2, and omega. As you can see from the ‘Observations’ count, above, this makes for a very long tibble.

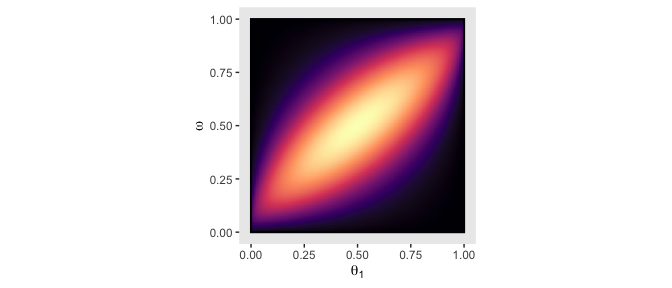

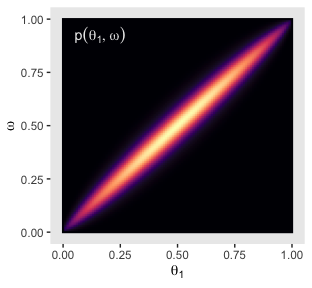

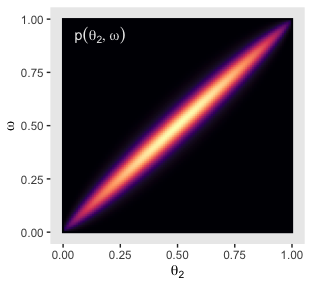

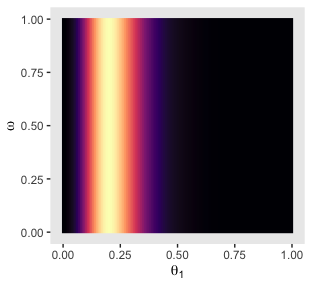

“Because the parameter space is 3D, a distribution on it cannot easily be displayed on a 2D page. Instead, Figure 9.5 shows various marginal distributions” (p. 234). The consequence of that is when we marginalize, we’ll have to group by the two variables we’d like to retain for the plot. For example, the plots in the left and middle columns of the top row are the same save for their indices. So let’s just do the plot for theta_1. In order to marginalize over theta_2, we’ll need to group_by(theta_1, omega) and then summarise(prior = sum(prior)).

d %>%

group_by(theta_1, omega) %>%

summarise(prior = sum(prior)) %>%

ggplot(aes(x = theta_1, y = omega, fill = prior)) +

geom_raster(interpolate = T) +

scale_fill_viridis_c(option = "A") +

labs(x = expression(theta[1]),

y = expression(omega)) +

coord_equal() +

theme(panel.grid = element_blank(),

legend.position = "none")

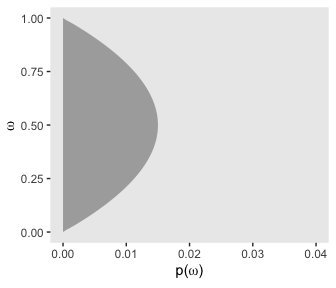

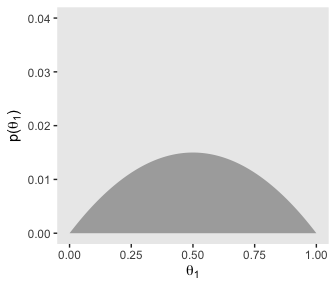

But we just have to average over omega and theta_1 to plot their marginal prior distributions.

# for omega

d %>%

group_by(omega) %>%

summarise(prior = sum(prior)) %>%

ggplot(aes(x = omega,

ymin = 0,

ymax = prior)) +

geom_ribbon(fill = "grey67") +

labs(x = expression(omega),

y = expression(paste("p(", omega, ")"))) +

coord_flip(ylim = c(0, .04)) +

theme(panel.grid = element_blank())

# for theta

d %>%

group_by(theta_1) %>%

summarise(prior = sum(prior)) %>%

ggplot(aes(x = theta_1,

ymin = 0,

ymax = prior)) +

geom_ribbon(fill = "grey67") +

labs(x = expression(theta[1]),

y = expression(paste("p(", theta[1], ")"))) +

coord_cartesian(ylim = c(0, .04)) +

theme(panel.grid = element_blank())

Before we make the plots in the middle row of Figure 9.5, we need to add the likelihoods. Recall that we’re presuming the coin flips contained in \(D_1\) and \(D_2\) are independent. Kruschke explained in Chapter 7, section 4.1, that

independence of the data across the two coins means that the data from coin 1 depend only on the bias in coin 1, and the data from coin 2 depend only on the bias in coin 2, which can be expressed formally as \(p(y_1 | \theta_1, \theta_2) = p(y_1 | \theta_1)\) and \(p(y_2 | \theta_1, \theta_2) = p(y_2 | \theta_2)\). (p. 164)

The likelihood function for our two series of coin flips is then

\[p(D | \theta_1, \theta_2) = \Big ( \theta_1^{z_1} (1 - \theta_1) ^ {N_1 - z_1} \Big ) \Big ( \theta_2^{z_2} (1 - \theta_2) ^ {N_2 - z_2} \Big ).\]

The upshot is we can compute the likelihoods for \(D_1\) and \(D_2\) separately and just multiply them together.

# D1: 3 heads, 12 tails

n <- 15

z <- 3

trial_data_1 <- rep(0:1, times = c(n - z, z))

# D2: 4 heads, 1 tail

n <- 5

z <- 4

trial_data_2 <- rep(0:1, times = c(n - z, z))

d <-

d %>%

mutate(likelihood_1 = bernoulli_likelihood(theta = theta_1,

data = trial_data_1),

likelihood_2 = bernoulli_likelihood(theta = theta_2,

data = trial_data_2)) %>%

mutate(likelihood = likelihood_1 * likelihood_2)

head(d)## # A tibble: 6 x 7

## theta_1 theta_2 omega prior likelihood_1 likelihood_2 likelihood

## <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

## 1 0 0 0 0 0 0 0

## 2 0 0 0.01 0 0 0 0

## 3 0 0 0.02 0 0 0 0

## 4 0 0 0.03 0 0 0 0

## 5 0 0 0.04 0 0 0 0

## 6 0 0 0.05 0 0 0 0Now after a little group_by() followed by summarise() we can plot the two marginal likelihoods, the two plots in the middle row of Figure 9.5.

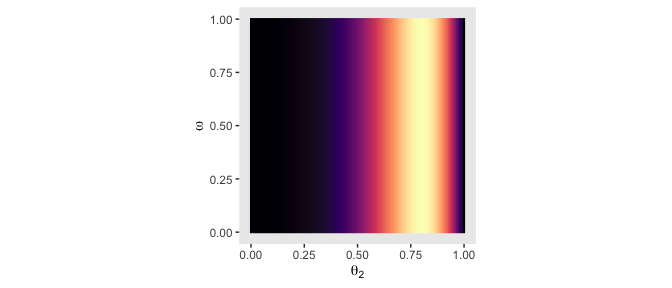

# likelihood_1

d %>%

group_by(theta_1, omega) %>%

summarise(likelihood = sum(likelihood)) %>%

ggplot(aes(x = theta_1, y = omega, fill = likelihood)) +

geom_raster(interpolate = T) +

scale_fill_viridis_c(option = "A") +

labs(x = expression(theta[1]),

y = expression(omega)) +

coord_equal() +

theme(panel.grid = element_blank(),

legend.position = "none")

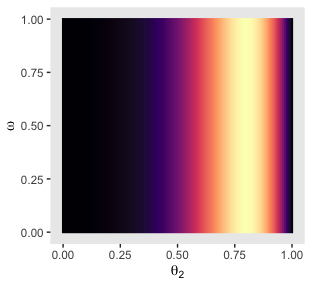

# likelihood_2

d %>%

group_by(theta_2, omega) %>%

summarise(likelihood = sum(likelihood)) %>%

ggplot(aes(x = theta_2, y = omega, fill = likelihood)) +

geom_raster(interpolate = T) +

scale_fill_viridis_c(option = "A") +

labs(x = expression(theta[2]),

y = expression(omega)) +

coord_equal() +

theme(panel.grid = element_blank(),

legend.position = "none")

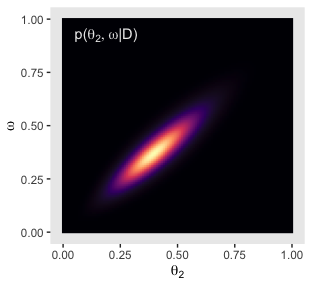

The likelihoods look good. Next we compute the posterior in the same way we’ve done before: multiply the prior and the likelihood and then divide by their sum in order to convert the results to a probability metric.

# compute

d <-

d %>%

mutate(posterior = (prior * likelihood) / sum(prior * likelihood))

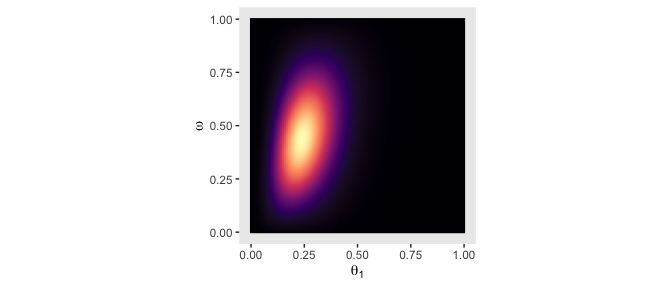

# posterior_1

d %>%

group_by(theta_1, omega) %>%

summarise(posterior = sum(posterior)) %>%

ggplot(aes(x = theta_1, y = omega, fill = posterior)) +

geom_raster(interpolate = T) +

scale_fill_viridis_c(option = "A") +

labs(x = expression(theta[1]),

y = expression(omega)) +

coord_equal() +

theme(panel.grid = element_blank(),

legend.position = "none")

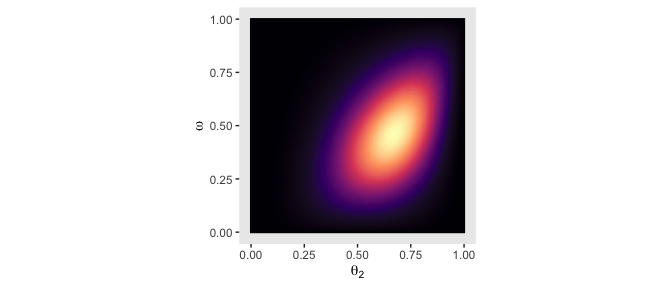

# posterior_2

d %>%

group_by(theta_2, omega) %>%

summarise(posterior = sum(posterior)) %>%

ggplot(aes(x = theta_2, y = omega, fill = posterior)) +

geom_raster(interpolate = T) +

scale_fill_viridis_c(option = "A") +

labs(x = expression(theta[2]),

y = expression(omega)) +

coord_equal() +

theme(panel.grid = element_blank(),

legend.position = "none")

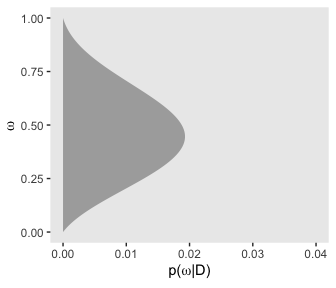

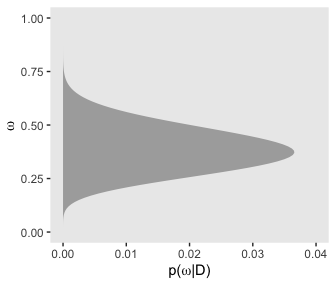

Here’s the right plot on the second row from the bottom, the posterior distribution for \(\omega\).

# for omega

d %>%

group_by(omega) %>%

summarise(posterior = sum(posterior)) %>%

ggplot(aes(x = omega,

ymin = 0,

ymax = posterior)) +

geom_ribbon(fill = "grey67") +

labs(x = expression(omega),

y = expression(paste("p(", omega, "|D)"))) +

coord_flip(ylim = c(0, .04)) +

theme(panel.grid = element_blank())

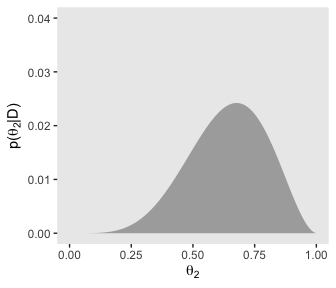

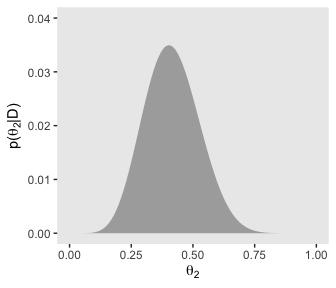

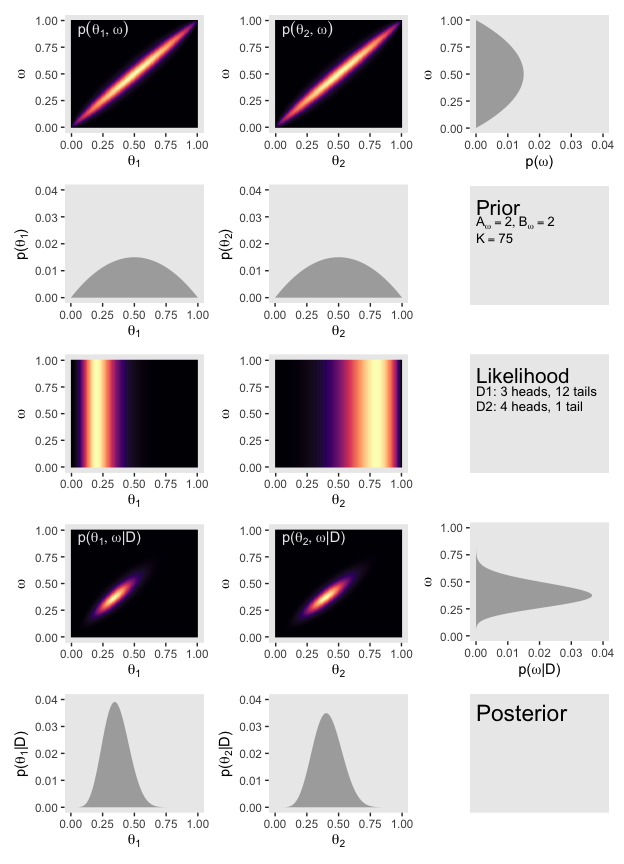

Now here are the marginal posterior plots on the bottom row of Figure 9.5.

# for theta_1

d %>%

group_by(theta_1) %>%

summarise(posterior = sum(posterior)) %>%

ggplot(aes(x = theta_1,

ymin = 0,

ymax = posterior)) +

geom_ribbon(fill = "grey67") +

labs(x = expression(theta[1]),

y = expression(paste("p(", theta[1], "|D)"))) +

coord_cartesian(ylim = c(0, .04)) +

theme(panel.grid = element_blank())

# for theta_2

d %>%

group_by(theta_2) %>%

summarise(posterior = sum(posterior)) %>%

ggplot(aes(x = theta_2,

ymin = 0,

ymax = posterior)) +

geom_ribbon(fill = "grey67") +

labs(x = expression(theta[2]),

y = expression(paste("p(", theta[2], "|D)"))) +

coord_cartesian(ylim = c(0, .04)) +

theme(panel.grid = element_blank())

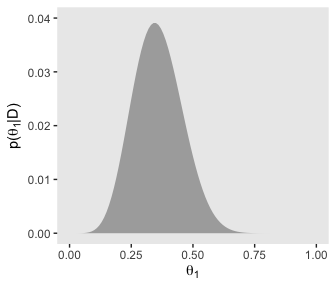

We’ll do this dog and pony one more time for Figure 9.6, which uses different priors on the same data. First, we make our new data object, d.

d <-

crossing(theta_1 = parameter_space,

theta_2 = parameter_space,

omega = parameter_space) %>%

mutate(prior = make_prior(theta_1, theta_2, omega, alpha = 2, beta = 2, kappa = 75)) %>%

mutate(prior = prior / sum(prior))Note how the only thing we changed from the last time was increasing kappa to 75. Also like last time, the plots in the left and middle columns of the top row are the same save for their indices. But unlike last time, we’ll make both in preparation for a grand plotting finale. You’ll see.

p11 <-

d %>%

group_by(theta_1, omega) %>%

summarise(prior = sum(prior)) %>%

ggplot(aes(x = theta_1, y = omega, fill = prior)) +

geom_raster(interpolate = T) +

annotate(x = .05, y = .925, label = "p(list(theta[1], omega))",

geom = "text", parse = T, color = "grey92", hjust = 0) +

scale_fill_viridis_c(option = "A") +

labs(x = expression(theta[1]),

y = expression(omega)) +

theme(panel.grid = element_blank(),

legend.position = "none")

p12 <-

d %>%

group_by(theta_2, omega) %>%

summarise(prior = sum(prior)) %>%

ggplot(aes(x = theta_2, y = omega, fill = prior)) +

geom_raster(interpolate = T) +

annotate(x = .05, y = .925, label = "p(list(theta[2], omega))",

geom = "text", parse = T, color = "grey92", hjust = 0) +

scale_fill_viridis_c(option = "A") +

labs(x = expression(theta[2]),

y = expression(omega)) +

theme(panel.grid = element_blank(),

legend.position = "none")

p11

Now we’ll average over omega and theta to plot their marginal prior distributions.

# for omega

p13 <-

d %>%

group_by(omega) %>%

summarise(prior = sum(prior)) %>%

ggplot(aes(x = omega,

ymin = 0,

ymax = prior)) +

geom_ribbon(fill = "grey67") +

labs(x = expression(omega),

y = expression(paste("p(", omega, ")"))) +

coord_flip(ylim = c(0, .04)) +

theme(panel.grid = element_blank())

# for theta_1

p21 <-

d %>%

group_by(theta_1) %>%

summarise(prior = sum(prior)) %>%

ggplot(aes(x = theta_1,

ymin = 0,

ymax = prior)) +

geom_ribbon(fill = "grey67") +

labs(x = expression(theta[1]),

y = expression(paste("p(", theta[1], ")"))) +

coord_cartesian(ylim = c(0, .04)) +

theme(panel.grid = element_blank())

# for theta_2

p22 <-

d %>%

group_by(theta_2) %>%

summarise(prior = sum(prior)) %>%

ggplot(aes(x = theta_2,

ymin = 0,

ymax = prior)) +

geom_ribbon(fill = "grey67") +

labs(x = expression(theta[2]),

y = expression(paste("p(", theta[2], ")"))) +

coord_cartesian(ylim = c(0, .04)) +

theme(panel.grid = element_blank())

p13

Let’s get those likelihoods in there and plot.

# D1: 3 heads, 12 tails

n <- 15

z <- 3

trial_data_1 <- rep(0:1, times = c(n - z, z))

# D2: 4 heads, 1 tail

n <- 5

z <- 4

trial_data_2 <- rep(0:1, times = c(n - z, z))

# compute the likelihoods

d <-

d %>%

mutate(likelihood_1 = bernoulli_likelihood(theta = theta_1,

data = trial_data_1),

likelihood_2 = bernoulli_likelihood(theta = theta_2,

data = trial_data_2)) %>%

mutate(likelihood = likelihood_1 * likelihood_2)

# plot likelihood_1

p31 <-

d %>%

group_by(theta_1, omega) %>%

summarise(likelihood = sum(likelihood)) %>%

ggplot(aes(x = theta_1, y = omega, fill = likelihood)) +

geom_raster(interpolate = T) +

scale_fill_viridis_c(option = "A") +

labs(x = expression(theta[1]),

y = expression(omega)) +

theme(panel.grid = element_blank(),

legend.position = "none")

# plot likelihood_2

p32 <-

d %>%

group_by(theta_2, omega) %>%

summarise(likelihood = sum(likelihood)) %>%

ggplot(aes(x = theta_2, y = omega, fill = likelihood)) +

geom_raster(interpolate = T) +

scale_fill_viridis_c(option = "A") +

labs(x = expression(theta[2]),

y = expression(omega)) +

theme(panel.grid = element_blank(),

legend.position = "none")

p31

Compute the posterior and make the left and middle plots of the second row to the bottom of Figure 9.6.

d <-

d %>%

mutate(posterior = (prior * likelihood) / sum(prior * likelihood))

# posterior_1

p41 <-

d %>%

group_by(theta_1, omega) %>%

summarise(posterior = sum(posterior)) %>%

ggplot(aes(x = theta_1, y = omega, fill = posterior)) +

geom_raster(interpolate = T) +

annotate(x = .05, y = .925, label = expression(paste("p(", list(theta[1], omega), "|D)")),

geom = "text", parse = T, color = "grey92", hjust = 0) +

scale_fill_viridis_c(option = "A") +

labs(x = expression(theta[1]),

y = expression(omega)) +

theme(panel.grid = element_blank(),

legend.position = "none")

# posterior_2

p42 <-

d %>%

group_by(theta_2, omega) %>%

summarise(posterior = sum(posterior)) %>%

ggplot(aes(x = theta_2, y = omega, fill = posterior)) +

geom_raster(interpolate = T) +

annotate(x = .05, y = .925, label = expression(paste("p(", list(theta[2], omega), "|D)")),

geom = "text", parse = T, color = "grey92", hjust = 0) +

scale_fill_viridis_c(option = "A") +

labs(x = expression(theta[2]),

y = expression(omega)) +

theme(panel.grid = element_blank(),

legend.position = "none")

p41

Here’s the right plot on the same row, the posterior distribution for \(\omega\).

# for omega

p43 <-

d %>%

group_by(omega) %>%

summarise(posterior = sum(posterior)) %>%

ggplot(aes(x = omega,

ymin = 0,

ymax = posterior)) +

geom_ribbon(fill = "grey67") +

labs(x = expression(omega),

y = expression(paste("p(", omega, "|D)"))) +

coord_flip(ylim = c(0, .04)) +

theme(panel.grid = element_blank())

p43

Finally, here are the marginal posterior plots on the bottom row of Figure 9.6.

# for theta_1

p51 <-

d %>%

group_by(theta_1) %>%

summarise(posterior = sum(posterior)) %>%

ggplot(aes(x = theta_1,

ymin = 0,

ymax = posterior)) +

geom_ribbon(fill = "grey67") +

labs(x = expression(theta[1]),

y = expression(paste("p(", theta[1], "|D)"))) +

coord_cartesian(ylim = c(0, .04)) +

theme(panel.grid = element_blank())

# for theta_2

p52 <-

d %>%

group_by(theta_2) %>%

summarise(posterior = sum(posterior)) %>%

ggplot(aes(x = theta_2,

ymin = 0,

ymax = posterior)) +

geom_ribbon(fill = "grey67") +

labs(x = expression(theta[2]),

y = expression(paste("p(", theta[2], "|D)"))) +

coord_cartesian(ylim = c(0, .04)) +

theme(panel.grid = element_blank())

p51

Did you notice how we saved each of plot from this last batch as objects? For our grand finale for this subsection, we’ll be stitching all those subplots together using syntax from the patchwork package. But before we do, we need to define three more subplots: the subplots with the annotation.

text <-

tibble(x = 1,

y = 10:8,

label = c("Prior", "list(A[omega]==2, B[omega]==2)", "K==75"),

size = c(2, 1, 1))

p23 <-

text %>%

ggplot(aes(x = x, y = y, label = label)) +

geom_text(aes(size = size),

parse = T, hjust = 0, show.legend = F) +

scale_size_continuous(range = c(3.5, 5.5)) +

coord_cartesian(xlim = 1:2,

ylim = 4:11) +

theme(panel.grid = element_blank(),

text = element_text(color = "white"),

axis.text = element_text(color = "white"),

axis.ticks = element_blank())

text <-

tibble(x = 1,

y = 10:8,

label = c("Likelihood", "D1: 3 heads, 12 tails", "D2: 4 heads, 1 tail"),

size = c(2, 1, 1))

p33 <-

text %>%

ggplot(aes(x = x, y = y, label = label)) +

geom_text(aes(size = size),

hjust = 0, show.legend = F) +

scale_size_continuous(range = c(3.5, 5.5)) +

coord_cartesian(xlim = 1:2,

ylim = 4:11) +

theme(panel.grid = element_blank(),

text = element_text(color = "white"),

axis.text = element_text(color = "white"),

axis.ticks = element_blank())

p53 <-

ggplot() +

annotate(x = 1, y = 10, label = "Posterior",

geom = "text", size = 6, hjust = 0) +

coord_cartesian(xlim = 1:2,

ylim = 3:11) +

theme(panel.grid = element_blank(),

text = element_text(color = "white"),

axis.text = element_text(color = "white"),

axis.ticks = element_blank())Okay, let’s make the full version of Figure 9.6.

library(patchwork)

(p11 / p21 / p31 / p41 / p51) | (p12 / p22 / p32 / p42 / p52) | (p13 / p23 / p33 / p43 / p53)

Oh mamma!

The grid approximation displayed in Figures 9.5 and 9.6 used combs of only [101] points on each parameter (\(\omega\), \(\theta_1\), and \(\theta_2\)). This means that the 3D grid had [1013 = 1,030,301] points, which is a size that can be handled easily on an ordinary desktop computer of the early 21st century. It is interesting to remind ourselves that the grid approximation displayed in Figures 9.5 and 9.6 would have been on the edge of computability 50 years ago, and would have been impossible 100 years ago. The number of points in a grid approximation can get hefty in a hurry. If we were to expand the example by including a third coin, with its parameter \(\theta_3\), then the grid would have [1014 = 104,060,401] points, which already strains small computers. Include a fourth coin, and the grid contains over [10 billion] points. Grid approximation is not a viable approach to even modestly large problems, which we encounter next. (p. 235)

In case you didn’t catch it, we used different numbers of points to evaluate each parameter. Whereas Kruschke indicated in the text he only used 50, we used 101. That value of 101 came from how we defined our parameter_space with the code seq(from = 0, to = 1, by = .01). The reason we used a more densely-packed parameter space was to get smoother-looking 2D density plots.

9.2.2 A realistic model with MCMC.

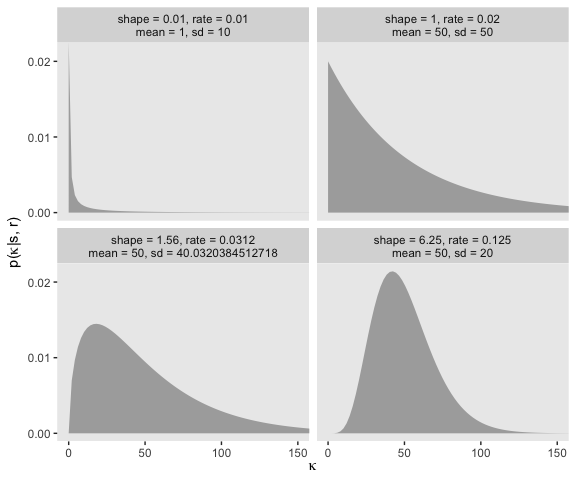

“Because the value of \(\kappa − 2\) must be non-negative, the prior distribution on \(\kappa − 2\) must not allow negative values” (p. 237). Gamma is one of the distributions with that property. The gamma distribution is defined by two parameters, its shape and rate. To get a sense of how those play out, here’ a look at the gamma densities of Figure 9.8.

# how many points do you want in your sequence of x values?

length <- 100

# wrangle

tibble(shape = c(.01, 1.56, 1, 6.25),

rate = c(.01, .0312, .02, .125)) %>%

expand(nesting(shape, rate),

x = seq(from = 0, to = 200, length.out = length)) %>%

mutate(mean = shape * 1 / rate,

sd = sqrt(shape * (1 / rate)^2)) %>%

mutate(label = str_c("shape = ", shape, ", rate = ", rate,

"\nmean = ", mean, ", sd = ", sd)) %>%

# plot

ggplot(aes(x = x,

ymin = 0,

ymax = dgamma(x = x, shape = shape, rate = rate))) +

geom_ribbon(fill = "grey67") +

scale_y_continuous(breaks = c(0, .01, .02)) +

coord_cartesian(xlim = 0:150) +

labs(x = expression(kappa),

y = expression(paste("p(", kappa, "|s, r)"))) +

theme(panel.grid = element_blank()) +

facet_wrap(~label)

You can find the formulas for the mean and \(SD\) for a given gamma distribution here. We used those formulas in the second mutate() statement for the data-prep stage of that last figure.

Using \(s\) for shape and \(r\) for rate, Kruschke’s equations 9.7 and 9.8 are as follows:

\[ s = \frac{\mu^2}{\sigma^2} \;\; \text{and} \;\; r = \frac{\mu}{\sigma^2} \;\; \text{for mean} \;\; \mu > 0 \\ s = 1 + \omega r \;\; \text{where} \;\; r = \frac{\omega + \sqrt{\omega^2 + 4\sigma^2}}{2\sigma^2} \;\; \text{for mode} \;\; \omega > 0. \]

With those in hand, we can follow Kruschke’s DBDA2E-utilities.R file to make a couple convenience functions.

gamma_s_and_r_from_mean_sd <- function(mean, sd) {

if (mean <= 0) stop("mean must be > 0")

if (sd <= 0) stop("sd must be > 0")

shape <- mean^2 / sd^2

rate <- mean / sd^2

return(list(shape = shape, rate = rate))

}

gamma_s_and_r_from_mode_sd <- function(mode, sd) {

if (mode <= 0) stop("mode must be > 0")

if (sd <= 0) stop("sd must be > 0")

rate <- (mode + sqrt(mode^2 + 4 * sd^2)) / (2 * sd^2)

shape <- 1 + mode * rate

return(list(shape = shape, rate = rate))

}They’re easy to put to use:

## $shape

## [1] 0.01

##

## $rate

## [1] 0.001## $shape

## [1] 1.105125

##

## $rate

## [1] 0.01051249Here’s a more detailed look at the structure of their output.

## List of 2

## $ shape: num 1.11

## $ rate : num 0.01059.2.3 Doing it with JAGS brms.

Unlike JAGS, the brms formula will not correspond as closely to Figure 9.7. You’ll see in just a bit.

9.2.4 Example: Therapeutic touch.

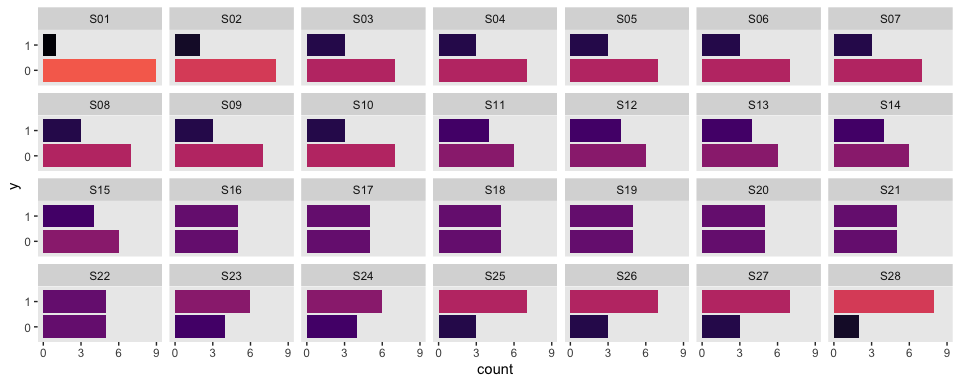

Load the data from the TherapeuticTouchData.csv file.

## Observations: 280

## Variables: 2

## $ y <dbl> 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 1, 0, 0, 0, 0,…

## $ s <chr> "S01", "S01", "S01", "S01", "S01", "S01", "S01", "S01", "S01",…Here are what the data look like:

my_data %>%

mutate(y = y %>% as.character()) %>%

ggplot(aes(x = y)) +

geom_bar(aes(fill = stat(count))) +

scale_y_continuous(breaks = seq(from = 0, to = 9, by = 3)) +

scale_fill_viridis_c(option = "A", end = .7) +

coord_flip() +

theme(legend.position = "none",

panel.grid = element_blank()) +

facet_wrap(~s, ncol = 7)

And here’s our Figure 9.9.

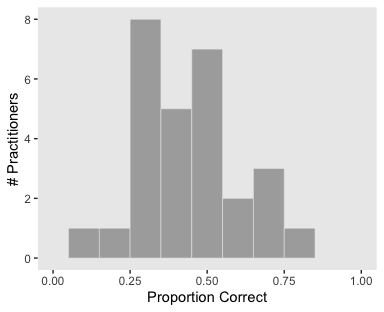

my_data %>%

group_by(s) %>%

summarize(mean = mean(y)) %>%

ggplot(aes(x = mean)) +

geom_histogram(color = "grey92", fill = "grey67",

size = .2, binwidth = .1) +

coord_cartesian(xlim = 0:1) +

labs(x = "Proportion Correct",

y = "# Practitioners") +

theme(panel.grid = element_blank())

Let’s open brms.

In applied statistics, the typical way to model a Bernoulli variable is with logistic regression. Instead of going through the pain of setting up a model in brms that mirrors the one in the text, I’m going to set up a hierarchical logistic regression model, instead.

Note the family = bernoulli(link = logit) argument. In work-a-day regression with vanilla Gaussian variables, the prediction space is unbounded. But when we want to model the probability of a success for a Bernoulli variable (i.e., \(\theta\)), we need to constrain the model to only produce predictions between 0 and 1. With logistic regression, we use a link function to do just that. The consequence is that instead of modeling the probability, \(\theta\), we’re modeling the logit probability.

In case you’re curious, the logit of \(\theta\) follows the formula

\[\operatorname{logit} (\theta) = \log \big (\theta/(1 - \theta) \big ).\]

But anyway, we’ll be doing logistic regression using the logit link. Kruschke will cover this in detail in Chapter 21.

The next new part of our syntax is (1 | s). As in the popular frequentist lme4 package, you specify random effects or group-level parameters with the (|) syntax in brms. On the left side of the |, you tell brms what parameters you’d like to make random (i.e., vary by group). On the right side of the |, you tell brms what variable you want to group the parameters by. In our case, we want the intercepts to vary over the grouping variable s.

fit1 <-

brm(data = my_data,

family = bernoulli(link = logit),

y ~ 1 + (1 | s),

prior = c(prior(normal(0, 1.5), class = Intercept),

prior(normal(0, 1), class = sd)),

iter = 20000, warmup = 1000, thin = 10, chains = 4, cores = 4,

seed = 9)As it turns out, the \(N(0, 1.5)\) prior is flat in the probability space for the intercept in a logistic regression model. We’ll explore that a little further down. The \(N(0, 1)\) prior for the random effect is actually a half Normal. That’s because brms defaults to bound \(SD\) parameters to zero and above. The half Normal prior for a hierarchical \(SD\) parameter in a logistic regression model is weakly regularizing and is conservative in the sense that it presumes some pooling is preferable to no pooling. If you wanted to take a lighter approach, you might use something like a cauchy(0, 5), instead. See the prior wiki by the Stan team for more ideas on priors.

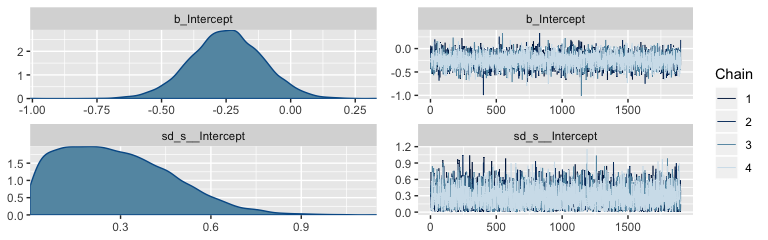

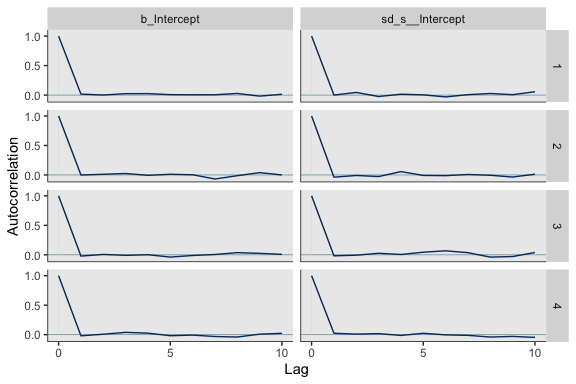

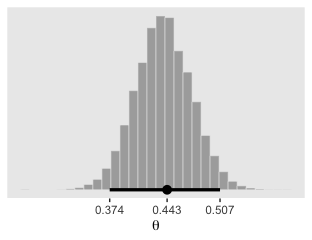

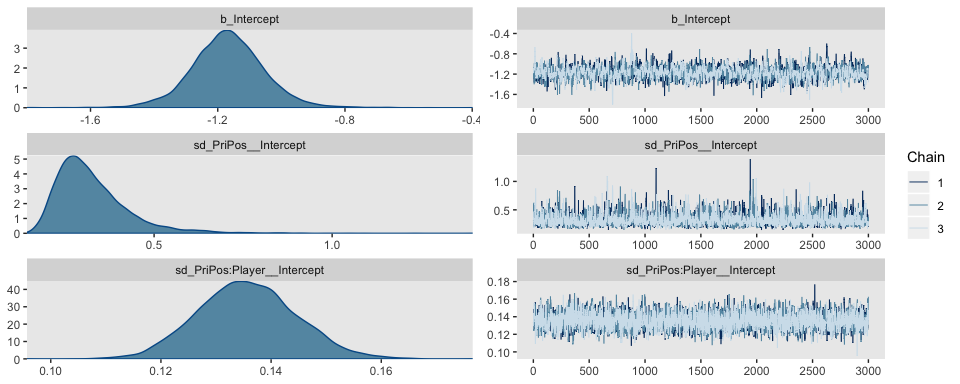

Here are the trace plots and posterior densities of the main parameters.

The trace plots indicate no problems with convergence. We’ll need to extract the posterior samples and open the bayesplot package before we can examine the autocorrelations.

One of the nice things about bayesplot is it returns ggplot2 objects. As such, we can amend their theme settings to be consistent with our other ggplot2 plots. The theme_set() function will make that particularly easy. And since I prefer to plot without gridlines, we’ll slip in a line on panel.grid to suppress them by default for the remainder of this chapter.

Now we’re ready for bayesplot::mcmc_acf().

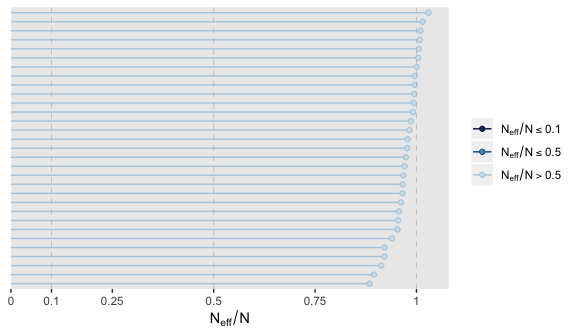

It appears fit1 had very low autocorrelations. Here we’ll examine the \(N_{eff}/N\) ratio.

The \(N_{eff}/N\) ratio values for our model parameters were excellent. And if you really wanted them, you could get the parameter labels on the y-axis by tacking + yaxis_text() on at the end of the plot block.

Here’s a numeric summary of the model.

## Family: bernoulli

## Links: mu = logit

## Formula: y ~ 1 + (1 | s)

## Data: my_data (Number of observations: 280)

## Samples: 4 chains, each with iter = 20000; warmup = 1000; thin = 10;

## total post-warmup samples = 7600

##

## Group-Level Effects:

## ~s (Number of levels: 28)

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## sd(Intercept) 0.28 0.18 0.02 0.68 1.00 7438 7638

##

## Population-Level Effects:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## Intercept -0.25 0.14 -0.53 0.02 1.00 7237 7507

##

## Samples were drawn using sampling(NUTS). For each parameter, Eff.Sample

## is a crude measure of effective sample size, and Rhat is the potential

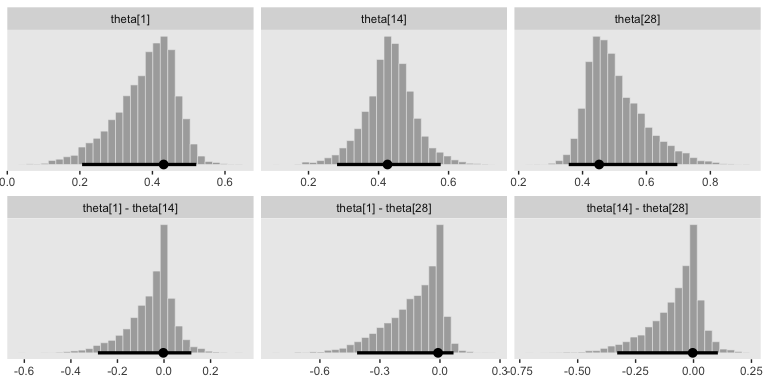

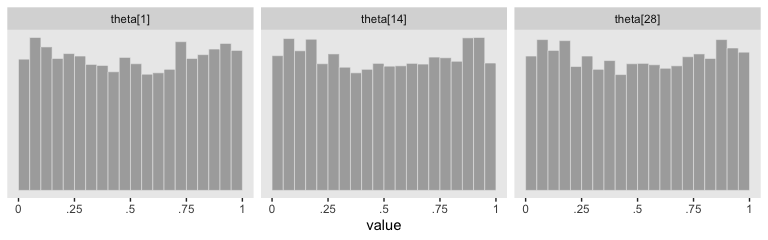

## scale reduction factor on split chains (at convergence, Rhat = 1).We’ll need brms::inv_logit_scaled() to convert the model parameters to predict \(\theta\) rather than \(\operatorname{logit} (\theta)\). After the conversions, we’ll be ready to make the histograms in the lower portion of Figure 9.10.

library(tidybayes)

post_small <-

post %>%

# convert the linter model to the probability space with `inv_logit_scaled()`

mutate(`theta[1]` = (b_Intercept + `r_s[S01,Intercept]`) %>% inv_logit_scaled(),

`theta[14]` = (b_Intercept + `r_s[S14,Intercept]`) %>% inv_logit_scaled(),

`theta[28]` = (b_Intercept + `r_s[S28,Intercept]`) %>% inv_logit_scaled()) %>%

# make the difference distributions

mutate(`theta[1] - theta[14]` = `theta[1]` - `theta[14]`,

`theta[1] - theta[28]` = `theta[1]` - `theta[28]`,

`theta[14] - theta[28]` = `theta[14]` - `theta[28]`) %>%

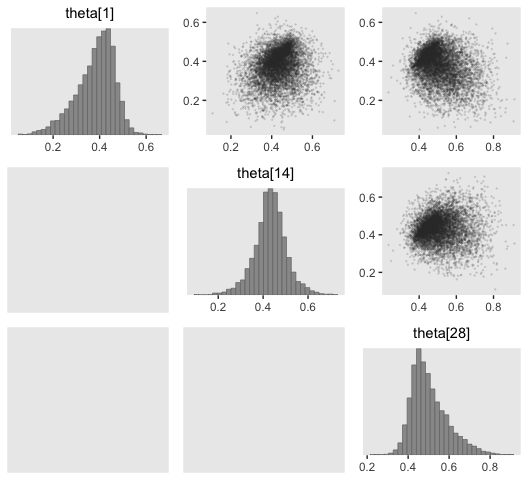

select(starts_with("theta"))

post_small %>%

gather() %>%

# this line is unnecessary, but will help order the plots

mutate(key = factor(key, levels = c("theta[1]", "theta[14]", "theta[28]",

"theta[1] - theta[14]", "theta[1] - theta[28]", "theta[14] - theta[28]"))) %>%

ggplot(aes(x = value)) +

geom_histogram(color = "grey92", fill = "grey67",

size = .2, bins = 30) +

stat_pointintervalh(aes(y = 0),

point_interval = mode_hdi, .width = .95) +

scale_y_continuous(NULL, breaks = NULL) +

xlab(NULL) +

facet_wrap(~key, scales = "free", ncol = 3)

If you wanted the specific values of the posterior modes and 95% HDIs, you could execute this.

post_small %>%

gather() %>%

group_by(key) %>%

mode_hdi(value) %>%

mutate_if(is.double, round, digits = 3)## # A tibble: 6 x 7

## key value .lower .upper .width .point .interval

## <chr> <dbl> <dbl> <dbl> <dbl> <chr> <chr>

## 1 theta[1] 0.431 0.206 0.521 0.95 mode hdi

## 2 theta[1] - theta[14] -0.003 -0.284 0.117 0.95 mode hdi

## 3 theta[1] - theta[28] -0.01 -0.414 0.068 0.95 mode hdi

## 4 theta[14] 0.426 0.282 0.578 0.95 mode hdi

## 5 theta[14] - theta[28] -0.004 -0.329 0.105 0.95 mode hdi

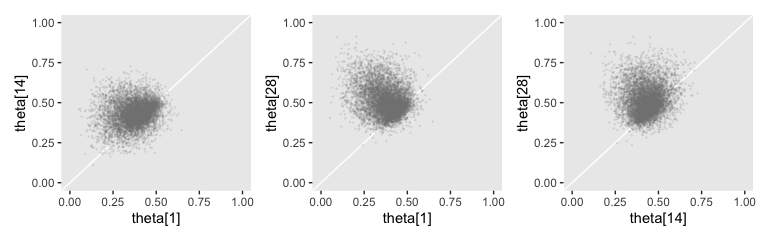

## 6 theta[28] 0.452 0.357 0.697 0.95 mode hdiAnd here are the Figure 9.10 scatter plots.

p1 <-

post_small %>%

ggplot(aes(x = `theta[1]`, y = `theta[14]`)) +

geom_abline(linetype = 1, color = "white") +

geom_point(color = "grey50", size = 1/8, alpha = 1/8) +

coord_cartesian(xlim = 0:1,

ylim = 0:1)

p2 <-

post_small %>%

ggplot(aes(x = `theta[1]`, y = `theta[28]`)) +

geom_abline(linetype = 1, color = "white") +

geom_point(color = "grey50", size = 1/8, alpha = 1/8) +

coord_cartesian(xlim = 0:1,

ylim = 0:1)

p3 <-

post_small %>%

ggplot(aes(x = `theta[14]`, y = `theta[28]`)) +

geom_abline(linetype = 1, color = "white") +

geom_point(color = "grey50", size = 1/8, alpha = 1/8) +

coord_cartesian(xlim = 0:1,

ylim = 0:1)

p1 + p2 + p3

This is posterior distribution for the population estimate for \(\theta\), which roughly corresponds to the upper right histogram of \(\omega\) in Figure 9.10.

# this part makes it easier to set the break points in `scale_x_continuous()`

labels <-

post %>%

transmute(theta = b_Intercept %>% inv_logit_scaled()) %>%

mode_hdi() %>%

select(theta:.upper) %>%

gather() %>%

mutate(label = value %>% round(3) %>% as.character) %>%

slice(1:3)

post %>%

mutate(theta = b_Intercept %>% inv_logit_scaled()) %>%

ggplot(aes(x = theta)) +

geom_histogram(color = "grey92", fill = "grey67",

size = .2, bins = 30) +

stat_pointintervalh(aes(y = 0),

point_interval = mode_hdi, .width = .95) +

scale_x_continuous(breaks = labels$value,

labels = labels$label) +

scale_y_continuous(NULL, breaks = NULL) +

xlab(expression(theta))

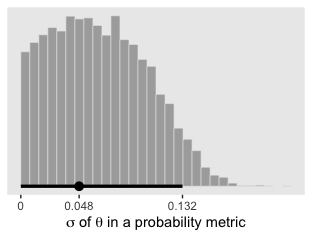

I’m not aware there’s a straight conversion to get \(\sigma\) in a probability metric. As far as I can tell, you have to first use coef() to “extract [the] model coefficients, which are the sum of population-level effects and corresponding group-level effects” (p. 43 of the brms Reference manual, version 2.10.0). With the model coefficient draws in hand, you can index them by posterior iteration, group them by that index, compute the iteration-level \(SD\)s, and then plot the distribution of the \(SD\)s.

# the tibble of the primary data

sigmas <-

coef(fit1, summary = F)$s %>%

as_tibble() %>%

mutate(iter = 1:n()) %>%

group_by(iter) %>%

gather(key, value, -iter) %>%

mutate(theta = inv_logit_scaled(value)) %>%

summarise(sd = sd(theta))

# this, again, is just to customize `scale_x_continuous()`

labels <-

sigmas %>%

mode_hdi(sd) %>%

select(sd:.upper) %>%

gather() %>%

mutate(label = value %>% round(3) %>% as.character) %>%

slice(1:3)

# the plot

sigmas %>%

ggplot(aes(x = sd)) +

geom_histogram(color = "grey92", fill = "grey67",

size = .2, bins = 30, boundary = 0) +

stat_pointintervalh(aes(y = 0),

point_interval = mode_hdi, .width = .95) +

scale_x_continuous(breaks = labels$value,

labels = labels$label) +

scale_y_continuous(NULL, breaks = NULL) +

xlab(expression(paste(sigma, " of ", theta, " in a probability metric")))

And now you have a sense of how to do all those by hand, bayesplot::mcmc_pairs() offers a fairly quick way to get a good portion of Figure 9.10.

color_scheme_set("gray")

coef(fit1, summary = F)$s %>%

inv_logit_scaled() %>%

as_tibble() %>%

rename(`theta[1]` = S01.Intercept,

`theta[14]` = S14.Intercept,

`theta[28]` = S28.Intercept) %>%

select(`theta[1]`, `theta[14]`, `theta[28]`) %>%

mcmc_pairs(off_diag_args = list(size = 1/8, alpha = 1/8))

Did you see how we slipped in that color_scheme_set("gray") line? When we used theme_set(), earlier, that changed the global theme settings for our ggplot2 plots. The color_scheme_set() function is specific to bayesplot plots and it sets the color palette within them. Setting the color palette “gray” changed the colors depicted in the dots and bars of the mcmc_pairs()-based scatter plots and histograms, respectively.

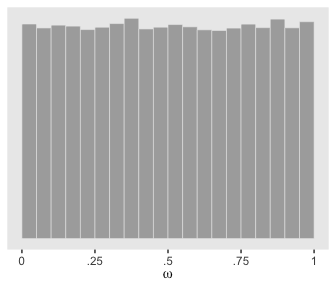

Kruschke used a \(\operatorname{Beta} (1, 1)\) prior for \(\omega\). If you randomly draw from that prior and plot a histogram, you’ll see it was flat.

set.seed(1)

tibble(prior = rbeta(n = 1e5, 1, 1)) %>%

ggplot(aes(x = prior)) +

geom_histogram(color = "grey92", fill = "grey67", size = .2,

binwidth = .05, boundary = 0) +

scale_x_continuous(expression(omega), labels = c("0", ".25", ".5", ".75", "1")) +

scale_y_continuous(NULL, breaks = NULL) +

coord_cartesian(xlim = 0:1) +

theme(legend.position = "none")

You’ll note that plot corresponds to the upper right panel of Figure 9.11.

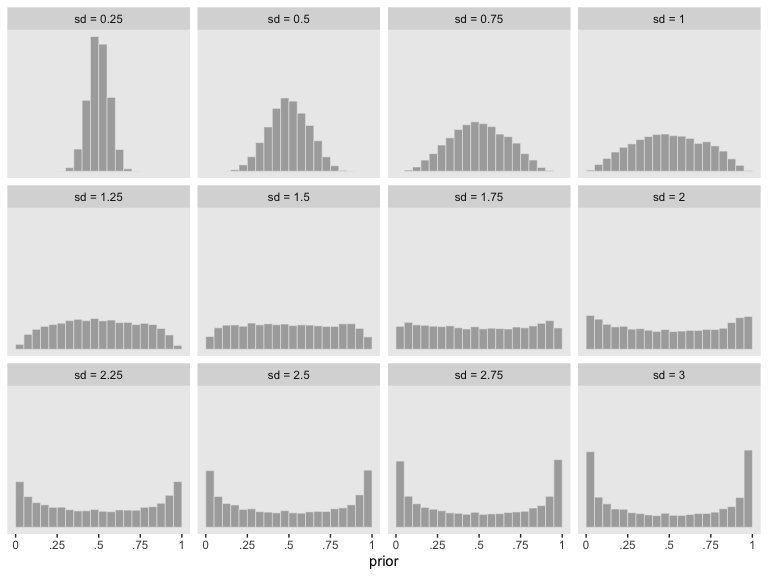

Recall that we used a logistic regression model with a normal(0, 1.5) prior on the intercept. If you sample from normal(0, 1.5) and then convert the draws using brms::inv_logit_scaled(), you’ll discover that our normal(0, 1.5) prior was virtually flat on the probability scale. Here we’ll show the consequence of a variety of zero-mean Gaussian priors for the intercept of a logistic regression model:

# define a function

r_norm <- function(i, n = 1e4) {

set.seed(1)

rnorm(n = n, mean = 0, sd = i) %>%

inv_logit_scaled()

}

# simulate and wrangle

tibble(sd = seq(from = .25, to = 3, by = .25)) %>%

group_by(sd) %>%

mutate(prior = map(sd, r_norm)) %>%

unnest(prior) %>%

ungroup() %>%

mutate(sd = str_c("sd = ", sd)) %>%

# plot!

ggplot(aes(x = prior)) +

geom_histogram(fill = "grey67", color = "grey92", size = .2,

binwidth = .05, boundary = 0) +

scale_x_continuous(labels = c("0", ".25", ".5", ".75", "1")) +

scale_y_continuous(NULL, breaks = NULL) +

coord_cartesian(xlim = 0:1) +

facet_wrap(~sd)

It appears that as \(\sigma\) goes lower than 1.25, the prior becomes increasingly regularizing, pulling the estimate for \(\theta\) to a neutral .5. However, as the prior’s \(\sigma\) gets larger than 1.25, more and more of the probability mass ends up at extreme values.

Next, Kruschke examined the prior distribution. There are a few ways to do this. The one we’ll explore involved adding the sample_prior = "only" argument to the brm() function. When you do so, the results of the model are just the prior. That is, brm() leaves out the likelihood. This returns a bunch of samples from the prior predictive distribution.

fit1_prior <-

brm(data = my_data,

family = bernoulli(link = logit),

y ~ 1 + (1 | s),

prior = c(prior(normal(0, 1.5), class = Intercept),

prior(normal(0, 1), class = sd)),

iter = 20000, warmup = 1000, thin = 10, chains = 4, cores = 4,

seed = 9,

sample_prior = "only")If we feed fit1_prior into the posterior_samples() function, we’ll get back a data frame of samples from the prior, but with the same parameter names we’d get from the posterior.

## # A tibble: 6 x 31

## b_Intercept sd_s__Intercept `r_s[S01,Interc… `r_s[S02,Interc…

## <dbl> <dbl> <dbl> <dbl>

## 1 -2.15 1.36 1.18 -0.892

## 2 -0.825 0.0112 -0.00256 -0.0330

## 3 0.299 0.315 -0.00208 -0.535

## 4 2.75 0.654 0.0715 0.821

## 5 0.350 0.352 -0.127 0.0561

## 6 -3.23 0.433 -0.680 0.0825

## # … with 27 more variables: `r_s[S03,Intercept]` <dbl>,

## # `r_s[S04,Intercept]` <dbl>, `r_s[S05,Intercept]` <dbl>,

## # `r_s[S06,Intercept]` <dbl>, `r_s[S07,Intercept]` <dbl>,

## # `r_s[S08,Intercept]` <dbl>, `r_s[S09,Intercept]` <dbl>,

## # `r_s[S10,Intercept]` <dbl>, `r_s[S11,Intercept]` <dbl>,

## # `r_s[S12,Intercept]` <dbl>, `r_s[S13,Intercept]` <dbl>,

## # `r_s[S14,Intercept]` <dbl>, `r_s[S15,Intercept]` <dbl>,

## # `r_s[S16,Intercept]` <dbl>, `r_s[S17,Intercept]` <dbl>,

## # `r_s[S18,Intercept]` <dbl>, `r_s[S19,Intercept]` <dbl>,

## # `r_s[S20,Intercept]` <dbl>, `r_s[S21,Intercept]` <dbl>,

## # `r_s[S22,Intercept]` <dbl>, `r_s[S23,Intercept]` <dbl>,

## # `r_s[S24,Intercept]` <dbl>, `r_s[S25,Intercept]` <dbl>,

## # `r_s[S26,Intercept]` <dbl>, `r_s[S27,Intercept]` <dbl>,

## # `r_s[S28,Intercept]` <dbl>, lp__ <dbl>And here we’ll take a subset of the columns in prior, transform the results to the probability metric, and save the results as prior_samples.

prior_samples <-

prior %>%

transmute(`theta[1]` = b_Intercept + `r_s[S01,Intercept]`,

`theta[14]` = b_Intercept + `r_s[S14,Intercept]`,

`theta[28]` = b_Intercept + `r_s[S28,Intercept]`) %>%

mutate_all(.funs = inv_logit_scaled)

head(prior_samples)## # A tibble: 6 x 3

## `theta[1]` `theta[14]` `theta[28]`

## <dbl> <dbl> <dbl>

## 1 0.276 0.669 0.0393

## 2 0.304 0.303 0.301

## 3 0.574 0.527 0.565

## 4 0.944 0.890 0.788

## 5 0.556 0.711 0.505

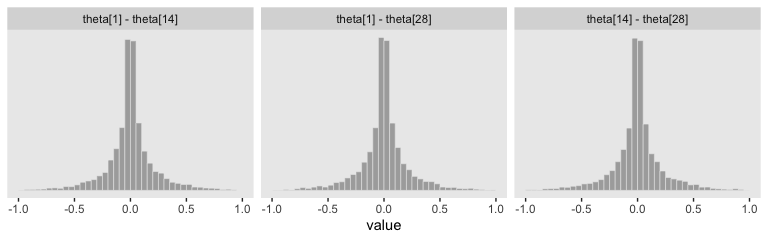

## 6 0.0197 0.0436 0.0305Now we can use our prior_samples object to make the diagonal of the lower grid of Figure 9.11.

prior_samples %>%

gather() %>%

ggplot(aes(x = value)) +

geom_histogram(fill = "grey67", color = "grey92", size = .2,

binwidth = .05, boundary = 0) +

scale_x_continuous(labels = c("0", ".25", ".5", ".75", "1")) +

scale_y_continuous(NULL, breaks = NULL) +

coord_cartesian(xlim = 0:1) +

facet_wrap(~key)

With a little subtraction, we can reproduce the plots in the upper triangle.

prior_samples %>%

transmute(`theta[1] - theta[14]` = `theta[1]` - `theta[14]`,

`theta[1] - theta[28]` = `theta[1]` - `theta[28]`,

`theta[14] - theta[28]` = `theta[14]` - `theta[28]`) %>%

gather() %>%

ggplot(aes(x = value)) +

geom_histogram(fill = "grey67", color = "grey92", size = .2,

binwidth = .05, boundary = 0) +

scale_y_continuous(NULL, breaks = NULL) +

facet_wrap(~key)

Those plots clarify our hierarchical logistic regression model was a little more regularizing than Kruschke’s. The consequence of our priors was more aggressive regularization, greater shrinkage toward zero. The prose in the next section of the text clarifies this isn’t necessarily a bad thing.

Finally, here are the plots for the lower triangle in Figure 9.11.

p1 <-

prior_samples %>%

ggplot(aes(x = `theta[1]`, y = `theta[14]`)) +

geom_point(color = "grey50", size = 1/8, alpha = 1/8) +

geom_abline(linetype = 1, color = "white") +

coord_cartesian(xlim = 0:1,

ylim = 0:1)

p2 <-

prior_samples %>%

ggplot(aes(x = `theta[1]`, y = `theta[28]`)) +

geom_point(color = "grey50", size = 1/8, alpha = 1/8) +

geom_abline(linetype = 1, color = "white") +

coord_cartesian(xlim = 0:1,

ylim = 0:1)

p3 <-

prior_samples %>%

ggplot(aes(x = `theta[14]`, y = `theta[28]`)) +

geom_point(color = "grey50", size = 1/8, alpha = 1/8) +

geom_abline(linetype = 1, color = "white") +

coord_cartesian(xlim = 0:1,

ylim = 0:1)

p1 + p2 + p3

In case you were curious, here are the Pearson’s correlation coefficients among the priors.

## theta[1] theta[14] theta[28]

## theta[1] 1.00 0.73 0.73

## theta[14] 0.73 1.00 0.72

## theta[28] 0.73 0.72 1.009.3 Shrinkage in hierarchical models

“In typical hierarchical models, the estimates of low-level parameters are pulled closer together than they would be if there were not a higher-level distribution. This pulling together is called shrinkage of the estimates” (p. 245, emphasis in the original)

Further,

shrinkage is a rational implication of hierarchical model structure, and is (usually) desired by the analyst because the shrunken parameter estimates are less affected by random sampling noise than estimates derived without hierarchical structure. Intuitively, shrinkage occurs because the estimate of each low-level parameter is influenced from two sources: (1) the subset of data that are directly dependent on the low-level parameter, and (2) the higher-level parameters on which the low-level parameter depends. The higher- level parameters are affected by all the data, and therefore the estimate of a low-level parameter is affected indirectly by all the data, via their influence on the higher-level parameters. (p. 247)

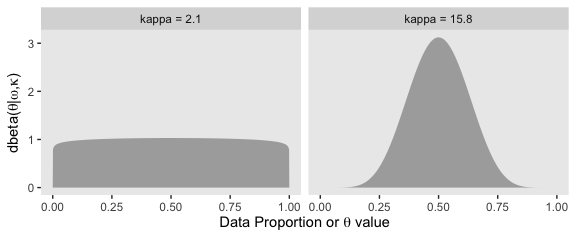

Recall Formula 9.4 from page 223,

\[\theta \sim \operatorname{dbeta} (\omega(\kappa - 2) + 1), (1 - \omega)(\kappa - 2) + 1).\]

With that formula, we can express dbeta()’s shape1 and shape2 in terms of \(\omega\) and \(\kappa\) and make the shapes in Figure 9.12.

omega <- 0.5

kappa1 <- 2.1

kappa2 <- 15.8

tibble(x = seq(from = 0, to = 1, by = .001)) %>%

mutate(`kappa = 2.1` = dbeta(x = x,

shape1 = omega * (kappa1 - 2) + 1,

shape2 = (1 - omega) * (kappa1 - 2) + 1),

`kappa = 15.8` = dbeta(x = x,

shape1 = omega * (kappa2 - 2) + 1,

shape2 = (1 - omega) * (kappa2 - 2) + 1)) %>%

gather(key, value, - x) %>%

mutate(key = factor(key, levels = c("kappa = 2.1", "kappa = 15.8"))) %>%

ggplot(aes(x = x,

ymin = 0,

ymax = value)) +

geom_ribbon(fill = "grey67") +

labs(x = expression(paste("Data Proportion or ", theta, " value")),

y = expression(paste("dbeta(", theta, "|", omega, ",", kappa, ")"))) +

facet_wrap(~key)

9.4 Speeding up JAGS brms

Here we’ll compare the time it takes to fit fit1 as either bernoulli(link = logit) or binomial(link = logit).

# bernoulli

start_time_bernoulli <- proc.time()

brm(data = my_data,

family = bernoulli(link = logit),

y ~ 1 + (1 | s),

prior = c(prior(normal(0, 1.5), class = Intercept),

prior(normal(0, 1), class = sd)),

iter = 20000, warmup = 1000, thin = 10, chains = 4, cores = 4,

seed = 9)

stop_time_bernoulli <- proc.time()

# binomial

start_time_binomial <- proc.time()

brm(data = my_data,

family = binomial(link = logit),

y | trials(1) ~ 1 + (1 | s),

prior = c(prior(normal(0, 1.5), class = Intercept),

prior(normal(0, 1), class = sd)),

iter = 20000, warmup = 1000, thin = 10, chains = 4, cores = 4,

seed = 9)

stop_time_binomial <- proc.time()See how we’re using proc.time() to record when we began and finished evaluating our brm() code? The last time we covered that was way back in Chapter 3. In Chapter 3 we also learned how subtracting the former from the latter yields the total elapsed time.

## user system elapsed

## 2.889 1.059 50.630## user system elapsed

## 53.496 4.285 144.122If you wanted to be rigorous about this, you could do this multiple times in a mini simulation.

As to the issue of parallel processing, we’ve been doing this all along. Note our chains = 4, cores = 4 code.

9.5 Extending the hierarchy: Subjects within categories

Many data structures invite hierarchical descriptions that may have multiple levels. Software such as

JAGS[brms] makes it easy to implement hierarchical models, and Bayesian inference makes it easy to interpret the parameter estimates, even for complex nonlinear hierarchical models. Here, we take a look at one type of extended hierarchical model. (p. 251)

Here we depart a little from Kruschke, again. Though we will be fitting a hierarchical model with subjects \(s\) within categories \(c\), the higher-level parameters will not be \(\omega\) and \(\kappa\). As we’ll go over, below, we will use the binomial distribution within a more conventional hierarchical logistic regression paradigm. In this paradigm, we have an overall intercept, often called \(\alpha\) or \(\beta_0\), which will be our analogue to Kruschke’s overall \(\omega\). For the two grouping categories, \(s\) and \(c\), we will have \(\sigma\) estimates, which express the variability within those grouping. You’ll see when we get there.

9.5.1 Example: Baseball batting abilities by position.

Here are the batting average data.

## Observations: 948

## Variables: 6

## $ Player <chr> "Fernando Abad", "Bobby Abreu", "Tony Abreu", "Dust…

## $ PriPos <chr> "Pitcher", "Left Field", "2nd Base", "2nd Base", "1…

## $ Hits <dbl> 1, 53, 18, 137, 21, 0, 0, 2, 150, 167, 0, 128, 66, …

## $ AtBats <dbl> 7, 219, 70, 607, 86, 1, 1, 20, 549, 576, 1, 525, 27…

## $ PlayerNumber <dbl> 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, …

## $ PriPosNumber <dbl> 1, 7, 4, 4, 3, 1, 1, 3, 3, 4, 1, 5, 4, 2, 7, 4, 6, …To give a sense of the data, here are the number of occasions by primary position, PriPos, with their median at bat, AtBats, values.

## # A tibble: 9 x 3

## PriPos n median

## <chr> <int> <dbl>

## 1 Pitcher 324 4

## 2 Catcher 103 170

## 3 Left Field 103 164

## 4 1st Base 81 265

## 5 3rd Base 75 267

## 6 2nd Base 72 228.

## 7 Center Field 67 259

## 8 Shortstop 63 205

## 9 Right Field 60 340.As these data are aggregated, we’ll fit with an aggregated binomial model. This is still logistic regression. The Bernoulli distribution is a special case of the binomial distribution when the number of trials in each data point is 1 (see this vignette for details). Since our data are aggregated, the information encoded in Hits is a combination of multiple trials, which requires us to jump up to the more general binomial likelihood. Note the Hits | trials(AtBats) syntax. With that bit, we instructed brms that our criterion, Hits, is an aggregate of multiple trials and the number of trials is encoded in AtBats.

Also note the (1 | PriPos) + (1 | PriPos:Player) syntax. In this model, we have two grouping factors, PriPos and Player. Thus we have two (|) arguments. But since players are themselves nested within positions, we have encoded that nesting with the (1 | PriPos:Player) syntax. For more on this style of syntax, see Kristoffer Magnusson’s handy post. Since brms syntax is based on that from the earlier nlme and lme4 packages, the basic syntax rules apply. Bürkner, of course, also covers these topics in the brmsformula subsection of his brms reference manual.

fit2 <-

brm(data = my_data,

family = binomial(link = logit),

Hits | trials(AtBats) ~ 1 + (1 | PriPos) + (1 | PriPos:Player),

prior = c(prior(normal(0, 1.5), class = Intercept),

prior(normal(0, 1), class = sd)),

iter = 3500, warmup = 500, chains = 3, cores = 3,

control = list(adapt_delta = .99),

seed = 9)The chains look good.

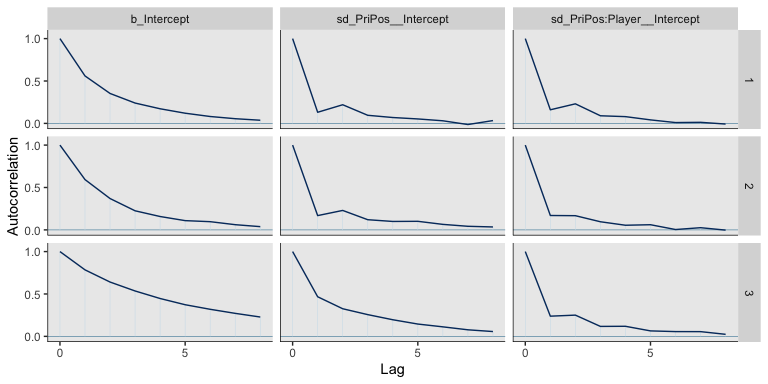

We might examine the autocorrelations within the chains.

post <- posterior_samples(fit2, add_chain = T)

mcmc_acf(post, pars = c("b_Intercept",

"sd_PriPos__Intercept",

"sd_PriPos:Player__Intercept"), lags = 8)

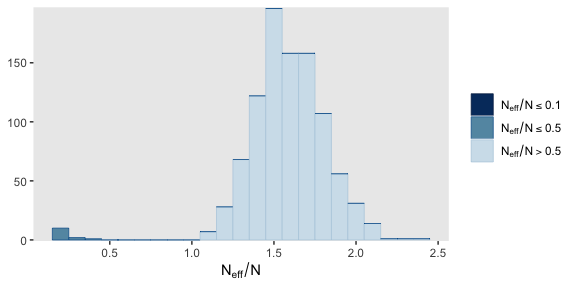

Here’s a histogram of the \(N_{eff}/N\) ratios.

Happily, most have a very favorable ratio. Here’s a numeric summary of the primary model parameters.

## Family: binomial

## Links: mu = logit

## Formula: Hits | trials(AtBats) ~ 1 + (1 | PriPos) + (1 | PriPos:Player)

## Data: my_data (Number of observations: 948)

## Samples: 3 chains, each with iter = 3500; warmup = 500; thin = 1;

## total post-warmup samples = 9000

##

## Group-Level Effects:

## ~PriPos (Number of levels: 9)

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## sd(Intercept) 0.32 0.10 0.19 0.59 1.00 2660 4283

##

## ~PriPos:Player (Number of levels: 948)

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## sd(Intercept) 0.14 0.01 0.12 0.15 1.00 3695 5911

##

## Population-Level Effects:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## Intercept -1.17 0.11 -1.40 -0.94 1.00 1507 2262

##

## Samples were drawn using sampling(NUTS). For each parameter, Eff.Sample

## is a crude measure of effective sample size, and Rhat is the potential

## scale reduction factor on split chains (at convergence, Rhat = 1).As far as I’m aware, brms offers three major ways to get the group-level parameters for a hierarchical model: using posterior_samples(), coef(), or fitted(). We’ll cover each, beginning with posterior_samples(). In order to look at the autocorrelation plots, above, we already saved the posterior_samples(fit2) output as post. Here we’ll look at its structure with head(). Before doing so we’ll convert post, which is currently saved as a data frame, into a tibble in order to keep the output from getting unwieldy.

## # A tibble: 6 x 963

## b_Intercept sd_PriPos__Inte… `sd_PriPos:Play… `r_PriPos[1st.B…

## <dbl> <dbl> <dbl> <dbl>

## 1 -1.11 0.213 0.145 -0.00504

## 2 -1.13 0.262 0.147 0.101

## 3 -1.18 0.351 0.149 0.169

## 4 -1.18 0.279 0.130 0.141

## 5 -1.16 0.408 0.137 0.0744

## 6 -1.20 0.344 0.142 0.146

## # … with 959 more variables: `r_PriPos[2nd.Base,Intercept]` <dbl>,

## # `r_PriPos[3rd.Base,Intercept]` <dbl>,

## # `r_PriPos[Catcher,Intercept]` <dbl>,

## # `r_PriPos[Center.Field,Intercept]` <dbl>,

## # `r_PriPos[Left.Field,Intercept]` <dbl>,

## # `r_PriPos[Pitcher,Intercept]` <dbl>,

## # `r_PriPos[Right.Field,Intercept]` <dbl>,

## # `r_PriPos[Shortstop,Intercept]` <dbl>,

## # `r_PriPos:Player[1st.Base_Adam.Dunn,Intercept]` <dbl>,

## # `r_PriPos:Player[1st.Base_Adam.LaRoche,Intercept]` <dbl>,

## # `r_PriPos:Player[1st.Base_Adam.Lind,Intercept]` <dbl>,

## # `r_PriPos:Player[1st.Base_Adrian.Gonzalez,Intercept]` <dbl>,

## # `r_PriPos:Player[1st.Base_Albert.Pujols,Intercept]` <dbl>,

## # `r_PriPos:Player[1st.Base_Allen.Craig,Intercept]` <dbl>,

## # `r_PriPos:Player[1st.Base_Anthony.Rizzo,Intercept]` <dbl>,

## # `r_PriPos:Player[1st.Base_Aubrey.Huff,Intercept]` <dbl>,

## # `r_PriPos:Player[1st.Base_Billy.Butler,Intercept]` <dbl>,

## # `r_PriPos:Player[1st.Base_Brandon.Allen,Intercept]` <dbl>,

## # `r_PriPos:Player[1st.Base_Brandon.Belt,Intercept]` <dbl>,

## # `r_PriPos:Player[1st.Base_Brandon.Moss,Intercept]` <dbl>,

## # `r_PriPos:Player[1st.Base_Brandon.Snyder,Intercept]` <dbl>,

## # `r_PriPos:Player[1st.Base_Brent.Lillibridge,Intercept]` <dbl>,

## # `r_PriPos:Player[1st.Base_Brett.Pill,Intercept]` <dbl>,

## # `r_PriPos:Player[1st.Base_Brett.Wallace,Intercept]` <dbl>,

## # `r_PriPos:Player[1st.Base_Bryan.LaHair,Intercept]` <dbl>,

## # `r_PriPos:Player[1st.Base_Carlos.Lee,Intercept]` <dbl>,

## # `r_PriPos:Player[1st.Base_Carlos.Pena,Intercept]` <dbl>,

## # `r_PriPos:Player[1st.Base_Casey.Kotchman,Intercept]` <dbl>,

## # `r_PriPos:Player[1st.Base_Casey.McGehee,Intercept]` <dbl>,

## # `r_PriPos:Player[1st.Base_Chad.Tracy,Intercept]` <dbl>,

## # `r_PriPos:Player[1st.Base_Chris.Carter,Intercept]` <dbl>,

## # `r_PriPos:Player[1st.Base_Chris.Davis,Intercept]` <dbl>,

## # `r_PriPos:Player[1st.Base_Chris.Parmelee,Intercept]` <dbl>,

## # `r_PriPos:Player[1st.Base_Corey.Hart,Intercept]` <dbl>,

## # `r_PriPos:Player[1st.Base_Dan.Johnson,Intercept]` <dbl>,

## # `r_PriPos:Player[1st.Base_Daric.Barton,Intercept]` <dbl>,

## # `r_PriPos:Player[1st.Base_David.Cooper,Intercept]` <dbl>,

## # `r_PriPos:Player[1st.Base_David.Ortiz,Intercept]` <dbl>,

## # `r_PriPos:Player[1st.Base_Edwin.Encarnacion,Intercept]` <dbl>,

## # `r_PriPos:Player[1st.Base_Eric.Hinske,Intercept]` <dbl>,

## # `r_PriPos:Player[1st.Base_Eric.Hosmer,Intercept]` <dbl>,

## # `r_PriPos:Player[1st.Base_Freddie.Freeman,Intercept]` <dbl>,

## # `r_PriPos:Player[1st.Base_Gaby.Sanchez,Intercept]` <dbl>,

## # `r_PriPos:Player[1st.Base_Garrett.Jones,Intercept]` <dbl>,

## # `r_PriPos:Player[1st.Base_Hector.Luna,Intercept]` <dbl>,

## # `r_PriPos:Player[1st.Base_Ike.Davis,Intercept]` <dbl>,

## # `r_PriPos:Player[1st.Base_James.Loney,Intercept]` <dbl>,

## # `r_PriPos:Player[1st.Base_Jason.Giambi,Intercept]` <dbl>,

## # `r_PriPos:Player[1st.Base_Jeff.Clement,Intercept]` <dbl>,

## # `r_PriPos:Player[1st.Base_Jim.Thome,Intercept]` <dbl>,

## # `r_PriPos:Player[1st.Base_Joe.Mahoney,Intercept]` <dbl>,

## # `r_PriPos:Player[1st.Base_Joey.Votto,Intercept]` <dbl>,

## # `r_PriPos:Player[1st.Base_Juan.Rivera,Intercept]` <dbl>,

## # `r_PriPos:Player[1st.Base_Justin.Morneau,Intercept]` <dbl>,

## # `r_PriPos:Player[1st.Base_Justin.Smoak,Intercept]` <dbl>,

## # `r_PriPos:Player[1st.Base_Kendrys.Morales,Intercept]` <dbl>,

## # `r_PriPos:Player[1st.Base_Kila.Kaaihue,Intercept]` <dbl>,

## # `r_PriPos:Player[1st.Base_Kyle.Blanks,Intercept]` <dbl>,

## # `r_PriPos:Player[1st.Base_Lance.Berkman,Intercept]` <dbl>,

## # `r_PriPos:Player[1st.Base_Luke.Scott,Intercept]` <dbl>,

## # `r_PriPos:Player[1st.Base_Lyle.Overbay,Intercept]` <dbl>,

## # `r_PriPos:Player[1st.Base_Mark.Reynolds,Intercept]` <dbl>,

## # `r_PriPos:Player[1st.Base_Mark.Teixeira,Intercept]` <dbl>,

## # `r_PriPos:Player[1st.Base_Mat.Gamel,Intercept]` <dbl>,

## # `r_PriPos:Player[1st.Base_Matt.Adams,Intercept]` <dbl>,

## # `r_PriPos:Player[1st.Base_Matt.Carpenter,Intercept]` <dbl>,

## # `r_PriPos:Player[1st.Base_Matt.Downs,Intercept]` <dbl>,

## # `r_PriPos:Player[1st.Base_Matt.Hague,Intercept]` <dbl>,

## # `r_PriPos:Player[1st.Base_Matt.LaPorta,Intercept]` <dbl>,

## # `r_PriPos:Player[1st.Base_Mauro.Gomez,Intercept]` <dbl>,

## # `r_PriPos:Player[1st.Base_Michael.Young,Intercept]` <dbl>,

## # `r_PriPos:Player[1st.Base_Miguel.Cairo,Intercept]` <dbl>,

## # `r_PriPos:Player[1st.Base_Mike.Costanzo,Intercept]` <dbl>,

## # `r_PriPos:Player[1st.Base_Mike.Jacobs,Intercept]` <dbl>,

## # `r_PriPos:Player[1st.Base_Mike.Olt,Intercept]` <dbl>,

## # `r_PriPos:Player[1st.Base_Mitch.Moreland,Intercept]` <dbl>,

## # `r_PriPos:Player[1st.Base_Nick.Johnson,Intercept]` <dbl>,

## # `r_PriPos:Player[1st.Base_Paul.Goldschmidt,Intercept]` <dbl>,

## # `r_PriPos:Player[1st.Base_Paul.Konerko,Intercept]` <dbl>,

## # `r_PriPos:Player[1st.Base_Prince.Fielder,Intercept]` <dbl>,

## # `r_PriPos:Player[1st.Base_Ryan.Howard,Intercept]` <dbl>,

## # `r_PriPos:Player[1st.Base_Steven.Hill,Intercept]` <dbl>,

## # `r_PriPos:Player[1st.Base_Taylor.Green,Intercept]` <dbl>,

## # `r_PriPos:Player[1st.Base_Todd.Helton,Intercept]` <dbl>,

## # `r_PriPos:Player[1st.Base_Travis.Ishikawa,Intercept]` <dbl>,

## # `r_PriPos:Player[1st.Base_Ty.Wigginton,Intercept]` <dbl>,

## # `r_PriPos:Player[1st.Base_Yan.Gomes,Intercept]` <dbl>,

## # `r_PriPos:Player[1st.Base_Yonder.Alonso,Intercept]` <dbl>,

## # `r_PriPos:Player[1st.Base_Zach.Lutz,Intercept]` <dbl>,

## # `r_PriPos:Player[2nd.Base_Aaron.Hill,Intercept]` <dbl>,

## # `r_PriPos:Player[2nd.Base_Adam.Rosales,Intercept]` <dbl>,

## # `r_PriPos:Player[2nd.Base_Adrian.Cardenas,Intercept]` <dbl>,

## # `r_PriPos:Player[2nd.Base_Alexi.Amarista,Intercept]` <dbl>,

## # `r_PriPos:Player[2nd.Base_Alexi.Casilla,Intercept]` <dbl>,

## # `r_PriPos:Player[2nd.Base_Blake.DeWitt,Intercept]` <dbl>,

## # `r_PriPos:Player[2nd.Base_Brandon.Phillips,Intercept]` <dbl>,

## # `r_PriPos:Player[2nd.Base_Brian.Roberts,Intercept]` <dbl>,

## # `r_PriPos:Player[2nd.Base_Brock.Holt,Intercept]` <dbl>,

## # `r_PriPos:Player[2nd.Base_Charlie.Culberson,Intercept]` <dbl>,

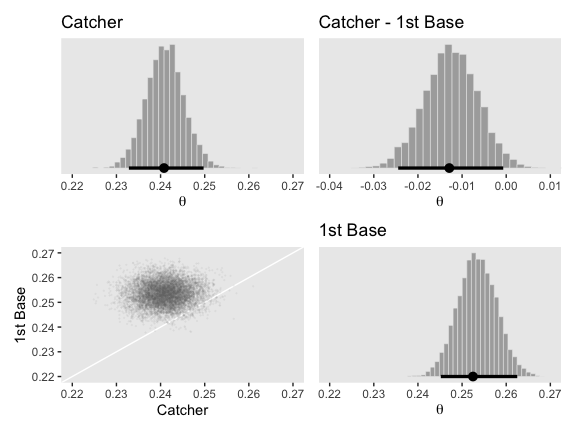

## # `r_PriPos:Player[2nd.Base_Chase.dArnaud,Intercept]` <dbl>, …In the text, Kruschke described the model as having 968 parameters. Our post tibble has one vector for each, with a couple others tacked onto the end. In the hierarchical logistic regression model, the group-specific parameters for the levels of PriPos are additive combinations of the global intercept vector, b_Intercept and each position-specific vector, r_PriPos[i.Base,Intercept], where i is a fill-in for the position of interest. And recall that since the linear model is of the logit of the criterion, we’ll need to use inv_logit_scaled() to convert that to the probability space.

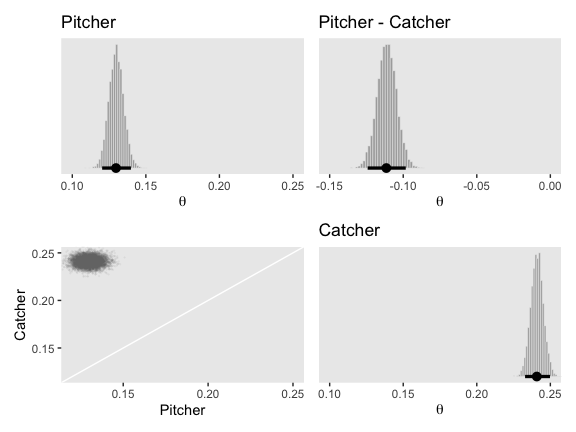

post_small <-

post %>%

transmute(`1st Base` = (b_Intercept + `r_PriPos[1st.Base,Intercept]`),

Catcher = (b_Intercept + `r_PriPos[Catcher,Intercept]`),

Pitcher = (b_Intercept + `r_PriPos[Pitcher,Intercept]`)) %>%

mutate_all(inv_logit_scaled) %>%

# here we compute our difference distributions

mutate(`Pitcher - Catcher` = Pitcher - Catcher,

`Catcher - 1st Base` = Catcher - `1st Base`)

head(post_small)## # A tibble: 6 x 5

## `1st Base` Catcher Pitcher `Pitcher - Catcher` `Catcher - 1st Base`

## <dbl> <dbl> <dbl> <dbl> <dbl>

## 1 0.246 0.237 0.135 -0.102 -0.00921

## 2 0.264 0.251 0.134 -0.117 -0.0131

## 3 0.267 0.242 0.122 -0.120 -0.0256

## 4 0.262 0.242 0.126 -0.116 -0.0201

## 5 0.252 0.241 0.133 -0.109 -0.0110

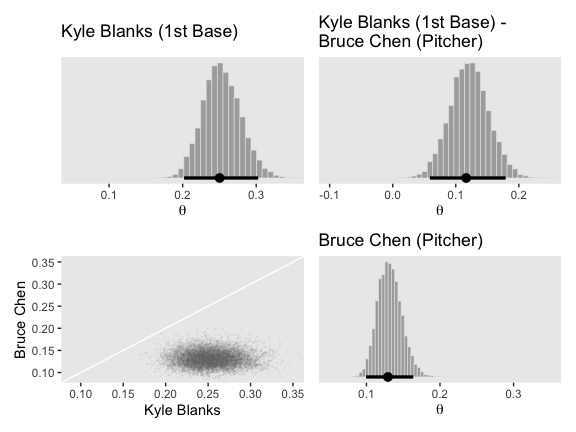

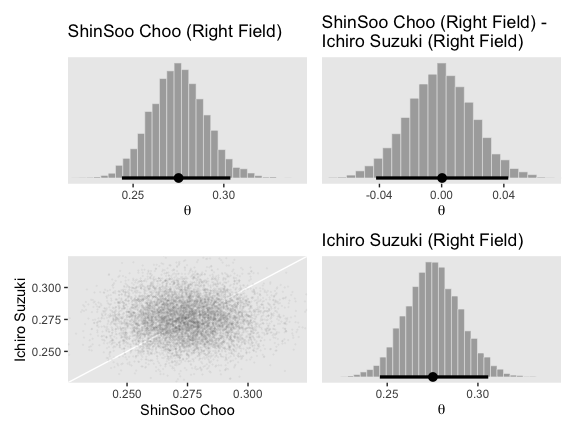

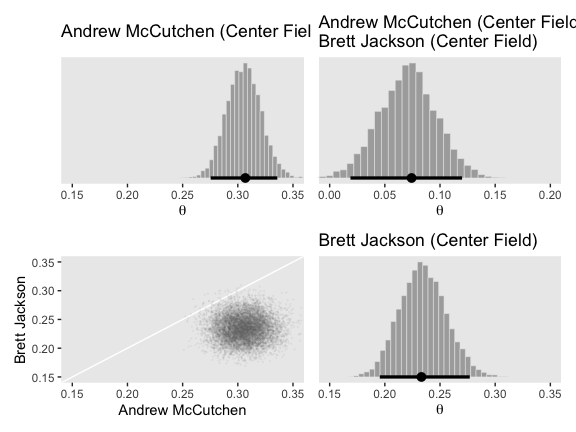

## 6 0.259 0.243 0.137 -0.106 -0.0161If you take a glance at Figures 9.14 through 9.16 in the text, we’ll be making a lot of histograms of the same basic structure. To streamline our code a bit, we can make a custom histogram plotting function.

make_histogram <- function(data, mapping, title, xlim, ...) {

ggplot(data, mapping) +

geom_histogram(fill = "grey67", color = "grey92", size = .2,

bins = 30) +

stat_pointintervalh(aes(y = 0),

point_interval = mode_hdi, .width = .95) +

scale_y_continuous(NULL, breaks = NULL) +

labs(title = title,

x = expression(theta)) +

coord_cartesian(xlim = xlim) +

theme(legend.position = "none")

}We’ll do the same thing for the correlation plots.

make_point <- function(data, mapping, limits, ...) {

ggplot(data, mapping) +

geom_abline(color = "white") +

geom_point(color = "grey50", size = 1/10, alpha = 1/20) +

coord_cartesian(xlim = limits,

ylim = limits)

}To learn more about wrapping custom plots into custom functions, check out Chapter 16 of Wickham’s ggplot2, Elegant graphics for data analysis.

Now we have our make_histogram() and make_point() functions, we’ll use grid.arrange() to paste together the left half of Figure 9.14.

p1 <-

make_histogram(data = post_small,

aes(x = Pitcher),

title = "Pitcher",

xlim = c(.1, .25))

p2 <-

make_histogram(data = post_small,

aes(x = `Pitcher - Catcher`),

title = "Pitcher - Catcher",

xlim = c(-.15, 0))

p3 <-

make_point(data = post_small,

aes(x = Pitcher, y = Catcher),

limits = c(.12, .25))

p4 <-

make_histogram(data = post_small,

aes(x = Catcher),

title = "Catcher",

xlim = c(.1, .25))

library(patchwork)

p1 + p2 + p3 + p4

We could follow the same procedure to make the right portion of Figure 9.14. But instead, let’s switch gears and explore the second way brms affords us for plotting group-level parameters. This time, we’ll use coef().