Chapter 10 Supervised Learning

Machine learning is very similar to statistics, but it is certainly not the same. As the name suggests, in machine learning we want machines to learn. This means that we want to replace hard-coded expert algorithm, with data-driven self-learned algorithm.

There are many learning setups, that depend on what information is available to the machine. The most common setup, discussed in this chapter, is supervised learning. The name takes from the fact that by giving the machine data samples with known inputs (a.k.a. features) and desired outputs (a.k.a. labels), the human is effectively supervising the learning. If we think of the inputs as predictors, and outcomes as predicted, it is no wonder that supervised learning is very similar to statistical prediction. When asked “are these the same?” I like to give the example of internet fraud. If you take a sample of fraud “attacks”, a statistical formulation of the problem is highly unlikely. This is because fraud events are not randomly drawn from some distribution, but rather, arrive from an adversary learning the defenses and adapting to it. This instance of supervised learning is more similar to game theory than statistics.

Other types of machine learning problems include (Sammut and Webb 2011):

Unsupervised learning: See Chapter 11.

Semi supervised learning: Where only part of the samples are labeled. A.k.a. co-training, learning from labeled and unlabeled data, transductive learning.

Active learning: Where the machine is allowed to query the user for labels. Very similar to adaptive design of experiments.

Learning on a budget: A version of active learning where querying for labels induces variable costs.

Weak learning: A version of supervised learning where the labels are given not by an expert, but rather by some heuristic rule. Example: mass-labeling cyber attacks by a rule based software, instead of a manual inspection.

Reinforcement learning:

Similar to active learning, in that the machine may query for labels. Different from active learning, in that the machine does not receive labels, but rewards.Structure learning: When predicting objects with structure such as dependent vectors, graphs, images, tensors, etc.

Manifold learning: An instance of unsupervised learning, where the goal is to reduce the dimension of the data by embedding it into a lower dimensional manifold. A.k.a. support estimation.

Similarity Learning: Where we try to learn how to measure similarity between objects (like faces, texts, images, etc.).

Metric Learning: Like similarity learning, only that the similarity has to obey the definitioin of a metric.

Learning to learn: Deals with the carriage of “experience” from one learning problem to another. A.k.a. cummulative learning, knowledge transfer, and meta learning.

10.1 Problem Setup

We now present the empirical risk minimization (ERM) approach to supervised learning, a.k.a. M-estimation in the statistical literature.

Given \(n\) samples with inputs \(x\) from some space \(\mathcal{X}\) and desired outcome, \(y\), from some space \(\mathcal{Y}\). In our example, \(y\) is the spam/no-spam label, and \(x\) is a vector of the mail’s attributes. Samples, \((x,y)\) have some distribution we denote \(P\). We want to learn a function that maps inputs to outputs, i.e., that classifies to spam given. This function is called a hypothesis, or predictor, denoted \(f\), that belongs to a hypothesis class \(\mathcal{F}\) such that \(f:\mathcal{X} \to \mathcal{Y}\). We also choose some other function that fines us for erroneous prediction. This function is called the loss, and we denote it by \(l:\mathcal{Y}\times \mathcal{Y} \to \mathbb{R}^+\).

The fundamental task in supervised (statistical) learning is to recover a hypothesis that minimizes the average loss in the sample, and not in the population. This is know as the risk minimization problem.

The best predictor, is the risk minimizer: \[\begin{align} f^* := argmin_f \{R(f)\}. \tag{10.1} \end{align}\]

Another fundamental problem is that we do not know the distribution of all possible inputs and outputs, \(P\). We typically only have a sample of \((x_i,y_i), i=1,\dots,n\). We thus state the empirical counterpart of (10.1), which consists of minimizing the average loss. This is known as the empirical risk miminization problem (ERM).

A good candidate proxy for \(f^*\) is its empirical counterpart, \(\hat f\), known as the empirical risk minimizer: \[\begin{align} \hat f := argmin_f \{ R_n(f) \}. \tag{10.2} \end{align}\]

To make things more explicit:

- \(f\) may be a linear function of the attributes, so that it may be indexed simply with its coefficient vector \(\beta\).

- \(l\) may be a squared error loss: \(l(f(x),y):=(f(x)-y)^2\).

Under these conditions, the best predictor \(f^* \in \mathcal{F}\) from problem (10.1) is to \[\begin{align} f^* := argmin_\beta \{ \mathbb{E}_{P(x,y)}[(x'\beta-y)^2] \}. \end{align}\]

When using a linear hypothesis with squared loss, we see that the empirical risk minimization problem collapses to an ordinary least-squares problem: \[\begin{align} \hat f := argmin_\beta \{1/n \sum_i (x_i'\beta - y_i)^2 \}. \end{align}\]

When data samples are assumingly independent, then maximum likelihood estimation is also an instance of ERM, when using the (negative) log likelihood as the loss function.

If we don’t assume any structure on the hypothesis, \(f\), then \(\hat f\) from (10.2) will interpolate the data, and \(\hat f\) will be a very bad predictor. We say, it will overfit the observed data, and will have bad performance on new data.

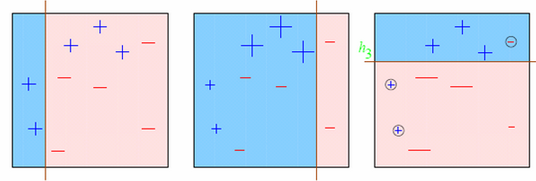

Figure 10.1 demonstrate an overfitting: Green and black lines represent overfitted and non-overfitted classification models. The green line perfectly classify the training data, but it seems that it is too dependent on that data, we call this “memorizing the data”. The green line is likely to have a higher error rate on new unseen data, compared to the black line.

We have several ways to avoid overfitting:

- Restrict the hypothesis class \(\mathcal{F}\) (such as linear functions).

- Penalize for the complexity of \(f\). The penalty denoted by \(\Vert f \Vert\).

- Unbiased risk estimation: \(R_n(f)\) is not an unbiased estimator of \(R(f)\). Why? Think of estimating the mean with the sample minimum… Because \(R_n(f)\) is downward biased, we may add some correction term, or compute \(R_n(f)\) on different data than the one used to recover \(\hat f\).

Almost all ERM algorithms consist of some combination of all the three methods above.

10.1.1 Common Hypothesis Classes

Some common hypothesis classes, \(\mathcal{F}\), with restricted complexity, are:

Linear hypotheses: such as linear models, GLMs, and (linear) support vector machines (SVM).

Neural networks: a.k.a. feed-forward neural nets, artificial neural nets, and the celebrated class of deep neural nets.

Tree: a.k.a. decision rules, is a class of hypotheses which can be stated as “if-then” rules.

Reproducing Kernel Hilbert Space: a.k.a. RKHS, is a subset of “the space of all functions16” that is both large enough to capture very complicated relations, but small enough so that it is less prone to overfitting, and also surprisingly simple to compute with.

10.1.2 Common Complexity Penalties

The most common complexity penalty applies to classes that have a finite dimensional parametric representation, such as the class of linear predictors, parametrized via its coefficients \(\beta\). In such classes we may penalize for the norm of the parameters. Common penalties include:

- Ridge penalty: penalizing the \(l_2\) norm of the parameter. I.e. \(\Vert f \Vert=\Vert \beta \Vert_2^2=\sum_j \beta_j^2\).

- LASSO penalty: penalizing the \(l_1\) norm of the parameter. I.e., \(\Vert f \Vert=\Vert \beta \Vert_1=\sum_j |\beta_j|\)

- Elastic net: a combination of the lasso and ridge penalty. I.e. ,\(\Vert f \Vert= (1-\alpha) \Vert \beta \Vert_2^2 + \alpha \Vert \beta \Vert_1\).

- Function Norms: If the hypothesis class \(\mathcal{F}\) does not admit a finite dimensional representation, the penalty is no longer a function of the parameters of the function. We may, however, penalize not the parametric representation of the function, but rather the function itself \(\Vert f \Vert=\sqrt{\int f(t)^2 dt}\).

other ways to reduce model complexity can be Pruning (in trees based algorithms such as random forset - make simpler trees); Subsampling (when iterating over the data; don’t use all features, but a subsample), pooling (e.g., max pooling mostly used in deep nets); seting a learning rate (when learning \(f\), don’t let the data change the model too much each iteration), etc.

The following figure (from Elements of stat. learning) depicts the lasso (left) and ridge regression (right) when there are only two parameters. The residual sum of squares has elliptical contours, centered at the full least squares estimate. The constraint region for ridge regression is the disk, while that for lasso is the diamond. Both methods find the first point where the elliptical contours hit the constraint region. Unlike the disk, the diamond has corners; if the solution occurs at a corner, then it has one parameter βj equal to zero. When p > 2, the diamond becomes a rhomboid, and has many corners, flat edges and faces; there are many more opportunities for the estimated parameters to be zero.

10.1.3 Unbiased Risk Estimation

The fundamental problem of overfitting, is that the empirical risk, \(R_n(\hat f)\), is downward biased to the population risk, \(R(\hat f)\). We can remove this bias in two ways: (a) purely algorithmic resampling approaches, and (b) theory driven estimators.

Train-Validate-Test: The simplest form of algorithmic validation is to split the data. A train set to train/estimate/learn \(\hat f\). A validation set to compute the out-of-sample expected loss, \(R(\hat f)\), and pick the best performing predictor. A test sample to compute the out-of-sample performance of the selected hypothesis. This is a very simple approach, but it is very “data inefficient”, thus motivating the next method.

K-Fold Cross Validation: By far the most popular algorithmic unbiased risk estimator; in K-fold CV we “fold” the data into \(K\) non-overlapping sets. For each of the \(K\) sets, we learn \(\hat f\) with the non-selected fold, and assess \(R(\hat f)\)) on the selected fold. We then aggregate results over the \(K\) folds, typically by averaging. More on data splitting and cross validation in Chapter ??

AIC: Akaike’s information criterion (AIC) is a theory driven correction of the empirical risk, so that it is unbiased to the true risk. It is appropriate when using the likelihood loss.

Cp: Mallow’s Cp is an instance of AIC for likelihood loss under normal noise.

Other theory driven unbiased risk estimators include the Bayesian Information Criterion (BIC, aka SBC, aka SBIC), the Minimum Description Length (MDL), Vapnic’s Structural Risk Minimization (SRM), the Deviance Information Criterion (DIC), and the Hannan-Quinn Information Criterion (HQC).

Other resampling based unbiased risk estimators include resampling without replacement algorithms like delete-d cross validation with its many variations, and resampling with replacement, like the bootstrap, with its many variations.

10.1.4 Collecting the Pieces

An ERM problem with regularization will look like \[\begin{align} \hat f := argmin_{f \in \mathcal{F}} \{ R_n(f) + \lambda \Vert f \Vert \}. \tag{10.3} \end{align}\]

Collecting ideas from the above sections, a typical supervised learning pipeline will include: choosing the hypothesis class, choosing the penalty function and level, unbiased risk estimator. We emphasize that choosing the penalty function, \(\Vert f \Vert\) is not enough, and we need to choose how “hard” to apply it. This if known as the regularization level, denoted by \(\lambda\) in Eq.(10.3).

Examples of such combos include:

- Linear regression, no penalty, train-validate test.

- Linear regression, no penalty, AIC.

- Linear regression, \(l_2\) penalty, K-fold CV. This combo is typically known as ridge regression.

- Linear regression, \(l_1\) penalty, K-fold CV. This combo is typically known as LASSO regression.

- Linear regression, \(l_1\) and \(l_2\) penalty, K-fold CV. This combo is typically known as elastic net regression.

- Logistic regression, \(l_2\) penalty, K-fold CV.

- SVM classification, \(l_2\) penalty, K-fold CV.

- Deep network, no penalty, K-fold CV.

- Unrestricted, \(\Vert \partial^2 f \Vert_2\), K-fold CV. This combo is typically known as a smoothing spline.

For fans of statistical hypothesis testing we will also emphasize: Testing and prediction are related, but are not the same:

- In the current chapter, we do not claim our models, \(f\), are generative. I.e., we do not claim that there is some causal relation between \(x\) and \(y\). We only claim that \(x\) predicts \(y\).

- It is possible that we will want to ignore a significant predictor, and add a non-significant one (Foster and Stine 2004).

- Some authors will use hypothesis testing as an initial screening for candidate predictors. This is a useful heuristic, but that is all it is– a heuristic. It may also fail miserably if predictors are linearly dependent (a.k.a. multicollinear).

10.2 Supervised Learning in R

At this point, we have a rich enough language to do supervised learning with R.

In these examples, I will use two data sets from the ElemStatLearn package, that accompanies the seminal book by Friedman, Hastie, and Tibshirani (2001).

I use the spam data for categorical predictions, and prostate for continuous predictions.

In spam we will try to decide if a mail is spam or not.

In prostate we will try to predict the size of a cancerous tumor.

You can now call ?prostate and ?spam to learn more about these data sets.

Some boring pre-processing.

# Preparing prostate data

data("prostate", package = 'ElemStatLearn')

prostate <- data.table::data.table(prostate)

prostate.train <- prostate[train==TRUE, -"train"]

prostate.test <- prostate[train!=TRUE, -"train"]

y.train <- prostate.train$lcavol

X.train <- as.matrix(prostate.train[, -'lcavol'] )

y.test <- prostate.test$lcavol

X.test <- as.matrix(prostate.test[, -'lcavol'] )

# Preparing spam data:

data("spam", package = 'ElemStatLearn')

n <- nrow(spam)

train.prop <- 0.66

train.ind <- sample(x = c(TRUE,FALSE),

size = n,

prob = c(train.prop,1-train.prop),

replace=TRUE)

spam.train <- spam[train.ind,]

spam.test <- spam[!train.ind,]

y.train.spam <- spam.train$spam

X.train.spam <- as.matrix(spam.train[,names(spam.train)!='spam'] )

y.test.spam <- spam.test$spam

X.test.spam <- as.matrix(spam.test[,names(spam.test)!='spam'])

spam.dummy <- spam

spam.dummy$spam <- as.numeric(spam$spam=='spam')

spam.train.dummy <- spam.dummy[train.ind,]

spam.test.dummy <- spam.dummy[!train.ind,]We also define some utility functions that we will require down the road.

l2 <- function(x) x^2 %>% sum %>% sqrt

l1 <- function(x) abs(x) %>% sum

MSE <- function(x) x^2 %>% mean

missclassification <- function(tab) sum(tab[c(2,3)])/sum(tab)10.2.1 Linear Models with Least Squares Loss

The simplest approach to supervised learning, is simply with OLS: a linear predictor, squared error loss, and train-test risk estimator. Notice the better in-sample MSE than the out-of-sample. That is overfitting in action.

ols.1 <- lm(lcavol~. ,data = prostate.train)

# Train error:

MSE( predict(ols.1)-prostate.train$lcavol) ## [1] 0.4383709## [1] 0.5084068Things to note:

- I use the

newdataargument of thepredictfunction to make the out-of-sample predictions required to compute the test-error. - The test error is larger than the train error. That is overfitting in action.

We now implement a K-fold CV, instead of our train-test approach.

The assignment of each observation to each fold is encoded in fold.assignment.

The following code is extremely inefficient, but easy to read.

folds <- 10

fold.assignment <- sample(1:folds, nrow(prostate), replace = TRUE)

errors <- NULL

for (k in 1:folds){

prostate.cross.train <- prostate[fold.assignment!=k,] # train subset

prostate.cross.test <- prostate[fold.assignment==k,] # test subset

.ols <- lm(lcavol~. ,data = prostate.cross.train) # train

.predictions <- predict(.ols, newdata=prostate.cross.test)

.errors <- .predictions-prostate.cross.test$lcavol # save prediction errors in the fold

errors <- c(errors, .errors) # aggregate error over folds.

}

# Cross validated prediction error:

MSE(errors)## [1] 0.5742128Let’s try all possible variable subsets, and choose the best performer with respect to the Cp criterion, which is an unbiased risk estimator.

This is done with leaps::regsubsets.

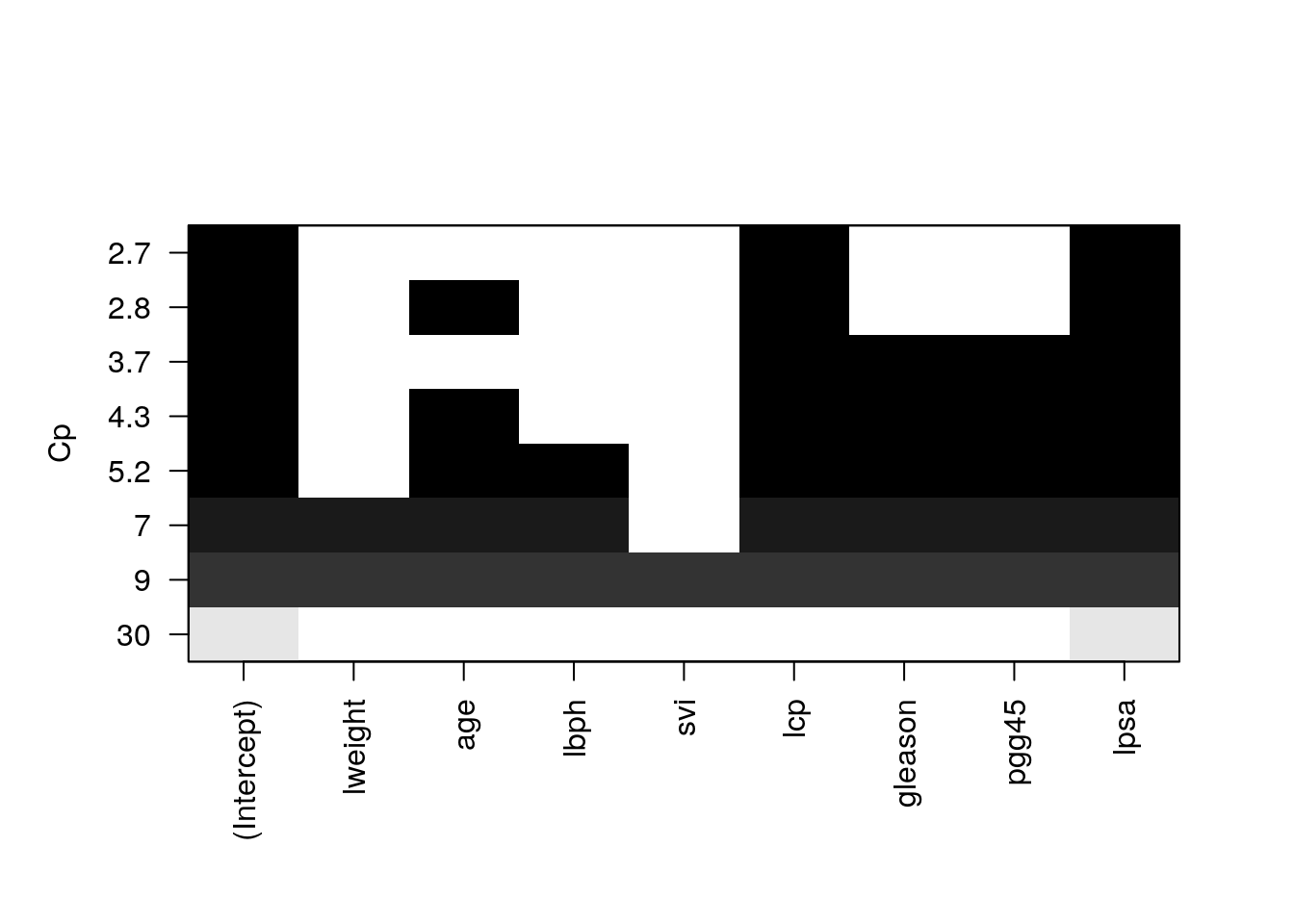

We see that the best performer has 3 predictors.

regfit.full <- prostate.train %>%

leaps::regsubsets(lcavol~.,data = ., method = 'exhaustive') # best subset selection

plot(regfit.full, scale = "Cp")

Things to note:

- The plot shows us which is the variable combination which is the best, i.e., has the smallest Cp.

- Scanning over all variable subsets is impossible when the number of variables is large.

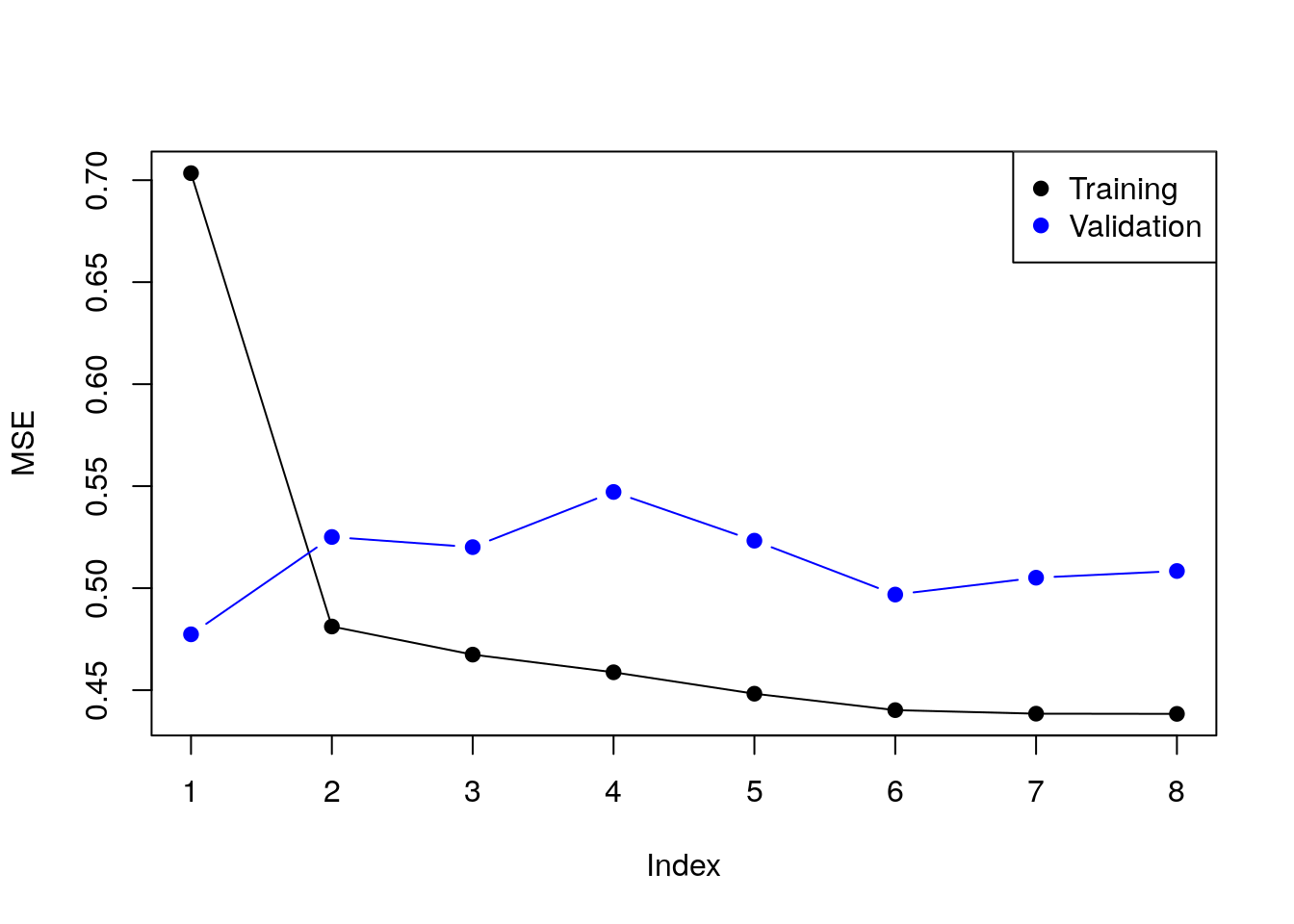

Instead of the Cp criterion, we now compute the train and test errors for all the possible predictor subsets17. In the resulting plot we can see overfitting in action.

model.n <- regfit.full %>% summary %>% length

X.train.named <- model.matrix(lcavol ~ ., data = prostate.train )

X.test.named <- model.matrix(lcavol ~ ., data = prostate.test )

val.errors <- rep(NA, model.n)

train.errors <- rep(NA, model.n)

for (i in 1:model.n) {

coefi <- coef(regfit.full, id = i) # exctract coefficients of i'th model

pred <- X.train.named[, names(coefi)] %*% coefi # make in-sample predictions

train.errors[i] <- MSE(y.train - pred) # train errors

pred <- X.test.named[, names(coefi)] %*% coefi # make out-of-sample predictions

val.errors[i] <- MSE(y.test - pred) # test errors

}Plotting results.

plot(train.errors, ylab = "MSE", pch = 19, type = "o")

points(val.errors, pch = 19, type = "b", col="blue")

legend("topright",

legend = c("Training", "Validation"),

col = c("black", "blue"),

pch = 19)

Checking all possible models is computationally very hard. Forward selection is a greedy approach that adds one variable at a time.

ols.0 <- lm(lcavol~1 ,data = prostate.train)

model.scope <- list(upper=ols.1, lower=ols.0)

step(ols.0, scope=model.scope, direction='forward', trace = TRUE)## Start: AIC=30.1

## lcavol ~ 1

##

## Df Sum of Sq RSS AIC

## + lpsa 1 54.776 47.130 -19.570

## + lcp 1 48.805 53.101 -11.578

## + svi 1 35.829 66.077 3.071

## + pgg45 1 23.789 78.117 14.285

## + gleason 1 18.529 83.377 18.651

## + lweight 1 9.186 92.720 25.768

## + age 1 8.354 93.552 26.366

## <none> 101.906 30.097

## + lbph 1 0.407 101.499 31.829

##

## Step: AIC=-19.57

## lcavol ~ lpsa

##

## Df Sum of Sq RSS AIC

## + lcp 1 14.8895 32.240 -43.009

## + svi 1 5.0373 42.093 -25.143

## + gleason 1 3.5500 43.580 -22.817

## + pgg45 1 3.0503 44.080 -22.053

## + lbph 1 1.8389 45.291 -20.236

## + age 1 1.5329 45.597 -19.785

## <none> 47.130 -19.570

## + lweight 1 0.4106 46.719 -18.156

##

## Step: AIC=-43.01

## lcavol ~ lpsa + lcp

##

## Df Sum of Sq RSS AIC

## <none> 32.240 -43.009

## + age 1 0.92315 31.317 -42.955

## + pgg45 1 0.29594 31.944 -41.627

## + gleason 1 0.21500 32.025 -41.457

## + lbph 1 0.13904 32.101 -41.298

## + lweight 1 0.05504 32.185 -41.123

## + svi 1 0.02069 32.220 -41.052##

## Call:

## lm(formula = lcavol ~ lpsa + lcp, data = prostate.train)

##

## Coefficients:

## (Intercept) lpsa lcp

## 0.08798 0.53369 0.38879Things to note:

- By default

stepadd variables according to the AIC criterion, which is a theory-driven unbiased risk estimator. - We need to tell

stepwhich is the smallest and largest models to consider using thescopeargument. direction='forward'is used to “grow” from a small model. For “shrinking” a large model, usedirection='backward', or the defaultdirection='stepwise'.

We now learn a linear predictor on the spam data using, a least squares loss, and train-test risk estimator.

# Train the predictor

ols.2 <- lm(spam~., data = spam.train.dummy)

# make in-sample predictions

.predictions.train <- predict(ols.2) > 0.5

# inspect the confusion matrix

(confusion.train <- table(prediction=.predictions.train, truth=spam.train.dummy$spam)) ## truth

## prediction 0 1

## FALSE 1778 227

## TRUE 66 980## [1] 0.09603409# make out-of-sample prediction

.predictions.test <- predict(ols.2, newdata = spam.test.dummy) > 0.5

# inspect the confusion matrix

(confusion.test <- table(prediction=.predictions.test, truth=spam.test.dummy$spam))## truth

## prediction 0 1

## FALSE 884 139

## TRUE 60 467## [1] 0.1283871Things to note:

- I can use

lmfor categorical outcomes.lmwill simply dummy-code the outcome. - A linear predictor trained on 0’s and 1’s will predict numbers. Think of these numbers as the probability of 1, and my prediction is the most probable class:

predicts()>0.5. - The train error is smaller than the test error. This is overfitting in action.

The glmnet package is an excellent package that provides ridge, LASSO, and elastic net regularization, for all GLMs, so for linear models in particular.

suppressMessages(library(glmnet))

means <- apply(X.train, 2, mean)

sds <- apply(X.train, 2, sd)

X.train.scaled <- X.train %>% sweep(MARGIN = 2, STATS = means, FUN = `-`) %>%

sweep(MARGIN = 2, STATS = sds, FUN = `/`)

ridge.2 <- glmnet(x=X.train.scaled, y=y.train, family = 'gaussian', alpha = 0)

# Train error:

MSE( predict(ridge.2, newx =X.train.scaled)- y.train)## [1] 1.006028# Test error:

X.test.scaled <- X.test %>% sweep(MARGIN = 2, STATS = means, FUN = `-`) %>%

sweep(MARGIN = 2, STATS = sds, FUN = `/`)

MSE(predict(ridge.2, newx = X.test.scaled)- y.test)## [1] 0.7678264Things to note:

- The

alpha=0parameters tells R to do ridge regression. Setting \(\alpha=1\) will do LASSO, and any other value, with return an elastic net with appropriate weights. - The

family='gaussian'argument tells R to fit a linear model, with least squares loss. - Features for regularized predictors should be z-scored before learning.

- We use the

sweepfunction to z-score the predictors: we learn the z-scoring from the train set, and apply it to both the train and the test. - The test error is smaller than the train error. This may happen because risk estimators are random. Their variance may mask the overfitting.

We now use the LASSO penalty.

lasso.1 <- glmnet(x=X.train.scaled, y=y.train, , family='gaussian', alpha = 1)

# Train error:

MSE( predict(lasso.1, newx =X.train.scaled)- y.train)## [1] 0.5525279## [1] 0.5211263We now use glmnet for classification.

means.spam <- apply(X.train.spam, 2, mean)

sds.spam <- apply(X.train.spam, 2, sd)

X.train.spam.scaled <- X.train.spam %>% sweep(MARGIN = 2, STATS = means.spam, FUN = `-`) %>%

sweep(MARGIN = 2, STATS = sds.spam, FUN = `/`) %>% as.matrix

logistic.2 <- cv.glmnet(x=X.train.spam.scaled, y=y.train.spam, family = "binomial", alpha = 0)Things to note:

- We used

cv.glmnetto do an automatic search for the optimal level of regularization (thelambdaargument inglmnet) using K-fold CV. - Just like the

glmfunction,'family='binomial'is used for logistic regression. - We z-scored features so that they all have the same scale.

- We set

alpha=0for an \(l_2\) penalization of the coefficients of the logistic regression.

# Train confusion matrix:

.predictions.train <- predict(logistic.2, newx = X.train.spam.scaled, type = 'class')

(confusion.train <- table(prediction=.predictions.train, truth=spam.train$spam))## truth

## prediction email spam

## email 1778 167

## spam 66 1040## [1] 0.0763684# Test confusion matrix:

X.test.spam.scaled <- X.test.spam %>% sweep(MARGIN = 2, STATS = means.spam, FUN = `-`) %>%

sweep(MARGIN = 2, STATS = sds.spam, FUN = `/`) %>% as.matrix

.predictions.test <- predict(logistic.2, newx = X.test.spam.scaled, type='class')

(confusion.test <- table(prediction=.predictions.test, truth=y.test.spam))## truth

## prediction email spam

## email 885 110

## spam 59 496## [1] 0.109032310.2.1.1 Another glmnet example

First let’s load some data with numeric output/label anf fit a glmnet with default parameters (i.e., lasso):

library(ggplot2)

data("diamonds")

d <- diamonds[1:5000,] # we take a subset from computational reasons

X <- model.matrix(price~.-1, data=d) %>% scale()

y <- d$price %>% scale()

fit <- glmnet(X, y)In defalut, the glmnet fit the model for 100 (sometimes little less) values of \(\lambda\) (hence, 100 different models). The coeficients can be accessed in a matrix via:

## [1] 24 86## 24 x 5 sparse Matrix of class "dgCMatrix"

## s0 s9 s19 s29 s49

## carat . . . . .

## cutFair . . . . -0.035894995

## cutGood . . . . .

## cutVery Good . . . . .

## cutPremium . . . . .

## cutIdeal . . . . 0.035536104

## color.L . . -0.00760010 -0.1235543 -0.187679636

## color.Q . . . . -0.008298318

## color.C . . . . .

## color^4 . . . . 0.005915433

## color^5 . . . . .

## color^6 . . . . .

## clarity.L . . 0.04507745 0.2047064 0.312886244

## clarity.Q . . . -0.0029780 -0.074734260

## clarity.C . . . . 0.036823841

## clarity^4 . . . . -0.048978454

## clarity^5 . . . . 0.006778281

## clarity^6 . . . . -0.002433626

## clarity^7 . . . . .

## depth . . . . 0.082958602

## table . . . . .

## x . . . . 0.089682676

## y . 0.502974 0.71112174 0.7913728 0.893429132

## z . . 0.04696494 0.1469210 0.071262737From some reason, the lambda values are called s.

When y is scaled, lambda is usually beteen 0 to 1.

You can get specific model’s coefficient by specifying the lambda value (for example for \(\lambda=0.01\) with: coef(glmn_lasso, s = 0.01)). Alternatively:

## carat cutFair cutGood cutVery Good cutPremium

## 0 0 0 0 0

## cutIdeal color.L color.Q color.C color^4

## 0 0 0 0 0

## color^5 color^6 clarity.L clarity.Q clarity.C

## 0 0 0 0 0

## clarity^4 clarity^5 clarity^6 clarity^7 depth

## 0 0 0 0 0

## table x y z

## 0 0 0 0## carat cutFair cutGood cutVery Good cutPremium

## 0.00000000 0.00000000 0.00000000 0.00000000 0.00000000

## cutIdeal color.L color.Q color.C color^4

## 0.00000000 0.00000000 0.00000000 0.00000000 0.00000000

## color^5 color^6 clarity.L clarity.Q clarity.C

## 0.00000000 0.00000000 0.00000000 0.00000000 0.00000000

## clarity^4 clarity^5 clarity^6 clarity^7 depth

## 0.00000000 0.00000000 0.00000000 0.00000000 0.00000000

## table x y z

## 0.00000000 0.00000000 0.07878848 0.00000000## carat cutFair cutGood cutVery Good cutPremium

## -0.0460505878 -0.0545861033 -0.0027630465 0.0086051078 0.0000000000

## cutIdeal color.L color.Q color.C color^4

## 0.0616082673 -0.2016675236 -0.0218796345 -0.0030919145 0.0124480448

## color^5 color^6 clarity.L clarity.Q clarity.C

## -0.0006628745 0.0071567871 0.3402527907 -0.0922788362 0.0600307092

## clarity^4 clarity^5 clarity^6 clarity^7 depth

## -0.0658633509 0.0185131339 -0.0103034807 0.0062673193 0.1234320835

## table x y z

## 0.0294788503 0.2284405594 0.8486733603 0.0409936795We see that the more penalty, the less complex the model is.

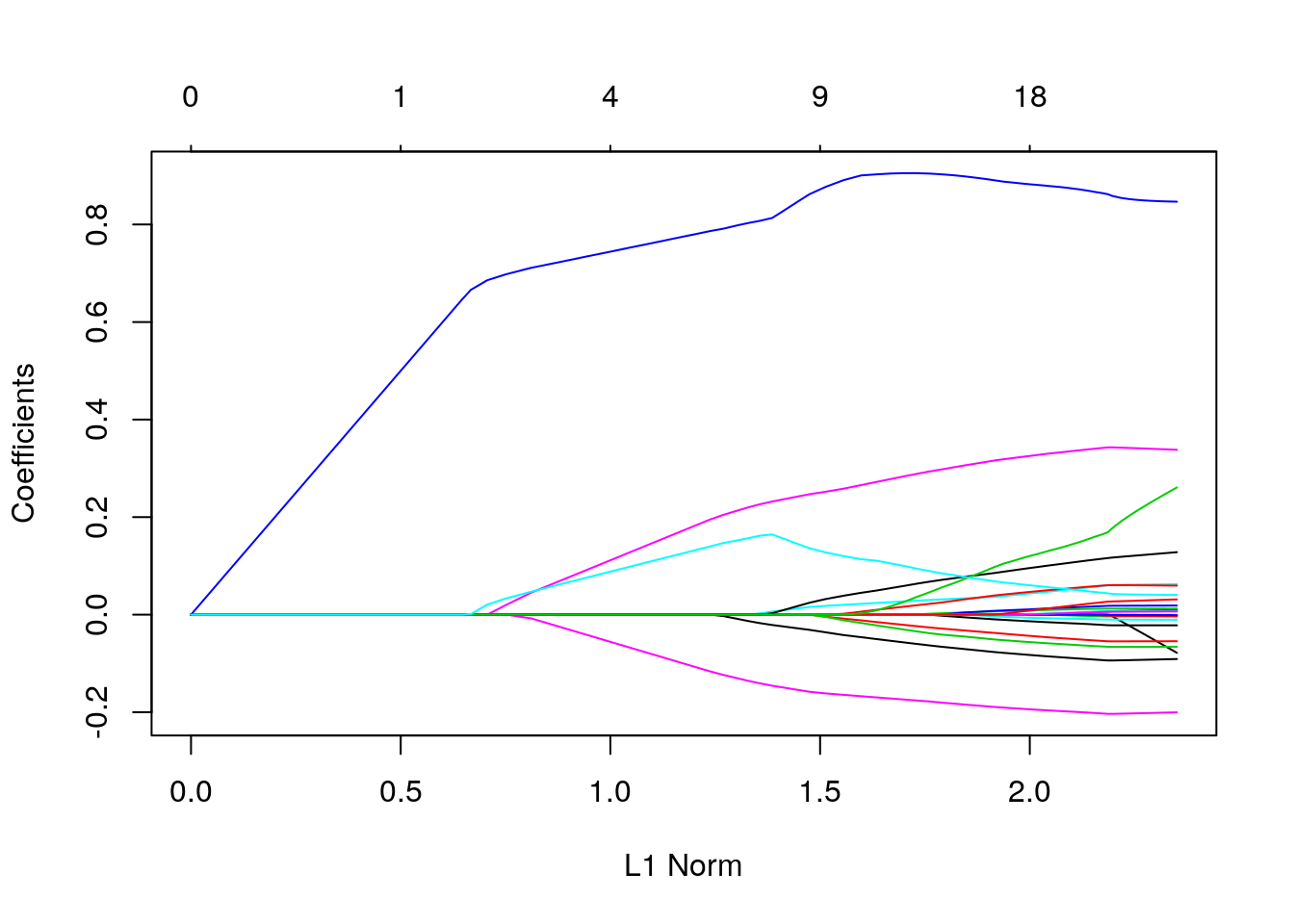

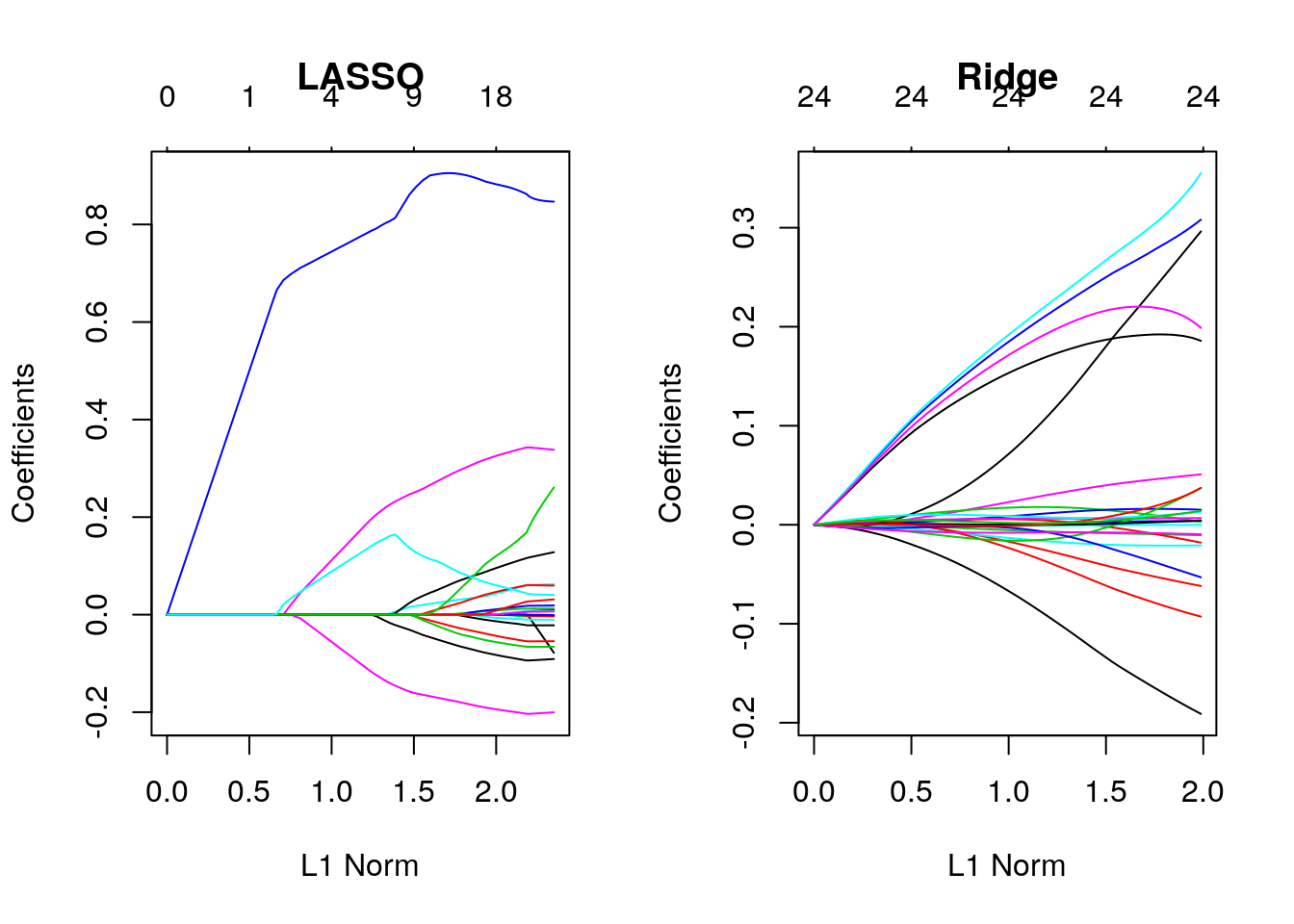

We can visualize the coefficients by executing the plot function:

Each curve corresponds to a variable. It shows the path of its coefficient against the \(l_1\)-norm of the whole coefficient vector at as \(\lambda\) varies.

The axis above indicates the number of nonzero coefficients at the current \(\lambda\), which is the effective degrees of freedom (df) for the lasso.

Users may also wish to annotate the curves by setting label = TRUE in the plot command.

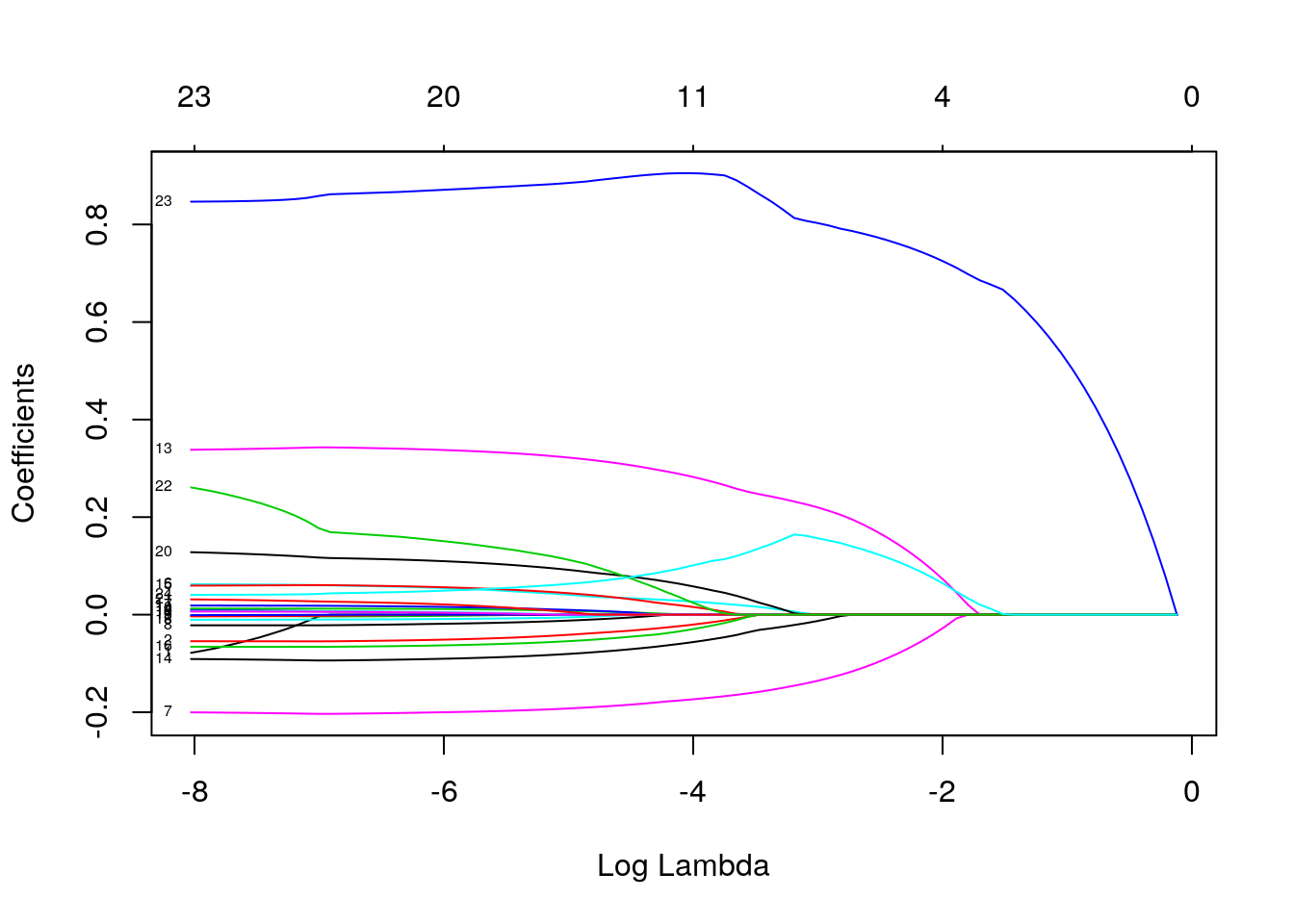

Alternatively, check the coefficients as \(\lambda\) itself changes:

Note that here we also annotate the curves by setting

Note that here we also annotate the curves by setting label = TRUE in the plot command.

You can see the shrinkage in action. See also the difference of the lasso from the ridge (\(\alpha=0\)) solution:

opar <- par()

par(mfrow=c(1,2))

fit_ridge <- glmnet(X, y, alpha = 0)

plot(fit, main = "LASSO"); plot(fit_ridge, main = "Ridge")

Recall our discussion on \(l_1\) vs. \(l_2\) norm. You can see that the \(l_1\)-norm forces certain coefficients to be set to zero (thus performing feature selection), effectively choosing a simpler model that does not include those coefficients. Ridge regression only shrinks the size of the coefficients, but does not set any of them to zero.

calling the model itself returns a matrix with the number of nonzero coefficients (df), the percent (of null) deviance explained (%dev) and the value of \(\lambda\).

##

## Call: glmnet(x = X, y = y)

##

## Df %Dev Lambda

## [1,] 0 0.0000 0.8868000

## [2,] 1 0.1335 0.8080000

## [3,] 1 0.2444 0.7362000

## [4,] 1 0.3365 0.6708000

## [5,] 1 0.4129 0.6112000

## [6,] 1 0.4763 0.5569000

## [7,] 1 0.5290 0.5075000

## [8,] 1 0.5727 0.4624000

## [9,] 1 0.6090 0.4213000

## [10,] 1 0.6392 0.3839000

## [11,] 1 0.6642 0.3498000

## [12,] 1 0.6850 0.3187000

## [13,] 1 0.7022 0.2904000

## [14,] 1 0.7165 0.2646000

## [15,] 1 0.7284 0.2411000

## [16,] 2 0.7384 0.2197000

## [17,] 2 0.7469 0.2002000

## [18,] 2 0.7540 0.1824000

## [19,] 3 0.7695 0.1662000

## [20,] 4 0.7884 0.1514000

## [21,] 4 0.8077 0.1380000

## [22,] 4 0.8238 0.1257000

## [23,] 4 0.8372 0.1145000

## [24,] 4 0.8482 0.1044000

## [25,] 4 0.8574 0.0950900

## [26,] 4 0.8651 0.0866400

## [27,] 4 0.8714 0.0789400

## [28,] 4 0.8767 0.0719300

## [29,] 4 0.8810 0.0655400

## [30,] 5 0.8850 0.0597200

## [31,] 5 0.8885 0.0544100

## [32,] 5 0.8915 0.0495800

## [33,] 6 0.8941 0.0451700

## [34,] 7 0.8966 0.0411600

## [35,] 7 0.8992 0.0375000

## [36,] 7 0.9013 0.0341700

## [37,] 8 0.9031 0.0311400

## [38,] 9 0.9054 0.0283700

## [39,] 10 0.9077 0.0258500

## [40,] 11 0.9097 0.0235500

## [41,] 11 0.9114 0.0214600

## [42,] 11 0.9129 0.0195600

## [43,] 11 0.9141 0.0178200

## [44,] 12 0.9151 0.0162300

## [45,] 13 0.9160 0.0147900

## [46,] 14 0.9168 0.0134800

## [47,] 14 0.9176 0.0122800

## [48,] 15 0.9182 0.0111900

## [49,] 15 0.9187 0.0102000

## [50,] 15 0.9192 0.0092900

## [51,] 15 0.9195 0.0084650

## [52,] 16 0.9199 0.0077130

## [53,] 16 0.9202 0.0070280

## [54,] 17 0.9205 0.0064030

## [55,] 18 0.9208 0.0058350

## [56,] 18 0.9210 0.0053160

## [57,] 18 0.9211 0.0048440

## [58,] 20 0.9213 0.0044140

## [59,] 20 0.9214 0.0040220

## [60,] 20 0.9215 0.0036640

## [61,] 20 0.9216 0.0033390

## [62,] 20 0.9217 0.0030420

## [63,] 20 0.9218 0.0027720

## [64,] 20 0.9218 0.0025260

## [65,] 20 0.9219 0.0023010

## [66,] 21 0.9219 0.0020970

## [67,] 21 0.9219 0.0019110

## [68,] 21 0.9219 0.0017410

## [69,] 21 0.9220 0.0015860

## [70,] 21 0.9220 0.0014450

## [71,] 21 0.9220 0.0013170

## [72,] 22 0.9220 0.0012000

## [73,] 22 0.9220 0.0010930

## [74,] 22 0.9220 0.0009962

## [75,] 23 0.9221 0.0009077

## [76,] 23 0.9221 0.0008270

## [77,] 23 0.9221 0.0007536

## [78,] 23 0.9222 0.0006866

## [79,] 23 0.9222 0.0006256

## [80,] 23 0.9222 0.0005700

## [81,] 23 0.9222 0.0005194

## [82,] 23 0.9223 0.0004733

## [83,] 23 0.9223 0.0004312

## [84,] 23 0.9223 0.0003929

## [85,] 23 0.9223 0.0003580

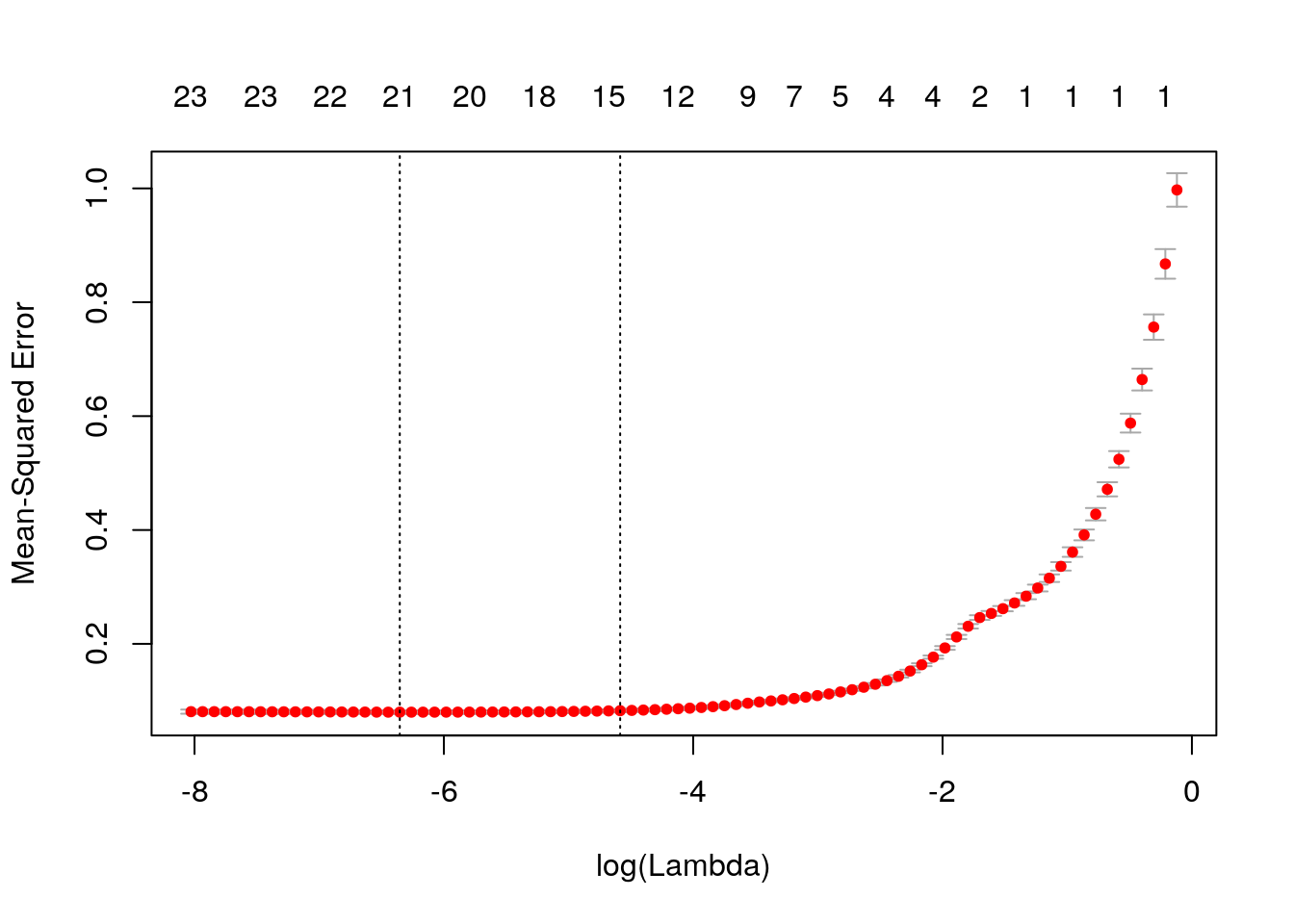

## [86,] 23 0.9223 0.0003262The function glmnet returns a sequence of models for the users to choose from. In many cases, users may prefer the software to select one of them. Cross-validation is perhaps the simplest and most widely used method for that task.

We see cross-validated MSE vs log(lambda), one of the lines is log(lamda) at the minimum MSE, and the other one is lambda.1se, which gives the most regularized model such that error is within one standard error of the minimum

We can extract the minimum-MSE \(\lambda\) and use it while predicting with:

## [1] -3.466903 -3.664798 -3.123675 -3.107562 -3.330688 -3.346324 -3.057386

## [8] -3.390206 -3.436090 -3.300953The power of shrinkage models like lasso/ridge is that it allows to include many features. glmnet can be used when you want to include many features like interactions or variables transformations; large part of them is probably noise. E.g.:

X_interactions <- model.matrix(price~(.)^2-1, data = d) %>% scale()

dim(X); dim(X_interactions) # check how many more variables we added## [1] 5000 24## [1] 5000 235Lastly, glmnet can be used to predict other type of labels, assuming different generative distribution:

E.g., binomial:

y_binary <- y > mean(y)

glmnet_binomial = glmnet(X, y_binary, family = "binomial", lambda = 0.01)

predict(glmnet_binomial, X, type = "response" ) %>% head()## s0

## 1 0.0013520834

## 2 0.0005424651

## 3 0.0045449530

## 4 0.0012719477

## 5 0.0009356790

## 6 0.0019846112## s0

## 1 "FALSE"

## 2 "FALSE"

## 3 "FALSE"

## 4 "FALSE"

## 5 "FALSE"

## 6 "FALSE"## y_binary

## FALSE TRUE

## FALSE 671 38

## TRUE 859 3432E.g., multinomial:

y_multiclass <- cut(y, breaks = quantile(y, seq(0,1,0.2)), labels = letters[1:5], include.lowest = T)

table(y_multiclass)## y_multiclass

## a b c d e

## 1003 997 1000 1003 997glmnet_multinomial <- glmnet(X, y_multiclass, family = "multinomial", nlambda = 4)

sapply(glmnet_multinomial$lambda, function(lambda)

table(predict(glmnet_multinomial, X, type = "class", s=lambda) , y_multiclass) )## [[1]]

## y_multiclass

## a b c d e

## a 1003 997 1000 1003 997

##

## [[2]]

## y_multiclass

## a b c d e

## a 623 41 21 11 1

## b 241 579 441 340 194

## c 68 162 207 149 88

## d 39 100 95 72 34

## e 32 115 236 431 680

##

## [[3]]

## y_multiclass

## a b c d e

## a 640 61 19 4 2

## b 288 597 354 176 60

## c 45 192 307 176 95

## d 16 85 183 318 187

## e 14 62 137 329 653

##

## [[4]]

## y_multiclass

## a b c d e

## a 649 77 21 6 3

## b 280 578 329 154 53

## c 49 195 329 197 100

## d 17 91 188 315 198

## e 8 56 133 331 643Note:

- type = "class" in predict applies only to “binomial” or “multinomial” models, and produces the class label corresponding to the maximum probability.

- nlambda specify the number of lambda values to fit models for - default is 100.

- s= in the predict tells R with which model, based on which \(\lambda\) to predict.

10.2.2 SVM

A support vector machine (SVM) is a linear hypothesis class with a particular loss function known as a hinge loss.

We learn an SVM with the svm function from the e1071 package, which is merely a wrapper for the libsvm C library; the most popular implementation of SVM today.

library(e1071)

svm.1 <- svm(spam~., data = spam.train, kernel='linear')

# Train confusion matrix:

.predictions.train <- predict(svm.1)

(confusion.train <- table(prediction=.predictions.train, truth=spam.train$spam))## truth

## prediction email spam

## email 1774 106

## spam 70 1101## [1] 0.057686# Test confusion matrix:

.predictions.test <- predict(svm.1, newdata = spam.test)

(confusion.test <- table(prediction=.predictions.test, truth=spam.test$spam))## truth

## prediction email spam

## email 876 75

## spam 68 531## [1] 0.09225806We can also use SVM for regression.

svm.2 <- svm(lcavol~., data = prostate.train, kernel='linear')

# Train error:

MSE( predict(svm.2)- prostate.train$lcavol)## [1] 0.4488577## [1] 0.5547759Things to note:

- The use of

kernel='linear'forces the predictor to be linear. Various hypothesis classes may be used by changing thekernelargument.

10.2.2.1 Another SVM example

The following example is taken from Datacampa’s SVM tutorial.

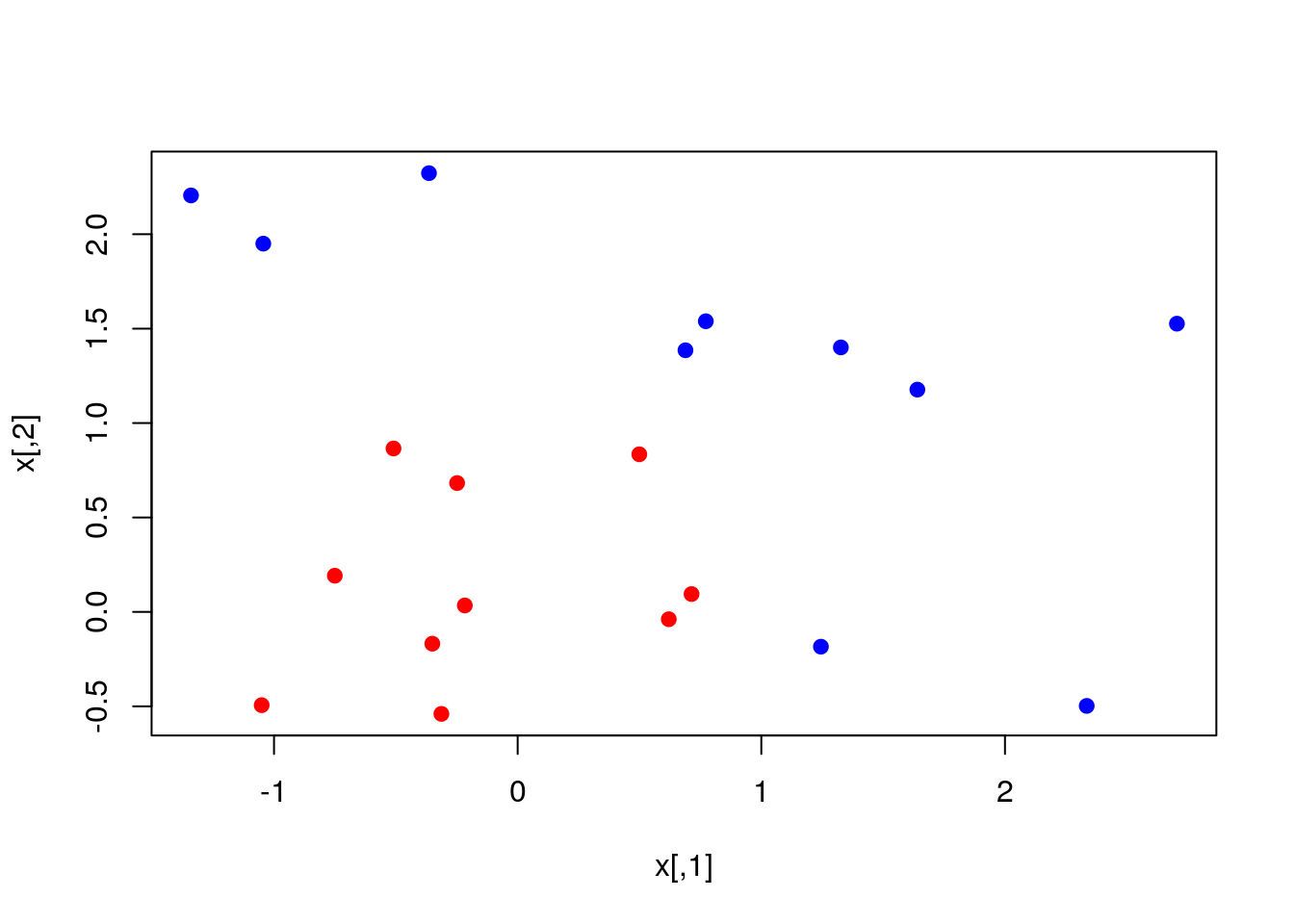

We start by SVM with linear kernel. Generating some data:

set.seed(10111)

x = matrix(rnorm(40), 20, 2)

y = rep(c(-1, 1), c(10, 10))

x[y == 1,] = x[y == 1,] + 1

plot(x, col = y + 3, pch = 19)

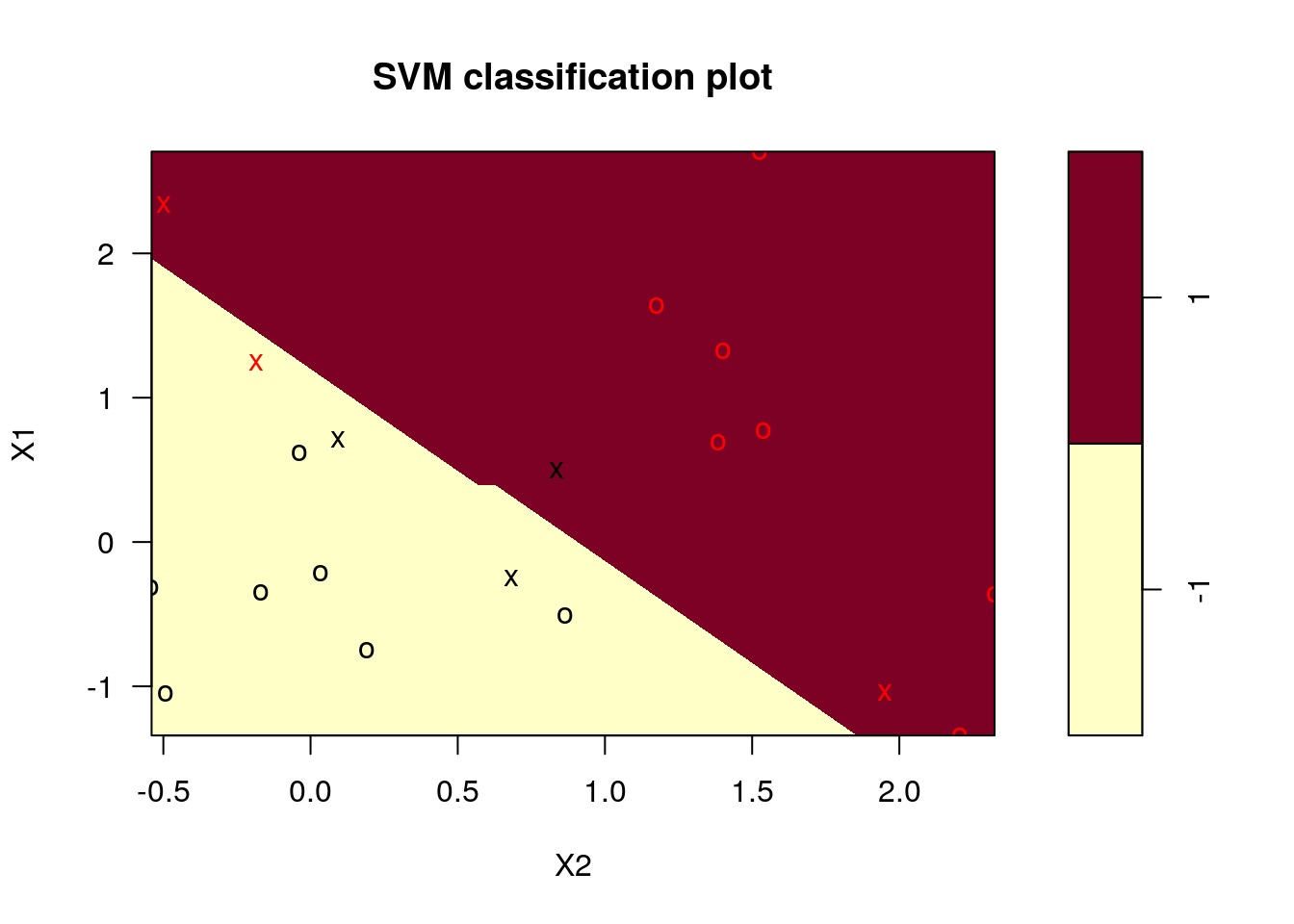

Fit the SVM model and plot it:

library(e1071)

svmfit = svm(y ~ ., data = dat, kernel = "linear", cost = 10, scale = FALSE)

print(svmfit)##

## Call:

## svm(formula = y ~ ., data = dat, kernel = "linear", cost = 10,

## scale = FALSE)

##

##

## Parameters:

## SVM-Type: C-classification

## SVM-Kernel: linear

## cost: 10

##

## Number of Support Vectors: 6

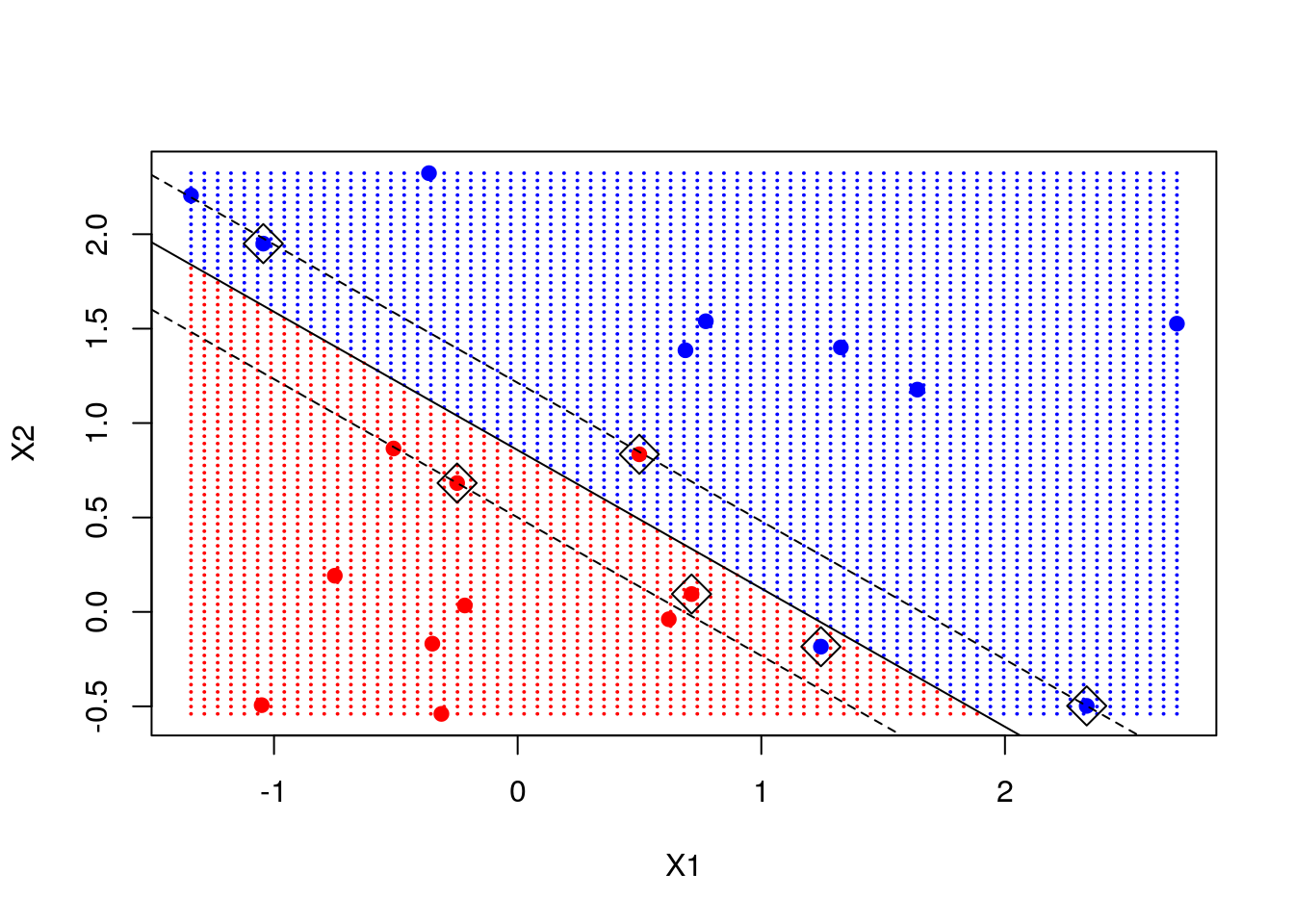

Note: we chose cost=10 although it may be that this is not the optimal choice. A better way to choose the cost is by cross-validation. We used linear kernel, which seems to be appropriate for the complexity of the problem.

Using our trained SVM for prediction on the entire grid (think of the grid as a test set)

make.grid = function(X, n = 75) {

grange = apply(X, 2, range)

x1 = seq(from = grange[1,1], to = grange[2,1], length = n)

x2 = seq(from = grange[1,2], to = grange[2,2], length = n)

expand.grid(X1 = x1, X2 = x2)

}

xgrid = make.grid(x)

xgrid[1:10,] # xgrid is just a matrix of x1 and x2 values## X1 X2

## 1 -1.3406379 -0.5400074

## 2 -1.2859572 -0.5400074

## 3 -1.2312766 -0.5400074

## 4 -1.1765959 -0.5400074

## 5 -1.1219153 -0.5400074

## 6 -1.0672346 -0.5400074

## 7 -1.0125540 -0.5400074

## 8 -0.9578733 -0.5400074

## 9 -0.9031927 -0.5400074

## 10 -0.8485120 -0.5400074ygrid = predict(svmfit, xgrid)

beta = drop(t(svmfit$coefs)%*%x[svmfit$index,])

beta0 = svmfit$rho

plot(xgrid, col = c("red", "blue")[as.numeric(ygrid)], pch = 20, cex = .2)

points(x, col = y + 3, pch = 19)

points(x[svmfit$index,], pch = 5, cex = 2)

abline(beta0 / beta[2], -beta[1] / beta[2])

abline((beta0 - 1) / beta[2], -beta[1] / beta[2], lty = 2)

abline((beta0 + 1) / beta[2], -beta[1] / beta[2], lty = 2)

Note: we marked the support points - points that are close to the decision boundary and are instrumental in determining that boundary. We add the decision line and the margin.

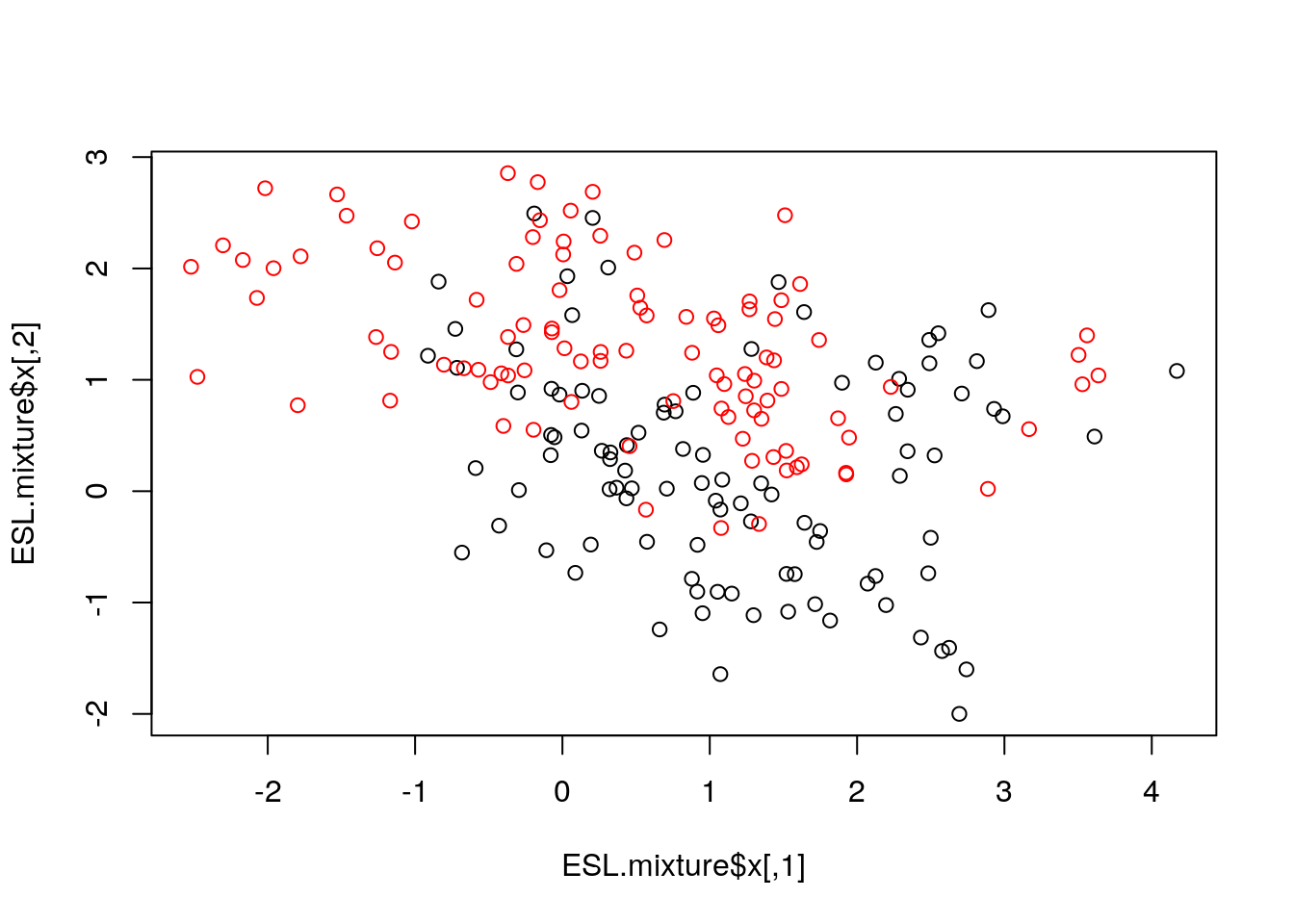

Now let’s consider a classification problem that seems not linear in the features space.

## [1] "x" "y" "xnew" "prob" "marginal" "px1"

## [7] "px2" "means"## The following objects are masked _by_ .GlobalEnv:

##

## means, x, y

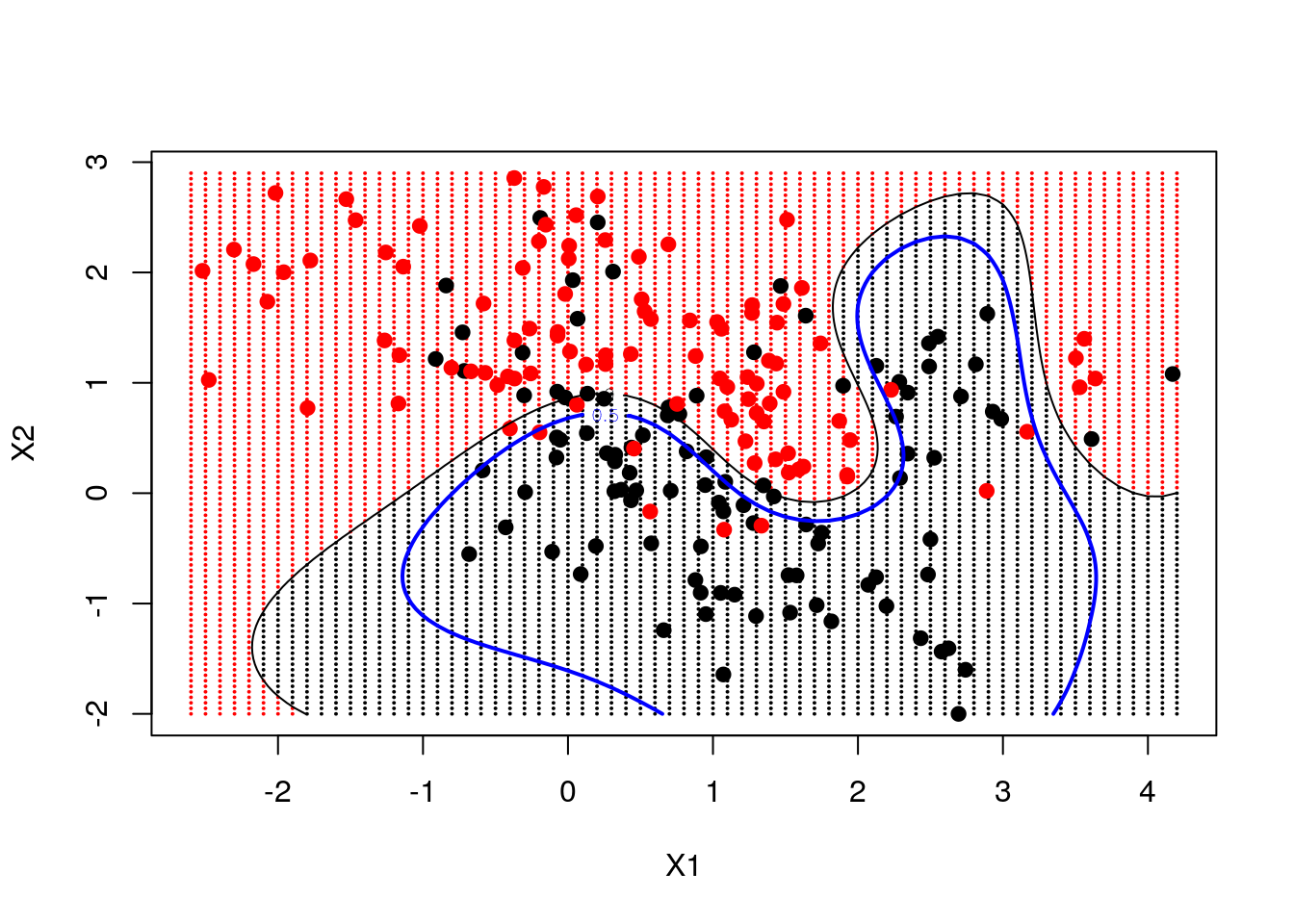

We now fit an SVM with "radial" kernel to catch non-linear relations, and plot its prediction on the grid, the decision line and the margin

fit = svm(factor(ESL.mixture$y) ~ ., data = dat, scale = FALSE, kernel = "radial", cost = 5)

xgrid = expand.grid(X1 = ESL.mixture$px1, X2 = ESL.mixture$px2)

ygrid = predict(fit, xgrid)

func = predict(fit, xgrid, decision.values = TRUE)

func = attributes(func)$decision

plot(xgrid, col = as.numeric(ygrid), pch = 20, cex = .2)

points(ESL.mixture$x, col = as.factor(ESL.mixture$y) , pch = 19)

contour(ESL.mixture$px1, ESL.mixture$px2, matrix(func, 69, 99), level = 0, add = TRUE) # our learned decision boundary

contour(ESL.mixture$px1, ESL.mixture$px2, matrix(func, 69, 99), level = 0.5, add = TRUE, col = "blue", lwd = 2) # the true decision boundary

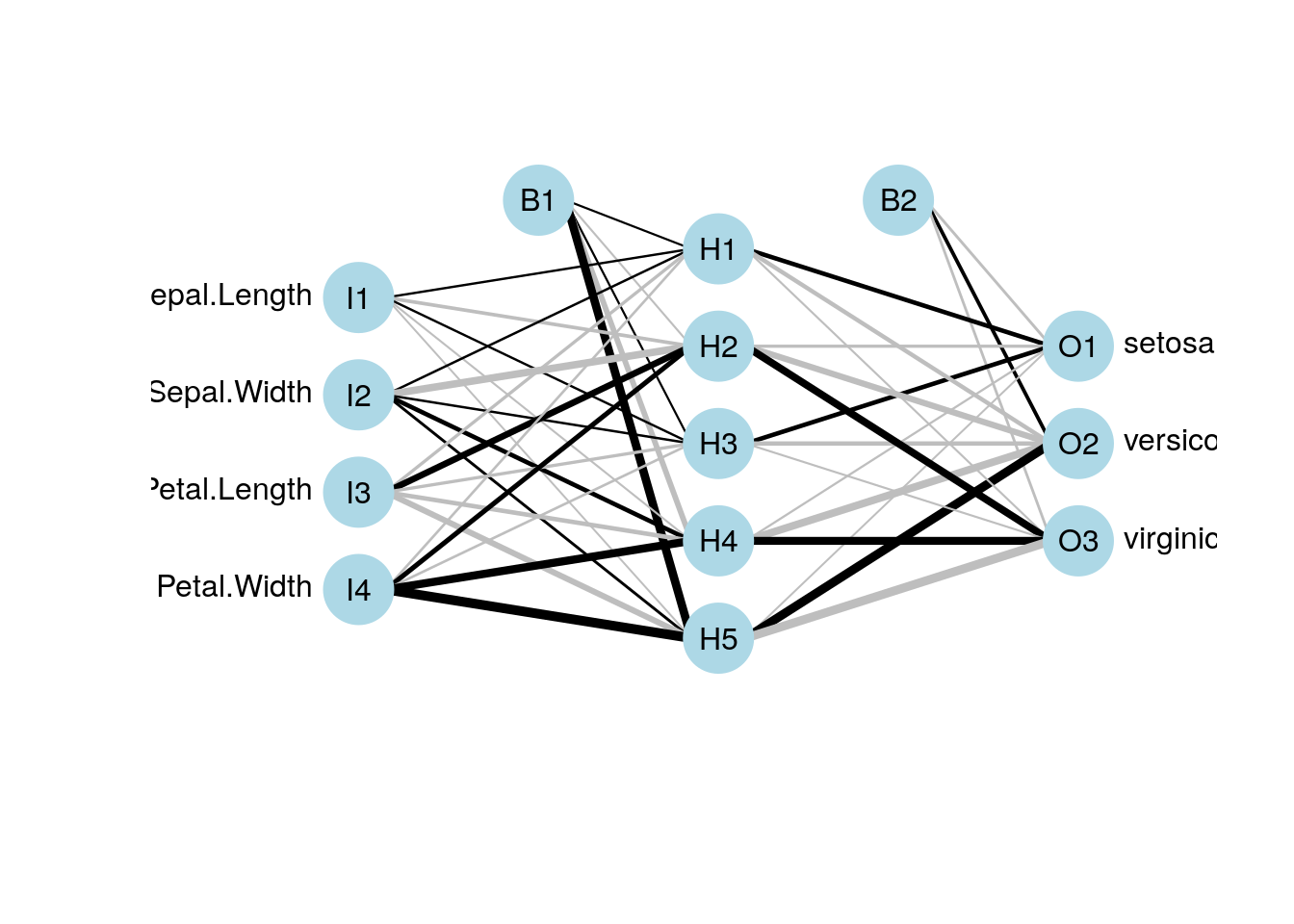

10.2.3 Neural Nets

Neural nets (i.e., shallow, non deep) can be fitted, for example, with the (somewhat old) nnet function in the nnet package.

This package fits a single-hidden-layer neural network (fully connected layer).

We start with a nnet regression.

library(nnet)

nnet.1 <- nnet(lcavol~., size=20, data=prostate.train, rang = 0.1, decay = 5e-4, maxit = 1000, trace=FALSE)

# Train error:

MSE( predict(nnet.1)- prostate.train$lcavol)## [1] 1.177099## [1] 1.21175Note: size is the number of units in the hidden layer. maxit is the maximum number of iterations, default 100. It controls the computation time and affects the accuracy and the overfitting. trace=FALSE is for not printing the training. Read more with ?nnet.

And nnet classification.

nnet.2 <- nnet(spam~., size=5, data=spam.train, rang = 0.1, decay = 5e-4, maxit = 1000, trace=FALSE)

# Train confusion matrix:

.predictions.train <- predict(nnet.2, type='class')

(confusion.train <- table(prediction=.predictions.train, truth=spam.train$spam))## truth

## prediction email spam

## email 1806 59

## spam 38 1148## [1] 0.03179285# Test confusion matrix:

.predictions.test <- predict(nnet.2, newdata = spam.test, type='class')

(confusion.test <- table(prediction=.predictions.test, truth=spam.test$spam))## truth

## prediction email spam

## email 897 64

## spam 47 542## [1] 0.0716129You can also use type='prob' to get the probabilities instead of the class.

Plotting the network can be done via the gamlss.add package. Here is an example:

library(gamlss.add)

nn_iris <- nnet(Species~., size=5, data=iris, rang = 0.1, decay = 5e-4, maxit = 1000, trace=FALSE)

plot(nn_iris)

10.2.3.1 Deep Nets

Not in our scope. See the R interface to Keras which makes it easy to quickly prototype deep learning models (But for serious problems you may need a GPU).

10.2.4 Classification and Regression Trees (CART)

A CART, is not a linear hypothesis class. It partitions the feature space \(\mathcal{X}\), thus creating a set of if-then rules for prediction or classification. It is thus particularly useful when you believe that the predicted classes may change abruptly with small changes in \(x\).

10.2.4.1 The rpart Package

This view clarifies the name of the function rpart, which recursively partitions the feature space.

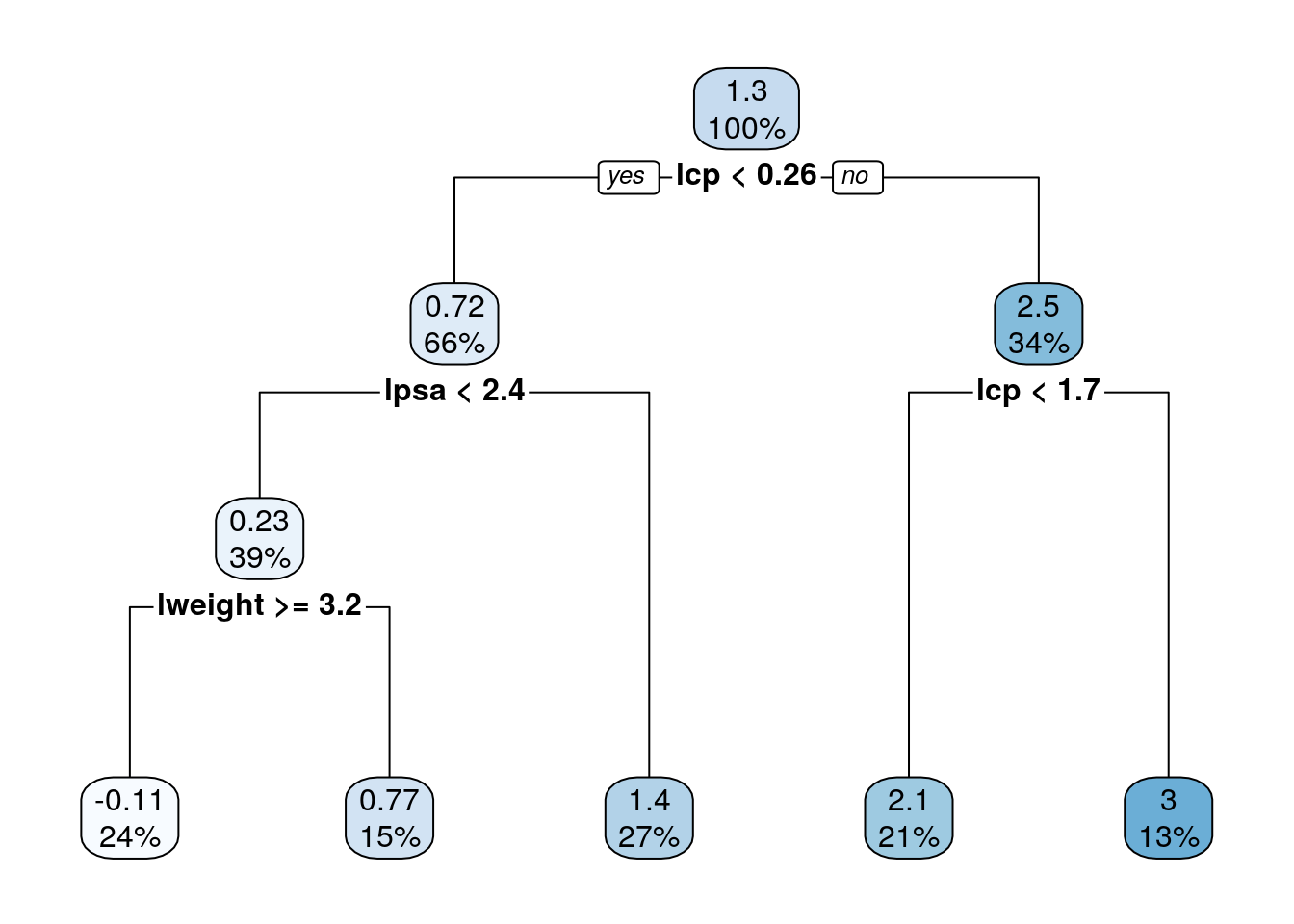

We start with a regression tree.

library(rpart)

library(rpart.plot)

tree.1 <- rpart(lcavol~., data=prostate.train)

# Train error:

MSE( predict(tree.1)- prostate.train$lcavol)## [1] 0.4909568## [1] 0.5623316We can use the rpart.plot package to visualize and interpret the predictor.

Trees are very prone to overfitting.

To avoid this, we reduce a tree’s complexity by pruning it.

This is done with the prune function (not demonstrated herein).

We now fit a classification tree.

tree.2 <- rpart(spam~., data=spam.train)

# Train confusion matrix:

.predictions.train <- predict(tree.2, type='class')

(confusion.train <- table(prediction=.predictions.train, truth=spam.train$spam))## truth

## prediction email spam

## email 1785 217

## spam 59 990## [1] 0.09046214# Test confusion matrix:

.predictions.test <- predict(tree.2, newdata = spam.test, type='class')

(confusion.test <- table(prediction=.predictions.test, truth=spam.test$spam))## truth

## prediction email spam

## email 906 125

## spam 38 481## [1] 0.105161310.2.4.2 Anoter trees examples

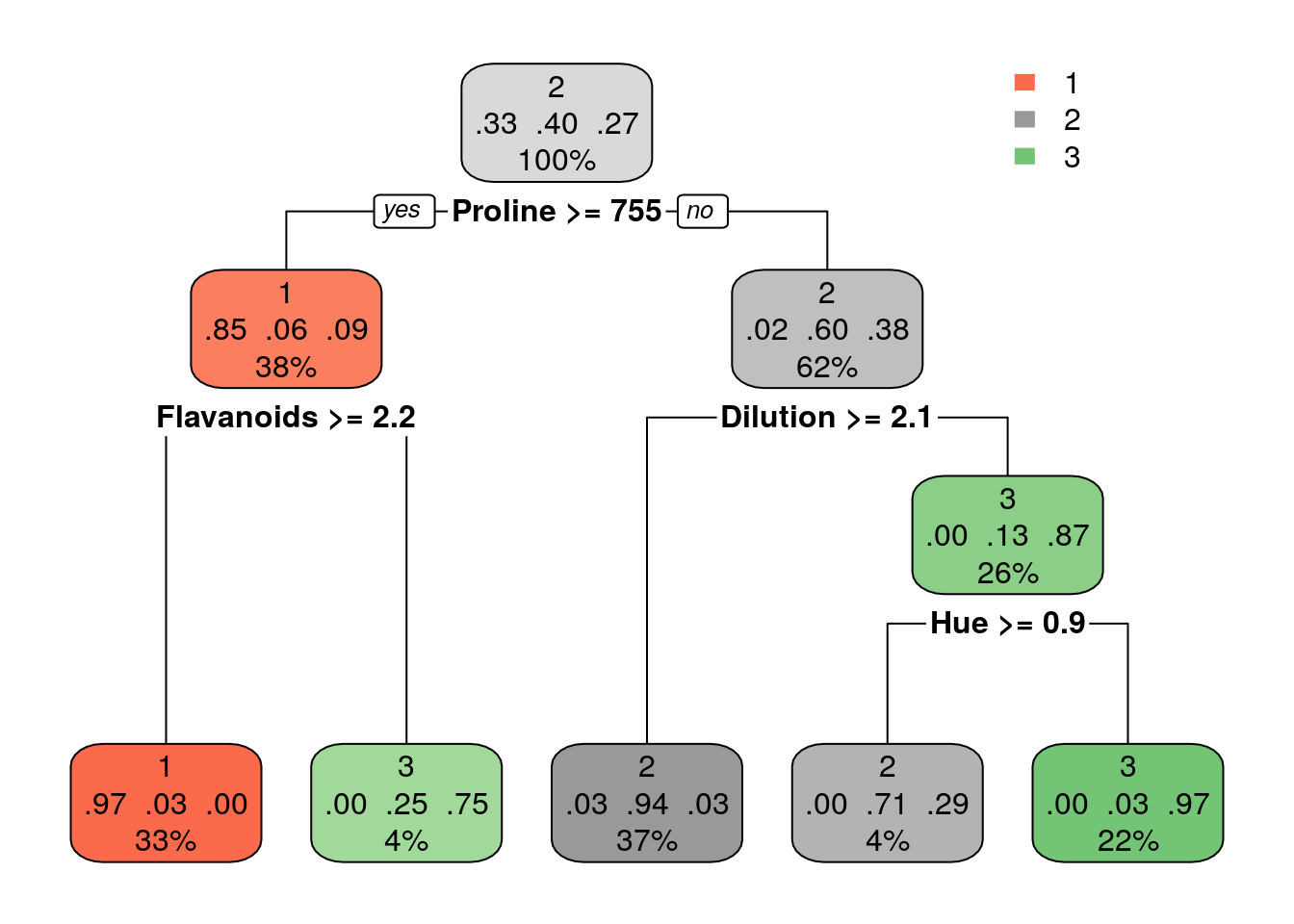

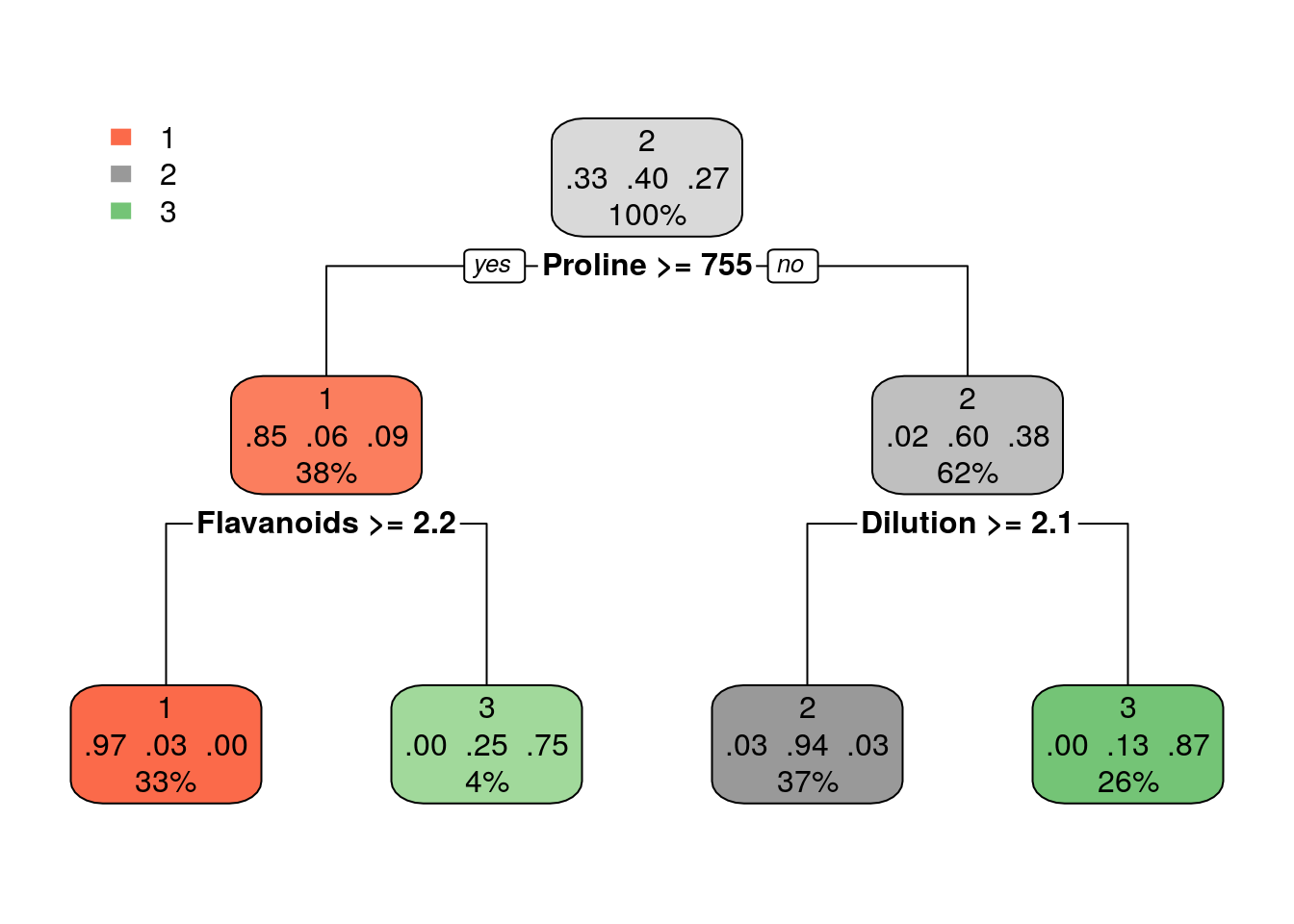

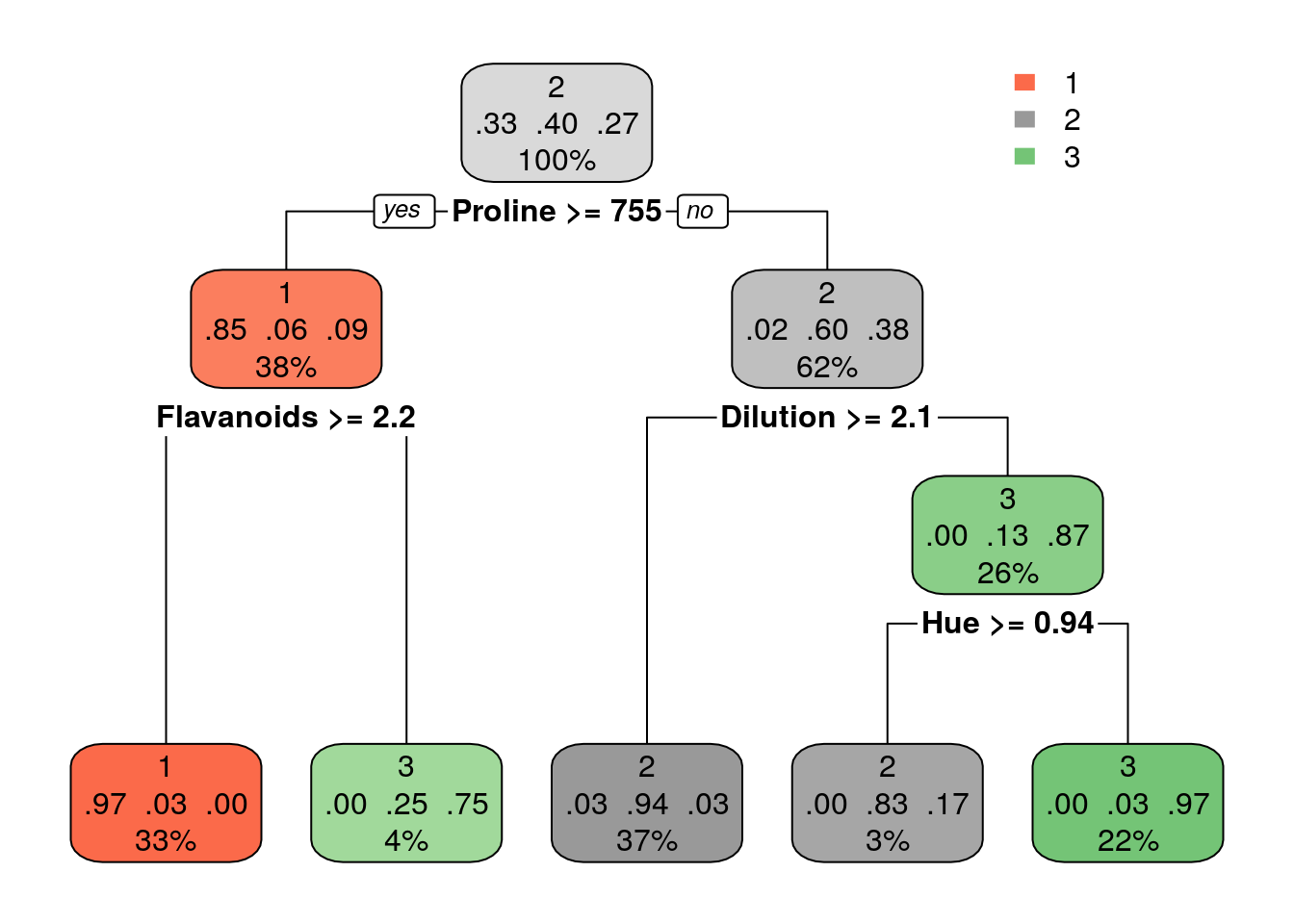

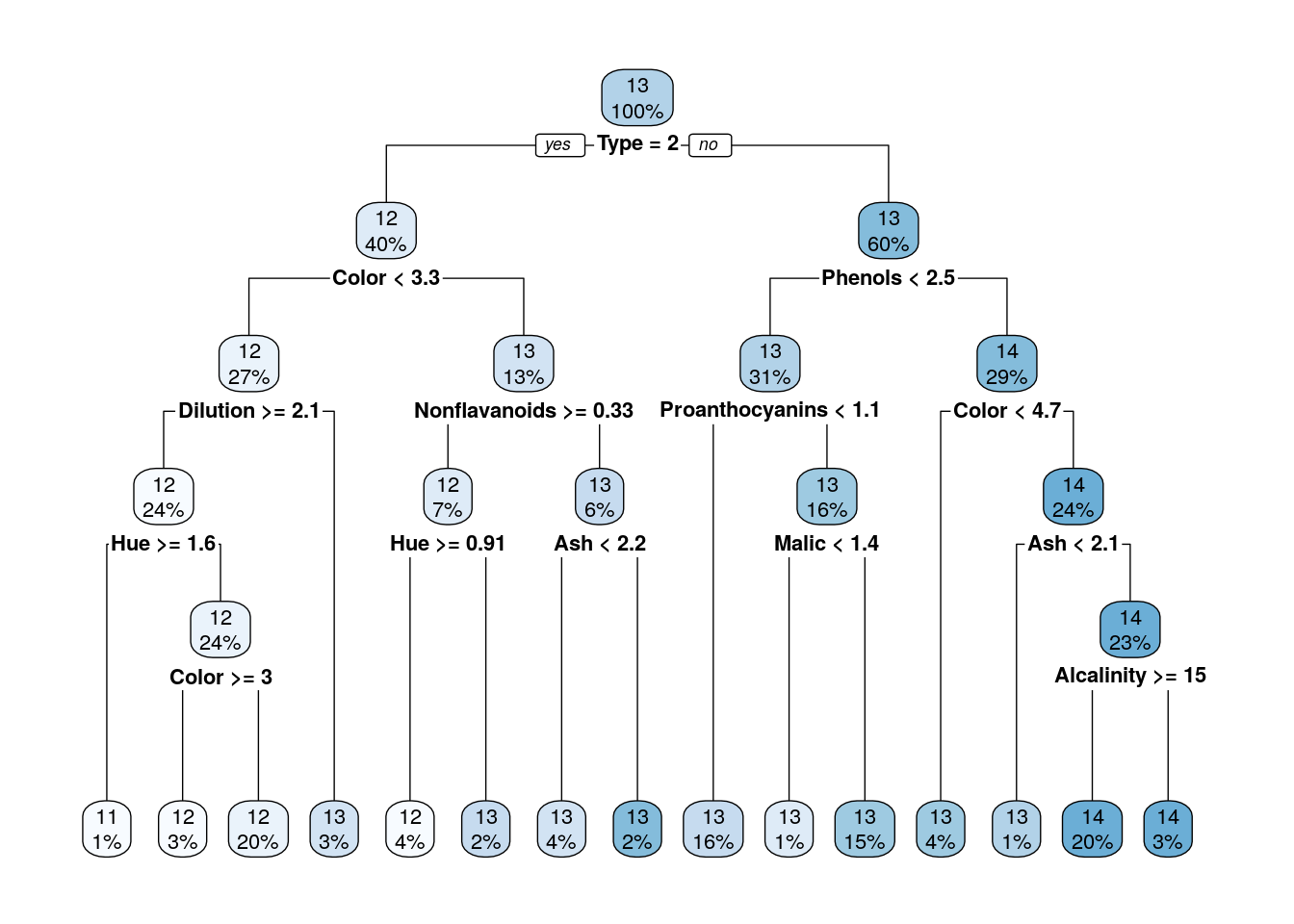

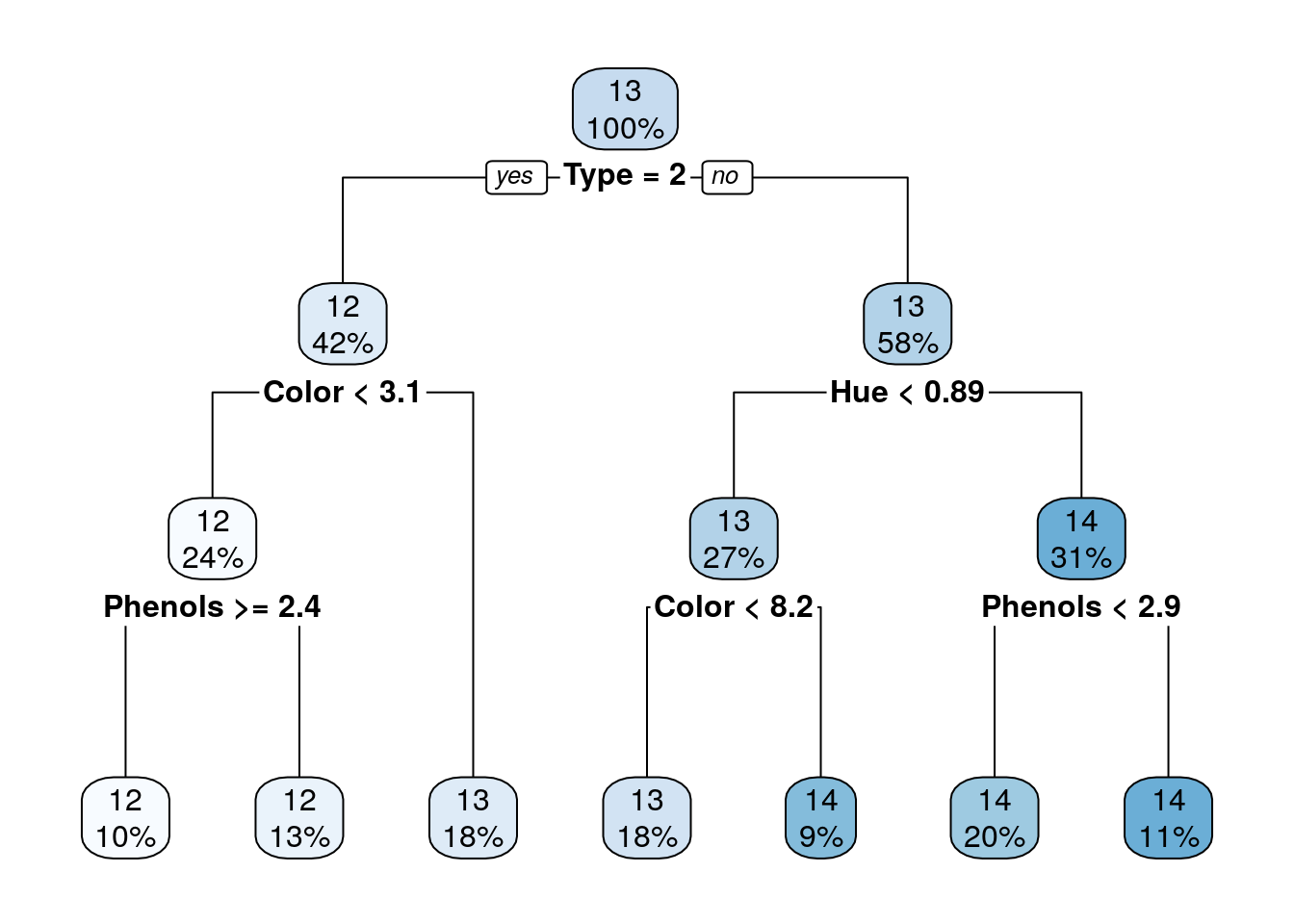

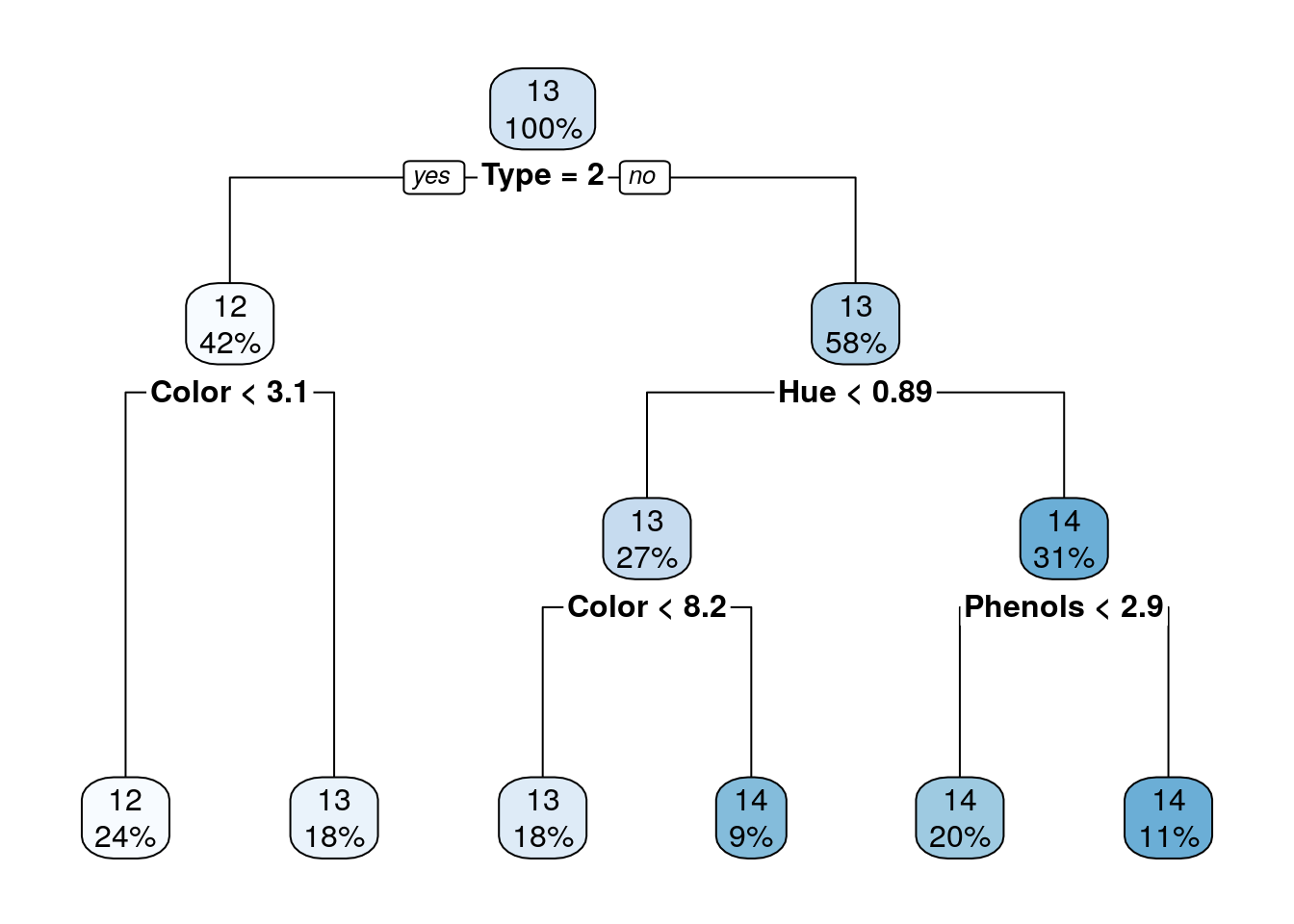

Classification:

Grow larger tree with smaller minsplit (the minimum number of observations that must exist in a node in order for a split to be attempted). See ?rpart.control for information on various parameters that control aspects of the rpart fit.

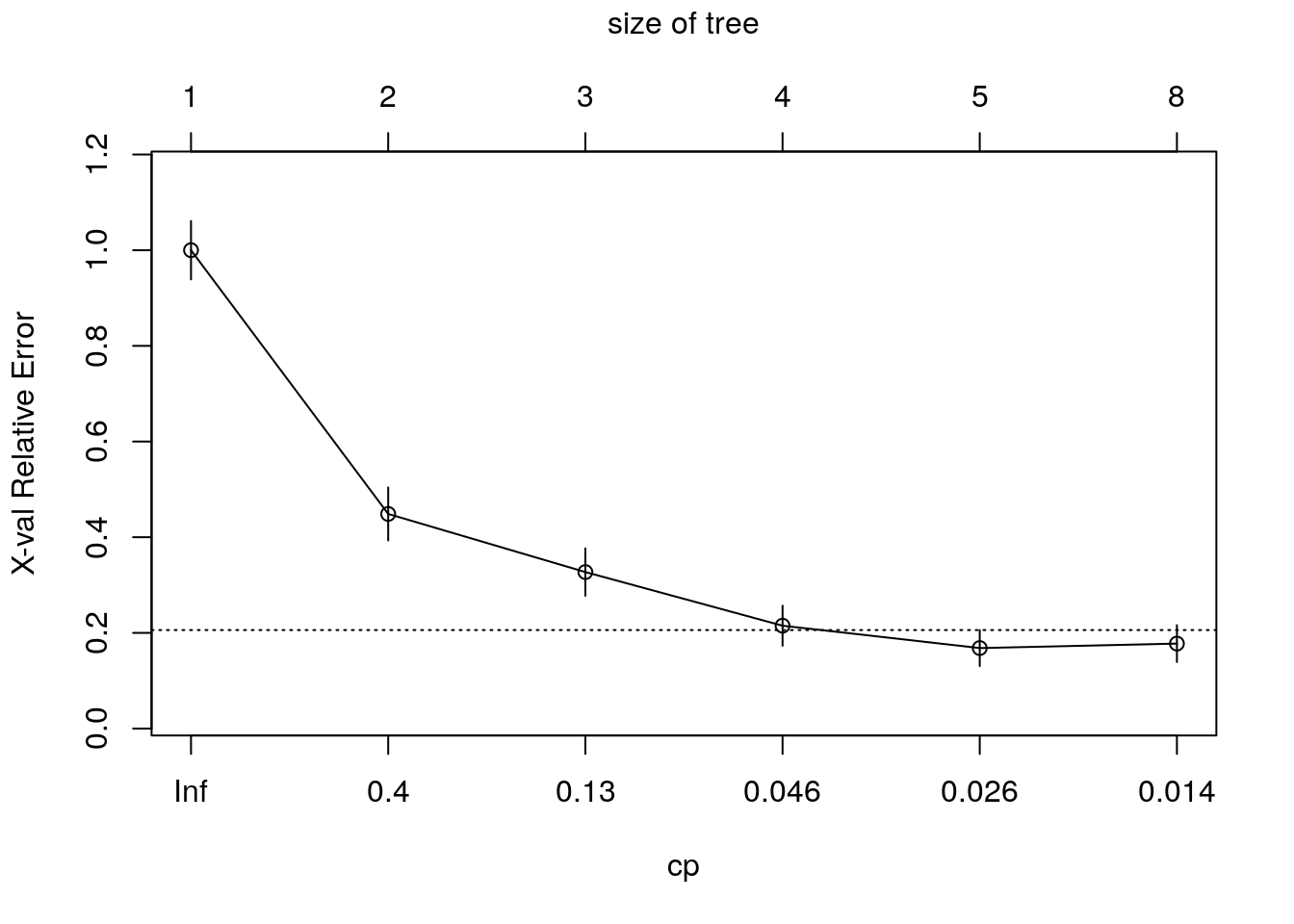

Once we trained a tree, we can prune it (good to avoid overfitting). How much to prune? use CV or decide on a point from which the performance improvement over the train in not significant. Use plotcp to get visual representation of the performance along the tree (using cross-validation)

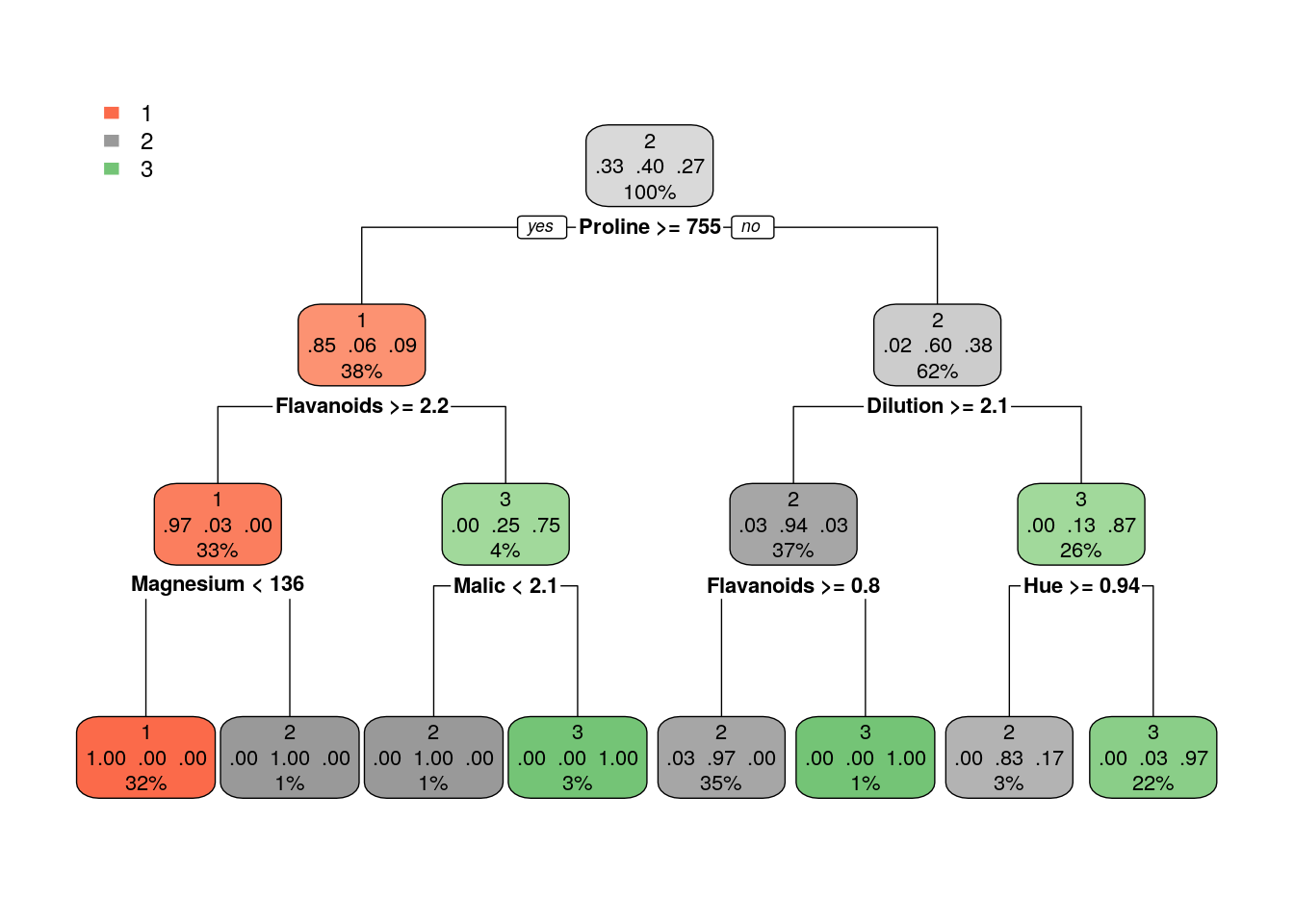

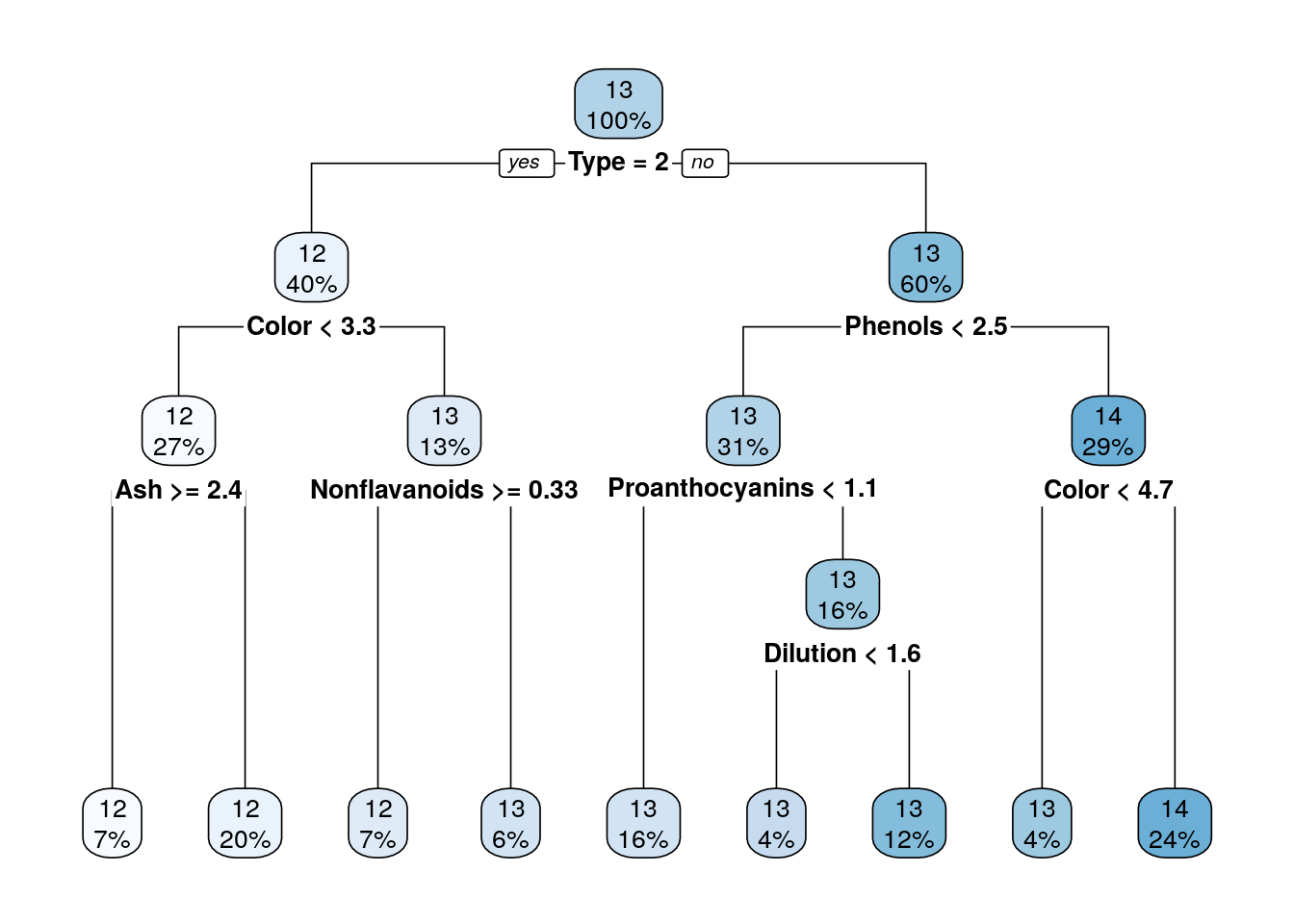

Using trees for regression:

Compare the performance of trees that were pruned on different heights on a test set.

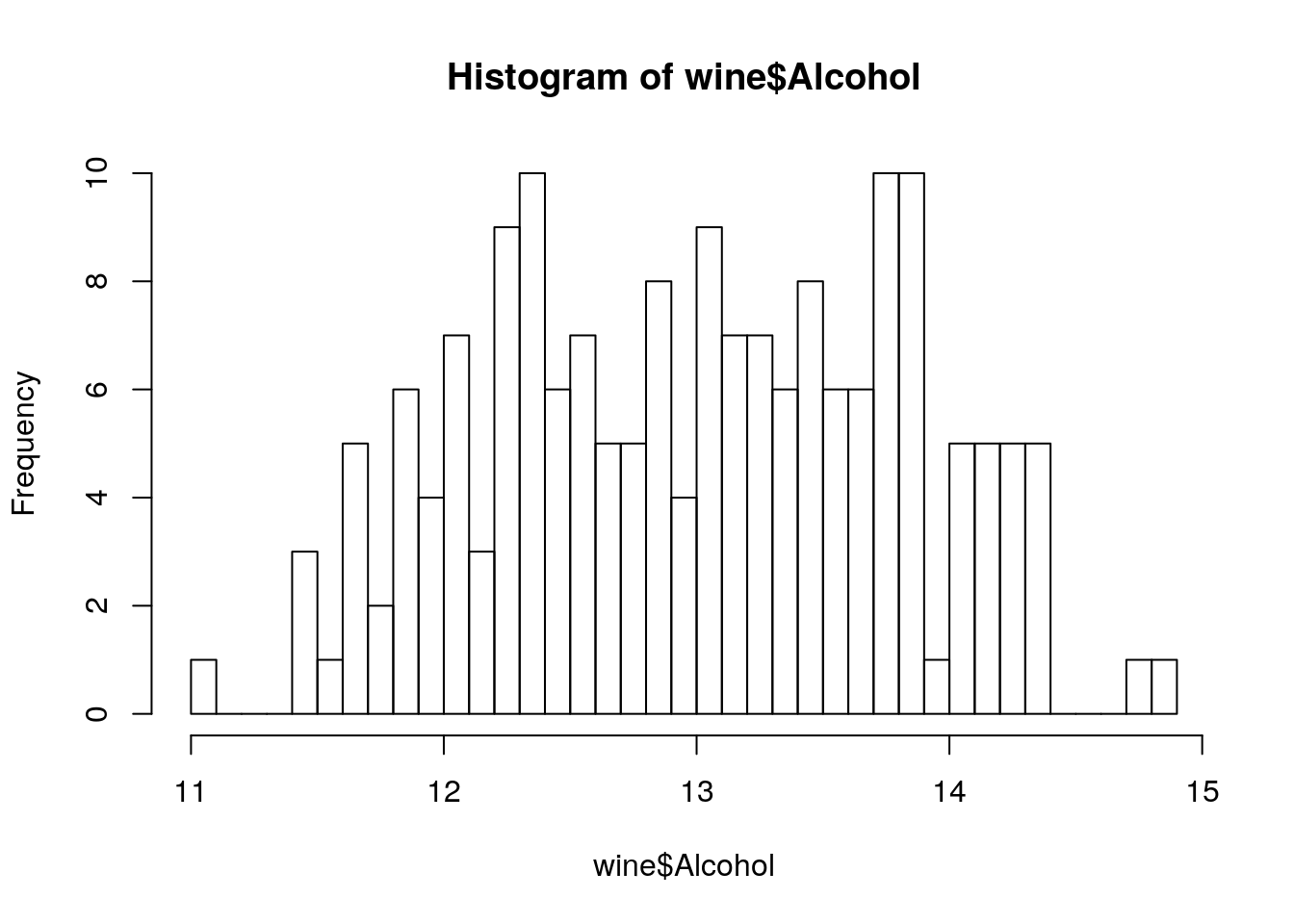

set.seed(256)

intrn <- sample(1:nrow(wine), 0.5*nrow(wine))

d_tr <- wine[intrn,]

d_ts <- wine[-intrn,]

tree_cp001 <- rpart(Alcohol~., data=d_tr, control = rpart.control(cp = 0.001))

rpart.plot(tree_cp001)

## [1] 0.3304413## [1] 0.322374The main advantage of a decsion trees is interpretability: it is very easy to understand the classiction/regression model of a tree. Yet, only in rare scenarios a single tree will learn a model that describes the phenomenon well. It is less common in complex and natural processes; more where there are yes-no decisions of the kind that people make.

Single trees usually produce noisy (bushy) or weak (stunted) classifiers. Multiple trees models are therfore better for prediction. But much worse for interpretation:

Bagging (Bootstrap aggregated): fit many large trees to bootstrap-resampled version of the data (“shake the data”), and aggregate them to choose a classifier (e.g. in classification - by majority vote). Random Forest: Fancier version of bagging: reduce variance by decorrelating the trees by choosing different set of features in each tree.Boosting: fit many large or small trees to reweighted versions of the training data. aggregate to choose a classifier.

We will see them next.

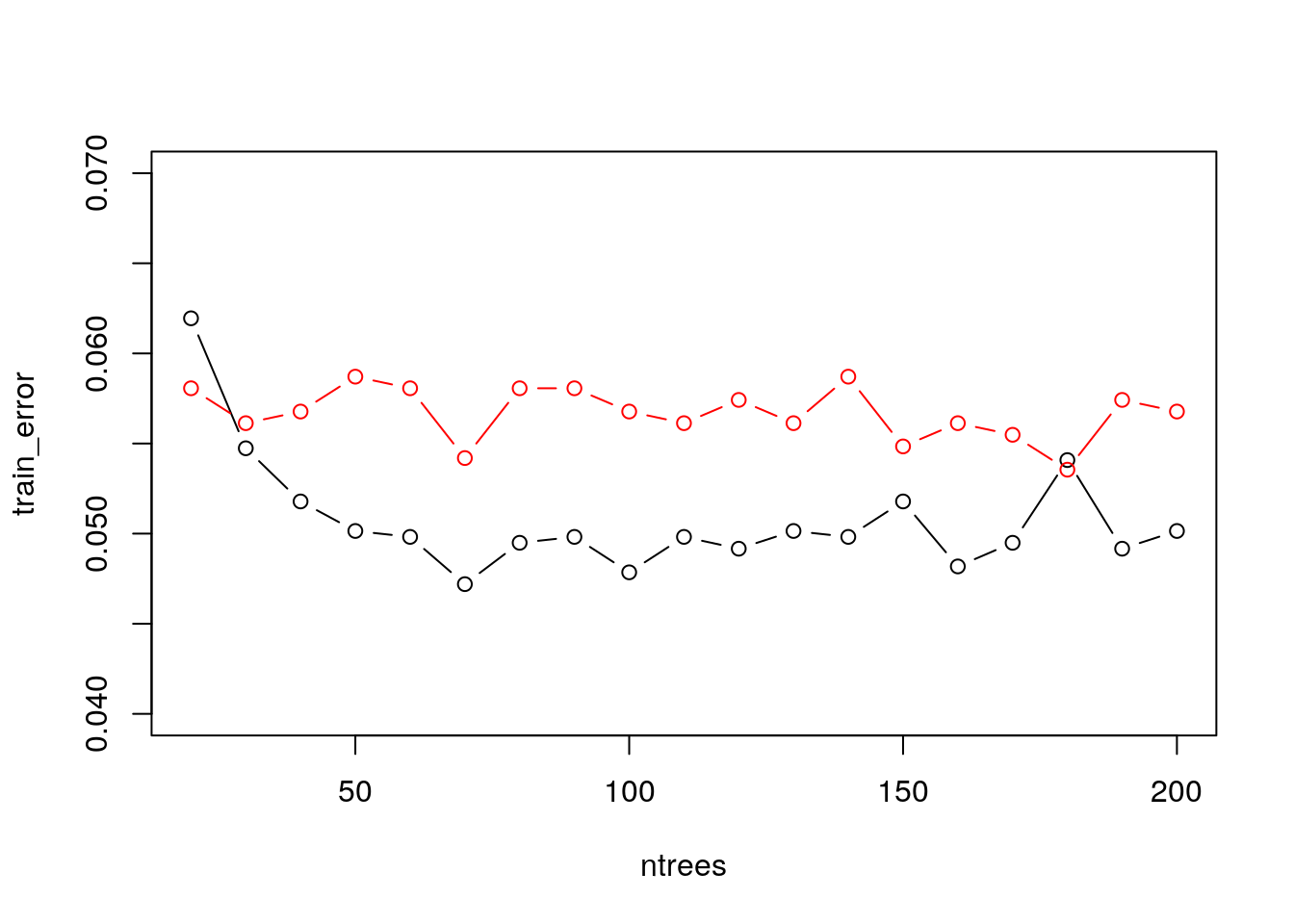

10.2.5 Random Forrest

With one tree we are very likely to get to overfitting, but with many trees - a forest, we can do a much better job. In Random Forests the idea is to decorrelate the several trees which are generated by the different bootstrapped samples from training Data (that means that each tree is fitted on a random subset of the training data). Then, we simply reduce the variance in the Trees by averaging them.

The Random forest is a Bootstrap aggregating approach, known as Bagging: Bagging is a machine learning ensemble meta-algorithm designed to improve the stability and accuracy of machine learning algorithms used in statistical classification and regression. It also reduces variance and helps to avoid overfitting. Although it is usually applied to decision tree methods (but not only).

Averaging the Trees helps to reduce the variance and improve the performance on test set so it helps to avoid Overfitting. The idea is to build lots of Trees in such a way to make the correlation between the Trees smaller. To reduce correlation between trees, in each split on the training exampleswe we also use only a subset of the features \(m\) (typically \(m \approx \sqrt{p}\) (where \(p\) is the number of features))

Throwing away features might sounds crazy, but it makes sense as it make the trees less correlated, and by growing A LOT of trees, that helps to reduce the variance of the predictions so the performance increase.

Random Forrest is one of the most popular supervised learning algorithms. It it an extremely successful algorithm, with very few tuning parameters, and easily parallelizable (thus scalable to massive datasets).

10.2.5.1 The randomForest Package

library(randomForest)

m_rf <- randomForest(spam~., data = spam.train, ntree=200, mtry = sqrt(ncol(spam.train)))

# Train confusion matrix:

pr_train <- predict(m_rf)

(rf_confusion.train <- table(prediction=pr_train, truth=spam.train$spam))## truth

## prediction email spam

## email 1785 89

## spam 59 1118## [1] 0.04850869# Test confusion matrix:

pr_test <- predict(m_rf, newdata = spam.test)

(rf_confusion.test <- table(prediction=pr_test, truth=spam.test$spam))## truth

## prediction email spam

## email 906 54

## spam 38 552## [1] 0.05935484Where ntree is the number of trees to grow. This should not be set to too small a number, to ensure that every input row gets predicted at least a few times. mtry is the number of variables randomly sampled as candidates at each split. Note that the default values are different for classification (sqrt(p) where p is number of variables in x) and regression (p/3). Other hyperparameters which you can tune are: replace (Should sampling of cases be done with or without replacement?); nodesize (minimum size of terminal nodes. Setting this number larger causes smaller trees to be grown (and thus take less time));maxnodes (Maximum number of terminal nodes trees in the forest can have). See more details with ?randomForest.

See the bagging in action:

ntrees <- seq(20,200,by = 10)

train_error <- numeric(length(ntrees))

test_error <- numeric(length(ntrees))

# this loop can take a while

for (i in 1:length(ntrees)) {

m_rf_i <- randomForest(spam~., data = spam.train, ntree=ntrees[i], mtry = 10)

train_error[i] <- mean(predict(m_rf_i) != spam.train$spam)

test_error[i] <- mean(predict(m_rf_i, spam.test) != spam.test$spam)

}

plot(train_error~ntrees, type = "b", ylim = c(0.04,0.07))

points(test_error~ntrees, col = 2,type = "b")

10.2.5.2 The ranger Package

A fast implementation of Random Forests, particularly suited for highdimensional data. Ensembles of classification, regression, survival andprobability prediction trees are supported. See this paper for details

10.2.6 Gradient Boosting

Gradient boosted machines (GBMs) are an extremely popular machine learning algorithm that have proven successful across many domains and is one of the leading methods for winning Kaggle competitions. Whereas random forests build an ensemble of deep independent trees, GBMs build an ensemble of shallow and weak successive trees with each tree learning and improving on the previous. When combined, these many weak successive trees produce a powerful “committee” that are often hard to beat with other algorithms. This tutorial will cover the fundamentals of GBMs for regression problems.

Some of the advantages of GBM is that it can optimize on different loss functions and provides several hyperparameter tuning options. Also, usually it works very well on non-preprocessed data (e.g., categorical and numerical variables together) and handels missing data.

Watch out: GBMs can also overfit! use validation (deafult in gbm package).

Caution: can be computationally expensive when many trees (>1000) are required.

How does it works:

- Initialize the outcome

- Iterate from 1 to total number of trees 2.1 Update the weights for targets based on previous run (higher for the ones mis-classified) 2.2 Fit the model on selected subsample of data 2.3 Make predictions on the full set of observations 2.4 Update the output with current results taking into account the learning rate

- Return the final output.

Updating weights:

additive training:

for \(i \in 1,...,n\):

\[ \begin{align} \hat{y}^{(0)}_i &= 0 \\ \hat{y}^{(1)}_i &= f_1(x_i) = \hat{y}^{(0)}_i + f_1(x_i) \\ \hat{y}^{(2)}_i &= f_2(x_i) = \hat{y}^{(1)}_i + f_2(x_i) \\ ... \\ \hat{y}^{(t)}_i &= \sum_{k=1}^t f_k(x_i) = \hat{y}^{(t-1)}_i + f_t(x_i) \\ \end{align}\]

at each step we add the tree that optimizes an objective function that includes a regularization term.

more on Gradient Boosting: Trevor Hastie - Gradient Boosting Machine Learning and check also this nice post, from which the following GBM code is taken.

10.2.6.1 The gbm Package

Train a GBM model:

library(gbm)

gbm.fit <- gbm(

formula = lcavol ~ .,

distribution = "gaussian",

data = prostate.train,

n.trees = 500, # use with caution

interaction.depth = 3,

shrinkage = 0.1,

cv.folds = 5,

n.cores = 10, # NULL will use all cores by default

verbose = FALSE

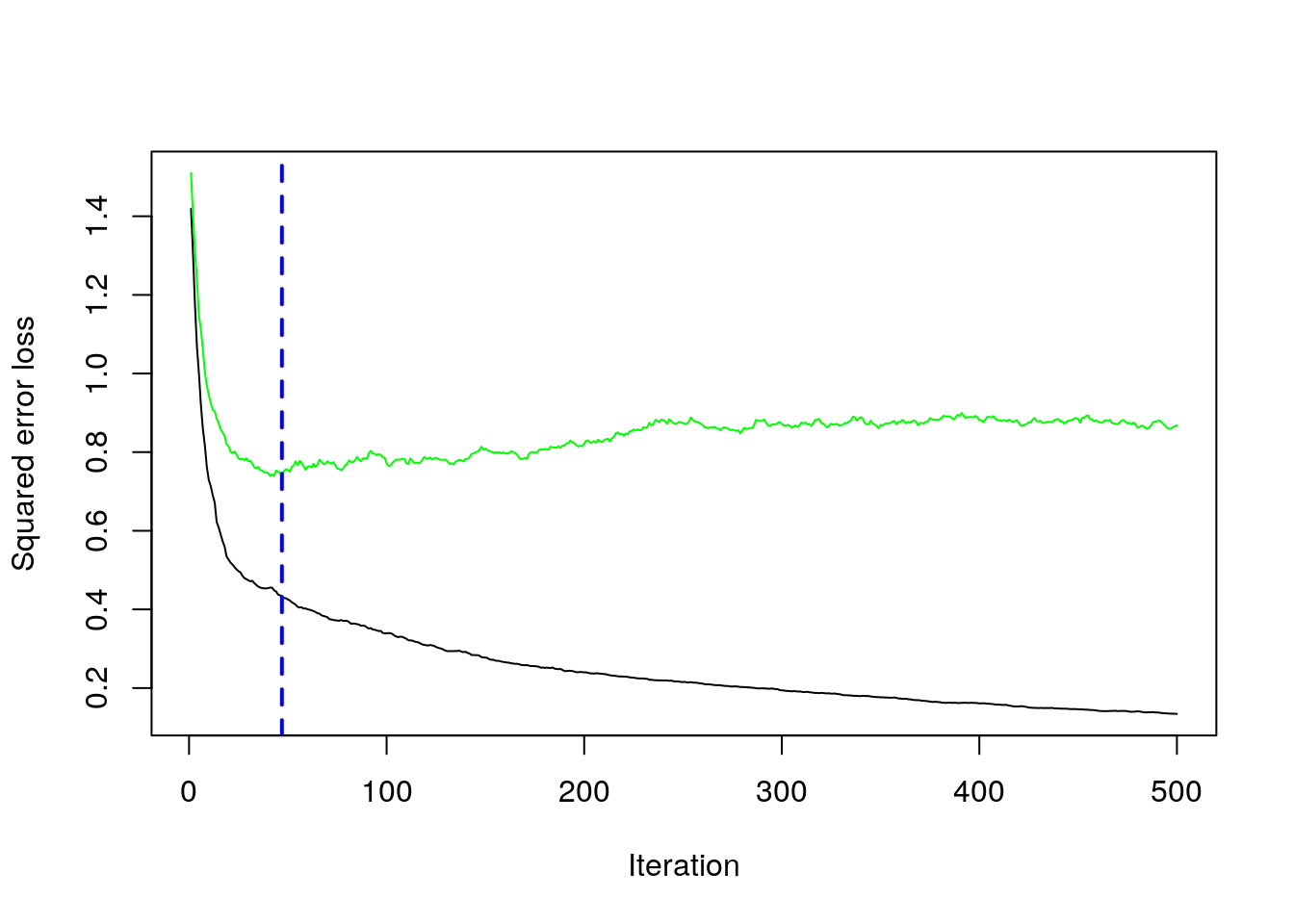

) Find index for n trees with minimum CV error

## [1] 47Get MSE and compute RMSE

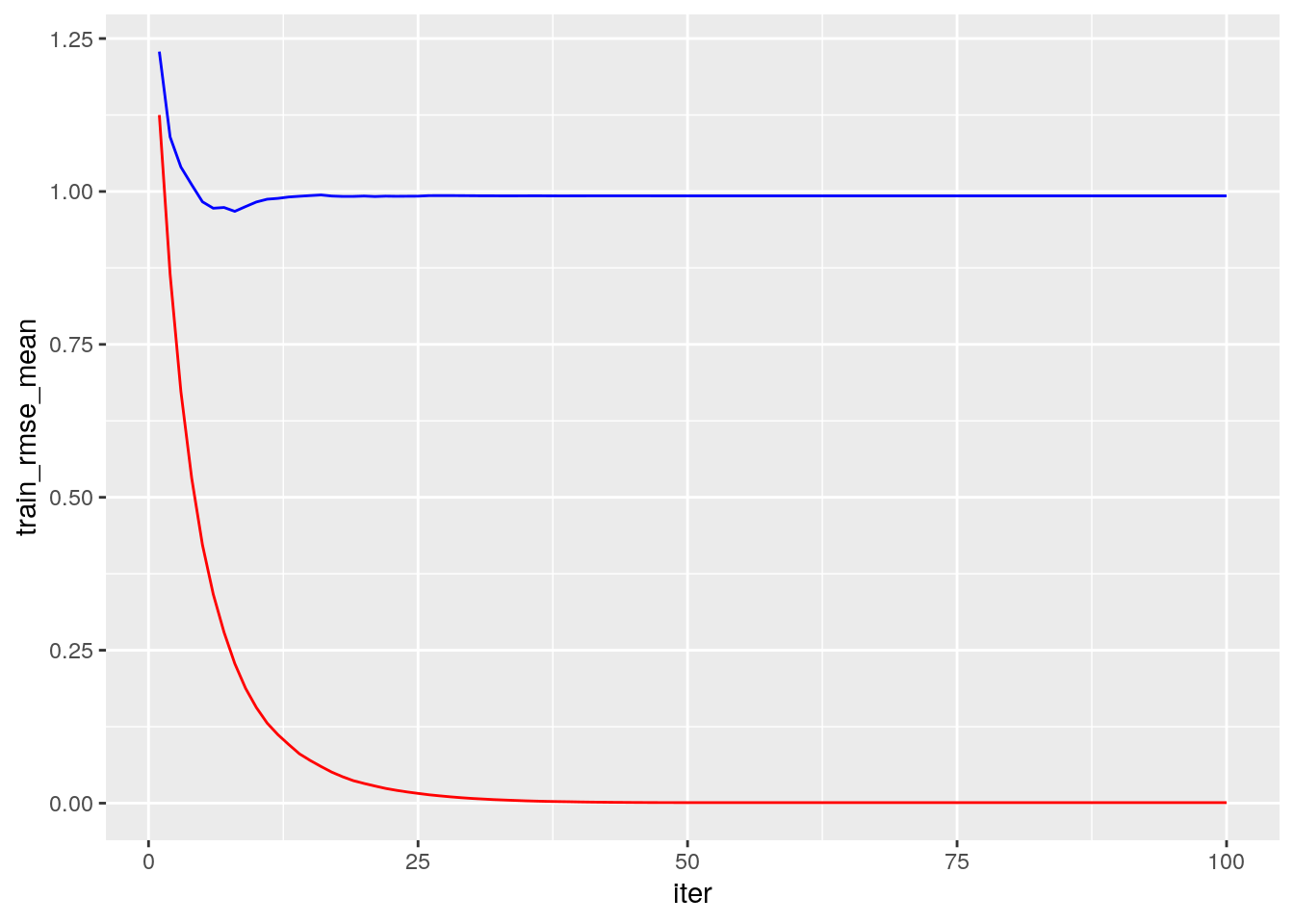

## [1] 0.8588556plot loss function as a result of n trees added to the ensemble

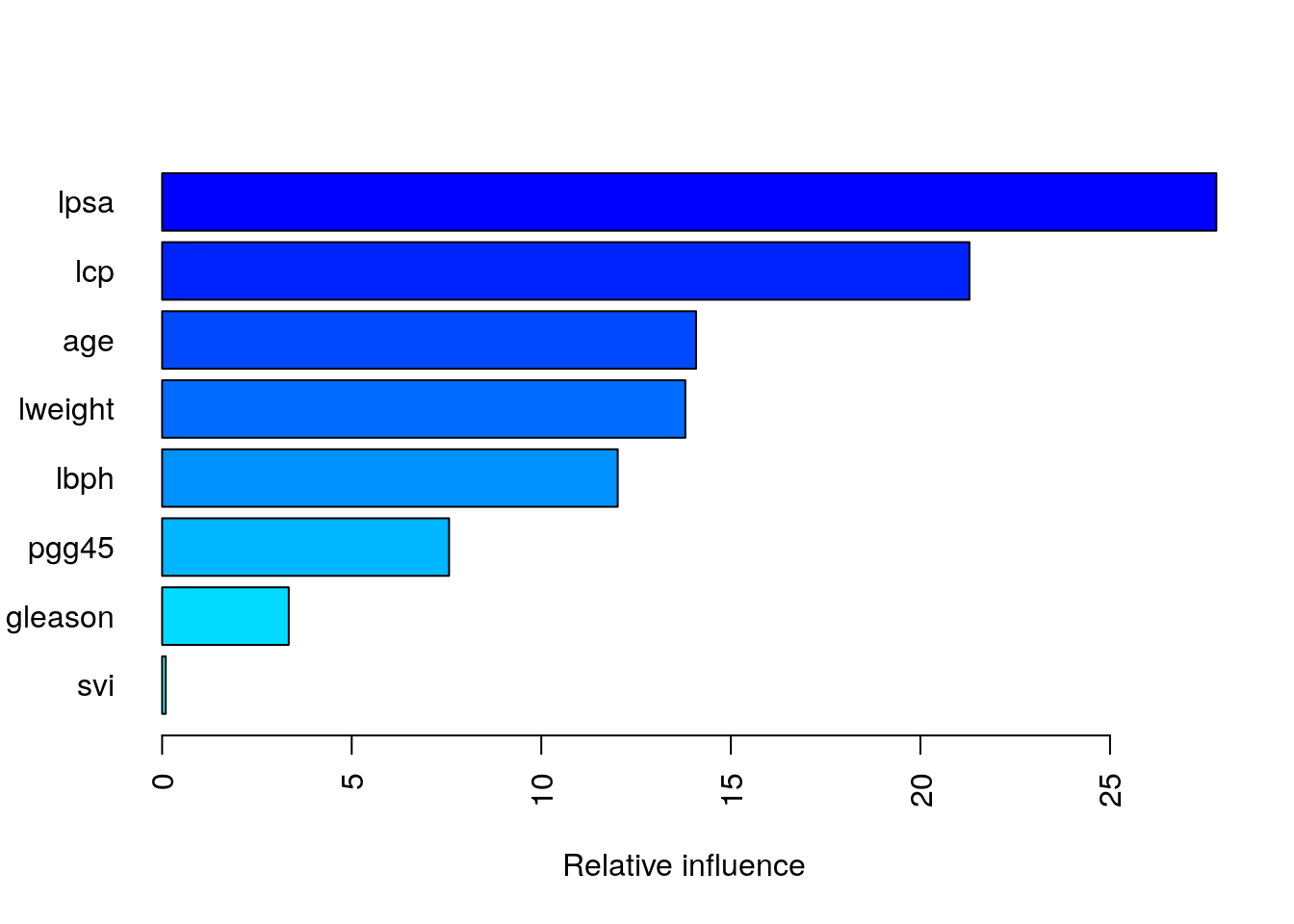

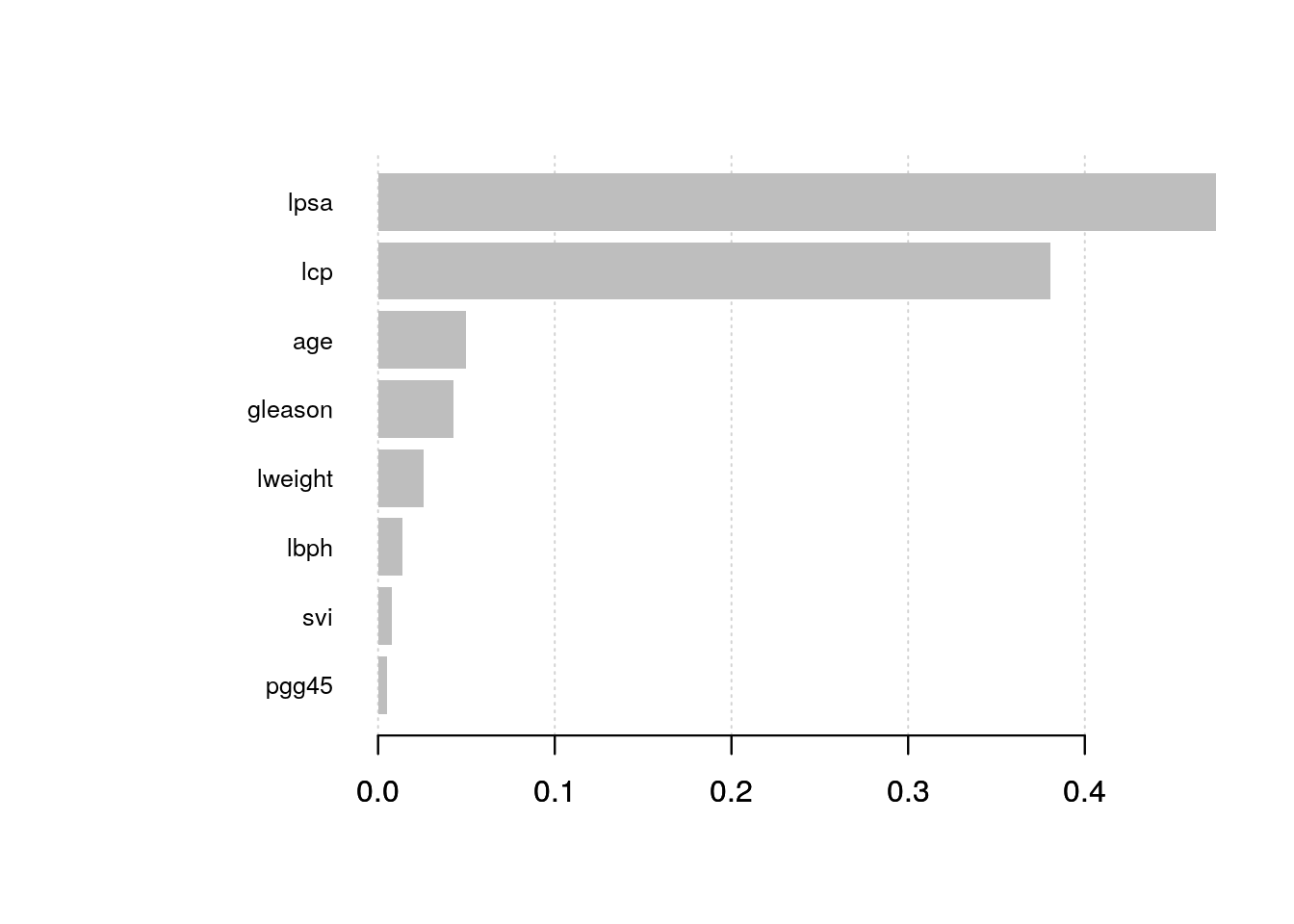

## [1] 47After tuning the GBM (which we didn’t show here), we likely want to understand the variables that have the largest influence.

The summary method for gbm will output a data frame and a plot that shows the most influential variables.

cBars allows you to adjust the number of variables to show (in order of influence).

The default method for computing variable importance is with relative influence

summary(

gbm.fit,

cBars = 10,

method = relative.influence, # also can use permutation.test.gbm

las = 2

)

## var rel.inf

## lpsa lpsa 27.80468330

## lcp lcp 21.29365479

## age age 14.08329747

## lweight lweight 13.79978513

## lbph lbph 12.01629085

## pgg45 pgg45 7.56751941

## gleason gleason 3.33953875

## svi svi 0.09523028Partial dependence plots: After the most relevant variables have been identified, we can attempt to understand how the response variable lcavol changes based on these variables.

For this we can use partial dependence plots (PDPs) and individual conditional expectation (ICE) curves.

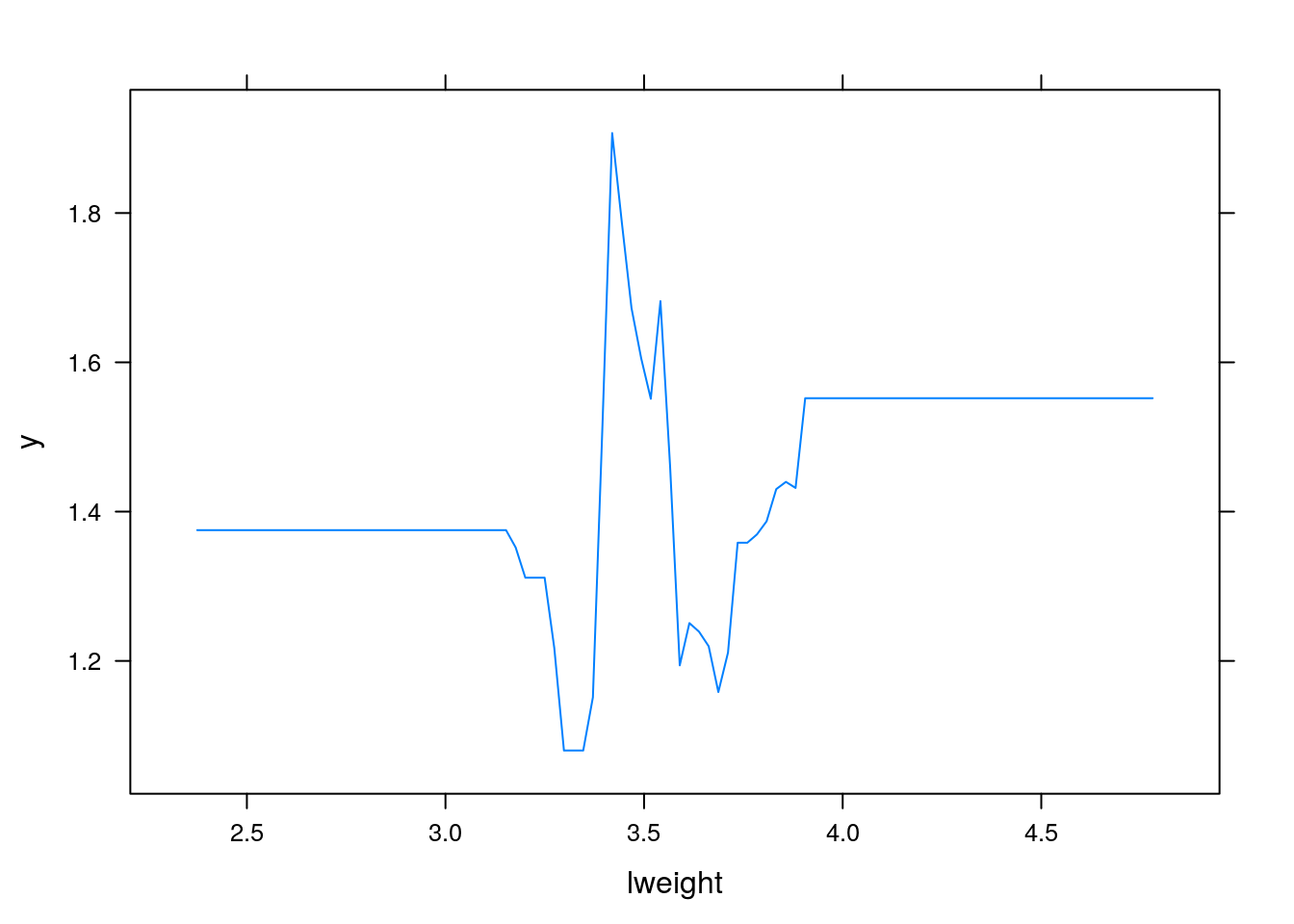

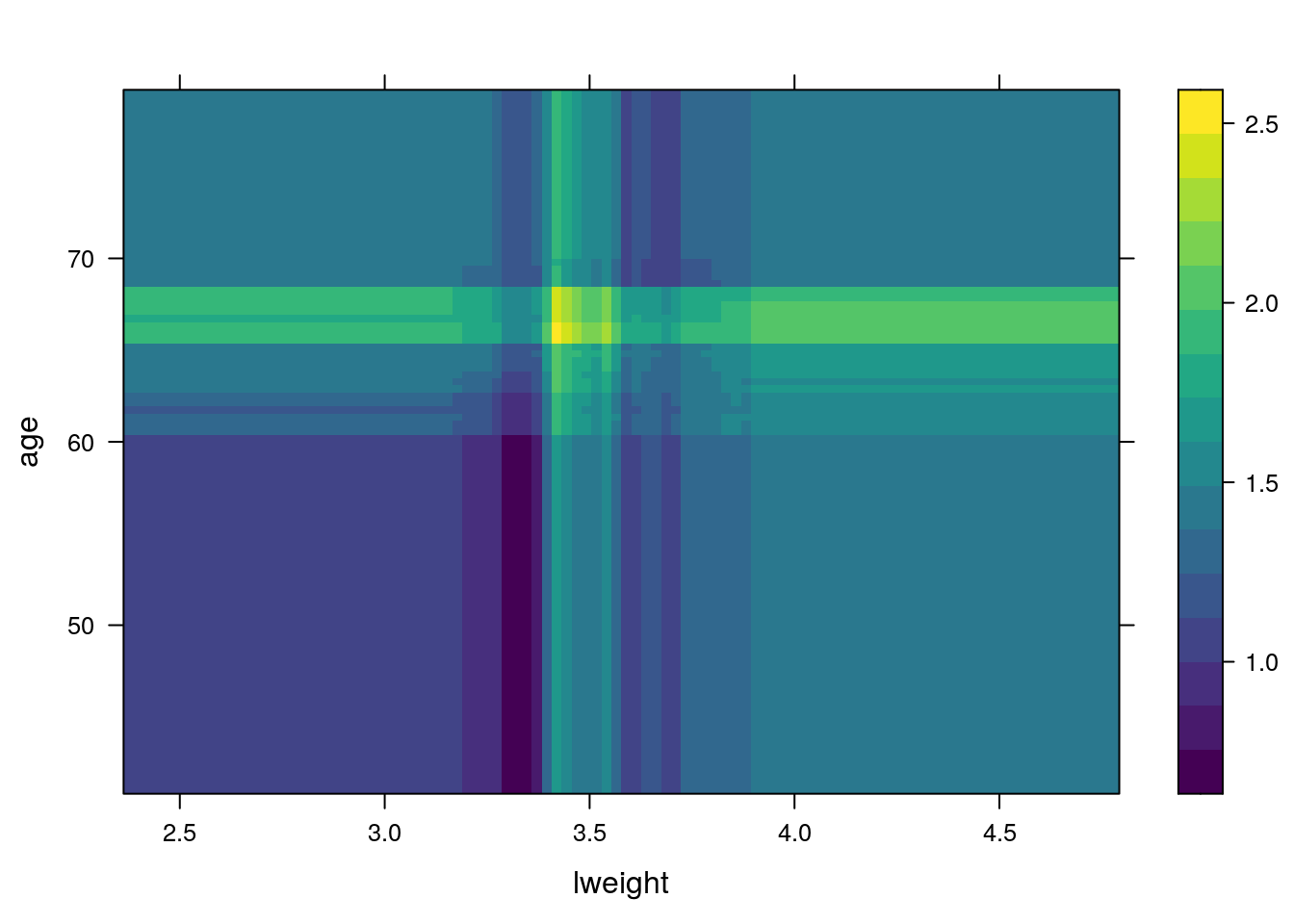

PDPs plot the change in the average predicted value as specified feature(s) vary over their marginal distribution. It is a great tool for model interpretation. The PDP plot below displays the average change in predicted lcavol as we vary lweight while holding all other variables constant.

i.var controls the index of the variable to plot. You can also do two (or more) way plots using:

When predicting the test data, you need to specify the number of trees:

Predicting with a gbm object (i.e., predict.gbm) produces predicted values for each observation in newdata using the the first n.trees iterations of the boosting sequence. If n.trees is a vector than the result is a matrix with each column representing the predictions from gbm models with n.trees[1] iterations, n.trees[2] iterations, and so on.

## [1] 0.403507310.2.6.2 The xgboost Package

The xgboost R package provides an R API to “Extreme Gradient Boosting”, which is an efficient implementation of gradient boosting framework (apprx 10x faster than gbm). The xgboost/demo repository provides a wealth of information. You can also find a fairly comprehensive parameter tuning tutorials here or here (in Python). The xgboost package has been quite popular and successful on Kaggle for data mining competitions.

Features include:

Provides built-in k-fold cross-validation Stochastic GBM with column and row sampling (per split and per tree) for better generalization. Includes efficient linear model solver and tree learning algorithms. Parallel computation on a single machine. Supports various objective functions, including regression, classification and ranking. The package is made to be extensible, so that users are also allowed to define their own objectives easily.Apache 2.0 License.

XGBoost only works with matrices that contain all numeric variables so you need to encode our data (dummies or effect coding).

Let’s fit an xgboost with default hyperparameters. We use the xgb.cv for cross-validated xgboost.

The example is for a regression problem, therefore objective = "reg:squarederror".

The objective argument specify the learning task and the corresponding learning objective.

There are many objective options but the most popular are binary:logistic for binary classification, multi:softmax, multi:softprob for multiclass classification and reg:squarederror for regression with squared loss.

library(xgboost)

xgb.fit1 <- xgb.cv(

data = X.train,

label = y.train,

nrounds = 100,

nfold = 5,

objective = "reg:squarederror", # for regression models

verbose = 0 # silent,

)Plot error vs number trees

ggplot(xgb.fit1$evaluation_log) +

geom_line(aes(iter, train_rmse_mean), color = "red") +

geom_line(aes(iter, test_rmse_mean), color = "blue")

After tuning your parameters (see the tuning with caret section) fit the final model with:

# example of tunned parameters:

params <- list( eta = 0.01, max_depth = 5, min_child_weight = 5, subsample = 0.65, colsample_bytree = 1)

# train final model

xgb.fit.final <- xgboost(

params = params,

data = X.train,

label = y.train,

nrounds = 50, # example for best nrounds found with cv

objective = "reg:linear",

verbose = 0

)Visualizing variable importance:

# create importance matrix

importance_matrix <- xgb.importance(model = xgb.fit.final)

# variable importance plot

xgb.plot.importance(importance_matrix, top_n = 10, measure = "Gain")

Predicting:

Building an xgboost predictor is easy. But, improving it is difficult. This algorithm uses multiple parameters. To improve the model, parameter tuning is must. Tuning is art (but look for some tips in the web, e.g., like here ).

The full list of hyperparameters is given here. Here are the most important:

- Booster Parameters: (some are tree-specific and some affect the boosting operation)

- eta [default=0.3]

- Analogous to learning rate in GBM

- Makes the model more robust by shrinking the weights on each step

- Typical final values to be used: 0.01-0.2

- min_child_weight [default=1]

- Defines the minimum sum of weights of all observations required in a child.

- Used to control over-fitting. Higher values prevent a model from learning relations which might be highly specific to the particular sample selected for a tree.

- Too high values can lead to under-fitting hence, it should be tuned using CV.

- max_depth [default=6]

- The maximum depth of a tree, same as GBM.

- Used to control over-fitting as higher depth will allow model to learn relations very specific to a particular sample.

- Should be tuned using CV.

- Typical values: 3-10

- gamma [default=0]

- A node is split only when the resulting split gives a positive reduction in the loss function. Gamma specifies the minimum loss reduction required to make a split.

- Makes the algorithm conservative. The values can vary depending on the loss function and should be tuned.

- subsample[default=1]

- Same as the subsample of GBM. Denotes the fraction of observations to be randomly samples for each tree.

- Lower values make the algorithm more conservative and prevents overfitting but too small values might lead to under-fitting.

- Typical values: 0.5-1

- lambda [default=1]

- L2 regularization term on weights (analogous to Ridge regression)

- This used to handle the regularization part of XGBoost. Though many data scientists don’t use it often, it should be explored to reduce overfitting.

- alpha [default=0]

- L1 regularization term on weight (analogous to Lasso regression)

- Can be used in case of very high dimensionality so that the algorithm runs faster when implemented

- eta [default=0.3]

- Learning Task Parameters: These parameters are used to define the optimization objective the metric to be calculated at each step.

- objective [default=reg:linear]

- This defines the loss function to be minimized. Mostly used values are binary:logistic, multi:softmax, multi:softprob

- eval_metric

- The metric to be used for validation data

- typical values: rmse, mae (mean absolute error), logloss, …

- objective [default=reg:linear]

10.2.7 K-nearest neighbour (KNN)

KNN is not an ERM problem. In the KNN algorithm, a prediction at some \(x\) is made based on the \(y\) is it neighbours. This means that:

- KNN is an Instance Based learning algorith where we do not learn the values of some parametric fnuction, but rather, need the original sample to make predictions. This has many implications when dealing with “BigData”.

- It may only be applied in spaces with known/defined matric. It is thus harder to apply in the presence of missing values, or in “string-spaces”, “genome-spaces”, etc. where no canonical metric exists.

KNN is so fundamental that we show how to fit such a hypothesis class, even if it not an ERM algorith.

The KNN rule:

Given new test instance \(x_t\):

- find its \(K\) nearest neighbors- i.e., the \(K\) training instances with the smallest distance. (e.g., the Euclidian distance: \(d(x,z) = ||x-z|| = \sqrt{\sum_{i=1}^{p}(z_i-x_i)^2}\) (Note: the scale of the data matter, sclae your dataset!))

- For classification: do majority vote among the neighbors’ labels. For regression, take the average of the neighbors’ labels.

Note: the 1-NN rule: return the label of the nearest neighbor.

Is it good?

Advantage: when the dataset is large and \(K\) is well chosen, KNN can be very effective. Disdvantage: May be slow for a large training sample - need to compute all distances to the query.

Note: the error in a KNN model is consists of two errors:

- estimation error: do we well estimate the true label of the test instance from the K neighbors? reduce it by increasing K

- representation error: do the K neighbors represent the test instance? reduce it by decreasing K

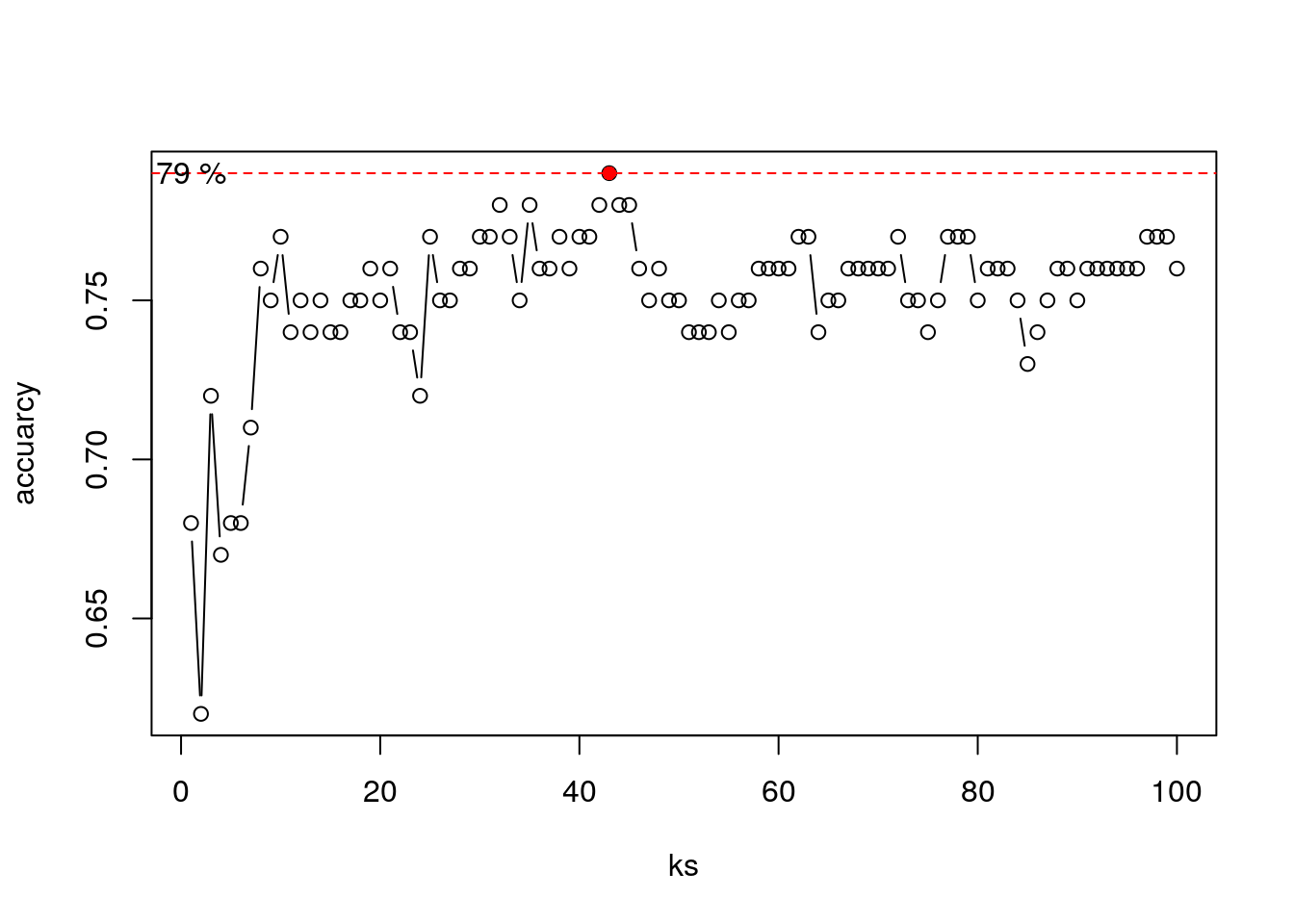

How to choose a good \(K\)? usually, do CV.

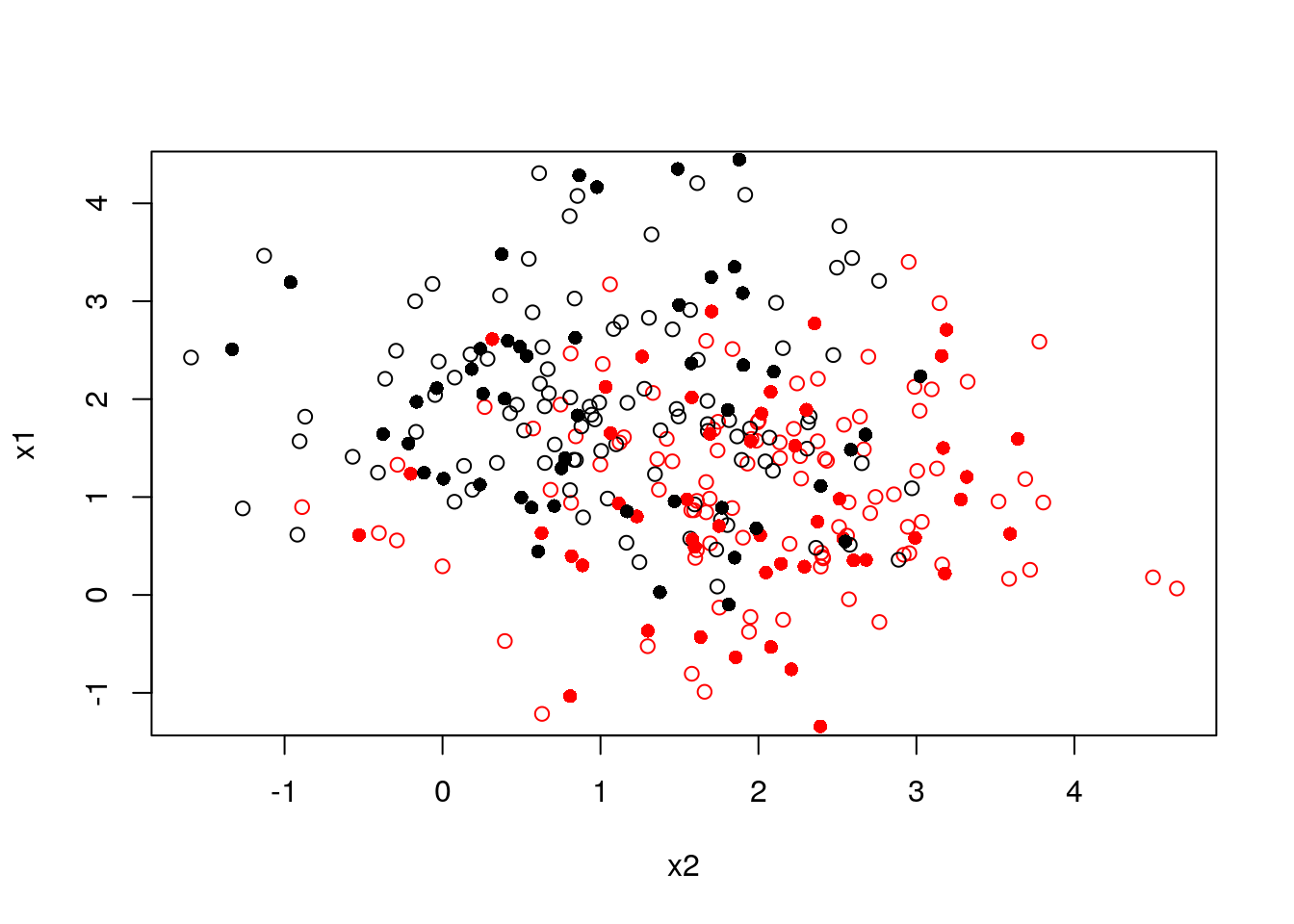

Here is a 2D example: assume we have a labeled training set (circles) and a labeled validation set (filled circle)

set.seed(1)

x1 <- rnorm(200, mean = rep(c(1,2),each = 100))

x2 <- rnorm(200, mean = rep(c(2,1),each = 100))

y <- as.factor(rep(c(1,-1), each = 100))

train <- data.frame(x1,x2,y)

x1_val <- rnorm(100, mean = rep(c(1,2),each = 50))

x2_val <- rnorm(100, mean = rep(c(2,1),each = 50))

y_val <- as.factor(rep(c(1,-1), each = 50))

validation <- data.frame(x1_val,x2_val,y_val)

plot(x1~x2, col = y, data = train)

points(x1_val~x2_val, col = y_val, data = validation, pch = 16)

Choosing \(K\):

ks <- 1:100

accuarcy <- numeric(length(ks))

for (k in ks) {

k_nn <- knn(train = train[,-3], test = validation[,-3], cl = train$y, k = k)

accuarcy[k] <- mean(k_nn == validation$y_val)

}

plot(accuarcy~ks, type = "b")

best_k <- which.max(accuarcy)

points(accuarcy[best_k]~best_k, col = "red", pch = 16)

abline(h = accuarcy[best_k], lty = 2, col = "red")

text((accuarcy[best_k])~1, labels = paste(accuarcy[best_k]*100,"%"))

Now to our spam example:

library(class)

knn.1 <- knn(train = X.train.spam.scaled, test = X.test.spam.scaled, cl =y.train.spam, k = 1)

# Test confusion matrix:

.predictions.test <- knn.1

(confusion.test <- table(prediction=.predictions.test, truth=spam.test$spam))## truth

## prediction email spam

## email 856 86

## spam 88 520## [1] 0.112258110.2.8 Linear Discriminant Analysis (LDA) and Quadratic Discriminant Analysis (QDA)

Linear Discriminant Analysis (LDA) and Quadratic Discriminant Analysis (QDA) are two classic classifiers, with, as their names suggest, a linear and a quadratic decision surface, respectively. These classifiers are attractive because they have closed-form solutions that can be easily computed, are inherently multiclass, have proven to work well in practice, and have no hyperparameters to tune.

Under certain assumptions, LDA is equivalent to least squares classification 10.2.1. This means that we actually did LDA when we used lm for binary classification (feel free to compare the confusion matrices).

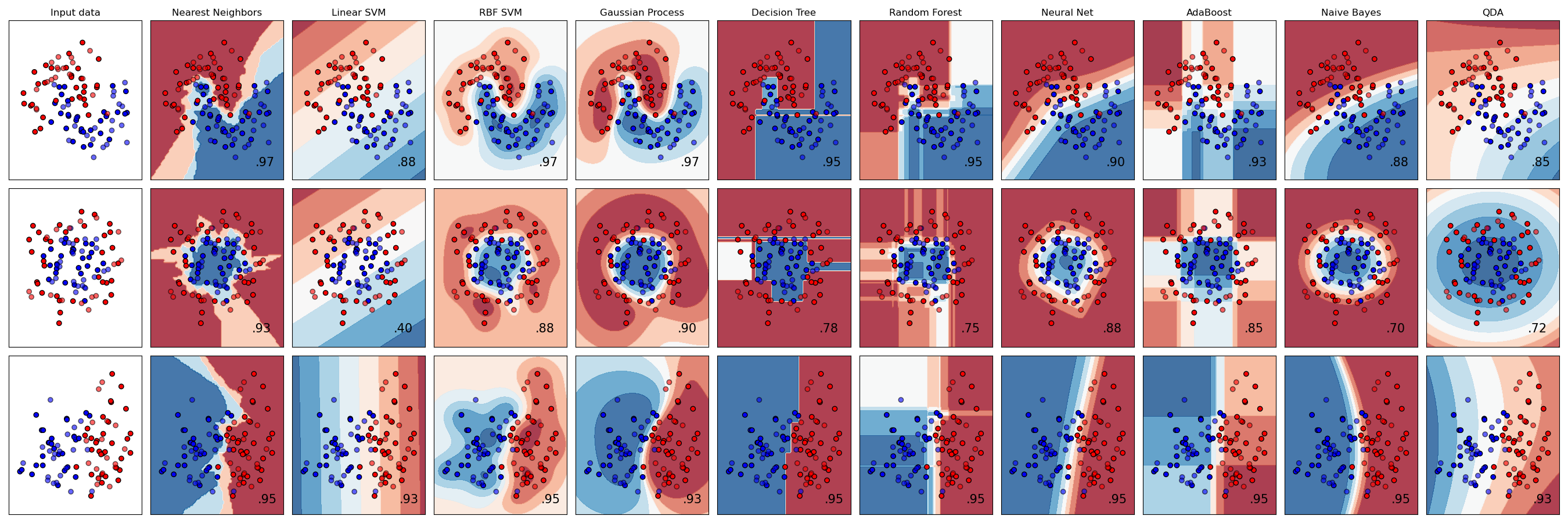

source: Python’s scikit-learn page

So how does LDA and QDA work?

Both LDA and QDA can be derived from simple probabilistic models which model the class conditional distribution of the data \(P(X|y=k)\) for each class \(k\). \(k\) is selected such that it maximize this conditional probability. \(P(X|y)\) is usually modeled as \(p\) multivariate Gaussian distribution with known density:

\[ P(X|y=k) = (2 \pi)^{-\frac{p}{2}} det(\Sigma)^{-\frac{1}{2}} e^{-\frac{1}{2} (X-\mu)' \Sigma^{-1} (X-\mu)}\] where \(p\) is ther number of features.

To use this model as a classifier, we need to estimate from the training data the class priors (by the proportion of instances of class), the class means (by the empirical sample class means) and the covariance matrices (e.g. by the empirical sample class covariance matrices).

In the case of LDA, the Gaussians for each class are assumed to share the same covariance matrix: This leads to linear decision surfaces, which can be seen by comparing the log-probability ratios.

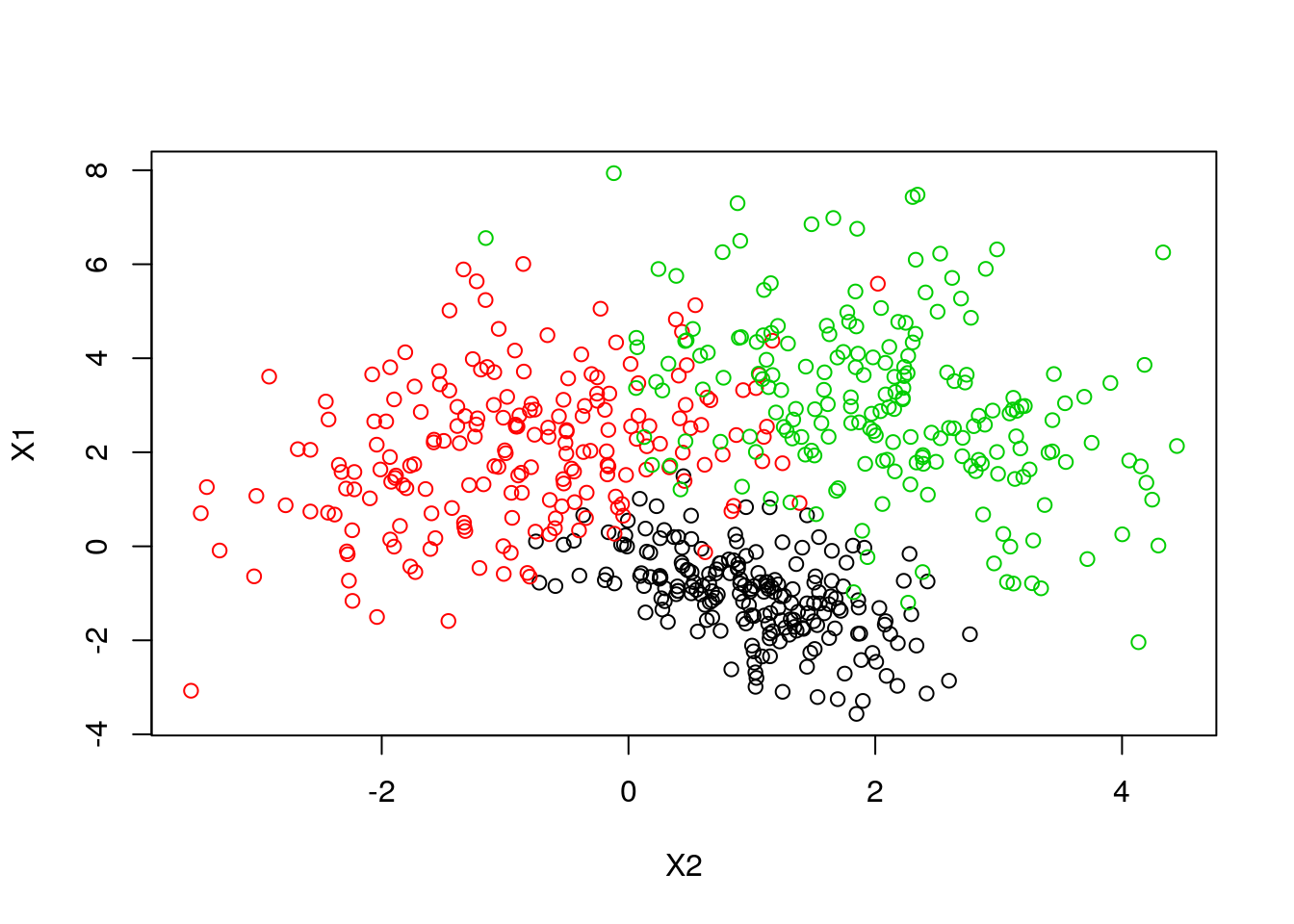

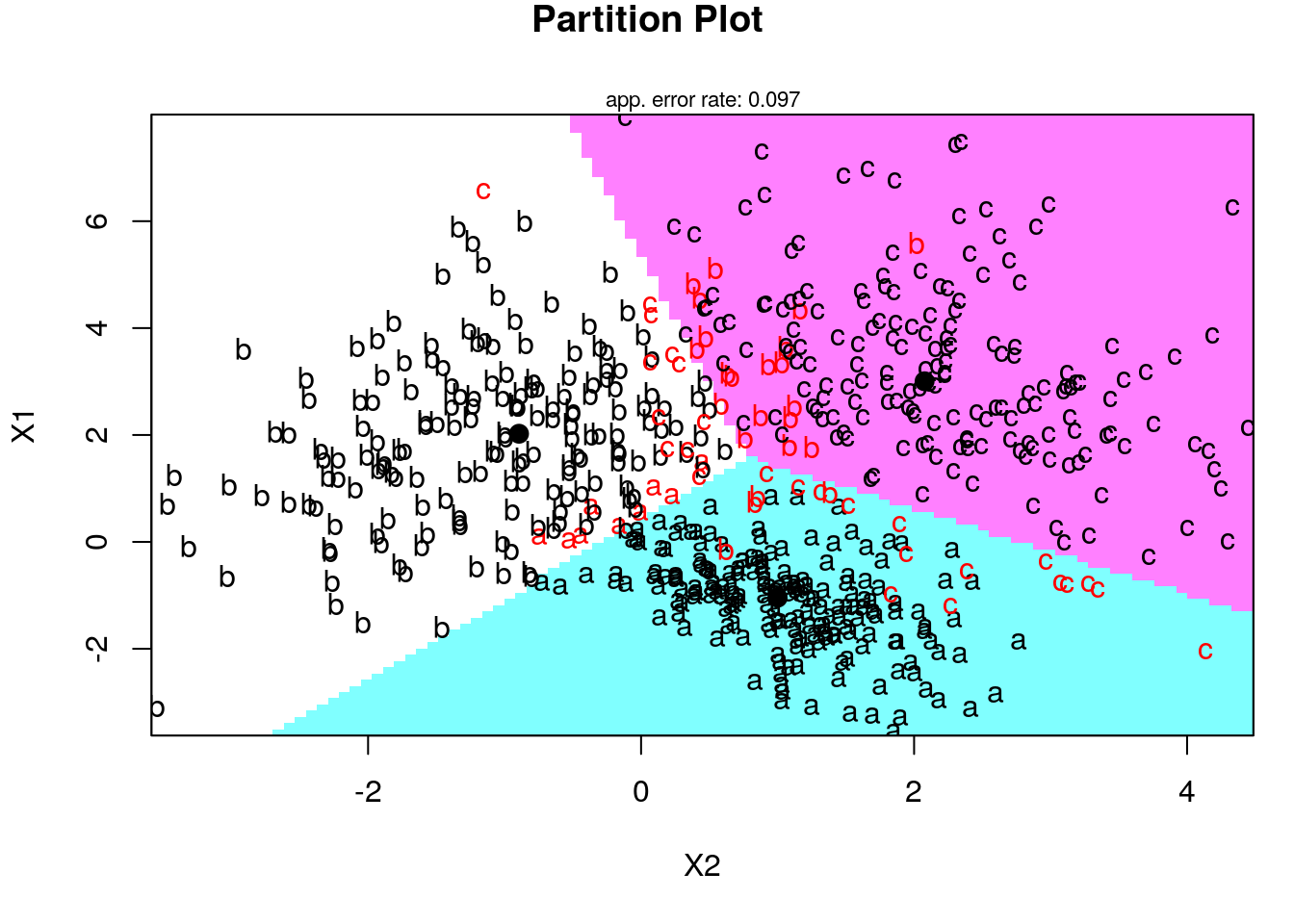

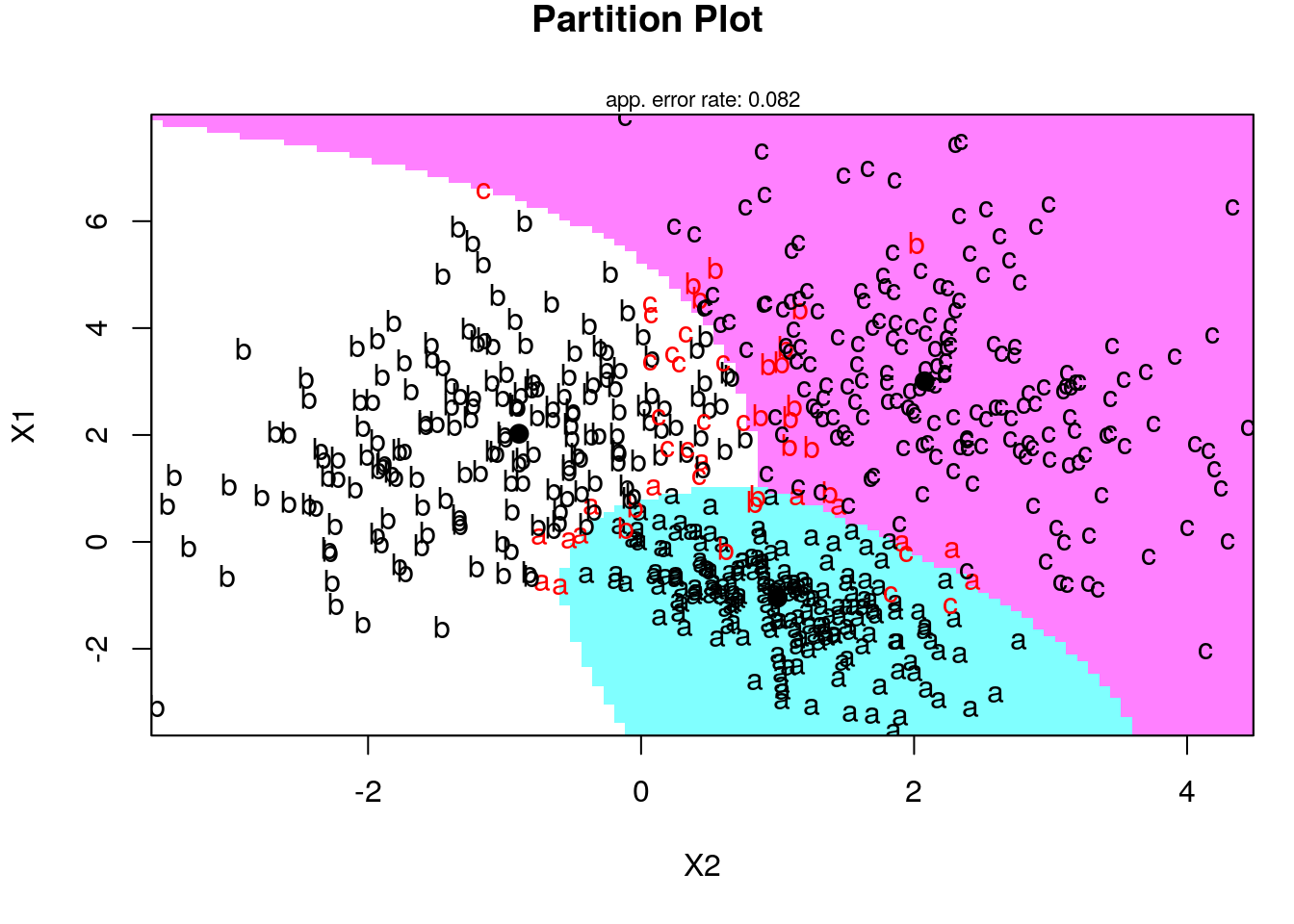

In the case of QDA, there are no assumptions on the covariance matrices of the Gaussians, leading to quadratic decision surfaces. 2D Example: Generating data from three distributions:

set.seed(1)

mu1 <- c(-1,1)

Sigma1 <- matrix(c(1,-0.4,-0.4,0.5),2,2)

mu2 <- c(2,-1)

Sigma2 <- matrix(c(2,0.5,0.5,1),2,2)

mu3 <- c(3,2)

Sigma3 <- matrix(c(3,-0.5,-0.5,1),2,2)

library(MASS)

G1 <- mvrnorm(200, mu = mu1, Sigma = Sigma1)

G2 <- mvrnorm(200, mu = mu2, Sigma = Sigma2)

G3 <- mvrnorm(200, mu = mu3, Sigma = Sigma3)

alldata <- data.frame(rbind(G1,G2,G3), y = rep(c("a","b","c"), each = 200))

plot(X1~X2, col = y, alldata)

Now let’s use the klaR package for visualization of LDA and QDA fitting:

Fitting LDA in the spam prediction:

library(MASS)

lda.1 <- lda(spam~., spam.train)

# Train confusion matrix:

.predictions.train <- predict(lda.1)$class

(confusion.train <- table(prediction=.predictions.train, truth=spam.train$spam))## truth

## prediction email spam

## email 1776 227

## spam 68 980## [1] 0.09668961# Test confusion matrix:

.predictions.test <- predict(lda.1, newdata = spam.test)$class

(confusion.test <- table(prediction=.predictions.test, truth=spam.test$spam))## truth

## prediction email spam

## email 884 138

## spam 60 468## [1] 0.1277419Fitting QDA in the spam prediction:

library(MASS)

qda.1 <- qda(spam~., spam.train[,50:58]) # we use subset of the features to avoid numerical problems (rank deficiency)

# Train confusion matrix:

.predictions.train <- predict(qda.1)$class

(confusion.train <- table(prediction=.predictions.train, truth=spam.train$spam))## truth

## prediction email spam

## email 1773 710

## spam 71 497## [1] 0.2559816# Test confusion matrix:

.predictions.test <- predict(qda.1, newdata = spam.test[,20:58])$class

(confusion.test <- table(prediction=.predictions.test, truth=spam.test$spam))## truth

## prediction email spam

## email 904 373

## spam 40 233## [1] 0.266451610.2.9 Naive Bayes

Naive-Bayes can be thought of LDA, i.e. linear regression, where predictors are assume to be uncorrelated. Predictions may be very good and certianly very fast, even if this assumption is not true.

library(e1071)

nb.1 <- naiveBayes(spam~., data = spam.train)

# Train confusion matrix:

.predictions.train <- predict(nb.1, newdata = spam.train)

(confusion.train <- table(prediction=.predictions.train, truth=spam.train$spam))## truth

## prediction email spam

## email 1025 55

## spam 819 1152## [1] 0.2864635# Test confusion matrix:

.predictions.test <- predict(nb.1, newdata = spam.test)

(confusion.test <- table(prediction=.predictions.test, truth=spam.test$spam))## truth

## prediction email spam

## email 484 42

## spam 460 564## [1] 0.32387110.3 How to choose your learning algorithm??

10.4 Supervised Learning Pipeline

Recap so far:

The Training set is a set of examples (i.e. observations) used to fit a learner by minimizing the empirical risk on it. For instance, if our learner is an SVM classifier, than we use the training dataset to learn its weights in a supervised learning framework.

Successively, the fitted model is used to predict the responses for the observations in a second dataset called the validation dataset. The validation dataset provides an unbiased evaluation of a learner fit on the training dataset. We evaluate the performance of the learner over a (potentially) independent dataset in order to do hyper-parameter tuning, variable selection, or model selection. That is, for each type of learner we trained over the training set, we have an independent performance measure. If we believe independence exist, and that the train-validation split generalizes the data distribution in the real world, we can chose the learner with the best score (given some aggregation method) to be the final model. Examples of hyperparameters that we use validation set to tune: k in k-nearest Neighbors, the cost parameter in SVM, the number of hidden layers in deep neural net, the regularization parameters in Elastic net, or the maximal tree depth in Xgboost. The motivation in validate trained models can be seen as: empirically deciding on the appropriate relation between complexity and simplicity. In other words, answering the question: when do we start to overfit the training data.

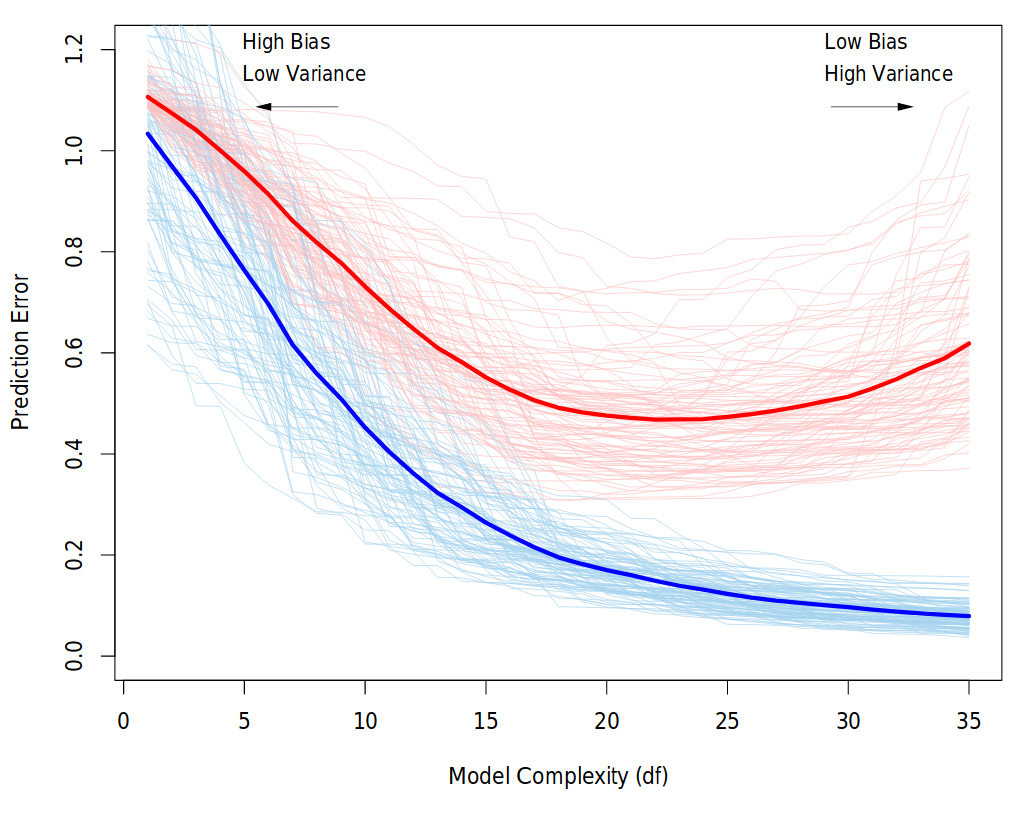

Behavior of validation and training sample error as the model complexity is varied. The light blue and red curves show the training error and the conditional validation error for 100 training sets, as the model complexity is increased. The solid curves show the expected validation and training error. It is clear that from some level, adding more complexity to the model hurts validation performance, while training performance continue to improve. The purpose of the validation phase here is to find the point of the minimum validation error. Source: “The Elements of Statistical Learning” book

Finally, the test dataset is a dataset used to provide an unbiased evaluation of a final model fit on the training dataset. Note: it is important that data from the test set was held out, i.e., has never been used in any of the training phases.

Cross validation (CV)

Instead of a permanent train-validation splitting, a dataset can be repeatedly split into a training and a validation datasets. This is known as cross-validation (CV). These repeated partitions can be done in various ways.

The most common is the K-fold Cross-validation, but also, Repeated random sub-sampling; Leave-one-out (LOO); Leave-p-out (LPO); Monte-Carlo; and h-block cross-validations.

We emphasize that CV in dependence setting is not straightforward, and its a gentle art to cross-validate in situations where you can’t shuffle your data, e.g.: time-series data or spatial data.

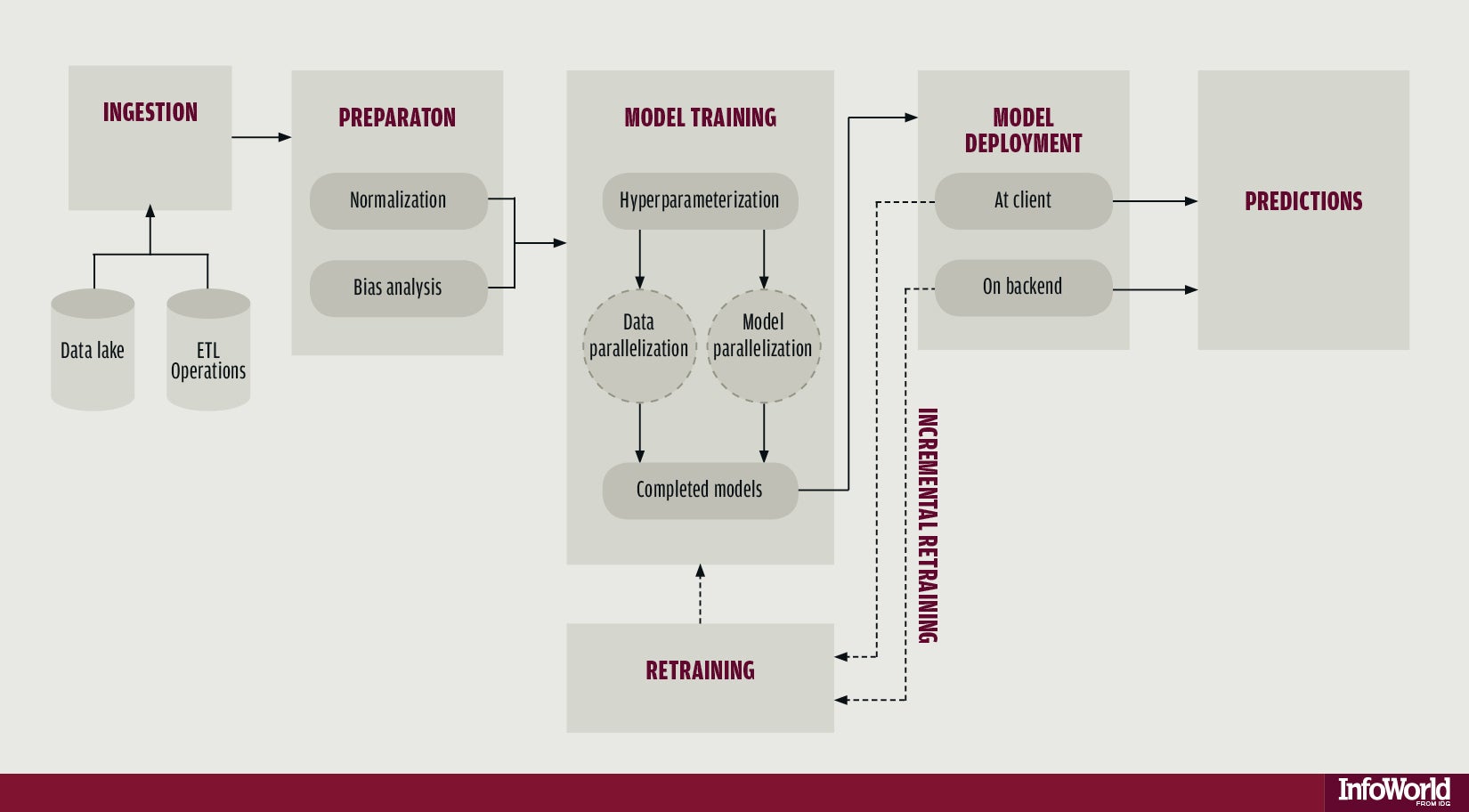

10.4.1 What is the (Supervised) Machine Learning Pipeline?

A machine learning typical project can usually be seen as a set of data processing elements connected in series, where the output of one element is the input of the next one. Usually there are some feedbacks between phases in the series, which relate to the learning process. This structure is sometimes referred as a machine learning pipeline. The parallelization structure of the process (i.e., the precise manner in which the computations will be distributed across multiple processing units) is of great importance in the pipeline. However, Explicit Parallelism is beyond the scope of this course and we will rely on the internal parallelization structure of R libraries.

The machine learning pipeline

The four main phases are:

- Data ingestion

- Data preparation / preprocessing

- Models training

- Prediction

10.4.1.1 Data ingestion

The raw data is loaded. When the data is to big for RAM see the memory chapter in R(BGU) Course. It is important that any manipulation on the raw data will be documented, for reproducability, that is, any subsetting, cleaning, arranging will be perform by code. The rational here, is that when new data comes, it will pass through the pipeline without having to do anything manually.

10.4.1.2 Preparation and Preprocessing

If you use a hold-out set for testing (that’s what I teach here, but testing can be performed repeatedly as in the training-validation CV, by defining inner (train-validation) and outer (train-test) foldings) than splitting is done at the beginning of this stage, before any preprocessing.

After you left some of the data aside for testing, you do all your preprocessing on the remaining.

In the preprocessing step you make the data ready to be trained by algorithms. You want the data to be as informative as possible, i.e., that your features would be most suitable for solving the prediction problem. Actions that are sometimes performed at this stage are data scaling, data imputation, bias corrections, outliers handling, dimensionality reduction, feature engineering and more. (Note: in deep learning, some of these are internal to the learning process, i.e., data representation is learned during the Model training phase.)

Before moving to the training, you need to prepare the test set so it will have the same variables and meaning as the training. For example, if you scaled the training set by subtracting from each variable the mean and dividing by its standard error, than you should apply this also to the test set, but with means and standard errors calculated on the test set.

Sometimes this phase involves Exploratory data analysis and visualization, in order to understand patterns in the data whose recognition may affect prediction success.

10.4.1.3 Models training

Once you have your data set established, next comes the training process, where the data is used to generate a model from which predictions can be made. You will generally try many different algorithms before finding the one that performs best with your data.

In order to evaluate the performance of some algorithm train it on a training set, and test it on the validation set. compare different algorithms using same train and test.

In this stage you also tune your hyperparameters. A hyperparameter for a machine learning model is a setting that governs how the resulting model is produced from the algorithm. These are parameters that are not learned in the fitting itself. You would learn it manually and systemically: train with different hyperparameters values combinations and test the performance over the validation set. chose the hyperparameters which resulted in the best predictive accuracy.

It is highly recommended to use cross-validation so the results would be more robust.

After convincing yourself which model is best suited and you have tuned the hyperparameters you finally have learner, and you are ready for the next step.

10.4.1.4 Prediction

In the last phase of the pipeline, you use your trained learner in order to predict with the target in new data. If you saved a test set, you can estimate the performance of this learner with it and report it.

10.4.2 The caret Package

The caret package (short for Classification And REgression Training) is a set of functions that attempt to streamline the process for creating predictive models. The package contains tools for:

- data splitting

- pre-processing

- feature selection

- model tuning using resampling

- variable importance estimation

as well as other functionalities.

There are many different learning algorithems implementations in R. Some have different syntax for model training and/or prediction. The package started off as a way to provide a uniform interface the functions themselves, as well as a way to standardize common tasks (such parameter tuning and variable importance).

caret is well documented online. See here, here and here.

10.4.2.1 Pre-Processing

See caret documentation for more on pre-processing.

10.4.2.2 Zero- and Near Zero-Variance Predictors

Identify variables with very small variance or not at all. There are many models where predictors with a single or almost single unique values (zero- and near zero- variance predictors) will cause the model to fail. We are concerned about this, especially when we split our data into subsamples. Deciding when to Include or not these features is a gentle art (see Kuhn and others (2008)). Anyway, with nearZeroVar() function you would be able to quickly identify these. Type ?nearZeroVar to know exactly how.

##

## 0 1 2

## 501 4 23nzv <- nearZeroVar(mdrrDescr, saveMetrics= TRUE)

head(nzv[nzv$nzv,]) # get a table of values identified as "Near Zero-Variance Predictors"(nzv$nzv == TRUE) ## freqRatio percentUnique zeroVar nzv

## nTB 23.00000 0.3787879 FALSE TRUE

## nBR 131.00000 0.3787879 FALSE TRUE

## nI 527.00000 0.3787879 FALSE TRUE

## nR03 527.00000 0.3787879 FALSE TRUE

## nR08 527.00000 0.3787879 FALSE TRUE

## nR11 21.78261 0.5681818 FALSE TRUE10.4.2.3 Centering and Scaling

Centering and Scaling is highly recommended before training, especially from computational perspective. Note that after these transformations you lose the numerical reasoning of the variables’ effects (important only if you are interested in statistical inference)

## Sepal.Length Sepal.Width Petal.Length Petal.Width Species

## 1 5.1 3.5 1.4 0.2 setosa

## 2 4.9 3.0 1.4 0.2 setosa

## 3 4.7 3.2 1.3 0.2 setosa

## 4 4.6 3.1 1.5 0.2 setosa

## 5 5.0 3.6 1.4 0.2 setosa

## 6 5.4 3.9 1.7 0.4 setosatrain <- iris[1:100,]

test <- iris[101:150,]

(preproc <- preProcess(train, method = c("center","scale"))) # note that factors are not transformed## Created from 100 samples and 5 variables

##

## Pre-processing:

## - centered (4)

## - ignored (1)

## - scaled (4)10.4.2.4 Model Training and Parameter Tuning

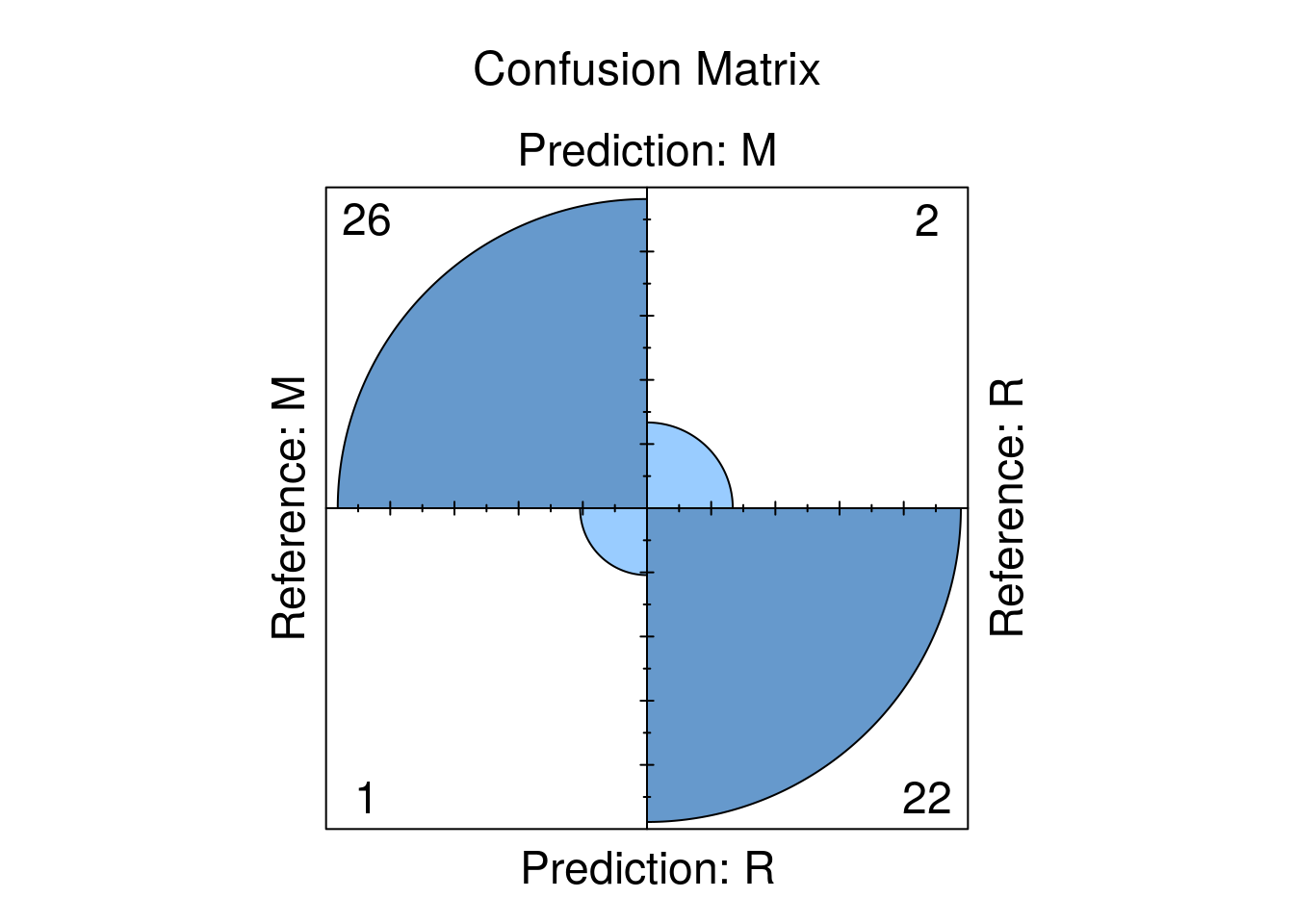

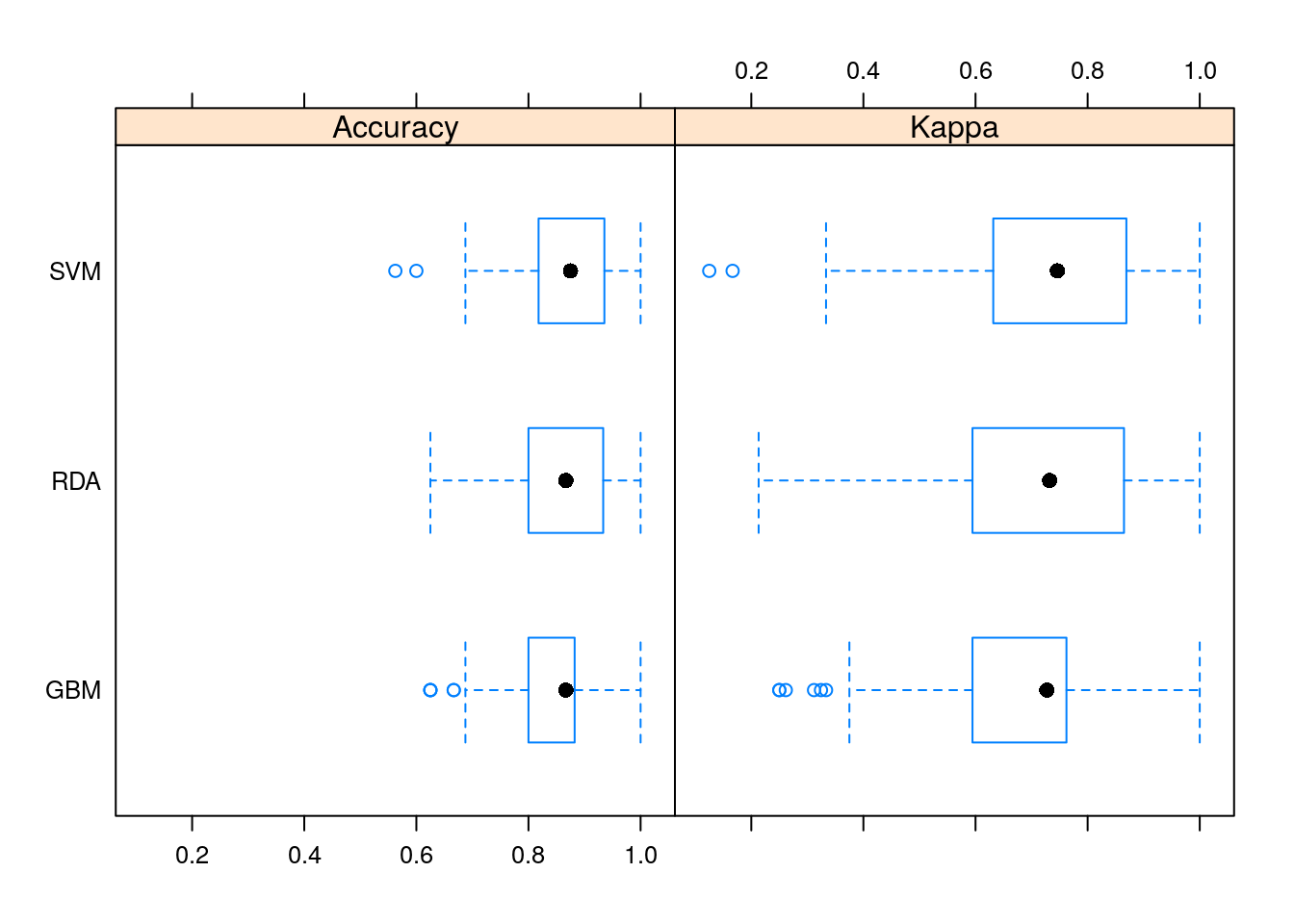

This is the most important and interesting stage in the pipeline, and it is very convenient to do it in caret