Chapter 4 Decision Trees

These notes rely on PSU STAT 508.

Decision trees, also known as classification and regression tree (CART) models, are tree-based methods for supervised machine learning. Simple classification trees and regression trees are easy to use and interpret, but are not competitive with the best machine learning methods. However, they form the foundation for ensemble models such as bagged trees, random forests, and boosted trees, which although less interpretable, are very accurate.

CART models segment the predictor space into \(K\) non-overlapping terminal nodes (leaves). Each node is described by a set of rules which can be used to predict new responses. The predicted value \(\hat{y}\) for each node is the mode (classification) or mean (regression).

CART models define the nodes through a top-down greedy process called recursive binary splitting. The process is top-down because it begins at the top of the tree with all observations in a single region and successively splits the predictor space. It is greedy because at each splitting step, the best split is made at that particular step without consideration to subsequent splits.

The best split is the predictor variable and cutpoint that minimizes a cost function. The most common cost function for regression trees is the sum of squared residuals,

\[RSS = \sum_{k=1}^K\sum_{i \in A_k}{\left(y_i - \hat{y}_{A_k} \right)^2}.\]

For classification trees, it is the Gini index,

\[G = \sum_{c=1}^C{\hat{p}_{kc}(1 - \hat{p}_{kc})},\]

and the entropy (aka information statistic)

\[D = - \sum_{c=1}^C{\hat{p}_{kc} \log \hat{p}_{kc}}\]

where \(\hat{p}_{kc}\) is the proportion of training observations in node \(k\) that are class \(c\). A completely pure node in a binary tree would have \(\hat{p} \in \{ 0, 1 \}\) and \(G = D = 0\). A completely impure node in a binary tree would have \(\hat{p} = 0.5\) and \(G = 0.5^2 \cdot 2 = 0.25\) and \(D = -(0.5 \log(0.5)) \cdot 2 = 0.69\).

CART repeats the splitting process for each child node until a stopping criterion is satisfied, usually when no node size surpasses a predefined maximum, or continued splitting does not improve the model significantly. CART may also impose a minimum number of observations in each node.

The resulting tree likely over-fits the training data and therefore does not generalize well to test data, so CART prunes the tree, minimizing the cross-validated prediction error. Rather than cross-validating every possible subtree to find the one with minimum error, CART uses cost-complexity pruning. Cost-complexity is the tradeoff between error (cost) and tree size (complexity) where the tradeoff is quantified with cost-complexity parameter \(c_p\). The cost complexity of the tree, \(R_{c_p}(T)\), is the sum of its risk (error) plus a “cost complexity” factor \(c_p\) multiple of the tree size \(|T|\).

\[R_{c_p}(T) = R(T) + c_p|T|\]

\(c_p\) can take on any value from \([0..\infty]\), but it turns out there is an optimal tree for ranges of \(c_p\) values, so there are only a finite set of interesting values for \(c_p\) (James et al. 2013) (Therneau and Atkinson 2019) (Kuhn and Johnson 2016). A parametric algorithm identifies the interesting \(c_p\) values and their associated pruned trees, \(T_{c_p}\). CART uses cross-validation to determine which \(c_p\) is optimal.

4.1 Classification Tree

You don’t usually build a simple classification tree on its own, but it is a good way to build understanding, and the ensemble models build on the logic. I’ll learn by example, using the ISLR::OJ data set to predict which brand of orange juice, Citrus Hill (CH) or Minute Maid = (MM), customers Purchase from its 17 predictor variables.

library(tidyverse)

library(caret)

library(rpart) # classification and regression trees

library(rpart.plot) # better formatted plots than the ones in rpart

oj_dat <- ISLR::OJ

skimr::skim(oj_dat)| Name | oj_dat |

| Number of rows | 1070 |

| Number of columns | 18 |

| _______________________ | |

| Column type frequency: | |

| factor | 2 |

| numeric | 16 |

| ________________________ | |

| Group variables | None |

Variable type: factor

| skim_variable | n_missing | complete_rate | ordered | n_unique | top_counts |

|---|---|---|---|---|---|

| Purchase | 0 | 1 | FALSE | 2 | CH: 653, MM: 417 |

| Store7 | 0 | 1 | FALSE | 2 | No: 714, Yes: 356 |

Variable type: numeric

| skim_variable | n_missing | complete_rate | mean | sd | p0 | p25 | p50 | p75 | p100 | hist |

|---|---|---|---|---|---|---|---|---|---|---|

| WeekofPurchase | 0 | 1 | 254.38 | 15.56 | 227.00 | 240.00 | 257.00 | 268.00 | 278.00 | ▆▅▅▇▇ |

| StoreID | 0 | 1 | 3.96 | 2.31 | 1.00 | 2.00 | 3.00 | 7.00 | 7.00 | ▇▅▃▁▇ |

| PriceCH | 0 | 1 | 1.87 | 0.10 | 1.69 | 1.79 | 1.86 | 1.99 | 2.09 | ▅▂▇▆▁ |

| PriceMM | 0 | 1 | 2.09 | 0.13 | 1.69 | 1.99 | 2.09 | 2.18 | 2.29 | ▂▁▃▇▆ |

| DiscCH | 0 | 1 | 0.05 | 0.12 | 0.00 | 0.00 | 0.00 | 0.00 | 0.50 | ▇▁▁▁▁ |

| DiscMM | 0 | 1 | 0.12 | 0.21 | 0.00 | 0.00 | 0.00 | 0.23 | 0.80 | ▇▁▂▁▁ |

| SpecialCH | 0 | 1 | 0.15 | 0.35 | 0.00 | 0.00 | 0.00 | 0.00 | 1.00 | ▇▁▁▁▂ |

| SpecialMM | 0 | 1 | 0.16 | 0.37 | 0.00 | 0.00 | 0.00 | 0.00 | 1.00 | ▇▁▁▁▂ |

| LoyalCH | 0 | 1 | 0.57 | 0.31 | 0.00 | 0.33 | 0.60 | 0.85 | 1.00 | ▅▃▆▆▇ |

| SalePriceMM | 0 | 1 | 1.96 | 0.25 | 1.19 | 1.69 | 2.09 | 2.13 | 2.29 | ▁▂▂▂▇ |

| SalePriceCH | 0 | 1 | 1.82 | 0.14 | 1.39 | 1.75 | 1.86 | 1.89 | 2.09 | ▂▁▇▇▅ |

| PriceDiff | 0 | 1 | 0.15 | 0.27 | -0.67 | 0.00 | 0.23 | 0.32 | 0.64 | ▁▂▃▇▂ |

| PctDiscMM | 0 | 1 | 0.06 | 0.10 | 0.00 | 0.00 | 0.00 | 0.11 | 0.40 | ▇▁▂▁▁ |

| PctDiscCH | 0 | 1 | 0.03 | 0.06 | 0.00 | 0.00 | 0.00 | 0.00 | 0.25 | ▇▁▁▁▁ |

| ListPriceDiff | 0 | 1 | 0.22 | 0.11 | 0.00 | 0.14 | 0.24 | 0.30 | 0.44 | ▂▃▆▇▁ |

| STORE | 0 | 1 | 1.63 | 1.43 | 0.00 | 0.00 | 2.00 | 3.00 | 4.00 | ▇▃▅▅▃ |

I’ll split oj_dat (n = 1,070) into oj_train (80%, n = 857) to fit various models, and oj_test (20%, n = 213) to compare their performance on new data.

set.seed(12345)

partition <- createDataPartition(y = oj_dat$Purchase, p = 0.8, list = FALSE)

oj_train <- oj_dat[partition, ]

oj_test <- oj_dat[-partition, ]Function rpart::rpart() builds a full tree, minimizing the Gini index \(G\) by default (parms = list(split = "gini")), until the stopping criterion is satisfied. The default stopping criterion is

- only attempt a split if the current node has at least

minsplit = 20observations, and - only accept a split if

- the resulting nodes have at least

minbucket = round(minsplit/3)observations, and - the resulting overall fit improves by

cp = 0.01(i.e., \(\Delta G <= 0.01\)).

- the resulting nodes have at least

# Use method = "class" for classification, method = "anova" for regression

set.seed(123)

oj_mdl_cart_full <- rpart(formula = Purchase ~ ., data = oj_train, method = "class")

print(oj_mdl_cart_full)## n= 857

##

## node), split, n, loss, yval, (yprob)

## * denotes terminal node

##

## 1) root 857 334 CH (0.61026838 0.38973162)

## 2) LoyalCH>=0.48285 537 94 CH (0.82495345 0.17504655)

## 4) LoyalCH>=0.7648795 271 13 CH (0.95202952 0.04797048) *

## 5) LoyalCH< 0.7648795 266 81 CH (0.69548872 0.30451128)

## 10) PriceDiff>=-0.165 226 50 CH (0.77876106 0.22123894) *

## 11) PriceDiff< -0.165 40 9 MM (0.22500000 0.77500000) *

## 3) LoyalCH< 0.48285 320 80 MM (0.25000000 0.75000000)

## 6) LoyalCH>=0.2761415 146 58 MM (0.39726027 0.60273973)

## 12) SalePriceMM>=2.04 71 31 CH (0.56338028 0.43661972) *

## 13) SalePriceMM< 2.04 75 18 MM (0.24000000 0.76000000) *

## 7) LoyalCH< 0.2761415 174 22 MM (0.12643678 0.87356322) *The output starts with the root node. The predicted class at the root is CH and this prediction produces 334 errors on the 857 observations for a success rate (accuracy) of 61% (0.61026838) and an error rate of 39% (0.38973162). The child nodes of node “x” are labeled 2x) and 2x+1), so the child nodes of 1) are 2) and 3), and the child nodes of 2) are 4) and 5). Terminal nodes are labeled with an asterisk (*).

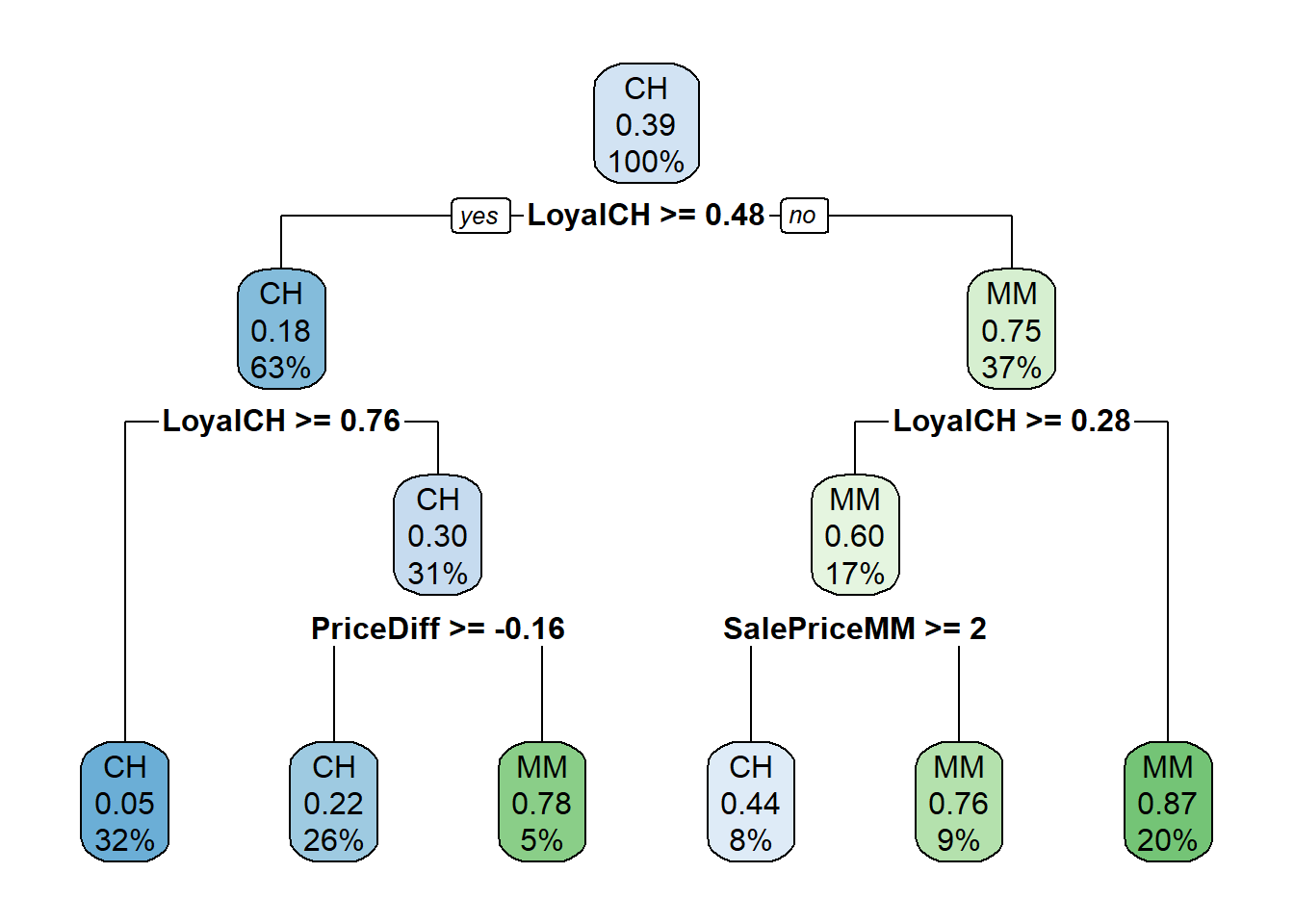

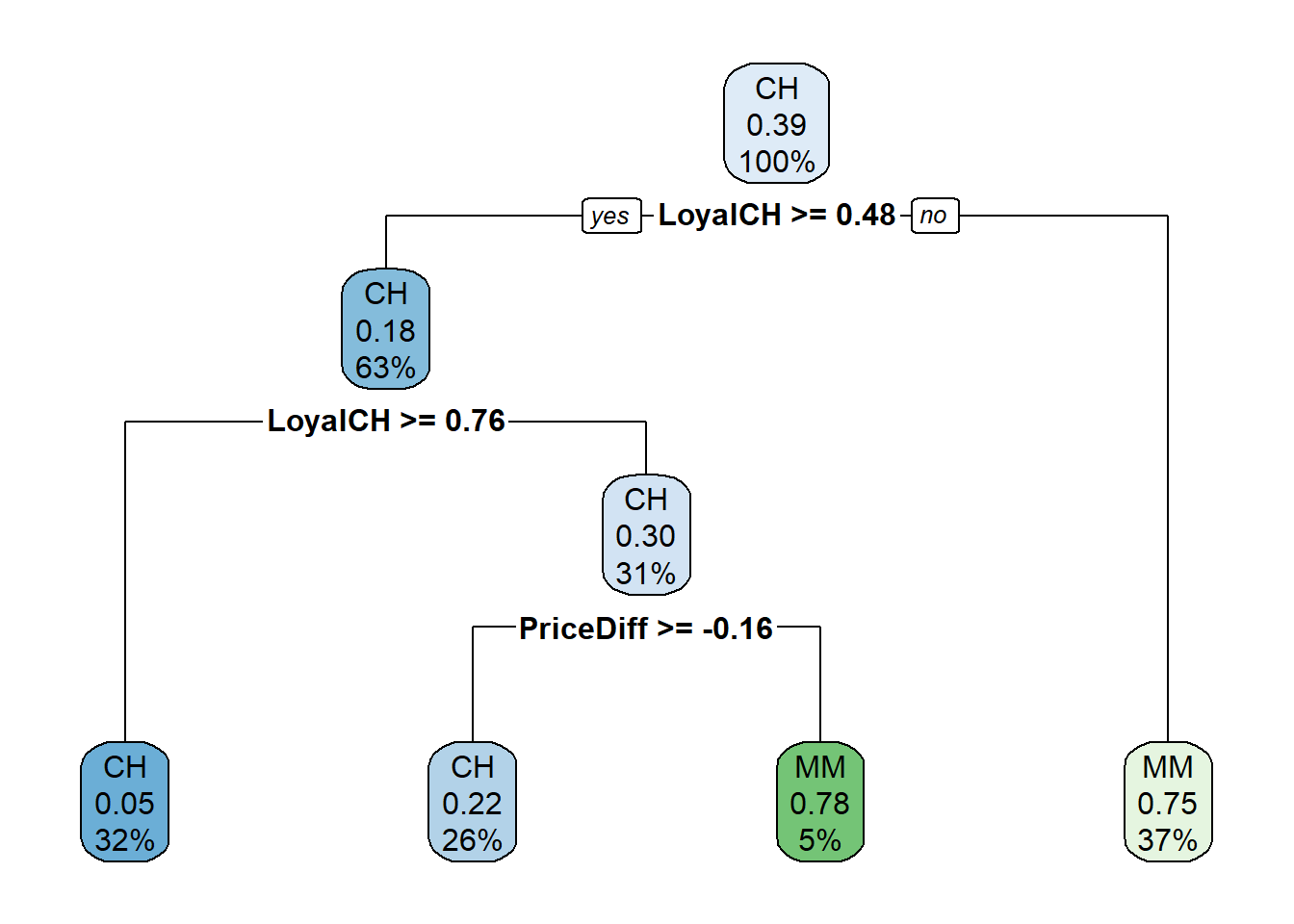

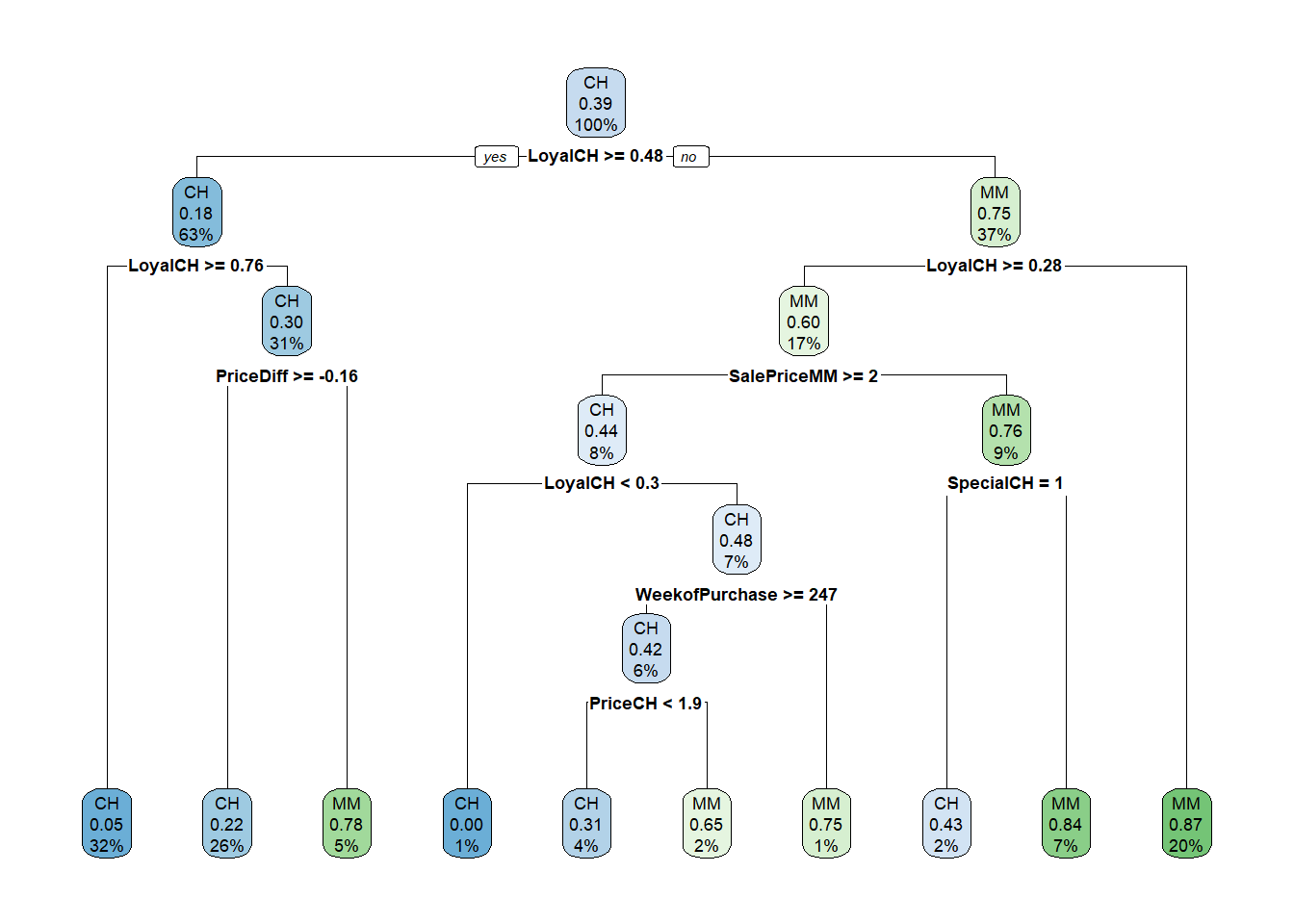

Surprisingly, only 3 of the 17 features were used the in full tree: LoyalCH (Customer brand loyalty for CH), PriceDiff (relative price of MM over CH), and SalePriceMM (absolute price of MM). The first split is at LoyalCH = 0.48285. Here is a diagram of the full (unpruned) tree.

rpart.plot(oj_mdl_cart_full, yesno = TRUE)

The boxes show the node classification (based on mode), the proportion of observations that are not CH, and the proportion of observations included in the node.

rpart() not only grew the full tree, it identified the set of cost complexity parameters, and measured the model performance of each corresponding tree using cross-validation. printcp() displays the candidate \(c_p\) values. You can use this table to decide how to prune the tree.

printcp(oj_mdl_cart_full)##

## Classification tree:

## rpart(formula = Purchase ~ ., data = oj_train, method = "class")

##

## Variables actually used in tree construction:

## [1] LoyalCH PriceDiff SalePriceMM

##

## Root node error: 334/857 = 0.38973

##

## n= 857

##

## CP nsplit rel error xerror xstd

## 1 0.479042 0 1.00000 1.00000 0.042745

## 2 0.032934 1 0.52096 0.54192 0.035775

## 3 0.013473 3 0.45509 0.47006 0.033905

## 4 0.010000 5 0.42814 0.46407 0.033736There are 4 \(c_p\) values in this model. The model with the smallest complexity parameter allows the most splits (nsplit). The highest complexity parameter corresponds to a tree with just a root node. rel error is the error rate relative to the root node. The root node absolute error is 0.38973162 (the proportion of MM), so its rel error is 0.38973162/0.38973162 = 1.0. That means the absolute error of the full tree (at CP = 0.01) is 0.42814 * 0.38973162 = 0.1669. You can verify that by calculating the error rate of the predicted values:

data.frame(pred = predict(oj_mdl_cart_full, newdata = oj_train, type = "class")) %>%

mutate(obs = oj_train$Purchase,

err = if_else(pred != obs, 1, 0)) %>%

summarize(mean_err = mean(err))## mean_err

## 1 0.1668611Finishing the CP table tour, xerror is the relative cross-validated error rate and xstd is its standard error. If you want the lowest possible error, then prune to the tree with the smallest relative CV error, \(c_p\) = 0.01. If you want to balance predictive power with simplicity, prune to the smallest tree within 1 SE of the one with the smallest relative error. The CP table is not super-helpful for finding that tree, so add a column to find it.

oj_mdl_cart_full$cptable %>%

data.frame() %>%

mutate(

min_idx = which.min(oj_mdl_cart_full$cptable[, "xerror"]),

rownum = row_number(),

xerror_cap = oj_mdl_cart_full$cptable[min_idx, "xerror"] +

oj_mdl_cart_full$cptable[min_idx, "xstd"],

eval = case_when(rownum == min_idx ~ "min xerror",

xerror < xerror_cap ~ "under cap",

TRUE ~ "")

) %>%

select(-rownum, -min_idx) ## CP nsplit rel.error xerror xstd xerror_cap eval

## 1 0.47904192 0 1.0000000 1.0000000 0.04274518 0.4978082

## 2 0.03293413 1 0.5209581 0.5419162 0.03577468 0.4978082

## 3 0.01347305 3 0.4550898 0.4700599 0.03390486 0.4978082 under cap

## 4 0.01000000 5 0.4281437 0.4640719 0.03373631 0.4978082 min xerrorThe simplest tree using the 1-SE rule is $c_p = 0.01347305, CV error = 0.1832). Fortunately, plotcp() presents a nice graphical representation of the relationship between xerror and cp.

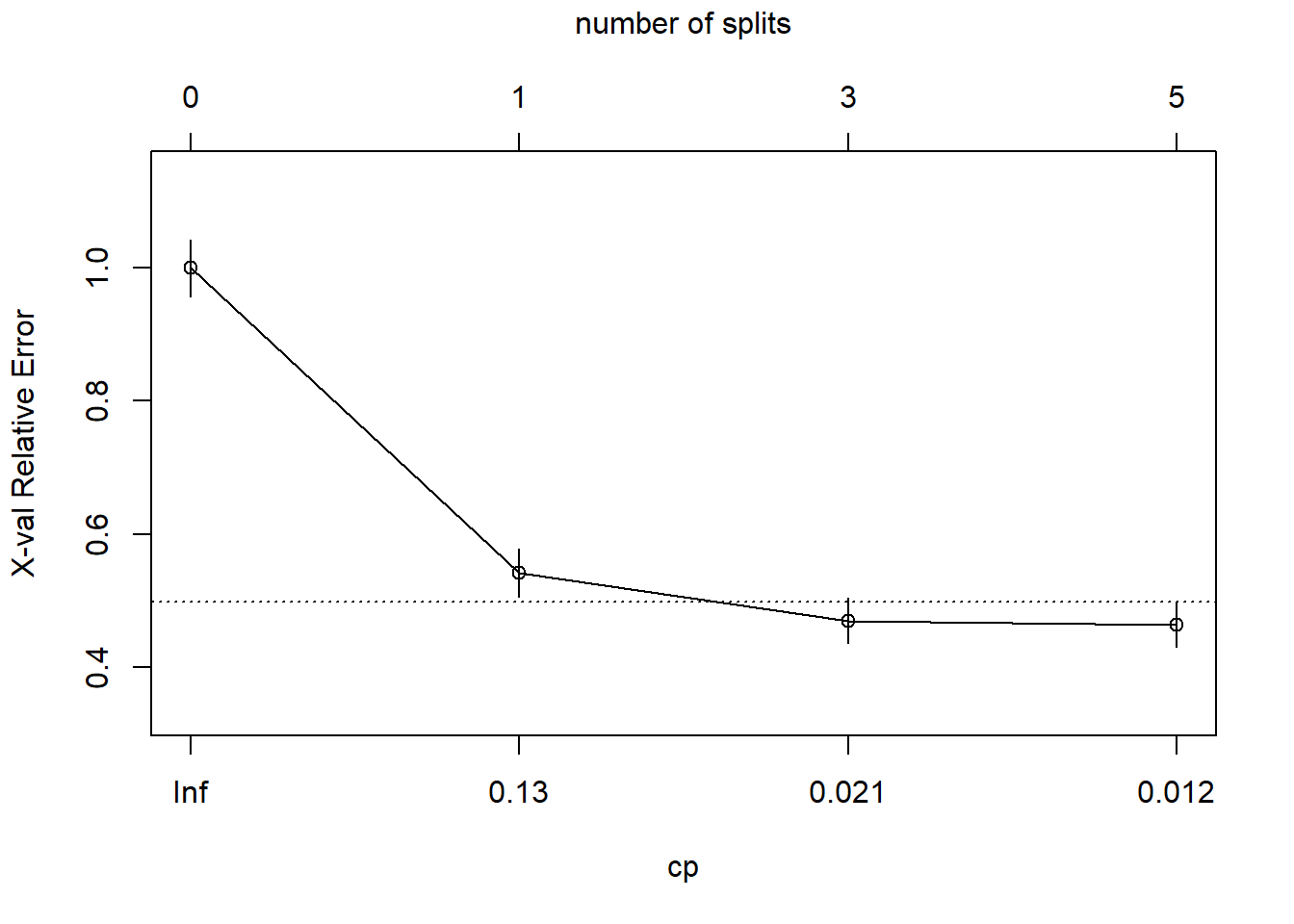

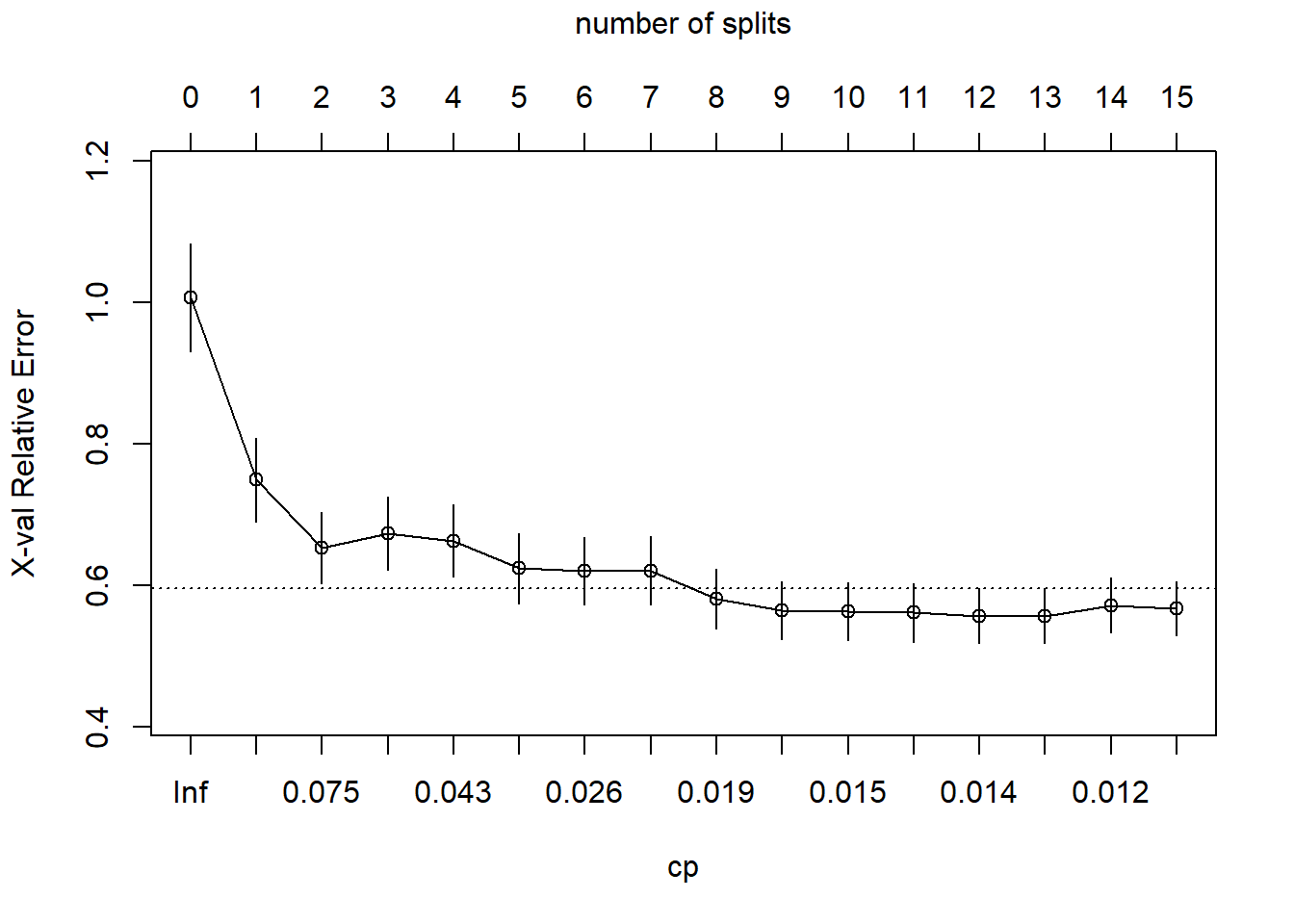

plotcp(oj_mdl_cart_full, upper = "splits")

The dashed line is set at the minimum xerror + xstd. The top axis shows the number of splits in the tree. I’m not sure why the CP values are not the same as in the table (they are close, but not the same). The figure suggests I should prune to 5 or 3 splits. I see this curve never really hits a minimum - it is still decreasing at 5 splits. The default tuning parameter value cp = 0.01 may be too large, so I’ll set it to cp = 0.001 and start over.

set.seed(123)

oj_mdl_cart_full <- rpart(

formula = Purchase ~ .,

data = oj_train,

method = "class",

cp = 0.001

)

print(oj_mdl_cart_full)## n= 857

##

## node), split, n, loss, yval, (yprob)

## * denotes terminal node

##

## 1) root 857 334 CH (0.61026838 0.38973162)

## 2) LoyalCH>=0.48285 537 94 CH (0.82495345 0.17504655)

## 4) LoyalCH>=0.7648795 271 13 CH (0.95202952 0.04797048) *

## 5) LoyalCH< 0.7648795 266 81 CH (0.69548872 0.30451128)

## 10) PriceDiff>=-0.165 226 50 CH (0.77876106 0.22123894)

## 20) ListPriceDiff>=0.255 115 11 CH (0.90434783 0.09565217) *

## 21) ListPriceDiff< 0.255 111 39 CH (0.64864865 0.35135135)

## 42) PriceMM>=2.155 19 2 CH (0.89473684 0.10526316) *

## 43) PriceMM< 2.155 92 37 CH (0.59782609 0.40217391)

## 86) DiscCH>=0.115 7 0 CH (1.00000000 0.00000000) *

## 87) DiscCH< 0.115 85 37 CH (0.56470588 0.43529412)

## 174) ListPriceDiff>=0.215 45 15 CH (0.66666667 0.33333333) *

## 175) ListPriceDiff< 0.215 40 18 MM (0.45000000 0.55000000)

## 350) LoyalCH>=0.527571 28 13 CH (0.53571429 0.46428571)

## 700) WeekofPurchase< 266.5 21 8 CH (0.61904762 0.38095238) *

## 701) WeekofPurchase>=266.5 7 2 MM (0.28571429 0.71428571) *

## 351) LoyalCH< 0.527571 12 3 MM (0.25000000 0.75000000) *

## 11) PriceDiff< -0.165 40 9 MM (0.22500000 0.77500000) *

## 3) LoyalCH< 0.48285 320 80 MM (0.25000000 0.75000000)

## 6) LoyalCH>=0.2761415 146 58 MM (0.39726027 0.60273973)

## 12) SalePriceMM>=2.04 71 31 CH (0.56338028 0.43661972)

## 24) LoyalCH< 0.303104 7 0 CH (1.00000000 0.00000000) *

## 25) LoyalCH>=0.303104 64 31 CH (0.51562500 0.48437500)

## 50) WeekofPurchase>=246.5 52 22 CH (0.57692308 0.42307692)

## 100) PriceCH< 1.94 35 11 CH (0.68571429 0.31428571)

## 200) StoreID< 1.5 9 1 CH (0.88888889 0.11111111) *

## 201) StoreID>=1.5 26 10 CH (0.61538462 0.38461538)

## 402) LoyalCH< 0.410969 17 4 CH (0.76470588 0.23529412) *

## 403) LoyalCH>=0.410969 9 3 MM (0.33333333 0.66666667) *

## 101) PriceCH>=1.94 17 6 MM (0.35294118 0.64705882) *

## 51) WeekofPurchase< 246.5 12 3 MM (0.25000000 0.75000000) *

## 13) SalePriceMM< 2.04 75 18 MM (0.24000000 0.76000000)

## 26) SpecialCH>=0.5 14 6 CH (0.57142857 0.42857143) *

## 27) SpecialCH< 0.5 61 10 MM (0.16393443 0.83606557) *

## 7) LoyalCH< 0.2761415 174 22 MM (0.12643678 0.87356322)

## 14) LoyalCH>=0.035047 117 21 MM (0.17948718 0.82051282)

## 28) WeekofPurchase< 273.5 104 21 MM (0.20192308 0.79807692)

## 56) PriceCH>=1.875 20 9 MM (0.45000000 0.55000000)

## 112) WeekofPurchase>=252.5 12 5 CH (0.58333333 0.41666667) *

## 113) WeekofPurchase< 252.5 8 2 MM (0.25000000 0.75000000) *

## 57) PriceCH< 1.875 84 12 MM (0.14285714 0.85714286) *

## 29) WeekofPurchase>=273.5 13 0 MM (0.00000000 1.00000000) *

## 15) LoyalCH< 0.035047 57 1 MM (0.01754386 0.98245614) *This is a much larger tree. Did I find a cp value that produces a local min?

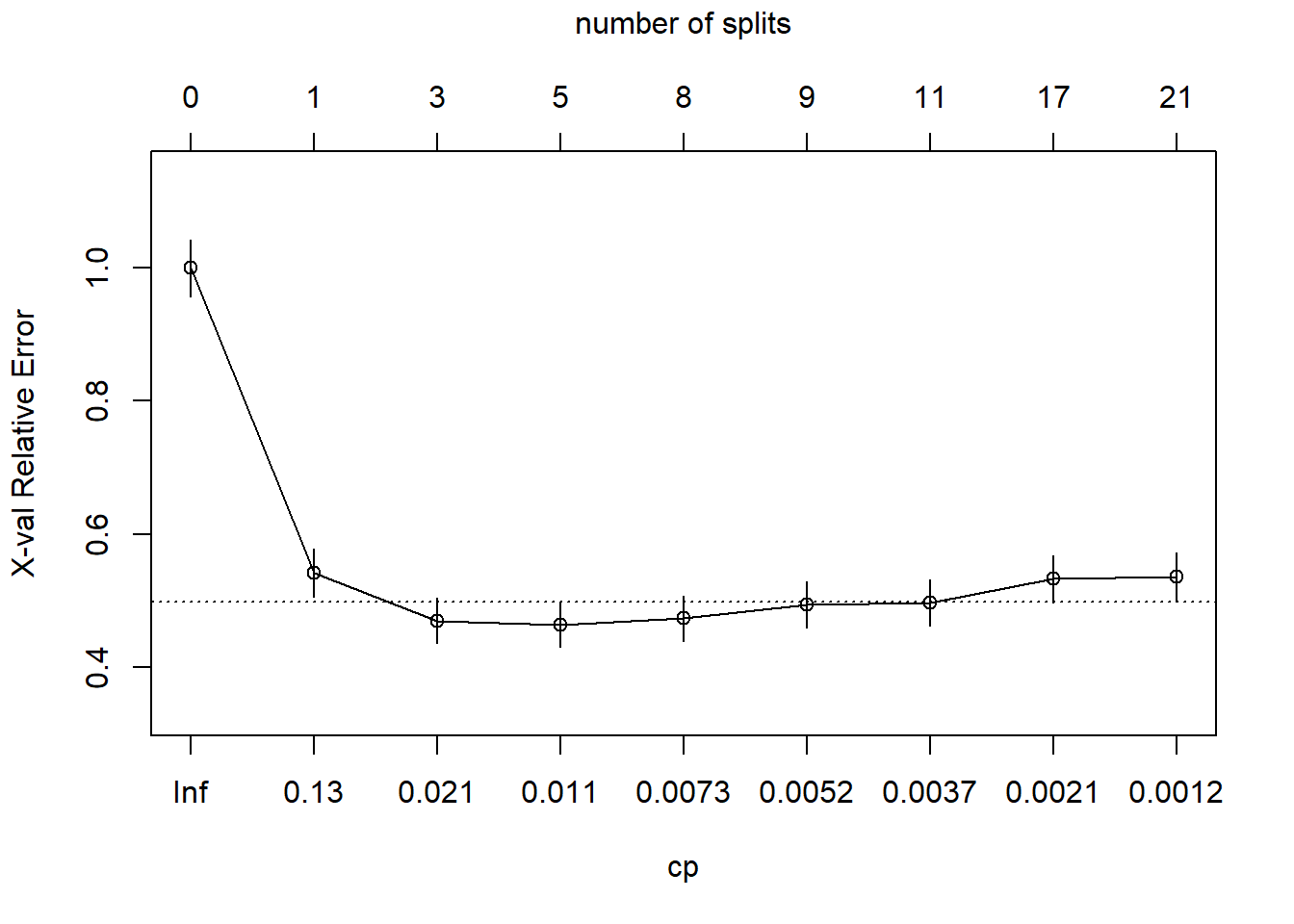

plotcp(oj_mdl_cart_full, upper = "splits")

Yes, the min is at CP = 0.011 with 5 splits. The min + 1 SE is at CP = 0.021 with 3 splits. I’ll prune the tree to 3 splits.

oj_mdl_cart <- prune(

oj_mdl_cart_full,

cp = oj_mdl_cart_full$cptable[oj_mdl_cart_full$cptable[, 2] == 3, "CP"]

)

rpart.plot(oj_mdl_cart, yesno = TRUE)

The most “important” indicator of Purchase appears to be LoyalCH. From the rpart vignette (page 12),

“An overall measure of variable importance is the sum of the goodness of split measures for each split for which it was the primary variable, plus goodness (adjusted agreement) for all splits in which it was a surrogate.”

Surrogates refer to alternative features for a node to handle missing data. For each split, CART evaluates a variety of alternative “surrogate” splits to use when the feature value for the primary split is NA. Surrogate splits are splits that produce results similar to the original split.

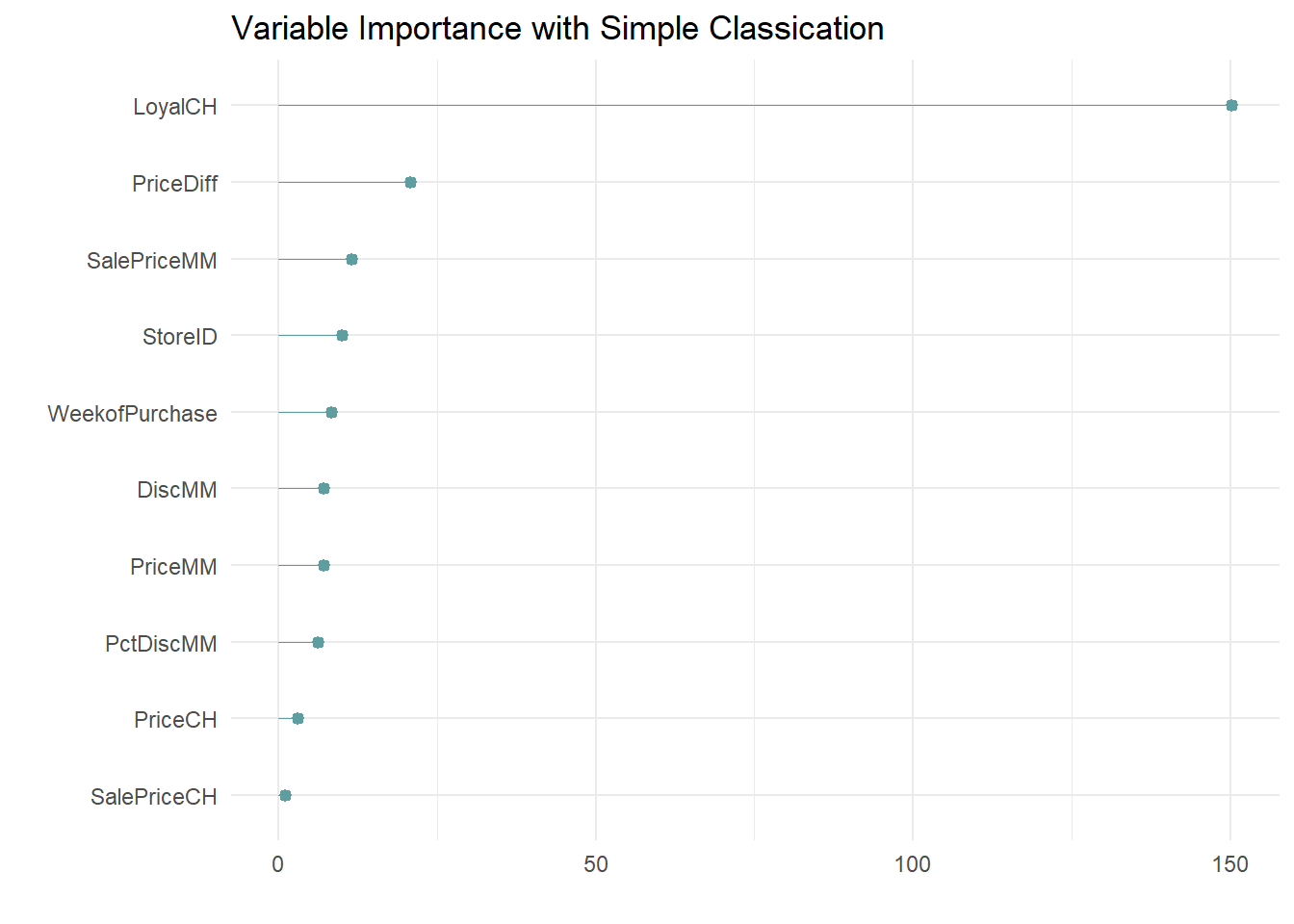

A variable’s importance is the sum of the improvement in the overall Gini (or RMSE) measure produced by the nodes in which it appears. Here is the variable importance for this model.

oj_mdl_cart$variable.importance %>%

data.frame() %>%

rownames_to_column(var = "Feature") %>%

rename(Overall = '.') %>%

ggplot(aes(x = fct_reorder(Feature, Overall), y = Overall)) +

geom_pointrange(aes(ymin = 0, ymax = Overall), color = "cadetblue", size = .3) +

theme_minimal() +

coord_flip() +

labs(x = "", y = "", title = "Variable Importance with Simple Classication")

LoyalCH is by far the most important variable, as expected from its position at the top of the tree, and one level down.

You can see how the surrogates appear in the model with the summary() function.

summary(oj_mdl_cart)## Call:

## rpart(formula = Purchase ~ ., data = oj_train, method = "class",

## cp = 0.001)

## n= 857

##

## CP nsplit rel error xerror xstd

## 1 0.47904192 0 1.0000000 1.0000000 0.04274518

## 2 0.03293413 1 0.5209581 0.5419162 0.03577468

## 3 0.01347305 3 0.4550898 0.4700599 0.03390486

##

## Variable importance

## LoyalCH PriceDiff SalePriceMM StoreID WeekofPurchase

## 67 9 5 4 4

## DiscMM PriceMM PctDiscMM PriceCH

## 3 3 3 1

##

## Node number 1: 857 observations, complexity param=0.4790419

## predicted class=CH expected loss=0.3897316 P(node) =1

## class counts: 523 334

## probabilities: 0.610 0.390

## left son=2 (537 obs) right son=3 (320 obs)

## Primary splits:

## LoyalCH < 0.48285 to the right, improve=132.56800, (0 missing)

## StoreID < 3.5 to the right, improve= 40.12097, (0 missing)

## PriceDiff < 0.015 to the right, improve= 24.26552, (0 missing)

## ListPriceDiff < 0.255 to the right, improve= 22.79117, (0 missing)

## SalePriceMM < 1.84 to the right, improve= 20.16447, (0 missing)

## Surrogate splits:

## StoreID < 3.5 to the right, agree=0.646, adj=0.053, (0 split)

## PriceMM < 1.89 to the right, agree=0.638, adj=0.031, (0 split)

## WeekofPurchase < 229.5 to the right, agree=0.632, adj=0.016, (0 split)

## DiscMM < 0.77 to the left, agree=0.629, adj=0.006, (0 split)

## SalePriceMM < 1.385 to the right, agree=0.629, adj=0.006, (0 split)

##

## Node number 2: 537 observations, complexity param=0.03293413

## predicted class=CH expected loss=0.1750466 P(node) =0.6266044

## class counts: 443 94

## probabilities: 0.825 0.175

## left son=4 (271 obs) right son=5 (266 obs)

## Primary splits:

## LoyalCH < 0.7648795 to the right, improve=17.669310, (0 missing)

## PriceDiff < 0.015 to the right, improve=15.475200, (0 missing)

## SalePriceMM < 1.84 to the right, improve=13.951730, (0 missing)

## ListPriceDiff < 0.255 to the right, improve=11.407560, (0 missing)

## DiscMM < 0.15 to the left, improve= 7.795122, (0 missing)

## Surrogate splits:

## WeekofPurchase < 257.5 to the right, agree=0.594, adj=0.180, (0 split)

## PriceCH < 1.775 to the right, agree=0.590, adj=0.173, (0 split)

## StoreID < 3.5 to the right, agree=0.587, adj=0.165, (0 split)

## PriceMM < 2.04 to the right, agree=0.587, adj=0.165, (0 split)

## SalePriceMM < 2.04 to the right, agree=0.587, adj=0.165, (0 split)

##

## Node number 3: 320 observations

## predicted class=MM expected loss=0.25 P(node) =0.3733956

## class counts: 80 240

## probabilities: 0.250 0.750

##

## Node number 4: 271 observations

## predicted class=CH expected loss=0.04797048 P(node) =0.3162194

## class counts: 258 13

## probabilities: 0.952 0.048

##

## Node number 5: 266 observations, complexity param=0.03293413

## predicted class=CH expected loss=0.3045113 P(node) =0.3103851

## class counts: 185 81

## probabilities: 0.695 0.305

## left son=10 (226 obs) right son=11 (40 obs)

## Primary splits:

## PriceDiff < -0.165 to the right, improve=20.84307, (0 missing)

## ListPriceDiff < 0.235 to the right, improve=20.82404, (0 missing)

## SalePriceMM < 1.84 to the right, improve=16.80587, (0 missing)

## DiscMM < 0.15 to the left, improve=10.05120, (0 missing)

## PctDiscMM < 0.0729725 to the left, improve=10.05120, (0 missing)

## Surrogate splits:

## SalePriceMM < 1.585 to the right, agree=0.906, adj=0.375, (0 split)

## DiscMM < 0.57 to the left, agree=0.895, adj=0.300, (0 split)

## PctDiscMM < 0.264375 to the left, agree=0.895, adj=0.300, (0 split)

## WeekofPurchase < 274.5 to the left, agree=0.872, adj=0.150, (0 split)

## SalePriceCH < 2.075 to the left, agree=0.857, adj=0.050, (0 split)

##

## Node number 10: 226 observations

## predicted class=CH expected loss=0.2212389 P(node) =0.2637106

## class counts: 176 50

## probabilities: 0.779 0.221

##

## Node number 11: 40 observations

## predicted class=MM expected loss=0.225 P(node) =0.04667445

## class counts: 9 31

## probabilities: 0.225 0.775I’ll evaluate the predictions and record the accuracy (correct classification percentage) for comparison to other models. Two ways to evaluate the model are the confusion matrix, and the ROC curve.

4.1.1 Measuring Performance

4.1.1.1 Confusion Matrix

Print the confusion matrix with caret::confusionMatrix() to see how well does this model performs against the holdout set.

oj_preds_cart <- bind_cols(

predict(oj_mdl_cart, newdata = oj_test, type = "prob"),

predicted = predict(oj_mdl_cart, newdata = oj_test, type = "class"),

actual = oj_test$Purchase

)

oj_cm_cart <- confusionMatrix(oj_preds_cart$predicted, reference = oj_preds_cart$actual)

oj_cm_cart## Confusion Matrix and Statistics

##

## Reference

## Prediction CH MM

## CH 113 13

## MM 17 70

##

## Accuracy : 0.8592

## 95% CI : (0.8051, 0.9029)

## No Information Rate : 0.6103

## P-Value [Acc > NIR] : 1.265e-15

##

## Kappa : 0.7064

##

## Mcnemar's Test P-Value : 0.5839

##

## Sensitivity : 0.8692

## Specificity : 0.8434

## Pos Pred Value : 0.8968

## Neg Pred Value : 0.8046

## Prevalence : 0.6103

## Detection Rate : 0.5305

## Detection Prevalence : 0.5915

## Balanced Accuracy : 0.8563

##

## 'Positive' Class : CH

## The confusion matrix is at the top. It also includes a lot of statistics. It’s worth getting familiar with the stats. The model accuracy and 95% CI are calculated from the binomial test.

binom.test(x = 113 + 70, n = 213)##

## Exact binomial test

##

## data: 113 + 70 and 213

## number of successes = 183, number of trials = 213, p-value < 2.2e-16

## alternative hypothesis: true probability of success is not equal to 0.5

## 95 percent confidence interval:

## 0.8050785 0.9029123

## sample estimates:

## probability of success

## 0.8591549The “No Information Rate” (NIR) statistic is the class rate for the largest class. In this case CH is the largest class, so NIR = 130/213 = 0.6103. “P-Value [Acc > NIR]” is the binomial test that the model accuracy is significantly better than the NIR (i.e., significantly better than just always guessing CH).

binom.test(x = 113 + 70, n = 213, p = 130/213, alternative = "greater")##

## Exact binomial test

##

## data: 113 + 70 and 213

## number of successes = 183, number of trials = 213, p-value = 1.265e-15

## alternative hypothesis: true probability of success is greater than 0.6103286

## 95 percent confidence interval:

## 0.8138446 1.0000000

## sample estimates:

## probability of success

## 0.8591549The “Accuracy” statistic indicates the model predicts 0.8590 of the observations correctly. That’s good, but less impressive when you consider the prevalence of CH is 0.6103 - you could achieve 61% accuracy just by predicting CH every time. A measure that controls for the prevalence is Cohen’s kappa statistic. The kappa statistic is explained here. It compares the accuracy to the accuracy of a “random system”. It is defined as

\[\kappa = \frac{Acc - RA}{1-RA}\]

where

\[RA = \frac{ActFalse \times PredFalse + ActTrue \times PredTrue}{Total \times Total}\]

is the hypothetical probability of a chance agreement. ActFalse will be the number of “MM” (13 + 70 = 83) and actual true will be the number of “CH” (113 + 17 = 130). The predicted counts are

table(oj_preds_cart$predicted)##

## CH MM

## 126 87So, \(RA = (83*87 + 130*126) / 213^2 = 0.5202\) and \(\kappa = (0.8592 - 0.5202)/(1 - 0.5202) = 0.7064\). The kappa statistic varies from 0 to 1 where 0 means accurate predictions occur merely by chance, and 1 means the predictions are in perfect agreement with the observations. In this case, a kappa statistic of 0.7064 is “substantial”. See chart here.

The other measures from the confusionMatrix() output are various proportions and you can remind yourself of their definitions in the documentation with ?confusionMatrix.

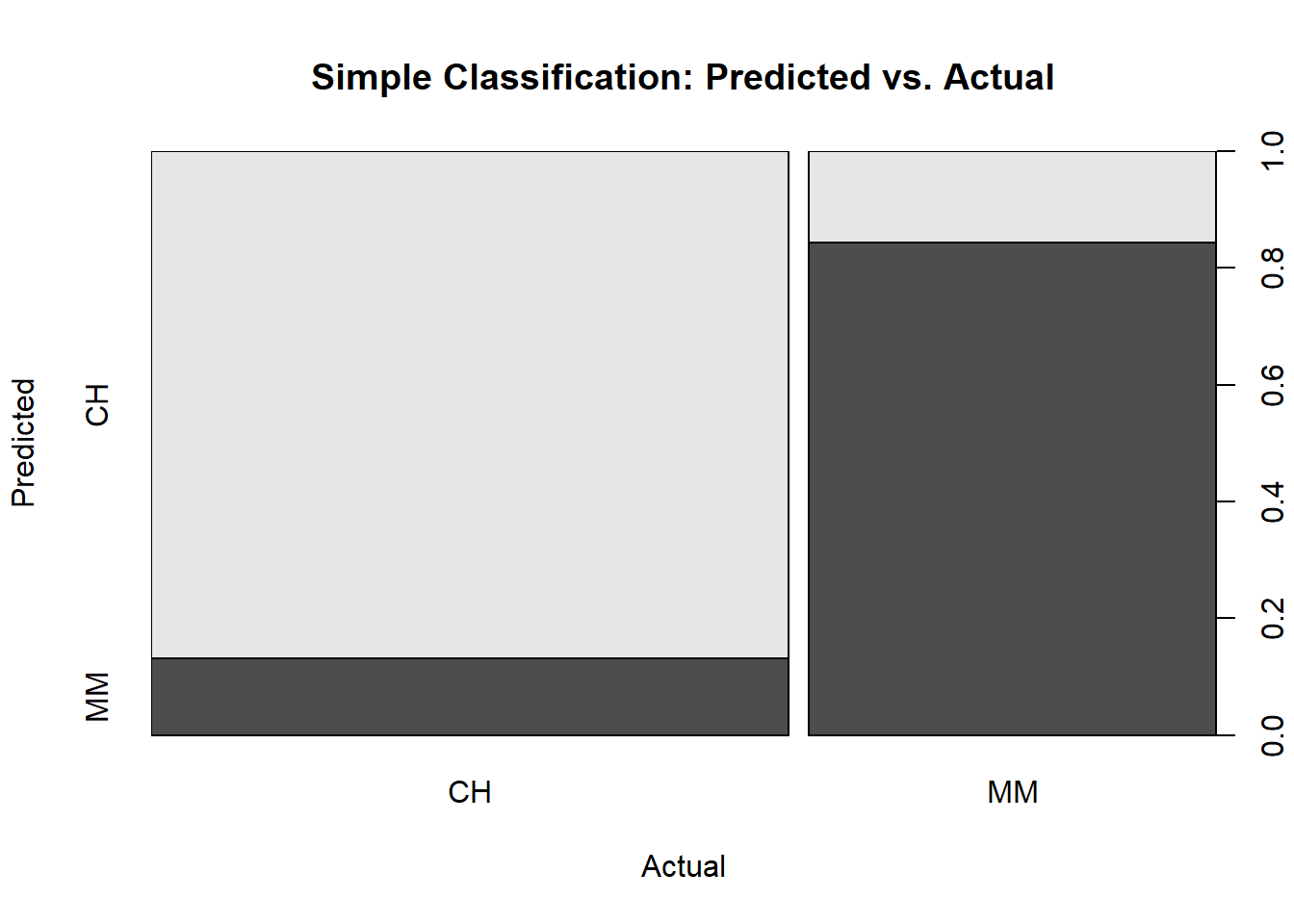

Visuals are almost always helpful. Here is a plot of the confusion matrix.

plot(oj_preds_cart$actual, oj_preds_cart$predicted,

main = "Simple Classification: Predicted vs. Actual",

xlab = "Actual",

ylab = "Predicted")

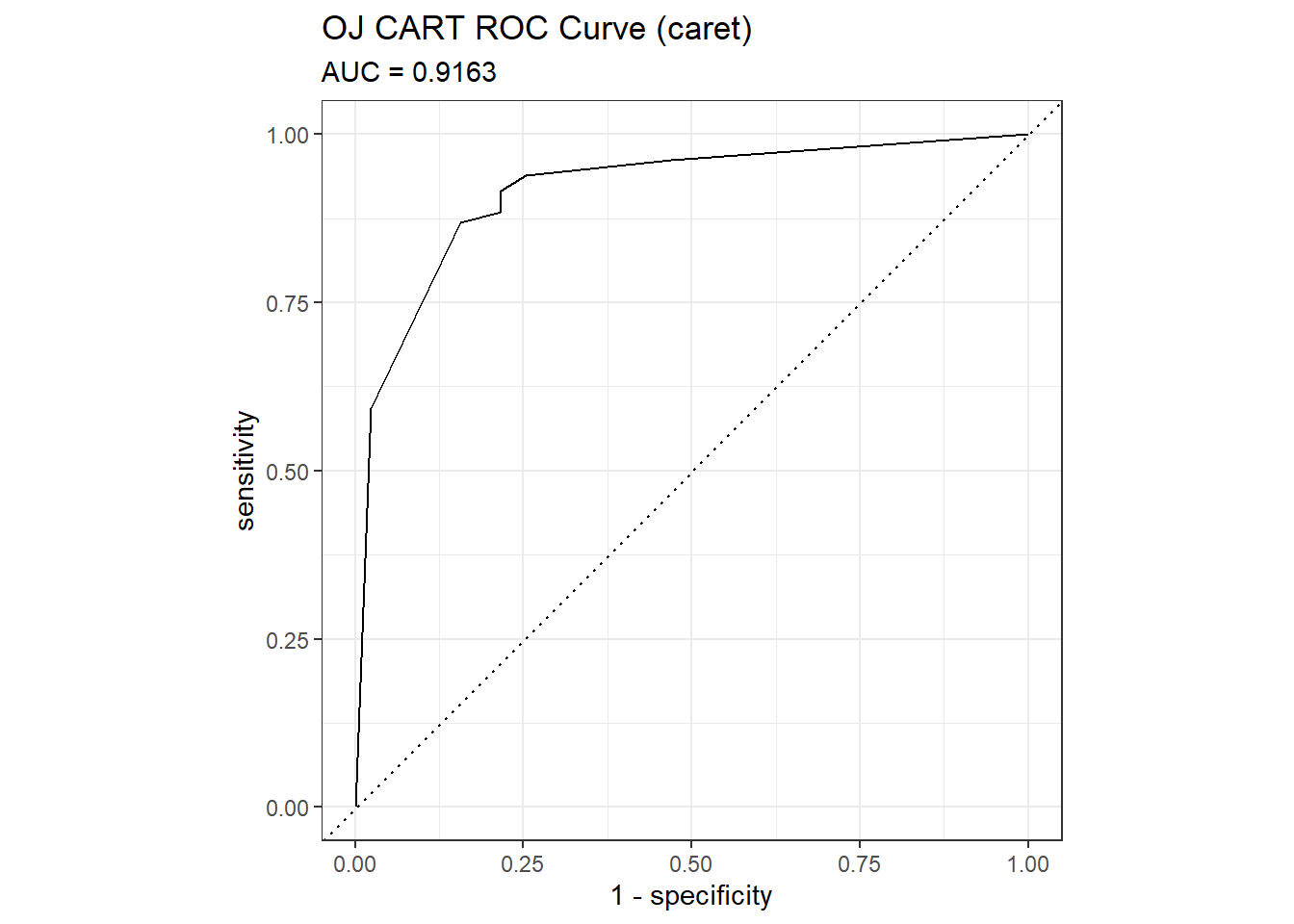

4.1.1.2 ROC Curve

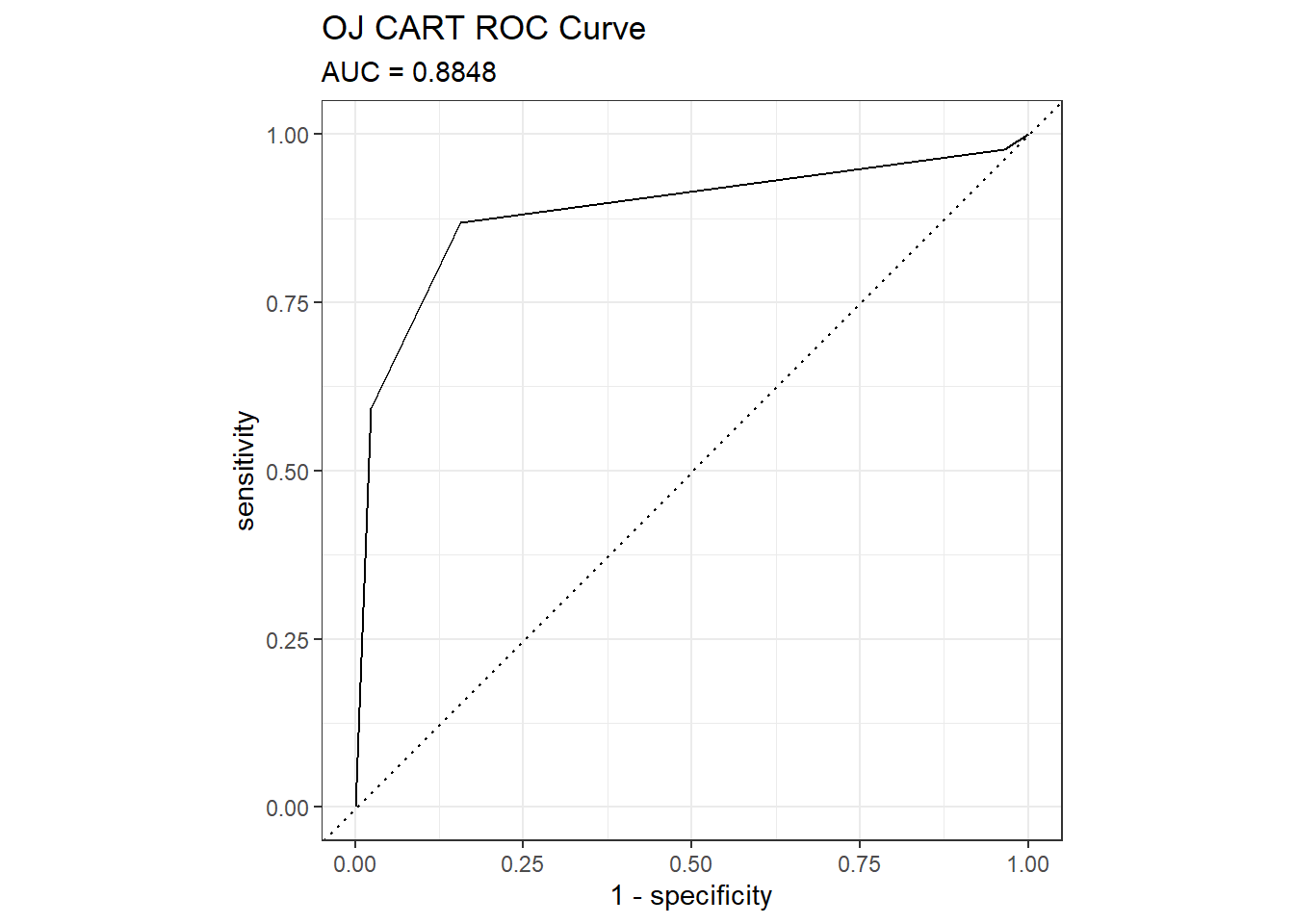

The ROC (receiver operating characteristics) curve (Fawcett 2005) is another measure of accuracy. The ROC curve is a plot of the true positive rate (TPR, sensitivity) versus the false positive rate (FPR, 1 - specificity) for a set of thresholds. By default, the threshold for predicting the default classification is 0.50, but it could be any threshold. precrec::evalmod() calculates the confusion matrix values from the model using the holdout data set. The AUC on the holdout set is 0.8848. pRoc::plot.roc(), plotROC::geom_roc(), and yardstick::roc_curve() are all options for plotting a ROC curve.

mdl_auc <- Metrics::auc(actual = oj_preds_cart$actual == "CH", oj_preds_cart$CH)

yardstick::roc_curve(oj_preds_cart, actual, CH) %>%

autoplot() +

labs(

title = "OJ CART ROC Curve",

subtitle = paste0("AUC = ", round(mdl_auc, 4))

)

A few points on the ROC space are helpful for understanding how to use it.

- The lower left point (0, 0) is the result of always predicting “negative” or in this case “MM” if “CH” is taken as the default class. No false positives, but no true positives either.

- The upper right point (1, 1) is the result of always predicting “positive” (“CH” here). You catch all true positives, but miss all the true negatives.

- The upper left point (0, 1) is the result of perfect accuracy.

- The lower right point (1, 0) is the result of perfect imbecility. You made the exact wrong prediction every time.

- The 45 degree diagonal is the result of randomly guessing positive (CH) X percent of the time. If you guess positive 90% of the time and the prevalence is 50%, your TPR will be 90% and your FPR will also be 90%, etc.

The goal is for all nodes to bunch up in the upper left.

Points to the left of the diagonal with a low TPR can be thought of as “conservative” predictors - they only make positive (CH) predictions with strong evidence. Points to the left of the diagonal with a high TPR can be thought of as “liberal” predictors - they make positive (CH) predictions with weak evidence.

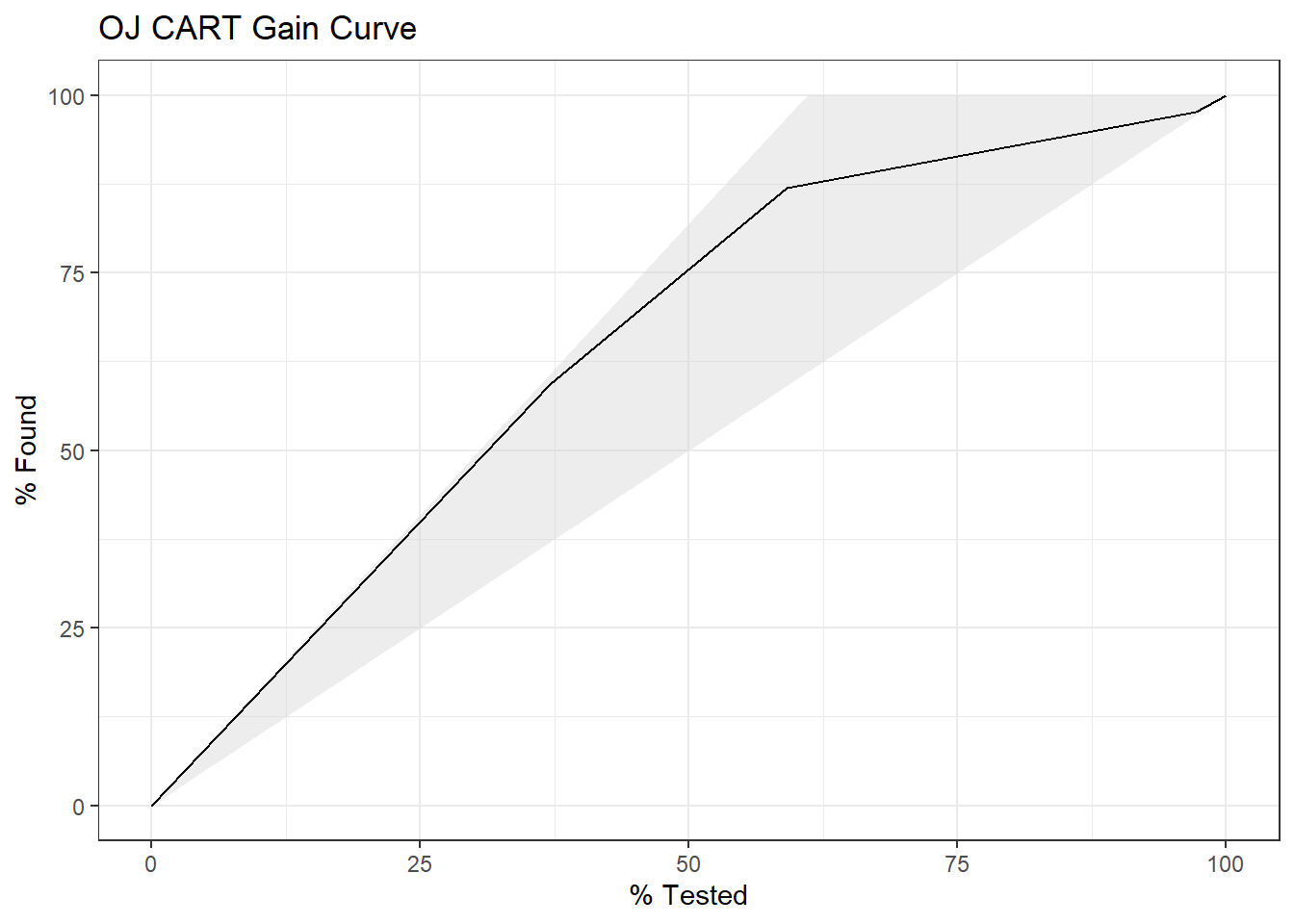

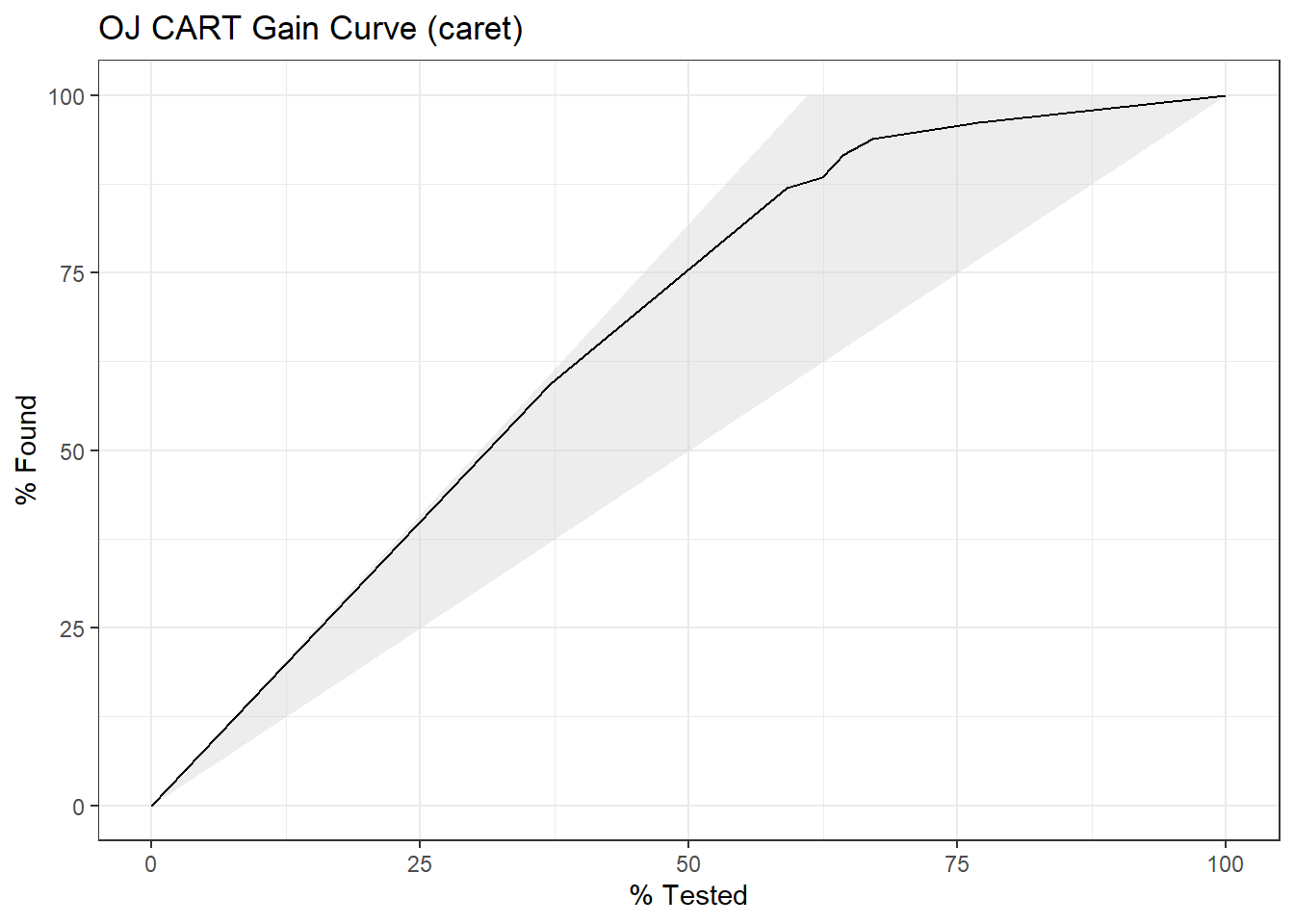

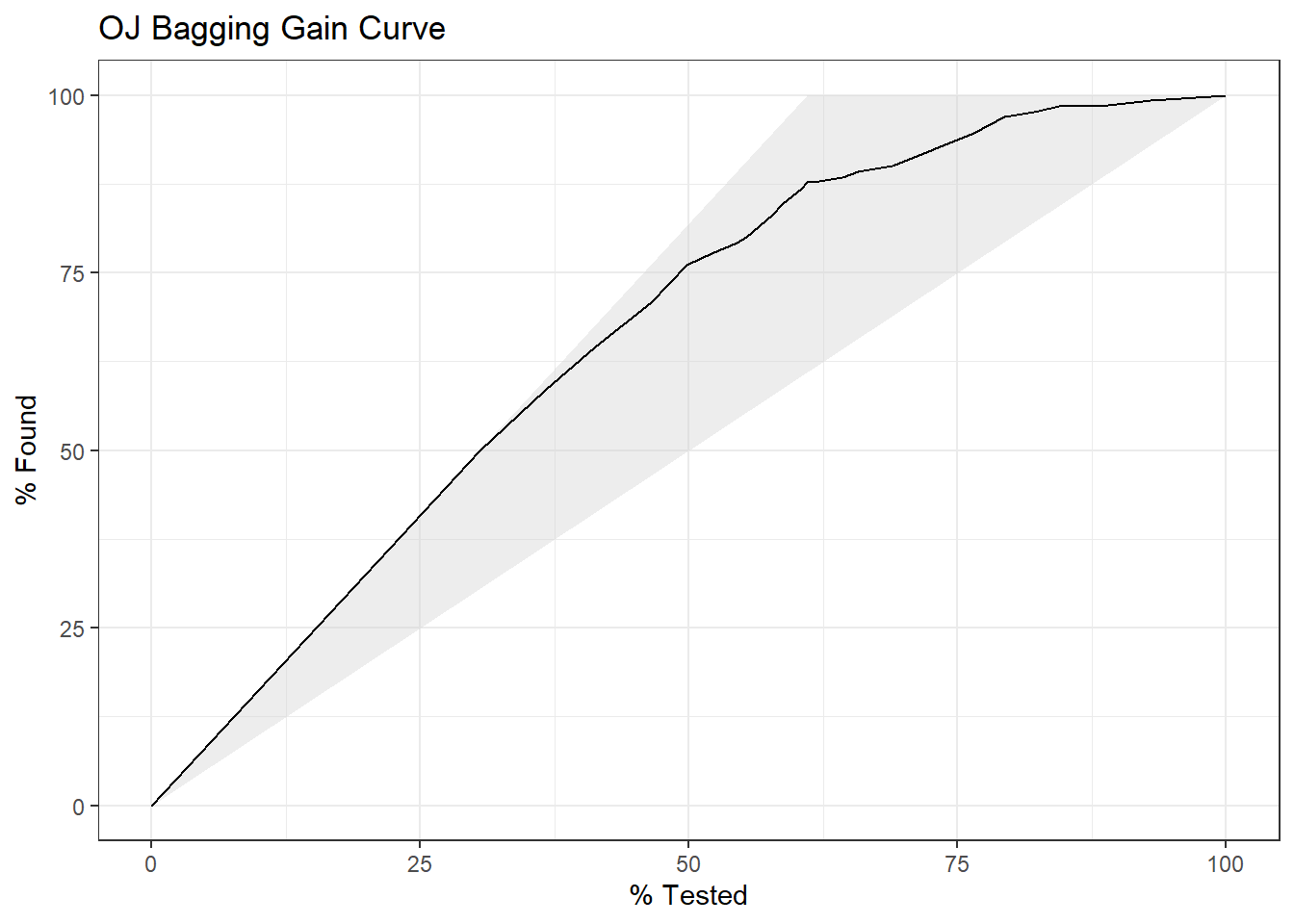

4.1.1.3 Gain Curve

The gain curve plots the cumulative summed true outcome versus the fraction of items seen when sorted by the predicted value. The “wizard” curve is the gain curve when the data is sorted by the true outcome. If the model’s gain curve is close to the wizard curve, then the model predicted the response variable well. The gray area is the “gain” over a random prediction.

130 of the 213 consumers in the holdout set purchased CH.

The gain curve encountered 77 CH purchasers (59%) within the first 79 observations (37%).

It encountered all 130 CH purchasers on the 213th observation (100%).

The bottom of the gray area is the outcome of a random model. Only half the CH purchasers would be observed within 50% of the observations. The top of the gray area is the outcome of the perfect model, the “wizard curve”. Half the CH purchasers would be observed in 65/213=31% of the observations.

yardstick::gain_curve(oj_preds_cart, actual, CH) %>%

autoplot() +

labs(

title = "OJ CART Gain Curve"

)

4.1.2 Training with Caret

I can also fit the model with caret::train(). There are two ways to tune hyperparameters in train():

- set the number of tuning parameter values to consider by setting

tuneLength, or - set particular values to consider for each parameter by defining a

tuneGrid.

I’ll build the model using 10-fold cross-validation to optimize the hyperparameter CP. If you have no idea what is the optimal tuning parameter, start with tuneLength to get close, then fine-tune with tuneGrid. That’s what I’ll do. I’ll create a training control object that I can re-use in other model builds.

oj_trControl = trainControl(

method = "cv",

number = 10,

savePredictions = "final", # save preds for the optimal tuning parameter

classProbs = TRUE, # class probs in addition to preds

summaryFunction = twoClassSummary

)Now fit the model.

set.seed(1234)

oj_mdl_cart2 <- train(

Purchase ~ .,

data = oj_train,

method = "rpart",

tuneLength = 5,

metric = "ROC",

trControl = oj_trControl

)caret built a full tree using rpart’s default parameters: gini splitting index, at least 20 observations in a node in order to consider splitting it, and at least 6 observations in each node. Caret then calculated the accuracy for each candidate value of \(\alpha\). Here is the results.

print(oj_mdl_cart2)## CART

##

## 857 samples

## 17 predictor

## 2 classes: 'CH', 'MM'

##

## No pre-processing

## Resampling: Cross-Validated (10 fold)

## Summary of sample sizes: 772, 772, 771, 770, 771, 771, ...

## Resampling results across tuning parameters:

##

## cp ROC Sens Spec

## 0.005988024 0.8539885 0.8605225 0.7274510

## 0.008982036 0.8502309 0.8568578 0.7334225

## 0.013473054 0.8459290 0.8473149 0.7397504

## 0.032934132 0.7776483 0.8509071 0.6796791

## 0.479041916 0.5878764 0.9201379 0.2556150

##

## ROC was used to select the optimal model using the largest value.

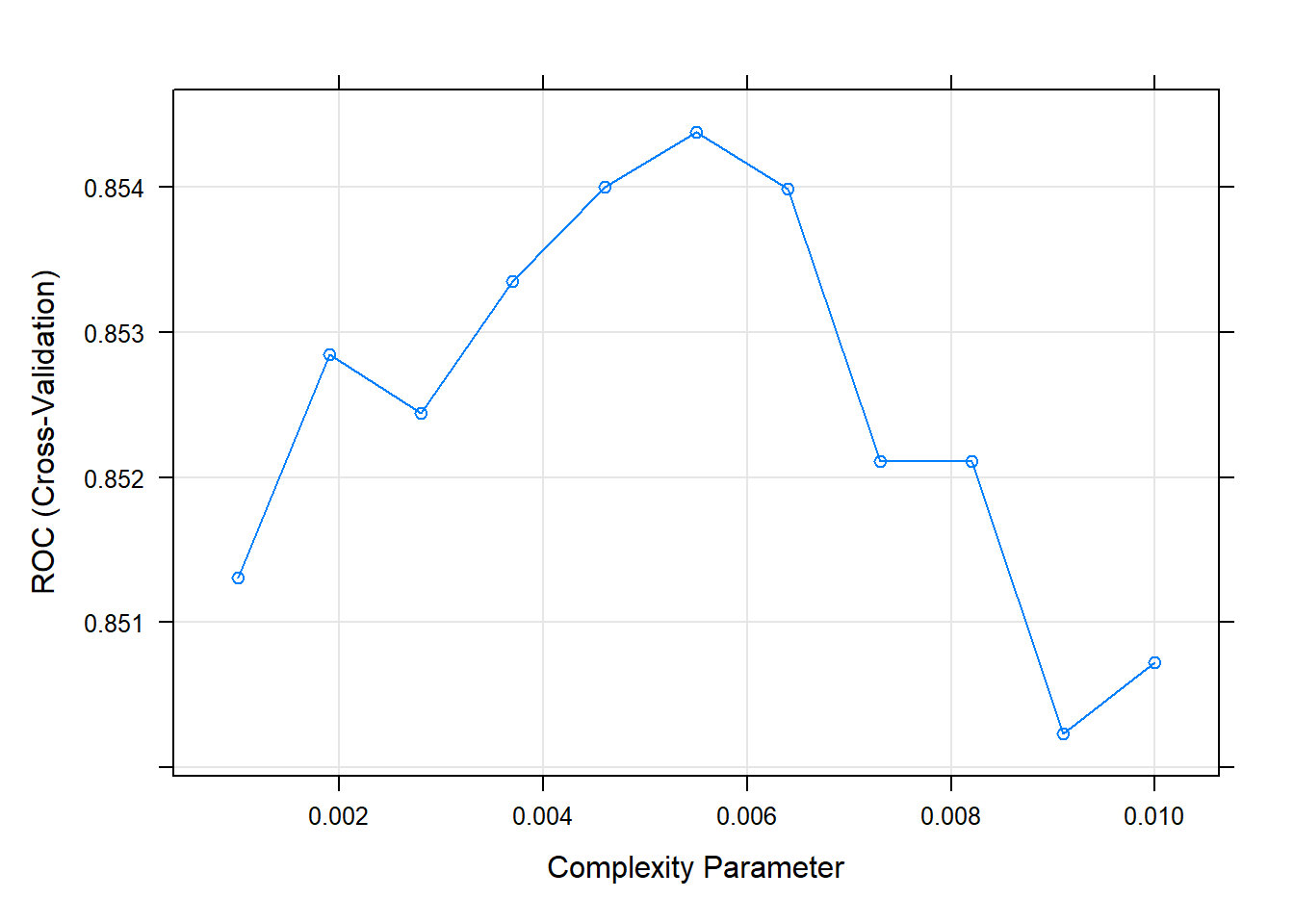

## The final value used for the model was cp = 0.005988024.The second cp (0.008982036) produced the highest accuracy. I can drill into the best value of cp using a tuning grid.

set.seed(1234)

oj_mdl_cart2 <- train(

Purchase ~ .,

data = oj_train,

method = "rpart",

tuneGrid = expand.grid(cp = seq(from = 0.001, to = 0.010, length = 11)),

metric = "ROC",

trControl = oj_trControl

)

print(oj_mdl_cart2)## CART

##

## 857 samples

## 17 predictor

## 2 classes: 'CH', 'MM'

##

## No pre-processing

## Resampling: Cross-Validated (10 fold)

## Summary of sample sizes: 772, 772, 771, 770, 771, 771, ...

## Resampling results across tuning parameters:

##

## cp ROC Sens Spec

## 0.0010 0.8513056 0.8529390 0.7182709

## 0.0019 0.8528471 0.8529753 0.7213012

## 0.0028 0.8524435 0.8510522 0.7302139

## 0.0037 0.8533529 0.8510522 0.7421569

## 0.0046 0.8540042 0.8491292 0.7333333

## 0.0055 0.8543820 0.8567126 0.7334225

## 0.0064 0.8539885 0.8605225 0.7274510

## 0.0073 0.8521076 0.8625181 0.7335116

## 0.0082 0.8521076 0.8625181 0.7335116

## 0.0091 0.8502309 0.8568578 0.7334225

## 0.0100 0.8507262 0.8510885 0.7424242

##

## ROC was used to select the optimal model using the largest value.

## The final value used for the model was cp = 0.0055.The best model is at cp = 0.0082. Here are the cross-validated accuracies for the candidate cp values.

plot(oj_mdl_cart2)

Here are the rules in the final model.

oj_mdl_cart2$finalModel## n= 857

##

## node), split, n, loss, yval, (yprob)

## * denotes terminal node

##

## 1) root 857 334 CH (0.61026838 0.38973162)

## 2) LoyalCH>=0.48285 537 94 CH (0.82495345 0.17504655)

## 4) LoyalCH>=0.7648795 271 13 CH (0.95202952 0.04797048) *

## 5) LoyalCH< 0.7648795 266 81 CH (0.69548872 0.30451128)

## 10) PriceDiff>=-0.165 226 50 CH (0.77876106 0.22123894) *

## 11) PriceDiff< -0.165 40 9 MM (0.22500000 0.77500000) *

## 3) LoyalCH< 0.48285 320 80 MM (0.25000000 0.75000000)

## 6) LoyalCH>=0.2761415 146 58 MM (0.39726027 0.60273973)

## 12) SalePriceMM>=2.04 71 31 CH (0.56338028 0.43661972)

## 24) LoyalCH< 0.303104 7 0 CH (1.00000000 0.00000000) *

## 25) LoyalCH>=0.303104 64 31 CH (0.51562500 0.48437500)

## 50) WeekofPurchase>=246.5 52 22 CH (0.57692308 0.42307692)

## 100) PriceCH< 1.94 35 11 CH (0.68571429 0.31428571) *

## 101) PriceCH>=1.94 17 6 MM (0.35294118 0.64705882) *

## 51) WeekofPurchase< 246.5 12 3 MM (0.25000000 0.75000000) *

## 13) SalePriceMM< 2.04 75 18 MM (0.24000000 0.76000000)

## 26) SpecialCH>=0.5 14 6 CH (0.57142857 0.42857143) *

## 27) SpecialCH< 0.5 61 10 MM (0.16393443 0.83606557) *

## 7) LoyalCH< 0.2761415 174 22 MM (0.12643678 0.87356322) *rpart.plot(oj_mdl_cart2$finalModel)

Let’s look at the performance on the holdout data set.

oj_preds_cart2 <- bind_cols(

predict(oj_mdl_cart2, newdata = oj_test, type = "prob"),

Predicted = predict(oj_mdl_cart2, newdata = oj_test, type = "raw"),

Actual = oj_test$Purchase

)

oj_cm_cart2 <- confusionMatrix(oj_preds_cart2$Predicted, oj_preds_cart2$Actual)

oj_cm_cart2## Confusion Matrix and Statistics

##

## Reference

## Prediction CH MM

## CH 117 18

## MM 13 65

##

## Accuracy : 0.8545

## 95% CI : (0.7998, 0.8989)

## No Information Rate : 0.6103

## P-Value [Acc > NIR] : 4.83e-15

##

## Kappa : 0.6907

##

## Mcnemar's Test P-Value : 0.4725

##

## Sensitivity : 0.9000

## Specificity : 0.7831

## Pos Pred Value : 0.8667

## Neg Pred Value : 0.8333

## Prevalence : 0.6103

## Detection Rate : 0.5493

## Detection Prevalence : 0.6338

## Balanced Accuracy : 0.8416

##

## 'Positive' Class : CH

## The accuracy is 0.8451 - a little worse than the 0.8592 from the direct method. The AUC is 0.9102.

mdl_auc <- Metrics::auc(actual = oj_preds_cart2$Actual == "CH", oj_preds_cart2$CH)

yardstick::roc_curve(oj_preds_cart2, Actual, CH) %>%

autoplot() +

labs(

title = "OJ CART ROC Curve (caret)",

subtitle = paste0("AUC = ", round(mdl_auc, 4))

)

yardstick::gain_curve(oj_preds_cart2, Actual, CH) %>%

autoplot() +

labs(title = "OJ CART Gain Curve (caret)")

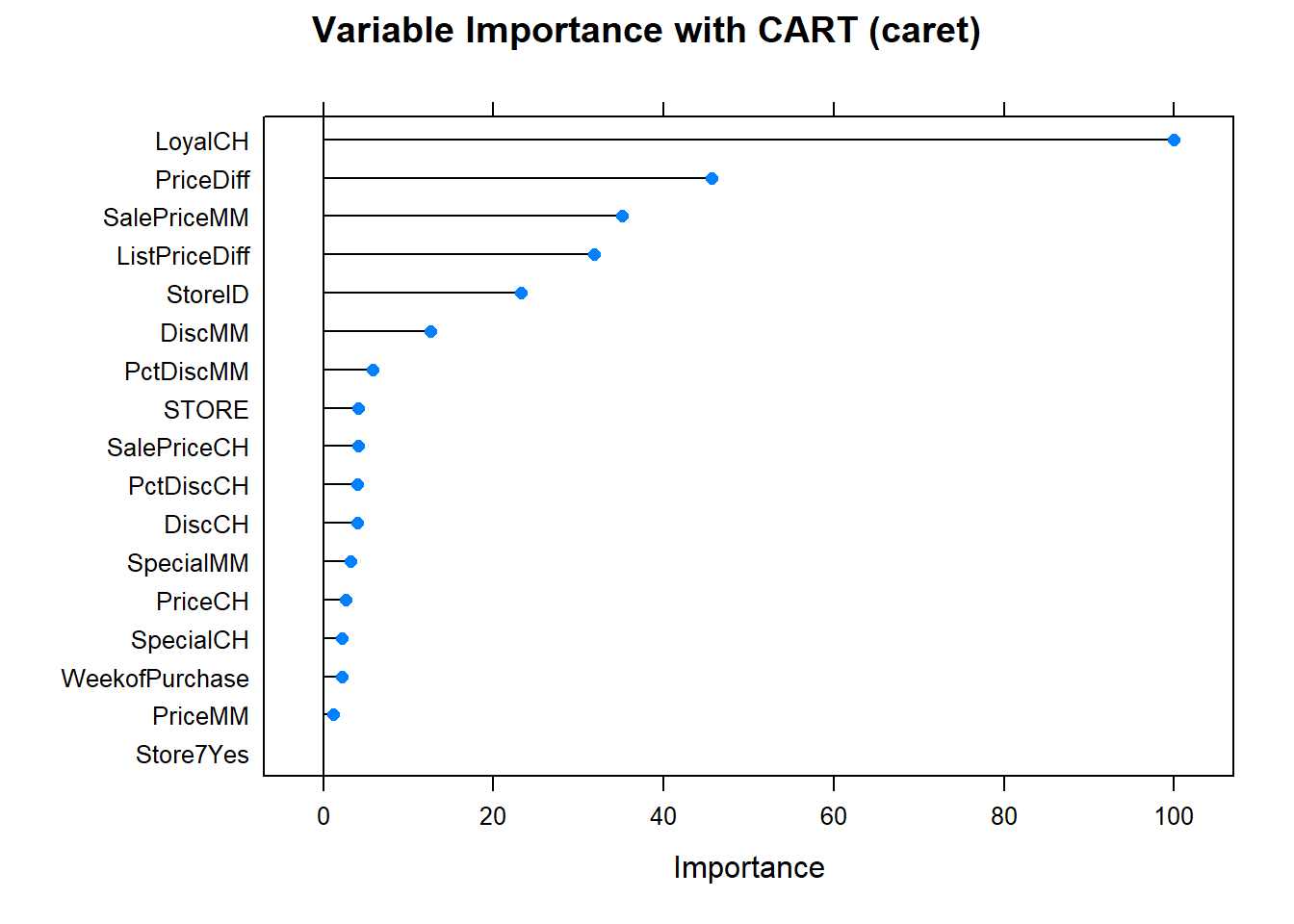

Finally, here is the variable importance plot. Brand loyalty is most important, followed by price difference.

plot(varImp(oj_mdl_cart2), main="Variable Importance with CART (caret)")

Looks like the manual effort fared best. Here is a summary the accuracy rates of the two models.

oj_scoreboard <- rbind(

data.frame(Model = "Single Tree", Accuracy = oj_cm_cart$overall["Accuracy"]),

data.frame(Model = "Single Tree (caret)", Accuracy = oj_cm_cart2$overall["Accuracy"])

) %>% arrange(desc(Accuracy))

scoreboard(oj_scoreboard)Model | Accuracy |

Single Tree | 0.8591549 |

Single Tree (caret) | 0.8544601 |

4.2 Regression Tree

A simple regression tree is built in a manner similar to a simple classification tree, and like the simple classification tree, it is rarely invoked on its own; the bagged, random forest, and gradient boosting methods build on this logic. I’ll learn by example again. Using the ISLR::Carseats data set, and predict Sales using from the 10 feature variables.

cs_dat <- ISLR::Carseats

skimr::skim(cs_dat)| Name | cs_dat |

| Number of rows | 400 |

| Number of columns | 11 |

| _______________________ | |

| Column type frequency: | |

| factor | 3 |

| numeric | 8 |

| ________________________ | |

| Group variables | None |

Variable type: factor

| skim_variable | n_missing | complete_rate | ordered | n_unique | top_counts |

|---|---|---|---|---|---|

| ShelveLoc | 0 | 1 | FALSE | 3 | Med: 219, Bad: 96, Goo: 85 |

| Urban | 0 | 1 | FALSE | 2 | Yes: 282, No: 118 |

| US | 0 | 1 | FALSE | 2 | Yes: 258, No: 142 |

Variable type: numeric

| skim_variable | n_missing | complete_rate | mean | sd | p0 | p25 | p50 | p75 | p100 | hist |

|---|---|---|---|---|---|---|---|---|---|---|

| Sales | 0 | 1 | 7.50 | 2.82 | 0 | 5.39 | 7.49 | 9.32 | 16.27 | ▁▆▇▃▁ |

| CompPrice | 0 | 1 | 124.97 | 15.33 | 77 | 115.00 | 125.00 | 135.00 | 175.00 | ▁▅▇▃▁ |

| Income | 0 | 1 | 68.66 | 27.99 | 21 | 42.75 | 69.00 | 91.00 | 120.00 | ▇▆▇▆▅ |

| Advertising | 0 | 1 | 6.64 | 6.65 | 0 | 0.00 | 5.00 | 12.00 | 29.00 | ▇▃▃▁▁ |

| Population | 0 | 1 | 264.84 | 147.38 | 10 | 139.00 | 272.00 | 398.50 | 509.00 | ▇▇▇▇▇ |

| Price | 0 | 1 | 115.80 | 23.68 | 24 | 100.00 | 117.00 | 131.00 | 191.00 | ▁▂▇▆▁ |

| Age | 0 | 1 | 53.32 | 16.20 | 25 | 39.75 | 54.50 | 66.00 | 80.00 | ▇▆▇▇▇ |

| Education | 0 | 1 | 13.90 | 2.62 | 10 | 12.00 | 14.00 | 16.00 | 18.00 | ▇▇▃▇▇ |

Split careseats_dat (n = 400) into cs_train (80%, n = 321) and cs_test (20%, n = 79).

set.seed(12345)

partition <- createDataPartition(y = cs_dat$Sales, p = 0.8, list = FALSE)

cs_train <- cs_dat[partition, ]

cs_test <- cs_dat[-partition, ]The first step is to build a full tree, then perform k-fold cross-validation to help select the optimal cost complexity (cp). The only difference here is the rpart() parameter method = "anova" to produce a regression tree.

set.seed(1234)

cs_mdl_cart_full <- rpart(Sales ~ ., cs_train, method = "anova")

print(cs_mdl_cart_full)## n= 321

##

## node), split, n, deviance, yval

## * denotes terminal node

##

## 1) root 321 2567.76800 7.535950

## 2) ShelveLoc=Bad,Medium 251 1474.14100 6.770359

## 4) Price>=105.5 168 719.70630 5.987024

## 8) ShelveLoc=Bad 50 165.70160 4.693600

## 16) Population< 201.5 20 48.35505 3.646500 *

## 17) Population>=201.5 30 80.79922 5.391667 *

## 9) ShelveLoc=Medium 118 434.91370 6.535085

## 18) Advertising< 11.5 88 290.05490 6.113068

## 36) CompPrice< 142 69 193.86340 5.769420

## 72) Price>=132.5 16 50.75440 4.455000 *

## 73) Price< 132.5 53 107.12060 6.166226 *

## 37) CompPrice>=142 19 58.45118 7.361053 *

## 19) Advertising>=11.5 30 83.21323 7.773000 *

## 5) Price< 105.5 83 442.68920 8.355904

## 10) Age>=63.5 32 153.42300 6.922500

## 20) Price>=85 25 66.89398 6.160800

## 40) ShelveLoc=Bad 9 18.39396 4.772222 *

## 41) ShelveLoc=Medium 16 21.38544 6.941875 *

## 21) Price< 85 7 20.22194 9.642857 *

## 11) Age< 63.5 51 182.26350 9.255294

## 22) Income< 57.5 12 28.03042 7.707500 *

## 23) Income>=57.5 39 116.63950 9.731538

## 46) Age>=50.5 14 21.32597 8.451429 *

## 47) Age< 50.5 25 59.52474 10.448400 *

## 3) ShelveLoc=Good 70 418.98290 10.281140

## 6) Price>=107.5 49 242.58730 9.441633

## 12) Advertising< 13.5 41 162.47820 8.926098

## 24) Age>=61 17 53.37051 7.757647 *

## 25) Age< 61 24 69.45776 9.753750 *

## 13) Advertising>=13.5 8 13.36599 12.083750 *

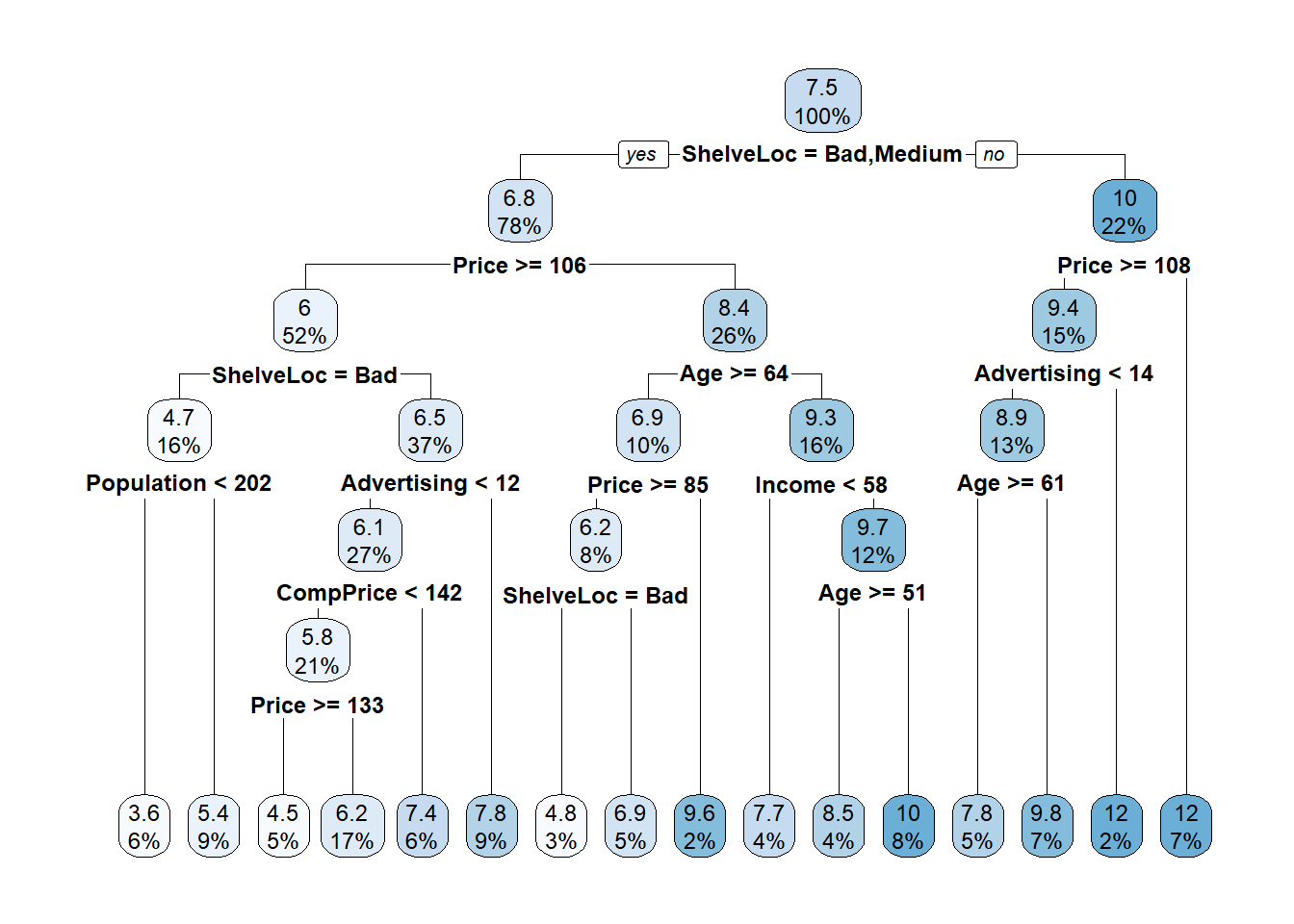

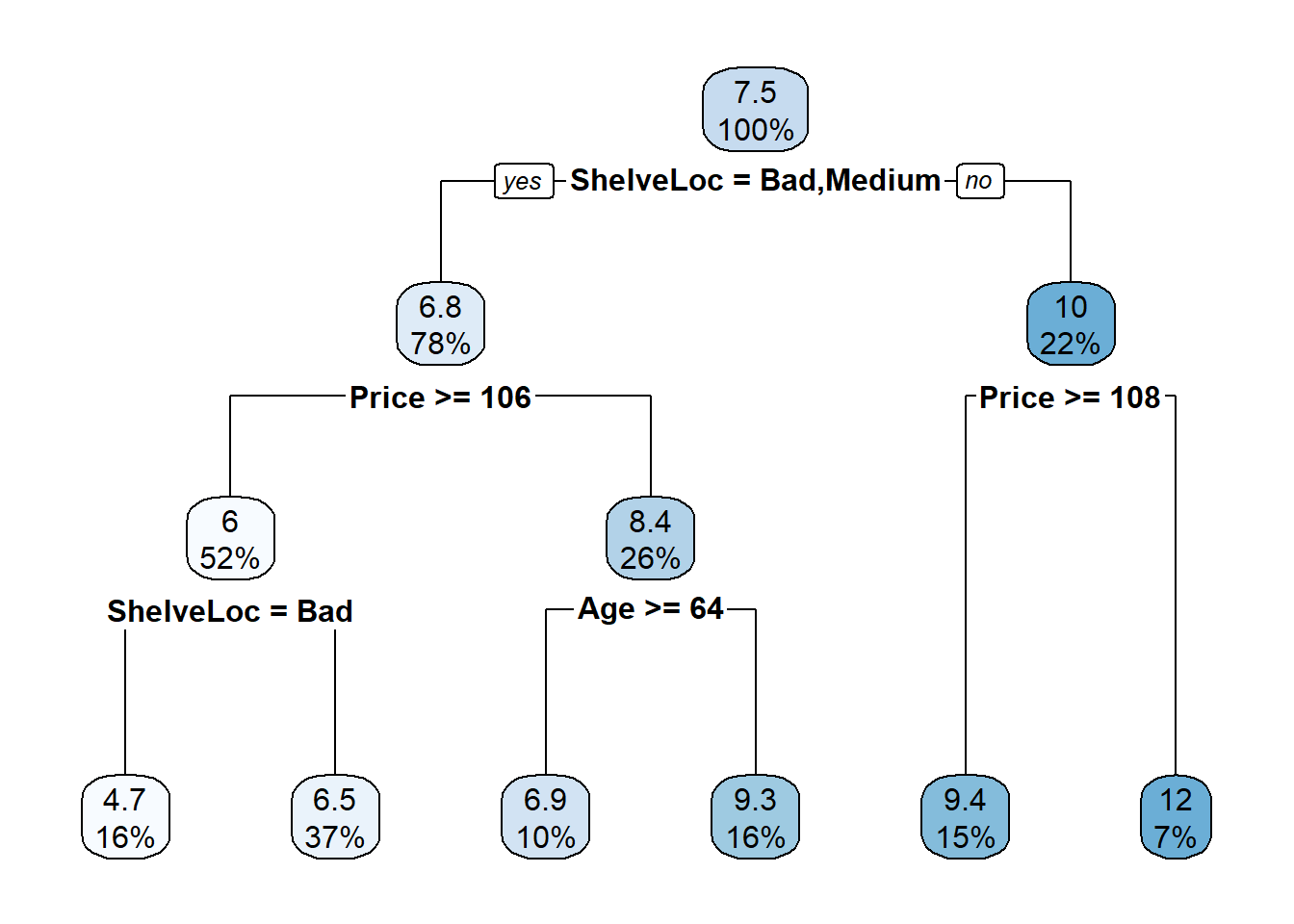

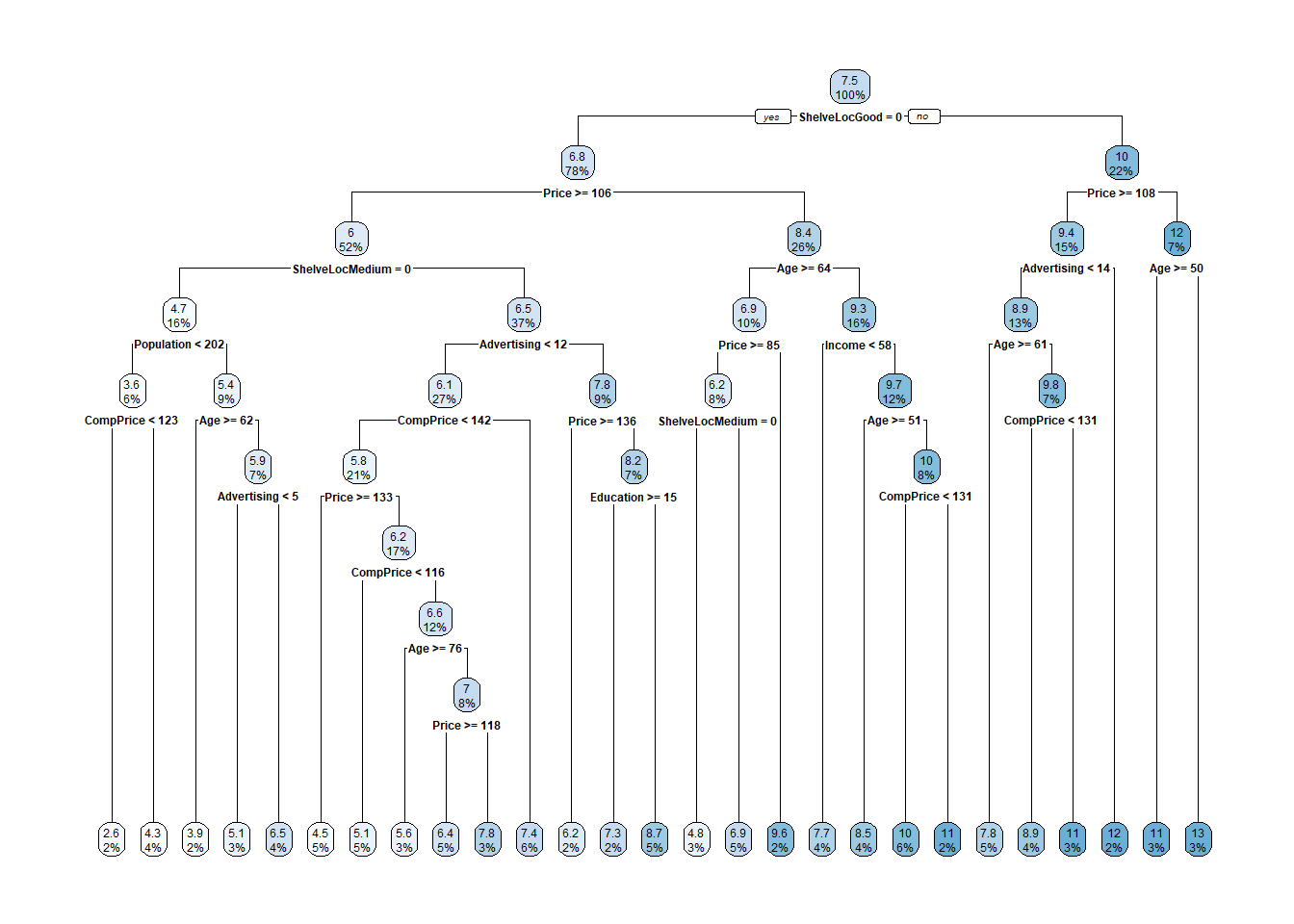

## 7) Price< 107.5 21 61.28200 12.240000 *The predicted Sales at the root is the mean Sales for the training data set, 7.535950 (values are $000s). The deviance at the root is the SSE, 2567.768. The first split is at ShelveLoc = [Bad, Medium] vs Good. Here is the unpruned tree diagram.

rpart.plot(cs_mdl_cart_full, yesno = TRUE)

The boxes show the node predicted value (mean) and the proportion of observations that are in the node (or child nodes).

rpart() grew the full tree, and used cross-validation to test the performance of the possible complexity hyperparameters. printcp() displays the candidate cp values. You can use this table to decide how to prune the tree.

printcp(cs_mdl_cart_full)##

## Regression tree:

## rpart(formula = Sales ~ ., data = cs_train, method = "anova")

##

## Variables actually used in tree construction:

## [1] Advertising Age CompPrice Income Population Price

## [7] ShelveLoc

##

## Root node error: 2567.8/321 = 7.9993

##

## n= 321

##

## CP nsplit rel error xerror xstd

## 1 0.262736 0 1.00000 1.00635 0.076664

## 2 0.121407 1 0.73726 0.74888 0.058981

## 3 0.046379 2 0.61586 0.65278 0.050839

## 4 0.044830 3 0.56948 0.67245 0.051638

## 5 0.041671 4 0.52465 0.66230 0.051065

## 6 0.025993 5 0.48298 0.62345 0.049368

## 7 0.025823 6 0.45698 0.61980 0.048026

## 8 0.024007 7 0.43116 0.62058 0.048213

## 9 0.015441 8 0.40715 0.58061 0.041738

## 10 0.014698 9 0.39171 0.56413 0.041368

## 11 0.014641 10 0.37701 0.56277 0.041271

## 12 0.014233 11 0.36237 0.56081 0.041097

## 13 0.014015 12 0.34814 0.55647 0.038308

## 14 0.013938 13 0.33413 0.55647 0.038308

## 15 0.010560 14 0.32019 0.57110 0.038872

## 16 0.010000 15 0.30963 0.56676 0.038090There were 16 possible cp values in this model. The model with the smallest complexity parameter allows the most splits (nsplit). The highest complexity parameter corresponds to a tree with just a root node. rel error is the SSE relative to the root node. The root node SSE is 2567.76800, so its rel error is 2567.76800/2567.76800 = 1.0. That means the absolute error of the full tree (at CP = 0.01) is 0.30963 * 2567.76800 = 795.058. You can verify that by calculating the SSE of the model predicted values:

data.frame(pred = predict(cs_mdl_cart_full, newdata = cs_train)) %>%

mutate(obs = cs_train$Sales,

sq_err = (obs - pred)^2) %>%

summarize(sse = sum(sq_err))## sse

## 1 795.0525Finishing the CP table tour, xerror is the cross-validated SSE and xstd is its standard error. If you want the lowest possible error, then prune to the tree with the smallest relative SSE (xerror). If you want to balance predictive power with simplicity, prune to the smallest tree within 1 SE of the one with the smallest relative SSE. The CP table is not super-helpful for finding that tree. I’ll add a column to find it.

cs_mdl_cart_full$cptable %>%

data.frame() %>%

mutate(min_xerror_idx = which.min(cs_mdl_cart_full$cptable[, "xerror"]),

rownum = row_number(),

xerror_cap = cs_mdl_cart_full$cptable[min_xerror_idx, "xerror"] +

cs_mdl_cart_full$cptable[min_xerror_idx, "xstd"],

eval = case_when(rownum == min_xerror_idx ~ "min xerror",

xerror < xerror_cap ~ "under cap",

TRUE ~ "")) %>%

select(-rownum, -min_xerror_idx) ## CP nsplit rel.error xerror xstd xerror_cap eval

## 1 0.26273578 0 1.0000000 1.0063530 0.07666355 0.5947744

## 2 0.12140705 1 0.7372642 0.7488767 0.05898146 0.5947744

## 3 0.04637919 2 0.6158572 0.6527823 0.05083938 0.5947744

## 4 0.04483023 3 0.5694780 0.6724529 0.05163819 0.5947744

## 5 0.04167149 4 0.5246478 0.6623028 0.05106530 0.5947744

## 6 0.02599265 5 0.4829763 0.6234457 0.04936799 0.5947744

## 7 0.02582284 6 0.4569836 0.6198034 0.04802643 0.5947744

## 8 0.02400748 7 0.4311608 0.6205756 0.04821332 0.5947744

## 9 0.01544139 8 0.4071533 0.5806072 0.04173785 0.5947744 under cap

## 10 0.01469771 9 0.3917119 0.5641331 0.04136793 0.5947744 under cap

## 11 0.01464055 10 0.3770142 0.5627713 0.04127139 0.5947744 under cap

## 12 0.01423309 11 0.3623736 0.5608073 0.04109662 0.5947744 under cap

## 13 0.01401541 12 0.3481405 0.5564663 0.03830810 0.5947744 min xerror

## 14 0.01393771 13 0.3341251 0.5564663 0.03830810 0.5947744 under cap

## 15 0.01055959 14 0.3201874 0.5710951 0.03887227 0.5947744 under cap

## 16 0.01000000 15 0.3096278 0.5667561 0.03808991 0.5947744 under capOkay, so the simplest tree is the one with CP = 0.02599265 (5 splits). Fortunately, plotcp() presents a nice graphical representation of the relationship between xerror and cp.

plotcp(cs_mdl_cart_full, upper = "splits")

The dashed line is set at the minimum xerror + xstd. The top axis shows the number of splits in the tree. I’m not sure why the CP values are not the same as in the table (they are close, but not the same). The smallest relative error is at CP = 0.01000000 (15 splits), but the maximum CP below the dashed line (one standard deviation above the minimum error) is at CP = 0.02599265 (5 splits). Use the prune() function to prune the tree by specifying the associated cost-complexity cp.

cs_mdl_cart <- prune(

cs_mdl_cart_full,

cp = cs_mdl_cart_full$cptable[cs_mdl_cart_full$cptable[, 2] == 5, "CP"]

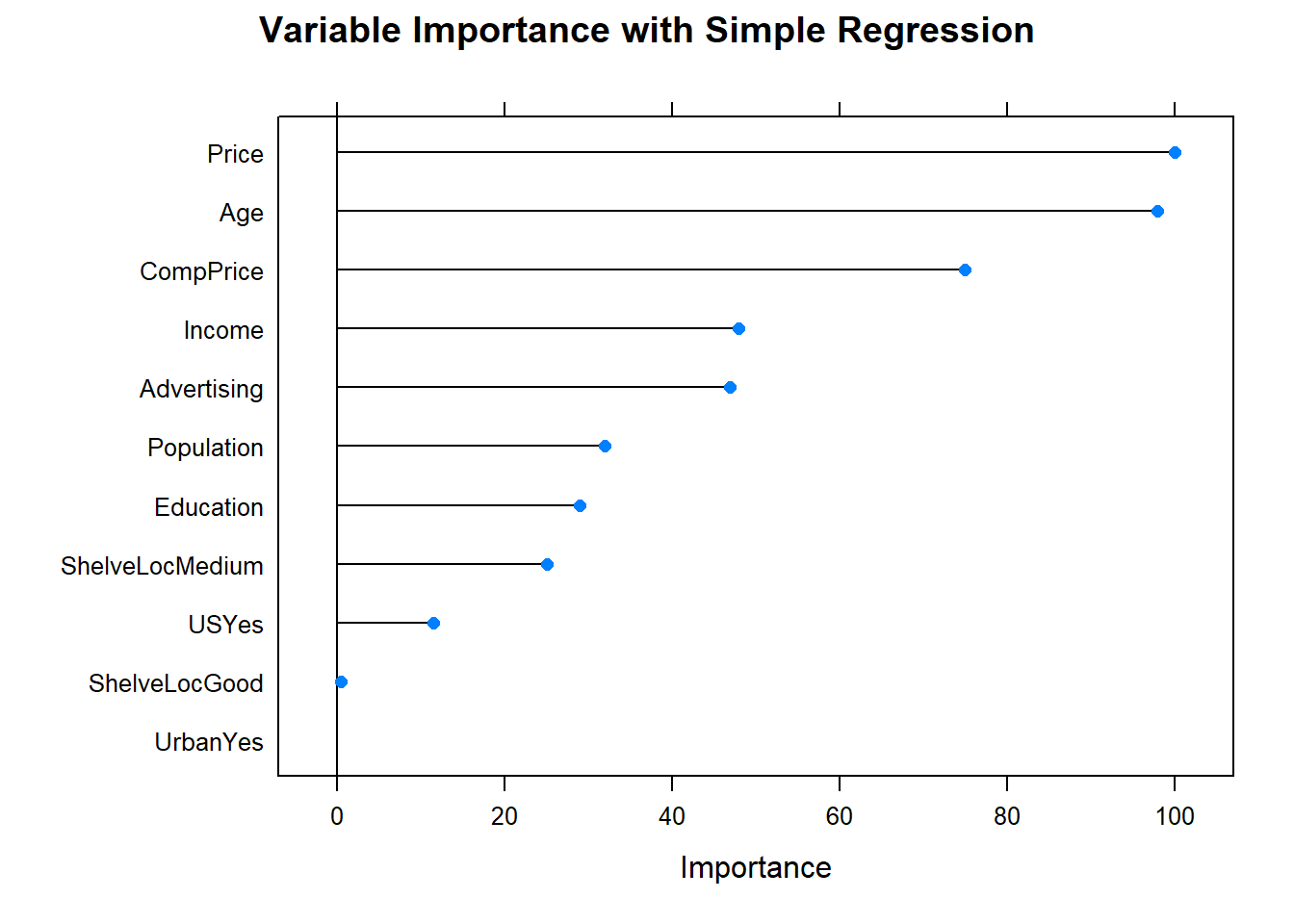

)

rpart.plot(cs_mdl_cart, yesno = TRUE)

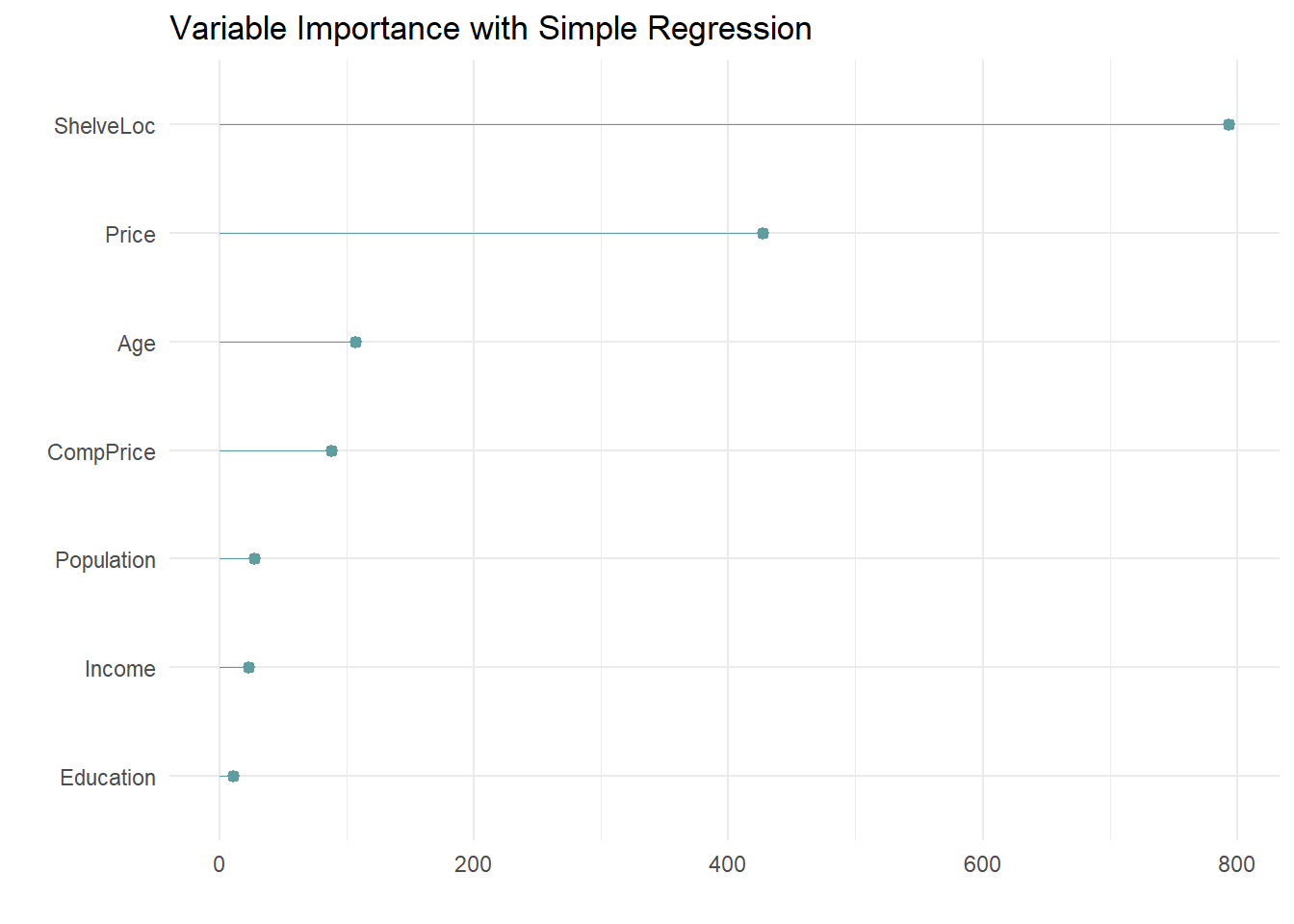

The most “important” indicator of Sales is ShelveLoc. Here are the importance values from the model.

cs_mdl_cart$variable.importance %>%

data.frame() %>%

rownames_to_column(var = "Feature") %>%

rename(Overall = '.') %>%

ggplot(aes(x = fct_reorder(Feature, Overall), y = Overall)) +

geom_pointrange(aes(ymin = 0, ymax = Overall), color = "cadetblue", size = .3) +

theme_minimal() +

coord_flip() +

labs(x = "", y = "", title = "Variable Importance with Simple Regression")

The most important indicator of Sales is ShelveLoc, then Price, then Age, all of which appear in the final model. CompPrice was also important.

The last step is to make predictions on the validation data set. The root mean squared error (\(RMSE = \sqrt{(1/2) \sum{(actual - pred)^2}})\) and mean absolute error (\(MAE = (1/n) \sum{|actual - pred|}\)) are the two most common measures of predictive accuracy. The key difference is that RMSE punishes large errors more harshly. For a regression tree, set argument type = "vector" (or do not specify at all).

cs_preds_cart <- predict(cs_mdl_cart, cs_test, type = "vector")

cs_rmse_cart <- RMSE(

pred = cs_preds_cart,

obs = cs_test$Sales

)

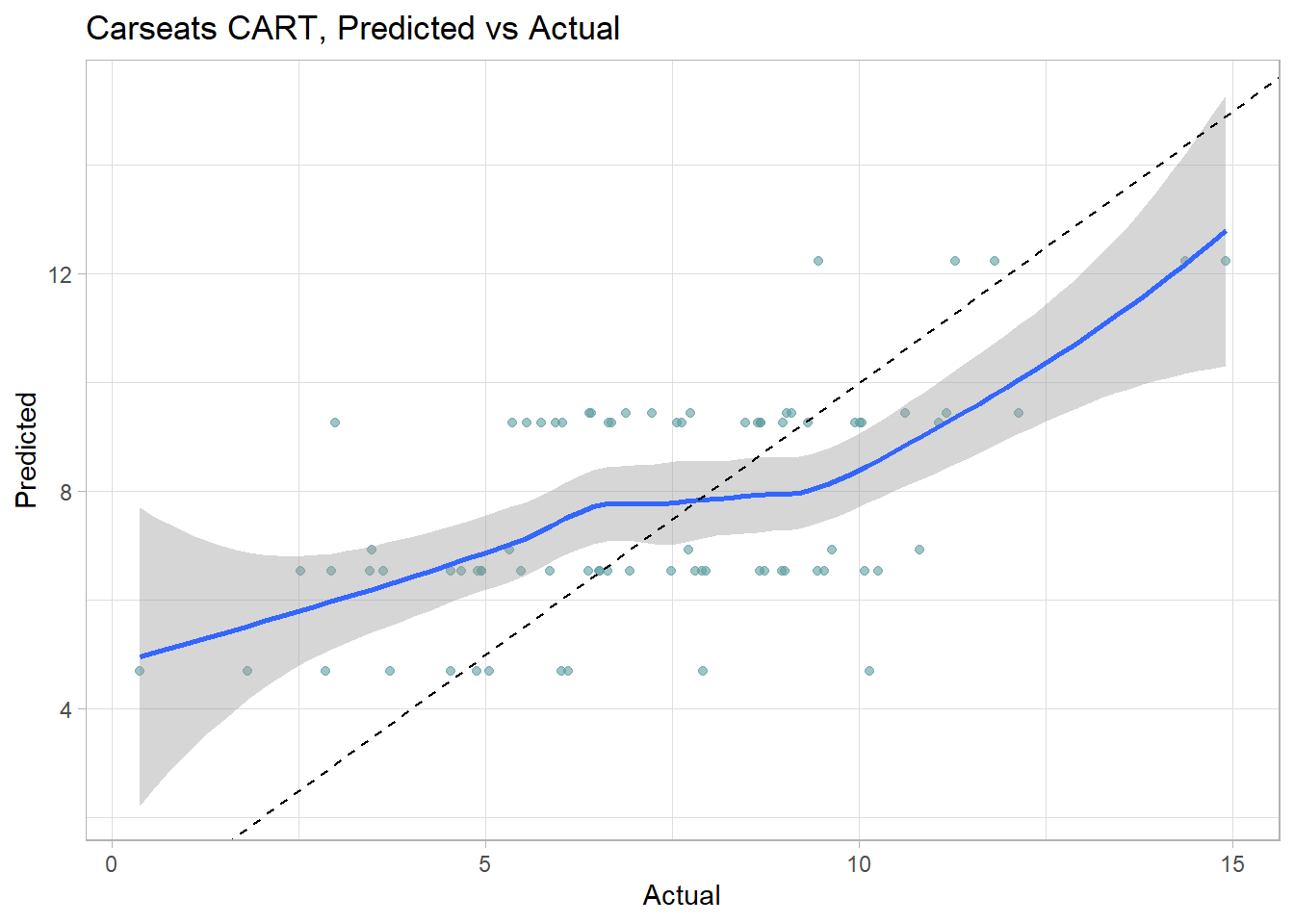

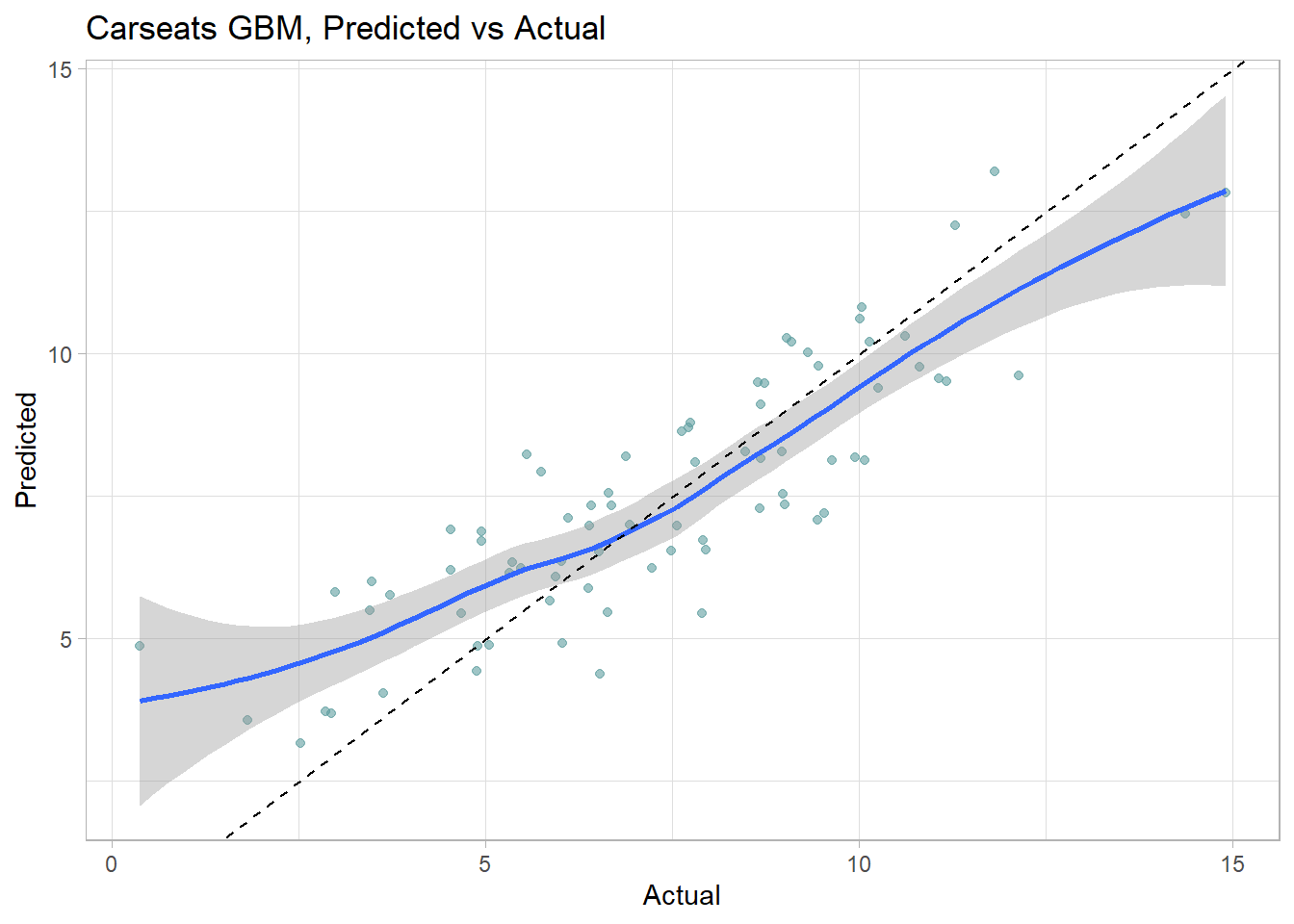

cs_rmse_cart## [1] 2.363202The pruning process leads to an average prediction error of 2.363 in the test data set. Not too bad considering the standard deviation of Sales is 2.801. Here is a predicted vs actual plot.

data.frame(Predicted = cs_preds_cart, Actual = cs_test$Sales) %>%

ggplot(aes(x = Actual, y = Predicted)) +

geom_point(alpha = 0.6, color = "cadetblue") +

geom_smooth() +

geom_abline(intercept = 0, slope = 1, linetype = 2) +

labs(title = "Carseats CART, Predicted vs Actual")## `geom_smooth()` using method = 'loess' and formula 'y ~ x'

The 6 possible predicted values do a decent job of binning the observations.

4.2.1 Training with Caret

I can also fit the model with caret::train(), specifying method = "rpart". I’ll build the model using 10-fold cross-validation to optimize the hyperparameter CP.

cs_trControl = trainControl(

method = "cv",

number = 10,

savePredictions = "final" # save predictions for the optimal tuning parameter

)I’ll let the model look for the best CP tuning parameter with tuneLength to get close, then fine-tune with tuneGrid.

set.seed(1234)

cs_mdl_cart2 = train(

Sales ~ .,

data = cs_train,

method = "rpart",

tuneLength = 5,

metric = "RMSE",

trControl = cs_trControl

)

print(cs_mdl_cart2)## CART

##

## 321 samples

## 10 predictor

##

## No pre-processing

## Resampling: Cross-Validated (10 fold)

## Summary of sample sizes: 289, 289, 289, 289, 289, 289, ...

## Resampling results across tuning parameters:

##

## cp RMSE Rsquared MAE

## 0.04167149 2.209383 0.4065251 1.778797

## 0.04483023 2.243618 0.3849728 1.805027

## 0.04637919 2.275563 0.3684309 1.808814

## 0.12140705 2.400455 0.2942663 1.936927

## 0.26273578 2.692867 0.1898998 2.192774

##

## RMSE was used to select the optimal model using the smallest value.

## The final value used for the model was cp = 0.04167149.The first cp (0.04167149) produced the smallest RMSE. I can drill into the best value of cp using a tuning grid. I’ll try that now.

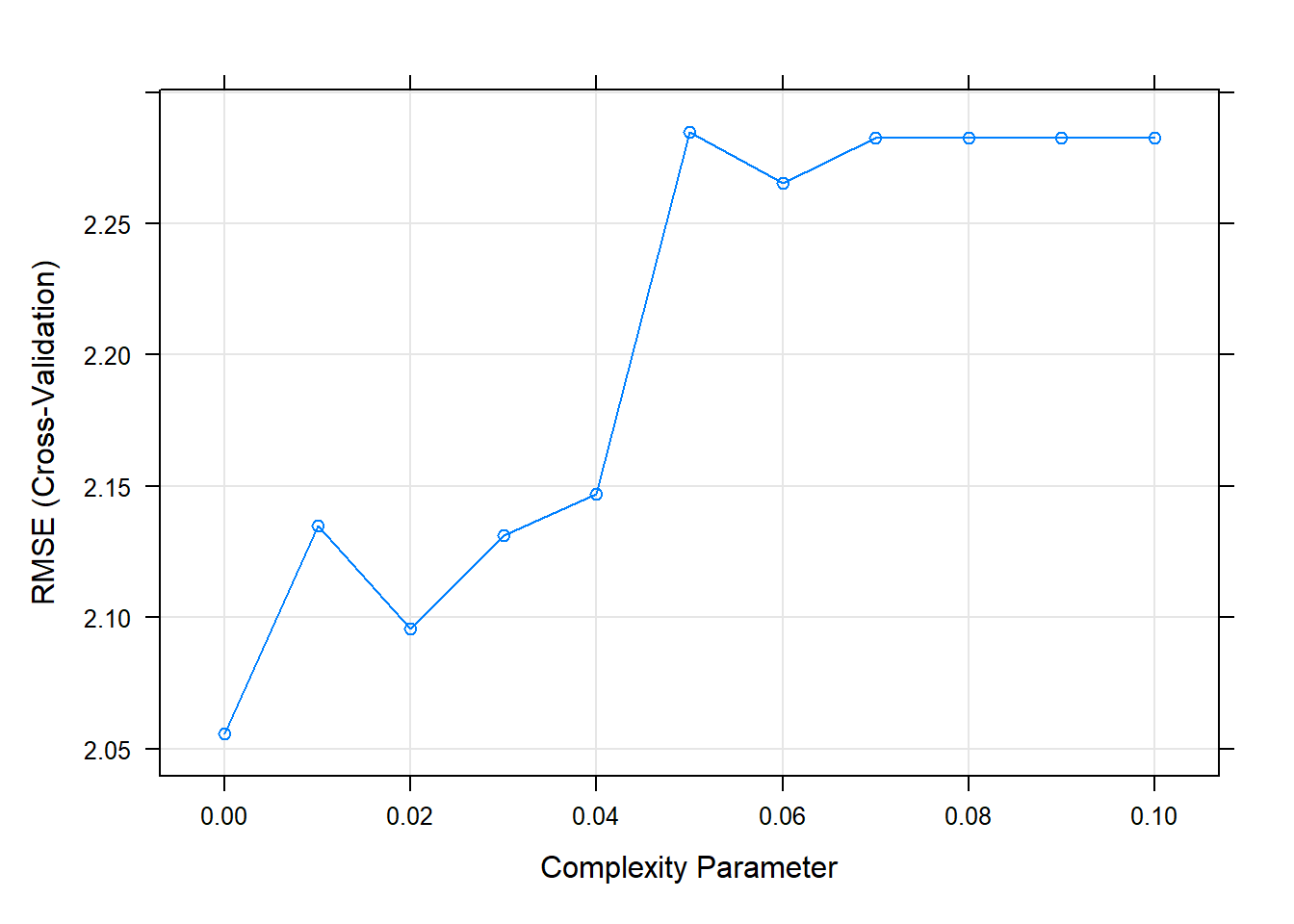

set.seed(1234)

cs_mdl_cart2 = train(

Sales ~ .,

data = cs_train,

method = "rpart",

tuneGrid = expand.grid(cp = seq(from = 0, to = 0.1, by = 0.01)),

metric = "RMSE",

trControl = cs_trControl

)

print(cs_mdl_cart2)## CART

##

## 321 samples

## 10 predictor

##

## No pre-processing

## Resampling: Cross-Validated (10 fold)

## Summary of sample sizes: 289, 289, 289, 289, 289, 289, ...

## Resampling results across tuning parameters:

##

## cp RMSE Rsquared MAE

## 0.00 2.055676 0.5027431 1.695453

## 0.01 2.135096 0.4642577 1.745937

## 0.02 2.095767 0.4733269 1.699235

## 0.03 2.131246 0.4534544 1.690453

## 0.04 2.146886 0.4411380 1.712705

## 0.05 2.284937 0.3614130 1.837782

## 0.06 2.265498 0.3709523 1.808319

## 0.07 2.282630 0.3597216 1.836227

## 0.08 2.282630 0.3597216 1.836227

## 0.09 2.282630 0.3597216 1.836227

## 0.10 2.282630 0.3597216 1.836227

##

## RMSE was used to select the optimal model using the smallest value.

## The final value used for the model was cp = 0.It looks like the best performing tree is the unpruned one.

plot(cs_mdl_cart2)

Let’s see the final model.

rpart.plot(cs_mdl_cart2$finalModel)

What were the most important variables?

plot(varImp(cs_mdl_cart2), main="Variable Importance with Simple Regression")

Evaluate the model by making predictions with the test data set.

cs_preds_cart2 <- predict(cs_mdl_cart2, cs_test, type = "raw")

data.frame(Actual = cs_test$Sales, Predicted = cs_preds_cart2) %>%

ggplot(aes(x = Actual, y = Predicted)) +

geom_point(alpha = 0.6, color = "cadetblue") +

geom_smooth(method = "loess", formula = "y ~ x") +

geom_abline(intercept = 0, slope = 1, linetype = 2) +

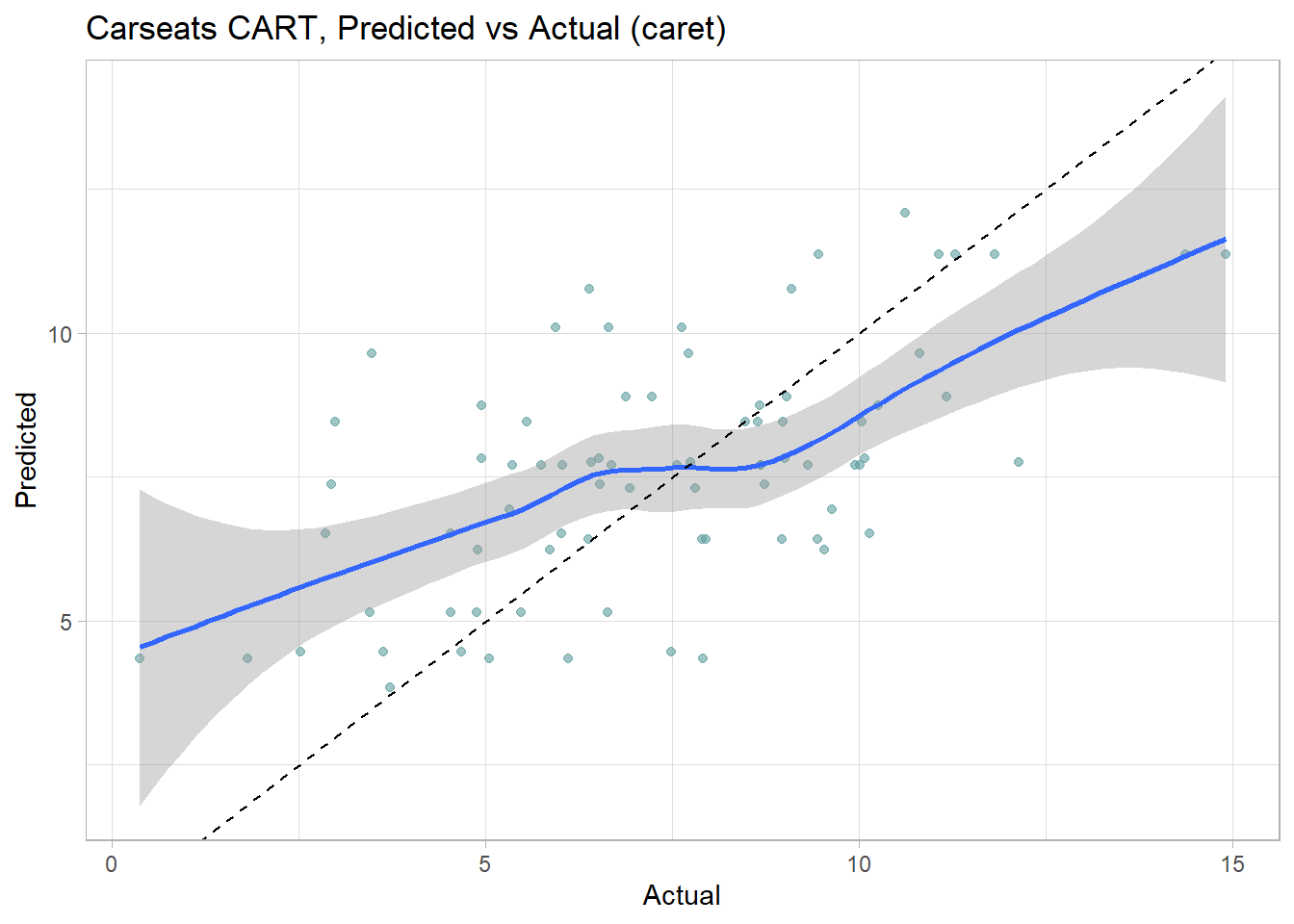

labs(title = "Carseats CART, Predicted vs Actual (caret)")

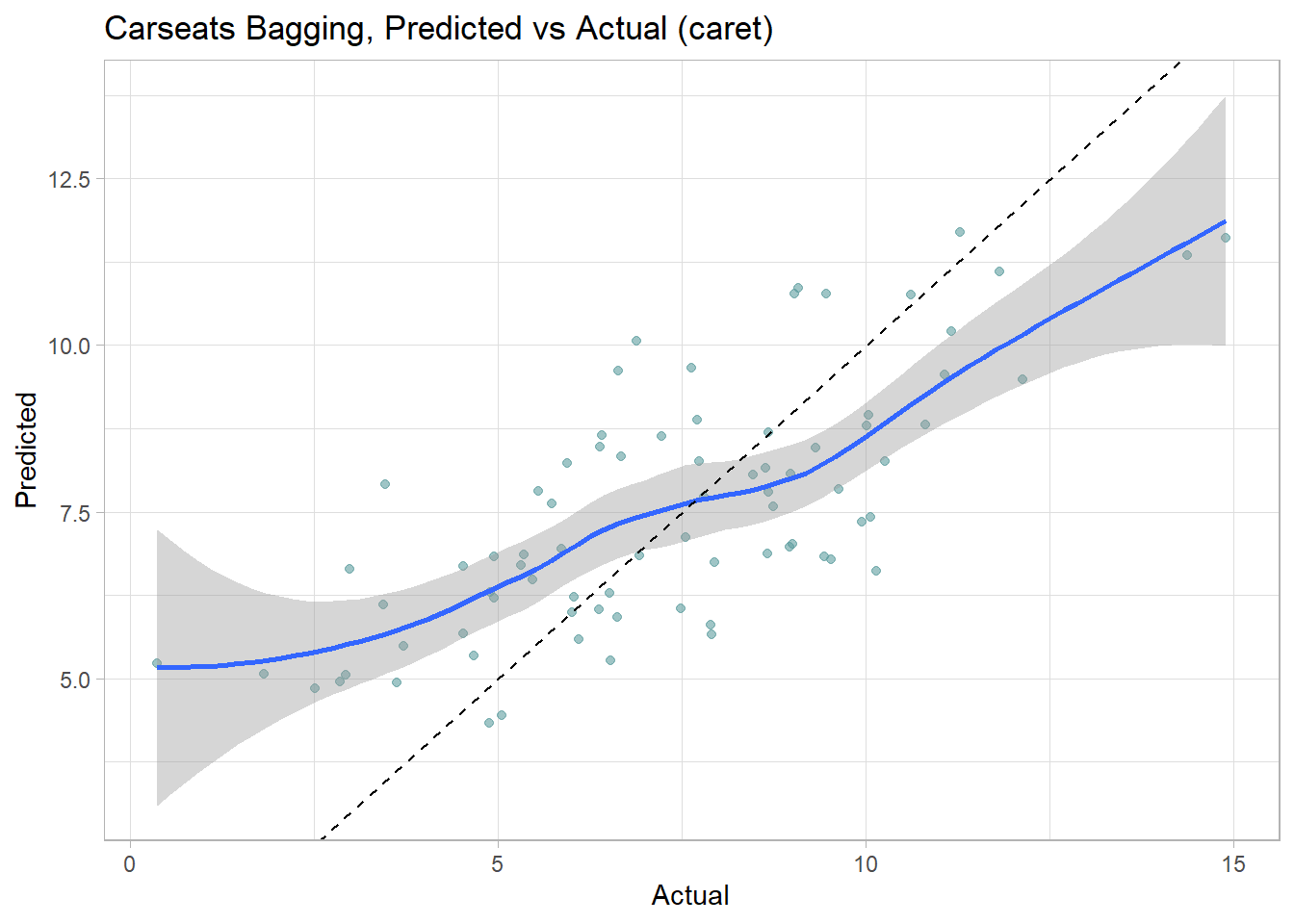

The model over-estimates at the low end and underestimates at the high end. Calculate the test data set RMSE.

(cs_rmse_cart2 <- RMSE(pred = cs_preds_cart2, obs = cs_test$Sales))## [1] 2.298331Caret performed better in this model. Here is a summary the RMSE values of the two models.

cs_scoreboard <- rbind(

data.frame(Model = "Single Tree", RMSE = cs_rmse_cart),

data.frame(Model = "Single Tree (caret)", RMSE = cs_rmse_cart2)

) %>% arrange(RMSE)

scoreboard(cs_scoreboard)Model | RMSE |

Single Tree (caret) | 2.298331 |

Single Tree | 2.363202 |

4.3 Bagged Trees

One drawback of decision trees is that they are high-variance estimators. A small number of additional training observations can dramatically alter the prediction performance of a learned tree.

Bootstrap aggregation, or bagging, is a general-purpose procedure for reducing the variance of a statistical learning method. The algorithm constructs B regression trees using B bootstrapped training sets, and averages the resulting predictions. These trees are grown deep, and are not pruned. Hence each individual tree has high variance, but low bias. Averaging the B trees reduces the variance. The predicted value for an observation is the mode (classification) or mean (regression) of the trees. B usually equals ~25.

To test the model accuracy, the out-of-bag observations are predicted from the models. For a training set of size n, each tree is composed of \(\sim (1 - e^{-1})n = .632n\) unique observations in-bag and \(.368n\) out-of-bag. For each tree in the ensemble, bagging makes predictions on the tree’s out-of-bag observations. I think (see page 197 of (Kuhn and Johnson 2016)) bagging measures the performance (RMSE, Accuracy, ROC, etc.) of each tree in the ensemble and averages them to produce an overall performance estimate. (This makes no sense to me. If each tree has poor performance, then the average performance of many trees will still be poor. An ensemble of B trees will produce \(\sim .368 B\) predictions per unique observation. Seems like you should take the mean/mode of each observation’s prediction as the final prediction. Then you have n predictions to compare to n actuals, and you assess performance on that.)

The downside to bagging is that there is no single tree with a set of rules to interpret. It becomes unclear which variables are more important than others.

The next section explains how bagged trees are a special case of random forests.

4.3.1 Bagged Classification Tree

Leaning by example, I’ll predict Purchase from the OJ data set again, this time using the bagging method by specifying method = "treebag". Caret has no hyperparameters to tune with this model, so I won’t set tuneLegth or tuneGrid. The ensemble size defaults to nbagg = 25, but you can override it (I didn’t).

set.seed(1234)

oj_mdl_bag <- train(

Purchase ~ .,

data = oj_train,

method = "treebag",

trControl = oj_trControl,

metric = "ROC"

)

oj_mdl_bag$finalModel##

## Bagging classification trees with 25 bootstrap replicationsoj_mdl_bag## Bagged CART

##

## 857 samples

## 17 predictor

## 2 classes: 'CH', 'MM'

##

## No pre-processing

## Resampling: Cross-Validated (10 fold)

## Summary of sample sizes: 772, 772, 771, 770, 771, 771, ...

## Resampling results:

##

## ROC Sens Spec

## 0.8553731 0.8432511 0.7186275# summary(oj_mdl_bag)If you review the summary(oj_mdl_bag), you’ll see that caret built B = 25 trees from 25 bootstrapped training sets of 857 samples (the size of oj_train). I think caret started by splitting the training set into 10 folds, then using 9 of the folds to run the bagging algorithm and collect performance measures on the hold-out fold. After repeating the process for all 10 folds, it averaged the performance measures to produce the resampling results shown above. Had there been hyperparameters to tune, caret would have repeated this process for all hyperparameter combinations and the resampling results above would be from the best performing combination. Then caret ran the bagging algorithm again on the entire data set, and the trees you see in summary(oj_mdl_bag) are what it produces. (It seems inefficient to cross-validate a bagging algorithm given that the out-of-bag samples are there for performance testing.)

Let’s look at the performance on the holdout data set.

oj_preds_bag <- bind_cols(

predict(oj_mdl_bag, newdata = oj_test, type = "prob"),

Predicted = predict(oj_mdl_bag, newdata = oj_test, type = "raw"),

Actual = oj_test$Purchase

)

oj_cm_bag <- confusionMatrix(oj_preds_bag$Predicted, reference = oj_preds_bag$Actual)

oj_cm_bag## Confusion Matrix and Statistics

##

## Reference

## Prediction CH MM

## CH 113 16

## MM 17 67

##

## Accuracy : 0.8451

## 95% CI : (0.7894, 0.8909)

## No Information Rate : 0.6103

## P-Value [Acc > NIR] : 6.311e-14

##

## Kappa : 0.675

##

## Mcnemar's Test P-Value : 1

##

## Sensitivity : 0.8692

## Specificity : 0.8072

## Pos Pred Value : 0.8760

## Neg Pred Value : 0.7976

## Prevalence : 0.6103

## Detection Rate : 0.5305

## Detection Prevalence : 0.6056

## Balanced Accuracy : 0.8382

##

## 'Positive' Class : CH

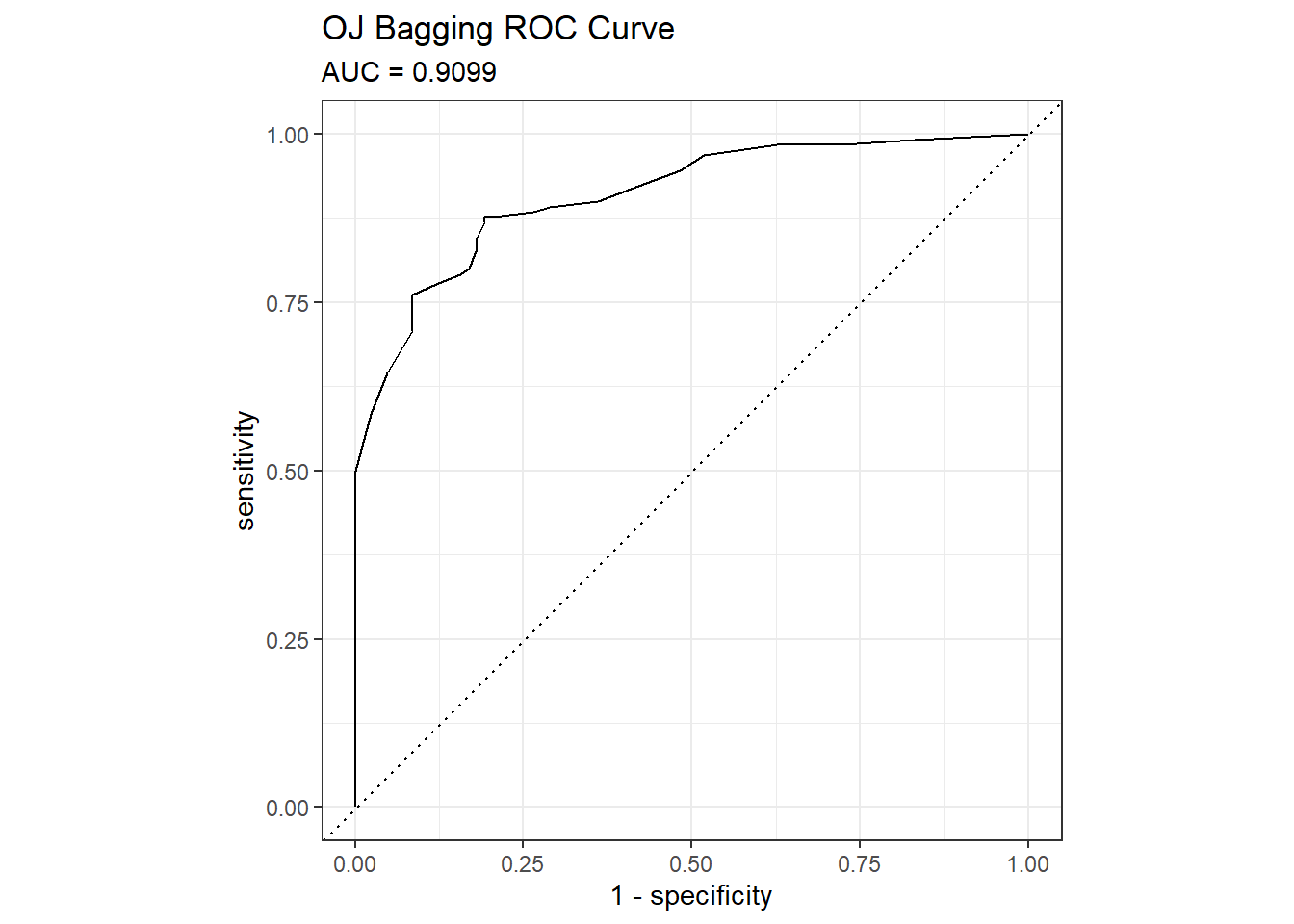

## The accuracy is 0.8451 - surprisingly worse than the 0.85915 of the single tree model, but that is a difference of three predictions in a set of 213. Here are the ROC and gain curves.

mdl_auc <- Metrics::auc(actual = oj_preds_bag$Actual == "CH", oj_preds_bag$CH)

yardstick::roc_curve(oj_preds_bag, Actual, CH) %>%

autoplot() +

labs(

title = "OJ Bagging ROC Curve",

subtitle = paste0("AUC = ", round(mdl_auc, 4))

)

yardstick::gain_curve(oj_preds_bag, Actual, CH) %>%

autoplot() +

labs(title = "OJ Bagging Gain Curve")

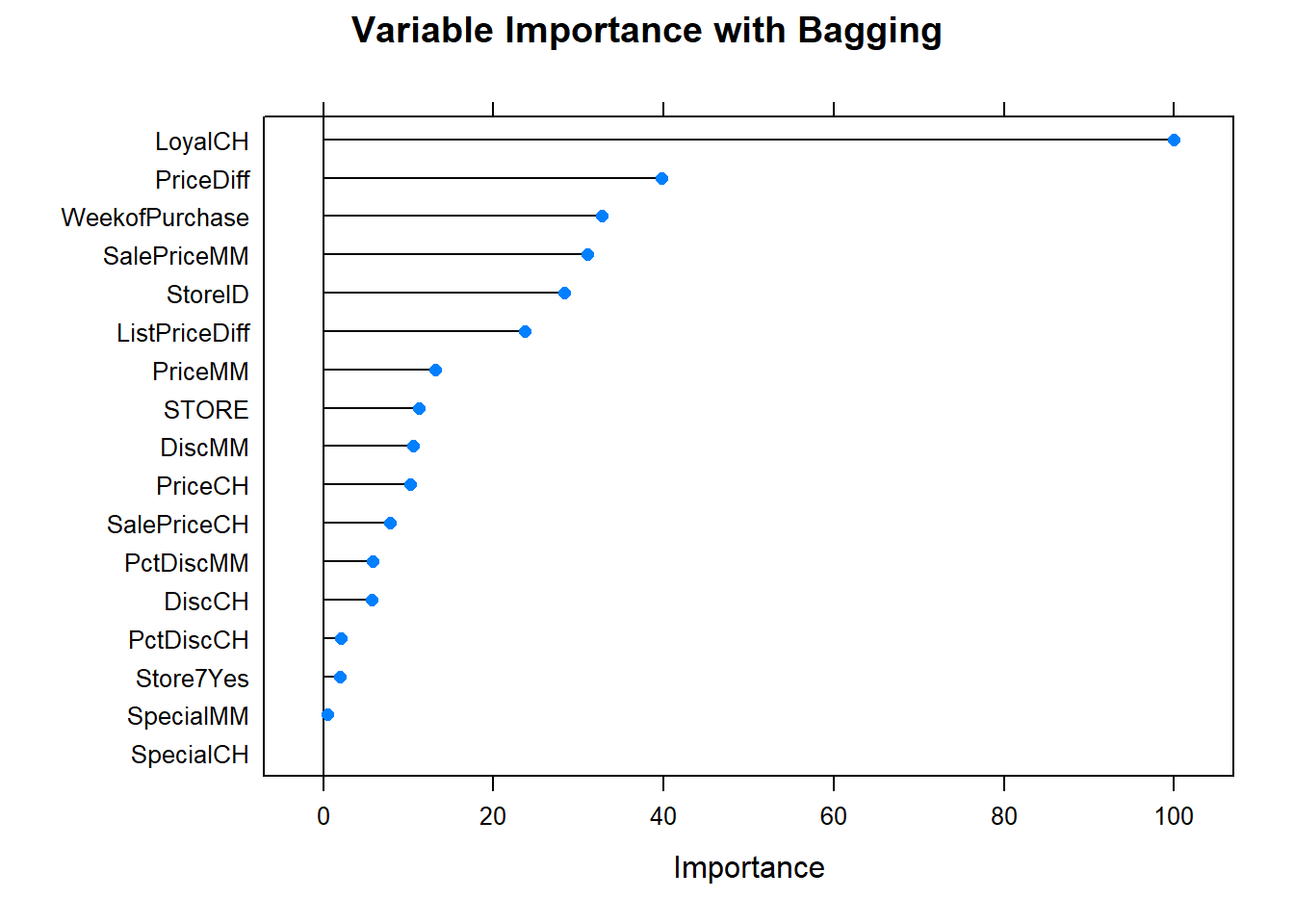

Let’s see what are the most important variables.

plot(varImp(oj_mdl_bag), main="Variable Importance with Bagging") Finally, let’s check out the scoreboard. Bagging fared worse than the single tree models.

Finally, let’s check out the scoreboard. Bagging fared worse than the single tree models.

oj_scoreboard <- rbind(oj_scoreboard,

data.frame(Model = "Bagging", Accuracy = oj_cm_bag$overall["Accuracy"])

) %>% arrange(desc(Accuracy))

scoreboard(oj_scoreboard)Model | Accuracy |

Single Tree | 0.8591549 |

Single Tree (caret) | 0.8544601 |

Bagging | 0.8450704 |

4.3.2 Bagging Regression Tree

I’ll predict Sales from the Carseats data set again, this time using the bagging method by specifying method = "treebag".

set.seed(1234)

cs_mdl_bag <- train(

Sales ~ .,

data = cs_train,

method = "treebag",

trControl = cs_trControl

)

cs_mdl_bag## Bagged CART

##

## 321 samples

## 10 predictor

##

## No pre-processing

## Resampling: Cross-Validated (10 fold)

## Summary of sample sizes: 289, 289, 289, 289, 289, 289, ...

## Resampling results:

##

## RMSE Rsquared MAE

## 1.681889 0.675239 1.343427Let’s look at the performance on the holdout data set. The RMSE is 1.9185, but the model over-predicts at low end of Sales and under-predicts at high end.

cs_preds_bag <- bind_cols(

Predicted = predict(cs_mdl_bag, newdata = cs_test),

Actual = cs_test$Sales

)

(cs_rmse_bag <- RMSE(pred = cs_preds_bag$Predicted, obs = cs_preds_bag$Actual))## [1] 1.918473cs_preds_bag %>%

ggplot(aes(x = Actual, y = Predicted)) +

geom_point(alpha = 0.6, color = "cadetblue") +

geom_smooth(method = "loess", formula = "y ~ x") +

geom_abline(intercept = 0, slope = 1, linetype = 2) +

labs(title = "Carseats Bagging, Predicted vs Actual (caret)")

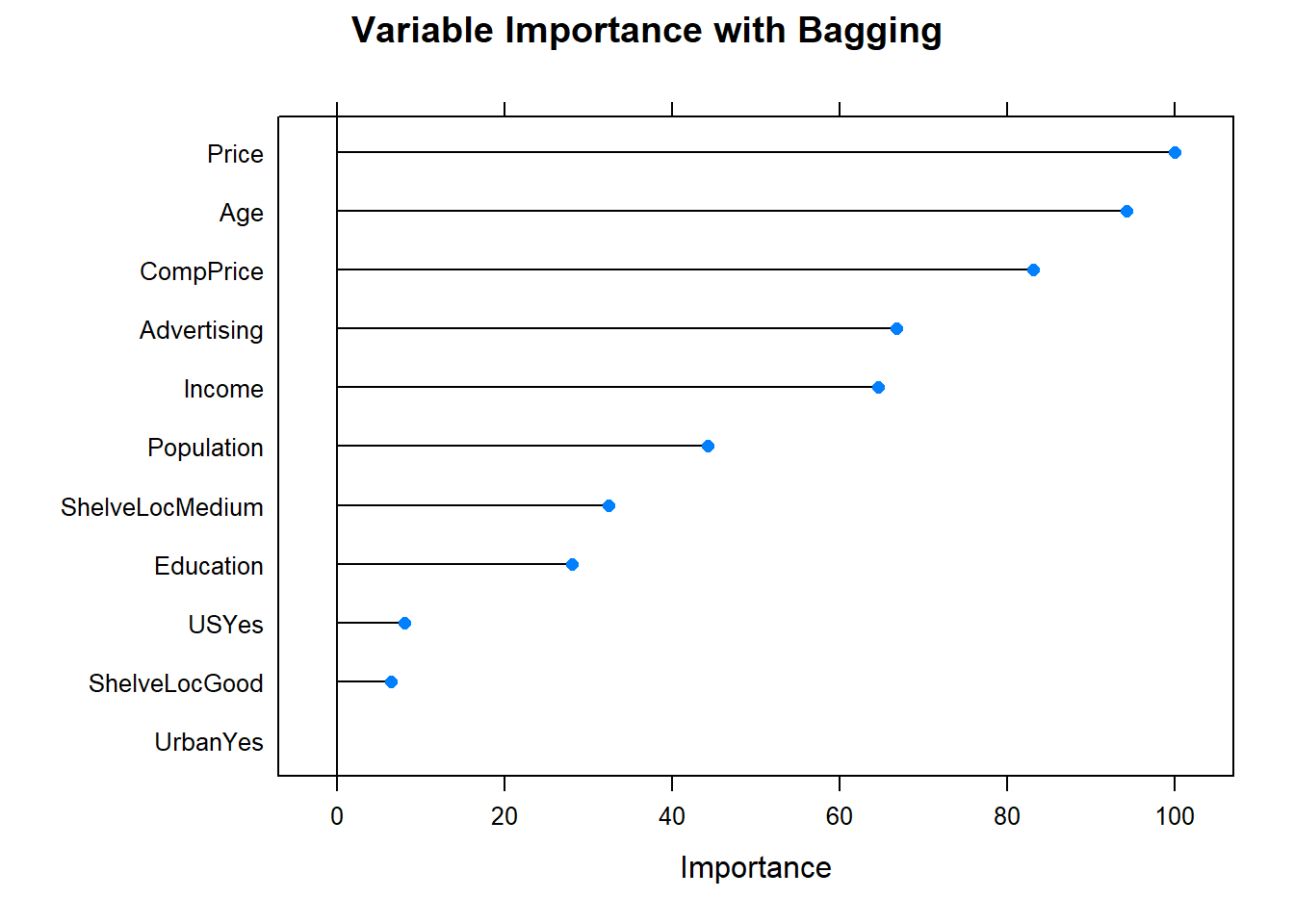

Now the variable importance.

plot(varImp(cs_mdl_bag), main="Variable Importance with Bagging")

Before moving on, check in with the scoreboard.

cs_scoreboard <- rbind(cs_scoreboard,

data.frame(Model = "Bagging", RMSE = cs_rmse_bag)

) %>% arrange(RMSE)

scoreboard(cs_scoreboard)Model | RMSE |

Bagging | 1.918473 |

Single Tree (caret) | 2.298331 |

Single Tree | 2.363202 |

4.4 Random Forests

Random forests improve bagged trees by way of a small tweak that de-correlates the trees. As in bagging, the algorithm builds a number of decision trees on bootstrapped training samples. But when building these decision trees, each time a split in a tree is considered, a random sample of mtry predictors is chosen as split candidates from the full set of p predictors. A fresh sample of mtry predictors is taken at each split. Typically \(mtry \sim \sqrt{p}\). Bagged trees are thus a special case of random forests where mtry = p.

4.4.0.1 Random Forest Classification Tree

Now I’ll try it with the random forest method by specifying method = "rf". Hyperparameter mtry can take any value from 1 to 17 (the number of predictors) and I expect the best value to be near \(\sqrt{17} \sim 4\).

set.seed(1234)

oj_mdl_rf <- train(

Purchase ~ .,

data = oj_train,

method = "rf",

metric = "ROC",

tuneGrid = expand.grid(mtry = 1:10), # searching around mtry=4

trControl = oj_trControl

)

oj_mdl_rf## Random Forest

##

## 857 samples

## 17 predictor

## 2 classes: 'CH', 'MM'

##

## No pre-processing

## Resampling: Cross-Validated (10 fold)

## Summary of sample sizes: 772, 772, 771, 770, 771, 771, ...

## Resampling results across tuning parameters:

##

## mtry ROC Sens Spec

## 1 0.8419091 0.9024673 0.5479501

## 2 0.8625832 0.8756531 0.6976827

## 3 0.8667189 0.8623004 0.7214795

## 4 0.8680957 0.8507983 0.7183601

## 5 0.8678322 0.8469521 0.7091800

## 6 0.8687425 0.8431785 0.7273619

## 7 0.8690552 0.8394049 0.7213904

## 8 0.8673816 0.8432148 0.7212121

## 9 0.8675878 0.8317489 0.7182709

## 10 0.8653655 0.8412917 0.7152406

##

## ROC was used to select the optimal model using the largest value.

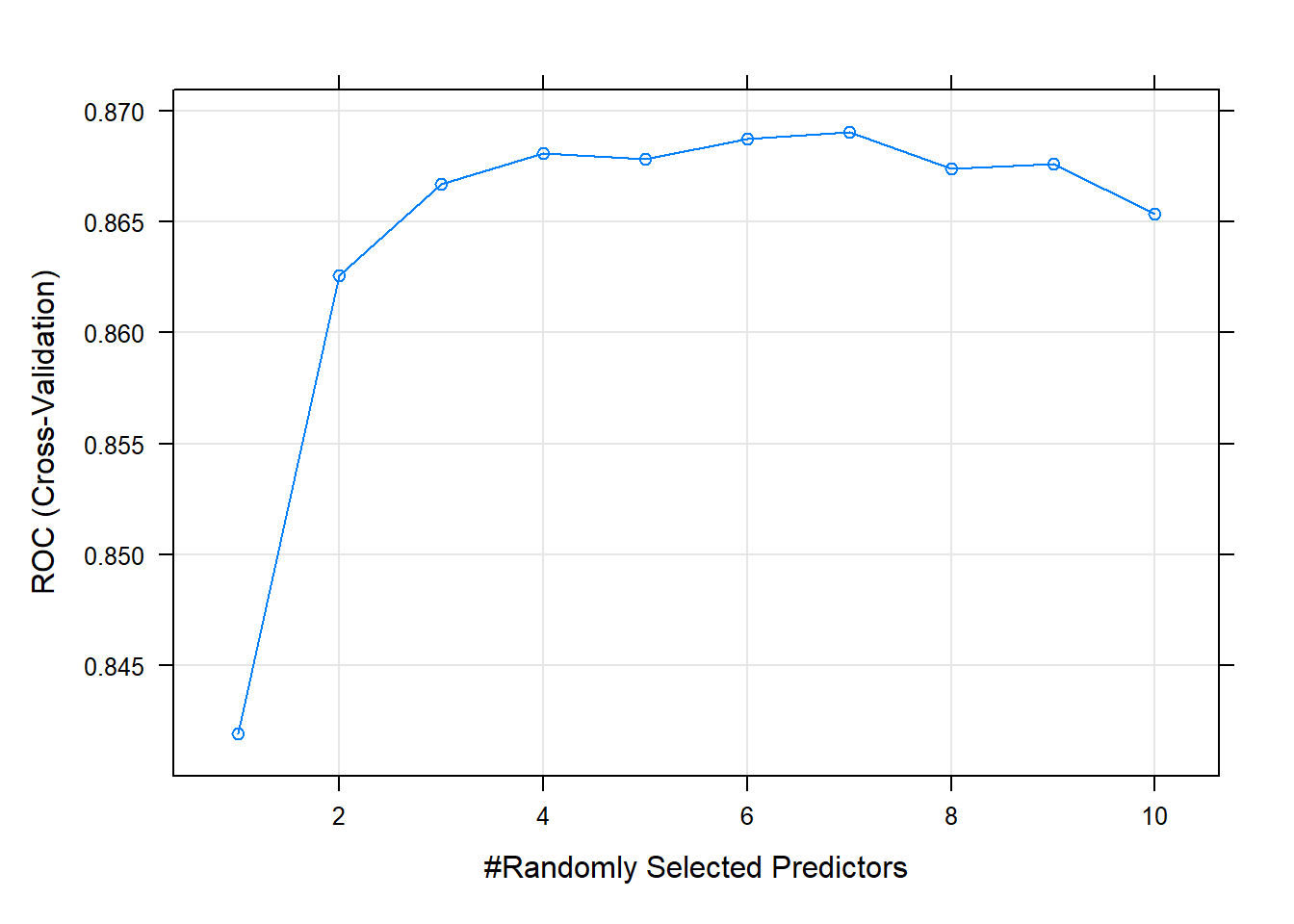

## The final value used for the model was mtry = 7.The largest ROC score was at mtry = 7 - higher than I expected.

plot(oj_mdl_rf)

Use the model to make predictions on the test set.

oj_preds_rf <- bind_cols(

predict(oj_mdl_rf, newdata = oj_test, type = "prob"),

Predicted = predict(oj_mdl_rf, newdata = oj_test, type = "raw"),

Actual = oj_test$Purchase

)

oj_cm_rf <- confusionMatrix(oj_preds_rf$Predicted, reference = oj_preds_rf$Actual)

oj_cm_rf## Confusion Matrix and Statistics

##

## Reference

## Prediction CH MM

## CH 110 16

## MM 20 67

##

## Accuracy : 0.831

## 95% CI : (0.7738, 0.8787)

## No Information Rate : 0.6103

## P-Value [Acc > NIR] : 2.296e-12

##

## Kappa : 0.6477

##

## Mcnemar's Test P-Value : 0.6171

##

## Sensitivity : 0.8462

## Specificity : 0.8072

## Pos Pred Value : 0.8730

## Neg Pred Value : 0.7701

## Prevalence : 0.6103

## Detection Rate : 0.5164

## Detection Prevalence : 0.5915

## Balanced Accuracy : 0.8267

##

## 'Positive' Class : CH

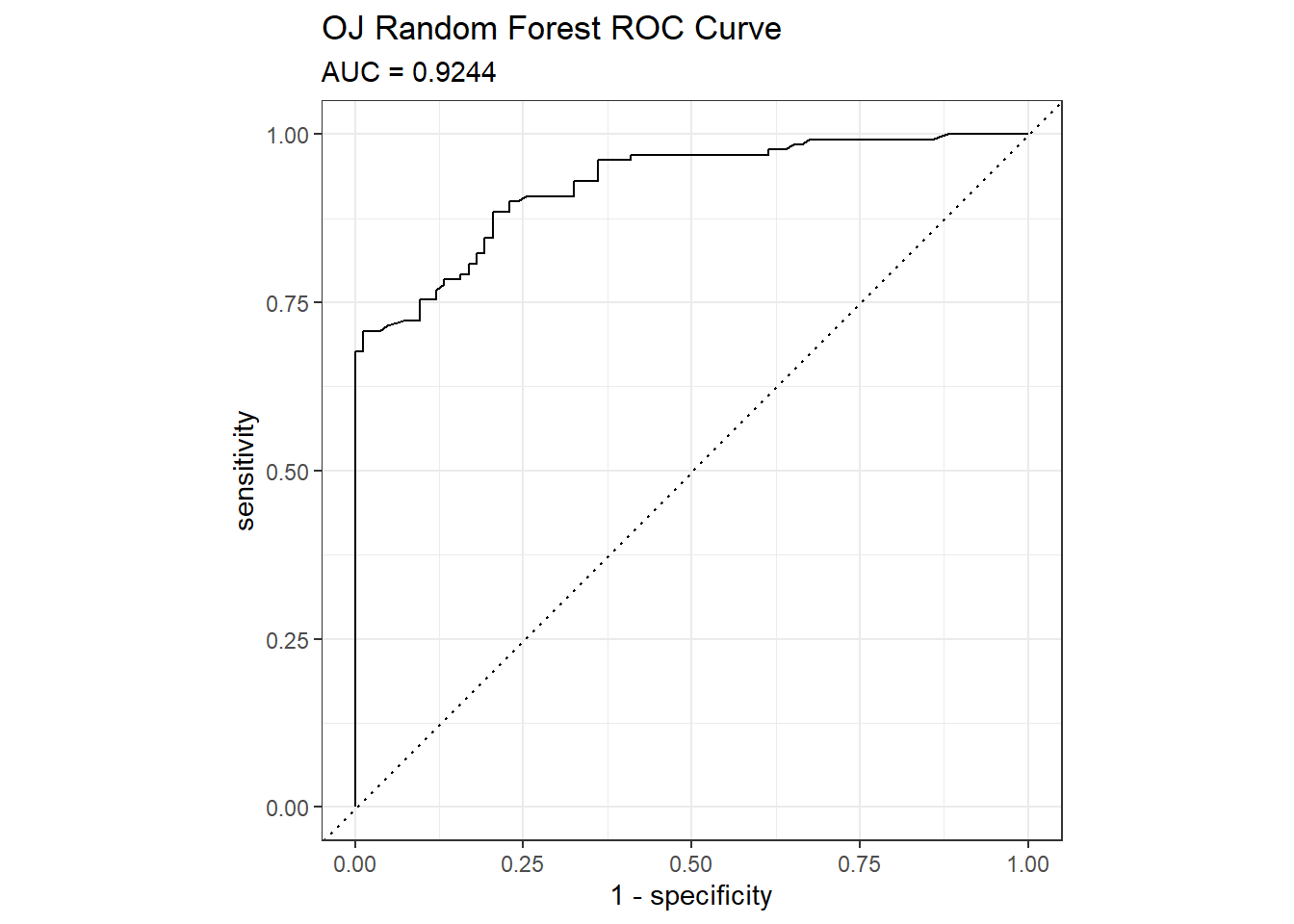

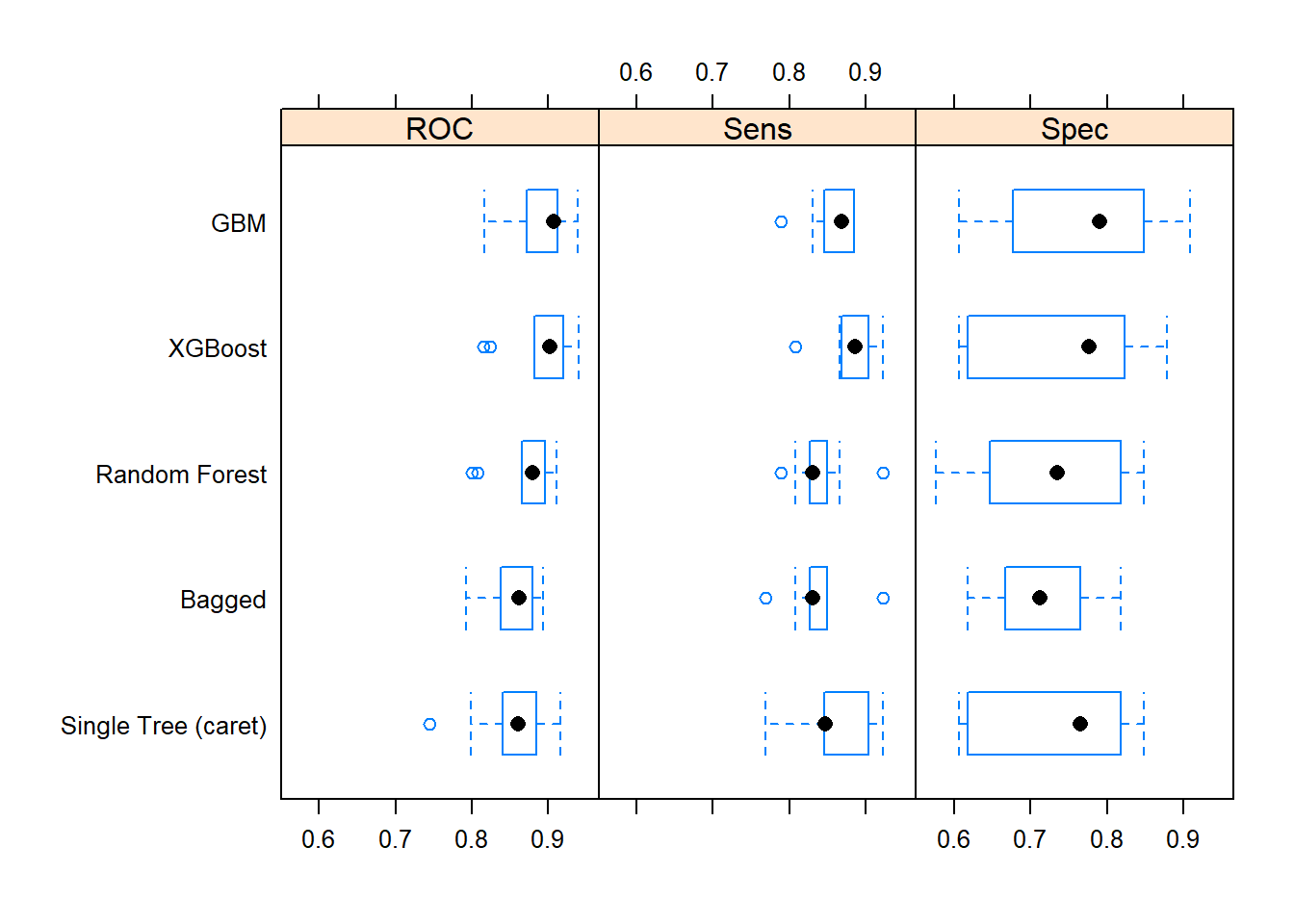

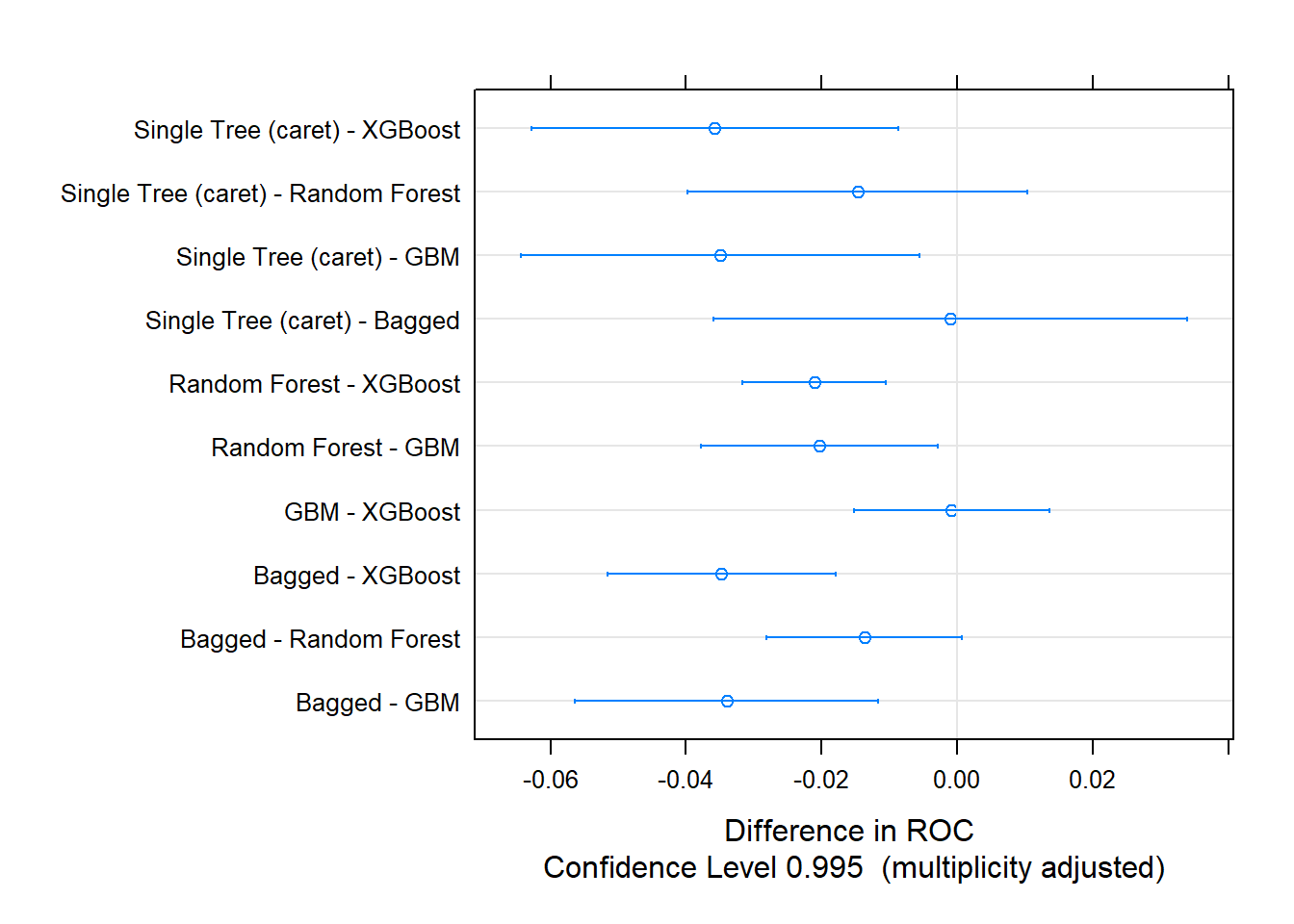

## The accuracy on the holdout set is 0.8310. The AUC is 0.9244. Here are the ROC and gain curves.

# AUC is 0.9190

mdl_auc <- Metrics::auc(actual = oj_preds_rf$Actual == "CH", oj_preds_rf$CH)

yardstick::roc_curve(oj_preds_rf, Actual, CH) %>%

autoplot() +

labs(

title = "OJ Random Forest ROC Curve",

subtitle = paste0("AUC = ", round(mdl_auc, 4))

)

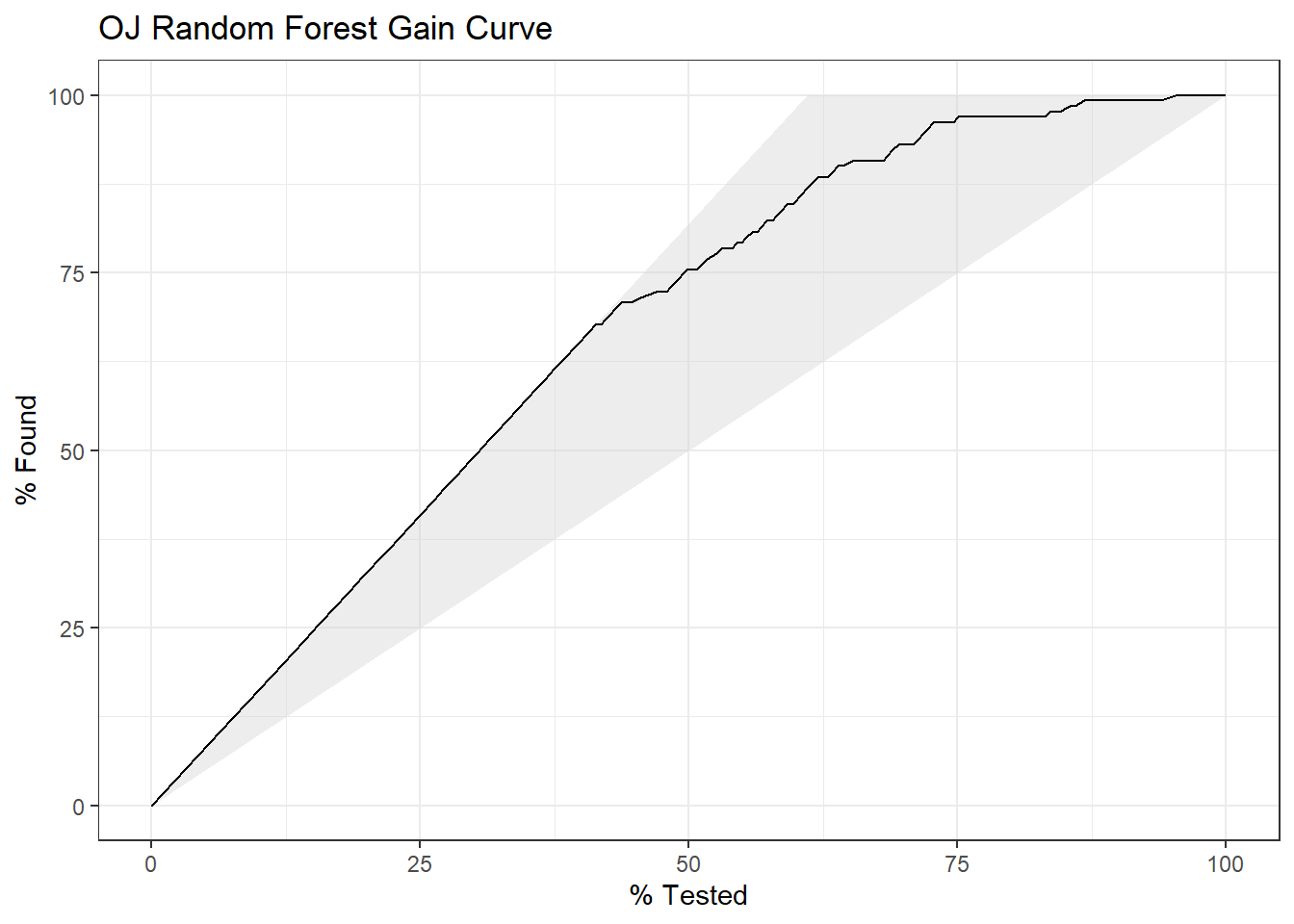

yardstick::gain_curve(oj_preds_rf, Actual, CH) %>%

autoplot() +

labs(title = "OJ Random Forest Gain Curve")

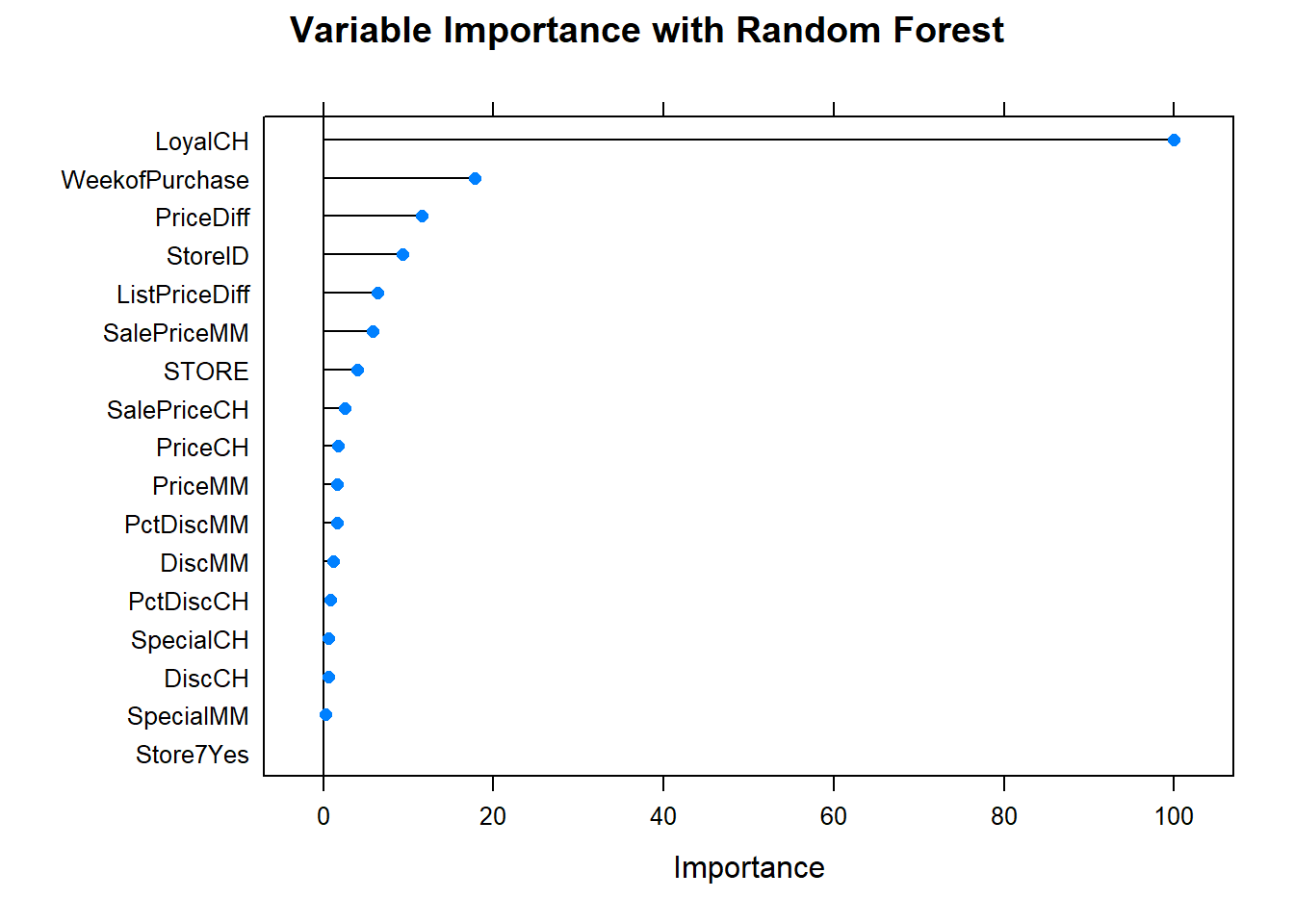

What are the most important variables?

plot(varImp(oj_mdl_rf), main="Variable Importance with Random Forest")

Let’s update the scoreboard. The bagging and random forest models did pretty well, but the manual classification tree is still in first place. There’s still gradient boosting to investigate!

oj_scoreboard <- rbind(oj_scoreboard,

data.frame(Model = "Random Forest", Accuracy = oj_cm_rf$overall["Accuracy"])

) %>% arrange(desc(Accuracy))

scoreboard(oj_scoreboard)Model | Accuracy |

Single Tree | 0.8591549 |

Single Tree (caret) | 0.8544601 |

Bagging | 0.8450704 |

Random Forest | 0.8309859 |

4.4.0.2 Random Forest Regression Tree

Now I’ll try it with the random forest method by specifying method = "rf". Hyperparameter mtry can take any value from 1 to 10 (the number of predictors) and I expect the best value to be near \(\sqrt{10} \sim 3\).

set.seed(1234)

cs_mdl_rf <- train(

Sales ~ .,

data = cs_train,

method = "rf",

tuneGrid = expand.grid(mtry = 1:10), # searching around mtry=3

trControl = cs_trControl

)

cs_mdl_rf## Random Forest

##

## 321 samples

## 10 predictor

##

## No pre-processing

## Resampling: Cross-Validated (10 fold)

## Summary of sample sizes: 289, 289, 289, 289, 289, 289, ...

## Resampling results across tuning parameters:

##

## mtry RMSE Rsquared MAE

## 1 2.170362 0.6401338 1.739791

## 2 1.806516 0.7281537 1.444411

## 3 1.661626 0.7539811 1.320989

## 4 1.588878 0.7531926 1.259214

## 5 1.539960 0.7580374 1.222062

## 6 1.526479 0.7536928 1.211417

## 7 1.515426 0.7541277 1.205956

## 8 1.523217 0.7456623 1.215768

## 9 1.521271 0.7447813 1.217091

## 10 1.527277 0.7380014 1.218469

##

## RMSE was used to select the optimal model using the smallest value.

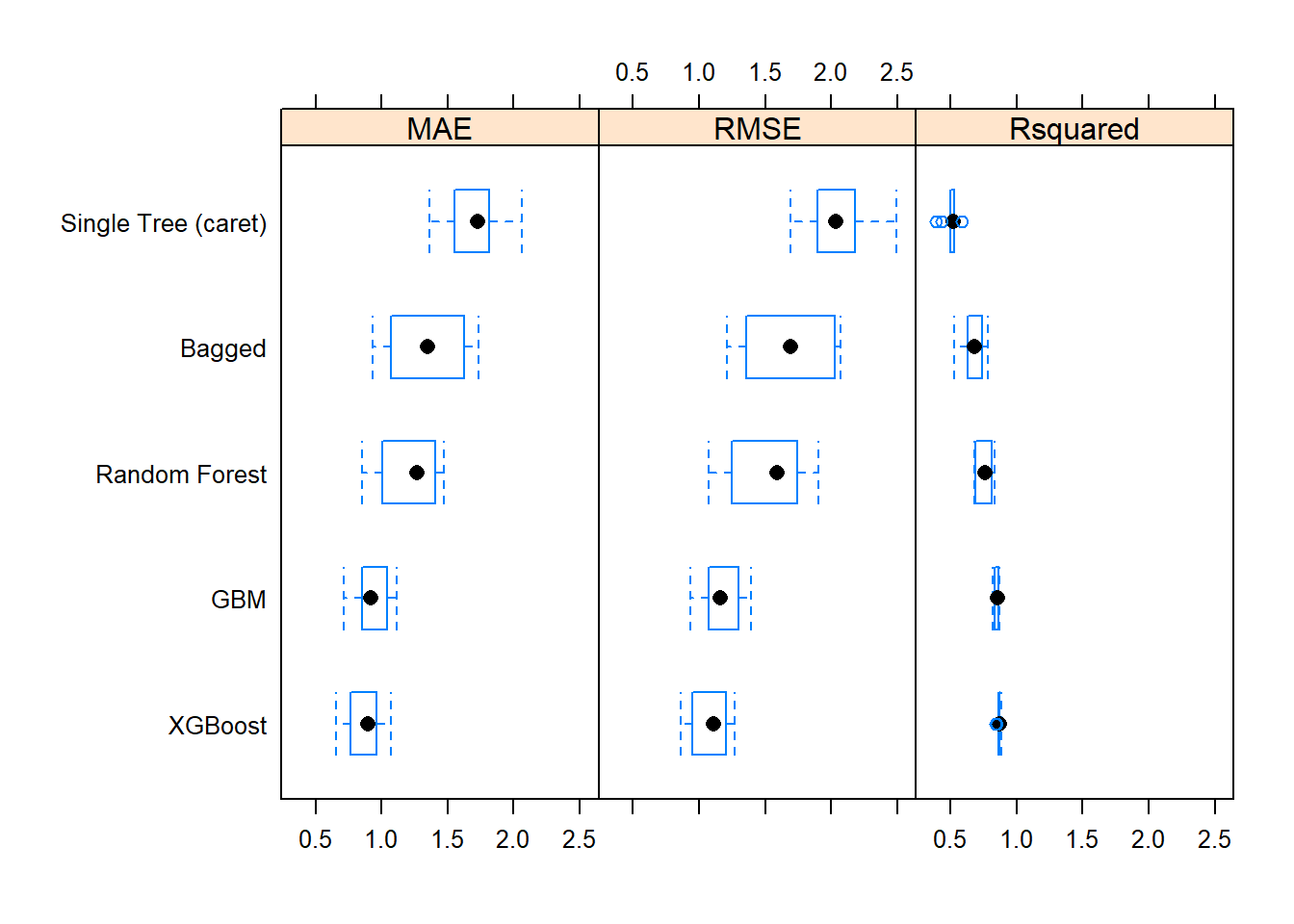

## The final value used for the model was mtry = 7.The minimum RMSE is at mtry = 7.

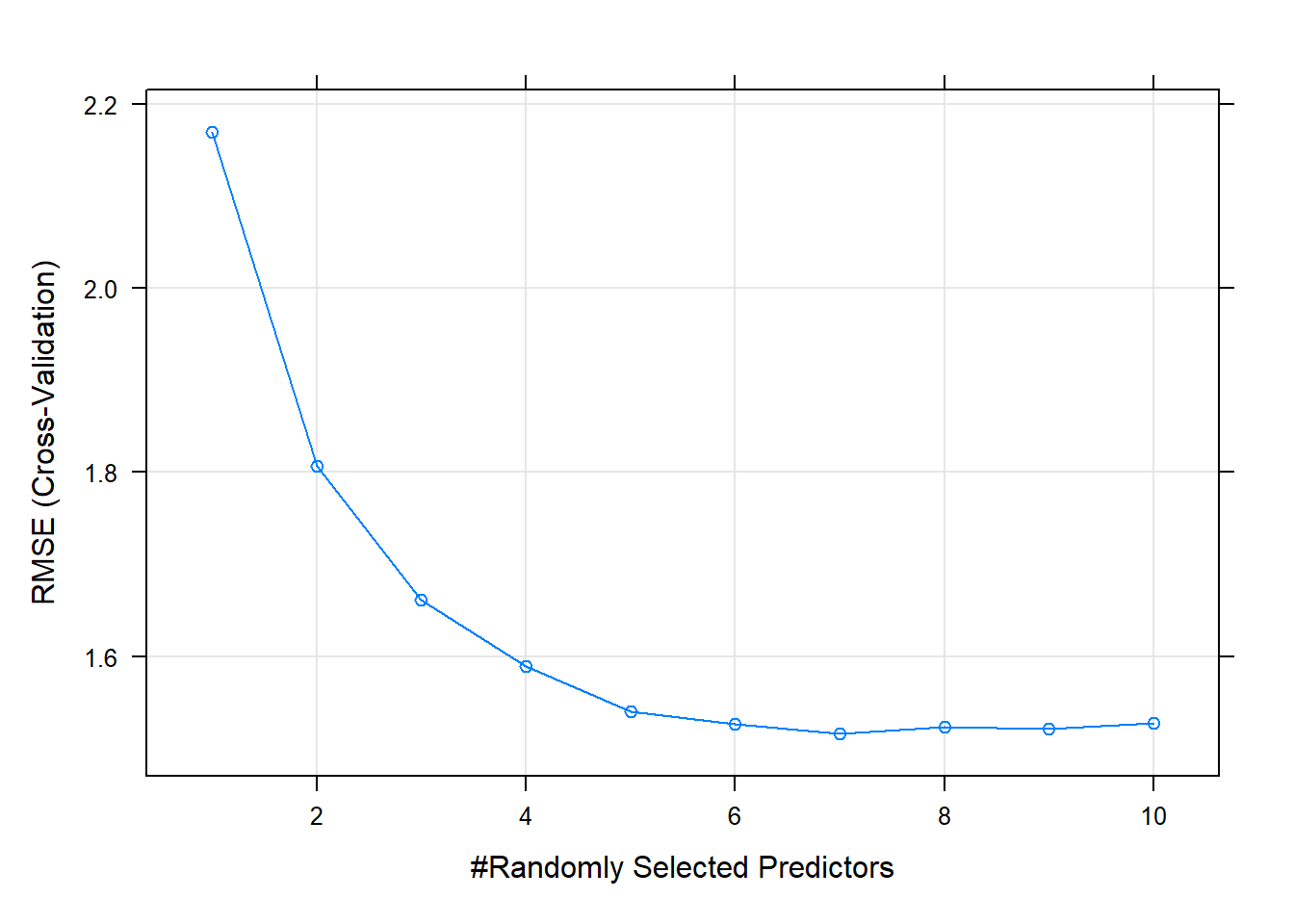

plot(cs_mdl_rf)

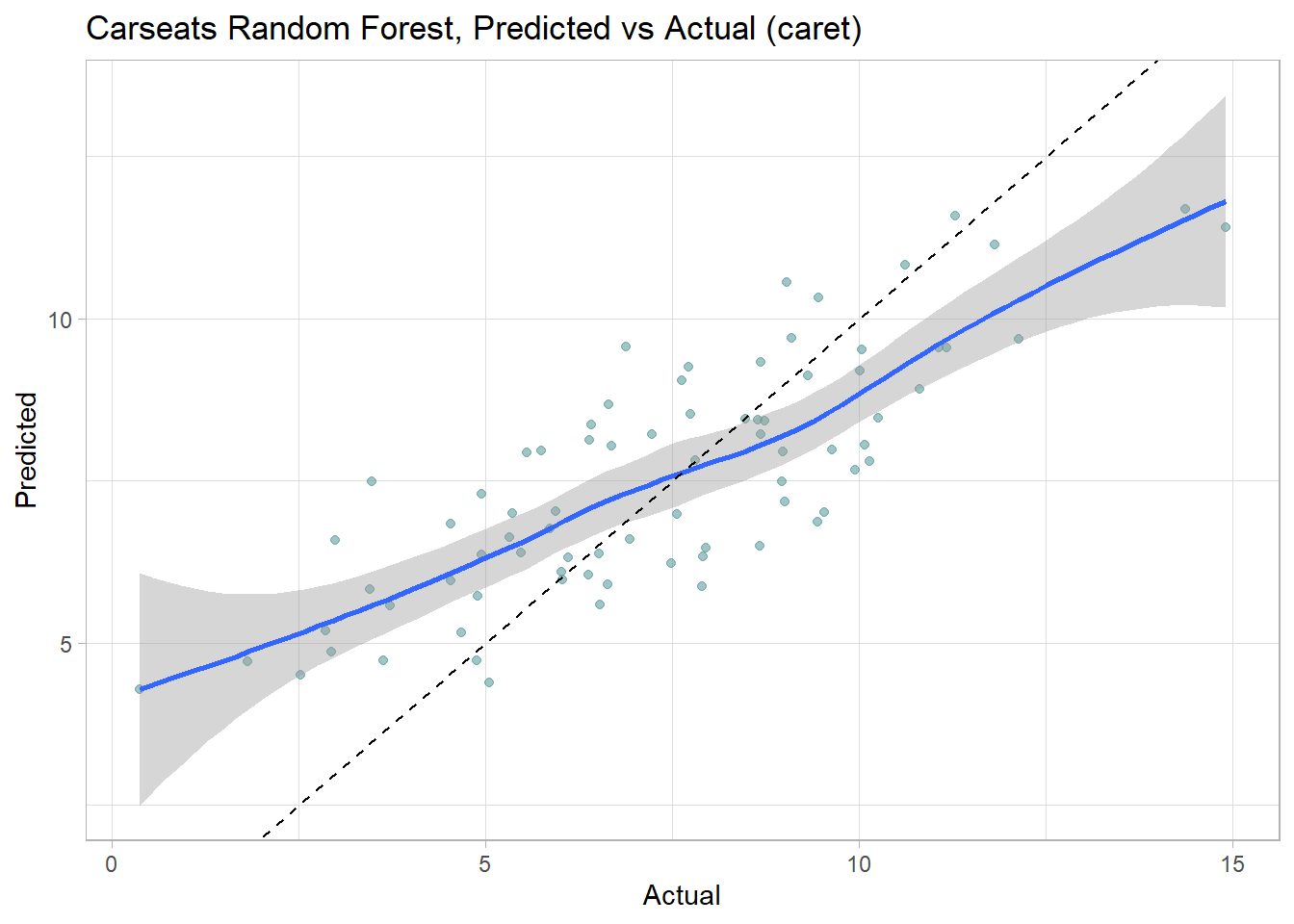

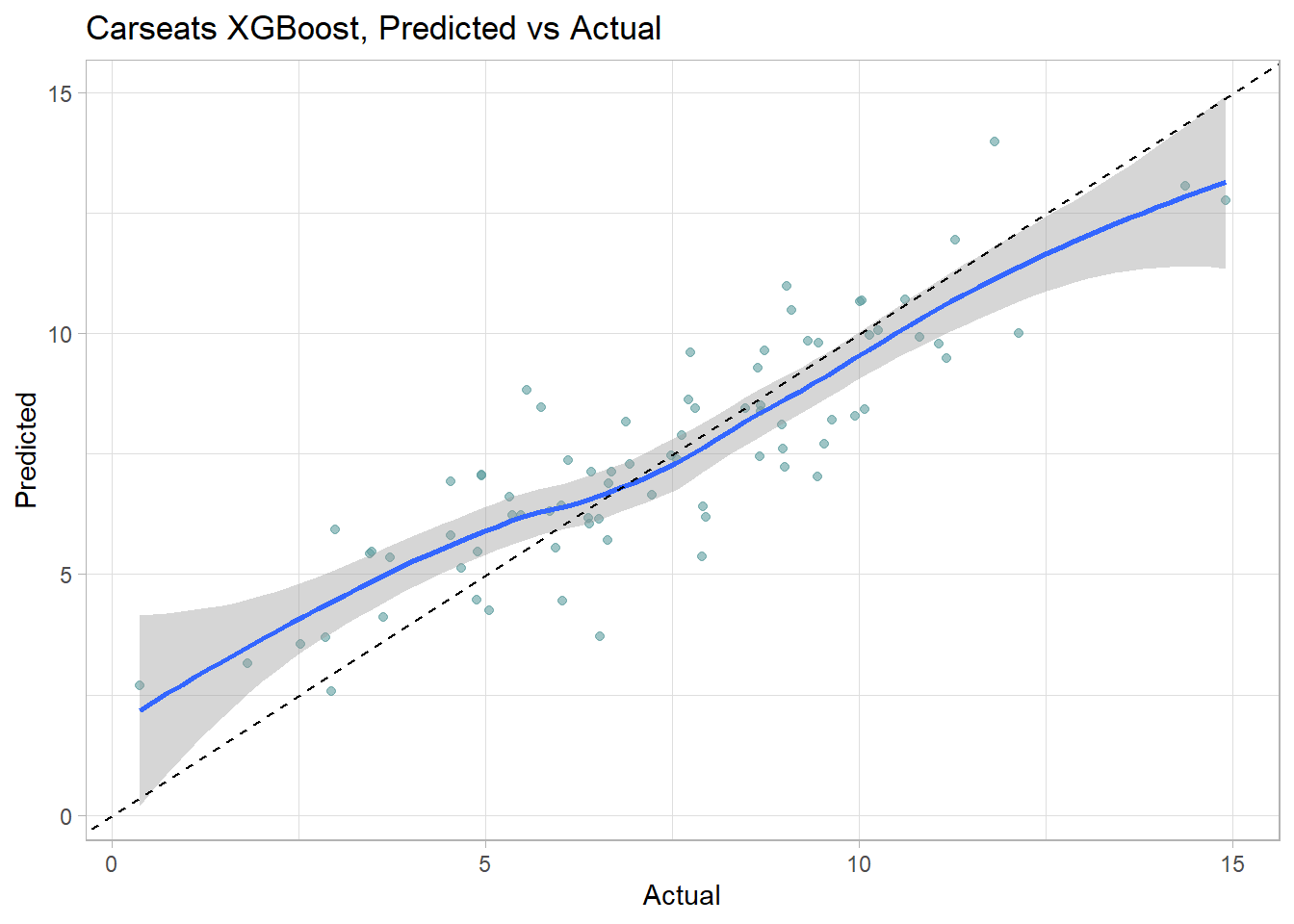

Make predictions on the test set. Like the bagged tree model, this one also over-predicts at low end of Sales and under-predicts at high end. The RMSE of 1.7184 is better than bagging’s 1.9185.

cs_preds_rf <- bind_cols(

Predicted = predict(cs_mdl_rf, newdata = cs_test),

Actual = cs_test$Sales

)

(cs_rmse_rf <- RMSE(pred = cs_preds_rf$Predicted, obs = cs_preds_rf$Actual))## [1] 1.718358cs_preds_rf %>%

ggplot(aes(x = Actual, y = Predicted)) +

geom_point(alpha = 0.6, color = "cadetblue") +

geom_smooth(method = "loess", formula = "y ~ x") +

geom_abline(intercept = 0, slope = 1, linetype = 2) +

labs(title = "Carseats Random Forest, Predicted vs Actual (caret)")

plot(varImp(cs_mdl_rf), main="Variable Importance with Random Forest")

Let’s check in with the scoreboard.

cs_scoreboard <- rbind(cs_scoreboard,

data.frame(Model = "Random Forest", RMSE = cs_rmse_rf)

) %>% arrange(RMSE)

scoreboard(cs_scoreboard)Model | RMSE |

Random Forest | 1.718358 |

Bagging | 1.918473 |

Single Tree (caret) | 2.298331 |

Single Tree | 2.363202 |

The bagging and random forest models did very well - they took over the top positions!

4.5 Gradient Boosting

Note: I learned gradient boosting from explained.ai.

Gradient boosting machine (GBM) is an additive modeling algorithm that gradually builds a composite model by iteratively adding M weak sub-models based on the performance of the prior iteration’s composite,

\[F_M(x) = \sum_m^M f_m(x).\]

The idea is to fit a weak model, then replace the response values with the residuals from that model, and fit another model. Adding the residual prediction model to the original response prediction model produces a more accurate model. GBM repeats this process over and over, running new models to predict the residuals of the previous composite models, and adding the results to produce new composites. With each iteration, the model becomes stronger and stronger. The successive trees are usually weighted to slow down the learning rate. “Shrinkage” reduces the influence of each individual tree and leaves space for future trees to improve the model.

\[F_M(x) = f_0 + \eta\sum_{m = 1}^M f_m(x).\]

The smaller the learning rate, \(\eta\), the larger the number of trees, \(M\). \(\eta\) and \(M\) are hyperparameters. Other constraints to the trees are usually applied as additional hyperparameters, including, tree depth, number of nodes, minimum observations per split, and minimum improvement to loss.

The name “gradient boosting” refers to the boosting of a model with a gradient. Each round of training builds a weak learner and uses the residuals to calculate a gradient, the partial derivative of the loss function. Gradient boosting “descends the gradient” to adjust the model parameters to reduce the error in the next round of training.

In the case of classification problems, the loss function is the log-loss; for regression problems, the loss function is mean squared error. GBM continues until it reaches maximum number of trees or an acceptable error level.

4.5.0.1 Gradient Boosting Classification Tree

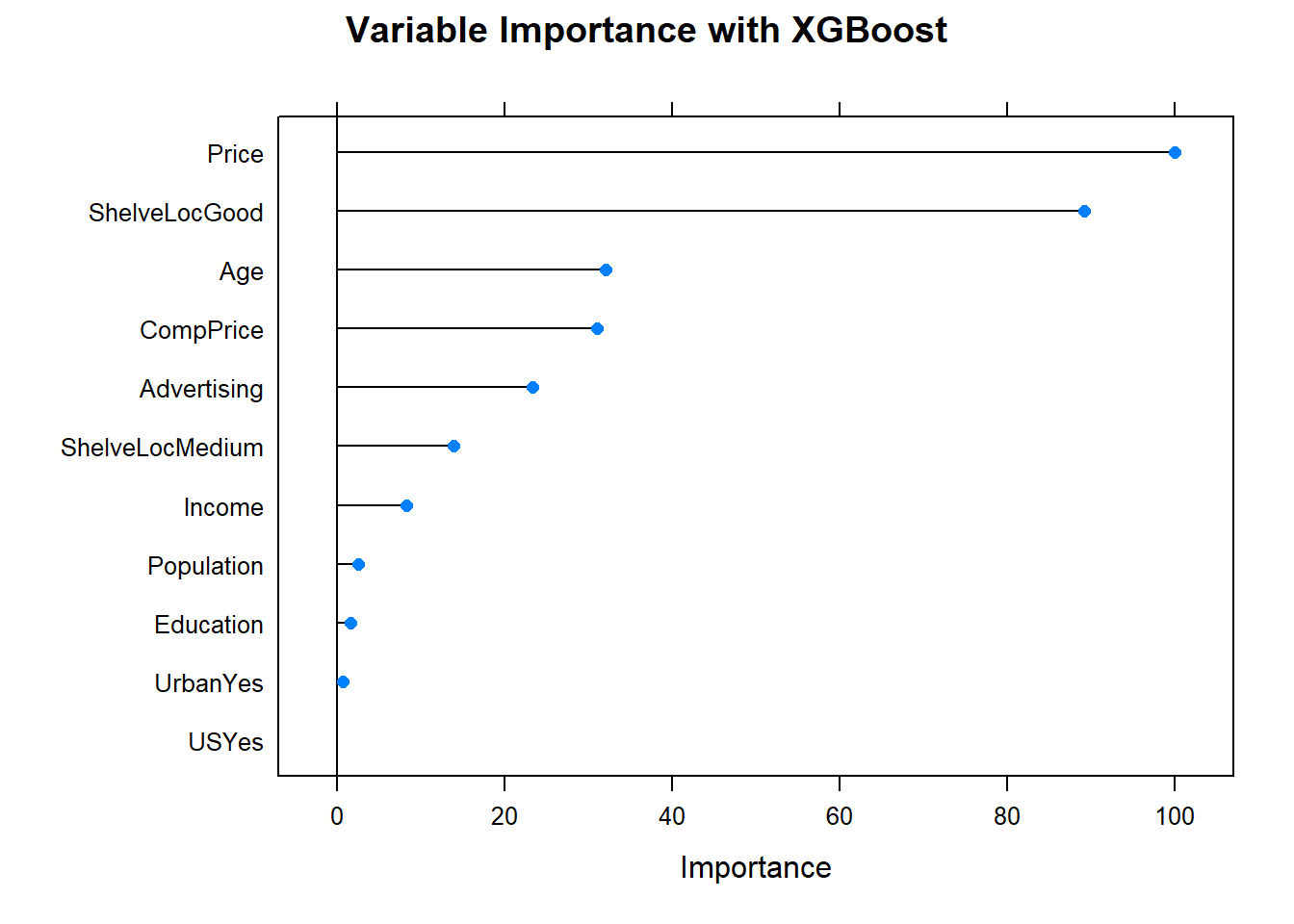

In addition to the gradient boosting machine algorithm, implemented in caret with method = gbm, there is a variable called Extreme Gradient Boosting, XGBoost, which frankly I don’t know anything about other than it is supposed to work extremely well. Let’s try them both!

4.5.0.1.1 GBM

I’ll predict Purchase from the OJ data set again, this time using the GBM method by specifying method = "gbm". gbm has the following tuneable hyperparameters (see modelLookup("gbm")).

n.trees: number of boosting iterations, \(M\)interaction.depth: maximum tree depthshrinkage: shrinkage, \(\eta\)n.minobsinnode: minimum terminal node size

I’ll use tuneLength = 5.

set.seed(1234)

garbage <- capture.output(

oj_mdl_gbm <- train(

Purchase ~ .,

data = oj_train,

method = "gbm",

metric = "ROC",

tuneLength = 5,

trControl = oj_trControl

))

oj_mdl_gbm## Stochastic Gradient Boosting

##

## 857 samples

## 17 predictor

## 2 classes: 'CH', 'MM'

##

## No pre-processing

## Resampling: Cross-Validated (10 fold)

## Summary of sample sizes: 772, 772, 771, 770, 771, 771, ...

## Resampling results across tuning parameters:

##

## interaction.depth n.trees ROC Sens Spec

## 1 50 0.8838502 0.8701016 0.7155971

## 1 100 0.8851784 0.8719521 0.7427807

## 1 150 0.8843982 0.8738389 0.7548128

## 1 200 0.8828378 0.8738389 0.7487522

## 1 250 0.8812937 0.8719884 0.7367201

## 2 50 0.8843060 0.8718795 0.7546346

## 2 100 0.8865391 0.8681422 0.7546346

## 2 150 0.8830249 0.8642961 0.7456328

## 2 200 0.8822619 0.8642598 0.7515152

## 2 250 0.8771918 0.8529028 0.7515152

## 3 50 0.8874290 0.8681422 0.7606061

## 3 100 0.8828219 0.8605588 0.7726381

## 3 150 0.8806565 0.8566038 0.7634581

## 3 200 0.8732572 0.8661829 0.7695187

## 3 250 0.8711321 0.8604499 0.7604278

## 4 50 0.8828612 0.8489840 0.7515152

## 4 100 0.8792110 0.8604862 0.7606061

## 4 150 0.8723941 0.8527939 0.7695187

## 4 200 0.8690015 0.8546444 0.7605169

## 4 250 0.8683316 0.8451016 0.7512478

## 5 50 0.8893367 0.8604499 0.7636364

## 5 100 0.8818969 0.8546807 0.7426025

## 5 150 0.8762509 0.8490203 0.7574866

## 5 200 0.8739284 0.8470247 0.7426025

## 5 250 0.8713918 0.8413643 0.7455437

##

## Tuning parameter 'shrinkage' was held constant at a value of 0.1

##

## Tuning parameter 'n.minobsinnode' was held constant at a value of 10

## ROC was used to select the optimal model using the largest value.

## The final values used for the model were n.trees = 50, interaction.depth =

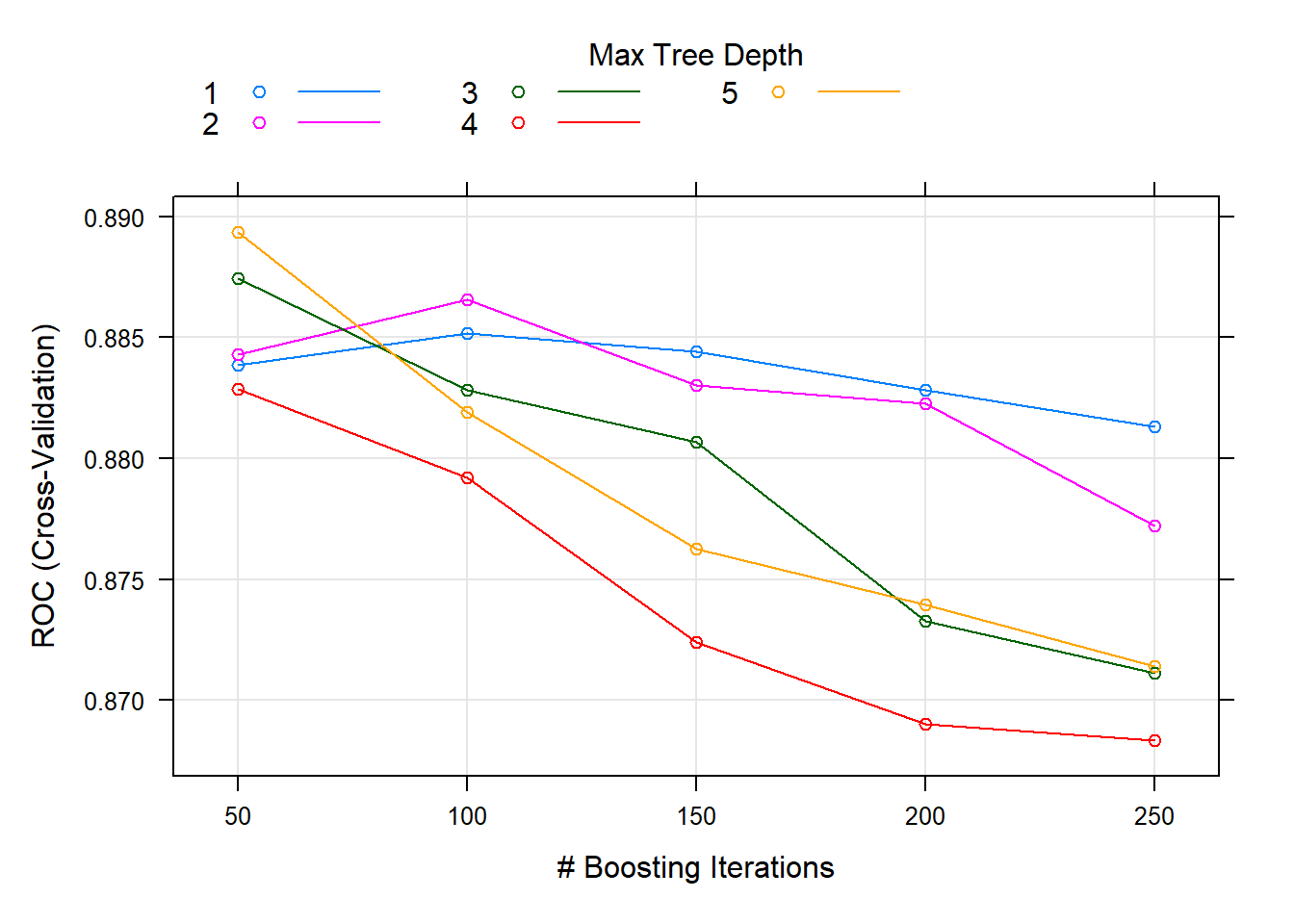

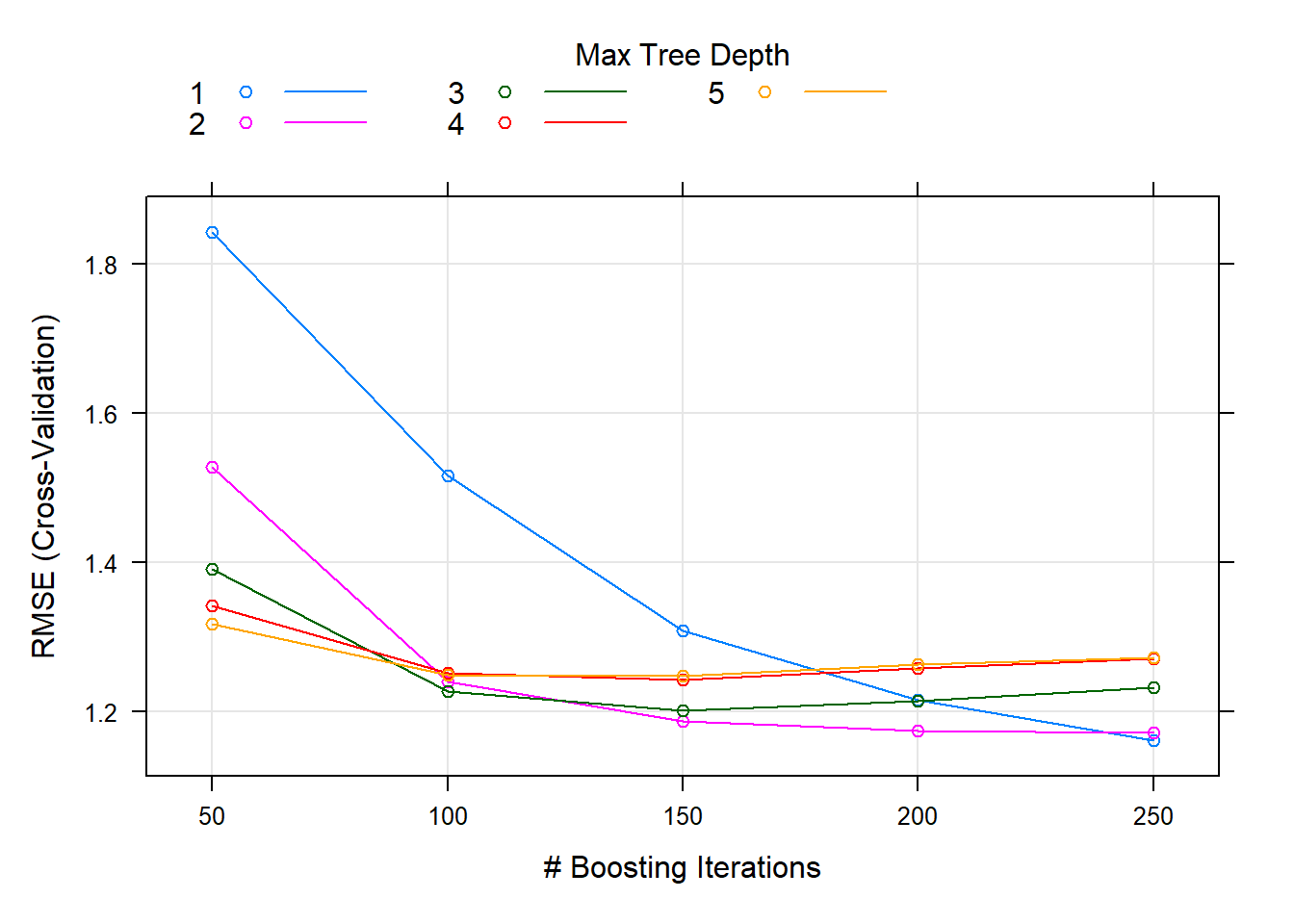

## 5, shrinkage = 0.1 and n.minobsinnode = 10.train() tuned n.trees ($M) and interaction.depth, holding shrinkage = 0.1 (), and n.minobsinnode = 10. The optimal hyperparameter values were n.trees = 50, and interaction.depth = 5.

You can see from the tuning plot that accuracy is maximized at \(M=50\) for tree depth of 5, but \(M=50\) with tree depth of 3 worked nearly as well.

plot(oj_mdl_gbm)

Let’s see how the model performed on the holdout set. The accuracy was 0.8451.

oj_preds_gbm <- bind_cols(

predict(oj_mdl_gbm, newdata = oj_test, type = "prob"),

Predicted = predict(oj_mdl_gbm, newdata = oj_test, type = "raw"),

Actual = oj_test$Purchase

)

oj_cm_gbm <- confusionMatrix(oj_preds_gbm$Predicted, reference = oj_preds_gbm$Actual)

oj_cm_gbm## Confusion Matrix and Statistics

##

## Reference

## Prediction CH MM

## CH 113 16

## MM 17 67

##

## Accuracy : 0.8451

## 95% CI : (0.7894, 0.8909)

## No Information Rate : 0.6103

## P-Value [Acc > NIR] : 6.311e-14

##

## Kappa : 0.675

##

## Mcnemar's Test P-Value : 1

##

## Sensitivity : 0.8692

## Specificity : 0.8072

## Pos Pred Value : 0.8760

## Neg Pred Value : 0.7976

## Prevalence : 0.6103

## Detection Rate : 0.5305

## Detection Prevalence : 0.6056

## Balanced Accuracy : 0.8382

##

## 'Positive' Class : CH

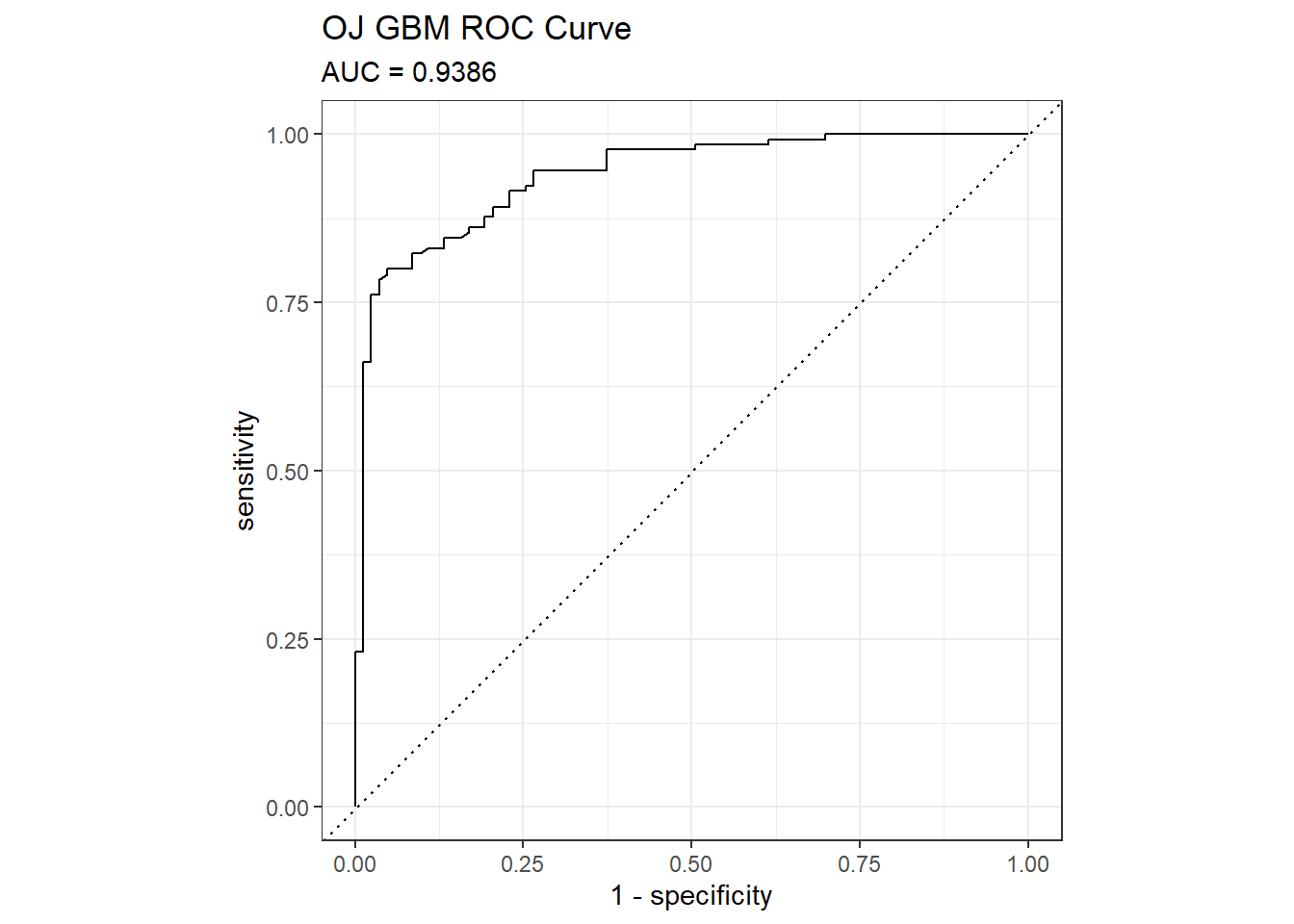

## AUC was 0.9386. Here are the ROC and gain curves.

mdl_auc <- Metrics::auc(actual = oj_preds_gbm$Actual == "CH", oj_preds_gbm$CH)

yardstick::roc_curve(oj_preds_gbm, Actual, CH) %>%

autoplot() +

labs(

title = "OJ GBM ROC Curve",

subtitle = paste0("AUC = ", round(mdl_auc, 4))

)

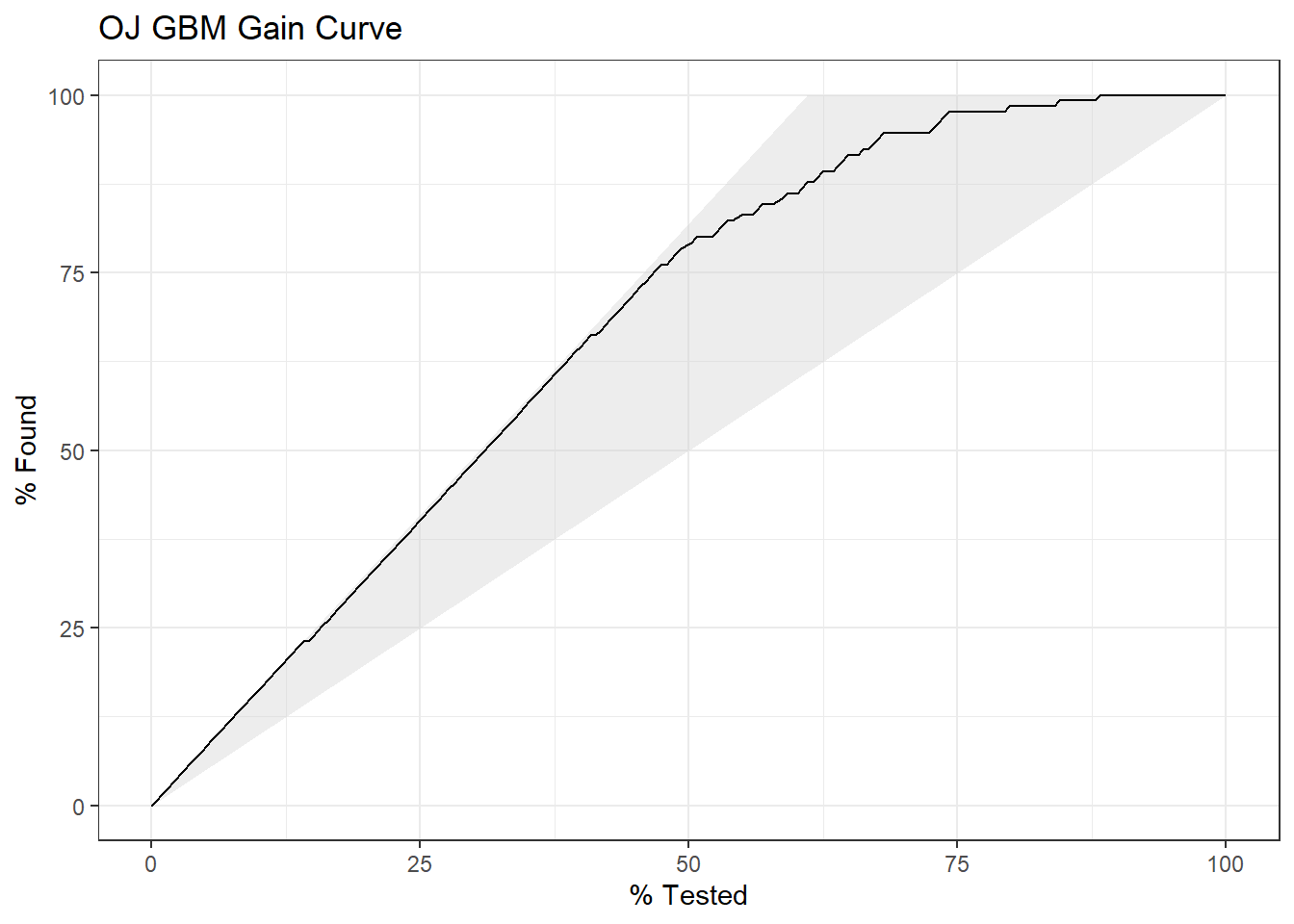

yardstick::gain_curve(oj_preds_gbm, Actual, CH) %>%

autoplot() +

labs(title = "OJ GBM Gain Curve")

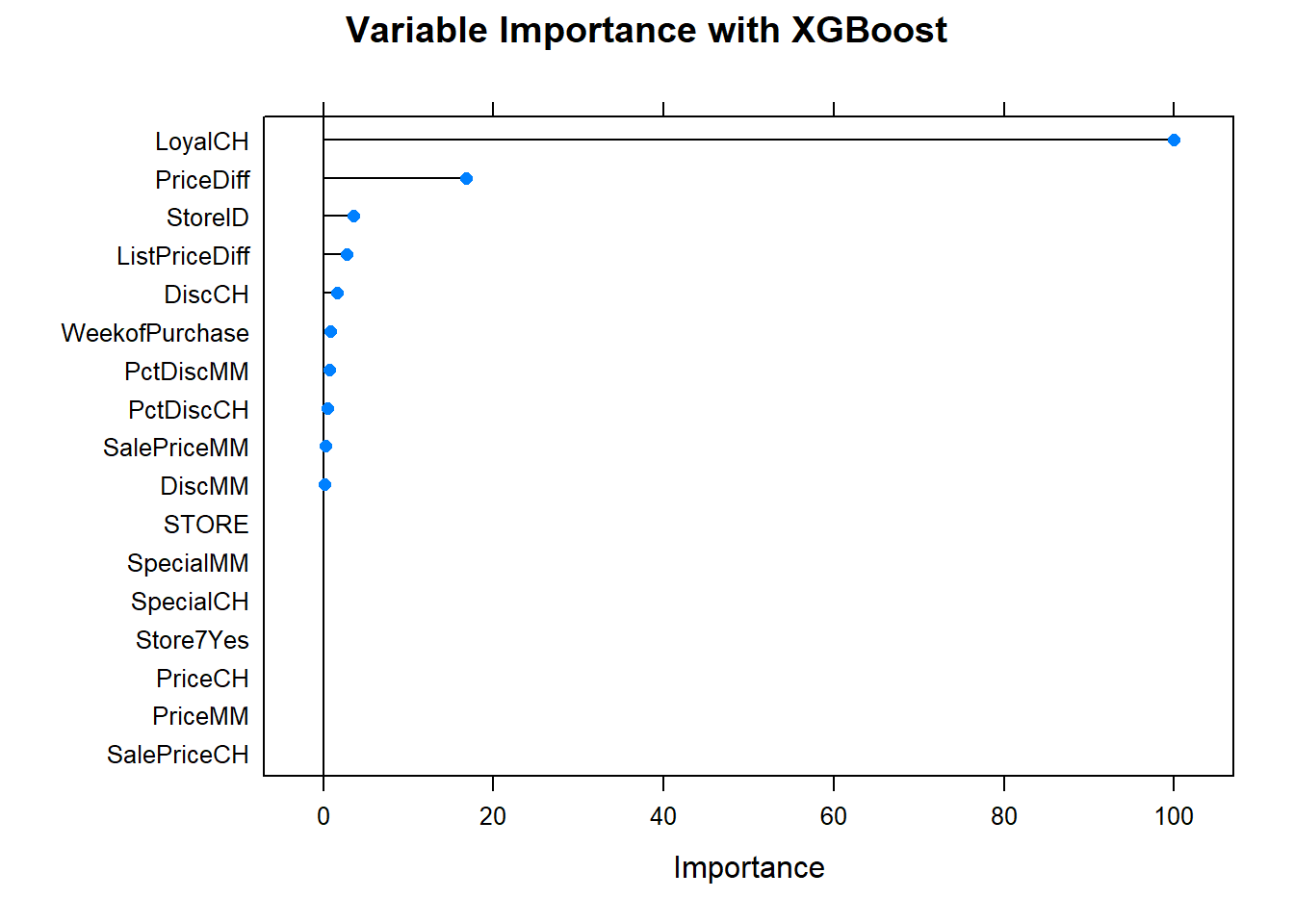

Now the variable importance. Just a few variables. LoyalCH is at the top again.

#plot(varImp(oj_mdl_gbm), main="Variable Importance with Gradient Boosting")4.5.0.1.2 XGBoost

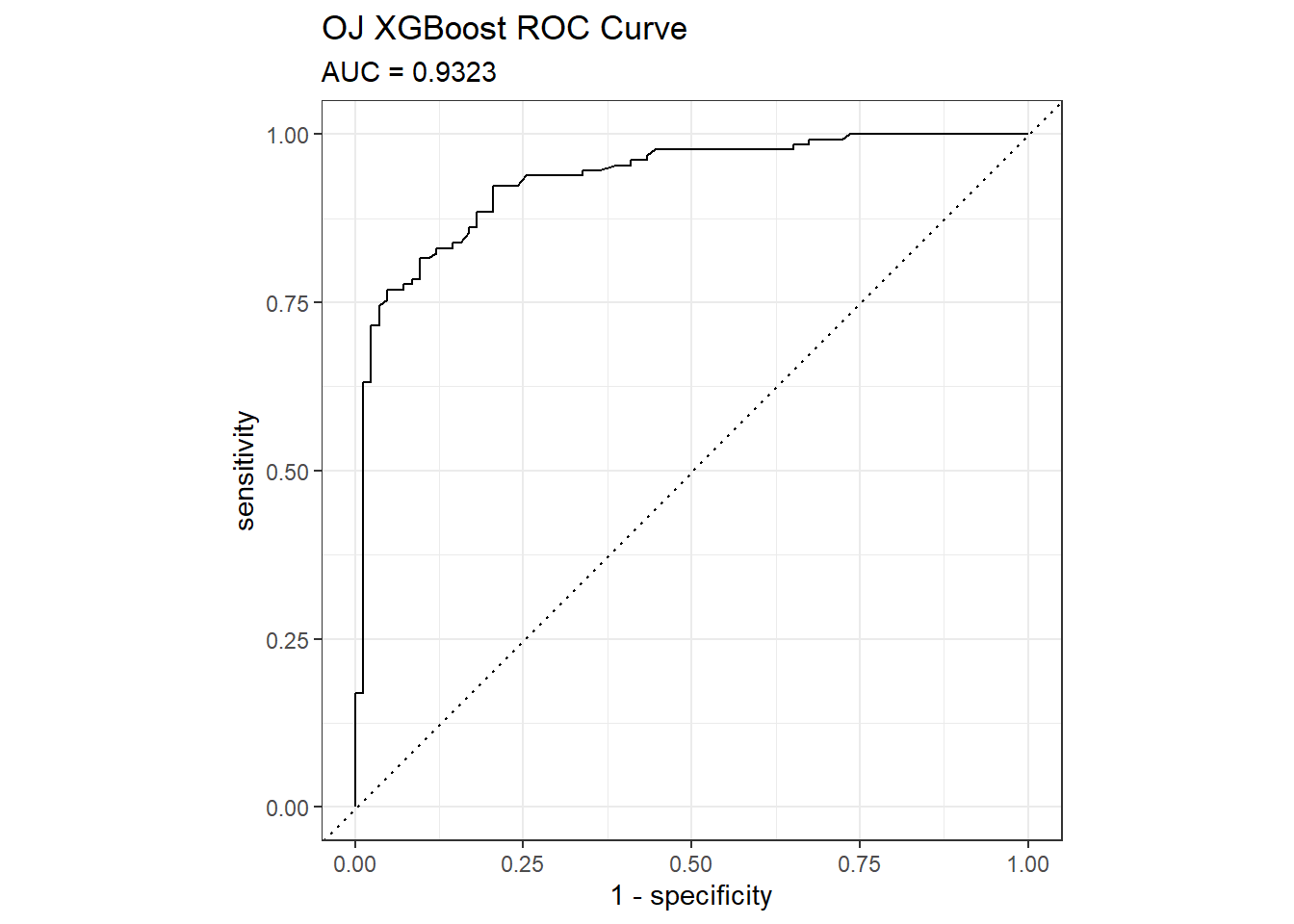

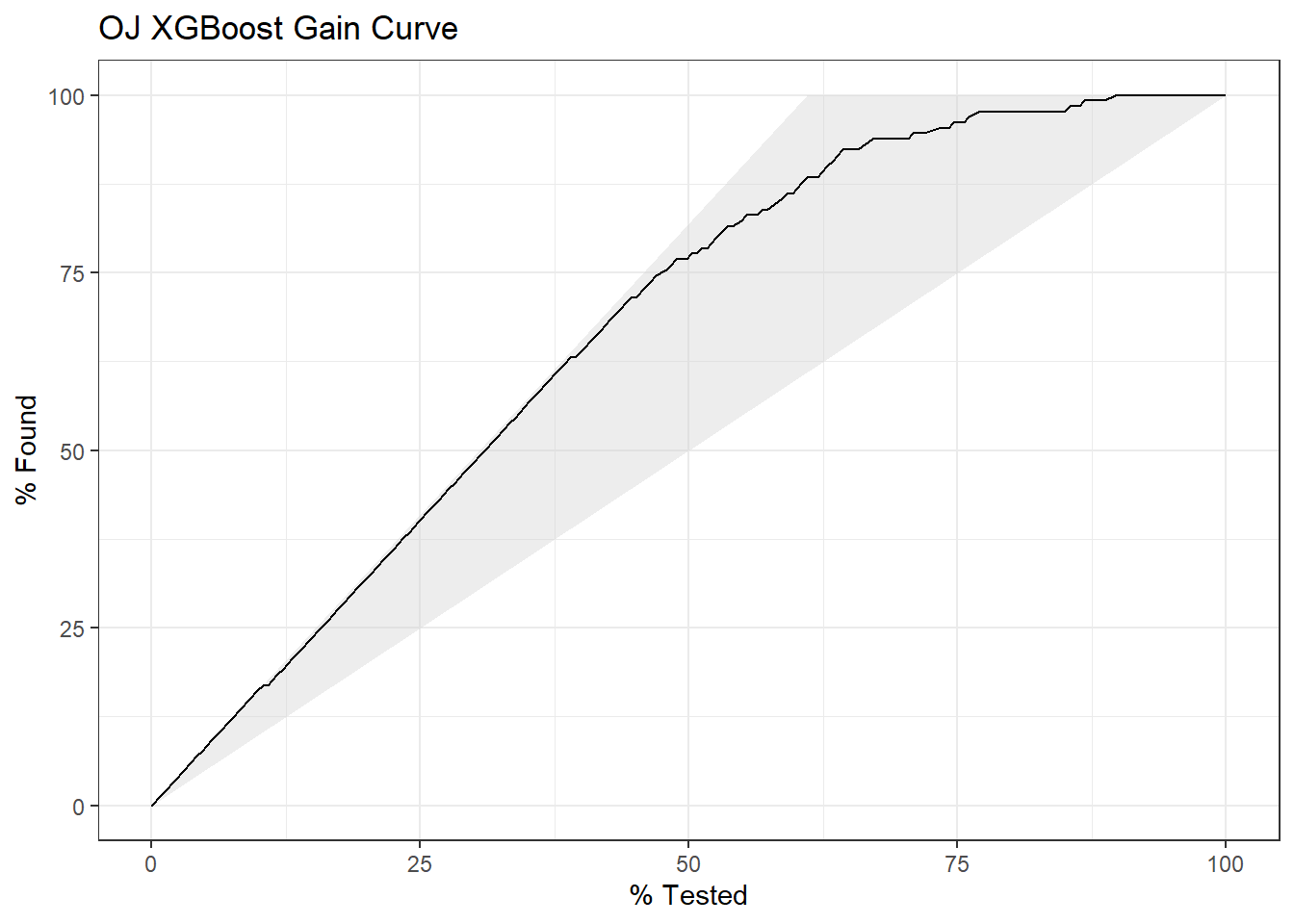

I’ll predict Purchase from the OJ data set again, this time using the XGBoost method by specifying method = "xgbTree". xgbTree has the following tuneable hyperparameters (see modelLookup("xgbTree")). The first three are the same as xgb.

nrounds: number of boosting iterations, \(M\)max_depth: maximum tree deptheta: shrinkage, \(\eta\)gamma: minimum loss reductioncolsamle_bytree: subsample ratio of columnsmin_child_weight: minimum size of instance weightsubstample: subsample percentage

I’ll use tuneLength = 5 again.

set.seed(1234)

garbage <- capture.output(

oj_mdl_xgb <- train(

Purchase ~ .,

data = oj_train,

method = "xgbTree",

metric = "ROC",

tuneLength = 5,

trControl = oj_trControl

))

oj_mdl_xgb## eXtreme Gradient Boosting

##

## 857 samples

## 17 predictor

## 2 classes: 'CH', 'MM'

##

## No pre-processing

## Resampling: Cross-Validated (10 fold)

## Summary of sample sizes: 772, 772, 771, 770, 771, 771, ...

## Resampling results across tuning parameters:

##

## eta max_depth colsample_bytree subsample nrounds ROC Sens

## 0.3 1 0.6 0.500 50 0.8840766 0.8834180

## 0.3 1 0.6 0.500 100 0.8790561 0.8662192

## 0.3 1 0.6 0.500 150 0.8766781 0.8701379

## 0.3 1 0.6 0.500 200 0.8776000 0.8624093

## 0.3 1 0.6 0.500 250 0.8757948 0.8624456

## 0.3 1 0.6 0.625 50 0.8859505 0.8834180

## 0.3 1 0.6 0.625 100 0.8835632 0.8719158

## 0.3 1 0.6 0.625 150 0.8795049 0.8623730

## 0.3 1 0.6 0.625 200 0.8779641 0.8623730

## 0.3 1 0.6 0.625 250 0.8750637 0.8604499

## 0.3 1 0.6 0.750 50 0.8888085 0.8796081

## 0.3 1 0.6 0.750 100 0.8821363 0.8701016

## 0.3 1 0.6 0.750 150 0.8788971 0.8758708

## 0.3 1 0.6 0.750 200 0.8795012 0.8682148

## 0.3 1 0.6 0.750 250 0.8776496 0.8643324

## 0.3 1 0.6 0.875 50 0.8873750 0.8699927

## 0.3 1 0.6 0.875 100 0.8842872 0.8738752

## 0.3 1 0.6 0.875 150 0.8836644 0.8643687

## 0.3 1 0.6 0.875 200 0.8815095 0.8643687

## 0.3 1 0.6 0.875 250 0.8810575 0.8585994

## 0.3 1 0.6 1.000 50 0.8901207 0.8814949

## 0.3 1 0.6 1.000 100 0.8873808 0.8814949

## 0.3 1 0.6 1.000 150 0.8850800 0.8777213

## 0.3 1 0.6 1.000 200 0.8839367 0.8777213

## 0.3 1 0.6 1.000 250 0.8830755 0.8701016

## 0.3 1 0.8 0.500 50 0.8813948 0.8661466

## 0.3 1 0.8 0.500 100 0.8758169 0.8605225

## 0.3 1 0.8 0.500 150 0.8775673 0.8719884

## 0.3 1 0.8 0.500 200 0.8705204 0.8452104

## 0.3 1 0.8 0.500 250 0.8708827 0.8623730

## 0.3 1 0.8 0.625 50 0.8837989 0.8680697

## 0.3 1 0.8 0.625 100 0.8798634 0.8642598

## 0.3 1 0.8 0.625 150 0.8771115 0.8681785

## 0.3 1 0.8 0.625 200 0.8767673 0.8643687

## 0.3 1 0.8 0.625 250 0.8757636 0.8662917

## 0.3 1 0.8 0.750 50 0.8873982 0.8776851

## 0.3 1 0.8 0.750 100 0.8845928 0.8663280

## 0.3 1 0.8 0.750 150 0.8812139 0.8796807

## 0.3 1 0.8 0.750 200 0.8810481 0.8662192

## 0.3 1 0.8 0.750 250 0.8791379 0.8605225

## 0.3 1 0.8 0.875 50 0.8860863 0.8699927

## 0.3 1 0.8 0.875 100 0.8824399 0.8757983

## 0.3 1 0.8 0.875 150 0.8800854 0.8720247

## 0.3 1 0.8 0.875 200 0.8781308 0.8643324

## 0.3 1 0.8 0.875 250 0.8760868 0.8623730

## 0.3 1 0.8 1.000 50 0.8885750 0.8738389

## 0.3 1 0.8 1.000 100 0.8864060 0.8738752

## 0.3 1 0.8 1.000 150 0.8846236 0.8738752

## 0.3 1 0.8 1.000 200 0.8834568 0.8777213

## 0.3 1 0.8 1.000 250 0.8828532 0.8701016

## 0.3 2 0.6 0.500 50 0.8827826 0.8604499

## 0.3 2 0.6 0.500 100 0.8738138 0.8623367

## 0.3 2 0.6 0.500 150 0.8712299 0.8509071

## 0.3 2 0.6 0.500 200 0.8628896 0.8489840

## 0.3 2 0.6 0.500 250 0.8588455 0.8432874

## 0.3 2 0.6 0.625 50 0.8852692 0.8566763

## 0.3 2 0.6 0.625 100 0.8763552 0.8432148

## 0.3 2 0.6 0.625 150 0.8707889 0.8355225

## 0.3 2 0.6 0.625 200 0.8673676 0.8317489

## 0.3 2 0.6 0.625 250 0.8648451 0.8393687

## 0.3 2 0.6 0.750 50 0.8820965 0.8662554

## 0.3 2 0.6 0.750 100 0.8763104 0.8623730

## 0.3 2 0.6 0.750 150 0.8705862 0.8623004

## 0.3 2 0.6 0.750 200 0.8665872 0.8566401

## 0.3 2 0.6 0.750 250 0.8646026 0.8528302

## 0.3 2 0.6 0.875 50 0.8854970 0.8604499

## 0.3 2 0.6 0.875 100 0.8756684 0.8546807

## 0.3 2 0.6 0.875 150 0.8718297 0.8546807

## 0.3 2 0.6 0.875 200 0.8662731 0.8489115

## 0.3 2 0.6 0.875 250 0.8657367 0.8432511

## 0.3 2 0.6 1.000 50 0.8851624 0.8662554

## 0.3 2 0.6 1.000 100 0.8790797 0.8547170

## 0.3 2 0.6 1.000 150 0.8764584 0.8547170

## 0.3 2 0.6 1.000 200 0.8731986 0.8585269

## 0.3 2 0.6 1.000 250 0.8691992 0.8470247

## 0.3 2 0.8 0.500 50 0.8795484 0.8546807

## 0.3 2 0.8 0.500 100 0.8725858 0.8585269

## 0.3 2 0.8 0.500 150 0.8668489 0.8490929

## 0.3 2 0.8 0.500 200 0.8627999 0.8317852

## 0.3 2 0.8 0.500 250 0.8604951 0.8260160

## 0.3 2 0.8 0.625 50 0.8798383 0.8585994

## 0.3 2 0.8 0.625 100 0.8731001 0.8546807

## 0.3 2 0.8 0.625 150 0.8727083 0.8547170

## 0.3 2 0.8 0.625 200 0.8705551 0.8489478

## 0.3 2 0.8 0.625 250 0.8648865 0.8432511

## 0.3 2 0.8 0.750 50 0.8807836 0.8528302

## 0.3 2 0.8 0.750 100 0.8778557 0.8413280

## 0.3 2 0.8 0.750 150 0.8710876 0.8489840

## 0.3 2 0.8 0.750 200 0.8675137 0.8527213

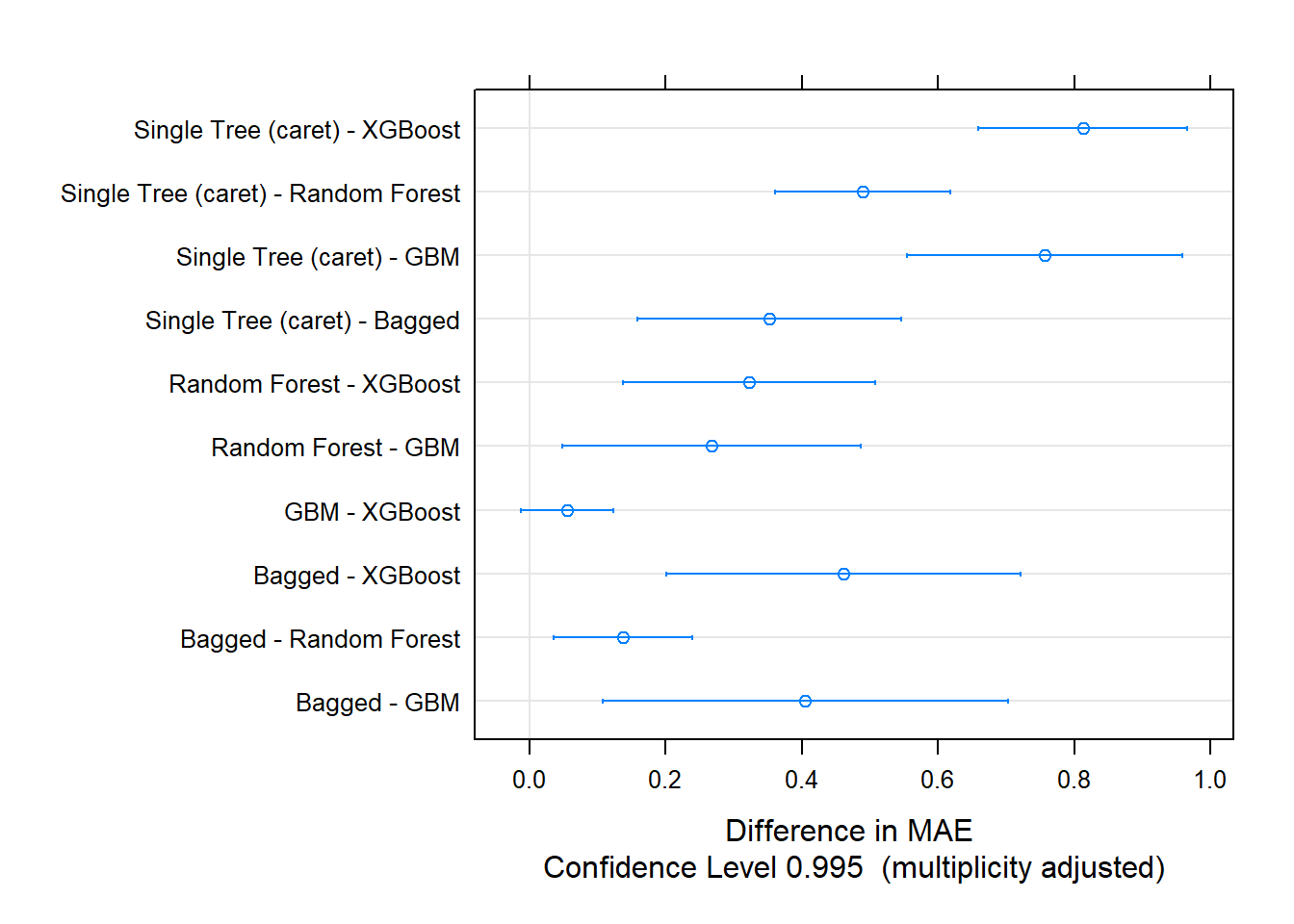

## 0.3 2 0.8 0.750 250 0.8669419 0.8432511