Day 6 Supervised Classification

In the following script, we will introduce you to the supervised classification of text. Supervised means that we will need to “show” the machine a data set that already contains the value or label we want to predict (the “dependent variable”) as well as all the variables that are used to predict the class/value (the independent variables or, in ML lingo, features). In the examples we will showcase, the features are the tokens that are contained in a document. Dependent variables are in our examples sentiment or party affiliation.

Overall, the process of supervised classification using text in R encompasses the following steps:

- Split data into training and test set

- Pre-processing and featurization

- Training

- Evaluation and tuning (through cross-validation) (… repeat 2.-4. as often as necessary)

- Applying the model to the held-out test set

- Final evaluation

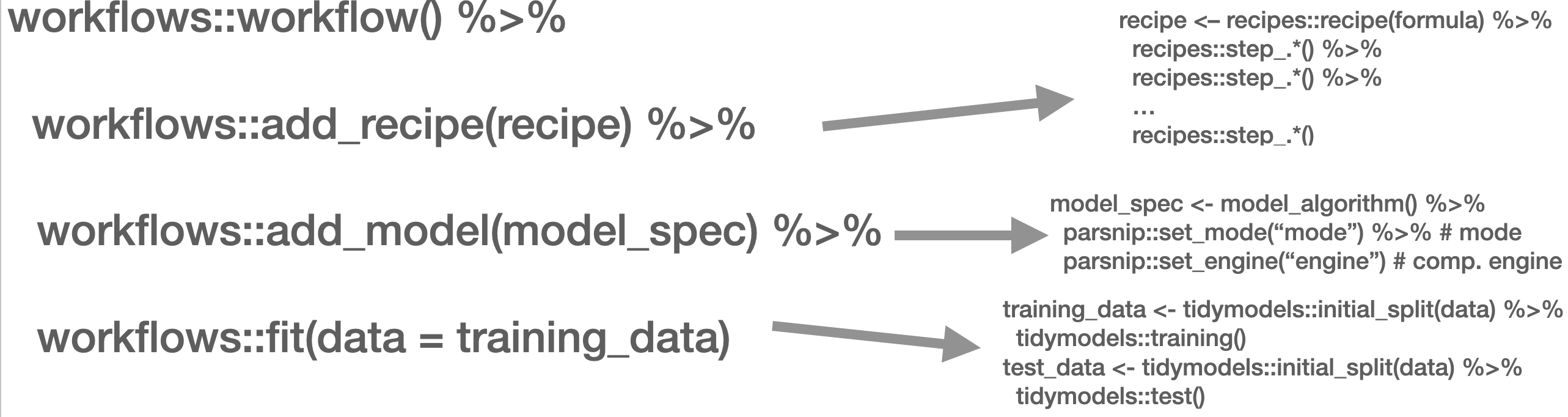

This is mirrored in the workflow() function from the workflows (Vaughan 2022) package. There, you define the pre-processing procedure (add_recipe() – created through the recipe() function from the recipes (Kuhn and Wickham 2022) and/or textrecipes (Hvitfeldt 2022) package(s)), the model specification with add_spec() – taking a model specification as created by the parsnip (Kuhn, Vaughan, and Hvitfeldt 2022) package.

In the next part, other approaches such as Support Vector Machines (SVM), penalized logistic regression models (penalized here means, loosely speaking, that insignificant predictors which contribute little will be shrunk and ignored – as the text contains many tokens that might not contribute much, those models lend themselves nicely to such tasks), random forest models, or XGBoost will be introduced. Those approaches are not to be explained in-depth, third-party articles will be linked though, but their intuition and the particularities of their implementation will be described. Since we use the tidymodels (Kuhn and Wickham 2020) framework for implementation, trying out different approaches is straightforward. Also, the pre-processing differs, recipes and textrecipes facilitate this task decisively. Third, the evaluation of different classifiers will be described. Finally, the entire workflow will be demonstrated using a Twitter data set.

The first example for today’s session is the IMDb data set. First, we load a whole bunch of packages and the data set.

needs(discrim, glmnet, gutenbergr, ranger, textrecipes, themis, tidymodels, tidytext, tidyverse, tune, vip, workflows)

imdb_data <- read_csv("https://www.dropbox.com/s/0cfr4rkthtfryyp/imdb_reviews.csv?dl=1")6.1 Split the data

The first step is to divide the data into training and test sets using initial_split(). You need to make sure that the test and training set are fairly balanced which is achieved by using strata =. prop = refers to the proportion of rows that make it into the training set.

split <- initial_split(imdb_data, prop = 0.2, strata = sentiment)

imdb_train <- training(split)

imdb_test <- testing(split)

glimpse(imdb_train)## Rows: 5,000

## Columns: 2

## $ text <chr> "This German horror film has to be one of the weirdest I hav…

## $ sentiment <chr> "negative", "negative", "negative", "negative", "negative", …## # A tibble: 2 × 2

## sentiment n

## <chr> <int>

## 1 negative 2500

## 2 positive 25006.2 Pre-processing and featurization

In the tidymodels framework, pre-processing and featurization are performed through so-called recipes. For text data, so-called textrecipes are available.

6.3 textrecipes – basic example

In the initial call, the formula needs to be provided. In our example, we want to predict the sentiment (“positive” or “negative”) using the text in the review. Then, different steps for pre-processing are added. Similar to what you have learned in the prior chapters containing measures based on the bag of words assumption, the first step is usually tokenization, achieved through step_tokenize(). In the end, the features need to be quantified, either through step_tf(), for raw term frequencies, or step_tfidf(), for TF-IDF. In between, various pre-processing steps such as word normalization (i.e., stemming or lemmatization), and removal of rare or common words Hence, a recipe for a very basic model just using raw frequencies and the 1,000 most common words would look as follows:

imdb_basic_recipe <- recipe(sentiment ~ text, data = imdb_train) |>

step_tokenize(text) |> # first step: tokenize text

step_tokenfilter(text, max_tokens = 1000) |> # only retain 1000 most common words

# additional pre-processing steps can be added, see next chapter

step_tfidf(text) # final step: add term frequenciesIn case you want to know what the data set for the classification task looks like, you can prep() and finally bake() the recipe. Note that we need to specify the data set we want to pre-process in the recipe’s manner. In our case, we want to perform the operations on the data specified in the basic_recipe and, hence, need to specify new_data = NULL.

## # A tibble: 5,000 × 1,001

## sentiment tfidf_text_1 tfidf_text_10 tfidf_text_2 tfidf_text_20 tfidf_text_3

## <fct> <dbl> <dbl> <dbl> <dbl> <dbl>

## 1 negative 0 0 0 0 0

## 2 negative 0 0.0173 0.0201 0 0

## 3 negative 0 0 0 0 0

## 4 negative 0 0 0 0 0

## 5 negative 0 0 0 0 0

## 6 negative 0 0 0 0 0

## 7 negative 0 0 0 0 0

## 8 negative 0.00812 0.00634 0 0 0.00834

## 9 negative 0 0 0 0 0

## 10 negative 0 0 0 0 0

## # ℹ 4,990 more rows

## # ℹ 995 more variables: tfidf_text_30 <dbl>, tfidf_text_4 <dbl>,

## # tfidf_text_5 <dbl>, tfidf_text_7 <dbl>, tfidf_text_8 <dbl>,

## # tfidf_text_80 <dbl>, tfidf_text_9 <dbl>, tfidf_text_a <dbl>,

## # tfidf_text_able <dbl>, tfidf_text_about <dbl>, tfidf_text_above <dbl>,

## # tfidf_text_absolutely <dbl>, tfidf_text_across <dbl>, tfidf_text_act <dbl>,

## # tfidf_text_acted <dbl>, tfidf_text_acting <dbl>, tfidf_text_action <dbl>, …6.4 textrecipes – further preprocessing steps

More steps exist. These always follow the same structure: their first two arguments are the recipe (which in practice does not matter, because they are generally used in a “pipeline”) and the variable that is affected (in our example “text” because it is the one to be modified). The rest of the arguments depends on the function. In the following, we will briefly list them and their most important arguments. Find the exhaustive list here,

step_tokenfilter(): filters tokensmax_times =upper threshold for how often a term can appear (removes common words)min_times =lower threshold for how often a term can appear (removes rare words)max_tokens =maximum number of tokens to be retained; will only keep the ones that appear the most often- you should filter before using

step_tforstep_tfidfto limit the number of variables that are created

step_lemma(): allows you to extract the lemma- in case you want to use it, make sure you tokenize via

spacyr(by usingstep_tokenize(text, engine = "spacyr"))

- in case you want to use it, make sure you tokenize via

step_pos_filter(): adds the Part-of-speech tagsstep_stem(): stems tokenscustom_stem =specifies the stemming function. Defaults toSnowballC. Custom functions can be provided.options =can be used to provide arguments (stored as named elements of a list) to the stemming function. E.g.,step_stem(text, custom_stem = "SnowballC", options = list(language = "russian"))

step_stopwords(): removes stopwordssource =alternative stopword lists can be used; potential values are contained instopwords::stopwords_getsources()custom_stopword_source =provide your own stopword listlanguage =specify language of stop word list; potential values can be found instopwords::stopwords_getlanguages()

step_ngram(): takes into account order of terms, provides more contextnum_tokens =number of tokens in n-gram – defaults to 3 – trigramsmin_num_tokens =minimal number of tokens in n-gram –step_ngram(text, num_tokens = 3, min_num_tokens = 1)will return all uni-, bi-, and trigrams.

step_word_embeddings(): use pre-trained embeddings for wordsembeddings(): tibble of pre-trained embeddings

step_normalize(): performs unicode normalization as a preprocessing stepnormalization_form =which Unicode Normalization to use, overview instringi::stri_trans_nfc()

themis::step_upsample()takes care of unbalanced dependent variables (which need to be specified in the call)over_ratio =ratio of desired minority-to-majority frequencies

6.5 Model specification

Now that the data is ready, the model can be specified. The parsnip package is used for this. It contains a model specification, the type, and the engine.

- Logistic regression

lr_spec <- logistic_reg() |> # the initial function, coming from the parsnip package

set_engine("glm") |> # glm package needs to be installed

set_mode("classification") # classification for discrete values, regression for continuous onesOther model specifications you might deem relevant:

- Naïve Bayes:

- Logistic regression (penalized with Lasso):

- SVM (here,

step_normalize(all_predictors())needs to be the last step in the recipe)

svm_spec <- svm_linear() |>

set_mode("regression") |> # can also be "classification"

set_engine("LiblineaR")- Random Forest (with 1000 decision trees):

rf_spec <- rand_forest(trees = 100) |>

set_engine("ranger") |> #to get important fea

set_mode("classification") # can also be "classification"- xgBoost (with 20 decision trees):

6.6 Model training – workflows

A workflow can be defined to train the model. It will contain the recipe, hence taking care of the pre-processing, and the model specification. In the end, it can be used to fit the model.

It can then be fit using fit().

## Warning: glm.fit: fitted probabilities numerically 0 or 1 occurredWe can also look at the words’ weights that were learned by the model.

coefs <- imdb_lr_basic |>

extract_fit_parsnip() |>

tidy() |>

mutate(term = str_remove(term, "^.?tfidf\\_text\\_"))Once we have the model, we can augment() it to our test set and predict:

## Warning in predict.lm(object, newdata, se.fit, scale = 1, type = if (type == :

## prediction from rank-deficient fit; attr(*, "non-estim") has doubtful cases

## Warning in predict.lm(object, newdata, se.fit, scale = 1, type = if (type == :

## prediction from rank-deficient fit; attr(*, "non-estim") has doubtful cases## [1] 0.8080580% accuracy – not bad! Maybe we can do even better in exercise 1.

6.7 Model evaluation

Now that a first model has been trained, its performance can be evaluated. In theory, we have a test set for this. However, the test set is precious and should only be used once we are sure that we have found a good model. Hence, for these intermediary tuning steps, we need to come up with another solution. So-called cross-validation lends itself nicely to this task. The rationale behind it is that chunks from the training set are used as test sets. So, in the case of 10-fold cross-validation, the test set is divided into 10 distinctive chunks of data. Then, 10 models are trained on the respective 9/10 of the training set that is not used for evaluation. Finally, each model is evaluated against the respective held-out “test set” and the performance metrics averaged.

First, the folds need to be determined. we set a seed in the beginning to ensure reproducibility.

fit_resamples() trains models on the respective samples. (Note that for this to work, no model must have been fit to this workflow before. Hence, you either need to define a new workflow first or restart the session and skip the fit-line from before.)

imdb_nb_resampled <- fit_resamples(

imdb_nb_wf,

imdb_folds,

control = control_resamples(save_pred = TRUE),

metrics = metric_set(accuracy, recall, precision)

)collect_metrics() can be used to evaluate the results.

- Accuracy tells me the share of correct predictions overall

- Precision tells me the number of correct positive predictions

- Recall tells me how many actual positives are predicted properly

In all cases, values close to 1 are better.

collect_predictions() will give you the predicted values.

nb_rs_metrics <- collect_metrics(imdb_nb_resampled)

nb_rs_predictions <- collect_predictions(imdb_nb_resampled)This can also be used to create the confusion matrix by hand.

confusion_mat <- nb_rs_predictions |>

group_by(id) |>

mutate(confusion_class = case_when(.pred_class == "positive" & sentiment == "positive" ~ "TP",

.pred_class == "positive" & sentiment == "negative" ~ "FP",

.pred_class == "negative" & sentiment == "negative" ~ "TN",

.pred_class == "negative" & sentiment == "positive" ~ "FN")) |>

count(confusion_class) |>

ungroup() |>

pivot_wider(names_from = confusion_class, values_from = n)Now you can go back and adapt the pre-processing recipe, fit a new model, or try a different classifier, and evaluate it against the same set of folds. Once you are satisfied, you can proceed to check the workflow on the held-out test data.

6.8 Hyperparameter tuning

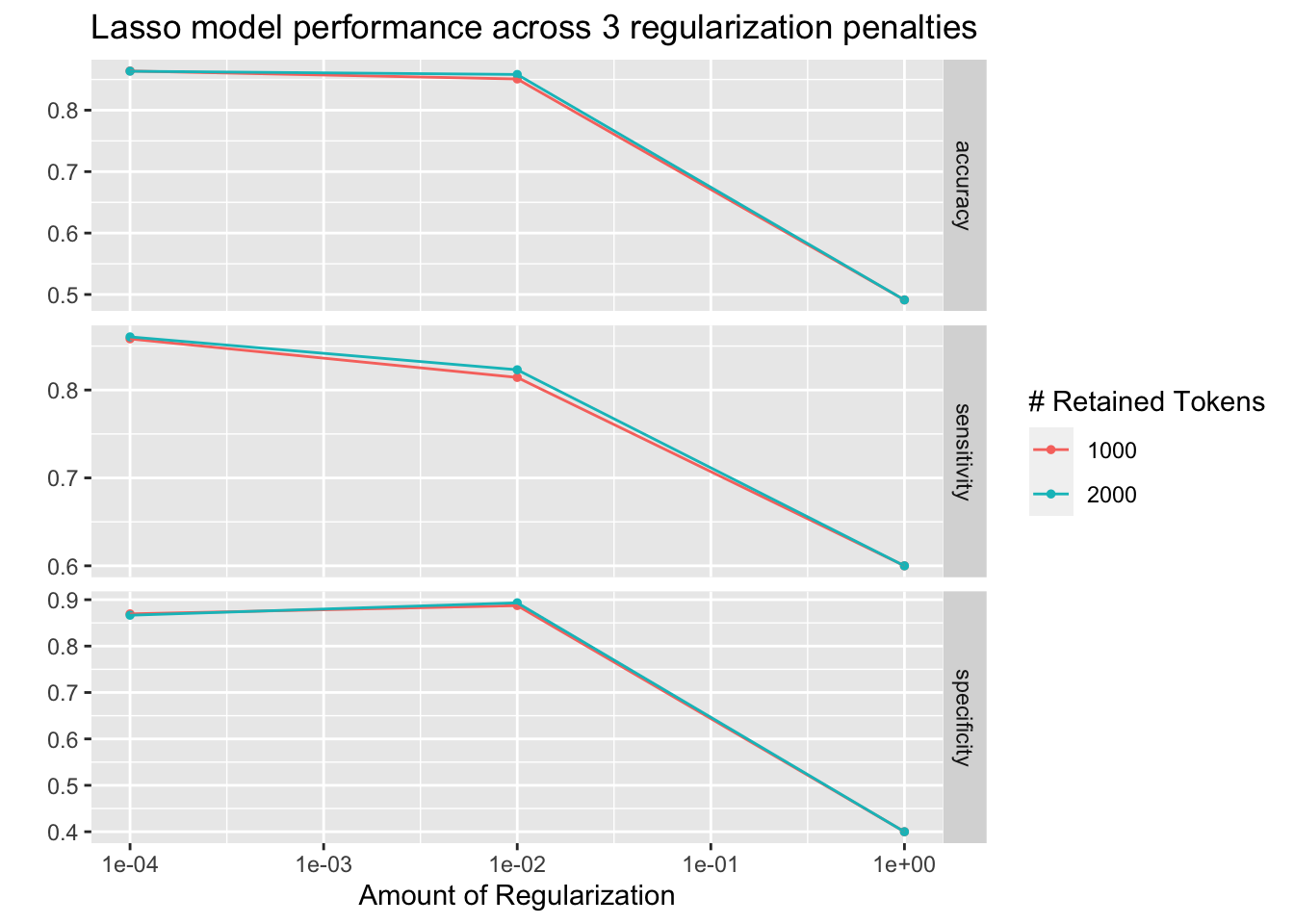

Some models also require the tuning of hyperparameters (for instance, lasso regression). If we wanted to tune these values, we could do so using the tune package. There, the parameter that needs to be tuned gets a placeholder in the model specification. Through variation of the placeholder, the optimal solution can be empirically determined.

So, in the first example, we will try to determine a good penalty value for LASSO regression.

lasso_tune_spec <- logistic_reg(penalty = tune(), mixture = 1) |>

set_mode("classification") |>

set_engine("glmnet")We will also play with the numbers of tokens to be included:

imdb_tune_basic_recipe <- recipe(sentiment ~ text, data = imdb_train) |>

step_tokenize(text) |>

step_tokenfilter(text, max_tokens = tune()) |>

step_tf(text)The dials (Kuhn and Frick 2022) package provides the handy grid_regular() function which chooses suitable values for certain parameters.

lambda_grid <- grid_regular(

penalty(range = c(-4, 0)),

max_tokens(range = c(1e3, 2e3)),

levels = c(penalty = 3, max_tokens = 2)

)Then, we need to define a new workflow, too.

For the resampling, we can use tune_grid() which will use the workflow, a set of folds (we use the ones we created earlier), and a grid containing the different parameters.

set.seed(123)

tune_lasso_rs <- tune_grid(

lasso_tune_wf,

imdb_folds,

grid = lambda_grid,

metrics = metric_set(accuracy)

)Again, we can access the resulting metrics using collect_metrics():

## # A tibble: 18 × 8

## penalty max_tokens .metric .estimator mean n std_err .config

## <dbl> <int> <chr> <chr> <dbl> <int> <dbl> <chr>

## 1 0.0001 1000 accuracy binary 0.864 10 0.00433 Preprocessor1_…

## 2 0.0001 1000 sensitivity binary 0.858 10 0.00537 Preprocessor1_…

## 3 0.0001 1000 specificity binary 0.869 10 0.00441 Preprocessor1_…

## 4 0.01 1000 accuracy binary 0.851 10 0.00329 Preprocessor1_…

## 5 0.01 1000 sensitivity binary 0.814 10 0.00556 Preprocessor1_…

## 6 0.01 1000 specificity binary 0.887 10 0.00298 Preprocessor1_…

## 7 1 1000 accuracy binary 0.491 10 0.00240 Preprocessor1_…

## 8 1 1000 sensitivity binary 0.6 10 0.163 Preprocessor1_…

## 9 1 1000 specificity binary 0.4 10 0.163 Preprocessor1_…

## 10 0.0001 2000 accuracy binary 0.864 10 0.00254 Preprocessor2_…

## 11 0.0001 2000 sensitivity binary 0.860 10 0.00439 Preprocessor2_…

## 12 0.0001 2000 specificity binary 0.867 10 0.00267 Preprocessor2_…

## 13 0.01 2000 accuracy binary 0.858 10 0.00273 Preprocessor2_…

## 14 0.01 2000 sensitivity binary 0.823 10 0.00542 Preprocessor2_…

## 15 0.01 2000 specificity binary 0.893 10 0.00332 Preprocessor2_…

## 16 1 2000 accuracy binary 0.491 10 0.00240 Preprocessor2_…

## 17 1 2000 sensitivity binary 0.6 10 0.163 Preprocessor2_…

## 18 1 2000 specificity binary 0.4 10 0.163 Preprocessor2_…autoplot() can be used to visualize them:

autoplot(tune_lasso_rs) +

labs(

title = "Lasso model performance across 3 regularization penalties"

)

Also, we can use show_best() to look at the best result. Subsequently, select_best() allows me to choose it. In real life, we would choose the best trade-off between a model as simple and as good as possible. Using select_by_pct_loss(), we choose the one that performs still more or less on par with the best option (i.e., within 2 percent accuracy) but is considerably simpler. Finally, once we are satisfied with the outcome, we can finalize_workflow() and fit the final model to the test data.

## # A tibble: 5 × 8

## penalty max_tokens .metric .estimator mean n std_err .config

## <dbl> <int> <chr> <chr> <dbl> <int> <dbl> <chr>

## 1 0.0001 1000 accuracy binary 0.864 10 0.00433 Preprocessor1_Mode…

## 2 0.0001 2000 accuracy binary 0.864 10 0.00254 Preprocessor2_Mode…

## 3 0.01 2000 accuracy binary 0.858 10 0.00273 Preprocessor2_Mode…

## 4 0.01 1000 accuracy binary 0.851 10 0.00329 Preprocessor1_Mode…

## 5 1 1000 accuracy binary 0.491 10 0.00240 Preprocessor1_Mode…6.9 Final fit

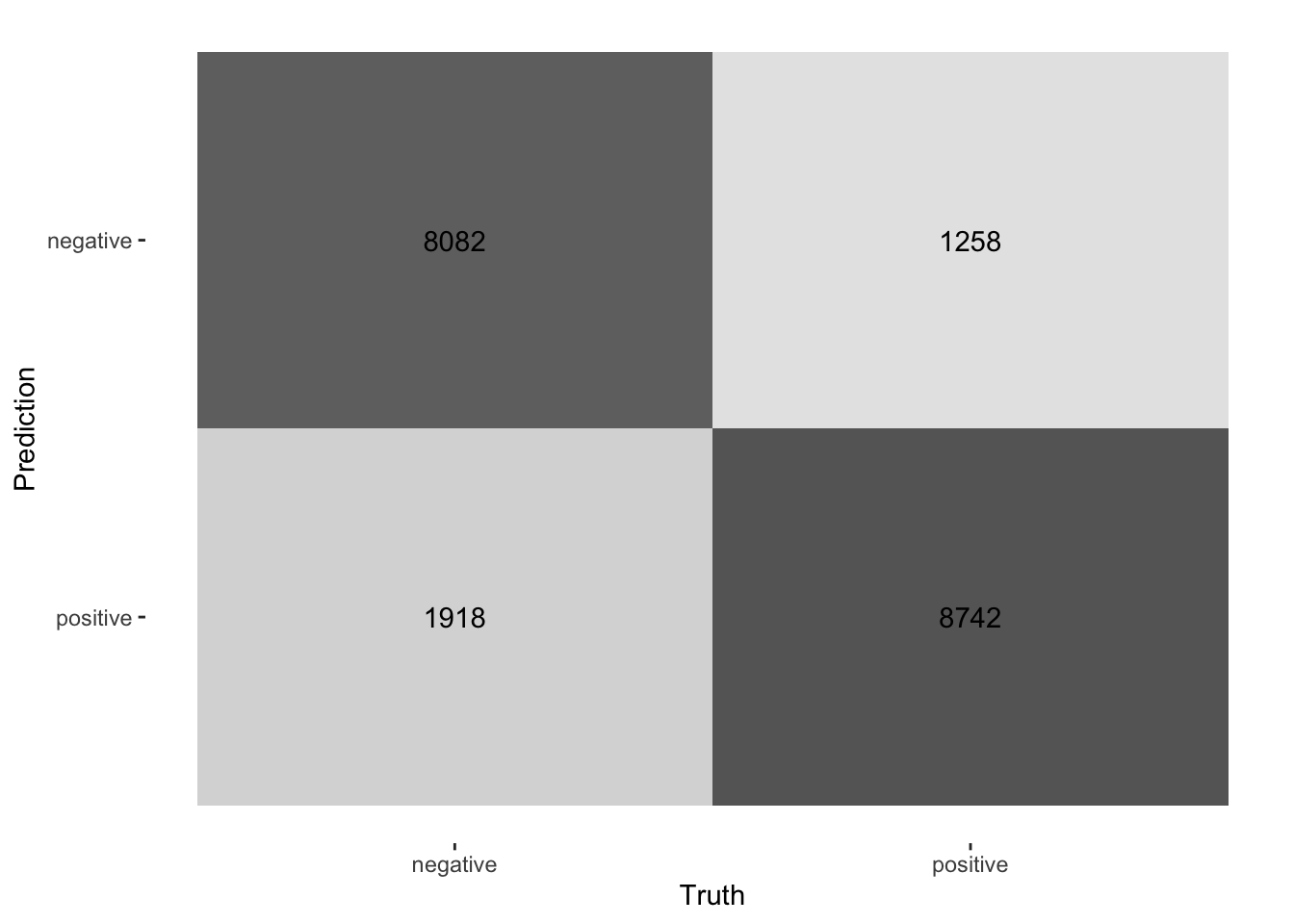

Now we can finally fit our model to the training data and predict on the test data. last_fit() is the way to go. It takes the workflow and the split (as defined by initial_split()) as parameters.

## # A tibble: 2 × 4

## .metric .estimator .estimate .config

## <chr> <chr> <dbl> <chr>

## 1 accuracy binary 0.841 Preprocessor1_Model1

## 2 roc_auc binary 0.919 Preprocessor1_Model1collect_predictions(final_fitted) |>

conf_mat(truth = sentiment, estimate = .pred_class) |>

autoplot(type = "heatmap")

In a final step, we will also want to check how different features (i.e., words) are associated with a certain outcome. For this we can pull the coefficients from the fitted model and bring them into a tidy format:

coef_tbl <- final_lasso_imdb |>

fit(imdb_train) |>

extract_fit_parsnip() |>

tidy() |>

mutate(term = str_remove(term, "^tf\\_text\\_"))Seems to make a lot of sense!

6.10 Supervised ML with tidymodels in a nutshell

Here, we give you the condensed version of how you would train a model on a number of documents and predict on a certain test set. To exemplify this, I use Tweets from British MPs from the two largest parties.

First, you take your data and split it into training and test set:

set.seed(1)

timelines_gb <- read_csv("https://www.dropbox.com/s/1lrv3i655u5d7ps/timelines_gb_2022.csv?dl=1")## Rows: 59444 Columns: 2

## ── Column specification ─────────────────────────────────────────────────────────

## Delimiter: ","

## chr (2): party, text

##

## ℹ Use `spec()` to retrieve the full column specification for this data.

## ℹ Specify the column types or set `show_col_types = FALSE` to quiet this message.split_gb <- initial_split(timelines_gb, prop = 0.3, strata = party)

party_tweets_train_gb <- training(split_gb)

party_tweets_test_gb <- testing(split_gb)Second, define your text pre-processing steps. In this case, we want to predict partisanship based on textual content. We tokenize the text, upsample to have balanced classed in terms of party in the training set, and retain only the 1,000 most commonly appearing tokens.

twitter_recipe <- recipe(party ~ text, data = party_tweets_train_gb) |>

step_tokenize(text) |> # tokenize text

themis::step_upsample(party) |>

step_tokenfilter(text, max_tokens = 1000) |>

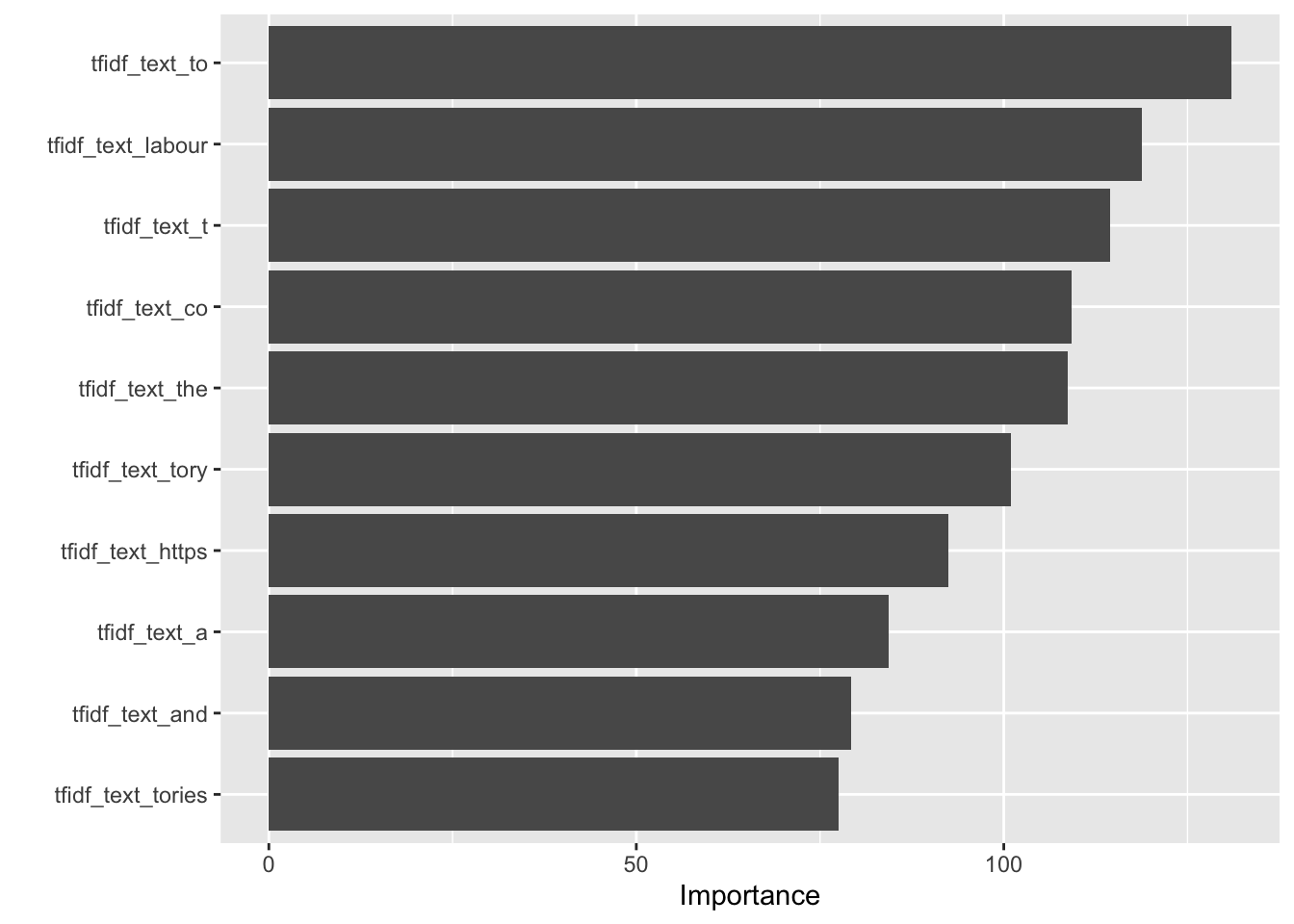

step_tfidf(text) Third, the model specification needs to be defined. In this case, we go with a random forest classifier containing 50 decision trees.

rf_spec <- rand_forest(trees = 50) |>

set_engine("ranger", importance = "impurity") |> #to get important features

set_mode("classification") Finally, the pre-processing “recipe” and the model specification can be summarized in one workflow and the model can be fit to the training data.

twitter_party_rf_workflow <- workflow() |>

add_recipe(twitter_recipe) |>

add_model(rf_spec)

party_gb <- twitter_party_rf_workflow |> fit(data = party_tweets_train_gb)

party_gb |> extract_fit_parsnip() |> vip::vip()

Now we have arrived at a model we can apply to make predictions using augment(). Finally, we can evaluate its accuracy on the test data by taking the share of correctly predicted values.

predictions_gb <- augment(party_gb, party_tweets_test_gb)

mean(predictions_gb$party == predictions_gb$.pred_class)## [1] 0.6989096.11 Further readings

- Check out the SMLTAR book

- More on tidymodels

- Basic descriptions of ML models

6.12 Exercises

- Train a new logistic regression model on the same IMDb data – but vary the preprocessing. Can you beat 80% accuracy?

set.seed(123)

## updated recipe, remove stopwords

imdb_adv_recipe <- recipe(sentiment ~ text, data = imdb_train) |>

step_tokenize(text) |>

step_stopwords(text) |>

step_tokenfilter(text, max_tokens = 1000) |>

step_tfidf(text)

adv_imdb_lr_wf <- workflow() |>

add_recipe(imdb_adv_recipe) |>

add_model(lr_spec)

adv_imdb_lr <- adv_imdb_lr_wf |> fit(data = imdb_train)

coefs <- adv_imdb_lr |>

extract_fit_parsnip() |>

tidy() |>

mutate(term = str_remove(term, "^.?tfidf\\_text\\_"))

## check accuracy

predictions_lr_imdb_adv <- adv_imdb_lr |> augment(imdb_test)

mean(predictions_lr_imdb_adv$sentiment == predictions_lr_imdb_adv$.pred_class)- Try to predict partisanship in the tweets from last week with a classifier of your choice (hint: do initial split with prop 0.1 or lower). How well does it perform? Now extract Tweets that are about abortion by using Friday’s approach. Perform the same prediction task (but now with

initial_split(prop = 0.8)). How does the accuracy change? What could this mean? What are predictive terms?

timelines <- read_csv("https://www.dropbox.com/s/dpu5m3xqz4u4nv7/tweets_house_rep_party.csv?dl=1") |> filter(!is.na(party))

## train general model

split_us <- initial_split(timelines, prop = 0.1, strata = party)

train_us <- training(split_us)

test_us <- testing(split_us)

twitter_recipe <- recipe(party ~ text, data = train_us) |>

step_tokenize(text) |> # tokenize text

themis::step_upsample(party) |>

step_tokenfilter(text, max_tokens = 1000) |>

step_tfidf(text)

rf_spec <- rand_forest(trees = 50) |>

set_engine("ranger", importance = "impurity") |> #to get important features

set_mode("classification")

twitter_party_rf_workflow <- workflow() |>

add_recipe(twitter_recipe) |>

add_model(rf_spec)

model_us <- twitter_party_rf_workflow |> fit(data = train_us)

predictions_party_us <- augment(model_us, test_us)

mean(predictions_party_us$party == predictions_party_us$.pred_class)

## train abortion-based model

keywords <- c("abortion", "prolife", " roe ", " wade ", "roevswade", "baby", "fetus", "womb", "prochoice", "leak")

timelines_us_abortion <- timelines |> filter(str_detect(text, keywords |> str_c(collapse = "|")))

split_us_abortion <- initial_split(timelines_us_abortion, prop = 0.8, strata = party)

abortion_tweets_train_us <- training(split_us_abortion)

abortion_tweets_test_us <- testing(split_us_abortion)

abortion_us <- twitter_party_rf_workflow |> fit(data = abortion_tweets_train_us)

predictions_abortion_us <- augment(abortion_us, abortion_tweets_test_us)

mean(predictions_abortion_us$party == predictions_abortion_us$.pred_class)

## compare accuracies

model_us |> extract_fit_parsnip() |> vip()

abortion_us |> extract_fit_parsnip() |> vip()