6 Modeling Discontinuous and Nonlinear Change

All the multilevel models for change presented so far assume that individual growth is smooth and linear. Yet individual change can also be discontinuous or nonlinear…

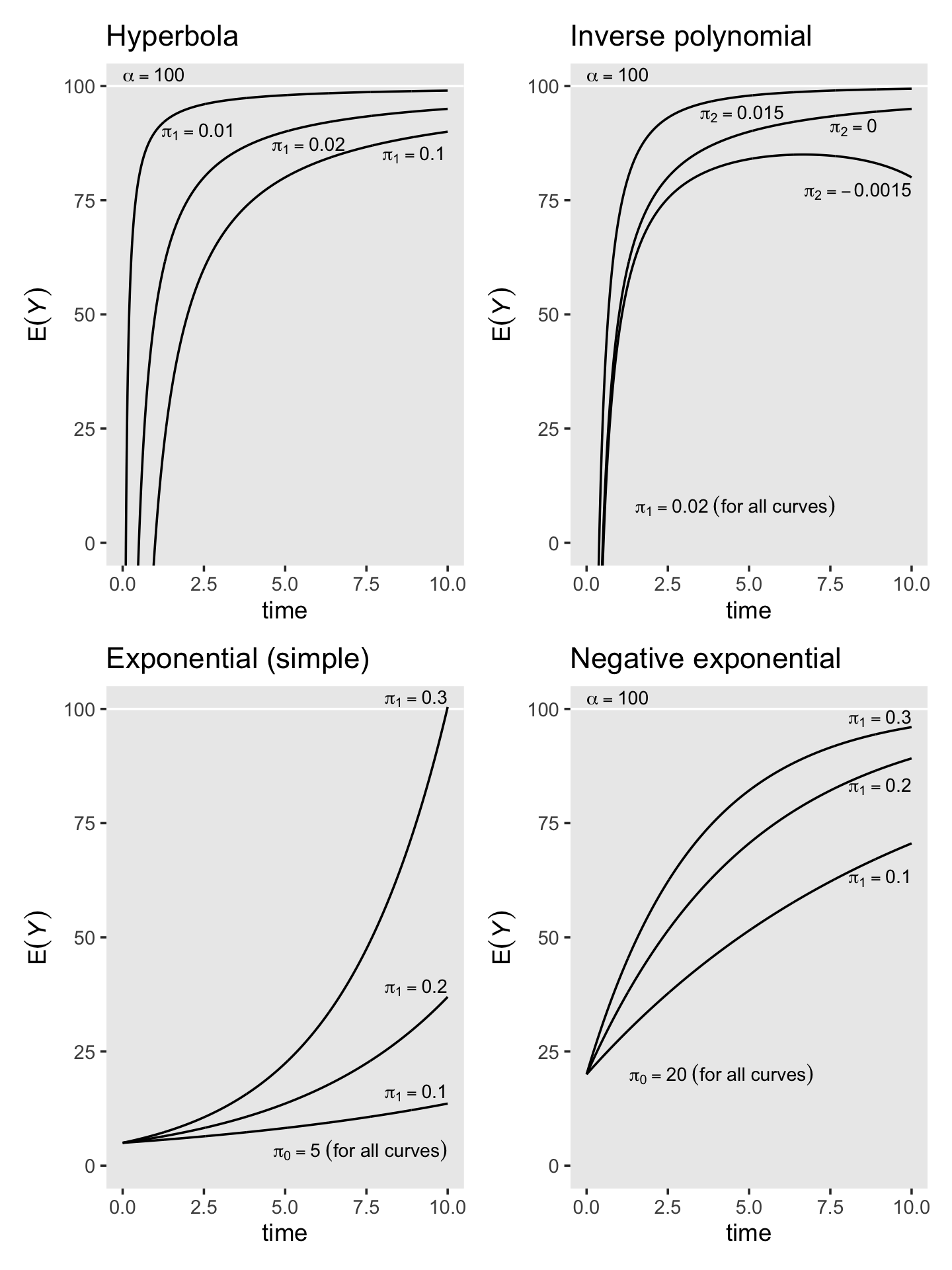

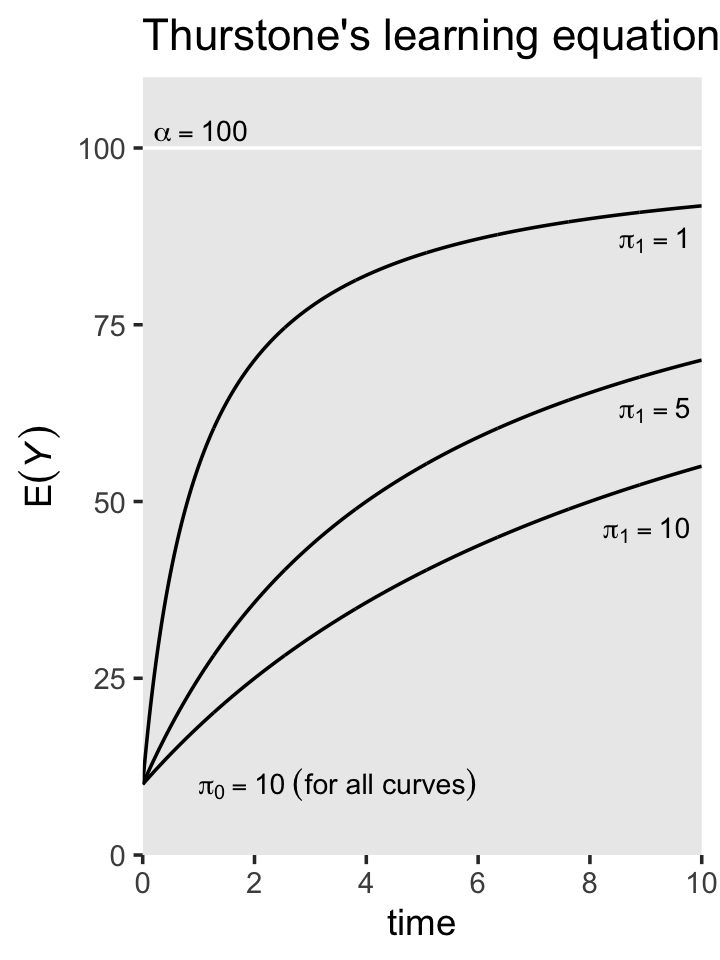

In this chapter, we introduce strategies for fitting models in which individual change is explicitly discontinuous or nonlinear. Rather than view these patterns as inconveniences, we treat them as substantively compelling opportunities. In doing so, we broaden our questions about the nature of change beyond the basic concepts of initial status and rate of change to a consideration of acceleration, deceleration, turning points, shifts, and asymptotes. The strategies that we use fall into two broad classes. Empirical strategies that let the “data speak for themselves.” Under this approach, you inspect observed growth records systematically and identify a transformation of the outcome, or of TIME, that linearizes the individual change trajectory. Unfortunately, this approach can lead to interpretive difficulties, especially if it involves esoteric transformations or higher order polynomials. Under rational strategies, on the other hand, you use theory to hypothesize a substantively meaningful functional form for the individual change trajectory. Although rational strategies generally yield clearer interpretations, their dependence on good theory makes them somewhat more difficult to develop and apply. (Singer & Willett, 2003, pp. 189–190, emphasis in the original)

6.1 Discontinuous individual change

Not all individual change trajectories are continuous functions of time…

If you have reason to believe that individual change trajectories might shift in elevation and/or slope, your level-1 model should reflect this hypothesis. Doing so allows you to test ideas about how the trajectory’s shape might change over time…

To postulate a discontinuous individual change trajectory, you need to know not just why the shift might occur but also when. This is because your level-1 individual growth model must include one (or more) time-varying predictor(s) that specify whether and if so, when each person experiences the hypothesized shift. (pp. 190–191, emphasis in the original)

6.1.1 Alternative discontinuous level-1 models for change.

To postulate a discontinuous level-1 individual growth model, you must first decide on its functional form. Although you can begin empirically, we prefer to focus on substance and the longitudinal process that gave rise to the data. What kind of discontinuity might the precipitating event create? What would a plausible level-1 trajectory look like? Before parameterizing models and constructing variables, we suggest that you: (1) take a pen and paper and sketch some options; and (2) articulate–in words, not equations–the rationale for each. We recommend these steps because, as we demonstrate, the easiest models to specify may not display the type of discontinuity you expect to find. (pp. 191–192)

I’ll leave the pen and paper scribbling to you. Here we load the wages_pp.csv data.

library(tidyverse)

wages_pp <- read_csv("data/wages_pp.csv")

glimpse(wages_pp)## Rows: 6,402

## Columns: 15

## $ id <dbl> 31, 31, 31, 31, 31, 31, 31, 31, 36, 36, 36, 36, 36, 36, 36, 36, 36, 36, 53, 53, 53, 53…

## $ lnw <dbl> 1.491, 1.433, 1.469, 1.749, 1.931, 1.709, 2.086, 2.129, 1.982, 1.798, 2.256, 2.573, 1.…

## $ exper <dbl> 0.015, 0.715, 1.734, 2.773, 3.927, 4.946, 5.965, 6.984, 0.315, 0.983, 2.040, 3.021, 4.…

## $ ged <dbl> 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 0, 0, 1, 1, 1, 1, 1, 1, 0, 0, 0,…

## $ postexp <dbl> 0.015, 0.715, 1.734, 2.773, 3.927, 4.946, 5.965, 6.984, 0.315, 0.983, 2.040, 3.021, 4.…

## $ black <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,…

## $ hispanic <dbl> 1, 1, 1, 1, 1, 1, 1, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 1, 1, 1, 1, 1, 1, 1, 0, 0, 0,…

## $ hgc <dbl> 8, 8, 8, 8, 8, 8, 8, 8, 9, 9, 9, 9, 9, 9, 9, 9, 9, 9, 7, 7, 7, 7, 7, 7, 7, 7, 12, 12, …

## $ hgc.9 <dbl> -1, -1, -1, -1, -1, -1, -1, -1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, -2, -2, -2, -2, -2, -2, …

## $ uerate <dbl> 3.215, 3.215, 3.215, 3.295, 2.895, 2.495, 2.595, 4.795, 4.895, 7.400, 7.400, 5.295, 4.…

## $ ue.7 <dbl> -3.785, -3.785, -3.785, -3.705, -4.105, -4.505, -4.405, -2.205, -2.105, 0.400, 0.400, …

## $ ue.centert1 <dbl> 0.000, 0.000, 0.000, 0.080, -0.320, -0.720, -0.620, 1.580, 0.000, 2.505, 2.505, 0.400,…

## $ ue.mean <dbl> 3.2150, 3.2150, 3.2150, 3.2150, 3.2150, 3.2150, 3.2150, 3.2150, 5.0965, 5.0965, 5.0965…

## $ ue.person.cen <dbl> 0.0000, 0.0000, 0.0000, 0.0800, -0.3200, -0.7200, -0.6200, 1.5800, -0.2015, 2.3035, 2.…

## $ ue1 <dbl> 3.215, 3.215, 3.215, 3.215, 3.215, 3.215, 3.215, 3.215, 4.895, 4.895, 4.895, 4.895, 4.…Here’s a more focused look along the lines of Table 6.1.

wages_pp %>%

select(id, lnw, exper, ged, postexp) %>%

mutate(`ged by exper` = ged * exper) %>%

filter(id %in% c(206, 2365, 4384))## # A tibble: 22 × 6

## id lnw exper ged postexp `ged by exper`

## <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

## 1 206 2.03 1.87 0 0 0

## 2 206 2.30 2.81 0 0 0

## 3 206 2.48 4.31 0 0 0

## 4 2365 1.78 0.66 0 0 0

## 5 2365 1.76 1.68 0 0 0

## 6 2365 1.71 2.74 0 0 0

## 7 2365 1.74 3.68 0 0 0

## 8 2365 2.19 4.68 1 0 4.68

## 9 2365 2.04 5.72 1 1.04 5.72

## 10 2365 2.32 6.72 1 2.04 6.72

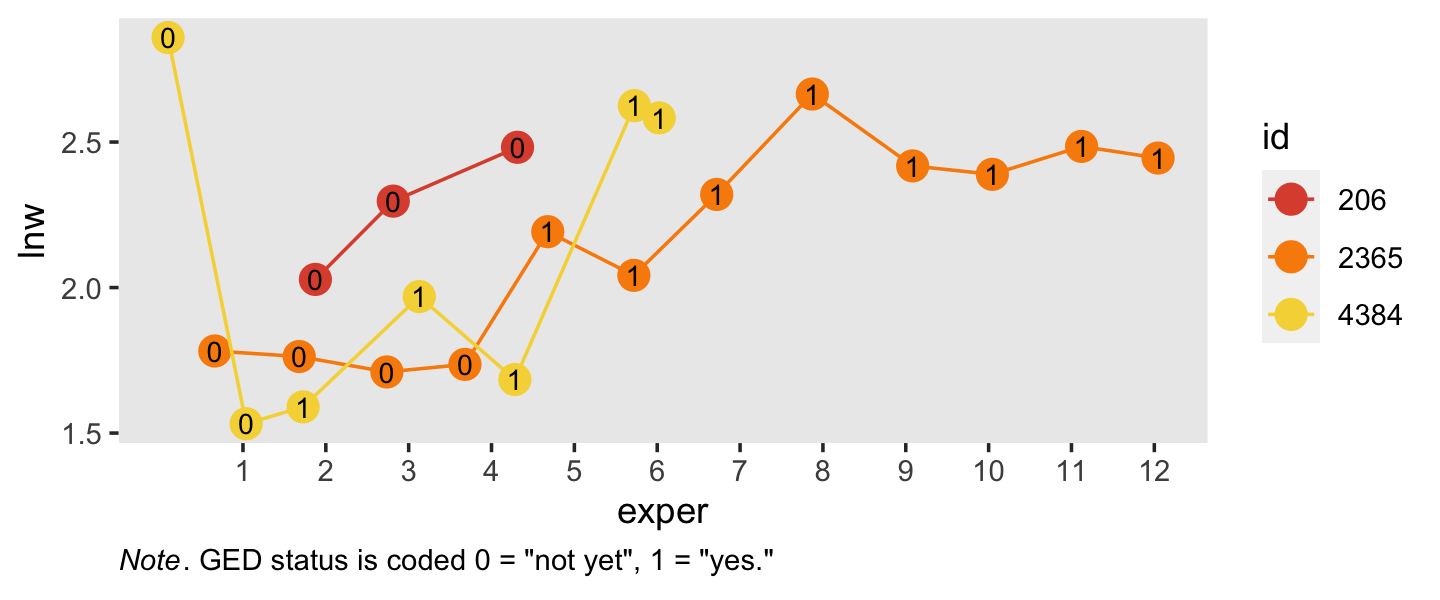

## # ℹ 12 more rowsSimilar to what we did in Section 5.2.1, here is a visualization of the two primary variables, exper and lnw, for those three participants.

wages_pp %>%

filter(id %in% c(206, 2365, 4384)) %>%

mutate(id = factor(id)) %>%

ggplot(aes(x = exper, y = lnw)) +

geom_point(aes(color = id),

size = 4) +

geom_line(aes(color = id)) +

geom_text(aes(label = ged),

size = 3) +

scale_x_continuous(breaks = 1:13) +

scale_color_viridis_d(option = "B", begin = .6, end = .9) +

labs(caption = expression(italic("Note")*'. GED status is coded 0 = "not yet", 1 = "yes."')) +

theme(panel.grid = element_blank(),

plot.caption = element_text(hjust = 0))

Note how the time-varying predictor ged is depicted as either an 0 or a 1 in the center of the dots. Maybe we might describe that change as linear with the simple model \(\text{lnw}_{ij} = \pi_{0i} + \pi_{1i} \text{exper}_{ij} + \epsilon_{ij}\). Maybe a different model that included ged would be helpful.

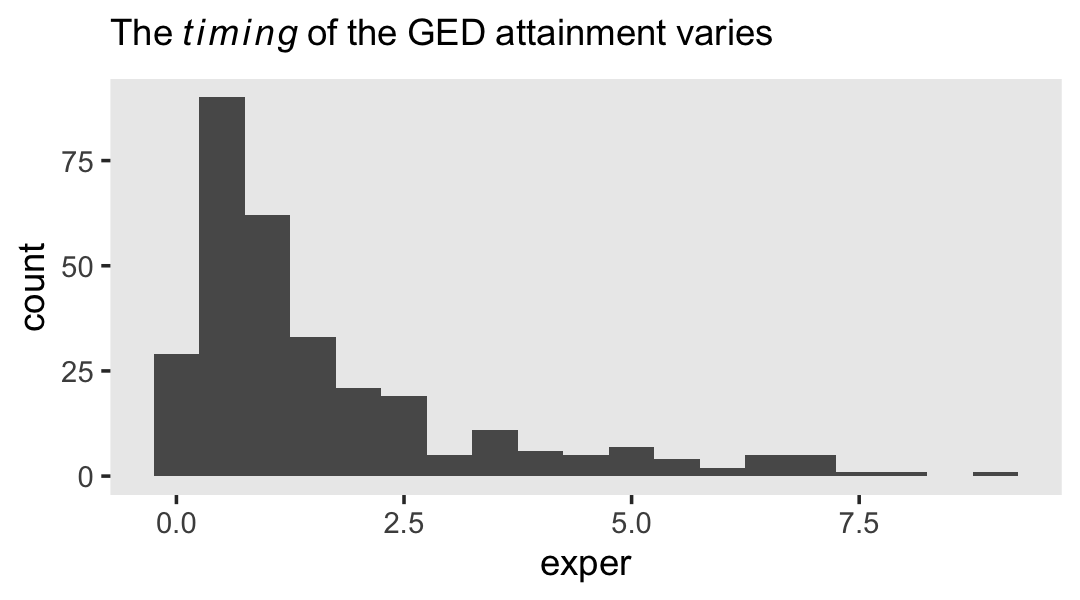

In the data, \(n = 581\) never got their GED’s, while \(n = 307\) did.

wages_pp %>%

group_by(id) %>%

summarise(got_ged = sum(ged) > 0) %>%

count(got_ged)## # A tibble: 2 × 2

## got_ged n

## <lgl> <int>

## 1 FALSE 581

## 2 TRUE 307However, those who did get their GED’s did so at different times. Here’s their distribution.

wages_pp %>%

filter(ged == 1) %>%

group_by(id) %>%

slice(1) %>%

ggplot(aes(x = exper)) +

geom_histogram(binwidth = 0.5) +

labs(subtitle = expression(The~italic(timing)~of~the~GED~attainment~varies)) +

theme(panel.grid = element_blank())

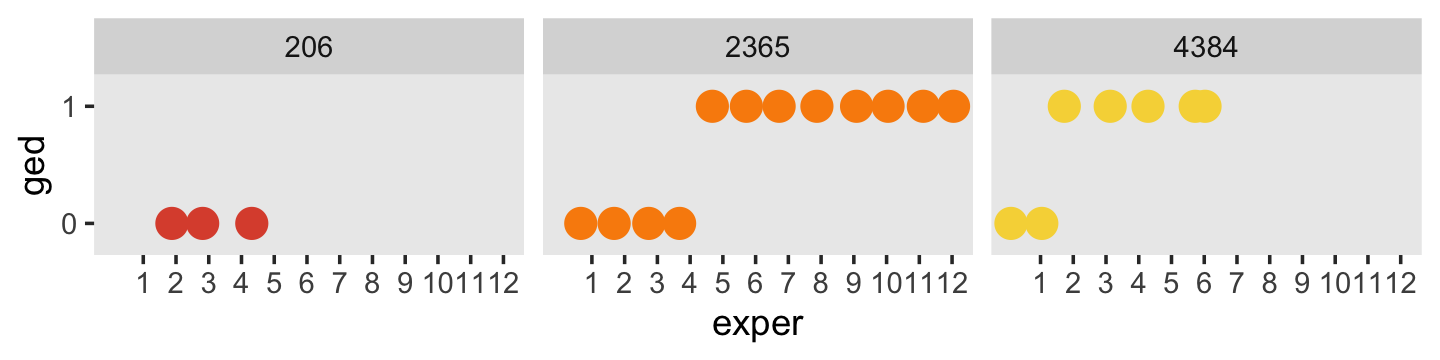

Here’s another, more focused, look at the GED status for our three focal participants, over exper.

wages_pp %>%

select(id, lnw, exper, ged, postexp) %>%

filter(id %in% c(206, 2365, 4384)) %>%

mutate(id = factor(id)) %>%

ggplot(aes(x = exper, y = ged)) +

geom_point(aes(color = id),

size = 4) +

scale_x_continuous(breaks = 1:13) +

scale_y_continuous(breaks = 0:1, limits = c(-0.2, 1.2)) +

scale_color_viridis_d(option = "B", begin = .6, end = .9, breaks = NULL) +

theme(panel.grid = element_blank(),

plot.caption = element_text(hjust = 0)) +

facet_wrap(~ id)

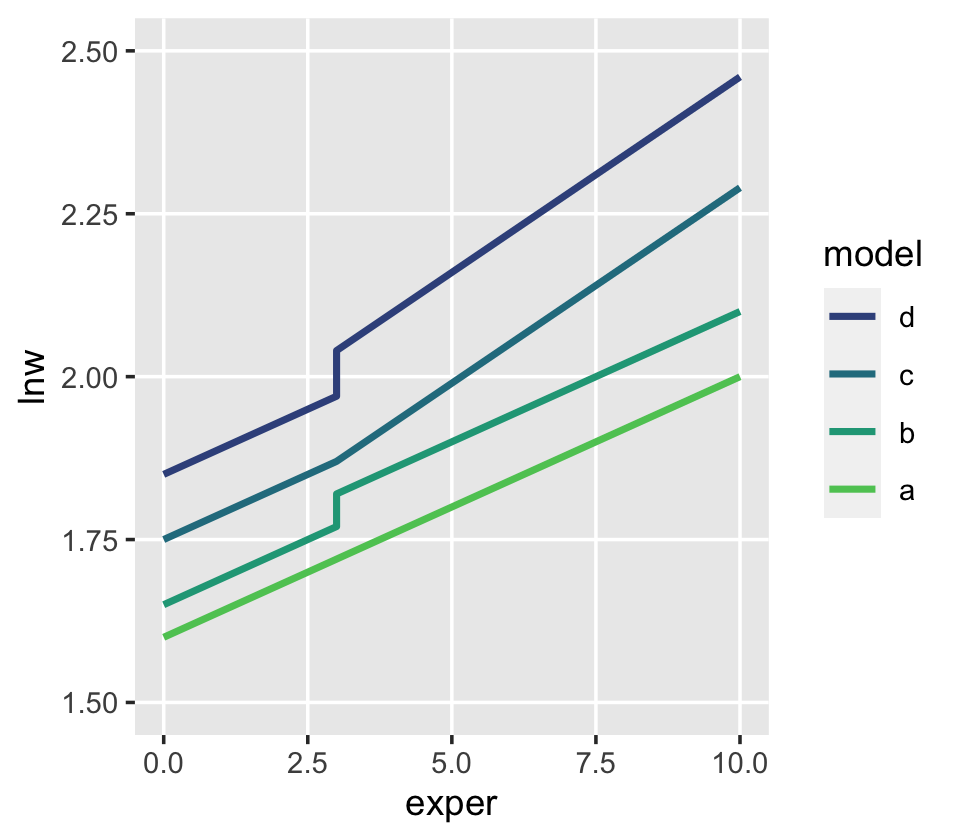

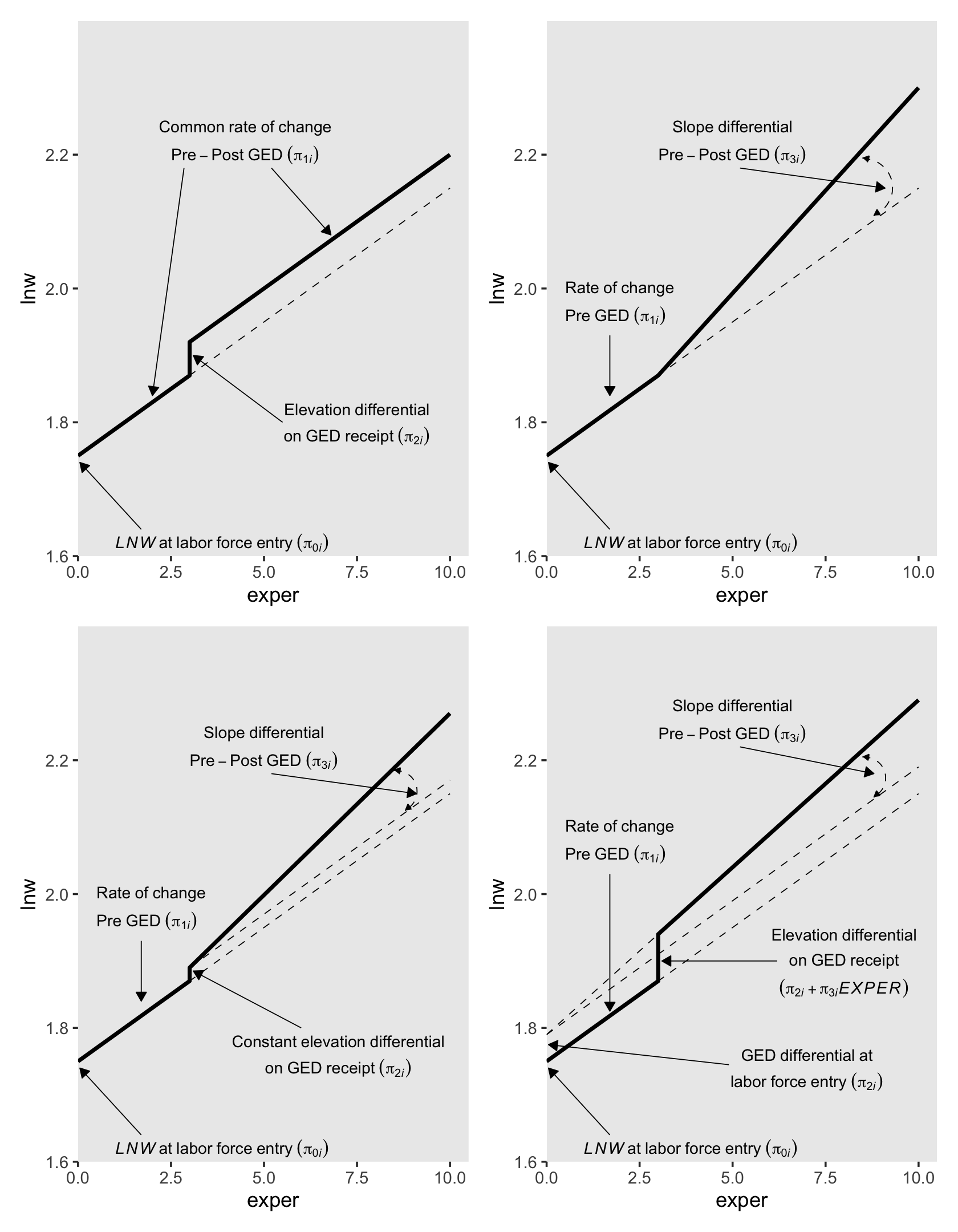

One might wonder: “How might GED receipt affect individual \(i\)’s wage trajectory?” (p. 193). Here we reproduce Figure 6.1, which entertains four possibilities.

tibble(exper = c(0, 3, 3, 10),

ged = rep(0:1, each = 2)) %>%

expand(model = letters[1:4],

nesting(exper, ged)) %>%

mutate(exper2 = if_else(ged == 0, 0, exper - 3)) %>%

mutate(lnw = case_when(

model == "a" ~ 1.60 + 0.04 * exper,

model == "b" ~ 1.65 + 0.04 * exper + 0.05 * ged,

model == "c" ~ 1.75 + 0.04 * exper + 0.02 * exper2 * ged,

model == "d" ~ 1.85 + 0.04 * exper + 0.01 * ged + 0.02 * exper * ged

),

model = fct_rev(model)) %>%

ggplot(aes(x = exper, y = lnw)) +

geom_line(aes(color = model),

linewidth = 1) +

scale_color_viridis_d(option = "D", begin = 1/4, end = 3/4) +

ylim(1.5, 2.5) +

theme(panel.grid.minor = element_blank())

6.1.1.1 Including a discontinuity in elevation, not slope.

We can write the level-1 formula for when there is a change in elevation, but not slope, as

\[ \text{lnw}_{ij} = \pi_{0i} + \pi_{1i} \text{exper}_{ij} + \pi_{2i} \text{ged}_{ij} + \epsilon_{ij}. \]

Because we are equating ged values as relating to the intercept, but not the slope, it might be helpful to rewrite that formula as

\[ \text{lnw}_{ij} = (\pi_{0i} + \pi_{2i} \text{ged}_{ij}) + \pi_{1i} \text{exper}_{ij} + \epsilon_{ij}, \]

where the portion inside of the parentheses concerns initial status and discontinuity in elevation, but not slope. Because the ged values only come in 0’s and 1’s, we can express the two versions of this equation as

\[ \begin{align*} \text{lnw}_{ij} & = [\pi_{0i} + \pi_{2i} (0)] + \pi_{1i} \text{exper}_{ij} + \epsilon_{ij} \;\;\; \text{and} \\ & = [\pi_{0i} + \pi_{2i} (1)] + \pi_{1i} \text{exper}_{ij} + \epsilon_{ij}. \end{align*} \]

In other words, whereas the pre-GED intercept is \(\pi_{0i}\), the post-GED intercept is \(\pi_{0i} + \pi_{2i}\). To get a better sense of this, we might make a version of the upper left panel of Figure 6.2. Since we’ll be making four of these over the next few sections, we might reduce the redundancies in the code by making a custom plotting function. We’ll call it plot_figure_6.2().

plot_figure_6.2 <- function(data,

mapping,

sizes = c(1, 1/4),

linetypes = c(1, 2),

...) {

ggplot(data, mapping) +

geom_line(aes(size = model, linetype = model)) +

geom_text(data = text,

aes(label = label, hjust = hjust),

size = 3, parse = T) +

geom_segment(data = arrow,

aes(xend = xend, yend = yend),

arrow = arrow(length = unit(0.075, "inches"), type = "closed"),

size = 1/4) +

scale_size_manual(values = sizes) +

scale_linetype_manual(values = linetypes) +

scale_x_continuous(expand = expansion(mult = c(0, 0.05))) +

scale_y_continuous(breaks = 0:4 * 0.2 + 1.6, expand = c(0, 0)) +

coord_cartesian(ylim = c(1.6, 2.4)) +

theme(legend.position = "none",

panel.grid = element_blank())

}Now we have our custom plotting function, let’s take it for a spin.

text <-

tibble(exper = c(4.5, 4.5, 7.5, 7.5, 1),

lnw = c(2.24, 2.2, 1.82, 1.78, 1.62),

label = c("Common~rate~of~change",

"Pre-Post~GED~(pi[1][italic(i)])",

"Elevation~differential",

"on~GED~receipt~(pi[2][italic(i)])",

"italic(LNW)~at~labor~force~entry~(pi[0][italic(i)])"),

hjust = c(.5, .5, .5, .5, 0))

arrow <-

tibble(exper = c(2.85, 5.2, 5.5, 1.7),

xend = c(2, 6.8, 3.1, 0.05),

lnw = c(2.18, 2.18, 1.8, 1.64),

yend = c(1.84, 2.08, 1.9, 1.74))

p1 <-

tibble(exper = c(0, 3, 3, 10),

ged = rep(0:1, each = 2)) %>%

expand(model = letters[1:2],

nesting(exper, ged)) %>%

mutate(exper2 = if_else(ged == 0, 0, exper - 3)) %>%

mutate(lnw = case_when(

model == "a" ~ 1.75 + 0.04 * exper,

model == "b" ~ 1.75 + 0.04 * exper + 0.05 * ged),

model = fct_rev(model)) %>%

plot_figure_6.2(aes(x = exper, y = lnw))## Warning: Using `size` aesthetic for lines was deprecated in ggplot2 3.4.0.

## ℹ Please use `linewidth` instead.

## This warning is displayed once every 8 hours.

## Call `lifecycle::last_lifecycle_warnings()` to see where this warning was generated.p1

Worked like a dream!

6.1.1.2 Including a discontinuity in slope, not elevation.

To specify a level-1 individual growth model that includes a discontinuity in slope, not elevation, you need a different time-varying predictor. Unlike GED, this predictor must clock the passage of time (like EXPER). But unlike EXPER, it must do so within only one of the two epochs (pre- or post-GED receipt). Adding a second temporal predictor allows each individual change trajectory to have two distinct slopes: one before the hypothesized discontinuity an another after. (p. 195, emphasis in the original)

In the wages_pp data, postexp is this second variable. Here is how it compares to the other relevant variables.

wages_pp %>%

select(id, ged, exper, postexp) %>%

filter(id >= 53)## # A tibble: 6,384 × 4

## id ged exper postexp

## <dbl> <dbl> <dbl> <dbl>

## 1 53 0 0.781 0

## 2 53 0 0.943 0

## 3 53 1 0.957 0

## 4 53 1 1.04 0.08

## 5 53 1 1.06 0.1

## 6 53 1 1.11 0.152

## 7 53 1 1.18 0.227

## 8 53 1 1.78 0.82

## 9 122 0 2.04 0

## 10 122 0 2.64 0

## # ℹ 6,374 more rowsSinger and Willett then went on to report “construction of a suitable time-varying predictor to register the desired discontinuity is often the hardest part of the model specification” (p. 195). They weren’t kidding.

This concept caused me a good bit of frustration when learning about these models. Let’s walk through this slowly. In the last code block, we looked at four relevant variables. You may wonder why we executed filter(id >= 53). This is because the first two participants always had ged == 1. They’re valid cases and all, but those data won’t be immediately helpful for understanding what’s going on with postexp. Happily, the next case, id == 53, is perfect for our goal. First, notice how that person’s postexp values are always 0 when ged == 0. Second, notice how the first time where ged == 1, postexp is still a 0. Third, notice that after that first initial row, postexp increases. If you caught all that, go you!

To make the next point, it’ll come in handy to subset the data. Because we’re trying to understand the relationship between exper and postesp conditional on ged, cases for which ged is always the same will be of little use. Let’s drop them.

wages_pp_subset <-

wages_pp %>%

group_by(id) %>%

filter(mean(ged) > 0) %>%

filter(mean(ged) < 1) %>%

ungroup() %>%

select(id, ged, exper, postexp)

wages_pp_subset## # A tibble: 819 × 4

## id ged exper postexp

## <dbl> <dbl> <dbl> <dbl>

## 1 53 0 0.781 0

## 2 53 0 0.943 0

## 3 53 1 0.957 0

## 4 53 1 1.04 0.08

## 5 53 1 1.06 0.1

## 6 53 1 1.11 0.152

## 7 53 1 1.18 0.227

## 8 53 1 1.78 0.82

## 9 134 0 0.192 0

## 10 134 0 0.972 0

## # ℹ 809 more rowsWhat might not be obvious yet is exper and postexp scale together. To show how this works, we’ll make two new columns. First, we’ll mark the minimum exper value for each level of id. Then we’ll make a exper - postexp which is exactly what the name implies. Here’s what that looks like.

wages_pp_subset %>%

filter(ged == 1) %>%

group_by(id) %>%

mutate(min_exper = min(exper),

`exper - postexp` = exper - postexp)## # A tibble: 525 × 6

## # Groups: id [107]

## id ged exper postexp min_exper `exper - postexp`

## <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

## 1 53 1 0.957 0 0.957 0.957

## 2 53 1 1.04 0.08 0.957 0.957

## 3 53 1 1.06 0.1 0.957 0.957

## 4 53 1 1.11 0.152 0.957 0.958

## 5 53 1 1.18 0.227 0.957 0.958

## 6 53 1 1.78 0.82 0.957 0.957

## 7 134 1 3.92 0 3.92 3.92

## 8 134 1 4.64 0.72 3.92 3.92

## 9 134 1 5.64 1.72 3.92 3.92

## 10 134 1 6.75 2.84 3.92 3.92

## # ℹ 515 more rowsHuh. For each case, the min_exper value is (near)identical with exper - postexp. The reason they’re not always identical is simply rounding error. Had we computed them by hand without rounding, they would always be the same. This relationship is the consequence of our having coded postexp == 0 the very first time ged == 1, but allowed it to linearly increase afterward. Within each level of id–and conditional on ged == 1–, the way it increases is simply exper – min(exper). Here’s that value.

wages_pp_subset %>%

filter(ged == 1) %>%

group_by(id) %>%

mutate(min_exper = min(exper),

`exper - postexp` = exper - postexp) %>%

mutate(`exper - min_exper` = exper - min_exper)## # A tibble: 525 × 7

## # Groups: id [107]

## id ged exper postexp min_exper `exper - postexp` `exper - min_exper`

## <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

## 1 53 1 0.957 0 0.957 0.957 0

## 2 53 1 1.04 0.08 0.957 0.957 0.0800

## 3 53 1 1.06 0.1 0.957 0.957 0.1

## 4 53 1 1.11 0.152 0.957 0.958 0.153

## 5 53 1 1.18 0.227 0.957 0.958 0.228

## 6 53 1 1.78 0.82 0.957 0.957 0.82

## 7 134 1 3.92 0 3.92 3.92 0

## 8 134 1 4.64 0.72 3.92 3.92 0.72

## 9 134 1 5.64 1.72 3.92 3.92 1.72

## 10 134 1 6.75 2.84 3.92 3.92 2.84

## # ℹ 515 more rowsSee? Our new exper - min_exper column is the same, within rounding error, as postexp.

A fundamental feature of POSTEXP–indeed, any temporal predictor designed to register a shift in slope–is that the difference between each non-zero pair of consecutive values must be numerically identical to the difference between the corresponding pair of values for the basic predictor (here, EXPER). (p. 197, emphasis in the original)

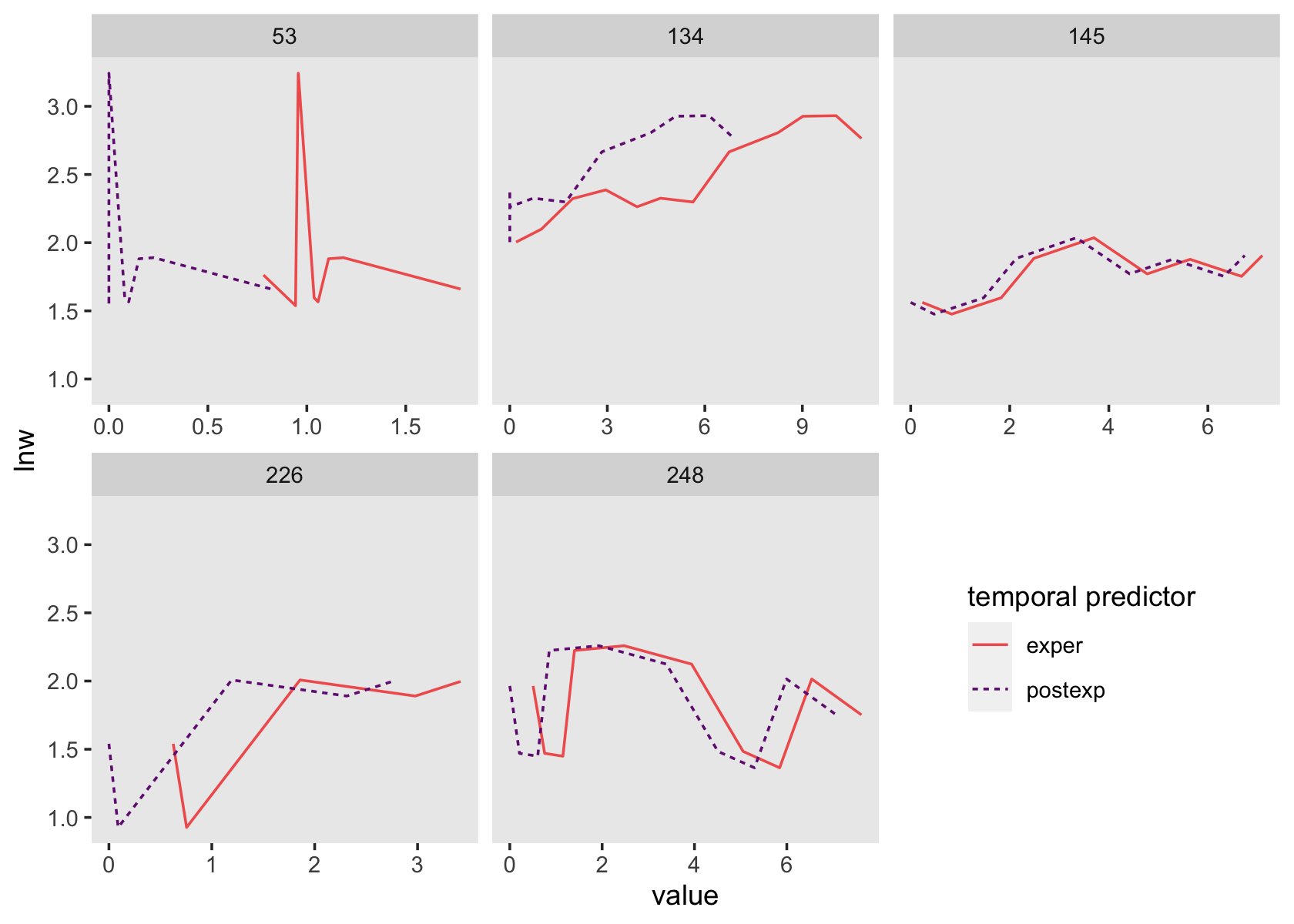

wages_pp %>%

group_by(id) %>%

filter(mean(ged) > 0 & mean(ged) < 1) %>%

ungroup() %>%

pivot_longer(c(exper, postexp),

names_to = "temporal predictor") %>%

filter(id < 250) %>%

ggplot(aes(x = value, y = lnw)) +

geom_line(aes(linetype = `temporal predictor`, color = `temporal predictor`)) +

scale_color_viridis_d(option = "A", begin = 1/3, end = 2/3, direction = -1) +

theme(legend.position = c(5/6, .25),

panel.grid = element_blank()) +

facet_wrap(~id, scales = "free_x")

See how those two scale together within each level of id?

All of this work is a setup for the level-1 equation

\[ \text{lnw}_{ij} = \pi_{0i} + \pi_{1i} \text{exper}_{ij} + \pi_{3i} \text{postexp}_{ij} + \epsilon_{ij}, \]

where \(\pi_{0i}\) is the only intercept parameter and \(\pi_{1i}\) and \(\pi_{3i}\) are two slope parameters. Singer and Willett explained

each slope assesses the effect of work experience, but it does so from a different origin: (1) \(\pi_{1i}\) captures the effects of total work experience (measured from labor force entry); and (2) \(\pi_{3i}\) captures the added effect of post-GED work experience (measured from GED receipt). (p. 197, emphasis in the original)

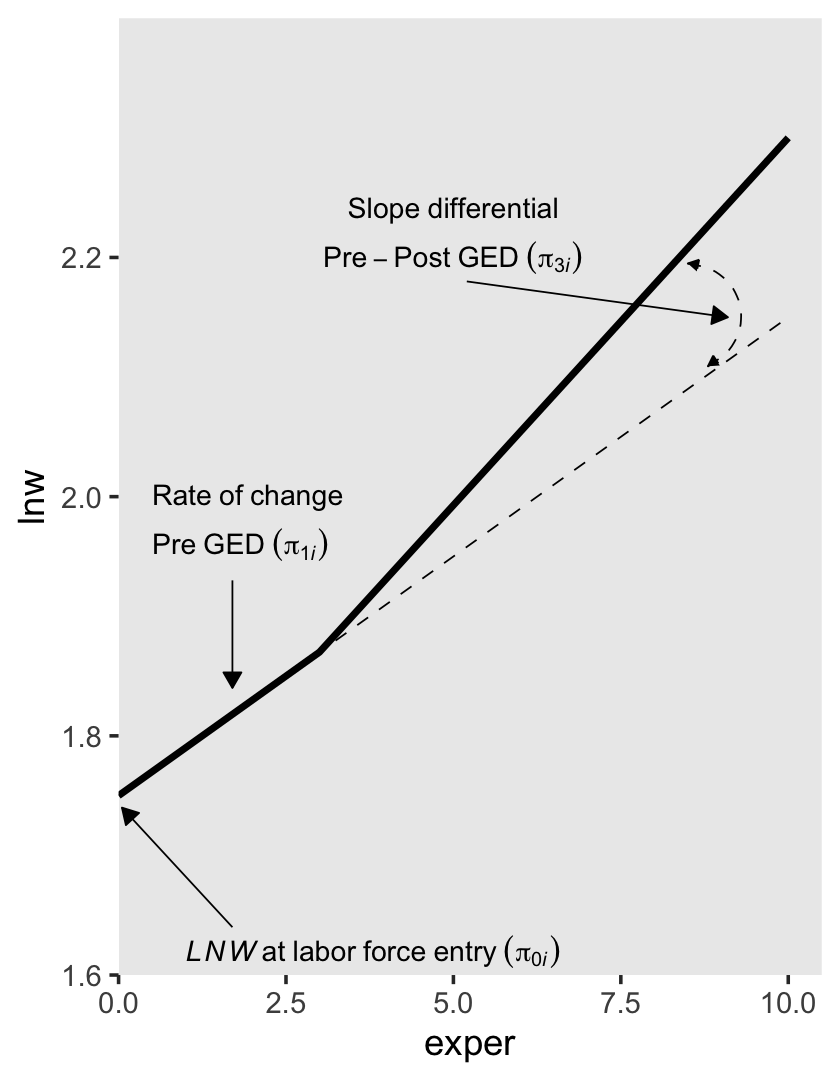

To get a sense of what this looks like, here’s our version of the upper right panel of Figure 6.2.

text <-

tibble(exper = c(5, 5, 0.5, 0.5, 1),

lnw = c(2.24, 2.2, 2, 1.96, 1.62),

label = c("Slope~differential",

"Pre-Post~GED~(pi[3][italic(i)])",

"Rate~of~change",

"Pre~GED~(pi[1][italic(i)])",

"italic(LNW)~at~labor~force~entry~(pi[0][italic(i)])"),

hjust = c(.5, .5, 0, 0, 0))

arrow <-

tibble(exper = c(5.2, 1.7, 1.7),

xend = c(9.1, 1.7, 0.05),

lnw = c(2.18, 1.93, 1.64),

yend = c(2.15, 1.84, 1.74))

p2 <-

tibble(exper = c(0, 3, 3, 10),

ged = rep(0:1, each = 2)) %>%

expand(model = letters[1:2],

nesting(exper, ged)) %>%

mutate(postexp = ifelse(exper == 10, 1, 0)) %>%

mutate(lnw = case_when(

model == "a" ~ 1.75 + 0.04 * exper,

model == "b" ~ 1.75 + 0.04 * exper + 0.15 * postexp),

model = fct_rev(model)) %>%

plot_figure_6.2(aes(x = exper, y = lnw)) +

annotate(geom = "curve",

x = 8.5, xend = 8.8,

y = 2.195, yend = 2.109,

arrow = arrow(length = unit(0.05, "inches"), type = "closed", ends = "both"),

size = 1/4, linetype = 2, curvature = -0.85)

p2

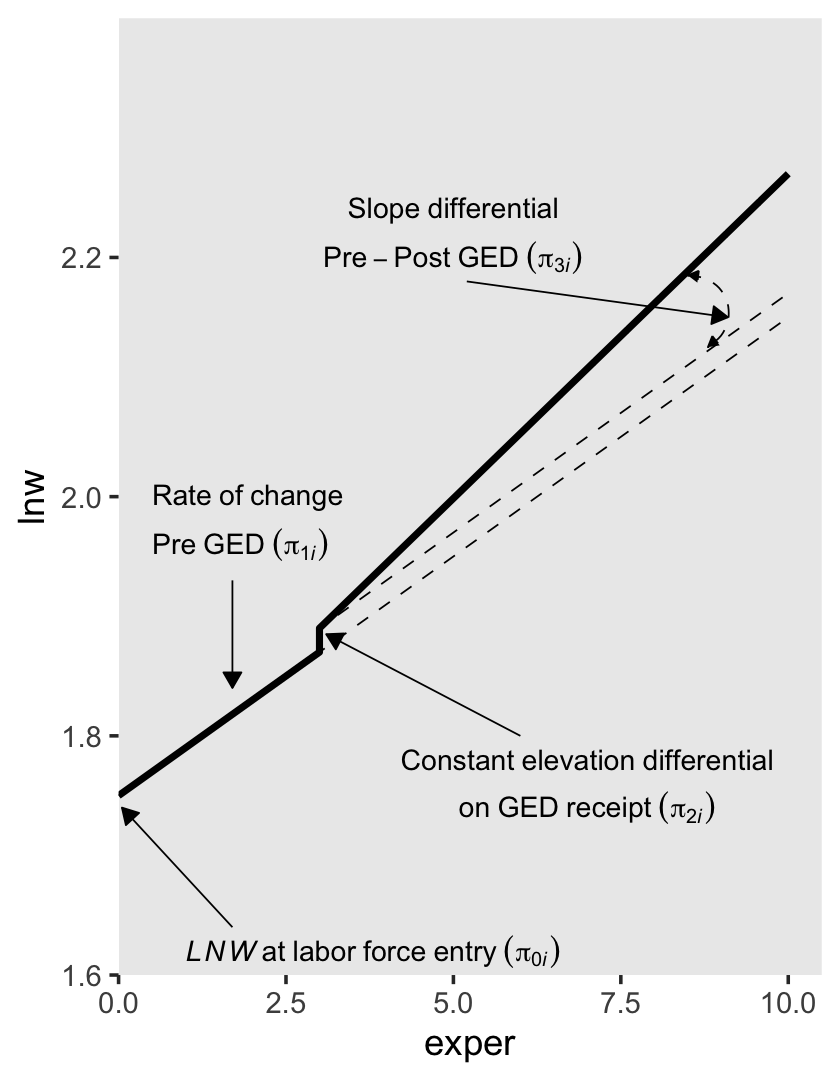

6.1.1.3 Including discontinuities in both elevation and slope.

There are (at least) two ways to do this. They are similar, but not identical. The first is an extension of the model from the last subsection where we retain postexp from our second slope parameter. We can express this as the equation

\[ \text{lnw}_{ij} = \pi_{0i} + \pi_{1i} \text{exper}_{ij} + \pi_{2i} \text{ged}_{ij} + \pi_{3i} \text{postexp}_{ij} + \epsilon_{ij}. \]

For those without a GED, the equation reduces to

\[ \begin{align*} \text{lnw}_{ij} & = \pi_{0i} + \pi_{1i} \text{exper}_{ij} + \pi_{2i} (0) + \pi_{3i} (0) + \epsilon_{ij} \\ & = \pi_{0i} + \pi_{1i} \text{exper}_{ij} + \epsilon_{ij}. \end{align*} \]

Once people secure their GED, \(\pi_{2i}\) is always multiplied by 1 (i.e., \(\pi_{2i} (1)\)) and the values by which we multiply \(\pi_{3i}\) scale linearly with exper, but with the offset the way we discussed in the previous subsection. To emphasize that, we might rewrite the equation as

\[ \begin{align*} \text{lnw}_{ij} & = \pi_{0i} + \pi_{1i} \text{exper}_{ij} + \pi_{2i} (1) + \pi_{3i} \text{postexp} + \epsilon_{ij} \\ & = (\pi_{0i} + \pi_{2i}) + \pi_{1i} \text{exper}_{ij} + \pi_{3i} \text{postexp} + \epsilon_{ij}. \end{align*} \]

To get a sense of what this looks like, here’s our version of the lower left panel of Figure 6.2.

text <-

tibble(exper = c(5, 5, 0.5, 0.5, 1, 7, 7),

lnw = c(2.24, 2.2, 2, 1.96, 1.62, 1.78, 1.74),

label = c("Slope~differential",

"Pre-Post~GED~(pi[3][italic(i)])",

"Rate~of~change",

"Pre~GED~(pi[1][italic(i)])",

"italic(LNW)~at~labor~force~entry~(pi[0][italic(i)])",

"Constant~elevation~differential",

"on~GED~receipt~(pi[2][italic(i)])"),

hjust = c(.5, .5, 0, 0, 0, .5, .5))

arrow <-

tibble(exper = c(5.2, 1.7, 1.7, 6),

xend = c(9.1, 1.7, 0.05, 3.1),

lnw = c(2.18, 1.93, 1.64, 1.8),

yend = c(2.15, 1.84, 1.74, 1.885))

p3 <-

tibble(exper = c(0, 3, 3, 10),

ged = rep(0:1, each = 2)) %>%

expand(model = letters[1:3],

nesting(exper, ged)) %>%

mutate(postexp = ifelse(exper == 10, 1, 0)) %>%

mutate(lnw = case_when(

model == "a" ~ 1.75 + 0.04 * exper,

model == "b" ~ 1.75 + 0.04 * exper + 0.02 * ged,

model == "c" ~ 1.75 + 0.04 * exper + 0.02 * ged + 0.1 * postexp),

model = fct_rev(model)) %>%

plot_figure_6.2(aes(x = exper, y = lnw),

sizes = c(1, 1/4, 1/4),

linetypes = c(1, 2, 2)) +

annotate(geom = "curve",

x = 8.5, xend = 8.8,

y = 2.185, yend = 2.125,

arrow = arrow(length = unit(0.05, "inches"), type = "closed", ends = "both"),

size = 1/4, linetype = 2, curvature = -0.85)

p3

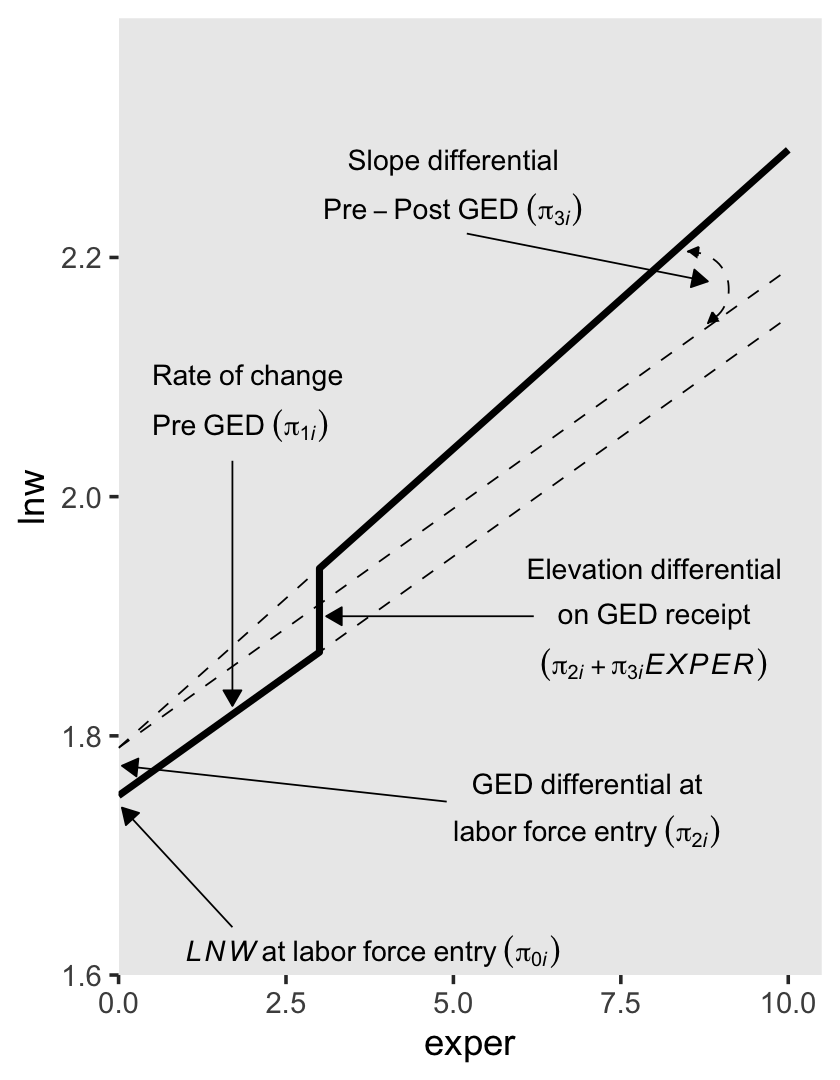

The second way to include discontinuities in both elevation and slope replaces the postexp variable with an interaction between exper and ged. Here’s the equation:

\[ \text{lnw}_{ij} = \pi_{0i} + \pi_{1i} \text{exper}_{ij} + \pi_{2i} \text{ged}_{ij} + \pi_{3i} (\text{exper}_{ij} \times \text{ged}_{ij}) + \epsilon_{ij}. \]

For those without a GED, the equation simplifies to

\[ \begin{align*} \text{lnw}_{ij} & = \pi_{0i} + \pi_{1i} \text{exper}_{ij} + \pi_{2i} (0) + \pi_{3i} (\text{exper}_{ij} \times 0) + \epsilon_{ij} \\ & = \pi_{0i} + \pi_{1i} \text{exper}_{ij} + \epsilon_{ij}. \end{align*} \]

Once a participant secures their GED, the equation changes to

\[ \begin{align*} \text{lnw}_{ij} & = \pi_{0i} + \pi_{1i} \text{exper}_{ij} + \pi_{2i} (1) + \pi_{3i} (\text{exper}_{ij} \times 1) + \epsilon_{ij} \\ & = (\pi_{0i} + \pi_{2i}) + (\pi_{1i} + \pi_{3i}) \text{exper}_{ij} + \epsilon_{ij}. \end{align*} \]

So again, the two ways we might include discontinuities in both elevation and slope are

\[ \begin{align*} \text{lnw}_{ij} & = \pi_{0i} + \pi_{1i} \text{exper}_{ij} + \pi_{2i} \text{ged}_{ij} + \pi_{3i} \text{postexp}_{ij} + \epsilon_{ij} & \text{and} \\ \text{lnw}_{ij} & = \pi_{0i} + \pi_{1i} \text{exper}_{ij} + \pi_{2i} \text{ged}_{ij} + \pi_{3i} (\text{exper}_{ij} \times \text{ged}_{ij}) + \epsilon_{ij}. \end{align*} \]

The \(\pi_{0i}\) and \(\pi_{1i}\) terms have the same meaning in both. Even though \(\pi_{3i}\) is multiplied by different values in the two equations, it has the same interpretation: “it represents the increment (or decrement) of the slope in the post-GED epoch” (p. 200). However, the big difference is the behavior and interpretation for \(\pi_{2i}\). In the equation for the first approach, it “assesses the magnitude of the instantaneous increment (or decrement) associated with GED attainment” (p. 200). But in the equation for the second approach, “\(\pi_{2i}\) assesses the magnitude of the increment (or decrement) associated with GED attainment at a particular–and not particularly meaningful–moment: the day of labor force entry” (p. 220, emphasis added). That is, whereas \(\pi_{2i}\) has a fixed value for the first approach, its magnitude changes with time in the second.

To get a sense of what this looks like, here’s our version of the lower right panel of Figure 6.2.

text <-

tibble(exper = c(5, 5, 0.5, 0.5, 1, 7, 7, 8, 8, 8),

lnw = c(2.28, 2.24, 2.1, 2.06, 1.62, 1.76, 1.72, 1.94, 1.9, 1.86),

label = c("Slope~differential",

"Pre-Post~GED~(pi[3][italic(i)])",

"Rate~of~change",

"Pre~GED~(pi[1][italic(i)])",

"italic(LNW)~at~labor~force~entry~(pi[0][italic(i)])",

"GED~differential~at",

"labor~force~entry~(pi[2][italic(i)])",

"Elevation~differential",

"on~GED~receipt",

"(pi[2][italic(i)]+pi[3][italic(i)]*italic(EXPER))"),

hjust = c(.5, .5, 0, 0, 0, .5, .5, .5, .5, .5))

arrow <-

tibble(exper = c(5.2, 1.7, 1.7, 4.9, 6.2),

xend = c(8.8, 1.7, 0.05, 0.05, 3.1),

lnw = c(2.22, 2.03, 1.64, 1.745, 1.9),

yend = c(2.18, 1.825, 1.74, 1.775, 1.9))

p4 <-

crossing(model = letters[1:4],

point = 1:4) %>%

mutate(exper = ifelse(point == 1, 0,

ifelse(point == 4, 10, 3)),

ged = c(0, 0, 1, 1, 1, 1, 1, 1, 0, 0, 0, 0, 1, 1, 1, 1)) %>%

mutate(lnw = case_when(

model %in% letters[1:3] ~ 1.75 + 0.04 * exper + 0.04 * ged + 0.01 * exper * ged,

model == "d" ~ 1.75 + 0.04 * exper + 0.04 * ged)) %>%

plot_figure_6.2(aes(x = exper, y = lnw),

sizes = c(1, 1/4, 1/4, 1/4),

linetypes = c(1, 2, 2, 2)) +

annotate(geom = "curve",

x = 8.5, xend = 8.8,

y = 2.205, yend = 2.145,

arrow = arrow(length = unit(0.05, "inches"), type = "closed", ends = "both"),

size = 1/4, linetype = 2, curvature = -0.85)

p4

You may have noticed we’ve been saving the various subplot panels as objects. Here we combine them to make the full version of Figure 6.2.

library(patchwork)

(p1 + p2) / (p3 + p4)

Glorious.

6.1.2 Selecting among the alternative discontinuous models.

Our first model in this section will be a call back from the last chapter, fit5.16.

library(brms)

# model a

fit5.16 <-

brm(data = wages_pp,

family = gaussian,

lnw ~ 0 + Intercept + exper + hgc_9 + black:exper + uerate_7 + (1 + exper | id),

prior = c(prior(normal(1.335, 1), class = b, coef = Intercept),

prior(normal(0, 0.5), class = b),

prior(student_t(3, 0, 1), class = sd),

prior(student_t(3, 0, 1), class = sigma),

prior(lkj(4), class = cor)),

iter = 2500, warmup = 1000, chains = 3, cores = 3,

seed = 5,

file = "fits/fit05.16")Review the summary.

print(fit5.16, digits = 3)## Family: gaussian

## Links: mu = identity; sigma = identity

## Formula: lnw ~ 0 + Intercept + exper + hgc_9 + black:exper + uerate_7 + (1 + exper | id)

## Data: wages_pp (Number of observations: 6402)

## Draws: 3 chains, each with iter = 2500; warmup = 1000; thin = 1;

## total post-warmup draws = 4500

##

## Group-Level Effects:

## ~id (Number of levels: 888)

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## sd(Intercept) 0.224 0.010 0.204 0.245 1.002 1704 2884

## sd(exper) 0.040 0.003 0.035 0.046 1.004 605 917

## cor(Intercept,exper) -0.306 0.068 -0.432 -0.165 1.004 713 1634

##

## Population-Level Effects:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## Intercept 1.749 0.011 1.727 1.772 1.000 2856 3207

## exper 0.044 0.003 0.039 0.049 1.000 2694 3522

## hgc_9 0.040 0.006 0.027 0.052 1.002 2100 3469

## uerate_7 -0.012 0.002 -0.016 -0.008 1.001 5116 4125

## exper:black -0.018 0.005 -0.027 -0.009 1.001 2338 2587

##

## Family Specific Parameters:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## sigma 0.308 0.003 0.302 0.314 1.001 3798 3478

##

## Draws were sampled using sampling(NUTS). For each parameter, Bulk_ESS

## and Tail_ESS are effective sample size measures, and Rhat is the potential

## scale reduction factor on split chains (at convergence, Rhat = 1).Before we move forward with the next models, we’ll need to wrangle the data a bit. First, we rename hgc.9 to the more tidyverse-centric hgc_9. Then we compute uerate_7, which is uerate centered on 7.

wages_pp <-

wages_pp %>%

rename(hgc_9 = hgc.9) %>%

mutate(uerate_7 = uerate - 7)Our model A (fit5.16) and the rest of the models B through J (fit6.1 through fit6.9) can all be thought of as variants of two parent models. The first parent model is model F (fit6.5), which follows the form

\[ \begin{align*} \text{lnw}_{ij} & = \gamma_{00} + \gamma_{01} (\text{hgc}_i - 9) \\ & \;\;\; + \gamma_{10} \text{exper}_{ij} + \gamma_{12} \text{black}_i \times \text{exper}_{ij} \\ & \;\;\; + \gamma_{20} (\text{uerate}_{ij} - 7) \\ & \;\;\; + \gamma_{30} \text{ged}_{ij} \\ & \;\;\; + \gamma_{40} \text{postexp}_{ij}\\ & \;\;\; + \zeta_{0i} + \zeta_{1i} \text{exper}_{ij} + \zeta_{3i} \text{ged}_{ij} + \zeta_{4i} \text{postexp}_{ij} + \epsilon_{ij}, \;\;\; \text{where} \\ \epsilon_{ij} & \sim \operatorname{Normal}(0, \sigma_\epsilon) \\ \begin{bmatrix} \zeta_{0i} \\ \zeta_{1i} \\ \zeta_{3i} \\ \zeta_{4i} \end{bmatrix} & \sim \operatorname{Normal} \begin{pmatrix} \begin{bmatrix} 0 \\ 0 \\ 0 \\ 0 \end{bmatrix}, \mathbf D \mathbf \Omega \mathbf D' \end{pmatrix} \\ \mathbf D & = \begin{bmatrix} \sigma_0 & 0 & 0 & 0 \\ 0 & \sigma_1 & 0 & 0 \\ 0 & 0 & \sigma_3 & 0 \\ 0 & 0 & 0 & \sigma_4 \end{bmatrix} \\ \mathbf\Omega & = \begin{bmatrix} 1 & \rho_{01} & \rho_{03} & \rho_{04} \\ \rho_{10} & 1 & \rho_{13} & \rho_{14} \\ \rho_{30} & \rho_{31} & 1 & \rho_{34} \\ \rho_{40} & \rho_{41} & \rho_{43} &1 \end{bmatrix} \\ \gamma_{00} & \sim \operatorname{Normal}(1.335, 1) \\ \gamma_{01}, \dots, \gamma_{40} & \sim \operatorname{Normal}(0, 0.5) \\ \sigma_0, \dots, \sigma_4 & \sim \operatorname{Student-t}(3, 0, 1) \\ \sigma_\epsilon & \sim \operatorname{Student-t}(3, 0, 1) \\ \mathbf\Omega & \sim \operatorname{LKJ}(4), \end{align*} \]

which uses the postexp-based approach for discontinuity in slopes. Notice how we’re using the same basic prior specification as with fit5.16. The second parent model is model I (fit6.8), which follows the form

\[ \begin{align*} \text{lnw}_{ij} & = \gamma_{00} + \gamma_{01} (\text{hgc}_i - 9) \\ & \;\;\; + \gamma_{10} \text{exper}_{ij} + \gamma_{12} \text{black}_i \times \text{exper}_{ij} \\ & \;\;\; + \gamma_{20} (\text{uerate}_{ij} - 7) \\ & \;\;\; + \gamma_{30} \text{ged}_{ij} \\ & \;\;\; + \gamma_{50} \text{ged}_{ij} \times \text{exper}_{ij} \\ & \;\;\; + \zeta_{0i} + \zeta_{1i} \text{exper}_{ij} + \zeta_{3i} \text{ged}_{ij} + \zeta_{5i} \text{ged}_{ij} \times \text{exper}_{ij} + \epsilon_{ij}, \;\;\; \text{where} \\ \epsilon_{ij} & \sim \operatorname{Normal}(0, \sigma_\epsilon) \\ \begin{bmatrix} \zeta_{0i} \\ \zeta_{1i} \\ \zeta_{3i} \\ \zeta_{5i} \end{bmatrix} & \sim \operatorname{Normal} \begin{pmatrix} \begin{bmatrix} 0 \\ 0 \\ 0 \\ 0 \end{bmatrix}, \mathbf D \mathbf \Omega \mathbf D' \end{pmatrix} \\ \mathbf D & = \begin{bmatrix} \sigma_0 & 0 & 0 & 0 \\ 0 & \sigma_1 & 0 & 0 \\ 0 & 0 & \sigma_3 & 0 \\ 0 & 0 & 0 & \sigma_5 \end{bmatrix} \\ \mathbf\Omega & = \begin{bmatrix} 1 & \rho_{01} & \rho_{03} & \rho_{05} \\ \rho_{10} & 1 & \rho_{13} & \rho_{15} \\ \rho_{30} & \rho_{31} & 1 & \rho_{35} \\ \rho_{50} & \rho_{51} & \rho_{53} &1 \end{bmatrix} \\ \gamma_{00} & \sim \operatorname{Normal}(1.335, 1) \\ \gamma_{01}, \dots, \gamma_{50} & \sim \operatorname{Normal}(0, 0.5) \\ \sigma_0, \dots, \sigma_5 & \sim \operatorname{Student-t}(3, 0, 1) \\ \sigma_\epsilon & \sim \operatorname{Student-t}(3, 0, 1) \\ \mathbf\Omega & \sim \operatorname{LKJ}(4). \end{align*} \]

which uses the ged:exper-based approach for discontinuity in slopes. Here we fit the models in bulk.

# model b

fit6.1 <-

brm(data = wages_pp,

family = gaussian,

lnw ~ 0 + Intercept + exper + hgc_9 + black:exper + uerate_7 + ged + (1 + exper + ged | id),

prior = c(prior(normal(1.335, 1), class = b, coef = Intercept),

prior(normal(0, 0.5), class = b),

prior(student_t(3, 0, 1), class = sd),

prior(student_t(3, 0, 1), class = sigma),

prior(lkj(4), class = cor)),

iter = 2500, warmup = 1000, chains = 3, cores = 3,

seed = 6,

file = "fits/fit06.01")

# model c

fit6.2 <-

brm(data = wages_pp,

family = gaussian,

lnw ~ 0 + Intercept + exper + hgc_9 + black:exper + uerate_7 + ged + (1 + exper | id),

prior = c(prior(normal(1.335, 1), class = b, coef = Intercept),

prior(normal(0, 0.5), class = b),

prior(student_t(3, 0, 1), class = sd),

prior(student_t(3, 0, 1), class = sigma),

prior(lkj(4), class = cor)),

iter = 2500, warmup = 1000, chains = 3, cores = 3,

seed = 6,

file = "fits/fit06.02")

# model d

fit6.3 <-

brm(data = wages_pp,

family = gaussian,

lnw ~ 0 + Intercept + exper + hgc_9 + black:exper + uerate_7 + postexp + (1 + exper + postexp | id),

prior = c(prior(normal(1.335, 1), class = b, coef = Intercept),

prior(normal(0, 0.5), class = b),

prior(student_t(3, 0, 1), class = sd),

prior(student_t(3, 0, 1), class = sigma),

prior(lkj(4), class = cor)),

iter = 2500, warmup = 1000, chains = 3, cores = 3,

seed = 6,

control = list(adapt_delta = .99),

file = "fits/fit06.03")

# model e

fit6.4 <-

brm(data = wages_pp,

family = gaussian,

lnw ~ 0 + Intercept + exper + hgc_9 + black:exper + uerate_7 + postexp + (1 + exper | id),

prior = c(prior(normal(1.335, 1), class = b, coef = Intercept),

prior(normal(0, 0.5), class = b),

prior(student_t(3, 0, 1), class = sd),

prior(student_t(3, 0, 1), class = sigma),

prior(lkj(4), class = cor)),

iter = 2500, warmup = 1000, chains = 3, cores = 3,

seed = 6,

file = "fits/fit06.04")

# model f

fit6.5 <-

brm(data = wages_pp,

family = gaussian,

lnw ~ 0 + Intercept + exper + hgc_9 + black:exper + uerate_7 + ged + postexp + (1 + exper + ged + postexp | id),

prior = c(prior(normal(1.335, 1), class = b, coef = Intercept),

prior(normal(0, 0.5), class = b),

prior(student_t(3, 0, 1), class = sd),

prior(student_t(3, 0, 1), class = sigma),

prior(lkj(4), class = cor)),

iter = 2500, warmup = 1000, chains = 3, cores = 3,

seed = 6,

file = "fits/fit06.05")

# model g

fit6.6 <-

brm(data = wages_pp,

family = gaussian,

lnw ~ 0 + Intercept + exper + hgc_9 + black:exper + uerate_7 + ged + postexp + (1 + exper + ged | id),

prior = c(prior(normal(1.335, 1), class = b, coef = Intercept),

prior(normal(0, 0.5), class = b),

prior(student_t(3, 0, 1), class = sd),

prior(student_t(3, 0, 1), class = sigma),

prior(lkj(4), class = cor)),

iter = 2500, warmup = 1000, chains = 3, cores = 3,

seed = 6,

file = "fits/fit06.06")

# model h

fit6.7 <-

brm(data = wages_pp,

family = gaussian,

lnw ~ 0 + Intercept + exper + hgc_9 + black:exper + uerate_7 + ged + postexp + (1 + exper + postexp | id),

prior = c(prior(normal(1.335, 1), class = b, coef = Intercept),

prior(normal(0, 0.5), class = b),

prior(student_t(3, 0, 1), class = sd),

prior(student_t(3, 0, 1), class = sigma),

prior(lkj(4), class = cor)),

iter = 2500, warmup = 1000, chains = 3, cores = 3,

seed = 6,

control = list(adapt_delta = .99),

file = "fits/fit06.07")

# model i

fit6.8 <-

brm(data = wages_pp,

family = gaussian,

lnw ~ 0 + Intercept + exper + hgc_9 + black:exper + uerate_7 + ged + ged:exper + (1 + exper + ged + ged:exper | id),

prior = c(prior(normal(1.335, 1), class = b, coef = Intercept),

prior(normal(0, 0.5), class = b),

prior(student_t(3, 0, 1), class = sd),

prior(student_t(3, 0, 1), class = sigma),

prior(lkj(4), class = cor)),

iter = 2500, warmup = 1000, chains = 3, cores = 3,

seed = 6,

control = list(adapt_delta = .99),

file = "fits/fit06.08")

# model j

fit6.9 <-

brm(data = wages_pp,

family = gaussian,

lnw ~ 0 + Intercept + exper + hgc_9 + black:exper + uerate_7 + ged + ged:exper + (1 + exper + ged | id),

prior = c(prior(normal(1.335, 1), class = b, coef = Intercept),

prior(normal(0, 0.5), class = b),

prior(student_t(3, 0, 1), class = sd),

prior(student_t(3, 0, 1), class = sigma),

prior(lkj(4), class = cor)),

iter = 2500, warmup = 1000, chains = 3, cores = 3,

seed = 6,

file = "fits/fit06.09")To keep from cluttering up this ebook, I’m not going to show the summary print() output for fit6.1 through fit6.7. If you go through that output yourself, you’ll see that several of them had low effective sample size estimates for one or a few of the \(\sigma\) parameters. For our pedagogical purposes, I’m okay with moving forward with these. But do note that when fitting a model for your scientific or other real-world projects, make sure you attend to your effective sample size issues (i.e., extract more posterior draws, as needed, by adjusting the warmup, iter, and chains arguments).

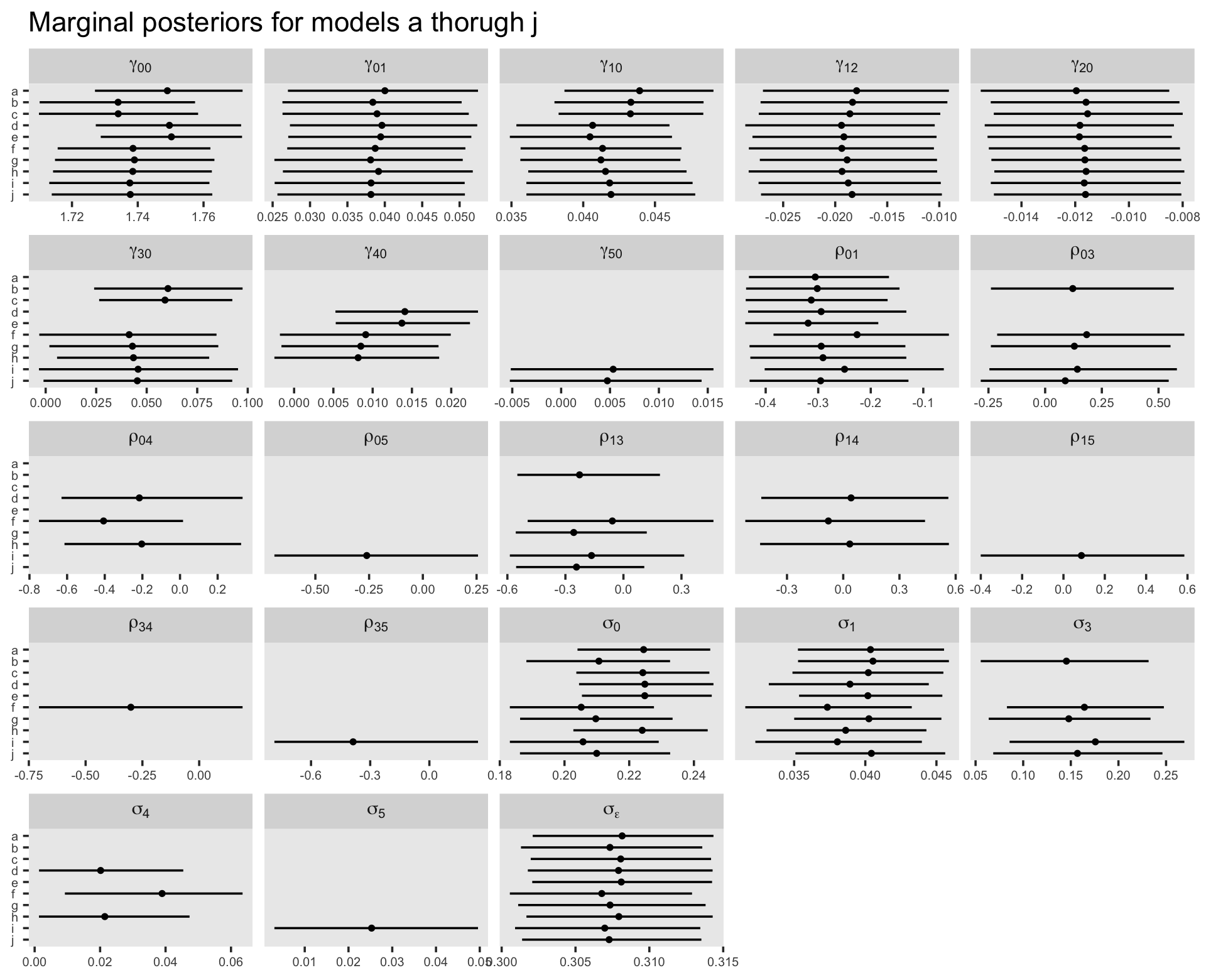

Though we’re avoiding print() output, we might take a birds-eye perspective and summarize the parameters for our competing models with a faceted coefficient plot. First we extract and save the relevant information as an object, post, and then we wrangle and plot.

# compute

post <-

tibble(model = letters[1:10],

fit = c("fit5.16", str_c("fit6.", 1:9))) %>%

mutate(p = map(fit, ~ get(.) %>%

posterior_summary() %>%

data.frame() %>%

rownames_to_column("parameter") %>%

filter(!str_detect(parameter, "r_id\\[") &

parameter != "lp__" &

parameter != "lprior"))) %>%

unnest(p)

# wrangle

post %>%

mutate(greek = case_when(

parameter == "b_Intercept" ~ "gamma[0][0]",

parameter == "b_hgc_9" ~ "gamma[0][1]",

parameter == "b_exper" ~ "gamma[1][0]",

parameter == "b_exper:black" ~ "gamma[1][2]",

parameter == "b_uerate_7" ~ "gamma[2][0]",

parameter == "b_ged" ~ "gamma[3][0]",

parameter == "b_postexp" ~ "gamma[4][0]",

parameter == "b_exper:ged" ~ "gamma[5][0]",

parameter == "sd_id__Intercept" ~ "sigma[0]",

parameter == "sd_id__exper" ~ "sigma[1]",

parameter == "sd_id__ged" ~ "sigma[3]",

parameter == "sd_id__postexp" ~ "sigma[4]",

parameter == "sd_id__exper:ged" ~ "sigma[5]",

parameter == "sigma" ~ "sigma[epsilon]",

parameter == "cor_id__Intercept__exper" ~ "rho[0][1]",

parameter == "cor_id__Intercept__ged" ~ "rho[0][3]",

parameter == "cor_id__exper__ged" ~ "rho[1][3]",

parameter == "cor_id__Intercept__postexp" ~ "rho[0][4]",

parameter == "cor_id__Intercept__exper:ged" ~ "rho[0][5]",

parameter == "cor_id__exper__postexp" ~ "rho[1][4]",

parameter == "cor_id__exper__exper:ged" ~ "rho[1][5]",

parameter == "cor_id__ged__postexp" ~ "rho[3][4]",

parameter == "cor_id__ged__exper:ged" ~ "rho[3][5]"

)) %>%

mutate(model = fct_rev(model)) %>%

# plot

ggplot(aes(x = Estimate, xmin = Q2.5, xmax = Q97.5, y = model)) +

geom_pointrange(size = 1/4, fatten = 1) +

labs(title = "Marginal posteriors for models a thorugh j",

x = NULL, y = NULL) +

theme(axis.text = element_text(size = 6),

axis.text.y = element_text(hjust = 0),

panel.grid = element_blank()) +

facet_wrap(~ greek, labeller = label_parsed, scales = "free_x")

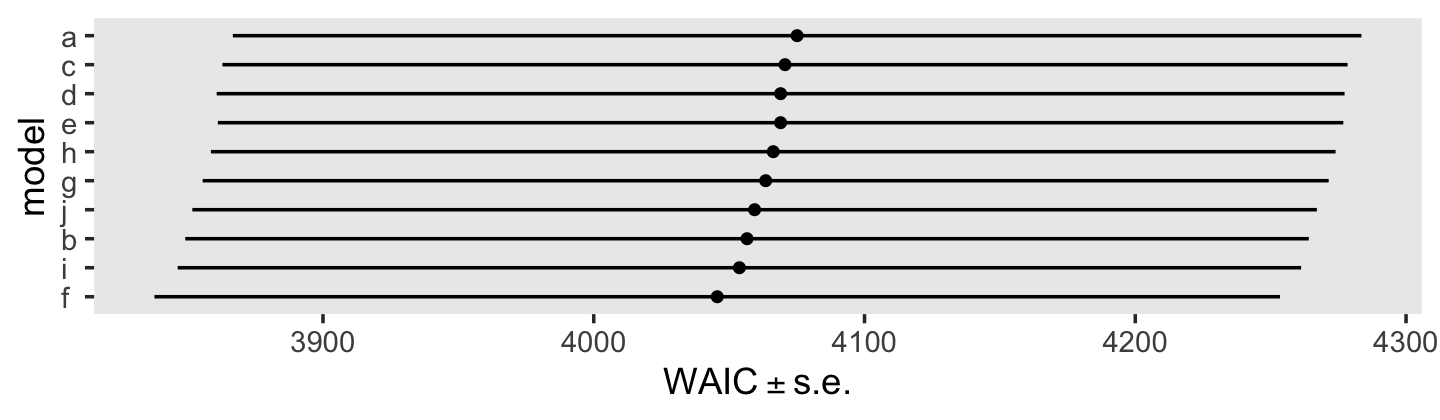

For our model comparisons, let’s compute the WAIC estimates for each.

fit5.16 <- add_criterion(fit5.16, criterion = "waic")

fit6.1 <- add_criterion(fit6.1, criterion = "waic")

fit6.2 <- add_criterion(fit6.2, criterion = "waic")

fit6.3 <- add_criterion(fit6.3, criterion = "waic")

fit6.4 <- add_criterion(fit6.4, criterion = "waic")

fit6.5 <- add_criterion(fit6.5, criterion = "waic")

fit6.6 <- add_criterion(fit6.6, criterion = "waic")

fit6.7 <- add_criterion(fit6.7, criterion = "waic")

fit6.8 <- add_criterion(fit6.8, criterion = "waic")

fit6.9 <- add_criterion(fit6.9, criterion = "waic")On pages 202 through 204, Singer and Willett performed a number of model comparisons with deviance tests and the frequentist AIC and BIC. Here we do the analogous comparisons with WAIC difference estimates.

# a vs b

loo_compare(fit5.16, fit6.1, criterion = "waic") %>% print(simplify = F)## elpd_diff se_diff elpd_waic se_elpd_waic p_waic se_p_waic waic se_waic

## fit6.1 0.0 0.0 -2028.3 103.7 878.4 27.4 4056.6 207.5

## fit5.16 -9.2 6.0 -2037.6 104.2 864.8 27.0 4075.1 208.4# b vs c

loo_compare(fit6.1, fit6.2, criterion = "waic") %>% print(simplify = F)## elpd_diff se_diff elpd_waic se_elpd_waic p_waic se_p_waic waic se_waic

## fit6.1 0.0 0.0 -2028.3 103.7 878.4 27.4 4056.6 207.5

## fit6.2 -7.0 4.9 -2035.3 103.9 863.8 27.0 4070.6 207.8# a vs d

loo_compare(fit5.16, fit6.3, criterion = "waic") %>% print(simplify = F)## elpd_diff se_diff elpd_waic se_elpd_waic p_waic se_p_waic waic se_waic

## fit6.3 0.0 0.0 -2034.5 104.1 867.0 27.2 4069.0 208.3

## fit5.16 -3.0 2.7 -2037.6 104.2 864.8 27.0 4075.1 208.4# d vs e

loo_compare(fit6.3, fit6.4, criterion = "waic") %>% print(simplify = F)## elpd_diff se_diff elpd_waic se_elpd_waic p_waic se_p_waic waic se_waic

## fit6.4 0.0 0.0 -2034.5 103.9 861.7 26.7 4069.0 207.8

## fit6.3 0.0 1.9 -2034.5 104.1 867.0 27.2 4069.0 208.3# f vs b

loo_compare(fit6.5, fit6.1, criterion = "waic") %>% print(simplify = F)## elpd_diff se_diff elpd_waic se_elpd_waic p_waic se_p_waic waic se_waic

## fit6.5 0.0 0.0 -2022.8 103.9 887.6 27.9 4045.6 207.9

## fit6.1 -5.5 3.6 -2028.3 103.7 878.4 27.4 4056.6 207.5# f vs d

loo_compare(fit6.5, fit6.3, criterion = "waic") %>% print(simplify = F)## elpd_diff se_diff elpd_waic se_elpd_waic p_waic se_p_waic waic se_waic

## fit6.5 0.0 0.0 -2022.8 103.9 887.6 27.9 4045.6 207.9

## fit6.3 -11.7 7.6 -2034.5 104.1 867.0 27.2 4069.0 208.3# f vs g

loo_compare(fit6.5, fit6.6, criterion = "waic") %>% print(simplify = F)## elpd_diff se_diff elpd_waic se_elpd_waic p_waic se_p_waic waic se_waic

## fit6.5 0.0 0.0 -2022.8 103.9 887.6 27.9 4045.6 207.9

## fit6.6 -8.9 3.7 -2031.7 104.0 881.2 27.8 4063.5 207.9# f vs h

loo_compare(fit6.5, fit6.7, criterion = "waic") %>% print(simplify = F)## elpd_diff se_diff elpd_waic se_elpd_waic p_waic se_p_waic waic se_waic

## fit6.5 0.0 0.0 -2022.8 103.9 887.6 27.9 4045.6 207.9

## fit6.7 -10.3 6.2 -2033.1 103.8 865.6 26.8 4066.3 207.7# i vs b

loo_compare(fit6.8, fit6.1, criterion = "waic") %>% print(simplify = F)## elpd_diff se_diff elpd_waic se_elpd_waic p_waic se_p_waic waic se_waic

## fit6.8 0.0 0.0 -2026.9 103.7 886.7 27.8 4053.8 207.5

## fit6.1 -1.4 2.6 -2028.3 103.7 878.4 27.4 4056.6 207.5# j vs i

loo_compare(fit6.9, fit6.8, criterion = "waic") %>% print(simplify = F)## elpd_diff se_diff elpd_waic se_elpd_waic p_waic se_p_waic waic se_waic

## fit6.8 0.0 0.0 -2026.9 103.7 886.7 27.8 4053.8 207.5

## fit6.9 -2.8 2.5 -2029.7 103.8 881.5 27.6 4059.4 207.7# i vs f

loo_compare(fit6.8, fit6.5, criterion = "waic") %>% print(simplify = F)## elpd_diff se_diff elpd_waic se_elpd_waic p_waic se_p_waic waic se_waic

## fit6.5 0.0 0.0 -2022.8 103.9 887.6 27.9 4045.6 207.9

## fit6.8 -4.1 2.4 -2026.9 103.7 886.7 27.8 4053.8 207.5We might also just look at all of the WAIC estimates, plus and minus their standard errors, in a coefficient plot.

loo_compare(fit5.16, fit6.1, fit6.2, fit6.3, fit6.4, fit6.5, fit6.6, fit6.7, fit6.8, fit6.9, criterion = "waic") %>%

data.frame() %>%

rownames_to_column("fit") %>%

arrange(fit) %>%

mutate(model = letters[1:n()]) %>%

ggplot(aes(x = waic, xmin = waic - se_waic, xmax = waic + se_waic, y = reorder(model, waic))) +

geom_pointrange(fatten = 1) +

labs(x = expression(WAIC%+-%s.e.),

y = "model") +

theme(axis.text.y = element_text(hjust = 0),

panel.grid = element_blank())

In the text, model I had the lowest (best) deviance and information criteria values, with model F coming in a close second. Our WAIC results are the reverse. However, look at the widths of the standard error intervals, for each, relative to their point estimates. I wouldn’t get too upset about differences in our results versus those in the text. Our Bayesian models reveal there’s massive uncertainty in each estimate, an insight missing from the frequent analyses reported in the text.

Here’s a focused look at the parameter summary for model F.

print(fit6.5, digits = 3)## Family: gaussian

## Links: mu = identity; sigma = identity

## Formula: lnw ~ 0 + Intercept + exper + hgc_9 + black:exper + uerate_7 + ged + postexp + (1 + exper + ged + postexp | id)

## Data: wages_pp (Number of observations: 6402)

## Draws: 3 chains, each with iter = 2500; warmup = 1000; thin = 1;

## total post-warmup draws = 4500

##

## Group-Level Effects:

## ~id (Number of levels: 888)

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## sd(Intercept) 0.205 0.011 0.183 0.228 1.002 1180 2248

## sd(exper) 0.037 0.003 0.032 0.043 1.004 575 1674

## sd(ged) 0.164 0.042 0.083 0.248 1.016 238 373

## sd(postexp) 0.039 0.013 0.009 0.064 1.032 159 206

## cor(Intercept,exper) -0.226 0.087 -0.385 -0.051 1.005 680 1163

## cor(Intercept,ged) 0.183 0.212 -0.212 0.614 1.008 446 847

## cor(exper,ged) -0.057 0.249 -0.495 0.465 1.008 403 730

## cor(Intercept,postexp) -0.406 0.195 -0.750 0.016 1.006 644 1024

## cor(exper,postexp) -0.079 0.245 -0.523 0.437 1.017 276 604

## cor(ged,postexp) -0.301 0.232 -0.705 0.191 1.008 448 941

##

## Population-Level Effects:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## Intercept 1.739 0.012 1.716 1.762 1.000 3337 3456

## exper 0.041 0.003 0.036 0.047 1.000 3185 3550

## hgc_9 0.039 0.006 0.027 0.051 1.001 3557 3700

## uerate_7 -0.012 0.002 -0.015 -0.008 1.001 6343 3864

## ged 0.041 0.022 -0.003 0.085 1.000 3486 3123

## postexp 0.009 0.005 -0.002 0.020 1.000 4099 3249

## exper:black -0.019 0.005 -0.028 -0.011 1.001 3341 3311

##

## Family Specific Parameters:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## sigma 0.307 0.003 0.301 0.313 1.003 2671 2878

##

## Draws were sampled using sampling(NUTS). For each parameter, Bulk_ESS

## and Tail_ESS are effective sample size measures, and Rhat is the potential

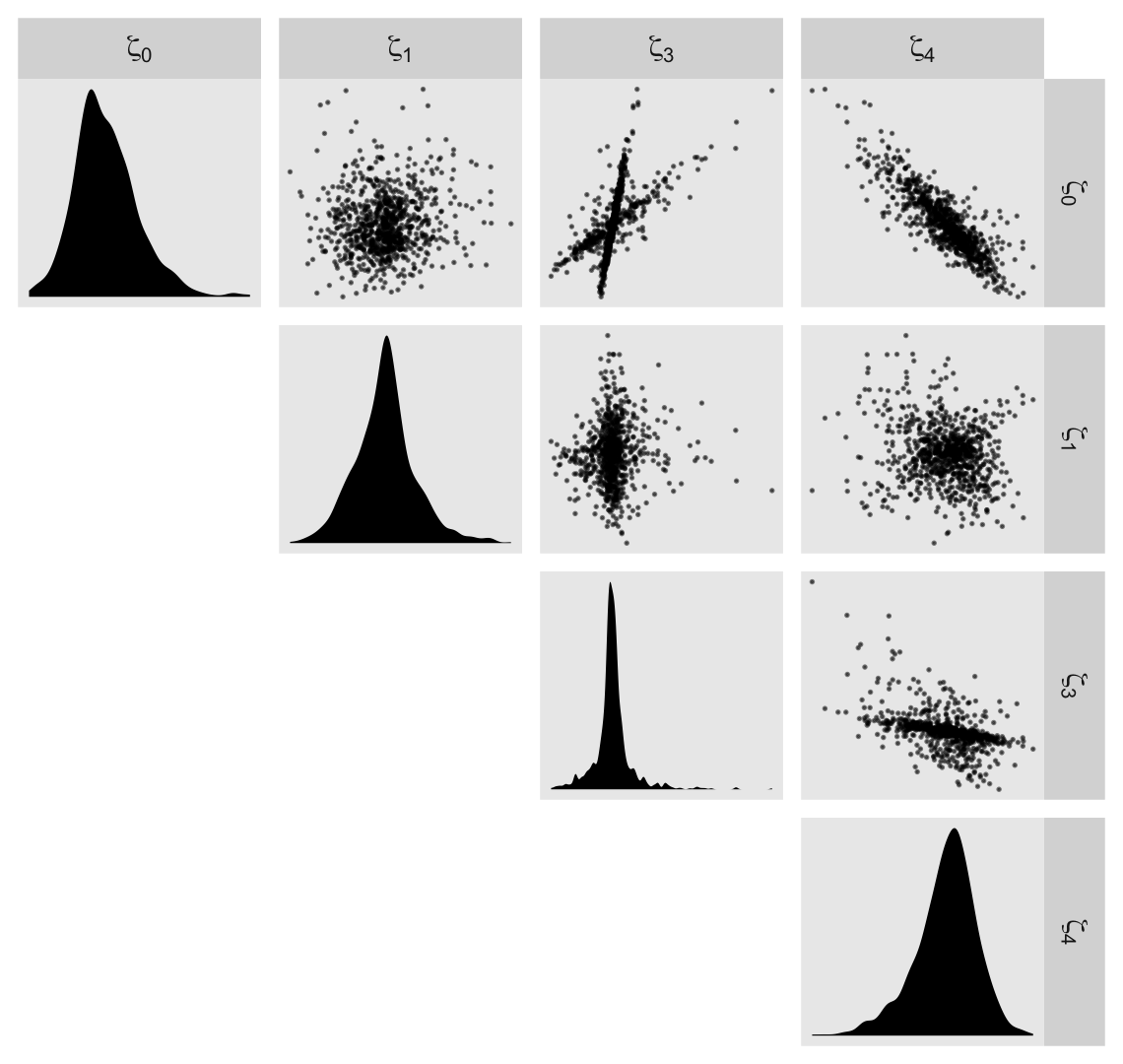

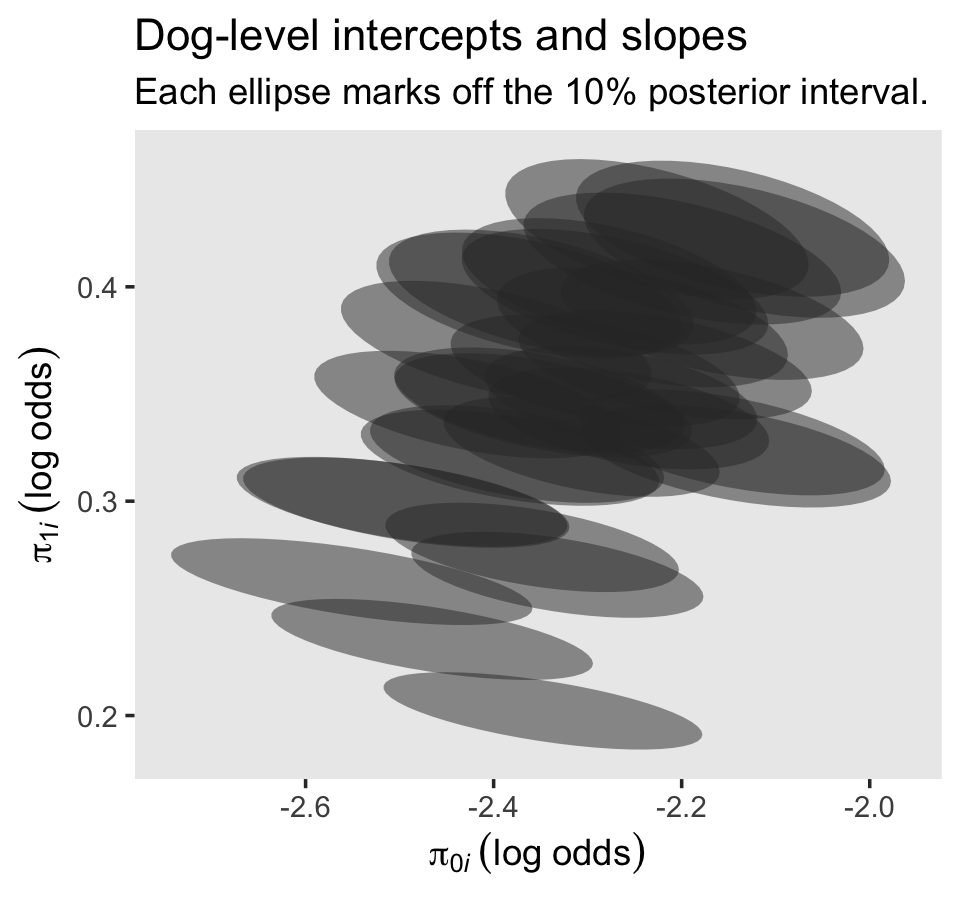

## scale reduction factor on split chains (at convergence, Rhat = 1).Before we dive into the \(\gamma\)-based counterfactual trajectories of Figure 6.3, we might use a plot to get at sense of the \(\zeta\)’s, as summarized by the \(\sigma\) and \(\rho\) parameters. Here we extract the \(\zeta\)’s. Since there’s such a large number, we’ll focus on their posterior means.

r <-

tibble(`zeta[0]` = ranef(fit6.5)$id[, 1, "Intercept"],

`zeta[1]` = ranef(fit6.5)$id[, 1, "exper"],

`zeta[3]` = ranef(fit6.5)$id[, 1, "ged"],

`zeta[4]` = ranef(fit6.5)$id[, 1, "postexp"])

glimpse(r)## Rows: 888

## Columns: 4

## $ `zeta[0]` <dbl> -0.151683338, 0.101952101, 0.058855105, 0.032556479, 0.162725921, -0.115933285, 0.01842935…

## $ `zeta[1]` <dbl> 0.0077954424, 0.0092990259, -0.0055013067, -0.0018657393, 0.0295247250, -0.0084006310, 0.0…

## $ `zeta[3]` <dbl> -0.1044292573, 0.0873875749, 0.0881116827, 0.0045926705, 0.0276905819, -0.0756621507, -0.0…

## $ `zeta[4]` <dbl> 0.0179942545, 0.0012571856, -0.0121561226, -0.0022347957, -0.0045111664, 0.0005690249, -0.…We’ll be plotting with help from the GGally package (Schloerke et al., 2021), which does a nice job displaying a grid of bivariate plots via the ggpairs(). We’re going to get fancy with our ggpairs() plot by using a handful of custom settings. Here we save them as two functions.

my_diag <- function(data, mapping, ...) {

ggplot(data = data, mapping = mapping) +

geom_density(fill = "black", linewidth = 0) +

scale_x_continuous(NULL, breaks = NULL) +

scale_y_continuous(NULL, breaks = NULL)

}

my_upper <- function(data, mapping, ...) {

ggplot(data = data, mapping = mapping) +

geom_point(size = 1/10, alpha = 1/2) +

scale_x_continuous(NULL, breaks = NULL) +

scale_y_continuous(NULL, breaks = NULL)

}Now visualize the model F \(\zeta\)’s with GGally::ggpairs().

library(GGally)

r %>%

ggpairs(upper = list(continuous = my_upper),

diag = list(continuous = my_diag),

lower = NULL,

labeller = label_parsed)

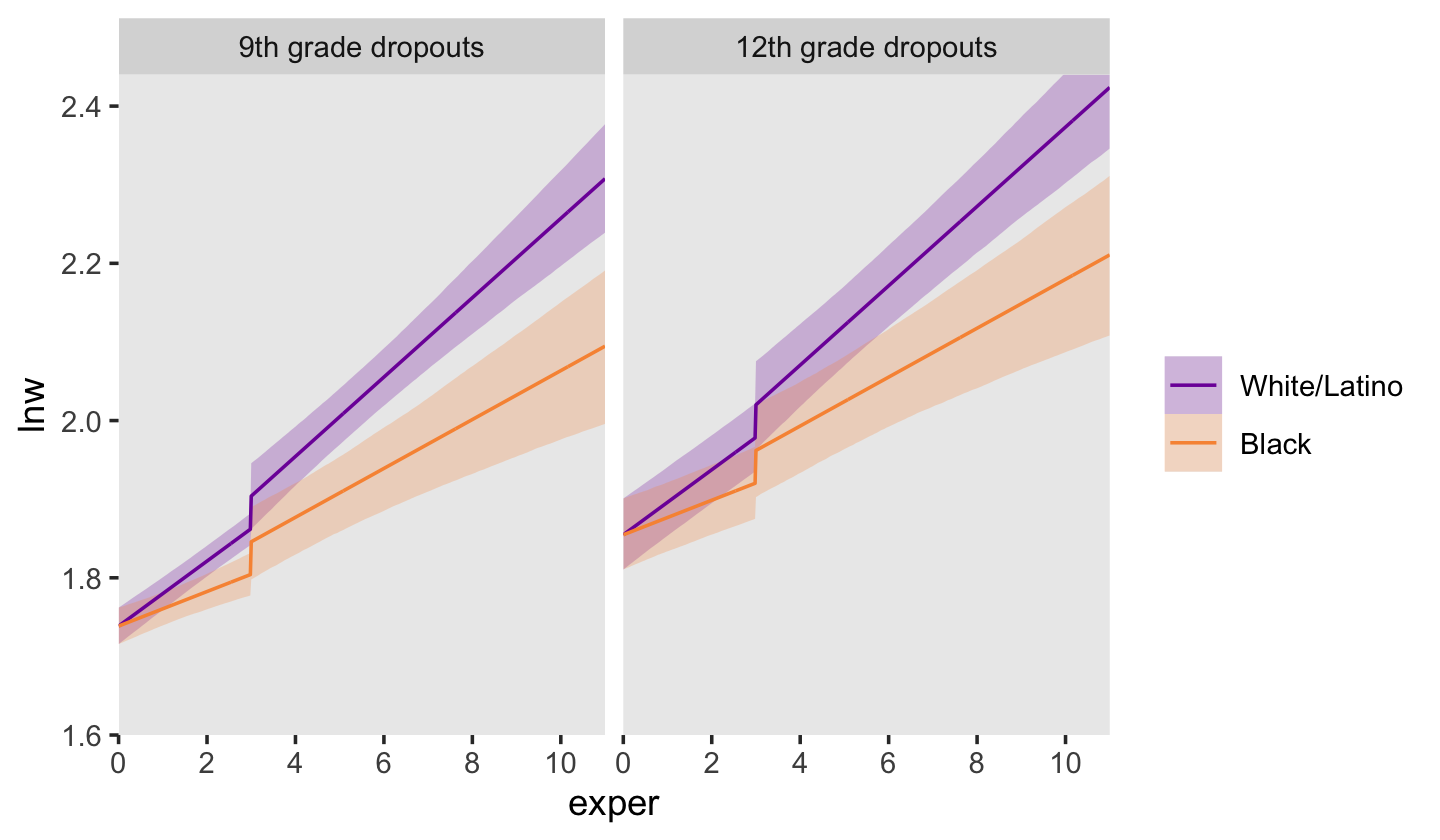

Now we’re ready to make our version of Figure 6.3. Since we will be expressing the uncertainty of our counterfactual trajectories with 95% interval bands, we’ll be faceting the plot by the two levels of hgc_9. Otherwise, the overplotting would become too much.

# define the new data

nd <-

crossing(black = 0:1,

hgc_9 = c(0, 3)) %>%

expand_grid(exper = seq(from = 0, to = 11, by = 0.02)) %>%

mutate(ged = ifelse(exper < 3, 0, 1),

postexp = ifelse(ged == 0, 0, exper - 3),

uerate_7 = 0)

# compute the fitted draws

fitted(fit6.5,

re_formula = NA,

newdata = nd) %>%

# wrangle

data.frame() %>%

bind_cols(nd) %>%

mutate(race = ifelse(black == 0, "White/Latino", "Black"),

hgc_9 = ifelse(hgc_9 == 0, "9th grade dropouts", "12th grade dropouts")) %>%

mutate(race = fct_rev(race),

hgc_9 = fct_rev(hgc_9)) %>%

# plot!

ggplot(aes(x = exper, y = Estimate, ymin = Q2.5, ymax = Q97.5,

fill = race, color = race)) +

geom_ribbon(size = 0, alpha = 1/4) +

geom_line() +

scale_fill_viridis_d(NULL, option = "C", begin = .25, end = .75) +

scale_color_viridis_d(NULL, option = "C", begin = .25, end = .75) +

scale_x_continuous(breaks = 0:5 * 2, expand = c(0, 0)) +

scale_y_continuous("lnw", breaks = 1.6 + 0:4 * 0.2,

expand = expansion(mult = c(0, 0.05))) +

coord_cartesian(ylim = c(1.6, 2.4)) +

theme(panel.grid = element_blank()) +

facet_wrap(~ hgc_9)

6.1.3 Further extensions of the discontinuous growth model.

“It is easy to generalize these strategies to models with other discontinuities” (p. 206).

6.1.3.1 Dividing TIME into multiple phases.

“You can divide TIME into multiple epochs, allowing the trajectories to differ in elevation (and perhaps slope) during each” (p. 206, emphasis in the original). With our examples, above, we divided time into two epochs: before and (possibly) after receipt of one’s GED. More possible epochs might after completing college or graduate school. All such epochs might influence intercepts and/or slopes (by either of the slope methods, above).

6.1.3.2 Discontinuities at common points in time.

In some data sets, the timing of the discontinuity will not be person-specific; instead, everyone will experience the hypothesized transition at a common point in time. You can hypothesize a similar discontinuous change trajectory for such data sets by applying the strategies outlined above. (p. 207)

Examples might include months, seasons, and academic quarters or semesters.

6.2 Using transformations to model nonlinear individual change

When confronted by obviously nonlinear trajectories, we usually begin with the transformation approach for two reasons. First, a straight line–even on a transformed scale–is a simple mathematical form whose two parameters have clear interpretations. Second, because the metrics of many variables are ad hoc to begin with, transformation to another ad hoc scale may sacrifice little. (p. 208)

As an example, consider fit4.6 from back in Chapter 4.

fit4.6 <-

brm(data = alcohol1_pp,

family = gaussian,

alcuse ~ 0 + Intercept + age_14 + coa + peer + age_14:peer + (1 + age_14 | id),

prior = c(prior(student_t(3, 0, 2.5), class = sd),

prior(student_t(3, 0, 2.5), class = sigma),

prior(lkj(1), class = cor)),

iter = 2000, warmup = 1000, chains = 4, cores = 4,

seed = 4,

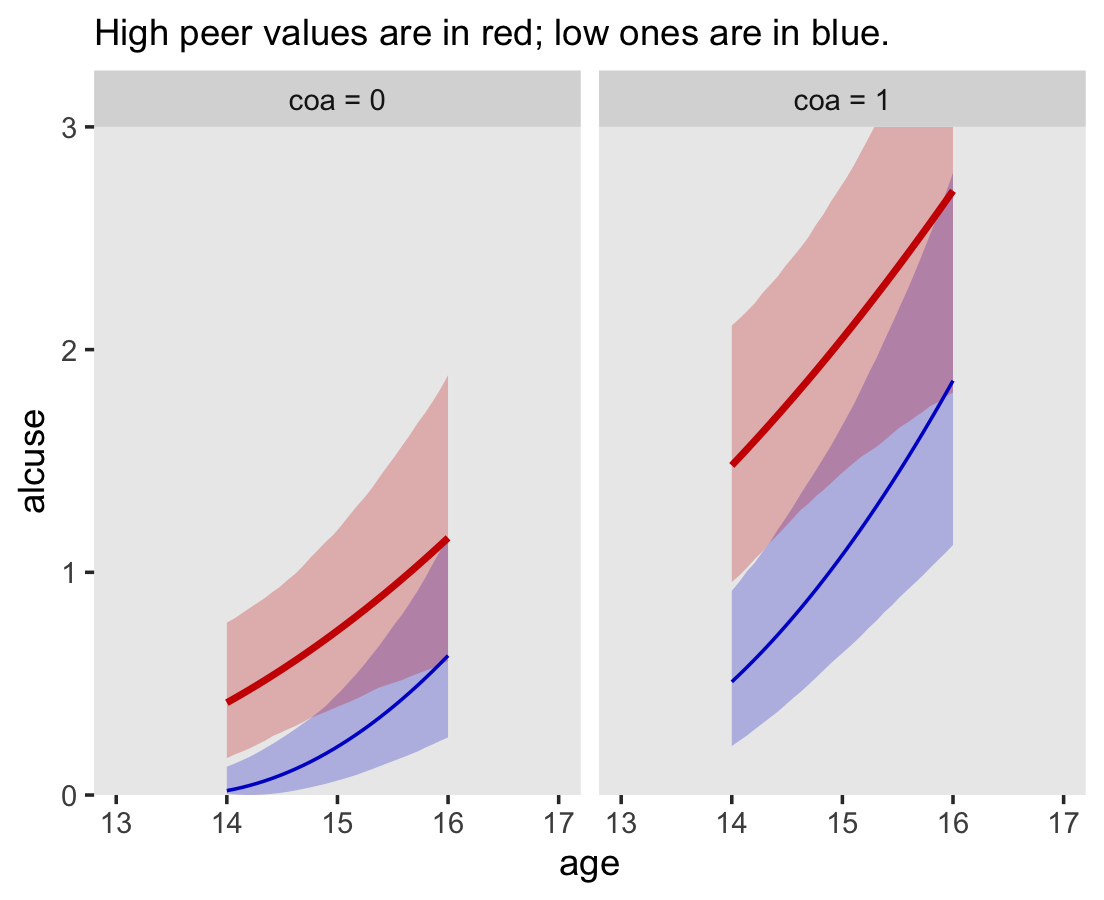

file = "fits/fit04.06")In the alcohol1_pp data, the criterion alcuse was transformed by taking its square root. Since we fit a linear model on that transformed variable, our model is actually non-linear on the original metric of alcuse. To see, we’ll square the fitted()-based counterfactual trajectories and their intervals to make our version of Figure 6.4.

# define the new data

nd <-

crossing(coa = 0:1,

peer = c(.655, 1.381)) %>%

expand_grid(age_14 = seq(from = 0, to = 2, length.out = 30))

# compute the counterfactual trajectories

fitted(fit4.6,

newdata = nd,

re_formula = NA) %>%

data.frame() %>%

bind_cols(nd) %>%

# transform the predictions by squaring them

mutate(Estimate = Estimate^2,

Q2.5 = Q2.5^2,

Q97.5 = Q97.5^2) %>%

# a little wrangling will make plotting much easier

mutate(age = age_14 + 14,

coa = ifelse(coa == 0, "coa = 0", "coa = 1"),

peer = factor(peer)) %>%

# plot!

ggplot(aes(x = age, color = peer, fill = peer)) +

geom_ribbon(aes(ymin = Q2.5, ymax = Q97.5),

size = 0, alpha = 1/4) +

geom_line(aes(y = Estimate, size = peer)) +

scale_size_manual(values = c(1/2, 1)) +

scale_fill_manual(values = c("blue3", "red3")) +

scale_color_manual(values = c("blue3", "red3")) +

scale_y_continuous("alcuse", breaks = 0:3, expand = c(0, 0)) +

labs(subtitle = "High peer values are in red; low ones are in blue.") +

coord_cartesian(xlim = c(13, 17),

ylim = c(0, 3)) +

theme(legend.position = "none",

panel.grid = element_blank()) +

facet_wrap(~coa)

Compare these to the linear trajectories depicted back in Figure 4.3c.

6.2.1 The ladder of transformations and the rule of the bulge.

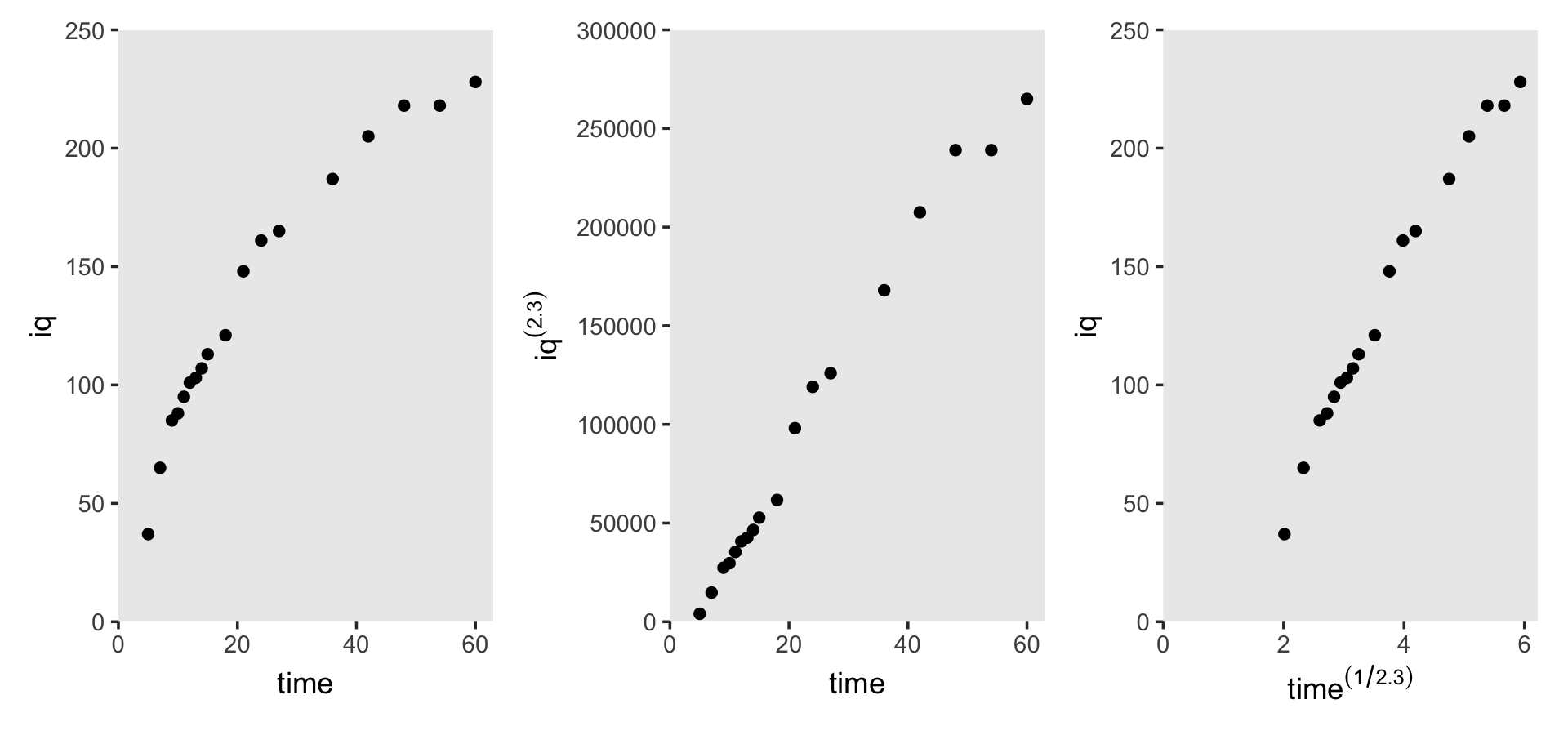

I just not a fan of the “ladder of powers” idea and I’m not interested in reproducing Figure 6.5. However, we will make the next one, real quick. Load the berkeley_pp data.

berkeley_pp <-

read_csv("data/berkeley_pp.csv") %>%

mutate(time = age)

glimpse(berkeley_pp)## Rows: 18

## Columns: 3

## $ age <dbl> 5, 7, 9, 10, 11, 12, 13, 14, 15, 18, 21, 24, 27, 36, 42, 48, 54, 60

## $ iq <dbl> 37, 65, 85, 88, 95, 101, 103, 107, 113, 121, 148, 161, 165, 187, 205, 218, 218, 228

## $ time <dbl> 5, 7, 9, 10, 11, 12, 13, 14, 15, 18, 21, 24, 27, 36, 42, 48, 54, 60Here’s how we might make Figure 6.6.

# left

p1 <-

berkeley_pp %>%

ggplot(aes(x = time, y = iq)) +

geom_point() +

scale_y_continuous(expand = c(0, 0), limits = c(0, 250))

# middle

p2 <-

berkeley_pp %>%

ggplot(aes(x = time, y = iq^2.3)) +

geom_point() +

scale_y_continuous(expression(iq^(2.3)), breaks = 0:6 * 5e4,

limits = c(0, 3e5), expand = c(0, 0))

# right

p3 <-

berkeley_pp %>%

ggplot(aes(x = time^(1/2.3), y = iq)) +

geom_point() +

scale_y_continuous(expand = c(0, 0), limits = c(0, 250)) +

xlab(expression(time^(1/2.3)))

# combine

(p1 + p2 + p3) &

scale_x_continuous(expand = expansion(mult = c(0, 0.05)), limits = c(0, NA)) &

theme(panel.grid = element_blank())

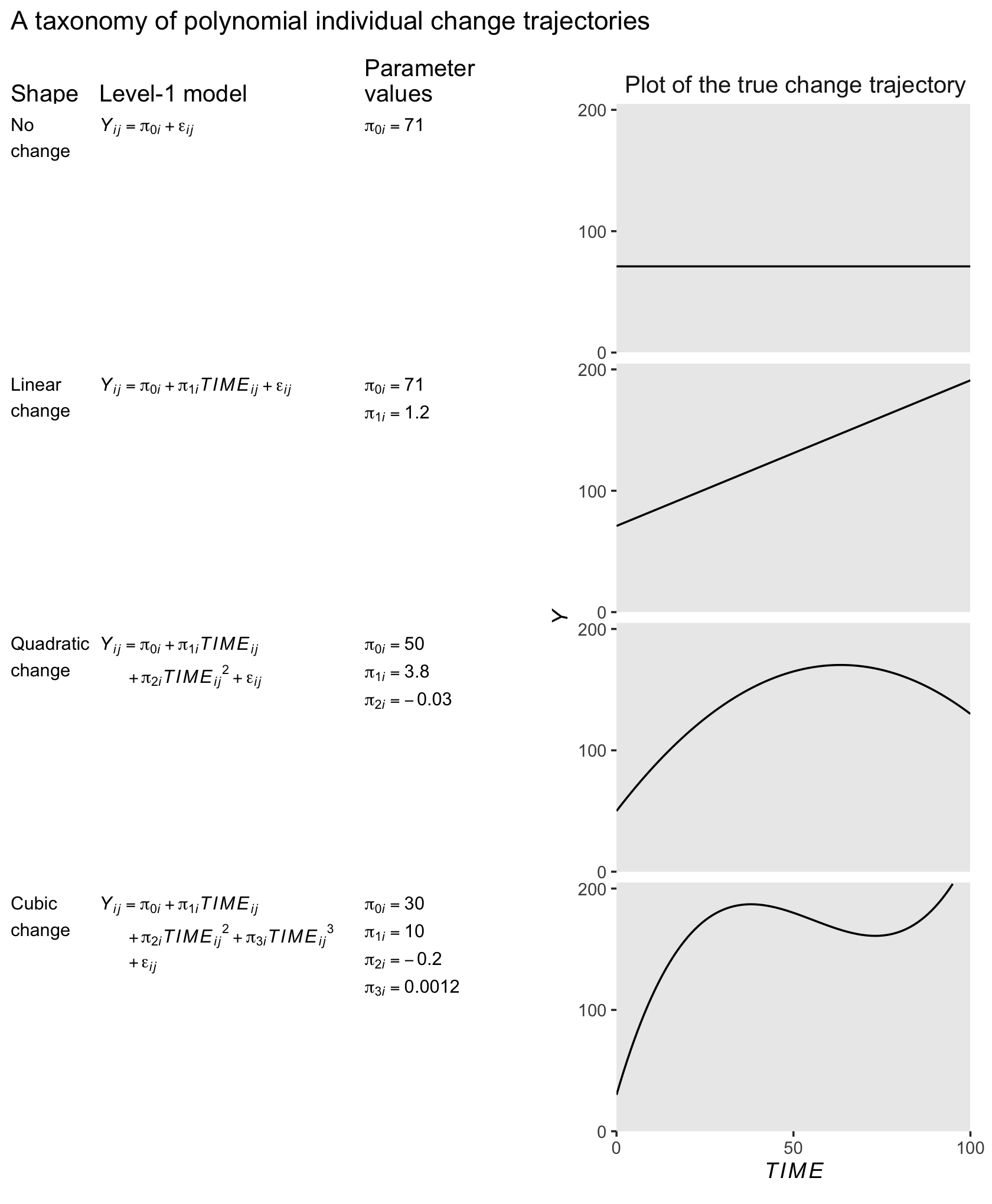

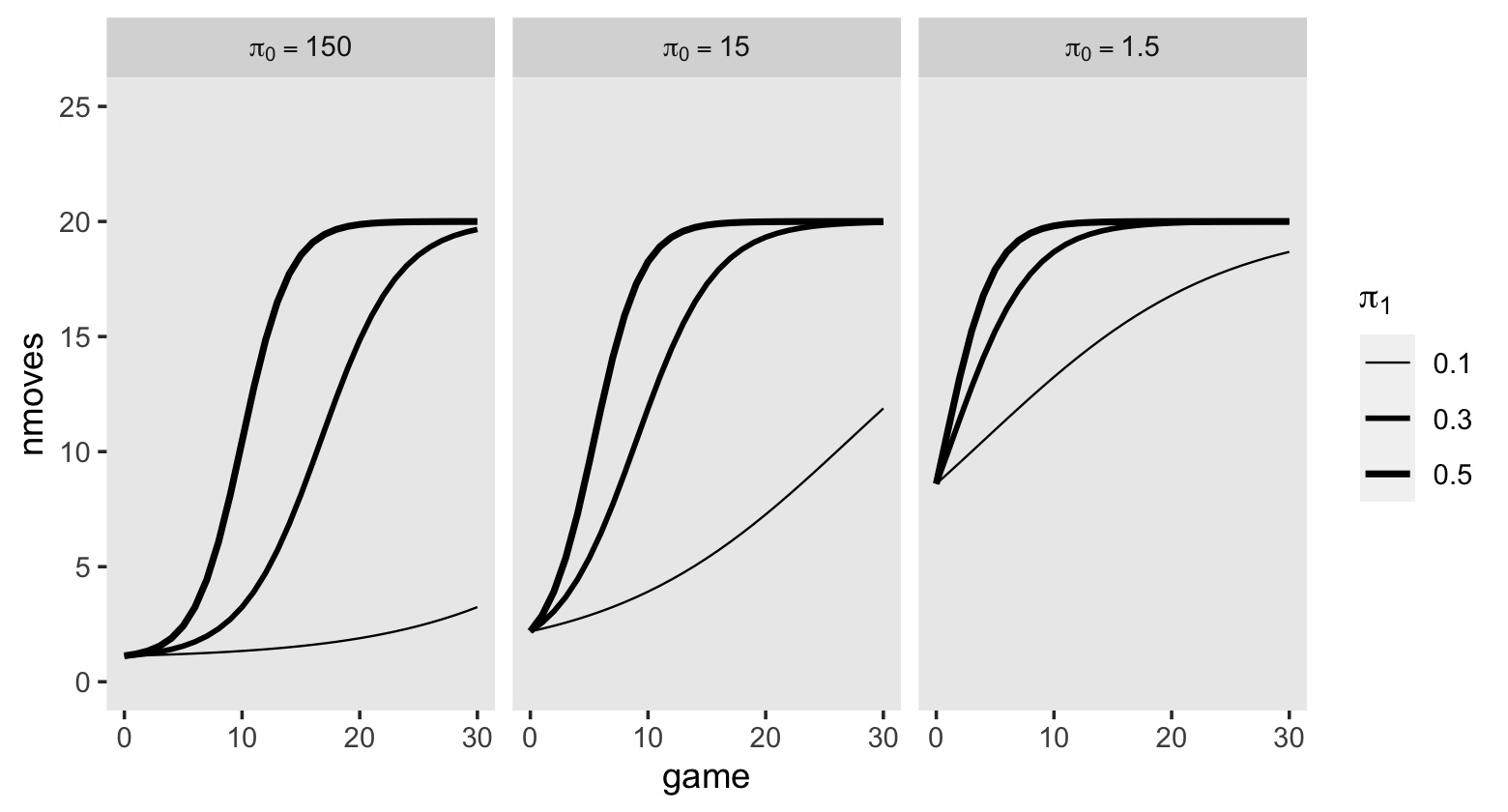

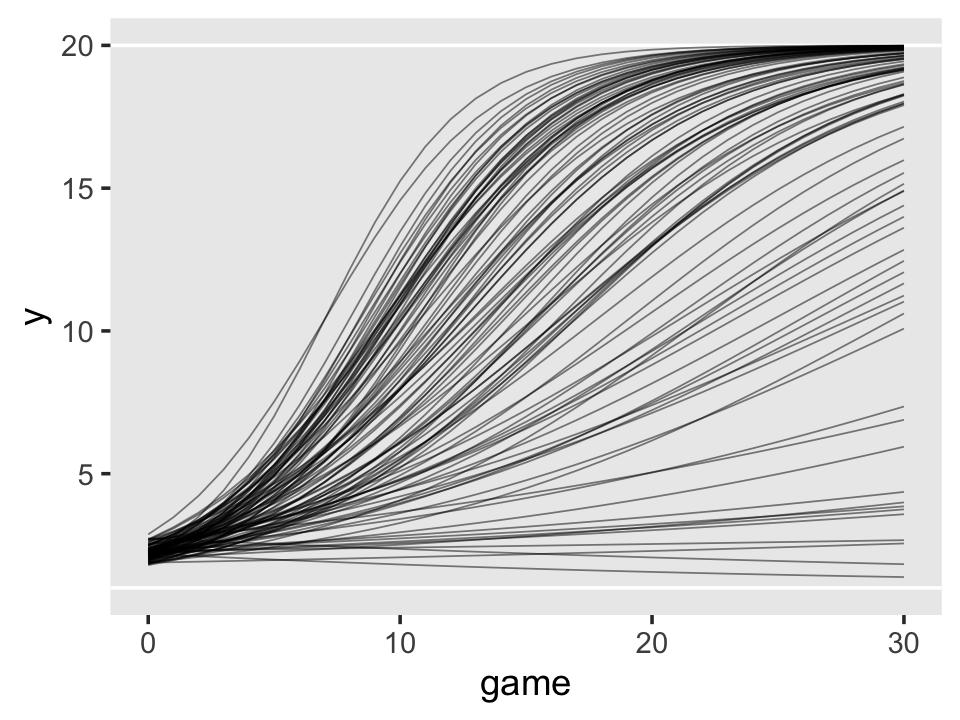

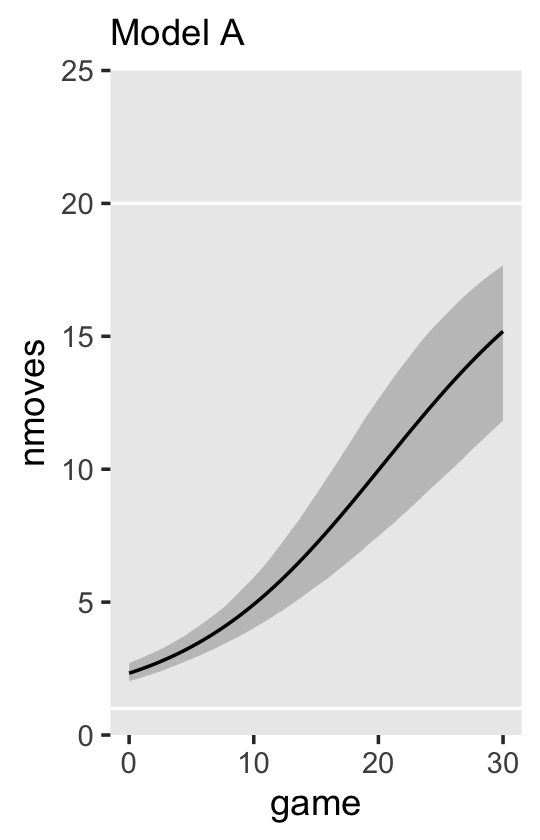

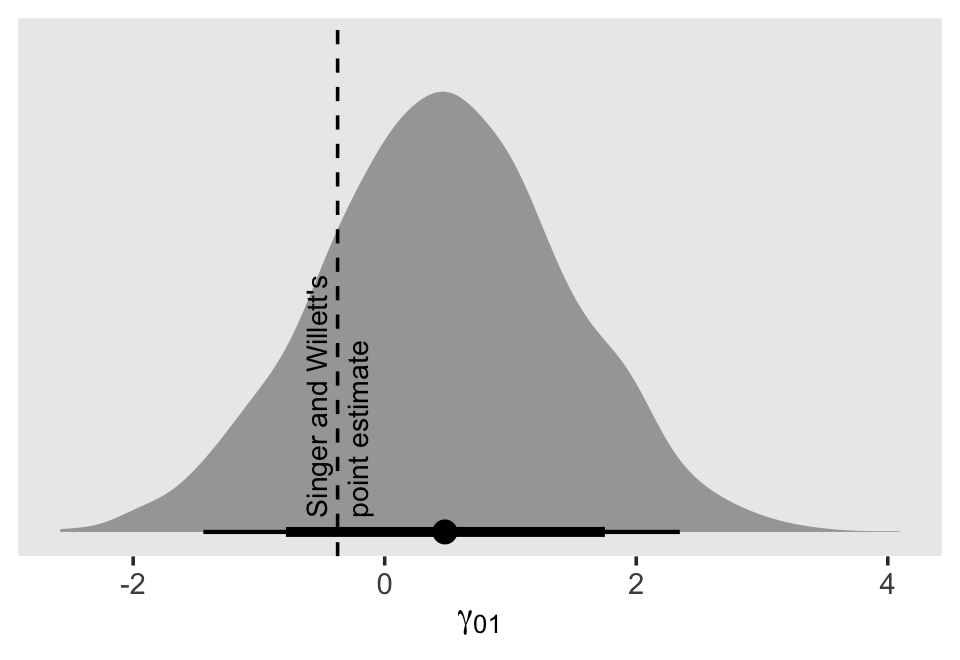

6.3 Representing individual change using a polynomial function of TIME

We can also model curvilinear change by including several level-1 predictors that collectively represent a polynomial function of time. Although the resulting polynomial growth model can be cumbersome, it can capture an even wider array of complex patterns of change over time. (p. 213, emphasis in the original)

To my eye, it will be easiest to make Table by dividing it up into columns, making each column individually, and then combining them on the back end. For our first step, here’s our first column, the “Shape” column.

p1 <-

tibble(x = 1,

y = 9.5,

label = c("No\nchange", "Linear\nchange", "Quadratic\nchange", "Cubic\nchange"),

row = 1:4,

col = "Shape") %>%

ggplot(aes(x = x, y = y, label = label)) +

geom_text(hjust = 0, vjust = 1, size = 3.25) +

scale_x_continuous(expand = c(0, 0), limits = 1:2) +

scale_y_continuous(expand = c(0, 0), limits = c(1, 10)) +

theme_void() +

theme(strip.background.y = element_blank(),

strip.text.x = element_text(hjust = 0, size = 12),

strip.text.y = element_blank()) +

facet_grid(row ~ col)For our second step, here’s the “Level-1 model” column.

p2 <-

tibble(x = c(1, 1, 1, 2, 1, 2, 2),

y = c(9.5, 9.5, 9.5, 8.5, 9.5, 8.5, 7.25),

label = c("italic(Y[i][j])==pi[0][italic(i)]+epsilon[italic(ij)]",

"italic(Y[i][j])==pi[0][italic(i)]+pi[1][italic(i)]*italic(TIME[ij])+epsilon[italic(ij)]",

"italic(Y[i][j])==pi[0][italic(i)]+pi[1][italic(i)]*italic(TIME[ij])",

"+pi[2][italic(i)]*italic(TIME[ij])^2+epsilon[italic(ij)]",

"italic(Y[i][j])==pi[0][italic(i)]+pi[1][italic(i)]*italic(TIME[ij])",

"+pi[2][italic(i)]*italic(TIME[ij])^2+pi[3][italic(i)]*italic(TIME[ij])^3",

"+epsilon[italic(ij)]"),

row = c(1:3, 3:4, 4, 4),

col = "Level-1 model") %>%

ggplot(aes(x = x, y = y, label = label)) +

geom_text(hjust = 0, vjust = 1, size = 3.25, parse = T) +

scale_x_continuous(expand = c(0, 0), limits = c(1, 10)) +

scale_y_continuous(expand = c(0, 0), limits = c(1, 10)) +

theme_void() +

theme(strip.background.y = element_blank(),

strip.text.x = element_text(hjust = 0, size = 12),

strip.text.y = element_blank()) +

facet_grid(row ~ col)For our third step, here’s the “Parameter values” column.

p3 <-

tibble(x = 1,

y = c(9.5, 9.5, 8.5, 9.5, 8.5, 7.5, 9.5, 8.5, 7.5, 6.5),

label = c("pi[0][italic(i)]==71",

"pi[0][italic(i)]==71",

"pi[1][italic(i)]==1.2",

"pi[0][italic(i)]==50",

"pi[1][italic(i)]==3.8",

"pi[2][italic(i)]==-0.03",

"pi[0][italic(i)]==30",

"pi[1][italic(i)]==10",

"pi[2][italic(i)]==-0.2",

"pi[3][italic(i)]==0.0012"),

row = rep(1:4, times = 1:4),

col = "Parameter\nvalues") %>%

ggplot(aes(x = x, y = y, label = label)) +

geom_text(hjust = 0, vjust = 1, size = 3.25, parse = T) +

scale_x_continuous(expand = c(0, 0), limits = c(1, 10)) +

scale_y_continuous(expand = c(0, 0), limits = c(1, 10)) +

theme_void() +

theme(strip.background.y = element_blank(),

strip.text.x = element_text(hjust = 0, size = 12),

strip.text.y = element_blank()) +

facet_grid(row ~ col)We’ll do our fourth step in three stages. First, we’ll make and save four data sets, one for each of the plot panels. Second, we’ll combine those into a single data set, which we’ll wrangle a bit. Third, we’ll make the plots in the final column.

# make the four small data sets

pi0 <- 71

d1 <- tibble(time = 0:100) %>%

mutate(y = pi0)

pi0 <- 71

pi1 <- 1.2

d2 <- tibble(time = 0:100) %>%

mutate(y = pi0 + pi1 * time)

pi0 <- 50

pi1 <- 3.8

pi2 <- -0.03

d3 <- tibble(time = 0:100) %>%

mutate(y = pi0 + pi1 * time + pi2 * time^2)

pi0 <- 30

pi1 <- 10

pi2 <- -0.2

pi3 <- 0.0012

d4 <- tibble(time = 0:100) %>%

mutate(y = pi0 + pi1 * time + pi2 * time^2 + pi3 * time^3)

# combine the data sets

p4 <-

bind_rows(d1, d2, d3, d4) %>%

# wrangle

mutate(row = rep(1:4, each = n() / 4),

col = "Plot of the true change trajectory") %>%

# plot!

ggplot(aes(x = time, y = y)) +

geom_line() +

scale_x_continuous(expression(italic(TIME)), expand = c(0, 0),

breaks = 0:2 * 50, limits = c(0, 100)) +

scale_y_continuous(expression(italic(Y)), expand = c(0, 0),

breaks = 0:2 * 100, limits = c(0, 205)) +

theme(panel.grid = element_blank(),

strip.background = element_blank(),

strip.text.x = element_text(hjust = 0, size = 12),

strip.text.y = element_blank()) +

facet_grid(row ~ col)Now we’re finally ready to combine all the elements to make Table 6.4.

(p1 | p2 | p3 | p4) +

plot_annotation(title = "A taxonomy of polynomial individual change trajectories") +

plot_layout(widths = c(1, 3, 2, 4))

6.3.1 The shapes of polynomial individual change trajectories.

“The ‘no change’ and ‘linear change’ models are familiar; the remaining models, which contain quadratic and cubic functions of TIME, are new” (p. 213, emphasis in the original).

6.3.1.1 “No change” trajectory.

The “no change” trajectory is known as a polynomial function of “zero order” because TIME raised to the 0th power is 1 (i.e., \(TIME^0 = 1\)). This model is tantamount to including a constant predictor, 1, in the level-1 model, as a multiplier of the sole individual growth parameter, the intercept, \(\pi_{0i}\)… Even though each trajectory is flat, different individuals can have different intercepts and so a collection of true “no change” trajectories is a set of vertically scattered horizontal lines. (p. 215, emphasis in the original)

6.3.1.2 “Linear change” trajectory.

The “linear change” trajectory is known as a “first order” polynomial in time because TIME raised to the 1st power equals TIME itself (i.e., \(TIME^1 = TIME\)). Linear TIME is the sole predictor and the two individual growth parameters have the usual interpretations. (p. 215, emphasis in the original)

6.3.1.3 “Quadratic change” trajectory.

Adding \(TIME^2\) to a level-1 individual growth model that already includes linear TIME yields a second order polynomial for quadratic change. Unlike a level-1 model that includes only \(TIME^2\), a second order polynomial change trajectory includes two TIME predictors and three growth parameters (\(\pi_{0i}\), \(\pi_{1i}\) and \(\pi_{2i}\)). The first two parameters have interpretations that are similar, but not identical, to those in the linear change trajectory; the third is new. (p. 215, emphasis in the original)

In this model, \(\pi_{0i}\) is still the intercept. The \(\pi_{1i}\) parameter is now the instantaneous rate of change when \(TIME = 0\). The new \(\pi_{2i}\) parameter, sometimes called the curvature parameter, describes the change in the rate of change.

6.3.2 Selecting a suitable level-1 polynomial trajectory for change.

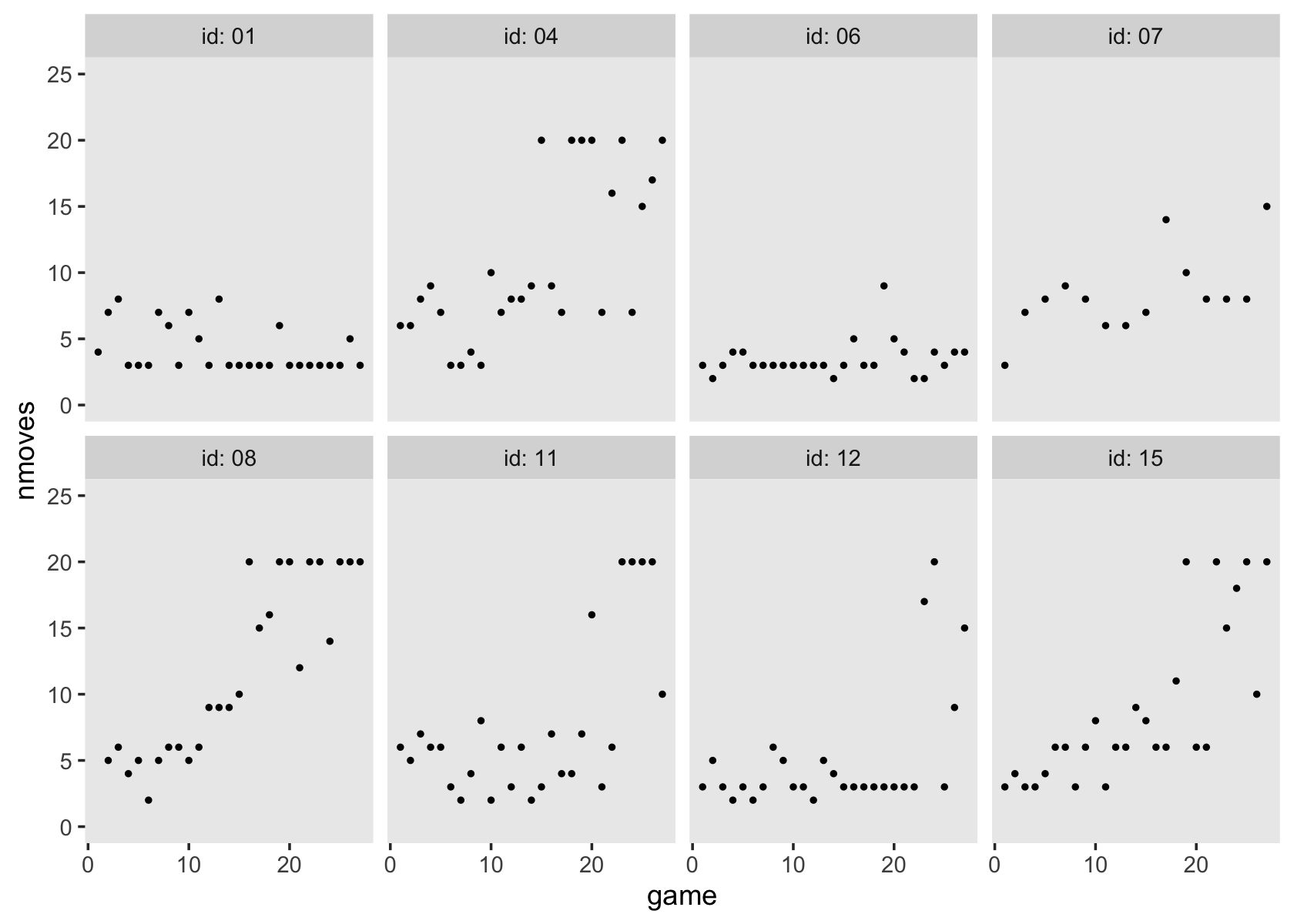

It appears that both the external_pp.csv and external_pp.txt files within the data folder (here) are missing a few occasions. Happily, you can download a more complete version of the data from the good people at stats.idre.ucla.edu.

external_pp <-

read.table("https://stats.idre.ucla.edu/wp-content/uploads/2020/01/external_pp.txt",

header = T, sep = ",")

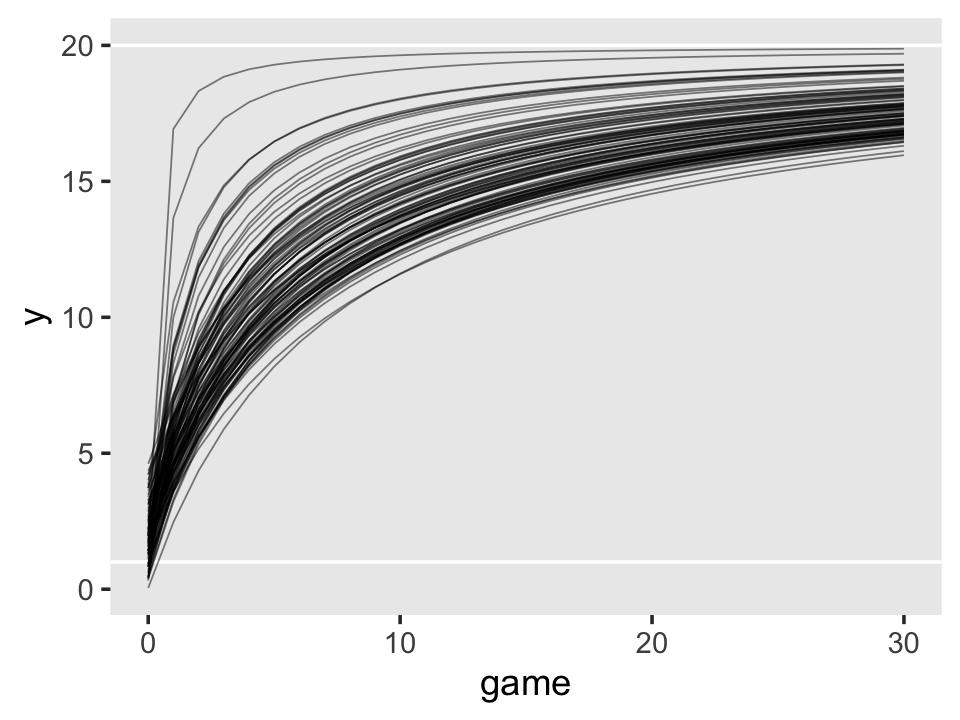

glimpse(external_pp)## Rows: 270

## Columns: 5

## $ id <int> 1, 1, 1, 1, 1, 1, 2, 2, 2, 2, 2, 2, 3, 3, 3, 3, 3, 3, 4, 4, 4, 4, 4, 4, 5, 5, 5, 5, 5, 5, 6…

## $ external <int> 50, 57, 51, 48, 43, 19, 4, 6, 3, 3, 5, 12, 0, 1, 9, 26, 10, 24, 14, 5, 18, 31, 31, 23, 26, …

## $ female <int> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 1, 1, 1, 1, 1, 0, 0, 0, 0, 0, 0, 0…

## $ time <int> 0, 1, 2, 3, 4, 5, 0, 1, 2, 3, 4, 5, 0, 1, 2, 3, 4, 5, 0, 1, 2, 3, 4, 5, 0, 1, 2, 3, 4, 5, 0…

## $ grade <int> 1, 2, 3, 4, 5, 6, 1, 2, 3, 4, 5, 6, 1, 2, 3, 4, 5, 6, 1, 2, 3, 4, 5, 6, 1, 2, 3, 4, 5, 6, 1…There are data from 45 children.

external_pp %>%

distinct(id) %>%

nrow()## [1] 45The 45 kids were composed of 28 boys and 17 girls.

external_pp %>%

distinct(id, female) %>%

count(female) %>%

mutate(percent = 100 * n / sum(n))## female n percent

## 1 0 28 62.22222

## 2 1 17 37.77778The data were collected over the children’s first through sixth grades.

external_pp %>%

distinct(grade)## grade

## 1 1

## 2 2

## 3 3

## 4 4

## 5 5

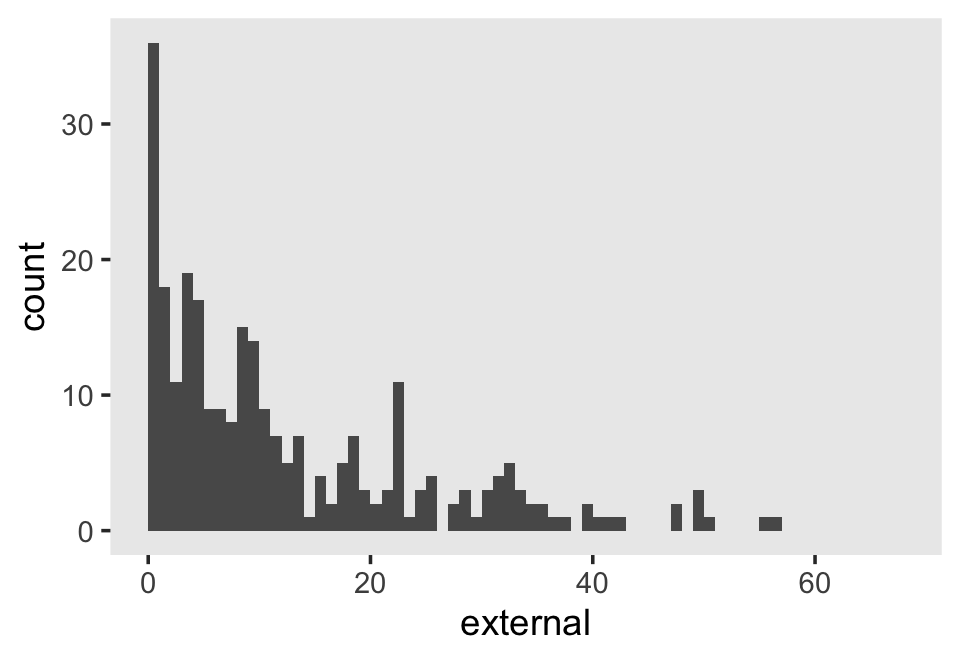

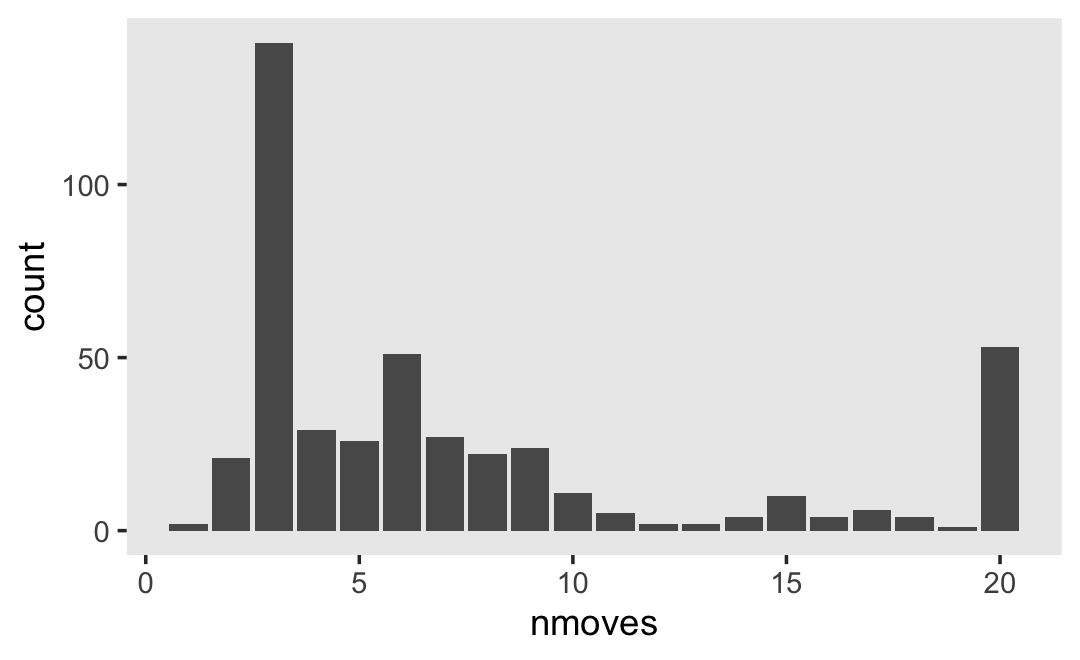

## 6 6Our criterion, external, is the sum of the 34 items in the Externalizing subscale of the Child Behavior Checklist. Each item is rated on a 3-point Likert-type scale, ranging from 0 (rarely/never) to 2 (often). The possible range for the sum score of the Externalizing subscale is 0 to 68. Here’s the overall distribution.

external_pp %>%

ggplot(aes(x = external)) +

geom_histogram(binwidth = 1, boundary = 0) +

xlim(0, 68) +

theme(panel.grid = element_blank())

The data are strongly bound to the left, which is a good thing in this case. We generally like it when our children exhibit fewer externalizing behaviors.

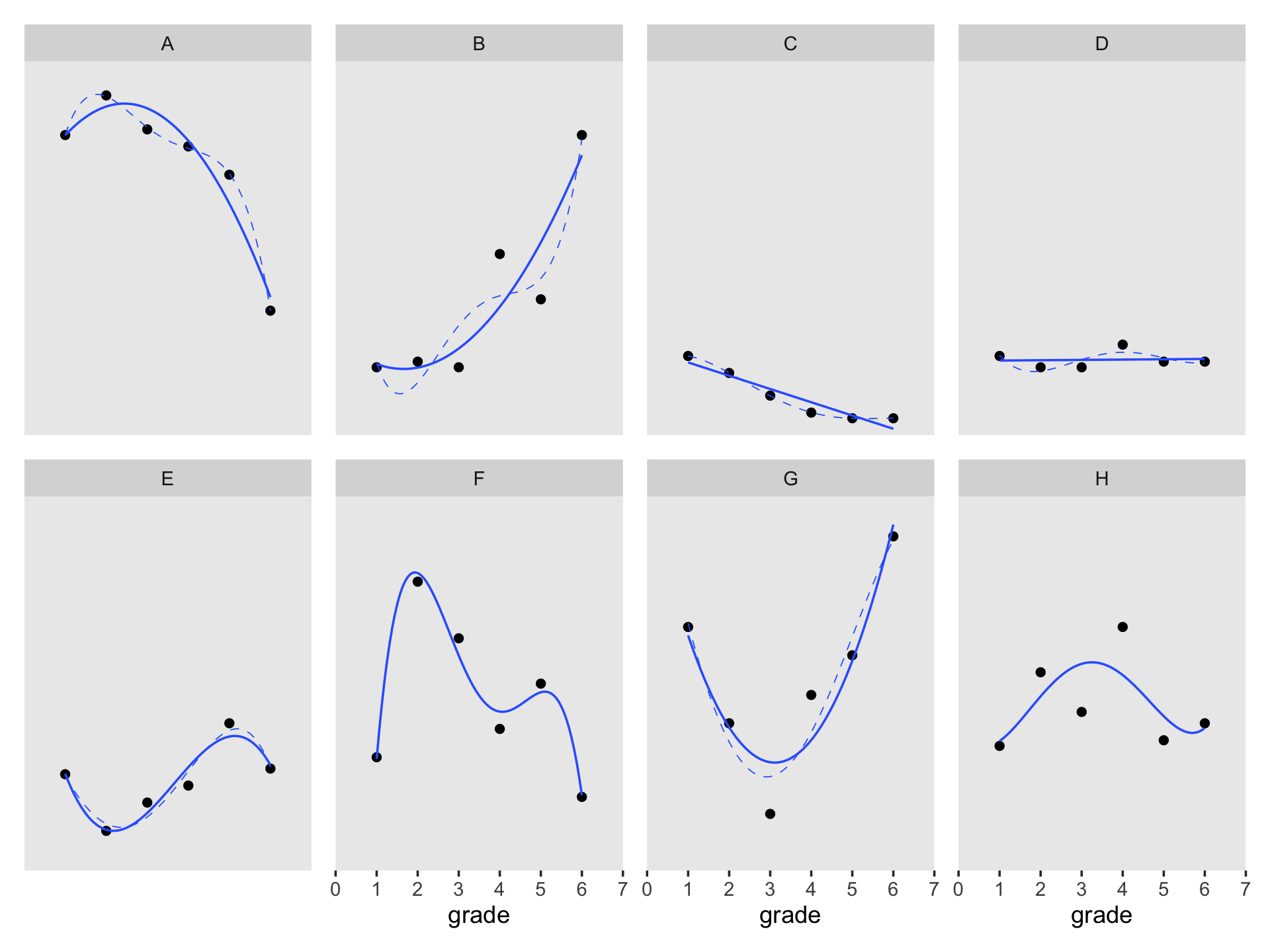

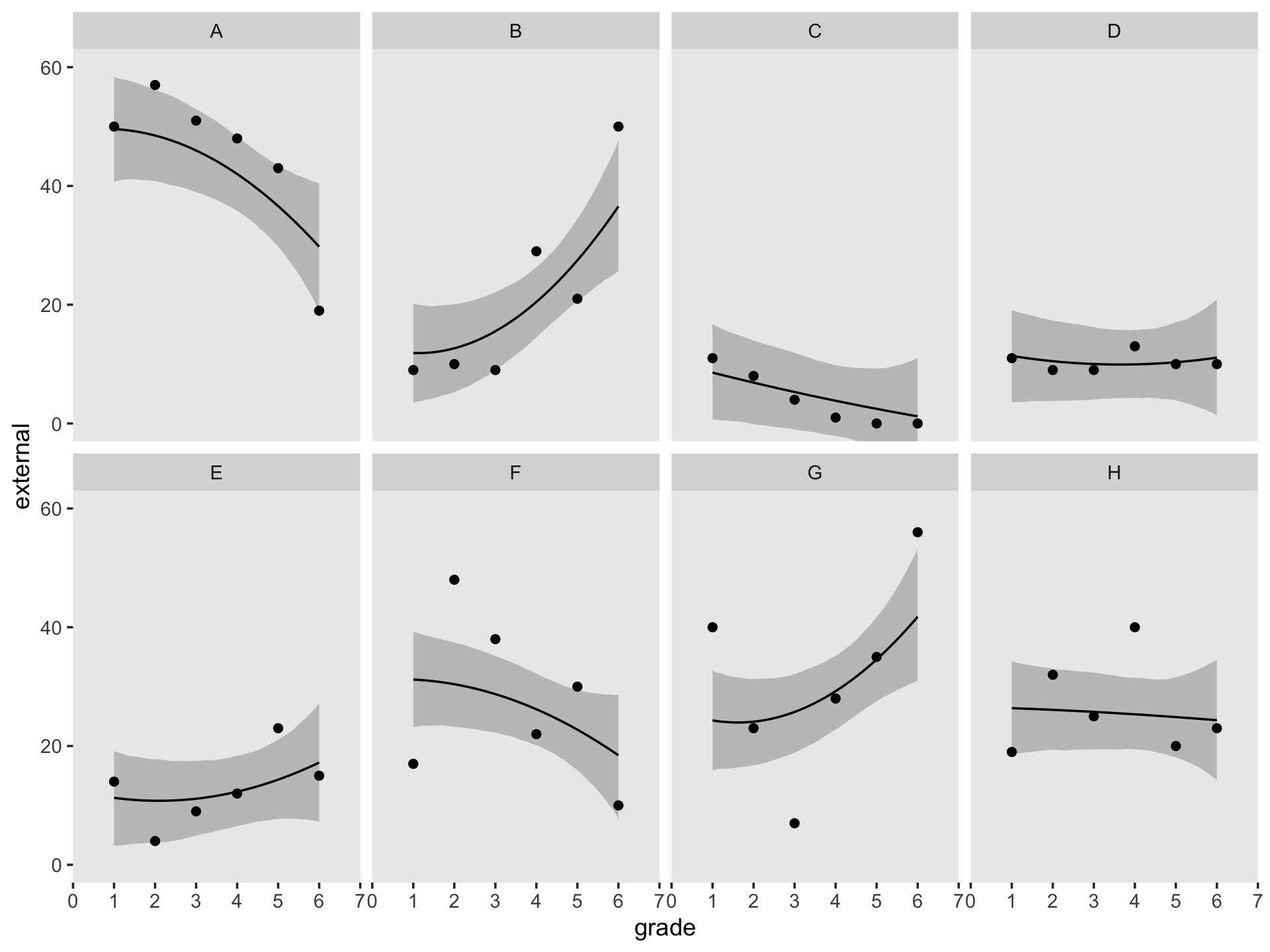

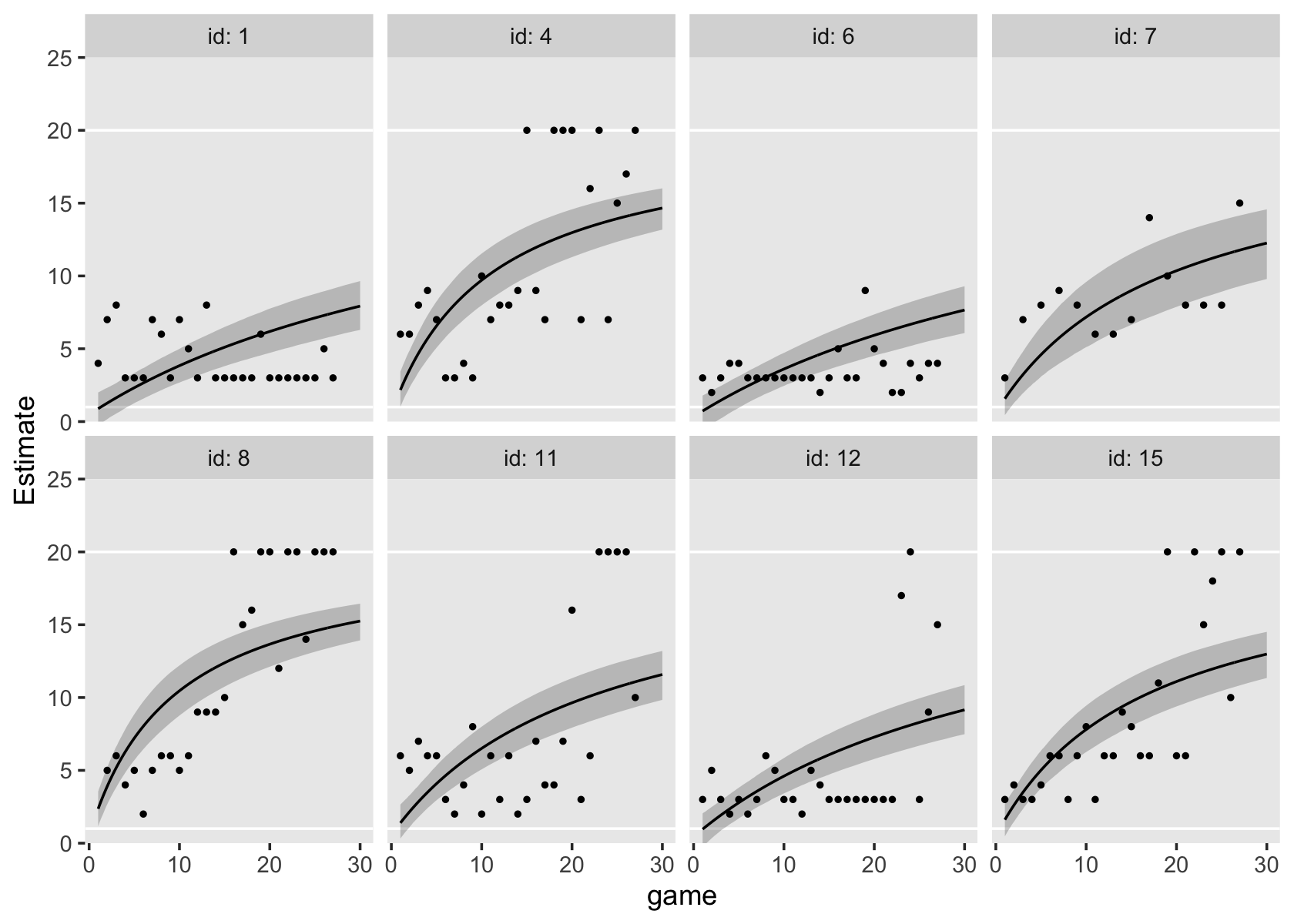

Figure 6.7 is based on a subset of the cases in the data. It might make our job easier if we just make a subset of the data, called external_pp_subset.

subset <- c(1, 6, 11, 25, 34, 36, 40, 26)

external_pp_subset <-

external_pp %>%

filter(id %in% subset) %>%

# this is for the facets in the plot

mutate(case = factor(id,

levels = subset,

labels = LETTERS[1:8]))

glimpse(external_pp_subset)## Rows: 48

## Columns: 6

## $ id <int> 1, 1, 1, 1, 1, 1, 6, 6, 6, 6, 6, 6, 11, 11, 11, 11, 11, 11, 25, 25, 25, 25, 25, 25, 26, 26,…

## $ external <int> 50, 57, 51, 48, 43, 19, 9, 10, 9, 29, 21, 50, 11, 8, 4, 1, 0, 0, 11, 9, 9, 13, 10, 10, 19, …

## $ female <int> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0…

## $ time <int> 0, 1, 2, 3, 4, 5, 0, 1, 2, 3, 4, 5, 0, 1, 2, 3, 4, 5, 0, 1, 2, 3, 4, 5, 0, 1, 2, 3, 4, 5, 0…

## $ grade <int> 1, 2, 3, 4, 5, 6, 1, 2, 3, 4, 5, 6, 1, 2, 3, 4, 5, 6, 1, 2, 3, 4, 5, 6, 1, 2, 3, 4, 5, 6, 1…

## $ case <fct> A, A, A, A, A, A, B, B, B, B, B, B, C, C, C, C, C, C, D, D, D, D, D, D, H, H, H, H, H, H, E…Since the order of the polynomials in Figure 6.7 is tailored to each case, we’ll have to first build the subplots in pieces, and then combine then at the end. Make the pieces.

# a and e

p1 <-

external_pp_subset %>%

filter(case %in% c("A")) %>%

ggplot(aes(x = grade, y = external)) +

geom_point() +

stat_smooth(method = "lm", formula = y ~ x + I(x^2) + I(x^3) + I(x^4),

se = F, size = 1/4, linetype = 2) + # quartic

stat_smooth(method = "lm", formula = y ~ x + I(x^2), # quadratic

se = F, size = 1/2) +

scale_x_continuous(NULL, breaks = NULL,

limits = c(0, 7), expand = c(0, 0)) +

scale_y_continuous(NULL, breaks = NULL) +

facet_wrap(~ case)

# e

p2 <-

external_pp_subset %>%

filter(case %in% c("E")) %>%

ggplot(aes(x = grade, y = external)) +

geom_point() +

stat_smooth(method = "lm", formula = y ~ x + I(x^2) + I(x^3) + I(x^4),

se = F, size = 1/4, linetype = 2) + # quartic

stat_smooth(method = "lm", formula = y ~ x + I(x^2) + I(x^3), # cubic

se = F, size = 1/2) +

scale_x_continuous(NULL, breaks = NULL,

limits = c(0, 7), expand = c(0, 0)) +

scale_y_continuous(NULL, breaks = NULL) +

facet_wrap(~ case)

# b

p3 <-

external_pp_subset %>%

filter(case %in% c("B")) %>%

ggplot(aes(x = grade, y = external)) +

geom_point() +

stat_smooth(method = "lm", formula = y ~ x + I(x^2) + I(x^3) + I(x^4),

se = F, size = 1/4, linetype = 2) + # quartic

stat_smooth(method = "lm", formula = y ~ x + I(x^2), # quadratic

se = F, size = 1/2) +

scale_x_continuous(NULL, breaks = NULL,

limits = c(0, 7), expand = c(0, 0)) +

scale_y_continuous(NULL, breaks = NULL) +

facet_wrap(~ case)

# f

p4 <-

external_pp_subset %>%

filter(case %in% c("F")) %>%

ggplot(aes(x = grade, y = external)) +

geom_point() +

stat_smooth(method = "lm", formula = y ~ x + I(x^2) + I(x^3) + I(x^4),

se = F, size = 1/2) + # quartic

scale_x_continuous(breaks = 0:7, limits = c(0, 7), expand = c(0, 0)) +

scale_y_continuous(NULL, breaks = NULL) +

facet_wrap(~ case)

# c

p5 <-

external_pp_subset %>%

filter(case %in% c("C")) %>%

ggplot(aes(x = grade, y = external)) +

geom_point() +

stat_smooth(method = "lm", formula = y ~ x + I(x^2) + I(x^3) + I(x^4),

se = F, size = 1/4, linetype = 2) + # quartic

stat_smooth(method = "lm", formula = y ~ x, # linear

se = F, size = 1/2) +

scale_x_continuous(NULL, breaks = NULL,

limits = c(0, 7), expand = c(0, 0)) +

scale_y_continuous(NULL, breaks = NULL) +

facet_wrap(~ case)

# g

p6 <-

external_pp_subset %>%

filter(case %in% c("G")) %>%

ggplot(aes(x = grade, y = external)) +

geom_point() +

stat_smooth(method = "lm", formula = y ~ x + I(x^2) + I(x^3) + I(x^4),

se = F, size = 1/4, linetype = 2) + # quartic

stat_smooth(method = "lm", formula = y ~ x + I(x^2), # quadratic

se = F, size = 1/2) +

scale_x_continuous(breaks = 0:7, limits = c(0, 7), expand = c(0, 0)) +

scale_y_continuous(NULL, breaks = NULL) +

facet_wrap(~ case)

# d

p7 <-

external_pp_subset %>%

filter(case %in% c("D")) %>%

ggplot(aes(x = grade, y = external)) +

geom_point() +

stat_smooth(method = "lm", formula = y ~ x + I(x^2) + I(x^3) + I(x^4),

se = F, size = 1/4, linetype = 2) + # quartic

stat_smooth(method = "lm", formula = y ~ x, # linear

se = F, size = 1/2) +

scale_x_continuous(NULL, breaks = NULL,

limits = c(0, 7), expand = c(0, 0)) +

scale_y_continuous(NULL, breaks = NULL) +

facet_wrap(~ case)

# h

p8 <-

external_pp_subset %>%

filter(case %in% c("H")) %>%

ggplot(aes(x = grade, y = external)) +

geom_point() +

stat_smooth(method = "lm", formula = y ~ x + I(x^2) + I(x^3) + I(x^4),

se = F, size = 1/2) + # quartic

scale_x_continuous(breaks = 0:7, limits = c(0, 7), expand = c(0, 0)) +

scale_y_continuous(NULL, breaks = NULL) +

facet_wrap(~ case)Now combine the subplots to make the full Figure 6.7.

((p1 / p2) | (p3 / p4) | (p5 / p6) | (p7 / p8)) &

coord_cartesian(ylim = c(0, 60)) &

theme(panel.grid = element_blank())

6.3.3 Testing higher order terms in a polynomial level-1 model.

Let’s talk about priors, first focusing on the overall intercept \(\pi_{01}\). At the time of the original article by Margaret Kraatz Keiley et al. (2000), it was known that boys tended to show more externalizing behaviors than girls, and that boys tend to either increase or remain fairly stable during primary school. Less was known about typical trajectories for girls. However, Sandberg et al. (1991) can give us a sense of what values are reasonable to center on. I their paper, they compared externalizing in two groups if children, broken down between boys and girls. From their second and third tables, we get the following descriptive statistics:

sample_statistics <-

crossing(gender = c("boys", "girls"),

sample = c("School", "CBCL Nonclinical")) %>%

mutate(n = c(300, 261, 300, 267),

mean = c(10.8, 14.5, 10.7, 16.6),

sd = c(8.4, 10.4, 8.6, 12.1))

sample_statistics %>%

flextable::flextable()gender | sample | n | mean | sd |

|---|---|---|---|---|

boys | CBCL Nonclinical | 300 | 10.8 | 8.4 |

boys | School | 261 | 14.5 | 10.4 |

girls | CBCL Nonclinical | 300 | 10.7 | 8.6 |

girls | School | 267 | 16.6 | 12.1 |

Here’s the weighted mean of the externalizing scores.

sample_statistics %>%

summarise(weighted_average = sum(n * mean) / sum(n))## # A tibble: 1 × 1

## weighted_average

## <dbl>

## 1 13.0Here’s the pooled standard deviation.

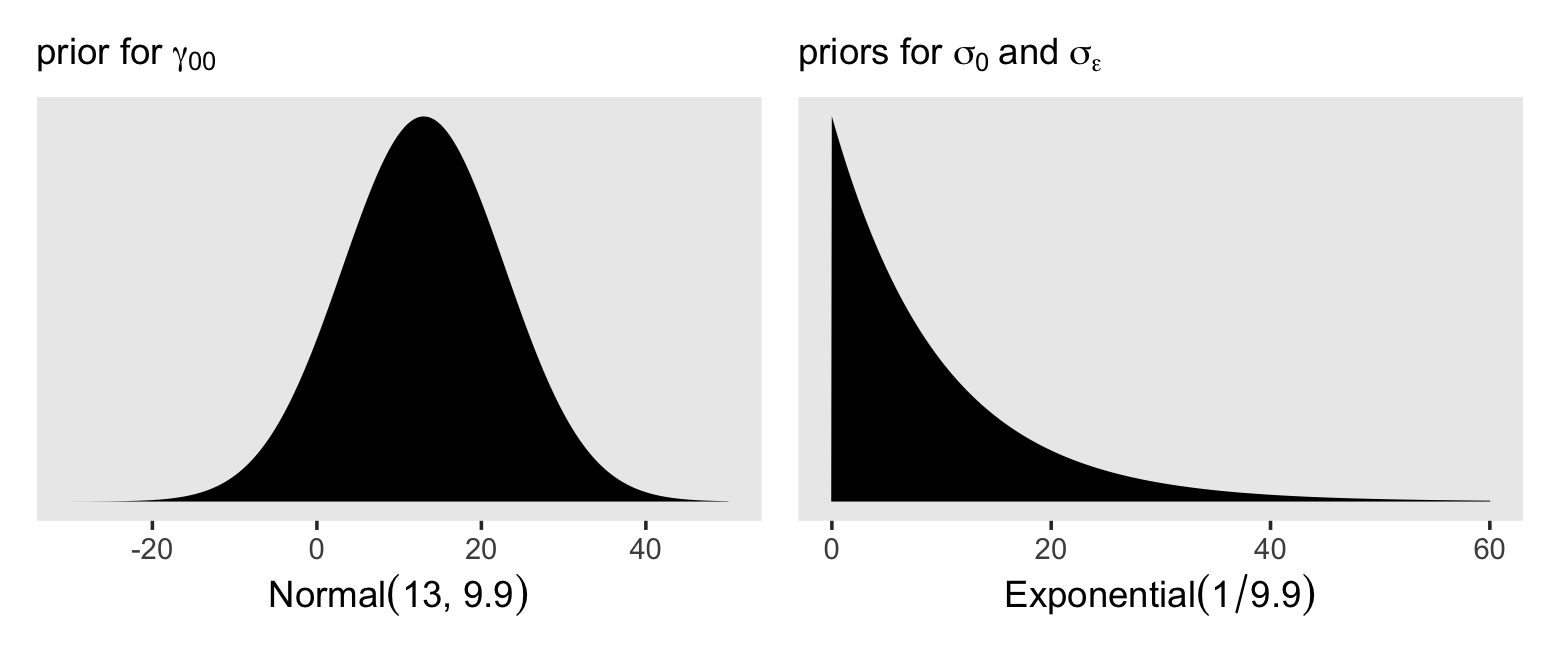

sample_statistics %>%

summarise(pooled_sd = sqrt(sum((n - 1) * sd^2) / (sum(n) - 4)))## # A tibble: 1 × 1

## pooled_sd

## <dbl>

## 1 9.91Thus, I propose a good place to start with is with a \(\operatorname{Normal}(13, 9.9)\) prior on the overall intercept, \(\gamma_{00}\). So far, we’ve been using the Student-\(t\) distribution for the priors on our variance parameters. Another option favored by McElreath in the second edition of his text is the exponential distribution. The exponential distribution has a single parameter, \(\lambda\), which is often called the rate. The mean of the exponential distribution is the inverse of the rate, \(1 / \lambda\). When you’re working with standardized data, a nice weakly-regularizing priors on the variance parameters is \(\operatorname{Exponential}(1)\), which has a mean of 1. Since our data are not standardized, we might use the prior \(\operatorname{Exponential}(1 / s_p)\), where \(s_p\) is the pooled standard deviation. In our case, that would be \(\operatorname{Exponential}(1 / 9.9)\). Here’s what those priors would look like.

# left

p1 <-

tibble(x = seq(from = -30, to = 50, length.out = 200)) %>%

mutate(d = dnorm(x, mean = 13, sd = 9.9)) %>%

ggplot(aes(x = x, y = d)) +

geom_area(fill = "black") +

scale_y_continuous(NULL, breaks = NULL) +

labs(subtitle = expression("prior for "*gamma[0][0]),

x = (expression(Normal(13*', '*9.9)))) +

theme(panel.grid = element_blank())

# right

p2 <-

tibble(x = seq(from = 0, to = 60, length.out = 200)) %>%

mutate(d = dexp(x, rate = 1.0 / 9.9)) %>%

ggplot(aes(x = x, y = d)) +

geom_area(fill = "black") +

scale_y_continuous(NULL, breaks = NULL) +

labs(subtitle = expression("priors for "*sigma[0]~and~sigma[epsilon]),

x = (expression(Exponential(1/9.9)))) +

theme(panel.grid = element_blank())

# combine

p1 | p2

Now using those priors, here’s how to fit the model.

fit6.10 <-

brm(data = external_pp,

family = gaussian,

external ~ 1 + (1 | id),

prior = c(prior(normal(13, 9.9), class = Intercept),

prior(exponential(1.0 / 9.9), class = sd),

prior(exponential(1.0 / 9.9), class = sigma)),

iter = 2500, warmup = 1000, chains = 3, cores = 3,

seed = 6,

file = "fits/fit06.10")For the next three models, we’ll keep those priors for \(\gamma_{00}\) and the \(\sigma\) parameters. Yet now we have to consider the new \(\gamma_{00}\) parameters which will account for the population-level effects if time, from a linear, quadratic, and cubic perspective. Given that time takes on integer values 0 through 5, the simple linear slope \(\gamma_{10}\) is the expected change from one grade to the next. Since we know from the previous literature that boys tend to have either stable or slightly-increasing trajectories for their externalizing behaviors (recall we’re uncertain about girls), a mildly conservative prior might be centered on zero with, say, half of the pooled standard deviation on the scale, \(\operatorname{Normal}(0, 4.95)\). This is the rough analogue to a \(\operatorname{Normal}(0, 0.5)\) prior on standardized data. Without better information, we’ll extend that same \(\operatorname{Normal}(0, 4.95)\) prior on \(\gamma_{20}\) and \(\gamma_{30}\). As to the new \(\rho\) parameters, we’ll use our typical weakly-regularizing \(\operatorname{LKJ}(4)\) prior on the level-2 correlation matrices.

# linear

fit6.11 <-

brm(data = external_pp,

family = gaussian,

external ~ 0 + Intercept + time + (1 + time | id),

prior = c(prior(normal(0, 4.95), class = b),

prior(normal(13, 9.9), class = b, coef = Intercept),

prior(exponential(1.0 / 9.9), class = sd),

prior(exponential(1.0 / 9.9), class = sigma),

prior(lkj(4), class = cor)),

iter = 2500, warmup = 1000, chains = 3, cores = 3,

seed = 6,

file = "fits/fit06.11")

# quadratic

fit6.12 <-

brm(data = external_pp,

family = gaussian,

external ~ 0 + Intercept + time + I(time^2) + (1 + time + I(time^2) | id),

prior = c(prior(normal(0, 4.95), class = b),

prior(normal(13, 9.9), class = b, coef = Intercept),

prior(exponential(1.0 / 9.9), class = sd),

prior(exponential(1.0 / 9.9), class = sigma),

prior(lkj(4), class = cor)),

iter = 2500, warmup = 1000, chains = 3, cores = 3,

seed = 6,

control = list(adapt_delta = .995),

file = "fits/fit06.12")

# cubic

fit6.13 <-

brm(data = external_pp,

family = gaussian,

external ~ 0 + Intercept + time + I(time^2) + I(time^3) + (1 + time + I(time^2) + I(time^3) | id),

prior = c(prior(normal(0, 4.95), class = b),

prior(normal(13, 9.9), class = b, coef = Intercept),

prior(exponential(1.0 / 9.9), class = sd),

prior(exponential(1.0 / 9.9), class = sigma),

prior(lkj(4), class = cor)),

iter = 2500, warmup = 1000, chains = 3, cores = 3,

seed = 6,

control = list(adapt_delta = .85),

file = "fits/fit06.13")Compute and save the WAIC estimates.

fit6.10 <- add_criterion(fit6.10, criterion = "waic")

fit6.11 <- add_criterion(fit6.11, criterion = "waic")

fit6.12 <- add_criterion(fit6.12, criterion = "waic")

fit6.13 <- add_criterion(fit6.13, criterion = "waic")Here we’ll make a simplified version of the WAIC output, saving the results as waic_summary.

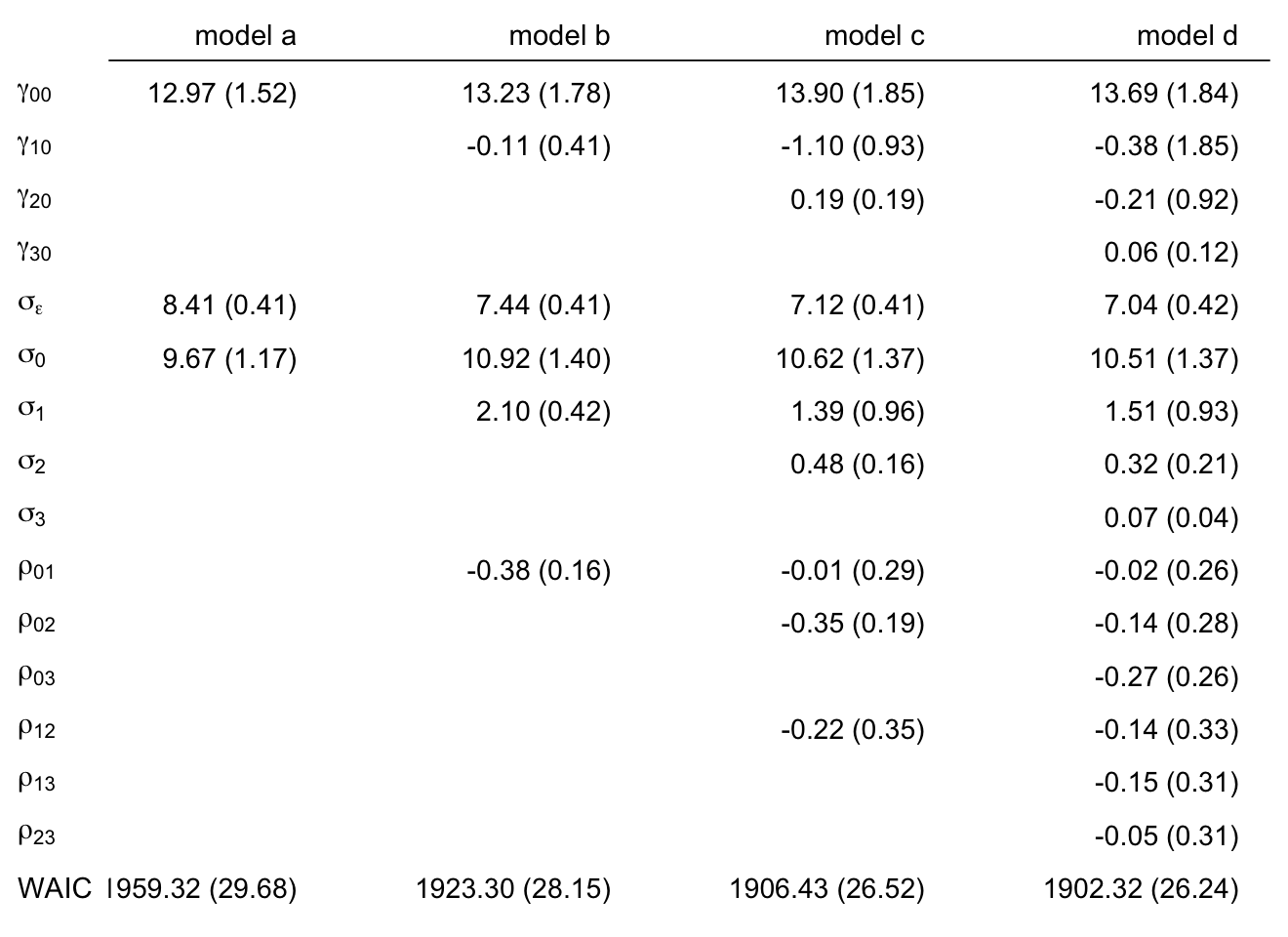

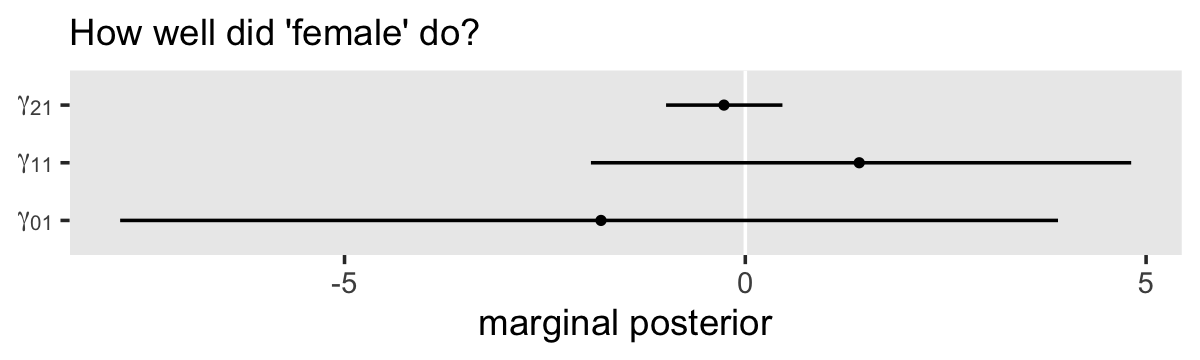

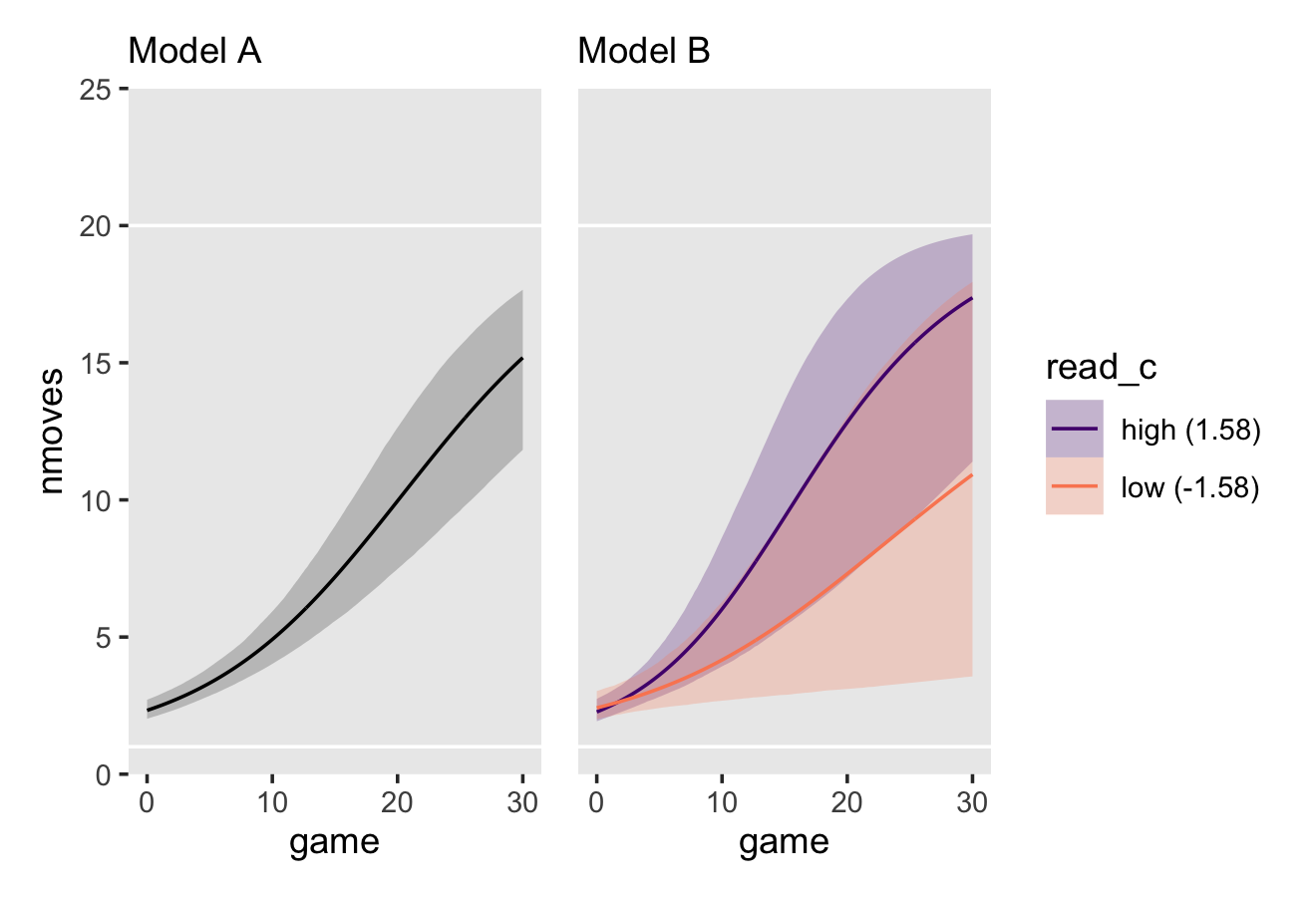

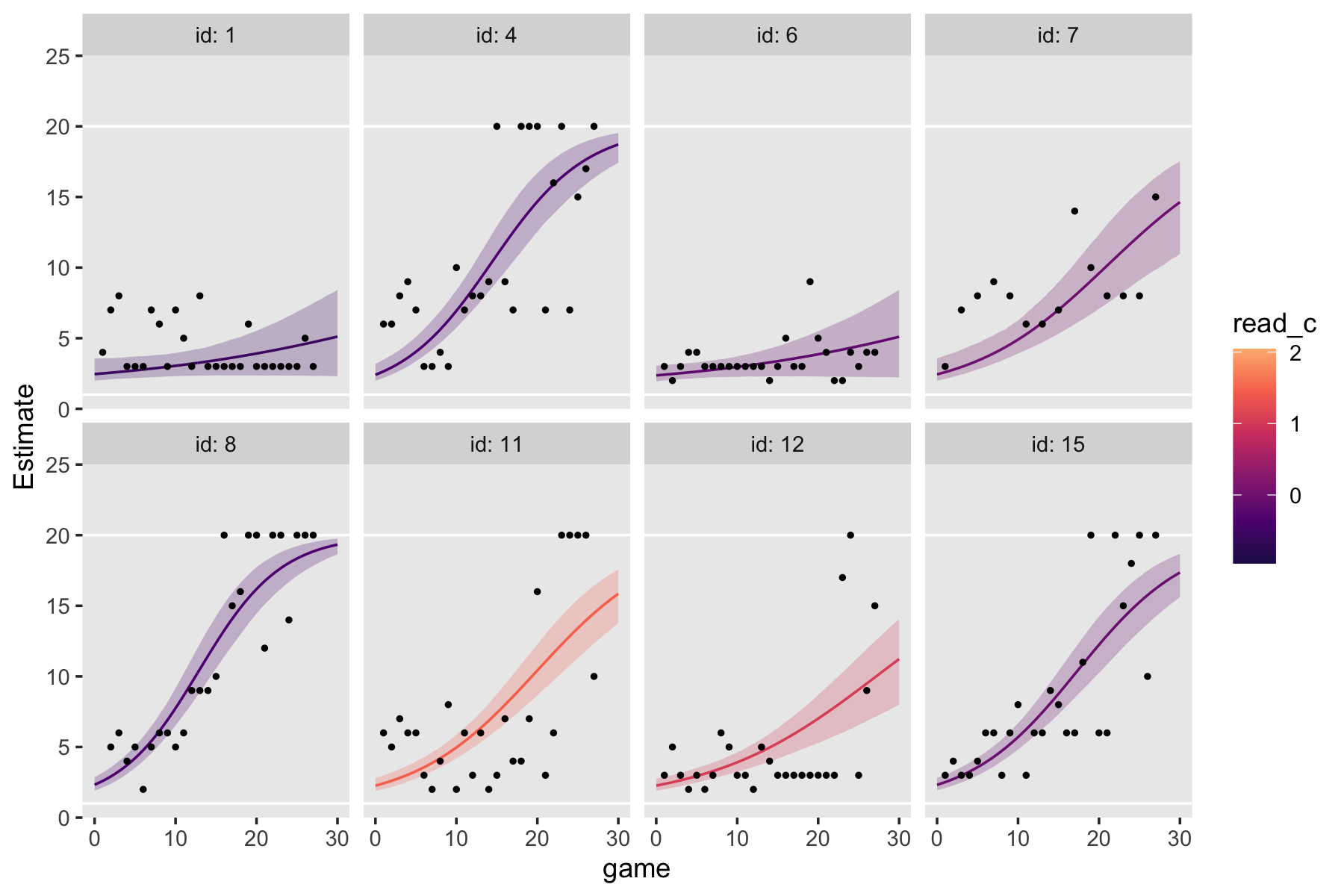

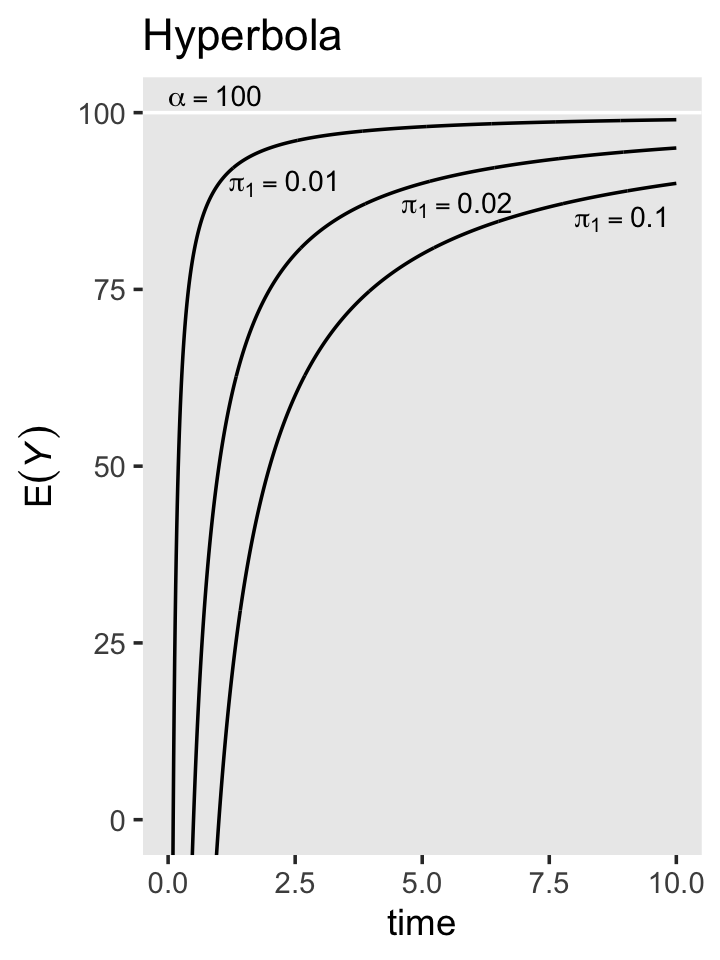

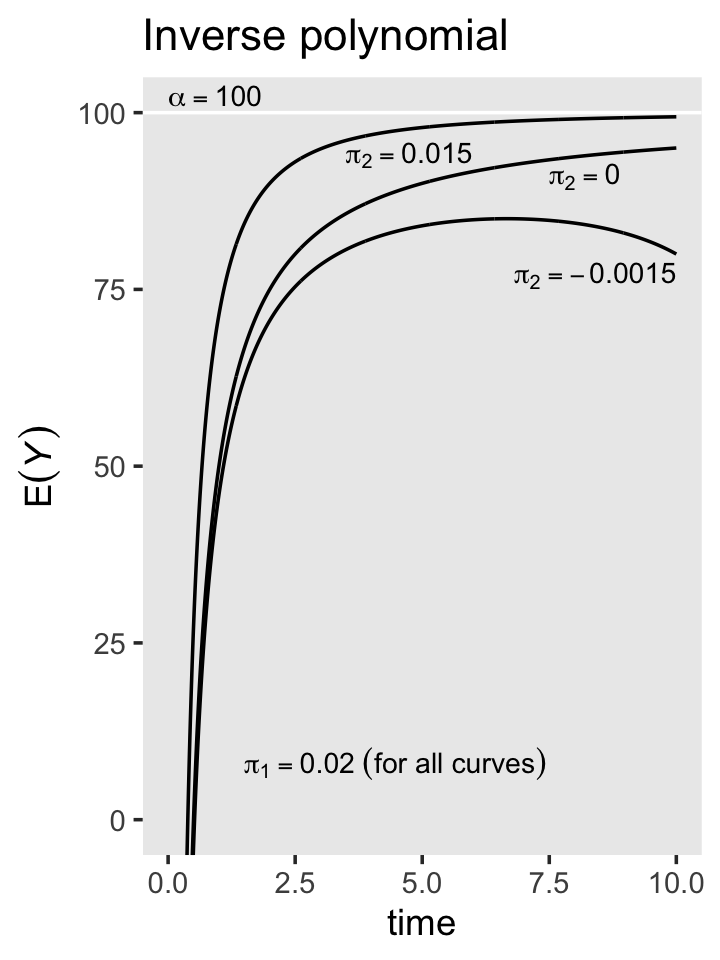

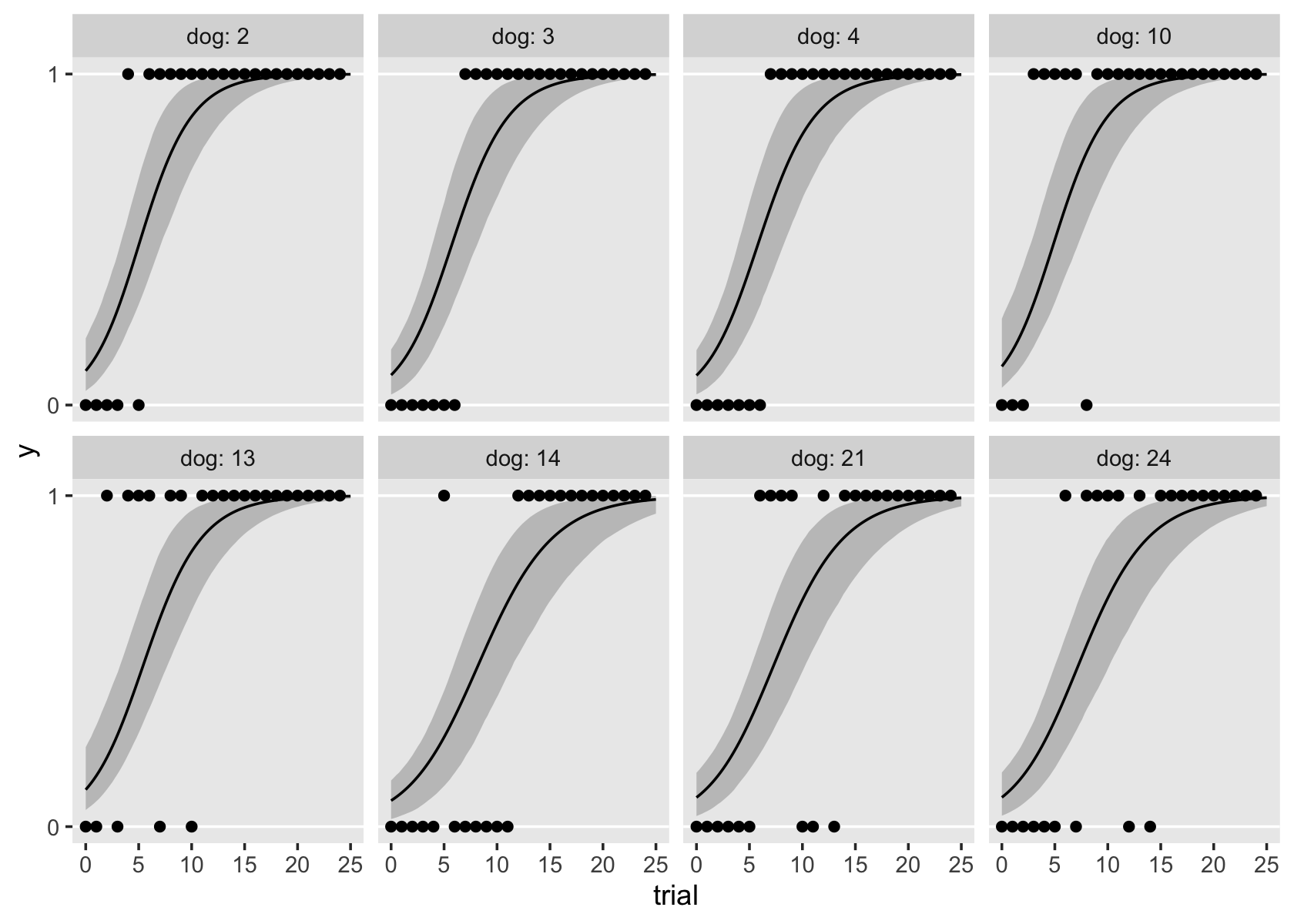

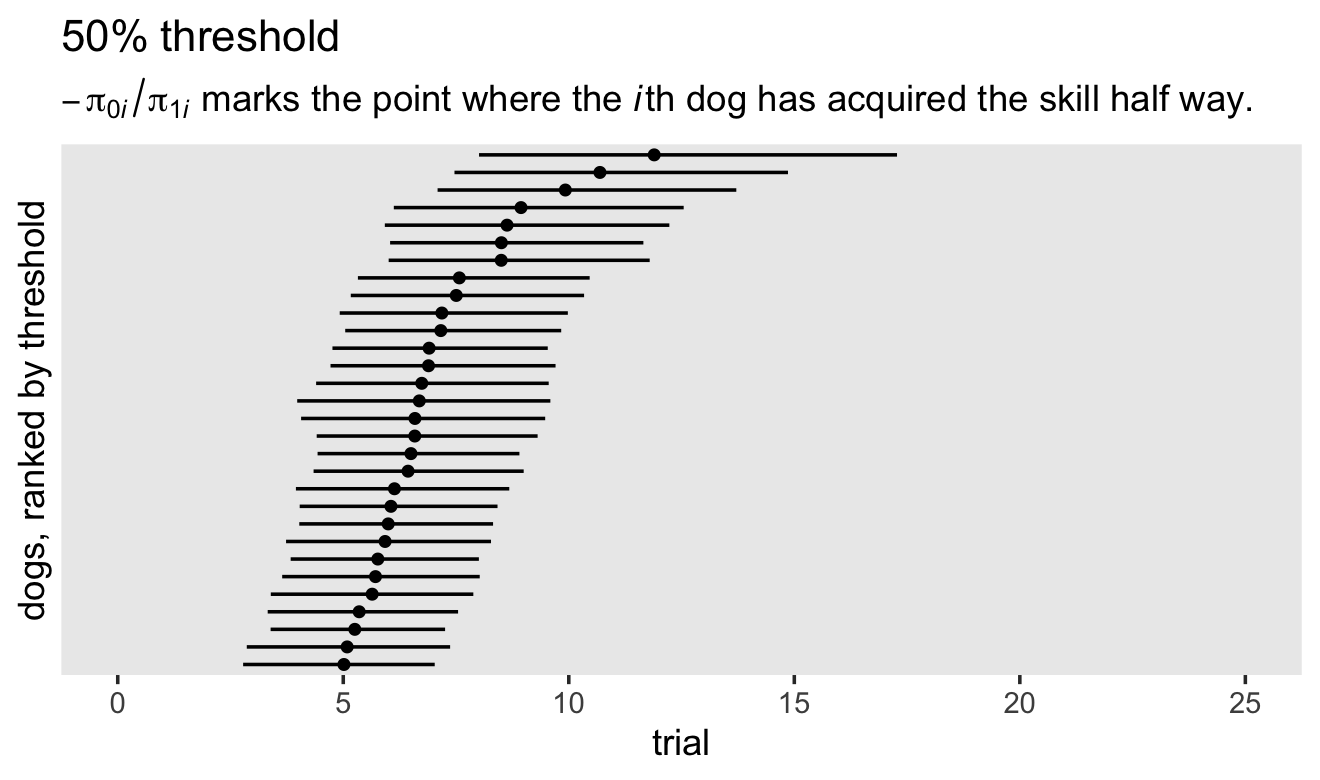

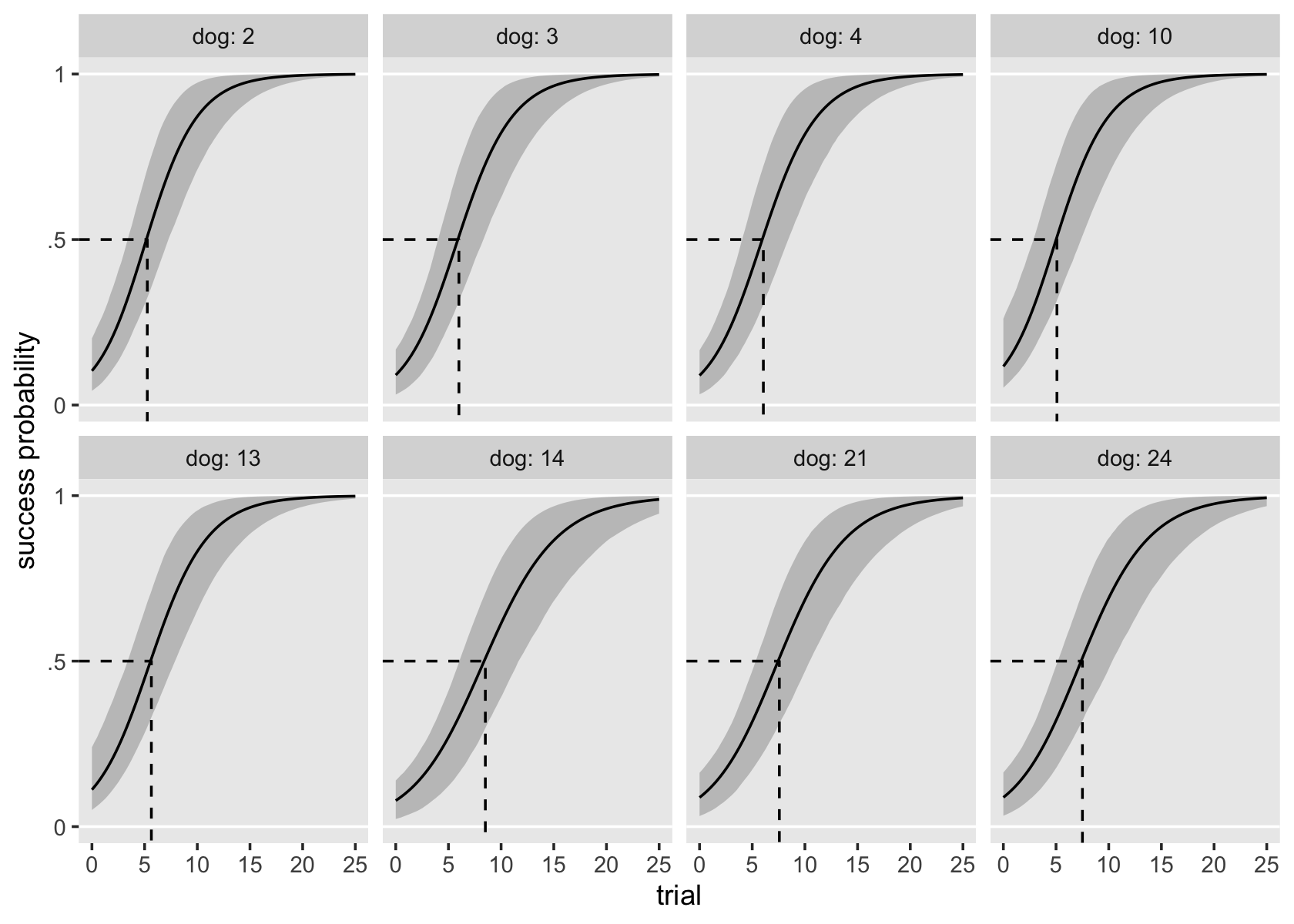

# order the parameters, which will come in handy in the next block