12 Extending the Discrete-Time Hazard Model

Like all statistical models, the basic discrete-time hazard model invokes assumptions about the population that may, or may not, hold in practice. Because no model should be adopted without scrutiny, we devote this chapter to examining its assumptions, demonstrating how to evaluate their tenability and relax their constraints when appropriate. In doing so, we illustrate practical principles of data analysis and offer theoretical insights into the model’s behavior and interpretation. (Singer & Willett, 2003, p. 407)

12.1 Alternative specification for the “main effect” of TIME

Use of a completely general specification for TIME [as explored in the last chapter] is an analytic decision, not an integral feature of the model. Nothing about the model or its estimation requires adoption of this, or any other, particular specification for TIME (p. 409, emphasis in the original)

In the next page, Singer and Willett listed three circumstances under which we might consider alternatives to the completely general approach to time. They were

- in studies with many discrete time periods,

- when hazard is expected to be near zero in some time periods, and

- when some time periods have small risk sets.

In the subsections to follow, we will explore each in turn.

12.1.1 An ordered series of polynomial specifications for TIME.

Load the Gamse and Conger’s (1997) tenure_pp.csv data.

library(tidyverse)

tenure_pp <-

read_csv("data/tenure_pp.csv") %>%

# convert the column names to lower case

rename_all(tolower)

glimpse(tenure_pp)## Rows: 1,474

## Columns: 12

## $ id <dbl> 111, 111, 111, 111, 111, 211, 211, 211, 211, 211, 211, 311, 311, 311, 311, 311, 311, 311, 311…

## $ period <dbl> 1, 2, 3, 4, 5, 1, 2, 3, 4, 5, 6, 1, 2, 3, 4, 5, 6, 7, 8, 1, 2, 3, 4, 5, 6, 7, 8, 9, 1, 2, 3, …

## $ event <dbl> 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 1, …

## $ d1 <dbl> 1, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, …

## $ d2 <dbl> 0, 1, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, …

## $ d3 <dbl> 0, 0, 1, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 0, 1, …

## $ d4 <dbl> 0, 0, 0, 1, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 0, …

## $ d5 <dbl> 0, 0, 0, 0, 1, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, …

## $ d6 <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, …

## $ d7 <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, …

## $ d8 <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, …

## $ d9 <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, …Let’s confirm these data are composed of the records of \(n = 260\) early-career academics.

tenure_pp %>%

distinct(id) %>%

count()## # A tibble: 1 × 1

## n

## <int>

## 1 260Here’s a way to count how many cases were censored.

tenure_pp %>%

group_by(id) %>%

arrange(desc(period)) %>%

slice(1) %>%

ungroup() %>%

count(event) %>%

mutate(percent = 100 * n / sum(n))## # A tibble: 2 × 3

## event n percent

## <dbl> <int> <dbl>

## 1 0 94 36.2

## 2 1 166 63.8Let’s fire up brms.

library(brms)As discussed in the prose and displayed in Tables 12.1 and 12.2, we will fit seven models, ranging from a constant (i.e., intercept only) model to a general (i.e., discrete factor) model. In the last chapter, we discussed how one can fit a general model with a series of \(J\) dummies or equivalently with the time variable, period in these data, set as a factor. Here we’ll do both. In preparation, we’ll make a period_f version of period.

tenure_pp <-

tenure_pp %>%

mutate(period_f = factor(period))Now fit the models.

# constant

fit12.1 <-

brm(data = tenure_pp,

family = binomial,

event | trials(1) ~ 1,

prior(normal(0, 4), class = Intercept),

chains = 4, cores = 4, iter = 2000, warmup = 1000,

seed = 12,

file = "fits/fit12.01")

# linear

fit12.2 <-

brm(data = tenure_pp,

family = binomial,

event | trials(1) ~ 0 + Intercept + period,

prior(normal(0, 4), class = b),

chains = 4, cores = 4, iter = 2000, warmup = 1000,

seed = 12,

file = "fits/fit12.02")

# quadratic

fit12.3 <-

brm(data = tenure_pp,

family = binomial,

event | trials(1) ~ 0 + Intercept + period + I(period^2),

prior(normal(0, 4), class = b),

chains = 4, cores = 4, iter = 2000, warmup = 1000,

seed = 12,

file = "fits/fit12.03")

# cubic

fit12.4 <-

brm(data = tenure_pp,

family = binomial,

event | trials(1) ~ 0 + Intercept + period + I(period^2) + I(period^3),

prior(normal(0, 4), class = b),

chains = 4, cores = 4, iter = 2000, warmup = 1000,

seed = 12,

file = "fits/fit12.04")

# fourth order

fit12.5 <-

brm(data = tenure_pp,

family = binomial,

event | trials(1) ~ 0 + Intercept + period + I(period^2) + I(period^3) + I(period^4),

prior(normal(0, 4), class = b),

chains = 4, cores = 4, iter = 2000, warmup = 1000,

control = list(max_treedepth = 12),

seed = 12,

file = "fits/fit12.05")

# fifth order

fit12.6 <-

brm(data = tenure_pp,

family = binomial,

event | trials(1) ~ 0 + Intercept + period + I(period^2) + I(period^3) + I(period^4) + I(period^5),

prior(normal(0, 4), class = b),

chains = 4, cores = 4, iter = 2000, warmup = 1000,

control = list(max_treedepth = 14),

seed = 12,

init = 0,

file = "fits/fit12.06")

# general

fit12.7 <-

brm(data = tenure_pp,

family = binomial,

event | trials(1) ~ 0 + d1 + d2 + d3 + d4 + d5 + d6 + d7 + d8 + d9,

prior(normal(0, 4), class = b),

chains = 4, cores = 4, iter = 2000, warmup = 1000,

seed = 12,

file = "fits/fit12.07")

# general with `factor(period)`

fit12.8 <-

brm(data = tenure_pp,

family = binomial,

event | trials(1) ~ 0 + period_f,

prior(normal(0, 4), class = b),

chains = 4, cores = 4, iter = 2000, warmup = 1000,

seed = 12,

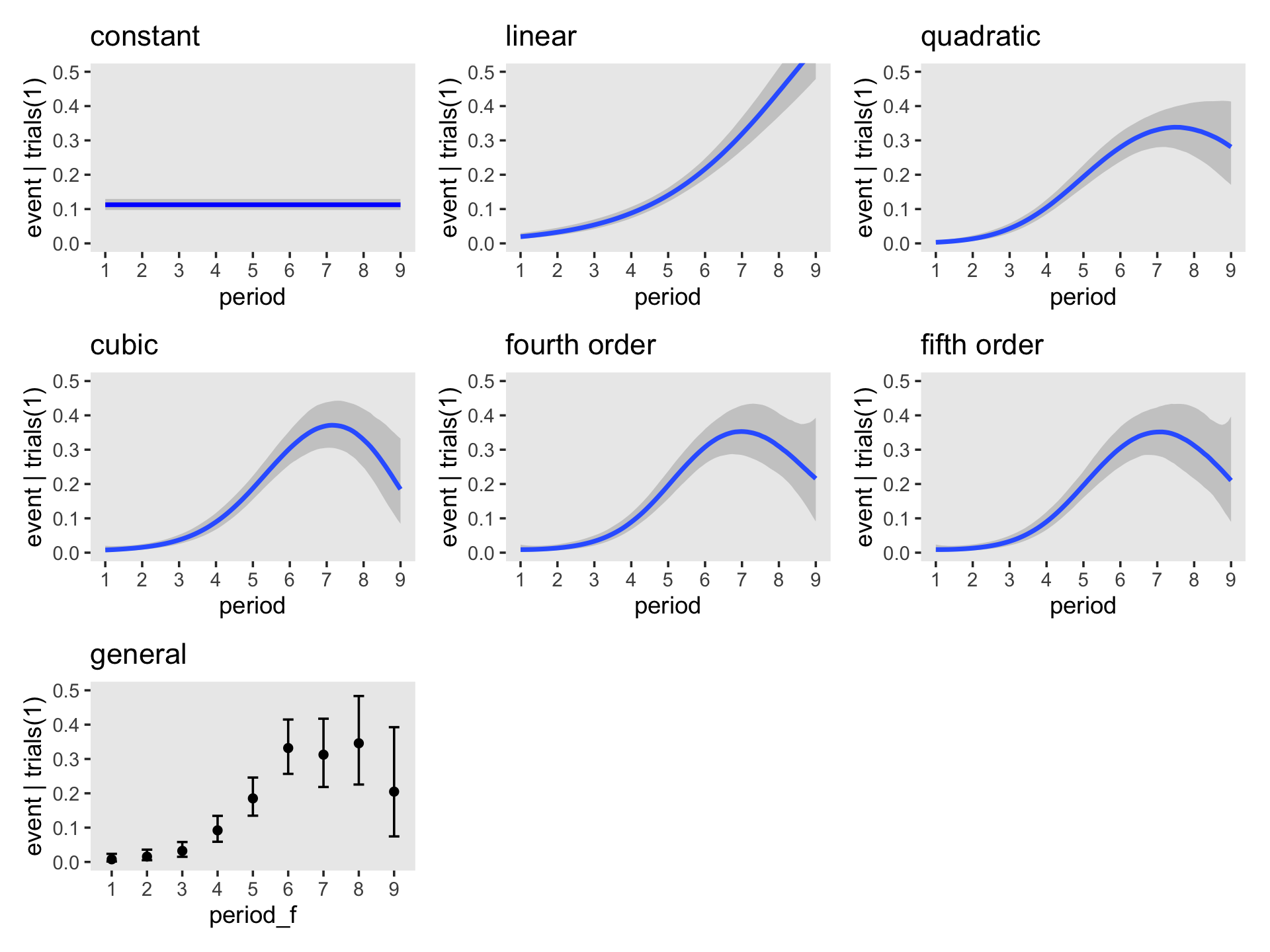

file = "fits/fit12.08")Before we compare the models with information criteria, it might be handy to look at the hazard functions for each. A relatively quick way is with the conditional_effects() function.

p2 <- plot(conditional_effects(fit12.2), plot = F)[[1]] + ggtitle("linear")

p3 <- plot(conditional_effects(fit12.3), plot = F)[[1]] + ggtitle("quadratic")

p4 <- plot(conditional_effects(fit12.4), plot = F)[[1]] + ggtitle("cubic")

p5 <- plot(conditional_effects(fit12.5), plot = F)[[1]] + ggtitle("fourth order")

p6 <- plot(conditional_effects(fit12.6), plot = F)[[1]] + ggtitle("fifth order")

p7 <- plot(conditional_effects(fit12.8),

cat_args = list(size = 3/2),

plot = F)[[1]] + ggtitle("general")Because it contains no predictors, we cannot use conditional_effects() to make a plot for the constant model (i.e., fit12.1). We’ll have to do that by hand.

p1 <-

tibble(period = 1:9) %>%

ggplot(aes(x = period)) +

geom_ribbon(aes(ymin = fixef(fit12.1)[, 3] %>% inv_logit_scaled(),

ymax = fixef(fit12.1)[, 4] %>% inv_logit_scaled()),

alpha = 1/5) +

geom_line(aes(y = fixef(fit12.1)[, 1] %>% inv_logit_scaled()),

linewidth = 1, color = "blue1") +

ggtitle("constant") +

ylab("event | trials(1)")Now combine and format the subplots with patchwork.

library(patchwork)

(((p1 + p2 + p3 + p4 + p5 + p6) & scale_x_continuous(breaks = 1:9)) + p7) &

coord_cartesian(ylim = c(0, .5)) &

theme(panel.grid = element_blank())

We are going to depart from Singer and Willett and no longer entertain using deviance for Bayesian model comparison. But we will compare then using the WAIC and the LOO.

fit12.1 <- add_criterion(fit12.1, criterion = c("loo", "waic"))

fit12.2 <- add_criterion(fit12.2, criterion = c("loo", "waic"))

fit12.3 <- add_criterion(fit12.3, criterion = c("loo", "waic"))

fit12.4 <- add_criterion(fit12.4, criterion = c("loo", "waic"))

fit12.5 <- add_criterion(fit12.5, criterion = c("loo", "waic"))

fit12.6 <- add_criterion(fit12.6, criterion = c("loo", "waic"))

fit12.7 <- add_criterion(fit12.7, criterion = c("loo", "waic"))

fit12.8 <- add_criterion(fit12.8, criterion = c("loo", "waic"))Before comparing the models in bulk, as in Table 12.2, let’s confirm that whether we use the dummy variable method (fit12.7) or the factor variable method (fit12.8), the results for the general model are the same.

loo_compare(fit12.7, fit12.8, criterion = "loo") %>% print(simplify = F)## elpd_diff se_diff elpd_loo se_elpd_loo p_loo se_p_loo looic se_looic

## fit12.7 0.0 0.0 -425.1 22.5 9.4 1.1 850.1 45.0

## fit12.8 0.0 0.0 -425.1 22.5 9.4 1.1 850.1 45.0loo_compare(fit12.7, fit12.8, criterion = "waic") %>% print(simplify = F)## elpd_diff se_diff elpd_waic se_elpd_waic p_waic se_p_waic waic se_waic

## fit12.7 0.0 0.0 -425.0 22.5 9.3 1.1 850.0 45.0

## fit12.8 0.0 0.0 -425.0 22.5 9.3 1.1 850.0 45.0Yep, both WAIC and LOO confirm both methods are equivalent. One of the main reasons we used the factor method, here, was because it made it easier to plot the results with conditional_effects(). But with the following model comparisons, we’ll focus on fit12.1 through fit12.7.

loo_compare(fit12.1, fit12.2, fit12.3, fit12.4, fit12.5, fit12.6, fit12.7, criterion = "loo") %>%

print(simplify = F)## elpd_diff se_diff elpd_loo se_elpd_loo p_loo se_p_loo looic se_looic

## fit12.4 0.0 0.0 -420.7 22.0 4.1 0.5 841.4 44.1

## fit12.3 -0.5 2.2 -421.2 22.0 3.1 0.4 842.3 44.1

## fit12.5 -0.6 0.8 -421.3 22.2 4.8 0.6 842.5 44.4

## fit12.6 -0.9 0.8 -421.6 22.2 5.2 0.6 843.3 44.4

## fit12.7 -4.4 1.5 -425.1 22.5 9.4 1.1 850.1 45.0

## fit12.2 -14.9 5.9 -435.6 22.0 1.8 0.1 871.2 44.1

## fit12.1 -99.1 13.2 -519.8 25.1 1.0 0.1 1039.5 50.2loo_compare(fit12.1, fit12.2, fit12.3, fit12.4, fit12.5, fit12.6, fit12.7, criterion = "waic") %>%

print(simplify = F)## elpd_diff se_diff elpd_waic se_elpd_waic p_waic se_p_waic waic se_waic

## fit12.4 0.0 0.0 -420.7 22.0 4.1 0.5 841.4 44.1

## fit12.3 -0.5 2.2 -421.2 22.0 3.1 0.4 842.3 44.1

## fit12.5 -0.6 0.8 -421.2 22.2 4.8 0.6 842.5 44.4

## fit12.6 -0.9 0.8 -421.6 22.2 5.2 0.6 843.2 44.4

## fit12.7 -4.3 1.5 -425.0 22.5 9.3 1.1 850.0 45.0

## fit12.2 -14.9 5.9 -435.6 22.0 1.8 0.1 871.2 44.1

## fit12.1 -99.1 13.2 -519.8 25.1 1.0 0.1 1039.5 50.2Our results are very similar to those in the AIC column in Table 12.2. Just for kicks and giggles, here are the model weights based on the LOO, WAIC, and stacking method.

model_weights(fit12.1, fit12.2, fit12.3, fit12.4, fit12.5, fit12.6, fit12.7, weights = "loo") %>%

round(digits = 3)## fit12.1 fit12.2 fit12.3 fit12.4 fit12.5 fit12.6 fit12.7

## 0.000 0.000 0.240 0.385 0.219 0.150 0.005model_weights(fit12.1, fit12.2, fit12.3, fit12.4, fit12.5, fit12.6, fit12.7, weights = "waic") %>%

round(digits = 3)## fit12.1 fit12.2 fit12.3 fit12.4 fit12.5 fit12.6 fit12.7

## 0.000 0.000 0.238 0.384 0.221 0.151 0.005model_weights(fit12.1, fit12.2, fit12.3, fit12.4, fit12.5, fit12.6, fit12.7, weights = "stacking") %>%

round(digits = 3)## fit12.1 fit12.2 fit12.3 fit12.4 fit12.5 fit12.6 fit12.7

## 0.019 0.000 0.487 0.494 0.000 0.000 0.000Across the comparison methods, the overall pattern is the cubic model (fit12.4) is marginally better than the rest, but that both the quadratic and fourth-order models were quite close. Unlike when you use model deviance, the parsimony corrections used in the information-criteria-based methods all suggest the general model is overfit.

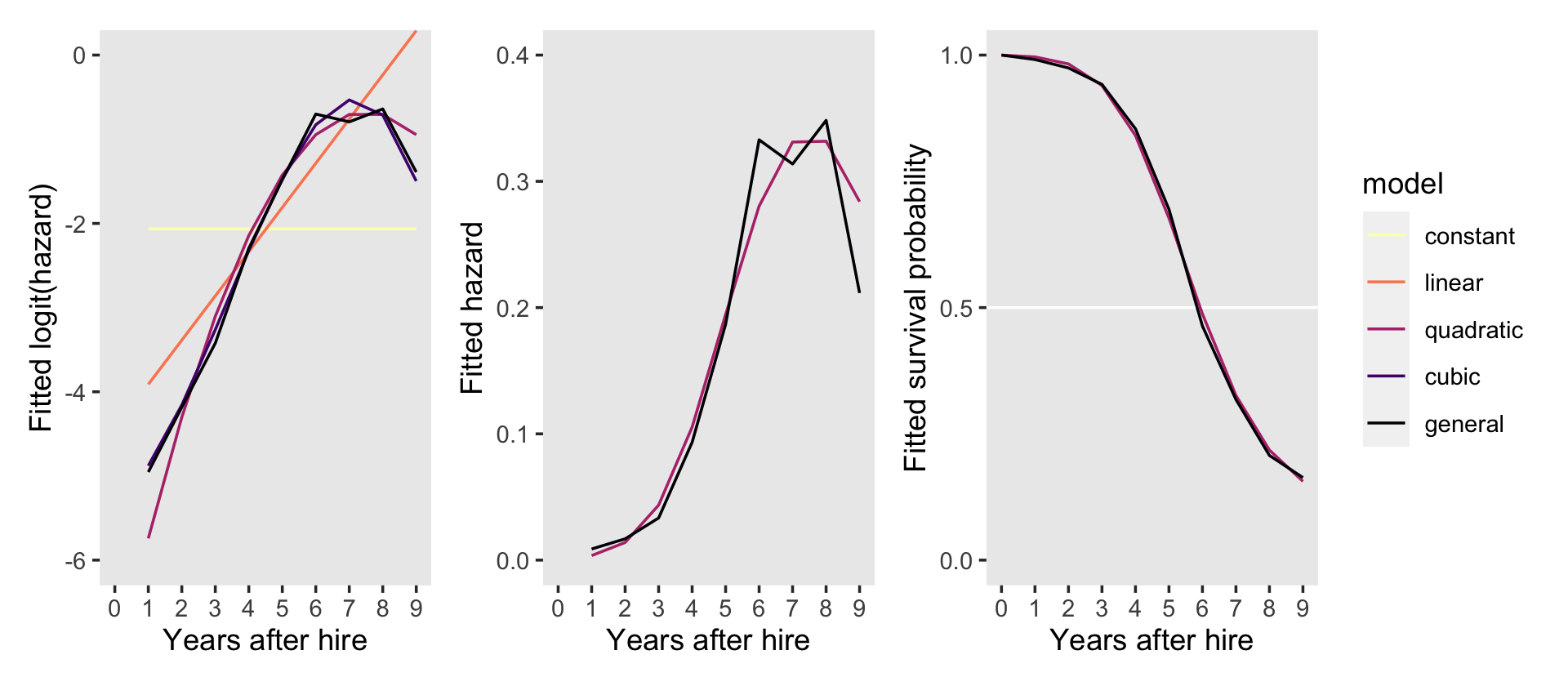

Before considering whether these differences in [information criteria] are sufficient to warrant use of an alternative specification for TIME, let us examine the corresponding fitted logit hazard functions. Doing so not only highlights the behavior of logit hazard, it also offers a graphical means of comparing the fit of competing specifications. (p. 413, emphasis in the original)

In preparation for our Figure 12.1, we’ll make a custom function called make_fitted(), which will streamline some of the data wrangling code.

make_fitted <- function(fit, scale, ...) {

fitted(fit,

newdata = nd,

scale = scale,

...) %>%

data.frame() %>%

bind_cols(nd)

}In addition to taking different brms fit objects as input, make_fitted() will allow us to adjust the scale of the output. As you’ll see, we will need to work with to settings in the coming plots. For our first batch of code, we’ll use scale = "linear". Because the newdata for our version of the general model (i.e., fit12.8) requires the predictor to be called period_f and the rest of the models require the predictor to be named period, we’ll make and save the results for the former first, redefine our newdata, apply make_fitted() to the rest of the models, and then combine them all. Here it is in one fell swoop.

nd <- tibble(period_f = 1:9)

f <- make_fitted(fit12.8, scale = "linear") %>% rename(period = period_f)

# this will simplify the `mutate()` code below

models <- c("constant", "linear", "quadratic", "cubic", "general")

nd <- tibble(period = 1:9)

f <-

bind_rows(make_fitted(fit12.1, scale = "linear"), # constant

make_fitted(fit12.2, scale = "linear"), # linear

make_fitted(fit12.3, scale = "linear"), # quadratic

make_fitted(fit12.4, scale = "linear"), # cubic

f) %>% # general

mutate(model = factor(rep(models, each = 9),

levels = models))

# what have we done?

glimpse(f)## Rows: 45

## Columns: 6

## $ Estimate <dbl> -2.0652119, -2.0652119, -2.0652119, -2.0652119, -2.0652119, -2.0652119, -2.0652119, -2.065…

## $ Est.Error <dbl> 0.08186425, 0.08186425, 0.08186425, 0.08186425, 0.08186425, 0.08186425, 0.08186425, 0.0818…

## $ Q2.5 <dbl> -2.22771603, -2.22771603, -2.22771603, -2.22771603, -2.22771603, -2.22771603, -2.22771603,…

## $ Q97.5 <dbl> -1.90606492, -1.90606492, -1.90606492, -1.90606492, -1.90606492, -1.90606492, -1.90606492,…

## $ period <int> 1, 2, 3, 4, 5, 6, 7, 8, 9, 1, 2, 3, 4, 5, 6, 7, 8, 9, 1, 2, 3, 4, 5, 6, 7, 8, 9, 1, 2, 3, …

## $ model <fct> constant, constant, constant, constant, constant, constant, constant, constant, constant, …Now we’re in good shape to make and save our version of the top panel of Figure 12.1.

p1 <-

f %>%

ggplot(aes(x = period, y = Estimate, color = model)) +

geom_line() +

scale_color_viridis_d(option = "A", direction = -1) +

ylab("Fitted logit(hazard)") +

coord_cartesian(ylim = -c(6, 0)) +

theme(panel.grid = element_blank())The bottom two panels require we redo our make_fitted() code from above, this time setting scale = "response".

nd <- tibble(period_f = 1:9)

f <- make_fitted(fit12.8, scale = "response") %>% rename(period = period_f)

nd <- tibble(period = 1:9)

f <-

bind_rows(make_fitted(fit12.1, scale = "response"), # constant

make_fitted(fit12.2, scale = "response"), # linear

make_fitted(fit12.3, scale = "response"), # quadratic

make_fitted(fit12.4, scale = "response"), # cubic

f) %>% # general

mutate(model = factor(rep(models, each = 9),

levels = models))Now make and save the bottom left panel for Figure 12.1.

p2 <-

f %>%

filter(model %in% c("quadratic", "general")) %>%

ggplot(aes(x = period, y = Estimate, color = model)) +

geom_line() +

scale_color_viridis_d(option = "A", end = .5, direction = -1) +

ylab("Fitted hazard") +

coord_cartesian(ylim = c(0, .4)) +

theme(legend.position = "none",

panel.grid = element_blank())Our f data will also work to make the final bottom right panel for the figure, but we’ll need to convert the Estimate values from the hazard metric to the survival-probability metric. In addition, we will need to add in values for when period = 0. Here we wrangle, plot, and save in one block.

new_rows <-

tibble(Estimate = 0,

period = 0,

model = factor(c("quadratic", "general"),

levels = models))

p3 <-

f %>%

filter(model %in% c("quadratic", "general")) %>%

select(Estimate, period, model) %>%

# add the `new_rows` data

bind_rows(new_rows) %>%

arrange(model, period) %>%

group_by(model) %>%

# convert hazards to survival probabilities

mutate(Estimate = cumprod(1 - Estimate)) %>%

# plot!

ggplot(aes(x = period, y = Estimate, color = model)) +

geom_hline(yintercept = .5, color = "white") +

geom_line() +

scale_color_viridis_d(option = "A", end = .5, direction = -1) +

scale_y_continuous("Fitted survival probability", breaks = c(0, .5, 1)) +

coord_cartesian(ylim = c(0, 1)) +

theme(legend.position = "none",

panel.grid = element_blank())Now combine the subplots and format a little with patchwork to make our version of Figure 12.1.

p1 + p2 + p3 +

plot_layout(guides = "collect") &

scale_x_continuous("Years after hire", breaks = 0:9, limits = c(0, 9))

Instead of the two-row layout in the text, it seemed simpler to arrange the panels all in one row. This way we can let the full version of the line-color legend serve for all three panels.

12.1.2 Criteria for comparing alternative specification.

The decline in the deviance statistic across models indicates that fit improves with increasing complexity of the temporal specification. To evaluate the magnitude of this decline, we must also account for the increased number of parameters in the model. You should not adopt a more complex specification if it fits no better than a simpler one. But if an alternative specification is (nearly) as good as the most general one, it may be “good enough.” At the same time, we would not want an alternative that performs measurably worse than we know we can do. (p. 415)

We won’t be comparing deviances with \(\chi^2\) tests, here. As to information criteria, we got ahead of the authors a bit and presented those comparisons in the last section. Although our use of the WAIC and the LOO is similar to Singer and Willett’s use of the AIC and BIC in that they yield no formal hypothesis test in the form of a \(p\)-value, their estimates and difference scores do come with standard errors.

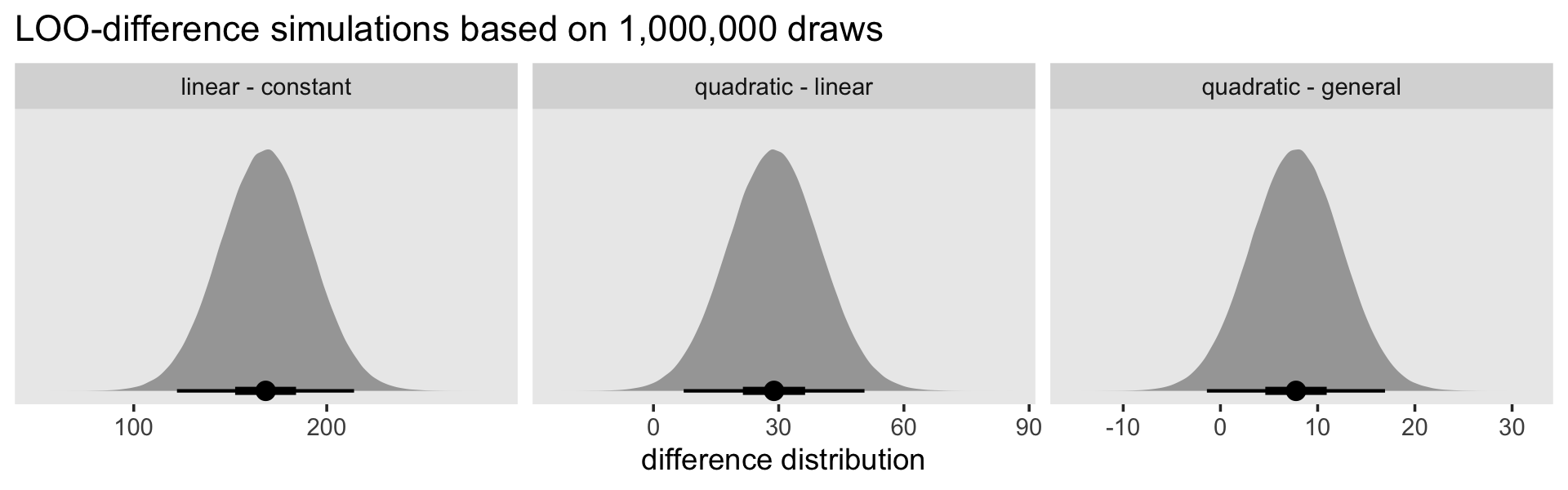

In the middle of page 416, the authors focused on comparing the constant and linear models, the linear and quadratic models, and the quadratic and general models. The LOO and WAIC estimates for each were near identical. For the sake of simplicity, here are those three focused comparisons using the LOO.

# the constant and linear models

l1 <- loo_compare(fit12.1, fit12.2, criterion = "loo")

# the linear and quadratic models

l2 <- loo_compare(fit12.2, fit12.3, criterion = "loo")

# the quadratic and general models

l3 <- loo_compare(fit12.3, fit12.7, criterion = "loo")

l1 %>% print(simplify = F)## elpd_diff se_diff elpd_loo se_elpd_loo p_loo se_p_loo looic se_looic

## fit12.2 0.0 0.0 -435.6 22.0 1.8 0.1 871.2 44.1

## fit12.1 -84.2 11.7 -519.8 25.1 1.0 0.1 1039.5 50.2l2 %>% print(simplify = F)## elpd_diff se_diff elpd_loo se_elpd_loo p_loo se_p_loo looic se_looic

## fit12.3 0.0 0.0 -421.2 22.0 3.1 0.4 842.3 44.1

## fit12.2 -14.4 5.5 -435.6 22.0 1.8 0.1 871.2 44.1l3 %>% print(simplify = F)## elpd_diff se_diff elpd_loo se_elpd_loo p_loo se_p_loo looic se_looic

## fit12.3 0.0 0.0 -421.2 22.0 3.1 0.4 842.3 44.1

## fit12.7 -3.9 2.3 -425.1 22.5 9.4 1.1 850.1 45.0If we presume the LOO differences follow a normal distribution, we can use their point estimates and standard errors to plot those distributions using simulated data from good old rnorm().

library(tidybayes)

n <- 1e6

models <- c("linear - constant", "quadratic - linear", "quadratic - general")

set.seed(12)

# wrangle

tibble(loo_difference = c(rnorm(n, mean = l1[2, 1] * -2, sd = l1[2, 2] * 2),

rnorm(n, mean = l2[2, 1] * -2, sd = l2[2, 2] * 2),

rnorm(n, mean = l3[2, 1] * -2, sd = l3[2, 2] * 2))) %>%

mutate(models = factor(rep(models, each = n),

levels = models)) %>%

# plot!

ggplot(aes(x = loo_difference, y = 0)) +

stat_halfeye(.width = c(.5, .95), normalize = "panels") +

scale_y_continuous(NULL, breaks = NULL) +

labs(title = "LOO-difference simulations based on 1,000,000 draws",

x = "difference distribution") +

theme(panel.grid = element_blank()) +

facet_wrap(~ models, scales = "free")

The LOO difference for the linear and constant models is decisive. The difference for the quadratic and linear models is fairly large on the information-criteria scale, but the uncertainty in that distribution is fairly large relative to its location (i.e., its mean), which might temper overly-strong conclusions about how much better the quadratic was compared to the linear. The comparison between the quadratic and the general produced a simulation with a modest location and rather large uncertainty relative to the magnitude of that location. All in all, “all signs point to the superiority of the quadratic specification, which fits nearly as well as the general mode, but with fewer parameters” (p. 416).

In the next page, Singer and Willett briefly focused on comparing the cubic and quadratic models. Here are their LOO and WAIC caparisons.

loo_compare(fit12.3, fit12.4, criterion = "loo") %>% print(simplify = F)## elpd_diff se_diff elpd_loo se_elpd_loo p_loo se_p_loo looic se_looic

## fit12.4 0.0 0.0 -420.7 22.0 4.1 0.5 841.4 44.1

## fit12.3 -0.5 2.2 -421.2 22.0 3.1 0.4 842.3 44.1loo_compare(fit12.3, fit12.4, criterion = "waic") %>% print(simplify = F)## elpd_diff se_diff elpd_waic se_elpd_waic p_waic se_p_waic waic se_waic

## fit12.4 0.0 0.0 -420.7 22.0 4.1 0.5 841.4 44.1

## fit12.3 -0.5 2.2 -421.2 22.0 3.1 0.4 842.3 44.1In the text, the results for the AIC and BIC differed. Our LOO and WAIC results both agree with the AIC: the cubic model has a slightly lower LOO and WAIC estimate compared to the quadratic. However, the standard errors for the formal difference score are about twice the size of that difference and the absolute magnitude of the difference is rather small to begin with. Here’s what it looks like if we compare them using LOO weights, WAIC weights, and stacking weights.

model_weights(fit12.3, fit12.4, weights = "loo") %>% round(digits = 3)## fit12.3 fit12.4

## 0.384 0.616model_weights(fit12.3, fit12.4, weights = "waic") %>% round(digits = 3)## fit12.3 fit12.4

## 0.383 0.617model_weights(fit12.3, fit12.4, weights = "stacking") %>% round(digits = 3)## fit12.3 fit12.4

## 0.404 0.596Across all three weight comparisons, there was a modest edge for the quadratic model. But back to Singer and Willett:

Although decision rules cannot substitute for judgment, intuition, and common sense, we nevertheless conclude by offering two guidelines for selecting among alternative specifications:

- If a smooth specification works nearly as well as the completely general one, appreciably better than all simpler ones, and no worse than all more complex ones, consider adopting it.

- If no smooth specifications meet these criteria, retain the completely general specification.

If this decision process leads you to a polynomial specification, then you can interpret the model’s parameters easily, as we [will discuss in a bit]. (p. 417, emphasis in the original)

Before moving on, we might point out that our Bayesian brms-based framework offers a different option: model averaging. We plot the hazard and survival curves based on weighted averages of multiple models. The weights can be based on various criteria. One approach would be to use the model weights from the LOO or the WAIC. As an example, here we use the LOO weights for the quadratic and cubic models.

nd <- tibble(period = 1:9)

pp <-

pp_average(fit12.3, fit12.4,

weights = "loo",

newdata = nd,

method = "pp_expect") %>%

data.frame() %>%

bind_cols(nd)The pp_average() function works much like fitted() or predict(). If you input models and perhaps newdata, it will return estimates that are the weighted averages of the specified models. With the weights = "loo" argument, we indicated our desired weights were those from the LOO, just as we computed earlier with the model_weights() function. With the method = "pp_expect" argument, we indicated we wanted fitted values like we would get from fitted().

Here we plot the results in terms of hazard and survival.

# hazard

p1 <-

pp %>%

ggplot(aes(x = period)) +

geom_ribbon(aes(ymin = Q2.5, ymax = Q97.5),

alpha = 1/5) +

geom_line(aes(y = Estimate)) +

scale_x_continuous("Years after hire", breaks = 0:9, limits = c(0, 9)) +

ylab("hazard") +

theme(panel.grid = element_blank())

# survival

p2 <-

pp %>%

select(-Est.Error) %>%

bind_rows(tibble(Estimate = 0, Q2.5 = 0, Q97.5 = 0, period = 0)) %>%

arrange(period) %>%

mutate_at(vars(Estimate:Q97.5), .funs = ~ cumprod(1 - .)) %>%

ggplot(aes(x = period)) +

geom_hline(yintercept = .5, color = "white") +

geom_ribbon(aes(ymin = Q2.5, ymax = Q97.5),

alpha = 1/5) +

geom_line(aes(y = Estimate)) +

scale_x_continuous("Years after hire", breaks = 0:9) +

scale_y_continuous("survival", breaks = c(0, .5, 1), limits = c(0, 1)) +

theme(panel.grid = element_blank())

# combine

(p1 | p2) +

plot_annotation(title = "Behold the fitted hazard and survival curves based on a weighted\naverage of the quadratic and linear models!")

12.1.3 Interpreting parameters from linear, quadratic, and cubic specifications.

“One advantage of a polynomial specification is that you can often interpret its parameters directly” (p. 417). For the polynomial models in this section, Singer and Willett used the \(TIME - c\) specification for period where \(c\) is a centering constant. They used \(c = 5\). Before we can refit our polynomial models with this parameterization, we’ll want to make a new period variable with this centering. We’ll call it period_5.

tenure_pp <-

tenure_pp %>%

mutate(period_5 = period - 5)

# how do the two `period` variables compare?

tenure_pp %>%

distinct(period, period_5)## # A tibble: 9 × 2

## period period_5

## <dbl> <dbl>

## 1 1 -4

## 2 2 -3

## 3 3 -2

## 4 4 -1

## 5 5 0

## 6 6 1

## 7 7 2

## 8 8 3

## 9 9 4Now refit the quadratic model using period_5.

fit12.9 <-

update(fit12.3,

newdata = tenure_pp,

event | trials(1) ~ 0 + Intercept + period_5 + I(period_5^2),

chains = 4, cores = 4, iter = 2000, warmup = 1000,

seed = 12,

file = "fits/fit12.09")Check the model summary.

print(fit12.9)## Family: binomial

## Links: mu = logit

## Formula: event | trials(1) ~ Intercept + period_5 + I(period_5^2) - 1

## Data: tenure_pp (Number of observations: 1474)

## Draws: 4 chains, each with iter = 2000; warmup = 1000; thin = 1;

## total post-warmup draws = 4000

##

## Population-Level Effects:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## Intercept -1.41 0.11 -1.64 -1.19 1.00 2318 2182

## period_5 0.61 0.06 0.50 0.73 1.00 2379 2147

## Iperiod_5E2 -0.13 0.03 -0.18 -0.08 1.00 2158 2633

##

## Draws were sampled using sampling(NUTS). For each parameter, Bulk_ESS

## and Tail_ESS are effective sample size measures, and Rhat is the potential

## scale reduction factor on split chains (at convergence, Rhat = 1).Focusing just on the posterior means, this yields the following formula for the quadratic discrete-time hazard model: \(\operatorname{logit} \hat h (t_j) = \;\) -1.4122 \(\text{one } +\) 0.6150\((\text{time}_j - 5)\) -0.1282\((\text{time}_j - 5)^2\).

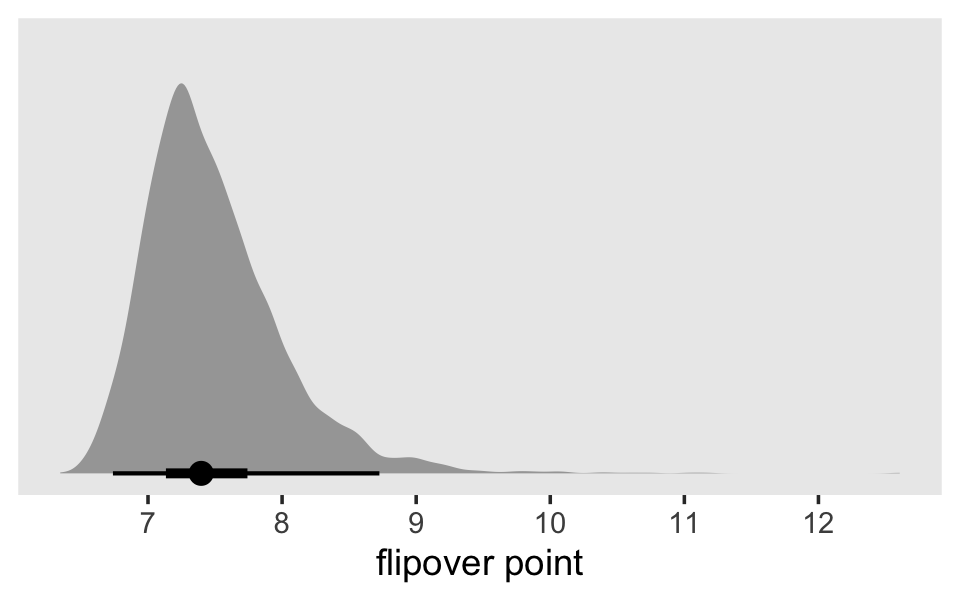

Singer and Willett used the term “flipover point” for the point at which the quadratic function reaches its peak or trough. If we let \(c\) be the centering constant for time variable, \(\alpha_1\) be the linear coefficient for time, and \(\alpha_2\) be the quadratic coefficient for time, we define the flipover point as

\[\text{flipover point} = [c - 1/2 (\alpha_1 / \alpha_2)].\]

Here’s what that looks like using the posterior samples.

as_draws_df(fit12.9) %>%

transmute(c = 5,

a1 = b_period_5,

a2 = b_Iperiod_5E2) %>%

mutate(`flipover point` = c - 0.5 * (a1 / a2)) %>%

ggplot(aes(x = `flipover point`, y = 0)) +

stat_halfeye(.width = c(.5, .95)) +

scale_x_continuous(breaks = 7:12) +

scale_y_continuous(NULL, breaks = NULL) +

theme(panel.grid = element_blank())

Just as each parameter has a posterior distribution, the flipover point, which is a function of two of the parameters, also has a posterior distribution. To understand what this flipover distribution means, it might be helpful to look at it in another way. For that, we’ll employ fitted().

nd <-

tibble(period = seq(from = 0, to = 12, by = .1)) %>%

mutate(period_5 = period - 5)

f <-

fitted(fit12.9,

newdata = nd,

summary = F,

scale = "linear") %>%

data.frame() %>%

pivot_longer(everything()) %>%

bind_cols(expand(nd,

iter = 1:4000,

nesting(period, period_5)))

f %>%

glimpse()## Rows: 484,000

## Columns: 5

## $ name <chr> "X1", "X2", "X3", "X4", "X5", "X6", "X7", "X8", "X9", "X10", "X11", "X12", "X13", "X14", "X…

## $ value <dbl> -6.718848, -6.570127, -6.423196, -6.278057, -6.134709, -5.993153, -5.853387, -5.715413, -5.…

## $ iter <int> 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1…

## $ period <dbl> 0.0, 0.1, 0.2, 0.3, 0.4, 0.5, 0.6, 0.7, 0.8, 0.9, 1.0, 1.1, 1.2, 1.3, 1.4, 1.5, 1.6, 1.7, 1…

## $ period_5 <dbl> -5.0, -4.9, -4.8, -4.7, -4.6, -4.5, -4.4, -4.3, -4.2, -4.1, -4.0, -3.9, -3.8, -3.7, -3.6, -…Now make a logit hazard spaghetti plot.

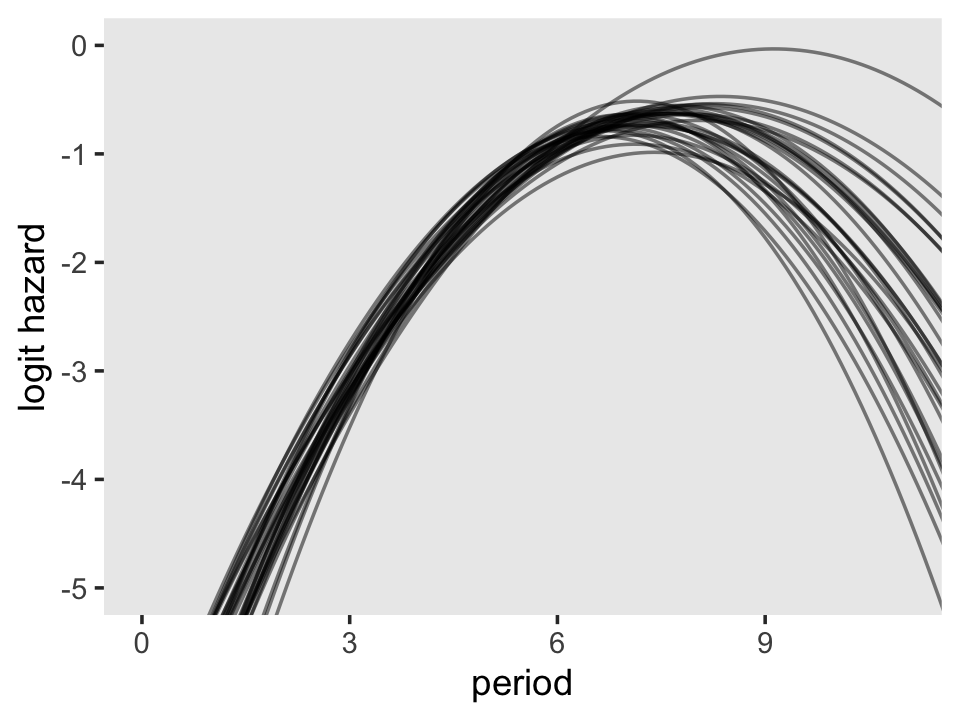

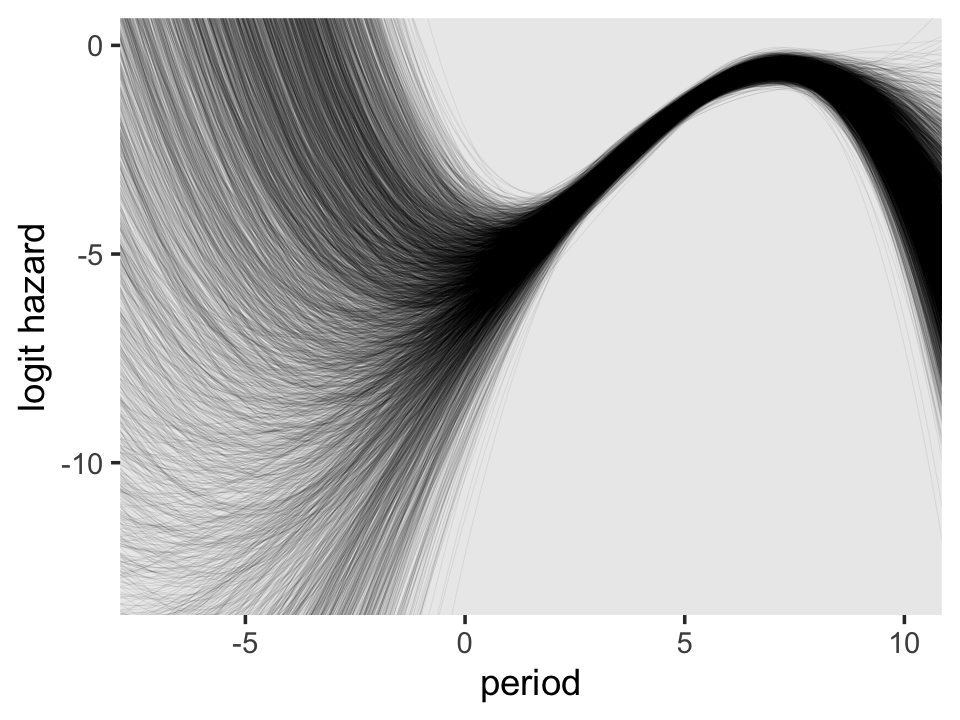

f %>%

# how many lines would you like?

filter(iter <= 30) %>%

ggplot(aes(x = period, y = value, group = iter)) +

geom_line(alpha = 1/2) +

ylab("logit hazard") +

coord_cartesian(xlim = c(0, 11),

ylim = c(-5, 0)) +

theme(panel.grid = element_blank())

To keep the plot manageable, we filtered to just the first 30 posterior draws. Each was depicted with its own hazard line. Note how each of those hazard lines peaks at a different point along the \(x\)-axis. Most peak somewhere around 7.4. Some take on notably higher values.

Now fit a cubic model using period_5, \((TIME_j - 5)\), as the measure of time.

fit12.10 <-

update(fit12.4,

newdata = tenure_pp,

event | trials(1) ~ 0 + Intercept + period_5 + I(period_5^2) + I(period_5^3),

chains = 4, cores = 4, iter = 2000, warmup = 1000,

seed = 12,

file = "fits/fit12.10")Check the model summary.

print(fit12.10)## Family: binomial

## Links: mu = logit

## Formula: event | trials(1) ~ Intercept + period_5 + I(period_5^2) + I(period_5^3) - 1

## Data: tenure_pp (Number of observations: 1474)

## Draws: 4 chains, each with iter = 2000; warmup = 1000; thin = 1;

## total post-warmup draws = 4000

##

## Population-Level Effects:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## Intercept -1.45 0.12 -1.68 -1.23 1.00 2027 2390

## period_5 0.75 0.10 0.56 0.94 1.00 1981 2332

## Iperiod_5E2 -0.11 0.03 -0.17 -0.07 1.00 1947 1964

## Iperiod_5E3 -0.02 0.01 -0.04 0.00 1.00 1927 2027

##

## Draws were sampled using sampling(NUTS). For each parameter, Bulk_ESS

## and Tail_ESS are effective sample size measures, and Rhat is the potential

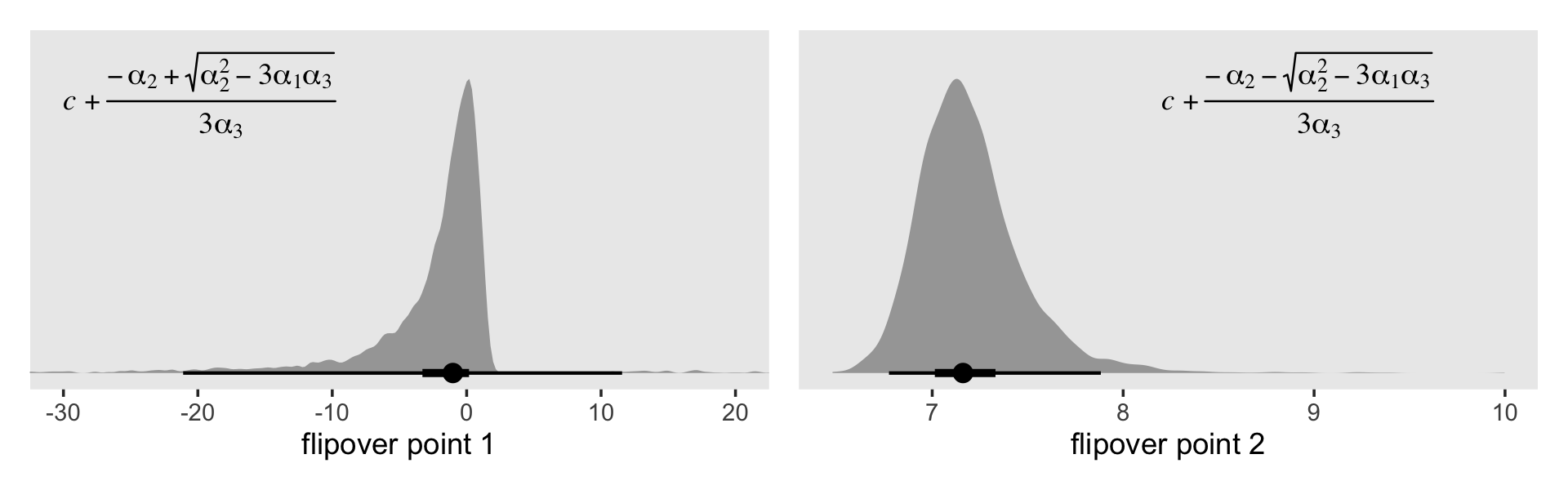

## scale reduction factor on split chains (at convergence, Rhat = 1).Focusing again on just the posterior means, this yields the following formula for the cubic discrete-time hazard model: \(\operatorname{logit} \hat h (t_j) =\;\) -1.4535 \(\text{one } +\) 0.7505\((\text{time}_j - 5)\) -0.1149\((\text{time}_j - 5)^2\) -0.0182\((\text{time}_j - 5)^3\). Now if we let \(c\), \(\alpha_1\) and \(\alpha_2\) retain their meanings from before and further let \(\alpha_3\) stand for the cubic term for time, we can define the two flipover points in the cubic logit hazard model as

\[\text{flipover points} = c + \frac{-\alpha_2 \pm \sqrt{\alpha_2^2 - 3 \alpha_1 \alpha_3}}{3 \alpha_3}.\]

Do you see that \(\pm\) sign in the numerator? That’s what gives us the two points. Now apply the formula to fit12.10 and plot.

# extract the posterior draws

draws <-

as_draws_df(fit12.10) %>%

transmute(c = 5,

a1 = b_period_5,

a2 = b_Iperiod_5E2,

a3 = b_Iperiod_5E3)

# flipover point with "+" in the numerator

p1 <-

draws %>%

mutate(`flipover point 1` = c + (- a2 + sqrt(a2^2 - 3 * a1 * a3)) / (3 * a3)) %>%

filter(!is.na(`flipover point 1`)) %>%

filter(`flipover point 1` > -50 & `flipover point 1` < 50) %>%

ggplot(aes(x = `flipover point 1`, y = 0)) +

stat_halfeyeh(.width = c(.5, .95),) +

annotate(geom = "text",

x = -30, y = .85,

label = "italic(c)+frac(-alpha[2]+sqrt(alpha[2]^2-3*alpha[1]*alpha[3]), 3*alpha[3])",

hjust = 0, family = "Times", parse = T) +

scale_y_continuous(NULL, breaks = NULL) +

coord_cartesian(xlim = c(-30, 20))

# flipover point with "-" in the numerator

p2 <-

draws %>%

mutate(`flipover point 2` = c + (- a2 - sqrt(a2^2 - 3 * a1 * a3)) / (3 * a3)) %>%

filter(!is.na(`flipover point 2`)) %>%

ggplot(aes(x = `flipover point 2`, y = 0)) +

stat_halfeyeh(.width = c(.5, .95)) +

annotate(geom = "text",

x = 8.2, y = .85,

label = "italic(c)+frac(-alpha[2]-sqrt(alpha[2]^2-3*alpha[1]*alpha[3]), 3*alpha[3])",

hjust = 0, family = "Times", parse = T) +

scale_y_continuous(NULL, breaks = NULL)

# combine!

(p1 | p2) & theme(panel.grid = element_blank())

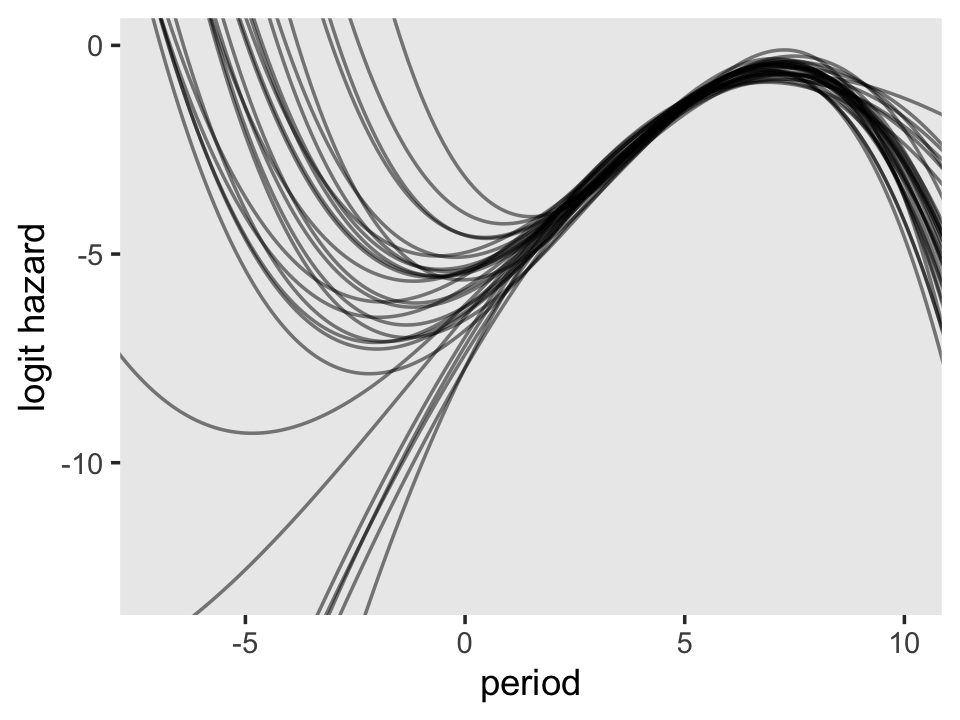

The plot on the right looks similar to the flipover plot from fit12.9. But look at the massive uncertainty in the flipover point in the plot on the left. If you play around with the code, you’ll see the \(x\)-axis extends far beyond the boundaries in the plot. Another spaghetti plot might help show what’s going on.

# redifine the `newdata`

nd <-

tibble(period = seq(from = -8, to = 11, by = .1)) %>%

mutate(period_5 = period - 5)

# employ `fitted()` and wrangle

f <-

fitted(fit12.10,

newdata = nd,

summary = F,

scale = "linear") %>%

data.frame() %>%

pivot_longer(everything()) %>%

bind_cols(expand(nd,

iter = 1:4000,

nesting(period, period_5)))

# plot!

f %>%

filter(iter <= 30) %>%

ggplot(aes(x = period, y = value, group = iter)) +

geom_line(alpha = 1/2) +

ylab("logit hazard") +

coord_cartesian(xlim = c(-7, 10),

ylim = c(-13, 0)) +

theme(panel.grid = element_blank())

On the region to the right of 0 on the \(x\)-axis, the plot looks a lot like the one for the quadratic model. But look at how wildly the lines fan out on the left side of 0. Since that’s the region where we find the second flipover point, all that uncertainty got baked into its marginal posterior. Just because I think it looks cool, here’s a version of that plot with lines corresponding to all 4,000 posterior draws.

f %>%

ggplot(aes(x = period, y = value, group = iter)) +

geom_line(alpha = 1/10, linewidth = 1/10) +

ylab("logit hazard") +

coord_cartesian(xlim = c(-7, 10),

ylim = c(-13, 0)) +

theme(panel.grid = element_blank())

12.2 Using the complementary log-log link to specify a discrete-time hazard model

So far we’ve been using the

logit transformation [which] represented a natural choice because it allowed us to: (1) specify the model using familiar terminology; (2) use widely available software for estimation; and (3) exploit interpretative strategies with which many empirical researchers are comfortable.

Just like the choice of a completely general specification for the main effect of TIME, use of a logit link is an analytic decision. Nothing about the way in which the model is postulated or fit requires the adoption of this, or any other, particular link function. (p. 419–420, emphasis in the original)

The complimentary log-log transformation–clog-log for short–is a widely-used alternative. It follows the form

\[\operatorname{clog-log} = \log \big (- \log (1 - p) \big),\]

where \(p\) is a probability value. In words, “while the logit transformation yields the logarithm of the odds of event occurrence, the clog-log transformation yields the logarithm of the negated logarithm of the probability of event nonoccurrence” (p. 420, emphasis in the original).

12.2.1 The clog-log transformation: When and why it is useful.

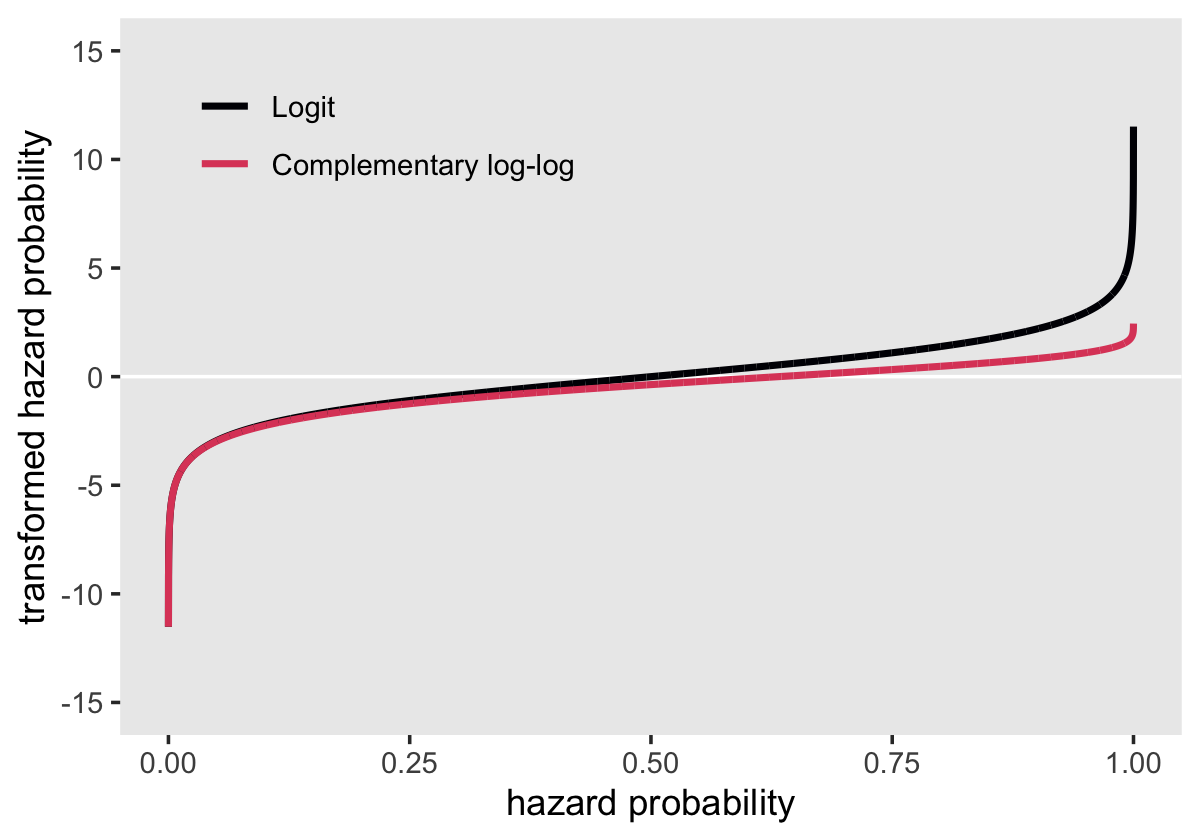

Here’s our version of Figure 12.2, where we compare the logit and clog-log transformations.

# simulate the data

tibble(p = seq(from = .00001, to = .99999, length.out = 1e4)) %>%

mutate(logit = log(p / (1 - p)),

cloglog = log(-log(1 - p))) %>%

pivot_longer(-p) %>%

mutate(name = factor(name,

levels = c("logit", "cloglog"),

labels = c("Logit", "Complementary log-log"))) %>%

# plot

ggplot(aes(x = p, y = value, color = name)) +

geom_hline(yintercept = 0, color = "white") +

geom_line(linewidth = 1) +

scale_color_viridis_d(NULL, option = "A", end = .6) +

scale_y_continuous("transformed hazard probability",

breaks = -3:3 * 5, limits = c(-15, 15)) +

xlab("hazard probability") +

theme(legend.background = element_rect(fill = "grey92"),

legend.key = element_rect(fill = "grey92", color = "grey92"),

legend.position = c(.25, .85),

panel.grid = element_blank())

Both transformations extend to the full \(-\infty\) to \(\infty\) parameter space. But whereas the logit is symmetric around zero and has a memorable point corresponding to \(p = .5\) (i.e., 0), the clog-log is asymmetric and has a less-intuitive point corresponding to \(p = .5\) (i.e., -0.3665129). Though somewhat odd, the advantage of the clog-log is

it provides a discrete-time statistical model for hazard that has a built-in proportional hazards assumption, and not a proportional odds assumption (as in the case of the logit link). This would be completely unremarkable except for one thing: it provides a conceptual parallelism between the clog-log discrete-time hazard model and the models that we will ultimately describe for continuous-time survival analysis. (p. 421, emphasis in the original)

12.2.2 A discrete-time hazard model using the complementary log-log link.

Singer and Willett (p. 422):

Any discrete-time hazard model postulated using a logit link can be rewritten using a clog-log link, simply by substituting transformations of the outcome. For example, we can write a general discrete-time hazard model for \(J\) time periods and \(P\) substantive predictors as:

\[\begin{align*} \operatorname{clog-log} h(t_j) & = [\alpha_1 D_1 + \alpha_2 D_2 + \cdots + \alpha_J D_J] \\ & \;\; + [\beta_1 X_1 + \beta_2 X_2 + \cdots + \beta_P X_P]. \end{align*}\]

Now reload the firstsex_pp.csv data to test this baby out.

sex_pp <- read_csv("data/firstsex_pp.csv")

glimpse(sex_pp)## Rows: 822

## Columns: 11

## $ id <dbl> 1, 1, 1, 2, 2, 2, 2, 2, 2, 3, 3, 3, 3, 3, 3, 5, 5, 5, 5, 5, 5, 6, 6, 6, 6, 6, 7, 7, 7, 9, 9, …

## $ period <dbl> 7, 8, 9, 7, 8, 9, 10, 11, 12, 7, 8, 9, 10, 11, 12, 7, 8, 9, 10, 11, 12, 7, 8, 9, 10, 11, 7, 8…

## $ event <dbl> 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 1, 0, 0, 1, 0, 0, …

## $ d7 <dbl> 1, 0, 0, 1, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 1, 0, 0, 1, 0, …

## $ d8 <dbl> 0, 1, 0, 0, 1, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 1, 0, 0, 1, …

## $ d9 <dbl> 0, 0, 1, 0, 0, 1, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 1, 0, 0, …

## $ d10 <dbl> 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, …

## $ d11 <dbl> 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, …

## $ d12 <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, …

## $ pt <dbl> 0, 0, 0, 1, 1, 1, 1, 1, 1, 0, 0, 0, 0, 0, 0, 1, 1, 1, 1, 1, 1, 0, 0, 0, 0, 0, 1, 1, 1, 0, 0, …

## $ pas <dbl> 1.9788670, 1.9788670, 1.9788670, -0.5454916, -0.5454916, -0.5454916, -0.5454916, -0.5454916, …Our next task is to recreate Figure 12.3, which shows the “sample hazard functions for the grade of first intercourse data displayed on different scales,” namely the logit and clog-log (p. 423). The key word here is “sample,” meaning we’re not fitting full models. For our version of the corresponding figure from the last chapter (i.e., Figure 11.2 from page 363), we computed the sample logit hazard functions with life tables based on the output from the survival::survfit() function. I am not aware that you can get hazards in the clog-log scale from the survfit() function. The folks at IDRE solved the problem by fitting four maximum likelihood models using the glm() function (see here). We’ll follow a similar approach, but with weakly-regularizing priors using the brms::brm() function.

## logit

# pt == 0

fit12.11 <-

brm(data = sex_pp %>% filter(pt == 0),

family = binomial,

event | trials(1) ~ 0 + d7 + d8 + d9 + d10 + d11 + d12,

prior(normal(0, 4), class = b),

chains = 4, cores = 4, iter = 2000, warmup = 1000,

seed = 12,

file = "fits/fit12.11")

# pt == 1

fit12.12 <-

brm(data = sex_pp %>% filter(pt == 1),

family = binomial,

event | trials(1) ~ 0 + d7 + d8 + d9 + d10 + d11 + d12,

prior(normal(0, 4), class = b),

chains = 4, cores = 4, iter = 2000, warmup = 1000,

seed = 12,

file = "fits/fit12.12")

## clog-log

# pt == 0

fit12.13 <-

brm(data = sex_pp %>% filter(pt == 0),

family = binomial(link = cloglog),

event | trials(1) ~ 0 + d7 + d8 + d9 + d10 + d11 + d12,

prior(normal(0, 4), class = b),

chains = 4, cores = 4, iter = 2000, warmup = 1000,

seed = 12,

file = "fits/fit12.13")

# pt == 1

fit12.14 <-

brm(data = sex_pp %>% filter(pt == 1),

family = binomial(link = cloglog),

event | trials(1) ~ 0 + d7 + d8 + d9 + d10 + d11 + d12,

prior(normal(0, 4), class = b),

chains = 4, cores = 4, iter = 2000, warmup = 1000,

seed = 12,

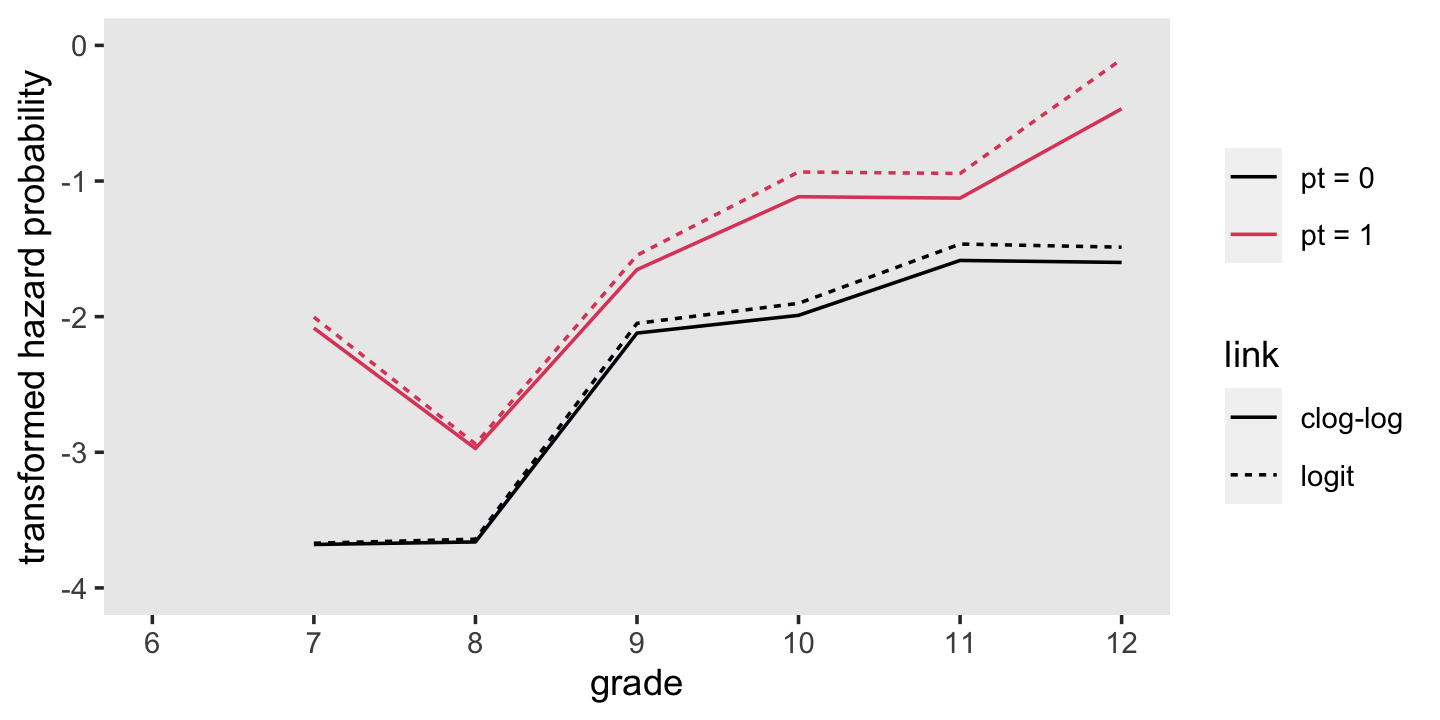

file = "fits/fit12.14")The primary line of code that distinguishes fit12.11 and fit12.12 from fit12.13 and fit12.14 is family = binomial(link = cloglog). With the family argument, we switched out the default logit link for the clog-log link. Before we can make our version of Figure 12.5, we will redefine our nd data for fitted(), pump our four fit objects into our custom make_fitted() function from earlier, and wrangle a little.

nd <-

tibble(period = 7:12) %>%

mutate(d7 = if_else(period == 7, 1, 0),

d8 = if_else(period == 8, 1, 0),

d9 = if_else(period == 9, 1, 0),

d10 = if_else(period == 10, 1, 0),

d11 = if_else(period == 11, 1, 0),

d12 = if_else(period == 12, 1, 0))

f <-

bind_rows(make_fitted(fit12.11, scale = "linear"),

make_fitted(fit12.12, scale = "linear"),

make_fitted(fit12.13, scale = "linear"),

make_fitted(fit12.14, scale = "linear")) %>%

mutate(pt = rep(str_c("pt = ", c(0:1, 0:1)), each = n() / 4),

link = rep(c("logit", "clog-log"), each = n() / 2))

f %>% glimpse()## Rows: 24

## Columns: 13

## $ Estimate <dbl> -3.6706692, -3.6407969, -2.0493384, -1.9018679, -1.4646795, -1.4876617, -2.0033286, -2.938…

## $ Est.Error <dbl> 0.7488902, 0.7365261, 0.3807953, 0.3840227, 0.3501475, 0.4042828, 0.3020423, 0.4622748, 0.…

## $ Q2.5 <dbl> -5.3764207, -5.3293475, -2.8240043, -2.7003814, -2.1926528, -2.3309166, -2.6308878, -3.926…

## $ Q97.5 <dbl> -2.41270860, -2.42506307, -1.33723119, -1.19372384, -0.81299679, -0.74145934, -1.44619911,…

## $ period <int> 7, 8, 9, 10, 11, 12, 7, 8, 9, 10, 11, 12, 7, 8, 9, 10, 11, 12, 7, 8, 9, 10, 11, 12

## $ d7 <dbl> 1, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0

## $ d8 <dbl> 0, 1, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0

## $ d9 <dbl> 0, 0, 1, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 1, 0, 0, 0

## $ d10 <dbl> 0, 0, 0, 1, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 1, 0, 0

## $ d11 <dbl> 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 1, 0

## $ d12 <dbl> 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 1

## $ pt <chr> "pt = 0", "pt = 0", "pt = 0", "pt = 0", "pt = 0", "pt = 0", "pt = 1", "pt = 1", "pt = 1", …

## $ link <chr> "logit", "logit", "logit", "logit", "logit", "logit", "logit", "logit", "logit", "logit", …Make our version of Figure 12.3.

f %>%

ggplot(aes(x = period, group = interaction(pt, link),

color = pt)) +

geom_line(aes(y = Estimate, linetype = link)) +

scale_color_viridis_d(NULL, option = "A", end = .6) +

scale_x_continuous("grade", breaks = 6:12, limits = c(6, 12)) +

ylab("transformed hazard probability") +

coord_cartesian(ylim = c(-4, 0)) +

theme(panel.grid = element_blank())

Our next step is to fit proper models to the data. First, we will refit fit11.8 from last chapter. Then we’ll fit the clog-log analogue to the same model. The two models, respectively, follow the form

\[ \begin{align*} \operatorname{logit} h(t_{ij}) & = [\alpha_7 D_{7ij} + \alpha_8 D_{8ij} + \cdots + \alpha_{12} D_{12ij}] + [\beta_1 \text{PT}_i ] \; \text{and} \\ \operatorname{clog-log} h(t_{ij}) & = [\alpha_7 D_{7ij} + \alpha_8 D_{8ij} + \cdots + \alpha_{12} D_{12ij}] + [\beta_1 \text{PT}_i ]. \end{align*} \]

Fit the models.

# logit

fit11.8 <-

brm(data = sex_pp,

family = binomial,

event | trials(1) ~ 0 + d7 + d8 + d9 + d10 + d11 + d12 + pt,

prior(normal(0, 4), class = b),

chains = 4, cores = 4, iter = 2000, warmup = 1000,

seed = 11,

file = "fits/fit11.08")

# clog-log

fit12.15 <-

brm(data = sex_pp,

family = binomial(link = cloglog),

event | trials(1) ~ 0 + d7 + d8 + d9 + d10 + d11 + d12 + pt,

prior(normal(0, 4), class = b),

chains = 4, cores = 4, iter = 2000, warmup = 1000,

seed = 12,

file = "fits/fit12.15")Both models used the binomial likelihood. They only differed in which link function we used to transform the data. To further explicate, we can more fully write out our Bayesian clog-log model as

\[ \begin{align*} \text{event}_{ij} & = \operatorname{Binomial}(n = 1, p_{ij}) \\ \operatorname{clog-log} (p_{ij}) & = [\alpha_7 D_{7ij} + \alpha_8 D_{8ij} + \cdots + \alpha_{12} D_{12ij}] + [\beta_1 \text{PT}_{1i} ] \\ \alpha_7, \alpha_8, ..., \alpha_{12} & \sim \operatorname{Normal}(0, 4) \\ \beta_1 & \sim \operatorname{Normal}(0, 4). \end{align*} \]

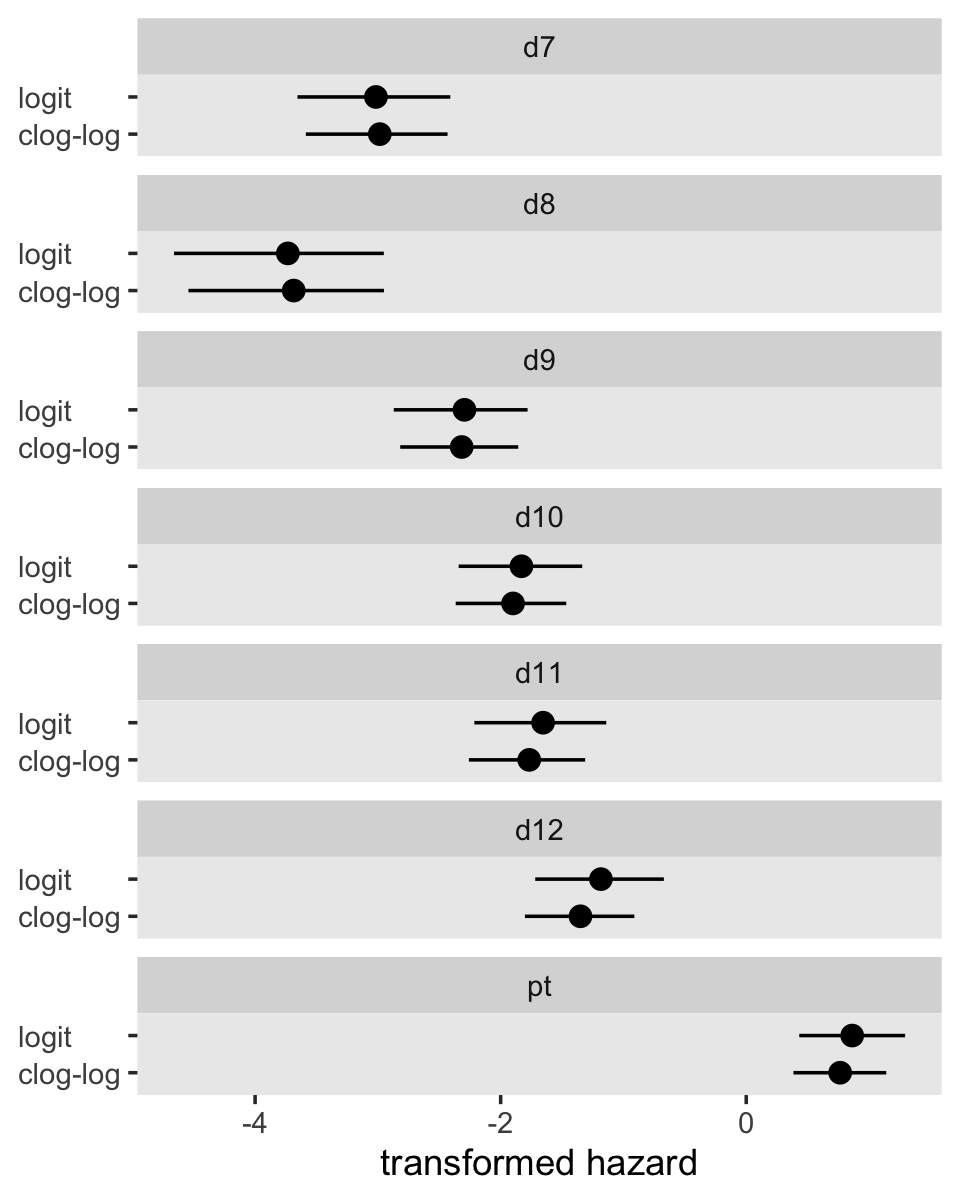

Here are the posterior means for fit11.8 and fit12.15, as depicted in the firs three columns of Table 12.3.

pars <-

bind_rows(fixef(fit11.8) %>% data.frame() %>% rownames_to_column("par"),

fixef(fit12.15) %>% data.frame() %>% rownames_to_column("par")) %>%

mutate(link = rep(c("logit", "clog-log"), each = n() / 2),

par = factor(par, levels = c(str_c("d", 7:12), "pt")))

pars %>%

select(par, link, Estimate) %>%

pivot_wider(names_from = link,

values_from = Estimate) %>%

select(par, `clog-log`, logit) %>%

mutate_if(is.double, round, digits = 4)## # A tibble: 7 × 3

## par `clog-log` logit

## <fct> <dbl> <dbl>

## 1 d7 -2.98 -3.02

## 2 d8 -3.69 -3.73

## 3 d9 -2.32 -2.29

## 4 d10 -1.90 -1.83

## 5 d11 -1.77 -1.65

## 6 d12 -1.35 -1.18

## 7 pt 0.765 0.863They might be easier to compare in a coefficient plot.

pars %>%

ggplot(aes(x = link, y = Estimate, ymin = Q2.5, ymax = Q97.5)) +

geom_pointrange() +

labs(x = NULL,

y = "transformed hazard") +

coord_flip() +

theme(axis.text.y = element_text(hjust = 0),

panel.grid = element_blank()) +

facet_wrap(~ par, ncol = 1)

Instead of comparing them with deviance values, we will compare the two models using Bayesian information criteria. For simplicity, we’ll focus on the LOO.

fit12.15 <- add_criterion(fit12.15, c("loo", "waic"))

loo_compare(fit12.15, fit11.8, criterion = "loo") %>% print(simplify = F)## elpd_diff se_diff elpd_loo se_elpd_loo p_loo se_p_loo looic se_looic

## fit12.15 0.0 0.0 -324.3 17.6 7.1 0.5 648.6 35.2

## fit11.8 -0.2 0.2 -324.5 17.6 7.2 0.6 649.0 35.3model_weights(fit12.15, fit11.8, weights = "loo") %>% round(digits = 3)## fit12.15 fit11.8

## 0.548 0.452From a LOO perspective, they’re basically the same. The parameter summaries are also quite similar between the two models. A coefficient plot might make it easy to see. “Numerical similarity is common when fitting identical models with alternate link functions (and net risks of event occurrence are low) and suggests that choice of a link function should depend on other considerations” (p. 423).

We already know from the last chapter that we can convert logits to probabilities with the function

\[p = \frac{1}{1 + e^{- \text{logit}}}.\]

The relevant inverse transformation for the clog-log link is

\[p = 1 - e^{\left (- e^{( \text{clog-log})} \right)}.\]

Now use those formulas to convert our Estimate values into the hazard metric, as shown in the last two columns of Table 12.3.

pars %>%

filter(par != "pt") %>%

mutate(Estimate = if_else(str_detect(link, "logit"),

1 / (1 + exp(-1 * Estimate)),

1 - exp(-exp(Estimate)))) %>%

select(par, link, Estimate) %>%

pivot_wider(names_from = link,

values_from = Estimate) %>%

select(par, `clog-log`, logit) %>%

mutate(`clog-log - logit` = `clog-log` - logit) %>%

mutate_if(is.double, round, digits = 4)## # A tibble: 6 × 4

## par `clog-log` logit `clog-log - logit`

## <fct> <dbl> <dbl> <dbl>

## 1 d7 0.0493 0.0467 0.0026

## 2 d8 0.0248 0.0234 0.0014

## 3 d9 0.0938 0.0916 0.0023

## 4 d10 0.139 0.138 0.0009

## 5 d11 0.157 0.160 -0.0035

## 6 d12 0.228 0.234 -0.0061For kicks, we threw in a column of their differences. The clog-log - logit column highlights how

in general, fitted hazard functions from models estimated with both link functions will be indistinguishable unless hazard is high, once again suggesting that the quality of the estimates does not provide a rationale for selecting one of these link functions over the other. (p. 424)

Now focus on the pt parameter for both models.

In both cases, we antilog [i.e., exponentiate] parameter estimates, but whereas an antilogged [i.e., exponentiated] coefficient from a model with a logit link is an odds ratio, an antilogged coefficient from a model with a clog-log link is a hazard ratio. (p. 424)

Here’s that in a plot.

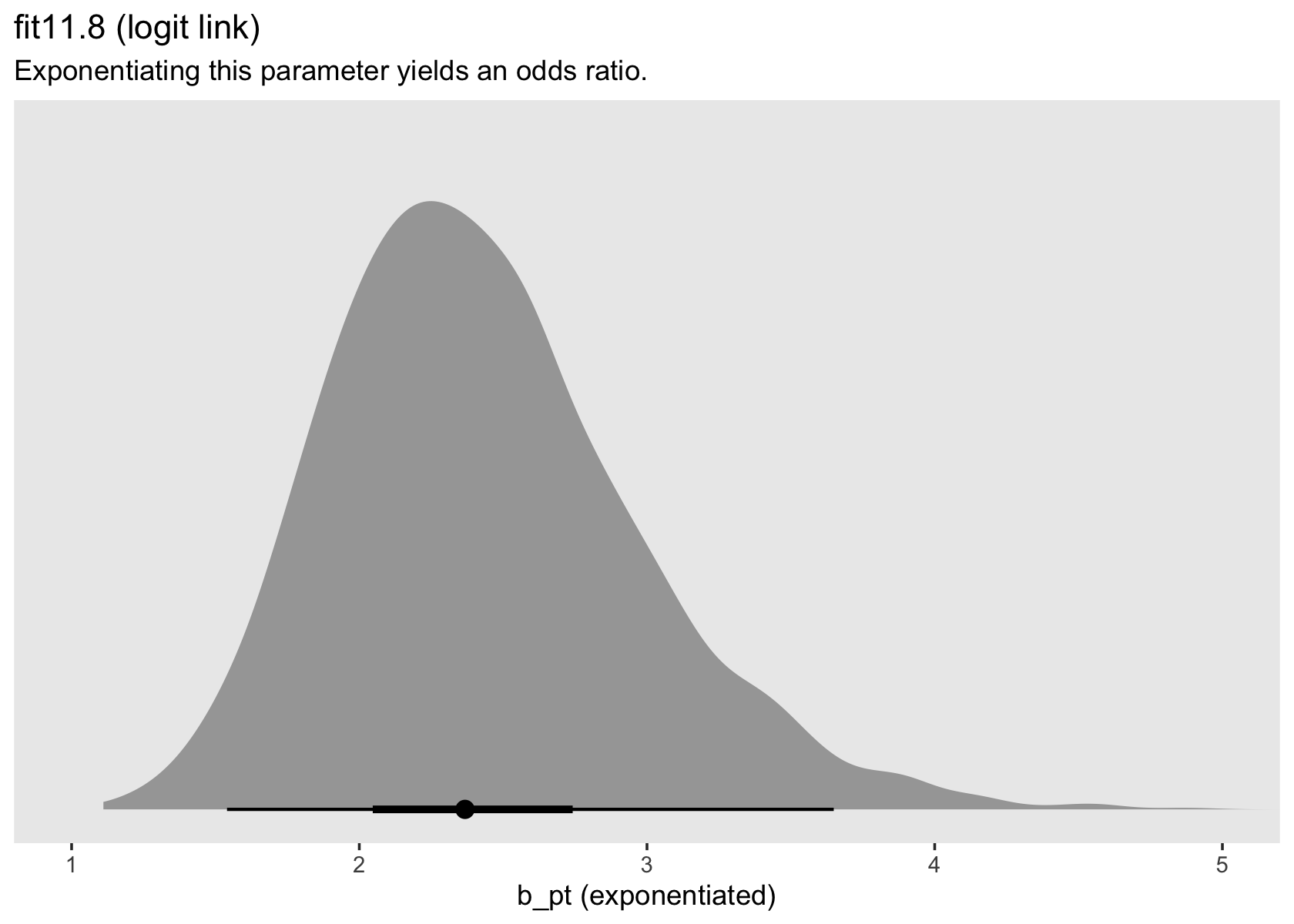

as_draws_df(fit11.8) %>%

ggplot(aes(x = b_pt %>% exp(), y = 0)) +

stat_halfeye(.width = c(.5, .95), na.rm = T) +

scale_x_continuous("b_pt (exponentiated)") +

scale_y_continuous(NULL, breaks = NULL) +

labs(title = "fit11.8 (logit link)",

subtitle = "Exponentiating this parameter yields an odds ratio.") +

coord_cartesian(xlim = c(1, 5)) +

theme(panel.grid = element_blank())

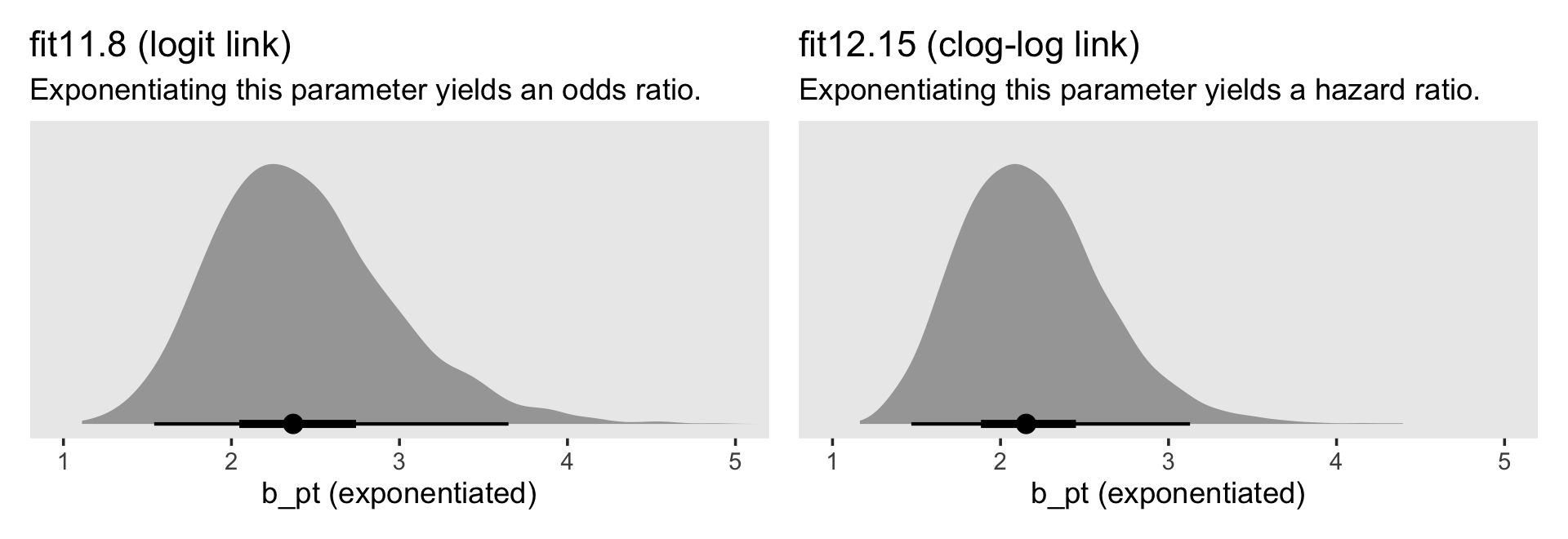

# left

p1 <-

as_draws_df(fit11.8) %>%

ggplot(aes(x = b_pt %>% exp(), y = 0)) +

stat_halfeye(.width = c(.5, .95), na.rm = T) +

scale_x_continuous("b_pt (exponentiated)") +

scale_y_continuous(NULL, breaks = NULL) +

labs(title = "fit11.8 (logit link)",

subtitle = "Exponentiating this parameter yields an odds ratio.") +

coord_cartesian(xlim = c(1, 5)) +

theme(panel.grid = element_blank())

# right

p2 <-

as_draws_df(fit12.15) %>%

ggplot(aes(x = b_pt %>% exp(), y = 0)) +

stat_halfeye(.width = c(.5, .95)) +

scale_x_continuous("b_pt (exponentiated)", limits = c(1, 5)) +

scale_y_continuous(NULL, breaks = NULL) +

labs(title = "fit12.15 (clog-log link)",

subtitle = "Exponentiating this parameter yields a hazard ratio.") +

theme(panel.grid = element_blank())

# combine

p1 | p2

For the clog-log model, then,

in every grade from 7th to 12th, we estimate the hazard of first intercourse for boys who experienced a parenting transition to be [about] 2.2 times the hazard for their peers raised with both biological parents. This interpretation contrasts with that from the model with a logit link, which suggests that the odds of first intercourse are [about] 2.4 times the odds for boys who experienced a parenting transition. (pp. 424–425, emphasis in the original)

12.2.3 Choosing between logit and clog-log links for discrete-time hazard models.

The primary advantage of the clog-log link is that in invoking a proportional hazards assumption it yields a direct analog to the continuous time hazard model…. If you believe that the underlying metric for time is truly continuous and that the only reason you observe discretized values is due to measurement difficulties, a model specified with a clog-log link has much to recommend it….

[Yet,] if data are collected in truly discrete time, the clog-log specification has no particular advantage. As N. Beck (1999); Beck, Katz, and Tucker (1998); and Sueyoshi (1995) argue, the proportional hazards assumption is no more sacred than the proportional odds assumption, and while consistency across models is noble, so, too, is simplicity (which decreases the chances of mistake). (p. 426)

12.3 Time-varying predictors

“Discrete-time survival analysis adopts naturally to the inclusion of time-varying predictors. Because models are fit using a person-period data set, a time-varying predictor simply takes on its appropriate value for each person in each period” (p. 427).

12.3.1 Assumptions underlying a model with time-varying predictors.

Load the depression data from Wheaton, Rozell, and Hall (1997).

depression_pp <-

read_csv("data/depression_pp.csv") %>%

# convert the column names to lower case

rename_all(tolower)

glimpse(depression_pp)## Rows: 36,997

## Columns: 22

## $ id <dbl> 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, …

## $ onset <dbl> NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA…

## $ age <dbl> 34, 34, 34, 34, 34, 34, 34, 34, 34, 34, 34, 34, 34, 34, 34, 34, 34, 34, 34, 34, 34, 34, 34…

## $ censor <dbl> 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, …

## $ censage <dbl> 34, 34, 34, 34, 34, 34, 34, 34, 34, 34, 34, 34, 34, 34, 34, 34, 34, 34, 34, 34, 34, 34, 34…

## $ aged <dbl> NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA…

## $ female <dbl> 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, …

## $ nsibs <dbl> 6, 6, 6, 6, 6, 6, 6, 6, 6, 6, 6, 6, 6, 6, 6, 6, 6, 6, 6, 6, 6, 6, 6, 6, 6, 6, 6, 6, 6, 6, …

## $ sibs12 <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, …

## $ sibs34 <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, …

## $ sibs56 <dbl> 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, …

## $ sibs78 <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, …

## $ sibs9plus <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, …

## $ bigfamily <dbl> 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, …

## $ period <dbl> 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, …

## $ event <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, …

## $ pd <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, …

## $ pdnow <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, …

## $ one <dbl> 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, …

## $ age_18 <dbl> -14, -13, -12, -11, -10, -9, -8, -7, -6, -5, -4, -3, -2, -1, 0, 1, 2, 3, 4, 5, 6, 7, 8, 9,…

## $ age_18sq <dbl> 196, 169, 144, 121, 100, 81, 64, 49, 36, 25, 16, 9, 4, 1, 0, 1, 4, 9, 16, 25, 36, 49, 64, …

## $ age_18cub <dbl> -2744, -2197, -1728, -1331, -1000, -729, -512, -343, -216, -125, -64, -27, -8, -1, 0, 1, 8…Here is the participant age range.

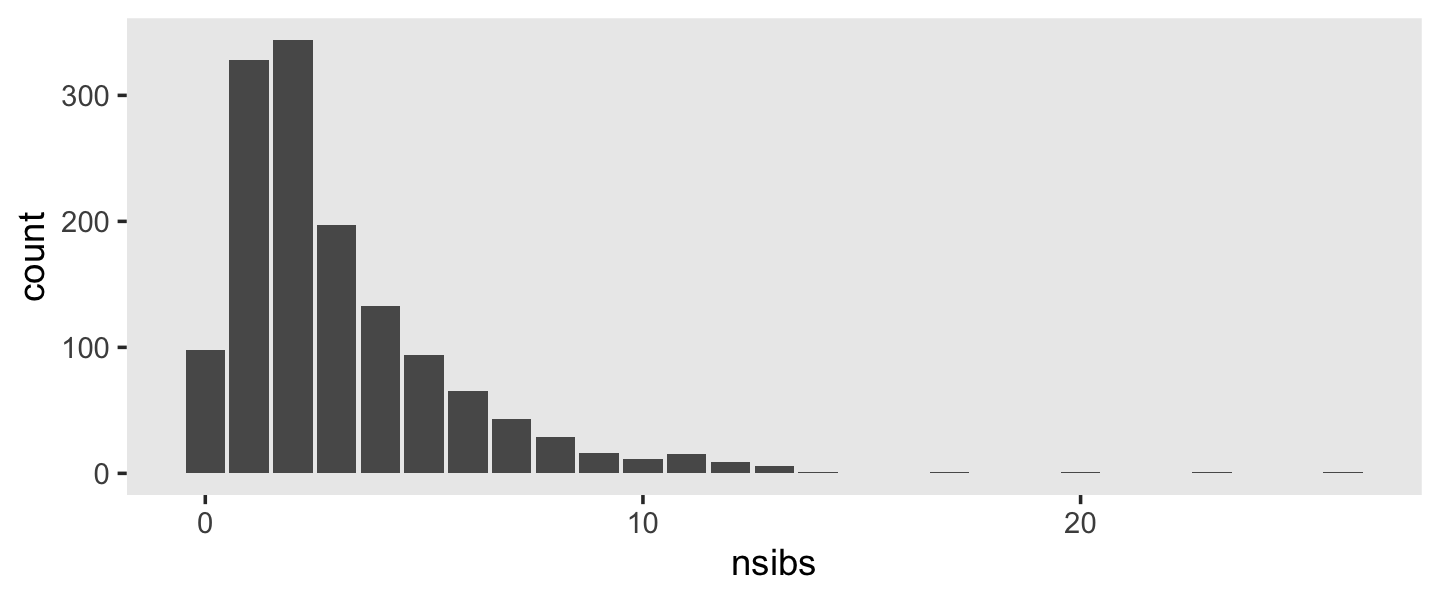

range(depression_pp$age)## [1] 17 57We might count how many participants experienced a parental divorce, pd, like this.

depression_pp %>%

group_by(id) %>%

summarise(pd = if_else(sum(pd) > 0, 1, 0)) %>%

ungroup() %>%

count(pd) %>%

mutate(percent = 100 * n / sum(n))## # A tibble: 2 × 3

## pd n percent

## <dbl> <int> <dbl>

## 1 0 1248 89.6

## 2 1 145 10.4Here we fit the discrete hazard model based on a quadratic treatment of \(\text{age} - 18\) with and the time-varying predictor pd and without/with the time-invariant predictor female.

1:52

# 1.092947 hours

fit12.16 <-

brm(data = depression_pp,

family = binomial,

event | trials(1) ~ 0 + Intercept + age_18 + I(age_18^2) + I(age_18^3) + pd,

prior(normal(0, 4), class = b),

chains = 4, cores = 4, iter = 2000, warmup = 1000,

seed = 12,

file = "fits/fit12.16")

# 1.055131 hours

fit12.17 <-

brm(data = depression_pp,

family = binomial,

event | trials(1) ~ 0 + Intercept + age_18 + I(age_18^2) + I(age_18^3) + pd + female,

prior(normal(0, 4), class = b),

chains = 4, cores = 4, iter = 2000, warmup = 1000,

seed = 12,

file = "fits/fit12.17")Check the summaries.

print(fit12.16)## Family: binomial

## Links: mu = logit

## Formula: event | trials(1) ~ 0 + Intercept + age_18 + I(age_18^2) + I(age_18^3) + pd

## Data: depression_pp (Number of observations: 36997)

## Draws: 4 chains, each with iter = 2000; warmup = 1000; thin = 1;

## total post-warmup draws = 4000

##

## Population-Level Effects:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## Intercept -4.25 0.08 -4.40 -4.10 1.00 1743 1884

## age_18 0.06 0.01 0.04 0.08 1.00 1874 2082

## Iage_18E2 -0.01 0.00 -0.01 -0.01 1.00 2637 2715

## Iage_18E3 0.00 0.00 0.00 0.00 1.00 2290 2451

## pd 0.42 0.16 0.09 0.73 1.00 2333 1984

##

## Draws were sampled using sampling(NUTS). For each parameter, Bulk_ESS

## and Tail_ESS are effective sample size measures, and Rhat is the potential

## scale reduction factor on split chains (at convergence, Rhat = 1).print(fit12.17)## Family: binomial

## Links: mu = logit

## Formula: event | trials(1) ~ 0 + Intercept + age_18 + I(age_18^2) + I(age_18^3) + pd + female

## Data: depression_pp (Number of observations: 36997)

## Draws: 4 chains, each with iter = 2000; warmup = 1000; thin = 1;

## total post-warmup draws = 4000

##

## Population-Level Effects:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## Intercept -4.59 0.11 -4.81 -4.38 1.00 1550 1673

## age_18 0.06 0.01 0.04 0.08 1.00 2095 2161

## Iage_18E2 -0.01 0.00 -0.01 -0.01 1.00 3563 3281

## Iage_18E3 0.00 0.00 0.00 0.00 1.00 2955 2815

## pd 0.41 0.16 0.08 0.71 1.00 2113 1779

## female 0.55 0.11 0.33 0.77 1.00 1759 1859

##

## Draws were sampled using sampling(NUTS). For each parameter, Bulk_ESS

## and Tail_ESS are effective sample size measures, and Rhat is the potential

## scale reduction factor on split chains (at convergence, Rhat = 1).Before we make our version of Figure 12.4, we’ll need to make an aggregated version of the data, which will allow us to replicate the dots and plus signs. We’ll use the same basic wrangling steps from when we made sex_aggregated from back in Chapter 11.

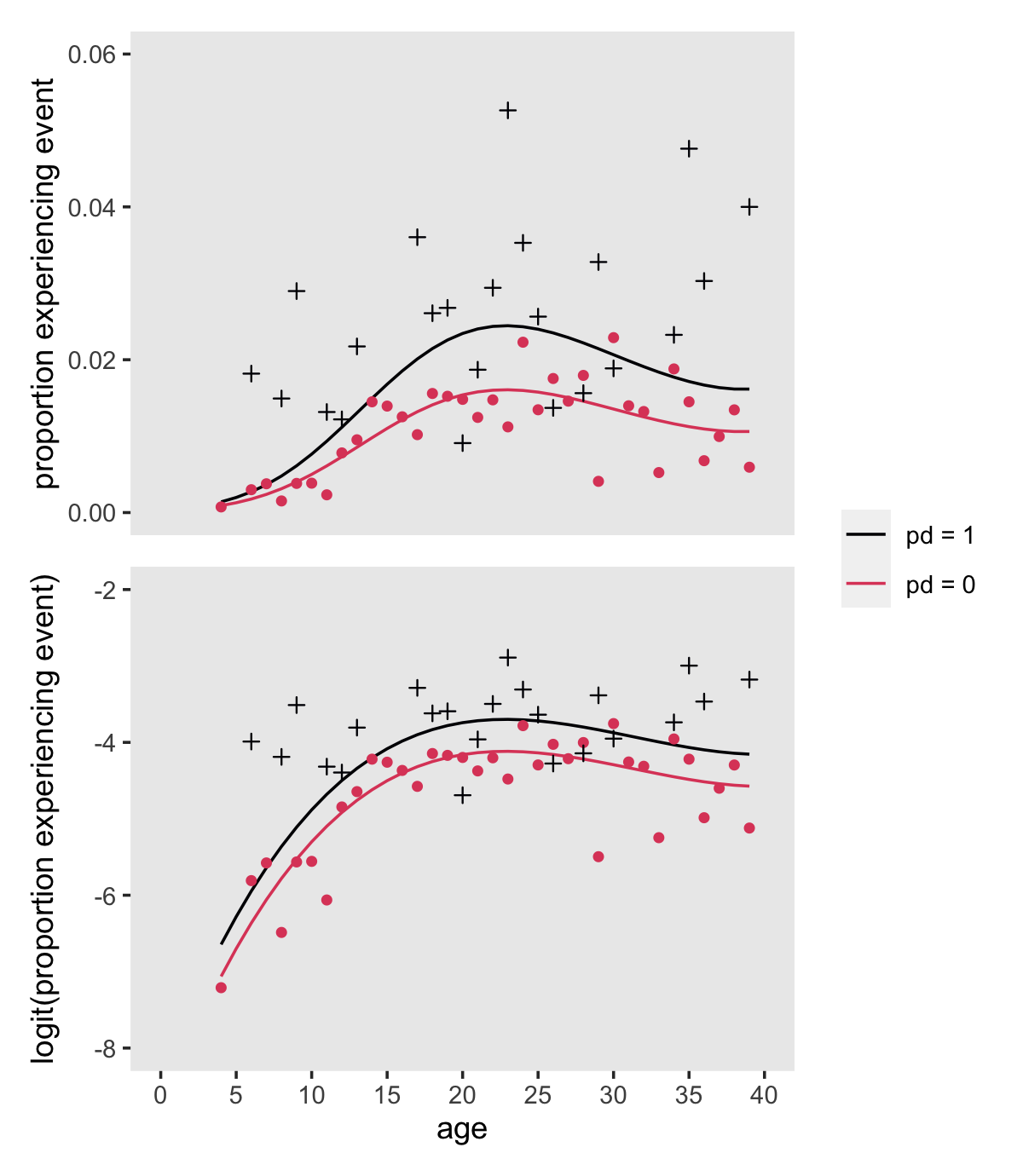

depression_aggregated <-

depression_pp %>%

mutate(event = if_else(event == 1, "event", "no_event")) %>%

group_by(age_18) %>%

count(event, pd) %>%

ungroup() %>%

complete(age_18, event, pd) %>%

pivot_wider(names_from = event,

values_from = n) %>%

mutate(pd = factor(str_c("pd = ", pd), levels = str_c("pd = ", 1:0)),

age = age_18 + 18,

total = event + no_event) %>%

mutate(proportion = event / total) %>%

mutate(logit = log(proportion / (1 - proportion)))

depression_aggregated## # A tibble: 72 × 8

## age_18 pd event no_event age total proportion logit

## <dbl> <fct> <int> <int> <dbl> <int> <dbl> <dbl>

## 1 -14 pd = 0 1 1353 4 1354 0.000739 -7.21

## 2 -14 pd = 1 NA 39 4 NA NA NA

## 3 -13 pd = 0 NA 1344 5 NA NA NA

## 4 -13 pd = 1 NA 48 5 NA NA NA

## 5 -12 pd = 0 4 1333 6 1337 0.00299 -5.81

## 6 -12 pd = 1 1 54 6 55 0.0182 -3.99

## 7 -11 pd = 0 5 1322 7 1327 0.00377 -5.58

## 8 -11 pd = 1 NA 60 7 NA NA NA

## 9 -10 pd = 0 2 1313 8 1315 0.00152 -6.49

## 10 -10 pd = 1 1 66 8 67 0.0149 -4.19

## # ℹ 62 more rowsNow make Figure 12.4.

nd <-

crossing(age_18 = -14:21,

pd = 0:1) %>%

mutate(age = age_18 + 18)

# hazard (top panel)

p1 <-

fitted(fit12.16,

newdata = nd) %>%

data.frame() %>%

bind_cols(nd) %>%

mutate(pd = factor(str_c("pd = ", pd),

levels = str_c("pd = ", 1:0))) %>%

ggplot(aes(x = age, group = pd, color = pd)) +

geom_line(aes(y = Estimate)) +

geom_point(data = depression_aggregated,

aes(y = proportion, shape = pd),

show.legend = F) +

scale_x_continuous(NULL, breaks = NULL, limits = c(0, 40)) +

scale_y_continuous("proportion experiencing event", limits = c(0, 0.06))

# logit(hazard) (top panel)

p2 <-

fitted(fit12.16,

newdata = nd,

scale = "linear") %>%

data.frame() %>%

bind_cols(nd) %>%

mutate(pd = factor(str_c("pd = ", pd),

levels = str_c("pd = ", 1:0))) %>%

ggplot(aes(x = age, group = pd, color = pd)) +

geom_line(aes(y = Estimate)) +

geom_point(data = depression_aggregated,

aes(y = logit, shape = pd),

show.legend = F) +

scale_x_continuous(breaks = 0:8 * 5, limits = c(0, 40)) +

scale_y_continuous("logit(proportion experiencing event)", limits = c(-8, -2))

# combine

(

(p1 / p2) &

scale_shape_manual(values = c(3, 16)) &

scale_color_viridis_d(NULL, option = "A", end = .6) &

theme(panel.grid = element_blank())

) +

plot_layout(guides = "collect")

12.3.2 Interpreting and displaying time-varying predictors’ effects.

We jumped the gun a little, but to repeat:

you can fit a discrete-time hazard model with time-varying predictors using exactly the same strategies presented in chapter 11. In the person-period data set, use a logistic regression routine to regress the event indicator on variables representing the main effect of TIME and the desired predictors. (pp. 434–435, emphasis in the original)

For example, here is the statistical formula we used when we fit fit12.17:

\[ \begin{align*} \text{event}_{ij} & = \operatorname{Binomial}(n = 1, p_{ij}) \\ \operatorname{logit} (p_{ij}) & = [\alpha_0 + \alpha_1 (\text{age}_{ij} - 18) + \alpha_2 (\text{age}_{ij} - 18)^2 + \alpha_3 (\text{age}_{ij} - 18)^3] \\ & \; \; + [\beta_1 \text{pd}_{ij} + \beta_2 \text{female}_{i}] \\ \alpha_0, ..., \alpha_3 & \sim \operatorname{Normal}(0, 4) \\ \beta_1 \text{ and } \beta_2 & \sim \operatorname{Normal}(0, 4), \end{align*} \]

where \(\operatorname{logit} (p_{ij}) = \operatorname{logit} \hat h(t_{ij})\). If you’d like to compare our results for those displayed by Singer and Willett in Equation 12.8, here are our posterior means.

fixef(fit12.17)[, 1] %>% round(digits = 4)## Intercept age_18 Iage_18E2 Iage_18E3 pd female

## -4.5895 0.0602 -0.0075 0.0002 0.4066 0.5453The values are pretty close. Though we won’t compare fit12.16 and fit12.17 using deviance values, we will use information criteria.

fit12.16 <- add_criterion(fit12.16, criterion = "waic")

fit12.17 <- add_criterion(fit12.17, criterion = "waic")

loo_compare(fit12.16, fit12.17, criterion = "waic") %>% print(simplify = F)## elpd_diff se_diff elpd_waic se_elpd_waic p_waic se_p_waic waic se_waic

## fit12.17 0.0 0.0 -2075.6 86.0 6.0 0.4 4151.3 172.0

## fit12.16 -12.0 5.0 -2087.7 86.4 5.0 0.4 4175.4 172.8model_weights(fit12.16, fit12.17, weights = "waic") %>% round(digits = 3)## fit12.16 fit12.17

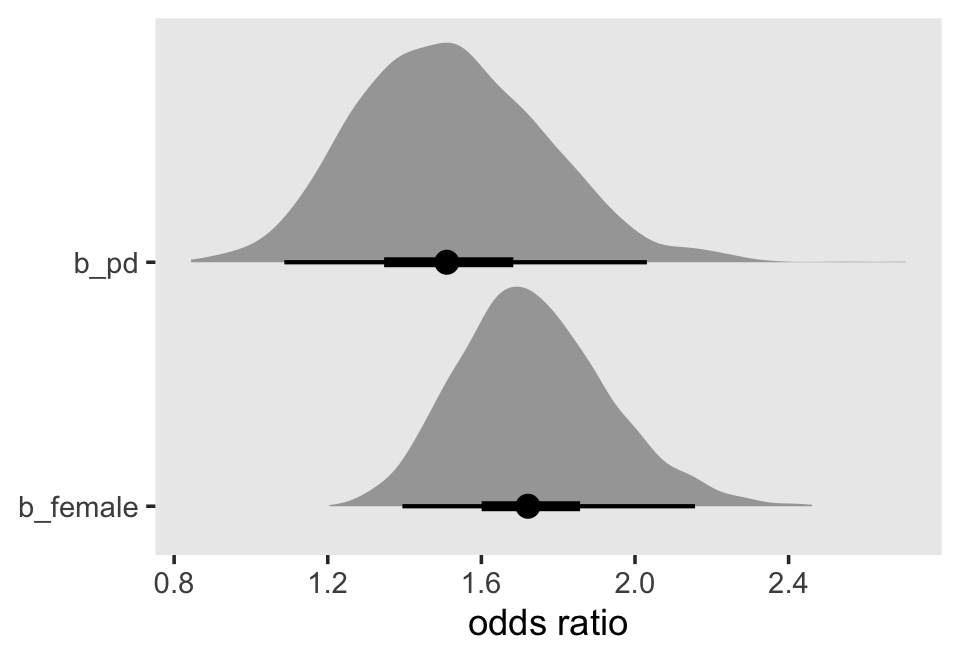

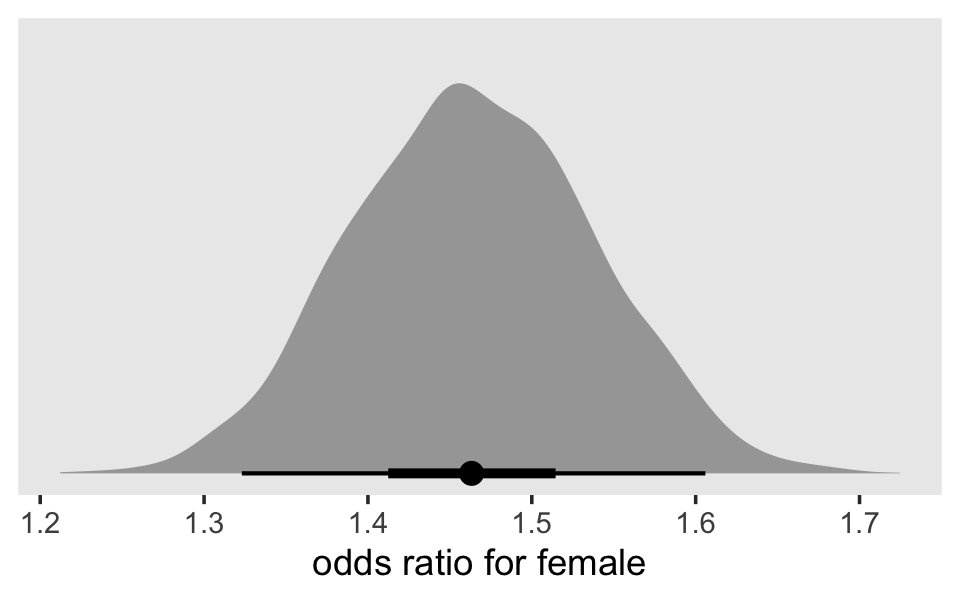

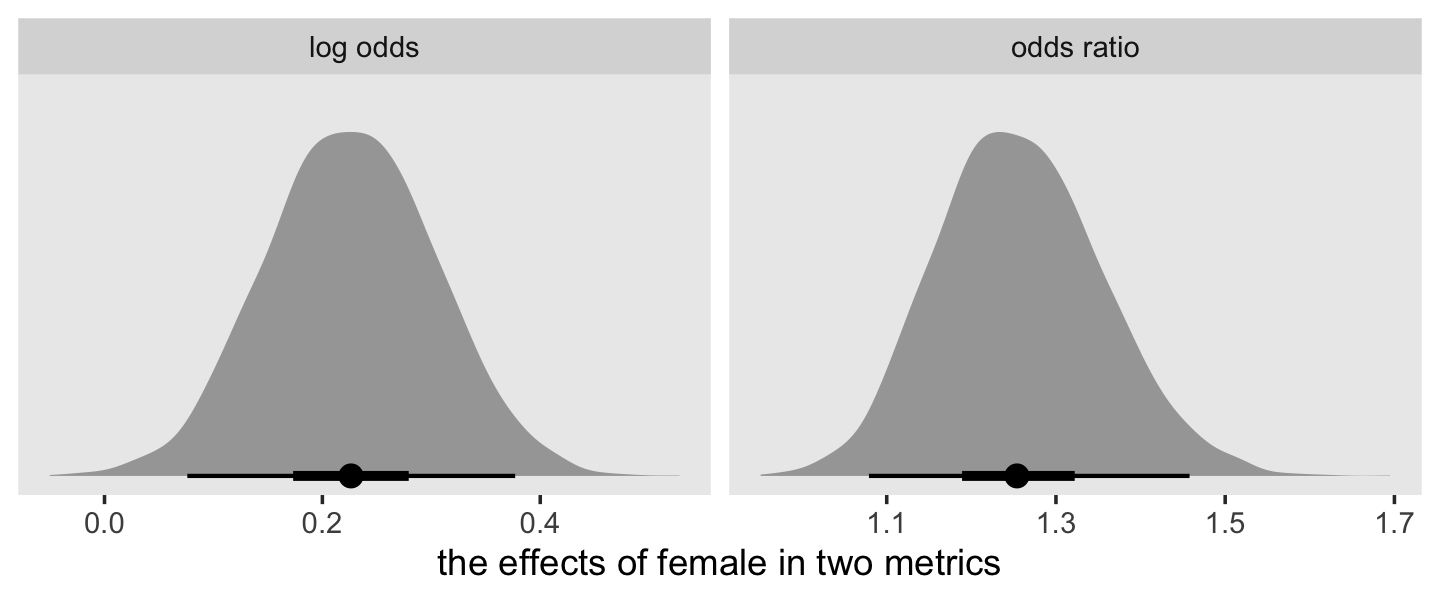

## 0 1Based on both the WAIC difference and the WAIC weights, fit12.17 is the clear favorite. From that model, Here’s a plot of the anti-logged (i.e., exponentiated) posteriors for pd and female, which yields their odds ratios.

as_draws_df(fit12.17) %>%

pivot_longer(b_pd:b_female) %>%

mutate(`odds ratio` = exp(value)) %>%

ggplot(aes(x = `odds ratio`, y = name)) +

stat_halfeye(.width = c(.5, .95), normalize = "xy") +

ylab(NULL) +

coord_cartesian(ylim = c(1.4, 2.4)) +

theme(panel.grid = element_blank())

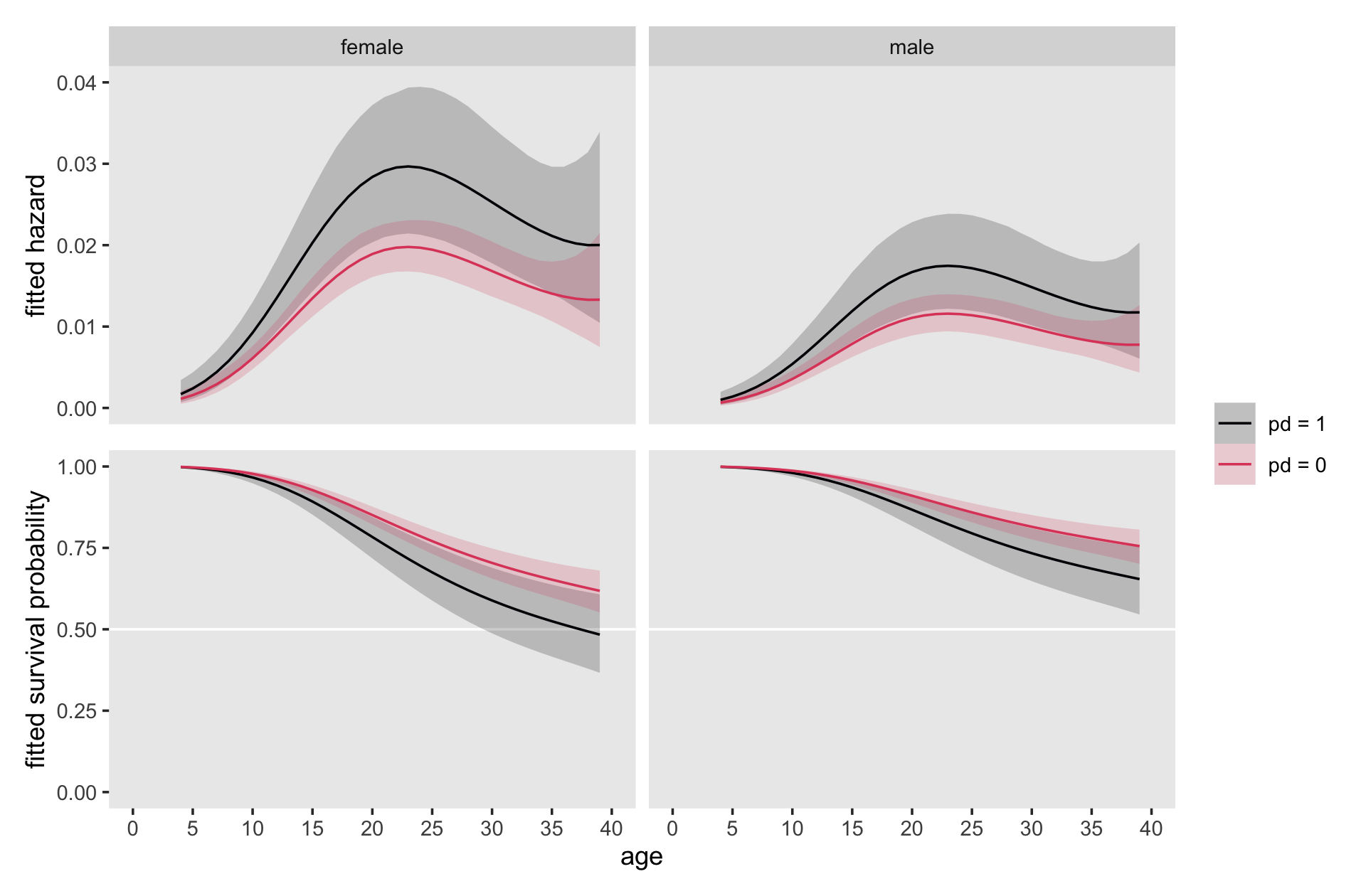

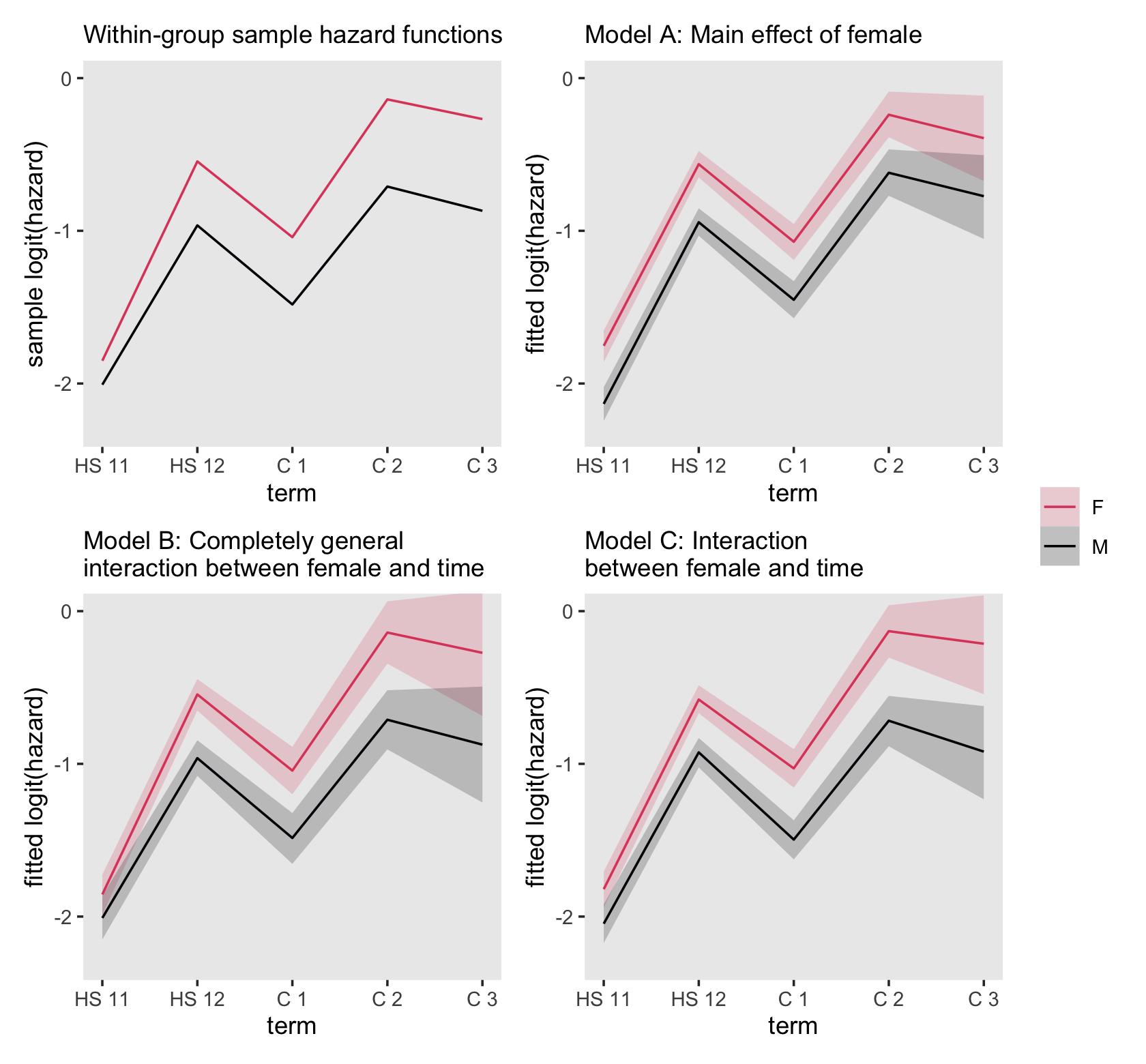

Here is our version of Figure 12.5.

nd <-

crossing(female = 0:1,

pd = 0:1) %>%

expand_grid(age_18 = -14:21) %>%

mutate(age = age_18 + 18)

f <-

fitted(fit12.17,

newdata = nd) %>%

data.frame() %>%

bind_cols(nd) %>%

mutate(sex = if_else(female == 0, "male", "female"),

pd = factor(str_c("pd = ", pd),

levels = str_c("pd = ", 1:0)))

# hazard (top panel)

p1 <-

f %>%

ggplot(aes(x = age, group = pd, color = pd, fill = pd)) +

geom_ribbon(aes(ymin = Q2.5, ymax = Q97.5),

alpha = 1/5, linewidth = 0) +

geom_line(aes(y = Estimate)) +

scale_x_continuous(NULL, breaks = NULL, limits = c(0, 40)) +

scale_y_continuous("fitted hazard", limits = c(0, 0.04))

# survival (bottom panel)

p2 <-

f %>%

group_by(sex, pd) %>%

mutate_at(vars(Estimate, Q2.5, Q97.5), .funs = ~cumprod(1 - .)) %>%

ggplot(aes(x = age, group = pd, color = pd, fill = pd)) +

geom_hline(yintercept = .5, color = "white") +

geom_ribbon(aes(ymin = Q2.5, ymax = Q97.5),

alpha = 1/5, linewidth = 0) +

geom_line(aes(y = Estimate)) +

scale_x_continuous(breaks = 0:8 * 5, limits = c(0, 40)) +

scale_y_continuous("fitted survival probability", limits = c(0, 1)) +

theme(strip.background.x = element_blank(),

strip.text.x = element_blank())

# combine

(

(p1 / p2) &

scale_fill_viridis_d(NULL, option = "A", end = .6) &

scale_color_viridis_d(NULL, option = "A", end = .6) &

theme(panel.grid = element_blank()) &

facet_wrap(~ sex)

) +

plot_layout(guides = "collect")

Note how with this many age levels, our discrete-time models are beginning to look like continuous-time models.

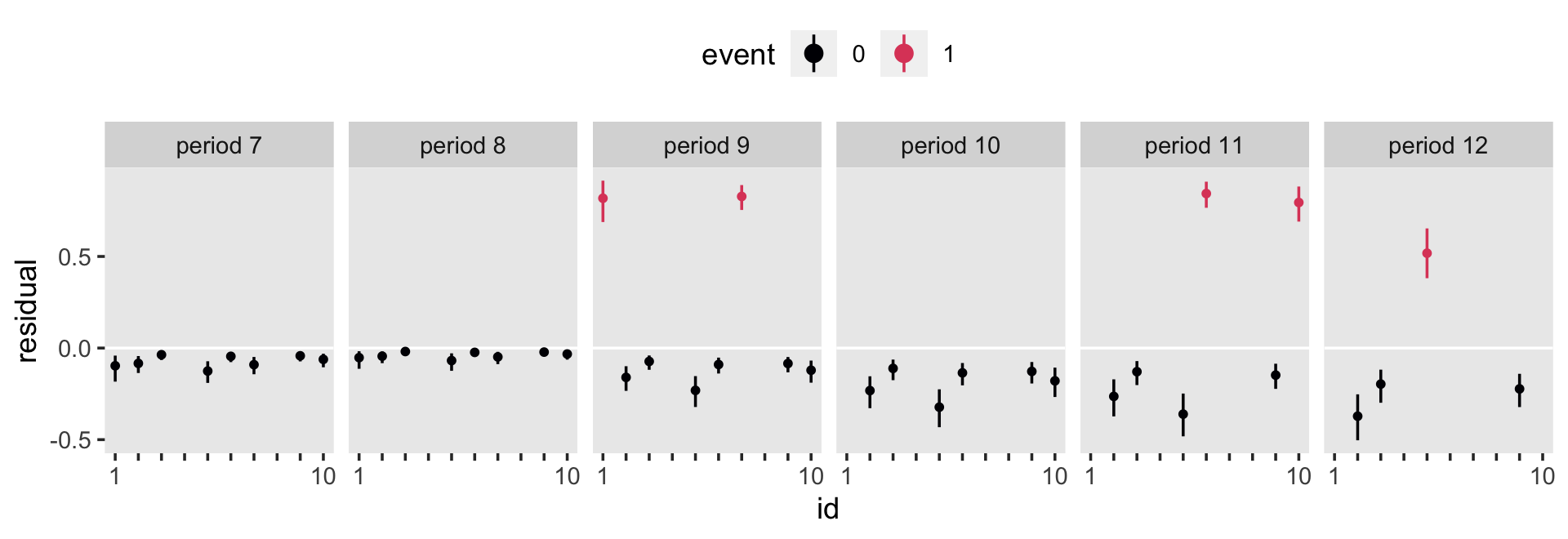

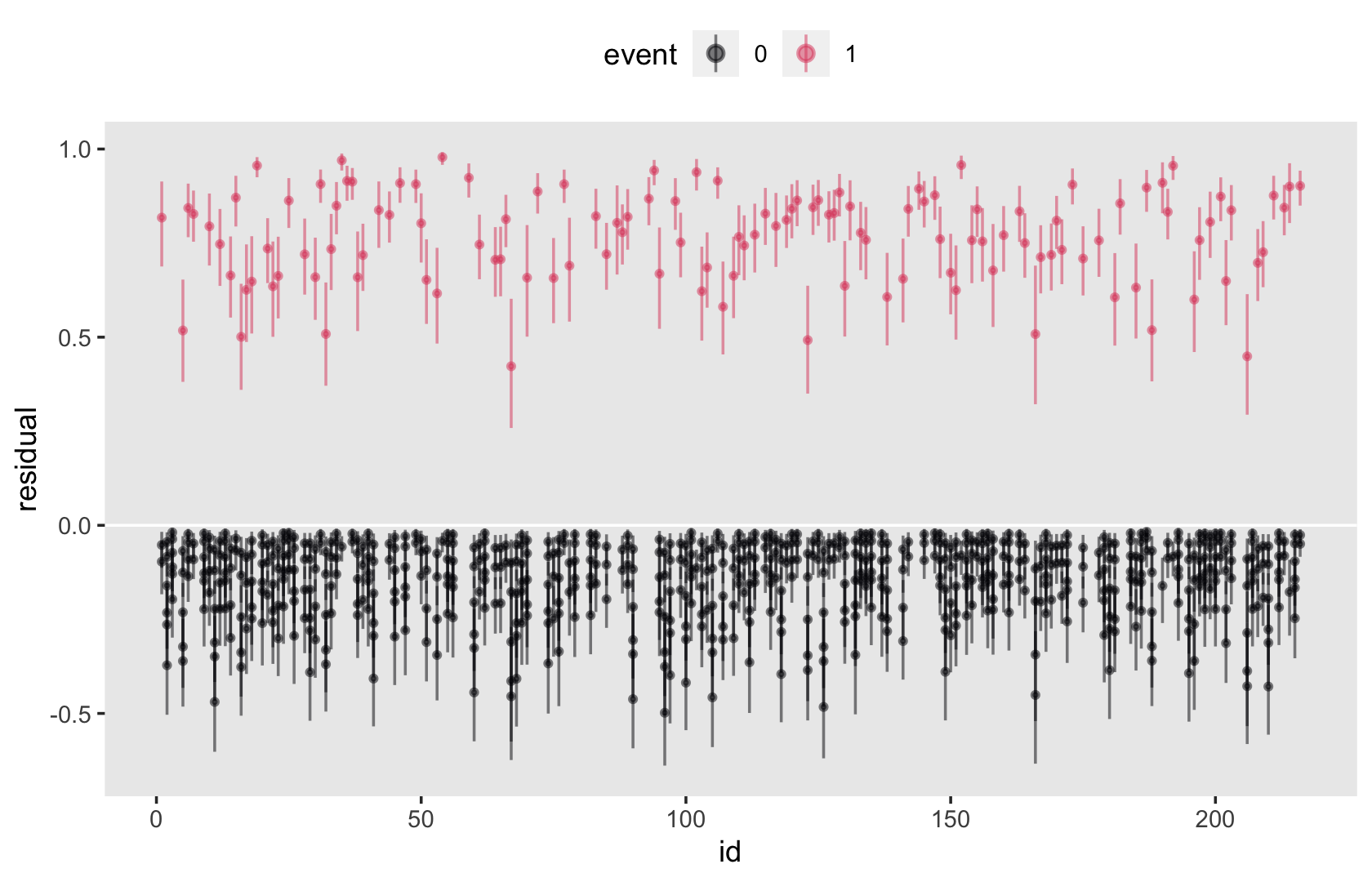

12.3.3 Two caveats: The problems of state and rate dependence.

A time-varying predictor is state-dependent if its values at time \(t_j\) are affected by an individual’s state (event occurrence status) at time \(t_j\): \(EVENT_{ij}\). A time-varying predictor is rate-dependent if its values at time \(t_j\) are affected by the individuals value of hazard (the “rate”) at time \(t_j\): \(h (t_{ij})\). (p. 440, emphasis in the original)

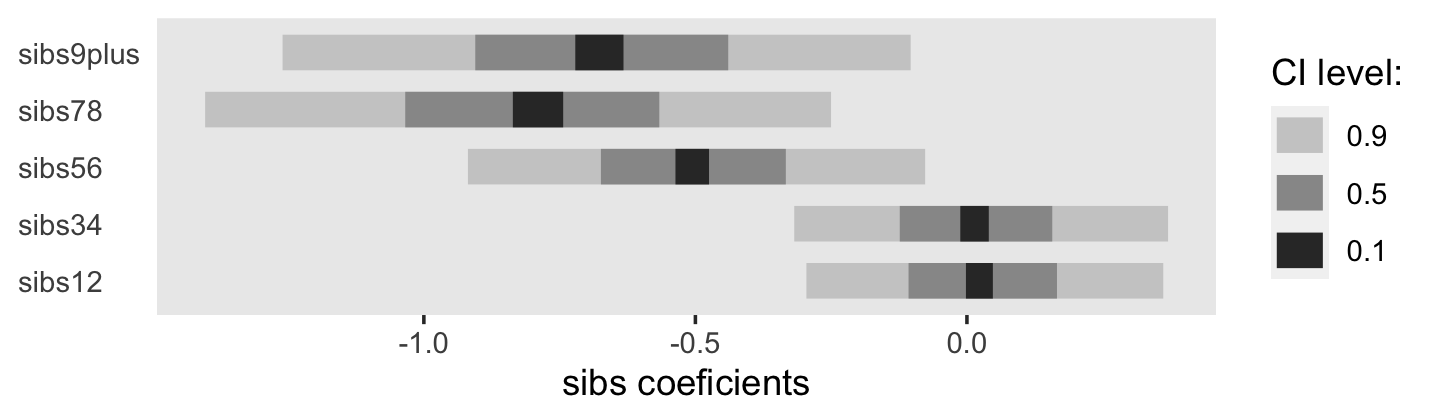

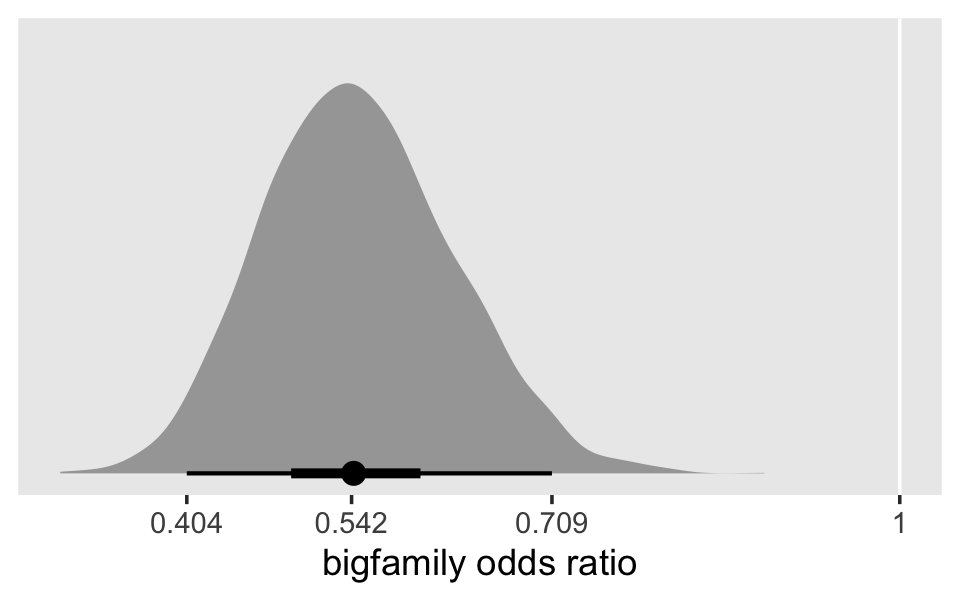

12.4 The linear additivity assumption: Uncovering violations and simple solutions

Because the focus on hazard causes you to analyze group level summaries, model violations can be more difficult to discern [than in other kinds of regression models]. We therefore devote this section to introducing practical strategies for diagnosing and correcting violations of the linear additivity assumption. (p. 443)

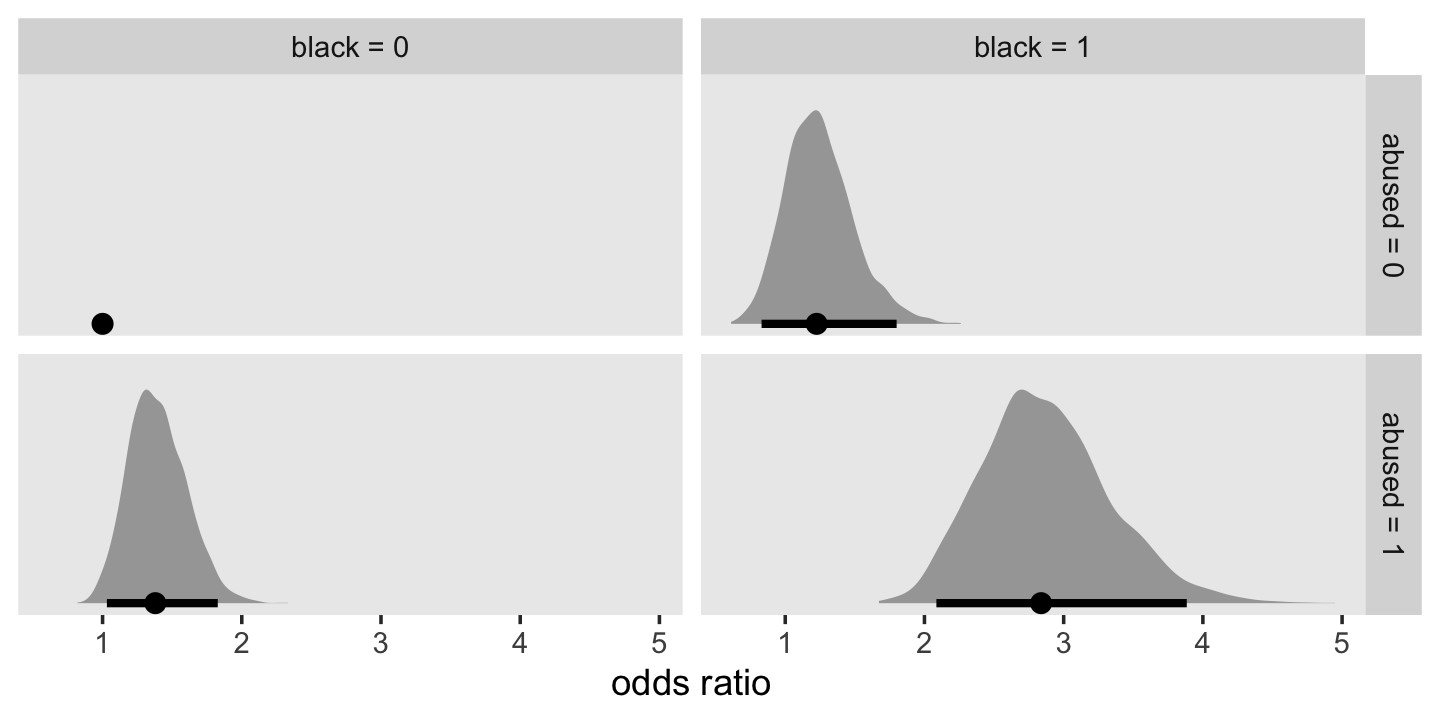

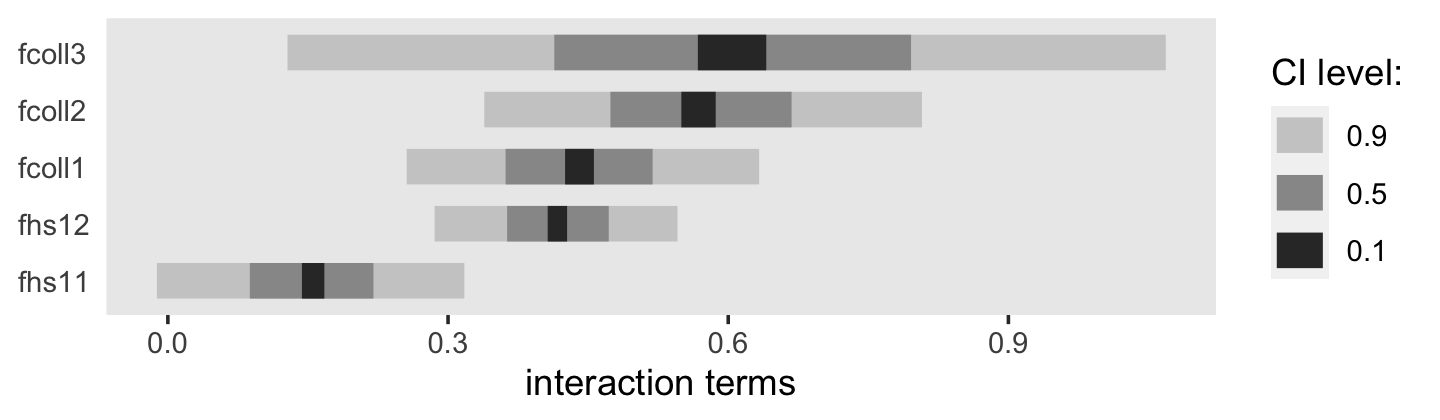

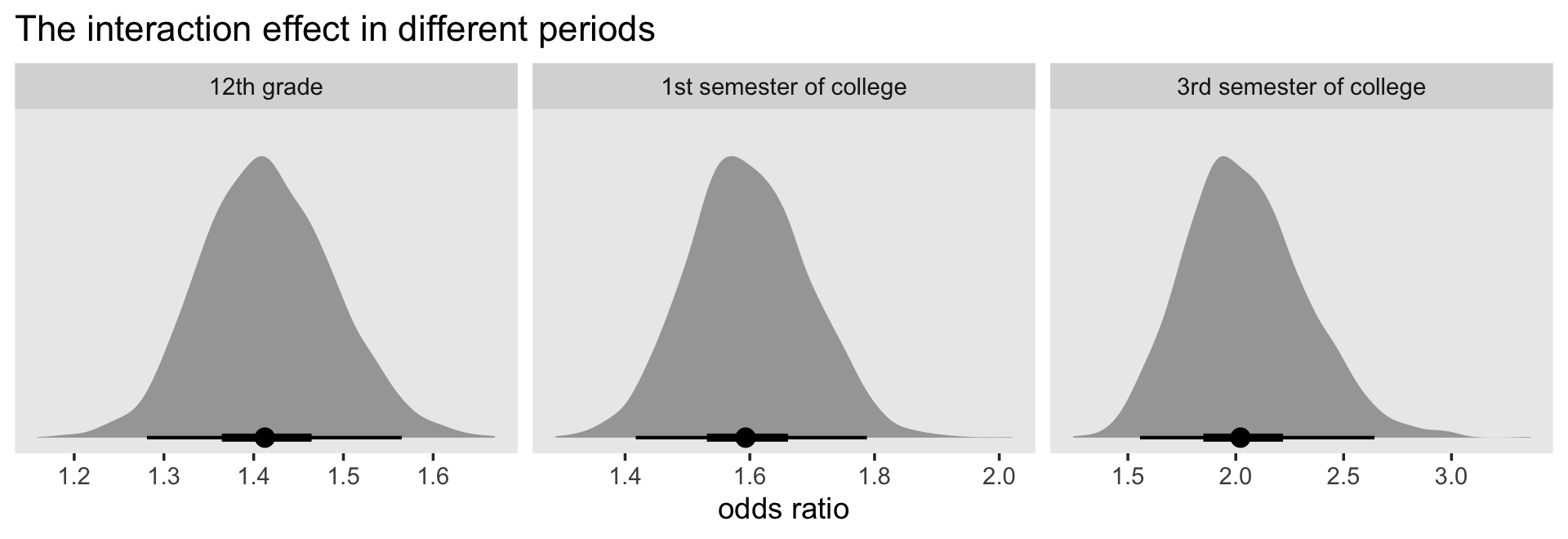

12.4.1 Interactions between substantive predictors.

We do not advocate fishing expeditions. Open searches for interactions can be counterproductive, leading to the discovery of many “effects” that are little more than sampling variation. But there are at least two circumstances when a guided search for interactions is crucial:

- When theory (or common sense!) suggests that two (or more) predictors will interact in the prediction of the outcome. If you hypothesize the existence of interactions a priori, your search will be targeted and efficient.

- When examining the effects of “question” predictor(s), variables whose effects you intend to emphasize in your report. You need to be certain that these predictors’ effects do not differ according ot levels of other important predictors, lest you misinterpret your major findings.

With this in mind, we now demonstrate how to (1) explore your data for the possibility of statistical interactions; and (2) include the additional appropriate terms when necessary. (p. 444, emphasis in the original)

Load the data from Keiley and Martin [-M. K. Keiley & Martin (2002)]3.

firstarrest_pp <-

read_csv("data/firstarrest_pp.csv") %>%

# convert the column names to lower case

rename_all(tolower)

glimpse(firstarrest_pp)## Rows: 15,834

## Columns: 19

## $ id <dbl> 1, 1, 1, 1, 1, 1, 1, 1, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 4, 4, …

## $ time <dbl> 15, 15, 15, 15, 15, 15, 15, 15, 29, 29, 29, 29, 29, 29, 29, 29, 29, 29, 29, 17, 17, 17, 17, 1…

## $ censor <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 1, …

## $ abused <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 1, …

## $ black <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, …

## $ ablack <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, …

## $ d8 <dbl> 1, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, …

## $ d9 <dbl> 0, 1, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, …

## $ d10 <dbl> 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, …

## $ d11 <dbl> 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 0, …

## $ d12 <dbl> 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, …

## $ d13 <dbl> 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, …

## $ d14 <dbl> 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, …

## $ d15 <dbl> 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, …

## $ d16 <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, …

## $ d17 <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, …

## $ d18 <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, …

## $ period <dbl> 8, 9, 10, 11, 12, 13, 14, 15, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 8, 9, 10, 11, 12, 13,…

## $ event <dbl> 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, …The total \(N\) is 1553.

firstarrest_pp %>%

distinct(id) %>%

count()## # A tibble: 1 × 1

## n

## <int>

## 1 1553\(n = 887\) were abused.

firstarrest_pp %>%

filter(abused == 1) %>%

distinct(id) %>%

count()## # A tibble: 1 × 1

## n

## <int>

## 1 887\(n = 342\) were arrested between the ages of 8 and 18.

firstarrest_pp %>%

filter(censor == 0) %>%

distinct(id) %>%

count()## # A tibble: 1 × 1

## n

## <int>

## 1 342Since the two focal predictors in this section with be black and abused, here are the counts broken down by both.

firstarrest_pp %>%

group_by(id) %>%

slice(1) %>%

ungroup() %>%

count(black, abused) %>%

mutate(percent = 100 * n / sum(n))## # A tibble: 4 × 4

## black abused n percent

## <dbl> <dbl> <int> <dbl>

## 1 0 0 434 27.9

## 2 0 1 605 39.0

## 3 1 0 232 14.9

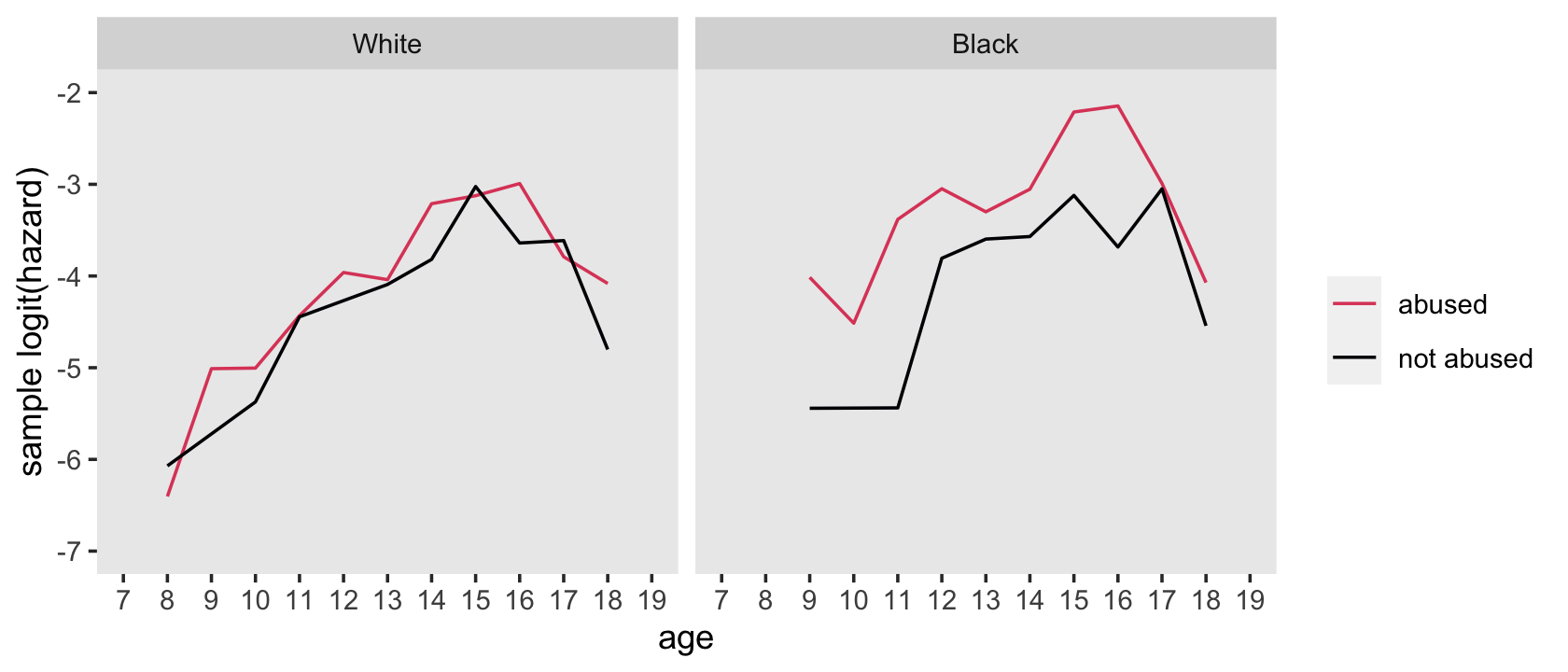

## 4 1 1 282 18.2Here’s how to hand compute the values for the sample logit(hazard) functions depicted in the top panels of Figure 12.6.

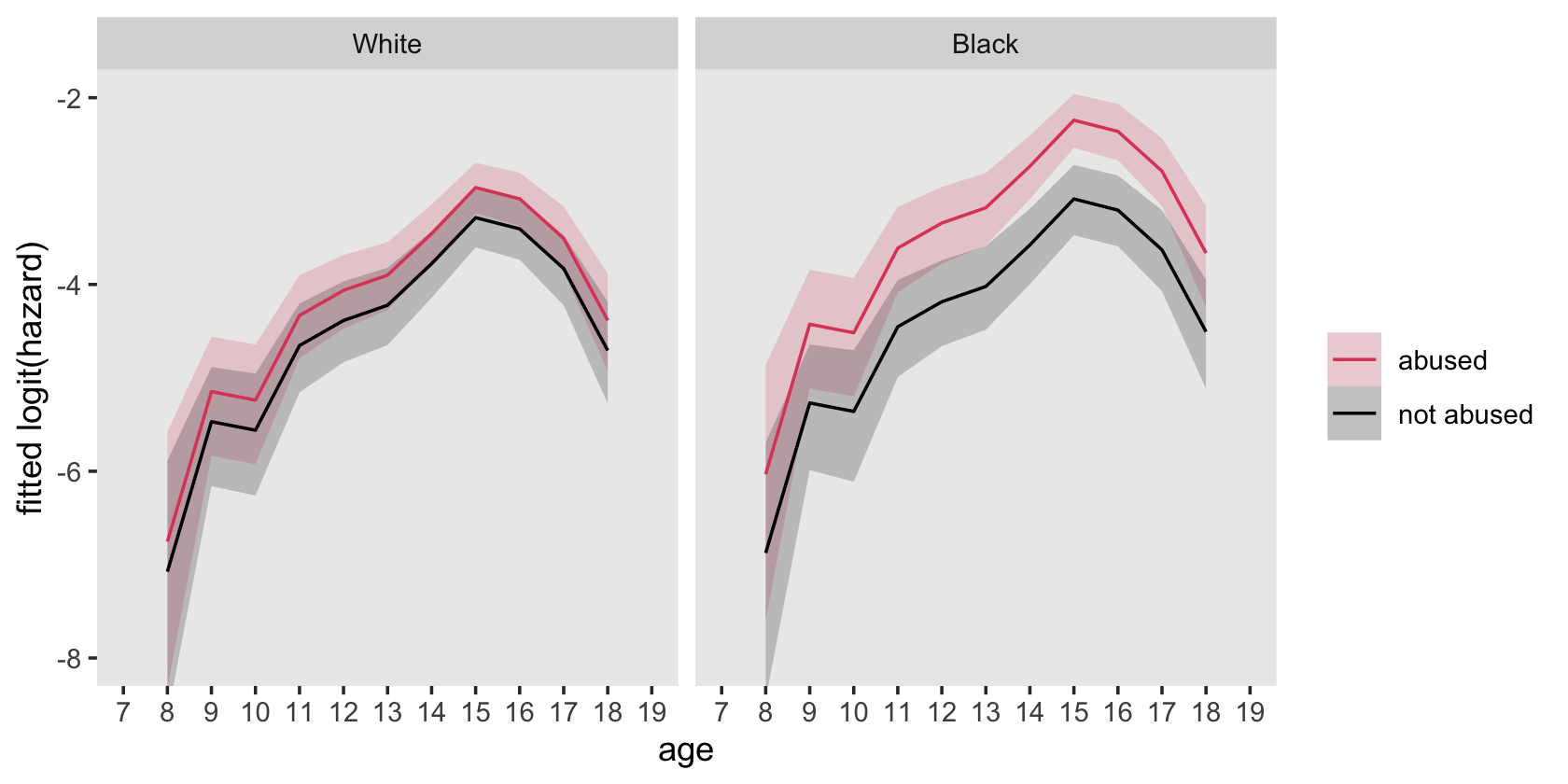

# wrangle

firstarrest_pp %>%

mutate(event = if_else(event == 1, "event", "no_event")) %>%

group_by(period) %>%

count(event, black, abused) %>%

ungroup() %>%

pivot_wider(names_from = event,

values_from = n) %>%

drop_na(event) %>%

mutate(total = event + no_event,

logit = log(event / total / (1 - event / total)),

race = factor(ifelse(black == 1, "Black", "White"),

levels = c("White", "Black")),

abused = ifelse(abused == 1, "abused", "not abused") %>% factor()) %>%

# plot!

ggplot(aes(x = period, y = logit)) +

geom_line(aes(color = abused, group = abused)) +

scale_color_viridis_d(NULL, option = "A", end = .6, direction = -1) +

scale_x_continuous("age", breaks = 7:19, limits = c(7, 19)) +

scale_y_continuous("sample logit(hazard)", limits = c(-7, -2)) +

theme(panel.grid = element_blank()) +

facet_wrap(~ race)

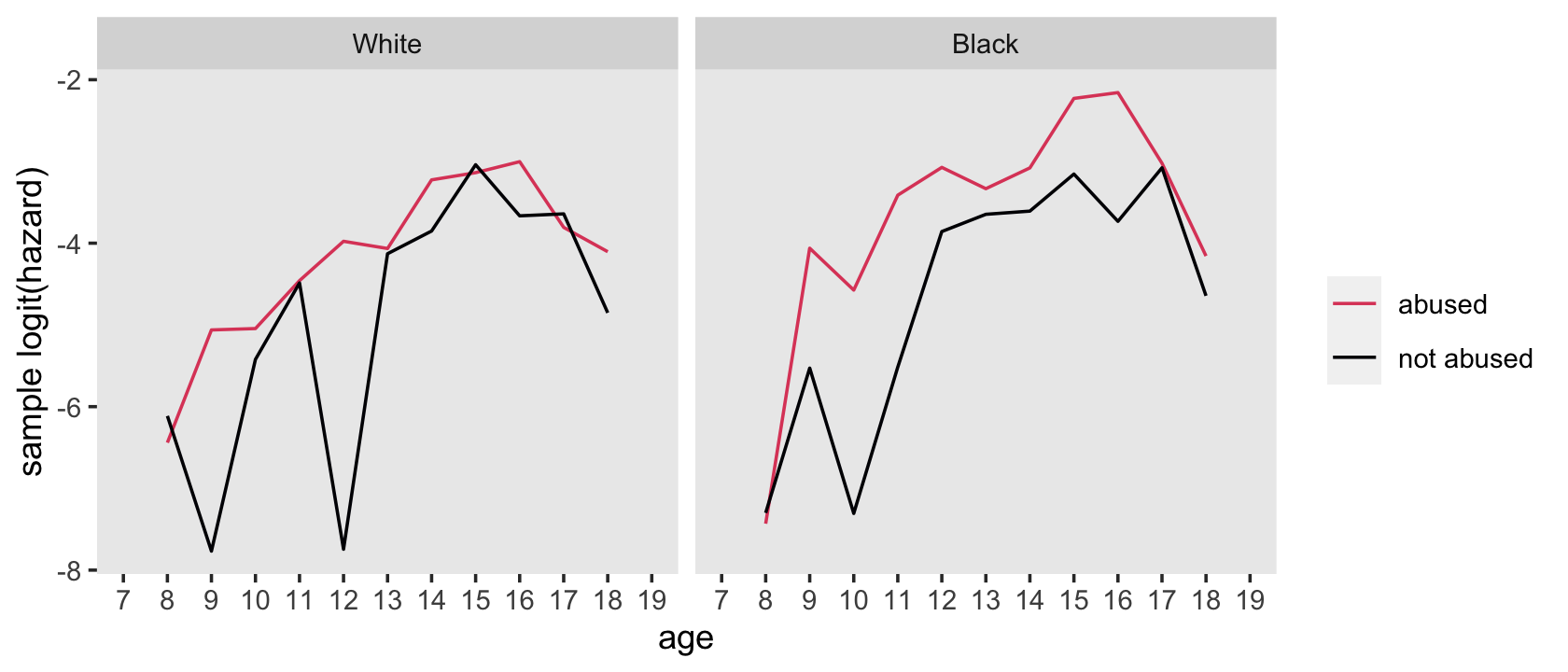

For kicks and giggles, it might informative to fit a series of subset Bayesian models to make similar versions of those sample hazard functions. Before we fit the models, let’s make our lives easier and make a factor version of our time variable, period.

firstarrest_pp <-

firstarrest_pp %>%

mutate(period_f = factor(period))Fit the four subset models.

# white, not abused

fit12.18 <-

brm(data = firstarrest_pp %>% filter(black == 0 & abused == 0),

family = binomial,

event | trials(1) ~ 0 + period_f,

prior(normal(0, 4), class = b),

chains = 4, cores = 4, iter = 2000, warmup = 1000,

seed = 12,

file = "fits/fit12.18")

# white, abused

fit12.19 <-

update(fit12.18,

newdata = firstarrest_pp %>% filter(black == 0 & abused == 1),

chains = 4, cores = 4, iter = 2000, warmup = 1000,

seed = 12,

file = "fits/fit12.19")

# black, not abused

fit12.20 <-

update(fit12.18,

newdata = firstarrest_pp %>% filter(black == 1 & abused == 0),

chains = 4, cores = 4, iter = 2000, warmup = 1000,

seed = 12,

file = "fits/fit12.20")

# black, abused

fit12.21 <-

update(fit12.18,

newdata = firstarrest_pp %>% filter(black == 1 & abused == 1),

chains = 4, cores = 4, iter = 2000, warmup = 1000,

seed = 12,

file = "fits/fit12.21")We might use fitted() via our custom make_fitted() function to perform some of the pre-plotting computation and wrangling.

nd <- tibble(period_f = 8:18)

# this will simplify the `mutate()` code below

models <- c("constant", "linear", "quadratic", "cubic", "general")

f <-

bind_rows(make_fitted(fit12.18, scale = "linear"), # white, not abused

make_fitted(fit12.19, scale = "linear"), # white, abused

make_fitted(fit12.20, scale = "linear"), # black, not abused

make_fitted(fit12.21, scale = "linear")) %>% # black, abused

mutate(race = factor(rep(c("White", "Black"), each = n() / 2),

levels = c("White", "Black")),

abuse = rep(c("not abused", "abused", "not abused", "abused"), each = n() / 4))

# what have we done?

glimpse(f)## Rows: 44

## Columns: 7

## $ Estimate <dbl> -6.113737, -7.767396, -5.421275, -4.486981, -7.744434, -4.128871, -3.851241, -3.039893, -3…

## $ Est.Error <dbl> 0.9614576, 1.7504718, 0.6720442, 0.4486308, 1.7498479, 0.3776723, 0.3404120, 0.2335922, 0.…

## $ Q2.5 <dbl> -8.345358, -11.981231, -6.962572, -5.458206, -12.100321, -4.942711, -4.558721, -3.510652, …

## $ Q97.5 <dbl> -4.579547, -5.171702, -4.295233, -3.687680, -5.140699, -3.451226, -3.252522, -2.600993, -3…

## $ period_f <int> 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 8, 9, …

## $ race <fct> White, White, White, White, White, White, White, White, White, White, White, White, White,…

## $ abuse <chr> "not abused", "not abused", "not abused", "not abused", "not abused", "not abused", "not a…Now plot.

f %>%

ggplot(aes(x = period_f, y = Estimate, group = abuse, color = abuse)) +

geom_line() +

scale_color_viridis_d(NULL, option = "A", end = .6, direction = -1) +

scale_x_continuous("age", breaks = 7:19, limits = c(7, 19)) +

ylab("sample logit(hazard)") +

theme(panel.grid = element_blank()) +

facet_wrap(~ race)