4 Doing Data Analysis with the Multilevel Model for Change

“We now delve deeper into the specification, estimation, and interpretation of the multilevel model for change” (Singer & Willett, 2003, p. 75).

4.1 Example: Changes in adolescent alcohol use

Load the data.

library(tidyverse)

alcohol1_pp <- read_csv("data/alcohol1_pp.csv")

head(alcohol1_pp)## # A tibble: 6 × 9

## id age coa male age_14 alcuse peer cpeer ccoa

## <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

## 1 1 14 1 0 0 1.73 1.26 0.247 0.549

## 2 1 15 1 0 1 2 1.26 0.247 0.549

## 3 1 16 1 0 2 2 1.26 0.247 0.549

## 4 2 14 1 1 0 0 0.894 -0.124 0.549

## 5 2 15 1 1 1 0 0.894 -0.124 0.549

## 6 2 16 1 1 2 1 0.894 -0.124 0.549Do note we already have an \((\text{age} - 14)\) variable in the data, age_14.

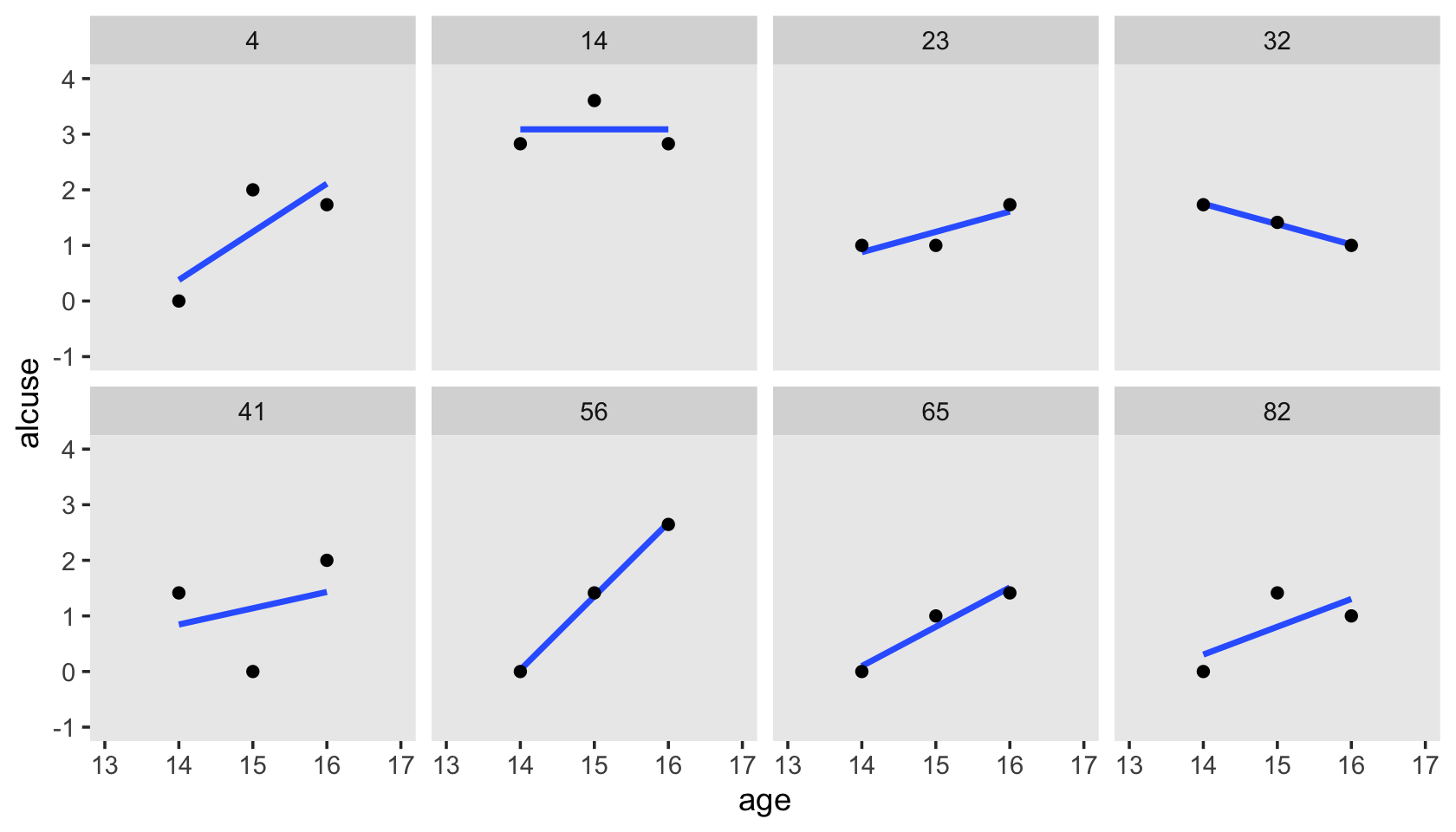

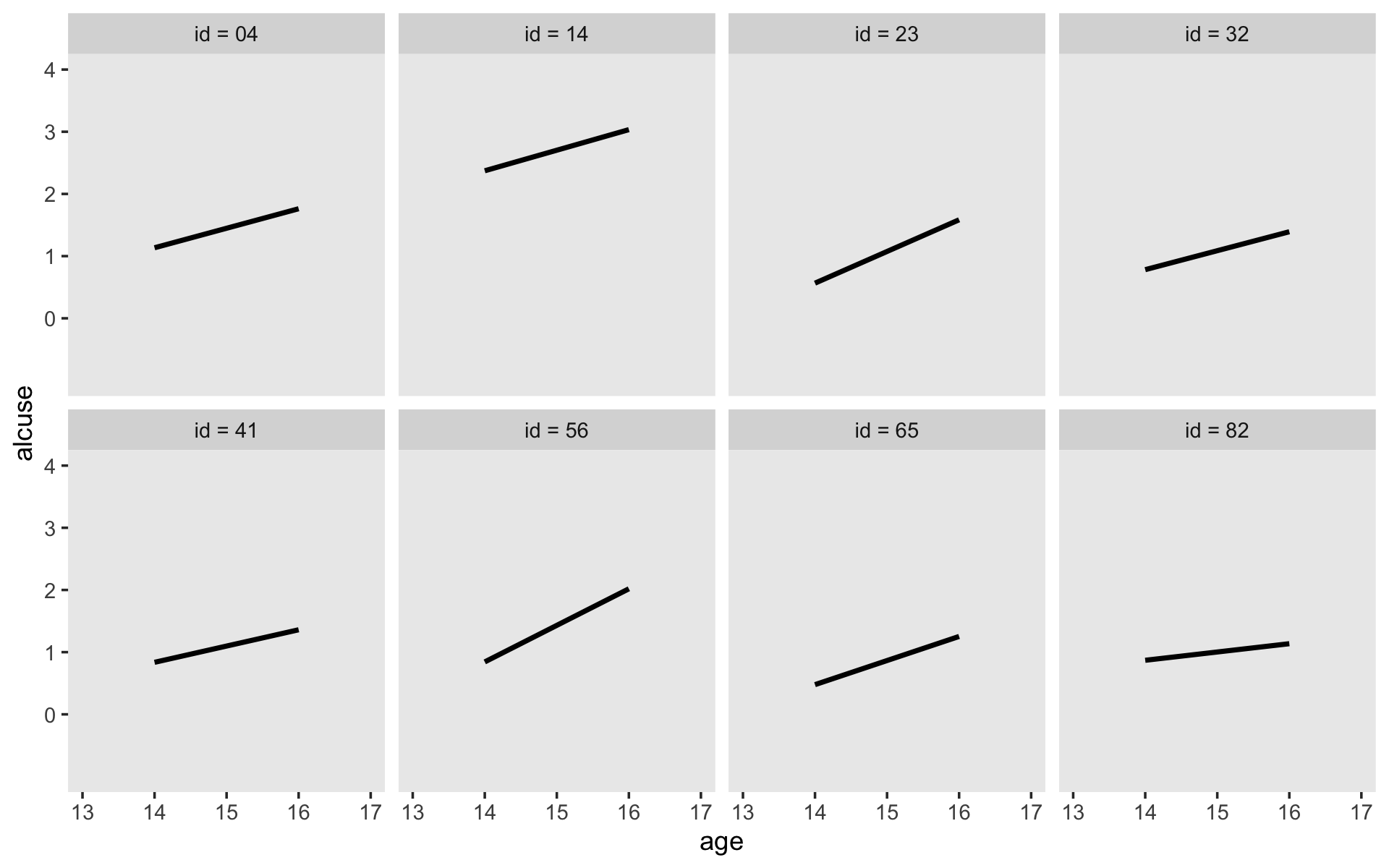

Here’s our version of Figure 4.1, using stat_smooth() to get the exploratory OLS trajectories.

alcohol1_pp %>%

filter(id %in% c(4, 14, 23, 32, 41, 56, 65, 82)) %>%

ggplot(aes(x = age, y = alcuse)) +

stat_smooth(method = "lm", se = F) +

geom_point() +

coord_cartesian(xlim = c(13, 17),

ylim = c(-1, 4)) +

theme(panel.grid = element_blank()) +

facet_wrap(~ id, ncol = 4)

By this figure, Singer and Willett suggested the simple linear level-1 submodel following the form

\[\begin{align*} \text{alcuse}_{ij} & = \pi_{0i} + \pi_{1i} (\text{age}_{ij} - 14) + \epsilon_{ij}\\ \epsilon_{ij} & \sim \operatorname{Normal}(0, \sigma_\epsilon^2), \end{align*}\]

where \(\pi_{0i}\) is the initial status of participant \(i\), \(\pi_{1i}\) is participant \(i\)’s rate of change, and \(\epsilon_{ij}\) is the variation in participant \(i\)’s data not accounted for in the model.

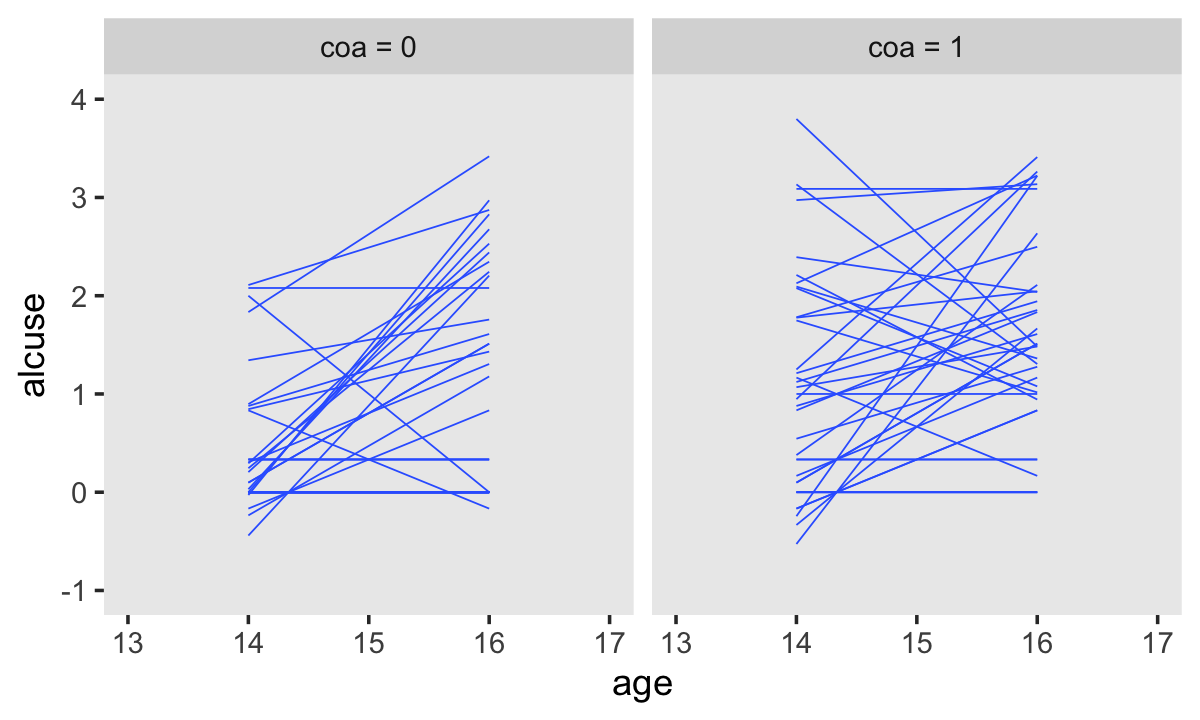

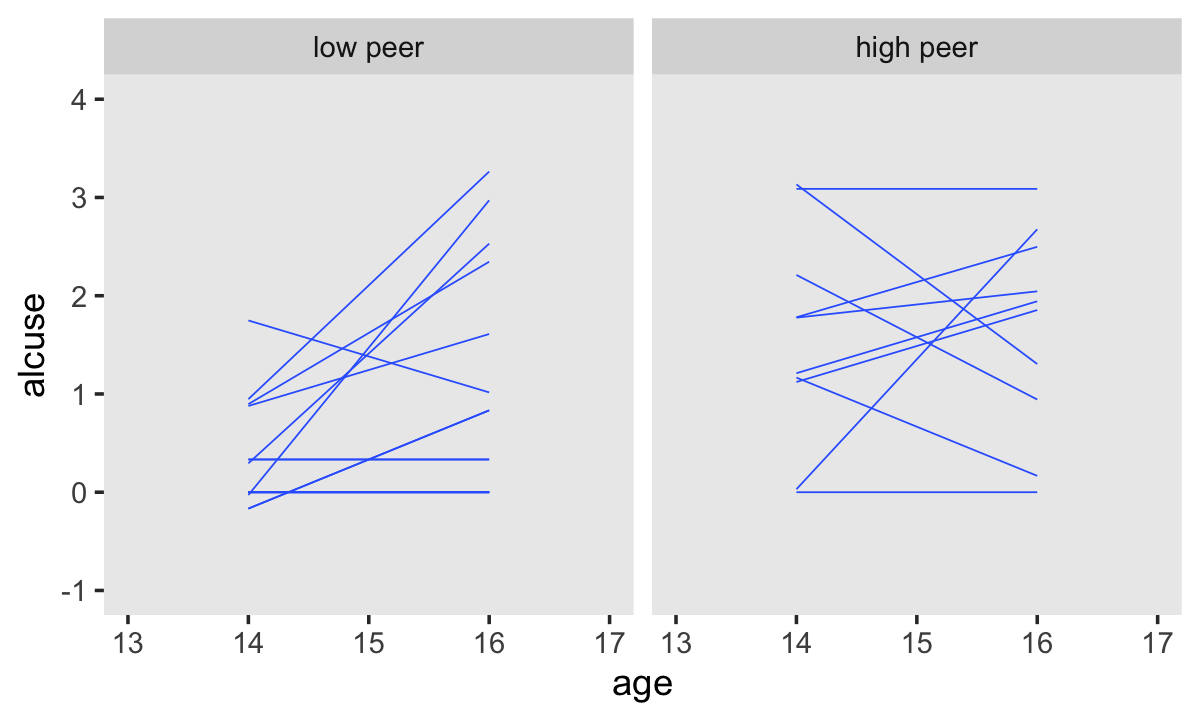

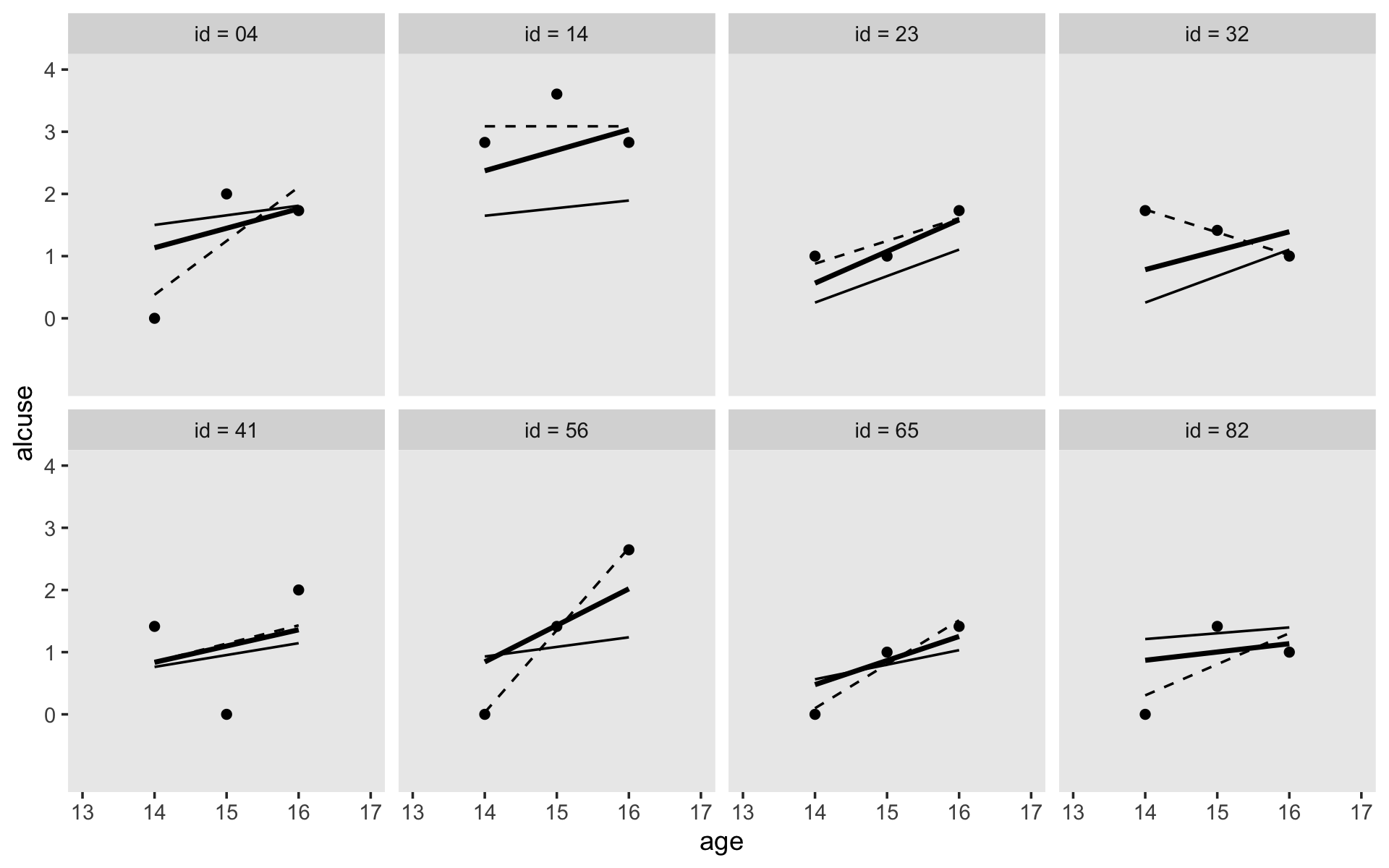

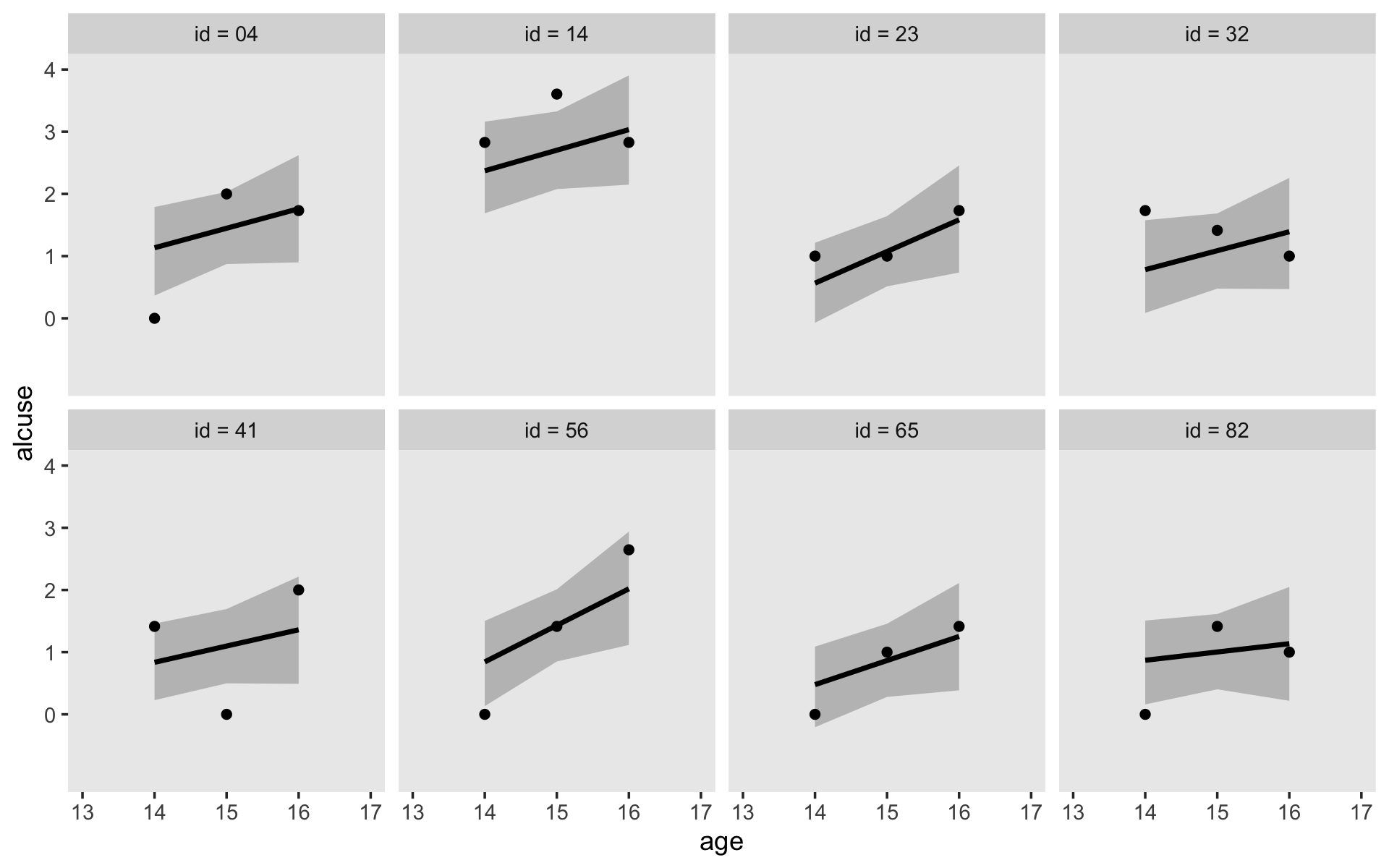

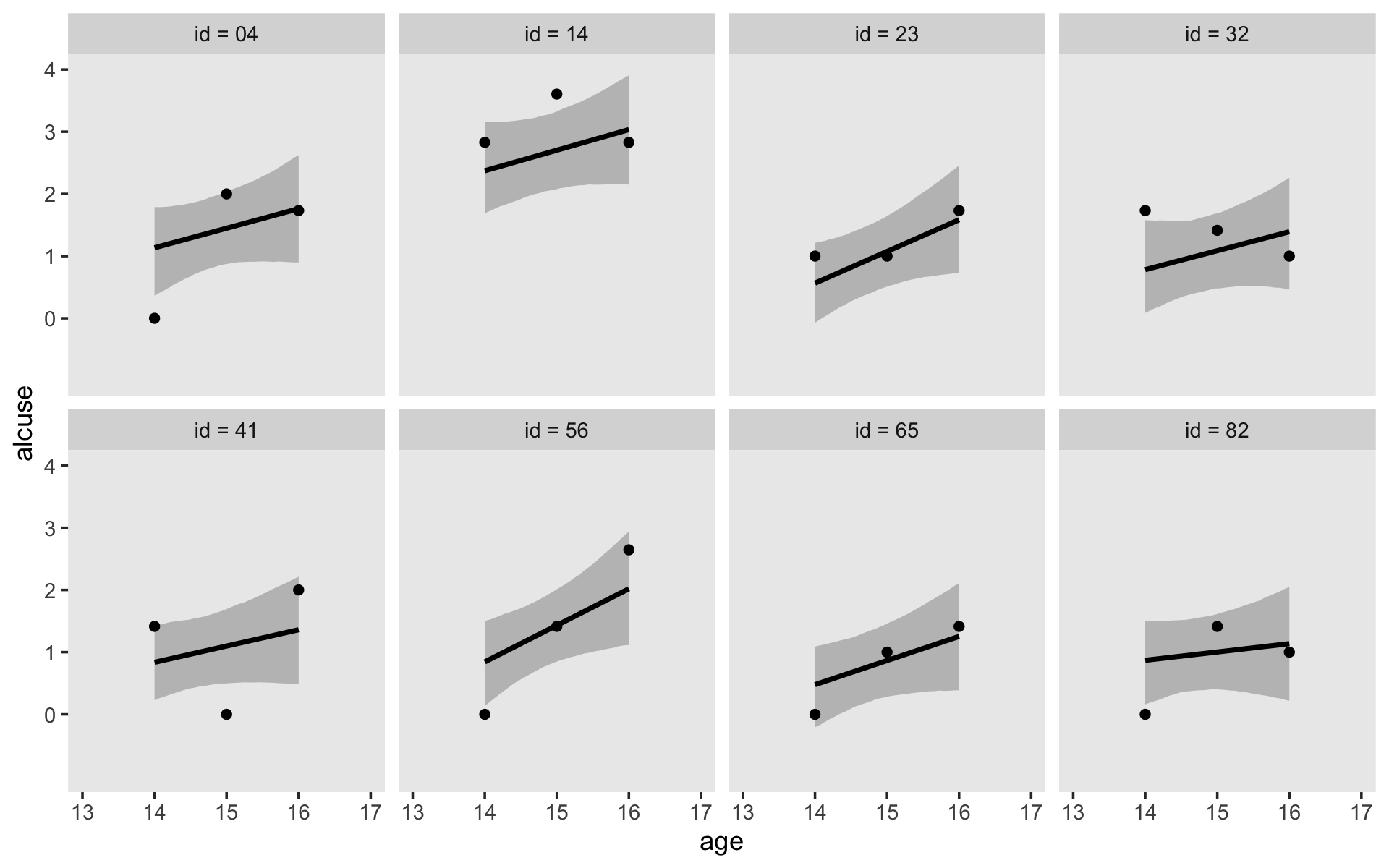

Singer and Willett made their Figure 4.2 “with a random sample of 32 of the adolescents” (p. 78). If we just wanted a random sample of rows, the sample_n() function would do the job. But since we’re working with long data, we’ll need some group_by() + nest() mojo. I got the trick from Jenny Bryan’s vignette, Sample from groups, n varies by group. Setting the seed makes the results from sample_n() reproducible. Here are the top panels.

set.seed(4)

alcohol1_pp %>%

group_by(id) %>%

nest() %>%

sample_n(size = 32, replace = T) %>%

unnest(data) %>%

mutate(coa = ifelse(coa == 0, "coa = 0", "coa = 1")) %>%

ggplot(aes(x = age, y = alcuse, group = id)) +

stat_smooth(method = "lm", se = F, linewidth = 1/4) +

coord_cartesian(xlim = c(13, 17),

ylim = c(-1, 4)) +

theme(panel.grid = element_blank()) +

facet_wrap(~ coa)

We have similar data wrangling needs for the bottom panels.

set.seed(4)

alcohol1_pp %>%

group_by(id) %>%

nest() %>%

ungroup() %>%

sample_n(size = 32, replace = T) %>%

unnest(data) %>%

mutate(hp = ifelse(peer < mean(peer), "low peer", "high peer")) %>%

mutate(hp = factor(hp, levels = c("low peer", "high peer"))) %>%

ggplot(aes(x = age, y = alcuse, group = id)) +

stat_smooth(method = "lm", se = F, linewidth = 1/4) +

coord_cartesian(xlim = c(13, 17),

ylim = c(-1, 4)) +

theme(panel.grid = element_blank()) +

facet_wrap(~ hp)

Based on the exploratory analyses, Singer and Willett posited the initial level-2 submodel might take the form

$$ \[\begin{align*} \pi_{0i} & = \gamma_{00} + \gamma_{01} \text{coa}_i + \zeta_{0i}\\ \pi_{1i} & = \gamma_{10} + \gamma_{11} \text{coa}_i + \zeta_{1i} \\ \begin{bmatrix} \zeta_{0i} \\ \zeta_{1i} \end{bmatrix} & \sim \operatorname{Normal} \begin{pmatrix} \begin{bmatrix} 0 \\ 0 \end{bmatrix}, \begin{bmatrix} \sigma_0^2 & \sigma_{01}\\ \sigma_{01} & \sigma_1^2 \end{bmatrix} \end{pmatrix}, \end{align*}\] $$

where \(\gamma_{00}\) and \(\gamma_{10}\) are the level-2 intercepts, the population averages when \(\text{coa} = 0\), \(\gamma_{10}\) and \(\gamma_{11}\) are the level-2 slopes expressing the difference when \(\text{coa} = 1\) and \(\zeta_{0i}\) and \(\zeta_{1i}\) are the unexplained variation across the \(\text{id}\)-level intercepts and slopes. Since we’ll be fitting the model with brms::brm(), the \(\Sigma\) matrix will be parameterized in terms of standard deviations and their correlation. So we might reexpress the model as

$$ \[\begin{align*} \pi_{0i} & = \gamma_{00} + \gamma_{01} \text{coa}_i + \zeta_{0i}\\ \pi_{1i} & = \gamma_{10} + \gamma_{11} \text{coa}_i + \zeta_{1i} \\ \begin{bmatrix} \zeta_{0i} \\ \zeta_{1i} \end{bmatrix} & \sim \operatorname{Normal} \begin{pmatrix} \begin{bmatrix} 0 \\ 0 \end{bmatrix}, \begin{bmatrix} \sigma_0 & \rho_{01}\\ \rho_{01} & \sigma_1 \end{bmatrix} \end{pmatrix}. \end{align*}\] $$

4.2 The composite specification of the multilevel model for change

With a little algebra, we can combine the level-1 and level-2 submodels into the composite multilevel model for change, which follows the form

$$ \[\begin{align*} \text{alcuse}_{ij} & = \big [ \gamma_{00} + \gamma_{10} \text{age_14}_{ij} + \gamma_{01} \text{coa}_i + \gamma_{11} (\text{coa}_i \times \text{age_14}_{ij}) \big ] \\ & \;\;\;\;\; + [ \zeta_{0i} + \zeta_{1i} \text{age_14}_{ij} + \epsilon_{ij} ] \\ \epsilon_{ij} & \sim \operatorname{Normal} (0, \sigma_\epsilon^2) \\ \begin{bmatrix} \zeta_{0i} \\ \zeta_{1i} \end{bmatrix} & \sim \operatorname{Normal} \begin{pmatrix} \begin{bmatrix} 0 \\ 0 \end{bmatrix}, \begin{bmatrix} \sigma_0^2 & \sigma_{01} \\ \sigma_{01} & \sigma_1^2 \end{bmatrix} \end{pmatrix}, \end{align*}\] $$

where the brackets in the first line partition the structural model (i.e., the model for \(\mu\)) and the stochastic components (i.e., the \(\sigma\) terms). We should note that this is the format that most closely mirrors what we use in the formula argument in brms::brm(). As long as age is not centered on the mean, our brms syntax would be: formula = alcuse ~ 0 + Intercept + age_c + coa + age_c:coa + (1 + age_c | id).

4.2.1 The structural component of the composite model.

Although their interpretation is identical, the \(\gamma\)s in the composite model describe patterns of change in a different way. Rather than postulating first how ALCUSE is related to TIME and the individual growth parameters, and second how the individual growth parameters are related to COA, the composite specification in equation 4.3 postulates that ALCUSE depends simultaneously on: (1) the level-1 predictor, TIME; (2) the level-2 predictor, COA; and (3) the cross-level interaction, COA by TIME. From this perspective, the composite model’s structural portion strongly resembles a regular regression model with predictors, TIME and COA, appearing as main effects (associated with \(\gamma_{10}\) and \(\gamma_{01}\), respectively) and in a cross-level interaction (associated with \(\gamma_{11}\)). (p. 82, emphasis in the original)

4.2.2 The stochastic component of the composite model.

A distinctive feature of the composite multilevel model is its composite residual, the three terms in the second set of brackets on the right of equation 4.3 that combine together the level-1 residual and the two level-2 residuals:

\[\text{Composite residual: } [ \zeta_{0i} + \zeta_{1i} \text{age_14}_{ij} + \epsilon_{ij} ].\] The composite residual is not a simple sum. Instead, the second level-2 residual, \(\zeta_{1i}\), is multiplied by the level-1 predictor, \([\text{age_14}_{ij}]\), before joining its siblings. Despite its unusual construction, the interpretation of the composite residual is straightforward: it describes the difference between the observed and expected value of \([\text{alcuse}]\) for individual \(i\) on occasion \(j\).

The mathematical form of the composite residual reveals two important properties about the occasion-specific residuals not readily apparent in the level-1/level-2 specification: they can be both autocorrelated and heteroscedastic within person. (p. 84, emphasis in the original)

4.3 Methods of estimation, revisited

In this section, the authors introduced generalized least squares (GLS) estimation and iterative generalized least squares (IGLS) estimation and then distinguished between full and restricted maximum likelihood estimation. Since our goal is to fit these models as Bayesians, we won’t be using or discussing any of these in this project. There are, of course, different ways to approach Bayesian estimation. Though we’re using Hamiltonian Monte Carlo, we could use other algorithms, such as the Gibbs sampler. However, all that is outside of the scope of this project.

I suppose the only thing to add is that whereas GLS estimates come from minimizing a weighted function of the residuals and maximum likelihood estimates come from maximizing the log-likelihood function, the results of our Bayesian analyses (i.e., the posterior distribution) come from the consequences of Bayes’ theorem,

\[ p(\theta \mid d) = \frac{p(d \mid \theta)\ p(\theta)}{p(d)}. \]

If you really want to dive into the details of this, I suggest referencing a proper introductory Bayesian textbook, such as McElreath (2020a, 2015), Kruschke (2015), or Gelman et al. (2013). I haven’t had time to check it out, but I’ve heard Labmert’s (2018) text is good, too. And for details specific to Stan, and thus brms, you might check out the documentation resources at https://mc-stan.org/users/documentation/.

4.4 First steps: Fitting two unconditional multilevel models for change

Singer and Willett recommended that before you fit your full theoretical multilevel model of change–the one with all the interesting covariates–you should fit two simpler preliminary models. The first is the unconditional means model. The second is the unconditional growth model.

I agree. In addition to the reasons they cover in the text, this is just good pragmatic data analysis. Start simple and build up to the more complicated models only after you’re confident you understand what’s going on with the simpler ones. And if you’re new to them, you’ll discover this is especially so with Bayesian methods.

4.4.1 The unconditional means model.

The likelihood for the unconditional means model follows the formula

\[ \begin{align*} \text{alcuse}_{ij} & = \gamma_{00} + \zeta_{0i} + \epsilon_{ij} \\ \epsilon_{ij} & \sim \operatorname{Normal}(0, \sigma_\epsilon^2) \\ \zeta_{0i} & \sim \operatorname{Normal}(0, \sigma_0^2). \end{align*} \]

Let’s open brms.

library(brms)Up till this point, we haven’t focused on priors. It would have been reasonable to wonder if we’d been using them at all. Yes, we have. Even if you don’t specify priors in the brm() function, it’ll compute default weakly-informative priors for you. You might be wondering, What might these default priors look like? The get_prior() function let us take a look.

get_prior(data = alcohol1_pp,

family = gaussian,

alcuse ~ 1 + (1 | id))## prior class coef group resp dpar nlpar lb ub source

## student_t(3, 1, 2.5) Intercept default

## student_t(3, 0, 2.5) sd 0 default

## student_t(3, 0, 2.5) sd id 0 (vectorized)

## student_t(3, 0, 2.5) sd Intercept id 0 (vectorized)

## student_t(3, 0, 2.5) sigma 0 defaultFor this model, all three priors are based on Student’s \(t\)-distribution. In case you’re rusty, the normal distribution is just a special case of Student’s \(t\)-distribution. Whereas the normal is defined by two parameters (\(\mu\) and \(\sigma\)), the \(t\) distribution is defined by \(\nu\), \(\mu\), and \(\sigma\). In frequentist circles, \(\nu\) is often called the degrees of freedom. More generally, it’s also referred to as a normality parameter. We’ll examine the prior more closely in a bit.

For now, let’s practice setting our priors by manually specifying them within brm(). You do with the prior argument. There are actually several ways to do this. To explore all the options, check out the set_prior section of the brms reference manual (Bürkner, 2021d). I typically define my individual priors with the prior() function. When there are more than one priors to define, I typically bind them together within c(...).

Other than the addition of our fancy prior statement, the rest of the settings within brm() are much like those in prior chapters. Let’s fit the model.

fit4.1 <-

brm(data = alcohol1_pp,

family = gaussian,

alcuse ~ 1 + (1 | id),

prior = c(prior(student_t(3, 1, 2.5), class = Intercept),

prior(student_t(3, 0, 2.5), class = sd),

prior(student_t(3, 0, 2.5), class = sigma)),

iter = 2000, warmup = 1000, chains = 4, cores = 4,

seed = 4,

file = "fits/fit04.01")Here are the results.

print(fit4.1)## Family: gaussian

## Links: mu = identity; sigma = identity

## Formula: alcuse ~ 1 + (1 | id)

## Data: alcohol1_pp (Number of observations: 246)

## Draws: 4 chains, each with iter = 2000; warmup = 1000; thin = 1;

## total post-warmup draws = 4000

##

## Group-Level Effects:

## ~id (Number of levels: 82)

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## sd(Intercept) 0.77 0.08 0.61 0.94 1.00 1513 2071

##

## Population-Level Effects:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## Intercept 0.92 0.10 0.73 1.12 1.00 2194 2304

##

## Family Specific Parameters:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## sigma 0.75 0.04 0.68 0.84 1.00 3220 3037

##

## Draws were sampled using sampling(NUTS). For each parameter, Bulk_ESS

## and Tail_ESS are effective sample size measures, and Rhat is the potential

## scale reduction factor on split chains (at convergence, Rhat = 1).Compare the results to those listed under “Model A” in Table 4.1. It’s important to keep in mind that brms returns ‘sigma’ and ‘sd(Intercept)’ in the standard deviation metric rather than the variance metric. “But I want them in the variance metric like in the text!”, you say. Okay fine. The best way to do the transformations is after saving the results from as_draws_df().

draws <- as_draws_df(fit4.1)

# first 12 columns

glimpse(draws[, 1:12])## Rows: 4,000

## Columns: 12

## $ b_Intercept <dbl> 0.9406565, 0.9862519, 0.8047797, 0.9481130, 0.8541637, 0.8386853, 0.92…

## $ sd_id__Intercept <dbl> 0.7185852, 0.6471299, 0.7926978, 0.7718956, 0.7410648, 0.6956998, 0.78…

## $ sigma <dbl> 0.7373521, 0.8087970, 0.8117765, 0.8095093, 0.8179681, 0.7830781, 0.77…

## $ `r_id[1,Intercept]` <dbl> 0.9163717, 0.6145222, 0.2345081, 0.7683651, 0.3509155, 0.6096043, 1.01…

## $ `r_id[2,Intercept]` <dbl> -1.009483116, 0.249090063, -0.562924108, 0.203001424, 0.173337825, -0.…

## $ `r_id[3,Intercept]` <dbl> 0.1852035, 1.6224810, -0.4611725, 1.1150763, 0.6648818, 0.7228837, 1.0…

## $ `r_id[4,Intercept]` <dbl> 0.35781229, -0.01697348, 0.83689995, 0.89752770, 0.88948250, 0.6430061…

## $ `r_id[5,Intercept]` <dbl> -0.68773929, -0.65962658, -1.06090865, -0.71889869, -0.55364888, 0.159…

## $ `r_id[6,Intercept]` <dbl> 1.6937918, 1.5190274, 1.1928640, 1.3234571, 1.8810541, 1.9431236, 1.49…

## $ `r_id[7,Intercept]` <dbl> 0.82085231, 0.43493772, 0.73868701, 0.06875076, 0.91568144, 0.16150059…

## $ `r_id[8,Intercept]` <dbl> -0.44182207, -0.82966404, -0.29547227, -0.84191779, -0.45499854, -0.56…

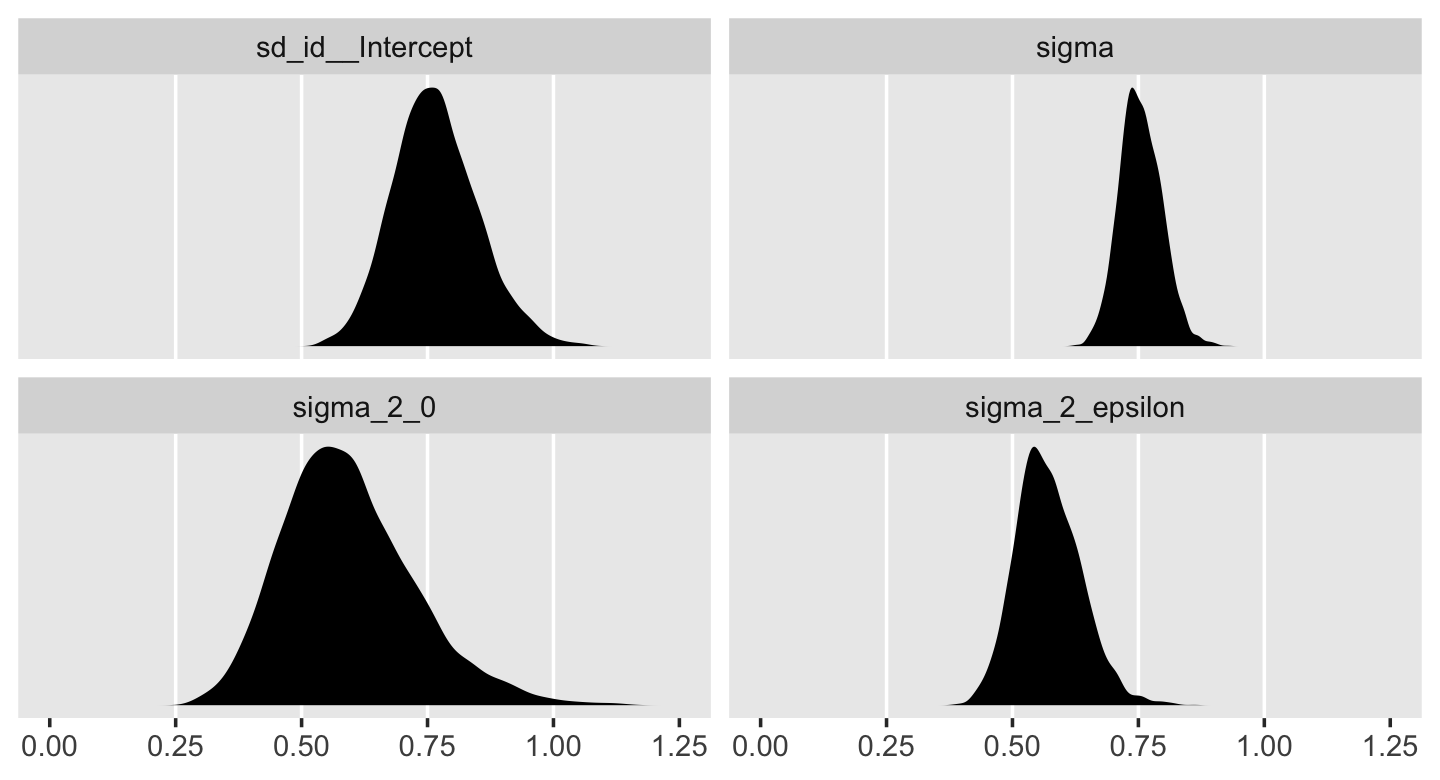

## $ `r_id[9,Intercept]` <dbl> 0.316124825, 0.621843617, -0.346237928, 0.162988921, 0.530201557, 0.30…Since all we’re interested in are the variance components, we’ll select() out the relevant columns from draws, compute the squared versions, and save the results in a mini data frame, v.

v <-

draws %>%

select(sigma, sd_id__Intercept) %>%

mutate(sigma_2_epsilon = sigma^2,

sigma_2_0 = sd_id__Intercept^2)

head(v)## # A tibble: 6 × 4

## sigma sd_id__Intercept sigma_2_epsilon sigma_2_0

## <dbl> <dbl> <dbl> <dbl>

## 1 0.737 0.719 0.544 0.516

## 2 0.809 0.647 0.654 0.419

## 3 0.812 0.793 0.659 0.628

## 4 0.810 0.772 0.655 0.596

## 5 0.818 0.741 0.669 0.549

## 6 0.783 0.696 0.613 0.484We can view their distributions like this.

v %>%

pivot_longer(everything()) %>%

ggplot(aes(x = value)) +

geom_vline(xintercept = c(.25, .5, .75, 1), color = "white") +

geom_density(size = 0, fill = "black") +

scale_x_continuous(NULL, limits = c(0, 1.25),

breaks = seq(from = 0, to = 1.25, by = .25)) +

scale_y_continuous(NULL, breaks = NULL) +

theme(panel.grid = element_blank()) +

facet_wrap(~ name, scales = "free_y")

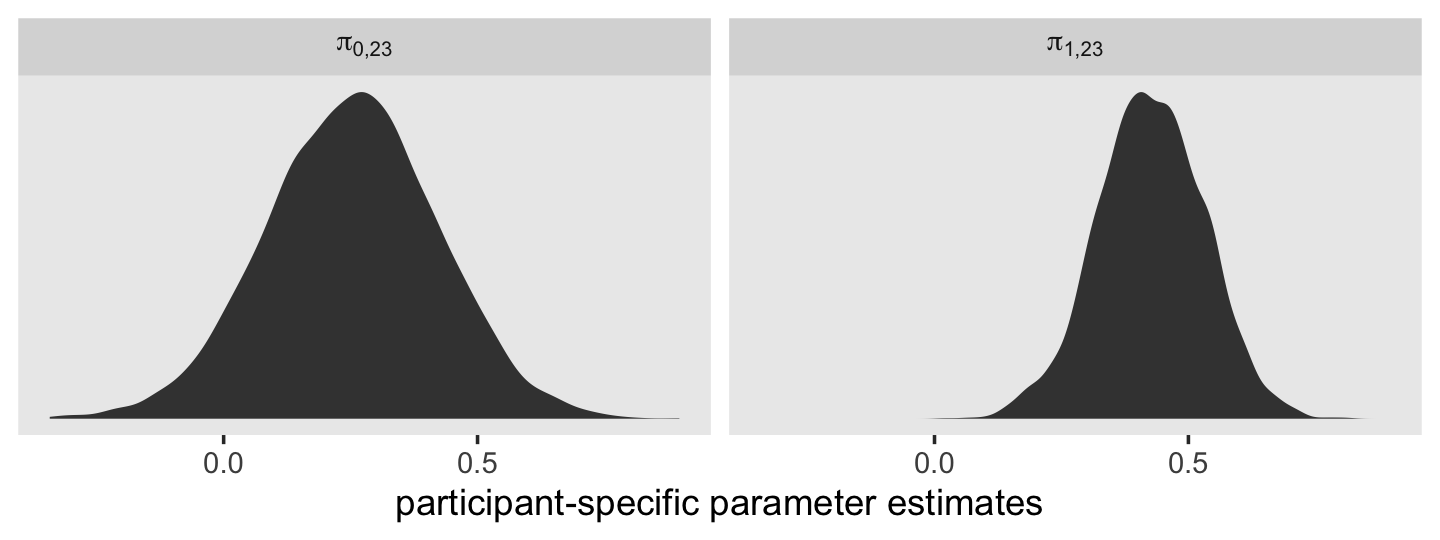

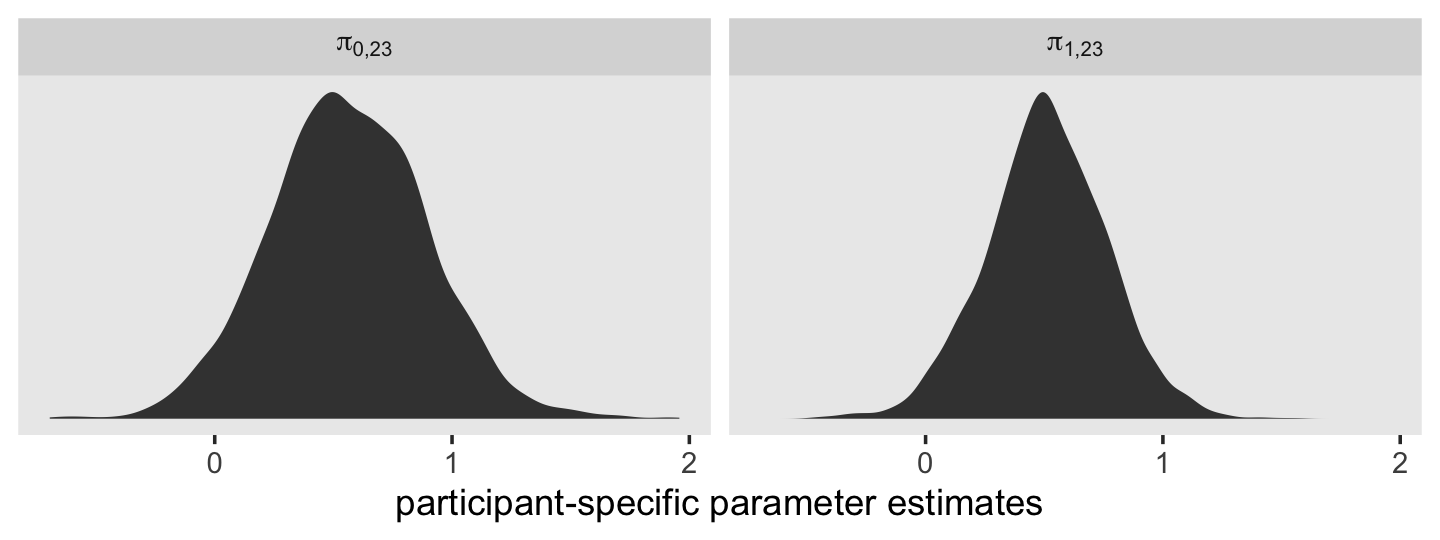

In case it’s hard to follow what just happened, the estimates in the brms-default standard-deviation metric are the two panels on the top. Those on the bottom are in the Singer-and-Willett style variance metric. Like we discussed toward the end of last chapter, the variance parameters won’t often be Gaussian. In my experience, they’re typically skewed to the right. There’s nothing wrong with that. This is a recurrent pattern among distributions that are constrained to be zero and above.

If you’re interested, you can summarize those posteriors like so.

v %>%

pivot_longer(everything()) %>%

group_by(name) %>%

summarise(mean = mean(value),

median = median(value),

sd = sd(value),

ll = quantile(value, prob = .025),

ul = quantile(value, prob = .975)) %>%

# this last bit just rounds the output

mutate_if(is.double, round, digits = 3)## # A tibble: 4 × 6

## name mean median sd ll ul

## <chr> <dbl> <dbl> <dbl> <dbl> <dbl>

## 1 sd_id__Intercept 0.766 0.761 0.084 0.612 0.945

## 2 sigma 0.755 0.752 0.042 0.678 0.841

## 3 sigma_2_0 0.593 0.58 0.131 0.375 0.893

## 4 sigma_2_epsilon 0.571 0.566 0.064 0.459 0.707For this model, our posterior medians are closer to the estimates in the text (Table 4.1) than the means. However, our posterior standard deviations are pretty close to the standard errors in the text.

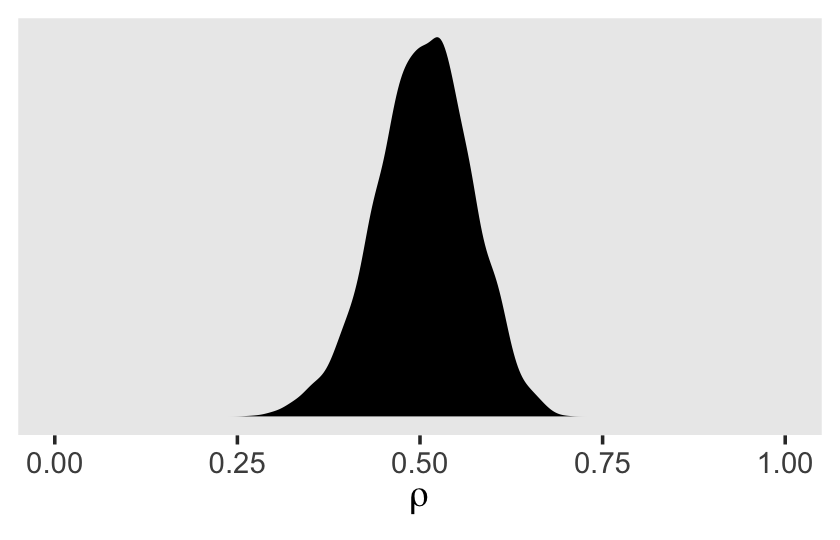

One of the advantages of our Bayesian method is that when we compute something like the intraclass correlation coefficient \(\rho\), we get an entire distribution for the parameter rather than a measly point estimates. This is always the case with Bayes. The algebraic transformations of the posterior distribution are themselves distributions. Before we compute \(\rho\), do pay close attention to the formula,

\[ \rho = \frac{\sigma_0^2}{\sigma_0^2 + \sigma_\epsilon^2}. \]

Even though our brms output yields the variance parameters in the standard-deviation metric, the formula for \(\rho\) demands we use variances. That’s nothing a little squaring can’t fix. Here’s what our \(\rho\) looks like.

v %>%

transmute(rho = sd_id__Intercept^2 / (sd_id__Intercept^2 + sigma^2)) %>%

ggplot(aes(x = rho)) +

geom_density(size = 0, fill = "black") +

scale_x_continuous(expression(rho), limits = 0:1) +

scale_y_continuous(NULL, breaks = NULL) +

theme(panel.grid = element_blank())

Though the posterior for \(\rho\) is indeed centered around .5, look at how wide and uncertain that distribution is. The bulk of the posterior mass takes up almost half of the parameter space. If you wanted the summary statistics, you might do what we did for the variance parameters, above.

v %>%

transmute(rho = sd_id__Intercept^2 / (sd_id__Intercept^2 + sigma^2)) %>%

summarise(mean = mean(rho),

median = median(rho),

sd = sd(rho),

ll = quantile(rho, prob = .025),

ul = quantile(rho, prob = .975)) %>%

mutate_if(is.double, round, digits = 3)## # A tibble: 1 × 5

## mean median sd ll ul

## <dbl> <dbl> <dbl> <dbl> <dbl>

## 1 0.505 0.507 0.065 0.371 0.624Concerning \(\rho\), Singer and Willett pointed out

it summarizes the size of the residual autocorrelation in the composite unconditional means mode….

Each person has a different composite residual on each occasion of measurement. But notice the difference in the subscripts of the pieces of the composite residual: while the level-1 residual, \(\epsilon_{ij}\) has two subscripts (\(i\) and \(j\)), the level-2 residual, \(\zeta_{0i}\), has only one (\(i\)). Each person can have a different \(\epsilon_{ij}\) on each occasion, but has only one \(\zeta_{0i}\) across every occasion. The repeated presence of \(\zeta_{0i}\) in individual \(i\)’s composite residual links his or her composite residuals across occasions. The error autocorrelation coefficient quantifies the magnitude of this linkage; in the unconditional means model, the error autocorrelation coefficient is the intraclass correlation coefficient. Thus, we estimate that, for each person, the average correlation between any pair of composite residuals–between occasions 1 and 2, or 2 and 3, or 1 and 3–is [.5]. (pp. 96–97, emphasis in the original)

Because of the differences in how they’re estimated with and presented by brm(), we focused right on the variance components. But before we move on to the next section, we should back up a bit. On page 93, Singer and Willett discussed their estimate for \(\gamma_{00}\). Here’s ours.

fixef(fit4.1)## Estimate Est.Error Q2.5 Q97.5

## Intercept 0.9226063 0.09813736 0.728995 1.119117They talked about how squaring that value puts it back to the natural metric the data were originally collected in. [Recall that as discussed earlier in the text the alcuse variable was square-root transformed because of excessive skew.] If you want a quick and dirty look, you can square our results, too.

fixef(fit4.1)^2 ## Estimate Est.Error Q2.5 Q97.5

## Intercept 0.8512023 0.009630941 0.5314337 1.252424However, I do not recommend this method. Though it did okay at transforming the posterior mean (i.e., Estimate), it’s not a great way to get the summary statistics correct. To do that, you’ll need to work with the posterior samples themselves. Remember how we saved them as draws? Let’s refresh ourselves and look at the first few columns.

draws %>%

select(b_Intercept:sigma) %>%

head()## # A tibble: 6 × 3

## b_Intercept sd_id__Intercept sigma

## <dbl> <dbl> <dbl>

## 1 0.941 0.719 0.737

## 2 0.986 0.647 0.809

## 3 0.805 0.793 0.812

## 4 0.948 0.772 0.810

## 5 0.854 0.741 0.818

## 6 0.839 0.696 0.783See that b_Intercept column there? That contains our posterior draws from \(\gamma_{00}\). If you want proper summary statistics from the transformed estimate, get them after transforming that column.

draws %>%

transmute(gamma_00_squared = b_Intercept^2) %>%

summarise(mean = mean(gamma_00_squared),

median = median(gamma_00_squared),

sd = sd(gamma_00_squared),

ll = quantile(gamma_00_squared, prob = .025),

ul = quantile(gamma_00_squared, prob = .975)) %>%

mutate_if(is.double, round, digits = 3) %>%

pivot_longer(everything())## # A tibble: 5 × 2

## name value

## <chr> <dbl>

## 1 mean 0.861

## 2 median 0.849

## 3 sd 0.181

## 4 ll 0.531

## 5 ul 1.25And one last bit before we move on to the next section. Remember how we discovered what the brm() default priors were for our model with the handy get_prior() function? Let’s refresh ourselves on how that worked.

get_prior(data = alcohol1_pp,

family = gaussian,

alcuse ~ 1 + (1 | id))## prior class coef group resp dpar nlpar lb ub source

## student_t(3, 1, 2.5) Intercept default

## student_t(3, 0, 2.5) sd 0 default

## student_t(3, 0, 2.5) sd id 0 (vectorized)

## student_t(3, 0, 2.5) sd Intercept id 0 (vectorized)

## student_t(3, 0, 2.5) sigma 0 defaultWe inserted the data and the model and get_prior() returned the default priors. Especially for new Bayesians, or even for experienced Bayesians working with unfamiliar models, it can be handy to plot your priors to get a sense of them.

Base R has an array of functions based on the \(t\) distribution (e.g., rt(), dt()). These functions are limited in that while they allow users to select the desired \(\nu\) values (i.e., degrees of freedom), they fix \(\mu = 0\) and \(\sigma = 1\). If you want to stick with the base R functions, you can find tricky ways around this. To avoid overwhelming anyone new to Bayes or the multilevel model or R or some exasperating combination, let’s just make things simpler and use a couple convenience functions from the ggdist package (Kay, 2021).

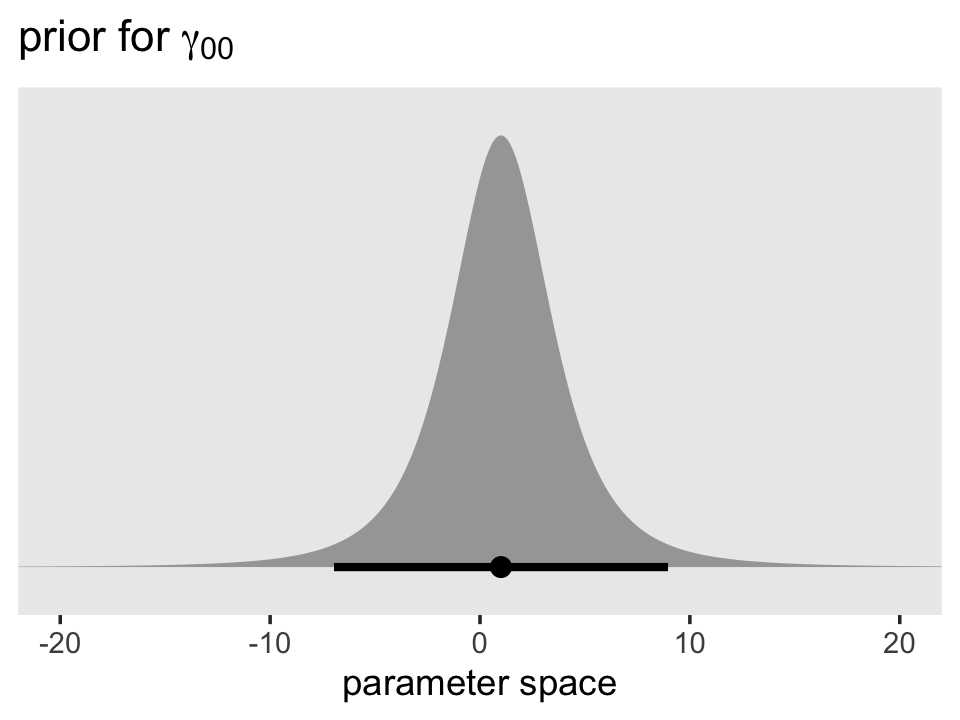

We’ll start with the default intercept prior, \(t(\nu = 3, \mu = 1, \sigma = 2.5)\). Here’s the density in the range \([-20, 20]\).

library(ggdist)##

## Attaching package: 'ggdist'## The following objects are masked from 'package:brms':

##

## dstudent_t, pstudent_t, qstudent_t, rstudent_tprior(student_t(3, 1, 2.5)) %>%

parse_dist() %>%

ggplot(aes(xdist = .dist_obj, y = prior)) +

stat_halfeye(.width = .95, p_limits = c(.001, .999)) +

scale_y_discrete(NULL, breaks = NULL, expand = expansion(add = 0.1)) +

labs(title = expression(paste("prior for ", gamma[0][0])),

x = "parameter space") +

theme(panel.grid = element_blank()) +

coord_cartesian(xlim = c(-20, 20))

Though it’s centered on 1, the inner 95% of the density is well between -10 and 10. Given the model estimate ended up about 0.9, it looks like that was a pretty broad and minimally-informative prior. However, the prior isn’t flat and it does help guard against wasting time and HMC iterations sampling from ridiculous regions of the parameter space such as -10,000 or +500,000,000. No adolescent is drinking that much (or that little–how does one drink a negative value?).

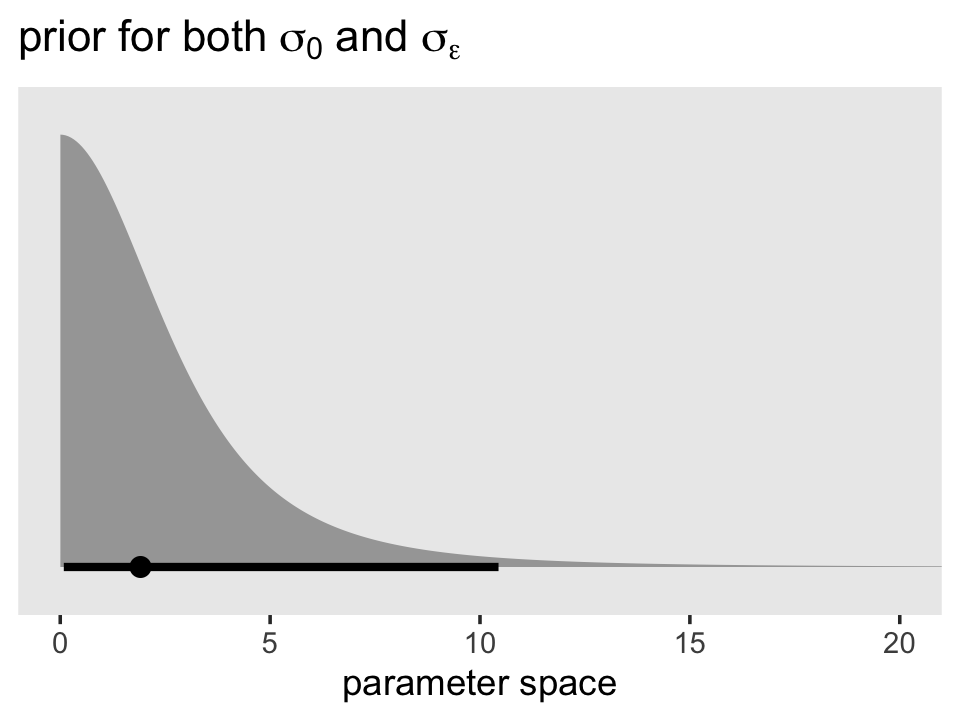

Here’s the shape of the variance priors.

prior(student_t(3, 0, 2.5), lb = 0) %>%

parse_dist() %>%

ggplot(aes(xdist = .dist_obj, y = prior)) +

stat_halfeye(.width = .95, p_limits = c(.001, .999)) +

scale_y_discrete(NULL, breaks = NULL, expand = expansion(add = 0.1)) +

labs(title = expression(paste("prior for both ", sigma[0], " and ", sigma[epsilon])),

x = "parameter space") +

coord_cartesian(xlim = c(0, 20)) +

theme(panel.grid = element_blank())

Recall that by brms default, the variance parameters have a lower-limit of 0. So specifying a Student’s \(t\) or other Gaussian-like prior on them ends up cutting the distribution off at 0. Given that our estimates were both below 1, it appears that these priors were minimally informative. But again, they did help prevent brm() from sampling from negative values or from obscenely-large values.

These priors look kinda silly, you might say. Anyone with a little common sense can do better. Well, sure. Probably. Maybe. But keep in mind we’re still getting the layout of the land. And plus, this was a pretty simple model. Selecting high-quality priors gets tricky as the models get more complicated. In other chapters, we’ll explore other ways to specify priors for our multilevel models. But to keep things simple for now, let’s keep practicing inspecting and using the defaults with get_prior() and so on.

4.4.2 The unconditional growth model.

Using the composite formula, our next model, the unconditional growth model, follows the form

\[ \begin{align*} \text{alcuse}_{ij} & = \gamma_{00} + \gamma_{10} \text{age_14}_{ij} + \zeta_{0i} + \zeta_{1i} \text{age_14}_{ij} + \epsilon_{ij} \\ \epsilon_{ij} & \sim \operatorname{Normal} (0, \sigma_\epsilon^2) \\ \begin{bmatrix} \zeta_{0i} \\ \zeta_{1i} \end{bmatrix} & \sim \operatorname{Normal} \begin{pmatrix} \begin{bmatrix} 0 \\ 0 \end{bmatrix}, \begin{bmatrix} \sigma_0^2 & \sigma_{01} \\ \sigma_{01} & \sigma_1^2 \end{bmatrix} \end{pmatrix}. \end{align*} \]

With it, we now have a full composite stochastic model. Let’s query the brms::brm() default priors when we apply this model to our data.

get_prior(data = alcohol1_pp,

family = gaussian,

alcuse ~ 0 + Intercept + age_14 + (1 + age_14 | id))## prior class coef group resp dpar nlpar lb ub source

## (flat) b default

## (flat) b age_14 (vectorized)

## (flat) b Intercept (vectorized)

## lkj(1) cor default

## lkj(1) cor id (vectorized)

## student_t(3, 0, 2.5) sd 0 default

## student_t(3, 0, 2.5) sd id 0 (vectorized)

## student_t(3, 0, 2.5) sd age_14 id 0 (vectorized)

## student_t(3, 0, 2.5) sd Intercept id 0 (vectorized)

## student_t(3, 0, 2.5) sigma 0 defaultSeveral things of note: First, notice how we continue to use the student_t(3, 0, 2.5) for all three of our standard-deviation-metric variance parameters. Since we’re now estimating \(\sigma_0\) and \(\sigma_1\), which themselves have a correlation, \(\rho_{01}\), we have a prior of class = cor. I’m going to put off what is meant by the name lkj, but for the moment just realize that this prior is essentially noninformative within this context.

There’s a major odd development with this output. Notice how the prior column is (flat) for the rows for our two coefficients of class b. And if you’re a little confused, recall that because our predictor age_14 is not mean-centered, we’ve used the 0 + Intercept syntax, which switches the model intercept parameter to the class of b. From the set_prior section of the reference manual for brms version 2.12.0, we read: “The default prior for population-level effects (including monotonic and category specific effects) is an improper flat prior over the reals” (p. 179). At present, these priors are uniform across the entire parameter space. They’re not just weak, their entirely noninformative. That is, the likelihood dominates the posterior for those parameters.

Here’s how to fit the model with these priors.

fit4.2 <-

brm(data = alcohol1_pp,

family = gaussian,

alcuse ~ 0 + Intercept + age_14 + (1 + age_14 | id),

prior = c(prior(student_t(3, 0, 2.5), class = sd),

prior(student_t(3, 0, 2.5), class = sigma),

prior(lkj(1), class = cor)),

iter = 2000, warmup = 1000, chains = 4, cores = 4,

seed = 4,

control = list(adapt_delta = .9),

file = "fits/fit04.02")How did we do?

print(fit4.2, digits = 3)## Family: gaussian

## Links: mu = identity; sigma = identity

## Formula: alcuse ~ 0 + Intercept + age_14 + (1 + age_14 | id)

## Data: alcohol1_pp (Number of observations: 246)

## Draws: 4 chains, each with iter = 2000; warmup = 1000; thin = 1;

## total post-warmup draws = 4000

##

## Group-Level Effects:

## ~id (Number of levels: 82)

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## sd(Intercept) 0.792 0.103 0.595 0.998 1.001 803 1574

## sd(age_14) 0.368 0.092 0.155 0.529 1.007 360 314

## cor(Intercept,age_14) -0.120 0.263 -0.504 0.583 1.004 499 379

##

## Population-Level Effects:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## Intercept 0.650 0.107 0.438 0.865 1.003 2078 2594

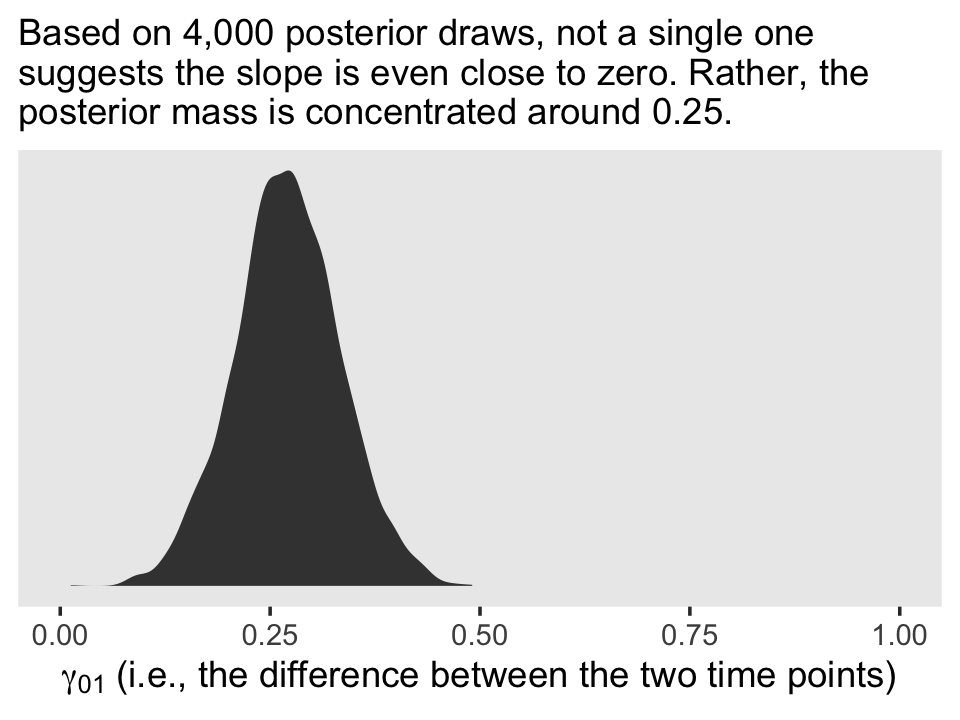

## age_14 0.272 0.065 0.147 0.400 1.001 3540 2850

##

## Family Specific Parameters:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## sigma 0.604 0.051 0.515 0.715 1.003 435 500

##

## Draws were sampled using sampling(NUTS). For each parameter, Bulk_ESS

## and Tail_ESS are effective sample size measures, and Rhat is the potential

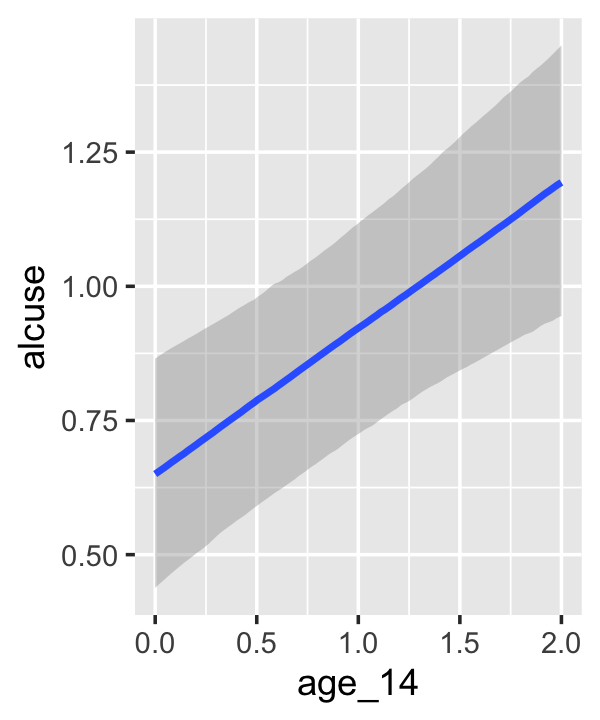

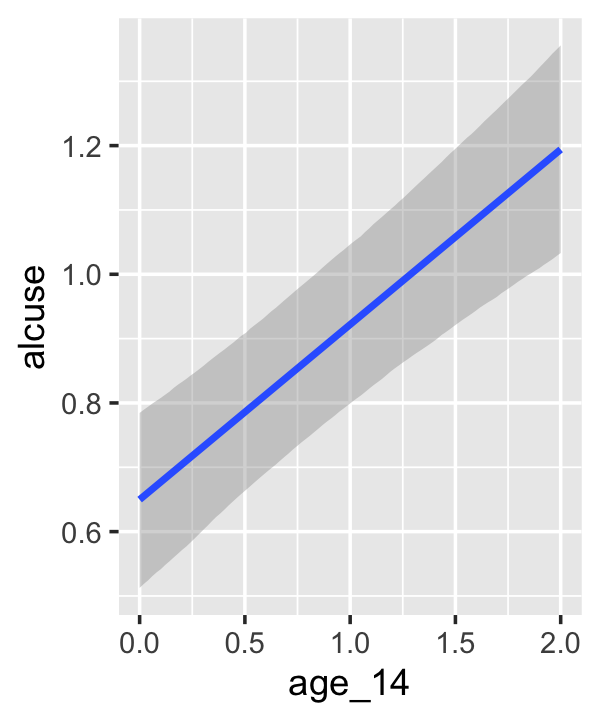

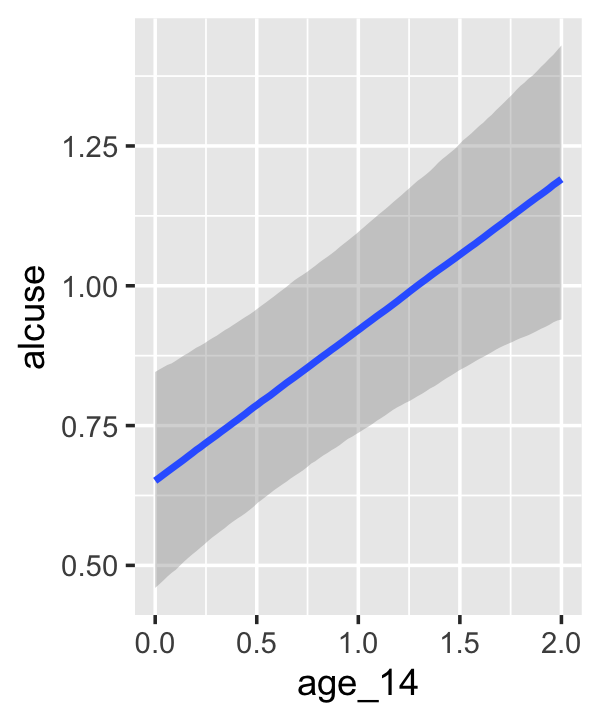

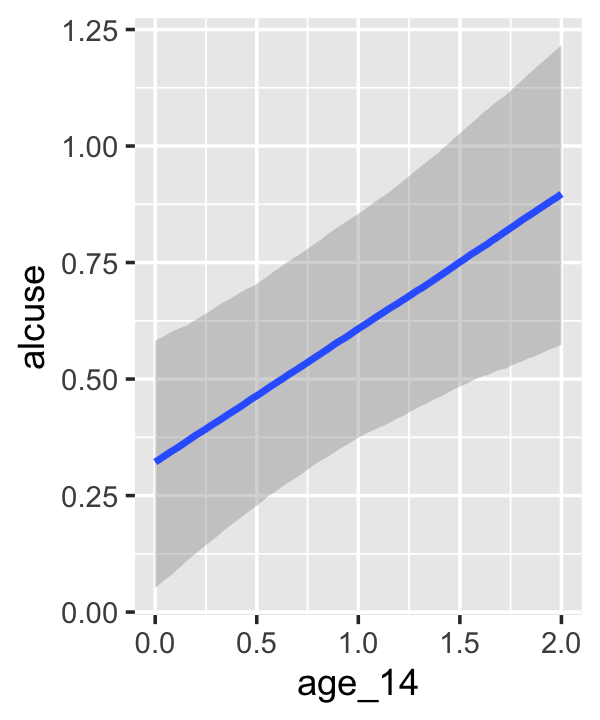

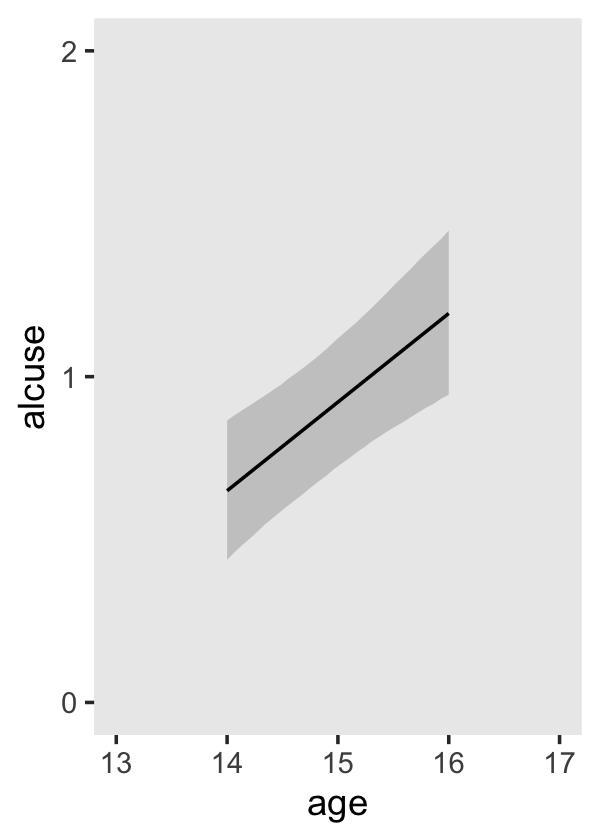

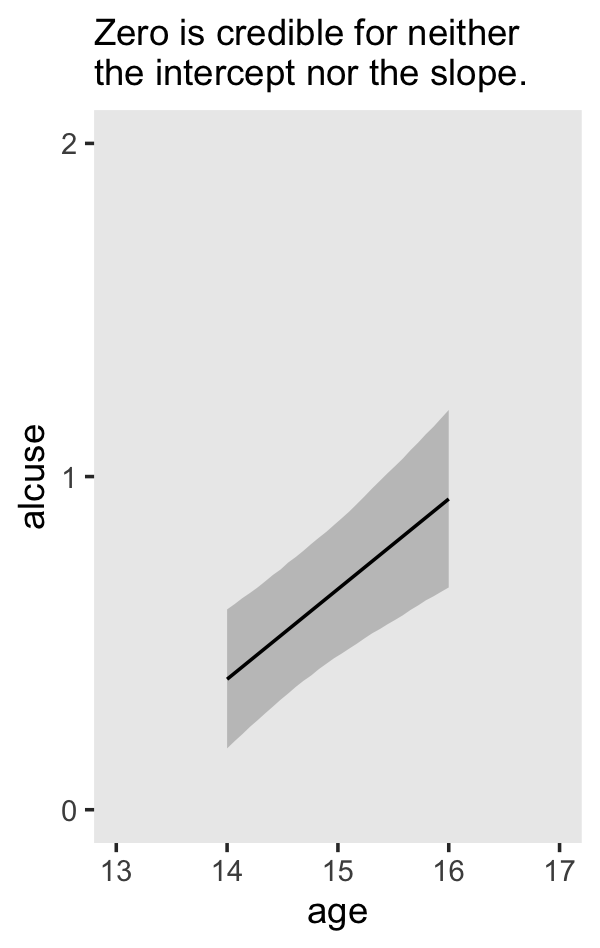

## scale reduction factor on split chains (at convergence, Rhat = 1).If your compare our results with those in the “Model B” column in Table 4.1, you’ll see our summary results match well with those in the text. Our \(\gamma\)’s (i.e., ‘Population-Level Effects:’) are near identical. The leftmost panel in Figure 4.3 shows the prototypical trajectory, based on the \(\gamma\)s. A quick way to get that within our brms framework is with the conditional_effects() function. Here’s the default output.

conditional_effects(fit4.2)

Staying with conditional_effects() allows users some flexibility for customizing the plot(s). For example, the default behavior is to depict the trajectory in terms of its 95% intervals and posterior median. If you’d prefer the 80% intervals and the posterior mean, customize it like so.

conditional_effects(fit4.2,

robust = F,

prob = .8)

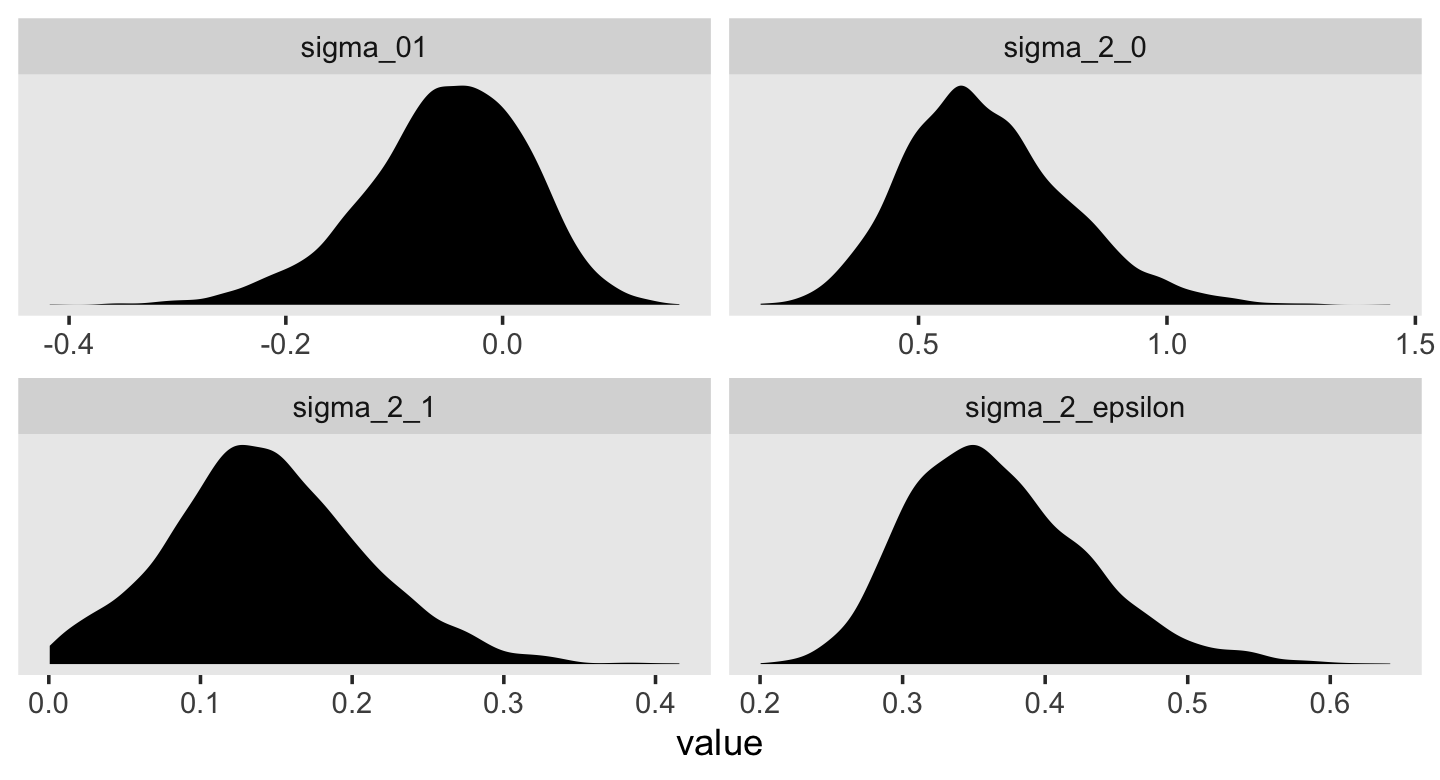

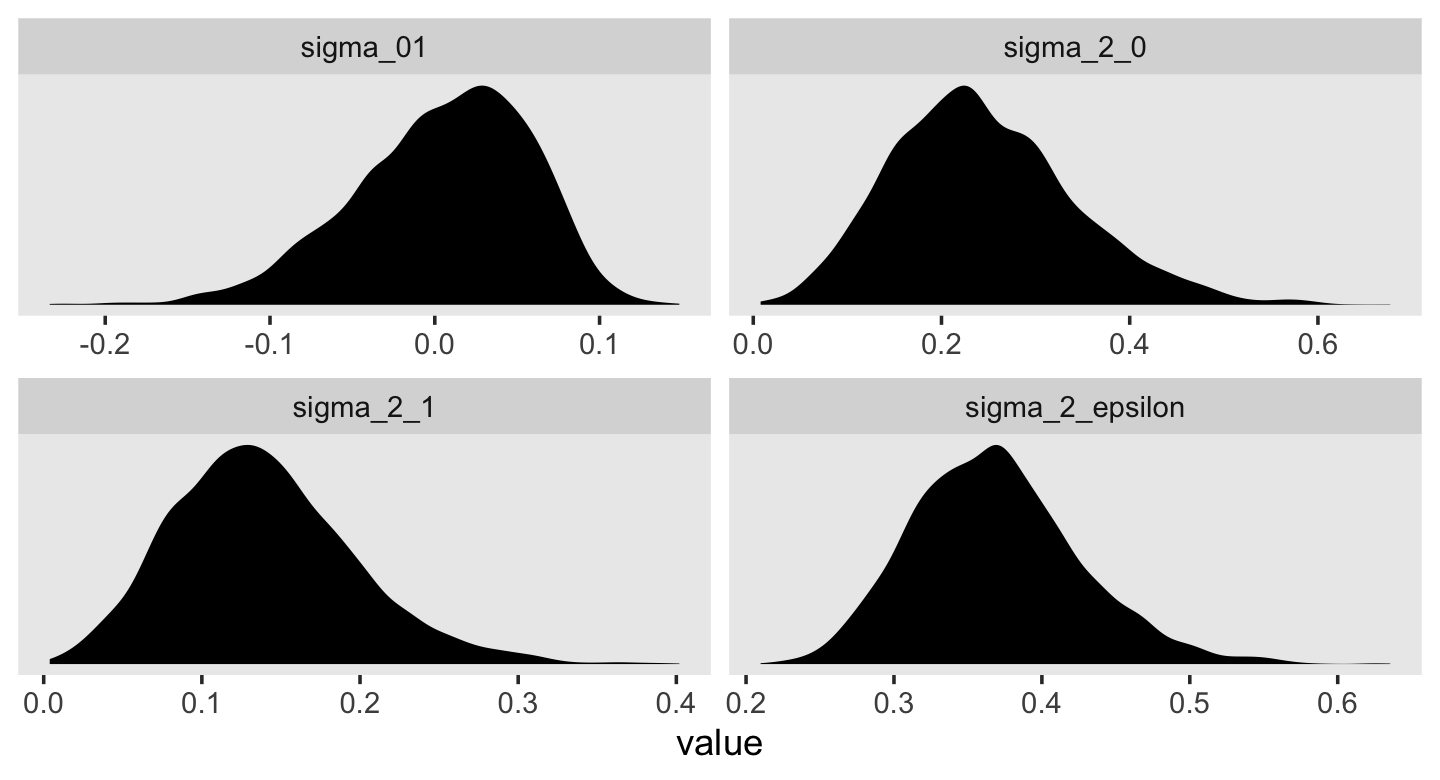

We’ll explore more options with brms::conditional_effects() with Model C. For now, let’s turn our focus on the stochastic elements in the model. Here we extract the posterior samples and do the conversions to see how they compare with Singer and Willett’s.

draws <- as_draws_df(fit4.2)

v <-

draws %>%

transmute(sigma_2_epsilon = sigma^2,

sigma_2_0 = sd_id__Intercept^2,

sigma_2_1 = sd_id__age_14^2,

sigma_01 = sd_id__Intercept * cor_id__Intercept__age_14 * sd_id__age_14)

head(v)## # A tibble: 6 × 4

## sigma_2_epsilon sigma_2_0 sigma_2_1 sigma_01

## <dbl> <dbl> <dbl> <dbl>

## 1 0.316 0.568 0.104 -0.0409

## 2 0.333 0.751 0.0648 0.0311

## 3 0.429 0.543 0.0544 0.0210

## 4 0.505 0.408 0.106 0.0311

## 5 0.450 0.694 0.0122 -0.00762

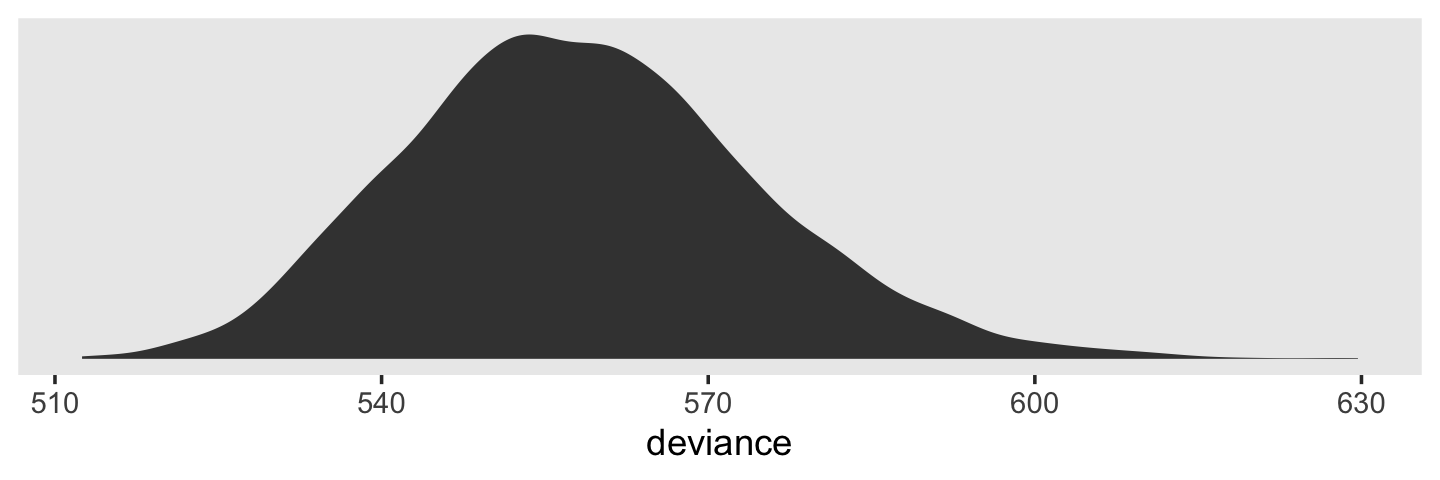

## 6 0.454 0.501 0.0177 0.0522This time, our v object only contains the stochastic components in the variance metric. Let’s plot.

v %>%

pivot_longer(everything()) %>%

ggplot(aes(x = value)) +

geom_density(size = 0, fill = "black") +

scale_y_continuous(NULL, breaks = NULL) +

theme(panel.grid = element_blank()) +

facet_wrap(~ name, scales = "free")

For each, their posterior mass is centered near the point estimates Singer and Willet reported in the text. Here are the summary statistics.

v %>%

pivot_longer(everything()) %>%

group_by(name) %>%

summarise(mean = mean(value),

median = median(value),

sd = sd(value),

ll = quantile(value, prob = .025),

ul = quantile(value, prob = .975)) %>%

mutate_if(is.double, round, digits = 3)## # A tibble: 4 × 6

## name mean median sd ll ul

## <chr> <dbl> <dbl> <dbl> <dbl> <dbl>

## 1 sigma_01 -0.053 -0.047 0.076 -0.222 0.078

## 2 sigma_2_0 0.637 0.62 0.165 0.354 0.997

## 3 sigma_2_1 0.144 0.14 0.065 0.024 0.28

## 4 sigma_2_epsilon 0.367 0.359 0.063 0.265 0.511Happily, they’re quite comparable to those in the text.

We’ve been pulling the posterior samples for all parameters with as_draws_df() and subsetting to a few variables of interest, such as the variance parameters. But it our primary interest is just the iterations for the variance parameters, we can extract them in a more focused way with the VarCorr() function. Here’s how we’d do so for fit4.2.

VarCorr(fit4.2, summary = F) %>%

str()## List of 2

## $ id :List of 3

## ..$ sd : num [1:4000, 1:2] 0.754 0.866 0.737 0.639 0.833 ...

## .. ..- attr(*, "dimnames")=List of 2

## .. .. ..$ draw : chr [1:4000] "1" "2" "3" "4" ...

## .. .. ..$ variable: chr [1:2] "Intercept" "age_14"

## .. ..- attr(*, "nchains")= int 4

## ..$ cor: num [1:4000, 1:2, 1:2] 1 1 1 1 1 1 1 1 1 1 ...

## .. ..- attr(*, "dimnames")=List of 3

## .. .. ..$ : NULL

## .. .. ..$ : chr [1:2] "Intercept" "age_14"

## .. .. ..$ : chr [1:2] "Intercept" "age_14"

## ..$ cov: num [1:4000, 1:2, 1:2] 0.568 0.751 0.543 0.408 0.694 ...

## .. ..- attr(*, "dimnames")=List of 3

## .. .. ..$ : NULL

## .. .. ..$ : chr [1:2] "Intercept" "age_14"

## .. .. ..$ : chr [1:2] "Intercept" "age_14"

## $ residual__:List of 1

## ..$ sd: num [1:4000, 1] 0.562 0.577 0.655 0.71 0.671 ...

## .. ..- attr(*, "dimnames")=List of 2

## .. .. ..$ draw : chr [1:4000] "1" "2" "3" "4" ...

## .. .. ..$ variable: chr ""

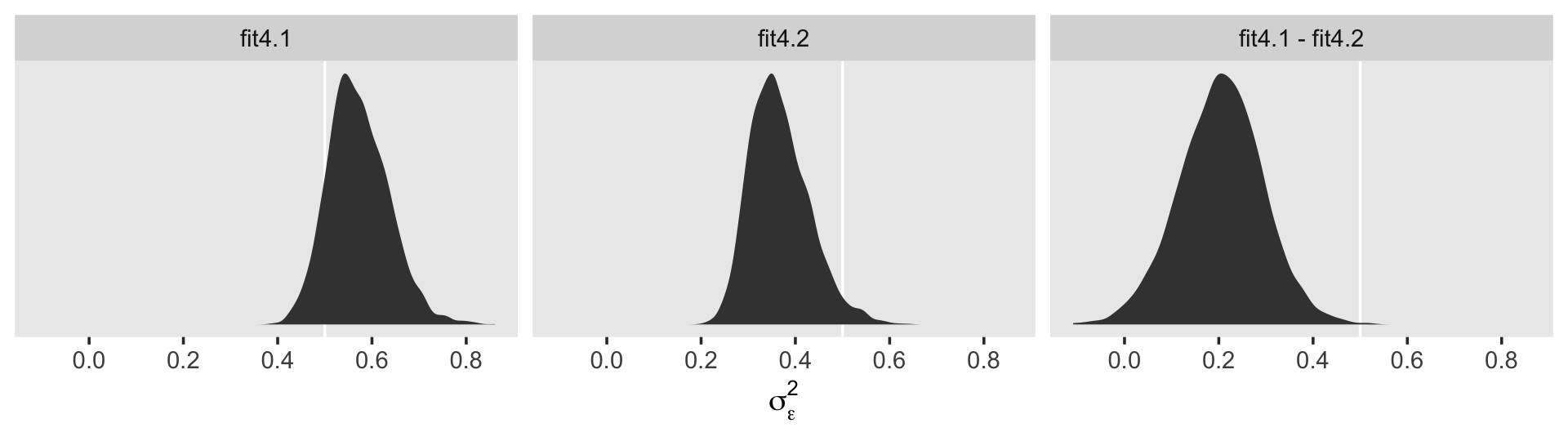

## .. ..- attr(*, "nchains")= int 4That last part, the contents of the second higher-level list indexed by $ residual, contains the contents for \(\sigma_\epsilon\). On page 100 in the text, Singer and Willett compared \(\sigma_\epsilon^2\) from the first model to that from the second. We might do that like so.

cbind(VarCorr(fit4.1, summary = F)[[2]][[1]],

VarCorr(fit4.2, summary = F)[[2]][[1]]) %>%

data.frame() %>%

mutate_all(~.^2) %>%

set_names(str_c("fit4.", 1:2)) %>%

mutate(`fit4.1 - fit4.2` = fit4.1 - fit4.2) %>%

pivot_longer(everything()) %>%

mutate(name = factor(name, levels = c("fit4.1", "fit4.2", "fit4.1 - fit4.2"))) %>%

ggplot(aes(x = value)) +

geom_vline(xintercept = .5, color = "white") +

geom_density(fill = "grey25", color = "transparent") +

scale_y_continuous(NULL, breaks = NULL) +

xlab(expression(sigma[epsilon]^2)) +

theme(panel.grid = element_blank()) +

facet_wrap(~ name, scales = "free_y", ncol = 3)

To compute a formal summary of the decline in \(\sigma_\epsilon^2\) after adding time to the model, we might summarize like before.

cbind(VarCorr(fit4.1, summary = F)[[2]][[1]],

VarCorr(fit4.2, summary = F)[[2]][[1]]) %>%

data.frame() %>%

mutate_all(~ .^2) %>%

set_names(str_c("fit4.", 1:2)) %>%

mutate(proportion_decline = (fit4.1 - fit4.2) / fit4.1) %>%

summarise(mean = mean(proportion_decline),

median = median(proportion_decline),

sd = sd(proportion_decline),

ll = quantile(proportion_decline, prob = .025),

ul = quantile(proportion_decline, prob = .975)) %>%

mutate_if(is.double, round, digits = 3)## mean median sd ll ul

## 1 0.35 0.365 0.132 0.051 0.569In case it wasn’t clear, when we presented fit4.1 – fit4.2 in the density plot, that was a simple difference score. However, we computed proportion_decline above by dividing that difference score by fit4.1; that’s what put the difference in a proportion metric. Anyway, Singer and Willett’s method led them to summarize the decline as .40. Our method was a more conservative .34-ish. And very happily, our method allows us to describe the proportion decline with summary statistics for the full posterior, such as with the \(\textit{SD}\) and the 95% intervals.

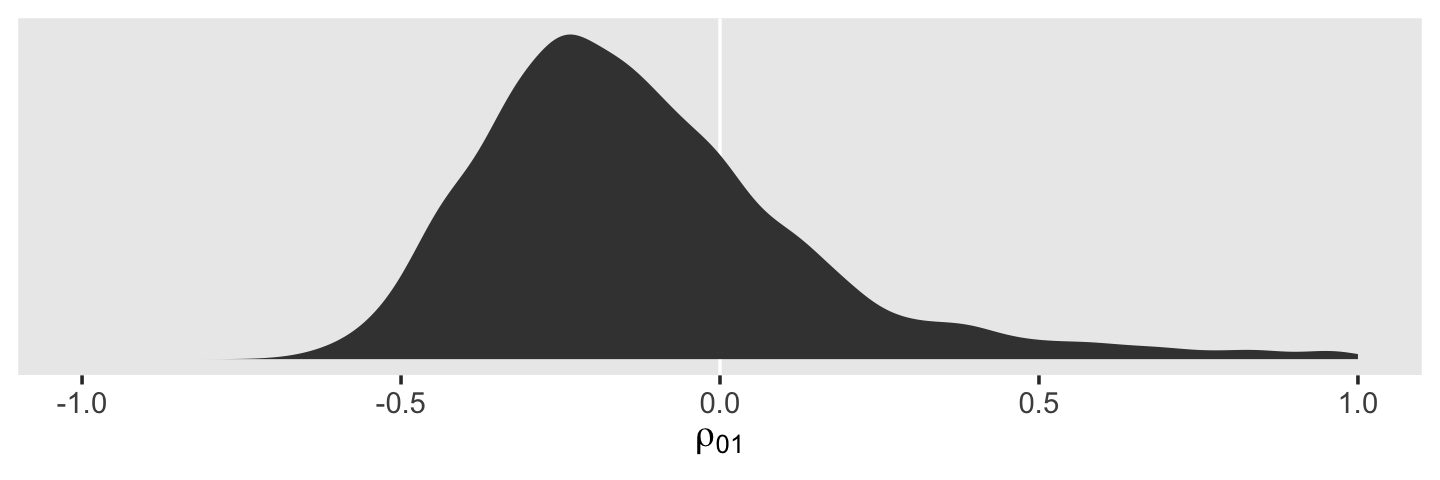

draws %>%

ggplot(aes(x = cor_id__Intercept__age_14)) +

geom_vline(xintercept = 0, color = "white") +

geom_density(fill = "grey25", color = "transparent") +

scale_x_continuous(expression(rho[0][1]), limits = c(-1, 1)) +

scale_y_continuous(NULL, breaks = NULL) +

theme(panel.grid = element_blank())

The estimate Singer and Willett hand-computed in the text, -.22, is near the mean of our posterior distribution for \(\rho_{01}\). However, our distribution provides a full expression of the uncertainty in the parameter. As are many other values within the parameter space, zero is indeed a credible value for \(\rho_{01}\).

On page 101, we get the generic formula for computing the residual variance for a given occasion \(j\),

\[ \sigma_{\text{Residual}_j}^2 = \sigma_0^2 + \sigma_1^2 \text{time}_j + 2 \sigma_{01} \text{time}_j + \sigma_\epsilon^2. \]

If we were just interested in applying it to one of our age values, say 14, we might apply the formula to the posterior like this.

draws %>%

transmute(sigma_2_residual_j = sd_id__Intercept^2 +

sd_id__age_14^2 * 0 +

2 * sd_id__Intercept * cor_id__Intercept__age_14 * sd_id__age_14 * 0 +

sigma^2) %>%

head()## # A tibble: 6 × 1

## sigma_2_residual_j

## <dbl>

## 1 0.884

## 2 1.08

## 3 0.972

## 4 0.913

## 5 1.14

## 6 0.955But given we’d like to do so over several values of age, it might be better to wrap the equation in a custom function. Let’s call it make_s2rj().

make_s2rj <- function(x) {

draws %>%

transmute(sigma_2_residual_j = sd_id__Intercept^2 + sd_id__age_14^2 * x + 2 * sd_id__Intercept * cor_id__Intercept__age_14 * sd_id__age_14 * x + sigma^2) %>%

pull()

}Now we can put our custom make_s2rj() function to work within the purrr::map() paradigm. We’ll plot the results.

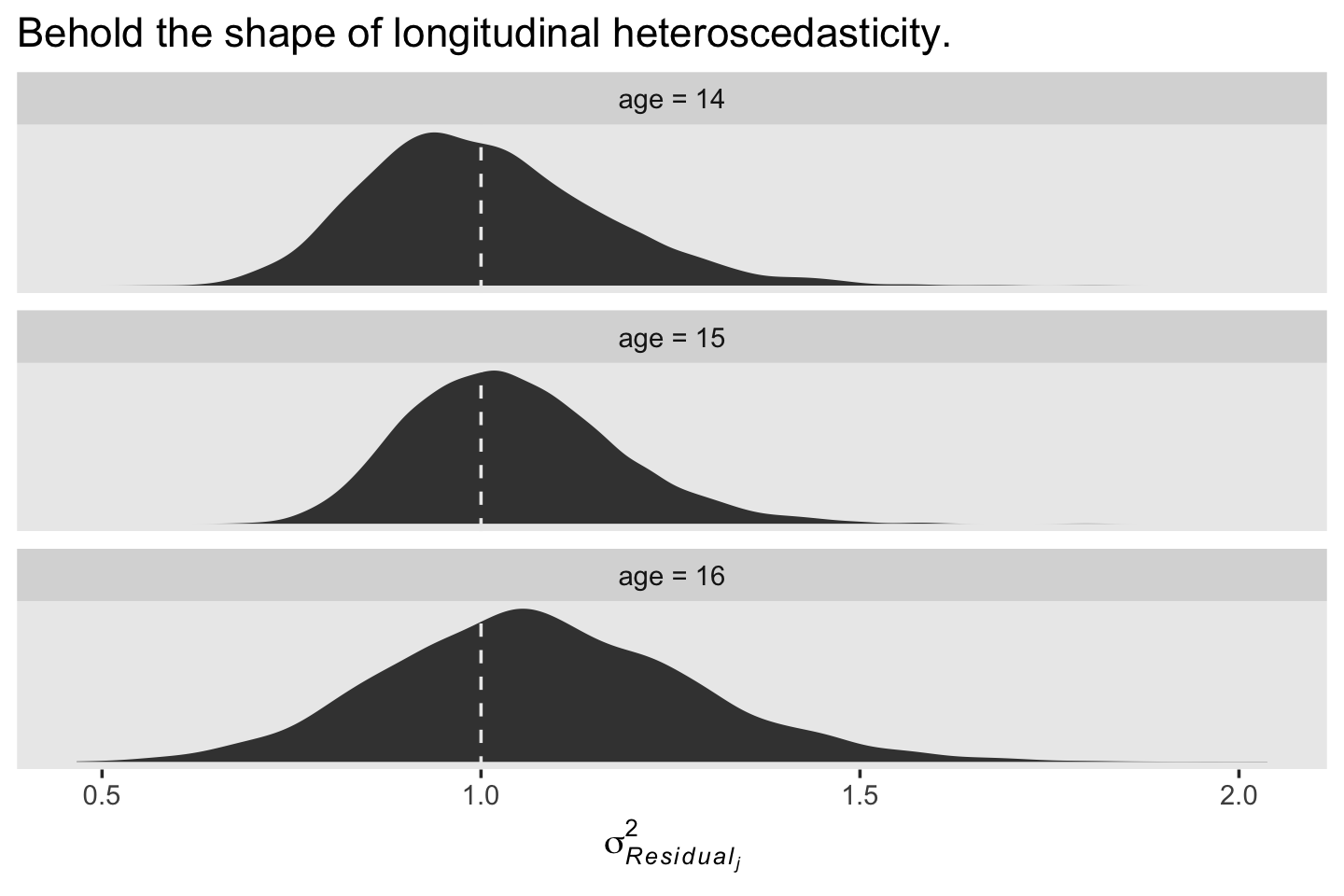

tibble(age = 14:16) %>%

mutate(age_c = age - 14) %>%

mutate(s2rj = map(age_c, make_s2rj)) %>%

unnest(s2rj) %>%

mutate(label = str_c("age = ", age)) %>%

ggplot(aes(x = s2rj)) +

geom_density(fill = "grey25", color = "transparent") +

# just for reference

geom_vline(xintercept = 1, color = "grey92", linetype = 2) +

scale_y_continuous(NULL, breaks = NULL) +

labs(title = "Behold the shape of longitudinal heteroscedasticity.",

x = expression(sigma[italic(Residual[j])]^2)) +

theme(panel.grid = element_blank()) +

facet_wrap(~ label, scales = "free_y", ncol = 1)

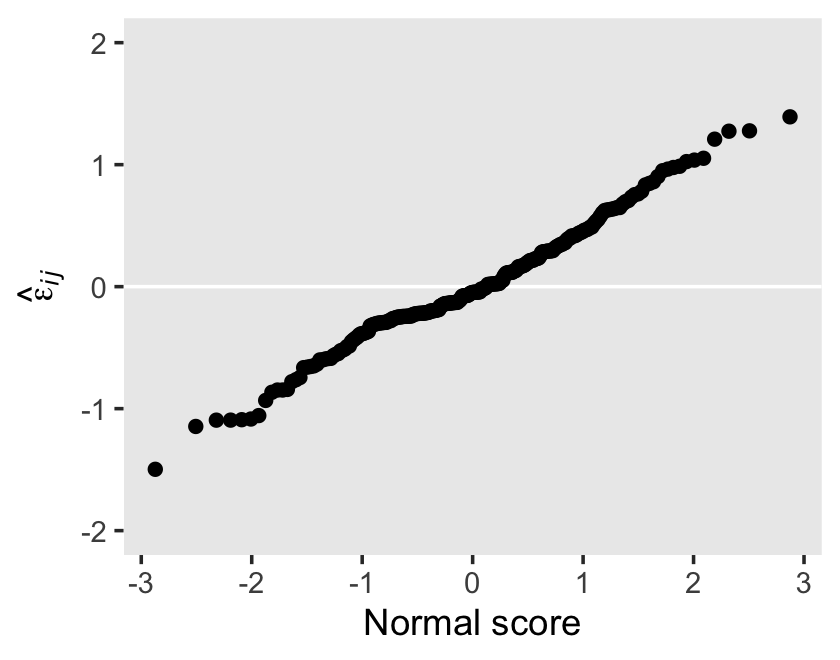

We see a subtle increase over time, particularly from age = 15 to age = 16. Yep, that’s heteroscedasticity. It is indeed “beyond the bland homoscedasticity we assume of residuals in cross-sectional data” (p. 101).

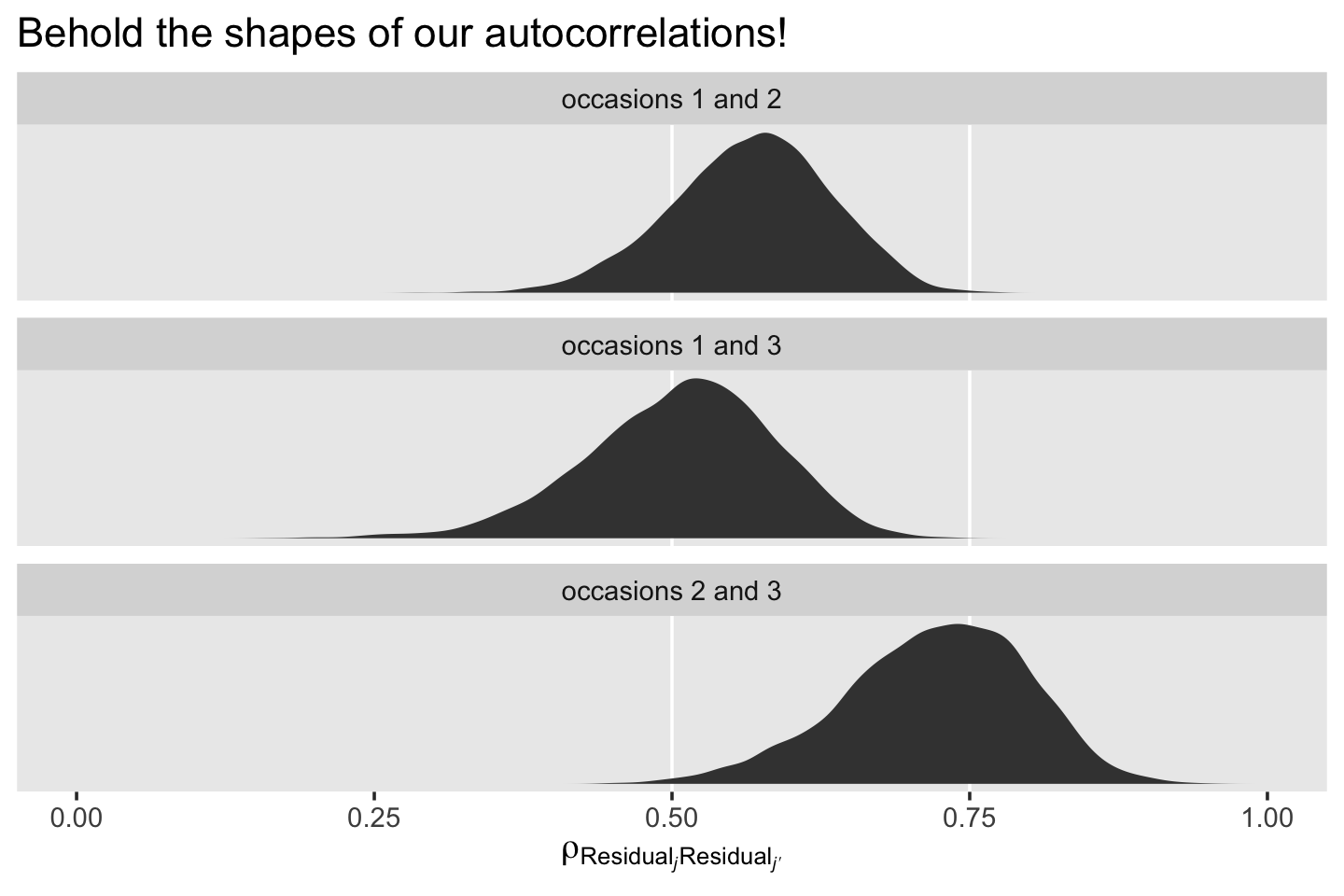

We might also be interested in computing the autocorrelation between the composite residuals on occasions \(j\) and \(j'\), which follows the formula

\[ \rho_{\text{Residual}_j, \text{Residual}_{j'}} = \frac{\sigma_0^2 + \sigma_{01} (\text{time}_j + \text{time}_{j'}) + \sigma_1^2 \text{time}_j \text{time}_{j'}} {\sqrt{\sigma_{\text{Residual}_j}^2 \sigma_{\text{Residual}_{j'}}^2 }}. \]

We only want to do that by hand once. Let’s make a custom function following the formula.

make_rho_rj_rjp <- function(j, jp) {

# define the elements in the denominator

s2rj_j <- make_s2rj(j)

s2rj_jp <- make_s2rj(jp)

# compute

draws %>%

transmute(r = (sd_id__Intercept^2 +

sd_id__Intercept * cor_id__Intercept__age_14 * sd_id__age_14 * (j + jp) +

sd_id__age_14^2 * j * jp) /

sqrt(s2rj_j * s2rj_jp)) %>%

pull()

}If you only cared about measures of central tendency, such as the posterior median, you could use the function like this.

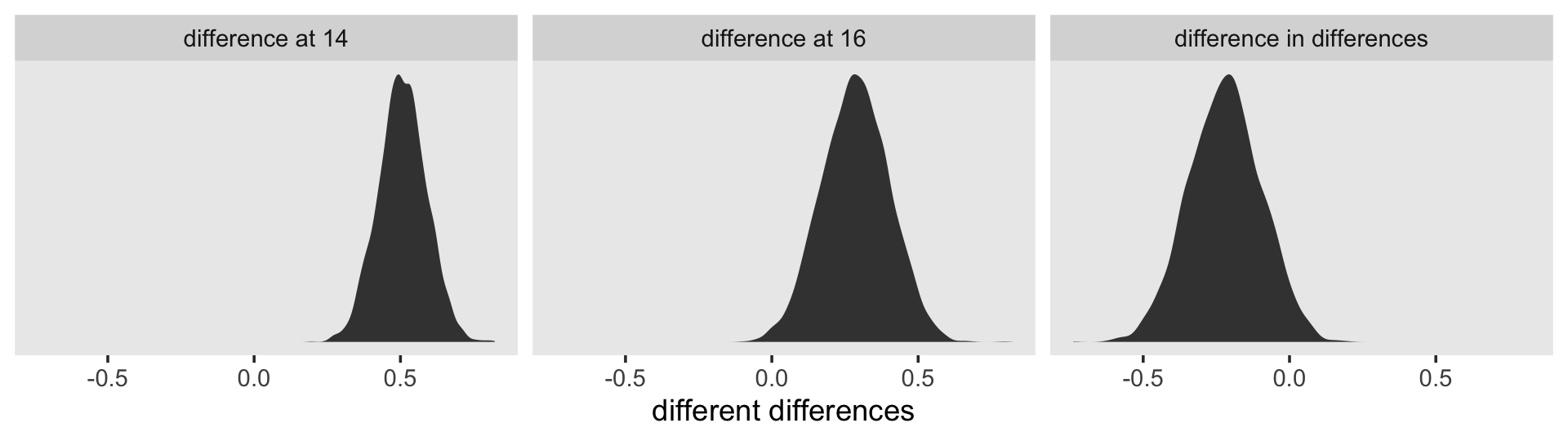

make_rho_rj_rjp(0, 1) %>% median()## [1] 0.5705756make_rho_rj_rjp(1, 2) %>% median()## [1] 0.7269452make_rho_rj_rjp(0, 2) %>% median()## [1] 0.5121775Here are the full posteriors.

tibble(occasion = 1:3) %>%

mutate(age_c = occasion - 1,

j = c(1, 2, 1) - 1,

jp = c(2, 3, 3) - 1) %>%

mutate(r = map2(j, jp, make_rho_rj_rjp)) %>%

unnest(r) %>%

mutate(label = str_c("occasions ", j + 1, " and ", jp + 1)) %>%

ggplot(aes(x = r)) +

# just for reference

geom_vline(xintercept = c(.5, .75), color = "white") +

geom_density(fill = "grey25", color = "transparent") +

scale_x_continuous(expression(rho[Residual[italic(j)]][Residual[italic(j*minute)]]), limits = 0:1) +

scale_y_continuous(NULL, breaks = NULL) +

ggtitle("Behold the shapes of our autocorrelations!") +

theme(panel.grid = element_blank()) +

facet_wrap(~ label, scales = "free_y", ncol = 1)

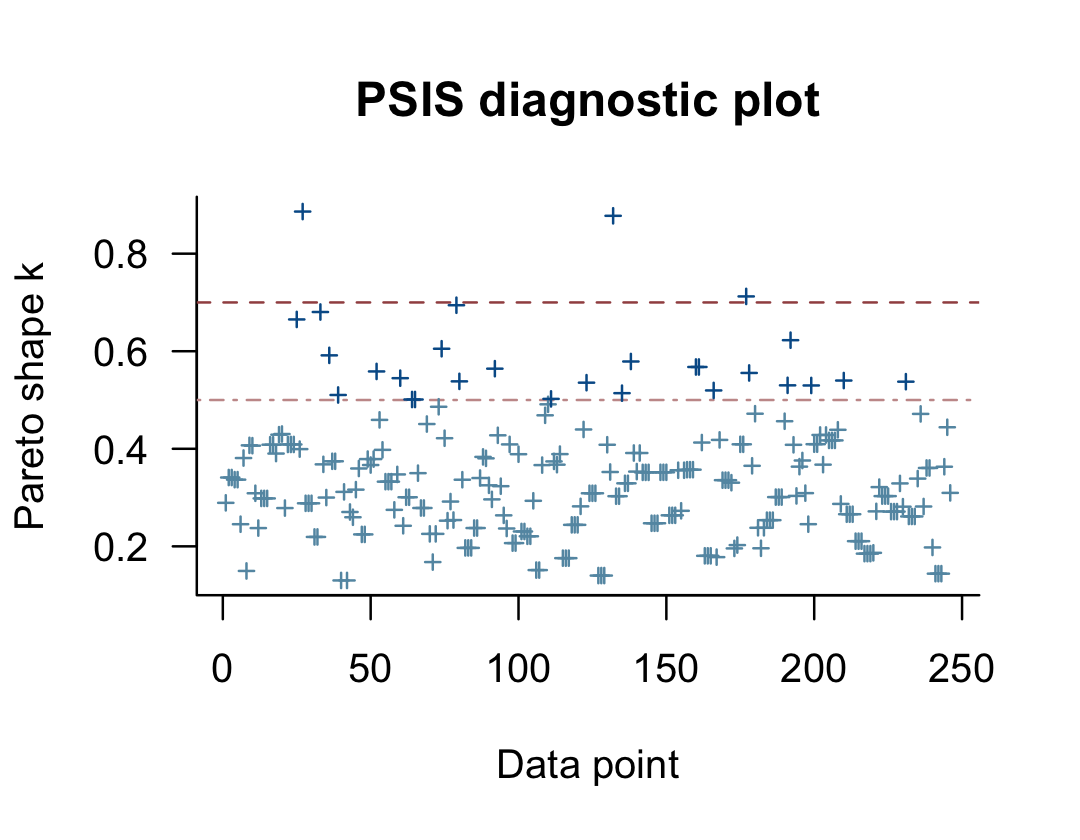

4.4.3 Quantifying the proportion of outcome variation “explained.”

Because of the way the multilevel model partitions off variance into different sources (e.g., \(\sigma_0^2\), \(\sigma_1^2\), and \(\sigma_\epsilon^2\) in the unconditional growth model), the conventional \(R^2\) is not applicable for evaluating models in the traditional OLS sense of percent of variance explained. Several pseudo \(R^2\) statistics are frequently used instead. Be warned, “statisticians have yet to agree on appropriate summaries (I. G. Kreft & de Leeuw, 1998; Snijders & Bosker, 1994)” (p. 102). See also Jaeger et al. (2017), Jason D. Rights & Cole (2018), and Jason D. Rights & Sterba (2020). To my eye, none of the solutions presented in this section are magic bullets.

4.4.3.1 An overall summary of total outcome variability explained.

In multiple regression, one simple way of computing a summary \(R^2\) statistic is to square the sample correlation between observed and predicted values of the outcome. The same approach can be used in the multilevel model for change. All you need to do is: (1) compute the predicted outcome value for each person on each occasion of measurement; and (2) square the sample correlation between observed and predicted values. The resultant pseudo-\(R^2\) statistic assesses the proportion of total outcome variation “explained” by the multilevel model’s specific contribution of predictors. (p. 102, emphasis added)

Singer and Willett called this \(R_{y, \hat y}^2\). They then walked through an example with their Model B (fit4.2), the unconditional growth model. Within our brms paradigm, we typically use the fitted() function to return predicted outcome values for cases within the data. The default option for the fitted() function is to return these predictions after accounting for the level-2 clustering. As we will see, Singer and Willett’s \(R_{y, \hat y}^2\) statistic only accounts for predictors (i.e., age_14, in this case), not clustering variables (i.e., id, in this case). To follow Singer and Willett’s specification, we need to set re_formula = NA, which will instruct fitted() to return the expected values without reference to the level-2 clustering. Here’s a look at the first six rows of that output.

fitted(fit4.2, re_formula = NA) %>%

head()## Estimate Est.Error Q2.5 Q97.5

## [1,] 0.6497368 0.10745950 0.4380402 0.8652803

## [2,] 0.9218824 0.09834597 0.7247202 1.1173032

## [3,] 1.1940281 0.12716581 0.9444807 1.4488893

## [4,] 0.6497368 0.10745950 0.4380402 0.8652803

## [5,] 0.9218824 0.09834597 0.7247202 1.1173032

## [6,] 1.1940281 0.12716581 0.9444807 1.4488893Within our Bayesian/brms paradigm, out expected values come with expressions of uncertainty in terms of the posterior standard deviation and percentile-based 95% intervals. If we followed Singer and Willett’s method in the text, we’d only work with the posterior means as presented within the Estimate column. But since we’re Bayesians, we should attempt to work with the model uncertainty. One approach is to set summary = F.

f <-

fitted(fit4.2,

summary = F,

re_formula = NA) %>%

data.frame() %>%

set_names(1:ncol(.)) %>%

rownames_to_column("draw")

head(f)## draw 1 2 3 4 5 6 7 8 9

## 1 1 0.5987915 0.9220302 1.245269 0.5987915 0.9220302 1.245269 0.5987915 0.9220302 1.245269

## 2 2 0.8109860 1.0437653 1.276545 0.8109860 1.0437653 1.276545 0.8109860 1.0437653 1.276545

## 3 3 0.7543876 0.9813998 1.208412 0.7543876 0.9813998 1.208412 0.7543876 0.9813998 1.208412

## 4 4 0.6657516 0.9690807 1.272410 0.6657516 0.9690807 1.272410 0.6657516 0.9690807 1.272410

## 5 5 0.8239123 1.0726329 1.321353 0.8239123 1.0726329 1.321353 0.8239123 1.0726329 1.321353

## 6 6 0.6303015 0.9231454 1.215989 0.6303015 0.9231454 1.215989 0.6303015 0.9231454 1.215989

## 10 11 12 13 14 15 16 17 18 19

## 1 0.5987915 0.9220302 1.245269 0.5987915 0.9220302 1.245269 0.5987915 0.9220302 1.245269 0.5987915

## 2 0.8109860 1.0437653 1.276545 0.8109860 1.0437653 1.276545 0.8109860 1.0437653 1.276545 0.8109860

## 3 0.7543876 0.9813998 1.208412 0.7543876 0.9813998 1.208412 0.7543876 0.9813998 1.208412 0.7543876

## 4 0.6657516 0.9690807 1.272410 0.6657516 0.9690807 1.272410 0.6657516 0.9690807 1.272410 0.6657516

## 5 0.8239123 1.0726329 1.321353 0.8239123 1.0726329 1.321353 0.8239123 1.0726329 1.321353 0.8239123

## 6 0.6303015 0.9231454 1.215989 0.6303015 0.9231454 1.215989 0.6303015 0.9231454 1.215989 0.6303015

## 20 21 22 23 24 25 26 27 28 29

## 1 0.9220302 1.245269 0.5987915 0.9220302 1.245269 0.5987915 0.9220302 1.245269 0.5987915 0.9220302

## 2 1.0437653 1.276545 0.8109860 1.0437653 1.276545 0.8109860 1.0437653 1.276545 0.8109860 1.0437653

## 3 0.9813998 1.208412 0.7543876 0.9813998 1.208412 0.7543876 0.9813998 1.208412 0.7543876 0.9813998

## 4 0.9690807 1.272410 0.6657516 0.9690807 1.272410 0.6657516 0.9690807 1.272410 0.6657516 0.9690807

## 5 1.0726329 1.321353 0.8239123 1.0726329 1.321353 0.8239123 1.0726329 1.321353 0.8239123 1.0726329

## 6 0.9231454 1.215989 0.6303015 0.9231454 1.215989 0.6303015 0.9231454 1.215989 0.6303015 0.9231454

## 30 31 32 33 34 35 36 37 38 39

## 1 1.245269 0.5987915 0.9220302 1.245269 0.5987915 0.9220302 1.245269 0.5987915 0.9220302 1.245269

## 2 1.276545 0.8109860 1.0437653 1.276545 0.8109860 1.0437653 1.276545 0.8109860 1.0437653 1.276545

## 3 1.208412 0.7543876 0.9813998 1.208412 0.7543876 0.9813998 1.208412 0.7543876 0.9813998 1.208412

## 4 1.272410 0.6657516 0.9690807 1.272410 0.6657516 0.9690807 1.272410 0.6657516 0.9690807 1.272410

## 5 1.321353 0.8239123 1.0726329 1.321353 0.8239123 1.0726329 1.321353 0.8239123 1.0726329 1.321353

## 6 1.215989 0.6303015 0.9231454 1.215989 0.6303015 0.9231454 1.215989 0.6303015 0.9231454 1.215989

## 40 41 42 43 44 45 46 47 48 49

## 1 0.5987915 0.9220302 1.245269 0.5987915 0.9220302 1.245269 0.5987915 0.9220302 1.245269 0.5987915

## 2 0.8109860 1.0437653 1.276545 0.8109860 1.0437653 1.276545 0.8109860 1.0437653 1.276545 0.8109860

## 3 0.7543876 0.9813998 1.208412 0.7543876 0.9813998 1.208412 0.7543876 0.9813998 1.208412 0.7543876

## 4 0.6657516 0.9690807 1.272410 0.6657516 0.9690807 1.272410 0.6657516 0.9690807 1.272410 0.6657516

## 5 0.8239123 1.0726329 1.321353 0.8239123 1.0726329 1.321353 0.8239123 1.0726329 1.321353 0.8239123

## 6 0.6303015 0.9231454 1.215989 0.6303015 0.9231454 1.215989 0.6303015 0.9231454 1.215989 0.6303015

## 50 51 52 53 54 55 56 57 58 59

## 1 0.9220302 1.245269 0.5987915 0.9220302 1.245269 0.5987915 0.9220302 1.245269 0.5987915 0.9220302

## 2 1.0437653 1.276545 0.8109860 1.0437653 1.276545 0.8109860 1.0437653 1.276545 0.8109860 1.0437653

## 3 0.9813998 1.208412 0.7543876 0.9813998 1.208412 0.7543876 0.9813998 1.208412 0.7543876 0.9813998

## 4 0.9690807 1.272410 0.6657516 0.9690807 1.272410 0.6657516 0.9690807 1.272410 0.6657516 0.9690807

## 5 1.0726329 1.321353 0.8239123 1.0726329 1.321353 0.8239123 1.0726329 1.321353 0.8239123 1.0726329

## 6 0.9231454 1.215989 0.6303015 0.9231454 1.215989 0.6303015 0.9231454 1.215989 0.6303015 0.9231454

## 60 61 62 63 64 65 66 67 68 69

## 1 1.245269 0.5987915 0.9220302 1.245269 0.5987915 0.9220302 1.245269 0.5987915 0.9220302 1.245269

## 2 1.276545 0.8109860 1.0437653 1.276545 0.8109860 1.0437653 1.276545 0.8109860 1.0437653 1.276545

## 3 1.208412 0.7543876 0.9813998 1.208412 0.7543876 0.9813998 1.208412 0.7543876 0.9813998 1.208412

## 4 1.272410 0.6657516 0.9690807 1.272410 0.6657516 0.9690807 1.272410 0.6657516 0.9690807 1.272410

## 5 1.321353 0.8239123 1.0726329 1.321353 0.8239123 1.0726329 1.321353 0.8239123 1.0726329 1.321353

## 6 1.215989 0.6303015 0.9231454 1.215989 0.6303015 0.9231454 1.215989 0.6303015 0.9231454 1.215989

## 70 71 72 73 74 75 76 77 78 79

## 1 0.5987915 0.9220302 1.245269 0.5987915 0.9220302 1.245269 0.5987915 0.9220302 1.245269 0.5987915

## 2 0.8109860 1.0437653 1.276545 0.8109860 1.0437653 1.276545 0.8109860 1.0437653 1.276545 0.8109860

## 3 0.7543876 0.9813998 1.208412 0.7543876 0.9813998 1.208412 0.7543876 0.9813998 1.208412 0.7543876

## 4 0.6657516 0.9690807 1.272410 0.6657516 0.9690807 1.272410 0.6657516 0.9690807 1.272410 0.6657516

## 5 0.8239123 1.0726329 1.321353 0.8239123 1.0726329 1.321353 0.8239123 1.0726329 1.321353 0.8239123

## 6 0.6303015 0.9231454 1.215989 0.6303015 0.9231454 1.215989 0.6303015 0.9231454 1.215989 0.6303015

## 80 81 82 83 84 85 86 87 88 89

## 1 0.9220302 1.245269 0.5987915 0.9220302 1.245269 0.5987915 0.9220302 1.245269 0.5987915 0.9220302

## 2 1.0437653 1.276545 0.8109860 1.0437653 1.276545 0.8109860 1.0437653 1.276545 0.8109860 1.0437653

## 3 0.9813998 1.208412 0.7543876 0.9813998 1.208412 0.7543876 0.9813998 1.208412 0.7543876 0.9813998

## 4 0.9690807 1.272410 0.6657516 0.9690807 1.272410 0.6657516 0.9690807 1.272410 0.6657516 0.9690807

## 5 1.0726329 1.321353 0.8239123 1.0726329 1.321353 0.8239123 1.0726329 1.321353 0.8239123 1.0726329

## 6 0.9231454 1.215989 0.6303015 0.9231454 1.215989 0.6303015 0.9231454 1.215989 0.6303015 0.9231454

## 90 91 92 93 94 95 96 97 98 99

## 1 1.245269 0.5987915 0.9220302 1.245269 0.5987915 0.9220302 1.245269 0.5987915 0.9220302 1.245269

## 2 1.276545 0.8109860 1.0437653 1.276545 0.8109860 1.0437653 1.276545 0.8109860 1.0437653 1.276545

## 3 1.208412 0.7543876 0.9813998 1.208412 0.7543876 0.9813998 1.208412 0.7543876 0.9813998 1.208412

## 4 1.272410 0.6657516 0.9690807 1.272410 0.6657516 0.9690807 1.272410 0.6657516 0.9690807 1.272410

## 5 1.321353 0.8239123 1.0726329 1.321353 0.8239123 1.0726329 1.321353 0.8239123 1.0726329 1.321353

## 6 1.215989 0.6303015 0.9231454 1.215989 0.6303015 0.9231454 1.215989 0.6303015 0.9231454 1.215989

## 100 101 102 103 104 105 106 107 108 109

## 1 0.5987915 0.9220302 1.245269 0.5987915 0.9220302 1.245269 0.5987915 0.9220302 1.245269 0.5987915

## 2 0.8109860 1.0437653 1.276545 0.8109860 1.0437653 1.276545 0.8109860 1.0437653 1.276545 0.8109860

## 3 0.7543876 0.9813998 1.208412 0.7543876 0.9813998 1.208412 0.7543876 0.9813998 1.208412 0.7543876

## 4 0.6657516 0.9690807 1.272410 0.6657516 0.9690807 1.272410 0.6657516 0.9690807 1.272410 0.6657516

## 5 0.8239123 1.0726329 1.321353 0.8239123 1.0726329 1.321353 0.8239123 1.0726329 1.321353 0.8239123

## 6 0.6303015 0.9231454 1.215989 0.6303015 0.9231454 1.215989 0.6303015 0.9231454 1.215989 0.6303015

## 110 111 112 113 114 115 116 117 118 119

## 1 0.9220302 1.245269 0.5987915 0.9220302 1.245269 0.5987915 0.9220302 1.245269 0.5987915 0.9220302

## 2 1.0437653 1.276545 0.8109860 1.0437653 1.276545 0.8109860 1.0437653 1.276545 0.8109860 1.0437653

## 3 0.9813998 1.208412 0.7543876 0.9813998 1.208412 0.7543876 0.9813998 1.208412 0.7543876 0.9813998

## 4 0.9690807 1.272410 0.6657516 0.9690807 1.272410 0.6657516 0.9690807 1.272410 0.6657516 0.9690807

## 5 1.0726329 1.321353 0.8239123 1.0726329 1.321353 0.8239123 1.0726329 1.321353 0.8239123 1.0726329

## 6 0.9231454 1.215989 0.6303015 0.9231454 1.215989 0.6303015 0.9231454 1.215989 0.6303015 0.9231454

## 120 121 122 123 124 125 126 127 128 129

## 1 1.245269 0.5987915 0.9220302 1.245269 0.5987915 0.9220302 1.245269 0.5987915 0.9220302 1.245269

## 2 1.276545 0.8109860 1.0437653 1.276545 0.8109860 1.0437653 1.276545 0.8109860 1.0437653 1.276545

## 3 1.208412 0.7543876 0.9813998 1.208412 0.7543876 0.9813998 1.208412 0.7543876 0.9813998 1.208412

## 4 1.272410 0.6657516 0.9690807 1.272410 0.6657516 0.9690807 1.272410 0.6657516 0.9690807 1.272410

## 5 1.321353 0.8239123 1.0726329 1.321353 0.8239123 1.0726329 1.321353 0.8239123 1.0726329 1.321353

## 6 1.215989 0.6303015 0.9231454 1.215989 0.6303015 0.9231454 1.215989 0.6303015 0.9231454 1.215989

## 130 131 132 133 134 135 136 137 138 139

## 1 0.5987915 0.9220302 1.245269 0.5987915 0.9220302 1.245269 0.5987915 0.9220302 1.245269 0.5987915

## 2 0.8109860 1.0437653 1.276545 0.8109860 1.0437653 1.276545 0.8109860 1.0437653 1.276545 0.8109860

## 3 0.7543876 0.9813998 1.208412 0.7543876 0.9813998 1.208412 0.7543876 0.9813998 1.208412 0.7543876

## 4 0.6657516 0.9690807 1.272410 0.6657516 0.9690807 1.272410 0.6657516 0.9690807 1.272410 0.6657516

## 5 0.8239123 1.0726329 1.321353 0.8239123 1.0726329 1.321353 0.8239123 1.0726329 1.321353 0.8239123

## 6 0.6303015 0.9231454 1.215989 0.6303015 0.9231454 1.215989 0.6303015 0.9231454 1.215989 0.6303015

## 140 141 142 143 144 145 146 147 148 149

## 1 0.9220302 1.245269 0.5987915 0.9220302 1.245269 0.5987915 0.9220302 1.245269 0.5987915 0.9220302

## 2 1.0437653 1.276545 0.8109860 1.0437653 1.276545 0.8109860 1.0437653 1.276545 0.8109860 1.0437653

## 3 0.9813998 1.208412 0.7543876 0.9813998 1.208412 0.7543876 0.9813998 1.208412 0.7543876 0.9813998

## 4 0.9690807 1.272410 0.6657516 0.9690807 1.272410 0.6657516 0.9690807 1.272410 0.6657516 0.9690807

## 5 1.0726329 1.321353 0.8239123 1.0726329 1.321353 0.8239123 1.0726329 1.321353 0.8239123 1.0726329

## 6 0.9231454 1.215989 0.6303015 0.9231454 1.215989 0.6303015 0.9231454 1.215989 0.6303015 0.9231454

## 150 151 152 153 154 155 156 157 158 159

## 1 1.245269 0.5987915 0.9220302 1.245269 0.5987915 0.9220302 1.245269 0.5987915 0.9220302 1.245269

## 2 1.276545 0.8109860 1.0437653 1.276545 0.8109860 1.0437653 1.276545 0.8109860 1.0437653 1.276545

## 3 1.208412 0.7543876 0.9813998 1.208412 0.7543876 0.9813998 1.208412 0.7543876 0.9813998 1.208412

## 4 1.272410 0.6657516 0.9690807 1.272410 0.6657516 0.9690807 1.272410 0.6657516 0.9690807 1.272410

## 5 1.321353 0.8239123 1.0726329 1.321353 0.8239123 1.0726329 1.321353 0.8239123 1.0726329 1.321353

## 6 1.215989 0.6303015 0.9231454 1.215989 0.6303015 0.9231454 1.215989 0.6303015 0.9231454 1.215989

## 160 161 162 163 164 165 166 167 168 169

## 1 0.5987915 0.9220302 1.245269 0.5987915 0.9220302 1.245269 0.5987915 0.9220302 1.245269 0.5987915

## 2 0.8109860 1.0437653 1.276545 0.8109860 1.0437653 1.276545 0.8109860 1.0437653 1.276545 0.8109860

## 3 0.7543876 0.9813998 1.208412 0.7543876 0.9813998 1.208412 0.7543876 0.9813998 1.208412 0.7543876

## 4 0.6657516 0.9690807 1.272410 0.6657516 0.9690807 1.272410 0.6657516 0.9690807 1.272410 0.6657516

## 5 0.8239123 1.0726329 1.321353 0.8239123 1.0726329 1.321353 0.8239123 1.0726329 1.321353 0.8239123

## 6 0.6303015 0.9231454 1.215989 0.6303015 0.9231454 1.215989 0.6303015 0.9231454 1.215989 0.6303015

## 170 171 172 173 174 175 176 177 178 179

## 1 0.9220302 1.245269 0.5987915 0.9220302 1.245269 0.5987915 0.9220302 1.245269 0.5987915 0.9220302

## 2 1.0437653 1.276545 0.8109860 1.0437653 1.276545 0.8109860 1.0437653 1.276545 0.8109860 1.0437653

## 3 0.9813998 1.208412 0.7543876 0.9813998 1.208412 0.7543876 0.9813998 1.208412 0.7543876 0.9813998

## 4 0.9690807 1.272410 0.6657516 0.9690807 1.272410 0.6657516 0.9690807 1.272410 0.6657516 0.9690807

## 5 1.0726329 1.321353 0.8239123 1.0726329 1.321353 0.8239123 1.0726329 1.321353 0.8239123 1.0726329

## 6 0.9231454 1.215989 0.6303015 0.9231454 1.215989 0.6303015 0.9231454 1.215989 0.6303015 0.9231454

## 180 181 182 183 184 185 186 187 188 189

## 1 1.245269 0.5987915 0.9220302 1.245269 0.5987915 0.9220302 1.245269 0.5987915 0.9220302 1.245269

## 2 1.276545 0.8109860 1.0437653 1.276545 0.8109860 1.0437653 1.276545 0.8109860 1.0437653 1.276545

## 3 1.208412 0.7543876 0.9813998 1.208412 0.7543876 0.9813998 1.208412 0.7543876 0.9813998 1.208412

## 4 1.272410 0.6657516 0.9690807 1.272410 0.6657516 0.9690807 1.272410 0.6657516 0.9690807 1.272410

## 5 1.321353 0.8239123 1.0726329 1.321353 0.8239123 1.0726329 1.321353 0.8239123 1.0726329 1.321353

## 6 1.215989 0.6303015 0.9231454 1.215989 0.6303015 0.9231454 1.215989 0.6303015 0.9231454 1.215989

## 190 191 192 193 194 195 196 197 198 199

## 1 0.5987915 0.9220302 1.245269 0.5987915 0.9220302 1.245269 0.5987915 0.9220302 1.245269 0.5987915

## 2 0.8109860 1.0437653 1.276545 0.8109860 1.0437653 1.276545 0.8109860 1.0437653 1.276545 0.8109860

## 3 0.7543876 0.9813998 1.208412 0.7543876 0.9813998 1.208412 0.7543876 0.9813998 1.208412 0.7543876

## 4 0.6657516 0.9690807 1.272410 0.6657516 0.9690807 1.272410 0.6657516 0.9690807 1.272410 0.6657516

## 5 0.8239123 1.0726329 1.321353 0.8239123 1.0726329 1.321353 0.8239123 1.0726329 1.321353 0.8239123

## 6 0.6303015 0.9231454 1.215989 0.6303015 0.9231454 1.215989 0.6303015 0.9231454 1.215989 0.6303015

## 200 201 202 203 204 205 206 207 208 209

## 1 0.9220302 1.245269 0.5987915 0.9220302 1.245269 0.5987915 0.9220302 1.245269 0.5987915 0.9220302

## 2 1.0437653 1.276545 0.8109860 1.0437653 1.276545 0.8109860 1.0437653 1.276545 0.8109860 1.0437653

## 3 0.9813998 1.208412 0.7543876 0.9813998 1.208412 0.7543876 0.9813998 1.208412 0.7543876 0.9813998

## 4 0.9690807 1.272410 0.6657516 0.9690807 1.272410 0.6657516 0.9690807 1.272410 0.6657516 0.9690807

## 5 1.0726329 1.321353 0.8239123 1.0726329 1.321353 0.8239123 1.0726329 1.321353 0.8239123 1.0726329

## 6 0.9231454 1.215989 0.6303015 0.9231454 1.215989 0.6303015 0.9231454 1.215989 0.6303015 0.9231454

## 210 211 212 213 214 215 216 217 218 219

## 1 1.245269 0.5987915 0.9220302 1.245269 0.5987915 0.9220302 1.245269 0.5987915 0.9220302 1.245269

## 2 1.276545 0.8109860 1.0437653 1.276545 0.8109860 1.0437653 1.276545 0.8109860 1.0437653 1.276545

## 3 1.208412 0.7543876 0.9813998 1.208412 0.7543876 0.9813998 1.208412 0.7543876 0.9813998 1.208412

## 4 1.272410 0.6657516 0.9690807 1.272410 0.6657516 0.9690807 1.272410 0.6657516 0.9690807 1.272410

## 5 1.321353 0.8239123 1.0726329 1.321353 0.8239123 1.0726329 1.321353 0.8239123 1.0726329 1.321353

## 6 1.215989 0.6303015 0.9231454 1.215989 0.6303015 0.9231454 1.215989 0.6303015 0.9231454 1.215989

## 220 221 222 223 224 225 226 227 228 229

## 1 0.5987915 0.9220302 1.245269 0.5987915 0.9220302 1.245269 0.5987915 0.9220302 1.245269 0.5987915

## 2 0.8109860 1.0437653 1.276545 0.8109860 1.0437653 1.276545 0.8109860 1.0437653 1.276545 0.8109860

## 3 0.7543876 0.9813998 1.208412 0.7543876 0.9813998 1.208412 0.7543876 0.9813998 1.208412 0.7543876

## 4 0.6657516 0.9690807 1.272410 0.6657516 0.9690807 1.272410 0.6657516 0.9690807 1.272410 0.6657516

## 5 0.8239123 1.0726329 1.321353 0.8239123 1.0726329 1.321353 0.8239123 1.0726329 1.321353 0.8239123

## 6 0.6303015 0.9231454 1.215989 0.6303015 0.9231454 1.215989 0.6303015 0.9231454 1.215989 0.6303015

## 230 231 232 233 234 235 236 237 238 239

## 1 0.9220302 1.245269 0.5987915 0.9220302 1.245269 0.5987915 0.9220302 1.245269 0.5987915 0.9220302

## 2 1.0437653 1.276545 0.8109860 1.0437653 1.276545 0.8109860 1.0437653 1.276545 0.8109860 1.0437653

## 3 0.9813998 1.208412 0.7543876 0.9813998 1.208412 0.7543876 0.9813998 1.208412 0.7543876 0.9813998

## 4 0.9690807 1.272410 0.6657516 0.9690807 1.272410 0.6657516 0.9690807 1.272410 0.6657516 0.9690807

## 5 1.0726329 1.321353 0.8239123 1.0726329 1.321353 0.8239123 1.0726329 1.321353 0.8239123 1.0726329

## 6 0.9231454 1.215989 0.6303015 0.9231454 1.215989 0.6303015 0.9231454 1.215989 0.6303015 0.9231454

## 240 241 242 243 244 245 246

## 1 1.245269 0.5987915 0.9220302 1.245269 0.5987915 0.9220302 1.245269

## 2 1.276545 0.8109860 1.0437653 1.276545 0.8109860 1.0437653 1.276545

## 3 1.208412 0.7543876 0.9813998 1.208412 0.7543876 0.9813998 1.208412

## 4 1.272410 0.6657516 0.9690807 1.272410 0.6657516 0.9690807 1.272410

## 5 1.321353 0.8239123 1.0726329 1.321353 0.8239123 1.0726329 1.321353

## 6 1.215989 0.6303015 0.9231454 1.215989 0.6303015 0.9231454 1.215989With those settings, fitted() returned a \(4,000 \times 246\) numeric array. The 4,000 rows corresponded to the 4,000 post-warmup HMC draws. Each of the 246 columns corresponded to one of the 246 rows in the original alcohol1_pp data. To make the output more useful, we converted it to a data frame, named the columns by the row numbers corresponding to the original alcohol1_pp data, and converted the row names to an draw column.

In the next code block, we’ll convert f to the long format and use left_join() to join it with the relevant subset of the alcohol1_pp data.

f <-

f %>%

pivot_longer(-draw,

names_to = "row",

values_to = "fitted") %>%

mutate(row = row %>% as.integer()) %>%

left_join(

alcohol1_pp %>%

mutate(row = 1:n()) %>%

select(row, alcuse),

by = "row"

)

f## # A tibble: 984,000 × 4

## draw row fitted alcuse

## <chr> <int> <dbl> <dbl>

## 1 1 1 0.599 1.73

## 2 1 2 0.922 2

## 3 1 3 1.25 2

## 4 1 4 0.599 0

## 5 1 5 0.922 0

## 6 1 6 1.25 1

## 7 1 7 0.599 1

## 8 1 8 0.922 2

## 9 1 9 1.25 3.32

## 10 1 10 0.599 0

## # ℹ 983,990 more rowsIf we collapse the distinction across the 4,000 HMC draws, here is the squared correlation between fitted and alcuse.

f %>%

summarise(r = cor(fitted, alcuse),

r2 = cor(fitted, alcuse)^2)## # A tibble: 1 × 2

## r r2

## <dbl> <dbl>

## 1 0.186 0.0346This is close to the \(R_{y, \hat y}^2 = .043\) Singer and Willett reported in the text. It might seem unsatisfying how this seemingly ignores model uncertainty by collapsing across HMC draws. Here’s a look at what happens is we compute the \(R_{y, \hat y}^2\) separately for each iteration.

f %>%

mutate(draw = draw %>% as.double()) %>%

group_by(draw) %>%

summarise(r = cor(fitted, alcuse),

r2 = cor(fitted, alcuse)^2)## # A tibble: 4,000 × 3

## draw r r2

## <dbl> <dbl> <dbl>

## 1 1 0.208 0.0434

## 2 2 0.208 0.0434

## 3 3 0.208 0.0434

## 4 4 0.208 0.0434

## 5 5 0.208 0.0434

## 6 6 0.208 0.0434

## 7 7 0.208 0.0434

## 8 8 0.208 0.0434

## 9 9 0.208 0.0434

## 10 10 0.208 0.0434

## # ℹ 3,990 more rowsNow for every level of draw, \(R_{y, \hat y}^2 = .0434\), which matches up nicely with the text. But it seems odd that the value should be the same for each of the 4,000 HMC draws. Sadly, my efforts to debug my workflow have been unsuccessful. If you see a flaw in this method, please share on GitHub.

Just for kicks, here’s a more compact alternative to our fitted() + left_join() approach that more closely resembles the work flow Singer and Willett showed on pages 102 and 103.

tibble(age_14 = 0:2) %>%

mutate(fitted = map(age_14, ~ draws$b_Intercept + draws$b_age_14 * .)) %>%

full_join(alcohol1_pp %>% select(id, age_14, alcuse),

by = "age_14") %>%

mutate(row = 1:n()) %>%

unnest(fitted) %>%

mutate(draw = rep(1:4000, times = alcohol1_pp %>% nrow())) %>%

group_by(draw) %>%

summarise(r = cor(fitted, alcuse),

r2 = cor(fitted, alcuse)^2)## # A tibble: 4,000 × 3

## draw r r2

## <int> <dbl> <dbl>

## 1 1 0.208 0.0434

## 2 2 0.208 0.0434

## 3 3 0.208 0.0434

## 4 4 0.208 0.0434

## 5 5 0.208 0.0434

## 6 6 0.208 0.0434

## 7 7 0.208 0.0434

## 8 8 0.208 0.0434

## 9 9 0.208 0.0434

## 10 10 0.208 0.0434

## # ℹ 3,990 more rowsEither way, our results agree with those in the text: about “4.3% of the total variability in ALCUSE is associated with linear time” (p. 103, emphasis in the original).

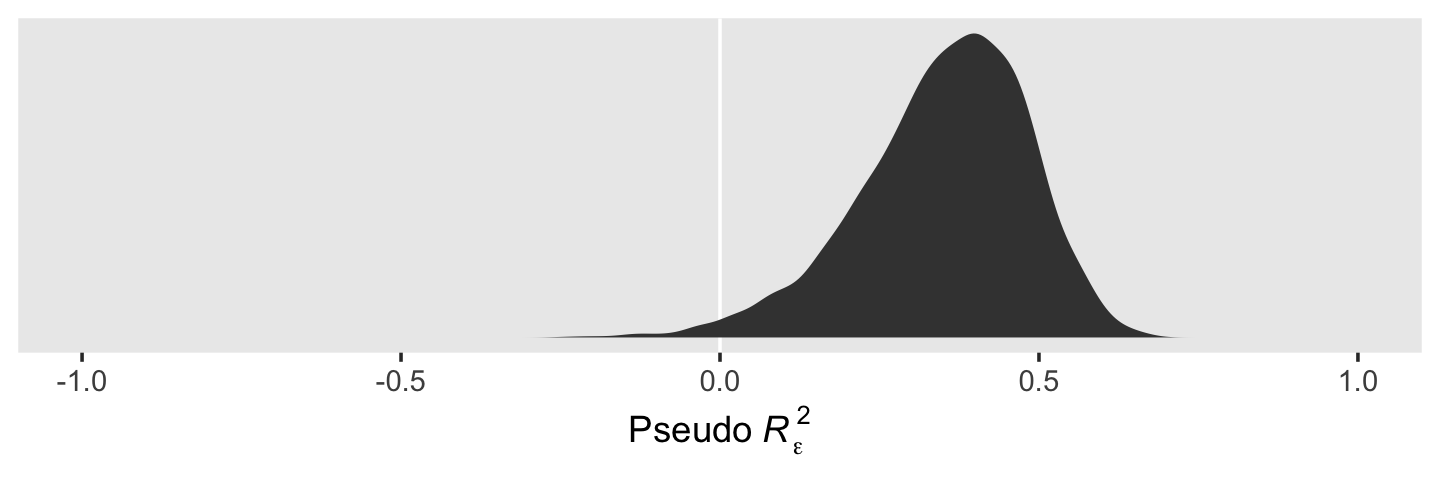

4.4.3.2 Pseudo-\(R^2\) statistics computed from the variance components.

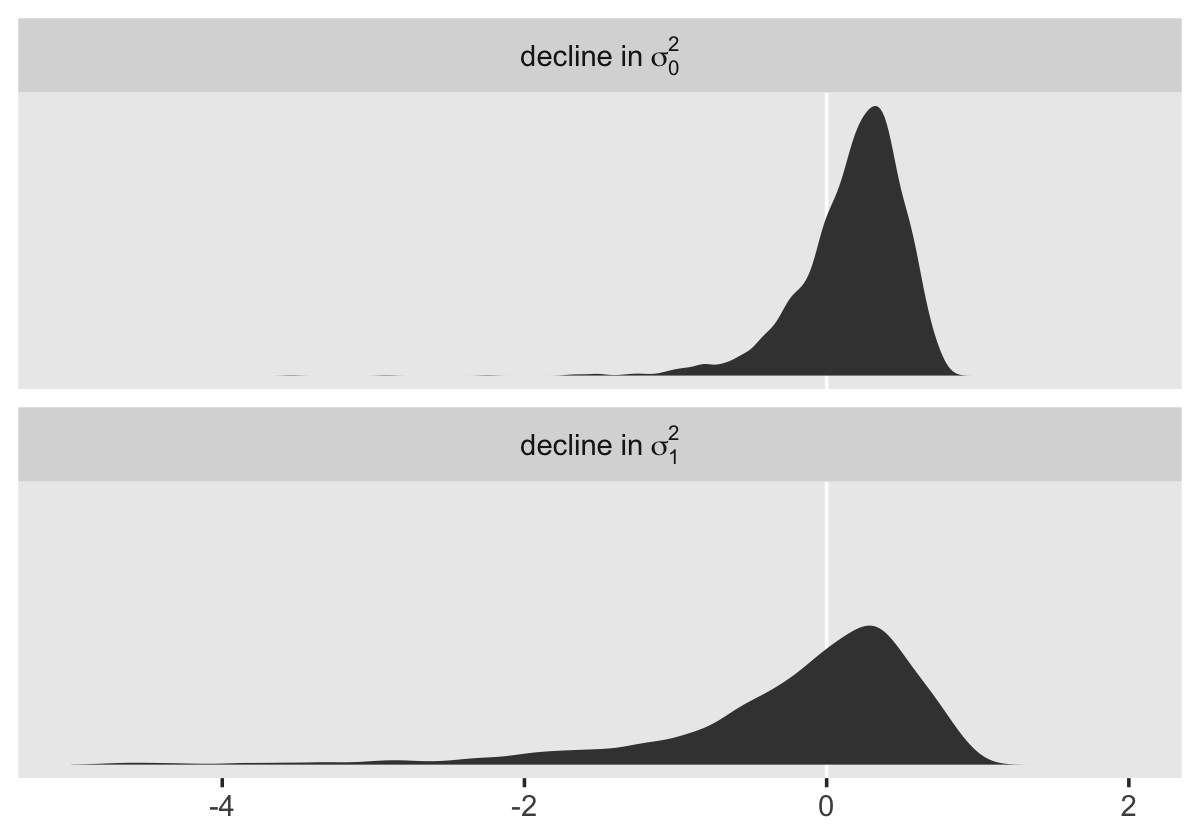

Residual variation–that portion of the outcome variation unexplained by a model’s predictors–provides another criterion for comparison. When you fit a series of models, you hope that added predictors further explain unexplained outcome variation, causing residual variation to decline. The magnitude of this decline quantifies the improvement in fit. A large decline suggests that the predictors make a big difference; a small, or zero, decline suggests that they do not. To assess these declines on a common scale, we compute the proportional reduction in residual variance as we add predictors.

Each unconditional model yields residual variances that serve as yardsticks for comparison. The unconditional means model provides a baseline estimate of \(\sigma_\epsilon^2\); the unconditional growth model provides baseline estimates of \(\sigma_0^2\) and \(\sigma_1^2\). Each leads to its own pseudo-\(R^2\) statistic. (p. 103, emphasis in the original)

This provides three more pseudo-\(R^2\) statistics: \(R_\epsilon^2\), \(R_0^2\), and \(R_1^2\). The formula for the first is

\[ R_\epsilon^2 = \frac{\sigma_\epsilon^2 (\text{unconditional means model}) - \sigma_\epsilon^2 (\text{unconditional growth model})}{\sigma_\epsilon^2 (\text{unconditional means model})}. \]

We’ve actually already computed this one, above, under the name where we referred to it as the decline in \(\sigma_\epsilon^2\) after adding time to the model. Here it is again.

cbind(VarCorr(fit4.1, summary = F)[[2]][[1]],

VarCorr(fit4.2, summary = F)[[2]][[1]]) %>%

data.frame() %>%

mutate_all(~ .^2) %>%

set_names(str_c("fit4.", 1:2)) %>%

mutate(r_2_epsilon = (fit4.1 - fit4.2) / fit4.1) %>%

summarise(mean = mean(r_2_epsilon),

median = median(r_2_epsilon),

sd = sd(r_2_epsilon),

ll = quantile(r_2_epsilon, prob = .025),

ul = quantile(r_2_epsilon, prob = .975)) %>%

mutate_if(is.double, round, digits = 3)## mean median sd ll ul

## 1 0.35 0.365 0.132 0.051 0.569Here’s a look at the full distribution for our \(\sigma_\epsilon^2\).

cbind(VarCorr(fit4.1, summary = F)[[2]][[1]],

VarCorr(fit4.2, summary = F)[[2]][[1]]) %>%

data.frame() %>%

mutate_all(~ .^2) %>%

set_names(str_c("fit4.", 1:2)) %>%

mutate(r_2_epsilon = (fit4.1 - fit4.2) / fit4.1) %>%

ggplot(aes(x = r_2_epsilon)) +

geom_vline(xintercept = 0, color = "white") +

geom_density(fill = "grey25", color = "transparent") +

scale_x_continuous(expression(Pseudo~italic(R)[epsilon]^2), limits = c(-1, 1)) +

scale_y_continuous(NULL, breaks = NULL) +

theme(panel.grid = element_blank())

When we use the full posteriors of our two \(\epsilon_\epsilon^2\) parameters, we end up with a slightly smaller statistic than the one in the text. So our conclusion is about 35% of the intraindividual variance is accounted for by time.

If we consider additional models with predictors for the \(\zeta\)s, we can examine similar pseudo \(R^2\) statistics following the generic form

\[ R_\zeta^2 = \frac{\sigma_\zeta^2 (\text{unconditional growth model}) - \sigma_\zeta^2 (\text{subsequent model})}{\sigma_\zeta^2 (\text{unconditional growth model})}, \]

where \(\zeta\) could refer to \(\zeta_{0i}\), \(\zeta_{1i}\), and so on. If you look back up at the shape of the full posterior of \(R_\epsilon^2\), you’ll notice part of the left tail crosses zero. “Unlike traditional \(R^2\) statistics, which will always be positive (or zero), some of these statistics can be negative” (p. 104)! If you compute them, interpret pseudo-\(R^2\) statistics with a grain of salt.

4.5 Practical data analytic strategies for model building

A sound statistical model includes all necessary predictors and no unnecessary ones. But how do you separate the wheat from the chaff? We suggest you rely on a combination of substantive theory, research questions, and statistical evidence. Never let a computer select predictors mechanically. (pp. 104–105, emphasis in the original)

4.5.1 A taxonomy of statistical models.

We suggest that you base decisions to enter, retain, and remove predictors on a combination of logic, theory, and prior research, supplemented by judicious [parameter evaluation] and comparison of model fit. At the outset, you might examine the effect of each predictor individually. You might then focus on predictors of primary interest (while including others whose effects you want to control). As in regular regression, you can add predictors singly or in groups and you can address issues of functional form using interactions and transformations. As you develop the taxonomy, you will progress toward a “final model” whose interpretation addresses your research questions. We place quotes around this term to emphasize that we believe no statistical model is ever final; it is simply a placeholder until a better model is found. (p. 105, emphasis in the original)

4.5.2 Interpreting fitted models.

You need not interpret every model you fit, especially those designed to guide interim decision making. When writing up findings for presentation and publication, we suggest that you identify a manageable subset of models that, taken together, tells a persuasive story parsimoniously. At a minimum, this includes the unconditional means model, the unconditional growth model, and a “final model”. You may also want to present intermediate models that either provide important building blocks or tell interesting stories in their own right. (p. 106)

In the dawn of the post-replication crisis era, it’s astonishing to reread and transcribe this section and the one above. I like a lot of what the authors had to say. Much of it seems like good pragmatic advice. But if they were to rewrite these sections again, I wonder what changes they’d make. Would they recommend researchers preregister their primary hypothesis, variables of interest, and perhaps their model building strategy (Nosek et al., 2018)? Would they be interested in a multiverse analysis (Steegen et al., 2016)? Would they still recommend sharing only a subset of one’s analyses in the era of sharing platforms like GitHub and the Open Science Framework? Would they weigh in on developments in causal inference (Pearl et al., 2016)?

4.5.2.1 Model C: The uncontrolled effects of COA.

The default priors for Model C are the same as for the unconditional growth model. All we’ve done is add parameters of class = b. As these default to improper flat priors, we have nothing to add to the prior argument to include them. Feel free to check with get_prior(). For the sake of practice, this model follows the form

\[ \begin{align*} \text{alcuse}_{ij} & = \gamma_{00} + \gamma_{01} \text{coa}_i + \gamma_{10} \text{age_14}_{ij} + \gamma_{11} \text{coa}_i \times \text{age_14}_{ij} + \zeta_{0i} + \zeta_{1i} \text{age_14}_{ij} + \epsilon_{ij} \\ \epsilon_{ij} & \sim \text{Normal} (0, \sigma_\epsilon^2) \\ \begin{bmatrix} \zeta_{0i} \\ \zeta_{1i} \end{bmatrix} & \sim \text{Normal} \begin{pmatrix} \begin{bmatrix} 0 \\ 0 \end{bmatrix}, \begin{bmatrix} \sigma_0^2 & \sigma_{01} \\ \sigma_{01} & \sigma_1^2 \end{bmatrix} \end{pmatrix}. \end{align*} \]

Fit the model.

fit4.3 <-

brm(data = alcohol1_pp,

family = gaussian,

alcuse ~ 0 + Intercept + age_14 + coa + age_14:coa + (1 + age_14 | id),

prior = c(prior(student_t(3, 0, 2.5), class = sd),

prior(student_t(3, 0, 2.5), class = sigma),

prior(lkj(1), class = cor)),

iter = 2000, warmup = 1000, chains = 4, cores = 4,

seed = 4,

file = "fits/fit04.03")Check the summary.

print(fit4.3, digits = 3)## Family: gaussian

## Links: mu = identity; sigma = identity

## Formula: alcuse ~ 0 + Intercept + age_14 + coa + age_14:coa + (1 + age_14 | id)

## Data: alcohol1_pp (Number of observations: 246)

## Draws: 4 chains, each with iter = 2000; warmup = 1000; thin = 1;

## total post-warmup draws = 4000

##

## Group-Level Effects:

## ~id (Number of levels: 82)

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## sd(Intercept) 0.695 0.101 0.500 0.899 1.001 809 1602

## sd(age_14) 0.369 0.092 0.166 0.535 1.004 411 526

## cor(Intercept,age_14) -0.103 0.279 -0.509 0.622 1.002 609 557

##

## Population-Level Effects:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## Intercept 0.320 0.134 0.052 0.582 1.000 2091 2190

## age_14 0.288 0.086 0.119 0.458 1.000 2671 2703

## coa 0.736 0.198 0.349 1.126 1.001 1980 2402

## age_14:coa -0.044 0.128 -0.292 0.210 1.002 2698 2701

##

## Family Specific Parameters:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## sigma 0.607 0.051 0.516 0.716 1.002 456 1041

##

## Draws were sampled using sampling(NUTS). For each parameter, Bulk_ESS

## and Tail_ESS are effective sample size measures, and Rhat is the potential

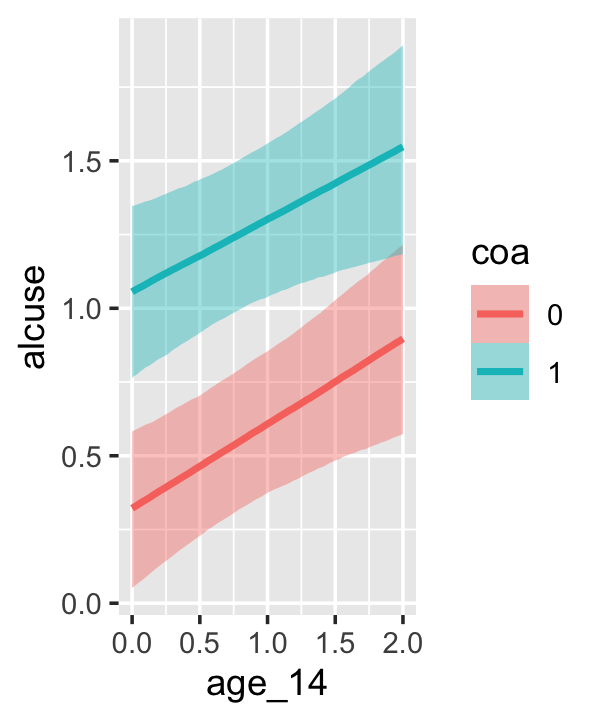

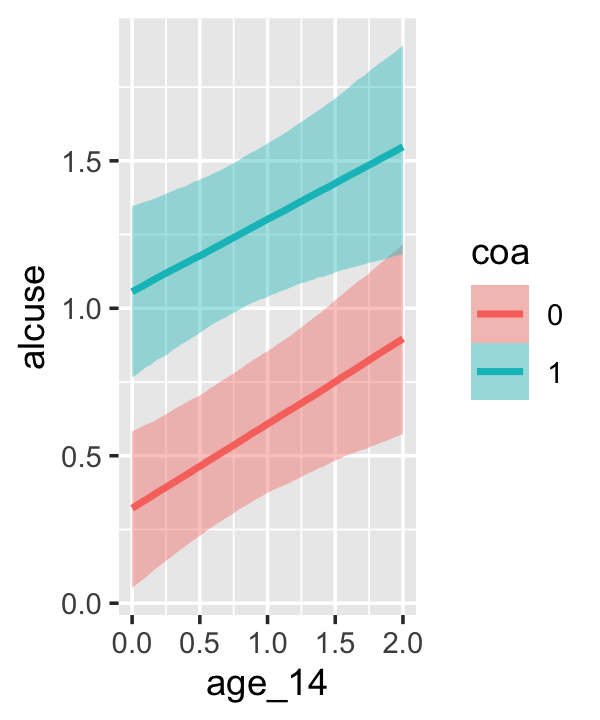

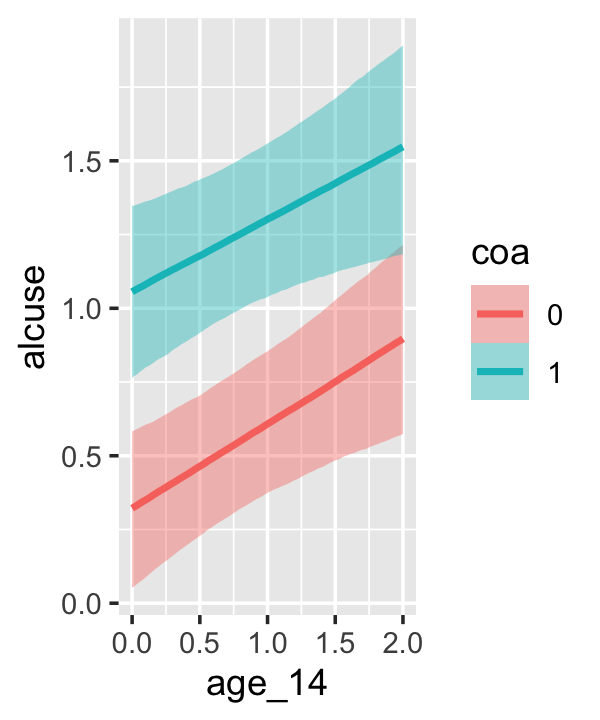

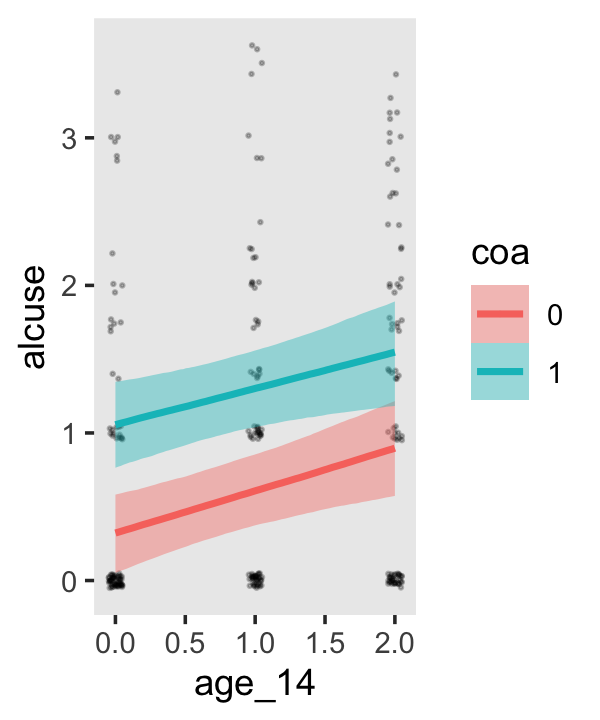

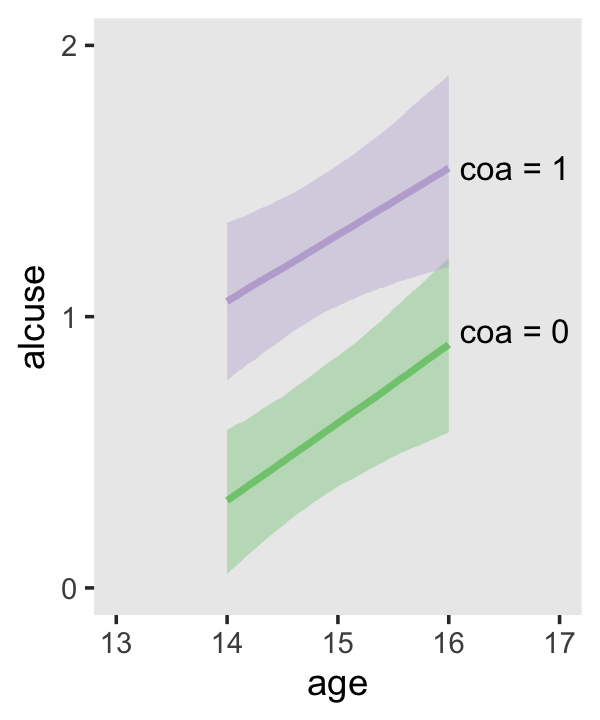

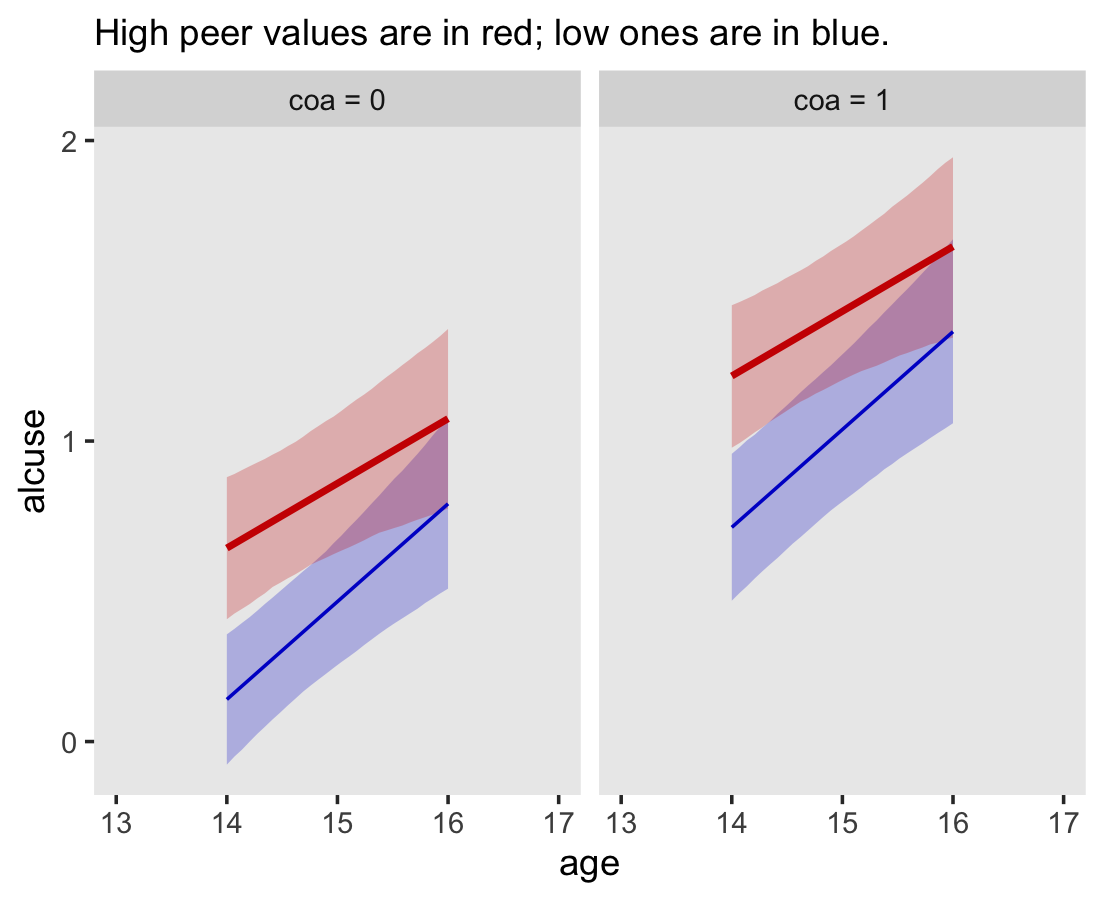

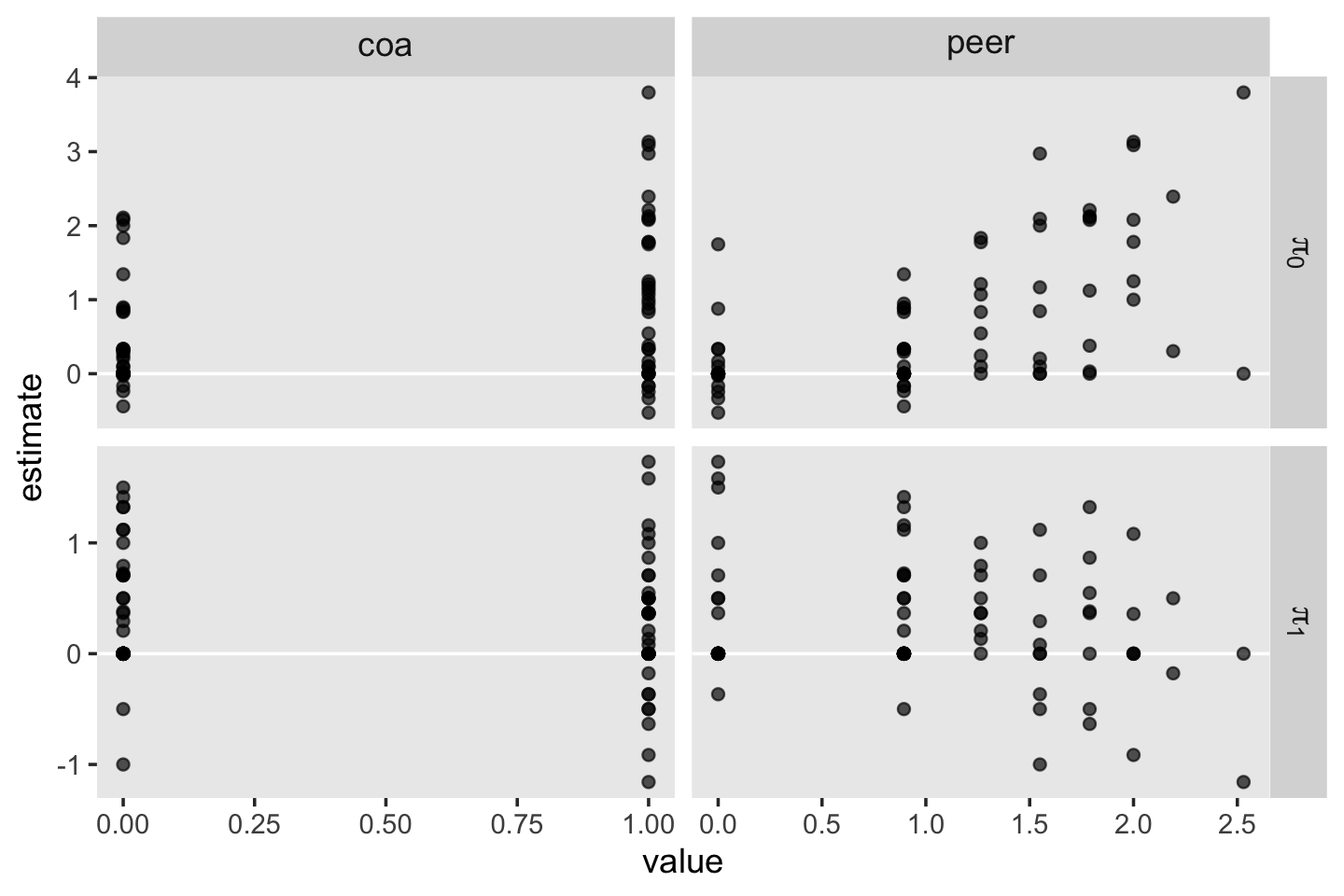

## scale reduction factor on split chains (at convergence, Rhat = 1).Our \(\gamma\)’s are quite similar to those presented in the text. Our \(\sigma_\epsilon\) for this model is about the same as with fit4.2. Let’s practice with conditional_effects() to plot the consequences of this model.

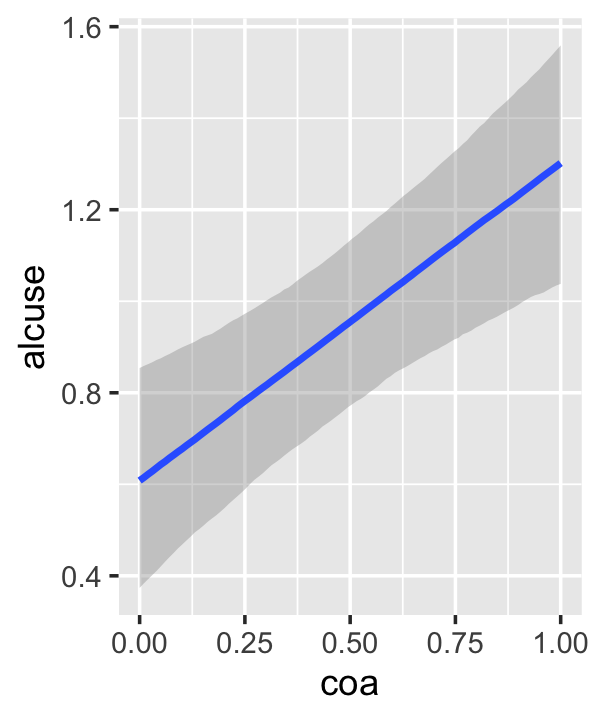

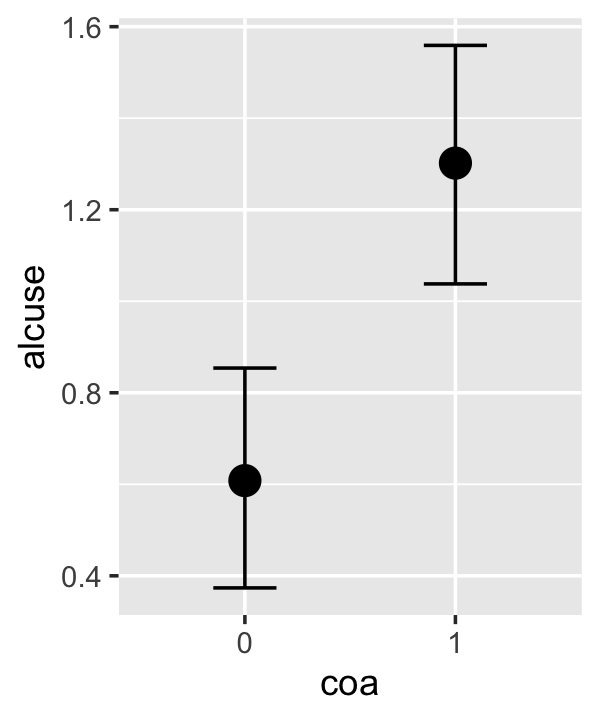

conditional_effects(fit4.3)

This time we got back three plots. The first two were of the lower-order parameters \(\gamma_{10}\) and \(\gamma_{01}\). Note how the plot for coa treated it as a continuous variable. This is because the variable was saved as an integer in the original data set.

fit4.3$data %>%

glimpse()## Rows: 246

## Columns: 5

## $ alcuse <dbl> 1.732051, 2.000000, 2.000000, 0.000000, 0.000000, 1.000000, 1.000000, 2.000000, …

## $ Intercept <dbl> 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1,…

## $ age_14 <dbl> 0, 1, 2, 0, 1, 2, 0, 1, 2, 0, 1, 2, 0, 1, 2, 0, 1, 2, 0, 1, 2, 0, 1, 2, 0, 1, 2,…

## $ coa <dbl> 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1,…

## $ id <dbl> 1, 1, 1, 2, 2, 2, 3, 3, 3, 4, 4, 4, 5, 5, 5, 6, 6, 6, 7, 7, 7, 8, 8, 8, 9, 9, 9,…Coding it as an integer further complicated things for the third plot returned by conditional_effects(), the one for the interaction of age_14 and coa, \(\gamma_{11}\).

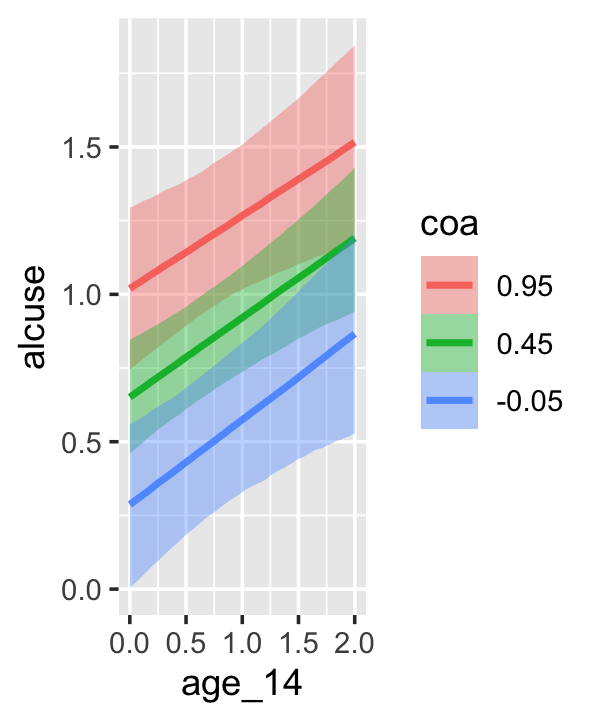

Since coa is binary, the natural way to express its interaction with age_14 would be with age_14 on the \(x\)-axis and two separate trajectories, one for each value of coa. That’s what Singer and Willett very sensibly did with the middle panel of Figure 4.3. However, the conditional_effects()function defaults to expressing interactions such that the first variable in the term–in this case,age_14–is on the \(x\)-axis and the second variable in the term–coa, treated as an integer–is depicted in three lines corresponding its mean and its mean \(\pm\) one standard deviation. This is great for continuous variables, but incoherent for categorical ones. The fix is to adjust the data and refit the model.

fit4.4 <-

update(fit4.3,

newdata = alcohol1_pp %>% mutate(coa = factor(coa)),

iter = 2000, warmup = 1000, chains = 4, cores = 4,

seed = 4,

file = "fits/fit04.04")We might compare the updated model with its predecessor. To get a focused look, we can use the posterior_summary() function with a little subsetting.

posterior_summary(fit4.3)[1:4, ] %>% round(digits = 3)## Estimate Est.Error Q2.5 Q97.5

## b_Intercept 0.320 0.134 0.052 0.582

## b_age_14 0.288 0.086 0.119 0.458

## b_coa 0.736 0.198 0.349 1.126

## b_age_14:coa -0.044 0.128 -0.292 0.210posterior_summary(fit4.4)[1:4, ] %>% round(digits = 3)## Estimate Est.Error Q2.5 Q97.5

## b_Intercept 0.320 0.134 0.052 0.582

## b_age_14 0.288 0.086 0.119 0.458

## b_coa1 0.736 0.198 0.349 1.126

## b_age_14:coa1 -0.044 0.128 -0.292 0.210The results are about the same. The payoff comes when we try again with conditional_effects().

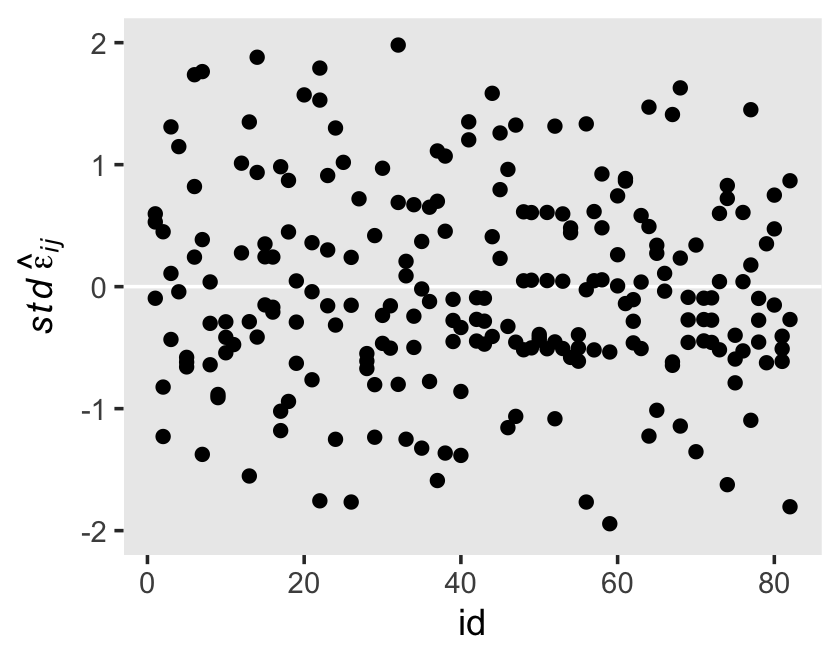

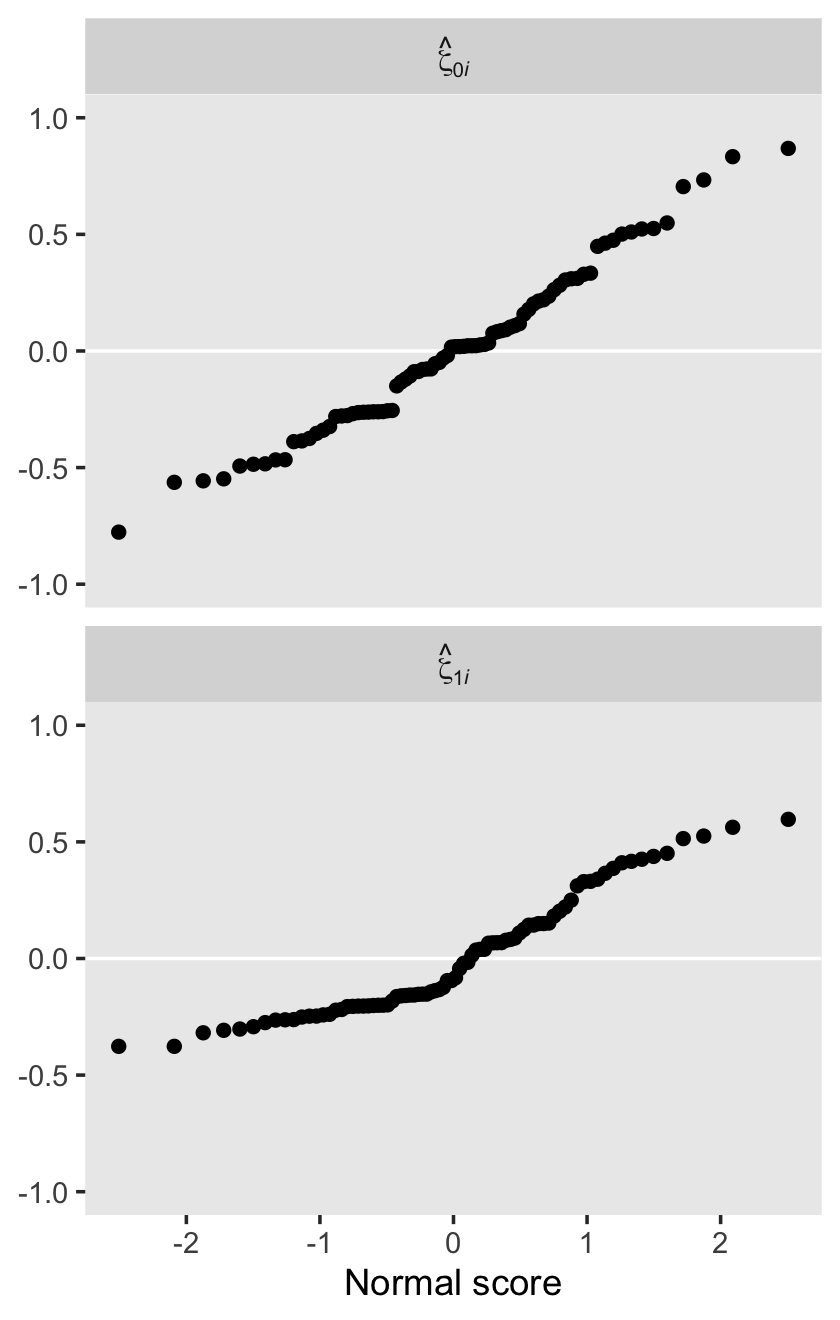

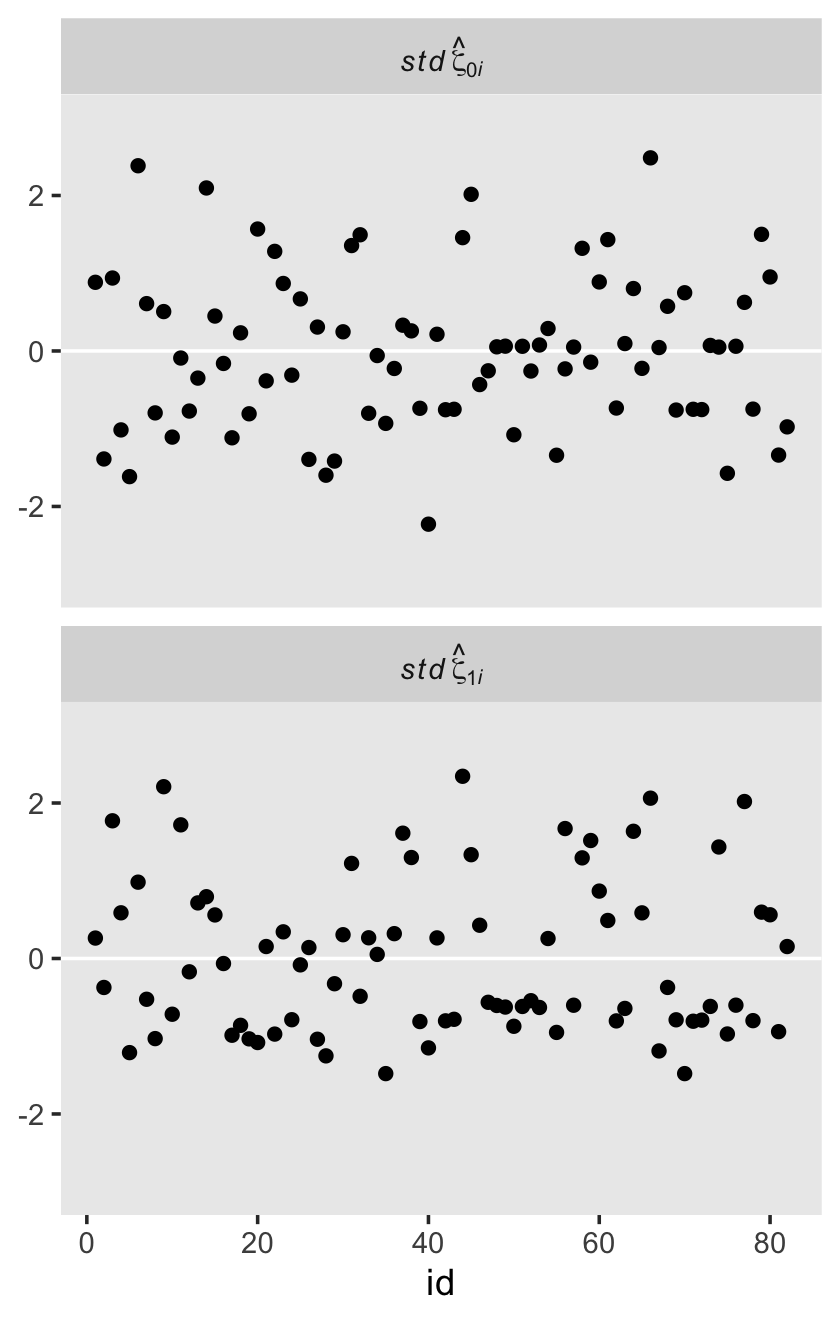

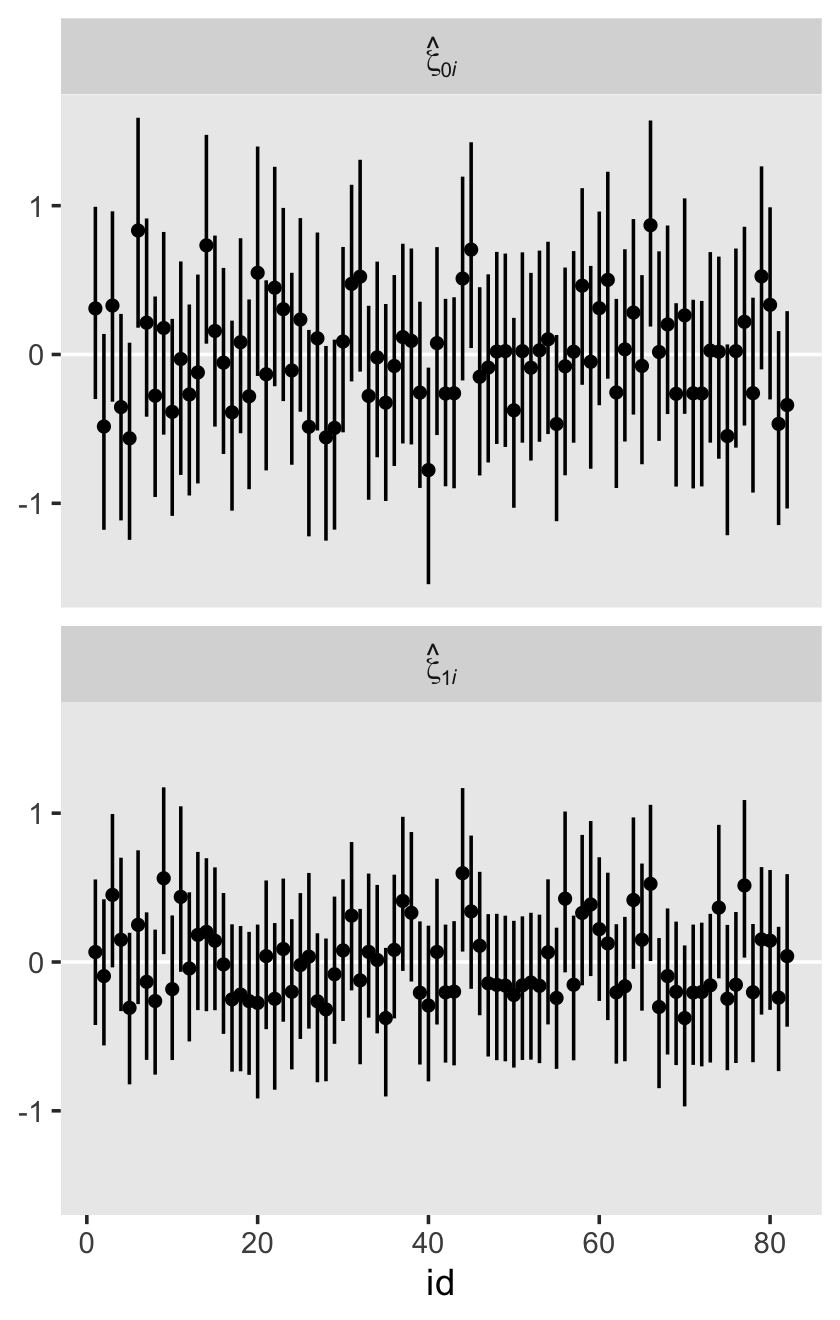

conditional_effects(fit4.4)