12 Multilevel Models

Multilevel models… remember features of each cluster in the data as they learn about all of the clusters. Depending upon the variation among clusters, which is learned from the data as well, the model pools information across clusters. This pooling tends to improve estimates about each cluster. This improved estimation leads to several, more pragmatic sounding, benefits of the multilevel approach. (p. 356)

These benefits include:

- improved estimates for repeated sampling (i.e., in longitudinal data)

- improved estimates when there are imbalances among subsamples

- estimates of the variation across subsamples

- avoiding simplistic averaging by retaining variation across subsamples

All of these benefits flow out of the same strategy and model structure. You learn one basic design and you get all of this for free.

When it comes to regression, multilevel regression deserves to be the default approach. There are certainly contexts in which it would be better to use an old-fashioned single-level model. But the contexts in which multilevel models are superior are much more numerous. It is better to begin to build a multilevel analysis, and then realize it’s unnecessary, than to overlook it. And once you grasp the basic multilevel stragety, it becomes much easier to incorporate related tricks such as allowing for measurement error in the data and even model missing data itself (Chapter 14). (p. 356)

I’m totally on board with this. After learning about the multilevel model, I see it everywhere. For more on the sentiment it should be the default, check out McElreath’s blog post, Multilevel Regression as Default.

12.1 Example: Multilevel tadpoles

Let’s get the reedfrogs data from rethinking.

library(rethinking)

data(reedfrogs)

d <- reedfrogsDetach rethinking and load brms.

rm(reedfrogs)

detach(package:rethinking, unload = T)

library(brms)## Warning: package 'Rcpp' was built under R version 3.5.2Go ahead and acquaint yourself with the reedfrogs.

library(tidyverse)

d %>%

glimpse()## Observations: 48

## Variables: 5

## $ density <int> 10, 10, 10, 10, 10, 10, 10, 10, 10, 10, 10, 10, 10, 10, 10, 10, 25, 25, 25, 25, …

## $ pred <fct> no, no, no, no, no, no, no, no, pred, pred, pred, pred, pred, pred, pred, pred, …

## $ size <fct> big, big, big, big, small, small, small, small, big, big, big, big, small, small…

## $ surv <int> 9, 10, 7, 10, 9, 9, 10, 9, 4, 9, 7, 6, 7, 5, 9, 9, 24, 23, 22, 25, 23, 23, 23, 2…

## $ propsurv <dbl> 0.9000000, 1.0000000, 0.7000000, 1.0000000, 0.9000000, 0.9000000, 1.0000000, 0.9…Making the tank cluster variable is easy.

d <-

d %>%

mutate(tank = 1:nrow(d))Here’s the formula for the un-pooled model in which each tank gets its own intercept.

And \(n_i = \text{density}_i\). Now we’ll fit this simple aggregated binomial model much like we practiced in Chapter 10.

b12.1 <-

brm(data = d, family = binomial,

surv | trials(density) ~ 0 + factor(tank),

prior(normal(0, 5), class = b),

iter = 2000, warmup = 500, chains = 4, cores = 4,

seed = 12)The formula for the multilevel alternative is

\[\begin{align*} \text{surv}_i & \sim \text{Binomial} (n_i, p_i) \\ \text{logit} (p_i) & = \alpha_{\text{tank}_i} \\ \alpha_{\text{tank}} & \sim \text{Normal} (\alpha, \sigma) \\ \alpha & \sim \text{Normal} (0, 1) \\ \sigma & \sim \text{HalfCauchy} (0, 1) \end{align*}\]You specify the corresponding multilevel model like this.

b12.2 <-

brm(data = d, family = binomial,

surv | trials(density) ~ 1 + (1 | tank),

prior = c(prior(normal(0, 1), class = Intercept),

prior(cauchy(0, 1), class = sd)),

iter = 4000, warmup = 1000, chains = 4, cores = 4,

seed = 12)The syntax for the varying effects follows the lme4 style, ( <varying parameter(s)> | <grouping variable(s)> ). In this case (1 | tank) indicates only the intercept, 1, varies by tank. The extent to which parameters vary is controlled by the prior, prior(cauchy(0, 1), class = sd), which is parameterized in the standard deviation metric. Do note that last part. It’s common in multilevel software to model in the variance metric, instead.

Let’s do the WAIC comparisons.

b12.1 <- add_criterion(b12.1, "waic")

b12.2 <- add_criterion(b12.2, "waic")

w <- loo_compare(b12.1, b12.2, criterion = "waic")

print(w, simplify = F)## elpd_diff se_diff elpd_waic se_elpd_waic p_waic se_p_waic waic se_waic

## b12.2 0.0 0.0 -100.0 3.6 20.9 0.8 200.0 7.2

## b12.1 -1.1 2.3 -101.0 4.7 22.9 0.7 202.1 9.4The se_diff is large relative to the elpd_diff. If we convert the \(\text{elpd}\) difference to the WAIC metric, the message stays the same.

cbind(waic_diff = w[, 1] * -2,

se = w[, 2] * 2)## waic_diff se

## b12.2 0.000000 0.000000

## b12.1 2.132735 4.555047I’m not going to show it here, but if you’d like a challenge, try comparing the models with the LOO. You’ll learn all about high pareto_k values, kfold() recommendations, and challenges implementing those kfold() recommendations. If you’re interested, pour yourself a calming adult beverage, execute the code below, and check out the Kfold(): “Error: New factor levels are not allowed” thread in the Stan forums.

b12.1 <- add_criterion(b12.1, "loo")

b12.2 <- add_criterion(b12.2, "loo")But back on track, here’s our prep work for Figure 12.1.

post <- posterior_samples(b12.2, add_chain = T)

post_mdn <-

coef(b12.2, robust = T)$tank[, , ] %>%

as_tibble() %>%

bind_cols(d) %>%

mutate(post_mdn = inv_logit_scaled(Estimate))

post_mdn## # A tibble: 48 x 11

## Estimate Est.Error Q2.5 Q97.5 density pred size surv propsurv tank post_mdn

## <dbl> <dbl> <dbl> <dbl> <int> <fct> <fct> <int> <dbl> <int> <dbl>

## 1 2.08 0.850 0.589 4.09 10 no big 9 0.9 1 0.889

## 2 2.95 1.05 1.19 5.44 10 no big 10 1 2 0.950

## 3 0.970 0.652 -0.255 2.39 10 no big 7 0.7 3 0.725

## 4 2.95 1.06 1.19 5.52 10 no big 10 1 4 0.950

## 5 2.07 0.856 0.567 4.02 10 no small 9 0.9 5 0.888

## 6 2.06 0.832 0.602 4.00 10 no small 9 0.9 6 0.887

## 7 2.96 1.09 1.17 5.51 10 no small 10 1 7 0.951

## 8 2.05 0.842 0.598 3.96 10 no small 9 0.9 8 0.886

## 9 -0.183 0.606 -1.41 0.992 10 pred big 4 0.4 9 0.454

## 10 2.06 0.839 0.596 4.05 10 pred big 9 0.9 10 0.887

## # … with 38 more rowsFor kicks and giggles, let’s use a FiveThirtyEight-like theme for this chapter’s plots. An easy way to do so is with help from the ggthemes package.

# install.packages("ggthemes", dependencies = T)

library(ggthemes) Finally, here’s the ggplot2 code to reproduce Figure 12.1.

post_mdn %>%

ggplot(aes(x = tank)) +

geom_hline(yintercept = inv_logit_scaled(median(post$b_Intercept)), linetype = 2, size = 1/4) +

geom_vline(xintercept = c(16.5, 32.5), size = 1/4) +

geom_point(aes(y = propsurv), color = "orange2") +

geom_point(aes(y = post_mdn), shape = 1) +

coord_cartesian(ylim = c(0, 1)) +

scale_x_continuous(breaks = c(1, 16, 32, 48)) +

labs(title = "Multilevel shrinkage!",

subtitle = "The empirical proportions are in orange while the model-\nimplied proportions are the black circles. The dashed line is\nthe model-implied average survival proportion.") +

annotate("text", x = c(8, 16 + 8, 32 + 8), y = 0,

label = c("small tanks", "medium tanks", "large tanks")) +

theme_fivethirtyeight() +

theme(panel.grid = element_blank())

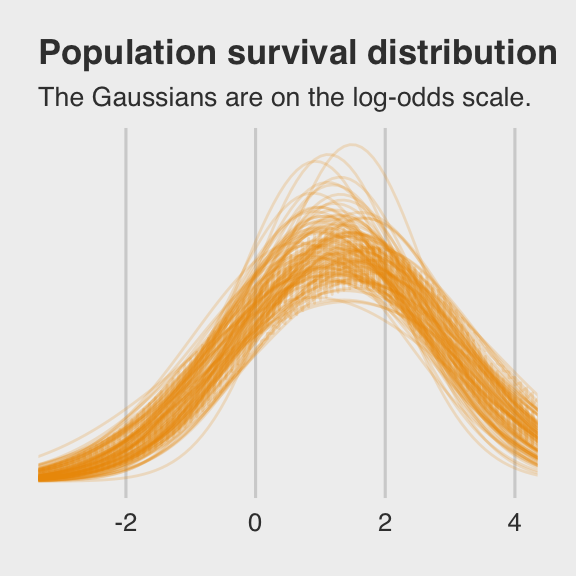

Here is our version of Figure 12.2.a.

# this makes the output of `sample_n()` reproducible

set.seed(12)

post %>%

sample_n(100) %>%

expand(nesting(iter, b_Intercept, sd_tank__Intercept),

x = seq(from = -4, to = 5, length.out = 100)) %>%

ggplot(aes(x = x, group = iter)) +

geom_line(aes(y = dnorm(x, b_Intercept, sd_tank__Intercept)),

alpha = .2, color = "orange2") +

labs(title = "Population survival distribution",

subtitle = "The Gaussians are on the log-odds scale.") +

scale_y_continuous(NULL, breaks = NULL) +

coord_cartesian(xlim = c(-3, 4)) +

theme_fivethirtyeight() +

theme(plot.title = element_text(size = 13),

plot.subtitle = element_text(size = 10))

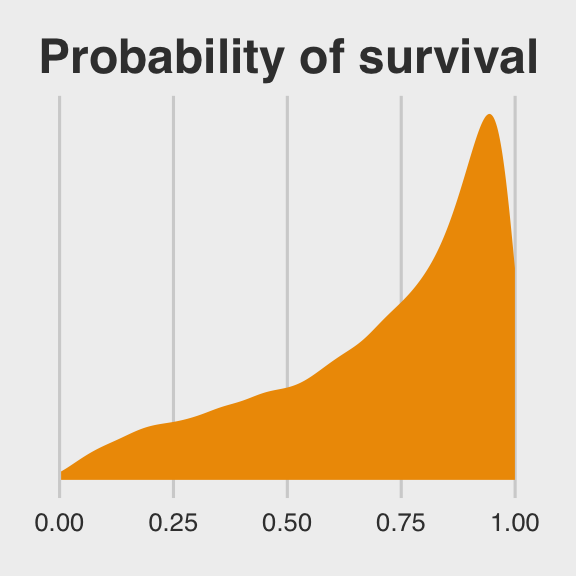

Note the uncertainty in terms of both location \(\alpha\) and scale \(\sigma\). Now here’s the code for Figure 12.2.b.

ggplot(data = post,

aes(x = rnorm(n = nrow(post),

mean = b_Intercept,

sd = sd_tank__Intercept) %>%

inv_logit_scaled())) +

geom_density(size = 0, fill = "orange2") +

scale_y_continuous(NULL, breaks = NULL) +

ggtitle("Probability of survival") +

theme_fivethirtyeight()

Note how we sampled 12,000 imaginary tanks rather than McElreath’s 8,000. This is because we had 12,000 HMC iterations (i.e., execute nrow(post)).

The aes() code, above, was a bit much. To get a sense of how it worked, consider this:

set.seed(12)

rnorm(n = 1,

mean = post$b_Intercept,

sd = post$sd_tank__Intercept) %>%

inv_logit_scaled()## [1] 0.2135091First, we took one random draw from a normal distribution with a mean of the first row in post$b_Intercept and a standard deviation of the value from the first row in post$sd_tank__Intercept, and passed it through the inv_logit_scaled() function. By replacing the 1 with nrow(post), we do this nrow(post) times (i.e., 12,000). Our orange density, then, is the summary of that process.

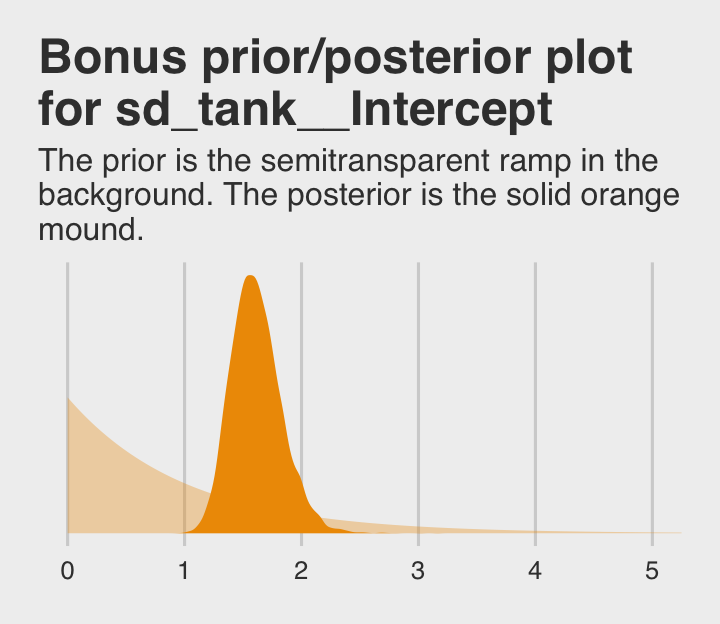

12.1.0.1 Overthinking: Prior for variance components.

Yep, you can use the exponential distribution for your priors in brms. Here it is for model b12.2.

b12.2.e <-

update(b12.2,

prior = c(prior(normal(0, 1), class = Intercept),

prior(exponential(1), class = sd)))The model summary:

print(b12.2.e)## Family: binomial

## Links: mu = logit

## Formula: surv | trials(density) ~ 1 + (1 | tank)

## Data: d (Number of observations: 48)

## Samples: 4 chains, each with iter = 4000; warmup = 1000; thin = 1;

## total post-warmup samples = 12000

##

## Group-Level Effects:

## ~tank (Number of levels: 48)

## Estimate Est.Error l-95% CI u-95% CI Eff.Sample Rhat

## sd(Intercept) 1.61 0.22 1.24 2.08 2962 1.00

##

## Population-Level Effects:

## Estimate Est.Error l-95% CI u-95% CI Eff.Sample Rhat

## Intercept 1.30 0.25 0.82 1.78 2304 1.00

##

## Samples were drawn using sampling(NUTS). For each parameter, Eff.Sample

## is a crude measure of effective sample size, and Rhat is the potential

## scale reduction factor on split chains (at convergence, Rhat = 1).If you’re curious how the exponential prior compares to the posterior, you might just plot.

tibble(x = seq(from = 0, to = 6, by = .01)) %>%

ggplot() +

# the prior

geom_ribbon(aes(x = x, ymin = 0, ymax = dexp(x, rate = 1)),

fill = "orange2", alpha = 1/3) +

# the posterior

geom_density(data = posterior_samples(b12.2.e),

aes(x = sd_tank__Intercept),

fill = "orange2", size = 0) +

scale_y_continuous(NULL, breaks = NULL) +

coord_cartesian(xlim = c(0, 5)) +

labs(title = "Bonus prior/posterior plot\nfor sd_tank__Intercept",

subtitle = "The prior is the semitransparent ramp in the\nbackground. The posterior is the solid orange\nmound.") +

theme_fivethirtyeight()

12.2 Varying effects and the underfitting/overfitting trade-off

Varying intercepts are just regularized estimates, but adaptively regularized by estimating how diverse the clusters are while estimating the features of each cluster. This fact is not easy to grasp…

A major benefit of using varying effects estimates, instead of the empirical raw estimates, is that they provide more accurate estimates of the individual cluster (tank) intercepts. On average, the varying effects actually provide a better estimate of the individual tank (cluster) means. The reason that the varying intercepts provides better estimates is that they do a better job trading off underfitting and overfitting. (p. 364)

In this section, we explicate this by contrasting three perspectives:

- Complete pooling (i.e., a single-\(\alpha\) model)

- No pooling (i.e., the single-level \(\alpha_{\text{tank}_i}\) model)

- Partial pooling (i.e., the multilevel model for which \(\alpha_{\text{tank}} \sim \text{Normal} (\alpha, \sigma)\))

To demonstrate [the magic of the multilevel model], we’ll simulate some tadpole data. That way, we’ll know the true per-pond survival probabilities. Then we can compare the no-pooling estimates to the partial pooling estimates, by computing how close each gets to the true values they are trying to estimate. The rest of this section shows how to do such a simulation. (p. 365)

12.2.1 The model.

The simulation formula should look familiar.

\[\begin{align*} \text{surv}_i & \sim \text{Binomial} (n_i, p_i) \\ \text{logit} (p_i) & = \alpha_{\text{pond}_i} \\ \alpha_{\text{pond}} & \sim \text{Normal} (\alpha, \sigma) \\ \alpha & \sim \text{Normal} (0, 1) \\ \sigma & \sim \text{HalfCauchy} (0, 1) \end{align*}\]12.2.2 Assign values to the parameters.

a <- 1.4

sigma <- 1.5

n_ponds <- 60

set.seed(12)

(

dsim <-

tibble(pond = 1:n_ponds,

ni = rep(c(5, 10, 25, 35), each = n_ponds / 4) %>% as.integer(),

true_a = rnorm(n = n_ponds, mean = a, sd = sigma))

)## # A tibble: 60 x 3

## pond ni true_a

## <int> <int> <dbl>

## 1 1 5 -0.821

## 2 2 5 3.77

## 3 3 5 -0.0351

## 4 4 5 0.0200

## 5 5 5 -1.60

## 6 6 5 0.992

## 7 7 5 0.927

## 8 8 5 0.458

## 9 9 5 1.24

## 10 10 5 2.04

## # … with 50 more rows12.2.3 Sumulate survivors.

Each pond \(i\) has \(n_i\) potential survivors, and nature flips each tadpole’s coin, so to speak, with probability of survival \(p_i\). This probability \(p_i\) is implied by the model definition, and is equal to:

\[p_i = \frac{\text{exp} (\alpha_i)}{1 + \text{exp} (\alpha_i)}\]

The model uses a logit link, and so the probability is defined by the [

inv_logit_scaled()] function. (p. 367)

set.seed(12)

(

dsim <-

dsim %>%

mutate(si = rbinom(n = n(), prob = inv_logit_scaled(true_a), size = ni))

)## # A tibble: 60 x 4

## pond ni true_a si

## <int> <int> <dbl> <int>

## 1 1 5 -0.821 0

## 2 2 5 3.77 5

## 3 3 5 -0.0351 4

## 4 4 5 0.0200 3

## 5 5 5 -1.60 0

## 6 6 5 0.992 5

## 7 7 5 0.927 5

## 8 8 5 0.458 3

## 9 9 5 1.24 5

## 10 10 5 2.04 5

## # … with 50 more rows12.2.4 Compute the no-pooling estimates.

The no-pooling estimates (i.e., \(\alpha_{\text{tank}_i}\)) are the results of simple algebra.

(

dsim <-

dsim %>%

mutate(p_nopool = si / ni)

)## # A tibble: 60 x 5

## pond ni true_a si p_nopool

## <int> <int> <dbl> <int> <dbl>

## 1 1 5 -0.821 0 0

## 2 2 5 3.77 5 1

## 3 3 5 -0.0351 4 0.8

## 4 4 5 0.0200 3 0.6

## 5 5 5 -1.60 0 0

## 6 6 5 0.992 5 1

## 7 7 5 0.927 5 1

## 8 8 5 0.458 3 0.6

## 9 9 5 1.24 5 1

## 10 10 5 2.04 5 1

## # … with 50 more rows“These are the same no-pooling estimates you’d get by fitting a model with a dummy variable for each pond and flat priors that induce no regularization” (p. 367).

12.2.5 Compute the partial-pooling estimates.

To follow along with McElreath, set chains = 1, cores = 1 to fit with one chain.

b12.3 <-

brm(data = dsim, family = binomial,

si | trials(ni) ~ 1 + (1 | pond),

prior = c(prior(normal(0, 1), class = Intercept),

prior(cauchy(0, 1), class = sd)),

iter = 10000, warmup = 1000, chains = 1, cores = 1,

seed = 12)print(b12.3)## Family: binomial

## Links: mu = logit

## Formula: si | trials(ni) ~ 1 + (1 | pond)

## Data: dsim (Number of observations: 60)

## Samples: 1 chains, each with iter = 10000; warmup = 1000; thin = 1;

## total post-warmup samples = 9000

##

## Group-Level Effects:

## ~pond (Number of levels: 60)

## Estimate Est.Error l-95% CI u-95% CI Eff.Sample Rhat

## sd(Intercept) 1.30 0.19 0.97 1.71 2948 1.00

##

## Population-Level Effects:

## Estimate Est.Error l-95% CI u-95% CI Eff.Sample Rhat

## Intercept 1.28 0.20 0.90 1.67 2996 1.00

##

## Samples were drawn using sampling(NUTS). For each parameter, Eff.Sample

## is a crude measure of effective sample size, and Rhat is the potential

## scale reduction factor on split chains (at convergence, Rhat = 1).I’m not aware that you can use McElreath’s depth=2 trick in brms for summary() or print(). But can get that information with the coef() function.

coef(b12.3)$pond[c(1:2, 59:60), , ] %>%

round(digits = 2)## Estimate Est.Error Q2.5 Q97.5

## 1 -1.07 0.89 -3.03 0.54

## 2 2.30 1.02 0.51 4.54

## 59 0.97 0.37 0.27 1.72

## 60 1.42 0.41 0.65 2.29Note how we just peeked at the top and bottom two rows with the c(1:2, 59:60) part of the code, there. Somewhat discouragingly, coef() doesn’t return the ‘Eff.Sample’ or ‘Rhat’ columns as in McElreath’s output. We can still extract that information, though. For \(\hat{R}\), the solution is simple; use the brms::rhat() function.

rhat(b12.3)## b_Intercept sd_pond__Intercept r_pond[1,Intercept] r_pond[2,Intercept]

## 1.0002418 0.9999371 0.9999115 1.0002275

## r_pond[3,Intercept] r_pond[4,Intercept] r_pond[5,Intercept] r_pond[6,Intercept]

## 0.9998903 0.9999139 0.9999526 0.9998954

## r_pond[7,Intercept] r_pond[8,Intercept] r_pond[9,Intercept] r_pond[10,Intercept]

## 1.0002992 1.0000516 0.9999460 0.9999920

## r_pond[11,Intercept] r_pond[12,Intercept] r_pond[13,Intercept] r_pond[14,Intercept]

## 0.9999889 0.9999319 0.9999591 0.9999101

## r_pond[15,Intercept] r_pond[16,Intercept] r_pond[17,Intercept] r_pond[18,Intercept]

## 1.0001250 0.9999149 0.9999016 0.9999239

## r_pond[19,Intercept] r_pond[20,Intercept] r_pond[21,Intercept] r_pond[22,Intercept]

## 0.9998892 0.9998931 0.9998939 0.9998921

## r_pond[23,Intercept] r_pond[24,Intercept] r_pond[25,Intercept] r_pond[26,Intercept]

## 0.9998933 0.9998986 0.9999875 0.9999691

## r_pond[27,Intercept] r_pond[28,Intercept] r_pond[29,Intercept] r_pond[30,Intercept]

## 0.9999052 0.9998893 0.9999064 1.0000005

## r_pond[31,Intercept] r_pond[32,Intercept] r_pond[33,Intercept] r_pond[34,Intercept]

## 1.0000856 0.9998893 0.9998950 0.9999871

## r_pond[35,Intercept] r_pond[36,Intercept] r_pond[37,Intercept] r_pond[38,Intercept]

## 0.9999385 1.0000467 0.9998907 0.9999261

## r_pond[39,Intercept] r_pond[40,Intercept] r_pond[41,Intercept] r_pond[42,Intercept]

## 0.9999002 0.9999136 0.9999024 0.9998960

## r_pond[43,Intercept] r_pond[44,Intercept] r_pond[45,Intercept] r_pond[46,Intercept]

## 0.9999817 1.0000738 1.0000156 1.0000022

## r_pond[47,Intercept] r_pond[48,Intercept] r_pond[49,Intercept] r_pond[50,Intercept]

## 0.9999479 0.9999159 0.9999162 0.9998972

## r_pond[51,Intercept] r_pond[52,Intercept] r_pond[53,Intercept] r_pond[54,Intercept]

## 0.9998896 0.9999483 0.9999232 0.9999950

## r_pond[55,Intercept] r_pond[56,Intercept] r_pond[57,Intercept] r_pond[58,Intercept]

## 0.9999233 0.9998901 0.9999382 0.9999012

## r_pond[59,Intercept] r_pond[60,Intercept] lp__

## 0.9999175 0.9998905 0.9999222Extracting the ‘Eff.Sample’ values is a little more complicated. There is no effsamples() function. However, we do have neff_ratio().

neff_ratio(b12.3)## b_Intercept sd_pond__Intercept r_pond[1,Intercept] r_pond[2,Intercept]

## 0.3328983 0.3276092 1.3086470 1.4222957

## r_pond[3,Intercept] r_pond[4,Intercept] r_pond[5,Intercept] r_pond[6,Intercept]

## 1.7287578 1.7931600 1.5172159 1.7087264

## r_pond[7,Intercept] r_pond[8,Intercept] r_pond[9,Intercept] r_pond[10,Intercept]

## 1.4415308 1.6367495 1.5541417 1.5271929

## r_pond[11,Intercept] r_pond[12,Intercept] r_pond[13,Intercept] r_pond[14,Intercept]

## 1.5474498 1.8682911 1.7837498 1.8735102

## r_pond[15,Intercept] r_pond[16,Intercept] r_pond[17,Intercept] r_pond[18,Intercept]

## 1.6606211 1.4500515 1.3307980 1.4573910

## r_pond[19,Intercept] r_pond[20,Intercept] r_pond[21,Intercept] r_pond[22,Intercept]

## 1.4950252 1.4288447 1.4943737 1.3833042

## r_pond[23,Intercept] r_pond[24,Intercept] r_pond[25,Intercept] r_pond[26,Intercept]

## 1.3976878 1.3691378 1.3661559 1.6933039

## r_pond[27,Intercept] r_pond[28,Intercept] r_pond[29,Intercept] r_pond[30,Intercept]

## 1.3845310 1.7058099 1.7976571 1.6092142

## r_pond[31,Intercept] r_pond[32,Intercept] r_pond[33,Intercept] r_pond[34,Intercept]

## 1.2267648 1.2972673 1.0352256 0.9503992

## r_pond[35,Intercept] r_pond[36,Intercept] r_pond[37,Intercept] r_pond[38,Intercept]

## 0.9909477 0.9580389 1.1905664 1.0429481

## r_pond[39,Intercept] r_pond[40,Intercept] r_pond[41,Intercept] r_pond[42,Intercept]

## 1.3957889 1.1026741 1.1539322 0.9819976

## r_pond[43,Intercept] r_pond[44,Intercept] r_pond[45,Intercept] r_pond[46,Intercept]

## 1.0965749 1.1089698 0.9528173 1.1863659

## r_pond[47,Intercept] r_pond[48,Intercept] r_pond[49,Intercept] r_pond[50,Intercept]

## 0.9622555 1.0673472 1.3589549 0.8415543

## r_pond[51,Intercept] r_pond[52,Intercept] r_pond[53,Intercept] r_pond[54,Intercept]

## 0.9910013 0.9237361 1.2107973 1.1505275

## r_pond[55,Intercept] r_pond[56,Intercept] r_pond[57,Intercept] r_pond[58,Intercept]

## 0.8304270 1.0743924 1.1347820 0.8837469

## r_pond[59,Intercept] r_pond[60,Intercept] lp__

## 0.8612560 0.9822023 0.1988217The brms::neff_ratio() function returns ratios of the effective samples over the total number of post-warmup iterations. So if we know the neff_ratio() values and the number of post-warmup iterations, the ‘Eff.Sample’ values are just a little algebra away. A quick solution is to look at the ‘total post-warmup samples’ line at the top of our print() output. Another way is to extract that information from our brm() fit object. I’m not aware of a way to do that directly, but we can extract the iter value (i.e., b12.2$fit@sim$iter), the warmup value (i.e., b12.2$fit@sim$warmup), and the number of chains (i.e., b12.2$fit@sim$chains). With those values in hand, simple algebra will return the ‘total post-warmup samples’ value. E.g.,

(n_iter <- (b12.3$fit@sim$iter - b12.3$fit@sim$warmup) * b12.3$fit@sim$chains)## [1] 9000And now we have n_iter, we can calculate the ‘Eff.Sample’ values.

neff_ratio(b12.3) %>%

data.frame() %>%

rownames_to_column() %>%

set_names("parameter", "neff_ratio") %>%

mutate(eff_sample = (neff_ratio * n_iter) %>% round(digits = 0)) %>%

head()## parameter neff_ratio eff_sample

## 1 b_Intercept 0.3328983 2996

## 2 sd_pond__Intercept 0.3276092 2948

## 3 r_pond[1,Intercept] 1.3086470 11778

## 4 r_pond[2,Intercept] 1.4222957 12801

## 5 r_pond[3,Intercept] 1.7287578 15559

## 6 r_pond[4,Intercept] 1.7931600 16138Digressions aside, let’s get ready for the diagnostic plot of Figure 12.3.

dsim %>%

glimpse()## Observations: 60

## Variables: 5

## $ pond <int> 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 2…

## $ ni <int> 5, 5, 5, 5, 5, 5, 5, 5, 5, 5, 5, 5, 5, 5, 5, 10, 10, 10, 10, 10, 10, 10, 10, 10,…

## $ true_a <dbl> -0.82085139, 3.76575421, -0.03511672, 0.01999213, -1.59646315, 0.99155593, 0.926…

## $ si <int> 0, 5, 4, 3, 0, 5, 5, 3, 5, 5, 3, 3, 3, 4, 4, 6, 10, 9, 9, 9, 9, 10, 10, 6, 3, 8,…

## $ p_nopool <dbl> 0.00, 1.00, 0.80, 0.60, 0.00, 1.00, 1.00, 0.60, 1.00, 1.00, 0.60, 0.60, 0.60, 0.…# we could have included this step in the block of code below, if we wanted to

p_partpool <-

coef(b12.3)$pond[, , ] %>%

as_tibble() %>%

transmute(p_partpool = inv_logit_scaled(Estimate))

dsim <-

dsim %>%

bind_cols(p_partpool) %>%

mutate(p_true = inv_logit_scaled(true_a)) %>%

mutate(nopool_error = abs(p_nopool - p_true),

partpool_error = abs(p_partpool - p_true))

dsim %>%

glimpse()## Observations: 60

## Variables: 9

## $ pond <int> 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21,…

## $ ni <int> 5, 5, 5, 5, 5, 5, 5, 5, 5, 5, 5, 5, 5, 5, 5, 10, 10, 10, 10, 10, 10, 10, 1…

## $ true_a <dbl> -0.82085139, 3.76575421, -0.03511672, 0.01999213, -1.59646315, 0.99155593,…

## $ si <int> 0, 5, 4, 3, 0, 5, 5, 3, 5, 5, 3, 3, 3, 4, 4, 6, 10, 9, 9, 9, 9, 10, 10, 6,…

## $ p_nopool <dbl> 0.00, 1.00, 0.80, 0.60, 0.00, 1.00, 1.00, 0.60, 1.00, 1.00, 0.60, 0.60, 0.…

## $ p_partpool <dbl> 0.2548519, 0.9089353, 0.8096274, 0.6809599, 0.2541776, 0.9097880, 0.909561…

## $ p_true <dbl> 0.3055830, 0.9773737, 0.4912217, 0.5049979, 0.1684765, 0.7293951, 0.716461…

## $ nopool_error <dbl> 0.305582963, 0.022626343, 0.308778278, 0.095002134, 0.168476520, 0.2706048…

## $ partpool_error <dbl> 0.050731032, 0.068438323, 0.318405689, 0.175962060, 0.085701039, 0.1803928…Here is our code for Figure 12.3. The extra data processing for dfline is how we get the values necessary for the horizontal summary lines.

dfline <-

dsim %>%

select(ni, nopool_error:partpool_error) %>%

gather(key, value, -ni) %>%

group_by(key, ni) %>%

summarise(mean_error = mean(value)) %>%

mutate(x = c( 1, 16, 31, 46),

xend = c(15, 30, 45, 60))

dsim %>%

ggplot(aes(x = pond)) +

geom_vline(xintercept = c(15.5, 30.5, 45.4),

color = "white", size = 2/3) +

geom_point(aes(y = nopool_error), color = "orange2") +

geom_point(aes(y = partpool_error), shape = 1) +

geom_segment(data = dfline,

aes(x = x, xend = xend,

y = mean_error, yend = mean_error),

color = rep(c("orange2", "black"), each = 4),

linetype = rep(1:2, each = 4)) +

scale_x_continuous(breaks = c(1, 10, 20, 30, 40, 50, 60)) +

annotate("text", x = c(15 - 7.5, 30 - 7.5, 45 - 7.5, 60 - 7.5), y = .45,

label = c("tiny (5)", "small (10)", "medium (25)", "large (35)")) +

labs(y = "absolute error",

title = "Estimate error by model type",

subtitle = "The horizontal axis displays pond number. The vertical axis measures\nthe absolute error in the predicted proportion of survivors, compared to\nthe true value used in the simulation. The higher the point, the worse\nthe estimate. No-pooling shown in orange. Partial pooling shown in black.\nThe orange and dashed black lines show the average error for each kind\nof estimate, across each initial density of tadpoles (pond size). Smaller\nponds produce more error, but the partial pooling estimates are better\non average, especially in smaller ponds.") +

theme_fivethirtyeight() +

theme(panel.grid = element_blank(),

plot.subtitle = element_text(size = 10))

If you wanted to quantify the difference in simple summaries, you might do something like this:

dsim %>%

select(ni, nopool_error:partpool_error) %>%

gather(key, value, -ni) %>%

group_by(key) %>%

summarise(mean_error = mean(value) %>% round(digits = 3),

median_error = median(value) %>% round(digits = 3))## # A tibble: 2 x 3

## key mean_error median_error

## <chr> <dbl> <dbl>

## 1 nopool_error 0.078 0.05

## 2 partpool_error 0.067 0.051I originally learned about the multilevel in order to work with longitudinal data. In that context, I found the basic principles of a multilevel structure quite intuitive. The concept of partial pooling, however, took me some time to wrap my head around. If you’re struggling with this, be patient and keep chipping away.

When McElreath lectured on this topic in 2015, he traced partial pooling to statistician Charles M. Stein. In 1977, Efron and Morris wrote the now classic paper, Stein’s Paradox in Statistics, which does a nice job breaking down why partial pooling can be so powerful. One of the primary examples they used in the paper was of 1970 batting average data. If you’d like more practice seeing how partial pooling works–or if you just like baseball–, check out my blog post, Stein’s Paradox and What Partial Pooling Can Do For You.

12.2.5.1 Overthinking: Repeating the pond simulation.

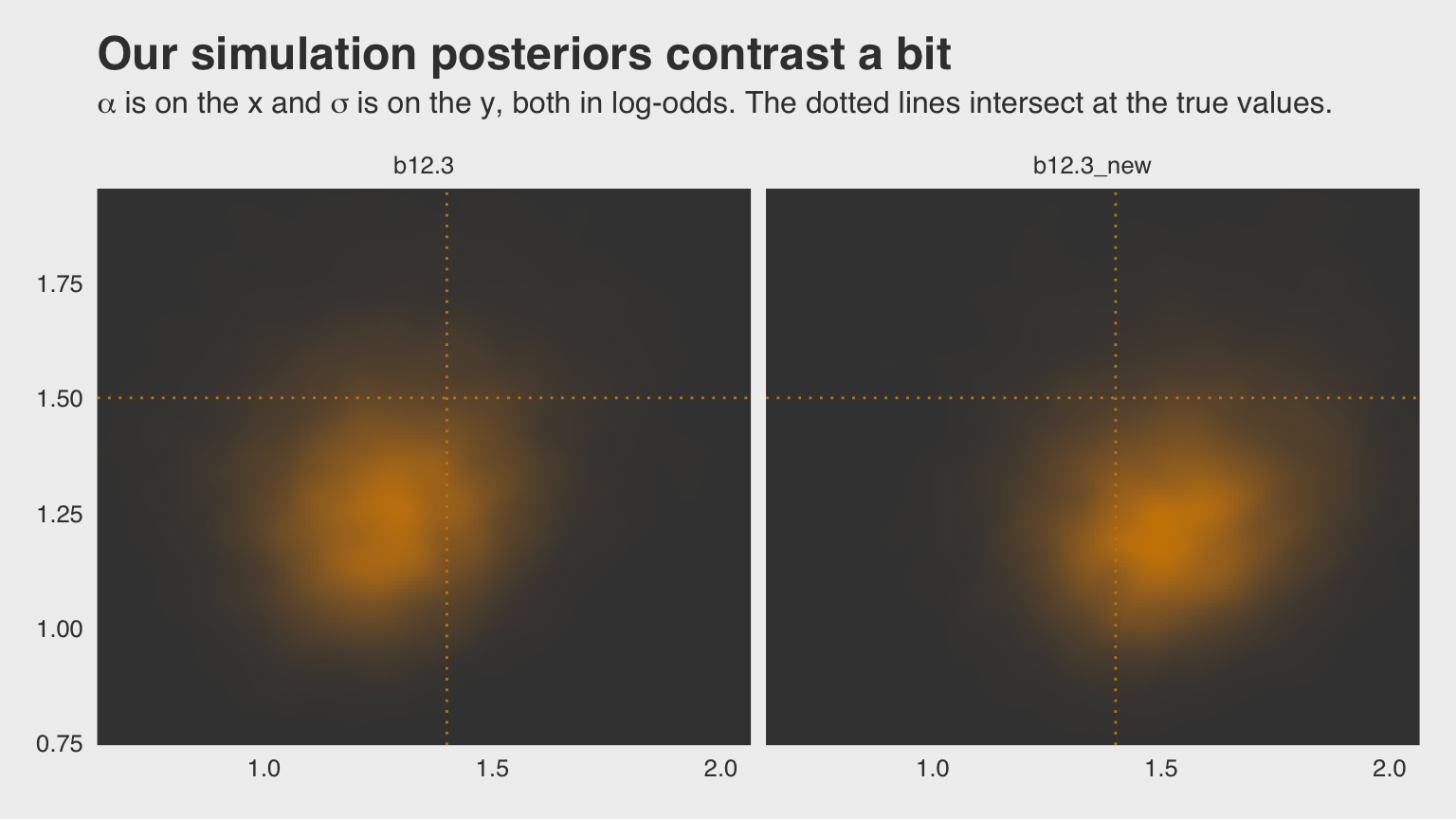

Within the brms workflow, we can reuse a compiled model with update(). But first, we’ll simulate new data.

a <- 1.4

sigma <- 1.5

n_ponds <- 60

set.seed(1999) # for new data, set a new seed

new_dsim <-

tibble(pond = 1:n_ponds,

ni = rep(c(5, 10, 25, 35), each = n_ponds / 4) %>% as.integer(),

true_a = rnorm(n = n_ponds, mean = a, sd = sigma)) %>%

mutate(si = rbinom(n = n(), prob = inv_logit_scaled(true_a), size = ni)) %>%

mutate(p_nopool = si / ni)

glimpse(new_dsim)## Observations: 60

## Variables: 5

## $ pond <int> 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 2…

## $ ni <int> 5, 5, 5, 5, 5, 5, 5, 5, 5, 5, 5, 5, 5, 5, 5, 10, 10, 10, 10, 10, 10, 10, 10, 10,…

## $ true_a <dbl> 2.4990087, 1.3432554, 3.2045137, 3.6047030, 1.6005354, 2.1797409, 0.5759270, -0.…

## $ si <int> 4, 4, 5, 4, 4, 4, 2, 4, 3, 5, 4, 5, 2, 2, 5, 10, 7, 10, 10, 8, 10, 9, 5, 10, 10,…

## $ p_nopool <dbl> 0.80, 0.80, 1.00, 0.80, 0.80, 0.80, 0.40, 0.80, 0.60, 1.00, 0.80, 1.00, 0.40, 0.…Fit the new model.

b12.3_new <-

update(b12.3,

newdata = new_dsim,

iter = 10000, warmup = 1000, chains = 1, cores = 1)print(b12.3_new)## Family: binomial

## Links: mu = logit

## Formula: si | trials(ni) ~ 1 + (1 | pond)

## Data: new_dsim (Number of observations: 60)

## Samples: 1 chains, each with iter = 10000; warmup = 1000; thin = 1;

## total post-warmup samples = 9000

##

## Group-Level Effects:

## ~pond (Number of levels: 60)

## Estimate Est.Error l-95% CI u-95% CI Eff.Sample Rhat

## sd(Intercept) 1.26 0.18 0.95 1.66 3633 1.00

##

## Population-Level Effects:

## Estimate Est.Error l-95% CI u-95% CI Eff.Sample Rhat

## Intercept 1.53 0.20 1.16 1.92 3279 1.00

##

## Samples were drawn using sampling(NUTS). For each parameter, Eff.Sample

## is a crude measure of effective sample size, and Rhat is the potential

## scale reduction factor on split chains (at convergence, Rhat = 1).Why not plot the first simulation versus the second one?

bind_rows(posterior_samples(b12.3),

posterior_samples(b12.3_new)) %>%

mutate(model = rep(c("b12.3", "b12.3_new"), each = n() / 2)) %>%

ggplot(aes(x = b_Intercept, y = sd_pond__Intercept)) +

stat_density_2d(geom = "raster",

aes(fill = stat(density)),

contour = F, n = 200) +

geom_vline(xintercept = a, color = "orange3", linetype = 3) +

geom_hline(yintercept = sigma, color = "orange3", linetype = 3) +

scale_fill_gradient(low = "grey25", high = "orange3") +

ggtitle("Our simulation posteriors contrast a bit",

subtitle = expression(paste(alpha, " is on the x and ", sigma, " is on the y, both in log-odds. The dotted lines intersect at the true values."))) +

coord_cartesian(xlim = c(.7, 2),

ylim = c(.8, 1.9)) +

theme_fivethirtyeight() +

theme(legend.position = "none",

panel.grid = element_blank()) +

facet_wrap(~model, ncol = 2)

If you’d like the stanfit portion of your brm() object, subset with $fit. Take b12.3, for example. You might check out its structure via b12.3$fit %>% str(). Here’s the actual Stan code.

b12.3$fit@ stanmodel## S4 class stanmodel 'bc042b5064dd9e434e229501da3f89fb' coded as follows:

## // generated with brms 2.8.8

## functions {

## }

## data {

## int<lower=1> N; // number of observations

## int Y[N]; // response variable

## int trials[N]; // number of trials

## // data for group-level effects of ID 1

## int<lower=1> N_1;

## int<lower=1> M_1;

## int<lower=1> J_1[N];

## vector[N] Z_1_1;

## int prior_only; // should the likelihood be ignored?

## }

## transformed data {

## }

## parameters {

## real temp_Intercept; // temporary intercept

## vector<lower=0>[M_1] sd_1; // group-level standard deviations

## vector[N_1] z_1[M_1]; // unscaled group-level effects

## }

## transformed parameters {

## // group-level effects

## vector[N_1] r_1_1 = (sd_1[1] * (z_1[1]));

## }

## model {

## vector[N] mu = temp_Intercept + rep_vector(0, N);

## for (n in 1:N) {

## mu[n] += r_1_1[J_1[n]] * Z_1_1[n];

## }

## // priors including all constants

## target += normal_lpdf(temp_Intercept | 0, 1);

## target += cauchy_lpdf(sd_1 | 0, 1)

## - 1 * cauchy_lccdf(0 | 0, 1);

## target += normal_lpdf(z_1[1] | 0, 1);

## // likelihood including all constants

## if (!prior_only) {

## target += binomial_logit_lpmf(Y | trials, mu);

## }

## }

## generated quantities {

## // actual population-level intercept

## real b_Intercept = temp_Intercept;

## }

## And you can get the data of a given brm() fit object like so.

b12.3$data %>%

head()## si ni pond

## 1 0 5 1

## 2 5 5 2

## 3 4 5 3

## 4 3 5 4

## 5 0 5 5

## 6 5 5 612.3 More than one type of cluster

“We can use and often should use more than one type of cluster in the same model” (p. 370).

12.3.1 Multilevel chimpanzees.

The initial multilevel update from model b10.4 from the last chapter follows the statistical formula

Notice that \(\alpha\) is inside the linear model, not inside the Gaussian prior for \(\alpha_\text{actor}\). This is mathematically equivalent to what [we] did with the tadpoles earlier in the chapter. You can always take the mean out of a Gaussian distribution and treat that distribution as a constant plus a Gaussian distribution centered on zero.

This might seem a little weird at first, so it might help train your intuition by experimenting in R. (p. 371)

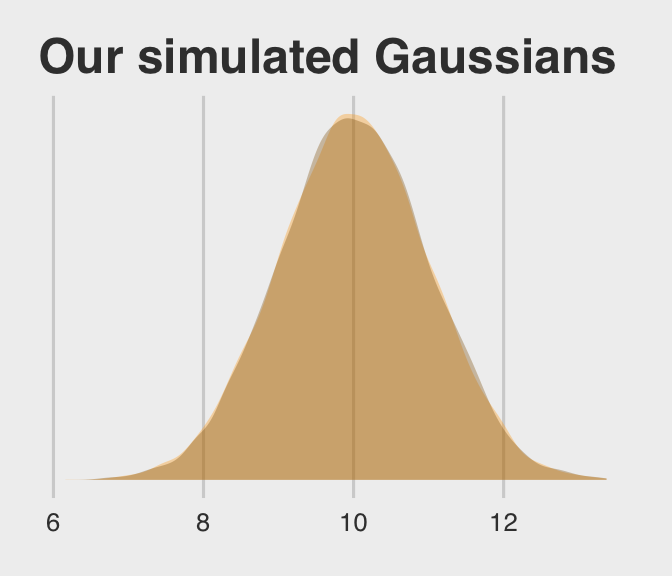

Behold our two identical Gaussians in a tidy tibble.

set.seed(12)

two_gaussians <-

tibble(y1 = rnorm(n = 1e4, mean = 10, sd = 1),

y2 = 10 + rnorm(n = 1e4, mean = 0, sd = 1))Let’s follow McElreath’s advice to make sure they are same by superimposing the density of one on the other.

two_gaussians %>%

ggplot() +

geom_density(aes(x = y1),

size = 0, fill = "orange1", alpha = 1/3) +

geom_density(aes(x = y2),

size = 0, fill = "orange4", alpha = 1/3) +

scale_y_continuous(NULL, breaks = NULL) +

labs(title = "Our simulated Gaussians") +

theme_fivethirtyeight()

Yep, those Gaussians look about the same.

Let’s get the chimpanzees data from rethinking.

library(rethinking)

data(chimpanzees)

d <- chimpanzeesDetach rethinking and reload brms.

rm(chimpanzees)

detach(package:rethinking, unload = T)

library(brms)For our brms model with varying intercepts for actor but not block, we employ the pulled_left ~ 1 + ... + (1 | actor) syntax, specifically omitting a (1 | block) section.

b12.4 <-

brm(data = d, family = binomial,

pulled_left | trials(1) ~ 1 + prosoc_left + prosoc_left:condition + (1 | actor),

prior = c(prior(normal(0, 10), class = Intercept),

prior(normal(0, 10), class = b),

prior(cauchy(0, 1), class = sd)),

# I'm using 4 cores, instead of the `cores=3` in McElreath's code

iter = 5000, warmup = 1000, chains = 4, cores = 4,

control = list(adapt_delta = 0.95),

seed = 12)The initial solutions came with a few divergent transitions. Increasing adapt_delta to 0.95 solved the problem. You can also solve the problem with more strongly regularizing priors such as normal(0, 2) on the intercept and slope parameters (see recommendations from the Stan team). Consider trying both methods and comparing the results. They’re similar.

Here we add the actor-level deviations to the fixed intercept, the grand mean.

post <- posterior_samples(b12.4)

post %>%

select(starts_with("r_actor")) %>%

gather() %>%

# this is how we might add the grand mean to the actor-level deviations

mutate(value = value + post$b_Intercept) %>%

group_by(key) %>%

summarise(mean = mean(value) %>% round(digits = 2))## # A tibble: 7 x 2

## key mean

## <chr> <dbl>

## 1 r_actor[1,Intercept] -0.71

## 2 r_actor[2,Intercept] 4.6

## 3 r_actor[3,Intercept] -1.02

## 4 r_actor[4,Intercept] -1.02

## 5 r_actor[5,Intercept] -0.71

## 6 r_actor[6,Intercept] 0.23

## 7 r_actor[7,Intercept] 1.76Here’s another way to get at the same information, this time using coef() and a little formatting help from the stringr::str_c() function. Just for kicks, we’ll throw in the 95% intervals, too.

coef(b12.4)$actor[, c(1, 3:4), 1] %>%

as_tibble() %>%

round(digits = 2) %>%

# here we put the credible intervals in an APA-6-style format

mutate(`95% CIs` = str_c("[", Q2.5, ", ", Q97.5, "]"),

actor = str_c("chimp #", 1:7)) %>%

rename(mean = Estimate) %>%

select(actor, mean, `95% CIs`) %>%

knitr::kable()| actor | mean | 95% CIs |

|---|---|---|

| chimp #1 | -0.71 | [-1.24, -0.2] |

| chimp #2 | 4.60 | [2.54, 8.49] |

| chimp #3 | -1.02 | [-1.57, -0.48] |

| chimp #4 | -1.02 | [-1.57, -0.49] |

| chimp #5 | -0.71 | [-1.23, -0.21] |

| chimp #6 | 0.23 | [-0.29, 0.77] |

| chimp #7 | 1.76 | [1.06, 2.54] |

If you prefer the posterior median to the mean, just add a robust = T argument inside the coef() function.

12.3.2 Two types of cluster.

The full statistical model follows the form

\[\begin{align*} \text{left_pull}_i & \sim \text{Binomial} (n_i = 1, p_i) \\ \text{logit} (p_i) & = \alpha + \alpha_{\text{actor}_i} + \alpha_{\text{block}_i} + (\beta_1 + \beta_2 \text{condition}_i) \text{prosoc_left}_i \\ \alpha_{\text{actor}} & \sim \text{Normal} (0, \sigma_{\text{actor}}) \\ \alpha_{\text{block}} & \sim \text{Normal} (0, \sigma_{\text{actor}}) \\ \alpha & \sim \text{Normal} (0, 10) \\ \beta_1 & \sim \text{Normal} (0, 10) \\ \beta_2 & \sim \text{Normal} (0, 10) \\ \sigma_{\text{actor}} & \sim \text{HalfCauchy} (0, 1) \\ \sigma_{\text{block}} & \sim \text{HalfCauchy} (0, 1) \end{align*}\]Our brms model with varying intercepts for both actor and block now employs the ... (1 | actor) + (1 | block) syntax.

b12.5 <-

update(b12.4,

newdata = d,

formula = pulled_left | trials(1) ~ 1 + prosoc_left + prosoc_left:condition +

(1 | actor) + (1 | block),

iter = 6000, warmup = 1000, cores = 4, chains = 4,

control = list(adapt_delta = 0.99),

seed = 12)This time we increased adapt_delta to 0.99 to avoid divergent transitions. We can look at the primary coefficients with print(). McElreath encouraged us to inspect the trace plots. Here they are.

library(bayesplot)

color_scheme_set("orange")

post <- posterior_samples(b12.5, add_chain = T)

post %>%

select(-lp__, -iter) %>%

mcmc_trace(facet_args = list(ncol = 4)) +

scale_x_continuous(breaks = c(0, 2500, 5000)) +

theme_fivethirtyeight() +

theme(legend.position = c(.75, .06))

The trace plots look great. We may as well examine the \(n_\text{eff} / N\) ratios, too.

neff_ratio(b12.5) %>%

mcmc_neff() +

theme_fivethirtyeight()

About half of them are lower than we might like, but none are in the embarrassing \(n_\text{eff} / N \leq .1\) range. Let’s look at the summary of the main parameters.

print(b12.5)## Family: binomial

## Links: mu = logit

## Formula: pulled_left | trials(1) ~ prosoc_left + (1 | actor) + (1 | block) + prosoc_left:condition

## Data: d (Number of observations: 504)

## Samples: 4 chains, each with iter = 6000; warmup = 1000; thin = 1;

## total post-warmup samples = 20000

##

## Group-Level Effects:

## ~actor (Number of levels: 7)

## Estimate Est.Error l-95% CI u-95% CI Eff.Sample Rhat

## sd(Intercept) 2.27 0.97 1.13 4.63 5171 1.00

##

## ~block (Number of levels: 6)

## Estimate Est.Error l-95% CI u-95% CI Eff.Sample Rhat

## sd(Intercept) 0.22 0.18 0.01 0.66 8624 1.00

##

## Population-Level Effects:

## Estimate Est.Error l-95% CI u-95% CI Eff.Sample Rhat

## Intercept 0.43 0.99 -1.39 2.47 4251 1.00

## prosoc_left 0.83 0.26 0.31 1.35 16813 1.00

## prosoc_left:condition -0.14 0.30 -0.73 0.45 16285 1.00

##

## Samples were drawn using sampling(NUTS). For each parameter, Eff.Sample

## is a crude measure of effective sample size, and Rhat is the potential

## scale reduction factor on split chains (at convergence, Rhat = 1).This time, we’ll need to use brms::ranef() to get those depth=2-type estimates in the same metric displayed in the text. With ranef(), you get the group-specific estimates in a deviance metric. The coef() function, in contrast, yields the group-specific estimates in what you might call the natural metric. We’ll get more language for this in the next chapter.

ranef(b12.5)$actor[, , "Intercept"] %>%

round(digits = 2)## Estimate Est.Error Q2.5 Q97.5

## 1 -1.15 1.00 -3.24 0.68

## 2 4.21 1.72 1.78 8.14

## 3 -1.46 1.00 -3.54 0.37

## 4 -1.46 1.00 -3.54 0.36

## 5 -1.15 1.00 -3.21 0.65

## 6 -0.20 1.00 -2.29 1.64

## 7 1.34 1.02 -0.74 3.24ranef(b12.5)$block[, , "Intercept"] %>%

round(digits = 2)## Estimate Est.Error Q2.5 Q97.5

## 1 -0.18 0.23 -0.74 0.12

## 2 0.04 0.19 -0.33 0.45

## 3 0.05 0.19 -0.30 0.48

## 4 0.00 0.18 -0.38 0.40

## 5 -0.04 0.18 -0.46 0.32

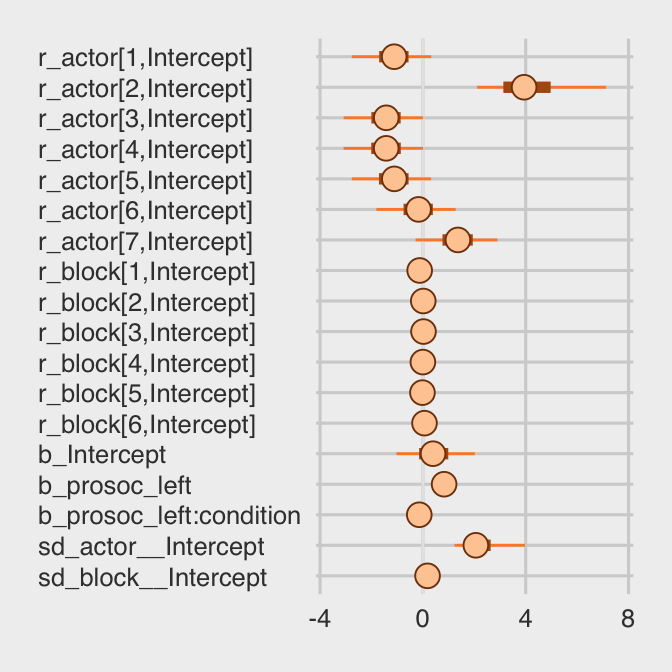

## 6 0.11 0.20 -0.21 0.59We might make the coefficient plot of Figure 12.4.a like this:

stanplot(b12.5, pars = c("^r_", "^b_", "^sd_")) +

theme_fivethirtyeight() +

theme(axis.text.y = element_text(hjust = 0))

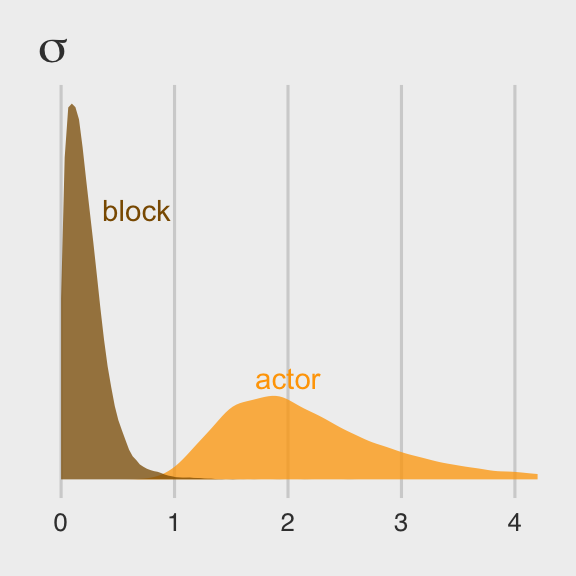

Once we get the posterior samples, it’s easy to compare the random variances as in Figure 12.4.b.

post %>%

ggplot(aes(x = sd_actor__Intercept)) +

geom_density(size = 0, fill = "orange1", alpha = 3/4) +

geom_density(aes(x = sd_block__Intercept),

size = 0, fill = "orange4", alpha = 3/4) +

scale_y_continuous(NULL, breaks = NULL) +

coord_cartesian(xlim = c(0, 4)) +

labs(title = expression(sigma)) +

annotate("text", x = 2/3, y = 2, label = "block", color = "orange4") +

annotate("text", x = 2, y = 3/4, label = "actor", color = "orange1") +

theme_fivethirtyeight()

We might compare our models by their PSIS-LOO values.

b12.4 <- add_criterion(b12.4, "loo")

b12.5 <- add_criterion(b12.5, "loo")

loo_compare(b12.4, b12.5) %>%

print(simplify = F)## elpd_diff se_diff elpd_loo se_elpd_loo p_loo se_p_loo looic se_looic

## b12.4 0.0 0.0 -265.7 9.8 8.1 0.4 531.4 19.5

## b12.5 -0.6 0.9 -266.3 9.9 10.4 0.5 532.6 19.7The two models yield nearly-equivalent information criteria values. Yet recall what McElreath wrote: “There is nothing to gain here by selecting either model. The comparison of the two models tells a richer story” (p. 367).

12.4 Multilevel posterior predictions

… producing implied predictions from a fit model, is very helpful for understanding what the model means. Every model is a merger of sense and nonsense. When we understand a model, we can find its sense and control its nonsense. But as models get more complex, it is very difficult to impossible to understand them just by inspecting tables of posterior means and intervals. Exploring implied posterior predictions helps much more…

… The introduction of varying effects does introduce nuance, however.

First, we should no longer expect the model to exactly retrodict the sample, because adaptive regularization has as its goal to trade off poorer fit in sample for better inference and hopefully better fit out of sample. This is what shrinkage does for us…

Second, “prediction” in the context of a multilevel model requires additional choices. If we wish to validate a model against the specific clusters used to fit the model, that is one thing. But if we instead wish to compute predictions for new clusters, other than the one observed in the sample, that is quite another. We’ll consider each of these in turn, continuing to use the chimpanzees model from the previous section. (p. 376)

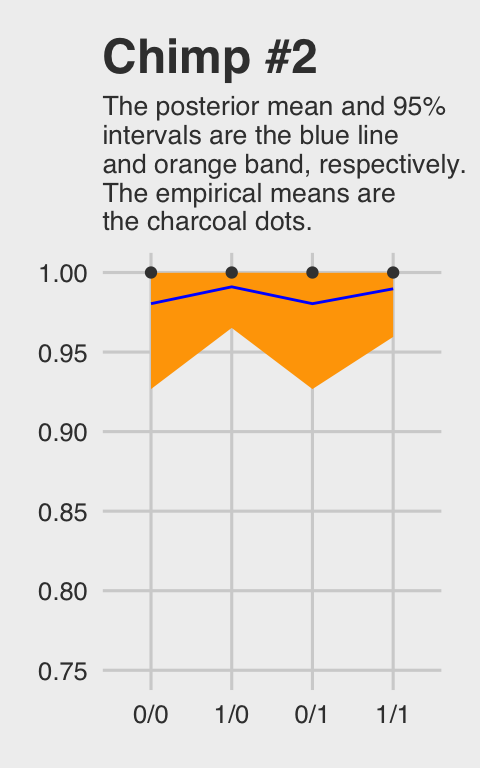

12.4.1 Posterior prediction for same clusters.

Like McElreath did in the text, we’ll do this two ways. Recall we use brms::fitted() in place of rethinking::link().

chimp <- 2

nd <-

tibble(prosoc_left = c(0, 1, 0, 1),

condition = c(0, 0, 1, 1),

actor = chimp)

(

chimp_2_fitted <-

fitted(b12.4,

newdata = nd) %>%

as_tibble() %>%

mutate(condition = factor(c("0/0", "1/0", "0/1", "1/1"),

levels = c("0/0", "1/0", "0/1", "1/1")))

)## # A tibble: 4 x 5

## Estimate Est.Error Q2.5 Q97.5 condition

## <dbl> <dbl> <dbl> <dbl> <fct>

## 1 0.980 0.0198 0.927 1.000 0/0

## 2 0.991 0.00963 0.965 1.000 1/0

## 3 0.980 0.0198 0.927 1.000 0/1

## 4 0.990 0.0109 0.960 1.000 1/1(

chimp_2_d <-

d %>%

filter(actor == chimp) %>%

group_by(prosoc_left, condition) %>%

summarise(prob = mean(pulled_left)) %>%

ungroup() %>%

mutate(condition = str_c(prosoc_left, "/", condition)) %>%

mutate(condition = factor(condition, levels = c("0/0", "1/0", "0/1", "1/1")))

)## # A tibble: 4 x 3

## prosoc_left condition prob

## <int> <fct> <dbl>

## 1 0 0/0 1

## 2 0 0/1 1

## 3 1 1/0 1

## 4 1 1/1 1McElreath didn’t show the corresponding plot in the text. It might look like this.

chimp_2_fitted %>%

# if you want to use `geom_line()` or `geom_ribbon()` with a factor on the x axis,

# you need to code something like `group = 1` in `aes()`

ggplot(aes(x = condition, y = Estimate, group = 1)) +

geom_ribbon(aes(ymin = Q2.5, ymax = Q97.5), fill = "orange1") +

geom_line(color = "blue") +

geom_point(data = chimp_2_d,

aes(y = prob),

color = "grey25") +

ggtitle("Chimp #2",

subtitle = "The posterior mean and 95%\nintervals are the blue line\nand orange band, respectively.\nThe empirical means are\nthe charcoal dots.") +

coord_cartesian(ylim = c(.75, 1)) +

theme_fivethirtyeight() +

theme(plot.subtitle = element_text(size = 10))

Do note how severely we’ve restricted the y-axis range. But okay, now let’s do things by hand. We’ll need to extract the posterior samples and look at the structure of the data.

post <- posterior_samples(b12.4)

glimpse(post)## Observations: 16,000

## Variables: 12

## $ b_Intercept <dbl> -0.49845428, 0.36263851, 1.91766750, 1.82740632, 2.24556133, 0.…

## $ b_prosoc_left <dbl> 0.9032174, 1.3799669, 0.8727582, 0.8015609, 0.6627169, 1.156136…

## $ `b_prosoc_left:condition` <dbl> -0.56182114, -0.46722870, -0.70816999, -0.33963142, -0.49257705…

## $ sd_actor__Intercept <dbl> 2.571271, 1.476708, 2.084258, 2.729417, 2.724787, 2.071737, 1.9…

## $ `r_actor[1,Intercept]` <dbl> -0.468006872, -1.364675261, -2.403394919, -2.574390330, -2.7928…

## $ `r_actor[2,Intercept]` <dbl> 5.533991, 2.910396, 5.437039, 4.342981, 3.537432, 2.313620, 3.7…

## $ `r_actor[3,Intercept]` <dbl> -0.45618115, -1.59925747, -2.81698107, -2.51778170, -3.15780434…

## $ `r_actor[4,Intercept]` <dbl> -0.65347198, -1.77826745, -2.54650655, -2.79884389, -2.96142601…

## $ `r_actor[5,Intercept]` <dbl> -0.3303643, -1.3395610, -2.4678893, -2.5197585, -2.6281694, -1.…

## $ `r_actor[6,Intercept]` <dbl> 0.56380900, -0.67941570, -1.10245504, -1.98517600, -1.52414804,…

## $ `r_actor[7,Intercept]` <dbl> 2.0842292, 1.6935043, -0.5287177, 0.4990477, -0.4595667, 1.0253…

## $ lp__ <dbl> -280.4227, -284.5651, -286.6607, -281.1776, -281.1189, -281.660…McElreath didn’t show what his R code 12.29 dens( post$a_actor[,5] ) would look like. But here’s our analogue.

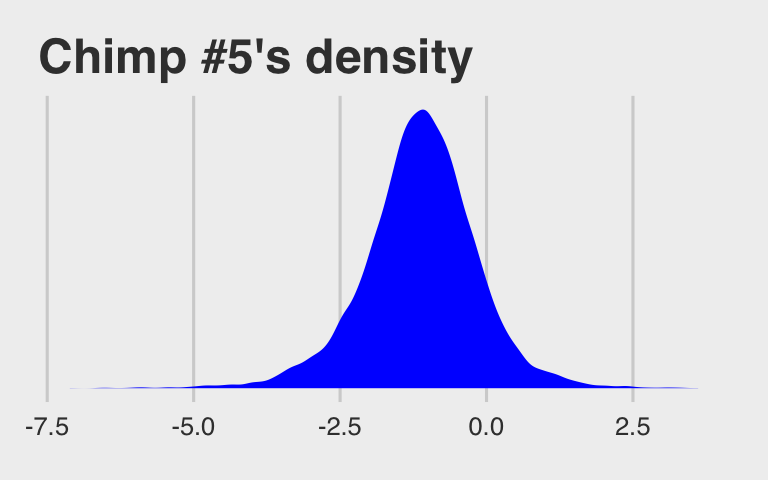

post %>%

transmute(actor_5 = `r_actor[5,Intercept]`) %>%

ggplot(aes(x = actor_5)) +

geom_density(size = 0, fill = "blue") +

scale_y_continuous(breaks = NULL) +

ggtitle("Chimp #5's density") +

theme_fivethirtyeight()

And because we made the density only using the r_actor[5,Intercept] values (i.e., we didn’t add b_Intercept to them), the density is in a deviance-score metric.

McElreath built his own link() function. Here we’ll build an alternative to fitted().

# our hand-made `brms::fitted()` alternative

my_fitted <- function(prosoc_left, condition){

post %>%

transmute(fitted = (b_Intercept +

`r_actor[5,Intercept]` +

b_prosoc_left * prosoc_left +

`b_prosoc_left:condition` * prosoc_left * condition) %>%

inv_logit_scaled())

}

# the posterior summaries

(

chimp_5_my_fitted <-

tibble(prosoc_left = c(0, 1, 0, 1),

condition = c(0, 0, 1, 1)) %>%

mutate(post = map2(prosoc_left, condition, my_fitted)) %>%

unnest() %>%

mutate(condition = str_c(prosoc_left, "/", condition)) %>%

mutate(condition = factor(condition, levels = c("0/0", "1/0", "0/1", "1/1"))) %>%

group_by(condition) %>%

tidybayes::mean_qi(fitted)

)## # A tibble: 4 x 7

## condition fitted .lower .upper .width .point .interval

## <fct> <dbl> <dbl> <dbl> <dbl> <chr> <chr>

## 1 0/0 0.331 0.226 0.448 0.95 mean qi

## 2 1/0 0.527 0.384 0.667 0.95 mean qi

## 3 0/1 0.331 0.226 0.448 0.95 mean qi

## 4 1/1 0.495 0.354 0.637 0.95 mean qi# the empirical summaries

chimp <- 5

(

chimp_5_d <-

d %>%

filter(actor == chimp) %>%

group_by(prosoc_left, condition) %>%

summarise(prob = mean(pulled_left)) %>%

ungroup() %>%

mutate(condition = str_c(prosoc_left, "/", condition)) %>%

mutate(condition = factor(condition, levels = c("0/0", "1/0", "0/1", "1/1")))

)## # A tibble: 4 x 3

## prosoc_left condition prob

## <int> <fct> <dbl>

## 1 0 0/0 0.333

## 2 0 0/1 0.278

## 3 1 1/0 0.556

## 4 1 1/1 0.5Okay, let’s see how good we are at retrodicting the pulled_left probabilities for actor == 5.

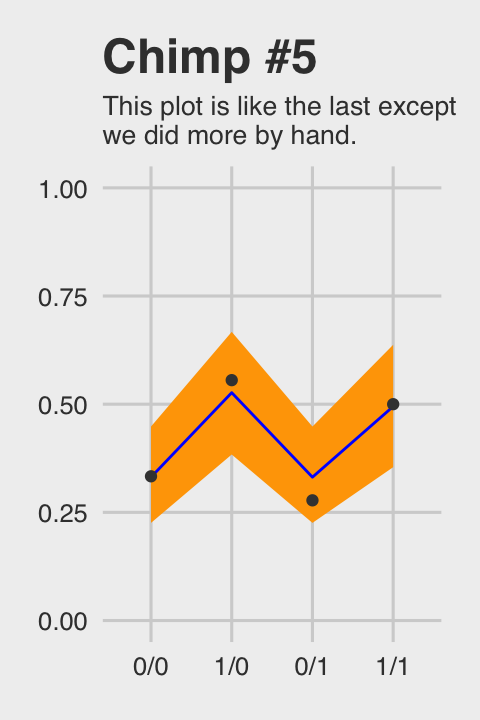

chimp_5_my_fitted %>%

ggplot(aes(x = condition, y = fitted, group = 1)) +

geom_ribbon(aes(ymin = .lower, ymax = .upper), fill = "orange1") +

geom_line(color = "blue") +

geom_point(data = chimp_5_d,

aes(y = prob),

color = "grey25") +

ggtitle("Chimp #5",

subtitle = "This plot is like the last except\nwe did more by hand.") +

coord_cartesian(ylim = 0:1) +

theme_fivethirtyeight() +

theme(plot.subtitle = element_text(size = 10))

Not bad.

12.4.2 Posterior prediction for new clusters.

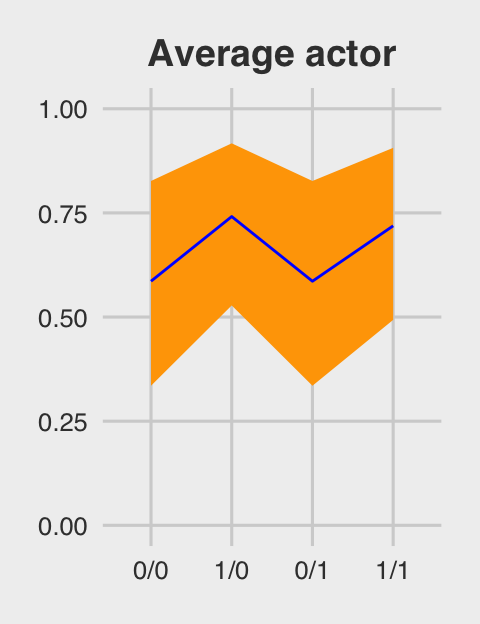

By average actor, McElreath referred to a chimp with an intercept exactly at the population mean \(\alpha\). So this time we’ll only be working with the population parameters, or what are also sometimes called the fixed effects. When using brms::posterior_samples() output, this would mean working with columns beginning with the b_ prefix (i.e., b_Intercept, b_prosoc_left, and b_prosoc_left:condition).

post_average_actor <-

post %>%

# here we use the linear regression formula to get the log_odds for the 4 conditions

transmute(`0/0` = b_Intercept,

`1/0` = b_Intercept + b_prosoc_left,

`0/1` = b_Intercept,

`1/1` = b_Intercept + b_prosoc_left + `b_prosoc_left:condition`) %>%

# with `mutate_all()` we can convert the estimates to probabilities in one fell swoop

mutate_all(inv_logit_scaled) %>%

# putting the data in the long format and grouping by condition (i.e., `key`)

gather() %>%

mutate(key = factor(key, level = c("0/0", "1/0", "0/1", "1/1"))) %>%

group_by(key) %>%

# here we get the summary values for the plot

summarise(m = mean(value),

# note we're using 80% intervals

ll = quantile(value, probs = .1),

ul = quantile(value, probs = .9))

post_average_actor## # A tibble: 4 x 4

## key m ll ul

## <fct> <dbl> <dbl> <dbl>

## 1 0/0 0.586 0.335 0.827

## 2 1/0 0.741 0.528 0.917

## 3 0/1 0.586 0.335 0.827

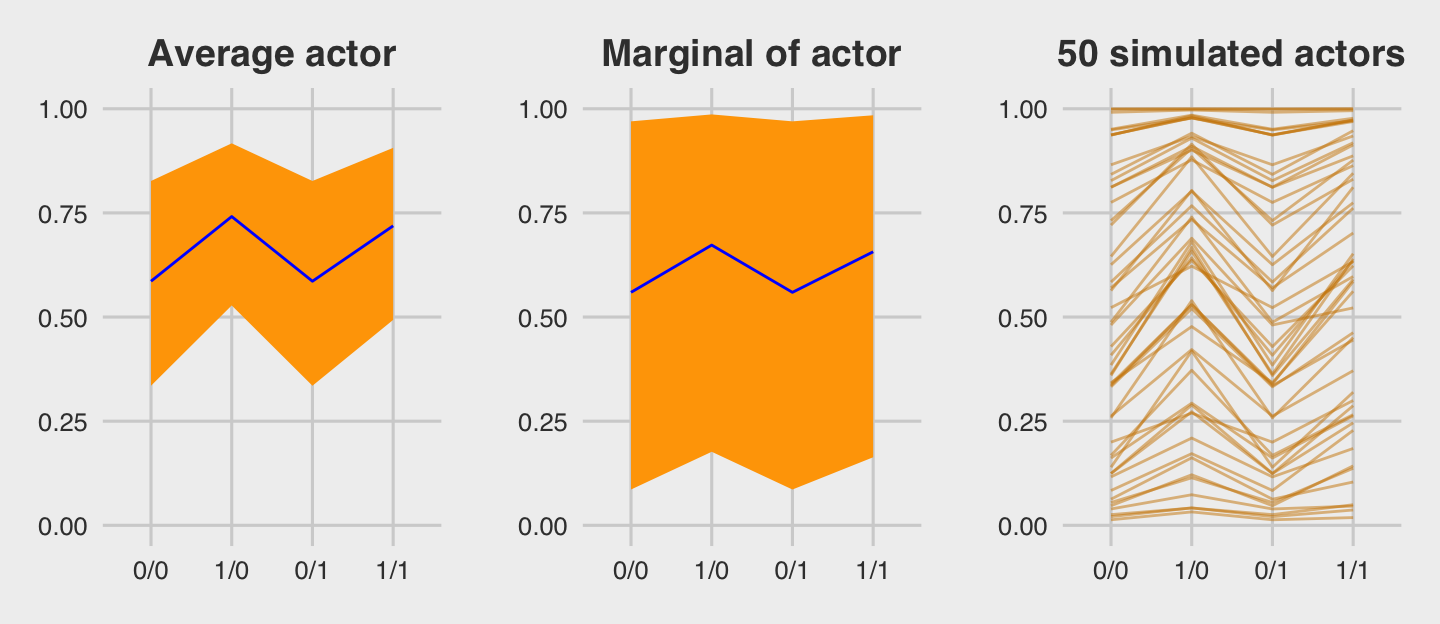

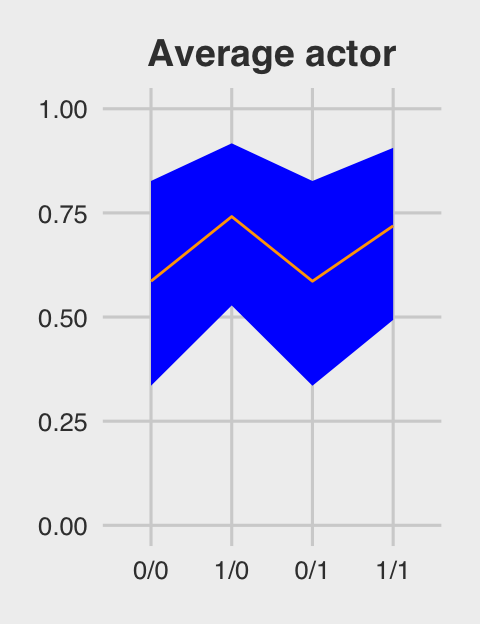

## 4 1/1 0.719 0.493 0.906Figure 12.5.a.

p1 <-

post_average_actor %>%

ggplot(aes(x = key, y = m, group = 1)) +

geom_ribbon(aes(ymin = ll, ymax = ul), fill = "orange1") +

geom_line(color = "blue") +

ggtitle("Average actor") +

coord_cartesian(ylim = 0:1) +

theme_fivethirtyeight() +

theme(plot.title = element_text(size = 14, hjust = .5))

p1

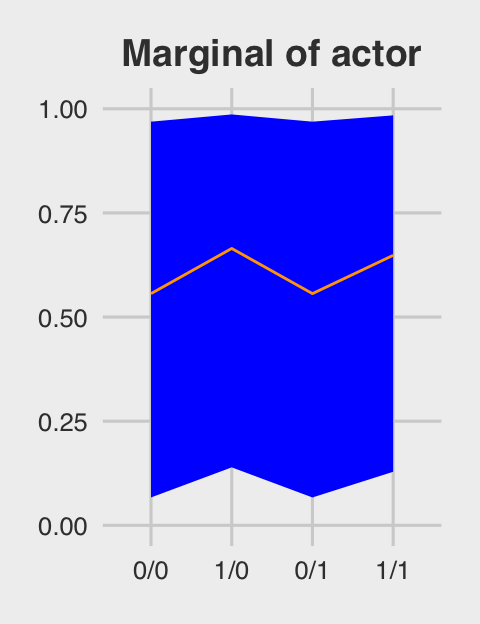

If we want to depict the variability across the chimps, we need to include sd_actor__Intercept into the calculations. In the first block of code, below, we simulate a bundle of new intercepts defined by

\[\alpha_\text{actor} \sim \text{Normal} (0, \sigma_\text{actor})\]

# the random effects

set.seed(12.42)

ran_ef <-

tibble(random_effect = rnorm(n = 1000, mean = 0, sd = post$sd_actor__Intercept)) %>%

# with the `., ., ., .` syntax, we quadruple the previous line

bind_rows(., ., ., .)

# the fixed effects (i.e., the population parameters)

fix_ef <-

post %>%

slice(1:1000) %>%

transmute(`0/0` = b_Intercept,

`1/0` = b_Intercept + b_prosoc_left,

`0/1` = b_Intercept,

`1/1` = b_Intercept + b_prosoc_left + `b_prosoc_left:condition`) %>%

gather() %>%

rename(condition = key,

fixed_effect = value) %>%

mutate(condition = factor(condition, level = c("0/0", "1/0", "0/1", "1/1")))

# combine them

ran_and_fix_ef <-

bind_cols(ran_ef, fix_ef) %>%

mutate(intercept = fixed_effect + random_effect) %>%

mutate(prob = inv_logit_scaled(intercept))

# to simplify things, we'll reduce them to summaries

(

marginal_effects <-

ran_and_fix_ef %>%

group_by(condition) %>%

summarise(m = mean(prob),

ll = quantile(prob, probs = .1),

ul = quantile(prob, probs = .9))

)## # A tibble: 4 x 4

## condition m ll ul

## <fct> <dbl> <dbl> <dbl>

## 1 0/0 0.559 0.0860 0.970

## 2 1/0 0.673 0.177 0.986

## 3 0/1 0.559 0.0860 0.970

## 4 1/1 0.657 0.163 0.984Behold Figure 12.5.b.

p2 <-

marginal_effects %>%

ggplot(aes(x = condition, y = m, group = 1)) +

geom_ribbon(aes(ymin = ll, ymax = ul), fill = "orange1") +

geom_line(color = "blue") +

ggtitle("Marginal of actor") +

coord_cartesian(ylim = 0:1) +

theme_fivethirtyeight() +

theme(plot.title = element_text(size = 14, hjust = .5))

p2

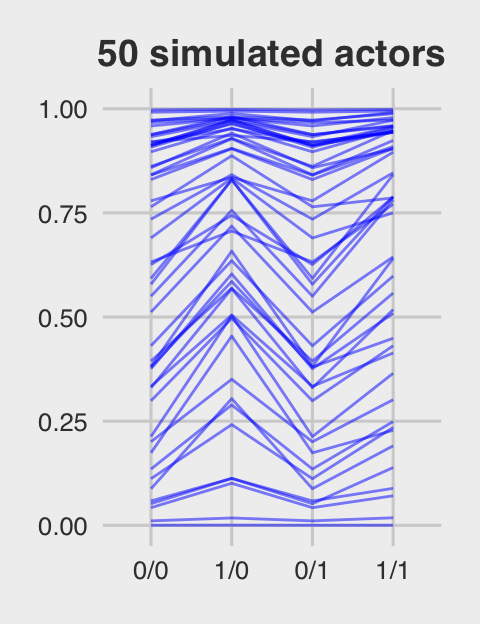

Figure 12.5.c just takes a tiny bit more wrangling.

p3 <-

ran_and_fix_ef %>%

mutate(iter = rep(1:1000, times = 4)) %>%

filter(iter %in% c(1:50)) %>%

ggplot(aes(x = condition, y = prob, group = iter)) +

theme_fivethirtyeight() +

ggtitle("50 simulated actors") +

coord_cartesian(ylim = 0:1) +

geom_line(alpha = 1/2, color = "orange3") +

theme(plot.title = element_text(size = 14, hjust = .5))

p3

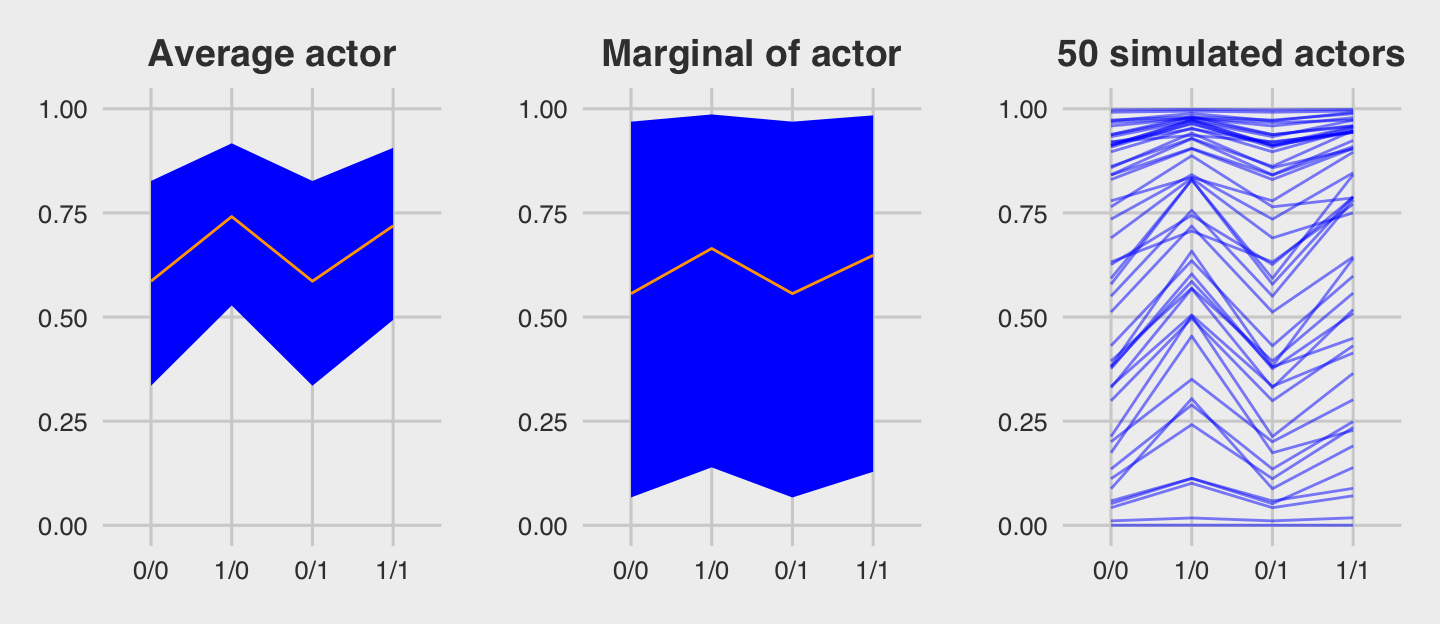

For the finale, we’ll stitch the three plots together.

library(gridExtra)

grid.arrange(p1, p2, p3, ncol = 3)

12.4.2.1 Bonus: Let’s use fitted() this time.

We just made those plots using various wrangled versions of post, the data frame returned by posterior_samples(b.12.4). If you followed along closely, part of what made that a great exercise is that it forced you to consider what the various vectors in post meant with respect to the model formula. But it’s also handy to see how to do that from a different perspective. So in this section, we’ll repeat that process by relying on the fitted() function, instead. We’ll go in the same order, starting with the average actor.

nd <-

tibble(prosoc_left = c(0, 1, 0, 1),

condition = c(0, 0, 1, 1))

(

f <-

fitted(b12.4,

newdata = nd,

re_formula = NA,

probs = c(.1, .9)) %>%

as_tibble() %>%

bind_cols(nd) %>%

mutate(condition = str_c(prosoc_left, "/", condition) %>%

factor(., levels = c("0/0", "1/0", "0/1", "1/1")))

)## # A tibble: 4 x 6

## Estimate Est.Error Q10 Q90 prosoc_left condition

## <dbl> <dbl> <dbl> <dbl> <dbl> <fct>

## 1 0.586 0.187 0.335 0.827 0 0/0

## 2 0.741 0.159 0.528 0.917 1 1/0

## 3 0.586 0.187 0.335 0.827 0 0/1

## 4 0.719 0.165 0.493 0.906 1 1/1You should notice a few things. Since b12.4 is a multilevel model, it had three predictors: prosoc_left, condition, and actor. However, our nd data only included the first two of those predictors. The reason fitted() permitted that was because we set re_formula = NA. When you do that, you tell fitted() to ignore group-level effects (i.e., focus only on the fixed effects). This was our fitted() version of ignoring the r_ vectors returned by posterior_samples(). Here’s the plot.

p4 <-

f %>%

ggplot(aes(x = condition, y = Estimate, group = 1)) +

geom_ribbon(aes(ymin = Q10, ymax = Q90), fill = "blue") +

geom_line(color = "orange1") +

ggtitle("Average actor") +

coord_cartesian(ylim = 0:1) +

theme_fivethirtyeight() +

theme(plot.title = element_text(size = 14, hjust = .5))

p4

For marginal of actor, we can continue using the same nd data. This time we’ll be sticking with the default re_formula setting, which will accommodate the multilevel nature of the model. However, we’ll also be adding allow_new_levels = T and sample_new_levels = "gaussian". The former will allow us to marginalize across the specific actors in our data and the latter will instruct fitted() to use the multivariate normal distribution implied by the random effects. It’ll make more sense why I say multivariate normal by the end of the next chapter. For now, just go with it.

(

f <-

fitted(b12.4,

newdata = nd,

probs = c(.1, .9),

allow_new_levels = T,

sample_new_levels = "gaussian") %>%

as_tibble() %>%

bind_cols(nd) %>%

mutate(condition = str_c(prosoc_left, "/", condition) %>%

factor(., levels = c("0/0", "1/0", "0/1", "1/1")))

)## # A tibble: 4 x 6

## Estimate Est.Error Q10 Q90 prosoc_left condition

## <dbl> <dbl> <dbl> <dbl> <dbl> <fct>

## 1 0.556 0.330 0.0669 0.969 0 0/0

## 2 0.665 0.312 0.139 0.986 1 1/0

## 3 0.556 0.330 0.0669 0.969 0 0/1

## 4 0.648 0.316 0.129 0.984 1 1/1Here’s our fitted()-based marginal of actor plot.

p5 <-

f %>%

ggplot(aes(x = condition, y = Estimate, group = 1)) +

geom_ribbon(aes(ymin = Q10, ymax = Q90), fill = "blue") +

geom_line(color = "orange1") +

ggtitle("Marginal of actor") +

coord_cartesian(ylim = 0:1) +

theme_fivethirtyeight() +

theme(plot.title = element_text(size = 14, hjust = .5))

p5

For the simulated actors plot, we’ll just amend our process from the last one. This time we’re setting summary = F, in order to keep the iteration-specific results, and setting nsamples = n_sim. n_sim is just a name for the number of actors we’d like to simulate (i.e., 50, as in the text).

# how many simulated actors would you like?

n_sim <- 50

(

f <-

fitted(b12.4,

newdata = nd,

probs = c(.1, .9),

allow_new_levels = T,

sample_new_levels = "gaussian",

summary = F,

nsamples = n_sim) %>%

as_tibble() %>%

mutate(iter = 1:n_sim) %>%

gather(key, value, -iter) %>%

bind_cols(nd %>%

transmute(condition = str_c(prosoc_left, "/", condition) %>%

factor(., levels = c("0/0", "1/0", "0/1", "1/1"))) %>%

expand(condition, iter = 1:n_sim))

)## # A tibble: 200 x 5

## iter key value condition iter1

## <int> <chr> <dbl> <fct> <int>

## 1 1 V1 0.330 0/0 1

## 2 2 V1 0.299 0/0 2

## 3 3 V1 0.841 0/0 3

## 4 4 V1 0.735 0/0 4

## 5 5 V1 0.858 0/0 5

## 6 6 V1 0.382 0/0 6

## 7 7 V1 0.690 0/0 7

## 8 8 V1 0.512 0/0 8

## 9 9 V1 0.912 0/0 9

## 10 10 V1 0.394 0/0 10

## # … with 190 more rowsp6 <-

f %>%

ggplot(aes(x = condition, y = value, group = iter)) +

geom_line(alpha = 1/2, color = "blue") +

ggtitle("50 simulated actors") +

coord_cartesian(ylim = 0:1) +

theme_fivethirtyeight() +

theme(plot.title = element_text(size = 14, hjust = .5))

p6

Here they are altogether.

grid.arrange(p4, p5, p6, ncol = 3)

12.4.3 Focus and multilevel prediction.

First, let’s load the Kline data.

# prep data

library(rethinking)

data(Kline)

d <- KlineSwitch out the packages, once again.

detach(package:rethinking, unload = T)

library(brms)

rm(Kline)The statistical formula for our multilevel count model is

\[\begin{align*} \text{total_tools}_i & \sim \text{Poisson} (\mu_i) \\ \text{log} (\mu_i) & = \alpha + \alpha_{\text{culture}_i} + \beta \text{log} (\text{population}_i) \\ \alpha & \sim \text{Normal} (0, 10) \\ \beta & \sim \text{Normal} (0, 1) \\ \alpha_{\text{culture}} & \sim \text{Normal} (0, \sigma_{\text{culture}}) \\ \sigma_{\text{culture}} & \sim \text{HalfCauchy} (0, 1) \\ \end{align*}\]With brms, we don’t actually need to make the logpop or society variables. We’re ready to fit the multilevel Kline model with the data in hand.

b12.6 <-

brm(data = d, family = poisson,

total_tools ~ 0 + intercept + log(population) +

(1 | culture),

prior = c(prior(normal(0, 10), class = b, coef = intercept),

prior(normal(0, 1), class = b),

prior(cauchy(0, 1), class = sd)),

iter = 4000, warmup = 1000, cores = 3, chains = 3,

seed = 12)Note how we used the special 0 + intercept syntax rather than using the default Intercept. This is because our predictor variable was not mean centered. For more info, see here. Though we used the 0 + intercept syntax for the fixed effect, it was not necessary for the random effect. Both ways work.

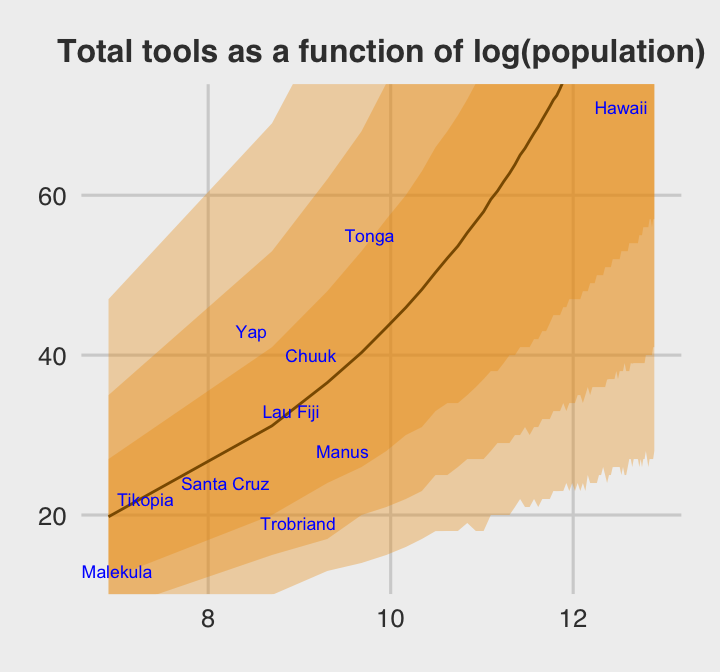

Here is the data-processing work for our variant of Figure 12.6.

nd <-

tibble(population = seq(from = 1000, to = 400000, by = 5000),

# to "simulate counterfactual societies, using the hyper-parameters" (p. 383),

# we'll plug a new island into the `culture` variable

culture = "my_island")

p <-

predict(b12.6,

# this allows us to simulate values for our counterfactual island, "my_island"

allow_new_levels = T,

# here we explicitly tell brms we want to include the group-level effects

re_formula = ~ (1 | culture),

# from the brms manual, this uses the "(multivariate) normal distribution implied by

# the group-level standard deviations and correlations", which appears to be

# what McElreath did in the text.

sample_new_levels = "gaussian",

newdata = nd,

probs = c(.015, .055, .165, .835, .945, .985)) %>%

as_tibble() %>%

bind_cols(nd)

p %>%

glimpse()## Observations: 80

## Variables: 10

## $ Estimate <dbl> 19.78322, 31.16189, 36.55989, 40.31711, 43.44322, 45.94956, 48.21122, 50.34489…

## $ Est.Error <dbl> 9.864749, 14.103522, 16.554112, 18.521093, 20.545470, 22.052733, 23.445121, 25…

## $ Q1.5 <dbl> 5.000, 10.000, 13.000, 14.000, 15.000, 16.000, 17.000, 17.985, 18.000, 18.000,…

## $ Q5.5 <dbl> 8, 15, 17, 20, 21, 22, 23, 25, 25, 26, 27, 27, 27, 28, 29, 29, 29, 30, 30, 31,…

## $ Q16.5 <dbl> 12, 20, 24, 26, 28, 30, 31, 33, 34, 34, 35, 36, 37, 38, 38, 39, 40, 40, 41, 41…

## $ Q83.5 <dbl> 27, 41, 48, 53, 57, 60, 63, 66, 68, 70, 72, 74, 76, 78, 79, 81, 82, 83, 85, 87…

## $ Q94.5 <dbl> 35.000, 53.000, 62.000, 68.000, 74.000, 78.000, 81.000, 85.000, 89.000, 91.055…

## $ Q98.5 <dbl> 47.000, 69.000, 82.000, 91.000, 98.015, 106.000, 109.000, 115.000, 120.015, 12…

## $ population <dbl> 1000, 6000, 11000, 16000, 21000, 26000, 31000, 36000, 41000, 46000, 51000, 560…

## $ culture <chr> "my_island", "my_island", "my_island", "my_island", "my_island", "my_island", …For a detailed discussion on this way of using brms::predict(), see Andrew MacDonald’s great blogpost on this very figure. Here’s what we’ve been working for:

p %>%

ggplot(aes(x = log(population), y = Estimate)) +

geom_ribbon(aes(ymin = Q1.5, ymax = Q98.5), fill = "orange2", alpha = 1/3) +

geom_ribbon(aes(ymin = Q5.5, ymax = Q94.5), fill = "orange2", alpha = 1/3) +

geom_ribbon(aes(ymin = Q16.5, ymax = Q83.5), fill = "orange2", alpha = 1/3) +

geom_line(color = "orange4") +

geom_text(data = d, aes(y = total_tools, label = culture),

size = 2.33, color = "blue") +

ggtitle("Total tools as a function of log(population)") +

coord_cartesian(ylim = range(d$total_tools)) +

theme_fivethirtyeight() +

theme(plot.title = element_text(size = 12, hjust = .5))

Glorious.

The envelope of predictions is a lot wider here than it was back in Chapter 10. This is a consequene of the varying intercepts, combined with the fact that there is much more variation in the data than a pure-Poisson model anticipates. (p. 384)

12.5 Summary Bonus: tidybayes::spread_draws()

A big part of this chapter, both what McElreath focused on in the text and even our plotting digression a bit above, focused on how to combine the fixed effects of a multilevel with the group-level. Given some binomial variable, \(\text{criterion}\), and some group term, \(\text{grouping variable}\), we’ve learned the simple multilevel model follows a form like

\[\begin{align*} \text{criterion}_i & \sim \text{Binomial} (n_i \geq 1, p_i) \\ \text{logit} (p_i) & = \alpha + \alpha_{\text{grouping variable}_i}\\ \alpha & \sim \text{Normal} (0, 1) \\ \alpha_{\text{grouping variable}} & \sim \text{Normal} (0, \sigma_{\text{grouping variable}}) \\ \sigma_{\text{grouping variable}} & \sim \text{HalfCauchy} (0, 1) \end{align*}\]and we’ve been grappling with the relation between the grand mean \(\alpha\) and the group-level deviations \(\alpha_{\text{grouping variable}}\). For situations where we have the brms::brm() model fit in hand, we’ve been playing with various ways to use the iterations, particularly with either the posterior_samples() method and the fitted()/predict() method. Both are great. But (a) we have other options, which I’d like to share, and (b) if you’re like me, you probably need more practice than following along with the examples in the text. In this bonus section, we are going to introduce two simplified models and then practice working with combining the grand mean various combinations of the random effects.

For our first step, we’ll introduce the models.

12.5.1 Intercepts-only models with one or two grouping variables

If you recall, b12.4 was our first multilevel model with the chimps data. We can retrieve the model formula like so.

b12.4$formula## pulled_left | trials(1) ~ 1 + prosoc_left + prosoc_left:condition + (1 | actor)In addition to the model intercept and random effects for the individual chimps (i.e., actor), we also included fixed effects for the study conditions. For our bonus section, it’ll be easier if we reduce this to a simple intercepts-only model with the sole actor grouping factor. That model will follow the form

Before we fit the model, you might recall that (a) we’ve already removed the chimpanzees data after saving the data as d and (b) we subsequently reassigned the Kline data to d. Instead of reloading the rethinking package to retrieve the chimpanzees data, we might also acknowledge that the data has also been saved within our b12.4 fit object. [It’s easy to forget such things.]

b12.4$data %>%

glimpse()## Observations: 504

## Variables: 4

## $ pulled_left <int> 0, 1, 0, 0, 1, 1, 0, 0, 0, 0, 1, 0, 1, 1, 0, 1, 0, 0, 1, 1, 1, 0, 1, 0, 0, 1,…

## $ prosoc_left <int> 0, 0, 1, 0, 1, 1, 1, 1, 0, 0, 0, 1, 0, 1, 0, 1, 1, 0, 1, 0, 0, 0, 1, 1, 0, 0,…

## $ condition <int> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,…

## $ actor <int> 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1,…So there’s no need to reload anything. Everything we need is already at hand. Let’s fit the intercepts-only model.

b12.7 <-

brm(data = b12.4$data, family = binomial,

pulled_left | trials(1) ~ 1 + (1 | actor),

prior = c(prior(normal(0, 10), class = Intercept),

prior(cauchy(0, 1), class = sd)),

iter = 5000, warmup = 1000, chains = 4, cores = 4,

control = list(adapt_delta = 0.95),

seed = 12)Here’s the model summary:

print(b12.7)## Family: binomial

## Links: mu = logit

## Formula: pulled_left | trials(1) ~ 1 + (1 | actor)

## Data: b12.4$data (Number of observations: 504)

## Samples: 4 chains, each with iter = 5000; warmup = 1000; thin = 1;

## total post-warmup samples = 16000

##

## Group-Level Effects:

## ~actor (Number of levels: 7)

## Estimate Est.Error l-95% CI u-95% CI Eff.Sample Rhat

## sd(Intercept) 2.20 0.89 1.10 4.55 2859 1.00

##

## Population-Level Effects:

## Estimate Est.Error l-95% CI u-95% CI Eff.Sample Rhat

## Intercept 0.76 0.92 -0.93 2.70 2370 1.00

##

## Samples were drawn using sampling(NUTS). For each parameter, Eff.Sample

## is a crude measure of effective sample size, and Rhat is the potential

## scale reduction factor on split chains (at convergence, Rhat = 1).Now recall that our competing cross-classified model, b12.5 added random effects for the trial blocks. Here was that formula.

b12.5$formula## pulled_left | trials(1) ~ prosoc_left + (1 | actor) + (1 | block) + prosoc_left:conditionAnd, of course, we can retrieve the data from that model, too.

b12.5$data %>%

glimpse()## Observations: 504

## Variables: 5

## $ pulled_left <int> 0, 1, 0, 0, 1, 1, 0, 0, 0, 0, 1, 0, 1, 1, 0, 1, 0, 0, 1, 1, 1, 0, 1, 0, 0, 1,…

## $ prosoc_left <int> 0, 0, 1, 0, 1, 1, 1, 1, 0, 0, 0, 1, 0, 1, 0, 1, 1, 0, 1, 0, 0, 0, 1, 1, 0, 0,…

## $ condition <int> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,…

## $ actor <int> 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1,…

## $ block <int> 1, 1, 1, 1, 1, 1, 2, 2, 2, 2, 2, 2, 3, 3, 3, 3, 3, 3, 4, 4, 4, 4, 4, 4, 5, 5,…It’s the same data we used from the b12.4 model, but with the addition of the block index. With those data in hand, we can fit the intercepts-only version of our cross-classified model. This model formula follows the form

Fit the model.

b12.8 <-

brm(data = b12.5$data, family = binomial,

pulled_left | trials(1) ~ 1 + (1 | actor) + (1 | block),

prior = c(prior(normal(0, 10), class = Intercept),

prior(cauchy(0, 1), class = sd)),

iter = 5000, warmup = 1000, chains = 4, cores = 4,

control = list(adapt_delta = 0.95),

seed = 12)Here’s the summary.

print(b12.8)## Family: binomial

## Links: mu = logit

## Formula: pulled_left | trials(1) ~ 1 + (1 | actor) + (1 | block)

## Data: b12.5$data (Number of observations: 504)

## Samples: 4 chains, each with iter = 5000; warmup = 1000; thin = 1;

## total post-warmup samples = 16000

##

## Group-Level Effects:

## ~actor (Number of levels: 7)

## Estimate Est.Error l-95% CI u-95% CI Eff.Sample Rhat

## sd(Intercept) 2.24 0.94 1.09 4.53 4049 1.00

##

## ~block (Number of levels: 6)

## Estimate Est.Error l-95% CI u-95% CI Eff.Sample Rhat

## sd(Intercept) 0.22 0.18 0.01 0.67 6647 1.00

##

## Population-Level Effects:

## Estimate Est.Error l-95% CI u-95% CI Eff.Sample Rhat

## Intercept 0.82 0.94 -0.92 2.79 3460 1.00

##

## Samples were drawn using sampling(NUTS). For each parameter, Eff.Sample

## is a crude measure of effective sample size, and Rhat is the potential

## scale reduction factor on split chains (at convergence, Rhat = 1).Now we’ve fit our two intercepts-only models, let’s get to the heart of this section. We are going to practice four methods for working with the posterior samples. Each method will revolve around a different primary function. In order, they are

brms::posterior_samples()brms::coef()brms::fitted()tidybayes::spread_draws()

We’ve already had some practice with the first three, but I hope this section will make them even more clear. The tidybayes::spread_draws() method will be new, to us. I think you’ll find it’s a handy alternative.

With each of the four methods, we’ll practice three different model summaries.

- Getting the posterior draws for the

actor-level estimates from theb12.7model - Getting the posterior draws for the

actor-level estimates from the cross-classifiedb12.8model, averaging over the levels ofblock - Getting the posterior draws for the

actor-level estimates from the cross-classifiedb12.8model, based onblock == 1

So to be clear, our goal is to accomplish those three tasks with four methods, each of which should yield equivalent results.

12.5.2 brms::posterior_samples()

To warm up, let’s take a look at the structure of the posterior_samples() output for the simple b12.7 model.

posterior_samples(b12.7) %>% str()## 'data.frame': 16000 obs. of 10 variables:

## $ b_Intercept : num 1.53 1.3 1.85 1.97 1.85 ...

## $ sd_actor__Intercept : num 1.76 1.34 1.8 1.6 1.98 ...

## $ r_actor[1,Intercept]: num -2.08 -1.35 -2.27 -2.24 -2.22 ...

## $ r_actor[2,Intercept]: num 2.44 2.19 3.17 1.61 2.24 ...

## $ r_actor[3,Intercept]: num -2.04 -1.85 -2.38 -2.08 -3.03 ...

## $ r_actor[4,Intercept]: num -2.62 -1.53 -2.16 -2.72 -2.26 ...

## $ r_actor[5,Intercept]: num -1.56 -2.09 -2.32 -2.22 -2.33 ...

## $ r_actor[6,Intercept]: num -1.046 -0.873 -0.829 -1.539 -1.272 ...

## $ r_actor[7,Intercept]: num 0.947 0.273 -0.241 0.574 0.785 ...

## $ lp__ : num -283 -286 -283 -285 -283 ...The b_Intercept vector corresponds to the \(\alpha\) term in the statistical model. The second vector, sd_actor__Intercept, corresponds to the \(\sigma_{\text{actor}}\) term. And the next 7 vectors beginning with the r_actor suffix are the \(\alpha_{\text{actor}}\) deviations from the grand mean, \(\alpha\). Thus if we wanted to get the model-implied probability for our first chimp, we’d add b_Intercept to r_actor[1,Intercept] and then take the inverse logit.

posterior_samples(b12.7) %>%

transmute(`chimp 1's average probability of pulling left` = (b_Intercept + `r_actor[1,Intercept]`) %>% inv_logit_scaled()) %>%

head()## chimp 1's average probability of pulling left

## 1 0.3666511

## 2 0.4891179

## 3 0.3965780

## 4 0.4309511

## 5 0.4097169

## 6 0.4485629To complete our first task, then, of getting the posterior draws for the actor-level estimates from the b12.7 model, we can do that in bulk.

p1 <-

posterior_samples(b12.7) %>%

transmute(`chimp 1's average probability of pulling left` = b_Intercept + `r_actor[1,Intercept]`,

`chimp 2's average probability of pulling left` = b_Intercept + `r_actor[2,Intercept]`,

`chimp 3's average probability of pulling left` = b_Intercept + `r_actor[3,Intercept]`,

`chimp 4's average probability of pulling left` = b_Intercept + `r_actor[4,Intercept]`,

`chimp 5's average probability of pulling left` = b_Intercept + `r_actor[5,Intercept]`,

`chimp 6's average probability of pulling left` = b_Intercept + `r_actor[6,Intercept]`,

`chimp 7's average probability of pulling left` = b_Intercept + `r_actor[7,Intercept]`) %>%

mutate_all(inv_logit_scaled)

str(p1)## 'data.frame': 16000 obs. of 7 variables:

## $ chimp 1's average probability of pulling left: num 0.367 0.489 0.397 0.431 0.41 ...

## $ chimp 2's average probability of pulling left: num 0.982 0.971 0.993 0.973 0.984 ...

## $ chimp 3's average probability of pulling left: num 0.375 0.367 0.37 0.472 0.236 ...

## $ chimp 4's average probability of pulling left: num 0.252 0.443 0.424 0.319 0.399 ...

## $ chimp 5's average probability of pulling left: num 0.493 0.313 0.386 0.436 0.383 ...

## $ chimp 6's average probability of pulling left: num 0.619 0.606 0.735 0.605 0.642 ...

## $ chimp 7's average probability of pulling left: num 0.922 0.829 0.833 0.927 0.933 ...One of the things I really like about this method is the b_Intercept + r_actor[i,Intercept] part of the code makes it very clear, to me, how the porterior_samples() columns correspond to the statistical model, \(\text{logit} (p_i) = \alpha + \alpha_{\text{actor}_i}\). This method easily extends to our next task, getting the posterior draws for the actor-level estimates from the cross-classified b12.8 model, averaging over the levels of block. In fact, other than switching out b12.7 for b12.8, the method is identical.

p2 <-

posterior_samples(b12.8) %>%

transmute(`chimp 1's average probability of pulling left` = b_Intercept + `r_actor[1,Intercept]`,

`chimp 2's average probability of pulling left` = b_Intercept + `r_actor[2,Intercept]`,

`chimp 3's average probability of pulling left` = b_Intercept + `r_actor[3,Intercept]`,

`chimp 4's average probability of pulling left` = b_Intercept + `r_actor[4,Intercept]`,

`chimp 5's average probability of pulling left` = b_Intercept + `r_actor[5,Intercept]`,

`chimp 6's average probability of pulling left` = b_Intercept + `r_actor[6,Intercept]`,

`chimp 7's average probability of pulling left` = b_Intercept + `r_actor[7,Intercept]`) %>%

mutate_all(inv_logit_scaled)

str(p2)## 'data.frame': 16000 obs. of 7 variables:

## $ chimp 1's average probability of pulling left: num 0.403 0.437 0.458 0.421 0.416 ...

## $ chimp 2's average probability of pulling left: num 0.993 0.99 0.991 1 0.999 ...

## $ chimp 3's average probability of pulling left: num 0.406 0.419 0.385 0.343 0.384 ...

## $ chimp 4's average probability of pulling left: num 0.353 0.347 0.31 0.286 0.429 ...

## $ chimp 5's average probability of pulling left: num 0.391 0.388 0.352 0.478 0.36 ...