Chapter 3 Use case 1: basic use of jointModel()

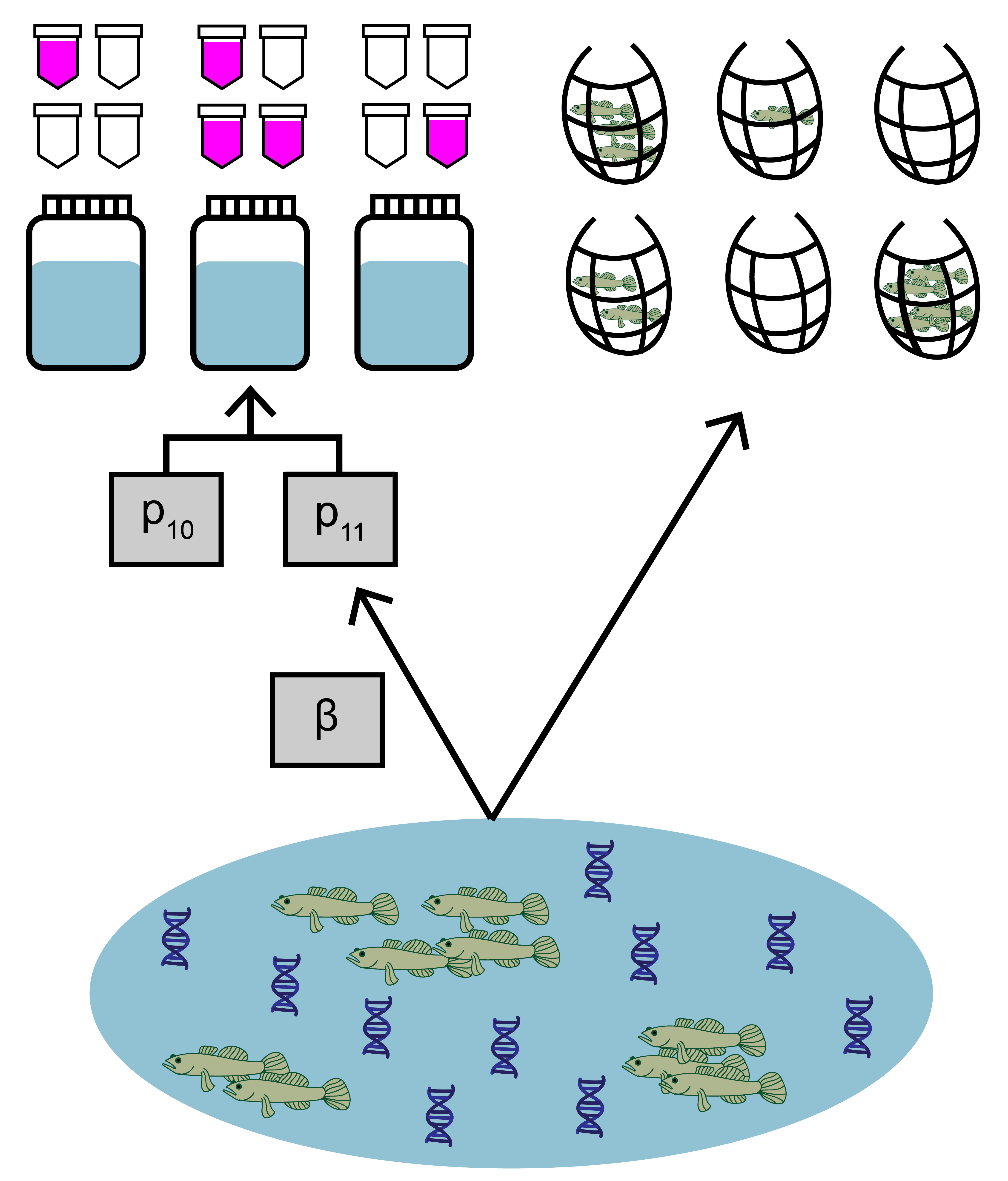

This first use case will show how to fit and interpret the joint model with paired eDNA and traditional survey data.

The data used in this example comes from a study by Schmelzle and Kinziger (2016) about endangered tidewater gobies (Eucyclogobius newberryi) in California. Environmental DNA samples were collected at 39 sites, along with paired traditional seine sampling. The eDNA data is detection/non-detection data generated through quantitative polymerase chain reaction (qPCR).

3.1 Prepare the data

Both eDNA and traditional survey data should have a hierarchical structure:

- Sites (primary sample units) within a study area

- eDNA and traditional samples (secondary sample units) collected from each site

- eDNA subsamples (replicate observations) taken from each eDNA sample

Ensuring that your data is formatted correctly is essential for successfully using eDNAjoint. Let’s first explore the structure of the goby data.

## List of 4

## $ qPCR.N : num [1:39, 1:22] 6 6 6 6 6 6 6 6 6 6 ...

## ..- attr(*, "dimnames")=List of 2

## .. ..$ : NULL

## .. ..$ : chr [1:22] "1" "2" "3" "4" ...

## $ qPCR.K : num [1:39, 1:22] 0 0 0 0 6 0 0 0 0 5 ...

## ..- attr(*, "dimnames")=List of 2

## .. ..$ : NULL

## .. ..$ : chr [1:22] "1" "2" "3" "4" ...

## $ count : int [1:39, 1:22] 0 0 0 0 0 0 0 0 0 1 ...

## ..- attr(*, "dimnames")=List of 2

## .. ..$ : NULL

## .. ..$ : chr [1:22] "1" "2" "3" "4" ...

## $ site.cov: num [1:39, 1:5] -0.711 -0.211 -1.16 -0.556 -0.988 ...

## ..- attr(*, "dimnames")=List of 2

## .. ..$ : NULL

## .. ..$ : chr [1:5] "Salinity" "Filter_time" "Other_fishes" "Hab_size" ...You can see that the data is a list of four matrices all with the same number of rows that represent each site (n=39). Across all matrices, rows in the data should correspond to the same sites (i.e., row one in all matrices corresponds to site one, and row 31 in all matrices corresponds to site 31).

qPCR.K, qPCR.N, and count are required for all implementations of jointModel(), and site.cov is optional and will be used in use case 2.

Let’s look first at qPCR.K. These are the total number of positive qPCR detections for each site (row) and eDNA sample (column). The number of columns should equal the maximum number of eDNA samples collected at any site. Blank spaces are filled with NA at sites where fewer eDNA samples were collected than the maximum.

For example, at site one, 11 eDNA samples were collected, and at site five, 20 eDNA samples were collected.

## 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22

## [1,] 0 0 0 0 0 0 0 0 0 0 0 NA NA NA NA NA NA NA NA NA NA NA

## [2,] 0 0 0 0 0 0 0 0 0 0 0 NA NA NA NA NA NA NA NA NA NA NA

## [3,] 0 0 0 0 0 0 0 0 0 0 0 NA NA NA NA NA NA NA NA NA NA NA

## [4,] 0 6 6 4 6 5 4 6 5 3 NA NA NA NA NA NA NA NA NA NA NA NA

## [5,] 6 6 4 6 6 6 5 4 2 2 0 6 5 5 6 6 6 5 5 4 NA NA

## [6,] 0 0 0 NA NA NA NA NA NA NA NA NA NA NA NA NA NA NA NA NA NA NANow let’s look at qPCR.N. These are the total number of qPCR eDNA subsamples (replicate observations) collected for each site (row) and eDNA sample (column). In this data, six qPCR replicate observations were collected for each eDNA sample. Notice that the locations of the NAs in the matrix match qPCR.K.

## 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22

## [1,] 6 6 6 6 6 6 6 6 6 6 6 NA NA NA NA NA NA NA NA NA NA NA

## [2,] 6 6 6 6 6 6 6 6 6 6 6 NA NA NA NA NA NA NA NA NA NA NA

## [3,] 6 6 6 6 6 6 6 6 6 6 6 NA NA NA NA NA NA NA NA NA NA NA

## [4,] 6 6 6 6 6 6 6 6 6 6 NA NA NA NA NA NA NA NA NA NA NA NA

## [5,] 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 6 NA NA

## [6,] 6 6 6 NA NA NA NA NA NA NA NA NA NA NA NA NA NA NA NA NA NA NANext, let’s look at count. This data is from seine sampling for tidewater gobies. Each integer refers to the catch of each seine sample (i.e., catch per unit effort, when effort = 1). Again, the rows correspond to sites, and the columns refer to replicated seine samples (secondary sample units) at each site, with a maximum of 22 samples. Blank spaces are filled with NA at sites where fewer seine samples were collected than the maximum.

In this example, the count data are integers, but continuous values can be used in the model (see Eq. 1.3 in the model description).

## 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22

## [1,] 0 0 0 0 0 0 0 0 0 0 0 NA NA NA NA NA NA NA NA NA NA NA

## [2,] 0 0 0 0 0 0 0 0 0 0 0 NA NA NA NA NA NA NA NA NA NA NA

## [3,] 0 0 0 0 0 0 0 0 0 0 0 NA NA NA NA NA NA NA NA NA NA NA

## [4,] 0 4 1 0 2 1 38 112 1 15 NA NA NA NA NA NA NA NA NA NA NA NA

## [5,] 0 0 0 2 0 0 0 0 0 0 0 0 4 1 0 2 0 8 NA NA NA NA

## [6,] 0 0 0 NA NA NA NA NA NA NA NA NA NA NA NA NA NA NA NA NA NA NA3.1.1 Converting data from long to wide

Using eDNAjoint requires your data to be in “wide” format. Wide vs. long data refers to the shape of your data in tidyverse jargon (see more here). Below is an example for how to convert your data to wide format.

First let’s simulate “long” qPCR data.

## data dimensions

# number of sites (primary sample units)

nsite <- 4

# number of eDNA samples (secondary sample units)

neDNA_samples <- 6

# number of traditional samples (secondary sample units)

ntraditional_subsamples <- 8

# number of eDNA subsamples (replicate observations)

neDNA_subsamples <- 3

# simulate qPCR data

qPCR_long <- data.frame(

site = rep(1:nsite, each = neDNA_samples),

eDNA_sample = rep(1:neDNA_samples, times = nsite),

N = rep(neDNA_subsamples, nsite*neDNA_samples),

K = c(

rbinom(neDNA_samples, neDNA_subsamples, 0.1), # site 1 positive detections

rbinom(neDNA_samples, neDNA_subsamples, 0.6), # site 2 positive detections

rbinom(neDNA_samples, neDNA_subsamples, 0), # site 3 positive detections

rbinom(neDNA_samples, neDNA_subsamples, 0.4) # site 4 positive detections

)

)

head(qPCR_long)## site eDNA_sample N K

## 1 1 1 3 0

## 2 1 2 3 0

## 3 1 3 3 0

## 4 1 4 3 1

## 5 1 5 3 0

## 6 1 6 3 1And simulate “long” traditional data:

# simulate traditional count data

count_long <- data.frame(

site = rep(1:nsite, each = ntraditional_subsamples),

traditional_sample = rep(1:ntraditional_subsamples, times = nsite),

count = c(

rpois(ntraditional_subsamples, 0.5), # site 1 count data

rpois(ntraditional_subsamples, 4), # site 2 count data

rpois(ntraditional_subsamples, 0), # site 3 count data

rpois(ntraditional_subsamples, 2) # site 4 count data

)

)

head(count_long)## site traditional_sample count

## 1 1 1 1

## 2 1 2 0

## 3 1 3 1

## 4 1 4 1

## 5 1 5 0

## 6 1 6 1Now let’s convert the data from “long” to “wide”.

# N: number of qPCR eDNA subsamples (i.e., replicate observations)

# per eDNA sample (i.e., secondary sample)

qPCR_N_wide <- qPCR_long %>%

pivot_wider(id_cols=site,

names_from=eDNA_sample,

values_from=N) %>%

arrange(site) %>%

select(-site)

# K: number of positive qPCR detections in each

# eDNA sample (i.e., secondary sample)

qPCR_K_wide <- qPCR_long %>%

pivot_wider(id_cols=site,

names_from=eDNA_sample,

values_from=K) %>%

arrange(site) %>%

select(-site)

# count: number of individuals in each traditional sample

# (i.e., secondary sample)

count_wide <- count_long %>%

pivot_wider(id_cols=site,

names_from=traditional_sample,

values_from=count) %>%

arrange(site) %>%

select(-site)Finally, we’ll bundle this data up into a named list of matrices.

3.2 Fit the model

Now that we understand our data, let’s fit the joint model. The key arguments of this function include:

- data: list of

qPCR.K,qPCR.N, andcountmatrices - family: probability distribution used to model the seine count data. A poisson distribution is chosen here.

- p10priors: Beta distribution parameters for the prior on the probability of false positive eDNA detection, p10. c(1,20) is the default specification. More on this later.

- q: logical value indicating the presence of multiple traditional gear types. Here, we’re only using data from one traditional method.

More parameters exist to further customize the MCMC sampling, but we’ll stick with the defaults.

# run the joint model

goby.fit1 <- jointModel(data = gobyData, family = 'poisson',

p10priors = c(1,20), q=FALSE)goby.fit1 is a list containing:

- model fit (

goby.fit1$model) of the class ‘stanfit’ and can be accessed and interpreted using all functions in the rstan package. - initial values used for each chain in MCMC (

goby.fit1$inits)

3.3 Model selection

We previously made a choice to use a poisson distribution to describe the count data. This distribution assumes that the mean equals variance for count data at each site. Perhaps we want to test how that model specification compares to a model specification using a negative binomial distribution, which allows the variance of the count data to be greater than the mean.

# fit a new model with negative binomial distribution

goby.fit2 <- jointModel(data = gobyData, family = 'negbin',

p10priors = c(1,20), q=FALSE)We can now compare the fit of these model to our data using the jointSelect() function, which performs leave-one-out cross validation with functions from the loo package.

## elpd_diff se_diff

## model2 0.0 0.0

## model1 -2339.4 619.9These results tell us that model1 has a higher Bayesian LOO estimate of the expected log pointwise predictive density (elpd_loo). This means that goby.fit1 is likely a better fit to the data.

3.4 Interpret the output

3.4.1 Summarize posterior distributions

Let’s interpret goby.fit1. Use jointSummarize() to see the posterior summaries of the model parameters.

## mean se_mean sd 2.5% 97.5% n_eff Rhat

## p10 0.002 0.000 0.001 0.001 0.003 16629.35 1

## beta 0.657 0.001 0.094 0.472 0.842 12362.86 1This summarizes the mean, sd, and quantiles of the posterior estimates of p10 and β, as well as the effective sample size (n_eff) and Rhat for the parameters.

The mean estimated probability of a false positive eDNA detection is 0.001. β is the parameter that scales the sensitivity between eDNA and traditional sampling.

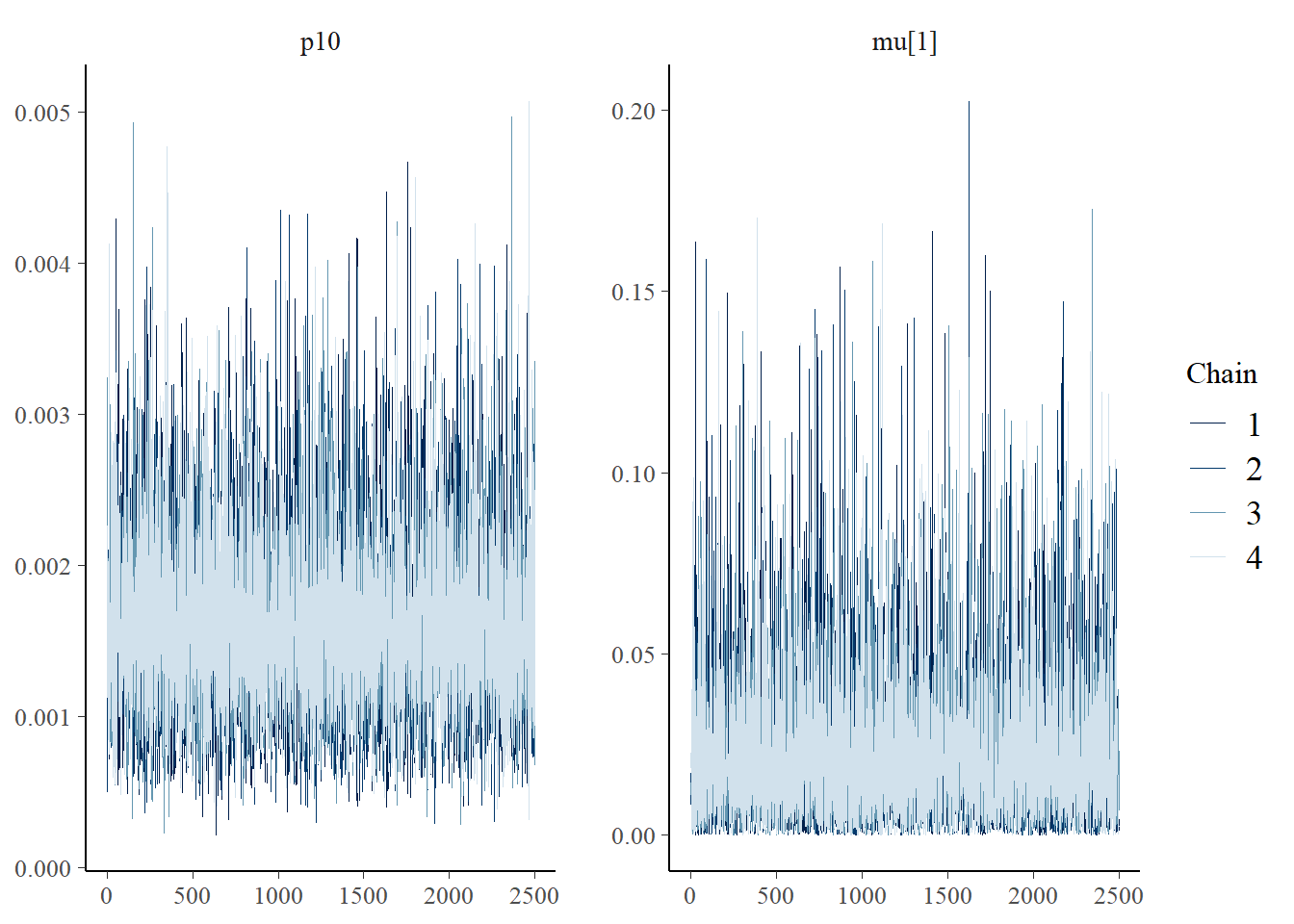

We can also use functions from the bayesplot package to examine the posterior distributions and chain convergence.

First let’s look at the posterior distribution for p10.

library(bayesplot)

# plot posterior distribution, highlighting median and 80% credibility interval

mcmc_areas(as.matrix(goby.fit1$model), pars = 'p10', prob = 0.8)

Next let’s look at chain convergence for p10 and μi=1.

# this will plot the MCMC chains for p10 and mu at site 1

mcmc_trace(rstan::extract(goby.fit1$model, permuted = FALSE),

pars = c('p10', 'mu[1]'))

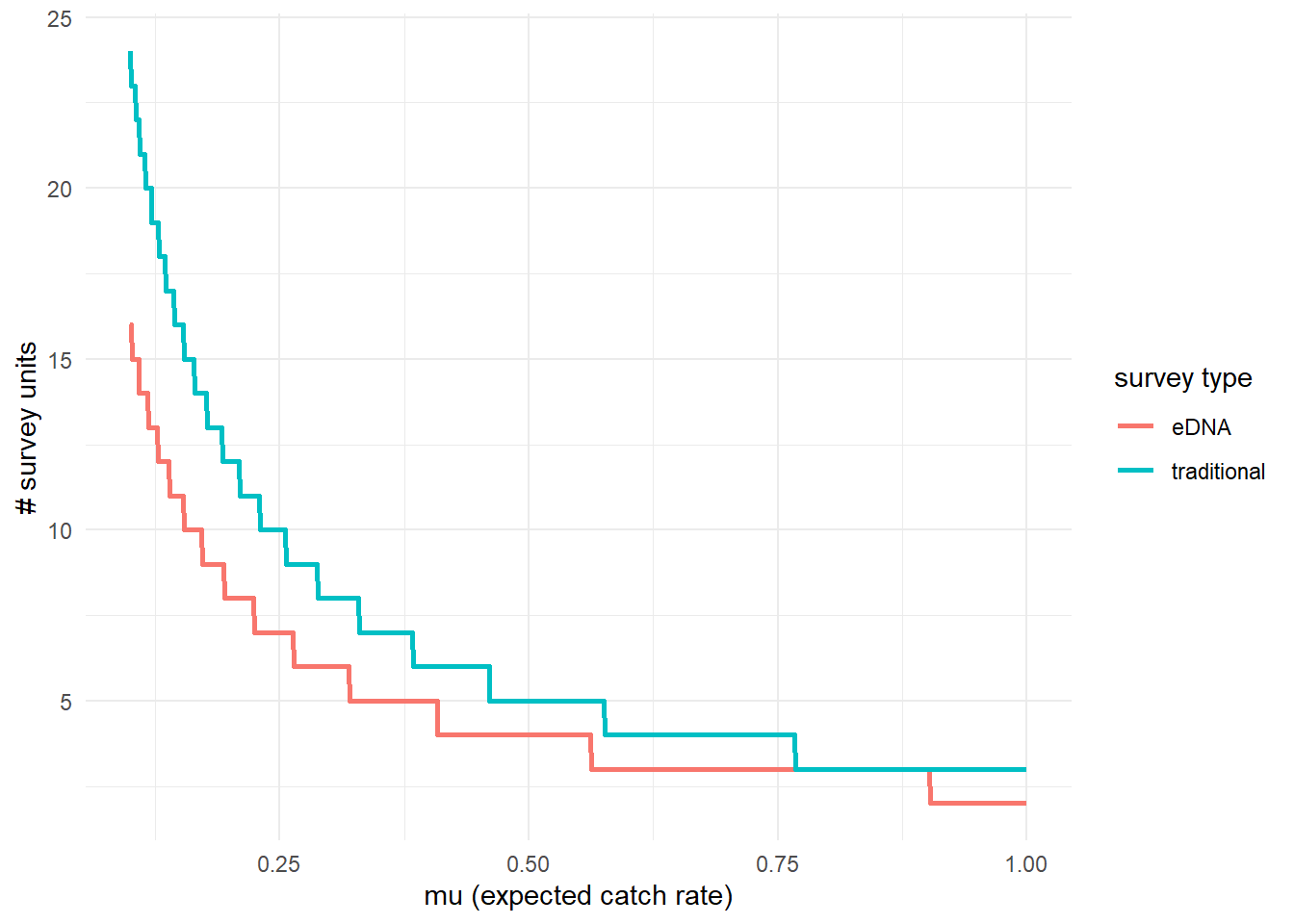

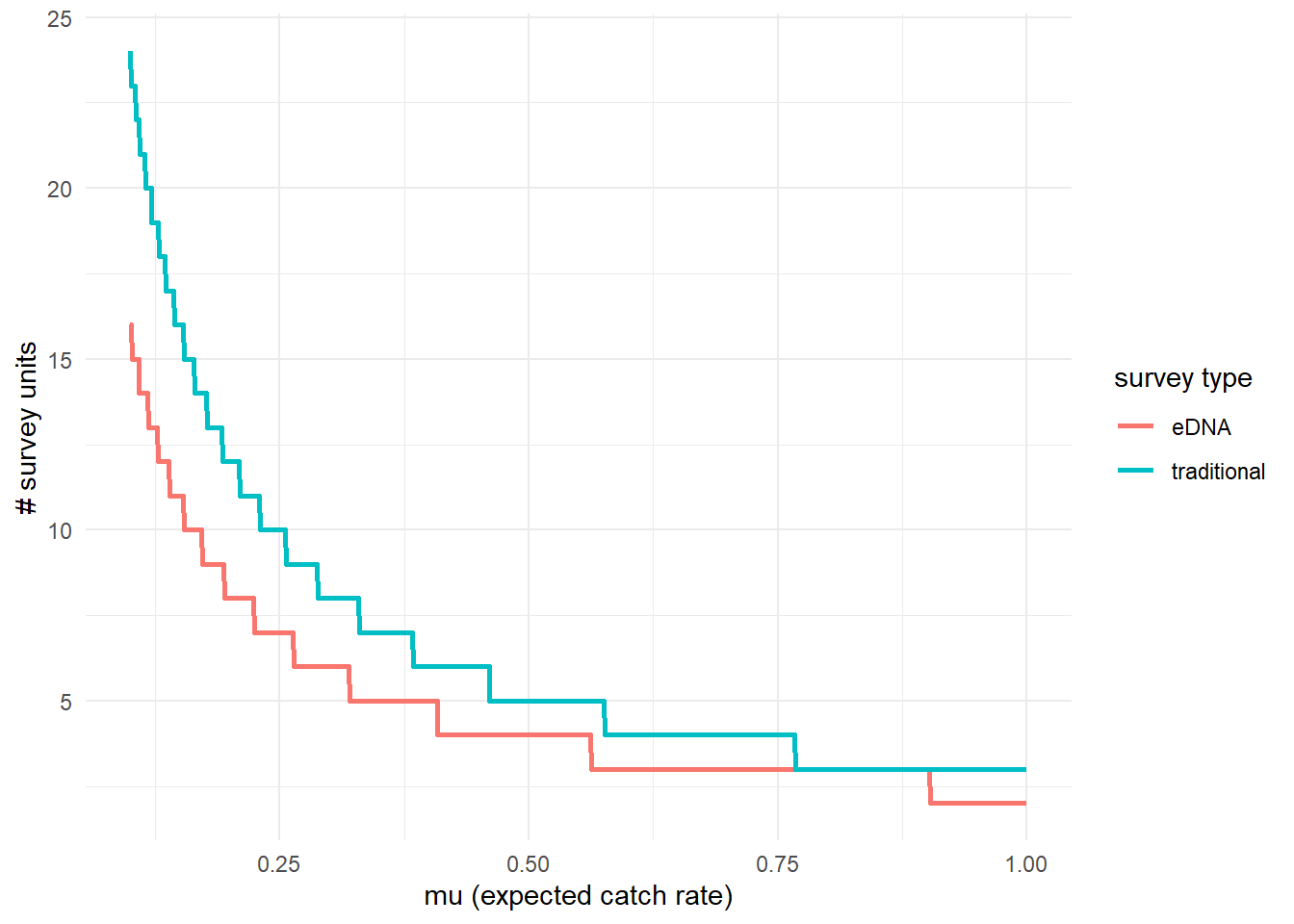

3.4.2 Effort necessary to detect presence

To further highlight the relative sensitivity of eDNA and traditional sampling, we can use detectionCalculate() to find the units of survey effort necessary to detect presence of the species. Here, detecting presence refers to producing at least one true positive eDNA detection or catching at least one individual in a traditional survey.

This function is finding the median number of survey units necessary to detect species presence if the expected catch rate, μ is 0.1, 0.5, or 1.

## mu n_traditional n_eDNA

## [1,] 0.1 24 16

## [2,] 0.5 5 4

## [3,] 1.0 3 2We can also plot these comparisons. mu.min and mu.max define the x-axis in the plot.

3.4.3 Calculate μcritical

The probability of a true positive eDNA detection, p11, is a function of the expected catch rate, μ. Low values of μ correspond to low probability of eDNA detection. Since the probability of a false-positive eDNA detection is non-zero, the probability of a false positive detection may be higher than the probability of a true positive detection at very low values of μ.

μcritical describes the value of μ where the probability of a false positive eDNA detection equals the probability of a true positive eDNA detection. This value can be calculated using muCritical().

## $median

## [1] 0.002958105

##

## $lower_ci

## Highest Density Interval: 1.13e-03

##

## $upper_ci

## Highest Density Interval: 5.19e-03This function calculates μcritical using the entire posterior distributions of parameters from the model, and ‘HDI’ corresponds to the 90% credibility interval calculated using the highest density interval.

3.5 Prior sensitivity analysis

The previous model implementation used default values for the beta prior distribution for p10. The choice of these prior parameters can impose undue influence on the model’s inference. The best way to investigate this is to perform a prior sensitivity analysis.

Let’s look at how three prior choices affect the posterior estimates of p10 and β.

Prior choice 1: default prior parameters c(1,20). The mean and sd of this prior distribution are 0.048 and 0.045, respectively.

## mean se_mean sd 2.5% 97.5% n_eff Rhat

## p10 0.002 0.000 0.001 0.001 0.003 16629.35 1

## beta 0.657 0.001 0.094 0.472 0.842 12362.86 1Prior choice 2: c(1,15). The mean and sd of this prior distribution are 0.063 and 0.058, respectively.

## mean se_mean sd 2.5% 97.5% n_eff Rhat

## p10 0.002 0.000 0.001 0.001 0.003 18783.13 1

## beta 0.661 0.001 0.095 0.476 0.845 10833.95 1Prior choice 3: c(1,10). The mean and sd of this prior distribution are 0.091 and 0.083, respectively.

## mean se_mean sd 2.5% 97.5% n_eff Rhat

## p10 0.002 0.000 0.001 0.001 0.004 16410.58 1

## beta 0.669 0.001 0.094 0.487 0.854 10486.92 1You can see that the choice of the p10 prior within this range has little influence on the estimated parameters.

3.6 Initial values

By default, eDNAjoint will provide initial values for parameters estimated by the model, but you can provide your own initial values if you prefer. Here is an example of providing initial values for parameters, mu,p10, and beta, as an input in jointModel().

# set number of chains

n.chain <- 4

# initial values should be a list of named lists

inits <- list()

for(i in 1:n.chain){

inits[[i]] <- list(

# length should equal the number of sites (dim(gobyData$count)[1]) for each chain

mu = stats::runif(dim(gobyData$count)[1], 0.01, 5),

# length should equal 1 for each chain

p10 = stats::runif(1,0.0001,0.08),

# length should equal 1 for each chain

beta = stats::runif(1,0.05,0.2)

)

}

# now fit the model

fit.w.inits <- jointModel(data = gobyData, initial_values = inits)##

## SAMPLING FOR MODEL 'joint_binary_pois' NOW (CHAIN 1).

## Chain 1:

## Chain 1: Gradient evaluation took 0.000175 seconds

## Chain 1: 1000 transitions using 10 leapfrog steps per transition would take 1.75 seconds.

## Chain 1: Adjust your expectations accordingly!

## Chain 1:

## Chain 1:

## Chain 1: Iteration: 1 / 3000 [ 0%] (Warmup)

## Chain 1: Iteration: 500 / 3000 [ 16%] (Warmup)

## Chain 1: Iteration: 501 / 3000 [ 16%] (Sampling)

## Chain 1: Iteration: 1000 / 3000 [ 33%] (Sampling)

## Chain 1: Iteration: 1500 / 3000 [ 50%] (Sampling)

## Chain 1: Iteration: 2000 / 3000 [ 66%] (Sampling)

## Chain 1: Iteration: 2500 / 3000 [ 83%] (Sampling)

## Chain 1: Iteration: 3000 / 3000 [100%] (Sampling)

## Chain 1:

## Chain 1: Elapsed Time: 1.941 seconds (Warm-up)

## Chain 1: 4.359 seconds (Sampling)

## Chain 1: 6.3 seconds (Total)

## Chain 1:

##

## SAMPLING FOR MODEL 'joint_binary_pois' NOW (CHAIN 2).

## Chain 2:

## Chain 2: Gradient evaluation took 0.000165 seconds

## Chain 2: 1000 transitions using 10 leapfrog steps per transition would take 1.65 seconds.

## Chain 2: Adjust your expectations accordingly!

## Chain 2:

## Chain 2:

## Chain 2: Iteration: 1 / 3000 [ 0%] (Warmup)

## Chain 2: Iteration: 500 / 3000 [ 16%] (Warmup)

## Chain 2: Iteration: 501 / 3000 [ 16%] (Sampling)

## Chain 2: Iteration: 1000 / 3000 [ 33%] (Sampling)

## Chain 2: Iteration: 1500 / 3000 [ 50%] (Sampling)

## Chain 2: Iteration: 2000 / 3000 [ 66%] (Sampling)

## Chain 2: Iteration: 2500 / 3000 [ 83%] (Sampling)

## Chain 2: Iteration: 3000 / 3000 [100%] (Sampling)

## Chain 2:

## Chain 2: Elapsed Time: 1.904 seconds (Warm-up)

## Chain 2: 4.786 seconds (Sampling)

## Chain 2: 6.69 seconds (Total)

## Chain 2:

##

## SAMPLING FOR MODEL 'joint_binary_pois' NOW (CHAIN 3).

## Chain 3:

## Chain 3: Gradient evaluation took 8.1e-05 seconds

## Chain 3: 1000 transitions using 10 leapfrog steps per transition would take 0.81 seconds.

## Chain 3: Adjust your expectations accordingly!

## Chain 3:

## Chain 3:

## Chain 3: Iteration: 1 / 3000 [ 0%] (Warmup)

## Chain 3: Iteration: 500 / 3000 [ 16%] (Warmup)

## Chain 3: Iteration: 501 / 3000 [ 16%] (Sampling)

## Chain 3: Iteration: 1000 / 3000 [ 33%] (Sampling)

## Chain 3: Iteration: 1500 / 3000 [ 50%] (Sampling)

## Chain 3: Iteration: 2000 / 3000 [ 66%] (Sampling)

## Chain 3: Iteration: 2500 / 3000 [ 83%] (Sampling)

## Chain 3: Iteration: 3000 / 3000 [100%] (Sampling)

## Chain 3:

## Chain 3: Elapsed Time: 2.29 seconds (Warm-up)

## Chain 3: 4.291 seconds (Sampling)

## Chain 3: 6.581 seconds (Total)

## Chain 3:

##

## SAMPLING FOR MODEL 'joint_binary_pois' NOW (CHAIN 4).

## Chain 4:

## Chain 4: Gradient evaluation took 8.8e-05 seconds

## Chain 4: 1000 transitions using 10 leapfrog steps per transition would take 0.88 seconds.

## Chain 4: Adjust your expectations accordingly!

## Chain 4:

## Chain 4:

## Chain 4: Iteration: 1 / 3000 [ 0%] (Warmup)

## Chain 4: Iteration: 500 / 3000 [ 16%] (Warmup)

## Chain 4: Iteration: 501 / 3000 [ 16%] (Sampling)

## Chain 4: Iteration: 1000 / 3000 [ 33%] (Sampling)

## Chain 4: Iteration: 1500 / 3000 [ 50%] (Sampling)

## Chain 4: Iteration: 2000 / 3000 [ 66%] (Sampling)

## Chain 4: Iteration: 2500 / 3000 [ 83%] (Sampling)

## Chain 4: Iteration: 3000 / 3000 [100%] (Sampling)

## Chain 4:

## Chain 4: Elapsed Time: 1.923 seconds (Warm-up)

## Chain 4: 4.338 seconds (Sampling)

## Chain 4: 6.261 seconds (Total)

## Chain 4:

## Refer to the eDNAjoint guide for visualization tips: https://bookdown.org/abigailkeller/eDNAjoint_vignette/tips.html#visualization-tips## $chain1

## $chain1$mu_trad

## [1] 2.01391381 1.25559601 0.41563428 4.82694638 0.83600351 4.30748495 2.36601809

## [8] 0.39530283 1.45825815 2.66141690 3.32957309 3.60864127 2.34038247 4.92368838

## [15] 2.11719136 0.91069842 2.67780586 3.86534942 4.80623740 2.17228961 4.20042273

## [22] 3.34444017 2.28607129 0.15141236 0.68654561 3.06456579 4.73994272 3.70362987

## [29] 3.99786725 2.04876099 2.93804214 0.91720229 0.58064812 3.33776691 2.98863764

## [36] 2.55980605 0.06658861 4.39519622 4.67691937

##

## $chain1$mu

## [1] 2.01391381 1.25559601 0.41563428 4.82694638 0.83600351 4.30748495 2.36601809

## [8] 0.39530283 1.45825815 2.66141690 3.32957309 3.60864127 2.34038247 4.92368838

## [15] 2.11719136 0.91069842 2.67780586 3.86534942 4.80623740 2.17228961 4.20042273

## [22] 3.34444017 2.28607129 0.15141236 0.68654561 3.06456579 4.73994272 3.70362987

## [29] 3.99786725 2.04876099 2.93804214 0.91720229 0.58064812 3.33776691 2.98863764

## [36] 2.55980605 0.06658861 4.39519622 4.67691937

##

## $chain1$log_p10

## [1] -2.999918

##

## $chain1$beta

## [1] 0.05397204

##

##

## $chain2

## $chain2$mu_trad

## [1] 0.9654809 2.2014818 0.9671374 3.1678988 4.5603247 1.8170409 4.5563945 3.9641428

## [9] 4.8218774 3.0925700 1.9031798 1.6237121 1.3969457 3.9350216 1.8576002 4.2436057

## [17] 3.2635289 2.5389627 1.2403107 3.2642428 0.6320112 4.7961765 2.1780876 4.3163066

## [25] 3.9847331 4.2370985 0.1399096 1.2657270 1.8978087 2.0389883 4.1236273 3.9105557

## [33] 4.9132148 3.5091323 2.6031631 4.3297012 3.6721368 3.3247593 0.4818758

##

## $chain2$mu

## [1] 0.9654809 2.2014818 0.9671374 3.1678988 4.5603247 1.8170409 4.5563945 3.9641428

## [9] 4.8218774 3.0925700 1.9031798 1.6237121 1.3969457 3.9350216 1.8576002 4.2436057

## [17] 3.2635289 2.5389627 1.2403107 3.2642428 0.6320112 4.7961765 2.1780876 4.3163066

## [25] 3.9847331 4.2370985 0.1399096 1.2657270 1.8978087 2.0389883 4.1236273 3.9105557

## [33] 4.9132148 3.5091323 2.6031631 4.3297012 3.6721368 3.3247593 0.4818758

##

## $chain2$log_p10

## [1] -2.655146

##

## $chain2$beta

## [1] 0.111839

##

##

## $chain3

## $chain3$mu_trad

## [1] 0.9165691 2.6670734 2.2691038 3.8368410 0.8432949 4.3849986 0.4118777 1.0452265

## [9] 1.7920187 3.8483753 2.4016282 4.1951907 1.7224685 3.0833681 0.9852619 1.8655148

## [17] 3.9343529 4.5467676 2.7610491 4.3980515 3.5321668 2.7537674 0.8118323 1.6419269

## [25] 2.2440976 4.0824542 2.9571905 1.3469608 3.3006075 0.5168841 1.1707444 4.2831916

## [33] 3.6723485 3.8128558 4.2430229 2.7496592 2.9975857 4.9951764 2.2973047

##

## $chain3$mu

## [1] 0.9165691 2.6670734 2.2691038 3.8368410 0.8432949 4.3849986 0.4118777 1.0452265

## [9] 1.7920187 3.8483753 2.4016282 4.1951907 1.7224685 3.0833681 0.9852619 1.8655148

## [17] 3.9343529 4.5467676 2.7610491 4.3980515 3.5321668 2.7537674 0.8118323 1.6419269

## [25] 2.2440976 4.0824542 2.9571905 1.3469608 3.3006075 0.5168841 1.1707444 4.2831916

## [33] 3.6723485 3.8128558 4.2430229 2.7496592 2.9975857 4.9951764 2.2973047

##

## $chain3$log_p10

## [1] -2.824668

##

## $chain3$beta

## [1] 0.05075341

##

##

## $chain4

## $chain4$mu_trad

## [1] 0.2139959 3.8336850 2.2284548 3.8006431 3.7800381 0.3500877 2.7647242 1.7389432

## [9] 3.1052340 0.1519294 0.2122061 2.3662545 4.9653820 0.8232471 3.7508484 4.2358386

## [17] 1.8070711 1.4005192 4.5797479 1.3575003 2.6236618 1.8111736 3.9053329 1.1063771

## [25] 1.4386567 2.5149701 2.0564225 4.8987182 4.3265848 1.8907922 2.6205461 2.8983300

## [33] 2.5966957 2.2764267 0.9491063 2.6725291 4.7969291 2.1751389 0.9637220

##

## $chain4$mu

## [1] 0.2139959 3.8336850 2.2284548 3.8006431 3.7800381 0.3500877 2.7647242 1.7389432

## [9] 3.1052340 0.1519294 0.2122061 2.3662545 4.9653820 0.8232471 3.7508484 4.2358386

## [17] 1.8070711 1.4005192 4.5797479 1.3575003 2.6236618 1.8111736 3.9053329 1.1063771

## [25] 1.4386567 2.5149701 2.0564225 4.8987182 4.3265848 1.8907922 2.6205461 2.8983300

## [33] 2.5966957 2.2764267 0.9491063 2.6725291 4.7969291 2.1751389 0.9637220

##

## $chain4$log_p10

## [1] -2.869797

##

## $chain4$beta

## [1] 0.07853136