Chapter 9 GP Fidelity and Scale

Gaussian processes are fantastic, but they’re not without drawbacks. Computational complexity is one. Flops in \(\mathcal{O}(n^3)\) for matrix decompositions, furnishing determinants and inverses, is severe. Many practitioners point out that storage, which is in \(\mathcal{O}(n^2)\), is the real bottleneck, at least on modern laptops and workstations. Even if you’re fine with waiting hours for MVN density calculation for a single likelihood evaluation, chances are you wouldn’t have enough high-speed memory (RAM) to store the \(n \times n\) matrix \(\Sigma_n\), let alone its inverse too and any auxiliary space required. There’s some truth to this, but usually I find time to be the limiting factor. MLE calculations can demand hundreds of decompositions. Big memory supercomputing nodes are a thing, with orders of magnitude more RAM than conventional workstations. Big time nodes are not. Except when executions can be massively parallelized, supercomputers aren’t much faster than high-end laptops.

Although GP inference and prediction (5.2)–(5.3) more prominently feature inverses \(\Sigma_n^{-1}\), it’s actually determinants \(|\Sigma_n|\) that impose the real bottleneck. Both are involved in MVN density/likelihood evaluations. Conditional on hyperparameters, only \(\Sigma_n^{-1}\) is required. A clever implementation could solve the requisite system of equations for prediction, e.g., \(\Sigma_n^{-1} Y_n\) for the mean and \(\Sigma_n^{-1} \Sigma(X_n, x)\) for the variance, without explicitly calculating an inverse. Parallelization over elements of \(x \in \mathcal{X}\), extending to \(\Sigma_n^{-1} \Sigma(X_n, \mathcal{X})\), is rather trivial. All that sounds sensible and actionable, but actually it’s little more than trivia. Knowing good hyperparameters (without access to the likelihood) is “rare” to say the least. Let’s presume likelihood-based inference is essential and not pursue that line of thinking further. Full matrix decomposition is required.

Most folks would agree that Cholesky decomposition, leveraging symmetry in distance-based \(\Sigma_n\), offers the best path forward.9 The Cholesky can furnish both inverse and determinant in \(\mathcal{O}(n^3)\) time, and in some cases even faster depending on the libraries used. Divide-and-conquer parallelization also helps on multi-core architectures. I strongly recommend Intel MKL which is the workhorse behind Microsoft R Open on Linux and Windows, and the Accelerate Framework on OSX. Both provide conventional BLAS/LAPACK interfaces with modern implementations and customizations under the hood. An example of the potential with GP regression is provided in Appendix A. As explained therein, OpenBLAS is not recommended in this context because of thread safety issues which become relevant in nested, and further parallelized application (e.g., §9.3).

Ok, that’s enough preamble on cumbersome calculations. Computational complexity is one barrier; flexibility is another. Stationarity of covariance is a nice simplifying assumption, but it’s not appropriate for all data generating mechanisms. There are many ways data can be nonstationary. The LGBB rocket booster (see §2.1), whose simulations are deterministic, exhibits mean nonstationarity along with rather abrupt regime changes from steep to flat dynamics. When data/simulations are noisy, variance nonstationarity or heteroskedasticity may be a concern: noise levels which are input-dependent. Changes in either may be smooth or abrupt. GPs don’t cope well in such settings, at least not in their standard form. Enhancing fidelity in GP modeling, to address issues like mean and variance nonstationarity, is easy in theory: just choose a nonstationary kernel. In practice it’s harder than that. What nonstationary kernel? What happens when regimes change and dynamics aren’t smooth?

Often the two issues, speed and flexibility, are coupled together. Coherent high-fidelity GP modeling schemes have been proposed, but in that literature there’s a tendency to exacerbate computational bottlenecks. Coupling processes together, for example to warp a nonstationary surface into one wherein simpler stationary dynamics reign (e.g., A. Schmidt and O’Hagan 2003; Sampson and Guttorp 1992) adds layers of additional computational complexity and/or requires MCMC. Consequently, such methods have only been applied on small data by modern standards. Yet observing complicated dynamics demands rich and numerous examples, putting data collection at odds with modeling goals. In a computer surrogate modeling context, where design and modeling go hand in hand, this state of affairs is particularly limiting. Surrogates must be flexible enough to drive sequential design acquisitions towards efficient learning, but computationally thrifty enough to solve underlying decision problems in time far less than it would take to run the actual simulations – a hallmark of a useful surrogate (§1.2.2).

This chapter focuses on GP methods which address those two issues, computational thrift and modeling fidelity, simultaneously. GPs can only be brought to bear on modern big data problems in statistics and machine learning (ML) by somehow skirting full dense matrix decomposition. There are lots of creative ways of doing that, some explicitly creating sparse matrices, some implicitly. Approximation is a given. A not unbiased selection of examples and references is listed below.

- Pseudo-inputs (Snelson and Ghahramani 2006) or the predictive process [PP; Banerjee et al. (2008)]; both examples of methods based on inducing points

- Iterating over batches (Haaland and Qian 2011) and sequential updating (Gramacy and Polson 2011)

- Fixed rank kriging (Cressie and Johannesson 2008)

- Compactly supported covariances and fast sparse linear algebra (Kaufman et al. 2011; Sang and Huang 2012)

- Partition models (Gramacy and Lee 2008a; Kim, Mallick, and Holmes 2005)

- Composite likelihood (Eidsvik et al. 2014)

- Local neighborhoods (Emery 2009; Gramacy and Apley 2015)

The literature on nonstationary modeling is more niche, although growing. Only a couple of the ideas listed above offer promise in the face of both computational and modeling challenges. This will dramatically narrow the scope of our presentation, as will the accessibility of public implementation in software.

There are a few underlying themes present in each of the approaches above: inducing points, sparse matrices, partitioning, and approximation. In fact all four can be seen as mechanisms for inducing sparsity in covariance. But they differ in how they leverage that sparsity to speed up calculations, and in how they offer scope for enhanced fidelity. It’s worth noting that you can’t just truncate as a means of inducing sparsity. Rounding small entries \(\Sigma^{ij}_n\) to zero will almost certainly destroy positive definiteness.

We begin in this chapter by illustrating a distance-based kernel which guarantees both sparsity and positive definiteness, however as a device that technique underwhelms. Sparse kernels compromise on long-range structure without gaining enhanced local modeling fidelity. Calculations speed up, but accuracy goes down despite valiant efforts to patch up long-range effects.

We then turn instead to implicit sparsity via partitioning and divide-and-conquer. These approaches, separately leveraging two key ideas from computer science – tree data structures on the one hand and transductive learning on the other – offer more control on speed versus accuracy fronts, which we shall see are not always at odds. The downside however is potential lack of smoothness and continuity. Although GPs are famous for their gracefully flowing surfaces and sausage-shaped error-bars, there are many good reasons to eschew that aesthetic when data get large and when mean and variance dynamics may change abruptly.

Finally, Chapter 10 focuses explicitly on variance nonstationarity, or input dependent noise, and low-signal scenarios. Stochastic simulations represent a rapidly growing sub-discipline of computer experiments. In that context, and when response surfaces are essential, tightly coupled active learning and GP modeling strategies (not unlike the warping ideas dismissed above) are quite effective thanks to a simple linear algebra trick, and generous application of replication as a tried and true design strategy for separating signal from noise.

9.1 Compactly supported kernels

A kernel \(k_{r_{\max}}(r)\) is said to have compact support if \(k_{r_{\max}}(r) = 0\) when \(r > r_{\max}\). Recall from §5.3.3 that \(r = |x - x'|\) for a stationary covariance. We may still proceed component-wise with \(r_j = |x_j - x_j'|\) and \(r_{j,\max}\) for a separable compactly supported kernel, augment with scales for amplitude adjustments, nuggets for noisy data and embellish with smoothness parameters (Matérn), etc. Rate of decay of correlation can be managed by lengthscale hyperparameters, a topic we shall return to shortly.

A compactly supported kernel (CSK) introduces zeros into the covariance matrix, so sparse matrix methods may be deployed to aid in computations, both in terms of economizing on storage and more efficient decomposition for inverses and determinants. Recall from §5.3.3 that a product of two kernels is a kernel, so a good way to build a bespoke CSK with certain properties is to take a kernel with those properties and multiply it by a CSK – an example of covariance tapering (Furrer, Genton, and Nychka 2006).

Two families of CSKs, Bohman and truncated power, offer decent approximations to the power exponential family (§5.3.3), of which the Gaussian (power \(\alpha=2\)) is a special case. These kernels are zero for \(r > r_{\max}\), and for \(r \leq r_{\max}\):

\[ \begin{aligned} k^{\mathrm{B}}_{r_{\max}}(r) &= \left(1-\frac{r}{r_{\max}}\right) \cos \left(\frac{\pi r}{r_{\max}}\right) + \frac{1}{\pi}\sin\left(\frac{\pi r}{r_{\max}}\right) \\ k^{\mathrm{tp}}_{r_{\max}}(r; \alpha, \nu) &= [1 - (r/r_{\max})^\alpha]^\nu, \quad \mbox{ where } 0 < \alpha < 2 \mbox{ and } \nu \geq \nu_m(\alpha). \end{aligned} \]

The function \(\nu_m(\alpha)\) in the definition of the truncated power kernel represents a restriction necessary to ensure a valid correlation in \(m\) dimensions, with \(\lim_{\alpha \rightarrow 2} \nu_m(\alpha) = \infty\). Although it’s difficult to calculate \(\nu_m(\alpha)\) directly, there are known upper bounds for a variety of \(\alpha\)-values between \(1.5\) and \(1.955\), e.g., \(v_1(3/2) \leq 2\) and \(v_1(5/3) \leq 3\). Chapter 9 of Wendland (2004) provides several other common CSKs. The presentation here closely follows Kaufman et al. (2011), concentrating on the simpler Bohman family as a representative case.

Bohman CSKs yield a mean-square differentiable process, whereas the truncated power family does not (unless \(\alpha = 2)\). Notice that \(r_{\max}\) plays a dual role, controlling both lengthscale and degree of sparsity. Augmenting with explicit lengthscales, i.e., \(r_\theta = r/\sqrt{\theta}\), enhances flexibility but at the expense of identifiability and computational concerns. Since CSKs are chosen over other kernels with computational thrift in mind, fine-tuning lengthscales often takes a back seat.

9.1.1 Working with CSKs

Let’s implement the Bohman CSK and kick the tires.

kB <- function(r, rmax)

{

rnorm <- r/rmax

k <- (1 - rnorm)*cos(pi*rnorm) + sin(pi*rnorm)/pi

k <- k*(r < rmax)

}To have some distances to work with, the code below calculates a rather large \(2000 \times 2000\) distance matrix based on a dense grid in \([0,10]\).

We can then feed these distances into \(k^B_{r_{\max}}(\cdot)\) and check for sparsity under several choices of \(r_{\max}\). Careful: kB is defined for ordinary, rather than squared, pairwise distances. For comparison, an ordinary/dense Gaussian covariance is calculated and saved as K.

eps <- sqrt(.Machine$double.eps) ## numerical stability

K <- exp(-D) + diag(eps, nrow(D))

K2 <- kB(sqrt(D), 2)

K1 <- kB(sqrt(D), 1)

K025 <- kB(sqrt(D), 0.25)

c(mean(K > 0), mean(K2 > 0), mean(K1 > 0), mean(K025 > 0))## [1] 1.00000 0.35960 0.18955 0.04889Indeed, as \(r_{\max}\) is decreased, the proportion of nonzero entries decreases. Observe that Bohman-based correlation matrices do not require jitter along the diagonal. Like Matérn, Bohman CSKs provide well-conditioned correlation matrices.

Investigating the extent to which those levels of sparsity translate into computational savings requires investing in a sparse matrix library e.g., spam (Furrer 2018) or Matrix (Bates and Maechler 2019). Below I choose Matrix as it’s built-in to base R, however CSK-GP fitting software illustrated later uses spam.

library(Matrix)

c(system.time(chol(K))[3],

system.time(chol(Matrix(K2, sparse=TRUE)))[3],

system.time(chol(Matrix(K1, sparse=TRUE)))[3],

system.time(chol(Matrix(K025, sparse=TRUE)))[3])## elapsed elapsed elapsed elapsed

## 1.007 0.208 0.102 0.062As you can see, small \(r_{\max}\) holds the potential for more than an order of magnitude speedup. Further improvements may be possible if the matrix can be built natively in sparse representation. So where is the catch? Such (speed) gains must come at a cost (to modeling and inference). We want to encourage sparsity because that means speed, but getting enough sparsity requires lots of zeros, and that means sacrificing long range spatial correlation. If local modeling is sufficient, then why bother with a global model? In §9.3 we’ll do just that: eschew global modeling all together. For now, let’s explore the cost–benefit trade-off with CSK and potential for mitigating compromises on predictive quality and uncertainty quantification (UQ) potential.

Consider a simple 1d random process, observed on a grid.

x <- c(1, 2, 4, 5, 6, 8, 9, 10)/11

n <- length(x)

D <- distance(as.matrix(x))

K <- exp(-5*sqrt(D)^1.5) + diag(eps, n)

library(mvtnorm)

y <- t(rmvnorm(1, sigma=K))Here are predictions gathered on a dense testing grid in the input space from the “ideal fit” to that data, using an ordinary GP conditioned on known hyperparameterization. (It’s been a while – way back in Chapter 5 – since we entertained such calculations by hand.)

xx <- seq(0, 1, length=100)

DX <- distance(as.matrix(x), as.matrix(xx))

KX <- exp(-5*sqrt(DX)^1.5)

Ki <- solve(K)

m <- t(KX) %*% Ki %*% y

Sigma <- diag(1+eps, ncol(KX)) - t(KX) %*% Ki %*% KX

q1 <- qnorm(0.05, m, sqrt(diag(Sigma)))

q2 <- qnorm(0.95, m, sqrt(diag(Sigma)))Before offering a visual, consider the analog of those calculations with a Bohman CSK using \(r_{\max} = 0.1\).

K01 <- kB(sqrt(D), 0.1)

KX01 <- kB(sqrt(DX), 0.1)

Ki01 <- solve(K01)

m01 <- t(KX01) %*% Ki01 %*% y

tau2 <- drop(t(y) %*% Ki01 %*% y)/n

Sigma01 <- tau2*(1 - t(KX01) %*% Ki01 %*% KX01)

q101 <- qnorm(0.05, m01, sqrt(diag(Sigma01)))

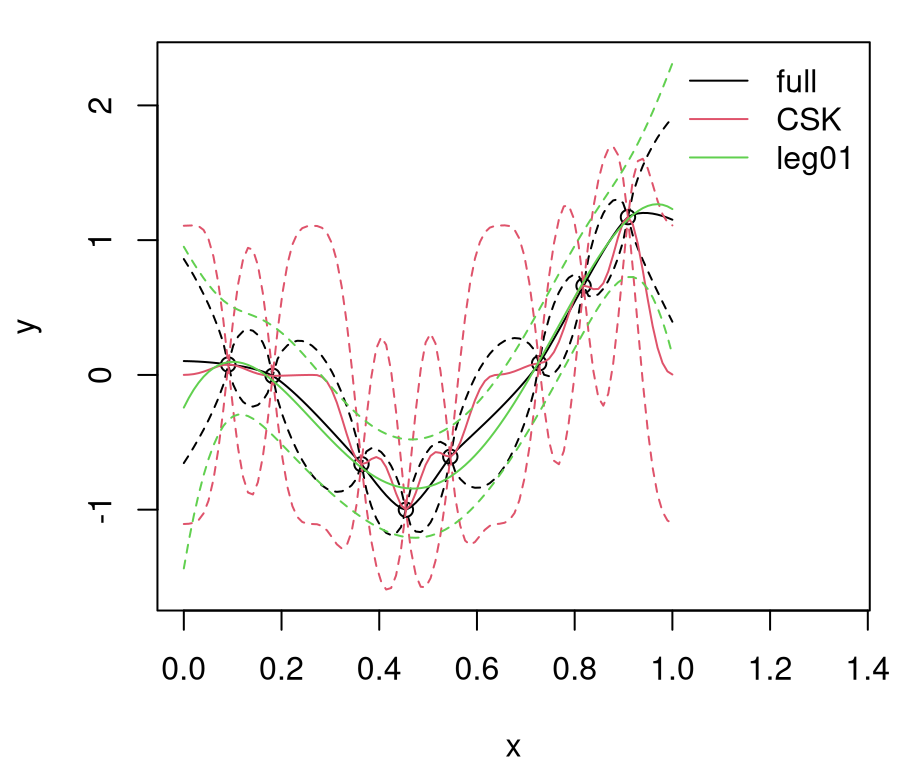

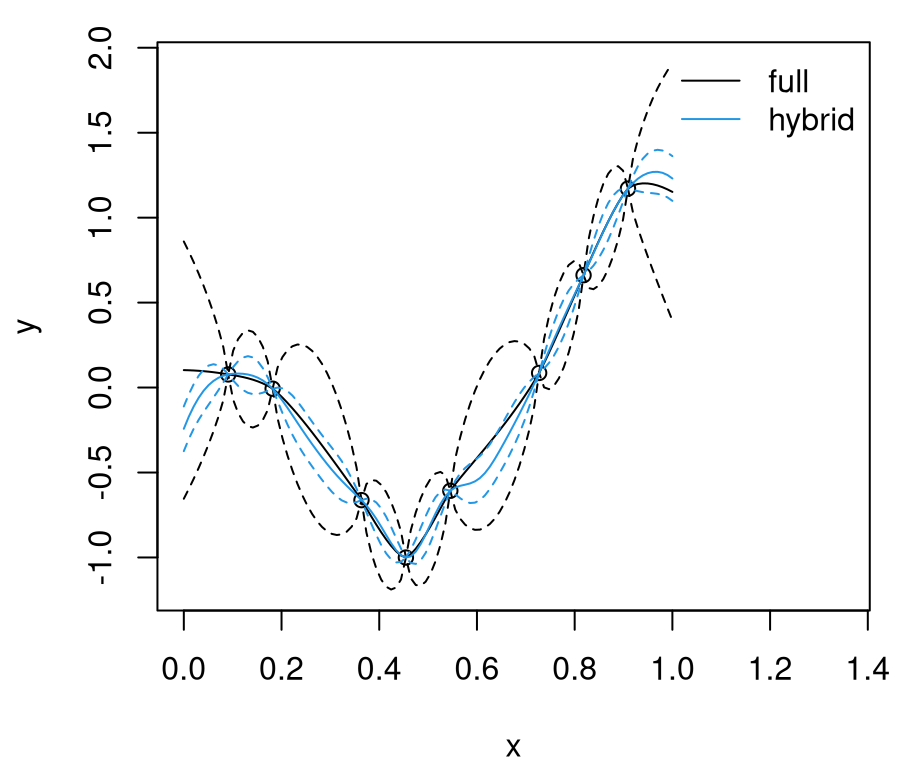

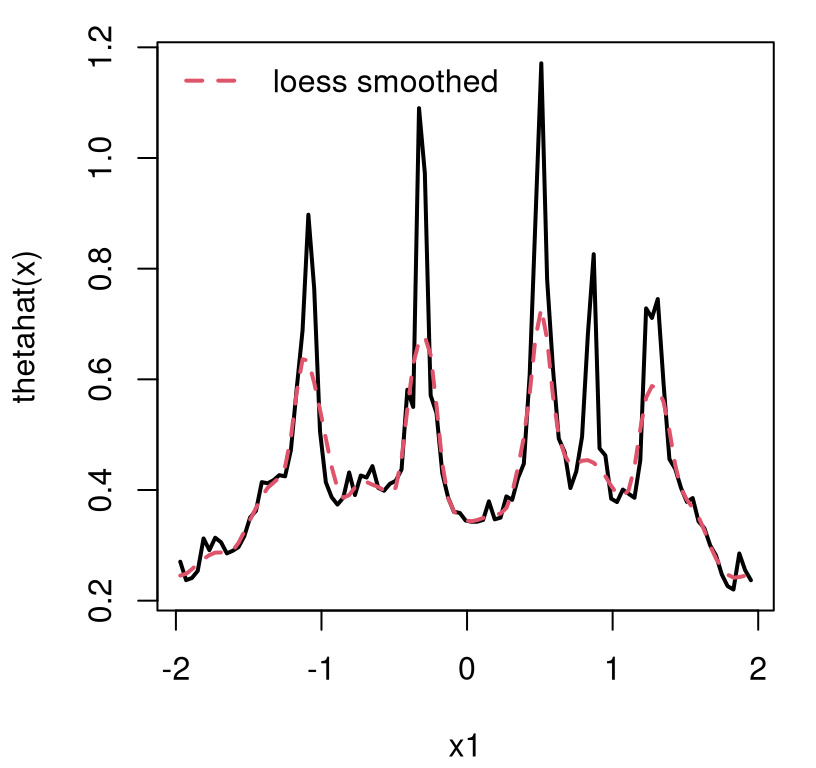

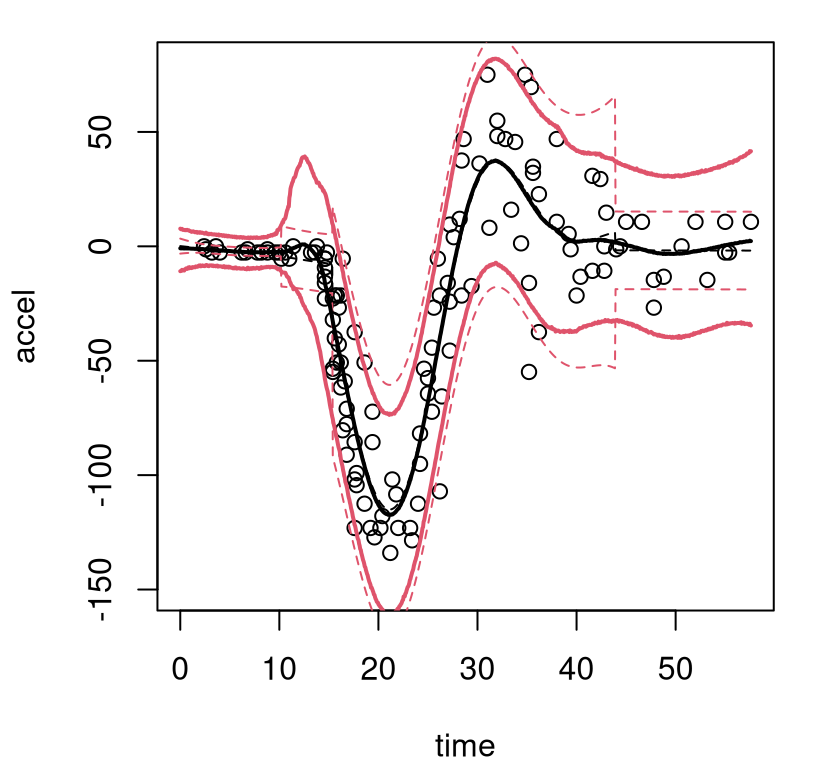

q201 <- qnorm(0.95, m01, sqrt(diag(Sigma01)))Figure 9.1 shows the randomly generated training data and our two GP surrogate fits. “Full”, non-CSK, predictive summaries are shown in black, with a solid line for means and dashed lines for 90% quantiles. Red lines are used for the CSK analog.

plot(x, y, xlim=c(0, 1.3), ylim=range(q101, q201))

lines(xx, m)

lines(xx, q1, lty=2)

lines(xx, q2, lty=2)

lines(xx, m01, col=2)

lines(xx, q101, col=2, lty=2)

lines(xx, q201, col=2, lty=2)

legend("topright", c("full", "CSK"), lty=1, col=1:2, bty="n")

FIGURE 9.1: Predictions under CSK compared to the ideal full GP.

Relative to the ideal baseline, Bohman CSK predictions are too wiggly in the mean and too wide in terms of uncertainty. Predictive means are off because aggregation transpires in a narrower window. Variance is larger simply because sparse \(K\) leads to larger \(K^{-1}\). Evidently, inducing sparsity can have deleterious effects on GP prediction equations. What can be done?

9.1.3 Practical Bayesian inference and UQ

Kaufman et al. argue that the simplest, coherent way to put this hybrid together, and fully account for all relevant uncertainties in prediction while retaining a handle on trade-offs between computational complexity and accuracy (though CSK sparsity), is with Bayesian hierarchical modeling. They describe a prior linking together separable \(r_{\max,j}\) hyperparameters, for each input dimension \(j\). That prior encourages coordinates to trade-off against one another – competing in a manner not unlike in L1 penalization for linear regression using lasso – to produce a covariance matrix with a desired degree of sparsity. That is, some directions yield zero correlations faster than others as a function of coordinate-wise distance. Specifically, Kaufman et al. recommend

\[\begin{equation} r_{\max} \mbox{ uniform in } R_C = \left\{ r_{\max} \in \mathbb{R}^d : r_{\max,j} \geq 0, \; \sum_{j=1}^d r_{\max,j} \leq C \right\}. \tag{9.1} \end{equation}\]

Said another way, this penalty allows some \(r_{\max,j}\) to be large to reflect a high degree of correlation in particular input directions, shifting the burden of sparsity to other coordinates. Parameter \(C\) determines the level of sparsity in the resulting MVN correlation matrix.

That prior on \(r_{\max}\) is then paired with the usual reference priors for scale \(\tau^2\) and regression coefficients \(\beta\) through the Legendre basis. Conditional on \(r_{\max}\), calculations similar to those required for an exercise in §5.5, analytically integrating out \(\tau^2\) and \(\beta\), yield closed form expressions for the marginal posterior density. For details, see, e.g., Appendix A of Gramacy (2005). Inference for \(r_{\max}\) and any other kernel hyperparameters may then be carried out with a conventional mixture of Metropolis and Gibbs sampling steps.

It remains to choose a \(C\) yielding enough sparsity for tractable calculation given data sizes present in the problem on hand. Kaufman et al. produced a map, which I shall not duplicate here, relating degree of sparsity to computation time for various data sizes, \(n\). That map helps mitigate search efforts, modulo computing architecture nuances, toward identifying \(C\) yielding a specified degree of sparsity. Perhaps more practically, they further provide a numerical procedure which estimates the value of \(C\) required, after fixing all other choices for priors and their hyperparameters. I shall illustrate that pre-processing step momentarily.

As one final detail, Kaufman et al. recommend Legendre polynomials up to degree 5 in a “tensor product” form for their motivating cosmology example, including all main effects, and all two-variable interactions in which the sum of the maximum exponent in each interacting variable is constrained to be less than or equal to five. However, in their simpler coded benchmark example, which we shall borrow for our illustration below, it would appear they prefer degree 2.

Borehole example

Consider the borehole data, which is a classic synthetic computer simulation example (MD Morris, Mitchell, and Ylvisaker 1993), originally described by Worley (1987). It’s a function of eight inputs, modeling water flow through a borehole.

\[ y = \frac{2\pi T_u [H_u - H_l]}{\log\left(\frac{r}{r_w}\right) \left[1 + \frac{2 L T_u}{\log (r/r_w) r_w^2 K_w} + \frac{T_u}{T_l} \right]}. \]

Input ranges are

\[ \begin{aligned} r_w &\in [0.05, 0.15] & r &\in [100,50000] & T_u &\in [63070, 115600] \\ T_l &\in [63.1, 116] & H_u &\in [990, 1110] & H_l &\in [700, 820] \\ L &\in [1120, 1680] & K_w &\in [9855, 12045]. \end{aligned} \]

The function below provides an implementation in coded inputs.

borehole <- function(x)

{

rw <- x[1]*(0.15 - 0.05) + 0.05

r <- x[2]*(50000 - 100) + 100

Tu <- x[3]*(115600 - 63070) + 63070

Hu <- x[4]*(1110 - 990) + 990

Tl <- x[5]*(116 - 63.1) + 63.1

Hl <- x[6]*(820 - 700) + 700

L <- x[7]*(1680 - 1120) + 1120

Kw <- x[8]*(12045 - 9855) + 9855

m1 <- 2*pi*Tu*(Hu - Hl)

m2 <- log(r/rw)

m3 <- 1 + 2*L*Tu/(m2*rw^2*Kw) + Tu/Tl

return(m1/m2/m3)

}Consider the following Latin hypercube sample (LHS; §4.1) training and testing partition a la Algorithm 4.1.

n <- 4000

nn <- 500

m <- 8

library(lhs)

x <- randomLHS(n + nn, m)

y <- apply(x, 1, borehole)

X <- x[1:n,]

Y <- y[1:n]

XX <- x[-(1:n),]

YY <- y[-(1:n)]Observe that the problem here is bigger than any we’ve entertained so far in this text. However it’s worth noting that \(n=4000\) is not too big for conventional GPs. Following from Kaufman’s example, we shall provide a full GP below which (in being fully Bayesian and leveraging a Legendre basis-expanded mean) offers a commensurate look for the purpose of benchmarking computational demands. Yet Appendix A illustrates a thriftier GP implementation leveraging the MKL library which can handle more than \(n=10000\) training data points on this very same borehole problem. But that compares apples with oranges. Therefore we shall press on with the example, whose primary role – by the time the chapter is finished – will anyway be to serve as a straw man against more recent advances in the realm of local–global GP approximation.

The first step is to find the value of \(C\) that provides a desired level of sparsity. I chose 99% sparse through an argument den specifying density as the opposite of sparsity.

## [1] 2.708Next, R code below sets up a degree-two Legendre basis with all two-variable interactions, and then collects two thousand samples from the posterior. Compute time is saved for later comparison. Warnings that occasionally come from spam, when challenges arise in solving sparse linear systems, are suppressed in order to keep the document clean.

D <- I <- 2

B <- 2000

tic <- proc.time()[3]

suppressWarnings({

samps99 <- mcmc.sparse(Y, X, mc=C, degree=D, maxint=I,

B=B, verbose=FALSE)

})

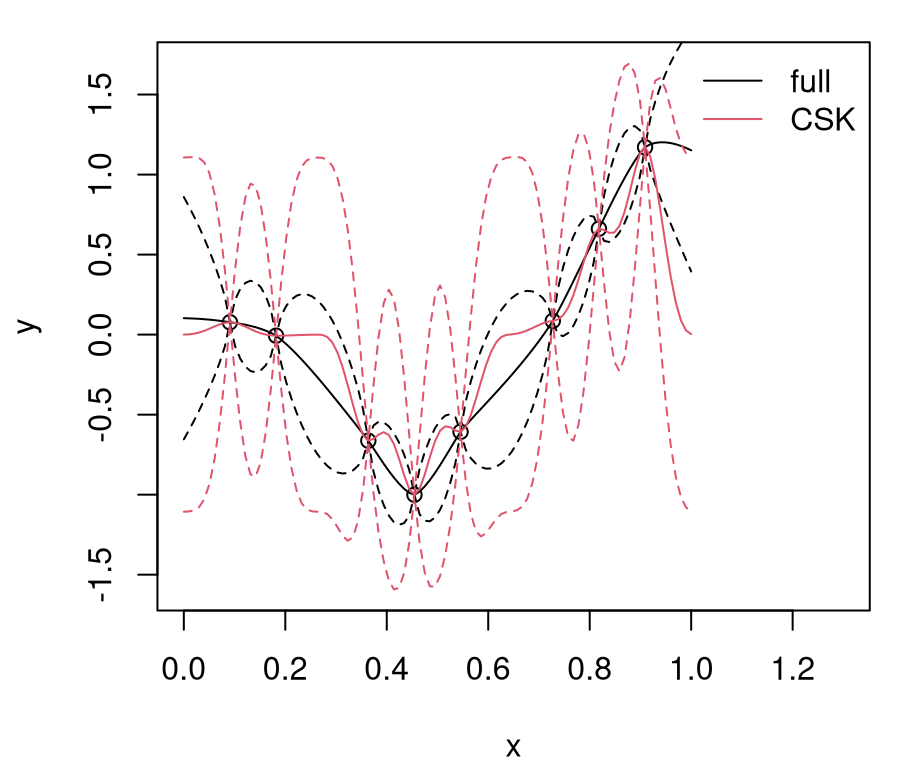

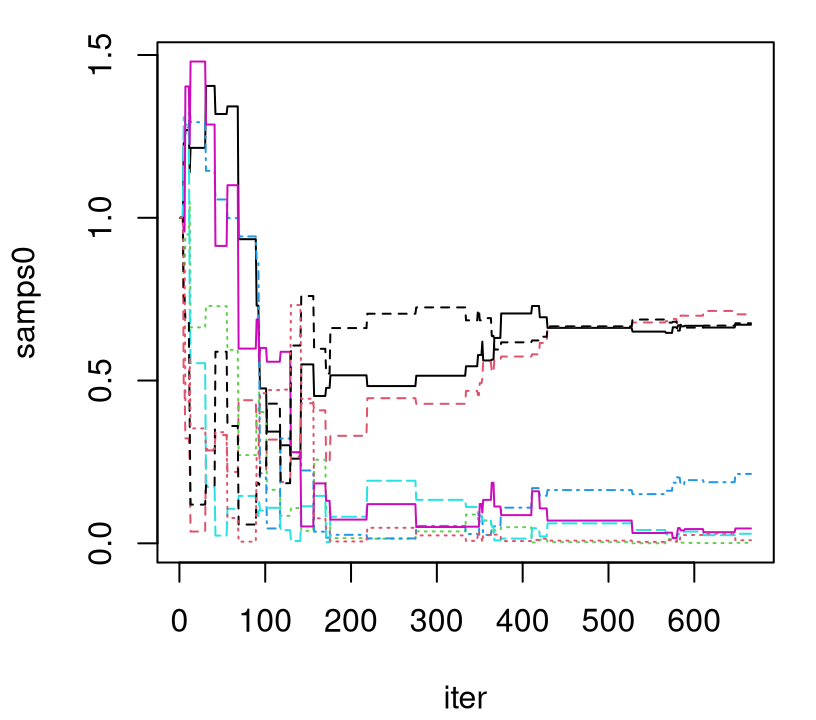

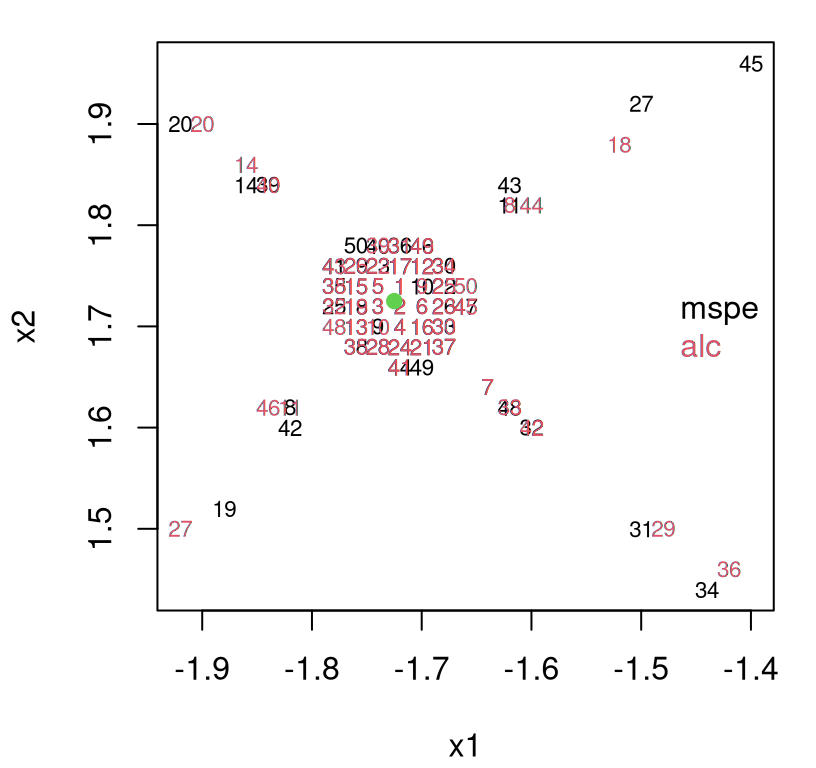

time99 <- as.numeric(proc.time()[3] - tic)Output samps99 is a B \(\times\) d matrix storing \(r_{\max,j}\) samples from the posterior distribution for each input coordinate, \(j=1,\dots,d\). Trace plots for these samples are shown in Figure 9.4. The left panel presents each \(r_{\max, j}\) marginally; on the right is their aggregate as in the definition of \(R_C\) (9.1). Observe how that aggregate bounces up against the estimated \(C\)-value of 2.71. Even with Legendre basis mopping up a degree of global nonlinearity, the posterior distribution over \(r_{\max, j}\) wants to be as dense as possible in order to capture spatial correlations at larger distances. A low \(C\)-value is forcing coordinates to trade off against one another in order to induce the desired degree of sparsity.

par(mfrow=c(1,2))

matplot(samps99, type="l", xlab="iter")

plot(rowSums(samps99), type="l", xlab="iter", ylab="Rc")

FIGURE 9.4: Trace plots of \(r_{\max,j}\) (left) and their aggregate \(\sum_j r_{\max, j}\) (right).

Both trace plots indicate convergence of the Markov chain after five hundred or so iterations followed by adequate – certainly not excellent – mixing. Marginal effective sample size calculations indicate that very few “equivalently independent” samples have been obtained from the posterior, however this could be improved with a longer chain.

## [1] 1.925 1.932 2.013 2.762 2.142 4.139 3.686 1.632Considering that it has already taken 46 minutes to gather these two thousand samples, it may not be worth spending more time to get a bigger collection for this simple example. (We’ll need even more time to convert those samples into predictions.)

## [1] 45.87Instead, perhaps let me encourage the curious reader to explore longer chains offline. Pushing on, the next step is to convert those hyperparameters into posterior predictive samples on a testing set. Below, discard the first five hundred iterations as burn-in, then save subsamples of predictive evaluations from every tenth iteration thereafter.

index <- seq(burnin+1, B, by=10)

tic <- proc.time()[3]

suppressWarnings({

p99 <- pred.sparse(samps99[index,], X, Y, XX, degree=D,

maxint=I, verbose=FALSE)

})

time99 <- as.numeric(time99 + proc.time()[3] - tic)

time99/60## [1] 54.35The extra work required to make this conversion depends upon the density of the predictive grid, XX. In this particular case it doesn’t add substantially to the total compute time, which now totals 54 minutes. The keen reader will notice that we didn’t factor in time to calculate \(C\) with find.tau above. Relative to other calculations, this represents a rather small, fixed-cost pre-processing step.

Before assessing the quality of these predictions, consider a couple alternatives to compare to. Kaufman et al. provide a non-sparse version, that otherwise works identically, primarily for timing and accuracy comparisons. That way we’ll be comparing apples to apples, at least in terms of inferential apparatus, when it comes to computation time. Working with dense covariance matrices in this context is really slow. Therefore, the code below collects an order of magnitude fewer MCMC samples from the posterior. (Sampling and predictive stages are combined.) Again, I encourage the curious reader to gather more for a fairer comparison.

tic <- proc.time()[3]

suppressWarnings({

samps0 <- mcmc.nonsparse(Y, X, B=B/3, verbose=FALSE)

})

index <- seq(burnin/3 + 1, B/3, by=10)

suppressWarnings({

p0 <- pred.nonsparse(samps0[index,], X, Y, XX, 2, verbose=FALSE)

})

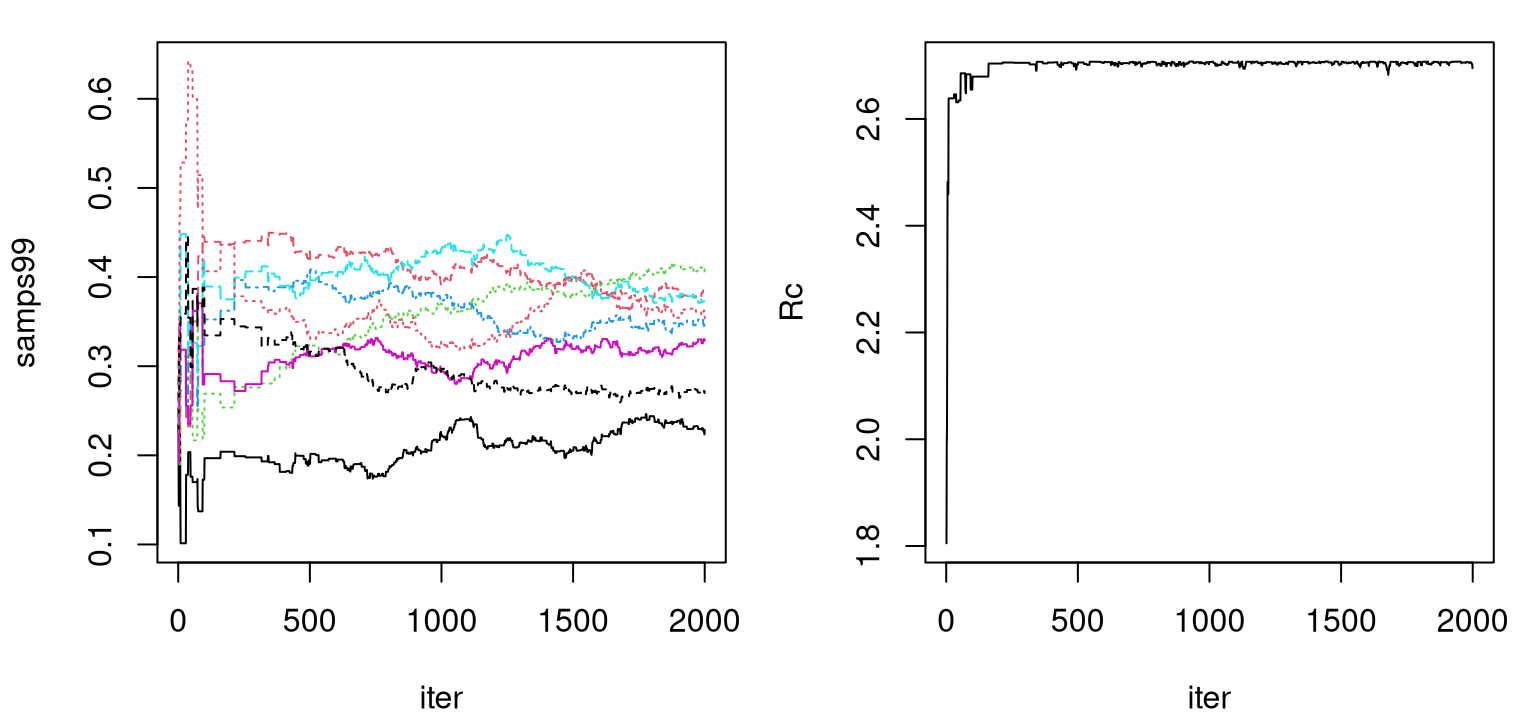

time0 <- as.numeric(proc.time()[3] - tic)As illustrated in Figure 9.5, 667 samples are not nearly sufficient to be confident about convergence. Admittedly, I’m not sure why the mixing here seems so much worse than in the CSK analog. It may have been that Kaufman didn’t put as much effort into fine-tuning this straw man relative to their showcase methodology.

FIGURE 9.5: Trace plot of lengthscales under the full (non CSK) GP posterior; compare to the left panel of Figure 9.4.

Considering the amount of time it took to get as many samples as we did, we’ll have to be content with extrapolating a bit to make a proper timing comparison.

## [1] 51.75Gathering the same number of samples as in the 99% sparse CSK case would’ve required more than 2 hours of total compute time. For a comparison on accuracy grounds, consider pointwise proper scores via Eq. (27) from Gneiting and Raftery (2007). Also see §8.1.4. Higher scores are better.

scorep <- function(YY, mu, s2) { mean(-(mu - YY)^2/s2 - log(s2)) }

scores <- c(sparse99=scorep(YY, p99$mean, p99$var),

dense=scorep(YY, p0$mean, p0$var))

scores## sparse99 dense

## -1.918 -6.962CSK is both faster and more accurate on this example. It’s reasonable to speculate that the dense/ordinary GP results could be improved with better MCMC. Perhaps MCMC proposals, tuned to work well for short \(r_{\max,j}\) on residuals obtained from a rich Legendre basis, work rather less well for ordinary lengthscales \(\theta_j\) applied directly on the main process. In §9.3.4 we’ll show how a full GP under MLE hyperparameters can be quite accurate on these data.

To add one final comparator into the mix, let’s see how much faster and how much less accurate a 99.9% sparse version is. Essentially cutting and pasting from above …

C <- find.tau(den=1 - 0.999, dim=ncol(x))*ncol(x)

tic <- proc.time()[3]

suppressWarnings({

samps999 <- mcmc.sparse(Y, X, mc=C, degree=D, maxint=I,

B=B, verbose=FALSE)

})

index <- seq(burnin+1, B, by=10)

suppressWarnings({

p999 <- pred.sparse(samps999[index,], X, Y, XX, degree=D,

maxint=I, verbose=FALSE)

})

time999 <- as.numeric(proc.time()[3] - tic)In terms of computing time, the 99.9% sparse version is almost an order of magnitude faster.

## sparse99 dense sparse999

## 3260.9 3104.9 404.8In terms of accuracy, it’s just a little bit worse than the 99% analog and much better than the slow and poorly mixing full GP.

## sparse99 dense sparse999

## -1.918 -6.962 -2.105To summarize this segment on CSKs, consider the following notes. Sparse covariance matrices decompose faster compared to their dense analogs, but the gap in execution time is only impressive when matrices are very sparse. In that context, intervention is essential to mop up long-range structure left unattended by all those zeros. A solution entails hybridization between processes targeting long- and short-distance correlation. Kaufman et al. utilize a rich mean structure; Sang and Huang’s FSA stays covariance-centric. Either way, both agree that Bayesian posterior sampling is essential to average over competing explanations. We have seen that the MCMC required can be cumbersome: long chains “eat up” computational savings offered by sparsity. Nevertheless, both camps offer dramatic success stories. For example, Kaufman fit a surrogate to more than twenty thousand runs of a photometric redshift simulation – a cosmology example – in four input dimensions, and predict with full UQ at more then eighty thousand sites. Results are highly accurate, and computation time is reasonable.

One big downside to these ideas, at least in the context of our motivation for this chapter, is that neither approach addresses nonstationarity head on. Computational demands are eased, somewhat, but modeling fidelity has not been substantially increased. I qualify with “substantially” because the introduction of a basis-expanded mean does hold the potential to enhance even though it was conceived to compensate. Polynomial bases, when dramatically expanded both by location and degree, do offer a degree of nonstationary flexibility. Smoothing splines are a perfect example; also see the splines supplement. But such techniques break down computationally when input dimension is greater than two. Exponentially many more knots are required as input dimension grows. A more deliberate and nonparametric approach to obtaining local variation in a global landscape could represent an attractive alternative.

9.2 Partition models and regression trees

Another way to induce sparsity in the covariance structure is to partition the input space into independent regions, and fit separate surrogates therein. The resulting covariance matrix is block-diagonal after row–column reordering. In fact, you might say it’s implicitly block-diagonal because it’d be foolish to actually build such a matrix. In fact, even “thinking” about the covariance structure on a global scale, after partitioning into multiple local models, can be a hindrance to efficient inference and effective implementation.

The trouble is, it’s hard to know just how to split things up. Divide-and-conquer is almost always an effective strategy computationally. But dividing haphazardly can make conquering hard. When statistical modeling, it’s often sensible to let the data decide. Once we’ve figured that out – i.e., how to let data say how it “wants” to be partitioned for independent modeling – many inferential and computational details naturally suggest themselves.

One happy consequence of partitioning, especially when splitting is spatial in nature, is a cheap nonstationary modeling mechanism. Independent latent processes and hyperparameterizations, thinking particularly about fitting GP surrogates to each partition element, kills two birds with one stone: 1) disparate spatial dynamics across the input space; 2) smaller matrices to decompose for faster local inference and prediction. The downside is that all bets for continuity are off. That “bug” could be a “feature”, e.g., if the data generating mechanism is inherently discontinuous, which is not as uncommon as you might think. But more often a scheme for smoothing, or averaging over all (likely) partitions is desired.

Easy to say, hard to do. I know of only two successful attempts involving GPs on partition elements: 1) with Voronoi tessellations (Kim, Mallick, and Holmes 2005); 2) with trees (Gramacy and Lee 2008a). In both cases, those references point to the original attempts. Other teams of authors have subsequently refined and extended these ideas, but the underlying themes remain the same. Software is a whole different ballgame; I know of only one package for R.

Tessellations are easy to characterize mathematically, but a nightmare computationally. Trees are easy mathematically too, and much friendlier in implementation. Although no walk in the park, tree data structures are well developed from a software engineering perspective. Translating tree data structures from their home world of computer science textbooks over to statistical modeling is relatively straightforward, but as always the devil is in the details.

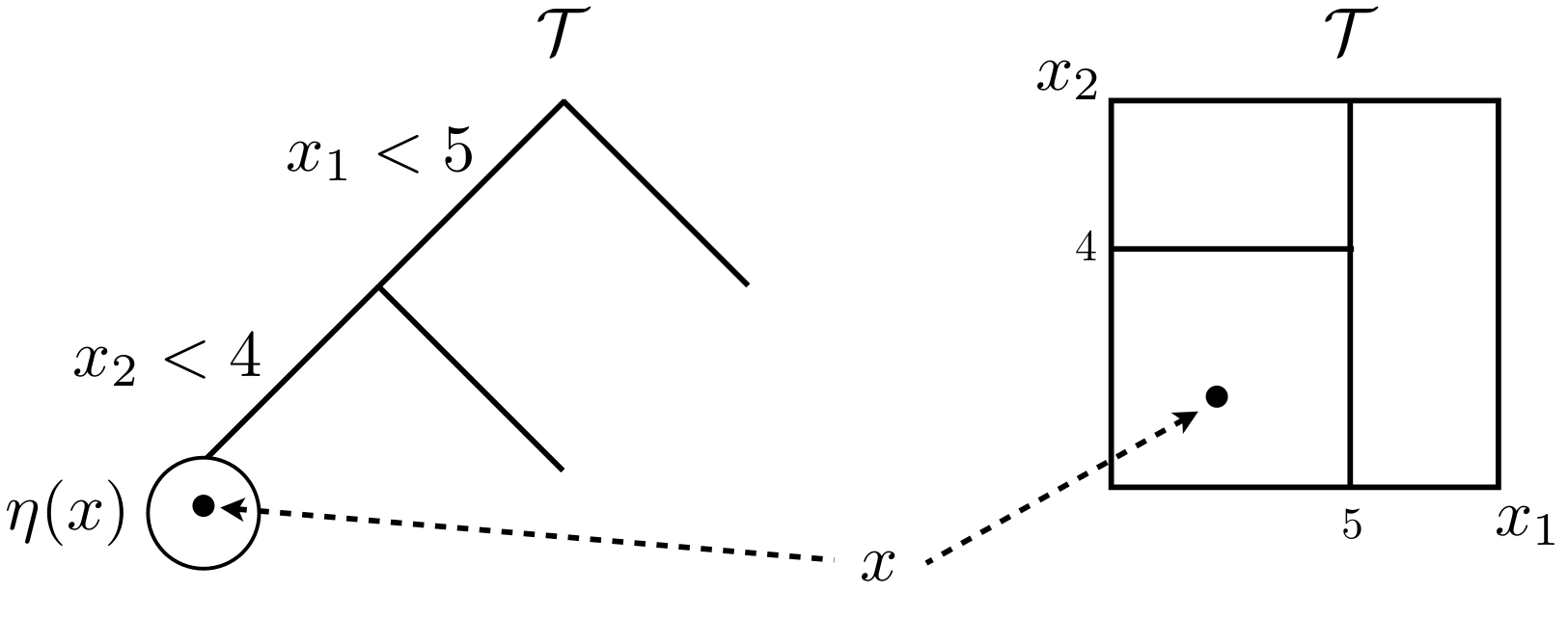

Figure 9.6 shows a partition tree \(\mathcal{T}\) in two views. The left-hand drawing offers a graph view, illustrating a tree with two internal, or splitting nodes, and three leaf or terminal nodes without splits. Internal nodes are endowed with splitting criteria, which in this case refers to conditional splits in a two-dimensional \(x\)-space. Internal nodes have two children, called siblings. All nodes have a parent except the root, paradoxically situated at the top of the tree.

FIGURE 9.6: Tree graph (left) and partition of a 2d input space (right). Borrowed from H. Chipman et al. (2013) with many similar variations elsewhere; used with permission from Wiley.

The right-hand drawing illustrates the recursive nature of those splits geographically in the input space, creating an axis-aligned partition. A generic 2d input coordinate \(x\) would land in one of the three leaf nodes, depending on the setting of its two coordinates \(x_1\) and \(x_2\). Leaf node \(\eta(x)\) resides in the lower-left partition of the input space.

For statistical modeling, the idea is that recursive, axis-aligned, splits represent a simple yet effective way to divvy up the input space \(\mathcal{X}\) into independent predictive models for responses \(y\). Predictions \(\hat{y}(x)\) are dictated by tree structure, \(\mathcal{X}\) and leaf model: historically, a simple prediction rule tailored to the subset of data residing at each terminal node. My plan is to showcase GPs at the leaves, but let’s take a step back first.

9.2.1 Divide-and-conquer regression

Use of trees in regression dates back to AID (automatic interaction detection) by Morgan and Sonquist (1963). Classification and regression trees [CART; Breiman et al. (1984)], a suite of methods obtaining fitted partition trees, popularized the idea. The selling point was that trees facilitate parsimonious divide-and-conquer, leading to flexible yet interpretable modeling.

Fitting partition structure (depth, splits, etc.) isn’t easy, however. You need a leaf model/prediction rule, goodness-of-fit criteria, and a search algorithm. And there are lots of very good ways to make choices in that arena. In case it isn’t yet obvious, I prefer likelihood whenever possible. Although other approaches are perhaps more common in the trees literature, with as many/possibly more contributions from computer science as statistics, likelihoods rule the roost as a default in modern statistics.

Given a particular tree, \(\mathcal{T}\), the (marginal) likelihood factorizes into a product form.

\[ p(y^n \mid \mathcal{T}, x^n) \equiv p(y_1, \dots, y_n \mid \mathcal{T}, x_1,\dots, x_n) = \prod_{\eta \in \mathcal{L}_{\mathcal{T}}} p(y^\eta \mid x^\eta) \]

Above, shorthand \(x^n = x_1,\dots,x_n\) and \(y^n = y_1, \dots, y_n\) represents the full dataset of \(n\) pairs \((x,y)^n = \{(x_i, y_i):\; i=1,\dots,n\}\). Analogously, superscript \(\eta\) represents those elements of the data which fall into leaf node \(\eta\). That is, \((x,y)^\eta = \{(x_i, y_i):\; x_i\in \eta, \; i=1,\dots,n\}\). The product arises from independent modeling, and by indexing over all leaf nodes in \(\mathcal{T}\), \(\eta \in \mathcal{L}_{\mathcal{T}}\), we cover all indices \(i \in \{1,\dots,n\}\). All that remains in order to complete the specification is to choose a model for \(p(y^\eta \mid x^\eta)\) to apply at leaf nodes \(\eta\).

Usually such models are specified parametrically, via \(\theta_\eta\), but calculations are simplified substantially if those parameters can be integrated out. Hence the parenthetical “marginal” above. The simplest leaf model for regression is the constant model with unknown mean and variance \(\theta_\eta = (\mu_\eta, \sigma^2_\eta)\):

\[ \begin{aligned} p(y^\eta \mid \mu_\eta, \sigma^2_\eta, x^\eta) &\propto \sigma_\eta^{-|\eta|} \exp\left\{\textstyle-\frac{1}{2\sigma_\eta^2} \sum_{y \in \eta} (y - \mu_\eta)^2\right\} \\ \mbox{so that } \;\; p(y^\eta \mid x^\eta) & = \frac{1}{(2\pi)^{\frac{|\eta|-1}{2}}}\frac{1}{\sqrt{|\eta|}} \left(\frac{s_\eta^2}{2}\right)^{-\frac{|\eta|-1}{2}} \Gamma\left(\frac{|\eta|-1}{2}\right) \end{aligned} \]

upon taking reference prior \(p(\mu_\eta, \sigma_\eta^2) \propto \sigma_\eta^{-2}\). Above, \(|\eta|\) is a count of the number of data points in leaf node \(\eta\), and \(s_\eta^2 \equiv \hat{\sigma}_\eta^2\) is the typical residual sum of squares from \(\bar{y}_\eta \equiv \hat{\mu}_\eta\). Concentrated analogs, i.e., not committing to a Bayesian approach, are similar. However the Bayesian view is natural from the perspective of coherent regularization.

Clearly some kind of penalty on complexity is needed for inference, otherwise marginal likelihood is maximized when there’s one leaf for each observation. The original CART family of methods relied on minimum leaf-size and other heuristics, paired with a cross validation (CV) pruning stage commencing after greedily growing a deep tree. The fully Bayesian approach, which is more recent, has a more natural feel to it although at the expense of greater computation through MCMC. A silver lining, however, is that the Monte Carlo can smooth over hard breaks and lend a degree of continuity to an inherently “jumpy” predictive surface.

Completing the Bayesian specification requires a prior over trees, \(p(\mathcal{T})\). There were two papers, published at almost the same time, proposing a so-called Bayesian CART model, or what is known as the Bayesian treed constant model in the regression (as opposed to classification) context. Denison, Mallick, and Smith (1998) were looking for a light touch, and put a Poisson on the number of leaves, but otherwise specified a uniform prior over other aspects such as tree depth. H. A. Chipman, George, and McCulloch (1998) called for a more intricate class of priors which allowed heavier regularization to be placed on tree depth. Time says they won the argument, although the reasons for that are complicated. Almost everyone has since adopted the so-called CGM prior, although that’s not evidence of much except popularity. (VHS beat out BetaMax in the videotape format war, but not because the former is better.) If the last twenty years have taught us nothing, we’ve at least learned that a hearty dose of regularization – even when not strictly essential – is often a good default.

CGM’s prior is based on the following tree growing stochastic process. A tree \(\mathcal{T}\) may grow from one of its leaf nodes \(\eta\), which might be the root, with a probability that depends on the depth \(D_\eta\) of that node in the tree. The family of probabilities preferred by CGM, dictating terms for when such a leaf node might split into two new children, is provided by the expression below.

\[ p_{\mathrm{split}}(\eta,\mathcal{T}) = \alpha(1+D_{\eta})^{-\beta} \]

One can use this probability to simulate the tree growing process, generating recursively starting from a null, single leaf/root tree, and stopping when all leaves refuse to draw a split. CGM studied this distribution under various choices of hyperparameters \(0 < \alpha < 1\) and \(\beta \geq 0\) in order to provide insight into characteristics of trees which are typical under this prior.

Of course, the primary aim here isn’t to generate trees a priori, but to learn trees via partitions of the data and the subsequent patchwork of regressions they imply. For that, a density on \(\mathcal{T}\) is required. It’s simple to show that this prior process induces a prior density for tree \(\mathcal{T}\) through the probability that internal nodes \(\mathcal{I}_{\mathcal{T}}\) split and leaves \(\mathcal{L}_{\mathcal{T}}\) do not:

\[ p(\mathcal{T}) \propto \prod_{\eta\,\in\,\mathcal{I}_{\mathcal{T}}} p_{\mathrm{split}}(\eta, \mathcal{T}) \prod_{\eta\,\in\,\mathcal{L}_{\mathcal{T}}} [1-p_{\mathrm{split}}(\eta, \mathcal{T})]. \]

As in the DMS prior (Denison, Mallick, and Smith 1998), CGM retains uniformity on everything else: splitting location/dimension, number of leaf node observations. Note that a minimum number of observations must be enforced in order to ensure proper posteriors at the leaves. That is, under the reference prior \(p(\mu_\eta, \sigma_\eta^2) \propto \sigma_\eta^{-2}\), we must have at least \(|\eta| \geq 2\) observations in each leaf node \(\eta \in \mathcal{L}_{\mathcal{T}}\).

Inference

Posterior inference proceeds by MCMC. Note that there are no parameters except tree \(\mathcal{T}\) when leaf-node \(\theta_\eta\) are integrated out. Here is how a single iteration of MCMC would go. Randomly choose one of a limited number of stochastic tree modification operations (grow, prune, change, swap, rotate; more below), and conditional on that choice, randomly select a node \(\eta \in \mathcal{T}\) on which that proposed modification would apply. Those two choices comprise proposal \(q(\mathcal{T}, \mathcal{T}')\) for generating a new tree \(\mathcal{T}'\) from \(\mathcal{T}\), taking a step along a random walk in tree space. Accept the move with Metropolis–Hastings (MH) probability:

\[ \frac{p(\mathcal{T}' \mid y^n, x^n)}{p(\mathcal{T} \mid y^n, x^n)} \times \frac{q(\mathcal{T}', \mathcal{T})}{q(\mathcal{T}, \mathcal{T}')} = \frac{p(y^n \mid \mathcal{T}', x^n)}{p(y^n \mid \mathcal{T}, x^n)} \times \frac{p(\mathcal{T}')}{p(\mathcal{T})} \times \frac{q(\mathcal{T}', \mathcal{T})}{q(\mathcal{T}, \mathcal{T}')}. \]

There’s substantial scope for computational savings here with local moves \(q(\mathcal{T}, \mathcal{T}')\) in tree space, since many terms in the big product over \(\eta \in \mathcal{L}_{\mathcal{T}}\) in the denominator marginal likelihood, and over \(\eta' \in \mathcal{L}_{\mathcal{T'}}\) in the numerator one, would cancel for unaltered leaves in \(\mathcal{T} \rightarrow \mathcal{T}'\).

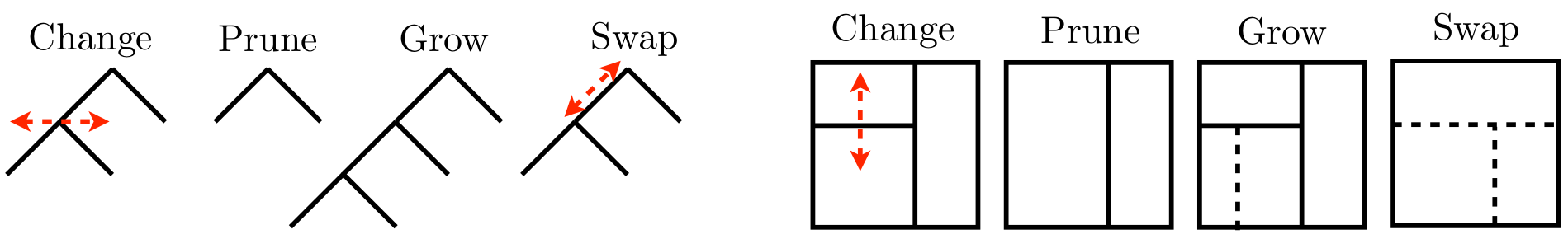

What do tree proposals \(q\) look like? Well, they can be whatever you like so long as they’re reversible, which is required by the ergodic theorem for MCMC convergence, providing samples from the target distribution \(p(\mathcal{T} \mid y^n, x^n)\). That basically means proposals must be matched with an opposite, undo proposal. Figure 9.7 provides an example of the four most popular tree moves, converting tree \(\mathcal{T}\) from Figure 9.6 to \(\mathcal{T}'\) shown in the same two views.

FIGURE 9.7: Random-walk proposals in tree space graphically (left four) and as partitions (right four). Borrowed from H. Chipman et al. (2013) with many similar variations elsewhere; used with permission from Wiley.

Observe that these moves have reversibility built in. Grow and prune are the undo of one another, and change and swap are the undo of themselves. Grow and prune are extremely local moves, acting only on leaf nodes and parents thereof, respectively. Swap and change are slightly more global as they may be performed on any internal node, or adjacent pair of nodes, respectively. Consequently, they may shuffle the contents of all their descendant leaves. Such “high up” proposals can have low MH acceptance rates because they tend to create \(\mathcal{T}'\) far from \(\mathcal{T}\).

Several new moves have been introduced to help. Gramacy and Lee (2008a) provide rotate which, like swap, acts on pairs of nodes which might reside anywhere in the tree. However, no matter how high up a rotation is, leaf nodes always remain unchanged. Thus acceptance is determined only by the prior. The idea comes from tree re-balancing in the computer science literature, for example as applied for red–black trees. Y. Wu, Tjelmeland, and West (2007) provide a radical restructure move targeting similar features, but with greater ambition. M. T. Pratola (2016) offers an alternative rotate targeting local moves which traverse disparate regions of partition space along contours of high, rather than identical likelihood.

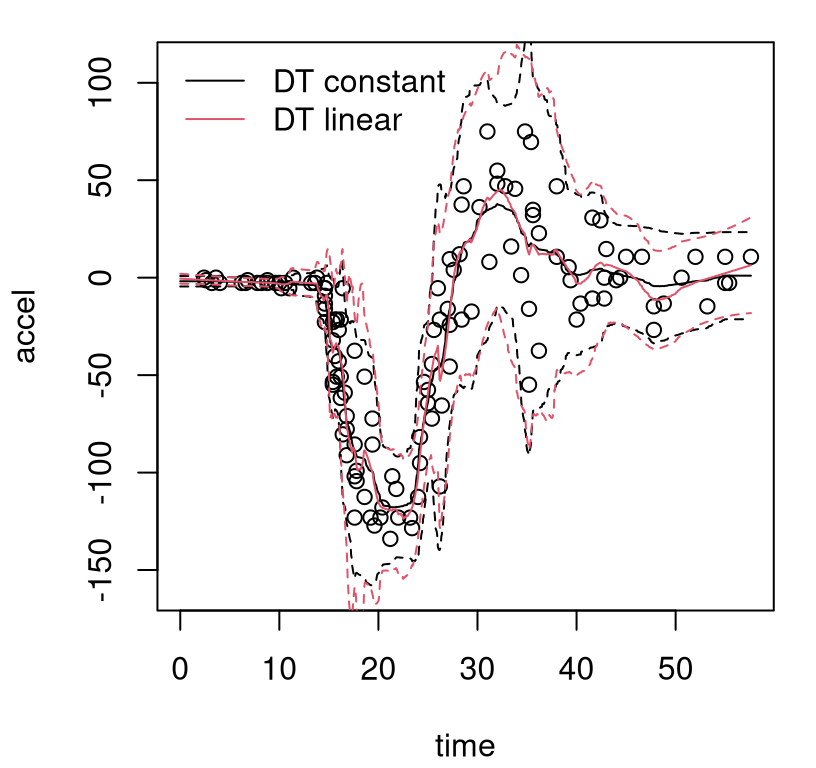

To illustrate inference under the conventional move set, consider the motorcycle accident data in the MASS library for R. These data are derived from simulation of the acceleration of the helmet of a motorcycle rider before and after an impact. The pre- and post-whiplash effect, which we shall visualize momentarily, is extremely hard to model, whether using parametric (linear) models, or with GPs.

We shall utilize a Bayesian CART (BCART) implementation from the tgp package (Gramacy and Taddy 2016) on CRAN. One quirk of tgp is that you must provide a predictive grid, XX below, at the time of fitting. Trees are complicated data structures, which makes saving samples from lots of MCMC iterations cumbersome. It’s far easier to save predictions derived from those trees, obtained by dropping elements of \(x \in \mathcal{X} \equiv\) XX down to leaf(s). In fact, it’s sufficient to save the average means and quantiles accumulated over MCMC iterations. For each \(x \in \mathcal{X}\), one can separately aggregate \(\hat{\mu}_{\eta(x)}\) and quantiles \(\hat{\mu}_{\eta(x)} \pm 1.96\hat{\sigma}_{\eta(x)}\) for all \(\eta(x) \in \mathcal{L}_{\mathcal{T}}\), for every tree \(\mathcal{T}\) visited by the Markov chain, normalizing at the end. That quick description is close to what tgp does by default.

XX <- seq(0, max(mcycle[,1]), length=1000)

out.bcart <- bcart(X=mcycle[,1], Z=mcycle[,2], XX=XX, R=100, verb=0)Another peculiarity here is the argument R=100, which calls for one hundred restarts of the MCMC. CGM observed that mixing in tree space can be poor, resulting in chains becoming stuck in local posterior maxima. Multiple restarts can help alleviate this. Whereas posterior mean predictive surfaces require accumulating predictive draws over MCMC iterations, the most probable predictions can be extracted after the fact. Code below utilizes predict.tgp to extract the predictive surface from the most probable tree, i.e., the maximum a posteriori (MAP) tree \(\hat{\mathcal{T}}\).

It’s worth reiterating that this way of working is different from the typical fit-then-predict scheme in R. The main prediction vehicle in tgp is driven by providing XX to bcart and similar methods. Still both surfaces, posterior mean and MAP, offer instructive visualizations. To that end, the R code below establishes a macro that I shall reuse, in several variations, to visualize predictive output from fitted tree models.

plot.moto <- function(out, outp)

{

plot(outp$XX[,1], outp$ZZ.km, ylab="accel", xlab="time",

ylim=c(-150, 80), lty=2, col=1, type="l")

points(mcycle)

lines(outp$XX[,1], outp$ZZ.km + 1.96*sqrt(outp$ZZ.ks2), col=2, lty=2)

lines(outp$XX[,1], outp$ZZ.km - 1.96*sqrt(outp$ZZ.ks2), col=2, lty=2)

lines(out$XX[,1], out$ZZ.mean, col=1, lwd=2)

lines(out$XX[,1], out$ZZ.q1, col=2, lwd=2)

lines(out$XX[,1], out$ZZ.q2, col=2, lwd=2)

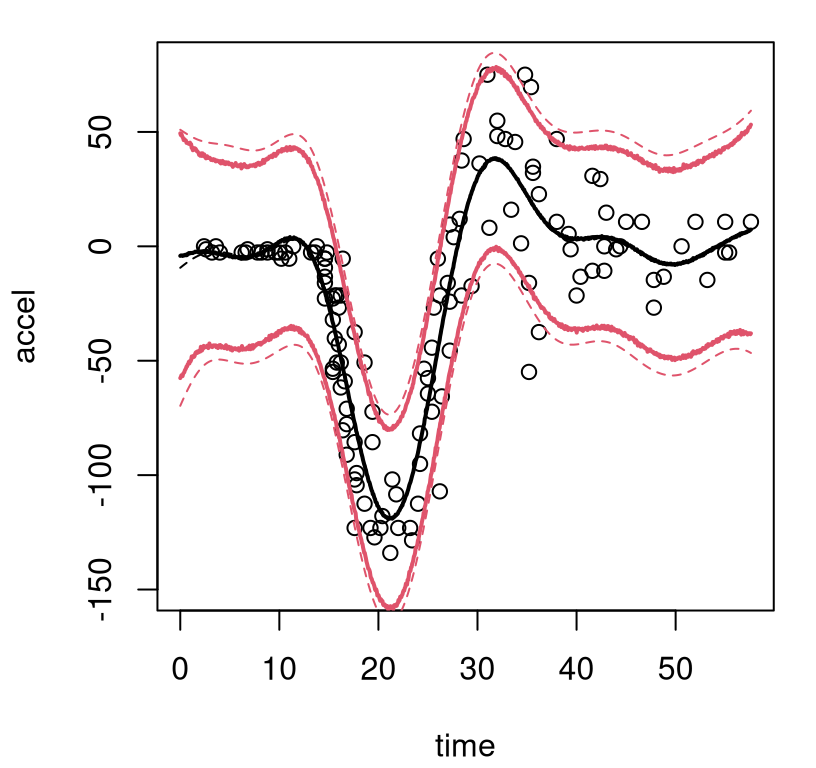

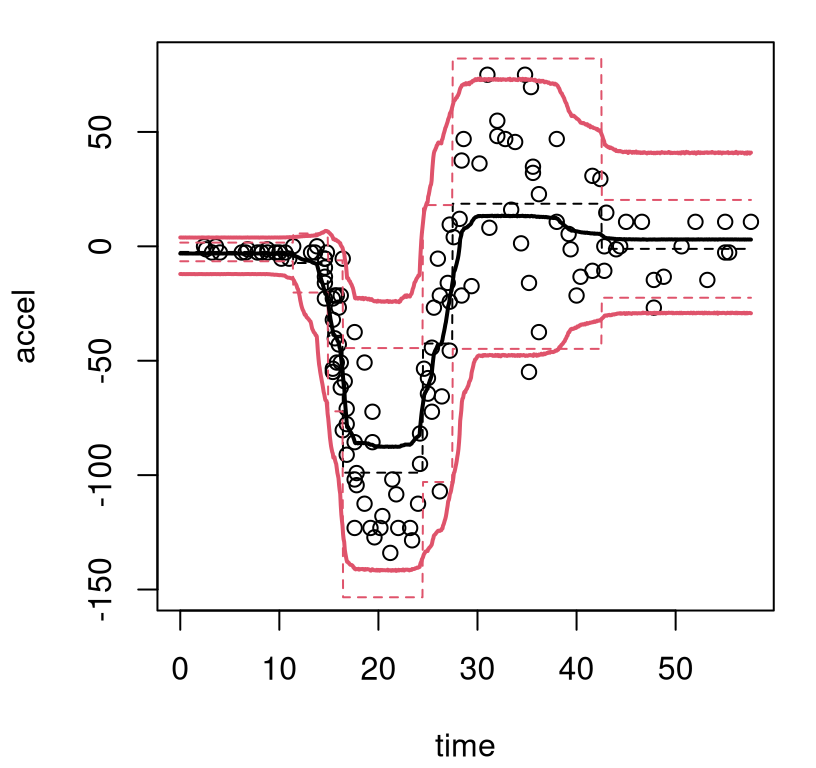

}Observe in the macro that solid bold (lwd=2) lines are used to indicate posterior mean predictive; thinner dashed lines (lty=2) indicate the MAP. On both, black (col=1) shows the center (mean of means or MAP mean) and red (col=2) shows 95% quantiles. Figure 9.8 uses this macro for the first time in the context of our Bayesian CART fit, also for the first time showing the training data.

FIGURE 9.8: Bayesian treed constant model fit to the motorcycle accident data in terms of means and 95% quantiles. Posterior means are indicated by solid lines; MAP dashed.

What can be seen in these surfaces? Organic nonstationarity and heteroskedasticity, that’s what. The rate of change of outputs is changing as a function of inputs, and so is the noise level. Training data exhibit these features, and predictive surfaces are coping well, albeit not gracefully. Variances, exhibited by quantiles, may be too high (wide) at the end. The whiplash effect in the middle of the data appears overly dampened by forecasts on the testing grid.

The MAP surface (dashed lines) in the figure exemplifies an “old CART way” of regression. Hard breaks abound, being both unsightly and a poor surrogate for what are likely smooth physical dynamics. Posterior mean predictive summaries (solid lines) are somewhat more smooth. Averaging over the posterior for \(\mathcal{T}\) with MCMC smooths over abrupt transitions that come in disparate form with each individual sample from the chain. Yet the surface, even after aggregation, is still blocky: like a meandering staircase with rounded edges. A longer MCMC chain, or more restarts, could smooth things out more, but with diminishing return.

Other leaf models

One of the cool things about this setup is that any data type/leaf model may be used without extra computational effort if \(p(y^\eta \mid x^\eta)\) is analytic; that is, as long as we can evaluate the marginal likelihood, integrating out parameters \(\theta_\eta\) in closed form. Fully conjugate, scale-invariant, default (non-informative) priors on \(\theta_\eta\) make this possible for a wide class of models for response \(y\), even conditional on \(x\). A so-called Bayesian treed linear model [BTLM; HA Chipman, George, and McCulloch (2002)] uses

\[ \begin{aligned} p(y^\eta \mid \beta_\eta, \sigma_\eta^2, x^\eta) & \propto \sigma_\eta^{-|\eta|} \exp \{ (y^\eta - \bar{X}_\eta \beta_\eta)^2/2\sigma_\eta^2\} \quad \mbox{ and } \quad p(\beta_\eta, \sigma_\eta^2) \propto \sigma_\eta^{-2}. \end{aligned} \]

In that case we have

\[ p(y^\eta \mid x^{\eta}) = \frac{1}{(2\pi)^{\frac{|\eta|-d-1}{2}}} \left(\frac{|\mathcal{G}_\eta^{-1}|}{|\eta|}\right)^{\frac{1}{2}} \left(\frac{s_\eta^2-\mathcal{R}_\eta}{2}\right)^{-\frac{|\eta|-m-1}{2}} \Gamma\left(\frac{|\eta|-d-1}{2}\right), \]

where \(\mathcal{G}_\eta = \bar{X}_\eta^\top\bar{X}_\eta\), \(\mathcal{R}_\eta = \hat{\beta}_\eta^\top \mathcal{G}_\eta \hat{\beta}_\eta\) and intercept-adjusted \((m+1)\)-column \(\bar{X}_\eta\) is a centered design matrix derived from \(x^\eta\).

Without getting too bogged down in details, how about a showcase of BTLM in action through its tgp implementation? Again, we must specify XX during the fitting stage for full posterior averaging in prediction.

out.btlm <- btlm(X=mcycle[,1], Z=mcycle[,2], XX=XX, R=100, verb=0)

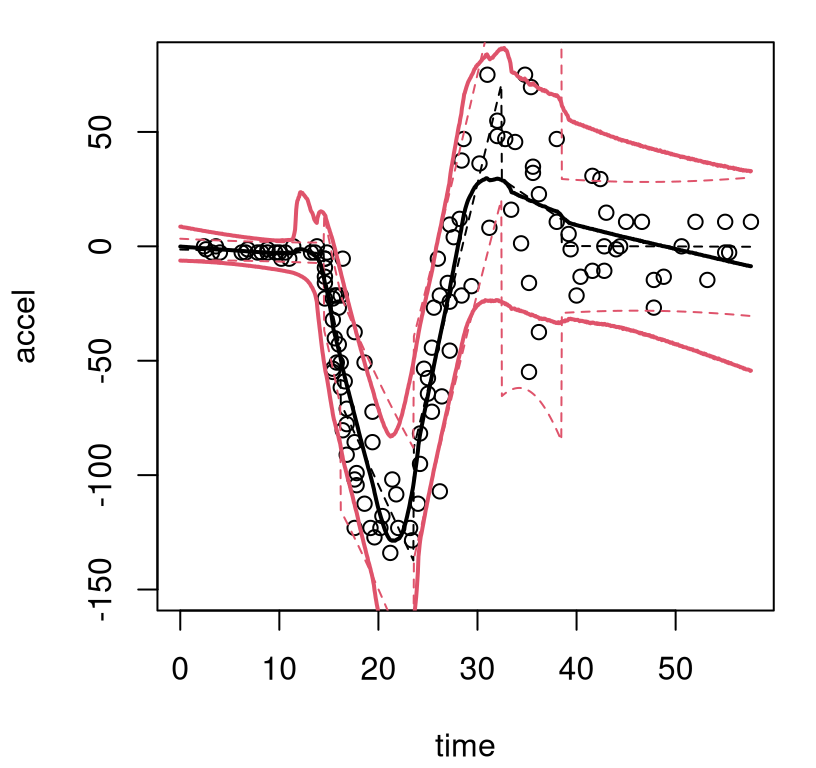

outp.btlm <- predict(out.btlm, XX=XX)As before, a MAP predictor may be extracted after the fact. Figure 9.9 reuses the plotting macro in order to view the result, and qualitatively compare to the earlier BCART fit.

FIGURE 9.9: Bayesian treed linear model fit to the motorcycle accident data; compare to Figure 9.8.

The MAP surface (dashed) indicates fewer partitions compared to the BCART analog, but the full posterior average (solid) implies greater diversity in trees and linear-leaves over MCMC iterations. In particular, there’s substantial posterior uncertainty in timing of the impact, when acceleration transitions from level at zero into whiplash around time=14. For the right third of inputs there’s disagreement about both slope and noise level. Both BTLM surfaces, MAP and posterior mean, dampen the whiplash to a lesser extent compared to BCART. Which surface is better likely depends upon intended use.

If responses \(y\) are categorical, then a multinomial leaf model and Dirichlet prior pair leads to an analytic marginal likelihood (H. A. Chipman, George, and McCulloch 1998). Other members of the exponential family proceed similarly: Poisson, exponential, negative binomial… Yet to my knowledge none of these choices – besides multinomial – have actually been implemented in software as leaf models in a Bayesian setting.

Technically, any leaf model can be deployed by extending the MCMC to integrate over leaf parameters \(\theta_\eta\) too; in other words, replace analytic integration to calculate marginal likelihoods, in closed form, with a numerical alternative. Since the dimension of the parameter space is changing when trees grow or prune, reversible jump MCMC (Richardson and Green 1997) is required. Beyond that technical detail, a more practical issue is that deep trees/many leaves can result in a prohibitively large parameter space. An important exception is GPs. GPs offer a parsimonious take on nonlinear nonparametric regression, mopping up much of the variability left to the tree with simpler leaf models. GP leaves encourage shallow trees with fewer leaf nodes. At the same time, treed partitioning enables (axis aligned) regime changes in mean stationarity and skedasticity.

Before getting into further detail, let’s look at a stationary GP fit to the motorcycle data. The tgp package provides a Bayesian GP fitting method that works similarly to bcart and btlm. Since GP MCMC mixes well, fewer restarts need be entertained. (Even the default of R=1 works well.)

Although the code above executes an order of magnitude fewer MCMC iterations, runtimes (not quoted here) are much slower for BGP due to the requisite matrix decompositions. Figure 9.10, again using our macro, shows predictive surfaces which result.

Both are nice and smooth, but lead to a less than ideal fit especially as regards variance. In fact you might say that GP and tree-based predictors are complementary. Where one is good the other is bad. Can they work together in harmony?

9.2.2 Treed Gaussian process

Bayesian treed Gaussian process (TGP) models (Gramacy and Lee 2008a) can offer the best of both worlds, marrying the smooth global perspective of an infinite basis expansion, via GPs, with the thrifty local adaptivity of trees. Their divide-and-conquer nature means faster computation from smaller matrix decompositions, and nonstationary and heteroskedasticity effects as conditionally independent leaves allow for disparate spatial dependencies. Perversely, the two go hand in hand. The more the training data exhibit nonstationary/heteroskedastic features, the more treed partitioning and the faster it goes!

There are too many modeling and implementation details to introduce here. References shall be provided – in addition to the original methodology paper cited above – in due course. For now the goal is to illustrate potential and then move on to more ambitious enterprises with TGP. The program is the same as above, using tgp from CRAN, but with btgp instead. Bayesian posterior sampling is extended to cover GP hyperparameters (lengthscales and nuggets) at the leaves.

Previous calls to tgp’s suite of b* functions specified verb=0 to suppress MCMC progress output printed to the screen by default. The call above is no exception. That output was suppressed because it was either excessive (bcart and btlm) or boring (bgp). Situated in-between on the modeling landscape, btgp progress statements are rather more informative, and less excessive, providing information about accepted tree moves and giving an online indication of trade-offs navigated between smooth and abrupt dynamics. I recommend trying verb=1.

Argument bprior="b0", above, is optional. By default, tgp fits a linear mean GP at the leaves, unless meanfn="constant" is given. Specifying bprior="b0" creates a hierarchical prior linking \(\beta_\eta\) and \(\sigma^2_\eta\), for all \(\eta \in \mathcal{L}_{\mathcal{T}}\), together. That makes sense for the motorcycle data because it starts and ends flat. Under the default setting of bprior="bflat", \(\beta_\eta\) and \(\sigma_\eta^2\) parameters of the linear mean are unrestricted. Results are not much different in that case.

As before, the MAP predictor may be extracted for comparison.

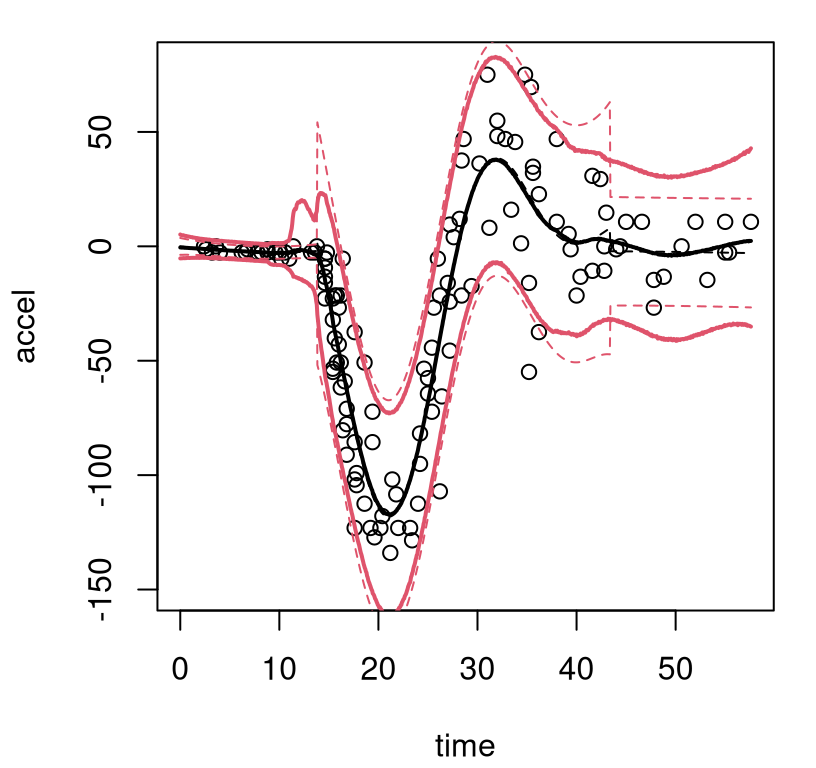

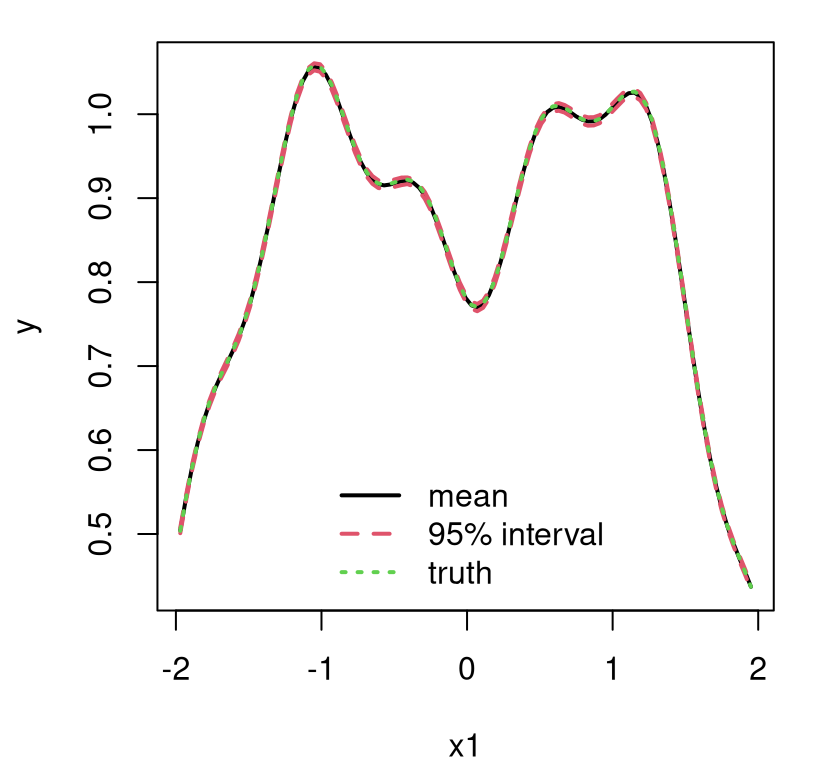

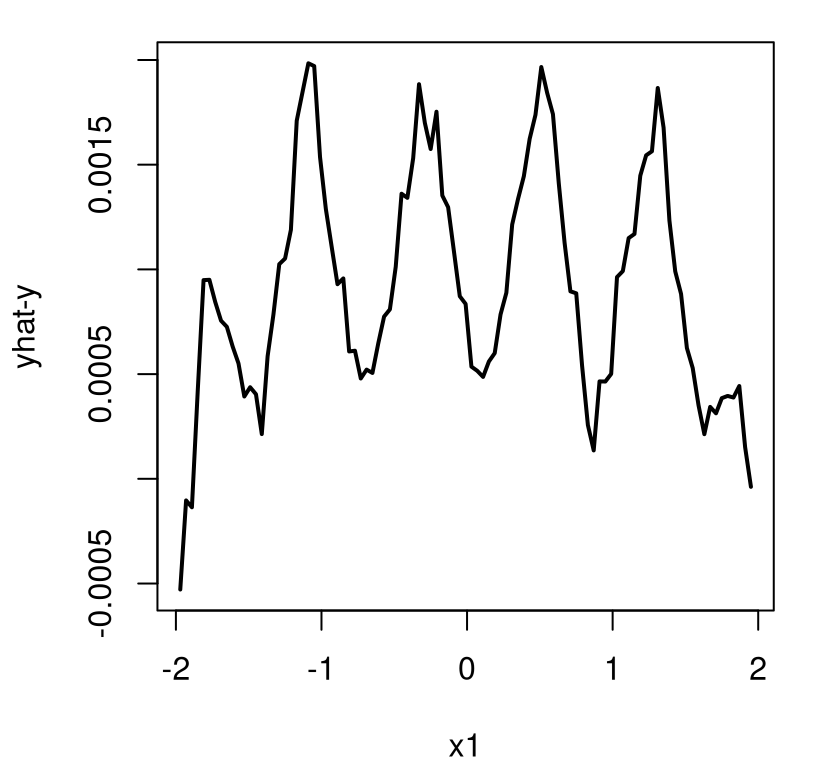

Figure 9.10, generated with our macro, provides a summary of both mean and MAP predictive surfaces.

It’s hard to imagine a better compromise. Both surfaces offer excellent fits on their own, but the posterior mean clearly enjoys greater smoothness, which is warranted by the physics under study. Both surfaces, but particularly the posterior mean, reflect uncertainty in the location of the transition between zero-acceleration and whiplash dynamics for time \(\in (10,15)\). The posterior over trees supports many transition points in that region more-or-less equally.

Often having a GP at all leaves is overkill, and this is the case with the motorcycle accident data. The response is flat for the first third of inputs, and potentially flat in the last third too. Sometimes spatial correlation is only expressed in some input coordinates; linear may be sufficient in others. Gramacy and Lee (2008b) explain how a limiting linear model (LLM) can allow the data to determine the flexibility of the leaf model, offering a more parsimonious fit and speed enhancements when training data determine that a linear model is sufficient to explain local dynamics.

For now, consider how LLMs work in the simple 1d case offered by mcycle.

out.btgpllm <- btgpllm(X=mcycle[,1], Z=mcycle[,2], XX=XX, R=30,

bprior="b0", verb=0)

outp.btgpllm <- predict(out.btgpllm, XX=XX)Figure 9.12, showcasing btgpllm, offers a subtle contrast to the btgp fit shown in Figure 9.11.

FIGURE 9.12: Bayesian treed GP with jumps to the limiting linear model (LLM) on the motorcycle data; compare to Figure 9.11.

Observe how the latter third of inputs enjoys a slightly tighter predictive interval in this setting, borrowing strength from the obviously linear (actually completely flat) fit to the first third of inputs. Transition uncertainty from zero-to-whiplash is also somewhat diminished.

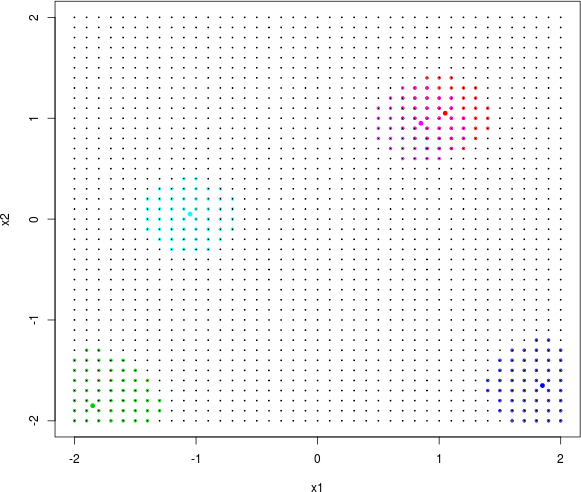

For a two-dimensional example, revisit the exponential data first introduced in §5.1.2. In fact, that data was created to showcase subtle nonstationarity with TGP. A data-generating shorthand is included in the tgp package.

The exp2d.rand function works with a grid in the input space and allows users to specify how many training data points should come from the interesting, lower-left quadrant of the input space versus the other three flat quadrants. The call targets slightly higher sampling in the interesting region, taking remaining grid elements as testing locations. Consider an ordinary (Bayesian) GP fit to these data as a warm up. To illustrate some of the alternatives offered by tgp’s GP capability, the call below asks for isotropic Gaussian correlation with corr="exp".

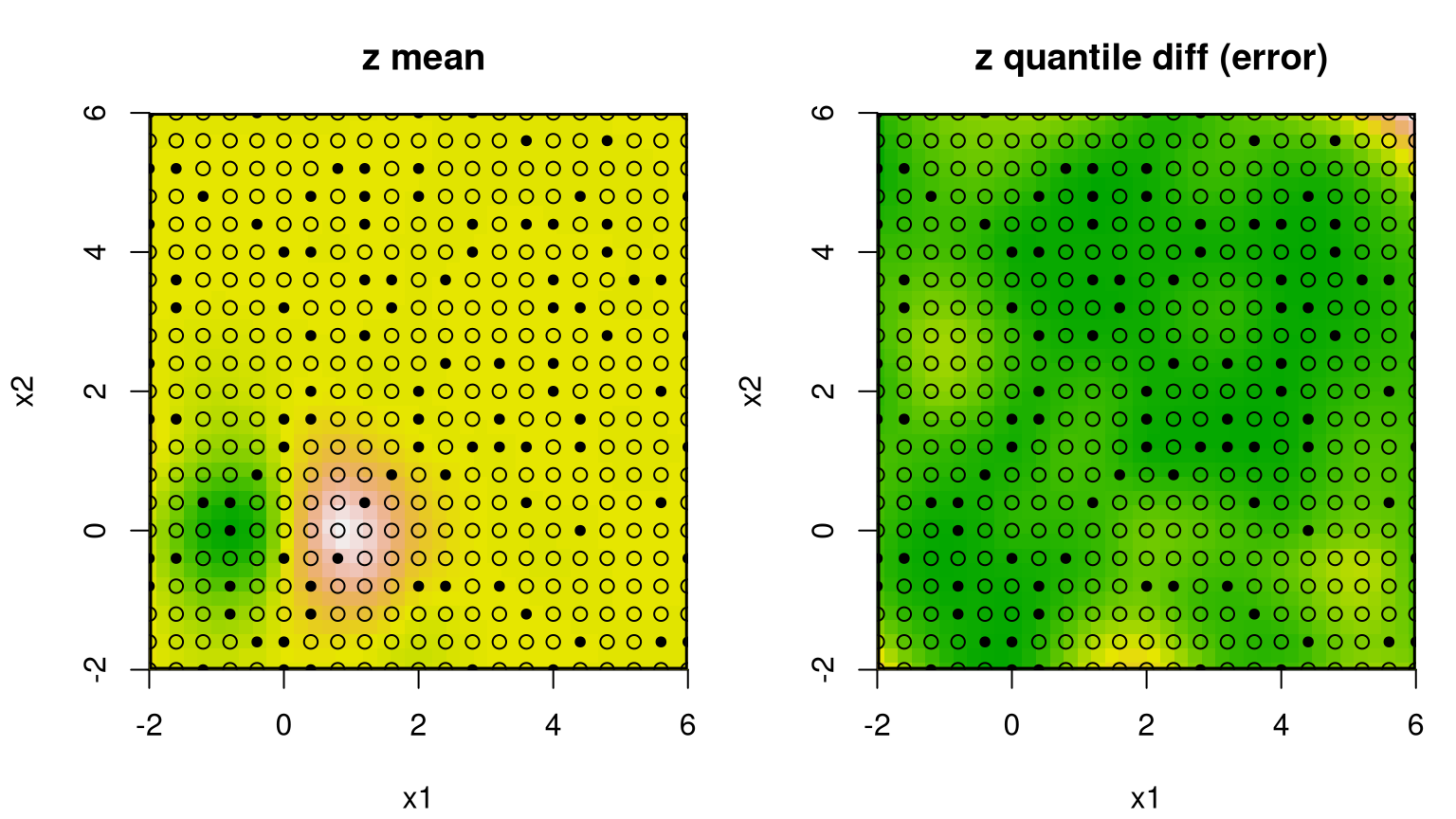

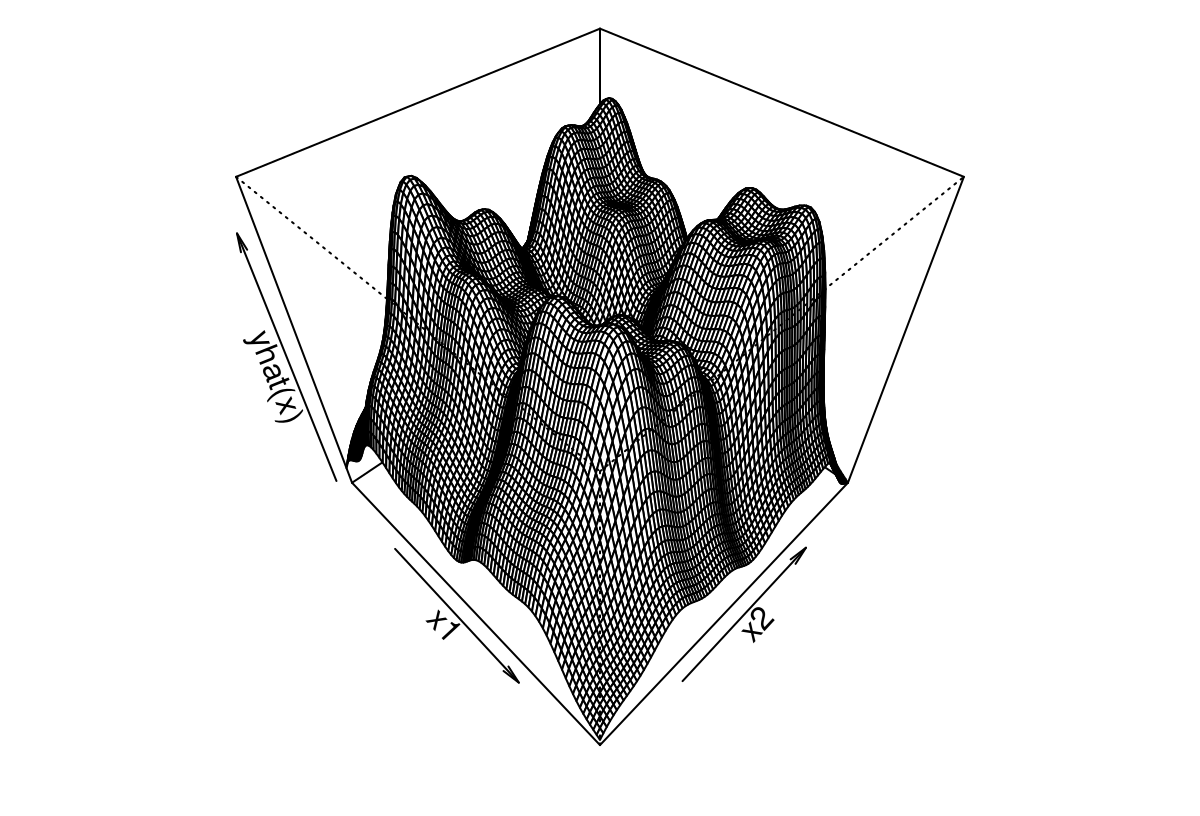

The tgp package provides a somewhat elaborate suite of plot methods defined for "tgp"-class objects. Figure 9.13 utilizes a paired image layout for mean and variance (actually 90% quantile gap) surfaces.

FIGURE 9.13: Bayesian GP fit to the 2d exponential data (§5.1.2) via mean (left) and uncertainty (right; difference between 95% and 5% quantiles).

Occasionally the predictive mean surface (left panel) is exceptionally poor, depending on the random design and response generated by exp2d.data. The predictive variance (right) almost always disappoints. That’s because the GP is stationary which implies, among other things, uniform uncertainty in distance. Consequently, the uncertainty surface is unable to reveal what is intuitively obvious from the pictures: that the interesting quadrant is harder to predict than the other flat ones. The uncertainty surface is sausage-shaped: higher where training data is scarce. To learn otherwise requires building in a degree of nonstationary flexibility, which is what the tree in TGP facilitates. Consider the analogous btgp fit, with modest restarting to avoid the Markov chain becoming stuck in local posterior modes. With GPs at the leaves, rather than constant or linear models, trees are less deep so tree movement is more fluid.

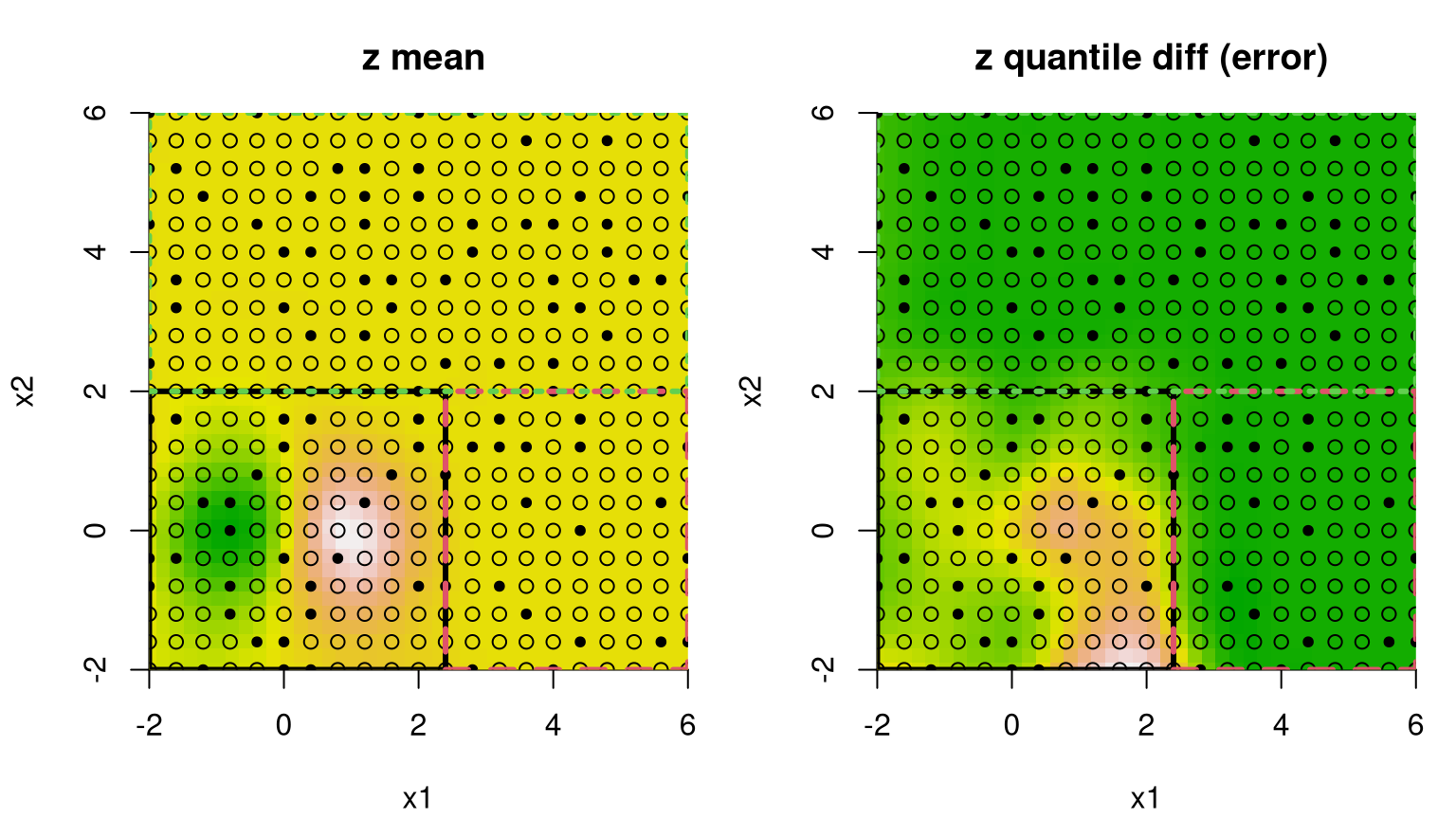

Analogous plots of posterior predictive mean and uncertainty reveal a partition structure that quarantines the interesting region away from the rest, and learns that uncertainty is indeed higher in the lower-left quadrant in spite of denser sampling there. Dashed lines in Figure 9.14 correspond to the MAP treed partition found during posterior sampling.

FIGURE 9.14: Bayesian treed GP fit to the 2d exponential data; compare to Figure 9.13.

Recursive axis-aligned partitioning is both a blessing and curse in this example. Notice that one of the flat quadrants is needlessly partitioned away from the other two. But the view in the figure only depicts one, highly probable tree. Posterior sampling averages over many other trees. In fact, a single accepted swap move would result in the diametrically opposed quadrant being isolated instead. This averaging over disparate, highly probable partitions explains why variance is about the same in these two regions. Unfortunately, there’s no support for viewing all of these trees at once, which would anyways be a mess.

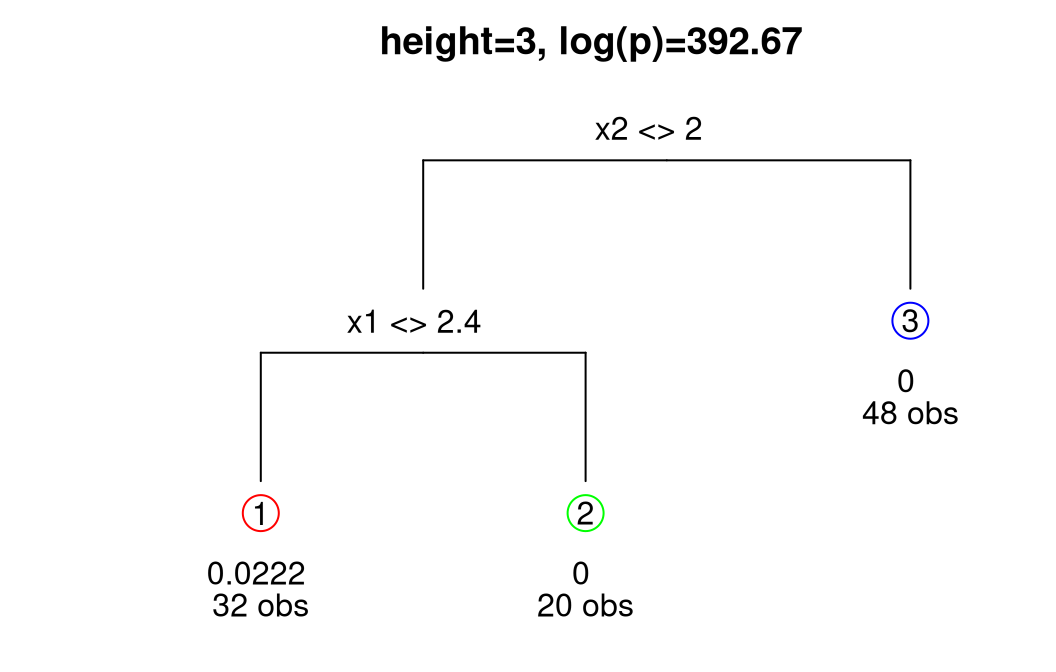

Increased uncertainty for the lower-left quadrant in the right panel of Figure 9.14 is primarily due to the shorter lengthscale and higher nugget estimated for data in that region, as supported by many of the trees sampled from the posterior, particularly the MAP. The diagram in Figure 9.15 provides another visual of the MAP tree, relaying a count of the number of observations in each leaf and estimated marginal variance therein.

FIGURE 9.15: MAP tree under Bayesian treed GP. Leaf information includes an estimate of local scale (equivalent to \(\hat{\tau}^2\)) and number of observations.

Again, the tree indicates that only the lower-left quadrant has substantial uncertainty. Having a regional notion of model inadequacy is essential to sequential design efforts which utilize variance-based acquisition. Examples are ALM/C, IMSPE, etc., from Chapter 6. All those heuristics are ultimately space-filling unless the model accommodates nonstationary flexibility. A homework exercise in §9.4 targets exploration of these ideas on the motivating NASA rocket booster data (§2.1). In an earlier §6.4 exercise we manually partitioned these data to affect sequential design decisions and direct acquisition towards more challenging-to-model regimes. TGP can take the human out of that loop, automating iteration between flexible learning and adaptive design.

Revisiting LGBB (rocket booster) data

TGP was invented for the rocket booster data. NASA scientists knew they needed to partition modeling, and accompanying design, to separate subsonic and supersonic speeds. They had an idea about how the partition might go, but thought it might be better if the data helped out. Below we shall explore that potential with data collected from a carefully implemented, sequentially designed, computer experiment conducted on NASA’s Columbia supercomputer.

lgbb.as <- read.table("lgbb/lgbb_as.txt", header=TRUE)

lgbb.rest <- read.table("lgbb/lgbb_as_rest.txt", header=TRUE)Those files contain training input–output pairs obtained with ALC-based sequential design (§6.2.2) selected from a dense candidate grid (Gramacy and Lee 2009). Un-selected elements from that grid form a testing set on which predictions are desired. Here our illustration centers on depicting the final predictive surface, culminating after a sequential design effort on the lift output, one of six responses. The curious reader may wish to repeat this analysis and subsequent visuals with one of the other five output columns. Code below sets up the data we shall use for training and testing.

## X XX

## 780 37128The training set is modestly sized and the testing set is big. Fitting isn’t speedy, so we won’t do any restarts (using default R=1). Even better results can be obtained with larger R and with more MCMC iterations, which is controlled with the BTE argument (“B”urn-in, “T”otal and thinning level to save “E”very sample). Defaults used here target fast execution, not necessarily ideal inferential or predictive performance. CRAN requires all coded examples in documentation files finish in five seconds. This larger example has no hope of achieving that speed, however results with the defaults are acceptable as we shall see.

## elapsed

## 138.5A fitting time of 138 minutes is quite a wait, but not outrageous. Compared to CSK timings from earlier, these btgpllm calculations are slower even though the training data entertained here is almost an order of magnitude smaller. The reason is that our “effective” covariance matrices from treed partitioning aren’t nearly as sparse. We entertained CSKs at 99% and 99.9% sparsity but our btgpllm MAP tree yields effective sparsity closer to 30%, as we illustrate below. Also, keep in mind that tgp bundles fitting and prediction, and our predictive set is huge. CSK examples entertained just five-hundred predictive locations.

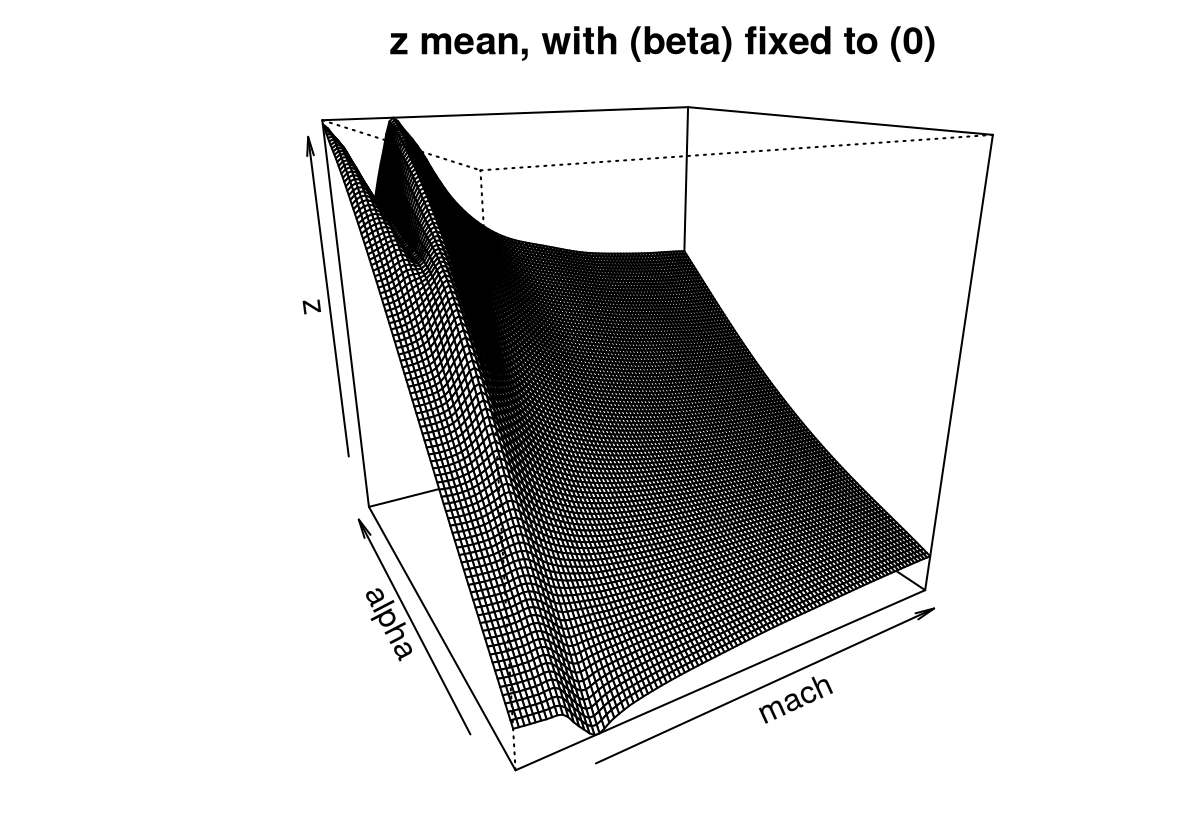

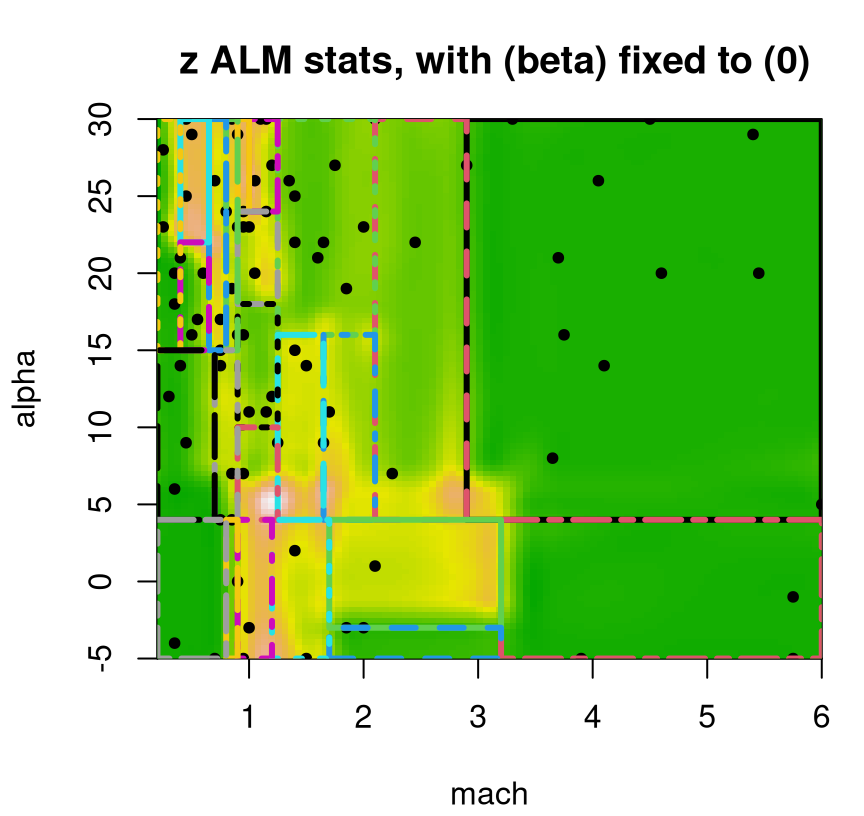

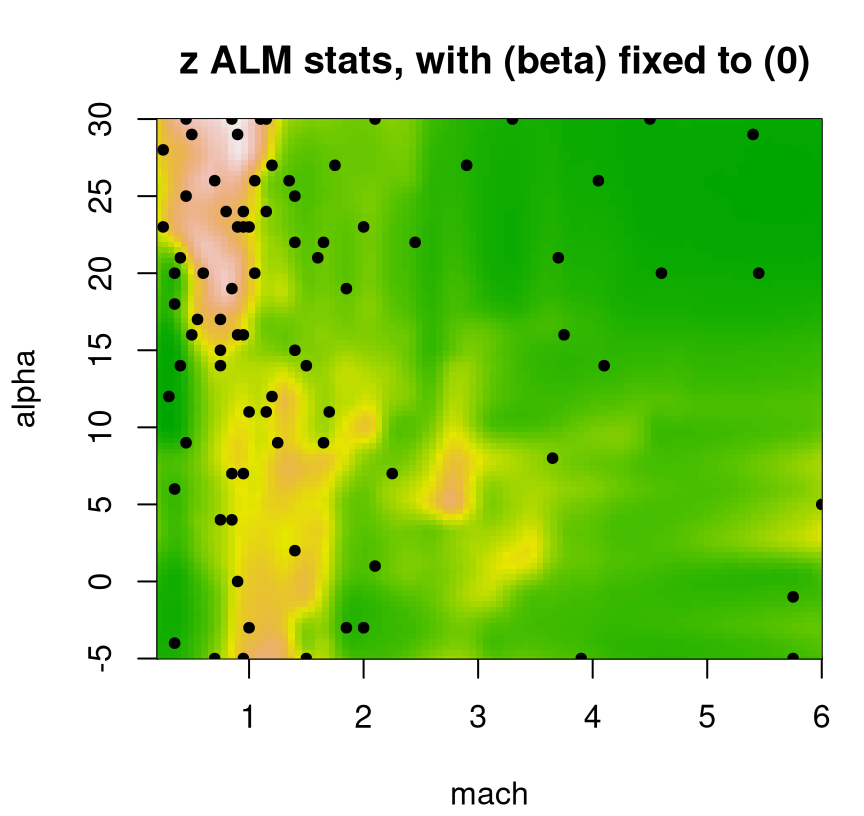

Figure 9.16 provides a 2d slice of the posterior predictive surface where the third input, side-slip angle (beta), is fixed to zero. The plot.tgp method provides several hooks that assist in 2d visualization of higher dimensional fitted surfaces through slices and projections. More details can be found in package documentation.

FIGURE 9.16: Slice of the Bayesian TGP with LLM predictive surface for the LGBB lift response.

Observe that the predictive mean surface is able to capture the ridge nearby low speeds (mach) and for high angles of attack (alpha), yet at the same time furnish a more slowly varying surface at higher speeds. That would not be possible under a stationary GP: one of the two regimes (or both) must compromise, and the result would be an inferior fit.

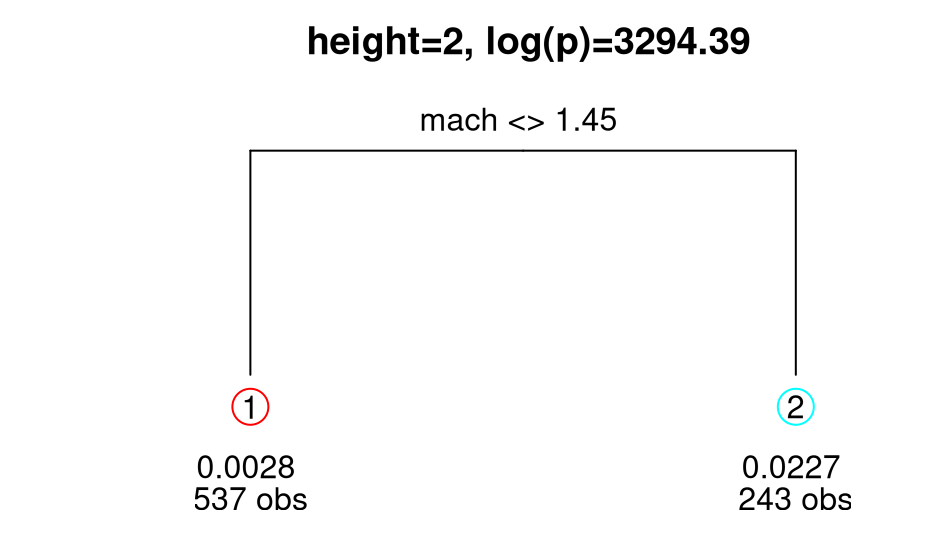

The MAP tree, visualized in Figure 9.17, indicates a two-element partition.

FIGURE 9.17: MAP tree for the lift response.

The number of data points in each leaf implies an effective (global) covariance matrix that’s about 30% sparse, with the precise number depending on the random seed used to generate this Rmarkdown build. Code for a more precise calculation – for a more interesting case with more leaves – is provided momentarily. Note this applies only for the MAP tree; the other thousands of trees visited by the Markov chain would likely be similar but seldom identical.

By default, bt* fitting functions begin with a null tree/single leaf containing all of the data, implying a 100% dense \(780 \times 780\) covariance matrix. So the first several hundred iterations of MCMC, before the first grow move is accepted, may be particularly slow. Even after successfully accepting a grow, subsequent prunes entertain a full \(780 \times 780\), covariance matrix when evaluating MH acceptance ratios. Thus the method is still in \(\mathcal{O}(N^3)\), pointing to very little improvement on computation, at least in terms of computational order. A more favorable assessment would be that we get enhanced fidelity at no extra cost compared to a (dense covariance) stationary GP. More aggressive use of the tree is required when speed is a priority. But let’s finish this example first.

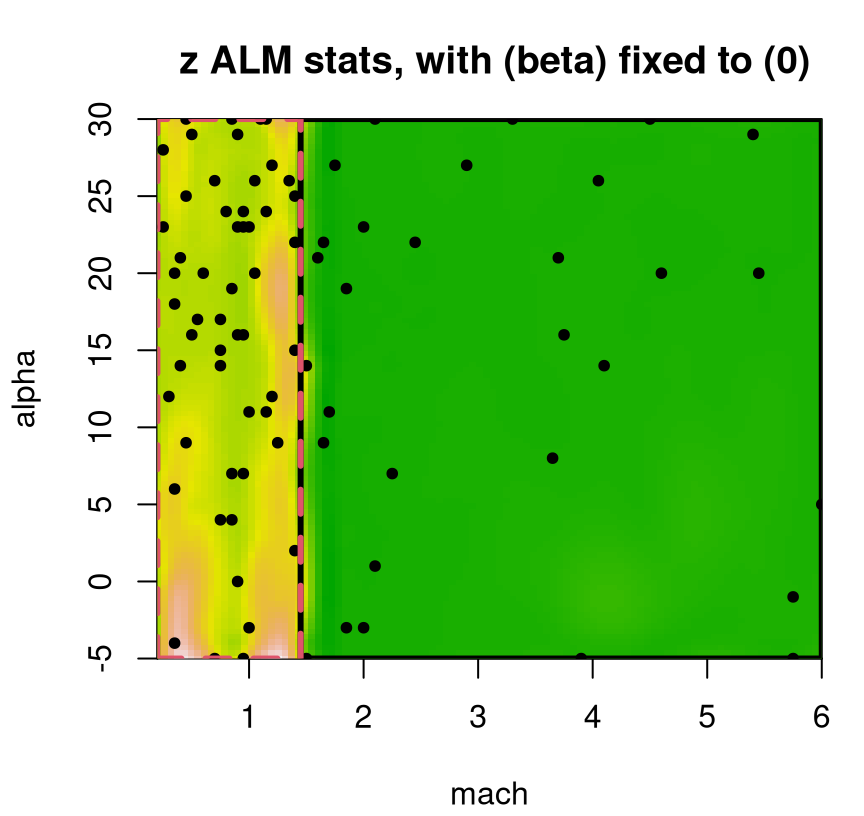

A slightly tweaked plot.tgp call can provide the predictive variance surface. See Figure 9.18. As in our visuals for the 2d exponential data (e.g., Figure 9.14), training inputs (dots) and testing locations (open circles) are shown automatically. A dense testing grid causes lots of open circles to be drawn, which unfortunately darkens the predictive surface. Adding pXX=FALSE makes for a prettier picture, but leaves the testing grid to the imagination. (Here, that grid is pretty easy to imagine in the negative space.) The main title says “z ALM stats”, which should be interpreted as “variance of the response(s)”. Recall that ALM sequential design from §6.2.1 involves a maximizing variance heuristic.

FIGURE 9.18: Predictive uncertainty surface for a slice of the lift response with MAP partition and training data (in slice) overlayed.

Several notable observations can be drawn from this surface. First, the partition doesn’t split the input space equally in a geographic sense. However it does partition the 780 inputs somewhat more equally. This is because the design is non-uniform, emphasizing low-speed inputs. Sequential design was based on ALC, not ALM, but since both focus on variance they would recommend similar acquisitions. Observe that predictive uncertainty is much higher in the low-speed regime, so future acquisitions would likely demand even heavier sampling in that region. In the next iteration of an ALC/M scheme, one might select a new run from the lower-left (low mach, low alpha) region to add into the training data. Finally, notice how high uncertainty bleeds across the MAP partition boundary – a relic of uncertainty in the posterior for \(\mathcal{T}\).

The tgp package provides a number of “knobs” to help speed things up at the expense of faithful modeling. One way is through the prior. For example, \(p_{\mathrm{split}}\) arguments \(\alpha\) (bigger) and \(\beta\) (smaller) can encourage deeper trees and consequently smaller matrices and faster execution.

Another way is through MCMC initialization. Providing linburn=TRUE will burn-in treed GP MCMC with a treed linear model, and then switch-on GPs at the leaves before collecting samples. That facilitates two economies. For starters it shortcuts an expensive full GP burn-in while waiting for accepted grow moves to organically partition up the input space into smaller GPs. More importantly, it causes the tree to over-grow, ensuring smaller partitions, initializing the chain in a local mode of tree space that’s hard to escape out of even after GPs are turned on. Once that happens some pruning is typical, but almost never entirely back to where the chain should be under the target distribution. You might say that linburn=TRUE takes advantage of poor tree mixing, originally observed by CGM, to favor speed.

Consider a btgpllm call identical to the one we did above, except with linburn=TRUE.

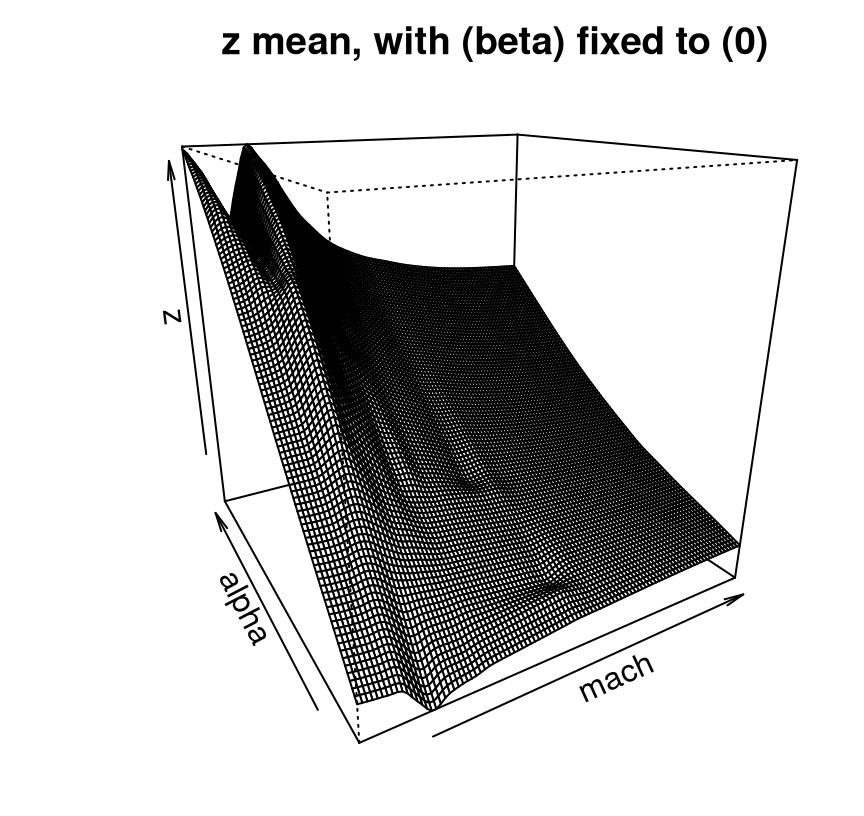

As you can see in Figure 9.19, our new visual of the beta=0 slice is not much different than Figure 9.16’s ideal fit. Much of the space is plausibly piecewise linear anyway, but it helps to smooth out rough edges with the GP.

FIGURE 9.19: Approximate LGBB lift posterior mean slice under linear model burn-in; compare to Figure 9.16.

It’s difficult to speculate on the exact nature of the aesthetic difference between this new linburn=TRUE surface and the earlier ideal one. Sometimes the thriftier surface over-smooths. Sometimes it under-smooths revealing kinks or wrinkles. Sometimes it looks pretty much the same. What is consistent, however, is that nothing looks entirely out of place while execution time is about 29 times faster than otherwise, taking about 5 minutes in this build.

## full.elapsed linburn.elapsed

## 8309.8 287.5To explain the speedup, code below calculates the effective degree sparsity realized by the MAP tree.

map.height <-fit2$post$height[which.max(fit2$posts$lpost)]

leafs.n <- fit2$trees[[map.height]]$n

1 - sum(leafs.n^2)/(sum(leafs.n)^2)## [1] 0.9314Approximately 93% sparse – much closer to the 99% of CSK. Maybe its predictive surface isn’t as smooth as it could be, or as would be ideal, say for a final visualization in a published article. For most other purposes, however, linburn fits possess all of the requisite ingredients.

One such purpose is sequential design, where turning around acquisitions quickly can be essential to applicability. We already talked about ALM: an estimate of predictive variance at XX locations comes for free. Usually, although not in all Rmarkdown builds, the ALM/variance surface plotted in Figure 9.20 demands future acquisitions in the interesting part of the space, for low speeds and high angles of attack. Even when that’s not the case, the maximizing location is usually sensible. An important thing to keep in mind about sequential design, especially when individual decisions seem erratic, is that the long run of many acquisitions is what really counts.

FIGURE 9.20: Approximate LGBB lift posterior mean slice under linear model burn-in; compare to Figure 9.16.

Having to specify XX locations in advance rules out continuous optimization of acquisition criteria, say with optim. Gramacy and Lee (2009) describe a sequential treed maximum entropy (ME) candidate-based scheme for coping with this drawback. The essence is to seek space-filling candidates separately in each region of the MAP partition. ALC (§6.2.2) is available via Ds2x=TRUE, signaling calculations of integrated change in variance \(\Delta \sigma^2(x)\) for all \(x \in\) XX. Testing XX locations are also used as reference sites in a sum approximating the ALC integral (6.7). This makes the computational expense quadratic in nrow(XX), which can be a tall order for testing sets sized in the tens of thousands \((780^3 \ll 37128^2)\). Treed ME XX thus prove valuable as reference locations as well. Finally, additional computational savings may be realized by undoing some of tgp’s defaults, such as automatic predictive sampling at training X locations. Those aren’t really necessary for acquisition and can be skipped by providing pred.n=FALSE.

Often simple leaf models, e.g., constant or linear, lead to great sequential designs. Variance estimates can be quite good, on relative terms, even if predictive means are unfaithful to dynamics exhibited by the response surface. Consequently ALM/C heuristics work quite well. You don’t need smooth prediction to find out where model uncertainty (predictive variance) is high. Consider the treed linear model …

… and resulting ALM surface provided in Figure 9.21. Treed partitioning can be quite heavy, so option pparts=FALSE suppresses those colorful rectangles to provide a clearer view of spatial variance.

plot(fit3, slice=list(x=3,z=0), gridlen=c(100,100), layout="as", as="alm",

span=0.01, pparts=FALSE, pXX=FALSE)

FIGURE 9.21: Even more approximate predictive uncertainty surface under a Bayesian treed LM for a slice of the lift response; compare to Figures 9.18 and 9.20.

That surface indicates a sufficiently localized sense of uncertainty despite having, what would most certainly be, a highly discontinuous (and thus potentially quite inaccurate) predictive mean analog. Despite a factor of R=10 more MCMC sampling, that fit takes a fraction of the time: 1 minute(s), which is 100 times faster than a full TGP.

## full.elapsed linburn.elapsed btlm.elapsed

## 8309.82 287.49 82.83Sometimes treed LMs provide even better acquisitions than treed GPs do. In my experience, this only happens in the latter stages of sequential design. Early on, GP smoothness – at least in part – is key to sensible acquisition. Linear models only have high variance at the edges, i.e., at partition boundaries, which can cause self-reinforcing acquisitions and trigger a vicious cycle. Boundary-targeting sequential design puts a heavy burden on tree mixing in the MCMC, which CGM remind is problematic for linear and constant leaves. If speed is a concern, my preference is for a treed GP with linburn=TRUE shortcuts over wholesale treed linear or constant models. Treed LM burn-in offers a nice compromise: fast predictions and design estimates; smoothed out rough edges, reducing spurious variances due to “over-quilted” input spaces arising from deep trees compensating for crude linear fits at the leaves.

9.2.3 Regression tree extensions, off-shoots and fix-ups

The tgp package also supports expected improvement (EI) for Bayesian optimization (BO; §7.2). Providing improv=TRUE to any b* function causes samples to be gathered from the posterior mean of improvements, converted from raw improvement \(I(x)\) using \(Y(x)\) sampled from the posterior predictive distribution. So you get a fully Bayesian EI, averaged over tree and leaf model uncertainty, for a truly Bayesian BO. (Before the “B” in BO meant marginalizing over a GP latent field; see §5.3.2. Here it means averaging over all uncertainties through posterior integration over the full set of unknown parameters, including trees.) A downside is that candidates, as with other Bayesian TGP sampling of active learning heuristics (ALC/M), must be specified in advance of sampling, thwarting acquisition by local optimization. The upside is that such sampling naturally extends to powered up improvements, encouraging exploration, and ranking by improvement for batch sequential optimization (Taddy et al. 2009).

For more details on tgp, tutorials and examples, see

- Gramacy (2007): a beginner’s primer, including instruction on custom compilation for fast linear algebra and threaded prediction for large

XX, e.g., for LGBB; also seevignette("tgp")in the package; - Gramacy and Taddy (2010): advanced topics like EI, categorical inputs (Broderick and Gramacy 2011), sensitivity analysis, and importance tempering (Gramacy, Samworth, and King 2010) to improve MCMC mixing; also see

vignette("tgp2")in the package.

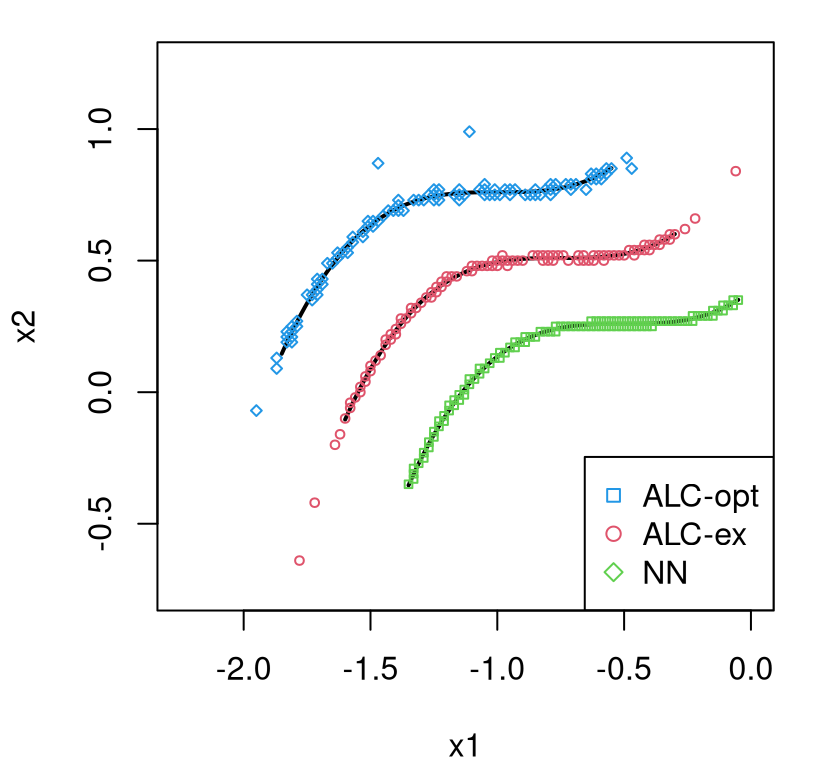

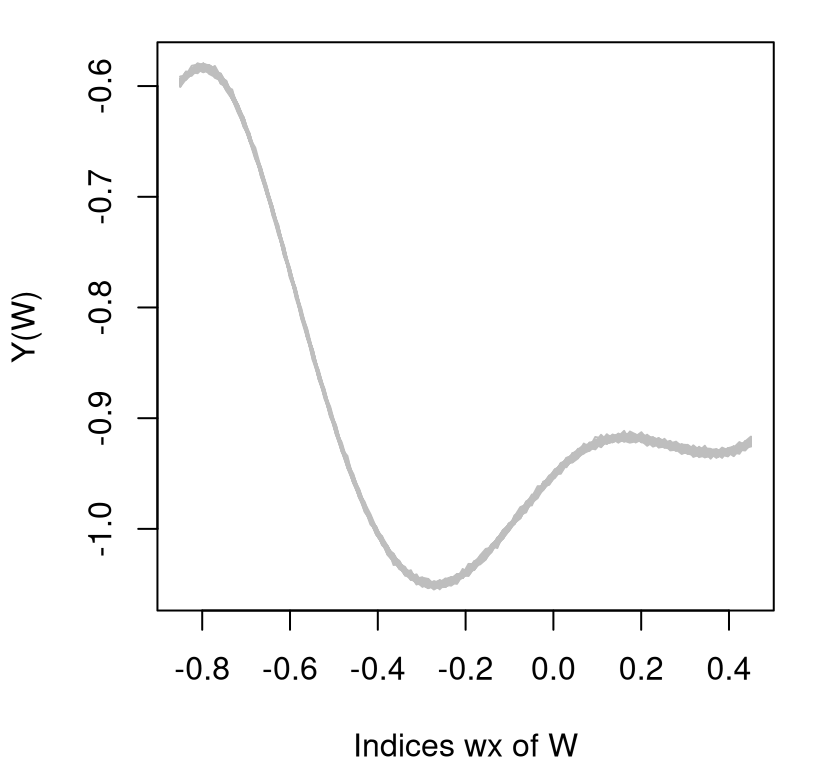

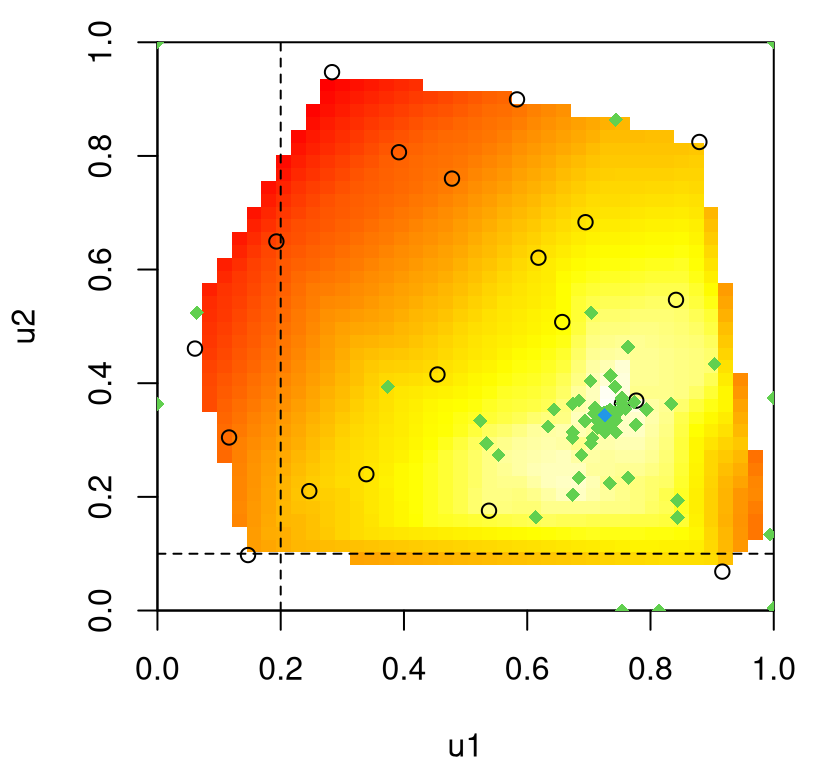

Several authors have further extended TGP capability. Konomi et al. (2017) demonstrate a nonstationary calibration framework (§8.1) based on TGP. MATLAB® code is provided as supplementary material supporting their paper. Classification with TGP, utilizing a logit linked multinomial response model paired with latent (treed) GP random field, has been explored (Broderick and Gramacy 2011). However synergy between tree and GP is weaker here than in the regression context. Notions of smoothness are artificial when classification labels are involved. A GP latent field can mimic tree-like partitioning features without the help of the tree. Yet the authors were able to engineer some examples where their hybrid was successful.