6 Linear Model Selection and Regularization

Load the packages used in this chapter:

library(tidyverse)

library(tidymodels)

library(broom)

library(gt)

library(patchwork)

library(tictoc)

# Load my R package and set the ggplot theme

library(dunnr)

extrafont::loadfonts(device = "win", quiet = TRUE)

theme_set(theme_td())

set_geom_fonts()

set_palette()Before discussing non-linear models in Chapters 7, 8 and 10, this chapter discusses some ways in which the simple linear model can be improved by replacing the familiar least squares fitting with some alternative fitting procedures. These alternatives can sometimes yield better prediction accuracy and model interpretability.

Prediction Accuracy: Provided that the true relationship between the response and the predictors is approximately linear, the least squares estimates will have low bias. If \(n >> p\)—that is, if \(n\), the number of observations, is much larger than \(p\), the number of variables—then the least squares estimates tend to also have low variance, and hence will perform well on test observations. However, if \(n\) is not much larger than \(p\), then there can be a lot of variability in the least squares fit, resulting in overfitting and consequently poor predictions on future observations not used in model training. And if \(p > n\), then there is no longer a unique least squares coefficient estimate: the variance is infinite so the method cannot be used at all. By constraining or shrinking the estimated coefficients, we can often substantially reduce the variance at the cost of a negligible increase in bias. This can lead to substantial improvements in the accuracy with which we can predict the response for observations not used in model training.

Model Interpretability: It is often the case that some or many of the variables used in a multiple regression model are in fact not associated with the response. Including such irrelevant variables leads to unnecessary complexity in the resulting model. By removing these variables—that is, by setting the corresponding coefficient estimates to zero—we can obtain a model that is more easily interpreted. Now least squares is extremely unlikely to yield any coefficient estimates that are exactly zero. In this chapter, we see some approaches for au- tomatically performing feature selection or variable selection—that is, feature for excluding irrelevant variables from a multiple regression model.

In this chapter, we discuss three important classes of methods:

Subset Selection. This approach involves identifying a subset of the \(p\) predictors that we believe to be related to the response. We then fit a model using least squares on the reduced set of variables.

Shrinkage. This approach involves fitting a model involving all \(p\) predictors. However, the estimated coefficients are shrunken towards zero relative to the least squares estimates. This shrinkage (also known as regularization) has the effect of reducing variance. Depending on what type of shrinkage is performed, some of the coefficients may be esti- mated to be exactly zero. Hence, shrinkage methods can also perform variable selection.

Dimension Reduction. This approach involves projecting the \(p\) predictors into an \(M\)-dimensional subspace, where \(M < p\). This is achieved by computing \(M\) different linear combinations, or projections, of the variables. Then these \(M\) projections are used as predictors to fit a linear regression model by least squares.

Although this chapter is specifically about extensions to the linear model for regression, the same concepts apply to other methods, such as the classification models in Chapter 4.

6.1 Subset Selection

Disclaimer at the top: as mentioned in section 3.1, there are a lot of reasons to avoid subset and stepwise model selection. Here are some resources on this topic:

- The 2018 paper by Smith (2018).

- A Stack Overflow response.

- Frank Harrell comments.

Regardless, I will still work through the examples in the text as a programming exercise.

However, tidymodels does not have the functionality for subset/stepwise selection, so I will be using alternatives.

6.1.1 Best Subset Selection

To perform best subset selection, we fit \(p\) models that contain exactly one predictor, \({p \choose 2} = p(p-1)/2\) models that contain exactly two predictors, and so on. In total, this involves fitting \(2^p\) models. Then we select the model that is best, usually following these steps

- Let \(\mathcal{M}_0\) denote the null model, which contains no predictors. This model simply predicts the sample mean.

- For \(k = 1, 2, \dots, p\):

- Fit all \({p \choose k}\) models that contain exactly \(k\) predictors.

- Pick the best among these \({p \choose k}\) models, and call it \(\mathcal{M}_k\). Here, best is defined as having the smallest RSS, or equivalently the largest \(R^2\).

- Select a single best model from among \(\mathcal{M}_0, \dots, \mathcal{M}_p\) using cross-validated prediction error \(C_p\) (AIC), BIC, or adjusted \(R^2\).

Step 2 identifies the best model (on the training data) for each subset size, in order to reduce the problem from \(2^p\) to \(p + 1\) possible models. Choosing the single best model from the \(p + 1\) options must be done with care, because the RSS of these models decreases monotonically, and the \(R^2\) increases monotonically, as the number of predictors increases. A model that minimizes these metrics will have a low training error, but not necessarily a low test error, as we saw in Chapter 2 in Figures 2.9-2.11. Therefore, in step 3, we use a cross-validated prediction error \(C_p\), BIC, or adjusted \(R^2\) in order to select the best model.

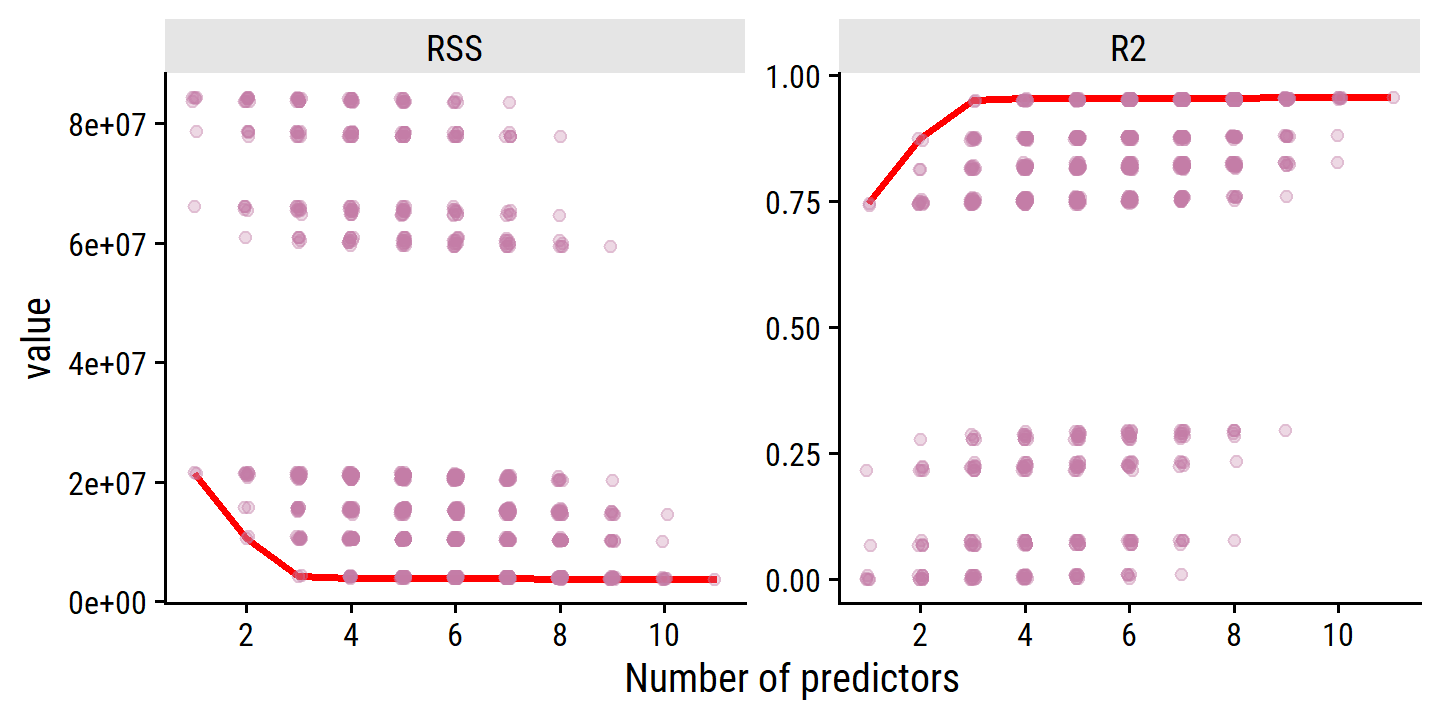

Figure 6.1 includes many least squares regression models predicting Balance, and fit using a different subsets of 10 predictors.

Load the credit data set:

credit <- ISLR2::Credit

glimpse(credit)## Rows: 400

## Columns: 11

## $ Income <dbl> 14.891, 106.025, 104.593, 148.924, 55.882, 80.180, 20.996, 7…

## $ Limit <dbl> 3606, 6645, 7075, 9504, 4897, 8047, 3388, 7114, 3300, 6819, …

## $ Rating <dbl> 283, 483, 514, 681, 357, 569, 259, 512, 266, 491, 589, 138, …

## $ Cards <dbl> 2, 3, 4, 3, 2, 4, 2, 2, 5, 3, 4, 3, 1, 1, 2, 3, 3, 3, 1, 2, …

## $ Age <dbl> 34, 82, 71, 36, 68, 77, 37, 87, 66, 41, 30, 64, 57, 49, 75, …

## $ Education <dbl> 11, 15, 11, 11, 16, 10, 12, 9, 13, 19, 14, 16, 7, 9, 13, 15,…

## $ Own <fct> No, Yes, No, Yes, No, No, Yes, No, Yes, Yes, No, No, Yes, No…

## $ Student <fct> No, Yes, No, No, No, No, No, No, No, Yes, No, No, No, No, No…

## $ Married <fct> Yes, Yes, No, No, Yes, No, No, No, No, Yes, Yes, No, Yes, Ye…

## $ Region <fct> South, West, West, West, South, South, East, West, South, Ea…

## $ Balance <dbl> 333, 903, 580, 964, 331, 1151, 203, 872, 279, 1350, 1407, 0,…For \(p = 10\) predictors, there are \(2^{10} = 1024\) possible combinations for models (including the null model, but this example doesn’t include it).

To get every combination, I’ll use the utils::combn() function:

credit_predictors <- names(credit)

credit_predictors <- credit_predictors[credit_predictors != "Balance"]

credit_model_subsets <- tibble(

n_preds = 1:10,

predictors = map(n_preds,

~ utils::combn(credit_predictors, .x, simplify = FALSE))

) %>%

unnest(predictors) %>%

mutate(

model_formula = map(predictors,

~ as.formula(paste("Balance ~", paste(.x, collapse = "+"))))

)

glimpse(credit_model_subsets)## Rows: 1,023

## Columns: 3

## $ n_preds <int> 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 2, 2, 2, 2, 2, 2, 2, 2, 2,…

## $ predictors <list> "Income", "Limit", "Rating", "Cards", "Age", "Education…

## $ model_formula <list> <Balance ~ Income>, <Balance ~ Limit>, <Balance ~ Ratin…For differing numbers of predictors \(k = 1, 2, \dots, p\), we should have \({p \choose k}\) models:

credit_model_subsets %>%

count(n_preds) %>%

mutate(p_choose_k = choose(10, n_preds))## # A tibble: 10 × 3

## n_preds n p_choose_k

## <int> <int> <dbl>

## 1 1 10 10

## 2 2 45 45

## 3 3 120 120

## 4 4 210 210

## 5 5 252 252

## 6 6 210 210

## 7 7 120 120

## 8 8 45 45

## 9 9 10 10

## 10 10 1 1Fit all of the models and extract RSS and \(R^2\) metrics:

credit_model_subsets <- credit_model_subsets %>%

mutate(

model_fit = map(model_formula, ~ lm(.x, data = credit)),

RSS = map_dbl(model_fit, ~ sum(.x$residuals^2)),

R2 = map_dbl(model_fit, ~ summary(.x)$r.squared),

# Because of one of the categorical variables (Region) having three levels,

# some models will have +1 dummy variable predictor, which I can calculate

# from the number of coefficients returned from the fit

n_preds_adj = map_int(model_fit, ~ length(.x$coefficients) - 1L)

)Figure 6.1:

credit_model_subsets %>%

pivot_longer(cols = c(RSS, R2), names_to = "metric", values_to = "value") %>%

mutate(metric = factor(metric, levels = c("RSS", "R2"))) %>%

group_by(n_preds_adj, metric) %>%

mutate(

# The "best" model has the lowest value by RSS...

best_model = (metric == "RSS" & value == min(value)) |

# ... and the highest value by R2

(metric == "R2" & value == max(value))

) %>%

ungroup() %>%

ggplot(aes(x = n_preds_adj, y = value)) +

geom_line(data = . %>% filter(best_model), color = "red", size = 1) +

geom_jitter(width = 0.05, height = 0, alpha = 0.3,

color = td_colors$nice$opera_mauve) +

facet_wrap(~ metric, ncol = 2, scales = "free_y") +

scale_x_continuous("Number of predictors", breaks = seq(2, 10, 2))

As expected, the values are monotonically decreasing (RSS) and increasing (\(R^2\)) with number of predictors. There is little improvement past 3 predictors, however.

Although we have presented best subset selection here for least squares regression, the same ideas apply to other types of models, such as logistic regression. In the case of logistic regression, instead of ordering models by RSS in Step 2 of Algorithm 6.1, we instead use the deviance, a measure that plays the role of RSS for a broader class of models. The deviance is negative two times the maximized log-likelihood; the smaller the deviance, the better the fit.

Because it scales as \(2^p\) models, best subset selection can quickly become computationally expensive. The next sections explore computationally efficient alternatives.

6.1.2 Stepwise Selection

Forward Stepwise Selection

Forward stepwise selection begins with a model containing no predictors, and then adds predictors to the model, one-at-a-time, until all predictors are in the model.

- Let \(\mathcal{M}_0\) denote the null model, which contains no predictors.

- For \(k = 0, 1, \dots, p-1\):

- Consider all \(p - k\) models that augment the predictors in \(\mathcal{M}_k\) with one additional predictor.

- Choose the best among these \(p - k\) models, and call it \(\mathcal{M}_{k+1}\). Here, best is defined as having smallest RSS or highest \(R^2\).

- Select a single best model from among \(\mathcal{M}_0, \dots, \mathcal{M}_p\) using cross-validated prediction error \(C_p\) (AIC), BIC, or adjusted \(R^2\).

Step 2 is similar to step 2 in best subset selection, in that we simply choose the model with the lowest RSS or highest \(R^2\). Step 3 is more tricky, and is discussed in Section 6.1.3.

Though much more computationally efficient, it is not guaranteed to find the best possible model (via best subset selection) out of all \(2^p\) possible models.

As a comparison, the example in the text involves performing four forward steps to find the best predictors.

The MASS package has an addTerm() function for taking a single step:

# Model with no predictors

balance_null <- lm(Balance ~ 1, data = credit)

# Model with all predictors

balance_full <- lm(Balance ~ ., data = credit)

MASS::addterm(balance_null, scope = balance_full, sorted = TRUE)## Single term additions

##

## Model:

## Balance ~ 1

## Df Sum of Sq RSS AIC

## Rating 1 62904790 21435122 4359.6

## Limit 1 62624255 21715657 4364.8

## Income 1 18131167 66208745 4810.7

## Student 1 5658372 78681540 4879.8

## Cards 1 630416 83709496 4904.6

## <none> 84339912 4905.6

## Own 1 38892 84301020 4907.4

## Education 1 5481 84334431 4907.5

## Married 1 2715 84337197 4907.5

## Age 1 284 84339628 4907.6

## Region 2 18454 84321458 4909.5Here, the Rating variable offers the best improvement over the null model (by both RSS and AIC).

To run this four times, I’ll use a for loop:

balance_preds <- c("1")

for (forward_step in 1:4) {

balance_formula <- as.formula(

paste("Balance ~", str_replace_all(balance_preds[forward_step], ",", "+"))

)

balance_model <- lm(balance_formula, data = credit)

# Find the next predictor by RSS

new_predictor <- MASS::addterm(balance_model, scope = balance_full) %>%

#broom::tidy() %>%

as_tibble(rownames = "term") %>%

filter(RSS == min(RSS)) %>%

pull(term)

balance_preds <- append(balance_preds,

paste(balance_preds[forward_step], new_predictor,

sep = ", "))

}

balance_preds## [1] "1" "1, Rating"

## [3] "1, Rating, Income" "1, Rating, Income, Student"

## [5] "1, Rating, Income, Student, Limit"Now re-create Table 6.1:

bind_cols(

credit_model_subsets %>%

filter(n_preds_adj <= 4) %>%

group_by(n_preds_adj) %>%

filter(RSS == min(RSS)) %>%

ungroup() %>%

transmute(

`# variables` = n_preds_adj,

`Best subset` = map_chr(predictors, str_c, collapse = ", ")

),

`Forward stepwise` = balance_preds[2:5] %>% str_remove("1, ")

) %>%

gt(rowname_col = "# variables")| Best subset | Forward stepwise | |

|---|---|---|

| 1 | Rating | Rating |

| 2 | Income, Rating | Rating, Income |

| 3 | Income, Rating, Student | Rating, Income, Student |

| 4 | Income, Limit, Cards, Student | Rating, Income, Student, Limit |

Backward Stepwise Selection

Backwards stepwise selection begins with the full model containing all \(p\) predictors, and then iteratively removes the least useful predictor.

- Let \(\mathcal{M}_p\) denote the full model, which contains all \(p\) predictors.

- For \(k = p, p-1, \dots, 1\):

- Consider all \(k\) models that contain all but one of the predictors in \(\mathcal{M}_k\) for a total of \(k - 1\) predictors.

- Choose the best among these \(k\) models, and call it \(\mathcal{M}_{k-1}\). Here, best is defined as having smallest RSS or highest \(R^2\).

- Select a single best model from among \(\mathcal{M}_0, \dots, \mathcal{M}_p\) using cross-validated prediction error \(C_p\) (AIC), BIC, or adjusted \(R^2\).

Like forward stepwise selection, the backward selection approach searches through only \(1+p(p+1)/2\) models, and so can be applied in settings where \(p\) is too large to apply best subset selection. Also like forward stepwise selection, backward stepwise selection is not guaranteed to yield the best model containing a subset of the \(p\) predictors.

Backward selection requires that the number of samples \(n\) is larger than the number of variables \(p\) (so that the full model can be fit). In contrast, forward stepwise can be used even when \(n < p\), and so is the only viable subset method when \(p\) is very large.

Hybrid Approaches

The best subset, forward stepwise, and backward stepwise selection approaches generally give similar but not identical models. As another alternative, hybrid versions of forward and backward stepwise selection are available, in which variables are added to the model sequentially, in analogy to forward selection. However, after adding each new variable, the method may also remove any variables that no longer provide an improvement in the model fit. Such an approach attempts to more closely mimic best subset selection while retaining the computational advantages of forward and backward stepwise selection.

6.1.3 Choosing the Optimal Model

To apply these subset selection methods, we need to determine which model is best. Since more predictors will always lead to smaller RSS and larger \(R^2\) (training error), we need to estimate the test error. There are two common approaches:

- We can indirectly estimate test error by making an adjustment to the training error to account for the bias due to overfitting.

- We can directly estimate the test error, using either a validation set approach or a cross-validation approach, as discussed in Chapter 5.

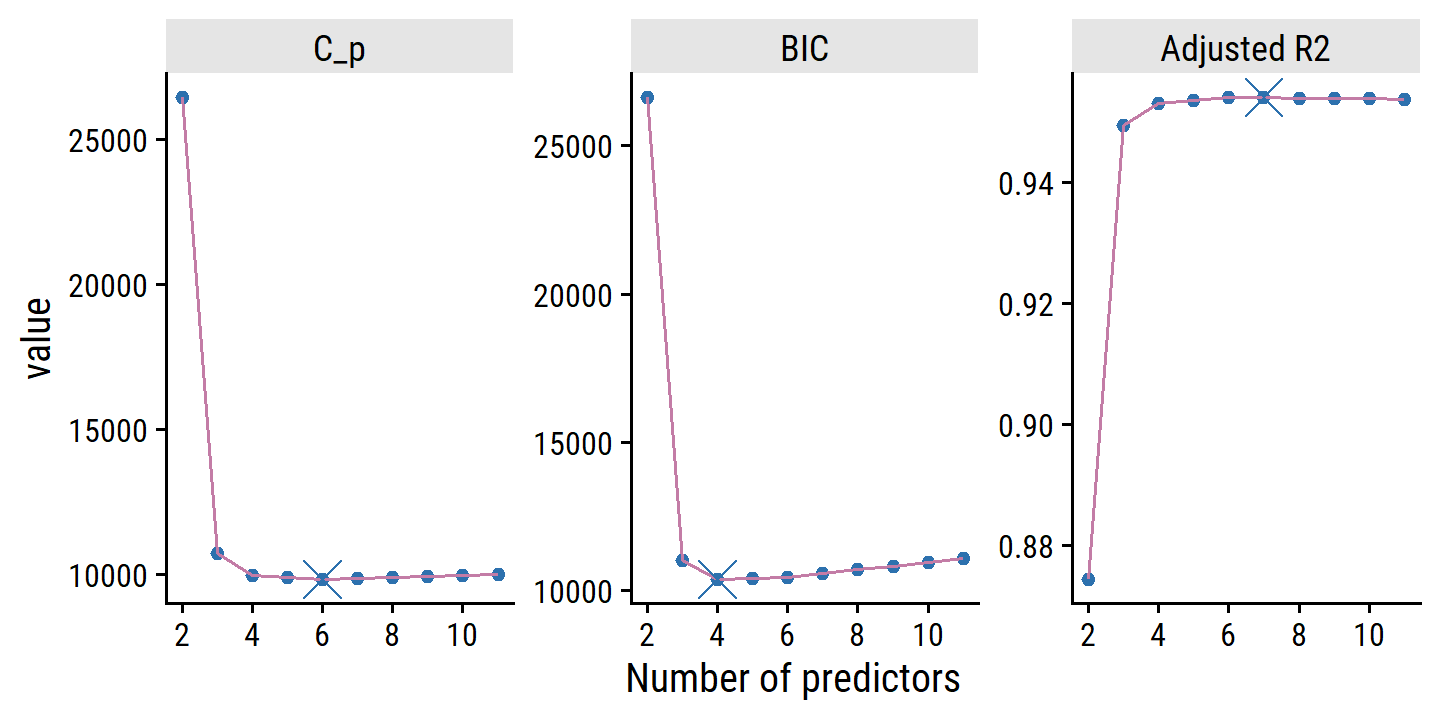

\(C_p\), AIC, BIC, and Adjusted \(R^2\)

These techniques involve adjusting the training error to select among a set a models with different numbers of variables.

For a fitted least squares model containg \(d\) predictors, the \(C_p\) estimate of test MSE is computed as:

\[ C_p = \frac{1}{n} (\text{RSS} + 2d \hat{\sigma}^2), \]

where \(\hat{\sigma}^2\) is an estimate of the variance of the error \(\epsilon\) associated with each response measurement. Typically, this is estimated using the full model containing all predictors.

Essentially, the \(C_p\) statistic adds a penalty of \(2d\hat{\sigma}^2\) to the training RSS in order to adjust for the fact that the training error tends to underestimate the test error. Clearly, the penalty increases as the number of predictors in the model increases; this is intended to adjust for the corresponding decrease in training RSS. Though it is beyond the scope of this book, one can show that if \(\hat{\sigma}^2\) is an unbiased estimate of \(\sigma^2\) in (6.2), then \(C_p\) is an unbiased estimate of test MSE. As a consequence, the \(C_p\) statistic tends to take on a small value for models with a low test error, so when determining which of a set of models is best, we choose the model with the lowest \(C_p\) value.

Compute \(\hat{\sigma}\) and \(C_p\) for the best model at the different numbers of predictors:

# Get the estimated variance of the error for calculating C_p

sigma_hat <- summary(balance_full)$sigma

credit_model_best <- credit_model_subsets %>%

group_by(n_preds_adj) %>%

filter(RSS == min(RSS)) %>%

ungroup() %>%

mutate(

`C_p` = (1 / nrow(credit)) * (RSS + 2 * n_preds_adj * sigma_hat^2)

)The AIC criterion is defined for a large class of models fit by maximum likelihood. In the case of the model (6.1) with Gaussian errors, maximum likelihood and least squares are the same thing. In this case AIC is given by

\[ \text{AIC} = \frac{1}{n} (\text{RSS} + 2 d \hat{\sigma}^2), \]

where, for simplicity, we have omitted irrelevant constants. Hence for least squares models, \(C_p\) and AIC are proportional to each other, and so only \(C_p\) is displayed in Figure 6.2.

BIC is derived from a Bayesian point of view, and looks similar to the AIC/\(C_p\):

\[ \text{BIC} = \frac{1}{n} (\text{RSS} + \log (n) d \hat{\sigma}^2), \]

where irrelevant constants were excluded. Here, the factor of 2 in the AIC/\(C_p\) is replaced with \(\log (n)\). Since \(\log n > 2\) for any \(n > 7\), the BIC statistic generally penalizes models with many variables more heavily.

credit_model_best <- credit_model_best %>%

mutate(

BIC = (1 / nrow(credit)) *

(RSS + log(nrow(credit)) * n_preds_adj * sigma_hat^2)

)Recall that the usual \(R^2\) is defined as 1 - RSS/TSS, where TSS = \(\sum (y_i - \bar{y})^2\) is the total sum of squares for the response. The adjusted \(R^2\) statistic is calculated as

\[ \text{Adjusted } R^2 = 1 - \frac{\text{RSS}/(n - d - 1)}{\text{TSS}/(n - 1)} \]

Unlike \(C_p\), AIC, and BIC, for which a smaller value indicates a lower test error, a larger value of adjusted \(R^2\) indicates smaller test error.

The intuition behind the adjusted \(R^2\) is that once all of the correct variables have been included in the model, adding additional noise variables will lead to only a very small decrease in RSS. Since adding noise variables leads to an increase in \(d\), such variables will lead to an increase in \(\text{RSS}/(n−d−1)\), and consequently a decrease in the adjusted \(R^2\). Therefore, in theory, the model with the largest adjusted \(R^2\) will have only correct variables and no noise variables. Unlike the \(R^2\) statistic, the adjusted \(R^2\) statistic pays a price for the inclusion of unnecessary variables in the model.

A model’s \(R^2\) value can be obtained directly from the lm object, so I don’t need to manually compute it:

credit_model_best <- credit_model_best %>%

mutate(

`Adjusted R2` = map_dbl(model_fit, ~ summary(.x)$adj.r.squared)

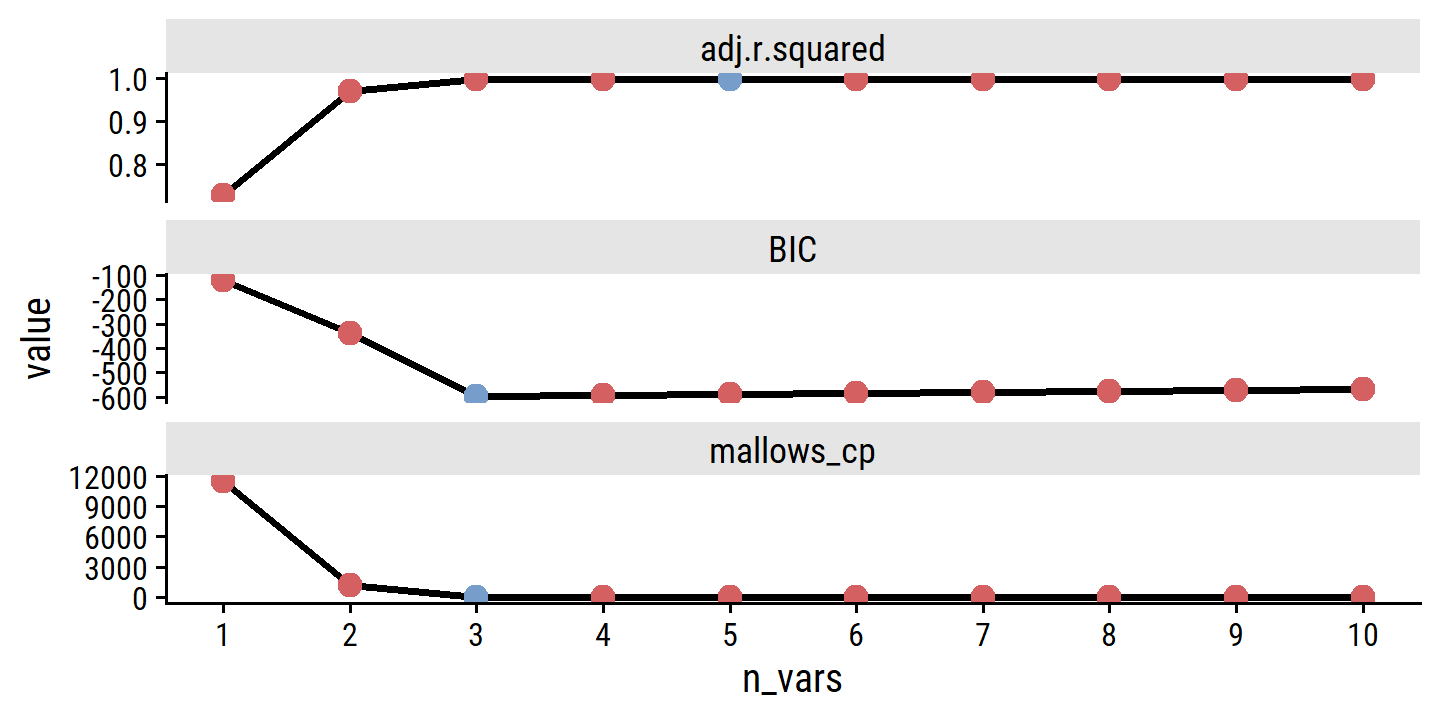

)Figure 6.2:

credit_model_best %>%

pivot_longer(cols = c("C_p", "BIC", "Adjusted R2"),

names_to = "metric", values_to = "value") %>%

group_by(metric) %>%

mutate(

best_model = ifelse(metric == "Adjusted R2",

value == max(value),

value == min(value))

) %>%

ungroup() %>%

mutate(metric = factor(metric, levels = c("C_p", "BIC", "Adjusted R2"))) %>%

filter(n_preds_adj >= 2) %>%

ggplot(aes(x = n_preds_adj, y = value)) +

geom_point(color = td_colors$nice$spanish_blue) +

geom_line(color = td_colors$nice$opera_mauve) +

geom_point(data = . %>% filter(best_model), size = 5, shape = 4,

color = td_colors$nice$spanish_blue) +

facet_wrap(~ metric, nrow = 1, scales = "free_y") +

scale_x_continuous("Number of predictors", breaks = seq(2, 10, 2))

\(C_p\), AIC, and BIC all have rigorous theoretical justifications that are beyond the scope of this book. These justifications rely on asymptotic arguments (scenarios where the sample size \(n\) is very large). Despite its popularity, and even though it is quite intuitive, the adjusted \(R^2\) is not as well motivated in statistical theory as AIC, BIC, and \(C_p\). All of these measures are simple to use and compute. Here we have presented their formulas in the case of a linear model fit using least squares; however, AIC and BIC can also be defined for more general types of models.

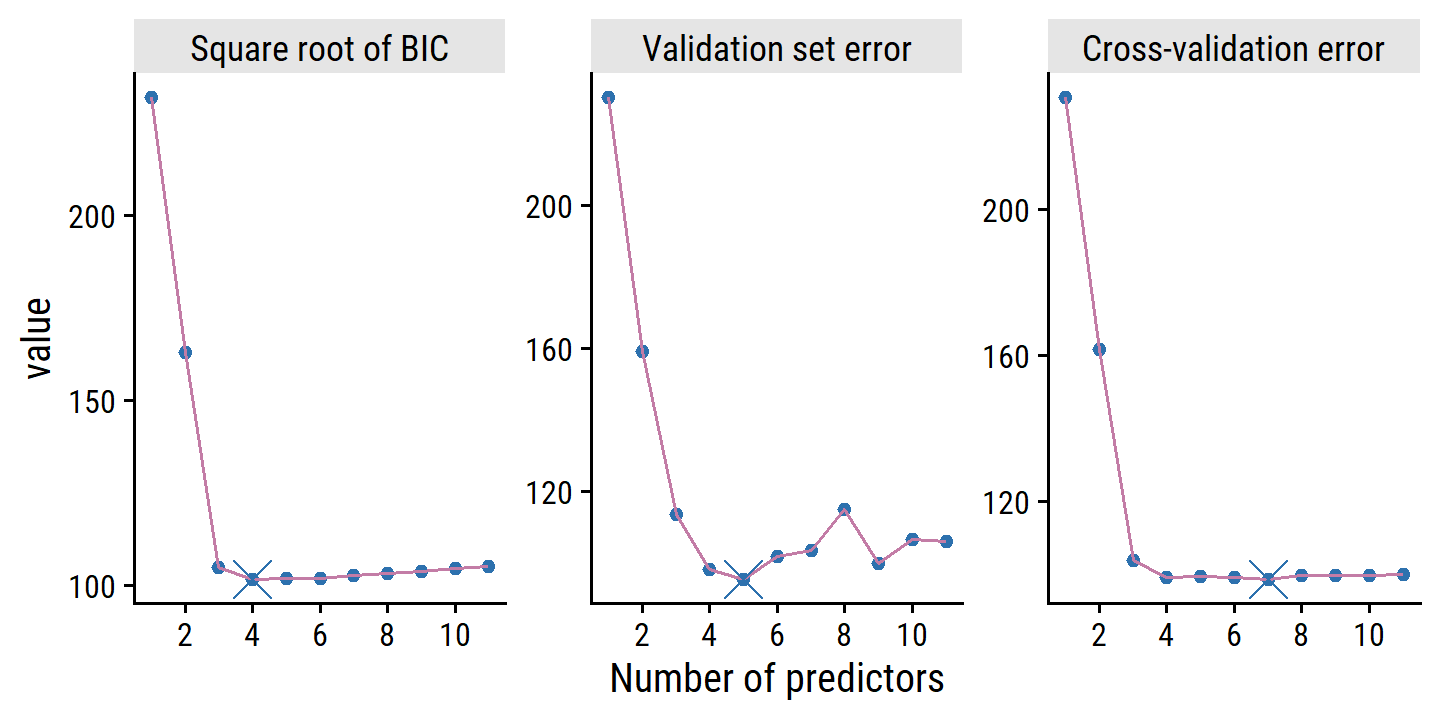

Validation and Cross-validation

Validation and cross-validation from Chapter 5 provide an advantage over AIC, BIC, \(C_p\) and adjusted \(R^2\), in that they provide a direct estimate of the test error, and make fewer assumptions about the true underlying model. It can also be used in a wider ranger of model selection tasks, including scenarios where the model degrees of freedom (e.g. the number of predictors) or error variance \(\sigma^2\) are hard to estimate.

To re-create Figure 6.3, I’ll use the tidymodels approach with rsample to make the validation set and cross-validation splits:

set.seed(499)

tic()

credit_model_best <- credit_model_best %>%

mutate(

validation_set_error = map(

model_formula,

function(model_formula) {

workflow() %>%

add_model(linear_reg()) %>%

add_recipe(recipe(model_formula, credit)) %>%

fit_resamples(validation_split(credit, prop = 0.75)) %>%

collect_metrics() %>%

filter(.metric == "rmse") %>%

select(`Validation set error` = mean, validation_std_err = std_err)

}

),

cross_validation_error = map(

model_formula,

function(model_formula) {

workflow() %>%

add_model(linear_reg()) %>%

add_recipe(recipe(model_formula, credit)) %>%

fit_resamples(vfold_cv(credit, v = 10)) %>%

collect_metrics() %>%

filter(.metric == "rmse") %>%

select(`Cross-validation error` = mean, cv_std_err = std_err)

}

),

`Square root of BIC` = sqrt(BIC)

) %>%

unnest(c(validation_set_error, cross_validation_error))

toc()## 13.95 sec elapsedcredit_model_best %>%

pivot_longer(cols = c("Validation set error", "Cross-validation error",

"Square root of BIC"),

names_to = "metric", values_to = "value") %>%

group_by(metric) %>%

mutate(best_model = value == min(value)) %>%

ungroup() %>%

mutate(

metric = factor(metric,

levels = c("Square root of BIC", "Validation set error",

"Cross-validation error"))

) %>%

ggplot(aes(x = n_preds_adj, y = value)) +

geom_point(color = td_colors$nice$spanish_blue) +

geom_line(color = td_colors$nice$opera_mauve) +

geom_point(data = . %>% filter(best_model), size = 5, shape = 4,

color = td_colors$nice$spanish_blue) +

facet_wrap(~ metric, nrow = 1, scales = "free_y") +

scale_x_continuous("Number of predictors", breaks = seq(2, 10, 2))

Because the randomness associated with splitting the data in the validation set and cross-validation approaches, we will likely find a different best model with different splits. In this case, we can select a model using the one-standard-error rule, where we calculate the standard error of the test MSE for each model, and then select the smallest model for which the estimated test error is within one standard error of the lowest test error.

credit_model_best %>%

transmute(

`# predictors` = n_preds_adj, `Cross-validation error`, cv_std_err,

lowest_error = min(`Cross-validation error`),

lowest_std_error = cv_std_err[`Cross-validation error` == lowest_error],

# The best model is the minimum `n_preds_adj` (number of predictors) for

# which the CV test error is within the standard error of the lowest error

best_model = n_preds_adj == min(n_preds_adj[`Cross-validation error` < lowest_error + lowest_std_error])

) %>%

gt() %>%

tab_style(style = cell_text(weight = "bold"),

locations = cells_body(rows = best_model))| # predictors | Cross-validation error | cv_std_err | lowest_error | lowest_std_error | best_model |

|---|---|---|---|---|---|

| 1 | 230.43401 | 12.663270 | 98.69851 | 3.405358 | FALSE |

| 2 | 161.43387 | 8.886096 | 98.69851 | 3.405358 | FALSE |

| 3 | 103.70715 | 2.457797 | 98.69851 | 3.405358 | FALSE |

| 4 | 99.24797 | 4.544705 | 98.69851 | 3.405358 | TRUE |

| 5 | 99.34556 | 3.231063 | 98.69851 | 3.405358 | FALSE |

| 6 | 99.01851 | 4.147442 | 98.69851 | 3.405358 | FALSE |

| 7 | 98.69851 | 3.405358 | 98.69851 | 3.405358 | FALSE |

| 8 | 99.65805 | 2.937664 | 98.69851 | 3.405358 | FALSE |

| 9 | 99.63657 | 4.109147 | 98.69851 | 3.405358 | FALSE |

| 10 | 99.67476 | 3.288357 | 98.69851 | 3.405358 | FALSE |

| 11 | 99.95464 | 3.082506 | 98.69851 | 3.405358 | FALSE |

The rationale here is that if a set of models appear to be more or less equally good, then we might as well choose the simplest model—that is, the model with the smallest number of predictors. In this case, applying the one-standard-error rule to the validation set or cross-validation approach leads to selection of the three-variable model.

Here, the four-variable model was selected, not the three-variable model like in the text, but I would chalk that up to the random sampling.

6.2 Shrinkage Methods

As already discussed, subset selection has a lot of issues. A much better alternative is use a technique that contrains or regularizes or shrinks the coefficient estimates towards zero in a model fit with all \(p\) predictors. It turns out that shrinking the coefficient estimates can significantly reduce the variance.

6.2.1 Ridge Regression

Recall from Chapter 3 that the least squares fitting procedure involves estimating \(\beta_0, \beta_1, \dots, \beta_p\) by minimizing the RSS:

\[ \text{RSS} = \sum_{i=1}^n \left(y_i - \hat{y}_i \right)^2 = \sum_{i=1}^n \left(y_i - \beta_0 - \sum_{j=1}^p \beta_j x_{ij} \right)^2. \]

Ridge regression is very similar to least squares, except the quantity minimized is slightly different:

\[ \sum_{i=1}^n \left(y_i - \beta_0 - \sum_{j=1}^p \beta_j x_{ij} \right)^2 + \lambda \sum_{j=1}^p \beta_j^2 = \text{RSS} + \lambda \sum_{j=1}^p \beta_j^2, \]

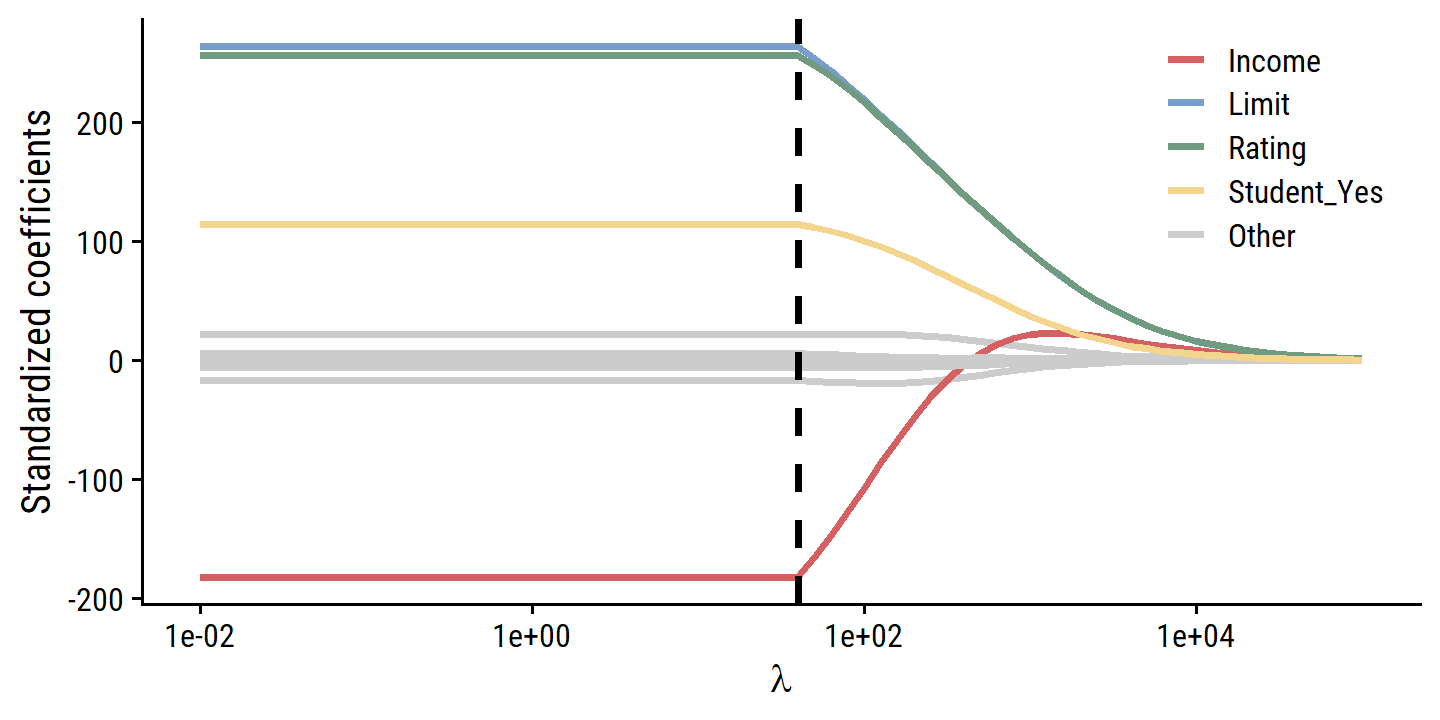

where \(\lambda \geq 0\) is a tuning parameter, to be determined separately. The second term above, called a shrinkage penalty is small when the coefficients are close to zero, and so has the effect of shrinking the estimates \(\beta_j\) towards zero. The tuning parameter \(\lambda\) serves to control the relative impact of these two terms on the regression coefficient estimates. When \(\lambda = 0\), the penalty term has no effect, and ridge regression will produce the same least squares estimates. Unlike least squares, which generates only one set of coefficient estimates (the “best fit”), ridge regression will produce a different set of coefficient estimates \(\hat{\beta}_{\lambda}^R\) for each value \(\lambda\). Selecting a good value for \(\lambda\) is critical.

Note that the shrinkage penalty is not applied to the intercept \(\beta_0\), which is simply a measure of the mean value of the response when all predictors are zero (\(x_{i1} = x_{i2} = \dots = 0\))

An Application to the Credit Data

In tidymodels, regularized least squares is done with the glmnet engine.

(See this article for a list of models available in parnsip and this article for examples using glmnet.)

Specify the model:

ridge_spec <- linear_reg(penalty = 0, mixture = 0) %>%

set_engine("glmnet")

# The `parnship::translate()` function is a helpful way to "decode" a model spec

ridge_spec %>% translate()## Linear Regression Model Specification (regression)

##

## Main Arguments:

## penalty = 0

## mixture = 0

##

## Computational engine: glmnet

##

## Model fit template:

## glmnet::glmnet(x = missing_arg(), y = missing_arg(), weights = missing_arg(),

## alpha = 0, family = "gaussian")The penalty argument above refers to the \(\lambda\) tuning parameter.

The mixture variable ranges from 0 to 1, with 0 corresponding to ridge regression, 1 corresponding to lasso regression, and values between using a mixture of both.

Fit Balance to all the predictors:

credit_ridge_fit <- fit(ridge_spec, Balance ~ ., data = credit)

tidy(credit_ridge_fit)## # A tibble: 12 × 3

## term estimate penalty

## <chr> <dbl> <dbl>

## 1 (Intercept) -401. 0

## 2 Income -5.18 0

## 3 Limit 0.114 0

## 4 Rating 1.66 0

## 5 Cards 15.8 0

## 6 Age -0.957 0

## 7 Education -0.474 0

## 8 OwnYes -4.86 0

## 9 StudentYes 382. 0

## 10 MarriedYes -12.1 0

## 11 RegionSouth 9.11 0

## 12 RegionWest 13.1 0Because our ridge_spec had penalty = 0, the coefficients here correspond to no penalty, but the glmnet::glmnet() function fits a range of penalty values all at once, which we can extract with broom::tidy like so:

tidy(credit_ridge_fit, penalty = 100)## # A tibble: 12 × 3

## term estimate penalty

## <chr> <dbl> <dbl>

## 1 (Intercept) -307. 100

## 2 Income -3.04 100

## 3 Limit 0.0951 100

## 4 Rating 1.40 100

## 5 Cards 16.4 100

## 6 Age -1.10 100

## 7 Education -0.178 100

## 8 OwnYes -0.341 100

## 9 StudentYes 335. 100

## 10 MarriedYes -12.4 100

## 11 RegionSouth 7.45 100

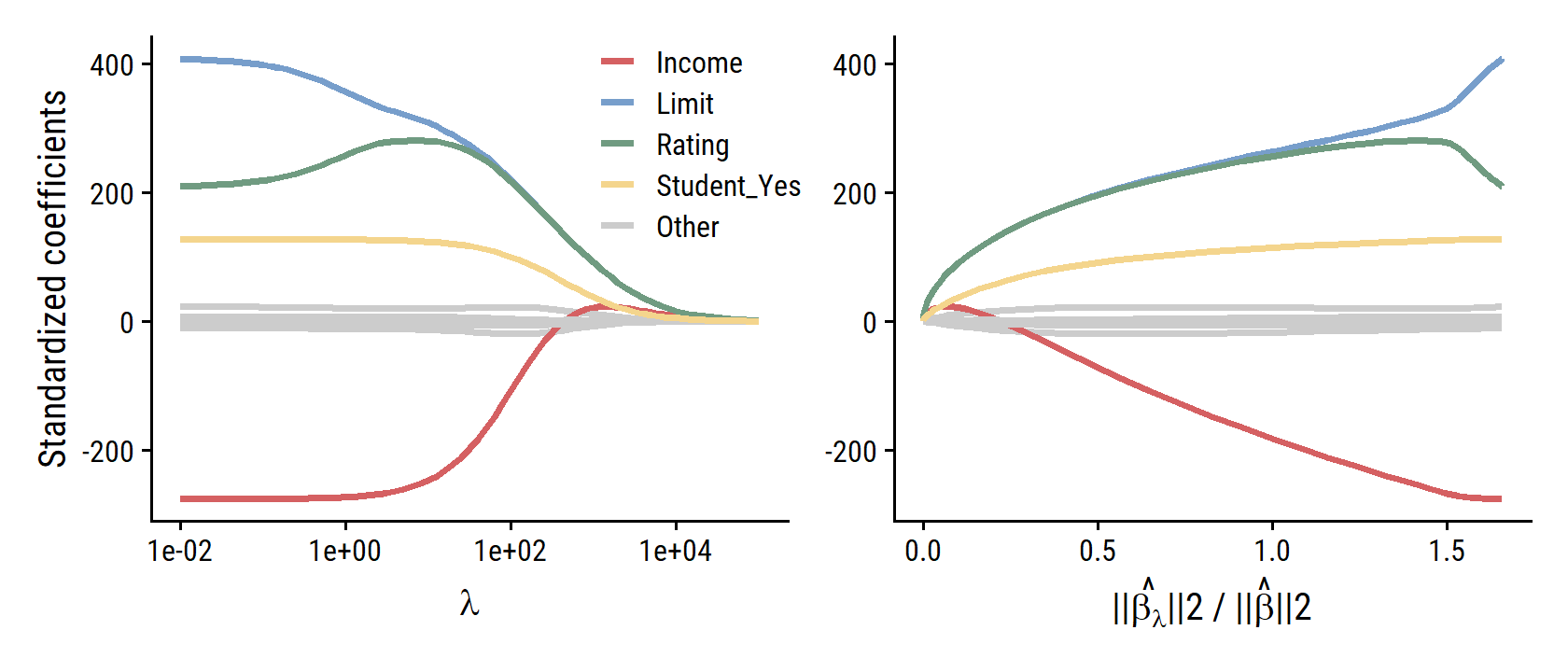

## 12 RegionWest 8.79 100To re-create Figure 6.4, I’ll first re-fit the data on standardized data (continuous variables re-scaled to have mean of 0, standard deviation of 1):

credit_recipe <- recipe(Balance ~ ., data = credit) %>%

step_dummy(all_nominal_predictors()) %>%

step_normalize(all_predictors())

credit_ridge_workflow <- workflow() %>%

add_recipe(credit_recipe) %>%

add_model(ridge_spec)

credit_ridge_fit <- fit(credit_ridge_workflow, data = credit)Then I’ll compile coefficient estimates for a wide range of \(\lambda\) values with purrr::map_dfr():

map_dfr(seq(-2, 5, 0.1),

~ tidy(credit_ridge_fit, penalty = 10^.x)) %>%

filter(term != "(Intercept)") %>%

mutate(

term_highlight = fct_other(

term, keep = c("Income", "Limit", "Rating", "Student_Yes")

)

) %>%

ggplot(aes(x = penalty, y = estimate)) +

geom_line(aes(group = term, color = term_highlight), size = 1) +

scale_x_log10(breaks = 10^c(-2, 0, 2, 4)) +

geom_vline(xintercept = 40, lty = 2, size = 1) +

labs(x = expression(lambda), y = "Standardized coefficients", color = NULL) +

scale_color_manual(values = c(td_colors$pastel6[1:4], "grey80")) +

theme(legend.position = c(0.8, 0.8))

From this figure, it is clear that the fitting procedure is truncated at a penalty values of \(\lambda\) = 40 (the vertical line above).

This has to do with the regularization path chosen by glmnet.

We can see from the grid of values for \(\lambda\) that the minimum value is 40:

extract_fit_engine(credit_ridge_fit)$lambda## [1] 396562.69957 361333.16232 329233.32005 299985.13930 273335.28632

## [6] 249052.93283 226927.75669 206768.12023 188399.41030 171662.52594

## [11] 156412.50026 142517.24483 129856.40559 118320.32042 107809.06926

## [16] 98231.60868 89504.98330 81553.60727 74308.60957 67707.23750

## [21] 61692.31313 56211.73806 51218.04218 46667.97247 42522.11842

## [26] 38744.57062 35302.60975 32166.42320 29308.84681 26705.12964

## [31] 24332.71953 22171.06779 20201.45123 18406.80998 16771.59971

## [36] 15281.65702 13924.07673 12687.10013 11560.01312 10533.05342

## [41] 9597.32598 8744.72599 7967.86864 7260.02515 6615.06452

## [46] 6027.40042 5491.94278 5004.05372 4559.50738 4154.45331

## [51] 3785.38313 3449.10012 3142.69158 2863.50352 2609.11776

## [56] 2377.33093 2166.13540 1973.70190 1798.36366 1638.60199

## [61] 1493.03311 1360.39616 1239.54232 1129.42479 1029.08981

## [66] 937.66830 854.36844 778.46870 709.31169 646.29839

## [71] 588.88302 536.56828 488.90103 445.46841 405.89423

## [76] 369.83570 336.98052 307.04410 279.76714 254.91340

## [81] 232.26760 211.63359 192.83264 175.70192 160.09305

## [86] 145.87082 132.91206 121.10452 110.34593 100.54310

## [91] 91.61113 83.47265 76.05717 69.30046 63.14400

## [96] 57.53446 52.42326 47.76612 43.52271 39.65627The regularization path can be set manually using the path_values argument (see this article for more details):

coef_path_values <- 10^seq(-2, 5, 0.1)

ridge_spec_path <- linear_reg(penalty = 0, mixture = 0) %>%

set_engine("glmnet", path_values = coef_path_values)

credit_ridge_workflow_path <- workflow() %>%

add_recipe(credit_recipe) %>%

add_model(ridge_spec_path)

credit_ridge_fit <- fit(credit_ridge_workflow_path, data = credit)Now with the full range of \(\lambda\) values, I can re-create the figure properly:

# Compute the l2 norm for the least squares model

credit_lm_fit <- lm(Balance ~ ., data = credit)

credit_lm_fit_l2_norm <- sum(credit_lm_fit$coefficients[-1]^2)

d <- map_dfr(seq(-2, 5, 0.1),

~ tidy(credit_ridge_fit, penalty = 10^.x)) %>%

filter(term != "(Intercept)") %>%

group_by(penalty) %>%

mutate(l2_norm = sum(estimate^2),

l2_norm_ratio = l2_norm / credit_lm_fit_l2_norm) %>%

ungroup() %>%

mutate(

term_highlight = fct_other(

term, keep = c("Income", "Limit", "Rating", "Student_Yes")

)

)

p1 <- d %>%

ggplot(aes(x = penalty, y = estimate)) +

geom_line(aes(group = term, color = term_highlight), size = 1) +

scale_x_log10(breaks = 10^c(-2, 0, 2, 4)) +

labs(x = expression(lambda), y = "Standardized coefficients", color = NULL) +

scale_color_manual(values = c(td_colors$pastel6[1:4], "grey80")) +

theme(legend.position = c(0.7, 0.8))

p2 <- d %>%

ggplot(aes(x = l2_norm_ratio, y = estimate)) +

geom_line(aes(group = term, color = term_highlight), size = 1) +

labs(

x = expression(paste("||", hat(beta[lambda]), "||2 / ||", hat(beta), "||2")),

y = NULL, color = NULL

) +

scale_color_manual(values = c(td_colors$pastel6[1:4], "grey80")) +

theme(legend.position = "none")

p1 | p2

On the subject of standardizing predictors:

The standard least squares coefficient estimates discussed in Chapter 3 are scale equivariant: multiplying \(X_j\) by a constant \(c\) simply leads to a scaling of the least squares coefficient estimates by a factor of \(1/c\). In other words, regardless of how the \(j\)th predictor is scaled, \(X_j \hat{\beta}_j\) will remain the same. In contrast, the ridge regression coefficient estimates can change substantially when multiplying a given predictor by a constant. For instance, consider the income variable, which is measured in dollars. One could reasonably have measured income in thousands of dollars, which would result in a reduction in the observed values of income by a factor of 1,000. Now due to the sum of squared coefficients term in the ridge regression formulation (6.5), such a change in scale will not simply cause the ridge regression coefficient estimate for income to change by a factor of 1,000. In other words, \(X_j \hat{\beta}^R_{j,λ}\) will depend not only on the value of \(\lambda\), but also on the scaling of the \(j\)th predictor. In fact, the value of \(X_j \hat{\beta}^R_{j, \lambda}\) may even depend on the scaling of the other predictors! Therefore, it is best to apply ridge regression after standardizing the predictors, … so that they are all on the same scale.

Why Does Ridge Regression Improve Over Least Squares?

Ridge regression’s advantage over least squares has to do with the bias-variance trade-off. As \(\lambda\) increases, the flexibility of the fit decreases, leading to decreased variance but increased bias. So ridge regression works best in situations where the least squares estimates have high variance, like when the number of variables \(p\) is almost as large as the number of observations \(n\) (as in Figure 6.5).

Ridge regression also has substantial computational advantages over best subset selection, which requires searching through \(2p\) models. As we discussed previously, even for moderate values of \(p\), such a search can be computationally infeasible. In contrast, for any fixed value of \(\lambda\), ridge regression only fits a single model, and the model-fitting procedure can be performed quite quickly. In fact, one can show that the computations required to solve (6.5), simultaneously for all values of \(\lambda\), are almost identical to those for fitting a model using least squares.

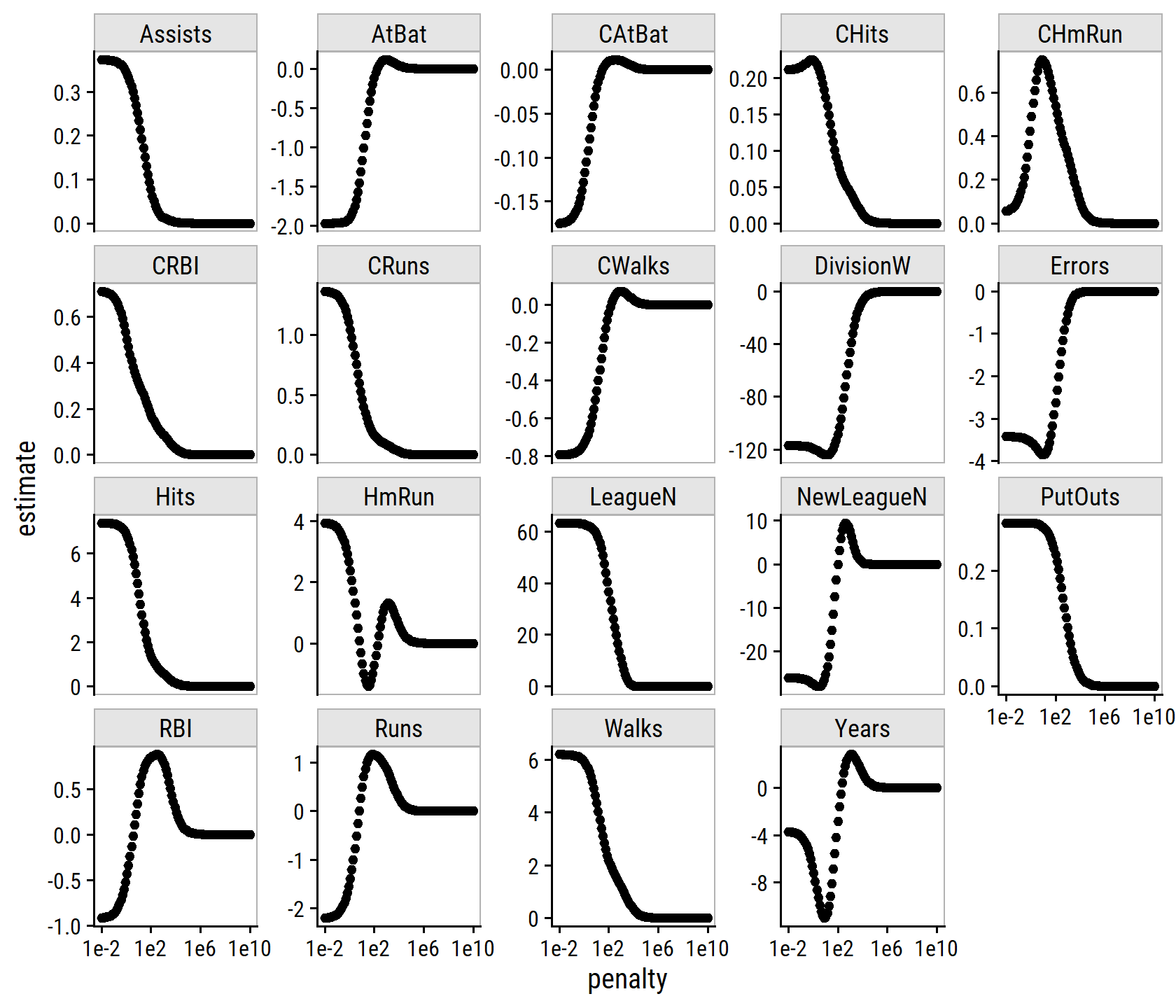

6.2.2 The Lasso

Ridge regression will shrink all coefficients towards zero, but will not set them to exactly zero (unless \(\lambda = \infty\)). This doesn’t affect prediction accuracy, but may cause challenges in model interpretation for large numbers of variables \(p\).

The lasso is a relatively recent alternative to ridge regression with a similar formulation as ridge regression. The coefficients \(\hat{\beta}_{\lambda}^L\) minimize the quantity:

\[ \sum_{i=1}^n \left(y_i - \beta_0 - \sum_{j=1}^p \beta_j x_{ij} \right)^2 + \lambda \sum_{j=1}^p | \beta_j | = \text{RSS} + \lambda \sum_{j=1}^p | \beta_j |. \]

The only difference is that the \(\beta_j^2\) term in the ridge regression penalty (6.5) has been replaced by \(|\beta_j|\) in the lasso penalty (6.7). In statistical parlance, the lasso uses an \(\mathcal{l}_1\) (pronounced “ell 1”) penalty instead of an \(\mathcal{l}_2\) penalty. The \(\mathcal{l}_1\) norm of a coefficient vector \(\beta\) is given by \(||\beta||_1 = \sum |\beta_j|\).

The \(\mathcal{l}_1\) penalty has the effect of forcing some of the coefficient estimates to be exactly equal to zero for sufficiently large \(\lambda\). Functionally, this is a form of automatic variable selection like the best subset techniques. We say that the lasso yields sparse models which only involve a subset of the variables. This makes lasso models much easier to interpret than those produced by ridge regression.

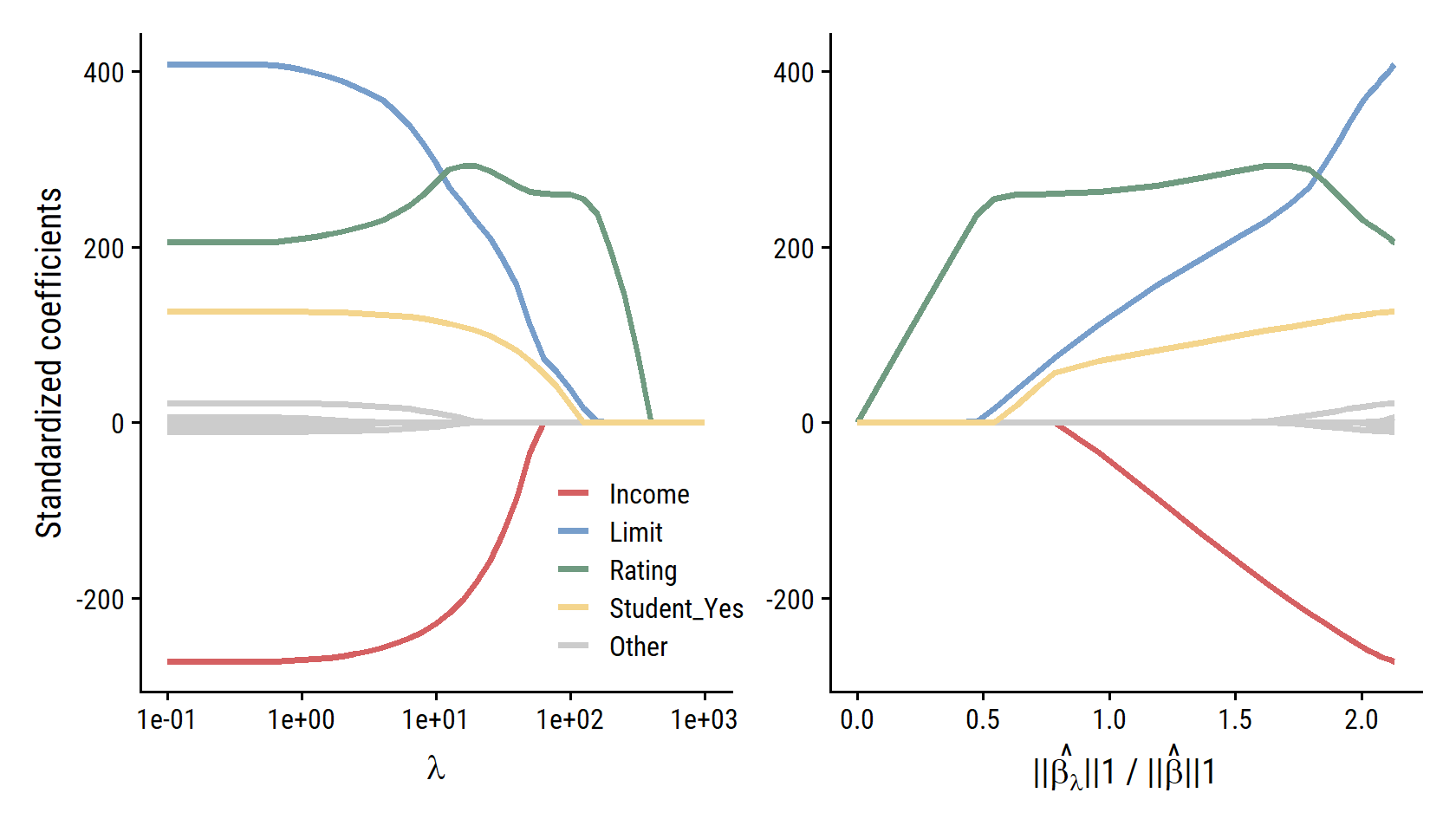

Fit the credit model with lasso regression:

credit_recipe <- recipe(Balance ~ ., data = credit) %>%

step_dummy(all_nominal_predictors()) %>%

step_normalize(all_predictors())lasso_spec <- linear_reg(penalty = 20, mixture = 1) %>%

set_engine("glmnet")

credit_lasso_workflow <- workflow() %>%

add_recipe(credit_recipe) %>%

add_model(lasso_spec)

credit_lasso_fit <- fit(credit_lasso_workflow, data = credit)# Compute the l1 norm for the least squares model

credit_lm_fit_l1_norm <- sum(abs(credit_lm_fit$coefficients[-1]))

d <- map_dfr(seq(-1, 3, 0.1),

~ tidy(credit_lasso_fit, penalty = 10^.x)) %>%

filter(term != "(Intercept)") %>%

group_by(penalty) %>%

mutate(l1_norm = sum(abs(estimate)),

l1_norm_ratio = l1_norm / credit_lm_fit_l1_norm) %>%

ungroup() %>%

mutate(

term_highlight = fct_other(

term, keep = c("Income", "Limit", "Rating", "Student_Yes")

)

)

p1 <- d %>%

ggplot(aes(x = penalty, y = estimate)) +

geom_line(aes(group = term, color = term_highlight), size = 1) +

scale_x_log10() +

labs(x = expression(lambda), y = "Standardized coefficients", color = NULL) +

scale_color_manual(values = c(td_colors$pastel6[1:4], "grey80")) +

theme(legend.position = c(0.7, 0.2))

p2 <- d %>%

ggplot(aes(x = l1_norm_ratio, y = estimate)) +

geom_line(aes(group = term, color = term_highlight), size = 1) +

labs(

x = expression(paste("||", hat(beta[lambda]), "||1 / ||", hat(beta), "||1")),

y = NULL, color = NULL

) +

scale_color_manual(values = c(td_colors$pastel6[1:4], "grey80")) +

theme(legend.position = "none")

p1 | p2

The shapes of the curves are right, but the scale of the \(\lambda\) parameter in the left panel and the ratio in the right panel are much different from the text – not sure what happened there.

Another Formulation for Ridge Regression and the Lasso

One can show that the lasso and ridge regression coefficient estimates solve the problems:

\[ \begin{align} &\text{minimize } \beta \left[ \sum_{i=1}^n \left(y_i - \beta_0 - \sum_{j=1}^p \beta_j x_{ij} \right)^2 \right] \text{ subject to } \sum_{j=1}^p |\beta_j| \leq s \\ &\text{minimize } \beta \left[ \sum_{i=1}^n \left(y_i - \beta_0 - \sum_{j=1}^p \beta_j x_{ij} \right)^2 \right] \text{ subject to } \sum_{j=1}^p \beta_j^2 \leq s, \end{align} \]

respectively. In other words, for every value of \(\lambda\), there is some \(s\) associated with the lasso/ridge coefficient estimates. For \(p = 2\), then the lasso coefficient estimates have the smallest RSS out of all points that lie within the diamond defined by \(|\beta_1| + |\beta_2| \leq s\); likewise, the circle \(\beta_1^2 + \beta_2^2 \leq s\) for ridge regression.

This formulation with \(s\) can be though of in terms of a budget of coefficient size:

When we perform the lasso we are trying to find the set of coefficient estimates that lead to the smallest RSS, subject to the constraint that there is a budget \(s\) for how large \(\sum_{j=1}^p |\beta_j|\) can be. When \(s\) is extremely large, then this budget is not very restrictive, and so the coefficient estimates can be large. In fact, if \(s\) is large enough that the least squares solution falls within the budget, then (6.8) will simply yield the least squares solution. In contrast, if \(s\) is small, then \(\sum_{j=1}^p |\beta_j|\) must be small in order to avoid violating the budget. Similarly, (6.9) indicates that when we perform ridge regression, we seek a set of coefficient estimates such that the RSS is as small as possible, subject to the requirement that \(\sum^p_{j=1} \beta_j^2\) does not exceed the budget \(s\).

This formulation also allows us to see the close connection between lasso, ridge and best subset selection. Best subset selection is the minimization problem:

\[ \text{minimize } \beta \left[ \sum_{i=1}^n \left(y_i - \beta_0 - \sum_{j=1}^p \beta_j x_{ij} \right)^2 \right] \text{ subject to } \sum_{j=1}^p I(\beta_j \neq 0) \leq s. \]

The Variable Selection Property of the Lasso

To understand why the lasso can remove predictors by shrinking coefficients to exactly zero, we can think of the shapes of the constraint functions, and the contours of RSS around the least squares estimate (see Figure 6.7 in the book for a visualization). For \(p = 2\), this is a circle (\(\beta_1^2 + \beta_2^2 \leq s\)) for ridge, and square (\(|\beta_1| + |\beta_2| \leq s\)) for lasso regression.

Each of the ellipses centered around \(\hat{\beta}\) represents a contour: this means that all of the points on a particular ellipse have the same RSS value. As the ellipses expand away from the least squares coefficient estimates, the RSS increases. Equations (6.8) and (6.9) indicate that the lasso and ridge regression coefficient estimates are given by the first point at which an ellipse contacts the constraint region. Since ridge regression has a circular constraint with no sharp points, this intersection will not generally occur on an axis, and so the ridge regression coefficient estimates will be exclusively non-zero. However, the lasso constraint has corners at each of the axes, and so the ellipse will often intersect the constraint region at an axis. When this occurs, one of the coefficients will equal zero. In higher dimensions, many of the coefficient estimates may equal zero simultaneously. In Figure 6.7, the intersection occurs at \(\beta_1 = 0\), and so the resulting model will only include \(\beta_2\).

This key idea holds for large dimension \(p > 2\) – the lasso constraint will always have sharp corners, and the ridge constraint will not.

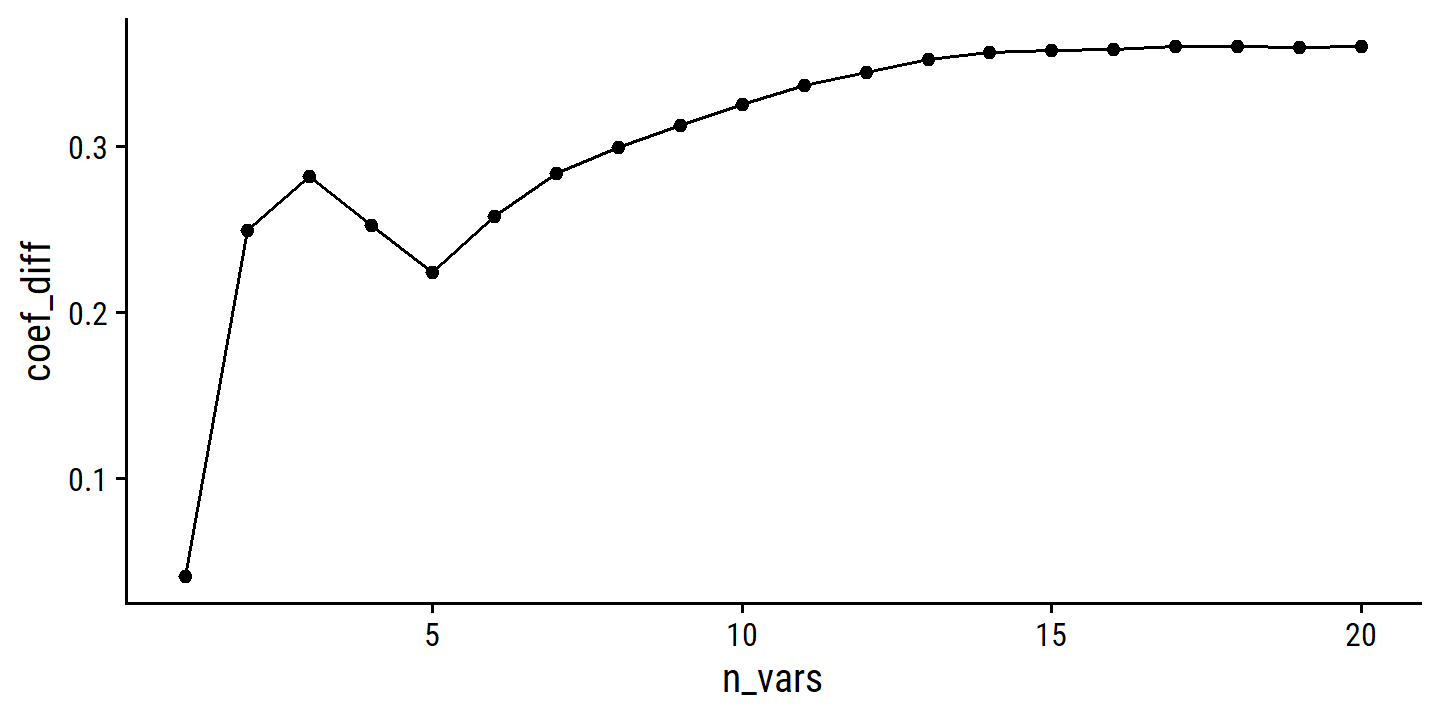

Comparing the Lasso and Ridge Regression

Generally, the lasso leads to qualitatively similar behavior to ridge regression, in that a larger \(\lambda\) increases bias and decreases variance. A helpful way to compare models with different types of regularization is to plot \(R^2\) as in Figure 6.8 comparing lasso and ridge MSE. In this example, the results are almost identical, with a slight edge to the ridge regression due to lower variance. This advantage is due to the fact that the simulated data consisted of 45 predictors, that were all related to the response – that is, none of the true coefficients equaled zero. Lasso implicitly assumes that a number of the coefficients are truly zero, so it is not surprising it performs slightly worse on this example. By contrast, the example in Figure 6.9 was simulated so that only 2 out of 45 predictors were related to the response. In this case, the lasso tends to outperform ridge regression in terms of bias, variance and MSE.

These two examples illustrate that neither ridge regression nor the lasso will universally dominate the other. In general, one might expect the lasso to perform better in a setting where a relatively small number of predictors have substantial coefficients, and the remaining predictors have coefficients that are very small or that equal zero. Ridge regression will perform better when the response is a function of many predictors, all with coefficients of roughly equal size. However, the number of predictors that is related to the response is never known a priori for real data sets. A technique such as cross-validation can be used in order to determine which approach is better on a particular data set.

As with ridge regression, when the least squares estimates have excessively high variance, the lasso solution can yield a reduction in variance at the expense of a small increase in bias, and consequently can gener- ate more accurate predictions. Unlike ridge regression, the lasso performs variable selection, and hence results in models that are easier to interpret.

There are very efficient algorithms for fitting both ridge and lasso models; in both cases the entire coefficient paths can be computed with about the same amount of work as a single least squares fit.

A Simple Special Case for Ridge Regression and the Lasso

Consider a simple case with \(n = p\) and a diagonal matrix \(\bf{X}\) with 1’s on the diagonal and 0’s in all off-diagonal elements. To simplify further, assume that we are performing regression without an intercept. Then the usual least squares problem involves minimizing:

\[ \sum_{j=1}^p (y_j - \beta_j)^2. \]

Which has the solution \(\hat{\beta}_j = y_j\). In ridge and lasso regression, the following are minimized:

\[ \begin{align} &\sum_{j=1}^p (y_j - \beta_j)^2 + \lambda \sum_{j=1}^p \beta_j^2 \\ &\sum_{j=1}^p (y_j - \beta_j)^2 + \lambda \sum_{j=1}^p |\beta_j|. \end{align} \]

One can show that the ridge regression estimates take the form

\[ \hat{\beta}_j^R = y_j / (1 + \lambda) \]

and the lasso estimates take the form

\[ \hat{\beta}_j^L = \left\{\begin{array}{lr} y_j - \lambda / 2, &\text{if } y_j > \lambda / 2 \\ y_j + \lambda / 2, &\text{if } y_j < - \lambda / 2 \\ 0, &\text{if } |y_j| \leq \lambda / 2 \end{array}\right. \]

The coefficient estimates are shown in Figure 6.10. The ridge regression estimates are all shrunken towards zero by the same proportion, while the lasso shrinks each coefficient by the same amount and values less than \(\lambda / 2\) are shrunken to exactly zero. This latter type of shrinkage is known as soft-thresholding, and is how lasso performs feature selection.

…ridge regression more or less shrinks every dimension of the data by the same proportion, whereas the lasso more or less shrinks all coefficients toward zero by a similar amount, and sufficiently small coefficients are shrunken all the way to zero.

Bayesian Interpretation for Ridge Regression and the Lasso

A Bayesian viewpoint for regression assumes that the vector of coefficients \(\beta\) has some prior distribution \(p(\beta)\), where \(\beta = (\beta_0, \dots , \beta_p)^T\). The likelihood of the data is then \(f(Y|X,\beta)\). Multiplying the prior by the likelihood gives us (up to a proportionality constant) the posterior distribution from Bayes’ theorem:

\[ p (\beta|X, Y) \propto f(Y|X,\beta) p(\beta|X) = f(Y|X,\beta) p(\beta). \]

If we assume

- the usual linear model \(Y = \beta_0 + X_1 \beta_1 + \dots X_p \beta_p + \epsilon\),

- the errors are independent and drawn from a normal distribution,

- that \(p(\beta) = \Pi_{j=1}^p g(\beta_j)\) for some density function \(g\).

It turns out that ridge and lasso follow naturally from two special cases of \(g\):

- If \(g\) is a Gaussian distribution with mean zero and standard deviation a function of \(\lambda\), then it follow that the posterior mode for \(\beta\) – that is, the most likely value for \(\beta\), given the data – is given by the ridge regression solution. (In fact, the ridge regression solution is also the posterior mean.)

- If \(g\) is a double-exponential (Laplace) distribution with mean zero and scale parameter a function of \(\lambda\), then it follow that the posterior mode for \(\beta\) is the lasso solution. (However, the lasso solution is not the posterior mean, and in fact, the posterior mean does not yield a spare coefficient vector).

The Gaussian and double-exponential priors are displayed in Figure 6.11. Therefore, from a Bayesian viewpoint, ridge regression and the lasso follow directly from assuming the usual linear model with normal errors, together with a simple prior distribution for \(β\). Notice that the lasso prior is steeply peaked at zero, while the Gaussian is flatter and fatter at zero. Hence, the lasso expects a priori that many of the coefficients are (exactly) zero, while ridge assumes the coefficients are randomly distributed about zero.

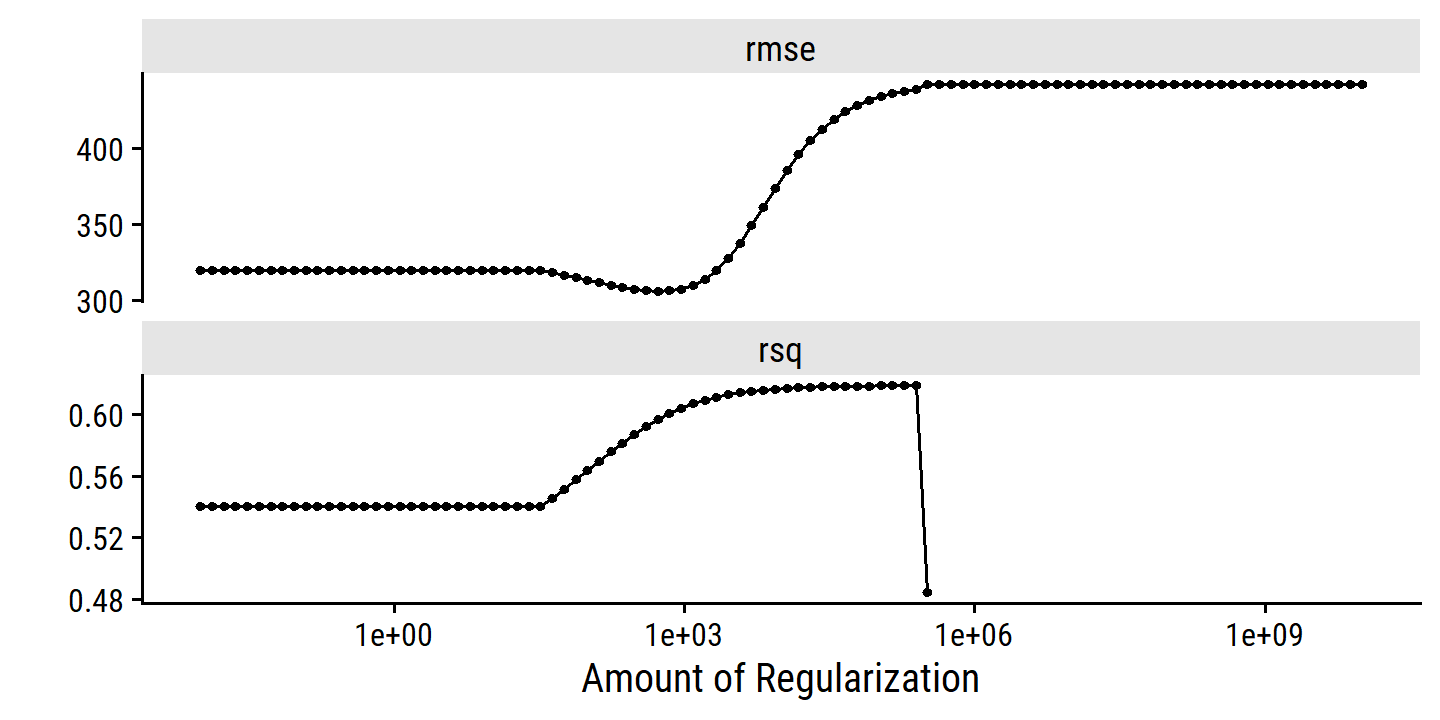

6.2.3 Selecting the Tuning Parameter

Just like subset selection requires a method to determine which models are best, these regularization methods require a method for selecting a value for the tuning parameter \(\lambda\) (or constraint \(s\)). Cross-validation is the simple way to tackle this. We choose a grid of \(\lambda\) values, and compute CV error for each value of \(\lambda\), and select the value for which error is smallest. The model is then re-fit using all available observations with the selected tuning parameter \(\lambda\).

As explained previously, the tidydmodels framework does not allow fitting by LOOCV.

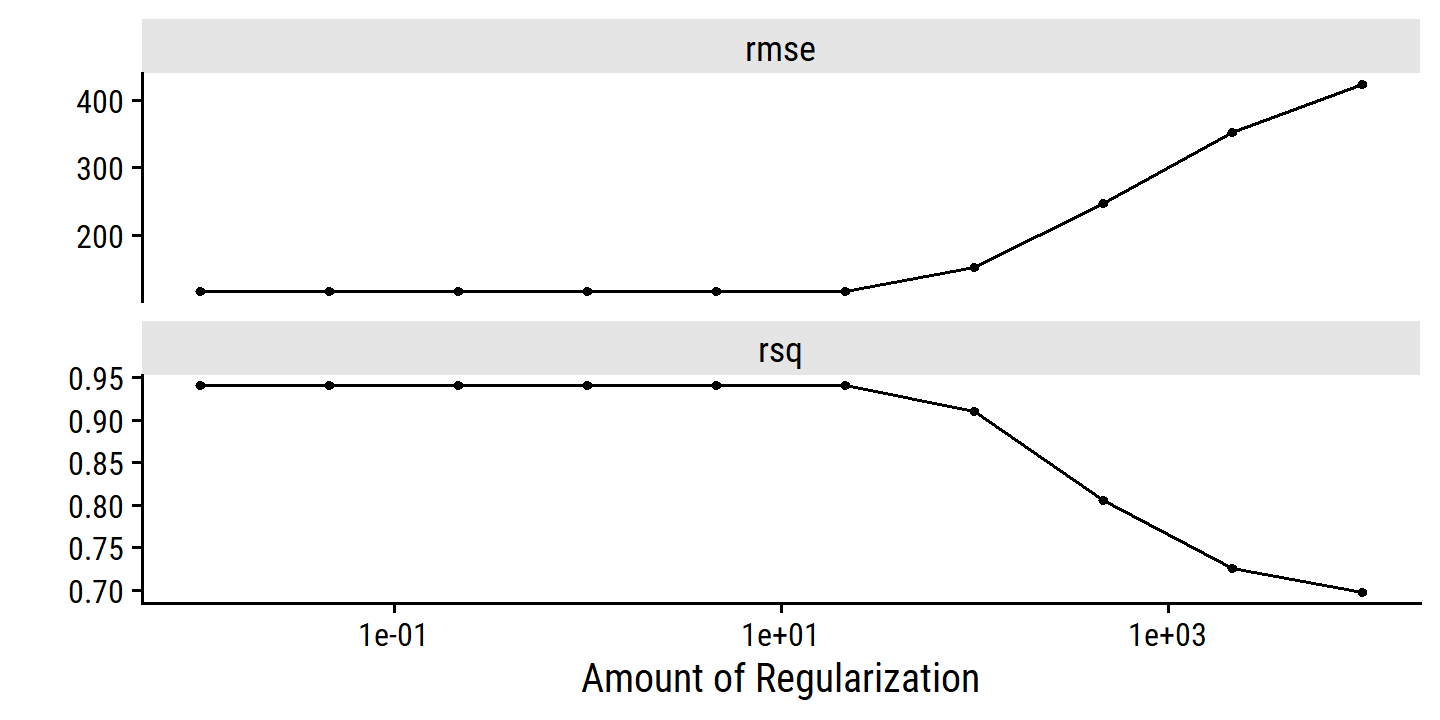

credit_splits <- vfold_cv(credit, v = 10)Then the recipe (nothing new here):

credit_ridge_recipe <- recipe(Balance ~ ., data = credit) %>%

step_dummy(all_nominal_predictors()) %>%

step_normalize(all_predictors())In the model specification, I set mixture = 0 for ridge regression, and set penalty = tune() to indicate it as a tunable parameter:

ridge_spec <- linear_reg(mixture = 0, penalty = tune()) %>%

set_engine("glmnet")Combine into a workflow:

credit_ridge_workflow <- workflow() %>%

add_recipe(credit_ridge_recipe) %>%

add_model(ridge_spec)

credit_ridge_workflow## ══ Workflow ════════════════════════════════════════════════════════════════════

## Preprocessor: Recipe

## Model: linear_reg()

##

## ── Preprocessor ────────────────────────────────────────────────────────────────

## 2 Recipe Steps

##

## • step_dummy()

## • step_normalize()

##

## ── Model ───────────────────────────────────────────────────────────────────────

## Linear Regression Model Specification (regression)

##

## Main Arguments:

## penalty = tune()

## mixture = 0

##

## Computational engine: glmnetLastly, because we are tuning penalty (\(\lambda\)), we need to define a grid of values to try when fitting the model.

The dials package provides many tools for tuning in tidymodels.

grid_regular() creates a grid of evenly spaced points.

As the first argument, I provide a penalty() with argument range that takes minimum and maximum values on a log scale:

penalty_grid <- grid_regular(penalty(range = c(-2, 4)), levels = 10)

penalty_grid## # A tibble: 10 × 1

## penalty

## <dbl>

## 1 0.01

## 2 0.0464

## 3 0.215

## 4 1

## 5 4.64

## 6 21.5

## 7 100

## 8 464.

## 9 2154.

## 10 10000To fit the models using this grid of values, we use tune_grid():

tic()

credit_ridge_tune <- tune_grid(

credit_ridge_workflow,

resamples = credit_splits,

grid = penalty_grid

)

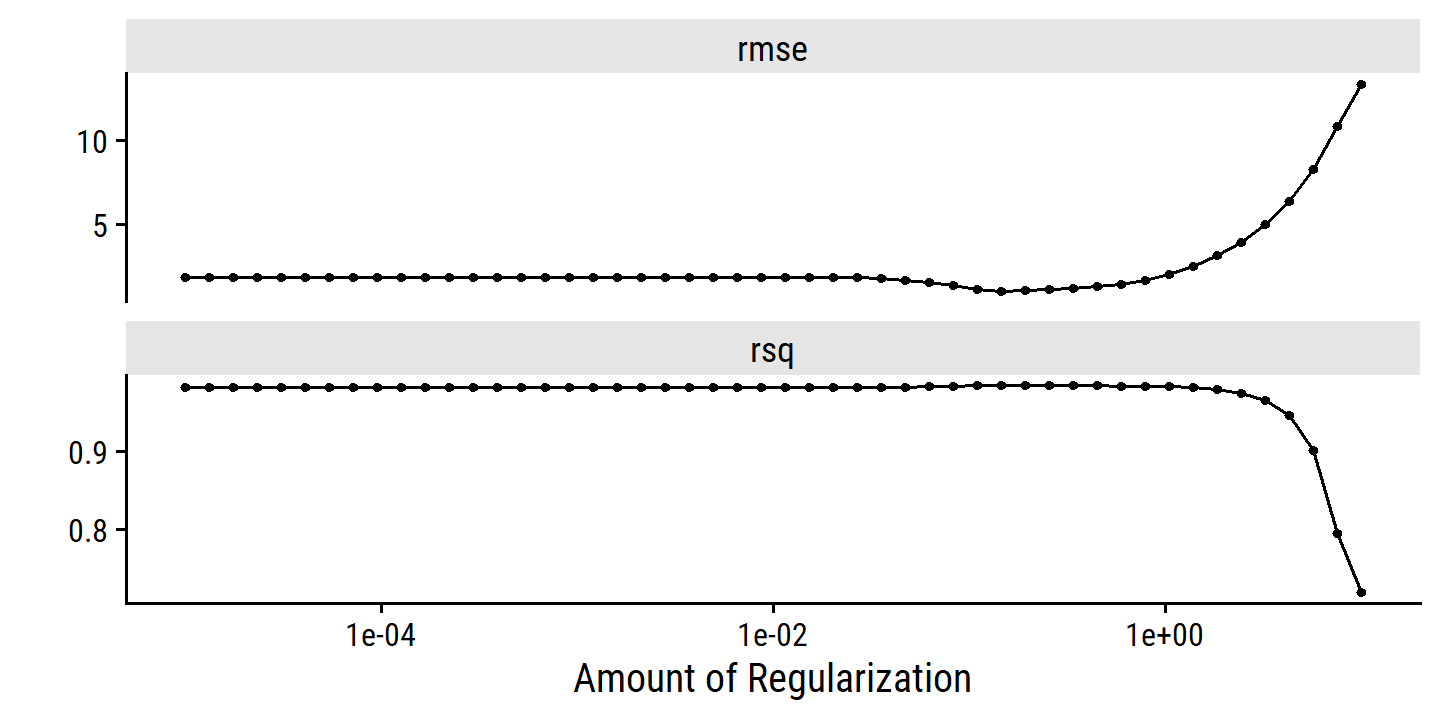

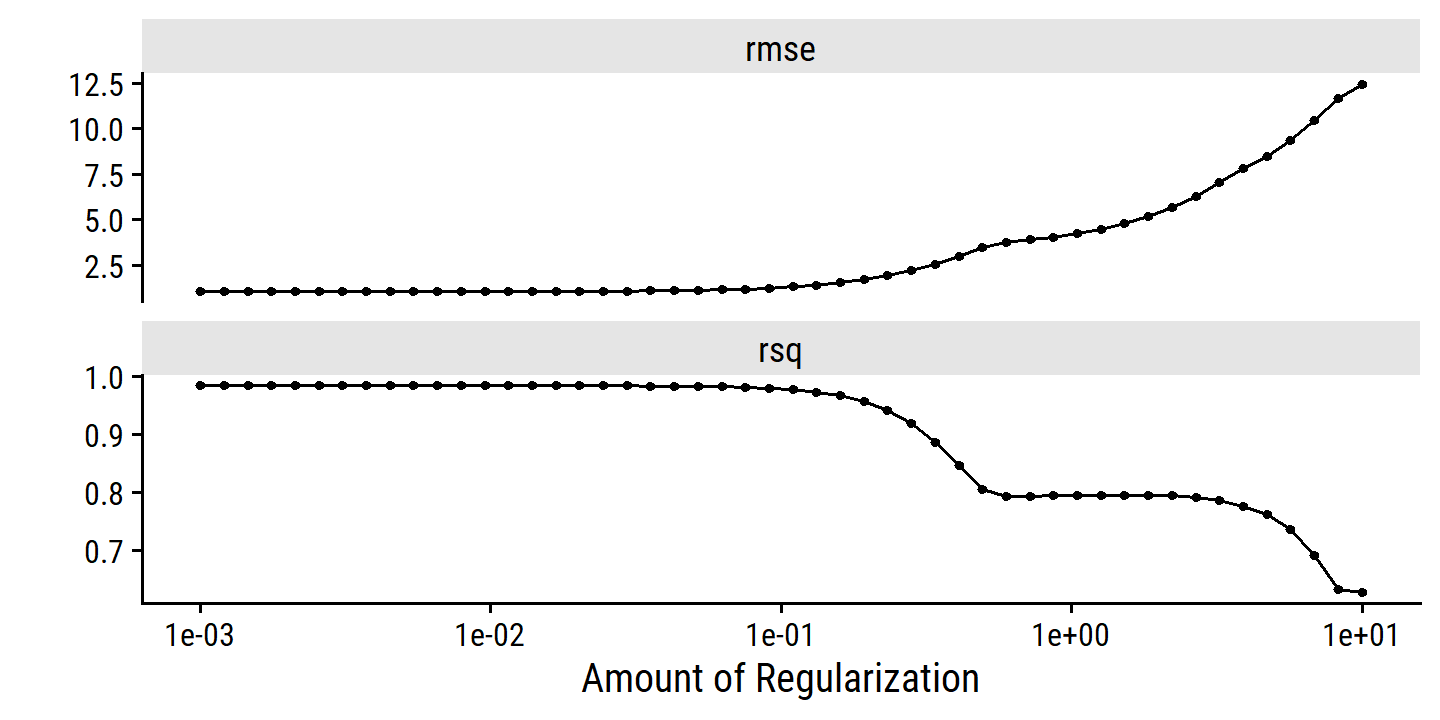

toc()## 2.36 sec elapsedcredit_ridge_tune %>% autoplot()

6.3 Dimension Reduction Methods

The methods that we have discussed so far in this chapter have controlled variance in two different ways, either by using a subset of the original variables, or by shrinking their coefficients toward zero. All of these methods are defined using the original predictors, \(X_1, X_2, \dots , X_p\). We now explore a class of approaches that transform the predictors and then fit a least squares model using the transformed variables. We will refer to these techniques as dimension reduction methods.

We represent our original \(p\) predictors \(X_j\) as \(M\) (\(< p\)) linear combinations:

\[ Z_m = \sum_{j=1}^p \phi_{jm} X_j \]

for some constants \(\phi_{1m}, \phi_{2m}, \dots \phi_{pm}\) , \(m = 1, \dots, M\). We can then fit a linear regression model:

\[ y_i = \theta_0 + \sum_{m=1}^M \theta_m z_{im} + \epsilon_i, \ \ \ \ i = 1, \dots, n, \]

using least squares. Note that the regression coefficients here are represented by \(\theta_0, \theta_1, \dots, \theta_M\).

If the constants \(\phi_{1m}, \phi_{2m}, \dots , \phi_{pm}\) are chosen wisely, then such dimension reduction approaches can often outperform least squares regression.

This method is called dimension reduction because it involves reducing the \(p+1\) coefficients \(\beta_0, \dots, \beta_p\) down to \(M + 1\) coefficients \(\theta_0, \dots, \theta_M\) (\(M < p\)).

Notice that the models are numerically equivalent:

\[ \sum_{m=1}^M \theta_m z_{im} = \sum_{m=1}^M \theta_m \sum_{j=1}^p \phi_{jm} x_{ij} = \sum_{j=1}^p \sum_{m=1}^M \theta_m \phi_{jm} x_{ij} = \sum_{j=1}^p \beta_j x_{ij}, \]

where

\[ \beta_j = \sum_{m=1}^M \theta_m \phi_{jm}. \]

Hence, this can be thought of as a special case of the original linear regression model. Dimension reduction serves to constrain the estimated \(\beta_j\) coefficients to the above form, which has the potential to bias the estimates. However, in situations where \(p\) is large relative to \(n\), selecting a value of \(M \ll p\) can significantly reduce the variance of the fitted coefficients.

All dimension reduction methods work in two steps. First, the transformed predictors \(Z_1, Z_2, \dots Z_M\) are obtained. Second, the model is fit using these \(M\) predictors. However, the choice of \(Z_1, Z_2, \dots Z_M\), or equivalently, the selection of the \(\phi_{jm}\)’s, can be achieved in different ways. In this chapter, we will consider two approaches for this task: principal components and partial least squares.

6.3.1 Principal Components Regression

An Overview of Principal Components Analysis

Principal components analysis (PCA) is a technique for reducing the dimension of an \(n \times p\) data matrix \(\textbf{X}\). The first principal component direction of the data is that along which the observations vary the most. If the observations were to be projected onto the line defined by this principal component, then the resulting projected observations would have the largest possible variance. Alternatively: the first principal component defines the line that is as close as possible to the data. The principal component can be summarized mathematically as a linear combination of the variables, \(Z_1\).

The second principal component \(Z_2\) is a linear combination of the variables that is uncorrelated with \(Z_1\), and has the largest variance subject to this constraint. Visually, the direction of this line must be perpendicular or orthogonal to the first principal component.

Up to \(p\) distinct principal components can be constructed, but the first component will contain the most information. Each component successively maximizes variance, subject to the constraint of being uncorrelated with the previous components.

The Principal Components Regression Approach

The principal components regression (PCR) approach involves constructing the first \(M\) principal components, \(Z_1, \dots, Z_M\), and then using these components as the predictors in a linear regression model that is fit using least squares. The key idea is that often a small number of principal components suffice to explain most of the variability in the data, as well as the relationship with the response. In other words, we assume that the directions in which \(X_1, \dots, X_p\) show the most variation are the directions that are associated with \(Y\). While this assumption is not guaranteed to be true, it often turns out to be a reasonable enough approximation to give good results.

If the assumption underlying PCR holds, then fitting a least squares model to \(Z_1,\dots, Z_M\) will lead to better results than fitting a least squares model to \(X_1,\dots,X_p\), since most or all of the information in the data that relates to the response is contained in \(Z_1,\dots,Z_M\), and by estimating only \(M ≪ p\) coefficients we can mitigate overfitting

It is important to note that, although PCR offers a simple way to perform regression using a smaller number of predictors (\(M < p\)), it is not a feature selection method. The \(M\) principal components used in the regression is a linear combination of all \(p\) of the original features. In this sense, PCR is more closely related to ridge regression than the lasso (because the lasso shrinks coefficients to exactly zero, essentially removing them). In fact, ridge regression can be considered a continuous version of PCR.

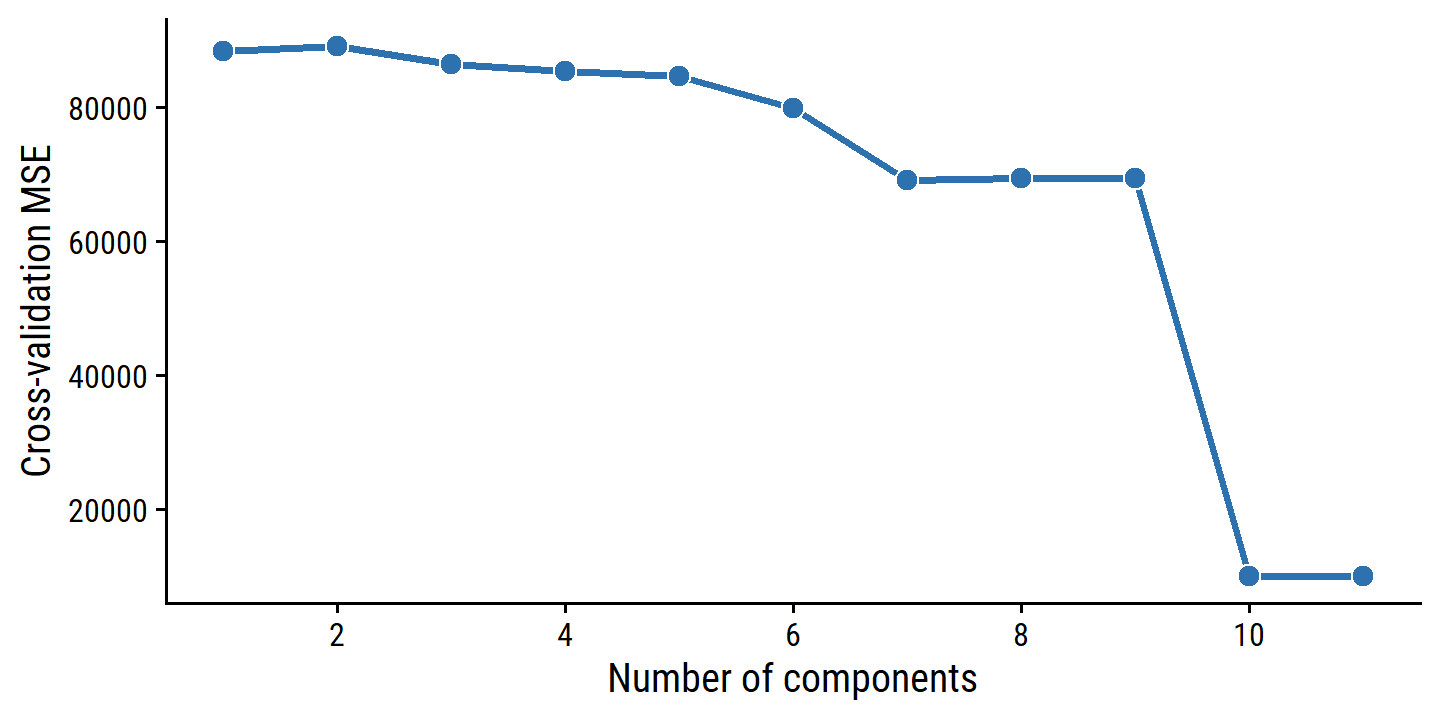

In tidymodels, principal components are extracted in pre-processing via recipes::step_pca().

Here is the workflow applied to the credit data set with a linear model:

lm_spec <- linear_reg() %>% set_engine("lm")

credit_splits <- vfold_cv(credit, v = 10)

credit_pca_recipe <- recipe(Balance ~ ., data = credit) %>%

step_dummy(all_nominal_predictors()) %>%

step_normalize(all_predictors()) %>%

step_pca(all_predictors(), num_comp = tune())

credit_pca_workflow <- workflow() %>%

add_recipe(credit_pca_recipe) %>%

add_model(lm_spec)The num_comp = tune() argument to step_pca() allows variation in the number of principal components \(M\).

To tune_grid(), I only need to provide a data frame of possible num_comp values, but here is the dials::grid_regular() approach to doing that:

pca_grid <- grid_regular(num_comp(range = c(1, 11)), levels = 11)

pca_grid## # A tibble: 11 × 1

## num_comp

## <int>

## 1 1

## 2 2

## 3 3

## 4 4

## 5 5

## 6 6

## 7 7

## 8 8

## 9 9

## 10 10

## 11 11Perform 10 fold cross-validation for PCR with 1 to 11 components:

credit_pca_tune <- tune_grid(

credit_pca_workflow,

resamples = credit_splits, grid = pca_grid,

# This option extracts the model fits, which are otherwise discarded

control = control_grid(extract = function(m) extract_fit_engine(m))

)And re-create the right panel of Figure 6.20:

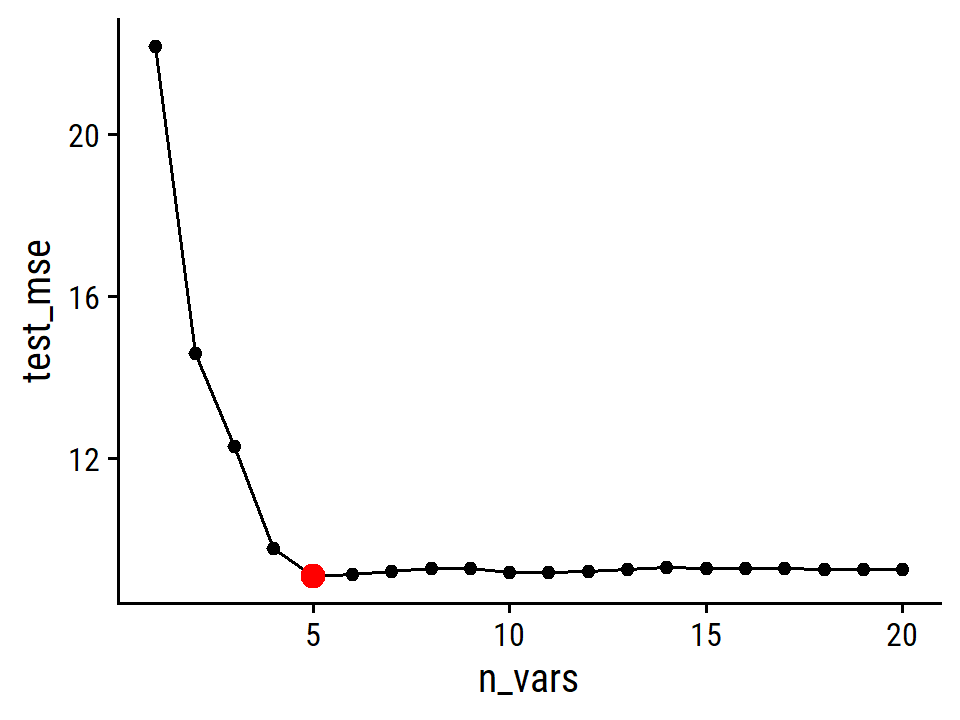

credit_pca_tune %>%

collect_metrics() %>%

filter(.metric == "rmse") %>%

mutate(mse = mean^2) %>%

ggplot(aes(x = num_comp, y = mse)) +

geom_line(color = td_colors$nice$spanish_blue, size = 1) +

geom_point(shape = 21, size = 3,

fill = td_colors$nice$spanish_blue, color = "white") +

scale_y_continuous("Cross-validation MSE",

breaks = c(20000, 40000, 60000, 80000)) +

scale_x_continuous("Number of components", breaks = c(2, 4, 6, 8, 10))

By MSE, the best performing models are \(M\) = 10 and 11 principal components, which mean that dimension reduction is not needed here because \(p = 11\).

Note the step_normalize() function in the recipe used here.

This is important when performing PCR:

We generally recommend standardizing each predictor, using (6.6), prior to generating the principal components. This standardization ensures that all variables are on the same scale. In the absence of standardization, the high-variance variables will tend to play a larger role in the principal components obtained, and the scale on which the variables are measured will ultimately have an effect on the final PCR model. However, if the variables are all measured in the same units (say, kilograms, or inches), then one might choose not to standardize them.

6.3.2 Partial Least Squares

The PCR approach that we just described involves identifying linear combinations, or directions, that best represent the predictors \(X1,\dots,X_p\). These directions are identified in an unsupervised way, since the response \(Y\) is not used to help determine the principal component directions. That is, the response does not supervise the identification of the principal components. Consequently, PCR suffers from a drawback: there is no guarantee that the directions that best explain the predictors will also be the best directions to use for predicting the response. Unsupervised methods are discussed further in Chapter 12.

Like PCR, partial least squares (PLS) is a dimension reduction method which identifies a set of features \(Z_1, \dots, Z_M\) that are linear combinations of the \(p\) original predictors. Unlike PCR, PLS identifies the new features which are related to the response \(Y\). In this way, PLS is a supervised alternative to PCR. Roughly speaking, the PLS approach attempts to find directions that help explain both the response and the predictors.

After standardizing the \(p\) predictors, the first PLS direction \(Z_1 = \sum_{j=1}^p \phi_{j1} X_j\) is found from the simple linear regression of \(Y\) onto \(X_j\). This means that PLS places the highest weight on the variables that are most strongly related to the response. For the second PLS direction, each of the variables is adjusted by regression on \(Z_1\) and taking residuals. These residuals can be interpreted as the remanining information that has not been explained by the first PLS direction. \(Z_2\) is then computed using this orthogonalized data in the same way as \(Z_1\) was computed on the original data. This iterative approach is repeated \(M\) times, and the final step is to fit the linear model to predict \(Y\) using \(Z_1, \dots, Z_M\) in exactly the same way as for PCR.

As with PCR, the number \(M\) of partial least squares directions used in PLS is a tuning parameter that is typically chosen by cross-validation. We generally standardize the predictors and response before performing PLS.

In practice, PLS often performs no better than ridge regression or PCR. It can often reduce bias, but it also has the potential to increase variance, so it evens out relative to other methods.

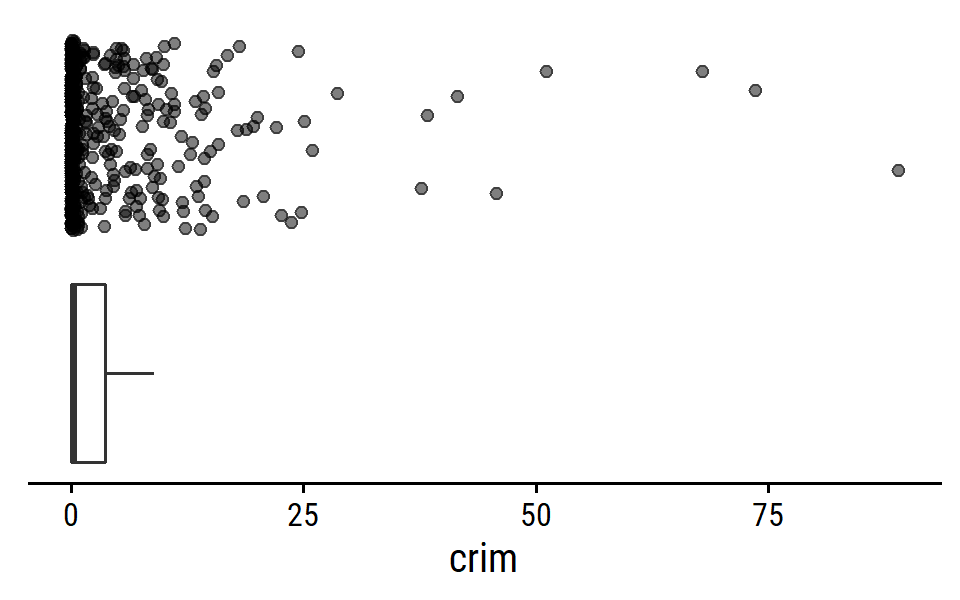

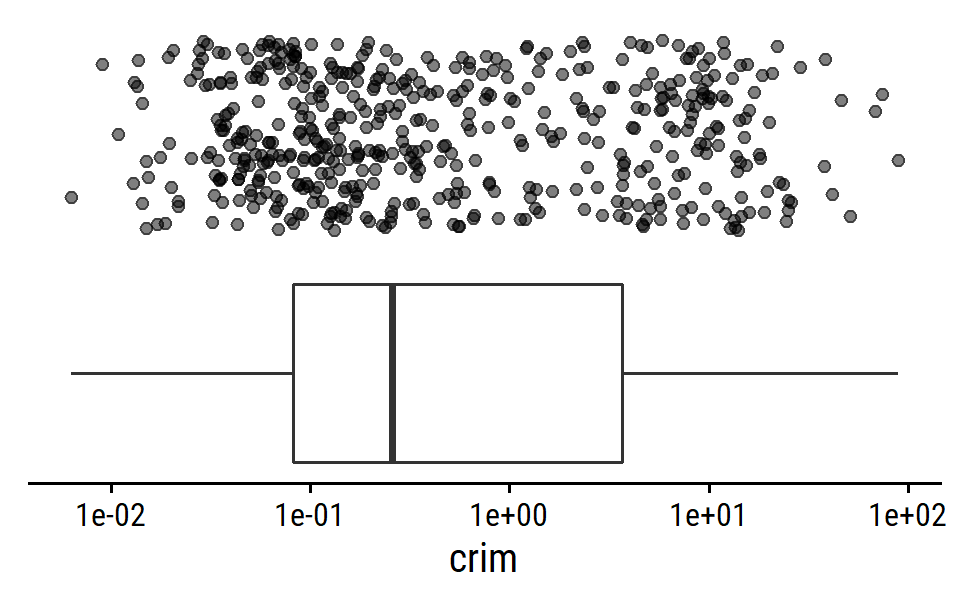

6.4 Considerations in High Dimensions

6.4.1 High-Dimensional Data

Most traditional statistical techniques for regression and classification are intended for the low-dimensional setting in which \(n\), the number of observations, is much greater than \(p\), the number of features. This is due in part to the fact that throughout most of the field’s history, the bulk of scientific problems requiring the use of statistics have been low-dimensional.

To be clear, by dimension, we are referring to the size of \(p\).

In the past 20 years, new technologies have changed the way that data are collected in fields as diverse as finance, marketing, and medicine. It is now commonplace to collect an almost unlimited number of feature measurements (\(p\) very large). While \(p\) can be extremely large, the number of observations \(n\) is often limited due to cost, sample availability, or other considerations.

These high-dimensional problems, in which the number of features \(p\) is larger than observations \(n\), are becoming more commonplace.

6.4.2 What Goes Wrong in High Dimensions?

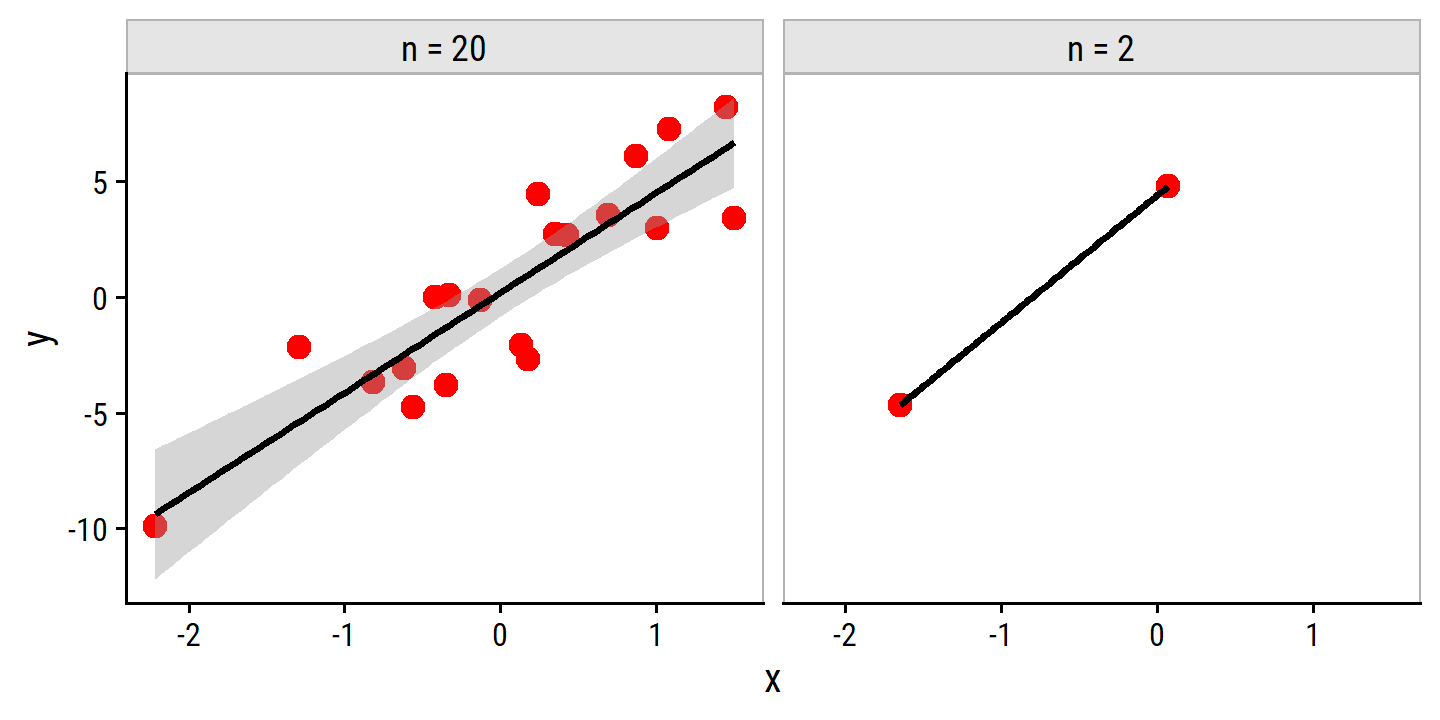

To illustrate the \(p > n\) issue, we examine least squares regression (but the same concepts apply to logistic regression, linear discriminant analysis and others). Least squares cannot be (or rather should not) be performed in this setting because it will yield coefficient estimates that perfectly fit the data, such that the residuals are zero.

tibble(n_obs = c(2, 20)) %>%

mutate(x = map(n_obs, rnorm)) %>%

unnest(x) %>%

mutate(y = 5 * x + rnorm(n(), 0, 3)) %>%

ggplot(aes(x, y)) +

geom_point(color = "red", size = 3) +

geom_smooth(method = "lm", formula = "y ~ x", color = "black") +

facet_wrap(~ fct_rev(paste0("n = ", as.character(n_obs)))) +

add_facet_borders()## Warning in qt((1 - level)/2, df): NaNs produced## Warning in max(ids, na.rm = TRUE): no non-missing arguments to max; returning

## -Inf

Note the warning returned by geom_smooth (NaNs and Infs produced) and the lack of errors around the best fit line in the right panel.

On the other hand, when there are only two observations, then regardless of the values of those observations, the regression line will fit the data exactly. This is problematic because this perfect fit will almost certainly lead to overfitting of the data. In other words, though it is possible to perfectly fit the training data in the high-dimensional setting, the resulting linear model will perform extremely poorly on an independent test set, and therefore does not constitute a useful model. In fact, we can see that this happened in Figure 6.22: the least squares line obtained in the right-hand panel will perform very poorly on a test set comprised of the observations in the lefth-and panel. The problem is simple: when \(p>n\) or \(p \approx n\), a simple least squares regression line is too flexible and hence overfits the data.

Previously we saw a number of approaches for adjusting the training set error to account for the number of variables used to fit a least squares model. Unfortunately, the \(C_p\), AIC, and BIC approaches are not appropriate in this setting, because estimating \(\hat{\sigma}^2\) is problematic). Similarly, a model can easily obtain an adjusted \(R^2\) value of 1. Clearly, alternative approaches that are better-suited to the high-dimensional setting are required.

6.4.3 Regression in High Dimensions

The methods learned in this chapter – stepwise selection, ridge and lasso regression, and principal components regression – are useful for performing regression in the high-dimensional setting because they are less flexible fitting procedures.

The lasso example in Figure 6.24 highlights three important points:

- regularlization or shrinkage plays a key role in high-dimensional problems,

- appropriate tuning parameter selection is crucial for good predictive performance, and

- the test error tends to increase as the dimensionality of the problem (i.e. the number of feature or predictors) increases, unless those additional features are truly associated with the response.

The third point is known as the curve of dimensionality. In general, adding additional signal features that are truly associated with the response will improve the fitted model (and a reduction in test set error). However, adding noise features that are not truly associated with teh response will lead to a worse fitted model (and an increase in test set error).

Thus, we see that new technologies that allow for the collection of measurements for thousands or millions of features are a double-edged sword: they can lead to improved predictive models if these features are in fact relevant to the problem at hand, but will lead to worse results if the features are not relevant. Even if they are relevant, the variance incurred in fitting their coefficients may outweigh the reduction in bias that they bring.

6.4.4 Interpreting Results in High Dimensions

When performing lasso, ridge or other regression in the high-dimensional setting, multicollinearity (where variables in a regression are correlated with each other) can be a big problem. Any variable in the model can be written as a linear combination of all other variables in the model, so we can never know exactly which variables (if any) truly are predictive of the outcome, and can never identify the best coefficients for use in regression. A model like this can still have very high predictive value, but we must be careful not to overstate the results and make it clear that we have identified one of many possible models for predicting the outcome, and that is must be further validated on independent data sets. It is also important to be careful in reporting errors. We have seen in the example that when \(p > n\), it is easy to obtain a useless model that has zero residuals. In this case, traditional measures of model fit (e.g. \(R^2\)) are misleading; instead, models should be assessed on an independent test set or cross-validation errors.

6.5 Lab: Linear Models and Regularization Methods

6.5.1 Subset Selection Methods

Best Subset Selection

Here, we aim to predict a baseball player’s Salary from performance statistics in the previous year:

hitters <- as_tibble(ISLR2::Hitters) %>%

filter(!is.na(Salary))

glimpse(hitters)## Rows: 263

## Columns: 20

## $ AtBat <int> 315, 479, 496, 321, 594, 185, 298, 323, 401, 574, 202, 418, …

## $ Hits <int> 81, 130, 141, 87, 169, 37, 73, 81, 92, 159, 53, 113, 60, 43,…

## $ HmRun <int> 7, 18, 20, 10, 4, 1, 0, 6, 17, 21, 4, 13, 0, 7, 20, 2, 8, 16…

## $ Runs <int> 24, 66, 65, 39, 74, 23, 24, 26, 49, 107, 31, 48, 30, 29, 89,…

## $ RBI <int> 38, 72, 78, 42, 51, 8, 24, 32, 66, 75, 26, 61, 11, 27, 75, 8…

## $ Walks <int> 39, 76, 37, 30, 35, 21, 7, 8, 65, 59, 27, 47, 22, 30, 73, 15…

## $ Years <int> 14, 3, 11, 2, 11, 2, 3, 2, 13, 10, 9, 4, 6, 13, 15, 5, 8, 1,…

## $ CAtBat <int> 3449, 1624, 5628, 396, 4408, 214, 509, 341, 5206, 4631, 1876…

## $ CHits <int> 835, 457, 1575, 101, 1133, 42, 108, 86, 1332, 1300, 467, 392…

## $ CHmRun <int> 69, 63, 225, 12, 19, 1, 0, 6, 253, 90, 15, 41, 4, 36, 177, 5…

## $ CRuns <int> 321, 224, 828, 48, 501, 30, 41, 32, 784, 702, 192, 205, 309,…

## $ CRBI <int> 414, 266, 838, 46, 336, 9, 37, 34, 890, 504, 186, 204, 103, …

## $ CWalks <int> 375, 263, 354, 33, 194, 24, 12, 8, 866, 488, 161, 203, 207, …

## $ League <fct> N, A, N, N, A, N, A, N, A, A, N, N, A, N, N, A, N, N, A, N, …

## $ Division <fct> W, W, E, E, W, E, W, W, E, E, W, E, E, E, W, W, W, E, W, W, …

## $ PutOuts <int> 632, 880, 200, 805, 282, 76, 121, 143, 0, 238, 304, 211, 121…

## $ Assists <int> 43, 82, 11, 40, 421, 127, 283, 290, 0, 445, 45, 11, 151, 45,…

## $ Errors <int> 10, 14, 3, 4, 25, 7, 9, 19, 0, 22, 11, 7, 6, 8, 10, 16, 2, 5…

## $ Salary <dbl> 475.000, 480.000, 500.000, 91.500, 750.000, 70.000, 100.000,…

## $ NewLeague <fct> N, A, N, N, A, A, A, N, A, A, N, N, A, N, N, A, N, N, N, N, …As in the text, I’ll use the leaps package to perform best subset selection via RSS.

library(leaps)

regsubsets_hitters_salary <- regsubsets(Salary ~ ., data = hitters)

summary(regsubsets_hitters_salary)## Subset selection object

## Call: regsubsets.formula(Salary ~ ., data = hitters)

## 19 Variables (and intercept)

## Forced in Forced out

## AtBat FALSE FALSE

## Hits FALSE FALSE

## HmRun FALSE FALSE

## Runs FALSE FALSE

## RBI FALSE FALSE

## Walks FALSE FALSE

## Years FALSE FALSE

## CAtBat FALSE FALSE

## CHits FALSE FALSE

## CHmRun FALSE FALSE

## CRuns FALSE FALSE

## CRBI FALSE FALSE

## CWalks FALSE FALSE

## LeagueN FALSE FALSE

## DivisionW FALSE FALSE

## PutOuts FALSE FALSE

## Assists FALSE FALSE

## Errors FALSE FALSE

## NewLeagueN FALSE FALSE

## 1 subsets of each size up to 8

## Selection Algorithm: exhaustive

## AtBat Hits HmRun Runs RBI Walks Years CAtBat CHits CHmRun CRuns CRBI

## 1 ( 1 ) " " " " " " " " " " " " " " " " " " " " " " "*"

## 2 ( 1 ) " " "*" " " " " " " " " " " " " " " " " " " "*"

## 3 ( 1 ) " " "*" " " " " " " " " " " " " " " " " " " "*"

## 4 ( 1 ) " " "*" " " " " " " " " " " " " " " " " " " "*"

## 5 ( 1 ) "*" "*" " " " " " " " " " " " " " " " " " " "*"

## 6 ( 1 ) "*" "*" " " " " " " "*" " " " " " " " " " " "*"

## 7 ( 1 ) " " "*" " " " " " " "*" " " "*" "*" "*" " " " "

## 8 ( 1 ) "*" "*" " " " " " " "*" " " " " " " "*" "*" " "

## CWalks LeagueN DivisionW PutOuts Assists Errors NewLeagueN

## 1 ( 1 ) " " " " " " " " " " " " " "

## 2 ( 1 ) " " " " " " " " " " " " " "

## 3 ( 1 ) " " " " " " "*" " " " " " "

## 4 ( 1 ) " " " " "*" "*" " " " " " "

## 5 ( 1 ) " " " " "*" "*" " " " " " "

## 6 ( 1 ) " " " " "*" "*" " " " " " "

## 7 ( 1 ) " " " " "*" "*" " " " " " "

## 8 ( 1 ) "*" " " "*" "*" " " " " " "An asterisk indicates the variable is included in the model, so the best variables in the three-predictor model are Hits, CRBI and PutOuts.

Note that there is a broom::tidy() function available for these objects:

broom::tidy(regsubsets_hitters_salary) %>%

glimpse()## Rows: 8

## Columns: 24

## $ `(Intercept)` <lgl> TRUE, TRUE, TRUE, TRUE, TRUE, TRUE, TRUE, TRUE

## $ AtBat <lgl> FALSE, FALSE, FALSE, FALSE, TRUE, TRUE, FALSE, TRUE

## $ Hits <lgl> FALSE, TRUE, TRUE, TRUE, TRUE, TRUE, TRUE, TRUE

## $ HmRun <lgl> FALSE, FALSE, FALSE, FALSE, FALSE, FALSE, FALSE, FALSE

## $ Runs <lgl> FALSE, FALSE, FALSE, FALSE, FALSE, FALSE, FALSE, FALSE

## $ RBI <lgl> FALSE, FALSE, FALSE, FALSE, FALSE, FALSE, FALSE, FALSE

## $ Walks <lgl> FALSE, FALSE, FALSE, FALSE, FALSE, TRUE, TRUE, TRUE

## $ Years <lgl> FALSE, FALSE, FALSE, FALSE, FALSE, FALSE, FALSE, FALSE

## $ CAtBat <lgl> FALSE, FALSE, FALSE, FALSE, FALSE, FALSE, TRUE, FALSE

## $ CHits <lgl> FALSE, FALSE, FALSE, FALSE, FALSE, FALSE, TRUE, FALSE

## $ CHmRun <lgl> FALSE, FALSE, FALSE, FALSE, FALSE, FALSE, TRUE, TRUE

## $ CRuns <lgl> FALSE, FALSE, FALSE, FALSE, FALSE, FALSE, FALSE, TRUE

## $ CRBI <lgl> TRUE, TRUE, TRUE, TRUE, TRUE, TRUE, FALSE, FALSE

## $ CWalks <lgl> FALSE, FALSE, FALSE, FALSE, FALSE, FALSE, FALSE, TRUE

## $ LeagueN <lgl> FALSE, FALSE, FALSE, FALSE, FALSE, FALSE, FALSE, FALSE

## $ DivisionW <lgl> FALSE, FALSE, FALSE, TRUE, TRUE, TRUE, TRUE, TRUE

## $ PutOuts <lgl> FALSE, FALSE, TRUE, TRUE, TRUE, TRUE, TRUE, TRUE

## $ Assists <lgl> FALSE, FALSE, FALSE, FALSE, FALSE, FALSE, FALSE, FALSE

## $ Errors <lgl> FALSE, FALSE, FALSE, FALSE, FALSE, FALSE, FALSE, FALSE

## $ NewLeagueN <lgl> FALSE, FALSE, FALSE, FALSE, FALSE, FALSE, FALSE, FALSE

## $ r.squared <dbl> 0.3214501, 0.4252237, 0.4514294, 0.4754067, 0.4908036, 0…

## $ adj.r.squared <dbl> 0.3188503, 0.4208024, 0.4450753, 0.4672734, 0.4808971, 0…

## $ BIC <dbl> -90.84637, -128.92622, -135.62693, -141.80892, -144.0714…

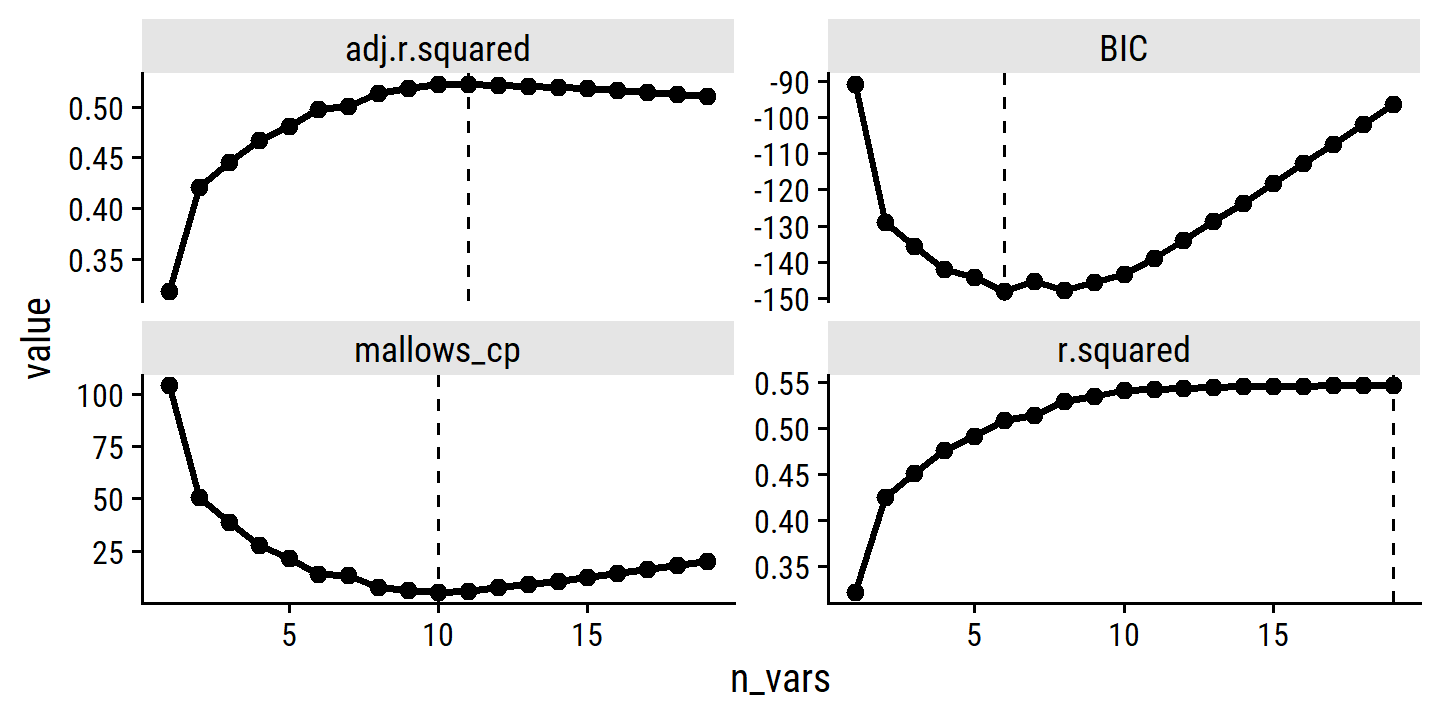

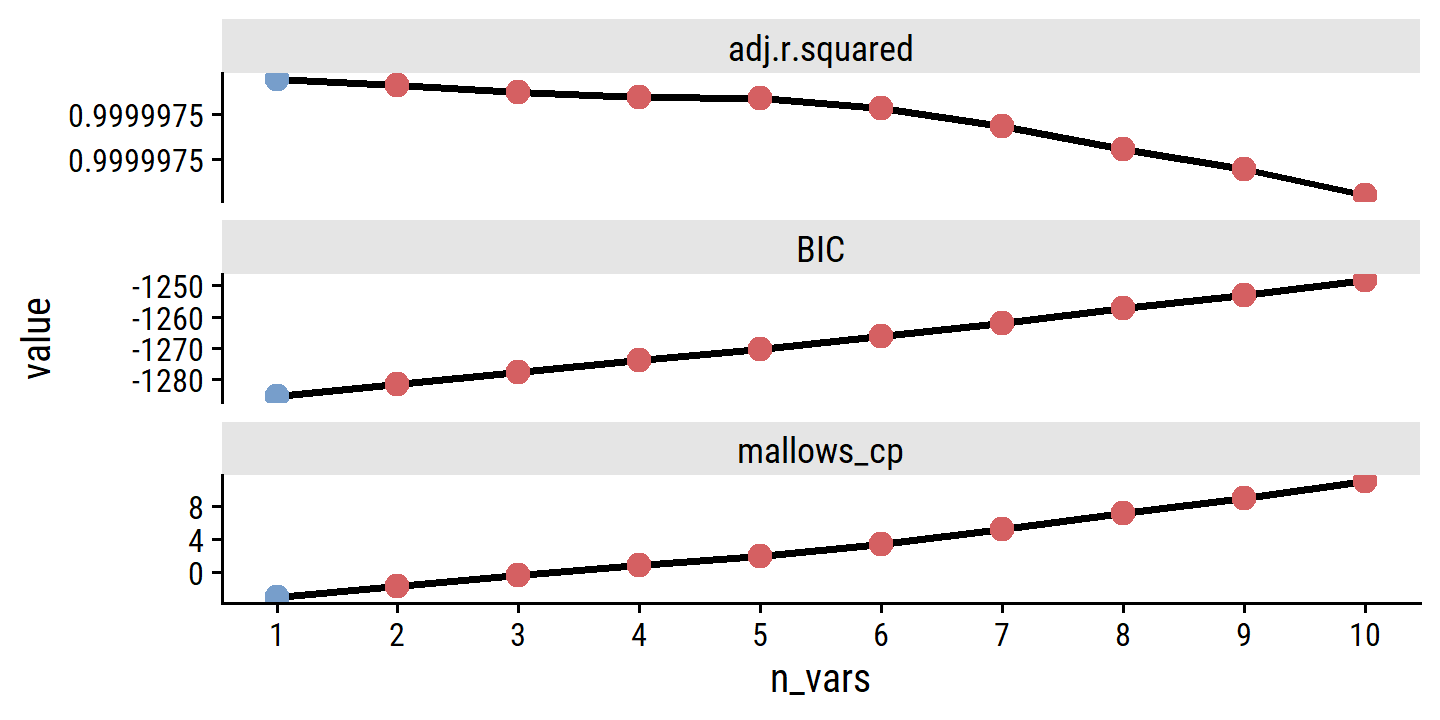

## $ mallows_cp <dbl> 104.281319, 50.723090, 38.693127, 27.856220, 21.613011, …Instead of a formatted table of asterisks, this gives us a tibble of booleans which are nice to work with for producing custom outputs like:

broom::tidy(regsubsets_hitters_salary) %>%

select(-`(Intercept)`) %>%

rownames_to_column(var = "n_vars") %>%

gt(rowname_col = "n_vars") %>%

gt::data_color(

columns = AtBat:NewLeagueN,

colors = col_numeric(

palette = c(td_colors$nice$soft_orange, td_colors$nice$lime_green),

domain = c(0, 1))

) %>%

gt::fmt_number(r.squared:mallows_cp, n_sigfig = 4)| AtBat | Hits | HmRun | Runs | RBI | Walks | Years | CAtBat | CHits | CHmRun | CRuns | CRBI | CWalks | LeagueN | DivisionW | PutOuts | Assists | Errors | NewLeagueN | r.squared | adj.r.squared | BIC | mallows_cp | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | FALSE | FALSE | FALSE | FALSE | FALSE | FALSE | FALSE | FALSE | FALSE | FALSE | FALSE | TRUE | FALSE | FALSE | FALSE | FALSE | FALSE | FALSE | FALSE | 0.3215 | 0.3189 | −90.85 | 104.3 |

| 2 | FALSE | TRUE | FALSE | FALSE | FALSE | FALSE | FALSE | FALSE | FALSE | FALSE | FALSE | TRUE | FALSE | FALSE | FALSE | FALSE | FALSE | FALSE | FALSE | 0.4252 | 0.4208 | −128.9 | 50.72 |

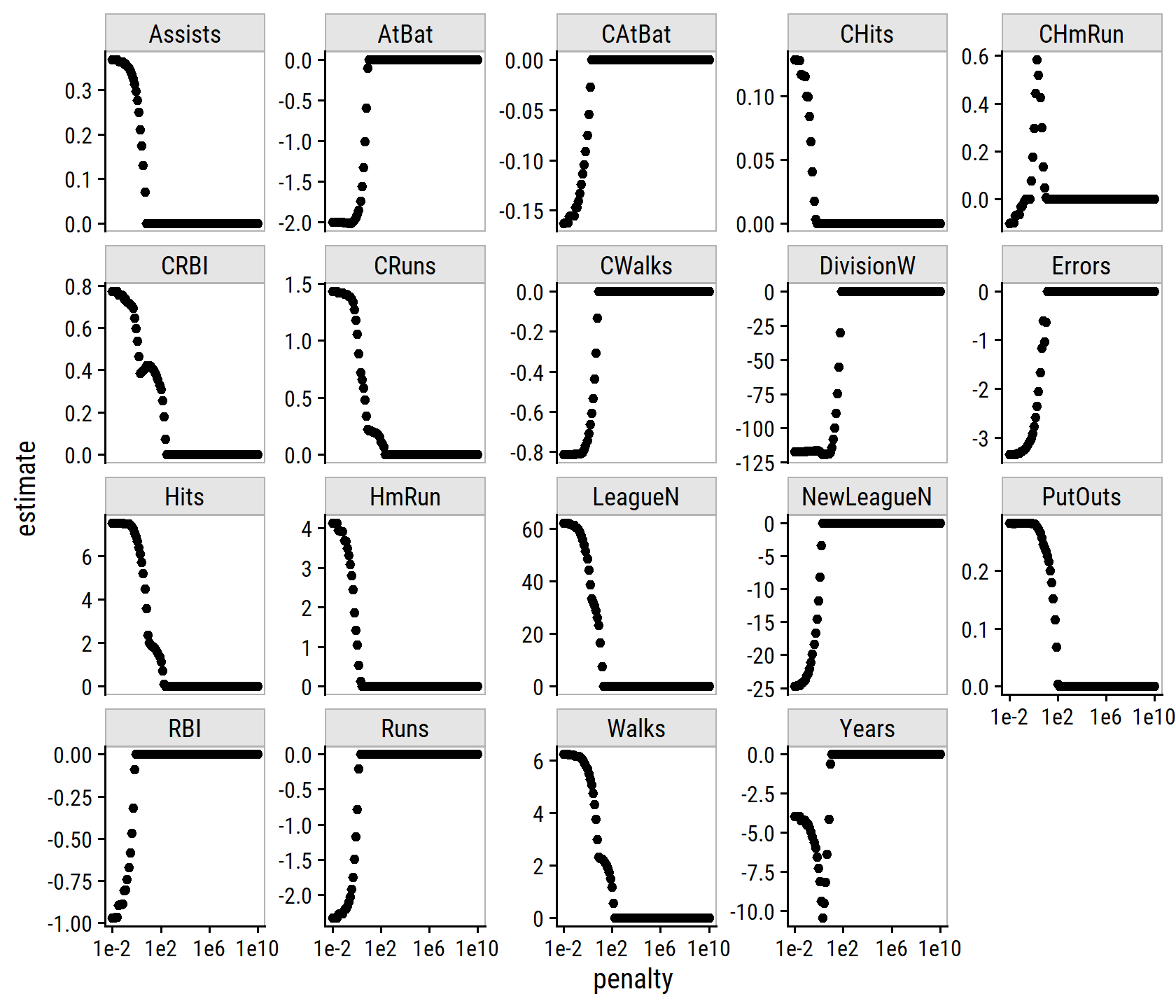

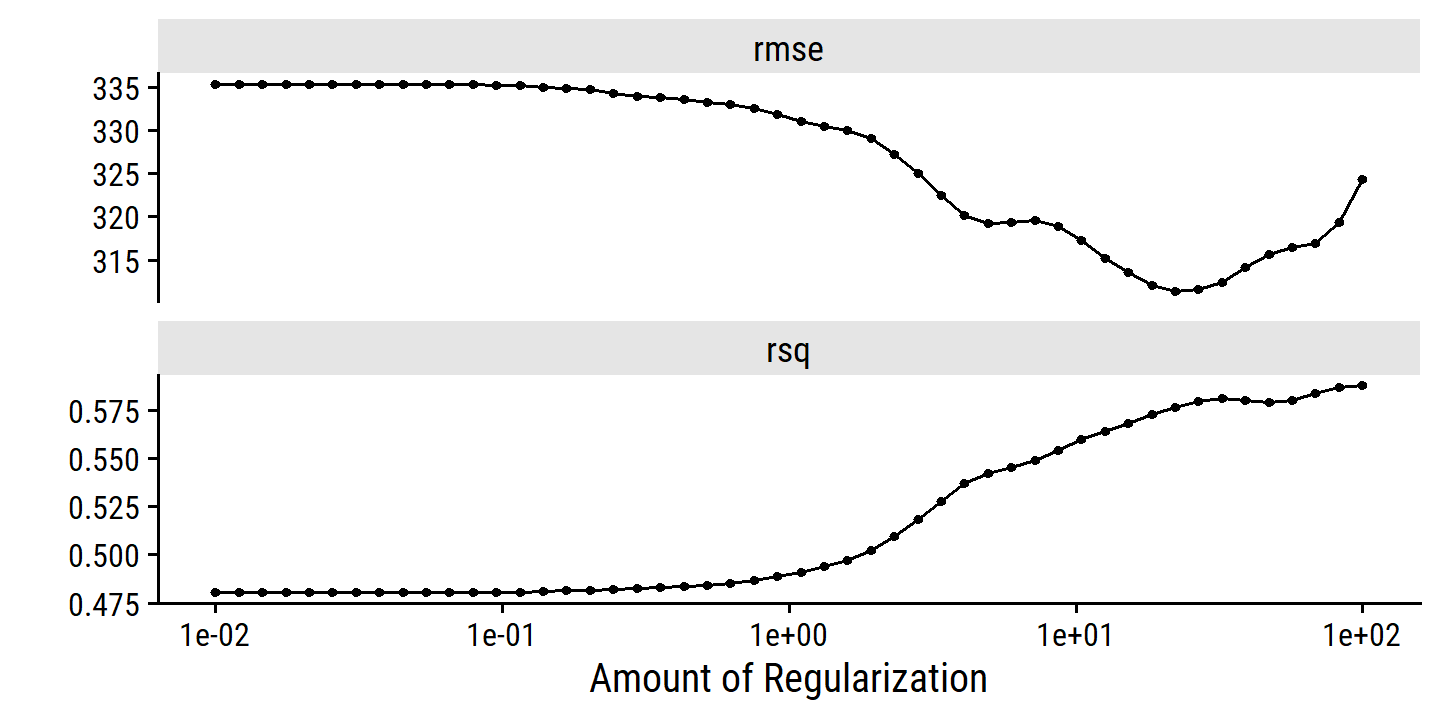

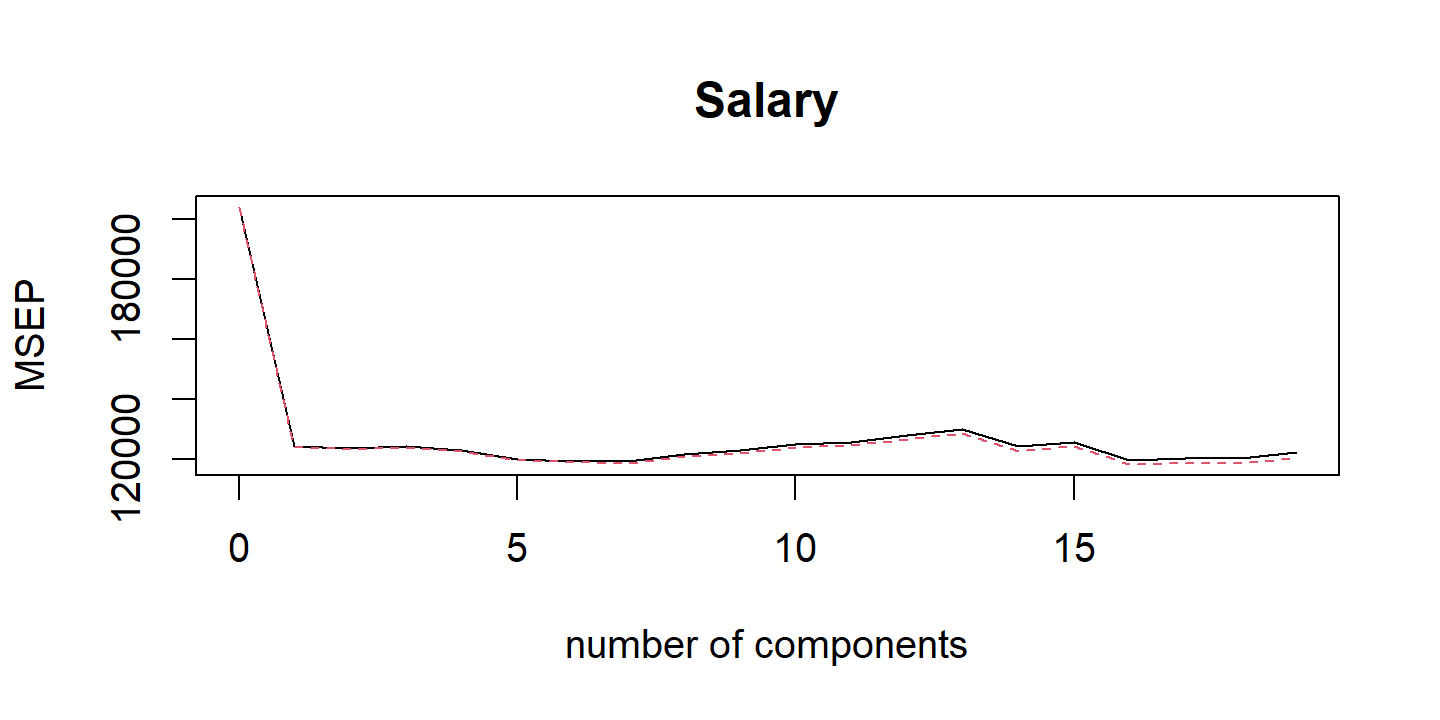

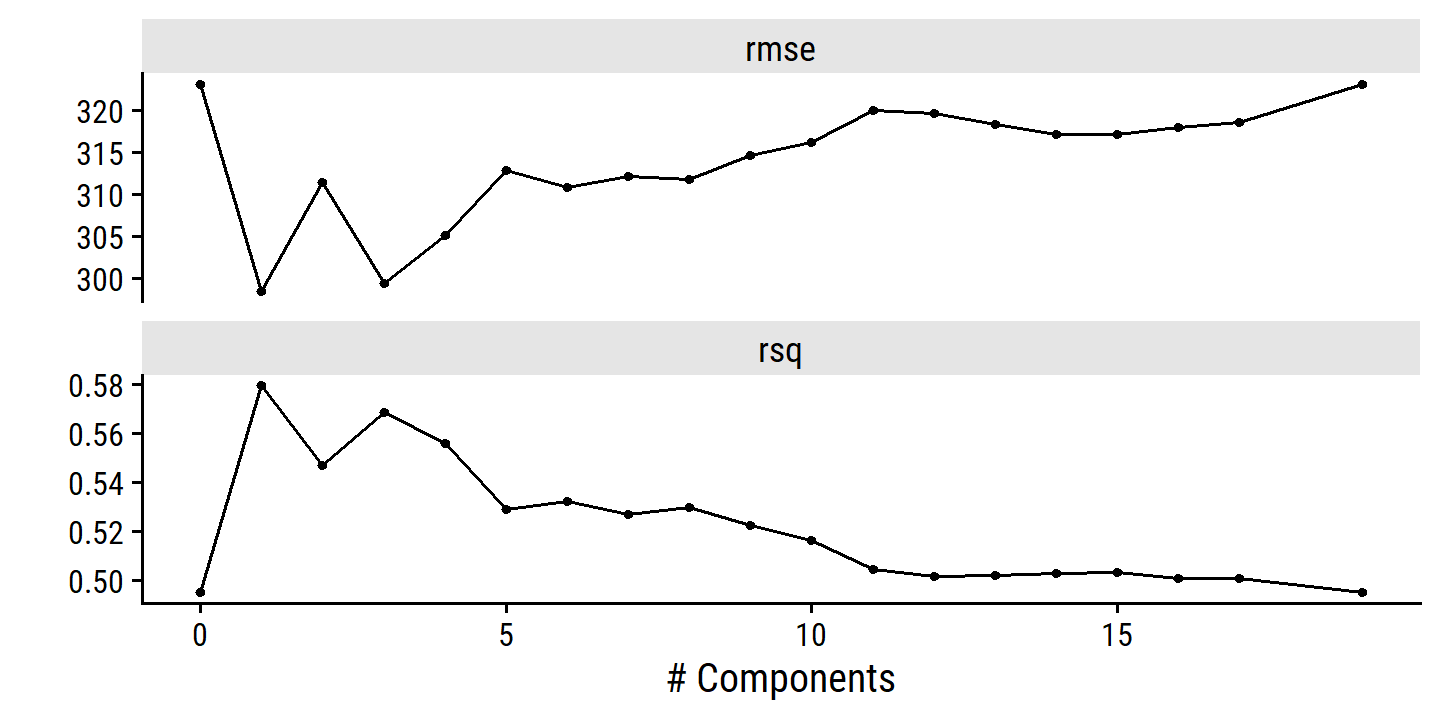

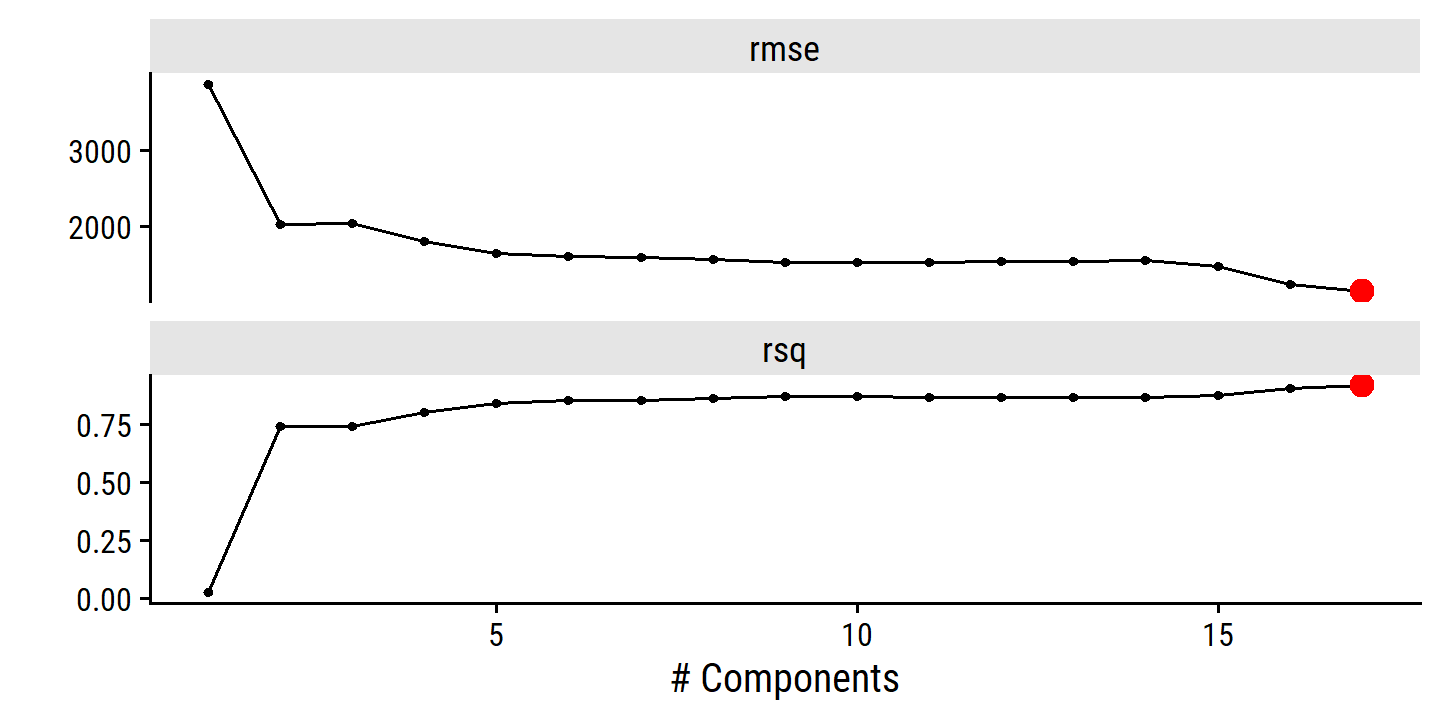

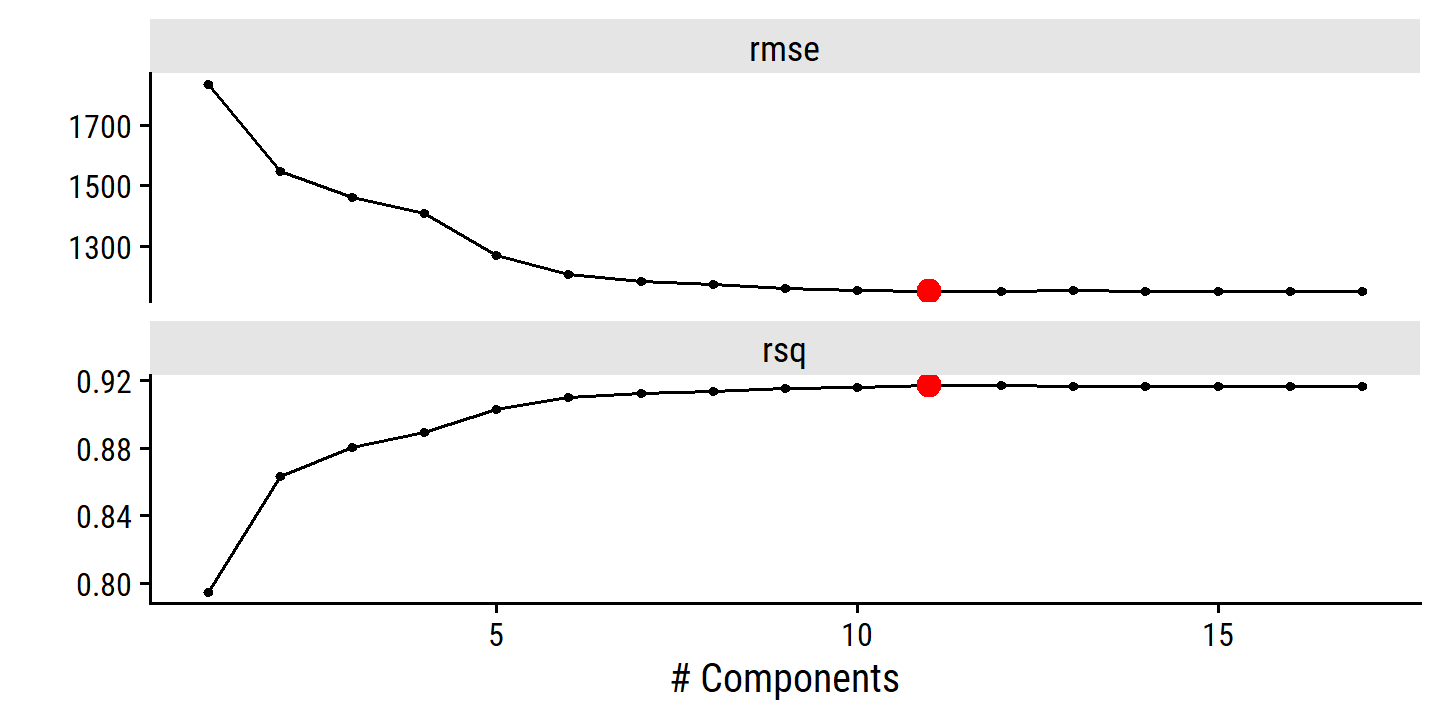

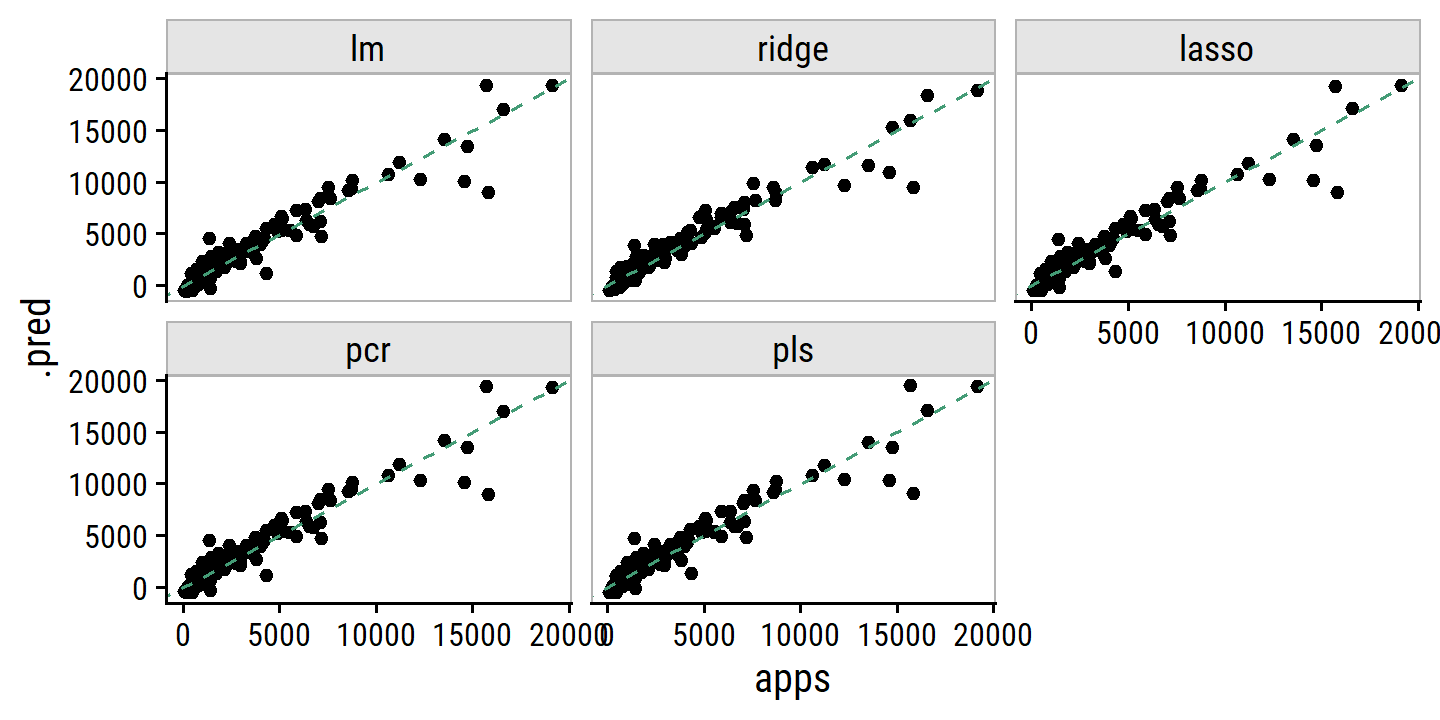

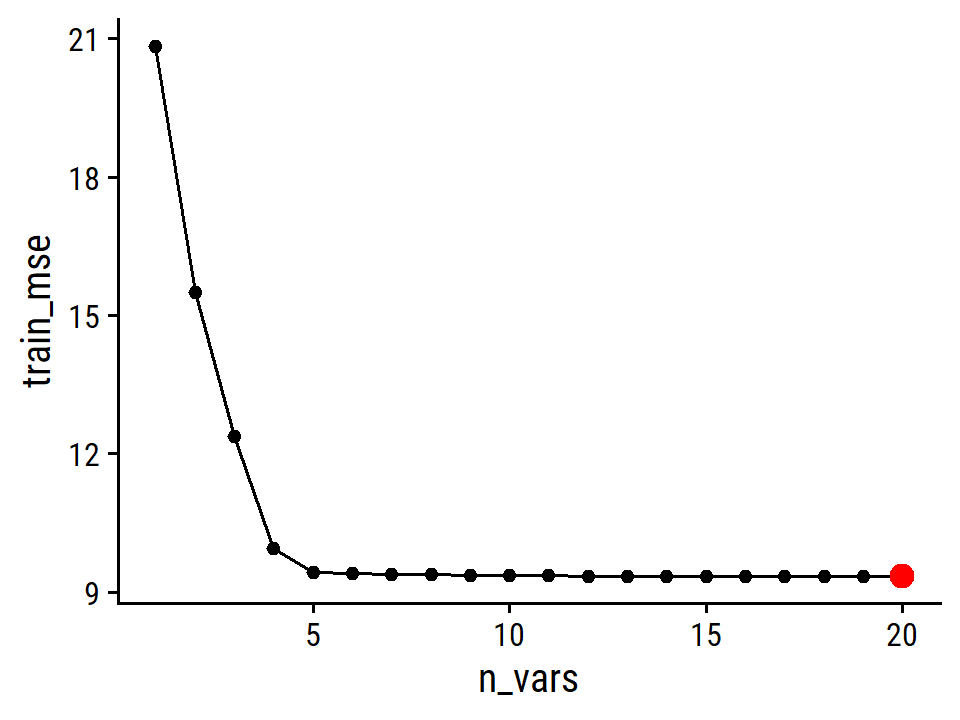

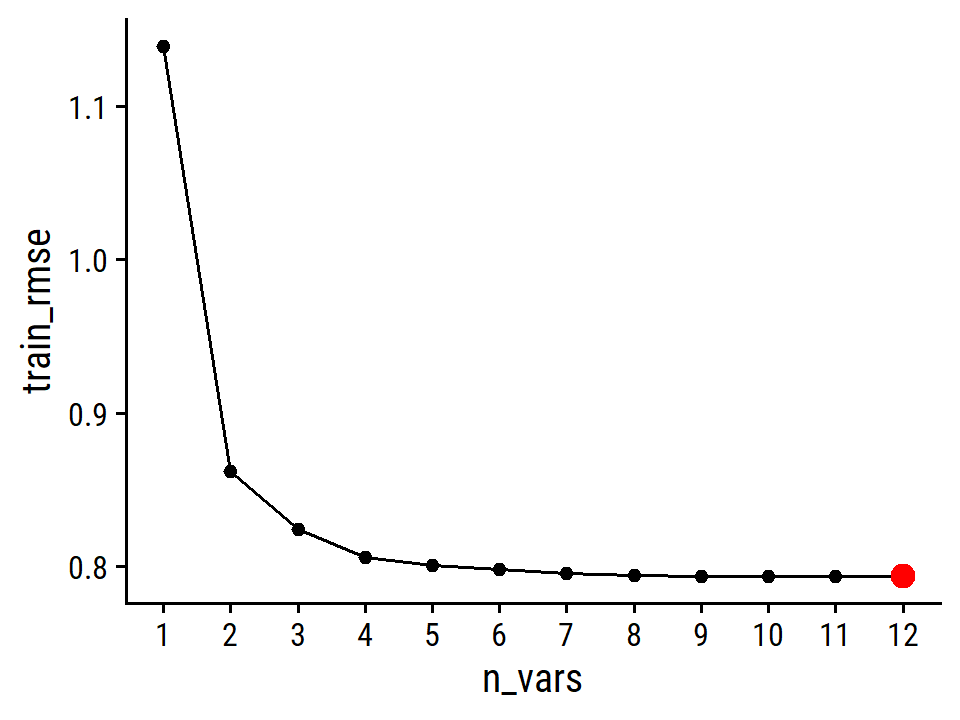

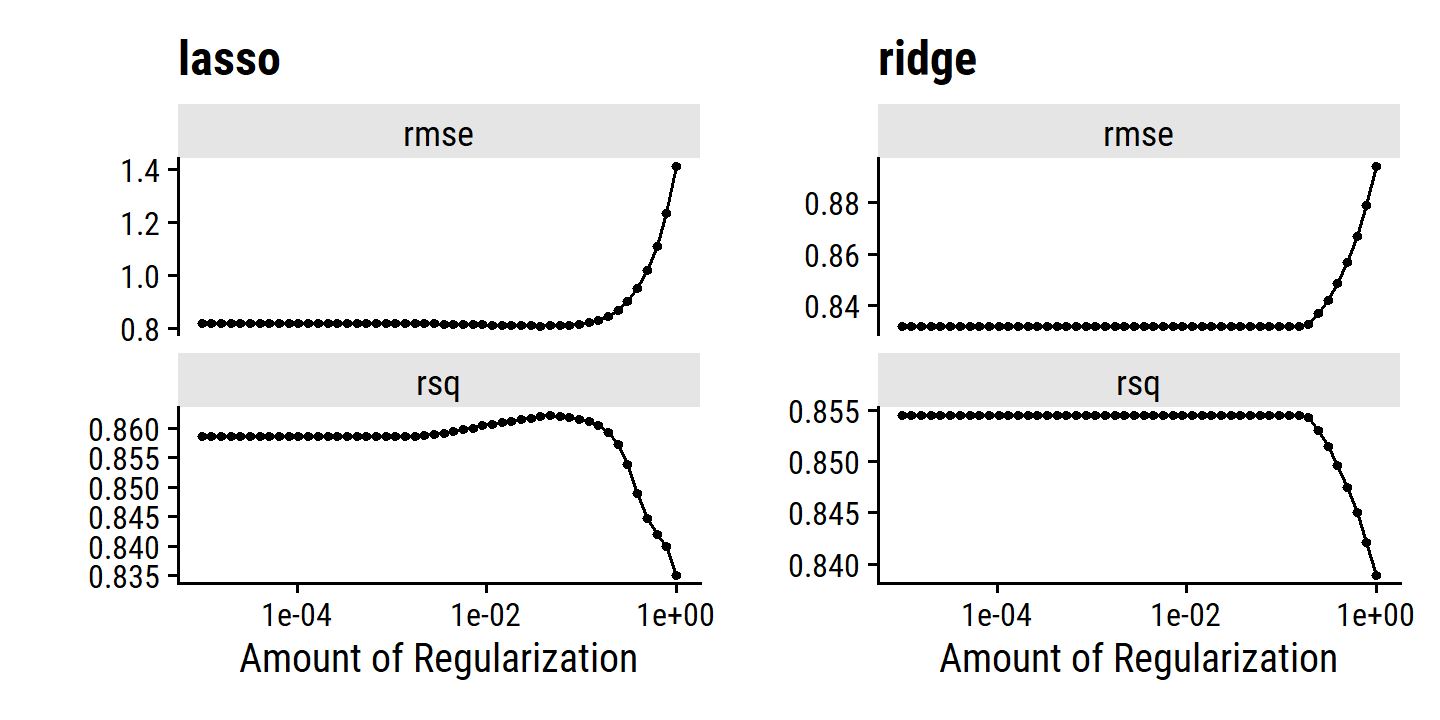

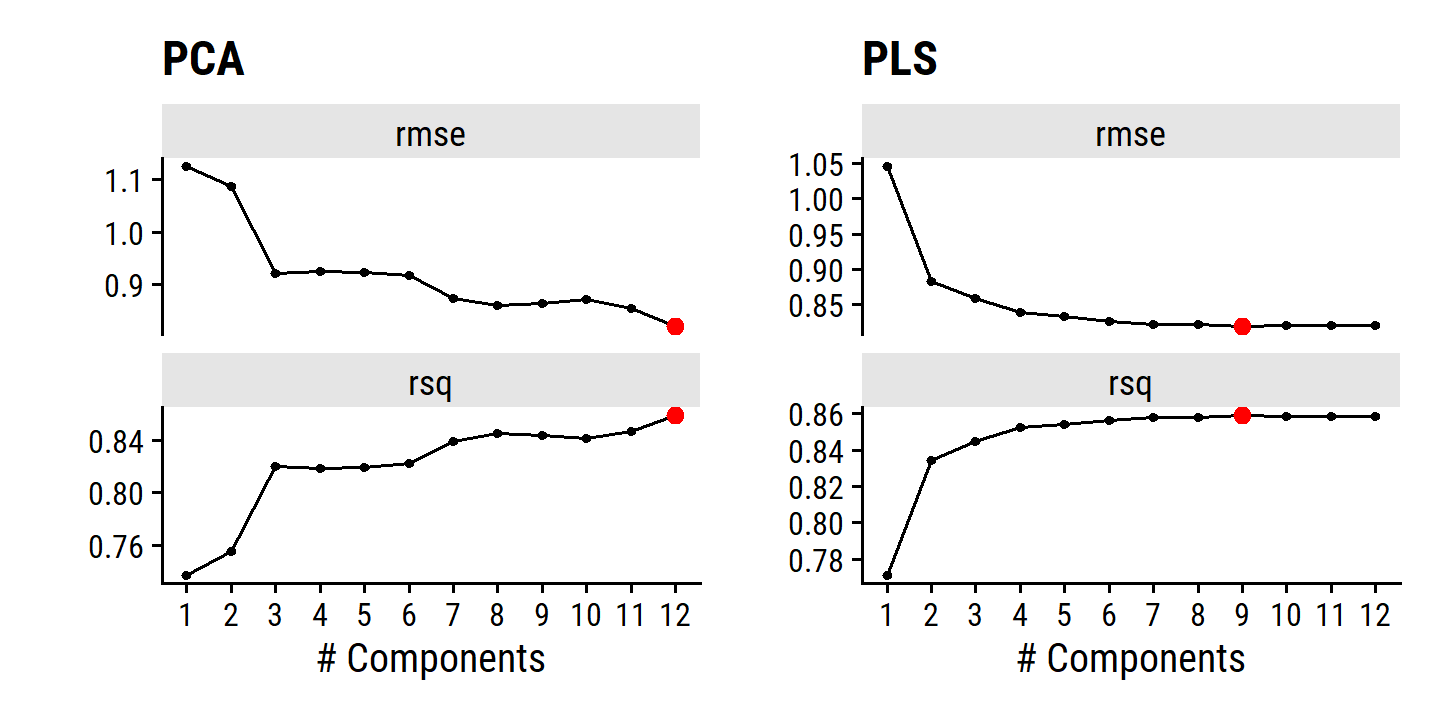

| 3 | FALSE | TRUE | FALSE | FALSE | FALSE | FALSE | FALSE | FALSE | FALSE | FALSE | FALSE | TRUE | FALSE | FALSE | FALSE | TRUE | FALSE | FALSE | FALSE | 0.4514 | 0.4451 | −135.6 | 38.69 |