15 Homophily and Exponential Random Graphs (ERGM)

15.1 Homophily

In this tutorial, we cover how to A) calculate homophily on a network and B) fit exponential random graphs to networks. First, we will need one of the Add Health data sets that we have been playing around with in previous tutorials. The code below downloads the data from Moreno’s website and converts it into an igraph object. We went over this in the Transitivy tutorial, so here, I just paste the code.

Through this tutorial, we will rely on igraph to analyze the comm59 Add Health network that we made use of last class.

# read in the edge list from our github

el <- read.table("https://raw.githubusercontent.com/mahoffman/stanford_networks/main/data/comm59.dat.txt", header = T)

# Read in attributes from our github

attributes <- read.table("https://raw.githubusercontent.com/mahoffman/stanford_networks/main/data/comm59_att.dat.txt", header = T)

# add an ID column

attributes$ID <- 1:nrow(attributes)

# Indexing data so that you only put in certain columns

el_no_weight <- el[,1:2] # We will ignore the ranking variable for now.

el_no_weight <- as.matrix(el_no_weight) # igraph requires a matrix

# convert ids to characters so they are preserved as names

el_no_weight[,1] <- as.character(el_no_weight[,1])

el_no_weight[,2] <- as.character(el_no_weight[,2])

# Graph the network

library(igraph)

net59 <- graph.edgelist(el_no_weight, directed = T)

# Finally, add attributes

# First link vertex names to their place in the attribute dataset

linked_ids <- match(V(net59)$name, attributes$ID)

# Then we can use that to assign a variable to each user in the network

V(net59)$race <- attributes$race[linked_ids]

V(net59)$sex <- attributes$sex[linked_ids]

V(net59)$grade <- attributes$grade[linked_ids]

V(net59)$school <- attributes$school[linked_ids]

net59 <- delete.vertices(net59, which(is.na(V(net59)$sex) | V(net59)$sex == 0))

net59 <- delete.vertices(net59, which(is.na(V(net59)$race) | V(net59)$race == 0))

net59 <- delete.vertices(net59, which(is.na(V(net59)$grade) | V(net59)$grade == 0))Great, now that we have the network, we can evaluate homophily. We can either use igraph’s built in function…

assortativity(net59, types1 = as.numeric(V(net59)$sex))## [1] 0.2180534Or do it ourselves. If you’ll remember from class on Tuesday, assortativity on variable is calculated by simply correlating the values of an attribute for every ego-alter pair in the network. We just grab the edgelist, match in ego and alter’s values for the variable of interest, and correlate with cor.test.

df <- data.frame(get.edgelist(net59), stringsAsFactors = F)

df$sex1 <- as.numeric(attributes$sex[match(df$X1, attributes$ID)])

df$sex2 <- as.numeric(attributes$sex[match(df$X2, attributes$ID)])

cor.test(df$sex1, df$sex2)##

## Pearson's product-moment correlation

##

## data: df$sex1 and df$sex2

## t = 14.092, df = 3978, p-value < 2.2e-16

## alternative hypothesis: true correlation is not equal to 0

## 95 percent confidence interval:

## 0.1882596 0.2474463

## sample estimates:

## cor

## 0.2180534Race, however, is probably better conceptualized as a categorical variable. Assortativity_nominal can be used to evaluate assortativity for categorical variables. It requires a numeric vector, denoting the different categories, which starts at 1, so we add one to the race variable, which starts at 0.

assortativity_nominal(net59, types = as.numeric(V(net59)$race) + 1)## [1] 0.0410346615.2 ERGMs

Now imagine, like Wimmer and Lewis, we wanted to calculate homophily for race, but wanted to control for other network factors, such as transitivity, which might lead to a higher degree of same race friendships, but which don’t actually signal an in-group preference. Just like them, we can use exponential random graphs, which model networks as a function of network statistics. Specifically, ERGMs imagine the observed network to be just one instantiation of a set of possible networks with similar features, that is, as the outcome of a stochastic process, which is unknown and must therefore be inferred.

The package that allows one to fit ergm models is part of the statnet (statistical networks) suite of packages. Much like tidyverse, which you might be familiar with, statnet includes a number of complementary packages for the statistical analysis of networks. Let’s install statnet and load it into R.

install.packages("statnet")library(statnet)Great, now we can use statnet’s ergm() function to fit our first ERGM. The only problem? Our network is an igraph object rather than a statnet one. There is some good news though. People have built a package for converting igraph objects to statnet and vise versa - intergraph. Let’s install that and load it in too.

install.packages("intergraph")library(intergraph)Now that we have intergraph installed and in our R environment, we can use the asNetwork function to convert it to a statnet object. I will also subset the data here, because ERGMs take forever to run and the network is somewhat large (~1000 nodes).

set.seed(1234)

sampled_students <- sample(V(net59)$name, 350, replace = F)

net59_for_ergm <- igraph::delete.vertices(net59, !V(net59)$name %in% sampled_students)

statnet59 <- asNetwork(net59_for_ergm)

statnet59## Network attributes:

## vertices = 350

## directed = TRUE

## hyper = FALSE

## loops = FALSE

## multiple = FALSE

## bipartite = FALSE

## total edges= 547

## missing edges= 0

## non-missing edges= 547

##

## Vertex attribute names:

## grade race school sex vertex.names

##

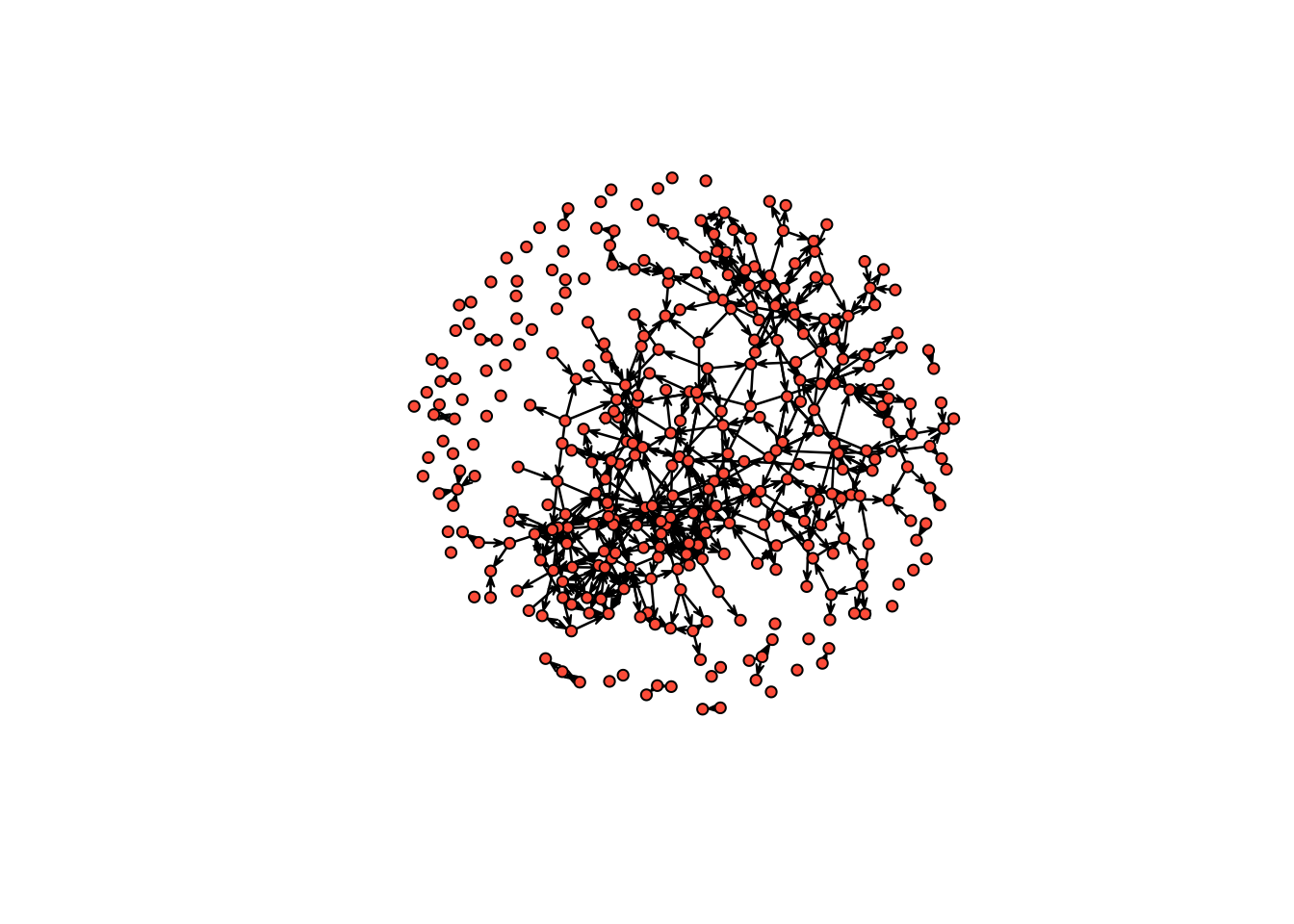

## No edge attributesIt even ported over our node attributes! You can plot it to see if it looks the same as it did in igraph.

plot(statnet59,

vertex.col = "tomato",

vertex.cex = 1)

With our data ready for analysis using statnet, let’s build our first ERGM. There are a number of potential ERG model terms that we could use in fitting our model. You can view a full list by looking at the documentation for:

?ergm.termsThat said, it is common to build up from some basic terms first. The McFarland-Moody paper we read in class is a useful reference point as is the Wimmer-Lewis paper. Generally, the first term that people use is the edges term. It is a statistic which counts how many edges there are in the network.

random_graph <- ergm(statnet59 ~ edges, control = control.ergm(seed = 1234))How do we interpret this coefficient? Coefficients in ERGMs represent the change in the (log-odds) likelihood of a tie for a unit change in a predictor. We can use a simple formula for converting log-odds to probability to understand them better.

inv.logit <- function(logit){

odds <- exp(logit)

prob <- odds / (1 + odds)

return(prob)

}

theta <- coef(random_graph)

inv.logit(theta)## edges

## 0.004478101So the probability of an edge being in the graph is roughly 0.02. The probability of an edge being drawn should in theory be the same as density - let’s check.

network.density(statnet59)## [1] 0.004478101Nice.

To put it all back into the theoretical underpinnings of ERGMs, we have modeled a stochastic generating process, and the only constraint we have put on the stochastic process is the probability with which edges are drawn, which was set equal to the network density of our observed graph.

We can more closely examine the model fit using the summary() function, just like lm() or glm() in base R.

summary(random_graph)## Call:

## ergm(formula = statnet59 ~ edges, control = control.ergm(seed = 1234))

##

## Maximum Likelihood Results:

##

## Estimate Std. Error MCMC % z value Pr(>|z|)

## edges -5.40407 0.04285 0 -126.1 <1e-04 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Null Deviance: 169336 on 122150 degrees of freedom

## Residual Deviance: 7009 on 122149 degrees of freedom

##

## AIC: 7011 BIC: 7020 (Smaller is better. MC Std. Err. = 0)We can also simulate graphs using our ERGM fit. We did something similar to this when we simulated random graphs and set the probability of an edge being drawn to be equal to our network’s density. We just didn’t know it at the time!

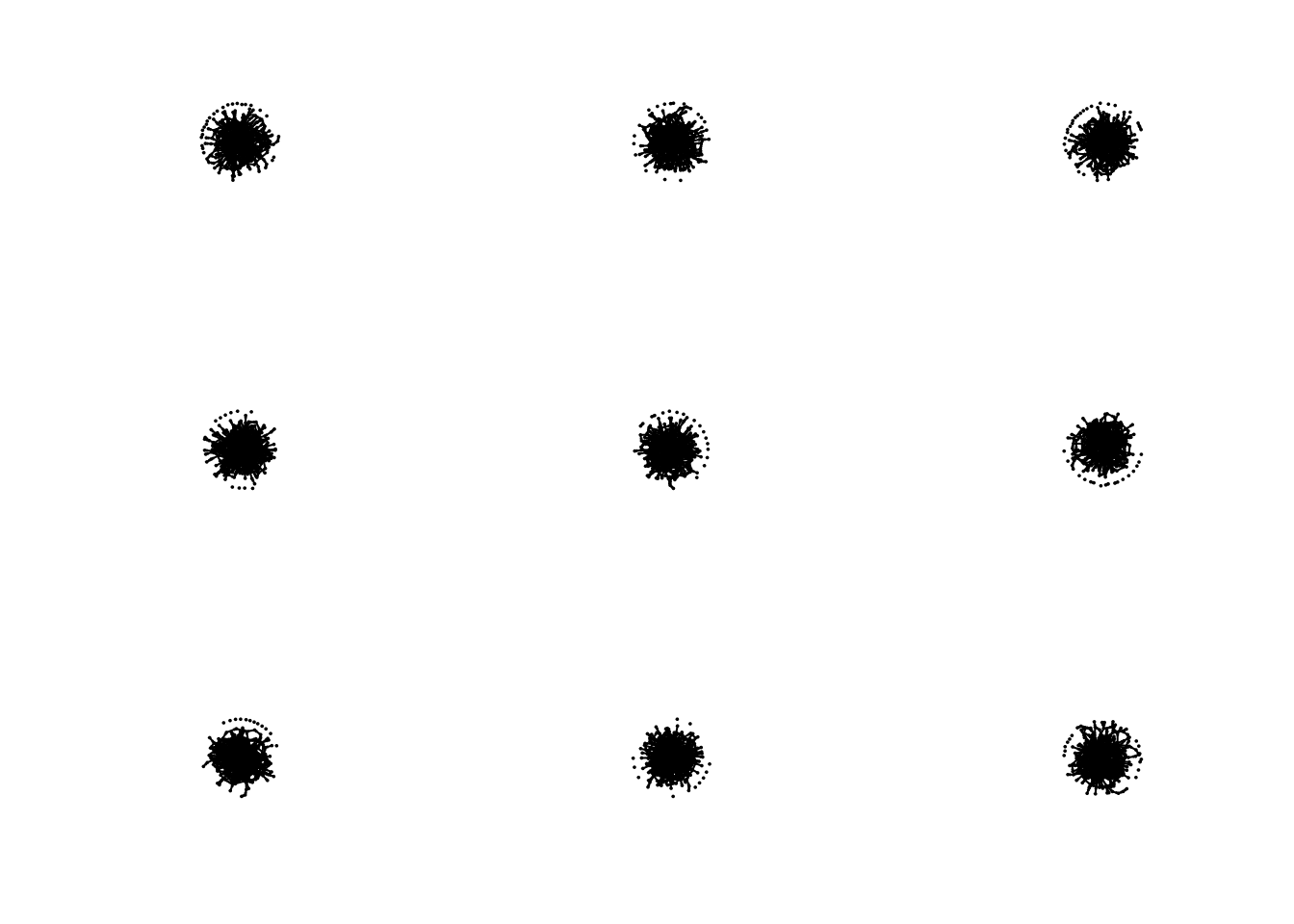

set.seed(1234)

hundred_simulations <- simulate(random_graph,

coef = theta,

nsim = 100,

control = control.simulate.ergm(MCMC.burnin = 1000,

MCMC.interval = 1000))Let’s examine the first nine simulations.

par(mfrow = c(3, 3))

sapply(hundred_simulations[1:9], plot, vertex.cex = 1, vertex.col = "tomato")

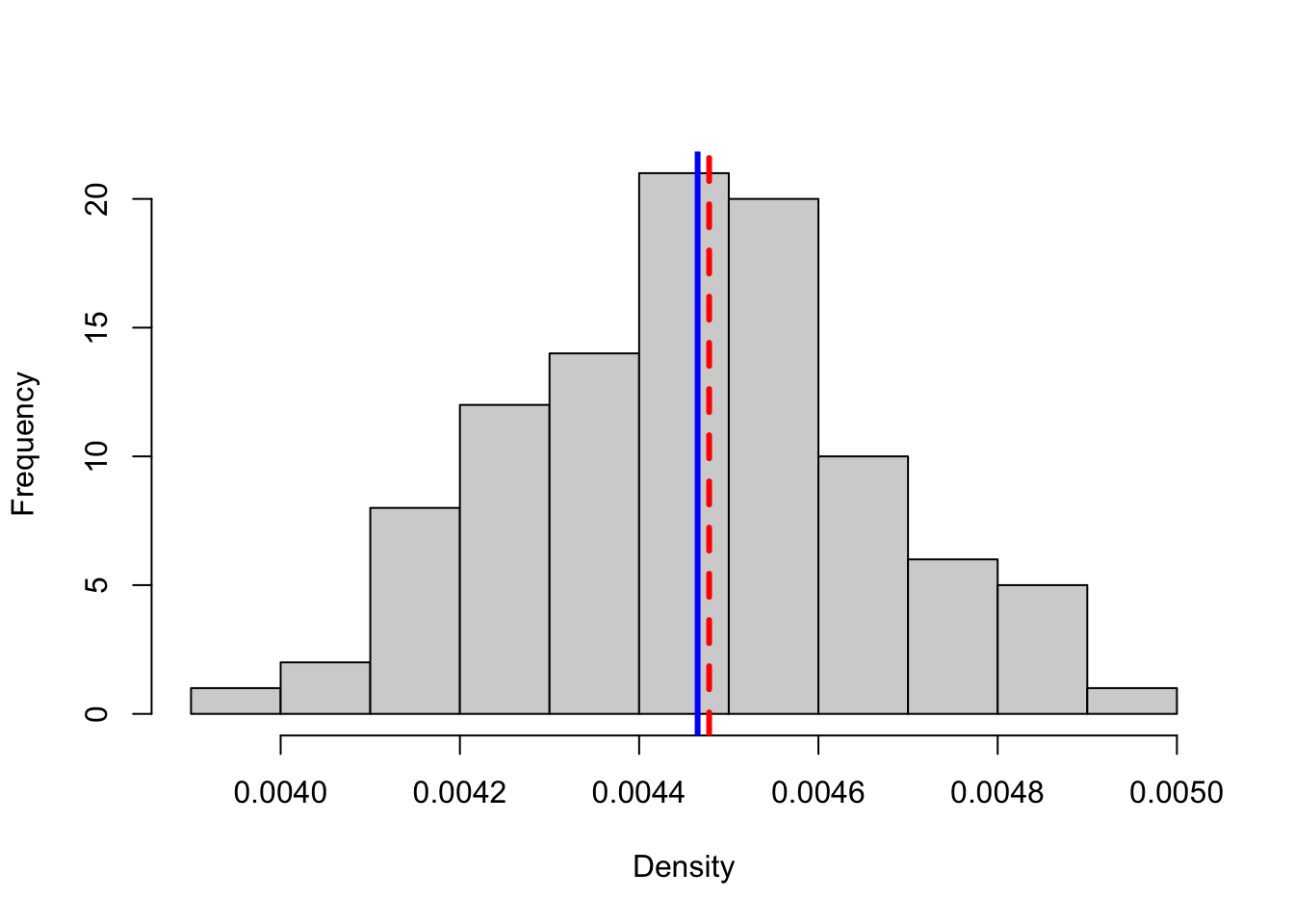

We can compare the number of edges our observed graph has to the average of the simulated networks.

net_densities <- unlist(lapply(hundred_simulations, network.density))

hist(net_densities, xlab = "Density", main = "", col = "lightgray")

abline(v = network.density(statnet59), col = "red", lwd = 3, lty = 2)

abline(v = mean(net_densities), col = "blue", lwd = 3, lty = 1) Pretty close!

Pretty close!

Another way to evaluate our model is to use the built-in goodness of fit measures. Essentially, we will evaluate whether our network has similar structural features as the simulated graphs. ergm has a built-in function - gof() - which calculates the statistics for us. We just have to tell it how many simulations we want to use in the comparison set - the larger the number, the more accurate representation of the model.

gof_stats <- gof(random_graph)

par(mfrow = c(2, 3))

plot(gof_stats, main = '')

On one measure, edgewise shared partners, our model looks okay. On the others, especially in and out degree, it looks awful. How to we improve our fit? By adding more terms to the model!

First, let’s build a model with only dyad-independent terms. Just like with the random graph, we are essentially fitting a logistic regression.

nodematch is the homophily term in ergm. We can specify the attribute we want to examine as well as the diff argument, which allows for differential levels of homophily for different groups.

model1 <- ergm(statnet59 ~ edges +

nodematch("race") +

nodematch("sex") +

nodematch("grade"))summary(model1)## Call:

## ergm(formula = statnet59 ~ edges + nodematch("race") + nodematch("sex") +

## nodematch("grade"))

##

## Maximum Likelihood Results:

##

## Estimate Std. Error MCMC % z value Pr(>|z|)

## edges -6.91859 0.12039 0 -57.468 < 1e-04 ***

## nodematch.race 0.05272 0.09125 0 0.578 0.56341

## nodematch.sex 0.26996 0.08717 0 3.097 0.00195 **

## nodematch.grade 2.87780 0.10361 0 27.774 < 1e-04 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Null Deviance: 169336 on 122150 degrees of freedom

## Residual Deviance: 6007 on 122146 degrees of freedom

##

## AIC: 6015 BIC: 6054 (Smaller is better. MC Std. Err. = 0)Every variable is significant! Grade and race have especially large coefficients and all three are positive.

Let’s try it with diff set to T. We will limit our examination to only grade/race/sex categories represented by a large number of vertices in our network. You can examine this using the table function

table(V(net59_for_ergm)$race) # 1 = white, 2 = black, 3 = hispanic, 4 = asian, and 5 = mixed/other

table(V(net59_for_ergm)$sex) # 1 = male, 2 = female

table(V(net59_for_ergm)$grade)

model2 <- ergm(statnet59 ~ edges +

nodematch("race", diff = T, levels = c("1", "2", "5")) +

nodematch("sex", diff = T, levels = c("1", "2")) +

nodematch("grade", diff = T, levels = as.character(c(7:12))))summary(model2)## Call:

## ergm(formula = statnet59 ~ edges + nodematch("race", diff = T,

## levels = c("1", "2", "5")) + nodematch("sex", diff = T, levels = c("1",

## "2")) + nodematch("grade", diff = T, levels = as.character(c(7:12))))

##

## Maximum Likelihood Results:

##

## Estimate Std. Error MCMC % z value Pr(>|z|)

## edges -6.96247 0.12167 0 -57.226 < 1e-04 ***

## nodematch.race.1 0.11676 0.09397 0 1.243 0.214032

## nodematch.race.2 -Inf 0.00000 0 -Inf < 1e-04 ***

## nodematch.race.5 -0.07078 0.28052 0 -0.252 0.800805

## nodematch.sex.1 0.17007 0.11570 0 1.470 0.141582

## nodematch.sex.2 0.34239 0.09813 0 3.489 0.000485 ***

## nodematch.grade.7 2.86262 0.14430 0 19.838 < 1e-04 ***

## nodematch.grade.8 2.65692 0.16632 0 15.975 < 1e-04 ***

## nodematch.grade.9 2.97460 0.12574 0 23.657 < 1e-04 ***

## nodematch.grade.10 2.42069 0.19922 0 12.151 < 1e-04 ***

## nodematch.grade.11 2.54508 0.21460 0 11.860 < 1e-04 ***

## nodematch.grade.12 3.31829 0.14238 0 23.306 < 1e-04 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Null Deviance: 169336 on 122150 degrees of freedom

## Residual Deviance: 5976 on 122138 degrees of freedom

##

## AIC: 5998 BIC: 6105 (Smaller is better. MC Std. Err. = 0)

##

## Warning: The following terms have infinite coefficient estimates:

## nodematch.race.2Interesting! Now let’s add some dyad-dependent terms. These can be a bit finnicky, especially transitivity ones.

mutual is the term for reciprocity.

model3 <- ergm(statnet59 ~ edges +

nodematch("race") +

nodematch("sex") +

nodematch("grade") +

mutual)summary(model3)## Call:

## ergm(formula = statnet59 ~ edges + nodematch("race") + nodematch("sex") +

## nodematch("grade") + mutual)

##

## Monte Carlo Maximum Likelihood Results:

##

## Estimate Std. Error MCMC % z value Pr(>|z|)

## edges -6.95232 0.10987 0 -63.275 <1e-04 ***

## nodematch.race 0.04004 0.07970 0 0.502 0.6154

## nodematch.sex 0.19509 0.07657 0 2.548 0.0108 *

## nodematch.grade 2.32082 0.10386 0 22.345 <1e-04 ***

## mutual 4.39947 0.15846 0 27.764 <1e-04 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Null Deviance: 169336 on 122150 degrees of freedom

## Residual Deviance: 5418 on 122145 degrees of freedom

##

## AIC: 5428 BIC: 5477 (Smaller is better. MC Std. Err. = 1.117)Now let’s add a term for triadic closure. There are a few terms for triads - one of them, triangles, tends to lead to degeneracy. The gwesp term behaves better, though convergence is far from guaranteed.

It may be a good time to use the bathroom. This will take a while…

model4 <- ergm(statnet59 ~ edges +

nodematch("race") +

nodematch("sex") +

nodematch("grade") +

mutual +

gwesp(0.25, fixed = T),

control=control.ergm(MCMLE.maxit= 40))

# you can change a number of other things about the MCMC algorithm - from its burn-in to its step and sample size

# here we just up the maximum iterations we wait to see if it has convergedsummary(model4)Let’s take a look at the goodness-of-fit statistics for this most elaborate model.

model4_gof <- gof(model4)

par(mfrow = c(3, 2))

plot(model4_gof, main = '')Definitely an improvement over the random graph.

Lab: Run ergm on a graph of interest to you and include balance (reciprocity and triadic closure) and homophily terms. Interpret the results. Simulate a graph from this model and compare it to your original graph.