Chapter 2 Introduction to R

This section lays the foundation for exploratory data analysis using the R language and packages especially within the tidyverse. This foundation progresses through:

- Introduction : An introduction to the R language

- Abstraction : Exploration of data via reorganization using

dplyrand other packages in the tidyverse (Chapter 3) - Visualization : Adding visual tools to enhance our data exploration (Chapter 4)

- Transformation : Reorganizing our data with pivots and data joins (Chapter 5)

In this chapter we’ll introduce the R language, using RStudio to explore its basic data types, structures, functions and programming methods in base R. We’re assuming you’re either new to R or need a refresher. Later chapters will add packages that extend what you can do with base R for data abstraction, transformation, and visualization, then explore the spatial world, statistical models, and time series applied to environmental research.

The following code illustrates a few of the methods we’ll explore in this chapter:

tC <- c(10.7, 9.7, 7.7, 9.2, 7.3)

elev <- c(52, 394, 510, 564, 725)

lat <- c(39.52, 38.91, 37.97, 38.70, 39.09)

elevft <- round(elev / 0.3048)

deg <- as.integer(lat)

min <- as.integer((lat-deg) * 60)

sec <- round((lat-deg-min/60)*3600)

sierradata <- cbind(tC, elev, elevft, lat, deg, min, sec)

mydata <- as.data.frame(sierradata)

mydata## tC elev elevft lat deg min sec

## 1 10.7 52 171 39.52 39 31 12

## 2 9.7 394 1293 38.91 38 54 36

## 3 7.7 510 1673 37.97 37 58 12

## 4 9.2 564 1850 38.70 38 42 0

## 5 7.3 725 2379 39.09 39 5 24RStudio

If you’re new to RStudio, or would like to learn more about using it, there are plenty of resources you can access to learn more about using it. As with many of the major packages we’ll explore, there’s even a cheat sheet: https://www.rstudio.com/resources/cheatsheets/. Have a look at this cheat sheet while you have RStudio running, and use it to learn about some of its different components:

- The Console, where you’ll enter short lines of code, install packages, and get help on functions. Messages created from running code will also be displayed here. There are other tabs in this area (e.g. Terminal, R Markdown) we may explore a bit, but mostly we’ll use the console.

- The Source Editor, where you’ll write full R scripts and R Markdown documents. You should get used to writing complete scripts and R Markdown documents as we go through the book.

- Various Tab Panes such as the Environment pane, where you can explore what scalars and more complex objects contain.

- The Plots pane in the lower right for static plots (graphs and maps that aren’t interactive), which also lets you see a listing of Files, or View interactive maps and maps.

2.1 Data Objects

As with all programming languages, R works with data and since it’s an object-oriented language, these are data objects. Data objects can range from the most basic type – the scalar which holds one value, like a number or text – to everything from an array of values to spatial data for mapping or a time series of data.

2.1.1 Scalars and assignment

We’ll be looking at a variety of types of data objects, but scalars are the most basic type, holding individual values, so we’ll start with it. Every computer language, like in math, stores values by assigning them constants or results of expressions. These are often called “variables,” but we’ll be using that name to refer to a column of data stored in a data frame, which we’ll look at later in this chapter. R uses a lot of objects, and not all are data objects; we’ll also create functions 2.8.1, a type of object that does something (runs the function code you’ve defined for it) with what you provide it.

To create a scalar (or other data objects), we’ll use the most common type of statement, the assignment statement, that takes an expression and assigns it to a new data object that we’ll name. The class of that data object is determined by the class of the expression provided, and that expression might be something as simple as a constant like a number or a character string of text. Here’s an example of a very basic assignment statement that assigns the value of a constant 5 to a new scalar x:

x <- 5

Note that this uses the assignment operator <- that is standard for R. You can also use = as most languages do (and I sometimes do), but we’ll use = for other types of assignments.

All object names must start with a letter, have no spaces, and must not use any names that are built into the R language or used in package libraries, such as reserved words like for or function names like log. Object names are case-sensitive (which you’ll probably discover at some point by typing in something wrong and getting an error).

To check the value of a data object, you can just enter the name in the console, or even in a script or code chunk.

## [1] 5## [1] 8## [1] -122.4## [1] 37.8## [1] "Inigo Montoya"This is counter to the way printing out values commonly works in other programming languages, and you will need to know how this method works as well because you will want to use your code to develop tools that accomplish things, and there are also limitations to what you can see by just naming objects.

To see the values of objects in programming mode, you can also use the print() function (but we rarely do); or to concatenate character string output, use paste() or paste0.

Numbers concatenated with character strings are converted to characters.

paste0(paste("The Ultimate Answer to Life", "The Universe",

"and Everything is ... ", sep=", "),42,"!")## [1] "The location is latitude 37.8 longitude -122.4"Review the code above and what it produces. Without looking it up, what’s the difference between paste() and paste0()?

We’ll use

paste0()a lot in this book to deal with long file paths which create problems for the printed/pdf version of this book, basically extending into the margins. Breaking the path into multiple strings and then combining them withpaste0()is one way to handle them. For instance, in the Imagery and Classification Models chapter, the Sentinel2 imagery is provided in a very long file path. So here’s how we usepaste0()to recombine after breaking up the path, and we then take it one more step and build out the full path to the 20 m imagery subset.

2.2 Functions

Just as in regular mathematics, R makes a lot of use of functions that accept an input and create an output:

log10(100)

log(exp(5))

cos(pi)

sin(90 * pi/180)But functions can be much more than numerical ones, and R functions can return a lot of different data objects. You’ll find that most of your work will involve functions, from those in base R to a wide variety in packages you’ll be adding. You will likely have already used the install.packages() and library() functions that add in an array of other functions.

Later in this chapter, we’ll also learn how to write our own functions, a capability that is easy to accomplish and also gives you a sense of what developing your own package might be like.

Arithmetic operators There are, of course, all the normal arithmetic operators (that are actually functions) like plus + and minus - or the key-stroke approximations of multiply * and divide / operators. You’re probably familiar with these approximations from using equations in Excel if not in some other programming language you may have learned. These operators look a bit different from how they’d look when creating a nicely formatted equation.

For example, \(\frac{NIR - R}{NIR + R}\) instead has to look like (NIR-R)/(NIR+R).

Similarly * must be used to multiply; there’s no implied multiplication that we expect in a math equation like \(x(2+y)\), which would need to be written x*(2+y).

In contrast to those four well-known operators, the symbol used to exponentiate – raise to a power – varies among programming languages. R uses either ** or ^ so the the Pythagorean theorem \(c^2=a^2+b^2\) might be written c**2 = a**2 + b**2 or c^2 = a^2 + b^2 except for the fact that it wouldn’t make sense as a statement to R. Why?

And how would you write an R statement that assigns the variable c an expression derived from the Pythagorean theorem? (And don’t use any new functions from a Google search – from deep math memory, how do you do \(\sqrt{x}\) using an exponent?)

It’s time to talk more about expressions and statements.

2.3 Expressions and Statements

The concepts of expressions and statements are very important to understand in any programming language.

An expression in R (or any programming language) has a value just like an object has a value. An expression will commonly combine data objects and functions to be evaluated to derive the value of the expression. Here are some examples of expressions:

5

x

x*2

sin(x)

(a^2 + b^2)^0.5

(-b+sqrt(b**2-4*a*c))/2*a

paste("My name is", aname)Note that some of those expressions used previously assigned objects – x, a, b, c, aname.

An expression can be entered in the console to display its current value, and this is commonly done in R for objects of many types and complexity.

## [1] -1## Time Series:

## Start = 1871

## End = 1970

## Frequency = 1

## [1] 1120 1160 963 1210 1160 1160 813 1230 1370 1140 995 935 1110 994 1020

## [16] 960 1180 799 958 1140 1100 1210 1150 1250 1260 1220 1030 1100 774 840

## [31] 874 694 940 833 701 916 692 1020 1050 969 831 726 456 824 702

## [46] 1120 1100 832 764 821 768 845 864 862 698 845 744 796 1040 759

## [61] 781 865 845 944 984 897 822 1010 771 676 649 846 812 742 801

## [76] 1040 860 874 848 890 744 749 838 1050 918 986 797 923 975 815

## [91] 1020 906 901 1170 912 746 919 718 714 740Whoa, what was that? We entered the expression Nile and got a bunch of stuff! Nile is a type of data object called a time series that we’ll be looking at much later, and since it’s in the built-in data in base R, just entering its name will display it. And since time series are also vectors which are like entire columns, rows, or variables of data, we can vectorize it (apply mathematical operations and functions element-wise) in an expression:

## Time Series:

## Start = 1871

## End = 1970

## Frequency = 1

## [1] 2240 2320 1926 2420 2320 2320 1626 2460 2740 2280 1990 1870 2220 1988 2040

## [16] 1920 2360 1598 1916 2280 2200 2420 2300 2500 2520 2440 2060 2200 1548 1680

## [31] 1748 1388 1880 1666 1402 1832 1384 2040 2100 1938 1662 1452 912 1648 1404

## [46] 2240 2200 1664 1528 1642 1536 1690 1728 1724 1396 1690 1488 1592 2080 1518

## [61] 1562 1730 1690 1888 1968 1794 1644 2020 1542 1352 1298 1692 1624 1484 1602

## [76] 2080 1720 1748 1696 1780 1488 1498 1676 2100 1836 1972 1594 1846 1950 1630

## [91] 2040 1812 1802 2340 1824 1492 1838 1436 1428 1480More on that later, but we’ll start using vectors here and there. Back to expressions and statements:

A statement in R does something. It represents a directive we’re assigning to the computer, or maybe the environment we’re running on the computer (like RStudio, which then runs R). A simple print() statement seems a lot like what we just did when we entered an expression in the console, but recognize that it does something:

## [1] "Hello, World"Which is the same as just typing "Hello, World", but either way we write it, it does something.

Statements in R are usually put on one line, but you can use a semicolon to have multiple statements on one line, if desired:

## [1] 5## [1] 25## [1] 5## [1] 2.236068What’s the print function for? It appears that you don’t really need a print function, since you can just enter an object you want to print in a statement, so the print() is implied. And indeed we’ll rarely use it, though there are some situations where it’ll be needed, for instance in a structure like a loop. It also has a couple of parameters you can use like setting the number of significant digits:

## [1] 2.24Many (perhaps most) statements don’t actually display anything. For instance:

doesn’t display anything, but it does assign the constant 5 to the object x, so it simply does something. It’s an assignment statement, easily the most common type of statement that we’ll use in R, and uses that special assignment operator <- . Most languages just use = which the designers of R didn’t want to use, to avoid confusing it with the equal sign meaning “is equal to”.

An assignment statement assigns an expression to a object. If that object already exists, it is reused with the new value. For instance it’s completely legit (and commonly done in coding) to update the object in an assignment statement. This is very common when using a counter scalar:

i = i + 1You’re simply updating the index object with the next value. This also illustrates why it’s not an equation: i=i+1 doesn’t work as an equation (unless i is actually \(\infty\) but that’s just really weird.)

And c**2 = a**2 + b**2 doesn’t make sense as an R statement because c**2 isn’t an object to be created. The ** part is interpreted as raise to a power. What is to the left of the assignment operator = must be an object to be assigned the value of the expression.

2.4 Data Classes

Scalars, constants, vectors, and other data objects in R have data classes. Common types are numeric and character, but we’ll also see some special types like Date.

## [1] "numeric"## [1] "numeric"## [1] "character"## [1] "Date"2.4.1 Integers

By default, R creates double-precision floating-point numeric data objects. To create integer objects:

- append an L to a constant, e.g.

5Lis an integer 5 - convert with

as.integer

We’re going to be looking at various as. functions in R, more on that later, but we should look at as.integer() now. Most other languages use int() for this, and what it does is convert any number into an integer, truncating it to an integer, not rounding it.

## [1] 5## [1] 4To round a number, there’s a round() function or you can instead use as.integer adding 0.5:

## [1] 5## [1] 5## [1] 4## [1] 4Integer division is really the first kind of division you learned about in elementary school, and is the kind of division that each step in long division employs, where you first get the highest integer you can get …

## [1] 2… but then there’s a remainder from division, which we can call the modulus. To see the modulus we use %% instead of %/%:

## [1] 1That modulus is handy for periodic data (like angles of a circle, hours of the day, days of the year), where if we use the length of that period (like 360°) as the divisor, the remainder will always be the value’s position in the repeated period. We’ll use a vector created by the seq function, and then apply a modulus operation:

## [1] 90 180 270 360 450 540## [1] 90 180 270 0 90 180Surprisingly, the values returned by integer division or the remainder are not stored as integers. R seems to prefer floating point…

2.5 Rectangular Data

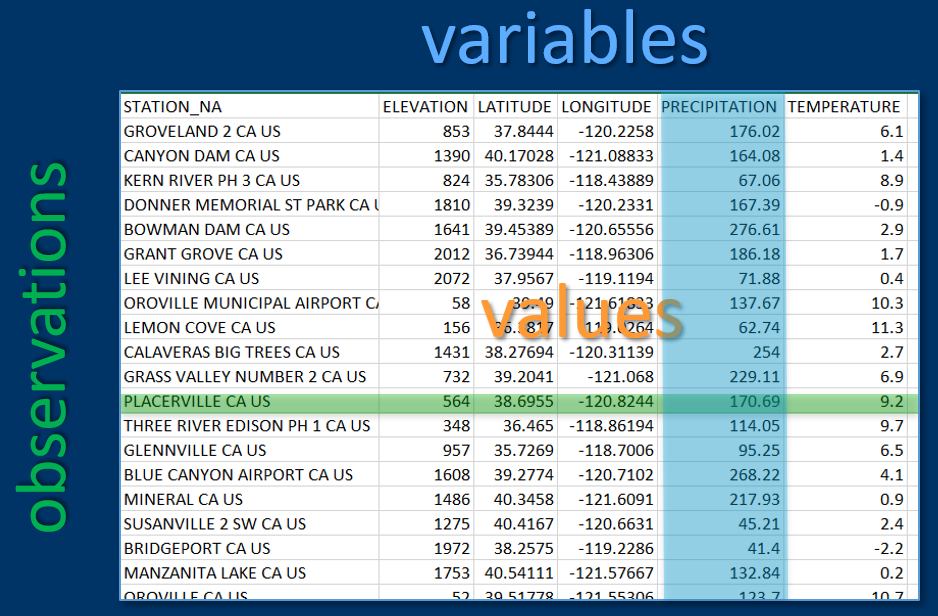

A common data format used in most types of research is rectangular data such as in a spreadsheet, with rows and columns, where rows might be observations and columns might be variables (Figure 2.1). We’ll read this type of data in from spreadsheets or even more commonly from comma-separated-variable (CSV) files, though some of these package data sets are already available directly as data frames.

FIGURE 2.1: Variables, observations, and values in rectangular data

## # A tibble: 82 × 7

## STATION_NAME COUNTY ELEVATION LATITUDE LONGITUDE PRECIPITATION TEMPERATURE

## <chr> <chr> <dbl> <dbl> <dbl> <dbl> <dbl>

## 1 GROVELAND 2, C… Tuolu… 853. 37.8 -120. 176. 6.1

## 2 CANYON DAM, CA… Plumas 1390. 40.2 -121. 164. 1.4

## 3 KERN RIVER PH … Kern 824. 35.8 -118. 67.1 8.9

## 4 DONNER MEMORIA… Nevada 1810. 39.3 -120. 167. -0.9

## 5 BOWMAN DAM, CA… Nevada 1641. 39.5 -121. 277. 2.9

## 6 BRUSH CREEK RA… Butte 1085. 39.7 -121. 296. NA

## 7 GRANT GROVE, C… Tulare 2012. 36.7 -119. 186. 1.7

## 8 LEE VINING, CA… Mono 2072. 38.0 -119. 71.9 0.4

## 9 OROVILLE MUNIC… Butte 57.9 39.5 -122. 138. 10.3

## 10 LEMON COVE, CA… Tulare 156. 36.4 -119. 62.7 11.3

## # ℹ 72 more rows2.6 Data Structures in R

We’ve already started using the most common data structures – scalars and vectors – but haven’t really talked about vectors yet, so we’ll start there.

2.6.1 Vectors

A vector is an ordered collection of numbers, strings, vectors, data frames, etc. What we mostly refer to simply as vectors are formally called atomic vectors, which require that they be homogeneous sets of whatever type we’re referring to, such as a vector of numbers, a vector of strings, or a vector of dates/times.

You can create a simple vector with the c() function:

## [1] 37.5 47.4 29.4 33.4## [1] "VA" "WA" "TX" "AZ"## [1] 23173 98801 78006 85001The class of a vector is the type of data it holds

## [1] "numeric"Let’s also introduce the handy str() function, which in one step gives you a view of the class of an item and its content – so its structure. We’ll often use it in this book when we want to tell the reader what a data object contains, instead of listing a vector and its class separately, so instead of …

## [1] 10.7 9.7 7.7 9.2 7.3 6.7## [1] "numeric"… we’ll just use str():

## num [1:6] 10.7 9.7 7.7 9.2 7.3 6.7Vectors can only have one data class, and if mixed with character types, numeric elements will become character:

## chr [1:3] "1" "fred" "7"## [1] "7"2.6.1.1 NA

Data science requires dealing with missing data by storing some sort of null value, called various things:

- null

- nodata

NA“not available” or “not applicable”

## [1] 1 NA 5Note that NA doesn’t really have a data class. The above example created a numeric vector with the one it couldn’t figure out being assigned NA. Remember that vectors (and matrices and arrays) have to be all the same data class. A character vector can also include NAs. Both of the following are valid vectors, with the second item being NA:

## [1] 5 NA 7

## [1] "alpha" NA "delta"Note that we typed

NAwithout quotations. It’s kind of like a special constant, like theTRUEandFALSElogical values, neither of which uses quotations.

We often want to ignore NA in statistical summaries. Where normally the summary statistic can only return NA…

## [1] NA… with na.rm=T you can still get the result for all actual data:

## [1] 3Don’t confuse with nan (“not a number”), which is used for things like imaginary numbers (explore the help for more on this), as you can see here:

## [1] FALSE## [1] TRUE## [1] FALSE## [1] TRUE## [1] TRUE2.6.1.2 Creating a vector from a sequence

We often need sequences of values, and there are a few ways of creating them. The following three examples are equivalent:

seq(1,10)

1:10

c(1,2,3,4,5,6,7,8,9,10)The seq() function has special uses like using a step parameter:

## [1] 2 4 6 8 102.6.1.3 Vectorization and vector arithmetic

Arithmetic on vectors operates element-wise, a process called vectorization.

## [1] 170.6037 1292.6509 1673.2283 1850.3937 2378.6089 2782.1522 3418.6352

## [8] 4019.0289 4875.3281 5823.4908 6230.3150 8369.4226Another example, with two vectors:

temp03 <- c(13.1,11.4,9.4,10.9,8.9,8.4,6.7,7.6,2.8,1.6,1.2,-2.1)

temp02 <- c(10.7,9.7,7.7,9.2,7.3,6.7,4.0,5.0,0.9,-1.1,-0.8,-4.4)

tempdiff <- temp03 - temp02

tempdiff## [1] 2.4 1.7 1.7 1.7 1.6 1.7 2.7 2.6 1.9 2.7 2.0 2.32.6.1.4 Plotting vectors

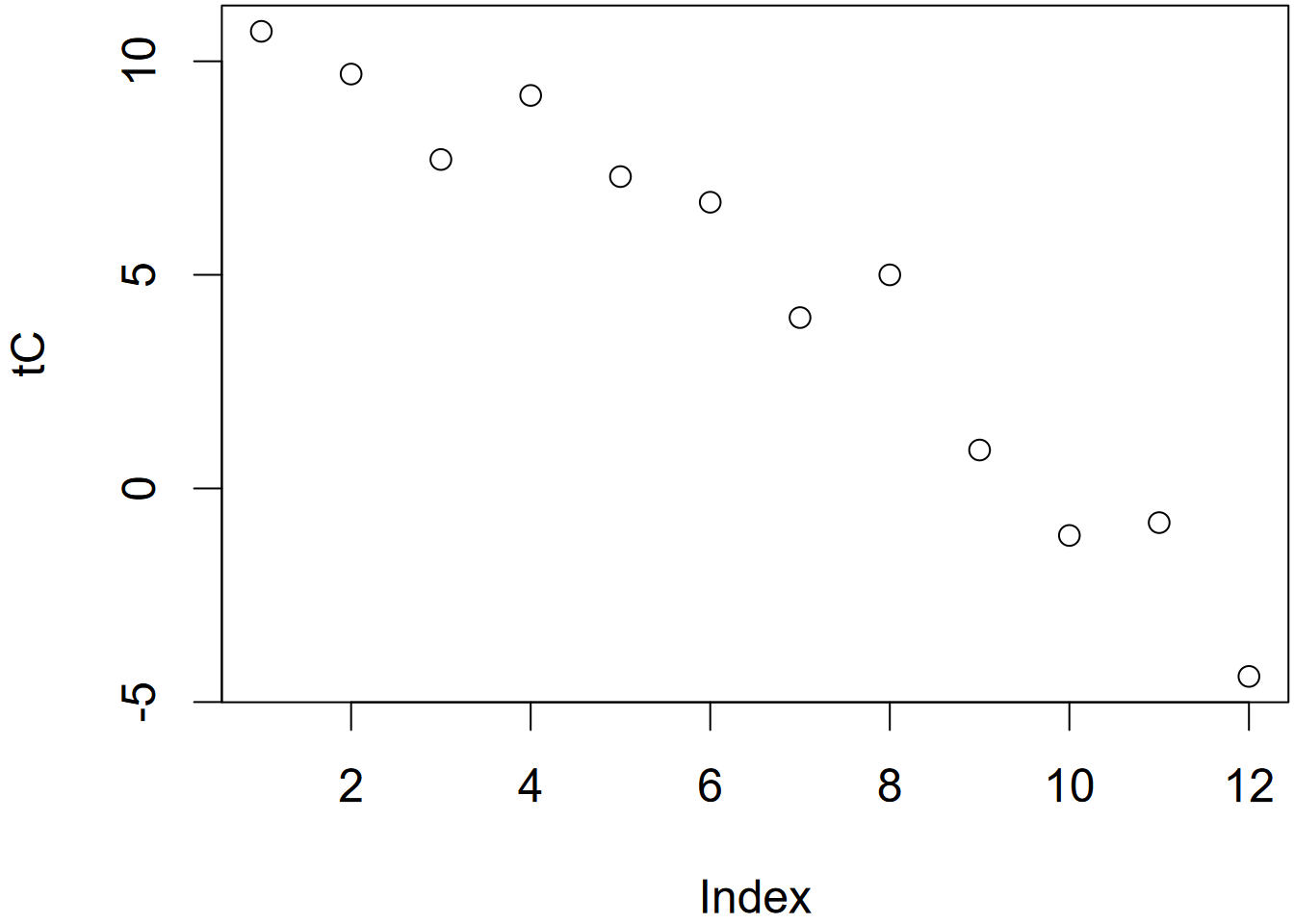

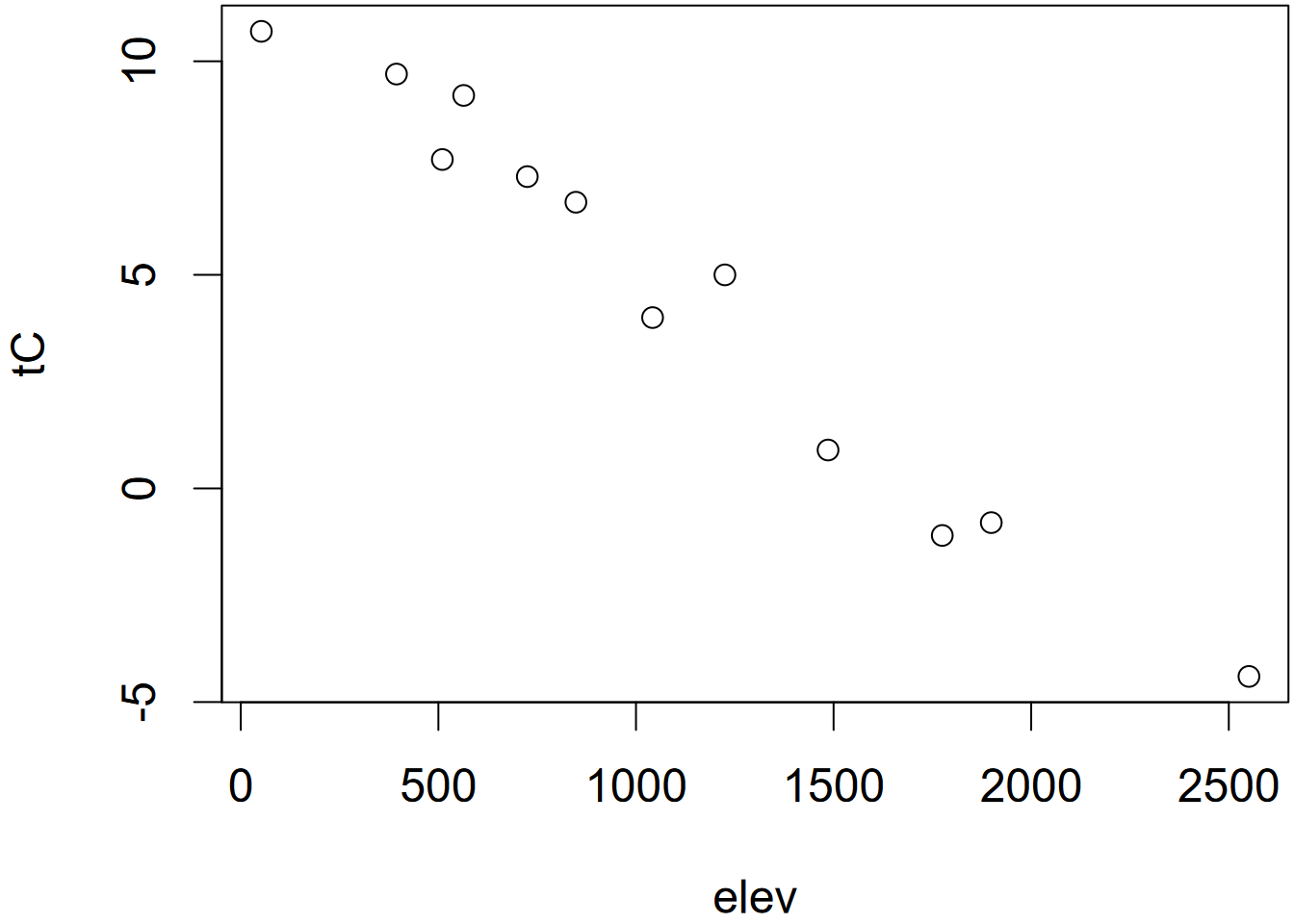

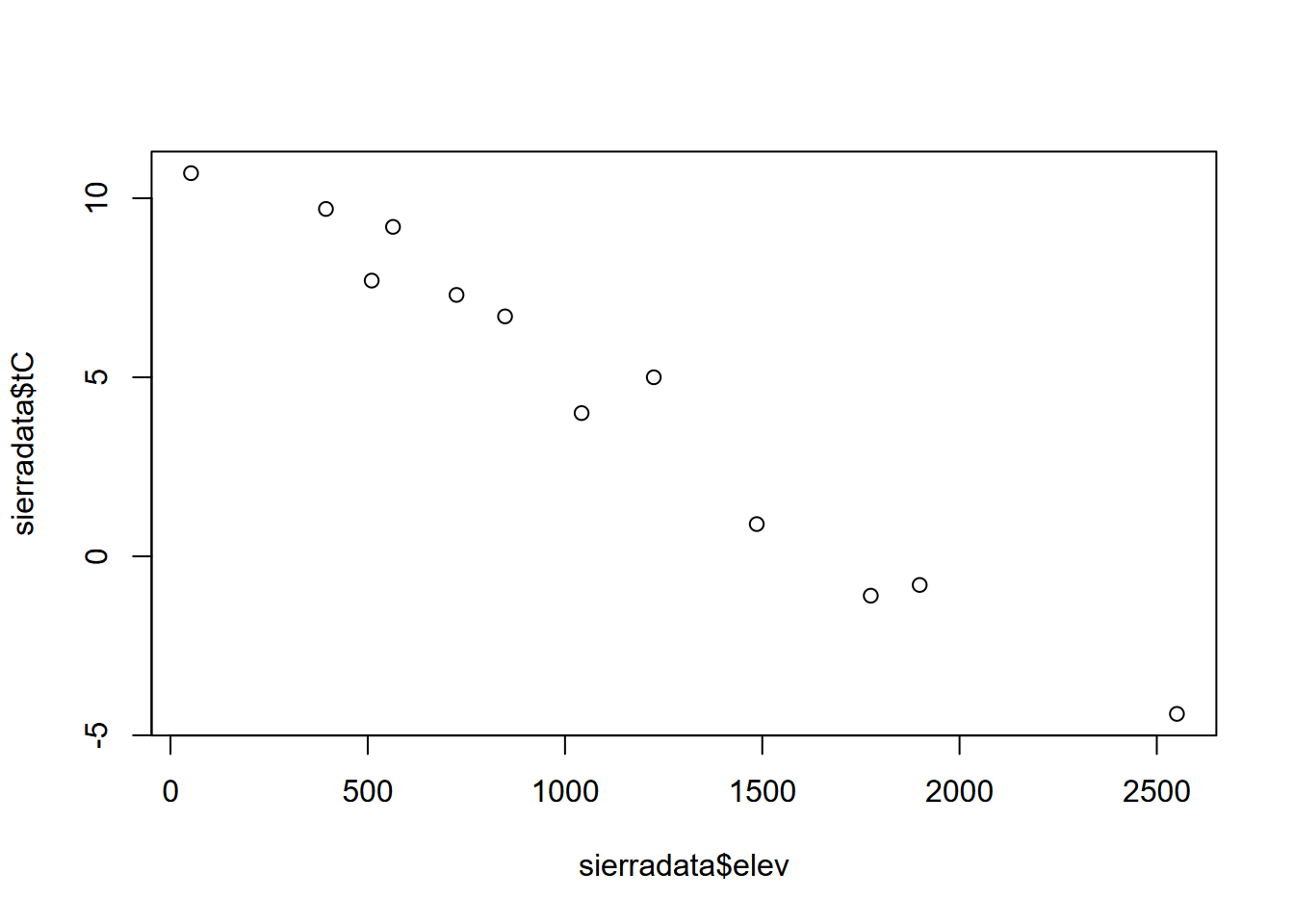

Vectors of Feb temperature, elevation, and latitude at stations in the Sierra:

tC <- c(10.7, 9.7, 7.7, 9.2, 7.3, 6.7, 4.0, 5.0, 0.9, -1.1, -0.8,-4.4)

elev <- c(52, 394, 510, 564, 725, 848, 1042, 1225, 1486, 1775, 1899, 2551)

lat <- c(39.52,38.91,37.97,38.70,39.09,39.25,39.94,37.75,40.35,39.33,39.17,38.21)Plot individually by index vs a scatterplot

We’ll use the plot() function to visualize what’s in a vector. The plot() function will create an output based upon its best guess of what you’re wanting to see, and will depend on the nature of the data you provide it. We’ll be looking at a lot of ways to visualize data soon, but it’s often useful to just see what plot() gives you. In this case, it just makes a bivariate plot where the x dimension is the sequential index of the vector from 1 through the length of the vector, and the values are in the y dimension. For comparison is a scatterplot with elevation on the x axis (Figure 2.2).

FIGURE 2.2: Temperature plotted by index (left) and elevation (right)

2.6.1.5 Named indices

Vectors themselves have names (like elev, tC, and lat above), but individual indices can also be named.

## num [1:3] 16 30 56## Named num [1:3] 16 30 56

## - attr(*, "names")= chr [1:3] "idaho" "montana" "wyoming"The reason we might do this is so you can refer to observations by name instead of index, maybe to filter observations based on criteria where the name will be useful. The following are equivalent:

## montana

## 30## montana

## 30The names() function can be used to display a character vector of names, or assign names from a character vector:

## [1] "idaho" "montana" "wyoming"## [1] "Idaho" "Montana" "Wyoming"2.6.2 Lists

Lists can be heterogeneous, with multiple class types. Lists are actually used a lot in R, and are created by many operations, but they can be confusing to get used to especially when it’s unclear what we’ll be using them for. We’ll avoid them for a while, and look into specific examples as we need them.

2.6.3 Matrices

Vectors are commonly used as a column in a matrix (or as we’ll see, a data frame), like a variable

tC <- c(10.7, 9.7, 7.7, 9.2, 7.3, 6.7, 4.0, 5.0, 0.9, -1.1, -0.8,-4.4)

elev <- c(52, 394, 510, 564, 725, 848, 1042, 1225, 1486, 1775, 1899, 2551)

lat <- c(39.52,38.91,37.97,38.70,39.09,39.25,39.94,37.75,40.35,39.33,39.17,38.21)Building a matrix from vectors as columns

The cbind() in base R binds multiple vectors into columns in a matrix.

## [1] "matrix" "array"## num [1:12, 1:3] 10.7 9.7 7.7 9.2 7.3 6.7 4 5 0.9 -1.1 ...

## - attr(*, "dimnames")=List of 2

## ..$ : NULL

## ..$ : chr [1:3] "tC" "elev" "lat"## tC elev lat

## [1,] 10.7 52 39.52

## [2,] 9.7 394 38.91

## [3,] 7.7 510 37.97

## [4,] 9.2 564 38.70

## [5,] 7.3 725 39.09

## [6,] 6.7 848 39.25

## [7,] 4.0 1042 39.94

## [8,] 5.0 1225 37.75

## [9,] 0.9 1486 40.35

## [10,] -1.1 1775 39.33

## [11,] -0.8 1899 39.17

## [12,] -4.4 2551 38.21Remember that matrices are similar to (atomic) vectors in that they all have to be of the same data type.

2.6.3.1 Dimensions for arrays and matrices

Note: a matrix is just a 2D array. Arrays have 1, 3, or more dimensions.

## [1] 12 3It’s also important to remember that a matrix or an array is a vector with dimensions, and we can change those dimensions in various ways as long as they work for the length of the vector.

## [1] "matrix" "array"## [1] "array"## [1] "array"We just saw that we can change the dimensions of an existing matrix or array. But what if the matrix has names for its columns? I wasn’t sure so following my basic philosophy of empirical programming I just tried it:

## [,1] [,2] [,3] [,4] [,5] [,6] [,7] [,8] [,9] [,10] [,11] [,12]

## [1,] 10.7 9.2 4.0 -1.1 52 564 1042 1775 39.52 38.70 39.94 39.33

## [2,] 9.7 7.3 5.0 -0.8 394 725 1225 1899 38.91 39.09 37.75 39.17

## [3,] 7.7 6.7 0.9 -4.4 510 848 1486 2551 37.97 39.25 40.35 38.21So the answer is that it gets rid of the column names, and we can also see that redimensioning changes a lot more about how the data appears (though dim(sierradata) <- c(12,3) will return it to its original structure, but without column names). It’s actually a little odd that matrices can have column names, because that really just makes them seem like data frames, so let’s look at those next. Let’s consider a situation where we want to create a rectangular data set from some data for a set of states:

We can use cbind to create a matrix out of them, just like we did with the sierradata above

## abb area pop

## [1,] "CO" "269837" "5758736"

## [2,] "WY" "253600" "578759"

## [3,] "UT" "84899" "3205958"But notice what it did – area and pop were converted to character type. This reminds us that matrices are still atomic vectors – all of the same class. So to comply with this, the numbers were converted to character strings, since you can’t convert character strings to numbers.

This isn’t very satisfactory as a data object, so we’ll need to use a data frame, which is not a vector, though its individual column variables are vectors.

2.6.4 Data frames

A data frame is a database with variables in columns and rows of observations. They’re kind of like a spreadsheet with rules (like the first row is field names) or a matrix that can have variables of unique types. Data frames will be very important for data analysis and GIS. We can easily create a data frame using the data.frame function, and then display it simply…

## abb area pop

## 1 CO 269837 5758736

## 2 WY 253600 578759

## 3 UT 84899 3205958… or use one of several table display tools. For instance, the gt tool in the gt package is designed for creating tables for a report, with many options to optimize its design for a given purpose. Typically you’d use it for the final results, as a static table with everything shown at once. See https://gt.rstudio.com/ for more information.

| abb | area | pop |

|---|---|---|

| CO | 269837 | 5758736 |

| WY | 253600 | 578759 |

| UT | 84899 | 3205958 |

Before we get started looking further into data frames, we’re going to use the palmerpenguins data set, so you need to install it if you haven’t yet, and then load the library with:

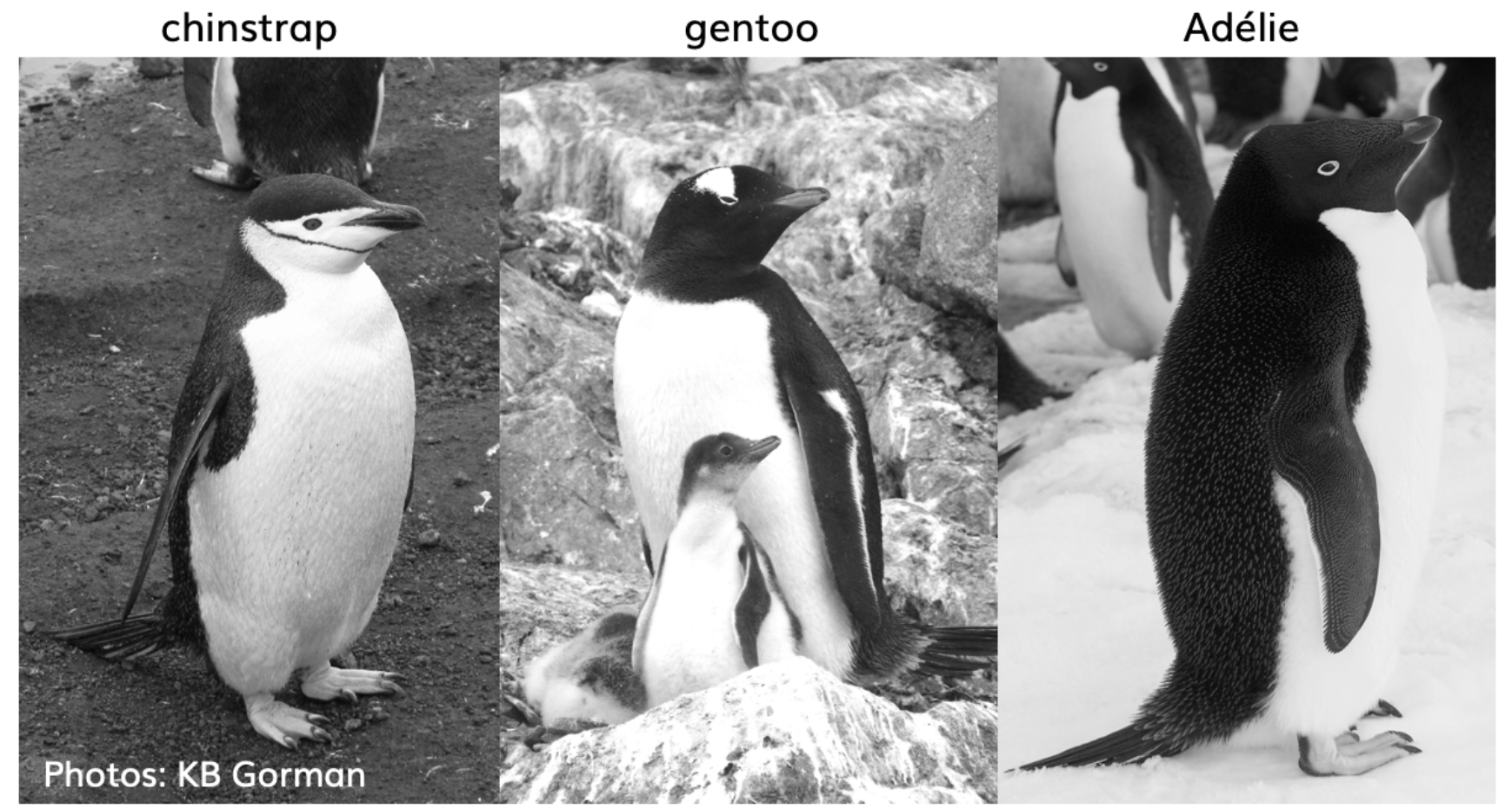

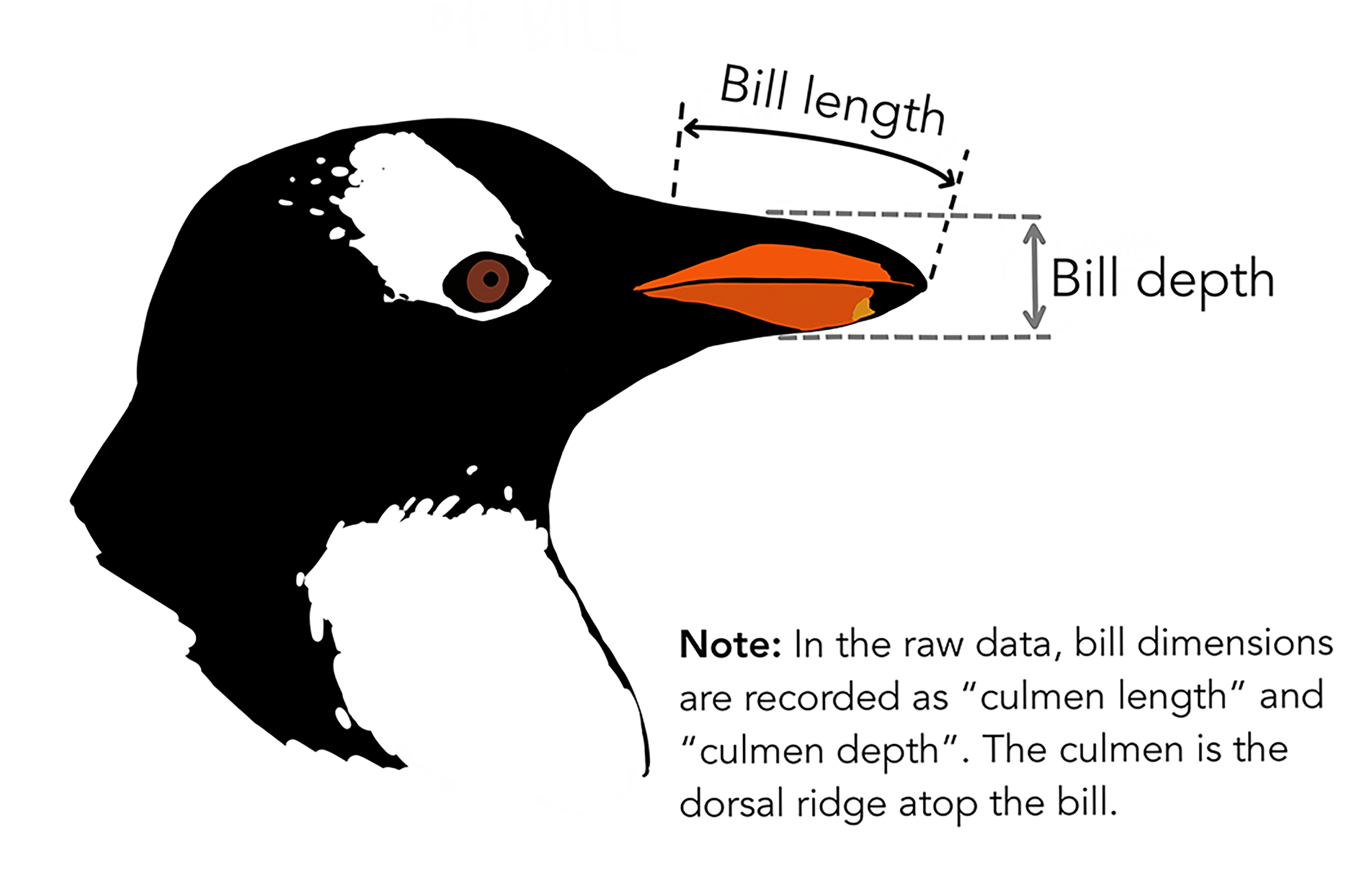

I’d encourage you to learn more about this dataset at https://allisonhorst.github.io/palmerpenguins/articles/intro.html(Horst, Hill, and Gorman (2020)) (Figures 2.3 and 2.4). It will be useful for a variety of demonstrations using numerical morphometric variables as well as a couple of categorical factors (species and island).

FIGURE 2.3: The three penguin species in palmerpenguins. Photos by KB Gorman. Used with permission

FIGURE 2.4: Diagram of penguin head with indication of bill length and bill depth (from Horst, Hill, and Gorman (2020), used with permission)

We’ll use a couple of alternative table display methods, first a simple one…

## # A tibble: 344 × 8

## species island bill_length_mm bill_depth_mm flipper_length_mm body_mass_g

## <fct> <fct> <dbl> <dbl> <int> <int>

## 1 Adelie Torgersen 39.1 18.7 181 3750

## 2 Adelie Torgersen 39.5 17.4 186 3800

## 3 Adelie Torgersen 40.3 18 195 3250

## 4 Adelie Torgersen NA NA NA NA

## 5 Adelie Torgersen 36.7 19.3 193 3450

## 6 Adelie Torgersen 39.3 20.6 190 3650

## 7 Adelie Torgersen 38.9 17.8 181 3625

## 8 Adelie Torgersen 39.2 19.6 195 4675

## 9 Adelie Torgersen 34.1 18.1 193 3475

## 10 Adelie Torgersen 42 20.2 190 4250

## # ℹ 334 more rows

## # ℹ 2 more variables: sex <fct>, year <int>… then a nicer table display using DT::datatable, with a bit of improvement using an option…

2.6.4.1 Creating a data frame out of a matrix

There are many functions that start with as. that convert things to a desired type. We’ll use as.data.frame() to create a data frame out of a matrix, the same sierradata we created earlier, but we’ll build it again so it’ll have variable names, and use yet another table display method from the knitr package (which also has a lot of options you might want to explore), which works well for both the html and pdf versions of this book, and creates numbered table headings, so I’ll use it a lot (Table 2.1).

tC <- c(10.7, 9.7, 7.7, 9.2, 7.3, 6.7, 4.0, 5.0, 0.9, -1.1, -0.8,-4.4)

elev <- c(52, 394, 510, 564, 725, 848, 1042, 1225, 1486, 1775, 1899, 2551)

lat <- c(39.52,38.91,37.97,38.70,39.09,39.25,39.94,37.75,40.35,39.33,39.17,38.21)

sierradata <- cbind(tC, elev, lat)

sierradata <- as.data.frame(sierradata) # It's ok to reuse the object name

knitr::kable(sierradata,

caption = 'Temperatures (Feb), elevations, and latitudes of 12 Sierra stations')| tC | elev | lat |

|---|---|---|

| 10.7 | 52 | 39.52 |

| 9.7 | 394 | 38.91 |

| 7.7 | 510 | 37.97 |

| 9.2 | 564 | 38.70 |

| 7.3 | 725 | 39.09 |

| 6.7 | 848 | 39.25 |

| 4.0 | 1042 | 39.94 |

| 5.0 | 1225 | 37.75 |

| 0.9 | 1486 | 40.35 |

| -1.1 | 1775 | 39.33 |

| -0.8 | 1899 | 39.17 |

| -4.4 | 2551 | 38.21 |

Then to plot the two variables that are now part of the data frame, we’ll need to make vectors out of them again using the accessor (Figure 2.5).

FIGURE 2.5: Temperature and elevation scatter plot

2.6.4.2 Creating a data frame directly from vectors

We’ll be using the dplyr package a lot in the next chapter, but we might as well introduce one of its functions – bind_cols – that works a lot like cbind but creates data frames instead of matrices. Assuming we’ve already read in the vectors, we’d use this:

There are in fact multiple ways of creating data frames from vectors or other data. Here are a few examples that all use just one line of code.

sierradataD <- as.data.frame(cbind(tC,elev,lat))

sierradataE <- data.frame(tC,elev,lat)

sierradataT <- tibble(tC,elev,lat)There are other ways to do this, but in general if you want a data frame, you should create it directly and not go through a matrix, as the first example above does. This one works fine but there are other situations where

cbindwon’t end up with the right column names.

Note that the last example above uses the

tibblepackage, which is loaded with eitherlibrary(tibble)orlibrary(tidyverse). We’ll be looking at tibbles and other parts of the “tidyverse” more in the next chapter, but they’re essentially an improved type of data frame.

2.6.4.3 Read a data frame from a CSV

We’ll be looking at this more in the next chapter, but a common need is to read data from a spreadsheet stored in the CSV format. Normally, you’d have that stored with your project and can just specify the file name, but we’ll access CSVs from the igisci package. Since you have this installed, it will already be on your computer, but not in your project folder. The path to it can be derived using the system.file() function.

Reading a csv in readr (part of the tidyverse that we’ll be looking at in the next chapter) is done with read_csv(). We’ll use the DT::datatable for this because it lets you interactively scroll across the many variables, and this table is included as Figure 2.6.

library(readr)

csvPath <- system.file("extdata","TRI/TRI_2017_CA.csv", package="igisci")

TRI2017 <- read_csv(csvPath)FIGURE 2.6: TRI dataframe – DT datatable output

Note that we could have used the built-in read.csv function, but as you’ll see later, there are advantages of readr::read_csv so we should get in the habit of using that instead.

2.6.4.4 Reorganizing data frames

There are quite a few ways to reorganize your data in R, and we’ll learn other methods in the next chapter where we start using the tidyverse, which makes data abstraction and transformation much easier. For now, we’ll work with a simpler CSV I’ve prepared:

csvPath <- system.file("extdata","TRI/TRI_1987_BaySites.csv", package="igisci")

TRI87 <- read_csv(csvPath)

TRI87## # A tibble: 335 × 9

## TRI_FACILITY_ID count FACILITY_NAME COUNTY air_releases fugitive_air

## <chr> <dbl> <chr> <chr> <dbl> <dbl>

## 1 91002FRMND585BR 2 FORUM IND SAN M… 1423 1423

## 2 92052ZPMNF2970C 1 ZEP MFG CO SANTA… 337 337

## 3 93117TLDYN3165P 2 TELEDYNE MEC SANTA… 12600 12600

## 4 94002GTWSG477HA 2 MORGAN ADVANCED CERAM… SAN M… 18700 18700

## 5 94002SMPRD120SE 2 SEM PRODS INC SAN M… 1500 500

## 6 94025HBLNN151CO 2 HEUBLEIN INC SAN M… 500 0

## 7 94025RYCHM300CO 10 TE CONNECTIVITY CORP SAN M… 144871 47562

## 8 94025SNFRD990OB 1 SANFORD METAL PROCESS… SAN M… 9675 9675

## 9 94026BYPCK3575H 2 BAY PACKAGING & CONVE… SAN M… 80000 32000

## 10 94026CDRSY3475E 2 CDR SYS CORP SAN M… 126800 0

## # ℹ 325 more rows

## # ℹ 3 more variables: stack_air <dbl>, LATITUDE <dbl>, LONGITUDE <dbl>Sort, Index, and Max/Min

One simple task is to sort data (numerically or by alphabetic order), such as a variable extracted as a vector.

## [1] 2 5 5 7 9 10… or create an index vector of the order of our vector/variable…

… where the index vector is just used to store the order of the TRI87$air_releases vector/variable; then we can use that index to display facilities in order of their air releases.

## [1] "AIR PRODUCTS MANUFACTURING CORP"

## [2] "UNITED FIBERS"

## [3] "CLOROX MANUFACTURING CO"

## [4] "ICI AMERICAS INC WESTERN RESEARCH CENTER"

## [5] "UNION CARBIDE CORP"

## [6] "SCOTTS-SIERRA HORTICULTURAL PRODS CO INC"This is similar to filtering for a subset. We can also pull out individual values using functions like which.max to find the desired index value:

## [1] "TESLA INC"2.6.4.5 Length of a data frame

You might expect the length of a data frame to be its number of rows, but it’s the number of its variables. You’ll get the same thing with ncol.

## [1] 9

## [1] 9To get the number of rows, you can access a single variable as a vector, or you can use nrow.

## [1] 335

## [1] 3352.6.5 Factors

Factors are vectors with predefined values, normally used for categorical data, and as R is a statistical language are frequently used to stratify data, such as defining groups for analysis of variance among those groups. They are built on an integer vector, and levels are the set of predefined values, which are commonly character data.

nut <- factor(c("almond", "walnut", "pecan", "almond"))

str(nut) # note that levels will be in alphabetical order## Factor w/ 3 levels "almond","pecan",..: 1 3 2 1## [1] "integer"As always, there are multiple ways of doing things. Here’s an equivalent conversion that illustrates their relation to integers:

nutint <- c(1, 2, 3, 2) # equivalent conversion

nut <- factor(nutint, labels = c("almond", "pecan", "walnut"))

str(nut)## Factor w/ 3 levels "almond","pecan",..: 1 2 3 22.6.5.1 Categorical data and factors

While character data might be seen as categorical (e.g. "urban", "agricultural", "forest" land covers), to be used as categorical variables they must be made into factors. So we have something to work with, we’ll generate some random memberships in one of three vegetation moisture categories using the sample() function:

veg_moisture_categories <- c("xeric", "mesic", "hydric")

veg_moisture_char <- sample(veg_moisture_categories, 42, replace = TRUE)

veg_moisture_fact <- factor(veg_moisture_char, levels = veg_moisture_categories)

veg_moisture_char## [1] "xeric" "mesic" "xeric" "mesic" "xeric" "hydric" "xeric" "hydric"

## [9] "mesic" "mesic" "hydric" "xeric" "hydric" "mesic" "mesic" "hydric"

## [17] "mesic" "xeric" "hydric" "mesic" "mesic" "xeric" "hydric" "mesic"

## [25] "hydric" "hydric" "mesic" "xeric" "mesic" "xeric" "hydric" "xeric"

## [33] "xeric" "mesic" "mesic" "hydric" "mesic" "xeric" "xeric" "xeric"

## [41] "hydric" "hydric"## [1] xeric mesic xeric mesic xeric hydric xeric hydric mesic mesic

## [11] hydric xeric hydric mesic mesic hydric mesic xeric hydric mesic

## [21] mesic xeric hydric mesic hydric hydric mesic xeric mesic xeric

## [31] hydric xeric xeric mesic mesic hydric mesic xeric xeric xeric

## [41] hydric hydric

## Levels: xeric mesic hydricTo make a categorical variable a factor:

nut <- c("almond", "walnut", "pecan", "almond")

farm <- c("organic", "conventional", "organic", "organic")

ag <- as.data.frame(cbind(nut, farm))

ag$nut <- factor(ag$nut)

ag$nut## [1] almond walnut pecan almond

## Levels: almond pecan walnutFactor example

## Factor w/ 21 levels "Amador","Butte",..: 20 14 7 12 12 2 19 11 2 19 ...2.7 Accessors and Subsetting

The use of accessors in R can be confusing, but they’re very important to understand. An accessor is “a method for accessing data in an object usually an attribute of that object” (Brown (n.d.)), so a method for subsetting, and for R these are [], [[]], and $, but it can be confusing to know when you might use which one. There are good reasons to have these three types for code clarity, however you can also use [] with a bit of clumsiness for all purposes.

We’ve already been using these in this chapter and will continue to use them throughout the book. Let’s look at the various accessors and subsetting options:

2.7.1 [] Subsetting

You use this to get a subset of any R object, whether it be a vector, list, or data frame. For a vector, the subset might be defined by indices …

## num 33.4## num [1:2] 47.4 29.4## chr [1:3] "x" "y" "z"… or a Boolean with TRUE or FALSE values representing which to keep or not.

## num [1:3] 37.5 29.4 33.4An initially surprising but very handy way of creating one of these is to reference the vector itself in a relational expression. The following returns the same as the above, with the purpose of selecting all lats that are less than 40:

## num [1:3] 37.5 29.4 33.4Getting one element from a data frame will return a data frame, in this case a data frame with just one variable. For columns especially, you might expect the result to be a vector, but it’s a data frame with just one variable. Note that you can use either the column number or the name of the variable.

## 'data.frame': 50 obs. of 2 variables:

## $ speed: num 4 4 7 7 8 9 10 10 10 11 ...

## $ dist : num 2 10 4 22 16 10 18 26 34 17 ...## 'data.frame': 50 obs. of 1 variable:

## $ speed: num 4 4 7 7 8 9 10 10 10 11 ...## 'data.frame': 50 obs. of 1 variable:

## $ speed: num 4 4 7 7 8 9 10 10 10 11 ...Similarly, subsetting to get a single observation (a row of values) with the [n,] method returns a one-row data frame, or it can be used to create a subset of a range or selection of observations. You can also get all but a given observation with [-n,].

## speed dist

## 1 4 2## speed dist

## 3 7 4

## 4 7 22

## 5 8 16## speed dist

## 1 4 2

## 3 7 4

## 4 7 22

## 5 8 16

## 6 9 10

## 7 10 18

## 8 10 26

## 9 10 34

## 10 11 17

## 11 11 28

## 12 12 14

## 13 12 20

## 14 12 24

## 15 12 28

## 16 13 26

## 17 13 34

## 18 13 34

## 19 13 46

## 20 14 26

## 21 14 36

## 22 14 60

## 23 14 80

## 24 15 20

## 25 15 26

## 26 15 54

## 27 16 32

## 28 16 40

## 29 17 32

## 30 17 40

## 31 17 50

## 32 18 42

## 33 18 56

## 34 18 76

## 35 18 84

## 36 19 36

## 37 19 46

## 38 19 68

## 39 20 32

## 40 20 48

## 41 20 52

## 42 20 56

## 43 20 64

## 44 22 66

## 45 23 54

## 46 24 70

## 47 24 92

## 48 24 93

## 49 24 120

## 50 25 85## speed dist

## 1 4 2

## 2 4 10

## 3 7 4

## 4 7 22

## 5 8 16

## 6 9 10Getting a data frame this way is very useful because many functions you’ll want to use require a data frame as input.

But sometimes you just want a vector. If you select an individual variable using the [,n] method, you’ll get one. You’ll want to assign it to a meaningful name like the original variable name. Note that you can either use the variable name or the variable column number:

## [1] 2 10 4 22 16 10 18 26 34 17 28 14 20 24 28 26 34 34 46

## [20] 26 36 60 80 20 26 54 32 40 32 40 50 42 56 76 84 36 46 68

## [39] 32 48 52 56 64 66 54 70 92 93 120 852.7.2 [[]] The mysterious double bracket

Double brackets extract just one element, so just one value from a vector or one vector from a data frame. You’re going one step further into the structure.

## num [1:50] 4 4 7 7 8 9 10 10 10 11 ...## num [1:50] 4 4 7 7 8 9 10 10 10 11 ...Note that the str result is telling you that these are both simply vectors, not something like a data frame. Though uncommon, you can also use the index of variables instead of its name. Similar to the examples above, the variable can be specified either with the index (or column number) or the variable name. Since “speed” is the first variable in the cars data frame, these return the same thing:

## num [1:50] 4 4 7 7 8 9 10 10 10 11 ...## num [1:50] 4 4 7 7 8 9 10 10 10 11 ...2.7.3 $ Accessing a vector from a data frame

The $ accessor is really just a shortcut, but any shortcut reduces code and thus increases clarity, so it’s a good idea and this accessor is commonly used. Their only limitation is that you can’t use the integer indices, which would allow you to loop through a numerical sequence.

These accessor operations do the same thing:

## num [1:50] 4 4 7 7 8 9 10 10 10 11 ...Again, to contrast, the following accessor operations do the same thing – create a data frame:

## 'data.frame': 50 obs. of 1 variable:

## $ speed: num 4 4 7 7 8 9 10 10 10 11 ...## [1] TRUE2.8 Programming scripts in RStudio

Given the exploratory nature of the R language, we sometimes forget that it provides significant capabilities as a programming language where we can solve more complex problems by coding procedures and using logic to control the process and handle a range of possible scenarios.

Programming languages are used for a wide range of purposes, from developing operating systems built from low-level code to high-level scripting used to run existing functions in libraries. R and Python are commonly used for scripting, and you may be familiar with using arcpy to script ArcGIS geoprocessing tools. But whether low- or high-level, some common operational structures are used in all computer programming languages:

- functions (defining your own)

- conditional operations: if a condition is true, do this

- loops

Common to all of these is an input in parentheses followed by what to do in braces:

- obj

<- function(input){process ending in an expression result} if(condition){process}if(condition){process} else {process}for(objectinlist){process}

The { } braces are only needed if the process goes past the first line, so for instance the following examples don’t need them (and actually some of the spaces aren’t necessary either, but I left them in for clarity):

a <- 2

f <- function(a) a*2

print(f(4))

if(a==3) print('yes') else print('no')

for(i in 1:5) print(i)2.8.1 function : creating your own

myFunction <- function(input){Do this and return the resulting expression}

The various packages that we’re installing, all those that aren’t purely data (like igisci), are built primarily of functions and perhaps most of those functions are written in R. Many of these simply make existing R functions work better or at least differently, often for a particular data science task commonly needed in a discipline or application area.

In geospatial environmental research for instance, we are often dealing with direction, for instance the movement of marine mammals who might be influenced by ship traffic. An agent-based model simulation of marine mammal movement might have the animal respond by shifting to the left or right, so we might want a turnLeft() or turnRight() function. Given the nature of circular data however, the code might be sufficiently complex to warrant writing a function that will make our main code easier to read:

Then in our code later on, after we’ve prepared some data …

id <- c("5A", "12D", "3B")

direction <- c(260, 270, 300)

whale <- dplyr::bind_cols(id = id,direction = direction) # better than cbind

whale## # A tibble: 3 × 2

## id direction

## <chr> <dbl>

## 1 5A 260

## 2 12D 270

## 3 3B 300… we can call this function:

## # A tibble: 3 × 2

## id direction

## <chr> <dbl>

## 1 5A 350

## 2 12D 0

## 3 3B 30Another function I found useful for our external data in igisci is to simplify the code needed to access the external data. I found I had to keep looking up the syntax for that task that we use a lot. It also makes the code difficult to read. Adding this function to the top of your code helps for both:

Then our code that accesses data is greatly simplified, with read.csv calls looking a lot like reading data stored in our project folder. For example, where if we had fishdata.csv stored locally in our project folder we might read it with …

read.csv("fishdata.csv")

… reading from the data package’s extdata folder looks pretty similar:

read.csv(ex("fishdata.csv"))

This simple function was so useful that it’s now included in the igisci package, so you can just call it with

ex()if you havelibrary(igisci)in your code.

2.8.2 if : conditional operations

if (condition) {Do this} else {Do this other thing}

Probably not used as much in R for most users, as it’s mostly useful for building a new tool (or function) that needs to run as a complete operation. (But I found it essential in writing this book to create some outputs differently for the output to html than for the output to LaTeX for the pdf/book version.)

Common in coding is the need to be able to handle a variety of inputs and avoid errors. Here’s an admittedly trivial example of some short code that avoids an error:

## [1] Inf## [1] 0.4Note that the

else {needs to follow the close of theif (...) {...}section on the same line.

2.8.3 for loops

for(counterinlist){*Do something*}`

Loops are very common in computer languages (in FORTRAN they were called DO loops) as they allow us to iterate through a list. The list could be just a series of numbers, and if starting from 1 might be called a counter, but could also be a list of objects like data frames or spatial features. The following example of a counter is trivial but illustrates a simple loop that prints a series of results, starting with the counter i itself:

## [1] "1 1"

## [1] "2 0.5"

## [1] "3 0.333333333333333"

## [1] "4 0.25"

## [1] "5 0.2"

## [1] "6 0.166666666666667"

## [1] "7 0.142857142857143"

## [1] "8 0.125"

## [1] "9 0.111111111111111"

## [1] "10 0.1"Note the use of

2.8.3.1 A for loop with an internal if..else

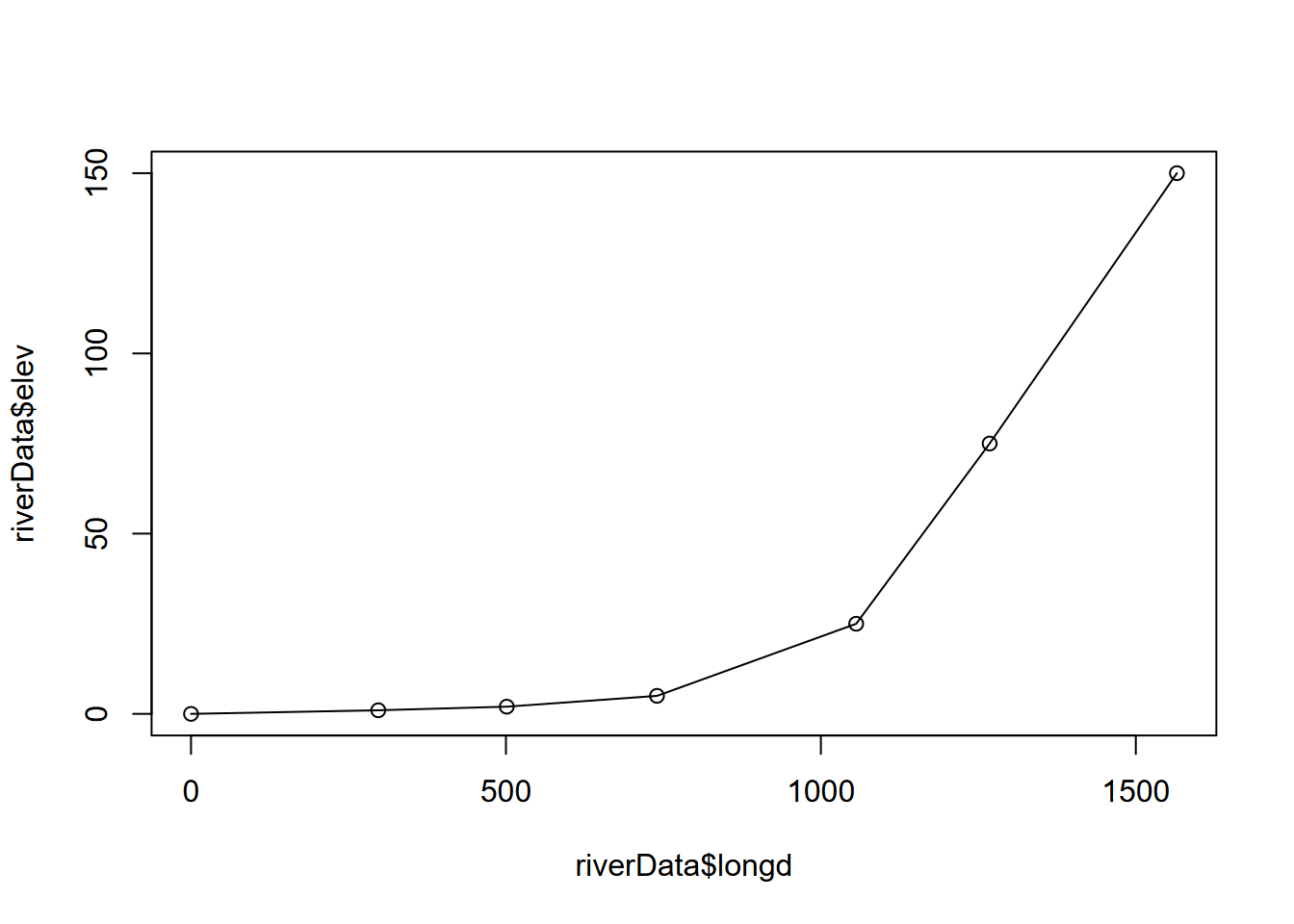

Here’s a more complex loop that builds river data for a map and profile that we’ll look at again in the visualization chapter. It also includes a conditional operation with if..else, embedded within the for loop (note the { } enclosures for each structure.)

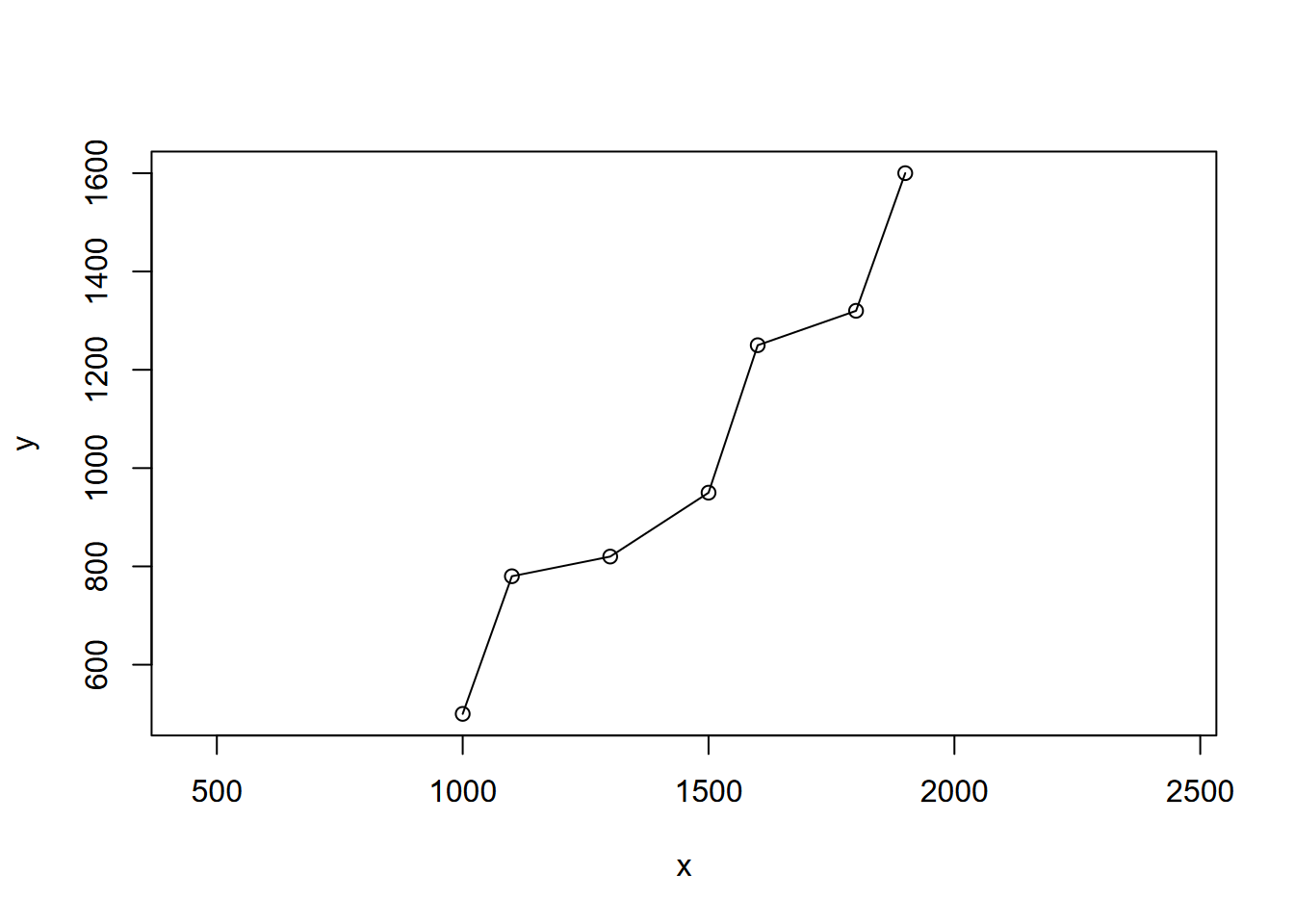

x <- c(1000, 1100, 1300, 1500, 1600, 1800, 1900)

y <- c(500, 780, 820, 950, 1250, 1320, 1600)

elev <- c(0, 1, 2, 5, 25, 75, 150)First we’ll create a crude map from the xy coordinates using the base plot system (Figure 2.7):

FIGURE 2.7: Crude river map using x y coordinates

Options for the

plotfunction in base R: In the visualization chapter, we’ll be making use of many options inggplot2, but for now we’re using theplotfunction of base R, which has quite a few options that we’ll introduce from time to time as needed. We’ll continue to useploteither for a quick output of our results, or where various packages liketerrahave extended its functionality for its data types. In the above code, we used two options in plot:asp=1for setting the x and y scales to be the same (like on a map) with an aspect ratio of 1; andadd=TRUEto allow the lines to be added to the same plot, using the same coordinate space.

Now we’ll use a loop to create a longitudinal graph by determining a cumulative longitudinal distance along the path of the river. You could look at it as straightening out the curves.

First we’ll need empty vectors to populate with the longitudinal profile data:

d <- double() # creates an empty numeric vector

longd <- double() # ("double" means double-precision floating point)

s <- double()Then the for loop that goes through all of the points, but uses an if statement to assign an initial distance and longitudinal distance of zero, since the longitudinal profile data is just started and we don’t have a distance yet.

for(i in 1:length(x)){

if(i==1){longd[i] <- 0; d[i] <- 0} else {

d[i] <- sqrt((x[i]-x[i-1])^2 + (y[i]-y[i-1])^2)

longd[i] <- longd[i-1] + d[i]

s[i-1] <- (elev[i]-elev[i-1])/d[i]

}

}What well-known theorem is used to derive each distance? Can you find \(c^2 = a^2 + b^2\) or \(c=\sqrt{a^2+b^2}\) in the above code? Consider how Cartesian (x and y) coordinates work and where you might find a right triangle and a hypotenuse in such a coordinate system. Hint: what if \(a=\Delta{x}\) and \(b=\Delta{y}\)? Draw it out on a piece of paper to help.

There is no known slope for the last point (since we have no next point), so the last slope is assigned NA.

Then we’ll create a data frame out of the vectors we just built, and then display the longitudinal profile (Figure 2.8).

## x y elev d longd s

## 1 1000 500 0 0.0000 0.0000 0.003363364

## 2 1100 780 1 297.3214 297.3214 0.004902903

## 3 1300 820 2 203.9608 501.2822 0.012576654

## 4 1500 950 5 238.5372 739.8194 0.063245553

## 5 1600 1250 25 316.2278 1056.0471 0.235964589

## 6 1800 1320 75 211.8962 1267.9433 0.252252298

## 7 1900 1600 150 297.3214 1565.2647 NA

FIGURE 2.8: Longitudinal profile built from cumulative distances and elevation

2.8.4 Subsetting with logic

We’ll use the 2022 USDOE fuel efficiency data to list all of the car lines with at least 50 miles to the gallon. Note the creation of a Boolean logical expression with an admittedly long variable ... >= 50; this will return TRUE or FALSE, so i will be a logical (Boolean) vector which can be used to subset a couple of variables which we’ll then paste together (using vectorization of course) to create a character string vector.

library(readxl)

fuelEff22 <- read_excel(ex("USDOE_FuelEfficiency2022.xlsx"))

i <- fuelEff22$`Hwy FE (Guide) - Conventional Fuel` >= 50

str(i)## logi [1:394] FALSE FALSE FALSE FALSE FALSE FALSE ...## chr [1:9] "TOYOTA COROLLA HYBRID" "HYUNDAI MOTOR COMPANY Elantra Hybrid" ...## [1] "TOYOTA COROLLA HYBRID"

## [2] "HYUNDAI MOTOR COMPANY Elantra Hybrid"

## [3] "HYUNDAI MOTOR COMPANY Elantra Hybrid Blue"

## [4] "TOYOTA PRIUS"

## [5] "TOYOTA PRIUS Eco"

## [6] "HYUNDAI MOTOR COMPANY Ioniq"

## [7] "HYUNDAI MOTOR COMPANY Ioniq Blue"

## [8] "HYUNDAI MOTOR COMPANY Sonata Hybrid"

## [9] "HYUNDAI MOTOR COMPANY Sonata Hybrid Blue"The which function allows us to do something similar, returning the indices of the variable or vector for which a condition TRI87$air_releases > 1e6 is TRUE. Then we can use that index to do the subset.

library(readr)

csvPath = system.file("extdata","TRI/TRI_1987_BaySites.csv", package="igisci")

TRI87 <- read_csv(csvPath)

i <- which(TRI87$air_releases > 1e6)

str(i)## int [1:4] 85 96 127 328## [1] "VALERO REFINING CO-CALI FORNIA BENICIA REFINERY"

## [2] "TESLA INC"

## [3] "TESORO REFINING & MARKETING CO LLC"

## [4] "HGST INC"The %in% operator is very useful when we have very specific items to select.

library(readr)

csvPath = system.file("extdata","TRI/TRI_1987_BaySites.csv", package="igisci")

TRI87 <- read_csv(csvPath)

i <- TRI87$COUNTY %in% c("NAPA","SONOMA")

TRI87$FACILITY_NAME[i]## [1] "SAWYER OF NAPA"

## [2] "BERINGER VINEYARDS"

## [3] "CAL-WOOD DOOR INC"

## [4] "SOLA OPTICAL USA INC"

## [5] "KEYSIGHT TECHNOLOGIES INC"

## [6] "SANTA ROSA STAINLESS STEEL"

## [7] "OPTICAL COATING LABORATORY INC"

## [8] "MGM BRAKES"

## [9] "SEBASTIANI VINEYARDS INC, SONOMA CASK CELLARS"2.8.5 Apply functions

There are many apply functions in R, and they often obviate the need for looping. For instance:

applyderives values at margins of rows and columns, e.g. to sum across rows or down columns.

# matrix apply – the same would apply to data frames

matrix12 <- 1:12

dim(matrix12) <- c(3,4)

rowsums <- apply(matrix12, 1, sum)

colsums <- apply(matrix12, 2, sum)

sum(rowsums)## [1] 78## [1] 78## [,1] [,2] [,3] [,4]

## [1,] 1 4 7 10

## [2,] 2 5 8 11

## [3,] 3 6 9 12Apply functions satisfy one of the needs that spreadsheets are used for. Consider how often you use sum, mean, or similar functions in Excel.

sapply

sapply applies functions to either:

- all elements of a vector – unary functions only

## [1] 1.000000 1.414214 1.732051 2.000000 2.236068 2.449490 2.645751 2.828427

## [9] 3.000000 3.162278 3.316625 3.464102- or all variables of a data frame (not a matrix), where it works much like a column-based apply (since variables are columns) but more easily interpreted without the need of specifying columns with 2:

## speed dist

## 15.40 42.98temp02 <- c(10.7,9.7,7.7,9.2,7.3,6.7,4.0,5.0,0.9,-1.1,-0.8,-4.4)

temp03 <- c(13.1,11.4,9.4,10.9,8.9,8.4,6.7,7.6,2.8,1.6,1.2,-2.1)

sapply(as.data.frame(cbind(temp02,temp03)),mean) # has to be a data frame## temp02 temp03

## 4.575000 6.658333While various apply functions are in base R, the purrr package takes these further. See https://www.rstudio.com/resources/cheatsheets/ for more information on this and other packages in the RStudio/tidyverse world.

2.9 RStudio projects

So far, you’ve been using RStudio, and it organizes your code and data into a project folder. You should familiarize yourself with where things are being saved and where you can find things. Start by seeing your working directory with :

getwd()When you create a new RStudio project with File/New Project..., it will set the working directory to the project folder, where you create the project. (You can change the working directory with setwd() but I don’t recommend it.) The project folder is useful for keeping things organized and allowing you to use relative paths to your data and allow everything to be moved somewhere else and still work. The project file has the extension .Rproj and it will reside in the project folder. If you’ve saved any scripts (.R)or R Markdown (.Rmd) documents, they’ll also reside in the project folder; and if you’ve saved any data, or if you want to read any data without providing the full path or using the extdata access method, those data files (e.g. .csv) will be in that project folder. You can see your scripts, R Markdown documents, and data files using the Files tab in the default lower right pane of RStudio.

RStudio projects are going to be the way we’ll want to work for the rest of this book, so you’ll often want to create new ones for particular data sets so things don’t get messy. And you may want to create data folders within your project folder, as we have in the igisci extdata folder, to keep things organized. Since we’re using our igisci package, this is less of an issue since at least input data isn’t stored in the project folder. However you’re going to be creating data, so you’ll want to manage your data in individual projects. You may want to start a new project for each data set, using File/New Project, and try to keep things organized (things can get messy fast!)

Just opening an .Rmd file from your computer will not set the working directory to the folder you’re in, even if it’s a project folder. It will instead open the document within the most recent project you’ve been working in. You will find that you don’t have simple relative access to any data in your folder. Instead, you need to open the project!

In this book, we’ll be making a lot of use of data provided for you from various data packages such as built-in data, palmerpenguins (Horst, Hill, and Gorman 2020), or igisci, but they correspond to specific research projects, such as Sierra Climate to which several data frames and spatial data apply. For this chapter, you can probably just use one project, but later you’ll find it useful to create separate projects for each data set – such as a sierra project and return to it every time it applies.

In that project, you’ll build a series of scripts, many of which you’ll re-use to develop new methods. When you’re working on your own project with your own data files, you should store these in a data folder inside the project folder. With the project folder as the default working directory, you can use relative paths, and everything will work even if the project folder is moved. So, for instance, you can specify "data/mydata.csv" as the path to a csv of that name. You can still access package data, including extdata folders and files, but your processed and saved or imported data will reside with your project.

An absolute path to somewhere on your computer in contrast won’t work for anyone else trying to run your code; absolute paths should only be used for servers that other users have access to and URLs on the web.

2.9.1 R Markdown

An alternative to writing scripts is writing R Markdown documents, which includes both formatted text (such as you’re seeing in this book, like italics created using asterisks) and code chunks. R lends itself to running code in chunks, as opposed to creating complete tools that run all of the way through. This book is built from R Markdown documents organized in a bookdown structure, and most of the figures are created from R code chunks. There are also many good resources on writing R Markdown documents, including the very thorough R Markdown: The Definitive Guide (Xie, Allaire, and Grolemund 2019).

2.10 Exercises: Introduction to R

Exercise 2.1 Assign scalars for your name, city, state and zip code, and use paste() to combine them, and assign them to the object me. What is the class of me?

Exercise 2.2 Knowing that trigonometric functions require angles (including azimuth directions) to be provided in radians, and that degrees can be converted into radians by dividing by 180 and multiplying that by pi, derive the sine of 30 degrees with an R expression. (Base R knows what pi is, so you can just use pi.)

Exercise 2.3 If two sides of a right triangle on a map can be represented as \(dX\) and \(dY\) and the direct line path between them \(c\), and the coordinates of two points on a map might be given as \((x1,y1)\) and \((x2,y2)\), with \(dX=x2-x1\) and \(dY=y2-y1\), use the Pythagorean theorem to derive the distance between them and assign that expression to \(c\). Start by assigning the input scalars.

Exercise 2.4 You can create a vector uniform random numbers from 0 to 1 using runif(n=30) where n=30 says to make 30 of them. Use the round() function to round each of the values (it vectorizes them), and provide what you created and explain what happened.

Exercise 2.5 Create two vectors x and y of 10 numbers each with the c() function, then assigning to x and y. Then plot(x,y), and provide the three lines of code you used to do the assignment and plot.

Exercise 2.6 Change your code from the previous question so that one value is NA (entered simply as NA, no quotation marks), and derive the mean value for x. Then add ,na.rm=T to the parameters for mean(). Also do this for y. Describe your results and explain what happens.

Exercise 2.7 Create two sequences, a and b, with a all odd numbers from 1 to 99, b all even numbers from 2 to 100. Then derive c through vector division of b/a. Plot a and c together as a scatterplot.

Exercise 2.8 Referring to the Matrices section, create the same sierradata matrix using the same data vectors repeated here …

… then convert it to a data frame (using the same sierradata object name), and from that data frame plot temperature (tC) against latitude (lat).

Exercise 2.9 From that sierradata data frame, derive colmeans using the mean parameter on the columns 2 for apply().

Exercise 2.10 Do the same thing with the sierra data frame with sapply().