Section 4 Child-Serving Organizations

4.1 Introduction

This section of the report includes quantitative and qualitative research findings from a survey and interview protocol that were intended to provide information around concepts related to trauma and racial equity policies and practices within human service institutions. The institutions and/or organizational types included in the analysis were Winston-Salem/Forsyth County Schools, Department of Social Services, medical providers (Wake Forest Baptist Health and Novant Health), child care and Pre-K providers, and home visitation service providers. The survey included the following constructs or measures:

- staff experiences of ACEs

- staff impact of COVID-19

- staff experiences of Secondary Traumatic Stress (STS)

- current implementation of trauma-informed practices

- familiarity with trauma-informed practices

- environmental assessment

- staff practices

- transformational leadership

More detailed descriptions of each of these measures or constructs can be found in their respective section introductions. Interviews with institutional/organizational representatives include information on how organizations are approaching racial justice and equity based policies and practices.

4.1.1 Key Findings

4.1.1.1 Adverse Childhood Experiences, COVID-19, and Secondary Traumatic Stress

- Most respondents in child-serving agencies have experienced at least one ACE, with almost half experiencing 1-3 ACEs. The reported ACEs of staff in child-serving agencies closely mirrored the adverse experiences of adults in Forsyth County in general, which means that staff may be interacting with families who have experienced or are experiencing similar trauma to what they have experienced.

- About 44% of staff in child-serving agencies reported experiences of discrimination. Respondents who identified as Black / African American, who did not identify as White, and middle-income respondents were the most likely to report experiences of discrimination.

- 78% of respondents reported being at least somewhat impacted by COVID-19, and 20% reported being “very much impacted,” and interviews with staff found that COVID-19 both impacted how providers were serving the community and added personal and professional stressors and trauma.

- Scores on the Secondary Traumatic Stress Scale subscales were generally low, but some respondents did have high scores. Staff reporting that they were not impacted by COVID-19 reported fewer indications of arousal in particular.

4.1.1.2 Current State of Trauma Informed Practice

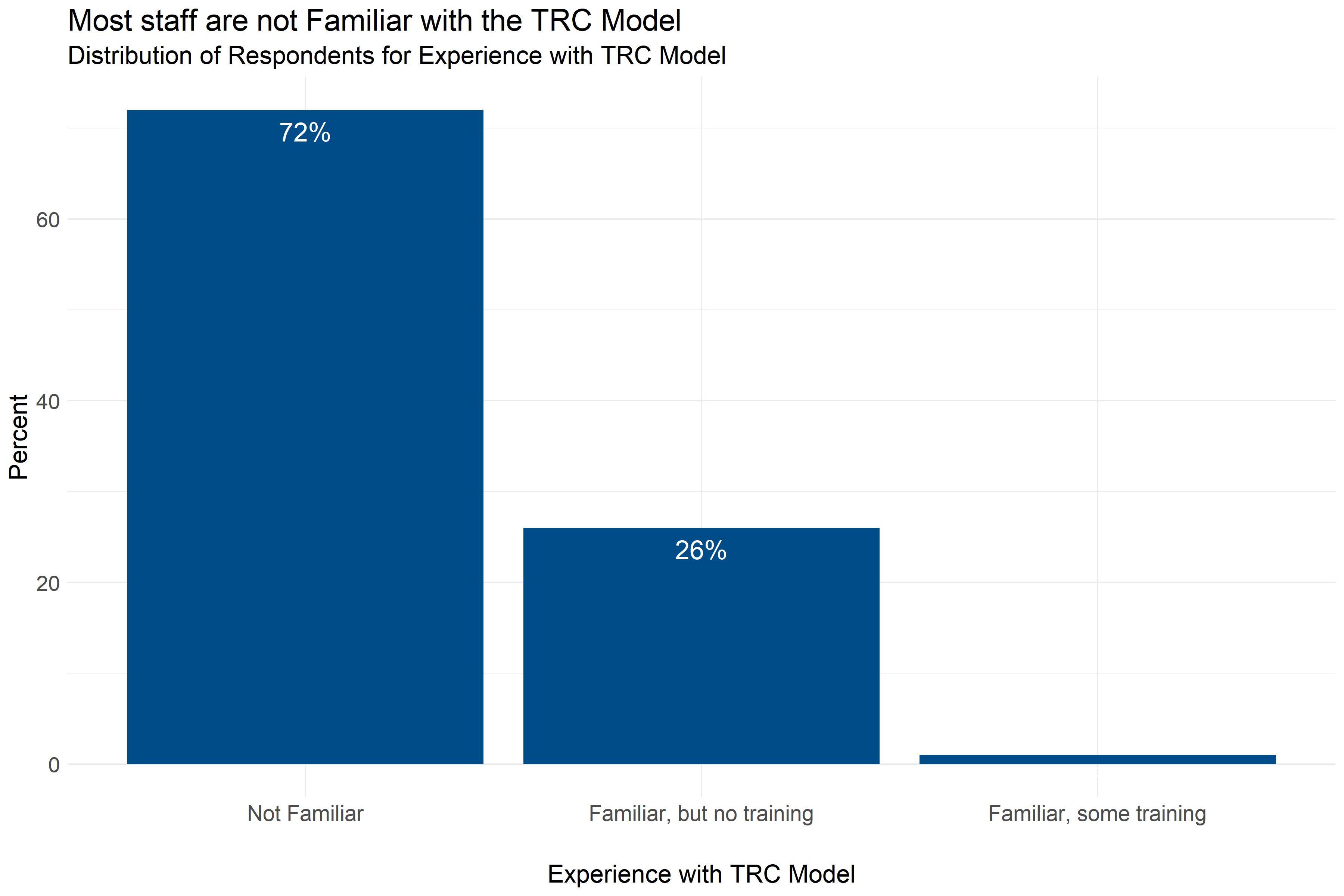

- About 72% of respondents in child-serving agencies were not familiar with the TRC model, and an additional 26% of respondents reported familiarity with the model but no training.

- Respondents with higher levels of training generally scored higher on the staff practices survey, indicating higher levels of alignment with trauma informed care practice at their agencies.

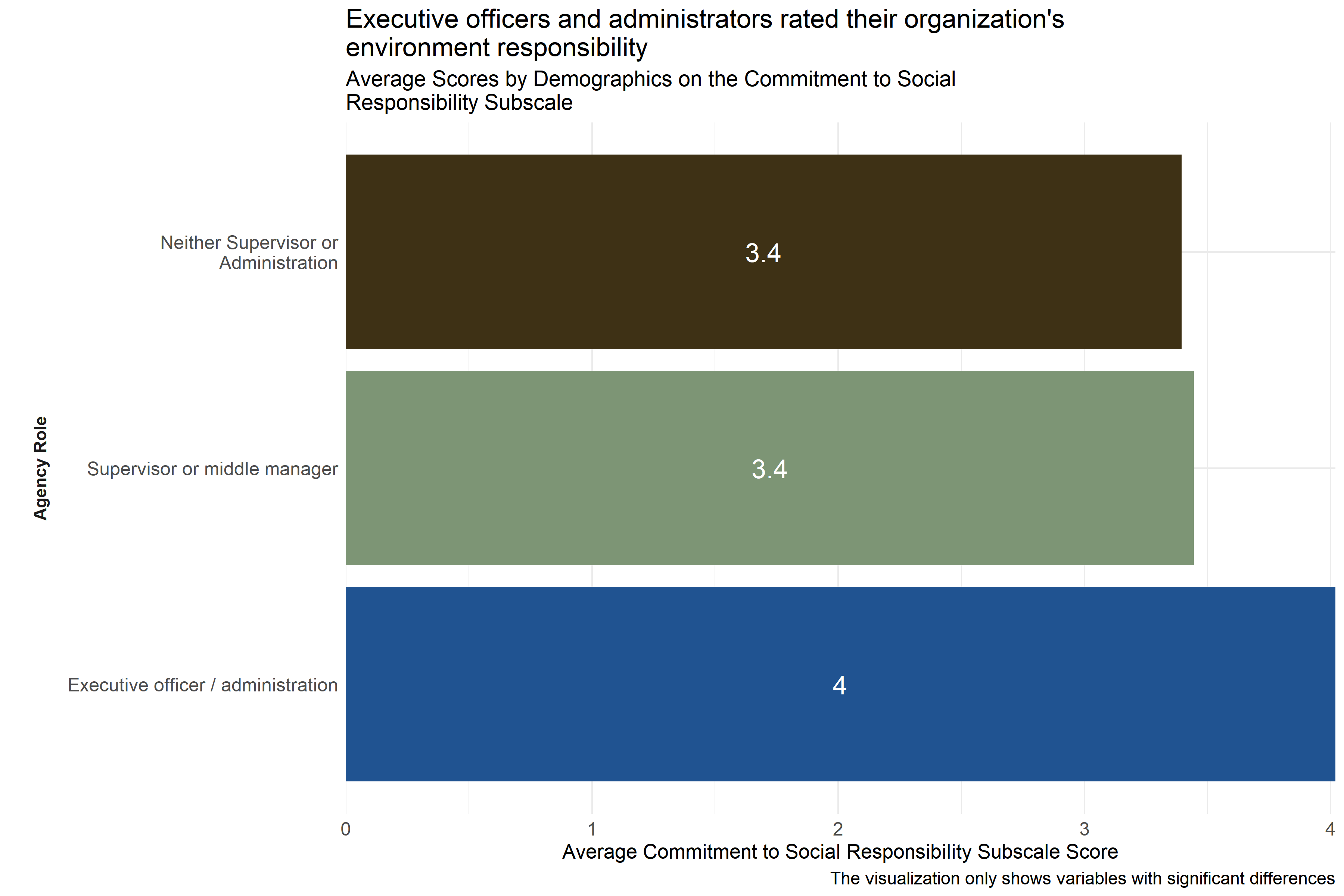

- Environmental assessment and staff practice scales indicated neutral to moderately high levels of alignment with trauma informed practice, but there was variation across respondents, particularly in the environmental assessment. Respondent experiences may differ across different agencies.

- Organizational leaders often had higher scores than other employees on scales measuring the alignment of staff practice and their environments, which indicates that their experiences may be different from other staff in significant ways.

4.1.1.3 Racial Equity and Structural Violence

- Interview participants indicated that there is a greater sense of urgency for racial equity-based work within the past few years with some respondents reporting additional organizational activities around racial equity as a response to broader socio-political events (e.g., the George Floyd protests). This also reflects a main finding among a plurality of interview participants which is that racial-equity based work is an area of growth or at least not at the level it should be at their organization.

- Some organizations prioritize diversity of staff over diversity of leadership in order to match the populations they serve. In these cases, there can be less diversity in leadership, reflecting the lack of an employee pipeline within the organization for people of color.

- Some organizations have sufficient resources to engage in racial equity-based practices and policies, while others face barriers such as leadership, funding, and/or staff buy-in.

- Respondents reported that, in general, their organization’s culture is inclusive and supportive of people from diverse backgrounds, however several respondents indicated that there is an expectation for people of color to assimilate into dominant white norm culture.

4.1.2 Methodology

To learn more about current trauma-informed practices and styles of leadership at child-serving agencies and the experiences of their staff, analysts designed a survey to measure staff experiences of Adverse Childhood Experiences (ACEs), the impact of COVID-19, Secondary Traumatic Stress (STS), familiarity with trauma informed practices, alignment of the work environment, and staff practice with a trauma-informed model. These topics were selected and prioritized by the TRC Advisory Committee. Experiences of ACEs, STS, the work environment, and staff practice were measured using survey questions that have been tested by other researchers and found to be good measures of those concepts. These groups of questions are combined to make scales and subscales. The scores on these scales and subscales are used to measure these concepts.

The survey instrument and data collection plan were shared with the Institutional Review Board at Wake Forest Baptist Health to ensure that researchers were protecting the privacy and rights of research participants. To collect survey data, analysts reached out to organizational leaders at agencies and organizations in Forsyth County that provide child care, home visitation, medical care, or educational services to children, asking them to invite the staff at their organization to take the survey online.

Analysts used instructions provided by the researchers who wrote the scales to calculate scores. They ran tests to ensure that people were answering questions about the concepts in the scales relatively consistently; a sign that the measures are working as expected. The results of these tests can be found in Appendix A: Output Tables. For scenarios in which respondents skipped particular items on a scale, the score of those respondents was not calculated for that scale, but their answers were used for other scales.

Analysts worked with the TRC Advisory committee to identify which organizations would be invited to complete the survey and which kinds of organizations would be included in the analysis. A total of 255 surveys met the survey-inclusion criteria. The criteria dictate that respondents be at least 18 years old, consent to participate, complete at least the first set of questions in the survey, and be currently employed at an agency or organization in Forsyth County that provides child care, home visitation, medical care, or educational services to children.

The tables below show the demographic distribution of respondents who answered the survey. To protect respondent privacy, analysts did not report disaggregated findings for demographic groups with fewer than 10 respondents. For example, 2 respondents self-identified as Asian. Analysts included these respondents’ data, but did not report results for Asian respondents specifically in any disaggregated summaries.

4.1.2.1 Demographics of Respondents

4.1.2.1.1 Gender and Sexual Orientation

Table 4.1 shows that 89% of survey respondents identified themselves as women compared to 11% who identified as men and less than 1% who identified as Non-binary / third gender.

| Gender | Respondents (n = 255) |

|---|---|

| Woman | 226 ( 89 %) |

| Man | 27 ( 11 %) |

| Non-binary / third gender | 1 ( 0 %) |

| Prefer not to answer | 1 ( 0 %) |

Table 4.2 shows that 93% of survey respondents identified themselves as heterosexual compared to 3% who identified as Gay or Lesbian and less than 2% who identified as preferred not to answer.

| Sexual Orientation | Respondents (n = 254) |

|---|---|

| Heterosexual | 237 ( 93 %) |

| Gay or Lesbian | 8 ( 3 %) |

| Pansexual | 1 ( 0 %) |

| Bisexual | 1 ( 0 %) |

| Prefer to self-describe another way | 1 ( 0 %) |

| Prefer not to answer | 6 ( 2 %) |

4.1.2.1.2 Race and Ethnicity

Table 4.3 shows that 95% of survey respondents did not identify themselves as Hispanic, Latino, Latina, or Latinx compared to 5% who identified did identify themselves as Hispanic, Latino, Latina, or Latinx.

| Hispanic, Latino, Latina, or Latinx | Respondents (n = 255) |

|---|---|

| No | 242 ( 95 %) |

| Yes | 13 ( 5 %) |

Table 4.4 shows that 99% of survey respondents did not identify themselves as American Indian or Alaska Native compared to 1% who identified did identify themselves as American Indian or Alaska Native.

| American Indian or Alaska Native | Respondents (n = 247) |

|---|---|

| American Indian or Alaska Native | 3 ( 1 %) |

| Not American Indian or Alaska Native | 244 ( 99 %) |

Table 4.5 shows that 99% of survey respondents did not identify themselves as Asian compared to 1% who identified did identify themselves as Asian.

| Asian | Respondents (n = 247) |

|---|---|

| Asian | 2 ( 1 %) |

| Not Asian | 245 ( 99 %) |

Table 4.6 shows that 78% of survey respondents did not identify themselves as Black / African American compared to 22% who identified did identify themselves as Black / African American.

| Black / African American | Respondents (n = 247) |

|---|---|

| Black / African American | 54 ( 22 %) |

| Not Black / African American | 193 ( 78 %) |

Table 4.7 shows that 100% of survey respondents did not identify themselves as Native Hawaiian or Pacific Islander compared to 0% who identified did identify themselves as Native Hawaiian or Pacific Islander.

| Native Hawaiian or Pacific Islander | Respondents (n = 247) |

|---|---|

| Not Native Hawaiian or Pacific Islander | 247 ( 100 %) |

Table 4.8 shows that 27% of survey respondents did not identify themselves as White compared to 73% who identified did identify themselves as White.

| White | Respondents (n = 247) |

|---|---|

| Not White | 67 ( 27 %) |

| White | 180 ( 73 %) |

4.1.2.1.3 Age

Table 4.9 shows that the average age survey respondents was 48 years. Ages ranged from 19 to 80 years, with an interquartile range of 42 to 55 and a half years.

| Number of Responses | Average | Lowest | First Quartile | Median | Third Quartile | Highest |

|---|---|---|---|---|---|---|

| 255 | 48 | 19 | 42 | 50 | 55.5 | 80 |

4.1.2.1.4 Educational Attainment

Table 4.10 shows that 6% of survey respondents highest level of education attained is High School / GED, 13% Associate’s degree or technical college, 31% Bachelor’s degree, 40% Master’s degree, 5% Doctoral degree, and 5% Other.

| Highest Level of Education | Respondents (n = 255) |

|---|---|

| Other | 14 ( 5 %) |

| High School Diploma / GED | 15 ( 6 %) |

| Associate’s Degree or Technical College | 34 ( 13 %) |

| Bachelor’s Degree | 78 ( 31 %) |

| Master’s Degree | 101 ( 40 %) |

| Doctoral Degree | 13 ( 5 %) |

4.1.2.1.5 Residential Zip Code

Table 4.11 shows the varying residential zip codes survey respondents identified themselves living in at the time of the survey.

| Residential Zip Code | Respondents (n = 255) |

|---|---|

| 27006 | 4 ( 2 %) |

| 27009 | 1 ( 0 %) |

| 27012 | 16 ( 6 %) |

| 27016 | 1 ( 0 %) |

| 27018 | 1 ( 0 %) |

| 27019 | 2 ( 1 %) |

| 27021 | 3 ( 1 %) |

| 27023 | 8 ( 3 %) |

| 27028 | 1 ( 0 %) |

| 27030 | 1 ( 0 %) |

| 27040 | 14 ( 5 %) |

| 27045 | 3 ( 1 %) |

| 27050 | 2 ( 1 %) |

| 27051 | 6 ( 2 %) |

| 27052 | 3 ( 1 %) |

| 27055 | 2 ( 1 %) |

| 27101 | 12 ( 5 %) |

| 27102 | 1 ( 0 %) |

| 27103 | 21 ( 8 %) |

| 27104 | 21 ( 8 %) |

| 27105 | 17 ( 7 %) |

| 27106 | 34 ( 13 %) |

| 27107 | 15 ( 6 %) |

| 27127 | 23 ( 9 %) |

| 27214 | 1 ( 0 %) |

| 27235 | 1 ( 0 %) |

| 27263 | 1 ( 0 %) |

| 27265 | 3 ( 1 %) |

| 27284 | 19 ( 7 %) |

| 27288 | 1 ( 0 %) |

| 27292 | 2 ( 1 %) |

| 27295 | 1 ( 0 %) |

| 27302 | 1 ( 0 %) |

| 27310 | 1 ( 0 %) |

| 27313 | 1 ( 0 %) |

| 27326 | 1 ( 0 %) |

| 27357 | 1 ( 0 %) |

| 27401 | 1 ( 0 %) |

| 27406 | 1 ( 0 %) |

| 27407 | 1 ( 0 %) |

| 27409 | 1 ( 0 %) |

| 27410 | 2 ( 1 %) |

| 28625 | 1 ( 0 %) |

| 28634 | 1 ( 0 %) |

| 28640 | 1 ( 0 %) |

4.1.2.1.6 Employment Experience

Table 4.12 shows that the average amount of time a survey respondent has worked in the field was 18 years. Scores ranged from 0 to 46 years, with an interquartile range of 8 to 25 years.

| Number of Responses | Average | Lowest | First Quartile | Median | Third Quartile | Highest |

|---|---|---|---|---|---|---|

| 255 | 18 | 0 | 8 | 17 | 25 | 46 |

Table 4.13 shows that the average amount of time a survey respondent has worked at the agency was 10 years. Scores ranged from 0 to 38 years, with an interquartile range of 3 to 16 years.

| Number of Responses | Average | Lowest | First Quartile | Median | Third Quartile | Highest |

|---|---|---|---|---|---|---|

| 255 | 10 | 0 | 3 | 7 | 16 | 38 |

4.1.2.1.7 Characteristics of Respondents’ Agencies and Positions

4.1.2.1.7.1 Agency Characteristics

Table 4.14 shows that 52% of survey respondents work in the Winston-Salem/Forsyth County school system, 18% in the Forsyth County Department of Social Services, 14% in the none of the listed organizations, 7% in the a child care or Pre-K facility, 5% in the Forsyth County Department of Public Health, 4% in the a pediatric office.

| Which organization or kind of organization do you work with? | Respondents (n = 255) |

|---|---|

| A child care or Pre-K facility | 19 ( 7 %) |

| A pediatric office | 11 ( 4 %) |

| Forsyth County Department of Public Health | 13 ( 5 %) |

| Forsyth County Department of Social Services | 45 ( 18 %) |

| Winston-Salem/Forsyth County School System | 132 ( 52 %) |

| None of the above | 35 ( 14 %) |

Table 4.15 shows that 47% of survey respondents agencies do not provide home visitations as a part of their services, 41% do provide home visitations, and 11% were not sure.

| Does your program within your agency provide home visitation as a part of your services? | Respondents (n = 253) |

|---|---|

| No | 119 ( 47 %) |

| Yes | 105 ( 42 %) |

| Not sure | 29 ( 11 %) |

Table 4.16 shows the varying agency zip codes survey respondents identified themselves a part of at the time of the survey.

| Agency Zip Code | Respondents (n = 250) |

|---|---|

| 27012 | 7 ( 3 %) |

| 27023 | 2 ( 1 %) |

| 27040 | 7 ( 3 %) |

| 27045 | 1 ( 0 %) |

| 27051 | 7 ( 3 %) |

| 27101 | 75 ( 30 %) |

| 27102 | 12 ( 5 %) |

| 27103 | 19 ( 8 %) |

| 27104 | 7 ( 3 %) |

| 27105 | 46 ( 18 %) |

| 27106 | 29 ( 12 %) |

| 27107 | 6 ( 2 %) |

| 27110 | 1 ( 0 %) |

| 27127 | 7 ( 3 %) |

| 27157 | 1 ( 0 %) |

| 27284 | 23 ( 9 %) |

4.1.2.1.7.2 Position Characteristics

Table 4.17 shows that 68% of survey respondents stated at least 50% of their work is with clients, i.e., direct care, 9% stated less than 50% of their work is with clients, i.e., indirect care, and 23% stated neither direct nor indirect care.

| Direct or Indirect Care | Respondents (n = 248) |

|---|---|

| Direct Care (at least 50% of your work is with clients) | 168 ( 68 %) |

| Indirect care (less than 50% of your work is with clients) | 22 ( 9 %) |

| Neither Direct nor Indirect Care | 58 ( 23 %) |

Table 4.18 shows that 10% of survey respondents identified themselves as an executive office / administrator, 14% a supervisor or middle manager, and 75% neither a supervisor or administrator.

| Supervisor, Administrator, or Neither | Respondents (n = 249) |

|---|---|

| Executive officer / administration | 26 ( 10 %) |

| Supervisor or middle manager | 36 ( 14 %) |

| Neither Supervisor or Administration | 187 ( 75 %) |

Table 4.19 shows that 93% of survey respondents identified themselves as full-time and 7% identified themselves as part-time.

| Hours Worked | Respondents (n = 251) |

|---|---|

| Full-time | 234 ( 93 %) |

| Part-time | 17 ( 7 %) |

Table 4.20 shows that 42% of survey respondents identified their annual income between $40,001-$60,000, 24% between $22,000-$40,000, 17% between $66,001-$107,000, 12% less than $22,000, and 5% more than $107,000.

| Annual Income for Position | Respondents (n = 254) |

|---|---|

| Less than $22,000 | 31 ( 12 %) |

| Between $22,000 and $40,000 | 62 ( 24 %) |

| Between $40,001 and $66,000 | 106 ( 42 %) |

| Between $66,001 and $107,000 | 42 ( 17 %) |

| More than $107,000 | 13 ( 5 %) |

4.1.2.2 Survey Analysis

To answer questions about the local network of child-serving agencies, analysts performed a series of statistical tests and analyses for each measure. They first looked at how respondents answered the questions overall, either by showing the distribution of scores on scales or by showing the percentage of respondents who gave specific answers to specific questions. Analysts then checked for differences in responses based on the type of program, the respondent’s role in the organization, whether or not the respondent provided direct care to clients, the years of experience respondents had in the field, the years respondents had worked at their particular agency, their experience with the TRC Model, their racial and ethnic identity, the extent to which they had been impacted by COVID-19, their gender, sexual orientation, level of education, income, and whether or not they were employed full time. For cases in which differences across respondents in these groups were large enough (analysts were at least 95% sure that differences were due to actual differences in responses and not random chance), scores were compared and reported. These differences are called statistically significant differences in this report. More details on tests that were run and exact test results can be found in Appendix A: Output Tables.

Analysts also checked for statistically significant differences across agencies. For cases in which analysts identified such differences, they were noted in the text, but specific scores and comparisons were omitted to protect the privacy of agencies and respondents.

Lastly, survey respondents were invited to answer an open-ended question about the ways in which they had been impacted by COVID-19. These answers were analyzed by the research team conducting the micro-level assessment at Wake Forest Baptist Health so that the categories used would be the same across the two reports.

4.1.2.3 Interview Methodology

Interviews were conducted as part of a larger assessment of the current human service provider landscape in Forsyth County, North Carolina, in relation to trauma resilience among the community. Specifically, interviews were administered to evaluate the extent to which employees in various community organizations experienced structural violence and their experiences around racial equity-based practices within their organizations.

Structural violence can be defined as a form violence experienced by people from social institutions that prevents one from meeting their basic needs (Farmer 1996). An open-ended question measured if any structural violence was experienced by staff, but most of the interview questions focused on organizational racial equity-based work. Those questions were adapted from a survey instrument, “Racial Justice Assessment Tool,” developed by the (Western States Center 2015) that helps organizations determine the extent to which they are oriented toward racial equity across different organizational areas, such as workplace culture and policies.

The data were derived from interviews with 23 employees across five human service providers, including:

- in-home visit providers,

- child care providers,

- teachers in the Winston-Salem/Forsyth County Schools system,

- employees from health and human services organizations, and

- staff from local hospital systems.

The following two sections present the demographic information of the interview participants and key findings from the interviews.

| Demographic | Respondent / Average |

|---|---|

| Gender | |

| Man | 4 |

| Woman | 17 |

| Other Gender Identity | 1 |

| Race/Ethncitiy | |

| Non-Hispanic Black/African American | 10 |

| Hispanic/Latino(a) | 3 |

| Non-Hispanic White | 9 |

| Educational Attainment | |

| Bachelor’s Degree | 6 |

| Master’s Degree | 14 |

| Doctoral Degree | 2 |

| Sexual Orientation | |

| Heterosexual | 21 |

| Other Sexual Orientation | 1 |

| Age (Years) | 47 |

| Income | |

| Less than $40,000 | 2 |

| $40,0001 - $66,000 | 6 |

| $66,001 - $107,000 | 8 |

| More than $107,001 | 4 |

| Years Employed in Field | 18 |

| Years Employed at Organization | 7 |

| Organization Sector | |

| Child Care | 4 |

| DSS | 4 |

| Home Visitation | 5 |

| Medical | 5 |

| Public Schools | 4 |

| Position | |

| Direct Care | 1 |

| Executive Officer or Administration | 10 |

| Supervisor or Middle Manager | 9 |

| Other Position Combination | 2 |

| Work Status | |

| Full-Time | 22 |

| Part-Time | 0 |

| Experience with TRC Model | |

| Familiar and involved in trainings | 4 |

| Familiar but no direct activities | 12 |

| Not familiar | 6 |

4.1.2.4 Demographics of Interview Participants

All but one interview participant completed a demographic sheet and all but two of the submitted demographic sheets were complete. Of the two incomplete sheets, one participant did not answer how COVID-19 affected them or their organization while the second participant did not answer the income question.

The majority of our qualitative sample (sample size: 23) identified as women and heterosexual. The racial and ethnic distribution is less skewed with almost the same number of non-Hispanic, White and Black/African American respondents participating in the interviews along with three Hispanic/Latino(a) participants. Our sample is highly educated. This reflects many of the educational requirements for their job, which is usually at least a bachelor’s degree. Age of participants ranged from 34 to 64 with an average age of 47. Half of the participants that answered the income question make $66,000 or more and no one from our sample worked less than full-time. Most respondents were experienced in their given field with an average of 18 years working in their respective field and an average of 7 years at their specific organization. But, it is important to note that some respondents had less than a year of experience in their field or organization. Efforts were made to ensure that at least four participants represented each organizational sector listed in the table above, and while organizational position varies, the sample skews heavily to participants who are not in direct care. Lastly, 27% of participants were not familiar with the Trauma Resilient Community model at the time of the interview.

4.1.2.5 Funding Web Diagram

In response to a request from the expanded advisory committee, Forsyth Futures analysts created diagrams to communicate how data, money, and influence were distributed among stakeholders involved in the mezzo-level analysis.

Diagram showing the distribution of data among stakeholders for the mezzo-level assessment

The diagram showing the distribution of data among stakeholders for the mezzo-level assessment shows how data was shared and distributed across stakeholders in the mezzo-level assessment. Forsyth Futures staff collected data from organizational staff, analyzed that data, and then shared it with organizational staff members, academic partners, the Stakeholder Advisory Committee, Community Partners for Change, Crossnore, and MDC. Crossnore and MDC shared that data with the Kate B. Reynolds Charitable Trust.

Diagram showing the distribution of funding among stakeholders for the mezzo-level assessment

The diagram showing the distribution of funding among stakeholders for the mezzo-level assessment shows the distribution of funding among stakeholders for the mezzo-level assessment. Funding was distributed from the Kate B. Reynolds Charitable Trust to Crossnore. Crossnore distributed that funding to Forsyth Futures and academic partners from the Center for Trauma Resilient Communities. Forsyth Futures distributed funding to some organization staff by compensating interview participants for their time and entering child care providers into a drawing to win gift cards. Interview participants were compensated for their time because of the increased time commitment associated with participating in a 45 minute to an hour long interview, and child care providers were provided with an opportunity for incentives because past work with child care providers has indicated that completing surveys is disproportionately burdensome for respondents working in those settings.

Diagram showing the distribution of influence among stakeholders for the mezzo-level assessment

The diagram showing the distribution of influence among stakeholders for the mezzo-level assessment shows the distribution of influence among stakeholders for the mezzo-level assessment. Influence was defined as the ability to meaningfully impact decisions that were being made about the project. In their role as a funder, the Kate B. Reynolds Charitable Trust had the ability to exert influence over Crossnore and MDC. As the key convener, Crossnore had the ability to exert influence over the initial Stakeholder Advisory Committee convened to advise on the project. As the grantee supervising Forsyth Futures as a subcontractor, Crossnore and MDC had the ability to exert influence over Forsyth Futures. The initial Stakeholder Advisory Committee and academic partners at the Center for Trauma Resilient Communities provided feedback and advice that influenced the methodological decisions made by Forsyth Futures. Staff at surveyed organizations and members of the Community Partners for Change, which convened after the research was completed, did not have the ability to exert influence in this project.

4.1.2.6 More Information on Methodology

Technical documentation for specific statistical tests and scale performance can be found in the appendices of this report. Please reach out to info@forsythfutures.org with additional questions about the methods used in this report or to request a technical methodology.

4.2 Staff Experiences of ACEs and Discrimination

4.2.1 Introduction

This section covers staff experiences of Adverse Childhood Experiences (ACEs) reported in the survey. The staff experiences of ACEs component of the survey contains 10 items that ask about whether or not the respondent experienced various ACEs, which are potentially traumatic experiences that some people experience in childhood (0-17 years old). The greater the number of ACEs that a person has experienced can have a long-term negative impact on health, well-being, and life opportunities. Below are a few example questions from this measure:

- Did you feel that you didn’t have enough to eat, had to wear dirty clothes, or had no one to protect or take care of you?

- Did you live with anyone who had a problem with drinking or using drugs, including prescription drugs?

This section of the survey also asked staff members if they had ever experienced discrimination.

4.2.2 Key Findings

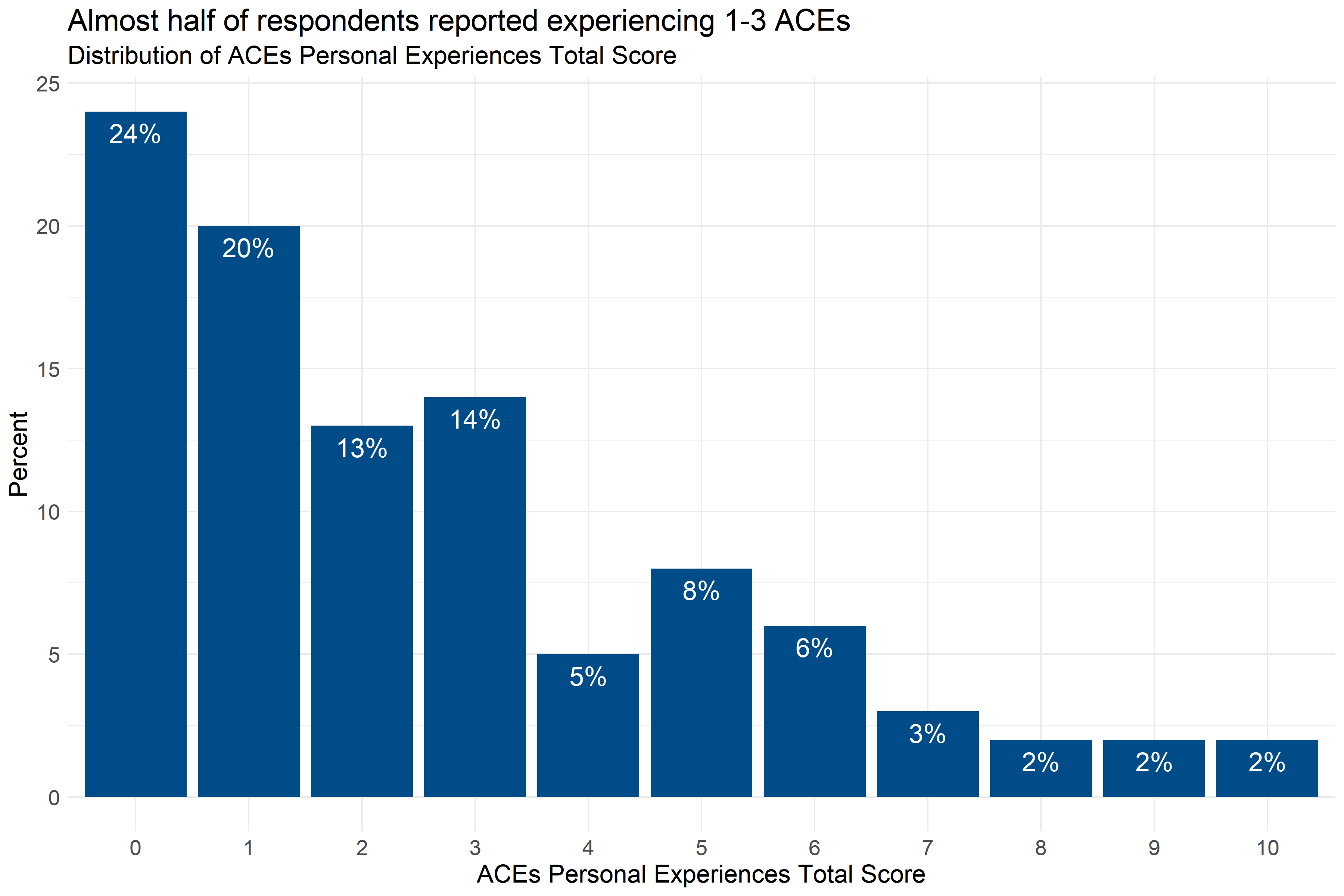

- Most respondents in child-serving agencies have experienced at least one ACE, with almost half experiencing 1-3 ACEs.

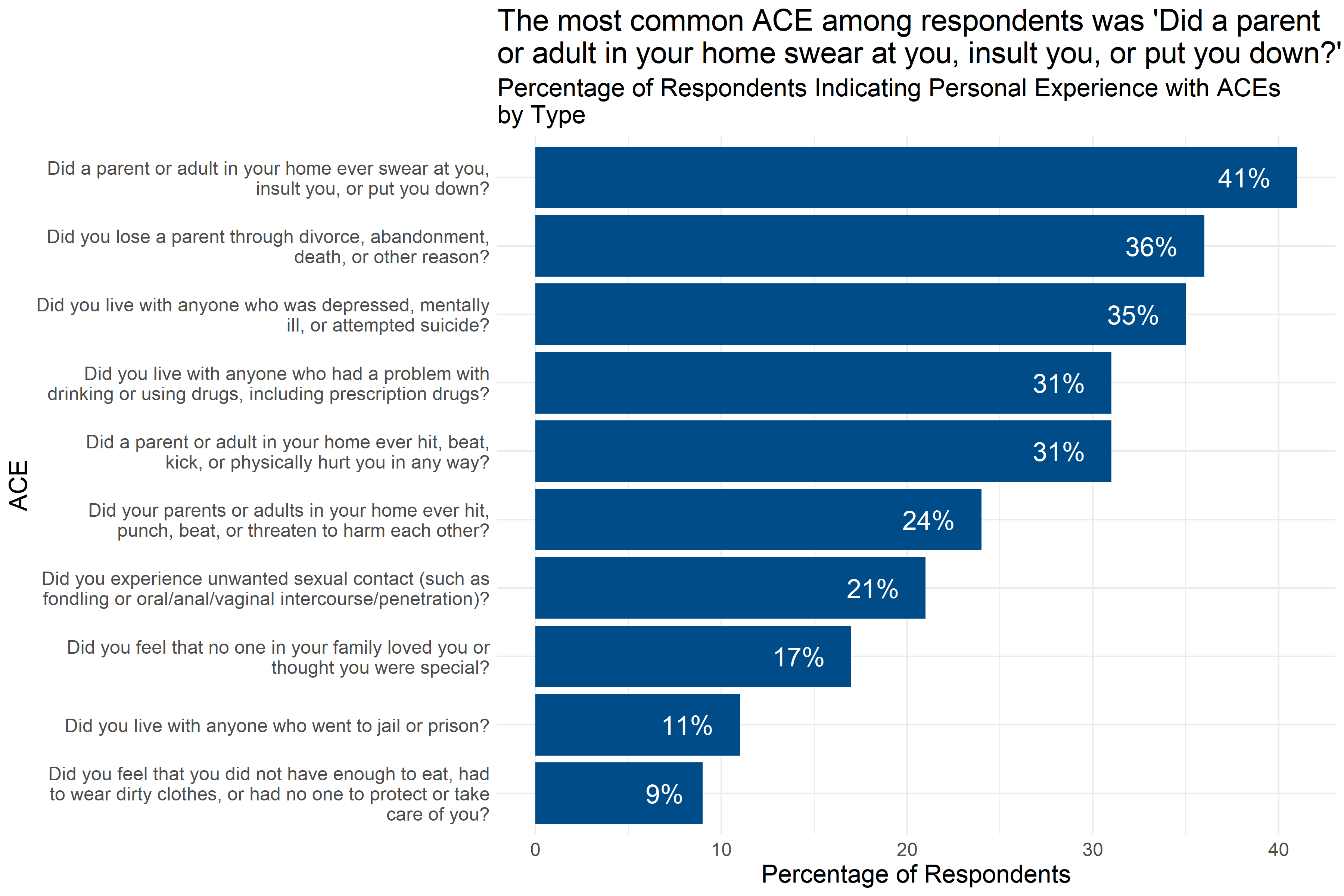

- The most common ACEs experienced were emotional abuse, loss of a parent, household mental illness, substance abuse, and physical abuse.

- Staff experiences of ACEs closely mirror those reported by adults in Forsyth County.

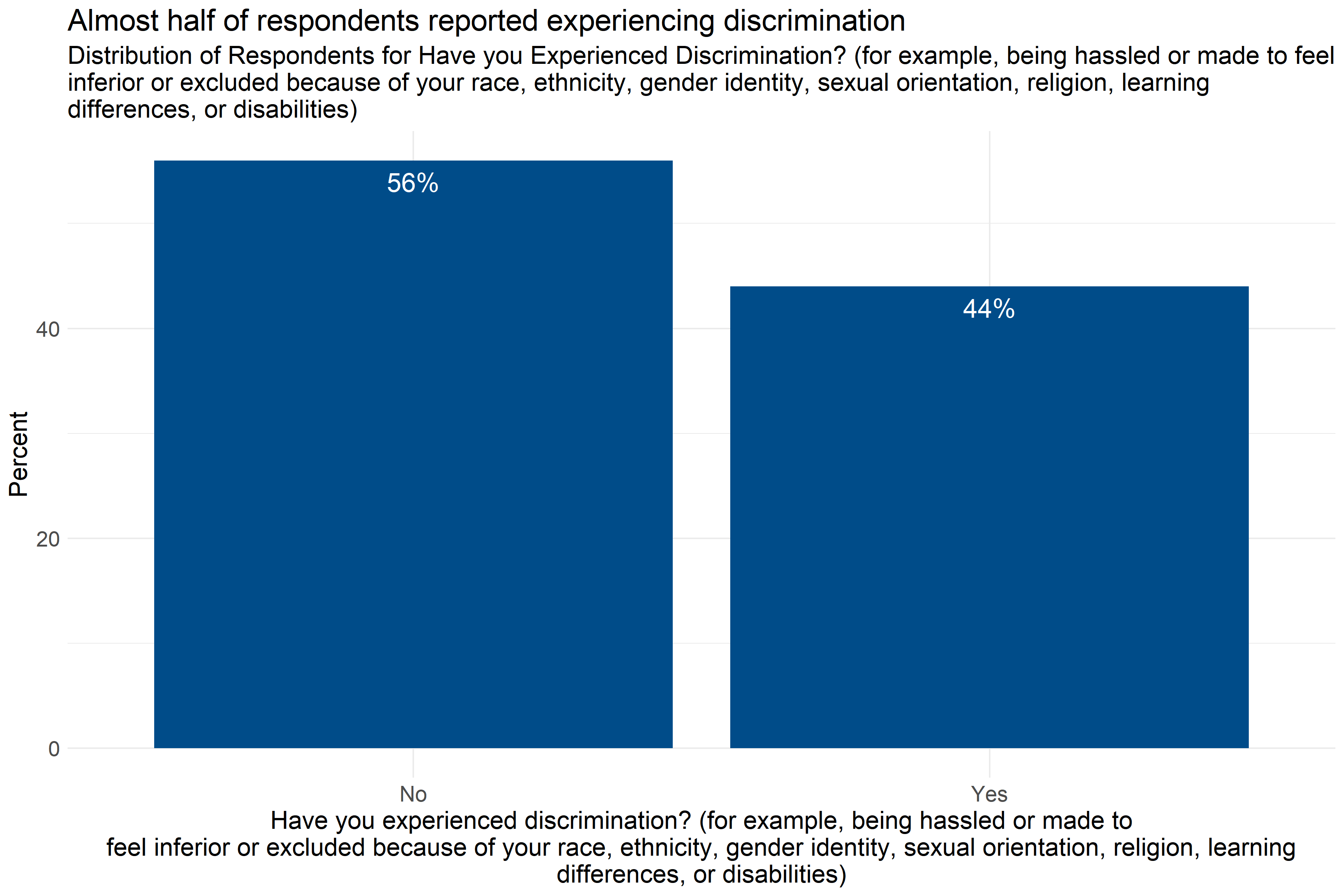

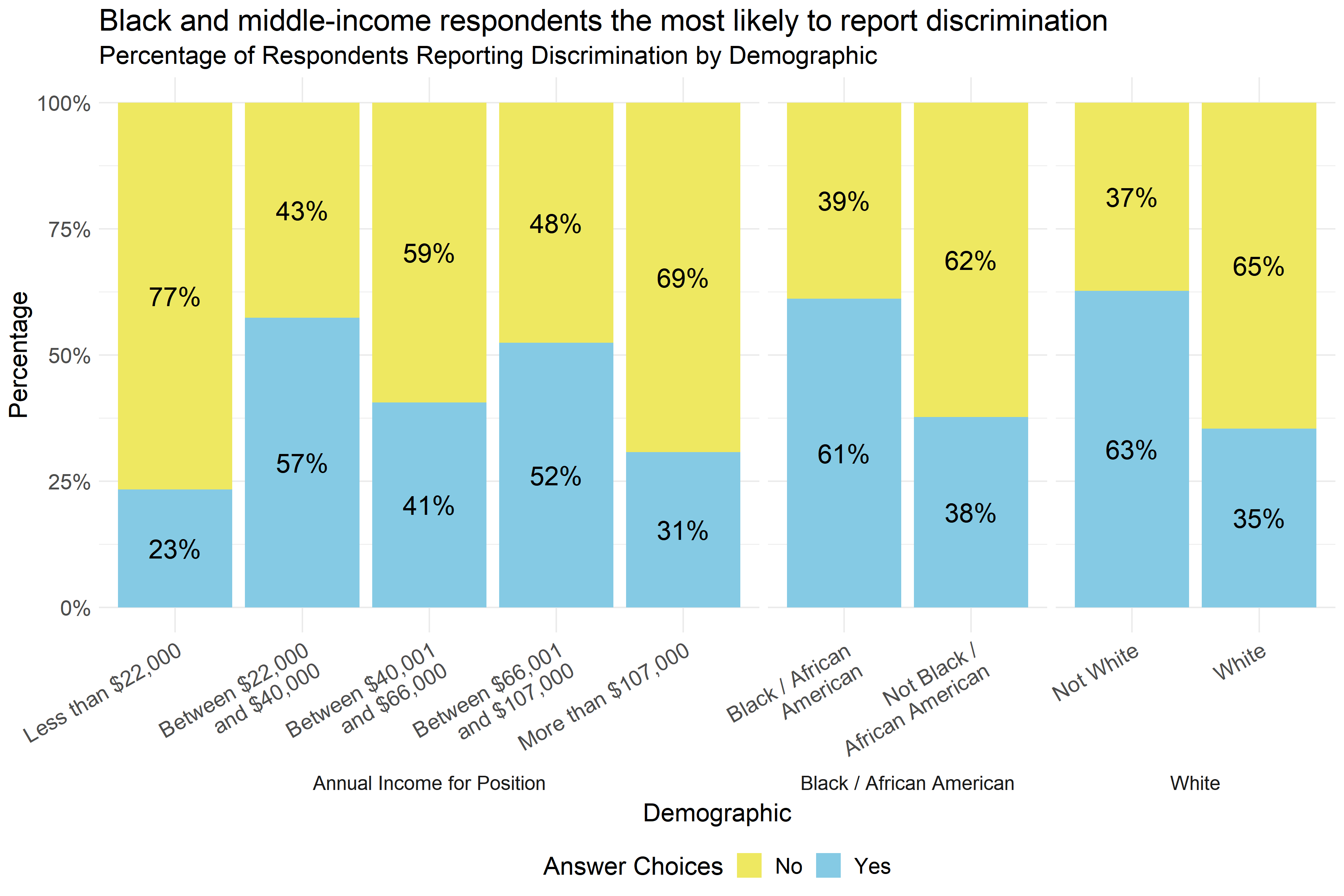

- About 44% of respondents reported experiences of discrimination with Black / African American respondents, respondents not identifying as White, and middle-income respondents being the most likely to report these experiences.

4.2.3 Staff Experiences of ACEs

To measure ACEs, respondents were asked a series of yes/no questions about their childhood experiences. This section looks at the number and types of experiences respondents reported.

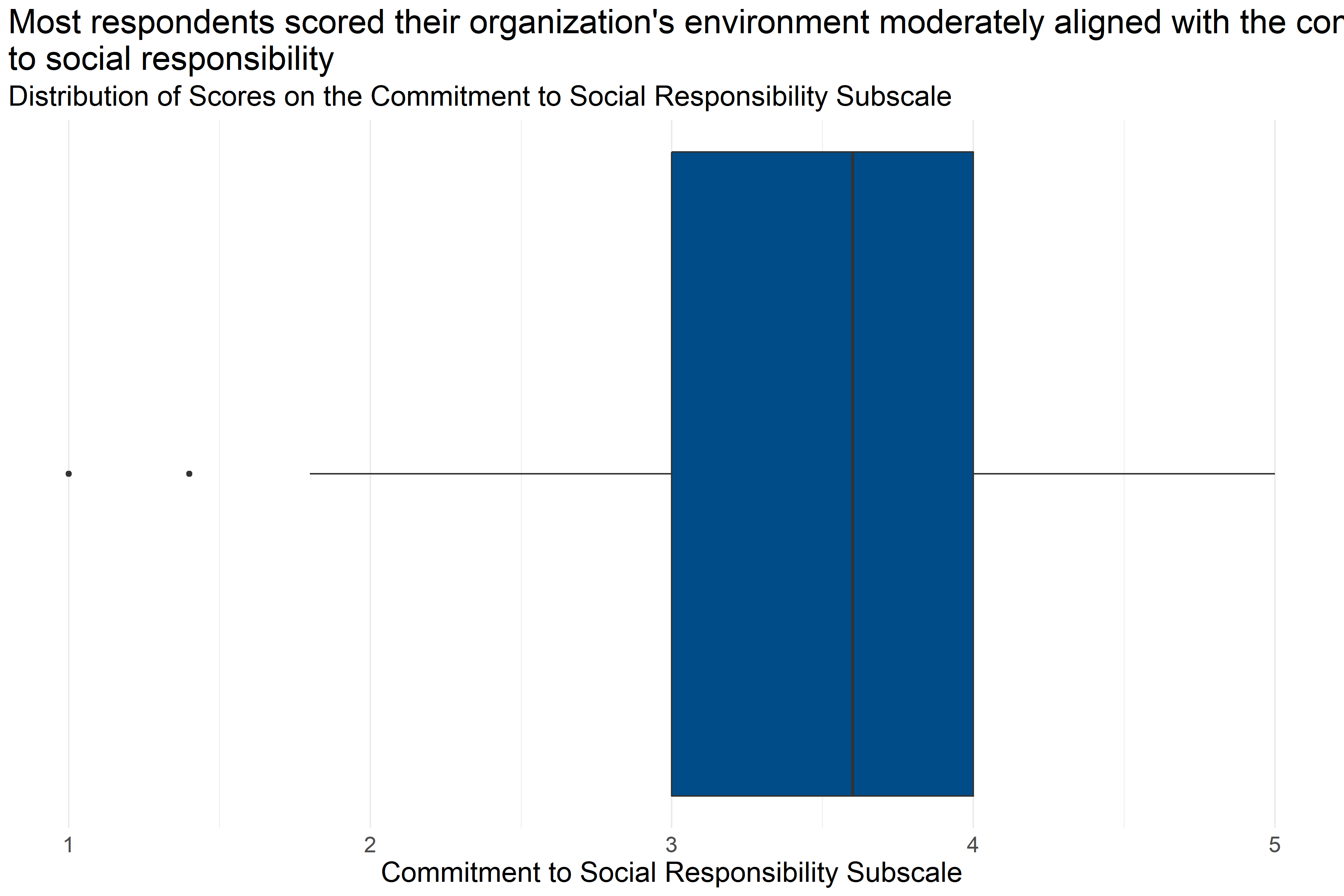

Figure 4.1: Distribution of the number of ACEs reported by respondents

Figure 4.1 shows that 76% of respondents reported experiencing at least one ACE, and 47% of respondents reported experiencing 1-3 ACEs. Analysts also tested the data to see if there were significant differences in the number of ACEs experienced by staff from different demographic groups and agencies, but did not find any statistically significant differences.

Figure 4.2: Percentage of respondents indicating personal experiences with ACEs by type of ACE

Figure 4.2 shows the percentage of survey respondents who indicated that they had experienced each kind of adverse experience. The most common adverse experience was having “a parent or adult in your home swear at you, insult you, or put you down” with 41% of respondents reporting this experience. Other common adverse experiences included the loss of a parent (including through divorce), household mental illness, substance abuse, and physical abuse, all reported by more than 25% of respondents.

4.2.4 Staff Experiences of Discrimination

Researchers also asked an additional question about experiences of discrimination: “Have you experienced discrimination? (for example, being hassled or made to feel inferior or excluded because of your race, ethnicity, gender identity, sexual orientation, religion, learning differences, or disabilities)”

Figure 4.3: Percentage of respondents reporting experiences of discrimination

Figure 4.3 shows that almost half (44%) of respondents reported experiencing discrimination on the basis of their race, ethnicity, gender identity, sexual orientation, religion, learning differences, or disabilities.

Figure 4.4: Percentage of respondents reporting discrimination by demographic

Figure 4.4 shows that there were significant differences in the percentage of respondents reporting discrimination by income and race. About 61% of respondents identifying as Black / African American reported experiences of discrimination, compared to 38% of respondents not identifying as Black / African American. Similarly, 35% of White respondents reported experiences of discrimination compared to 63% of respondents who did not identify as White. Respondents reporting mid-range annual incomes were also more likely to report experiences of discrimination, with the highest rate of reporting among those making $22,000-$40,000 a year.

The differences in reported experiences of discrimination and annual income are driven by respondents making less than $22,000 a year being much less likely to report discrimination and those making between $22,000 and $40,000 being more likely to report discrimination.

4.2.5 Conclusions

Most respondents in child-serving agencies have experienced at least one Adverse Childhood Experience (ACE), with almost half experiencing 1-3 ACEs. The most common ACEs experienced were emotional abuse, loss of a parent, household mental illness, substance abuse, and physical abuse, which closely mirrored the adverse experiences of adults in Forsyth County in general.

Additionally, 44% of respondents reported experiences of discrimination, with Black / African American respondents, respondents not identifying as White, and middle-income respondents being the most likely to report experiences of discrimination.

It is important to note that survey questions asked whether or not a staff member had ever had one of these experiences. The long-term impact of individual events that were not frequent occurrences may have different impacts on staff members than events that happened frequently, especially when responding to questions about verbal or emotional abuse. This survey did not ask how frequently these experiences happened, it only asked whether or not they happened.

This suggests that ACEs and experiences of discrimination, both potentially traumatizing experiences, are relatively common among staff in child-serving agencies. Some of the specific ACEs experienced by staff are likely to be similar to the experiences of adults in their clients’ households, which could result in staff experiencing triggers when interacting with some clients. A plan to implement a trauma-informed model in Forsyth County should take into account the experiences and potential needs of staff as well as residents in the community.

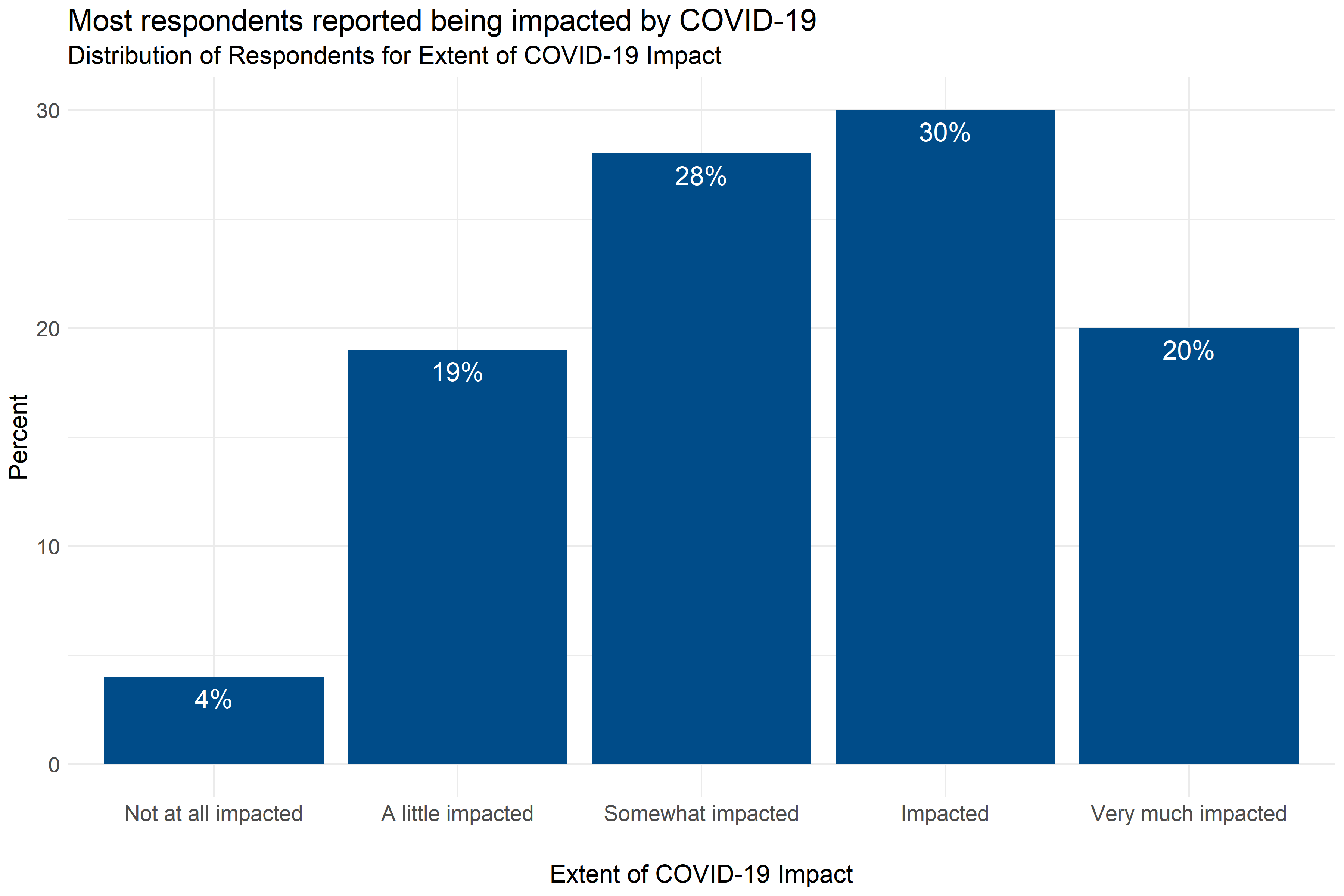

4.3 Staff Impact from COVID-19

This section includes results of staff responses on the degree to which COVID-19 has impacted them, as well as the type of impact it has had on them. To assess the degree of impact, the following survey question was asked “To what extent have you been impacted by the COVID 19 pandemic?” with response options on a five-point scale from “Not at all impacted” to "Very much impacted". The type of impact was determined by an open response question on surveys completed that was analyzed by the Wake Forest Baptist Health team.

4.3.1 Key Findings

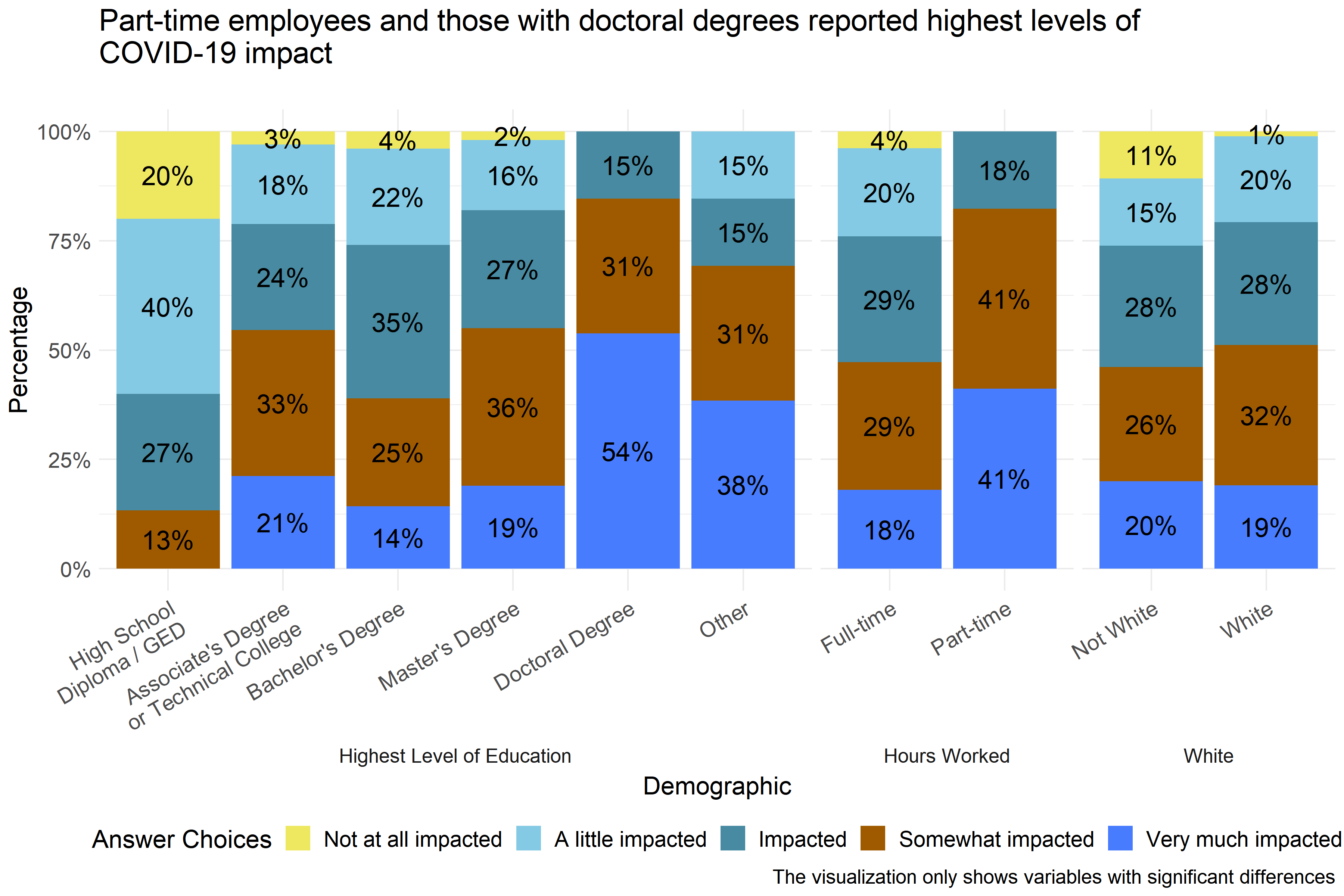

- 78% of respondents reported being at least somewhat impacted by COVID-19, and 20% reported being “very much impacted.”

- Respondents with doctoral degrees and part-time respondents reported more impact than others from COVID-19.

- The most common ways that COVID-19 has impacted respondents were job changes and stressors, isolation/separation from others, job losses or disruption, kids’ school changes, and family stressors.

4.3.2 Extent of COVID-19 Impact

Figure 4.5: Distribution of responses about the extent to which COVID-19 has impacted respondents

Figure 4.5 shows that most respondents, around 78%, reported being at least somewhat impacted by COVID-19. Only 4% of respondents reported being “not at all impacted,” and 20% of respondents reported being “very much impacted.”

Figure 4.6: Extent of COVID-19 impact reported by demographic

Analysts found statistically significant differences in how respondents described the extent of COVID-19 impact that they had experienced by level of education, hours worked, whether or not respondents described themselves as White, and specific agency where respondents were employed. Figure 4.6 shows that all respondents with doctoral degrees reported that they were at least “impacted” by COVID-19, with 54% reporting that they have been “very much impacted.” Similarly all part-time workers reported being at least “impacted,” with 41% reporting being “very much impacted.” And, only 1% of White respondents reported being “not at all impacted,” compared to 11% who did not describe themselves as White. Analysts also found significant differences in reported COVID-19 impact across specific agencies, but did not include that information in Figure 4.6 to protect the privacy of employees at those agencies.

4.3.3 Types of Impact from COVID-19 Pandemic

The survey included an open-item response option for respondents to indicate how COVID-19 has impacted them and their family. The five most common types of impacts were as follows:

- Job changes or stressors (e.g., delivering services virtually) - 29% of respondents

- Isolation/Separation from others - 22% of respondents

- Job loss or disruption (self/spouse/family member) - 14% of respondents

- Kids’ School Changes - 14% of respondents

- Family stressors (including illness, tension over COVID) - 12% of respondents

4.3.4 Conclusions

The survey found that most staff in child-serving agencies have been impacted by the COVID-19 pandemic. About 78% of survey respondents reported being at least “somewhat impacted” by COVID-19, and about 20% reported being “very much impacted.” Analysts found that respondents with doctoral degrees and part-time respondents reported more impact from COVID-19 than others. (It is possible that respondents with doctoral degrees were disproportionately working in pediatric healthcare settings.) This suggests that any efforts taken to mitigate the stressors or emotional impact of COVID-19 on agency staff should be sure to include part-time employees as well as those with high levels of education. Some individual agencies may also have staff who have been more impacted by COVID-19 than others.

The most common COVID-19 impacts reported by child-serving agencies were job changes and stressors, isolation/separation from others, job losses or disruption, kids’ school changes, and family stressors. This is consistent with what analysts learned from interviews with child-serving agency staff.

4.4 Staff Experiences of Secondary Traumatic Stress (STS)

This section includes survey results of the staff experiences of STS or what is also known as ‘compassion fatigue.’ The questions asked in this section include statements made by people who have been impacted by their work with traumatized clients. STS (or compassion fatigue) can have negative effects such as mental and physical health issues, strained relationships at home, and poor work performance. Below are a few example questions from this measure:

- “My heart started pounding when I thought about my work with clients.”

- “I felt jumpy.”

4.4.1 Key Findings

- Scores on the STS Scale subscales were generally low, but some respondents did report high scores.

- Scores on the Arousal Subscale were the highest of the three subscales.

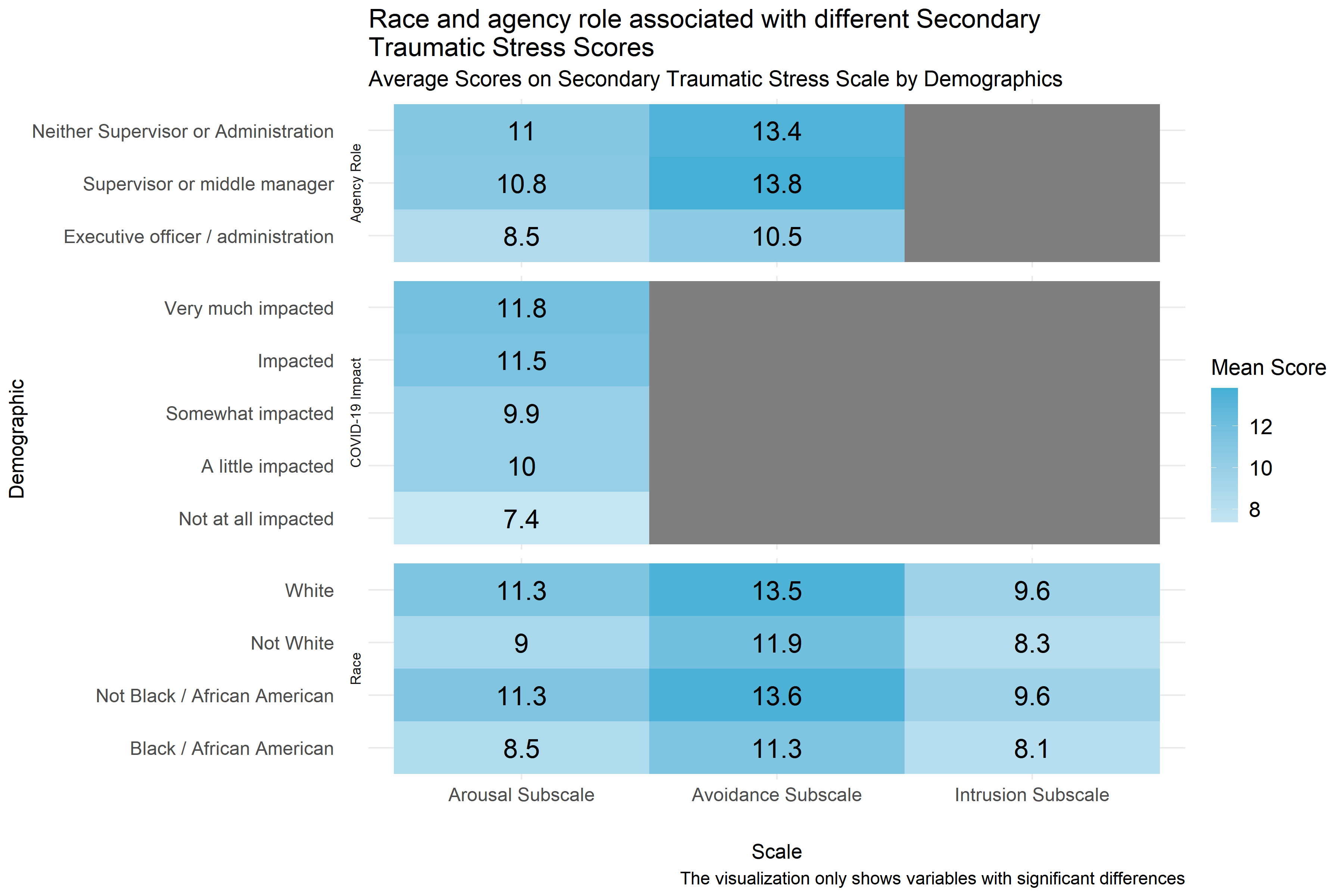

- Across all three subscales, respondents who identified themselves as White had higher scores than those who did not, and those who identified themselves as Black / African American had lower subscores than those who did not.

- Executive officers and administrators had lower scores on the Arousal and Avoidance subscales than other respondents.

- Respondents not at all impacted by COVID-19 and those reporting more years of experience in the field had lower scores on the Arousal Subscale.

4.4.1.1 Intrusion Subscale

The Intrusion Subscale of the Secondary Traumatic Stress Scale Survey measures how employees responded to questions about the “recurrent and distressing recollections of patients.” (Orrù et al. 2021) Respondents answered on a five point scale with options ranging from 1 “Never” to 5 “Very Often” to statements like, “My heart started pounding when I thought about my work with clients.” and “Reminders of my work with clients upset me.” Individual responses to each of the questions in this scale can be found in Appendix B: Detailed Question Responses by Scale. Lower scores on this scale indicate little or no intrusion, and higher scores indicate high or severe intrusion.

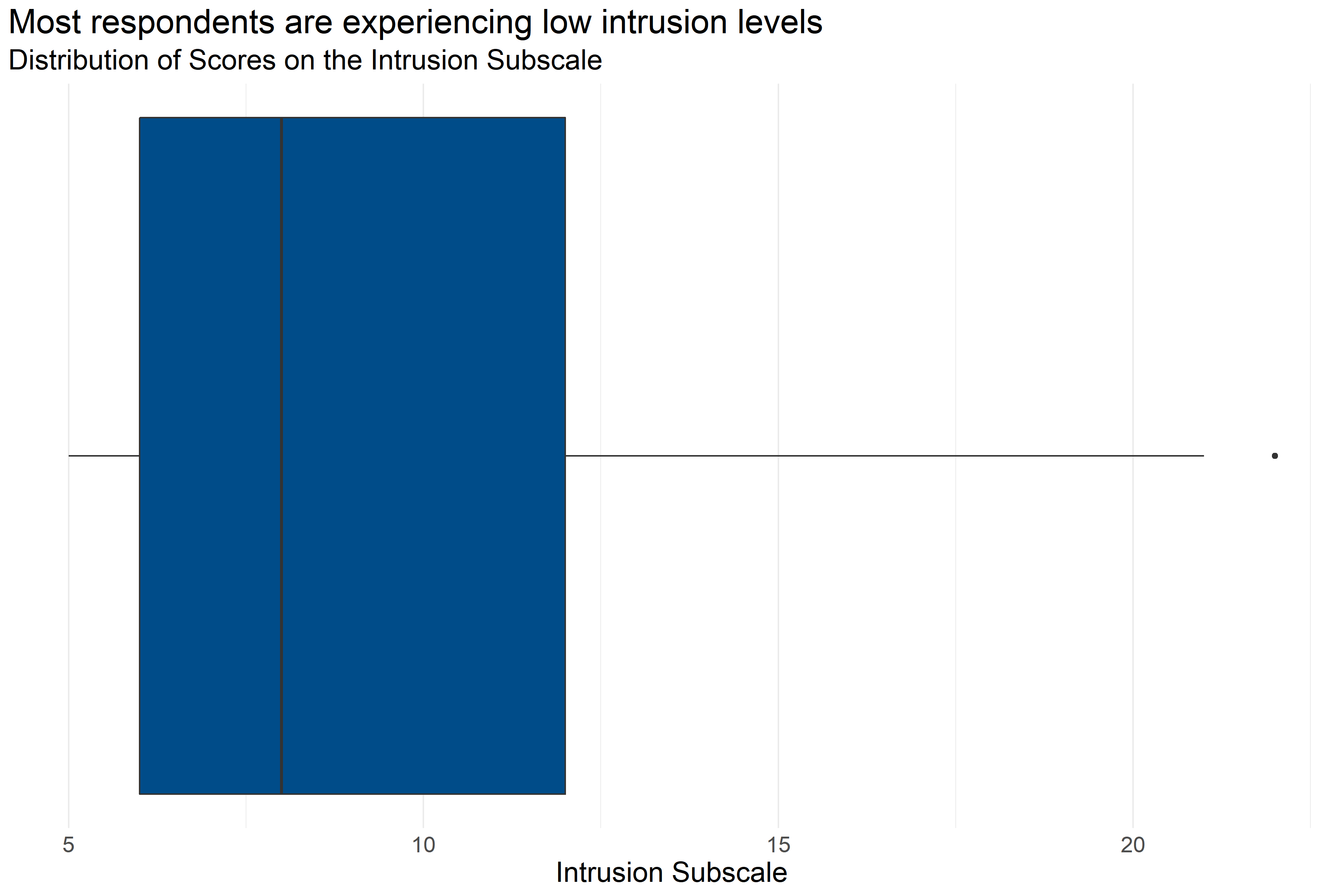

Figure 4.7: Boxplot of score distributions for the Intrusion Subscale

| Number of Answers | Average Score | Lowest Score | First Quartile | Median | Third Quartile | Highest Score |

|---|---|---|---|---|---|---|

| 227 | 9.32 | 5 | 6 | 8 | 12 | 22 |

Figure 4.7 and Table 4.22 provide information about how respondents scored on the Intrusion Subscale. They provide the following information:

- Response: 227 respondents answered all questions in the scale.

- Average: The average score was 5.

- Range: Scores ranged from 9.32 to 22.

- Median: About half of respondents scored below 8 and half scored above 8.

- Interquartile Range: If the range of responses were cut into quarters, the middle half of respondents (between the first and third quartiles) scored between 6 and 12.

This indicates that most respondents are experiencing relatively low levels of intrusion as a potential symptom of secondary traumatic stress syndrome, but some respondents reported experiencing higher levels of intrusion.

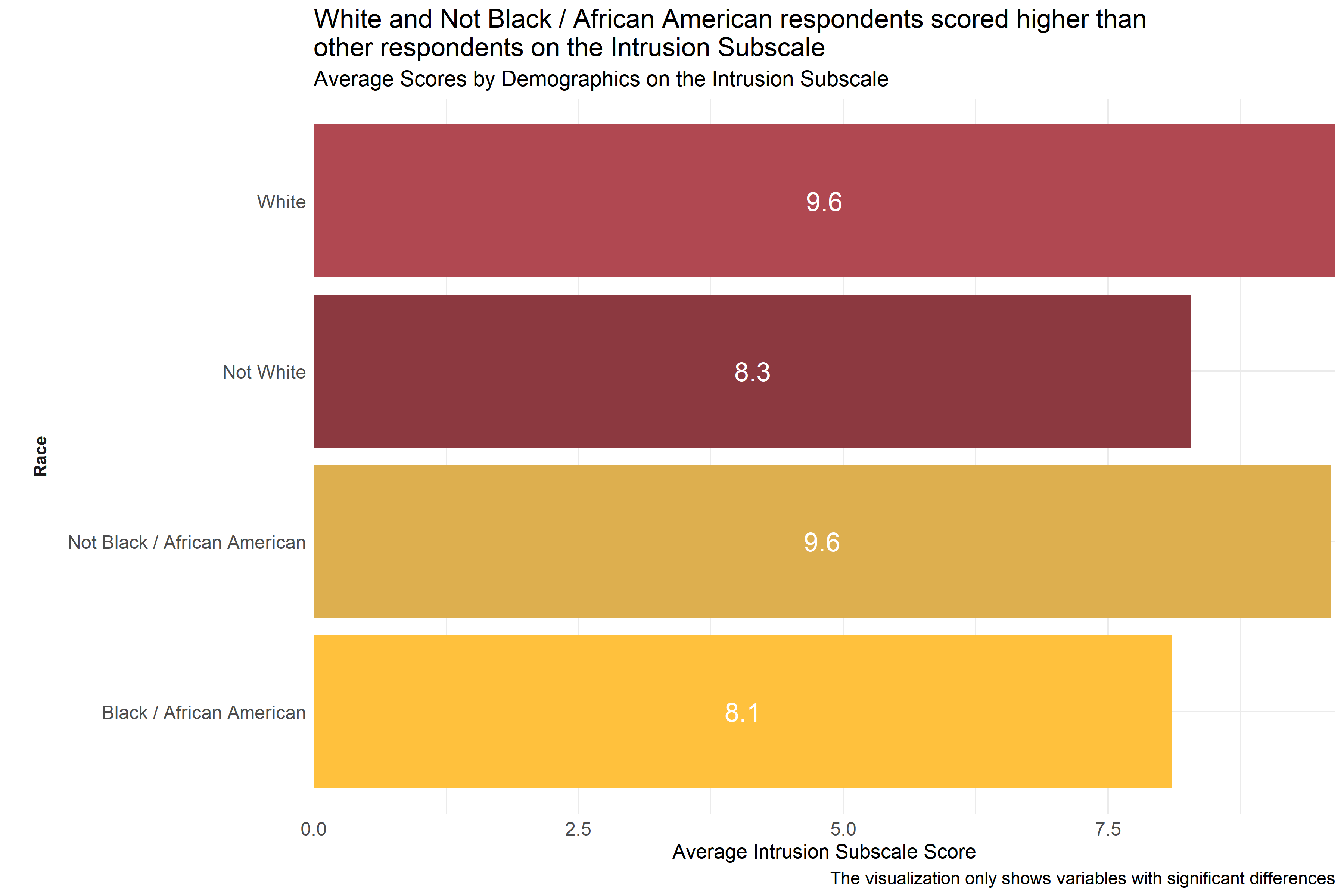

Figure 4.8: Average scores for Intrusion Subscale across demographic groups

Analysts tested the average scores on the Intrusion Subscale across demographic groups to identify differences in how respondents answered questions about potential symptoms of secondary traumatic stress. As Figure 4.8 shows, respondents who identified themselves as White or Not Black / African American scored higher on the Intrusion Subscale than other races. (Respondents were asked to select racial identities from a list of options and could select more than one.) This suggests that respondents identifying as White or Not Black / African American are experiencing more intrusion.

Analysts also observed differences in average scores across different agencies, but these scores are not included in Figure 4.8 to protect the privacy of those agencies.

4.4.1.2 Avoidance Subscale

The Avoidance Subscale of the Secondary Traumatic Stress Scale Survey measures how employees responded to questions about “the avoidance of stimuli associated with the care of patients and the numbing of general responsiveness.” (Orrù et al. 2021) Respondents answered on a five point scale with options ranging from 1 “Never” to 5 “Very Often” to statements like, “I had little interest in being around others.” and “I avoided people, places, or things that reminded me of my work with clients.” Individual responses to each of the questions in this scale can be found in Appendix B: Detailed Question Responses by Scale. Lower scores on this scale indicate little or no avoidance, and higher scores indicate high or severe avoidance.

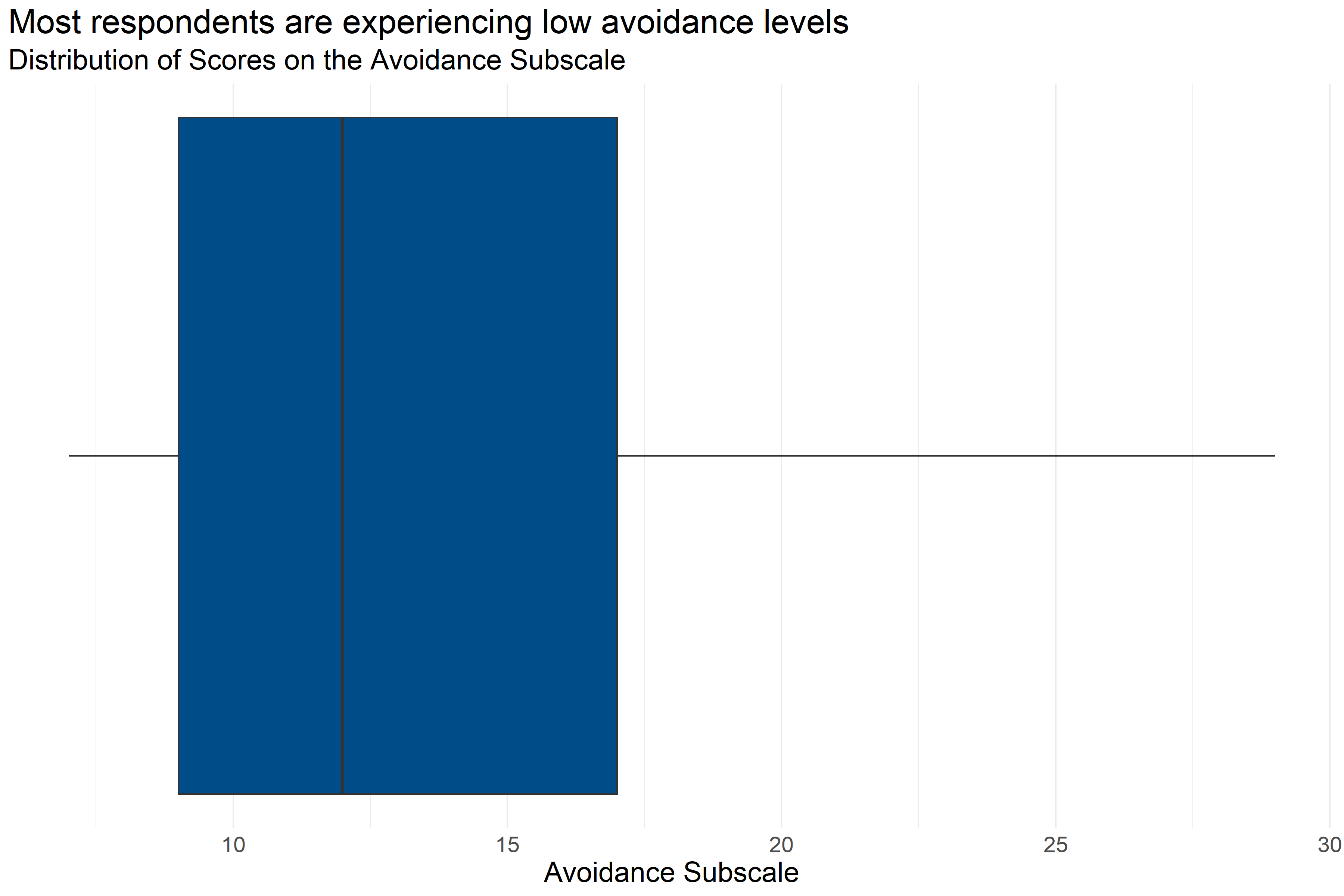

Figure 4.9: Boxplot of score distributions for the Avoidance Subscale

| Number of Answers | Average Score | Lowest Score | First Quartile | Median | Third Quartile | Highest Score |

|---|---|---|---|---|---|---|

| 217 | 13.13 | 7 | 9 | 12 | 17 | 29 |

Figure 4.9 and Table 4.23 provide information about how respondents scored on the Avoidance Subscale. They provide the following information:

- Response: 217 respondents answered all questions in the scale.

- Average: The average score was 7.

- Range: Scores ranged from 13.13 to 29.

- Median: About half of respondents scored below 12 and half scored above 12.

- Interquartile Range: If the range of responses were cut into quarters, the middle half of respondents (between the first and third quartiles) scored between 9 and 17.

While most respondents scored relatively low on the avoidance subscale, there were some respondents with relatively high scores.

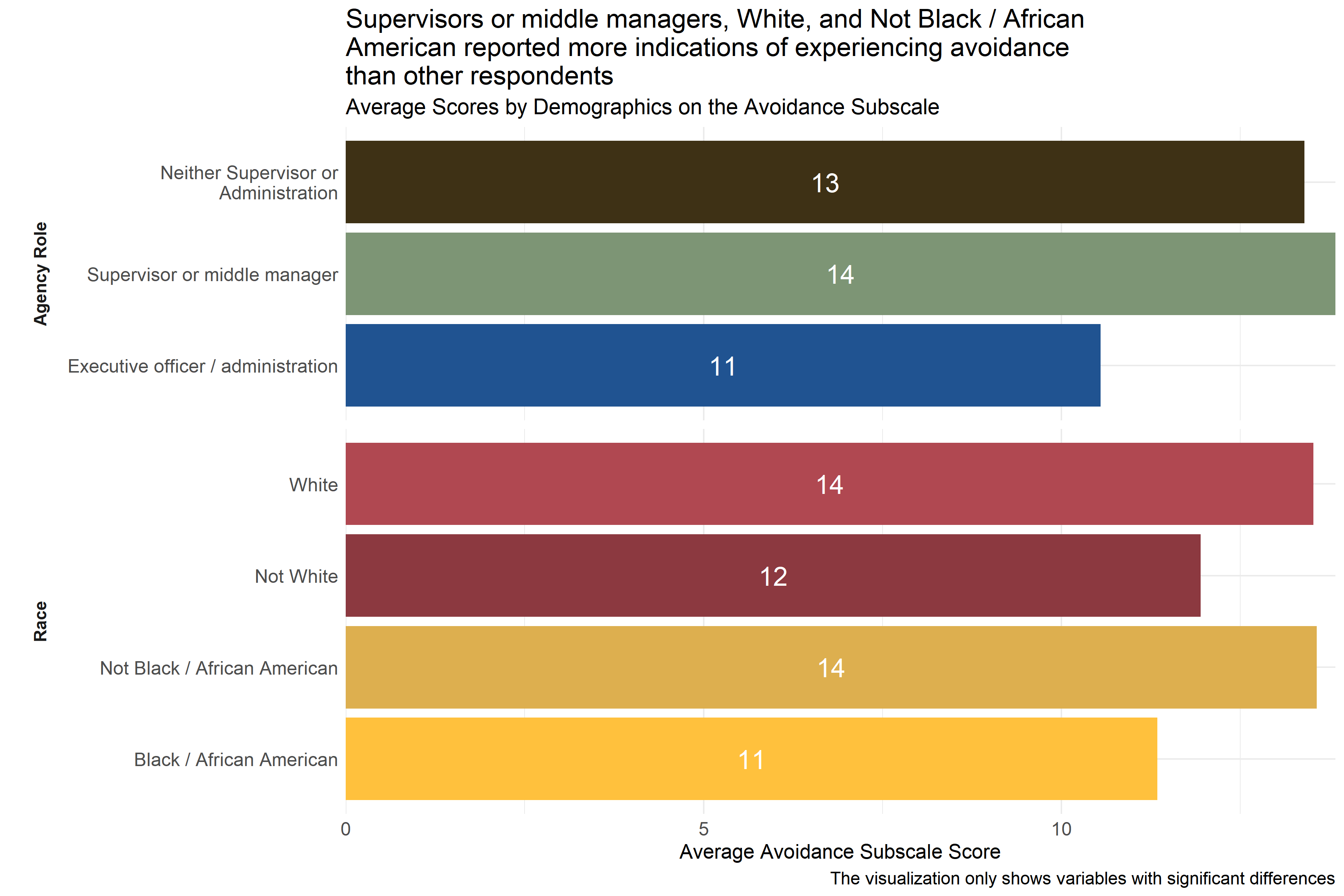

Figure 4.10: Average scores for Avoidance Subscale across demographic groups

Analysts tested the average scores on the Avoidance Subscale across demographic groups to identify differences in how respondents answered questions about potential symptoms of secondary traumatic stress. As Figure 4.10 shows, there were some statistically significant differences in average score across respondent groups by race and role in agency. Respondents identifying as executive officers or administrators had lower average scores than respondents in other agency roles. Additionally, Not Black / African American and White respondents reported higher scores than other respondents. (Respondents were asked to select racial identities from a list of options and could select more than one.)

Analysts also observed significant differences in average scores across agencies, but scores from these specific agencies were not included in Figure 4.10 to protect those agencies’ privacy.

4.4.1.3 Arousal Subscale

The Arousal Subscale of the Secondary Traumatic Stress Scale Survey measures how employees responded to questions “that assess symptoms like irritability, hypervigilance, and difficulty concentrating.” (Orrù et al. 2021) Respondents answered on a five point scale with options ranging from 1 “Never” to 5 “Very Often” to statements like, “I had trouble concentrating.” and “I expected something bad to happen.” Individual responses to each of the questions in this scale can be found in Appendix B: Detailed Question Responses by Scale. Lower scores on this scale indicate little or no arousal, and higher scores indicate high or severe arousal.

Figure 4.11: Boxplot of score distributions for the Arousal Subscale

| Number of Answers | Average Score | Lowest Score | First Quartile | Median | Third Quartile | Highest Score |

|---|---|---|---|---|---|---|

| 239 | 10.66 | 5 | 7 | 10 | 13 | 25 |

Figure 4.11 and Table 4.24 provide information about how respondents scored on the Arousal Subscale. They provide the following information:

- Response: 239 respondents answered all questions in the scale.

- Average: The average score was 5.

- Range: Scores ranged from 10.66 to 25.

- Median: About half of respondents scored below 10 and half scored above 10.

- Interquartile Range: If the range of responses were cut into quarters, the middle half of respondents (between the first and third quartiles) scored between 7 and 13.

While most respondents scored relatively low on the arousal subscale, there were some respondents with relatively high scores.

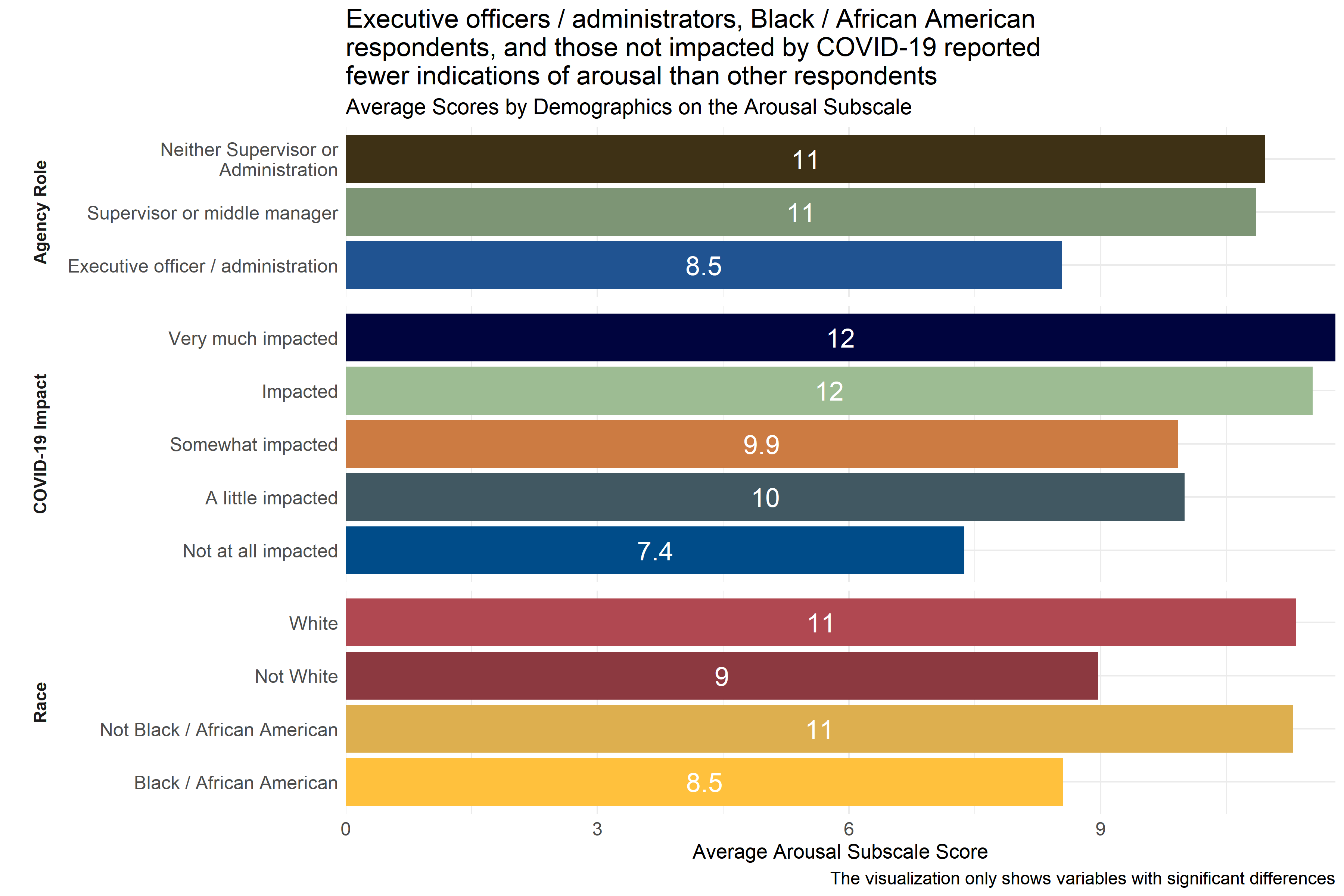

Figure 4.12: Average scores for Arousal Subscale across demographic groups

Analysts tested the average scores on the Arousal Subscale across demographic groups to identify differences in how respondents answered questions about potential symptoms of secondary traumatic stress. As Figure 4.12 shows, executive officers / administrators had lower average scores than respondents in other agency roles, as did respondents who identified themselves as Black / African American or did not identify as White. (Respondents were asked to select racial identities from a list of options and could select more than one.) When compared to respondents who reported experiencing some level of impact from COVID-19, those who reported that they were “not at all impacted” had lower scores on the arousal subscale. This suggests that respondents identifying as executive officers / administrators or as Black / African American and those reporting no impact from COVID-19 are experiencing less arousal.

Analysts also observed significant differences in average scores across agencies, but scores from these specific agencies were not included in Figure 4.12 to protect those agencies’ privacy.

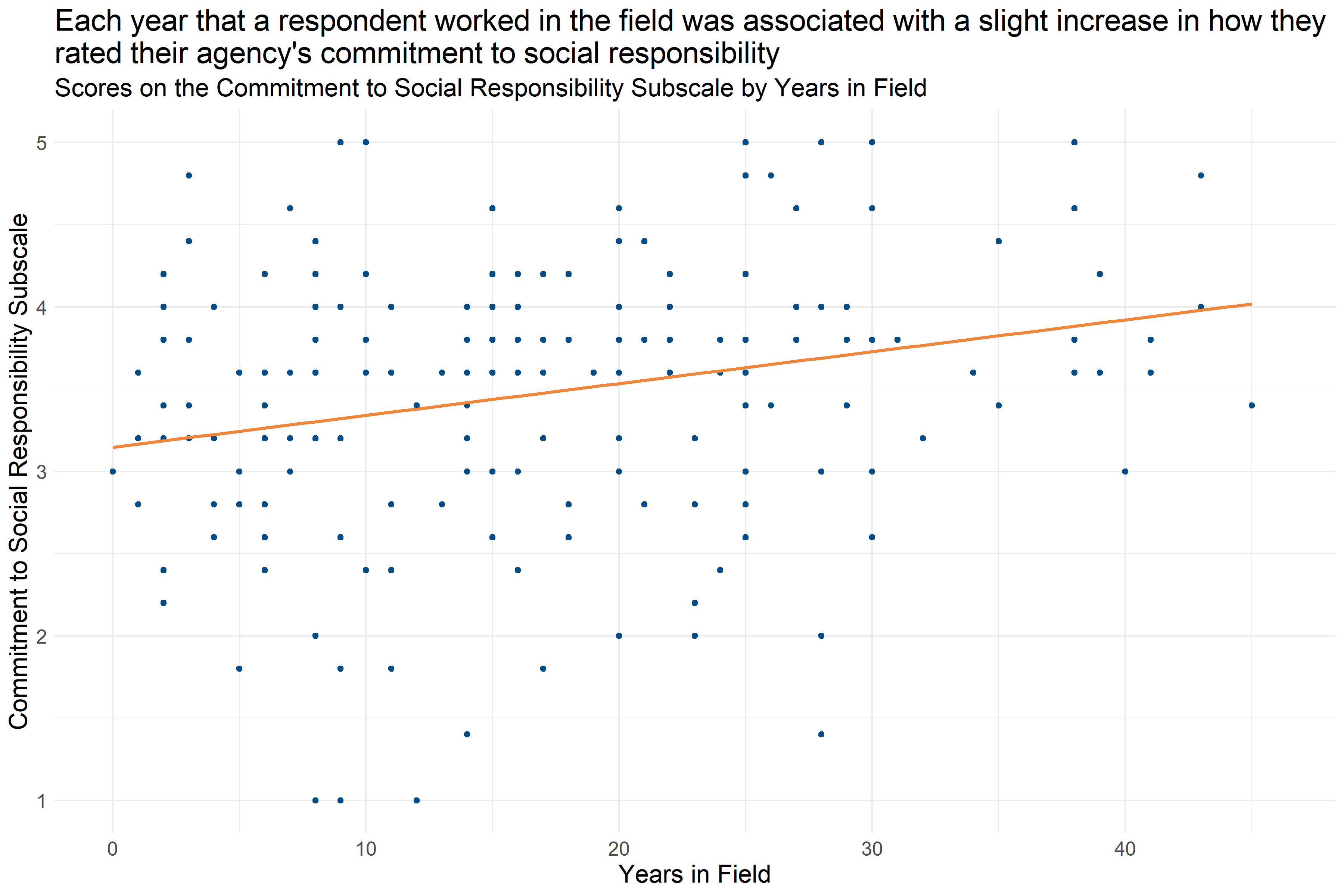

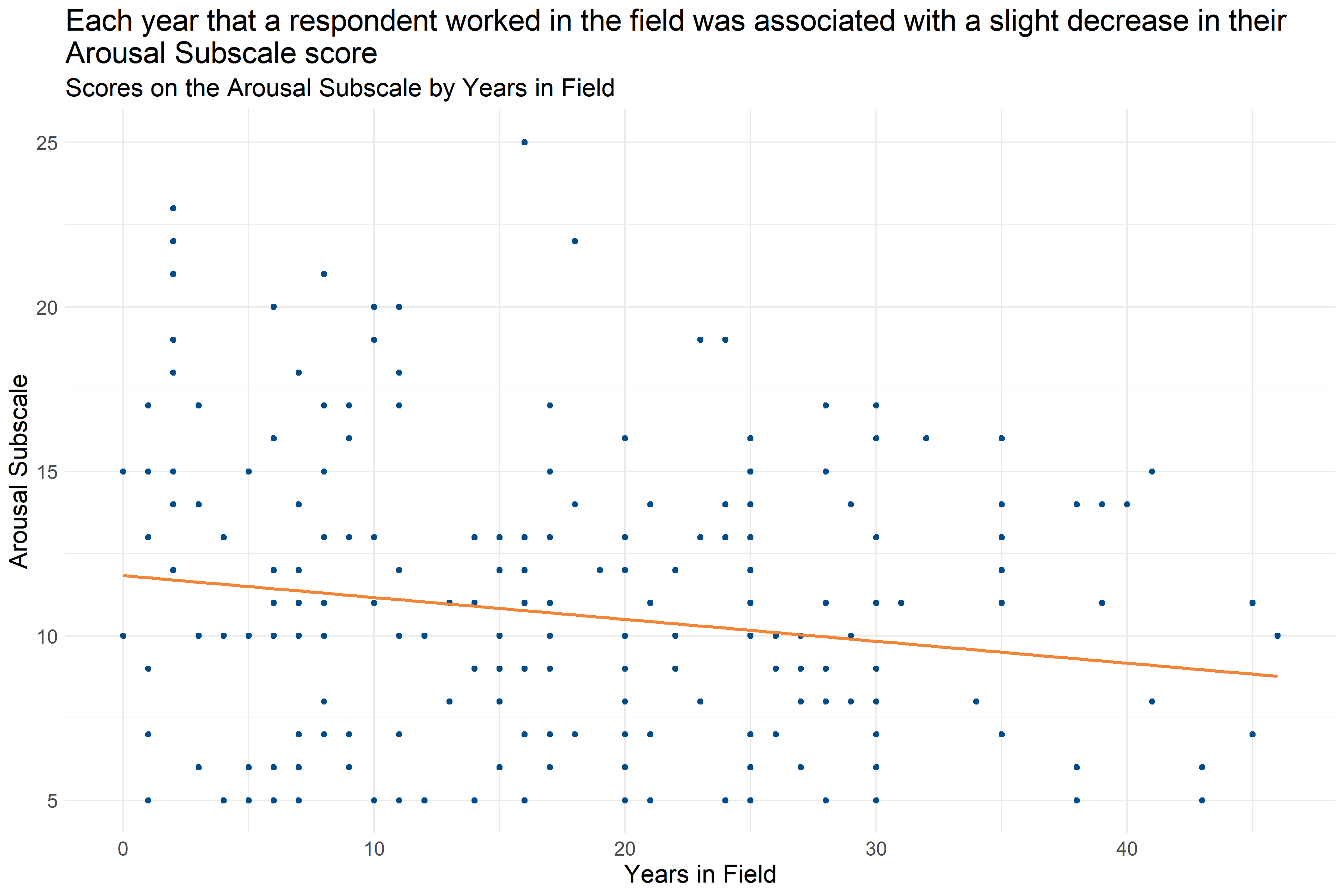

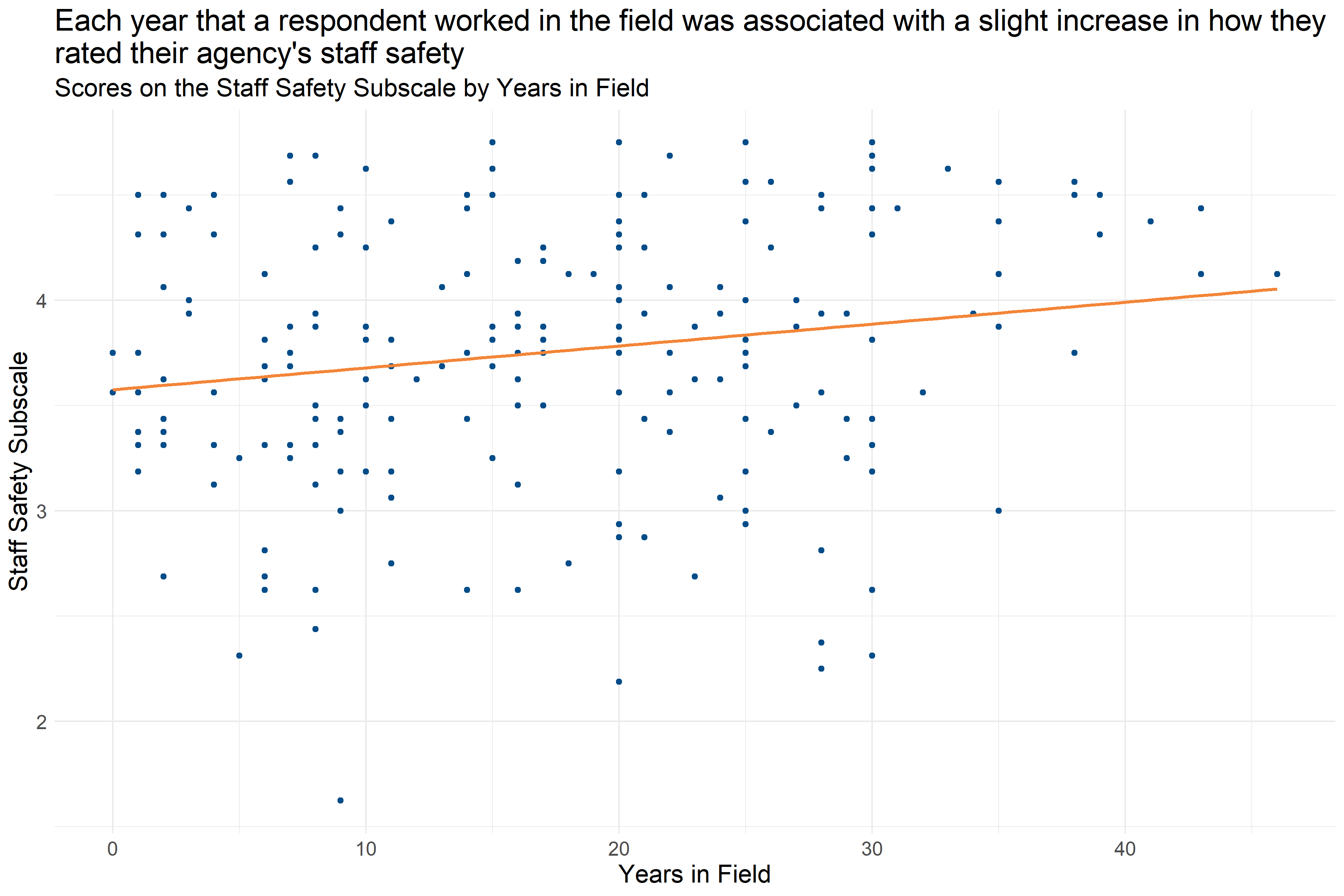

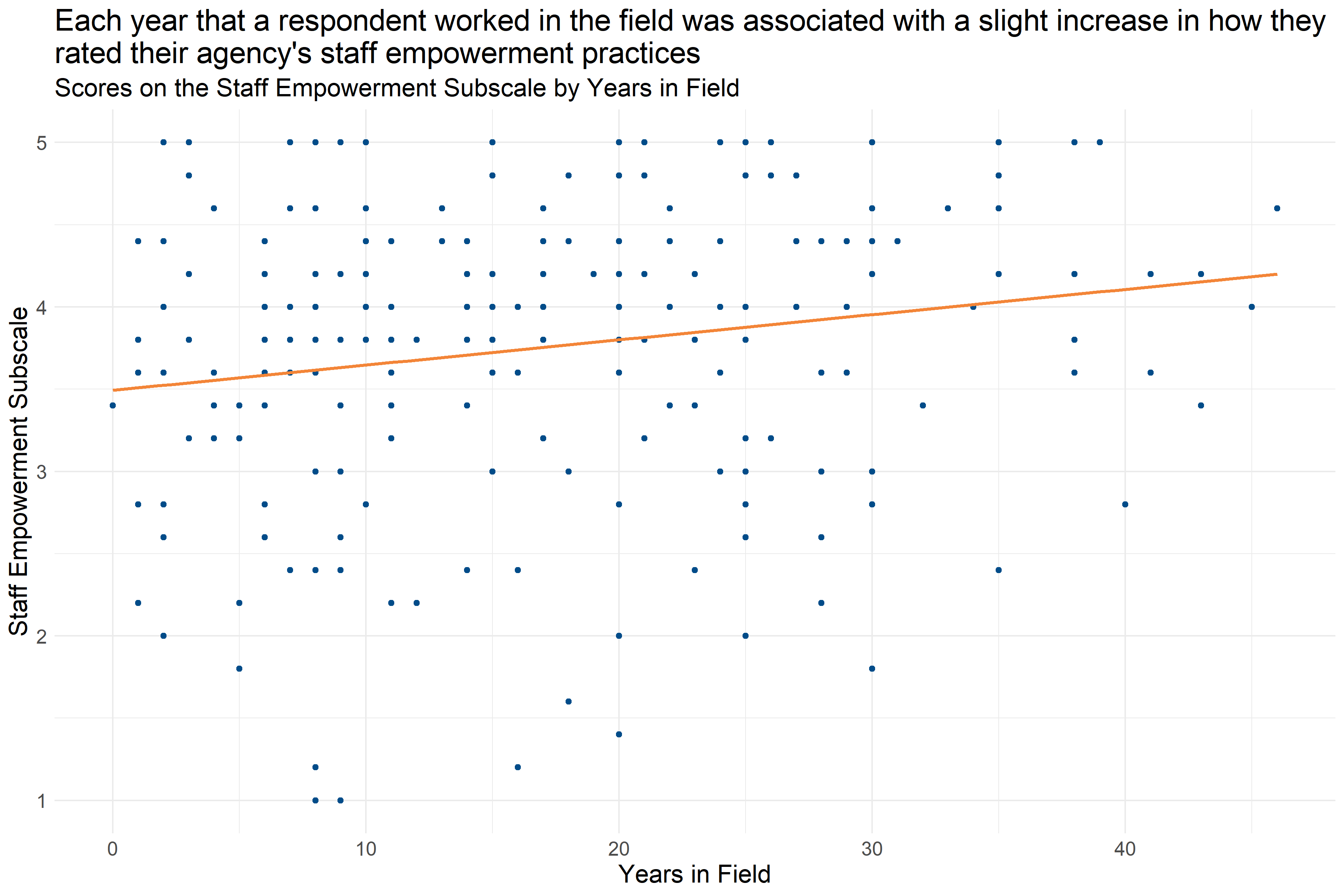

Figure 4.13: Scatter plot of Years in Field and Arousal Subscale Score

Analysts measured Years in Field against the Arousal Subscale to identify patterns between the two variables. As Figure 4.13 displays, as Years in Field increases, there is a slight decline in ratings on the Arousal Subscale.

4.4.2 Conclusions

4.4.2.1 Scores on Secondary Traumatic Stress Scale Subscales

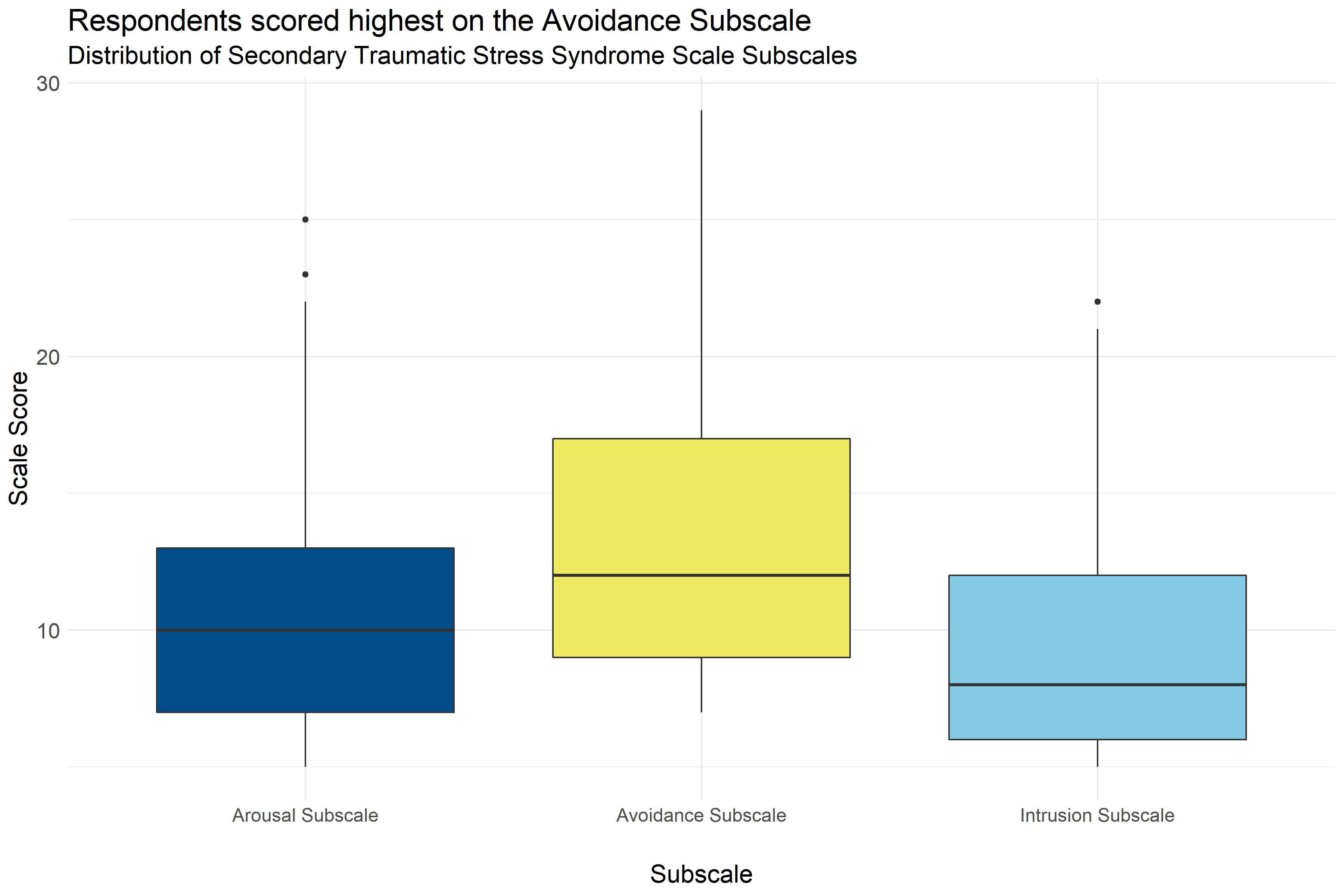

Figure 4.14: Boxplot of score distributions on the Secondary Traumatic Stress Syndrome Scale Subscales

Figure 4.15: Mean score on Secondary Traumatic Stress Subscales by demographic

Figure 4.14 shows the distribution of scores on the Secondary Traumatic Stress Scale’s three subscales: Arousal, Avoidance, and Intrusion. The colored boxes represent the range that the scores of the middle half of respondents fell into. (If you were to cut all of the scores into quarters, this would be between the first and third quartiles.) And, the lines and dots represent the full distribution of scores on each scale. Scales with dots on either side indicate unusually high or low scores. Figure 4.14 shows that of the three subscales, respondents generally scored highest on the Avoidance subscale, but scores on all three scales were generally low. Despite the generally low scores, it is notable that some respondents scored high on these scales indicating that some staff at child-serving agencies may be experiencing symptoms of secondary traumatic stress.

Similarly Figure 4.15 shows the average scores by demographics for all three subscales for all of the demographics for which analysts were able to identify statistically significant differences in scores. Gray boxes indicate that demographic differences for that scale were not statistically significant. Figure 4.15 shows that in addition to having the highest average scores, the Arousal subscale had the most consistent significant differences across different demographic groups, and that there are consistent racial differences in scores across all three subscales. Respondents identifying themselves as White and respondents not identifying themselves as Black / African American consistently scored higher than those not identifying themselves as White and those identifying as Black / African American on all three scales. Respondents who identified their agency roles as being executive officers and administrators also consistently scored lower than other respondents on both the Arousal and Avoidance Subscales. It also worth noting that respondents reporting that they were not at all impacted by COVID-19 reported lower scores on the Arousal Subscale. Analysts also noted significant differences between specific agencies across all three subscales, but those scores are not included in Figure 4.15 to protect the privacy of those respondents and agencies.

Lastly, the number of years that respondents reported working in the field was only significantly associated with the Arousal Subscale, in which it was associated with a slight decrease (about one point decrease per every ten years of experience) as can be seen in Figure 4.13, and years spent at a particular agency were not associated with any of the subscales.

4.4.2.2 Important Considerations

These demographic breakdowns only compare scores by demographics considering one demographic at a time. In cases where these demographics may be interrelated (for example, executive officers and administrators also having more experience than other staff or agencies having different racial demographics) it is not possible to know from this analysis what those differences would look like if those relationships were controlled for (for example, looking at agency role while controlling for years of experience). It is possible that some of these differences in scores are actually the result of other demographic differences, even differences not measured in this analysis.

Additionally, this data is based on a survey that respondents volunteered to take across a variety of fields. There could be ways that the people who volunteered to take the survey are different than other people who work in child-serving agencies. And, because smaller differences between groups are easier to see with a higher number of survey responses, it is possible that there are other differences that exist across demographics that analysts would be able to measure with a larger group of respondents.

4.4.2.3 Implications

Despite that fact that most scores on the Secondary Traumatic Stress Scale were relatively low, some staff scored highly on the subscales indicating that some respondents may be experiencing symptoms of secondary traumatic stress syndrome. It is essential for planners to be mindful that those not describing themselves as executive officers and administrators and individuals who have not worked in the field long, on the aggregate, are likely to score higher on the Secondary Traumatic Stress Scales, and may need more assistance in their current roles.

4.5 Current Implementation of Trauma Informed Practices

4.5.1 Introduction

This section includes survey results on the current implementation of trauma-informed practices within local human service organizations and institutions. There are three subsections included within the current implementation section of trauma-informed practices which are:

- the level of familiarity with trauma-informed practices by survey respondents;

- An environmental assessment; and

- Staff practices.

4.5.2 Key Findings

- About 72% of respondents in child-serving agencies were not familiar with the TRC model, and an additional 26% of respondents reported familiarity with the model but no training.

- Respondents with higher levels of training generally scored higher on the staff practices survey, indicating higher levels of alignment with trauma-informed care practice at their agencies.

- Scores on the Environmental Assessment scale and Staff Practices Survey generally showed neutral to moderate alignment with Trauma informed practice. Some respondents had lower scores, particularly on the Environmental Assessment and some subscales of the staff practices survey. This suggests that there may be variation across agencies or staff.

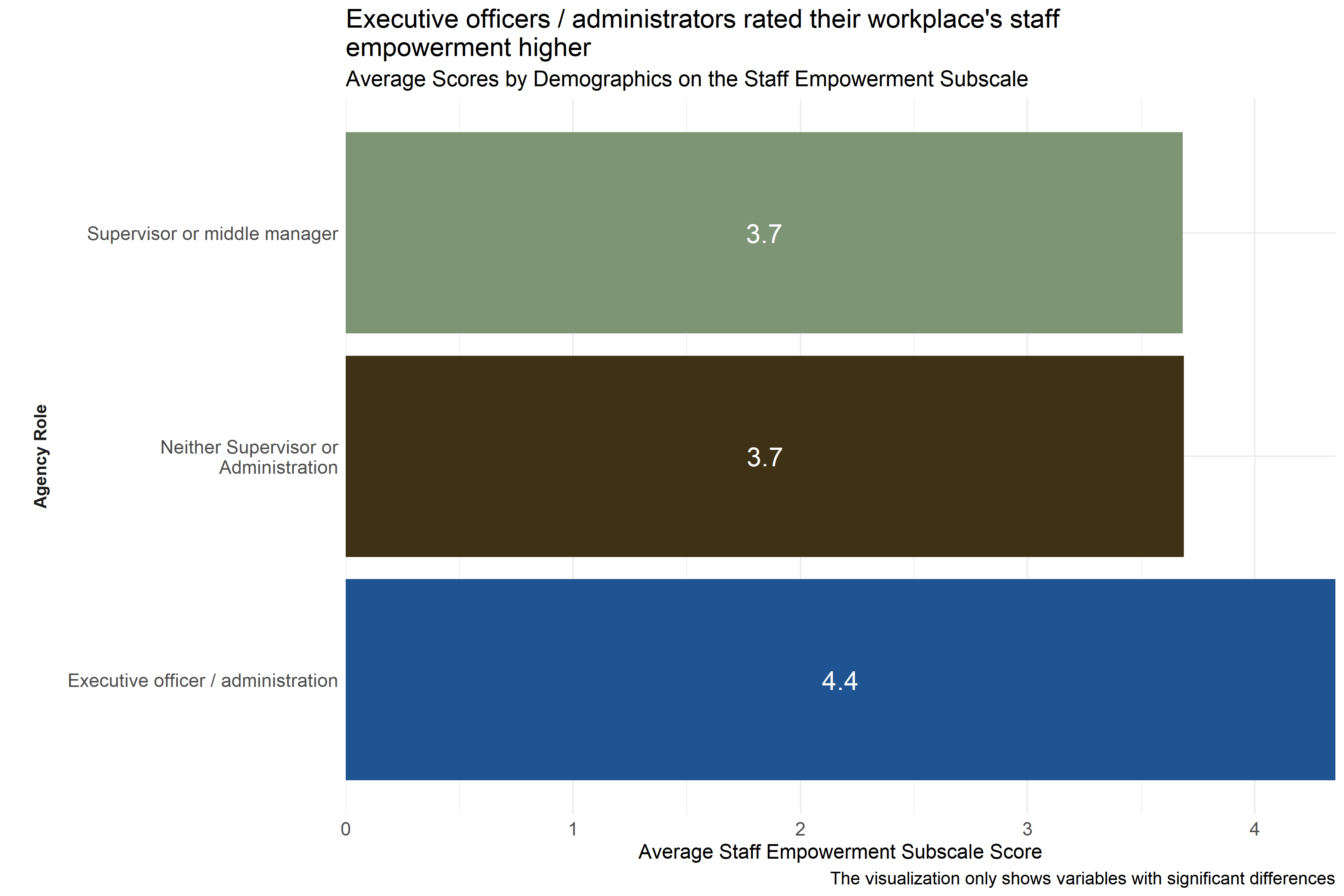

- Organizational leaders often had higher scores than other employees on the Environmental Assessment Scale and Staff Practices Survey. Interview participants indicated that there has been a greater sense of urgency for racial equity-based work within the past few years, suggesting that organizations and staff are responsive to the socio-political landscape (e.g., COVID-19, George Floyd protests, etc).

- Some interview respondents identified that engaging in more racial equity-based work is an area of growth in their organization. There were more instances reported of organizations lacking equity-based policies and practices than having such measures in place.

- Some organizations prioritize diversity of staff over diversity of leadership in order to match the populations they serve. In these cases, there can be less diversity in leadership, reflecting the lack of an employee pipeline within the organization for people of color.

- Some organizations have sufficient resources to engage in racial equity-based practices and policies, while others face barriers such as leadership, funding, and/or staff buy-in.

- Respondents report that, in general, their organization’s culture is inclusive and supportive of people from diverse backgrounds, however several respondents indicated that there is an expectation for people of color to assimilate into dominant white norm culture.

- The COVID-19 pandemic not only affected how community service providers served the community, but it also added extra personal and professional stressors and trauma.

- Many organizations serve members of the community who have experienced structural violence, which suggests that there are many adverse experiences that the community encounters which can compound pre-existing trauma or lead to new forms of stress and trauma.

4.5.3 Familiarity with Trauma Informed Practices

This section includes survey results about staff familiarity with trauma-informed practices. The survey included a question that asked the respondent “Which of the following BEST describes your current experience with the TRC Model?” with the following response options:

- I am not familiar with the TRC Model

- I am familiar with the TRC Model and have not attended any presentations or participated in any training activities

- I am in the process of participating in TRC staff training modules I have attended and completed the 3-day TRC leadership training

- I have attended and completed the 5-day TRC Train-the-Trainer training

4.5.3.1 Key Findings

- About 72% of respondents in child-serving agencies were not familiar with the TRC model, and an additional 26% of respondents reported familiarity with the model but no training.

- A small percentage of respondents reported that they were in the process of attending staff training modules.

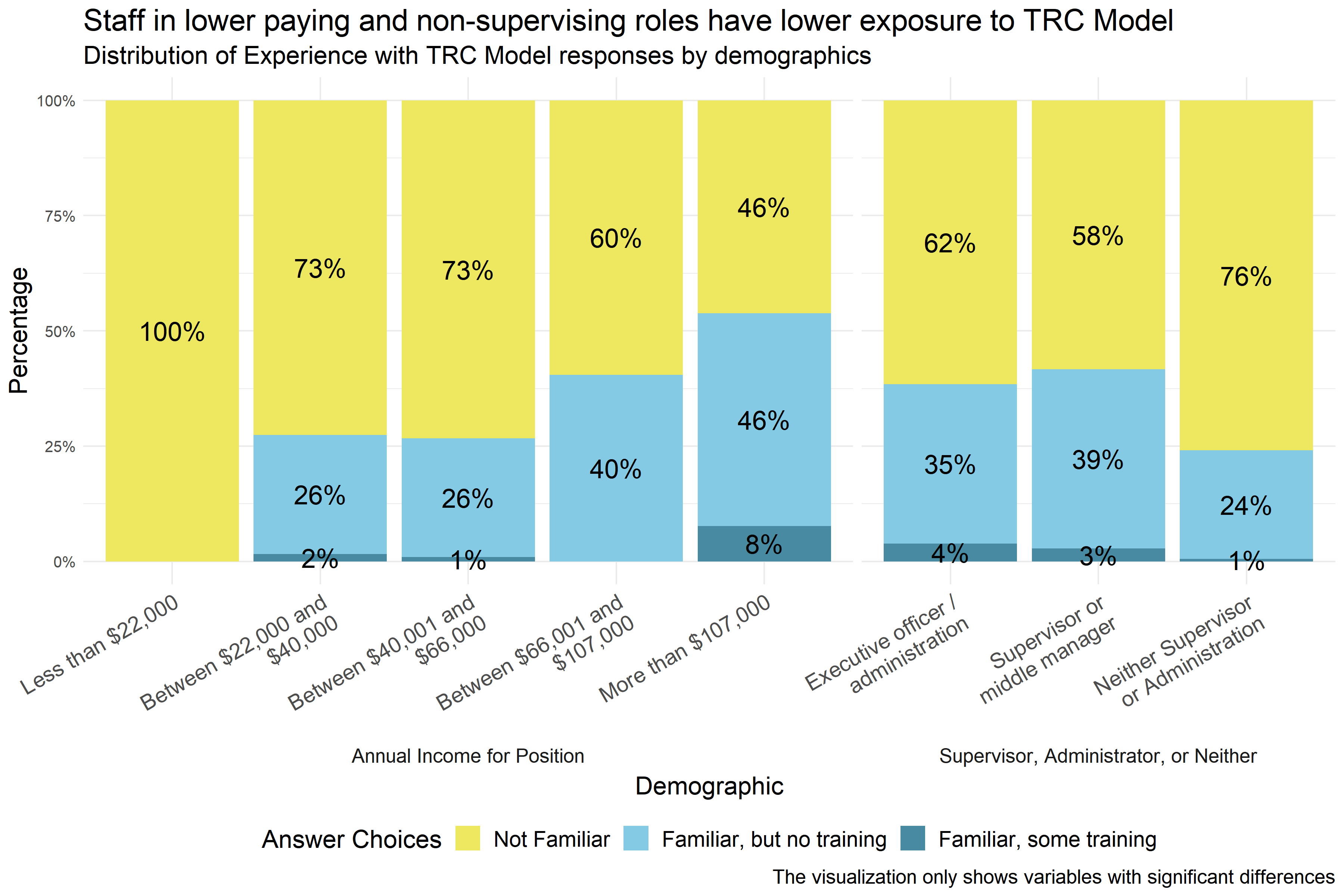

- Respondents in lower-earning positions and respondents who were not in supervisory or administrative roles generally reported lower levels of familiarity with the model.

Figure 4.16: Distribution of responses about level of experience with the TRC Model

Figure 4.16 shows that most respondents, 72%, were not familiar with the TRC Model, and an additional 26% of respondents reported being familiar with the TRC Model but not having any training. However, a small percentage of respondents indicated that they were in the process of participating in TRC staff training modules.

Figure 4.17: Level of experience with the TRC Model reported by demographic

Analysts found significant differences in respondents’ level of experience with the TRC Model across respondents in positions with different levels of annual income, different roles in the agency, and different agencies. Figure 4.17 shows that respondents with lower annual incomes and respondents who are not supervisors or administrators generally had less exposure to the TRC Model. Even among executive officers, administrators, supervisors, and middle managers more than half of all respondents indicated that they were not familiar with the TRC Model, and even at the highest levels of annual income, more than $107,000, almost half of respondents did not have exposure to the TRC Model. The small percentage of respondents who reported that they were in the process of attending TRC training modules were mostly in higher-income positions or positions of leadership. Analysts also found significant differences across specific agencies, but that data is not shown in Figure 4.17 to protect the privacy of the respondents and the agencies.

4.5.3.2 Conclusions

4.5.3.2.1 Important Considerations

Demographic breakdowns only compare scores by demographics considering one demographic at a time. In cases where these demographics may be interrelated (for example, executive officers and administrators also having higher incomes) it is not possible to know from this analysis what those differences would look like if those relationships were controlled for (for example, looking at agency role while controlling for income). It is possible that some of these differences in scores are actually the result of other demographic differences, even differences not measured in this analysis.

Additionally, this data is based on a survey that respondents volunteered to take across a variety of fields. There could be ways that the people who volunteered to take the survey are different from other people who work in child-serving agencies. And, because smaller differences between groups are easier to see with a higher number of survey responses, it is possible that there are other differences that exist across demographics that analysts would be able to measure with a larger group of respondents.

4.5.3.2.2 Implications

Implementation of a TRC Model should not assume wide-spread exposure to or knowledge of the TRC Model, especially among lower-earning staff members and employees who are not supervisors or administrators. If widespread familiarity with the TRC Model is the goal, training is needed across all positions and agencies.

4.5.4 Environmental Assessment Survey

This section includes survey results from the environmental assessment scale, which includes questions about the physical environment - questions about the physical work space - and the social environment - questions about relationships and how people feel and are treated - as well as the seven commitments of the Trauma Informed Care (TIC) model. The TRC model promotes organizational change and addresses the ways in which chronic stress, adversity, and trauma influence individual behavior. It also recognizes the ways in which whole organizations can be influenced by chronic stress, adversity, and trauma. The seven commitments are as follows: Non-Violence, Emotional Intelligence, Social Learning, Shared Governance, Open Communication, Social Responsibility, and Growth and Change.

4.5.4.1 Key Findings

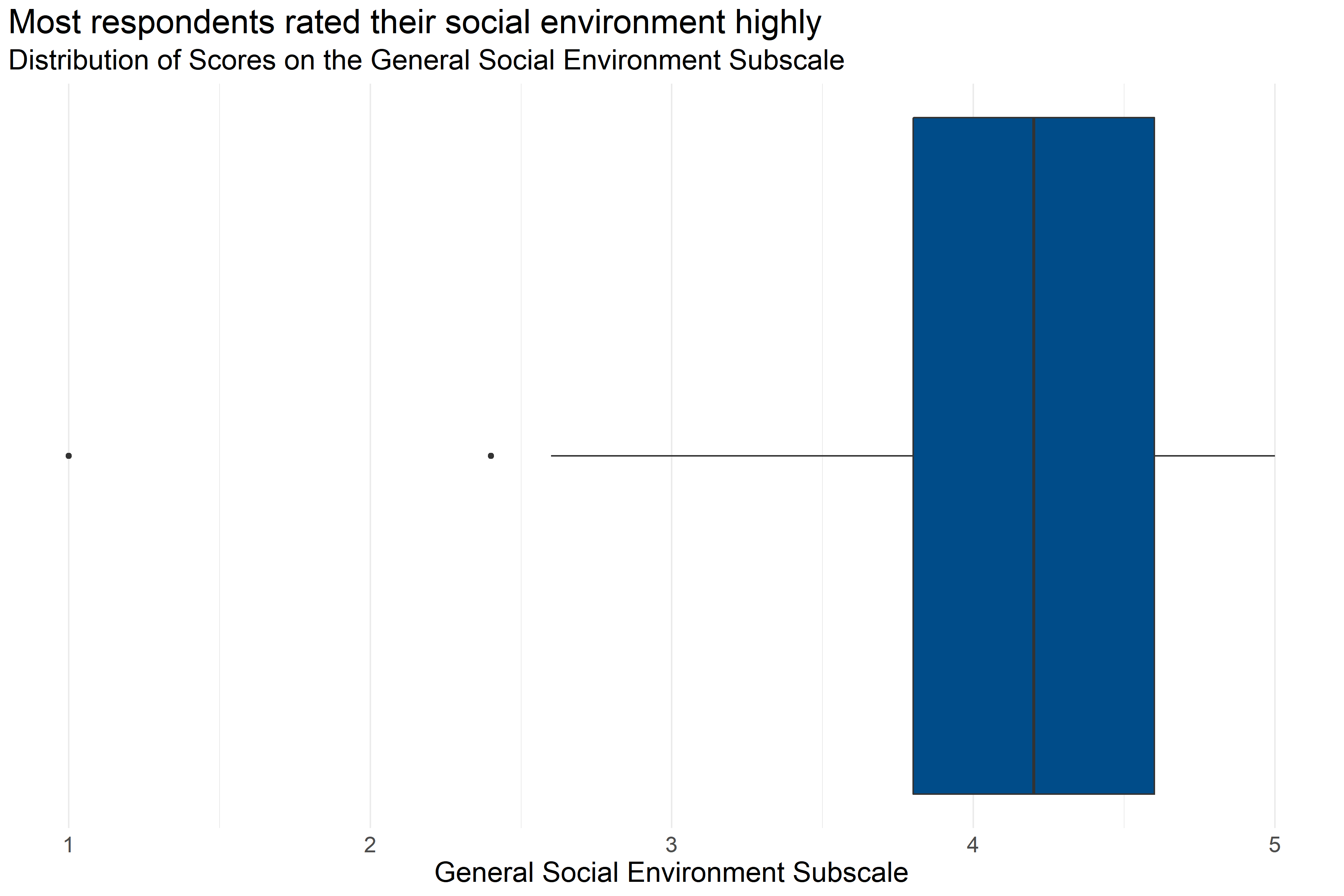

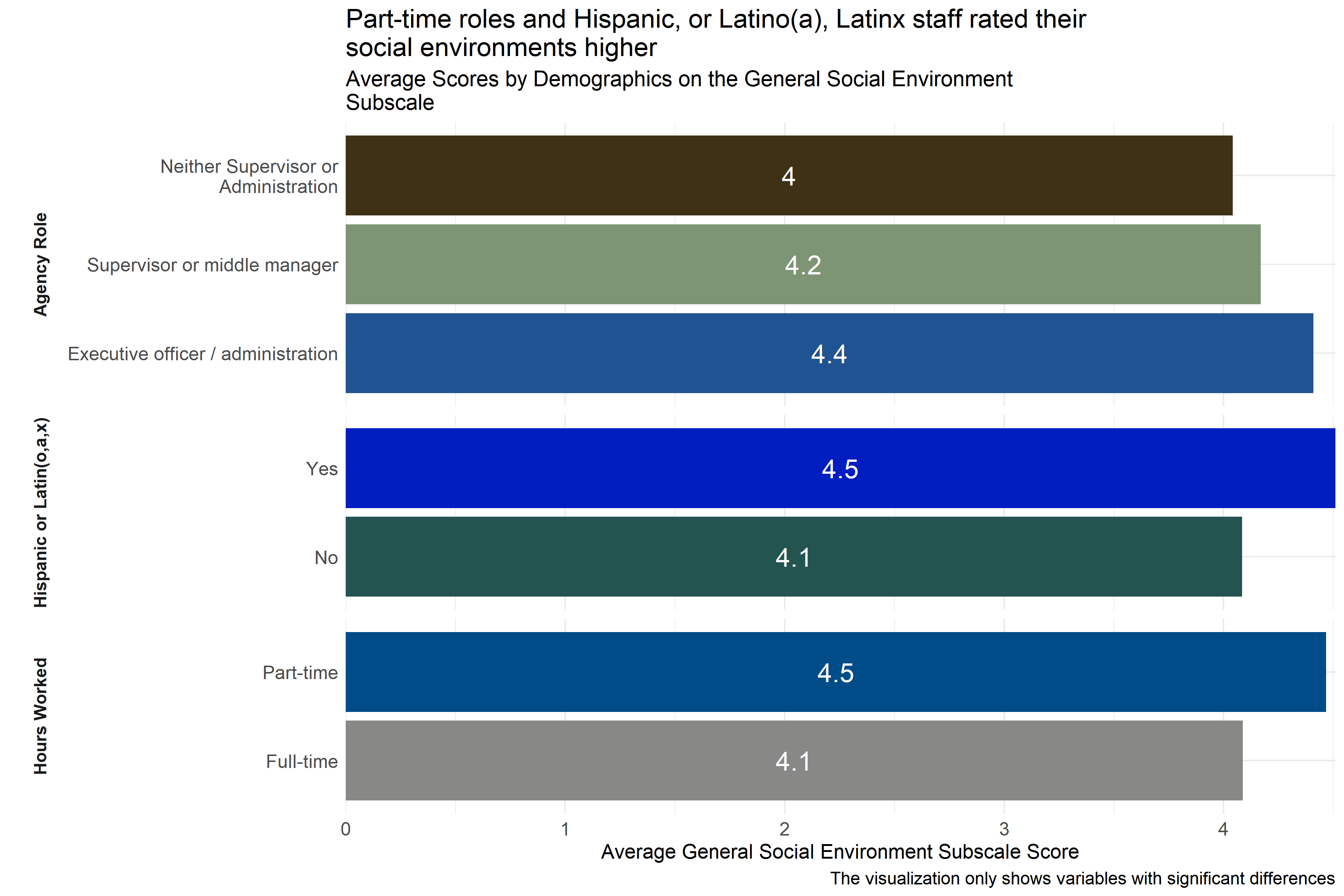

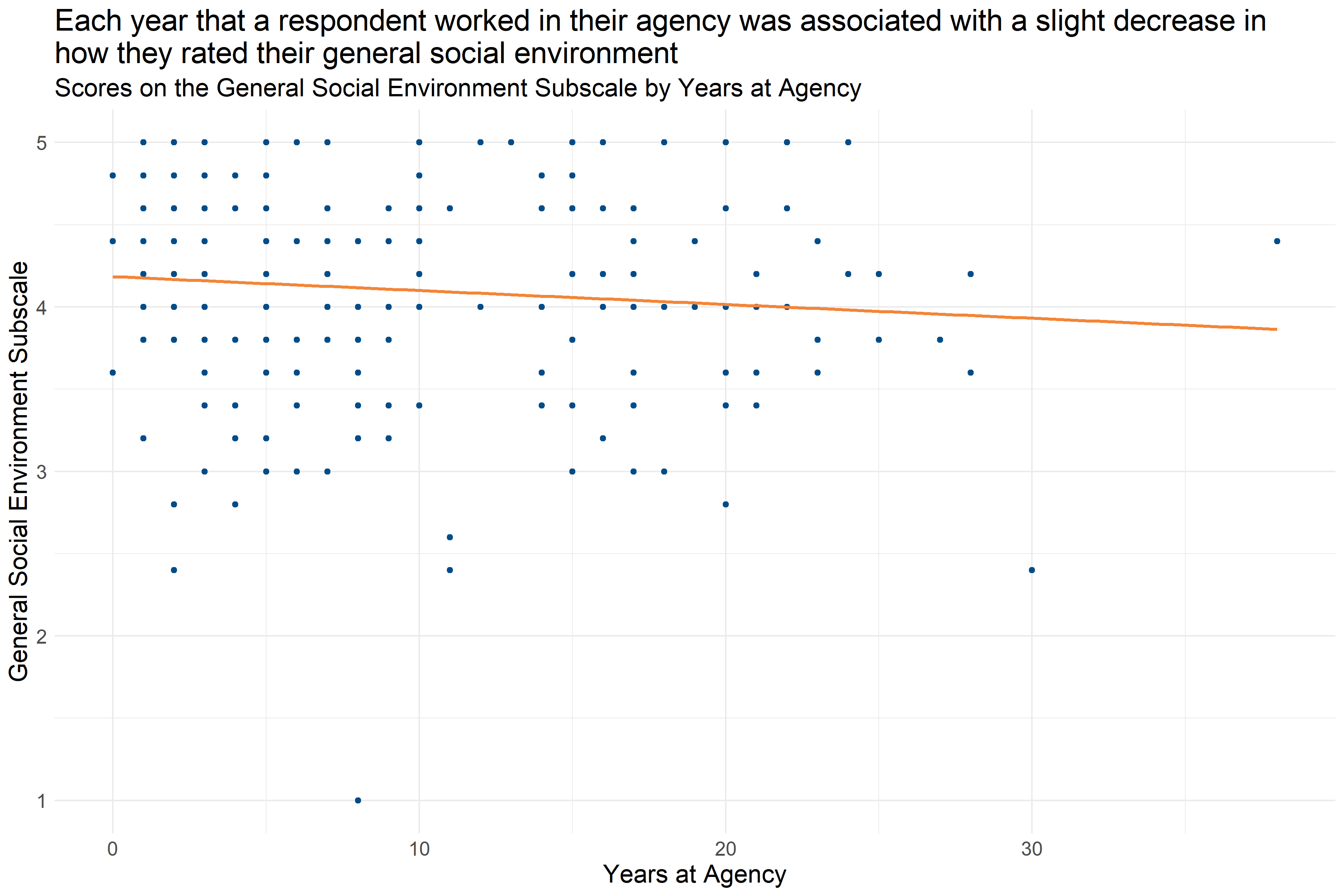

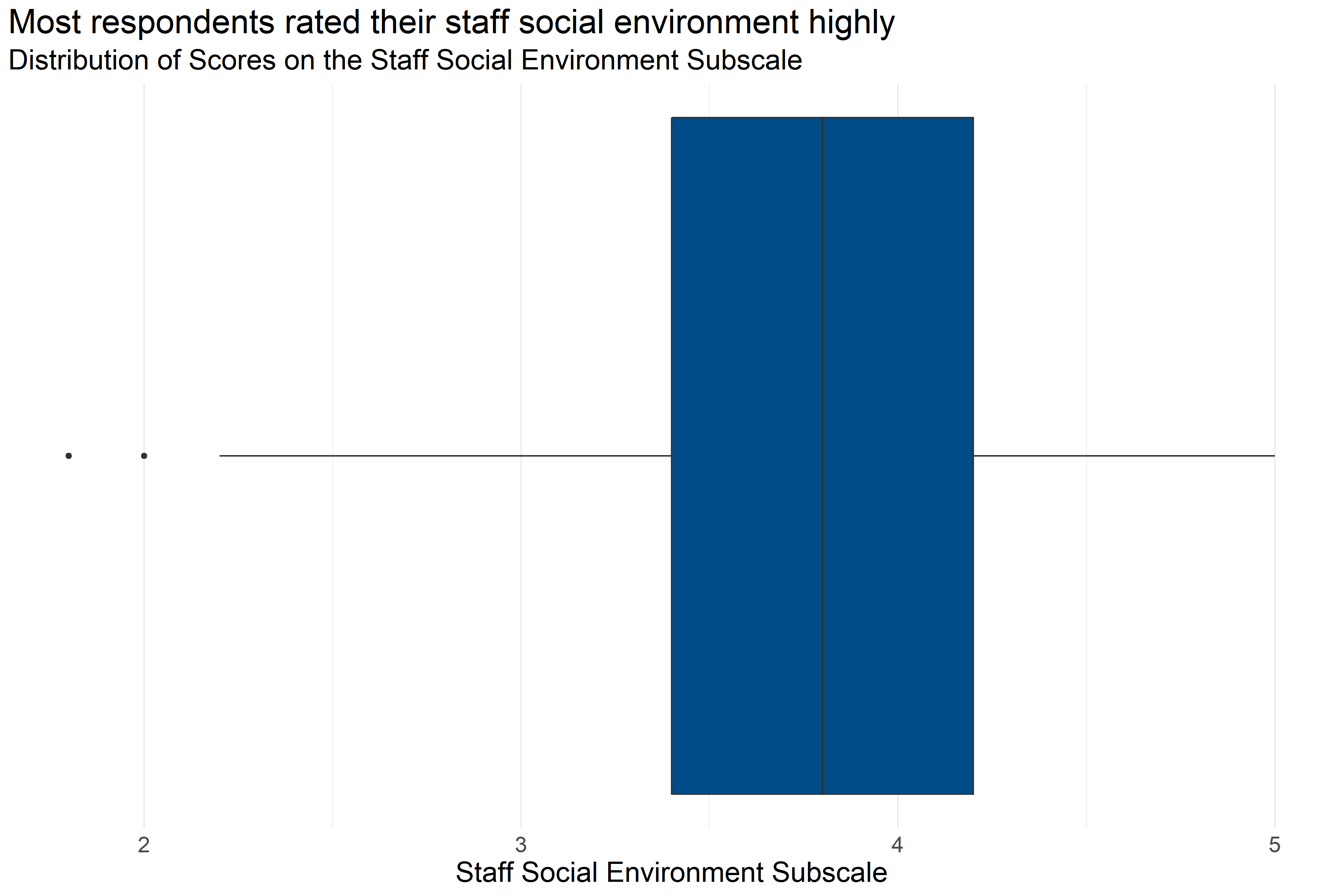

- Scores on the Environmental Assessment scale were generally high, with the General Social Environment subscale generally having the highest scores.

- About a quarter of respondents generally gave responses ranging from neutral to negative on the commitment to open communication, shared governance, emotional intelligence, social learning, and social responsibility scale. Given the range of responses, it is possible that some individual agencies tend to have lower scores on these scales.

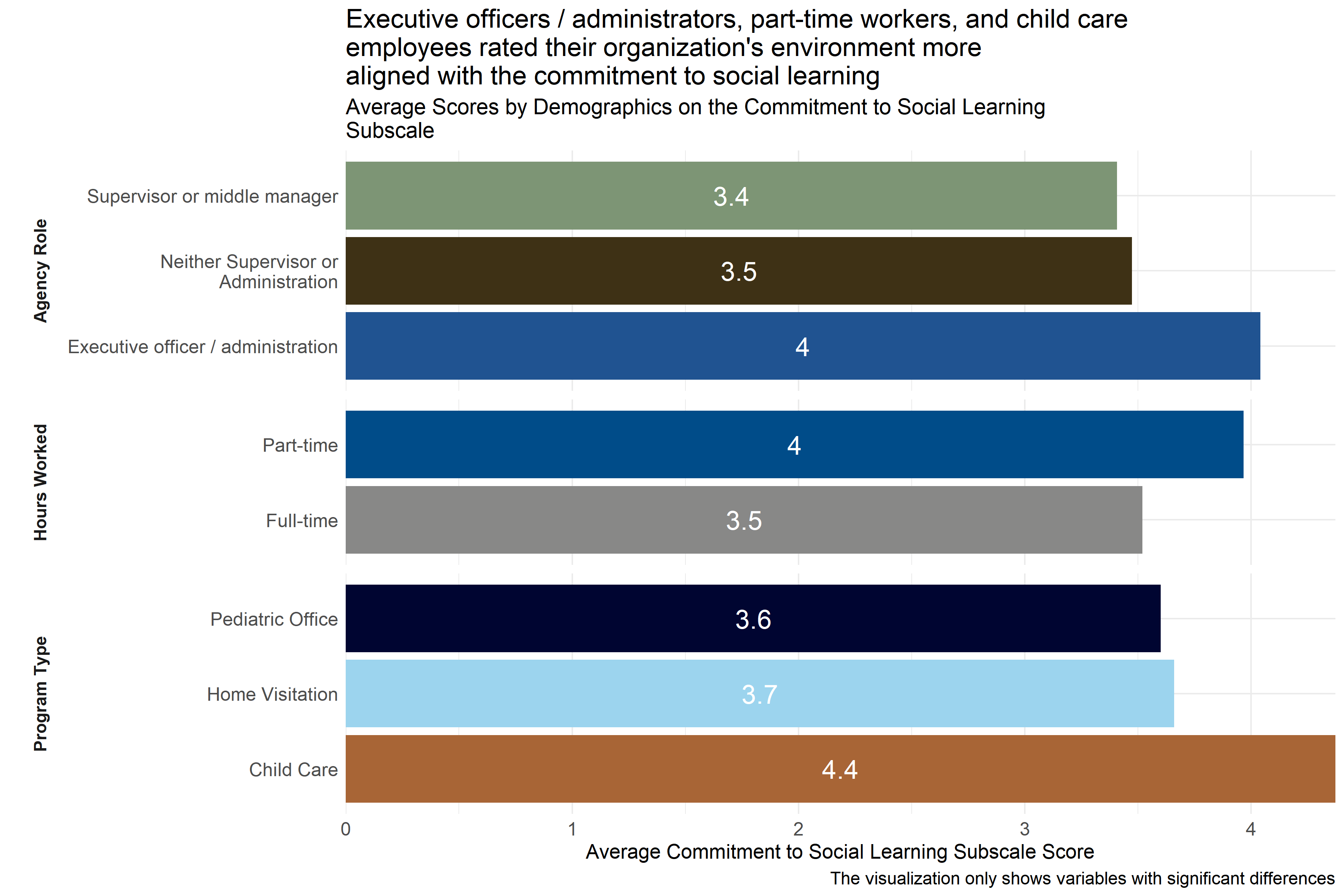

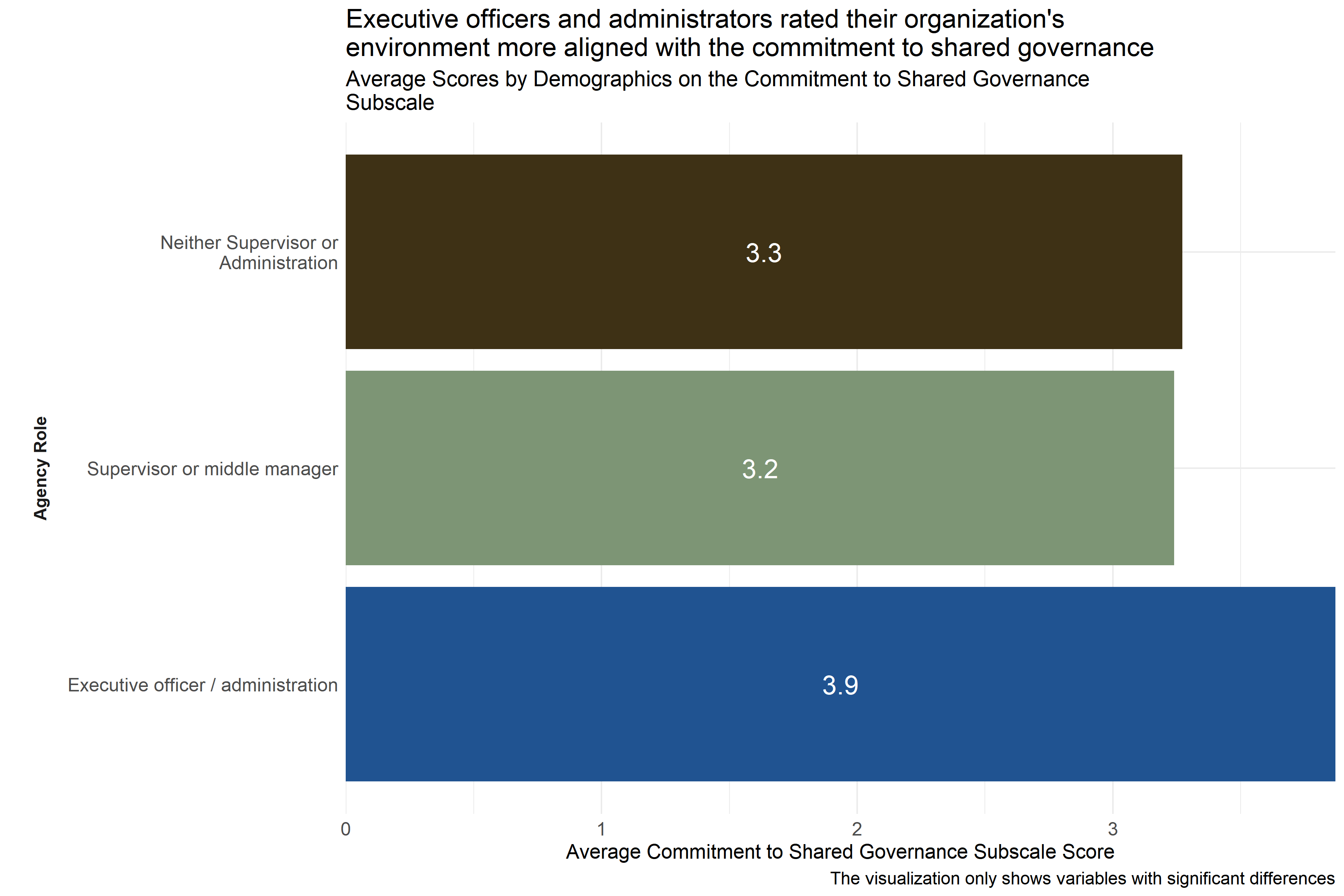

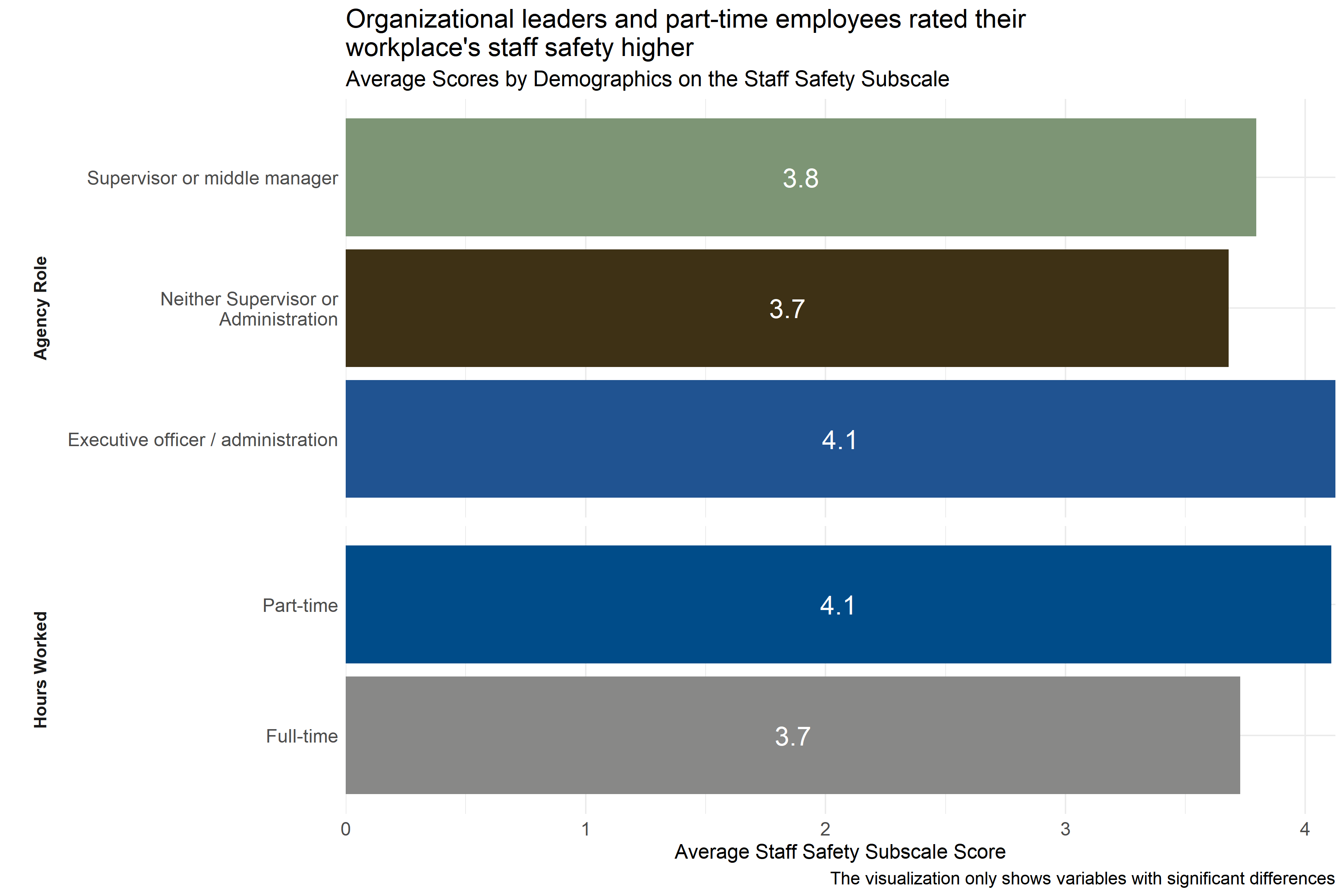

- When there were differences between groups, executive directors and administrators, child care providers, and part-time employees consistently scored higher on Environmental Assessment Scale subscales than full-time employees and staff in other organizational roles or fields.

- Scores on the Environmental Assessment scale were generally between neutral and moderately aligned with a trauma-informed model, with the General Social Environment subscale generally having the highest scores.

- When there were differences between groups, executive directors and administrators, child care providers, and part-time employees consistently scored higher on Environmental Assessment Scale subscales than full-time employees and respondents in other roles or fields.

4.5.4.2 Physical Environment

The Physical Environment Subscale of the Environmental Assessment Survey measures how employees responded to questions about their physical environment. Respondents answered on a five point scale with options ranging from “Strongly disagree” to “Strongly agree” to statements like, “There is enough community space for gathering with seating that can become a circle.” Individual responses to each of the questions in this scale can be found in Appendix B: Detailed Question Responses by Scale. Lower scores on this scale indicate a more negative physical environment, and higher scores indicate a more positive physical environment.

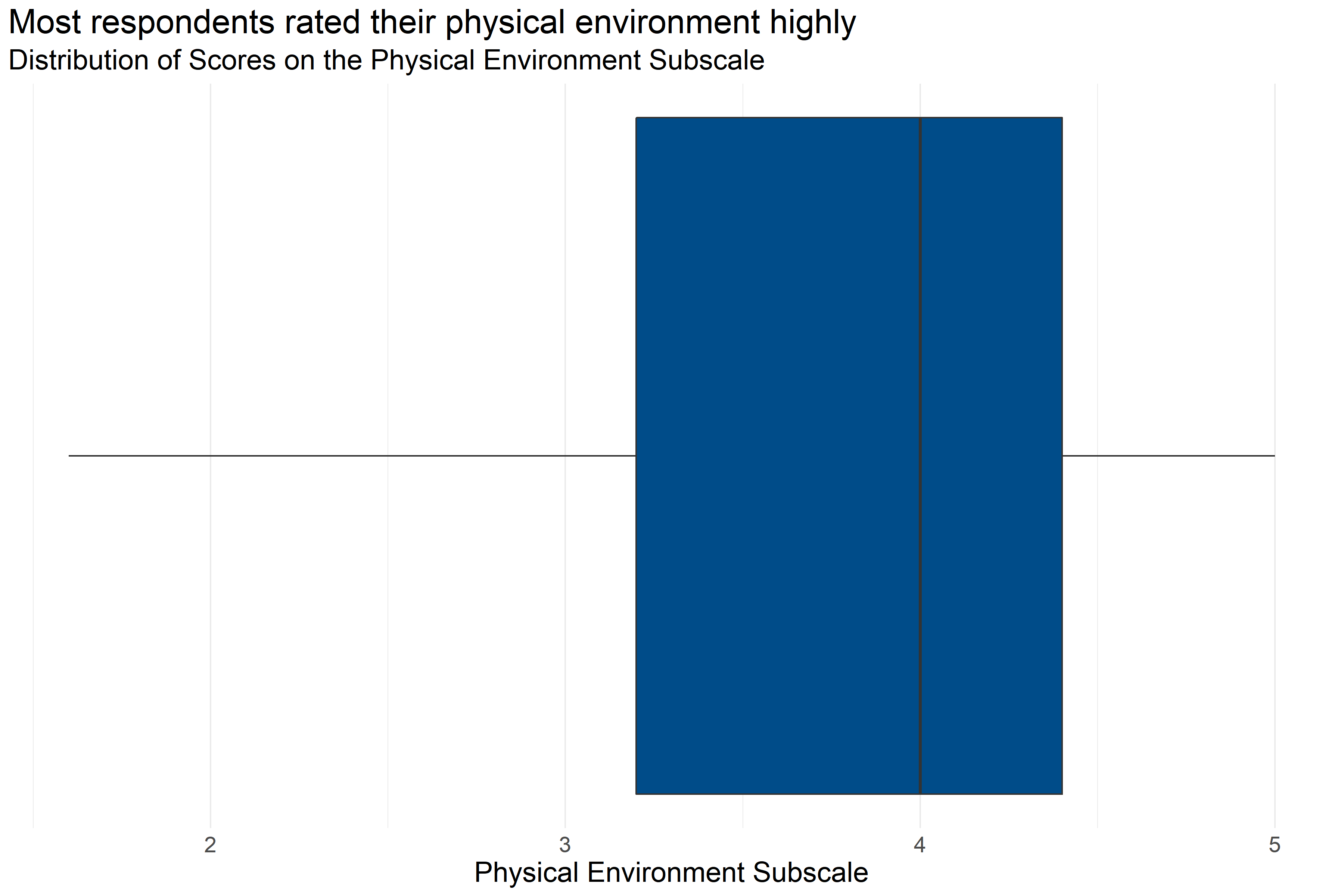

Figure 4.18: Boxplot of score distributions for the Physical Environment Subscale

| Number of Answers | Average Score | Lowest Score | First Quartile | Median | Third Quartile | Highest Score |

|---|---|---|---|---|---|---|

| 220 | 3.79 | 1.6 | 3.2 | 4 | 4.4 | 5 |

Figure 4.18 and Table 4.25 provide information about how respondents scored on the Physical Environment Subscale. They provide the following information:

- Response: 220 respondents answered all questions in the scale.

- Average: The average score was 1.6.

- Range: Scores ranged from 3.79 to 5.

- Median: About half of respondents scored below 4 and half scored above 4.

- Interquartile Range: If the range of responses were cut into quarters, the middle half of respondents (between the first and third quartiles) scored between 3.2 and 4.4.

This means that more than half of respondents generally answered that they agreed or strongly agreed with statements about their physical environment while on the job that were consistent with a Trauma Informed Model.

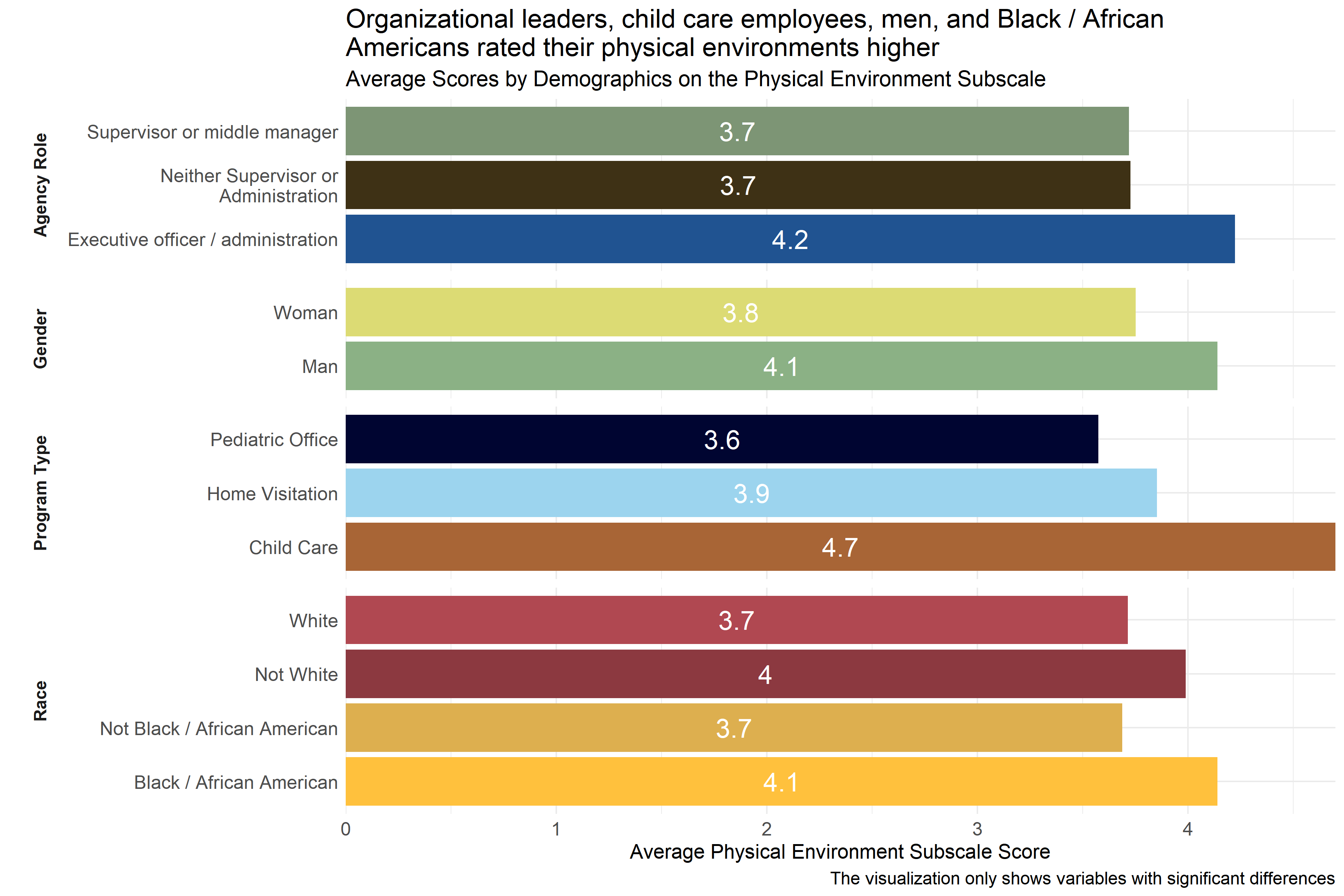

Figure 4.19: Average scores for Physical Environment Subscale across demographic groups

Analysts tested the averages scores on the Physical Environment Subscale across demographic groups to identify differences in how respondents scored their environment. As Figure 4.19 shows, there were some statistically significant differences in average score across respondent groups by race, program type, and role in agency. Respondents identifying as executive officers or members of the organization’s administration had higher average scores than those who did not identify as supervisors, middle managers, administrators, or executive officers. Similarly, respondents working in child care settings and men reported higher scores on average than those in other settings and women, respectively. In addition, Black / African American respondents and respondents who did not identify as White reported higher scores than other respondents.

4.5.4.5 Commitment to Nonviolence

The Commitment to Nonviolence Subscale measures the extent to which the cultural and social environment at an agency aligns with the trauma-informed norm and value of commitment to nonviolence. Survey participants responded to statements like “Destructive or violent incidents are addressed nonviolently and openly reviewed as soon as possible.” and “The community has a clear set of boundaries.” on a scale of 1 “Strongly Disagree” to 5 “Strongly Agree.” Individual responses to each of the questions in this scale can be found in Appendix B: Detailed Question Responses by Scale. Low scores on this scale indicate that the organization’s environment is less aligned with the commitment to nonviolence.

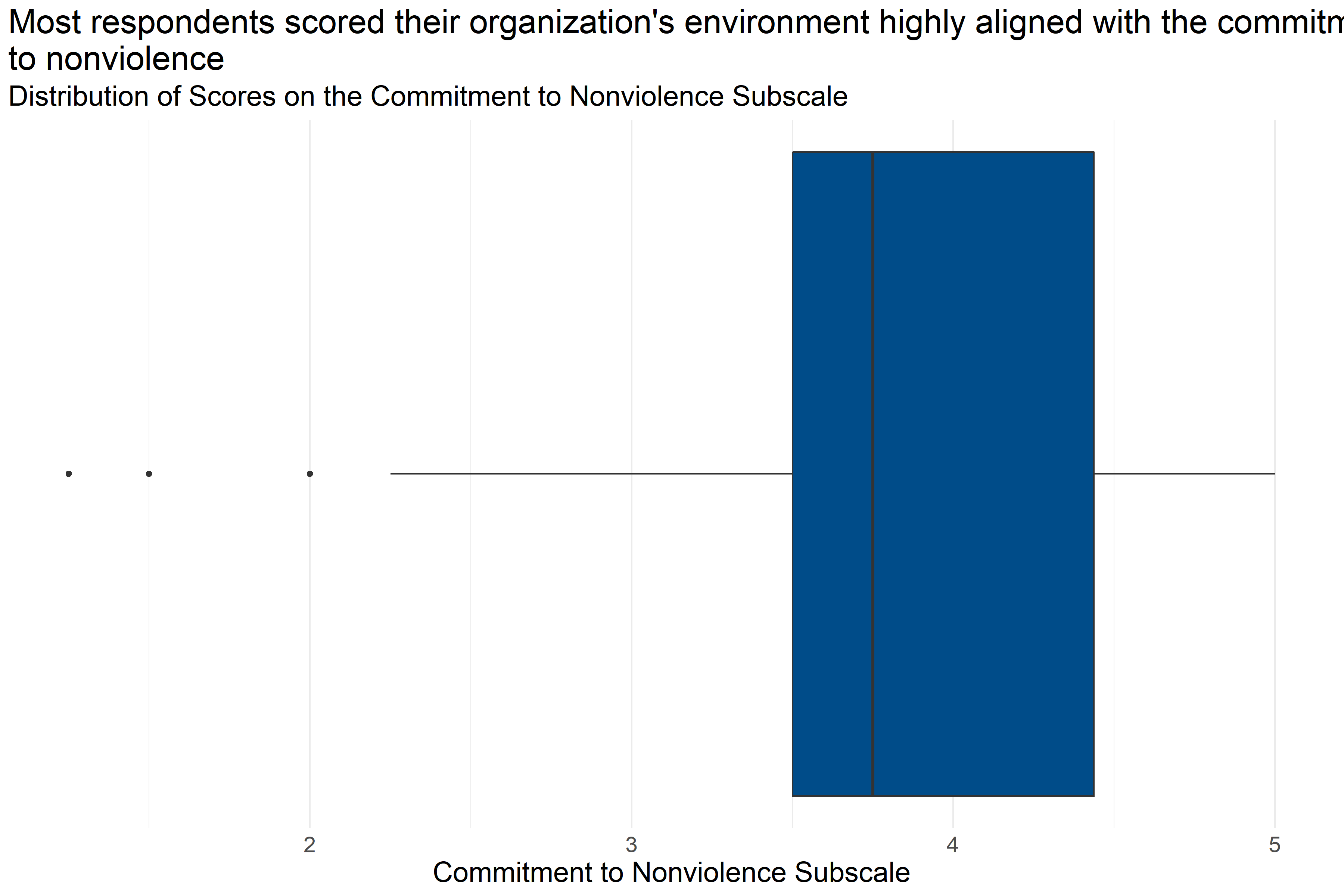

Figure 4.24: Boxplot of score distributions for Commitment to Nonviolence Subscale

| Number of Answers | Average Score | Lowest Score | First Quartile | Median | Third Quartile | Highest Score |

|---|---|---|---|---|---|---|

| 218 | 3.82 | 1.25 | 3.5 | 3.75 | 4.44 | 5 |

Figure 4.24 and Table 4.28 provide information about how respondents scored on the Commitment to Nonviolence Subscale. They provide the following information:

- Response: 218 respondents answered all questions in the scale.

- Average: The average score was 1.25.

- Range: Scores ranged from 3.82 to 5.

- Median: About half of respondents scored below 3.75 and half scored above 3.75.

- Interquartile Range: If the range of responses were cut into quarters, the middle half of respondents (between the first and third quartiles) scored between 3.5 and 4.44.

This means that more than half of respondents generally answered that they agreed or strongly agreed with statements about the alignment of their organization’s commitment to nonviolence that were consistent with a Trauma Informed Model.

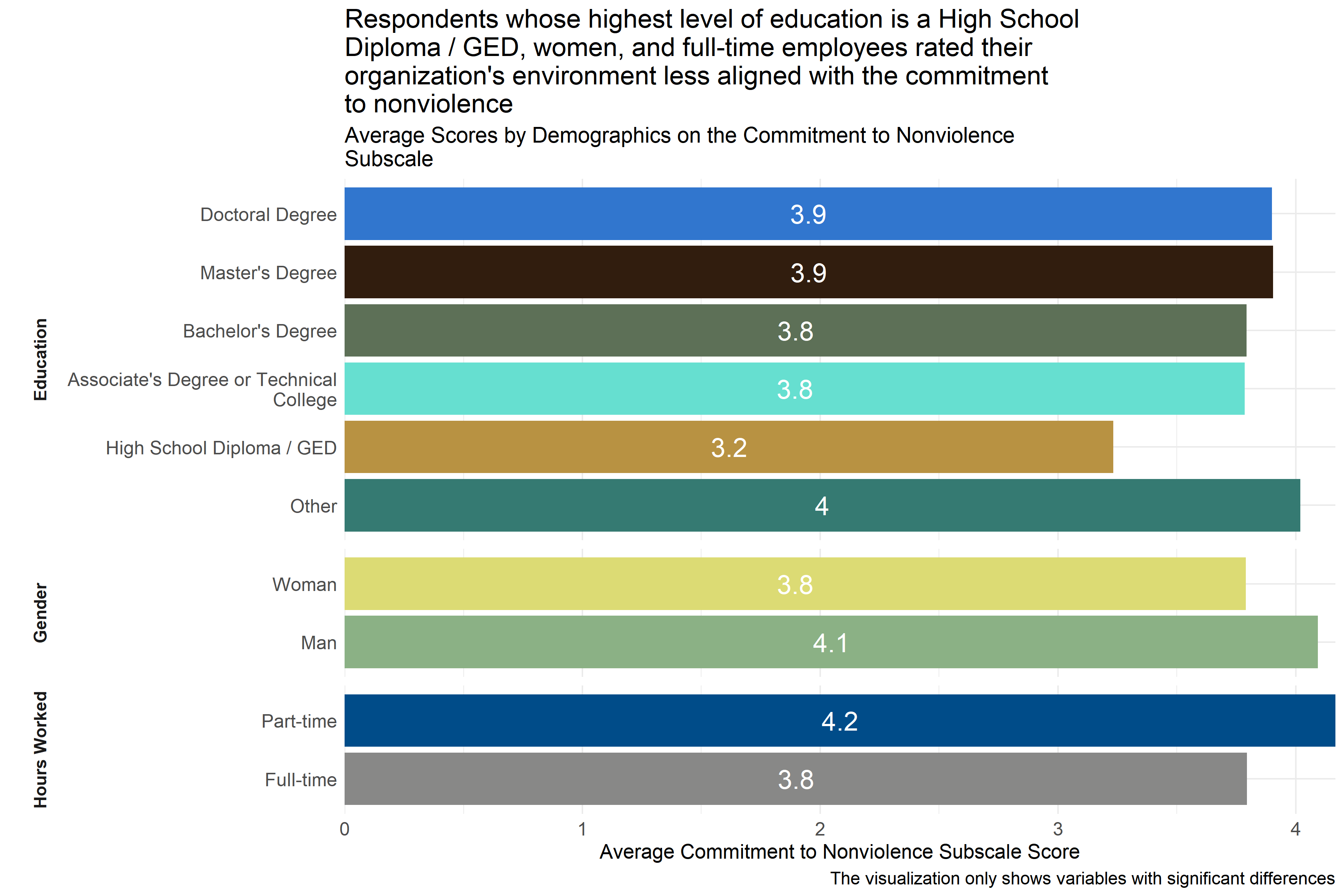

Figure 4.25: Average scores for Commitment to Nonviolence Subscale across demographic groups

Analysts tested the average scores on the Commitment to Nonviolence Subscale across demographic groups to identify differences in how respondents answered questions about how they perceived indications of their agencies’ commitment to nonviolence in a way that was consistent with the Trauma Informed Care model. As Figure 4.25 shows, respondents whose highest level of education is a High School Diploma / GED reported lower scores on average than those who have achieved higher levels of education, as did women and full-time employees. Analysts also saw significant differences in responses across specific agencies, but those scores are not included in Figure 4.25 above to protect the privacy of those agencies.

This suggests that respondents whose highest level of education is a High School Diploma / GED, who are women, and who work full-time are experiencing less alignment between their organizations’ commitment to nonviolence and the commitment to nonviolence that is consistent with a trauma informed care model.

4.5.4.6 Commitment to Emotional Intelligence

The Commitment to Emotional Intelligence Subscale measures the extent to which the cultural and social environment at an agency aligns with the trauma-informed norm and value of commitment to emotional intelligence. Survey participants responded to statements like “When staff members discuss a client, there is always an emphasis on thoughtful exploration of complicated issues.” and “My supervisor talks with me about work-related stress and helps me manage that stress in appropriate ways.” on a scale of 1 “Strongly Disagree” to 5 “Strongly Agree.” Individual responses to each of the questions in this scale can be found in Appendix B: Detailed Question Responses by Scale. Low scores on this scale indicate that the organization’s environment is less aligned with the Commitment to Emotional Intelligence.

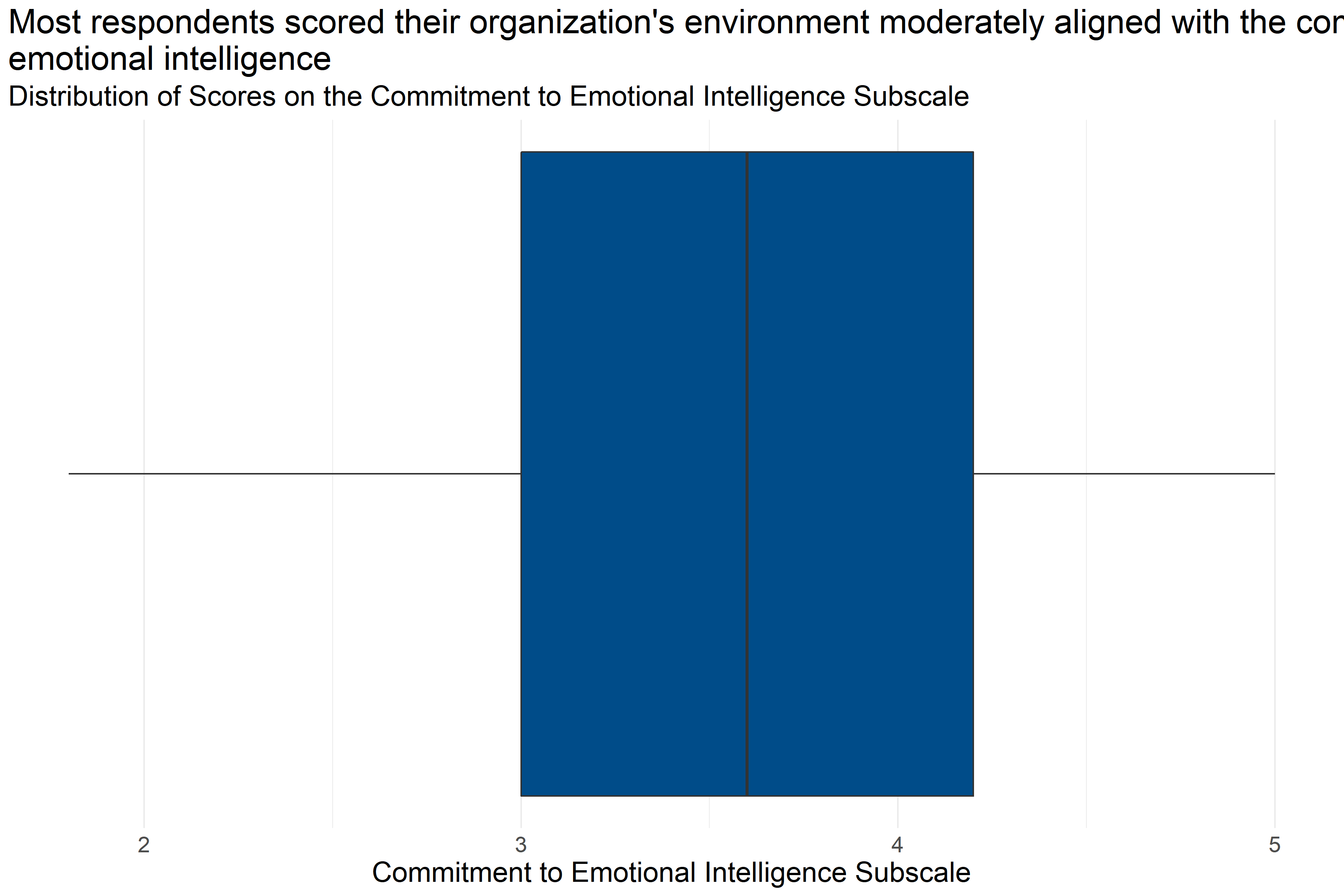

Figure 4.26: Boxplot of score distributions for Commitment to Emotional Intelligence Subscale

| Number of Answers | Average Score | Lowest Score | First Quartile | Median | Third Quartile | Highest Score |

|---|---|---|---|---|---|---|

| 226 | 3.64 | 1.8 | 3 | 3.6 | 4.2 | 5 |

Figure 4.26 and Table 4.29 provide information about how respondents scored on the Commitment to Emotional Intelligence Subscale. They provide the following information:

- Response: 226 respondents answered all questions in the scale.

- Average: The average score was 1.8.

- Range: Scores ranged from 3.64 to 5.

- Median: About half of respondents scored below 3.6 and half scored above 3.6.

- Interquartile Range: If the range of responses were cut into quarters, the middle half of respondents (between the first and third quartiles) scored between 3 and 4.2.

This means that more than half of respondents generally answered that they neither agreed nor disagreed or agreed with statements about the alignment of their organization’s commitment to emotional intelligence that were consistent with a Trauma Informed Model.

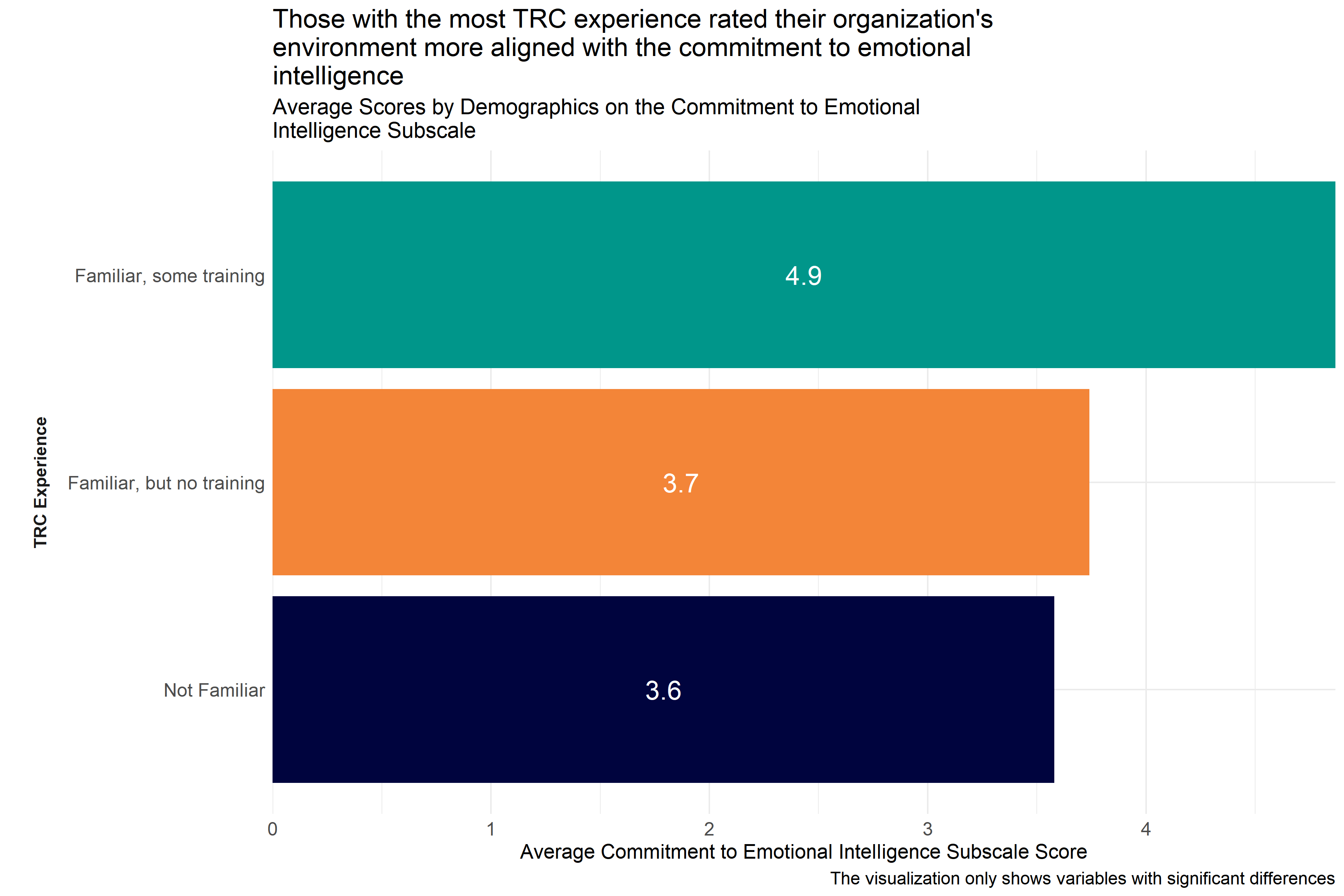

Figure 4.27: Average scores for Commitment to Emotional Intelligence Subscale across demographic groups

Analysts tested the average scores on the Commitment to Emotional Intelligence Subscale across demographic groups to identify differences in how respondents answered questions about how they perceived indications of their agencies’ commitment to emotional intelligence in a way that was consistent with the Trauma Informed Care model. As Figure 4.27 shows, those who identified themselves as “I am in the process of participating in the TRC staff training modules I have attended and completed the 3-day TRC leadership training” scored higher than respondents who had less experience with TRC. This suggests that those with more TRC experience are witnessing more alignment within their respective organizations towards a commitment to emotional intelligence.

Analysts also observed significant differences in responses across specific agencies, but did not include that information in Figure 4.27 to protect the privacy of those agencies.

4.5.4.9 Commitment to Open Communication

The Commitment to Open Communication Subscale of the Environmental Assessment Survey measures the extent to which the cultural and social environment at an agency aligns with the trauma-informed norm and value of commitment to open communication. Survey participants responded to statements like “The schedule of program activities and events are available and accessible to clients and staff.” and “All staff are aware of decisions made around policies and procedures.” on a scale of 1 “Strongly Disagree” to 5 “Strongly Agree.” Individual responses to each of the questions in this scale can be found in Appendix B: Detailed Question Responses by Scale. Low scores on this scale indicate that the organization’s environment is less aligned with the commitment to open communication.

Figure 4.32: Boxplot of score distributions for Commitment to Open Communication Subscale

| Number of Answers | Average Score | Lowest Score | First Quartile | Median | Third Quartile | Highest Score |

|---|---|---|---|---|---|---|

| 219 | 3.51 | 1 | 2.8 | 3.6 | 4 | 5 |

Figure 4.32 and Table 4.32 provide information about how respondents scored on the Commitment to Open Communication Subscale. They provide the following information:

- Response: 219 respondents answered all questions in the scale.

- Average: The average score was 1.

- Range: Scores ranged from 3.51 to 5.

- Median: About half of respondents scored below 3.6 and half scored above 3.6.

- Interquartile Range: If the range of responses were cut into quarters, the middle half of respondents (between the first and third quartiles) scored between 2.8 and 4.

This means that more than half of respondents generally answered that they neither agreed nor disagreed or agreed with statements about the alignment of their organization’s commitment to open communication that were consistent with a Trauma Informed Model.

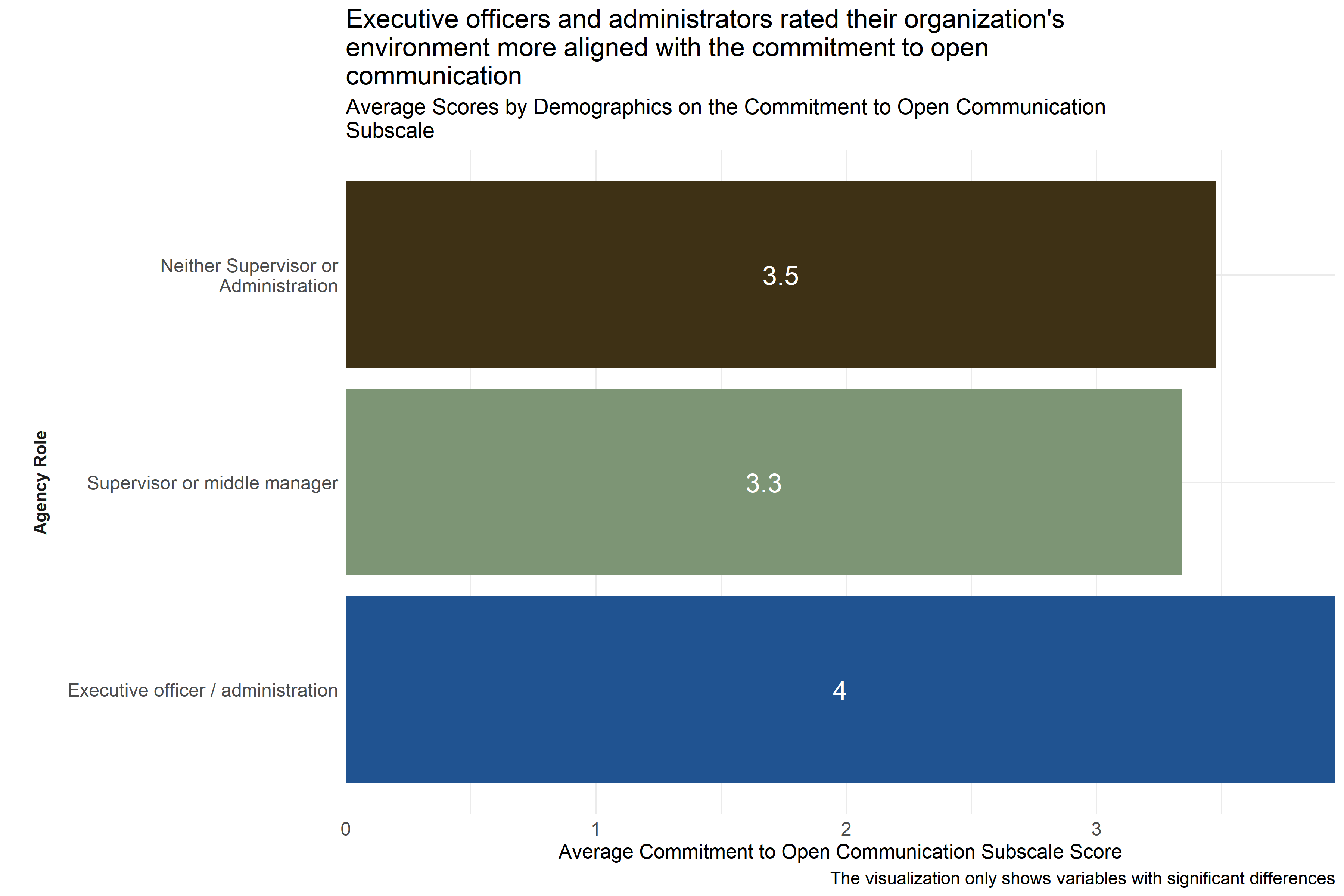

Figure 4.33: Average scores for Commitment to Open Communication Subscale across demographic groups

Analysts tested the average scores on the Commitment to Open Communication Subscale across demographic groups to identify differences in how respondents answered questions about how they perceived indications of their agencies’ commitment to open communication in a way that was consistent with the Trauma Informed Care model. As Figure 4.33 shows, executive officers / administrators scored higher than respondents in other agency roles. This suggests that executive officers / administrators are experiencing more alignment within their respective organizations towards a commitment to open communication.

Analysts also observed significant differences in responses across specific agencies, but did not include that information in Figure 4.33 to protect the privacy of those agencies.

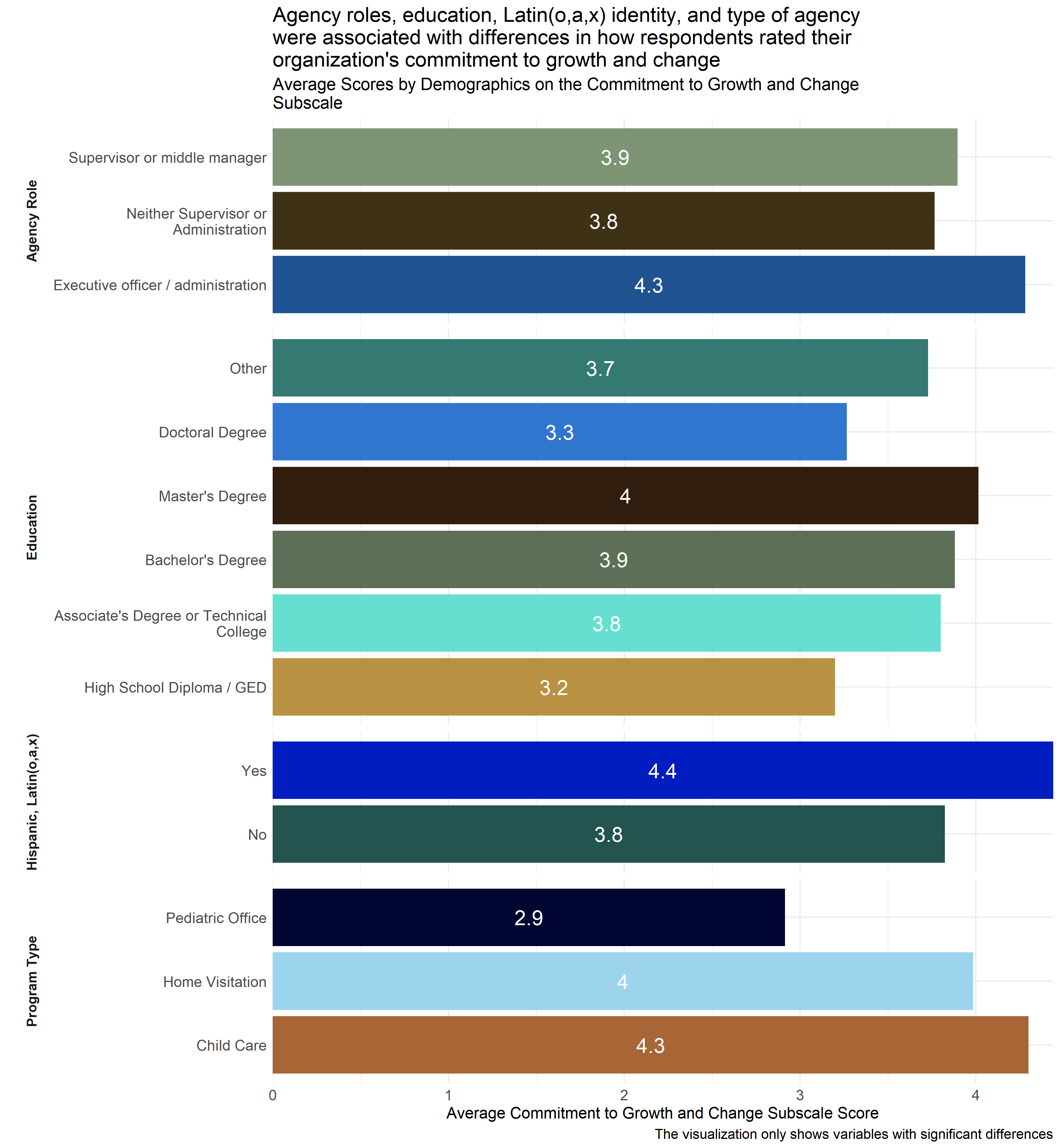

4.5.4.11 Commitment to Growth and Change

The Commitment to Growth and Change Subscale measures the extent to which the cultural and social environment at an agency aligns with the trauma-informed norm and value of commitment to growth and change. Survey participants responded to statements like “Clients are routinely encouraged to think about, plan and work on goals for the immediate, short-term and long-term future.” and “Administrators, managers and staff truly believe in the potential for positive change in the clients we serve.” on a scale of 1 “Strongly Disagree” to 5 “Strongly Agree.” Individual responses to each of the questions in this scale can be found in Appendix B: Detailed Question Responses by Scale. Low scores on this scale indicate that the organization’s environment is less aligned with the commitment to growth and change.

Figure 4.37: Boxplot of score distributions for Commitment to Growth and Change Subscale

| Number of Answers | Average Score | Lowest Score | First Quartile | Median | Third Quartile | Highest Score |

|---|---|---|---|---|---|---|

| 215 | 3.85 | 1.6 | 3.4 | 4 | 4.4 | 5 |

Figure 4.37 and Table 4.34 provide information about how respondents scored on the Commitment to Growth and Change Subscale. They provide the following information:

- Response: 215 respondents answered all questions in the scale.

- Average: The average score was 1.6.

- Range: Scores ranged from 3.85 to 5.

- Median: About half of respondents scored below 4 and half scored above 4.

- Interquartile Range: If the range of responses were cut into quarters, the middle half of respondents (between the first and third quartiles) scored between 3.4 and 4.4.

This means that more than half of respondents generally answered that they agreed or strongly agreed with statements about the alignment of their organization’s commitment to growth and change that were consistent with a Trauma Informed Model.

Figure 4.38: Average scores for Commitment to Growth and Change Subscale across demographic groups

Analysts tested the average scores on the Commitment to Growth and Change Subscale across demographic groups to identify differences in how respondents answered questions about how they perceived indications of their agencies’ commitment to growth and change in a way that was consistent with the Trauma Informed Care model. As Figure 4.38 shows, analysts found significant differences by agency role, highest level of education, whether or not the respondent self-identified as Hispanic, Latino(a) or Latinx, and agency type. Respondents whose highest level of education is a High School Diploma / GED or Doctoral degree reported lower scores on average than those who have achieved other levels of education. Those with High School Diploma / GED as their highest level of education specifically had lower scores than respondents who had bachelor’s or master’s degrees, and those with doctoral degrees had lower scores than those with master’s degrees. Respondents who identified themselves as working at pediatric offices had lower scores than those working in home visitation programs or child care settings. And, executive officers and administrators scored higher than respondents who did not identify as supervisors or administrators.

Additionally, respondents identifying as Hispanic, Latino(a) or Latinx rated their organizations higher on average than those who did not identify as Hispanic, Latino(a) or Latinx. This suggests that respondents whose highest level of education is a High School Diploma / GED or Doctoral degree, who work at pediatric offices, did not identify as Hispanic, Latino(a) or Latinx, and are not supervisors or administrators are experiencing less alignment within their respective organizations towards a commitment to growth and change.

4.5.4.12 Conclusion

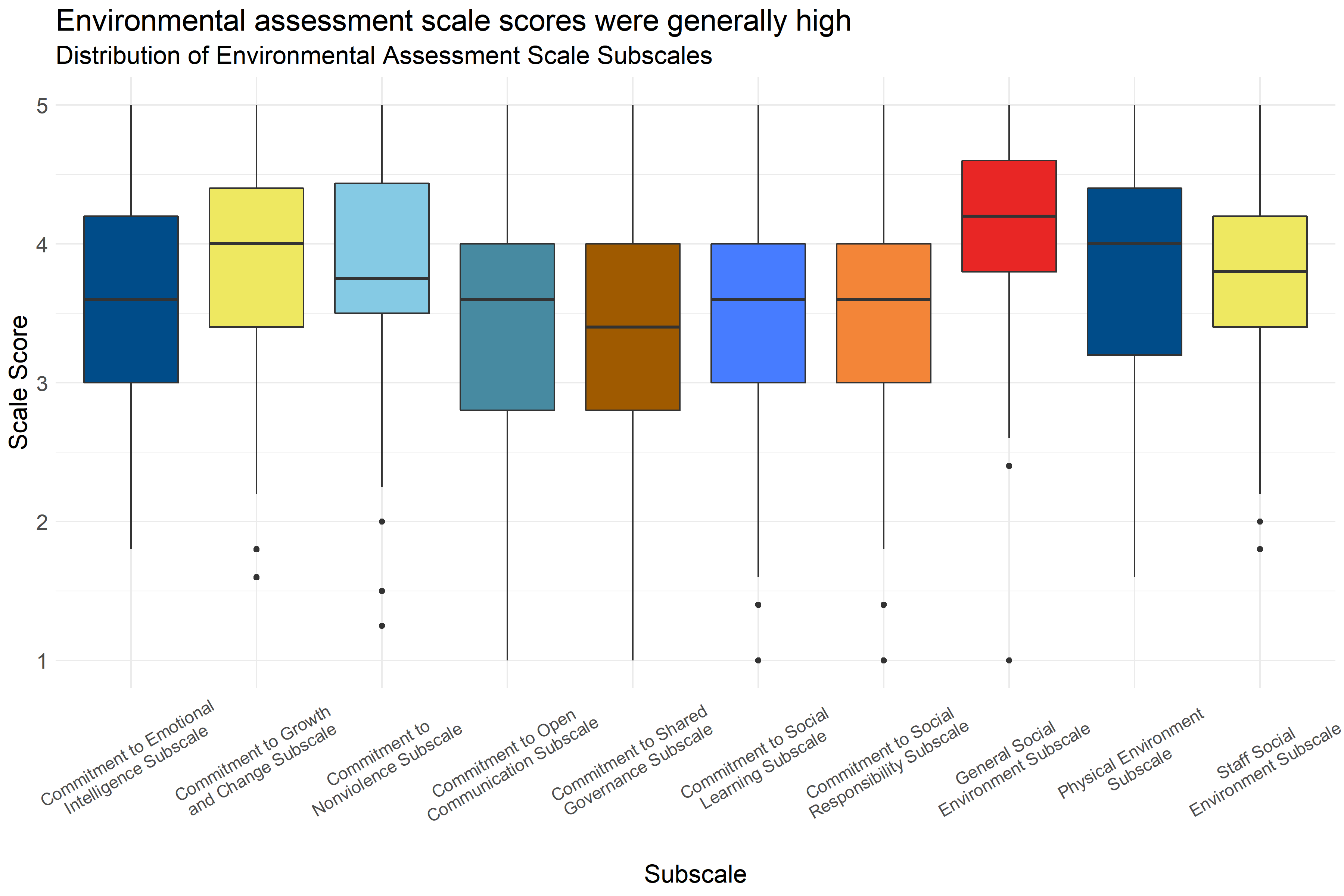

Figure 4.39: Boxplot of score distributions on the Environmental Assessment Scale Subscales

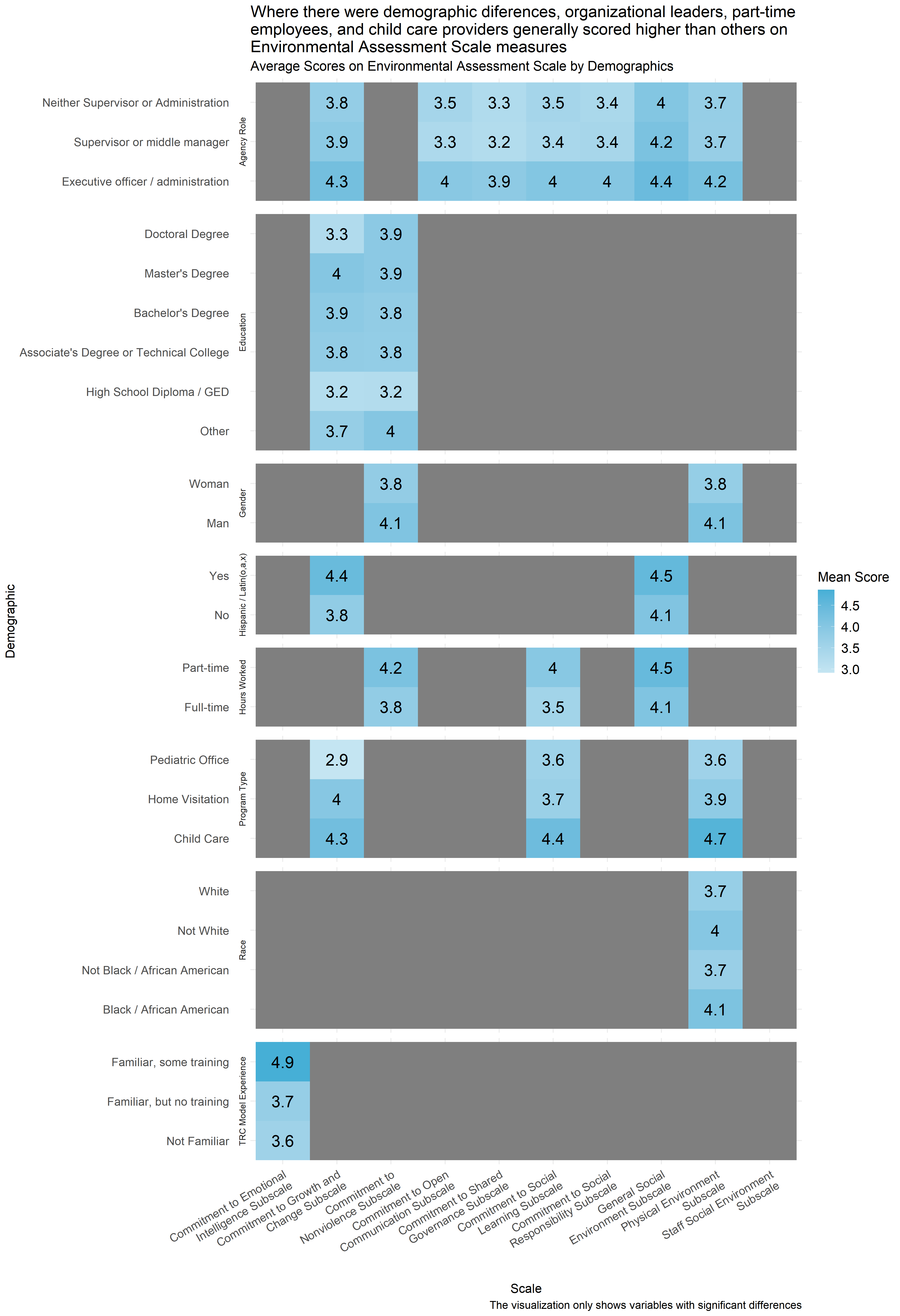

Figure 4.40: Mean score on Environmental Assessment Scale Subscales by demographic

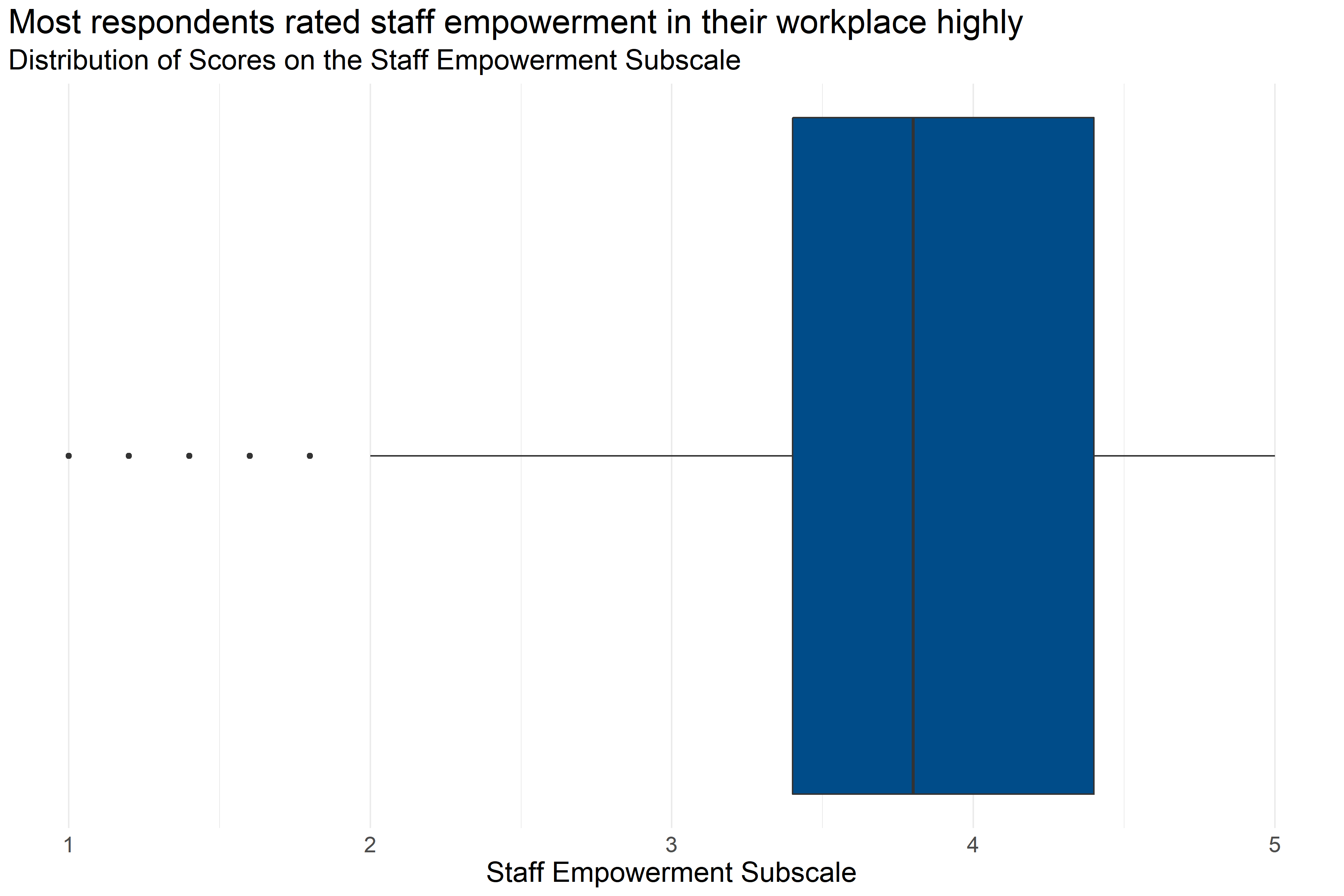

Figure 4.39 shows the distribution of scores on the Environmental Assessment Scale subscales. The colored boxes represent the range that the scores of the middle half of respondents. (If you were to cut all of the scores into quarters, this would be between the first and third quartiles.) And, the lines and dots represent the full distribution of scores on each scale. Scales with dots on either side indicate unusually high or low scores. Figure 4.39 shows that the scores on the Environmental Assessment Scale subscales were generally between neutral and moderately aligned with trauma-informed practice, with the highest scores generally being on the General Social Environment subscale.

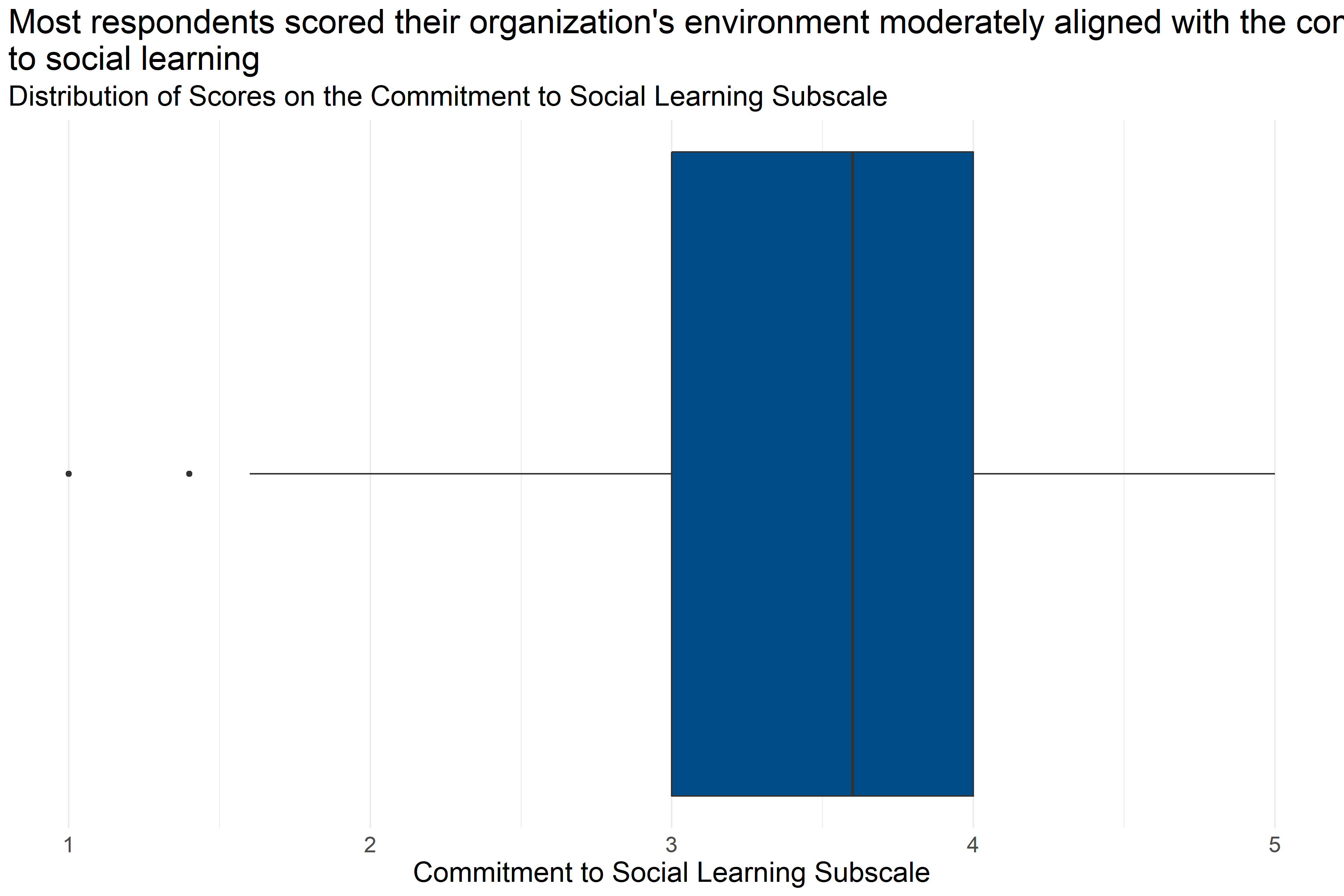

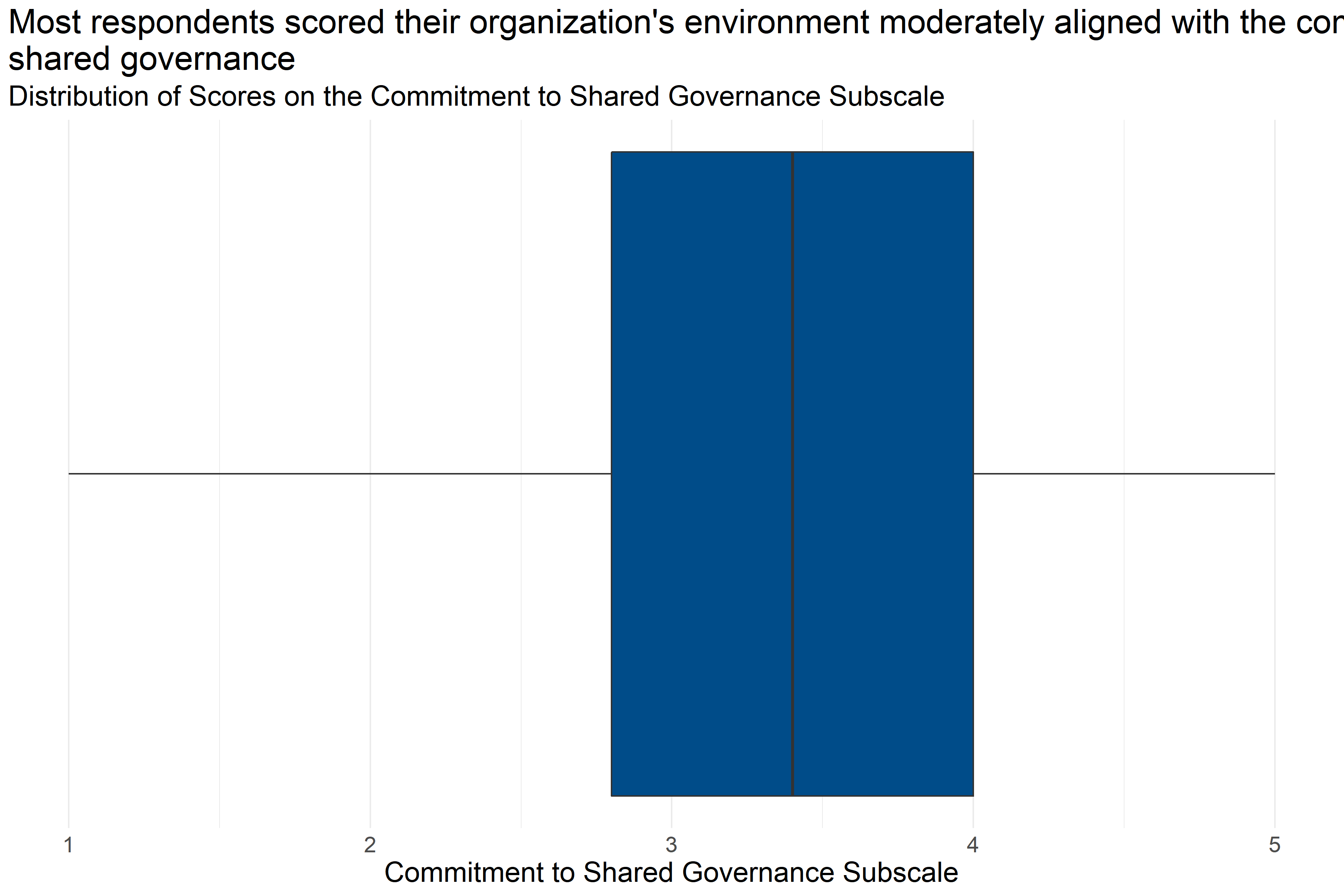

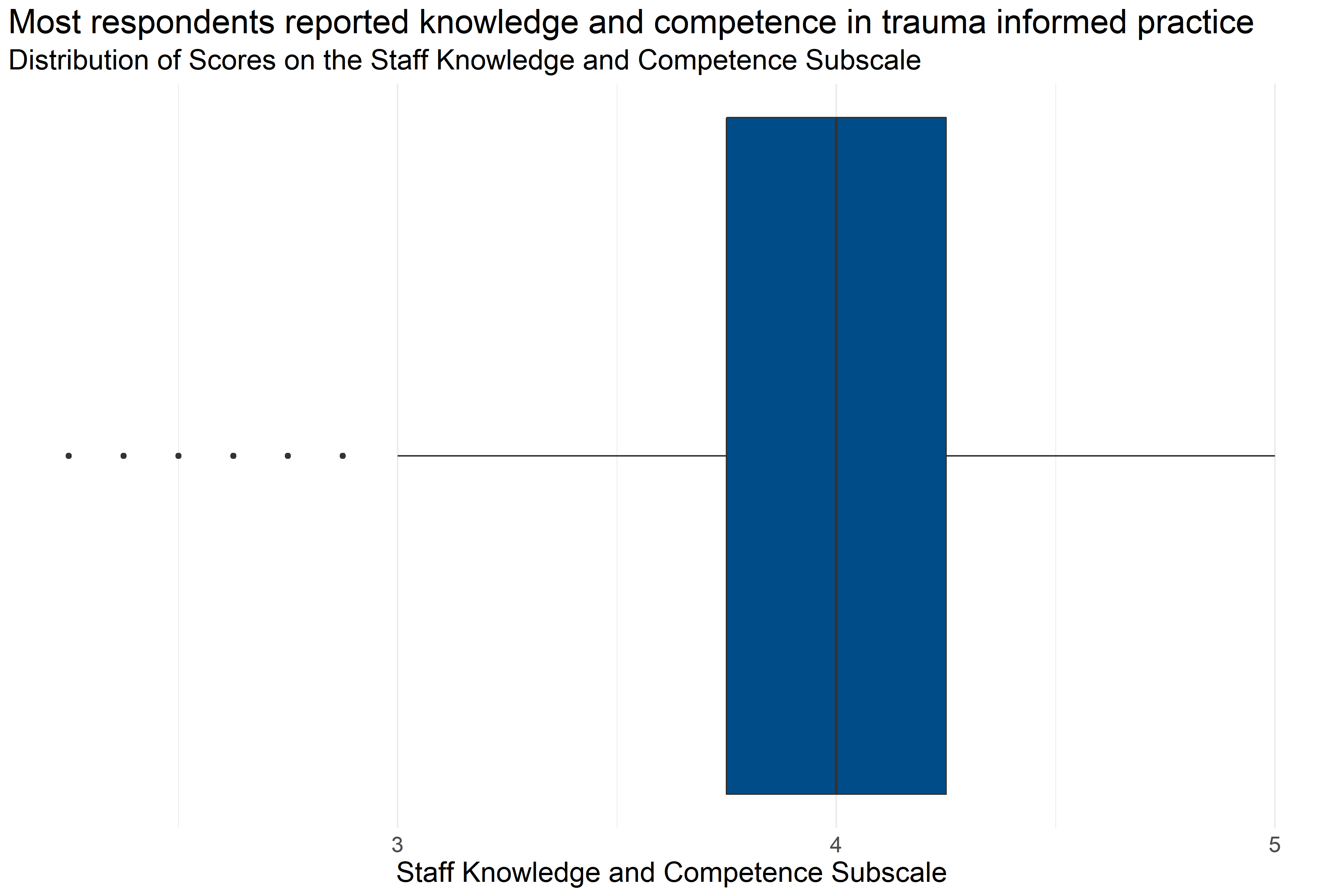

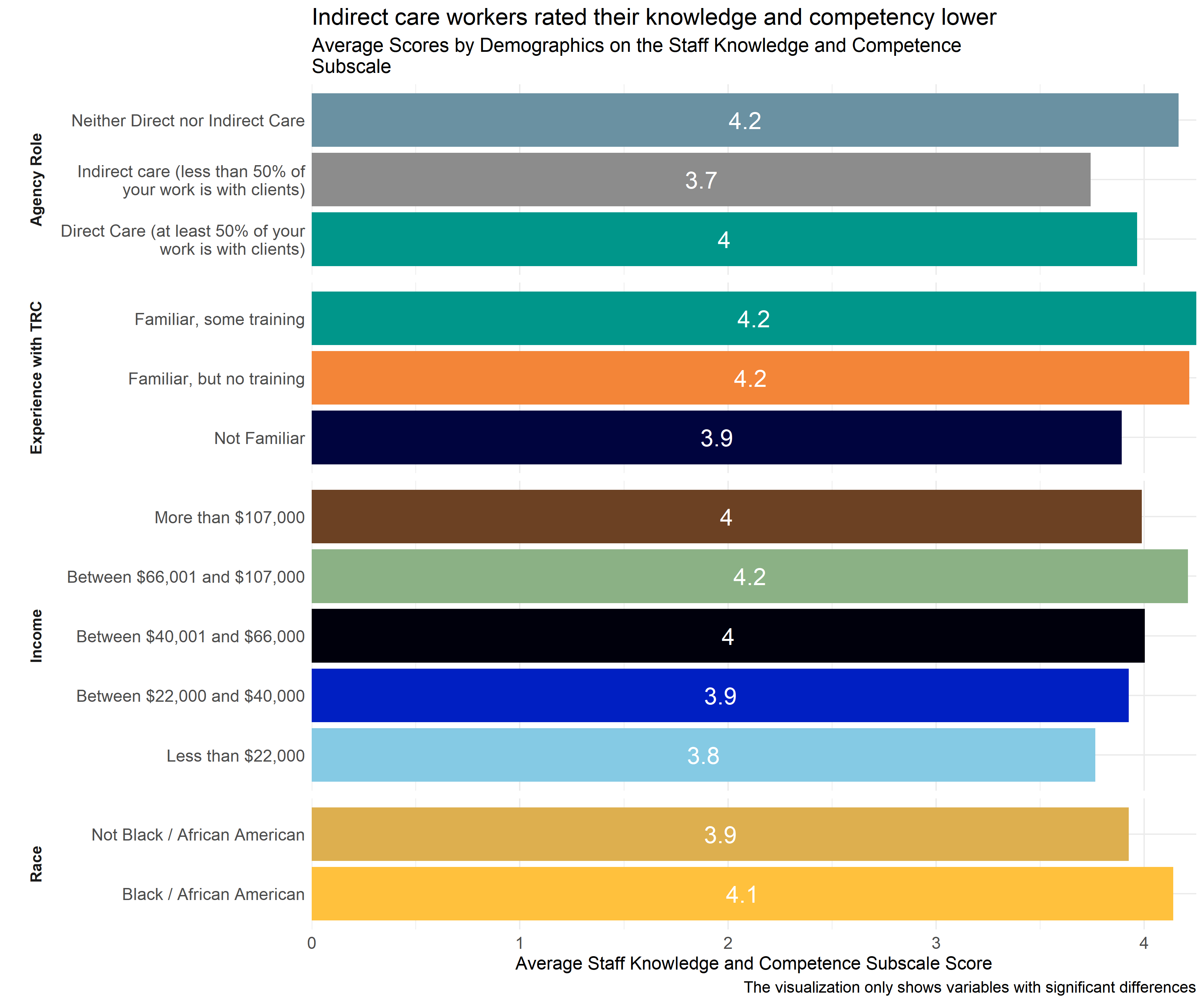

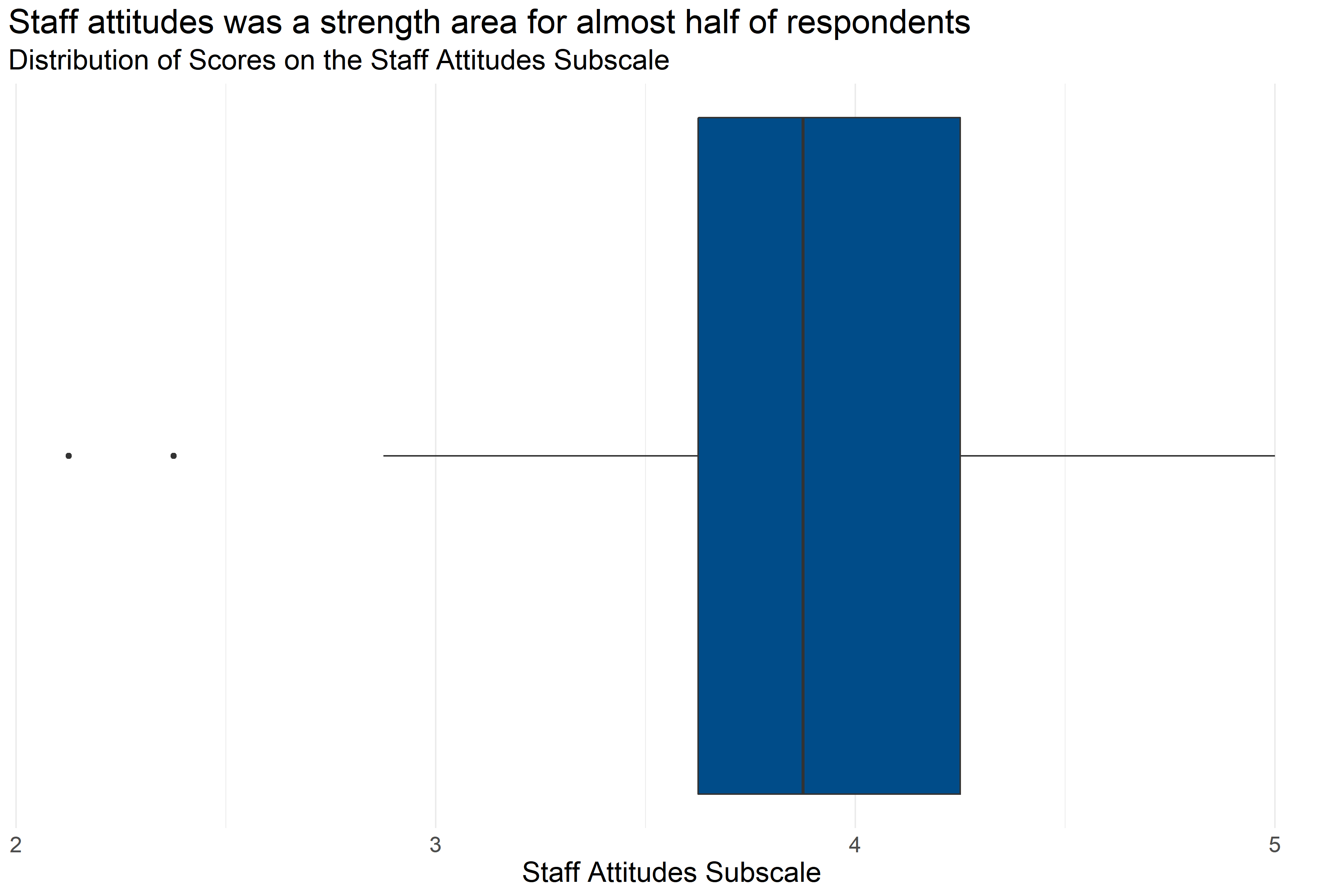

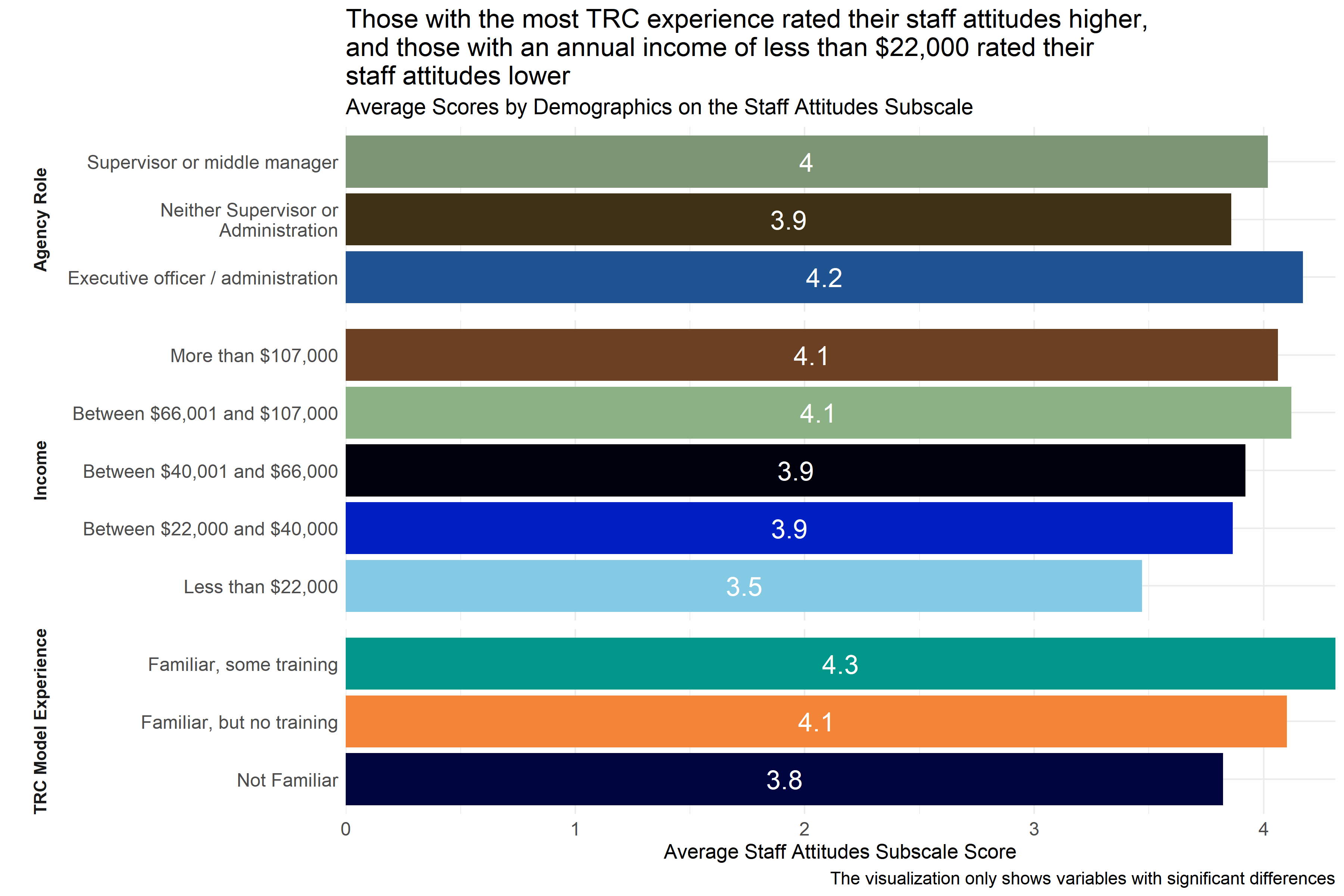

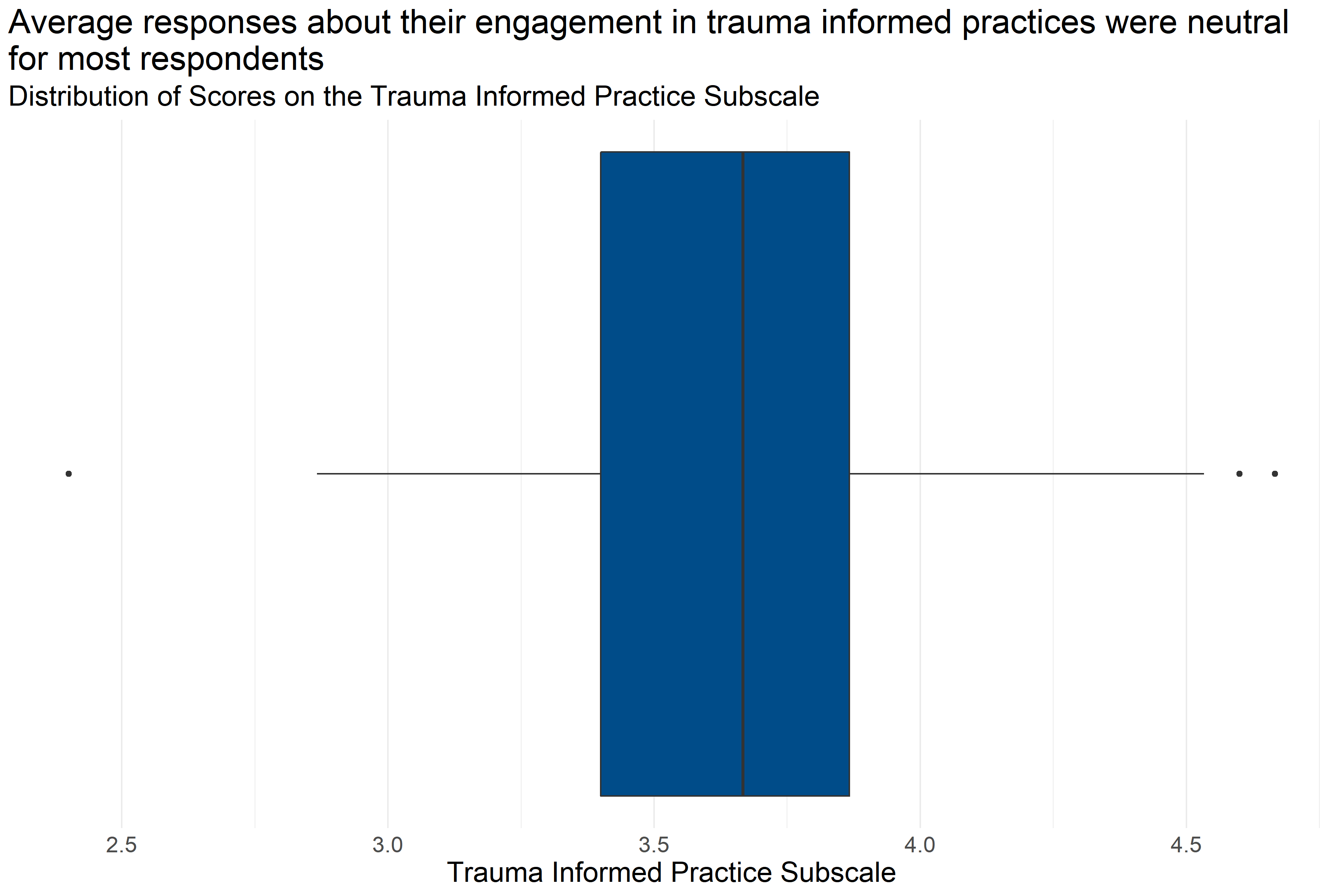

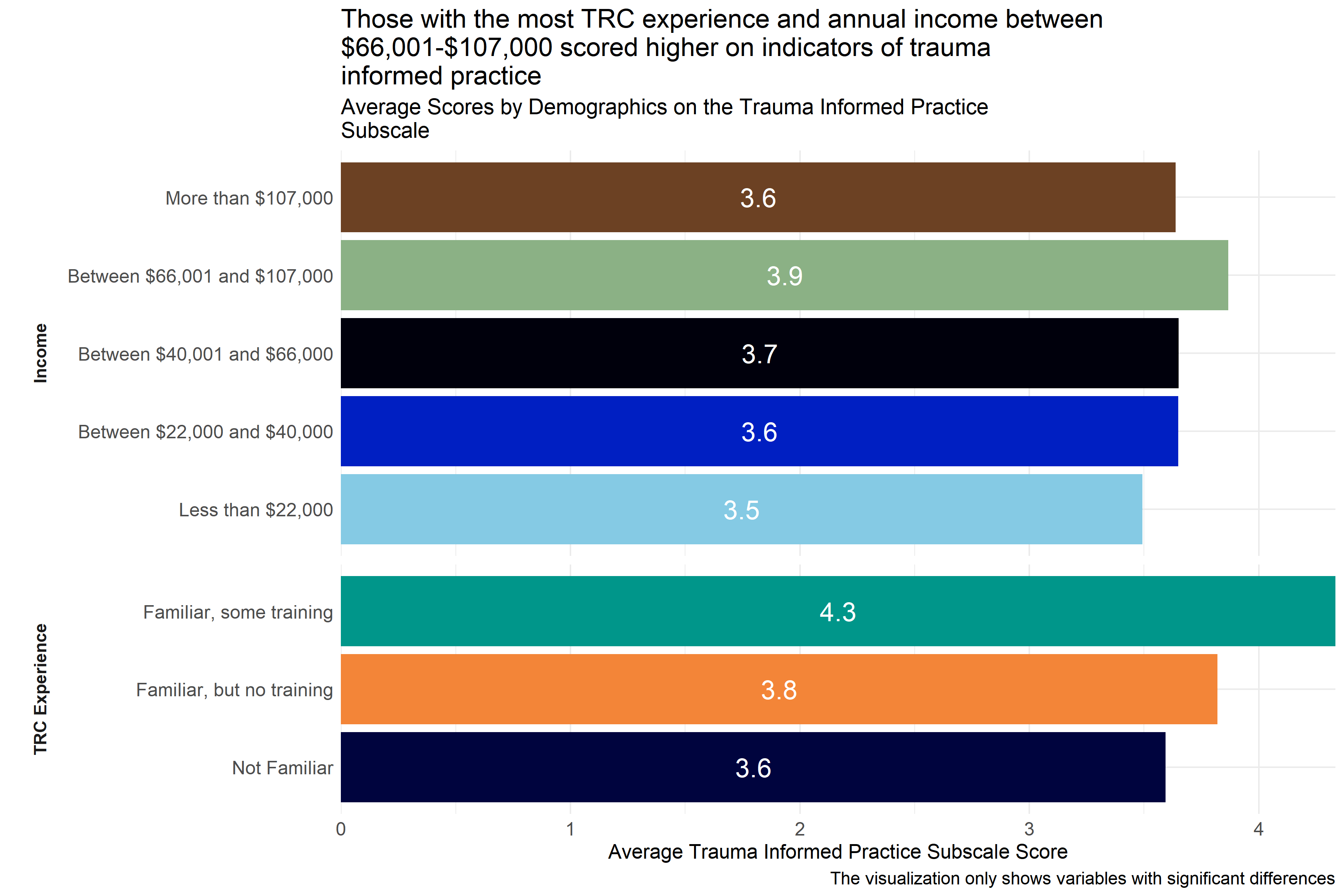

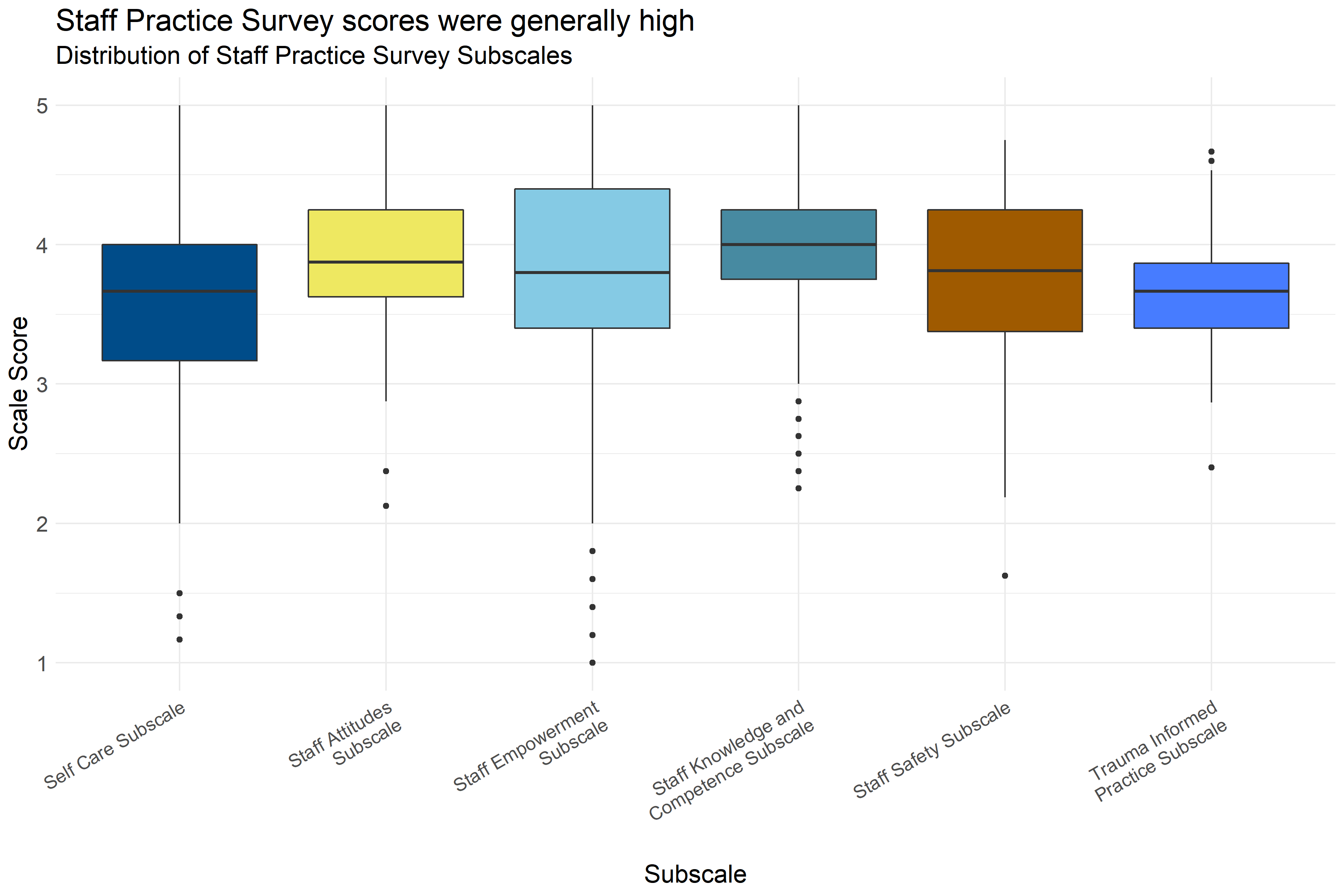

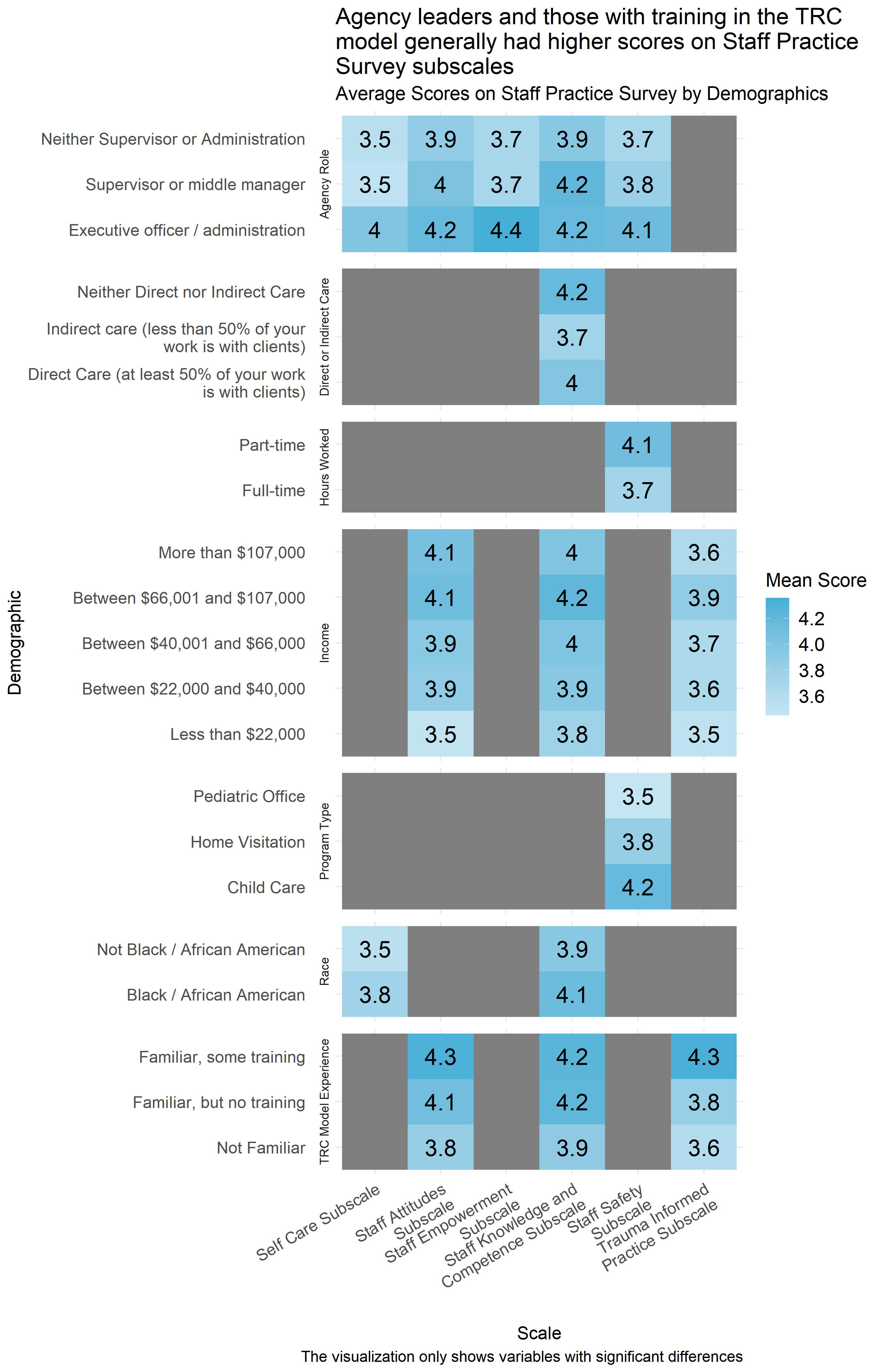

While most respondents rated their organization’s commitment to open communication and shared governance neutrally or highly on average, at least 25% of respondents responded to questions about their organizations’ environment more negatively on average. Similarly, at least 75% of respondents generally responded to questions about their organizations’ commitment to emotional intelligence, social learning, and social responsibility neutrally or positively, but at least 25% gave responses ranging from negative to neutral.