5 Dictionary-based Analysis

Sentiment analyses are fairly easy when you have your data in tidy text format. As they basically consist of matching the particular words’ sentiment values to the corpus, this can be done with an inner_join(). tidytext comes with four dictionaries: bing, loughran, afinn, and nrc:

#install.packages("textdata") #may be required to access the lexicons

needs(SnowballC, sotu, stopwords, tidyverse, tidytext)

walk(c("bing", "loughran", "afinn", "nrc"), \(x) get_sentiments(lexicon = x) |>

head() |>

print())# A tibble: 6 × 2

word sentiment

<chr> <chr>

1 2-faces negative

2 abnormal negative

3 abolish negative

4 abominable negative

5 abominably negative

6 abominate negative

# A tibble: 6 × 2

word sentiment

<chr> <chr>

1 abandon negative

2 abandoned negative

3 abandoning negative

4 abandonment negative

5 abandonments negative

6 abandons negative

# A tibble: 6 × 2

word value

<chr> <dbl>

1 abandon -2

2 abandoned -2

3 abandons -2

4 abducted -2

5 abduction -2

6 abductions -2

# A tibble: 6 × 2

word sentiment

<chr> <chr>

1 abacus trust

2 abandon fear

3 abandon negative

4 abandon sadness

5 abandoned anger

6 abandoned fear As you can see here, the dictionaries are mere tibbles with two columns: “word” and “sentiment”. For easier joining, I should rename my column “token” to word.

needs(magrittr)

sotu_20cent_clean <- sotu_meta |>

mutate(text = sotu_text) |>

distinct(text, .keep_all = TRUE) |>

filter(between(year, 1900, 2000)) |>

unnest_tokens(output = token, input = text) |>

anti_join(get_stopwords(), by = c("token" = "word")) |>

filter(!str_detect(token, "[0-9]")) |>

mutate(token = wordStem(token, language = "en")) |>

mutate(n_rows = length(token))

sotu_20cent_clean %<>% rename(word = token)The AFINN dictionary is the only one with numeric values. You might have noticed that its words are not stemmed. Hence, I need to do this before I can join it with my tibble. To get the sentiment value per document, I need to average it.

sotu_20cent_afinn <- get_sentiments("afinn") |>

mutate(word = wordStem(word, language = "en")) |>

inner_join(sotu_20cent_clean) |>

group_by(year) |>

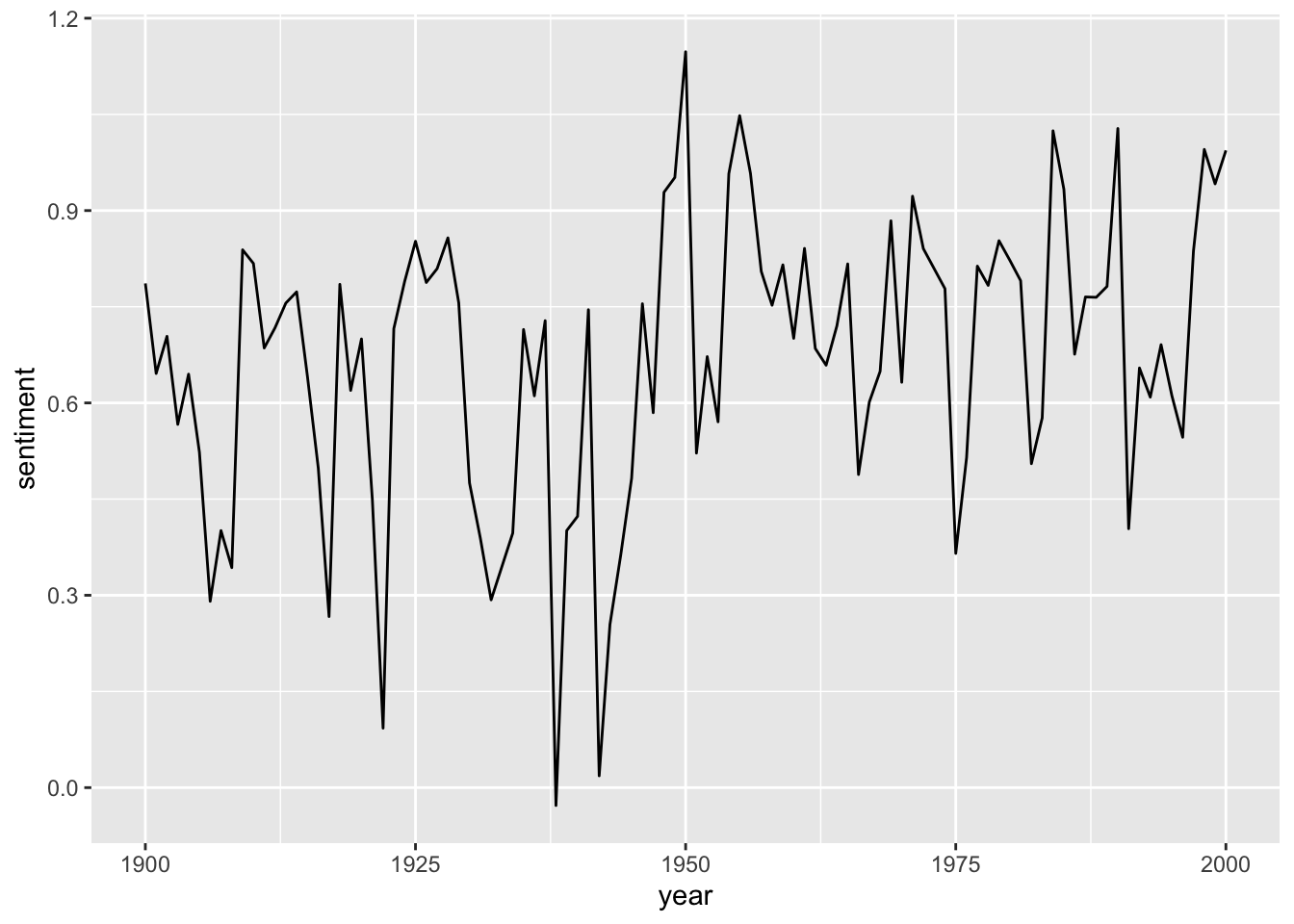

summarize(sentiment = mean(value))Thereafter, I can just plot it:

sotu_20cent_afinn |>

ggplot() +

geom_line(aes(x = year, y = sentiment))

That’s a bit hard to interpret. geom_smooth() might help:

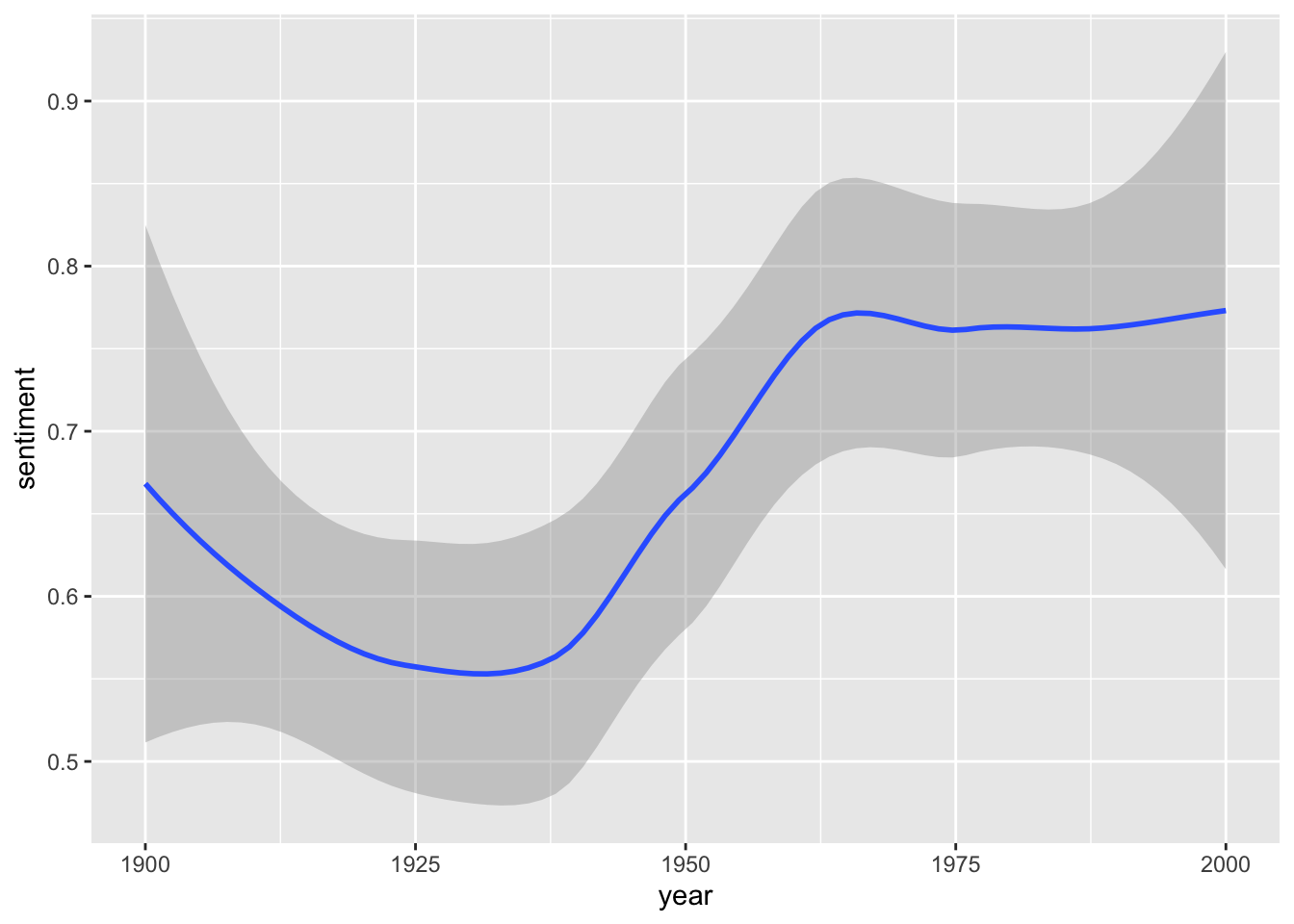

sotu_20cent_afinn |>

ggplot() +

geom_smooth(aes(x = year, y = sentiment))

Interesting. When you think of the tone in the SOTU addresses as a proxy measure for the circumstances, the worst phase appears to be during the 1920s and 1930s – might make sense given the then economic circumstances, etc. The maximum was in around the 1960s and since then it has, apparently, remained fairly stable.

5.1 Assessing the results

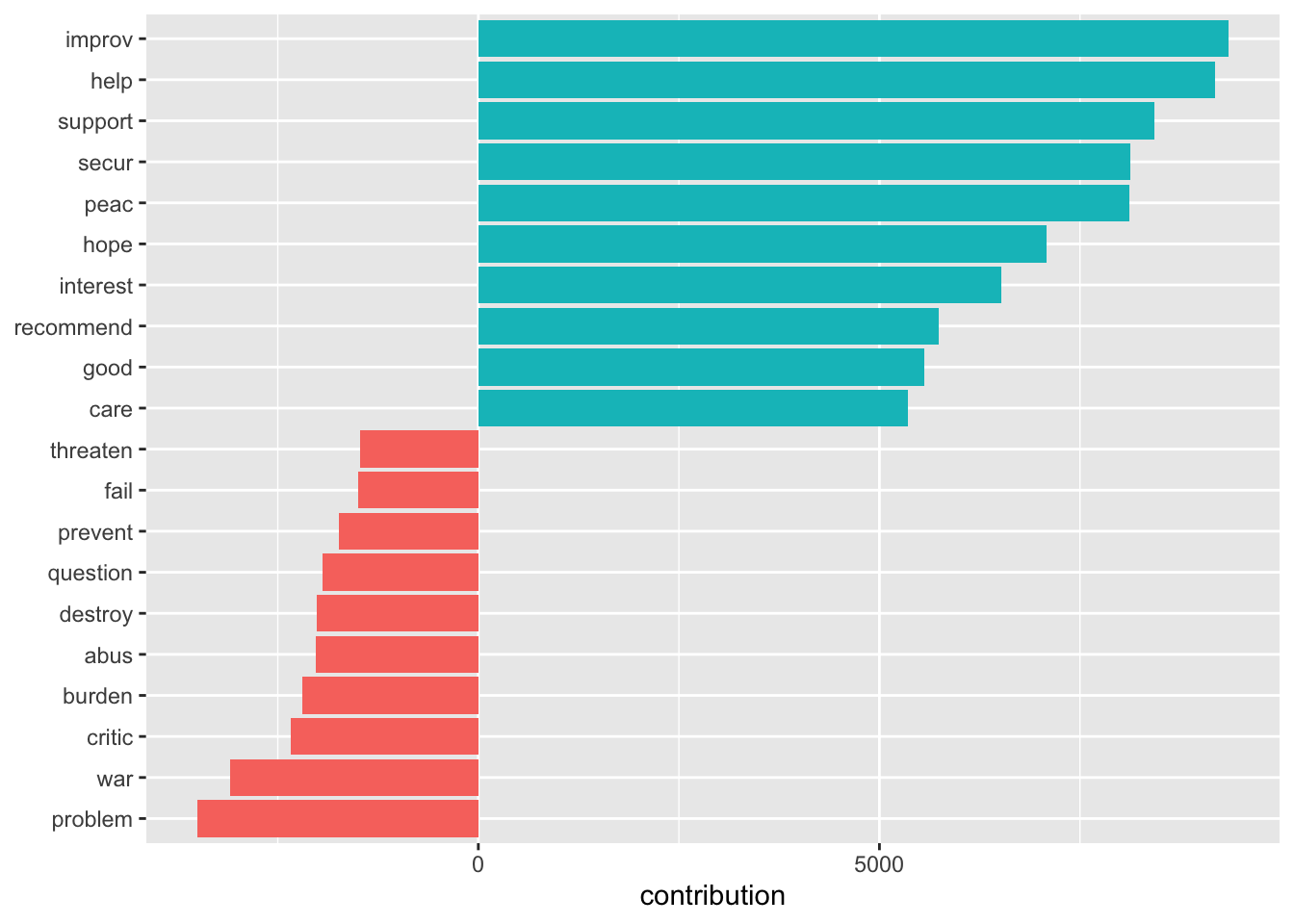

However, we have no idea whether we are capturing some valid signal or not. Let’s look at what drives those classifications the most:

sotu_20cent_contribution <- get_sentiments("afinn") |>

mutate(word = wordStem(word, language = "en")) |>

inner_join(sotu_20cent_clean) |>

group_by(word) |>

summarize(occurences = n(),

contribution = sum(value))sotu_20cent_contribution |>

slice_max(contribution, n = 10) |>

bind_rows(sotu_20cent_contribution |> slice_min(contribution, n = 10)) |>

mutate(word = reorder(word, contribution)) |>

ggplot(aes(contribution, word, fill = contribution > 0)) +

geom_col(show.legend = FALSE) +

labs(y = NULL)

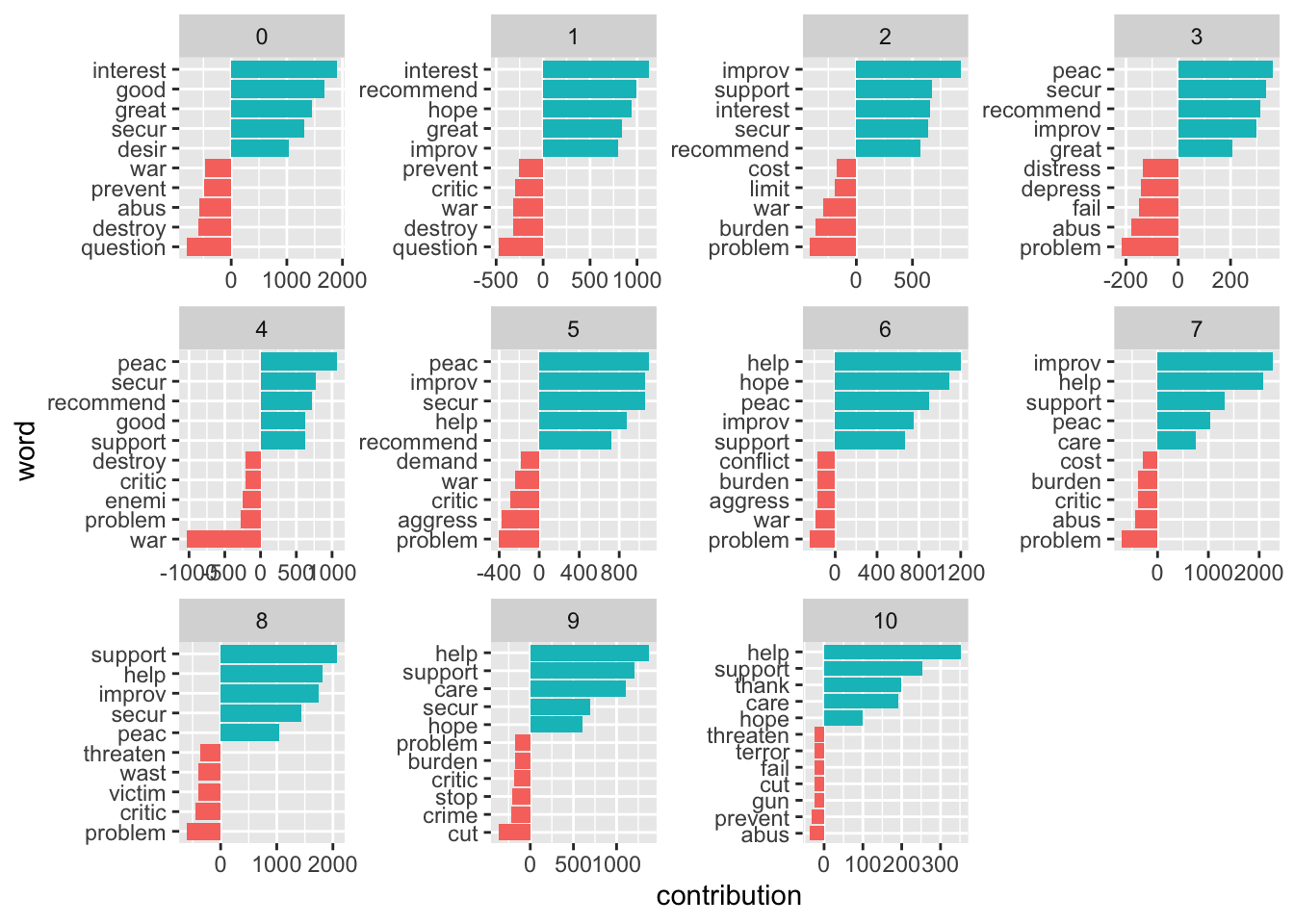

Let’s split this up per decade:

get_sentiments("afinn") |>

mutate(word = wordStem(word, language = "en")) |>

inner_join(sotu_20cent_clean) |>

mutate(decade = ((year - 1900)/10) |> floor()) |>

group_by(decade, word) |>

summarize(occurrences = n(),

contribution = sum(value)) |>

slice_max(contribution, n = 5) |>

bind_rows(get_sentiments("afinn") |>

mutate(word = wordStem(word, language = "en")) |>

inner_join(sotu_20cent_clean) |>

mutate(decade = ((year - 1900)/10) |> floor()) |>

group_by(decade, word) |>

summarize(occurrences = n(),

contribution = sum(value)) |>

slice_min(contribution, n = 5)) |>

mutate(word = reorder_within(word, contribution, decade)) |>

ggplot(aes(contribution, word, fill = contribution > 0)) +

geom_col(show.legend = FALSE) +

facet_wrap(~decade, ncol = 4, scales = "free") +

scale_y_reordered()

5.2 Assessing the quality of the rating

We need to assess the reliability of our classification (would different raters come to the same conclusion; and, if we compare it to a gold standard, how does the classification live up to its standards). One measure we can use here is Krippendorf’s Alpha which is defined as

\[\alpha = \frac{D_o}{D_e}\]

where \(D_{o}\) is the observed disagreement and \(D_{e}\) is the expected disagreement (by chance). The calculation of the measure is far more complicated, but R can easily take care of that – we just need to feed it with proper data. For this example I use a commonly used benchmark data set containing IMDb reviews of movies and whether they’re positive or negative.

imdb_reviews <- read_csv("https://www.dropbox.com/scl/fi/psgj6ze6at3zovildm728/imdb_reviews.csv?rlkey=ve2s02ydosbweemalvskyiu4s&dl=1")

glimpse(imdb_reviews)Rows: 25,000

Columns: 2

$ text <chr> "Once again Mr. Costner has dragged out a movie for far long…

$ sentiment <chr> "negative", "negative", "negative", "negative", "negative", …imdb_reviews_afinn <- imdb_reviews |>

rowid_to_column("doc") |>

unnest_tokens(token, text) |>

anti_join(get_stopwords(), by = c("token" = "word")) |>

mutate(stemmed = wordStem(token)) |>

inner_join(get_sentiments("afinn") |> mutate(stemmed = wordStem(word))) |>

group_by(doc) |>

summarize(sentiment = mean(value)) |>

mutate(sentiment_afinn = case_when(sentiment > 0 ~ "positive",

TRUE ~ "negative") |>

factor(levels = c("positive", "negative")))Now we have two classifications, one “gold standard” from the data and the one obtained through AFINN.

review_coding <- imdb_reviews |>

mutate(true_sentiment = sentiment |>

factor(levels = c("positive", "negative"))) |>

select(-sentiment) |>

rowid_to_column("doc") |>

left_join(imdb_reviews_afinn |> select(doc, sentiment_afinn)) First, we can check how often AFINN got it right, the accuracy:

sum(review_coding$true_sentiment == review_coding$sentiment_afinn, na.rm = TRUE)/25000[1] 0.64712However, accuracy is not a perfect metric because it doesn’t tell you anything about the details. For instance, your classifier might just predict “positive” all of the time. If your gold standard has 50 percent “positive” cases, the accuracy would lie at 0.5. We can address this using the following measures.

For the calculation of Krippendorff’s Alpha, the data must be in a different format: a matrix containing with documents as columns and the respective ratings as rows.

needs(irr)

mat <- review_coding |>

select(-text) |>

as.matrix() |>

t()

mat[1:3, 1:5] [,1] [,2] [,3] [,4] [,5]

doc " 1" " 2" " 3" " 4" " 5"

true_sentiment "negative" "negative" "negative" "negative" "negative"

sentiment_afinn "positive" "negative" "negative" "positive" "positive"colnames(mat) <- mat[1,]

mat <- mat[2:3,]

mat[1:2, 1:5] 1 2 3 4 5

true_sentiment "negative" "negative" "negative" "negative" "negative"

sentiment_afinn "positive" "negative" "negative" "positive" "positive"kripp.alpha(mat, method = "nominal") Krippendorff's alpha

Subjects = 25000

Raters = 2

alpha = 0.266 Good are alpha values of around 0.8 – AFINN missed that one.

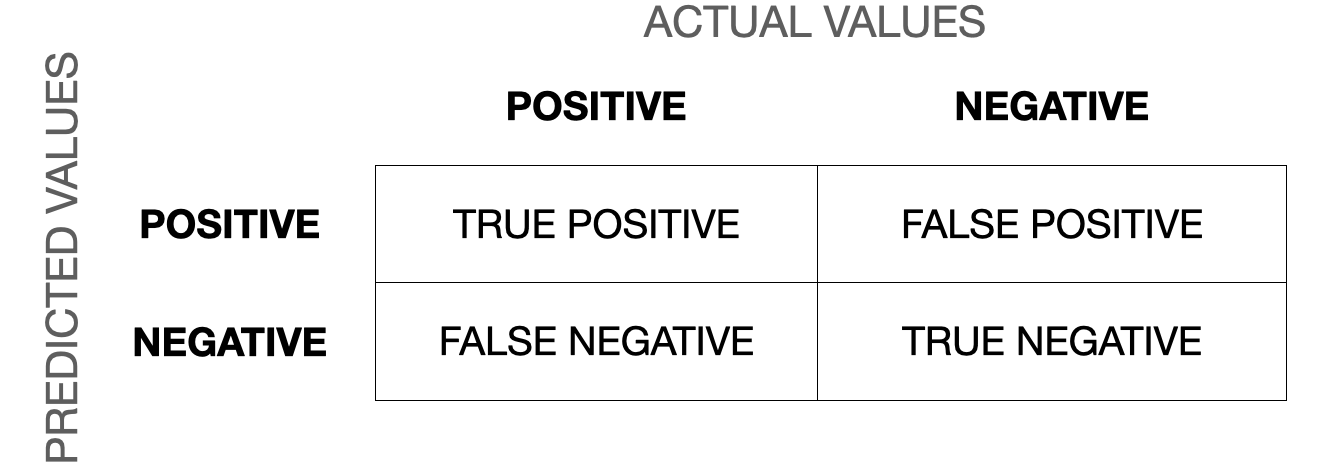

Another way to evaluate the quality of classification is through a confusion matrix.

Now we can calculate precision (when it predicts “positive”, how often is it correct), recall/sensitivity (when it is “positive”, how often is this predicted), specificity (when it’s “negative”, how often is it actually negative). The F1-score is the harmonic mean of precision and recall and defined as \(F_1 = \frac{2}{\frac{1}{recall}\times \frac{1}{precision}} = 2\times \frac{precision\times recall}{precision + recall}\) and the most commonly used measure to assess the accuracy of the classification. The closer to 1 it is, the better. You can find a more thorough description of the confusion matrix and the different measures in this blog post.

We can do this in R using the caret package.

needs(caret)

confusion_matrix <- confusionMatrix(data = review_coding$sentiment_afinn,

reference = review_coding$true_sentiment,

positive = "positive")

confusion_matrix$byClass Sensitivity Specificity Pos Pred Value

0.8439750 0.4504721 0.6056500

Neg Pred Value Precision Recall

0.7427441 0.6056500 0.8439750

F1 Prevalence Detection Rate

0.7052216 0.5000000 0.4219875

Detection Prevalence Balanced Accuracy

0.6967515 0.6472236 5.3 Exercises

Take the data from before (State of the Union Addresses). Have a look at different dictionaries (e.g., Bing or Loughran). Check the words that contributed the most. Do you see any immediate ambiguities or flaws?

sotu_20cent_clean <- sotu_meta |>

mutate(text = sotu_text) |>

distinct(text, .keep_all = TRUE) |>

filter(between(year, 1900, 2000)) |>

unnest_tokens(output = word, input = text) |>

mutate(word = wordStem(word, language = "en"))Preprocess the dictionaries properly so that they match the terms in the text (stemming!).

Join the respective tibbles together using an

inner_join().Determine the sentiments for every year, plot it.

Think about how you can transform the dictionaries into a more unified representation (i.e., so that labels are “positive” or “negative”).

Compare the share of “positive” and “negative” the different dictionaries give over time.

Which terms contribute the most to “positive” or “negative?” Compare.