5.4 Convex Optimisation

Recognizing convex optimization problems, or those that can be transformed to convex optimization problems, can therefore be challenging. (Boyd, Boyd, and Vandenberghe 2004)

5.4.1 Convex or Concave Functions

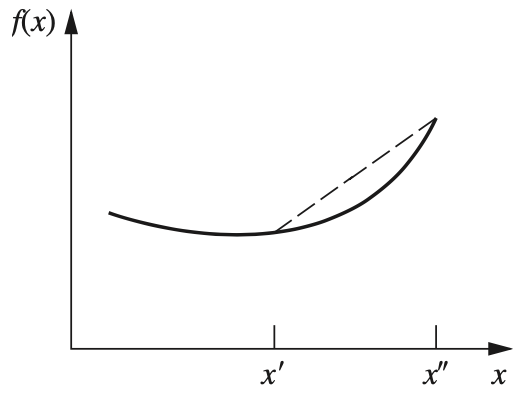

Figure 5.1: A strictly convex function.

The sum of convex functions is a convex function, and the sum of concave functions is a concave function. (Hillier 2012)

Convexity Test for Multi-Variate Function

In mathematical terminology, \(f\left(x_{1}, x_{2}, \ldots, x_{n}\right)\) is convex if and only if its \(n \times n\) Hessian matrix is positive semidefinite for all possible values of \(\left(x_{1}, x_{2}, \ldots, x_{n}\right)\). (Hillier 2012)

\[ \begin{array}{ccccc} \hline & \text { Strictly } & & \text { Strictly } \\ \text { Quantity } & \text { Convex } & \text { Convex } & \text { Concave } & \text { Concave } \\ \hline \frac{\partial^{2} f\left(x_{1}, x_{2}\right)}{\partial x_{1}^{2}} \frac{\partial^{2} f\left(x_{1}, x_{2}\right)}{\partial x_{2}^{2}}-\left[\frac{\partial^{2} f\left(x_{1}, x_{2}\right)}{\partial x_{1} \partial x_{2}}\right]^{2} & \geq 0 & >0 & \geq 0 & >0 \\ \frac{\partial^{2} f\left(x_{1}, x_{2}\right)}{\partial x_{1}^{2}} & \geq 0 & >0 & \leq 0 & <0 \\ \frac{\partial^{2} f\left(x_{1}, x_{2}\right)}{\partial x_{2}^{2}} & \geq 0 & >0 & \leq 0 & <0 \\ \hline \end{array} \]

5.4.2 Convex Set

5.4.3 Regularity Conditions (Constraint Qualifications)

There are many results that establish conditions on the problem, beyond convexity, under which strong duality holds. These conditions are called constraint qualifications. (Boyd, Boyd, and Vandenberghe 2004)

In mathematics, Slater’s condition (or Slater condition) is a sufficient condition for strong duality to hold for a convex optimisation problem.

If a convex optimization problem with differentiable objective and constraint functions satisfies Slater’s condition, then the KKT conditions provide necessary and sufficient conditions for optimality. (Boyd, Boyd, and Vandenberghe 2004)

5.4.4 Lagrangian Duality

There also are many valuable indirect applications of the KKT conditions. One of these applications arises in the duality theory that has been developed for nonlinear programming to parallel the duality theory for linear programming. (Hillier 2012)

The basic idea in Lagrangian duality is to take the constraints in (5.1) into account by augmenting the objective function with a weighted sum of the constraint functions.

For example: \[ \begin{align} \max_{\mathbf{x}} \quad & f(\mathbf{x})\\ \text{s.t.} \quad & \boldsymbol{g}(\boldsymbol{x}) \leq \mathbf{0} \\ & \boldsymbol{h}(\boldsymbol{x}) = \mathbf{0} \end{align} \]

The dual problem is \[ \begin{align} \min_{\boldsymbol{u}, \boldsymbol{v}} \quad & \theta(\boldsymbol{u}, \boldsymbol{v}) \\ \text{s.t.} \quad & \boldsymbol{u} \geq 0 \end{align} \] where \[ \theta(\boldsymbol{u}, \boldsymbol{v}) = \max _{\boldsymbol{x}} \{f(\boldsymbol{x}) + \boldsymbol{u} \boldsymbol{g}(\boldsymbol{x}) + \boldsymbol{v} \boldsymbol{h}(\boldsymbol{x}) \} \]

References

Boyd, Stephen, Stephen P Boyd, and Lieven Vandenberghe. 2004. Convex Optimization. Cambridge university press.

Hillier, Frederick S. 2012. Introduction to Operations Research. Tata McGraw-Hill Education.

Morgan, Peter B. 2015. An Explanation of Constrained Optimization for Economists. University of Toronto Press.