25 Tools in the Trunk

“This chapter includes some important topics that apply to many different models throughout the book… The sections can be read independently of each other and at any time” (Kruschke, 2015, p. 721).

25.1 Reporting a Bayesian analysis

Bayesian data analyses are not yet standard procedure in many fields of research, and no conventional format for reporting them has been established. Therefore, the researcher who reports a Bayesian analysis must be sensitive to the background knowledge of his or her specific audience, and must frame the description accordingly. (p. 721)

At the time of this writing (mid 2022), this is still generally the case. See Aczel et al. (2020), Discussion points for Bayesian inference, for a recent discussion from several Bayesian scholars. For a take from a familiar voice, see Kruschke’s (2021) Bayesian analysis reporting guidelines.

25.1.1 Essential points.

Recall the basic steps of a Bayesian analysis from Section 2.3 (p. 25): Identify the data, define a descriptive model, specify a prior, compute the posterior distribution, interpret the posterior distribution, and, check that the model is a reasonable description of the data. Those steps are in logical order, with each step building on the previous step. That logical order should be preserved in the report of the analysis. (p. 722)

Kruschke then gave recommendations for motivating Bayesian inference. His (2018) paper with Liddell, The Bayesian New Statistics: Hypothesis testing, estimation, meta-analysis, and power analysis from a Bayesian perspective, might be helpful in this regard. Many of the other points Kruschke made in this section (e.g., adequately reporting the data structure, the priors, evidence for convergence) can be handled by adopting open science practices.

If your data and research questions are simple and straightforward, you might find it easy to detail these and other concerns in the primary manuscript. The harsh reality is many journals place tight constraints on word and/or page limits. If your projects are not of the simple and straightforward type, supplemental materials are your friend. Regardless of a journal’s policy on hosting supplemental materials on the official journal website, you can detail your data, priors, MCMC diagnostics, and all the other fine-grained details of your analysis in supplemental documents hosted in publicly-accessible repositories like the Open Science Framework (OSF) or GutHub. If possible, do consider making your data openly available. Regardless of the status of your data, please consider making all your R scripts available as supplementary material. To reiterate from Chapter 3, I strongly recommend checking out R Notebooks for that purpose. They are a type of R Markdown document with augmentations that make them more useful for working scientists. You can learn more about them here and here. And for a more comprehensive overview, check out Xie, Allaire, and Grolemund’s (2022) R markdown: The definitive guide.

25.1.2 Optional points.

For more thoughts on robustness checks, check out a couple Gelman’s blog posts, What’s the point of a robustness check? and Robustness checks are a joke, along with the action in the comments section.

In addition to posterior predictive checks, which are great (see Kruschke, 2013), consider prior predictive checks, too. For a great introduction to the topic, check out Gabry, Simpson, Vehtari, Betancourt, and Gelman’s (2019) Visualization in Bayesian workflow.

25.1.3 Helpful points.

For more ideas on open data, check out Rouder’s (2016) The what, why, and how of born-open data. You might also check out Klein and colleagues’ (2018) A practical guide for transparency in psychological science and Martone, Garcia-Castro, and VandenBos’s (2018) Data sharing in psychology.

As to posting your model fits, this could be done in any number of ways, including as official supplemental materials hosted by the journal, on GitHub, or on the OSF. At a base level, this means saving your fits as external files. We’ve already been modeling this with our brm() code throughout this book. With the save argument, we saved the model fits within the fits folder on GitHub. You might adopt a similar approach. But do be warned: brms fit objects contain a copy of the data used to create them. For example, here’s how we might reload fit24.1 from last chapter.

fit24.1 <- readRDS("fits/fit24.01.rds")By indexing the fit object with $data, you can see the data.

library(tidyverse)

library(brms)

fit24.1$data %>%

glimpse()## Rows: 16

## Columns: 4

## $ Count <dbl> 20, 68, 5, 15, 94, 7, 16, 10, 84, 119, 29, 54, 17, 26, 14, …

## $ Hair <fct> Black, Black, Black, Black, Blond, Blond, Blond, Blond, Bru…

## $ Eye <chr> "Blue", "Brown", "Green", "Hazel", "Blue", "Brown", "Green"…

## $ `Hair:Eye` <chr> "Black_Blue", "Black_Brown", "Black_Green", "Black_Hazel", …Here’s a quick way to remove the data from the fit object.

fit24.1$data <- NULLConfirm it worked.

fit24.1$data## NULLHappily, the rest of the information is still there for you. E.g., here’s the summary.

summary(fit24.1)## Family: poisson

## Links: mu = log

## Formula: Count ~ 1 + (1 | Hair) + (1 | Eye) + (1 | Hair:Eye)

## Data: NULL (Number of observations: )

## Draws: 4 chains, each with iter = 3000; warmup = 1000; thin = 1;

## total post-warmup draws = 8000

##

## Group-Level Effects:

## ~Eye (Number of levels: 4)

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## sd(Intercept) 1.80 1.51 0.30 5.80 1.00 2907 5001

##

## ~Hair (Number of levels: 4)

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## sd(Intercept) 1.89 1.52 0.35 6.10 1.00 3302 5159

##

## ~Hair:Eye (Number of levels: 16)

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## sd(Intercept) 0.95 0.30 0.53 1.67 1.00 2534 4849

##

## Population-Level Effects:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## Intercept 3.13 1.47 -0.04 6.12 1.00 4494 4726

##

## Draws were sampled using sampling(NUTS). For each parameter, Bulk_ESS

## and Tail_ESS are effective sample size measures, and Rhat is the potential

## scale reduction factor on split chains (at convergence, Rhat = 1).25.2 Functions for computing highest density intervals

You can find a copy of Kruschke’s scripts, including DBDA2E-utilities.R, at https://github.com/ASKurz/Doing-Bayesian-Data-Analysis-in-brms-and-the-tidyverse/tree/master/data.R.

25.2.1 R code for computing HDI of a grid approximation.

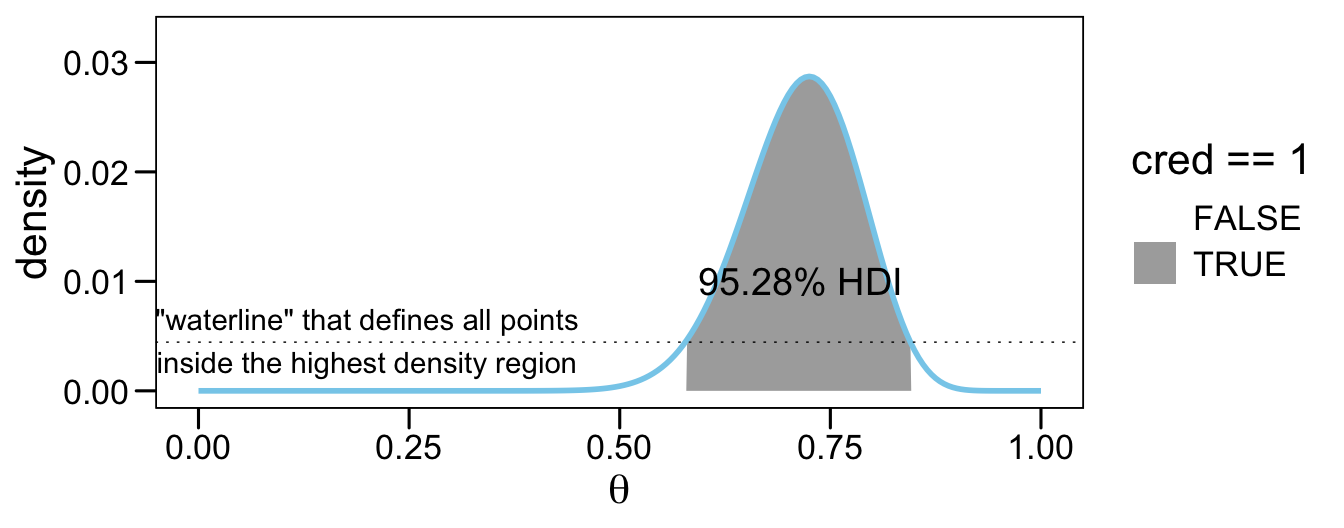

We can imagine the grid approximation of a distribution as a landscape of poles sticking up from each point on the parameter grid, with the height of each pole indicating the probability mass at that discrete point. We can imagine the highest density region by visualizing a rising tide: We gradually flood the landscape, monitoring the total mass of the poles that protrude above water, stopping the flood when 95% (say) of the mass remains protruding. The waterline at that moment defines the highest density region (e.g., Hyndman, 1996). (p. 725)

We can use Kruschke’s HDIofGrid() function for such a task.

HDIofGrid <- function(probMassVec, credMass = 0.95) {

# Arguments:

# probMassVec is a vector of probability masses at each grid point.

# credMass is the desired mass of the HDI region.

# Return value:

# A list with components:

# indices is a vector of indices that are in the HDI

# mass is the total mass of the included indices

# height is the smallest component probability mass in the HDI

# Example of use: For determining HDI of a beta(30,12) distribution

# approximated on a grid:

# > probDensityVec = dbeta( seq(0,1,length=201) , 30 , 12 )

# > probMassVec = probDensityVec / sum( probDensityVec )

# > HDIinfo = HDIofGrid( probMassVec )

# > show( HDIinfo )

sortedProbMass <- sort(probMassVec, decreasing = TRUE)

HDIheightIdx <- min(which(cumsum(sortedProbMass) >= credMass))

HDIheight <- sortedProbMass[HDIheightIdx]

HDImass <- sum(probMassVec[probMassVec >= HDIheight])

return(list(indices = which(probMassVec >= HDIheight),

mass = HDImass, height = HDIheight))

}I found Kruschke’s description of his HDIofGrid() a bit opaque. Happily, we can understand this function with a little help from an example posted at https://rdrr.io/github/kyusque/DBDA2E-utilities/man/HDIofGrid.html.

prob_density_vec <- dbeta(seq(0, 1, length = 201), 30, 12)

prob_mass_vec <- prob_density_vec / sum(prob_density_vec)

HDI_info <- HDIofGrid(prob_mass_vec)

show(HDI_info)## $indices

## [1] 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135

## [20] 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154

## [39] 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170

##

## $mass

## [1] 0.9528232

##

## $height

## [1] 0.004448336To walk that through a bit, prob_density_vec is a vector of density values for Beta(30,12) based on 201 evenly-spaced values spanning the parameter space for θ (i.e., from 0 to 1). In the second line, we converted those density values to the probability metric by dividing each by their sum, which we then saved as prob_mass_vec. In the third line we shoved those probability values into Kruschke’s HDIofGrid() and saved the results as HDI_info. The output of the fourth line, show(HDI_info), showed us the results (i.e., the contents of HDI_info).

As to those results, the values in saved as $indices are the row numbers for all cases in prob_mass_vec that were within the HDI. The value in $mass showed the actual width of the HDI. Because we’re only working with finite samples (i.e., length = 201), we won’t likely get a perfect 95% HDI. The value in $height is the density value for the waterline that defines the highest density region. A plot might make that less abstract.

library(ggthemes)

theme_set(

theme_base() +

theme(plot.background = element_rect(color = "transparent"))

)

# wrangle

tibble(row = 1:length(prob_density_vec),

theta = seq(0, 1, length = length(prob_density_vec)),

density = prob_mass_vec,

cred = if_else(row %in% HDI_info$indices, 1, 0)) %>%

# plot

ggplot(aes(x = theta, y = density)) +

# HDI

geom_area(aes(fill = cred == 1)) +

# density line

geom_line(color = "skyblue", linewidth = 1) +

# waterline

geom_hline(yintercept = HDI_info$height, linetype = 3, linewidth = 1/4) +

# fluff

annotate(geom = "text", x = .2, y = 0.0046,

label = '"waterline" that defines all points\ninside the highest density region') +

annotate(geom = "text", x = .715, y = 0.01,

label = "95.28% HDI", color = "black", size = 5) +

scale_fill_manual(values = c("transparent", "grey67")) +

xlab(expression(theta))

You might have noticed our theme_set() lines at the top of that code block. For the plots in this chapter, we’ll give a nod to the source material and make them with a similar aesthetic to Kruschke’s figures.

25.2.2 HDI of unimodal distribution is shortest interval.

The algorithms [in the next sections] find the HDI by searching among candidate intervals of mass M. The shortest one found is declared to be the HDI. It is an approximation, of course. See Chen & Shao (1999) for more details, and Chen, He, Shao, and Xu (2003) for dealing with the unusual situation of multimodal distributions. (p. 727)

25.2.3 R code for computing HDI of a MCMC sample.

In this section, Kruschke provided the code for his HDIofMCMC() function. We recreate it, below, with a few mild formatting changes. The ggthemes::theme_base() theme gets us most of the way there.

HDIofMCMC <- function(sampleVec, credMass = .95) {

# Computes highest density interval from a sample of representative values,

# estimated as shortest credible interval.

# Arguments:

# sampleVec

# is a vector of representative values from a probability distribution.

# credMass

# is a scalar between 0 and 1, indicating the mass within the credible

# interval that is to be estimated.

# Value:

# HDIlim is a vector containing the limits of the HDI

sortedPts <- sort(sampleVec)

ciIdxInc <- ceiling(credMass * length(sortedPts))

nCIs <- length(sortedPts) - ciIdxInc

ciWidth <- rep(0, nCIs)

for (i in 1:nCIs) {

ciWidth[i] <- sortedPts[i + ciIdxInc] - sortedPts[i]

}

HDImin <- sortedPts[which.min(ciWidth)]

HDImax <- sortedPts[which.min(ciWidth) + ciIdxInc]

HDIlim <- c(HDImin, HDImax)

return(HDIlim)

}Let’s continue working with fit24.1 to see how Kruschke’s HDIofMCMC() works. First we need to extract the posterior draws.

draws <- as_draws_df(fit24.1)Here’s how you might use the function to get the HDIs for the intercept parameter.

HDIofMCMC(draws$b_Intercept)## [1] -0.06511808 6.08724849Kruschke’s HDIofMCMC() works very much the same as the summary functions from tidybayes. For example, here’s good old tidybayes::mode_hdi().

library(tidybayes)

mode_hdi(draws$b_Intercept)## y ymin ymax .width .point .interval

## 1 3.207135 -0.06511808 6.087248 0.95 mode hdiIf you’d like to use tidybayes to just pull the HDIs without the extra information, just use the hdi() function.

hdi(draws$b_Intercept)## [,1] [,2]

## [1,] -0.06511808 6.087248Just in case you’re curious, Kruschke’s HDIofMCMC() function returns the same information as tidybayes::hdi(). Let’s confirm.

HDIofMCMC(draws$b_Intercept) == hdi(draws$b_Intercept)## [,1] [,2]

## [1,] TRUE TRUEIdentical.

25.2.4 R code for computing HDI of a function.

The function described in this section finds the HDI of a unimodal probability density function that is specified mathematically in R. For example, the function can find HDI’s of normal densities or of beta densities or of gamma densities, because those densities are specified as functions in R. (p. 728)

If you recall, we’ve been using this function off and on since Chapter 4. Here is it, again, with mildly reformatted code and parameter names.

hdi_of_icdf <- function(name, width = .95, tol = 1e-8, ... ) {

# Arguments:

# `name` is R's name for the inverse cumulative density function

# of the distribution.

# `width` is the desired mass of the HDI region.

# `tol` is passed to R's optimize function.

# Return value:

# Highest density iterval (HDI) limits in a vector.

# Example of use: For determining HDI of a beta(30, 12) distribution, type

# `hdi_of_icdf(qbeta, shape1 = 30, shape2 = 12)`

# Notice that the parameters of the `name` must be explicitly stated;

# e.g., `hdi_of_icdf(qbeta, 30, 12)` does not work.

# Adapted and corrected from Greg Snow's TeachingDemos package.

incredible_mass <- 1.0 - width

interval_width <- function(low_tail_prob, name, width, ...) {

name(width + low_tail_prob, ...) - name(low_tail_prob, ...)

}

opt_info <- optimize(interval_width, c(0, incredible_mass),

name = name, width = width,

tol = tol, ...)

hdi_lower_tail_prob <- opt_info$minimum

return(c(name(hdi_lower_tail_prob, ...),

name(width + hdi_lower_tail_prob, ...)))

}Here’s how it works for the standard normal distribution.

hdi_of_icdf(qnorm, mean = 0, sd = 1)## [1] -1.959964 1.959964By default, it returns 95% HDIs. Here’s how it’d work if you wanted the 80% intervals for Beta(2,2).

hdi_of_icdf(qbeta, shape1 = 2, shape2 = 2, width = .8)## [1] 0.1958001 0.804199925.3 Reparameterization

There are situations in which one parameterization is intuitive to express a distribution, but a different parameterization is required for mathematical convenience. For example, we may think intuitively of the standard deviation of a normal distribution, but have to parameterize the distribution in terms of the precision (i.e., reciprocal of the variance). (p. 729)

The details in the rest of this section are beyond the scope of this project.

25.4 Censored data in JAGS brms

“In many situations some data are censored, which means that their values are known only within a certain range” (p. 732) Happily, brms is capable of handling censored variables. The setup is a little different from how Kruschke described for JAGS. From the brmsformula section of the brms reference manual (Bürkner, 2022f), we read:

With the exception of categorical, ordinal, and mixture families, left, right, and interval censoring can be modeled through

y | cens(censored) ~ predictors. The censoring variable (namedcensoredin this example) should contain the values'left','none','right', and'interval'(or equivalently-1,0,1, and2) to indicate that the corresponding observation is left censored, not censored, right censored, or interval censored. For interval censored data, a second variable (let’s call ity2) has to be passed tocens. In this case, the formula has the structurey | cens(censored,y2) ~ predictors. While the lower bounds are given iny, the upper bounds are given iny2for interval censored data. Intervals are assumed to be open on the left and closed on the right:(y,y2].

We’ll make sense of all this in just a moment. First, let’s see how Kruschke described the example in the text.

To illustrate why it is important to include censored data in the analysis, consider a case in which N=500 values are generated randomly from a normal distribution with μ=100 and σ=15. Suppose that values above 106 are censored, as are values in the interval between 94 and 100. For the censored values, all we know is the interval in which they occurred, but not their exact value. (p. 732)

I’m not aware that we have access to Kruschke’s censored data, so we’ll just make our own based on his description. We’ll start off by simulating the idealized uncensored data, y, based on Normal(100,15).

n <- 500

set.seed(25)

d <- tibble(y = rnorm(n, mean = 100, sd = 15))To repeat, Kruschke described two kinds of censoring:

- “values above 106 are censored,”

- “as are values in the interval between 94 and 100.”

⚠️ Rather than examine both kinds of censoring at once, as in the text, I’m going to slow down and break this section up. First, we’ll explore right censoring. Second, we’ll explore interval censoring. For our grand finale, we’ll combine the two, as in the text.

25.4.1 Right censoring: When you’re uncertain beyond a single threshold.

In the case where the “values above 106 are censored,” we need to save a single threshold value. We’ll call it t1.

t1 <- 106Now we can use our t1 threshold value to make a right censored version of the y column called y1. We’ll also make a character value listing out a row’s censoring status in nominal terms.

d <-

d %>%

mutate(y1 = if_else(y > t1, t1, y),

cen1 = if_else(y > t1, "right", "none"))

d## # A tibble: 500 × 3

## y y1 cen1

## <dbl> <dbl> <chr>

## 1 96.8 96.8 none

## 2 84.4 84.4 none

## 3 82.7 82.7 none

## 4 105. 105. none

## 5 77.5 77.5 none

## 6 93.3 93.3 none

## 7 126. 106 right

## 8 108. 106 right

## 9 101. 101. none

## 10 99.1 99.1 none

## # … with 490 more rowsWhen the values in y1 are not censored, we see cen1 == "none". For all cases where the original y value exceeded the t1 threshold (i.e., 106), y1 == 106 and cen1 == "right". We are using the terms "none" and "right" because they are based on the block quote from the brms reference manual. For plotting purposes, we’ll make a new variable, y_na, that only has values for which cen1 == "none".

d <-

d %>%

mutate(y_na = ifelse(cen1 == "none", y, NA))

d## # A tibble: 500 × 4

## y y1 cen1 y_na

## <dbl> <dbl> <chr> <dbl>

## 1 96.8 96.8 none 96.8

## 2 84.4 84.4 none 84.4

## 3 82.7 82.7 none 82.7

## 4 105. 105. none 105.

## 5 77.5 77.5 none 77.5

## 6 93.3 93.3 none 93.3

## 7 126. 106 right NA

## 8 108. 106 right NA

## 9 101. 101. none 101.

## 10 99.1 99.1 none 99.1

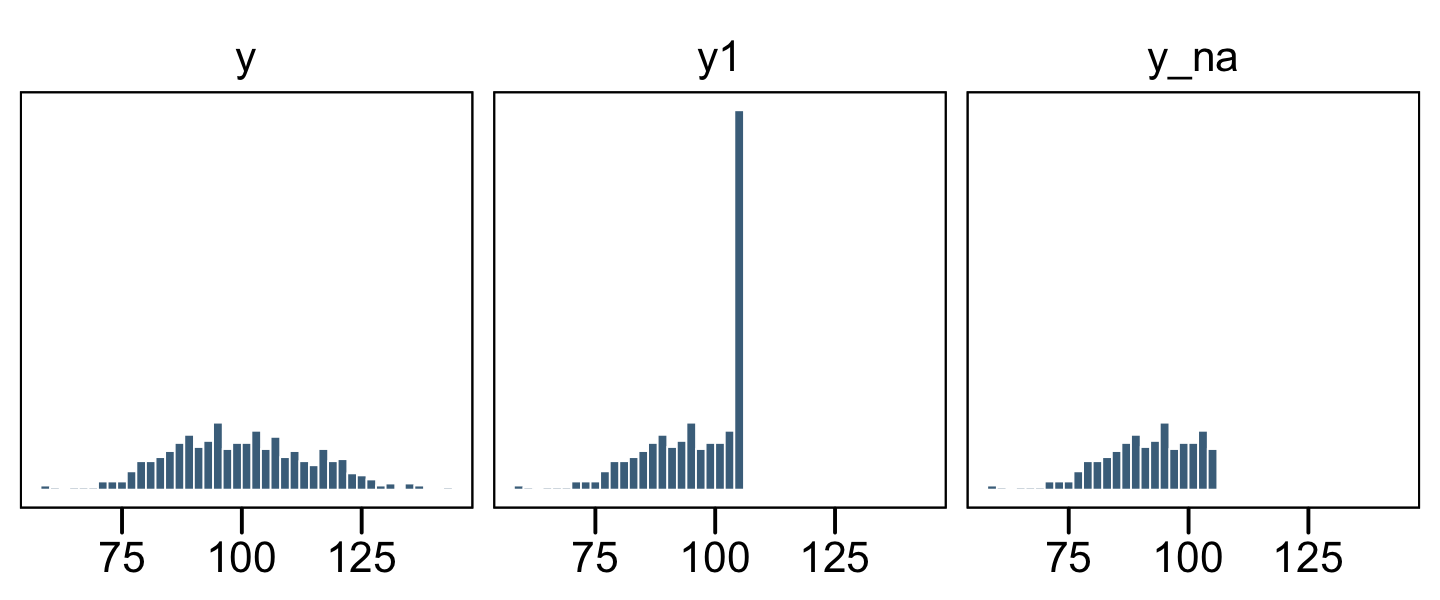

## # … with 490 more rowsNow let’s get a better sense of the data with a few histograms.

d %>%

pivot_longer(-cen1) %>%

mutate(name = factor(name, levels = c("y", "y1", "y_na"))) %>%

ggplot(aes(x = value)) +

geom_histogram(linewidth = 0.25, binwidth = 2, boundary = 106,

fill = "skyblue4", color = "white") +

xlab(NULL) +

scale_y_continuous(NULL, breaks = NULL) +

facet_wrap(~ name, ncol = 3)

The original un-censored data are in y. The censored data, for which all values above 106 were simply recoded 106, is y1. The y_na column shows what the distribution would look like if the censored values were omitted.

Here’s how we might fit a model which only uses the uncensored values, those in y_na.

# define the stanvars

mean_y <- mean(d$y_na, na.rm = T)

sd_y <- sd(d$y_na, na.rm = T)

stanvars <-

stanvar(mean_y, name = "mean_y") +

stanvar(sd_y, name = "sd_y")

# fit the model

fit25.1a <-

brm(data = d,

family = gaussian,

y_na ~ 1,

prior = c(prior(normal(mean_y, sd_y * 100), class = Intercept),

prior(normal(0, sd_y), class = sigma)),

chains = 4, cores = 4,

stanvars = stanvars,

file = "fits/fit25.01a")Check the summary for the naïve model.

print(fit25.1a)## Family: gaussian

## Links: mu = identity; sigma = identity

## Formula: y_na ~ 1

## Data: d (Number of observations: 333)

## Draws: 4 chains, each with iter = 2000; warmup = 1000; thin = 1;

## total post-warmup draws = 4000

##

## Population-Level Effects:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## Intercept 91.43 0.51 90.40 92.41 1.00 3410 2678

##

## Family Specific Parameters:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## sigma 9.39 0.36 8.70 10.13 1.00 3708 2881

##

## Draws were sampled using sampling(NUTS). For each parameter, Bulk_ESS

## and Tail_ESS are effective sample size measures, and Rhat is the potential

## scale reduction factor on split chains (at convergence, Rhat = 1).Relative to the true data-generating process for the original variable y, Normal(100,15), those parameters look pretty biased. Now let’s practice fitting our first censored model. Here we use the y1 | cens(cen1) syntax in the left side of the model formula to indicate that the criterion variable, y1, has been censored according to the process as defined in the cens() function. Within cens() we inserted our nominal variable, cens1, which indicates which cases are censored ("right") or not ("none").

This model is one of the rare occasions where we’ll set our initial values for the model intercept. In my first few attempts, brm() had great difficulty initializing the chains using the default initial values. We’ll help it out by setting them at mean_y. Recall that when you set custom initial values in brms, you save them in a list with the number of lists equaling the number of HMC chains. Because we’re using the default chains = 4, well need four lists of intercept start values, mean_y. You can set them to different values, if you’d like.

inits <- list(Intercept = mean_y)

inits_list <- list(inits, inits, inits, inits)

fit25.2a <-

brm(data = d,

family = gaussian,

y1 | cens(cen1) ~ 1,

prior = c(prior(normal(mean_y, sd_y * 100), class = Intercept),

prior(normal(0, sd_y), class = sigma)),

chains = 4, cores = 4,

stanvars = stanvars,

# here we insert our start values for the intercept

inits = inits_list,

file = "fits/fit25.02a")Now check the summary for the model accounting for the censoring.

print(fit25.2a)## Family: gaussian

## Links: mu = identity; sigma = identity

## Formula: y1 | cens(cen1) ~ 1

## Data: d (Number of observations: 500)

## Draws: 4 chains, each with iter = 2000; warmup = 1000; thin = 1;

## total post-warmup draws = 4000

##

## Population-Level Effects:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## Intercept 99.43 0.71 98.09 100.90 1.00 2939 2197

##

## Family Specific Parameters:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## sigma 14.29 0.61 13.17 15.56 1.00 2918 2440

##

## Draws were sampled using sampling(NUTS). For each parameter, Bulk_ESS

## and Tail_ESS are effective sample size measures, and Rhat is the potential

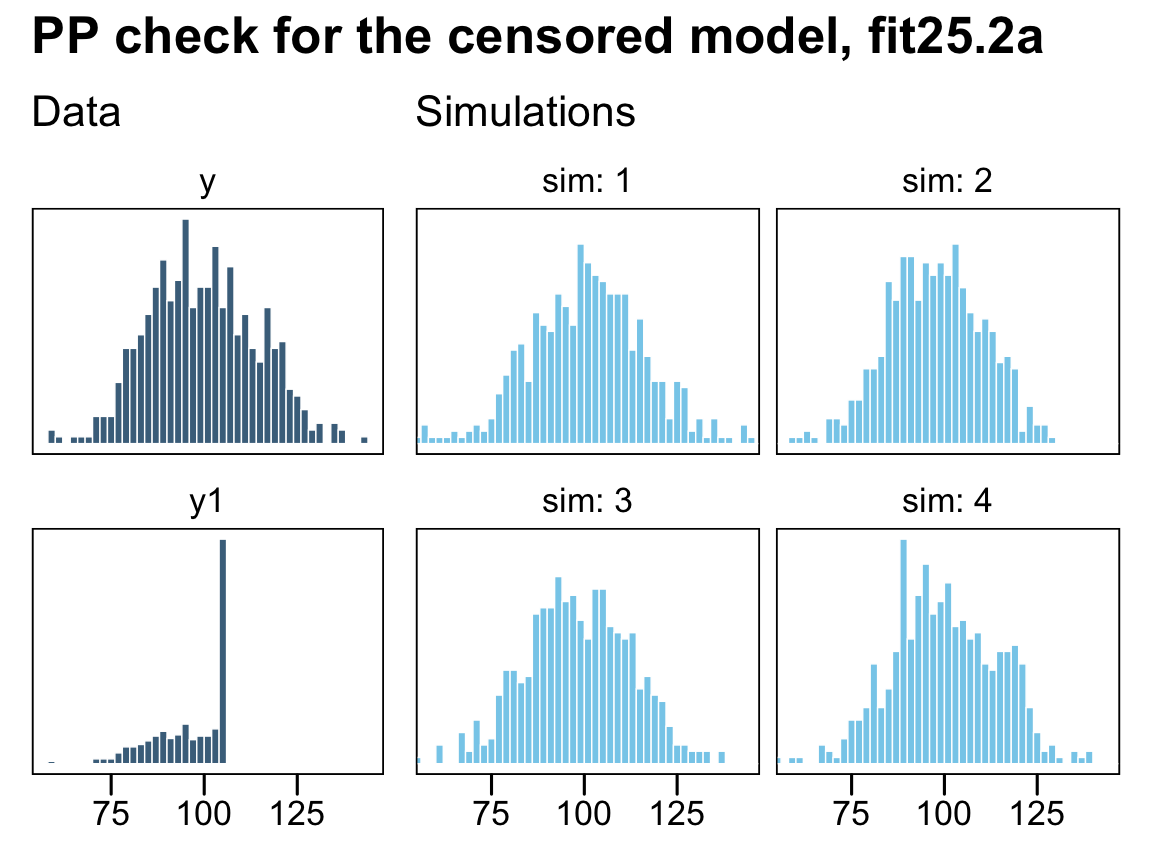

## scale reduction factor on split chains (at convergence, Rhat = 1).To get a better sense of what these models are doing, we might do a posterior predictive check with predict().

# original

p1 <-

d %>%

pivot_longer(cols = c(y, y_na)) %>%

ggplot(aes(x = value)) +

geom_histogram(linewidth = 0.25, binwidth = 2, boundary = 106,

fill = "skyblue4", color = "white") +

labs(subtitle = "Data",

x = NULL) +

scale_x_continuous(breaks = 3:5 * 25) +

scale_y_continuous(NULL, breaks = NULL) +

facet_wrap(~ name, ncol = 1)

# posterior simulations

set.seed(25)

p2 <-

predict(fit25.1a,

summary = F,

ndraws = 4) %>%

data.frame() %>%

mutate(sim = 1:n()) %>%

pivot_longer(-sim) %>%

ggplot(aes(x = value)) +

geom_histogram(linewidth = 0.25, binwidth = 2, boundary = 106,

fill = "skyblue", color = "white") +

geom_vline(xintercept = t1, linetype = 2) +

labs(subtitle = "Simulations",

x = NULL) +

scale_x_continuous(breaks = 3:5 * 25) +

scale_y_continuous(NULL, breaks = NULL) +

coord_cartesian(xlim = range(d$y)) +

facet_wrap(~ sim, ncol = 2, labeller = label_both)

# combine

library(patchwork)

p1 + p2 +

plot_layout(widths = c(1, 2)) +

plot_annotation(title = "PP check for the naïve model, fit25.1a")

On the left column, the have the original uncensored data y and the y_na data where the censored values were removed. The four lighter histograms on the right are individual simulations based on our naïve model, fit25.1a, which only used the y_na data. In all cases, the simulated data are biased to be too small compared with the original data, y. They also have the undesirable quality that they don’t even match up with the y_na data, which drops sharply off at the 106 threshold, whereas the simulated data all contain values exceeding that point (depicted by the dashed vertical lines). Now let’s see what happens when we execute a few posterior predictive checks with our first censored model, fit25.2a.

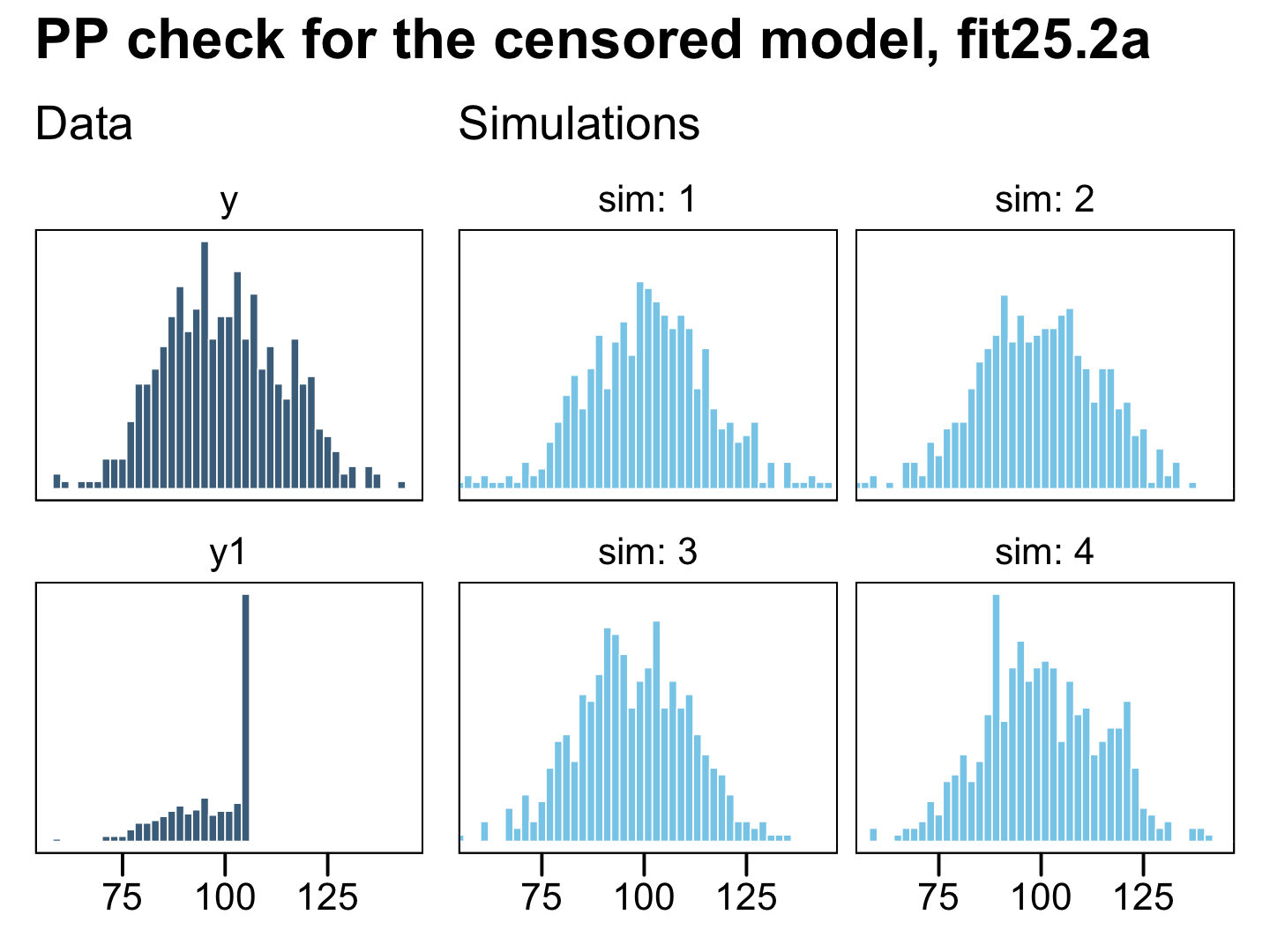

p1 <-

d %>%

pivot_longer(cols = c(y, y1)) %>%

ggplot(aes(x = value)) +

geom_histogram(linewidth = 0.25, binwidth = 2, boundary = 106,

fill = "skyblue4", color = "white") +

labs(subtitle = "Data",

x = NULL) +

scale_x_continuous(breaks = 3:5 * 25) +

scale_y_continuous(NULL, breaks = NULL) +

facet_wrap(~ name, ncol = 1, scales = "free_y")

set.seed(25)

p2 <-

predict(fit25.2a,

summary = F,

ndraws = 4) %>%

data.frame() %>%

mutate(sim = 1:n()) %>%

pivot_longer(-sim) %>%

ggplot(aes(x = value)) +

geom_histogram(linewidth = 0.25, binwidth = 2, boundary = 106,

fill = "skyblue", color = "white") +

labs(subtitle = "Simulations",

x = NULL) +

scale_x_continuous(breaks = 3:5 * 25) +

scale_y_continuous(NULL, breaks = NULL) +

coord_cartesian(xlim = range(d$y)) +

facet_wrap(~ sim, ncol = 2, labeller = label_both)

# combine

p1 + p2 +

plot_layout(widths = c(1, 2)) +

plot_annotation(title = "PP check for the censored model, fit25.2a")

Now the posterior predictive draws match up nicely from the original uncensored data y in terms of central tendency, dispersion, and their overall shapes. This is the magic of a model that accounts for right (or left) censoring. It can take all those stacked-up 106 values and approximate the underlying distribution, had they been more accurately recorded.

25.4.2 Interval censoring: When your values are somewhere in the middle.

Time to move onto interval censoring. Kruschke’s example in the text included values censored in the interval between 94 and 100. This will require two more thresholds, which we’ll call t2 and t3.

t2 <- 94

t3 <- 100We should revisit part of the block quote from the brms reference manual, above:

For interval censored data, a second variable (let’s call it

y2) has to be passed tocens. In this case, the formula has the structurey | cens(censored,y2) ~ predictors. While the lower bounds are given iny, the upper bounds are given iny2for interval censored data. Intervals are assumed to be open on the left and closed on the right:(y,y2]

It’s a little unclear, to me, if this is how Kruschke defined his intervals, but since we’re working with brms we’ll just use this convention. Thus, we will define “values in the interval between 94 and 100” as y >= t2 & y < t3. Here we’ll follow the brms convention and define a new variable y2 for our lower bound and y3 for our upper bound. Additionally, the new cen2 variable will tell us whether a case is interval censored ("interval") or not ("none"). We have also redefined y_na in terms of our cen2 variable.

d <-

d %>%

mutate(y2 = if_else(y >= t2 & y < t3, t2, y),

y3 = if_else(y >= t2 & y < t3, t3, y),

cen2 = if_else(y >= t2 & y < t3, "interval", "none")) %>%

mutate(y_na = ifelse(cen2 == "none", y, NA))

d## # A tibble: 500 × 7

## y y1 cen1 y_na y2 y3 cen2

## <dbl> <dbl> <chr> <dbl> <dbl> <dbl> <chr>

## 1 96.8 96.8 none NA 94 100 interval

## 2 84.4 84.4 none 84.4 84.4 84.4 none

## 3 82.7 82.7 none 82.7 82.7 82.7 none

## 4 105. 105. none 105. 105. 105. none

## 5 77.5 77.5 none 77.5 77.5 77.5 none

## 6 93.3 93.3 none 93.3 93.3 93.3 none

## 7 126. 106 right 126. 126. 126. none

## 8 108. 106 right 108. 108. 108. none

## 9 101. 101. none 101. 101. 101. none

## 10 99.1 99.1 none NA 94 100 interval

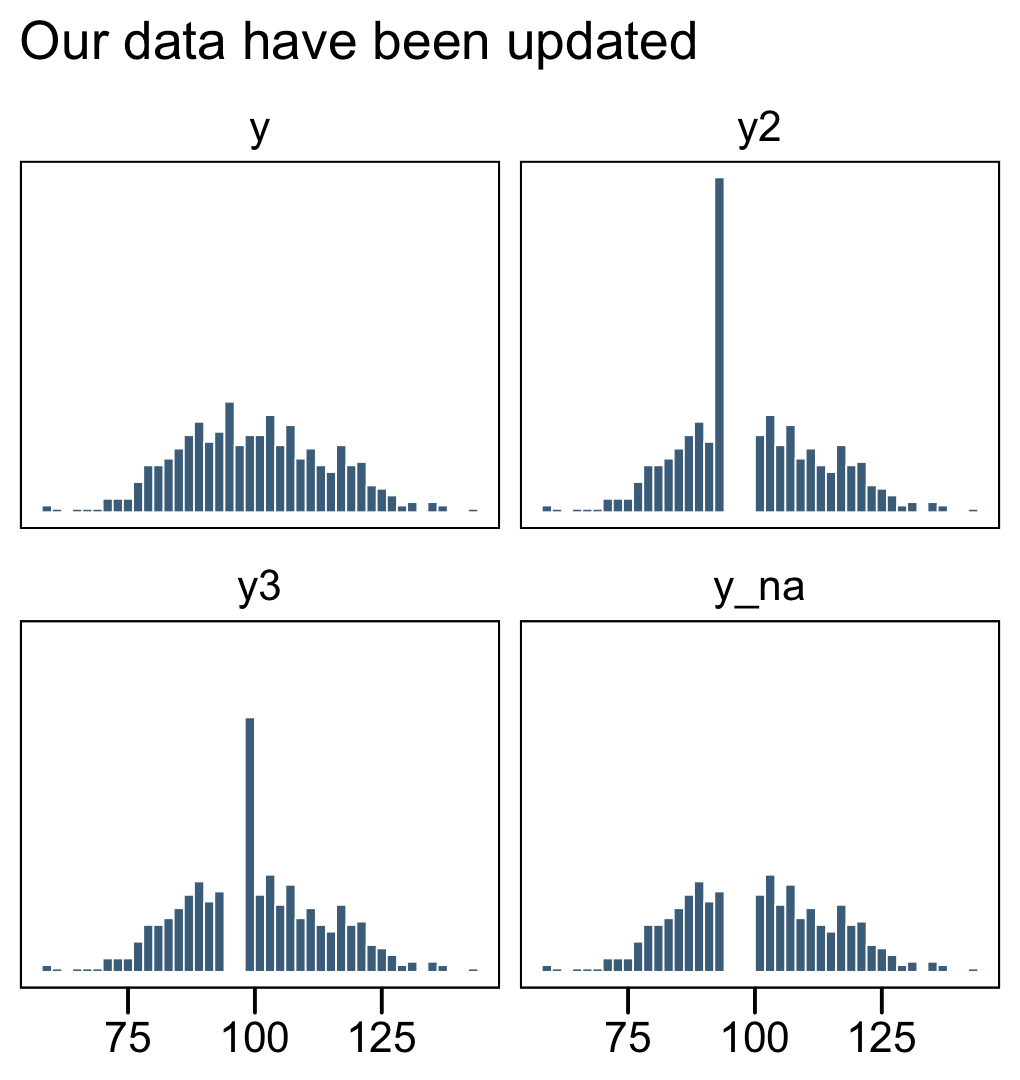

## # … with 490 more rowsNow let’s get a better sense of the new data with a few histograms.

d %>%

pivot_longer(cols = c(y, y2, y3, y_na)) %>%

mutate(name = factor(name, levels = c("y", "y2", "y3", "y_na"))) %>%

ggplot(aes(x = value)) +

geom_histogram(linewidth = 0.25, binwidth = 2, boundary = 106,

fill = "skyblue4", color = "white") +

labs(subtitle = "Our data have been updated",

x = NULL) +

scale_y_continuous(NULL, breaks = NULL) +

facet_wrap(~ name, ncol = 2)

Now we fit two models. The first, fit25.1b, will only used the values not interval censored, y_na. The second model, fit25.2b, will use the cens() function to fit account for the interval censored data with the y2, y3, and cen2 columns. As before, we’ll want to use inits to help the censored model run smoothly.

# naïve

fit25.1b <-

brm(data = d,

family = gaussian,

y_na ~ 1,

prior = c(prior(normal(mean_y, sd_y * 100), class = Intercept),

prior(normal(0, sd_y), class = sigma)),

chains = 4, cores = 4,

stanvars = stanvars,

file = "fits/fit25.01b")

# censored

fit25.2b <-

brm(data = d,

family = gaussian,

y2 | cens(cen2, y3) ~ 1,

prior = c(prior(normal(mean_y, sd_y * 100), class = Intercept),

prior(normal(0, sd_y), class = sigma)),

chains = 4, cores = 4,

stanvars = stanvars,

# here we insert our start values for the intercept

inits = inits_list,

file = "fits/fit25.02b")Review the model summaries.

print(fit25.1b)## Family: gaussian

## Links: mu = identity; sigma = identity

## Formula: y_na ~ 1

## Data: d (Number of observations: 424)

## Draws: 4 chains, each with iter = 2000; warmup = 1000; thin = 1;

## total post-warmup draws = 4000

##

## Population-Level Effects:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## Intercept 100.24 0.77 98.74 101.73 1.00 3302 2636

##

## Family Specific Parameters:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## sigma 15.79 0.53 14.81 16.87 1.00 3801 2459

##

## Draws were sampled using sampling(NUTS). For each parameter, Bulk_ESS

## and Tail_ESS are effective sample size measures, and Rhat is the potential

## scale reduction factor on split chains (at convergence, Rhat = 1).print(fit25.2b)## Family: gaussian

## Links: mu = identity; sigma = identity

## Formula: y2 | cens(cen2, y3) ~ 1

## Data: d (Number of observations: 500)

## Draws: 4 chains, each with iter = 2000; warmup = 1000; thin = 1;

## total post-warmup draws = 4000

##

## Population-Level Effects:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## Intercept 99.75 0.65 98.49 101.07 1.00 3475 2566

##

## Family Specific Parameters:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## sigma 14.61 0.46 13.74 15.56 1.00 2822 2343

##

## Draws were sampled using sampling(NUTS). For each parameter, Bulk_ESS

## and Tail_ESS are effective sample size measures, and Rhat is the potential

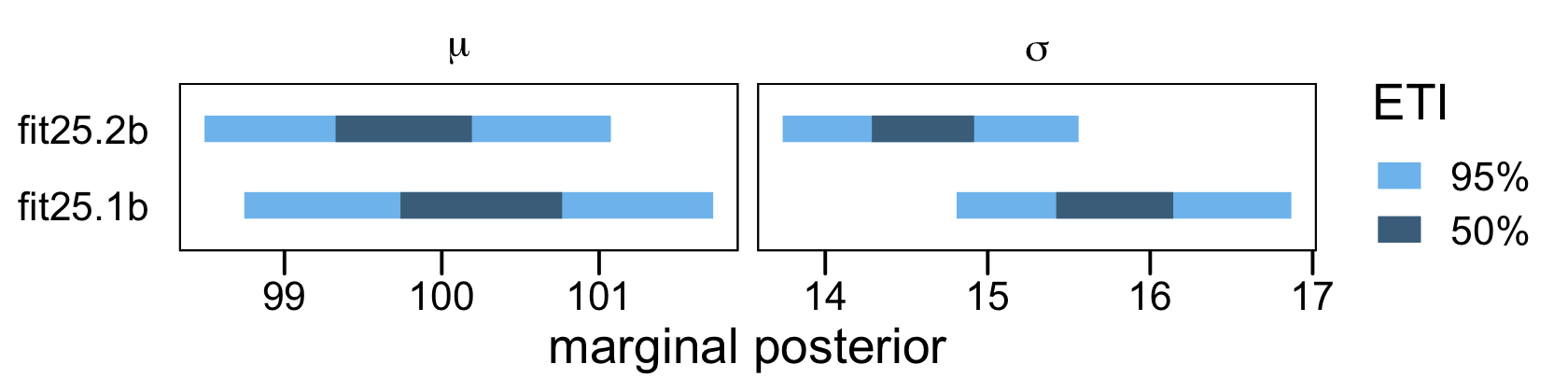

## scale reduction factor on split chains (at convergence, Rhat = 1).In this case, both models did a reasonable job approximating the original data-generating parameters, Normal(100,15). However, the naïve model fit25.1b did so at a considerable loss of precision, as indicated by the posterior standard deviations. To give a better sense, here are the model parameters in an interval plot.

# wrangle

bind_rows(as_draws_df(fit25.1b),

as_draws_df(fit25.2b)) %>%

mutate(fit = rep(c("fit25.1b", "fit25.2b"), each = n() / 2)) %>%

pivot_longer(b_Intercept:sigma) %>%

mutate(parameter = if_else(name == "b_Intercept", "mu", "sigma")) %>%

# plot

ggplot(aes(x = value, y = fit)) +

stat_interval(.width = c(.5, .95)) +

scale_color_manual("ETI",

values = c("skyblue2", "skyblue4"),

labels = c("95%", "50%")) +

labs(x = "marginal posterior",

y = NULL) +

theme(axis.ticks.y = element_blank()) +

facet_wrap(~ parameter, scales = "free_x", labeller = label_parsed)

One could also argue the parameters for the censored model fit25.2b were a little less biased. However, I’d be leery of making that as a general claim based on this example, alone.

25.4.3 Complex censoring: When the going gets tough…

Okay, let’s put what we’ve learned together and practice with data that are both right AND interval censored. This is going to require some tricky coding on our part. Since interval censoring is in play, we’ll need two y variables, again. When the data are indeed interval censored, their lower and upper bounds will be depicted by y4 and y5, respectively. When the data are right censored, y4 will contain the threshold 106 and y5 will contain the original value from y. All this tricky information will be indexed in our nominal variable cen3. Once again, we update our y_na variable, too.

d <-

d %>%

mutate(y4 = if_else(y >= t2 & y < t3, t2,

if_else(y > t1, t1, y)),

y5 = if_else(y >= t2 & y < t3, t3, y),

cen3 = if_else(y >= t2 & y < t3, "interval",

if_else(y > t1, "right", "none"))) %>%

mutate(y_na = ifelse(cen3 == "none", y, NA))

d## # A tibble: 500 × 10

## y y1 cen1 y_na y2 y3 cen2 y4 y5 cen3

## <dbl> <dbl> <chr> <dbl> <dbl> <dbl> <chr> <dbl> <dbl> <chr>

## 1 96.8 96.8 none NA 94 100 interval 94 100 interval

## 2 84.4 84.4 none 84.4 84.4 84.4 none 84.4 84.4 none

## 3 82.7 82.7 none 82.7 82.7 82.7 none 82.7 82.7 none

## 4 105. 105. none 105. 105. 105. none 105. 105. none

## 5 77.5 77.5 none 77.5 77.5 77.5 none 77.5 77.5 none

## 6 93.3 93.3 none 93.3 93.3 93.3 none 93.3 93.3 none

## 7 126. 106 right NA 126. 126. none 106 126. right

## 8 108. 106 right NA 108. 108. none 106 108. right

## 9 101. 101. none 101. 101. 101. none 101. 101. none

## 10 99.1 99.1 none NA 94 100 interval 94 100 interval

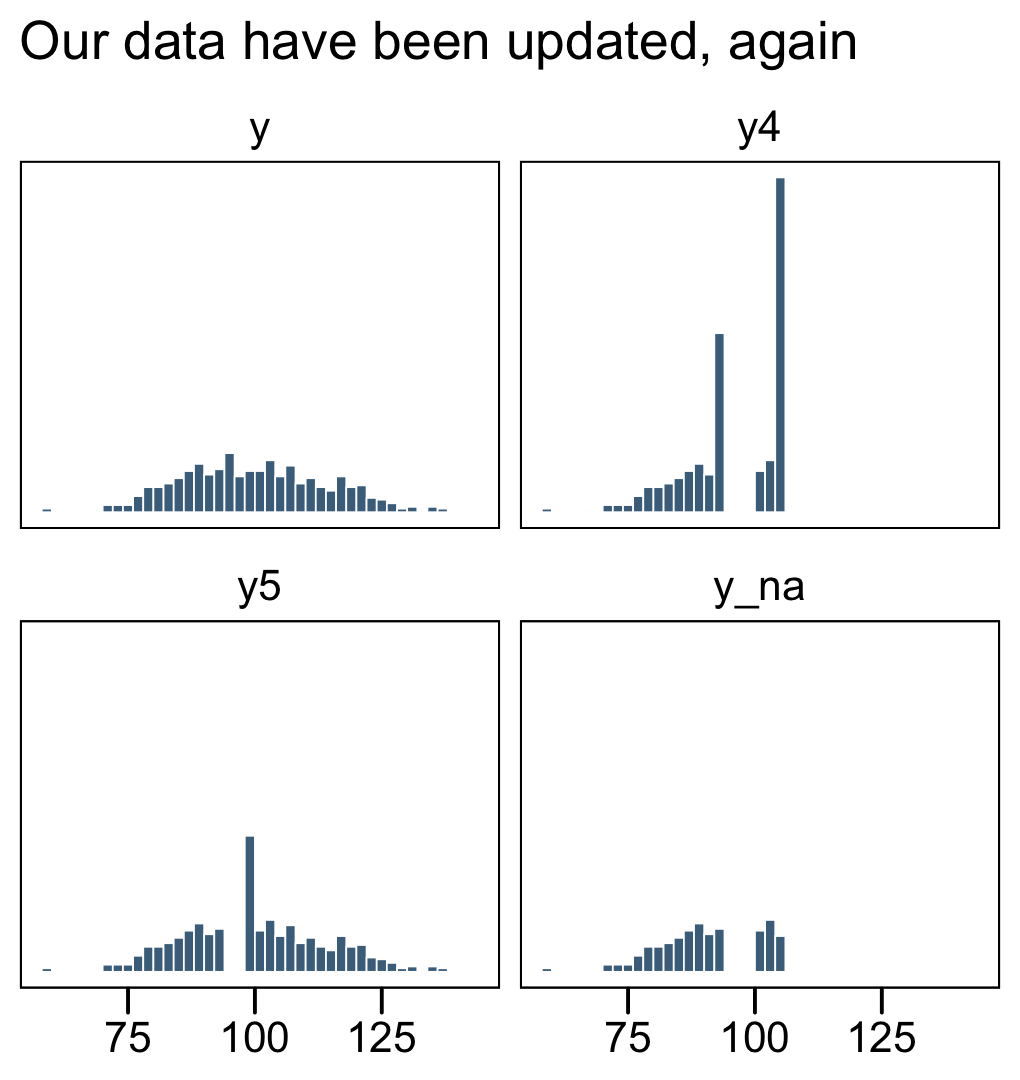

## # … with 490 more rowsExplore the new data with histograms.

d %>%

pivot_longer(cols = c(y, y4, y5, y_na)) %>%

mutate(name = factor(name, levels = c("y", "y4", "y5", "y_na"))) %>%

ggplot(aes(x = value)) +

geom_histogram(linewidth = 0.25, binwidth = 2, boundary = 106,

fill = "skyblue4", color = "white") +

labs(subtitle = "Our data have been updated, again",

x = NULL) +

scale_y_continuous(NULL, breaks = NULL) +

facet_wrap(~ name, ncol = 2)

In the text, Kruschke reported he had 255 uncensored values (p. 732). Here’s the breakdown of our data.

d %>%

count(cen3)## # A tibble: 3 × 2

## cen3 n

## <chr> <int>

## 1 interval 76

## 2 none 257

## 3 right 167We got really close! Now we fit our two models: fit25.1c, which ignores the censoring problem and just drops those cases, and fit25.2c, which makes slick use of the cens() function.

# naïve

fit25.1c <-

brm(data = d,

family = gaussian,

y_na ~ 1,

prior = c(prior(normal(mean_y, sd_y * 100), class = Intercept),

prior(normal(0, sd_y), class = sigma)),

chains = 4, cores = 4,

stanvars = stanvars,

file = "fits/fit25.01c")

# censored

fit25.2c <-

brm(data = d,

family = gaussian,

y4 | cens(cen3, y5) ~ 1,

prior = c(prior(normal(mean_y, sd_y * 100), class = Intercept),

prior(normal(0, sd_y), class = sigma)),

chains = 4, cores = 4,

stanvars = stanvars,

# here we insert our start values for the intercept

inits = inits_list,

file = "fits/fit25.02c")Compare the model summaries.

print(fit25.1c)## Family: gaussian

## Links: mu = identity; sigma = identity

## Formula: y_na ~ 1

## Data: d (Number of observations: 257)

## Draws: 4 chains, each with iter = 2000; warmup = 1000; thin = 1;

## total post-warmup draws = 4000

##

## Population-Level Effects:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## Intercept 89.87 0.64 88.62 91.12 1.00 3532 2900

##

## Family Specific Parameters:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## sigma 10.12 0.44 9.30 11.01 1.00 4041 2475

##

## Draws were sampled using sampling(NUTS). For each parameter, Bulk_ESS

## and Tail_ESS are effective sample size measures, and Rhat is the potential

## scale reduction factor on split chains (at convergence, Rhat = 1).print(fit25.2c)## Family: gaussian

## Links: mu = identity; sigma = identity

## Formula: y4 | cens(cen3, y5) ~ 1

## Data: d (Number of observations: 500)

## Draws: 4 chains, each with iter = 2000; warmup = 1000; thin = 1;

## total post-warmup draws = 4000

##

## Population-Level Effects:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## Intercept 99.48 0.70 98.13 100.89 1.00 3074 2240

##

## Family Specific Parameters:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## sigma 14.27 0.58 13.21 15.45 1.00 2792 2545

##

## Draws were sampled using sampling(NUTS). For each parameter, Bulk_ESS

## and Tail_ESS are effective sample size measures, and Rhat is the potential

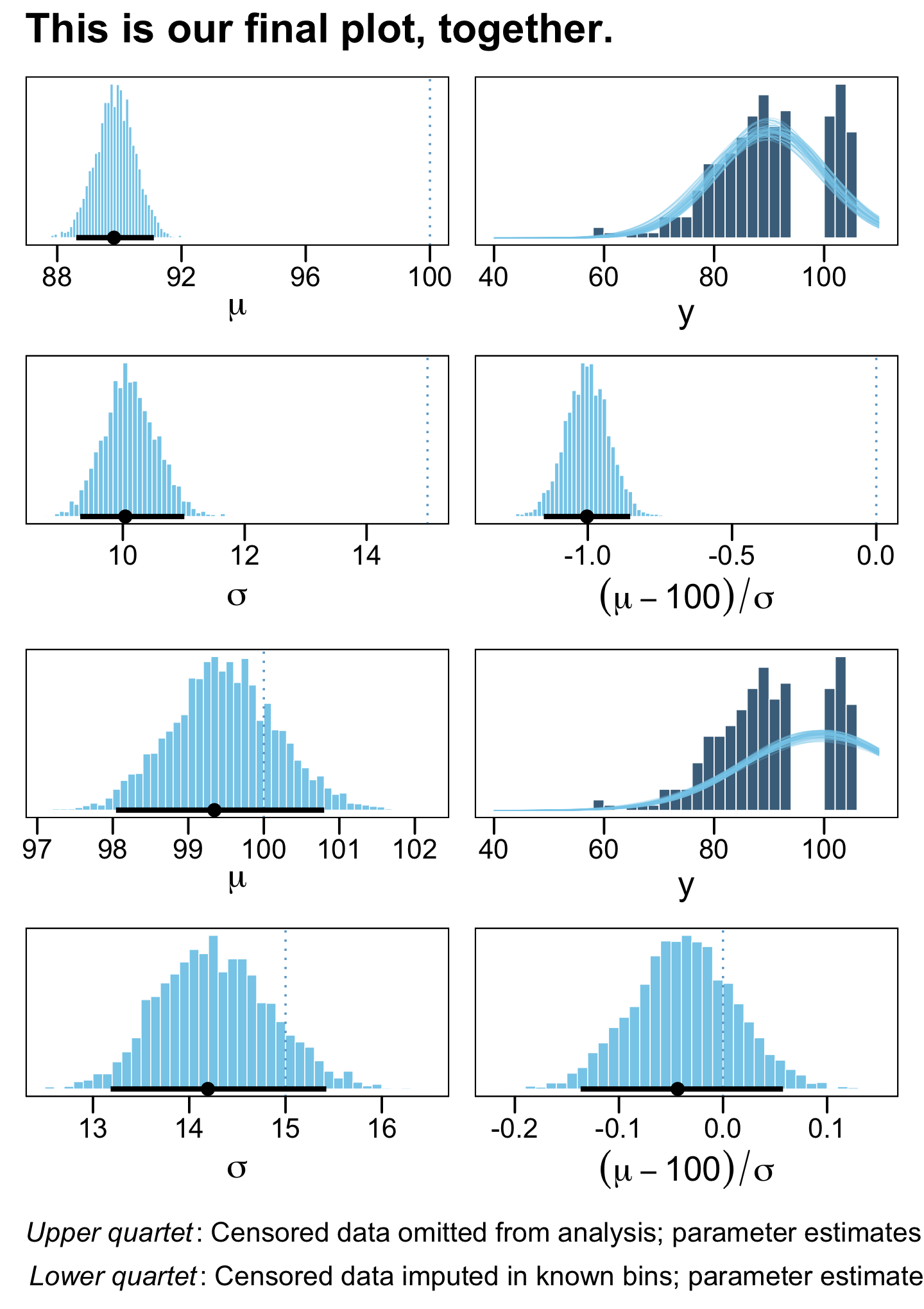

## scale reduction factor on split chains (at convergence, Rhat = 1).The results for the naïve model are a mess and those from the censored model look great! Also note how the latter used all avaliable cases, whereas the former dropped nearly half of them.

Before we can make our version of Figure 25.4, we’ll need to extract the posterior draws. We’ll start with fit25.1c.

draws <-

as_draws_df(fit25.1c) %>%

mutate(mu = b_Intercept,

`(mu-100)/sigma` = (b_Intercept - 100) / sigma)

head(draws)## # A draws_df: 6 iterations, 1 chains, and 6 variables

## b_Intercept sigma lprior lp__ mu (mu-100)/sigma

## 1 89 9.9 -11 -968 89 -1.10

## 2 90 10.6 -11 -968 90 -0.98

## 3 91 9.7 -11 -968 91 -0.98

## 4 90 10.3 -11 -967 90 -0.99

## 5 90 9.8 -11 -968 90 -0.97

## 6 90 9.6 -11 -968 90 -1.08

## # ... hidden reserved variables {'.chain', '.iteration', '.draw'}These subplots look a lot like those from back in Section 16.2. Since this is the last plot from the book, it seems like we should go all out. To reduce some of the code redundancy with the six subplots of the marginal posteriors, we’ll make a custom geom, geom_hist().

geom_hist <- function(xintercept = xintercept, binwidth = binwidth, ...) {

list(

geom_histogram(fill = "skyblue", color = "white", linewidth = 0.2, binwidth = binwidth, boundary = 106),

geom_vline(xintercept = xintercept, color = "skyblue3", linewidth = 1/2, linetype = 3),

stat_pointinterval(aes(y = 0), point_interval = mode_hdi, .width = .95),

scale_y_continuous(NULL, breaks = NULL)

)

}Now we have our geom_hist(), here are the first three histograms for the marginal posteriors from fit25.1c.

p1 <-

draws %>%

ggplot(aes(x = mu)) +

geom_hist(xintercept = 100, binwidth = 0.1) +

xlab(expression(mu))

p3 <-

draws %>%

ggplot(aes(x = sigma)) +

geom_hist(xintercept = 15, binwidth = 0.08) +

xlab(expression(sigma))

p4 <-

draws %>%

ggplot(aes(x = `(mu-100)/sigma`)) +

geom_hist(xintercept = 0, binwidth = 0.015) +

xlab(expression((mu-100)/sigma))The histogram of the censored data with the posterior predictive density curves superimposed will take a little more work.

n_lines <- 50

p2 <-

draws %>%

slice(1:n_lines) %>%

expand_grid(y_na = seq(from = 40, to = 120, by = 1)) %>%

mutate(density = dnorm(x = y_na, mean = mu, sd = sigma)) %>%

ggplot(aes(x = y_na)) +

geom_histogram(data = d,

aes(y = after_stat(density)),

linewidth = 0.25, binwidth = 2, boundary = 106,

fill = "skyblue4", color = "white") +

geom_line(aes(y = density, group = .draw),

size = 1/4, alpha = 1/3, color = "skyblue") +

scale_x_continuous("y", limits = c(40, 110)) +

scale_y_continuous(NULL, breaks = NULL)Now extract the posterior draws from our censored model, fit25.2, and repeat the process.

draws <-

as_draws_df(fit25.2c) %>%

mutate(mu = b_Intercept,

`(mu-100)/sigma` = (b_Intercept - 100) / sigma)

p5 <-

draws %>%

ggplot(aes(x = mu)) +

geom_hist(xintercept = 100, binwidth = 0.1) +

xlab(expression(mu))

p7 <-

draws %>%

ggplot(aes(x = sigma)) +

geom_hist(xintercept = 15, binwidth = 0.1) +

xlab(expression(sigma))

p8 <-

draws %>%

ggplot(aes(x = `(mu-100)/sigma`)) +

geom_hist(xintercept = 0, binwidth = 0.01) +

xlab(expression((mu-100)/sigma))

p6 <-

draws %>%

slice(1:n_lines) %>%

expand_grid(y_na = seq(from = 40, to = 120, by = 1)) %>%

mutate(density = dnorm(x = y_na, mean = mu, sd = sigma)) %>%

ggplot(aes(x = y_na)) +

geom_histogram(data = d,

aes(y = after_stat(density)),

linewidth = 0.25, binwidth = 2, boundary = 106,

fill = "skyblue4", color = "white") +

geom_line(aes(y = density, group = .draw),

size = 1/4, alpha = 1/3, color = "skyblue") +

scale_x_continuous("y", limits = c(40, 110)) +

scale_y_continuous(NULL, breaks = NULL)Finally, combine the subplots, and annotate a bit.

((p1 | p2) / (p3 | p4) / (p5 | p6) / (p7 | p8)) +

plot_annotation(title = "This is our final plot, together.",

caption = expression(atop(italic("Upper quartet")*": Censored data omitted from analysis; parameter estimates are too small. ", italic("Lower quartet")*": Censored data imputed in known bins; parameter estimates are accurate."))) &

theme(plot.caption = element_text(hjust = 0))

To learn more about censored data, check out the nice lecture by Gordon Fox, Introduction to analysis of censored and truncated data.

25.4.4 Bonus: Truncation.

Though Kruschke didn’t cover it, truncation is often confused with censoring. When you have actually collected all your values, but some are just less certain than you’d like, you have a censoring issue. That is, you can think of censoring as an issue with measurement precision. Truncation has to do with data collection. When there’s some level in your criterion variable that you haven’t collected data on, that’s a truncation issue. For example, imagine you wanted to predict scores on an IQ test with socioeconomic status (SES). There might be a relation, there. But now imagine you only collected data on people with an IQ above 110. Those IQ data are severely left truncated. If you only care about the relation of SES and IQ for those with high IQ scores, your data are fine. But if you want to make general statements across the full range of IQ values, standard regression methods will likely produce biased results. Happily, brms allows users to accommodate truncated criterion variables with the trunc() function, which works in a similar way to the cens() function. For details on fitting truncated regression models with trunc(), check out the Additional response information subsection of the brmsformula section of the brms reference manual. The end of the Fox lecture, above, briefly covers truncated regression. You can also find a brief vignette on truncation by the good folks at the UCLA Institute for Digital Research and Education.

25.5 What Next?

“If you have made it this far and you are looking for more, you might peruse posts at [Kruschke’s] blog, https://doingbayesiandataanalysis.blogspot.com/, and search there for topics that interest you.” In addition to the other references Kruschke mentioned, you might also check out McElreath’s Statistical rethinking, both first (2015) and second (2020) editions. Much like this ebook, I have recoded both editions of Statistical rethinking in a bookdown form (Kurz, 2023a, 2023b). Click here for the first and here for the second. You can find other pedagogical material at my academic blog, https://solomonkurz.netlify.com/post/. For assistance on brms-related issues, check out the brms section on the Stan forums at https://discourse.mc-stan.org/c/interfaces/brms/36. Nicenboim, Schad, and Vasishth also have a nice new (2021) ebook called An introduction to Bayesian data analysis for cognitive science, which highlights brms in a way that could compliment the material we have practiced together.

Happy modeling, friends!

Session info

sessionInfo()## R version 4.2.2 (2022-10-31)

## Platform: x86_64-apple-darwin17.0 (64-bit)

## Running under: macOS Big Sur ... 10.16

##

## Matrix products: default

## BLAS: /Library/Frameworks/R.framework/Versions/4.2/Resources/lib/libRblas.0.dylib

## LAPACK: /Library/Frameworks/R.framework/Versions/4.2/Resources/lib/libRlapack.dylib

##

## locale:

## [1] en_US.UTF-8/en_US.UTF-8/en_US.UTF-8/C/en_US.UTF-8/en_US.UTF-8

##

## attached base packages:

## [1] stats graphics grDevices utils datasets methods base

##

## other attached packages:

## [1] patchwork_1.1.2 tidybayes_3.0.2 ggthemes_4.2.4 brms_2.18.0

## [5] Rcpp_1.0.9 forcats_0.5.1 stringr_1.4.1 dplyr_1.0.10

## [9] purrr_1.0.1 readr_2.1.2 tidyr_1.2.1 tibble_3.1.8

## [13] ggplot2_3.4.0 tidyverse_1.3.2

##

## loaded via a namespace (and not attached):

## [1] readxl_1.4.1 backports_1.4.1 plyr_1.8.7

## [4] igraph_1.3.4 svUnit_1.0.6 sp_1.5-0

## [7] splines_4.2.2 crosstalk_1.2.0 TH.data_1.1-1

## [10] rstantools_2.2.0 inline_0.3.19 digest_0.6.31

## [13] htmltools_0.5.3 fansi_1.0.3 magrittr_2.0.3

## [16] checkmate_2.1.0 googlesheets4_1.0.1 tzdb_0.3.0

## [19] modelr_0.1.8 RcppParallel_5.1.5 matrixStats_0.63.0

## [22] xts_0.12.1 sandwich_3.0-2 prettyunits_1.1.1

## [25] colorspace_2.0-3 rvest_1.0.2 ggdist_3.2.1

## [28] haven_2.5.1 xfun_0.35 callr_3.7.3

## [31] crayon_1.5.2 jsonlite_1.8.4 lme4_1.1-31

## [34] survival_3.4-0 zoo_1.8-10 glue_1.6.2

## [37] gtable_0.3.1 gargle_1.2.0 emmeans_1.8.0

## [40] distributional_0.3.1 pkgbuild_1.3.1 rstan_2.21.8

## [43] abind_1.4-5 scales_1.2.1 mvtnorm_1.1-3

## [46] emo_0.0.0.9000 DBI_1.1.3 miniUI_0.1.1.1

## [49] xtable_1.8-4 HDInterval_0.2.4 stats4_4.2.2

## [52] StanHeaders_2.21.0-7 DT_0.24 htmlwidgets_1.5.4

## [55] httr_1.4.4 threejs_0.3.3 arrayhelpers_1.1-0

## [58] posterior_1.3.1 ellipsis_0.3.2 pkgconfig_2.0.3

## [61] loo_2.5.1 farver_2.1.1 sass_0.4.2

## [64] dbplyr_2.2.1 utf8_1.2.2 labeling_0.4.2

## [67] tidyselect_1.2.0 rlang_1.0.6 reshape2_1.4.4

## [70] later_1.3.0 munsell_0.5.0 cellranger_1.1.0

## [73] tools_4.2.2 cachem_1.0.6 cli_3.6.0

## [76] generics_0.1.3 broom_1.0.2 evaluate_0.18

## [79] fastmap_1.1.0 processx_3.8.0 knitr_1.40

## [82] fs_1.5.2 nlme_3.1-160 mime_0.12

## [85] projpred_2.2.1 xml2_1.3.3 compiler_4.2.2

## [88] bayesplot_1.10.0 shinythemes_1.2.0 rstudioapi_0.13

## [91] gamm4_0.2-6 reprex_2.0.2 bslib_0.4.0

## [94] stringi_1.7.8 highr_0.9 ps_1.7.2

## [97] Brobdingnag_1.2-8 lattice_0.20-45 Matrix_1.5-1

## [100] nloptr_2.0.3 markdown_1.1 shinyjs_2.1.0

## [103] tensorA_0.36.2 vctrs_0.5.1 pillar_1.8.1

## [106] lifecycle_1.0.3 jquerylib_0.1.4 bridgesampling_1.1-2

## [109] estimability_1.4.1 raster_3.5-15 httpuv_1.6.5

## [112] R6_2.5.1 bookdown_0.28 promises_1.2.0.1

## [115] gridExtra_2.3 codetools_0.2-18 boot_1.3-28

## [118] colourpicker_1.1.1 MASS_7.3-58.1 gtools_3.9.4

## [121] assertthat_0.2.1 withr_2.5.0 shinystan_2.6.0

## [124] multcomp_1.4-20 mgcv_1.8-41 parallel_4.2.2

## [127] hms_1.1.1 terra_1.5-21 grid_4.2.2

## [130] coda_0.19-4 minqa_1.2.5 rmarkdown_2.16

## [133] googledrive_2.0.0 shiny_1.7.2 lubridate_1.8.0

## [136] base64enc_0.1-3 dygraphs_1.1.1.6