15 Overview of the Generalized Linear Model

Along with Kruschke’s text, in this part if the project we’re moving away from simple Bernoulli coin flipping examples to more complicated analyses of the type we’d actually see in applied data analysis. As Kruschke explained, we’ll be using a

versatile family of models known as the generalized linear model [GLM; Nelder & Wedderburn (1972); Nelder & Wedderburn (1972)]. This family of models comprises the traditional “off the shelf” analyses such as t tests, analysis of variance (ANOVA), multiple linear regression, logistic regression, log-linear models, etc. (Kruschke, 2015, p. 420)

15.1 Types of variables

“To understand the GLM and its many specific cases, we must build up a variety of component concepts regarding relationships between variables and how variables are measured in the first place (p. 420).”

15.1.1 Predictor and predicted variables.

It’s worth repeating the second paragraph of this subsection in its entirety.

The key mathematical difference between predictor and predicted variables is that the likelihood function expresses the probability of values of the predicted variable as a function of values of the predictor variable. The likelihood function does not describe the probabilities of values of the predictor variable. The value of the predictor variable comes from outside the system being modeled, whereas the value of the predicted variable depends on the value of the predictor variable. (p. 420)

This is one of those fine points that can be easy to miss when you’re struggling through the examples in this book or chest-deep in the murky waters of your own real-world data problem. But write this down on a sticky note and put it in your sock drawer or something. There are good reasons to fret about the distributional properties of your predictor variables–rules of thumb about the likelihood aren’t among them.

15.1.2 Scale types: metric, ordinal, nominal, and count.

I don’t know that I’m interested in detailing the content of this section. But it’s worth while considering what Kruschke wrote in its close.

Why we care: We care about the scale type because the likelihood function must specify a probability distribution on the appropriate scale. If the scale has two nominal values, then a Bernoulli likelihood function may be appropriate. If the scale is metric, then a normal distribution may be appropriate as a probability distribution to describe the data. Whenever we are choosing a model for data, we must answer the question, What kind of scale are we dealing with? (p. 423, emphasis in the original)

15.2 Linear combination of predictors

“The core of the GLM is expressing the combined influence of predictors as their weighted sum. The following sections build this idea by scaffolding from the simplest intuitive cases” (p. 423).

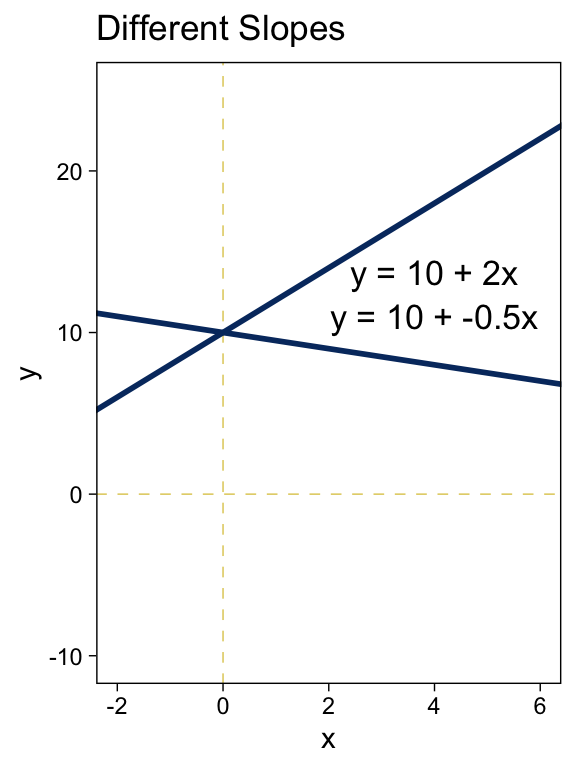

15.2.1 Linear function of a single metric predictor.

“A linear function is the generic, ‘vanilla,’ off-the-shelf dependency that is used in statistical models” (p. 424). Its basic form is

y=β0+β1x,

where y is the variable being predicted and x is the predictor. β0 is the intercept (i.e., the expected value when x is zero) and β1 is the expected increase in y after a one-unit increase in x.

Before we make our version of Figure 15.1, let’s talk about our theme and color palette. In this chapter, we’ll base our overall on ggplot2::theme_linedraw(). We’ll take selections from option = "E" from the viridis package to make our color palette. Based on our ggplot2 skills from the last few chapters, these moves are pretty mundane. We can go further.

In this chapter, we’ll extend our skillset by practicing how to alter the default settings of our ggplot2 geoms. If you browse through the content of the text, you’ll see we’ll a lot of the upcomming plots will require lines of various sorts. We’ll make the bulk of those lines with geom_line(), geom_hline(), geom_vline(), and geom_segment(). as a first step, it’d be handy to see how their default aesthetic settings are defined. Here’s how to do that for geom_line().

ggplot2:::check_subclass("line", "Geom")$default_aes## Aesthetic mapping:

## * `colour` -> "black"

## * `linewidth` -> 0.5

## * `linetype` -> 1

## * `alpha` -> NAWe can use the ggplot2::update_geom_defaults() function to change those default settings. Here’s how we might go about increasing the default size and color parameters.

library(tidyverse)

library(viridis)

default_line <- ggplot2:::check_subclass("line", "Geom")$default_aes

update_geom_defaults(

geom = "line",

new = list(

color = viridis_pal(option = "E")(9)[2],

linewidth = 1)

)Now confirm our new defaults.

ggplot2:::check_subclass("line", "Geom")$default_aes## $colour

## [1] "#05366EFF"

##

## $linewidth

## [1] 1

##

## $linetype

## [1] 1

##

## $alpha

## [1] NACritically, notice how we saved the original default settings for geom_line() as default_line before we changed them. That way, all we have to do is execute this to change things back to normal.

update_geom_defaults(

geom = "line",

new = default_line

)

ggplot2:::check_subclass("line", "Geom")$default_aes## $colour

## [1] "black"

##

## $linewidth

## [1] 0.5

##

## $linetype

## [1] 1

##

## $alpha

## [1] NANow you see how this works, let’s save the default settings for the other three geoms.

default_hline <- ggplot2:::check_subclass("vline", "Geom")$default_aes

default_vline <- ggplot2:::check_subclass("hline", "Geom")$default_aes

default_segment <- ggplot2:::check_subclass("segment", "Geom")$default_aesHere we’ll update the defaults of all four of our line-oriented geoms and change the settings for our global plot theme.

update_geom_defaults(

geom = "line",

new = list(

color = viridis_pal(option = "E")(9)[2],

linewidth = 1)

)

update_geom_defaults(

geom = "hline",

new = list(

color = viridis_pal(option = "E")(9)[8],

linewidth = 1/4,

linetype = 2)

)

update_geom_defaults(

geom = "vline",

new = list(

color = viridis_pal(option = "E")(9)[8],

linewidth = 1/4,

linetype = 2)

)

update_geom_defaults(

geom = "segment",

new = list(

color = viridis_pal(option = "E")(9)[5],

linewidth = 1/4)

)

theme_set(

theme_linedraw() +

theme(panel.grid = element_blank())

)You can learn more about these methods here and here. Let’s see what we’ve done by making the left panel of Figure 15.1.

tibble(x = -3:7) %>%

mutate(y_1 = -5 + 2 * x,

y_2 = 10 + 2 * x) %>%

ggplot(aes(x = x)) +

geom_vline(xintercept = 0) +

geom_hline(yintercept = 0) +

geom_line(aes(y = y_1)) +

geom_line(aes(y = y_2)) +

geom_text(data = tibble(

x = 2.25,

y = c(-5, 10),

label = c("y = -5 + 2x", "y = 10 + 2x")

),

aes(y = y, label = label),

size = 4.5) +

labs(title = "Different Intercepts",

y = "y") +

coord_cartesian(xlim = c(-2, 6),

ylim = c(-10, 25))

Did you notice how we followed the form of the basic linear function when we defined the values for y_1 and y_2? It’s that simple! Here’s the right panel.

tibble(x = -3:7) %>%

mutate(y_1 = 10 + -0.5 * x,

y_2 = 10 + 2 * x) %>%

ggplot(aes(x = x)) +

geom_vline(xintercept = 0) +

geom_hline(yintercept = 0) +

geom_line(aes(y = y_1)) +

geom_line(aes(y = y_2)) +

geom_text(data = tibble(

x = 4,

y = c(11, 13.75),

label = c("y = 10 + -0.5x", "y = 10 + 2x")

),

aes(y = y, label = label),

size = 4.5) +

labs(title = "Different Slopes",

y = "y") +

coord_cartesian(xlim = c(-2, 6),

ylim = c(-10, 25))

Summary of why we care. The likelihood function includes the form of the dependency of y on x. When y and x are metric variables, the simplest form of dependency, both mathematically and intuitively, is one that preserves proportionality. The mathematical expression of this relation is a so-called linear function. The usual mathematical expression of a line is the y-intercept form, but sometimes a more intuitive expression is the x threshold form. Linear functions form the core of the GLM. (pp. 424–425, emphasis in the original)

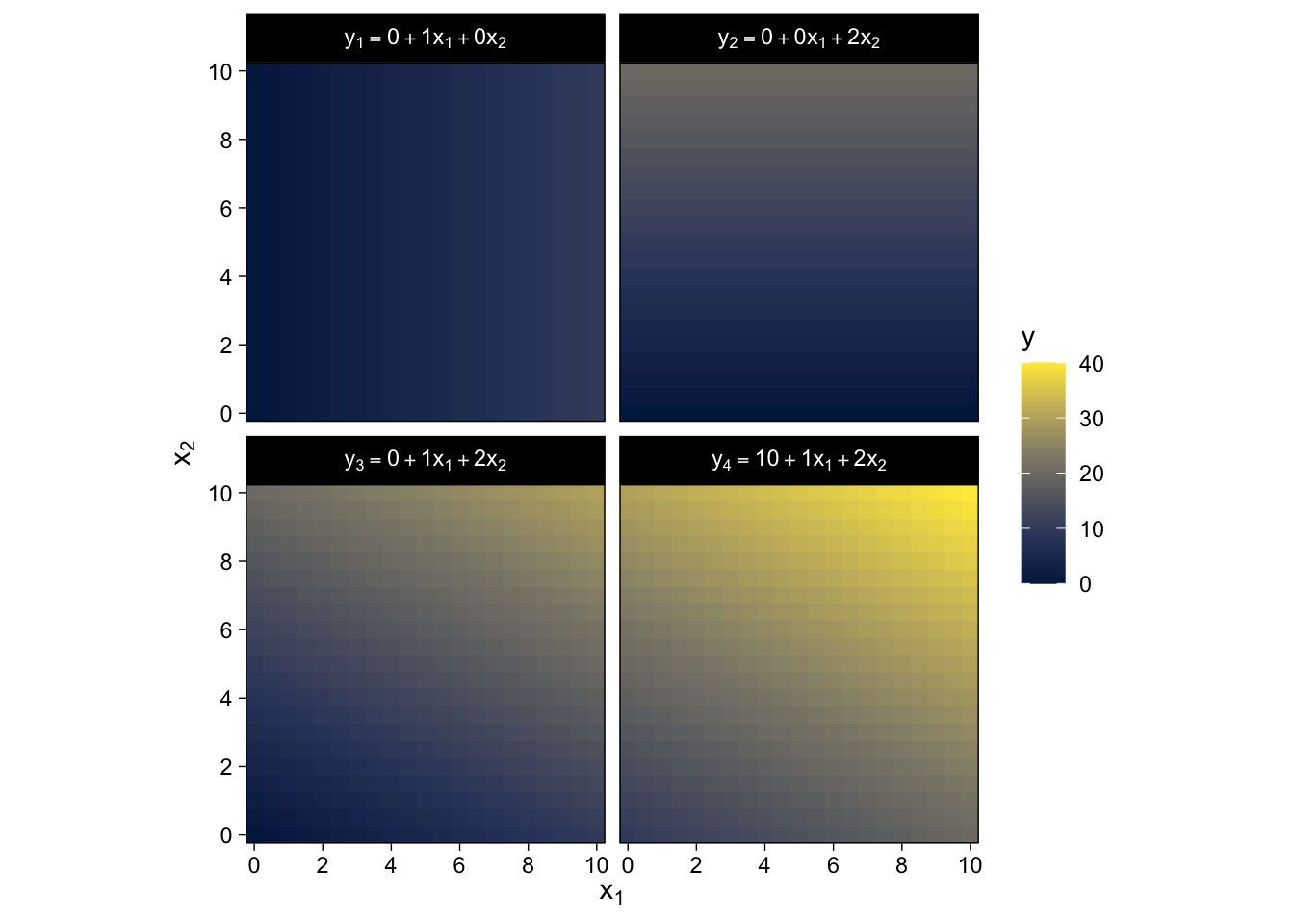

15.2.2 Additive combination of metric predictors.

The linear combination of K predictors has the general form

y=β0+β1x1+⋯+βKxK=β0+K∑k=1βkxk.

In the special case where K=0, you have an intercept-only model,

y=β0,

in which β0 simply models the mean of y.

We won’t be able to reproduce the wireframe plots of Figure 15.2, exactly. But we can use some geom_raster() tricks from back in Chapter 10 to express the third y dimension as fill gradients.

crossing(x1 = seq(from = 0, to = 10, by = 0.5),

x2 = seq(from = 0, to = 10, by = 0.5)) %>%

mutate(`y[1] == 0 + 1 * x[1] + 0 * x[2]` = 0 + 1 * x1 + 0 * x2,

`y[2] == 0 + 0 * x[1] + 2 * x[2]` = 0 + 0 * x1 + 2 * x2,

`y[3] == 0 + 1 * x[1] + 2 * x[2]` = 0 + 1 * x1 + 2 * x2,

`y[4] == 10 + 1 * x[1] + 2 * x[2]` = 10 + 1 * x1 + 2 * x2) %>%

pivot_longer(cols = -c(x1, x2),

values_to = "y") %>%

ggplot(aes(x = x1, y = x2, fill = y)) +

geom_raster() +

scale_fill_viridis_c(option = "E") +

scale_x_continuous(expression(x[1]), expand = c(0, 0),

breaks = seq(from = 0, to = 10, by = 2)) +

scale_y_continuous(expression(x[2]), expand = c(0, 0),

breaks = seq(from = 0, to = 10, by = 2)) +

coord_equal() +

facet_wrap(~ name, labeller = label_parsed)

Here we’ve captured some of Kruschke’s grid aesthetic by keeping the by argument within seq() somewhat coarse and omitting the interpolate = T argument from geom_raster(). If you’d prefer smoother fill transitions for the y-values, set by = 0.1 and interpolate = T.

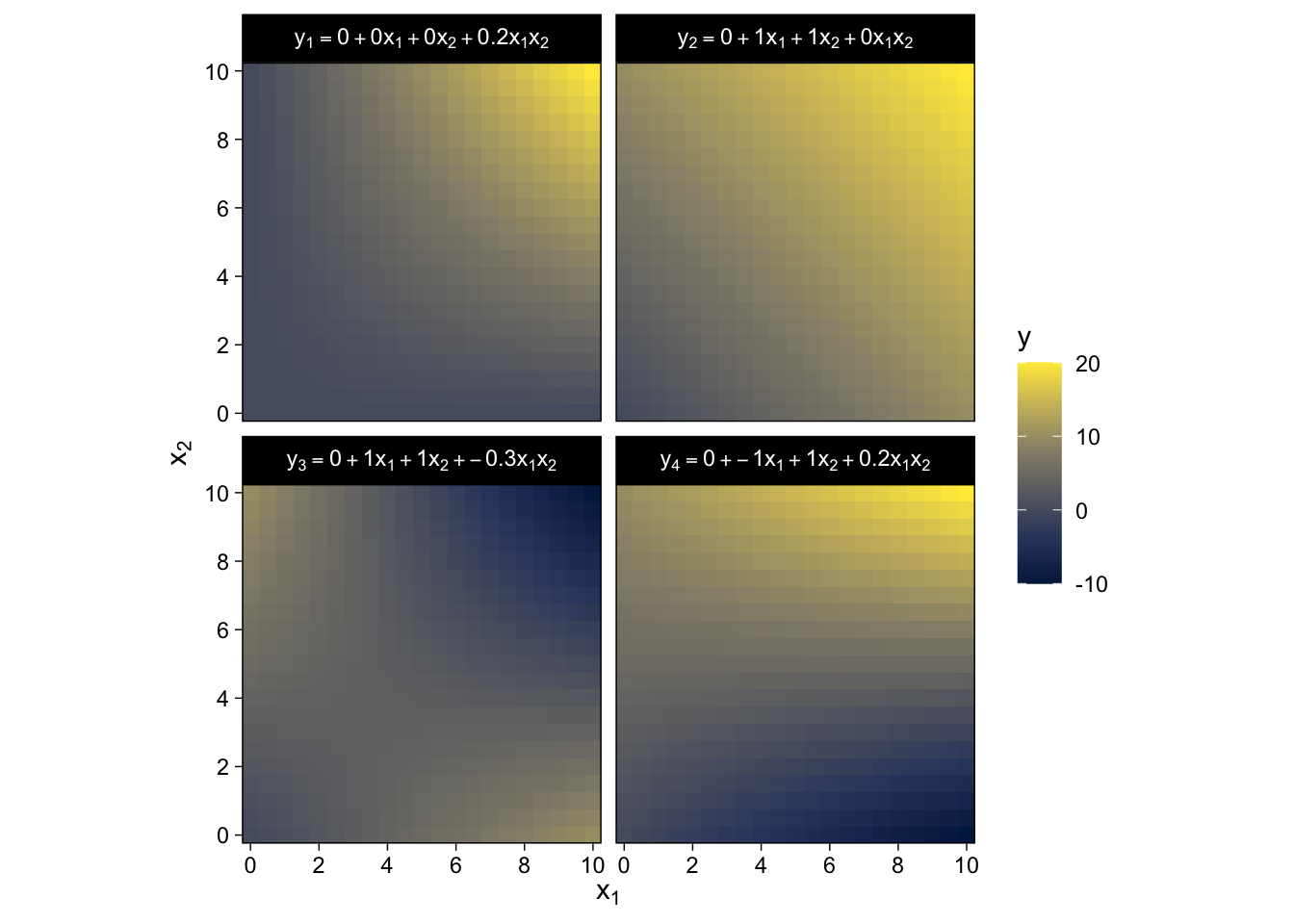

15.2.3 Nonadditive interaction of metric predictors.

As it turns out, “the combined influence of two predictors does not have to be additive” (p. 427). Let’s explore what that can look like with our version of Figure 15.3.

crossing(x1 = seq(from = 0, to = 10, by = 0.5),

x2 = seq(from = 0, to = 10, by = 0.5)) %>%

mutate(`y[1] == 0 + 0 * x[1] + 0 * x[2] + 0.2 * x[1] * x[2]` = 0 + 0 * x1 + 0 * x2 + 0.2 * x1 * x2,

`y[2] == 0 + 1 * x[1] + 1 * x[2] + 0 * x[1] * x[2]` = 0 + 1 * x1 + 1 * x2 + 0 * x1 * x2,

`y[3] == 0 + 1 * x[1] + 1 * x[2] + -0.3 * x[1] * x[2]` = 0 + 1 * x1 + 1 * x2 + -0.3 * x1 * x2,

`y[4] == 0 + -1 * x[1] + 1 * x[2] + 0.2 * x[1] * x[2]` = 0 + -1 * x1 + 1 * x2 + 0.2 * x1 * x2) %>%

pivot_longer(cols = -c(x1, x2),

values_to = "y") %>%

ggplot(aes(x = x1, y = x2, fill = y)) +

geom_raster() +

scale_fill_viridis_c(option = "E") +

scale_x_continuous(expression(x[1]), expand = c(0, 0),

breaks = seq(from = 0, to = 10, by = 2)) +

scale_y_continuous(expression(x[2]), expand = c(0, 0),

breaks = seq(from = 0, to = 10, by = 2)) +

coord_equal() +

facet_wrap(~ name, labeller = label_parsed)

Did you notice all those * operators in the mutate() code? We’re no longer restricting ourselves to additive functions. We’re square in the middle of multiplicative town!

There is a subtlety in the use of the term “linear” that can sometimes cause confusion in this context. The interactions shown in Figure 15.3 are not linear on the two predictors x1 and x2. But if the product of the two predictors, x1x2, is thought of as a third predictor, then the model is linear on the three predictors, because the predicted value of y is a weighted additive combination of the three predictors. This reconceptualization can be useful for implementing nonlinear interactions in software for linear models, but we will not be making that semantic leap to a third predictor, and instead we will think of a nonadditive combination of two predictors.

A nonadditive interaction of predictors does not have to be multiplicative. Other types of interaction are possible. (p. 428, emphasis in the original)

In my experience, this “subtlety” is really easy to misunderstand. You might be surprised how often it pops up even among senior scholars. Which is all to say, this stuff is difficult, so give yourself a break.

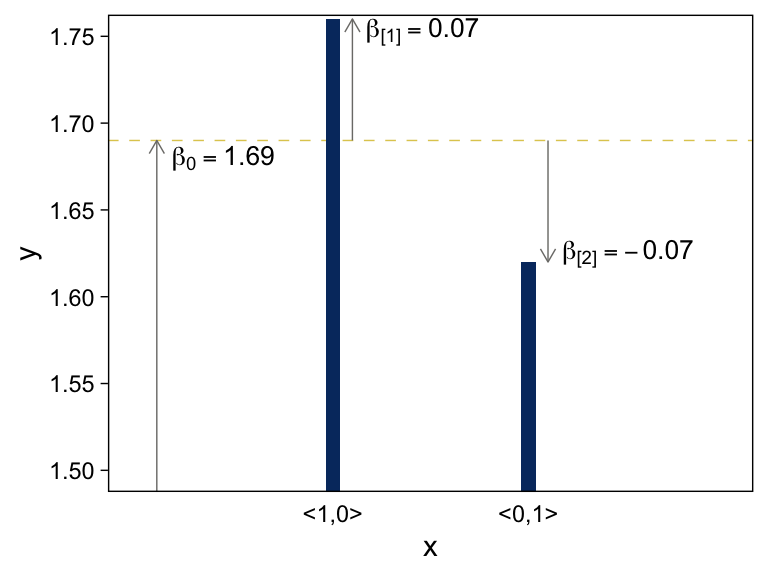

15.2.4 Nominal predictors.

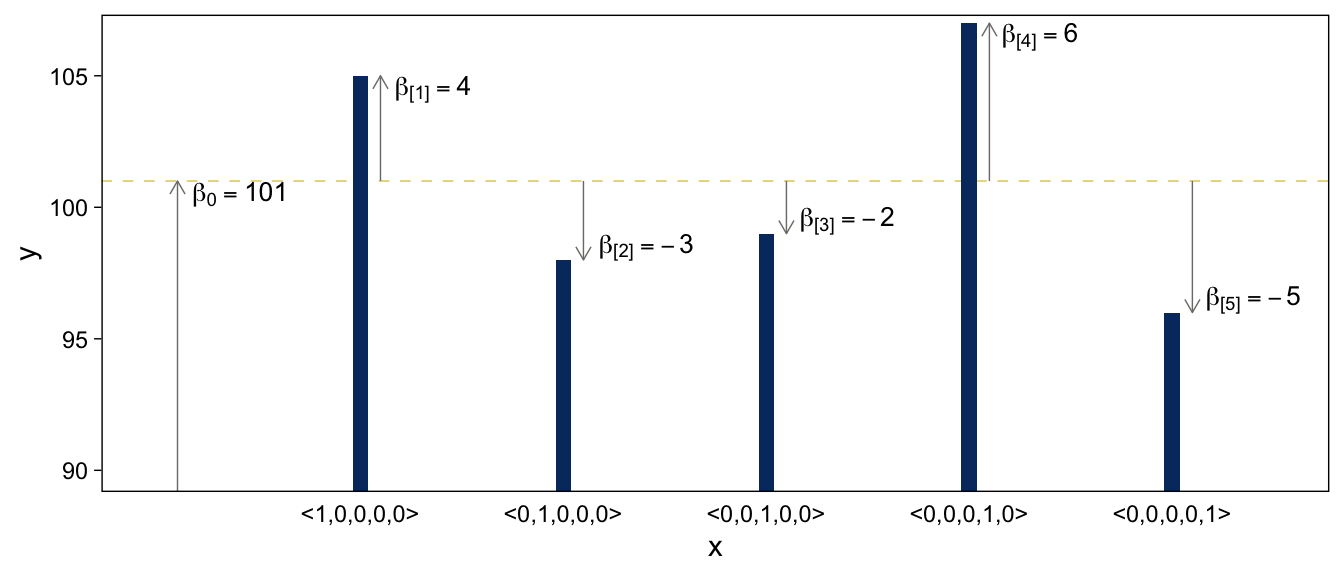

15.2.4.1 Linear model for a single nominal predictor.

Instead of representing the value of the nominal predictor by a single scalar value x, we will represent the nominal predictor by a vector →x=⟨x[1],...,x[J]⟩ where J is the number of categories that the predictor has…

We will denote the baseline value of the prediction as β0. The deflection for the jth level of the predictor is denoted β[j]. Then the predicted value is

y=β0+β[1]x[1]+⋯+β[J]x[J]=β0+→β⋅→x

where the notation →β⋅→x is sometimes called the “dot product” of the vectors. (p. 429)

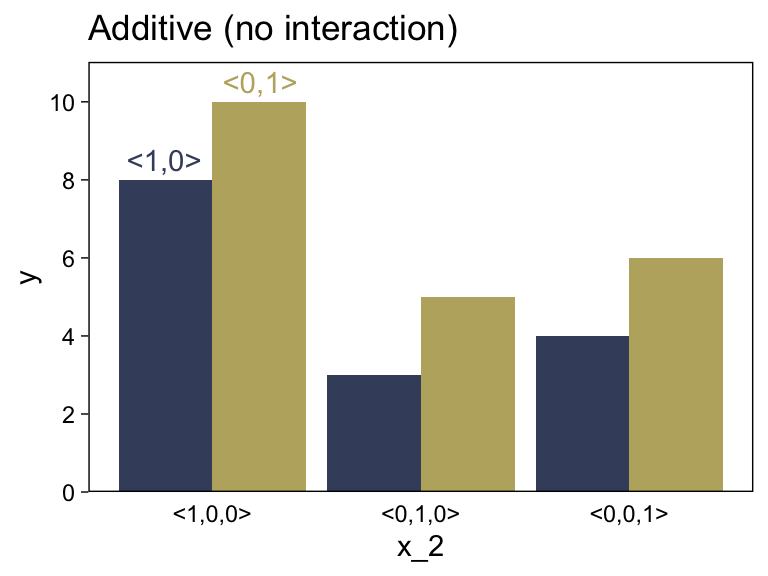

15.2.4.2 Additive combination of nominal predictors.

When you have two additive nominal predictors, the model follows the form

y=β0+→β1→x1+→β2→x2=β0+∑jβ1[j]x1[j]+∑kβ2[k]x2[k],

given the constraints

∑jβ1[j]=0and∑kβ2[k]=0.

Both panels in Figure 15.4 are going to require three separate data objects. Here’s the code for the top panel.

arrows <-

tibble(x = c(0.1, 1.1, 2.1),

y = c(1, 1.69, 1.69),

yend = c(1.69, 1.69 + .07, 1.69 - .07))

text <-

tibble(x = c(0.44, 1.46, 2.51),

y = c(1.68, 1.753, 1.625),

label = c("beta[0] == 1.69", "beta['[1]'] == 0.07", "beta['[2]'] == -0.07"))

tibble(x = 1:2,

y = c(1.69 + .07, 1.69 - .07)) %>%

# plot!

ggplot(aes(x = x, y = y)) +

geom_hline(yintercept = 1.69) +

geom_col(width = .075, fill = viridis_pal(option = "E")(9)[2]) +

geom_segment(data = arrows,

aes(xend = x, yend = yend),

arrow = arrow(length = unit(0.2, "cm"))) +

geom_text(data = text,

aes(label = label),

size = 3.5, parse = T) +

scale_x_continuous(breaks = 1:2, labels = c("<1,0>", "<0,1>")) +

coord_cartesian(xlim = c(0, 3),

ylim = c(1.5, 1.75)) +

theme(axis.ticks.x = element_blank())

Here’s the code for the bottom panel.

arrows <-

tibble(x = c(0.1, 1.1, 2.1, 3.1, 4.1, 5.1),

y = rep(c(50, 101), times = c(1, 5)),

yend = c(101, 101 + 4, 101 - 3, 101 - 2, 101 + 6, 101 - 5))

text <-

tibble(x = c(0.41, 1.36, 2.41, 3.4, 4.35, 5.4),

y = c(100.5, 104.5, 98.5, 99.5, 106.5, 96.5),

label = c("beta[0] == 101", "beta['[1]'] == 4", "beta['[2]'] == -3", "beta['[3]'] == -2", "beta['[4]'] == 6", "beta['[5]'] == -5"))

tibble(x = 1:5,

y = c(101 + 4, 101 - 3, 101 - 2, 101 + 6, 101 - 5)) %>%

# the plot

ggplot(aes(x = x, y = y)) +

geom_hline(yintercept = 101) +

geom_col(width = .075, fill = viridis_pal(option = "E")(9)[2]) +

geom_segment(data = arrows,

aes(xend = x, yend = yend),

arrow = arrow(length = unit(0.2, "cm"))) +

geom_text(data = text,

aes(label = label),

size = 3.5, parse = T) +

scale_x_continuous(breaks = 1:5,

labels = c("<1,0,0,0,0>", "<0,1,0,0,0>", "<0,0,1,0,0>", "<0,0,0,1,0>", "<0,0,0,0,1>")) +

coord_cartesian(xlim = c(0, 5.5),

ylim = c(90, 106.5)) +

theme(axis.ticks.x = element_blank())

Before we make our versions of the two panels in Figure 15.5, we should note that the values in the prose are at odds with the values implied in the figure. For simplicity, our versions of Figure 15.5 will match up with the values in the original figure, not the prose. For a look at what the figure might have looked like had it been based on the values in the prose, check out Kruschke’s Corrigenda.

Here’s the code for the left panel of Figure 15.5.

d <-

tibble(x_1 = rep(c(" <1,0>", "<0,1> "), times = 4),

x_2 = c(rep(0:2, each = 2), -0.25, 0.25),

y = c(8, 10, 3, 5, 4, 6, 8.5, 10.5),

type = rep(c("number", "text"), times = c(6, 2))) %>%

mutate(x_1 = factor(x_1, levels = c(" <1,0>", "<0,1> ")))

d %>%

filter(type == "number") %>%

ggplot(aes(x = x_2, y = y, fill = x_1)) +

geom_col(position = "dodge") +

geom_text(data = d %>% filter(type == "text"),

aes(label = x_1, color = x_1)) +

scale_fill_viridis_d(NULL, option = "E", begin = .25, end = .75) +

scale_color_viridis_d(NULL, option = "E", begin = .25, end = .75) +

scale_x_continuous(breaks = 0:2, labels = c("<1,0,0>", "<0,1,0>", "<0,0,1>")) +

scale_y_continuous(breaks = seq(from = 0, to = 10, by = 2), expand = expansion(mult = c(0, 0.05))) +

ggtitle("Additive (no interaction)") +

theme(axis.ticks.x = element_blank(),

legend.position = "none")

Did you notice our filter() trick in the code?

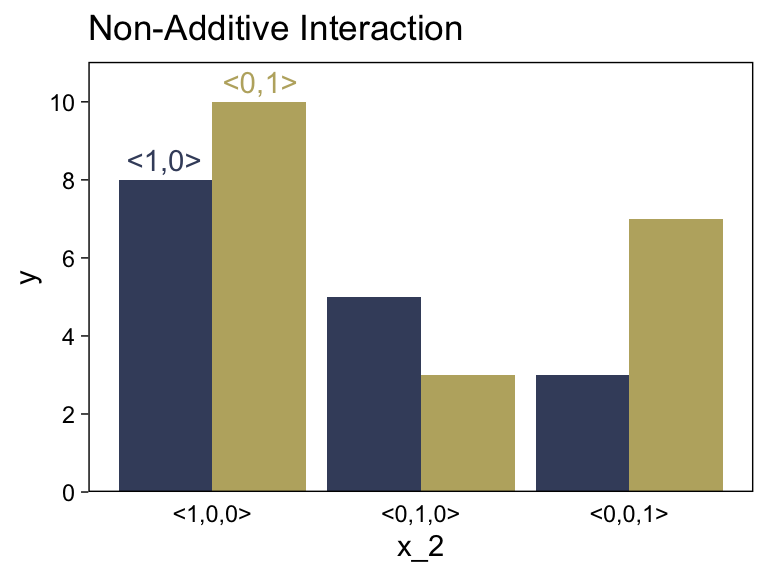

15.2.4.3 Nonadditive interaction of nominal predictors.

We need new notation to formalize the nonadditive influence of a combination of nominal values. Just as →x1 refers to the value of predictor 1, and →x2 refers to the value of predictor 2, the notation →x1×2 will refer to a particular combination of values of predictors 1 and 2. If there are J levels of predictor 1 and K levels of predictor 2, then there are J×K combinations of the two predictors. To indicate a particular combination of levels from predictors 1 and 2, the corresponding component of →x1×2 is set to 1 while all other components are set to 0. A nonadditive interaction of predictors is formally represented by including a term for the influence of combinations of predictors, beyond the additive influences, as follows: y=β0+→β1⋅→x1+→β2⋅→x2+→β1×2⋅→x1×2. (pp. 432–433, emphasis in the original)

Now we’re in nonadditive interaction land, here’s the code for the right panel of Figure 15.5.

d <-

tibble(x_1 = rep(c(" <1,0>", "<0,1> "), times = 4),

x_2 = c(rep(0:2, each = 2), -0.25, 0.25),

y = c(8, 10, 5, 3, 3, 7, 8.5, 10.5),

type = rep(c("number", "text"), times = c(6, 2))) %>%

mutate(x_1 = factor(x_1, levels = c(" <1,0>", "<0,1> ")))

d %>%

filter(type == "number") %>%

ggplot(aes(x = x_2, y = y, fill = x_1)) +

geom_col(position = "dodge") +

geom_text(data = d %>% filter(type == "text"),

aes(label = x_1, color = x_1)) +

scale_fill_viridis_d(NULL, option = "E", begin = .25, end = .75) +

scale_color_viridis_d(NULL, option = "E", begin = .25, end = .75) +

scale_x_continuous(breaks = 0:2, labels = c("<1,0,0>", "<0,1,0>", "<0,0,1>")) +

scale_y_continuous(breaks = 0:5 * 2, expand = expansion(mult = c(0, 0.05))) +

ggtitle("Non-Additive Interaction") +

theme(axis.ticks.x = element_blank(),

legend.position = "none")

“The main point to understand now is that the term ‘interaction’ refers to a nonadditive influence of the predictors on the predicted, regardless of whether the predictors are measured on a nominal scale or a metric scale” (p. 434, emphasis in the original).

15.3 Linking from combined predictors to noisy predicted data

15.3.1 From predictors to predicted central tendency.

After the predictor variables are combined, they need to be mapped to the predicted variable. This mathematical mapping is called the (inverse) link function, and is denoted by f() in the following equation:

y=f(lin(x))

Until now, we have been assuming that the link function is merely the identity function, f(lin(x))=lin(x). (p. 436, emphasis in the original)

Yet as we’ll see, many models use links other than the identity function.

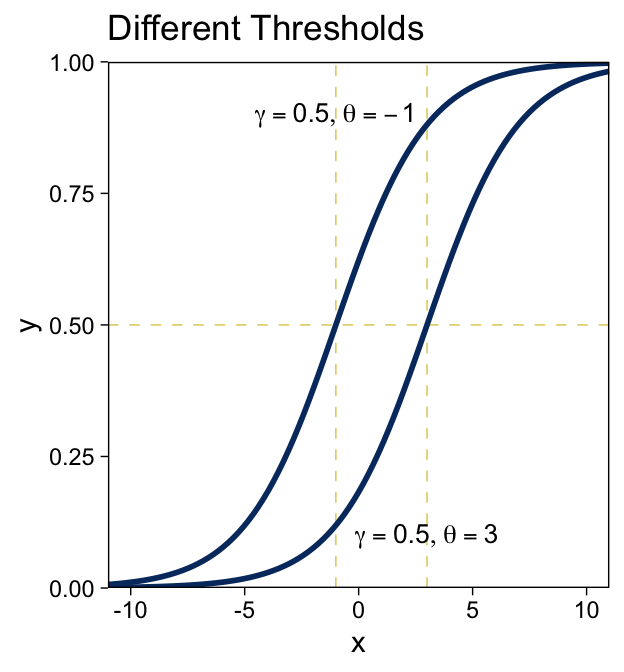

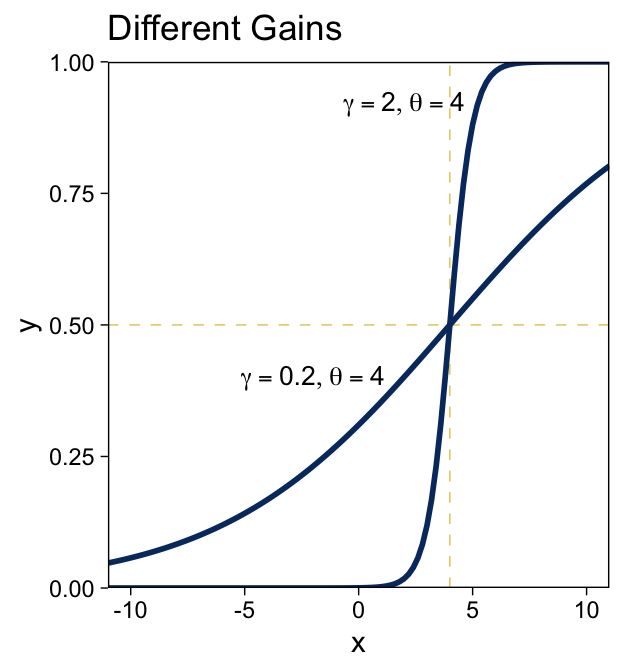

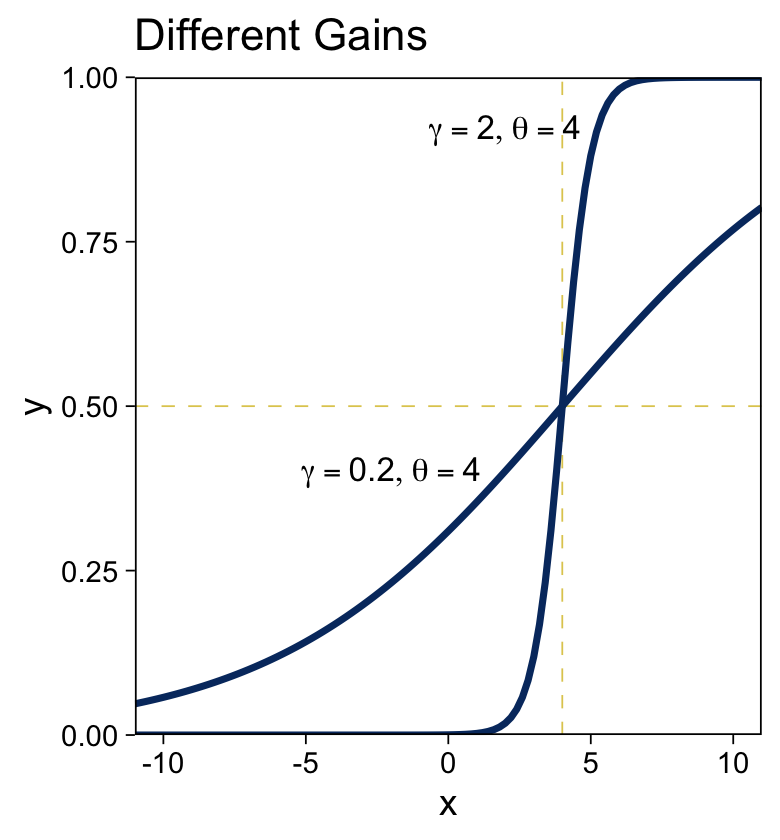

15.3.1.1 The logistic function.

The logistic link function follows the form

y=logistic(x)=1(1+exp(−x)).

We can write the logistic function for a univariable metric predictor as

y=logistic(x;β0,β1)=1(1+exp(−[β0+β1x])).

And if we prefer to parameterize it in terms of gain γ and threshold θ, it’d be

y=logistic(x;γ,θ)=1(1+exp(−γ[x−θ])).

We can make the sexy logistic curves of Figure 15.6 with stat_function(), into which we’ll plug our very own custom make_logistic() function. Here’s the left panel.

make_logistic <- function(x, gamma, theta) {

1 / (1 + exp(-gamma * (x - theta)))

}

# annotation

text <-

tibble(x = c(-1, 3),

y = c(.9, .1),

label = str_c("list(gamma == 0.5, theta == ", c(-1, 3), ")"))

# plot

tibble(x = c(-11, 11)) %>%

ggplot(aes(x = x)) +

geom_vline(xintercept = c(-1, 3)) +

geom_hline(yintercept = .5) +

stat_function(fun = make_logistic,

args = list(gamma = .5, theta = -1),

linewidth = 1, color = viridis_pal(option = "E")(9)[2]) +

stat_function(fun = make_logistic,

args = list(gamma = .5, theta = 3),

linewidth = 1, color = viridis_pal(option = "E")(9)[2]) +

geom_text(data = text,

aes(y = y, label = label),

size = 3.5, parse = T) +

scale_y_continuous(expand = expansion(mult = 0), limits = c(0, 1)) +

coord_cartesian(xlim = c(-10, 10)) +

ggtitle("Different Thresholds")

For kicks, we’ll take a different approach for the right panel. Instead of pumping values through stat_function() within our plot code, we’ll use our make_logistic() function within mutate() before beginning the plot code.

# define the annotation values

text <-

tibble(x = c(2, -2),

y = c(.92, .4),

label = str_c("list(gamma == ", c(2, 0.2), ", theta == 4)"))

# make the data

crossing(gamma = c(2, .2),

x = seq(from = -11, to = 11, by = 0.2)) %>%

mutate(y = make_logistic(x, gamma, theta = 4)) %>%

# plot!

ggplot(aes(x = x, y = y)) +

geom_vline(xintercept = 4) +

geom_hline(yintercept = .5) +

geom_line(aes(group = gamma),

linewidth = 1, color = viridis_pal(option = "E")(9)[2]) +

geom_text(data = text,

aes(label = label),

size = 3.5, parse = T) +

scale_y_continuous(expand = expansion(mult = 0), limits = c(0, 1)) +

coord_cartesian(xlim = c(-10, 10)) +

ggtitle("Different Gains")

I don’t know that one plotting approach is better than the other. It’s good to have options.

To make our two-dimensional version of the wireframe plots of Figure 15.7, we’ll first want to define the logistic() function.

logistic <- function(x) {

1 / (1 + exp(-x))

}Now we’ll just extend the same method we used for Figures 15.2 and 15.3.

crossing(x1 = seq(from = -6, to = 6, by = 0.5),

x2 = seq(from = -6, to = 6, by = 0.5)) %>%

mutate(`y[1] == logistic(1 * ( 0 * x[1] + 1 * x[2] - 0))` = logistic(1 * ( 0 * x1 + 1 * x2 - 0)),

`y[2] == logistic(1 * ( 0.71 * x[1] + 0.71 * x[2] - 0))` = logistic(1 * ( 0.71 * x1 + 0.71 * x2 - 0)),

`y[3] == logistic(2 * ( 0 * x[1] + 1 * x[2] - -3))` = logistic(2 * ( 0 * x1 + 1 * x2 - -3)),

`y[4] == logistic(2 * (-0.71 * x[1] + 0.71 * x[2] - 3))` = logistic(2 * (-0.71 * x1 + 0.71 * x2 - 3))) %>%

pivot_longer(cols = c(-x1, -x2), values_to = "y") %>%

ggplot(aes(x = x1, y = x2, fill = y)) +

geom_raster() +

scale_fill_viridis_c(option = "E", limits = c(0, 1)) +

scale_x_continuous(expression(x[1]), expand = c(0, 0),

breaks = seq(from = -6, to = 6, by = 2)) +

scale_y_continuous(expression(x[2]), expand = c(0, 0),

breaks = seq(from = -6, to = 6, by = 2),

position = "right") +

coord_equal() +

theme(legend.position = "left",

strip.text = element_text(size = 8)) +

facet_wrap(~ name, labeller = label_parsed)

“The threshold determines the position of the logistical cliff. In other words, the threshold determines the x values for which y=0.5… The gain determines the steepness of the logistical cliff” (pp. 437–438, emphasis in the original).

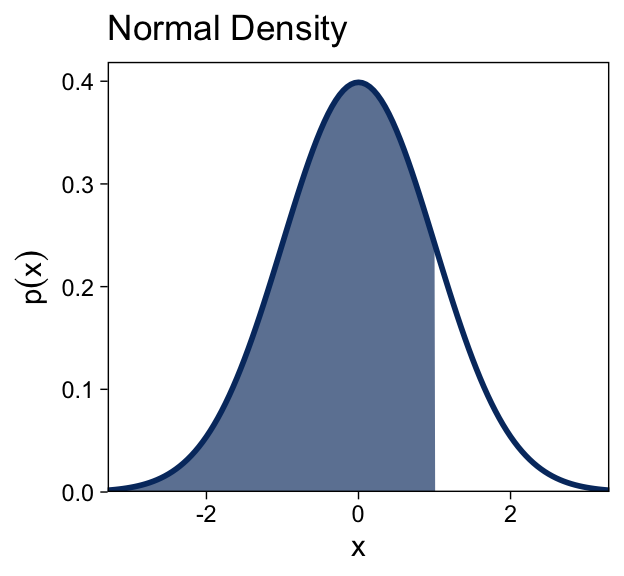

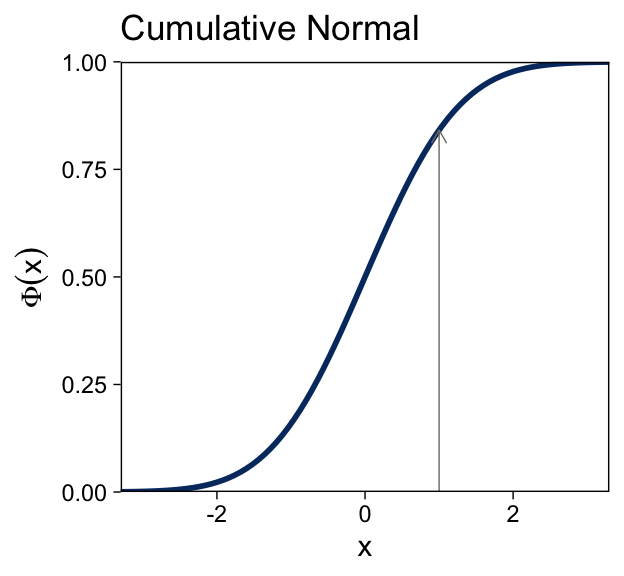

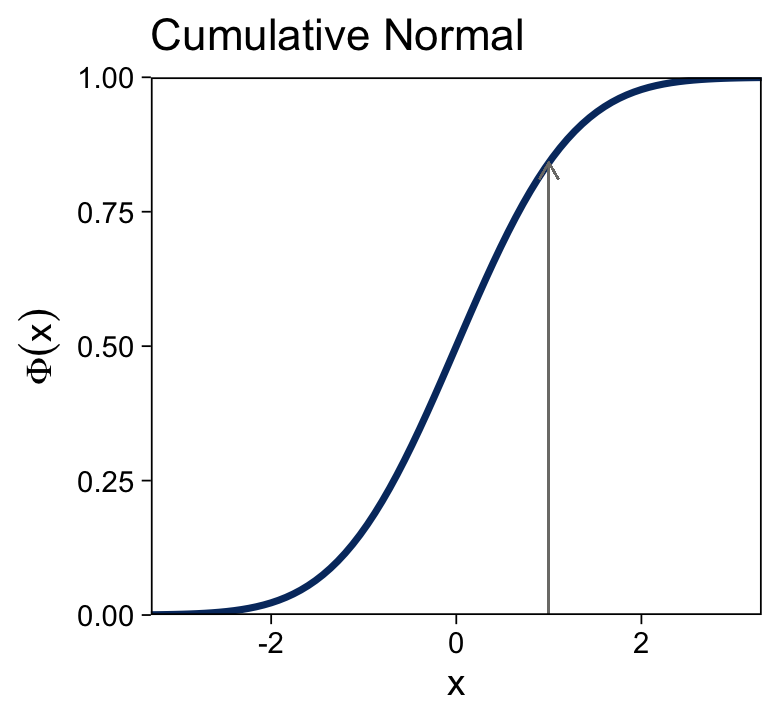

15.3.1.2 The cumulative normal function.

The cumulative normal is denoted Φ(x;μ,σ), where x is a real number and where μ and σ are parameter values, called the mean and standard deviation of the normal distribution. The parameter μ governs the point at which the cumulative normal, Φ(x), equals 0.5. In other words, μ plays the same role as the threshold in the logistic. The parameter σ governs the steepness of the cumulative normal function at x=μ, but inversely, such that a smaller value of σ corresponds to a steeper cumulative normal. (p. 440, emphasis in the original)

Here we plot the standard normal density in the top panel of Figure 15.8.

tibble(x = seq(from = -4, to = 4, by = 0.05)) %>%

mutate(d = dnorm(x, mean = 0, sd = 1)) %>%

ggplot(aes(x = x, y = d)) +

geom_area(aes(fill = x <= 1), show.legend = F) +

geom_line(linewidth = 1, color = viridis_pal(option = "E")(9)[2]) +

scale_fill_manual(values = c("white", alpha(viridis_pal(option = "E")(9)[2], 2/3))) +

scale_y_continuous(expression(p(x)), expand = expansion(mult = c(0, 0.05))) +

coord_cartesian(xlim = c(-3, 3)) +

ggtitle("Normal Density")

Here’s the analogous cumulative normal function depicted in the bottom panel of Figure 15.8.

tibble(x = seq(from = -4, to = 4, by = 0.05)) %>%

ggplot(aes(x = x)) +

stat_function(fun = pnorm,

args = list(mean = 0, sd = 1),

linewidth = 1, color = viridis_pal(option = "E")(9)[2]) +

geom_segment(aes(x = 1, xend = 1,

y = 0, yend = pnorm(1, 0, 1)),

arrow = arrow(length = unit(0.2, "cm"))) +

scale_y_continuous(expression(Phi(x)), expand = expansion(mult = 0), limits = c(0, 1)) +

coord_cartesian(xlim = c(-3, 3)) +

ggtitle("Cumulative Normal")

Terminology: The inverse of the cumulative normal is called the probit function. [“Probit” stands for “probability unit”; Bliss (1934)]. The probit function maps a value p, for 0.0≤p≤1.0, onto the infinite real line, and a graph of the probit function looks very much like the logit function. (p. 439, emphasis in the original)

15.3.2 From predicted central tendency to noisy data.

In the real world, there is always variation in y that we cannot predict from x. This unpredictable “noise” in y might be deterministically caused by sundry factors we have neither measured nor controlled, or the noise might be caused by inherent non-determinism in y. It does not matter either way because in practice the best we can do is predict the probability that y will have any particular value, dependent upon x…

To make this notion of probabilistic tendency precise, we need to specify a probability distribution for y that depends on f(lin(x)). To keep the notation tractable, first define μ=f(lin(x)). The value μ represents the central tendency of the predicted y values, which might or might not be the mean. With this notation, we then denote the probability distribution of y as some to-be-specified probability density function, abbreviated as “pdf”:

y∼pdf(μ,[scale, shape, etc.])

As indicated by the bracketed terms after μ, the pdf might have various additional parameters that control the distribution’s scale (i.e., standard deviation), shape, etc.

The form of the pdf depends on the measurement scale of the predicted variable. (pp. 440–441, emphasis in the original)

The top panel of Figure 15.9 is tricky. One way to make those multiple densities tipped on their sides is with ggridges::geom_ridgeline() followed by coord_flip(). However, explore a different approach that’ll come in handy in many of the plots we’ll be making in later chapters. The method requires we make a data set with the necessary coordinates for the side-tipped densities. Let’s walk through that step slowly.

curves <-

# define the 3 x-values we want the Gaussians to originate from

tibble(x = seq(from = -7.5, to = 7.5, length.out = 4)) %>%

# use the formula 10 + 2x to compute the expected y-value for x

mutate(y_mean = 10 + (2 * x)) %>%

# based on a Gaussian with `mean = y_mean` and `sd = 2`, compute the 99.5% intervals

mutate(ll = qnorm(.0025, mean = y_mean, sd = 2),

ul = qnorm(.9975, mean = y_mean, sd = 2)) %>%

# now use those interval bounds to make a sequence of y-values

mutate(y = map2(ll, ul, seq, length.out = 100)) %>%

# since that operation returned a nested column, we need to `unnest()`

unnest(y) %>%

# compute the density values

mutate(density = map2_dbl(y, y_mean, dnorm, sd = 2)) %>%

# now rescale the density values to be wider.

# since we want these to be our x-values, we'll

# just redefine the x column with these results

mutate(x = x - density * 2 / max(density))

str(curves)## tibble [400 × 6] (S3: tbl_df/tbl/data.frame)

## $ x : num [1:400] -7.54 -7.55 -7.55 -7.56 -7.57 ...

## $ y_mean : num [1:400] -5 -5 -5 -5 -5 -5 -5 -5 -5 -5 ...

## $ ll : num [1:400] -10.6 -10.6 -10.6 -10.6 -10.6 ...

## $ ul : num [1:400] 0.614 0.614 0.614 0.614 0.614 ...

## $ y : num [1:400] -10.6 -10.5 -10.4 -10.3 -10.2 ...

## $ density: num [1:400] 0.00388 0.00454 0.0053 0.00617 0.00715 ...In case it’s not clear, we’ll be plotting with the x and y columns. Think of the other columns as showing our work. But now we’ve got those curves data, we’re ready to simulate the points and plot.

# how many points would you like?

n_samples <- 750

# generate the points

tibble(x = runif(n = n_samples, min = -10, max = 10)) %>%

mutate(y = rnorm(n = n_samples, mean = 10 + 2 * x, sd = 2)) %>%

# plot!

ggplot(aes(x = x, y = y)) +

geom_vline(xintercept = 0) +

geom_hline(yintercept = 0) +

geom_point(size = 1/5) +

geom_abline(intercept = 10, slope = 2,

linewidth = 1, color = viridis_pal(option = "E")(9)[2]) +

geom_path(data = curves,

aes(group = y_mean),

color = viridis_pal(option = "E")(9)[2], linewidth = 3/4) +

labs(title = "Normal PDF around Linear Function",

y = "y") +

coord_cartesian(ylim = c(-10, 30))

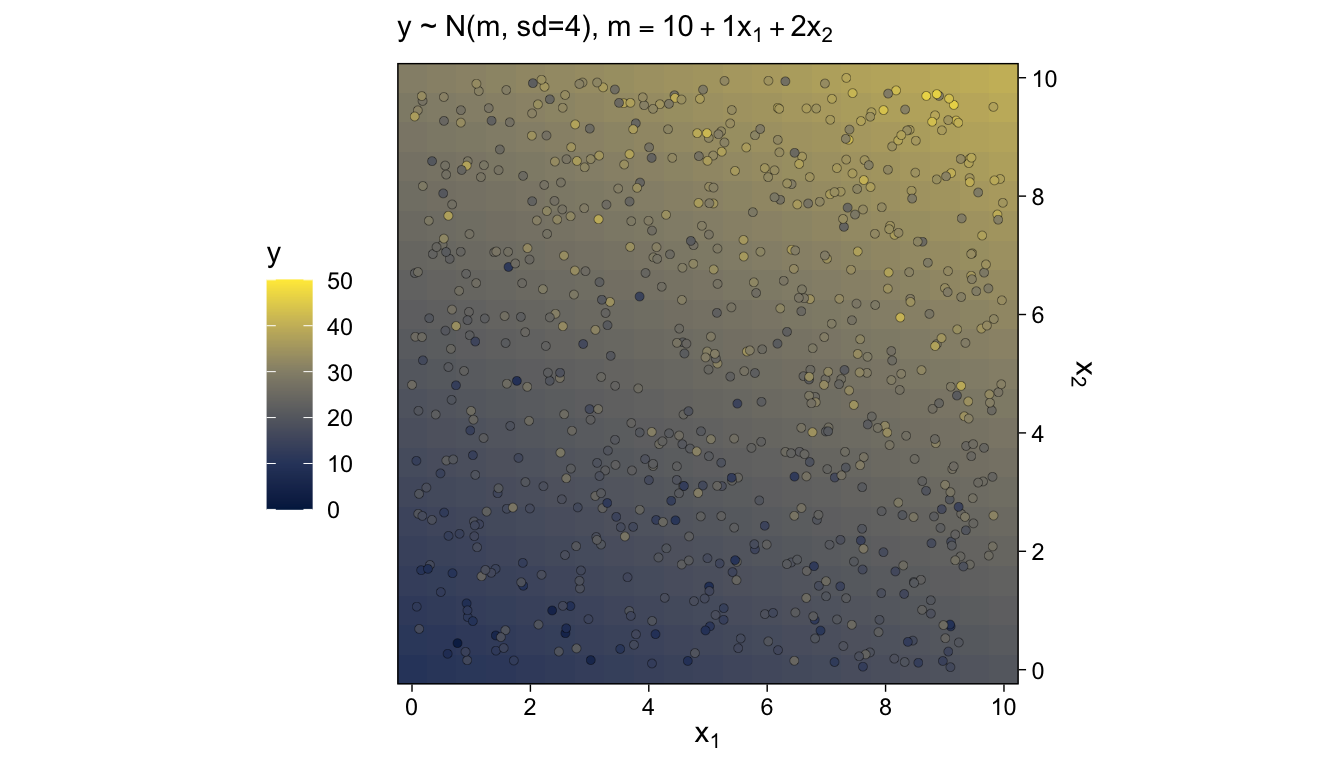

We’ll revisit this method in Chapter 17. The wireframe plots at the bottom of Figure 15.9 and in Figure 15.10 are outside of our ggplot2 purview. However, we can continue on with our approach of expressing the third y dimension as fill gradients. Thus, here’s a version of the bottom of Figure 15.9.

# how many points would you like?

n_samples <- 750

# generate and save the points

set.seed(15)

d <-

tibble(x1 = runif(n = n_samples, min = 0, max = 10),

x2 = runif(n = n_samples, min = 0, max = 10)) %>%

mutate(y = rnorm(n = n(), mean = 10 + 1 * x1 + 2 * x2, sd = 4))

# make the grid

crossing(x1 = seq(from = 0, to = 10, by = 0.5),

x2 = seq(from = 0, to = 10, by = 0.5)) %>%

mutate(y = 10 + 1 * x1 + 2 * x2) %>%

# plot!

ggplot(aes(x = x1, y = x2, fill = y)) +

geom_raster() +

geom_point(data = d,

shape = 21, stroke = 1/10) +

scale_color_viridis_c(option = "E", limits = c(0, 50)) +

scale_fill_viridis_c(option = "E", limits = c(0, 50)) +

scale_x_continuous(expression(x[1]), expand = c(0, 0), breaks = 0:5 * 2) +

scale_y_continuous(expression(x[2]), expand = c(0, 0), breaks = 0:5 * 2,

position = "right") +

coord_equal() +

labs(subtitle = expression("y ~ N(m, sd=4), "*m==10+1*x[1]+2*x[2])) +

theme(legend.position = "left")

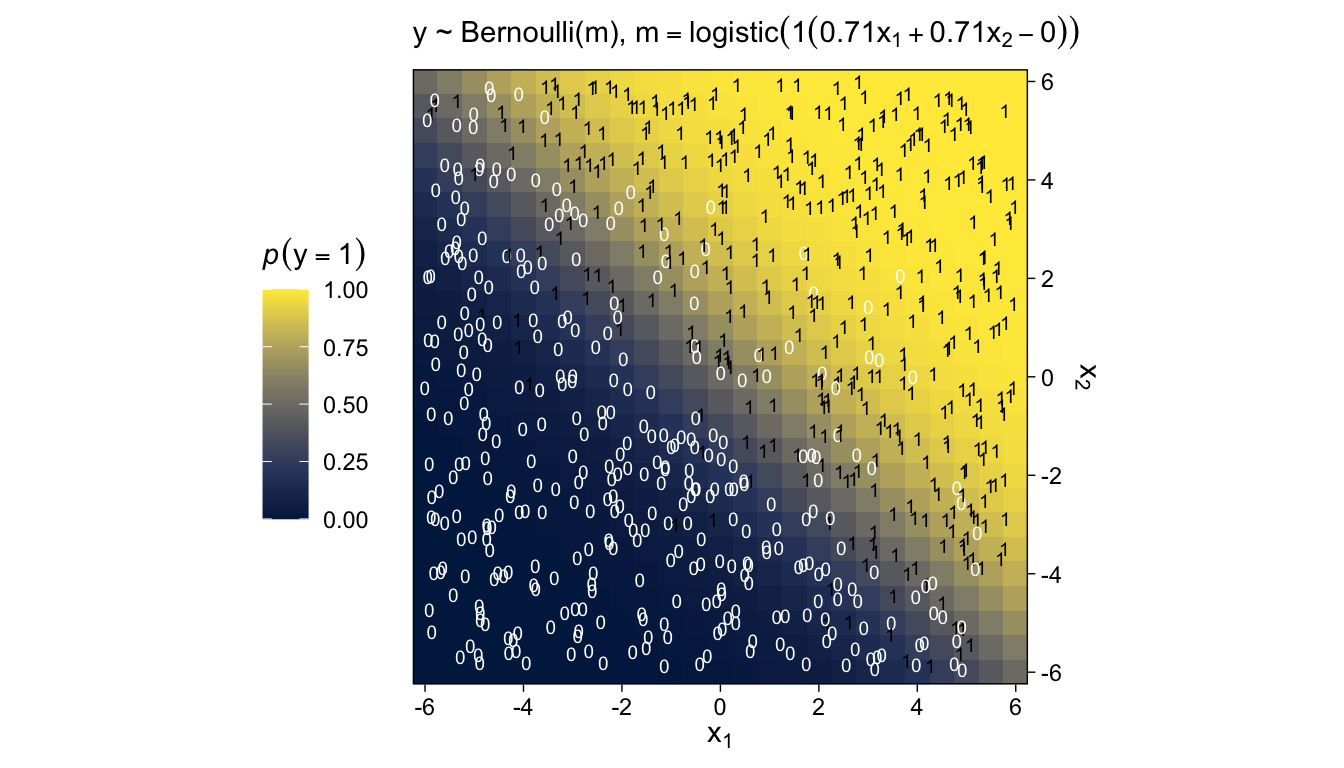

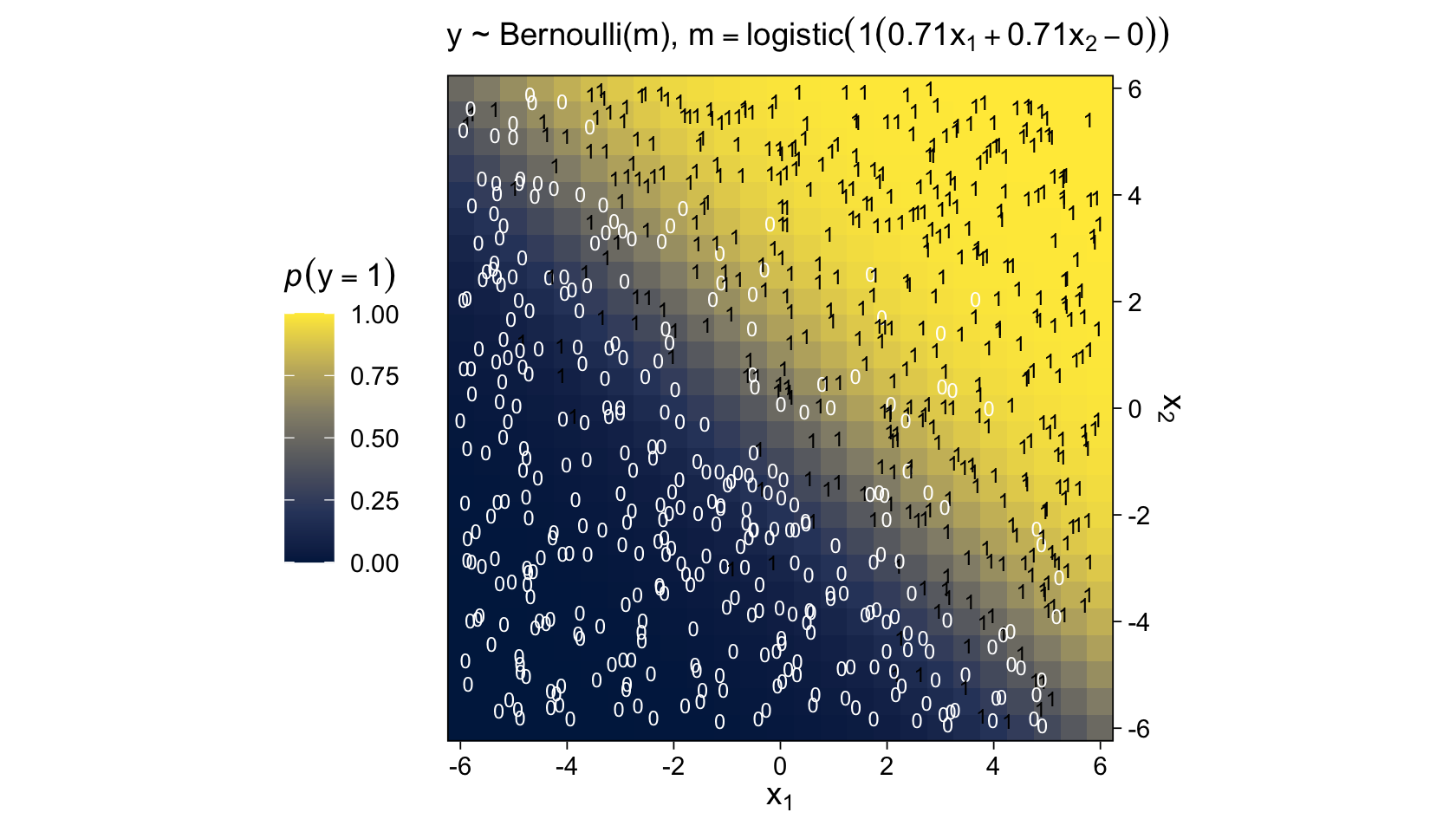

For Figure 15.10, we can simulate Bernoulli distributed data with the rbinom() function, provided we set size = 1.

# how many points would you like?

n_samples <- 750

# generate and save the points

set.seed(15)

d <-

tibble(x1 = runif(n = n_samples, min = -6, max = 6),

x2 = runif(n = n_samples, min = -6, max = 6)) %>%

mutate(y = rbinom(n = n(), size = 1, prob = logistic(1 * (0.71 * x1 + 0.71 * x2 - 0))))

# make the grid

crossing(x1 = seq(from = -6, to = 6, by = 0.5),

x2 = seq(from = -6, to = 6, by = 0.5)) %>%

mutate(y = logistic(1 * (0.71 * x1 + 0.71 * x2 - 0))) %>%

# plot!

ggplot(aes(x = x1, y = x2, fill = y)) +

geom_raster() +

geom_text(data = d,

aes(label = y, color = factor(y)),

size = 2.5) +

scale_color_manual(values = c("white", "black"), breaks = NULL) +

scale_fill_viridis_c(expression(italic(p)(y==1)), option = "E", limits = 0:1) +

scale_x_continuous(expression(x[1]), expand = c(0, 0), breaks = -3:3 * 2) +

scale_y_continuous(expression(x[2]), expand = c(0, 0), breaks = -3:3 * 2,

position = "right") +

coord_equal() +

labs(subtitle = expression("y ~ Bernoulli(m), "*m==logistic(1*(0.71*x[1]+0.71*x[2]-0)))) +

theme(legend.position = "left")

Because of their use of the logistic function, we typically refer to models of this kind as logistic regression.

15.4 Formal expression of the GLM

We can write the GLM as

y∼pdf(μ,[parameters]),whereμ=f(lin(x),[parameters]).

As has been previously explained, the predictors x are combined in the linear function lin(x), and the function f in [the first equation] is called the inverse link function. The data, y, are distributed around the central tendency μ according to the probability density function labeled “pdf.” (p. 444)

15.4.1 Cases of the GLM.

When a client brings an application to a [statistical] consultant, one of the first things the consultant does is find out from the client which data are supposed to be predictors and which data are supposed to be predicted, and the measurement scales of the data… When you are considering how to analyze data, your first task is to be your own consultant and find out which data are predictors, which are predicted, and what measurement scales they are. (pp. 445–446)

Soak this last bit in. It’s gold. I’ve found it to be true in my exchanges with other researchers and with my own data problems, too.

Session info

sessionInfo()## R version 4.2.2 (2022-10-31)

## Platform: x86_64-apple-darwin17.0 (64-bit)

## Running under: macOS Big Sur ... 10.16

##

## Matrix products: default

## BLAS: /Library/Frameworks/R.framework/Versions/4.2/Resources/lib/libRblas.0.dylib

## LAPACK: /Library/Frameworks/R.framework/Versions/4.2/Resources/lib/libRlapack.dylib

##

## locale:

## [1] en_US.UTF-8/en_US.UTF-8/en_US.UTF-8/C/en_US.UTF-8/en_US.UTF-8

##

## attached base packages:

## [1] stats graphics grDevices utils datasets methods base

##

## other attached packages:

## [1] viridis_0.6.2 viridisLite_0.4.1 forcats_0.5.1 stringr_1.4.1

## [5] dplyr_1.0.10 purrr_1.0.1 readr_2.1.2 tidyr_1.2.1

## [9] tibble_3.1.8 ggplot2_3.4.0 tidyverse_1.3.2

##

## loaded via a namespace (and not attached):

## [1] lubridate_1.8.0 assertthat_0.2.1 digest_0.6.31

## [4] utf8_1.2.2 R6_2.5.1 cellranger_1.1.0

## [7] backports_1.4.1 reprex_2.0.2 evaluate_0.18

## [10] highr_0.9 httr_1.4.4 pillar_1.8.1

## [13] rlang_1.0.6 googlesheets4_1.0.1 readxl_1.4.1

## [16] rstudioapi_0.13 jquerylib_0.1.4 rmarkdown_2.16

## [19] labeling_0.4.2 googledrive_2.0.0 munsell_0.5.0

## [22] broom_1.0.2 compiler_4.2.2 modelr_0.1.8

## [25] xfun_0.35 pkgconfig_2.0.3 htmltools_0.5.3

## [28] tidyselect_1.2.0 gridExtra_2.3 bookdown_0.28

## [31] fansi_1.0.3 crayon_1.5.2 tzdb_0.3.0

## [34] dbplyr_2.2.1 withr_2.5.0 grid_4.2.2

## [37] jsonlite_1.8.4 gtable_0.3.1 lifecycle_1.0.3

## [40] DBI_1.1.3 magrittr_2.0.3 scales_1.2.1

## [43] cli_3.6.0 stringi_1.7.8 cachem_1.0.6

## [46] farver_2.1.1 fs_1.5.2 xml2_1.3.3

## [49] bslib_0.4.0 ellipsis_0.3.2 generics_0.1.3

## [52] vctrs_0.5.1 tools_4.2.2 glue_1.6.2

## [55] hms_1.1.1 fastmap_1.1.0 colorspace_2.0-3

## [58] gargle_1.2.0 rvest_1.0.2 knitr_1.40

## [61] haven_2.5.1 sass_0.4.2