5 The Many Variables & The Spurious Waffles

Correlation in general is not surprising. In large data sets, every pair of variables has a statistically discernible non-zero correlation. But since most correlations do not indicate causal relationships, we need tools for distinguishing mere association from evidence of causation. This is why so much effort is devoted to multiple regression, using more than one predictor variable to simultaneously model an outcome. (McElreath, 2020a, p. 123, emphasis in the original)

In his endnote #80 (p. 562), McElreath wrote: “See Meehl (1990), in particular the ‘crud factor’ described on page 204.” For a fun look at some dubious correlations, check out the examples at https://www.tylervigen.com/spurious-correlations.

But back to the text, McElreath’s listed reasons for multivariable regression include:

- statistical control for confounds

- multiple/complex causation

- interactions

We’ll approach the first two in this chapter. Interactions are reserved for Chapter 7.

5.0.0.1 Rethinking: Causal inference.

“Despite its central importance, there is no unified approach to causal inference yet in the sciences” (p. 124). To dip into the topic, you might check out the recent blog post by Finn Lattimore and David Rohde, Causal inference with Bayes rule; McElreath blog series on causal inference, starting with Regression, Fire, and Dangerous Things (1/3); or McElreath’s epic 3-hour introductory lecture on causal inference called Science Before Statistics: Causal Inference.

5.1 Spurious associations

Load the Waffle House data.

library(tidyverse)

data(WaffleDivorce, package = "rethinking")

d <- WaffleDivorceDid you notice how we used the package argument within the data() function, there? That allowed us to load the WaffleDivorce without actually loading the rethinking package. Since we generally don’t want to have both rethinking and brms loaded up at the same time, using the package argument will save us a line of code.

Now standardize the focal variables with the rethinking::standardize() function.

d <-

d %>%

mutate(d = rethinking::standardize(Divorce),

m = rethinking::standardize(Marriage),

a = rethinking::standardize(MedianAgeMarriage))Because we avoided directly loading the rethinking package, we did not have immediate access to McElreath’s handy standardize() function. If you want to use a function from a package without loading that package, you can use the double colon operator ::. You can learn more about the double colon operator here. Now load brms.

rm(WaffleDivorce)

library(brms)I’m not going to show the output, but you might go ahead and investigate the data with the typical functions. E.g.,

head(d)

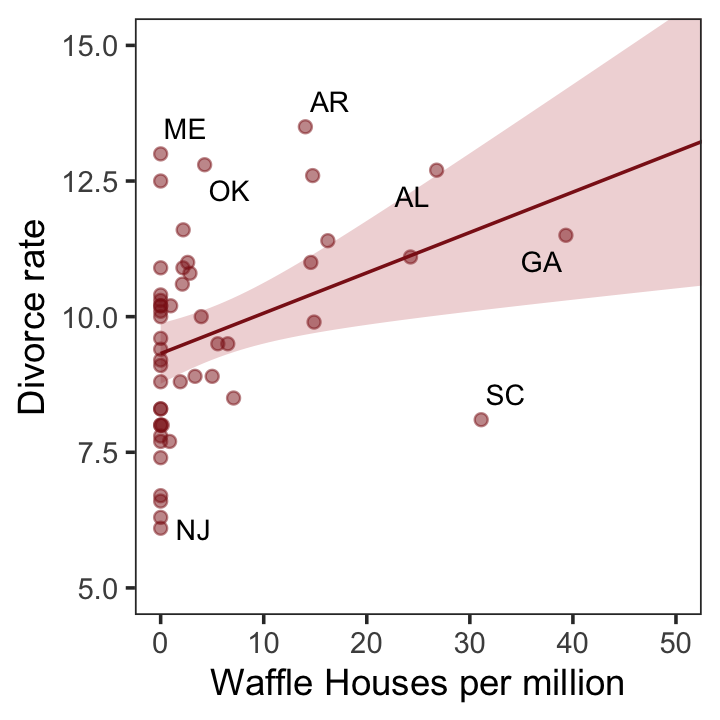

glimpse(d)Now we have our data, we can reproduce Figure 5.1. One convenient way to get the handful of sate labels into the plot was with the geom_text_repel() function from the ggrepel package (Slowikowski, 2022). But first, we spent the last few chapters warming up with ggplot2. Going forward, each chapter will have its own plot theme. In this chapter, we’ll characterize the plots with theme_bw() + theme(panel.grid = element_rect()) and coloring based off of "firebrick".

library(ggrepel)

d %>%

ggplot(aes(x = WaffleHouses/Population, y = Divorce)) +

stat_smooth(method = "lm", fullrange = T, linewidth = 1/2,

color = "firebrick4", fill = "firebrick", alpha = 1/5) +

geom_point(size = 1.5, color = "firebrick4", alpha = 1/2) +

geom_text_repel(data = d %>% filter(Loc %in% c("ME", "OK", "AR", "AL", "GA", "SC", "NJ")),

aes(label = Loc),

size = 3, seed = 1042) + # this makes it reproducible

scale_x_continuous("Waffle Houses per million", limits = c(0, 55)) +

ylab("Divorce rate") +

coord_cartesian(xlim = c(0, 50), ylim = c(5, 15)) +

theme_bw() +

theme(panel.grid = element_blank())

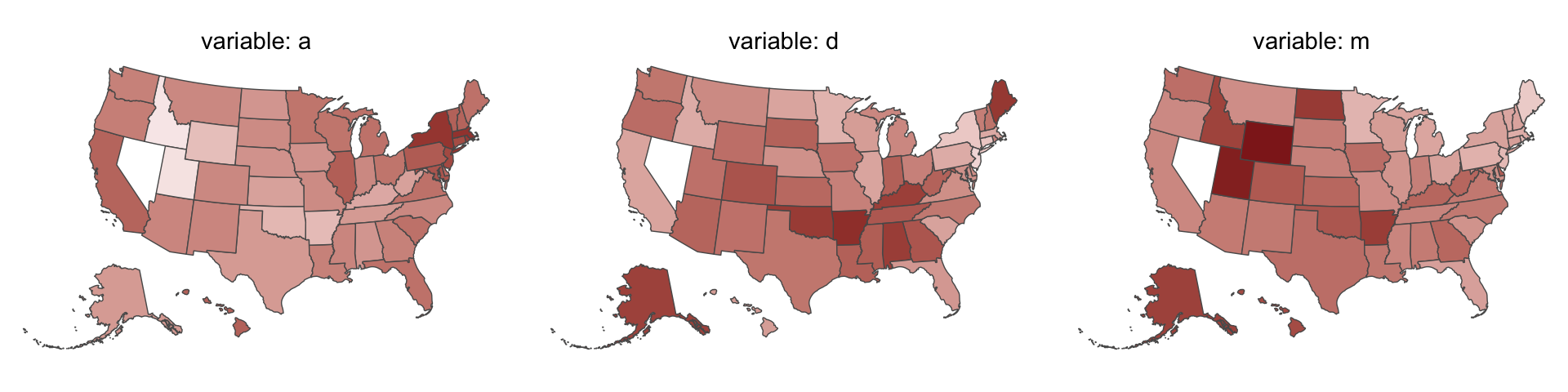

Since these are geographically-based data, we might plot our three major variables in a map format. The tigris package (Walker, 2022) provides functions for retrieving latitude and longitude data for the 50 states and we can plot then with the ggplot2::geom_sf() function. We’ll use the right_join() function to combine those data with our primary data d2.

library(tigris)

# get the map data

d_states <- states(cb = TRUE, resolution = "20m") %>%

shift_geometry() %>%

# add the primary data

right_join(d %>%

mutate(NAME = Location %>% as.character()) %>%

select(d:a, NAME),

by = "NAME") %>%

# convert to the long format for faceting

pivot_longer(cols = c("d", "m", "a"), names_to = "variable")Now plot.

d_states %>%

ggplot() +

geom_sf(aes(fill = value, geometry = geometry),

size = 0) +

scale_fill_gradient(low = "#f8eaea", high = "firebrick4") +

theme_void() +

theme(legend.position = "none",

strip.text = element_text(margin = margin(0, 0, .5, 0))) +

facet_wrap(~ variable, labeller = label_both)

One of the advantages of this visualization method is it just became clear that Nevada is missing from the WaffleDivorce data. Execute d %>% distinct(Location) to see for yourself and click here to find out why it’s missing. Those missing data should motivate the skills we’ll cover in Chapter 15. But let’s get back on track.

Here’s the standard deviation for MedianAgeMarriage in its current metric.

sd(d$MedianAgeMarriage)## [1] 1.24363Our first statistical model follows the form

\[\begin{align*} \text{divorce_std}_i & \sim \operatorname{Normal}(\mu_i, \sigma) \\ \mu_i & = \alpha + \beta_1 \text{median_age_at_marriage_std}_i \\ \alpha & \sim \operatorname{Normal}(0, 0.2) \\ \beta_1 & \sim \operatorname{Normal}(0, 0.5) \\ \sigma & \sim \operatorname{Exponential}(1), \end{align*}\]

where the _std suffix indicates the variables are standardized (i.e., zero centered, with a standard deviation of one). Let’s fit the first univariable model.

b5.1 <-

brm(data = d,

family = gaussian,

d ~ 1 + a,

prior = c(prior(normal(0, 0.2), class = Intercept),

prior(normal(0, 0.5), class = b),

prior(exponential(1), class = sigma)),

iter = 2000, warmup = 1000, chains = 4, cores = 4,

seed = 5,

sample_prior = T,

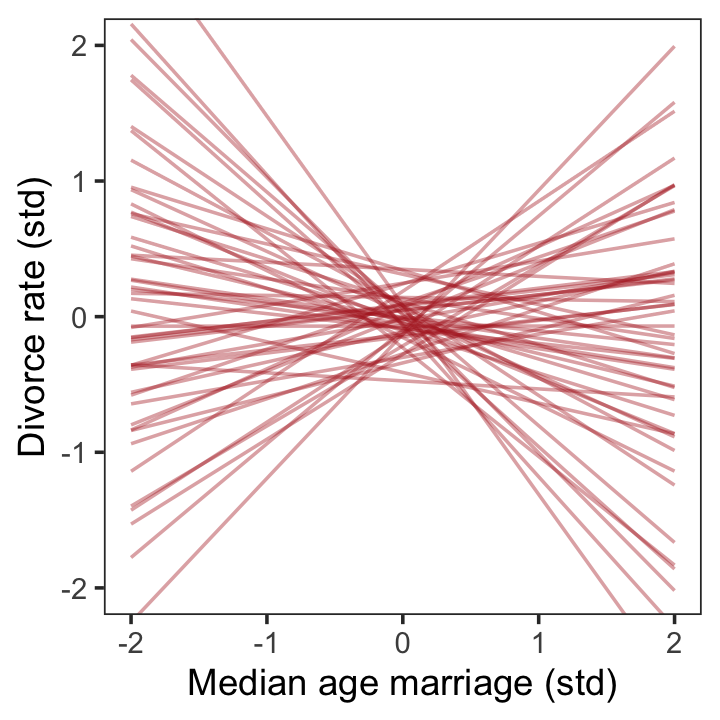

file = "fits/b05.01")Did you notice the sample_prior = T line? This told brms to take draws from both the posterior distribution (as usual) and from the prior predictive distribution. If you look at McElreath’s R code 5.4, you’ll see he plotted 50 draws from the prior predictive distribution of his m5.1. For our brms workflow, our first step is the extract our prior draws with the well-named prior_draws() function.

prior <- prior_draws(b5.1)

prior %>% glimpse()## Rows: 4,000

## Columns: 3

## $ Intercept <dbl> 0.262235852, 0.435592131, -0.294742095, -0.007232040, 0.058308258, 0.050358902, …

## $ b <dbl> 0.01116464, -0.18996254, 0.43071360, -0.60435682, -0.31401810, 0.22439532, -0.06…

## $ sigma <dbl> 2.20380842, 0.57847121, 1.00816092, 2.94427170, 0.30736807, 0.94694471, 0.660626…We ended up with 4,000 draws from the prior predictive distribution, much like as_draws_df() would return 4,000 draws from the posterior. Next we’ll use slice_sample() to take a random sample from our prior object. After just a little more wrangling, we’ll be in good shape to plot our version of Figure 5.3.

set.seed(5)

prior %>%

slice_sample(n = 50) %>%

rownames_to_column("draw") %>%

expand_grid(a = c(-2, 2)) %>%

mutate(d = Intercept + b * a) %>%

ggplot(aes(x = a, y = d)) +

geom_line(aes(group = draw),

color = "firebrick", alpha = .4) +

labs(x = "Median age marriage (std)",

y = "Divorce rate (std)") +

coord_cartesian(ylim = c(-2, 2)) +

theme_bw() +

theme(panel.grid = element_blank())

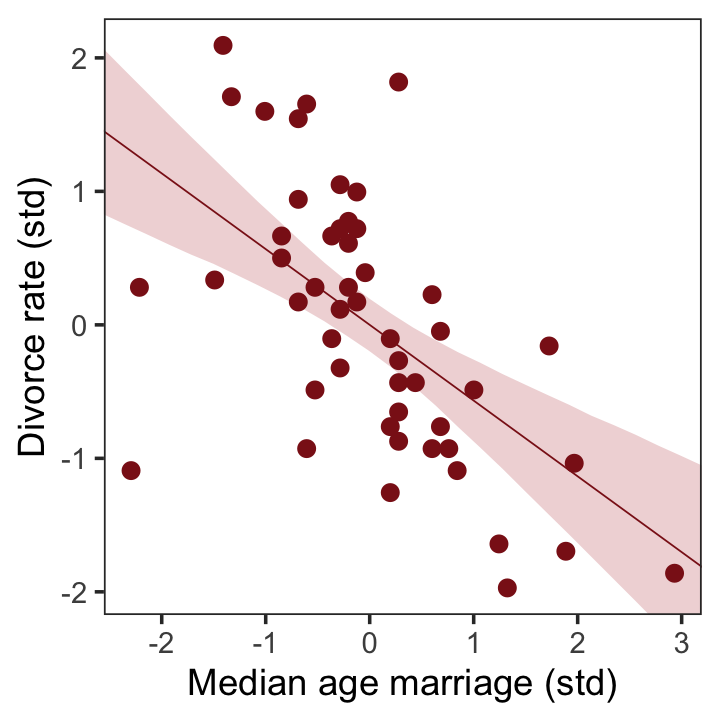

To get the posterior predictions from our brms model, we’ll use fitted() in place of link().

# determine the range of `a` values we'd like to feed into `fitted()`

nd <- tibble(a = seq(from = -3, to = 3.2, length.out = 30))

# now use `fitted()` to get the model-implied trajectories

fitted(b5.1,

newdata = nd) %>%

data.frame() %>%

bind_cols(nd) %>%

# plot

ggplot(aes(x = a)) +

geom_smooth(aes(y = Estimate, ymin = Q2.5, ymax = Q97.5),

stat = "identity",

fill = "firebrick", color = "firebrick4", alpha = 1/5, linewidth = 1/4) +

geom_point(data = d,

aes(y = d),

size = 2, color = "firebrick4") +

labs(x = "Median age marriage (std)",

y = "Divorce rate (std)") +

coord_cartesian(xlim = range(d$a),

ylim = range(d$d)) +

theme_bw() +

theme(panel.grid = element_blank())

That’ll serve as our version of the right panel of Figure 5.2. To paraphrase McElreath, “if you inspect the [print()] output, you’ll see that posterior for \([\beta_\text{a}]\) is reliably negative” (p. 127). Let’s see.

print(b5.1)## Family: gaussian

## Links: mu = identity; sigma = identity

## Formula: d ~ 1 + a

## Data: d (Number of observations: 50)

## Draws: 4 chains, each with iter = 2000; warmup = 1000; thin = 1;

## total post-warmup draws = 4000

##

## Population-Level Effects:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## Intercept 0.00 0.10 -0.20 0.19 1.00 4143 2663

## a -0.57 0.12 -0.80 -0.34 1.00 3660 2775

##

## Family Specific Parameters:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## sigma 0.82 0.09 0.68 1.02 1.00 3413 2900

##

## Draws were sampled using sampling(NUTS). For each parameter, Bulk_ESS

## and Tail_ESS are effective sample size measures, and Rhat is the potential

## scale reduction factor on split chains (at convergence, Rhat = 1).On the standardized scale, -0.57 95% CI [-0.79, -0.34] is pretty negative, indeed.

We’re ready to fit our second univariable model.

b5.2 <-

brm(data = d,

family = gaussian,

d ~ 1 + m,

prior = c(prior(normal(0, 0.2), class = Intercept),

prior(normal(0, 0.5), class = b),

prior(exponential(1), class = sigma)),

iter = 2000, warmup = 1000, chains = 4, cores = 4,

seed = 5,

file = "fits/b05.02")The summary suggests \(\beta_\text{m}\) is of a smaller magnitude.

print(b5.2)## Family: gaussian

## Links: mu = identity; sigma = identity

## Formula: d ~ 1 + m

## Data: d (Number of observations: 50)

## Draws: 4 chains, each with iter = 2000; warmup = 1000; thin = 1;

## total post-warmup draws = 4000

##

## Population-Level Effects:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## Intercept -0.00 0.11 -0.22 0.22 1.00 4273 2828

## m 0.35 0.13 0.09 0.61 1.00 3555 2749

##

## Family Specific Parameters:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## sigma 0.95 0.10 0.78 1.17 1.00 3881 2939

##

## Draws were sampled using sampling(NUTS). For each parameter, Bulk_ESS

## and Tail_ESS are effective sample size measures, and Rhat is the potential

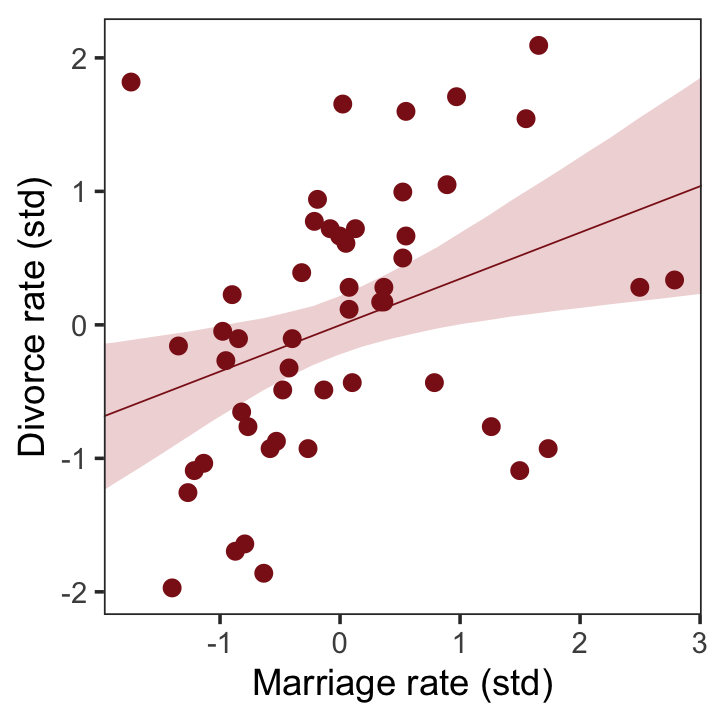

## scale reduction factor on split chains (at convergence, Rhat = 1).Now we’ll wangle and plot our version of the left panel in Figure 5.2.

nd <- tibble(m = seq(from = -2.5, to = 3.5, length.out = 30))

fitted(b5.2, newdata = nd) %>%

data.frame() %>%

bind_cols(nd) %>%

ggplot(aes(x = m)) +

geom_smooth(aes(y = Estimate, ymin = Q2.5, ymax = Q97.5),

stat = "identity",

fill = "firebrick", color = "firebrick4", alpha = 1/5, linewidth = 1/4) +

geom_point(data = d,

aes(y = d),

size = 2, color = "firebrick4") +

labs(x = "Marriage rate (std)",

y = "Divorce rate (std)") +

coord_cartesian(xlim = range(d$m),

ylim = range(d$d)) +

theme_bw() +

theme(panel.grid = element_blank())

But merely comparing parameter means between different bivariate regressions is no way to decide which predictor is better. Both of these predictors could provide independent value, or they could be redundant, or one could eliminate the value of the other.

To make sense of this, we’re going to have to think causally. And then, only after we’ve done some thinking, a bigger regression model that includes both age at marriage and marriage rate will help us. (pp. 127–128)

5.1.1 Think before you regress.

It is helpful to introduce a particular type of causal graph known as a DAG, short for directed acyclic graph. Graph means it is nodes and connections. Directed means the connections have arrows that indicate directions of causal influence. And acyclic means that causes do not eventually flow back on themselves. A DAG is a way of describing qualitative causal relationships among variables. It isn’t as detailed as a full model description, but it contains information that a purely statistical model does not. Unlike a statistical model, a DAG will tell you the consequences of intervening to change a variable. But only if the DAG is correct. There is no inference without assumption. (p. 128, emphasis in the original)

If you’re interested in making directed acyclic graphs (DAG) in R, the dagitty (Textor et al., 2016, 2021) and ggdag (Barrett, 2022b) packages are handy. Our approach will focus on ggdag.

# library(dagitty)

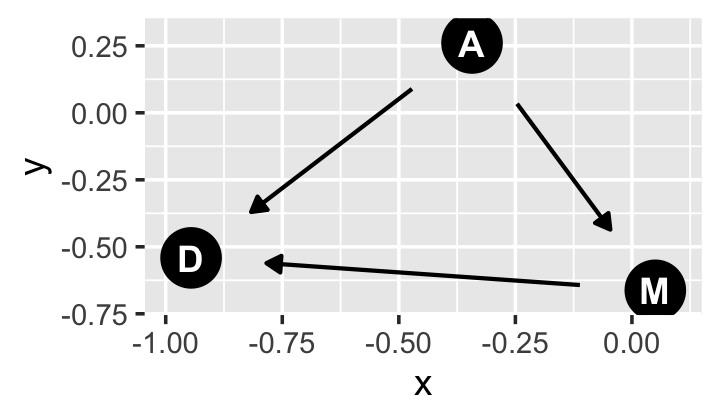

library(ggdag)If all you want is a quick and dirty DAG for our three variables, you might execute something like this.

set.seed(5)

dagify(M ~ A,

D ~ A + M) %>%

ggdag(node_size = 8)

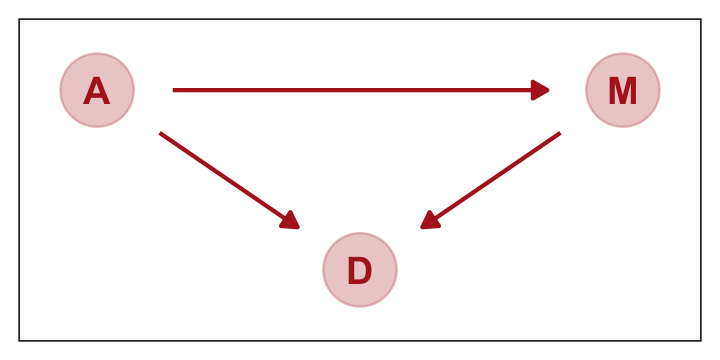

We can pretty it up a little, too.

dag_coords <-

tibble(name = c("A", "M", "D"),

x = c(1, 3, 2),

y = c(2, 2, 1))

p1 <-

dagify(M ~ A,

D ~ A + M,

coords = dag_coords) %>%

ggplot(aes(x = x, y = y, xend = xend, yend = yend)) +

geom_dag_point(color = "firebrick", alpha = 1/4, size = 10) +

geom_dag_text(color = "firebrick") +

geom_dag_edges(edge_color = "firebrick") +

scale_x_continuous(NULL, breaks = NULL, expand = c(0.1, 0.1)) +

scale_y_continuous(NULL, breaks = NULL, expand = c(0.2, 0.2)) +

theme_bw() +

theme(panel.grid = element_blank())

p1

We could have left out the coords argument and let the dagify() function set the layout of the nodes on its own. But since we were picky and wanted to ape McElreath, we first specified our coordinates in a tibble and then included that tibble in the coords argument. For more on the topic, check out the Barrett’s (2022a) vignette, An introduction to ggdag.

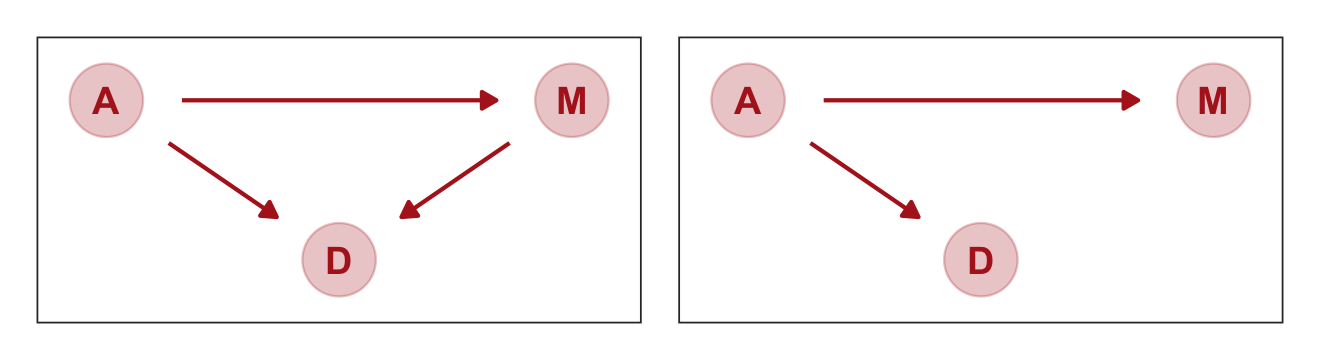

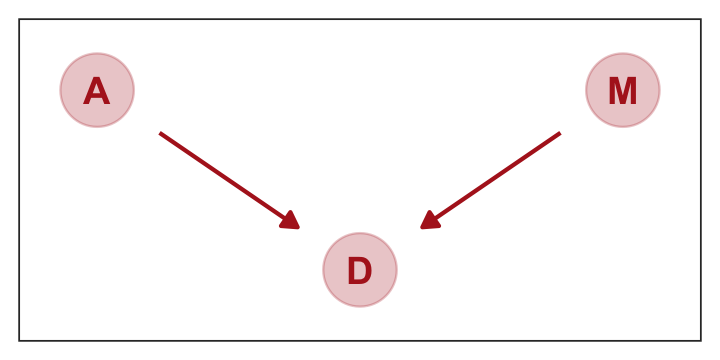

Buy anyway, our DAG

represents a heuristic causal model. Like other models, it is an analytical assumption. The symbols \(A\), \(M\), and \(D\) are our observed variables. The arrows show directions of influence. What this DAG says is:

- \(A\) directly influences \(D\)

- \(M\) directly influences \(D\)

- \(A\) directly influences \(M\)

These statements can then have further implications. In this case, age of marriage influences divorce in two ways. First it has a direct effect, \(A \rightarrow D\). Perhaps a direct effect would arise because younger people change faster than older people and are therefore more likely to grow incompatible with a partner. Second, it has an indirect effect by influencing the marriage rate, which then influences divorce, \(A \rightarrow M \rightarrow D\). If people get married earlier, then the marriage rate may rise, because there are more young people. (p. 128)

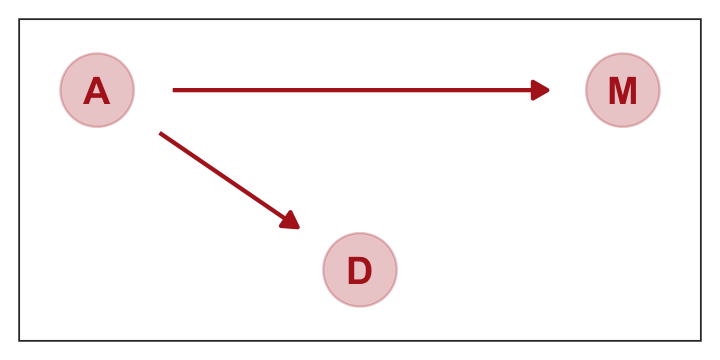

Considering alternative models, “It could be that the association between \(M\) and \(D\) arises entirely from \(A\)’s influence on both \(M\) and \(D\). Like this:” (p. 129)

p2 <-

dagify(M ~ A,

D ~ A,

coords = dag_coords) %>%

ggplot(aes(x = x, y = y, xend = xend, yend = yend)) +

geom_dag_point(color = "firebrick", alpha = 1/4, size = 10) +

geom_dag_text(color = "firebrick") +

geom_dag_edges(edge_color = "firebrick") +

scale_x_continuous(NULL, breaks = NULL, expand = c(0.1, 0.1)) +

scale_y_continuous(NULL, breaks = NULL, expand = c(0.2, 0.2)) +

theme_bw() +

theme(panel.grid = element_blank())

p2

This DAG is also consistent with the posterior distributions of models [

b5.1] and [b5.2]. Why? Because both \(M\) and \(D\) “listen” to \(A\). They have information from \(A\). So when you inspect the association between \(D\) and \(M\), you pick up that common information that they both got from listening to \(A\). You’ll see a more formal way to deduce this, in the next chapter.So which is it? Is there a direct effect of marriage rate, or rather is age at marriage just driving both, creating a spurious correlation between marriage rate and divorce rate? To find out, we need to consider carefully what each DAG implies. That’s what’s next. (p. 129)

5.1.1.1 Rethinking: What’s a cause?

Questions of causation can become bogged down in philosophical debates. These debates are worth having. But they don’t usually intersect with statistical concerns. Knowing a cause in statistics means being able to correctly predict the consequences of an intervention. There are contexts in which even this is complicated. (p. 129)

5.1.2 Testable implications.

So far, we have entertained two DAGs. Here we use patchwork to combine them into one plot.

library(patchwork)

p1 | p2

McElreath encouraged us to examine the correlations among these three variables with cor().

d %>%

select(d:a) %>%

cor()## d m a

## d 1.0000000 0.3737314 -0.5972392

## m 0.3737314 1.0000000 -0.7210960

## a -0.5972392 -0.7210960 1.0000000If you just want the lower triangle, you can use the lowerCor() function from the psych package (Revelle, 2022).

library(psych)

d %>%

select(d:a) %>%

lowerCor(digits = 3)## d m a

## d 1.000

## m 0.374 1.000

## a -0.597 -0.721 1.000Our second DAG, above, suggests “that \(D\) is independent of \(M\), conditional on \(A\)” (p. 130). We can use the dagitty::impliedConditionalIndependencies() function to express that conditional independence in formal notation.

library(dagitty)

dagitty('dag{ D <- A -> M }') %>%

impliedConditionalIndependencies()## D _||_ M | AThe lack of conditional dependencies in the first DAG may be expressed this way.

dagitty('dag{D <- A -> M -> D}') %>%

impliedConditionalIndependencies()Okay, that was a bit of a tease. “There are no conditional independencies, so there is no output to display” (p. 131). To close out this section,

once you fit a multiple regression to predict divorce using both marriage rate and age at marriage, the model addresses the questions:

- After I already know marriage rate, what additional value is there in also knowing age at marriage?

- After I already know age at marriage, what additional value is there in also knowing marriage rate?

The parameter estimates corresponding to each predictor are the (often opaque) answers to these questions. The questions above are descriptive, and the answers are also descriptive. It is only the derivation of the testable implications above that gives these descriptive results a causal meaning. But that meaning is still dependent upon believing the DAG. (p. 131)

5.1.3 Multiple regression notation.

We can write the statistical formula for our first multivariable model as

\[\begin{align*} \text{Divorce_std}_i & \sim \operatorname{Normal}(\mu_i, \sigma) \\ \mu_i & = \alpha + \beta_1 \text{Marriage_std}_i + \beta_2 \text{MedianAgeMarriage_std}_i \\ \alpha & \sim \operatorname{Normal}(0, 0.2) \\ \beta_1 & \sim \operatorname{Normal}(0, 0.5) \\ \beta_2 & \sim \operatorname{Normal}(0, 0.5) \\ \sigma & \sim \operatorname{Exponential}(1). \end{align*}\]

5.1.4 Approximating the posterior.

Much like we used the + operator to add single predictors to the intercept, we just use more + operators in the formula argument to add more predictors. Also notice we’re using the same prior prior(normal(0, 1), class = b) for both predictors. Within the brms framework, they are both of class = b. But if we wanted their priors to differ, we’d make two prior() statements and differentiate them with the coef argument. You’ll see examples of that later on.

b5.3 <-

brm(data = d,

family = gaussian,

d ~ 1 + m + a,

prior = c(prior(normal(0, 0.2), class = Intercept),

prior(normal(0, 0.5), class = b),

prior(exponential(1), class = sigma)),

iter = 2000, warmup = 1000, chains = 4, cores = 4,

seed = 5,

file = "fits/b05.03")Behold the summary.

print(b5.3)## Family: gaussian

## Links: mu = identity; sigma = identity

## Formula: d ~ 1 + m + a

## Data: d (Number of observations: 50)

## Draws: 4 chains, each with iter = 2000; warmup = 1000; thin = 1;

## total post-warmup draws = 4000

##

## Population-Level Effects:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## Intercept 0.00 0.10 -0.19 0.20 1.00 3683 2454

## m -0.06 0.16 -0.38 0.26 1.00 2641 2654

## a -0.61 0.16 -0.93 -0.29 1.00 2799 2710

##

## Family Specific Parameters:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## sigma 0.83 0.09 0.68 1.02 1.00 3492 2455

##

## Draws were sampled using sampling(NUTS). For each parameter, Bulk_ESS

## and Tail_ESS are effective sample size measures, and Rhat is the potential

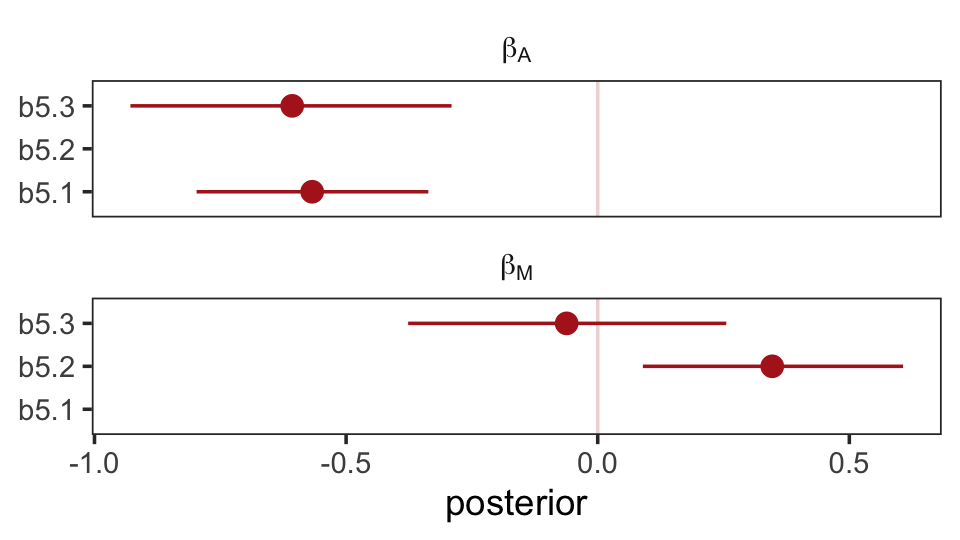

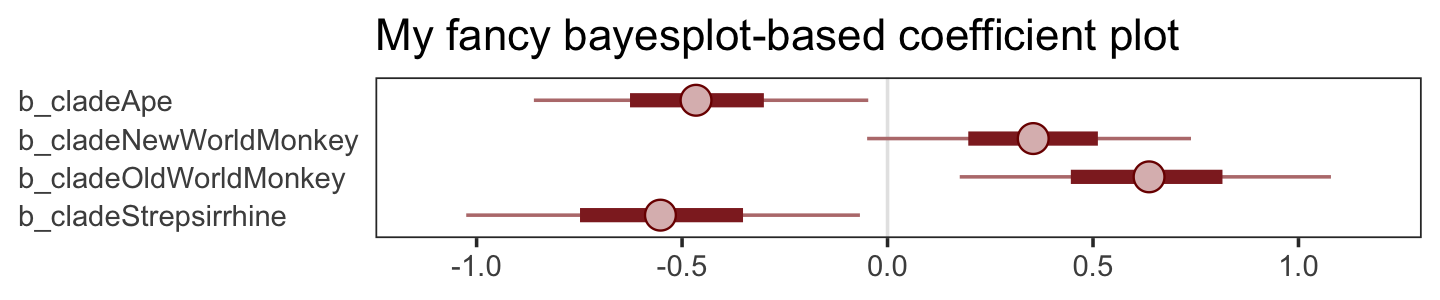

## scale reduction factor on split chains (at convergence, Rhat = 1).The brms package doesn’t have a convenience function like rethinking::coeftab(). However, we can make something similar with a little deft wrangling and ggplot2 code.

# first, extract and rename the necessary posterior parameters

bind_cols(

as_draws_df(b5.1) %>%

transmute(`b5.1_beta[A]` = b_a),

as_draws_df(b5.2) %>%

transmute(`b5.2_beta[M]` = b_m),

as_draws_df(b5.3) %>%

transmute(`b5.3_beta[M]` = b_m,

`b5.3_beta[A]` = b_a)

) %>%

# convert them to the long format, group, and get the posterior summaries

pivot_longer(everything()) %>%

group_by(name) %>%

summarise(mean = mean(value),

ll = quantile(value, prob = .025),

ul = quantile(value, prob = .975)) %>%

# since the `key` variable is really two variables in one, here we split them up

separate(col = name, into = c("fit", "parameter"), sep = "_") %>%

# plot!

ggplot(aes(x = mean, xmin = ll, xmax = ul, y = fit)) +

geom_vline(xintercept = 0, color = "firebrick", alpha = 1/5) +

geom_pointrange(color = "firebrick") +

labs(x = "posterior", y = NULL) +

theme_bw() +

theme(panel.grid = element_blank(),

strip.background = element_rect(fill = "transparent", color = "transparent")) +

facet_wrap(~ parameter, ncol = 1, labeller = label_parsed)

Don’t worry, coefficient plots won’t always be this complicated. We’ll walk out simpler ones toward the end of the chapter.

The substantive interpretation of all those coefficients is: “Once we know median age at marriage for a State, there is little or no additional predictive power in also knowing the rate of marriage in that State” (p. 134, emphasis in the original). This coheres well with one of our impliedConditionalIndependencies() statements, from above.

dagitty('dag{ D <- A -> M }') %>%

impliedConditionalIndependencies()## D _||_ M | A5.1.4.1 Overthinking: Simulating the divorce example.

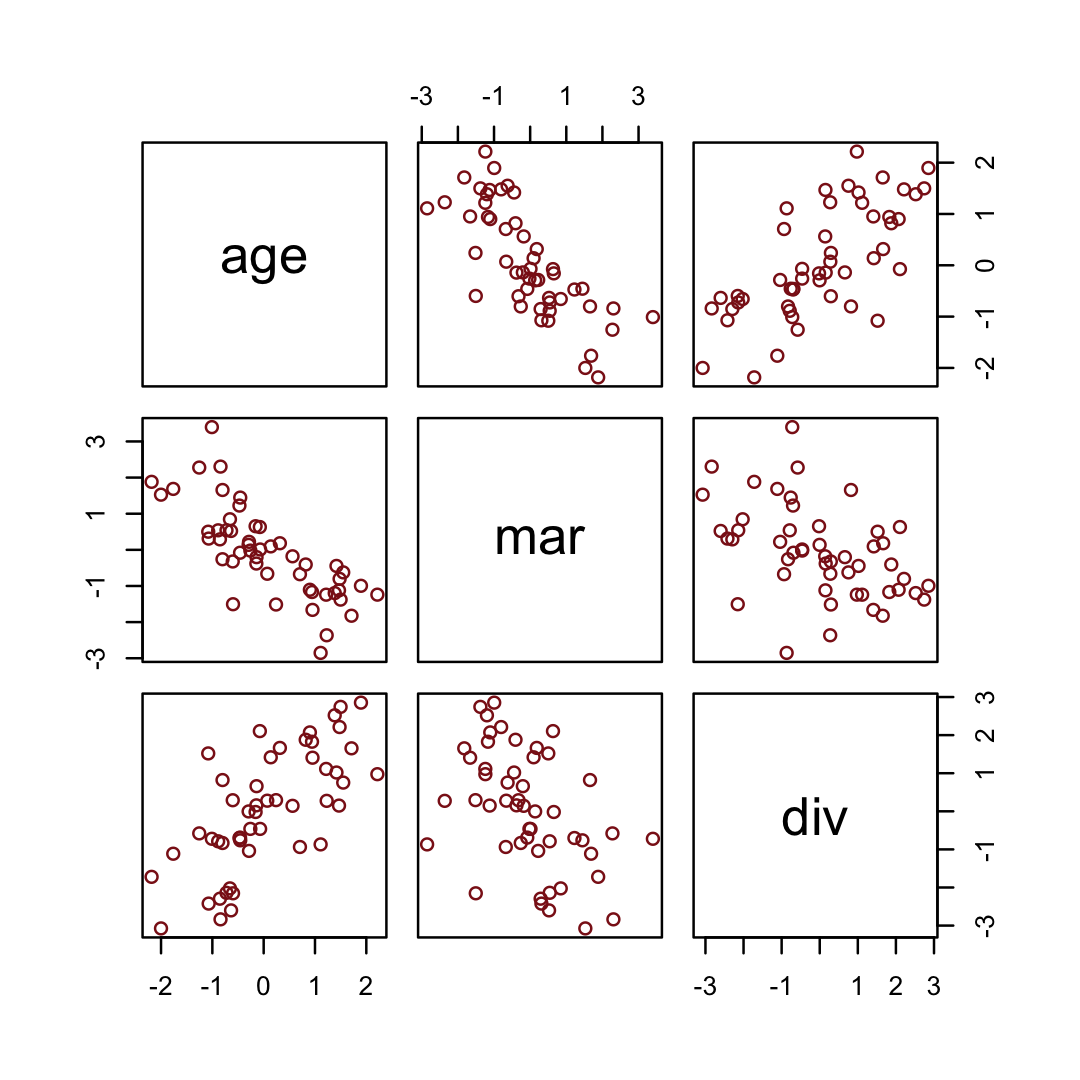

Okay, let’s simulate our divorce data in a tidyverse sort of way.

# how many states would you like?

n <- 50

set.seed(5)

sim_d <-

tibble(age = rnorm(n, mean = 0, sd = 1)) %>% # sim A

mutate(mar = rnorm(n, mean = -age, sd = 1), # sim A -> M

div = rnorm(n, mean = age, sd = 1)) # sim A -> D

head(sim_d)## # A tibble: 6 × 3

## age mar div

## <dbl> <dbl> <dbl>

## 1 -0.841 2.30 -2.84

## 2 1.38 -1.20 2.52

## 3 -1.26 2.28 -0.580

## 4 0.0701 -0.662 0.279

## 5 1.71 -1.82 1.65

## 6 -0.603 -0.322 0.291We simulated those data based on this formulation.

dagitty('dag{divorce <- age -> marriage}') %>%

impliedConditionalIndependencies()## dvrc _||_ mrrg | ageHere are the quick pairs() plots.

pairs(sim_d, col = "firebrick4")

If we use the update() function, we can refit the last models in haste.

b5.1_sim <-

update(b5.1,

newdata = sim_d,

formula = div ~ 1 + age,

seed = 5,

file = "fits/b05.01_sim")

b5.2_sim <-

update(b5.2,

newdata = sim_d,

formula = div ~ 1 + mar,

seed = 5,

file = "fits/b05.02_sim")

b5.3_sim <-

update(b5.3,

newdata = sim_d,

formula = div ~ 1 + mar + age,

seed = 5,

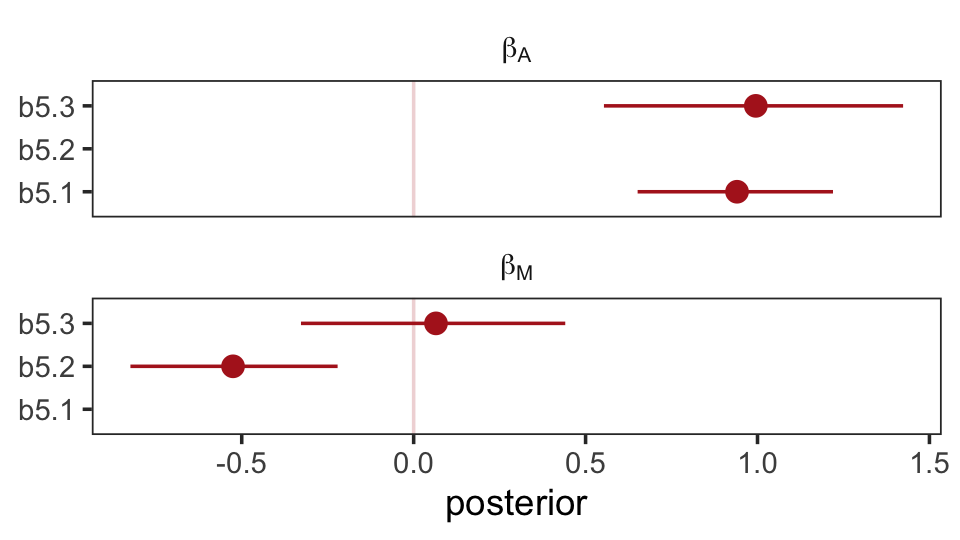

file = "fits/b05.03_sim")The steps for our homemade coefplot() plot are basically the same. Just switch out some of the names.

bind_cols(

as_draws_df(b5.1_sim) %>%

transmute(`b5.1_beta[A]` = b_age),

as_draws_df(b5.2_sim) %>%

transmute(`b5.2_beta[M]` = b_mar),

as_draws_df(b5.3_sim) %>%

transmute(`b5.3_beta[M]` = b_mar,

`b5.3_beta[A]` = b_age)

) %>%

pivot_longer(everything()) %>%

group_by(name) %>%

summarise(mean = mean(value),

ll = quantile(value, prob = .025),

ul = quantile(value, prob = .975)) %>%

# since the `key` variable is really two variables in one, here we split them up

separate(name, into = c("fit", "parameter"), sep = "_") %>%

# plot!

ggplot(aes(x = mean, xmin = ll, xmax = ul, y = fit)) +

geom_vline(xintercept = 0, color = "firebrick", alpha = 1/5) +

geom_pointrange(color = "firebrick") +

labs(x = "posterior", y = NULL) +

theme_bw() +

theme(panel.grid = element_blank(),

strip.background = element_blank()) +

facet_wrap(~ parameter, ncol = 1, labeller = label_parsed)

Well, okay. This is the same basic pattern, but with the signs switched and with a little simulation variability thrown in. But you get the picture.

5.1.5 Plotting multivariate posteriors.

“Let’s pause for a moment, before moving on. There are a lot of moving parts here: three variables, some strange DAGs, and three models. If you feel at all confused, it is only because you are paying attention” (p. 133).

Preach, brother.

Down a little further, McElreath gave us this deflationary delight: “There is a huge literature detailing a variety of plotting techniques that all attempt to help one understand multiple linear regression. None of these techniques is suitable for all jobs, and most do not generalize beyond linear regression” (pp. 134–135). Now you’re inspired, let’s learn three:

- predictor residual plots

- posterior prediction plots

- counterfactual plots

5.1.5.1 Predictor residual plots.

To get ready to make our residual plots, we’ll predict one predictor, m, with another one, a.

b5.4 <-

brm(data = d,

family = gaussian,

m ~ 1 + a,

prior = c(prior(normal(0, 0.2), class = Intercept),

prior(normal(0, 0.5), class = b),

prior(exponential(1), class = sigma)),

iter = 2000, warmup = 1000, chains = 4, cores = 4,

seed = 5,

file = "fits/b05.04")print(b5.4)## Family: gaussian

## Links: mu = identity; sigma = identity

## Formula: m ~ 1 + a

## Data: d (Number of observations: 50)

## Draws: 4 chains, each with iter = 2000; warmup = 1000; thin = 1;

## total post-warmup draws = 4000

##

## Population-Level Effects:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## Intercept -0.00 0.09 -0.19 0.17 1.00 3337 2718

## a -0.69 0.10 -0.89 -0.49 1.00 3961 2856

##

## Family Specific Parameters:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## sigma 0.71 0.07 0.59 0.87 1.00 3746 2498

##

## Draws were sampled using sampling(NUTS). For each parameter, Bulk_ESS

## and Tail_ESS are effective sample size measures, and Rhat is the potential

## scale reduction factor on split chains (at convergence, Rhat = 1).With fitted(), we compute the expected values for each state (with the exception of Nevada). Since the a values for each state are in the date we used to fit the model, we’ll omit the newdata argument.

f <-

fitted(b5.4) %>%

data.frame() %>%

bind_cols(d)

glimpse(f)## Rows: 50

## Columns: 20

## $ Estimate <dbl> 0.4168429, 0.4723741, 0.1391869, 0.9721549, -0.4161251, 0.1947181, -0.86…

## $ Est.Error <dbl> 0.11084576, 0.11575588, 0.09359310, 0.17181804, 0.10902234, 0.09588333, …

## $ Q2.5 <dbl> 0.1919818448, 0.2397930892, -0.0511494288, 0.6390384012, -0.6351883273, …

## $ Q97.5 <dbl> 0.63066723, 0.69604942, 0.32323792, 1.30586005, -0.20011387, 0.38252344,…

## $ Location <fct> Alabama, Alaska, Arizona, Arkansas, California, Colorado, Connecticut, D…

## $ Loc <fct> AL, AK, AZ, AR, CA, CO, CT, DE, DC, FL, GA, HI, ID, IL, IN, IA, KS, KY, …

## $ Population <dbl> 4.78, 0.71, 6.33, 2.92, 37.25, 5.03, 3.57, 0.90, 0.60, 18.80, 9.69, 1.36…

## $ MedianAgeMarriage <dbl> 25.3, 25.2, 25.8, 24.3, 26.8, 25.7, 27.6, 26.6, 29.7, 26.4, 25.9, 26.9, …

## $ Marriage <dbl> 20.2, 26.0, 20.3, 26.4, 19.1, 23.5, 17.1, 23.1, 17.7, 17.0, 22.1, 24.9, …

## $ Marriage.SE <dbl> 1.27, 2.93, 0.98, 1.70, 0.39, 1.24, 1.06, 2.89, 2.53, 0.58, 0.81, 2.54, …

## $ Divorce <dbl> 12.7, 12.5, 10.8, 13.5, 8.0, 11.6, 6.7, 8.9, 6.3, 8.5, 11.5, 8.3, 7.7, 8…

## $ Divorce.SE <dbl> 0.79, 2.05, 0.74, 1.22, 0.24, 0.94, 0.77, 1.39, 1.89, 0.32, 0.58, 1.27, …

## $ WaffleHouses <int> 128, 0, 18, 41, 0, 11, 0, 3, 0, 133, 381, 0, 0, 2, 17, 0, 6, 64, 66, 0, …

## $ South <int> 1, 0, 0, 1, 0, 0, 0, 0, 0, 1, 1, 0, 0, 0, 0, 0, 0, 1, 1, 0, 0, 0, 0, 0, …

## $ Slaves1860 <int> 435080, 0, 0, 111115, 0, 0, 0, 1798, 0, 61745, 462198, 0, 0, 0, 0, 0, 2,…

## $ Population1860 <int> 964201, 0, 0, 435450, 379994, 34277, 460147, 112216, 75080, 140424, 1057…

## $ PropSlaves1860 <dbl> 4.5e-01, 0.0e+00, 0.0e+00, 2.6e-01, 0.0e+00, 0.0e+00, 0.0e+00, 1.6e-02, …

## $ d <dbl> 1.6542053, 1.5443643, 0.6107159, 2.0935693, -0.9270579, 1.0500799, -1.64…

## $ m <dbl> 0.02264406, 1.54980162, 0.04897436, 1.65512283, -0.26698927, 0.89154405,…

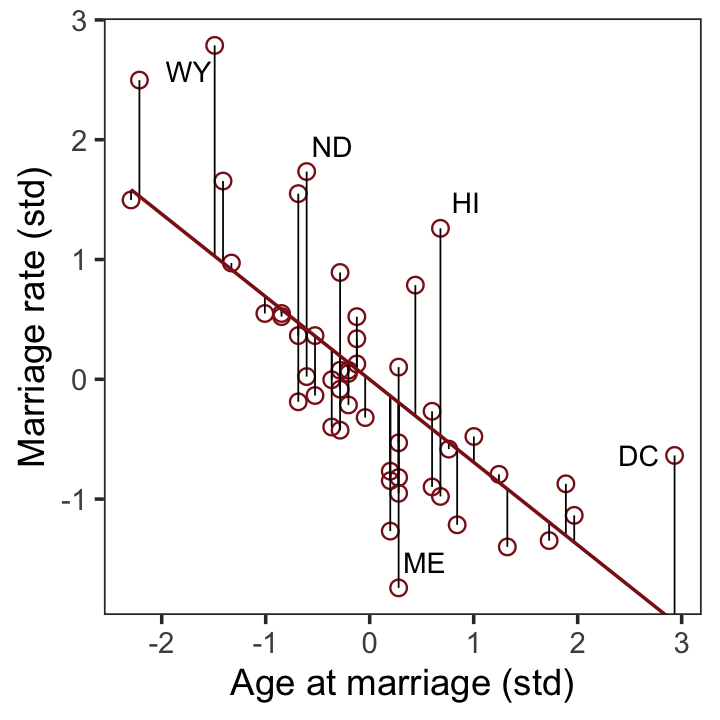

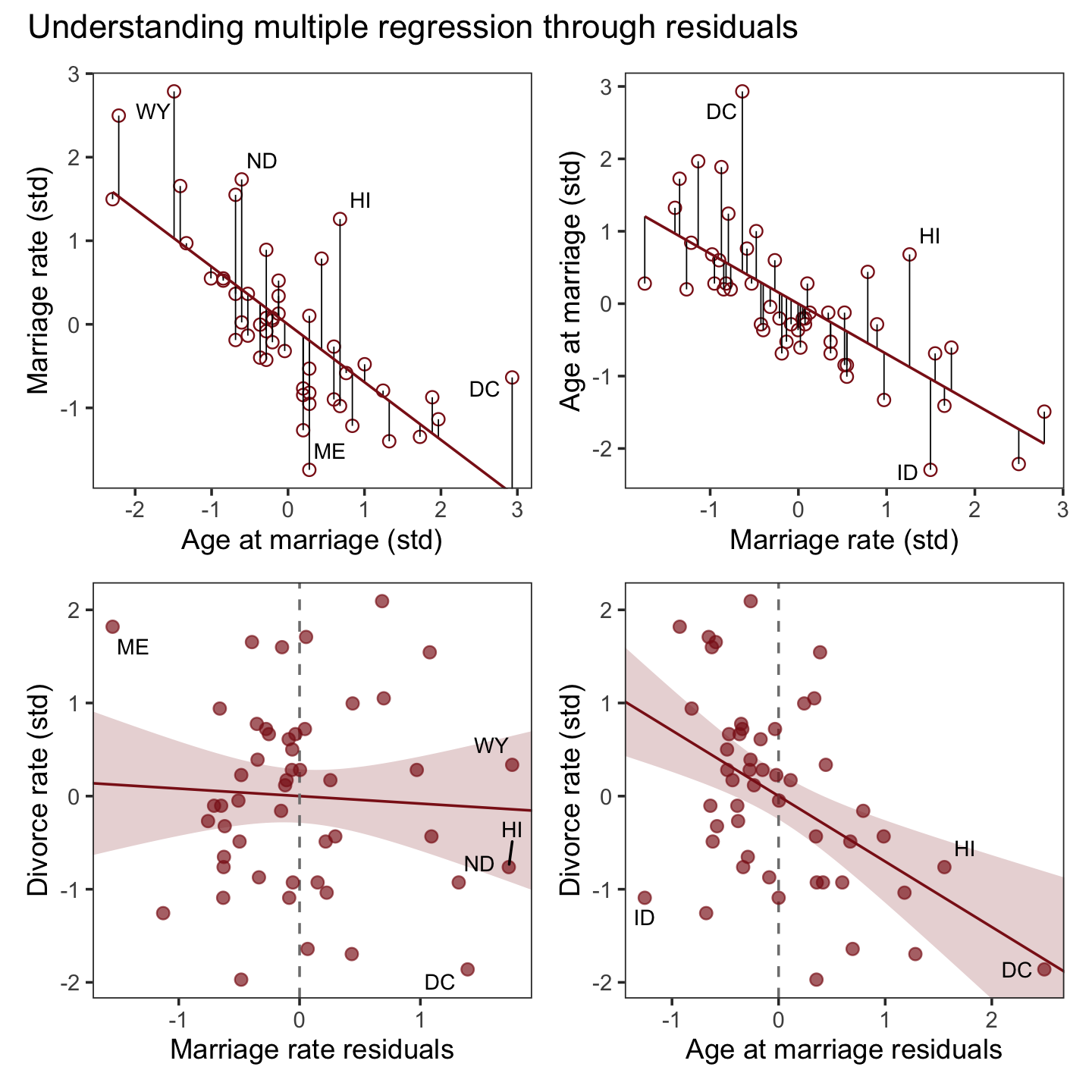

## $ a <dbl> -0.6062895, -0.6866993, -0.2042408, -1.4103870, 0.5998567, -0.2846505, 1…After a little data processing, we can make the upper left panel of Figure 5.4.

p1 <-

f %>%

ggplot(aes(x = a, y = m)) +

geom_point(size = 2, shape = 1, color = "firebrick4") +

geom_segment(aes(xend = a, yend = Estimate),

linewidth = 1/4) +

geom_line(aes(y = Estimate),

color = "firebrick4") +

geom_text_repel(data = . %>% filter(Loc %in% c("WY", "ND", "ME", "HI", "DC")),

aes(label = Loc),

size = 3, seed = 14) +

labs(x = "Age at marriage (std)",

y = "Marriage rate (std)") +

coord_cartesian(ylim = range(d$m)) +

theme_bw() +

theme(panel.grid = element_blank())

p1

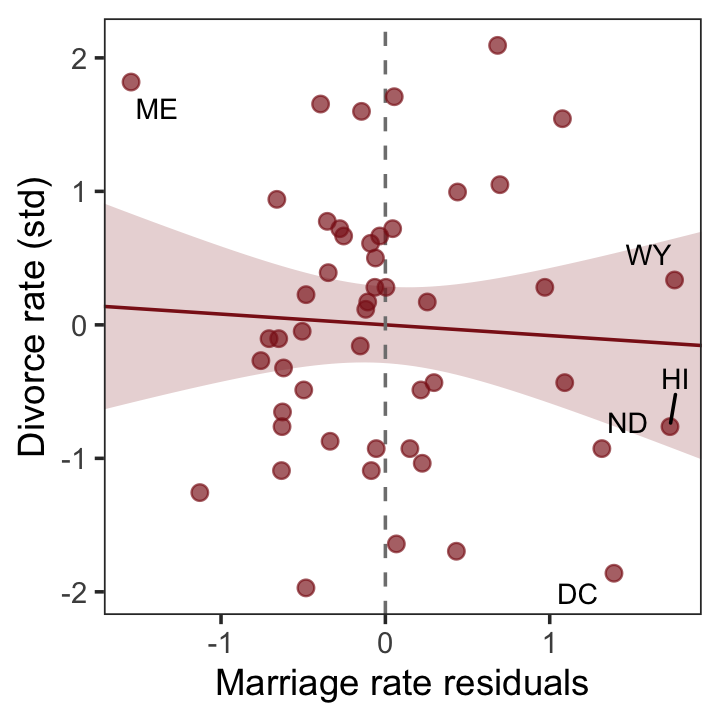

We get the residuals with the well-named residuals() function. Much like with brms::fitted(), brms::residuals() returns a four-vector matrix with the number of rows equal to the number of observations in the original data (by default, anyway). The vectors have the familiar names: Estimate, Est.Error, Q2.5, and Q97.5. See the brms reference manual (Bürkner, 2022i) for details.

With our residuals in hand, we just need a little more data processing to make lower left panel of Figure 5.4.

r <-

residuals(b5.4) %>%

# to use this in ggplot2, we need to make it a tibble or data frame

data.frame() %>%

bind_cols(d)

p3 <-

r %>%

ggplot(aes(x = Estimate, y = d)) +

stat_smooth(method = "lm", fullrange = T,

color = "firebrick4", fill = "firebrick4",

alpha = 1/5, linewidth = 1/2) +

geom_vline(xintercept = 0, linetype = 2, color = "grey50") +

geom_point(size = 2, color = "firebrick4", alpha = 2/3) +

geom_text_repel(data = . %>% filter(Loc %in% c("WY", "ND", "ME", "HI", "DC")),

aes(label = Loc),

size = 3, seed = 5) +

scale_x_continuous(limits = c(-2, 2)) +

coord_cartesian(xlim = range(r$Estimate)) +

labs(x = "Marriage rate residuals",

y = "Divorce rate (std)") +

theme_bw() +

theme(panel.grid = element_blank())

p3

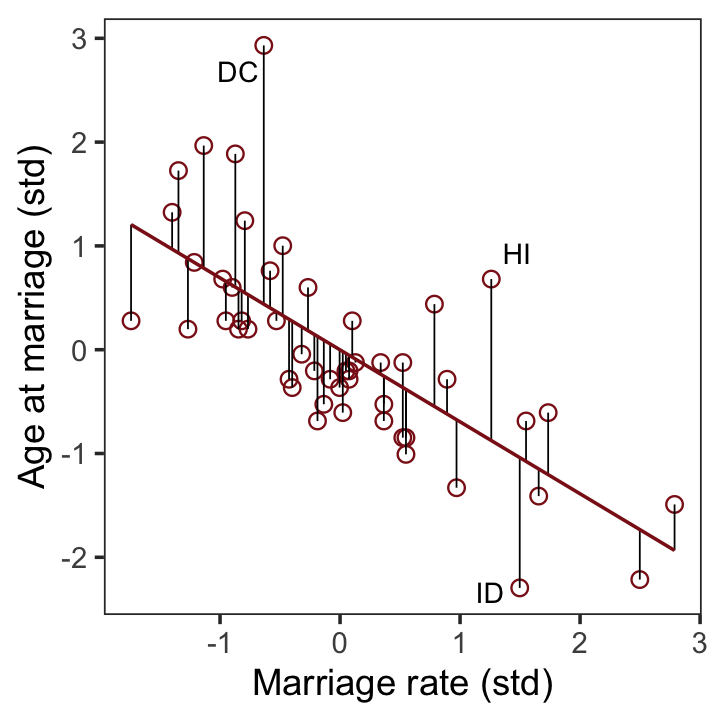

To get the MedianAgeMarriage_s residuals, we have to fit the corresponding model where m predicts a.

b5.4b <-

brm(data = d,

family = gaussian,

a ~ 1 + m,

prior = c(prior(normal(0, 0.2), class = Intercept),

prior(normal(0, 0.5), class = b),

prior(exponential(1), class = sigma)),

iter = 2000, warmup = 1000, chains = 4, cores = 4,

seed = 5,

file = "fits/b05.04b")With b5.4b in hand, we’re ready to make the upper right panel of Figure 5.4.

p2 <-

fitted(b5.4b) %>%

data.frame() %>%

bind_cols(d) %>%

ggplot(aes(x = m, y = a)) +

geom_point(size = 2, shape = 1, color = "firebrick4") +

geom_segment(aes(xend = m, yend = Estimate),

linewidth = 1/4) +

geom_line(aes(y = Estimate),

color = "firebrick4") +

geom_text_repel(data = . %>% filter(Loc %in% c("DC", "HI", "ID")),

aes(label = Loc),

size = 3, seed = 5) +

labs(x = "Marriage rate (std)",

y = "Age at marriage (std)") +

coord_cartesian(ylim = range(d$a)) +

theme_bw() +

theme(panel.grid = element_blank())

p2

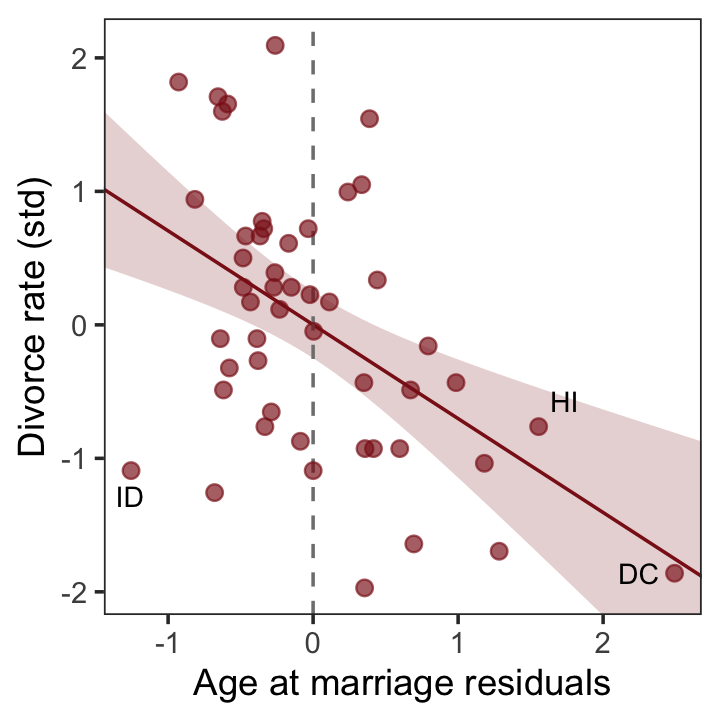

And now we’ll get the new batch of residuals, do a little data processing, and make a plot corresponding to the final panel of Figure 5.4.

r <-

residuals(b5.4b) %>%

data.frame() %>%

bind_cols(d)

p4 <-

r %>%

ggplot(aes(x = Estimate, y = d)) +

stat_smooth(method = "lm", fullrange = T,

color = "firebrick4", fill = "firebrick4",

alpha = 1/5, linewidth = 1/2) +

geom_vline(xintercept = 0, linetype = 2, color = "grey50") +

geom_point(size = 2, color = "firebrick4", alpha = 2/3) +

geom_text_repel(data = . %>% filter(Loc %in% c("ID", "HI", "DC")),

aes(label = Loc),

size = 3, seed = 5) +

scale_x_continuous(limits = c(-2, 3)) +

coord_cartesian(xlim = range(r$Estimate),

ylim = range(d$d)) +

labs(x = "Age at marriage residuals",

y = "Divorce rate (std)") +

theme_bw() +

theme(panel.grid = element_blank())

p4

Here we close out the section by combining our four subplots into one glorious whole with a little patchwork syntax.

p1 + p2 + p3 + p4 + plot_annotation(title = "Understanding multiple regression through residuals")

5.1.5.1.1 Rethinking: Residuals are parameters, not data.

There is a tradition, especially in parts of biology, of using residuals from one model as data in another model. For example, a biologist might regress brain size on body size and then use the brain size residuals as data in another model. This procedure is always a mistake. Residuals are not known. They are parameters, variables with unobserved values. Treating them as known values throws away uncertainty. (p. 137)

Let’s hammer this point home. Recall how brms::residuals() returns four columns: Estimate, Est.Error, Q2.5, and Q97.5.

r %>%

glimpse()## Rows: 50

## Columns: 20

## $ Estimate <dbl> -0.58903636, 0.38918106, -0.16873543, -0.26149791, 0.41633575, 0.3349249…

## $ Est.Error <dbl> 0.09007649, 0.18147491, 0.09015909, 0.19089755, 0.09437421, 0.12750315, …

## $ Q2.5 <dbl> -0.76631179, 0.03230000, -0.34731730, -0.63777776, 0.23116991, 0.0856600…

## $ Q97.5 <dbl> -0.413936585, 0.741616393, 0.004905611, 0.109295277, 0.599561771, 0.5784…

## $ Location <fct> Alabama, Alaska, Arizona, Arkansas, California, Colorado, Connecticut, D…

## $ Loc <fct> AL, AK, AZ, AR, CA, CO, CT, DE, DC, FL, GA, HI, ID, IL, IN, IA, KS, KY, …

## $ Population <dbl> 4.78, 0.71, 6.33, 2.92, 37.25, 5.03, 3.57, 0.90, 0.60, 18.80, 9.69, 1.36…

## $ MedianAgeMarriage <dbl> 25.3, 25.2, 25.8, 24.3, 26.8, 25.7, 27.6, 26.6, 29.7, 26.4, 25.9, 26.9, …

## $ Marriage <dbl> 20.2, 26.0, 20.3, 26.4, 19.1, 23.5, 17.1, 23.1, 17.7, 17.0, 22.1, 24.9, …

## $ Marriage.SE <dbl> 1.27, 2.93, 0.98, 1.70, 0.39, 1.24, 1.06, 2.89, 2.53, 0.58, 0.81, 2.54, …

## $ Divorce <dbl> 12.7, 12.5, 10.8, 13.5, 8.0, 11.6, 6.7, 8.9, 6.3, 8.5, 11.5, 8.3, 7.7, 8…

## $ Divorce.SE <dbl> 0.79, 2.05, 0.74, 1.22, 0.24, 0.94, 0.77, 1.39, 1.89, 0.32, 0.58, 1.27, …

## $ WaffleHouses <int> 128, 0, 18, 41, 0, 11, 0, 3, 0, 133, 381, 0, 0, 2, 17, 0, 6, 64, 66, 0, …

## $ South <int> 1, 0, 0, 1, 0, 0, 0, 0, 0, 1, 1, 0, 0, 0, 0, 0, 0, 1, 1, 0, 0, 0, 0, 0, …

## $ Slaves1860 <int> 435080, 0, 0, 111115, 0, 0, 0, 1798, 0, 61745, 462198, 0, 0, 0, 0, 0, 2,…

## $ Population1860 <int> 964201, 0, 0, 435450, 379994, 34277, 460147, 112216, 75080, 140424, 1057…

## $ PropSlaves1860 <dbl> 4.5e-01, 0.0e+00, 0.0e+00, 2.6e-01, 0.0e+00, 0.0e+00, 0.0e+00, 1.6e-02, …

## $ d <dbl> 1.6542053, 1.5443643, 0.6107159, 2.0935693, -0.9270579, 1.0500799, -1.64…

## $ m <dbl> 0.02264406, 1.54980162, 0.04897436, 1.65512283, -0.26698927, 0.89154405,…

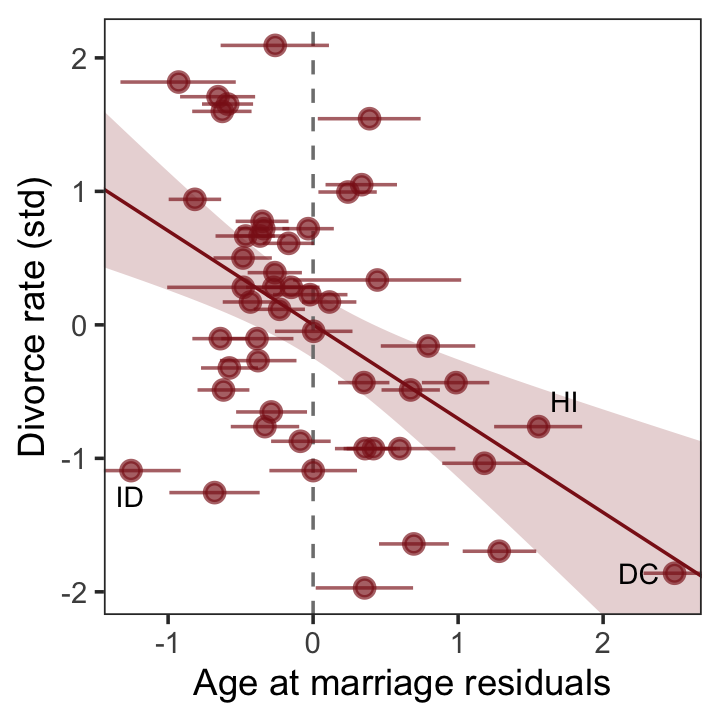

## $ a <dbl> -0.6062895, -0.6866993, -0.2042408, -1.4103870, 0.5998567, -0.2846505, 1…In the residual plots from the lower two panels of Figure 5.4, we focused on the means of the residuals (i.e., Estimate). However, we can express the uncertainty in the residuals by including error bars for the 95% intervals. Here’s what that might look like with a slight reworking of the lower right panel of Figure 5.4.

r %>%

ggplot(aes(x = Estimate, y = d)) +

stat_smooth(method = "lm", fullrange = T,

color = "firebrick4", fill = "firebrick4",

alpha = 1/5, linewidth = 1/2) +

geom_vline(xintercept = 0, linetype = 2, color = "grey50") +

# the only change is here

geom_pointrange(aes(xmin = Q2.5, xmax = Q97.5),

color = "firebrick4", alpha = 2/3) +

geom_text_repel(data = . %>% filter(Loc %in% c("ID", "HI", "DC")),

aes(label = Loc),

size = 3, seed = 5) +

scale_x_continuous(limits = c(-2, 3)) +

coord_cartesian(xlim = range(r$Estimate),

ylim = range(d$d)) +

labs(x = "Age at marriage residuals",

y = "Divorce rate (std)") +

theme_bw() +

theme(panel.grid = element_blank())

Look at that. If you were to fit a follow-up model based on only the point estimates (posterior means) of those residuals, you’d be ignoring a lot of uncertainty. For more on the topic of residuals, see Freckleton (2002), On the misuse of residuals in ecology: regression of residuals vs. multiple regression.

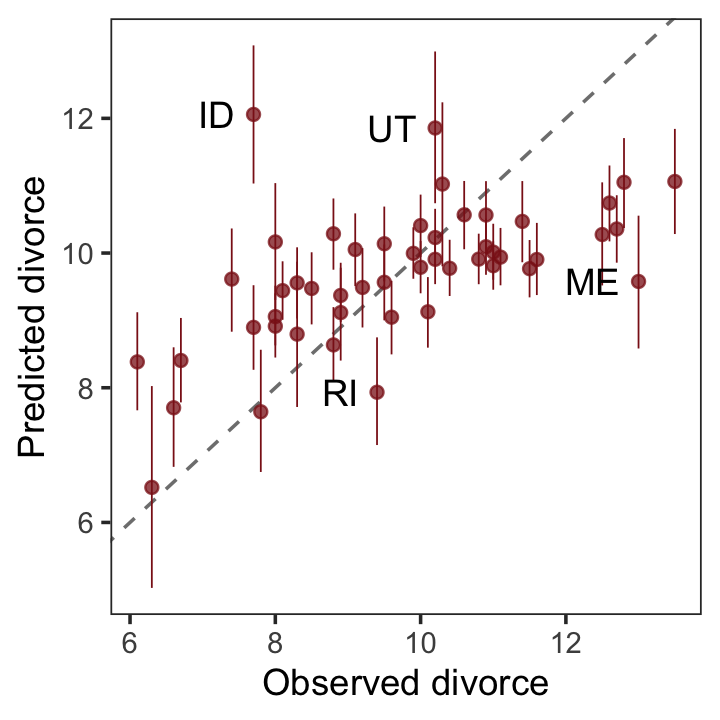

5.1.5.2 Posterior prediction plots.

“It’s important to check the model’s implied predictions against the observed data” (p. 137). For more on the topic, check out Gabry and colleagues’ (2019) Visualization in Bayesian workflow or Simpson’s related blog post, Touch me, I want to feel your data.

The code below will make our version of Figure 5.5.

fitted(b5.3) %>%

data.frame() %>%

# un-standardize the model predictions

mutate_all(~. * sd(d$Divorce) + mean(d$Divorce)) %>%

bind_cols(d) %>%

ggplot(aes(x = Divorce, y = Estimate)) +

geom_abline(linetype = 2, color = "grey50", linewidth = 0.5) +

geom_point(size = 1.5, color = "firebrick4", alpha = 3/4) +

geom_linerange(aes(ymin = Q2.5, ymax = Q97.5),

linewidth = 1/4, color = "firebrick4") +

geom_text(data = . %>% filter(Loc %in% c("ID", "UT", "RI", "ME")),

aes(label = Loc),

hjust = 1, nudge_x = - 0.25) +

labs(x = "Observed divorce", y = "Predicted divorce") +

theme_bw() +

theme(panel.grid = element_blank())

It’s easy to see from this arrangement of the simulations that the model under-predicts for States with very high divorce rates while it over-predicts for States with very low divorce rates. That’s normal. This is what regression does–it is skeptical of extreme values, so it expects regression towards the mean. But beyond this general regression to the mean, some States are very frustrating to the model, lying very far from the diagonal. (p. 139)

5.1.5.2.1 Rethinking: Stats, huh, yeah what is it good for?

Often people want statistical modeling to do things that statistical modeling cannot do. For example, we’d like to know whether an effect is “real” or rather spurious. Unfortunately, modeling merely quantifies uncertainty in the precise way that the model understands the problem. Usually answers to large world questions about truth and causation depend upon information not included in the model. For example, any observed correlation between an outcome and predictor could be eliminated or reversed once another predictor is added to the model. But if we cannot think of the right variable, we might never notice. Therefore all statistical models are vulnerable to and demand critique, regardless of the precision of their estimates and apparent accuracy of their predictions. (p. 139)

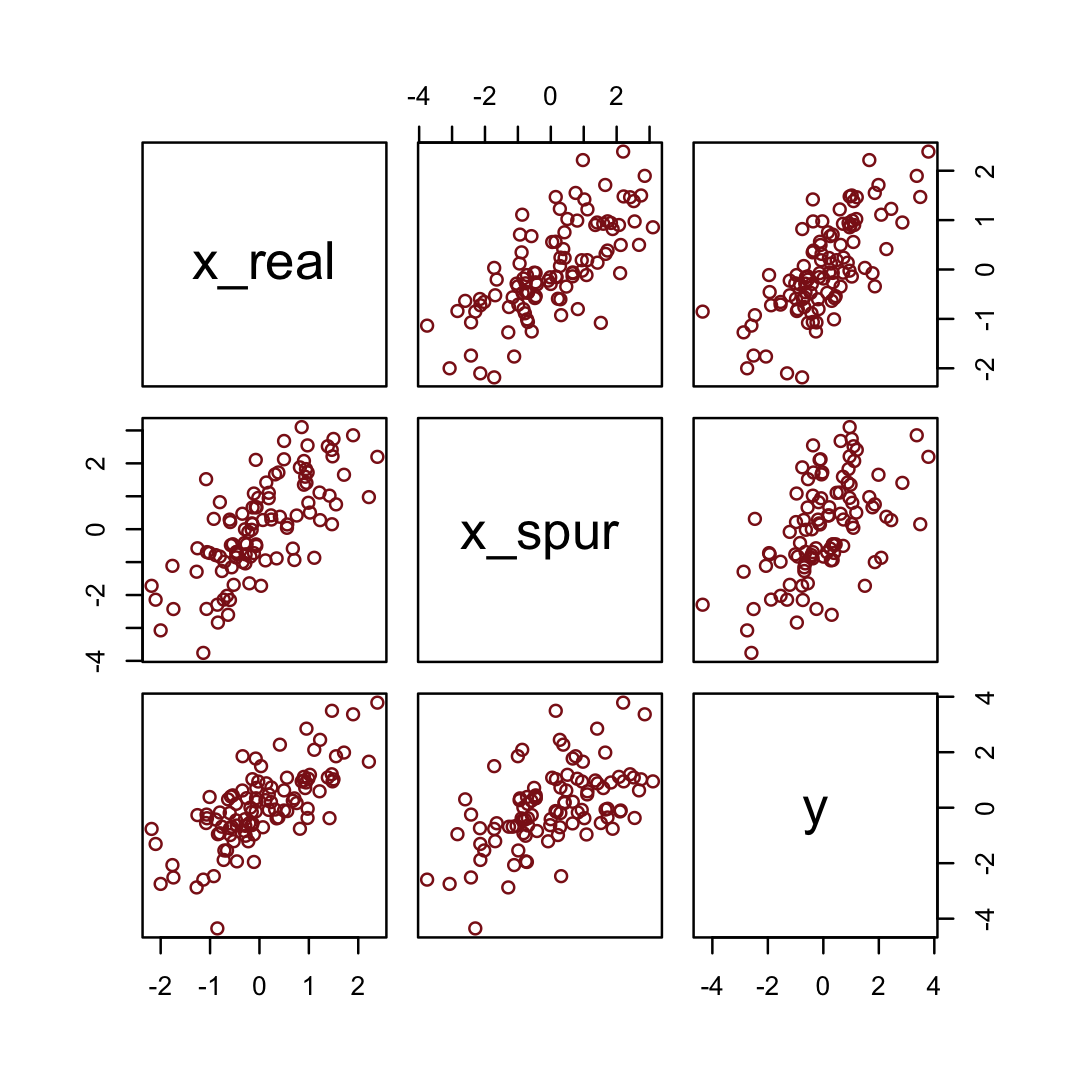

5.1.5.2.2 Overthinking: Simulating spurious association.

n <- 100 # number of cases

set.seed(5) # setting the seed makes the results reproducible

d_spur <-

tibble(x_real = rnorm(n), # x_real as Gaussian with mean 0 and SD 1 (i.e., the defaults)

x_spur = rnorm(n, x_real), # x_spur as Gaussian with mean = x_real

y = rnorm(n, x_real)) # y as Gaussian with mean = x_realHere are the quick pairs() plots.

pairs(d_spur, col = "firebrick4")

We may as well fit and evaluate a model.

b5.0_spur <-

brm(data = d_spur,

family = gaussian,

y ~ 1 + x_real + x_spur,

prior = c(prior(normal(0, 0.2), class = Intercept),

prior(normal(0, 0.5), class = b),

prior(exponential(1), class = sigma)),

iter = 2000, warmup = 1000, chains = 4, cores = 4,

seed = 5,

file = "fits/b05.00_spur")fixef(b5.0_spur) %>%

round(digits = 2)## Estimate Est.Error Q2.5 Q97.5

## Intercept -0.01 0.09 -0.18 0.16

## x_real 0.93 0.15 0.65 1.22

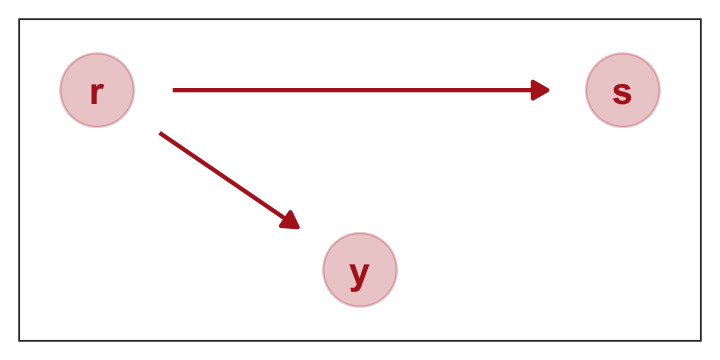

## x_spur 0.08 0.09 -0.10 0.26If we let “r” stand for x_rel and “s” stand for x_spur, here’s how we might depict that our simulation in a DAG.

dag_coords <-

tibble(name = c("r", "s", "y"),

x = c(1, 3, 2),

y = c(2, 2, 1))

dagify(s ~ r,

y ~ r,

coords = dag_coords) %>%

ggplot(aes(x = x, y = y, xend = xend, yend = yend)) +

geom_dag_point(color = "firebrick", alpha = 1/4, size = 10) +

geom_dag_text(color = "firebrick") +

geom_dag_edges(edge_color = "firebrick") +

scale_x_continuous(NULL, breaks = NULL, expand = c(0.1, 0.1)) +

scale_y_continuous(NULL, breaks = NULL, expand = c(0.2, 0.2)) +

theme_bw() +

theme(panel.grid = element_blank())

5.1.5.3 Counterfactual plots.

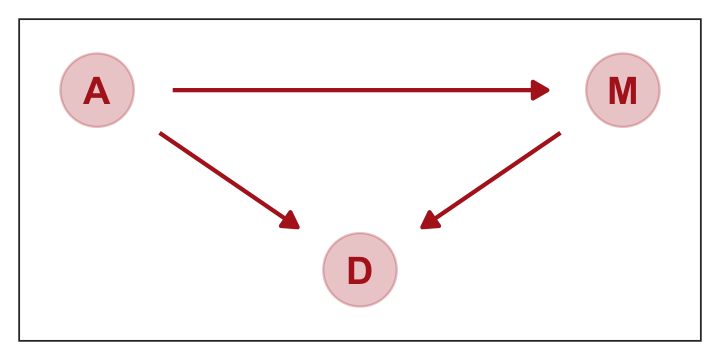

A second sort of inferential plot displays the causal implications of the model. I call these plots counterfactual, because they can be produced for any values of the predictor variables you like, even unobserved combinations like very high median age of marriage and very high marriage rate. There are no States with this combination, but in a counterfactual plot, you can ask the model for a prediction for such a State. (p. 140, emphasis in the original)

Take another look at one of the DAGs from back in Section 5.1.2.

dag_coords <-

tibble(name = c("A", "M", "D"),

x = c(1, 3, 2),

y = c(2, 2, 1))

dagify(M ~ A,

D ~ A + M,

coords = dag_coords) %>%

ggplot(aes(x = x, y = y, xend = xend, yend = yend)) +

geom_dag_point(color = "firebrick", alpha = 1/4, size = 10) +

geom_dag_text(color = "firebrick") +

geom_dag_edges(edge_color = "firebrick") +

scale_x_continuous(NULL, breaks = NULL, expand = c(0.1, 0.1)) +

scale_y_continuous(NULL, breaks = NULL, expand = c(0.2, 0.2)) +

theme_bw() +

theme(panel.grid = element_blank())

The full statistical model implied in this DAG requires we have two criterion variables, \(D\) and \(M\). To simultaneously model the effects of \(A\) on \(M\) and \(D\) AND the effects of \(A\) on \(M\) with brms, we’ll need to invoke the multivariate syntax. There are several ways to do this with brms, which Bürkner outlines in his (2022d) vignette, Estimating multivariate models with brms. At this point, it’s important to recognize we have two regression models. As a first step, we might specify each model separately in a bf() function and save them as objects.

d_model <- bf(d ~ 1 + a + m)

m_model <- bf(m ~ 1 + a)Next we will combine our bf() objects with the + operator within the brm() function. For a model like this, we also specify set_rescor(FALSE) to prevent brms from adding a residual correlation between d and m. Also, notice how each prior statement includes a resp argument. This clarifies which sub-model the prior refers to.

b5.3_A <-

brm(data = d,

family = gaussian,

d_model + m_model + set_rescor(FALSE),

prior = c(prior(normal(0, 0.2), class = Intercept, resp = d),

prior(normal(0, 0.5), class = b, resp = d),

prior(exponential(1), class = sigma, resp = d),

prior(normal(0, 0.2), class = Intercept, resp = m),

prior(normal(0, 0.5), class = b, resp = m),

prior(exponential(1), class = sigma, resp = m)),

iter = 2000, warmup = 1000, chains = 4, cores = 4,

seed = 5,

file = "fits/b05.03_A")Look at the summary.

print(b5.3_A)## Family: MV(gaussian, gaussian)

## Links: mu = identity; sigma = identity

## mu = identity; sigma = identity

## Formula: d ~ 1 + a + m

## m ~ 1 + a

## Data: d (Number of observations: 50)

## Draws: 4 chains, each with iter = 2000; warmup = 1000; thin = 1;

## total post-warmup draws = 4000

##

## Population-Level Effects:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## d_Intercept 0.00 0.10 -0.20 0.20 1.00 5428 2829

## m_Intercept -0.00 0.09 -0.18 0.18 1.00 5395 3085

## d_a -0.61 0.15 -0.91 -0.30 1.00 3712 3233

## d_m -0.06 0.16 -0.37 0.26 1.00 3514 2872

## m_a -0.69 0.10 -0.90 -0.49 1.00 5957 2665

##

## Family Specific Parameters:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## sigma_d 0.82 0.08 0.68 1.01 1.00 5243 3246

## sigma_m 0.71 0.08 0.58 0.88 1.00 4792 3021

##

## Draws were sampled using sampling(NUTS). For each parameter, Bulk_ESS

## and Tail_ESS are effective sample size measures, and Rhat is the potential

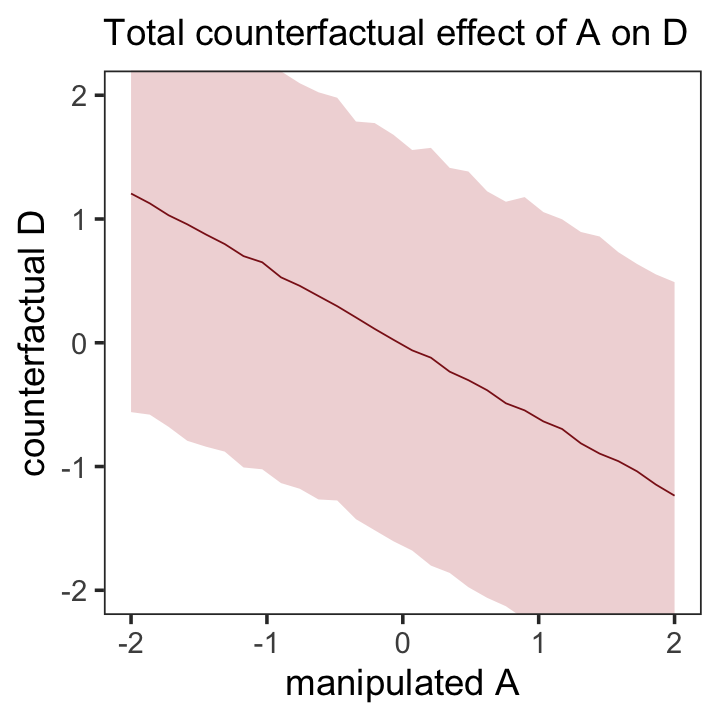

## scale reduction factor on split chains (at convergence, Rhat = 1).Note our parameters now all have either a d_ or an m_ prefix to help clarify which sub-model they were for. The m_a row shows how strongly and negatively associated a is to m. Here’s how we might use predict() to make our version of the counterfactual plot in the left panel of Figure 5.6.

nd <- tibble(a = seq(from = -2, to = 2, length.out = 30),

m = 0)

p1 <-

predict(b5.3_A,

resp = "d",

newdata = nd) %>%

data.frame() %>%

bind_cols(nd) %>%

ggplot(aes(x = a, y = Estimate, ymin = Q2.5, ymax = Q97.5)) +

geom_smooth(stat = "identity",

fill = "firebrick", color = "firebrick4", alpha = 1/5, linewidth = 1/4) +

labs(subtitle = "Total counterfactual effect of A on D",

x = "manipulated A",

y = "counterfactual D") +

coord_cartesian(ylim = c(-2, 2)) +

theme_bw() +

theme(panel.grid = element_blank())

p1

Because the plot is based on a multivariate model, we used the resp argument within predict() to tell brms which of our two criterion variables (d or m) we were interested in. Unlike McElreath’s R code 5.20, we included predictor values for both a and m. This is because brms requires we provide values for all predictors in a model when using predict(). Even though we set all the m values to 0 for the counterfactual, it was necessary to tell predict() that’s exactly what we wanted.

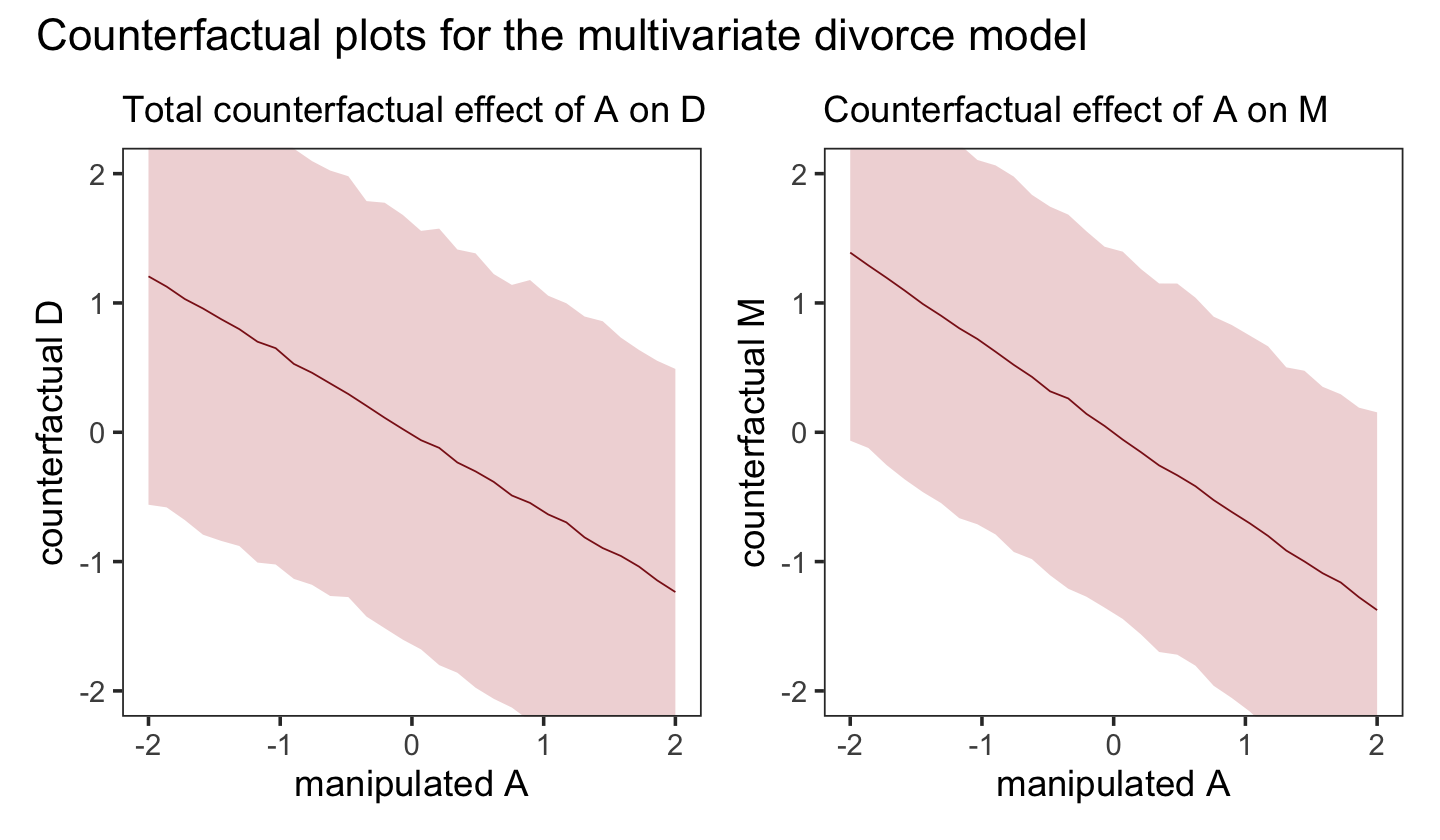

Let’s do that all again, this time making the counterfactual for d. While we’re at it, we’ll combine this subplot with the last one to make the full version of Figure 5.6.

nd <- tibble(a = seq(from = -2, to = 2, length.out = 30))

p2 <-

predict(b5.3_A,

resp = "m",

newdata = nd) %>%

data.frame() %>%

bind_cols(nd) %>%

ggplot(aes(x = a, y = Estimate, ymin = Q2.5, ymax = Q97.5)) +

geom_smooth(stat = "identity",

fill = "firebrick", color = "firebrick4", alpha = 1/5, linewidth = 1/4) +

labs(subtitle = "Counterfactual effect of A on M",

x = "manipulated A",

y = "counterfactual M") +

coord_cartesian(ylim = c(-2, 2)) +

theme_bw() +

theme(panel.grid = element_blank())

p1 + p2 + plot_annotation(title = "Counterfactual plots for the multivariate divorce model")

With our brms + tidyverse paradigm, we might compute “the expected causal effect of increasing median age at marriage from 20 to 30” (p. 142) like this.

# new data frame, standardized to mean 26.1 and std dev 1.24

nd <- tibble(a = (c(20, 30) - 26.1) / 1.24,

m = 0)

predict(b5.3_A,

resp = "d",

newdata = nd,

summary = F) %>%

data.frame() %>%

set_names("a20", "a30") %>%

mutate(difference = a30 - a20) %>%

summarise(mean = mean(difference))## mean

## 1 -4.899465The trick with simulating counterfactuals is to realize that when we manipulate some variable \(X\), we break the causal influence of other variables on \(X\). This is the same as saying we modify the DAG so that no arrows enter \(X\). Suppose for example that we now simulate the effect of manipulating \(M.\) (p. 143)

Here’s how to plot that DAG.

dag_coords <-

tibble(name = c("A", "M", "D"),

x = c(1, 3, 2),

y = c(2, 2, 1))

dagify(D ~ A + M,

coords = dag_coords) %>%

ggplot(aes(x = x, y = y, xend = xend, yend = yend)) +

geom_dag_point(color = "firebrick", alpha = 1/4, size = 10) +

geom_dag_text(color = "firebrick") +

geom_dag_edges(edge_color = "firebrick") +

scale_x_continuous(NULL, breaks = NULL, expand = c(0.1, 0.1)) +

scale_y_continuous(NULL, breaks = NULL, expand = c(0.2, 0.2)) +

theme_bw() +

theme(panel.grid = element_blank())

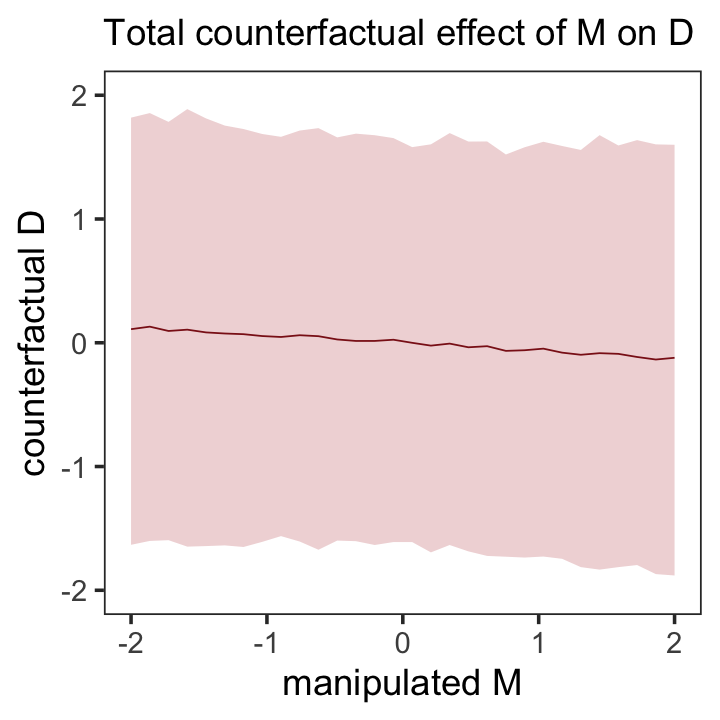

Here’s the new counterfactual plot focusing on \(M \rightarrow D\), holding \(A = 0\), Figure 5.7.

nd <- tibble(m = seq(from = -2, to = 2, length.out = 30),

a = 0)

predict(b5.3_A,

resp = "d",

newdata = nd) %>%

data.frame() %>%

bind_cols(nd) %>%

ggplot(aes(x = m, y = Estimate, ymin = Q2.5, ymax = Q97.5)) +

geom_smooth(stat = "identity",

fill = "firebrick", color = "firebrick4", alpha = 1/5, linewidth = 1/4) +

labs(subtitle = "Total counterfactual effect of M on D",

x = "manipulated M",

y = "counterfactual D") +

coord_cartesian(ylim = c(-2, 2)) +

theme_bw() +

theme(panel.grid = element_blank())

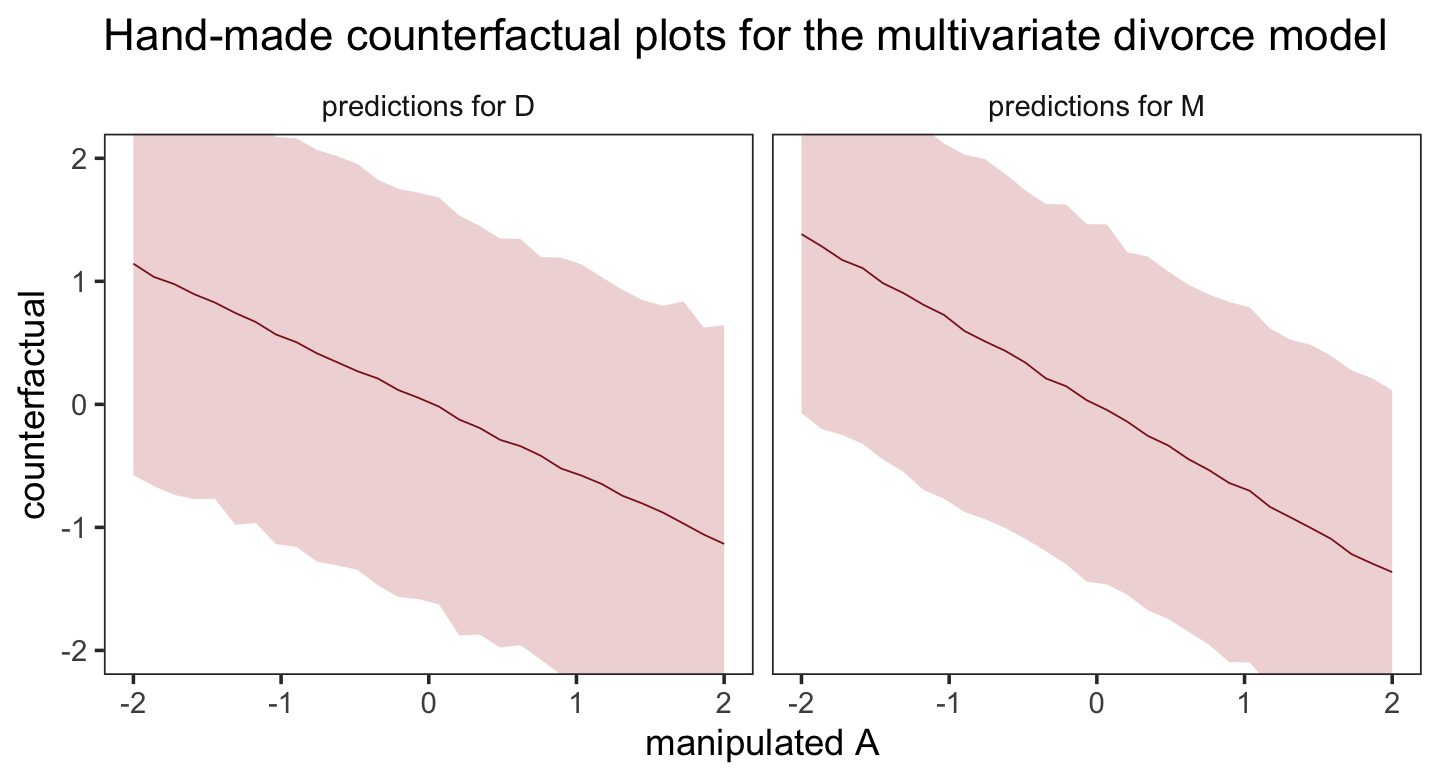

5.1.5.3.1 Overthinking: Simulating counterfactuals.

Just like McElreath showed how to compute the counterfactuals without his sim() function, we can make ours without brms::predict(). First we’ll start out extracting the posterior draws.

post <-

as_draws_df(b5.3_A)Here we use expand_grid() to elongate the output from above by a factor of thirty, each time corresponding to one of the levels of a = seq(from = -2, to = 2, length.out = 30). In the two mutate() lines that follow, we plug the model formulas into the rnorm() function to take random draws from posterior predictive distribution. The rest is just wrangling and summarizing.

set.seed(5)

post <-

post %>%

expand_grid(a = seq(from = -2, to = 2, length.out = 30)) %>%

mutate(m_sim = rnorm(n(), mean = b_m_Intercept + b_m_a * a, sd = sigma_m)) %>%

mutate(d_sim = rnorm(n(), mean = b_d_Intercept + b_d_a * a + b_d_m * m_sim, sd = sigma_d)) %>%

pivot_longer(ends_with("sim")) %>%

group_by(a, name) %>%

summarise(mean = mean(value),

ll = quantile(value, prob = .025),

ul = quantile(value, prob = .975))

# what did we do?

head(post)## # A tibble: 6 × 5

## # Groups: a [3]

## a name mean ll ul

## <dbl> <chr> <dbl> <dbl> <dbl>

## 1 -2 d_sim 1.14 -0.575 2.87

## 2 -2 m_sim 1.38 -0.0700 2.86

## 3 -1.86 d_sim 1.04 -0.665 2.68

## 4 -1.86 m_sim 1.28 -0.201 2.79

## 5 -1.72 d_sim 0.978 -0.735 2.65

## 6 -1.72 m_sim 1.17 -0.249 2.65Now we plot.

post %>%

mutate(dv = if_else(name == "d_sim", "predictions for D", "predictions for M")) %>%

ggplot(aes(x = a, y = mean, ymin = ll, ymax = ul)) +

geom_smooth(stat = "identity",

fill = "firebrick", color = "firebrick4", alpha = 1/5, linewidth = 1/4) +

labs(title = "Hand-made counterfactual plots for the multivariate divorce model",

x = "manipulated A",

y = "counterfactual") +

coord_cartesian(ylim = c(-2, 2)) +

theme_bw() +

theme(panel.grid = element_blank(),

strip.background = element_blank()) +

facet_wrap(~ dv)

5.2 Masked relationship

A second reason to use more than one predictor variable is to measure the direct influences of multiple factors on an outcome, when none of those influences is apparent from bivariate relationships. This kind of problem tends to arise when there are two predictor variables that are correlated with one another. However, one of these is positively correlated with the outcome and the other is negatively correlated with it. (p. 144)

Let’s load the Hinde & Milligan (2011) milk data.

data(milk, package = "rethinking")

d <- milk

rm(milk)

glimpse(d)## Rows: 29

## Columns: 8

## $ clade <fct> Strepsirrhine, Strepsirrhine, Strepsirrhine, Strepsirrhine, Strepsirrhine, …

## $ species <fct> Eulemur fulvus, E macaco, E mongoz, E rubriventer, Lemur catta, Alouatta se…

## $ kcal.per.g <dbl> 0.49, 0.51, 0.46, 0.48, 0.60, 0.47, 0.56, 0.89, 0.91, 0.92, 0.80, 0.46, 0.7…

## $ perc.fat <dbl> 16.60, 19.27, 14.11, 14.91, 27.28, 21.22, 29.66, 53.41, 46.08, 50.58, 41.35…

## $ perc.protein <dbl> 15.42, 16.91, 16.85, 13.18, 19.50, 23.58, 23.46, 15.80, 23.34, 22.33, 20.85…

## $ perc.lactose <dbl> 67.98, 63.82, 69.04, 71.91, 53.22, 55.20, 46.88, 30.79, 30.58, 27.09, 37.80…

## $ mass <dbl> 1.95, 2.09, 2.51, 1.62, 2.19, 5.25, 5.37, 2.51, 0.71, 0.68, 0.12, 0.47, 0.3…

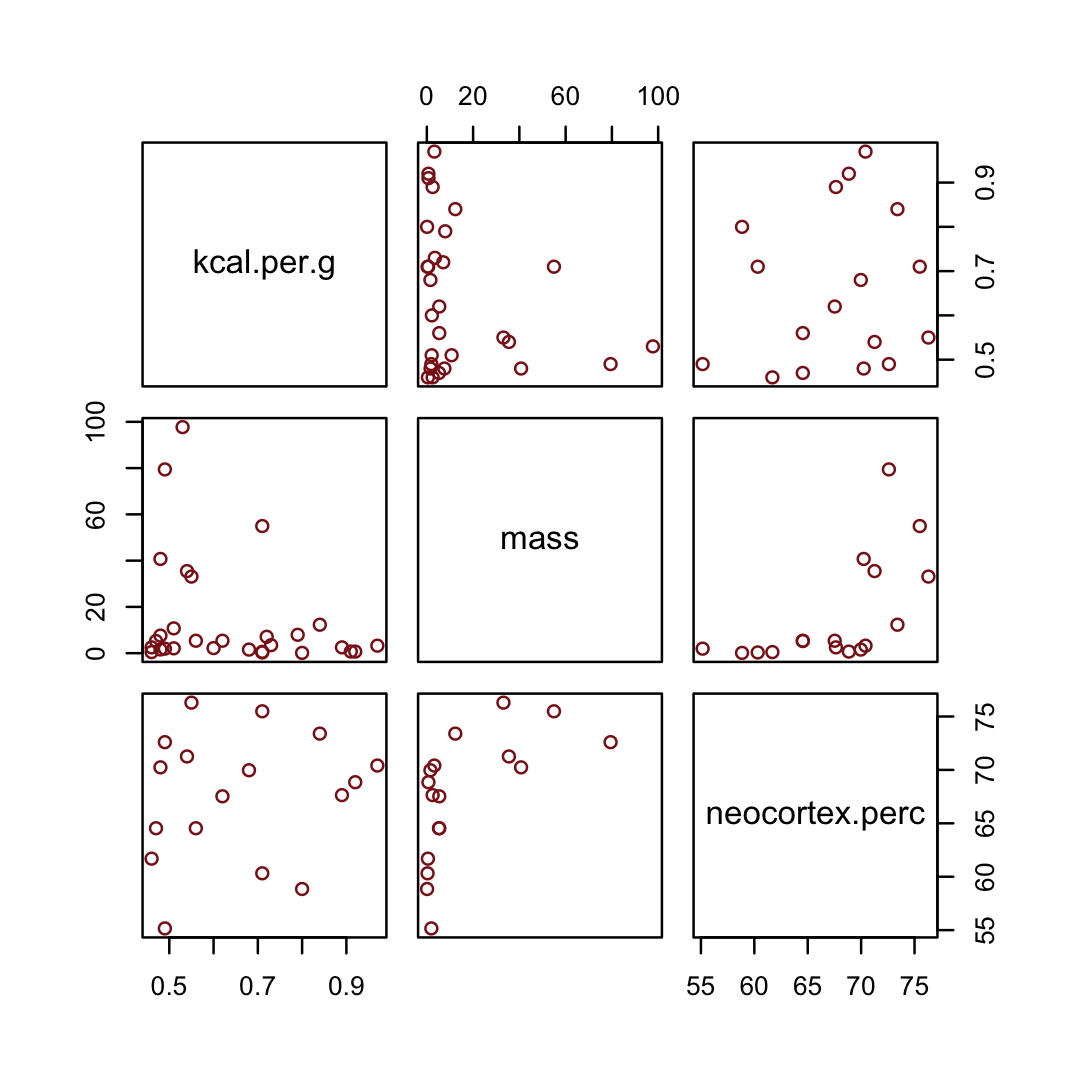

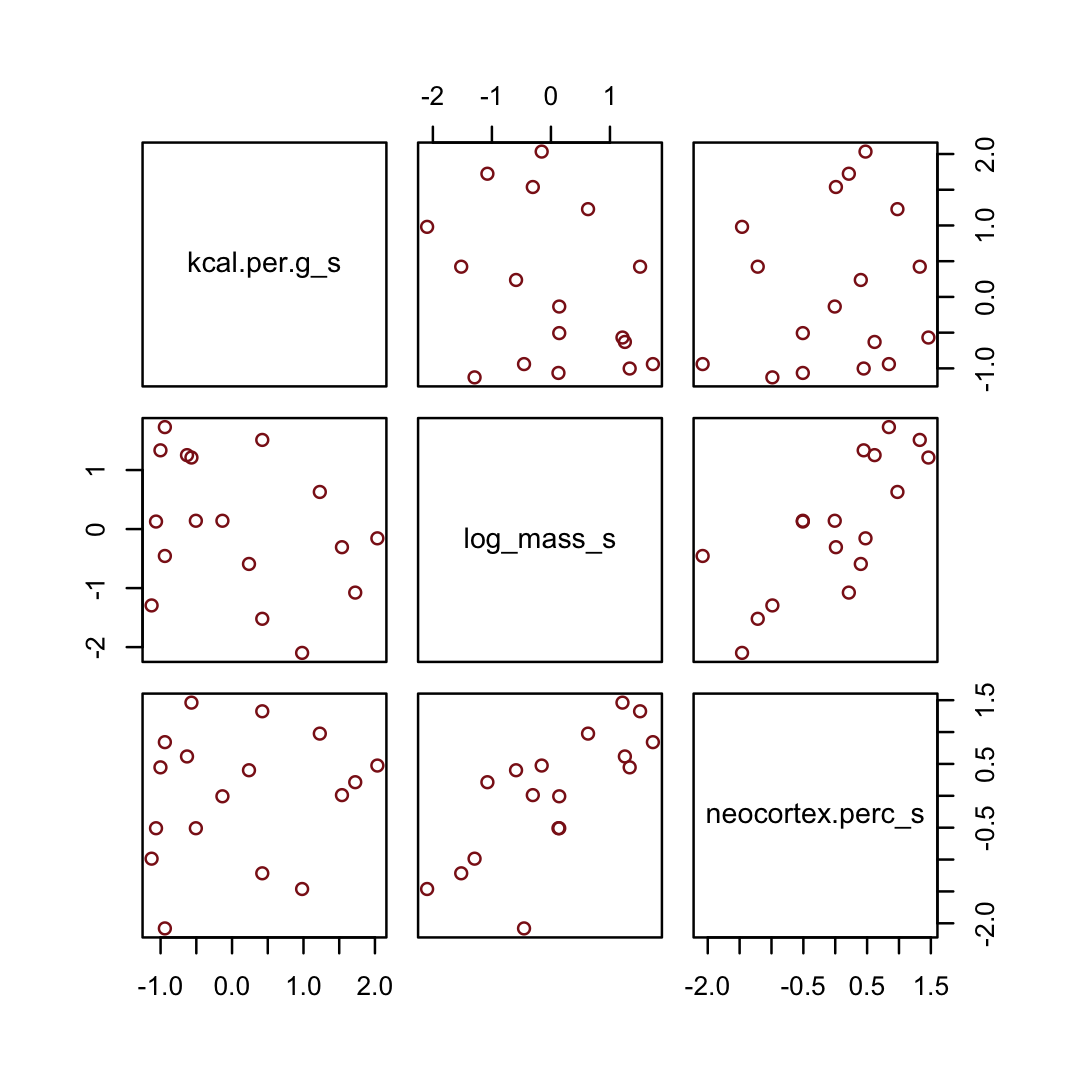

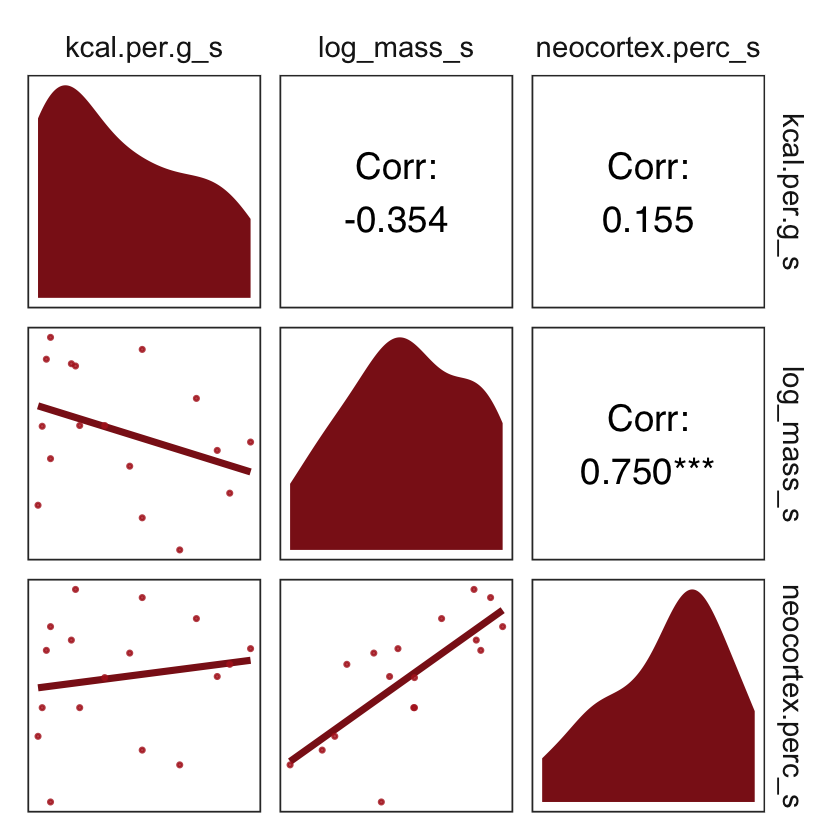

## $ neocortex.perc <dbl> 55.16, NA, NA, NA, NA, 64.54, 64.54, 67.64, NA, 68.85, 58.85, 61.69, 60.32,…You might inspect the primary variables in the data with the pairs() function.

d %>%

select(kcal.per.g, mass, neocortex.perc) %>%

pairs(col = "firebrick4")

By just looking at that mess, do you think you could describe the associations of mass and neocortex.perc with the criterion, kcal.per.g? I couldn’t. It’s a good thing we have math.

Let’s standardize our variables by hand.

d <-

d %>%

mutate(kcal.per.g_s = (kcal.per.g - mean(kcal.per.g)) / sd(kcal.per.g),

log_mass_s = (log(mass) - mean(log(mass))) / sd(log(mass)),

neocortex.perc_s = (neocortex.perc - mean(neocortex.perc, na.rm = T)) / sd(neocortex.perc, na.rm = T))McElreath has us starting off our first milk model with more permissive priors than we’ve used in the past. Although we should note that from a historical perspective, these priors are pretty informative. Times keep changing.

b5.5_draft <-

brm(data = d,

family = gaussian,

kcal.per.g_s ~ 1 + neocortex.perc_s,

prior = c(prior(normal(0, 1), class = Intercept),

prior(normal(0, 1), class = b),

prior(exponential(1), class = sigma)),

iter = 2000, warmup = 1000, chains = 4, cores = 4,

seed = 5,

sample_prior = T,

file = "fits/b05.05_draft")Similar to the rethinking example in the text, brms warned that “Rows containing NAs were excluded from the model.” This isn’t necessarily a problem; the model fit just fine. But we should be ashamed of ourselves and look eagerly forward to Chapter 15 where we’ll learn how to do better.

To compliment how McElreath removed cases with missing values on our variables of interest with base R complete.cases(), here we’ll do so with tidyr::drop_na() and a little help with ends_with().

dcc <-

d %>%

drop_na(ends_with("_s"))

# how many rows did we drop?

nrow(d) - nrow(dcc)## [1] 12We’ll use update() to refit the model with the altered data.

b5.5_draft <-

update(b5.5_draft,

newdata = dcc,

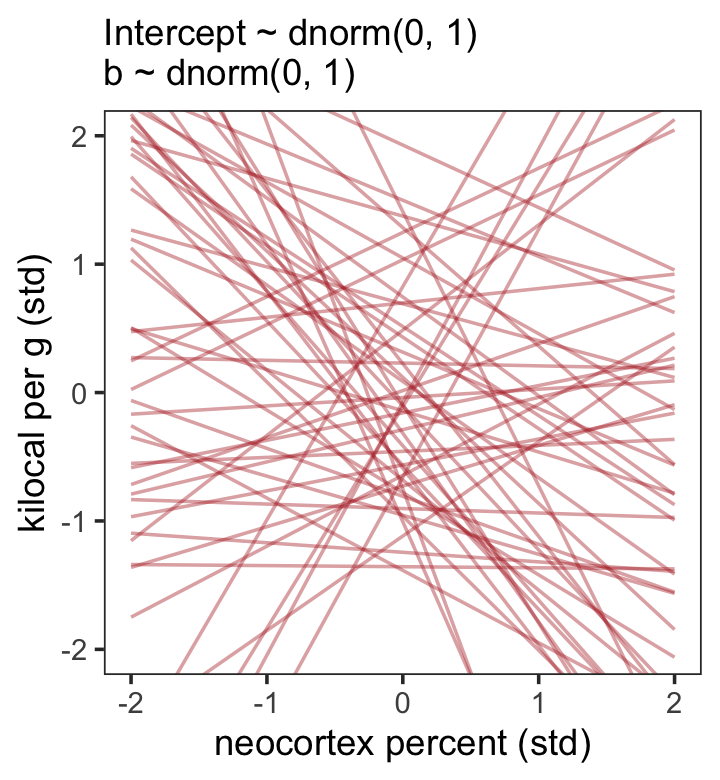

seed = 5)“Before considering the posterior predictions, let’s consider those priors. As in many simple linear regression problems, these priors are harmless. But are they reasonable?” (p. 146). Let’s find out with our version of Figure 5.8.a.

set.seed(5)

prior_draws(b5.5_draft) %>%

slice_sample(n = 50) %>%

rownames_to_column() %>%

expand_grid(neocortex.perc_s = c(-2, 2)) %>%

mutate(kcal.per.g_s = Intercept + b * neocortex.perc_s) %>%

ggplot(aes(x = neocortex.perc_s, y = kcal.per.g_s)) +

geom_line(aes(group = rowname),

color = "firebrick", alpha = .4) +

coord_cartesian(ylim = c(-2, 2)) +

labs(x = "neocortex percent (std)",

y = "kilocal per g (std)",

subtitle = "Intercept ~ dnorm(0, 1)\nb ~ dnorm(0, 1)") +

theme_bw() +

theme(panel.grid = element_blank())

That’s a mess. How’d the posterior turn out?

print(b5.5_draft)## Family: gaussian

## Links: mu = identity; sigma = identity

## Formula: kcal.per.g_s ~ 1 + neocortex.perc_s

## Data: dcc (Number of observations: 17)

## Draws: 4 chains, each with iter = 2000; warmup = 1000; thin = 1;

## total post-warmup draws = 4000

##

## Population-Level Effects:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## Intercept 0.10 0.27 -0.44 0.63 1.00 3333 2518

## neocortex.perc_s 0.16 0.27 -0.40 0.71 1.00 3076 2077

##

## Family Specific Parameters:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## sigma 1.14 0.21 0.81 1.61 1.00 2953 2747

##

## Draws were sampled using sampling(NUTS). For each parameter, Bulk_ESS

## and Tail_ESS are effective sample size measures, and Rhat is the potential

## scale reduction factor on split chains (at convergence, Rhat = 1).Let’s tighten up our priors and fit b5.5.

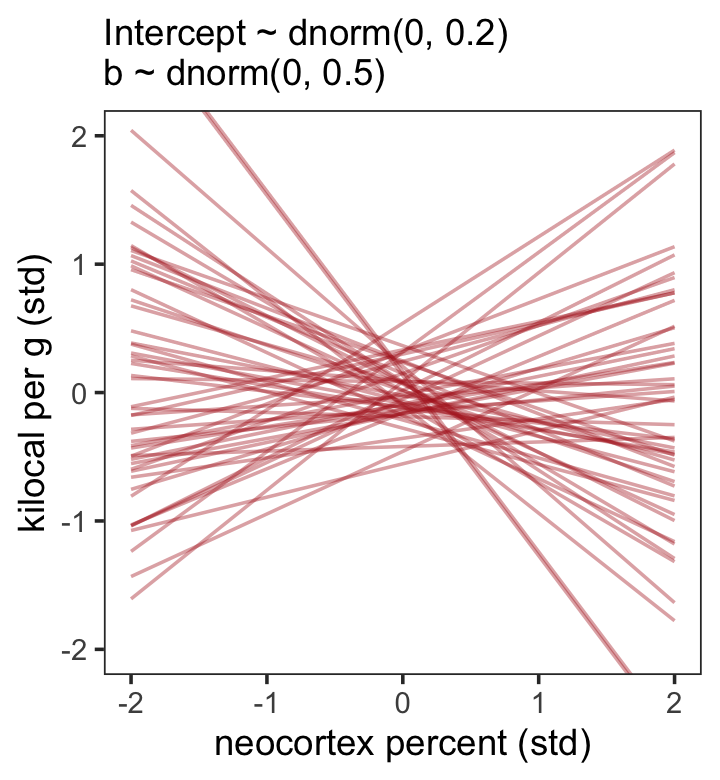

b5.5 <-

brm(data = dcc,

family = gaussian,

kcal.per.g_s ~ 1 + neocortex.perc_s,

prior = c(prior(normal(0, 0.2), class = Intercept),

prior(normal(0, 0.5), class = b),

prior(exponential(1), class = sigma)),

iter = 2000, warmup = 1000, chains = 4, cores = 4,

seed = 5,

sample_prior = T,

file = "fits/b05.05")Now make our version of Figure 5.8.b.

set.seed(5)

prior_draws(b5.5) %>%

slice_sample(n = 50) %>%

rownames_to_column() %>%

expand_grid(neocortex.perc_s = c(-2, 2)) %>%

mutate(kcal.per.g_s = Intercept + b * neocortex.perc_s) %>%

ggplot(aes(x = neocortex.perc_s, y = kcal.per.g_s, group = rowname)) +

geom_line(color = "firebrick", alpha = .4) +

coord_cartesian(ylim = c(-2, 2)) +

labs(subtitle = "Intercept ~ dnorm(0, 0.2)\nb ~ dnorm(0, 0.5)",

x = "neocortex percent (std)",

y = "kilocal per g (std)") +

theme_bw() +

theme(panel.grid = element_blank())

Look at the posterior summary.

print(b5.5)## Family: gaussian

## Links: mu = identity; sigma = identity

## Formula: kcal.per.g_s ~ 1 + neocortex.perc_s

## Data: dcc (Number of observations: 17)

## Draws: 4 chains, each with iter = 2000; warmup = 1000; thin = 1;

## total post-warmup draws = 4000

##

## Population-Level Effects:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## Intercept 0.04 0.16 -0.28 0.34 1.00 3569 2651

## neocortex.perc_s 0.12 0.24 -0.36 0.58 1.00 3028 2556

##

## Family Specific Parameters:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## sigma 1.11 0.20 0.80 1.58 1.00 2917 2283

##

## Draws were sampled using sampling(NUTS). For each parameter, Bulk_ESS

## and Tail_ESS are effective sample size measures, and Rhat is the potential

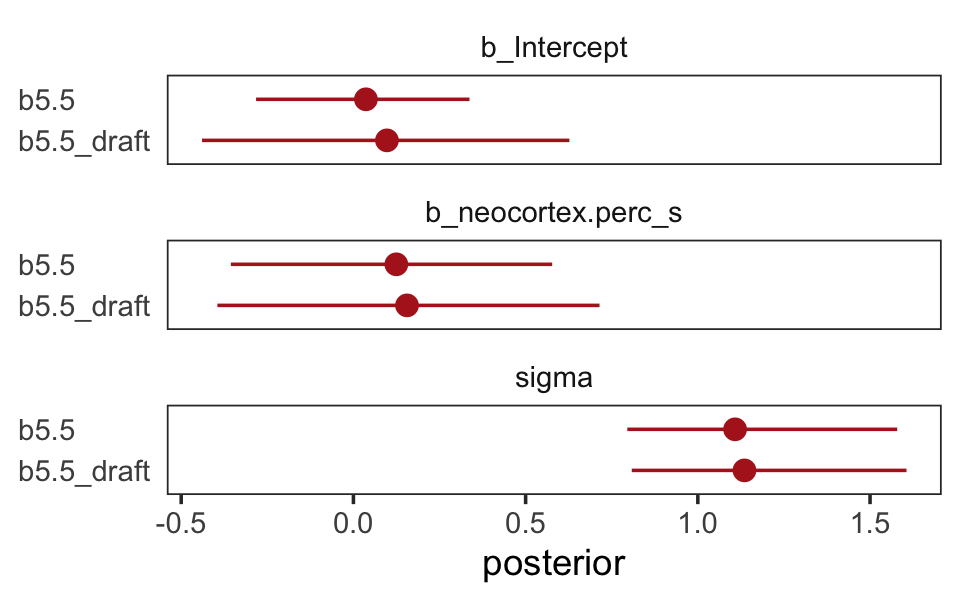

## scale reduction factor on split chains (at convergence, Rhat = 1).The results are very similar to those returned earlier from print(b5.5_draft). It’s not in the text, but let’s compare the parameter estimates between the two models with another version of our homemade coeftab() plot.

# wrangle

bind_rows(

as_draws_df(b5.5_draft) %>% select(b_Intercept:sigma),

as_draws_df(b5.5) %>% select(b_Intercept:sigma)

) %>%

mutate(fit = rep(c("b5.5_draft", "b5.5"), each = n() / 2)) %>%

pivot_longer(-fit) %>%

group_by(name, fit) %>%

summarise(mean = mean(value),

ll = quantile(value, prob = .025),

ul = quantile(value, prob = .975)) %>%

mutate(fit = factor(fit, levels = c("b5.5_draft", "b5.5"))) %>%

# plot

ggplot(aes(x = mean, y = fit, xmin = ll, xmax = ul)) +

geom_pointrange(color = "firebrick") +

geom_hline(yintercept = 0, color = "firebrick", alpha = 1/5) +

labs(x = "posterior",

y = NULL) +

theme_bw() +

theme(axis.text.y = element_text(hjust = 0),

axis.ticks.y = element_blank(),

panel.grid = element_blank(),

strip.background = element_blank()) +

facet_wrap(~ name, ncol = 1)

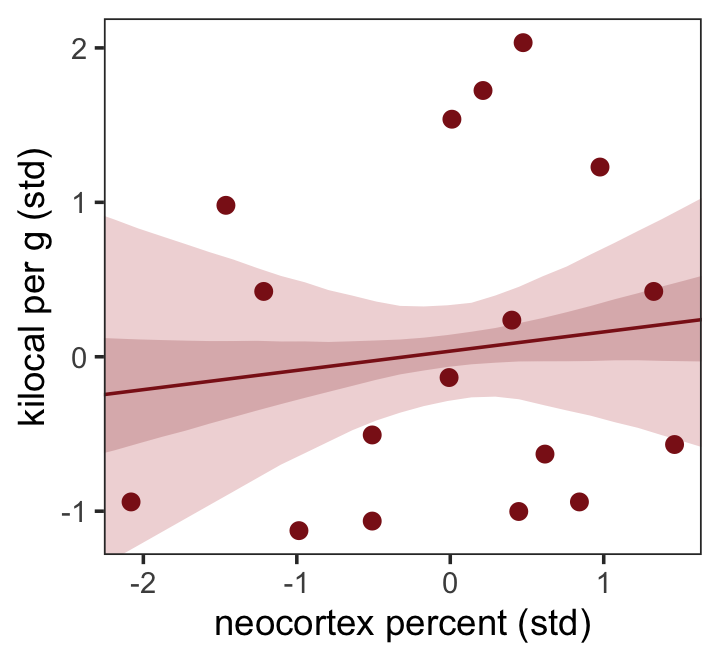

The results were quite similar, but the posteriors from b5.5 are more precise. Let’s get back on track with the text and make the top left panel of Figure 5.9. Just for kicks, we’ll superimpose 50% intervals atop 95% intervals for the next few plots. Here’s Figure 5.9, top left.

nd <- tibble(neocortex.perc_s = seq(from = -2.5, to = 2, length.out = 30))

fitted(b5.5,

newdata = nd,

probs = c(.025, .975, .25, .75)) %>%

data.frame() %>%

bind_cols(nd) %>%

ggplot(aes(x = neocortex.perc_s, y = Estimate)) +

geom_ribbon(aes(ymin = Q2.5, ymax = Q97.5),

fill = "firebrick", alpha = 1/5) +

geom_smooth(aes(ymin = Q25, ymax = Q75),

stat = "identity",

fill = "firebrick4", color = "firebrick4", alpha = 1/5, linewidth = 1/2) +

geom_point(data = dcc,

aes(x = neocortex.perc_s, y = kcal.per.g_s),

size = 2, color = "firebrick4") +

coord_cartesian(xlim = range(dcc$neocortex.perc_s),

ylim = range(dcc$kcal.per.g_s)) +

labs(x = "neocortex percent (std)",

y = "kilocal per g (std)") +

theme_bw() +

theme(panel.grid = element_blank())

Do note the probs argument in the fitted() code, above.

Now we use log_mass_s as the new sole predictor.

b5.6 <-

brm(data = dcc,

family = gaussian,

kcal.per.g_s ~ 1 + log_mass_s,

prior = c(prior(normal(0, 0.2), class = Intercept),

prior(normal(0, 0.5), class = b),

prior(exponential(1), class = sigma)),

iter = 2000, warmup = 1000, chains = 4, cores = 4,

seed = 5,

sample_prior = T,

file = "fits/b05.06")print(b5.6)## Family: gaussian

## Links: mu = identity; sigma = identity

## Formula: kcal.per.g_s ~ 1 + log_mass_s

## Data: dcc (Number of observations: 17)

## Draws: 4 chains, each with iter = 2000; warmup = 1000; thin = 1;

## total post-warmup draws = 4000

##

## Population-Level Effects:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## Intercept 0.05 0.16 -0.26 0.36 1.00 3320 2765

## log_mass_s -0.28 0.21 -0.69 0.13 1.00 3285 2586

##

## Family Specific Parameters:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## sigma 1.06 0.19 0.75 1.52 1.00 3388 2359

##

## Draws were sampled using sampling(NUTS). For each parameter, Bulk_ESS

## and Tail_ESS are effective sample size measures, and Rhat is the potential

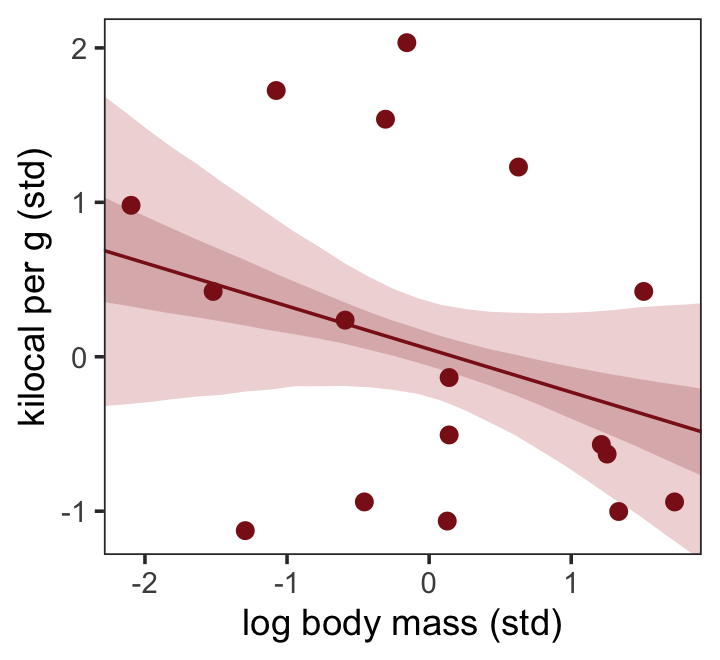

## scale reduction factor on split chains (at convergence, Rhat = 1).Make Figure 5.9, top right.

nd <- tibble(log_mass_s = seq(from = -2.5, to = 2.5, length.out = 30))

fitted(b5.6,

newdata = nd,

probs = c(.025, .975, .25, .75)) %>%

data.frame() %>%

bind_cols(nd) %>%

ggplot(aes(x = log_mass_s, y = Estimate)) +

geom_ribbon(aes(ymin = Q2.5, ymax = Q97.5),

fill = "firebrick", alpha = 1/5) +

geom_smooth(aes(ymin = Q25, ymax = Q75),

stat = "identity",

fill = "firebrick4", color = "firebrick4", alpha = 1/5, linewidth = 1/2) +

geom_point(data = dcc,

aes(y = kcal.per.g_s),

size = 2, color = "firebrick4") +

coord_cartesian(xlim = range(dcc$log_mass_s),

ylim = range(dcc$kcal.per.g_s)) +

labs(x = "log body mass (std)",

y = "kilocal per g (std)") +

theme_bw() +

theme(panel.grid = element_blank())

Finally, we’re ready to fit with both predictors included in a multivariable model. The statistical formula is

\[\begin{align*} \text{kcal.per.g_s}_i & \sim \operatorname{Normal}(\mu_i, \sigma) \\ \mu_i & = \alpha + \beta_1 \text{neocortex.perc_s}_i + \beta_2 \text{log_mass_s} \\ \alpha & \sim \operatorname{Normal}(0, 0.2) \\ \beta_1 & \sim \operatorname{Normal}(0, 0.5) \\ \beta_2 & \sim \operatorname{Normal}(0, 0.5) \\ \sigma & \sim \operatorname{Exponential}(1). \end{align*}\]

Fit the model.

b5.7 <-

brm(data = dcc,

family = gaussian,

kcal.per.g_s ~ 1 + neocortex.perc_s + log_mass_s,

prior = c(prior(normal(0, 0.2), class = Intercept),

prior(normal(0, 0.5), class = b),

prior(exponential(1), class = sigma)),

iter = 2000, warmup = 1000, chains = 4, cores = 4,

seed = 5,

file = "fits/b05.07")print(b5.7)## Family: gaussian

## Links: mu = identity; sigma = identity

## Formula: kcal.per.g_s ~ 1 + neocortex.perc_s + log_mass_s

## Data: dcc (Number of observations: 17)

## Draws: 4 chains, each with iter = 2000; warmup = 1000; thin = 1;

## total post-warmup draws = 4000

##

## Population-Level Effects:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## Intercept 0.06 0.15 -0.23 0.34 1.00 3250 2504

## neocortex.perc_s 0.60 0.29 -0.00 1.12 1.00 2389 2369

## log_mass_s -0.64 0.25 -1.10 -0.10 1.00 2315 2397

##

## Family Specific Parameters:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## sigma 0.87 0.18 0.59 1.29 1.00 2587 2564

##

## Draws were sampled using sampling(NUTS). For each parameter, Bulk_ESS

## and Tail_ESS are effective sample size measures, and Rhat is the potential

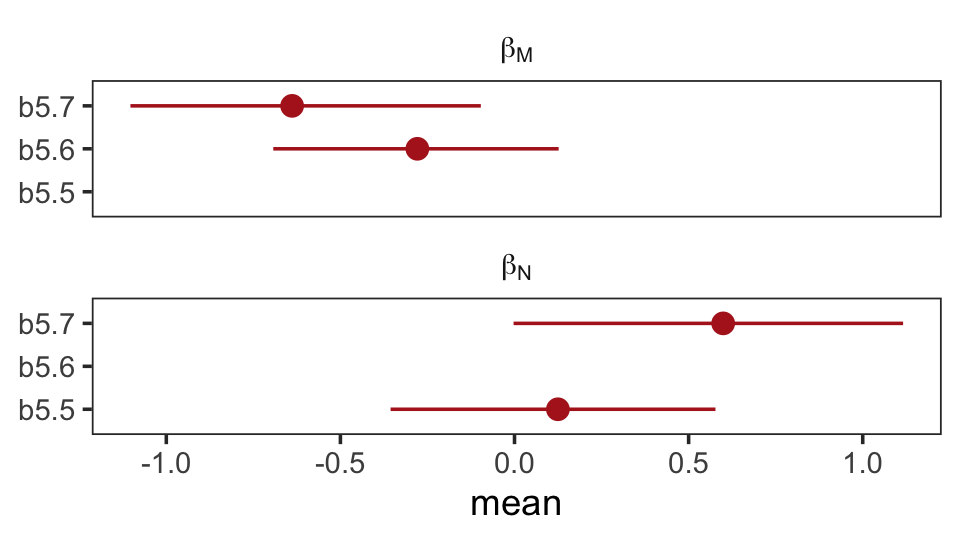

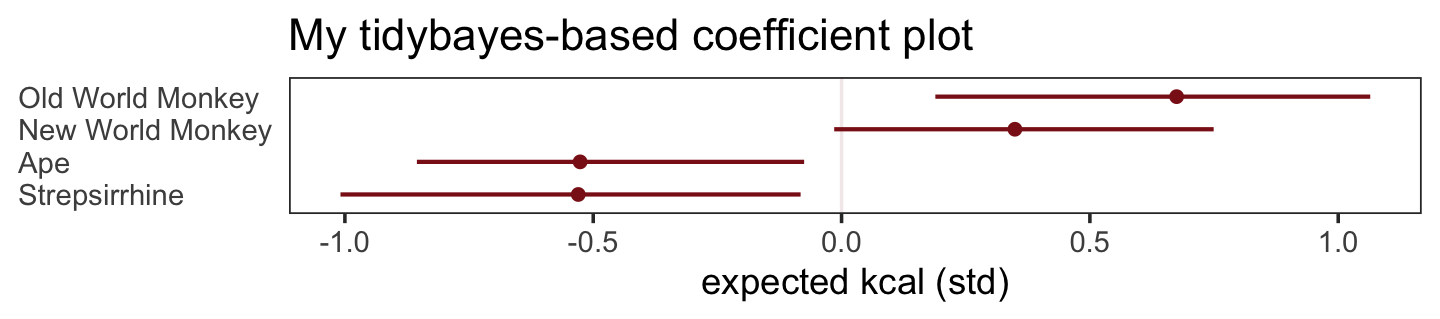

## scale reduction factor on split chains (at convergence, Rhat = 1).Once again, let’s roll out our homemade coefplot() plot code.

bind_cols(

as_draws_df(b5.5) %>%

transmute(`b5.5_beta[N]` = b_neocortex.perc_s),

as_draws_df(b5.6) %>%

transmute(`b5.6_beta[M]` = b_log_mass_s),

as_draws_df(b5.7) %>%

transmute(`b5.7_beta[N]` = b_neocortex.perc_s,

`b5.7_beta[M]` = b_log_mass_s)

) %>%

pivot_longer(everything()) %>%

group_by(name) %>%

summarise(mean = mean(value),

ll = quantile(value, prob = .025),

ul = quantile(value, prob = .975)) %>%

separate(name, into = c("fit", "parameter"), sep = "_") %>%

# complete(fit, parameter) %>%

ggplot(aes(x = mean, y = fit, xmin = ll, xmax = ul)) +

geom_pointrange(color = "firebrick") +

geom_hline(yintercept = 0, color = "firebrick", alpha = 1/5) +

ylab(NULL) +

theme_bw() +

theme(panel.grid = element_blank(),

strip.background = element_rect(fill = "transparent", color = "transparent")) +

facet_wrap(~ parameter, ncol = 1, labeller = label_parsed)

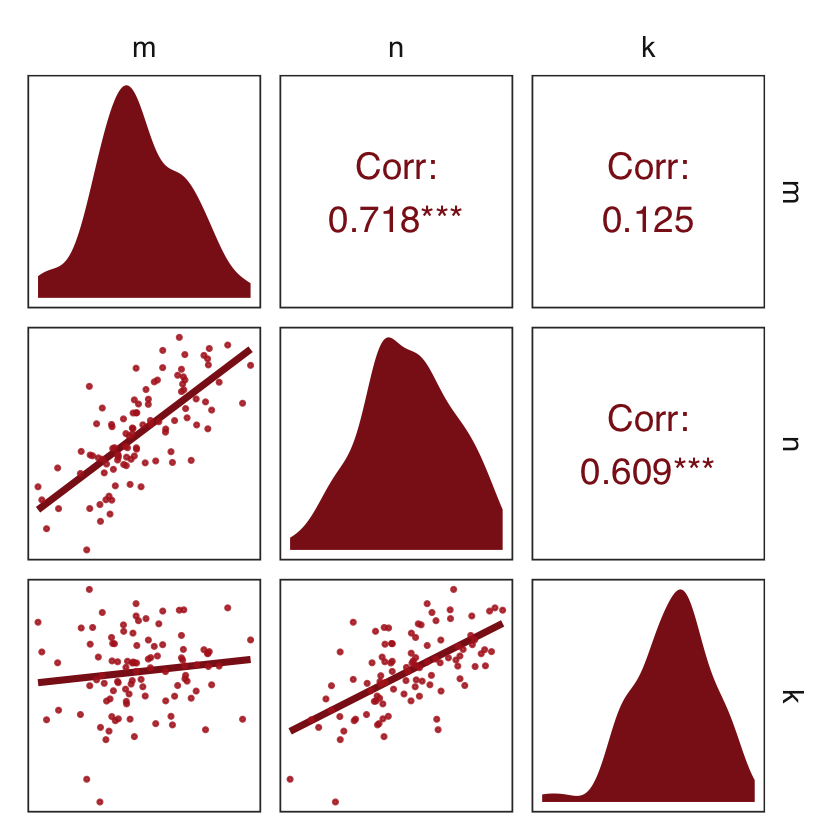

On page 151, McElreath suggested we look at a pairs plot to get a sense of the zero-order correlations. We did that once with the raw data. Here it is, again, but with the transformed variables.

dcc %>%

select(ends_with("_s")) %>%

pairs(col = "firebrick4")

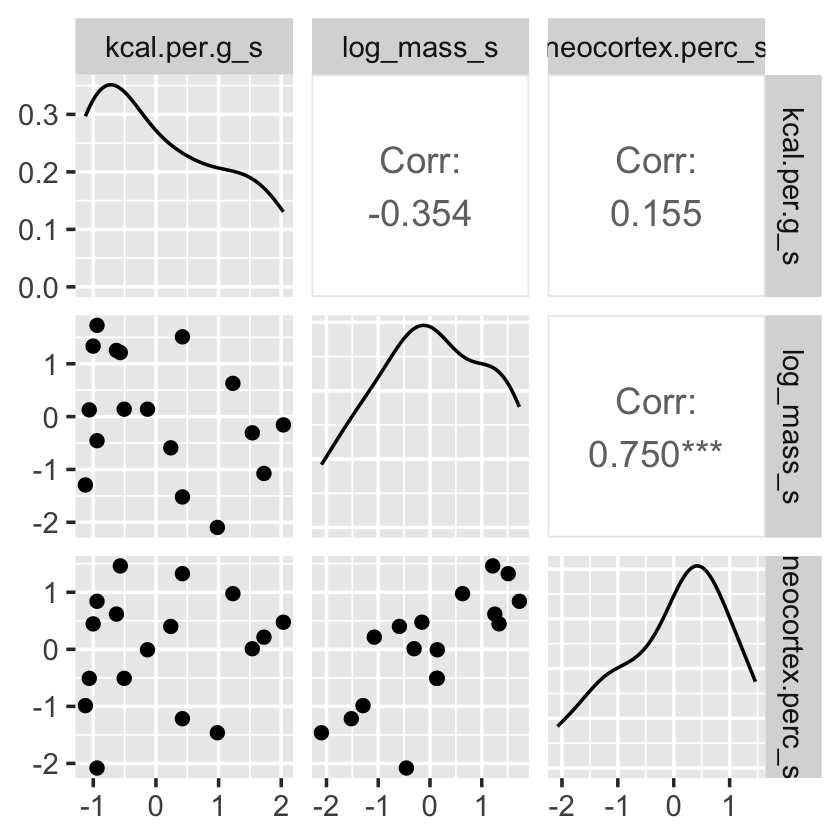

Have you noticed how un-tidyverse-like those pairs() plots are? I have. Within the tidyverse, you can make custom pairs plots with the GGally package (Schloerke et al., 2021), which will also compute the point estimates for the bivariate correlations. Here’s a default-style plot.

library(GGally)

dcc %>%

select(ends_with("_s")) %>%

ggpairs()

But you can customize these, too. E.g.,

# define custom functions

my_diag <- function(data, mapping, ...) {

ggplot(data = data, mapping = mapping) +

geom_density(fill = "firebrick4", linewidth = 0)

}

my_lower <- function(data, mapping, ...) {

ggplot(data = data, mapping = mapping) +

geom_smooth(method = "lm", color = "firebrick4", linewidth = 1,

se = F) +

geom_point(color = "firebrick", alpha = .8, size = 1/3)

}

# plot

dcc %>%

select(ends_with("_s")) %>%

ggpairs(upper = list(continuous = wrap("cor", family = "sans", color = "black")),

# plug those custom functions into `ggpairs()`

diag = list(continuous = my_diag),

lower = list(continuous = my_lower)) +

theme_bw() +

theme(axis.text = element_blank(),

axis.ticks = element_blank(),

panel.grid = element_blank(),

strip.background = element_rect(fill = "white", color = "white"))

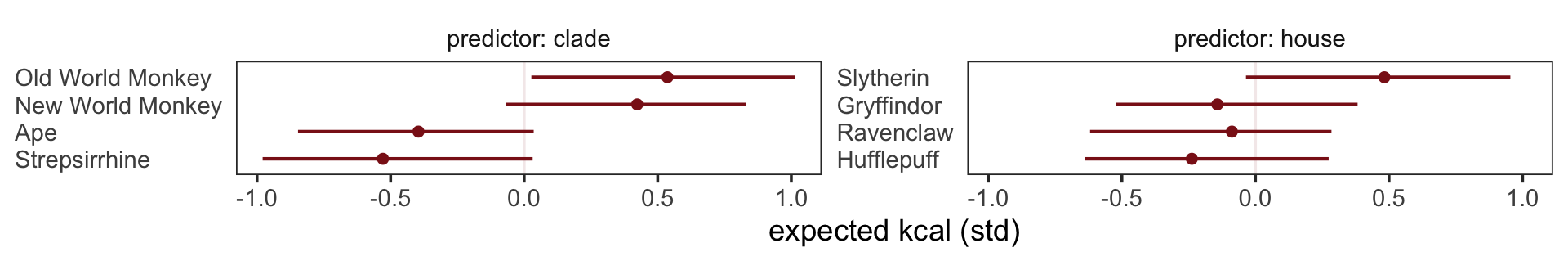

What the regression model does is ask if species that have high neocortex percent for their body mass have higher milk energy. Likewise, the model asks if species with high body mass for their neocortex percent have higher milk energy. Bigger species, like apes, have milk with less energy. But species with more neocortex tend to have richer milk. The fact that these two variables, body size and neocortex, are correlated across species makes it hard to see these relationships, unless we account for both.

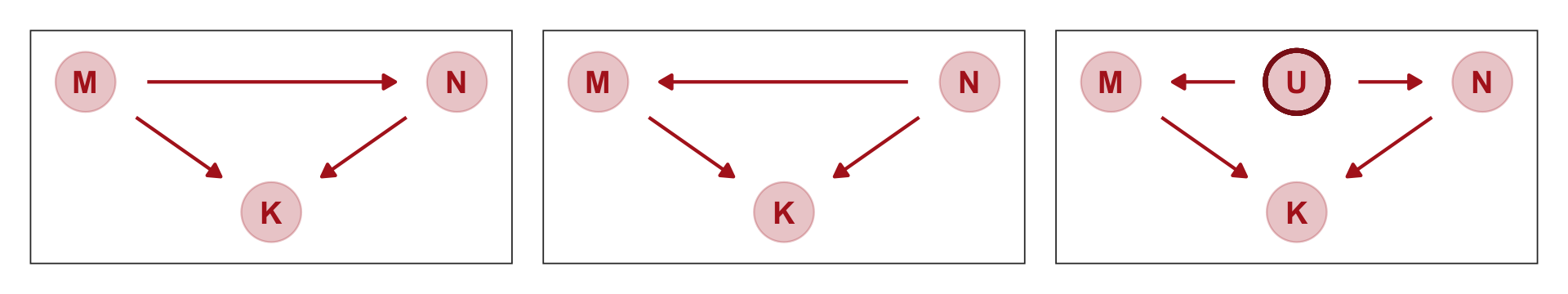

Some DAGs will help. (p. 148, emphasis in the original)

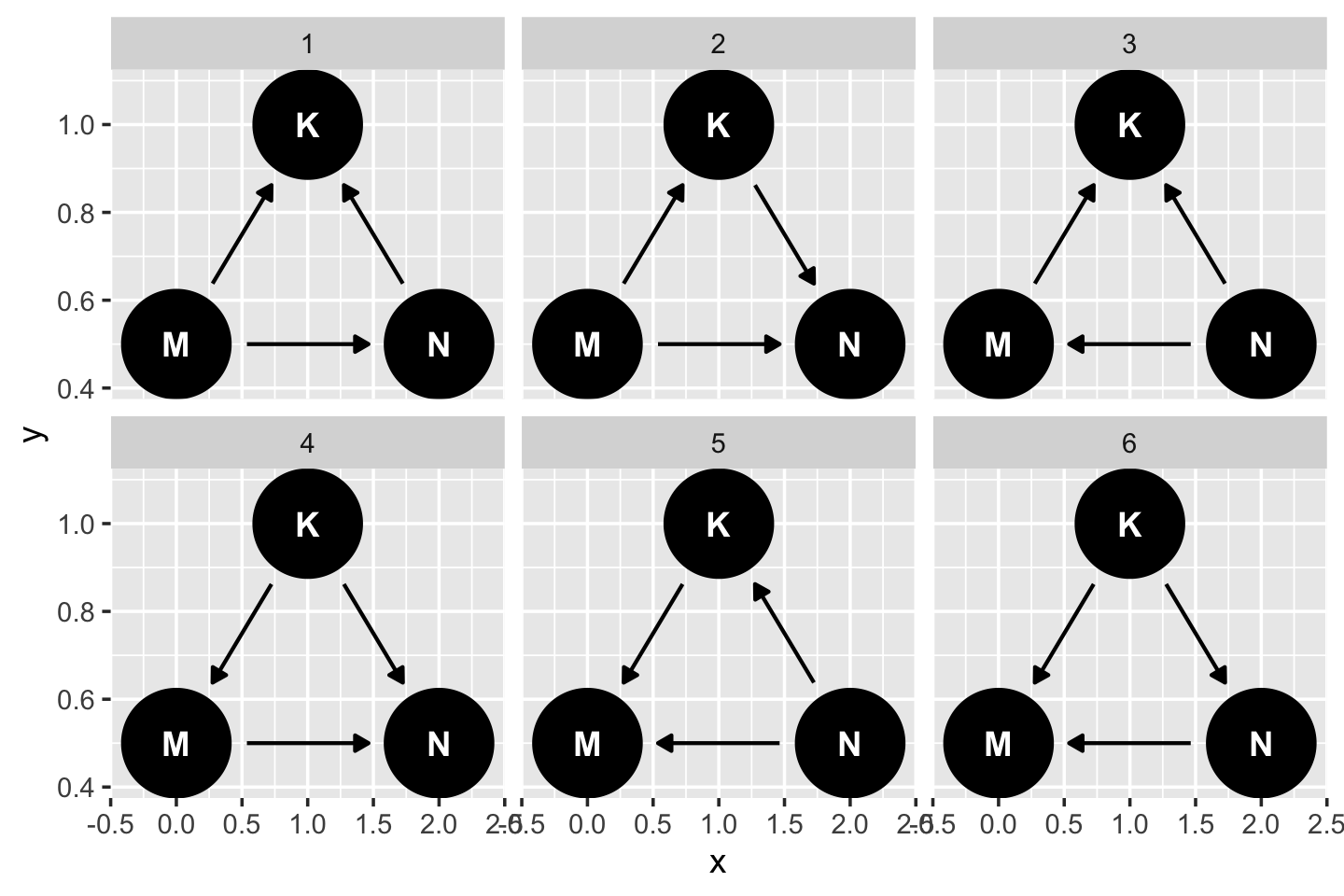

Here are three. I’m not aware we can facet dagify() objects. But we can take cues from Chapter 4 to link our three DAGs like McElreath did his. first, we’ll recognize the ggplot2 code will be nearly identical for each DAG. So we can just wrap the ggplot2 code into a compact function, like so.

gg_dag <- function(d) {

d %>%

ggplot(aes(x = x, y = y, xend = xend, yend = yend)) +

geom_dag_point(color = "firebrick", alpha = 1/4, size = 10) +

geom_dag_text(color = "firebrick") +

geom_dag_edges(edge_color = "firebrick") +

scale_x_continuous(NULL, breaks = NULL, expand = c(0.1, 0.1)) +

scale_y_continuous(NULL, breaks = NULL, expand = c(0.2, 0.2)) +

theme_bw() +

theme(panel.grid = element_blank())

}Now we’ll make the three individual DAGs, saving each.

# left DAG

dag_coords <-

tibble(name = c("M", "N", "K"),

x = c(1, 3, 2),

y = c(2, 2, 1))

p1 <-

dagify(N ~ M,

K ~ M + N,

coords = dag_coords) %>%

gg_dag()

# middle DAG

p2 <-

dagify(M ~ N,

K ~ M + N,

coords = dag_coords) %>%

gg_dag()

# right DAG

dag_coords <-

tibble(name = c("M", "N", "K", "U"),

x = c(1, 3, 2, 2),

y = c(2, 2, 1, 2))

p3 <-

dagify(M ~ U,

N ~ U,

K ~ M + N,

coords = dag_coords) %>%

gg_dag() +

geom_point(x = 2, y = 2,

shape = 1, size = 10, stroke = 1.25, color = "firebrick4")Now we combine our gg_dag() plots together with patchwork syntax.

p1 + p2 + p3

Which of these graphs is right? We can’t tell from the data alone, because these graphs imply the same set of conditional independencies. In this case, there are no conditional independencies–each DAG above implies that all pairs of variables are associated, regardless of what we condition on. A set of DAGs with the same conditional independencies is known as a Markov equivalence set. (p. 151, emphasis in the original).

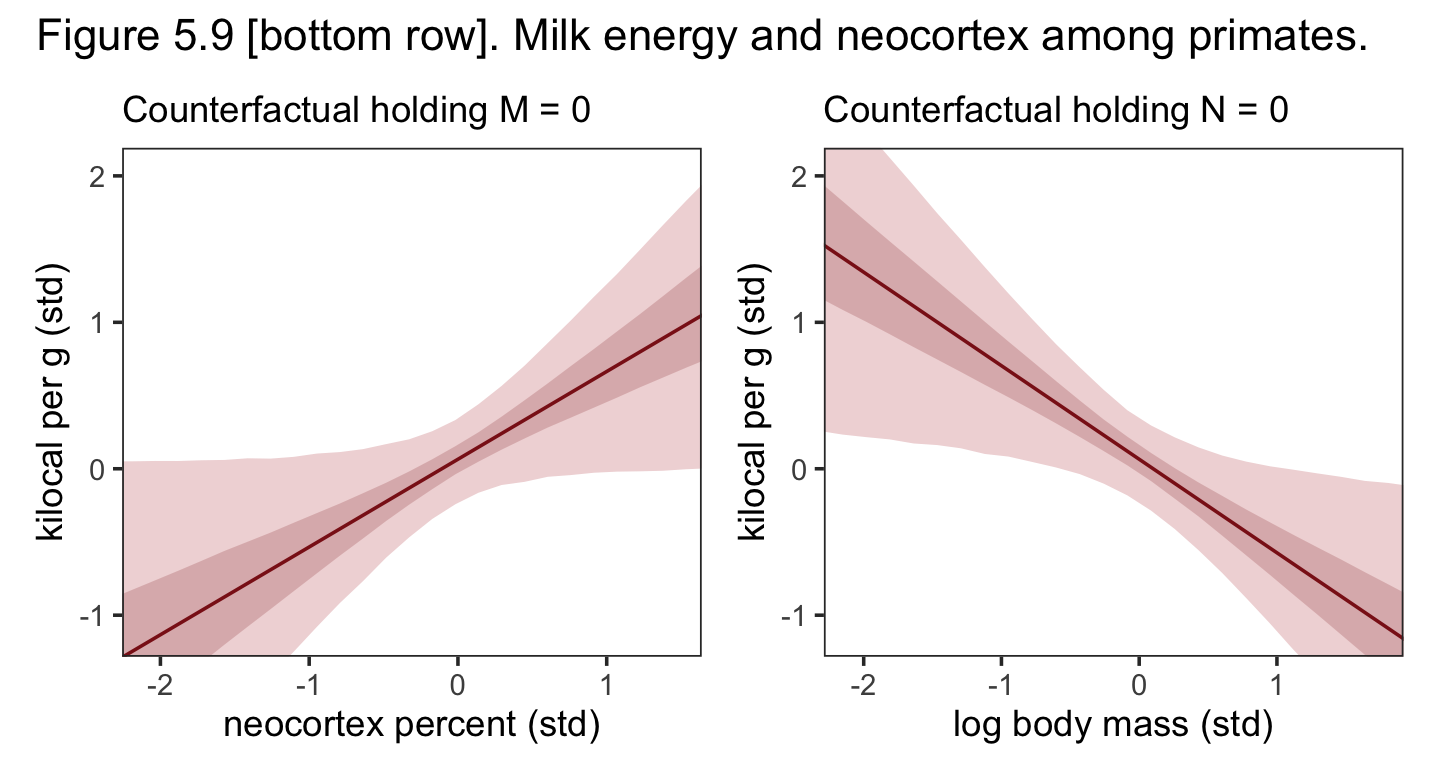

Let’s make the counterfactual plots at the bottom of Figure 5.9. Here’s the one on the left.

nd <- tibble(neocortex.perc_s = seq(from = -2.5, to = 2, length.out = 30),

log_mass_s = 0)

p1 <-

fitted(b5.7,

newdata = nd,

probs = c(.025, .975, .25, .75)) %>%

data.frame() %>%

bind_cols(nd) %>%

ggplot(aes(x = neocortex.perc_s, y = Estimate)) +

geom_ribbon(aes(ymin = Q2.5, ymax = Q97.5),

fill = "firebrick", alpha = 1/5) +

geom_smooth(aes(ymin = Q25, ymax = Q75),

stat = "identity",

fill = "firebrick4", color = "firebrick4", alpha = 1/5, linewidth = 1/2) +

coord_cartesian(xlim = range(dcc$neocortex.perc_s),

ylim = range(dcc$kcal.per.g_s)) +

labs(subtitle = "Counterfactual holding M = 0",

x = "neocortex percent (std)",

y = "kilocal per g (std)")Now make Figure 5.9, bottom right, and combine the two.

nd <- tibble(log_mass_s = seq(from = -2.5, to = 2.5, length.out = 30),

neocortex.perc_s = 0)

p2 <-

fitted(b5.7,

newdata = nd,

probs = c(.025, .975, .25, .75)) %>%

data.frame() %>%

bind_cols(nd) %>%

ggplot(aes(x = log_mass_s, y = Estimate)) +

geom_ribbon(aes(ymin = Q2.5, ymax = Q97.5),

fill = "firebrick", alpha = 1/5) +

geom_smooth(aes(ymin = Q25, ymax = Q75),

stat = "identity",

fill = "firebrick4", color = "firebrick4", alpha = 1/5, linewidth = 1/2) +

coord_cartesian(xlim = range(dcc$log_mass_s),

ylim = range(dcc$kcal.per.g_s)) +

labs(subtitle = "Counterfactual holding N = 0",

x = "log body mass (std)",

y = "kilocal per g (std)")

# combine

p1 + p2 +

plot_annotation(title = "Figure 5.9 [bottom row]. Milk energy and neocortex among primates.") &

theme_bw() &

theme(panel.grid = element_blank())

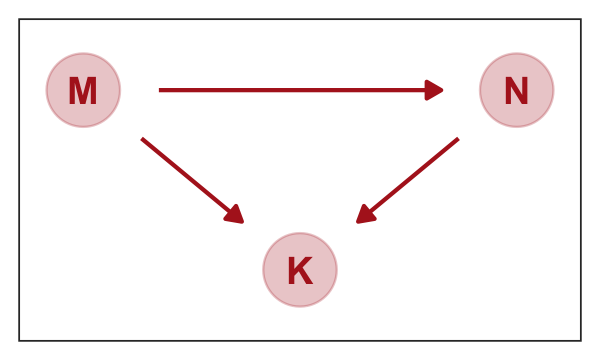

5.2.0.1 Overthinking: Simulating a masking relationship.

As a refresher, here’s our focal DAG.

dag_coords <-

tibble(name = c("M", "N", "K"),

x = c(1, 3, 2),

y = c(2, 2, 1))

dagify(N ~ M,

K ~ M + N,

coords = dag_coords) %>%

gg_dag()

Now simulate data consistent with that DAG.

# how many cases would you like?

n <- 100

set.seed(5)

d_sim <-

tibble(m = rnorm(n, mean = 0, sd = 1)) %>%

mutate(n = rnorm(n, mean = m, sd = 1)) %>%

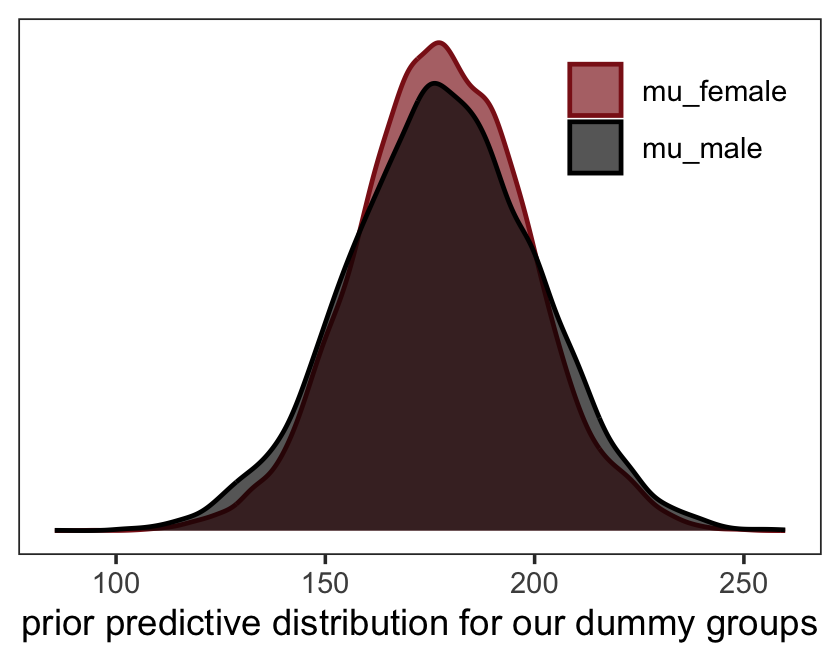

mutate(k = rnorm(n, mean = n - m, sd = 1))Use ggpairs() to get a sense of what we just simulated.

d_sim %>%

ggpairs(upper = list(continuous = wrap("cor", family = "sans", color = "firebrick4")),

diag = list(continuous = my_diag),

lower = list(continuous = my_lower)) +

theme_bw() +

theme(axis.text = element_blank(),

axis.ticks = element_blank(),

panel.grid = element_blank(),

strip.background = element_rect(fill = "white", color = "white"))

Here we fit the simulation models with a little help from the update() function.

b5.7_sim <-

update(b5.7,

newdata = d_sim,

formula = k ~ 1 + n + m,

seed = 5,

file = "fits/b05.07_sim")

b5.5_sim <-

update(b5.7_sim,

formula = k ~ 1 + n,

seed = 5,

file = "fits/b05.05_sim")

b5.6_sim <-

update(b5.7_sim,

formula = k ~ 1 + m,

seed = 5,

file = "fits/b05.06_sim")Compare the coefficients.

fixef(b5.5_sim) %>% round(digits = 2)## Estimate Est.Error Q2.5 Q97.5

## Intercept -0.02 0.10 -0.21 0.18

## n 0.58 0.08 0.43 0.73fixef(b5.6_sim) %>% round(digits = 2)## Estimate Est.Error Q2.5 Q97.5

## Intercept 0.00 0.12 -0.23 0.23

## m 0.17 0.15 -0.12 0.46fixef(b5.7_sim) %>% round(digits = 2)## Estimate Est.Error Q2.5 Q97.5

## Intercept -0.01 0.09 -0.18 0.17

## n 0.98 0.09 0.79 1.16

## m -0.88 0.15 -1.16 -0.59Due to space considerations, I’m not going to show the code corresponding to the other two DAGs from the R code 5.43 block. Rather, I’ll leave that as an exercise for the interested reader.

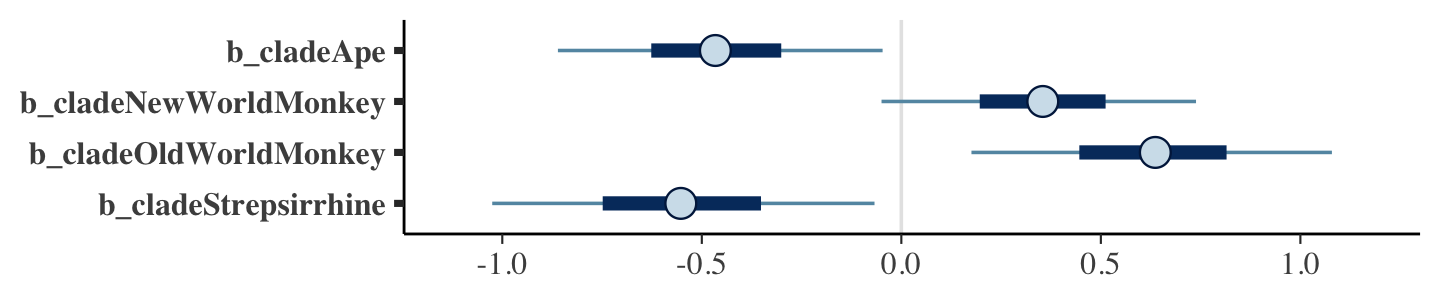

Let’s do the preliminary work to making our DAGs.

dag5.7 <- dagitty("dag{ M -> K <- N M -> N }" )

coordinates(dag5.7) <- list(x = c(M = 0, K = 1, N = 2),

y = c(M = 0.5, K = 1, N = 0.5)) If you just want a quick default plot, ggdag::ggdag_equivalent_dags() is the way to go.

ggdag_equivalent_dags(dag5.7)

However, if you’d like to customize your DAGs, start with the ggdag::node_equivalent_dags() function and build from there.

dag5.7 %>%

node_equivalent_dags() %>%

ggplot(aes(x = x, y = y, xend = xend, yend = yend)) +

geom_dag_point(color = "firebrick", alpha = 1/4, size = 10) +

geom_dag_text(color = "firebrick") +

geom_dag_edges(edge_color = "firebrick") +

scale_x_continuous(NULL, breaks = NULL, expand = c(0.1, 0.1)) +

scale_y_continuous(NULL, breaks = NULL, expand = c(0.1, 0.1)) +

theme_bw() +

theme(panel.grid = element_blank(),

strip.background = element_blank()) +

facet_wrap(~ dag, labeller = label_both)

These all demonstrate Markov equivalence. I should note that I got help from the great Malcolm Barrett on how to make this plot with ggdag.

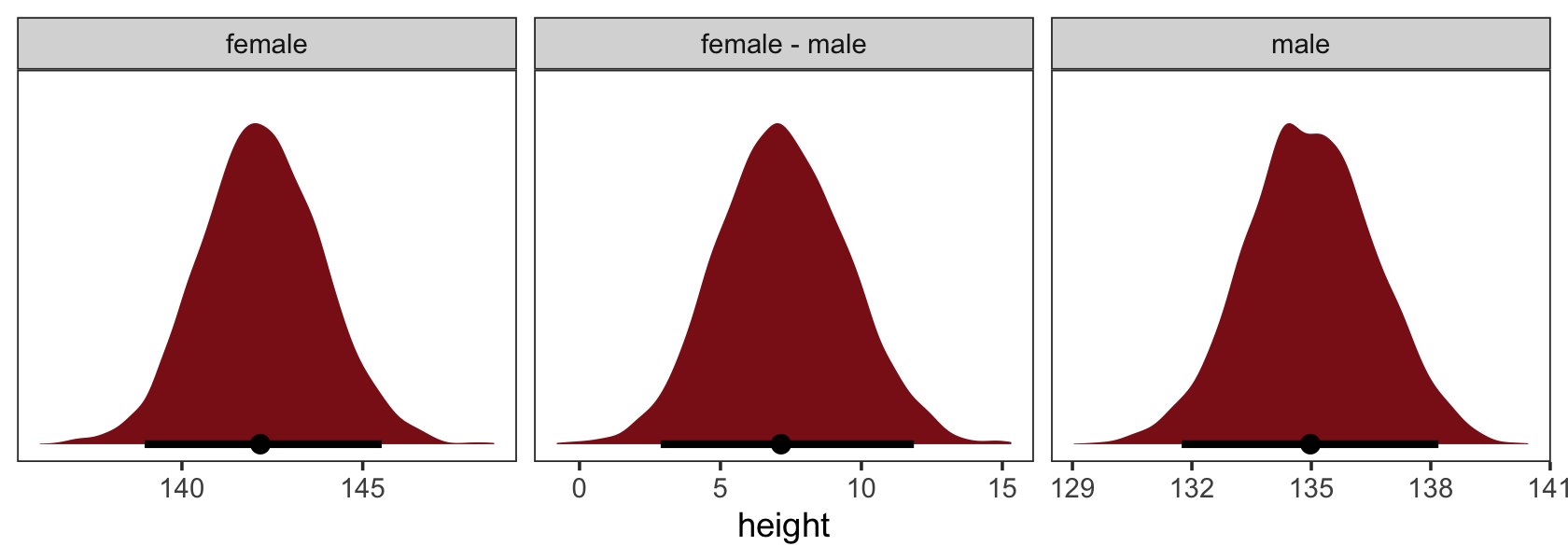

5.3 Categorical variables

Many readers will already know that variables like this, routinely called factors, can easily be included in linear models. But what is not widely understood is how these variables are represented in a model… Knowing how the machine (golem) works both helps you interpret the posterior distribution and gives you additional power in building the model. (p. 153, emphasis in the original)

5.3.1 Binary categories.

Reload the Howell1 data.

data(Howell1, package = "rethinking")

d <- Howell1

rm(Howell1)If you forgot what these data were like, take a glimpse().

d %>%

glimpse()## Rows: 544

## Columns: 4

## $ height <dbl> 151.7650, 139.7000, 136.5250, 156.8450, 145.4150, 163.8300, 149.2250, 168.9100, 147…

## $ weight <dbl> 47.82561, 36.48581, 31.86484, 53.04191, 41.27687, 62.99259, 38.24348, 55.47997, 34.…

## $ age <dbl> 63.0, 63.0, 65.0, 41.0, 51.0, 35.0, 32.0, 27.0, 19.0, 54.0, 47.0, 66.0, 73.0, 20.0,…

## $ male <int> 1, 0, 0, 1, 0, 1, 0, 1, 0, 1, 0, 1, 0, 0, 0, 1, 1, 0, 1, 0, 0, 1, 0, 1, 0, 1, 0, 0,…The