14 Adventures in Covariance

In this chapter, you’ll see how to… specify varying slopes in combination with the varying intercepts of the previous chapter. This will enable pooling that will improve estimates of how different units respond to or are influenced by predictor variables. It will also improve estimates of intercepts, by borrowing information across parameter types. Essentially, varying slopes models are massive interaction machines. They allow every unit in the data to have its own response to any treatment or exposure or event, while also improving estimates via pooling. When the variation in slopes is large, the average slope is of less interest. Sometimes, the pattern of variation in slopes provides hints about omitted variables that explain why some units respond more or less. We’ll see an example in this chapter.

The machinery that makes such complex varying effects possible will be used later in the chapter to extend the varying effects strategy to more subtle model types, including the use of continuous categories, using Gaussian process. Ordinary varying effects work only with discrete, unordered categories, such as individuals, countries, or ponds. In these cases, each category is equally different from all of the others. But it is possible to use pooling with categories such as age or location. In these cases, some ages and some locations are more similar than others. You’ll see how to model covariation among continuous categories of this kind, as well as how to generalize the strategy to seemingly unrelated types of models such as phylogenetic and network regressions. Finally, we’ll circle back to causal inference and use our new powers over covariance to go beyond the tools of Chapter 6, introducing instrumental variables. Instruments are ways of inferring cause without closing backdoor paths. However they are very tricky both in design and estimation. (McElreath, 2020a, pp. 436–437, emphasis in the original)

14.1 Varying slopes by construction

How should the robot pool information across intercepts and slopes? By modeling the joint population of intercepts and slopes, which means by modeling their covariance. In conventional multilevel models, the device that makes this possible is a joint multivariate Gaussian distribution for all of the varying effects, both intercepts and slopes. So instead of having two independent Gaussian distributions of intercepts and of slopes, the robot can do better by assigning a two-dimensional Gaussian distribution to both the intercepts (first dimension) and the slopes (second dimension). (p. 437)

14.1.0.1 Rethinking: Why Gaussian?

There is no reason the multivariate distribution of intercepts and slopes must be Gaussian. But there are both practical and epistemological justifications. On the practical side, there aren’t many multivariate distributions that are easy to work with. The only common ones are multivariate Gaussian and multivariate Student-t distributions. On the epistemological side, if all we want to say about these intercepts and slopes is their means, variances, and covariances, then the maximum entropy distribution is multivariate Gaussian. (p. 437)

As it turns out, brms does currently allow users to use the multivariate Student-\(t\) distribution in this way. For details, check out this discussion from the brms GitHub repository. Bürkner’s exemplar syntax from his comment on May 13, 2018, was y ~ x + (x | gr(g, dist = "student")). I haven’t experimented with this, but if you do, do consider sharing how it went.

14.1.1 Simulate the population.

If you follow this section closely, it’s a great template for simulating multilevel code for any of your future projects. You might think of this as an alternative to a frequentist power analysis. Vourre has done some nice work along these lines, I have a blog series on Bayesian power analysis, and Kruschke covered the topic in Chapter 13 of his (2015) text.

a <- 3.5 # average morning wait time

b <- -1 # average difference afternoon wait time

sigma_a <- 1 # std dev in intercepts

sigma_b <- 0.5 # std dev in slopes

rho <- -.7 # correlation between intercepts and slopes

# the next three lines of code simply combine the terms, above

mu <- c(a, b)

cov_ab <- sigma_a * sigma_b * rho

sigma <- matrix(c(sigma_a^2, cov_ab,

cov_ab, sigma_b^2), ncol = 2)It’s common to refer to a covariance matrix as \(\mathbf \Sigma\). The mathematical notation for those last couple lines of code is

\[ \mathbf \Sigma = \begin{bmatrix} \sigma_\alpha^2 & \sigma_\alpha \sigma_\beta \rho \\ \sigma_\alpha \sigma_\beta \rho & \sigma_\beta^2 \end{bmatrix}. \]

Anyway, if you haven’t used the matrix() function before, you might get a sense of the elements like so.

matrix(1:4, nrow = 2, ncol = 2)## [,1] [,2]

## [1,] 1 3

## [2,] 2 4This next block of code will finally yield our café data.

library(tidyverse)

sigmas <- c(sigma_a, sigma_b) # standard deviations

rho <- matrix(c(1, rho, # correlation matrix

rho, 1), nrow = 2)

# now matrix multiply to get covariance matrix

sigma <- diag(sigmas) %*% rho %*% diag(sigmas)

# how many cafes would you like?

n_cafes <- 20

set.seed(5) # used to replicate example

vary_effects <-

MASS::mvrnorm(n_cafes, mu, sigma) %>%

data.frame() %>%

set_names("a_cafe", "b_cafe")

head(vary_effects)## a_cafe b_cafe

## 1 4.223962 -1.6093565

## 2 2.010498 -0.7517704

## 3 4.565811 -1.9482646

## 4 3.343635 -1.1926539

## 5 1.700971 -0.5855618

## 6 4.134373 -1.1444539Let’s make sure we’re keeping this all straight. a_cafe = our café-specific intercepts; b_cafe = our café-specific slopes. These aren’t the actual data, yet. But at this stage, it might make sense to ask What’s the distribution of a_cafe and b_cafe? Our variant of Figure 14.2 contains the answer.

For our plots in this chapter, we’ll make our own custom ggplot2 theme. The color palette will come from the “pearl_earring” palette of the dutchmasters package (Thoen, 2022). You can learn more about the original painting, Vermeer’s (1665) Girl with a Pearl Earring, here.

# devtools::install_github("EdwinTh/dutchmasters")

library(dutchmasters)

dutchmasters$pearl_earring## red(lips) skin blue(scarf1) blue(scarf2) white(colar) gold(dress)

## "#A65141" "#E7CDC2" "#80A0C7" "#394165" "#FCF9F0" "#B1934A"

## gold(dress2) black(background) grey(scarf3) yellow(scarf4)

## "#DCA258" "#100F14" "#8B9DAF" "#EEDA9D" "#E8DCCF"scales::show_col(dutchmasters$pearl_earring)

We’ll name our custom theme theme_pearl_earring(). I cobbled together this approach to defining a custom ggplot2 theme with help from

- Chapter 19 of Wichkam’s (2016) ggplot2: Elegant graphics for data analysis;

- Section 4.6 of Peng, Kross, and Anderson’s (2017) Mastering Software Development in R;

- Lea Waniek’s blog post, Custom themes in ggplot2, and

- Joey Stanley’s blog post of the same name, Custom themes in ggplot2.

theme_pearl_earring <- function(light_color = "#E8DCCF",

dark_color = "#100F14",

my_family = "Courier",

...) {

theme(line = element_line(color = light_color),

text = element_text(color = light_color, family = my_family),

axis.line = element_blank(),

axis.text = element_text(color = light_color),

axis.ticks = element_line(color = light_color),

legend.background = element_rect(fill = dark_color, color = "transparent"),

legend.key = element_rect(fill = dark_color, color = "transparent"),

panel.background = element_rect(fill = dark_color, color = light_color),

panel.grid = element_blank(),

plot.background = element_rect(fill = dark_color, color = dark_color),

strip.background = element_rect(fill = dark_color, color = "transparent"),

strip.text = element_text(color = light_color, family = my_family),

...)

}

# now set `theme_pearl_earring()` as the default theme

theme_set(theme_pearl_earring())Note how our custom theme_pearl_earing() function has a few adjustable parameters. Feel free to play around with alternative settings to see how they work. If we just use the defaults as we have defined them, here is our Figure 14.2.

vary_effects %>%

ggplot(aes(x = a_cafe, y = b_cafe)) +

geom_point(color = "#80A0C7") +

geom_rug(color = "#8B9DAF", linewidth = 1/7)

Again, these are not “data.” Figure 14.2 shows a distribution of parameters. Here’s their Pearson’s correlation coefficient.

cor(vary_effects$a_cafe, vary_effects$b_cafe)## [1] -0.5721537Since there are only 20 rows in our vary_effects simulation, it shouldn’t be a surprise that the Pearson’s correlation is a bit off from the population value of \(\rho = -.7\). If you rerun the simulation with n_cafes <- 200, the Pearson’s correlation is much closer to the data generating value.

14.1.2 Simulate observations.

Here we put those simulated parameters to use and simulate actual data from them.

n_visits <- 10

sigma <- 0.5 # std dev within cafes

set.seed(22) # used to replicate example

d <-

vary_effects %>%

mutate(cafe = 1:n_cafes) %>%

expand_grid(visit = 1:n_visits) %>%

mutate(afternoon = rep(0:1, times = n() / 2)) %>%

mutate(mu = a_cafe + b_cafe * afternoon) %>%

mutate(wait = rnorm(n = n(), mean = mu, sd = sigma)) %>%

select(cafe, everything())We might peek at the data.

d %>%

glimpse()## Rows: 200

## Columns: 7

## $ cafe <int> 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, …

## $ a_cafe <dbl> 4.223962, 4.223962, 4.223962, 4.223962, 4.223962, 4.223962, 4.223962, 4.223962, 4.223962, …

## $ b_cafe <dbl> -1.6093565, -1.6093565, -1.6093565, -1.6093565, -1.6093565, -1.6093565, -1.6093565, -1.609…

## $ visit <int> 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 1, 2, 3, 4, 5, 6, 7, 8, 9, 1…

## $ afternoon <int> 0, 1, 0, 1, 0, 1, 0, 1, 0, 1, 0, 1, 0, 1, 0, 1, 0, 1, 0, 1, 0, 1, 0, 1, 0, 1, 0, 1, 0, 1, …

## $ mu <dbl> 4.223962, 2.614606, 4.223962, 2.614606, 4.223962, 2.614606, 4.223962, 2.614606, 4.223962, …

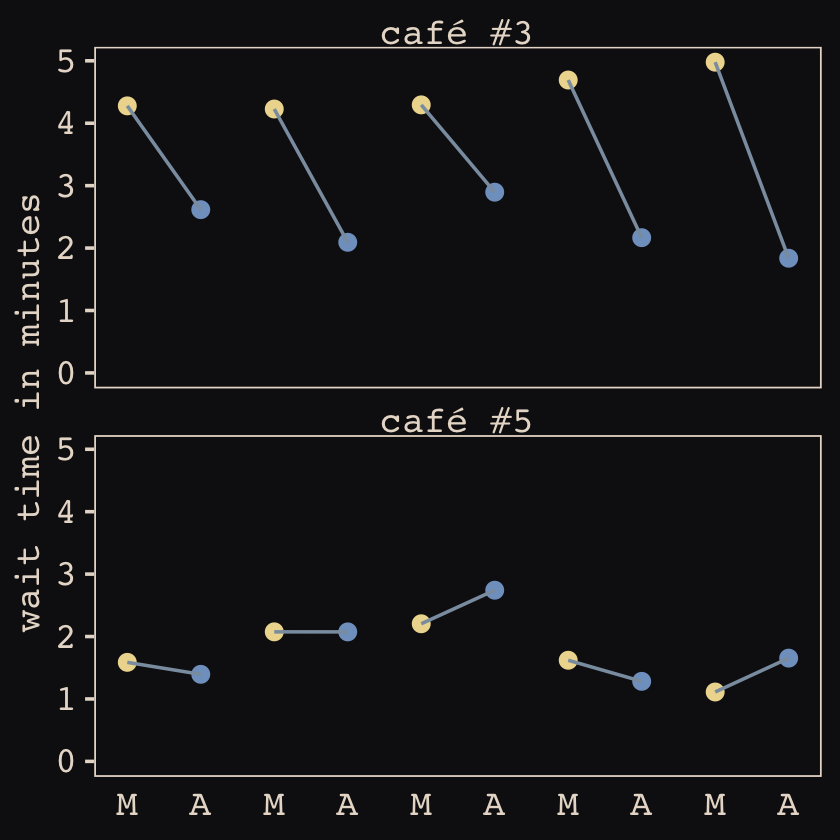

## $ wait <dbl> 3.9678929, 3.8571978, 4.7278755, 2.7610133, 4.1194827, 3.5436522, 4.1909492, 2.5332235, 4.…Now we’ve finally simulated our data, we are ready to make our version of Figure 14.1, from way back on page 436.

d %>%

mutate(afternoon = ifelse(afternoon == 0, "M", "A"),

day = rep(rep(1:5, each = 2), times = n_cafes)) %>%

filter(cafe %in% c(3, 5)) %>%

mutate(cafe = str_c("café #", cafe)) %>%

ggplot(aes(x = visit, y = wait, group = day)) +

geom_point(aes(color = afternoon), size = 2) +

geom_line(color = "#8B9DAF") +

scale_color_manual(values = c("#80A0C7", "#EEDA9D")) +

scale_x_continuous(NULL, breaks = 1:10, labels = rep(c("M", "A"), times = 5)) +

scale_y_continuous("wait time in minutes", limits = c(0, NA)) +

theme_pearl_earring(axis.ticks.x = element_blank(),

legend.position = "none") +

facet_wrap(~ cafe, ncol = 1)

14.1.2.1 Rethinking: Simulation and misspecification.

In this exercise, we are simulating data from a generative process and then analyzing that data with a model that reflects exactly the correct structure of that process. But in the real world, we’re never so lucky. Instead we are always forced to analyze data with a model that is misspecified: The true data-generating process is different than the model. Simulation can be used however to explore misspecification. Just simulate data from a process and then see how a number of models, none of which match exactly the data-generating process, perform. And always remember that Bayesian inference does not depend upon data-generating assumptions, such as the likelihood, being true. (p. 441)

14.1.3 The varying slopes model.

The statistical formula for our varying intercepts and slopes café model follows the form

\[\begin{align*} \text{wait}_i & \sim \operatorname{Normal}(\mu_i, \sigma) \\ \mu_i & = \alpha_{\text{café}[i]} + \beta_{\text{café}[i]} \text{afternoon}_i \\ \begin{bmatrix} \alpha_\text{café} \\ \beta_\text{café} \end{bmatrix} & \sim \operatorname{MVNormal} \begin{pmatrix} \begin{bmatrix} \alpha \\ \beta \end{bmatrix}, \mathbf \Sigma \end{pmatrix} \\ \mathbf \Sigma & = \begin{bmatrix} \sigma_\alpha & 0 \\ 0 & \sigma_\beta \end{bmatrix} \mathbf R \begin{bmatrix} \sigma_\alpha & 0 \\ 0 & \sigma_\beta \end{bmatrix} \\ \alpha & \sim \operatorname{Normal}(5, 2) \\ \beta & \sim \operatorname{Normal}(-1, 0.5) \\ \sigma & \sim \operatorname{Exponential}(1) \\ \sigma_\alpha & \sim \operatorname{Exponential}(1) \\ \sigma_\beta & \sim \operatorname{Exponential}(1) \\ \mathbf R & \sim \operatorname{LKJcorr}(2), \end{align*}\]

where \(\mathbf \Sigma\) is the covariance matrix and \(\mathbf R\) is the corresponding correlation matrix, which we might more fully express as

\[\mathbf R = \begin{bmatrix} 1 & \rho \\ \rho & 1 \end{bmatrix}.\]

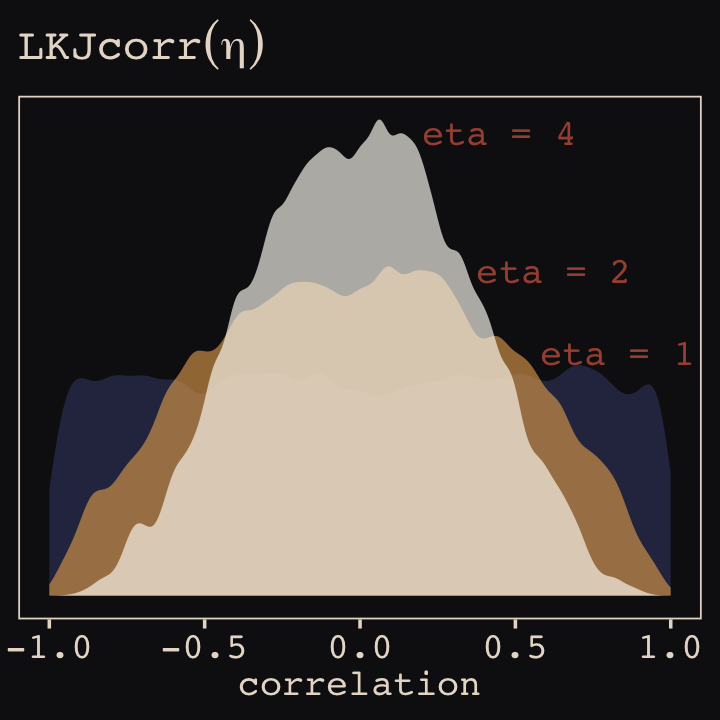

And according to our prior, \(\mathbf R\) is distributed as \(\operatorname{LKJcorr}(2)\). We’ll use rethinking::rlkjcorr() to get a better sense of what that even is.

library(rethinking)

n_sim <- 1e4

set.seed(14)

r_1 <-

rlkjcorr(n_sim, K = 2, eta = 1) %>%

data.frame()

set.seed(14)

r_2 <-

rlkjcorr(n_sim, K = 2, eta = 2) %>%

data.frame()

set.seed(14)

r_4 <-

rlkjcorr(n_sim, K = 2, eta = 4) %>%

data.frame()Here are the \(\text{LKJcorr}\) distributions of Figure 14.3.

# for annotation

text <-

tibble(x = c(.83, .625, .45),

y = c(.56, .75, 1.07),

label = c("eta = 1", "eta = 2", "eta = 4"))

# plot

r_1 %>%

ggplot(aes(x = X2)) +

geom_density(color = "transparent", fill = "#394165", alpha = 2/3, adjust = 1/2) +

geom_density(data = r_2,

color = "transparent", fill = "#DCA258", alpha = 2/3, adjust = 1/2) +

geom_density(data = r_4,

color = "transparent", fill = "#FCF9F0", alpha = 2/3, adjust = 1/2) +

geom_text(data = text,

aes(x = x, y = y, label = label),

color = "#A65141", family = "Courier") +

scale_y_continuous(NULL, breaks = NULL) +

labs(title = expression(LKJcorr(eta)),

x = "correlation")

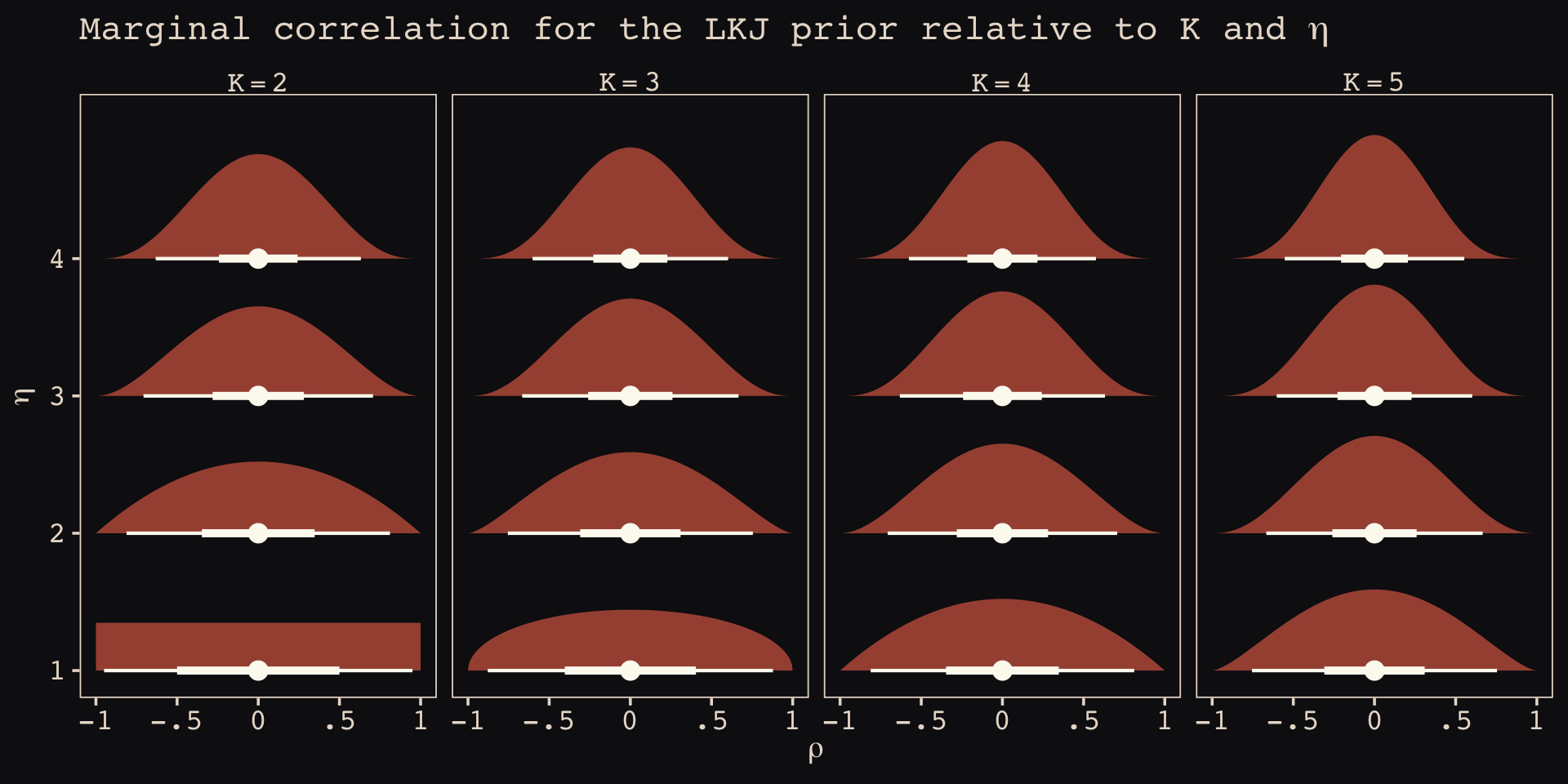

As it turns out, the shape of the LKJ is sensitive to both \(\eta\) and the \(K\) dimensions of the correlation matrix. Our simulations only considered the shapes for when \(K = 2\). We can use a combination of the parse_dist() and stat_dist_halfeye() functions from the tidybayes package to derive analytic solutions for different combinations of \(\eta\) and \(K\).

library(tidybayes)

crossing(k = 2:5,

eta = 1:4) %>%

mutate(prior = str_c("lkjcorr_marginal(", k, ", ", eta, ")"),

strip = str_c("K==", k)) %>%

parse_dist(prior) %>%

ggplot(aes(y = eta, dist = .dist, args = .args)) +

stat_dist_halfeye(.width = c(.5, .95),

color = "#FCF9F0", fill = "#A65141") +

scale_x_continuous(expression(rho), limits = c(-1, 1),

breaks = c(-1, -.5, 0, .5, 1), labels = c("-1", "-.5", "0", ".5", "1")) +

scale_y_continuous(expression(eta), breaks = 1:4) +

ggtitle(expression("Marginal correlation for the LKJ prior relative to K and "*eta)) +

facet_wrap(~ strip, labeller = label_parsed, ncol = 4)

To learn more about this plotting method, check out Kay’s (2020) Marginal distribution of a single correlation from an LKJ distribution. To get a better intuition what that plot means, check out the illuminating blog post by Stephen Martin, Is the LKJ(1) prior uniform? “Yes”.

Okay, let’s get ready to model and switch out rethinking for brms.

detach(package:rethinking, unload = T)

library(brms)As defined above, our first model has both varying intercepts and afternoon slopes. I should point out that the (1 + afternoon | cafe) syntax specifies that we’d like brm() to fit the random effects for 1 (i.e., the intercept) and the afternoon slope as correlated. Had we wanted to fit a model in which they were orthogonal, we’d have coded (1 + afternoon || cafe).

b14.1 <-

brm(data = d,

family = gaussian,

wait ~ 1 + afternoon + (1 + afternoon | cafe),

prior = c(prior(normal(5, 2), class = Intercept),

prior(normal(-1, 0.5), class = b),

prior(exponential(1), class = sd),

prior(exponential(1), class = sigma),

prior(lkj(2), class = cor)),

iter = 2000, warmup = 1000, chains = 4, cores = 4,

seed = 867530,

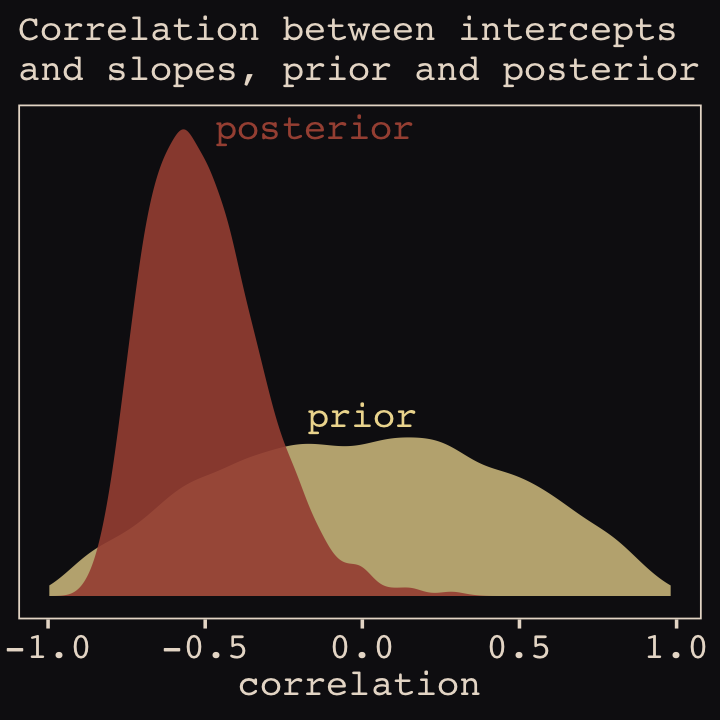

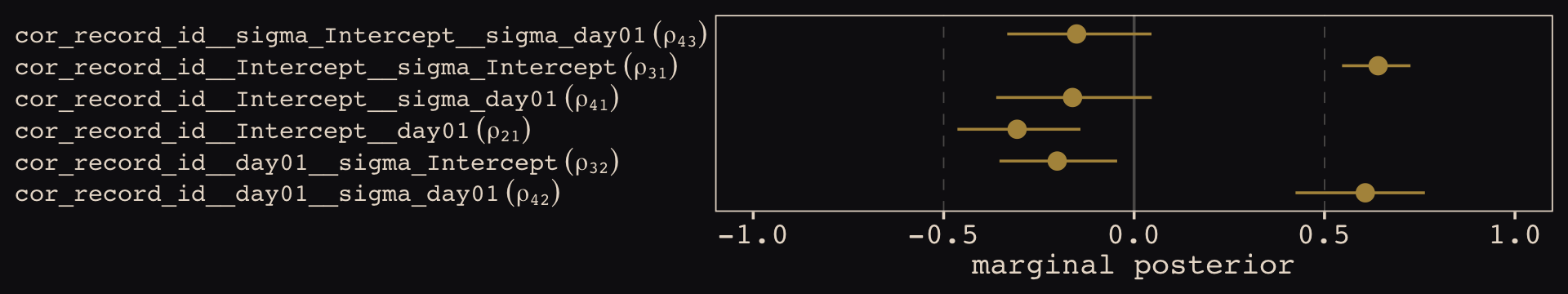

file = "fits/b14.01")With Figure 14.4, we assess how the posterior for the correlation of the random effects compares to its prior.

post <- as_draws_df(b14.1)

post %>%

ggplot() +

geom_density(data = r_2, aes(x = X2),

color = "transparent", fill = "#EEDA9D", alpha = 3/4) +

geom_density(aes(x = cor_cafe__Intercept__afternoon),

color = "transparent", fill = "#A65141", alpha = 9/10) +

annotate(geom = "text",

x = c(-0.15, 0), y = c(2.21, 0.85),

label = c("posterior", "prior"),

color = c("#A65141", "#EEDA9D"), family = "Courier") +

scale_y_continuous(NULL, breaks = NULL) +

labs(subtitle = "Correlation between intercepts\nand slopes, prior and posterior",

x = "correlation")

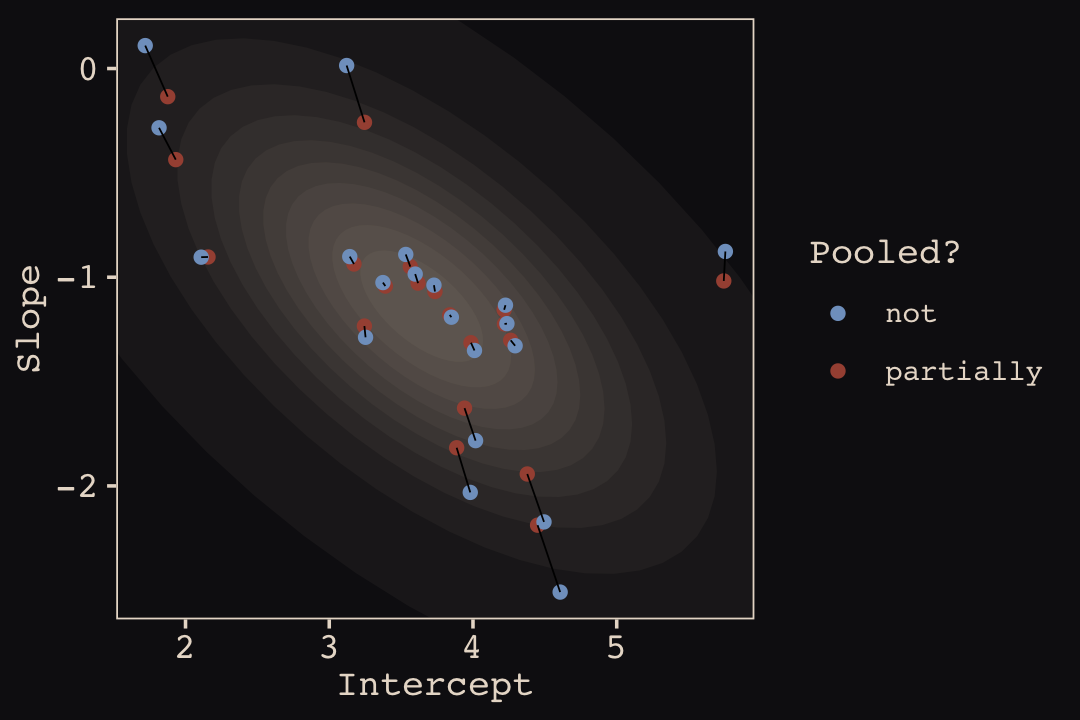

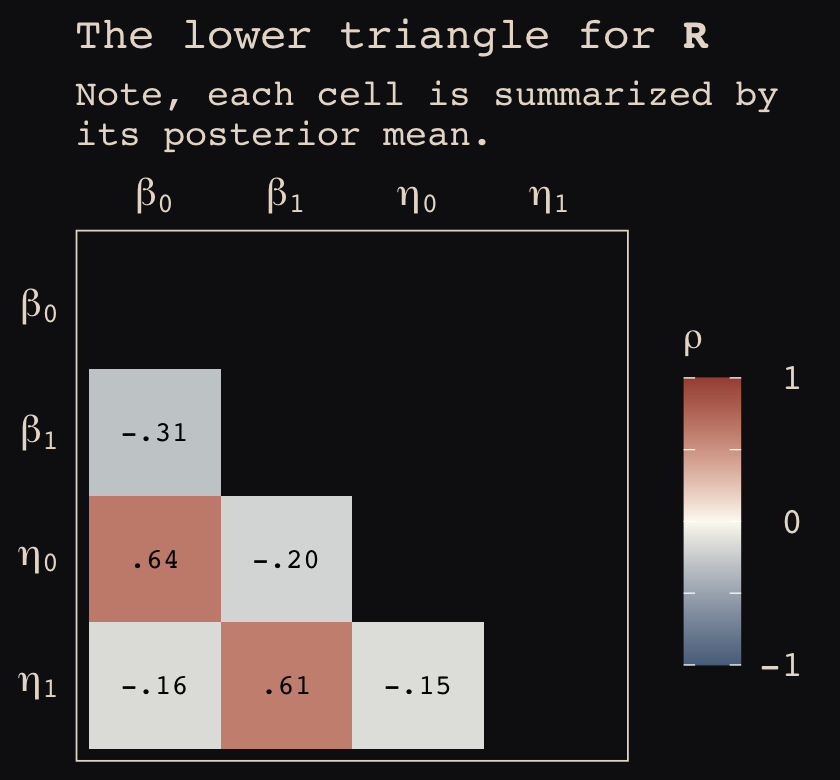

McElreath then depicted multidimensional shrinkage by plotting the posterior mean of the varying effects compared to their raw, unpooled estimated. With brms, we can get the cafe-specific intercepts and afternoon slopes with coef(), which returns a three-dimensional list.

# coef(b14.1) %>% glimpse()

coef(b14.1)## $cafe

## , , Intercept

##

## Estimate Est.Error Q2.5 Q97.5

## 1 4.216792 0.2000687 3.821914 4.608532

## 2 2.156508 0.2005916 1.774121 2.555899

## 3 4.377672 0.2027371 3.988610 4.777364

## 4 3.244505 0.1986072 2.856801 3.646256

## 5 1.876298 0.2088697 1.458488 2.286024

## 6 4.262255 0.2008303 3.875215 4.645384

## 7 3.617877 0.2021080 3.223550 4.011151

## 8 3.941250 0.2018318 3.543704 4.335027

## 9 3.985973 0.1991844 3.594660 4.375243

## 10 3.563857 0.2026278 3.180496 3.967585

## 11 1.932069 0.2042003 1.543031 2.337843

## 12 3.838104 0.2003312 3.447778 4.232749

## 13 3.886496 0.1963709 3.496096 4.266538

## 14 3.171946 0.2020853 2.779492 3.564929

## 15 4.449334 0.2057724 4.045851 4.853363

## 16 3.390196 0.2056161 2.984552 3.791244

## 17 4.219108 0.1959220 3.832107 4.605128

## 18 5.744843 0.2043381 5.336572 6.147451

## 19 3.245238 0.2056034 2.836199 3.643502

## 20 3.735587 0.1989374 3.338077 4.117335

##

## , , afternoon

##

## Estimate Est.Error Q2.5 Q97.5

## 1 -1.1558221 0.2665564 -1.6777742 -0.62643336

## 2 -0.9030389 0.2618489 -1.4233655 -0.38857670

## 3 -1.9428430 0.2793570 -2.4906668 -1.41633174

## 4 -1.2339085 0.2630983 -1.7607847 -0.72434996

## 5 -0.1348526 0.2760740 -0.6757120 0.42042554

## 6 -1.3022076 0.2729663 -1.8464983 -0.77908494

## 7 -1.0284096 0.2754878 -1.5592451 -0.49208696

## 8 -1.6272876 0.2678544 -2.1524894 -1.10279089

## 9 -1.3138842 0.2698650 -1.8531660 -0.77163692

## 10 -0.9493012 0.2663965 -1.4637540 -0.43254540

## 11 -0.4366110 0.2754459 -0.9576143 0.09921394

## 12 -1.1804850 0.2658584 -1.7120566 -0.65263010

## 13 -1.8171061 0.2673527 -2.3517635 -1.29795483

## 14 -0.9367173 0.2686524 -1.4559835 -0.40796057

## 15 -2.1880870 0.2871369 -2.7415055 -1.63301873

## 16 -1.0422942 0.2724214 -1.5660179 -0.51131852

## 17 -1.2239292 0.2606178 -1.7472319 -0.71671980

## 18 -1.0178642 0.2803683 -1.5563606 -0.45398219

## 19 -0.2578258 0.2901755 -0.8122857 0.31412096

## 20 -1.0685338 0.2583032 -1.5728118 -0.55443586Here’s the code to extract the relevant elements from the coef() list, convert them to a tibble, and add the cafe index.

partially_pooled_params <-

# with this line we select each of the 20 cafe's posterior mean (i.e., Estimate)

# for both `Intercept` and `afternoon`

coef(b14.1)$cafe[ , 1, 1:2] %>%

data.frame() %>% # convert the two vectors to a data frame

rename(Slope = afternoon) %>%

mutate(cafe = 1:nrow(.)) %>% # add the `cafe` index

select(cafe, everything()) # simply moving `cafe` to the leftmost positionLike McElreath, we’ll compute the unpooled estimates directly from the data.

# compute unpooled estimates directly from data

un_pooled_params <-

d %>%

# with these two lines, we compute the mean value for each cafe's wait time

# in the morning and then the afternoon

group_by(afternoon, cafe) %>%

summarise(mean = mean(wait)) %>%

ungroup() %>% # ungrouping allows us to alter afternoon, one of the grouping variables

mutate(afternoon = ifelse(afternoon == 0, "Intercept", "Slope")) %>%

spread(key = afternoon, value = mean) %>% # use `spread()` just as in the previous block

mutate(Slope = Slope - Intercept) # finally, here's our slope!

# here we combine the partially-pooled and unpooled means into a single data object,

# which will make plotting easier.

params <-

# `bind_rows()` will stack the second tibble below the first

bind_rows(partially_pooled_params, un_pooled_params) %>%

# index whether the estimates are pooled

mutate(pooled = rep(c("partially", "not"), each = nrow(.)/2))

# here's a glimpse at what we've been working for

params %>%

slice(c(1:5, 36:40))## cafe Intercept Slope pooled

## 1 1 4.216792 -1.15582209 partially

## 2 2 2.156508 -0.90303887 partially

## 3 3 4.377672 -1.94284298 partially

## 4 4 3.244505 -1.23390848 partially

## 5 5 1.876298 -0.13485264 partially

## ...6 16 3.373496 -1.02563866 not

## ...7 17 4.236192 -1.22236910 not

## ...8 18 5.755987 -0.87660383 not

## ...9 19 3.121060 0.01441784 not

## ...10 20 3.728481 -1.03811567 notFinally, here’s our code for Figure 14.5.a, showing shrinkage in two dimensions.

p1 <-

ggplot(data = params, aes(x = Intercept, y = Slope)) +

stat_ellipse(geom = "polygon", type = "norm", level = 1/10, linewidth = 0, alpha = 1/20, fill = "#E7CDC2") +

stat_ellipse(geom = "polygon", type = "norm", level = 2/10, linewidth = 0, alpha = 1/20, fill = "#E7CDC2") +

stat_ellipse(geom = "polygon", type = "norm", level = 3/10, linewidth = 0, alpha = 1/20, fill = "#E7CDC2") +

stat_ellipse(geom = "polygon", type = "norm", level = 4/10, linewidth = 0, alpha = 1/20, fill = "#E7CDC2") +

stat_ellipse(geom = "polygon", type = "norm", level = 5/10, linewidth = 0, alpha = 1/20, fill = "#E7CDC2") +

stat_ellipse(geom = "polygon", type = "norm", level = 6/10, linewidth = 0, alpha = 1/20, fill = "#E7CDC2") +

stat_ellipse(geom = "polygon", type = "norm", level = 7/10, linewidth = 0, alpha = 1/20, fill = "#E7CDC2") +

stat_ellipse(geom = "polygon", type = "norm", level = 8/10, linewidth = 0, alpha = 1/20, fill = "#E7CDC2") +

stat_ellipse(geom = "polygon", type = "norm", level = 9/10, linewidth = 0, alpha = 1/20, fill = "#E7CDC2") +

stat_ellipse(geom = "polygon", type = "norm", level = .99, linewidth = 0, alpha = 1/20, fill = "#E7CDC2") +

geom_point(aes(group = cafe, color = pooled)) +

geom_line(aes(group = cafe), linewidth = 1/4) +

scale_color_manual("Pooled?", values = c("#80A0C7", "#A65141")) +

coord_cartesian(xlim = range(params$Intercept),

ylim = range(params$Slope))

p1

Learn more about stat_ellipse(), here. Let’s prep for Figure 14.5.b.

# retrieve the partially-pooled estimates with `coef()`

partially_pooled_estimates <-

coef(b14.1)$cafe[ , 1, 1:2] %>%

# convert the two vectors to a data frame

data.frame() %>%

# the Intercept is the wait time for morning (i.e., `afternoon == 0`)

rename(morning = Intercept) %>%

# `afternoon` wait time is the `morning` wait time plus the afternoon slope

mutate(afternoon = morning + afternoon,

cafe = 1:n()) %>% # add the `cafe` index

select(cafe, everything())

# compute unpooled estimates directly from data

un_pooled_estimates <-

d %>%

# as above, with these two lines, we compute each cafe's mean wait value by time of day

group_by(afternoon, cafe) %>%

summarise(mean = mean(wait)) %>%

# ungrouping allows us to alter the grouping variable, afternoon

ungroup() %>%

mutate(afternoon = ifelse(afternoon == 0, "morning", "afternoon")) %>%

# this separates out the values into morning and afternoon columns

spread(key = afternoon, value = mean)

estimates <-

bind_rows(partially_pooled_estimates, un_pooled_estimates) %>%

mutate(pooled = rep(c("partially", "not"), each = n() / 2))The code for Figure 14.5.b.

p2 <-

ggplot(data = estimates, aes(x = morning, y = afternoon)) +

# nesting `stat_ellipse()` within `mapply()` is a less redundant way to produce the

# ten-layered semitransparent ellipses we did with ten lines of `stat_ellipse()`

# functions in the previous plot

mapply(function(level) {

stat_ellipse(geom = "polygon", type = "norm",

linewidth = 0, alpha = 1/20, fill = "#E7CDC2",

level = level)

},

# enter the levels here

level = c(1:9 / 10, .99)) +

geom_point(aes(group = cafe, color = pooled)) +

geom_line(aes(group = cafe), linewidth = 1/4) +

scale_color_manual("Pooled?", values = c("#80A0C7", "#A65141")) +

labs(x = "morning wait (mins)",

y = "afternoon wait (mins)") +

coord_cartesian(xlim = range(estimates$morning),

ylim = range(estimates$afternoon))Here we combine the two subplots together with patchwork syntax.

library(patchwork)

(p1 + theme(legend.position = "none")) +

p2 +

plot_annotation(title = "Shrinkage in two dimensions")

What I want you to appreciate in this plot is that shrinkage on the parameter scale naturally produces shrinkage where we actually care about it: on the outcome scale. And it also implies a population of wait times, shown by the [semitransparent ellipses]. That population is now positively correlated–cafés with longer morning waits also tend to have longer afternoon waits. They are popular, after all. But the population lies mostly below the dashed line where the waits are equal. You’ll wait less in the afternoon, on average. (p. 446)

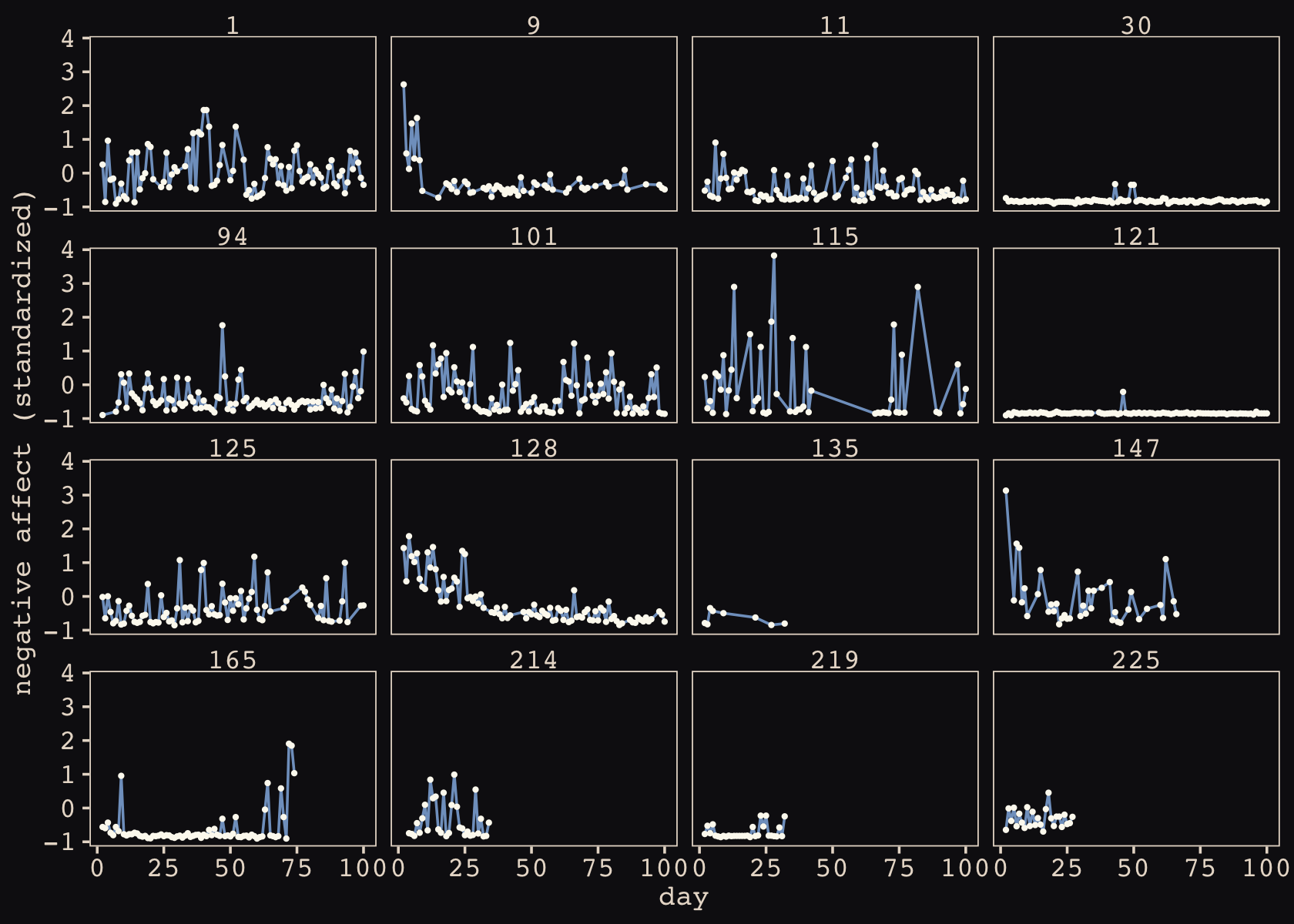

14.2 Advanced varying slopes

In Section 13.3 we saw that data can be considered cross-classified if they have multiple grouping factors. We used the chipanzees data in that section and we only considered cross-classification by single intercepts. Turns out cross-classified models can be extended further. Let’s load and wrangle those data.

data(chimpanzees, package = "rethinking")

d <- chimpanzees

rm(chimpanzees)

# wrangle

d <-

d %>%

mutate(actor = factor(actor),

block = factor(block),

treatment = factor(1 + prosoc_left + 2 * condition),

# this will come in handy, later

labels = factor(treatment,

levels = 1:4,

labels = c("r/n", "l/n", "r/p", "l/p")))

glimpse(d)## Rows: 504

## Columns: 10

## $ actor <fct> 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, …

## $ recipient <int> NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA,…

## $ condition <int> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, …

## $ block <fct> 1, 1, 1, 1, 1, 1, 2, 2, 2, 2, 2, 2, 3, 3, 3, 3, 3, 3, 4, 4, 4, 4, 4, 4, 5, 5, 5, 5, 5, …

## $ trial <int> 2, 4, 6, 8, 10, 12, 14, 16, 18, 20, 22, 24, 26, 28, 30, 32, 34, 36, 38, 40, 42, 44, 46,…

## $ prosoc_left <int> 0, 0, 1, 0, 1, 1, 1, 1, 0, 0, 0, 1, 0, 1, 0, 1, 1, 0, 1, 0, 0, 0, 1, 1, 0, 0, 1, 1, 0, …

## $ chose_prosoc <int> 1, 0, 0, 1, 1, 1, 0, 0, 1, 1, 0, 0, 0, 1, 1, 1, 0, 1, 1, 0, 0, 1, 1, 0, 1, 0, 0, 0, 1, …

## $ pulled_left <int> 0, 1, 0, 0, 1, 1, 0, 0, 0, 0, 1, 0, 1, 1, 0, 1, 0, 0, 1, 1, 1, 0, 1, 0, 0, 1, 0, 0, 0, …

## $ treatment <fct> 1, 1, 2, 1, 2, 2, 2, 2, 1, 1, 1, 2, 1, 2, 1, 2, 2, 1, 2, 1, 1, 1, 2, 2, 1, 1, 2, 2, 1, …

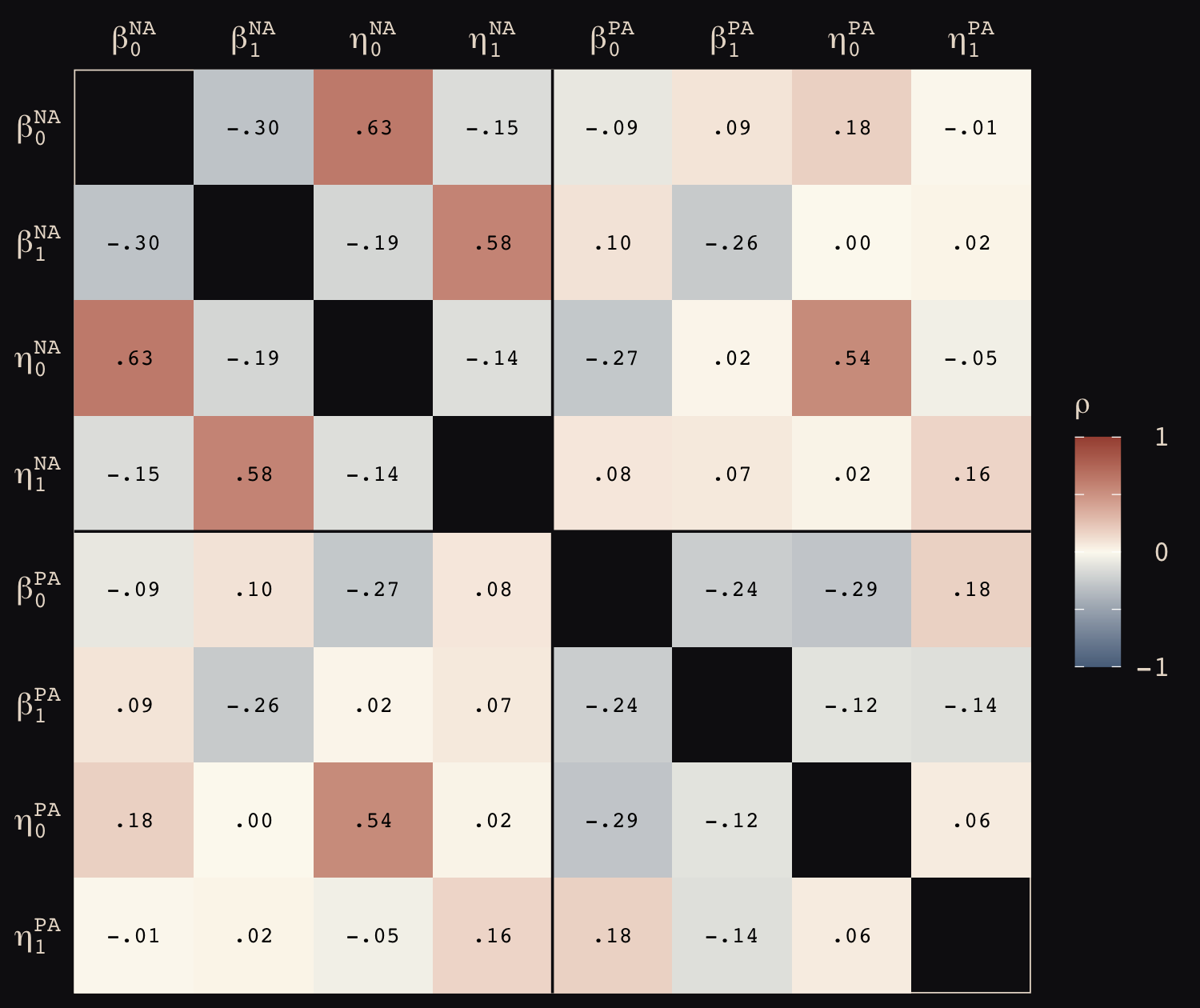

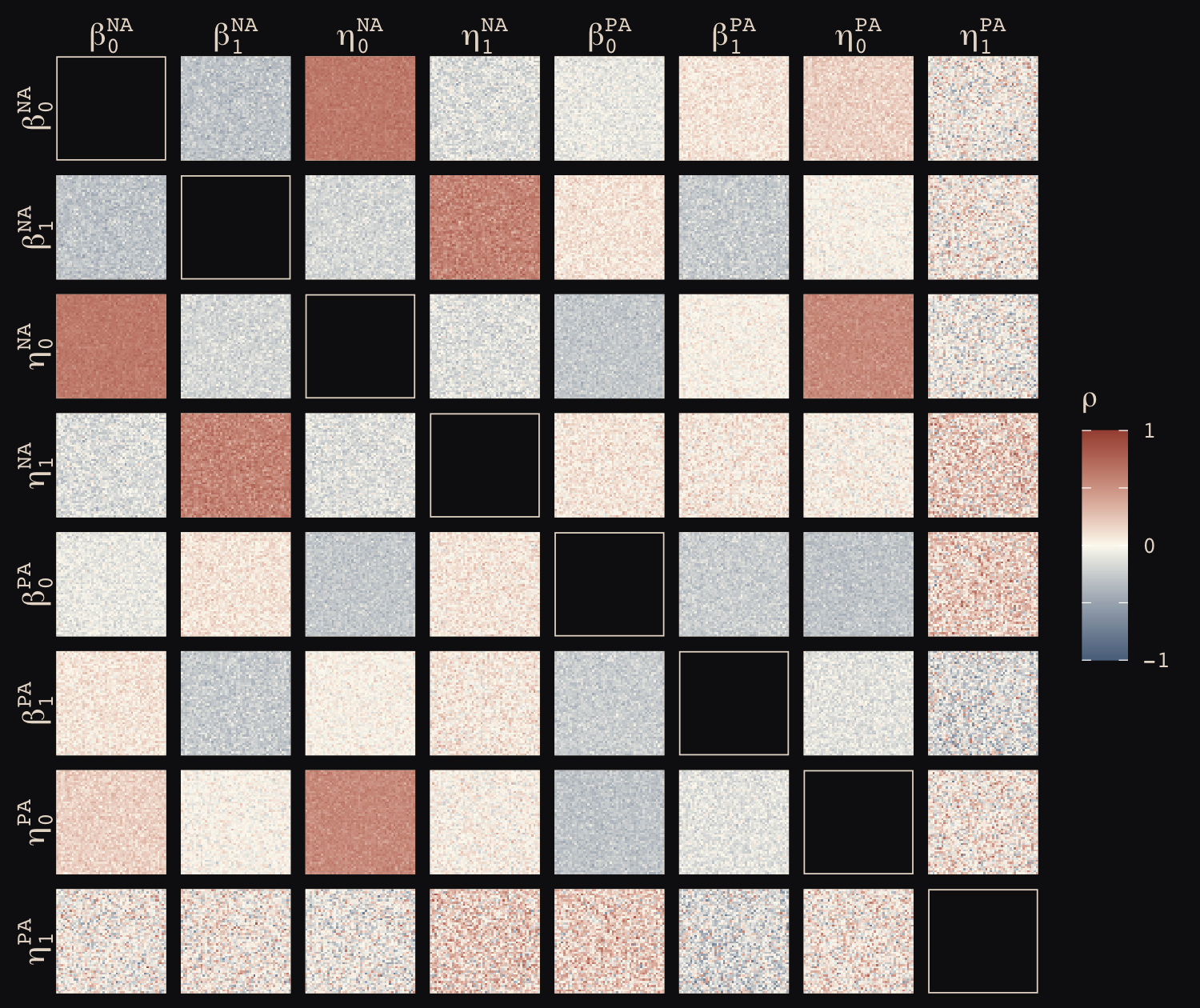

## $ labels <fct> r/n, r/n, l/n, r/n, l/n, l/n, l/n, l/n, r/n, r/n, r/n, l/n, r/n, l/n, r/n, l/n, l/n, r/…If I’m following along correctly with the text, McElreath’s m14.2 uses the centered parameterization. Recall from the last chapter that brms only supports the non-centered parameterization. Happily, McElreath’s m14.3 appears to use the non-centered parameterization. Thus, we’ll skip making a b14.2 and jump directly into making a b14.3. I believe one could describe the statistical model as

\[\begin{align*} \text{left_pull}_i & \sim \operatorname{Binomial}(n_i = 1, p_i) \\ \operatorname{logit} (p_i) & = \gamma_{\text{treatment}[i]} + \alpha_{\text{actor}[i], \text{treatment}[i]} + \beta_{\text{block}[i], \text{treatment}[i]} \\ \gamma_j & \sim \operatorname{Normal}(0, 1), \;\;\; \text{for } j = 1, \dots, 4 \\ \begin{bmatrix} \alpha_{j, 1} \\ \alpha_{j, 2} \\ \alpha_{j, 3} \\ \alpha_{j, 4} \end{bmatrix} & \sim \operatorname{MVNormal} \begin{pmatrix} \begin{bmatrix} 0 \\ 0 \\ 0 \\ 0 \end{bmatrix}, \mathbf \Sigma_\text{actor} \end{pmatrix} \\ \begin{bmatrix} \beta_{j, 1} \\ \beta_{j, 2} \\ \beta_{j, 3} \\ \beta_{j, 4} \end{bmatrix} & \sim \operatorname{MVNormal} \begin{pmatrix} \begin{bmatrix} 0 \\ 0 \\ 0 \\ 0 \end{bmatrix}, \mathbf \Sigma_\text{block} \end{pmatrix} \\ \mathbf \Sigma_\text{actor} & = \mathbf{S_\alpha R_\alpha S_\alpha} \\ \mathbf \Sigma_\text{block} & = \mathbf{S_\beta R_\beta S_\beta} \\ \sigma_{\alpha, [1]}, \dots, \sigma_{\alpha, [4]} & \sim \operatorname{Exponential}(1) \\ \sigma_{\beta, [1]}, \dots, \sigma_{\beta, [4]} & \sim \operatorname{Exponential}(1) \\ \mathbf R_\alpha & \sim \operatorname{LKJ}(2) \\ \mathbf R_\beta & \sim \operatorname{LKJ}(2). \end{align*}\]

In this model, we have four population-level intercepts, \(\gamma_1, \dots, \gamma_4\), one for each of the four levels of treatment. There are two higher-level grouping variables, actor and block, making this a cross-classified model.

The term \(\alpha_{\text{actor}[i], \text{treatment}[i]}\) is meant to convey that each of the treatment effects can vary by actor. The first line containing the \(\operatorname{MVNormal}(\cdot)\) operator indicates the actor-level deviations from the population-level estimates for \(\gamma_j\) follow the multivariate normal distribution where the four means are set to zero (i.e., they are deviations) and their spread around those zeros are controlled by \(\Sigma_\text{actor}\). In the first line below the last line containing \(\operatorname{MVNormal}(\cdot)\), we learn that \(\Sigma_\text{actor}\) can be decomposed into two terms, \(\mathbf S_\alpha\) and \(\mathbf R_\alpha\). It may not yet be clear by the notation, but \(\mathbf S_\alpha\) is a \(4 \times 4\) matrix,

\[ \mathbf S_\alpha = \begin{bmatrix} \sigma_{\alpha, [1]} & 0 & 0 & 0 \\ 0 & \sigma_{\alpha, [2]} & 0 & 0 \\ 0 & 0 & \sigma_{\alpha, [3]} & 0 \\ 0 & 0 & 0 & \sigma_{\alpha, [4]} \end{bmatrix}. \]

In a similar way, \(\mathbf R_\alpha\) is a \(4 \times 4\) matrix,

\[ \mathbf R_\alpha = \begin{bmatrix} 1 & \rho_{\alpha, [1, 2]} & \rho_{\alpha, [1, 3]} & \rho_{\alpha, [1, 4]} \\ \rho_{\alpha, [2, 1]} & 1 & \rho_{\alpha, [2, 3]} & \rho_{\alpha, [2, 4]} \\ \rho_{\alpha, [3, 1]} & \rho_{\alpha, [3, 2]} & 1 & \rho_{\alpha, [3, 4]} \\ \rho_{\alpha, [4, 1]} & \rho_{\alpha, [4, 2]} & \rho_{\alpha, [4, 3]} & 1 \end{bmatrix}. \]

The same overall pattern holds true for \(\beta_{\text{block}[i], \text{treatment}[i]}\) and the associated \(\beta\) parameters connected to the block grouping variable. All the population parameters \(\sigma_{\alpha, [1]}, \dots, \sigma_{\alpha, [4]}\) and \(\sigma_{\beta, [1]}, \dots, \sigma_{\beta, [4]}\) have individual \(\operatorname{Exponential}(1)\) priors. The two \(\mathbf R_{< \cdot >}\) matrices have the priors \(\operatorname{LKJ}(2)\).

I know; this is a lot. This all takes time to grapple with. Here’s how to fit such a model with brms.

b14.3 <-

brm(data = d,

family = binomial,

pulled_left | trials(1) ~ 0 + treatment + (0 + treatment | actor) + (0 + treatment | block),

prior = c(prior(normal(0, 1), class = b),

prior(exponential(1), class = sd, group = actor),

prior(exponential(1), class = sd, group = block),

prior(lkj(2), class = cor, group = actor),

prior(lkj(2), class = cor, group = block)),

iter = 2000, warmup = 1000, chains = 4, cores = 4,

seed = 4387510,

file = "fits/b14.03")Happily, we got no warnings about divergent transitions. Since it’s been a while, we’ll use bayesplot::mcmc_rank_overlay() to examine the primary parameters with a trank plot.

library(bayesplot)

# give the parameters fancy names

names <-

c(str_c("treatment[", 1:4, "]"),

str_c("sigma['actor[", 1:4, "]']"),

str_c("sigma['block[", 1:4, "]']"),

str_c("rho['actor:treatment[", c(1, 1:2, 1:3), ",", rep(2:4, times = 1:3), "]']"),

str_c("rho['block:treatment[", c(1, 1:2, 1:3), ",", rep(2:4, times = 1:3), "]']"),

"Chain")

# wrangle

as_draws_df(b14.3) %>%

select(b_treatment1:`cor_block__treatment3__treatment4`, .chain) %>%

set_names(names) %>%

# plot

mcmc_rank_overlay() +

scale_color_manual(values = c("#80A0C7", "#B1934A", "#A65141", "#EEDA9D")) +

scale_x_continuous(NULL, breaks = 0:4 * 1e3, labels = c(0, str_c(1:4, "K"))) +

coord_cartesian(ylim = c(30, NA)) +

ggtitle("McElreath would love this trank plot.") +

theme(legend.position = "bottom") +

facet_wrap(~ parameter, labeller = label_parsed, ncol = 4)

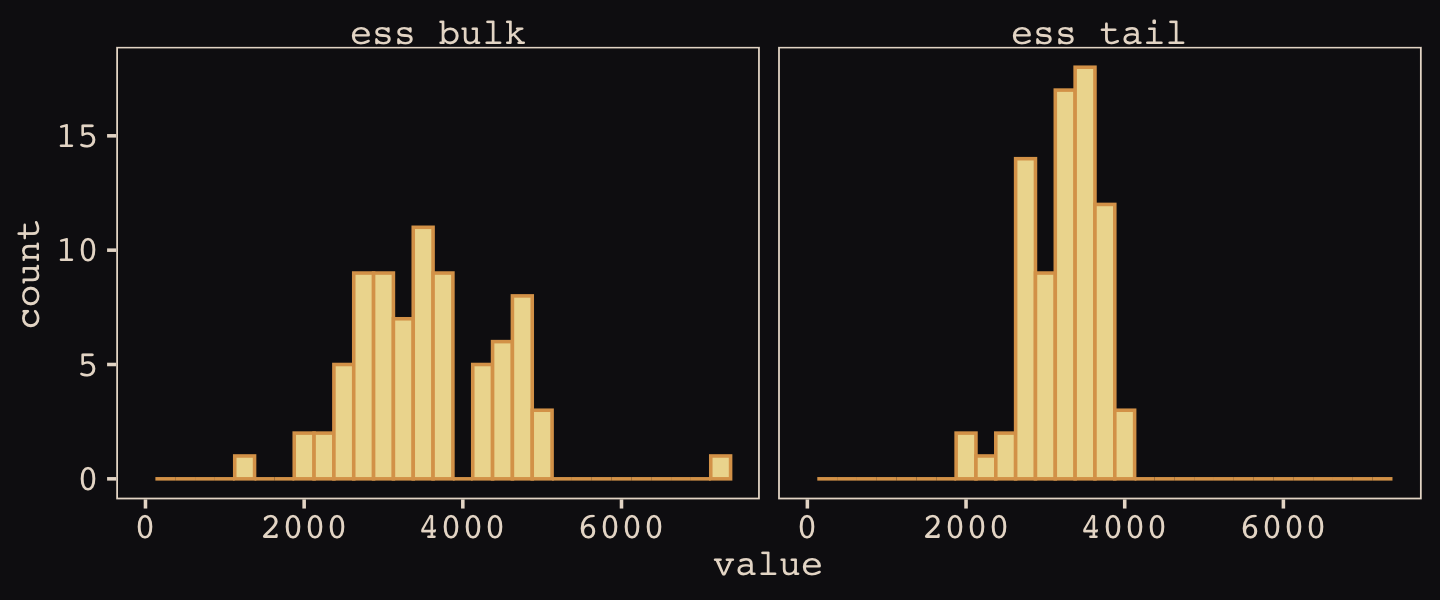

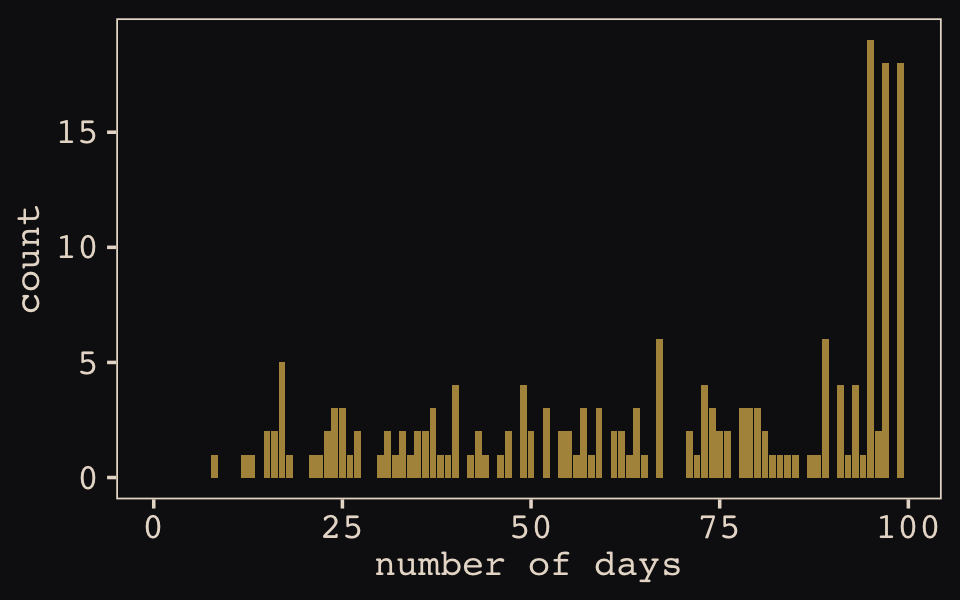

Because we only fit a non-centered version of the model, we aren’t able to make a faithful version of McElreath’s Figure 14.6. However, we can still use posterior::summarise_draws() to help make histograms of the two kinds of effective sample sizes for our b14.3.

library(posterior)

as_draws_df(b14.3) %>%

summarise_draws() %>%

pivot_longer(starts_with("ess")) %>%

ggplot(aes(x = value)) +

geom_histogram(binwidth = 250, fill = "#EEDA9D", color = "#DCA258") +

xlim(0, NA) +

facet_wrap(~ name)

Here is a summary of the model parameters.

print(b14.3)## Family: binomial

## Links: mu = logit

## Formula: pulled_left | trials(1) ~ 0 + treatment + (0 + treatment | actor) + (0 + treatment | block)

## Data: d (Number of observations: 504)

## Draws: 4 chains, each with iter = 2000; warmup = 1000; thin = 1;

## total post-warmup draws = 4000

##

## Group-Level Effects:

## ~actor (Number of levels: 7)

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## sd(treatment1) 1.39 0.49 0.69 2.59 1.00 2379 2865

## sd(treatment2) 0.90 0.38 0.33 1.80 1.00 2391 2793

## sd(treatment3) 1.87 0.58 1.04 3.30 1.00 3471 3184

## sd(treatment4) 1.56 0.58 0.73 2.93 1.00 2904 2669

## cor(treatment1,treatment2) 0.43 0.29 -0.19 0.87 1.00 2754 2751

## cor(treatment1,treatment3) 0.53 0.25 -0.03 0.90 1.00 2903 3376

## cor(treatment2,treatment3) 0.48 0.26 -0.11 0.89 1.00 2911 2839

## cor(treatment1,treatment4) 0.45 0.27 -0.15 0.86 1.00 3082 3183

## cor(treatment2,treatment4) 0.45 0.28 -0.18 0.87 1.00 2873 2777

## cor(treatment3,treatment4) 0.57 0.24 0.02 0.92 1.00 3031 3183

##

## ~block (Number of levels: 6)

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## sd(treatment1) 0.40 0.32 0.02 1.19 1.00 2193 2382

## sd(treatment2) 0.43 0.33 0.02 1.22 1.00 1977 1916

## sd(treatment3) 0.30 0.27 0.01 1.00 1.00 3778 2695

## sd(treatment4) 0.47 0.37 0.03 1.41 1.00 2192 2678

## cor(treatment1,treatment2) -0.08 0.37 -0.74 0.66 1.00 4622 2500

## cor(treatment1,treatment3) -0.01 0.38 -0.72 0.70 1.00 7145 2739

## cor(treatment2,treatment3) -0.03 0.38 -0.73 0.68 1.00 4894 2905

## cor(treatment1,treatment4) 0.05 0.37 -0.66 0.74 1.00 4847 2657

## cor(treatment2,treatment4) 0.05 0.37 -0.67 0.73 1.00 4666 3334

## cor(treatment3,treatment4) 0.01 0.38 -0.68 0.70 1.00 3558 3328

##

## Population-Level Effects:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## treatment1 0.21 0.50 -0.78 1.19 1.00 1943 2323

## treatment2 0.64 0.40 -0.17 1.45 1.00 2792 2646

## treatment3 -0.03 0.58 -1.16 1.10 1.00 2685 2962

## treatment4 0.66 0.55 -0.47 1.76 1.00 3002 2800

##

## Draws were sampled using sampling(NUTS). For each parameter, Bulk_ESS

## and Tail_ESS are effective sample size measures, and Rhat is the potential

## scale reduction factor on split chains (at convergence, Rhat = 1).Like McElreath explained on page 450, our b14.3 has 76 parameters:

- 4 average

treatmenteffects, as listed in the ‘Population-Level Effects’ section; - 7 \(\times\) 4 = 28 varying effects on

actor, as indicated in the ‘~actor:treatment (Number of levels: 7)’ header multiplied by the four levels oftreatment; - 6 \(\times\) 4 = 24 varying effects on

block, as indicated in the ‘~block:treatment (Number of levels: 6)’ header multiplied by the four levels oftreatment; - 8 standard deviations listed in the eight rows beginning with

sd(; and - 12 free correlation parameters listed in the eight rows beginning with

cor(.

Compute the WAIC estimate.

b14.3 <- add_criterion(b14.3, "waic")

waic(b14.3)##

## Computed from 4000 by 504 log-likelihood matrix

##

## Estimate SE

## elpd_waic -272.7 9.9

## p_waic 27.0 1.4

## waic 545.3 19.8

##

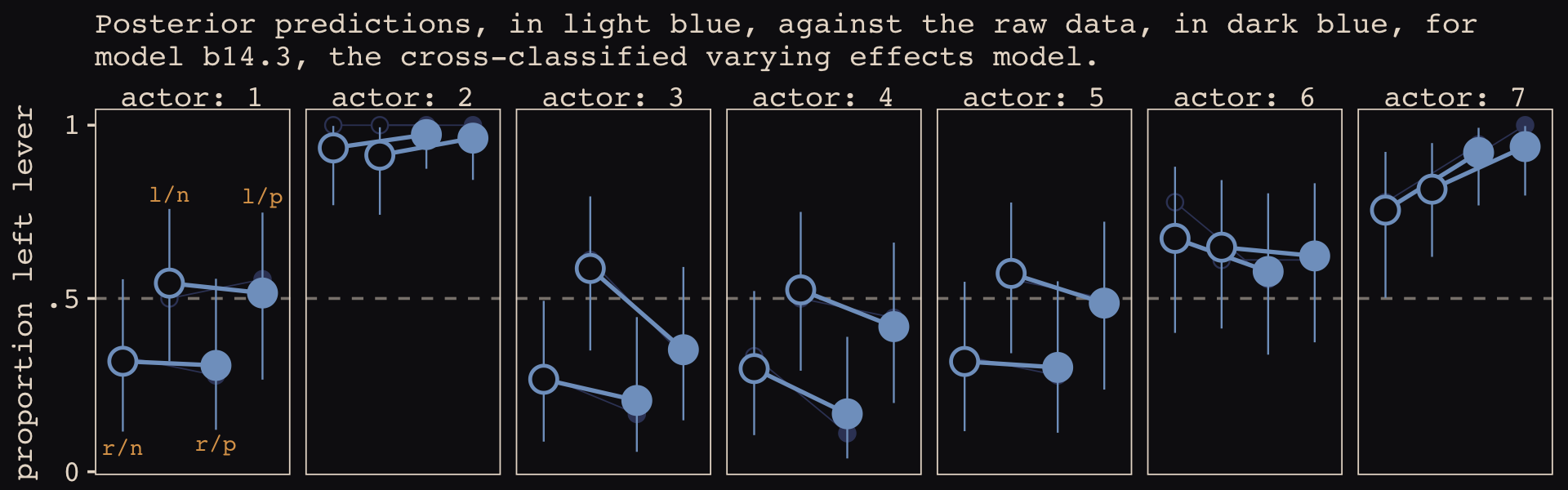

## 1 (0.2%) p_waic estimates greater than 0.4. We recommend trying loo instead.Like the \(p_\text{WAIC}\), our brms version of the model has about 27 effective parameters. Now we’ll get a better sense of the model with a posterior predictive check in the form of our version of Figure 14.7. McElreath described his R code 14.22 as “a big chunk of code” (p. 451). I’ll leave up to the reader to decide whether our big code chunk is any better.

# for annotation

text <-

distinct(d, labels) %>%

mutate(actor = 1,

prop = c(.07, .8, .08, .795))

nd <-

d %>%

distinct(actor, condition, labels, prosoc_left, treatment) %>%

mutate(block = 5)

# compute and wrangle the posterior predictions

fitted(b14.3,

newdata = nd) %>%

data.frame() %>%

bind_cols(nd) %>%

# add the empirical proportions

left_join(

d %>%

group_by(actor, treatment) %>%

mutate(proportion = mean(pulled_left)) %>%

distinct(actor, treatment, proportion),

by = c("actor", "treatment")

) %>%

mutate(condition = factor(condition)) %>%

# plot!

ggplot(aes(x = labels)) +

geom_hline(yintercept = .5, color = "#E8DCCF", alpha = 1/2, linetype = 2) +

# empirical proportions

geom_line(aes(y = proportion, group = prosoc_left),

linewidth = 1/4, color = "#394165") +

geom_point(aes(y = proportion, shape = condition),

color = "#394165", fill = "#100F14", size = 2.5, show.legend = F) +

# posterior predictions

geom_line(aes(y = Estimate, group = prosoc_left),

linewidth = 3/4, color = "#80A0C7") +

geom_pointrange(aes(y = Estimate, ymin = Q2.5, ymax = Q97.5, shape = condition),

color = "#80A0C7", fill = "#100F14", fatten = 8, linewidth = 1/3, show.legend = F) +

# annotation for the conditions

geom_text(data = text,

aes(y = prop, label = labels),

color = "#DCA258", family = "Courier", size = 3) +

scale_shape_manual(values = c(21, 19)) +

scale_x_discrete(NULL, breaks = NULL) +

scale_y_continuous("proportion left lever", breaks = 0:2 / 2, labels = c("0", ".5", "1")) +

labs(subtitle = "Posterior predictions, in light blue, against the raw data, in dark blue, for\nmodel b14.3, the cross-classified varying effects model.") +

facet_wrap(~ actor, nrow = 1, labeller = label_both)

These chimpanzees simply did not behave in any consistently different way in the partner treatments. The model we’ve used here does have some advantages, though. Since it allows for some individuals to differ in how they respond to the treatments, it could reveal a situation in which a treatment has no effect on average, even though some of the individuals respond strongly. That wasn’t the case here. But often we are more interested in the distribution of responses than in the average response, so a model that estimates the distribution of treatment effects is very useful. (p. 452)

For more practice with models of this kind, check out my blog post, Multilevel models and the index-variable approach.

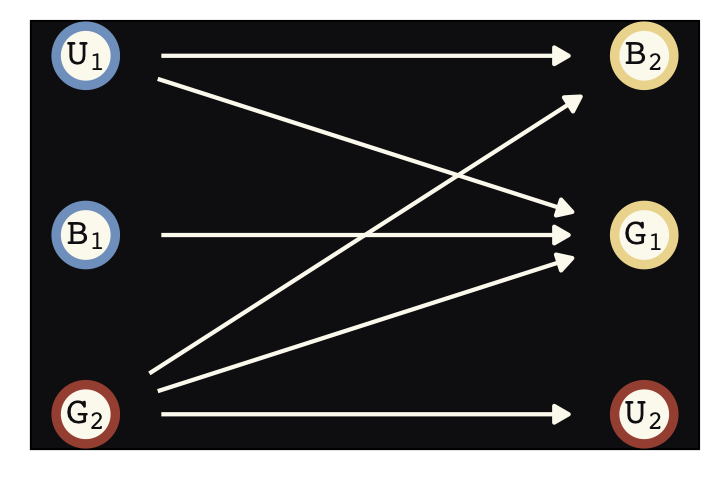

14.3 Instruments and causal designs

Of course sometimes it won’t be possible to close all of the non-causal paths or rule of unobserved confounds. What can be done in that case? More than nothing. If you are lucky, there are ways to exploit a combination of natural experiments and clever modeling that allow causal inference even when non-causal paths cannot be closed. (p. 455)

14.3.1 Instrumental variables.

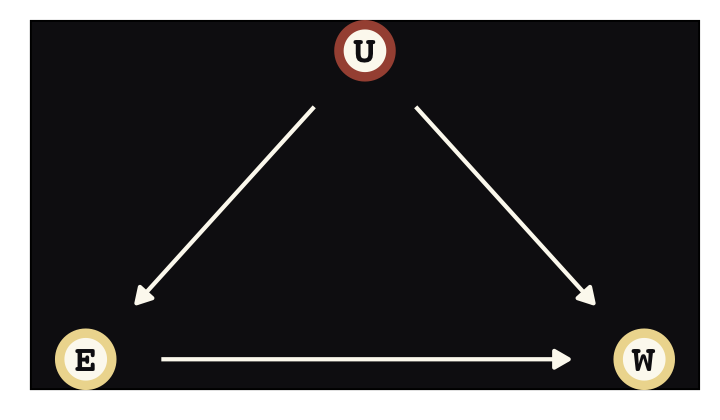

Say were are interested in the causal impact of education \(E\) on wages \(W\), \(E \rightarrow W\). Further imagine there is some unmeasured variable \(U\) that has causal relations with both, \(E \leftarrow U \rightarrow W\), creating a backdoor path. We might use good old ggdag to plot the DAG.

library(ggdag)

dag_coords <-

tibble(name = c("E", "U", "W"),

x = c(1, 2, 3),

y = c(1, 2, 1))Before we make the plot, we’ll make a custom theme, theme_pearl_dag(), to streamline our DAG plots.

theme_pearl_dag <- function(...) {

theme_pearl_earring() +

theme_dag() +

theme(panel.background = element_rect(fill = "#100F14"),

...)

}

dagify(E ~ U,

W ~ E + U,

coords = dag_coords) %>%

ggplot(aes(x = x, y = y, xend = xend, yend = yend)) +

geom_dag_point(aes(color = name == "U"),

shape = 21, stroke = 2, fill = "#FCF9F0", size = 6, show.legend = F) +

geom_dag_text(color = "#100F14", family = "Courier") +

geom_dag_edges(edge_colour = "#FCF9F0") +

scale_color_manual(values = c("#EEDA9D", "#A65141")) +

theme_pearl_dag()

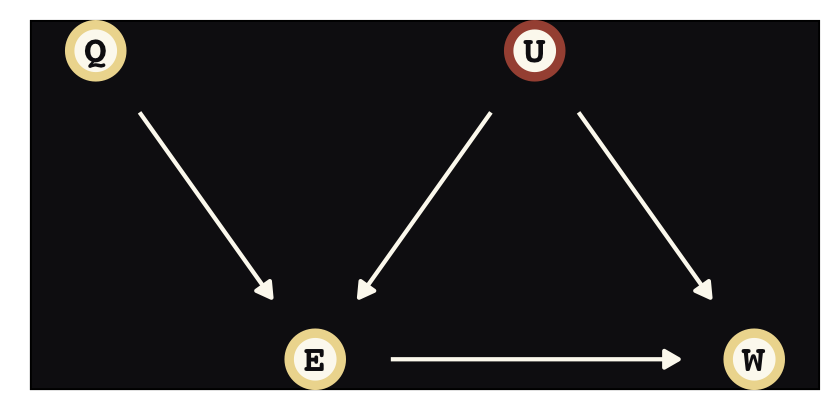

Instrumental variables will solve some of the difficulties we have in not being able to condition on \(U\). Here we’ll call our instrumental variable \(Q\). In the terms of the present example, the instrumental variable has the qualities that

- \(Q\) is independent of \(U\),

- \(Q\) is not independent of \(E\), and

- \(Q\) can only influence \(W\) through \(E\) (i.e., the effect of \(Q\) on \(W\) is fully mediated by \(E\)).

There is what this looks like in a DAG.

dag_coords <-

tibble(name = c("Q", "E", "U", "W"),

x = c(0, 1, 2, 3),

y = c(2, 1, 2, 1))

dagify(E ~ Q + U,

W ~ E + U,

coords = dag_coords) %>%

ggplot(aes(x = x, y = y, xend = xend, yend = yend)) +

geom_dag_point(aes(color = name == "U"),

shape = 21, stroke = 2, fill = "#FCF9F0", size = 6, show.legend = F) +

geom_dag_text(color = "#100F14", family = "Courier") +

geom_dag_edges(edge_colour = "#FCF9F0") +

scale_color_manual(values = c("#EEDA9D", "#A65141")) +

theme_pearl_dag()

Sadly, our condition that \(Q\) can only influence \(W\) through \(E\)–often called the exclusion restriction–generally cannot be tested. Given \(U\) is unmeasured, by definition, we also cannot test that \(Q\) is independent of \(U\). These are model assumptions.

Let’s simulate data based on Angrist & Keueger (1991) to get a sense of how this works.

# make a standardizing function

standardize <- function(x) {

(x - mean(x)) / sd(x)

}

# simulate

set.seed(73)

n <- 500

dat_sim <-

tibble(u_sim = rnorm(n, mean = 0, sd = 1),

q_sim = sample(1:4, size = n, replace = T)) %>%

mutate(e_sim = rnorm(n, mean = u_sim + q_sim, sd = 1)) %>%

mutate(w_sim = rnorm(n, mean = u_sim + 0 * e_sim, sd = 1)) %>%

mutate(w = standardize(w_sim),

e = standardize(e_sim),

q = standardize(q_sim))

dat_sim## # A tibble: 500 × 7

## u_sim q_sim e_sim w_sim w e q

## <dbl> <int> <dbl> <dbl> <dbl> <dbl> <dbl>

## 1 -0.145 1 1.51 0.216 0.173 -0.575 -1.36

## 2 0.291 1 0.664 0.846 0.584 -1.09 -1.36

## 3 0.0938 3 2.44 -0.664 -0.402 -0.0185 0.428

## 4 -0.127 3 4.09 -0.725 -0.442 0.978 0.428

## 5 -0.847 4 2.62 -1.24 -0.780 0.0939 1.32

## 6 0.141 4 3.54 -0.0700 -0.0146 0.651 1.32

## 7 1.54 2 3.65 1.88 1.26 0.714 -0.464

## 8 2.74 3 4.91 2.52 1.67 1.48 0.428

## 9 1.55 3 4.18 0.624 0.439 1.04 0.428

## 10 0.462 1 0.360 0.390 0.286 -1.27 -1.36

## # … with 490 more rows\(Q\) in this context is like quarter in the school year, but inversely scaled such that larger numbers indicate more quarters. In this simulation, we have set the true effect of education on wages–\(E \rightarrow W\)–to be zero. Any univariate association is through the confounding variable \(U\). Also, \(Q\) has no direct effect on \(W\) or \(U\), but it does have a causal relation with \(E\), which is \(Q \rightarrow E \leftarrow U\). First we fit the univariable model corresponding to \(E \rightarrow W\).

b14.4 <-

brm(data = dat_sim,

family = gaussian,

w ~ 1 + e,

prior = c(prior(normal(0, 0.2), class = Intercept),

prior(normal(0, 0.5), class = b),

prior(exponential(1), class = sigma)),

iter = 2000, warmup = 1000, chains = 4, cores = 4,

seed = 14,

file = "fits/b14.04")print(b14.4)## Family: gaussian

## Links: mu = identity; sigma = identity

## Formula: w ~ 1 + e

## Data: dat_sim (Number of observations: 500)

## Draws: 4 chains, each with iter = 2000; warmup = 1000; thin = 1;

## total post-warmup draws = 4000

##

## Population-Level Effects:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## Intercept 0.00 0.04 -0.08 0.08 1.00 3649 2979

## e 0.40 0.04 0.32 0.48 1.00 3940 3096

##

## Family Specific Parameters:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## sigma 0.92 0.03 0.86 0.98 1.00 4065 2876

##

## Draws were sampled using sampling(NUTS). For each parameter, Bulk_ESS

## and Tail_ESS are effective sample size measures, and Rhat is the potential

## scale reduction factor on split chains (at convergence, Rhat = 1).Because we have not conditioned on \(U\), then model suggests a moderately large spurious causal relation for \(E \rightarrow W\). Now see what happens when we also condition directly on \(Q\), as in \(Q \rightarrow W \leftarrow E\).

b14.5 <-

brm(data = dat_sim,

family = gaussian,

w ~ 1 + e + q,

prior = c(prior(normal(0, 0.2), class = Intercept),

prior(normal(0, 0.5), class = b),

prior(exponential(1), class = sigma)),

iter = 2000, warmup = 1000, chains = 4, cores = 4,

seed = 14,

file = "fits/b14.05")print(b14.5)## Family: gaussian

## Links: mu = identity; sigma = identity

## Formula: w ~ 1 + e + q

## Data: dat_sim (Number of observations: 500)

## Draws: 4 chains, each with iter = 2000; warmup = 1000; thin = 1;

## total post-warmup draws = 4000

##

## Population-Level Effects:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## Intercept 0.00 0.04 -0.08 0.07 1.00 3237 2650

## e 0.64 0.05 0.54 0.72 1.00 3399 2874

## q -0.40 0.05 -0.49 -0.32 1.00 3291 2794

##

## Family Specific Parameters:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## sigma 0.86 0.03 0.81 0.92 1.00 3915 2920

##

## Draws were sampled using sampling(NUTS). For each parameter, Bulk_ESS

## and Tail_ESS are effective sample size measures, and Rhat is the potential

## scale reduction factor on split chains (at convergence, Rhat = 1).Holy smokes that’s a mess. This model suggests both \(E\) and \(Q\) have moderate to strong causal effects on \(W\), even though we know neither do based on the true data-generating model. Like McElreath said, “bad stuff happens” when we condition on an instrumental variable this way.

There is no backdoor path through \(Q\), as you can see. But there is a non-causal path from \(Q\) to \(W\) through \(U\): \(Q \rightarrow E \leftarrow U \rightarrow W\). This is a non-causal path, because changing \(Q\) doesn’t result in any change in \(W\) through this path. But since we are conditioning on \(E\) in the same model, and \(E\) is a collider of \(Q\) and \(U\), the non-causal path is open. This confounds the coefficient on \(Q\). It won’t be zero, because it’ll pick up the association between \(U\) and \(W\). And then, as a result, the coefficient on \(E\) can get even more confounded. Used this way, an instrument like \(Q\) might be called a bias amplifier. (p. 456, emphasis in the original)

The statistical solution to this mess is to express the data-generating DAG as a multivariate statistical model following the form

\[\begin{align*} \begin{bmatrix} W_i \\ E_i \end{bmatrix} & \sim \operatorname{MVNormal} \begin{pmatrix} \begin{bmatrix} \mu_{\text W,i} \\ \mu_{\text E,i} \end{bmatrix}, \color{#A65141}{\mathbf \Sigma} \end{pmatrix} \\ \mu_{\text W,i} & = \alpha_\text W + \beta_\text{EW} E_i \\ \mu_{\text E,i} & = \alpha_\text E + \beta_\text{QE} Q_i \\ \color{#A65141}{\mathbf\Sigma} & \color{#A65141}= \color{#A65141}{\begin{bmatrix} \sigma_\text W & 0 \\ 0 & \sigma_\text E \end{bmatrix} \mathbf R \begin{bmatrix} \sigma_\text W & 0 \\ 0 & \sigma_\text E \end{bmatrix}} \\ \color{#A65141}{\mathbf R} & \color{#A65141}= \color{#A65141}{\begin{bmatrix} 1 & \rho \\ \rho & 1 \end{bmatrix}} \\ \alpha_\text W \text{ and } \alpha_\text E & \sim \operatorname{Normal}(0, 0.2) \\ \beta_\text{EW} \text{ and } \beta_\text{QE} & \sim \operatorname{Normal}(0, 0.5) \\ \sigma_\text W \text{ and } \sigma_\text E & \sim \operatorname{Exponential}(1) \\ \rho & \sim \operatorname{LKJ}(2). \end{align*}\]

You might not remember, but we’ve actually fit a model like this before. It was b5.3_A from way back in Section 5.1.5.3. The big difference between that earlier model and this one is whereas the former did not include a residual correlation, \(\rho\), this one will. Thus, this time we will make sure to set set_rescor(TRUE) in the formula. Within brms parlance, priors for residual correlations are of class = rescor.

e_model <- bf(e ~ 1 + q)

w_model <- bf(w ~ 1 + e)

b14.6 <-

brm(data = dat_sim,

family = gaussian,

e_model + w_model + set_rescor(TRUE),

prior = c(# E model

prior(normal(0, 0.2), class = Intercept, resp = e),

prior(normal(0, 0.5), class = b, resp = e),

prior(exponential(1), class = sigma, resp = e),

# W model

prior(normal(0, 0.2), class = Intercept, resp = w),

prior(normal(0, 0.5), class = b, resp = w),

prior(exponential(1), class = sigma, resp = w),

# rho

prior(lkj(2), class = rescor)),

iter = 2000, warmup = 1000, chains = 4, cores = 4,

seed = 14,

file = "fits/b14.06")print(b14.6)## Family: MV(gaussian, gaussian)

## Links: mu = identity; sigma = identity

## mu = identity; sigma = identity

## Formula: e ~ 1 + q

## w ~ 1 + e

## Data: dat_sim (Number of observations: 500)

## Draws: 4 chains, each with iter = 2000; warmup = 1000; thin = 1;

## total post-warmup draws = 4000

##

## Population-Level Effects:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## e_Intercept -0.00 0.04 -0.07 0.07 1.00 3121 2942

## w_Intercept 0.00 0.04 -0.08 0.09 1.00 3207 3131

## e_q 0.59 0.04 0.52 0.66 1.00 2973 2487

## w_e -0.05 0.08 -0.21 0.09 1.00 1840 2007

##

## Family Specific Parameters:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## sigma_e 0.81 0.03 0.76 0.86 1.00 3291 2515

## sigma_w 1.02 0.05 0.94 1.12 1.00 2073 2041

##

## Residual Correlations:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## rescor(e,w) 0.54 0.05 0.44 0.64 1.00 1892 2233

##

## Draws were sampled using sampling(NUTS). For each parameter, Bulk_ESS

## and Tail_ESS are effective sample size measures, and Rhat is the potential

## scale reduction factor on split chains (at convergence, Rhat = 1).Now the parameter for \(E \rightarrow W\), w_e, is just where it should be–near zero. The residual correlation between \(E\) and \(Q\), rescor(e,w), is positive and large in magnitude, indicating their common influence from the unmeasured variable \(U\). Next we’ll take McElreath’s direction to “adjust the simulation and try other scenarios” (p. 459) by adjusting the causal relations, as in his R code 14.28.

set.seed(73)

n <- 500

dat_sim <-

tibble(u_sim = rnorm(n, mean = 0, sd = 1),

q_sim = sample(1:4, size = n, replace = T)) %>%

mutate(e_sim = rnorm(n, mean = u_sim + q_sim, sd = 1)) %>%

mutate(w_sim = rnorm(n, mean = -u_sim + 0.2 * e_sim, sd = 1)) %>%

mutate(w = standardize(w_sim),

e = standardize(e_sim),

q = standardize(q_sim))

dat_sim## # A tibble: 500 × 7

## u_sim q_sim e_sim w_sim w e q

## <dbl> <int> <dbl> <dbl> <dbl> <dbl> <dbl>

## 1 -0.145 1 1.51 0.809 0.248 -0.575 -1.36

## 2 0.291 1 0.664 0.396 -0.0563 -1.09 -1.36

## 3 0.0938 3 2.44 -0.364 -0.615 -0.0185 0.428

## 4 -0.127 3 4.09 0.347 -0.0922 0.978 0.428

## 5 -0.847 4 2.62 0.976 0.370 0.0939 1.32

## 6 0.141 4 3.54 0.357 -0.0852 0.651 1.32

## 7 1.54 2 3.65 -0.466 -0.690 0.714 -0.464

## 8 2.74 3 4.91 -1.98 -1.80 1.48 0.428

## 9 1.55 3 4.18 -1.64 -1.55 1.04 0.428

## 10 0.462 1 0.360 -0.461 -0.686 -1.27 -1.36

## # … with 490 more rowsWe’ll use update() to avoid re-compiling the models.

b14.4x <-

update(b14.4,

newdata = dat_sim,

iter = 2000, warmup = 1000, chains = 4, cores = 4,

seed = 14,

file = "fits/b14.04x")

b14.6x <-

update(b14.6,

newdata = dat_sim,

iter = 2000, warmup = 1000, chains = 4, cores = 4,

seed = 14,

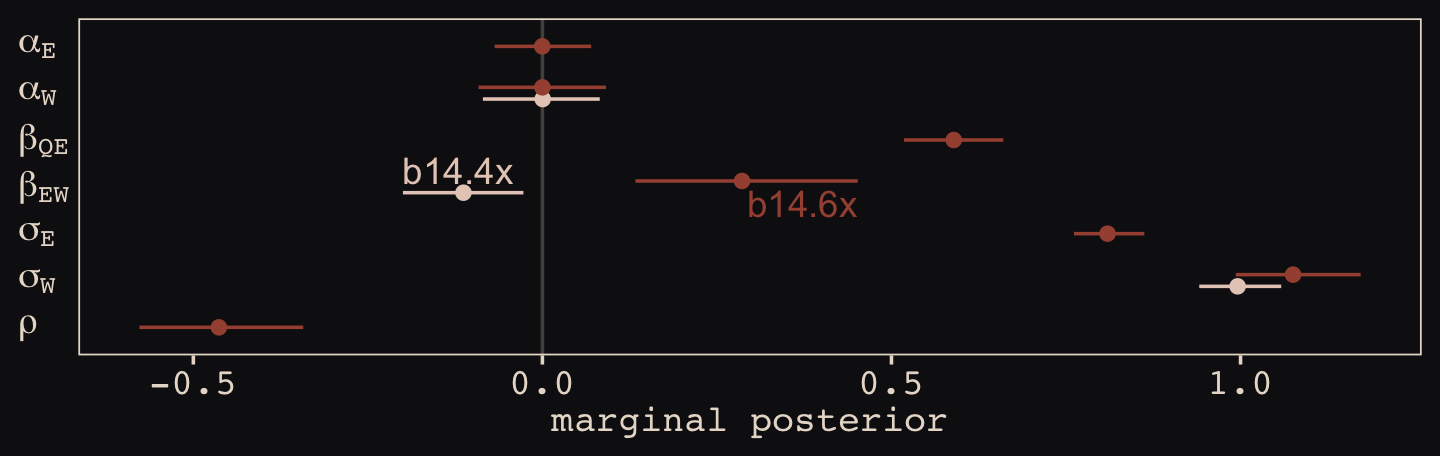

file = "fits/b14.06x")Just for kicks, let’s examine the results with a coefficient plot.

text <-

tibble(Estimate = c(fixef(b14.4x)[2, 3], fixef(b14.6x)[4, 4]),

y = c(4.35, 3.65),

hjust = c(0, 1),

fit = c("b14.4x", "b14.6x"))

bind_rows(

# b_14.4x

posterior_summary(b14.4x)[1:3, ] %>%

data.frame() %>%

mutate(param = c("alpha[W]", "beta[EW]", "sigma[W]"),

fit = "b14.4x"),

# b_14.6x

posterior_summary(b14.6x)[1:7, ] %>%

data.frame() %>%

mutate(param = c("alpha[E]", "alpha[W]", "beta[QE]", "beta[EW]", "sigma[E]", "sigma[W]", "rho"),

fit = "b14.6x")) %>%

mutate(param = factor(param,

levels = c("rho", "sigma[W]", "sigma[E]", "beta[EW]", "beta[QE]", "alpha[W]", "alpha[E]"))) %>%

ggplot(aes(x = param, y = Estimate, color = fit)) +

geom_hline(yintercept = 0, color = "#E8DCCF", alpha = 1/4) +

geom_pointrange(aes(ymin = Q2.5, ymax = Q97.5),

fatten = 2, position = position_dodge(width = 0.5)) +

geom_text(data = text,

aes(x = y, label = fit, hjust = hjust)) +

scale_color_manual(NULL, values = c("#E7CDC2", "#A65141")) +

scale_x_discrete(NULL, labels = ggplot2:::parse_safe) +

ylab("marginal posterior") +

coord_flip() +

theme(axis.text.y = element_text(hjust = 0),

axis.ticks.y = element_blank(),

legend.position = "none")

With the help from b14.6x, we found “that \(E\) and \(W\) have a negative correlation in their residual variance, because the confound positively influences one and negatively influences the other” (p. 459).

One can use the dagitty() and instrumentalVariables() functions from the dagitty package to first define a DAG and then query whether there are instrumental variables for a given exposure and outcome.

library(dagitty)

dagIV <- dagitty("dag{Q -> E <- U -> W <- E}")

instrumentalVariables(dagIV, exposure = "E", outcome = "W")## QThe hardest thing about instrumental variables is believing in any particular instrument. If you believe in your DAG, they are easy to believe. But should you believe in your DAG?…

In general, it is not possible to statistically prove whether a variable is a good instrument. As always, we need scientific knowledge outside of the data to make sense of the data. (p. 460)

14.3.1.1 Rethinking: Two-stage worst squares.

“The instrumental variable model is often discussed with an estimation procedure known as two-stage least squares (2SLS)” (p. 460, emphasis in the original). For a nice introduction to instrumental variables via 2SLS, see this practical introduction, and also the slides and video-lecture files, from the great Andrew Heiss. For the clinical researchers in the room, Kristoffer Magnusson has a nice brms-based blog post on the topic called Confounded dose-response effects of treatment adherence: fitting Bayesian instrumental variable models using brms.

14.3.2 Other designs.

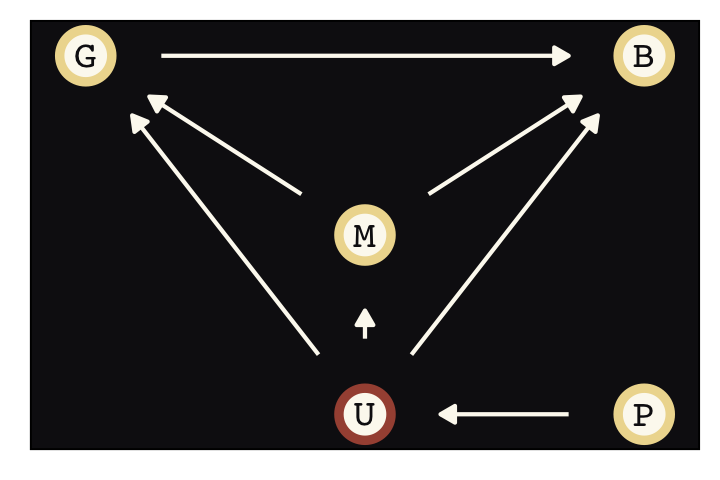

There are potentially many ways to find natural experiments. Not all of them are strictly instrumental variables. But they can provide theoretically correct designs for causal inference, if you can believe the assumptions. Let’s consider two more.

In addition to the backdoor criterion you met in Chapter 6, there is something called the front-door criterion. (p. 460, emphasis in the original)

To get a sense of the front-door criterion, consider the following DAG with observed variables \(X\), \(Y\), and \(Z\) and an unobserved variable, \(U\).

dag_coords <-

tibble(name = c("X", "Z", "U", "Y"),

x = c(1, 2, 2, 3),

y = c(1, 1, 2, 1))

dagify(X ~ U,

Z ~ X,

Y ~ U + Z,

coords = dag_coords) %>%

ggplot(aes(x = x, y = y, xend = xend, yend = yend)) +

geom_dag_point(aes(color = name == "U"),

shape = 21, stroke = 2, fill = "#FCF9F0", size = 6, show.legend = F) +

geom_dag_text(color = "#100F14", family = "Courier") +

geom_dag_edges(edge_colour = "#FCF9F0") +

scale_color_manual(values = c("#EEDA9D", "#A65141")) +

theme_pearl_dag()

We are interested, as usual, in the causal influence of \(X\) on \(Y\). But there is an unobserved confound \(U\), again as usual. It turns out that, if we can find a perfect mediator \(Z\), then we can possibly estimate the causal effect of \(X\) on \(Y\). It isn’t crazy to think that causes are mediated by other causes. Everything has a mechanism. \(Z\) in the DAG above is such a mechanism. If you have a believable \(Z\) variable, then the causal effect of \(X\) on \(Y\) is estimated by expressing the generative model as a statistical model, similar to the instrumental variable example before. (p. 461)

McElreath’s second example is the regression discontinuity approach. If you have a time series where the variable of interest is measured before and after some relevant intervention variable, you can estimate intercepts and slopes before and after the intervention, the cutoff. However,

in practice, one trend is fit for individuals above the cutoff and another to those below the cutoff. Then an estimate of the causal effect is the average difference between individuals just above and just below the cutoff. While the difference near the cuttoff is of interest, the entire function influences this difference. So some care is needed in choosing functions for the overall relationship between the exposure and the outcome. (p. 461)

McElreath’s not kidding about the need for care when fitting regression discontinuity models. Gleman’s blog is littered with awful examples (e.g., here, here, here, here, here). See also Gelman and Imbens’ (2019) paper, Why high-order polynomials should not be used in regression discontinuity designs, or Nick HK’s informative tweet on how this applies to autocorrelated data 7.

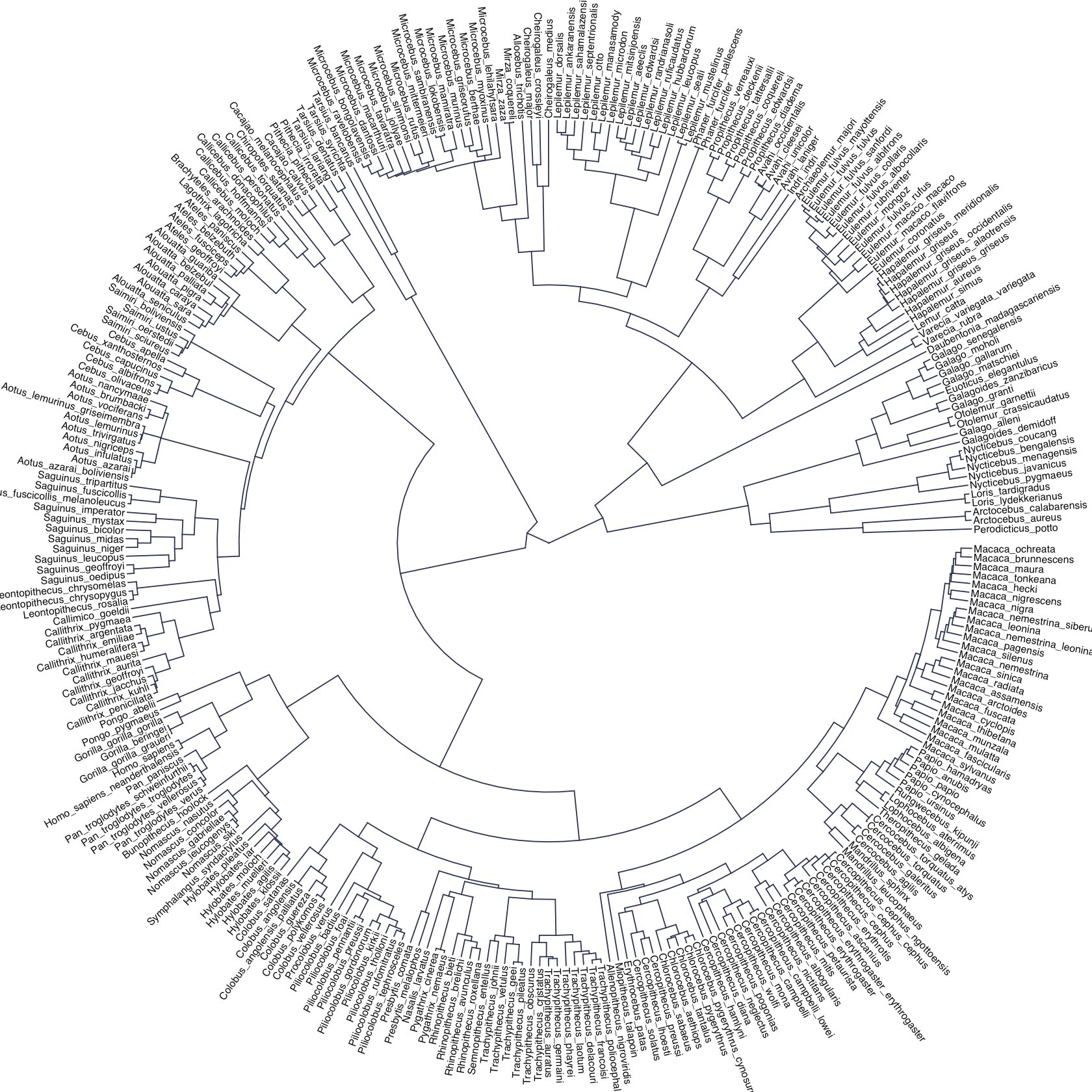

14.5 Continuous categories and the Gaussian process

There is a way to apply the varying effects approach to continuous categories… The general approach is known as Gaussian process regression. This name is unfortunately wholly uninformative about what it is for and how it works.

We’ll proceed to work through a basic example that demonstrates both what it is for and how it works. The general purpose is to define some dimension along which cases differ. This might be individual differences in age. Or it could be differences in location. Then we measure the distance between each pair of cases. What the model then does is estimate a function for the covariance between pairs of cases at different distances. This covariance function provides one continuous category generalization of the varying effects approach. (p. 468, emphasis in the original)

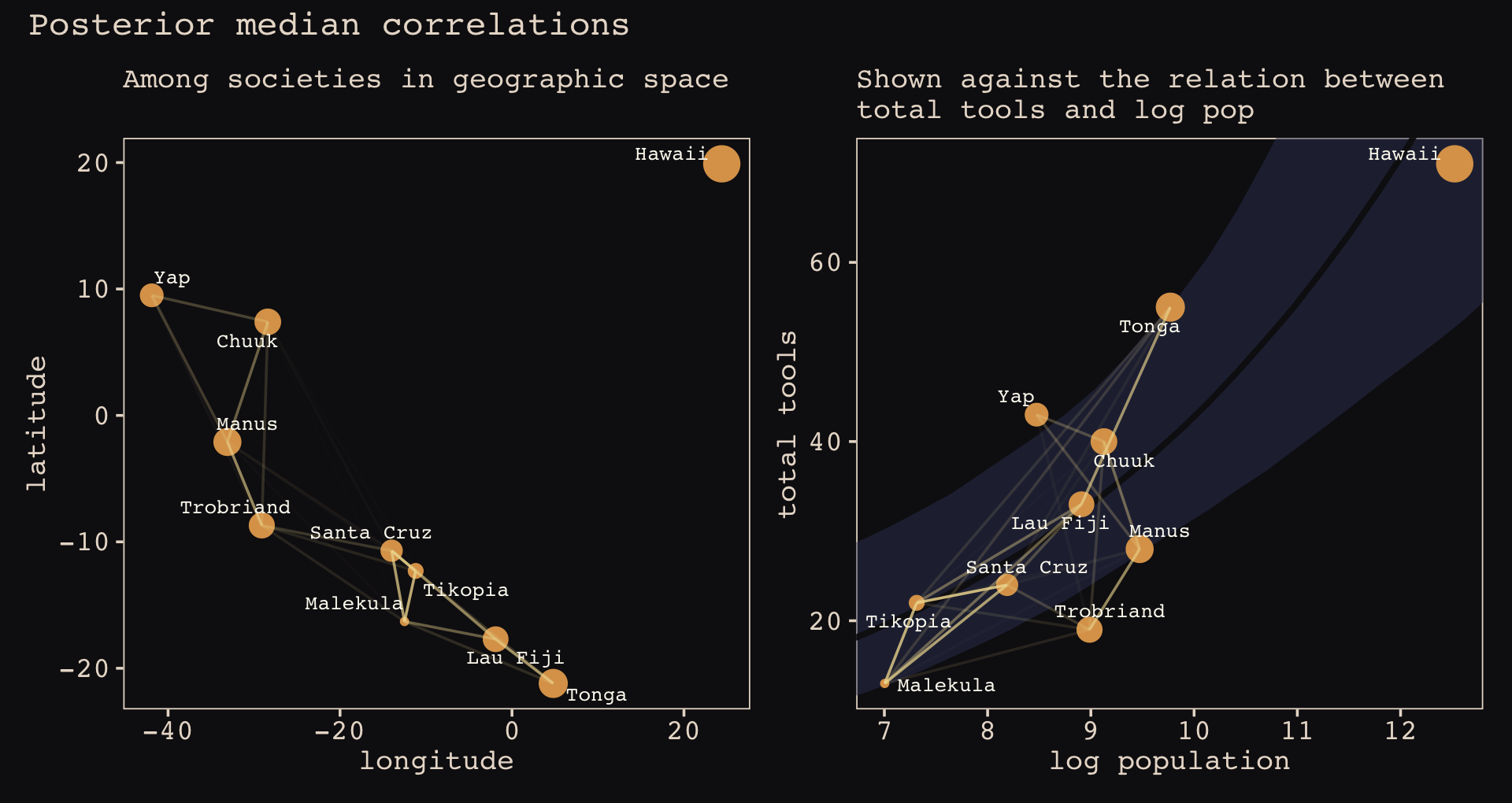

14.5.1 Example: Spatial autocorrelation in Oceanic tools.

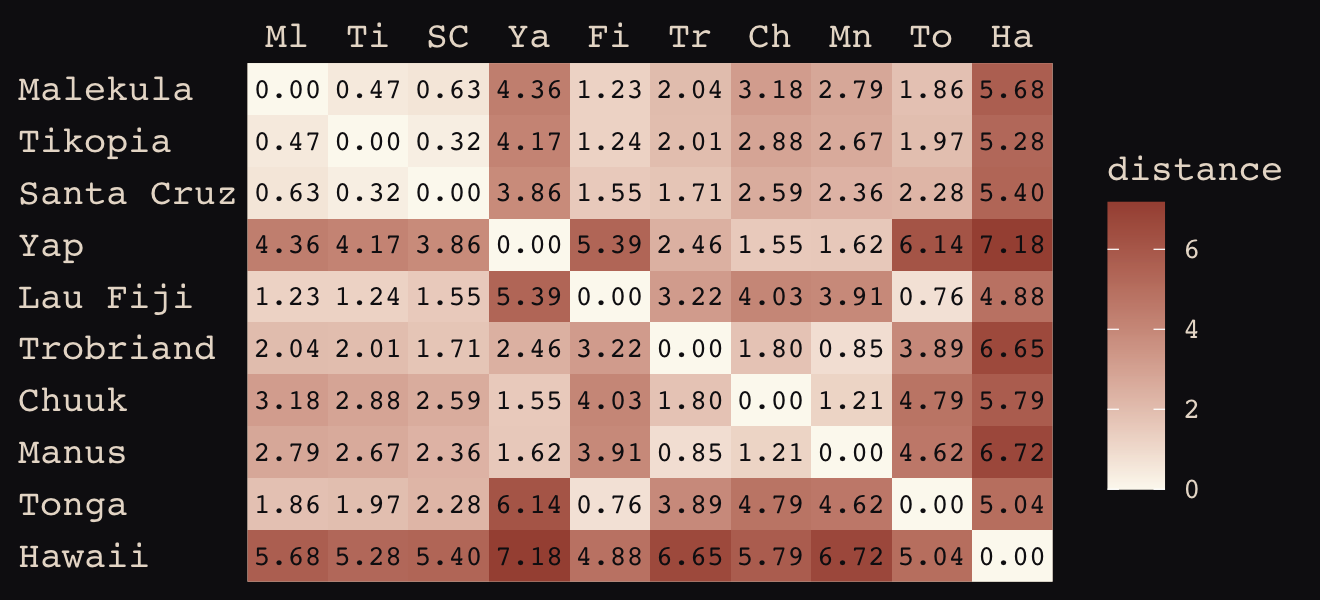

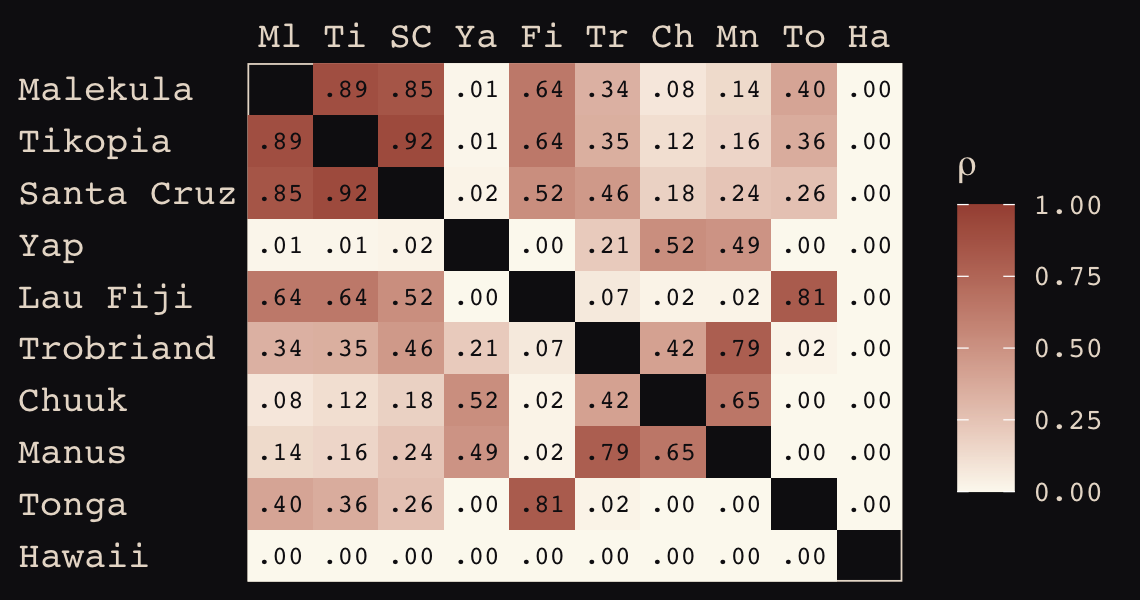

We start by loading the matrix of geographic distances.

# load the distance matrix

library(rethinking)

data(islandsDistMatrix)

# display (measured in thousands of km)

d_mat <- islandsDistMatrix

colnames(d_mat) <- c("Ml", "Ti", "SC", "Ya", "Fi", "Tr", "Ch", "Mn", "To", "Ha")

round(d_mat, 1)## Ml Ti SC Ya Fi Tr Ch Mn To Ha

## Malekula 0.0 0.5 0.6 4.4 1.2 2.0 3.2 2.8 1.9 5.7

## Tikopia 0.5 0.0 0.3 4.2 1.2 2.0 2.9 2.7 2.0 5.3

## Santa Cruz 0.6 0.3 0.0 3.9 1.6 1.7 2.6 2.4 2.3 5.4

## Yap 4.4 4.2 3.9 0.0 5.4 2.5 1.6 1.6 6.1 7.2

## Lau Fiji 1.2 1.2 1.6 5.4 0.0 3.2 4.0 3.9 0.8 4.9

## Trobriand 2.0 2.0 1.7 2.5 3.2 0.0 1.8 0.8 3.9 6.7

## Chuuk 3.2 2.9 2.6 1.6 4.0 1.8 0.0 1.2 4.8 5.8

## Manus 2.8 2.7 2.4 1.6 3.9 0.8 1.2 0.0 4.6 6.7

## Tonga 1.9 2.0 2.3 6.1 0.8 3.9 4.8 4.6 0.0 5.0

## Hawaii 5.7 5.3 5.4 7.2 4.9 6.7 5.8 6.7 5.0 0.0If you wanted to use color to more effectively visualize the values in the matrix, you might do something like this.

d_mat %>%

data.frame() %>%

rownames_to_column("row") %>%

gather(column, distance, -row) %>%

mutate(column = factor(column, levels = colnames(d_mat)),

row = factor(row, levels = rownames(d_mat)) %>% fct_rev(),

label = formatC(distance, format = 'f', digits = 2)) %>%

ggplot(aes(x = column, y = row)) +

geom_raster(aes(fill = distance)) +

geom_text(aes(label = label),

size = 3, family = "Courier", color = "#100F14") +

scale_fill_gradient(low = "#FCF9F0", high = "#A65141") +

scale_x_discrete(NULL, position = "top", expand = c(0, 0)) +

scale_y_discrete(NULL, expand = c(0, 0)) +

theme_pearl_earring(axis.text.y = element_text(hjust = 0)) +

theme(axis.ticks = element_blank())

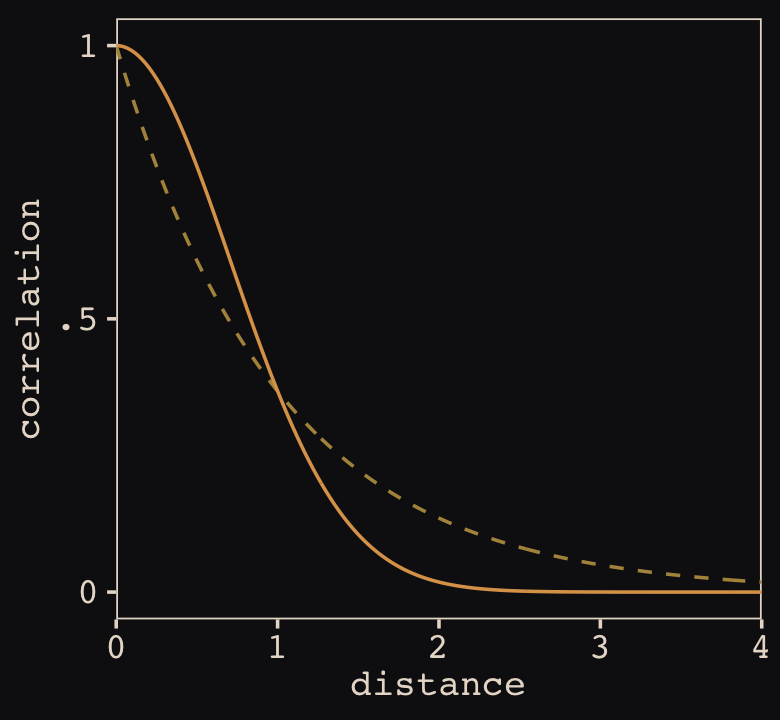

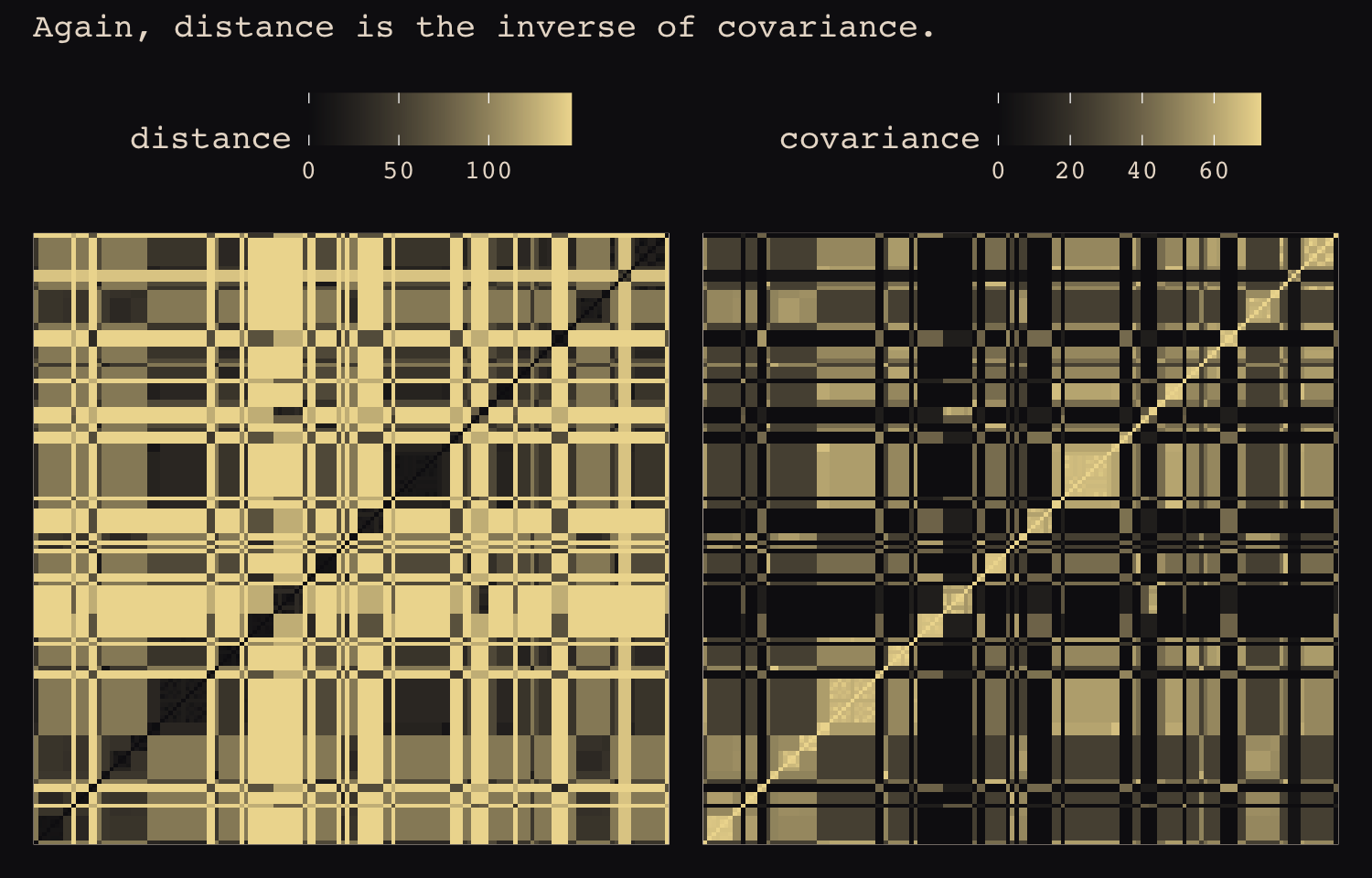

Figure 14.10 shows the “shape of the function relating distance to the covariance \(\mathbf K_{ij}\).”

tibble(x = seq(from = 0, to = 4, by = .01),

linear = exp(-1 * x),

squared = exp(-1 * x^2)) %>%

ggplot(aes(x = x)) +

geom_line(aes(y = linear),

color = "#B1934A", linetype = 2) +

geom_line(aes(y = squared),

color = "#DCA258") +

scale_x_continuous("distance", expand = c(0, 0)) +

scale_y_continuous("correlation",

breaks = c(0, .5, 1),

labels = c(0, ".5", 1))

Now load the primary data.

data(Kline2) # load the ordinary data, now with coordinates

d <-

Kline2 %>%

mutate(society = 1:10)

rm(Kline2)

d %>% glimpse()## Rows: 10

## Columns: 10

## $ culture <fct> Malekula, Tikopia, Santa Cruz, Yap, Lau Fiji, Trobriand, Chuuk, Manus, Tonga, Hawaii

## $ population <int> 1100, 1500, 3600, 4791, 7400, 8000, 9200, 13000, 17500, 275000

## $ contact <fct> low, low, low, high, high, high, high, low, high, low

## $ total_tools <int> 13, 22, 24, 43, 33, 19, 40, 28, 55, 71

## $ mean_TU <dbl> 3.2, 4.7, 4.0, 5.0, 5.0, 4.0, 3.8, 6.6, 5.4, 6.6

## $ lat <dbl> -16.3, -12.3, -10.7, 9.5, -17.7, -8.7, 7.4, -2.1, -21.2, 19.9

## $ lon <dbl> 167.5, 168.8, 166.0, 138.1, 178.1, 150.9, 151.6, 146.9, -175.2, -155.6

## $ lon2 <dbl> -12.5, -11.2, -14.0, -41.9, -1.9, -29.1, -28.4, -33.1, 4.8, 24.4

## $ logpop <dbl> 7.003065, 7.313220, 8.188689, 8.474494, 8.909235, 8.987197, 9.126959, 9.472705, 9.769956…

## $ society <int> 1, 2, 3, 4, 5, 6, 7, 8, 9, 10👋 Heads up: The brms package is capable of handling a variety of Gaussian process models using the gp() function. As we will see throughout this section, this method will depart in important ways from how McElreath fits Gaussian process models with rethinking. Due in large part to these differences, this section and its analogue in the first edition of Statistical rethinking (McElreath, 2015) baffled me, at first. Happily, fellow enthusiasts Louis Bliard and Richard Torkar reached out and helped me hammer this section out behind the scenes. The method to follow is due in large part to their efforts. 🤝

The brms::gp() function takes a handful of arguments. The first and most important argument, ..., accepts the names of one or more predictors from the data. When fitting a spatial Gaussian process of this kind, we’ll enter in the latitude and longitude data for each of levels of culture. This will be an important departure from the text. For his m14.8, McElreath directly entered in the Dmat distance matrix data into ulam(). In so doing, he defined \(D_{ij}\), the matrix of distances between each of the societies. When using brms, we instead estimate the distance matrix from the latitude and longitude variables.

Before we practice fitting a Gaussian process with the brms::gp() function, we’ll first need to think a little bit about our data. McElreath’s Dmat measured the distances in thousands of km. However, the lat and lon2 variables in the data above are in decimal degrees, which means they need to be transformed to keep our model in the same metric as McElreath’s. Turns out that one decimal degree is 111.32km (at the equator). Thus, we can turn both lat and lon2 into 1,000 km units by multiplying each by 0.11132. Here’s the conversion.

d <-

d %>%

mutate(lat_adj = lat * 0.11132,

lon2_adj = lon2 * 0.11132)

d %>%

select(culture, lat, lon2, lat_adj:lon2_adj)## culture lat lon2 lat_adj lon2_adj

## 1 Malekula -16.3 -12.5 -1.814516 -1.391500

## 2 Tikopia -12.3 -11.2 -1.369236 -1.246784

## 3 Santa Cruz -10.7 -14.0 -1.191124 -1.558480

## 4 Yap 9.5 -41.9 1.057540 -4.664308

## 5 Lau Fiji -17.7 -1.9 -1.970364 -0.211508

## 6 Trobriand -8.7 -29.1 -0.968484 -3.239412

## 7 Chuuk 7.4 -28.4 0.823768 -3.161488

## 8 Manus -2.1 -33.1 -0.233772 -3.684692

## 9 Tonga -21.2 4.8 -2.359984 0.534336

## 10 Hawaii 19.9 24.4 2.215268 2.716208Note that because this conversion is valid at the equator, it is only an approximation for latitude and longitude coordinates for our island societies.

Now we’ve scaled our two spatial variables, the basic way to use them in a brms Gaussian process is including gp(lat_adj, lon2_adj) into the formula argument within the brm() function. Note however that one of the default gp() settings is scale = TRUE, which scales predictors so that the maximum distance between two points is 1. We don’t want this for our example, so we will set scale = FALSE instead.

Our Gaussian process model is an extension of the non-linear model from Section 11.2.1.1, b11.11. Thus our model here will also use the non-linear syntax. Here’s how we might use brms to fit our amended non-centered version of McElreath’s m14.8.

b14.8 <-

brm(data = d,

family = poisson(link = "identity"),

bf(total_tools ~ exp(a) * population^b / g,

a ~ 1 + gp(lat_adj, lon2_adj, scale = FALSE),

b + g ~ 1,

nl = TRUE),

prior = c(prior(normal(0, 1), nlpar = a),

prior(exponential(1), nlpar = b, lb = 0),

prior(exponential(1), nlpar = g, lb = 0),

prior(inv_gamma(2.874624, 2.941204), class = lscale, coef = gplat_adjlon2_adj, nlpar = a),

prior(exponential(1), class = sdgp, coef = gplat_adjlon2_adj, nlpar = a)),

iter = 2000, warmup = 1000, chains = 4, cores = 4,

seed = 14,

sample_prior = T,

file = "fits/b14.08")Check the results.

print(b14.8)## Family: poisson

## Links: mu = identity

## Formula: total_tools ~ exp(a) * population^b/g

## a ~ 1 + gp(lat_adj, lon2_adj, scale = FALSE)

## b ~ 1

## g ~ 1

## Data: d (Number of observations: 10)

## Draws: 4 chains, each with iter = 2000; warmup = 1000; thin = 1;

## total post-warmup draws = 4000

##

## Gaussian Process Terms:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## sdgp(a_gplat_adjlon2_adj) 0.47 0.29 0.15 1.21 1.00 1392 2260

## lscale(a_gplat_adjlon2_adj) 1.61 0.88 0.51 3.85 1.00 1317 2263

##

## Population-Level Effects:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## a_Intercept 0.34 0.85 -1.33 1.95 1.00 2851 2981

## b_Intercept 0.26 0.09 0.09 0.44 1.00 1860 1514

## g_Intercept 0.68 0.65 0.05 2.42 1.00 2273 2203

##

## Draws were sampled using sampling(NUTS). For each parameter, Bulk_ESS

## and Tail_ESS are effective sample size measures, and Rhat is the potential

## scale reduction factor on split chains (at convergence, Rhat = 1).The posterior_summary() function will return a summary that looks more like the one in the text.

posterior_summary(b14.8)[1:15, ] %>% round(digits = 2)## Estimate Est.Error Q2.5 Q97.5

## b_a_Intercept 0.34 0.85 -1.33 1.95

## b_b_Intercept 0.26 0.09 0.09 0.44

## b_g_Intercept 0.68 0.65 0.05 2.42

## sdgp_a_gplat_adjlon2_adj 0.47 0.29 0.15 1.21

## lscale_a_gplat_adjlon2_adj 1.61 0.88 0.51 3.85

## zgp_a_gplat_adjlon2_adj[1] -0.48 0.76 -2.00 0.95

## zgp_a_gplat_adjlon2_adj[2] 0.46 0.86 -1.25 2.11

## zgp_a_gplat_adjlon2_adj[3] -0.63 0.74 -2.05 0.92

## zgp_a_gplat_adjlon2_adj[4] 0.97 0.69 -0.36 2.35

## zgp_a_gplat_adjlon2_adj[5] 0.22 0.75 -1.25 1.67

## zgp_a_gplat_adjlon2_adj[6] -1.05 0.78 -2.65 0.52

## zgp_a_gplat_adjlon2_adj[7] 0.16 0.70 -1.30 1.47

## zgp_a_gplat_adjlon2_adj[8] -0.20 0.88 -1.94 1.67

## zgp_a_gplat_adjlon2_adj[9] 0.44 0.91 -1.49 2.16

## zgp_a_gplat_adjlon2_adj[10] -0.42 0.80 -1.97 1.14Let’s focus on our three non-linear parameters, first. Happily, both our b_b_Intercept and b_g_Intercept summaries look a lot like those for McElreath’s b and g, respectively. Our b_a_Intercept might look distressingly small, but that’s just because of how we parameterized our model. It’s actually very close to McElreath’s a after you exponentiate.

fixef(b14.8, probs = c(.055, .945))["a_Intercept", c(1, 3:4)] %>%

exp() %>%

round(digits = 2)## Estimate Q5.5 Q94.5

## 1.40 0.36 5.22Our Gaussian process parameters are different from McElreath’s. From the gp section of the brms reference manual (Bürkner, 2022i), we learn the brms parameterization follows the form

\[k(x_{i},x_{j}) = sdgp^2 \exp \big (-||x_i - x_j||^2 / (2 lscale^2) \big ),\]

where \(k(x_{i},x_{j})\) is the same as McElreath’s \(\mathbf K_{ij}\) and \(||x_i - x_j||^2\) is the Euclidean distance, the same as McElreath’s \(D_{ij}^2\). Thus we could also express the brms parameterization as

\[\mathbf K_{ij} = sdgp^2 \exp \big (-D_{ij}^2 / (2 lscale^2) \big ),\]

which is much closer to McElreath’s

\[\mathbf K_{ij} = \eta^2 \exp \big (-\rho^2 D_{ij}^2 \big ) + \delta_{ij} \sigma^2\]

On page 470, McElreath explained that the final \(\delta_{ij} \sigma^2\) term is mute with the Oceanic societies data. Thus we won’t consider it further. This reduces McElreath’s equation to

\[\mathbf K_{ij} = \eta^2 \exp \big (-\rho^2 D_{ij}^2 \big ).\]

Importantly, what McElreath called \(\eta\), Bürkner called \(sdgp\). While McElreath estimated \(\eta^2\), brms simply estimated \(sdgp\). So we’ll have to square our sdgp(a_gplat_adjlon2_adj) before it’s on the same scale as etasq in the text. Here it is.

post <-

as_draws_df(b14.8) %>%

mutate(etasq = sdgp_a_gplat_adjlon2_adj^2)

post %>%

mean_hdi(etasq, .width = .89) %>%

mutate_if(is.double, round, digits = 3)## # A tibble: 1 × 6

## etasq .lower .upper .width .point .interval

## <dbl> <dbl> <dbl> <dbl> <chr> <chr>

## 1 0.308 0 0.618 0.89 mean hdiThough our posterior is a little bit larger than McElreath’s, we’re in the ballpark. You may have noticed that in our model brm() code, above, we just went with the flow and kept the exponential(1) prior on sdgp. The brms default would have been student_t(3, 0, 15.6).

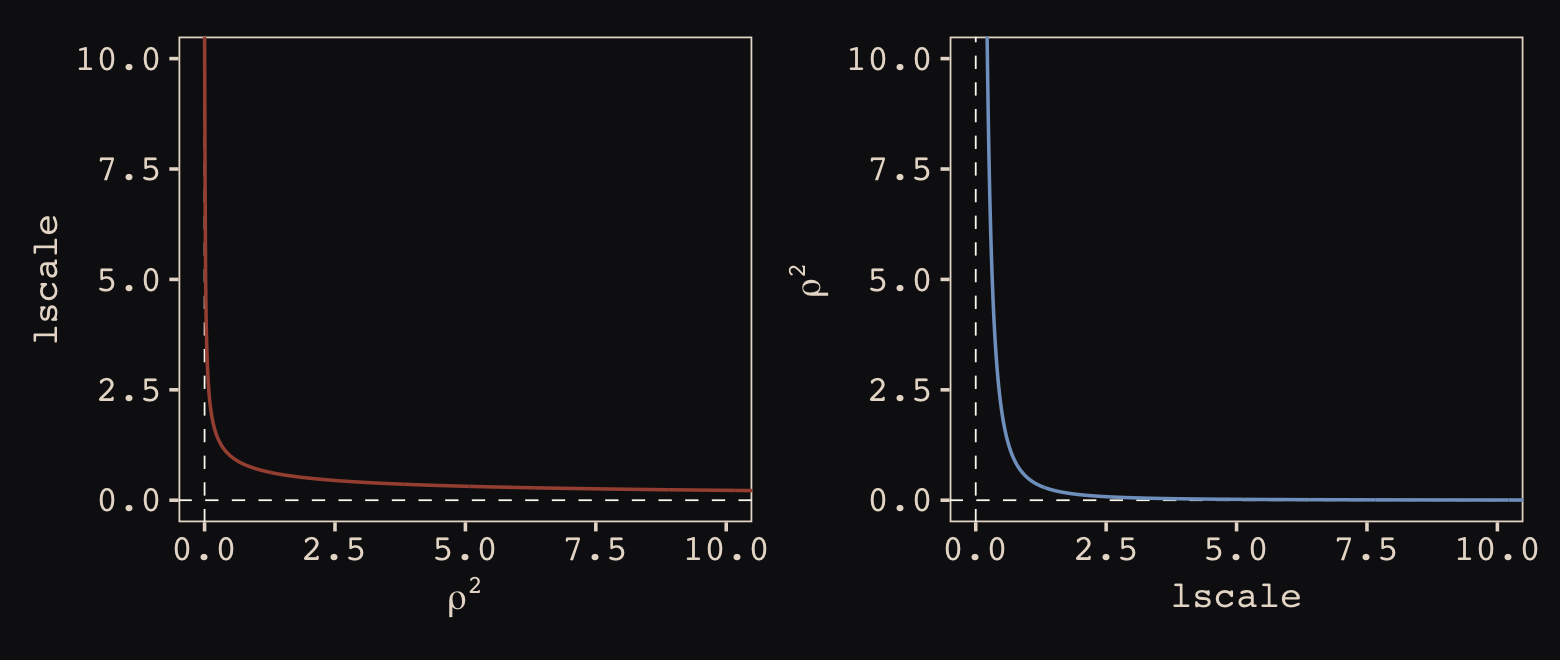

Now look at the denominator of the inner part of Bürkner’s equation, \(2 lscale^2\). This appears to be the brms equivalent to McElreath’s \(\rho^2\). Or at least it’s what we’ve got. Anyway, also note that McElreath estimated \(\rho^2\) directly as rhosq. If I’m doing the algebra correctly, we might expect

\[\begin{align*} \rho^2 & = 1/(2 \cdot lscale^2) & \text{and thus} \\ lscale & = \sqrt{1 / (2 \cdot \rho^2)}. \end{align*}\]

To get a sense of this relation, it might be helpful to plot.

p1 <-

tibble(`rho^2` = seq(from = 0, to = 11, by = 0.01)) %>%

mutate(lscale = sqrt(1 / (2 * `rho^2`))) %>%

ggplot(aes(x = `rho^2`, y = lscale)) +

geom_hline(yintercept = 0, color = "#FCF9F0", linewidth = 1/4, linetype = 2) +

geom_vline(xintercept = 0, color = "#FCF9F0", linewidth = 1/4, linetype = 2) +

geom_line(color = "#A65141") +

xlab(expression(rho^2)) +

coord_cartesian(xlim = c(0, 10),

ylim = c(0, 10))

p2 <-

tibble(lscale = seq(from = 0, to = 11, by = 0.01)) %>%

mutate(`rho^2` = 1 / (2 * lscale^2)) %>%

ggplot(aes(x = lscale, y = `rho^2`)) +

geom_hline(yintercept = 0, color = "#FCF9F0", linewidth = 1/4, linetype = 2) +

geom_vline(xintercept = 0, color = "#FCF9F0", linewidth = 1/4, linetype = 2) +

geom_line(color = "#80A0C7") +

ylab(expression(rho^2)) +

coord_cartesian(xlim = c(0, 10),

ylim = c(0, 10))

p1 + p2

The two aren’t quite inverses of one another, but the overall pattern is when one is large, the other is small. Now we have a sense of how they compare and how to covert one to the other, let’s see how our posterior for \(lscale\) looks when we convert it to the scale of McElreath’s \(\rho^2\).

post <-

post %>%

mutate(rhosq = 1 / (2 * lscale_a_gplat_adjlon2_adj^2))

post %>%

mean_hdi(rhosq, .width = .89) %>%

mutate_if(is.double, round, digits = 3)## # A tibble: 1 × 6

## rhosq .lower .upper .width .point .interval

## <dbl> <dbl> <dbl> <dbl> <chr> <chr>

## 1 0.43 0.009 0.913 0.89 mean hdiThis is about a third of the size of the McElreath’s \(\rho^2 = 1.31, 89 \text{% HDI } [0.08, 4.41]\). The plot deepens. If you look back, you’ll see we used a very different prior for lscale. Here it is: inv_gamma(2.874624, 2.941204). Use get_prior() to discover where that came from.

get_prior(data = d,

family = poisson(link = "identity"),

bf(total_tools ~ exp(a) * population^b / g,

a ~ 1 + gp(lat_adj, lon2_adj, scale = FALSE),

b + g ~ 1,

nl = TRUE))## prior class coef group resp dpar nlpar lb ub source

## (flat) b a default

## (flat) b Intercept a (vectorized)

## (flat) lscale a 0 default

## inv_gamma(2.874624, 2.941204) lscale gplat_adjlon2_adj a 0 default

## student_t(3, 0, 15.6) sdgp a 0 default

## student_t(3, 0, 15.6) sdgp gplat_adjlon2_adj a 0 (vectorized)

## (flat) b b default

## (flat) b Intercept b (vectorized)

## (flat) b g default

## (flat) b Intercept g (vectorized)That is, we used the brms default prior for \(lscale\). In a GitHub exchange, Bürkner pointed out that brms uses special priors for \(lscale\) parameters based on Michael Betancourt’s (2017) vignette, Robust Gaussian processes in Stan. We can use the dinvgamma() function from the well-named invgamma package (Kahle & Stamey, 2017) to get a sense of what that prior looks like.

tibble(lscale = seq(from = 0.01, to = 9, by = 0.01)) %>%

mutate(density = invgamma::dinvgamma(lscale, shape = 2.874624, rate = 2.941204)) %>%

ggplot(aes(x = lscale, y = density)) +

geom_area(fill = "#80A0C7") +

annotate(geom = "text",

x = 4.75, y = 0.75,

label = "inverse gamma(2.874624, 2.941204)",

color = "#8B9DAF", family = "Courier") +

scale_y_continuous(NULL, breaks = NULL) +

coord_cartesian(xlim = c(0, 8))

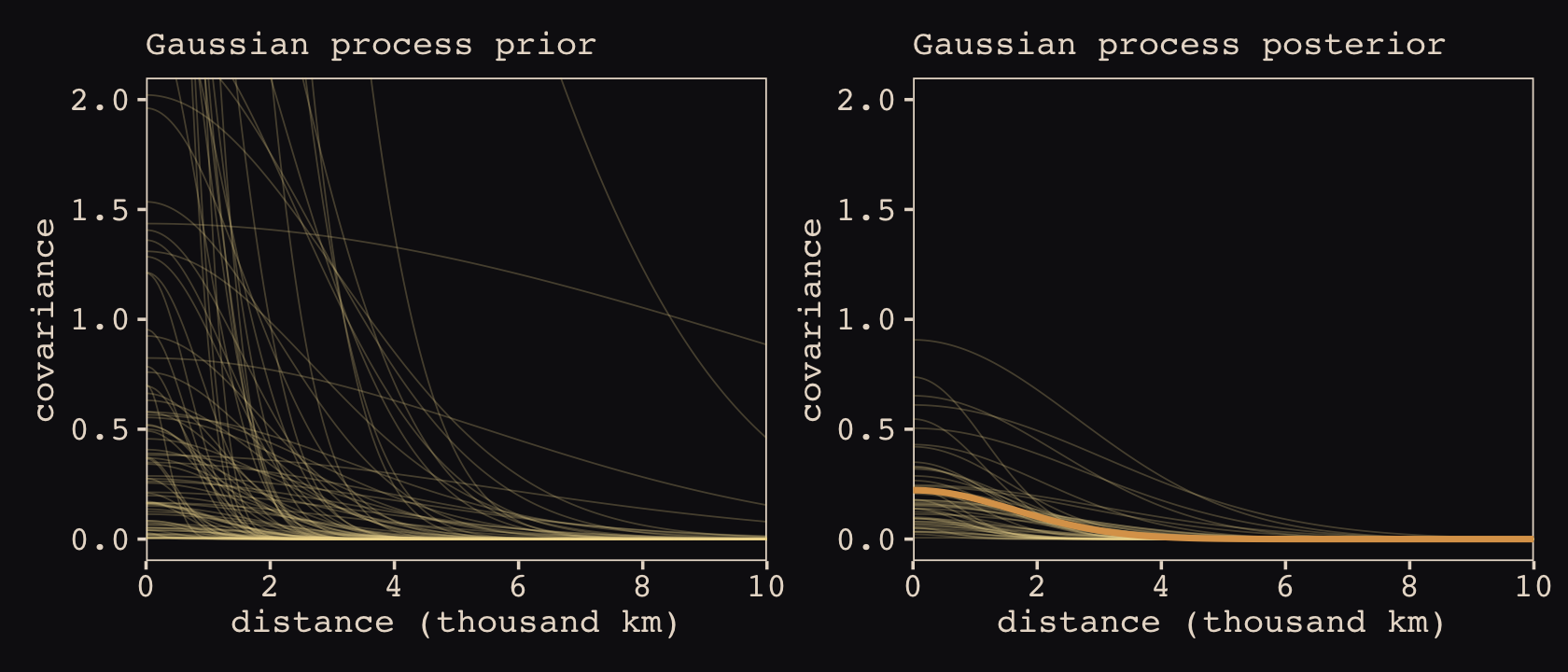

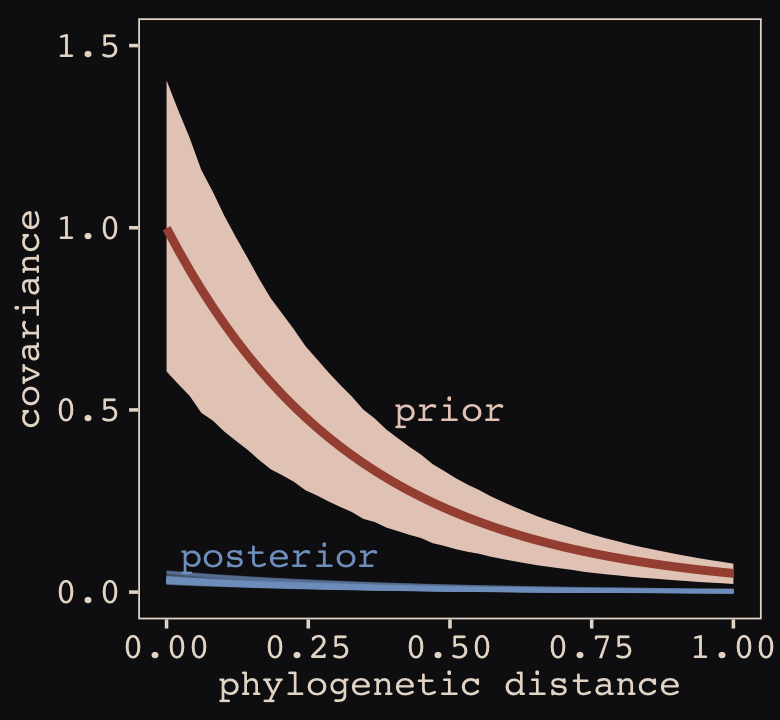

Anyways, let’s make the subplots for our version of Figure 14.11 to get a sense of what this all means. Start with the left panel, the prior predictive distribution for the covariance.

# for `slice_sample()`

set.seed(14)

# wrangle

p1 <-

prior_draws(b14.8) %>%

transmute(draw = 1:n(),

etasq = sdgp_a_gplat_adjlon2_adj^2,

rhosq = 1 / (2 * lscale_a__1_gplat_adjlon2_adj^2)) %>%

slice_sample(n = 100) %>%

expand_grid(x = seq(from = 0, to = 10, by = .05)) %>%

mutate(covariance = etasq * exp(-rhosq * x^2)) %>%

# plot

ggplot(aes(x = x, y = covariance)) +

geom_line(aes(group = draw),

linewidth = 1/4, alpha = 1/4, color = "#EEDA9D") +

scale_x_continuous("distance (thousand km)", expand = c(0, 0),

breaks = 0:5 * 2) +

coord_cartesian(xlim = c(0, 10),

ylim = c(0, 2)) +

labs(subtitle = "Gaussian process prior")Now make the right panel, the posterior distribution.

# for `slice_sample()`

set.seed(14)

# wrangle

p2 <-

post %>%

mutate(etasq = sdgp_a_gplat_adjlon2_adj^2,

rhosq = 1 / (2 * lscale_a_gplat_adjlon2_adj^2)) %>%

slice_sample(n = 50) %>%

expand_grid(x = seq(from = 0, to = 10, by = .05)) %>%

mutate(covariance = etasq * exp(-rhosq * x^2)) %>%

# plot

ggplot(aes(x = x, y = covariance)) +

geom_line(aes(group = .draw),

linewidth = 1/4, alpha = 1/4, color = "#EEDA9D") +

stat_function(fun = function(x) mean(post$sdgp_a_gplat_adjlon2_adj)^2 *

exp(-(1 / (2 * mean(post$lscale_a_gplat_adjlon2_adj)^2)) * x^2),

color = "#DCA258", linewidth = 1) +

scale_x_continuous("distance (thousand km)", expand = c(0, 0),

breaks = 0:5 * 2) +

coord_cartesian(xlim = c(0, 10),

ylim = c(0, 2)) +

labs(subtitle = "Gaussian process posterior")Combine the two with patchwork.

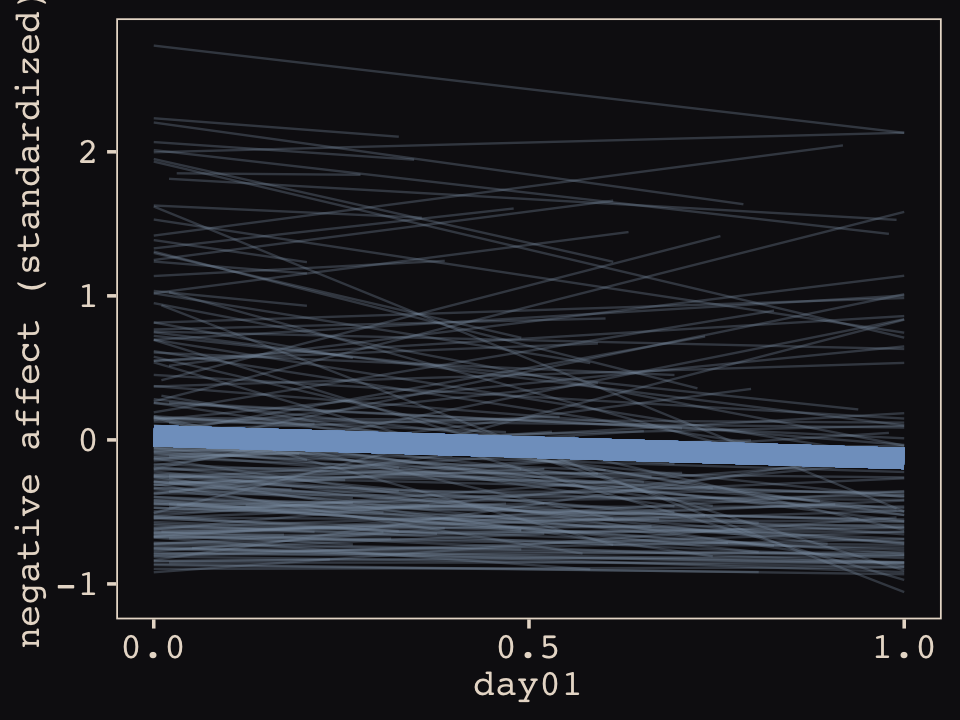

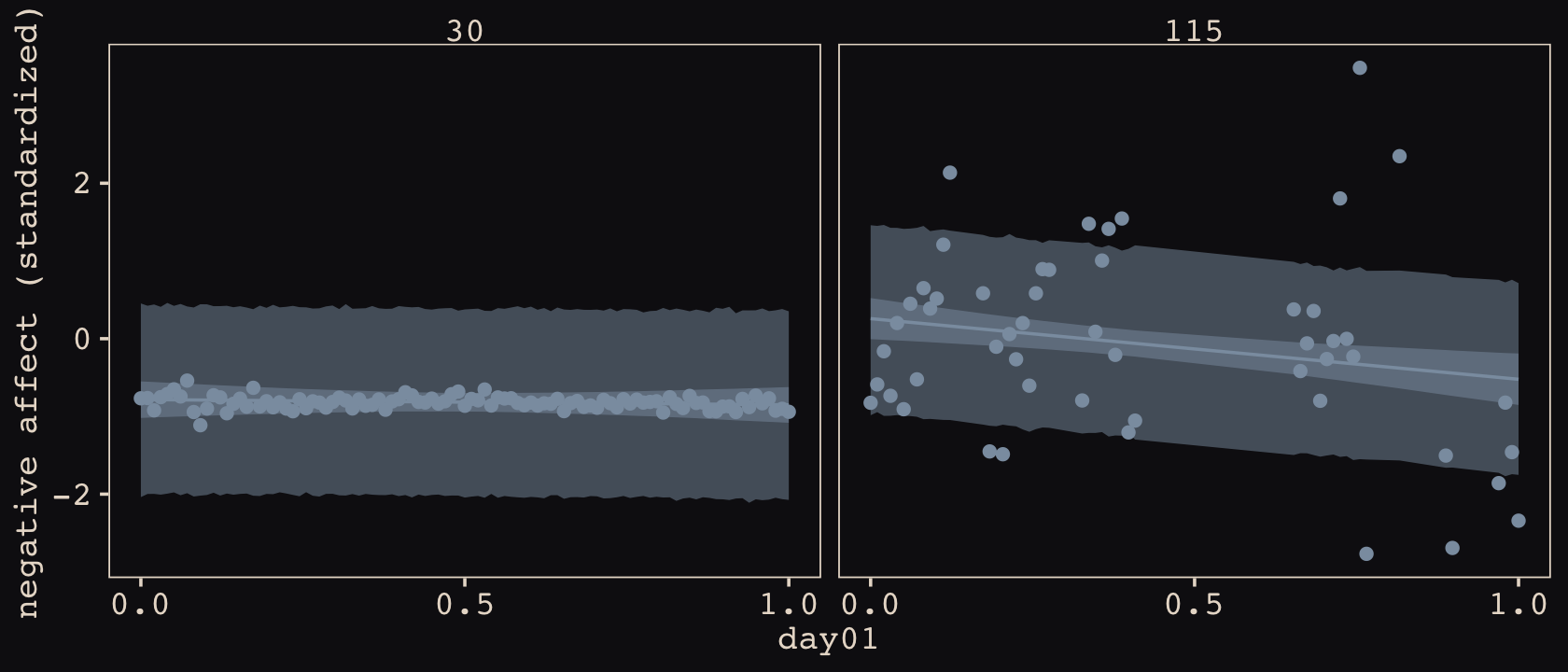

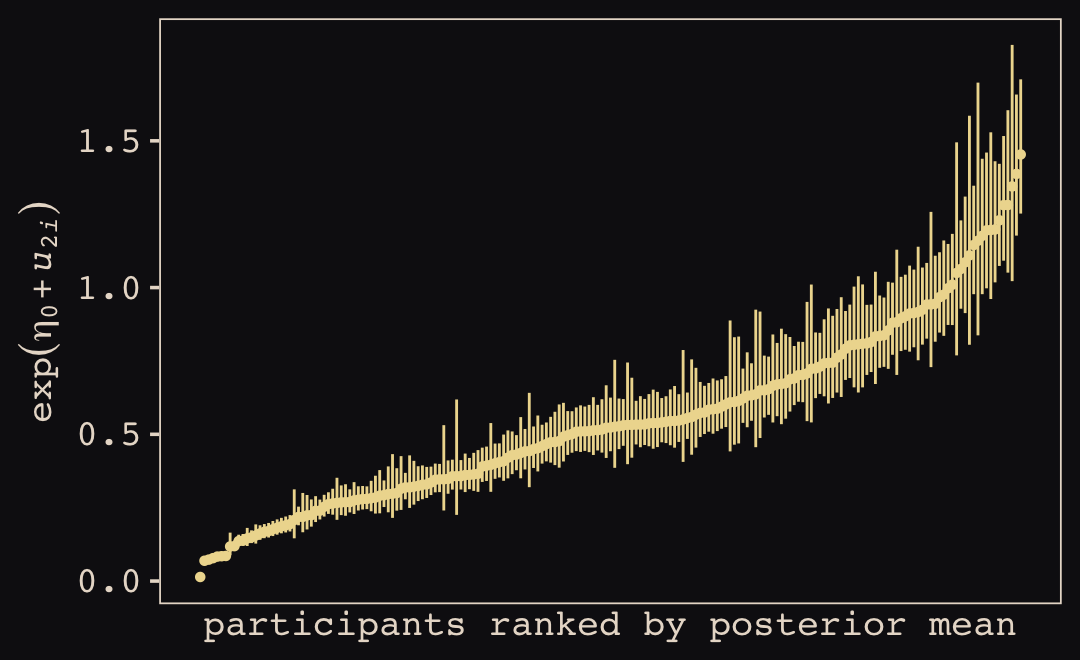

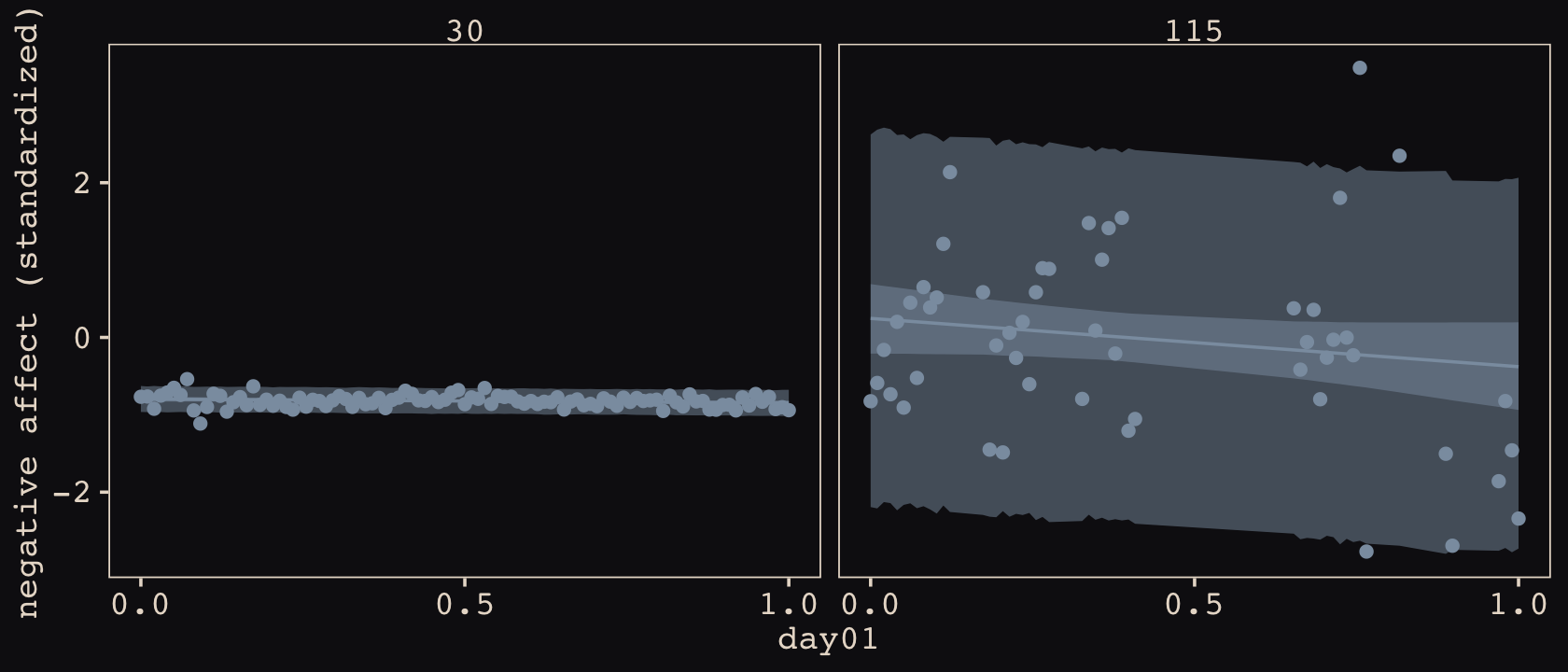

p1 | p2