5 Treating Time More Flexibly

All the illustrative longitudinal data sets in previous chapters share two structural features that simplify analysis. Each is: (1) balanced–everyone is assessed on the identical number of occasions; and (2) time-structured–each set of occasions is identical across individuals. Our analyses have also been limited in that we have used only: (1) time-invariant predictors that describe immutable characteristics of individuals or their environment (except for TIME itself); and (2) a representation of TIME that forces the level-1 individual growth parameters to represent “initial status” and “rate of change.”

The multilevel model for change is far more flexible than these examples suggest. With little or no adjustment, you can use the same strategies to analyze more complex data sets. Not only can the waves of data be irregularly spaced, their number and spacing can vary across participants. Each individual can have his or her own data collection schedule and number of waves can vary without limit from person to person. So, too, predictors of change can be time-invariant or time-varying, and the level-1 submodel can be parameterized in a variety of interesting ways. (Singer & Willett, 2003, p. 138, emphasis in the original)

5.1 Variably spaced measurement occasions

Many researchers design their studies with the goal of assessing each individual on an identical set of occasions…

Yet sometimes, despite a valiant attempt to collect time-structured data, actual measurement occasions will differ. Variation often results from the realities of fieldwork and data collection…

So, too, many researchers design their studies knowing full well that the measurement occasions may differ across participants. This is certainly true, for example, of those who use an accelerated cohort design in which an age-heterogeneous cohort of individuals is followed for a constant period of time. Because respondents initial vary in age, and age, not wave, is usually the appropriate metric for analyses (see the discussion of time metrics in section 1.3.2), observed measurement occasions will differ across individuals. (p. 139, emphasis in the original)

5.1.1 The structure of variably spaced data sets.

You can find the PIAT data from the CNLSY study in the reading_pp.csv file.

library(tidyverse)

reading_pp <- read_csv("data/reading_pp.csv")

head(reading_pp)## # A tibble: 6 × 5

## id wave agegrp age piat

## <dbl> <dbl> <dbl> <dbl> <dbl>

## 1 1 1 6.5 6 18

## 2 1 2 8.5 8.33 35

## 3 1 3 10.5 10.3 59

## 4 2 1 6.5 6 18

## 5 2 2 8.5 8.5 25

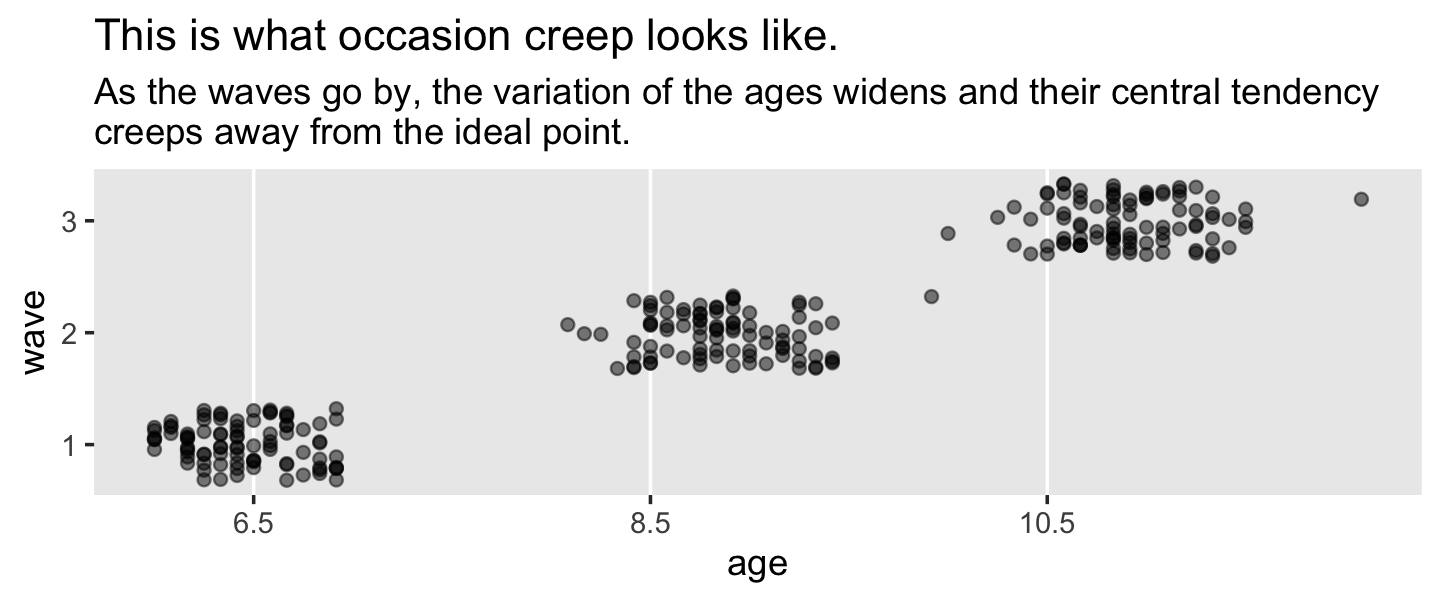

## 6 2 3 10.5 10.6 28On pages 141 and 142, Singer and Willett discussed the phenomena of occasion creep, which is when “the temporal separations of occasions widens as the actual ages exceed design projections”. Here’s what that might look like.

reading_pp %>%

ggplot(aes(x = age, y = wave)) +

geom_vline(xintercept = c(6.5, 8.5, 10.5), color = "white") +

geom_jitter(alpha = .5, height = .33, width = 0) +

scale_x_continuous(breaks = c(6.5, 8.5, 10.5)) +

scale_y_continuous(breaks = 1:3) +

ggtitle("This is what occasion creep looks like.",

subtitle = "As the waves go by, the variation of the ages widens and their central tendency\ncreeps away from the ideal point.") +

theme(panel.grid = element_blank())

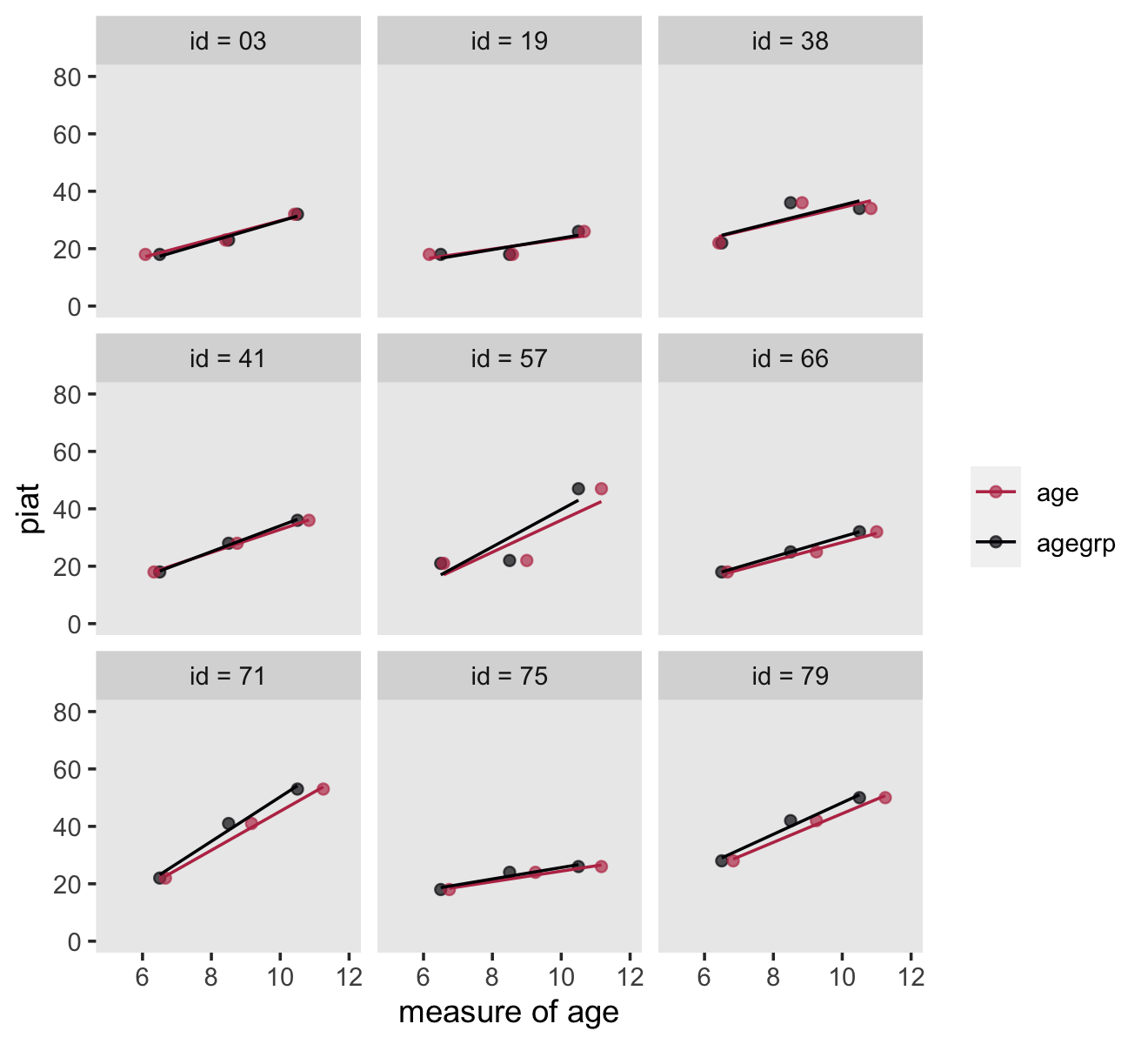

Here’s how we might make our version of Figure 5.1.

set.seed(5)

# wrangle

reading_pp %>%

nest(data = c(wave, agegrp, age, piat)) %>%

sample_n(size = 9) %>%

unnest(data) %>%

# this will help format and order the facets

mutate(id = ifelse(id < 10, str_c("0", id), id) %>% str_c("id = ", .)) %>%

pivot_longer(contains("age")) %>%

# plot

ggplot(aes(x = value, y = piat, color = name)) +

geom_point(alpha = 2/3) +

stat_smooth(method = "lm", se = F, linewidth = 1/2) +

scale_color_viridis_d(NULL, option = "B", end = .5, direction = -1) +

xlab("measure of age") +

coord_cartesian(xlim = c(5, 12),

ylim = c(0, 80)) +

theme(panel.grid = element_blank()) +

facet_wrap(~ id)

Since it wasn’t clear which id values the authors used in the text, we just randomized. Change the seed to view different samples.

5.1.2 Postulating and fitting multilevel models with variably spaced waves of data.

The composite formula for our first model is

$$ \[\begin{align*} \text{piat}_{ij} & = \gamma_{00} + \gamma_{10} (\text{agegrp}_{ij} - 6.5) + \zeta_{0i} + \zeta_{1i} (\text{agegrp}_{ij} - 6.5) + \epsilon_{ij} \\ \epsilon_{ij} & \sim \operatorname{Normal} (0, \sigma_\epsilon) \\ \begin{bmatrix} \zeta_{0i} \\ \zeta_{1i} \end{bmatrix} & \sim \operatorname{Normal} \begin{pmatrix} \begin{bmatrix} 0 \\ 0 \end{bmatrix}, \mathbf \Sigma \end{pmatrix}, \text{where} \\ \mathbf \Sigma & = \mathbf D \mathbf\Omega \mathbf D', \text{where} \\ \mathbf D & = \begin{bmatrix} \sigma_0 & 0 \\ 0 & \sigma_1 \end{bmatrix} \text{and} \\ \mathbf \Omega & = \begin{bmatrix} 1 & \rho_{01} \\ \rho_{01} & 1 \end{bmatrix} \end{align*}\] $$

It’s the same for the twin model using age rather than agegrp. Notice how we’ve switched from Singer and Willett’s \(\sigma^2\) parameterization to the \(\sigma\) parameterization typical of brms.

reading_pp <-

reading_pp %>%

mutate(agegrp_c = agegrp - 6.5,

age_c = age - 6.5)

head(reading_pp)## # A tibble: 6 × 7

## id wave agegrp age piat agegrp_c age_c

## <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

## 1 1 1 6.5 6 18 0 -0.5

## 2 1 2 8.5 8.33 35 2 1.83

## 3 1 3 10.5 10.3 59 4 3.83

## 4 2 1 6.5 6 18 0 -0.5

## 5 2 2 8.5 8.5 25 2 2

## 6 2 3 10.5 10.6 28 4 4.08In the last chapter, we began familiarizing ourselves with brms::brm() default priors. It’s time to level up. Another approach is to use domain knowledge to set weakly-informative priors. Let’s start with the PIAT. The Peabody Individual Achievement Test is a standardized individual test of scholastic achievement. It yields several subtest scores. The reading subtest is the one we’re focusing on, here. As is typical for such tests, the PIAT scores are normed to yield a population mean of 100 and a standard deviation of 15.

With that information alone, even a PIAT novice should have an idea about how to specify the priors. Since our sole predictor variables are versions of age centered at 6.5, we know that the model intercept is interpreted as the expected value on the PIAT when the children are 6.5 years old. If you knew nothing else, you’d guess the mean score would be 100 with a standard deviation around 15. One way to use a weakly-informative prior on the intercept would be to multiply that \(SD\) by a number like 2.

Next we need a prior for the time variables, age_c and agegrp_c. A one-unit increase in either of these is the expected increase in the PIAT with one year’s passage of age. Bringing in a little domain knowledge, IQ and achievement tests tend to be rather stable over time. However, we also expect children to get better as they age and we also don’t know exactly how these data have been adjusted for the children’s ages. It’s also important to know that it’s typical within the Bayesian world to place Normal priors on \(\beta\) parameters. So one approach would be to center the Normal prior on 0 and put something like twice the PIAT’s standard deviation on the prior’s \(\sigma\). If we were PIAT researchers, we could do much better. But with minimal knowledge of the test, this approach is certainly beats defaults.

Next we have the variance parameters. Recall that brms::brm() defaults are Student’s \(t\)-distributions with \(\nu = 3\) and \(\mu = 0\). Let’s start there. Now we just need to put values on \(\sigma\). Since the PIAT has a standard deviation of 15 in the population, why not just use 15? If you felt insecure about this, multiply if by a factor of 2 or so. Also recall that when Student’s \(t\)-distributions has a \(\nu = 3\), the tails are quite fat. Within the context of Bayesian priors, those fat tails make it easy for the likelihood to dominate the prior even when it’s a good way into the tail.

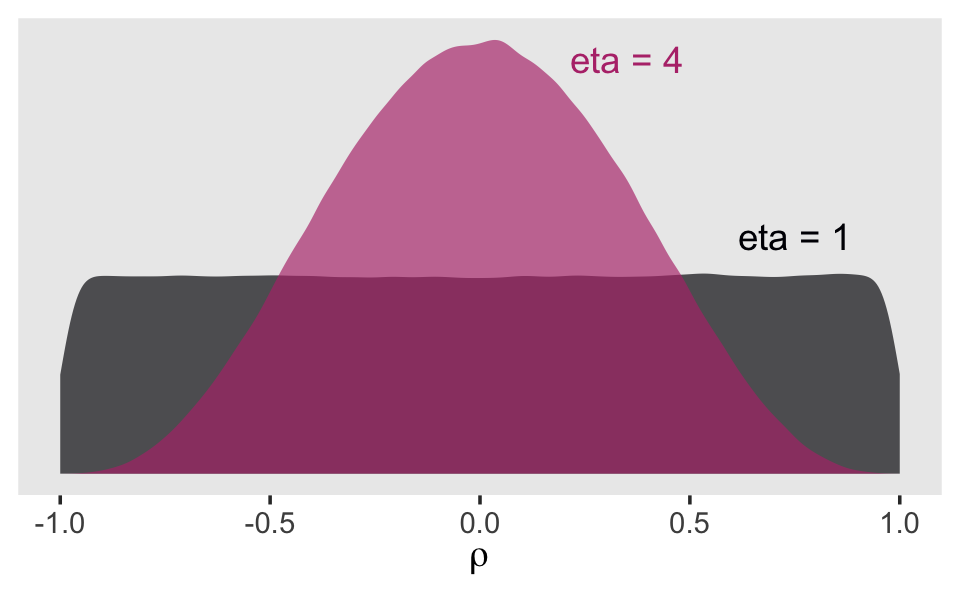

Finally, we have the correlation among the group-level variance parameters, \(\sigma_0\) and \(\sigma_1\). Recall that last chapter we learned the brms::brm() default was lkj(1). To get a sense of what the LKJ does, we’ll simulate from it. McElreath’s rethinking package contains a handy rlkjcorr() function, which will allow us to simulate n draws from a K by K correlation matrix for which \(\eta\) is defined by eta. Let’s take n <- 1e6 draws from two LKJ prior distributions, one with \(\eta = 1\) and the other with \(\eta = 4\).

library(rethinking)

n <- 1e6

set.seed(5)

lkj <-

tibble(eta = c(1, 4)) %>%

mutate(draws = purrr::map(eta, ~ rlkjcorr(n, K = 2, eta = .)[, 2, 1])) %>%

unnest(draws)

glimpse(lkj)## Rows: 2,000,000

## Columns: 2

## $ eta <dbl> 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, …

## $ draws <dbl> 0.59957109, -0.83375155, 0.79069974, -0.05591997, -0.91300025, 0.45343010, 0.3631919…Now plot that lkj.

lkj %>%

mutate(eta = factor(eta)) %>%

ggplot(aes(x = draws, fill = eta, color = eta)) +

geom_density(size = 0, alpha = 2/3) +

geom_text(data = tibble(

draws = c(.75, .35),

y = c(.6, 1.05),

label = c("eta = 1", "eta = 4"),

eta = c(1, 4) %>% as.factor()),

aes(y = y, label = label)) +

scale_fill_viridis_d(option = "A", end = .5) +

scale_color_viridis_d(option = "A", end = .5) +

scale_y_continuous(NULL, breaks = NULL) +

xlab(expression(rho)) +

theme(panel.grid = element_blank(),

legend.position = "none")## Warning: Using `size` aesthetic for lines was deprecated in ggplot2 3.4.0.

## ℹ Please use `linewidth` instead.

## This warning is displayed once every 8 hours.

## Call `lifecycle::last_lifecycle_warnings()` to see where this warning was generated.

When we use lkj(1), the prior is flat over the parameter space. However, setting lkj(4) is tantamount to a prior with a probability mass concentrated a bit towards zero. It’s a prior that’s skeptical of extremely large or small correlations. Within the context of our multilevel model \(\rho\) parameters, this will be our weakly-regularizing prior.

Let’s prepare to fit our models and load brms.

detach(package:rethinking, unload = T)

library(brms)Fit the models. Following the same form, the differ in that the first uses agegrp_c and the second uses age_c.

fit5.1 <-

brm(data = reading_pp,

family = gaussian,

piat ~ 0 + Intercept + agegrp_c + (1 + agegrp_c | id),

prior = c(prior(normal(100, 30), class = b, coef = Intercept),

prior(normal(0, 30), class = b, coef = agegrp_c),

prior(student_t(3, 0, 15), class = sd),

prior(student_t(3, 0, 15), class = sigma),

prior(lkj(4), class = cor)),

iter = 2000, warmup = 1000, chains = 4, cores = 4,

seed = 5,

file = "fits/fit05.01")

fit5.2 <-

brm(data = reading_pp,

family = gaussian,

piat ~ 0 + Intercept + age_c + (1 + age_c | id),

prior = c(prior(normal(100, 30), class = b, coef = Intercept),

prior(normal(0, 30), class = b, coef = age_c),

prior(student_t(3, 0, 15), class = sd),

prior(student_t(3, 0, 15), class = sigma),

prior(lkj(4), class = cor)),

iter = 2000, warmup = 1000, chains = 4, cores = 4,

seed = 5,

file = "fits/fit05.02")Focusing first on fit5.1, our analogue to the \((AGEGRP – 6.5)\) model displayed in Table 5.2, here is our model summary.

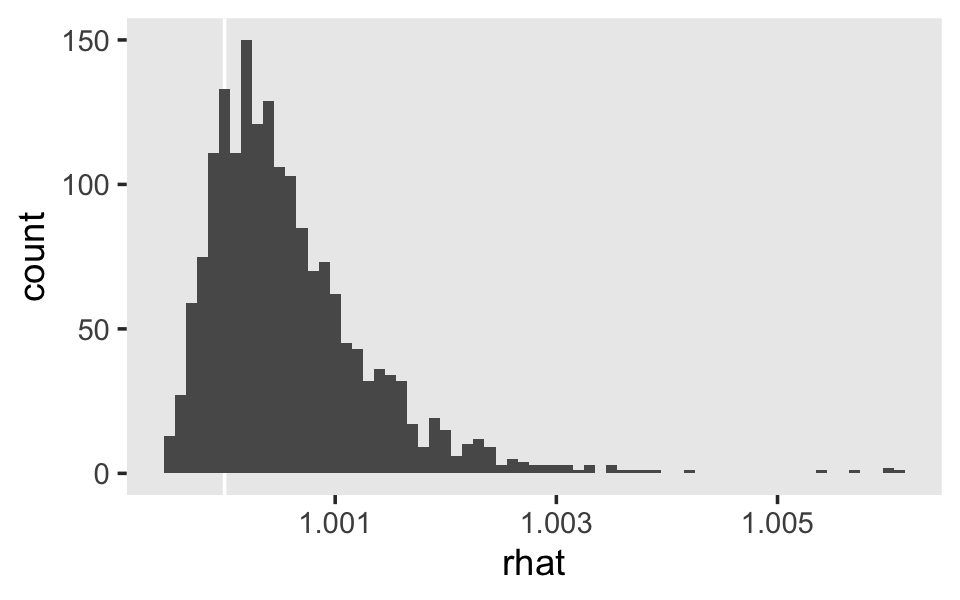

print(fit5.1, digits = 3)## Family: gaussian

## Links: mu = identity; sigma = identity

## Formula: piat ~ 0 + Intercept + agegrp_c + (1 + agegrp_c | id)

## Data: reading_pp (Number of observations: 267)

## Draws: 4 chains, each with iter = 2000; warmup = 1000; thin = 1;

## total post-warmup draws = 4000

##

## Group-Level Effects:

## ~id (Number of levels: 89)

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## sd(Intercept) 3.388 0.824 1.724 4.981 1.009 1067 1719

## sd(agegrp_c) 2.197 0.284 1.659 2.787 1.003 1139 2070

## cor(Intercept,agegrp_c) 0.172 0.220 -0.226 0.627 1.007 616 1068

##

## Population-Level Effects:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## Intercept 21.198 0.634 19.941 22.452 1.000 4896 3166

## agegrp_c 5.028 0.309 4.422 5.637 1.000 4121 3240

##

## Family Specific Parameters:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## sigma 5.271 0.360 4.604 6.012 1.010 991 1720

##

## Draws were sampled using sampling(NUTS). For each parameter, Bulk_ESS

## and Tail_ESS are effective sample size measures, and Rhat is the potential

## scale reduction factor on split chains (at convergence, Rhat = 1).Here’s the age_c model.

print(fit5.2, digits = 3)## Family: gaussian

## Links: mu = identity; sigma = identity

## Formula: piat ~ 0 + Intercept + age_c + (1 + age_c | id)

## Data: reading_pp (Number of observations: 267)

## Draws: 4 chains, each with iter = 2000; warmup = 1000; thin = 1;

## total post-warmup draws = 4000

##

## Group-Level Effects:

## ~id (Number of levels: 89)

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## sd(Intercept) 2.748 0.848 1.037 4.364 1.001 1081 1128

## sd(age_c) 1.976 0.247 1.520 2.477 1.002 1152 2193

## cor(Intercept,age_c) 0.244 0.229 -0.177 0.695 1.006 479 1107

##

## Population-Level Effects:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## Intercept 21.103 0.605 19.937 22.305 1.001 5162 3451

## age_c 4.537 0.283 3.973 5.078 1.001 2742 2634

##

## Family Specific Parameters:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## sigma 5.187 0.345 4.512 5.870 1.000 1220 1896

##

## Draws were sampled using sampling(NUTS). For each parameter, Bulk_ESS

## and Tail_ESS are effective sample size measures, and Rhat is the potential

## scale reduction factor on split chains (at convergence, Rhat = 1).For a more focused look, we can use fixef() compare our \(\gamma\)’s to each other and those in the text.

fixef(fit5.1) %>% round(digits = 3)## Estimate Est.Error Q2.5 Q97.5

## Intercept 21.198 0.634 19.941 22.452

## agegrp_c 5.028 0.309 4.422 5.637fixef(fit5.2) %>% round(digits = 3)## Estimate Est.Error Q2.5 Q97.5

## Intercept 21.103 0.605 19.937 22.305

## age_c 4.537 0.283 3.973 5.078Here are our \(\sigma_\epsilon\) summaries.

VarCorr(fit5.1)$residual$sd %>% round(digits = 3)## Estimate Est.Error Q2.5 Q97.5

## 5.271 0.36 4.604 6.012VarCorr(fit5.2)$residual$sd %>% round(digits = 3)## Estimate Est.Error Q2.5 Q97.5

## 5.187 0.345 4.512 5.87From a quick glance, you can see they are about the square of the \(\sigma_\epsilon^2\) estimates in the text.

Let’s go ahead and compute the LOO and WAIC.

fit5.1 <- add_criterion(fit5.1, criterion = c("loo", "waic"))

fit5.2 <- add_criterion(fit5.2, criterion = c("loo", "waic"))Compare the models with a WAIC difference.

loo_compare(fit5.1, fit5.2, criterion = "waic") %>%

print(simplify = F)## elpd_diff se_diff elpd_waic se_elpd_waic p_waic se_p_waic waic se_waic

## fit5.2 0.0 0.0 -865.6 15.3 77.4 7.2 1731.1 30.6

## fit5.1 -5.1 3.4 -870.6 13.6 78.5 6.5 1741.3 27.2The WAIC difference between the two isn’t that large relative to its standard error. The LOO tells a similar story.

loo_compare(fit5.1, fit5.2, criterion = "loo") %>%

print(simplify = F)## elpd_diff se_diff elpd_loo se_elpd_loo p_loo se_p_loo looic se_looic

## fit5.2 0.0 0.0 -879.9 16.0 91.7 8.3 1759.8 32.1

## fit5.1 -4.5 3.6 -884.4 14.3 92.3 7.5 1768.9 28.5The uncertainty in our WAIC and LOO estimates and their differences provides information that was not available for the AIC and the BIC comparisons in the text. We can also compare the WAIC and the LOO with model weights. Given the WAIC, from McElreath (2015) we learn

A total weight of 1 is partitioned among the considered models, making it easier to compare their relative predictive accuracy. The weight for a model \(i\) in a set of \(m\) models is given by:

\[w_i = \frac{\exp(-\frac{1}{2} \text{dWAIC}_i)}{\sum_{j = 1}^m \exp(-\frac{1}{2} \text{dWAIC}_i)}\]

where dWAIC is the

dWAICin thecomparetable output. This example uses WAIC but the formula is the same for any other information criterion, since they are all on the deviance scale. (p. 199)

The compare() function McElreath referenced is from his (2020b) rethinking package, which is meant to accompany his texts. We don’t have that function with brms. A rough analogue to the rethinking::compare() function is loo_compare(). We don’t quite have a dWAIC column from loo_compare(). Remember how last chapter we discussed how Aki Vehtari isn’t a fan of converting information criteria to the \(\chi^2\) difference metric with that last \(-2 \times ...\) step? That’s why we have an elpd_diff instead of a dWAIC. But to get the corresponding value, you just multiply those values by -2. And yet if you look closely at the formula for \(w_i\), you’ll see that each time the dWAIC term appears, it’s multiplied by \(-\frac{1}{2}\). So we don’t really need that dWAIC value anyway. As it turns out, we’re good to go with our elpd_diff. Thus the above equation simplifies to

\[ w_i = \frac{\exp(\text{elpd_diff}_i)}{\sum_{j = 1}^m \exp(\text{elpd_diff}_i)} \]

But recall you don’t have to do any of this by hand. We have the brms::model_weights() function, which we can use to compute weights with the WAIC or the LOO.

model_weights(fit5.1, fit5.2, weights = "waic") %>% round(digits = 3)## fit5.1 fit5.2

## 0.006 0.994model_weights(fit5.1, fit5.2, weights = "loo") %>% round(digits = 3)## fit5.1 fit5.2

## 0.011 0.989Both put the lion’s share of the weight on the age_c model. Back to McElreath McElreath (2015):

But what do these weights mean? There actually isn’t a consensus about that. But here’s Akaike’s interpretation, which is common.

A model’s weight is an estimate of the probability that the model will make the best predictions on new data, conditional on the set of models considered.

Here’s the heuristic explanation. First, regard WAIC as the expected deviance of a model on future data. That is to say that WAIC gives us an estimate of \(\text{E} (D_\text{test})\). Akaike weights convert these deviance values, which are log-likelihoods, to plain likelihoods and then standardize them all. This is just like Bayes’ theorem uses a sum in the denominator to standardize the produce of the likelihood and prior. Therefore the Akaike weights are analogous to posterior probabilities of models, conditional on expected future data. (p. 199, emphasis in the original)

5.2 Varying numbers of measurement occasions

As Singer and Willett pointed out,

once you allow the spacing of waves to vary across individuals, it is a small leap to allow their number to vary as well. Statisticians say that such data sets are unbalanced. As you would expect, balance facilitates analysis: models can be parameterized more easily, random effects can be estimated more precisely, and computer algorithms will converge more rapidly.

Yet a major advantage of the multilevel model for change is that it is easily fit to unbalanced data. (p. 146, emphasis in the original)

5.2.1 Analyzing data sets in which the number of waves per person varies.

Here we load the wages_pp.csv data.

wages_pp <- read_csv("data/wages_pp.csv")

glimpse(wages_pp)## Rows: 6,402

## Columns: 15

## $ id <dbl> 31, 31, 31, 31, 31, 31, 31, 31, 36, 36, 36, 36, 36, 36, 36, 36, 36, 36, 53, …

## $ lnw <dbl> 1.491, 1.433, 1.469, 1.749, 1.931, 1.709, 2.086, 2.129, 1.982, 1.798, 2.256,…

## $ exper <dbl> 0.015, 0.715, 1.734, 2.773, 3.927, 4.946, 5.965, 6.984, 0.315, 0.983, 2.040,…

## $ ged <dbl> 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 0, 0, 1, 1, 1, 1, 1, 1…

## $ postexp <dbl> 0.015, 0.715, 1.734, 2.773, 3.927, 4.946, 5.965, 6.984, 0.315, 0.983, 2.040,…

## $ black <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0…

## $ hispanic <dbl> 1, 1, 1, 1, 1, 1, 1, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 1, 1, 1, 1, 1, 1, 1…

## $ hgc <dbl> 8, 8, 8, 8, 8, 8, 8, 8, 9, 9, 9, 9, 9, 9, 9, 9, 9, 9, 7, 7, 7, 7, 7, 7, 7, 7…

## $ hgc.9 <dbl> -1, -1, -1, -1, -1, -1, -1, -1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, -2, -2, -2, -2…

## $ uerate <dbl> 3.215, 3.215, 3.215, 3.295, 2.895, 2.495, 2.595, 4.795, 4.895, 7.400, 7.400,…

## $ ue.7 <dbl> -3.785, -3.785, -3.785, -3.705, -4.105, -4.505, -4.405, -2.205, -2.105, 0.40…

## $ ue.centert1 <dbl> 0.000, 0.000, 0.000, 0.080, -0.320, -0.720, -0.620, 1.580, 0.000, 2.505, 2.5…

## $ ue.mean <dbl> 3.2150, 3.2150, 3.2150, 3.2150, 3.2150, 3.2150, 3.2150, 3.2150, 5.0965, 5.09…

## $ ue.person.cen <dbl> 0.0000, 0.0000, 0.0000, 0.0800, -0.3200, -0.7200, -0.6200, 1.5800, -0.2015, …

## $ ue1 <dbl> 3.215, 3.215, 3.215, 3.215, 3.215, 3.215, 3.215, 3.215, 4.895, 4.895, 4.895,…Here’s a more focused look along the lines of Table 5.3.

wages_pp %>%

select(id, exper, lnw, black, hgc, uerate) %>%

filter(id %in% c(206, 332, 1028))## # A tibble: 20 × 6

## id exper lnw black hgc uerate

## <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

## 1 206 1.87 2.03 0 10 9.2

## 2 206 2.81 2.30 0 10 11

## 3 206 4.31 2.48 0 10 6.30

## 4 332 0.125 1.63 0 8 7.1

## 5 332 1.62 1.48 0 8 9.6

## 6 332 2.41 1.80 0 8 7.2

## 7 332 3.39 1.44 0 8 6.20

## 8 332 4.47 1.75 0 8 5.60

## 9 332 5.18 1.53 0 8 4.60

## 10 332 6.08 2.04 0 8 4.30

## 11 332 7.04 2.18 0 8 3.40

## 12 332 8.20 2.19 0 8 4.39

## 13 332 9.09 4.04 0 8 6.70

## 14 1028 0.004 0.872 1 8 9.3

## 15 1028 0.035 0.903 1 8 7.4

## 16 1028 0.515 1.39 1 8 7.3

## 17 1028 1.48 2.32 1 8 7.4

## 18 1028 2.14 1.48 1 8 6.30

## 19 1028 3.16 1.70 1 8 5.90

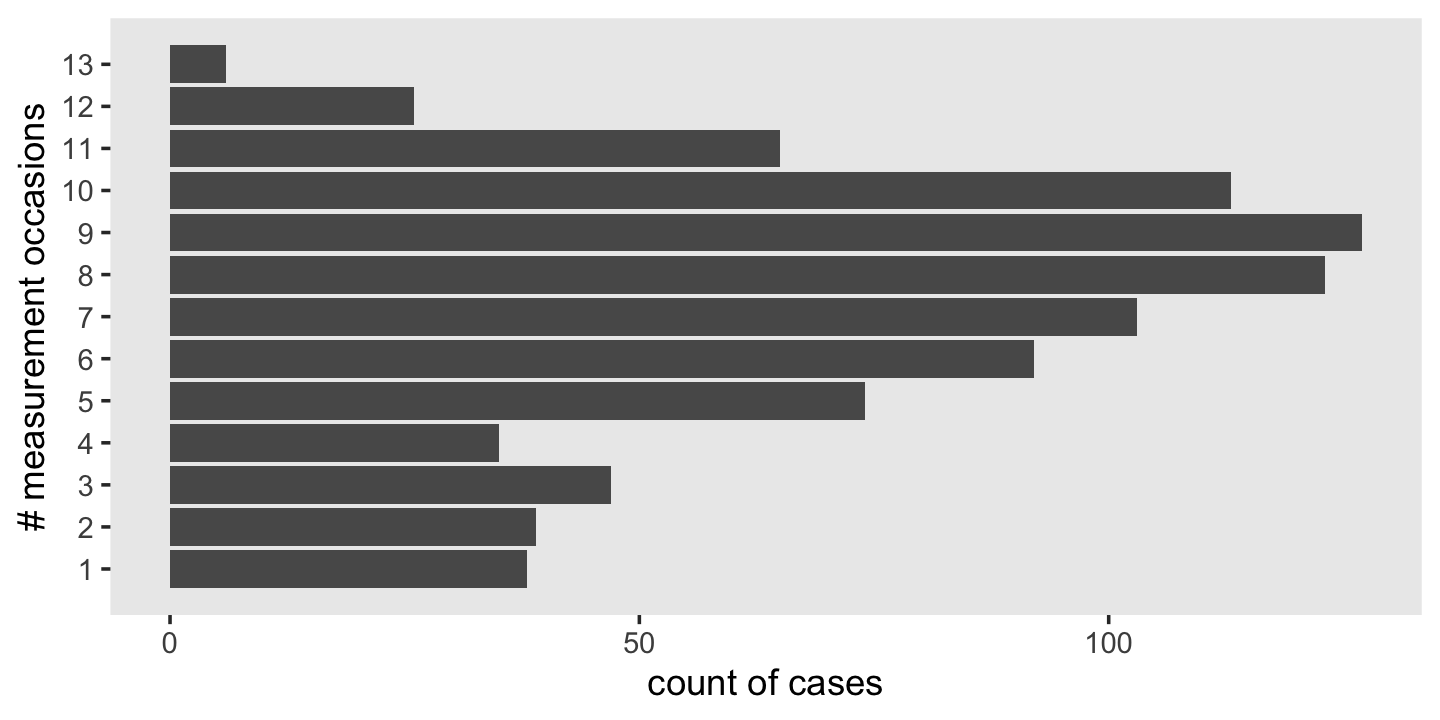

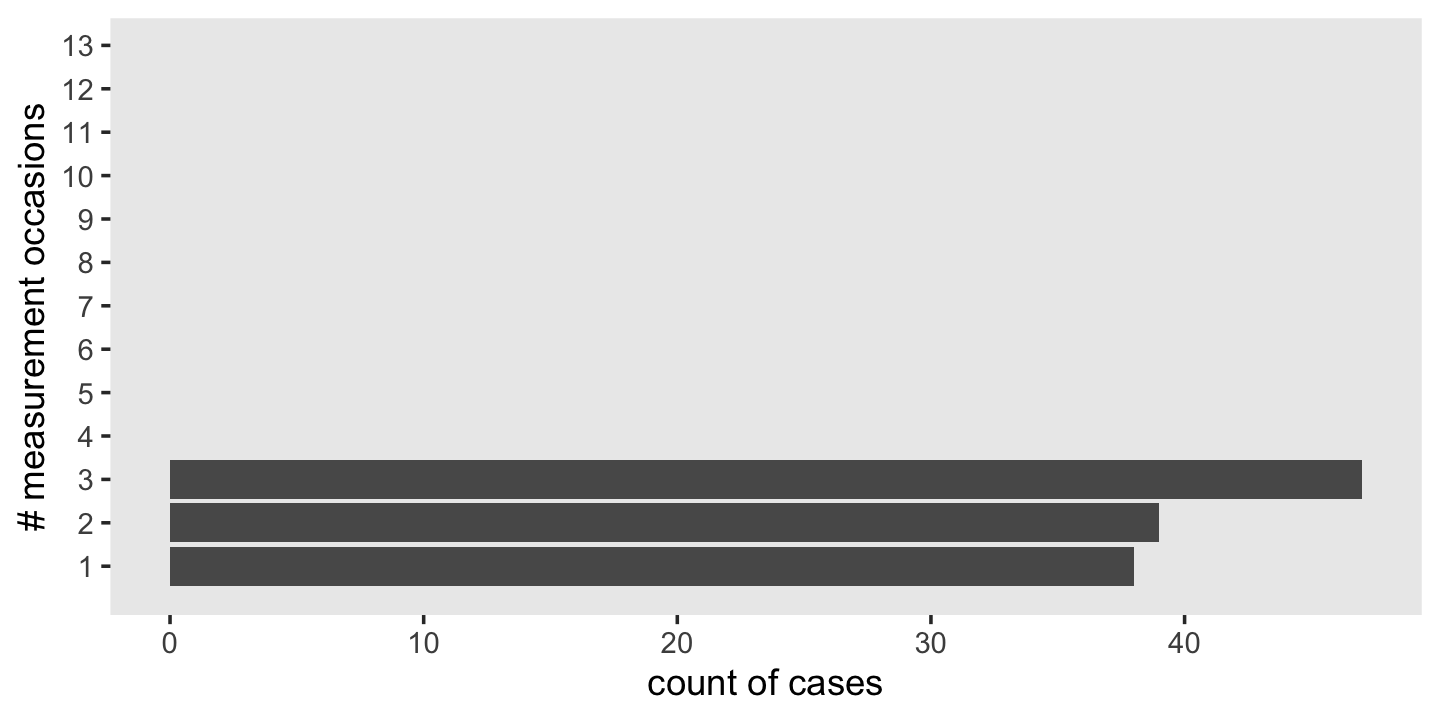

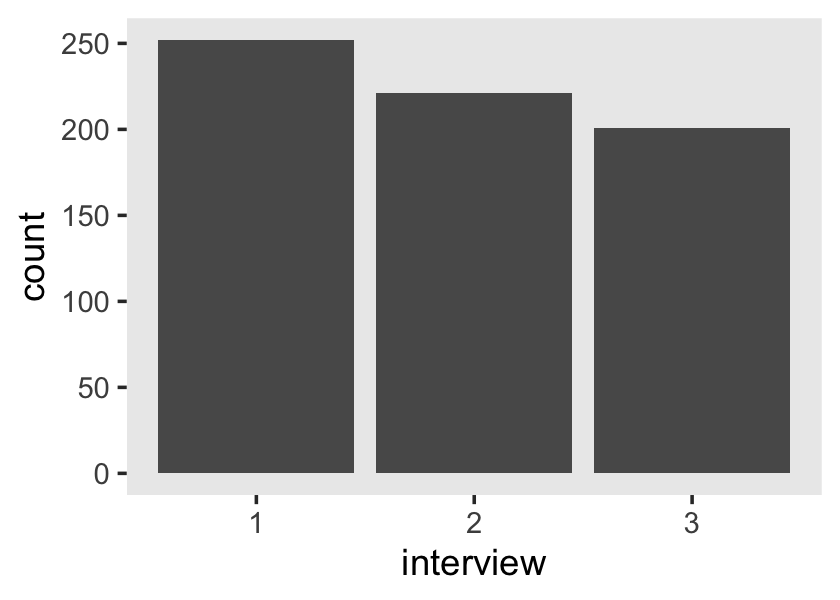

## 20 1028 4.10 2.34 1 8 6.9To get a sense of the diversity in the number of occasions per id, use group_by() and count().

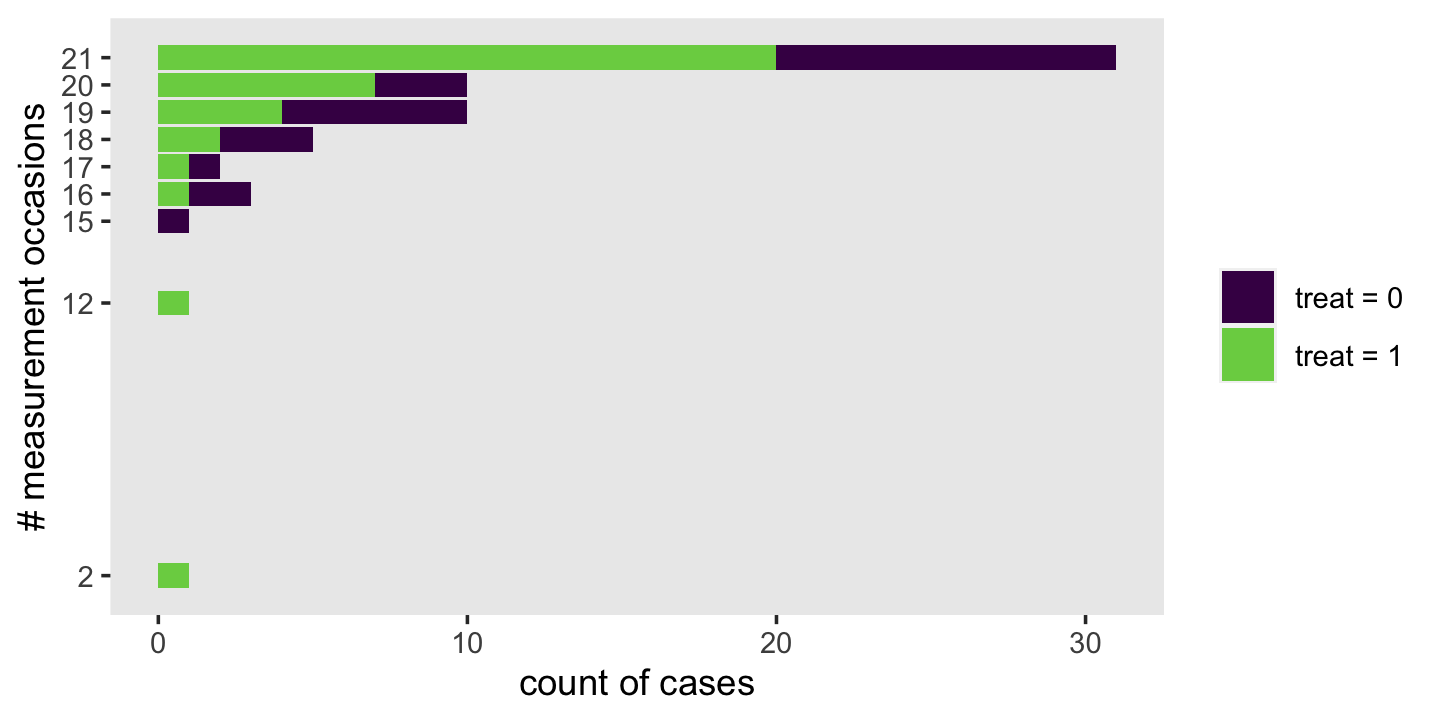

wages_pp %>%

count(id) %>%

ggplot(aes(y = n)) +

geom_bar() +

scale_y_continuous("# measurement occasions", breaks = 1:13) +

xlab("count of cases") +

theme(panel.grid = element_blank())

The spacing of the measurement occasions also differs a lot across cases. Recall that exper “identifies the specific moment–to the nearest day–in each man’s labor force history associated with each observed value of” lnw (p. 147). Here’s a sense of what that looks like.

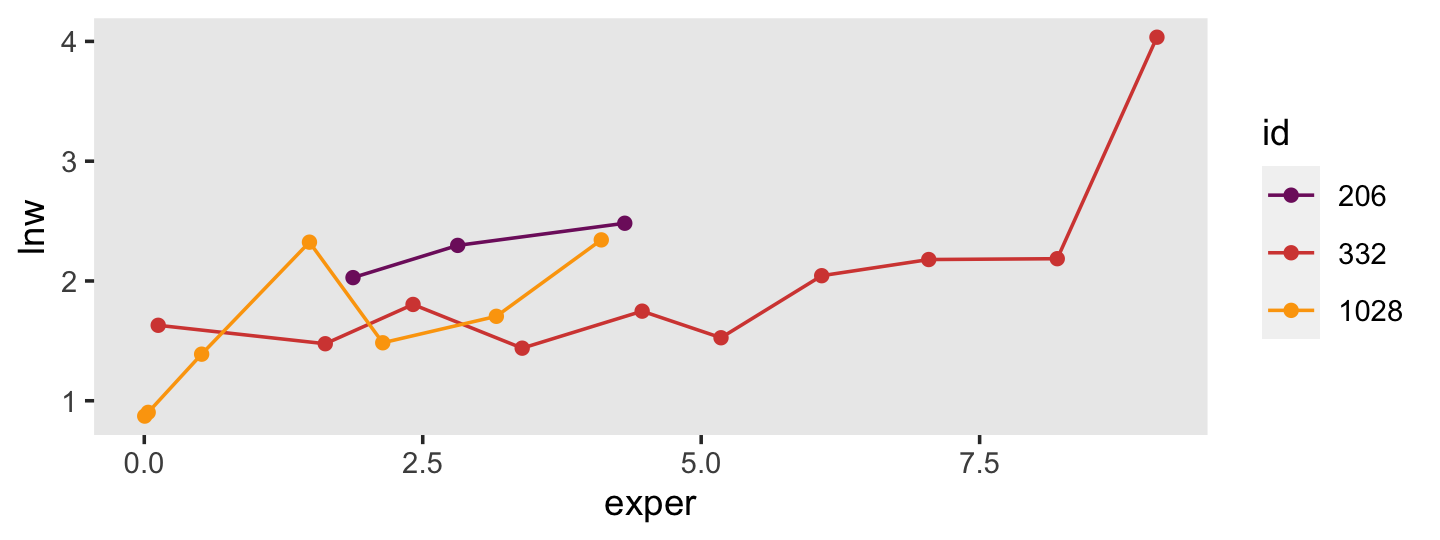

wages_pp %>%

filter(id %in% c(206, 332, 1028)) %>%

mutate(id = factor(id)) %>%

ggplot(aes(x = exper, y = lnw, color = id)) +

geom_point() +

geom_line() +

scale_color_viridis_d(option = "B", begin = .35, end = .8) +

theme(panel.grid = element_blank())

Uneven for dayz.

Here’s the brms version of the composite formula for Model A, the unconditional growth model for lnw.

$$ \[\begin{align*} \text{lnw}_{ij} & = \gamma_{00} + \gamma_{10} \text{exper}_{ij} + \zeta_{0i} + \zeta_{1i} \text{exper}_{ij} + \epsilon_{ij} \\ \epsilon_{ij} & \sim \operatorname{Normal}(0, \sigma_\epsilon) \\ \begin{bmatrix} \zeta_{0i} \\ \zeta_{1i} \end{bmatrix} & \sim \operatorname{Normal} \begin{pmatrix} \begin{bmatrix} 0 \\ 0 \end{bmatrix}, \mathbf\Sigma \end{pmatrix}, \text{where} \\ \mathbf\Sigma & = \mathbf D \mathbf \Omega \mathbf D', \text{where} \\ \mathbf D & = \begin{bmatrix} \sigma_0 & 0 \\ 0 & \sigma_1 \end{bmatrix}, \text{and} \\ \mathbf\Omega & = \begin{bmatrix} 1 & \rho_{01} \\ \rho_{01} & 1 \end{bmatrix} \end{align*}\] $$

To attempt setting priors for this, we need to review what lnw is. From the text: “To adjust for inflation, each hourly wage is expressed in constant 1990 dollars. To address the skewness commonly found in wage data and to linearize the individual wage trajectories, we analyze the natural logarithm of wages, LNW” (p. 147). So it’s the log of participant wages in 1990 dollars. From the official US Social Secutiry website, we learn the average yearly wage in 1990 was $20,172.11. Here’s that natural log for that.

log(20172.11)## [1] 9.912056However, that’s the yearly wage. In the text, this is conceptualized as rate per hour. If we presume a 40 hour week for 52 weeks, this translates to a little less than $10 per hour.

20172.11 / (40 * 52)## [1] 9.69813Here’s what that looks like in a log metric.

log(20172.11 / (40 * 52))## [1] 2.271933But keep in mind that “to track wages on a common temporal scale, Murnane and colleagues decided to clock time from each respondent’s first day of work” (p. 147). So the wages at one’s initial point in the study were often entry-level wages. From the official website for the US Department of Labor, we learn the national US minimum wage in 1990 was $3.80 per hour. Here’s what that looks like on the log scale.

log(3.80)## [1] 1.335001So perhaps this is a better figure to center our prior for the model intercept on. If we stay with a conventional Gaussian prior and put \(\mu = 1.335\), what value should we use for the standard deviation? Well, if that’s the log minimum and 2.27 is the log mean, then there’s less than a log value of 1 between the minimum and the mean. If we’d like to continue our practice of weakly regularizing priors a value of 1 or even 0.5 on the log scale would seem reasonable. For simplicity, we’ll use normal(1.335, 1).

Next we need a prior for the expected increase over a single year’s employment. A conservative default might be to center it on zero—no change from year to year. Since as we’ve established a 1 on the log scale is more than the difference between the minimum and average hourly wages in 1990 dollars, we might just use normal(0, 0.5) as a starting point.

So then what about our variance parameters? Given these are all entry-level workers and given how little we’d expect them to increase from year to year, a student_t(3, 0, 1) on the log scale would seem pretty permissive.

So then here’s how we might formally specify our model priors:

\[ \begin{align*} \gamma_{00} & \sim \operatorname{Normal}(1.335, 1) \\ \gamma_{10} & \sim \operatorname{Normal}(0, 0.5) \\ \sigma_\epsilon & \sim \operatorname{Student-t}(3, 0, 1) \\ \sigma_0 & \sim \operatorname{Student-t}(3, 0, 1) \\ \sigma_1 & \sim \operatorname{Student-t}(3, 0, 1) \\ \rho_{01} & \sim \operatorname{LKJ} (4) \end{align*} \]

For a point of comparison, here are the brms::brm() default priors.

get_prior(data = wages_pp,

family = gaussian,

lnw ~ 0 + Intercept + exper + (1 + exper | id))## prior class coef group resp dpar nlpar lb ub source

## (flat) b default

## (flat) b exper (vectorized)

## (flat) b Intercept (vectorized)

## lkj(1) cor default

## lkj(1) cor id (vectorized)

## student_t(3, 0, 2.5) sd 0 default

## student_t(3, 0, 2.5) sd id 0 (vectorized)

## student_t(3, 0, 2.5) sd exper id 0 (vectorized)

## student_t(3, 0, 2.5) sd Intercept id 0 (vectorized)

## student_t(3, 0, 2.5) sigma 0 defaultEven though our priors are still quite permissive on the scale of the data, they’re much more informative than the defaults. If we had formal backgrounds in the entry-level economy of the US in the early 1900s, we’d be able to specify even better priors. But hopefully this walk-through gives a sense of how to start thinking about model priors.

Let’s fit the model. To keep the size of the fits/fit05.03.rds file below the 100MB GitHub limit, we’ll set chains = 3 and compensate by upping iter a little.

fit5.3 <-

brm(data = wages_pp,

family = gaussian,

lnw ~ 0 + Intercept + exper + (1 + exper | id),

prior = c(prior(normal(1.335, 1), class = b, coef = Intercept),

prior(normal(0, 0.5), class = b, coef = exper),

prior(student_t(3, 0, 1), class = sd),

prior(student_t(3, 0, 1), class = sigma),

prior(lkj(4), class = cor)),

iter = 2500, warmup = 1000, chains = 3, cores = 3,

seed = 5,

file = "fits/fit05.03")Here are the results.

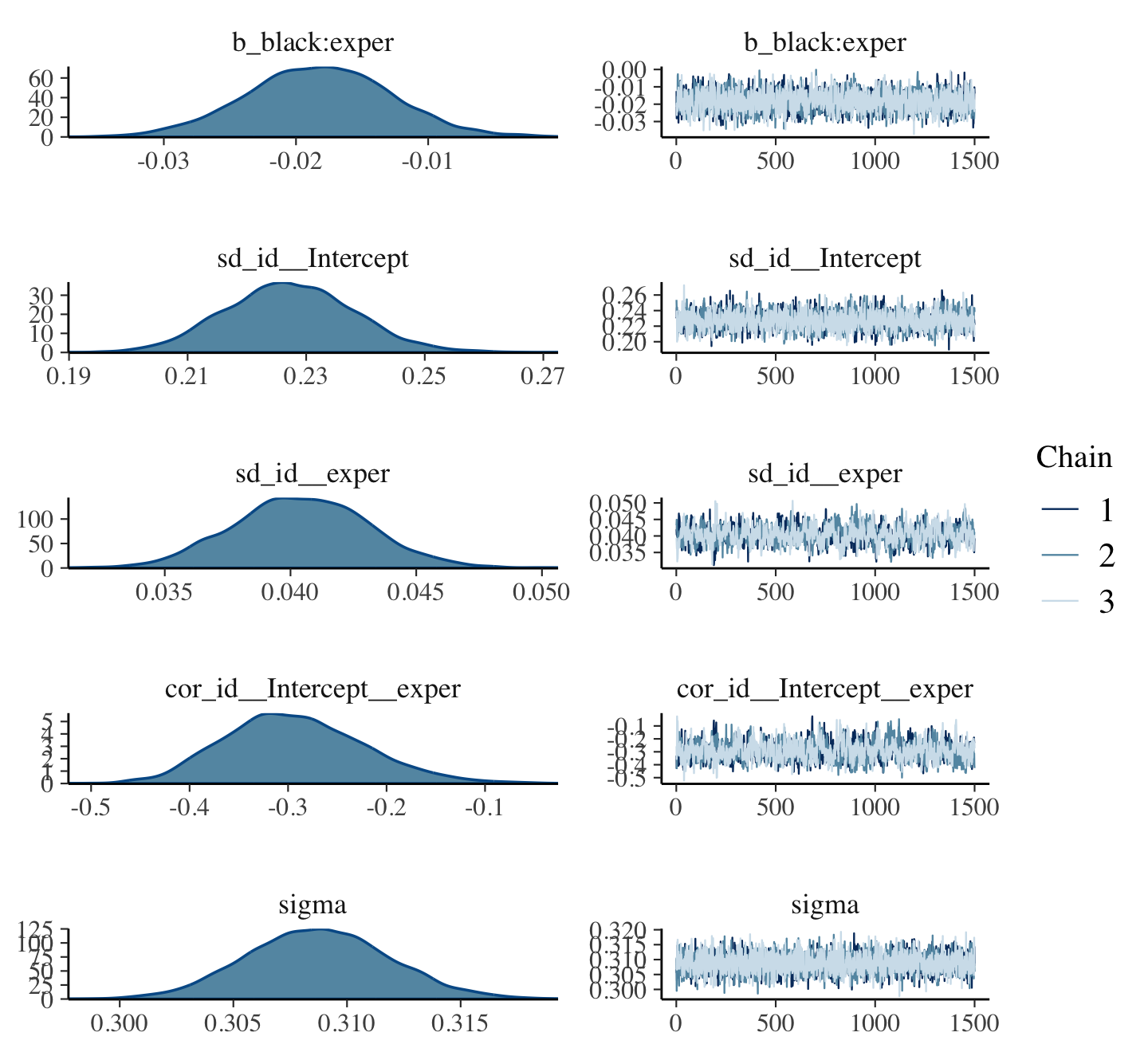

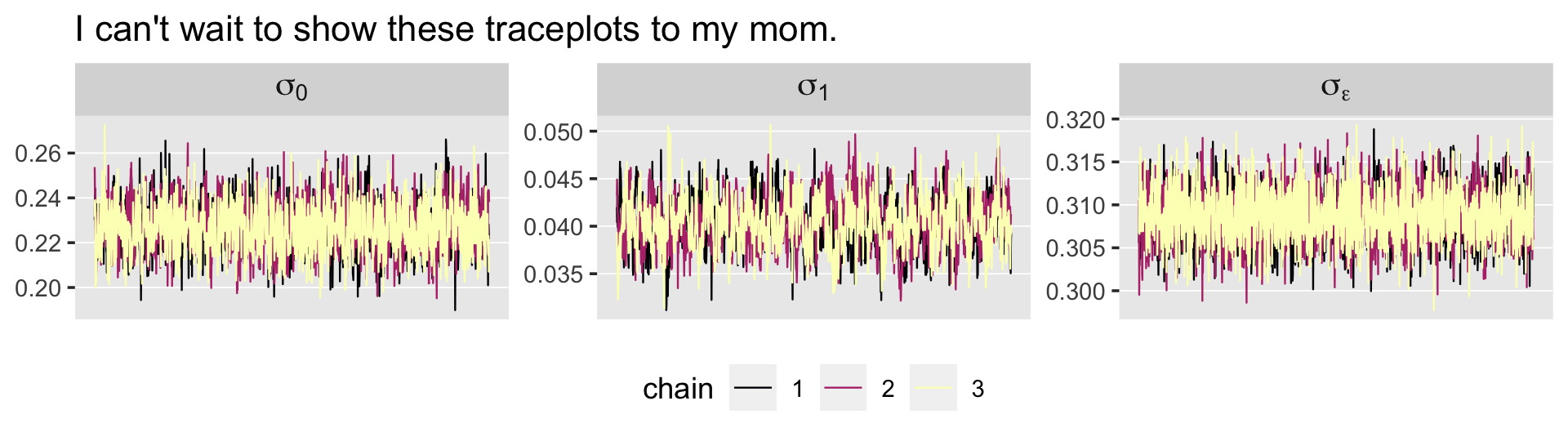

print(fit5.3, digits = 3)## Family: gaussian

## Links: mu = identity; sigma = identity

## Formula: lnw ~ 0 + Intercept + exper + (1 + exper | id)

## Data: wages_pp (Number of observations: 6402)

## Draws: 3 chains, each with iter = 2500; warmup = 1000; thin = 1;

## total post-warmup draws = 4500

##

## Group-Level Effects:

## ~id (Number of levels: 888)

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## sd(Intercept) 0.232 0.011 0.212 0.253 1.002 2004 2969

## sd(exper) 0.041 0.003 0.037 0.047 1.001 674 1505

## cor(Intercept,exper) -0.287 0.067 -0.410 -0.147 1.001 727 1645

##

## Population-Level Effects:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## Intercept 1.716 0.011 1.694 1.737 1.000 2959 3329

## exper 0.046 0.002 0.041 0.050 1.001 2821 3499

##

## Family Specific Parameters:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## sigma 0.309 0.003 0.303 0.315 1.000 4212 3206

##

## Draws were sampled using sampling(NUTS). For each parameter, Bulk_ESS

## and Tail_ESS are effective sample size measures, and Rhat is the potential

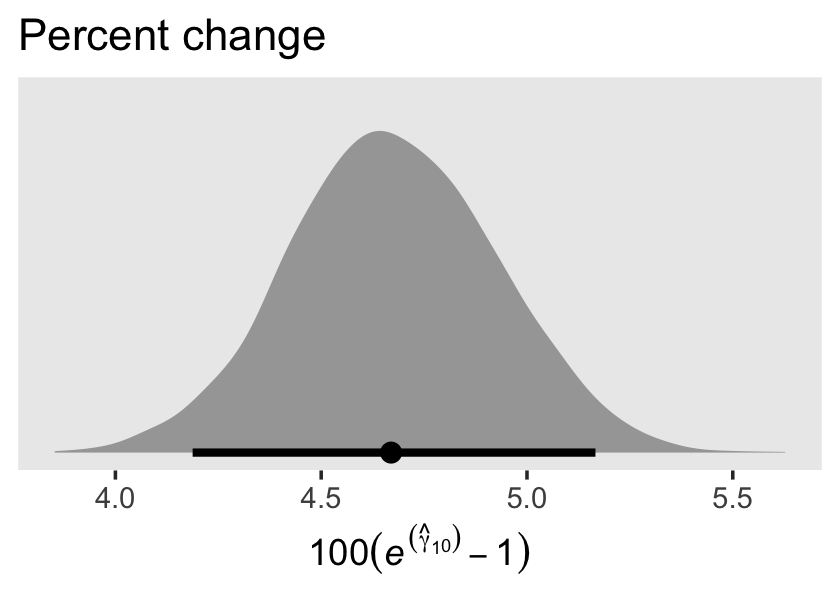

## scale reduction factor on split chains (at convergence, Rhat = 1).Since the criterion lnw is on the log scale, Singer and Willett pointed out our estimate for \(\gamma_{10}\) indicates a nonlinear growth rate on the natural dollar scale. They further explicated that “if an outcome in a linear relationship, \(Y\), is expressed as a natural logarithm and \(\hat \gamma_{01}\) is the regression coefficient for a predictor \(X\), then \(100(e^{\hat{\gamma}_{01}} - 1)\) is the percentage change in \(Y\) per unit difference in \(X\)” (p. 148, emphasis in the original). Here’s how to do that conversion with our brms output.

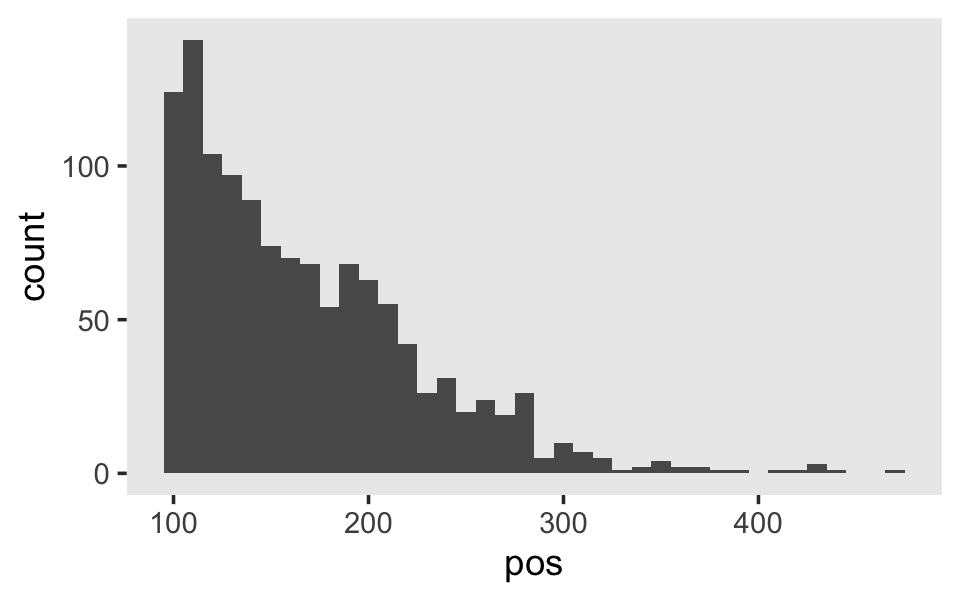

draws <-

as_draws_df(fit5.3) %>%

transmute(percent_change = 100 * (exp(b_exper) - 1))

head(draws)## # A tibble: 6 × 1

## percent_change

## <dbl>

## 1 4.88

## 2 4.69

## 3 5.07

## 4 4.51

## 5 4.53

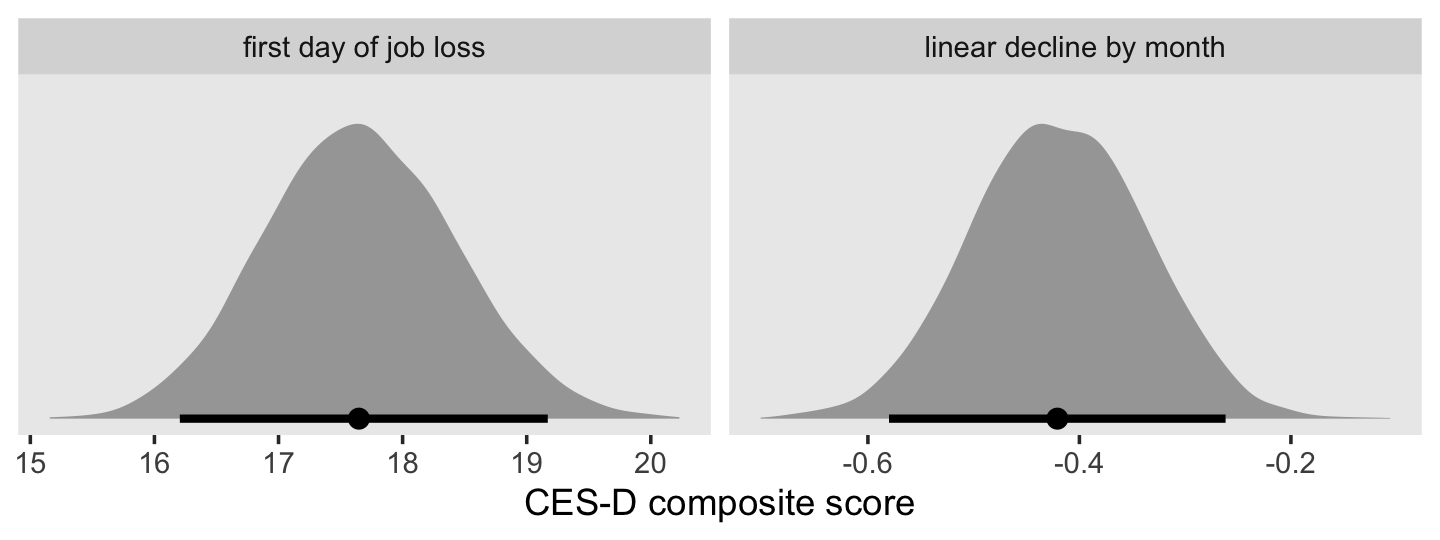

## 6 4.51For our plot, let’s break out Matthew Kay’s handy tidybayes package (Kay, 2023). With the tidybayes::stat_halfeye() function, it’s easy to put horizontal point intervals beneath out parameter densities. Here we’ll use 95% intervals.

library(tidybayes)

draws %>%

ggplot(aes(x = percent_change, y = 0)) +

stat_halfeye(.width = .95) +

scale_y_continuous(NULL, breaks = NULL) +

labs(title = "Percent change",

x = expression(100*(italic(e)^(hat(gamma)[1][0])-1))) +

theme(panel.grid = element_blank())

The tidybayes package also has a group of functions that make it easy to summarize posterior parameters with measures of central tendency (i.e., mean, median, mode) and intervals (i.e., percentile based, highest posterior density intervals). Here we’ll use median_qi() to get the posterior median and percentile-based 95% intervals.

draws %>%

median_qi(percent_change)## # A tibble: 1 × 6

## percent_change .lower .upper .width .point .interval

## <dbl> <dbl> <dbl> <dbl> <chr> <chr>

## 1 4.67 4.19 5.17 0.95 median qiFor our next model, Model B in Table 5.4, we add two time-invariant covariates. In the data, these are listed as black and hgc.9. Before we proceed, let’s rename hgc.9 to be more consistent with tidyverse style.

wages_pp <-

wages_pp %>%

rename(hgc_9 = hgc.9)There we go. Let’s take a look at the distributions of our covariates.

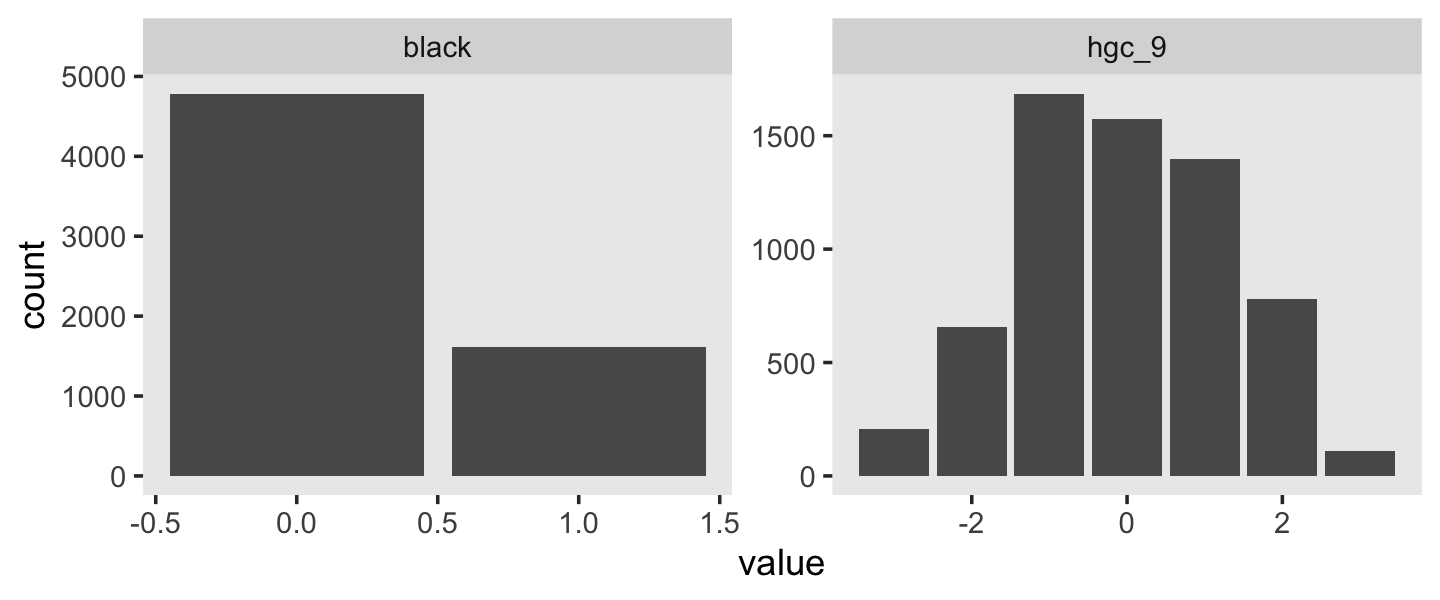

wages_pp %>%

pivot_longer(c(black, hgc_9)) %>%

ggplot(aes(x = value)) +

geom_bar() +

theme(panel.grid = element_blank()) +

facet_wrap(~ name, scales = "free")

We see black is a dummy variable coded “Black” = 1, “Non-black” = 0. hgc_9 is a somewhat Gaussian ordinal centered around zero. For context, it might also help to check its standard deviation.

sd(wages_pp$hgc_9)## [1] 1.347135With a mean near 0 and an \(SD\) near 1, hgc_9 is almost in a standardized metric. If we wanted to keep with our weakly-regularizing approach, normal(0, 1) or even normal(0, 0.5) would be pretty permissive for both these variables. Recall that we’re predicting wage on the log scale. A \(\gamma\) value of 1 or even 0.5 would be humongous for the social sciences. Since we already have the \(\gamma\) for exper set to normal(0, 0.5), let’s just keep with that. Here’s how we might describe our model in statistical terms:

$$ \[\begin{align*} \text{lnw}_{ij} & = \gamma_{00} + \gamma_{01} (\text{hgc}_{i} - 9) + \gamma_{02} \text{black}_{i} \\ & \;\;\; + \gamma_{10} \text{exper}_{ij} + \gamma_{11} \text{exper}_{ij} \times (\text{hgc}_{i} - 9) + \gamma_{12} \text{exper}_{ij} \times \text{black}_{i} \\ & \;\;\; + \zeta_{0i} + \zeta_{1i} \text{exper}_{ij} + \epsilon_{ij} \\ \epsilon_{ij} & \sim \operatorname{Normal} (0, \sigma_\epsilon) \\ \begin{bmatrix} \zeta_{0i} \\ \zeta_{1i} \end{bmatrix} & \sim \operatorname{Normal} \begin{pmatrix} \begin{bmatrix} 0 \\ 0 \end{bmatrix}, \mathbf D \mathbf\Omega \mathbf D' \end{pmatrix} \\ \mathbf D & = \begin{bmatrix} \sigma_0 & 0 \\ 0 & \sigma_1 \end{bmatrix} \\ \mathbf\Omega & = \begin{bmatrix} 1 & \rho_{01} \\ \rho_{01} & 1 \end{bmatrix} \\ \gamma_{00} & \sim \operatorname{Normal}(1.335, 1) \\ \gamma_{01}, \dots, \gamma_{12} & \sim \operatorname{Normal}(0, 0.5) \\ \sigma_\epsilon, \sigma_0, \text{ and } \sigma_1 & \sim \operatorname{Student-t} (3, 0, 1) \\ \rho_{01} & \sim \operatorname{LKJ} (4). \end{align*}\] $$

The top portion up through the \(\mathbf\Omega\) line is the likelihood. Starting with \(\gamma_{00} \sim \text{Normal}(1.335, 1)\) on down, we’ve listed our priors. Here’s how to fit the model with brms.

fit5.4 <-

brm(data = wages_pp,

family = gaussian,

lnw ~ 0 + Intercept + hgc_9 + black + exper + exper:hgc_9 + exper:black + (1 + exper | id),

prior = c(prior(normal(1.335, 1), class = b, coef = Intercept),

prior(normal(0, 0.5), class = b),

prior(student_t(3, 0, 1), class = sd),

prior(student_t(3, 0, 1), class = sigma),

prior(lkj(4), class = cor)),

iter = 2500, warmup = 1000, chains = 3, cores = 3,

seed = 5,

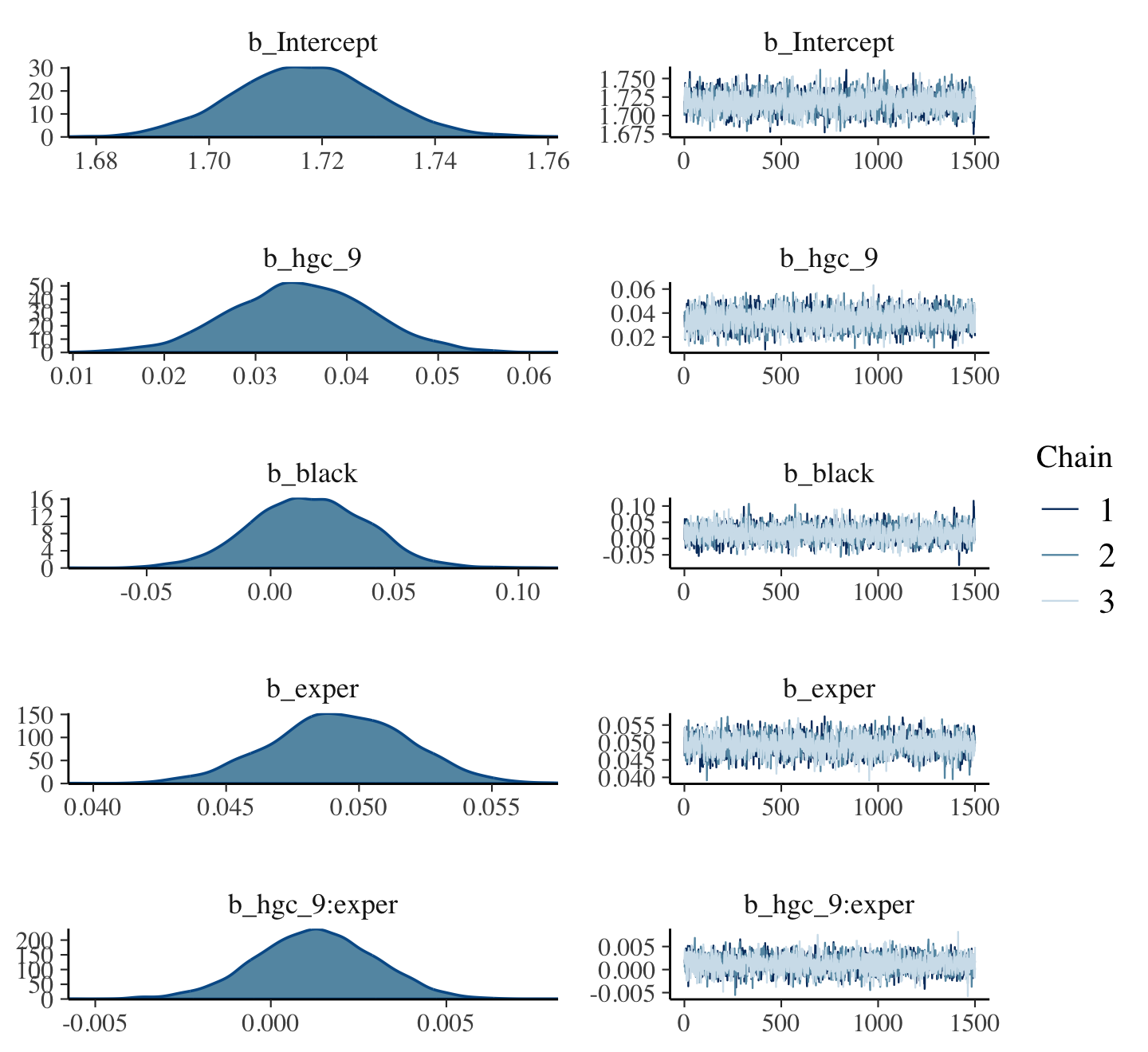

file = "fits/fit05.04")Let’s take a look at the results.

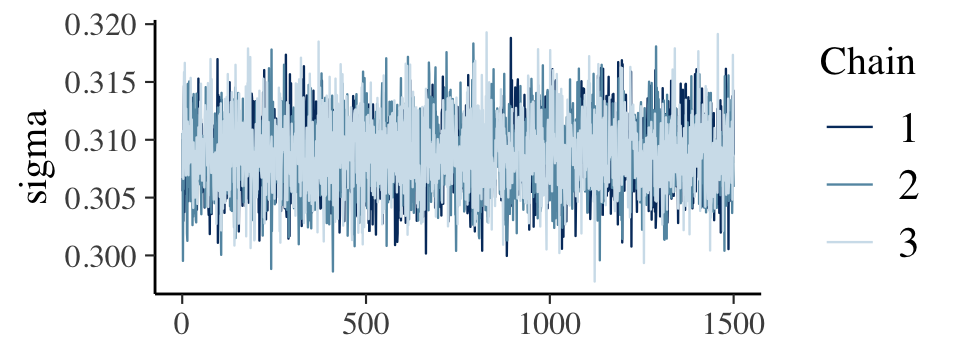

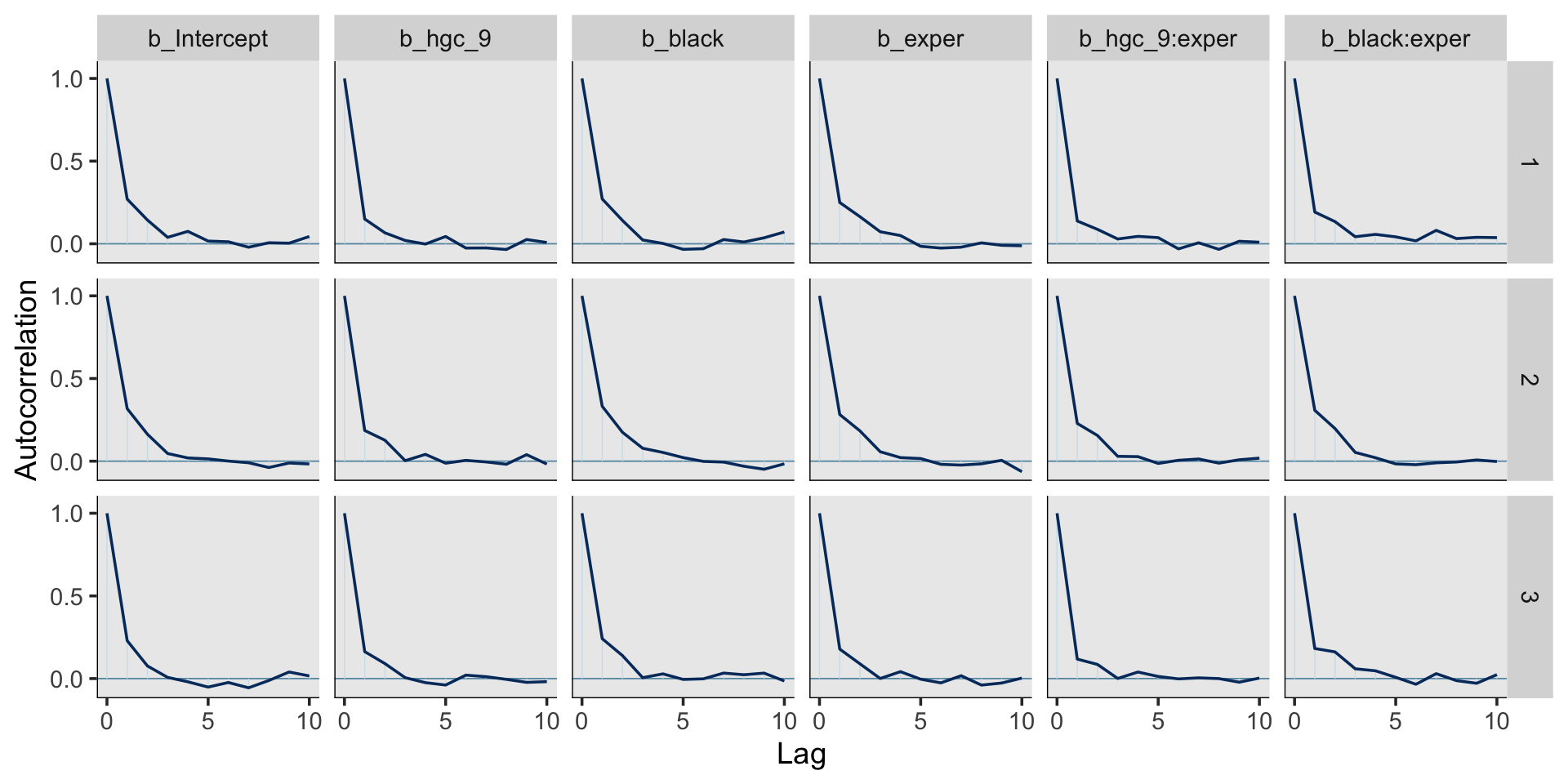

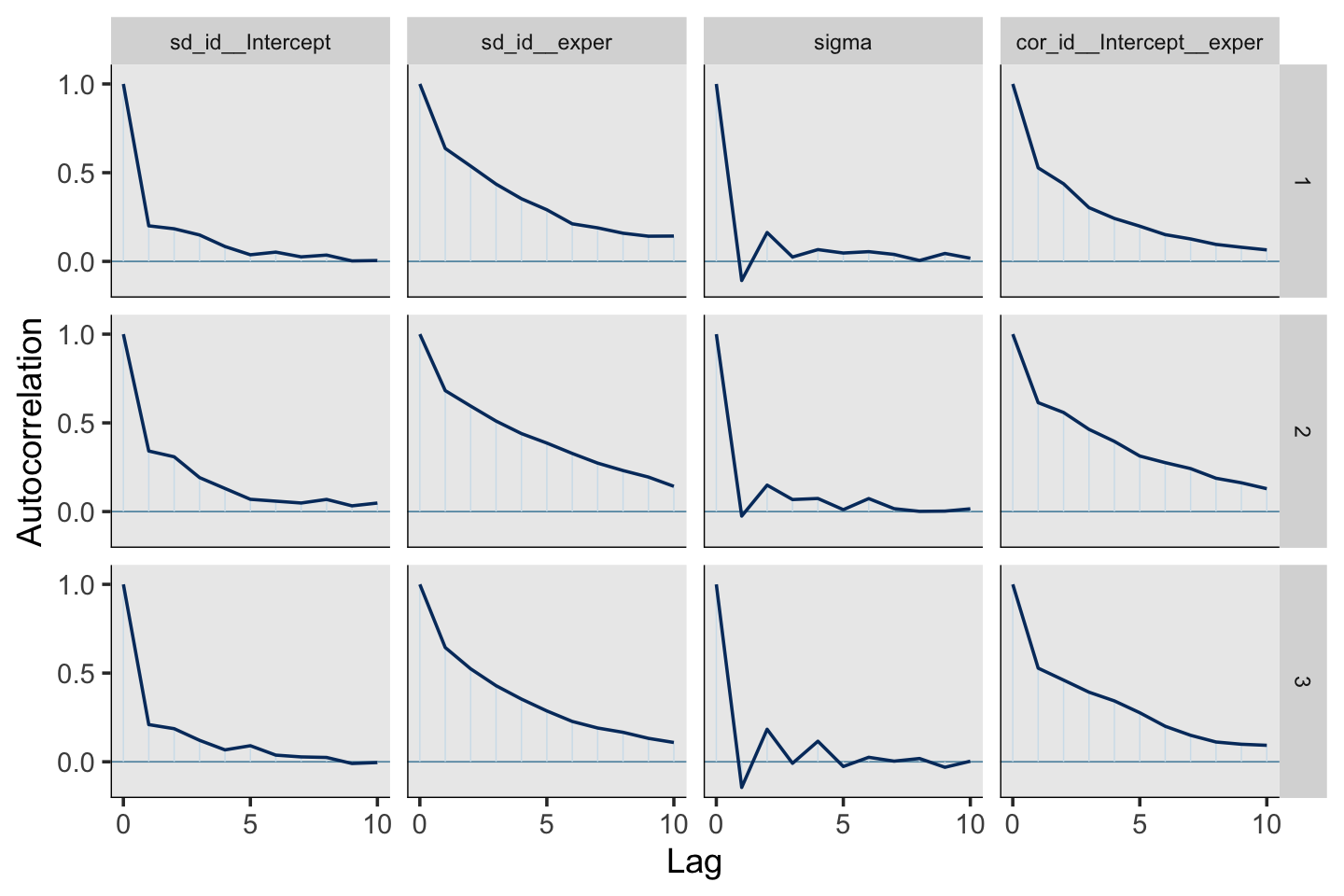

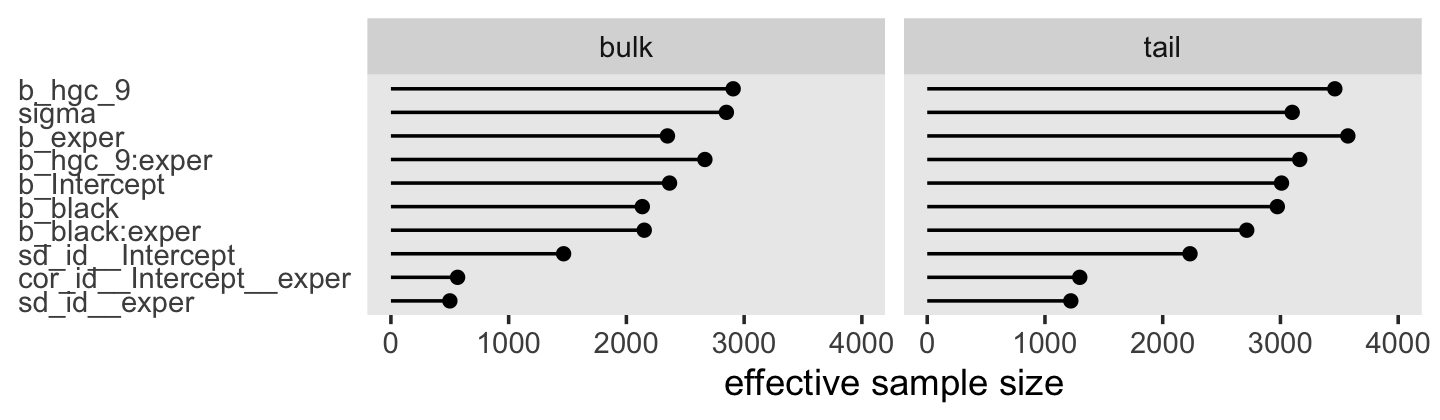

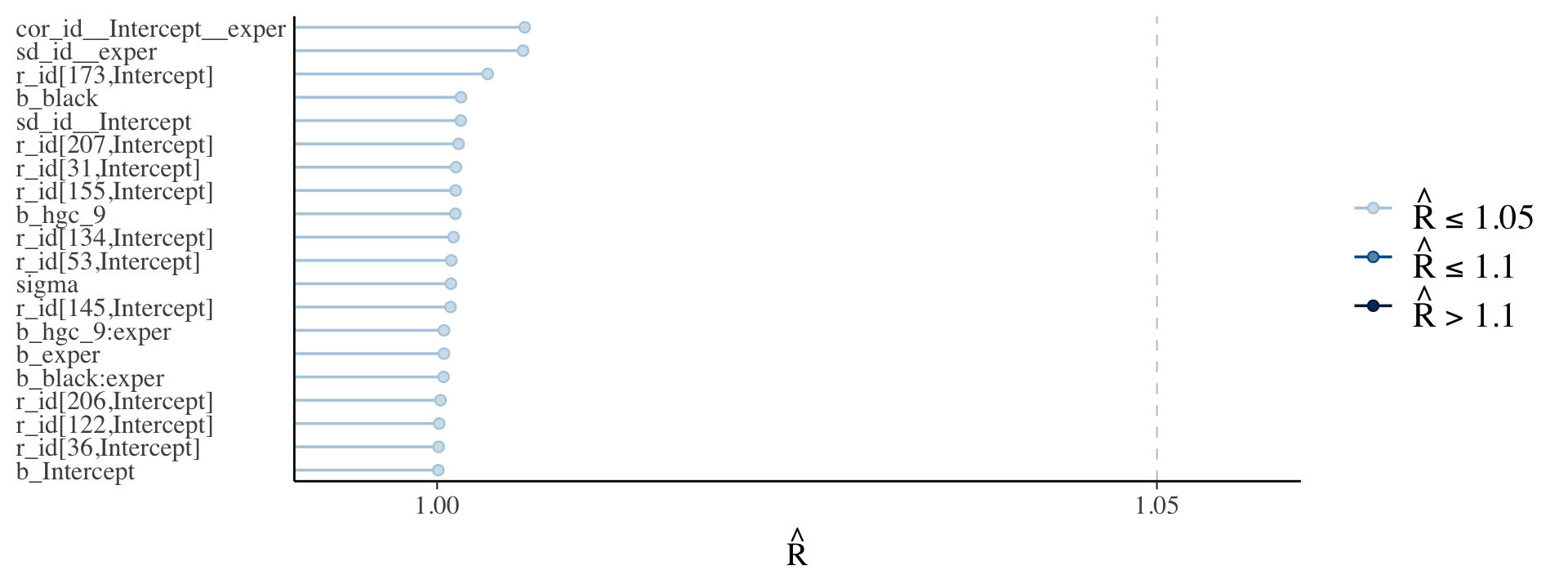

print(fit5.4, digits = 3)## Family: gaussian

## Links: mu = identity; sigma = identity

## Formula: lnw ~ 0 + Intercept + hgc_9 + black + exper + exper:hgc_9 + exper:black + (1 + exper | id)

## Data: wages_pp (Number of observations: 6402)

## Draws: 3 chains, each with iter = 2500; warmup = 1000; thin = 1;

## total post-warmup draws = 4500

##

## Group-Level Effects:

## ~id (Number of levels: 888)

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## sd(Intercept) 0.227 0.011 0.207 0.249 1.002 1467 2232

## sd(exper) 0.040 0.003 0.035 0.046 1.006 501 1219

## cor(Intercept,exper) -0.293 0.071 -0.418 -0.144 1.006 566 1296

##

## Population-Level Effects:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## Intercept 1.717 0.013 1.693 1.742 1.000 2367 3009

## hgc_9 0.035 0.008 0.020 0.050 1.001 2907 3462

## black 0.016 0.024 -0.030 0.062 1.002 2135 2973

## exper 0.049 0.003 0.044 0.054 1.000 2349 3573

## hgc_9:exper 0.001 0.002 -0.002 0.005 1.000 2667 3164

## black:exper -0.018 0.005 -0.029 -0.007 1.000 2152 2714

##

## Family Specific Parameters:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## sigma 0.309 0.003 0.303 0.315 1.001 2849 3100

##

## Draws were sampled using sampling(NUTS). For each parameter, Bulk_ESS

## and Tail_ESS are effective sample size measures, and Rhat is the potential

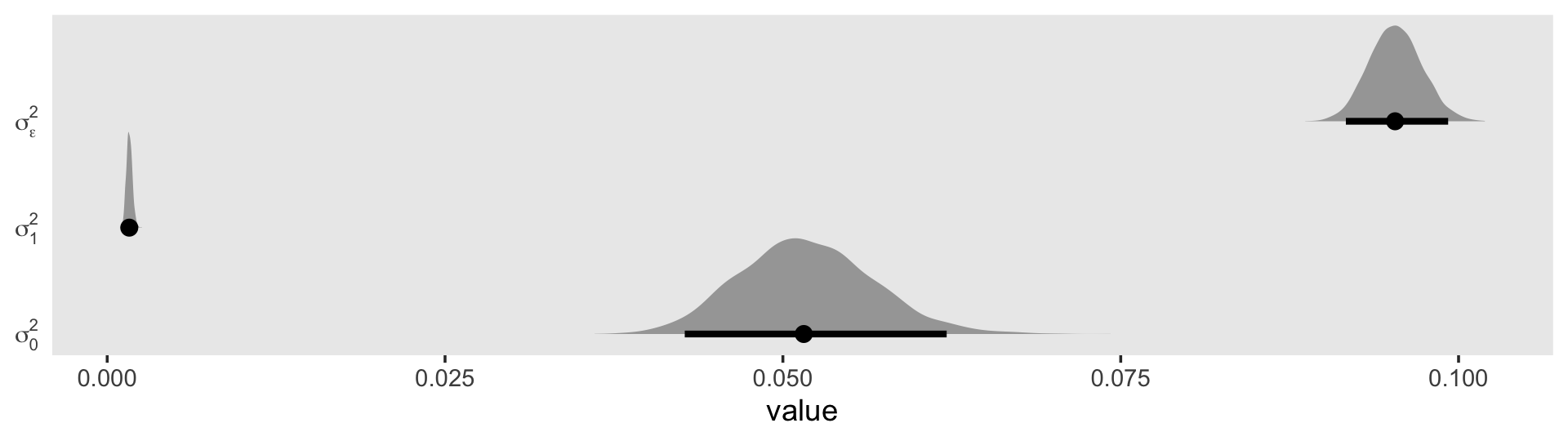

## scale reduction factor on split chains (at convergence, Rhat = 1).The \(\gamma\)’s are on par with those in the text. When we convert the \(\sigma\) parameters to the \(\sigma^2\) metric, here’s what they look like.

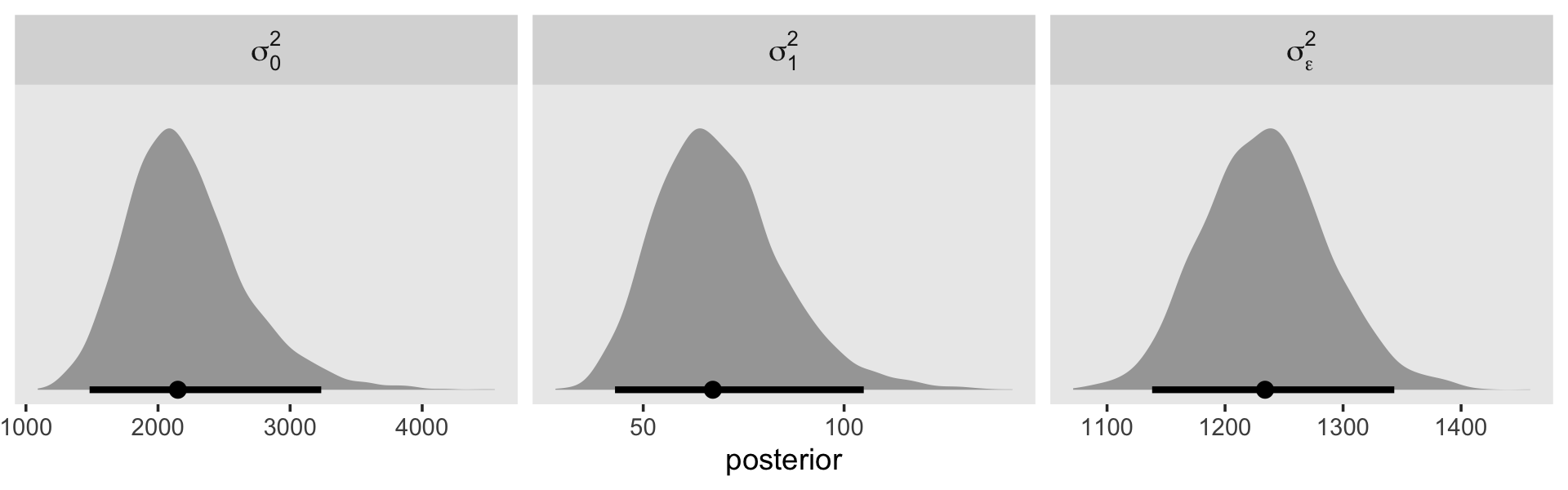

draws <- as_draws_df(fit5.4)

draws %>%

transmute(`sigma[0]^2` = sd_id__Intercept^2,

`sigma[1]^2` = sd_id__exper^2,

`sigma[epsilon]^2` = sigma^2) %>%

pivot_longer(everything()) %>%

ggplot(aes(x = value, y = name)) +

stat_halfeye(.width = .95, normalize = "xy") +

scale_y_discrete(NULL, labels = ggplot2:::parse_safe) +

coord_cartesian(ylim = c(1.4, 3.4)) +

theme(axis.ticks.y = element_blank(),

panel.grid = element_blank())

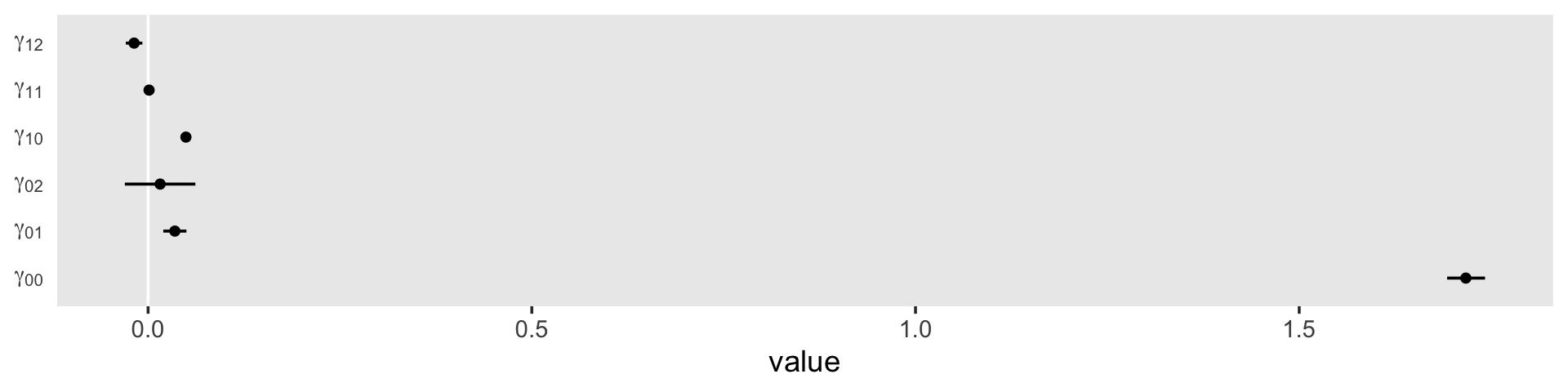

We might plot our \(\gamma\)’s, too. Here we’ll use tidybayes::stat_pointinterval() to just focus on the points and intervals.

draws %>%

select(b_Intercept:`b_black:exper`) %>%

set_names(str_c("gamma", c("[0][0]", "[0][1]", "[0][2]", "[1][0]", "[1][1]", "[1][2]"))) %>%

pivot_longer(everything()) %>%

ggplot(aes(x = value, y = name)) +

geom_vline(xintercept = 0, color = "white") +

stat_pointinterval(.width = .95, size = 1/2) +

scale_y_discrete(NULL, labels = ggplot2:::parse_safe) +

theme(axis.ticks.y = element_blank(),

panel.grid = element_blank())

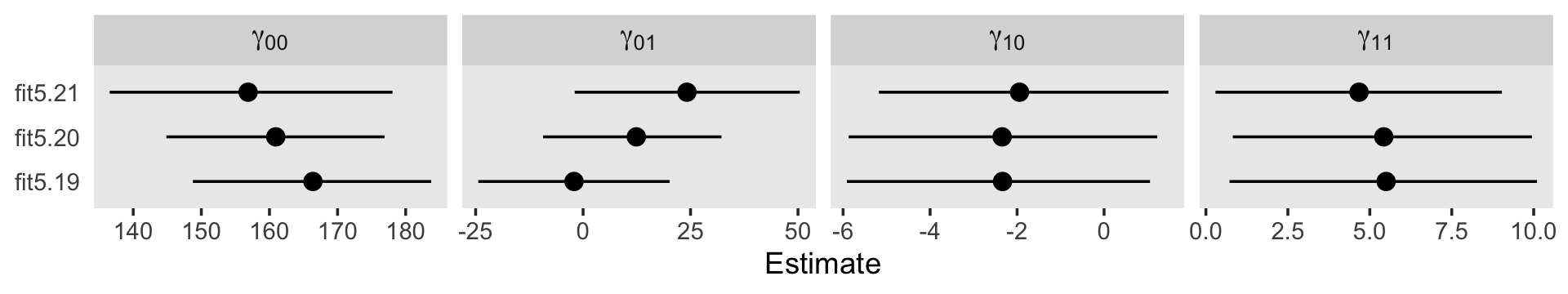

As in the text, our \(\gamma_{02}\) and \(\gamma_{11}\) parameters hovered around zero. For our next model, Model C in Table 5.4, we’ll drop those parameters.

fit5.5 <-

brm(data = wages_pp,

family = gaussian,

lnw ~ 0 + Intercept + hgc_9 + exper + exper:black + (1 + exper | id),

prior = c(prior(normal(1.335, 1), class = b, coef = Intercept),

prior(normal(0, 0.5), class = b),

prior(student_t(3, 0, 1), class = sd),

prior(student_t(3, 0, 1), class = sigma),

prior(lkj(4), class = cor)),

iter = 2500, warmup = 1000, chains = 3, cores = 3,

seed = 5,

file = "fits/fit05.05")Let’s take a look at the results.

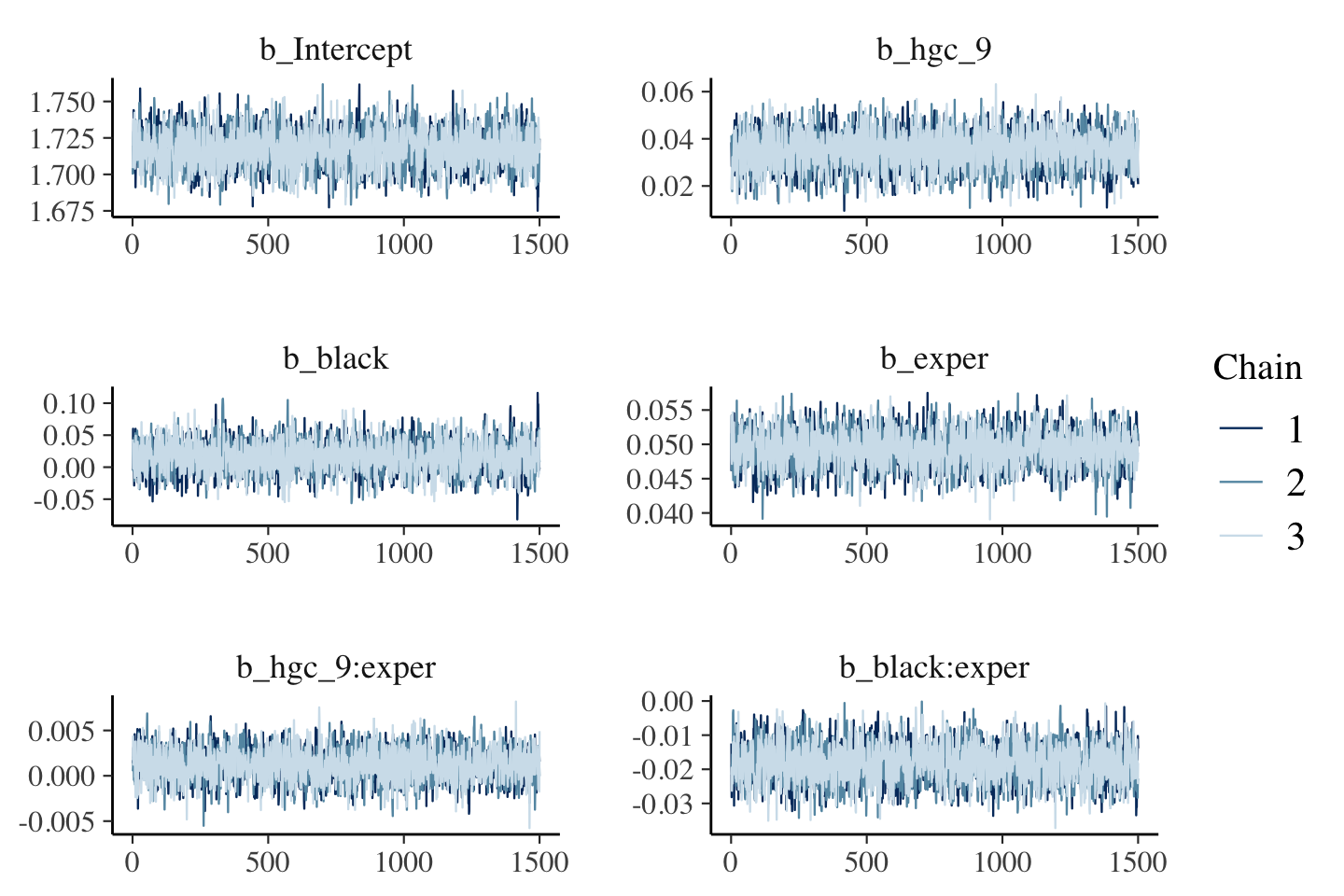

print(fit5.5, digits = 3)## Family: gaussian

## Links: mu = identity; sigma = identity

## Formula: lnw ~ 0 + Intercept + hgc_9 + exper + exper:black + (1 + exper | id)

## Data: wages_pp (Number of observations: 6402)

## Draws: 3 chains, each with iter = 2500; warmup = 1000; thin = 1;

## total post-warmup draws = 4500

##

## Group-Level Effects:

## ~id (Number of levels: 888)

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## sd(Intercept) 0.227 0.011 0.206 0.249 1.000 1687 2519

## sd(exper) 0.041 0.003 0.035 0.046 1.002 651 1287

## cor(Intercept,exper) -0.297 0.069 -0.424 -0.156 1.001 699 1767

##

## Population-Level Effects:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## Intercept 1.722 0.011 1.701 1.743 1.001 2946 3624

## hgc_9 0.038 0.006 0.025 0.051 1.000 2909 3307

## exper 0.049 0.003 0.044 0.054 1.001 2656 3501

## exper:black -0.016 0.005 -0.025 -0.007 1.001 2448 3128

##

## Family Specific Parameters:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## sigma 0.309 0.003 0.303 0.315 1.002 3348 3526

##

## Draws were sampled using sampling(NUTS). For each parameter, Bulk_ESS

## and Tail_ESS are effective sample size measures, and Rhat is the potential

## scale reduction factor on split chains (at convergence, Rhat = 1).Perhaps unsurprisingly, the parameter estimates for fit5.5 ended up quite similar to those from fit5.4. Happily, they’re also similar to those in the text. Let’s compute the WAIC estimates.

fit5.3 <- add_criterion(fit5.3, criterion = "waic")

fit5.4 <- add_criterion(fit5.4, criterion = "waic")

fit5.5 <- add_criterion(fit5.5, criterion = "waic")Compare their WAIC estimates using \(\text{elpd}\) difference scores.

loo_compare(fit5.3, fit5.4, fit5.5, criterion = "waic") %>%

print(simplify = F)## elpd_diff se_diff elpd_waic se_elpd_waic p_waic se_p_waic waic se_waic

## fit5.5 0.0 0.0 -2051.2 103.8 866.6 27.0 4102.3 207.5

## fit5.4 -0.2 1.6 -2051.4 103.5 867.8 26.9 4102.8 207.1

## fit5.3 -2.5 4.2 -2053.7 103.5 876.2 27.0 4107.4 207.1The differences are subtle. Here are the WAIC weights.

model_weights(fit5.3, fit5.4, fit5.5, weights = "waic") %>%

round(digits = 3)## fit5.3 fit5.4 fit5.5

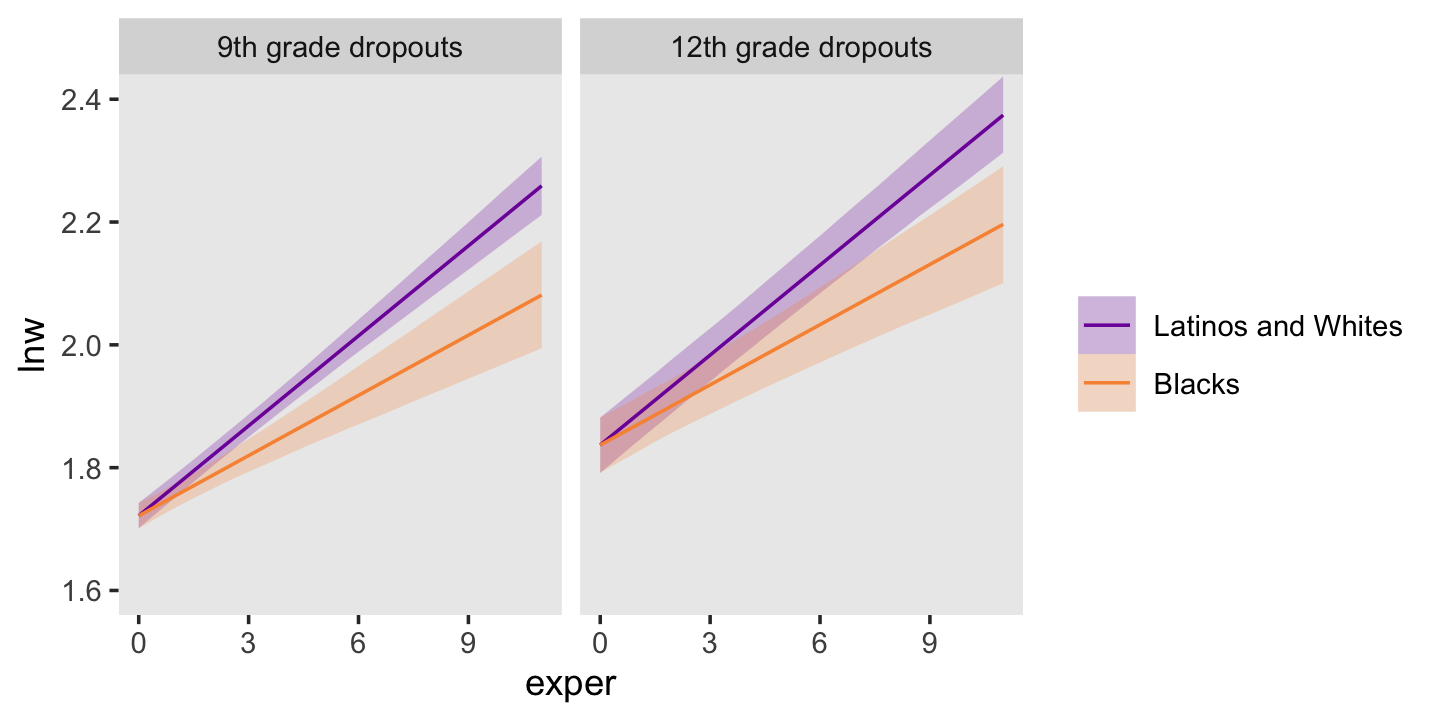

## 0.042 0.424 0.534When we use weights, almost all goes to fit5.4 and fit5.5. Focusing on the trimmed model, fit5.5, let’s get ready to make our version of Figure 5.2. We’ll start with fitted() work.

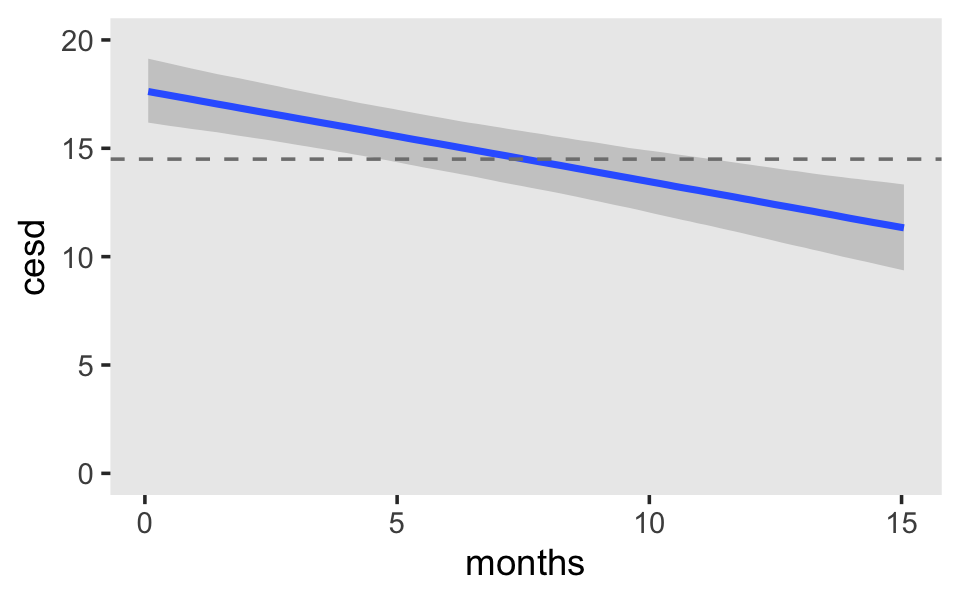

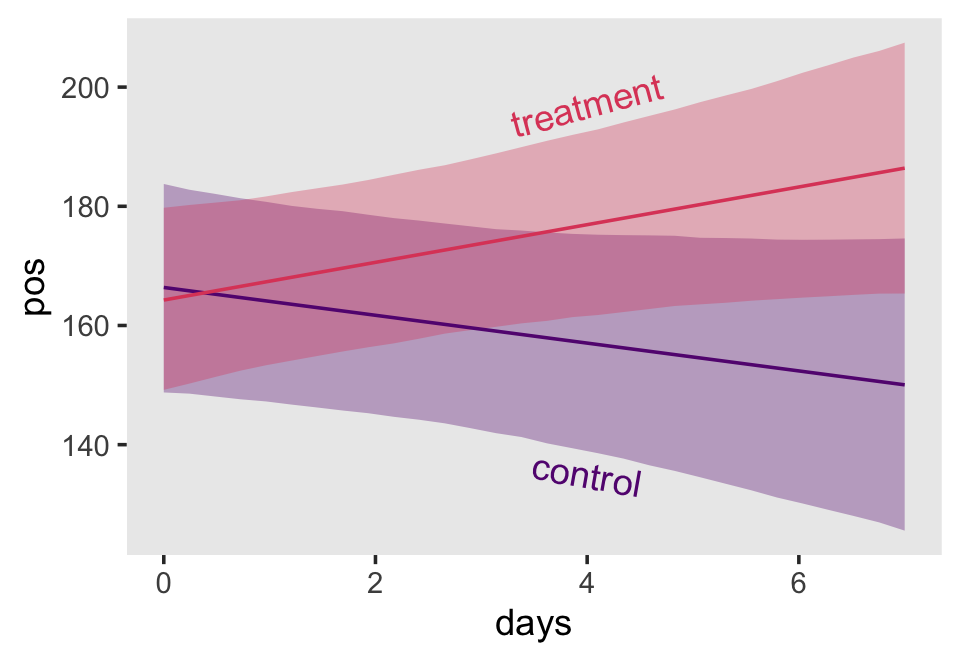

nd <-

crossing(black = 0:1,

hgc_9 = c(0, 3)) %>%

expand_grid(exper = seq(from = 0, to = 11, length.out = 30))

f <-

fitted(fit5.5,

newdata = nd,

re_formula = NA) %>%

data.frame() %>%

bind_cols(nd)

head(f)## Estimate Est.Error Q2.5 Q97.5 black hgc_9 exper

## 1 1.721590 0.010598941 1.701243 1.742709 0 0 0.0000000

## 2 1.740120 0.010144588 1.720661 1.760299 0 0 0.3793103

## 3 1.758651 0.009765267 1.739506 1.778078 0 0 0.7586207

## 4 1.777181 0.009469999 1.758576 1.795902 0 0 1.1379310

## 5 1.795712 0.009266821 1.777720 1.814151 0 0 1.5172414

## 6 1.814242 0.009161863 1.796453 1.832464 0 0 1.8965517Here it is, our two-panel version of Figure 5.2.

f %>%

mutate(black = factor(black,

labels = c("Latinos and Whites", "Blacks")),

hgc_9 = factor(hgc_9,

labels = c("9th grade dropouts", "12th grade dropouts"))) %>%

ggplot(aes(x = exper,

color = black, fill = black)) +

geom_ribbon(aes(ymin = Q2.5, ymax = Q97.5),

size = 0, alpha = 1/4) +

geom_line(aes(y = Estimate)) +

scale_fill_viridis_d(NULL, option = "C", begin = .25, end = .75) +

scale_color_viridis_d(NULL, option = "C", begin = .25, end = .75) +

ylab("lnw") +

coord_cartesian(ylim = c(1.6, 2.4)) +

theme(panel.grid = element_blank()) +

facet_wrap(~ hgc_9)

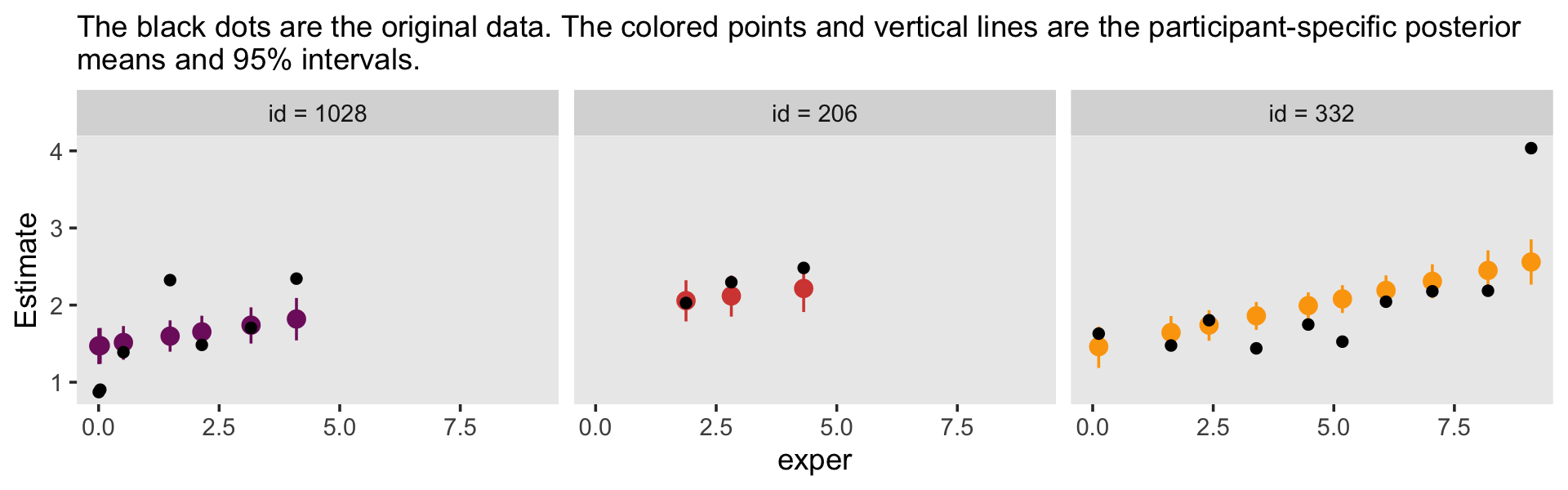

This leads in nicely to a brief discussion of posterior predictive checks (PPC). The basic idea is that good models should be able to retrodict the data used to produce them. Table 5.3 in the text introduced the data set by highlighting three participants and we went ahead and looked at their data in a plot. One way to do a PPC might be to plot their original data atop their model estimates. The fitted() function will help us with the preparatory work.

nd <-

wages_pp %>%

filter(id %in% c(206, 332, 1028))

f <-

fitted(fit5.5,

newdata = nd) %>%

data.frame() %>%

bind_cols(nd)

head(f)## Estimate Est.Error Q2.5 Q97.5 id lnw exper ged postexp black hispanic hgc hgc_9 uerate

## 1 2.057977 0.1382457 1.788240 2.322068 206 2.028 1.874 0 0 0 0 10 1 9.200

## 2 2.118612 0.1377214 1.850584 2.384066 206 2.297 2.814 0 0 0 0 10 1 11.000

## 3 2.215370 0.1559436 1.910120 2.519224 206 2.482 4.314 0 0 0 0 10 1 6.295

## 4 1.461034 0.1366113 1.184824 1.722247 332 1.630 0.125 0 0 0 1 8 -1 7.100

## 5 1.644720 0.1109559 1.420882 1.858243 332 1.476 1.625 0 0 0 1 8 -1 9.600

## 6 1.741217 0.1005240 1.538177 1.934535 332 1.804 2.413 0 0 0 1 8 -1 7.200

## ue.7 ue.centert1 ue.mean ue.person.cen ue1

## 1 2.200 0.000 8.831667 0.3683333 9.2

## 2 4.000 1.800 8.831667 2.1683333 9.2

## 3 -0.705 -2.905 8.831667 -2.5366667 9.2

## 4 0.100 0.000 5.906500 1.1935000 7.1

## 5 2.600 2.500 5.906500 3.6935000 7.1

## 6 0.200 0.100 5.906500 1.2935000 7.1Here’s the plot.

f %>%

mutate(id = str_c("id = ", id)) %>%

ggplot(aes(x = exper)) +

geom_pointrange(aes(y = Estimate, ymin = Q2.5, ymax = Q97.5,

color = id)) +

geom_point(aes(y = lnw)) +

scale_color_viridis_d(option = "B", begin = .35, end = .8) +

labs(subtitle = "The black dots are the original data. The colored points and vertical lines are the participant-specific posterior\nmeans and 95% intervals.") +

theme(legend.position = "none",

panel.grid = element_blank()) +

facet_wrap(~ id)

Although each participant got their own intercept and slope, the estimates all fall in straight lines. Since we’re only working with time-invariant covariates, that’s about the best we can do. Though our models can express gross trends over time, they’re unable to speak to variation from occasion to occasion. Just a little later on in this chapter and we’ll learn how to do better.

5.2.2 Practical problems that may arise when analyzing unbalanced data sets.

With HMC, the issues with non-convergence aren’t quite the same as with maximum likelihood estimation. However, the basic issue still remains:

Estimation of variance components requires that enough people have sufficient data to allow quantification of within-person residual variation–variation in the residuals over and above the fixed effects. If too many people have too little data, you will

be unable to quantify[have difficulty quantifying] this residual variability. (p. 152)

The big difference is that as Bayesians, our priors add additional information that will help us define the posterior distributions of our variance components. Thus our challenge will choosing sensible priors for our \(\sigma\)’s.

5.2.2.1 Boundary constraints.

Unlike with the frequentist multilevel software discussed in the text, brms will not yield negative values on the \(\sigma\) parameters. This is because the brms default is to set a lower limit of zero on those parameters. For example, see what happens when we execute fit5.3$model.

fit5.3$model## // generated with brms 2.19.0

## functions {

## /* compute correlated group-level effects

## * Args:

## * z: matrix of unscaled group-level effects

## * SD: vector of standard deviation parameters

## * L: cholesky factor correlation matrix

## * Returns:

## * matrix of scaled group-level effects

## */

## matrix scale_r_cor(matrix z, vector SD, matrix L) {

## // r is stored in another dimension order than z

## return transpose(diag_pre_multiply(SD, L) * z);

## }

## }

## data {

## int<lower=1> N; // total number of observations

## vector[N] Y; // response variable

## int<lower=1> K; // number of population-level effects

## matrix[N, K] X; // population-level design matrix

## // data for group-level effects of ID 1

## int<lower=1> N_1; // number of grouping levels

## int<lower=1> M_1; // number of coefficients per level

## int<lower=1> J_1[N]; // grouping indicator per observation

## // group-level predictor values

## vector[N] Z_1_1;

## vector[N] Z_1_2;

## int<lower=1> NC_1; // number of group-level correlations

## int prior_only; // should the likelihood be ignored?

## }

## transformed data {

## }

## parameters {

## vector[K] b; // population-level effects

## real<lower=0> sigma; // dispersion parameter

## vector<lower=0>[M_1] sd_1; // group-level standard deviations

## matrix[M_1, N_1] z_1; // standardized group-level effects

## cholesky_factor_corr[M_1] L_1; // cholesky factor of correlation matrix

## }

## transformed parameters {

## matrix[N_1, M_1] r_1; // actual group-level effects

## // using vectors speeds up indexing in loops

## vector[N_1] r_1_1;

## vector[N_1] r_1_2;

## real lprior = 0; // prior contributions to the log posterior

## // compute actual group-level effects

## r_1 = scale_r_cor(z_1, sd_1, L_1);

## r_1_1 = r_1[, 1];

## r_1_2 = r_1[, 2];

## lprior += normal_lpdf(b[1] | 1.335, 1);

## lprior += normal_lpdf(b[2] | 0, 0.5);

## lprior += student_t_lpdf(sigma | 3, 0, 1)

## - 1 * student_t_lccdf(0 | 3, 0, 1);

## lprior += student_t_lpdf(sd_1 | 3, 0, 1)

## - 2 * student_t_lccdf(0 | 3, 0, 1);

## lprior += lkj_corr_cholesky_lpdf(L_1 | 4);

## }

## model {

## // likelihood including constants

## if (!prior_only) {

## // initialize linear predictor term

## vector[N] mu = rep_vector(0.0, N);

## for (n in 1:N) {

## // add more terms to the linear predictor

## mu[n] += r_1_1[J_1[n]] * Z_1_1[n] + r_1_2[J_1[n]] * Z_1_2[n];

## }

## target += normal_id_glm_lpdf(Y | X, mu, b, sigma);

## }

## // priors including constants

## target += lprior;

## target += std_normal_lpdf(to_vector(z_1));

## }

## generated quantities {

## // compute group-level correlations

## corr_matrix[M_1] Cor_1 = multiply_lower_tri_self_transpose(L_1);

## vector<lower=-1,upper=1>[NC_1] cor_1;

## // extract upper diagonal of correlation matrix

## for (k in 1:M_1) {

## for (j in 1:(k - 1)) {

## cor_1[choose(k - 1, 2) + j] = Cor_1[j, k];

## }

## }

## }That returned the Stan code corresponding to our brms::brm() code, above. Notice the second and third lines in the parameters block. Both contained <lower=0>, which indicated the lower bounds for those parameters was zero. See? Stan has you covered.

Let’s load the wages_small_pp.csv data.

wages_small_pp <- read_csv("data/wages_small_pp.csv") %>%

rename(hgc_9 = hcg.9)

glimpse(wages_small_pp)## Rows: 257

## Columns: 5

## $ id <dbl> 206, 206, 206, 266, 304, 329, 329, 329, 336, 336, 336, 394, 394, 394, 518, 518, 541,…

## $ lnw <dbl> 2.028, 2.297, 2.482, 1.808, 1.842, 1.422, 1.308, 1.885, 1.892, 1.279, 2.224, 2.383, …

## $ exper <dbl> 1.874, 2.814, 4.314, 0.322, 0.580, 0.016, 0.716, 1.756, 1.910, 2.514, 3.706, 1.890, …

## $ black <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 1, 1, 1, 1, 1, 1, 1, 1, 0, 0, 0, 1, 1, 1, 1, 1, 1, 0, 0, 0, …

## $ hgc_9 <dbl> 1, 1, 1, 0, -1, -1, -1, -1, -1, -1, -1, 1, 1, 1, -1, -1, 1, 1, 1, 1, 1, 1, 2, -1, 0,…Here’s the distribution of the number of measurement occasions for our small data set.

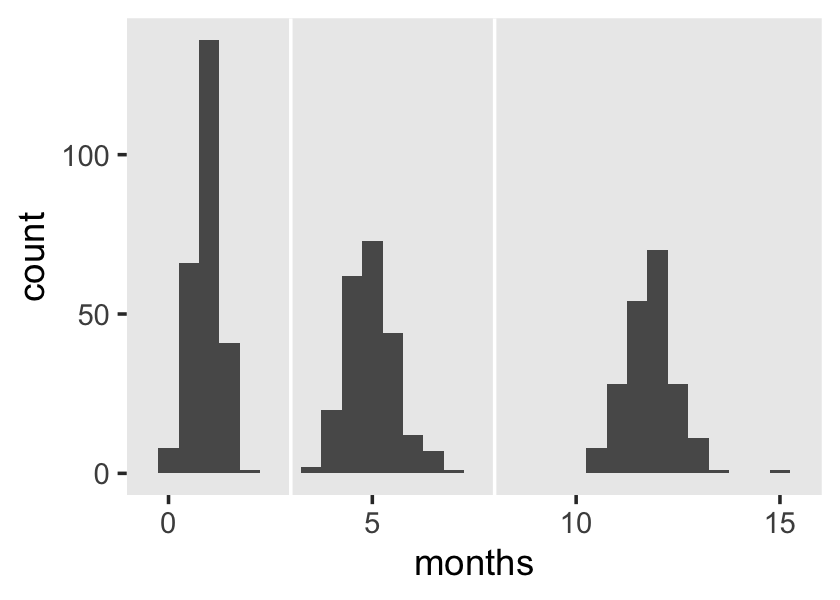

wages_small_pp %>%

count(id) %>%

ggplot(aes(y = n)) +

geom_bar() +

scale_y_continuous("# measurement occasions", breaks = 1:13, limits = c(.5, 13)) +

xlab("count of cases") +

theme(panel.grid = element_blank())

Our brm() code is the same as that for fit5.5, above, with just a slightly different data argument. If we wanted to, we could be hasty and just use update(), instead. But since we’re still practicing setting our priors and such, here we’ll be exhaustive.

fit5.6 <-

brm(data = wages_small_pp,

family = gaussian,

lnw ~ 0 + Intercept + hgc_9 + exper + exper:black + (1 + exper | id),

prior = c(prior(normal(1.335, 1), class = b, coef = Intercept),

prior(normal(0, 0.5), class = b),

prior(student_t(3, 0, 1), class = sd),

prior(student_t(3, 0, 1), class = sigma),

prior(lkj(4), class = cor)),

iter = 2000, warmup = 1000, chains = 4, cores = 4,

seed = 5,

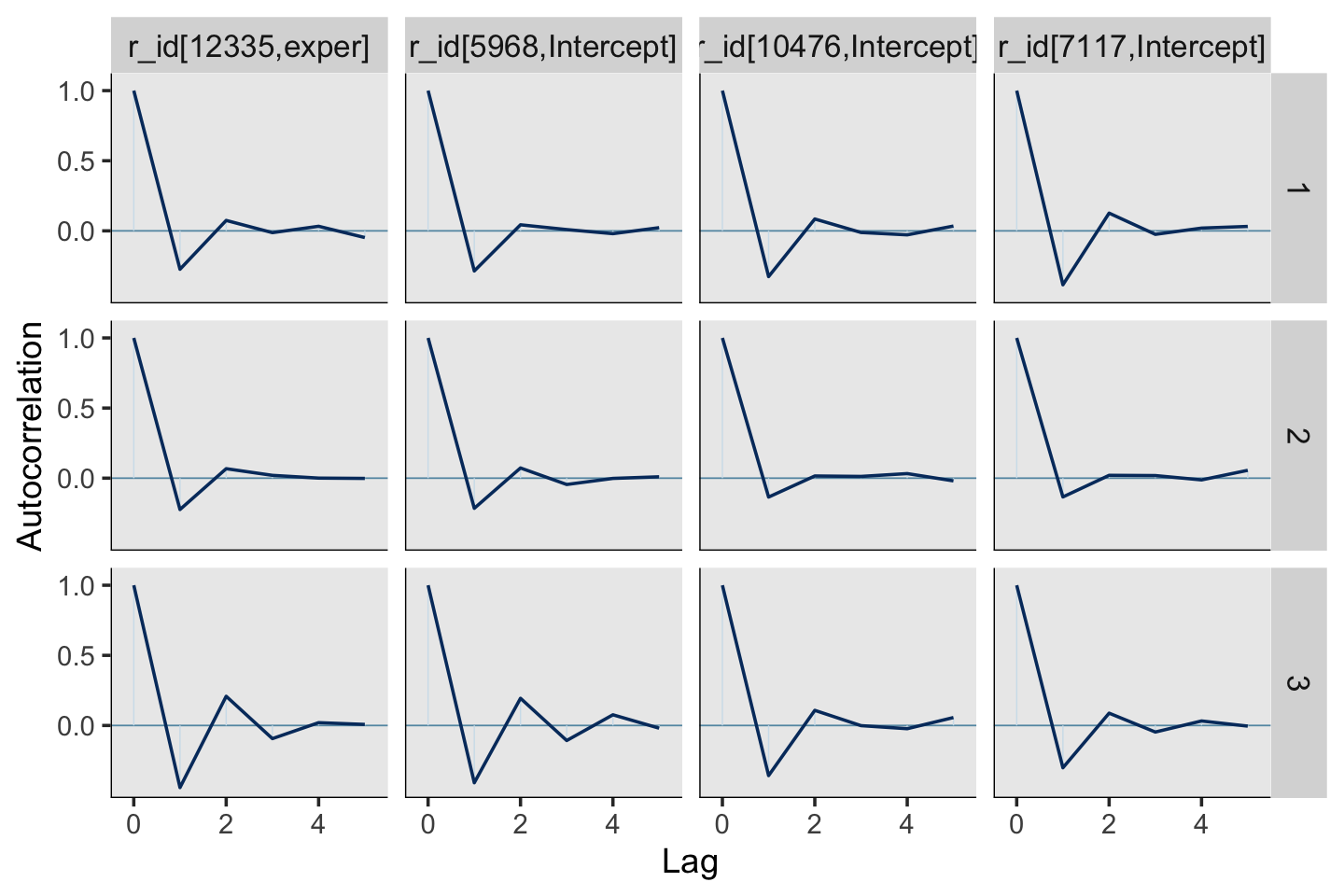

file = "fits/fit05.06")print(fit5.6)## Warning: There were 28 divergent transitions after warmup. Increasing adapt_delta above 0.8 may

## help. See http://mc-stan.org/misc/warnings.html#divergent-transitions-after-warmup## Family: gaussian

## Links: mu = identity; sigma = identity

## Formula: lnw ~ 0 + Intercept + hgc_9 + exper + exper:black + (1 + exper | id)

## Data: wages_small_pp (Number of observations: 257)

## Draws: 4 chains, each with iter = 2000; warmup = 1000; thin = 1;

## total post-warmup draws = 4000

##

## Group-Level Effects:

## ~id (Number of levels: 124)

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## sd(Intercept) 0.29 0.05 0.19 0.38 1.01 681 628

## sd(exper) 0.04 0.03 0.00 0.11 1.02 338 213

## cor(Intercept,exper) -0.05 0.31 -0.62 0.59 1.00 974 648

##

## Population-Level Effects:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## Intercept 1.74 0.05 1.64 1.83 1.00 2166 2413

## hgc_9 0.05 0.02 -0.00 0.10 1.00 2186 2537

## exper 0.05 0.02 0.00 0.10 1.00 2283 1898

## exper:black -0.05 0.04 -0.13 0.02 1.00 1686 2786

##

## Family Specific Parameters:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## sigma 0.34 0.02 0.30 0.39 1.00 1118 2328

##

## Draws were sampled using sampling(NUTS). For each parameter, Bulk_ESS

## and Tail_ESS are effective sample size measures, and Rhat is the potential

## scale reduction factor on split chains (at convergence, Rhat = 1).Let’s walk through this slow.

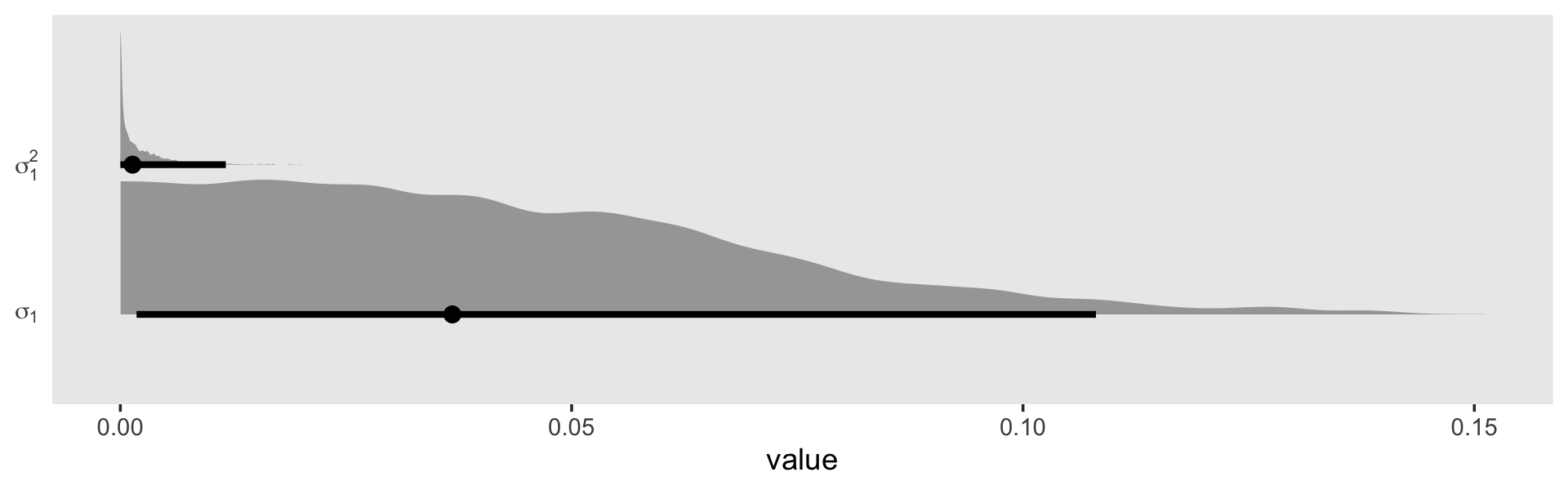

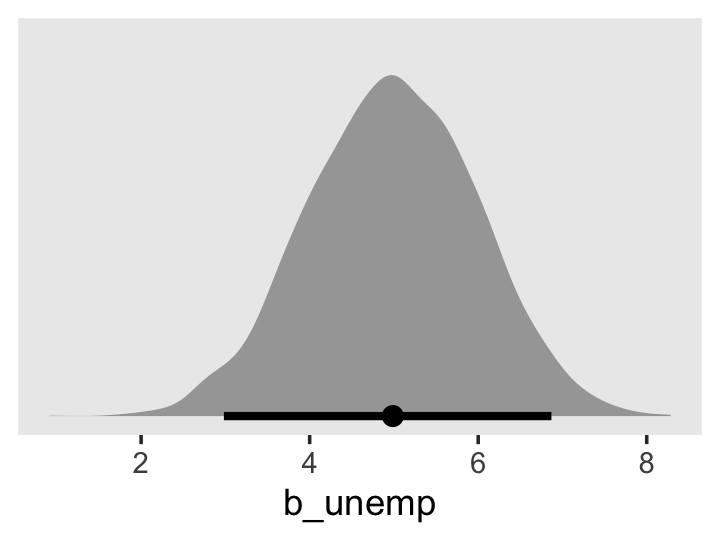

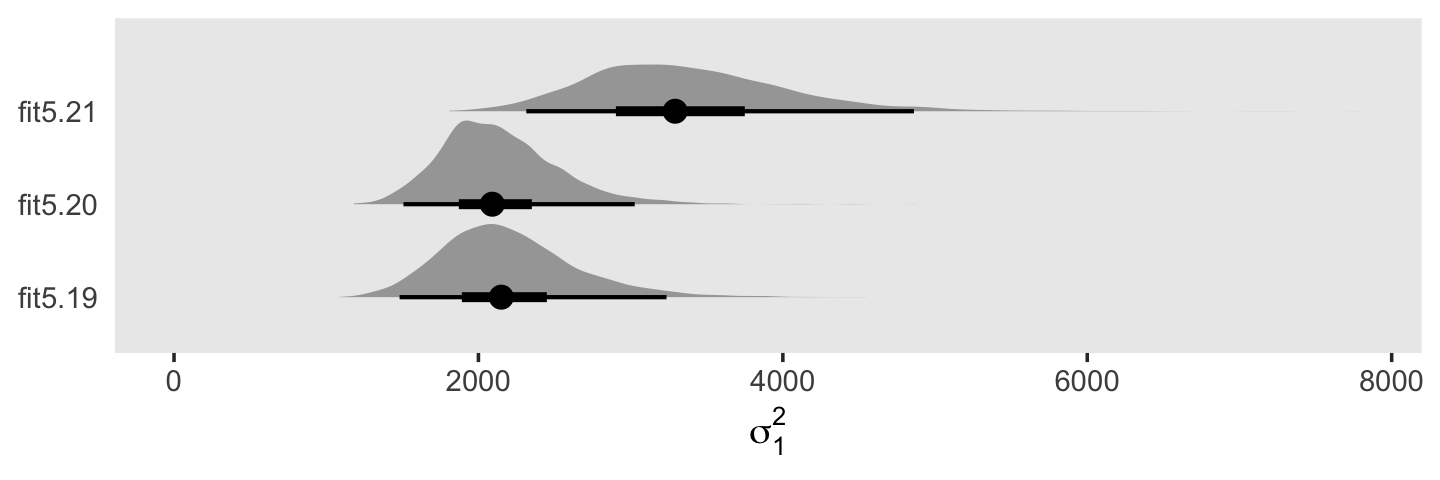

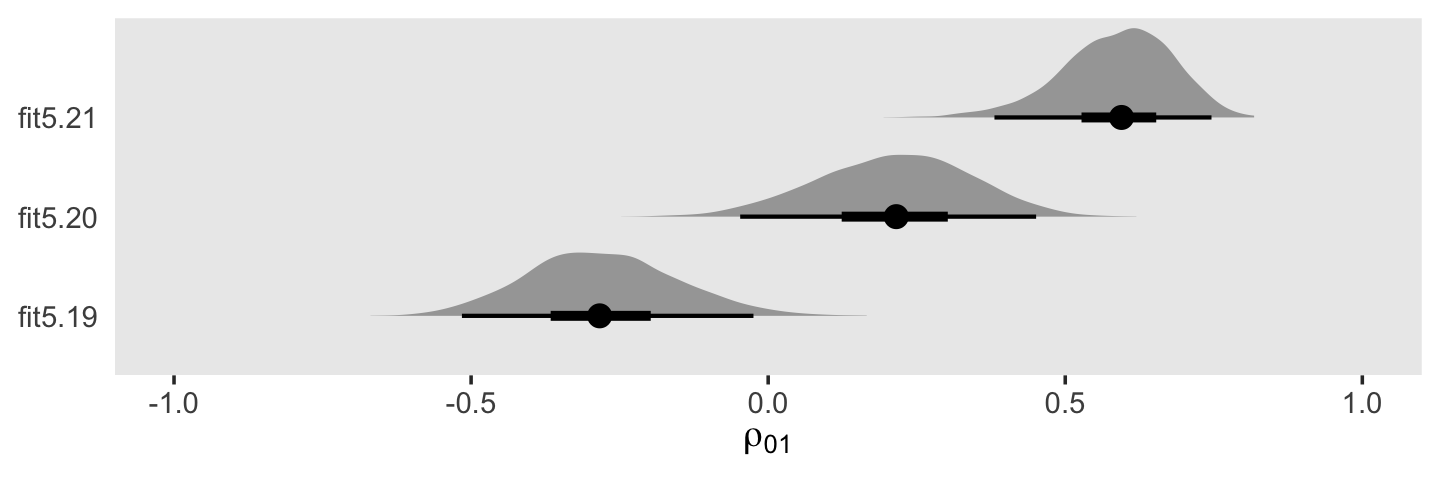

You may have noticed that warning message about divergent transitions. We’ll get to that in a bit. First focus on the parameter estimates for sd(exper). Unlike in the text, our posterior mean is not 0.000. But do remember that our posterior is parameterized in the \(\sigma\) metric. Let’s do a little converting and look at it in a plot.

draws <- as_draws_df(fit5.6)

v <-

draws %>%

transmute(sigma_1 = sd_id__exper) %>%

mutate(sigma_2_1 = sigma_1^2) %>%

set_names("sigma[1]", "sigma[1]^2") %>%

pivot_longer(everything())Plot.

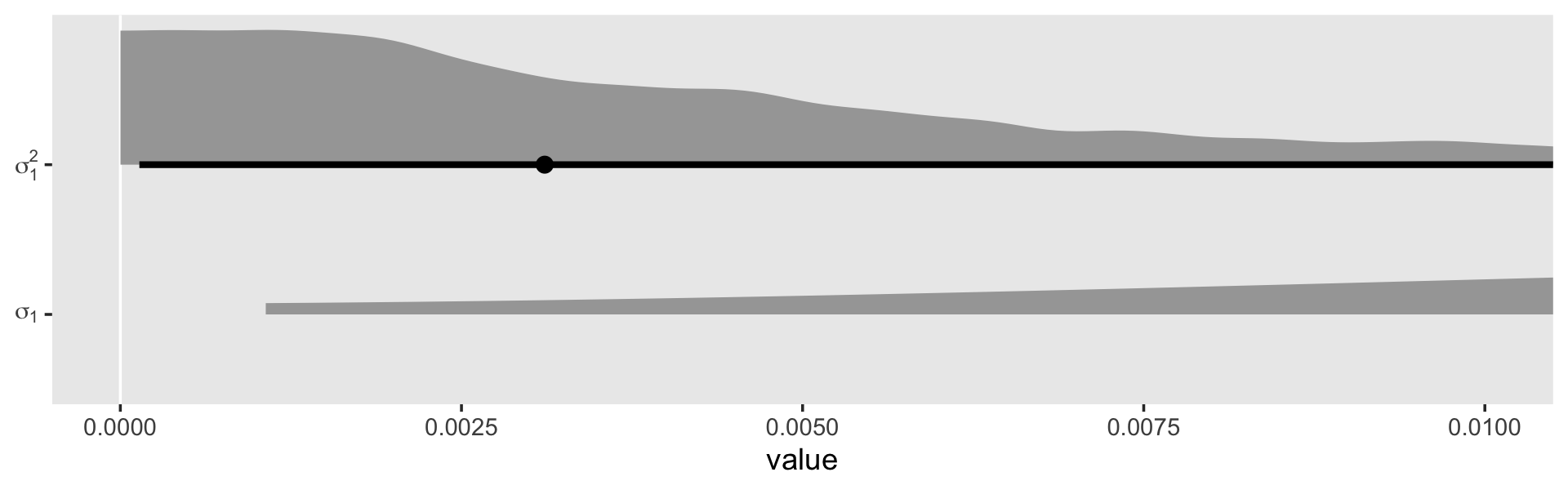

v %>%

ggplot(aes(x = value, y = name)) +

stat_halfeye(.width = .95, normalize = "xy") +

scale_y_discrete(NULL, labels = parse(text = c("sigma[1]", "sigma[1]^2"))) +

theme(axis.ticks.y = element_blank(),

panel.grid = element_blank())

In the \(\sigma\) metric, the posterior is bunched up a little on the boundary, but much of its mass is a gently right-skewed mound concentrated in the 0—0.1 range. When we convert the posterior to the \(\sigma^2\) metric, the parameter appears much more bunched up against the boundary. Because we typically summarize our posteriors with means or medians, the point estimate still moves away from zero.

v %>%

group_by(name) %>%

mean_qi() %>%

mutate_if(is.double, round, digits = 4)## # A tibble: 2 × 7

## name value .lower .upper .width .point .interval

## <chr> <dbl> <dbl> <dbl> <dbl> <chr> <chr>

## 1 sigma[1] 0.0412 0.0018 0.108 0.95 mean qi

## 2 sigma[1]^2 0.0025 0 0.0117 0.95 mean qiv %>%

group_by(name) %>%

median_qi() %>%

mutate_if(is.double, round, digits = 4)## # A tibble: 2 × 7

## name value .lower .upper .width .point .interval

## <chr> <dbl> <dbl> <dbl> <dbl> <chr> <chr>

## 1 sigma[1] 0.0368 0.0018 0.108 0.95 median qi

## 2 sigma[1]^2 0.0014 0 0.0117 0.95 median qiBut it really does start to shoot to zero if we attempt to summarize the central tendency with the mode, as within the maximum likelihood paradigm.

v %>%

group_by(name) %>%

mode_qi() %>%

mutate_if(is.double, round, digits = 4)## # A tibble: 2 × 7

## name value .lower .upper .width .point .interval

## <chr> <dbl> <dbl> <dbl> <dbl> <chr> <chr>

## 1 sigma[1] 0.0159 0.0018 0.108 0.95 mode qi

## 2 sigma[1]^2 0 0 0.0117 0.95 mode qiBacking up to that warning message, we were informed that “Increasing adapt_delta above 0.8 may help.” The adapt_delta parameter ranges from 0 to 1. The brm() default is .8. In my experience, increasing to .9 or .99 is often a good place to start. For this model, .9 wasn’t quite enough, but .99 worked. Here’s how to do it.

fit5.7 <-

brm(data = wages_small_pp,

family = gaussian,

lnw ~ 0 + Intercept + hgc_9 + exper + exper:black + (1 + exper | id),

prior = c(prior(normal(1.335, 1), class = b, coef = Intercept),

prior(normal(0, 0.5), class = b),

prior(student_t(3, 0, 1), class = sd),

prior(student_t(3, 0, 1), class = sigma),

prior(lkj(4), class = cor)),

iter = 2000, warmup = 1000, chains = 4, cores = 4,

seed = 5,

control = list(adapt_delta = .99),

file = "fits/fit05.07")Now look at the summary.

print(fit5.7)## Family: gaussian

## Links: mu = identity; sigma = identity

## Formula: lnw ~ 0 + Intercept + hgc_9 + exper + exper:black + (1 + exper | id)

## Data: wages_small_pp (Number of observations: 257)

## Draws: 4 chains, each with iter = 2000; warmup = 1000; thin = 1;

## total post-warmup draws = 4000

##

## Group-Level Effects:

## ~id (Number of levels: 124)

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## sd(Intercept) 0.28 0.05 0.19 0.37 1.00 1076 1236

## sd(exper) 0.04 0.03 0.00 0.10 1.02 321 791

## cor(Intercept,exper) -0.05 0.32 -0.62 0.60 1.00 2443 2768

##

## Population-Level Effects:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## Intercept 1.74 0.05 1.64 1.83 1.00 2364 2688

## hgc_9 0.05 0.02 -0.00 0.10 1.00 1981 2913

## exper 0.05 0.02 0.01 0.09 1.00 2410 2746

## exper:black -0.05 0.04 -0.12 0.02 1.00 2841 3135

##

## Family Specific Parameters:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## sigma 0.34 0.02 0.30 0.39 1.00 1398 2111

##

## Draws were sampled using sampling(NUTS). For each parameter, Bulk_ESS

## and Tail_ESS are effective sample size measures, and Rhat is the potential

## scale reduction factor on split chains (at convergence, Rhat = 1).Our estimates were pretty much the same as before. Happily, this time we got our summary without any warning signs. It won’t always be that way, so make sure to take adapt_delta warnings seriously.

Now do note that for both fit5.6 and fit5.7, our effective sample sizes for \(\sigma_0\) and \(\sigma_1\) aren’t terribly large relative to the total number of post-warmup draws, 4,000. If it was really important that you had high-quality summary statistics for these parameters, you might need to refit the model with something like iter = 20000, warmup = 2000.

In Model B in Table 5.5, Singer and Willett gave the results of a model with the boundary constraints on the \(\sigma^2\) parameters removed. I am not going to attempt something like that with brms. If you’re interested, you’re on your own.

But we will fit a version of their Model C where we’ve removed the \(\sigma_1\) parameter. Notice that this results in our removal of the LKJ prior for \(\rho_{01}\), too. Without a \(\sigma_1\), there’s no other parameter with which our lonely \(\sigma_0\) might covary.

fit5.8 <-

brm(data = wages_small_pp,

family = gaussian,

lnw ~ 0 + Intercept + hgc_9 + exper + exper:black + (1 | id),

prior = c(prior(normal(1.335, 1), class = b, coef = Intercept),

prior(normal(0, 0.5), class = b),

prior(student_t(3, 0, 1), class = sd),

prior(student_t(3, 0, 1), class = sigma)),

iter = 2000, warmup = 1000, chains = 4, cores = 4,

seed = 5,

file = "fits/fit05.08")Here is the basic model summary.

print(fit5.8)## Family: gaussian

## Links: mu = identity; sigma = identity

## Formula: lnw ~ 0 + Intercept + hgc_9 + exper + exper:black + (1 | id)

## Data: wages_small_pp (Number of observations: 257)

## Draws: 4 chains, each with iter = 2000; warmup = 1000; thin = 1;

## total post-warmup draws = 4000

##

## Group-Level Effects:

## ~id (Number of levels: 124)

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## sd(Intercept) 0.30 0.04 0.22 0.37 1.00 975 2080

##

## Population-Level Effects:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## Intercept 1.74 0.05 1.64 1.83 1.00 2494 3027

## hgc_9 0.05 0.03 -0.01 0.10 1.00 2323 2477

## exper 0.05 0.02 0.01 0.10 1.00 2504 2850

## exper:black -0.06 0.04 -0.13 0.01 1.00 3029 2647

##

## Family Specific Parameters:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## sigma 0.34 0.02 0.30 0.39 1.00 1616 2259

##

## Draws were sampled using sampling(NUTS). For each parameter, Bulk_ESS

## and Tail_ESS are effective sample size measures, and Rhat is the potential

## scale reduction factor on split chains (at convergence, Rhat = 1).No warning messages and our effective samples for \(\sigma_0\) improved a bit. Compute the WAIC for both models.

fit5.7 <- add_criterion(fit5.7, criterion = "waic")

fit5.8 <- add_criterion(fit5.8, criterion = "waic")Compare.

loo_compare(fit5.7, fit5.8, criterion = "waic") %>%

print(simplify = F, digits = 3)## elpd_diff se_diff elpd_waic se_elpd_waic p_waic se_p_waic waic se_waic

## fit5.8 0.000 0.000 -132.245 20.411 70.235 11.916 264.490 40.821

## fit5.7 -1.822 2.195 -134.067 21.880 71.175 13.388 268.135 43.760Yep. Those WAIC estimates are quite similar and when you compare them with formal \(\text{elpd}\) difference scores, the standard error is about the same size as the difference itself.

Though we’re stepping away from the text a bit, we should explore more alternatives for this boundary issue. The Stan team has put together a Prior Choice Recommendations wiki at https://github.com/stan-dev/stan/wiki/Prior-Choice-Recommendations. In the Boundary-avoiding priors for modal estimation (posterior mode, MAP, marginal posterior mode, marginal maximum likelihood, MML) section, we read:

- These are for parameters such as group-level scale parameters, group-level correlations, group-level covariance matrix

- What all these parameters have in common is that (a) they’re defined on a space with a boundary, and (b) the likelihood, or marginal likelihood, can have a mode on the boundary. Most famous example is the group-level scale parameter tau for the 8-schools hierarchical model.

- With full Bayes the boundary shouldn’t be a problem (as long as you have any proper prior).

- But with modal estimation, the estimate can be on the boundary, which can create problems in posterior predictions. For example, consider a varying-intercept varying-slope multilevel model which has an intercept and slope for each group. Suppose you fit marginal maximum likelihood and get a modal estimate of 1 for the group-level correlation. Then in your predictions the intercept and slope will be perfectly correlated, which in general will be unrealistic.

- For a one-dimensional parameter restricted to be positive (e.g., the scale parameter in a hierarchical model), we recommend Gamma(2,0) prior (that is, p(tau) proportional to tau) which will keep the mode away from 0 but still allows it to be arbitrarily close to the data if that is what the likelihood wants. For details see this paper by Chung et al.: http://www.stat.columbia.edu/~gelman/research/published/chung_etal_Pmetrika2013.pdf

- Gamma(2,0) biases the estimate upward. When number of groups is small, try Gamma(2,1/A), where A is a scale parameter representing how high tau can be.

We should walk those Gamma priors out, a bit. The paper by Chung et al. (2013) is quite helpful. We’ll first let them give us a little more background in the topic:

Zero group-level variance estimates can cause several problems. Zero variance can go against prior knowledge of researchers and results in underestimation of uncertainty in fixed coefficient estimates. Inferences for groups are often of interest to researchers, but when the group-level variance is estimated as zero, the resulting predictions of the group-level errors will all be zero, so one fails to find unexplained differences between groups. In addition, uncertainty in predictions for new and existing groups is also understated. (p. 686)

They expounded further on page 687.

When a variance parameter is estimated as zero, there is typically a large amount of uncertainty about this variance. One possibility is to declare in such situations that not enough information is available to estimate a multilevel model. However, the available alternatives can be unappealing since, as noted in the introduction, discarding a variance component or setting the variance to zero understates the uncertainty. In particular, standard errors for coefficients of covariates that vary between groups will be too low as we will see in Section 2.2. The other extreme is to fit a regression with indicators for groups (a fixed-effects model), but this will overcorrect for group effects (it is mathematically equivalent to a mixed-effects model with variance set to infinity), and also does not allow predictions for new groups.

Degenerate variance estimates lead to complete shrinkage of predictions for new and existing groups and yield estimated prediction standard errors that understate uncertainty. This problem has been pointed out by Li and Lahiri (2010) and Morris and Tang (2011) in small area estimation….

If zero variance is not a null hypothesis of interest, a boundary estimate, and the corresponding zero likelihood ratio test statistic, should not necessarily lead us to accept the null hypothesis and to proceed as if the true variance is zero.

In their paper, they covered both penalized maximum likelihood and full Bayesian estimation. We’re just going to focus on Bayes, but some of the quotes will contain ML talk. Further, we read:

We recommend a class of log-gamma penalties (or gamma priors) that in our default setting (the log-gamma(2, \(\lambda\)) penalty with \(\lambda \rightarrow 0\)) produce maximum penalized likelihood (MPL) estimates (or Bayes modal estimates) approximately one standard error away from zero when the maximum likelihood estimate is at zero. We consider these priors to be weakly informative in the sense that they supply some direction but still allow inference to be driven by the data. The penalty has little influence when the number of groups is large or when the data are informative about the variance, and the asymptotic mean squared error of the proposed estimator is the same as that of the maximum likelihood estimator. (p. 686)

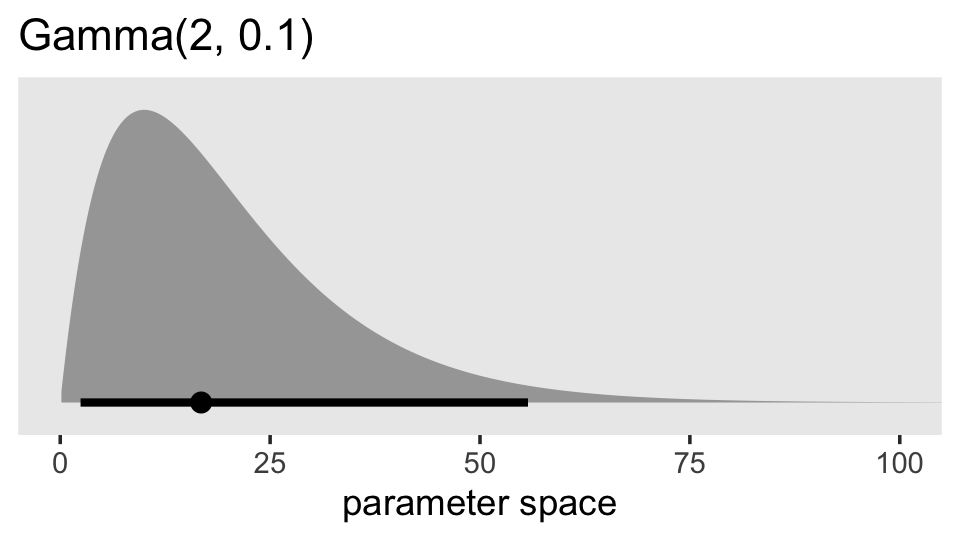

In the upper left panel of Figure 3, Chung and colleagues gave an example of what they mean by \(\lambda \rightarrow 0\): \(\lambda = 0.1\). Here’s an example of what \(\operatorname{Gamma}(2, 0.1)\) looks like across the parameter space of 0 to 100.

library(ggdist)

prior(gamma(2, 0.1)) %>%

parse_dist() %>%

ggplot(aes(xdist = .dist_obj, y = prior)) +

stat_halfeye(.width = .95, p_limits = c(.0001, .9999)) +

scale_y_discrete(NULL, breaks = NULL, expand = expansion(add = 0.1)) +

labs(title = "Gamma(2, 0.1)",

x = "parameter space") +

coord_cartesian(xlim = c(0, 100)) +

theme(panel.grid = element_blank())

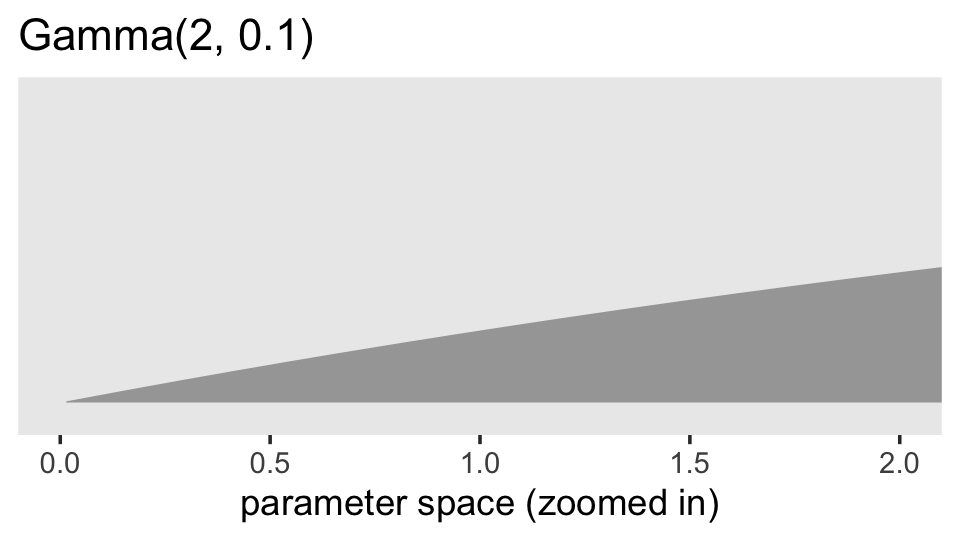

Given we’re working with data on the log scale, that’s a massively permissive prior. Let’s zoom in and see what it means for the parameter space of possible values for our data.

prior(gamma(2, 0.1)) %>%

parse_dist() %>%

ggplot(aes(xdist = .dist_obj, y = prior)) +

stat_halfeye(.width = .95, p_limits = c(.000001, .99)) +

scale_y_discrete(NULL, breaks = NULL, expand = expansion(add = 0.1)) +

labs(title = "Gamma(2, 0.1)",

x = "parameter space (zoomed in)") +

coord_cartesian(xlim = c(0, 2)) +

theme(panel.grid = element_blank())

Now keep that picture in mind as we read further along in the paper:

In addition, with \(\lambda \rightarrow 0\), the gamma density function has a positive constant derivative at zero, which allows the likelihood to dominate if it is strongly curved near zero. The positive constant derivative implies that the prior is linear at zero so that there is no dead zone near zero. The top-left panel of Figure 3 shows that the gamma(2,0.1) density increases linearly from zero with a gentle slope. The shape will be even flatter with a smaller rate parameter. (p. 691)

In case you’re not familiar with the gamma distribution, the rate parameter is what we’ve been calling \(\lambda\). Let’s test this baby out with our model. Here’s how to specify it in brms.

fit5.9 <-

brm(data = wages_small_pp,

family = gaussian,

lnw ~ 0 + Intercept + hgc_9 + exper + exper:black + (1 + exper | id),

prior = c(prior(normal(1.335, 1), class = b, coef = Intercept),

prior(normal(0, 0.5), class = b),

prior(student_t(3, 0, 1), class = sd),

prior(gamma(2, 0.1), class = sd, group = id, coef = exper),

prior(student_t(3, 0, 1), class = sigma),

prior(lkj(4), class = cor)),

iter = 2000, warmup = 1000, chains = 4, cores = 4,

seed = 5,

control = list(adapt_delta = .85),

file = "fits/fit05.09")Notice how we had to increase adapt_delta a bit. Here are the results.

print(fit5.9, digits = 3)## Family: gaussian

## Links: mu = identity; sigma = identity

## Formula: lnw ~ 0 + Intercept + hgc_9 + exper + exper:black + (1 + exper | id)

## Data: wages_small_pp (Number of observations: 257)

## Draws: 4 chains, each with iter = 2000; warmup = 1000; thin = 1;

## total post-warmup draws = 4000

##

## Group-Level Effects:

## ~id (Number of levels: 124)

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## sd(Intercept) 0.282 0.053 0.175 0.386 1.004 953 1685

## sd(exper) 0.059 0.028 0.012 0.118 1.005 554 1239

## cor(Intercept,exper) -0.091 0.312 -0.642 0.541 1.001 2070 2666

##

## Population-Level Effects:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## Intercept 1.735 0.050 1.639 1.835 1.001 2811 3069

## hgc_9 0.048 0.025 -0.001 0.097 1.002 2871 2588

## exper 0.050 0.025 0.002 0.097 1.001 3205 3038

## exper:black -0.051 0.038 -0.125 0.022 1.000 2624 2718

##

## Family Specific Parameters:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## sigma 0.344 0.023 0.303 0.393 1.002 1171 2337

##

## Draws were sampled using sampling(NUTS). For each parameter, Bulk_ESS

## and Tail_ESS are effective sample size measures, and Rhat is the potential

## scale reduction factor on split chains (at convergence, Rhat = 1).Our sd(exper) is still quite close to zero. But notice how not the lower level of the 95% interval is higher than zero. Here’s what it looks like in both \(\sigma\) and \(\sigma^2\) metrics.

as_draws_df(fit5.9) %>%

transmute(sigma_1 = sd_id__exper) %>%

mutate(sigma_2_1 = sigma_1^2) %>%

set_names("sigma[1]", "sigma[1]^2") %>%

pivot_longer(everything()) %>%

ggplot(aes(x = value, y = name)) +

stat_halfeye(.width = .95, normalize = "xy") +

scale_y_discrete(NULL, labels = parse(text = c("sigma[1]", "sigma[1]^2"))) +

theme(axis.ticks.y = element_blank(),

panel.grid = element_blank())

Let’s zoom in on the leftmost part of the plot.

as_draws_df(fit5.9) %>%

transmute(sigma_1 = sd_id__exper) %>%

mutate(sigma_2_1 = sigma_1^2) %>%

set_names("sigma[1]", "sigma[1]^2") %>%

pivot_longer(everything()) %>%

ggplot(aes(x = value, y = name)) +

geom_vline(xintercept = 0, color = "white") +

stat_halfeye(.width = .95, normalize = "xy") +

scale_y_discrete(NULL, labels = parse(text = c("sigma[1]", "sigma[1]^2"))) +

coord_cartesian(xlim = c(0, 0.01)) +

theme(panel.grid = element_blank())

Although we are still brushing up on the boundary with \(\sigma_1^2\), the mode is no longer at zero. In the discussion, Chung and colleagues pointed out “sometimes weak prior information is available about a variance parameter. When \(\alpha = 2\), the gamma density has its mode at \(1 / \lambda\), and so one can use the \(\operatorname{gamma}(\alpha, \lambda)\) prior with \(1 / \lambda\) set to the prior estimate of \(\sigma_\theta\)” (p. 703). Let’s say we only had our wages_small_pp, but the results of something like the wages_pp data were published by some earlier group of researchers. In this case, we do have good prior data; we have the point estimate from the model of the wages_pp data! Here’s what that was in terms of the median.

as_draws_df(fit5.3) %>%

median_qi(sd_id__exper)## # A tibble: 1 × 6

## sd_id__exper .lower .upper .width .point .interval

## <dbl> <dbl> <dbl> <dbl> <chr> <chr>

## 1 0.0413 0.0366 0.0469 0.95 median qiAnd here’s what that value is when set as the divisor of 1.

1 / 0.04154273## [1] 24.0716What does that distribution look like?

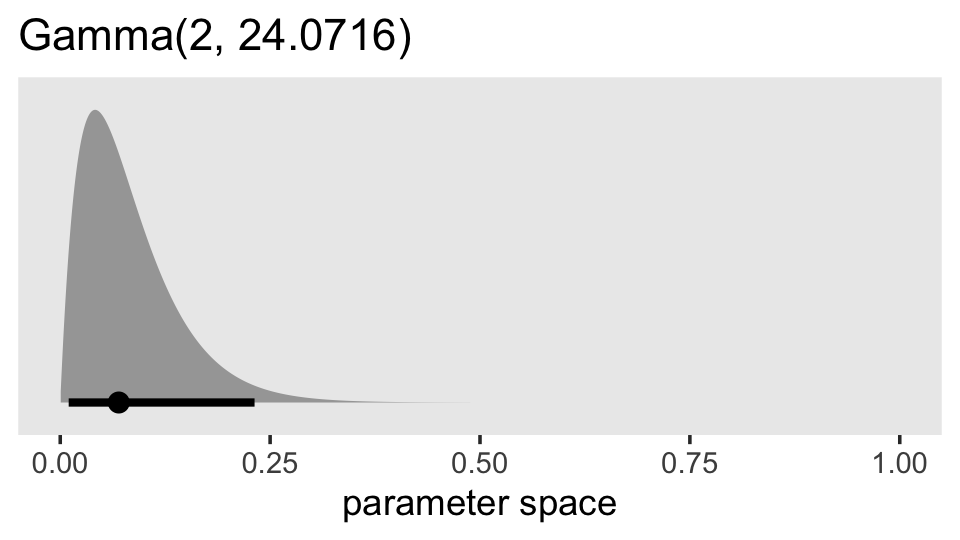

prior(gamma(2, 24.0716)) %>%

parse_dist() %>%

ggplot(aes(xdist = .dist_obj, y = prior)) +

stat_halfeye(.width = .95, p_limits = c(.0001, .9999)) +

scale_y_discrete(NULL, breaks = NULL, expand = expansion(add = 0.1)) +

labs(title = "Gamma(2, 24.0716)",

x = "parameter space") +

coord_cartesian(xlim = c(0, 1)) +

theme(panel.grid = element_blank())

So this is much more informative than our gamma(2, 0.1) prior from before. But given the magnitude of the estimate from fit5.3, it’s still fairly liberal. Let’s practice using it.

fit5.10 <-

brm(data = wages_small_pp,

family = gaussian,

lnw ~ 0 + Intercept + hgc_9 + exper + exper:black + (1 + exper | id),

prior = c(prior(normal(1.335, 1), class = b, coef = Intercept),

prior(normal(0, 0.5), class = b),

prior(student_t(3, 0, 1), class = sd),

prior(gamma(2, 24.0716), class = sd, group = id, coef = exper),

prior(student_t(3, 0, 1), class = sigma),

prior(lkj(4), class = cor)),

iter = 2000, warmup = 1000, chains = 4, cores = 4,

seed = 5,

file = "fits/fit05.10")Check out the results.

print(fit5.10, digits = 3)## Family: gaussian

## Links: mu = identity; sigma = identity

## Formula: lnw ~ 0 + Intercept + hgc_9 + exper + exper:black + (1 + exper | id)

## Data: wages_small_pp (Number of observations: 257)

## Draws: 4 chains, each with iter = 2000; warmup = 1000; thin = 1;

## total post-warmup draws = 4000

##

## Group-Level Effects:

## ~id (Number of levels: 124)

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## sd(Intercept) 0.286 0.047 0.191 0.376 1.002 985 1685

## sd(exper) 0.042 0.024 0.007 0.095 1.008 524 825

## cor(Intercept,exper) -0.039 0.309 -0.583 0.595 1.001 1684 2298

##

## Population-Level Effects:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## Intercept 1.735 0.048 1.638 1.825 1.000 1688 2461

## hgc_9 0.046 0.025 -0.004 0.096 1.005 1756 2425

## exper 0.051 0.023 0.005 0.095 1.001 1801 2211

## exper:black -0.054 0.037 -0.127 0.019 1.002 1810 2320

##

## Family Specific Parameters:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## sigma 0.344 0.022 0.303 0.392 1.001 1297 2428

##

## Draws were sampled using sampling(NUTS). For each parameter, Bulk_ESS

## and Tail_ESS are effective sample size measures, and Rhat is the potential

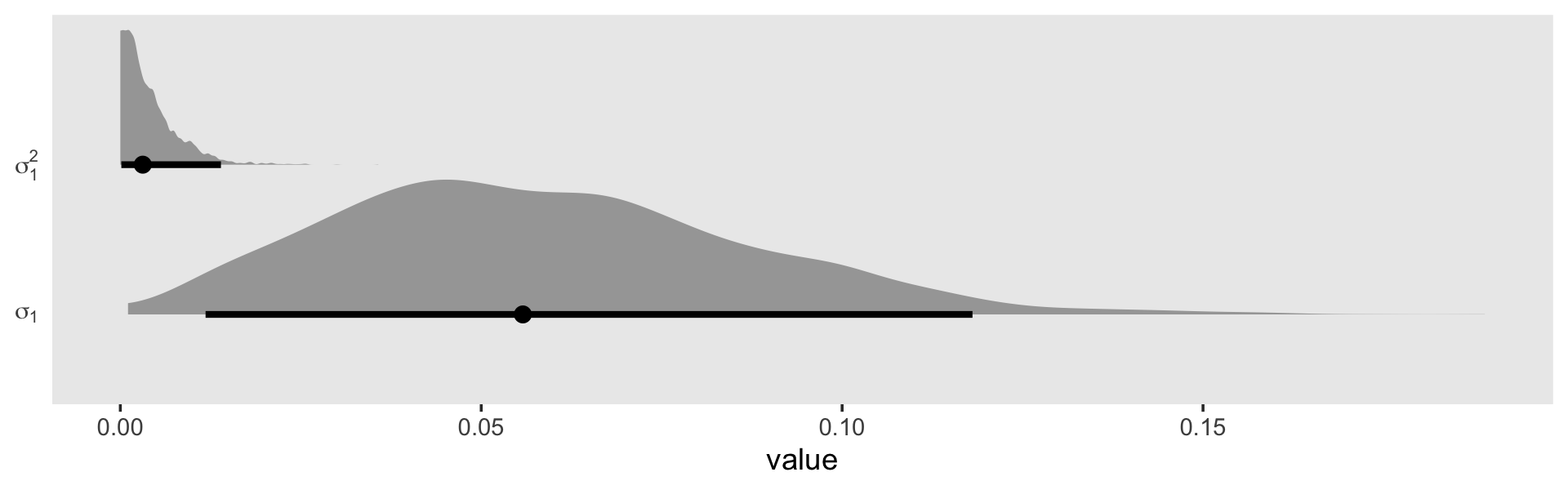

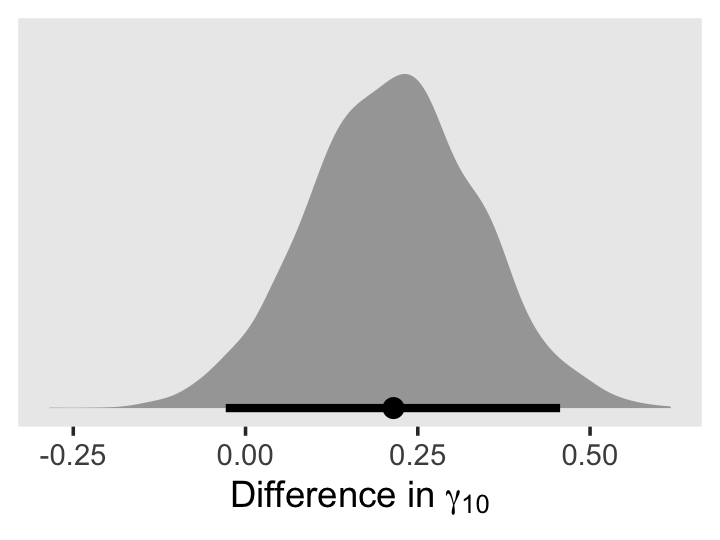

## scale reduction factor on split chains (at convergence, Rhat = 1).Here we compare the three ways to specify the \(\sigma_1\) prior with the posterior from the original model fit with the full data set. For simplicity, we’ll just look at the results in the brms-like \(\sigma\) metric. Hopefully by now you’ll know how to do the conversions to get the values into the \(\sigma^2\) metric.

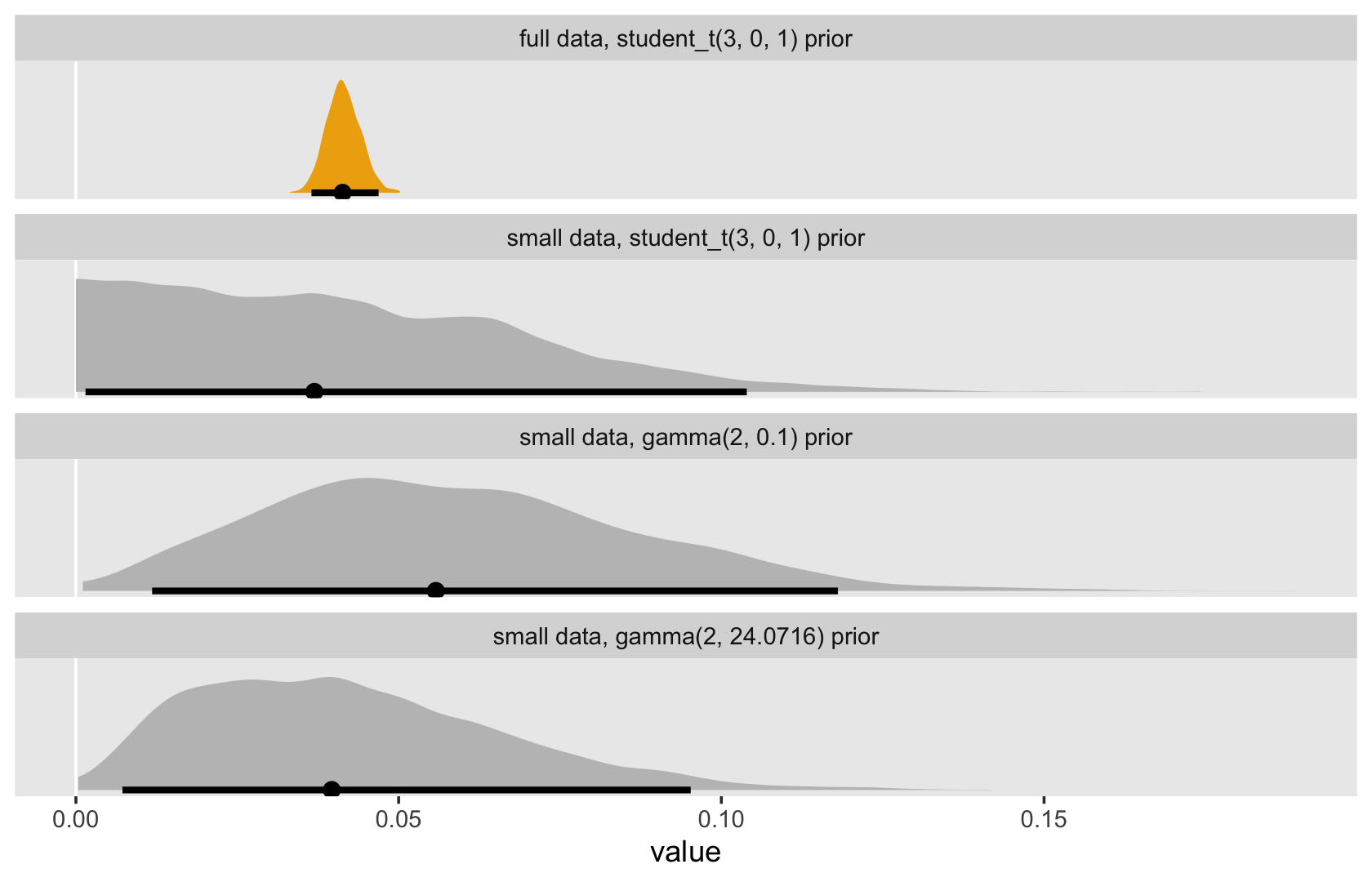

tibble(`full data, student_t(3, 0, 1) prior` = VarCorr(fit5.3, summary = F)[[1]][[1]][1:4000, 2],

`small data, student_t(3, 0, 1) prior` = VarCorr(fit5.7, summary = F)[[1]][[1]][, 2],

`small data, gamma(2, 0.1) prior` = VarCorr(fit5.9, summary = F)[[1]][[1]][, 2],

`small data, gamma(2, 24.0716) prior` = VarCorr(fit5.10, summary = F)[[1]][[1]][, 2]) %>%

pivot_longer(everything()) %>%

mutate(name = factor(name,

levels = c("full data, student_t(3, 0, 1) prior",

"small data, student_t(3, 0, 1) prior",

"small data, gamma(2, 0.1) prior",

"small data, gamma(2, 24.0716) prior"))) %>%

ggplot(aes(x = value, y = 0, fill = name == "full data, student_t(3, 0, 1) prior")) +

geom_vline(xintercept = 0, color = "white") +

stat_halfeye(.width = .95, normalize = "panels") +

scale_y_continuous(NULL, breaks = NULL) +

scale_fill_manual(values = c("grey75", "darkgoldenrod2")) +

theme(legend.position = "none",

panel.grid = element_blank()) +

facet_wrap(~ name, ncol = 1)

One thing to notice is that when you’re working with full Bayesian estimation with even a rather vague prior with a boundary on zero, the measure of central tendency in the posterior is away from zero. Things get more compact when you’re working in the \(\sigma^2\) metric. But remember that when we’re fitting our models with brms, we’re in the \(\sigma\) metric, anyway. And with either of these three options, you don’t have a compelling reason to set the \(\sigma_\theta\) parameter to zero the way you would with ML. Even a rather vague prior will add enough information to the model that we can feel confident about keeping our theoretically-derived \(\sigma_1\) parameter.

5.2.2.2 Nonconvergence [i.e., it’s time to talk chains and such].

As discussed in Section 4.3, all multilevel modeling programs implement iterative numeric algorithms for model fitting. (p. 155). This is also true for our Stan-propelled brms software. However, what’s going on under the hood, here, is not what’s happening with the frequentist packages discussed by Singer and Willett. We’re using Hamiltonian Monte Carlo (HMC) to draw from the posterior. To my eye, Bürkner gave in a (2020) preprint probably the clearest and most direct introduction to why we need fancy algorithms like HMC to fit Bayesian models. First, Bürkner warmed up by contrasting Bayes with conventional frequentist inference:

In frequentist statistics, parameter estimates are usually obtained by finding those parameter values that maximise the likelihood. In contrast, Bayesian statistics aims to estimate the full (joint) posterior distribution of the parameters. This is not only fully consistent with probability theory, but also much more informative than a single point estimate (and an approximate measure of uncertainty commonly known as ‘standard error’). (p. 9)

Those iterative algorithms Singer and Willett discussed in this section, that’s what they’re doing. They are maximizing the likelihood. But with Bayes, we have the more challenging goal of describing the entire posterior distribution, which is the product of the likelihood and the prior. As such,