Chapter3 GAMA101- Mathematics for Computer and Information Science-1

Course Objective: This course is designed as an entry level course to Computer and Informations Science. Upon successful completion of this course the student can use calculus as effective tool for analysis.

3.1 Module-1 Single Variable Calculus and its applications in Computer Science

Syllabus Content: Limits of Function Values, The Limit Laws, Continuous Functions, Rates of Change, Second- and Higher-Order Derivatives, Instantaneous Rates of Change, Derivative as a Function , Chain Rule, Implicit Differentiation, Tangents and Normal Lines, Linearization, Concavity. Reference text & Sections: (Thomas Jr et al. 2014),Relevant topics from sections 2.2, 2.5, 3.1, 3.2, 3.3, 3.4, 3.6,3.7, 3.9, 4.4

3.1.1 Instanteneous Average Throughput of a Network using limit

Suppose we want to calculate the average throughput (data transfer rate) of a network over a week. The throughput \(D\) (in megabits per second, Mbps) is recorded periodically:

- Monday: \(D = 100\) Mbps

- Tuesday: \(D = 110\) Mbps

- Wednesday: \(D = 120\) Mbps

- Thursday: \(D = 115\) Mbps

- Friday: \(D = 105\) Mbps

- Saturday: \(D = 108\) Mbps

- Sunday: \(D = 112\) Mbps

To find the average throughput over the week, we use the standard formula for average: \[ \text{Average Throughput} = \frac{D_{\text{Mon}} + D_{\text{Tue}} + D_{\text{Wed}} + D_{\text{Thu}} + D_{\text{Fri}} + D_{\text{Sat}} + D_{\text{Sun}}}{7} \]

Let’s calculate this:

Sum of Throughputs: \[ D_{\text{total}} = 100 + 110 + 120 + 115 + 105 + 108 + 112 = 770 \text{ Mbps} \]

Average Throughput: \[ \text{Average Throughput} = \frac{770}{7} \approx 110 \text{ Mbps} \]

Now, let’s formulate and interpret this in terms of a limit in computer science:

Limit Interpretation

Suppose we want to estimate the average throughput if we measured it continuously rather than periodically, approaching continuous monitoring: \[ \lim_{\Delta t \to 0} \frac{\sum D(t)}{n} \]

Here, \(\Delta t\) represents the time interval (approaching zero), \(D(t)\) is the throughput at time \(t\), and \(n\) is the number of intervals.

Interpretation

As \(\Delta t\) (the time interval) approaches zero, the average throughput calculated for smaller and smaller time intervals (like per second instead of per day) would approach the continuous average throughput we would theoretically obtain if we could monitor throughput continuously.

This example demonstrates how limits can be applied in computer science to understand data processing rates or system performance as we consider finer and finer time resolutions (approaching continuous monitoring). It aligns with concepts like real-time data processing, system performance analysis, and network optimization, where understanding behavior as variables approach specific conditions (like time intervals approaching zero) is crucial.

3.2 Refreshing knowledge

- Main points to be highlighted along with the formal definition

- The limit is a value one expect that function would have at the point \(x=a\), based on the values of that function at close vicinity of \(x=a\), but regardless of the value of \(f(x)\) at \(x=a\), if \(f(a)\) is defined at all. Use the link https://en.wikipedia.org/wiki/Limit_of_a_function#/media/File:Epsilon-delta_limit.svg for geometrical explanation for a better understanding.

- For the existence of limit at \(x=a\), \(f(a^+)=f(a^-)\). Use this property geometrically to check existence of limit at \(x=0\) of the unit step function and similar examples from the text book.

An interesting video session is available at:

3.3 Limit of a Function

3.3.1 Understanding Limits of a Function

The concept of a limit is essential for studying the local behavior of a function near a specified point. It helps us understand how a function behaves as the input value approaches a particular point from both the left and the right.

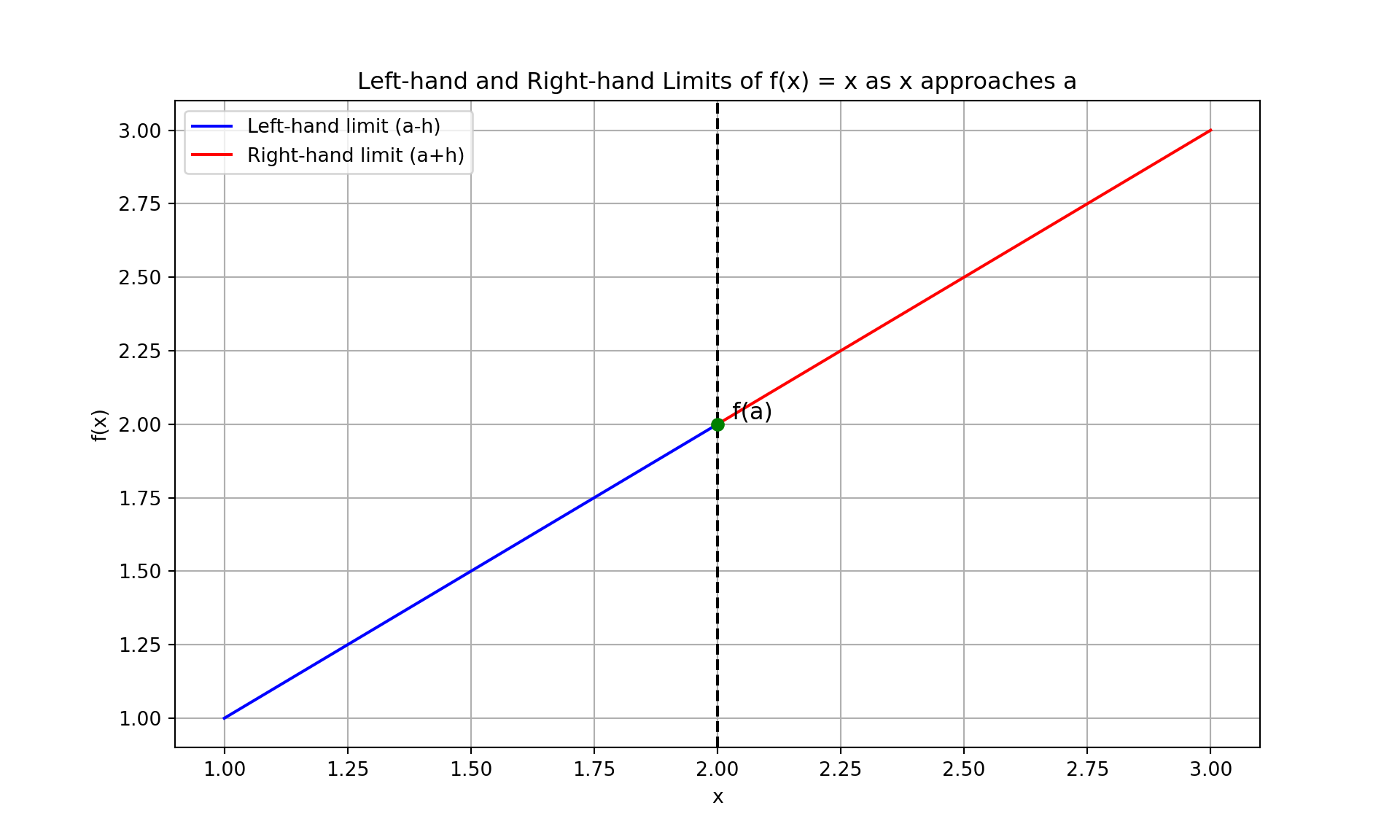

3.3.1.1 Identity Function \(f(x) = x\)

For the identity function \(f(x) = x\):

- Left-hand limit as \(x\) approaches \(a\): \[ \lim_{{x \to a^-}} x = \lim_{{h \to 0}} (a - h) = a \]

- Right-hand limit as \(x\) approaches \(a\): \[ \lim_{{x \to a^+}} x = \lim_{{h \to 0}} (a + h) = a \]

Python Visualization:

import numpy as np

import matplotlib.pyplot as plt

# Identity function

def f(x):

return x

a = 2 # Point to approach

h_values = np.linspace(0.01, 1, 100)

# Left-hand limit

left_hand_limit = f(a - h_values)

# Right-hand limit

right_hand_limit = f(a + h_values)

plt.figure(figsize=(10, 6))

plt.plot(a - h_values, left_hand_limit, label='Left-hand limit (a-h)', color='blue')

plt.plot(a + h_values, right_hand_limit, label='Right-hand limit (a+h)', color='red')

plt.axvline(x=a, color='black', linestyle='--')

plt.scatter([a], [f(a)], color='green', zorder=5)

plt.text(a, f(a), ' f(a)', fontsize=12, verticalalignment='bottom')

plt.xlabel('x')

plt.ylabel('f(x)')

plt.title('Left-hand and Right-hand Limits of f(x) = x as x approaches a')

plt.legend()

plt.grid(True)

plt.show()

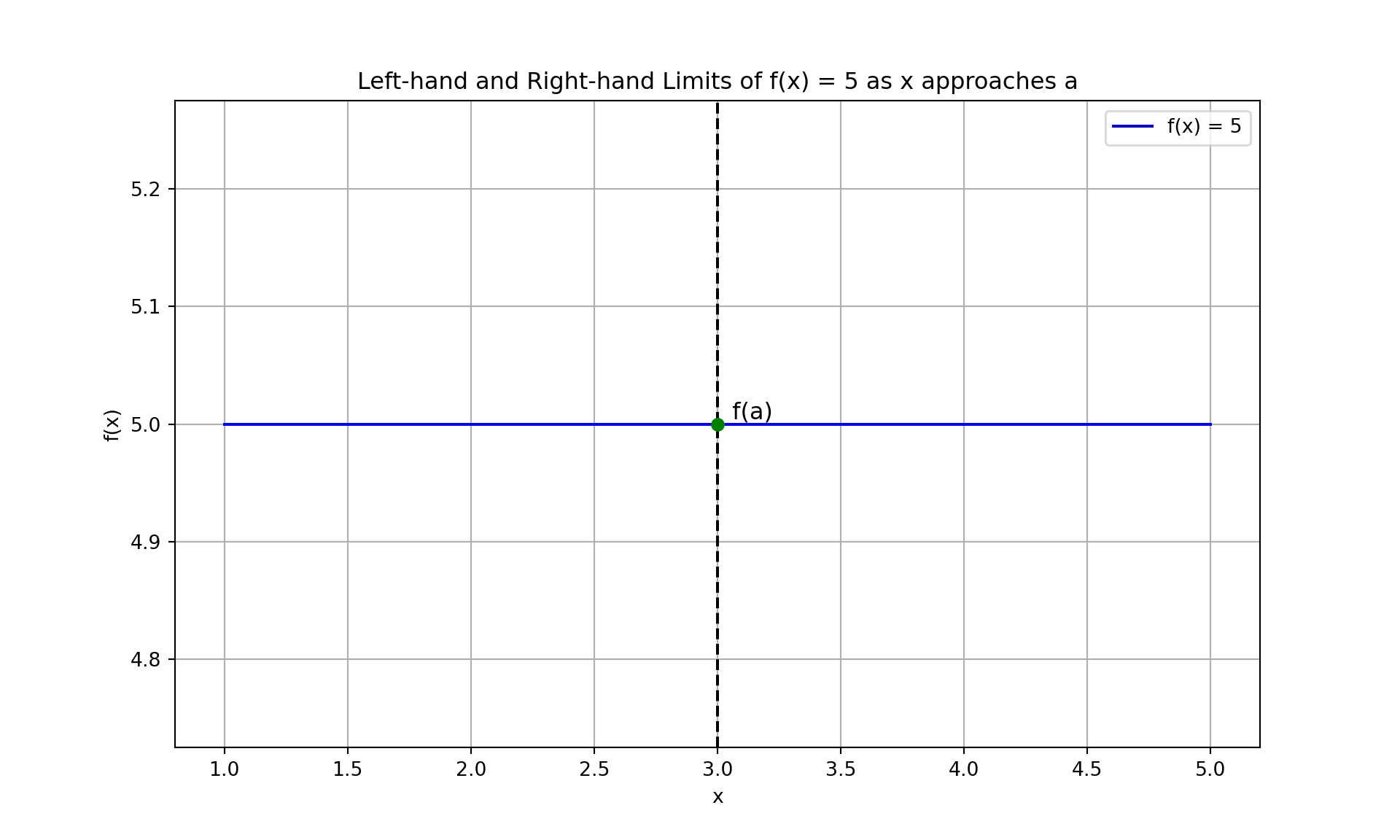

3.3.1.2 Constant Function \(f(x) = c\)

For a constant function \(f(x) = c\):

- Left-hand limit as \(x\) approaches \(a\): \[ \lim_{{x \to a^-}} c = c \]

- Right-hand limit as \(x\) approaches \(a\): \[ \lim_{{x \to a^+}} c = c \]

Python Visualization:

import numpy as np

import matplotlib.pyplot as plt

# Constant function

def f(x):

return np.full_like(x, 5) # Constant value

a = 3 # Point to approach

h_values = np.linspace(0.01, 1, 100)

# Left-hand limit

left_hand_limit = f(a - h_values)

# Right-hand limit

right_hand_limit = f(a + h_values)

x_range = np.linspace(a - 2, a + 2, 400)

y_range = f(x_range)

plt.figure(figsize=(10, 6))

plt.plot(x_range, y_range, label='f(x) = 5', color='blue')

plt.axvline(x=a, color='black', linestyle='--')

plt.scatter([a], [f(a)], color='green', zorder=5)

plt.text(a, f(a), ' f(a)', fontsize=12, verticalalignment='bottom')

plt.xlabel('x')

plt.ylabel('f(x)')

plt.title('Left-hand and Right-hand Limits of f(x) = 5 as x approaches a')

plt.legend()

plt.grid(True)

plt.show()

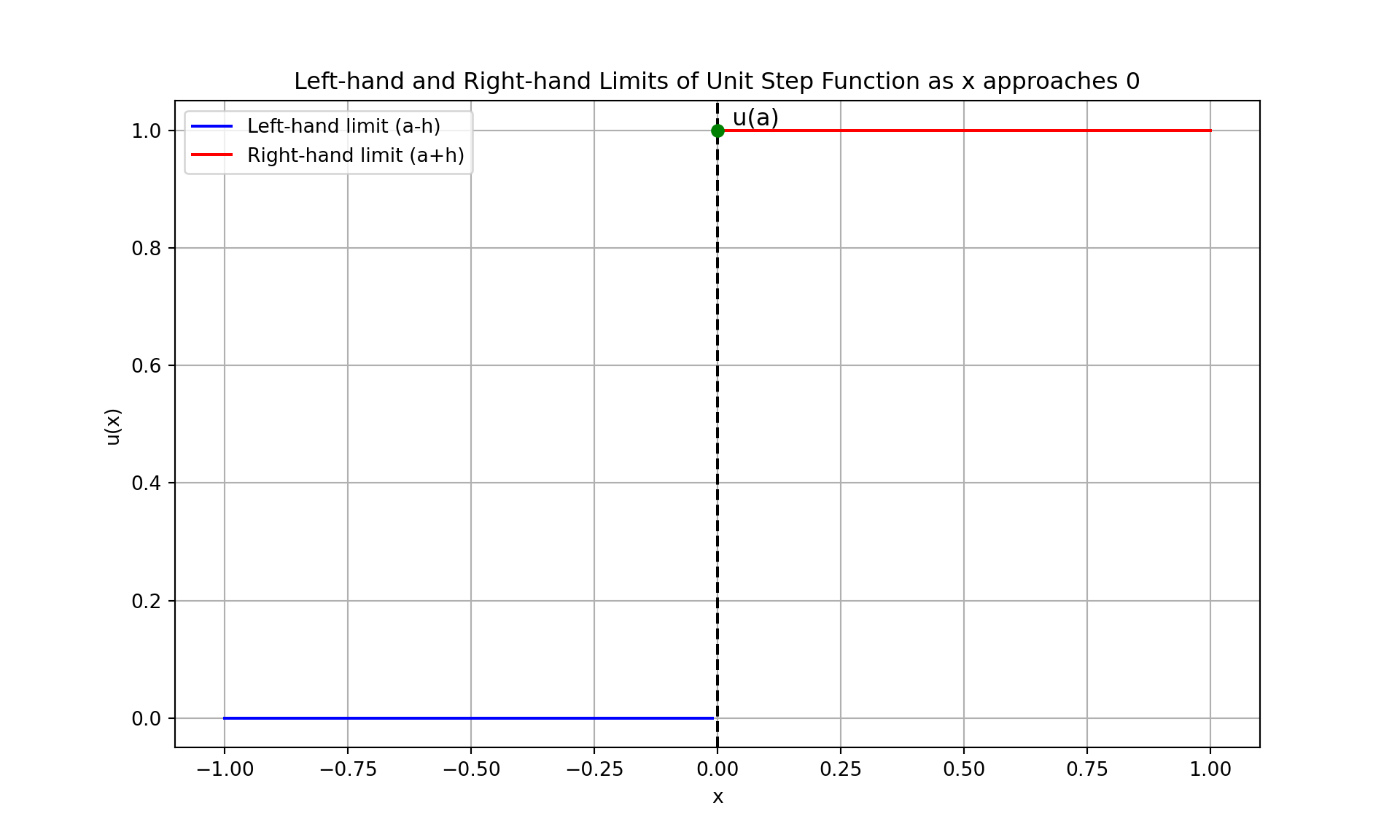

3.3.1.3 Unit Step Function \(u(x)\)

The unit step function \(u(x)\) is defined as: \[ u(x) = \begin{cases} 0 & \text{if } x < 0 \\ 1 & \text{if } x \geq 0 \end{cases} \]

- Left-hand limit as \(x\) approaches 0: \[ \lim_{{x \to 0^-}} u(x) = \lim_{{h \to 0}} u(-h) = 0 \]

- Right-hand limit as \(x\) approaches 0: \[ \lim_{{x \to 0^+}} u(x) = \lim_{{h \to 0}} u(h) = 1 \]

Python Visualization:

import numpy as np

import matplotlib.pyplot as plt

# Unit step function

def u(x):

return np.where(x < 0, 0, 1)

a = 0 # Point to approach

h_values = np.linspace(0.01, 1, 100)

# Left-hand limit

left_hand_limit = u(a - h_values)

# Right-hand limit

right_hand_limit = u(a + h_values)

plt.figure(figsize=(10, 6))

plt.plot(a - h_values, left_hand_limit, label='Left-hand limit (a-h)', color='blue')

plt.plot(a + h_values, right_hand_limit, label='Right-hand limit (a+h)', color='red')

plt.axvline(x=a, color='black', linestyle='--')

plt.scatter([a], [u(a)], color='green', zorder=5)

plt.text(a, u(a), ' u(a)', fontsize=12, verticalalignment='bottom')

plt.xlabel('x')

plt.ylabel('u(x)')

plt.title('Left-hand and Right-hand Limits of Unit Step Function as x approaches 0')

plt.legend()

plt.grid(True)

plt.show()

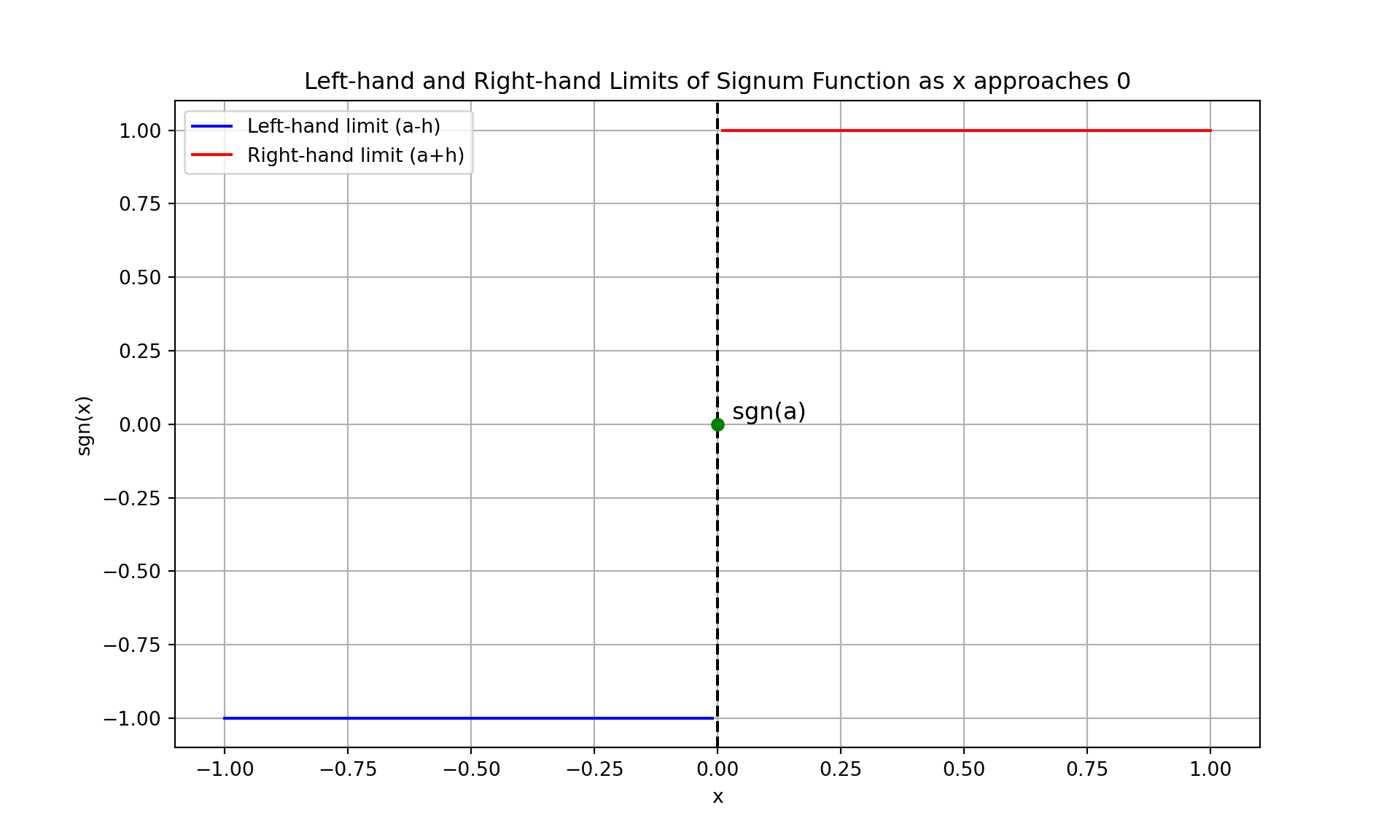

3.3.1.4 Signum Function \(\text{sgn}(x)\)

The signum function \(\text{sgn}(x)\) is defined as: \[ \text{sgn}(x) = \begin{cases} -1 & \text{if } x < 0 \\ 0 & \text{if } x = 0 \\ 1 & \text{if } x > 0 \end{cases} \]

- Left-hand limit as \(x\) approaches 0: \[ \lim_{{x \to 0^-}} \text{sgn}(x) = \lim_{{h \to 0}} \text{sgn}(-h) = -1 \]

- Right-hand limit as \(x\) approaches 0: \[ \lim_{{x \to 0^+}} \text{sgn}(x) = \lim_{{h \to 0}} \text{sgn}(h) = 1 \]

Python Visualization:

import numpy as np

import matplotlib.pyplot as plt

# Signum function

def sgn(x):

return np.where(x < 0, -1, np.where(x > 0, 1, 0))

a = 0 # Point to approach

h_values = np.linspace(0.01, 1, 100)

# Left-hand limit

left_hand_limit = sgn(a - h_values)

# Right-hand limit

right_hand_limit = sgn(a + h_values)

plt.figure(figsize=(10, 6))

plt.plot(a - h_values, left_hand_limit, label='Left-hand limit (a-h)', color='blue')

plt.plot(a + h_values, right_hand_limit, label='Right-hand limit (a+h)', color='red')

plt.axvline(x=a, color='black', linestyle='--')

plt.scatter([a], [sgn(a)], color='green', zorder=5)

plt.text(a, sgn(a), ' sgn(a)', fontsize=12, verticalalignment='bottom')

plt.xlabel('x')

plt.ylabel('sgn(x)')

plt.title('Left-hand and Right-hand Limits of Signum Function as x approaches 0')

plt.legend()

plt.grid(True)

plt.show()

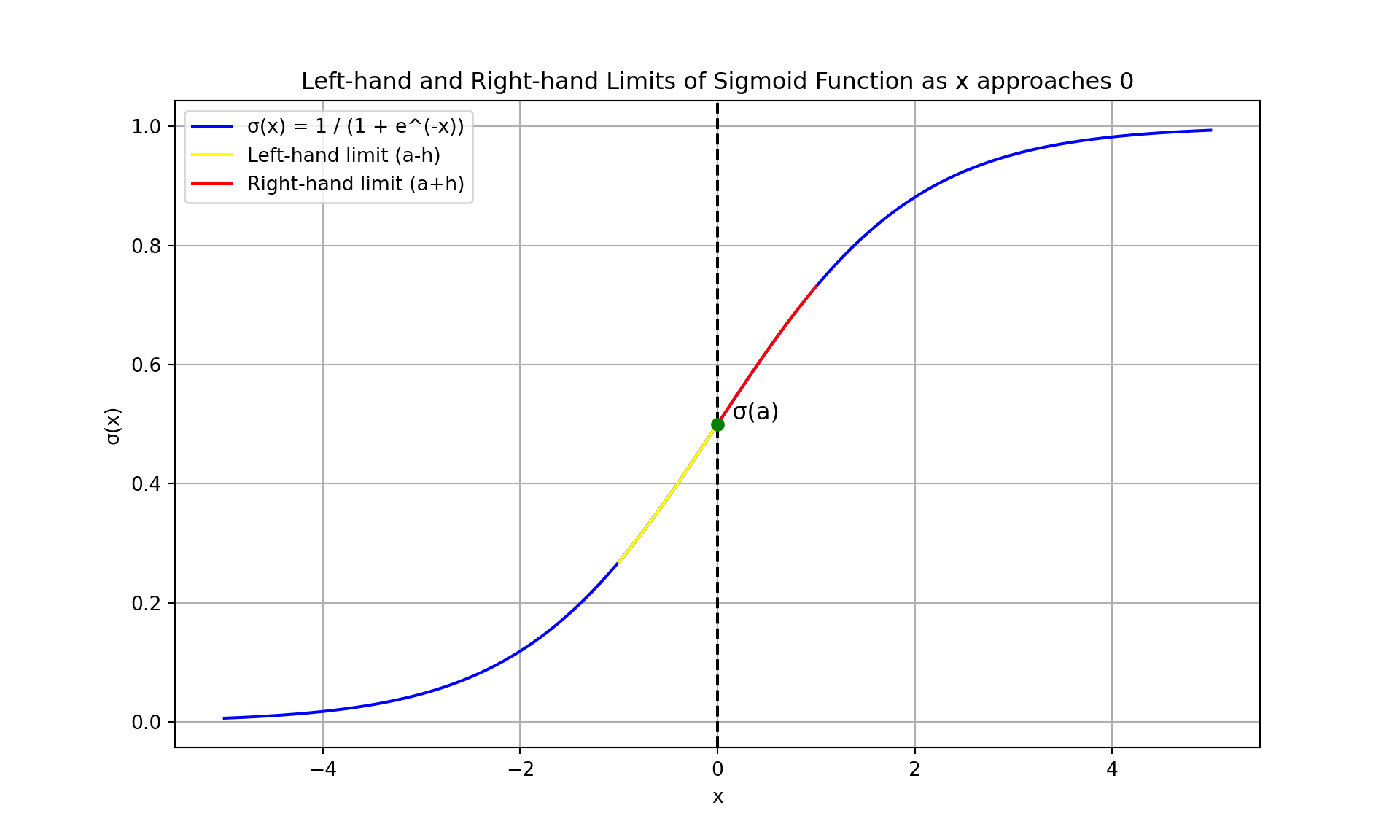

3.3.1.5 Sigmoid Function \(\sigma(x)\)

The sigmoid function \(\sigma(x)\) is defined as: \[ \sigma(x) = \frac{1}{1 + e^{-x}} \]

- Left-hand limit as \(x\) approaches 0: \[ \lim_{{x \to 0^-}} \sigma(x) = \frac{1}{1 + e^{-0}} = \frac{1}{2} \]

- Right-hand limit as \(x\) approaches 0: \[ \lim_{{x \to 0^+}} \sigma(x) = \frac{1}{1 + e^{0}} = \frac{1}{2} \]

Python Visualization:

import numpy as np

import matplotlib.pyplot as plt

# Sigmoid function

def sigmoid(x):

return 1 / (1 + np.exp(-x))

a = 0 # Point to approach

h_values = np.linspace(0.01, 1, 100)

# Left-hand limit

left_hand_limit = sigmoid(a - h_values)

# Right-hand limit

right_hand_limit = sigmoid(a + h_values)

x_range = np.linspace(a - 5, a + 5, 400)

y_range = sigmoid(x_range)

plt.figure(figsize=(10, 6))

plt.plot(x_range, y_range, label='σ(x) = 1 / (1 + e^(-x))', color='blue')

plt.plot(a - h_values, left_hand_limit, label='Left-hand limit (a-h)', color='yellow')

plt.plot(a + h_values, right_hand_limit, label='Right-hand limit (a+h)', color='red')

plt.axvline(x=a, color='black', linestyle='--')

plt.scatter([a], [sigmoid(a)], color='green', zorder=5)

plt.text(a, sigmoid(a), ' σ(a)', fontsize=12, verticalalignment='bottom')

plt.xlabel('x')

plt.ylabel('σ(x)')

plt.title('Left-hand and Right-hand Limits of Sigmoid Function as x approaches 0')

plt.legend()

plt.grid(True)

plt.show()

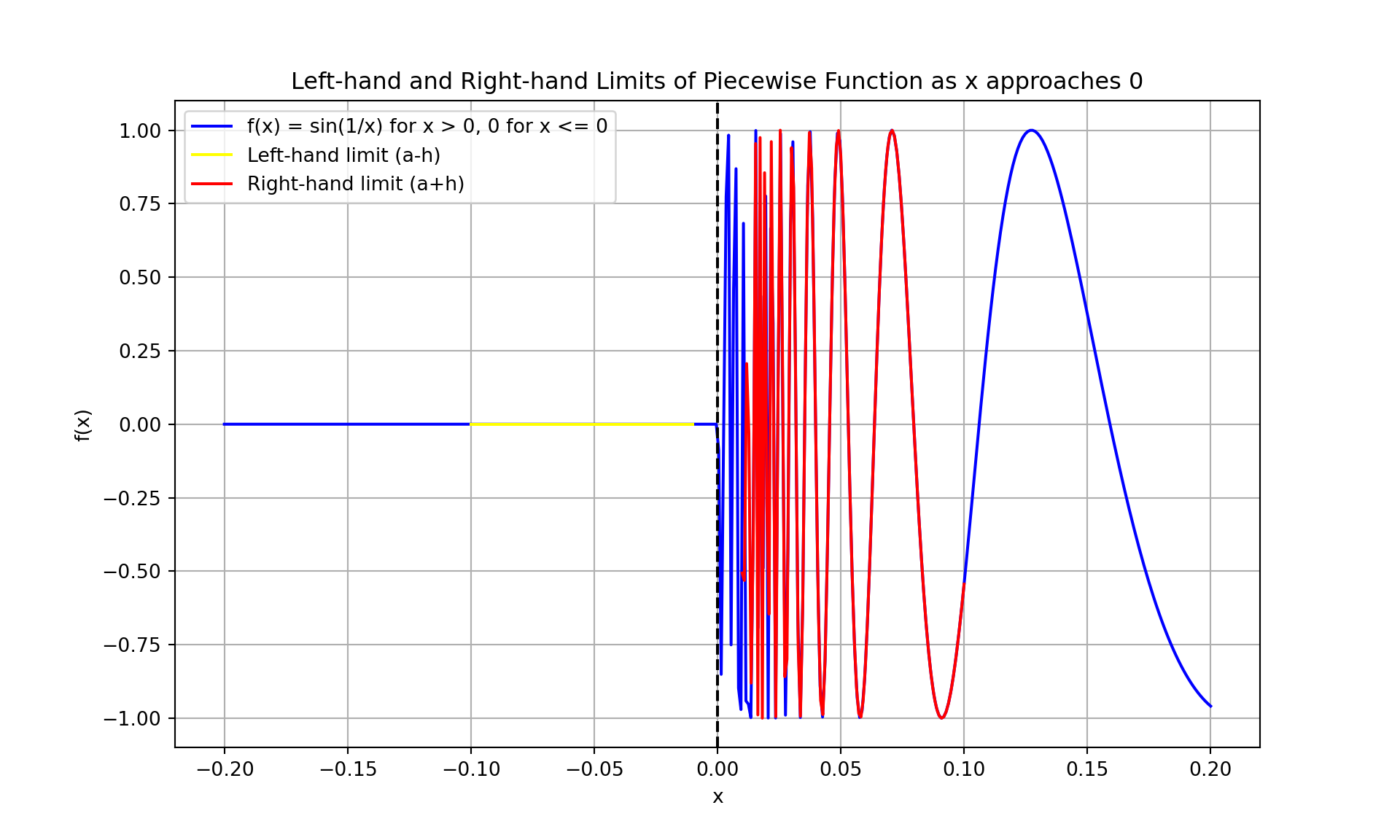

3.3.1.6 Piecewise Function \(f(x)\)

The piecewise function \(f(x)\) is defined as: \[ f(x) = \begin{cases} \sin\left(\frac{1}{x}\right) & \text{if } x > 0 \\ 0 & \text{if } x \leq 0 \end{cases} \]

- Left-hand limit as \(x\) approaches 0: \[ \lim_{{x \to 0^-}} f(x) = 0 \]

- Right-hand limit as \(x\) approaches 0: \[ \lim_{{x \to 0^+}} f(x) = \text{Does not exist (oscillates)} \]

Python Visualization:

import numpy as np

import matplotlib.pyplot as plt

# Piecewise function

def f(x):

# Avoid division by zero by only applying the function where x is positive

return np.where(x > 0, np.sin(1 / x), 0)

a = 0 # Point to approach

h_values = np.linspace(0.01, 0.1, 100)

# Left-hand limit (x <= 0)

x_negative_h_values = -h_values

left_hand_limit = f(x_negative_h_values)

# Right-hand limit (x > 0)

x_positive_h_values = h_values

right_hand_limit = f(x_positive_h_values)

x_range = np.linspace(a - 0.2, a + 0.2, 400)

y_range = f(x_range)

plt.figure(figsize=(10, 6))

plt.plot(x_range, y_range, label='f(x) = sin(1/x) for x > 0, 0 for x <= 0', color='blue')

plt.plot(a - h_values, left_hand_limit, label='Left-hand limit (a-h)', color='yellow')

plt.plot(a + h_values, right_hand_limit, label='Right-hand limit (a+h)', color='red')

plt.axvline(x=a, color='black', linestyle='--')

#plt.scatter([a], [f(a)], color='green', zorder=5)

#plt.text(a, f(a), ' f(a)', fontsize=12, verticalalignment='bottom')

plt.xlabel('x')

plt.ylabel('f(x)')

plt.title('Left-hand and Right-hand Limits of Piecewise Function as x approaches 0')

plt.legend()

plt.grid(True)

plt.show()

3.3.1.7 Takeaway

In this introductory session on limits, we explored how single-variable functions behave as they approach a specific point from both sides. Here are the key takeaways:

- Definition of Limits: The limit of a function at a point provides insight into the function’s behavior as it approaches that point from both directions. Specifically:

- Left-hand limit: The value the function approaches as the input approaches the point from the left.

- Right-hand limit: The value the function approaches as the input approaches the point from the right.

- Stability in Behavior: A function is considered stable at a point if the left-hand limit and the right-hand limit are equal. This implies that:

- The function approaches the same value from both sides of the point.

- The function has a well-defined limit at that point.

3.3.1.8 Examples

- Constant Function:

- For \(f(x) = c\), the left-hand limit and the right-hand limit are both \(c\), demonstrating stability.

- Unit Step Function:

- For \(u(x)\), the left-hand limit as \(x\) approaches 0 is 0, while the right-hand limit is 1, indicating a discontinuity at \(x = 0\).

- Sigmoid Function:

- For \(\sigma(x) = \frac{1}{1 + e^{-x}}\), both the left-hand limit and the right-hand limit as \(x\) approaches 0 are \(\frac{1}{2}\), showing stability.

- Piecewise Function \(f(x)\):

- For \(f(x) = \sin\left(\frac{1}{x}\right)\) for \(x > 0\) and \(0\) for \(x \leq 0\), the left-hand limit is 0, and the right-hand limit does not exist due to oscillation, showing instability.

By analyzing these examples, we observe that stability at a point is characterized by the equality of the left-hand and right-hand limits. When these limits are equal, the function is said to be continuous at that point. When they are not equal or the limit does not exist, the function may exhibit discontinuities or oscillatory behavior.

Understanding limits helps in analyzing and predicting the behavior of functions in various contexts, including computer science, where stability and continuity can be crucial in algorithms and system behaviors.

3.3.2 Practice Problems

Solving Limits of Functions - Thomas Calculus Section 2.2

- Problem: Find the limit of the function \(f(x) = 3x^2 - 2x + 1\) as \(x\) approaches 2.

Solution: \[ \lim_{{x \to 2}} (3x^2 - 2x + 1) = 3(2)^2 - 2(2) + 1 = 12 - 4 + 1 = 9 \]

Left-hand limit: \(\lim\limits_{{x \to 2^-}} (3x^2 - 2x + 1) = 9\)

Right-hand limit: \(\lim\limits_{{x \to 2^+}} (3x^2 - 2x + 1) = 9\)

- Problem: Find the limit of the function \(f(x) = \sqrt{x + 4}\) as \(x\) approaches 1.

Solution: \[ \lim_{{x \to 1}} \sqrt{x + 4} = \sqrt{1 + 4} = \sqrt{5} \]

Left-hand limit: \(\lim\limits_{{x \to 1^-}} \sqrt{x + 4} = \sqrt{5}\)

Right-hand limit: \(\lim\limits_{{x \to 1^+}} \sqrt{x + 4} = \sqrt{5}\)

- Problem: Find the limit of the function \(f(x) = \frac{x^2 - 1}{x - 1}\) as \(x\) approaches 1.

Solution: First, simplify the function: \[ \frac{x^2 - 1}{x - 1} = \frac{(x - 1)(x + 1)}{x - 1} = x + 1 \quad \text{for } x \neq 1 \] Thus, \[ \lim_{{x \to 1}} \frac{x^2 - 1}{x - 1} = \lim_{{x \to 1}} (x + 1) = 2 \]

Left-hand limit: \(\lim\limits_{{x \to 1^-}} \frac{x^2 - 1}{x - 1} = 2\)

Right-hand limit: \(\lim\limits_{{x \to 1^+}} \frac{x^2 - 1}{x - 1} = 2\)

- Problem: Find the limit of the function \(f(x) = \frac{\sin(x)}{x}\) as \(x\) approaches 0.

Solution: Use the fact that \(\lim\limits_{{x \to 0}} \frac{\sin(x)}{x} = 1\): \[ \lim_{{x \to 0}} \frac{\sin(x)}{x} = 1 \]

- Left-hand limit: \(\lim_{{x \to 0^-}} \frac{\sin(x)}{x} = 1\)

- Right-hand limit: \(\lim_{{x \to 0^+}} \frac{\sin(x)}{x} = 1\)

- Problem: Find the limit of the function \(f(x) = \frac{e^x - 1}{x}\) as \(x\) approaches 0.

Solution: Apply L’Hôpital’s rule, which is used when the limit is of the form \(\frac{0}{0}\): \[ \lim_{{x \to 0}} \frac{e^x - 1}{x} = \lim_{{x \to 0}} \frac{e^x}{1} = e^0 = 1 \]

Left-hand limit: \(\lim\limits_{{x \to 0^-}} \frac{e^x - 1}{x} = 1\)

Right-hand limit: \(\lim\limits_{{x \to 0^+}} \frac{e^x - 1}{x} = 1\)

- Problem: Find the limit of the function \(f(x) = \frac{\ln(x)}{x - 1}\) as \(x\) approaches 1.

Solution: Apply L’Hôpital’s rule: \[ \lim_{{x \to 1}} \frac{\ln(x)}{x - 1} = \lim_{{x \to 1}} \frac{\frac{d}{dx}[\ln(x)]}{\frac{d}{dx}[x - 1]} = \lim_{{x \to 1}} \frac{\frac{1}{x}}{1} = \frac{1}{1} = 1 \]

Left-hand limit: \(\lim\limits_{{x \to 1^-}} \frac{\ln(x)}{x - 1} = 1\)

Right-hand limit: \(\lim\limits_{{x \to 1^+}} \frac{\ln(x)}{x - 1} = 1\)

- Problem: Find the limit of the function \(f(x) = \frac{x^3 - 8}{x - 2}\) as \(x\) approaches 2.

Solution: First, simplify the function using polynomial division or factoring: \[ \frac{x^3 - 8}{x - 2} = \frac{(x - 2)(x^2 + 2x + 4)}{x - 2} = x^2 + 2x + 4 \quad \text{for } x \neq 2 \] Thus, \[ \lim_{{x \to 2}} \frac{x^3 - 8}{x - 2} = \lim_{{x \to 2}} (x^2 + 2x + 4) = 4 + 4 + 4 = 12 \]

Left-hand limit: \(\lim\limits_{{x \to 2^-}} \frac{x^3 - 8}{x - 2} = 12\)

Right-hand limit: \(\lim\limits_{{x \to 2^+}} \frac{x^3 - 8}{x - 2} = 12\)

- Problem: Find the limit of the function \(f(x) = \frac{\tan(x)}{x}\) as \(x\) approaches 0.

Solution: Use the fact that \(\lim_{{x \to 0}} \frac{\tan(x)}{x} = 1\): \[ \lim_{{x \to 0}} \frac{\tan(x)}{x} = 1 \]

Left-hand limit: \(\lim\limits_{{x \to 0^-}} \frac{\tan(x)}{x} = 1\)

Right-hand limit: \(\lim\limits_{{x \to 0^+}} \frac{\tan(x)}{x} = 1\)

- Problem: Find the limit of the function \(f(x) = \frac{x^2 - 4}{x^2 - x - 6}\) as \(x\) approaches 3.

Solution: First, simplify the function by factoring: \[ \frac{x^2 - 4}{x^2 - x - 6} = \frac{(x - 2)(x + 2)}{(x - 3)(x + 2)} = \frac{x - 2}{x - 3} \quad \text{for } x \neq 3 \] Thus, \[ \lim_{{x \to 3}} \frac{x^2 - 4}{x^2 - x - 6} = \lim_{{x \to 3}} \frac{x - 2}{x - 3} = \frac{3 - 2}{3 - 3} = \text{Undefined (asymptote)} \]

Left-hand limit: \(\lim\limits_{{x \to 3^-}} \frac{x^2 - 4}{x^2 - x - 6} = -\infty\)

Right-hand limit: \(\lim\limits_{{x \to 3^+}} \frac{x^2 - 4}{x^2 - x - 6} = +\infty\)

- Problem: Find the limit of the function \(f(x) = \frac{e^{2x} - e^x}{x}\) as \(x\) approaches 0.

Solution: Apply L’Hôpital’s rule: \[ \lim_{{x \to 0}} \frac{e^{2x} - e^x}{x} = \lim_{{x \to 0}} \frac{2e^{2x} - e^x}{1} = 2e^0 - e^0 = 2 - 1 = 1 \]

Left-hand limit: \(\lim\limits_{{x \to 0^-}} \frac{e^{2x} - e^x}{x} = 1\)

Right-hand limit: \(\lim\limits_{{x \to 0^+}} \frac{e^{2x} - e^x}{x} = 1\)

3.3.3 Limit laws

| Serial No. | Law | Description | Formula |

|---|---|---|---|

| 1 | Constant Law | If \(c\) is a constant, then: | \[ \lim_{{x \to a}} c = c \] |

| 2 | Identity Law | If \(f(x) = x\), then: | \[ \lim_{{x \to a}} x = a \] |

| 3 | Sum Law | If \(\lim_{{x \to a}} f(x) = L\) and \(\lim_{{x \to a}} g(x) = M\), then: | \[ \lim_{{x \to a}} [f(x) + g(x)] = L + M \] |

| 4 | Difference Law | If \(\lim_{{x \to a}} f(x) = L\) and \(\lim_{{x \to a}} g(x) = M\), then: | \[ \lim_{{x \to a}} [f(x) - g(x)] = L - M \] |

| 5 | Product Law | If \(\lim_{{x \to a}} f(x) = L\) and \(\lim_{{x \to a}} g(x) = M\), then: | \[ \lim_{{x \to a}} [f(x) \cdot g(x)] = L \cdot M \] |

| 6 | Quotient Law | If \(\lim_{{x \to a}} f(x) = L\) and \(\lim_{{x \to a}} g(x) = M\), and \(M \neq 0\), then: | \[ \lim_{{x \to a}} \frac{f(x)}{g(x)} = \frac{L}{M} \] |

| 7 | Power Law | If \(\lim_{{x \to a}} f(x) = L\) and \(n\) is a positive integer, then: | \[ \lim_{{x \to a}} [f(x)]^n = L^n \] |

| 8 | Root Law | If \(\lim_{{x \to a}} f(x) = L\) and \(n\) is a positive integer, then: | \[ \lim_{{x \to a}} \sqrt[n]{f(x)} = \sqrt[n]{L} \] |

| 9 | Composite Function Law | If \(\lim_{{x \to a}} f(x) = L\) and \(\lim_{{x \to L}} g(x) = M\), then: | \[ \lim_{{x \to a}} g(f(x)) = M \] |

| 10 | Limit of a Constant Multiple | If \(\lim_{{x \to a}} f(x) = L\) and \(c\) is a constant, then: | \[ \lim_{{x \to a}} [c \cdot f(x)] = c \cdot L \] |

| 11 | Limit of a Function Raised to a Power | If \(\lim_{{x \to a}} f(x) = L\) and \(n\) is a positive integer, then: | \[ \lim_{{x \to a}} [f(x)]^n = L^n \] |

3.3.4 Limit Problems and Solutions

Problem: Find \(\lim_{{x \to 3}} (2x + 1)\)

Solution: Using the Sum Law and Constant Multiple Law: \[ \lim_{{x \to 3}} (2x + 1) = 2 \cdot \lim_{{x \to 3}} x + \lim_{{x \to 3}} 1 = 2 \cdot 3 + 1 = 7 \]

Problem: Find \(\lim_{{x \to -1}} (x^2 - 4)\)

Solution: Using the Difference Law and Power Law: \[ \lim_{{x \to -1}} (x^2 - 4) = \lim_{{x \to -1}} x^2 - \lim_{{x \to -1}} 4 = (-1)^2 - 4 = 1 - 4 = -3 \]

Problem: Find \(\lim_{{x \to 2}} \frac{x^2 - 4}{x - 2}\)

Solution: Factor the numerator: \[ \frac{x^2 - 4}{x - 2} = \frac{(x - 2)(x + 2)}{x - 2} = x + 2 \] Then apply the Identity Law: \[ \lim_{{x \to 2}} \frac{x^2 - 4}{x - 2} = \lim_{{x \to 2}} (x + 2) = 2 + 2 = 4 \]

Problem: Find \(\lim_{{x \to 0}} \frac{\sin x}{x}\)

Solution: Using L’Hôpital’s Rule: \[ \lim_{{x \to 0}} \frac{\sin x}{x} = \lim_{{x \to 0}} \frac{\cos x}{1} = \cos 0 = 1 \]

Problem: Find \(\lim_{{x \to \infty}} \frac{3x^2 - 2x + 1}{x^2 + 5}\)

Solution: Divide numerator and denominator by \(x^2\): \[ \lim_{{x \to \infty}} \frac{3x^2 - 2x + 1}{x^2 + 5} = \lim_{{x \to \infty}} \frac{3 - \frac{2}{x} + \frac{1}{x^2}}{1 + \frac{5}{x^2}} = \frac{3 - 0 + 0}{1 + 0} = 3 \]

Problem: Find \(\lim_{{x \to 0^+}} \frac{1}{x}\)

Solution: As \(x\) approaches 0 from the right: \[ \lim_{{x \to 0^+}} \frac{1}{x} = \infty \]

Problem: Find \(\lim_{{x \to 1}} (x^3 - 1)\)

Solution: Using the Power Law and Difference Law: \[ \lim_{{x \to 1}} (x^3 - 1) = \lim_{{x \to 1}} x^3 - \lim_{{x \to 1}} 1 = 1^3 - 1 = 0 \]

Problem: Find \(\lim_{{x \to \infty}} \frac{e^x}{x^2}\)

Solution: Using L’Hôpital’s Rule twice: \[ \lim_{{x \to \infty}} \frac{e^x}{x^2} = \lim_{{x \to \infty}} \frac{e^x}{2x} = \lim_{{x \to \infty}} \frac{e^x}{2} = \infty \]

Problem: Find \(\lim\limits_{{x \to 0}} \frac{x^2 - \sin^2 x}{x^2}\)

Solution: Using the Difference Law and Taylor expansion for \(\sin x\): \[ \sin x \approx x - \frac{x^3}{6} + O(x^5) \] \[ \lim_{{x \to 0}} \frac{x^2 - \left(x - \frac{x^3}{6}\right)^2}{x^2} = \lim_{{x \to 0}} \frac{x^2 - \left(x^2 - \frac{x^4}{3} + \frac{x^6}{36}\right)}{x^2} = \frac{1}{3} \]

Problem: Find \(\lim_{{x \to 0}} \frac{e^x - 1}{x}\)

Solution: Using L’Hôpital’s Rule: \[ \lim_{{x \to 0}} \frac{e^x - 1}{x} = \lim_{{x \to 0}} \frac{e^x}{1} = e^0 = 1 \]

3.3.5 Additional Problems

Problem (a)

Find:

- \(\lim\limits_{{x \to -2}} (4x^2 - 3)\)

- \(\lim\limits_{{x \to -2}} \frac{4x^2 - 3}{x - 2}\)

- \(\lim\limits_{{x \to 2}} \frac{2x^3 - 5x^2 + 1}{x^2 - 3}\)

Solution:

For the limit \(\lim\limits_{{x \to -2}} (4x^2 - 3)\):

Using the Sum and Difference Rules and Power Rule: \[ \lim\limits_{{x \to -2}} (4x^2 - 3) = \lim\limits_{{x \to -2}} 4x^2 - \lim\limits_{{x \to -2}} 3 \] Calculate each term separately: \[ \lim\limits_{{x \to -2}} 4x^2 = 4 \cdot (-2)^2 = 4 \cdot 4 = 16 \] \[ \lim\limits_{{x \to -2}} 3 = 3 \] Thus: \[ \lim\limits_{{x \to -2}} (4x^2 - 3) = 16 - 3 = 13 \]

For the limit \(\lim\limits_{{x \to -2}} \frac{4x^2 - 3}{x - 2}\):

Using the Quotient Rule: \[ \lim\limits_{{x \to -2}} \frac{4x^2 - 3}{x - 2} = \frac{\lim\limits_{{x \to -2}} (4x^2 - 3)}{\lim\limits_{{x \to -2}} (x - 2)} \] Calculate each part: \[ \lim\limits_{{x \to -2}} (4x^2 - 3) = 13 \] \[ \lim\limits_{{x \to -2}} (x - 2) = -2 - 2 = -4 \] Thus: \[ \lim\limits_{{x \to -2}} \frac{4x^2 - 3}{x - 2} = \frac{13}{-4} = -\frac{13}{4} \]

For the limit \(\lim\limits_{{x \to 2}} \frac{2x^3 - 5x^2 + 1}{x^2 - 3}\):

Using the Quotient Rule: \[ \lim\limits_{{x \to 2}} (2x^3 - 5x^2 + 1) = 2 \cdot 2^3 - 5 \cdot 2^2 + 1 = 16 - 20 + 1 = -3 \] \[ \lim\limits_{{x \to 2}} (x^2 - 3) = 2^2 - 3 = 4 - 3 = 1 \] Thus: \[ \lim\limits_{{x \to 2}} \frac{2x^3 - 5x^2 + 1}{x^2 - 3} = \frac{-3}{1} = -3 \]

Problem (b)

Find:

- \(\lim\limits_{{x \to c}} \frac{x^4 + x^2 - 1}{x^2 + 5}\)

- \(\lim\limits_{{x \to c}} (x^4 + x^2 - 1)\)

- \(\lim\limits_{{x \to c}} (x^2 + 5)\)

Solution:

For the limit \(\lim\limits_{{x \to c}} \frac{x^4 + x^2 - 1}{x^2 + 5}\):

Using the Quotient Rule: \[ \lim\limits_{{x \to c}} \frac{x^4 + x^2 - 1}{x^2 + 5} = \frac{\lim\limits_{{x \to c}} (x^4 + x^2 - 1)}{\lim\limits_{{x \to c}} (x^2 + 5)} \] Calculate each part: \[ \lim\limits_{{x \to c}} (x^4 + x^2 - 1) = c^4 + c^2 - 1 \] \[ \lim\limits_{{x \to c}} (x^2 + 5) = c^2 + 5 \] Thus: \[ \lim\limits_{{x \to c}} \frac{x^4 + x^2 - 1}{x^2 + 5} = \frac{c^4 + c^2 - 1}{c^2 + 5} \]

For the limit \(\lim\limits_{{x \to c}} (x^4 + x^2 - 1)\):

Using the Sum and Difference Rules and Power Rule: \[ \lim\limits_{{x \to c}} (x^4 + x^2 - 1) = c^4 + c^2 - 1 \]

For the limit \(\lim\limits_{{x \to c}} (x^2 + 5)\):

Using the Sum and Difference Rules and Power Rule: \[ \lim\limits_{{x \to c}} (x^2 + 5) = c^2 + 5 \]

Problem (c)

Find:

- \(\lim\limits_{{x \to c}} (x^3 + 4x^2 - 3)\)

- \(\lim\limits_{{x \to c}} \frac{x^3 + 4x^2 - 3}{x^2 + 5}\)

Solution:

For the limit \(\lim\limits_{{x \to c}} (x^3 + 4x^2 - 3)\):

Using the Sum and Difference Rules and Power Rule: \[ \lim\limits_{{x \to c}} (x^3 + 4x^2 - 3) = c^3 + 4c^2 - 3 \]

For the limit \(\lim\limits_{{x \to c}} \frac{x^3 + 4x^2 - 3}{x^2 + 5}\):

Using the Quotient Rule: \[ \lim\limits_{{x \to c}} \frac{x^3 + 4x^2 - 3}{x^2 + 5} = \frac{\lim\limits_{{x \to c}} (x^3 + 4x^2 - 3)}{\lim\limits_{{x \to c}} (x^2 + 5)} \] Calculate each part: \[ \lim\limits_{{x \to c}} (x^3 + 4x^2 - 3) = c^3 + 4c^2 - 3 \] \[ \lim\limits_{{x \to c}} (x^2 + 5) = c^2 + 5 \] Thus: \[ \lim\limits_{{x \to c}} \frac{x^3 + 4x^2 - 3}{x^2 + 5} = \frac{c^3 + 4c^2 - 3}{c^2 + 5} \]

3.4 Continuity of Functions

In calculus, continuity is a fundamental property of functions. Intuitively, a function is continuous if its graph can be drawn without lifting the pencil from the paper. More formally, a function \(f(x)\) is said to be continuous at a point \(x = c\) if the following three conditions are met:

The function is defined at \(c\): \[ f(c) \text{ exists.} \]

The limit of the function as \(x\) approaches \(c\) exists: \[ \lim\limits_{{x \to c}} f(x) \text{ exists.} \]

The limit of the function as \(x\) approaches \(c\) is equal to the value of the function at \(c\): \[ \lim\limits_{{x \to c}} f(x) = f(c). \]

If a function \(f(x)\) is continuous at every point in its domain, it is said to be a continuous function.

3.4.1 Examples of Continuity

3.4.1.1 Identity Function

The identity function \(f(x) = x\) is continuous everywhere because: \[ \lim\limits_{{x \to c}} f(x) = \lim\limits_{{x \to c}} x = c = f(c). \]

3.4.1.2 Constant Function

The constant function \(f(x) = k\) (where \(k\) is a constant) is continuous everywhere because: \[ \lim\limits_{{x \to c}} f(x) = \lim\limits_{{x \to c}} k = k = f(c). \]

3.4.1.3 Piecewise Functions

Piecewise functions can exhibit points of discontinuity. Consider the unit step function \(u(x)\): \[ u(x) = \begin{cases} 0 & \text{if } x < 0, \\ 1 & \text{if } x \ge 0. \end{cases} \]

This function has a discontinuity at \(x = 0\), because the left-hand limit and the right-hand limit are not equal: \[ \lim\limits_{{x \to 0^-}} u(x) = 0 \quad \text{and} \quad \lim\limits_{{x \to 0^+}} u(x) = 1. \]

3.4.1.4 Signum Function

The signum function \(\text{sgn}(x)\) is defined as: \[ \text{sgn}(x) = \begin{cases} -1 & \text{if } x < 0, \\ 0 & \text{if } x = 0, \\ 1 & \text{if } x > 0. \end{cases} \]

The signum function has discontinuities at \(x = 0\) because the left-hand limit and the right-hand limit are not equal: \[ \lim\limits_{{x \to 0^-}} \text{sgn}(x) = -1 \quad \text{and} \quad \lim\limits_{{x \to 0^+}} \text{sgn}(x) = 1. \]

3.4.1.5 Sigmoid Function

The sigmoid function \(\sigma(x)\) is defined as: \[ \sigma(x) = \frac{1}{1 + e^{-x}}. \]

This function is continuous everywhere because it is defined for all \(x\), and the limit at every point \(x = c\) matches the function value \(\sigma(c)\).

3.4.1.6 Multi-Valued Function

Consider the function: \[ f(x) = \begin{cases} \sin\left(\frac{1}{x}\right) & \text{if } x > 0, \\ 0 & \text{if } x \le 0. \end{cases} \]

This function is not continuous at \(x = 0\). As \(x\) approaches 0 from the right, the function oscillates between -1 and 1, and does not settle to a single value. Therefore, the limit as \(x\) approaches 0 does not exist, which means the function is not continuous at \(x = 0\).

3.4.2 Visualization of Continuity

We can visualize the continuity of these functions using Python. Below is the code for plotting these functions and their behaviors around points of interest.

import numpy as np

import matplotlib.pyplot as plt

def identity_function(x):

return x

def constant_function(x):

return 3 # Arbitrary constant

def unit_step_function(x):

return np.where(x >= 0, 1, 0)

def signum_function(x):

return np.sign(x)

def sigmoid_function(x):

return 1 / (1 + np.exp(-x))

def f(x):

return np.where(x > 0, np.sin(1 / x), 0)

x = np.linspace(-2, 2, 400)

x_positive = np.linspace(0.001, 2, 400) # Avoiding division by zero for f(x)

# Plotting the functions

plt.figure(figsize=(10, 8))

# Identity function

plt.subplot(3, 2, 1)

plt.plot(x, identity_function(x), label='f(x) = x')

plt.axvline(x=0, color='grey', linestyle='--')

plt.title('Identity Function')

plt.legend()

# Constant function

plt.subplot(3, 2, 2)

plt.plot(x, constant_function(x), label='f(x) = 3')

plt.axvline(x=0, color='grey', linestyle='--')

plt.title('Constant Function')

plt.legend()

# Unit step function

plt.subplot(3, 2, 3)

plt.plot(x, unit_step_function(x), label='u(x)')

plt.axvline(x=0, color='grey', linestyle='--')

plt.title('Unit Step Function')

plt.legend()

# Signum function

plt.subplot(3, 2, 4)

plt.plot(x, signum_function(x), label='sgn(x)')

plt.axvline(x=0, color='grey', linestyle='--')

plt.title('Signum Function')

plt.legend()

# Sigmoid function

plt.subplot(3, 2, 5)

plt.plot(x, sigmoid_function(x), label='σ(x)')

plt.axvline(x=0, color='grey', linestyle='--')

plt.title('Sigmoid Function')

plt.legend()

# f(x) = sin(1/x) for x > 0 and 0 for x ≤ 0

plt.subplot(3, 2, 6)

plt.plot(x_positive, f(x_positive), label='f(x) = sin(1/x) if x>0, 0 if x≤0')

plt.axvline(x=0, color='grey', linestyle='--')

plt.title('Function with Oscillation')

plt.legend()

plt.tight_layout()

plt.show()3.4.3 Importance of Continuity in Analysis and Design of Computational Models

Continuity is a crucial concept in the analysis and design of computational models in computer science. It ensures that small changes in input lead to small changes in output, providing stability and predictability in the behavior of algorithms and systems. This property is vital for various applications, including optimization, numerical analysis, machine learning, and graphics.

3.4.4 Examples of Continuity in Computer Science

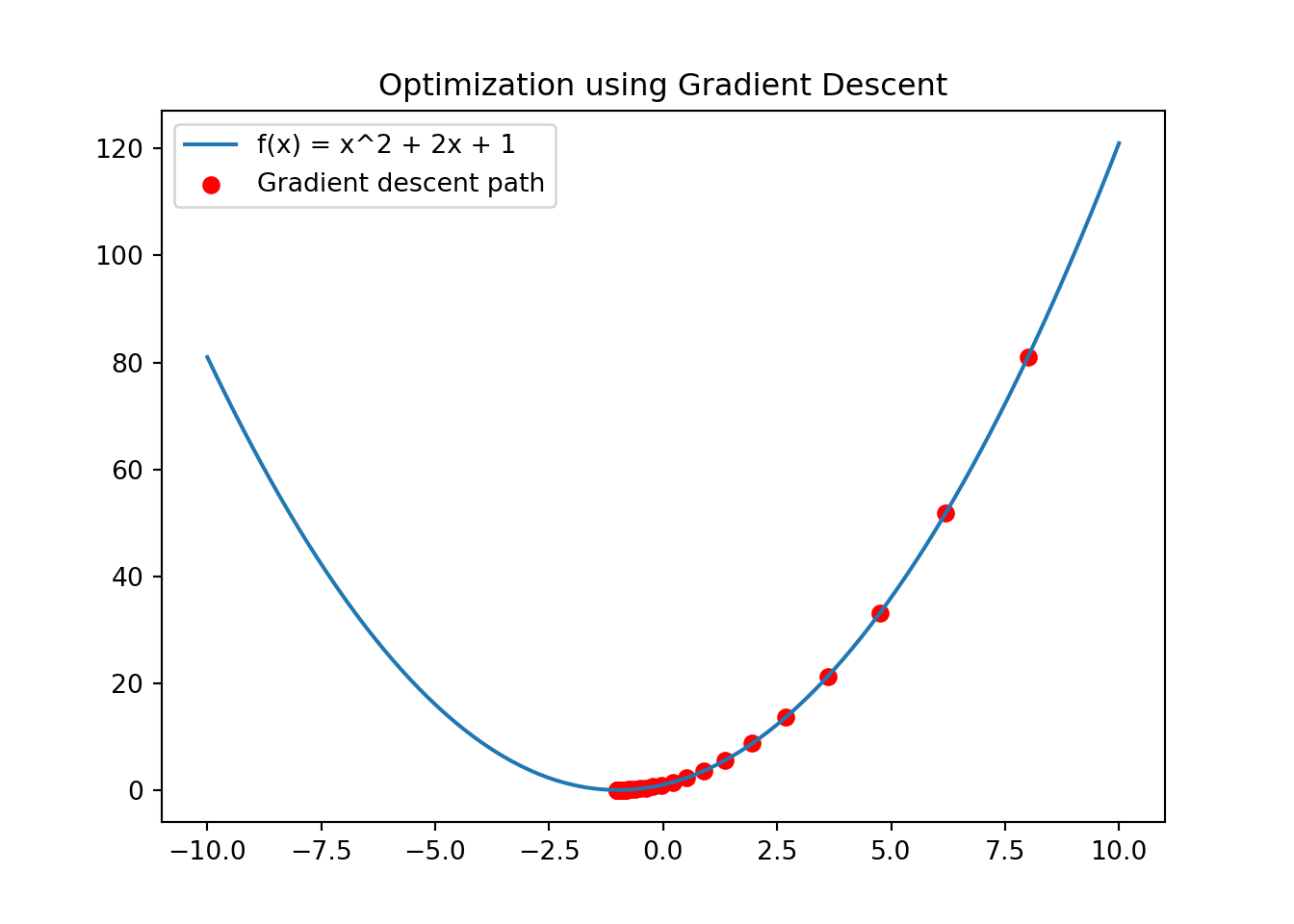

3.4.4.1 1. Optimization

In optimization problems, we often seek to find the minimum or maximum of a function. If the function is continuous, optimization algorithms can reliably find these extrema by following the gradient or using other methods. Discontinuities can cause algorithms to fail or converge to incorrect solutions.

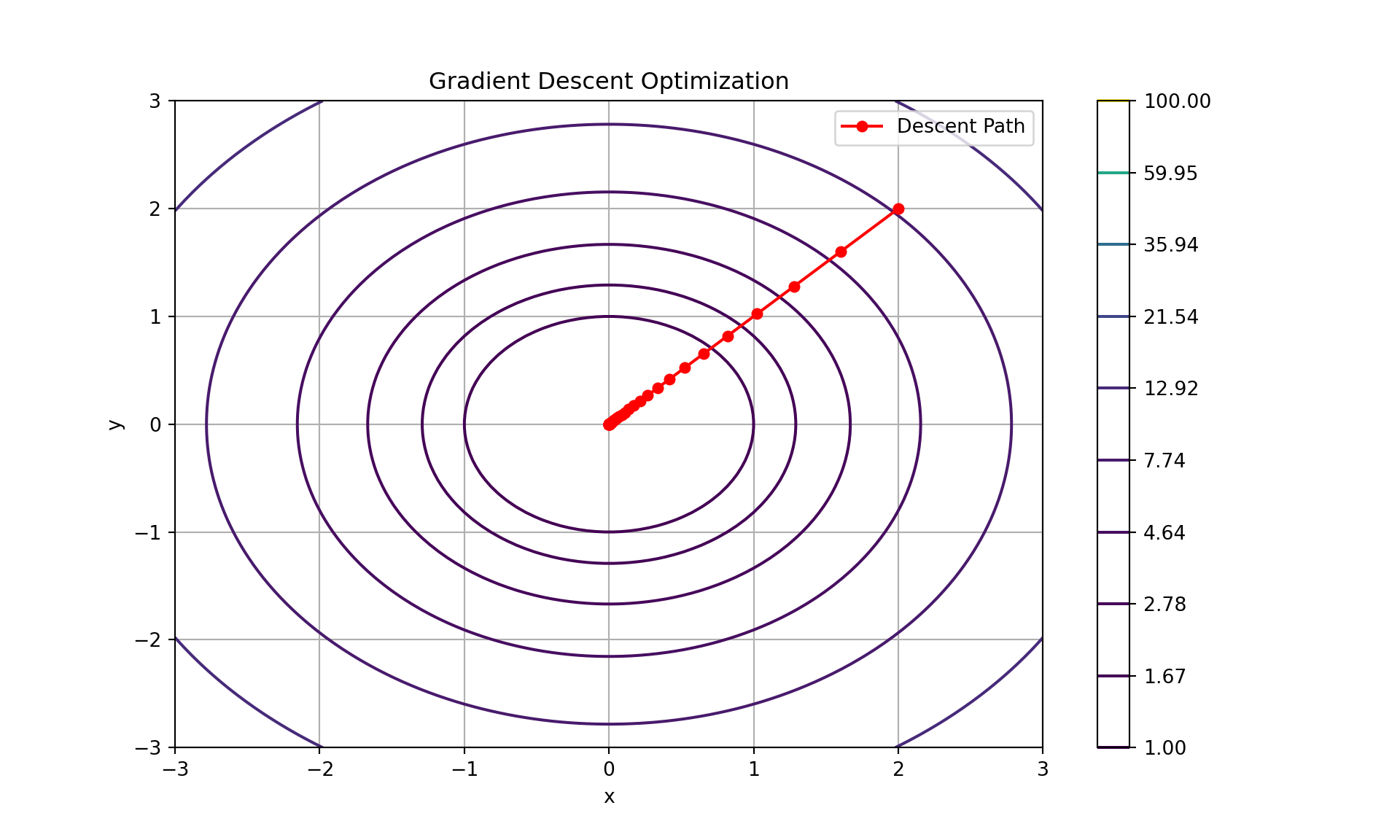

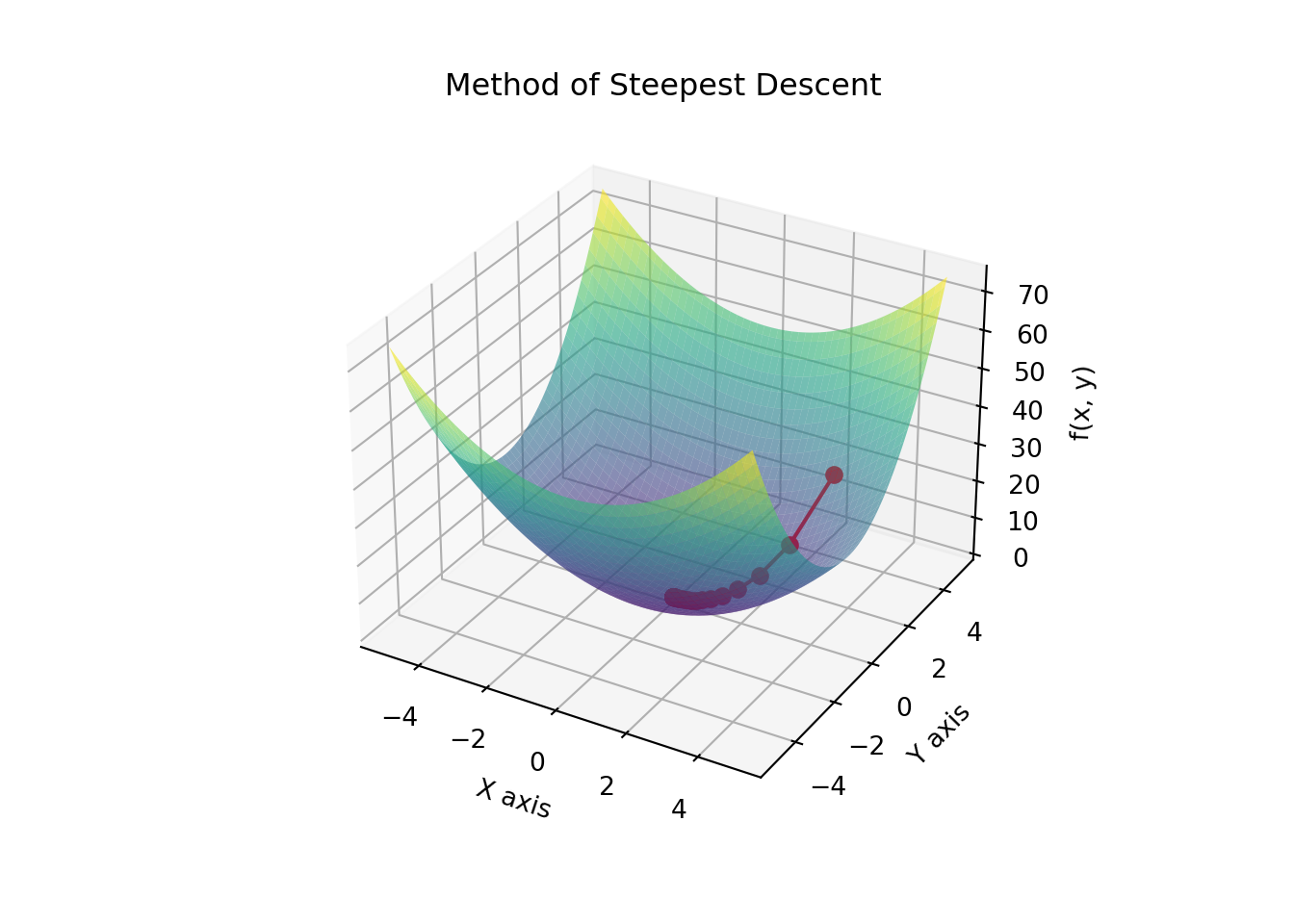

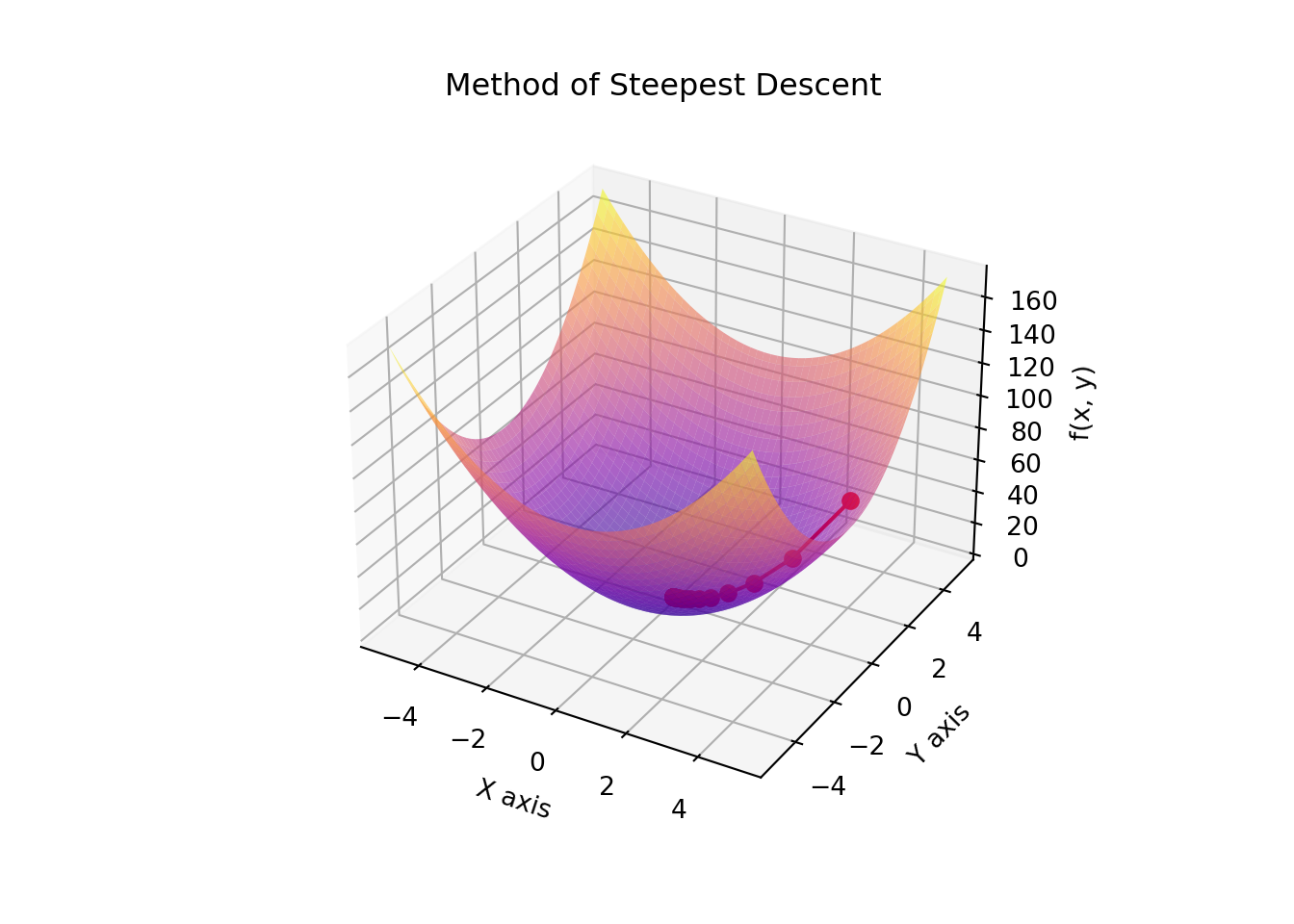

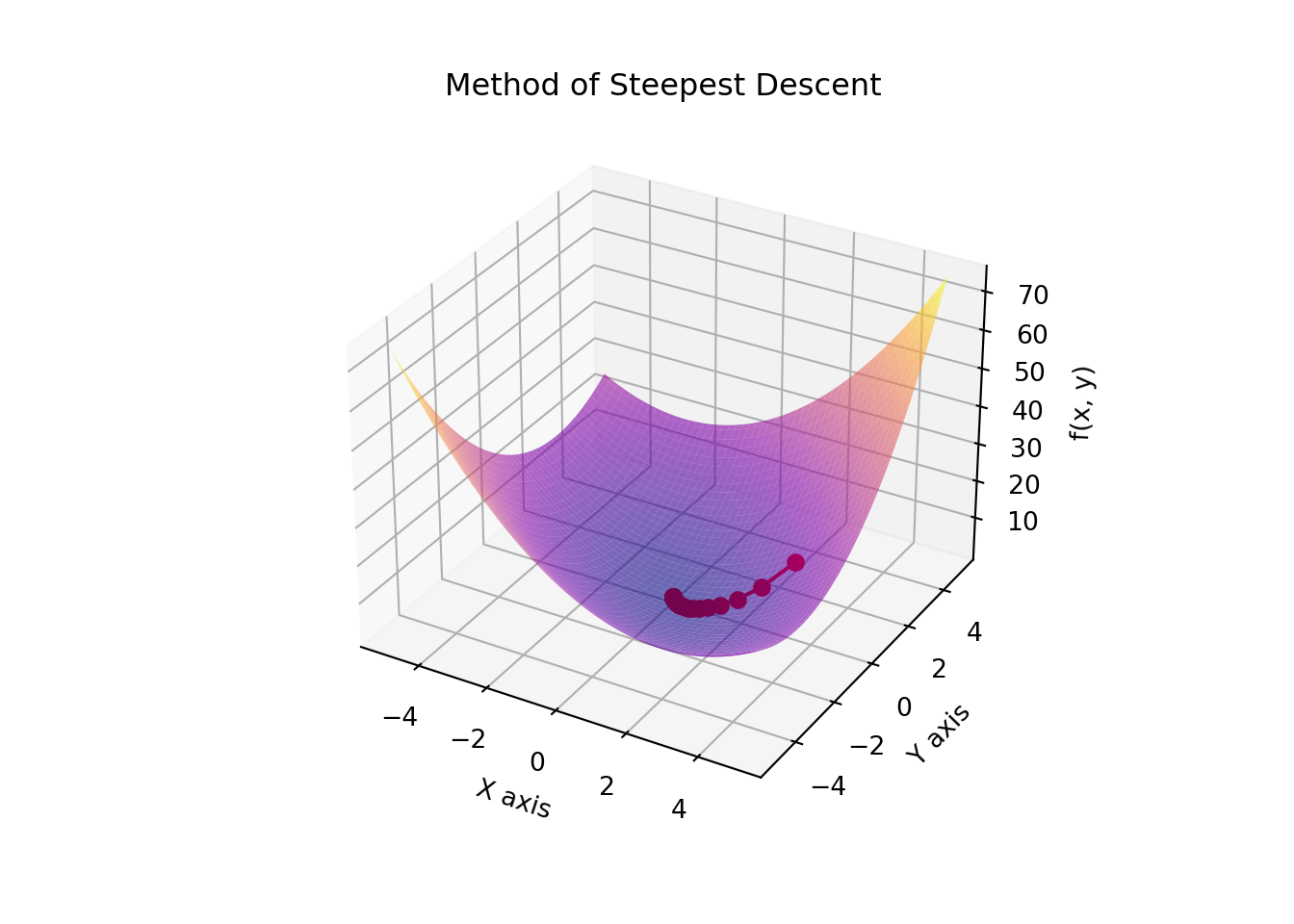

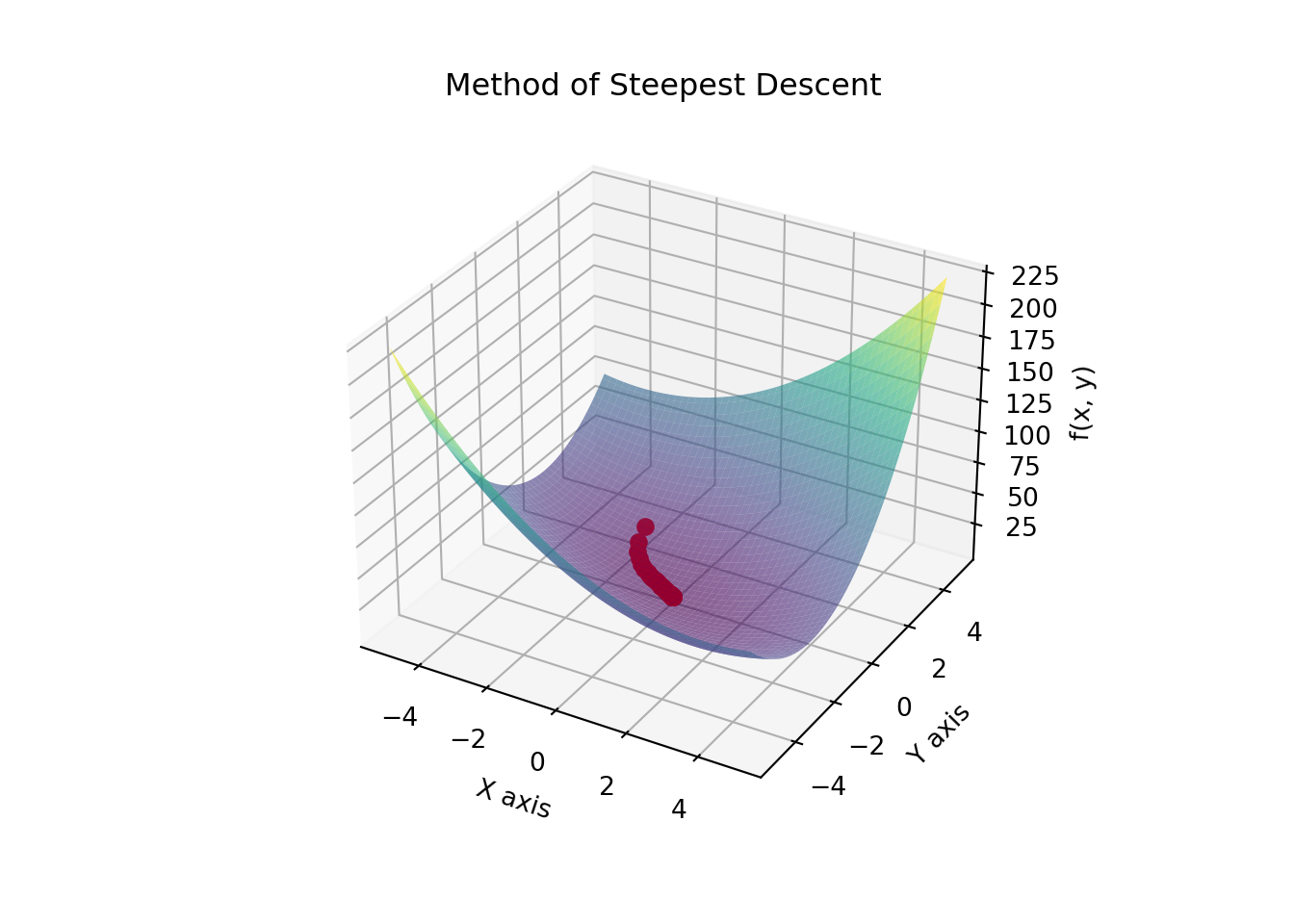

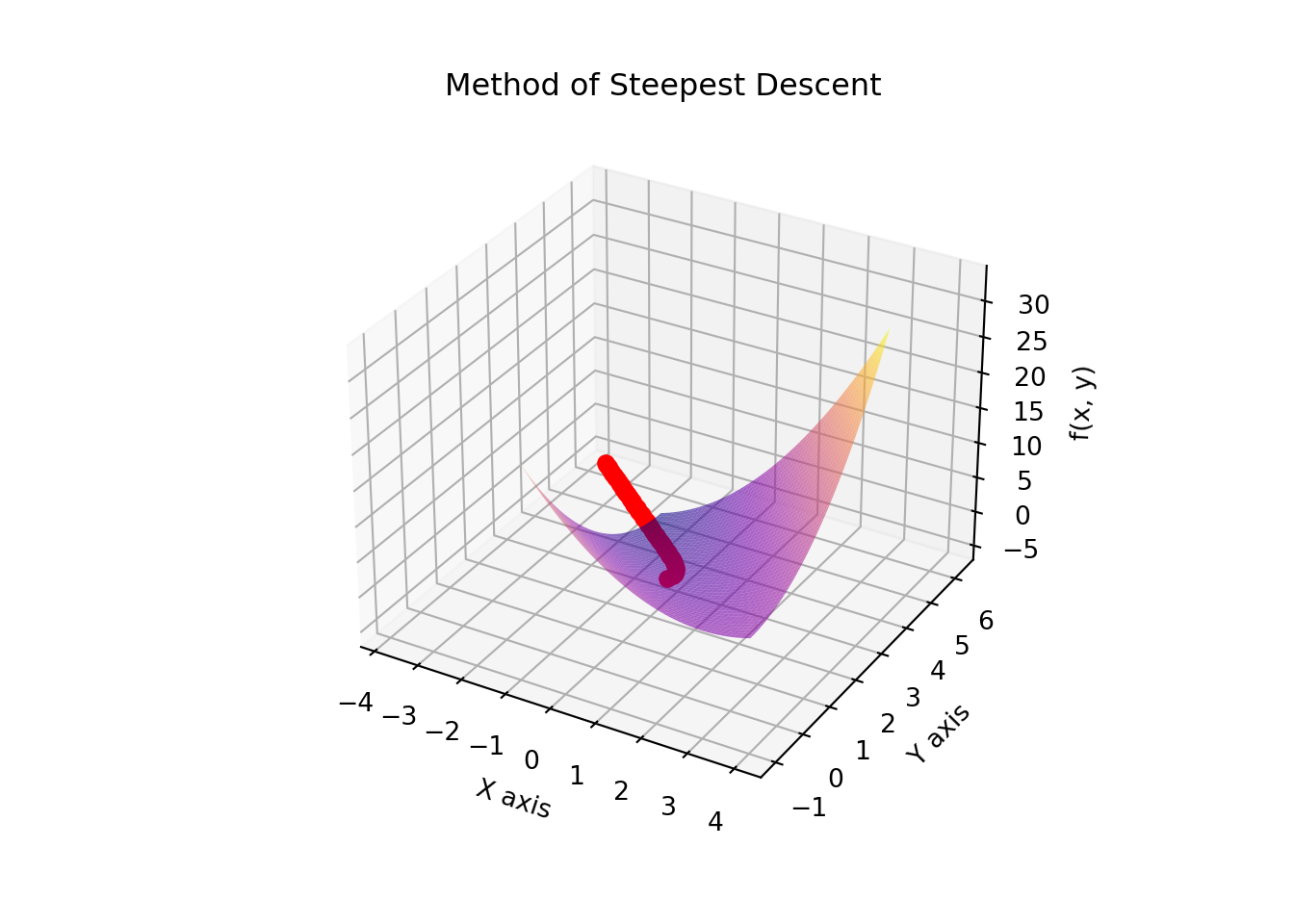

Example: Gradient Descent

Gradient descent is an optimization algorithm used to minimize functions. It relies on the continuity of the function to ensure that the gradient (rate of change) provides accurate information about the direction to move to decrease the function value. If the function is continuous, gradient descent can converge to a local minimum.

import numpy as np

import matplotlib.pyplot as plt

def f(x):

return x**2 + 2*x + 1

def df(x):

return 2*x + 2

x = np.linspace(-10, 10, 100)

y = f(x)

# Gradient descent

x0 = 8 # Starting point

learning_rate = 0.1

iterations = 50

x_history = [x0]

for _ in range(iterations):

x0 = x0 - learning_rate * df(x0)

x_history.append(x0)

plt.plot(x, y, label='f(x) = x^2 + 2x + 1')## [<matplotlib.lines.Line2D object at 0x0000029F569E9460>]## <matplotlib.collections.PathCollection object at 0x0000029F569E9D00>## <matplotlib.legend.Legend object at 0x0000029F56758550>## Text(0.5, 1.0, 'Optimization using Gradient Descent')

3.4.4.2 2. Numerical Analysis

Numerical methods, such as numerical integration and differentiation, rely on the continuity of functions to provide accurate approximations. Discontinuous functions can lead to large errors or even make the numerical methods inapplicable.

Example: Numerical Integration

Numerical integration methods, like the trapezoidal rule, approximate the area under a curve. Continuity ensures that these approximations are accurate.

from scipy.integrate import quad

def f(x):

return np.sin(x)

result, error = quad(f, 0, np.pi)

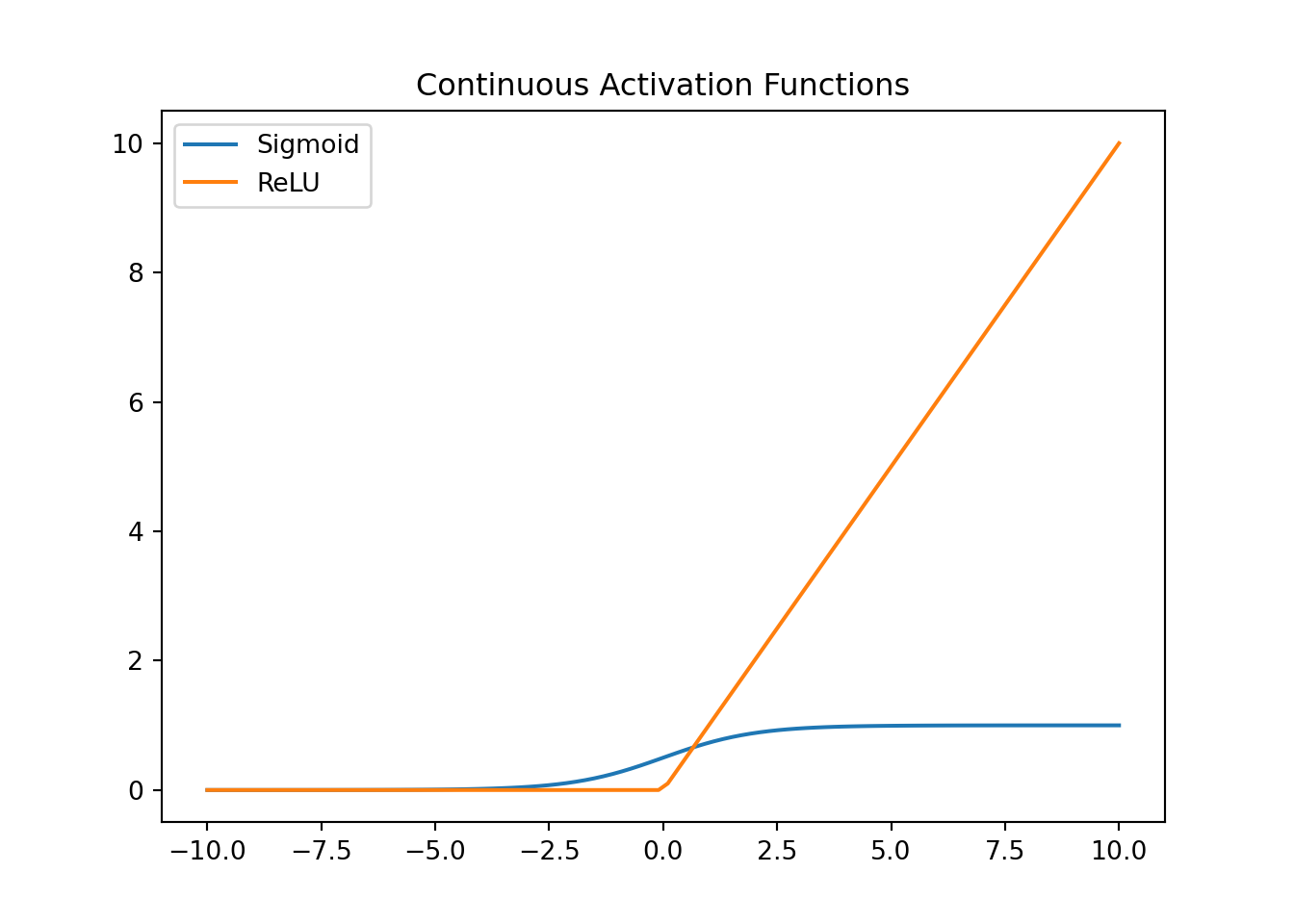

print(f"Numerical integration result: {result}")## Numerical integration result: 2.03.4.4.3 3. Machine Learning

In machine learning, continuous functions are used to model data and make predictions. Activation functions in neural networks, such as the sigmoid and ReLU functions, need to be continuous to ensure smooth gradients during backpropagation, enabling the network to learn effectively.

Example: Activation Functions in Neural Networks

Activation functions introduce non-linearity into neural networks, allowing them to learn complex patterns. Continuous activation functions ensure that the gradients are well-behaved during training.

import numpy as np

import matplotlib.pyplot as plt

def sigmoid(x):

return 1 / (1 + np.exp(-x))

def relu(x):

return np.maximum(0, x)

x = np.linspace(-10, 10, 100)

y_sigmoid = sigmoid(x)

y_relu = relu(x)

plt.plot(x, y_sigmoid, label='Sigmoid')## [<matplotlib.lines.Line2D object at 0x0000029F56FD8AC0>]## [<matplotlib.lines.Line2D object at 0x0000029F56FE3400>]## <matplotlib.legend.Legend object at 0x0000029F56FE3EB0>## Text(0.5, 1.0, 'Continuous Activation Functions') #### 4. Computer Graphics

#### 4. Computer Graphics

In computer graphics, continuity is essential for rendering smooth curves and surfaces. Techniques like Bézier curves and B-splines rely on continuous functions to create visually appealing graphics.

Example: Bezier Curves

Bezier curves are used in vector graphics and animation to model smooth curves. The continuity of the curve ensures smooth transitions between points.

import numpy as np

import matplotlib.pyplot as plt

def bezier(t, P0, P1, P2, P3):

return (1 - t)**3 * P0 + 3 * (1 - t)**2 * t * P1 + 3 * (1 - t) * t**2 * P2 + t**3 * P3

t = np.linspace(0, 1, 100)

P0, P1, P2, P3 = np.array([0, 0]), np.array([1, 2]), np.array([3, 3]), np.array([4, 0])

curve = bezier(t, P0, P1, P2, P3)

plt.plot(curve[:, 0], curve[:, 1], label='Bezier Curve')

plt.scatter([P0[0], P1[0], P2[0], P3[0]], color='red')

plt.title('Bezier Curve in Computer Graphics')

plt.legend()

plt.show()3.4.4.4 Practical Example of Continuity in Game Design: Smooth Character Movement with Animation

Problem Statement:

In game design, ensuring smooth and natural character movement is essential for a good player experience. Discontinuous or jerky movements can disrupt gameplay and reduce immersion. We will model and animate a character’s movement in a 2D platformer game to ensure smooth transitions.

Mathematical Modelling:

We’ll model the character’s position \(x(t)\) and \(y(t)\) over time \(t\). The movement is influenced by constant velocities in both horizontal and vertical directions.

The equations for position are: \[ x(t) = x_0 + v_x \cdot t \] \[ y(t) = y_0 + v_y \cdot t \]

where: - \(x_0\) and \(y_0\) are the initial positions. - \(v_x\) and \(v_y\) are the constant velocities in the x and y directions, respectively.

Solution:

Model the Movement: Update the position continuously based on the time elapsed.

Animate the Movement: Create an animation to visualize the smooth movement of the character.

Python Animation Code:

We will use the matplotlib library to create an animation of the character’s movement.

import numpy as np

import matplotlib.pyplot as plt

import matplotlib.animation as animation

# Constants

v_x = 5 # Velocity in x direction

v_y = 3 # Velocity in y direction

x_0 = 0 # Initial x position

y_0 = 0 # Initial y position

# Time array

t = np.linspace(0, 10, 500) # Time from 0 to 10 seconds

# Position functions

x_t = x_0 + v_x * t

y_t = y_0 + v_y * t

# Create a figure and axis for plotting

fig, ax = plt.subplots()

ax.set_xlim(0, max(x_t) + 10)

ax.set_ylim(min(y_t) - 10, max(y_t) + 10)

line, = ax.plot([], [], 'bo', markersize=10) # Character represented as a blue dot

trail, = ax.plot([], [], 'b-', alpha=0.5) # Character's path

def init():

line.set_data([], [])

trail.set_data([], [])

return line, trail

def update(frame):

line.set_data(x_t[frame], y_t[frame])

trail.set_data(x_t[:frame+1], y_t[:frame+1])

return line, trail

# Create animation

ani = animation.FuncAnimation(fig, update, frames=len(t), init_func=init, blit=True, interval=20)

plt.xlabel('X Position')

plt.ylabel('Y Position')

plt.title('Smooth Character Movement in 2D Space')

plt.grid(True)

plt.show()3.4.4.5 Practical Example of Continuity in Game Design: Smooth Character Movement with Animation

Problem Statement:

In game design, ensuring smooth and natural character movement is essential for a good player experience. Discontinuous or jerky movements can disrupt gameplay and reduce immersion. We will model and animate a character’s movement in a 2D platformer game to ensure smooth transitions.

Mathematical Modelling:

We’ll model the character’s position \(x(t)\) and \(y(t)\) over time \(t\). The movement is influenced by constant velocities in both horizontal and vertical directions.

The equations for position are: \[ x(t) = x_0 + v_x \cdot t \] \[ y(t) = y_0 + v_y \cdot t \]

where: - \(x_0\) and \(y_0\) are the initial positions. - \(v_x\) and \(v_y\) are the constant velocities in the x and y directions, respectively.

Solution:

Model the Movement: Update the position continuously based on the time elapsed.

Animate the Movement: Create an animation to visualize the smooth movement of the character.

Python Animation Code:

We will use the matplotlib library to create an animation of the character’s movement.

import numpy as np

import matplotlib.pyplot as plt

import matplotlib.animation as animation

# Constants

v_x = 5 # Velocity in x direction

v_y = 3 # Velocity in y direction

x_0 = 0 # Initial x position

y_0 = 0 # Initial y position

# Time array

t = np.linspace(0, 10, 500) # Time from 0 to 10 seconds

# Position functions

x_t = x_0 + v_x * t

y_t = y_0 + v_y * t

# Create a figure and axis for plotting

fig, ax = plt.subplots()

ax.set_xlim(0, max(x_t) + 10)

ax.set_ylim(min(y_t) - 10, max(y_t) + 10)

line, = ax.plot([], [], 'bo', markersize=10) # Character represented as a blue dot

trail, = ax.plot([], [], 'b-', alpha=0.5) # Character's path

def init():

line.set_data([], [])

trail.set_data([], [])

return line, trail

def update(frame):

line.set_data(x_t[frame], y_t[frame])

trail.set_data(x_t[:frame+1], y_t[:frame+1])

return line, trail

# Create animation

ani = animation.FuncAnimation(fig, update, frames=len(t), init_func=init, blit=True, interval=20)

plt.xlabel('X Position')

plt.ylabel('Y Position')

plt.title('Smooth Character Movement in 2D Space')

plt.grid(True)

plt.show()3.5 Rate of Change

Building on our understanding of limits and continuity, we can now explore the concept of the rate of change, which is crucial for analyzing and optimizing various computational processes in computer science and engineering.

3.5.1 Definition of Rate of Change

The rate of change of a function \(f(x)\) at a particular point \(x\) describes how \(f(x)\) varies as \(x\) changes. Mathematically, it is defined using the concept of a derivative. For a function \(f(x)\), the derivative at a point \(a\) is given by:

\[ f'(a) = \lim_{h \to 0} \frac{f(a+h) - f(a)}{h} \]

This formula represents the instantaneous rate of change of \(f\) with respect to \(x\) at the point \(x = a\).

3.5.2 Practical Applications in Computer Science

- Algorithm Analysis: The rate of change helps in determining the time complexity of algorithms. For instance, analyzing how the running time of an algorithm increases as the input size grows can be understood through derivatives.

- Network Traffic Management: Understanding the rate of data flow in networks can help in optimizing bandwidth usage and avoiding congestion.

- Machine Learning: In gradient-based optimization methods, such as gradient descent, the derivative (or gradient) is used to update model parameters to minimize the loss function.

- Signal Processing: The rate of change of signals can be analyzed to filter noise and improve signal quality.

- Graphics and Animation: Smooth rendering of graphics and animations often involves understanding the rate of change of various parameters to create realistic movements and transitions.

3.5.2.1 Visualizing Rate of Change with Python

To make this concept more tangible, let’s use Python and the turtle library to visualize the rate of change for the function \(y = x^2\). We’ll draw the function and dynamically show the tangent line at any point you click, representing the instantaneous rate of change at that point.

Python Code for Visualization: You can visualize the rate of change of \(f(x)\) as tangent at \(x\) using the following

Pythoncode (preferably in vscode or pycharm IDEs).

import turtle

# Setup the screen

screen = turtle.Screen()

screen.bgcolor("white")

screen.setup(width=600, height=600) # Set window size

screen.setworldcoordinates(-6, -1, 6, 36) # Set coordinate system to match the function range

# Create a turtle object for drawing the function

pen = turtle.Turtle()

pen.speed(0) # Fastest drawing speed

# Function to draw the quadratic function y = x^2

def draw_function():

pen.penup()

pen.goto(-6, 36) # Move to the starting point

pen.pendown()

for x in range(-300, 301):

x_scaled = x / 50

y = x_scaled ** 2 # Quadratic function

pen.goto(x_scaled, y)

pen.penup()

# Function to draw the tangent line at a given point

def draw_tangent(x, y):

h = 0.01 # A small increment

f_a = (x) ** 2 # Function value at x

f_a_h = (x + h) ** 2 # Function value at x + h

slope = (f_a_h - f_a) / h # Derivative (slope) using the limit definition

# Create a new turtle for drawing the tangent

tangent_pen = turtle.Turtle()

tangent_pen.speed(0)

tangent_pen.color("red")

tangent_pen.penup()

tangent_pen.goto(x, y)

tangent_pen.pendown()

# Draw the tangent line within the visible range

tangent_pen.goto(x + 1, y + slope * 1)

tangent_pen.penup()

tangent_pen.goto(x, y)

tangent_pen.pendown()

tangent_pen.goto(x - 1, y - slope * 1)

tangent_pen.hideturtle()

# Function to handle mouse click events

def on_click(x, y):

# Adjust y-coordinate to the quadratic function

y_adjusted = x ** 2

draw_tangent(x, y_adjusted)

# Draw the function

draw_function()

# Set the mouse click event handler

screen.onclick(on_click)

# Hide the main pen and display the window

pen.hideturtle()

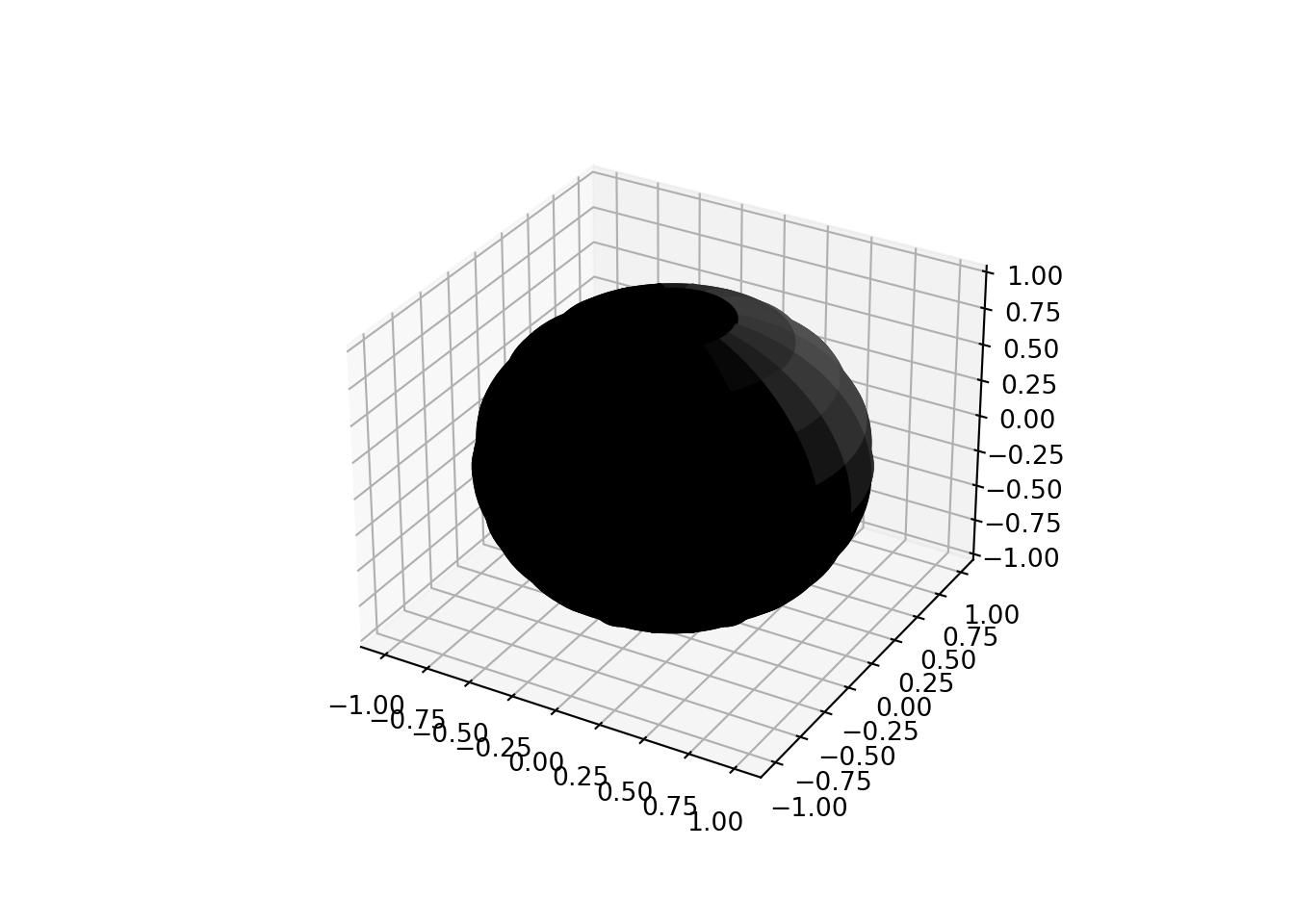

turtle.done()3.5.2.2 Example 1: Shading a Sphere in Computer Graphics**

In computer graphics, shading techniques are used to create realistic visuals by simulating how light interacts with surfaces. The rate of change is crucial in determining how light intensity varies across the surface of an object, such as a sphere.

Mathematical Model

To shade a sphere realistically, we use the concept of the surface normal and the dot product between the light direction and the normal vector. The intensity of light at a point on the surface is influenced by these factors.

Surface Normal Vector: For a sphere centered at the origin with radius \(R\), the surface normal at a point \((x, y, z)\) on the sphere is:

\[ \mathbf{n} = \frac{(x, y, z)}{R} \]

Light Direction Vector: Suppose the light source is located at \((L_x, L_y, L_z)\). The light direction vector at a point on the sphere is:

\[ \mathbf{L} = (L_x - x, L_y - y, L_z - z) \]

Dot Product: The dot product of the surface normal and the light direction vectors is used to compute the intensity of light at that point:

\[ I = \mathbf{n} \cdot \mathbf{L} \]

where \(\cdot\) denotes the dot product.

Shading Intensity: To ensure the shading intensity is within a valid range, it is often clamped to a minimum value of 0:

\[ I = \max(0, \mathbf{n} \cdot \mathbf{L}) \]

Python Code for Visualizing Shading on a Sphere

Below is an example code snippet using Python with matplotlib to visualize the shading of a sphere:

import numpy as np

import matplotlib.pyplot as plt

from mpl_toolkits.mplot3d import Axes3D

# Parameters

R = 1 # Radius of the sphere

L = np.array([1, 1, 1]) # Light source position

# Generate spherical coordinates

phi, theta = np.mgrid[0:2*np.pi:100j, 0:np.pi:50j]

x = R * np.sin(theta) * np.cos(phi)

y = R * np.sin(theta) * np.sin(phi)

z = R * np.cos(theta)

# Compute normals

normals = np.stack([x, y, z], axis=-1) / R

# Compute light direction vectors

light_directions = np.stack([L[0] - x, L[1] - y, L[2] - z], axis=-1)

# Compute dot products (shading intensity)

intensities = np.maximum(0, np.sum(normals * light_directions, axis=-1))

# Plotting the sphere with shading

fig = plt.figure()

ax = fig.add_subplot(111, projection='3d')

ax.plot_surface(x, y, z, facecolors=plt.cm.gray(intensities), rstride=5, cstride=5, antialiased=True)## <mpl_toolkits.mplot3d.art3d.Poly3DCollection object at 0x0000029F571CAD90>

Explanation of terms and notations used:

Surface Normal: The surface normal at any point on the sphere is a unit vector pointing outward, which is essential for calculating how light interacts with the surface.

Light Direction: The vector from the point on the sphere to the light source determines the angle at which light hits the surface.

Dot Product: The dot product between the normal and light direction vectors gives the shading intensity, which is used to color the surface.

3.5.2.3 Example 2: Animating a Bouncing Ball

In animation, the rate of change is crucial for simulating realistic motion. For example, animating a bouncing ball involves understanding how position, velocity, and acceleration change over time.

Mathematical Model:

To simulate the bouncing ball, we use the following kinematic equations:

Position as a Function of Time: \(h(t)\)

- The height \(h(t)\) of the ball at time \(t\) is updated based on its velocity and acceleration.

Velocity as a Function of Time: \(v(t)\)

- The velocity \(v(t)\) changes due to gravity, which is a constant acceleration \(g\).

The equations are:

\[ h(t + \Delta t) = h(t) + v(t) \Delta t \]

\[ v(t + \Delta t) = v(t) - g \Delta t \]

where:

- \(g\) is the acceleration due to gravity (\(\approx 9.81 \, \text{m/s}^2\)).

- \(\Delta t\) is the time step.

Handling Bounces: When the ball hits the ground, its velocity is reversed and reduced by a coefficient of restitution \(e\):

\[ v(t + \Delta t) = -e \cdot v(t) \]

where \(e\) represents the bounciness of the ball.

Python Code for Visualizing a Bouncing Ball Animation:

Below is an example code snippet using Python with matplotlib to visualize the bouncing ball:

import numpy as np

import matplotlib.pyplot as plt

import matplotlib.animation as animation

# Parameters

g = 9.81 # Acceleration due to gravity (m/s^2)

v_init = 15 # Initial velocity (m/s)

h_init = 0 # Initial height (m)

dt = 0.01 # Time step (s)

e = 0.8 # Coefficient of restitution (bounciness)

# Initial conditions

t = 0 # Initial time

h = h_init # Initial height

v = v_init # Initial velocity

# Lists to store the position and time values

positions = []

times = []

# Function to update the position and velocity

def update_position_velocity(h, v, dt):

h_new = h + v * dt

v_new = v - g * dt

return h_new, v_new

# Simulation loop

while t < 5:

positions.append(h)

times.append(t)

h, v = update_position_velocity(h, v, dt)

if h <= 0:

h = 0

v = -v * e

t += dt

# Create the animation

fig, ax = plt.subplots()

ax.set_xlim(0, 5)

ax.set_ylim(0, max(positions) + 1)

line, = ax.plot([], [], 'o', markersize=10)

def init():

line.set_data([], [])

return line,

def animate(i):

line.set_data(times[i], positions[i])

return line,

ani = animation.FuncAnimation(fig, animate, frames=len(times), init_func=init, blit=True, interval=dt*1000)

plt.show()Explanation of terms and constructs used:

Position and Velocity: The height \(h\) and velocity \(v\) of the ball are updated iteratively. The position changes based on the current velocity, and the velocity changes based on acceleration due to gravity.

Bounce Dynamics: When the ball reaches the ground (height \(\leq 0\)), the velocity is reversed and scaled by the coefficient of restitution, which simulates the bounce effect.

Rate of Change: The rate of change of position (velocity) and the rate of change of velocity (acceleration) are key to simulating realistic motion.

3.5.3 Takeaway

The rate of change is a fundamental concept in calculus that finds extensive applications in computer science. By understanding and visualizing how functions change at specific points, computer science students can gain deeper insights into various computational processes and enhance their problem-solving skills.

3.6 Transition from Rate of Change to First Derivative

In our previous discussions, we explored the concept of rate of change in various contexts. We used this idea to understand how quantities such as shading intensity in graphics or position in animation change over time or space. This concept can be formally connected to the mathematical idea of derivatives.

3.6.1 From Rate of Change to Derivatives

The rate of change describes how one quantity changes relative to another. When we talk about the rate of change over an interval, we are referring to the average rate of change. However, to analyze how a function behaves at an exact point, we need to consider the instantaneous rate of change. This is where derivatives come into play.

3.6.2 Instantaneous Rate of Change

While the average rate of change over an interval can be insightful, the derivative provides a precise measure of how a function changes at a specific point. The derivative of a function at a point is essentially the limit of the average rate of change as the interval approaches zero. This captures the instantaneous rate of change.

3.6.3 Definition of the Derivative

The derivative of a function provides a new function that describes the rate of change of the original function at any given point. This new function, known as the derivative function, gives us important insights into the behavior of the original function, including its slope at any point and how that slope changes over different regions.

Concept: Given a function \(f(x)\), its derivative \(f'(x)\) is a function that gives the slope of the tangent to the graph of \(f(x)\) at any point \(x\). The derivative function \(f'(x)\) itself can be analyzed to understand how the rate of change of \(f(x)\) varies:

- Rate of Change: The derivative function \(f'(x)\) tells us how quickly \(f(x)\) is changing at each point \(x\).

- Slope of Tangent: At any point on the curve of \(f(x)\), the value of \(f'(x)\) represents the slope of the tangent line at that point.

Formally, the derivative of a function \(f(x)\) at a point \(x\) is defined as:

\[ f'(x) = \lim_{h \to 0} \frac{f(x + h) - f(x)}{h} \]

This definition states that the derivative \(f'(x)\) is the limit of the average rate of change of the function as the interval \(h\) approaches zero. This limit must be instantaneous to accurately reflect how the function is changing at exactly \(x\).

Key Points o remember:

- Rate of Change: Refers to how a function changes over an interval.

- Instantaneous Rate of Change: Requires the interval to be infinitesimally small, capturing how the function changes precisely at a point.

- Derivative: The limit of the average rate of change as the interval approaches zero, providing a precise measure of the instantaneous rate of change.

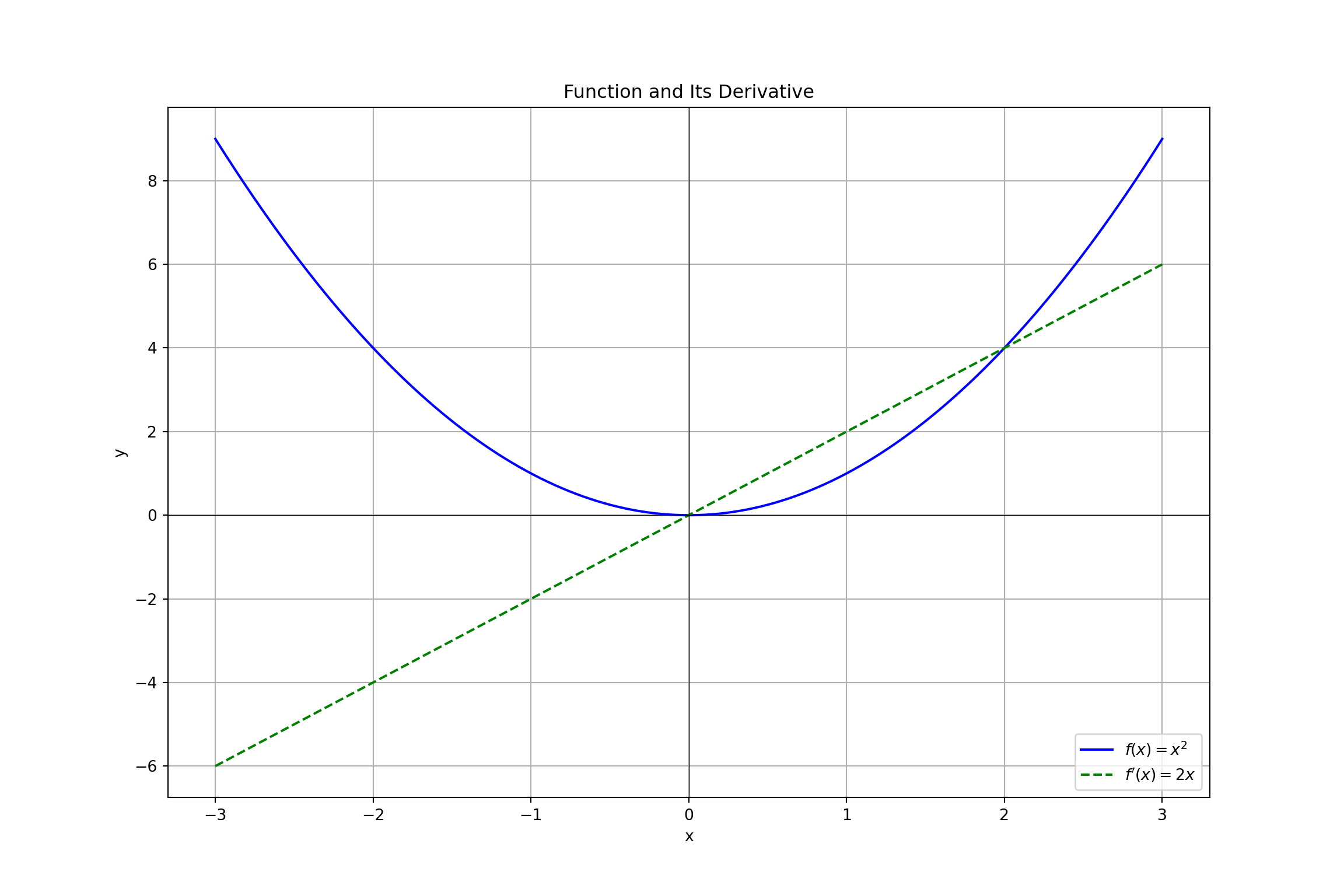

Example:

Consider a simple function \(f(x) = x^2\). The rate of change between two points \(x\) and \(x + h\) is:

\[ \frac{f(x + h) - f(x)}{h} = \frac{(x + h)^2 - x^2}{h} = \frac{2xh + h^2}{h} = 2x + h \]

As \(h\) approaches zero, the rate of change approaches \(2x\), which is the derivative of \(f(x)\):

\[ f'(x) = \lim_{h \to 0} \left(2x + h\right) = 2x \]

Here, \(2x\) represents the instantaneous rate of change of the function \(f(x) = x^2\) at any point \(x\).

This means the derivative function \(f'(x) = 2x\) tells us that the slope of the tangent line to the curve \(f(x) = x^2\) at any point \(x\) is \(2x\). For instance: - At \(x = 1\), the slope of the tangent line is \(2 \times 1 = 2\). - At \(x = -2\), the slope of the tangent line is \(2 \times (-2) = -4\).

Visualization

To visualize the derivative as a function, we can plot the original function along with its derivative:

import numpy as np

import matplotlib.pyplot as plt

# Define the function and its derivative

def f(x):

return x**2

def f_prime(x):

return 2*x

# Define the x values

x = np.linspace(-3, 3, 400)

y = f(x)

y_prime = f_prime(x)

# Create a figure with subplots

plt.figure(figsize=(12, 8))## <Figure size 1200x800 with 0 Axes>## [<matplotlib.lines.Line2D object at 0x0000029F57409D60>]## [<matplotlib.lines.Line2D object at 0x0000029F57414370>]## Text(0.5, 1.0, 'Function and Its Derivative')## Text(0.5, 0, 'x')## Text(0, 0.5, 'y')## <matplotlib.lines.Line2D object at 0x0000029F574189D0>## <matplotlib.lines.Line2D object at 0x0000029F57420D30>## <matplotlib.legend.Legend object at 0x0000029F574207F0>

By transitioning from the average rate of change to the instantaneous rate of change, we use the concept of the derivative. The derivative provides a precise measure of how a function behaves at a particular point, capturing the essence of instantaneous change. This concept is fundamental in various applications, from optimization in machine learning to dynamic simulations in computer graphics.

3.6.4 Chain Rule in Differentiation

The Chain Rule is a fundamental technique in calculus used to differentiate composite functions. In practical terms, the Chain Rule helps us determine how a function changes when it is composed of other functions. This is particularly useful in computer science and engineering when dealing with complex functions or systems where one function is nested within another.

Concept:

The Chain Rule states that if you have a composite function \(g(f(x))\), where: - \(f(x)\) is an inner function - \(g(u)\) is an outer function, where \(u = f(x)\)

then the derivative of the composite function \(g(f(x))\) with respect to \(x\) is given by:

\[ \frac{d}{dx} [g(f(x))] = g'(f(x)) \cdot f'(x) \]

Note: In simpler terms, the derivative of the composite function is the derivative of the outer function evaluated at the inner function multiplied by the derivative of the inner function.

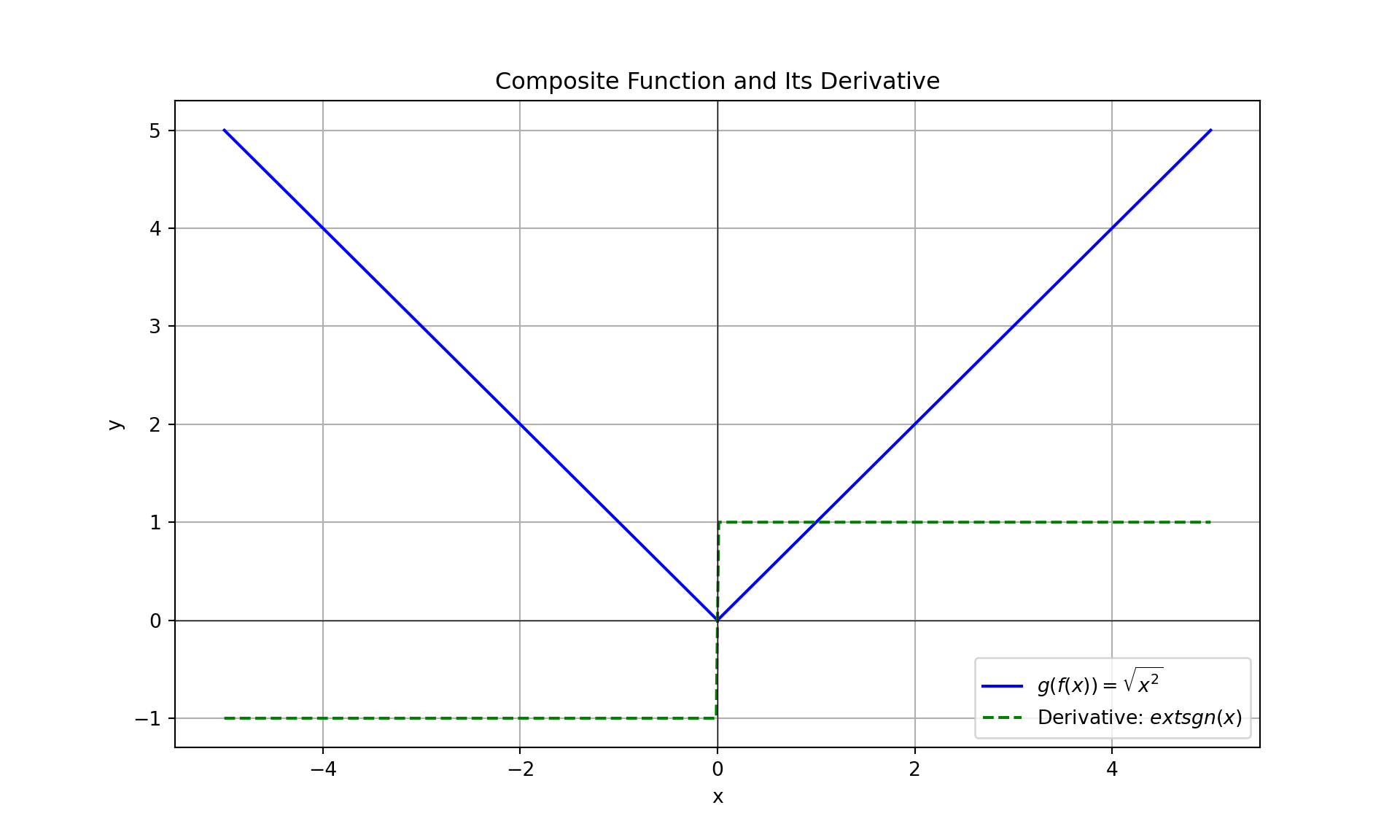

Practical Example:

Consider a scenario in computer graphics where you need to compute the rate of change of color intensity on a surface, which depends on a parameter such as light intensity. Let’s break it down into a practical example:

- Inner Function \(f(x)\): Represents the light intensity as a function of some parameter \(x\).

- Outer Function \(g(u)\): Represents the color intensity as a function of light intensity \(u\), where \(u = f(x)\).

Suppose: - The inner function \(f(x) = x^2\) represents how light intensity changes with parameter \(x\). - The outer function \(g(u) = \sqrt{u}\) represents how color intensity changes with light intensity \(u\).

To find the rate of change of color intensity with respect to \(x\), use the Chain Rule:

Differentiate the inner function \(f(x)\): \[ f'(x) = 2x \]

Differentiate the outer function \(g(u)\): \[ g'(u) = \frac{1}{2\sqrt{u}} \]

Apply the Chain Rule: \[ \frac{d}{dx} [g(f(x))] = g'(f(x)) \cdot f'(x) \] Substituting \(f(x) = x^2\) into \(g'(u)\): \[ \frac{d}{dx} [\sqrt{x^2}] = \frac{1}{2\sqrt{x^2}} \cdot 2x = \frac{x}{\sqrt{x^2}} \] Simplifying, we get: \[ \frac{d}{dx} [\sqrt{x^2}] = \text{sgn}(x) \] where \(\text{sgn}(x)\) is the sign function that indicates the sign of \(x\).

Practical Visualization

In programming or simulations, applying the Chain Rule often involves writing functions that call other functions. Here’s an example in Python:

import numpy as np

import matplotlib.pyplot as plt

# Define the inner function and its derivative

def f(x):

return x**2

def f_prime(x):

return 2*x

# Define the outer function and its derivative

def g(u):

return np.sqrt(u)

def g_prime(u):

return 1 / (2 * np.sqrt(u))

# Define the composite function and its derivative

def composite_function(x):

return g(f(x))

def composite_derivative(x):

return g_prime(f(x)) * f_prime(x)

# Define the x values

x = np.linspace(-5, 5, 400)

y = composite_function(x)

y_prime = composite_derivative(x)

# Create the plot

plt.figure(figsize=(10, 6))## <Figure size 1000x600 with 0 Axes>## [<matplotlib.lines.Line2D object at 0x0000029F56713E20>]# Plot the derivative of the composite function

plt.plot(x, y_prime, label="Derivative: $\text{sgn}(x)$", color='green', linestyle='--')## [<matplotlib.lines.Line2D object at 0x0000029F56758FA0>]## Text(0.5, 1.0, 'Composite Function and Its Derivative')## Text(0.5, 0, 'x')## Text(0, 0.5, 'y')## <matplotlib.lines.Line2D object at 0x0000029F56A45940>## <matplotlib.lines.Line2D object at 0x0000029F56DAB370>## <matplotlib.legend.Legend object at 0x0000029F56A45E80> To find derivative of functions, we need standard rules of differentiation. Laws of differentiation is shown in following table.

To find derivative of functions, we need standard rules of differentiation. Laws of differentiation is shown in following table.

3.6.5 Basic Rules in Differentiation

| Rule | Formula | Description |

|---|---|---|

| Constant Rule | \(\frac{d}{dx}[c] = 0\) | The derivative of a constant \(c\) is zero. |

| Power Rule | \(\frac{d}{dx}[x^n] = nx^{n-1}\) | For \(n\) a real number, the derivative of \(x^n\) is \(nx^{n-1}\). |

| Constant Multiple Rule | \(\frac{d}{dx}[cf(x)] = c \cdot f'(x)\) | The derivative of \(cf(x)\) is \(c\) times the derivative of \(f(x)\). |

| Sum Rule | \(\frac{d}{dx}[f(x) + g(x)] = f'(x) + g'(x)\) | The derivative of a sum is the sum of the derivatives. |

| Difference Rule | \(\frac{d}{dx}[f(x) - g(x)] = f'(x) - g'(x)\) | The derivative of a difference is the difference of the derivatives. |

| Product Rule | \(\frac{d}{dx}[f(x) \cdot g(x)] = f'(x) \cdot g(x) + f(x) \cdot g'(x)\) | The derivative of a product is given by: \(f'(x) \cdot g(x) + f(x) \cdot g'(x)\). |

| Quotient Rule | \(\frac{d}{dx}\left[\frac{f(x)}{g(x)}\right] = \frac{f'(x) \cdot g(x) - f(x) \cdot g'(x)}{[g(x)]^2}\) | The derivative of a quotient is \(\frac{f'(x) \cdot g(x) - f(x) \cdot g'(x)}{[g(x)]^2}\). |

| Chain Rule | \(\frac{d}{dx}[f(g(x))] = f'(g(x)) \cdot g'(x)\) | The derivative of a composite function is the derivative of the outer function evaluated at the inner function, multiplied by the derivative of the inner function. |

| Exponential Rule | \(\frac{d}{dx}[e^x] = e^x\) | The derivative of \(e^x\) is \(e^x\). |

| Logarithmic Rule | \(\frac{d}{dx}[\ln(x)] = \frac{1}{x}\) | The derivative of \(\ln(x)\) is \(\frac{1}{x}\). |

| Trigonometric Functions | \(\frac{d}{dx}[\sin(x)] = \cos(x)\) \(\frac{d}{dx}[\cos(x)] = -\sin(x)\) \(\frac{d}{dx}[\tan(x)] = \sec^2(x)\) |

Derivatives of common trigonometric functions: \(\sin(x)\), \(\cos(x)\), and \(\tan(x)\). |

3.6.5.1 Practice Problems for Basic Differentiation Rules

Constant Rule

Problems:

- Find the derivative of \(f(x) = 7\).

- Find the derivative of \(f(x) = -3\).

- Find the derivative of \(f(x) = \pi\).

- Find the derivative of \(f(x) = 0\).

- Find the derivative of \(f(x) = \sqrt{2}\).

Solutions:

- Solution: \(\frac{d}{dx}[7] = 0\)

- Solution: \(\frac{d}{dx}[-3] = 0\)

- Solution: \(\frac{d}{dx}[\pi] = 0\)

- Solution: \(\frac{d}{dx}[0] = 0\)

- Solution: \(\frac{d}{dx}[\sqrt{2}] = 0\)

Power Rule

Problems:

- Find the derivative of \(f(x) = x^5\).

- Find the derivative of \(f(x) = x^{-2}\).

- Find the derivative of \(f(x) = x^{1/2}\).

- Find the derivative of \(f(x) = 4x^3\).

- Find the derivative of \(f(x) = \frac{1}{x^4}\).

Solutions:

- Solution: \(\frac{d}{dx}[x^5] = 5x^4\)

- Solution: \(\frac{d}{dx}[x^{-2}] = -2x^{-3}\)

- Solution: \(\frac{d}{dx}[x^{1/2}] = \frac{1}{2}x^{-1/2}\)

- Solution: \(\frac{d}{dx}[4x^3] = 12x^2\)

- Solution: \(\frac{d}{dx}\left[\frac{1}{x^4}\right] = -4x^{-5}\)

Constant Multiple Rule

Problems:

- Find the derivative of \(f(x) = 3 \cdot x^4\).

- Find the derivative of \(f(x) = -7 \cdot x^2\).

- Find the derivative of \(f(x) = 5 \cdot x^{-1}\).

- Find the derivative of \(f(x) = \frac{2}{3} \cdot x^3\).

- Find the derivative of \(f(x) = 4 \cdot \sqrt{x}\).

Solutions:

- Solution: \(\frac{d}{dx}[3x^4] = 12x^3\)

- Solution: \(\frac{d}{dx}[-7x^2] = -14x\)

- Solution: \(\frac{d}{dx}[5x^{-1}] = -5x^{-2}\)

- Solution: \(\frac{d}{dx}\left[\frac{2}{3}x^3\right] = 2x^2\)

- Solution: \(\frac{d}{dx}[4\sqrt{x}] = 2x^{-1/2}\)

Sum Rule

Problems:

- Find the derivative of \(f(x) = x^3 + 2x^2\).

- Find the derivative of \(f(x) = 4x^2 - x + 5\).

- Find the derivative of \(f(x) = \sin(x) + \cos(x)\).

- Find the derivative of \(f(x) = x^{-1} + e^x\).

- Find the derivative of \(f(x) = 3x^4 + 2x^3 - x\).

Solutions:

- Solution: \(\frac{d}{dx}[x^3 + 2x^2] = 3x^2 + 4x\)

- Solution: \(\frac{d}{dx}[4x^2 - x + 5] = 8x - 1\)

- Solution: \(\frac{d}{dx}[\sin(x) + \cos(x)] = \cos(x) - \sin(x)\)

- Solution: \(\frac{d}{dx}\left[x^{-1} + e^x\right] = -x^{-2} + e^x\)

- Solution: \(\frac{d}{dx}[3x^4 + 2x^3 - x] = 12x^3 + 6x^2 - 1\)

Difference Rule

Problems:

- Find the derivative of \(f(x) = x^3 - 3x^2\).

- Find the derivative of \(f(x) = e^x - \ln(x)\).

- Find the derivative of \(f(x) = \tan(x) - \sin(x)\).

- Find the derivative of \(f(x) = \frac{1}{x^2} - x\).

- Find the derivative of \(f(x) = 4x^3 - 2x^2 + x - 5\).

Solutions:

- Solution: \(\frac{d}{dx}[x^3 - 3x^2] = 3x^2 - 6x\)

- Solution: \(\frac{d}{dx}[e^x - \ln(x)] = e^x - \frac{1}{x}\)

- Solution: \(\frac{d}{dx}[\tan(x) - \sin(x)] = \sec^2(x) - \cos(x)\)

- Solution: \(\frac{d}{dx}\left[\frac{1}{x^2} - x\right] = -\frac{2}{x^3} - 1\)

- Solution: \(\frac{d}{dx}[4x^3 - 2x^2 + x - 5] = 12x^2 - 4x + 1\)

Product Rule

Problems:

- Find the derivative of \(f(x) = x^2 \cdot \sin(x)\).

- Find the derivative of \(f(x) = e^x \cdot \cos(x)\).

- Find the derivative of \(f(x) = x \cdot \ln(x)\).

- Find the derivative of \(f(x) = x^3 \cdot e^x\).

- Find the derivative of \(f(x) = \sqrt{x} \cdot \tan(x)\).

Solutions:

- Solution: \(\frac{d}{dx}[x^2 \sin(x)] = 2x \sin(x) + x^2 \cos(x)\)

- Solution: \(\frac{d}{dx}[e^x \cos(x)] = e^x \cos(x) - e^x \sin(x)\)

- Solution: \(\frac{d}{dx}[x \ln(x)] = \ln(x) + 1\)

- Solution: \(\frac{d}{dx}[x^3 e^x] = x^3 e^x + 3x^2 e^x\)

- Solution: \(\frac{d}{dx}[\sqrt{x} \cdot \tan(x)] = \frac{1}{2\sqrt{x}} \cdot \tan(x) + \sqrt{x} \cdot \sec^2(x)\)

Quotient Rule

Problems:

- Find the derivative of \(f(x) = \frac{x^2}{\sin(x)}\).

- Find the derivative of \(f(x) = \frac{e^x}{x}\).

- Find the derivative of \(f(x) = \frac{\cos(x)}{x^2}\).

- Find the derivative of \(f(x) = \frac{x \cdot \ln(x)}{x^2 + 1}\).

- Find the derivative of \(f(x) = \frac{\sqrt{x}}{\tan(x)}\).

Solutions:

- Solution: \(\frac{d}{dx}\left[\frac{x^2}{\sin(x)}\right] = \frac{2x \sin(x) - x^2 \cos(x)}{\sin^2(x)}\)

- Solution: \(\frac{d}{dx}\left[\frac{e^x}{x}\right] = \frac{e^x (x - 1)}{x^2}\)

- Solution: \(\frac{d}{dx}\left[\frac{\cos(x)}{x^2}\right] = \frac{-\sin(x) \cdot x^2 - \cos(x) \cdot 2x}{x^4}\)

- Solution: \(\frac{d}{dx}\left[\frac{x \ln(x)}{x^2 + 1}\right] = \frac{(x \cdot \frac{1}{x} + \ln(x)) (x^2 + 1) - x \ln(x) \cdot 2x}{(x^2 + 1)^2}\)

- Solution: \(\frac{d}{dx}\left[\frac{\sqrt{x}}{\tan(x)}\right] = \frac{\frac{1}{2\sqrt{x}} \cdot \tan(x) - \sqrt{x} \cdot \sec^2(x)}{\tan^2(x)}\)

Chain Rule

Problems:

- Find the derivative of \(f(x) = \sin(x^2)\).

- Find the derivative of \(f(x) = \ln(\cos(x))\).

- Find the derivative of \(f(x) = e^{\sin(x)}\).

- Find the derivative of \(f(x) = (3x + 1)^5\).

- Find the derivative of \(f(x) = \sqrt{\ln(x)}\).

Solutions:

- Solution: \(\frac{d}{dx}[\sin(x^2)] = \cos(x^2) \cdot 2x\)

- Solution: \(\frac{d}{dx}[\ln(\cos(x))] = \frac{-\sin(x)}{\cos(x)} = -\tan(x)\)

- Solution: \(\frac{d}{dx}[e^{\sin(x)}] = e^{\sin(x)} \cdot \cos(x)\)

- Solution: \(\frac{d}{dx}[(3x + 1)^5] = 5(3x + 1)^4 \cdot 3\)

- Solution: \(\frac{d}{dx}[\sqrt{\ln(x)}] = \frac{1}{2\sqrt{\ln(x)}} \cdot \frac{1}{x}\)

Exponential Rule

Problems:

- Find the derivative of \(f(x) = e^{3x}\).

- Find the derivative of \(f(x) = 2e^x\).

- Find the derivative of \(f(x) = e^{-x}\).

- Find the derivative of \(f(x) = e^{x^2}\).

- Find the derivative of \(f(x) = e^{\sin(x)}\).

Solutions:

- Solution: \(\frac{d}{dx}[e^{3x}] = 3e^{3x}\)

- Solution: \(\frac{d}{dx}[2e^x] = 2e^x\)

- Solution: \(\frac{d}{dx}[e^{-x}] = -e^{-x}\)

- Solution: \(\frac{d}{dx}[e^{x^2}] = 2x e^{x^2}\)

- Solution: \(\frac{d}{dx}[e^{\sin(x)}] = e^{\sin(x)} \cdot \cos(x)\)

Logarithmic Rule

Problems:

- Find the derivative of \(f(x) = \ln(x^2 + 1)\).

- Find the derivative of \(f(x) = \ln(3x)\).

- Find the derivative of \(f(x) = \ln(\sin(x))\).

- Find the derivative of \(f(x) = \ln(x^3 + x)\).

- Find the derivative of \(f(x) = \ln(e^x + 1)\).

Solutions:

- Solution: \(\frac{d}{dx}[\ln(x^2 + 1)] = \frac{2x}{x^2 + 1}\)

- Solution: \(\frac{d}{dx}[\ln(3x)] = \frac{1}{x}\)

- Solution: \(\frac{d}{dx}[\ln(\sin(x))] = \cot(x)\)

- Solution: \(\frac{d}{dx}[\ln(x^3 + x)] = \frac{3x^2 + 1}{x^3 + x}\)

- Solution: \(\frac{d}{dx}[\ln(e^x + 1)] = \frac{e^x}{e^x + 1}\)

3.6.6 Implicit Differentiation

Implicit Differentiation is a technique used to find the derivative of a function when it is not explicitly defined in terms of one variable. Instead, the function is given in an implicit form, where the variables are mixed together in an equation. This method is crucial when dealing with equations where solving for one variable in terms of another is difficult or impossible.

Concept: When a function \(y\) is defined implicitly by an equation involving both \(x\) and \(y\), such as:

\[ F(x, y) = 0 \]

where \(F\) is a function of both \(x\) and \(y\), implicit differentiation allows us to find \(\frac{dy}{dx}\) without explicitly solving for \(y\) as a function of \(x\).

To perform implicit differentiation, follow these steps:

- Differentiate both sides of the equation with respect to \(x\), treating \(y\) as an implicit function of \(x\).

- Apply the chain rule when differentiating terms involving \(y\), because \(\frac{dy}{dx}\) is present in those terms.

- Solve for \(\frac{dy}{dx}\) to find the derivative.

Necessity in Application: Implicit differentiation is particularly useful in several scenarios:

- Complex Relationships: When dealing with curves defined by equations that are difficult to solve for \(y\) explicitly.

- Conic Sections and Ellipses: In engineering and physics problems where the equations of curves are given in implicit form.

- Machine Learning: When optimizing loss functions where constraints are given implicitly.

- Computer Graphics: When modeling curves and surfaces where explicit formulas are hard to derive.

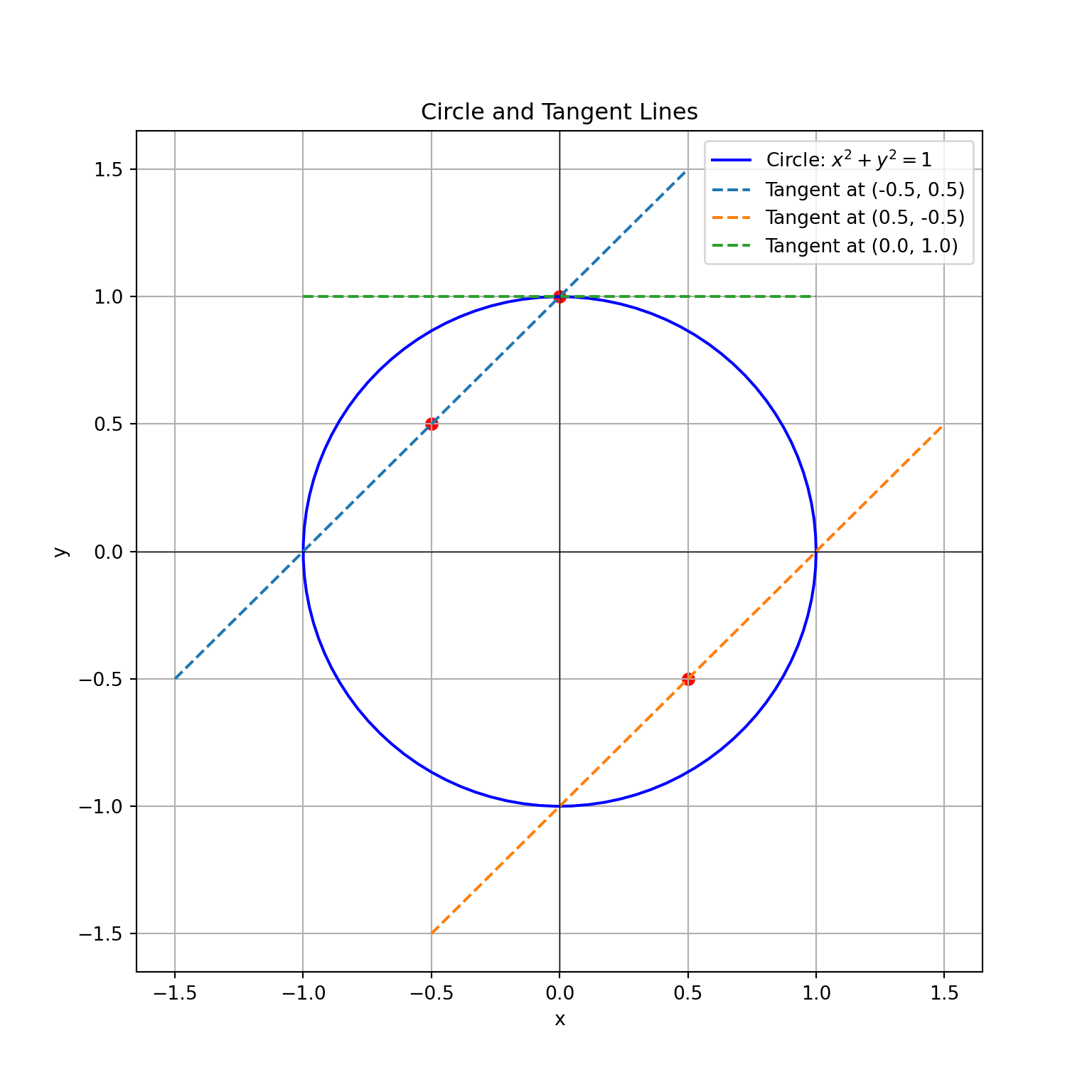

Practical Example:

Consider the circle defined by the equation:

\[ x^2 + y^2 = 1 \]

We want to find the slope of the tangent line to the circle at any point \((x, y)\).

Differentiate both sides of the equation with respect to \(x\): \[ \frac{d}{dx}(x^2 + y^2) = \frac{d}{dx}(1) \]

Apply the chain rule: \[ 2x + 2y \frac{dy}{dx} = 0 \]

Solve for \(\frac{dy}{dx}\): \[ \frac{dy}{dx} = -\frac{x}{y} \]

This result shows how the slope of the tangent to the circle changes depending on the coordinates \((x, y)\).

Python Visualization

Here’s a Python example to visualize the tangent lines to a circle:

import numpy as np

import matplotlib.pyplot as plt

# Define the circle

theta = np.linspace(0, 2 * np.pi, 100)

x_circle = np.cos(theta)

y_circle = np.sin(theta)

# Define points of interest

points = np.array([[-0.5, 0.5], [0.5, -0.5], [0, 1]])

# Create the plot

plt.figure(figsize=(8, 8))## <Figure size 800x800 with 0 Axes>## [<matplotlib.lines.Line2D object at 0x0000029F569F9CD0>]# Plot tangent lines

for point in points:

x, y = point

slope = -x / y

x_tangent = np.linspace(x - 1, x + 1, 10)

y_tangent = slope * (x_tangent - x) + y

plt.plot(x_tangent, y_tangent, '--', label=f'Tangent at ({x}, {y})')## [<matplotlib.lines.Line2D object at 0x0000029F56CC8A90>]

## [<matplotlib.lines.Line2D object at 0x0000029F569E4700>]

## [<matplotlib.lines.Line2D object at 0x0000029F56741BB0>]## <matplotlib.collections.PathCollection object at 0x0000029F567521F0>## Text(0.5, 1.0, 'Circle and Tangent Lines')## Text(0.5, 0, 'x')## Text(0, 0.5, 'y')## <matplotlib.lines.Line2D object at 0x0000029F56D65610>## <matplotlib.lines.Line2D object at 0x0000029F56D81EE0>## <matplotlib.legend.Legend object at 0x0000029F57219520>

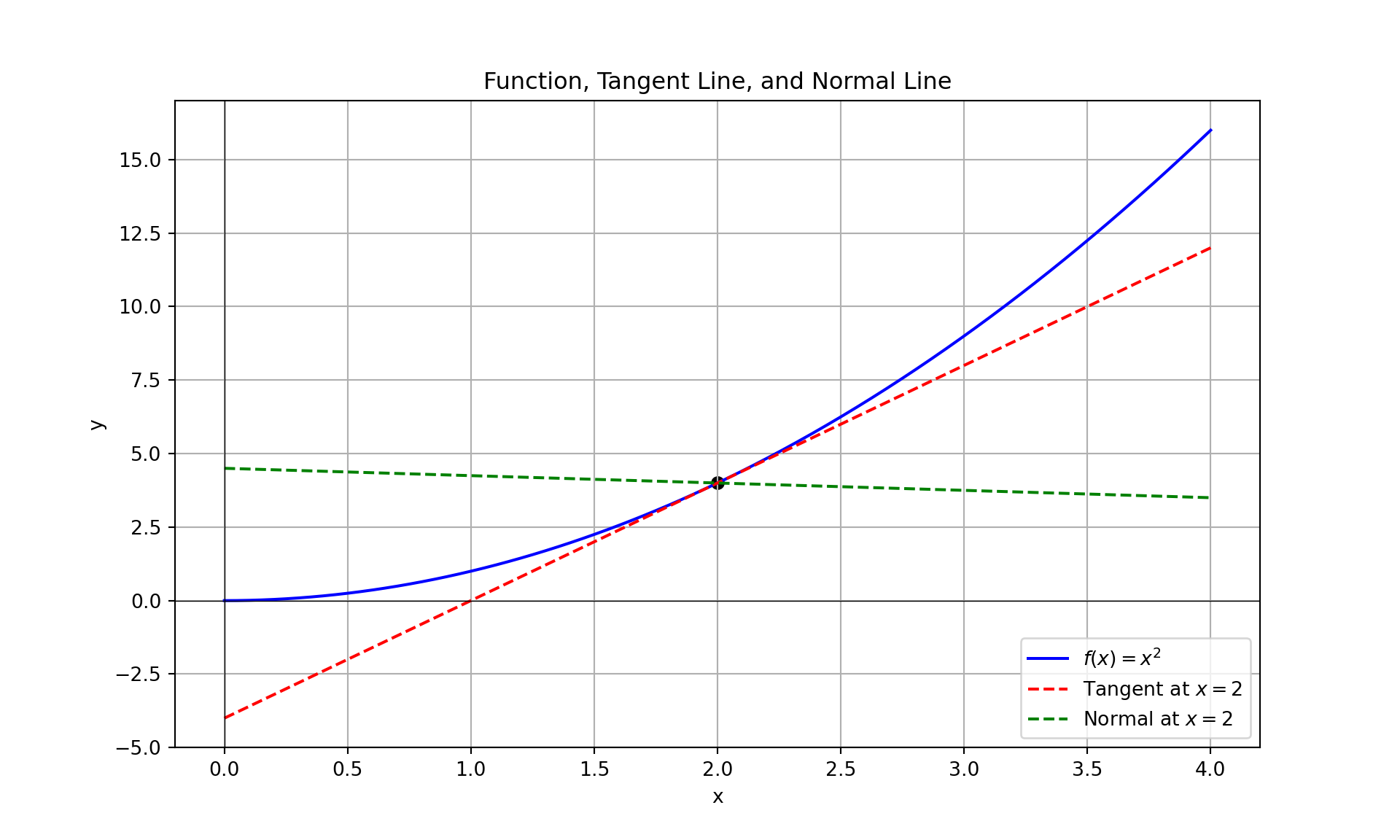

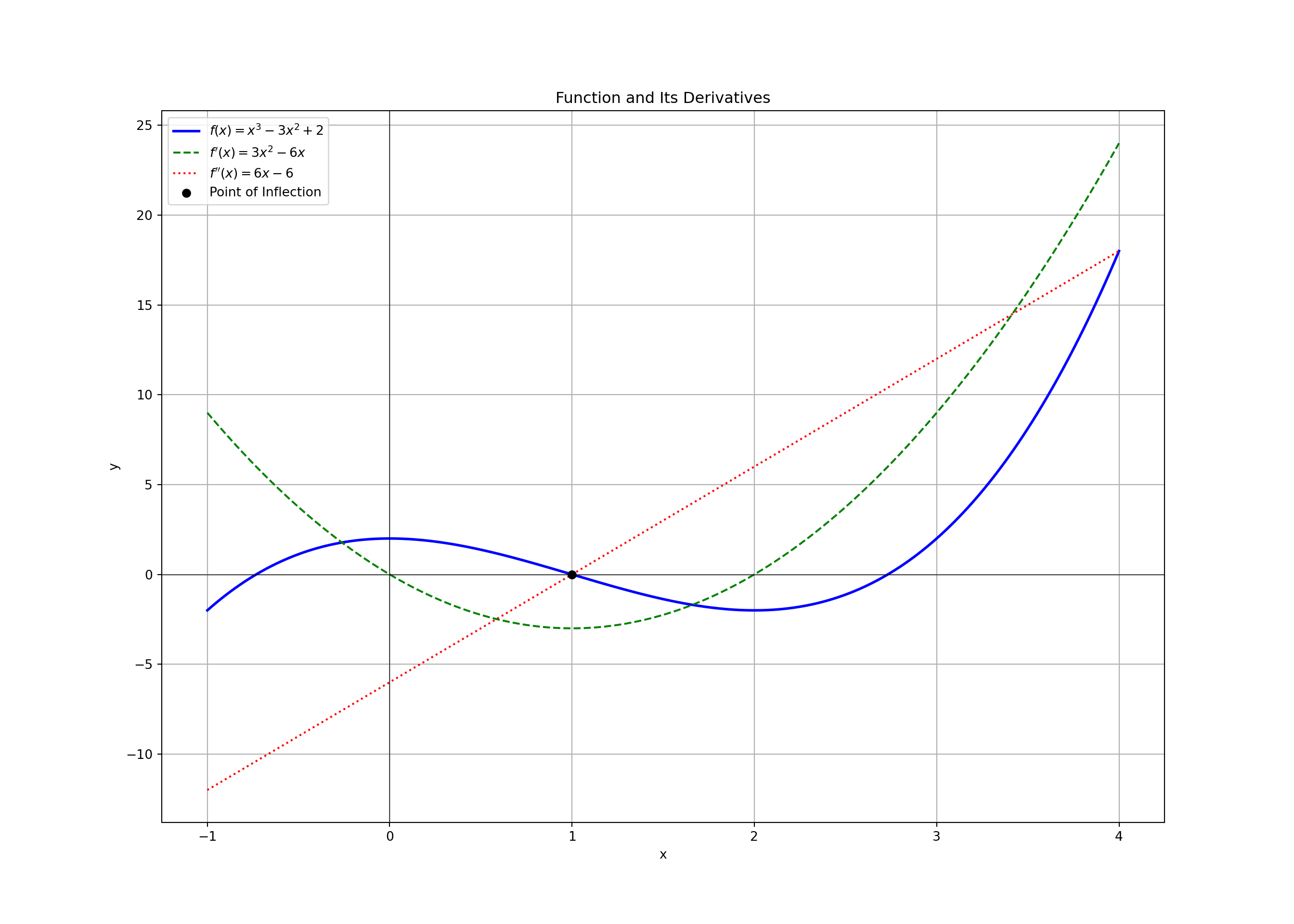

3.6.7 Tangents and Normal Lines

In our previous discussions, we explored the concept of a tangent at a point on a curve. To recap, the tangent line to a function \(f(x)\) at a specific point \(x = x_0\) represents the instantaneous rate of change of the function at that point.

Tangent Line: The tangent line to \(f(x)\) at \(x = x_0\) is the line that just touches the curve at that point, providing the best linear approximation of the function at \(x_0\). The formula for the tangent line is: \[ y - f(x_0) = f'(x_0) (x - x_0) \] where \(f'(x_0)\) is the derivative of \(f(x)\) at \(x_0\), and \((x_0, f(x_0))\) is the point of tangency.

Normal Line: The normal line to \(f(x)\) at \(x = x_0\) is the line perpendicular to the tangent line at that point. It provides insight into the direction in which the curve bends away from the tangent line. The formula for the normal line is: \[ y - f(x_0) = -\frac{1}{f'(x_0)} (x - x_0) \] where \(-\frac{1}{f'(x_0)}\) is the slope of the normal line.

Example: Consider thefFunction \(f(x) = x^2\) at \(x = 2\)

Let’s use the function \(f(x) = x^2\) to find both the tangent and normal lines at \(x = 2\):

Find the derivative \(f'(x)\): \[ f'(x) = 2x \]

Evaluate the derivative at \(x = 2\): \[ f'(2) = 2 \cdot 2 = 4 \] The slope of the tangent line at \(x = 2\) is \(4\).

Write the equation of the tangent line: Using the point-slope form: \[ y - 4 = 4 (x - 2) \] where \(f(2) = 4\). Simplifying: \[ y = 4x - 4 \]

Find the slope of the normal line: The slope of the normal line is the negative reciprocal of the tangent line’s slope: \[ \text{slope of normal line} = -\frac{1}{4} \]

Write the equation of the normal line: Using the point-slope form: \[ y - 4 = -\frac{1}{4} (x - 2) \] Simplify to: \[ y = -\frac{1}{4}x + \frac{9}{2} \]

Python Visualization

To visualize both the tangent and normal lines for the function \(f(x) = x^2\) at \(x = 2\), use the following Python code:

import numpy as np

import matplotlib.pyplot as plt

# Define the function and its derivative

def f(x):

return x**2

def f_prime(x):

return 2*x

# Define the point of interest

x0 = 2

y0 = f(x0)

slope_tangent = f_prime(x0)

slope_normal = -1 / slope_tangent

# Define x values for plotting

x = np.linspace(0, 4, 400)

y = f(x)

# Calculate tangent and normal lines

x_tangent = np.linspace(0, 4, 10)

y_tangent = slope_tangent * (x_tangent - x0) + y0

x_normal = np.linspace(0, 4, 10)

y_normal = slope_normal * (x_normal - x0) + y0

# Create the plot

plt.figure(figsize=(10, 6))## <Figure size 1000x600 with 0 Axes>## [<matplotlib.lines.Line2D object at 0x0000029F56FD8790>]## [<matplotlib.lines.Line2D object at 0x0000029F569E9BB0>]## [<matplotlib.lines.Line2D object at 0x0000029F569E9FD0>]## <matplotlib.collections.PathCollection object at 0x0000029F56A69190>## Text(0.5, 1.0, 'Function, Tangent Line, and Normal Line')## Text(0.5, 0, 'x')## Text(0, 0.5, 'y')## <matplotlib.lines.Line2D object at 0x0000029F56A73D30>## <matplotlib.lines.Line2D object at 0x0000029F56A73040>## <matplotlib.legend.Legend object at 0x0000029F569E9820>

3.6.7.1 Problems on Tangent Line and Normal Line

Problem 1:

Find the equation of the tangent line to the curve \(y = x^2\) at the point \((1, 1)\).

Solution:

1. Find the derivative \(y'\) of the function \(y = x^2\):

\[

y' = 2x

\]

2. Evaluate the derivative at \(x = 1\):

\[

y'(1) = 2(1) = 2

\]

3. Use the point-slope form of the equation of the tangent line:

\[

y - y_1 = m(x - x_1)

\]

\[

y - 1 = 2(x - 1)

\]

\[

y = 2x - 1

\]

Problem 2:

Find the equation of the normal line to the curve \(y = \sqrt{x}\) at the point \((4, 2)\).

Solution:

1. Find the derivative \(y'\) of the function \(y = \sqrt{x}\):

\[

y' = \frac{1}{2\sqrt{x}}

\]

2. Evaluate the derivative at \(x = 4\):

\[

y'(4) = \frac{1}{2\sqrt{4}} = \frac{1}{4}

\]

3. The slope of the normal line is the negative reciprocal of the slope of the tangent line:

\[

m_{\text{normal}} = -\frac{1}{y'(4)} = -4

\]

4. Use the point-slope form of the equation of the normal line:

\[

y - y_1 = m_{\text{normal}}(x - x_1)

\]

\[

y - 2 = -4(x - 4)

\]

\[

y = -4x + 18

\]

Problem 3:

Find the equation of the tangent line to the curve \(y = e^x\) at the point where \(x = 0\).

Solution:

1. Find the derivative \(y'\) of the function \(y = e^x\):

\[

y' = e^x

\]

2. Evaluate the derivative at \(x = 0\):

\[

y'(0) = e^0 = 1

\]

3. Use the point-slope form of the equation of the tangent line:

\[

y - y_1 = m(x - x_1)

\]

\[

y - 1 = 1(x - 0)

\]

\[

y = x + 1

\]

Problem 4:

Find the equation of the normal line to the curve \(y = \ln(x)\) at the point where \(x = 1\).

Solution:

1. Find the derivative \(y'\) of the function \(y = \ln(x)\):

\[

y' = \frac{1}{x}

\]

2. Evaluate the derivative at \(x = 1\):

\[

y'(1) = 1

\]

3. The slope of the normal line is the negative reciprocal of the slope of the tangent line:

\[

m_{\text{normal}} = -\frac{1}{y'(1)} = -1

\]

4. Use the point-slope form of the equation of the normal line:

\[

y - y_1 = m_{\text{normal}}(x - x_1)

\]

\[

y - 0 = -1(x - 1)

\]

\[

y = -x + 1

\]

Problem 5:

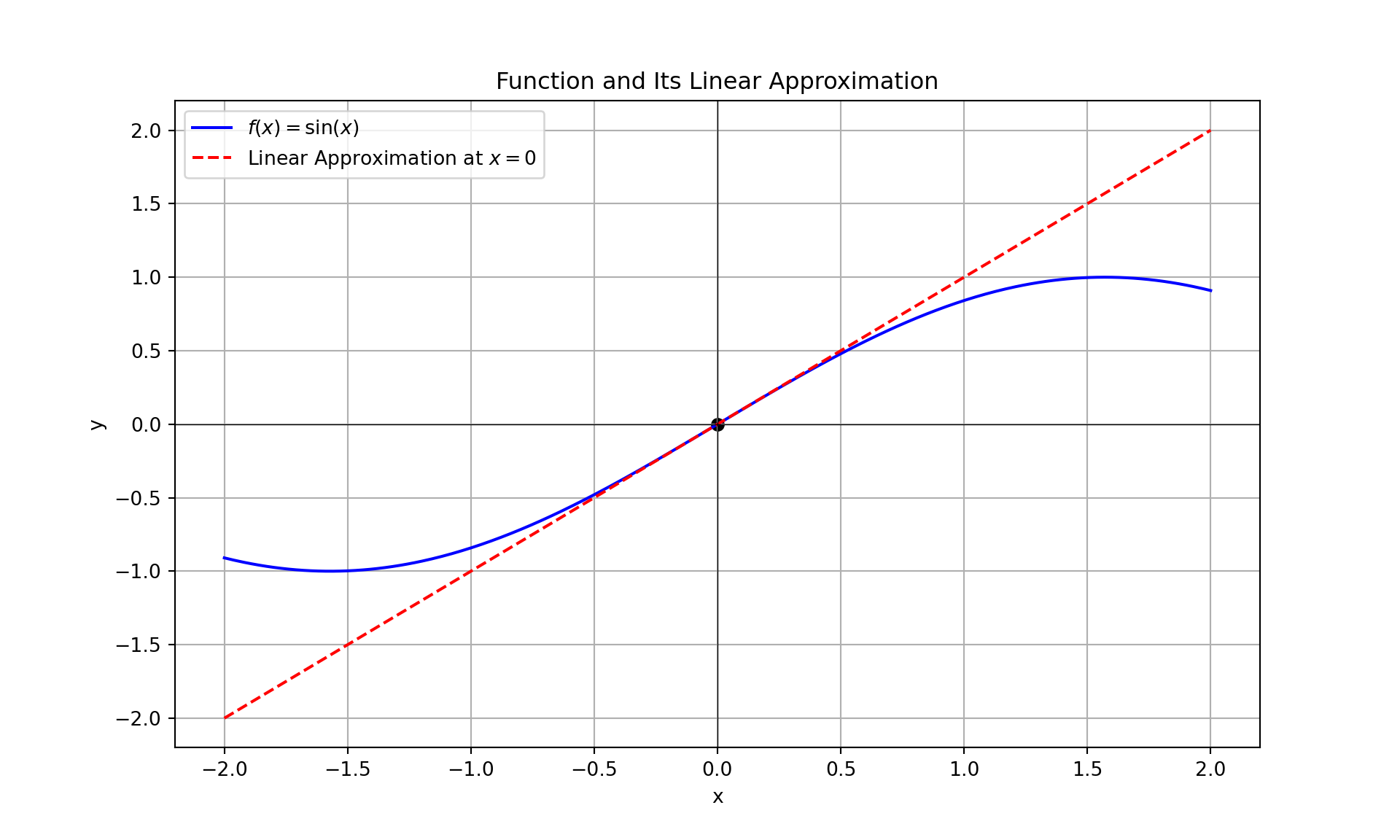

Find the equation of the tangent line to the curve \(y = \sin(x)\) at the point where \(x = \frac{\pi}{2}\).

Solution:

1. Find the derivative \(y'\) of the function \(y = \sin(x)\):

\[

y' = \cos(x)

\]

2. Evaluate the derivative at \(x = \frac{\pi}{2}\):

\[

y'(\frac{\pi}{2}) = \cos(\frac{\pi}{2}) = 0

\]

3. Use the point-slope form of the equation of the tangent line:

\[