Chapter 1 Cluster Analysis

These notes are primarily taken from studying DataCamp courses Cluster Analysis in R and Unsupervised Learning in R, AIHR, UC Business Analytics R Programming Guide, and PSU STAT-505.

Cluster analysis is a data exploration (mining) in which an algorithm divides features into distinct populations (clusters) with no a priori defining characteristics. The researcher then describes the clusters by summarizing the observed data within the clusters. Popular uses of clustering include audience segmentation, persona creation, anomaly detection, and image pattern recognition.

There are two common approaches to cluster analysis.

Agglomerative hierarchical algorithms (e.g., HCA) start by defining each data point as a cluster, then repeatedly combine the two closest clusters into a new cluster until all data points are merged into a single cluster.

Non-hierarchical methods (e.g., kNN, K-means) randomly partition the data into a set of K clusters, then iteratively move data points among clusters until no sensible reassignments remain.

1.1 Case Study

Let’s learn by example, using the IBM HR Analytics Employee Attrition & Performance data set from Kaggle to discover which factors are associated with employee turnover and whether distinct clusters of employees are more susceptible to turnover.1 Data set eap includes 1,470 employee records consisting of the EmployeeNumber, a flag for Attrition (Yes|No) during an (unspecified) time frame, and 32 other descriptive variables.

library(tidyverse)

library(plotly) # interactive graphing

library(cluster) # daisy and pam

library(Rtsne) # dimensionality reduction and visualization

library(dendextend) # color_branches

# set.seed(1234) # reproducibility

eap_0 <- read_csv("./input/WA_Fn-UseC_-HR-Employee-Attrition.csv")

eap_1 <- eap_0 %>%

mutate_if(is.character, as_factor) %>%

mutate(

EnvironmentSatisfaction = factor(EnvironmentSatisfaction, ordered = TRUE),

StockOptionLevel = factor(StockOptionLevel, ordered = TRUE),

JobLevel = factor(JobLevel, ordered = TRUE),

JobInvolvement = factor(JobInvolvement, ordered = TRUE)

) %>%

select(EmployeeNumber, Attrition, everything())

my_skim <- skimr::skim_with(numeric = skimr::sfl(p25 = NULL, p50 = NULL, p75 = NULL, hist = NULL))

my_skim(eap_1)| Name | eap_1 |

| Number of rows | 1470 |

| Number of columns | 35 |

| _______________________ | |

| Column type frequency: | |

| factor | 13 |

| numeric | 22 |

| ________________________ | |

| Group variables | None |

Variable type: factor

| skim_variable | n_missing | complete_rate | ordered | n_unique | top_counts |

|---|---|---|---|---|---|

| Attrition | 0 | 1 | FALSE | 2 | No: 1233, Yes: 237 |

| BusinessTravel | 0 | 1 | FALSE | 3 | Tra: 1043, Tra: 277, Non: 150 |

| Department | 0 | 1 | FALSE | 3 | Res: 961, Sal: 446, Hum: 63 |

| EducationField | 0 | 1 | FALSE | 6 | Lif: 606, Med: 464, Mar: 159, Tec: 132 |

| EnvironmentSatisfaction | 0 | 1 | TRUE | 4 | 3: 453, 4: 446, 2: 287, 1: 284 |

| Gender | 0 | 1 | FALSE | 2 | Mal: 882, Fem: 588 |

| JobInvolvement | 0 | 1 | TRUE | 4 | 3: 868, 2: 375, 4: 144, 1: 83 |

| JobLevel | 0 | 1 | TRUE | 5 | 1: 543, 2: 534, 3: 218, 4: 106 |

| JobRole | 0 | 1 | FALSE | 9 | Sal: 326, Res: 292, Lab: 259, Man: 145 |

| MaritalStatus | 0 | 1 | FALSE | 3 | Mar: 673, Sin: 470, Div: 327 |

| Over18 | 0 | 1 | FALSE | 1 | Y: 1470 |

| OverTime | 0 | 1 | FALSE | 2 | No: 1054, Yes: 416 |

| StockOptionLevel | 0 | 1 | TRUE | 4 | 0: 631, 1: 596, 2: 158, 3: 85 |

Variable type: numeric

| skim_variable | n_missing | complete_rate | mean | sd | p0 | p100 |

|---|---|---|---|---|---|---|

| EmployeeNumber | 0 | 1 | 1024.87 | 602.02 | 1 | 2068 |

| Age | 0 | 1 | 36.92 | 9.14 | 18 | 60 |

| DailyRate | 0 | 1 | 802.49 | 403.51 | 102 | 1499 |

| DistanceFromHome | 0 | 1 | 9.19 | 8.11 | 1 | 29 |

| Education | 0 | 1 | 2.91 | 1.02 | 1 | 5 |

| EmployeeCount | 0 | 1 | 1.00 | 0.00 | 1 | 1 |

| HourlyRate | 0 | 1 | 65.89 | 20.33 | 30 | 100 |

| JobSatisfaction | 0 | 1 | 2.73 | 1.10 | 1 | 4 |

| MonthlyIncome | 0 | 1 | 6502.93 | 4707.96 | 1009 | 19999 |

| MonthlyRate | 0 | 1 | 14313.10 | 7117.79 | 2094 | 26999 |

| NumCompaniesWorked | 0 | 1 | 2.69 | 2.50 | 0 | 9 |

| PercentSalaryHike | 0 | 1 | 15.21 | 3.66 | 11 | 25 |

| PerformanceRating | 0 | 1 | 3.15 | 0.36 | 3 | 4 |

| RelationshipSatisfaction | 0 | 1 | 2.71 | 1.08 | 1 | 4 |

| StandardHours | 0 | 1 | 80.00 | 0.00 | 80 | 80 |

| TotalWorkingYears | 0 | 1 | 11.28 | 7.78 | 0 | 40 |

| TrainingTimesLastYear | 0 | 1 | 2.80 | 1.29 | 0 | 6 |

| WorkLifeBalance | 0 | 1 | 2.76 | 0.71 | 1 | 4 |

| YearsAtCompany | 0 | 1 | 7.01 | 6.13 | 0 | 40 |

| YearsInCurrentRole | 0 | 1 | 4.23 | 3.62 | 0 | 18 |

| YearsSinceLastPromotion | 0 | 1 | 2.19 | 3.22 | 0 | 15 |

| YearsWithCurrManager | 0 | 1 | 4.12 | 3.57 | 0 | 17 |

Data Exploration

You would normally start a cluster analysis with an exploration of the data to determine which variables are interesting and relevant to your goal. I’ll bypass that rigor and just use a binary correlation analysis using the correlationfunnel package. binarize() converts features into binary format by binning the continuous features and one-hot encoding the binary features. correlate() calculates the correlation coefficient between each binary feature and the response variable. plot_correlation_funnel() creates a tornado plot that lists the highest correlation features (based on absolute magnitude) at the top.

cf <- eap_1 %>%

select(-EmployeeNumber) %>%

correlationfunnel::binarize(n_bins = 5, thresh_infreq = 0.01) %>%

correlationfunnel::correlate(Attrition__Yes)

cf %>%

correlationfunnel::plot_correlation_funnel(interactive = FALSE) %>%

ggplotly()OverTime (Y|N) has the largest correlation (0.25). I’ll include just the variables with a correlation coefficient of at least 0.10.

feature_cols <- cf %>%

filter(abs(correlation) >= .1 & feature != "Attrition") %>%

pull(feature) %>%

as.character() %>%

unique()

eap_2 <- eap_1 %>%

select(one_of(c("EmployeeNumber", "Attrition", feature_cols)))Using the cutoff of 0.10 leaves 14 features for the analysis.

eap_2 %>%

select(-EmployeeNumber) %>%

correlationfunnel::binarize(n_bins = 5, thresh_infreq = 0.01) %>%

correlationfunnel::correlate(Attrition__Yes) %>%

correlationfunnel::plot_correlation_funnel(interactive = FALSE) %>%

ggplotly()Data Preparation

Measuring distance is central to clustering. Two observations are similar if the distance between their features is small. There are many ways to define distance (see options in ?dist). Two common measures are Euclidean distance (\(d = \sqrt{\sum{(x_i - y_i)^2}}\)), and Jaccard distance (proportion of unshared features). If you have a mix of feature data types, use the Gower distance. Gower range-normalizes the quantitative variables, one-hot encodes the nominal variables, and ranks the ordinal variables, then calculates the Manhattan distance for quantitative and ordinal variables and the Dice coefficient for nominal variables. calculate Gower’s distance with cluster::daisy().

eap_2_gwr <- cluster::daisy(eap_2[, 2:16], metric = "gower")Let’s see the most similar and dissimilar pairs of employees according to their Gower distance. The most similar pair are identical except for MonthlyIncome. The most dissimilar pair have nothing in common.

x <- as.matrix(eap_2_gwr)

bind_rows(

eap_2[which(x == min(x[x != 0]), arr.ind = TRUE)[1, ], ],

eap_2[which(x == max(x[x != 0]), arr.ind = TRUE)[1, ], ]

) %>%

as.data.frame() %>%

mutate(across(everything(), as.character)) %>%

pivot_longer(cols = -EmployeeNumber) %>%

pivot_wider(names_from = EmployeeNumber) %>%

flextable::flextable() %>%

flextable::add_header_row(values = c("", "Similar", "Dissimilar"), colwidths = c(1, 2, 2)) %>%

flextable::border(j = c(1, 3), border.right = officer::fp_border("gray80"), part = "all")Similar | Dissimilar | |||

name | 1624 | 614 | 1094 | 825 |

Attrition | Yes | Yes | No | Yes |

OverTime | Yes | Yes | No | Yes |

JobLevel | 1 | 1 | 1 | 5 |

MonthlyIncome | 1569 | 1878 | 4621 | 19246 |

YearsAtCompany | 0 | 0 | 3 | 31 |

StockOptionLevel | 0 | 0 | 3 | 0 |

YearsWithCurrManager | 0 | 0 | 2 | 8 |

TotalWorkingYears | 0 | 0 | 3 | 40 |

MaritalStatus | Single | Single | Married | Single |

Age | 18 | 18 | 27 | 58 |

YearsInCurrentRole | 0 | 0 | 2 | 15 |

JobRole | Sales Representative | Sales Representative | Laboratory Technician | Research Director |

EnvironmentSatisfaction | 2 | 2 | 1 | 4 |

JobInvolvement | 3 | 3 | 1 | 3 |

BusinessTravel | Travel_Frequently | Travel_Frequently | Non-Travel | Travel_Rarely |

With the data preparation complete, we are ready to fit a clustering model.

1.2 K-Means (Medoids)

The K-means algorithm randomly assigns all observations to one of K clusters. K-means iteratively calculates the cluster centroids and reassigns observations to their nearest centroid. Centroids are set of mean values for each feature (hence the name “K-means”). The iterations continue until either the centroids stabilize or the iterations reach a set maximum (typically 50). The result is K clusters with the minimum total intra-cluster variation.

The centroid of cluster \(c_i \in C\) is the mean of the cluster observations \(S_i\): \(c_i = \frac{1}{|S_i|} \sum_{x_i \in S_i}{x_i}\). The nearest centroid is the minimum squared euclidean distance, \(\underset{c_i \in C}{\operatorname{arg min}} D(c_i, x)^2\). A more robust version of K-means is K-medoids which minimizes the sum of dissimilarities instead of a sum of squared euclidean distances.

1.2.0.1 Fitting the Model

What value should k take? You may have a preference in advance, but more likely you will use either a scree plot (K-means) or the silhouette plot (K-medoids).

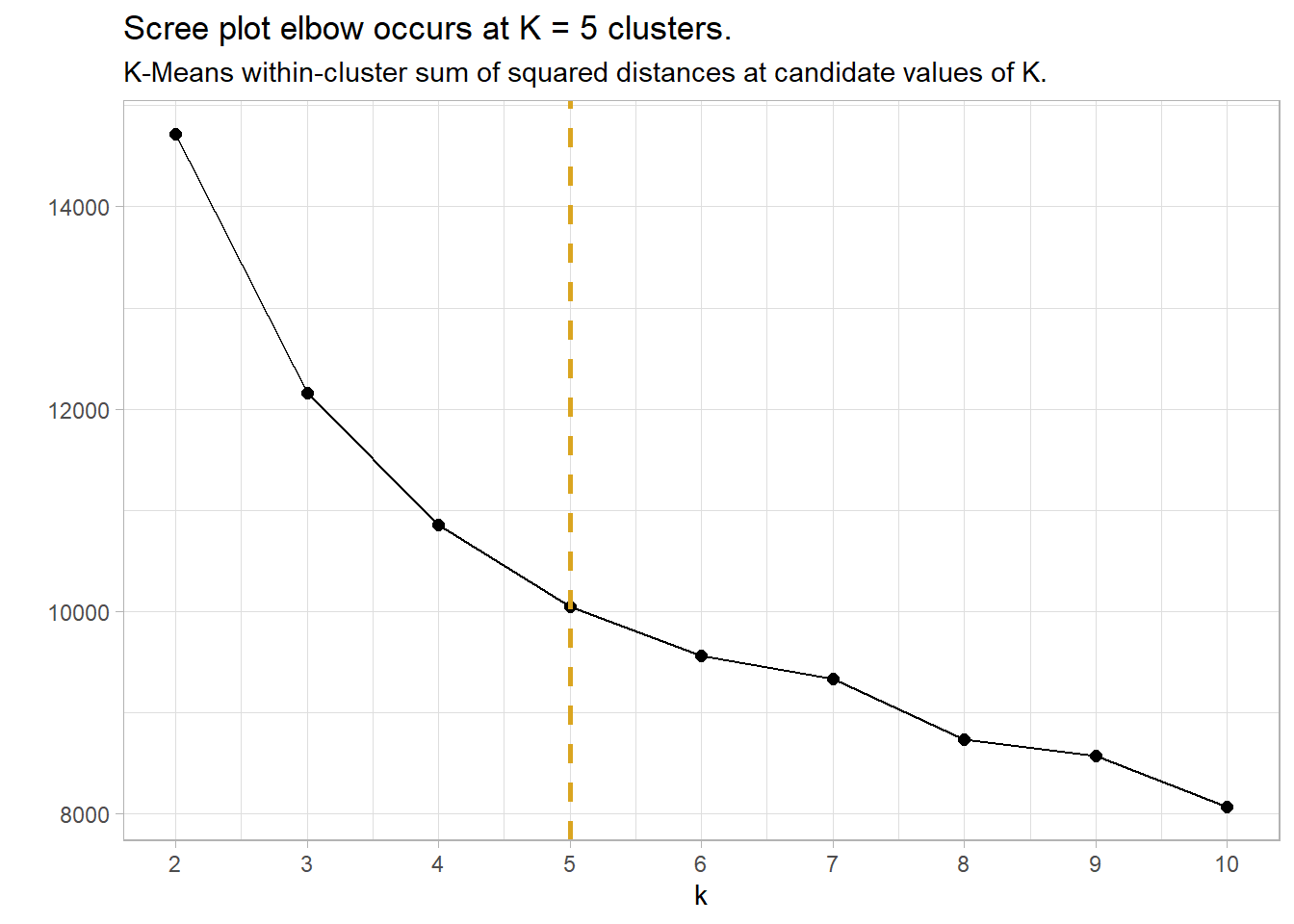

I’ll construct a scree plot for reference, but I think K-medoids and silhouette plots are the newer, better way to cluster. The scree plot is a plot of the total within-cluster sum of squared distances as a function of K. The sum of squares always decreases as K increases, but at a declining rate. The optimal K is at the “elbow” in the curve - the point at which the curve flattens. In the scree plot below, the elbow may be K = 5.

set.seed(1234)

kmeans_mdl <- data.frame(k = 2:10) %>%

mutate(

mdl = map(k, ~stats::kmeans(eap_2_gwr, centers = .)),

wss = map_dbl(mdl, ~ .$tot.withinss)

)

kmeans_mdl %>%

ggplot(aes(x = k, y = wss)) +

geom_point(size = 2) +

geom_line() +

geom_vline(aes(xintercept = 5), linetype = 2, size = 1, color = "goldenrod") +

scale_x_continuous(breaks = kmeans_mdl$k) +

theme_light() +

labs(title = "Scree plot elbow occurs at K = 5 clusters.",

subtitle = "K-Means within-cluster sum of squared distances at candidate values of K.",

y = "")

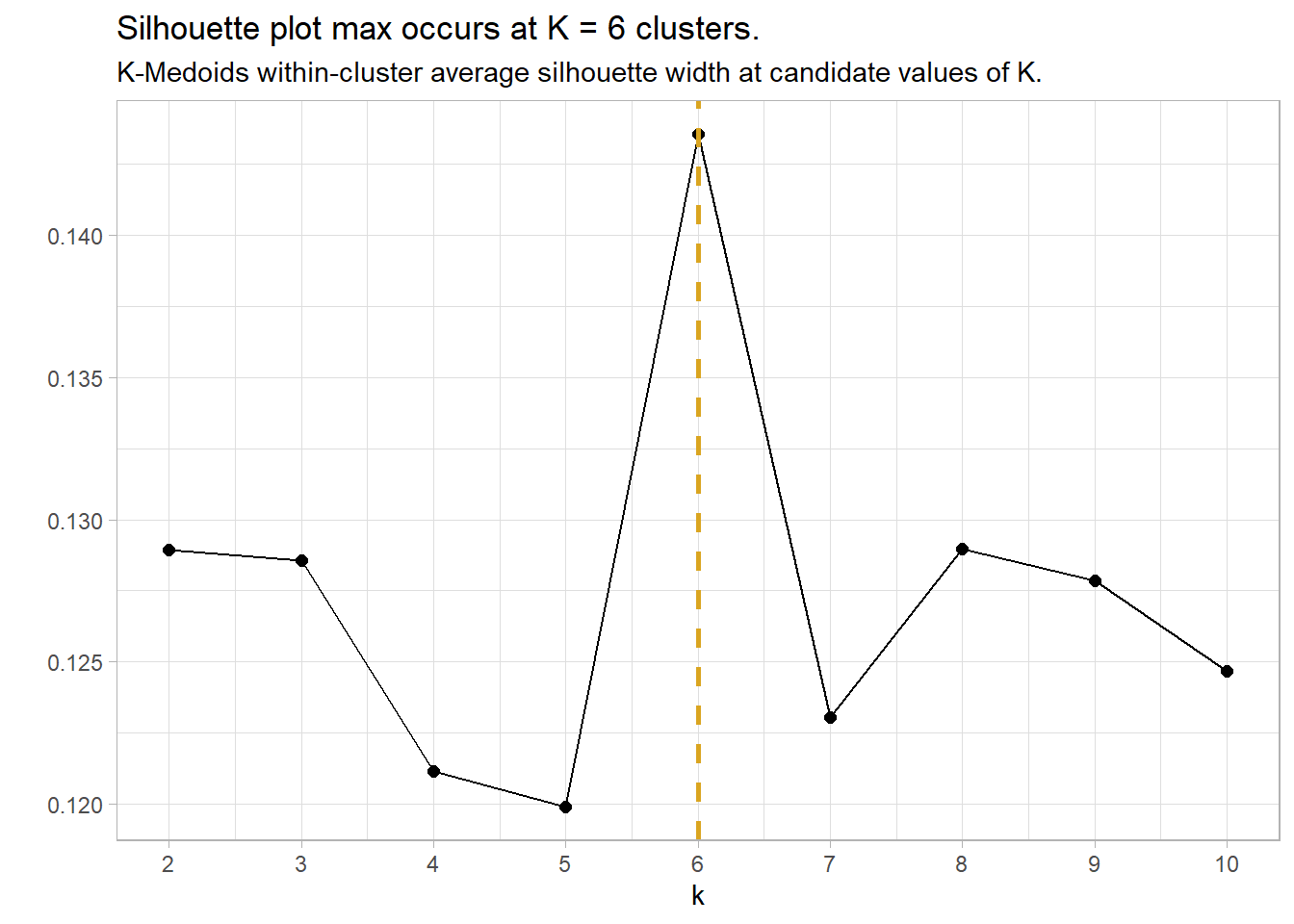

The silhouette method calculates the within-cluster distance \(C(i)\) for each observation, and its distance to the nearest cluster \(N(i)\). The silhouette width is

\[ \begin{align} S &= \frac{C(i)}{N(i)} - 1, & C(i) > N(i)\\ &= 1 - \frac{C(i)}{N(i)}, & C(i) < N(i). \end{align} \]

A value close to 1 means the observation is well-matched to its current cluster; A value near 0 means the observation is on the border between the two clusters; and a value near -1 means the observation is better-matched to the other cluster. The optimal number of clusters is the number that maximizes the total silhouette width. cluster::pam() returns a list in which one of the components is the average width silinfo$avg.width. In the silhouette plot below, the maximum width is at k = 6.

set.seed(1234)

pam_mdl <- data.frame(k = 2:10) %>%

mutate(

mdl = map(k, ~pam(eap_2_gwr, k = .)),

sil = map_dbl(mdl, ~ .$silinfo$avg.width)

)

pam_mdl %>%

ggplot(aes(x = k, y = sil)) +

geom_point(size = 2) +

geom_line() +

geom_vline(aes(xintercept = 6), linetype = 2, size = 1, color = "goldenrod") +

scale_x_continuous(breaks = pam_mdl$k) +

theme_light() +

labs(title = "Silhouette plot max occurs at K = 6 clusters.",

subtitle = "K-Medoids within-cluster average silhouette width at candidate values of K.",

y = "")

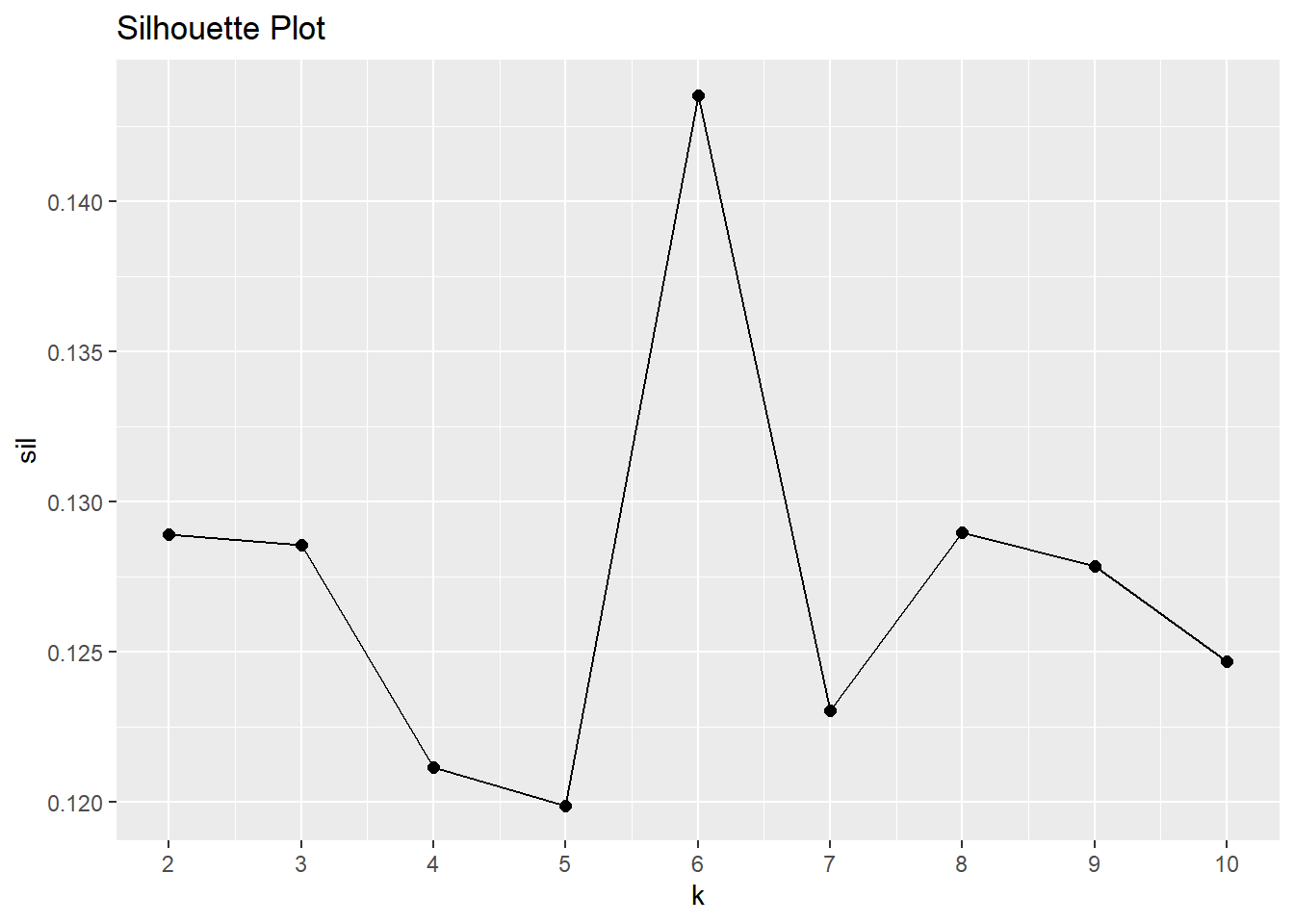

sil <- map_dbl(2:10, ~ pam(eap_2_gwr, k = .x)$silinfo$avg.width)

sil_tbl <- tibble(k = 2:10, sil)

ggplot(sil_tbl, aes(x = k, y = sil)) +

geom_point(size = 2) +

geom_line() +

scale_x_continuous(breaks = 2:10) +

labs(title = "Silhouette Plot")

Summarize Results

Attach the results to the original table for visualization and summary statistics.

pam_mdl_final <- pam_mdl %>% filter(k == 6) %>% pluck("mdl", 1)

eap_3 <- eap_2 %>%

mutate(cluster = as.factor(pam_mdl_final$clustering))Here are the six medoids observations.

eap_3[pam_mdl_final$medoids, ] %>%

t() %>% as.data.frame() %>% rownames_to_column() %>%

flextable::flextable() %>% flextable::autofit()rowname | V1 | V2 | V3 | V4 | V5 | V6 |

EmployeeNumber | 1171 | 35 | 65 | 221 | 747 | 1408 |

Attrition | No | No | Yes | No | No | No |

OverTime | No | No | Yes | No | No | No |

JobLevel | 2 | 2 | 1 | 1 | 2 | 4 |

MonthlyIncome | 5155 | 6825 | 3441 | 2713 | 5304 | 16799 |

YearsAtCompany | 6 | 9 | 2 | 5 | 8 | 20 |

StockOptionLevel | 0 | 1 | 0 | 1 | 1 | 1 |

YearsWithCurrManager | 5 | 2 | 2 | 2 | 7 | 9 |

TotalWorkingYears | 9 | 10 | 2 | 5 | 10 | 21 |

MaritalStatus | Single | Married | Single | Married | Divorced | Married |

Age | 42 | 42 | 28 | 28 | 30 | 42 |

YearsInCurrentRole | 4 | 7 | 2 | 2 | 7 | 7 |

JobRole | Sales Executive | Sales Executive | Laboratory Technician | Research Scientist | Sales Executive | Manager |

EnvironmentSatisfaction | 2 | 3 | 3 | 3 | 3 | 3 |

JobInvolvement | 3 | 3 | 3 | 3 | 3 | 3 |

BusinessTravel | Travel_Rarely | Travel_Rarely | Travel_Rarely | Travel_Rarely | Travel_Rarely | Travel_Rarely |

cluster | 1 | 2 | 3 | 4 | 5 | 6 |

What can the medoids tell us about attrition?. Do high-attrition employees fall into a particular cluster? Yes! 79.7% of cluster 3 left the company. - that’s 59.5% of all turnover in the company.

eap_3_smry <- eap_3 %>%

count(cluster, Attrition) %>%

group_by(cluster) %>%

mutate(cluster_n = sum(n),

turnover_rate = scales::percent(n / sum(n), accuracy = 0.1)) %>%

ungroup() %>%

filter(Attrition == "Yes") %>%

mutate(pct_of_turnover = scales::percent(n / sum(n), accuracy = 0.1)) %>%

select(cluster, cluster_n, turnover_n = n, turnover_rate, pct_of_turnover)

eap_3_smry %>% flextable::flextable() %>% flextable::autofit()cluster | cluster_n | turnover_n | turnover_rate | pct_of_turnover |

1 | 268 | 23 | 8.6% | 9.7% |

2 | 280 | 24 | 8.6% | 10.1% |

3 | 177 | 141 | 79.7% | 59.5% |

4 | 364 | 29 | 8.0% | 12.2% |

5 | 202 | 8 | 4.0% | 3.4% |

6 | 179 | 12 | 6.7% | 5.1% |

You can get some sense of the quality of clustering by constructing the Barnes-Hut t-Distributed Stochastic Neighbor Embedding (t-SNE).

eap_4 <- eap_3 %>%

left_join(eap_3_smry, by = "cluster") %>%

rename(Cluster = cluster) %>%

mutate(

MonthlyIncome = MonthlyIncome %>% scales::dollar(),

description = str_glue("Turnover = {Attrition}

MaritalDesc = {MaritalStatus}

Age = {Age}

Job Role = {JobRole}

Job Level {JobLevel}

Overtime = {OverTime}

Current Role Tenure = {YearsInCurrentRole}

Professional Tenure = {TotalWorkingYears}

Monthly Income = {MonthlyIncome}

Cluster: {Cluster}

Cluster Size: {cluster_n}

Cluster Turnover Rate: {turnover_rate}

Cluster Turnover Count: {turnover_n}

"))

tsne_obj <- Rtsne(eap_2_gwr, is_distance = TRUE)

tsne_tbl <- tsne_obj$Y %>%

as_tibble() %>%

setNames(c("X", "Y")) %>%

bind_cols(eap_4) %>%

mutate(Cluster = as_factor(Cluster))

g <- tsne_tbl %>%

ggplot(aes(x = X, y = Y, colour = Cluster, text = description)) +

geom_point()

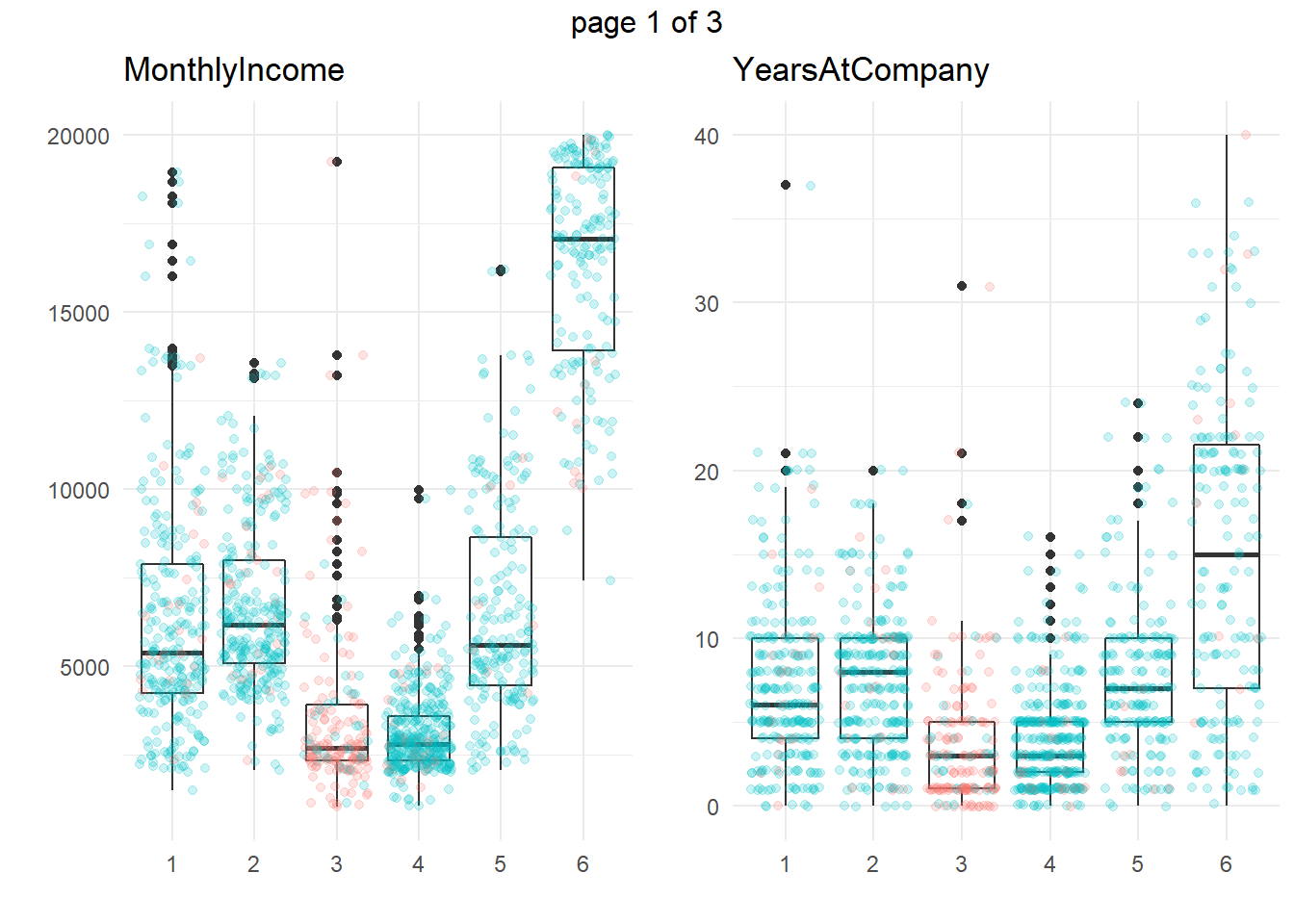

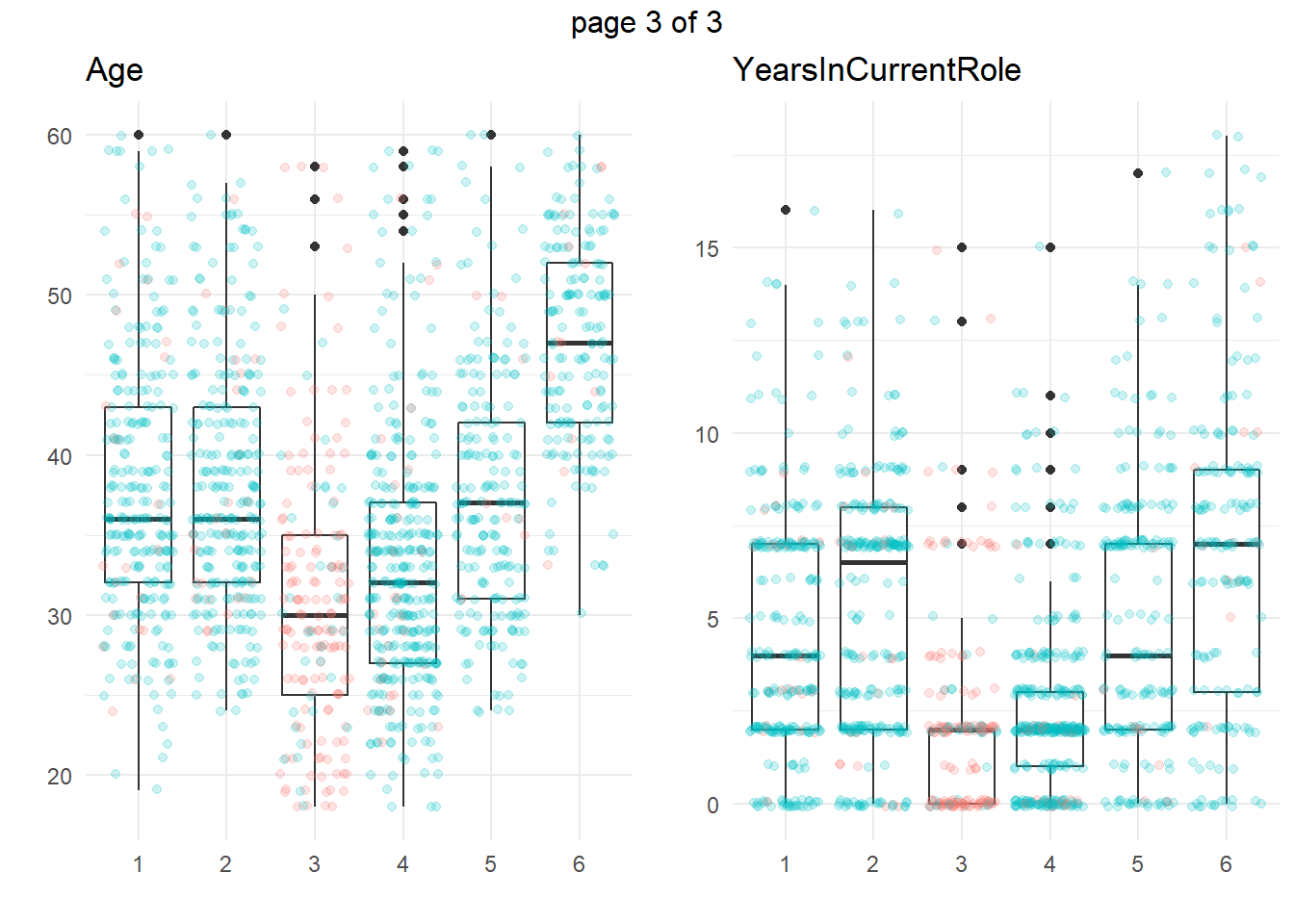

ggplotly(g)Another approach is to take summary statistics and draw your own conclusions. You might start by asking which attributes differ among the clusters. The box plots below show the distribution of the numeric variables. All of the numeric variable distributions appear to vary among the clusters.

my_boxplot <- function(y_var){

eap_3 %>%

ggplot(aes(x = cluster, y = !!sym(y_var))) +

geom_boxplot() +

geom_jitter(aes(color = Attrition), alpha = 0.2, height = 0.10) +

theme_minimal() +

theme(legend.position = "none") +

labs(x = "", y = "", title = y_var)

}

vars_numeric <- eap_3 %>% select(-EmployeeNumber) %>% select_if(is.numeric) %>% colnames()

g <- map(vars_numeric, my_boxplot)

gridExtra::marrangeGrob(g, nrow=1, ncol = 2)

You can perform an analysis of variance to confirm. The table below collects the ANOVA results for each of the numeric variables. The results indicate that there are significant differences among clusters at the .01 level for all of the numeric variables.

km_aov <- vars_numeric %>%

map(~ aov(rlang::eval_tidy(expr(!!sym(.x) ~ cluster)), data = eap_3))

km_aov %>%

map(anova) %>%

map(~ data.frame(F = .x$`F value`[[1]], p = .x$`Pr(>F)`[[1]])) %>%

bind_rows() %>%

bind_cols(Attribute = vars_numeric) %>%

select(Attribute, everything()) %>%

flextable::flextable() %>%

flextable::colformat_double(j = 2, digits = 2) %>%

flextable::colformat_double(j = 3, digits = 4) %>%

flextable::autofit()Attribute | F | p |

MonthlyIncome | 718.88 | 0.0000 |

YearsAtCompany | 134.58 | 0.0000 |

YearsWithCurrManager | 65.53 | 0.0000 |

TotalWorkingYears | 342.88 | 0.0000 |

Age | 98.76 | 0.0000 |

YearsInCurrentRole | 69.53 | 0.0000 |

What sets Cluster 3, the high attrition cluster, apart from the others? Cluster 3 is similar to cluster 4 in almost all attributes. They tend to have the smaller incomes, company and job tenure, years with their current manager, total working experience, and age. I.e., they tend to be lower on the career ladder.

To drill into cluster differences to determine which clusters differ from others, use the Tukey HSD post hoc test with Bonferroni method applied to control the experiment-wise error rate. That is, only reject the null hypothesis of equal means among clusters if the p-value is less than \(\alpha / p\), or \(.05 / 6 = 0.0083\). The significantly different cluster combinations are shown in bold. Clusters 3 and 4 differ from the others on all six measures. However, there are no significant differences between c3 and c4 (highlighted green) for the numeric variables.

km_hsd <- map(km_aov, TukeyHSD)

map(km_hsd, ~ .x$cluster %>%

data.frame() %>%

rownames_to_column() %>%

filter(str_detect(rowname, "-"))) %>%

map2(vars_numeric, bind_cols) %>%

bind_rows() %>%

select(predictor = `...6`, everything()) %>%

mutate(cluster_a = str_sub(rowname, start = 1, end = 1),

cluster = paste0("c", str_sub(rowname, start = 3, end = 3))) %>%

pivot_wider(c(predictor, cluster_a, cluster, p.adj),

names_from = cluster_a, values_from = p.adj,

names_prefix = "c") %>%

flextable::flextable() %>%

flextable::colformat_double(j = c(3:7), digits = 4) %>%

flextable::bold(i = ~ c2 < .05 / length(vars_numeric), j = ~ c2, bold = TRUE) %>%

flextable::bold(i = ~ c3 < .05 / length(vars_numeric), j = ~ c3, bold = TRUE) %>%

flextable::bold(i = ~ c4 < .05 / length(vars_numeric), j = ~ c4, bold = TRUE) %>%

flextable::bold(i = ~ c5 < .05 / length(vars_numeric), j = ~ c5, bold = TRUE) %>%

flextable::bold(i = ~ c6 < .05 / length(vars_numeric), j = ~ c6, bold = TRUE) %>%

flextable::bg(i = ~ cluster == "c3", j = ~ c4, bg = "#B6E2D3") %>%

flextable::border(i = ~ cluster == "c1", border.top = officer::fp_border()) %>%

flextable::autofit() predictor | cluster | c2 | c3 | c4 | c5 | c6 |

MonthlyIncome | c1 | 0.6587 | 0.0000 | 0.0000 | 0.9720 | 0.0000 |

MonthlyIncome | c2 | 0.0000 | 0.0000 | 0.9899 | 0.0000 | |

MonthlyIncome | c3 | 0.3975 | 0.0000 | 0.0000 | ||

MonthlyIncome | c4 | 0.0000 | 0.0000 | |||

MonthlyIncome | c5 | 0.0000 | ||||

YearsAtCompany | c1 | 0.9999 | 0.0000 | 0.0000 | 0.9922 | 0.0000 |

YearsAtCompany | c2 | 0.0000 | 0.0000 | 0.9989 | 0.0000 | |

YearsAtCompany | c3 | 0.9935 | 0.0000 | 0.0000 | ||

YearsAtCompany | c4 | 0.0000 | 0.0000 | |||

YearsAtCompany | c5 | 0.0000 | ||||

YearsWithCurrManager | c1 | 1.0000 | 0.0000 | 0.0000 | 0.9763 | 0.0000 |

YearsWithCurrManager | c2 | 0.0000 | 0.0000 | 0.9595 | 0.0000 | |

YearsWithCurrManager | c3 | 0.9965 | 0.0000 | 0.0000 | ||

YearsWithCurrManager | c4 | 0.0000 | 0.0000 | |||

YearsWithCurrManager | c5 | 0.0000 | ||||

TotalWorkingYears | c1 | 0.9840 | 0.0000 | 0.0000 | 0.9980 | 0.0000 |

TotalWorkingYears | c2 | 0.0000 | 0.0000 | 0.8934 | 0.0000 | |

TotalWorkingYears | c3 | 0.9998 | 0.0000 | 0.0000 | ||

TotalWorkingYears | c4 | 0.0000 | 0.0000 | |||

TotalWorkingYears | c5 | 0.0000 | ||||

Age | c1 | 0.9980 | 0.0000 | 0.0000 | 0.9989 | 0.0000 |

Age | c2 | 0.0000 | 0.0000 | 0.9691 | 0.0000 | |

Age | c3 | 0.0532 | 0.0000 | 0.0000 | ||

Age | c4 | 0.0000 | 0.0000 | |||

Age | c5 | 0.0000 | ||||

YearsInCurrentRole | c1 | 0.1013 | 0.0000 | 0.0000 | 0.6471 | 0.0000 |

YearsInCurrentRole | c2 | 0.0000 | 0.0000 | 0.9573 | 0.0000 | |

YearsInCurrentRole | c3 | 0.9854 | 0.0000 | 0.0000 | ||

YearsInCurrentRole | c4 | 0.0000 | 0.0000 | |||

YearsInCurrentRole | c5 | 0.0000 |

So far the picture is incomplete. High-attrition employees are low on the career ladder, but cluster 4 is also low on the career ladder and they are not high-attrition.

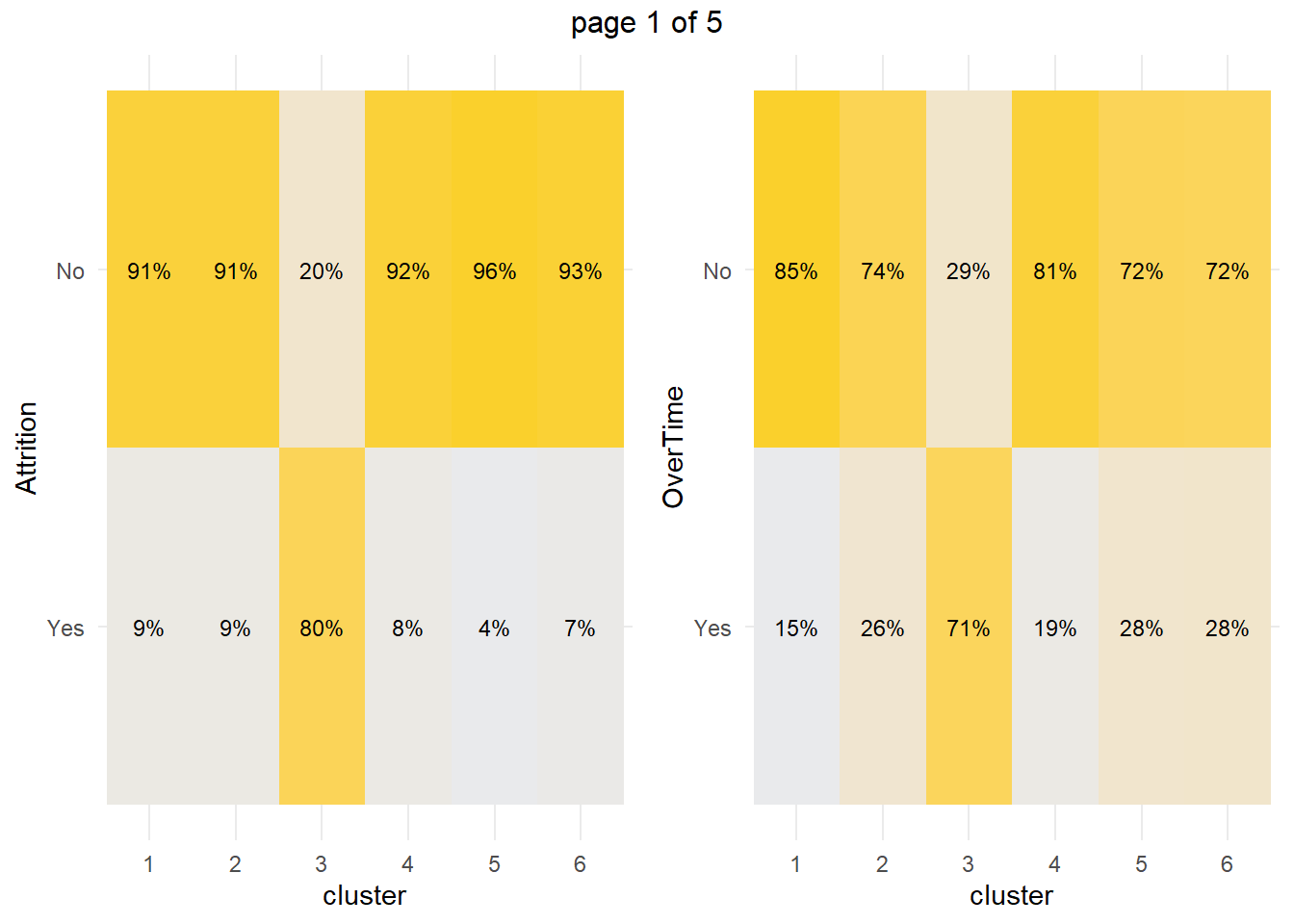

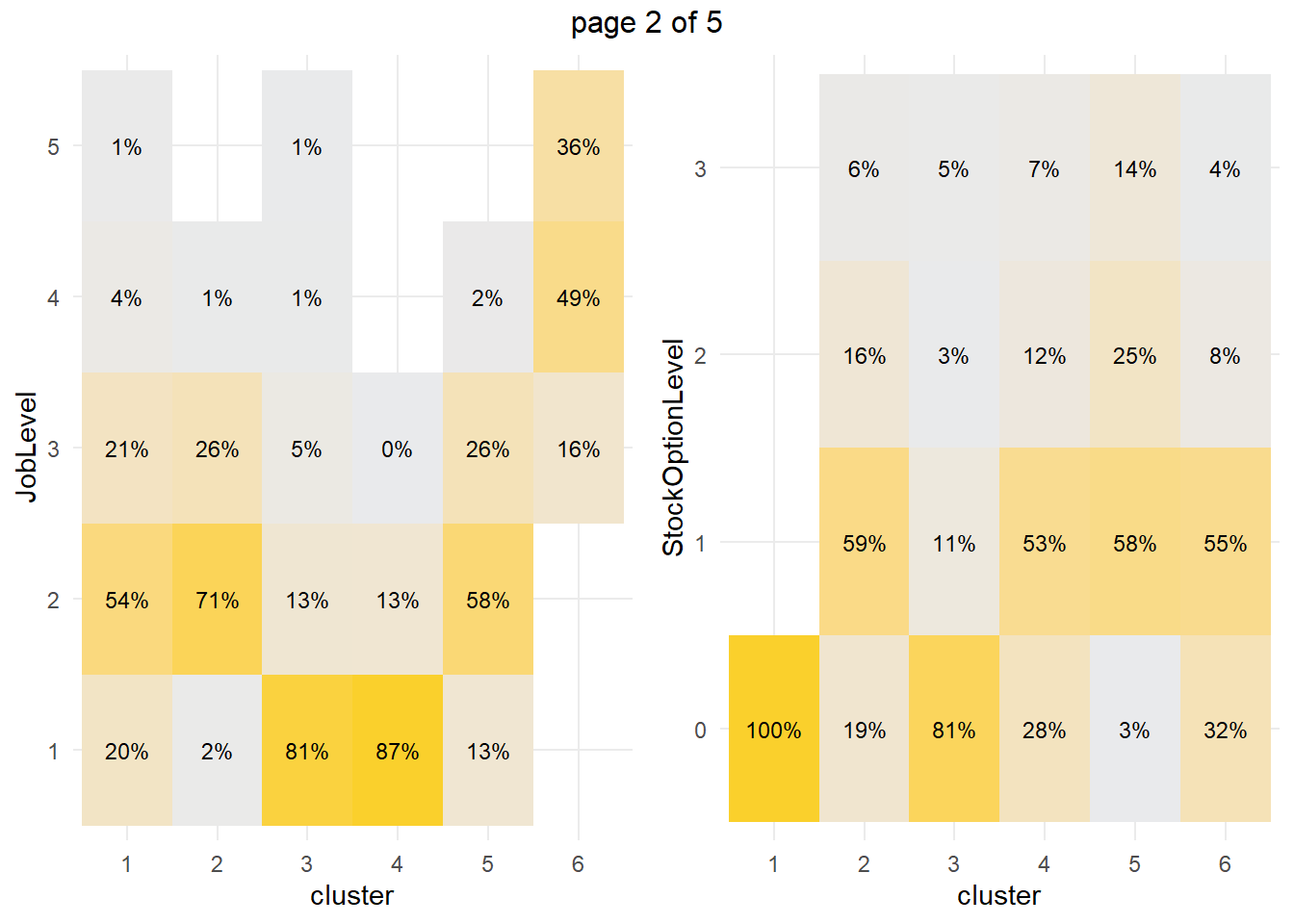

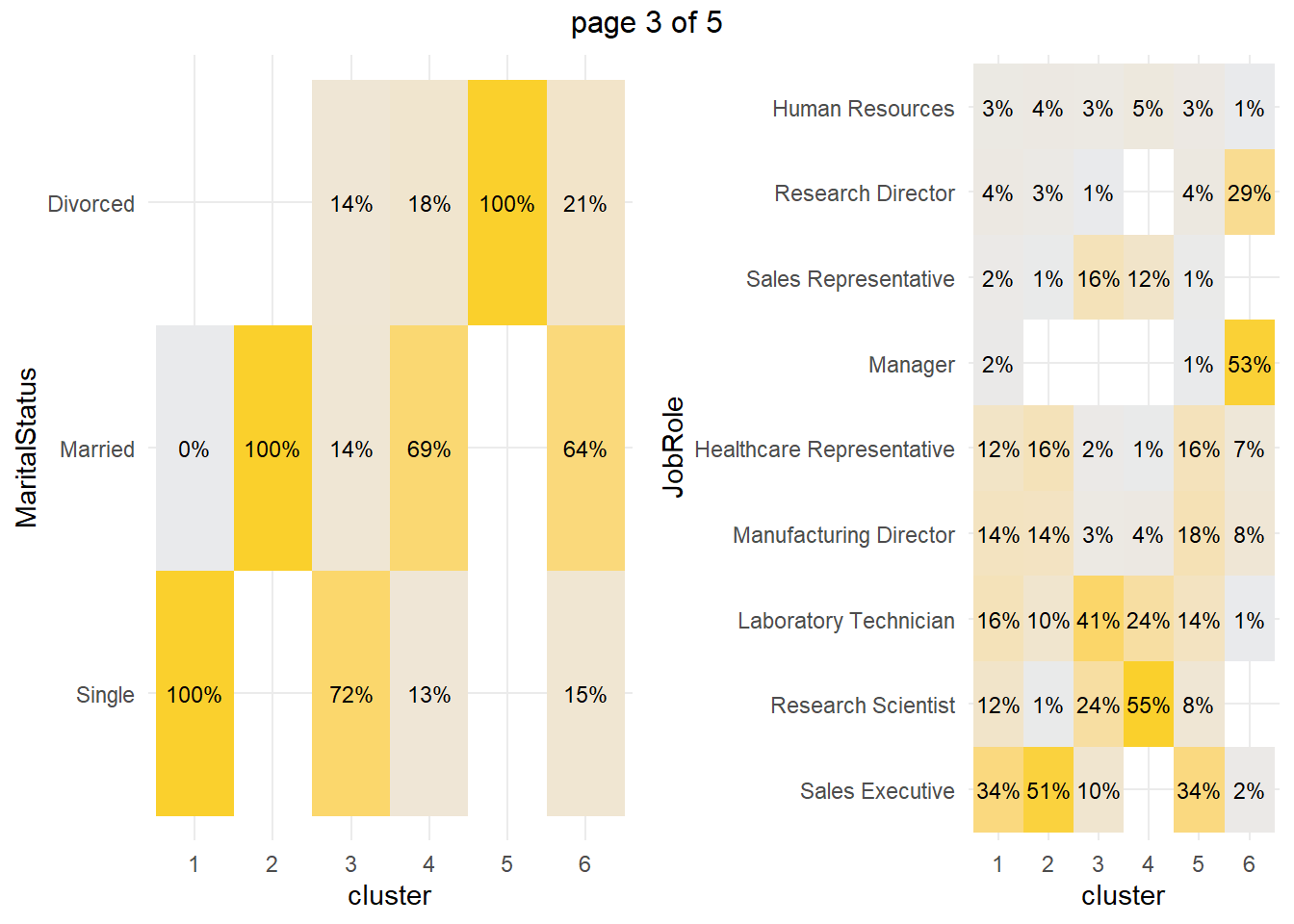

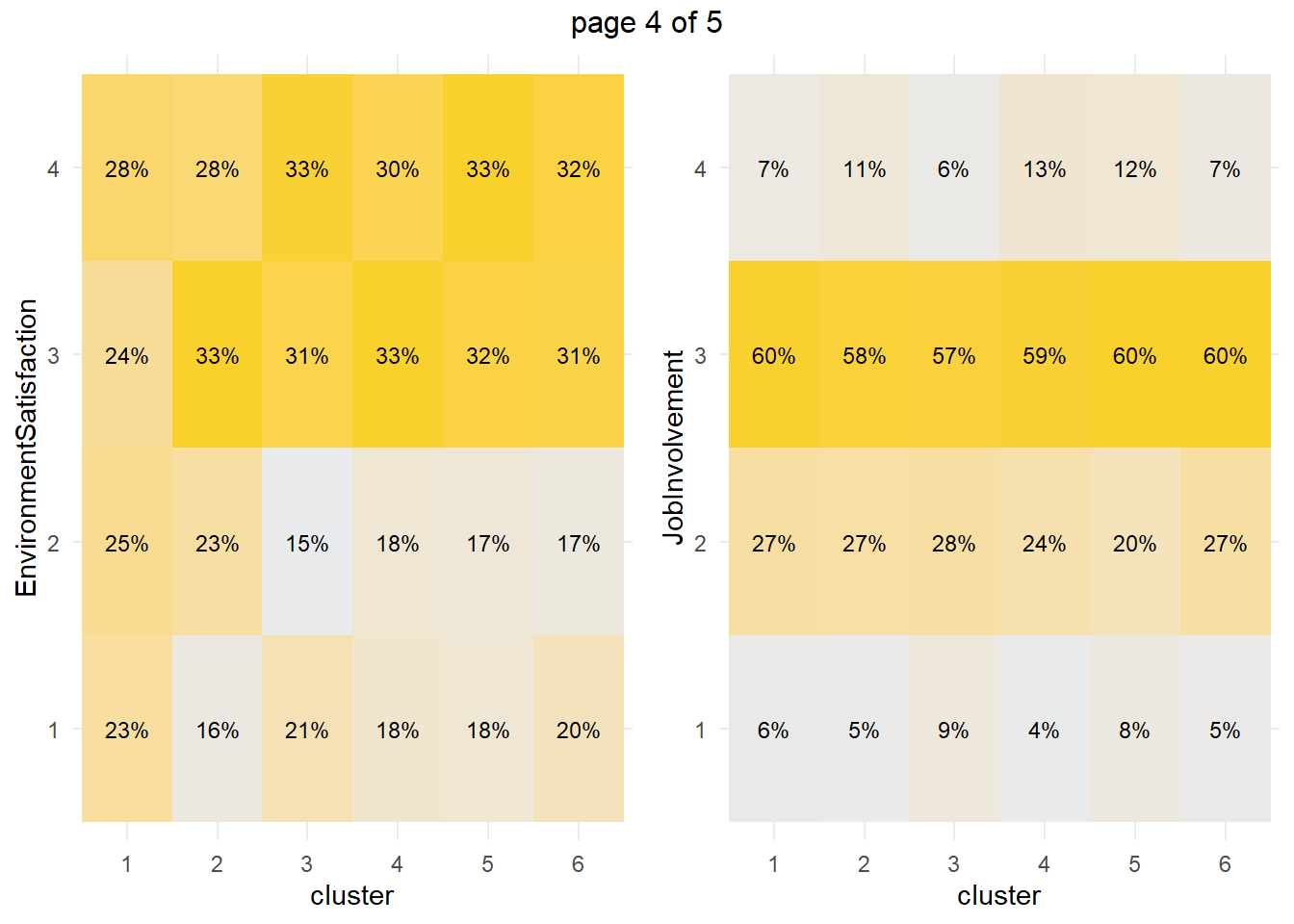

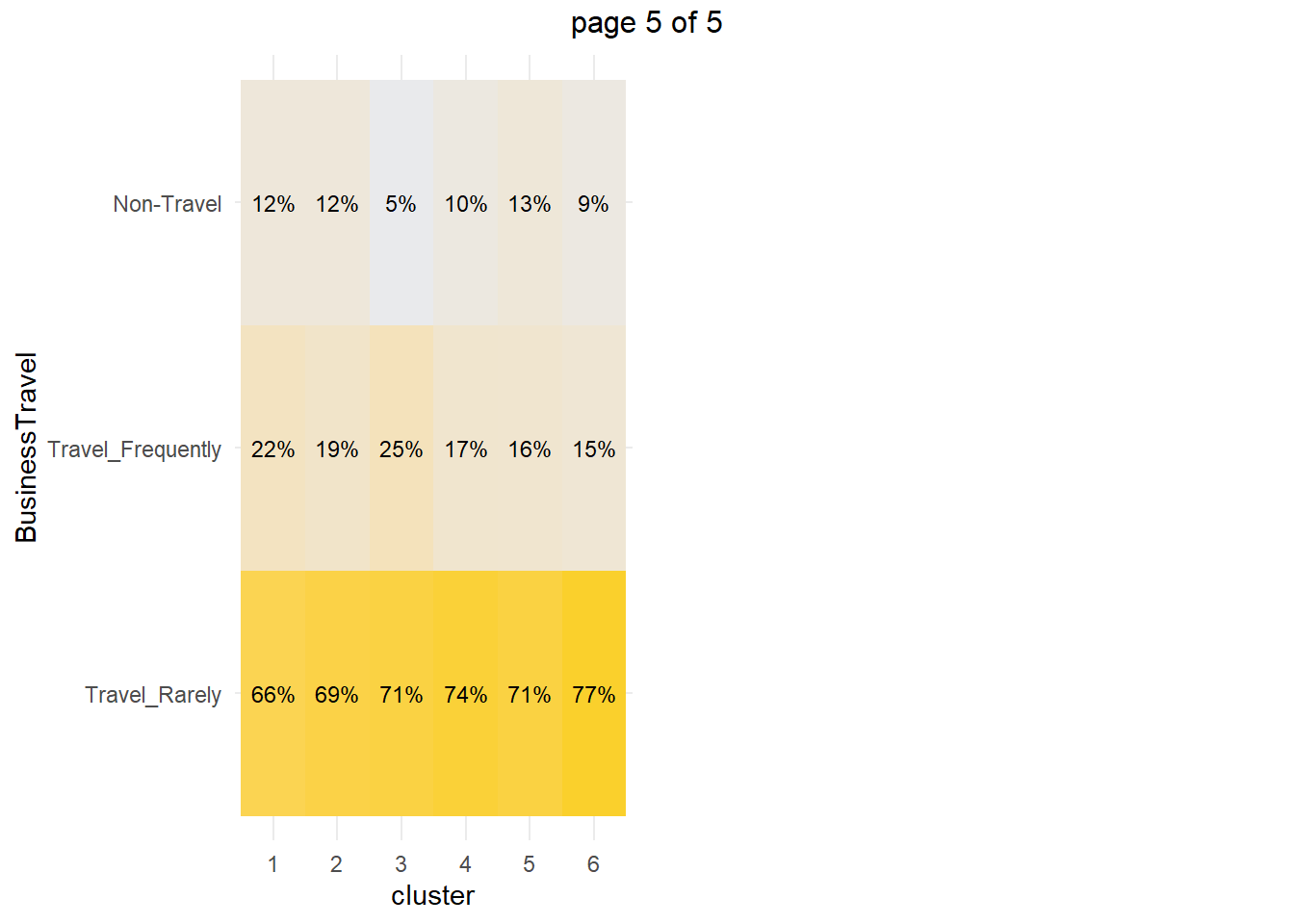

How about the factor variables? The tile plots below show that clusters 3 and 4 are lab technicians and research scientists. They are both at the lowest job level. But three factors distinguish these clusters from each other: cluster 3 is far more likely to work overtime, have no stock options, and be single.

my_tileplot <- function(y_var){

eap_3 %>%

count(cluster, !!sym(y_var)) %>%

ungroup() %>%

group_by(cluster) %>%

mutate(pct = n / sum(n)) %>%

ggplot(aes(y = !!sym(y_var), x = cluster, fill = pct)) +

geom_tile() +

scale_fill_gradient(low = "#E9EAEC", high = "#FAD02C") +

geom_text(aes(label = scales::percent(pct, accuracy = 1.0)), size = 3) +

theme_minimal() +

theme(legend.position = "none")

}

vars_factor <- eap_3 %>% select(-cluster) %>% select_if(is.factor) %>% colnames()

g <- map(vars_factor, my_tileplot)

gridExtra::marrangeGrob(g, nrow=1, ncol = 2)

You can perform a chi-squared independence test to confirm. The table below collects the Chi-Sq test results for each of the factor variables. The results indicate that there are significant differences among clusters at the .05 level for all of the factor variables except EnvironmentSatisfaction and JobInvolvement.

km_chisq <- vars_factor %>%

map(~ janitor::tabyl(eap_3, cluster, !!sym(.x))) %>%

map(janitor::chisq.test)

km_chisq %>%

map(~ data.frame(ChiSq = .x$statistic[[1]], df = .x$parameter[[1]], p = .x$p.value[[1]])) %>%

bind_rows() %>%

bind_cols(Attribute = vars_factor) %>%

select(Attribute, everything()) %>%

flextable::flextable() %>%

flextable::colformat_double(j = ~ ChiSq, digits = 1) %>%

flextable::colformat_double(j = ~ p, digits = 4) %>%

flextable::autofit()Attribute | ChiSq | df | p |

Attrition | 603.2 | 5 | 0.0000 |

OverTime | 199.9 | 5 | 0.0000 |

JobLevel | 1,855.6 | 20 | 0.0000 |

StockOptionLevel | 731.2 | 15 | 0.0000 |

MaritalStatus | 1,853.4 | 10 | 0.0000 |

JobRole | 1,754.0 | 40 | 0.0000 |

EnvironmentSatisfaction | 20.0 | 15 | 0.1712 |

JobInvolvement | 22.6 | 15 | 0.0938 |

BusinessTravel | 19.7 | 10 | 0.0324 |

What sets cluster 3 apart from the others, cluster 4 in particular? Cluster 3 is far more likely to work overtime, have no stock options, and be single. Perform a residuals analysis on the the chi-sq test to verify. The residuals with absolute value >2 are driving the differences among the clusters.

km_chisq %>%

map(~ data.frame(.x$residuals)) %>%

map(data.frame) %>%

map(t) %>%

map(data.frame) %>%

map(rownames_to_column, var = "Predictor") %>%

map(~ filter(.x, Predictor != "cluster")) %>%

map(~ select(.x, Predictor, c1 = X1, c2 = X2, c3 = X3, c4 = X4, c5 = X5, c6 = X6)) %>%

map(~ mutate_at(.x, vars(2:7), as.numeric)) %>%

map(flextable::flextable) %>%

map(~ flextable::colformat_double(.x, j = ~ c1 + c2 + c3 + c4 + c5 + c6, digits = 1)) %>%

map(~ flextable::bold(.x, i = ~ abs(c1) > 2, j = ~ c1, bold = TRUE)) %>%

map(~ flextable::bold(.x, i = ~ abs(c2) > 2, j = ~ c2, bold = TRUE)) %>%

map(~ flextable::bold(.x, i = ~ abs(c3) > 2, j = ~ c3, bold = TRUE)) %>%

map(~ flextable::bold(.x, i = ~ abs(c4) > 2, j = ~ c4, bold = TRUE)) %>%

map(~ flextable::bold(.x, i = ~ abs(c5) > 2, j = ~ c5, bold = TRUE)) %>%

map(~ flextable::bold(.x, i = ~ abs(c6) > 2, j = ~ c6, bold = TRUE)) %>%

# map(~ flextable::bg(i = ~ cluster == "c3", j = ~ c4, bg = "#B6E2D3") %>%

map2(vars_factor, ~ flextable::set_caption(.x, caption = .y))## [[1]]

## a flextable object.

## col_keys: `Predictor`, `c1`, `c2`, `c3`, `c4`, `c5`, `c6`

## header has 1 row(s)

## body has 2 row(s)

## original dataset sample:

## Predictor c1 c2 c3 c4 c5 c6

## 1 Yes -3.074284 -3.146800 21.052736 -3.875086 -4.304940 -3.138300

## 2 No 1.347835 1.379627 -9.229989 1.698924 1.887382 1.375901

##

## [[2]]

## a flextable object.

## col_keys: `Predictor`, `c1`, `c2`, `c3`, `c4`, `c5`, `c6`

## header has 1 row(s)

## body has 2 row(s)

## original dataset sample:

## Predictor c1 c2 c3 c4 c5 c6

## 1 Yes -4.000828 -0.5884457 10.725697 -3.449431 -0.15403618 0.04836361

## 2 No 2.513485 0.3696858 -6.738324 2.167075 0.09677186 -0.03038401

##

## [[3]]

## a flextable object.

## col_keys: `Predictor`, `c1`, `c2`, `c3`, `c4`, `c5`, `c6`

## header has 1 row(s)

## body has 5 row(s)

## original dataset sample:

## Predictor c1 c2 c3 c4 c5 c6

## 1 X1 -4.622859 -9.678341 9.599235 15.569991 -5.512378 -8.1314460

## 2 X2 4.828776 9.745394 -5.150270 -7.324817 5.208898 -8.0637760

## 3 X3 2.578522 4.729466 -3.561905 -7.211066 4.210211 0.2822892

## 4 X4 -2.121266 -3.825733 -3.012750 -5.123243 -2.768472 20.6230820

## 5 X5 -2.418986 -3.625308 -2.535454 -4.133487 -3.079226 19.1807890

##

## [[4]]

## a flextable object.

## col_keys: `Predictor`, `c1`, `c2`, `c3`, `c4`, `c5`, `c6`

## header has 1 row(s)

## body has 4 row(s)

## original dataset sample:

## Predictor c1 c2 c3 c4 c5 c6

## 1 X0 14.261198 -6.03754600 7.6891380 -4.3398430 -8.667412 -2.1488560

## 2 X1 -10.423939 4.83128800 -6.1104130 3.8210350 3.989103 3.1019730

## 3 X2 -5.367070 2.71691560 -2.9860991 0.4598298 6.071044 -0.9665264

## 4 X3 -3.936572 -0.04733811 -0.6985228 1.0794749 4.775144 -1.0413857

##

## [[5]]

## a flextable object.

## col_keys: `Predictor`, `c1`, `c2`, `c3`, `c4`, `c5`, `c6`

## header has 1 row(s)

## body has 3 row(s)

## original dataset sample:

## Predictor c1 c2 c3 c4 c5 c6

## 1 Single 19.587144 -9.461702 9.492289 -6.338612 -8.036481 -3.9961330

## 2 Married -10.986571 13.408220 -6.224744 6.611745 -9.616666 3.6508300

## 3 Divorced -7.721161 -7.892130 -2.450022 -1.886047 23.430922 -0.4466382

##

## [[6]]

## a flextable object.

## col_keys: `Predictor`, `c1`, `c2`, `c3`, `c4`, `c5`, `c6`

## header has 1 row(s)

## body has 9 row(s)

## original dataset sample:

## Predictor c1 c2 c3 c4 c5

## 1 Sales.Executive 4.224222 10.393935 -3.551837 -8.984643 3.616083

## 2 Research.Scientist -3.047504 -7.055555 1.153688 15.017279 -3.808570

## 3 Laboratory.Technician -0.468456 -3.037306 7.308603 2.730491 -1.272337

## 4 Manufacturing.Director 2.249256 1.975299 -2.742469 -3.822520 3.601185

## 5 Healthcare.Representative 1.865554 4.213539 -2.964428 -4.993130 3.299386

## c6

## 1 -5.6656560

## 2 -5.9629240

## 3 -5.4378120

## 4 -0.8701804

## 5 -0.9894197

##

## [[7]]

## a flextable object.

## col_keys: `Predictor`, `c1`, `c2`, `c3`, `c4`, `c5`, `c6`

## header has 1 row(s)

## body has 4 row(s)

## original dataset sample:

## Predictor c1 c2 c3 c4 c5 c6

## 1 X1 1.4207444 -1.1006522 0.47951650 -0.39635550 -0.4843633 0.2410757

## 2 X2 1.8906688 1.2623375 -1.28554920 -0.71964310 -0.7067058 -0.8369268

## 3 X3 -2.0453569 0.7228204 0.06162142 0.83358388 0.2219346 0.1129351

## 4 X4 -0.5890421 -0.8627985 0.58649848 0.05346915 0.7297500 0.3651772

##

## [[8]]

## a flextable object.

## col_keys: `Predictor`, `c1`, `c2`, `c3`, `c4`, `c5`, `c6`

## header has 1 row(s)

## body has 4 row(s)

## original dataset sample:

## Predictor c1 c2 c3 c4 c5 c6

## 1 X1 -0.03392631 -0.7065995 1.8998844 -1.44533350 1.3604659 -0.3481475

## 2 X2 0.56028040 0.4225771 0.5724950 -0.40027460 -1.6062743 0.4937853

## 3 X3 0.21879629 -0.2592379 -0.3437552 -0.06366268 0.2494020 0.2241808

## 4 X4 -1.41557010 0.4909903 -1.5222857 1.89954330 0.9469245 -1.0829262

##

## [[9]]

## a flextable object.

## col_keys: `Predictor`, `c1`, `c2`, `c3`, `c4`, `c5`, `c6`

## header has 1 row(s)

## body has 3 row(s)

## original dataset sample:

## Predictor c1 c2 c3 c4 c5

## 1 Travel_Rarely -1.0263102 -0.33108864 -0.05226557 0.6056579 -0.02704771

## 2 Travel_Frequently 1.3367306 0.03277861 1.84355843 -0.9165098 -0.82079013

## 3 Non.Travel 0.8897836 0.82850980 -2.36743050 -0.3516054 1.18671160

## c6

## 1 0.8869173

## 2 -1.3309690

## 3 -0.5300458Cluster 3 are significantly more likely to work overtime while cluster 4 (and 1) are significantly less likely. Cluster 3 (and 1) are significantly more likely to have no stock options and be single.

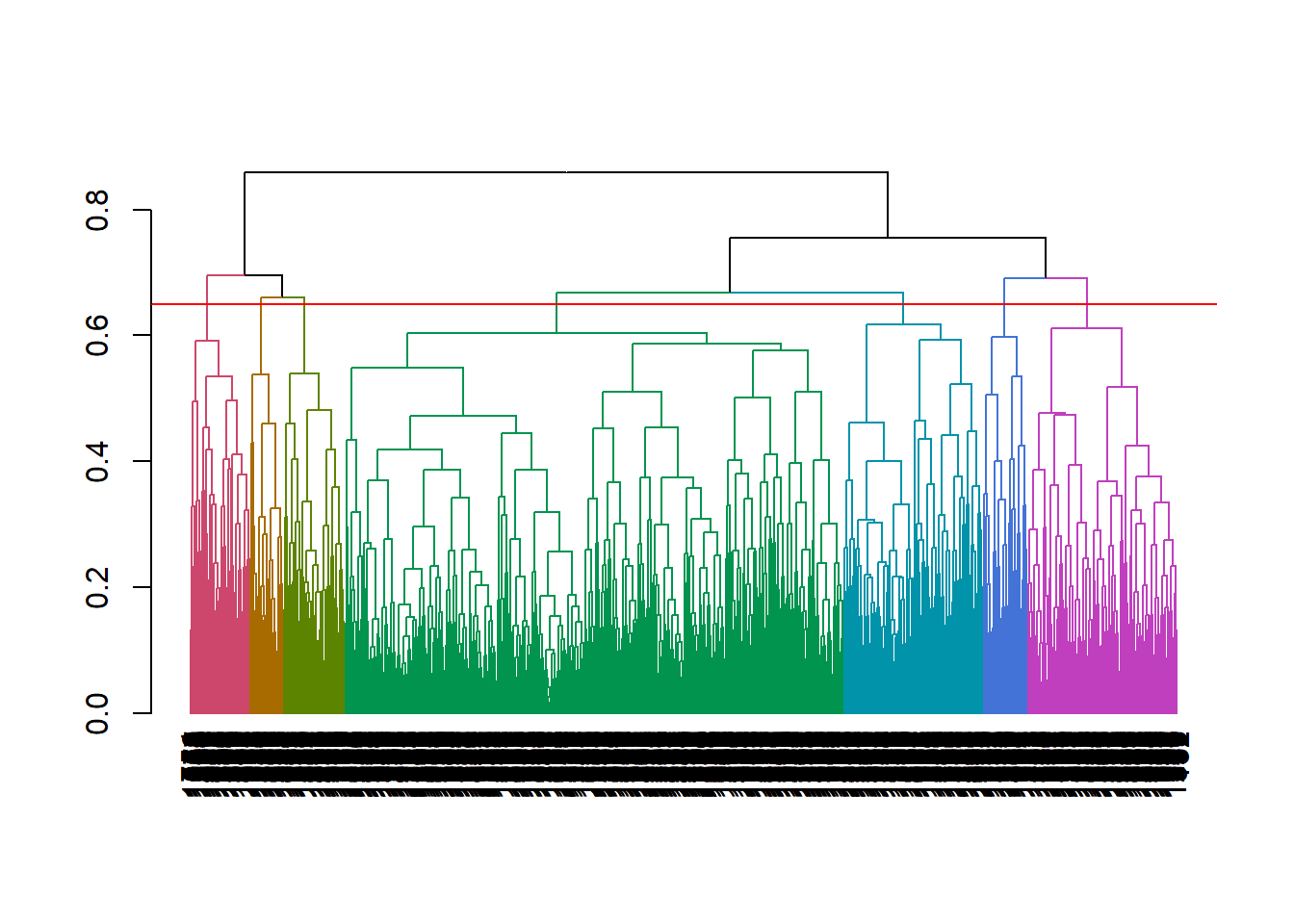

1.3 HCA

Hierarchical clustering (also called hierarchical cluster analysis or HCA) is a method of cluster analysis which builds a hierarchy of clusters (usually presented in a dendrogram). The HCA process is:

Calculate the distance between each observation with

dist()ordaisy(). We did that above when we createdeap_2_gwr.Cluster the two closest observations into a cluster with

hclust(). Then calculate the cluster’s distance to the remaining observations. If the shortest distance is between two observations, define a second cluster, otherwise adds the observation as a new level to the cluster. The process repeats until all observations belong to a single cluster. The “distance” to a cluster can be defined as:

- complete: distance to the furthest member of the cluster,

- single: distance to the closest member of the cluster,

- average: average distance to all members of the cluster, or

- centroid: distance between the centroids of each cluster.

Complete and average distances tend to produce more balanced trees and are most common. Pruning an unbalanced tree can result in most observations assigned to one cluster and only a few observations assigned to other clusters. This is useful for identifying outliers.

mdl_hc <- hclust(eap_2_gwr, method = "complete")- Evaluate the

hclusttree with a dendogram, principal component analysis (PCA), and/or summary statistics. The vertical lines in a dendogram indicate the distance between nodes and their associated cluster. Choose the number of clusters to keep by identifying a cut point that creates a reasonable number of clusters with a substantial number of observations per cluster (I know, “reasonable” and “substantial” are squishy terms). Below, cutting at height 0.65 to create 7 clusters seems good.

# Inspect the tree to choose a size.

plot(color_branches(as.dendrogram(mdl_hc), k = 7))

abline(h = .65, col = "red")

- “Cut” the hierarchical tree into the desired number of clusters (

k) or heighthwithcutree(hclust, k = NULL, h = NULL).cutree()returns a vector of cluster memberships. Attach this vector back to the original dataframe for visualization and summary statistics.

eap_2_clstr_hca <- eap_2 %>% mutate(cluster = cutree(mdl_hc, k = 7))- Calculate summary statistics and draw conclusions. Useful summary statistics are typically membership count, and feature averages (or proportions).

eap_2_clstr_hca %>%

group_by(cluster) %>%

summarise_if(is.numeric, funs(mean(.)))## # A tibble: 7 x 8

## cluster EmployeeNumber MonthlyIncome YearsAtCompany YearsWithCurrManager

## <int> <dbl> <dbl> <dbl> <dbl>

## 1 1 1051. 8056. 8.21 4.23

## 2 2 1029. 5146. 5.98 3.85

## 3 3 974. 4450. 5.29 3.51

## 4 4 1088. 3961. 4.60 2.64

## 5 5 980. 16534. 14.2 6.80

## 6 6 1036. 12952. 14.9 7.64

## 7 7 993. 13733. 12.8 6.53

## # ... with 3 more variables: TotalWorkingYears <dbl>, Age <dbl>,

## # YearsInCurrentRole <dbl>1.3.0.1 K-Means vs HCA

Hierarchical clustering has some advantages over k-means. It can use any distance method - not just euclidean. The results are stable - k-means can produce different results each time. While they can both be evaluated with the silhouette and elbow plots, hierachical clustering can also be evaluated with a dendogram. But hierarchical clusters has one significant drawback: it is computationally complex compared to k-means. For this last reason, k-means is more common.

Clustering is used to personalize employee experience. See A Complete Guide to the Employee Experience at AIHR.↩︎