Chapter 4 Modeling

En esta parte aplicaremos dos modelos: un Lasso-Logit y un XGboosting.

4.1 Cross-Validated LASSO-logit

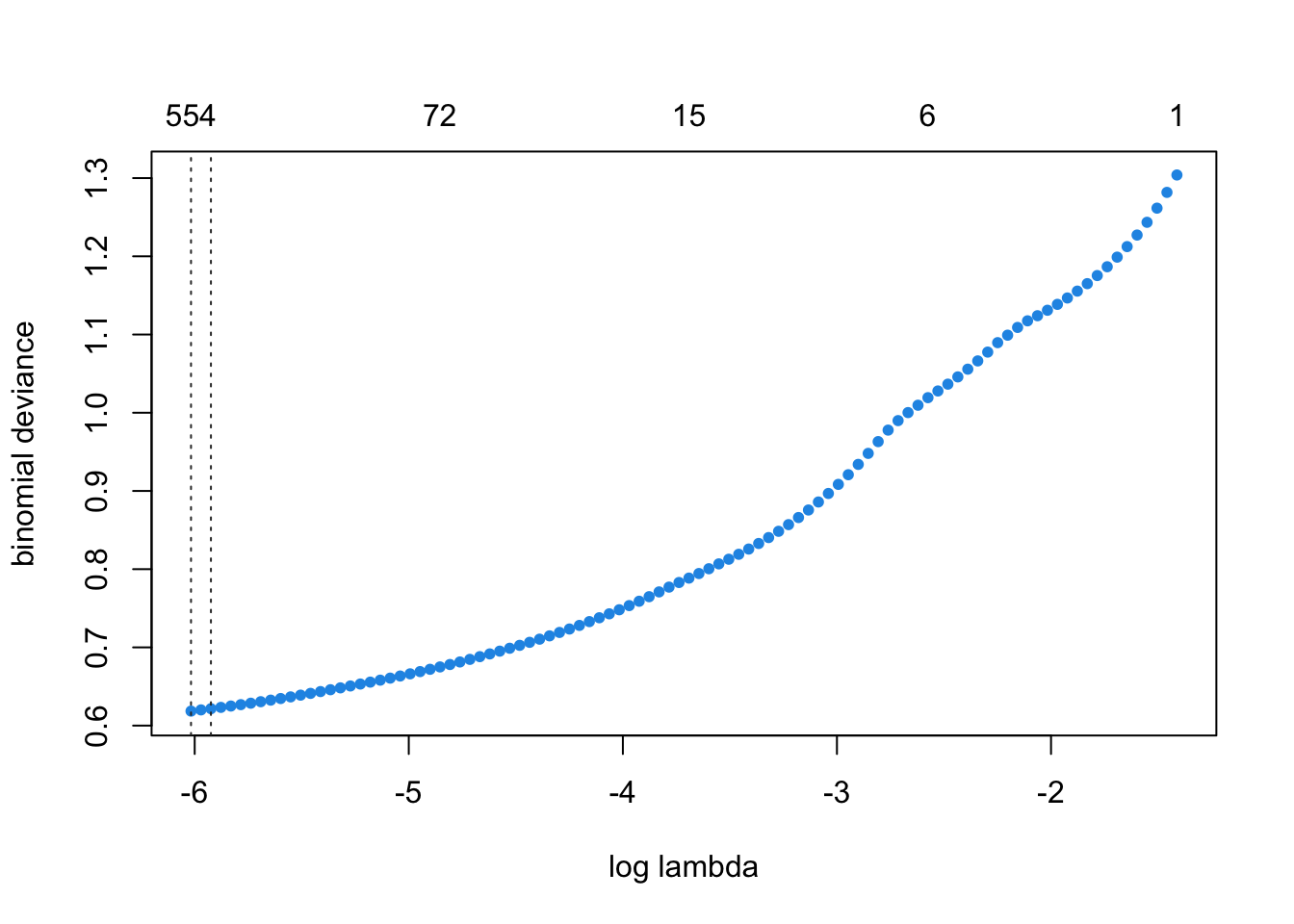

Seestima un cross validated LASSO y se muestra el la gráfica de CV Binomial Deviance vs Complejidad

#CV LASSO

# se hacen 5 folds

cvlasso_a<-cv.gamlr(x = Xa, y = Ya, verb = T, family = 'binomial', nfold = 5)## fold 1,2,3,4,5,done.#Grafica

plot(cvlasso_a)

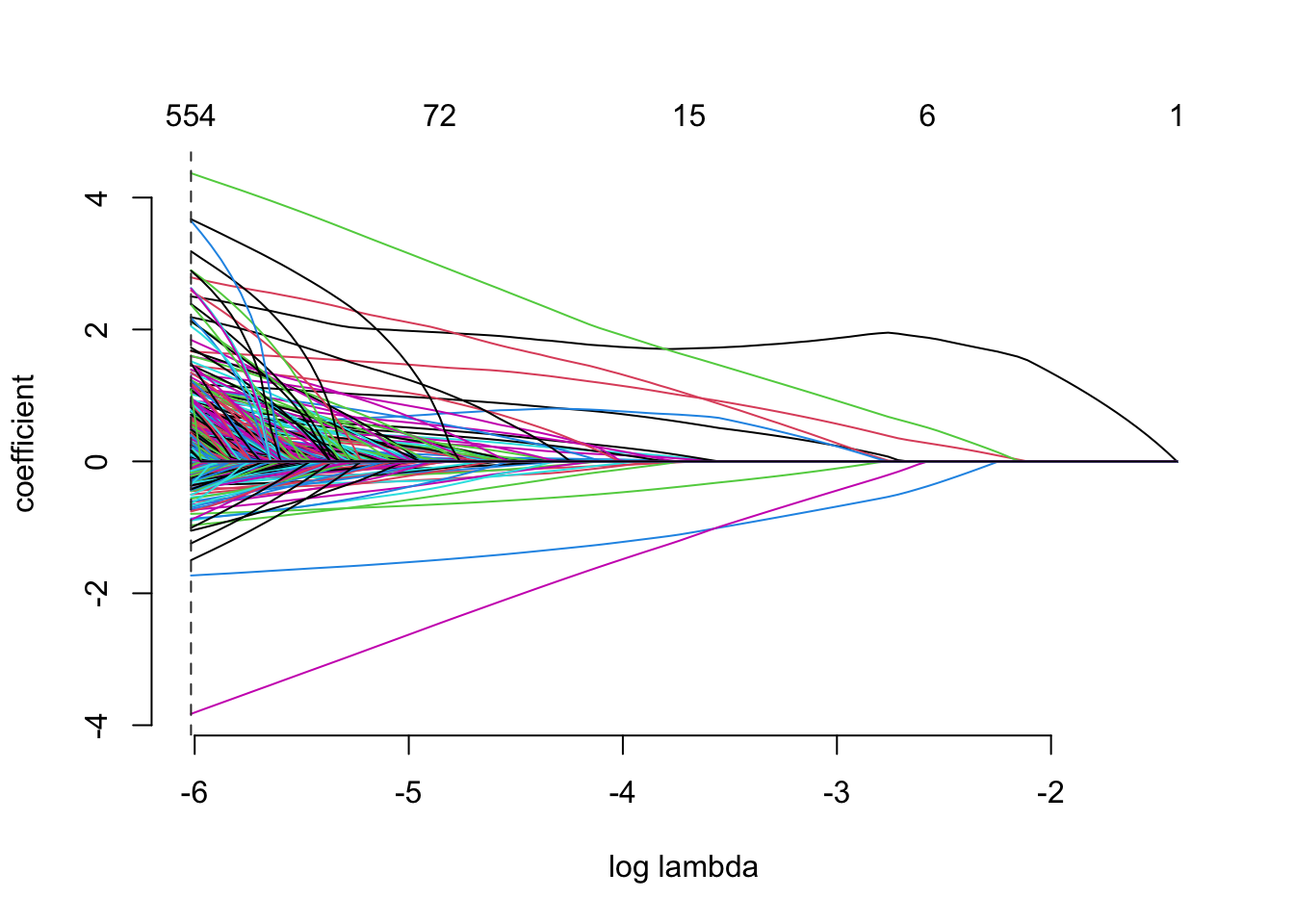

4.1.1 Grafica Lasso de los coeficientes vs la complejidad del modelo.

plot(cvlasso_a$gamlr)

4.1.2 Hiper parametro

Automaticamente se elige el lambda que minimiza la devianza OOS.

# Identificador para el lambda deseado

# Valor del lambda deseado

#lambda resultante

a_lambda<- colnames(coef(cvlasso_a, select="min"))

cvlasso_a$gamlr$lambda[a_lambda]## seg100

## 0.0024366584.1.3 Variables

A continuacion una tabla con los coeficientes que se selecciona para el CV LASSO. Que sorprendentemente solo fueron 561.

library(DT)

coefs<-coef(cvlasso_a, select="min", k=2, corrected=TRUE)

coefs<-as.data.frame(coefs[,1])

names(coefs)<-"valor"

coefs<-coefs %>% filter(valor !=0)

datatable(coefs%>% arrange(desc(valor)))4.1.4 LOG LOSS test OOS

Ahora pruebo el error log loss del lasso

#Predicciones

lasso_score <- predict(cvlasso_a,

newdata = Xb,

type="response",

select = "min" )

#dataframe

lasso_validation <- data.frame(y, lasso_score)

colnames(lasso_validation)[2] <- c('lasso_score')

library(MLmetrics)##

## Attaching package: 'MLmetrics'## The following object is masked from 'package:base':

##

## RecallLogLoss(lasso_validation$lasso_score,lasso_validation$y)## [1] 0.3129797Nos dio un error sorprendetemente muy pequeño. Con este modelo logramos realizar un error de 0.41872 y 0.42131 en los datos de test de Kaggle.

4.2 XGBOOSTING

Sin embargo, para ganar el concurso optamos por explorar otros modelos que generalmente tienen mayor potencial de ganar este tipo de concursos: XG boosting.

En este caso, se eligieron los hiperparametros mediante un tuning manual explorando el comportamiento del error cuando se fijaban todos los hp excepto uno. De esta manera se fijo la profunidad máxima del arbol en 6 y el learning rate en .06.

Debido a la alta cantidad de variables de las bases de datos (y pues que muchas son poco informativas) el colsample por cada arbol generado es alto: del 70%. De haber tenido solo variables muy informativas pues bajariamos ese porcentaje, sin embargo quicimos explitar la capacidad del modelo de seleccionar por si solo las variables.

# Preparar la base de entrenamiento

library(xgboost)##

## Attaching package: 'xgboost'## The following object is masked from 'package:plotly':

##

## slice## The following object is masked from 'package:dplyr':

##

## slicedtrain <- xgb.DMatrix(Xa, label = Ya)

# Label es el target

# Preparar la base de validación

dtest <- xgb.DMatrix(Xb, label = y)

watchlist <- list(train = dtrain, eval = dtest)

# Para evaluar el performance del modelo

# Entrenamiento del modelo

param <- list(max_depth = 6, learning_rate = 0.06,

objective = "binary:logistic",

eval_metric = "logloss", subsample = 0.6, colsample_bytree = 0.7)

xgb_model <- xgb.train(params = param, dtrain,

early_stopping_rounds = 10,

nrounds = 300,

watchlist)## [1] train-logloss:0.665098 eval-logloss:0.665667

## Multiple eval metrics are present. Will use eval_logloss for early stopping.

## Will train until eval_logloss hasn't improved in 10 rounds.

##

## [2] train-logloss:0.641221 eval-logloss:0.641971

## [3] train-logloss:0.614687 eval-logloss:0.615819

## [4] train-logloss:0.590774 eval-logloss:0.592444

## [5] train-logloss:0.568975 eval-logloss:0.571190

## [6] train-logloss:0.548233 eval-logloss:0.550956

## [7] train-logloss:0.530716 eval-logloss:0.533807

## [8] train-logloss:0.515927 eval-logloss:0.519216

## [9] train-logloss:0.502567 eval-logloss:0.506112

## [10] train-logloss:0.489525 eval-logloss:0.493378

## [11] train-logloss:0.477705 eval-logloss:0.481602

## [12] train-logloss:0.467383 eval-logloss:0.471699

## [13] train-logloss:0.456398 eval-logloss:0.460840

## [14] train-logloss:0.446114 eval-logloss:0.450814

## [15] train-logloss:0.438908 eval-logloss:0.443665

## [16] train-logloss:0.429406 eval-logloss:0.434500

## [17] train-logloss:0.422044 eval-logloss:0.427494

## [18] train-logloss:0.414068 eval-logloss:0.419736

## [19] train-logloss:0.407732 eval-logloss:0.413605

## [20] train-logloss:0.399773 eval-logloss:0.405979

## [21] train-logloss:0.394154 eval-logloss:0.400463

## [22] train-logloss:0.389672 eval-logloss:0.396128

## [23] train-logloss:0.383994 eval-logloss:0.390732

## [24] train-logloss:0.377858 eval-logloss:0.384795

## [25] train-logloss:0.372587 eval-logloss:0.379743

## [26] train-logloss:0.367282 eval-logloss:0.374645

## [27] train-logloss:0.362632 eval-logloss:0.370094

## [28] train-logloss:0.358672 eval-logloss:0.366222

## [29] train-logloss:0.355014 eval-logloss:0.362701

## [30] train-logloss:0.350580 eval-logloss:0.358596

## [31] train-logloss:0.347293 eval-logloss:0.355508

## [32] train-logloss:0.344457 eval-logloss:0.352769

## [33] train-logloss:0.342089 eval-logloss:0.350283

## [34] train-logloss:0.339376 eval-logloss:0.347755

## [35] train-logloss:0.336715 eval-logloss:0.345158

## [36] train-logloss:0.334183 eval-logloss:0.342716

## [37] train-logloss:0.331207 eval-logloss:0.339855

## [38] train-logloss:0.329343 eval-logloss:0.338172

## [39] train-logloss:0.325686 eval-logloss:0.334538

## [40] train-logloss:0.323158 eval-logloss:0.332055

## [41] train-logloss:0.321323 eval-logloss:0.330334

## [42] train-logloss:0.319130 eval-logloss:0.328256

## [43] train-logloss:0.317542 eval-logloss:0.326740

## [44] train-logloss:0.315605 eval-logloss:0.324905

## [45] train-logloss:0.314003 eval-logloss:0.323298

## [46] train-logloss:0.312519 eval-logloss:0.321748

## [47] train-logloss:0.310820 eval-logloss:0.320202

## [48] train-logloss:0.309514 eval-logloss:0.318914

## [49] train-logloss:0.308511 eval-logloss:0.317889

## [50] train-logloss:0.307255 eval-logloss:0.316698

## [51] train-logloss:0.305529 eval-logloss:0.315050

## [52] train-logloss:0.304378 eval-logloss:0.314005

## [53] train-logloss:0.303257 eval-logloss:0.312926

## [54] train-logloss:0.302388 eval-logloss:0.312117

## [55] train-logloss:0.301430 eval-logloss:0.311187

## [56] train-logloss:0.299083 eval-logloss:0.308857

## [57] train-logloss:0.297956 eval-logloss:0.307714

## [58] train-logloss:0.296969 eval-logloss:0.306870

## [59] train-logloss:0.296204 eval-logloss:0.306248

## [60] train-logloss:0.295512 eval-logloss:0.305615

## [61] train-logloss:0.294669 eval-logloss:0.304831

## [62] train-logloss:0.294058 eval-logloss:0.304199

## [63] train-logloss:0.293223 eval-logloss:0.303451

## [64] train-logloss:0.292572 eval-logloss:0.302828

## [65] train-logloss:0.291959 eval-logloss:0.302220

## [66] train-logloss:0.290968 eval-logloss:0.301248

## [67] train-logloss:0.290267 eval-logloss:0.300673

## [68] train-logloss:0.289372 eval-logloss:0.299778

## [69] train-logloss:0.288761 eval-logloss:0.299260

## [70] train-logloss:0.287111 eval-logloss:0.297684

## [71] train-logloss:0.286612 eval-logloss:0.297239

## [72] train-logloss:0.286186 eval-logloss:0.296791

## [73] train-logloss:0.285414 eval-logloss:0.296055

## [74] train-logloss:0.283695 eval-logloss:0.294386

## [75] train-logloss:0.283211 eval-logloss:0.293935

## [76] train-logloss:0.281591 eval-logloss:0.292384

## [77] train-logloss:0.281183 eval-logloss:0.291962

## [78] train-logloss:0.280684 eval-logloss:0.291505

## [79] train-logloss:0.280247 eval-logloss:0.291059

## [80] train-logloss:0.279860 eval-logloss:0.290683

## [81] train-logloss:0.279572 eval-logloss:0.290415

## [82] train-logloss:0.279012 eval-logloss:0.289875

## [83] train-logloss:0.278129 eval-logloss:0.289121

## [84] train-logloss:0.277820 eval-logloss:0.288824

## [85] train-logloss:0.277428 eval-logloss:0.288552

## [86] train-logloss:0.275781 eval-logloss:0.286991

## [87] train-logloss:0.275252 eval-logloss:0.286505

## [88] train-logloss:0.274808 eval-logloss:0.286111

## [89] train-logloss:0.274513 eval-logloss:0.285894

## [90] train-logloss:0.273493 eval-logloss:0.284944

## [91] train-logloss:0.273158 eval-logloss:0.284661

## [92] train-logloss:0.272915 eval-logloss:0.284410

## [93] train-logloss:0.272324 eval-logloss:0.283904

## [94] train-logloss:0.271902 eval-logloss:0.283549

## [95] train-logloss:0.271398 eval-logloss:0.283053

## [96] train-logloss:0.271162 eval-logloss:0.282823

## [97] train-logloss:0.270779 eval-logloss:0.282555

## [98] train-logloss:0.270447 eval-logloss:0.282359

## [99] train-logloss:0.269607 eval-logloss:0.281557

## [100] train-logloss:0.269273 eval-logloss:0.281295

## [101] train-logloss:0.268677 eval-logloss:0.280786

## [102] train-logloss:0.268194 eval-logloss:0.280368

## [103] train-logloss:0.268006 eval-logloss:0.280212

## [104] train-logloss:0.267774 eval-logloss:0.279957

## [105] train-logloss:0.267413 eval-logloss:0.279639

## [106] train-logloss:0.267012 eval-logloss:0.279383

## [107] train-logloss:0.266718 eval-logloss:0.279113

## [108] train-logloss:0.266400 eval-logloss:0.278859

## [109] train-logloss:0.266207 eval-logloss:0.278720

## [110] train-logloss:0.265831 eval-logloss:0.278369

## [111] train-logloss:0.265616 eval-logloss:0.278183

## [112] train-logloss:0.265218 eval-logloss:0.277912

## [113] train-logloss:0.264770 eval-logloss:0.277543

## [114] train-logloss:0.263727 eval-logloss:0.276570

## [115] train-logloss:0.263328 eval-logloss:0.276221

## [116] train-logloss:0.263039 eval-logloss:0.275995

## [117] train-logloss:0.262694 eval-logloss:0.275711

## [118] train-logloss:0.262344 eval-logloss:0.275464

## [119] train-logloss:0.262168 eval-logloss:0.275297

## [120] train-logloss:0.261299 eval-logloss:0.274546

## [121] train-logloss:0.261161 eval-logloss:0.274423

## [122] train-logloss:0.260809 eval-logloss:0.274117

## [123] train-logloss:0.260625 eval-logloss:0.273937

## [124] train-logloss:0.260477 eval-logloss:0.273797

## [125] train-logloss:0.260213 eval-logloss:0.273583

## [126] train-logloss:0.259948 eval-logloss:0.273437

## [127] train-logloss:0.259747 eval-logloss:0.273332

## [128] train-logloss:0.259492 eval-logloss:0.273099

## [129] train-logloss:0.259310 eval-logloss:0.272965

## [130] train-logloss:0.258976 eval-logloss:0.272675

## [131] train-logloss:0.258673 eval-logloss:0.272432

## [132] train-logloss:0.258470 eval-logloss:0.272270

## [133] train-logloss:0.258293 eval-logloss:0.272147

## [134] train-logloss:0.257973 eval-logloss:0.271926

## [135] train-logloss:0.257798 eval-logloss:0.271831

## [136] train-logloss:0.257429 eval-logloss:0.271510

## [137] train-logloss:0.257271 eval-logloss:0.271374

## [138] train-logloss:0.257090 eval-logloss:0.271286

## [139] train-logloss:0.256989 eval-logloss:0.271220

## [140] train-logloss:0.256729 eval-logloss:0.270992

## [141] train-logloss:0.256569 eval-logloss:0.270873

## [142] train-logloss:0.256447 eval-logloss:0.270805

## [143] train-logloss:0.256258 eval-logloss:0.270621

## [144] train-logloss:0.255872 eval-logloss:0.270282

## [145] train-logloss:0.255423 eval-logloss:0.269957

## [146] train-logloss:0.254832 eval-logloss:0.269530

## [147] train-logloss:0.254701 eval-logloss:0.269449

## [148] train-logloss:0.254514 eval-logloss:0.269350

## [149] train-logloss:0.254333 eval-logloss:0.269253

## [150] train-logloss:0.254165 eval-logloss:0.269130

## [151] train-logloss:0.253832 eval-logloss:0.268887

## [152] train-logloss:0.253693 eval-logloss:0.268821

## [153] train-logloss:0.253516 eval-logloss:0.268696

## [154] train-logloss:0.253429 eval-logloss:0.268633

## [155] train-logloss:0.253290 eval-logloss:0.268524

## [156] train-logloss:0.252911 eval-logloss:0.268204

## [157] train-logloss:0.252723 eval-logloss:0.268085

## [158] train-logloss:0.252591 eval-logloss:0.268009

## [159] train-logloss:0.252390 eval-logloss:0.267882

## [160] train-logloss:0.252220 eval-logloss:0.267823

## [161] train-logloss:0.251209 eval-logloss:0.266885

## [162] train-logloss:0.251019 eval-logloss:0.266777

## [163] train-logloss:0.250887 eval-logloss:0.266721

## [164] train-logloss:0.250772 eval-logloss:0.266623

## [165] train-logloss:0.250680 eval-logloss:0.266531

## [166] train-logloss:0.250586 eval-logloss:0.266455

## [167] train-logloss:0.250460 eval-logloss:0.266366

## [168] train-logloss:0.250382 eval-logloss:0.266333

## [169] train-logloss:0.250312 eval-logloss:0.266296

## [170] train-logloss:0.250231 eval-logloss:0.266252

## [171] train-logloss:0.249905 eval-logloss:0.266057

## [172] train-logloss:0.249748 eval-logloss:0.265941

## [173] train-logloss:0.249649 eval-logloss:0.265906

## [174] train-logloss:0.249487 eval-logloss:0.265817

## [175] train-logloss:0.249381 eval-logloss:0.265747

## [176] train-logloss:0.249267 eval-logloss:0.265646

## [177] train-logloss:0.249036 eval-logloss:0.265420

## [178] train-logloss:0.248779 eval-logloss:0.265272

## [179] train-logloss:0.248532 eval-logloss:0.265094

## [180] train-logloss:0.248429 eval-logloss:0.265035

## [181] train-logloss:0.248343 eval-logloss:0.264956

## [182] train-logloss:0.248178 eval-logloss:0.264857

## [183] train-logloss:0.248024 eval-logloss:0.264763

## [184] train-logloss:0.247887 eval-logloss:0.264663

## [185] train-logloss:0.247781 eval-logloss:0.264574

## [186] train-logloss:0.247525 eval-logloss:0.264428

## [187] train-logloss:0.247428 eval-logloss:0.264362

## [188] train-logloss:0.247300 eval-logloss:0.264281

## [189] train-logloss:0.247086 eval-logloss:0.264168

## [190] train-logloss:0.246923 eval-logloss:0.264013

## [191] train-logloss:0.246849 eval-logloss:0.263986

## [192] train-logloss:0.246695 eval-logloss:0.263878

## [193] train-logloss:0.246533 eval-logloss:0.263755

## [194] train-logloss:0.246390 eval-logloss:0.263701

## [195] train-logloss:0.246242 eval-logloss:0.263570

## [196] train-logloss:0.245988 eval-logloss:0.263446

## [197] train-logloss:0.245870 eval-logloss:0.263373

## [198] train-logloss:0.245764 eval-logloss:0.263336

## [199] train-logloss:0.245649 eval-logloss:0.263245

## [200] train-logloss:0.245377 eval-logloss:0.263064

## [201] train-logloss:0.245255 eval-logloss:0.262988

## [202] train-logloss:0.245092 eval-logloss:0.262886

## [203] train-logloss:0.244962 eval-logloss:0.262788

## [204] train-logloss:0.244759 eval-logloss:0.262661

## [205] train-logloss:0.244638 eval-logloss:0.262624

## [206] train-logloss:0.244279 eval-logloss:0.262276

## [207] train-logloss:0.244040 eval-logloss:0.262131

## [208] train-logloss:0.243889 eval-logloss:0.262026

## [209] train-logloss:0.243642 eval-logloss:0.261824

## [210] train-logloss:0.243584 eval-logloss:0.261787

## [211] train-logloss:0.243505 eval-logloss:0.261727

## [212] train-logloss:0.243115 eval-logloss:0.261392

## [213] train-logloss:0.243042 eval-logloss:0.261368

## [214] train-logloss:0.242969 eval-logloss:0.261317

## [215] train-logloss:0.242903 eval-logloss:0.261271

## [216] train-logloss:0.242760 eval-logloss:0.261199

## [217] train-logloss:0.242305 eval-logloss:0.260851

## [218] train-logloss:0.242193 eval-logloss:0.260760

## [219] train-logloss:0.242093 eval-logloss:0.260708

## [220] train-logloss:0.241799 eval-logloss:0.260524

## [221] train-logloss:0.241715 eval-logloss:0.260464

## [222] train-logloss:0.241652 eval-logloss:0.260435

## [223] train-logloss:0.241512 eval-logloss:0.260351

## [224] train-logloss:0.241429 eval-logloss:0.260301

## [225] train-logloss:0.241301 eval-logloss:0.260228

## [226] train-logloss:0.241231 eval-logloss:0.260214

## [227] train-logloss:0.241072 eval-logloss:0.260075

## [228] train-logloss:0.241023 eval-logloss:0.260063

## [229] train-logloss:0.240976 eval-logloss:0.260024

## [230] train-logloss:0.240868 eval-logloss:0.260022

## [231] train-logloss:0.240766 eval-logloss:0.259929

## [232] train-logloss:0.240680 eval-logloss:0.259910

## [233] train-logloss:0.240582 eval-logloss:0.259896

## [234] train-logloss:0.240468 eval-logloss:0.259849

## [235] train-logloss:0.240352 eval-logloss:0.259784

## [236] train-logloss:0.240166 eval-logloss:0.259679

## [237] train-logloss:0.239997 eval-logloss:0.259526

## [238] train-logloss:0.239921 eval-logloss:0.259480

## [239] train-logloss:0.239721 eval-logloss:0.259373

## [240] train-logloss:0.239639 eval-logloss:0.259354

## [241] train-logloss:0.239550 eval-logloss:0.259311

## [242] train-logloss:0.239467 eval-logloss:0.259250

## [243] train-logloss:0.239363 eval-logloss:0.259159

## [244] train-logloss:0.239257 eval-logloss:0.259149

## [245] train-logloss:0.239188 eval-logloss:0.259119

## [246] train-logloss:0.239089 eval-logloss:0.259073

## [247] train-logloss:0.239007 eval-logloss:0.259052

## [248] train-logloss:0.238934 eval-logloss:0.259008

## [249] train-logloss:0.238850 eval-logloss:0.258968

## [250] train-logloss:0.238767 eval-logloss:0.258894

## [251] train-logloss:0.238664 eval-logloss:0.258841

## [252] train-logloss:0.238518 eval-logloss:0.258770

## [253] train-logloss:0.238438 eval-logloss:0.258732

## [254] train-logloss:0.238368 eval-logloss:0.258661

## [255] train-logloss:0.238234 eval-logloss:0.258553

## [256] train-logloss:0.238143 eval-logloss:0.258484

## [257] train-logloss:0.238057 eval-logloss:0.258451

## [258] train-logloss:0.237971 eval-logloss:0.258409

## [259] train-logloss:0.237743 eval-logloss:0.258231

## [260] train-logloss:0.237686 eval-logloss:0.258198

## [261] train-logloss:0.237613 eval-logloss:0.258149

## [262] train-logloss:0.237485 eval-logloss:0.258066

## [263] train-logloss:0.237361 eval-logloss:0.257997

## [264] train-logloss:0.237296 eval-logloss:0.257949

## [265] train-logloss:0.237237 eval-logloss:0.257906

## [266] train-logloss:0.237176 eval-logloss:0.257863

## [267] train-logloss:0.237045 eval-logloss:0.257761

## [268] train-logloss:0.236796 eval-logloss:0.257571

## [269] train-logloss:0.236602 eval-logloss:0.257430

## [270] train-logloss:0.236546 eval-logloss:0.257383

## [271] train-logloss:0.236407 eval-logloss:0.257271

## [272] train-logloss:0.236323 eval-logloss:0.257213

## [273] train-logloss:0.236204 eval-logloss:0.257147

## [274] train-logloss:0.236110 eval-logloss:0.257096

## [275] train-logloss:0.235980 eval-logloss:0.257021

## [276] train-logloss:0.235854 eval-logloss:0.256960

## [277] train-logloss:0.235728 eval-logloss:0.256926

## [278] train-logloss:0.235654 eval-logloss:0.256879

## [279] train-logloss:0.235552 eval-logloss:0.256791

## [280] train-logloss:0.235470 eval-logloss:0.256752

## [281] train-logloss:0.235366 eval-logloss:0.256714

## [282] train-logloss:0.235143 eval-logloss:0.256559

## [283] train-logloss:0.235068 eval-logloss:0.256503

## [284] train-logloss:0.234999 eval-logloss:0.256435

## [285] train-logloss:0.234859 eval-logloss:0.256374

## [286] train-logloss:0.234802 eval-logloss:0.256354

## [287] train-logloss:0.234739 eval-logloss:0.256321

## [288] train-logloss:0.234611 eval-logloss:0.256236

## [289] train-logloss:0.234562 eval-logloss:0.256207

## [290] train-logloss:0.234435 eval-logloss:0.256179

## [291] train-logloss:0.234349 eval-logloss:0.256153

## [292] train-logloss:0.234267 eval-logloss:0.256127

## [293] train-logloss:0.234173 eval-logloss:0.256071

## [294] train-logloss:0.234104 eval-logloss:0.256009

## [295] train-logloss:0.234047 eval-logloss:0.255945

## [296] train-logloss:0.233990 eval-logloss:0.255908

## [297] train-logloss:0.233878 eval-logloss:0.255870

## [298] train-logloss:0.233585 eval-logloss:0.255712

## [299] train-logloss:0.233537 eval-logloss:0.255697

## [300] train-logloss:0.233481 eval-logloss:0.255686# Predicción

xgb_pred <- predict(xgb_model, Xb)

XGpred<-data.frame(y, xgb_pred)

colnames(XGpred)<-c("y","xgb_pred")Se muestran las evaluaciones del modelo, tanto in sample como out of sample, para las primeras y últimas iteraciones.

4.2.1 Error

LogLoss(XGpred$xgb_pred,XGpred$y)## [1] 0.255686Este modelo logró ganar el concurso con un error en los datasets de kaggle de 0.37598 y 0.37401.

4.2.2 Variables

importance <- xgb.importance(feature_names = xgb_model[["feature_names"]], model = xgb_model)

datatable(importance)