Chapter 3 Importing and Cleaning Data

In the previous two chapters, we used mostly built-in data sets to practice visualizing and transforming data. In practice, of course, you’ll have to import a data set into R before starting your analysis. Once a data set is imported, it’s often necessary to spruce it up by fixing broken formatting, changing variable names, deciding what to do with missing values, etc. We address these important steps in this chapter.

We’ll need tidyverse:

library(tidyverse)We’ll also need two new libraries used for importing data sets. They’re installed as part of base R, but you’ll have to load them.

library(readxl) # For importing Excel files

library(readr) # For importing csv (comma separated values) files3.1 Importing Tabular Data

All of our data sets in this course are tabular, meaning that they consist of several rows of observations and several columns of variables. Tabular data is usually entered into spreadsheet software (such as Excel, Google Sheets, etc), where it can be manipulated using the built-in functionality of the software. Importing the data into R, however, allows for greater flexibility and versatility when performing your analysis.

RStudio makes it very easy to import data sets, although the first step is placing your spreadsheet file in the right directory.

Click here to view a very small sample csv file. Our goal is to import this into R. We can do so as follows:

Download the file by clicking the download button in the upper-right corner of the screen.

Import this file into R:

- If you’re using the RStudio desktop version, move this downloaded file to your working directory in R. If you’re not sure what your working directory is, you can enter

getwd()in the RStudio console to find out. - If you’re using Posit Cloud, click the “Files” tab in the lower-right pane, and then click the “Upload” button. Then click “Choose File” and browse to the location of the downloaded csv file. In either case (desktop or cloud), the file should now appear in your list of files.

Click on the csv file in your files list, and then choose “Import Dataset.” You’ll see a preview of what the data set will look like in R, a window of importing options, and the R code used to do the importing.

In the “Import Options” window, choose the name you want to use for the data set once it’s in R. Enter this in the “Name” field. Also, decide whether you want to use the values in the first row in your csv file as column names. If so, make sure the “First Row as Names” box is checked. There are other options in this window as well that you’ll sometimes need to adjust, but these two will get you started.

Now you’re ready to import. You can do so by either clicking the “Import” button at the bottom right or by cutting and pasting the code provided into your document. I’d recommend cutting and pasting the code, as it allows you to quickly re-import your csv over your original in case you have to start your analysis over. The code generated by the import feature is:

library(readr)

sample_csv <- read_csv("sample_csv.csv")

View(sample_csv)readr is the package needed to access the read_csv function. If you’ve already loaded it, it’s unnecessary to do so again. Also, the View(sample_csv) command is, of course, optional. All you really need to import the file is:

sample_csv <- read_csv("sample_csv.csv")Once you import it, you should see sample_csv listed in the Global Environment pane, and you can now start to analyze it like any other data set. It’s always good to at least preview it to make sure it imported correctly:

sample_csv## # A tibble: 3 x 2

## state capital

## <chr> <chr>

## 1 Michigan Lansing

## 2 California Sacramento

## 3 New Jersey TrentonData entry is usually done in Excel (or other spreadsheet software) and then imported into R for analysis. However, you sometimes might want to enter tabular data directly into R, especially when the data set is small. This is often the case when you need a sample data set to test a piece of code.

You can do so in R using the tibble or tribble (= transposed tibble) functions from tidyverse.

(small_data_set_tibble <- tibble(

x = c(3, 4, 2, -5, 7),

y = c(5, 6, -1, 0, 5),

z = c(-3, -2, 0, 1, 6)

))## # A tibble: 5 x 3

## x y z

## <dbl> <dbl> <dbl>

## 1 3 5 -3

## 2 4 6 -2

## 3 2 -1 0

## 4 -5 0 1

## 5 7 5 6(small_data_set_tribble <- tribble(

~name, ~points, ~year,

"Wilt Chamberlain", 100, 1962,

"Kobe Bryant", 81, 2006,

"Wilt Chamberlain", 78, 1961,

"Wilt Chamberlain", 73, 1962,

"Wilt Chamberlain", 73, 1962,

"David Thompson", 73, 1978,

"Luka Doncic", 73, 2024

))## # A tibble: 7 x 3

## name points year

## <chr> <dbl> <dbl>

## 1 Wilt Chamberlain 100 1962

## 2 Kobe Bryant 81 2006

## 3 Wilt Chamberlain 78 1961

## 4 Wilt Chamberlain 73 1962

## 5 Wilt Chamberlain 73 1962

## 6 David Thompson 73 1978

## 7 Luka Doncic 73 2024Notice that the column names do not require quotation marks, but any non-numeric values in the data set do.

Exercises

Click here to access another sample spreadsheet file. Download it as a .xlsx file and then import it into R. (Copy and paste the code generated for the import into your answer.) Why is the code a little different from that used to import the sample spreadsheet from above in this section?

Click here for yet another sample spreadsheet. Download it and import it into R, but when you do, give the imported data set a more meaningful name than the default name. What else will you have to do to make the data set import correctly? (Copy and paste the generated import code into your answer.)

When you chose not to use the first row as the column names in the previous exercise, what default names did

read_excelassign to the columns? By either checking theread_exceldocumentation in R (?read_excel) or by searching the internet, find out how to override the default column names. Then re-import the spreadsheet from the previous exercise and assign more meaningful column names.Use both the

tibbleandtribblefunctions to directly enter your current course schedule into R. Include a column for “course” (e.g., MAT 210), “title” (e.g., Data Analysis with R), and “credits” (e.g., 4).The

tibbleandtribblefunctions both result in the same thing, but try to think about why it’s sometimes easier to usetibblethantribbleand vice versa.

3.2 Data Types and Data Structures

R supports various “types” of data. The table below summarizes the ones we will use most often (but is not a comprehensive list).

| type | description |

|---|---|

| numeric | real numbers, both decimals and whole numbers (known as “doubles”) |

| integer | whole numbers |

| character | text characters or strings of text characters, including letters, numbers, and other symbols |

| logical | statements whose value is either true or false |

You can check the type of an object using the typeof function:

typeof("abc")## [1] "character"typeof("3.6")## [1] "character"The last above output might seem surprising, but when a data object is enclosed in quotes, it is automatically considered a character, even when the quoted object is a number, such as 3.6. Contrast this with the following:

typeof(3.6)## [1] "double"typeof(3)## [1] "double"Notice that the object, 3, is a whole number, but typeof labeled it a double. This is because doubles are the default numeric data type – every whole number is a double but not vice versa. If you want to indicate that a whole number should not be treated as a double, include the suffix “L”:

typeof(3L)## [1] "integer"typeof(10 < 7)## [1] "logical"You can sometimes “coerce” objects of one type into objects of another using the as.<TYPE> function. For example, suppose we want to coerce 3, considered as a double, into an integer:

x <- as.integer(3)

typeof(x)## [1] "integer"One of the exercises below explores coercing in more depth.

Data objects are the building blocks of more complex “data structures.” R uses various data structures, including the following ones most relevant to us:

| data structure | description |

|---|---|

| vector | ordered collection of objects of the same data type |

| list | ordered collection of objects of possibly different data types |

| data frame | tabular structure, a list of column vectors |

| factor | a vector of ordinal categorical data with predefined “levels” |

Vectors and lists have similar functionality, the main difference being that vectors are special lists in which each entry has the same data type. Both are entered using the combine function c(). (Some specially structured lists can be entered without typing each entry into the c() function. You will explore these in the exercises.)

The first list below is not a vector, but the second one is:

L1 <- c("a", 4.7, TRUE)

L2 <- c(4.9, 2, -1/2, pi)There are some helpful functions available for lists. For example, you can calculate the length of a list:

length(L1)## [1] 3length(L2)## [1] 4You can also sort lists:

sort(L1)## [1] "4.7" "a" "TRUE"sort(L2)## [1] -0.500000 2.000000 3.141593 4.900000The vector L2 was sorted into numerical order (from smallest to largest). Do you see how the list L1 was sorted?

You can access entries from a list by referring to its “index” (its numbered position in the list). The following extracts the third entry in L2:

L2[3]## [1] -0.5You can also access several entries at once by referring to a vector of indices. The following extracts the first and fourth entries of L2:

L2[c(1,4)]## [1] 4.900000 3.141593If you want to instead specify which entries you do not want to access, then just insert a negative sign in front of the excluded entry. The following extracts all entries except the second from L2:

L2[-2]## [1] 4.900000 -0.500000 3.141593A data frame is a tabular array of data objects (like a spreadsheet). Each column of a data frame is a vector, so a single column in a data frame cannot contain a mix of data types. The tidyverse package works best with “tibbles,” which are data frames designed to print more nicely in the console.

We’ve seen how to extract a single column vector from a data frame. The following extracts the model column from mpg:

mpg$modelSince each column of a data frame has entries of only one type, we can check the column’s type using typeof:

typeof(mpg$model)## [1] "character"For data frames that are tibbles, we can also view the data type of each column by displaying the tibble. (This is one of the nice features of tibbles.)

mpg## # A tibble: 234 x 11

## manufacturer model displ year cyl trans drv cty hwy fl class

## <chr> <chr> <dbl> <int> <int> <chr> <chr> <int> <int> <chr> <chr>

## 1 audi a4 1.8 1999 4 auto(l5) f 18 29 p compact

## 2 audi a4 1.8 1999 4 manual(m5) f 21 29 p compact

## 3 audi a4 2 2008 4 manual(m6) f 20 31 p compact

## 4 audi a4 2 2008 4 auto(av) f 21 30 p compact

## 5 audi a4 2.8 1999 6 auto(l5) f 16 26 p compact

## 6 audi a4 2.8 1999 6 manual(m5) f 18 26 p compact

## 7 audi a4 3.1 2008 6 auto(av) f 18 27 p compact

## 8 audi a4 quattro 1.8 1999 4 manual(m5) 4 18 26 p compact

## 9 audi a4 quattro 1.8 1999 4 auto(l5) 4 16 25 p compact

## 10 audi a4 quattro 2 2008 4 manual(m6) 4 20 28 p compact

## # i 224 more rowsNotice that an abbreviation of the type is stated under each column name.

Earlier in this section, we saw how to coerce data objects from one type to another using the as.<TYPE> function. We can coerce entire columns of data frames as well by coercing within the mutate function. For example, recall the data set small_data_set_tribble from the previous section:

(small_data_set_tribble <- tribble(

~name, ~points, ~year,

"Wilt Chamberlain", 100, 1962,

"Kobe Bryant", 81, 2006,

"Wilt Chamberlain", 78, 1961,

"Wilt Chamberlain", 73, 1962,

"Wilt Chamberlain", 73, 1962,

"David Thompson", 73, 1978,

"Luka Doncic", 73, 2024

))## # A tibble: 7 x 3

## name points year

## <chr> <dbl> <dbl>

## 1 Wilt Chamberlain 100 1962

## 2 Kobe Bryant 81 2006

## 3 Wilt Chamberlain 78 1961

## 4 Wilt Chamberlain 73 1962

## 5 Wilt Chamberlain 73 1962

## 6 David Thompson 73 1978

## 7 Luka Doncic 73 2024Notice that the points and year columns are both doubles, which is the default numeric data type. However, since the values of these variables must always be whole numbers, it would be appropriate to coerce them into integers.

small_data_set_tribble %>%

mutate(points = as.integer(points),

year = as.integer(year))## # A tibble: 7 x 3

## name points year

## <chr> <int> <int>

## 1 Wilt Chamberlain 100 1962

## 2 Kobe Bryant 81 2006

## 3 Wilt Chamberlain 78 1961

## 4 Wilt Chamberlain 73 1962

## 5 Wilt Chamberlain 73 1962

## 6 David Thompson 73 1978

## 7 Luka Doncic 73 2024Notice that these variables now have integer type.

One final data structure we’ll use is a “factor.” Factors are lists of ordinal categorical data which come with a specified ordering.

To begin to see what factors are, let’s create a small data set:

(schedule <- tribble(

~day, ~task,

"Tuesday", "wash car",

"Friday", "doctor's appointment",

"Monday", "haircut",

"Saturday", "laundry",

"Wednesday", "oil change"

))## # A tibble: 5 x 2

## day task

## <chr> <chr>

## 1 Tuesday wash car

## 2 Friday doctor's appointment

## 3 Monday haircut

## 4 Saturday laundry

## 5 Wednesday oil changeSuppose we wanted to organize this list of tasks by the day. We could try:

arrange(schedule, day)## # A tibble: 5 x 2

## day task

## <chr> <chr>

## 1 Friday doctor's appointment

## 2 Monday haircut

## 3 Saturday laundry

## 4 Tuesday wash car

## 5 Wednesday oil changeAs you can see, this organizes by day alphabetically, which is certainly not what we want. To organize by day chronologically, we should specify our desired ordering so that the arrange function will sort it the way we want.

We first have to define our ordering by establishing a “levels” vector for the day variable:

day_levels <- c("Sunday", "Monday", "Tuesday", "Wednesday", "Thursday", "Friday", "Saturday")We can now turn the day column into a factor with the above levels as follows:

(schedule2 <- schedule %>%

mutate(day = factor(day, levels = day_levels)))## # A tibble: 5 x 2

## day task

## <fct> <chr>

## 1 Tuesday wash car

## 2 Friday doctor's appointment

## 3 Monday haircut

## 4 Saturday laundry

## 5 Wednesday oil changeNotice that the type of day has been changed to a factor. Re-trying the sort above, we get:

arrange(schedule2, day)## # A tibble: 5 x 2

## day task

## <fct> <chr>

## 1 Monday haircut

## 2 Tuesday wash car

## 3 Wednesday oil change

## 4 Friday doctor's appointment

## 5 Saturday laundryWhen it’s necessary to exploit the order of an ordinal categorical variable, it’s best to convert it to a factor, specifying the levels in a way that corresponds to the order.

Exercises

You will need the nycflights13 library for these exercises.

Answer the following questions by experimenting.

- Can you coerce an integer into a character? What happens when you try?

- Can you coerce a double into a character? What happens when you try?

- Can you coerce a double into an integer? What happens when you try?

- Can you coerce a character into a double? What happens when you try?

- Can you coerce a logical object into a character? What happens when you try?

- Can you coerce a logical object into a double? What happens when you try?

- Can you coerce a character into a logical object? What happens when you try?

Sort the objects in the list

c("R", 4.2, "math", FALSE, -2). Then try to explain how lists containing more than one data type are sorted.The

flightsdata set has a variable with a type that was not covered in this section. What is the variable, and what is its type?Create a data set called

mpg2which is an exact copy ofmpgexcept that thehwyandctyvariables are doubles rather than integers.Download and import the data set found here.

- What is the type of the variable

day? Change it to a more appropriate type. - Use

arrangeto sort the data set so that the records are in chronological order. (You’ll have to changemonthto a different data structure.)

- What is the type of the variable

Download and import the data set found here. It contains observations about 200 college students with two variables:

classis their college class, andpreferenceis their political party preference.- Obtain a bar graph that shows the distribution of the

classvariable. - What is the obvious problem with your bar graph from part (a)?

- Think of a way to fix the problem noticed in part (b), and then redo the bar graph.

- Obtain a bar graph that shows the distribution of the

Without scrolling through the data set, state the value in the 3,568th row of the

destcolumn inflights.As noted in this section, there are ways to enter certain special lists without typing every entry. Describe the entries in each of the following lists.

3:10seq(5,63,10)rep("j",5)rep(c("MAT",210),4)rep(c("MAT",210),c(6,3))

Use the shortcuts from the previous exercise to enter the following lists.

4 10 16 22 28 34 40 46"a" "b" "c" "a" "b" "c" "a" "b" "c""a" "a" "a" "b" "b" "b" "c" "c" "c""a" "a" "a" "b" "b" "b" "b" "b" "c"

3.3 Renaming Columns

For the next few sections, we’ll be using the data set found here. You should download it and then import it into R using the name high_school_data. It contains high school data for incoming college students as well as data from their first college semester.

You probably immediately notice that one of the variable names, the one that starts with Acad Standing After FA20... is extremely long. (You can’t see the column’s data in the clipped tibble in the console, but it’s one of the off-screen columns.) A data set should have variable names that are descriptive without being prohibitively long. Column names are easy to change in Excel, Google Sheets, etc, but you can also change them using the tidyverse function rename.

First, a few notes about the way R handles column names. By default, column names are not allowed to contain non-syntactic characters, i.e., any character other than letters, numbers, . (period), and _ (underscore). Also, column names must begin with a letter. In particular, column names cannot have spaces. (It’s best to use an underscore in place of a space in a column name). However, you can override these restrictions by enclosing the character string for a column name in backticks. For example, if we want to rename the long variable name above, then `Acad Standing` is allowed, but Acad Standing is not.

Also, when the data set you’re importing has a column name with a space in it, the space will be retained in R. However, to refer to that column, you’ll have to enclose the column name in backticks. For example, high_school_data$`ACT Comp` will return the ACT Comp column in high_school_data, but high_school_data$ACT Comp will produce an error message.

With the above in mind, renaming variables is actually very easy. The rename function is one of the transformation functions like filter, select, etc, and works the same way. Let’s rename the really long Acad Standing After FA20... column as just Acad_Standing:

(high_school_data_clean <- high_school_data %>%

rename("Acad_Standing" = `Acad Standing After FA20 (Dean's List, Good Standing, Academic Warning, Academic Probation, Academic Dismissal, Readmission on Condition)`))## # A tibble: 278 x 10

## `High School GPA` `ACT Comp` `SAT Comp` High School Quality Acad~1 `Bridge Program?` FA20 Credit Hours At~2

## <dbl> <dbl> <dbl> <dbl> <chr> <dbl>

## 1 2.72 14 800 0.888 yes 13

## 2 4 NA 1380 0.839 <NA> 15

## 3 3.08 NA 1030 0.894 <NA> 14

## 4 3.69 NA 1240 NA <NA> 17

## 5 NA NA NA NA <NA> 13

## 6 3.47 NA 1030 0.533 <NA> 16

## 7 3.43 27 NA NA <NA> 15

## 8 3.27 NA 1080 NA <NA> 15

## 9 3.76 NA 1120 0.916 <NA> 15

## 10 3.23 33 NA 0.797 <NA> 15

## # i 268 more rows

## # i abbreviated names: 1: `High School Quality Academic`, 2: `FA20 Credit Hours Attempted`

## # i 4 more variables: `FA20 Credit Hours Earned` <dbl>, Acad_Standing <chr>, Race <chr>, Ethnicity <chr>Notice that the syntax for rename is rename(<"NEW NAME"> = <OLD NAME>). Notice also that in the above code chunk, a new data set high_school_data_clean was created to update high_school_data. The original data set, high_school_data, will still have the original long column name. In general, it’s a good practice to not change the original data set. It’s safer to always create a new data set to accept whatever changes you need to make.

3.4 Missing Values

Another thing that stands out right away in high_school_data is the abundance of NAs. This is an issue in most real-world data sets you will analyze. The way you handle the NAs in your analysis will depend on the context, and we’ll see various methods as we proceed in the course. (Recall that we’ve already seen one way to work around NAs, namely the na.rm = TRUE argument we can include when we calculate summary statistics, such as means, sums, and standard deviations.)

Knowing the scope of the missing values in a data set is important when you first start to explore and clean. We can count how many NAs there are in a given column using the is.na function as follows.

Let’s count how many missing values there are in the SAT Comp column. (Notice the backticks around SAT Comp in the code below. Recall that this is necessary since SAT Comp has a space in it.)

sum(is.na(high_school_data$`SAT Comp`))## [1] 40This works because is.na(high_school_data$SAT Comp) is a logical vector whose entries are TRUE when there is an NA in high_school_data$SAT Comp and FALSE otherwise. Recall that we can do arithmetic with logical values, with TRUE having the value 1 and FALSE having the value 0. Thus, when we find the sum of the entries in is.na(high_school_data$SAT Comp), we just end up counting the TRUEs, and this corresponds to counting the NAs.

The safest way to handle missing values is to just let them be. However, there are sometimes situations in which missing values will be an obstacle that prevents certain types of analysis. When that’s the case, the NAs have to be either filtered out or imputed.

Filtering out observations with NAs is a good option if you’d be losing only a very small percentage of the data. However, as in the case of SAT Comp, there are often far too many NAs to just get rid of them. In this case, imputing is a better option.

To impute a missing value means to assign an actual value to an NA. This is risky since NA stands for “not available,” and making the decision to change an NA to an actual value runs the risk of compromising the integrity of the data set. An analyst should be wary of “playing God” with the data. However, sometimes we can safely convert NAs to actual values when it’s clear what the NAs really mean or when we need to assign them a certain value in order to make a calculation work.

In high_school_data, the Bridge Program? column indicates whether a student was required to attend the summer Bridge Program as a preparation for taking college classes. The missing values in this column are clearly there because the person who entered the data meant it to be understood that a blank cell means “no.” While this convention is immediately understood by anyone viewing the data set, it sets up potential problems when doing our analysis. It’s always a better practice to reserve NA for values that are truly missing rather than using it as a stand-in for some known value.

We can replace NAs in a column with an actual value using the transformation function mutate together with replace_na. Let’s replace the NAs in the Bridge Program? column with “no.” We’ll display only this cleaned up column so we can check our work.

high_school_data_clean <- high_school_data_clean %>%

mutate(`Bridge Program?` = replace_na(`Bridge Program?`, "no"))

high_school_data_clean %>%

select(`Bridge Program?`)## # A tibble: 278 x 1

## `Bridge Program?`

## <chr>

## 1 yes

## 2 no

## 3 no

## 4 no

## 5 no

## 6 no

## 7 no

## 8 no

## 9 no

## 10 no

## # i 268 more rowsWhen there’s a need to impute missing values of a continuous variable, a common method is to replace them with a plausible representative value of that variable, usually the mean or median.

Let’s use replace_na to impute the missing values of the brainwt variable in msleep with the mean of the non-missing values. (The na.rm = TRUE argument in the code below is essential, for obvious reasons.) Note that the value of the mean we’re imputing is 0.2815814.

(msleep_v2 <- msleep %>%

mutate(brainwt = replace_na(brainwt, mean(brainwt, na.rm = TRUE))) %>%

select(name, brainwt))## # A tibble: 83 x 2

## name brainwt

## <chr> <dbl>

## 1 Cheetah 0.282

## 2 Owl monkey 0.0155

## 3 Mountain beaver 0.282

## 4 Greater short-tailed shrew 0.00029

## 5 Cow 0.423

## 6 Three-toed sloth 0.282

## 7 Northern fur seal 0.282

## 8 Vesper mouse 0.282

## 9 Dog 0.07

## 10 Roe deer 0.0982

## # i 73 more rowsThe mean of a variable is more sensitive to outliers than the median, so when there are outliers present, it’s often more sensible to impute the median to the missing values.

Exercises

In

high_school_data, do you feel confident replacing theNAs in any of the columns besidesBridge Program?? Why or why not?Run the command

summary(high_school_data). One of the many things you see is the number ofNAs in some of the columns. For what types of columns is thisNAcount missing?For the columns from the previous exercise that did not produce an

NAcount, find the number ofNAs using the method from this section.A rough formula for converting an ACT score to a comparable SAT score is \(\textrm{SAT} = 41.6 \times\textrm{ACT} + 102.4\).

- Use this formula to create a new column in

high_school_data_cleanthat contains the converted ACT scores for each student who took it. - Create another new column in

high_school_data_cleanthat contains the better of each student’s SAT and converted ACT score. (Hint: Look up thepmaxfunction.) You’ll have to think about how to handle the manyNAs in theACT CompandSAT Compcolumns.

- Use this formula to create a new column in

This exercise requires the Lahman library. In baseball, a “plate appearance” is any instance during a game of a batter standing at home plate being pitched to. Only some plate appearances count as “at bats,” namely the ones for which the batter either gets a hit or fails to reach base through some fault of his own. For example, when a player draws a walk (which is the result of four bad pitches) or is hit by a pitch, these plate appearances do not count as at bats. Or if a batter purposely hits the ball in such a way that he gets out so that other base runners can advance, this type of plate appearance is also not counted as an at bat. The idea is that a player’s batting average (hits divided by at bats) should not be affected by walks, being hit by pitches, or sacrifice hits. A player’s plate appearance total is the sum of at bats (

AB), walks (BB), intentional walks (IBB), number of times being hit by a pitch (HBP), sacrifice hits (SH), and sacrifice flies (SF).- Add a column to

Battingthat records each player’s plate appearance totals. - Why are there so many

NAs in the plate appearance column added in part (a)? - For each of the six variables that contribute to the plate appearances total, determine the number of missing values.

- For all of the variables from part (c) that had missing values, replace the missing values with 0.

- Re-do part (a) and verify that there are no missing values in your new plate appearances column.

- Who holds the record for most plate appearances in a single season?

- Who holds the record for most plate appearances throughout his entire career?

- Add a column to

Impute the median to the

brainwtcolumn inmsleep. Do you think this is more appropriate than imputing the mean? Explain your answer.

3.5 Detecting Entry Errors

It’s very common for data entered into a spreadsheet manually to have a few entry errors, and there are standard methods for detecting these errors. One of the first things to look for are numerical data points that seem way too big or way too small. A good place to start is with the summary statistics. (We’ll be using the data set high_school_data_clean throughout this section, which is the partially cleaned version of the original high_school_data with the renamed Acad_Standing column and with the NAs in the Bridge Program? column replaced by "no".)

summary(high_school_data_clean)## High School GPA ACT Comp SAT Comp High School Quality Academic Bridge Program?

## Min. :2.279 Min. : 4.05 Min. : 100 Min. :0.1420 Length:278

## 1st Qu.:3.225 1st Qu.:20.00 1st Qu.:1020 1st Qu.:0.7322 Class :character

## Median :3.625 Median :23.00 Median :1120 Median :0.8470 Mode :character

## Mean :3.534 Mean :22.62 Mean :1116 Mean :0.7982

## 3rd Qu.:3.930 3rd Qu.:26.00 3rd Qu.:1220 3rd Qu.:0.9117

## Max. :4.650 Max. :33.00 Max. :1480 Max. :0.9870

## NA's :2 NA's :205 NA's :40 NA's :76

## FA20 Credit Hours Attempted FA20 Credit Hours Earned Acad_Standing Race

## Min. : 0.00 Min. : 0.00 Length:278 Length:278

## 1st Qu.: 14.00 1st Qu.:13.00 Class :character Class :character

## Median : 15.00 Median :15.00 Mode :character Mode :character

## Mean : 15.06 Mean :13.94

## 3rd Qu.: 16.00 3rd Qu.:16.00

## Max. :150.00 Max. :19.00

##

## Ethnicity

## Length:278

## Class :character

## Mode :character

##

##

##

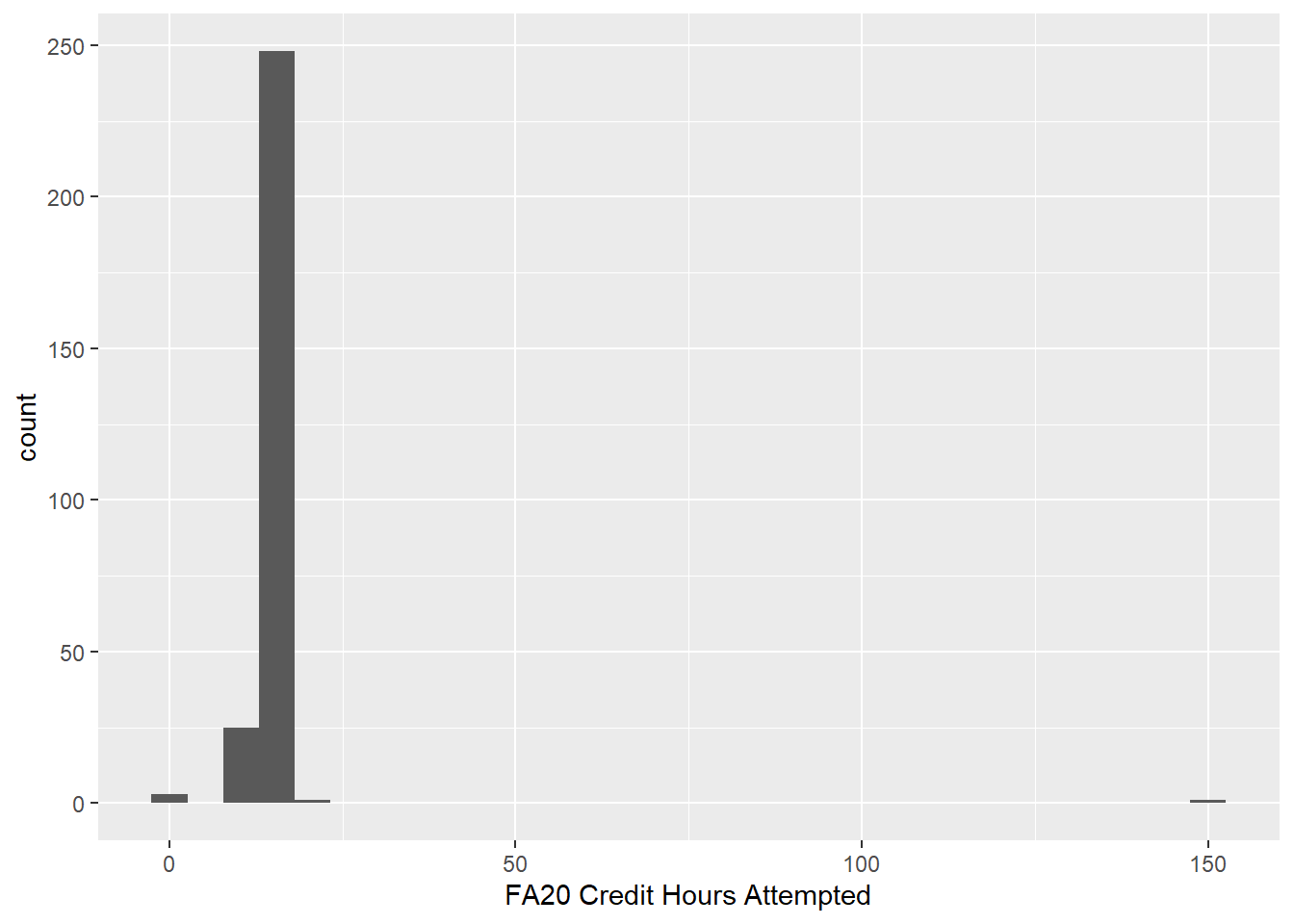

## This summary is most helpful for continuous data. We’ll deal with categorical data separately. Notice that the maximum value of FA20 Credit Hours Attempted is quite a bit bigger than the third quartile value (and totally unrealistic anyway). This is certainly an entry error. A visual way to detect this would be to obtain a plot of the distribution of the FA20 Credit Hours Attempted variable. Since this is a continuous variable, we’ll use a histogram. (Recall that categorical distributions are visualized with bar graphs.)

ggplot(data = high_school_data_clean) +

geom_histogram(mapping = aes(x = `FA20 Credit Hours Attempted`))

This histogram is skewed very far to the right with a huge gap between the right-most bar and the nearest bar to its left. This is another sign of a possible entry error.

We can drill down and see what’s going on with this point by sorting the FA20 Credit Hours Attempted variable in descending order. In the code below, head is a useful function that can be used to display only the beginning of a data set. (There’s also a tail function that displays the end of the data set.) By default, head displays the first 6 records, but you can override this and specify the number you want to display. Let’s display the records with the top ten highest values of FA20 Credit Hours Attempted. (The reason for the select is to make the FA20 Credit Hours Attempted column visible.)

high_school_data_clean %>%

arrange(desc(`FA20 Credit Hours Attempted`)) %>%

select(`FA20 Credit Hours Attempted`,

`FA20 Credit Hours Earned`,

Acad_Standing) %>%

head(10)## # A tibble: 10 x 3

## `FA20 Credit Hours Attempted` `FA20 Credit Hours Earned` Acad_Standing

## <dbl> <dbl> <chr>

## 1 150 15 Good Standing

## 2 19 19 Dean's List

## 3 18 18 Dean's List

## 4 18 18 Dean's List

## 5 18 17 Good Standing

## 6 18 18 Good Standing

## 7 18 18 Good Standing

## 8 18 18 Dean's List

## 9 18 18 Dean's List

## 10 18 18 Dean's ListSince we can see the entire row with the error, we can also get an insight into what probably happened. The student earned 15 credit hours and finished the semester in good academic standing, which likely means they didn’t fail any classes. Therefore the person doing the data entry probably absent-mindedly entered 150 instead of 15.

It’s a minor judgement call, but it’s probably safe to change the 150 to 15. (The other option would be to ignore this row altogether, which is also not ideal.) One way to do this is to make the change in the original spreadsheet and then re-import it. However, we can also make the change directly in R. We just have to access the problematic entry in its specific row and column of the data set, as in Section 3.2.

However, we first have to find the index (i.e., row number) of the 150 entry error. For this, we can use the which function as follows:

which(high_school_data_clean$`FA20 Credit Hours Attempted` == 150)## [1] 74So our problematic 150 value is in the 74th row. Of course, we should store this index so that we can refer to it without the risk of making a typo:

index_150 <- which(high_school_data_clean$`FA20 Credit Hours Attempted` == 150)We can now make the change. We just have to overwrite the entry in row 74 in the column FA20 Credit Hours Attempted with 15.

high_school_data_clean$`FA20 Credit Hours Attempted`[index_150] <- 15Entry errors for categorical variables can be harder to detect than for continuous ones. In this case, entry errors often take the form of inconsistent naming. For example, let’s look at the Race column in high_school_data_clean. One way to check for inconsistencies is to use group_by and summarize to see how many times each value in Race shows up in the data set:

high_school_data_clean %>%

group_by(Race) %>%

summarize(count = n())## # A tibble: 7 x 2

## Race count

## <chr> <int>

## 1 American/Alaska Native 5

## 2 Asian 9

## 3 Black or African American 11

## 4 Black/African American 1

## 5 Hawaiian/Pacific Islander 1

## 6 White 230

## 7 <NA> 21We should especially pay attention to the values with low counts since these might indicate inconsistencies. Notice that “Black or African American” and “Black/African American” are both listed as distinct values, although this is certainly because of an inconsistent naming system. Since “Black/African American” only shows up once, this is probably a mistake and should be changed to “Black or African American.” We can make this change with the str_replace function within mutate. The syntax is str_replace(<VARIABLE>, <VALUE TO REPLACE>, <NEW VALUE>):

high_school_data_clean <- high_school_data_clean %>%

mutate(Race = str_replace(Race, "Black/African American", "Black or African American"))Running the grouped summary again on high_school_data_clean shows that the problem has been fixed:

high_school_data_clean %>%

group_by(Race) %>%

summarize(count = n())## # A tibble: 6 x 2

## Race count

## <chr> <int>

## 1 American/Alaska Native 5

## 2 Asian 9

## 3 Black or African American 12

## 4 Hawaiian/Pacific Islander 1

## 5 White 230

## 6 <NA> 21Exercises

Look for possible entry errors for other continuous variables in

high_school_data_clean. Explain why you think there’s an entry error by:- Inspecting the data set summary.

- Referring to a visualization of the variable’s distribution.

- Sorting the data set by the variable and displaying the first or last few entries.

For any of the entry errors you found in the previous exercise, decide whether you think you should fix them. If so, proceed to do so, but if not, explain why.

Suppose the owner of the data set tells you that the 100 in the

SAT Compcolumn was supposed to be 1000. Make this change directly in R.For this exercise, you will need the Lahman package.

- Use the

whichfunction to return all of the indices (i.e., row numbers) fromBattingthat have a home run (HR) value in the range from 50 through 59. - Use part (a) to write a single-line command that will return a list of the players who had a home run total from 50 through 59. Recall the method introduced in Section 3.2 to access several entries from a vector at once.

- Use the

3.6 Separating and Uniting Columns

Sometimes the entries in a column of a data set contain more than one piece of data. For example, we might have a column that lists peoples’ full names, and we’d prefer to have separate columns for their first and last names. The function to use is separate. We have to tell it which column to separate as well as names for the two new columns that will house the separated values. By default, separate will split the cell values at the first non-syntactic character, such as a space, a comma, an arithmetic symbol, etc.

Let’s create a small data set to see how this works.

(city_data_set <- tibble(

location = c("Detroit, MI", "Chicago, IL", "Boston, MA")

))## # A tibble: 3 x 1

## location

## <chr>

## 1 Detroit, MI

## 2 Chicago, IL

## 3 Boston, MANow let’s separate the location column into two columns named city and state:

city_data_set %>%

separate(location, into = c("city", "state"))## # A tibble: 3 x 2

## city state

## <chr> <chr>

## 1 Detroit MI

## 2 Chicago IL

## 3 Boston MAWhat if we were to add “Grand Rapids, MI” to the original data set and then redo the separate?

city_data_set_v2 <- tibble(

location = c("Detroit, MI", "Chicago, IL", "Boston, MA", "Grand Rapids, MI")

)

city_data_set_v2 %>%

separate(location, into = c("city", "state"))## # A tibble: 4 x 2

## city state

## <chr> <chr>

## 1 Detroit MI

## 2 Chicago IL

## 3 Boston MA

## 4 Grand RapidsDo you see what happened? The entry “Grand Rapids, MI” was separated in “Grand” and “Rapids” rather than “Grand Rapids” and “MI.” The reason is that separate looks for the first non-syntactic character and separates the data at that point. The space in “Grand Rapids” is non-syntactic and therefore acts as the separation character for that observation.

Luckily, we can specify the separation character using the optional sep argument. We want to separate the location variable at the comma/space sequence:

city_data_set_v2 %>%

separate(location, into = c("city", "state"), sep = ", ")## # A tibble: 4 x 2

## city state

## <chr> <chr>

## 1 Detroit MI

## 2 Chicago IL

## 3 Boston MA

## 4 Grand Rapids MIAnother subtlety of separate is revealed in the following example. The following built-in data set contains data about rates of tuberculosis contraction in various countries.

table3## # A tibble: 6 x 3

## country year rate

## <chr> <dbl> <chr>

## 1 Afghanistan 1999 745/19987071

## 2 Afghanistan 2000 2666/20595360

## 3 Brazil 1999 37737/172006362

## 4 Brazil 2000 80488/174504898

## 5 China 1999 212258/1272915272

## 6 China 2000 213766/1280428583The rate column seems to contain the number of tuberculosis cases divided by the population, which would give the rate of the population that contracted tuberculosis. There are a few problems with this. First, it’s not very helpful to report rates this way. Rates are usually more meaningful as decimals or percentages, but even if this is how rate were displayed, remember that when comparing rates, it’s important to also display an overall count. In other words, we should convert the fractions in rate to decimals, but we should also keep the actual case and population numbers, each in its own column. We should therefore separate rate into cases and population columns. The first non-syntactic character in each entry is /, but let’s specify it as a separation character anyway just to be safe. We’ll also go ahead and add a rate column reported as a percentage:

table3_v2 <- table3 %>%

separate(rate, into = c("cases", "population"), sep = "/") %>%

mutate(rate = cases / population * 100)## Error in `mutate()`:

## i In argument: `rate = cases/population * 100`.

## Caused by error in `cases / population`:

## ! non-numeric argument to binary operatorWhat does this error message mean? There’s apparently a problem with rate = cases/population * 100, and it’s that there’s a non-numeric argument to binary operator. A binary operator is an arithmetic operation that takes two numbers as input and produces a single numeric output. The binary operator of concern here is division, so the error message must be saying that one or both of cases or population is non-numeric. Let’s check this out by running the above code again with the offending mutate commented out:

(table3_v2 <- table3 %>%

separate(rate, into = c("cases", "population"), sep = "/")) #%>%## # A tibble: 6 x 4

## country year cases population

## <chr> <dbl> <chr> <chr>

## 1 Afghanistan 1999 745 19987071

## 2 Afghanistan 2000 2666 20595360

## 3 Brazil 1999 37737 172006362

## 4 Brazil 2000 80488 174504898

## 5 China 1999 212258 1272915272

## 6 China 2000 213766 1280428583 #mutate(rate = cases / population * 100)Notice that, indeed, cases and population are both listed as characters. The reason is that the default procedure for separate is to make the separated variables into characters. We can override this by including the convert = TRUE argument in separate:

(table3_v2 <- table3 %>%

separate(rate, into = c("cases", "population"), sep = "/", convert = TRUE)) #%>%## # A tibble: 6 x 4

## country year cases population

## <chr> <dbl> <int> <int>

## 1 Afghanistan 1999 745 19987071

## 2 Afghanistan 2000 2666 20595360

## 3 Brazil 1999 37737 172006362

## 4 Brazil 2000 80488 174504898

## 5 China 1999 212258 1272915272

## 6 China 2000 213766 1280428583 #mutate(rate = cases / population * 100)The cases and population variables are now numeric, so we can compute the rate. Let’s remove the # and run the mutate:

(table3_v2 <- table3 %>%

separate(rate, into = c("cases", "population"), sep = "/", convert = TRUE) %>%

mutate(rate = cases / population * 100))## # A tibble: 6 x 5

## country year cases population rate

## <chr> <dbl> <int> <int> <dbl>

## 1 Afghanistan 1999 745 19987071 0.00373

## 2 Afghanistan 2000 2666 20595360 0.0129

## 3 Brazil 1999 37737 172006362 0.0219

## 4 Brazil 2000 80488 174504898 0.0461

## 5 China 1999 212258 1272915272 0.0167

## 6 China 2000 213766 1280428583 0.0167We’ve successfully separated the data and added a rate column, but there’s still a problem with rate. The percentages are so small as to be somewhat meaningless. The reason is that a percentage tells you how many cases there are for every hundred people (cent = hundred). When the number is very small (less than 1), it’s better to report the rate as cases per some other power of 10. In this case, if we multiply the rates by 100,000, we get more meaningful numbers, although now we’re calculating cases per 100,000:

(table3_v2 <- table3 %>%

separate(rate, into = c("cases", "population"), sep = "/", convert = TRUE) %>%

mutate(rate_per_100K = cases / population * 100000))## # A tibble: 6 x 5

## country year cases population rate_per_100K

## <chr> <dbl> <int> <int> <dbl>

## 1 Afghanistan 1999 745 19987071 3.73

## 2 Afghanistan 2000 2666 20595360 12.9

## 3 Brazil 1999 37737 172006362 21.9

## 4 Brazil 2000 80488 174504898 46.1

## 5 China 1999 212258 1272915272 16.7

## 6 China 2000 213766 1280428583 16.7While the sep = argument allows you to split the cells at any named character, sometimes you’ll want to split the cells into strings of a certain length instead, regardless of the characters involved. Look at this small sample data set, which contains a runner’s split times in a 5K race:

(split_times <- tribble(

~`distance (km)`, ~`time (min)`,

1, 430,

2, 920,

3, 1424,

4, 1920,

5, 2425

))## # A tibble: 5 x 2

## `distance (km)` `time (min)`

## <dbl> <dbl>

## 1 1 430

## 2 2 920

## 3 3 1424

## 4 4 1920

## 5 5 2425It obviously didn’t take the runner 430 minutes to finish the first kilometer; the numbers in the time (min) column are clearly meant to be interpreted as “minutes:seconds.” The first 1 or 2 digits are minutes, and the last two are seconds. Let’s fix this.

First, we should separate the last two digits from the first 1 or 2, although there’s no character we can give to sep =. Luckily, sep = also accepts a number that states how many characters into the string we make our separation. If we include the argument sep = <NUMBER>, then the cell will be separated <NUMBER> characters in from the left if <NUMBER> is positive, and from the right otherwise. In our data set above, we want the separation to occur 2 characters in from the right, so we’ll use sep = -2:

(split_times_v2 <- split_times %>%

separate(`time (min)`, into = c("minutes", "seconds"), sep = -2, convert = TRUE))## # A tibble: 5 x 3

## `distance (km)` minutes seconds

## <dbl> <int> <int>

## 1 1 4 30

## 2 2 9 20

## 3 3 14 24

## 4 4 19 20

## 5 5 24 25Next, we’ll look at the unite function and see how we can combine the minutes and seconds columns back into a single column with a colon : inserted.

The function used to unite two columns into one is unite. We have to specify a name for the new united column as well as the existing columns we want to unite. Let’s unite the minutes and seconds columns from split_times above.

(split_times_v3 <- split_times_v2 %>%

unite("time (min:sec)", minutes, seconds))## # A tibble: 5 x 2

## `distance (km)` `time (min:sec)`

## <dbl> <chr>

## 1 1 4_30

## 2 2 9_20

## 3 3 14_24

## 4 4 19_20

## 5 5 24_25You can see that by default, unite joins united values with an underscore _. We can override this with the optional sep = <SEPARATION CHARACTER> argument. In this case, we want the separation character to be a colon :.

(split_times_v4 <- split_times_v2 %>%

unite("time (min:sec)", minutes, seconds, sep = ":"))## # A tibble: 5 x 2

## `distance (km)` `time (min:sec)`

## <dbl> <chr>

## 1 1 4:30

## 2 2 9:20

## 3 3 14:24

## 4 4 19:20

## 5 5 24:25Of course, in practice, we’d combine the separate and unite in the pipe:

split_times_v5 <- split_times %>%

separate(`time (min)`, into = c("minutes", "seconds"), sep = -2, convert = TRUE) %>%

unite("time (min:sec)", minutes, seconds, sep = ":")We saw that by default, separate treats the newly created variables as characters. How does unite handle data types?

Consider the following built-in data set:

table5## # A tibble: 6 x 4

## country century year rate

## <chr> <chr> <chr> <chr>

## 1 Afghanistan 19 99 745/19987071

## 2 Afghanistan 20 00 2666/20595360

## 3 Brazil 19 99 37737/172006362

## 4 Brazil 20 00 80488/174504898

## 5 China 19 99 212258/1272915272

## 6 China 20 00 213766/1280428583Obviously, century and year should be combined into one variable called year. Notice that when we unite these columns, we don’t want the century and year values to be separated by any character, so we should specify the separation character as an empty pair of quotation marks.

(table5_v2 <- table5 %>%

unite("year", century, year, sep = ""))## # A tibble: 6 x 3

## country year rate

## <chr> <chr> <chr>

## 1 Afghanistan 1999 745/19987071

## 2 Afghanistan 2000 2666/20595360

## 3 Brazil 1999 37737/172006362

## 4 Brazil 2000 80488/174504898

## 5 China 1999 212258/1272915272

## 6 China 2000 213766/1280428583Notice, though, that the new year variable is a character. It would be more appropriate to have it be an integer, but unfortunately, unlike separate, unite does not offer a convert = TRUE option. We have to coerce it into an integer like in Section 3.2.

(table5_v3 <- table5_v2 %>%

mutate(year = as.integer(year)))## # A tibble: 6 x 3

## country year rate

## <chr> <int> <chr>

## 1 Afghanistan 1999 745/19987071

## 2 Afghanistan 2000 2666/20595360

## 3 Brazil 1999 37737/172006362

## 4 Brazil 2000 80488/174504898

## 5 China 1999 212258/1272915272

## 6 China 2000 213766/1280428583Exercises

You will need the nycflights13 package for these exercises.

Create a version of the

flightsdata set that has a single column for the date, with dates entered asmm-dd-yyyyrather than three separate columns for the month, day, and year.Click here to go to the Wikipedia page that lists all members of the Baseball Hall of Fame. Then do the following:

- Copy and paste the list, including the column headings, into an Excel document and import it into R.

- Create a data table that lists only the name of each hall of famer in a single column, alphabetized by last name. Be sure there aren’t any

NAentries. Try to execute this using a sequence of commands in the pipe. Each name should appear in a single cell when you’re done, with the first name listed first. For example, the first entry in your finished list should show up as: Hank Aaron. - Are there any problematic entries in your list? What’s going on with these? (Hint: Try to interpret the warning message you’ll get.)

3.7 Pivoting

Consider the following built-in data set:

table2## # A tibble: 12 x 4

## country year type count

## <chr> <dbl> <chr> <dbl>

## 1 Afghanistan 1999 cases 745

## 2 Afghanistan 1999 population 19987071

## 3 Afghanistan 2000 cases 2666

## 4 Afghanistan 2000 population 20595360

## 5 Brazil 1999 cases 37737

## 6 Brazil 1999 population 172006362

## 7 Brazil 2000 cases 80488

## 8 Brazil 2000 population 174504898

## 9 China 1999 cases 212258

## 10 China 1999 population 1272915272

## 11 China 2000 cases 213766

## 12 China 2000 population 1280428583It’s clear how to interpret this table; for example, we can see that in Afghanistan in 1999, there were 745 cases of tuberculosis, and the population was 19,987,071. However, this method of recording data doesn’t lend itself well to analysis. For example, we couldn’t readily find the average number of tuberculosis cases or the average population since these values are mixed into the same column. Also, each observation, which consists of a given country in a given year, is spread over two rows. Additionally, the variable type has values (cases and population) which are actually variables themselves, and their values are the corresponding numbers in the count column.

It would be much better to rearrange the data set as shown below:

## # A tibble: 6 x 4

## country year cases population

## <chr> <dbl> <dbl> <dbl>

## 1 Afghanistan 1999 745 19987071

## 2 Afghanistan 2000 2666 20595360

## 3 Brazil 1999 37737 172006362

## 4 Brazil 2000 80488 174504898

## 5 China 1999 212258 1272915272

## 6 China 2000 213766 1280428583The way to do this is to perform a pivot on the table that turns the values from the type column into variables and assigns the corresponding values for these new variables from count. Since the values in the type column are being converted to variables, a pivot of this type has the potential to give the data table more columns than it currently has (although not in this case) and thus to make the table wider. The syntax below reflects exactly what we want to do:

table2 %>%

pivot_wider(names_from = type, values_from = count)## # A tibble: 6 x 4

## country year cases population

## <chr> <dbl> <dbl> <dbl>

## 1 Afghanistan 1999 745 19987071

## 2 Afghanistan 2000 2666 20595360

## 3 Brazil 1999 37737 172006362

## 4 Brazil 2000 80488 174504898

## 5 China 1999 212258 1272915272

## 6 China 2000 213766 1280428583In general, pivot_wider should be used whenever the values of some column should be pivoted to variable names.

The opposite problem occurs when a data set has column names that are actually values of some variable. For example, consider the following built-in data set:

table4a## # A tibble: 3 x 3

## country `1999` `2000`

## <chr> <dbl> <dbl>

## 1 Afghanistan 745 2666

## 2 Brazil 37737 80488

## 3 China 212258 213766Again, we know what this means. For example, in 1999, there were 745 cases of tuberculosis in Afghanistan, and in 2000, there were 2666. However, 1999 and 2000 are not variable names. It wouldn’t make sense to say that 745 is a value of 1999. Rather, 1999 and 2000 are values of a missing year variable, and the values in the 1999 and 2000 columns are actually values of a missing cases variable. A better way to arrange the data set would be as follows:

## # A tibble: 6 x 3

## country year cases

## <chr> <chr> <dbl>

## 1 Afghanistan 1999 745

## 2 Afghanistan 2000 2666

## 3 Brazil 1999 37737

## 4 Brazil 2000 80488

## 5 China 1999 212258

## 6 China 2000 213766We can achieve this by performing a pivot that will send the variable names 1999 and 2000 to values of a year variable. We will also have to say what to do with the values in the 1999 and 2000 columns. Since we’re sending variable names to values, a pivot of this type has the potential to add more rows and therefore to make the data table longer. The code below shows how to do this. The first argument is a vector that lists the column names that are being pivoted to values. (Notice the backticks around 1999 and 2000, which are necessary for names that don’t start with a letter.)

(table4a_v2 <- table4a %>%

pivot_longer(cols = c(`1999`, `2000`), names_to = "year", values_to = "cases"))## # A tibble: 6 x 3

## country year cases

## <chr> <chr> <dbl>

## 1 Afghanistan 1999 745

## 2 Afghanistan 2000 2666

## 3 Brazil 1999 37737

## 4 Brazil 2000 80488

## 5 China 1999 212258

## 6 China 2000 213766Notice that pivoting the years 1999 and 2000 away from variable names and into values converted them to character type. We will have to manually convert them back to integers since pivot_longer does not have a convert = TRUE option.

(table4a_v3 <- table4a_v2 %>%

mutate(year = as.integer(year)))## # A tibble: 6 x 3

## country year cases

## <chr> <int> <dbl>

## 1 Afghanistan 1999 745

## 2 Afghanistan 2000 2666

## 3 Brazil 1999 37737

## 4 Brazil 2000 80488

## 5 China 1999 212258

## 6 China 2000 213766In general, use pivot_longer whenever a data set has column names that should actually be values of some variable.

Exercises

- Decide whether

pivot_longerorpivot_wideris needed for the data table below, and explain your decision. Then perform the pivot.

patients <- tribble(

~name, ~attribute, ~value,

"Stephen Johnson", "age", 47,

"Stephen Johnson", "weight", 185,

"Ann Smith", "age", 39,

"Ann Smith", "weight", 125,

"Ann Smith", "height", 64,

"Mark Davidson", "age", 51,

"Mark Davidson", "weight", 210,

"Mark Davidson", "height", 73

)- Repeat the previous exercise for this data table. What problem do you run into? (Use the

Viewcommand to see the pivoted table.) What could be done to avoid this problem?

patients <- tribble(

~name, ~attribute, ~value,

"Stephen Johnson", "age", 47,

"Stephen Johnson", "weight", 185,

"Ann Smith", "age", 39,

"Ann Smith", "weight", 125,

"Ann Smith", "height", 64,

"Mark Davidson", "age", 51,

"Mark Davidson", "weight", 210,

"Mark Davidson", "height", 73,

"Ann Smith", "age", 43,

"Ann Smith", "weight", 140,

"Ann Smith", "height", 66

)- Perform a pivot (or sequence of pivots) to rearrange the data table below to a more appropriate layout.

preg <- tribble(

~pregnant, ~male, ~female,

"yes", NA, 10,

"no", 20, 12

)3.8 Joining Data Sets

In Exercise 2 from Section 2.5, we noticed that the value of air_time from the flights data set does not seem to be consistent with the difference between the departure and arrival times, i.e., the “gate-to-gate time.” This difference is partly explained by the fact that air_time does not include time spent taxiing on the runway, but another explanation is the fact that arrival times are recorded relative to the time zone of the destination airport.

In order to compare air_time to the gate-to-gate time, we would need to know the time zone of each destination airport. Download and import the csv file found here. It lists the airport code for every airport in the world along with its time zone.

library(readr)

timezones <- read_csv("timezones.csv")Between flights and timezones we have enough information to determine the time zone of every destination airport in flights. In fact, we can join these two data sets into one by adding a column to flights that contains the time zones of each destination airport as found in timezones. The idea is to use the dest variable in flights and the airport code variable in timezones to link the data sets together. A variable used in this way is known as a key variable. When we join two related data sets by a key variable, the key variable must have the same name in both data sets. Let’s rename airport code in timezones as dest so that it matches its partner in flights.

timezones_v2 <- timezones %>%

rename("dest" = `airport code`)Now that the key variable has the same name in both data sets, we can join the two data sets by the key variable using the left_join function as follows:

library(nycflights13)

flights_with_timezones <- left_join(flights, timezones_v2, by = "dest")Let’s select a few columns of our joined data set so that we can see the new time zone column:

flights_with_timezones %>%

select(dest, `time zone`)## # A tibble: 336,776 x 2

## dest `time zone`

## <chr> <chr>

## 1 IAH Central Standard Time

## 2 IAH Central Standard Time

## 3 MIA Eastern Standard Time

## 4 BQN SA Western Standard Time

## 5 ATL Eastern Standard Time

## 6 ORD Central Standard Time

## 7 FLL Eastern Standard Time

## 8 IAD Eastern Standard Time

## 9 MCO Eastern Standard Time

## 10 ORD Central Standard Time

## # i 336,766 more rowsYou might be wondering why this is called a left join. The reason is that it uses the data set listed first (i.e., the one on the left), which in this case is flights, as a foundation and then builds onto it using the data set listed second, which in this case is timezones2. We can also construct our join by feeding the first data set into left_join via the pipe as follows:

flights_with_timezones <- flights %>%

left_join(timezones_v2, by = "dest")In the exercises, we’ll investigate three other types of joins: right_join, inner_join, and full_join.

Sometimes a single variable is not enough to join two related data sets. Consider the following two data sets, one containing the points scored per game of three NBA players over two years and the other containing those same players’ minutes played per game over those same two years:

(points <- tribble(

~year, ~player, ~ppg,

"2022-23", "Lebron James", 28.9,

"2022-23", "Stephen Curry", 29.4,

"2022-23", "Nikola Jokic", 24.5,

"2023-24", "Lebron James", 25.7,

"2023-24", "Stephen Curry", 26.4,

"2023-24", "Nikola Jokic", 26.4

))## # A tibble: 6 x 3

## year player ppg

## <chr> <chr> <dbl>

## 1 2022-23 Lebron James 28.9

## 2 2022-23 Stephen Curry 29.4

## 3 2022-23 Nikola Jokic 24.5

## 4 2023-24 Lebron James 25.7

## 5 2023-24 Stephen Curry 26.4

## 6 2023-24 Nikola Jokic 26.4(minutes <- tribble(

~year, ~player, ~mpg,

"2022-23", "Lebron James", 35.5,

"2022-23", "Stephen Curry", 34.7,

"2022-23", "Nikola Jokic", 33.7,

"2023-24", "Lebron James", 35.3,

"2023-24", "Stephen Curry", 32.7,

"2023-24", "Nikola Jokic", 34.6

))## # A tibble: 6 x 3

## year player mpg

## <chr> <chr> <dbl>

## 1 2022-23 Lebron James 35.5

## 2 2022-23 Stephen Curry 34.7

## 3 2022-23 Nikola Jokic 33.7

## 4 2023-24 Lebron James 35.3

## 5 2023-24 Stephen Curry 32.7

## 6 2023-24 Nikola Jokic 34.6Suppose we want to add a column to the points data set containing each player’s mpg value for each year. For example, we would take the mpg value of 35.5 from Lebron James’s 2022-23 row in minutes and add it to his 2022-23 row in points. Notice that this process requires two variables to identify each observation: year and player. Thus, when performing a join, the key will have to be a vector of variables rather than just a single variable:

(NBA_data <- left_join(points, minutes, by = c("year", "player")))## # A tibble: 6 x 4

## year player ppg mpg

## <chr> <chr> <dbl> <dbl>

## 1 2022-23 Lebron James 28.9 35.5

## 2 2022-23 Stephen Curry 29.4 34.7

## 3 2022-23 Nikola Jokic 24.5 33.7

## 4 2023-24 Lebron James 25.7 35.3

## 5 2023-24 Stephen Curry 26.4 32.7

## 6 2023-24 Nikola Jokic 26.4 34.6Exercises

You will need the Lahman package for these exercises.

- Enter the following two small data sets:

sample1 <- tribble(

~x, ~y,

1, "a",

2, "b",

3, "c"

)

sample2 <- tribble(

~x, ~z,

1, "p",

2, "q",

4, "r"

)Predict what will happen when you use left_join to join sample2 to sample1 using x as the key variable. In particular, what z value will be assigned to the x value 3 in sample1? Then perform this join.

- Using the data sets

sample1andsample2from the previous exercise, perform each of the following joins, and based on the results, explain what each one does.

right_join(sample1, sample2, by = "x")

inner_join(sample1, sample2, by = "x")

full_join(sample1, sample2, by = "x")When we created the

NBA_datadata set at the end of this section, we usedleft_join. Explain why usingright_join,inner_join, orfull_joinwould have resulted in the same data set.Another data set included in Lahman is

Salaries, which contains player salaries. Create a data set that contains the salary of each player in theBattingdata set each year. If a player’s salary was not available for a given year, the salary should be displayed asNA.In the previous problem, the joined data set contained the variables

teamID.x,lgID.x,teamID.y, andlgID.y. Try to explain why these variables occurred. Then re-do the join so that this does not happen.Re-do the previous problem, but choose your join so that the only observations that show up in the joined data set are those for which salary data is available.

3.9 Case Study

We’ll close this chapter by cleaning and analyzing data from the World Health Organization’s 2014 Global Tuberculosis Report2. We will be focusing on two data sets (both of which are included with tidyverse): who and population.

who## # A tibble: 7,240 x 60

## country iso2 iso3 year new_sp_m014 new_sp_m1524 new_sp_m2534 new_sp_m3544 new_sp_m4554 new_sp_m5564

## <chr> <chr> <chr> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

## 1 Afghanistan AF AFG 1980 NA NA NA NA NA NA

## 2 Afghanistan AF AFG 1981 NA NA NA NA NA NA

## 3 Afghanistan AF AFG 1982 NA NA NA NA NA NA

## 4 Afghanistan AF AFG 1983 NA NA NA NA NA NA

## 5 Afghanistan AF AFG 1984 NA NA NA NA NA NA

## 6 Afghanistan AF AFG 1985 NA NA NA NA NA NA

## 7 Afghanistan AF AFG 1986 NA NA NA NA NA NA

## 8 Afghanistan AF AFG 1987 NA NA NA NA NA NA

## 9 Afghanistan AF AFG 1988 NA NA NA NA NA NA

## 10 Afghanistan AF AFG 1989 NA NA NA NA NA NA

## # i 7,230 more rows

## # i 50 more variables: new_sp_m65 <dbl>, new_sp_f014 <dbl>, new_sp_f1524 <dbl>, new_sp_f2534 <dbl>,

## # new_sp_f3544 <dbl>, new_sp_f4554 <dbl>, new_sp_f5564 <dbl>, new_sp_f65 <dbl>, new_sn_m014 <dbl>,

## # new_sn_m1524 <dbl>, new_sn_m2534 <dbl>, new_sn_m3544 <dbl>, new_sn_m4554 <dbl>, new_sn_m5564 <dbl>,

## # new_sn_m65 <dbl>, new_sn_f014 <dbl>, new_sn_f1524 <dbl>, new_sn_f2534 <dbl>, new_sn_f3544 <dbl>,

## # new_sn_f4554 <dbl>, new_sn_f5564 <dbl>, new_sn_f65 <dbl>, new_ep_m014 <dbl>, new_ep_m1524 <dbl>,

## # new_ep_m2534 <dbl>, new_ep_m3544 <dbl>, new_ep_m4554 <dbl>, new_ep_m5564 <dbl>, new_ep_m65 <dbl>, ...population## # A tibble: 4,060 x 3

## country year population

## <chr> <dbl> <dbl>

## 1 Afghanistan 1995 17586073

## 2 Afghanistan 1996 18415307

## 3 Afghanistan 1997 19021226

## 4 Afghanistan 1998 19496836

## 5 Afghanistan 1999 19987071

## 6 Afghanistan 2000 20595360

## 7 Afghanistan 2001 21347782

## 8 Afghanistan 2002 22202806

## 9 Afghanistan 2003 23116142

## 10 Afghanistan 2004 24018682

## # i 4,050 more rowsAs always, you can enter View(who) and View(population) to see the full tables.

The data documentation is often found in the publication or web site from which the data is pulled, but since these are built-in data sets, we’re lucky to have access to their dictionaries right in R. They’re both found in the same place:

?whowho contains data about tuberculosis cases in countries throughout the world during the years 1980-2013, and population contains data about those countries’ populations during those years.

Our analysis will be devoted to answering the following question:

How does the prevalence of tuberculosis vary from region-to-region throughout the world during the years 1980-2013?

We will start by thoroughly cleaning these data sets, and then we will try to answer the question above using visualizations and transformations.

The population data set looks fairly clean already. The data types and names of the columns are appropriate, and a quick look at the summary shows us that there are no missing values and no suspiciously low or high year or population values. One thing to note, though, is that population data only dates back to 1995, whereas tuberculosis data dates back to 1980. We’ll keep this in mind moving forward.

summary(population)## country year population

## Length:4060 Min. :1995 Min. :1.129e+03

## Class :character 1st Qu.:1999 1st Qu.:6.029e+05

## Mode :character Median :2004 Median :5.319e+06

## Mean :2004 Mean :3.003e+07

## 3rd Qu.:2009 3rd Qu.:1.855e+07

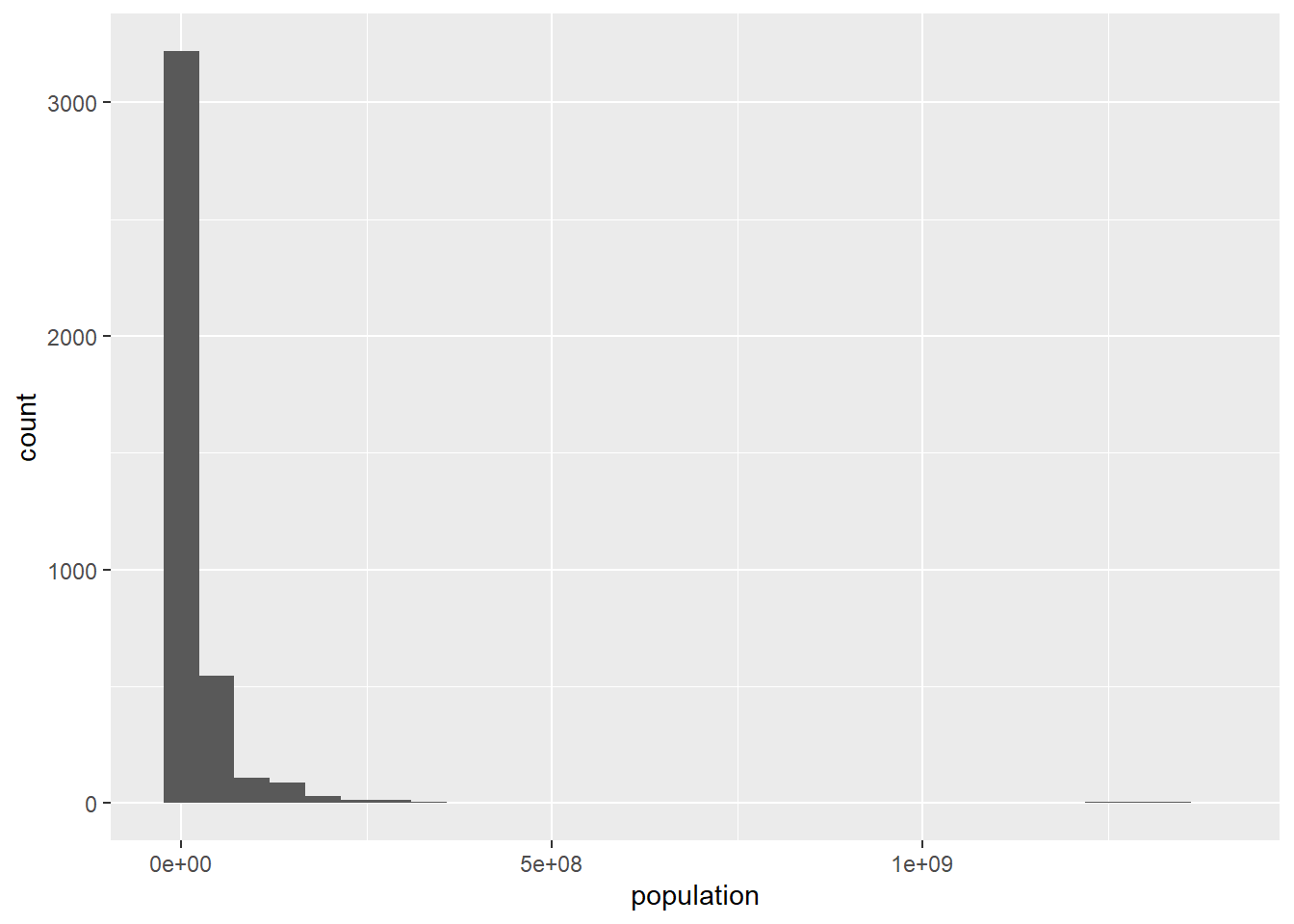

## Max. :2013 Max. :1.386e+09We can also check the histogram of population to see whether there are any obvious outliers:

ggplot(data = population) +

geom_histogram(mapping = aes(x = population))

It looks like there is a possible outlier population at the far right. Let’s see why this is:

population %>%

arrange(desc(population)) %>%

head()## # A tibble: 6 x 3

## country year population

## <chr> <dbl> <dbl>

## 1 China 2013 1385566537

## 2 China 2012 1377064907

## 3 China 2011 1368440300

## 4 China 2010 1359821465

## 5 China 2009 1351247555

## 6 China 2008 1342732604We can see that China’s population is creating the big gap in the histogram. We’ll make note of the outlier behavior of China’s population, but as of now, there’s no reason to remove it from the data set. At least we know that the histogram gap is not due to an entry error.

Since population is ready to go, we’ll move on to who.

Let’s start with the data set summary:

summary(who)## country iso2 iso3 year new_sp_m014 new_sp_m1524

## Length:7240 Length:7240 Length:7240 Min. :1980 Min. : 0.00 Min. : 0

## Class :character Class :character Class :character 1st Qu.:1988 1st Qu.: 0.00 1st Qu.: 9

## Mode :character Mode :character Mode :character Median :1997 Median : 5.00 Median : 90

## Mean :1997 Mean : 83.71 Mean : 1016

## 3rd Qu.:2005 3rd Qu.: 37.00 3rd Qu.: 502

## Max. :2013 Max. :5001.00 Max. :78278

## NA's :4067 NA's :4031

## new_sp_m2534 new_sp_m3544 new_sp_m4554 new_sp_m5564 new_sp_m65 new_sp_f014

## Min. : 0.0 Min. : 0.0 Min. : 0 Min. : 0.0 Min. : 0.0 Min. : 0.00

## 1st Qu.: 14.0 1st Qu.: 13.0 1st Qu.: 12 1st Qu.: 8.0 1st Qu.: 8.0 1st Qu.: 1.00

## Median : 150.0 Median : 130.0 Median : 102 Median : 63.0 Median : 53.0 Median : 7.00

## Mean : 1403.8 Mean : 1315.9 Mean : 1104 Mean : 800.7 Mean : 682.8 Mean : 114.33

## 3rd Qu.: 715.5 3rd Qu.: 583.5 3rd Qu.: 440 3rd Qu.: 279.0 3rd Qu.: 232.0 3rd Qu.: 50.75

## Max. :84003.0 Max. :90830.0 Max. :82921 Max. :63814.0 Max. :70376.0 Max. :8576.00

## NA's :4034 NA's :4021 NA's :4017 NA's :4022 NA's :4031 NA's :4066

## new_sp_f1524 new_sp_f2534 new_sp_f3544 new_sp_f4554 new_sp_f5564 new_sp_f65

## Min. : 0.0 Min. : 0.0 Min. : 0.0 Min. : 0.0 Min. : 0.0 Min. : 0.0

## 1st Qu.: 7.0 1st Qu.: 9.0 1st Qu.: 6.0 1st Qu.: 4.0 1st Qu.: 3.0 1st Qu.: 4.0

## Median : 66.0 Median : 84.0 Median : 57.0 Median : 38.0 Median : 25.0 Median : 30.0

## Mean : 826.1 Mean : 917.3 Mean : 640.4 Mean : 445.8 Mean : 313.9 Mean : 283.9

## 3rd Qu.: 421.0 3rd Qu.: 476.2 3rd Qu.: 308.0 3rd Qu.: 211.0 3rd Qu.: 146.5 3rd Qu.: 129.0

## Max. :53975.0 Max. :49887.0 Max. :34698.0 Max. :23977.0 Max. :18203.0 Max. :21339.0

## NA's :4046 NA's :4040 NA's :4041 NA's :4036 NA's :4045 NA's :4043

## new_sn_m014 new_sn_m1524 new_sn_m2534 new_sn_m3544 new_sn_m4554 new_sn_m5564

## Min. : 0.0 Min. : 0.0 Min. : 0.0 Min. : 0.0 Min. : 0.0 Min. : 0.0

## 1st Qu.: 1.0 1st Qu.: 2.0 1st Qu.: 2.0 1st Qu.: 2.0 1st Qu.: 2.0 1st Qu.: 2.0

## Median : 9.0 Median : 15.5 Median : 23.0 Median : 19.0 Median : 19.0 Median : 16.0

## Mean : 308.7 Mean : 513.0 Mean : 653.7 Mean : 837.9 Mean : 520.8 Mean : 448.6

## 3rd Qu.: 61.0 3rd Qu.: 102.0 3rd Qu.: 135.5 3rd Qu.: 132.0 3rd Qu.: 127.5 3rd Qu.: 101.0

## Max. :22355.0 Max. :60246.0 Max. :50282.0 Max. :250051.0 Max. :57181.0 Max. :64972.0

## NA's :6195 NA's :6210 NA's :6218 NA's :6215 NA's :6213 NA's :6219

## new_sn_m65 new_sn_f014 new_sn_f1524 new_sn_f2534 new_sn_f3544 new_sn_f4554

## Min. : 0.0 Min. : 0 Min. : 0.0 Min. : 0.0 Min. : 0.00 Min. : 0.00

## 1st Qu.: 2.0 1st Qu.: 1 1st Qu.: 1.0 1st Qu.: 2.0 1st Qu.: 1.00 1st Qu.: 1.00

## Median : 20.5 Median : 8 Median : 12.0 Median : 18.0 Median : 11.00 Median : 10.00

## Mean : 460.4 Mean : 292 Mean : 407.9 Mean : 466.3 Mean : 506.59 Mean : 271.16

## 3rd Qu.: 111.8 3rd Qu.: 58 3rd Qu.: 89.0 3rd Qu.: 103.2 3rd Qu.: 82.25 3rd Qu.: 76.75

## Max. :74282.0 Max. :21406 Max. :35518.0 Max. :28753.0 Max. :148811.00 Max. :23869.00

## NA's :6220 NA's :6200 NA's :6218 NA's :6224 NA's :6220 NA's :6222

## new_sn_f5564 new_sn_f65 new_ep_m014 new_ep_m1524 new_ep_m2534 new_ep_m3544

## Min. : 0.0 Min. : 0.0 Min. : 0.0 Min. : 0.0 Min. : 0.0 Min. : 0.00

## 1st Qu.: 1.0 1st Qu.: 1.0 1st Qu.: 0.0 1st Qu.: 1.0 1st Qu.: 1.0 1st Qu.: 1.00

## Median : 8.0 Median : 13.0 Median : 6.0 Median : 11.0 Median : 13.0 Median : 10.50

## Mean : 213.4 Mean : 230.8 Mean : 128.6 Mean : 158.3 Mean : 201.2 Mean : 272.72

## 3rd Qu.: 56.0 3rd Qu.: 74.0 3rd Qu.: 55.0 3rd Qu.: 88.0 3rd Qu.: 124.0 3rd Qu.: 91.25

## Max. :26085.0 Max. :29630.0 Max. :7869.0 Max. :8558.0 Max. :11843.0 Max. :105825.00

## NA's :6223 NA's :6221 NA's :6202 NA's :6214 NA's :6220 NA's :6216

## new_ep_m4554 new_ep_m5564 new_ep_m65 new_ep_f014 new_ep_f1524 new_ep_f2534

## Min. : 0.00 Min. : 0.00 Min. : 0.00 Min. : 0.0 Min. : 0.0 Min. : 0.0

## 1st Qu.: 1.00 1st Qu.: 1.00 1st Qu.: 1.00 1st Qu.: 0.0 1st Qu.: 1.0 1st Qu.: 1.0

## Median : 8.50 Median : 7.00 Median : 10.00 Median : 5.0 Median : 9.0 Median : 12.0

## Mean : 108.11 Mean : 72.17 Mean : 78.94 Mean : 112.9 Mean : 149.2 Mean : 189.5

## 3rd Qu.: 63.25 3rd Qu.: 46.00 3rd Qu.: 55.00 3rd Qu.: 50.0 3rd Qu.: 78.0 3rd Qu.: 95.0

## Max. :5875.00 Max. :3957.00 Max. :3061.00 Max. :6960.0 Max. :7866.0 Max. :10759.0

## NA's :6220 NA's :6225 NA's :6222 NA's :6208 NA's :6219 NA's :6219

## new_ep_f3544 new_ep_f4554 new_ep_f5564 new_ep_f65 newrel_m014 newrel_m1524

## Min. : 0.0 Min. : 0.00 Min. : 0.00 Min. : 0.00 Min. : 0.0 Min. : 0.0

## 1st Qu.: 1.0 1st Qu.: 1.00 1st Qu.: 1.00 1st Qu.: 0.00 1st Qu.: 5.0 1st Qu.: 17.5

## Median : 9.0 Median : 8.00 Median : 6.00 Median : 10.00 Median : 32.5 Median : 171.0

## Mean : 241.7 Mean : 93.77 Mean : 63.04 Mean : 72.31 Mean : 538.2 Mean : 1489.5

## 3rd Qu.: 77.0 3rd Qu.: 56.00 3rd Qu.: 42.00 3rd Qu.: 51.00 3rd Qu.: 210.0 3rd Qu.: 684.2

## Max. :101015.0 Max. :6759.00 Max. :4684.00 Max. :2548.00 Max. :18617.0 Max. :84785.0

## NA's :6219 NA's :6223 NA's :6223 NA's :6226 NA's :7050 NA's :7058

## newrel_m2534 newrel_m3544 newrel_m4554 newrel_m5564 newrel_m65 newrel_f014

## Min. : 0 Min. : 0.00 Min. : 0.0 Min. : 0 Min. : 0.0 Min. : 0.0

## 1st Qu.: 25 1st Qu.: 24.75 1st Qu.: 19.0 1st Qu.: 13 1st Qu.: 17.0 1st Qu.: 5.0

## Median : 217 Median : 208.00 Median : 175.0 Median : 136 Median : 117.0 Median : 32.5

## Mean : 2140 Mean : 2036.40 Mean : 1835.1 Mean : 1525 Mean : 1426.0 Mean : 532.8

## 3rd Qu.: 1091 3rd Qu.: 851.25 3rd Qu.: 688.5 3rd Qu.: 536 3rd Qu.: 453.5 3rd Qu.: 226.0

## Max. :76917 Max. :84565.00 Max. :100297.0 Max. :112558 Max. :124476.0 Max. :18054.0

## NA's :7057 NA's :7056 NA's :7056 NA's :7055 NA's :7058 NA's :7050

## newrel_f1524 newrel_f2534 newrel_f3544 newrel_f4554 newrel_f5564 newrel_f65

## Min. : 0.00 Min. : 0.0 Min. : 0.0 Min. : 0.0 Min. : 0.0 Min. : 0.0

## 1st Qu.: 10.75 1st Qu.: 18.0 1st Qu.: 12.5 1st Qu.: 10.0 1st Qu.: 8.0 1st Qu.: 9.0

## Median : 123.00 Median : 161.0 Median : 125.0 Median : 92.0 Median : 69.0 Median : 69.0

## Mean : 1161.85 Mean : 1472.8 Mean : 1125.0 Mean : 877.3 Mean : 686.4 Mean : 683.8

## 3rd Qu.: 587.75 3rd Qu.: 762.5 3rd Qu.: 544.5 3rd Qu.: 400.5 3rd Qu.: 269.0 3rd Qu.: 339.0

## Max. :49491.00 Max. :44985.0 Max. :38804.0 Max. :37138.0 Max. :40892.0 Max. :47438.0

## NA's :7056 NA's :7058 NA's :7057 NA's :7057 NA's :7057 NA's :7055A couple of things might stand out right away, namely the redundant iso2 and iso3 columns, the several NAs, and the strange looking column names.

Let’s address the column names first. The documentation explains that the column names encode several pieces of information. They all begin with new, indicating that these are newly diagnosed cases. After new_, the next string encodes the method of diagnosis. For example, rel means the case was a relapse. The next string is an m (for male) or f (for female), followed by numbers which indicate an age range. Thus each column heading is actually a set of four data values. The numbers in these columns are the numbers of cases. Since we have values being used as column names, we should pivot the column names into values. This will make the data table longer, so we’ll use pivot_longer. We’ll also get rid of the iso2 column while we’re at it. (We’re going to leave iso3 since we’ll need it later. It provides a standardized name for each country.)

(who_clean <- who %>%

select(-iso2) %>%

pivot_longer(cols = new_sp_m014:newrel_f65, names_to = "case_type", values_to = "cases"))## # A tibble: 405,440 x 5

## country iso3 year case_type cases

## <chr> <chr> <dbl> <chr> <dbl>

## 1 Afghanistan AFG 1980 new_sp_m014 NA

## 2 Afghanistan AFG 1980 new_sp_m1524 NA

## 3 Afghanistan AFG 1980 new_sp_m2534 NA

## 4 Afghanistan AFG 1980 new_sp_m3544 NA

## 5 Afghanistan AFG 1980 new_sp_m4554 NA

## 6 Afghanistan AFG 1980 new_sp_m5564 NA

## 7 Afghanistan AFG 1980 new_sp_m65 NA

## 8 Afghanistan AFG 1980 new_sp_f014 NA

## 9 Afghanistan AFG 1980 new_sp_f1524 NA

## 10 Afghanistan AFG 1980 new_sp_f2534 NA

## # i 405,430 more rowsAnother easy cleanup is to remove the rows with missing values for cases, as our question depends on knowing the number of cases.

(who_clean <- who_clean %>%

filter(!is.na(cases)))## # A tibble: 76,046 x 5

## country iso3 year case_type cases

## <chr> <chr> <dbl> <chr> <dbl>

## 1 Afghanistan AFG 1997 new_sp_m014 0

## 2 Afghanistan AFG 1997 new_sp_m1524 10

## 3 Afghanistan AFG 1997 new_sp_m2534 6

## 4 Afghanistan AFG 1997 new_sp_m3544 3

## 5 Afghanistan AFG 1997 new_sp_m4554 5

## 6 Afghanistan AFG 1997 new_sp_m5564 2

## 7 Afghanistan AFG 1997 new_sp_m65 0

## 8 Afghanistan AFG 1997 new_sp_f014 5

## 9 Afghanistan AFG 1997 new_sp_f1524 38

## 10 Afghanistan AFG 1997 new_sp_f2534 36

## # i 76,036 more rowsYou may have noticed during the pivot above that there are, annoyingly, some inconsistent naming conventions. For example, in some of the case types, there is an underscore after new and in some there are not. Let’s perform a grouped count on the case_type variable to see the extent of this problem. Notice that we’re not storing this grouped table in who_clean since we’re only using it to detect entry errors.

case_type_count <- who_clean %>%

group_by(case_type) %>%

summarize(count = n())By viewing the full case_type_count table (View(case_type_count)), we see that the last 14 values are entered as newrel rather than new_rel. Here’s what you’d see at the end of the table:

tail(case_type_count, 16)## # A tibble: 16 x 2

## case_type count

## <chr> <int>

## 1 new_sp_m5564 3218

## 2 new_sp_m65 3209

## 3 newrel_f014 190

## 4 newrel_f1524 184

## 5 newrel_f2534 182

## 6 newrel_f3544 183

## 7 newrel_f4554 183

## 8 newrel_f5564 183

## 9 newrel_f65 185

## 10 newrel_m014 190

## 11 newrel_m1524 182

## 12 newrel_m2534 183

## 13 newrel_m3544 184

## 14 newrel_m4554 184

## 15 newrel_m5564 185

## 16 newrel_m65 182We can fix this with str_replace: