Chapter 1 Introduction

1.1 What is Machine Learning?

Is ML the same as Statistics? Is it Data Science? Is it its own thing? Is it just a buzzword which is good for employment? Just what is Machine Learning?

Well, I don’t think there’s a single concrete definition for Machine Learning (ML), but let’s have a look at several different ones….

A popular definition:

“A computer program is said to learn from experience E with respect to some class of tasks T, and performance measure P, if its performance at tasks in T, as measured by P, improves with experience E.”. Mitchell (1997)

This means there are many different kinds of machine learning, depending on the nature of the task T we wish the system to learn, the nature of the performance measure P we use to evaluate the system, and the nature of the training signal or experience E we give it. Murphy (2022)

Another definition: “Machine learning is a branch of artificial intelligence (AI) and computer science which focuses on the use of data and algorithms to imitate the way that humans learn, gradually improving its accuracy”. Hurwitz and Kirsch (2018)

And one more definition: “A set of methods that can automatically detect patterns in data, and then use the uncovered patterns to predict future data, or to perform other kinds of decision making under uncertainty (such as planning how to collect more data!).”. Murphy (2012)

Well, it seems that the general consensus is that ML is about getting the machine (most likely a computer in some sense) to learn as much as possible, with the least human interaction possible. This may be an admirable aim, however, I would say that there are elements of human interaction and interpretation of results that machines can’t do. Machines and humans (bringing an analytical mind as well as the know-how to use the machines) must together perform Statistics, Data Science and Machine Learning in my opinion.

With regards to this course specifically, my half of the course could be seen as Statistical Learning, we view machine learning from a probabilistic perspective and apply statistical tools to machine learning.

I will leave you to decide for yourself about these various names for our science as the course progresses! What I do know is that we are going to cover some important statistical tools if you want to get into “Machine Learning”!

1.2 Motivation

We are currently living in a data-driven world, where the amount of digitally-collected data grows exponentially. Being a powerful tool, machine learning derives insights from this tremendous amount of data and helps solve many practical problems.

People who are exploring machine learning as a field to shift their careers or people who are just curious about why there is such a buzz of machine learning all over places often have one burning question in their mind – what all possible things can one achieve with machine learning? Well, the short answer is – that the possibility is endless and one’s creativity is the only limit.

Here are a few machine learning examples that are in popular use in today’s world.

Machine Learning is an integral part of our daily life

Google search engine, now processes over 40,000 searches per second on average and billions of searches daily and yet throws relevant search results within seconds. Did you ever freak out at how Google gives such accurate autocomplete prompts in the search boxes as if it is reading our minds about search context?

Google maps predict which route will be faster and shows such an accurate ETA of reaching the destination.

Ever noticed that spam/junk folder in your university mailbox or many other email providers, for example Gmail, and did you ever open it to see so many spam mails indeed.

Various language translators and automatic subtitles are becoming better and better with time.

Youtube and Tiktok suggestions, which keep people hooked for endless hours. Amazon and Netflix are so good at recommending what you may like.

ChatGPT have been widely utilized in various aspects of daily life, including communication, education, content creation, business marketing and healthcare.

Yes, I think you are getting my point now, it is machine learning that is behind all the magic.

Machine Learning in industrial uses

Industries are adopting machine learning across the world.

Banks are using machine learning to detect frauds, pass loans, and maintain investment portfolios.

Hospitals are using machine learning to detect and diagnose diseases with more accuracy than actual doctors.

Companies are running ad campaigns targeted to specific customer segments and managing their supply chain and inventory control using machine learning.

Enterprise chatbots are now the next big thing, with companies adopting them to interface with customers.

Online retailers are building their recommendation systems with more accuracy than before using the latest advancements in machine learning.

Self Driving Cars are the latest fascination that has caught the imaginations of companies like Google, Uber, and Tesla who are investing heavily into this future vision using next-generation machine learning technology.

These are just a few of the popular use cases and are just the tip of the iceberg. Machine learning can be used in the most creative ways to solve social, economical, and business problems. The possibility is just endless.

Machine Learning – The Artist

This is the coolest part- with so many advancements in Deep Learning a subset field with machine learning things are getting to the next level of reality. Now we are able to produce artwork using machine learning. These are some of the astonishing artworks.

Deepfakes

Jason Allen, a video game designer in Pueblo, Colorado, spent roughly 80 hours working on his entry to the Colorado State Fair’s digital arts competition. Judges awarded his A.I.-generated work (“Théâtre D’opéra Spatial”) first place, which came with a $300 prize.

The beautiful model-looking faces below do not exist and have been generated by machine learning’s imagination.

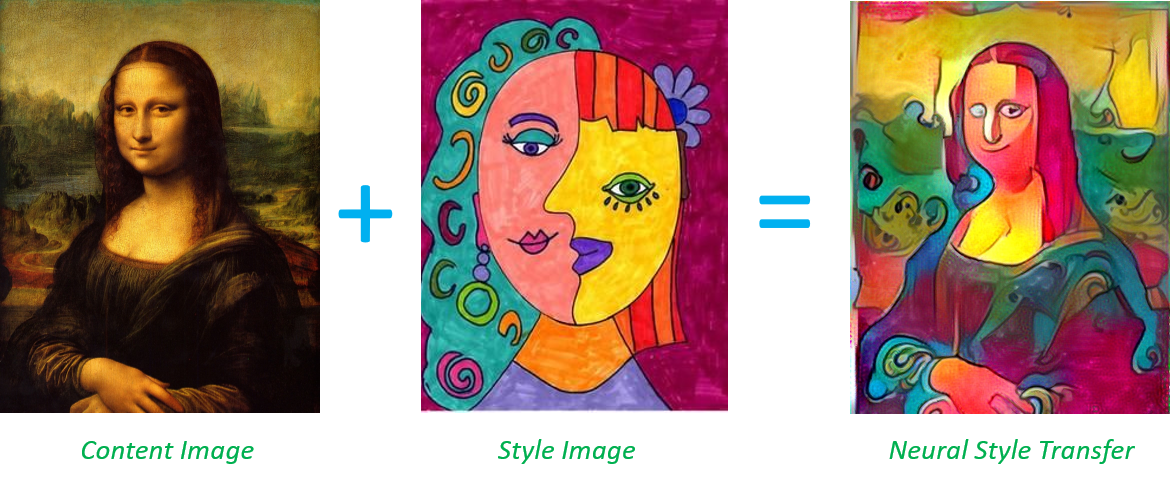

Neural Style Transfer

Neural style transfer is a cool technique in which a style of an image or artwork is transferred to another image.

Music Composer

Nowadays, Machine Learning based apps (for example https://www.aiva.ai/) can assist you to create various styles of AI-generated music.

Check out this soundtrack music (https://www.youtube.com/watch?v=Emidxpkyk6o&ab_channel=Aiva), there is no reason to believe that it was not generated by humans!!

Machine Learning – The Gamer

In 2011, IBM’s machine learning system Watson participated in a popular TV game show Jeopardy – which competed and defeated the world’s best Jeopardy human champions.

In 2016, Deepmind’s machine learning system AlphaGo played Go with Lee Sudol (the best player and champion of Go) and defeated him. This feat is considered to be one of the greatest achievements in the field of machine learning.

Machine learning-based AI-player has been outcompeting top human players in various real-time strategy games, such as StarCraft and League of Legends.

In This Course…

In the first term (Michaelmas term) of this course, we will cover the following topics along with some practical exercises.

Fundamental concepts of Statistical (Machine) Learning

Linear models (Logistic Regression, Linear Regression)

Beyond Linearity (Polynomial Regression, Step Functions, Splines)

Tree-based methods (Classification and regression trees, Random forests, Boosting)

You may find these topics seem to be nowhere near those fascinating application examples listed above. Well, they are in fact the cornerstone of the more advanced machine learning algorithms including neural networks.

1.3 What do we need?

What do we need to achieve “successful” Machine (Statistical) Learning? In my opinion, it requires the following four pillars.

1. Sufficient Data

If you ask any data scientist how much data is needed for machine learning, you’ll most probably get either “It depends” or “The more, the better.” And the thing is, both answers are correct.

It really depends on the type of project you’re working on, and it’s always a great idea to have as many relevant and reliable examples in the datasets as you can get to receive accurate results. But the question remains: how much is enough?

General speaking, more complex algorithms always require a larger amount of data. The more features (the number of input parameters), model parameters, and variability of the expected output it should take into account, the more data you need. For example, you want to train the model to predict housing prices. You are given a table where each row is a house, and the columns are the location, the neighborhood, the number of bedrooms, floors, bathrooms, etc., and the price. In this case, you train the model to predict prices based on the change of variables in the columns. And to learn how each additional input feature influences the input, you’ll need more data examples.

2. Sufficient Computational Resources

There are four steps for conducting machine learning, all require sufficient computational resources:

- Preprocessing input data

- Training the machine learning model

- Storing the trained machine learning model

- Deployment of the model

Among all these, training the machine learning model is the most computationally intensive task. For example, training a neural network often requires intensive computational resources to conduct an enormous amount of matrix multiplications.

3. Performance metrics

Performance metrics are a part of every machine learning pipeline. They tell you if you’re making progress, and put a number on it. All machine learning models, whether it’s linear regression or neural networks, need a metric to judge performance.

Many of the machine learning tasks can be broken down into either Regression or Classification. There are dozens of metrics for both problems, for example, Root Mean Squared Error for Regression problem and Accuracy for Classification problem. Choosing a “proper” performance could be crucial to the whole machine learning process. We must carefully choose the metrics for evaluating machine learning performance because

How the performance of ML algorithms is measured and compared will be dependent entirely on the metric you choose.

How you weigh the importance of various characteristics in the result will be influenced completely by the metric you choose.

We will explore some of the popular performance metrics for machine learning in the coming lectures.

Note most of the performance metrics (especially in supervised learning) require verification/labelled data. For regression problems, we usually require an observed response variable (verification) in order to calculate performance metrics. And for the classification problems, we usually require labelled data. In machine learning, data labelling is the process of identifying raw data (images, text files, videos, etc.) and adding one or more meaningful and informative labels to provide context so that a machine learning model can learn from it. For example, labels might indicate whether a photo contains a bird or car, which words were uttered in an audio recording, or if an x-ray contains a tumor.

4. Proper Machine Learning Algorithm

There are so many algorithms that it can feel overwhelming when algorithm names are thrown around. In this course, we will look into some classic machine learning algorithms including for example linear models, tree-based models and neural networks. Knowing where the algorithms fit is much more important than knowing how to apply them. For example, applying a linear model to the data generated by a nonlinear underlying system is obviously not a good idea.

1.4 A Note on These Notes

Since this is a third-year course, you are encouraged to read about it widely, expanding your knowledge beyond the notes contained here. That’s because that is precisely what these are - Notes! They are written to complement the lectures and give you a flavour of the topics covered in this course. They are not meant to be a comprehensive textbook, covering every detail that will be necessary to become a well-trained Statistician, Data Scientist or Machine Learner - that requires reading around! …and practice!

1.5 Key References

The key references are the following (All of the recommended books are freely and legally available online):

James et al. (2013) - , by Gareth James, Daniela Witten, Trevor Hastie and Robert Tibshiriani. This book is relatively easy to follow and doesn’t require strong maths background. There are quite a few online resources about this book available here.

Faraway (2009) - Linear Models with R, by Julian Faraway. This book gives a thorough introduction to linear models and their applications to make predictions and explaining the relationship between the response and the predictors. Understanding linear models are crucial to a broader competence in the practice of machine learning. https://search-ebscohost-com.ezphost.dur.ac.uk/login.aspx?direct=true&AuthType=cookie,ip,athens&db=nlebk&AN=1910500&site=ehost-live

Murphy (2012) - Machine Learning : A Probabilistic Perspective, by Kevin P. Murphy. This book gives a comprehensive introduction to machine learning and offers a comprehensive and self-contained introduction to the field of machine learning, based on a unified, probabilistic approach. The book is written in an informal, accessible style, complete with pseudo-code for the most important algorithms. https://ebookcentral.proquest.com/lib/durham/detail.action?docID=3339490

Murphy (2022) - Probabilistic Machine Learning : An Introduction, by Kevin P. Murphy. This book is similar to Murphy (2012) which covers the most common types of machine learning but from a probabilistic perspective. This book offers a more detailed and up-to-date introduction to machine learning including relevant mathematical background, basic supervised learning as well as more advanced topics. https://ebookcentral.proquest.com/lib/durham/detail.action?docID=6884472