2 Methods

Spotify for Developers

One resource that was used to learn about the process for retrieving data from Spotify was the Spotify for Developers website [14]. Here, ample information is provided from general guides to pages of reference materials. It has the information needed to learn about authorization, how to work with playlists, and a quick start guide to the Spotify Web API. Most of the code examples used throughout the guides were programmed using Java, therefore, they were not much help. However, a greater understanding was developed of the background process to accessing the data through Spotify.

Spotify Web API

The Spotify Web API is a server-side application to access data from user profiles. An API is an application programming interface, which allows a user to ask for some information and then the API returns that information back to the user. The Spotify Web API allows a user to request track details and it then returns the values that were requested. This application can be used to create new playlists, find information about songs and artists, along with many other functions. The main purpose the Spotify Web API was used for was to acquire authorization scopes, that is, the Spotify Client ID and Client Secret. The Client ID is the unique identifier of personal applications, and the Client Secret is a secure and private code to access Spotify Web API services [14].

Spotipy

In order to run the program, Lamere’s Spotipy library had to be imported at the start of the code [15]. Spotipy is a Python library for the Spotify Web API. The Spotipy site has examples of code that are programmed using Python, which was more comprehensible than the Spotify for Developers page.

2.1 Coding Process

The most time-consuming portion of this project was learning how to use the Python coding language. After reading from multiple resources, a conceptual understanding of Python was developed, but applying that understanding proved to be more challenging than anticipated. First, a tutorial was followed working though the Spotify Web API. With the code produced from this tutorial, specific tracks were able to be extracted from specific artists, but complete playlists could not be extracted as needed. Now, the code from Tamer’s GitHub page became helpful [1]. Tamer’s project’s purpose was to predict the next year’s most popular songs, and her code was able to extract data from entire playlists. The code produced using the Spotify Web API tutorial was adapted to Tamer’s code and a path was created that extracted the information from the Spotify playlists to a .csv file.

2.2 Data Extraction Process

For the code to be able to connect with the Spotify Web API, an app had to created through the Spotify dashboard. These apps can be used to create playlists among other things, but for this project, it was only needed to gain access to the Spotify Client ID and Client Secret. When inserted into the Python script, these credentials permitted access to various data points in Spotify and ultimately provided a path to extract the data from the playlists.

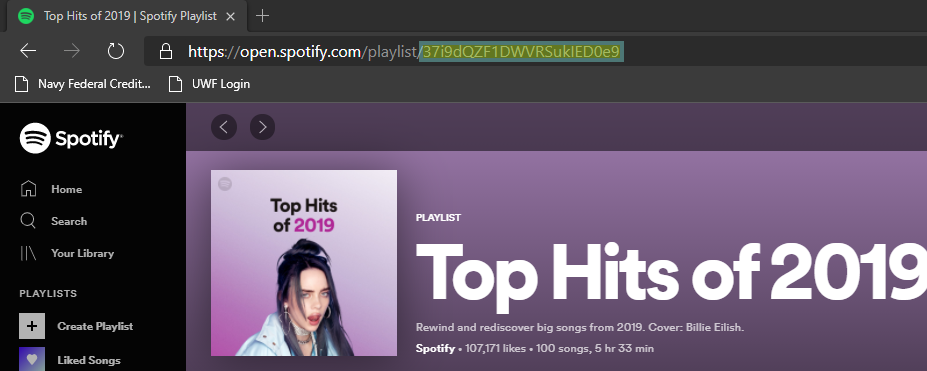

In this app, a whitelisted redirect URI had to be created with Spotify. This URI enabled the Spotify authentication service to automatically re-launch the app every instance of running the Python code. Then, the Top 100 Tracks playlists created by Spotify ranging from 2001 to 2019 were found. With the final Python script, complete with the authorization scopes and redirect URI, the playlist URI from the playlist URL could simply be inserted into the code and the information pulled from Spotify would be sent to a .csv file with all the datapoints needed to conduct the analysis. From here, the data could be compiled into something analyzable.

Pictured here is the process described above of finding the playlist URI and inserting it into the Python code.

The final lines of code provide the path to send the data frame to a .csv file.

2.3 Variables

In each of the datasets extracted from Spotify, there were 100 tracks and 12 variables. These variables included danceability, energy, key, loudness, mode, speechiness, acousticness, instrumentalness, liveness, valence, tempo, duration, and time signature.

- Outcome Variable

- Danceability - describes how easy it is to dance to a song. It is the culmination of tempo, rhythm, and beat. Measured from 0 to 1.

- Predictor Variables

- Energy - measures how intense a track is from 0 to 1. Soft, melodic songs have a lower energy, while loud, dynamic songs have a higher energy.

- Key - defines what key the song is in. Each key from A to G# is assigned to an integer from 0 to 11.

- Loudness - how loud a track is, measured in decibels and averaged across the track.

- Mode - a binary variable that indicates whether a song is in a major key (1) or a minor key (0).

- Speechiness - the portion of the song consisting of spoken word. Rap songs that are highly comprised of speech would have a score closer to 1, while fully instrumental songs would have a score close to 0.

- Acousticness - determines if a song is acoustic or not. A song with a score of 1 would be an acoustic track.

- Instrumentalness - the antithesis to speechiness in that it detects how instrumental a song is. A song with no vocals would have a score of 1 whereas a song with only vocals would have a score of 0.

- Liveness - detects whether or not there is an audience present. Higher values indicate the presence of an audience.

- Valence - how uplifting a song is. A song with a positive mood would have a score nearing 1 and a negative song would have a score closer to 0.

- Tempo - the speed of the song, in beats per minute.

- Duration - how long the song is in milliseconds.

- Time signature - measures how many beats are in a measure.

From previous research in Biostatistics and Regression Analysis, as stated in the literature review, it was determined through regression models that energy, speechiness, acousticness, valence, and tempo are all significant predictors of danceability. In this analysis, year will also be included as a predictor variable. Using this outcome, along with the additional years of data, continuing analyses would consist of how the variables have changed over time.

2.4 Statistical Methods

Multiple linear regression is a modeling approach used when there is more than one predictor variable. In this analysis, with 12 predictor variables, multiple linear regression will provide a model that will be able to predict the outcome of danceability.

\[\hat{Y} = \beta_0 + \beta_1{X_1} + ... + \beta_k{X_k} \] This is the general multiple linear regression formula, where k is the number of predictors in the model.

There are a few assumptions of linear regression that need to be tested with any dataset.

\[\varepsilon_i \overset{\text{iid}}{\sim} N(0, \sigma^2)\]

- Linearity - there must be a linear relationship between the independent and dependent variables.

- Independence - the observations should be independent of one another.

- Normality - the distribution of errors should be normal.

- Homoscedasticity - variance of y should be the same across all x.

The best regression models undergo testing to determine which variables are significant predictors of the outcome variable. To create a model with only statistically significant variables, backward selection was used testing variables based on a significant p-value of 0.05, however other criteria are sometimes used. Backward selection is a type of stepwise regression that starts with a full model including all predictors. Then, the predictors that do not significantly contribute to the model are stripped away leaving a model that includes only statistically significant predictors. This process is used when the number of observations is greater than the number of variables. With 1,900 data points and 12 predictor variables, backward selection is the chosen regression method in this case.

2.5 Results

Testing and Analysis

Initial Model

The initial regression model including all variables is: \[\hat{Y} = -7.0358 + 0.0039\mbox{ year} -0.2561\mbox{ energy} + 0.0003\mbox{ key}\] \[+ 0.001\mbox{ loudness} -0.0178\mbox{ mode} + 0.1798\mbox{ speechiness} -0.1227\mbox{ acousticness}\] \[+ 0.119\mbox{ instumentalness} -0.0928\mbox{ liveness} + 0.3141\mbox{ valence} -0.0009\mbox{ tempo}\] \[ + 0\mbox{ duration} + 0.0185\mbox{ time signature}\]

The following table was examined for variables with a p-value greater than 0.05. As seen here, key, loudness, duration, and time signature all have p-values greater than 0.05. Therefore, they are not significant predictors of danceability and can be removed from the model.

| Characteristic | Beta | 95% CI1 | p-value |

|---|---|---|---|

| year | 0.00 | 0.00, 0.00 | <0.001 |

| energy | -0.26 | -0.31, -0.20 | <0.001 |

| key | 0.00 | 0.00, 0.00 | 0.720 |

| loudness | 0.00 | 0.00, 0.00 | 0.610 |

| mode | -0.02 | -0.03, -0.01 | 0.001 |

| speechiness | 0.18 | 0.12, 0.24 | <0.001 |

| acousticness | -0.12 | -0.15, -0.09 | <0.001 |

| instrumentalness | 0.12 | 0.06, 0.18 | <0.001 |

| liveness | -0.09 | -0.13, -0.05 | <0.001 |

| valence | 0.31 | 0.29, 0.34 | <0.001 |

| tempo | 0.00 | 0.00, 0.00 | <0.001 |

| duration_ms | 0.00 | 0.00, 0.00 | 0.614 |

| time_signature | 0.02 | 0.00, 0.04 | 0.106 |

|

1

CI = Confidence Interval

|

|||

Final Model

After more testing and removing those variables found to be not significant predictors of danceability, this is the final regression model: \[\hat{Y} = -16.8717 + 0.0088\mbox{ year} -0.2426\mbox{ energy} -0.017\mbox{ mode} + 23.877\mbox{ speechiness}\] \[ -0.1224\mbox{ acousticness} + 0.1145\mbox{ instumentalness} -0.0946\mbox{ liveness} + 13.9302\mbox{ valence}\] \[ -0.0008\mbox{ tempo} -0.0118\mbox{ year} \times \mbox{ speechiness} -0.0068\mbox{ year } \times \mbox{ valence}\]

The data was also tested for interactions and the two significant interactions were between year and speechiness and year and valence.

As seen in this table, all of the p-values have remained less than 0.05. Therefore, all of the variables included in the final model are significant.

| Characteristic | Beta | 95% CI1 | p-value |

|---|---|---|---|

| year | 0.01 | 0.01, 0.01 | <0.001 |

| energy | -0.24 | -0.28, -0.20 | <0.001 |

| mode | -0.02 | -0.03, -0.01 | 0.002 |

| speechiness | 24 | 3.7, 44 | 0.021 |

| acousticness | -0.12 | -0.15, -0.09 | <0.001 |

| instrumentalness | 0.11 | 0.05, 0.17 | <0.001 |

| liveness | -0.09 | -0.14, -0.05 | <0.001 |

| valence | 14 | 5.1, 23 | 0.002 |

| tempo | 0.00 | 0.00, 0.00 | <0.001 |

| year * speechiness | -0.01 | -0.02, 0.00 | 0.022 |

| year * valence | -0.01 | -0.01, 0.00 | 0.002 |

|

1

CI = Confidence Interval

|

|||

Testing Assumptions

Here, the assumptions of linear regression were tested. In the residuals versus fitted plot testing for homoscedasticity, there is no clear distribution pattern. Therefore, the variance of residuals is the same for any x value. The Normal Q-Q plot and the Histogram for residuals test for normality. The Q-Q plot shows that the data is normal, and the Histogram is only slightly skewed, therefore the residuals are normally distributed for any value of x.

Graphical Analysis

Here is the graphical analysis of the significant variables over time. The green point plots mean value. In graph 1, average danceability of the Top 100 tracks has increased slightly over time. This means songs are becoming more danceable over time. Graph 2 shows that energy has gone down throughout time. It is worth noting the outliers on the lower end of the energy scale. There are almost always a few songs in the Top 100 per year that have a lower energy which would cause the overall energy to decrease. The range of energy per year has also decreased over time. This could be in part because songs are becoming more similar in energy. Graph 3 looks different than the other graphs pictured. With mode being a binary variable, the mean value is a proportion of the number of songs in a major key and the number of songs in a minor key. Therefore, the average determines whether there were more major songs or minor songs. In graph 4, average speechiness has oscillated slightly over the years This could be because the songs people listen to the most usually have a similar song map with verses, choruses, and bridges in the same pattern. Also, very few songs that make it to the Top 100 have a score greater than 0.5 in speechiness; this means most of the songs that make it into the Top 100 are more instrumental or comprised of vocalizations. In graph 5, average acousticness has recently increased per year which means more acoustic songs are making it into the top 100 tracks. Also, the variability per year has increased slightly over time. In graph 6, instrumentalness has had very little change over time but in recent years most of the top 100 songs are not instrumental at all, meaning most songs have a lot of words and singing. In graph 7, average liveness has stayed the same over time with a slight decrease in recent years. In graph 8, average valence has decreased with time with values at all points on the spectrum. This decrease means more negative songs have made their way into the top 100 tracks. Graph 9 depicting average tempo over time has had little change. This means recent years have had similar speed songs in the Top 100 tracks.

Interactions

An interaction occurs when the relationship between a predictor variable and the outcome variable depends on the value of another variable. The data was tested for interactions with year and found year and speechiness to have an interaction. This means that the relationship between danceability and speechiness depends on the year we are looking at. The following graph plots the slope of speechiness over year. As year has gone on the slope of speechiness has decreased. This means that every year the relationship between speechiness and danceability is decreasing.

An interaction was also found between year and valence. This means that the relationship between danceability and valence depends on the year. The graph below plots the slope of valence over year. As year has gone on, the slope of valence has decreased. This means that every year the relationship between valence and danceability is weakening.