Chapter 9 Hypothesis Testing

9.1 Getting Started

In this chapter, we extend the concepts used in Chapters 7 & 8 to focus more squarely on making statistical inferences through the process of hypothesis testing. The focus here is on taking the abstract ideas that are the foundation for hypothesis testing and apply them to some concrete example. The only thing you need to load in order to follow among is the anes20.rda data set.

9.2 The Logic of Hypothesis Testing

When engaged in the process of of hypothesis testing, we are essentially asking “what is the probability that the statistic found in the sample could have come from a population in which it is equal to some other, specified, value?” As discussed in chapter 8, social scientists want to know something about a population value of interest but frequently are only able to work with sample data. We generally think the sample data represent the population fairly well but we know that there will be some sampling error. In Chapter 8, we took this into account using confidence intervals around sample statistics. In this chapter, we apply some of the same logic to determine if the sample statistic is different enough from a hypothesize population parameter that we can be confident it did not occur just due to sampling error. (Come back and reread this paragraph when you are done with this chapter; it will make a lot more sense then).

We generally consider two different types of hypotheses, the null and alternative (or research) hypotheses?

Null Hypothesis (H0): This is the hypothesis that is tested directly. This hypothesis usually states that the sample finding (\(\bar{x}\) ) is the same as some hypothetical population parameter (\(\mu\)), even though it may appear that they are different. We usually hope to reject the null hypothesis (H0). I know this sounds strange, but it will make more sense to you soon.

Alternative (research) Hypothesis (H1): This is a substantive hypothesis that we think is true. Usually, the alternative hypothesis posits that a sample statistic \(\bar{x}\) does not equal some specified population parameter (\(\mu\)). We don’t actually test this hypothesis directly. Rather, we try to build a case for it by showing that the sample statistic is different enough from the population value hypothesize in H0 that it is unlikely that the null hypothesis is true.

We can use what we know about the z-distribution to test the validity of the null hypothesis by stating and testing hypotheses about specific values of population parameters. Consider the following problem:

An analyst in the Human Resources department for a large metropolitan county is asked to evaluate the impact of a new method of documenting sick leave among county employees. The new policy is intended to cut down on the number of sick leave hours taken by workers. Last year, the average number of hours of sick leave taken by workers was 59.2 (about 7.4 days), a level determined to be too high. To evaluate if the new policy is working, the analyst took a sample of 100 workers at the end of one year under the new rules and found that a sample mean of 54.8 hours (about 6.8 days), and a standard deviation of 15.38. The question is, does this sample mean represent a real change in sick leave use, or or does it only refect sampling error? To answer this, we need to determine how likely is is to fet a sample mean if 54.8 from a population in which \(\mu=59.2\).

9.2.1 Using Confidence Intervals

As alluded to at the end of Chapter 8, you already know one way to test hypotheses about population parameters by using confidence intervals In this case, we can calculate the lower- and upper-limits of a 95% confidence interval around the sample mean (54.8) to see if it includes \(\mu\) (59.2):

\[c.i._{.95}=54.8\pm {1.96(S_{\bar{x}})}\] \[S_{\bar{x}}=\frac{15.38}{\sqrt{100}}=1.538\] \[c.i._{.95}=54.8 \pm 1.96(1.538)\] \[c.i._{.95}=54.8 \pm 3.01\] \[51.78\le \mu \le57.81\]

From this sample of 100 employees, after one year of the new policy in place we estimate that there is a 95% chance that \(\mu\) is between 51.78 and 57.81, and the probability that \(\mu\) is outside this range is less than .05. Based on this alone we can say there is less than a 5% chance that the number of hours of sick leave taken is the the same that it was in the previous year. In other words, there is a fairly high probability that fewer sick leave hours were used in the year after that policy change than in the previous year.

9.2.2 Direct Hypothesis Tests

We can be a bit more direct and precise by setting this up as a hypothesis test and then calculating the probability that the null hypothesis is true. First, the null hypothesis.

\[H_{0}:\mu=59.2\]

Note that this is saying is that there is no real difference between last year’s mean number of sick days (\(\mu\)) and the sample we’ve drawn from this year (\(\bar{x}\)). Even though the sample mean looks different from 59.2, the true population mean is 59.2 and the sample statistic is just a result of random sampling error. After all, if the population mean is equal to 59.2, any sample drawn from that population will produce a mean that is different from 59.2, due to sampling error. In other words, H0, is saying that the new policy had no effect, even though the sample mean suggests otherwise.

Because the county analyst is interested in whether the new policy reduced the use of sick leave hours, the alternative hypothesis is:

\[H_{1}:\mu < 59.2\]

Here, we are saying that the sample statistic is different enough from the hypothesized population value (59.2) that it is unlikely to be the result of random chance, and the population value is less than 59.2.

Note here that we are not testing whether the number of sick days is equal to 54.8 (the sample mean). Instead, we are testing whether the average hours of sick leave taken this year is lower than the number of sick days taken last year. The alternative hypothesis reflects what we really think is happening; it is what we’re really interested in. However, we cannot test the alternative hypotheses directly. Instead, we examine the null hypothesis as a way of gathering evidence to support the alternative.

So, the question we need to answer in order to test the null hypothesis is, how likely is it that a sample mean of this magnitude (54.8) could be drawn from a population in which \(\mu\text{= 59.2}\)? We know that we would get lots of different mean outcomes if we took repeated samples from this population. We also know that most of them would be clustered near \(\mu\) and a few would be relatively far away from \(\mu\) at both ends of the distribution. All we have to do is estimate the probability of getting a sample mean of 54.8 from a population in which \(\mu\text{= 59.2}\) If the probability of drawing \(\bar{x}\) from \(\mu\) is small enough, then we can reject H0.

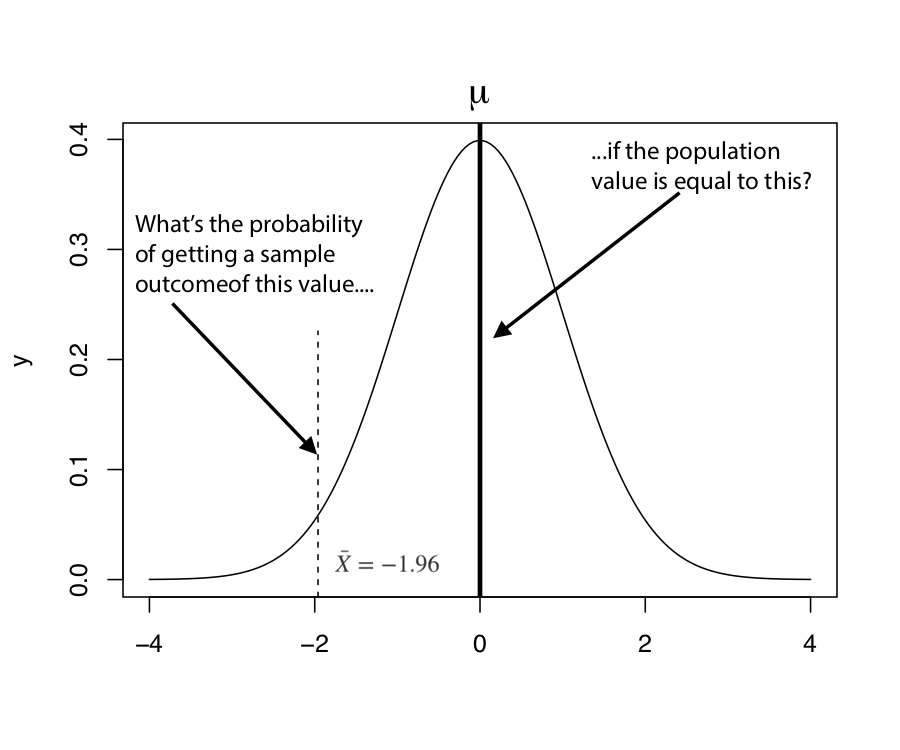

How do we assess this probability? By using what we know about the sampling distributions. Check out the figure below, which illustrates the logic of hypothesis testing using a theoretical distribution:

Figure 9.1: The Logic of Hypothesis Testing

Suppose we draw a sample mean equal to -1.96 from a population in which \(\mu=0\) and the standard error equals 1 (this, of course, is a normal distribution). We can calculate the probability of \(\bar{x}\le0\) by estimating the area under the curve to the left of -1.96. The area on the tail of the distribution used for hypothesis testing is referred to as the \(\alpha\) (alpha) area. We know that this \(\alpha\) area is equal to .025 (How do we know this? Check out the discussion of the z-distribution from the earlier chapters), so we can say that the probability of drawing a sample mean less than or equal to -1.96 from a population in which \(\mu=0\) is about .025. What does this mean in terms of H0? It means that probability that \(\mu=0\) is about .025, which is pretty low, so we reject the null hypothesis and conclude that \(\mu<0\). The smaller the p-value, the less likely it is the H0 is true.

Critical Values. A common and fairly quick way to use the z-score in hypothesis testing is by comparing it the to critical value (c.v.) for z. The c.v. is the z-score associated with the probability level required to reject the null hypothesis. To determine the critical value of z, we need to determine what the probability threshold is for rejecting the null hypothesis. It is fairly standard to consider any probability level lower than .05 sufficient for rejecting the null hypothesis in the social sciences. This probability level is also known as the significance level.

So, typically, the critical value is the z-score that gives us .05 as the area on the (left in this case) tail of the normal distribution. Looking at the z-score table from Chapter 6, or using the qnorm function in R, we see that this is z = -1.645. The area beyond the critical value is referred to as the critical region, and is sometimes also called the area of rejection: if the z-score fall in this region, the null hypothesis is rejected.

[1] -1.645Once we have the \(c.v.\) we can calculate the z-score for the difference between \(\bar{x}\) and \(\mu\). If \(|z| > |z_{cv}|\), then we reject the null hypothesis:

So let’s get back to the sick leave example.

- First, what’s the critical value? -1.65 (make sure you understand why this is the value)

- What is the obtained value of z?

\[z=\frac{\bar{x}-\mu}{S_{\bar{x}}} = \frac{54.8-59.2}{1.538} = \frac{-4.4}{1.538}= -2.86\]

- If the |z| is greater than the |c.v.|, then reject H0. If the |z| is less than the critical value, then fail to reject H0

In this case z (-2.86) is of much greater (absolute) magnitude than c.v. (-1.65), so we reject the null hypothesis and conclude that \(\mu\) is probably < 59.2. By rejecting the null hypothesis we build a case for the alternative hypothesis, though we never test the alternative directly. One way of thinking about this is that there is less than a .05 probability that H0 is true. We are saying that this probability is small enough that we are confident in rejecting H0.

We can be a bit more precise about the level of confidence in rejecting the null hypothesis (the level of significance) by estimating the alpha area to the left of z=-2.86:

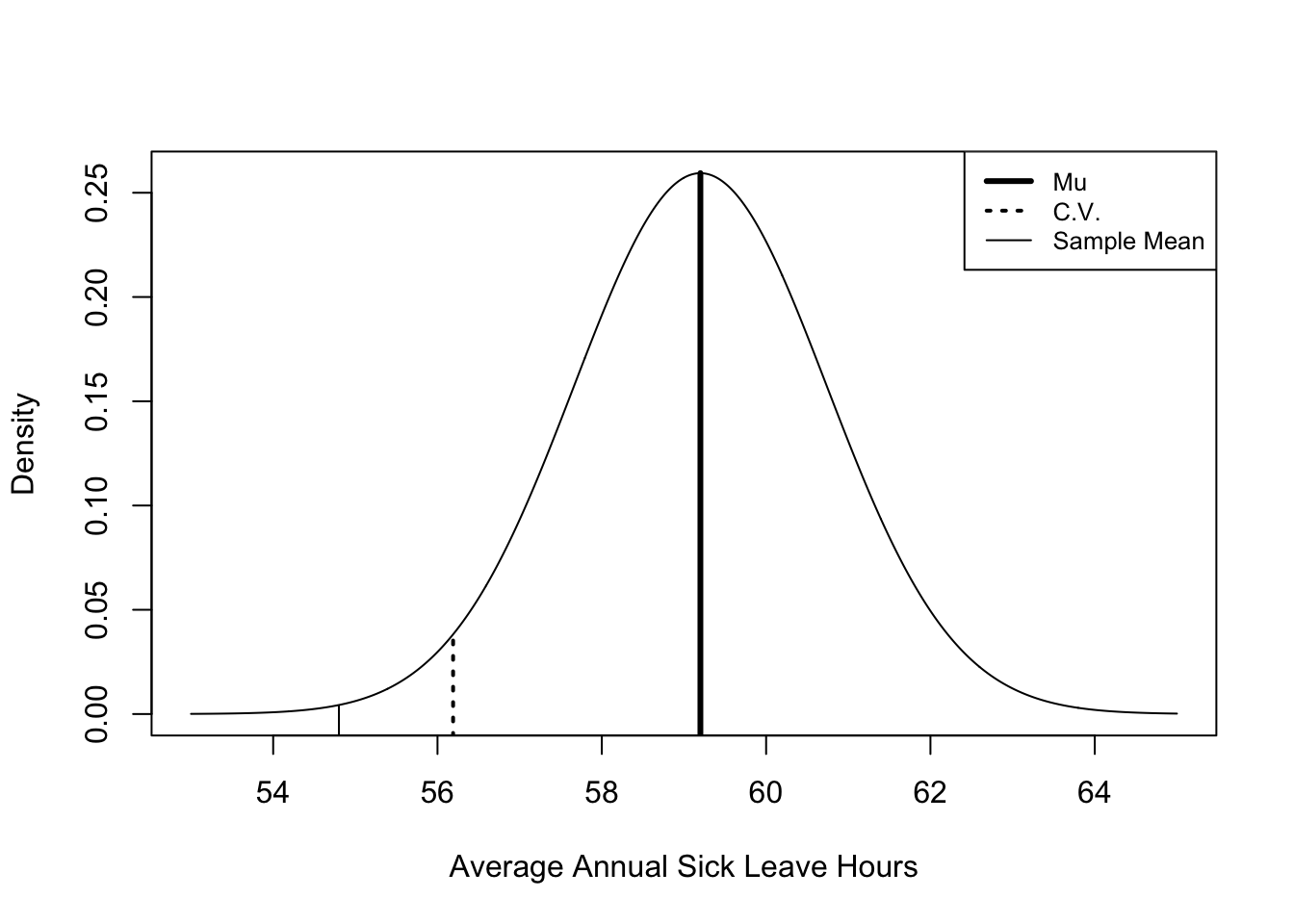

[1] 0.002118This alpha area (or p-value) is close to zero, meaning that there is little chance that the there was no change in sick leave usage. Check out Figure 9.2 as an illustration of how unlikely it is to get a sample mean of 54.8 (thin solid line) from a population in which \(\mu=59.2\), (thick solid line) based on our sample statistics. Remember, the area to the left of the critical value (dashed line) is the critical region, equal to .05 of the the area under the curve in this case, and the sample mean is far to the left of this point.

One useful way to think about this p-value is that if we took 1000 samples of 100 workers from a population in which \(\mu=59.2\) and calculated the mean mean hours of sick leave taken for each sample, only two samples would give you a result equal to or less than than 54.8 simply due to sampling error. In other words, there is a 2/1000 chance that the sample mean was the result of random variation instead of representing a real difference form the hypothesized value.

Figure 9.2: An Illustration of Key Concepts in Hypothesis Testing

9.2.3 One-tail or Two?

Note that we were explicitly testing a one-tailed hypothesis in the example above. We were saying that we expect a reduction in the number of sick days due to the new policy. But suppose someone wanted to argue that there was a loophole in the new policy that might make it easier for people to take sick days. These sort of unintended consequences almost always occur with new policies. Given that it could go either way (\(\mu\) could be higher or lower than 59.2), we might want to test a two-tailed hypothesis, that the new policy could create a difference in sick day use–maybe positive, maybe negative.

\(H_{1}:\mu \ne 59.2\)

The process for testing two-tailed hypotheses is exactly the same, except that we use a larger critical value because even though the \(\alpha\) area is the same (.05), we must now split it between two tails of the distribution. Again, this is because we are not sure if the policy will increase or decrease sick leave. When the alternative hypothesis does not specify a direction, we use the two-tailed test.

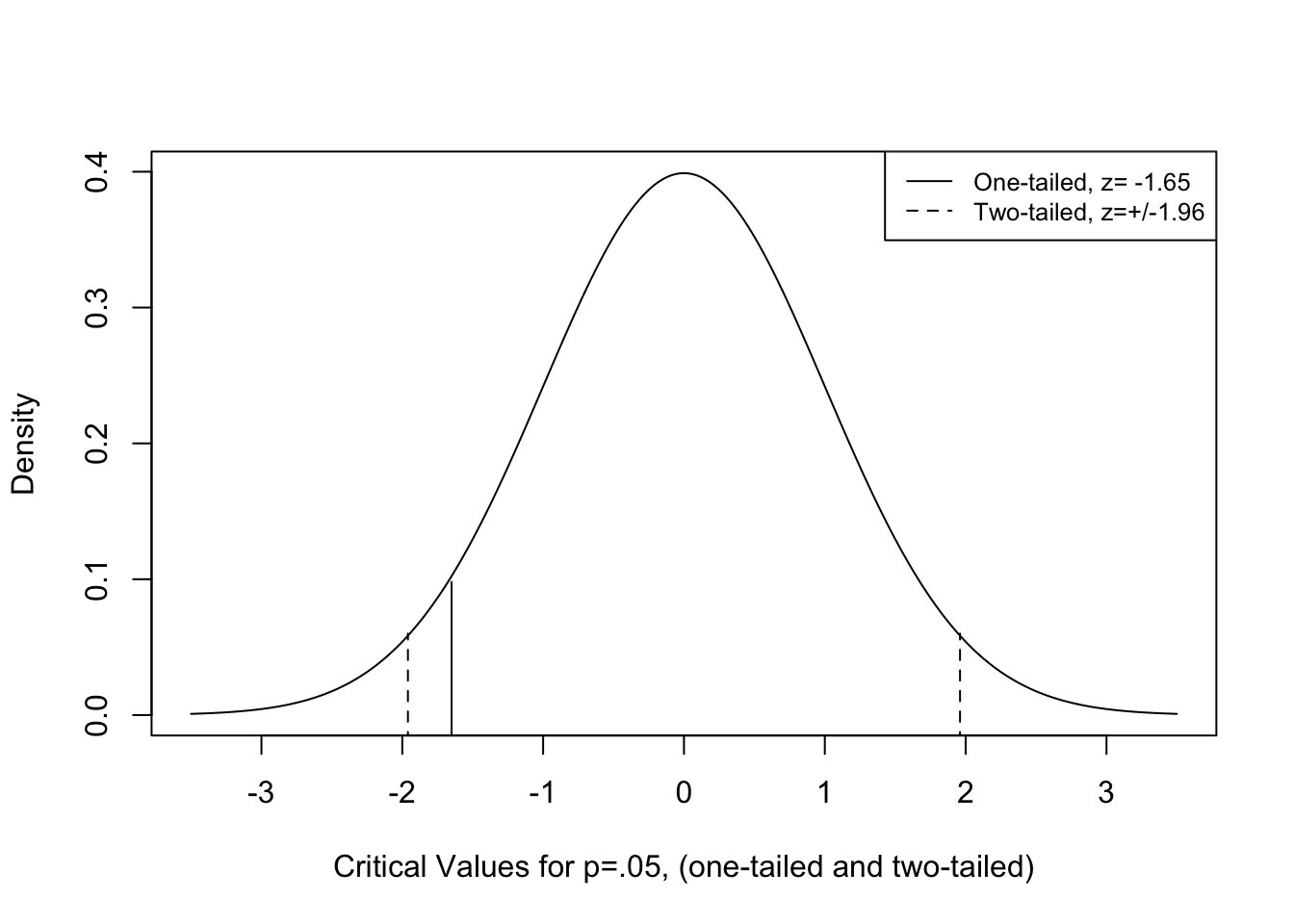

Figure 9.3: Critical Values for One and Two-tailed Tests

The figure below illustrates the difference in critical values for one- and two-tailed hypothesis tests. Since we are splitting .05 between the two tails, the c.v. for a two-tailed test is now the z-score that gives us .025 as the area beyond z at the tails of the distribution. Using the qnorm function in R (below), we see that this is z= 1.96, so the critical value for the two-tailed test is 1.96.

[1] -1.96If we obtain a z-score (positive or negative) that is larger in absolute magnitude than this, we reject H0. Using a two-tailed test requires a larger z-score, making it slightly harder to reject the null hypothesis. However, since the z-score in the sick leave example is -2.86, we would still reject H0 under a two-tailed test.

In truth, the choice between a one- or two-tailed test rarely makes a difference in rejecting or failing to reject the null hypothesis. The choice matters most when the p-value from a one-tailed test is greater than .025, in which case it would be greater than .05 in a two-tailed test. It is worth scrutinizing findings from one-tailed tests that are just barely statistically significant to see if a two-tailed test would be more appropriate. Because the two-tailed test provides a more conservative basis for rejecting the null hypothesis, researchers often choose to report two-tailed significance levels even when a one-tailed test could be justified. Many statistical programs, including R, report two-tailed p-values by default.

9.3 T-Distribution

Thus far, we have focused on using z-scores and the z-distribution for testing hypotheses and constructing confidence intervals. Another distribution available to us is the t-distribution. The t-distribution has an important advantage over the z-distribution: it does not assume that we know the population standard error. This is very important because we rarely know the population standard error. In other words, the t-distribution assumes that we are using an estimate of the standard error. The estimate of the standard error is:

\[\hat{\sigma}_{\bar{x}}=S_{\bar{x}}=\frac{S}{\sqrt{N}}\]

\(S_{\bar{x}}\) is our best guess for \(\sigma_{\bar{x}}\), but it is a sample statistic, so it does involve some level of error.

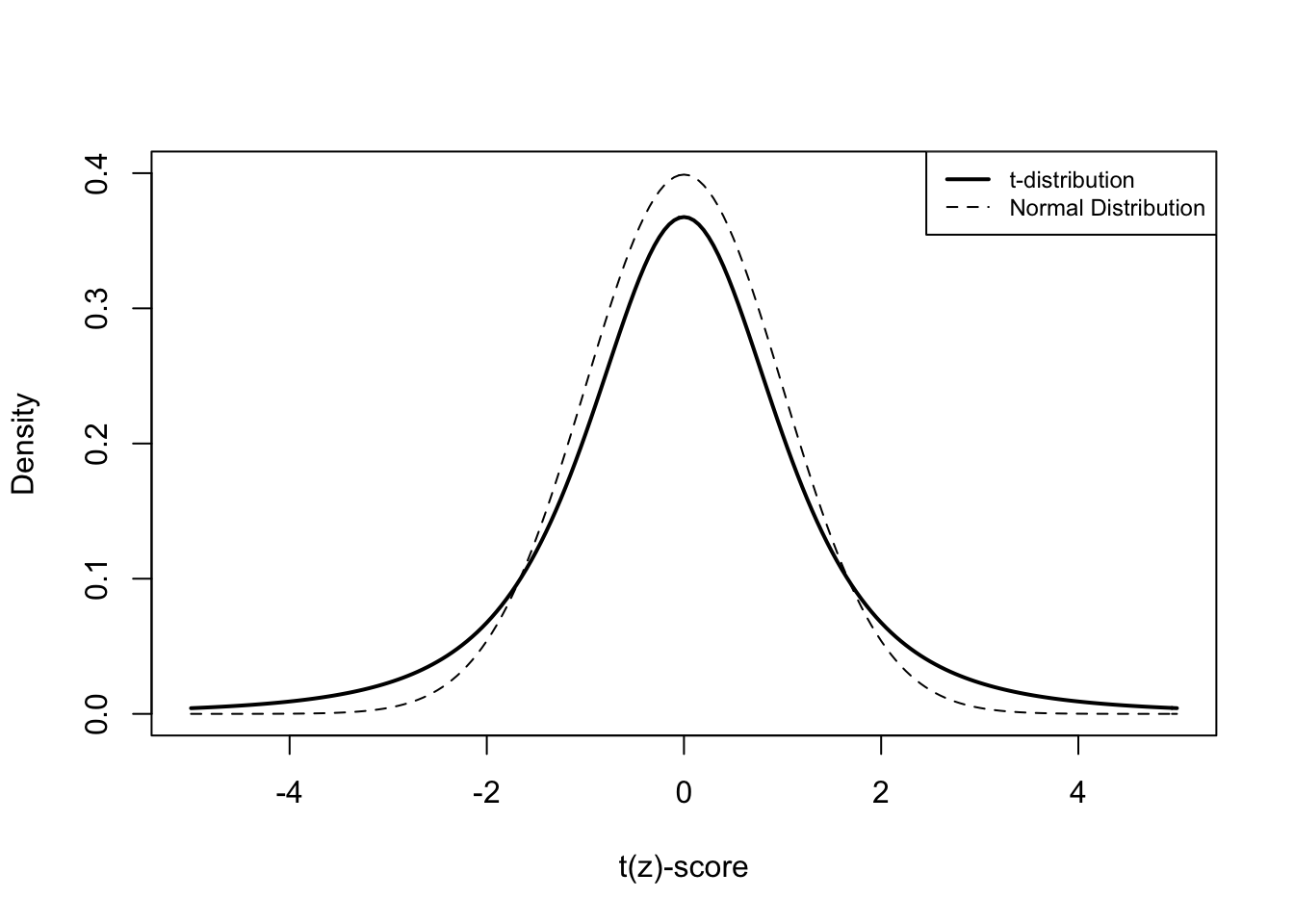

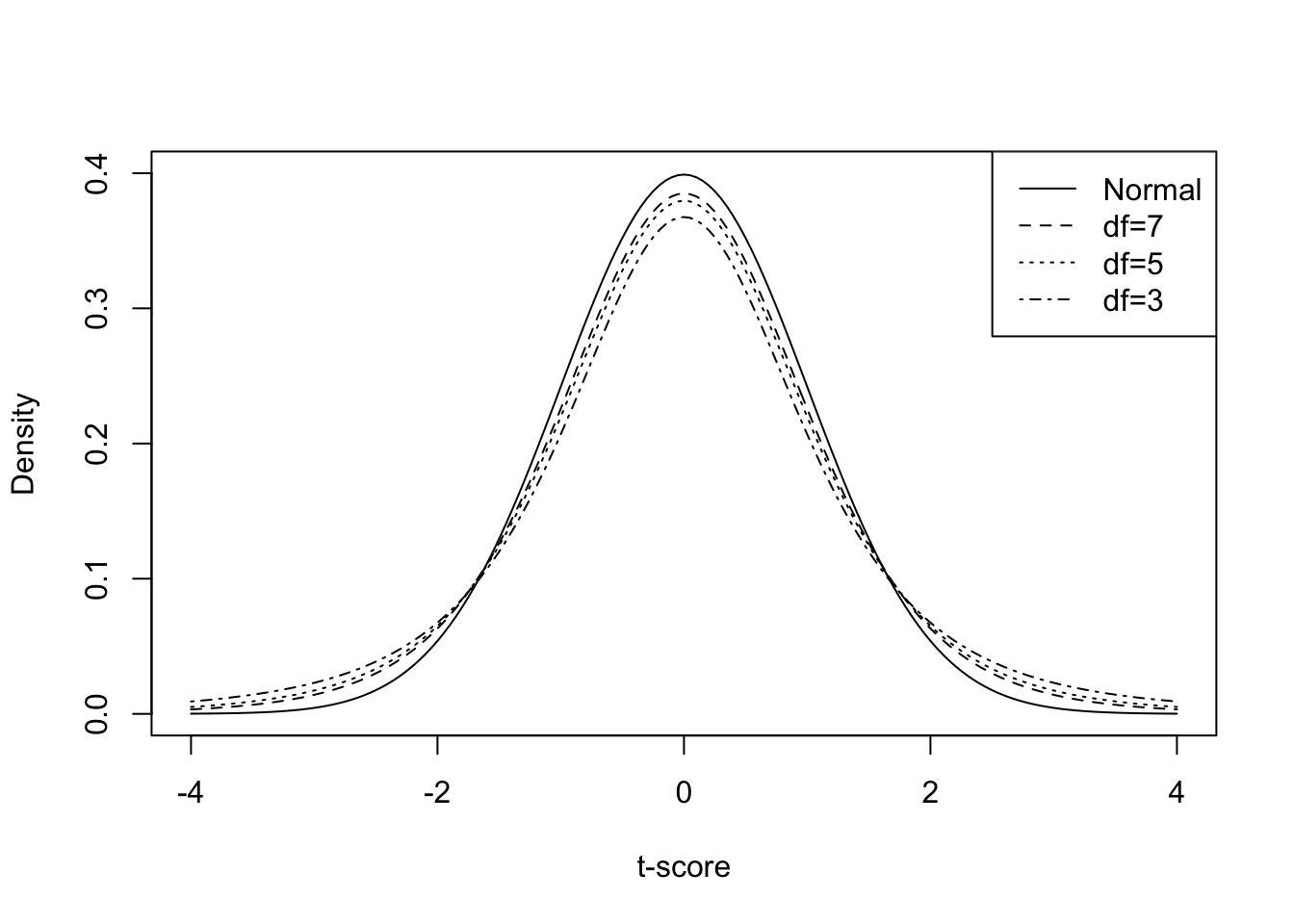

In recognition of the fact that we are estimating the standard error with sample data rather than the population, the t-distribution is somewhat flatter (see Figure 9.4 below) than the z-distribution. This means that the critical value for a given level of significance will be larger in magnitude for a t-score than for a z-score. This difference is especially noticeable for small samples and virtually disappears for samples greater than 100, at which point the t-distribution becomes almost indistinguishable from the z-distribution (see Figure 9.5 below). Comparing the two distributions, you can see that they are both perfectly symmetric but that the t-distribution is a bit more squat and has slightly fatter tails.

Figure 9.4: Comparison of Normal and t-Distributions

Now, here’s the fun part—the t-score is calculated the same way as the z-score. We do nothing different than what we did to calculate the z-score.

\[t=\frac{\bar{x}-\mu}{S_{\bar{x}}}\]

We use the t-score and the t-distribution in the same way and for the same purposes that we use the z-score.

Choose a p-value or level of significance (\(\alpha\)) for rejecting H0. (Usually .05)

Find the critical value of t associated with \(\alpha\) (depends on degrees of freedom)

Calculate the t-score from the sample data.

Compare t-score to c.v. If \(|t| > c.v.\), then reject H0; if \(|t| < c.v.\), then fail to reject.

While everything else looks about the same as the process for hypothesis testing with z-scores, determining the critical value for a t-distribution is somewhat different and depends upon sample size. This is because we have to consider something called degrees of freedom (df), essentially taking into account the issue discussed in Chapter 8, that sample data tend to slightly underestimated the variance and standard deviation and that this underestimation is a bigger problem with small samples. For testing hypotheses about a single mean, degrees of freedom equal:

\[df=n-1\]

So for the sick leave example used above:

\[df=99\]

In the figure below, you can see the impact of sample size (through degrees of freedom) on the shape of the t-distribution: as sample size and degrees of freedom increase, the t-distribution grows more and more similar to the normal distribution. At df=100 (not shown here) the t-distribution is virtually indistinguishable from the z-distribution.

Figure 9.5: Degrees of Freedom and Resemblance of t-distribution to the Normal Distribution

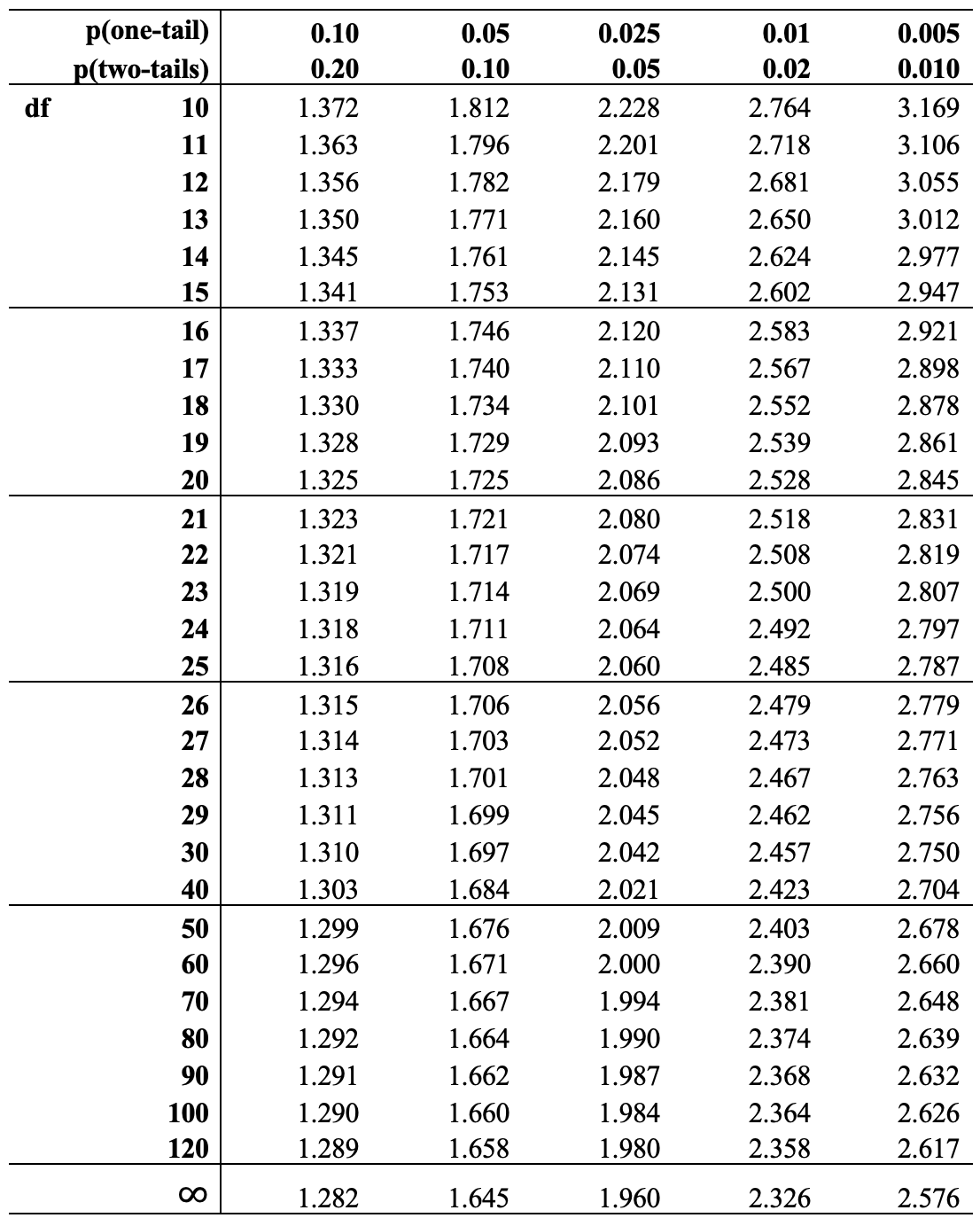

There are two different methods you can use to find the critical value of t for a given level of degrees of freedom. We can go “old school” and look it up in a t-distribution table (below)23, or we can ask R to figure it out for us. It’s easier to rely on R for this, but there is some benefit to going old school at least once. In particular, it helps reinforce how degrees of freedom, significance levels, and critical values fit together. You should follow along.

The first step is to decide if you are using a one-tailed or two-tailed test, and then decide what you desired level of significance is. For instance, for the sick leave policy example, we can assume a one-tailed test with a .05 level of significance. The relevant column of the table is found by going across the first row (from the top) of significance levels to the column headed by .05. We then scan down the column until we find the point where it intersects with the appropriate degree of freedom row. In this example, df=99, but there is no listing of df=99 in the table so we will err on the side of caution and use the next lowest value, 90. The .05 one-tailed level of significance column intersects with the df=90 row at t=1.662, so -1.662 is the critical value of t in the sick leave example. Note that it is only slightly different than the c.v. for z we used in the sick leave calculations, -1.65. This is because our sample size is relatively large (in statistical terms) and the t-distribution closely approximates the z-distribution for large samples. So, in this case the z- and t-distributions lead to the same outcome, we decide to reject H0.

Alternatively, we could ask R to provide this information using the qt function. for this, you need to declare the desired p-value and specify the degrees of freedom, and R reports the critical value:

[1] -1.66By default, qt() provides the critical values for a specified alpha area at the lower tail of the distribution (hence, -1.66). For a two-tailed test, you need to cut the alpha area in half:

[1] -1.984Here, R reports a critical value of \(\pm 1.984\) for a two-tailed test from a sample with df=99. Again, this is slightly larger than the critical value for a z-score (1.96). If you used the t-score table to do this the old-school way, you would find the critical value is t=1.99, for df=90. The results from using the qt function are more accurate than using the t-table since you are able to specify the correct degrees of freedom.

One-tailed or two-tailed,the conclusion for the sick leave example is unaffected: the t-score obtained from the sample is in the critical region, so reject H0.

We can also get a bit more precise estimate of the probability of getting a sample mean of 54.8 from a population in which \(\mu\)=59.2 by asking R to tell us the area under the curve to the left of t=-2.86:

[1] 0.002584Note that this result is very similar to what we obtained when using the z-distribution (.002118). For a two-tailed test using the t-distribution, we double this to find a p-value equal to .005167.

9.4 Proportions

As discussed in Chapter 8, the logic of hypothesis testing about mean values also applies to proportions. For example, in the sick leave example, instead of testing whether \(\mu=59.2\) we could test a hypothesis regarding the proportion of employees who take a certain number of sick days. Let’s suppose that in the year before the new policy went into effect, 50% of employees took at least 7 sick days. If the new policy has an impact, then the proportion of employees taking at least 7 days sick leave should be lower than .50. In the sample of 100 employees used above, the proportion of employees taking at least 7 sick days was .41. In this case, the null and alternative hypotheses are:

H0: P=.50

H1: P<.50

To review, in the previous example, to test the null hypothesis we established a desired level of statistical significance (.05), determined the critical value for the t-score (-1.66), and calculated the t-statistic. If |t| is greater than the |c.v.|, we can reject H0; if |t| is less than the |c.v.|, fail to reject H0. There are a couple differences, however, when working with this proportion.

In this case, because we can calculate the population standard deviation based on the hypothesized value of P (.5), we can use the z-distribution rather than the t-distribution. First, to calculate the z-score, we use the same formula as before

\[z=\frac{p-P}{S_{p}}\] Where:

\[S_{p}=\sqrt{\frac{P(1-P)}{n}}\]

Using the data from the problem, this give us:

\[z=\frac{p-P}{S_{p}}=\frac{.41-.5}{\sqrt{\frac{.5(.5))}{100}}}=\frac{-.09}{.05}=-1.8\]

We know from before that the critical value for a one-tailed test using the z-distribution is -1.65. Since this z-score is larger (in absolute terms) than the critical value, we can reject the null hypothesis and conclude that the proportion of employees using at least 7 days of sick leave per year is lower than it was in the year before the new sick leave policy went into effect.

Again, we can be a bit more specific about the p-value:

[1] 0.03593Here are a couple of things to think about with this finding. First, while the p-value is lower than .05, it is not much lower. In this case, if you took 1000 samples of 100 workers from a population in which \(P=.50\) and calculated the proportion who took 7 or more sick days, approximately 36 of those samples would produce a proportion equal to .41 or lower, just due to sampling error. This still means that the probability of getting this sample finding from a population in which the null hypothesis were true is pretty small (.03593), so we should be comfortable rejecting the null hypothesis. But what if there were good reasons to use a two-tailed test? Would we still reject the null hypothesis? No, because the critical value (-1.96) would be larger in absolute terms than the z-score, and the p-value would be .07186. These findings stand in contrast to those findings from the analysis of the average number of sick days taken, where the p-values for both one- and two-tailed tests were well below the .05 cut-off level.

One of the take-home messages from this example is that our confidence in findings is sometimes fragile, since “significance” can be a function of how you frame the hypothesis test (one- or two-tailed test?) or how you measure your outcomes (average hours of sick days taken, or proportion who take a certain number of sick days). For this reason, it is always a good idea to be mindful of how the choices you make might influence your findings.

9.5 T-test in R

Let’s say you are looking at data on public perceptions of the presidential candidates in 2020 and you have a sense that people had mixed feelings about Democratic nominee, Joe Biden, going into the election. This leads you to conclude that his average rating was probably about 50 on the 0 to 100 feeling thermometer scale from the ANES. You decide to test this directly with the anes20 data set.

The null hypothesis is:

H0: \(\mu=50\)

Because there are good arguments for expecting the mean to be either higher or lower than 50, the alternative hypothesis is two-tailed:

H1: \(\mu\ne50\)

First, you get the sample mean:

[1] 53.41Here, you see that the mean feeling thermometer rating for Biden in the fall of 2020 was 53.41. This is higher than what you thought it would be (50), but you know that it’s possible to could get a sample outcome of 53.41 from a population in which the mean is actually 50, so you need to do a t-test to rule out sampling error as reason for the difference.

In R, the command for a one-sample two-tailed t-test is relatively simple, you just have to specify the variable of interest and the value of \(\mu\) under the null hypothesis:

One Sample t-test

data: anes20$V202143

t = 8.2, df = 7368, p-value = 3e-16

alternative hypothesis: true mean is not equal to 50

95 percent confidence interval:

52.59 54.23

sample estimates:

mean of x

53.41 These results are pretty conclusive, the t-score is 8.2 and the p-value is very close to 0 24 Also, if it makes more sense for you to think of this in terms of a confidence interval, the 95% confidence interval ranges from about 52.6 to 54.2, which does not include 50. We should reject the null hypothesis and conclude instead that Biden’s feeling thermometer rating in the fall of 2020 was greater than 50.

9.6 Next Steps

The last three chapters have given you a foundation in the principles and mechanics of sampling, statistical inference, and hypothesis testing. Everything you have learned thus far is interesting and important in its own right, but what is most exciting is that it prepares you for testing hypotheses about outcomes of a dependent variable across two or more categories of an independent variable. In other words, you now have the tools necessary to begin looking at relationships among variables. We take this up in the next chapter by looking at differences in outcomes across two groups. Following that, we test hypotheses about outcomes across multiple groups in Chapters 11 through 13. In each of the next several chapters, we continue to focus methods of statistical inference, exploring alternative ways to evaluate statistical significance. At the same time, we also introduce the idea of evaluating the strength of relationships by focusing on measures of effect size. Both of these concepts–statistical significance and effect size–continue to play an important role in the remainder of the book.

9.7 Exercises

9.7.1 Concepts and Calculations

The survey of 300 college student introduced in exercises in Chapter 8 found that the average semester expenditure was $350 with a standard deviation of $78. At the same time, campus administration has done an audit of required course materials and claims that the average cost of books and supplies for a single semester should be no more that $340. In other words, the administration is saying the the population value is $340.

State a null and alternative hypothesis to test the administration’s claim. Did you use a one- or two-tailed alternative hypothesis? Explain your choise

Test the null hypothesis and discuss the findings. Show all calculations

In the same survey reports that among the 300 students, 55% reported being satisfied with the university’s response to the COVID-19 pandemic. The administration hailed this finding as evidence that a majority of students support the course they’ve taken in reaction to the pandemic. (Hint: this is a “proportion” problem)

State a null and alternative hypothesis to test the administration’s claim. Did you use a one- or two-tailed alternative hypothesis? Explain your choise

Test the null hypothesis and discuss the findings. Show all calculations

9.7.2 R Problems

For this assignment, you should use the feeling thermometers for Donald Trump (anes20$V202144), liberals (anes20$V20261), and conservatives (anes20$V202164).

Using descriptive statistics and either a histogram, boxplot, or density plot, describe the central tendency and distribution of each feeling thermometer.

Use the

t.testfunction to test the null hypotheses that the mean for each of these variables in the population is equal to 50. State the null and alternative hypotheses and interpret the findings from the t-test.Taking these findings into account, along with the analysis of the Joe Biden’s feeling thermometer at the end of the chapter, do you notice any apparent contradictions in American public opinion? Explain

The code for generating this table comes from Ben Bolker via stackoverflow (https://stackoverflow.com/questions/31637388/).↩︎

Remember that 3e-16 is scientific notation and means that you should move the decimal point 16 places to the left of 3. This means that p=.0000000000000003.↩︎