| 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 |

|---|---|---|---|---|---|---|---|---|---|---|

| 303 | 42 | 172 | 270 | 369 | 1281 | 853 | 1344 | 1295 | 353 | 356 |

Seminar: Kausalanalyse

Ein Einsteiger-Seminar für Studierende der Politikwissenschaft

Updated: Jul 17, 2024

Session 1

Introduction

Introduction

When, where, requirements, contact, grading: See syllabus

Document for posting questions can be found here.

Content of slides is a fusion of…

- …book project Applied Causal Analysis & Machine Learning (with R) (with Denis Cohen, Lion Behrens)

- …past lectures & seminars (Kreuter, Bach, Bauer etc.) (e.g., applied causal analysis seminar)

- …different books, articles and material from different disciplines (see citations)

Sociology of research methodology (Where did you study?)

Session 2

Statistical foundations: Measurement, variables, data (distributions) and models

Today’s objectives

- Discuss components of a research design & definition

- Research questions, hypotheses, population & sampling method, conceptualization & measurement, observations & data

- Terminfindung: https://forms.gle/Sdtj2fj9U1hzoev37

![]()

Research design: Definition

- Wikipedia (careful!) on research design (RD)

- A framework that has been created to find answers to research questions

- A set of methods and procedures used in collecting and analyzing measures of the variables specified in the research question

- Wrong RD → wrong answer to research question (RQ) → potentially drastic consequences (e.g., medicine)

- Bad RQs → bad RDs (e.g, RQ is too vague)

- Criteria for “good research design” change over years/decades

Replication crisis

- Replication crisis (Q: Retraction watch?) (Open Science Collaboration 2015)

- Reasons: Research design errors, fraud and coding errors (e.g., Psychoticism)

- What a massive database of retracted papers reveals about science publishing’s ‘death penalty’

- Increasing calls for open science to foster replicability and reproducability

- Terminology: Reproduction vs. replication

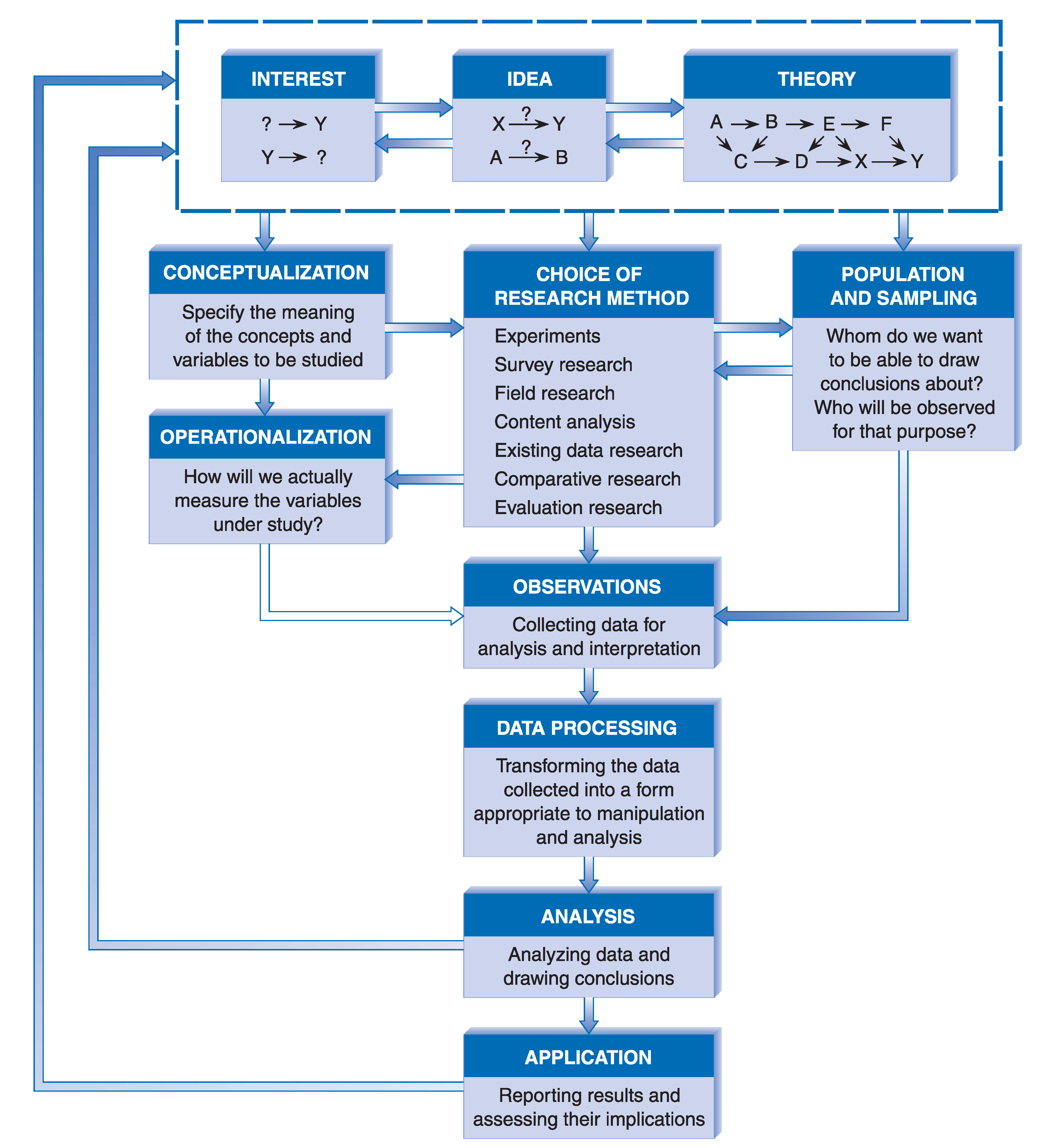

Research design: Components by Babbie (2015, 114)

Research design (RD): Steps

- Formulate a research question and hypotheses

- Specify target population (e.g., humans) and sampling method (e.g., random sample)

- Specify concepts (conceptualization) and their measures (operationalization)

- Choose a research method, e.g., a randomized experiment

- Collect data or use data that has been collected (observations)

- Analyze data (statistical modelling)

- Important: Steps may overlap and order may change during research process (see Babbie’s graph)

Research questions: Types

- Empirical analytical (positive) vs. normative

- Should men and women be paid equally? Are men and women paid equally (and why?)?

- Q: Which one is empirical-analytical, which one normative? Can we derive hypotheses for normative questions?

- Y-based, X-based and y = f(x)-based (Plümper 2014, 22)

- Y-based: What causes differences in income (Y)?

- X-based: What are the consequences of differences in education (X), i.e., how does it impact other outcome variables?

- y = f(x)-based: Do differences in education (X) cause differences in income (Y)? (Gerring 2012, 646–48)

- What? vs. Why? (Gerring 2012, 722–23)

- Describe aspect of the world (What?) vs. causal arguments that hold that one or more phenomena generate change in some outcome (imply a counterfactual) (Why?)

- My personal preference: descriptive vs. causal questions vs. predictive questions

Research questions: Descriptive

Measure:‘Would you say that most people can be trusted or that you can’t be too careful in dealing with people, if 0 means “Can’t be too careful” and 10 means “Most people can be trusted”?’

RQ: What is the average level of trust (Y)? How are individuals distributed? (univariate)

- We can add as many variables/dimensions as we like (e.g. gender, time) → multivariate

- Q: What would the table above look like when we add gender as a second dimension?

- Descriptive questions (multivariate)

- RQ: Do females have more trust than males? (multivariate)

- RQ: Did trust rise across time?(multivariate)

Research questions: Causal

| 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| no victim | 259 | 36 | 135 | 214 | 320 | 1142 | 782 | 1228 | 1193 | 326 | 331 |

| victim | 44 | 6 | 37 | 56 | 48 | 139 | 70 | 114 | 101 | 27 | 25 |

- Descriptive RQs: Do victims have a different/lower level of trust from/than non-victims?

- Mean Non-victims: 6.2; Mean Victims: 5.48

- Why?-questions start with difference(s) and, then, seek to explain why those difference(s) occured

- Why does this group of people have a higher level of trust?

- Causal questions: Is there a causal effect of victimization on trust? (We’ll define causal effect later)

- Insights

- Data underlying descriptive & causal questions is the same

- Causal questions link Y to one (or more) explanatory causes X (or D)

Research questions: Precision

- Q: Below you find different versions of the same research question. What is the most precise question and why is it precise?

Is there a causal effect of victimization on trust in a sample of Swiss citizens?

What is the impact of negative experiences on trust?

Is there a causal effect of victimization on generalized trust in a sample of Swiss aged from 18 to 98 in 2010?

What is the impact of victimization on generalized trust?

Research questions → hypotheses

Hypotheses = expectations we have for the answers to our research question (descriptive or causal)

RQ: Does smoking increase the probability of/cause cancer?

- Q: What hypotheses could we formulate?

- Hypotheses:

- Smoking has an/no effect on the probability of getting cancer!

- Smoking has a positive effect on (increases the probability of) getting cancer! (the higher X, the higher Y)

- Smoking has a negative effect on (decreases the probability of) getting cancer! (the higher X, the lower Y)

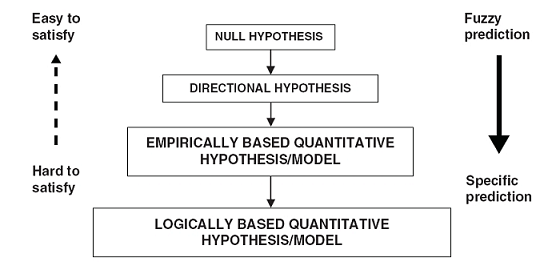

Hypotheses: Precision (1)

- Hypotheses are not created equal (Taagepera 2008, 71–81)

- Null hypothesis: Focus on “disproving that something is due to chance”

- Directional hypothesis: Hold that increasing X will increase or decrease Y (we are here…)

- Emp. based quant. hypothesis: Focusses on the shape of the function y = f(x) [Ceteris paribus]

- Log. based quant. hypothesis: Formal theoretical model that makes a prediction, i.e., generates a hypothesis

Hypotheses: Precision (2)

- Q: Which of the hypotheses below is a null/directional/emp. based quant. hypothesis?

- Smoking increases the probability of getting cancer by 1% per 100 cigarettes

- Smoking has an effect on the probability of getting cancer

- Smoking increases the probability of getting cancer

- Most social science research studies use null or directional hypotheses.

Research design (RD): Steps

- Formulate a research question and hypotheses

- Specify target population (e.g., humans) and sampling method (e.g., random sample)

- Specify concepts (conceptualization) and their measures (operationalization)

- Choose a research method, e.g., a randomized experiment

- Collect data/sample or use data that has been collected (observations)

- Analyze data (statistical modelling)

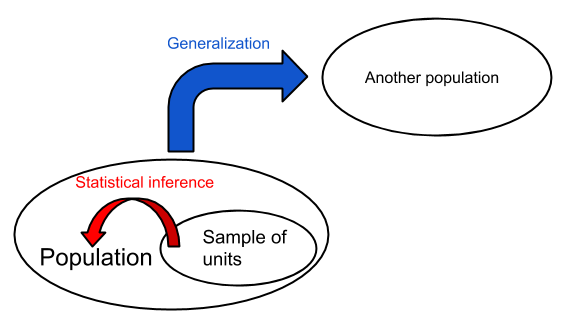

Population & sampling method (1)

- Q: What do the concepts internal/external validity describe?

- Target population = Students at Freiburg university and Sample = students in this classroom

- Internal validity (Abadie et al. 2020): Is estimate = true (causal) effect in the sample (= students in this classroom) [requires random assignment]

- External validity (Abadie et al. 2020): Is estimate = value in the population (= Freiburg University students) [requires random sampling]

- Generalizability: e.g., generalize from sample (= students in this classroom) to.. ..other populations (e.g., LMU Munich students)? ..other times (e.g., Freiburg University students in 2027)? ..other settings (e.g., cinema)? Or a combination thereof.1

Population & sampling method (2)

Sampling: Select subset of units from population to estimate characteristics of that population

Steps: Researcher (we)…

- …define (target) population.

- …create a sampling frame = list of population members to sample from

- Q: Can you imagine a situation where population \(\neq / =\) sampling frame?

- …choose sampling method & units that should be in the sample.

Q: Are the above steps necessary when we work with secondary data (e.g., ALLBUS)?

- No.. but we should still evaluate representativeness (statistical inference).

Population & sampling method (3)

- Q: Imagine we are interested in estimating population averages/prop.. Below you find pairs of (target) population and sample: Are these good or bad samples? Why? Any bias?

- Income: Population: Freiburg university students; Sample: Students in this seminar

- Income: Population: Immigrants in Germany; Sample: Turkish immigrants

- Age: Population: Whatsapp users; Sample: Random sample of Whatsapp users

- Racist comments: Population: Tweets; Sample: Random sample of tweets provided by Twitter

- Q: What might be the problem with secondary data as opposed to data that you collect yourself?

Population & sampling method (4)

Population & sampling method (5)

Sampling techniques (Cochran 2007)

Simple random sampling: (1) Units in the population are numbered from 1 to N; (2) Series of random numbers between 1 and N is drawn; (3) Units which bear these numbers constitute the sample (ibid, 11-12) → Each unit has same probability of being chosen

Stratified random sampling: (1) Population divided into non-overlapping, exhaustive subpopulations (strata); (2) Simple random sample is taken in each stratum (ibid, 65f)

Quota sampling: Decide about N units that are wanted from each stratum (e.g., age, gender, state) and continue sampling until the neccessary “quota” has been obtained in each stratum (ibid, 105)

Snowball sampling: (1) Locate members of special population (e.g., drug addicts); (2) Ask them to name other members of population and repeat this step (Sudman and Kalton 1986, 413) → use snowballing to create sampling frame, then sample

- “Relaxed” version: Interview those named until sample size is reached

- Q: Does this create weaker or stronger bias? (ibid, 413)

- “Relaxed” version: Interview those named until sample size is reached

Research design (RD): Steps

- Formulate a research question and hypotheses

- Specify target population (e.g., humans) and sampling method (e.g., random sample)

- Specify concepts (conceptualization) and their measures (operationalization)

- Choose a research method, e.g., a randomized experiment

- Collect data or use data that has been collected (observations)

- Analyze data (statistical modelling)

Measurement & variables (1)

“the most important thing in statistics that’s not in the textbooks” (Gelman, April, 2015)

Theories (and the hypotheses they imply) (Moore and Siegel 2013, 3–4)

- Concern relationships among abstract concepts

- Variables are the indicators we use to measure our concepts

A [theoretical] variable has different theoretical “levels” or “values” (Jaccard and Jacoby 2019, 13)

- e.g., gender can be conceptualized as a variable that has two values (or more)

Empirical value of a variable for a given unit u (ui): the number assigned by some measurement process to u (Holland 1986, 954), e.g., male (0) or female (1)

Random variables: “If we have beliefs (i.e., probabilities) attached to the possible values that a variable may attain, we will call that variable a random variable.” (Pearl 2009, 8)

Measurement & variables (2): Quantifying the world

- Albert Einstein: “the whole of science is nothing more than an extension of everyday thinking” (Jaccard and Jacoby 2019, Ch. 2)

- Concepts (gender, education, race etc.) are the foundations stones of thinking in daily life & science

- Quantification = assignment of variable values to real-world objects

| id | gender | age | degree | subject |

|---|---|---|---|---|

| John | M | 25 | bachelor | sociology |

| Petra | F | 30 | master | physics |

| Hans | M | 29 | master | biology |

- Social scientists identify and classify people, countries, etc. according to various concepts

Measurement & variables (3)

| names | id | gender | income | education | happiness | age |

|---|---|---|---|---|---|---|

| Hans | 1 | male | 1000 | 0 | 5 | 30 |

| Peter | 2 | male | 5000 | 3 | 10 | 30 |

| Julia | 3 | female | 500 | 1 | 3 | 30 |

| Andrea | 4 | female | 1600 | 3 | 7 | 30 |

| Feli | 5 | female | 1600 | 3 | 7 | 30 |

Columns = variables

Rows = observations (often observations = units but not always)

Q: Which type of dataset has more observations (rows) than units? (Tip: Pa…)

Q: What are the theoretical and observed (empirical) values of happiness and age?

Q: Which are constants and which are variables in the above data frame? What is the difference?

- Idea of constant relevant later on (holding things constant!)

Research design (RD): Steps

- Formulate a research question and hypotheses

- Specify target population (e.g., humans) and sampling method (e.g., random sample)

- Specify concepts (conceptualization) and their measures (operationalization)

- Choose a research method, e.g., a randomized experiment (next weeks!)

- Collect data or use data that has been collected (observations)

- Analyze data (statistical modelling) (next weeks!)

Data collection (1): Measurement error

- Q: What is measurement (or observational) error? Can you give an example?

- Difference between a measured value of a variable and its true value

- e.g., difference between Peter’s measured and his real income

- Q: What is random and systematic measurement error? Can you give an example?

Systematic error:

- If men systematically provide income values that are above the real values

- If victims systematically under-report their victimization (Me Too movement!)

Random error: In repeated measures, a scale randomly deviates from your true weight

Partly conceptual confusion around terms such as reliability, repeatability (Bartlett and Frost 2008) (Q: Validity? Reliability?)

Data collection (2): Meas. error example

.png)

- Q: Which colors does this dress have?

- Please give an answer: https://www.menti.com/msg5qk5bb4

- After the vote: What do you think will be the result?

Data collection (3): Meas. error example

- Q: What can we learn from this example? (intersubjective agreement)

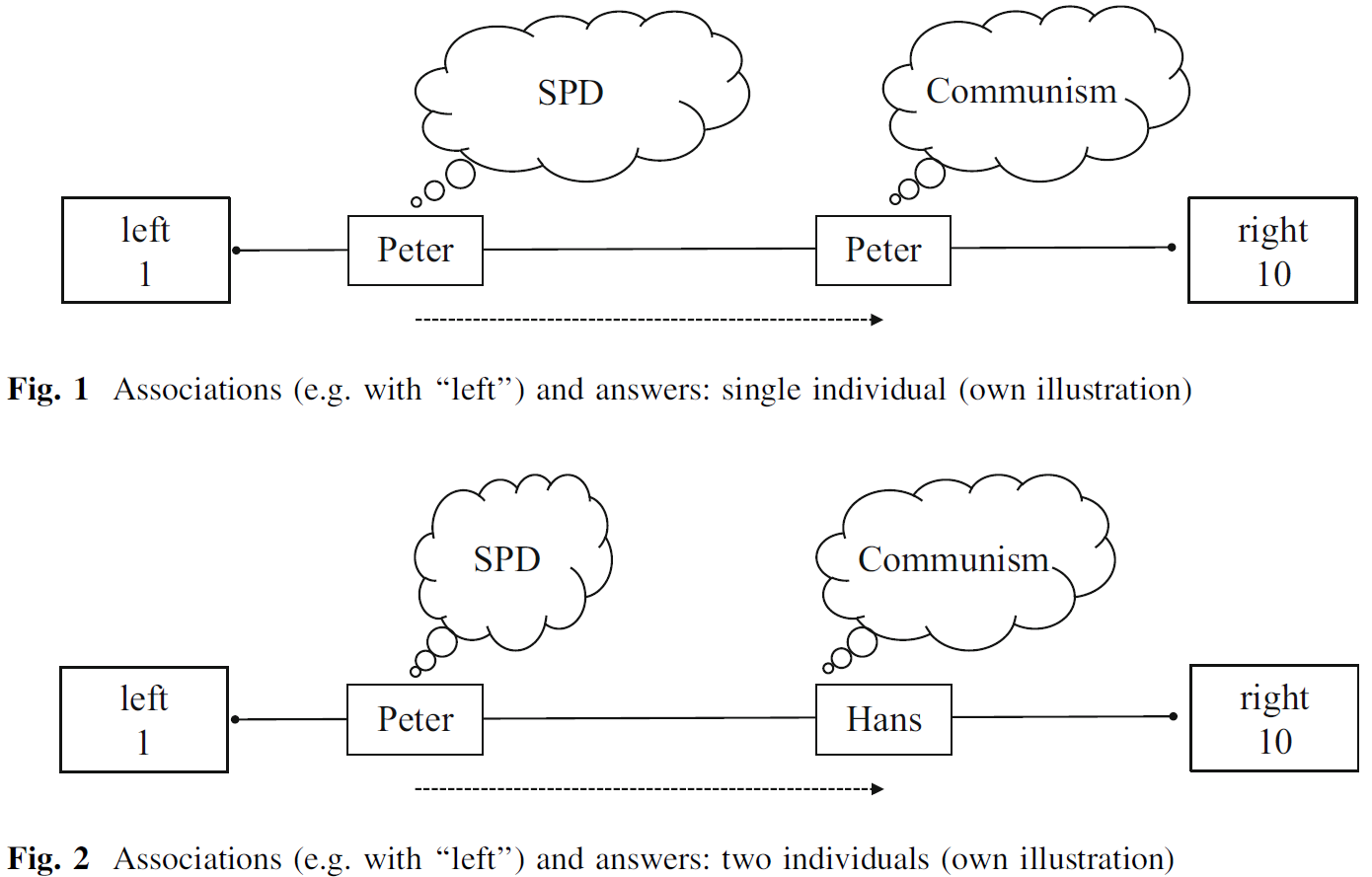

Data collection (4): Meas. equivalence

- Concept: Left-right ideology; Survey measure: “In politics people sometimes talk of ‘left’ and ‘right’. Using this card, where would you place yourself on this scale, where 0 means the left and 10 means the right?” (ESS 2012)

- Q: What does the graph below illustrate? (Bauer et al. 2017, 558)

- Measurement inequivalence: When a measure provides different values for units with the same underlying true value, e.g., because of differential interpretation

Data (1)

After decisions about research question, population, sample, concepts, and measures we finally choose a research method and have collected data

Lecture focuses on causal inference using experimental/observational data so let’s quickly reiterate what data is!

Data: Units’ observed values on different variables (observations)

- Time is just another variable

Variables: Dimensions of the data space

Empirical observations are distributed across those dimensions, i.e., across (theoretical) values of those variables

Data (2)

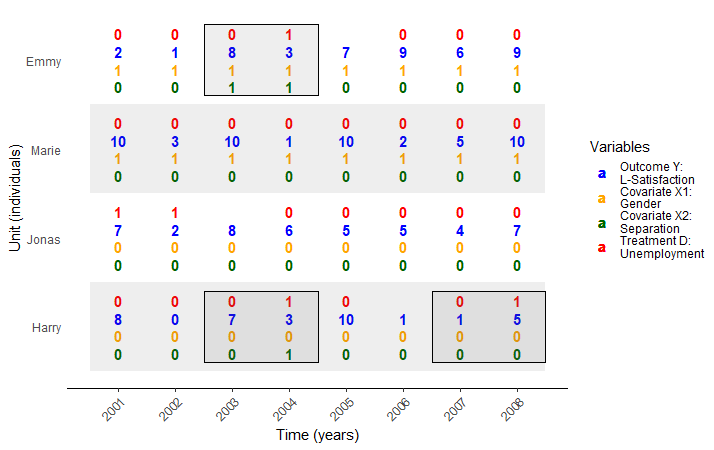

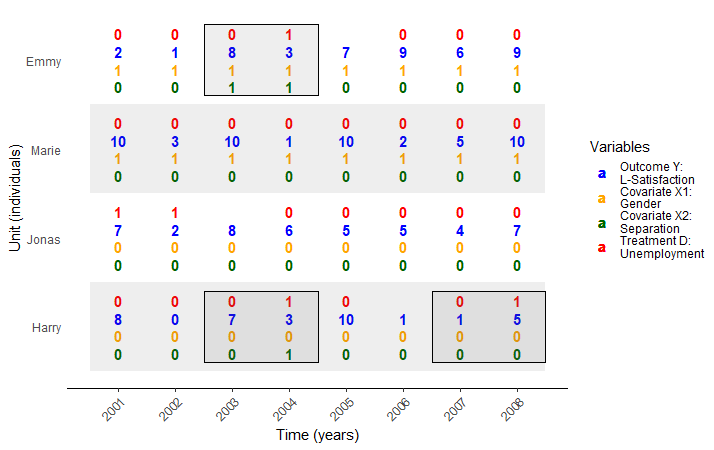

- Data example: ‘Negative Experiences and Trust: A Causal Analysis of the Effects of Victimization on Generalized Trust’ (Bauer 2015)

- What is the causal effect of being victimized (= threatened) on trust?

- Population: People living in Switzerland

- Units: Individuals

- Data:

- Sample: 6633 Individuals (Switzerland!) (Sampling method)

- Variables: Victimization/Threat (0,1); Education (0-10) → Trust (0-10); Age (0-94)

- Time: data from 2006 (here)

- Q: How do we normally show/look at data?

Data: Table format

| Name | trust2006 | threat2006 | education2006 |

|---|---|---|---|

| Aseela | 4 | 0 | 8 |

| Dominic | 5 | 1 | 1 |

| Elshaday | 0 | 0 | 0 |

| Daniel | 5 | 0 | 9 |

| Sulaimaan | 7 | 0 | 4 |

| Peyton | 5 | 0 | 1 |

| Mudrik | 2 | 0 | 4 |

| Alexander | 7 | 0 | 5 |

| .. | .. | .. | .. |

- Q: How many rows should this table have if N = 6633? How many dimensions?

- Aseela[4, 0, 8]: Position of Aseela in the multi-dimensional space

Data: Univariate distribution(s)

| 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 |

|---|---|---|---|---|---|---|---|---|---|---|

| 303 | 42 | 172 | 270 | 368 | 1281 | 852 | 1342 | 1294 | 353 | 356 |

| 0 | 1 |

|---|---|

| 5966 | 667 |

| 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 |

|---|---|---|---|---|---|---|---|---|---|---|

| 380 | 806 | 194 | 89 | 2182 | 324 | 687 | 474 | 195 | 425 | 877 |

- Q: Where are most individuals located on the trust2006 and threat2006 variables?

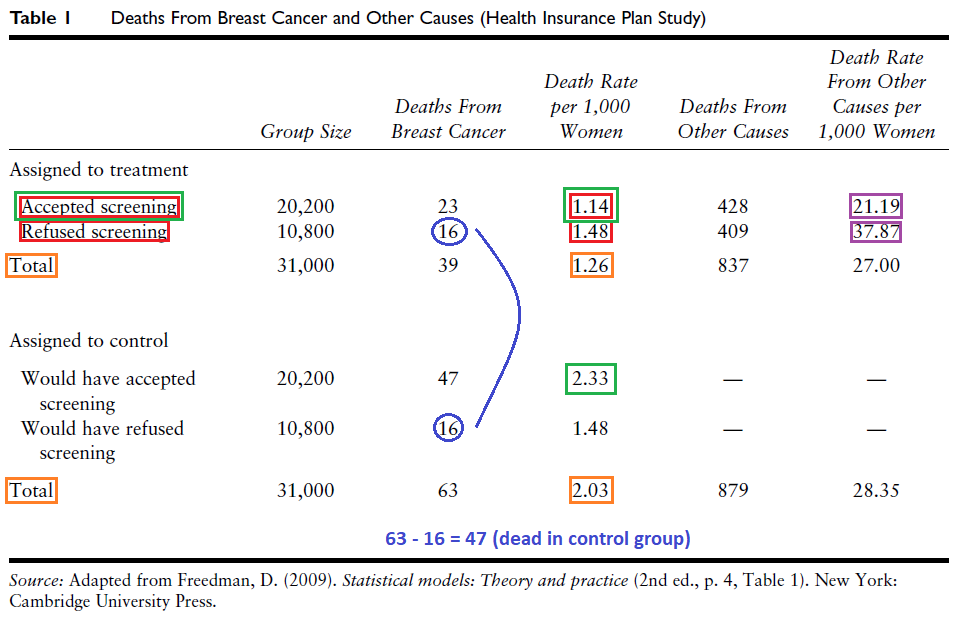

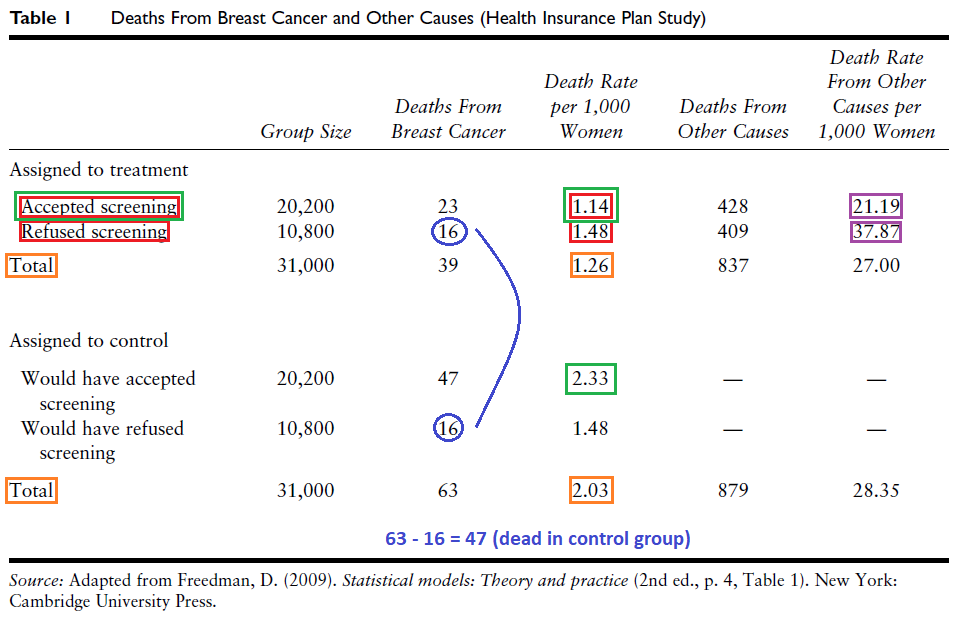

Data: Joint distribution(s) [1]

Measures on several variables → multivariate joint distribution

3 variables: Victimization/Threat (0,1); Education (0-10) → Trust (0-10)

Q: How many dimensions? How many theoretical value combinations?

Data: Joint distribution(s) [2]

- Units are grouped on three variables: Trust (Y), Threat (D) and Education (X)

- Q: What would the corresponding dataset/dataframe look like?

- Q: What would a joint distribution with 4 variables look like?

- Q: What is a conditional distribution?

- Q: What does the joint distribution of two perfectly correlated variables look like?

- Important: Often we can only make a causal claims for a subset of our data (e.g., education = 4)

Data: One more joint distribution

Associational vs. causal inference

Joint distribution is basis for any quantitative analysis (Holland 1986, 948) of variables used in the design/analysis

Associational inference (descriptive questions, what?):

- Summarize joint distribution with statistical model (e.g., regression model)

- Does not tell us anything about causality, e.g., coefficient represents effect in both directions (Trust ↔︎ Threat)

Causal inference (causal questions, why?):

- Summarize joint distribution with statistical model (e.g., regression model)

- Add assumptions

- Give causal interpretation to coefficients!

Research design (RD): Steps with examples

- Formulate a research question and hypotheses

- RQ: Is there a causal effect of victimization on trust (Bauer 2015)?

- Hypothesis: Yes there is a positive effect!

- Specify target population (e.g., humans) and sampling method (e.g., random sample)

- Target population: Swiss population; Sampling method: Random sample of households

- Specify concepts (conceptualization) and their measures (operationalization)

- Define victimization & trust and choose survey questions

- Choose a research method, e.g., a randomized experiment (next weeks!)

- Use survey with repeated observations (panel data)

- Collect data or use data that has been collected (observations)

- Take data from the Swiss Household Panel (SHP)

- Analyze data (statistical modelling)

- Use matching + difference-in-differences

Quiz

- Please do the quiz under the link provided in the seminar.

Session 3 & 4

Causal analysis: Concepts and definitions

The causal inference ‘revolution(s)’

- Revolution of identification Keele (2015)

- Revolution of potential outcomes (Rubin 1974)

- Consequences

- New Style of writing/conducting data analysis

- New rationales for evaluating research (Causal empiricism)

- Has impacted research questions (search for natural experiments…)

- Exciting ongoing debates (e.g., on RCTs in development economics, Banerjee, Duflo, Deaton)

Causality everywhere!

- Many research questions (at least implicitly) aim to identify a causal effect

- Is there a causal effect of schooling on earnings/electoral participation?

- What is the effect of obtaining a master’s degree (compared to a bachelor’s degree) on lifetime earnings/vote choice?

- Should high school last eight or nine years?

- Does contact with migrants reduce xenophobic attitudes?

- Are gender-mixed teams more productive?

- Does survey question difficulty affect the quality/accuracy of responses?

- Does minimum wage affect employment?

- Are unemployed people less happy?

- Does unemployment benefits affect the health of recipients?

Causality & causes

- Some examples of causal statements

- My headache went away because I took an aspirin.

- Sarah earns a lot of money because she went to college.

- Tom found a job because he participated in a job training program.

- Revenues went up because firm X hired more people.

- Tomatoes are large this year because the summer was hot.

- Causality tied to

- an action

- taking an aspirin / going to college / job training program / hiring new people / a lot of sun (“conceptually”)

- applied to a unit

- me / Sarah / Tom / firm / tomatoes

- an action

Cause = action

- Causality tied to action applied to unit at particular point in time (Imbens and Rubin 2015, 4)

- An action (in our case binary)

- Action / No action

- Action A / Action B

- Often called treatment / control

- Easily extendable to multiple actions

- Action A / Action B / Action C

- Action A / Action B / Action C / No action

- Unit: Pretty much anything… a person, a group, any physical object

- Time: The same unit at a different time is a different unit (Imbens and Rubin 2015)

- Better: different observation of the same unit

Potential outcomes & causal effect

Given an unit (individual/you!) and a set of actions (take aspirin or not) we associate each action-individual pair with a potential outcome

Example (Peter has a headache): Aspirin (0 = no/1 = yes) → headache/Kopfschmerzen (0 = no/1 = yes)

- Potential outcome 1: Peter’s headache when taking aspirin

- Potential outcome 2: Peter’s headache when not taking aspirin

Definition individual-level causal effect

Difference in potential outcomes, same individual, same moment in time post-treatment (Imbens and Rubin 2015)

Causal effect = treatment effect

Individual causal/treatment effect

- Treatment variable \(D\): Aspirin

- \(D_{i}\): Value of \(D\) for individual \(i\) (1 = aspirin = treatment, 0 = no aspirin = control)

- Outcome variable \(Y\): Headache

- \(Y_{i}(\color{red}{1})\), \(Y_{i}(\color{blue}{0})\): Potential outcomes for individual \(i\)

- e.g., \(Y_{i}(\color{red}{1})\): Headache Peter would have when taking an aspirin

- \(Y_{i}\): Realized outcome → Headache we observe

- \(Y_{i}(\color{red}{1})\), \(Y_{i}(\color{blue}{0})\): Potential outcomes for individual \(i\)

- Individual-level Treatment Effect (ITE)

\(ITE = Y_{i}(\color{red}{1}) - Y_{i}(\color{blue}{0})\) ( \(t\) often omitted)

\(ITE_{Peter} = \text{Headache}_{Peter}(\color{red}{\text{Aspirin}}) - \text{Headache}_{Peter}(\color{blue}{\text{No aspirin}})\)

Fundamental problem of causal inference (Holl. 1986)

- Only one potential outcome will ever be realized and observed!

- Unobserved potential outcome also called counterfactual outcome

- Let’s assume Peter takes an aspirin \(D_{Peter} = 1\)

- We observe \(Y_{Peter}(\color{red}{1})\) but not \(Y_{Peter}(\color{blue}{0})\)

- Then we have the following data:

| \(\quad i\quad\) | \(\quad D_i\quad\) | \(\quad Y_{i}\quad\) | \(\quad Y_{i}(\color{red}{1})\quad\) | \(Y_{i}(\color{blue}{0})\) |

|---|---|---|---|---|

| Peter | 1 | 0 | 0 | ? |

- But we can not calculate \(ITE_{Peter} = Y_{Peter}(\color{red}{1}) - Y_{Peter}(\color{blue}{0}) = 0 - ?\)

Solution?

Missing data problem: We don’t observe the missing potential outcome

- here \(Y_{i}(\color{blue}{0})\), potential outcome under control is missing

| \(\quad i\quad\) | \(\quad D_i\quad\) | \(\quad Y_{i}\quad\) | \(\quad Y_{i}(\color{red}{1})\quad\) | \(Y_{i}(\color{blue}{0})\) |

|---|---|---|---|---|

| Peter | 1 | 0 | 0 | ? |

- Q: What is the solution? What could we fill in for missing value? Compare Peter with…?

- Q: What kind of person would you choose as a comparison for Peter?

Estimation of causal effects

- Missing data problem:

- Calculating treatment effect requires filling in missing counterfactual outcome

- Two solutions

- Either compare Peter to someone else

- e.g., Peter’s headache with Hans’s who did not take an aspirin (“social twin”)

- Between-individual comparative strategy (requires multiple units)

- Or compare Peter to himself

- e.g., Peter’s headache with his headache before taking the aspirin

- Within-individual comparative strategy

- Either compare Peter to someone else

- In making such comparisons we rely on assumptions, e.g., “unit homogeneity” (Holland 1986, 948)

Assumptions: Scientific solution

- unit homogeneity (Holland 1986, 948): Compare two different units and assume they are the same (e.g., 2 samples of substance in lab)

- temporal stability (a) and causal transience (b) (Holland 1986, 948): Measure causal effect by sequential exposure of unit \(i\) to control and then to treatment, measuring \(Y\) after each exposure

- states that the outcome value for unit \(i\) under control, does not depend on when the sequence ‘apply control to unit \(i\) then measure \(Y\) on \(i\)’ occurs

- i.e., Peter’s value under control would be the same regardless of when Peter is assigned to the control condition

- states the outcome value of unit \(i\) under treatment is not affected by the prior exposure of \(i\) to the sequence in (a)

- Peter’s value under treatment not affected by control condition and measurement of \(Y\) beforehand

- Scientific solution (exploit assumptions above) vs. statistical solution (average causal effect = expected value of the differences) (Holland 1986, 947)

Quick summary

Definition of causal effect does not require more than one individual (Imbens and Rubin 2015, 8)

- Difference in potential outcomes, same individual, same moment in time post-treatment

- \(ITE_{Peter} = \text{Headache}_{Peter}(\color{red}{\text{Aspirin}}) - \text{Headache}_{Peter}(\color{blue}{\text{No aspirin}})\)

BUT only one potential outcome realized (and observable)

Estimation: Pursue between-individual comparisons or within-individual comparisons

Individuals = units = can be anything (school classes, firms, governments etc.)

Q: How many potential outcomes do we have for a treatment variable Aspirin (no/yes), Education (primary school/high school/university) and Motivation (lowest/low/high/highest)?

Exercise: Vague actions & potential oucomes

- Sometimes, it is difficult to clearly define actions and potential outcomes

- Q: What is the action, what are the potential outcomes?

- My headache went away because I took an aspirin

- Sarah earns a lot of money because she went to college.

- Tom found a job because he participated in a job training program.

- Firm X’s revenues went up because it hired more people.

- Tomatoes are large this year because the summer was hot.

- …

- Tom was promoted because he is a man.

- The more precise the better…

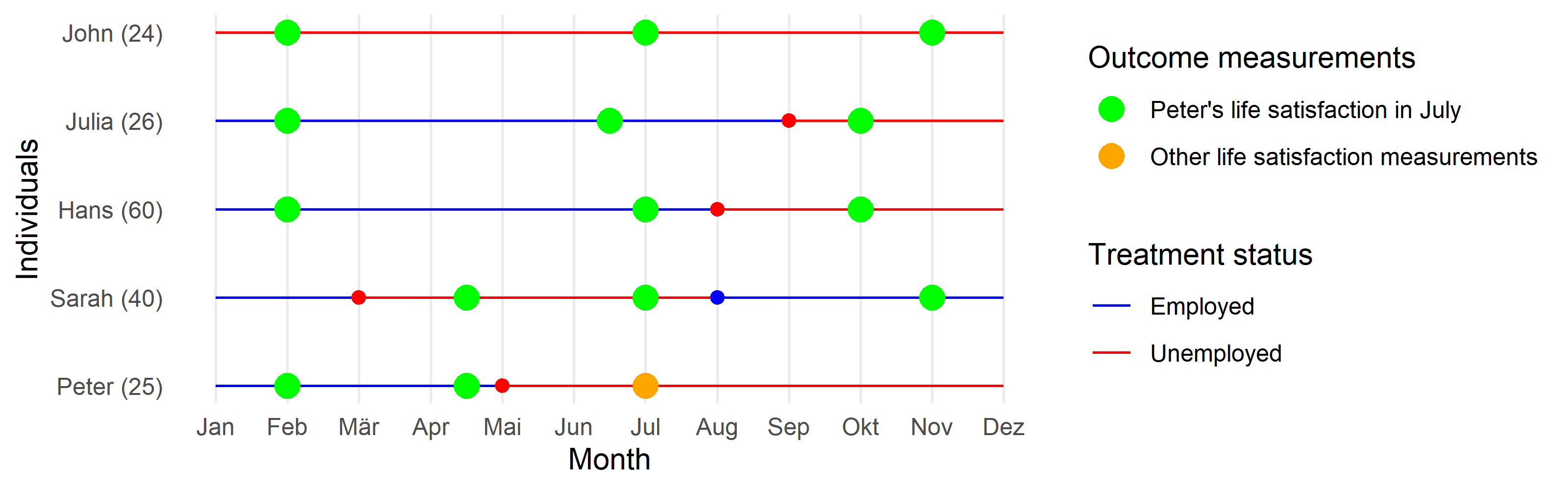

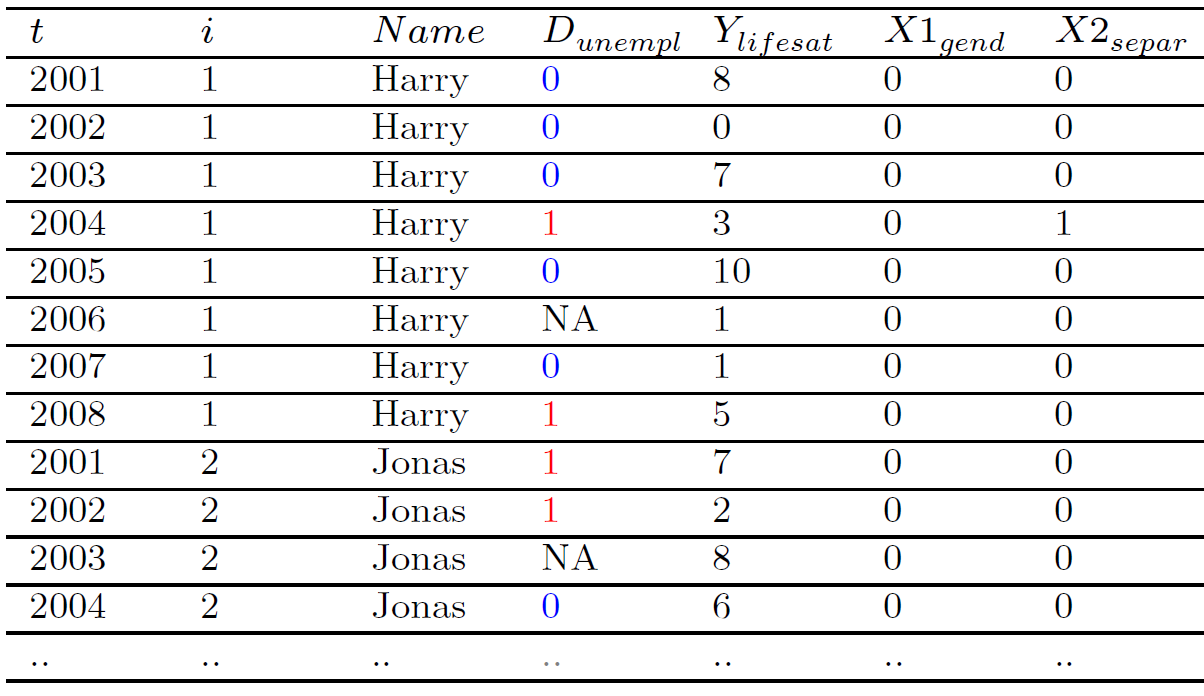

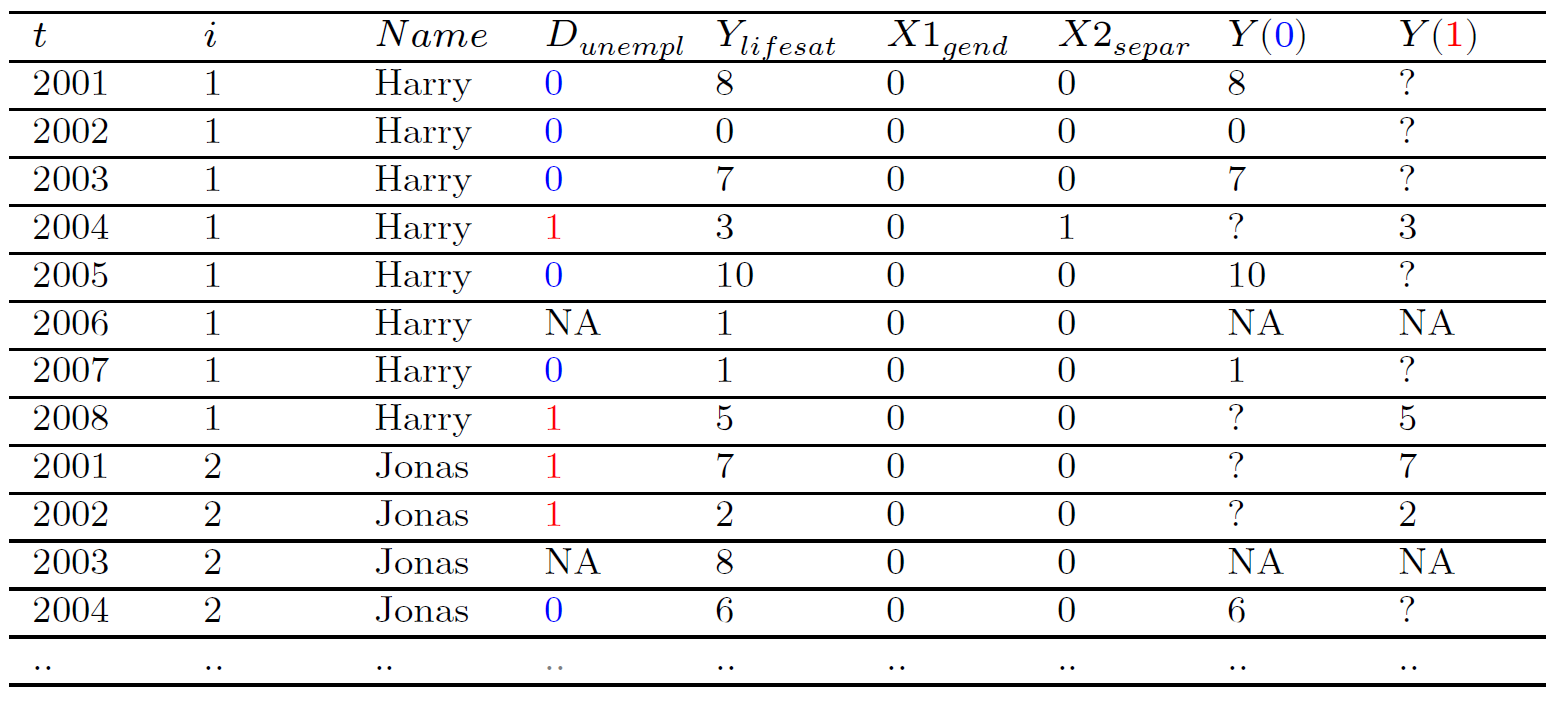

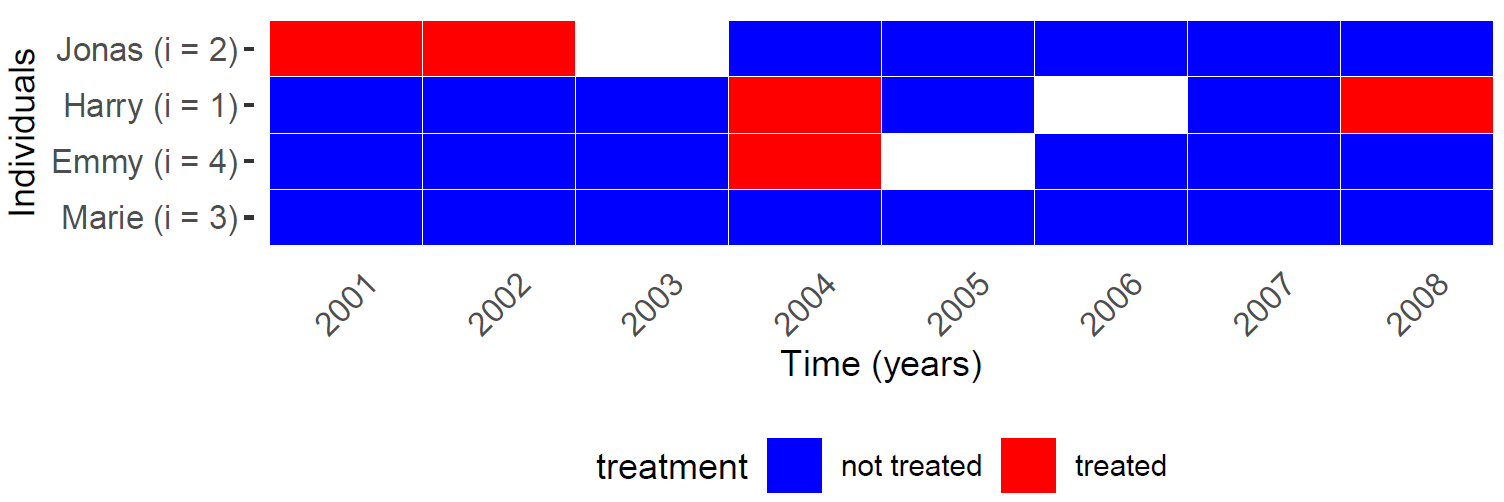

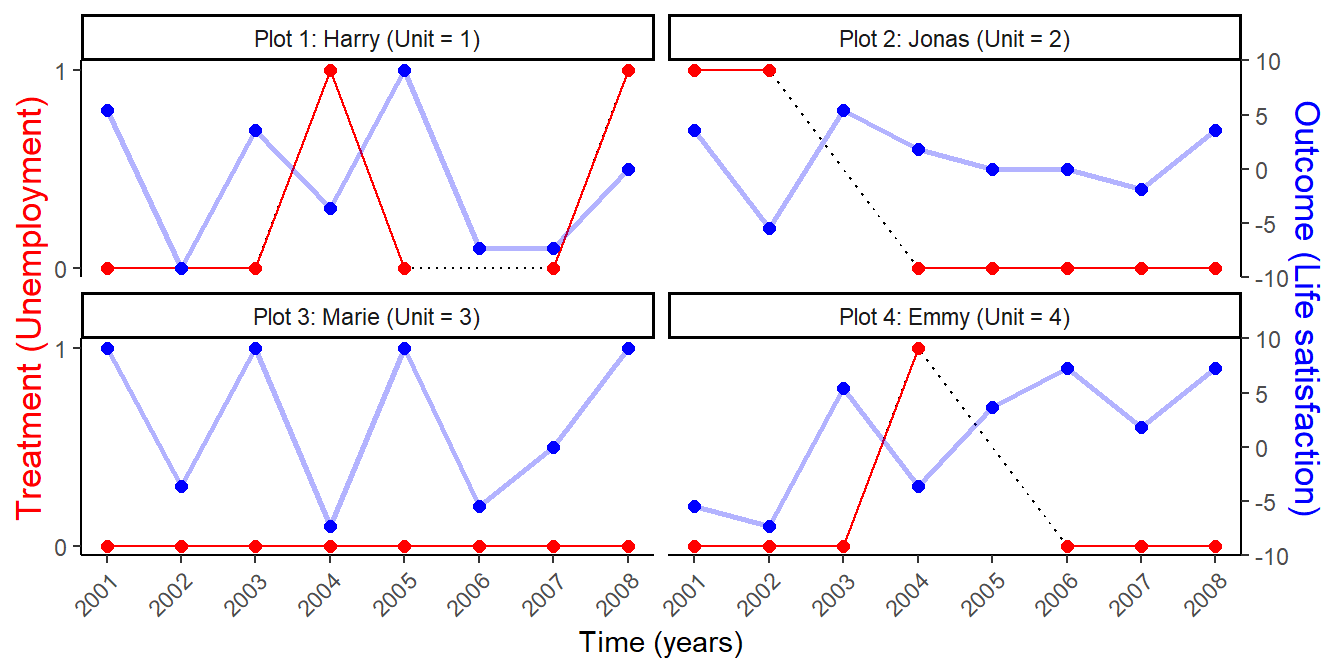

Exercise: Employment and life satisfaction

- We want to estimate the causal effect for Peter: \(\text{Life Satisfaction}_{Peter}(\color{red}{\text{Unemployed}})\) \(- \text{Life Satisfaction}_{Peter}(\color{blue}{\text{Employed}})\) at \(t = July\) (yellow point).

- Q: What is shown on the graph below?

- Q: At \(t = July\), do we observe Peter’s outcome under treatment (unemployed) or under control (employed)?

- Q: Which green measurement point would you pick as a comparison for Peter’s yellow measurement? And why?

ATE: Average Treatment Effect

- Average Treatement Effect (ATE): The average difference in the pair of potential outcomes (averaged over the entire population of interest)

- \(ATE = E[Y_{i}(1) - Y_{i}(0)]\) (time is omitted from the notation)

- Q: Which observations does that concern in the table below?

| \(Unit\) | \(D_{i} (Aspirin:Yes/No)\quad\) | \(Y_{i}(1) (Headache \mid Aspirin)\quad\) | \(Y_{i}(0) (Headache \mid NoAspirin)\) |

|---|---|---|---|

| Simon | 1 | 0 | ? |

| Julia | 1 | 1 | ? |

| Paul | 0 | ? | 1 |

| Trump | 0 | ? | 0 |

| Fabrizio | 0 | ? | 0 |

| Diego | 0 | ? | 0 |

- Again.. can’t estimate ATE without observing both potential outcomes (even with infinite sample size)

Why? moving from ITE to ATE?

- \(ITE\) is unobservable

- Average Treatment Effect (\(ATE\)) = Diff. in averages

- \(ATE\) can be estimated relying on less daring assumptions (Holland 1986, 948f)

- e.g., (proper) random assignment (should) partly satisfy them (“statistical solution”)

- Important: \(ATE = E[Y_{i}(1) - Y_{i}(0)]\): is the expected value of the unit-level treatment effect under the distribution induced by sampling from the super-population (= ATE in super-population) (Imbens and Rubin 2015, 117)

- Estimating Population ATE (PATE) \((ATE_{sp} = \tau_{sp} = \mathbb{E}_{sp})\) from Sample ATE (SATE) \((ATE_{fs} = \tau_{fs})\) requires random sample (Holland 1986, Imai 2008)

- \(_{fs}\) = finite sample, \(_{sp}\) = super population (= target population)

ATE: Naive Estimate

- Naive estimate of ATE: Difference between expected values in treatment and control

- Equate \(E[Y_{i}(1)]\) - \(E[Y_{i}(0)]\) with \(E[Y_{i}|D=1] - E[Y_{i}|D=0]\)

- \(E[Y_{i}|D=1] - E[Y_{i}|D=0] = ({\color{red}{0+1}})/2 - ({\color{orange}{1+0+0+0}})/4 = 0.5 - 0.25 = 0.25\)

| \(Unit\) | \(D_{i} (Aspirin: Yes/No)\quad\) | \(Y_{i} (Headache: Yes/No)\quad\) |

|---|---|---|

| Simon | 1 | 0 |

| Julia | 1 | 1 |

| Paul | 0 | 1 |

| Trump | 0 | 0 |

| Fabrizio | 0 | 0 |

| Diego | 0 | 0 |

- Q: What is the problem with the naive estimate of the treatment effect? Can we interpret 0.25 as causal effect? Why (not)?

ATE: Decomposition

| \(Unit\) | \(D_{i} (Aspirin: Yes/No)\quad\) | \(Y_{i} (Head.: Yes/No)\quad\) | \(Y_{i}(1) (Head. \mid YesAspirin)\quad\) | \(Y_{i}(0) (Head. \mid NoAspirin)\) |

|---|---|---|---|---|

| Simon | 1 | 0 | 0 | ? |

| Julia | 1 | 1 | 1 | ? |

| Paul | 0 | 1 | ? | 1 |

| Trump | 0 | 0 | ? | 0 |

| Fabrizio | 0 | 0 | ? | 0 |

| Diego | 0 | 0 | ? | 0 |

- ATE can be decomposed as a function of 5 quantities (e.g., Keele 2015, 4)

- \(\underbrace{E[Y_{i}(1) - Y_{i}(0)]}_{\substack{ATE}}\) = \({\color{violet}\pi}\) \((\underbrace{E[\color{red} {Y_{i}(1)|D_{i} = 1}] - E[\color{blue}{Y_{i}(0)|D_{i} = 1}]}_{\substack{ATT}})\) + \((1 - {\color{violet}\pi})(\underbrace{E[\color{green}{Y_{i}(1)|D_{i} = 0}] - E[\color{orange}{Y_{i}(0)|D_{i} = 0}]}_{\substack{ATC}})\)

- \({\color{violet}\pi}\): proportion of sample assigned to treatment (2/6 = 0.33..)

- e.g., \(E[\color{red} {Y_{i}(1)|D_{i} = 1}]\): Average pot. outcome under treatment, given units are in treatment condition

- Q: What do the following terms describe? \(E[\color{blue}{Y_{i}(0)|D_{i} = 1}]\); \(E[\color{green}{Y_{i}(1)|D_{i} = 0}]\); \(E[\color{orange}{Y_{i}(0)|D_{i} = 0}]\)

- Q: In your own words, which parts do we have and which parts don’t we have?

ATE: Identification Problem

- Observed (measured)

- \({\color{violet}\pi}\) using \(E[{\color{violet}{D_{i}}}]\)

- \(E[\color{red} {Y_{i}(1)|D_{i} = 1}]\) using \(E[Y_{i}\mid D_{i} = 1]\)

- \(E[\color{orange}{Y_{i}(0)|D_{i} = 0}]\) using \(E[Y_{i}\mid D_{i} = 0]\)

- Unobserved (not measured)

- \(E[\color{blue}{Y_{i}(0)|D_{i} = 1}]\): Average outcome under control \((Y_{i}(0))\), given units in treatment condition \((...|(D_{i}=1))\)

- \(E[\color{green}{Y_{i}(1)|D_{i} = 0}]\): Average outcome under treatment \((Y_{i}(1))\), given units in control condition \((...|(D_{i}=0))\)

- \(E[Y_{i}(1) - Y_{i}(0)]\) = 2/6 \(\times\) (0.5 - ?) + 4/6 \(\times\) (? - 0.25)

| \(Y = 1\) | \(Y = 0\) | |

|---|---|---|

| \(D = 1\) | 0.5 | ? |

| \(D = 0\) | ? | 0.25 |

- Identification Problem!

- Two quantities are unobservable

- Just as for ITE we must rely on assumptions to identify ATE (fill em in)

Causal estimands & notation (1)

Potential outcomes: Sometimes \(Y_{i}(1)\) written as \(Y_{1i}\), \(Y_{i}^{t}\), \(y_{i}^{1}\) (Morgan and Winship 2007, 43)

ATE notation (finite vs. superpopulation, Imbens and Rubin (2015, 18))

- Finite sample: \(ATE_{fs} = \tau_{fs} = \frac{1}{N} \sum_{i=1}^{N} (Y_{i}(1) - Y_{i}(0))\)

- Superpopulation: \(ATE_{sp} = \tau_{sp} = \mathbb{E}_{sp} = E[Y_{i}(1) - Y_{i}(0)] = \mathbb{E}_{sp}[Y_{i}(1) - Y_{i}(0)]\)

Causal estimands & notation (2)

Often the focus is on subpopulations (Imbens and Rubin 2015, 18)

Females (covariate value): \(\tau_{fs} (f) = \frac{1}{N(f)} \sum\limits_{i: X_{i} = f}^{N} (Y_{i}(1) - Y_{i}(0))\) (Conditional ATE)

- e.g., average effect of drug only for females

ATT: \(\tau_{fs,t} = \frac{1}{N_{t}} \sum\limits_{i: D_{i} = 1}^{N} (Y_{i}(1) - Y_{i}(0))\)

- Average effect of the treatment for those who were exposed to it

- Q: How could we write the ATC?

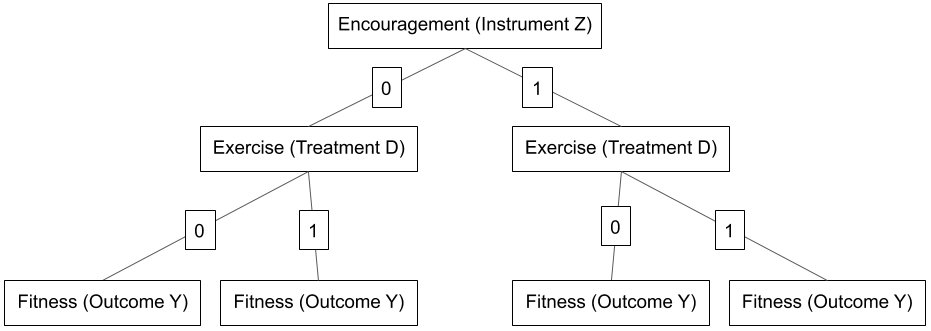

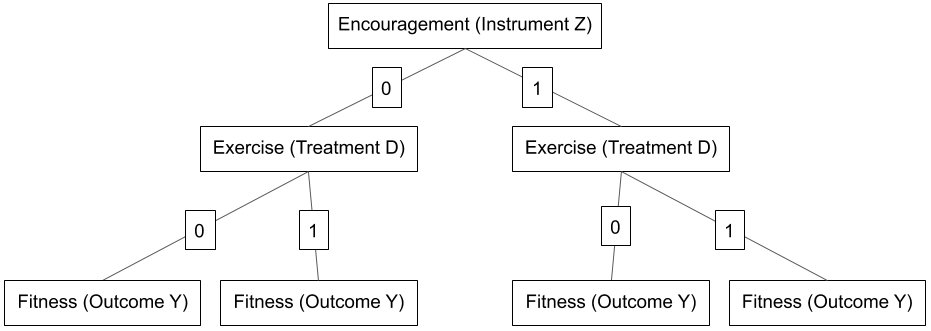

Other subpopulations: Complier Average Treatment Effect; Intent-to-Treat Effect (see overview at Egap)

Causal estimands & notation (3): Exercise

Q: For which units in the Table below would you need to fill in the missing potential outcomes if you were interested in…

- …the average treatment effect: \(\small ATE_{fs} = \tau_{fs} = \frac{1}{N} \sum_{i=1}^{N} (Y_{i}(1) - Y_{i}(0))\)?

- …the average treatment effect on the treated: \(\small ATT_{fs} = \tau_{fs,t} = \frac{1}{N_{t}} \sum\limits_{i: D_{i} = 1}^{N} (Y_{i}(1) - Y_{i}(0))\)?

- …the conditional average treatment effect for males: \(\small ATE_{fs,m} = \tau_{fs} (m) = \frac{1}{N(m)} \sum\limits_{i: X_{i} = m}^{N} (Y_{i}(1) - Y_{i}(0))\)?

- …the conditional average treatment effect on the treated for males: \(\small ATT_{fs}(m) = \tau_{fs, t} (m) = \frac{1}{N_{t}(m)} \sum\limits_{i: D_{i} = 1, X_{i} = m}^{N} (Y_{i}(1) - Y_{i}(0))\)?

| \(Unit\) | \(D_{i}\) | \(Y_{i}(1)\) | \(Y_{i}(0)\) |

|---|---|---|---|

| Simon | 1 | 0 | ? |

| Julia | 1 | 1 | ? |

| Paul | 0 | ? | 1 |

| Trump | 0 | ? | 0 |

| Fabrizio | 0 | ? | 0 |

| Diego | 0 | ? | 0 |

(Key) Assumptions

- Often causal identification concerns defending several key assumptions (e.g., Keele 2015)

Causal ordering assumption: Written as \(D_{i} \longrightarrow Y_{i}\) (Imai’s notation)

- No reverse causality: \(D_{i}\not\longleftarrow Y_{i}\)

- No simultaneity: \(D_{i}\not\longleftrightarrow Y_{i}\)

Independence assumption (IA): also called unconfounded assignment (For other names see Imbens and Rubin 2015, 43)

Stable Unit Treatment Values Assumption (SUTVA): (1) No interference assumption & (2) Consistency assumption

- We’ll talk about 2. and 3. now and other ones later

Assumptions: Independence Assumption (IA)

- IA = Treatment status is independent of potential outcomes \((Y_{i}(1), Y_{i}(0) \perp D_{i})\)

- i.e., assignment status unrelated to potential outcomes…

- …whether you take aspirin or not is independent of what outcome you would have under treatment/control

- Violation: e.g., experimenter knows how patients respond to aspirin and assigns accordingly

- Insight: Under IA “expectation(s) of the unobserved potential outcomes is equal to the conditional expectations of the observed outcomes conditional on treatment assignment” (Keele 2015, 5)

- IA allows us to connect unobservable potential outcomes to observable quantities in the data

- IA is linked to the “assignment mechanism” (see (Imbens and Rubin 2015, 14) for a recent discussion)

- Why does the indpendence assumption (+ SUTVA below) identify causal effect? (next slide)

Assumptions: Independence Assumption (IA)

- Under the IA: ATE = \(\underbrace{E[Y_{i}(1) - Y_{i}(0)]}_{\substack{unobserved}} = E[Y_{i}(1)] - E[Y_{i}(0)] = \underbrace{E[Y_{i}|D_{i} = 1] - E[Y_{i}|D_{i} = 0]}_{\substack{observed}}\)

- We can replaces potential outcomes with observed outcomes

| \(Unit\) | \(D_{i}\quad\) | \(Y_{i}\quad\) | \(Y_{i}(1)\quad\) | \(Y_{i}(0)\) |

|---|---|---|---|---|

| Simon | 1 | 0 | 0 | ? |

| Julia | 1 | 1 | 1 | ? |

| Paul | 0 | 1 | ? | 1 |

| Trump | 0 | 0 | ? | 0 |

| Fabrizio | 0 | 0 | ? | 0 |

| Diego | 0 | 0 | ? | 0 |

- The IA allows us to equate the expected value of the whole column \(E[Y_{i}(1)]\) (red and green values) with the observed red values, i.e. \(E[Y_{i}|D_{i} = 1]\) (same for column \(E[Y_{i}(1)]\)).

- \(E[Y_{i}|D_{i} = 1] \stackrel{1}{=} E[(Y_{i}(0) + D_{i}(Y_{i}(1) - Y_{i}(0))| D_{i} = 1] \stackrel{2}{=} E[Y_{i}(1)|D_{i} = 1] \stackrel{3}{=} E[Y_{i}(1)]\)

- See next slide for explanation of step \(\stackrel{1}{=}\), \(\stackrel{2}{=}\) and \(\stackrel{3}{=}\)

- Same logic for \(E[Y_{i}\mid D_{i} = 0] = E[Y_{i}(0)]\)

Assumptions: Independence Assumption - Steps

Step \(\stackrel{1}{=}\): In causal inference, the observed outcome \(Y_{i}\) can be expressed as a combination of potential outcomes \(Y_{i}(0)\) and \(Y_{i}(1)\) based on the treatment indicator \(D_{i}\) (Observed Outcome Definition). Specifically, \(Y_{i} = Y_{i}(0) + D_{i} (Y_{i}(1) - Y_{i}(0))\).

- This means that if the individual \(i\) does not receive the treatment (\(D_{i} = 0\)), their outcome is \(Y_{i}(0)\). If they do receive the treatment (\(D_{i} = 1\)), their outcome is \(Y_{i}(1)\).

Step \(\stackrel{2}{=}\): If \(D_{i} = 1\), \((Y_{i}(0) + 1\times Y_{i}(1) - 1\times Y_{i}(0))\) so \(Y_{i}(0)\) cancels out and we end up with \(E[Y_{i}(1)\mid D_{i} = 1]\)

Step \(\stackrel{3}{=}\): Because \(Y_{i}(1)\) is independent of \(D_{i}\) (independence assumption) we can replace \(E[Y_{i}(1)\mid D_{i} = 1]\) with \(E[Y_{i}(1)]\)

Longer explanation

- To estimate the ATE we would need to calculate the expected value of the differences between potential outcomes in column \(Y_{i}(1)\) and column \(Y_{i}(0)\). In other words, we would have to observe both treatment and control units in their counterfactual states (e.g., observe what the value of control units would be if they had been treated). However, for the units that were assigned to control \(D_{i} = 0\) we do not observe \(Y_{i}(1)\) and the other way round.

- Starting with the column \(Y_{i}(1)\), the independence assumption simply means that the expected value of the whole column \(E[Y_{i}(1)]\) (red and green values) can be equated with the expected value of the first two rows of the column, namely \(E[Y_{i}(1)\mid D_{i} = 1]\) (the red values). And that is what we actually observe. Hence, through this assumption there is no need to observe the missing green values any more. The same logic applies to column \(Y_{i}(0)\). The IA allows us to equate the expected value of the whole column \(E[Y_{i}(0)]\) (blue and orange values) with the orange values, i.e. \(E[Y_{i}(0)\mid D_{i} = 0]\).

Independence assumption & random assignment

- Q: Imagine the students in our class were randomly split into two groups, a treatment group and a control group. What would be your expectation regarding the distribution of … across the two groups?

- Observable characteristics (Gender, age, skin color)

- Unobservable characteristics (blood pressure, happiness)

- Possible responses to experimental treatment (reaction to aspirin)

- Random assignment

- = a statistical solution (Holland 1986, 948f)

- Units randomly assigned to treatment/control have identical distributions of covariates/potential outcomes in both groups (long run!)

- “if the physical randomization is carried out correctly, then it is plausible that S [= treatment status D] is independent of \(Y_{t}\) [ \(Y_{i}(1)\) ], and \(Y_{c}\) [ \(Y_{i}(0)\) ], and all other variables over U [the super population]. This is the independence assumption.” (Holland 1986, 948).

- Random assignment induces independence between treatment status and potential outcomes

- Random assignment induces independence between treatment status and potential outcomes

- Q: What does in the long run mean?

Assumptions: SUTVA

- SUTVA: Stable Unit Treatment Values Assumption

- -1- No interference & -2- Consistency: No hidden variations of treatment

- The potential outcomes for any unit do not vary with the treatments assigned to other units

- A subject’s potential outcome is not affected by other subjects’ exposure to the treatment

- Imai’s notation: \(Y_{i}(D_{1},D_{2},...,D_{n})=Y_{i}(D_{i})\)

- For each unit, there are no different forms or versions of each treatment level, which lead to different potential outcomes (Imbens and Rubin 2015, 10; Keele 2015, 5)

- Unambiguous definition of exposure, e.g. “15 min of exercise” (Keele 2015, 5)

- No hidden multiple versions/different administration of treatment

- Imai’s notation: \(Y_{i}=Y_{i}(d)\text{ whenever } D_{i}=d\)

Assumptions: SUTVA Exercise (1)

Insight: If we assume SUTVA! (Imbens and Rubin 2015, 10)

- Each individual faces same number of treatment levels (2 in aspirin example)

- Potential outcomes are a function of only our individual actions (e.g., not influenced by others in this room)

Q: Imagine you all have a headache and you sit in the same room. Then we try to randomly assign aspirin to one half of you to test its effect: What would the two assumptions (No interference, No hidden variations) mean and how could they be violated in that situation? Discuss in groups!

Q: In groups, think of one more empirical examples where those assumption could be violated.

Assumptions: SUTVA Exercise (2)

- Q: Below you find examples for planned experiments. Discuss in groups of 2 or 3.

- What is the unit in these examples? How could the independence assumption and the SUTVA assumption be violated when those experiments are implemented?

- Private lessons \((D)\) → School performance \((Y)\)

- In a Mannheim school, half of the 10% worst performing pupils in each class are randomly assigned to receiving private lessons.

- In-person teaching \((D)\) → student satisfaction \((Y)\)

- To test the reception of online-teaching, a unversity decides to randomly assign half of the seminars to in-person teaching, the other half to online teaching.

- Job-training programme \((D)\) → Unemployment \((Y)\)

- Half of Mannheim’s unemployed are randomly assigned to a job training program.

- COVID19 drug \((D)\) → survival \((Y)\)

- 50% of the COVID19 patients at a Mannheim hospital are randomly assigned a new, promising drug in a placebo trial.

Quiz

- See here.

Session 5

Randomized experiments: Ideal, lab, natural and field experiments

Intro

Questions?

Q: What is the fundamental problem of causal inference? (missing data!)

Assumptions example: Campaign Advertisement and Vote Choice (1)

- Research question: What is the effect of a campaign advertisement on an individual’s vote choice (Party A vs. B)?

- Treatment \(D_{i}\): Exposure to the campaign advertisement

- Outcome \(Y_{i}\): Individual’s vote choice.

- Causal Ordering Assumption \(D_{i} \longrightarrow Y_{i}\): implies that treatment causes outcome and not the other way around

- No reverse causality \(D_{i}\not\longleftarrow Y_{i}\): implies that an individual’s vote choice does not influence whether or not they were exposed to the campaign advertisement

- No simultaneity: \(D_{i}\not\longleftrightarrow Y_{i}\): implies that treatment and outcome do not occur simultaneously, i.e., exposure to advertisement \(D\) occurs before the vote choice \(Y\) is made

- Practice: ensure this by timing exposure to advertisement \(D\) before voting choice \(Y\) is made and by randomizing advertisement exposure to avoid selection bias where more likely voters for Party A are more exposed to the advertisement

Assumptions example: Campaign Advertisement and Vote Choice (2)

- Independence Assumption \((Y_{i}(1), Y_{i}(0) \perp D_{i})\): states that treatment assignment is independent of potential outcomes

- i.e., the exposure to the advertisement should not be correlated with other factors that might influence the vote choice which can be achieved through randomization

- Stable Unit Treatment Value Assumption (SUTVA)

- No Interference: treatment of one individual does not affect the outcomes of another individual

- e.g., one person’s exposure to the advertisement does not influence another person’s vote choice

- Consistency Assumption: For each unit, there are no different forms or versions of each treatment level, which lead to different potential outcomes (unambiguous definition of exposure)

- e.g., if an individual is exposed to the advertisement, their vote choice reflects the effect of seeing that specific advertisement which is the same for other treated individuals (analogue for individuals in control)

- SUTVA violated if individuals discuss the advertisement with each other, thereby indirectly affecting each other’s vote choices or if advertisement is different across treated individuals

- No Interference: treatment of one individual does not affect the outcomes of another individual

Ensuring Assumptions in Practice

To ensure these assumptions hold in a real study:

- Randomization: Randomly assign individuals to either the treatment group (exposed to the advertisement) or the control group (not exposed)

- Timing Control: Ensure that the exposure to the advertisement occurs well before the vote choice is made to avoid simultaneity

- Isolation: Conduct the experiment in a way that minimizes interaction between subjects to prevent interference

- Measurement: Carefully measure the vote choice after the exposure period to ensure that the treatment effect is captured accurately.

- Treatment design:: Make sure treatment/control is unambiguously defined across experimental subjects

Experimental vs. observational studies

Distinction going back to Cochran (1965) and others

Assignment mechanism: “process that determines which units receive which treatments, hence which potential outcomes are realized and thus can be observed [and which are missing]” (Imbens and Rubin 2015, 31)

Experiments (experimental studies)

- Assignment mechanism is both known and controlled by the researcher

- Q: What do we mean by “controlled by” and how does assignment in an experiment normally work?

Observational studies

- Assignment mechanism is not known to, or not under the control of, the researcher.

- e.g., with survey data we can NOT decide who gets treated (e.g., education, divorce) and who doesn’t

Field experiments

- A randomized intervention in the real world rather than in artificial, controlled setting of a laboratory

- Examples

- Remedying Education: Evidence from Two Randomized Experiments in India (Banerjee et al. 2007)

- “two randomized experiments conducted in schools in urban India. A remedial education program hired young women to teach students lagging behind in basic literacy and numeracy skills. It increased average test scores of all children in treatment schools by 0.28 standard deviation, mostly due to large gains experienced by children at the bottom of the test-score distribution.”

- The Mark of a Criminal Record (Pager 2003)

- “The present study adopts an experimental audit approach—in which matched pairs of individuals applied for real entry‐level jobs—to formally test the degree to which a criminal record affects subsequent employment opportunities.”

- Causal effect of intergroup contact on exclusionary attitudes (Enos 2014)

- “Here, I report a randomized controlled trial that assigns repeated intergroup contact between members of different ethnic groups. The contact results in exclusionary attitudes toward the outgroup.”

- Durably reducing transphobia: A field experiment on door-to-door canvassing (Broockman2016-oa?)

- “we show that a single approximately 10-minute conversation encouraging actively taking the perspective of others can markedly reduce prejudice for at least 3 months. We illustrate this potential with a door-to-door canvassing intervention in South Florida targeting antitransgender prejudice”

- Remedying Education: Evidence from Two Randomized Experiments in India (Banerjee et al. 2007)

Natural experiments

- Assumption: “a real world situation that produces haphazard [random] assignment to a treatment” (Rosenbaum 2010, 67)

- Examples

- Does indiscriminate violence increase insurgent attacks? (Lyall 2009)

- “This proposition is tested using Russian artillery fire in Chechnya (2000 to 2005) to estimate indiscriminate violence’s effect on subsequent patterns of insurgent attacks across matched pairs of similar shelled and nonshelled villages.”

- The Republicans Should Pray for Rain: Weather, Turnout, and Voting in U.S. Presidential Elections (Gomez 2007)

- Does indiscriminate violence increase insurgent attacks? (Lyall 2009)

- Criticism

- When Natural Experiments Are Neither Natural nor Experiments (Sekhon 2012)

- Design drives questions… researcher roaming around in search of natural experiments

Exercise: Lab vs. field vs. natural experiments

- Q: What do you think are the advantages and disadvantages of lab, field and natural experiments? Discuss in groups.

Ideal Experiments & Ideal Research Designs (IRDs): Exercise

- IRD: “the study a researcher would carry out to answer a research question if there weren’t any practical, ethical or resource-related constraints” (Bauer and Landesvatter 2023)

- Research question: What is the causal effect of victimization on social trust (trust in strangers)? (Bauer 2015)

- Research question: What is the causal effect of fake news about immigrants on vote choice for the AfD? (similarly to Bauer and Clemm von Hohenberg 2021)

- Q: If you had no (practical, ethical, financial) constraints what would your study look like? Develop one with your neighbour(s).

- What is the target population you want to study? (let’s take University Freiburg students)

- What is your sample?

- Where would the experiment take place?

- How would you construct the treatment?

- Which units in the sample get treated? When are the treated or not?

- How would you measure individuals’ vote choice/trust? When would you measure it? etc.

Paper: From ideal experiments to ideal research designs (IDRs) (Bauer and Landesvatter 2023)

- Review how methodologists define and advocate using ideal experiments (IEs) (Section 2)

- (Re-)introduce the concept of an ideal research design (IRD) vs. ARD (Section 3)

- Contrasting IRDs and ARDs: An example (Section 4)

- Departing from our more systematical account of IRDs we review whether and how empirical researchers have used ideal experiments and ideal research designs in applied empirical work (Section 5)

Summary

- Randomized experiments are seen as the “gold standard”

- Researcher controls assignment of units to treatment and control

- Randomization breaks any link between covariates/potential outcomes and the treatment(independence assumption holds!)

- We know the assignment mechanism

- Bernoulli trial (coin flip): In the long run (with many units), treated and control groups will be identical in all respects, observable and unobservable on average

- Other randomization types (e.g., stratified randomization) may yield more precise causal inferences when N limited and/or potential outcomes vary with covariates

- Lab vs. field vs. natural experiments: Pros & cons

- Ideal research designs (experiments) as benchmarks

Session 6

Experiments: Analysis and checks

Retake

- Imbens and Rubin (2015) use \(W\) as letter for treatment not \(D\) (don’t get confused!)

- Q: Why don’t we use \(T\)?

- Naming: Ideally use same name for a concept but usage differs..

- independence assumption (e.g., Keele 2015) = unconfoundedness assumption (Imbens and Rubin 2015)

- Controlling = conditioning; Treatment effect = causal effect

- Ideal experiment example: Bauer (2015)

Randomized experiments: Analysis & checks (1)

Provided perfect randomization we can estimate causal effect by comparing outcome averages between treatment and control group (e.g., using t-tests)

- But different randomization designs may require different stat. approaches (Imbens and Rubin 2015)

Various checks are recommended!

Examples from…

- Enos 2014 (field experiment): Is there a causal effect of Causal effect of intergroup contact on exclusionary attitudes?

- Bauer & Clemm von Hohenberg 2021 (survey experiment): Is there a causal effect of source characteristics (and content) on belief in and sharing of their information? (see appendix for treatment)

Randomized experiments: Analysis & checks (2)

- Various checks are recommended (2-6 also useful for non-experiments):

- (1) Did the randomization really work?

- (2) Are treatment groups really balanced in terms of covariates?

- (3) Did participants really (not) “take” the treatment?

- (4) Is the sample representative of a larger target population?

- (5) Are different participants affected differently by the treatment?

- (6) How long does the treatment effect operate? (treatment lag & decay)

Analysis & checks (1)

- (1) Did the randomization really work?

- Enos (2014): Compare sizes of treatment groups (Q: Where?)

Analysis & checks (2)

- (1) Did the randomization really work?

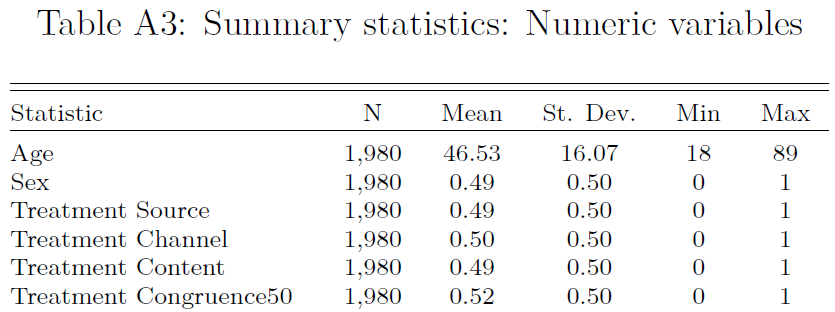

- Enos (2014): Compare sizes of treatment groups

- Randomization into 6 groups: \(2 \times 2 \times 2\) (Source \(\times\) Channel \(\times\) Content)

- Enos (2014): Compare sizes of treatment groups

Analysis & checks (3)

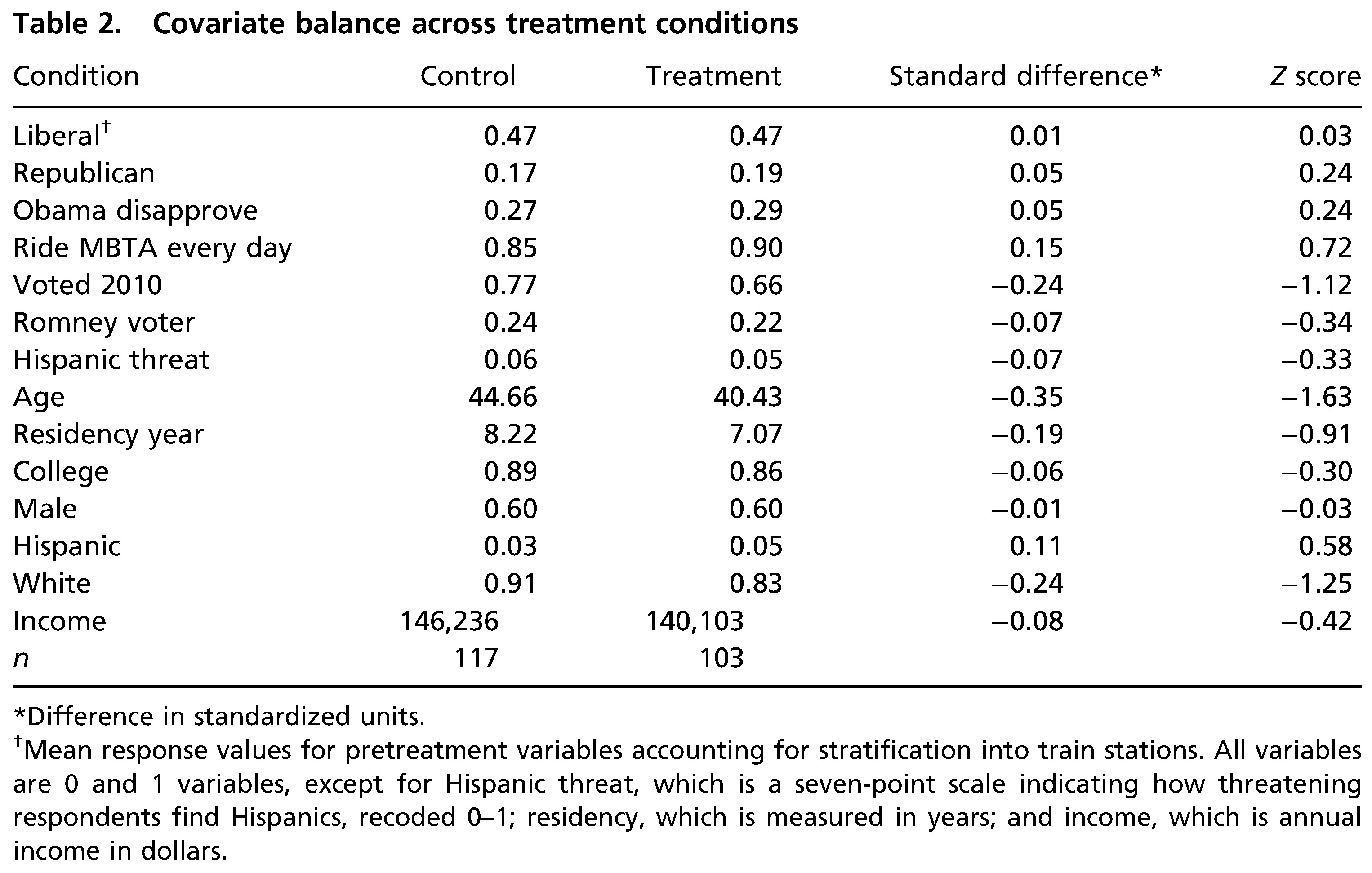

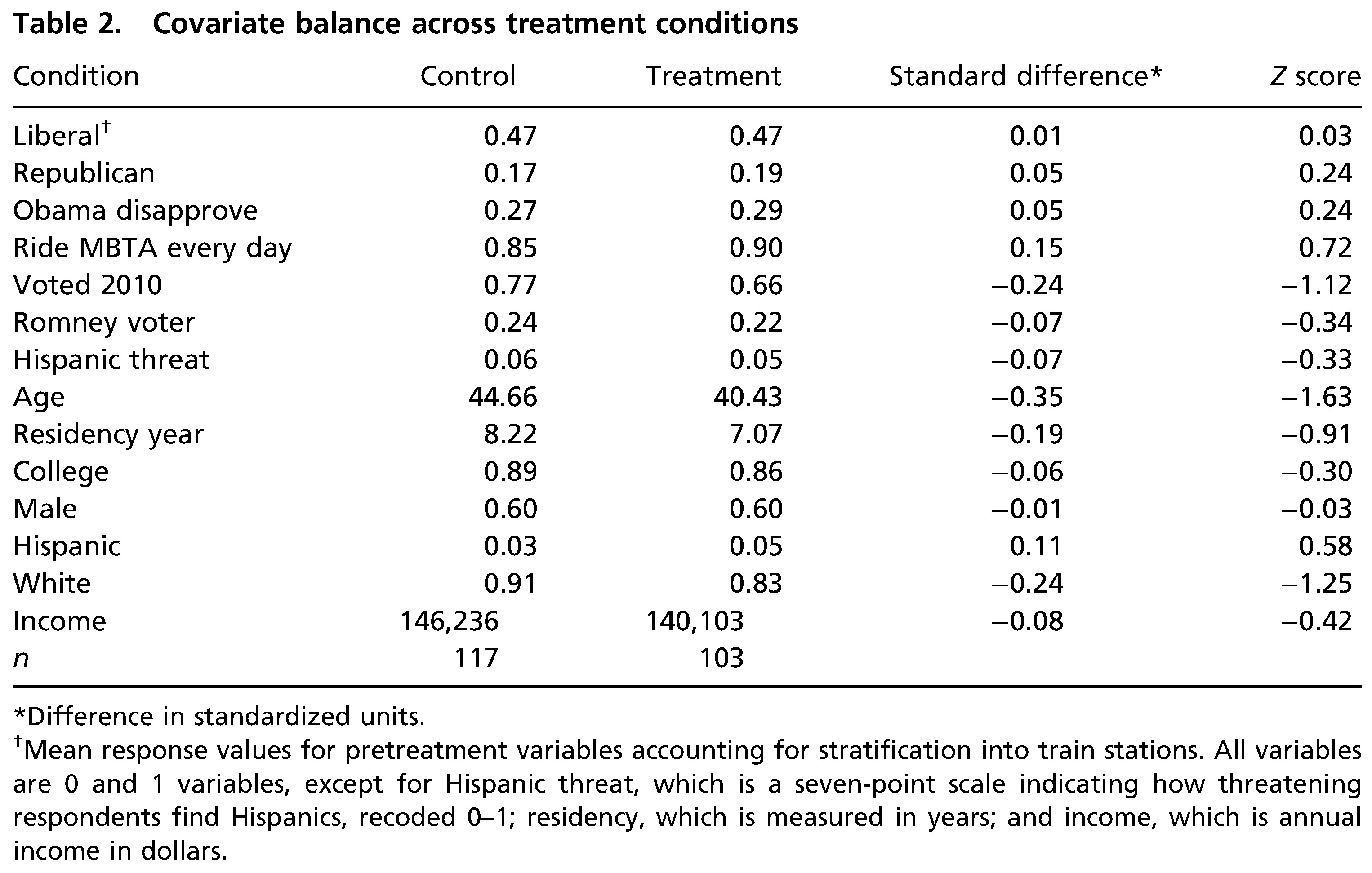

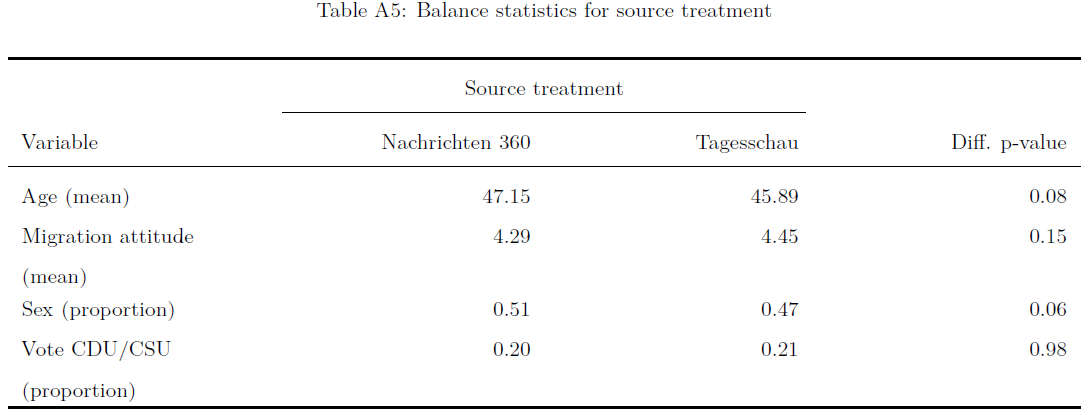

- (2) Are treatment groups really balanced?

- Bauer and Clemm von Hohenberg (2021): Compare covariate distributions across treatment groups

Analysis & checks (4)

- (2) Are treatment groups really balanced?

- Bauer and Clemm von Hohenberg (2021): Compare covariate distributions across treatment groups

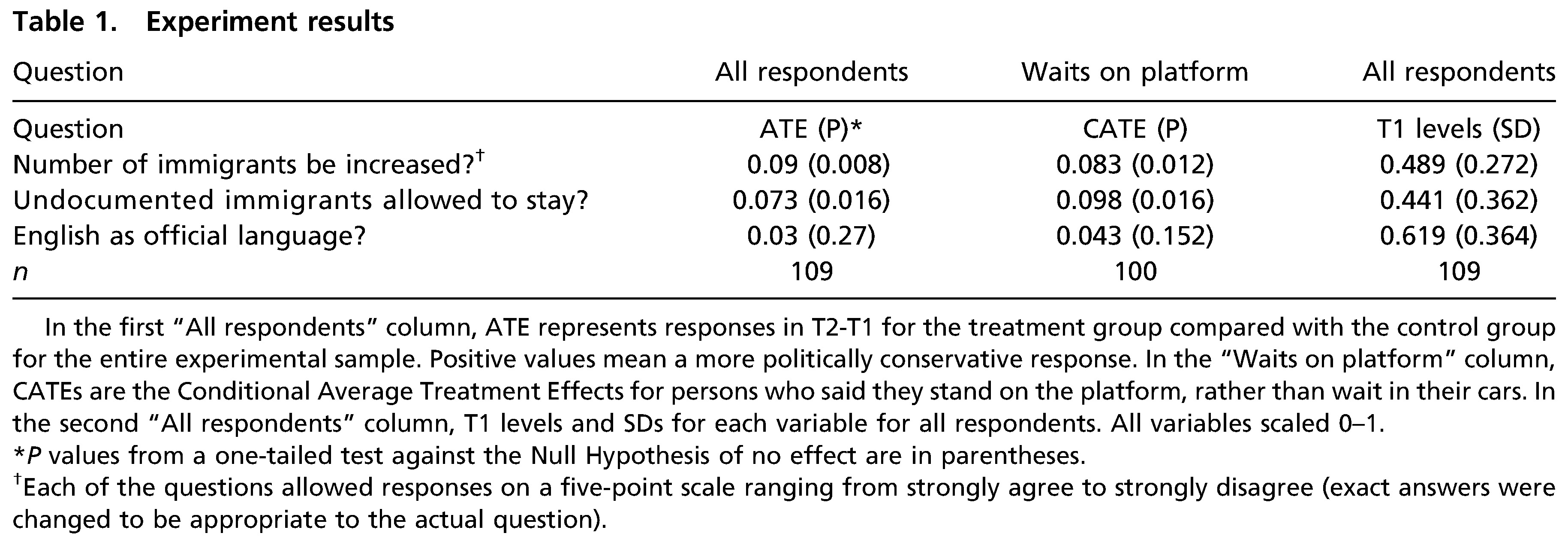

Analysis & checks (5)

- (3) Did participants really (not) “take” the treatment?

- Enos (2014): Provide analysis for subsets that are more likely to have been exposed (waiting on the platform)

Analysis & checks (6)

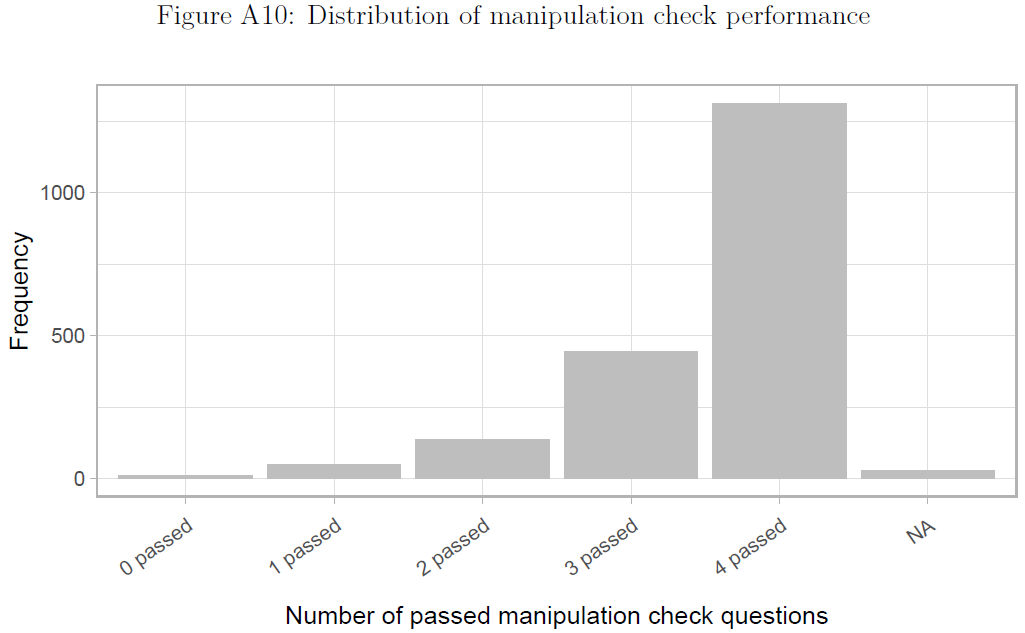

- (3) Did participants really (not) “take” the treatment?

- Bauer and Clemm von Hohenberg (2021): Build in manipulation checks testing whether participants have seen or read the treatment & estimate effects for subsets that passed the checks

Analysis & checks (7)

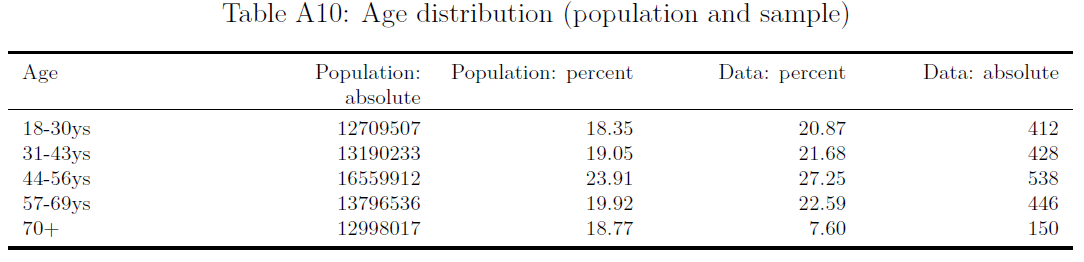

- (4) Is the sample representative of a larger target population?

- Bauer and Clemm von Hohenberg (2021): Q: How would you proceed? What can we learn from the table below?

- Enos (2014): e.g., argues that the “Census Tracts used in this experiment had a mean of just 2.8% Hispanic, making the communities tested here both demographically typical and representative of the type of community in which demographic change has not already occurred.” (p. 3700)

Analysis & checks (8)

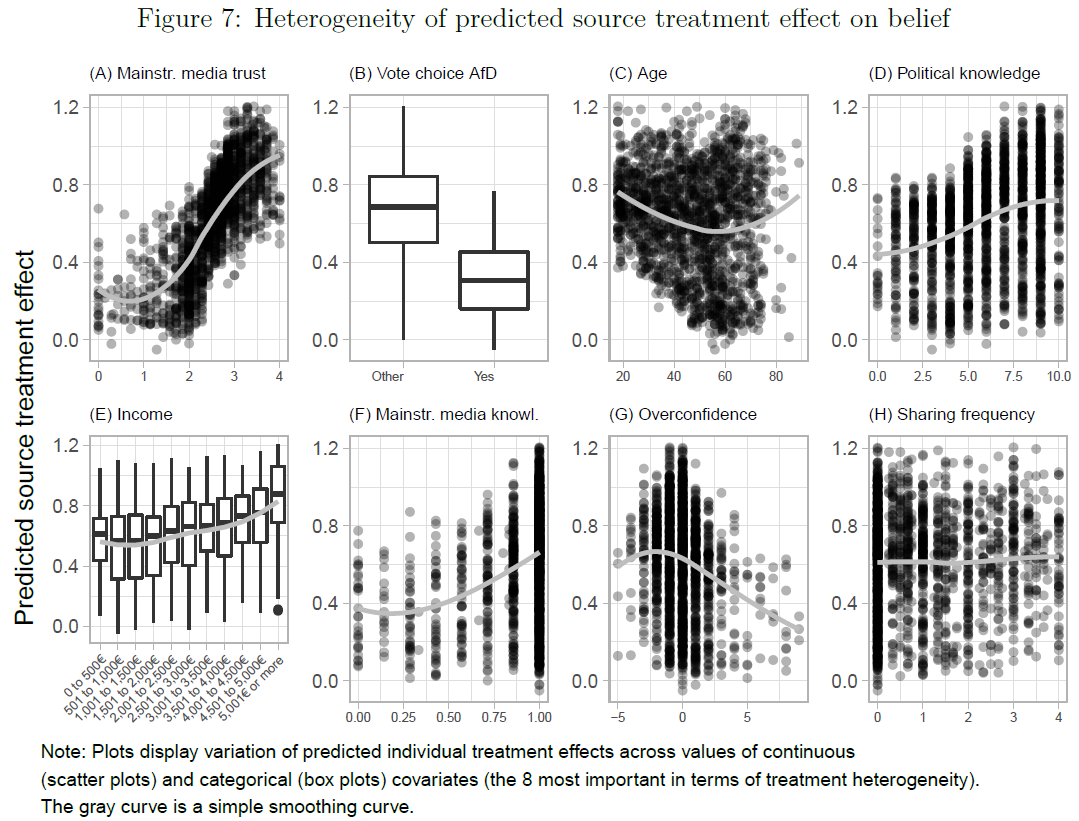

- (5) Are different participants affected differently by the treatment?

- Bauer and Clemm von Hohenberg (2021): Explore treatment heterogeneity through interactions or with ML methods

Analysis & checks (9)

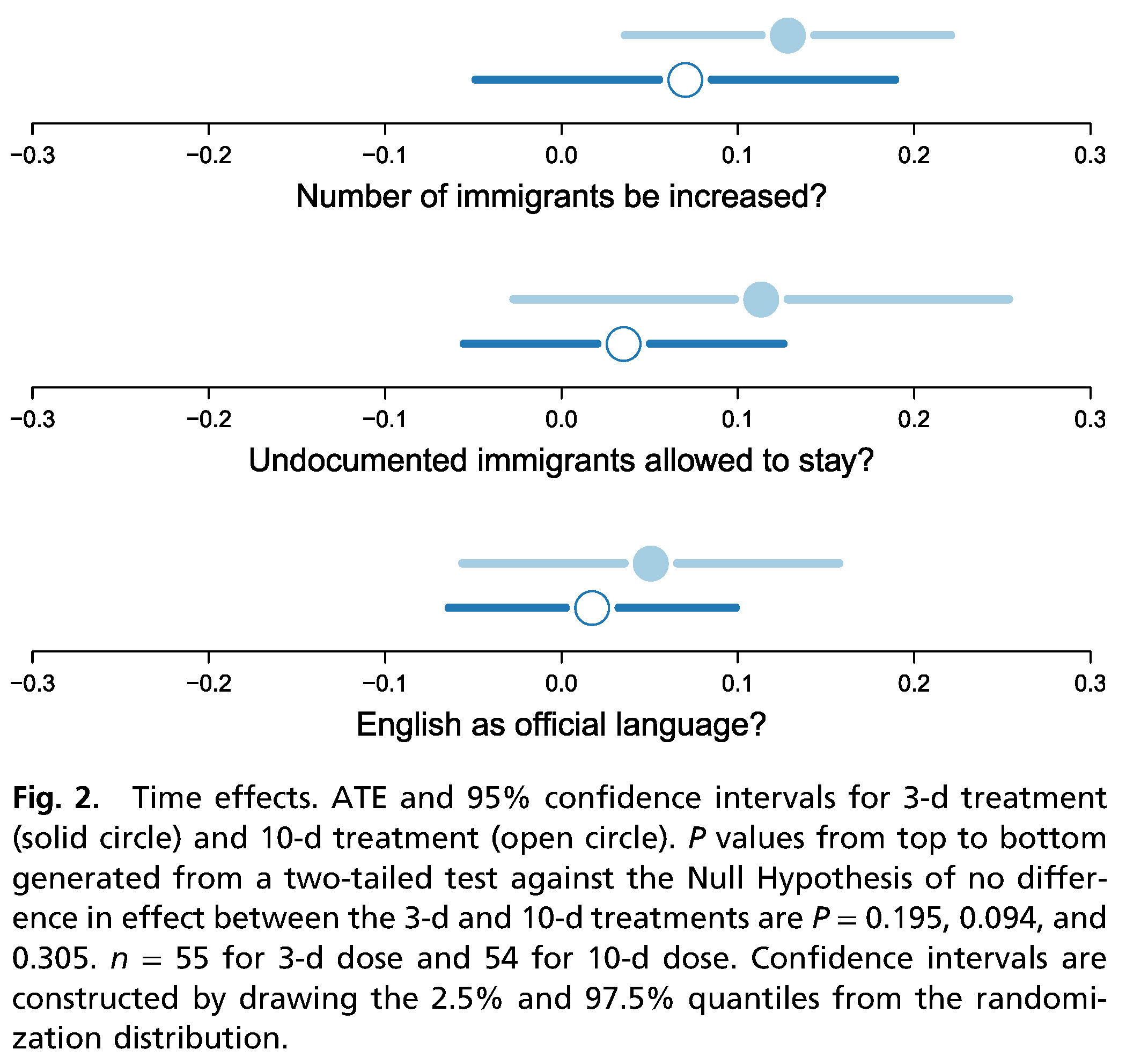

- (6) How long does the treatment effect operate? (treatment lag & decay)

- Enos (2014): Measure the outcome at different time points post-treatment

Analysis & checks (10): More checks

- Attrition (cf. Enos 2014, 3704)

- If outcome measured at two time points make sure to check whether units dropped out for second measurement

- Q: What is a possible disadvantage of measuring the outcome before the treatment?2

- SUTVA violation

- No interference: Try to observe interactions or ask whether they took place or exclude observations where you suspect interference

- Consistency/no hidden variations: Try to observe or ask whether people really got/took the same treatment

- Measurement error

- Pre-test measures, e.g., pre-test survey and ask respondents whether they understood questions

Experimental vs. observational studies

- Q: What was the main difference between experimental (experiments) and observational studies again?

- Experiments (experimental studies)

- Assignment mechanism is both known and controlled by the researcher

- Observational studies

- Assignment mechanism is not known to, or not under the control of, the researcher

We are leaving the realm of experimental studies and enter the realm of observational studies!

Good because.. many questions cannot be answered using experiments! (ethical & resource constraints)

Lab: Experimental data

- You can find the files for the lab in this folder.

- Please download the following files and store them in a directory that you use for this course (this will be your working directory).

Lab_2_Experimental_data.htmlLab_2_Experimental_data.qmdLab2_data

- Please download the following files and store them in a directory that you use for this course (this will be your working directory).

Quiz

- Please do the quiz.

Session 7 & 8

Selection on Observables: Theory

Intro

Questions?

Today’s objective

- Discuss strategies when we don’t have experimental data

- Data: Cross-sectional data, i.e., variables measured once (usually at the same moment in time)

Terminology: Conditioning on vs. controlling for

Experimental vs. observational studies

Assignment mechanism: “process that determines which units receive which treatments, hence which potential outcomes are realized and thus can be observed [and which are missing]” (see Imbens and Rubin 2015, 31)

Q: What is the difference between experimental (experiments) and observational studies?

- Experiments (experimental studies inlcude lab & field experiments)

- Assignment mechanism is both known and controlled by the researcher

- Researcher randomly assigns units to treatment and control

- We know the function of \(Pr(\mathbf{D}|\mathbf{X},\mathbf{Y}(0),\mathbf{Y}(1))\)!

- Observational studies (e.g., Imbens and Rubin 2015, 41)

- Assignment mechanism is not known to, or not under the control of, the researcher

- Researcher can not randomly assign units to treatment and control

- We don’t know the functional form

- We are leaving the realm of experimental studies and enter the realm of observational studies!

Observational studies (1)

Many questions cannot be answered using experiments (Q: Any examples?)

Imbens and Rubin (2015) show that under certain assumptions assignment mechanism within subpopulations of units with the same value for the covariates (Q?) can be interpreted as if it was completely randomized experiment (see Imbens and Rubin 2015, 257)

- Albeit an experiment with unknown assignment probabilities for the units

- Assumptions (see also Imbens and Rubin 2015, 257, 262, 43)

- Unconfounded assignment or uncounfoundedness (= conditional independence): Assignment free from dependence on the potential outcomes

- Probabilistic assignment: Probability of receiving any level of the treatment is strictly between zero and one for all units

- Individualistic assignment: Probability for unit \(i\) is essentially a function of the pre-treatment variables for unit \(i\) only, free of dependence on the values of pre-treatment variables for other units (Q: What is difference to SUTVA?3)

- Assumption (2) & (3) often glossed over (see Imbens and Rubin 2015, 43)

Observational studies (2)

I & R call assignment mechanisms that fulfill those assumptions regular assignment mechanism

Given assumptions 1-3 the probability of receiving the treatment is equal to \(e(x)=N_{t}(x)/(N_{c}(x)+N_{t}(x))\) for all units with \(X_{i}=x\) conditional on the number of treated and control units composing such a subpopulation

- \(N_{t}(x)\) or \(N_{c}(x)\) are the number of units in treatment and control groups with pre-treatment value \(X_{i}=x\)

- \(e(x)\) is also called the Propensity score4

We don’t know a priori assignment probabilities for units, but know that units with the same pre-treatment covariate values have same \(e(x)\), i.e., the same prob. of getting the treatment

This insight still suggests feasible strategies (e.g., focus on subsample)!

Q: What might be the problem if we have many distinct values of covariates?

Exercise 1: Conditioning on covariates (1)

- Q: Which persons would you compare (use) in the table below if you want to condition on gender, i.e., hold the value of the covariate gender constant ( \(X_{i}=1\), \(X_{i}=0\) ) and focus on the respective subsamples (subsetting!)?

| \(Unit\) | \(D_{i}\) | \(X_{i}\) | \(Y_{i}\) | \(Y_{i}(1)\) | \(Y_{i}(0)\) |

|---|---|---|---|---|---|

| Simon | 1 | 0 | 0 | 0 | ? |

| Julia | 1 | 1 | 1 | 1 | ? |

| Paul | 0 | 0 | 1 | ? | 1 |

| Sarah | 0 | 1 | 0 | ? | 0 |

| Fabrizio | 0 | 0 | 0 | ? | 0 |

| Diego | 0 | 0 | 0 | ? | 0 |

Exercise 1: Conditioning on covariates (2)

- Below we colored individuals for which \((\color{#984ea3}{X_{i} = 1})\) and \((\color{#fb9a99}{X_{i} = 0})\).

| $Unit$ | $D_{i}$ | $X_{i}$ | $Y_{i}$ | $Y_{i}(1)$ | $Y_{i}(0)$ |

|---|---|---|---|---|---|

| Simon | 1 | 0 | 0 | 0 | ? |

| Julia | 1 | 1 | 1 | 1 | ? |

| Paul | 0 | 0 | 1 | ? | 1 |

| Sarah | 0 | 1 | 0 | ? | 0 |

| Fabrizio | 0 | 0 | 0 | ? | 0 |

| Diego | 0 | 0 | 0 | ? | 0 |

- Q: Imagine we study the causal effect of education (MA sociology degree: Yes, no) on income (in Euros): Do you think the assumption of unconfoundedness/conditional independence is realistic when we only condition on gender?

Exercise 2: Conditioning on covariates (1)

- Q: On the left you find the joint distribution of outcome Trust (Y), treatment Victimization (D) and covariate Education (X).

- If victimization had been randomly assigned, would estimating the causal effect require conditioning for education (= holding education constant)?

- Which conditional distribution do we focus on if we were to hold Education constant at \(X_{i}=6\)? And \(X_{i}=2\)?

- You assume cond. independence/unconfoundedness for subpopulations of values of the Education variable (i.e., victimization should be as good as random). Within which partitions of the sample would you then compare victims to non-victims?

Exercise 2: Conditioning on covariates (2)

- Conditional distributions are colored in orange: \((\color{orange}{X_{i}=6})\) and \((\color{orange}{X_{i}=2})\).

- Important notion: Condition/control = filtering (Pearl et al. 2016, 8)

- when we condition on X, we filter the data into subsets based on values of X (Pearl et al. 2016, 8) [hold values of X constant]

- Subsequently, we would estimate the treatment effect in those subsets and average it (in some way)

Unconfoundedness/cond. independence assumption

- Why is the unconfoundedness/conditional independence assumption so relevant?

- Most widely used assumption in causal research (Imbens and Rubin 2015, 262)

- Often combined with other assumptions (often implicitly)

- e.g., exogeneity assumption combines unconfoundedness with functional form and constant treatment effect assumptions that are quite strong, and arguably unnecessary

- → here focus on cleaner, functional-form-free unconfoundedness assumption

- Comparison with other assumptions highlights its attractiveness

- Unconfoundedness implies that one should compare units similar in terms of pre-treatment variables (compare “like with like”)

- → has intuitive appeal and underlies many informal/formal causal inferences

- In its absence we would need other additional assumptions that provide guidance on which control units would make good comparisons for particular treated units (and vice versa)

- Q: How should we select covariates for conditioning (controlling/filtering)?

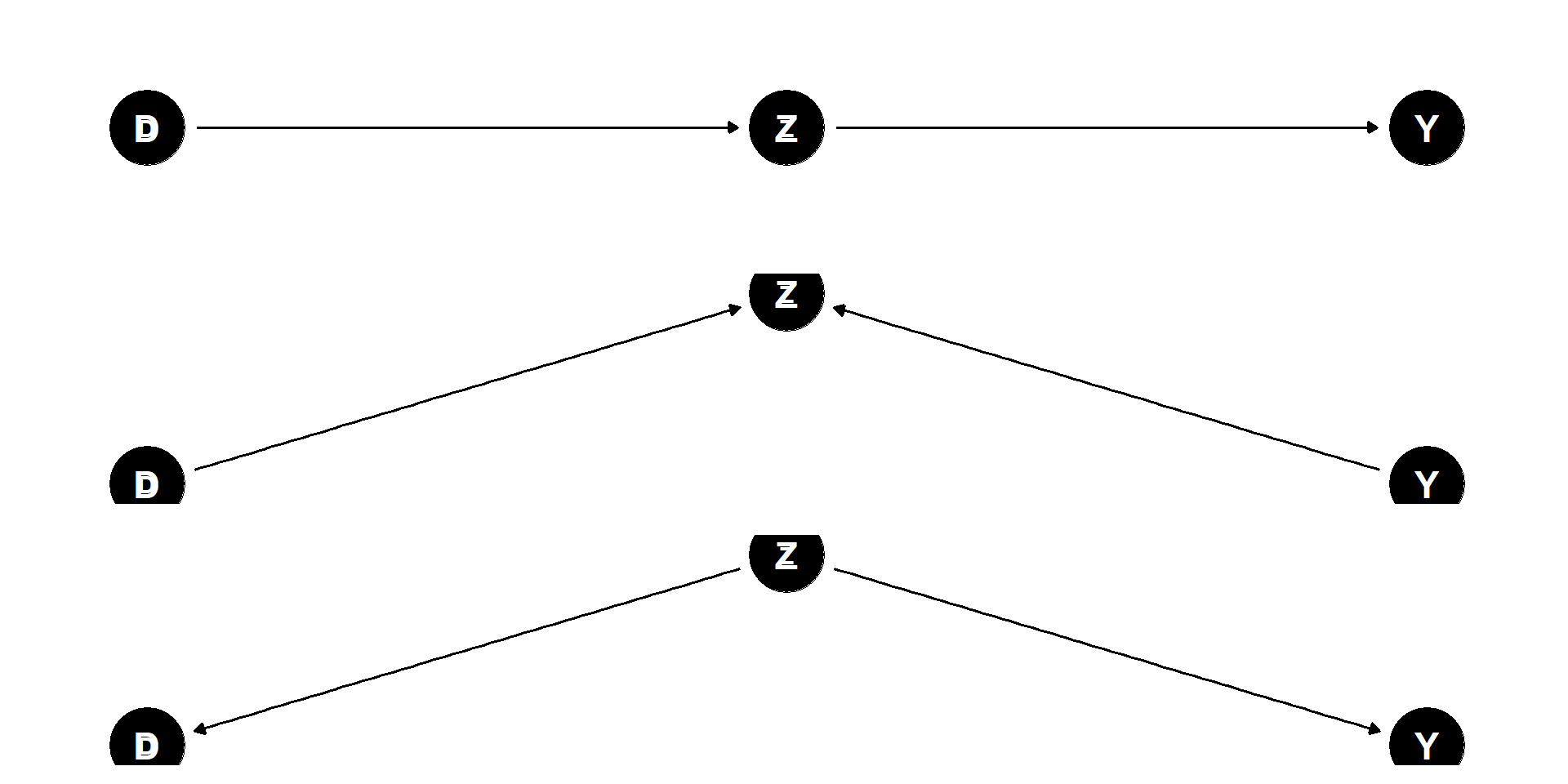

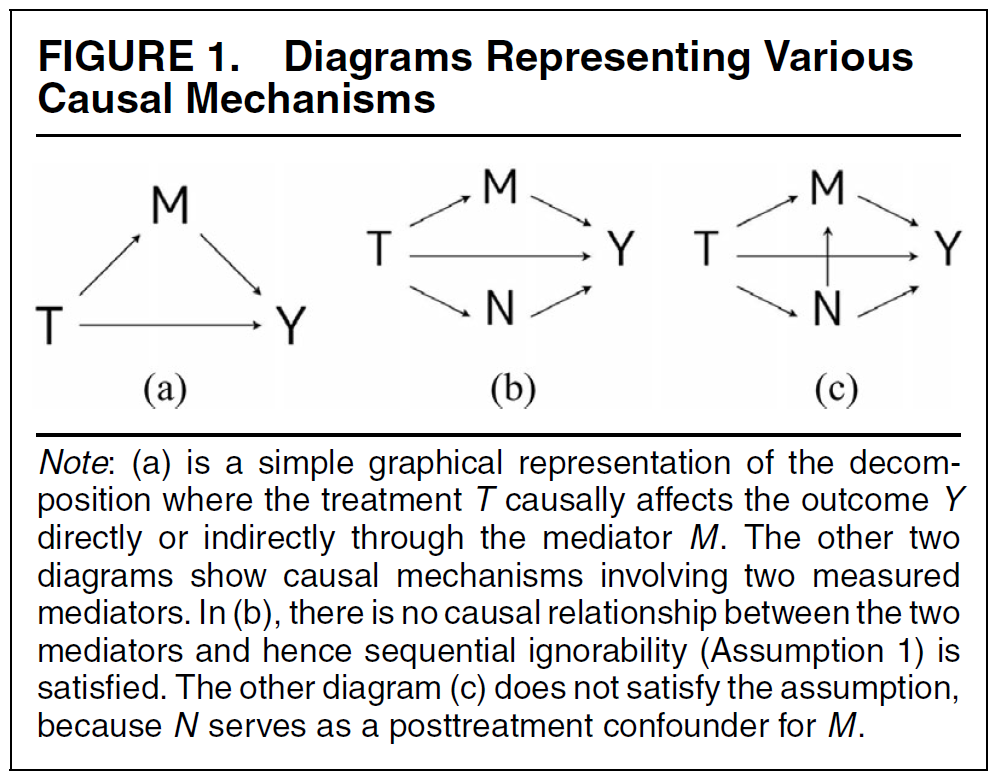

Covariate selection for conditioning & bias

Remember.. fundamental objective: Estimate true causal effect of D on Y without bias (unbiased)

Common-cause confounding bias (Elwert and Winship 2014b, 37)

- D ← X → Y

- results from failure to condition on a common cause (a confounder) of treatment and outcome

Overcontrol/post-treatment bias

- D → X → Y

- results from conditioning on a variable on a causal path between treatment and outcome (Elwert and Winship 2014b, 35–36)

Endogenous selection bias

- D → X ← Y

- Collider variable: A common outcome of D and Y

- results from conditioning on a collider (or its descendant) on a non-causal path linking treatment and outcome

Covariates: confounding/post-treatment bias

- Example: Party identification → Vote choice (U.S. examply by King 2010)

- Q: What covariates should we control for/condition on? Why or why not? And what biases may we introduce/avoid in doing so? (always indicate when X is measured)

- Possible covariates

- race

- education

- gender

- voting intentions measured five minutes before vote choice

- Q: What do we mean by “unbiased” and “bias can go in both directions”? Example?

- Q: Does it matter when the covariates are measured?

- Yes, always consider when covariates are measured (or better ‘happened’), i.e., before, between or after treatment D and outcome Y!

Covariates: endogenous selection bias

- Talent T, Beauty B, Hollywood success S (Elwert and Winship 2014b, 36)

- RQ: Is there a causal effect of Talent T → Beauty B?

- Asume talent and beauty are unrelated (no causal relationship)

- Assume both T and B separately cause success S: T → S ← B

- Hollywood success S is a collider variable (common outcome of T and B)

- Endogenous selection bias if conditioning on/controlling for collider (Elwert and Winship 2014b, 36)

- Given success (S = 1), i.e., conditioning on and looking at subset of successful Hollywood actors

- …knowing that non-talented person (T = 0) is successful actor implies that the person must be beautiful (B = 1)

- …knowing that non-beautiful person (B = 0) is a successful actor implies that the person must be talented (T = 1)

- In subsets of Hollywood success (S = 1 or 0) there is a correlation between T and B

- Conditioning on collider (y) creates spurious association between beauty and talent (spurious association is endogenous selection bias)

- Given success (S = 1), i.e., conditioning on and looking at subset of successful Hollywood actors

Exercise: Covariates & bias

- Q: Discuss in groups: A friend of yours wants to investigate the causal effect of having studied (yes vs. no) at \(t_0\) on individuals’ income 20 years later \(t_1\). Your friend wants to control (condition on) different covariates but is nervous because she heard that one might introduce different biases. She is considering the covariates below. Please indicate whether one should control for the following variables (“Yes” vs. “No”) in this concrete example and what kind of bias they may introduce or avoid.

- Marital status at the age of 35

- Parents’ educational level

- Piano lessons during childhood

- Intelligence test in school

- Work experience at the age of 16

- Job skill-level (low vs. high) in his/her forties

Covariates: Summary

- Choice of covariates for conditioning

- Yes: Covariates that affect both D and Y (confounders)

- No: Covariates the lie on the path between D and Y (post-treatment variables)

- No: Covariates that are affected by D and Y (colliders)

- All empirical papers, top 3 political science journals, 2010-2015

- “40% explicitly conditioned on a post treatment variable […] 27% conditioned on a variable that could plausibly be posttreatment […] 33% […] no post-treatment variables included in their analyses […] two-thirds […] that make causal claims condition on post treatment variables.” (Acharya, Blackwell, and Sen 2015, 1)

- Q: How about other disciplines?

Selection on Observables: Lab

Lab: Observational data

- In practice, we usually use a linear regression model to estimate effect of \(D\) on \(Y\) controlling for/conditioning on all relevant covariates \(Xs\)

- ..we will do this in the lab!

- You can find the files for the lab in this folder.

- Please download the following files and store them in a directory that you use for this course (this will be your working directory).

Lab_3_SSO_Observational_data.htmlLab_3_SSO_Observational_data.qmdLab3_data.csv

- Please download the following files and store them in a directory that you use for this course (this will be your working directory).

Quiz

- Link: See email.

Session 9

Matching: Theory

Intro

- Questions?

- Quick wrap up of previous sessions

- RQs; Research design; Population & sample; Measurement; Data (distributions)

- Potential outcomes framework (def. of causal effect), ITE, Naive est., ATE, Assumptions: independence assumption, SUTVA

- Experimental data: Randomization; Field & natural experiments; Ideal experiments; Analytics & checks of rand. experiments

- Observational data: Observational studies (conditional independence assumption!); Conditioning (+ choice of covariates); Confounder, post-treatment and collider bias

- Today: Cross-sectional observational data & estimation strategies & matching

Strategies for Estimation

Remember: In randomized experiments we can simply compare means in treatment and control

Observational studies (and data) require more refined estimation strategies

4 (+1) broad classes of strategies for estimation (Imbens and Rubin 2015, 268f)

- All four aim at estimating unbiased treatment (causal) effects

- Model-based imputation (1), weighting (2), blocking [i.e., subclassification] (3), and matching methods (4) (+ 5th combines aspects)

Strategies (1) and (2-4) differ in that (2-4) can be implemented before seing any outcome data

- Prevents researcher from adapting the model to fit his/her priors about treatment effect

We focus on model-based imputation (1) (= regression) and matching methods (4)

Model-based imputation (1)

Theory: Impute missing potential outcomes by building a model for the missing outcomes

- Use model to predict what would have happened to a specific unit had this unit been subject to the treatment to which it was not exposed

In practice: “off-the-shelf” methods

- Typically linear models (regression) are postulated for average outcomes, without a full specification of the conditional joint potential outcome distribution (Imbens and Rubin 2015, 272f)

But model-based imputation problematic when covariate distributions are far apart!

Better: Prior to using regression methods ensure balance between covariate distributions for treatment and control

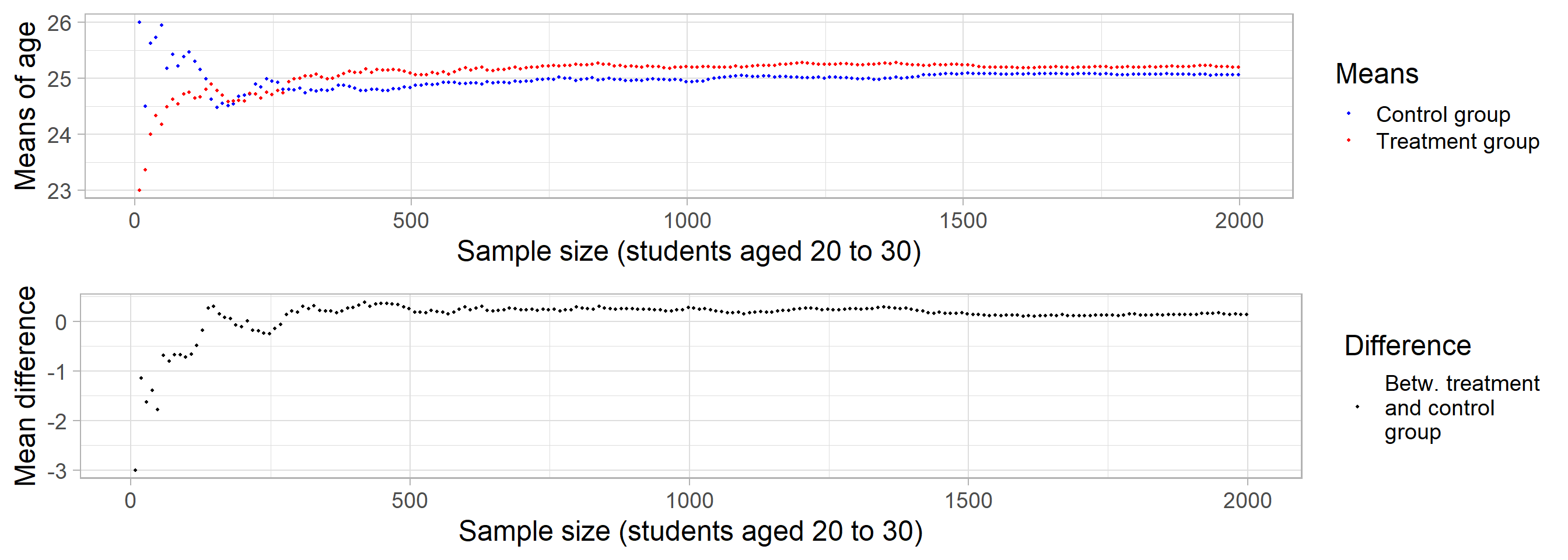

Model-based imputation (2)

- Data: \(\small N_{t}\) =42; \(\small N_{c}\) =150 (subset visualized)

- Education: Mean 2.81 for treated and 5.01 for control units

- Q: Is the covariate distribution of Education (X) across treatment and control balanced?

- LM: \(\small y_{i} = \underbrace{\color{magenta}{\beta_{0}} + \color{orange}{\beta _{1}} \times d_{i} + \color{orange}{\beta _{2}} \times x_{i}}_{\text{Modell} = \color{green}{\widehat{y}}_{i} = \text{Predicted values}} + \underbrace{\color{red}{\varepsilon}_{i}}_{\color{red}{Error}} = \color{green}{\widehat{y}}_{i} + \color{red}{\varepsilon}_{i}\)

- Estimates: \(\small \color{magenta}{\beta_{0}}\) =6.02; \(\small \color{orange}{\beta _{1}}\) = -0.51; \(\small \color{orange}{\beta _{2}}\) = 0.06;

- Q: What do we mean by “extrapolation”?

- Model makes predictions for regions where we don’t have observations (e.g., victims with education 7 or higher)

- Pre-processing data makes sense!

- Prune units without equivalent units in treatment or control to balance data

Matching: Basics

“broadly […] any method that aims to equate (or ”balance”) the distribution of covariates in the treated and control groups” (Stuart 2010, 2)

Goal

- Find one (or more) non-treated unit(s) for every treated unit with similar observable characteristics against whom the effect of the treatment can be assessed

- Treatment and control group as similar as possible except for the treatment status (Covariate balance)

Approach

- Exclude/prune (or down-weight) observations without comparable units in both treatment and control

Q: What tradeoff is there when it comes to pruning units (think of representativeness)?

Matching: Why?

Pure regression approach is increasingly questioned (e.g., Aronow and Samii 2015)

Matching methods (Stuart 2010, 2)

- Complementary to regression adjustment

- Reduce imbalance

- Highlight areas of covariate distribution without sufficient overlap/common support between treatment/control (avoid extrapolation)

- Straightforward diagnostics to assess performance

- Makes you think about selection

Q: If we use matching, do we still need the conditional unconfoundedness/independence assumption?

Matching: Overlap & common support I

- Q: Are there areas in the distribution where we don’t have common support across treatment/control focusing on value combinations of education and age?

- Q: If we increase the number of variables (covariates) on which we match. Does that make it more or less difficult to find matches? Exact vs. inexact matching? What might we do with a variable like age before we match?

Matching: Overlap & common support II

- Common support affects what populations we can learn about (namely the subsample for which we have common support)

- “any method would struggle to assess the causal effect of probation [Bewährung] on offenders who committed a very serious crime (e.g., terrorism) because no one sentenced for that crime would receive probation. A lack of common support arises whenever some subpopulation defined by a confounder (e.g., terrorists) contains no treated units or no untreated units (e.g., those on probation or not).” (Lundberg et al. 2021, 539-540)

- Common support problems leave three options (Lundberg et al. 2021, 539-540)

- Argue that feasible subpopulation (with treated and control observations) is interesting in itself

- Argue that feasible subpopulation is still informative about target population

- Lean on parametric model and extrapolate to what they think would happen in the space beyond common support

Matching: Steps

- Select distance measure

- Exact matching (same covariates values)

- One-dimensional summary measure (e.g. Propensity-score, Mahalanobis)

- Caliper: “One strategy to avoid poor matches is to impose a caliper and only to select a match if it is within the caliper.” (Stuart 2010, 10)

- Describes bandwidth that matches are allowed to have (e.g., accept someone aged between 40 and 44 for someone aged 42)

- Matching method (see Savje et al. 2016, 5, Fig. 1)

- Nearest neighbor matching (1:1 or k:1 nearest neighbor, optimal matching, replacement)

- Subclassification, full matching and weighting

- Assessing balance

- Compare covariate distribution between treatment and control

- In practice: one-dimensional measures (std. difference in means, variance ratio etc)

- Repeat prior steps until balance is good

- Analysis of outcome based on matched sample (e.g., estimate regression)

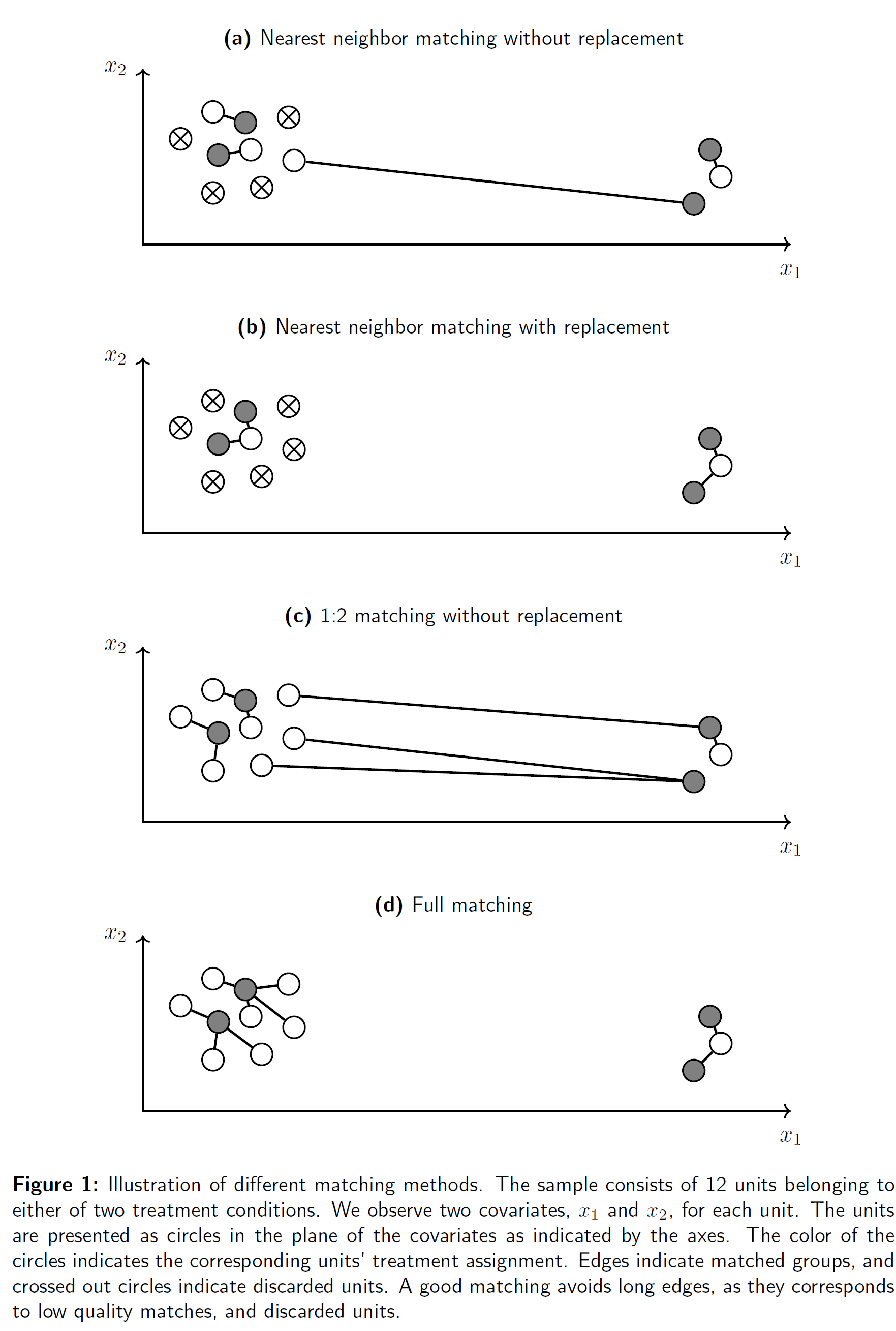

Matching: Exercise - Matching methods

- Q: Discuss the graph (Sävje, Higgins, and Sekhon 2017, fig. 1) in groups (read the description!) and explain what you see in the different panels!

- If the image is too small use right-click to open it in a new window.

- How many dimensions/variables are shown in the graph?

- What are the white/gray/strikethrough circles?

- What are the broad differences between the 4 matching methods? And which one is preferable?

- Explain each panel.

- Does the order in which we find matches matter (why?)?

Matching: Choices

- Distance measures

- In practice: Choose different methods for different variables

- e.g., numeric distance for numeric variables; exact matching for categorical variables

- In practice: Choose different methods for different variables

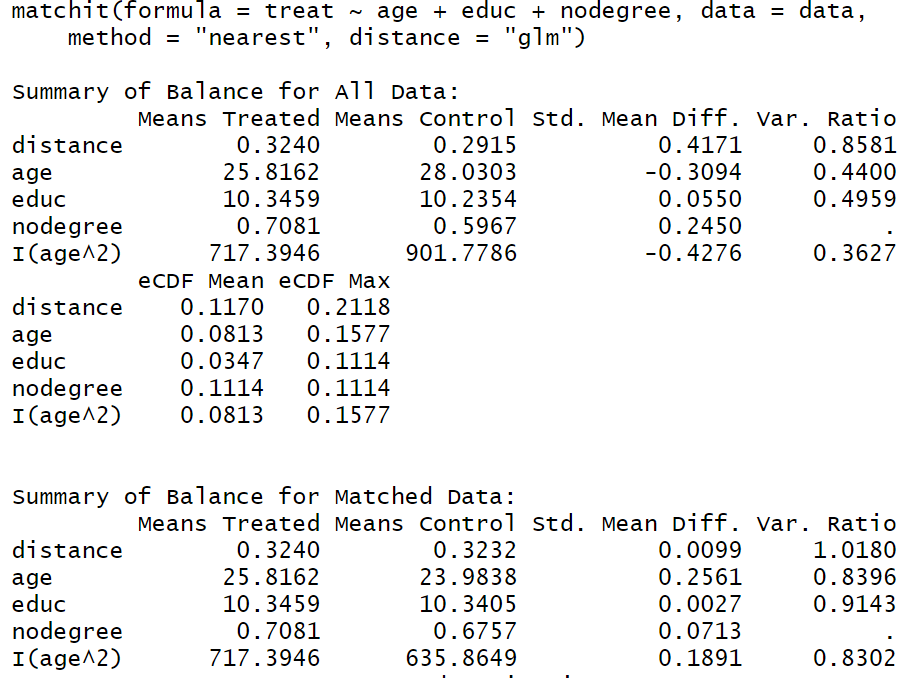

- Matching methods