Chapter 3 Regression and Correlation

3.1 Chapter Overview

We will extend our conversation on descriptive measures for quantitative variables to include the relationship between two variables.

Chapter Learning Outcomes/Objectives

- Calculate and interpret a correlation coefficient.

- Calculate and interpret a regression line.

- Use a regression line to make predictions.

3.2 Linear Equations

From your previous math classes, you should have a passing familiarity with linear equations like \(y=mx+b\). In statistics, we write these as \[y=b_0 + b_1x\] where \(b_0\) and \(b_1\) are constants, \(x\) is the independent variable, and \(y\) is the dependent variable. The graph of a linear function is always a (straight) line.

The y-intercept is \(b_0\), the value the dependent variable takes when the independent variable \(x=0\). The slope is \(b_1\), the change in \(y\) for a 1-unit change in \(x\).

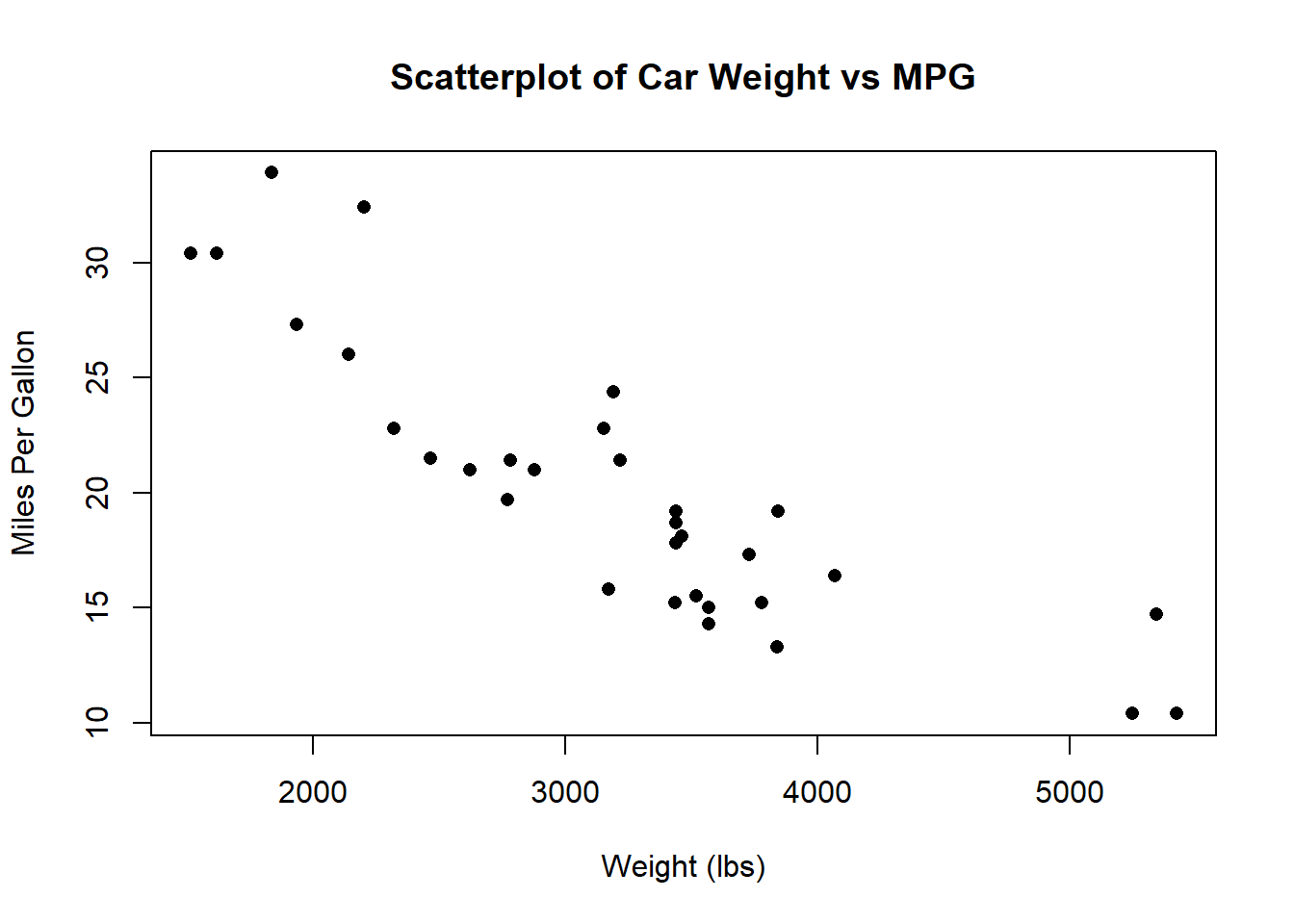

A scatterplot shows the relationship between two (numeric) variables.

At a glance, we can see that (in general) heavier cars have lower MPG. We call this type of data bivariate data (“bi” meaning “two” and “variate” meaning “random variable”). Now consider

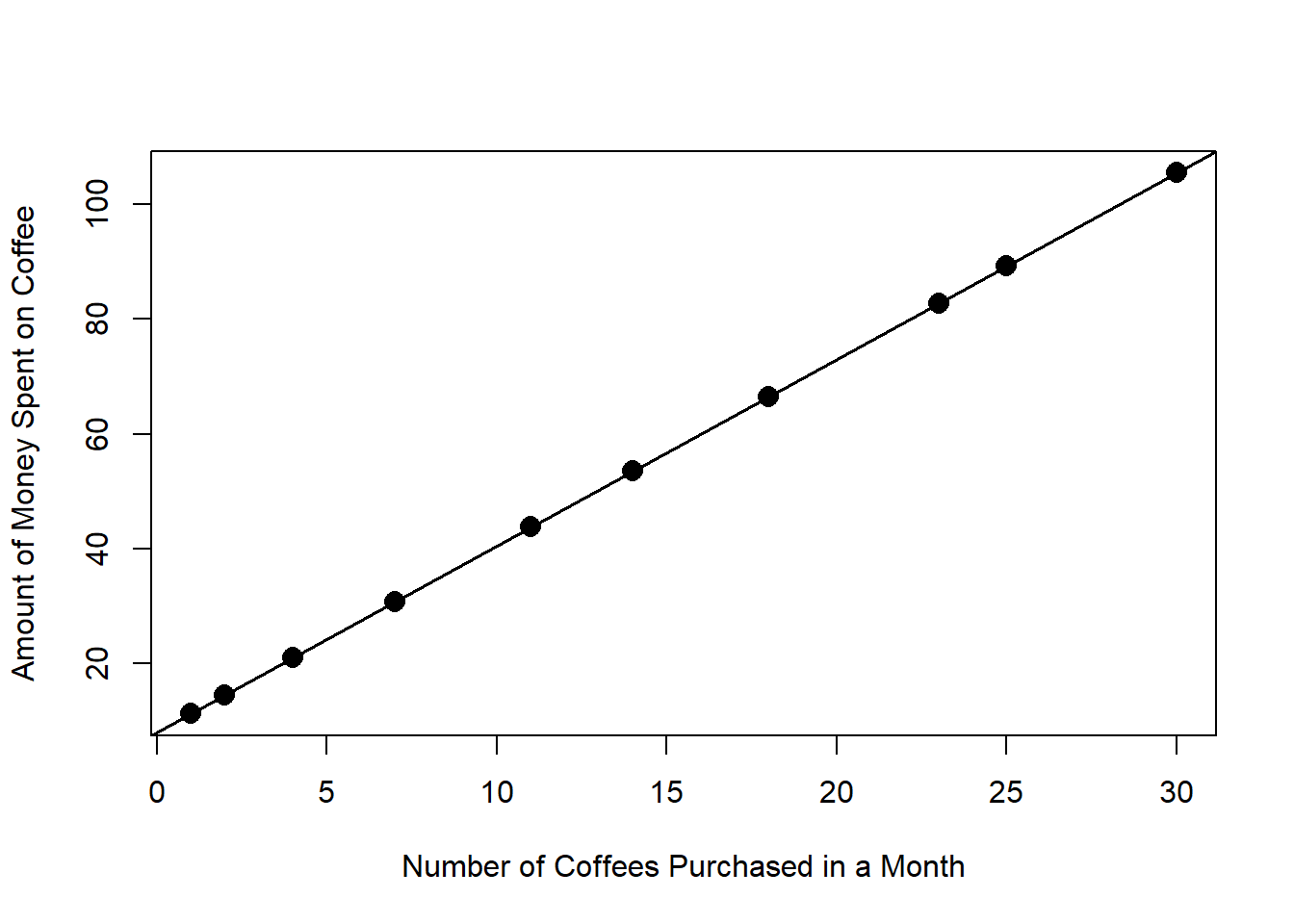

This relationship can be modeled perfectly with a straight line: \[ y = 8 + 3.25x \] Each month, this person spends $8 buying a bag of coffee for their home coffee machine. Each coffee they purchase costs $3.25. If we know the numbers of coffees they bought last month, we know exactly how much money they spent! When we can do this - model a relationship perfectly - we know the exact value of \(y\) whenever we know the value of \(x\). This is nice (we would love to be able to do this all the time!) but typically real-world data is much more complex than this.

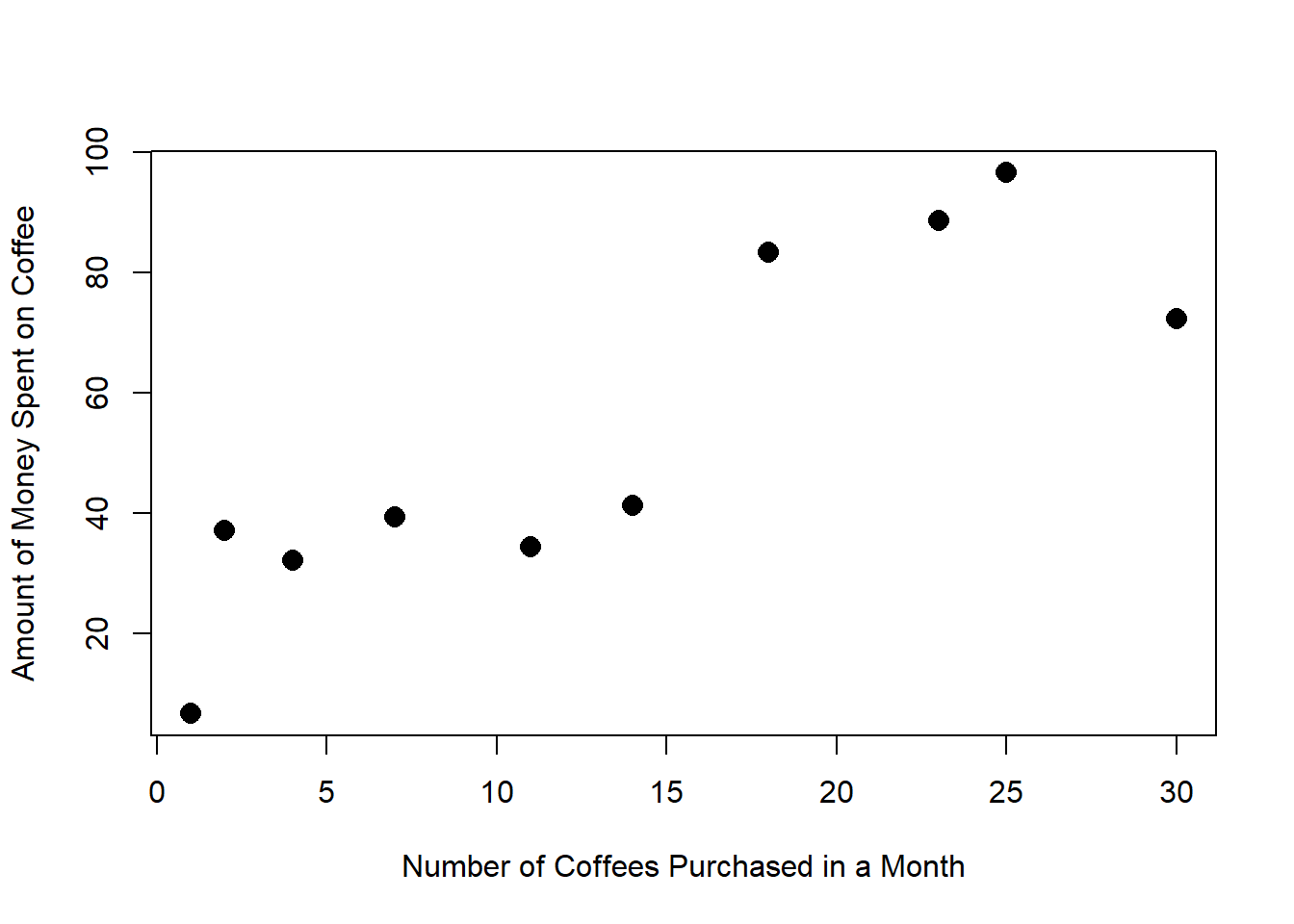

Linear regression takes the idea of fitting a line and allows the relationship to be imperfect. What if that pound of coffee didn’t always cost $8? Or the coffee drinks didn’t always cost $3.25? In this case, you might get a plot that looks something like this:

The linear regression line looks like \[y = \beta_0 + \beta_1x + \epsilon\]

- \(\beta\) is the Greek letter “beta”.

- \(\beta_0\) and \(\beta_1\) are constants.

- Error (the fact that the points don’t all line up perfectly) is represented by \(\epsilon\).

We estimate \(\beta_0\) and \(\beta_1\) using data and denote the estimated line by \[\hat{y} = b_0 + b_1x \]

- \(\hat{y}\), “y-hat”, is the estimated value of \(y\).

- \(b_0\) is the estimate for \(\beta_0\).

- \(b_1\) is the estimate for \(\beta_1\).

We drop the error term \(\epsilon\) when we estimate the constants for a regression line; we assume that the mean error is 0, so on average we can ignore this error.

We use a regression line to make predictions about \(y\) using values of \(x\). Think of this as the 2-dimensional version of a point estimate!

- \(y\) is the response variable.

- \(x\) is the predictor variable.

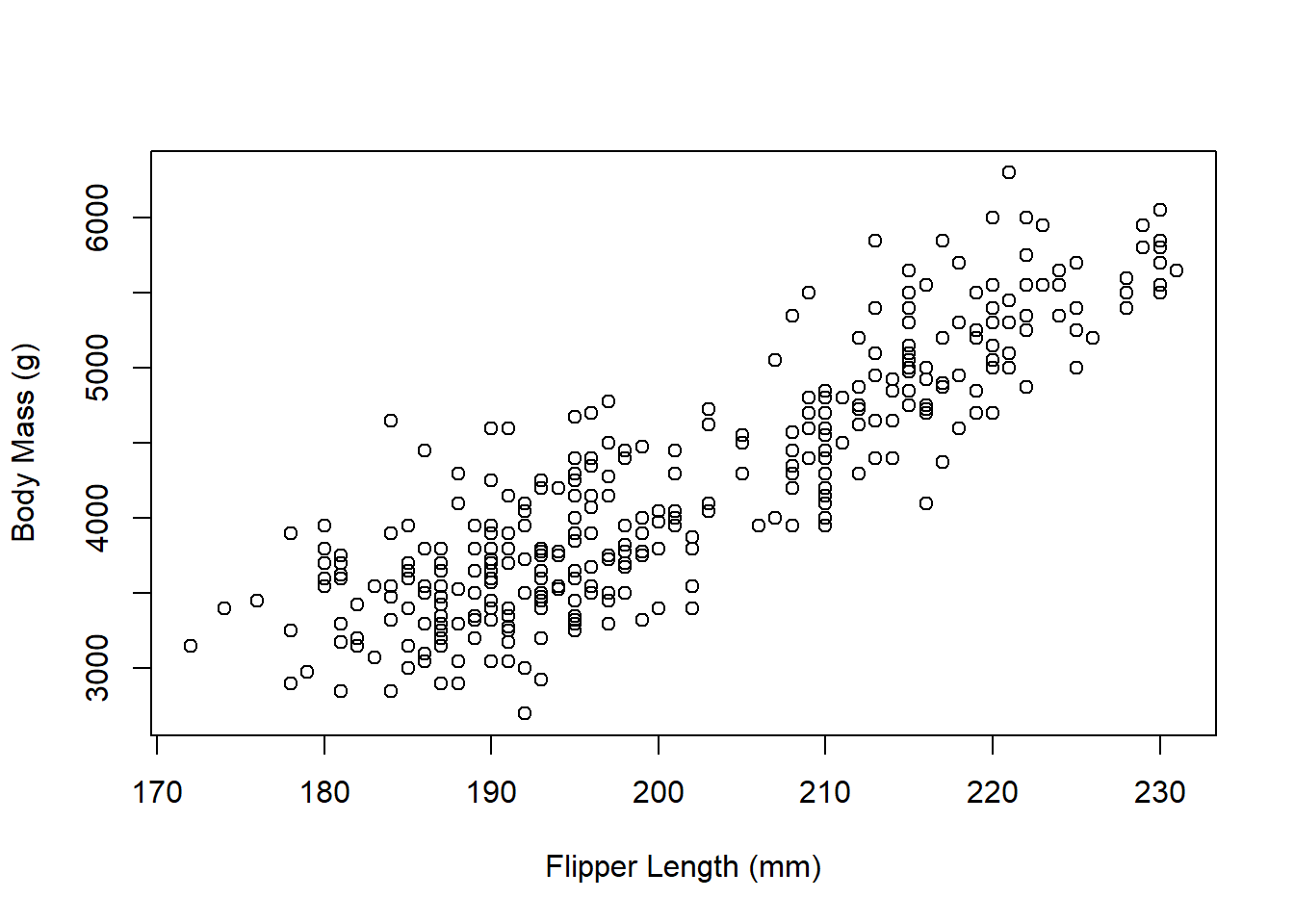

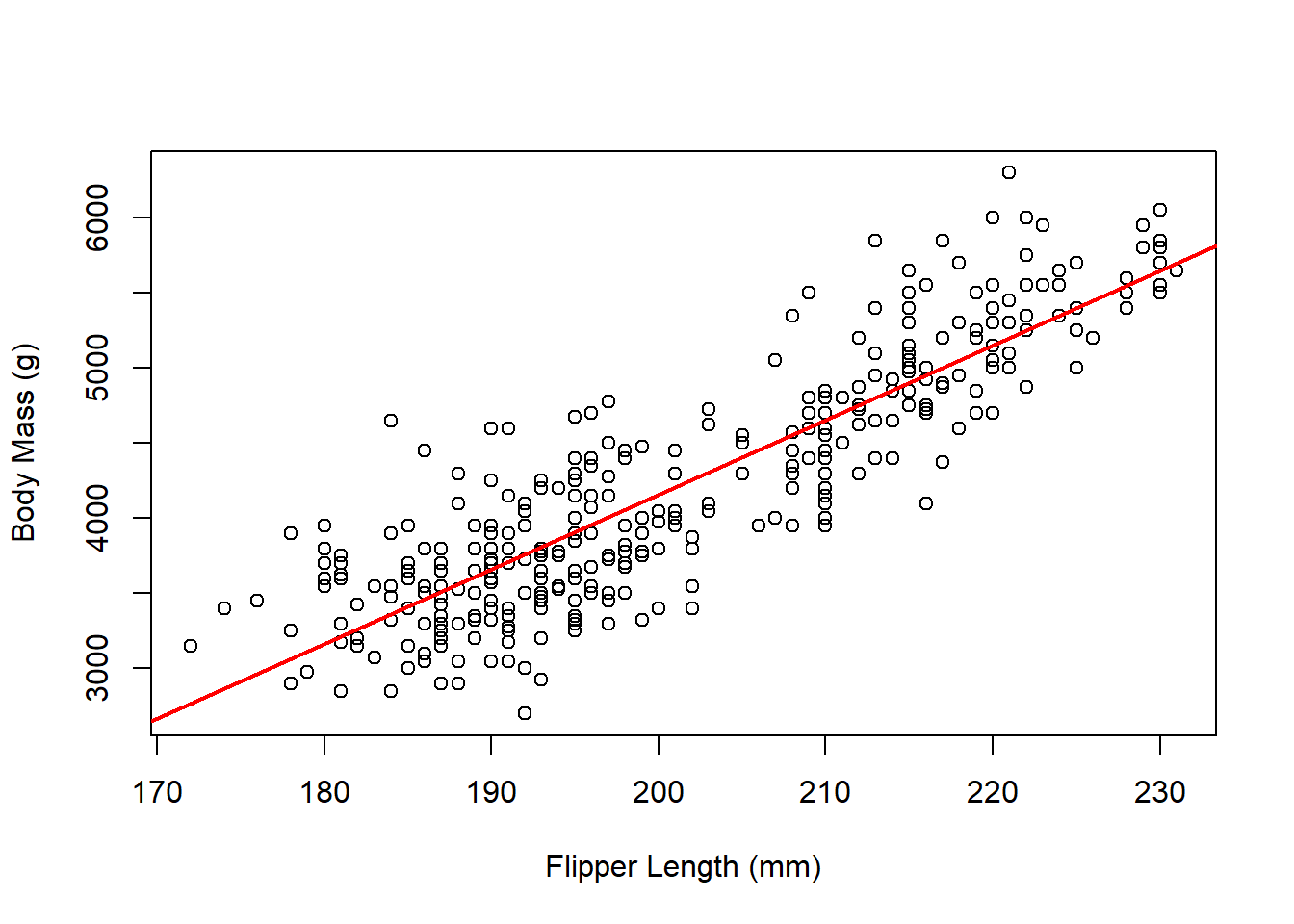

Example: Researchers took a variety of measurements on 344 adult penguins (species chinstrap, gentoo, and adelie) near Palmer Station in Antarctica.

Artwork by @allison_horst

We will consider two measurements for each penguin: their body mass (weight in grams) and flipper length (in millimeters).

Clearly, the relationship isn’t perfectly linear, but there does appear to be some kind of linear relationship (as flipper length increases, body mass also increases). We want to try to use flipper length (\(x\)) to predict body mass (\(y\)).

The regression model for these data is \[\hat{y}=-5780.83 + 49.69x\]

To predict the body mass for a penguin with a flipper length of 180cm, we just need to plug in 180 for flipper length (\(x\)): \[\hat{y}=-5780.83 + 49.69\times 180 = 3163.37\text{g}.\] Note: because the regression line is built using the data’s original units (mm for flipper length, g for body mass), the regression line will preserve those units. That means that when we plugged in a value in mm, the equation spit out a predicted value in g.

Scatterplots in R

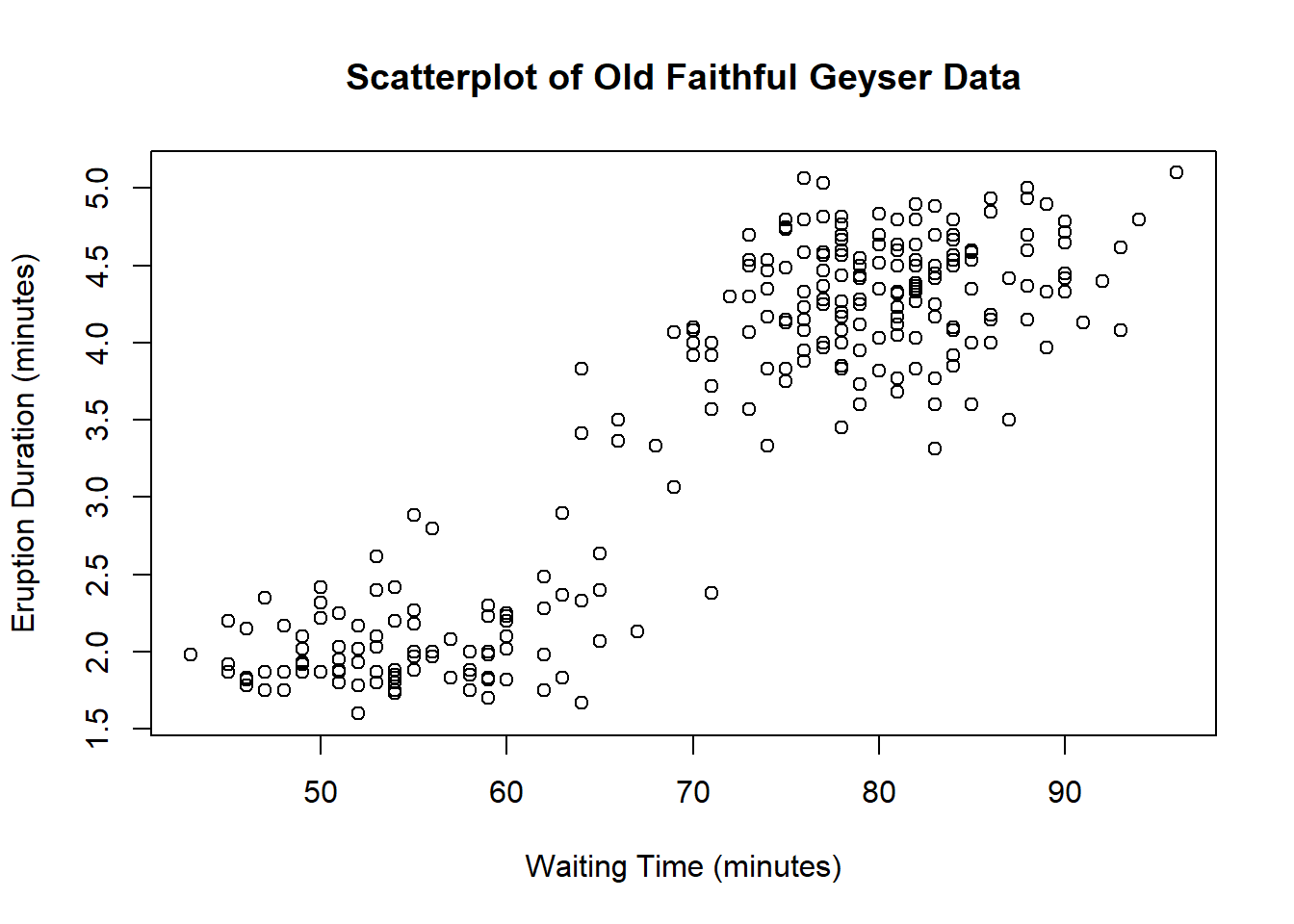

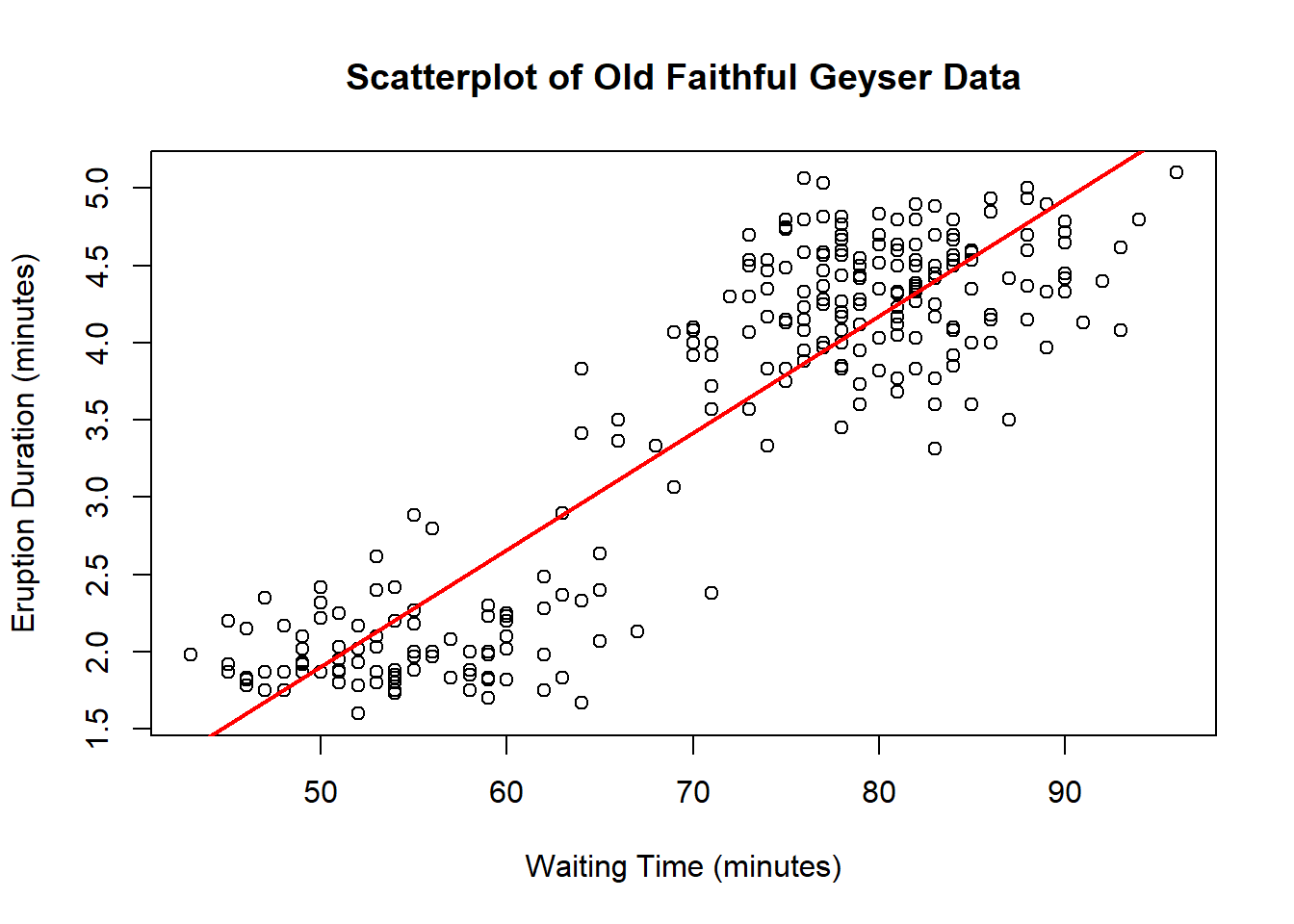

Let’s create a scatterplot from the faithful dataset in R, which

contains measurements on waiting time and eruption duration for Old

Faithful geyser in Yellowstone National Park. We can use some of the

same arguments we used with the hist and boxplot commands to give it

titles:

xis the variable I want represented on the x-axis (horizontal axis).yis the variable I want represented on the y-axis (vertical axis).mainis where I can give the plot a new title. (Make sure to put the title in quotes!)xlabis the x-axis title.ylabis the y-axis title.

data("faithful")

attach(faithful)

plot(x = waiting, y = eruptions,

main = "Scatterplot of Old Faithful Geyser Data",

xlab = "Waiting Time (minutes)",

ylab = "Eruption Duration (minutes)")

3.3 Correlation

We’ve talked about the strength of linear relationships, but it would be nice to formalize this concept. The correlation between two variables describes the strength of their linear relationship. It always takes values between \(-1\) and \(1\). We denote the correlation (or correlation coefficient) by \(R\): \[R = \frac{1}{n-1}\sum_{i=1}^n\left(\frac{x_i - \bar{x}}{s_x}\times\frac{y_i - \bar{y}}{s_y}\right)\] where \(s_x\) and \(s_y\) are the respective standard deviations for \(x\) and \(y\). The sample size \(n\) is the total number of \((x,y)\) pairs.

This is a pretty involved formula! We’ll let a computer handle this one.

Correlations

- close to \(-1\) suggest strong, negative linear relationships.

- close to \(+1\) suggest strong, positive linear relationships.

- close to \(0\) have little-to-no linear relationship.

Note: the sign of the correlation will match the sign of the slope!

- If \(R < 0\), there is a downward trend and \(b_1 < 0\).

- If \(R > 0\), there is an upward trend and \(b_1 > 0\).

- If \(R \approx 0\), there is no relationship and \(b_1 \approx 0\).

When two variables are highly correlated (\(R\) close to \(-1\) or \(1\)), we know there is a strong relationship between them, but we do not know what causes that relationship. For example, when we looked at the Palmer penguins, we noticed that an increase in flipper length related to an increase in body weight… but we have no way of knowing if flipper length causes a higher body weight (or vice versa). That is, correlation does not imply causation.

For a fun collection of spurious correlations (correlations where \(R\) is relatively high, but the variables have nothing to do with each other), check out this website.

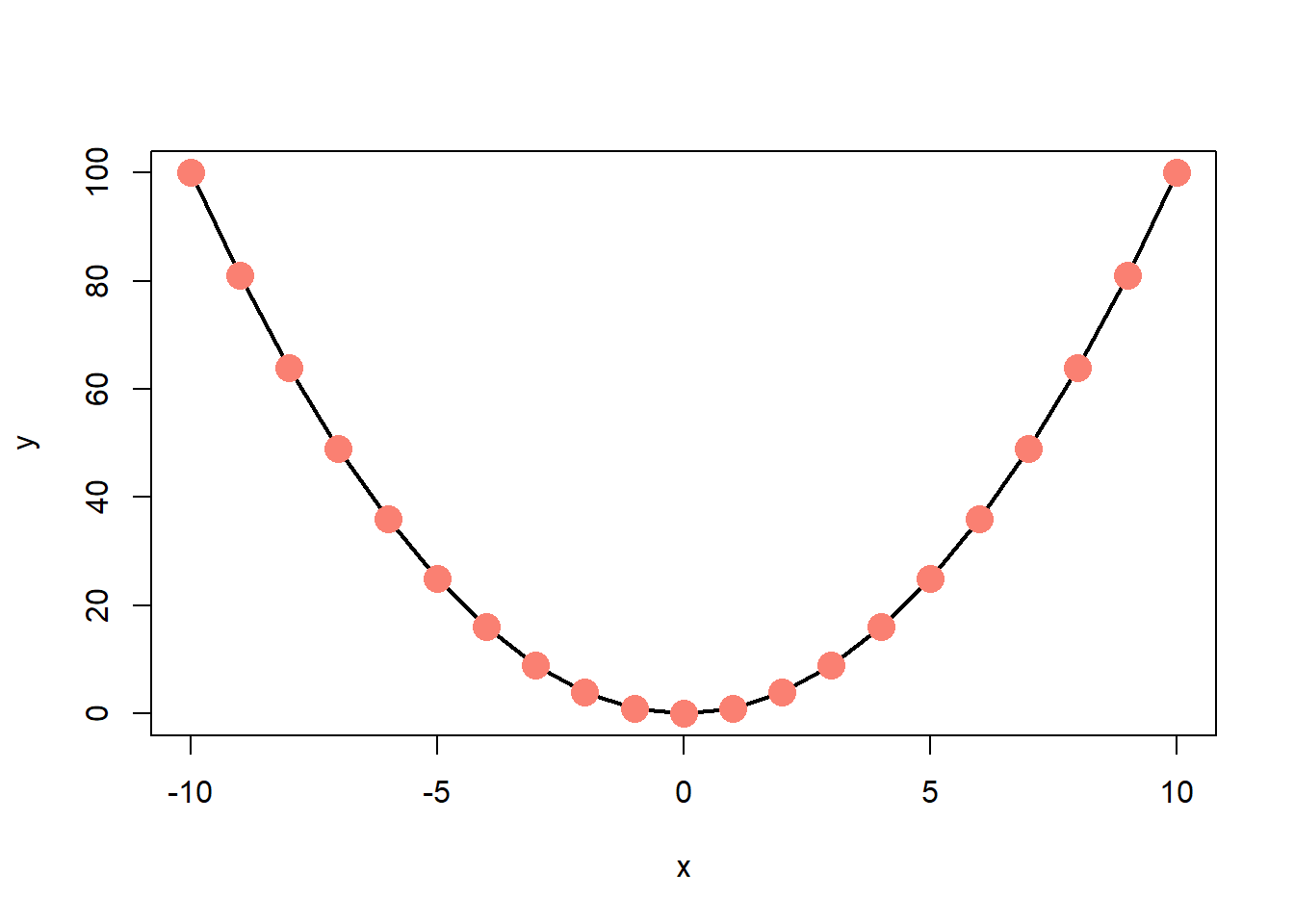

A final note: correlations only represent linear trends. Consider the following scatterplot:

Obviously there’s a strong relationship between \(x\) and \(y\). In fact, there’s a perfect relationship here: \(y = x^2\). But the correlation between \(x\) and \(y\) is 0! This is one reason why it’s important to examine the data both through visual and numeric measures.

3.4 Finding a Regression Line

Residuals are the leftover stuff (variation) in the data after accounting for model fit: \[\text{data} = \text{prediction} + \text{residual}\] Each observation has its own residual. The residual for an observation \((x,y)\) is the difference between observed (\(y\)) and predicted (\(\hat{y}\)): \[e = y - \hat{y}\] We denote the residuals by \(e\) and find \(\hat{y}\) by plugging \(x\) into the regression equation. If an observation lands above the regression line, \(e > 0\). If below, \(e < 0\).

When we estimate the parameters for the regression, our goal is to get each residual as close to 0 as possible. We might think to try minimizing \[\sum_{i=1}^n e_i = \sum_{i=1}^n (y_i - \hat{y}_i)\] but that would just give us very large negative residuals. As with the variance, we will use squares to shift the focus to magnitude: \[\begin{align} \sum_{i=1}^n e_i^2 &= \sum_{i=1}^n (y_i - \hat{y}_i)^2 \\ & = \sum_{i=1}^n [y_i - (b_0 + b_1 x_i)]^2 \end{align}\] This will allow us to shrink the residuals toward 0: the values \(b_0\) and \(b_1\) that minimize this will make up our regression line.

This is a calculus-free course, so we’ll skip the proof of the minimization part. The slope can be estimated as \[b_1 = \frac{s_y}{s_x}\times R\] and the intercept as \[b_0 = \bar{y} - b_1 \bar{x}\]

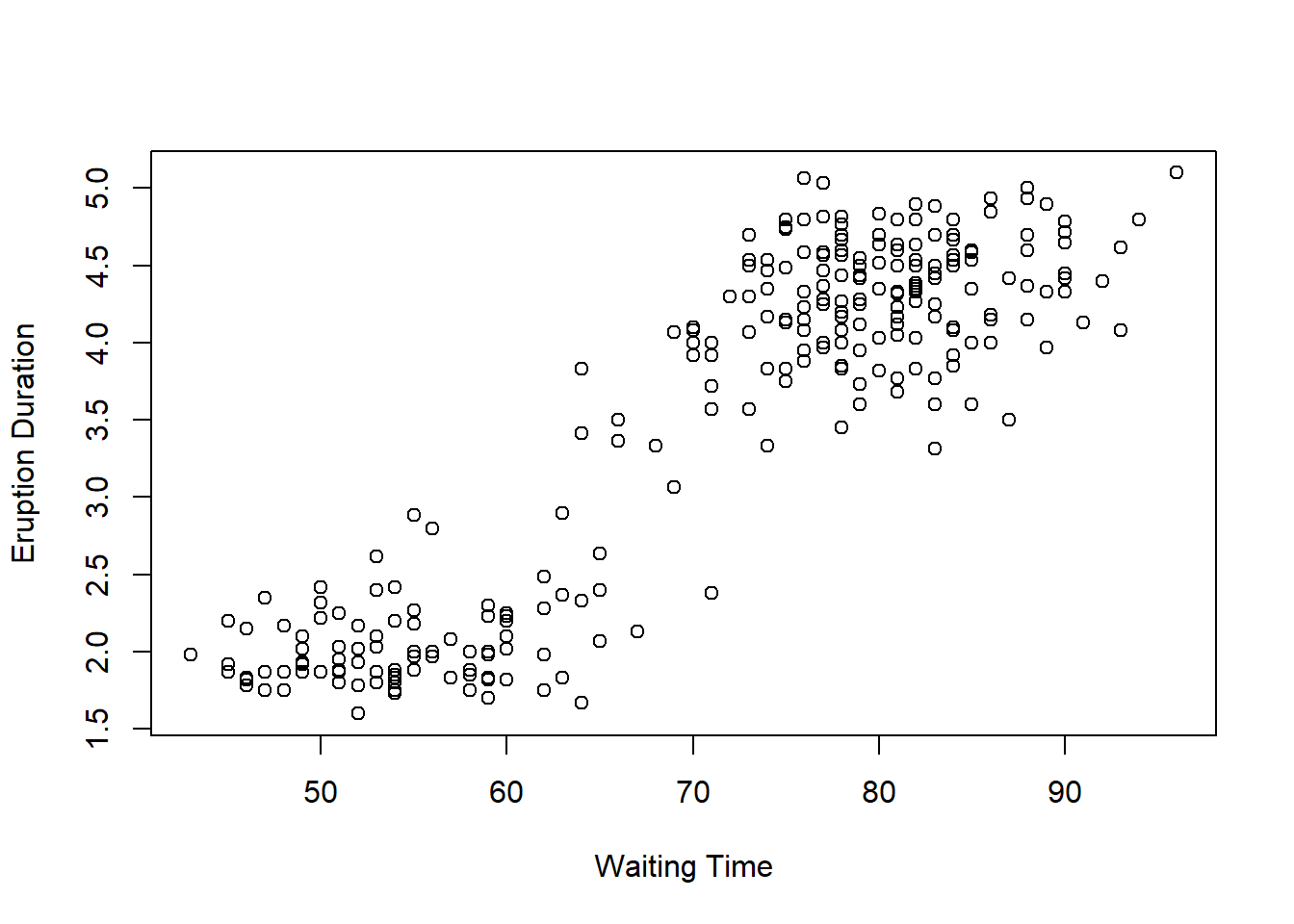

Example: Consider two measurements taken on the Old Faithful Geyser in Yellowstone National Park:

eruptions, the length of each eruption andwaiting, the time between eruptions. Each is measured in minutes.

There does appear to be some kind of linear relationship here, so we will see if we can use the wait time to predict the eruption duration. The sample statistics for these data are

waitingeruptionsmean \(\bar{x}=70.90\) \(\bar{y}=3.49\) sd \(s_x=13.60\) \(s_y=1.14\) \(R = 0.90\) Since we want to use wait time to predict eruption duration, wait time is \(x\) and eruption duration is \(y.\) Then \[b_1 = \frac{1.14}{13.60}\times 0.90 \approx 0.076 \] and \[b_0 = 3.49 - 0.076\times 70.90 \approx -1.87\] so the estimated regression line is \[\hat{y} = -1.87 + 0.076x\]

To interpret \(b_1\), the slope, we would say that for a one-minute increase in waiting time, we would predict a 0.076 minute increase in eruption duration. The intercept is a little bit trickier. Plugging in 0 for \(x\), we get a predicted eruption duration of \(-1.87\) minutes. There are two issues with this. First, a negative eruption duration doesn’t make sense… but it also doesn’t make sense to have a waiting time of 0 minutes.

3.4.1 The Coefficient of Determination

With the correlation and regression line in hand, we will add one last piece for considering the fit of a regression line. The coefficient of determination, \(R^2\), is the square of the correlation coefficient. This value tells us how much of the variability around the regression line is accounted for by the regression. An easy way to interpret this value is to assign it a letter grade. For example, if \(R^2 = 0.84\), the predictive capabilities of the regression line get a B.

Finding a Regression Line in R

To find a regression line using R, we use the command lm, which stands

for “linear model”. The necessary argument is the formula, which takes

the form y ~ x. For example, to find the regression line for the Old

Faithful geyser data with waiting time predicting eruption duration,

\[\text{eruptions} = b_0 + b_1\times\text{waiting}\] we would use

formula = eruptions ~ waiting:

lm(formula = eruptions ~ waiting)##

## Call:

## lm(formula = eruptions ~ waiting)

##

## Coefficients:

## (Intercept) waiting

## -1.87402 0.07563The lm command prints out the intercept, \(b_0\) and the slope

(waiting), \(b_1\). So the model is

\[\text{eruptions} = -1.87 + 0.08\times\text{waiting}\]

To get the coefficient of determination, we can simply find and square

the correlation coefficient. I will do this by putting the cor()

command in parentheses and squaring it by adding ^2 at the end:

(cor(x = waiting, y = eruptions))^2## [1] 0.8114608We might also be interested in adding a regression line to a

scatterplot. Now that we know how to find both separately, we can put

them together. I can add a line to a plot in R by using the command

abline. This command takes two primary arguments, along with some

optional arguments for adjusting line color and thickness:

a: the intercept (\(b_0\))b: the slope (\(b_1\))col: the line colorlwd: the line width

plot(x = waiting, y = eruptions,

main = "Scatterplot of Old Faithful Geyser Data",

xlab = "Waiting Time (minutes)",

ylab = "Eruption Duration (minutes)")

abline(summary(lm(formula = eruptions ~ waiting))$coef[,1],

col = "red", lwd = 2)

3.5 Prediction: A Cautionary Tale

It’s important to stop and think about our predictions. Sometimes, the numbers don’t make sense and it’s easy to see that there’s something wrong with the prediction. Other times, these issues are more insidious. Usually, all of these issues result from what we call extrapolation, applying a model estimate for values outside of the data’s range for \(x\). Our linear model is only an approximation, and we don’t know anything about how the relationship outside of the scope of our data!

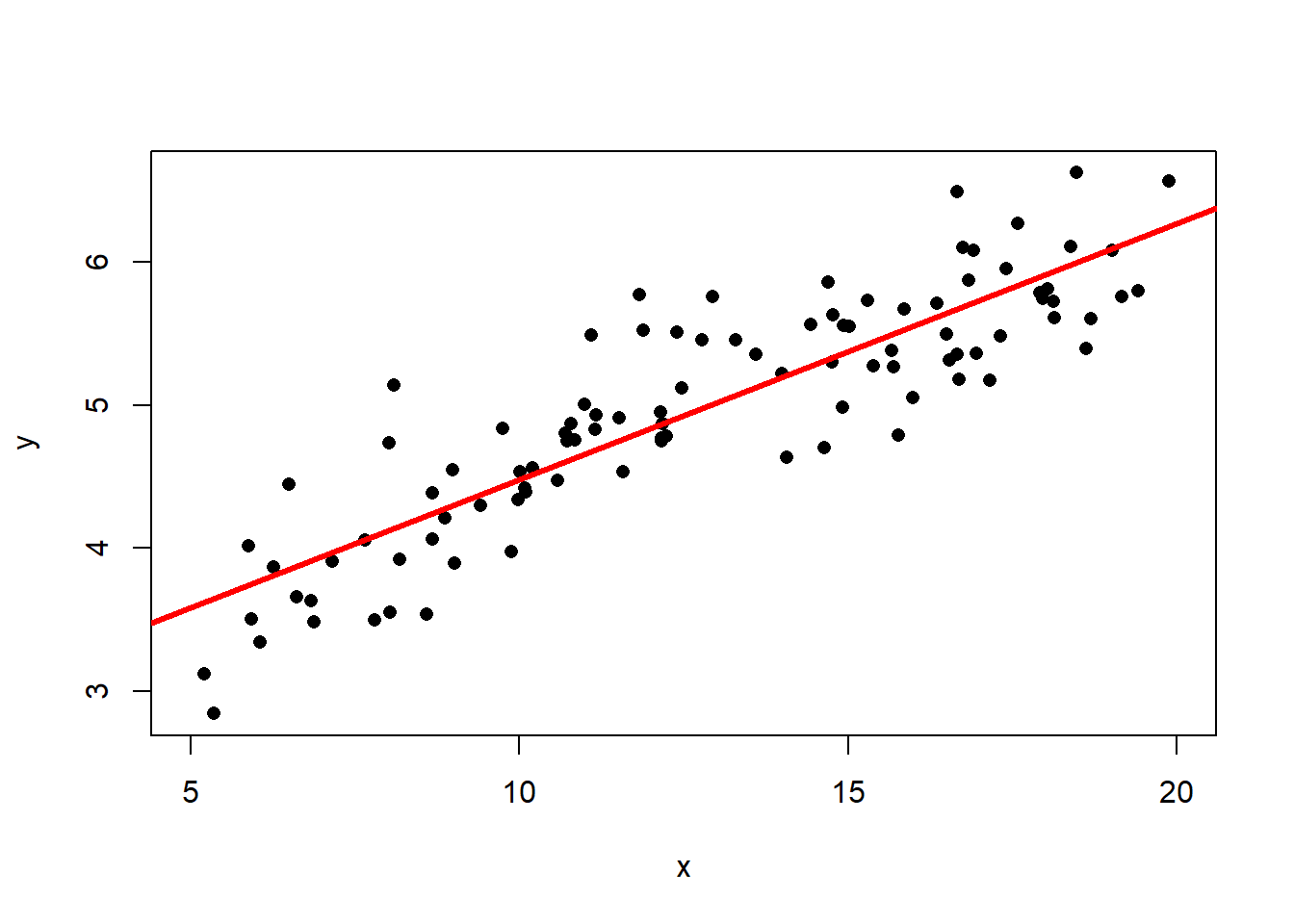

Consider the following data with the best fit line drawn on the scatterplot.

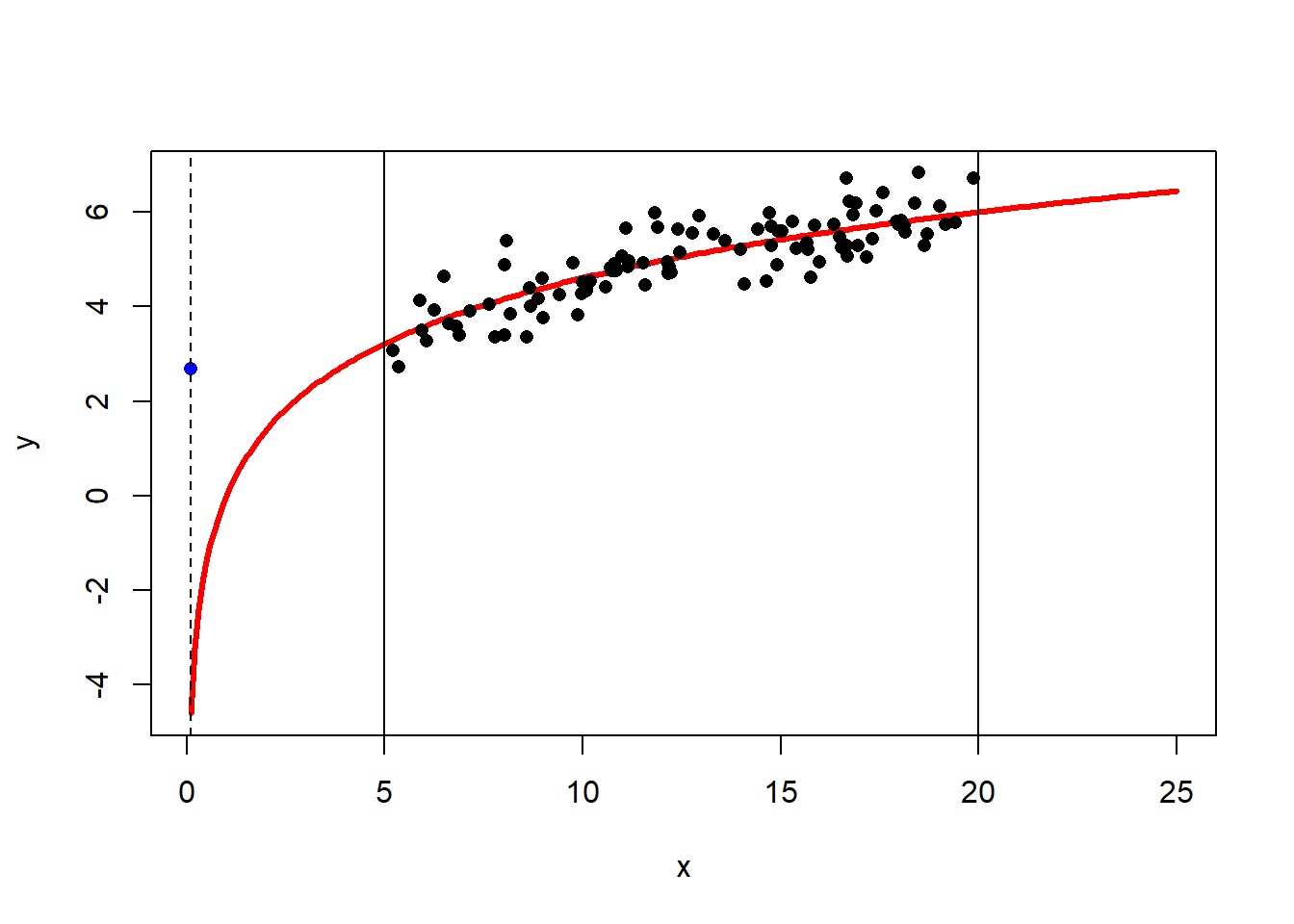

The best fit line is \[\hat{y} = 2.69 + 0.179x\] and the correlation is \(R=0.877\). Then the coefficient of determination is \(R^2 = 0.767\) (think: a C grade), so the model has decent predictive capabilities. More precisely, the model accounts for 76.7% of the variability about the regression line. Now suppose we wanted to predict the value of \(y\) when \(x=0.1\): \[\hat{y} = 2.66 + 0.181\times0.1 = 2.67\] This seems like a perfectly reasonable number… But what if I told you that I generated the data using the model \(y = 2\ln(x) + \text{random error}\)? (If you’re not familiar with the natural log, \(\ln\), don’t worry about it! You won’t need to use it.) The true (population) best-fit model would look like this:

The vertical lines at \(x=5\) and \(x=20\) show the bounds of our data. The blue dot at \(x=0.1\) is the predicted value \(\hat{y}\) based on the linear model. The dashed horizontal line helps demonstrate just how far this estimate is from the true population value! This does not mean there’s anything inherently wrong with our model. If it works well from \(x=5\) to \(x=20\), great, it’s doing its job!