Chapter 8 Raster Spatial Analysis

Raster spatial analysis is particularly important in environmental analysis, since much environmental data are continuous in nature, based on continuous measurements from instruments (like temperature, pH, air pressure, water depth, elevation, to name a few.) In the “Spatial Data and Maps” chapter, we looked at creating rasters from scratch and visualizing them. Here we’ll explore raster analytical methods, commonly working from existing information-rich rasters like elevation data, where we’ll start by looking at terrain functions.

We’ll make a lot of use of terra functions in this chapter, as this package is replacing the raster package which has been widely used. One raster package that’s probably also worth considering is the stars package.

8.1 Terrain functions

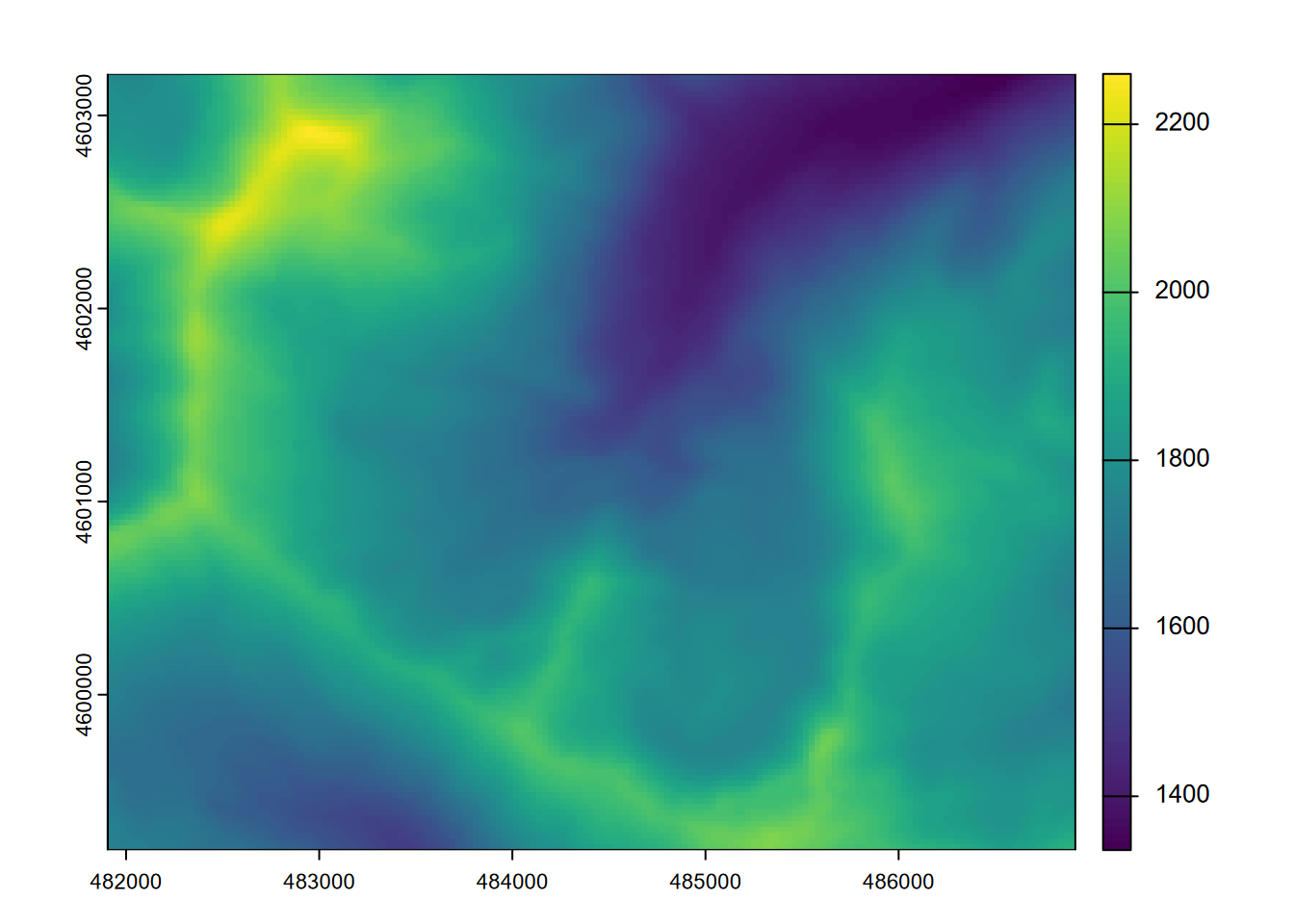

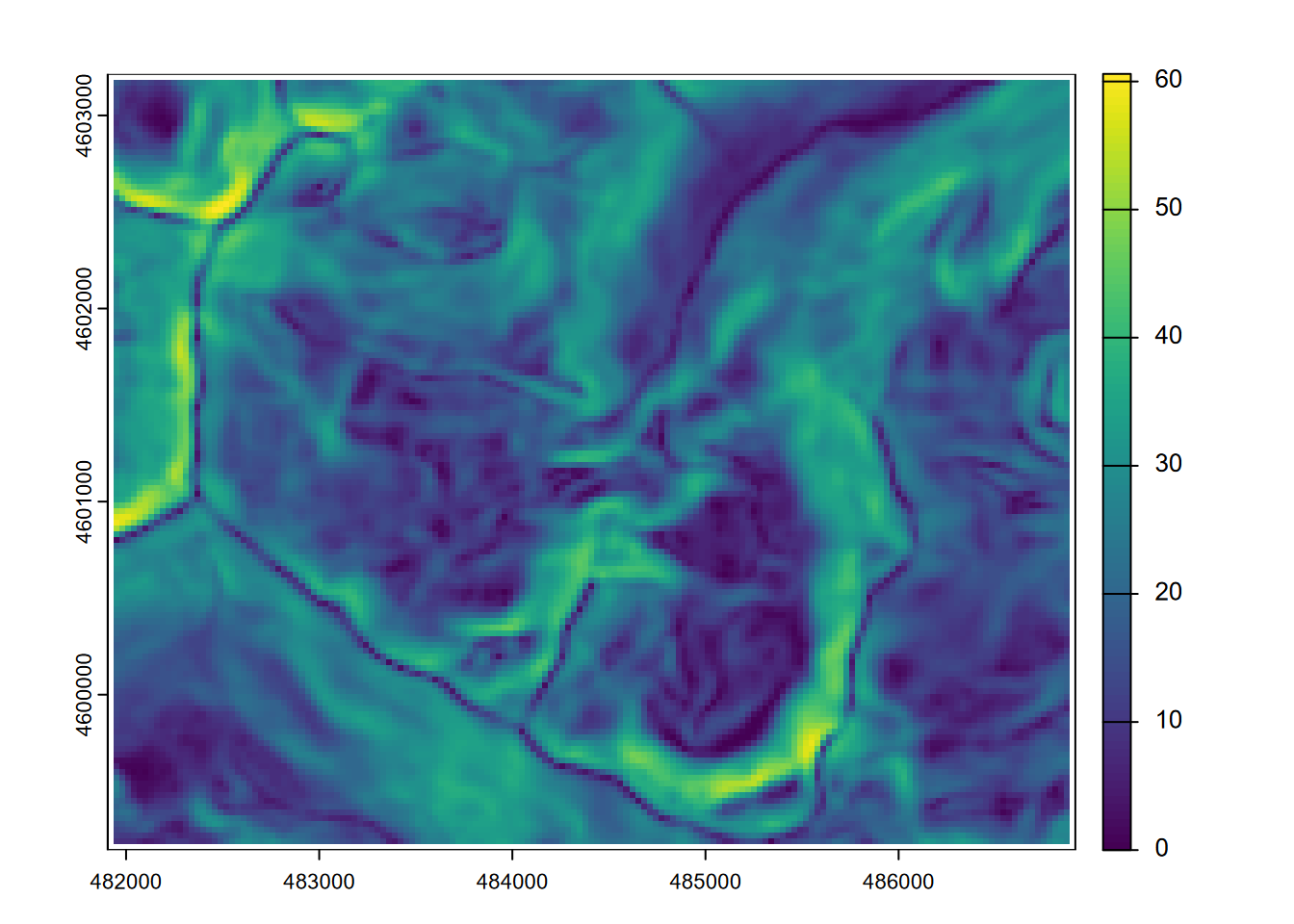

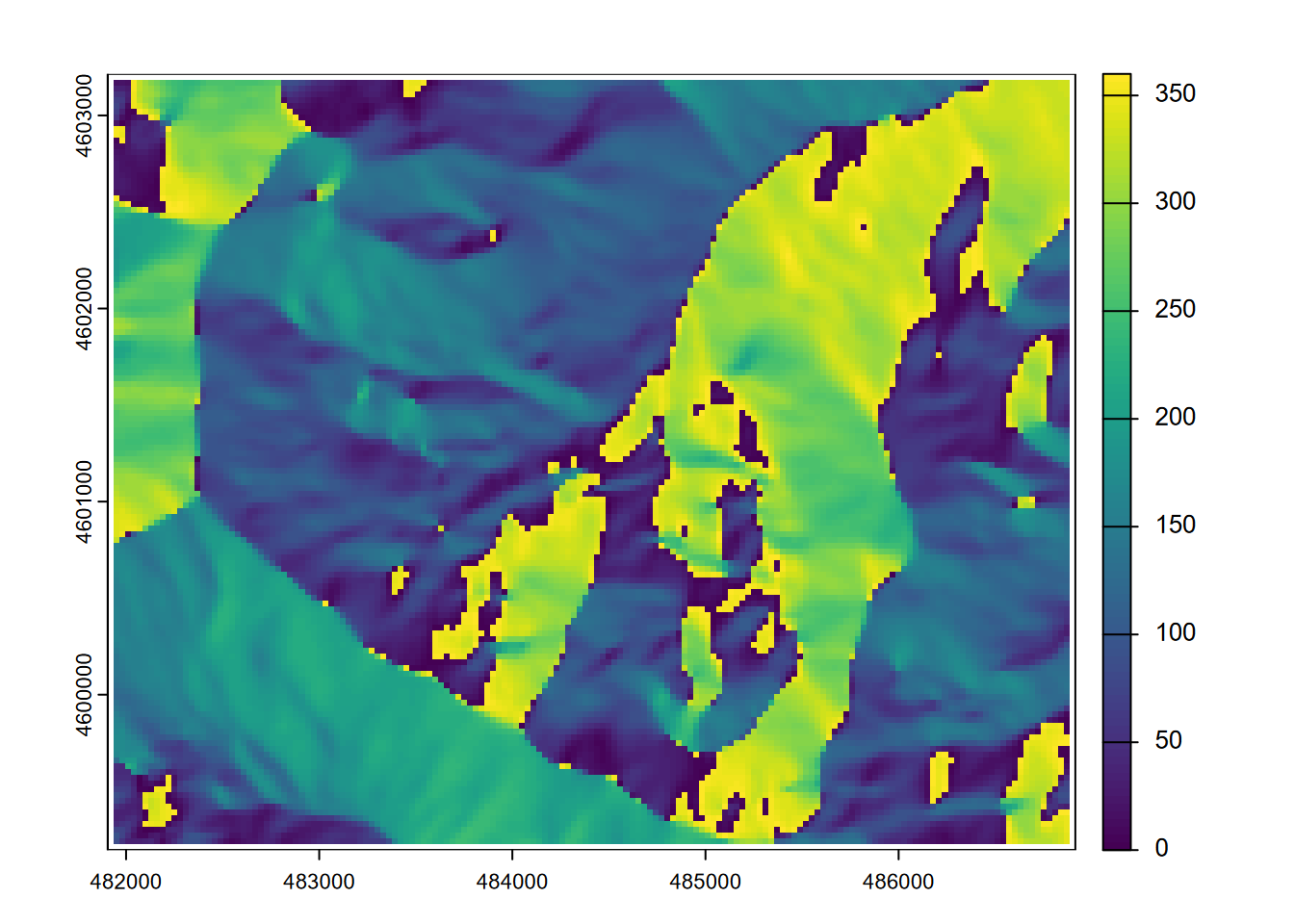

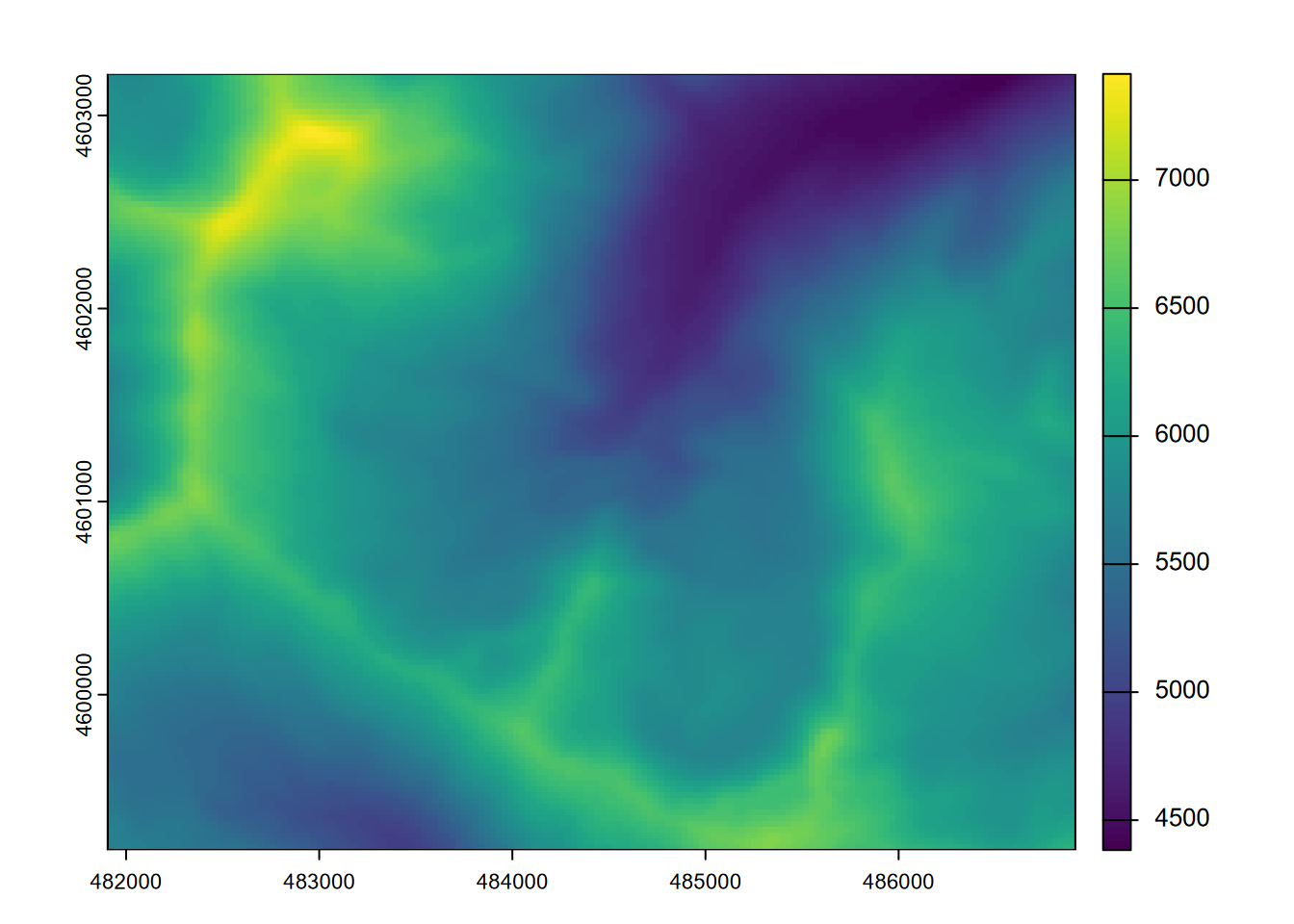

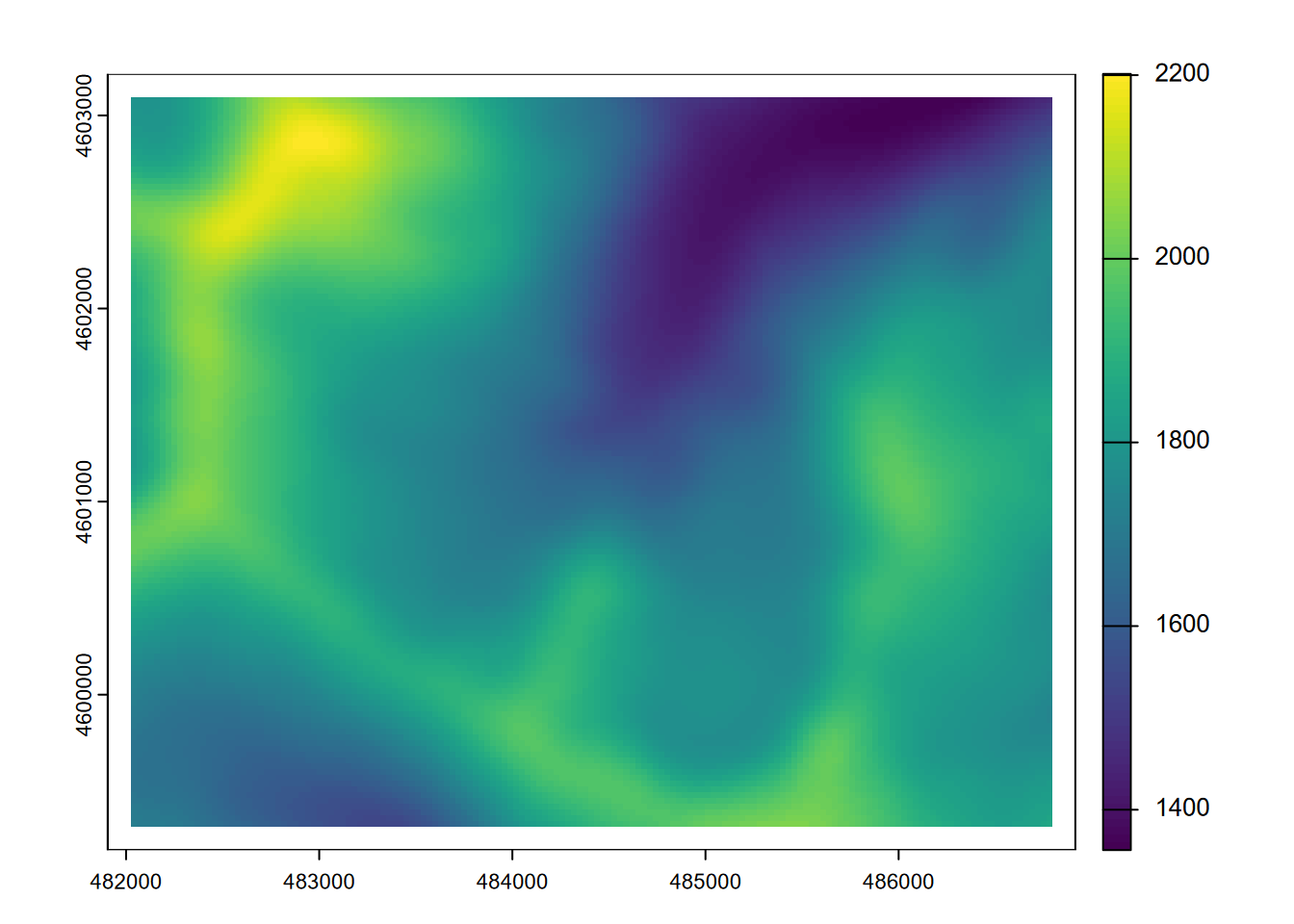

Elevation data are particularly information-rich, and a lot can be derived from them that informs us about the nature of landscapes and what drives surface hydrologic and geomorphic processes as well as biotic habitat (some slopes are drier than others, for instance). We’ll start by reading in some elevation data from the Marble Mountains of California (Figure 8.1) and use terra’s terrain function to derive slope (Figure 8.2), aspect (Figure 8.3), and hillshade rasters.

library(terra); library(igisci)

elev <- rast(ex("marbles/elev.tif"))

plot(elev)

FIGURE 8.1: Marble Mountains (California) elevation

slope <- terrain(elev, v="slope")

plot(slope)

FIGURE 8.2: Slope

aspect <- terrain(elev, v="aspect")

plot(aspect)

FIGURE 8.3: Aspect

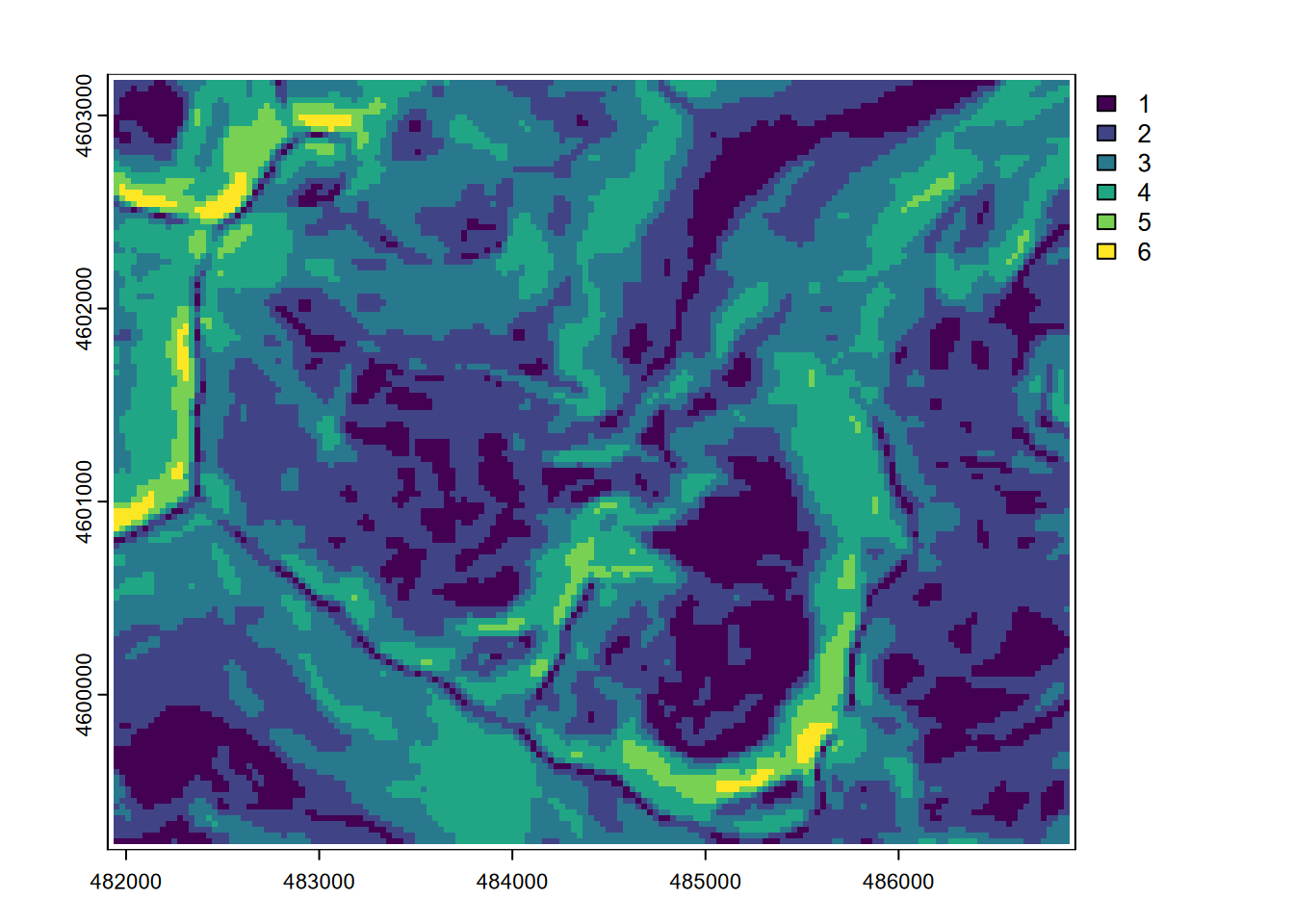

… then we’ll use terra::classify to make six discrete categorical slope classes (though the legend suggests it’s continuous) (Figure 8.4)…

slopeclasses <-matrix(c(00,10,1, 10,20,2, 20,30,3,

30,40,4, 40,50,5, 50,90,6), ncol=3, byrow=TRUE)

slopeclass <- classify(slope, rcl = slopeclasses)

plot(slopeclass)

FIGURE 8.4: Classified slopes

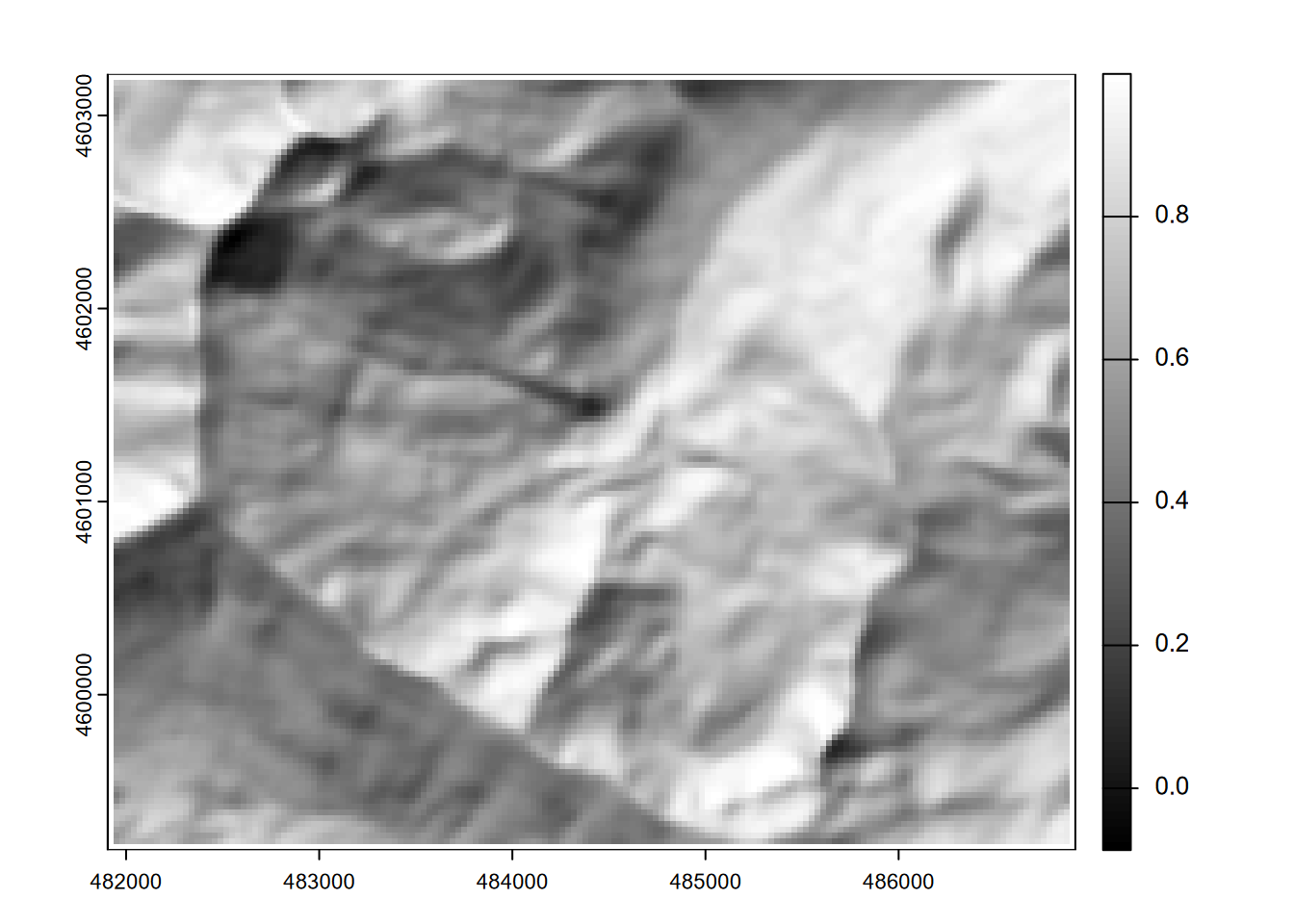

Then a hillshade effect raster with slope and aspect as inputs after converting to radians (Figure 8.5):

hillsh <- shade(slope/180*pi, aspect/180*pi, angle=40, direction=330)

plot(hillsh, col=gray(0:100/100))

FIGURE 8.5: Hillshade

8.2 Map algebra in terra

Map algebra was originally developed by Dana Tomlin in the 1970s and 1980s (Tomlin (1990)), and was the basis for his Map Analysis Package. It works by assigning raster outputs from an algebraic expression of raster inputs. Map algebra was later incorporated in Esri’s Grid and Spatial Analyst subsystems of ArcInfo and ArcGIS. Its simple and elegant syntax makes it still one of the best ways to manipulate raster data.

Let’s look at a couple of simple map algebra statements to derive some new rasters, such as converting elevation to feet (Figure 8.6).

elevft <- elev / 0.3048

plot(elevft)

FIGURE 8.6: Map algebra conversion of elevations from metres to feet

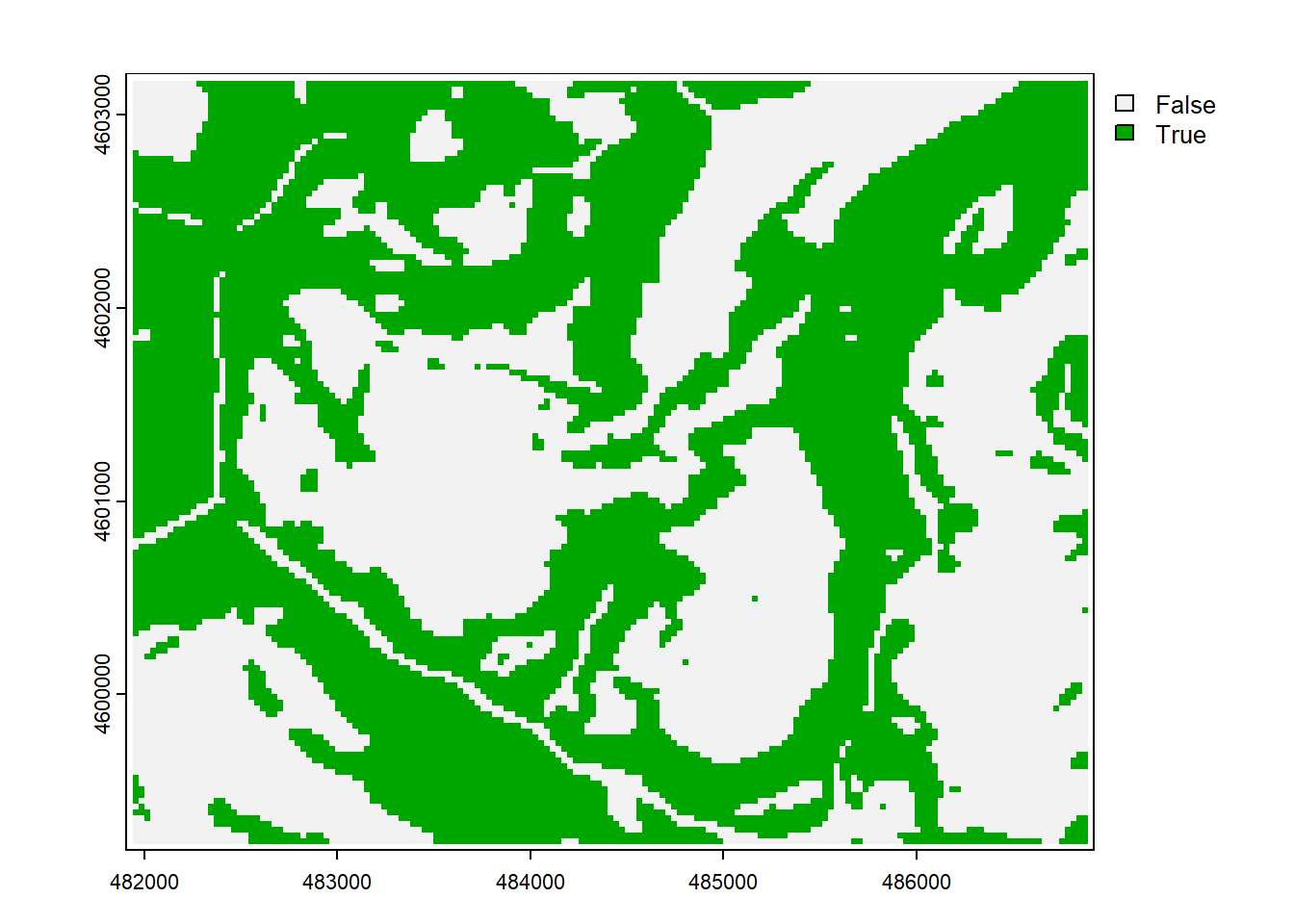

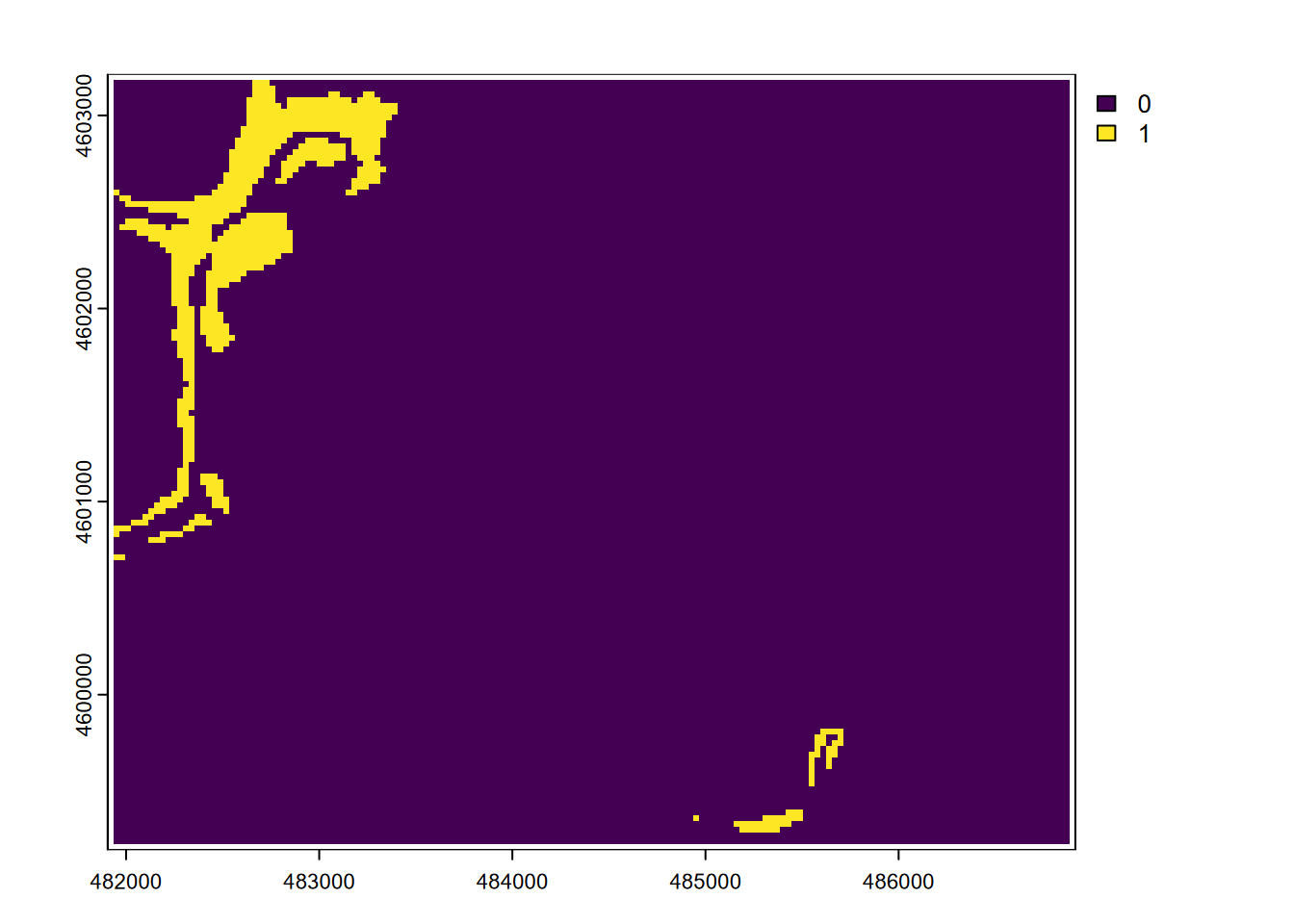

… including some that create and use Boolean (true-false) values where 1 is true and 0 is false, so answers the question “Is it steep?” (as long as we understand 1 means Yes or true) (Figure 8.7)…

steep <- slope > 20

plot(steep)

FIGURE 8.7: Boolean: slope > 20

… or Figure 8.8 that shows all areas that are steeper than 20 degrees and above 2000 m elevation.

plot(steep * (elev > 2000))

FIGURE 8.8: Boolean intersection: (slope > 20) * (elev > 2000)

You should be able to imagine that map algebra is particularly useful when applying a model equation to data to create a prediction map. For instance, later we’ll use lm() to derive linear regression parameters for predicting February temperature from elevation in the Sierra …

\[ Temperature_{prediction} = 11.88 - 0.006{elevation} \]

… which can be coded in map algebra something like the following if we have an elevation raster, to create a tempPred raster:

tempPred <- 11.88 - 0.006 * elevation8.3 Distance (and vector-raster conversion)

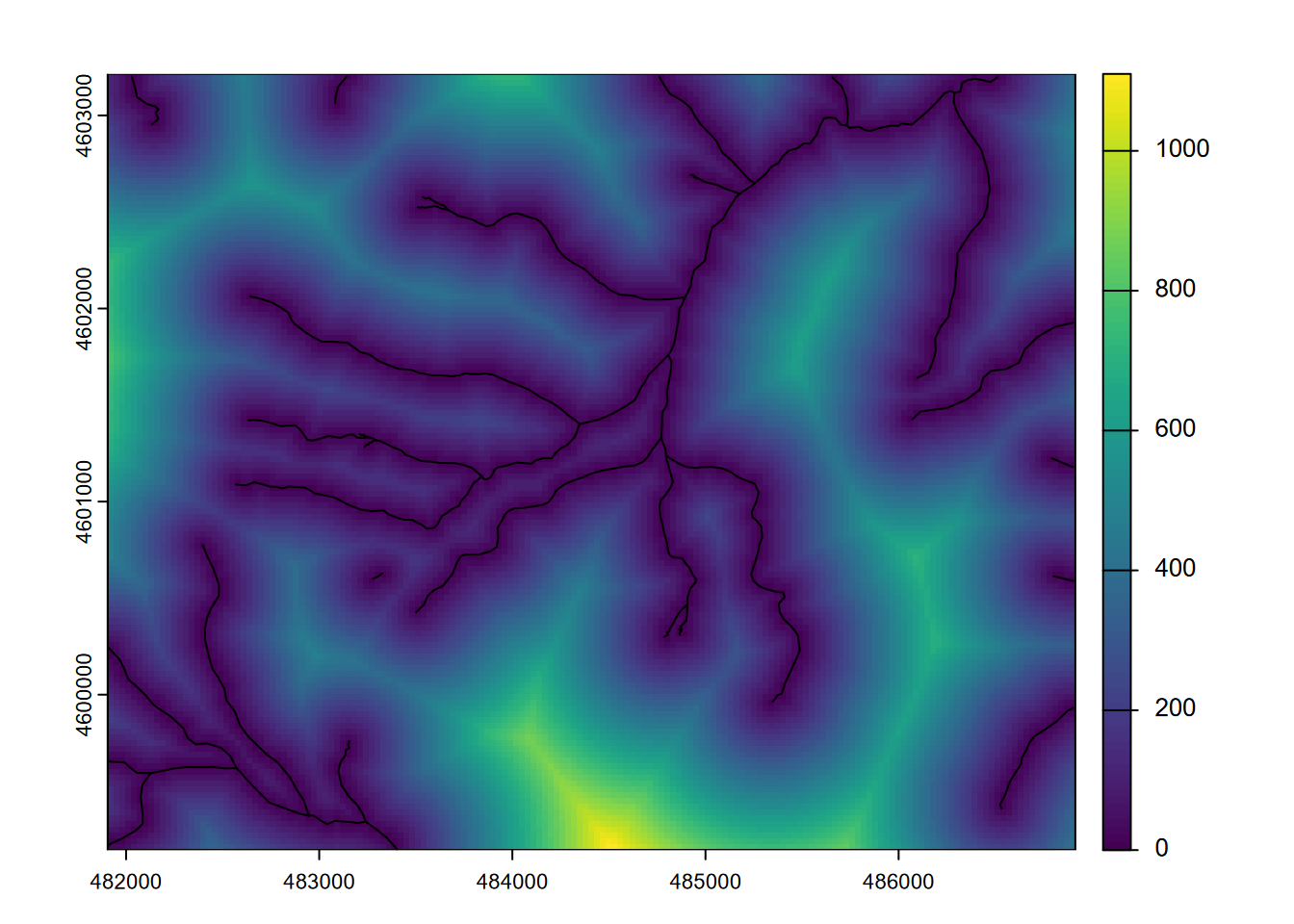

A continuous raster of distances from significant features can be very informative in environmental analysis. For instance, distance from the coast or a body of water may be an important variable for habitat analysis and ecological niche modeling. The goal of the following is to derive distances from streams as a continuous raster. It includes converting streams from a SpatVector to a SpatRaster, which is useful for defining the raster structure to populate the distance values with, and is essential since otherwise the distance function will return point-to-point distances, which we looked at in the last chapter but we don’t for the current purpose of deriving continuous rasters (Figure 8.9).

streams <- vect(ex("marbles/streams.shp"))

elev <- rast(ex("marbles/elev.tif"))

stdist <- terra::distance(rasterize(streams,elev))

plot(stdist)

lines(streams)

FIGURE 8.9: Stream distance raster

8.3.1 Using a new raster created from scratch

In the above process, we referenced elev essentially as a template to get a raster structure and crs to use. But what if we wanted to derive a distance raster and we didn’t have a raster to use as a template? We’d need to create a raster, and so we might want to use the method from the “Spatial Data and Maps” chapter earlier to do this where we specify the number of columns and rows and the extent (xmin, xmax, ymin, ymax).

Since we have an input streams features, we could look at its properties …

streams <- vect(ex("marbles/streams.shp"))

streams## class : SpatVector

## geometry : lines

## dimensions : 48, 8 (geometries, attributes)

## extent : 481903.6, 486901.9, 4599199, 4603201 (xmin, xmax, ymin, ymax)

## source : streams.shp

## coord. ref. : NAD83 / UTM zone 10N (EPSG:26910)

## names : FNODE_ TNODE_ LPOLY_ RPOLY_ LENGTH STREAMS_ STREAMS_ID

## type : <num> <num> <num> <num> <num> <num> <num>

## values : 5 6 4 4 269.3 1 1

## 2 7 2 2 153.7 2 5

## 8 1 2 2 337.9 3 36

## Shape_Leng

## <num>

## 269.3

## 153.7

## 337.9… and in code we could pull out the four extent properties with the ext method, and use that also to work out the aspect ratio and (assuming that we know we want a raster cell size of 30 m based on the crs being in UTM metres) we can come up with the parameters (with integer numbers of columns and rows) for creating the raster template.

XMIN <- ext(streams)$xmin

XMAX <- ext(streams)$xmax

YMIN <- ext(streams)$ymin

YMAX <- ext(streams)$ymax

aspectRatio <- (YMAX-YMIN)/(XMAX-XMIN)

cellSize <- 30

NCOLS <- as.integer((XMAX-XMIN)/cellSize)

NROWS <- as.integer(NCOLS * aspectRatio)Then we use those parameters to create the template, also borrowing the crs from the streams layer.

templateRas <- rast(ncol=NCOLS, nrow=NROWS,

xmin=XMIN, xmax=XMAX, ymin=YMIN, ymax=YMAX,

vals=1, crs=crs(streams))Finally we can do something similar to original example, but with the template instead of elev as reference for the vector-raster conversion, and in turn the distance raster. The result will be identical to what we saw above.

strms <- rasterize(streams,templateRas)

stdist <- terra::distance(strms)

plot(stdist)

lines(streams)Note that if you knew of a reference raster, you can also look at its properties with

elev## class : SpatRaster

## dimensions : 134, 167, 1 (nrow, ncol, nlyr)

## resolution : 30, 30 (x, y)

## extent : 481905, 486915, 4599195, 4603215 (xmin, xmax, ymin, ymax)

## coord. ref. : NAD_1983_UTM_Zone_10N (EPSG:26910)

## source : elev.tif

## name : elev

## min value : 1336

## max value : 2260and use or modify those properties to manually create a template raster, with code something like:

templateRas <- rast(ncol=167, nrow=134,

xmin=481905, xmax=486915, ymin=4599195, ymax=4603215,

vals=1, crs="EPSG:26910")The purpose here is just to show how we can create and use a raster template. How you define your raster template will depend on the extent and scale of your data, and if you have other rasters (like elev above) you want to match with, you may want to use it as a template as we did first.

Then in deriving distances, it’s useful to remember that distances can go on forever (well, on the planet they may go around and around, if we were using spherical coordinates) so that’s another reason we have to specify the raster structure we want to populate.

8.4 Extracting values

A very useful method or environmental analysis and modeling is to extract values from rasters at specific point locations. The point locations might be observations of species, soil samples, or even random points, and getting continuous (or discrete) raster observations can be very useful in a statistical analysis associated with those points. The distance from streams raster we derived earlier, or elevation, or terrain derivatives like slope and aspect might be very useful in a ecological niche model, for instance. We’ll start by using random points and use these to extract values from 4 rasters:

- elev: read in from elev.tif

- slope: created from elev with terrain

- str_dist: Euclidean distance to streams

- geol: rasterized from geology polygon features

library(igisci); library(terra)

geolshp <- vect(ex("marbles/geology.shp"))

streams <- vect(ex("marbles/streams.shp"))

elev <- rast(ex("marbles/elev.tif"))

slope <- terrain(elev, v="slope")

str_dist <- terra::distance(rasterize(streams,elev))

geol <- rasterize(geolshp,elev,field="CLASS")Note that in contrast to the other rasters, the stream distance raster ends up with no name, so we should give it a name:

names(slope)## [1] "slope"names(str_dist)## [1] "layer"names(str_dist) <- "str_dist"On the raster

namesproperty: You’ll find that many terra functions may not assign thenamesproperty you’d expect, so it’s a good idea to check withnames()and maybe set it to what we want, as we’ve just done forstr_dist. As we’ll see later with the focal statistics function, the name of the input is used even though we’ve modified it in the function result, and that may create confusion when we use it. We just saw that thedistance()function produced an empty name, and there may be others you’ll run into. For many downstream uses, the names property may not matter, but it will be important when we extract values from rasters into points where thenamesproperty is assigned to the variable created for the points.

Then we’ll create 200 random xy points within the extent of elev, and assign it the same crs.

library(sf)

x <- runif(200, min=xmin(elev), max=xmax(elev))

y <- runif(200, min=ymin(elev), max=ymax(elev))

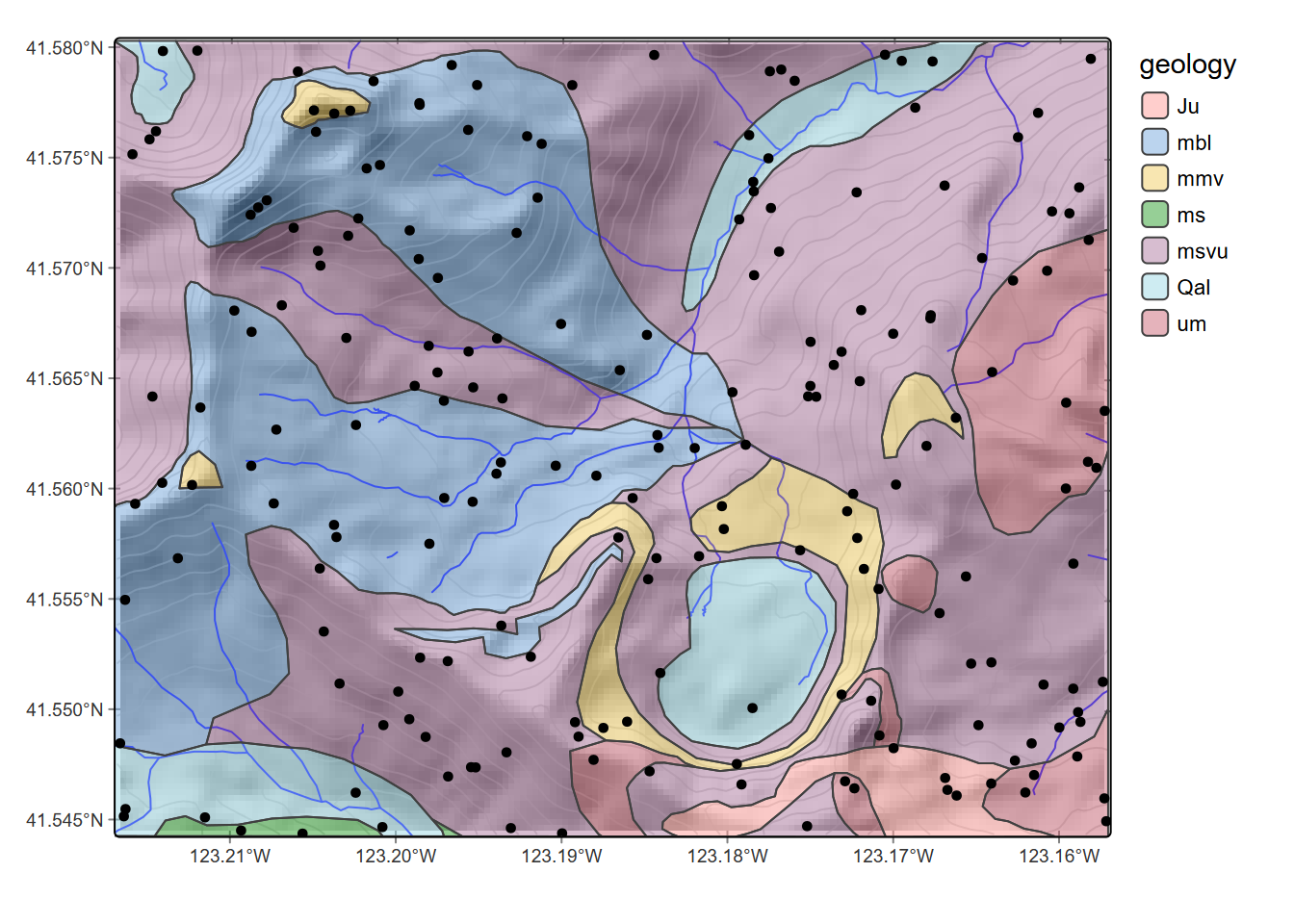

rsamps <- st_as_sf(data.frame(x,y), coords = c("x","y"), crs=crs(elev))To visualize where the random points land, we’ll map them on the geology sf, streams, and contours created from elev using default settings. The terra::as.contour function will create these as SpatVector data, which along with streams we’ll convert with sf::st_as_sf to display in ggplot (Figure 8.10).

library(tidyverse)

cont <- st_as_sf(as.contour(elev, nlevels=30))

geology <- st_read(ex("marbles/geology.shp"))ggplot() +

geom_sf(data=geology, aes(fill=CLASS)) +

geom_sf(data=cont, col="gray") +

geom_sf(data=rsamps) +

geom_sf(data=st_as_sf(streams), col="blue")

FIGURE 8.10: Random points in the Marble Valley area, Marble Mountains, California

Now we’ll extract data from each of the rasters, using an S4 version of rsamps, and then bind them together with the rsamps sf. and . We’ll have to use terra::vect and sf::st_as_sf to convert feature data to the type required by specific tools, and due to a function naming issue, we’ll need to use the package prefix with terra::extract, but otherwise the code is pretty straightforward.

rsampS4 <- vect(rsamps)

elev_ex <- terra::extract(elev, rsampS4) %>% dplyr::select(-ID)

slope_ex <- terra::extract(slope, rsampS4) %>% dplyr::select(-ID)

geol_ex <- terra::extract(geol, rsampS4) %>%

dplyr::rename(geology = CLASS) %>% dplyr::select(-ID)

strD_ex <- terra::extract(str_dist, rsampS4) %>% dplyr::select(-ID)

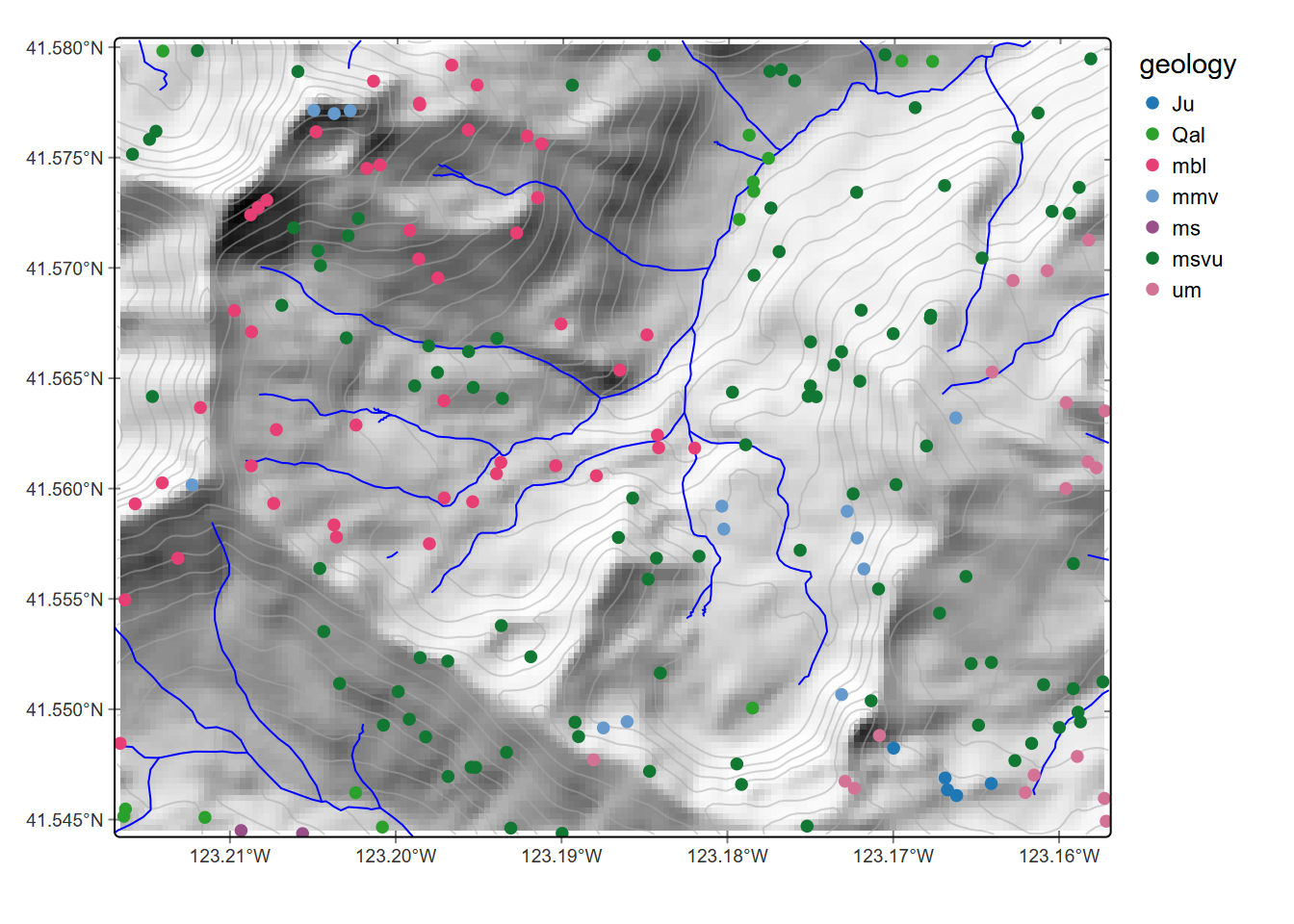

rsampsData <- bind_cols(rsamps, elev_ex, slope_ex, geol_ex, strD_ex)Then plot the map with the points colored by geology (Figure 8.11)…

ggplot() +

geom_sf(data=cont, col="gray") +

geom_sf(data=rsampsData, aes(col=geology)) +

geom_sf(data=st_as_sf(streams), col="blue")

FIGURE 8.11: Points colored by geology extracted from raster

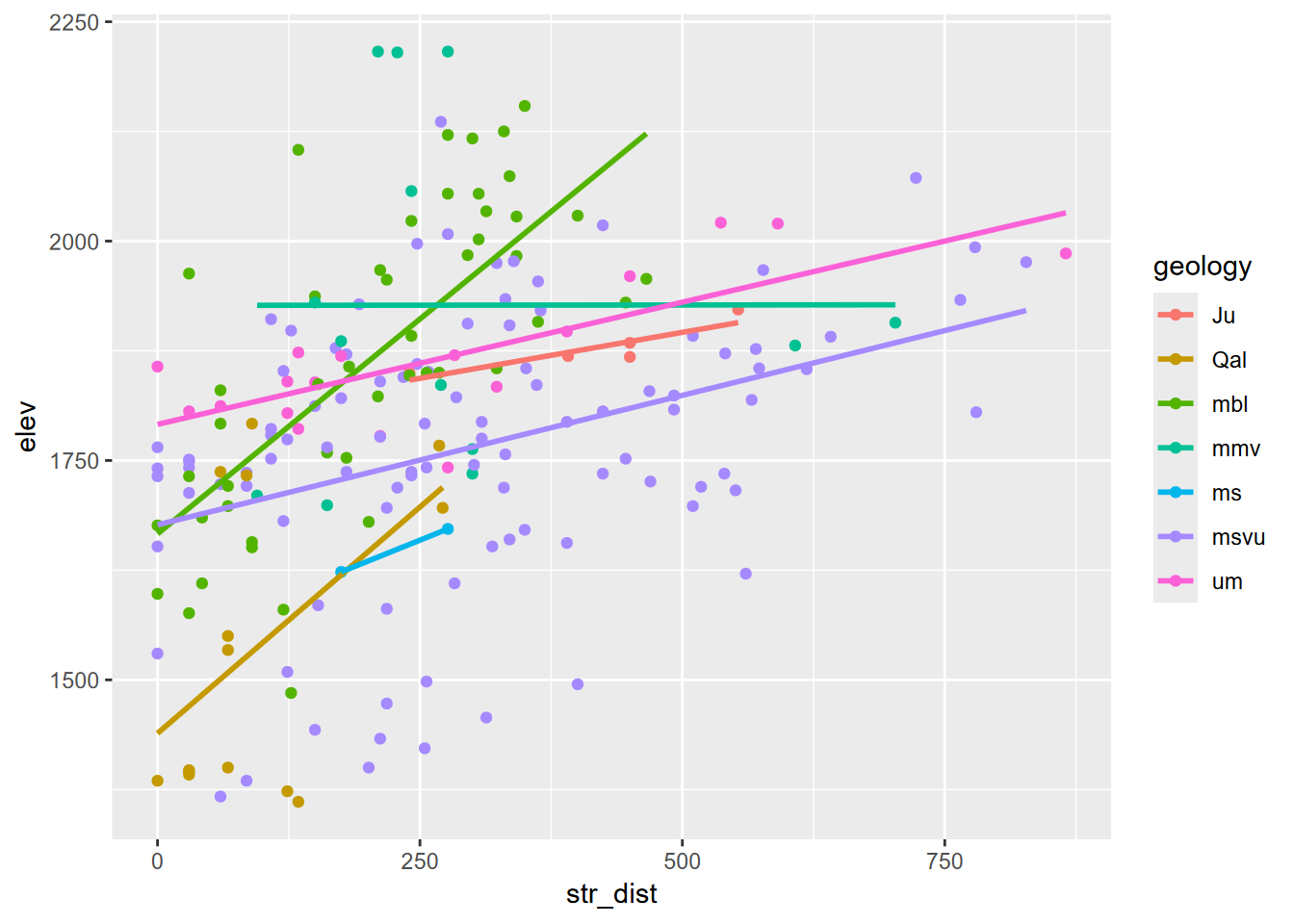

… and finally str_dist by elev, colored by geology, derived by extracting. We’ll filter out the NAs along the edge (Figure 8.12). Of course other analyses and visualizations are possible.

rsampsData %>%

filter(!is.na(geology)) %>%

ggplot(aes(x=str_dist,y=elev,col=geology)) +

geom_point() + geom_smooth(method = "lm", se=F)

FIGURE 8.12: Elevation by stream distance, colored by geology, random point extraction

Here’s a similar example, but using water sample data, which we can then use to relate to extracted raster values to look at relationships such as in Figures 8.13, 8.14 and 8.15. It’s worthwhile to check various results along the way, as we did above. Most of the code is very similar to what we used above, including dealing with naming the distance rasters.

streams <- vect(ex("marbles/streams.shp"))

trails <- vect(ex("marbles/trails.shp"))

elev <- rast(ex("marbles/elev.tif"))

geolshp <- vect(ex("marbles/geology.shp"))

sampsf <- st_read(ex("marbles/samples.shp")) %>%

dplyr::select(CATOT, MGTOT, PH, TEMP, TDS)

samples <- vect(sampsf)

strms <- rasterize(streams,elev)

tr <- rasterize(trails,elev)

geol <- rasterize(geolshp,elev,field="CLASS")

stdist <- terra::distance(strms); names(stdist) <- "stDist"

trdist <- terra::distance(tr); names(trdist) = "trDist"

slope <- terrain(elev, v="slope")

aspect <- terrain(elev, v="aspect")

elev_ex <- terra::extract(elev, samples) %>% dplyr::select(-ID)

slope_ex <- terra::extract(slope, samples) %>% dplyr::select(-ID)

aspect_ex <- terra::extract(aspect, samples) %>% dplyr::select(-ID)

geol_ex <- terra::extract(geol, samples) %>%

dplyr::rename(geology = CLASS) %>% dplyr::select(-ID)

strD_ex <- terra::extract(stdist, samples) %>% dplyr::select(-ID)

trailD_ex <- terra::extract(trdist, samples) %>% dplyr::select(-ID)

samplePts <- cbind(samples,elev_ex,slope_ex,aspect_ex,geol_ex,strD_ex,trailD_ex)

samplePtsDF <- as.data.frame(samplePts)head(samplePtsDF)## CATOT MGTOT PH TEMP TDS elev slope aspect geology stDist

## 1 0.80 0.02 7.74 15.3 0.16 1357 1.721006 123.690068 Qal 0.0000

## 2 0.83 0.04 7.88 14.8 0.16 1359 6.926249 337.833654 Qal 30.0000

## 3 0.83 0.04 7.47 15.1 0.12 1361 4.222687 106.389540 Qal 0.0000

## 4 0.63 0.03 7.54 15.8 0.17 1356 2.160789 353.659808 Qal 0.0000

## 5 0.67 0.06 7.67 14.0 0.14 1374 1.687605 81.869898 Qal 0.0000

## 6 0.70 0.04 7.35 7.0 0.16 1399 12.713997 4.236395 msvu 192.0937

## trDist

## 1 169.7056

## 2 318.9044

## 3 108.1665

## 4 241.8677

## 5 150.0000

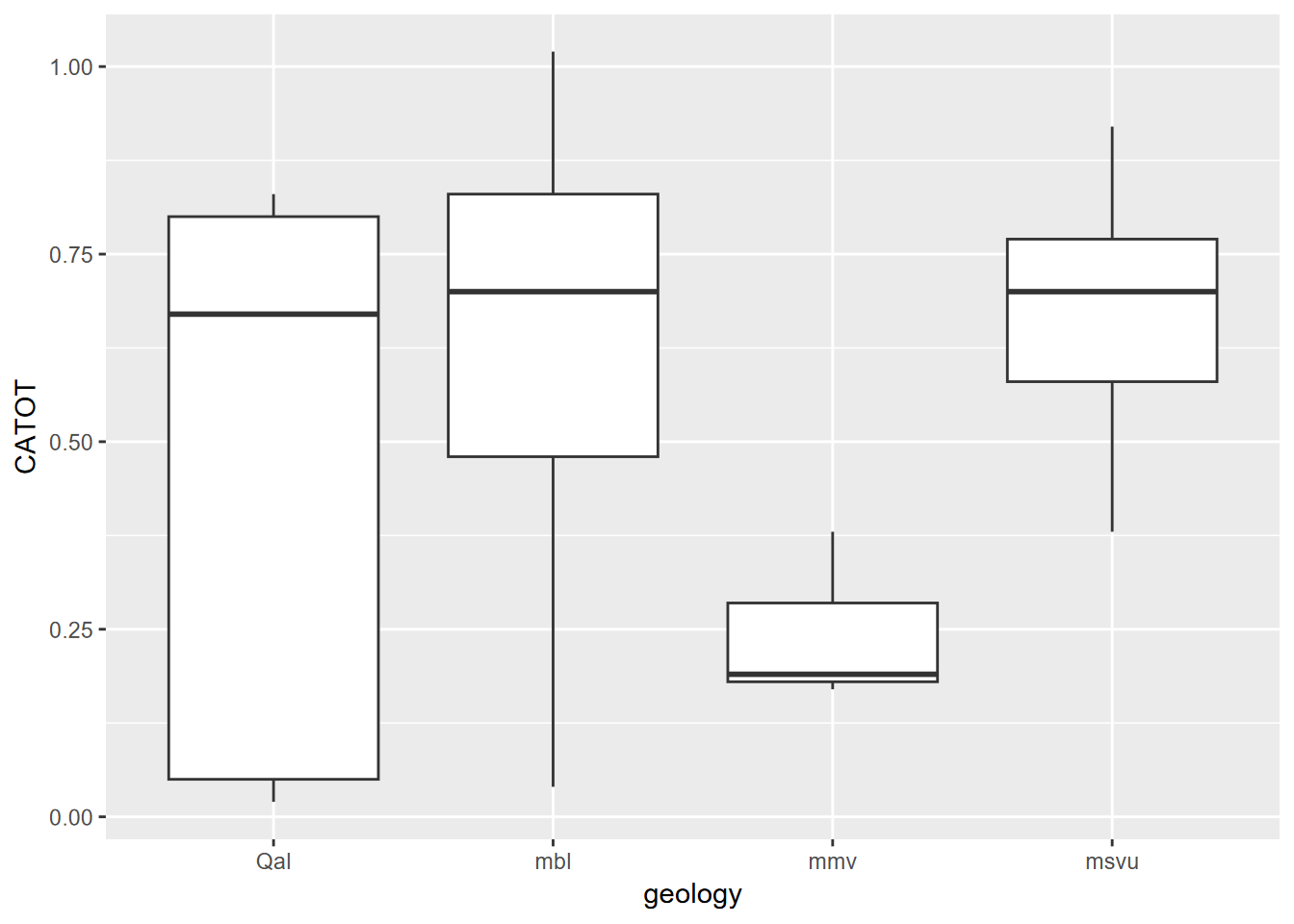

## 6 342.0526ggplot(data=samplePtsDF, aes(x=geology,y=CATOT)) + geom_boxplot()

FIGURE 8.13: Dissolved calcium carbonate grouped by geology extracted at water sample points

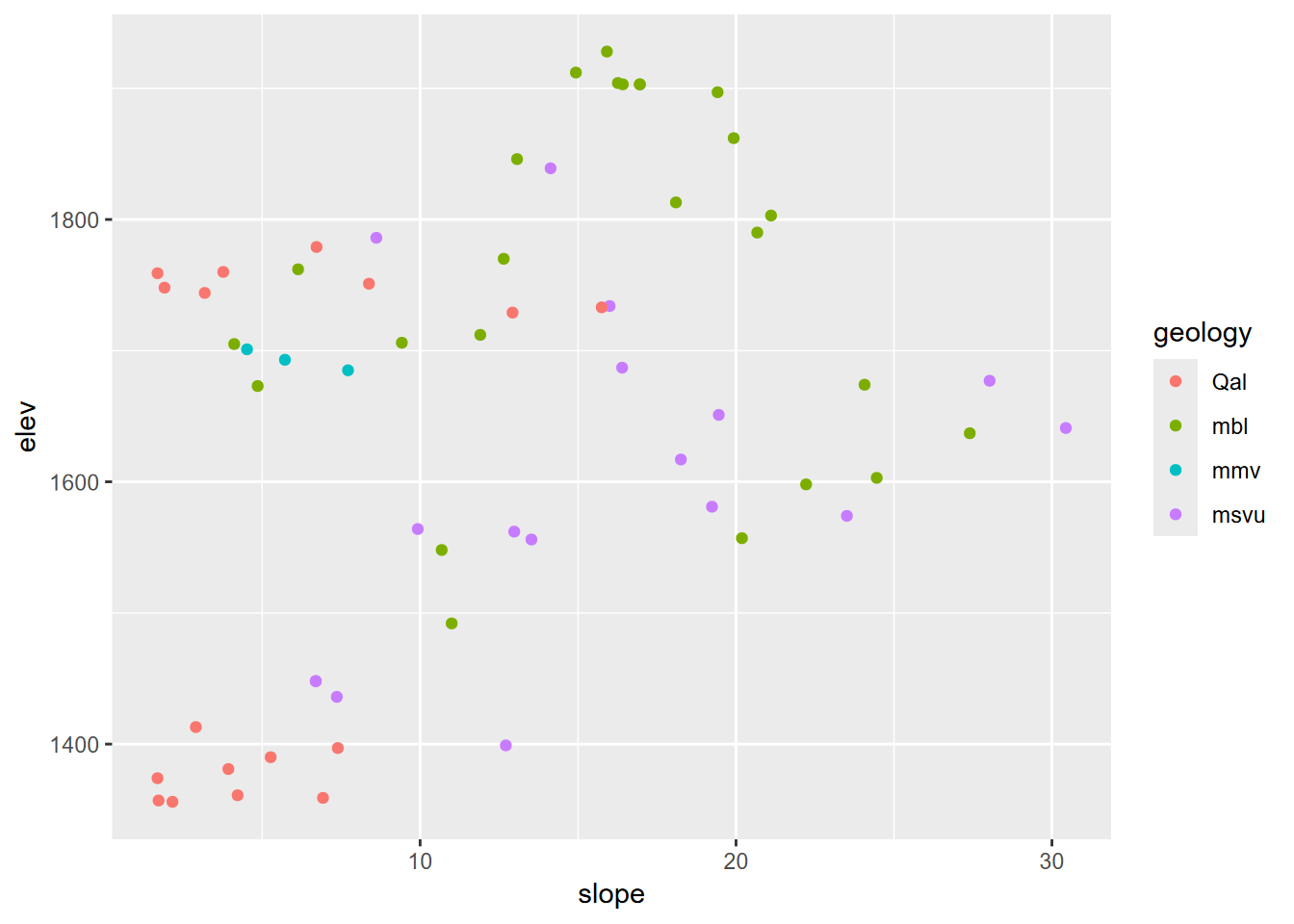

ggplot(data=samplePtsDF, aes(x=slope,y=elev,col=geology)) + geom_point()

FIGURE 8.14: Slope by elevation colored by extracted geology

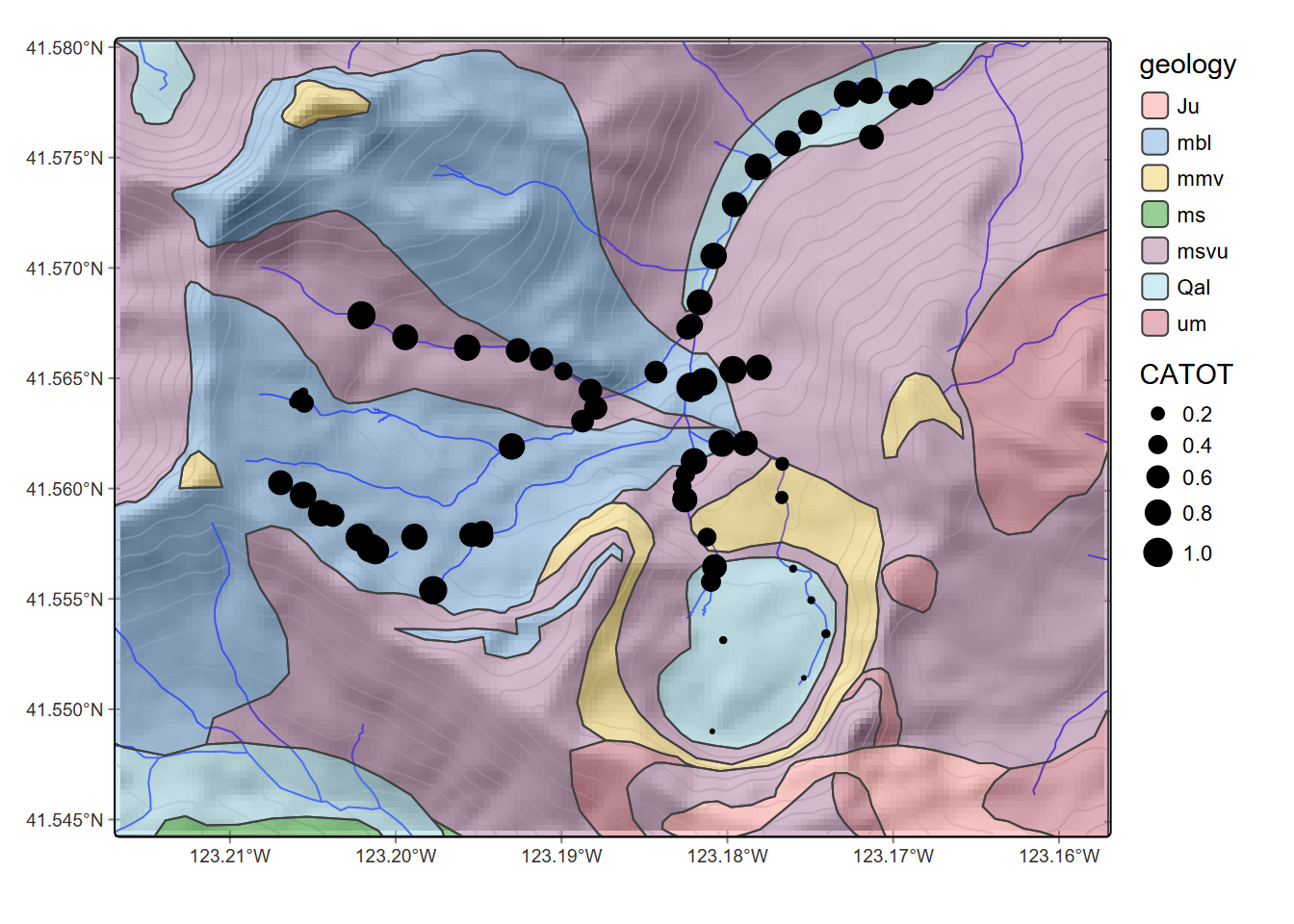

cont <- st_as_sf(as.contour(elev, nlevels=30))ggplot() +

geom_sf(data=st_as_sf(geolshp), aes(fill=CLASS)) +

geom_sf(data=cont, col="gray") +

geom_sf(data=st_as_sf(streams), col="blue") +

geom_sf(data=sampsf, aes(size=log(CATOT)))

FIGURE 8.15: Logarithm of calcium carbonate total hardness at sample points, showing geologic units

8.5 Focal statistics

Focal (or neighborhood) work with a continuous or categorical raster to pass a moving window through it, assigning the central cell with summary statistic applied to the neighborhood, which by default is a 3x3 neighborhood (w=3) centered on the cell. One of the simplest is a low-pass filter where fun="mean". This applied to a continuous raster like elevation will look very similar to the original, so we’ll apply a larger 9x9 (w=9) window so we can see the effect (Figure 8.16), which you can compare with the earlier plots of raw elevation.

elevLowPass9 <- terra::focal(elev,w=9,fun="mean")

names(elevLowPass9) <- "elevLowPass9" # otherwise gets "elev"

plot(elevLowPass9)

FIGURE 8.16: 9x9 focal mean of elevation

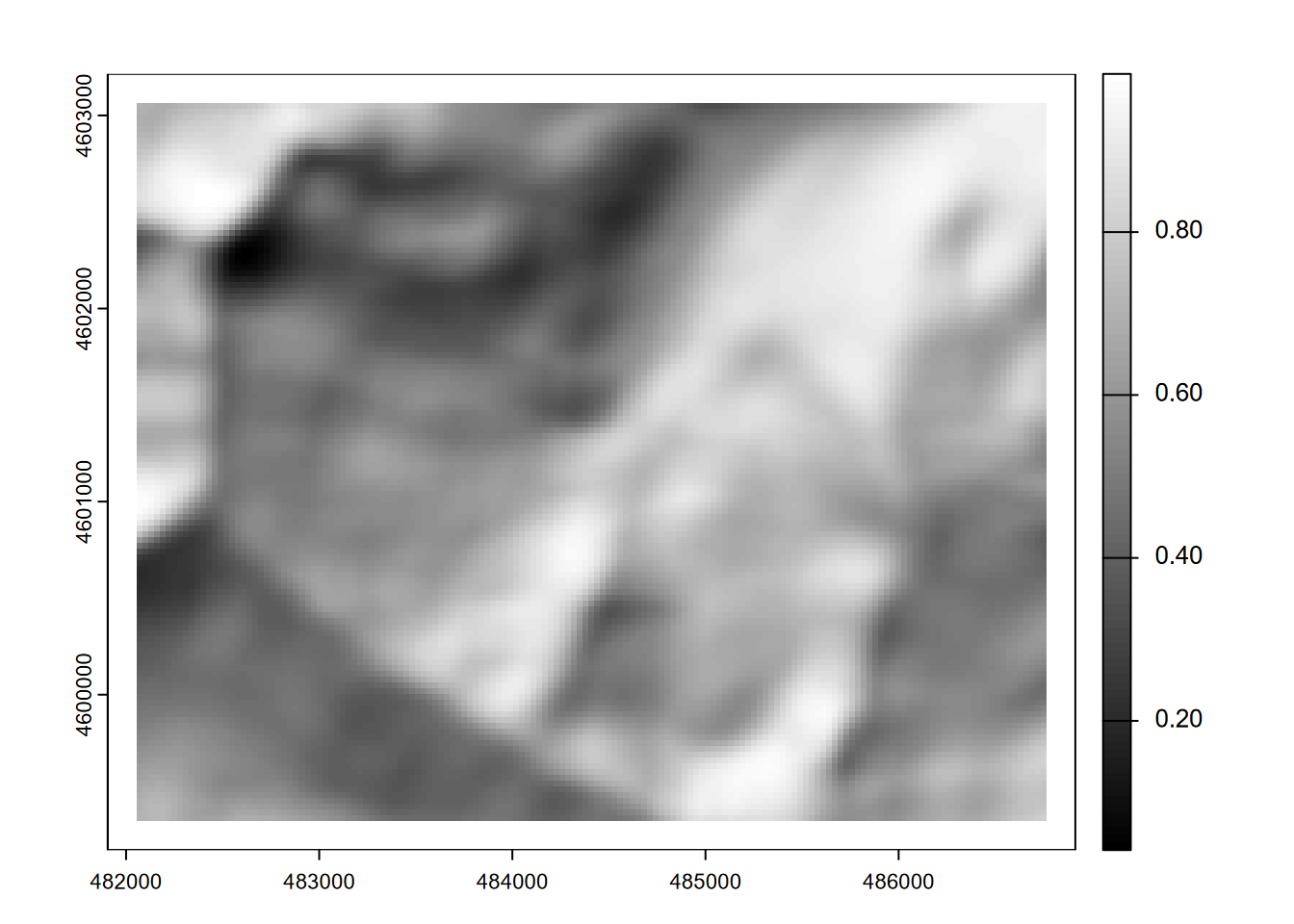

The effect is probably much more apparent in a hillshade, where the very smooth 9x9 low-pass filtered elevation will seem to create an out-of-focus hillshade (Figure 8.17).

slope9 <- terrain(elevLowPass9, v="slope")

aspect9 <- terrain(elevLowPass9, v="aspect")

plot(shade(slope9/180*pi, aspect9/180*pi, angle=40, direction=330), col=gray(0:100/100))

FIGURE 8.17: Hillshade of 9x9 focal mean of elevation

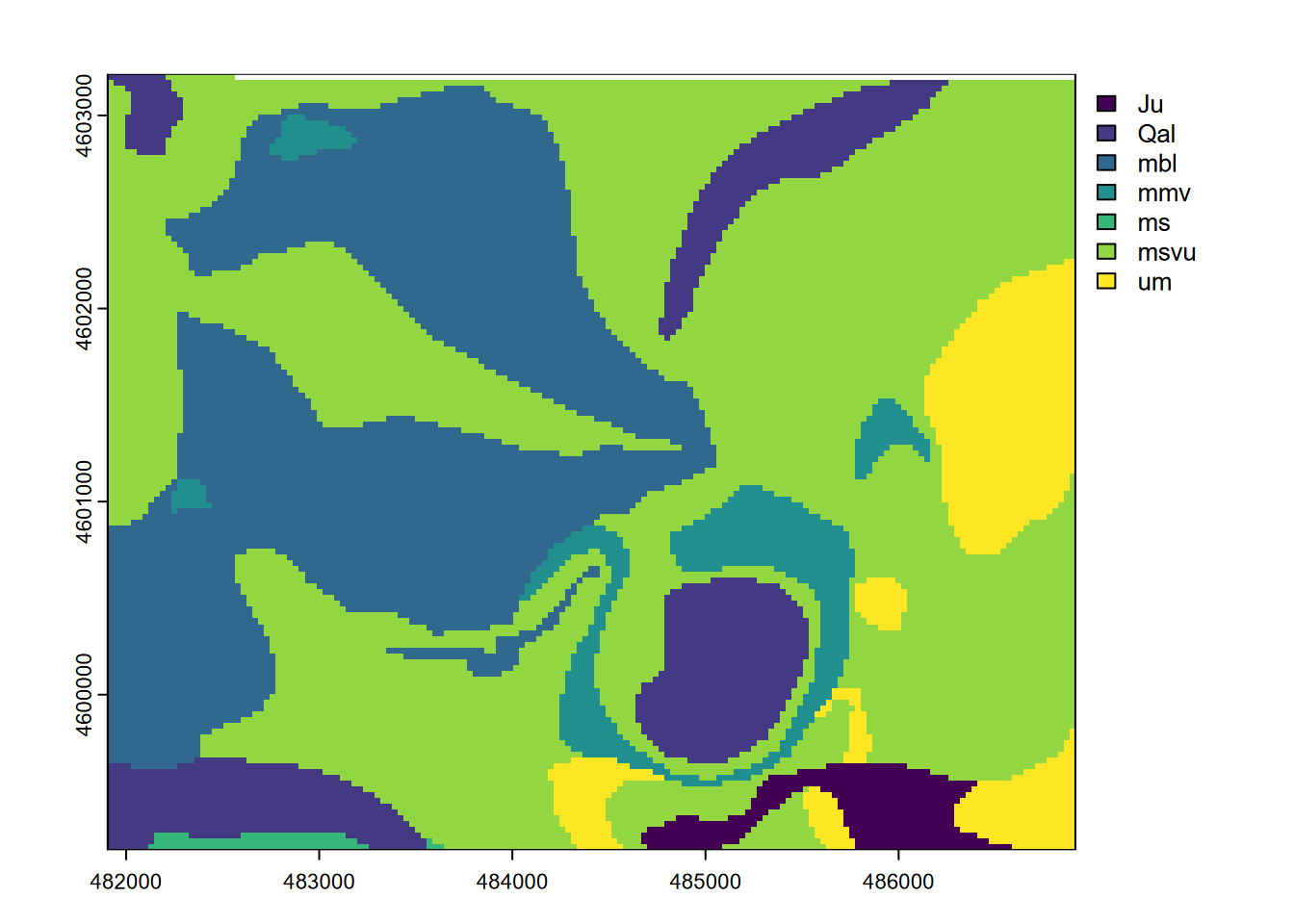

For categorical/factor data such as geology (Figure 8.18), the modal class in the neighborhood can be defined (Figure 8.19).

plot(geol)

FIGURE 8.18: Marble Mountains geology raster

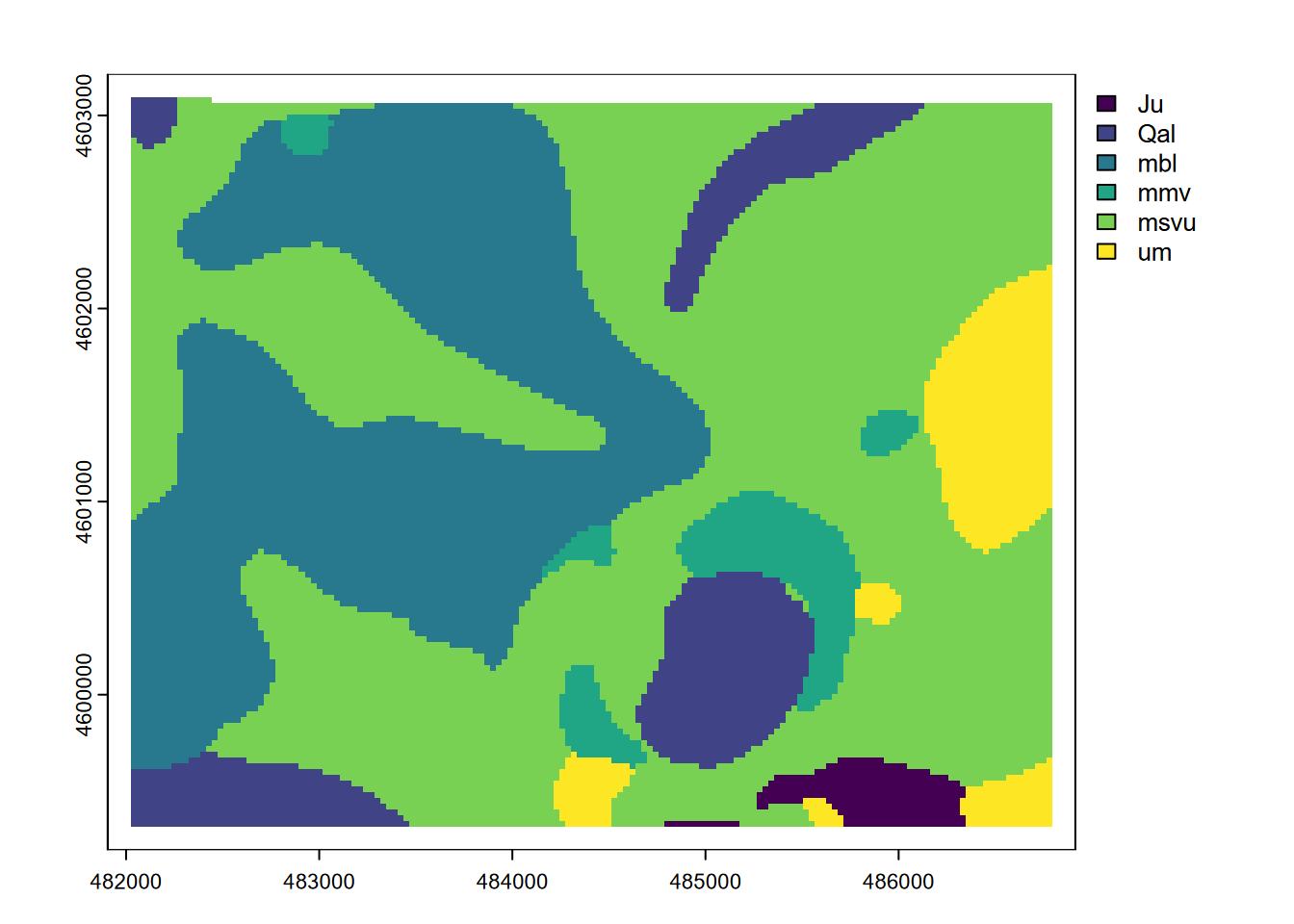

plot(terra::focal(geol,w=9,fun="modal"))

FIGURE 8.19: Modal geology in 9x9 neighborhoods

Note that while plot displayed these with a continuous legend, the modal result is going to be an integer value representing the modal class, the most common rock type in the neighborhood. This is sometimes called a majority filter. Challenge: how could we link the modes to the original character CLASS value, and produce a more useful map?

8.6 Zonal statistics

Zonal statistics let you stratify by zone, and is a lot like the grouped summary we’ve done before, but in this case the groups are connected to the input raster values by location. There’s probably a more elegant way of doing this but here are a few, that are then joined together.

meanElev <- zonal(elev,geol,"mean") %>% rename(mean=elev)

maxElev <- zonal(elev,geol,"max") %>% rename(max=elev)

minElev <- zonal(elev,geol,"min") %>% rename(min=elev)left_join(left_join(meanElev,maxElev,by="CLASS"),minElev,by="CLASS")## CLASS mean max min

## 1 Ju 1940.050 2049 1815

## 2 Qal 1622.944 1825 1350

## 3 mbl 1837.615 2223 1463

## 4 mmv 1846.186 2260 1683

## 5 ms 1636.802 1724 1535

## 6 msvu 1750.205 2136 1336

## 7 um 1860.758 2049 1719Exercises

Exercise 8.1 You can get the four values that define the extent of a raster with terra functions xmin, xmax, ymin, and ymax. Use these with the raster elev created from "marbles/elev.tif", then derive 100 uniform random x and y values with those min and max values. Use cbind to display a matrix of 100 coordinate pairs.

Exercise 8.2 Create sf points from these 100 uniform random coordinate pairs. Use tmap to display them on a base of the elevation raster.

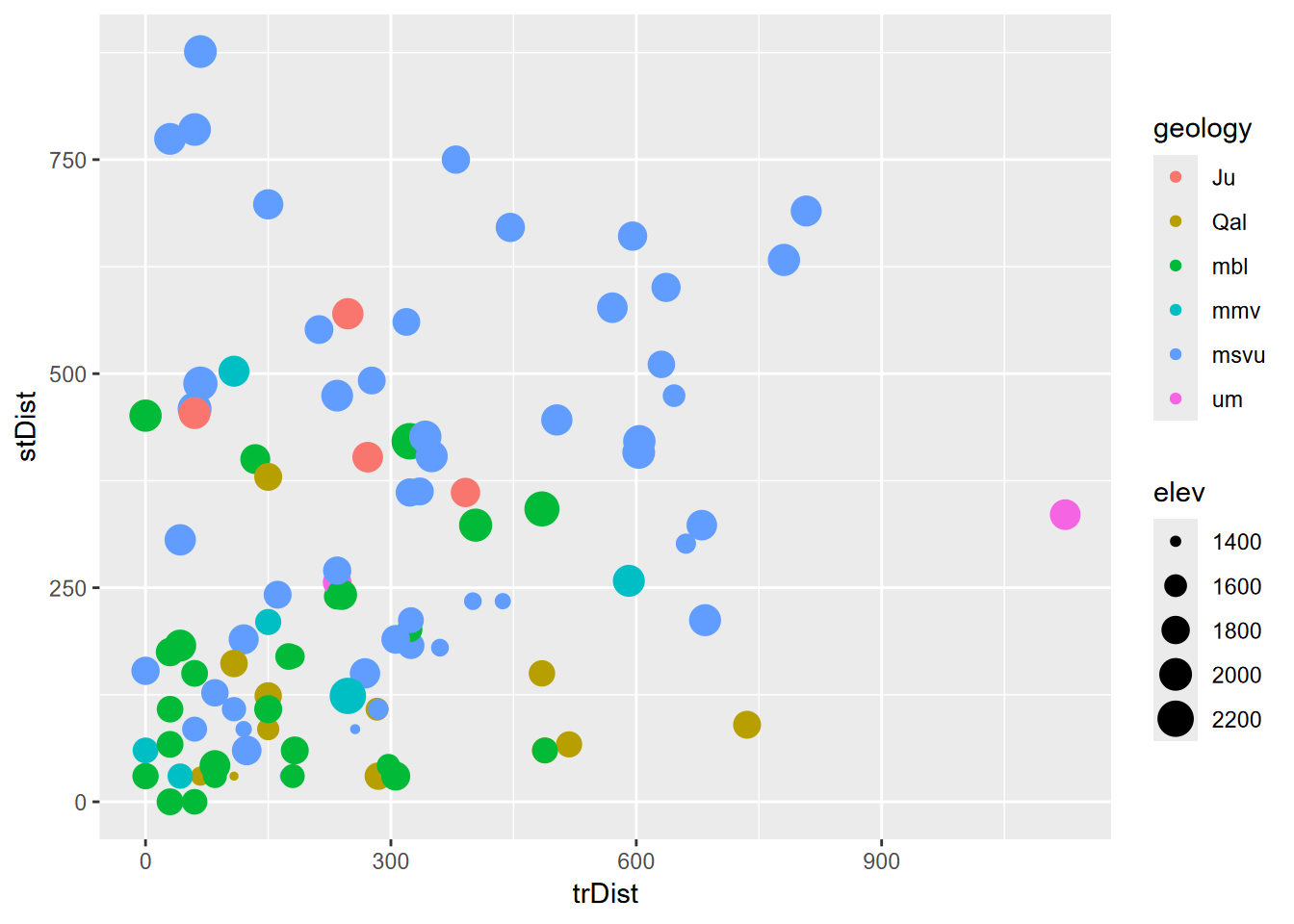

Exercise 8.3 Geology and elevation by stream and trail distance. Now use those points to extract values from stream distance, trail distance, geology, slope, aspect, and elevation, and display that sf data frame as a table, then plot trail distance (x) vs stream distance (y) colored by geology and sized by elevation (Figure 8.20).

FIGURE 8.20: Geology and elevation by stream and trail distance (goal)

Exercise 8.4 Create a slope raster from “SanPedro/dem.tif” then a “steep” raster of all slopes > 26 degrees, determined by a study of landslides to be a common landslide threshold, then display them using (palette=“Reds”, alpha=0.5, legend.show=F) along with roads “SanPedro/roads.shp” in “black”, streams “SanPedro/streams.shp” in “blue”, and watershed borders “SanPedro/SPCWatershed.shp” in “darkgreen” with lwd=2.

Exercise 8.5 Add a hillshade to that map.